Page 1

Intel® NetStructure

TM

ZT 4901

High Availability Software

Technical Product Specification

April 2003

Order Number: 273856-002

Page 2

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY

ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN

INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUM ES NO LIABILIT Y WHA T SOEVER, AND INTE L DISCLAIMS

ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES

RELATING T O FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER

INTELLECTUAL PROPERTY RIGHT . Intel products are not intended for use in medical, life saving, life sustaining applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves the se for

future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to the m.

®

The Intel

deviate from published specificatio ns. Current characterized errata are available on request.

This document and the software described in it are furnished under license and may only be used or copied in accordance with the terms of the

license. The i nf orm at i o n i n t h is do cum en t i s f ur n is he d f o r i nf o rm ati o na l u s e o nl y, is su bj ec t t o ch a ng e wi tho ut n ot ic e , and s h o uld not be construed as a

commitment by Intel Corporation. Intel Corporation assumes no responsibility or liability for any errors or inaccuracies that may appear in this

document or any software that may be provided in association with this document. Except as permitted by such license, no part of this document may

be reproduced, stored in a retrieval system, or transmitted in any form or by any means without the express written consent of Intel Corporation.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an ordering number and are referenced in this document, or other Intel literature may be obtained by calli ng

1-800-548-4725 or by visiting Intel's website at http://www.intel.com.

AlertVIEW, AnyPoint, AppChoice, BoardWatch, BunnyPeople, CablePort, Celeron, Chips, CT Connect, CT Media, Dialogic, DM3, EtherExpress,

ETOX, FlashFile, i386, i486, i960, iCOMP, InstantIP, Intel, Intel logo, Intel386, Intel486, Intel740, IntelDX2, IntelDX4, IntelSX2, Intel Create & Share,

Intel GigaBlade, Intel InBusiness, Intel Inside, Intel Inside logo, Intel NetBurst, Intel NetMerge, Intel NetStructure, Intel Play, Intel Play logo, Intel

SingleDriver, Intel SpeedStep, Intel StrataFlash, Intel TeamStation, Intel Xeon, Intel XScale, IPLink, Itanium, LANDesk, LanRover, MCS, MMX, MMX

logo, Optimizer logo, OverDrive, Paragon, PC Dads, PC Parents, PDCharm, Pentium, Pentium II Xeon, Pentium III Xeon, Performance at Your

Command, RemoteExpress, Shiva, SmartDie, Solutions960, Sound Mark, StorageExpress, The Computer Inside., The Journey Inside,

TokenExpress, Trillium, VoiceBrick, Vtune, and Xircom are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United

States and other countries.

*Other names and brands may be claimed as the property of others.

Copyright © Intel Corporation, 2003

NetStructureTM ZT 4901 High Availability Software may contain design defects or errors known as errata which may cause the product to

2 High Availability Software for t he Intel® NetStructureTM ZT 4901 Technical Product Specificati on

Page 3

Contents

Contents

1 Document Organization................................................................................................................9

2 Introduction..................................................................................................................................11

2.1 Terminology........................................................................................................................11

2.2 High Availability Hardware Approach .................................................................................14

2.2.1 Processor Boards ..................................................................................................15

2.2.2 Bridge Mezzanine..................................................................................................16

2.2.3 Backplane..............................................................................................................17

2.3 High-Availability Software Approach...................................................................................18

2.3.1 Host Application.....................................................................................................18

2.3.2 System Management.............................................................................................19

2.3.3 Backplane Device Drivers......................................................................................20

3 Host Application Software..........................................................................................................21

3.1 Goals of the Host Application .............................................................................................21

3.1.1 Serviceability..........................................................................................................21

3.1.2 Portability...............................................................................................................21

3.1.3 Redundancy...........................................................................................................21

3.2 Division of Labor.................................................................................................................22

3.3 Development Issues ................... ....................................... ...... ....... ...... ....... ...... ....... ...... ... .23

3.3.1 Redundancy...........................................................................................................23

3.3.2 Graceful Switchover...............................................................................................24

3.3.3 Hardened Applications...........................................................................................24

3.3.4 Code Modularity.....................................................................................................24

4 System Management...................................................................................................................25

4.1 Redundant Host API...........................................................................................................25

4.1.1 IPMI API.................................................................................................................25

4.1.2 Hot Swap API ................................................ ....... ...... ....... ...... ....... ...... ....... ..........26

4.1.2.1 Slot Control API .....................................................................................26

4.2 Baseboard Management Controller Firmware Enhancements...........................................26

4.2.1 Fault Configuration ................................................................................................26

4.2.2 Isolation Strategies ................................................................................................27

4.2.3 IPMI RH Channel Commands................................................................................28

4.2.3.1 RH Channel Enabled .............................................................................28

4.2.3.2 RH Channel Get RH BMC Address .......................................................28

5 High Availability CompactPCI Device Drivers ..........................................................................31

5.1 Device Driver Design ..........................................................................................................31

5.1.1 Device Driver States...... ....... ...... ....................................... ...... ....... ...... ....... ...... ....32

5.1.1.1 Initialization ............................................................................................32

5.1.1.2 Quiesced................................................................................................32

5.1.1.3 Activation ...............................................................................................32

5.1.2 Adding High-Availability Functionality....................................................................33

5.1.2.1 Add Device.............................................................................................34

5.1.2.2 Resume Operations...............................................................................34

5.1.2.3 Suspend Operations ..............................................................................35

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 3

Page 4

Contents

5.1.2.4 Remove Device......................................................................................35

5.1.2.5 Driver Synchronization...........................................................................35

5.2 Summary ............................................................................................................................36

6 Redundant Host API....................................................................................................................37

6.1 Intel-Specific APIs...............................................................................................................37

6.1.1 RhSetHostName....................................................................................................37

6.1.1.1 RhGetHwDestinationHostAndReset ......................................................37

6.2 Redundant Host PICMG* 2.12 APIs...................................................................................38

6.2.1 Definitions and Types ............................................................................................39

6.2.2 Initialization/Termination........................................................................................42

6.2.2.1 RhEnumerateInstances .........................................................................42

6.2.2.2 RhOpen..................................................................................................43

6.2.2.3 RhClose.................................................................................................44

6.2.2.4 RhGetInstanceID ...................................................................................44

6.2.3 Domain and Host Information API .........................................................................45

6.2.3.1 RhGetDomainCount...............................................................................45

6.2.3.2 RhGetDomainNumbers..........................................................................46

6.2.3.3 RhGetDomainOwnership.......................................................................47

6.2.3.4 RhGetDomainSlotPath...........................................................................47

6.2.3.5 RhGetDomainSlotCount ........................................................................49

6.2.3.6 RhGetDomainSlots................................................................................49

6.2.3.7 RhGetSlotDomain..................................................................................50

6.2.3.8 RhGetCurrentHostNumber ....................................................................51

6.2.3.9 RhGetHostCount....................................................................................51

6.2.3.10 RhGetHostNumbers...............................................................................52

6.2.3.11 RhGetHostName....................................................................................53

6.2.3.12 RhSetHostAvailability.............................................................................54

6.2.3.13 RhGetHostAvailability............................................................................55

6.2.3.14 RhGetDomainAvailabilityToHost............................................................56

6.2.4 Slot Information API...............................................................................................56

6.2.4.1 RhGetPhysicalSlotInformation...............................................................56

6.2.4.2 RhGetSlotChildInformation ....................................................................58

6.2.5 Switchover API ..................................... ...... ...... ....... ...... ....... ...... ....... ...... ....... .......61

6.2.5.1 Switchover Scenarios and Theory of Operation ....................................61

6.2.5.2 RhPrepareForSwitchover.......................................................................63

6.2.5.3 RhCancelPrepareForSwitchover ...........................................................65

6.2.5.4 RhGetDomainSwConnectionStatus.......................................................66

6.2.5.5 RhGetSlotSwConnectionStatus.............................................................67

6.2.5.6 RhPerformSwitchover............................................................................67

6.2.5.7 RhSetHwDestinationHost ......................................................................68

6.2.5.8 RhGetHwDestinationHost......................................................................70

6.2.6 Notification, Reporting and Alarms ........................................................................70

6.2.6.1 RhEnableDomainStateNotification.........................................................70

6.2.6.2 RhEnableSwitchoverNotification............................................................71

6.2.6.3 RhEnableSwitchoverRequestNotification ..............................................72

6.2.6.4 RhEnableUnsafeSwitchoverNotification ................................................73

6.2.6.5 RhDisableNotification.............................................................................75

7Hot Swap API...............................................................................................................................77

8IPMI API........................................................................................................................................79

8.1 imbOpenDriver....................................................................................................................79

4 High Availability Software for t he Intel® NetStructureTM ZT 4901 Technical Product Specificati on

Page 5

Contents

8.2 imbCloseDriver ...................................................................................................................79

8.3 imbDeviceIoControl ............................................................................................................79

8.4 imbSendTimedI2cRequest .................................................................................................80

8.5 imbSendIpmiRequest .........................................................................................................81

8.6 imbGetAsyncMessage........................................................................................................81

8.7 imbIsAsyncMessageAvailable ............................................................................................82

8.8 imbRegisterForAsyncMsgNotification.................................................................................82

8.9 imbUnregisterForAsyncMsgNotification..............................................................................82

8.10 imbGetLocalBmcAddr.........................................................................................................83

8.11 imbSetLocalBmcAddr .........................................................................................................83

8.12 imbGetIpmiVersion .............................................................................................................84

9 Slot Control API...........................................................................................................................85

9.1 HsiOpenSlotControl............................................................................................................85

9.2 HsiCloseSlotControl............................................................................................................85

9.3 HsiGetSlotCount.................................................................................................................86

9.4 HsiGetBoardPresent...........................................................................................................86

9.5 HsiGetBoardHealthy...........................................................................................................87

9.6 HsiGetSlotPower ................................................................................................................88

9.7 HsiSetSlotPower.................................................................................................................89

9.8 HsiGetSlotReset .................................................................................................................89

9.9 HsiSetSlotReset..................................................................................................................90

9.10 HsiGetSlotM66Enable ........................................................................................................91

9.11 HsiSetSlotM66Enable.........................................................................................................92

9.12 HsiSetSlotEventCallback....................................................................................................93

10 Demonstration Utilities ...............................................................................................................95

10.1 Functional Description ........................................................................................................95

10.1.1 User Interface ........................................................................................................95

10.1.2 RH Interface...........................................................................................................95

10.1.2.1 Software Initiated Handovers.................................................................96

10.1.2.2 Hardware Initiated Failovers .... ...... ....... ...... ....... ...... ....... ...... ....... ...... ....96

10.1.2.3 Multiple Mode Capabilities ................................. ....................................96

10.1.2.4 Switchover Functions.............................................................................97

10.1.2.5 Host Domain Enumeration and Association ..........................................97

10.1.2.6 Slot Information......................................................................................97

10.1.2.7 Notification, Reporting and Alarms ........................................................97

10.1.3 IPMI Interface ........................................................................................................98

10.1.3.1 Fault Configuration.................................................................................98

10.1.3.2 Isolation Strategy ...................................................................................98

10.1.4 Hot Swap Interface ........ ....................................... ...... ....... ...... ....... ...... ....... ...... ....99

10.1.4.1 HS Functional Description .....................................................................99

10.1.4.2 Slot Information Structure....................................................................100

10.1.4.3 Slot State .............................................................................................101

10.1.5 Slot Control Interface...........................................................................................101

Index.....................................................................................................................................................133

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 5

Page 6

Contents

Figures

1 High-Availability CPU Architecture .............................................................................................11

2 RSS Processor Board Block Diagram........................................................................................16

3 RSS Host with Bridge Mezzanine Block Diagram ......................................................................17

4 High-Availability System Backplane Architecture.......................................................................18

5 Layered Host Application Diagram.............................................................................................22

6 Multi-Stated Driver Flowchart.....................................................................................................33

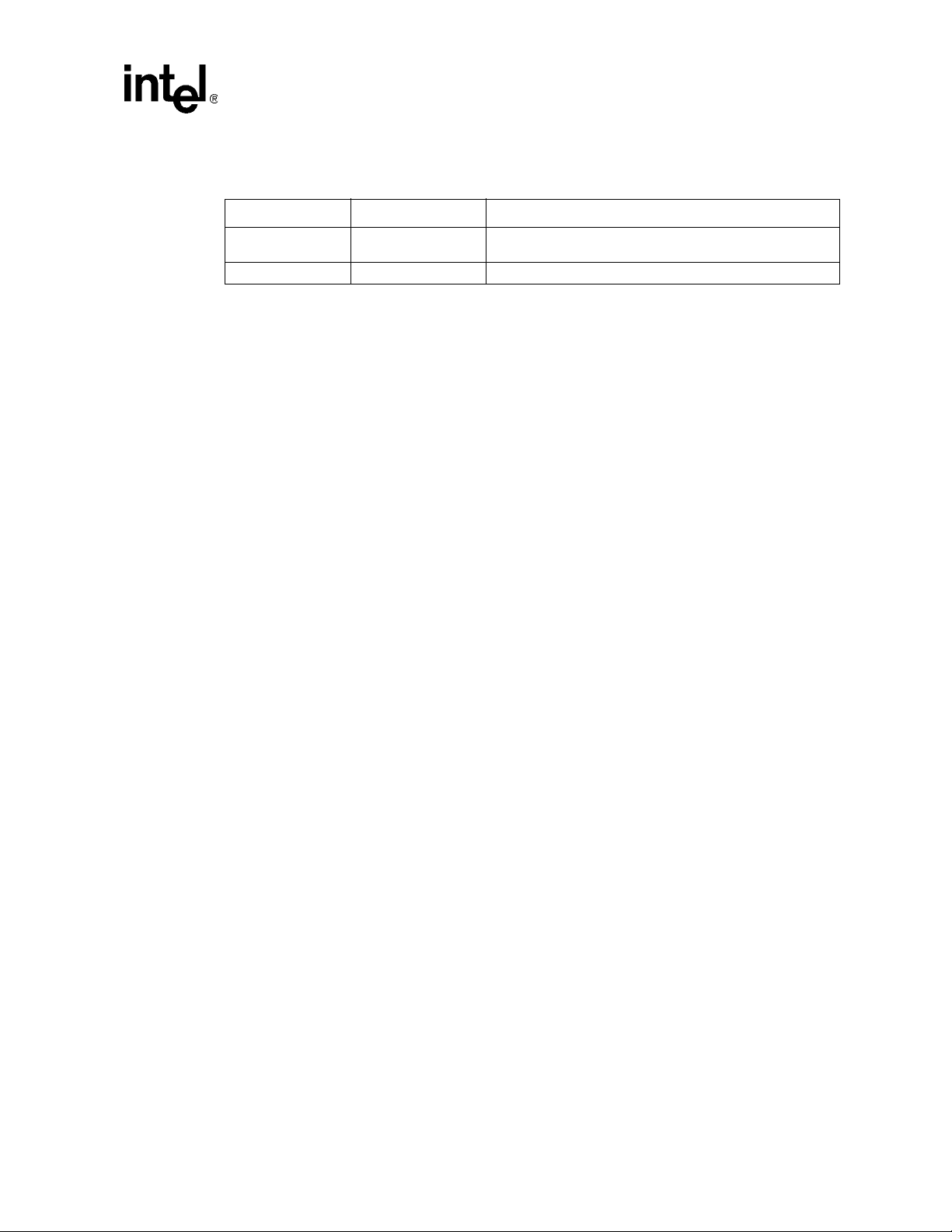

Tables

1 Channel Definitions for ZT 5524.................................................................................................27

2 RH Channel Alert Destinations...................................................................................................28

3 PCI Tree Information Retrieval Flags .......................................................................................100

4 Events that Generate Notification Messages .................. ...... ....... ...... ....... ...... ....... ...... ....... .....1 00

5 Slot State Flags ........................................................................................................................101

6 High Availability Software for t he Intel® NetStructureTM ZT 4901 Technical Product Specificati on

Page 7

Revision History

Date Revision Description

April 2003 002

January 2003 001 Initial release of this document

Contents

Removed three demonstration utilities from 10.1.2.7 and

removed Interhost Communication section.

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 7

Page 8

Contents

This page intentionally left blank.

8 High Availability Software for t he Intel® NetStructureTM ZT 4901 Technical Product Specificati on

Page 9

Document Organization 1

This document describes the High Availability Software Development Kit for the Intel®

NetStructure™ ZT 4901 I/O Mezzanine Card. Following is a summary of the contents.

Chapter 2, “Introduction,” provides an overview of the hardware and software subsystems

supported by Intel’s High Availability Software Development Kit.

Chapter 3, “Host Application Software,” covers the basic requirements needed for applications to

properly leverage Redundant Host architecture.

Chapter 4, “System Management,” describes the philosophy behind system management through

the monitoring of onboard and chassis located devices as well as the importance placed upon

logging other system resources .

Chapter 5, “High Availability CompactPCI Device Drivers,” describes the requirements placed on

a device driver in order to operate in a Redundant Host framework.

Chapter 6, “Redundant Host API,” presents a detailed description of the Redundant Host

Application Programming Interfaces. These function interfaces provide programmatic control of

takeover configurations and event notifications.

Chapter 7, “Hot Swap API,” outlines syst em config uration and event notification using the Hot

Swap API functions.

Chapter 8, “IPMI API,” describes system monitoring and alarming functions .

Chapter 9, “Slot Control API,” describ es the interface for High Availability control of individual

CompactPCI slots.

Chapter 10, “Demonstration Utilities,” describes interactive utilities used to configure and monitor

the High Availability attributes of the system.

Appendix A, “Software Installation,” includes the procedures for installing the software

components that make up the High Availability platform architecture for systems running the

VxWorks* and Linux* operating environments.

Appendix B, “Redundant Host Function Return Values,” documents an extensive table of values

that are returned by the Redundant Host APIs.

Appendix C, “HSK Device Driver Interface for VxWorks* 5.4,” details how a VxWorks 5.4

backplane device driver functions within a Redundant Host environment.

Appendix D, “RH Device Driver Interface for Linux* 2.4,” details how a Linux 2.4 backplane

device driver functions within a Redundant Host environment.

Appendix E, “Design Guideline for Peripheral Vendors,” offers important information for

designing a device driver for use in the Intel

Appendix F, “Porting ZT 555 0 HA Applicat ions to PICMG 2. 12,” provides information for po rting

applications that were written for the Intel

system.

®

NetStructureTM Redundant Host environment.

®

NetStructure™ ZT 5550 to a PICMG* 2.12 based

Intel® NetStructureTM ZT 4901 High Availability Software Technical Product Specification 9

Page 10

Document Organization

Appendix G, “RH Switchover on OS Crash,” describes how the High-Availability Redundant Host

architecture enables the system master board to perform a switchover to the backup host in the

event of a system crash under the Linux and VxWorks operating systems.

Appendix H, “Data Sheet Reference,” provides links to specifications and user documentation

relevant to the High Availability Software Development Kit.

10 Intel® NetStructureTM ZT 4901 High Availabi lity Software Technica l Product Specificatio n

Page 11

Introduction 2

Intel® High Availability (HA) systems feature built-in redundancy for active system components

such as power supplies, system master processor boards, and system alarms. Red undant Host (RH)

systems are HA systems that feature an architecture allowing the Active Host system master

processor board to hand over control of its bus segment to a Stand by Host system mas ter processor

board.

This section gives an overview of the hardware and software used in systems supported by Intel’s

High Availability Software Development Kit (HASDK).

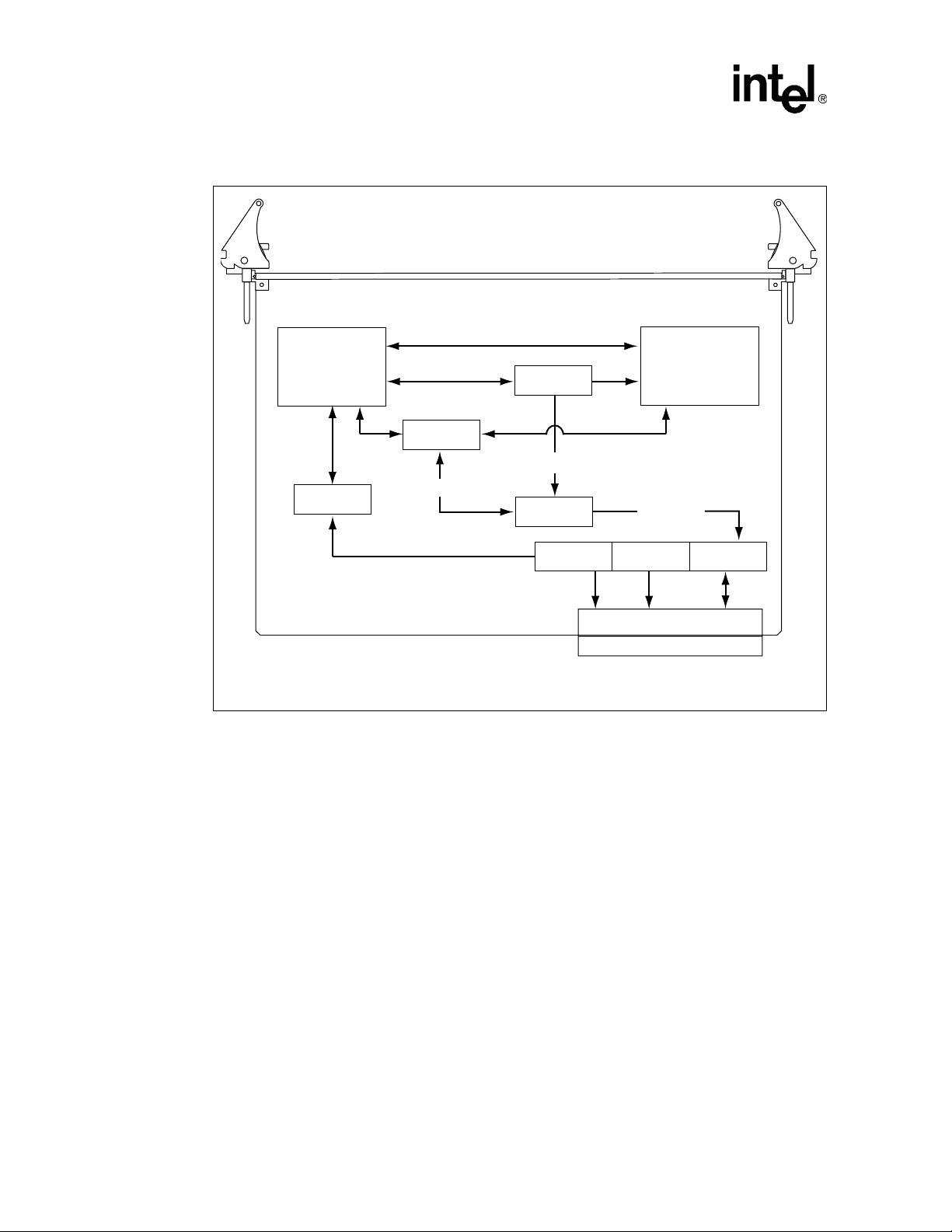

The following figure shows how the basic elements in an HASDK system are related.

Figure 1. High-Availability CPU Architecture

Current Host

OS/Drivers

Hardware

Slot

Control

Driver

Slot

Controller

Comm

Driver

Slot

Comm

Controller

Application

Backplane

Driver

PCI-to-PCI

Host

Bridge

Application

Hot Swap

Driver

CompactPCI Bus

Host

Driver

CompactPCI

Interface

Controller

RH

Driver

Available Host

RH

Driver

CompactPCI

Interface

Controller

RH Comm

Controller

RH

Comm

Driver

RH

Comm

Driver

RH Comm

Controller

2.1 Terminology

The following terms are commonly used in this document:

Active Host (also known as Current Host, owner, or bus segment owner)—A board is said to

be Active or the Active Host if it is providing System Host functions to the peripherals in a

CompactPCI backplane. This means that it is the Owner of at least one bus segment.

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 11

Page 12

Introduction

Application—Application-specific code, not including application-specific device drivers.

Arbitration—Hardware process of a bus master using the hardware REQ# signal to request the

PCI bus from the Active Host and then being granted access to the bus with the hardware GNT#

signal.

Available Host—A Host operating in Owner mode that can own domains and communicate with

the rest of the RH system. A Host, for example, that it is not switched off or not in some special

mode in which it is isolated from the rest of the RH system.

BP Driver—A backplane device driver is the executable object residing in kernel space that

controls interaction between an application and an instance of a device. For a device driver to be

considered High Availability Aware, it must conform to specific requirements detailed in this

document.

Bus Interface Mode—The mode of the bus segment interface from the CPU base board or the

bridge mezzanine board. The possible bus interface modes are owner mode, drone mode, and

peripheral mode.

Bus master—Any device given peer-to-peer access across the PCI bus to any other master or

target. A bus master must have been granted access to the PCI bus through arbitration.

CIC—The CompactPCI Interface Controller is responsible for coordinating switchovers. It is

generally implemented in programmable logic.

Cluster mode—When two or more Hosts in an HA system are operating in Cluster mode, each

owns a domain and switchover of domain ownership is not allowed.

CMM—The CMM refers to the Chassis Management Module. The Chassis Management Module

maintains the status and control over management devices located inside the chassis.

Cold Switchover— During a cold switchover, bus ownership is transferred from a system master

Host to a receiving Host. The Host receiving bus ownership is then reset, which in turn resets all

the devices that are owned by that Host.

COMM—Ethernet, Media Independent Interface, and so on.

DDK—Driver Development Kit. Software development tools that enable developers to create

device drivers.

Destination Host—The Host that receives the specified domains owned by an Active Host if a

hardware-initiated switchover takes place on the Active Host.

Domain—A collection of peripheral PCI slots that is a Host’s unit of ownership. PCI-to-PCI

bridges can populate these slots, so the domain is generally a collection of PCI trees.

Drone mode (also known as Isolated mode)—A Host operating in Drone mode is isolated from

the backplane.

Failover—A type of switchover that is initiated by the Active Host, resulting from a failure that

leaves the domain in an unknown state and requires a bus segment reset to recover.

Fault-tolerant system—Hardware and software designed with redundancy to achieve very high

availability. Typically this is a h igh-cost High Availability solution.

12 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 13

Introduction

Handover—A type of switchover that is initiated by the Active Ho st, r e sulting from a software

command or Baseboard Management Controller detected fault wherein the bus segment is

quiesced before the transfer of system slot functions.

HA SDK—High Availability Software Development Kit

High-Availability (HA) system—Constructed from standard components with redundancy to

reduce the probability of interruptions. Typically, “five nines” of availability ar e expected

(99.999%).

Host—A Host is a CPU board that is capable of providing system slot functions and System Host

functions to the peripherals in a CompactPCI backplane. This can include any number of bus

segments.

Hot Pluggable—Hot pluggable in the context of this document refers to the driver model used by

devices that reside on the backplane that allows for asynchronous driver suspension and

resumption.

Hot Swap—The term Hot Swap refers to the ability of the hardware and software to work in

conjunction to support the insertion and removal of peripheral boards without requiring the chassis

to be powered-off during the operation.

Hot Switchover—A hot switchover refers to the state of the bus segment that is being inherited by

a newly Active Host. On a hot switchover bus ownership is transitioned and upon unmasking of

backplane interrupts, and enabling of grants, the bus is allowed to operate without any recovery

actions.

Intelligent Platform Management Interf ace (IPM I) —A two-wire electrical bus through which

system- and power-management-related chips can communicate with the rest of the system.

Management Controller—System Management Controller. This may be a Baseboard

Management Controller (BMC), a Satellite Management Controller (SMC) or a Dual Domain

Controller.

Mode Change—A mode change is a change in Host domain ownership characteristics,

specifically, when Hosts change between Active/Active, Active/Standby, or Cluster modes. A

mode change can only occur when all operating Hosts agree through negotiation to change modes.

Owner Mode—A Host operating in Owner mode owns one or more domains. At any given

moment of time, one domain can be owned by no more than one Host. If a Host owns the domain,

software on the Host has access to PCI devices in (or behind) the PCI slots of the domain.

Redundant Host (RH) system—Two or more Hosts that control one or more domains. At any

given instant, no more than one Host can own one domain. If a Host owns th e domain, softwa re on

the Host has access to PCI devices in (or behind) the PCI slots of the domain.

Redundant System Slot (RSS) board—Any CompactPCI board that meets the RSS bus interface

requirements in the CompactPCI Hot Swap Infrastructure Interface Specification, PICMG 2.12.

This includes CPU boards and bridge mezzanine boards.

Segment A Interface—The CompactPCI bus segment interface on the base CPU board.

Segment B Interface—The CompactPCI bus segment interface on the bridge mezzanine.

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 13

Page 14

Introduction

Split Mode—Split Mode is a term that refers to a system operating with multiple system master

Host boards that each own a single bus segment. Split Mode may refer to either Active/Active or

cluster modes. In an Active/Active either of two Hosts can inherit the other Host’s bus segment. In

cluster mode each Host’ s bus segment is locked to that Host and ownership cannot be transferred to

the other Host.

Standby Host (also known as the standby system master)—System board in a High Availability

system that is currently operating in Drone Mode and therefore not the Active Host. The Standby

Host has no visibility of the devices on the other side of the PCI-to-PCI bridge.

Switchover—Changing ownership of a domain from one Host to another.

System Host functions—Central functions provided to a CompactPCI bus segment including hot

swap event response, bus enumeration, and interrupt serv ice. The sys tem slot bo ard pr ovid es these

functions.

System slot—Slot occupied by a System Master that performs arbitration for secondary bus

masters, responds to interrupts from periph eral bo ards , an d dr ives a clock si gn al to each b ackplan e

slot.

Takeover—A type of switchover that is initiated by the Standby Host in a High Availability

system. A takeover may be hostile or friendly.

Warm Switchover—A wa rm switchover refer s to the state of the dom ain that is being inher ited by

the Host taking ownership. On a warm switchover domain ownership is transitioned and, before

any bus actions or operati ons are allow ed to occur, the bus segment is toggled through rese t. This in

effect resets all the devices that reside in the reset domain.

2.2 High Availability Hardware Approach

In an RH system the Redundant System Slot (RSS) subsystem is spread across several building

blocks. These include:

• Processor boards (such as the Intel

• Bridge mezzanine (such as the Intel

• Backplane (such as the Intel

Other building blocks and subsystems may be required to support the RSS subsystem. These

include:

• System management

• Storage

• Power distribution

• Cooling

• Media

®

NetStructure™ ZT 5524 System Master Processor Board)

®

NetStructure™ ZT 4901 Mezzanine Expansion Card)

®

NetStructure™ ZT 4103 Redundant Host Backplane)

• Packet switching

14 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 15

Intel’s RH software runs on system master processor boards with bridge mezzanine cards in a

PICMG 2.13 compliant RSS backplane to provide redundant system master functionality. This

allows the failover of control of redundant PCI buses. It provides faster hardware that is PICMG

2.9 and 2.16 compliant. The system makes use of the IPMI infrastructure for fault detection and

correction.

2.2.1 Processor Boards

The Host processor board is a CompactPCI system master processor board, such as the ZT 5524,

that can operate in Owner Mode or Drone Mode, and may operate in Peripheral Mode.

Additionally, it must be able to gracefully transition between modes by coordinating with a

Redundant Host (RH). The processor board must also support hot swap when it is in Drone Mode.

The key elements that allow RSS functionality are shown in Figure 2, “RSS Processor Board Block

Diagram” on page 16 and are described below.

PCI The PCI interface to the backplane. This may be a PCI-to-PCI bridge like

the Intel 21154, or some other PCI interface.

Iso/Term CompactPCI termination and isolation. Isolation is require d to ens ure

that the PCI interface does not affect the backplane bus segment when

the board interface is in Drone Mode. Termination is required when the

board interface is in Owner Mode. The isolation may be integrated into

the PCI interface device.

Introduction

Clk The clock generator for the CompactPCI bus segment when the board

interface is in Owner Mode.

CIC The CompactPCI Interface Controller is responsible for coordinating

switchovers.

Arb The bus arbiter for the CompactPCI wh en the board interface is in Owner

Mode.

HC The Host Controller provides the software accessible registers for

control and status of th e CIC.

xMC The IPMI Management Controller may operate as a Baseboard

Management Controller (BMC) or Satellite Management Controller

(SMC). This device is responsible for detecting faults and notifying the

CIC so that it may make the appropriate response. Additionally, the xMC

is responsible for power-on negotiation of bus ownership with a

redundant board.

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 15

Page 16

Introduction

Figure 2. RSS Processor Board Block Diagram

Base CPU Board

CPU/

Chipset

PCI

2.2.2 Bridge Mezzanine

The HASDK driver set works in single and dual bus segment configurations. In order for the dual

bus configuration to be supported a bridge mezzanine must be mounted on the processor board.

HC

Control/Status

Interboard

xMC

Xreq

CIC

Connector

Control

Iso/Term Clk. Arb.

CompactPCI J1/J2

Bus Segment A

The bridge mezzanine is a board that is physically attached to the base processor board. The

processor board and bridge mezzanine are stacked such that they occu py two adjacent CompactPCI

slots.

Like the base processor board, the bridge mezzanine has a CompactPCI bus seg ment interf ace that

can operate in Owner Mode or Drone Mode. The bus interface mode of the bridge mezzanine is

independent of the processor board’s mode.

The bridge mezzanine contains elements that are identical to the base processor board in order to

create a second CompactPCI interface for connection to a different bus segment, as shown in

Figure 3, “RSS Host with Bridge Mezzanine Block Diagram” on page 17.

16 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 17

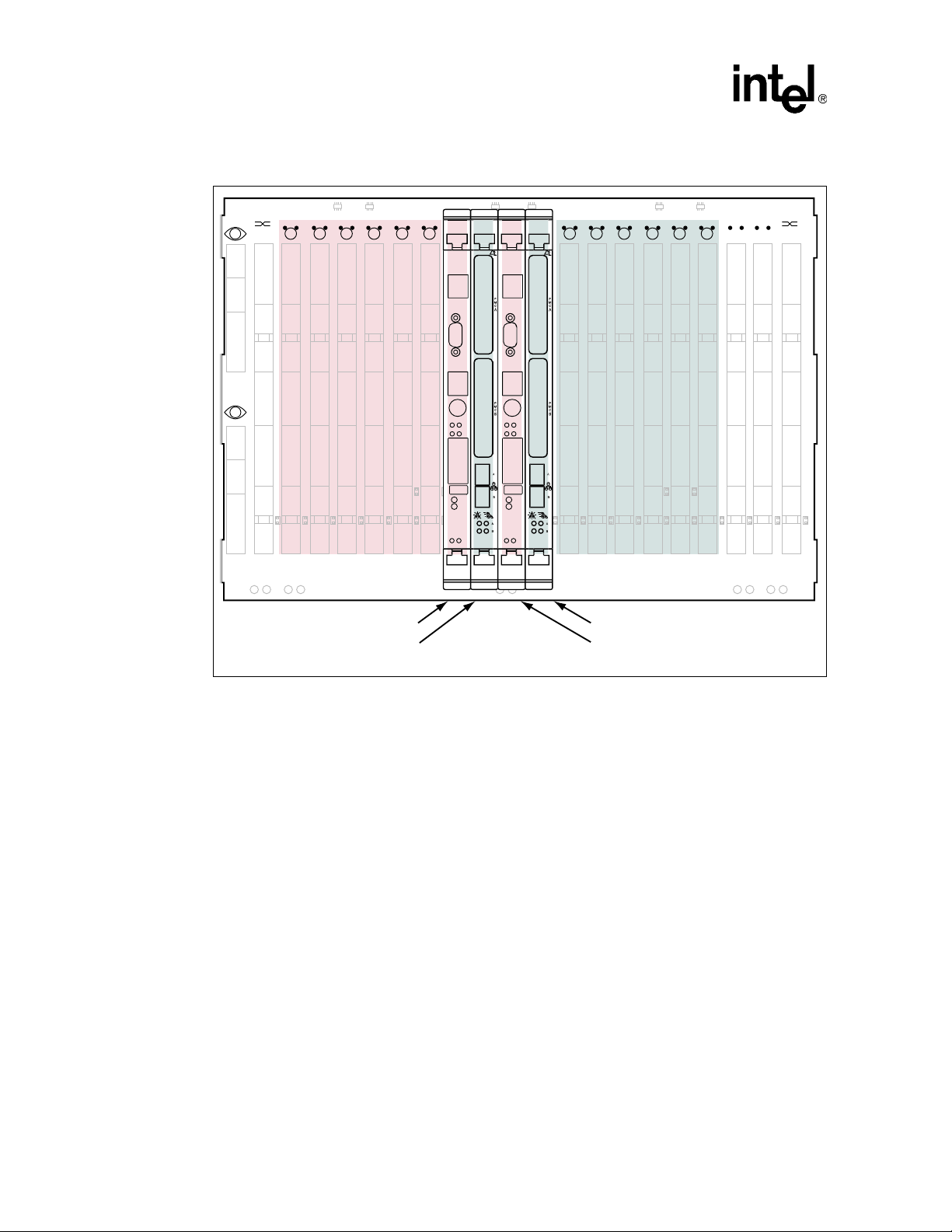

Figure 3. RSS Host with Bridge Mezzanine Block Diagram

Introduction

CPU/

Chipset

Control/Status

PCI

2.2.3 Backplane

The RSS system backplane supports two CompactPCI buses accessible by both Redundant Hosts.

In Active-Standby mode, the active processor board controls the buses (Active Host) and the

standby processor board is isolated from the backplane (Drone mode). By using Active-Activecapable processor boards such as the ZT 5524, the system can be configured so that each p rocessor

board has access to one backplane bus (Cluster mode). The backplane has separate buses for

active-to-standby processor board communication (COMM) and Host Controller functions. See

Figure 4, “High-Availability System Backplane Architecture” on page 18 for an example of a

typical High-Availability backplane.

Base CPU Board

HC

Interboard

Connector

xMC

Xreq

CIC

Iso/Term Clk. Arb.

Control

CompactPCI J1/J2

Bus Segment A

Interboard

Connector

Control/

Status

CIC

Xreq

Bridge Mezzanine

Control

PCI

Iso/TermClk.Arb.

CompactPCI J1/J2

Bus Segment B

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 17

Page 18

Introduction

Figure 4. High-Availability System Backplane Architecture

1A

1B

3 4 5 6 7 8 13 14 18 19 20

2 21

Host Processor Board

Bridge Mezzanine Board

15 16 17

Bridge Mezzanine Board

Redundant Host Processor Board

2.3 High-Availability Software Approach

As shown in the Figure 1, “High-Availability CPU Architecture” on page 11, there are three HighAvailability software components:

• Host application

• System Management

• Backplane Device Drivers

2.3.1 Host Application

The host application serves as the central control mechanism for the platform. For a host

application to function in an RH environment it must be able to relinquis h o r receive contro l of th e

system in a controlled manner. Dynamically transitioning of bus segment ownership between

active and backup requires the application to maintain data synchronization between the

applications on the redundant Hosts.

18 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 19

The design of the application should be made as portable as possible. This requires that the design

be implemented in a modular approach that isolates the s ystem management requirements from the

host application. This division of responsibilities can be achieved through a layered

implementation. See Chapter 3, “Host Application Sof twar e” for more information.

In addition to taking a modular approach, the application designer should recognize the importance

of producing a hardened application. A hardened application must at least provide a capable

logging mechanism that allows for application faults to be reconstructed and corrected. It should

also adhere to good coding practices such as validating all input parameters and return statuses. A

more proactive approach is to implement fault recovery mechanisms. This could include the

capturing of faults and the isolation of faulted application components.

2.3.2 System Management

Introduction

System management is the mechanism by which system configuration and fault characteristics are

established, insuring system health is maintained. In the Intel

are extensive sets of APIs that provide the developer with a fine level of control of the platform.

The API described in Chapter 6, “Redundant Host API” deals with the management of redundant

hosts that reside in a single chassis. In order to manage such a configuration, a number of function

calls are required so that predetermined default actions can be prescr ibed d epend ing o n the desir ed

switchover strategy. The required functions are based on the Hot Swap Infrastructure Interface

Specification, PICMG 2.12, specifically in the Redundant Host API chapter. The supplied APIs

provide the following abilities:

®

Redundant Host architecture there

• Enumerate the hosts, domains, and slots in the system

• Get information about devices in slots

• Initiate domain switchovers among hosts

• Enable and disable notifications regar ding sw it chov er operati on s

• Specify actions that result from hardware-initiated alarms and control notifications about

alarms.

Chassis management is achieved using the IPMI infrastructure. The IPMI interface exposes the

embedded monitoring devices such as temperature and voltage sensors. Currently there is no

industry standard API for managing IPMI dev ices, primar ily because the devices that are us ed may

vary significantly between chassis configurations. Since the drivers supplied for use in the

Redundant Host architecture are operating system dependant, the interfaces used to access the

IPMI devices are not necessarily portable between the supported operating systems.

The supplied Hot Swap API provides a mechanism to identify the topology and Hot Swap state

within a specified chassis. By using this API the system management application is able to identify

which slots are populated and the power states of the occupying boards. There are additional APIs

that allow for simulated backplane peripheral insertion and extraction. In addition, this API

provides for notification of Hot Swap events.

The Slot Control Interface is independent of the Redundant Host driver. This separation of

functionality is designed to allow for slot control functionality in a chassis without full hot swap or

redundant host capabilities. The Slot Control API is based on the PICMG 2.12 High Availability

Slot Control Interface functions. It interacts with the Slot Control Driver to create IPMI messages

through which a finer granularity of board control can be achieved then was found in previous

generations of High Availability systems. Using the Slot Control API the application can retrieve

information regarding “Board Present Detection”, “Board Healthy”, and “Board Reset” capability.

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 19

Page 20

Introduction

2.3.3 Backplane Device Drivers

Backplane device drivers are a critical component of High A vailability system. The drivers need to

be robust in their operations as well as to be dynamic given the “Stated” nature of a Hot Swap

architecture.

The ability of a driver to remain loaded and initialized even though the Host may not have visibility

to the device is critical when Host ownership transfer can occur almost instantan eously. In order for

a driver to function in this environment the designer should implement the driver in a stated

fashion. This means that the driver must be able to be started and stopped asynchronously.

Another important factor when designing a driver that will function in a Redundant Host

environment is the ability to maintain synchronization between redundant device drivers that reside

on separate Hosts. In order to provide an easily implemented communication mechanism the Intel

HASDK provides a single callback definition and API call. This driver communication mechanism

enables not only a simple interface, but because of its simplicity, a very robust synchronization

tool.

The Intel Redundant Host architecture also provides support for those devices that require a

domain reset. The domain can be reset by using either of the following methods:

• The default IPMI settings. These can be configured using the IPMI API, described in

Chapter 8, “IPMI API.”

• The Redundant Host API using either the Switchov er or Slot Informati on APIs, as d escribed in

Chapter 5, Chapter 6, “Redundant Host API.”

For more information regarding the Hot Swap and Redundant Host CompactPCI device driver

design model see Chapter 5, “High Availability CompactPCI Device Drivers.” Redundant Host

APIs and callback definitions for specific operating systems are in Appendix C, “HSK Device

Driver Interface for VxWorks* 5.4,” and Appendix D, “RH Device Driver Interface for Linux*

2.4.”

20 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 21

Host Application Software 3

Through thoughtful design and the use of a layered development approach, an application can be

developed that meets the implied robustness of a highly available system and also is a portable

entity. In addition to covering the details of developing an application that runs in a High

Availability environment, this chapter provides a foundation for unde rstan ding the issues that a

developer needs to be aware of when deploying in a multi-host architecture.

3.1 Goals of the Host Application

Design goals that should be achieved for your application to perform successfully in a High

Availability environment are:

• Serviceability

• Portability

• Redundancy

3.1.1 Serviceability

The first and probably most important attribute of an application is to maintain a constant level of

service. This ability to provide a minimum level of functionality is referred to as serviceability. The

concept of serviceability should not be restricted to performing the required functionality within

the domain of a single Host, but should be considered at a much higher level. An app lication is the

service or set of services that need to be performed within the domain o f a platform. By domain we

are referring to the system that is providing the service. The system could be as simple as a system

master processor board, but more than likely the system will contain peripheral boards, chassis

management modules, various system sensors, and in the case of a redundant host architecture,

multiple system master boards.

3.1.2 Portability

Another goal is to design and implement a portable Host application. Some of the largest

investments that a provider makes are in the areas of application development and maintenance. In

order to preserve as much of the initial investment as possible, it is important to design the

application so that it is separated from specific platform components that may be enhanced or

changed. Portability can be achieved by isolating the application as much as possible from the

system management responsibilities required for High Availability. This separation of functionality

can be achieved through a combination of modular design and a layered software approach. This

topic is covered in more detail in Secti on 3.2, “Division of Labor” on page 22.

3.1.3 Redundancy

In order to achieve a high level of serviceability within a Redundant Host environment, it is

assumed that the host application has the ability to failover to another application. This backup

application should be a mirrored copy of the original application that will likely reside on another

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 21

Page 22

Host Application Software

System Host in the same chassis. In order for a host application to be capable of maintaining the

system’s serviceability, these redundant applications should maintain some level of

synchronization. The level of synchronization and the level of sophistication of the system’s

peripherals determine the failover characteristics of your system. Synchronization issues, in

addition to other implementation concerns, are covered in the Section 3.3, “Development Issues”

on page 23.

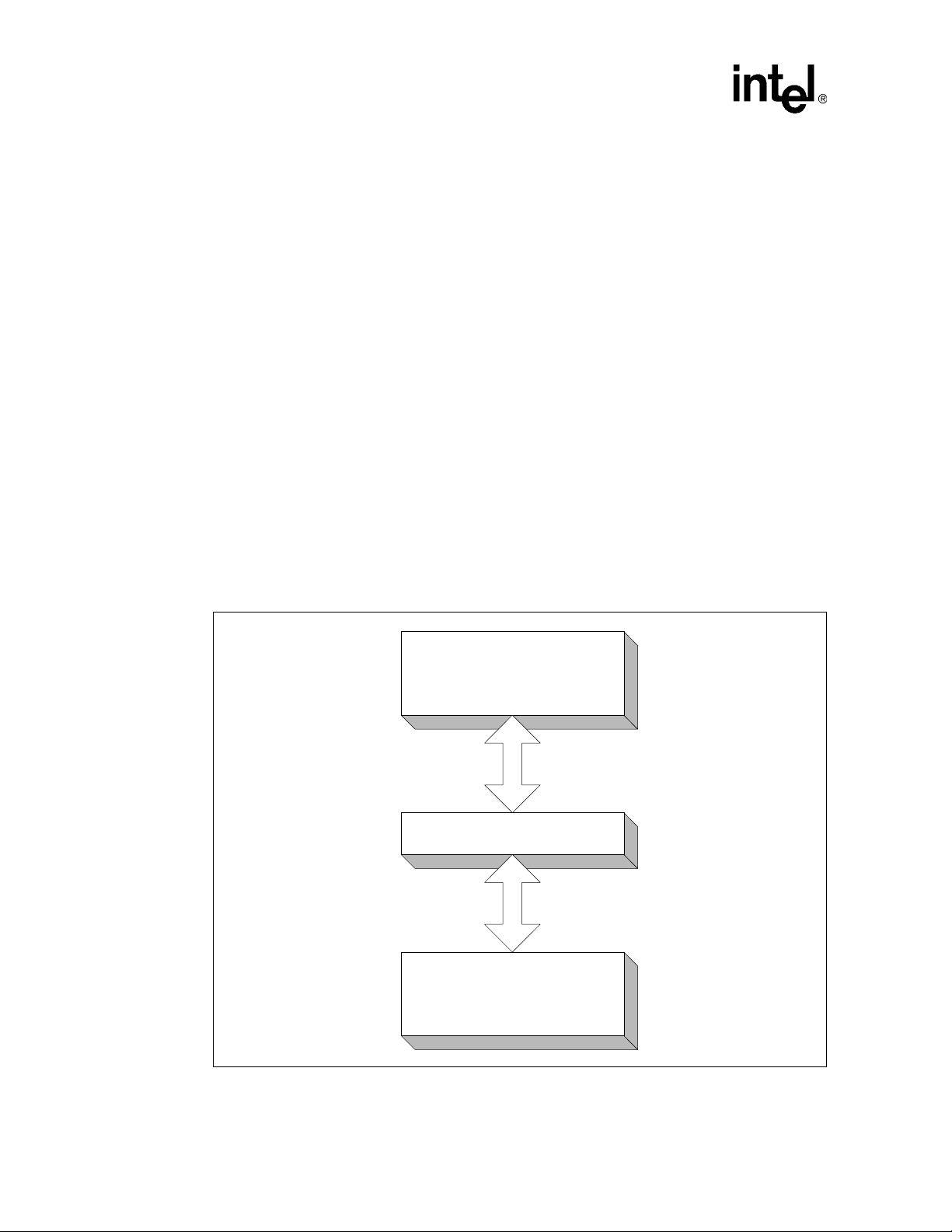

3.2 Division of Labor

Historically, embedded application developers have i ntegrat ed the manag ement of th e syst em wit h

the host application. This tight integration meant it was unlikely that much of the host application

could be ported when the application was rehosted on a new platform. This topic presents a

possible architecture that allows the host application to remain aware of system performance and

degradation while maintaining a loose coupling with the system managem ent as pects of th e

architecture.

One of the keys to portability in application design is to maintain a modu lar design. This goal is

often complicated by routines used for system manage ment that place particular requirements upon

the implementation of the application. One way to reduce the awareness of the application on a

particular implementation is to take a layered approach to the design of the application. In this way

you can reduce specific implementation features without unnecessarily isolating the application

from the underlying performance of the system. See the “Layered Host Application Diagram”

below.

Figure 5. Layered Host Application Diagram

Host Application

Platform Interface

System Management

Modules

22 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 23

Host Application Software

The diagram shows that the host application’s need to understand the particular implementation

aspects of the platform’s system management is reduced by placing an intermediary layer that in

effect interfaces and translates only the system management information that the host application

cares about. The host application should usually care about only those issues that would degrade

performance or cease operations, such as:

• Access to peripherals

• System performance

• Integrity of operations

The platform interface can be more than a wrapper around exposed system functionality: It could

act as a filter with a level of intelligence. The platform interface could be designed so that the

module could monitor system health and take proactive actions like initiating a handover, when

circumstances dictate. The platform interface might also be responsible for translating systemparticular messages and alerts into a normalized format that the application understands. The

events that a host application most likely requires notification of are:

• Switchover situations

• Warnings of system failures

• The availability of system resources

All these events should be handled first by the platform interface and relayed to the host

application only when they might impede performance.

3.3 Development Issues

There are several issues that an application developer of a High Availability system architecture

must be aware of:

• Redundancy

• Graceful switchover

• Hardened applications

• Code modularity

3.3.1 Redundancy

Redundancy, or at least the awareness of redundancy, must be designed into the application. This

requires that data be constantly normalized. The term data could mean anything from state

information to an entire database. The ultimate goa l is to have a system that appropriately res ponds

to a switchover while maintaining the integrity of all system data.

The trade-off for maintaining a high level of synchronization is required overhead. The amount of

bandwidth required for data normalization can be effectively reduced by:

• Utilizing intelligent peripherals that internally maintain state

• Creating innovative methods of database sharing through shared RAID architectures

These are just examples of data synchronization; there are numerous ways to share data that are

dependant on your actual implementation.

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 23

Page 24

Host Application Software

3.3.2 Graceful Switchover

In a Redundant Host environment a graceful switchover is only secondary in importance to data

integrity. An effective mechanism is required in order for an application to seamlessly pick up the

functionality of a faulted application. The Intel Redundant Host environment has an infrastructure

in place to help facilitate such control transitions. This architecture supplies:

• Multiple communication paths

• A capable fault detection interface

• Embedded firmware that can be configured for multiple failover scenarios

In addition to providing a fine level of granularity on the type of switchovers provided, this

platform also exposes these switchover events to an application or platform interface module so

that the software can act upon the events appropriately.

3.3.3 Hardened Applications

In almost all environments it is important to develop applications in a hardened manner, but in a

highly available embedded environment it is especially important. The definition of the term

“Hardened” may vary depending on the type o f system that is being dev eloped and the accessibi lity

of various system level software components. In the context of this Redundant Host architecture,

the term hardened refers to verifying that all function return codes are appropriately handled and

dispatched with accordingly, function parameters are validated, and that the system maintains a

logging mechanism sufficient to monitor system performance and to assist in diagnosing fault

conditions when present. Code hardening should be part of any standard development effort, but a

disciplined approach to code hardening must be maintained in an HA environment.

3.3.4 Code Modularity

Code modularity is also considered a common implementation characteristic, but it is often

overlooked during the implementation portion of a project. In order to achieve some level of

application portability the designers need to make the conscience effort to move away from typical

embedded monolithic designs.

One approach to modular design in an HA architecture is to decouple the services provided by the

system from the entities responsible for system management. Since system management is heavily

dependant on the hardware configuration of the host platform, the implementation of a platform

interface module helps to abstract the host application away from the platform on which it resides.

The Platform Interface Module achieves platform abstraction by handling most hardware level

monitoring and exposing platform specific interfaces only through non-proprietary APIs. One of

the advantages of the Intel High Availability Redundant Host System is the reliance on industrystandard, non-proprietary interfaces. These interfaces allow for future portability of the developed

code base.

24 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 25

System Management 4

System Management is an all-encompassing term whose definition can vary drastically depending

on the type of system that is being developed. System Management can indicate anything from

system configuration all the way to active reporting, proactive fault remediation, and

comprehensive system security. In a relatively closed system with limited access to external

interaction, system management could be limited to chassis ma nagement, event logging, and

resource management. In systems that require more sophisticated external interface and a finer

granularity of control, system management mechanisms can provide a myriad of APIs and system

services for administering a system.

The intent of this section is to give a developer an overview of what application programming

interfaces are supplied by the High Availability SDK (HASDK).

The HASDK provides System Management capable APIs. The APIs enable Redundant Host

configuration and administration, IPMI infrastructure communication and administration, Hot

Swap device detection and management, Slot Control for control and access of backplane slot

attributes.

Most of the details for creating and administering a Telco based solution are beyond the scope of

this document.

4.1 Redundant Host API

Among these APIs is a PICMG* 2.12 compliant Redundant Host Programming Interface. This

interface allows a client to perform the following operations:

• Initialize and terminate an instance of this interface

• Enumerate the Hosts, domains and slots in the system

• Get information about devices in slots

• Initiate domain switchovers among Hosts

• Enable and disable notifications regar ding sw it chov er operati on s

• Specify actions that result from hardware-initiated alarms and control

See Chapter 6, “Redundant Host API,” for more information.

4.1.1 IPMI API

Platform management is a major component of a com preh ensiv e sys tem man agemen t architectur e.

Platform management allows for status and event notification of all exposed interfaces such as

temperature sensors, voltage monitors, and other sensory devices. These status and

communications capabilities need to be as extensible as possible.

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 25

Page 26

System Management

The next-generation, high-availability architecture provides this system management infrastructure

using IPMI. Through the IPMI API the developer is able to access the status of individual sensors,

various management controllers, and to configure the system to initiate switchovers based on

events or threshold excursions. See Chapter 8, “IPMI API,” for details.

4.1.2 Hot Swap API

A critical feature of any system that claims to be Highly Available is the capability to perform

peripheral insertions and extractions without requiring that the system be powered off. In order to

provide this functionality a kernel level Hot Swap infrastru cture should be integrated into the

operating system. This infrastructure allows for dynamic resource allocation for peripheral slot

cards. Given the dynamic nature of a Highly Available platform, the system management needs to

remain aware of the system’s topology. A PICMG 2.12 compliant Hot Swap API accomplishes

this. The Hot Swap API includes functions to return the state and population of the CompactPCI

bus, to simulate unlatching a particular board's hot swap extractor, and to permit software

connection and disconnection. See Chapter 6, “Redundant Host API,” for more information.

4.1.2.1 Slot Control API

Another part of system management is the ability to control individual peripherals car ds . Under

normal circumstances in which a system is operating properly, little in the way of card control

needs to be performed. There are events that require actions to be taken to place the peripheral

cards into a known state. It is the respo nsibility of the sl ot control driver and the accompany ing API

to provide this quiescing and peripheral shutdown functionality. This API provides control at the

card level, as well as providing several functions that allow reporting the status of the peripheral

card’s operational state. See Chapter 9, “Slot Control API,” fo r more inf orm ation.

4.2 Baseboard Management Controller Firmware Enhancements

The HASDK takes advantage of the system master processor board’s capability for board

management provided through its resident Baseboard Management Controller (BMC). The

standard capabilities of the BMC provide a high level of system management. To support RH

functionality, some extensions for bus segment control are added to IPMI v1.5 specification

support. These extensions include:

• Fault Configuration

• Isolation Strategies

• CompactPCI Interface Controller interaction

• Non-Volatile Storage of RH Parameters

• IPMI RH Channel Commands

4.2.1 Fault Configuration

The BMC handles the following event triggering mechanisms for each entry in its Sensor Data

Record (SDR):

• Upper/Lower non-critical threshold

26 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 27

• Upper/Lower critical threshold

• Upper/Lower non-recoverable threshold

Each range can be set independently for each sensor and the ranges can overlap. This area of

configuration is used only to trigger events. These events appear in the System Event Log.

Platform Event Filtering (PEF) determines the actions that occur as a result of these events. Only

the Upper/Lower non-recoverable threshold is typically configured using the PEF to cause a

hardware-initiated takeover to occur.

4.2.2 Isolation Strategies

The BMC handles the following event actions in its PEF Table:

• Alert

• Power Off

• Reset

• Power Cycle

• Diagnostic Interrupt (NMI)

These options can be set independently for each event.

System Management

Support for a Handover action allows the takeover / handover p rocess to occur from the B MC. This

action triggers the CompactPCI Interface Controller (CIC) to initiate the handover sequence. A

virtual RH channel facilitates this switchover request.

T a ble 1. Channel Definitions for ZT 5524

Channel # Description

0x0 IPMB 0

0x1 EMP

0x2 ICMB

0x3 PCI

0x4 SMM

0x5 RH Virtual Channel

0x 6 LAN Inte rface 2

0x 7 LAN Inte rface 1

0x 8 IPMB 1

0x 9 RESERVED

0xA RESERVED

0xB RESERVED

0xC Internal

0xD RESERVED

0xE Self

0xF SMS

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 27

Page 28

System Management

Table 2. RH Channel Alert Destinations

Destination # Description

0x00 RESERVED

0x01 RH_CHAN_SET_ALL_MC_FD (Sets CIC Fault Detection Lines)

0x02 RH_CHAN_CLEAR_ALL_MC_FD (Clears CIC Fault Detection Lines)

The RH channel acts as a virtual channel that can respond to Alert Actions. This channel supports

IPMI commands like Alert Immediate:

• In the Alert Policy Table: Create an entry with a unique policy number, channel specified as

RH, destination specified as RH_CHAN_SET_ALL_MC_FD.

• In the Platform Event Filter Table: Create an entry with the Alert action selected, Alert Policy

Number defined as above, and the data mask specified based on the sensor thresholds to be

triggered.

4.2.3 IPMI RH Channel Commands

The following RH commands are present in the ZT 5524 processor board BMC firmware. These

are accessible only by sending the selected command/net function to the RH channel (0x05)

4.2.3.1 RH Channel Enabled

This IPMI command returns whether the board has RH features enabled o r not. Conditions for nonRH operation are: No IOX presence or the board is in a non-RH capable slot. Standard IPMI

completion codes are returned.

IPMI Command: RH_CHAN_ENABLED (0x00)

Net Function: INTEL_RH_SPECIFIC_REQUEST (0x36)

ByteData Fields

Request Response 1 Completion Code

2 1h = RSS enabled

-

0h = RSS disabled

4.2.3.2 RH Channel Get RH BMC Address

This command gets the IPMB 1 address of the redundant host’s BMC. Standard IPMI completion

codes are returned.

IPMI Command: RH_CHAN_GET_RH_BMC_ADDR (0x05)

Net Function: INTEL_RH_SPECIFIC_REQUEST (0x36)

ByteData Fields

28 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 29

Request - Response 1 Completion Code

2 RH BMC Address

System Management

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 29

Page 30

System Management

This page intentionally left blank.

30 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 31

High Availability CompactPCI Device

Drivers 5

This chapter describes the characteristics of highly available software drivers for CompactPCI

peripherals in a Redundant Host environment.

To fully utilize the High Availability SDK, you must write a peripheral driver that can be started

and stopped repeatedly and that can be lo aded and in itialized even when the d evice it is ser vicing is

not physically visible to the operating system.

5.1 Device Driver Design

Historically, device drivers are relatively simple in their high level requirements. The operating

system detects a hardware component and loads a module of software that allows softwareinitiated interaction with the hardware. It was assumed that the hardware configuratio n would not

change over the life of the system, or at the least would remain static between power cycles.

With the advent of CompactPCI these assumptions can no longer be guaranteed. One of the

primary advantages of a CompactPCI architecture is the ability to perform peripheral insertions

and extractions without requiring the chassis to be powered down. This system attribute is referred

to as Hot Swap. Because of this, system configurations can no longer be assumed to be static. This

dynamic configuration capability places new requirements on the operating system and the Hot

Swappable device drivers.

The operating system kernel now needs to be able to dynamically handle system resources, in

allocation and resource collections. Intel supplies a Hot Swap manager for operating systems

supported by the Intel

operating system kernel that manages dynamic bus and resource allocations. Since this is a kernellevel function that is transparent to the developer, this document will not describe the details of this

module.

In order for a device driver to function in a Hot Swap environment, the driver is required to

implement what is known as a Stated Driver Model. A stated device driver is constructed in a

manner that allows it to gracefully transition between multiple operational modes.

The specifics of stated device driver design vary for each operating system supported. This is due

to the Hot Swap implementation that is used by each operating system. If an operating system

natively supports Hot Swap events then the driver implementation will leverag e the supported

driver model.

This is the case with Linux* kernel version 2.4. Refer to Appendix D, “RH Device Driver Interface

for Linux* 2.4” for more informat ion.

VxWorks* version 5.4 does not natively support a stated driver model, so Intel has provided

enhancements to this operating system. The specifics of the VxWorks CompactPCI driver model

can be found in Appendix C, “HSK Device Driver Interface for VxWorks* 5.4.”

®

High Availability architecture. This manager is a component of the

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 31

Page 32

High Availability CompactPCI Device Drivers

5.1.1 Device Dri ver States

There are varying degrees of functionality that are dependent on power modes, operating system

Hot Swap implementations, and device characteristics. But for a device driver to function in this

High Availability architecture we can generalize the required driver states down to thr ee dist inct

states.

• Initialization

• Quiesced

• Activation

5.1.1.1 Initialization

During initialization, the driver starts up and is loaded. The driver cannot “talk” directly to the

hardware devices it is controlling, with the exception of PCI configuration cycles. Intel has

provided the ability to perform PCI configuration cycles to any backplane devices even if the

device driver resides on the Standby Host.

5.1.1.2 Quiesced

A quiesced device driver is completely initialized with all internal allocations and instantiations of

device information completed, ready to perfo rm direct dev ice operations . A device driver waits for

notification from the Hot Swap Manager via a Start or Resume callback mechanism, indicating that

the driver is free to access the device directly. The driver must be designed to transition between

Quiesced and Active states at any time.

5.1.1.3 Activation

Device activation notifies the driver that the system master is in the Active state; direct device

interaction is permitted. When a device driver receives a Stop or Suspend callback, the driver must

clean up any device-specific state information and transition to a known or Quiesced state.

32 High Availability Software f or the Inte l® NetStructureTM ZT 4901 Technical Product Specificati on

Page 33

Figure 6. Multi-Stated Driver Flowchart

High Availability CompactPCI Device Drivers

AddDevice

Driver

Not Loaded

RemoveDevice /

Find

Suitable

Driver

Device

Removed

SurpriseRemoval

StopDevice StartDevice

Device Not

Present

RemoveDevice /

SurpriseRemoval

5.1.2 Adding High-Availability Functionality

Operating in a Redundant Host architecture places additional responsibilities on device drivers

beyond those issues required to function in a normal Hot Swap environment. This section covers

particular issues that a Redundant Host device driver designer needs to be aware of when

implementing their design.

Device

Suspended

Device

Running

The Redundant Host architecture leverages the Hot Swap driver interface to enable Ultra-Quick

switchovers. To do this the Hot Swap Manager views a domain ownership change as a multi-card

Hot Swap event. When a Host loses ownership of a bus segment its Hot Swap Manager issues a

stream of stop device messages that attempts to place the backplane devices into a known quiesced

state. The device drivers on the Destination Host are in a known state. By us ing the describ ed High

Availability driver model the Destination Host devi ce driv ers are able to as sume control in an

almost instantaneous manner.

Additional measures need to be taken to protect against inadvertent backplane interrupts and busmastering activities by devices on the segment in question. These additional measures are

completely transparent operations to the device drivers since the Hot Swap Manager in the kernel

handles them. All a device driver needs to be concerned with is being able to gracefully suspend

and resume interaction with the device or devices it controls.

Each operating system that supports Hot Swap does so in a unique way. The specific function

callbacks, number of callbacks, and level of control vary between operating system

implementations. However, all Hot Swap implementations are based on the stated driver model

described in Section 5.1.1, “Device Driver States” on page 32. The driver states can be classified

into the following generic functions:

High Availability Software for the Intel® NetStructureTM ZT 4901 Technical Product Specification 33

Page 34

High Availability CompactPCI Device Drivers

• Add Device

• Resume Operations

• Suspend Operations

• Remove Device

5.1.2.1 Add Device

Add Device is the device driver call made by the Hot Swap Manager either when an asserted

ENUM signal is detected or during the kernel load time. The Add Device callback execution

indicates to a device driver that an instance of a device that the driver can control has been

detected. The driver should perform any internal structure initialization, but should not attempt to

initialize the device.

This is where device driver design on a Redundant Host architecture capable device branches from

common device driver practices. Normally du ring the Add Device callback the driver initializes the

device. During the Add Device execution in a Redundant Host architecture, the device cannot be

assumed to be physically visible to the Host making the Add Device call.

The Intel

that provides the Host with the ability to query the configuration of backplane devices, whether or