Page 1

Intel® PXA27x Processor Family

Optimization Guide

April, 2004

Order Number: 280004-001

Page 2

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTELR PRODUCTS. EXCEPT AS PROVIDED IN INTEL’S TERMS

AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS

OR IMPLIED WARRANTY RELATING TO SALE AND/OR USE OF INTEL PRODUCTS, INCLUDING LIABILITY OR WARRANTIES RELATING TO

FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT, OR OTHER

INTELLECTUAL PROPERTY RIGHT.

Intel Corporation may have patents or pending patent applications, trademarks, copyrights, or other intellectual property rights that relate to the

presented subject matter. The furnishing of documents and other materials and information does not provide any license, express or implied, by

estoppel or otherwise, to any such patents, trademarks, copyrights, or other intellectual property rights.

Intel products are not intended for use in medical, life saving, life sustaining, critical control or safety systems, or in nuclear facility applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for

future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to th em.

The Intel® PXA27x Processor Family may contain design defects or errors known as errata which may cause the product to deviate from published

specifications. Current characterized errata are available on request.

MPEG is an international standard for video compression/decompression promoted by ISO. Implementations of MPEG CODECs, or MPEG enabled

platforms may require licenses from various entities, including Intel Corporation.

This document and the software described in it are furnished under license and may only be used or copied in accordance with the terms of the

license. The information in this document is furnished for informational use only, is subject to change without notice, and should not be construed as a

commitment by Intel Corporation. Intel Corporation assumes no responsibility or liability for any errors or inaccuracies that may appear in this

document or any software that may be provided in association with this document. Except as permitted by such license, no part of this document may

be reproduced, stored in a retrieval system, or transmitted in any form or by any means without the express written consent of Intel Corporation.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an ordering number and are referenced in this document, or other Intel literature may be obtained by calling

1-800-548-4725 or by visiting Intel's website at http://www.intel.com.

Copyright © Intel Corporation, 2004

AlertVIEW, i960, AnyPoint, AppChoice, BoardWatch, BunnyPeople, CablePort, Celeron, Chips, Commerce Cart, CT Connect, CT Media, Dialogic,

DM3, EtherExpress, ETOX, FlashFile, GatherRound, i386, i486, iCat, iCOMP, Insight960, InstantIP, Intel, Intel logo, Intel386, Intel486, Intel740,

IntelDX2, IntelDX4, IntelSX2, Intel Cha tPad, I ntel Create&Share, Intel D ot.Statio n, Intel GigaBlade, Intel I nBusiness, Intel Inside, Intel Inside logo, Intel

NetBurst, Intel NetStructure, Intel Play, Intel Play logo, Intel Pocket Concert, Intel SingleDriver, Intel SpeedStep, Intel StrataFlash, Intel TeamStation,

Intel WebOutfitter, Intel Xeon, Intel XScale, Itanium, JobAnalyst, LANDesk, LanRover, MCS, MMX, MMX logo , NetPort, NetportExpress, Optimizer

logo, OverDrive, Paragon, PC Dads, PC Parents, Pentium, Pentium II Xeon, Pentium III Xeon, Performance at Your Command, ProShare,

RemoteExpress, Screamline, Shiva, SmartDie, Solutions960, Sound Mark, StorageExpress, The Computer Inside, The Journey Inside, This Way In,

TokenExpress, Trillium, Vivonic, and VTune are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and

other countries.

*Other names and brands may be claimed as the property of others.

ii Intel® PXA27x Processor Family Optimization Guide

Page 3

Contents

Contents

1 Introduction.................................................................................................................................1-1

1.1 About This Document ........................................................................................................1-1

1.2 High-Level Overview..........................................................................................................1-2

1.2.1 Intel XScale® Microarchitecture and Intel XScale® core......................................1-3

1.2.2 Intel XScale® Microarchitecture Features ............................................................1-4

1.2.3 Intel® Wireless MMX™ technology ......................................................................1-4

1.2.4 Memory Architecture.............................................................................................1-5

1.2.4.1 Caches..................................................................................................1-5

1.2.4.2 Internal Memories .................................................................................1-5

1.2.4.3 External Memory Controller ..................................................................1-5

1.2.5 Processor Internal Communications.....................................................................1-5

1.2.5.1 System Bus...........................................................................................1-5

1.2.5.2 Peripheral Bus ......................................................................................1-6

1.2.5.3 Peripherals in the Processor.................................................................1-6

1.2.6 Wireless Intel Speedstep® technology .................................................................1-7

1.3 Intel XScale® Microarchitecture Compatibility...................................................................1-8

1.3.1 PXA27x Processor Performance Features...........................................................1-8

2 Microarchitecture Overview ......................................................................................................2-1

2.1 Introduction ........................................................................................................................2-1

2.2 Intel XScale® Microarchitecture Pipeline...........................................................................2-1

2.2.1 General Pipeline Characteristics...........................................................................2-1

2.2.1.1 Pipeline Organization............................................................................2-1

2.2.1.2 Out of Order Completion .......................................................................2-2

2.2.1.3 Use of Bypassing ..................................................................................2-2

2.2.2 Instruction Flow Through the Pipeline ..................................................................2-2

2.2.2.1 ARM* V5TE Instruction Execution ........................................................2-3

2.2.2.2 Pipeline Stalls .......................................................................................2-3

2.2.3 Main Execution Pipeline .......................................................................................2-3

2.2.3.1 F1 / F2 (Instruction Fetch) Pipestages..................................................2-3

2.2.3.2 Instruction Decode (ID) Pipestage........................................................2-4

2.2.3.3 Register File / Shifter (RF) Pipestage ...................................................2-4

2.2.3.4 Execute (X1) Pipestages ......................................................................2-4

2.2.3.5 Execute 2 (X2) Pipestage .....................................................................2-5

2.2.3.6 Write-Back (WB) ...................................................................................2-5

2.2.4 Memory Pipeline ...................................................................................................2-5

2.2.4.1 D1 and D2 Pipestage............................................................................2-5

2.2.5 Multiply/Multiply Accumulate (MAC) Pipeline........................................................2-5

2.2.5.1 Behavioral Description ..........................................................................2-6

2.2.5.2 Perils of Superpipelining .......................................................................2-6

2.3 Intel® Wireless MMX™ Technology Pipeline ....................................................................2-7

2.3.1 Execute Pipeline Thread.......................................................................................2-7

2.3.1.1 ID Stage ................................................................................................2-7

2.3.1.2 RF Stage...............................................................................................2-7

2.3.1.3 X1 Stage ...............................................................................................2-8

2.3.1.4 X2 Stage ...............................................................................................2-8

2.3.1.5 XWB Stage ...........................................................................................2-8

2.3.2 Multiply Pipeline Thread .......................................................................................2-8

Intel® PXA27x Processor Family Optimization Guide iii

Page 4

Contents

2.3.2.1 M1 Stage...............................................................................................2-8

2.3.2.2 M2 Stage...............................................................................................2-8

2.3.2.3 M3 Stage...............................................................................................2-8

2.3.2.4 MWB Stage...........................................................................................2-8

2.3.3 Memory Pipeline Thread.......................................................................................2-9

2.3.3.1 D1 Stage ...............................................................................................2-9

2.3.3.2 D2 Stage ...............................................................................................2-9

2.3.3.3 DWB Stage ...........................................................................................2-9

3 System Level Optimization ........................................................................................................3-1

3.1 Optimizing Frequency Selection........................................................................................3-1

3.2 Memory System Optimization............................................................................................3-1

3.2.1 Optimal Setting for Memory Latency and Bandwidth............................................3-1

3.2.2 Alternate Memory Clock Setting ...........................................................................3-2

3.2.3 Page Table Configuration.....................................................................................3-3

3.2.3.1 Page Attributes For Instructions............................................................3-3

3.2.3.2 Page Attributes For Data Access..........................................................3-3

3.3 Optimizing for Instruction and Data Caches......................................................................3-4

3.3.1 Increasing Instruction Cache Performance...........................................................3-4

3.3.1.1 Round Robin Replacement Cache Policy.............................................3-5

3.3.1.2 Code Placement to Reduce Cache Misses ..........................................3-5

3.3.1.3 Locking Code into the Instruction Cache..............................................3-5

3.3.2 Increasing Data Cache Performance....................................................................3-5

3.3.2.1 Cache Configuration .............................................................................3-6

3.3.2.2 Creating Scratch RAM in the Internal SRAM........................................3-6

3.3.2.3 Creating Scratch RAM in Data Cache ..................................................3-7

3.3.2.4 Reducing Memory Page Thrashing ......................................................3-7

3.3.2.5 Using Mini-Data Cache.........................................................................3-8

3.3.2.6 Reducing Cache Conflicts, Pollution and Pressure ..............................3-8

3.3.3 Optimizing TLB (Translation Lookaside Buffer) Usage.........................................3-8

3.4 Optimizing for Internal Memory Usage ..............................................................................3-9

3.4.1 LCD Frame Buffer.................................................................................................3-9

3.4.2 Buffer for Capture Interface ..................................................................................3-9

3.4.3 Buffer for Context Switch....................................................................................3-10

3.4.4 Scratch Ram.......................................................................................................3-10

3.4.5 OS Acceleration..................................................................................................3-10

3.4.6 Increasing Preloads for Memory Performance ...................................................3-10

3.5 Optimization of System Components ..............................................................................3-10

3.5.1 LCD Controller Optimization...............................................................................3-11

3.5.1.1 Bandwidth and Latency Requirements for LCD..................................3-11

3.5.1.2 Frame Buffer Placement for LCD Optimization...................................3-13

3.5.1.3 LCD Display Frame Buffer Setting......................................................3-14

3.5.1.4 LCD Color Conversion HW.................................................................3-14

3.5.1.5 Arbitration Scheme Tuning for LCD....................................................3-14

3.5.2 Optimizing Arbiter Settings .................................................................................3-15

3.5.2.1 Arbiter Functionality............................................................................3-15

3.5.2.2 Determining the Optimal Weights for Clients ......................................3-15

3.5.2.3 Taking Advantage of Bus Parking.......................................................3-16

3.5.2.4 Dynamic Adaptation of Weights..........................................................3-16

3.5.3 Usage of DMA ....................................................................................................3-17

3.5.4 Peripheral Bus Split Transactions.......................................................................3-17

iv Intel® PXA27x Processor Family Optimization Guide

Page 5

Contents

4 Intel XScale® Microarchitecture & Intel® Wireless MMX™ Technology Optimization ........4-1

4.1 Introduction........................................................................................................................4-1

4.2 General Optimization Techniques .....................................................................................4-1

4.2.1 Conditional Instructions and Loop Control............................................................4-1

4.2.2 Program Flow and Branch Instructions.................................................................4-2

4.2.3 Optimizing Complex Expressions .........................................................................4-5

4.2.3.1 Bit Field Manipulation............................................................................4-6

4.2.4 Optimizing the Use of Immediate Values..............................................................4-6

4.2.5 Optimizing Integer Multiply and Divide..................................................................4-7

4.2.6 Effective Use of Addressing Modes......................................................................4-8

4.3 Instruction Scheduling for Intel XScale® Microarchitecture and Intel® Wireless

MMX™ Technology ...........................................................................................................4-8

4.3.1 Instruction Scheduling for Intel XScale® Microarchitecture..................................4-8

4.3.1.1 Scheduling Loads .................................................................................4-8

4.3.1.2 Increasing Load Throughput ...............................................................4-11

4.3.1.3 Increasing Store Throughput ..............................................................4-12

4.3.1.4 Scheduling Load Double and Store Double (LDRD/STRD)................4-13

4.3.1.5 Scheduling Load and Store Multiple (LDM/STM)................................4-14

4.3.1.6 Scheduling Data-Processing...............................................................4-15

4.3.1.7 Scheduling Multiply Instructions..........................................................4-15

4.3.1.8 Scheduling SWP and SWPB Instructions...........................................4-16

4.3.1.9 Scheduling the MRA and MAR Instructions (MRRC/MCRR)..............4-17

4.3.1.10 Scheduling MRS and MSR Instructions..............................................4-17

4.3.1.11 Scheduling Coprocessor 15 Instructions ............................................4-18

4.3.2 Instruction Scheduling for Intel® Wireless MMX™ Technology .........................4-18

4.3.2.1 Increasing Load Throughput on Intel® Wireless MMX™ Technology 4-18

4.3.2.2 Scheduling the WMAC Instructions ....................................................4-19

4.3.2.3 Scheduling the TMIA Instruction .........................................................4-20

4.3.2.4 Scheduling the WMUL and WMADD Instructions...............................4-21

4.4 SIMD Optimization Techniques .......................................................................................4-21

4.4.1 Software Pipelining .............................................................................................4-21

4.4.1.1 General Remarks on Software Pipelining...........................................4-23

4.4.2 Multi-Sample Technique .....................................................................................4-23

4.4.2.1 General Remarks on Multi-Sample Technique ...................................4-25

4.4.3 Data Alignment Techniques................................................................................4-25

4.5 Porting Existing Intel® MMX™ Technology Code to Intel® Wireless MMX™

Technology ......................................................................................................................4-26

4.5.1 Intel® Wireless MMX™ Technology Instruction Mapping...................................4-27

4.5.2 Unsigned Unpack Example ................................................................................4-28

4.5.3 Signed Unpack Example ....................................................................................4-29

4.5.4 Interleaved Pack with Saturation Example .........................................................4-29

4.6 Optimizing Libraries for System Performance .................................................................4-29

4.6.1 Case Study 1: Memory-to-Memory Copy............................................................4-29

4.6.2 Case Study 2: Optimizing Memory Fill................................................................4-30

4.6.3 Case Study 3: Dot Product .................................................................................4-31

4.6.4 Case Study 4: Graphics Object Rotation ............................................................4-32

4.6.5 Case Study 5: 8x8 Block 1/2X Motion Compensation ........................................4-33

4.7 Intel® Performance Primitives .........................................................................................4-34

4.8 Instruction Latencies for Intel XScale® Microarchitecture ...............................................4-35

4.8.1 Performance Terms ............................................................................................4-35

4.8.2 Branch Instruction Timings .................................................................................4-37

Intel® PXA27x Processor Family Optimization Guide v

Page 6

Contents

4.8.3 Data Processing Instruction Timings ..................................................................4-38

4.8.4 Multiply Instruction Timings ................................................................................4-39

4.8.5 Saturated Arithmetic Instructions........................................................................4-40

4.8.6 Status Register Access Instructions ...................................................................4-41

4.8.7 Load/Store Instructions.......................................................................................4-41

4.8.8 Semaphore Instructions......................................................................................4-42

4.8.9 CP15 and CP14 Coprocessor Instructions.........................................................4-42

4.8.10 Miscellaneous Instruction Timing........................................................................4-42

4.8.11 Thumb* Instructions............................................................................................4-43

4.9 Instruction Latencies for Intel® Wireless MMX™ Technology.........................................4-43

4.10 Performance Hazards......................................................................................................4-45

4.10.1 Data Hazards......................................................................................................4-45

4.10.2 Resource Hazard................................................................................................4-45

4.10.2.1 Execution Pipeline ..............................................................................4-46

4.10.2.2 Multiply Pipeline ..................................................................................4-47

4.10.2.3 Memory Control Pipeline.....................................................................4-48

4.10.2.4 Coprocessor Interface Pipeline...........................................................4-49

4.10.2.5 Multiple Pipelines................................................................................4-49

5 High Level Language Optimization...........................................................................................5-1

5.1 C and C++ Level Optimization...........................................................................................5-1

5.1.1 Efficient Usage of Preloading ...............................................................................5-1

5.1.1.1 Preload Considerations.........................................................................5-1

5.1.1.2 Preload Loop Limitations ......................................................................5-3

5.1.1.3 Coding Technique with Preload............................................................5-4

5.1.2 Array Merging .......................................................................................................5-6

5.1.3 Cache Blocking.....................................................................................................5-8

5.1.4 Loop Interchange..................................................................................................5-8

5.1.5 Loop Fusion..........................................................................................................5-9

5.1.6 Loop Unrolling.......................................................................................................5-9

5.1.7 Loop Conditionals...............................................................................................5-11

5.1.8 If-else versus Switch Statements........................................................................5-12

5.1.9 Nested If-Else and Switch Statements ...............................................................5-12

5.1.10 Locality in Source Code......................................................................................5-12

5.1.11 Choosing Data Types .........................................................................................5-12

5.1.12 Data Alignment For Maximizing Cache Usage...................................................5-12

5.1.13 Placing Literal Pools ...........................................................................................5-14

5.1.14 Global versus Local Variables ............................................................................5-14

5.1.15 Number of Parameters in Functions...................................................................5-14

5.1.16 Other General Optimizations ..............................................................................5-14

6 Power Optimization ....................................................................................................................6-1

6.1 Introduction ........................................................................................................................6-1

6.2 Optimizations for Core Power............................................................................................6-1

6.2.1 Code Optimization for Power Consumption..........................................................6-1

6.2.2 Switching Modes for Saving Power......................................................................6-1

6.2.2.1 Normal Mode ........................................................................................6-1

6.2.2.2 Idle Mode ..............................................................................................6-2

6.2.2.3 Deep Idle Mode.....................................................................................6-2

6.2.2.4 Standby Mode.......................................................................................6-2

6.2.2.5 Sleep Mode...........................................................................................6-2

vi Intel® PXA27x Processor Family Optimization Guide

Page 7

Contents

6.2.2.6 Deep-Sleep Mode .................................................................................6-2

6.2.3 Wireless Intel Speedstep® Technology Power Manager .....................................6-3

6.2.4 System Bus Frequency Selection .........................................................................6-3

6.2.4.1 Fast-Bus Mode......................................................................................6-4

6.2.4.2 Half-Turbo Mode ...................................................................................6-4

6.3 Optimizations for Memory and Peripheral Power ..............................................................6-5

6.3.1 Improved Caching and Internal Memory Usage ...................................................6-5

6.3.2 SDRAM Auto Power Down (APD) ........................................................................6-5

6.3.3 External Memory Bus Buffer Strength Registers..................................................6-5

6.3.4 Peripheral Clock Gating........................................................................................6-5

6.3.5 LCD Subsystem....................................................................................................6-5

6.3.6 Voltage and Regulators ........................................................................................6-6

6.3.7 Operating Mode Recommendations for Power Savings.......................................6-6

6.3.7.1 Normal Mode ........................................................................................6-6

6.3.7.2 Idle Mode ..............................................................................................6-6

6.3.7.3 Deep-Idle Mode ....................................................................................6-7

6.3.7.4 Standby Mode.......................................................................................6-7

6.3.7.5 Sleep Mode...........................................................................................6-7

6.3.7.6 Deep-Sleep Mode .................................................................................6-7

A Performance Checklist.............................................................................................................. A-1

A.1 Performance Optimization Tips ........................................................................................ A-1

A.2 Power Optimization Guidelines......................................................................................... A-2

Glossary ................................................................................................................................... Glossary-1

Figures

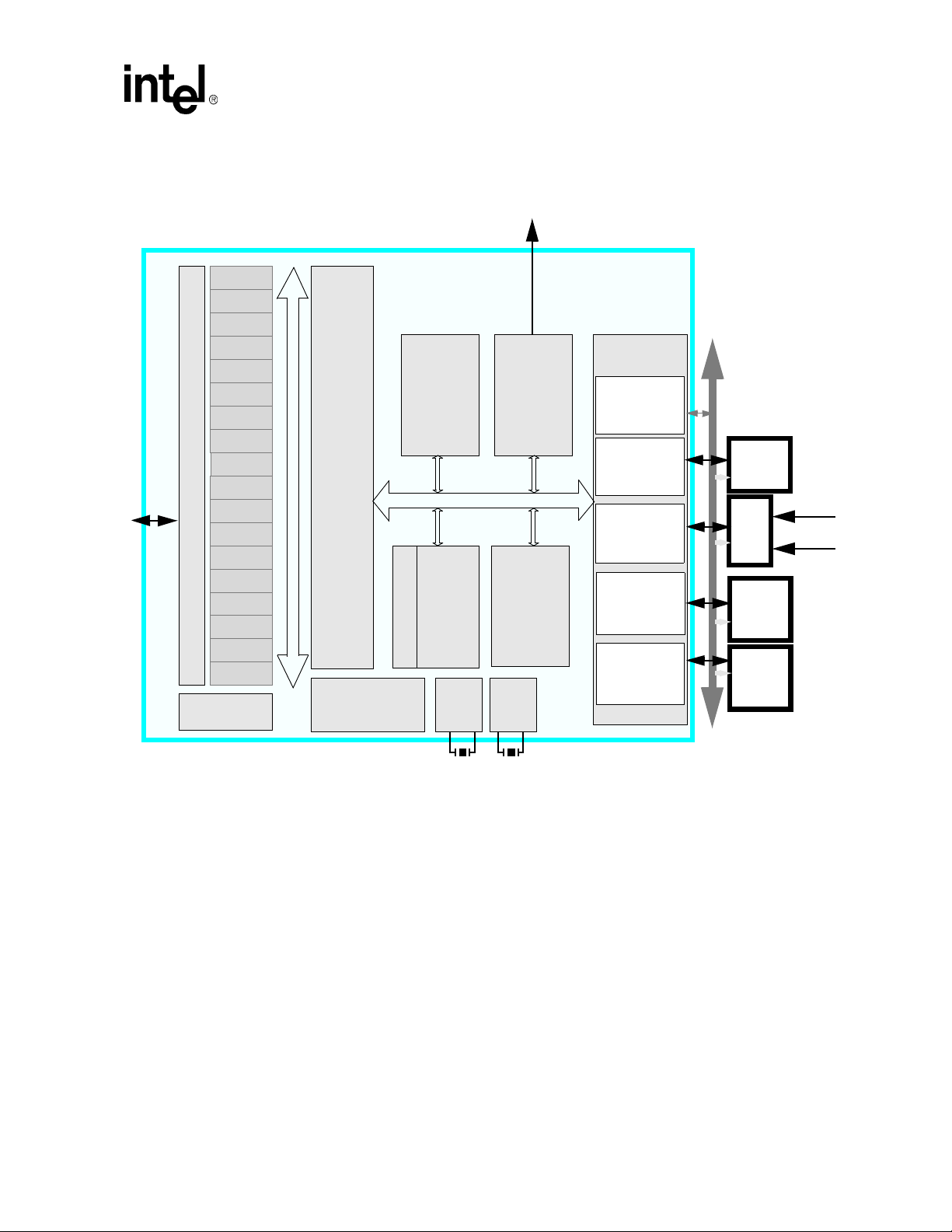

1-1 PXA27x Processor Block Diagram ............................................................................................1-3

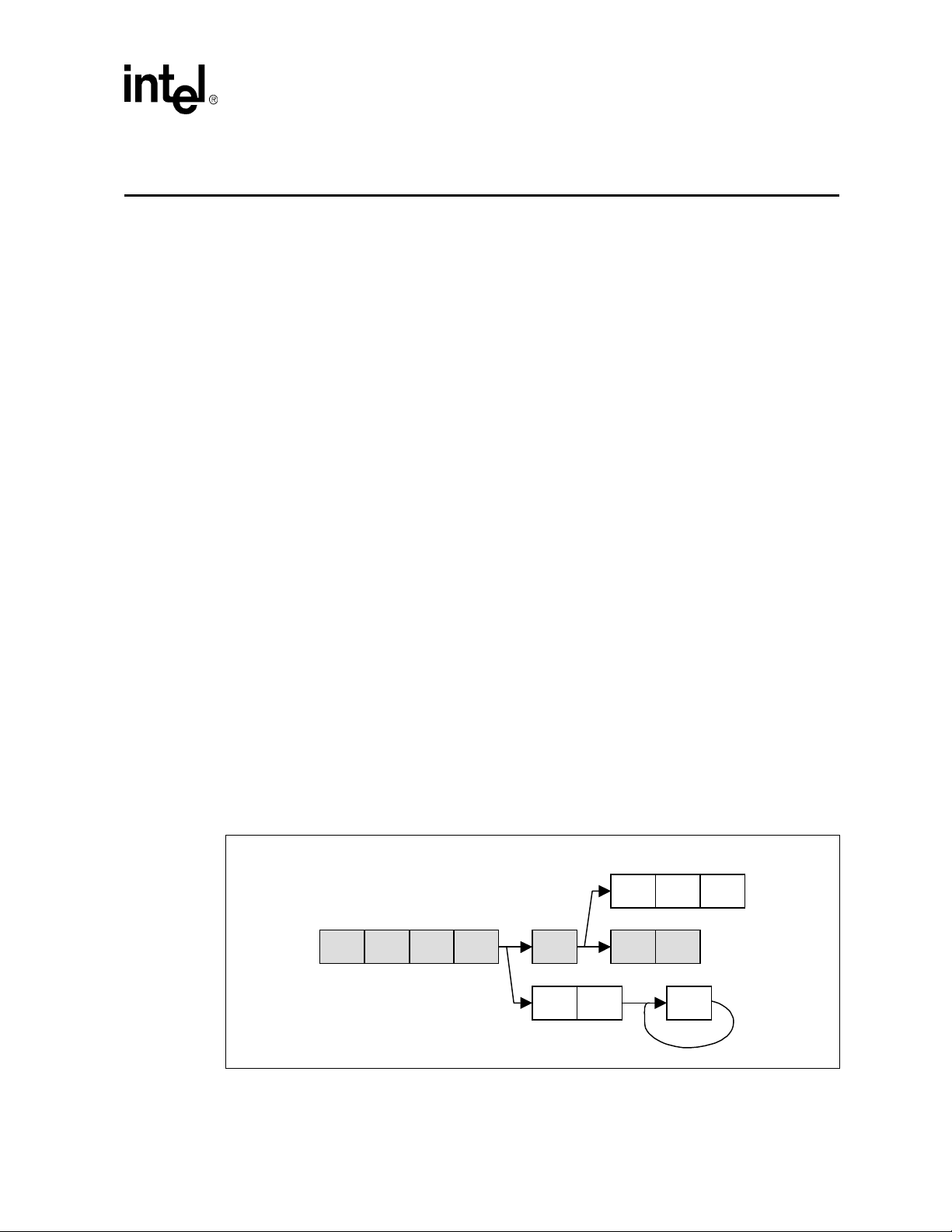

2-1 Intel XScale® Microarchitecture RISC Superpipeline................................................................2-1

2-2 Intel® Wireless MMX™ Technology Pipeline Threads and relation with Intel XScale®

Microarchitecture Pipeline .........................................................................................................2-7

4-1 High-Level Pipeline Organization ............................................................................................4-46

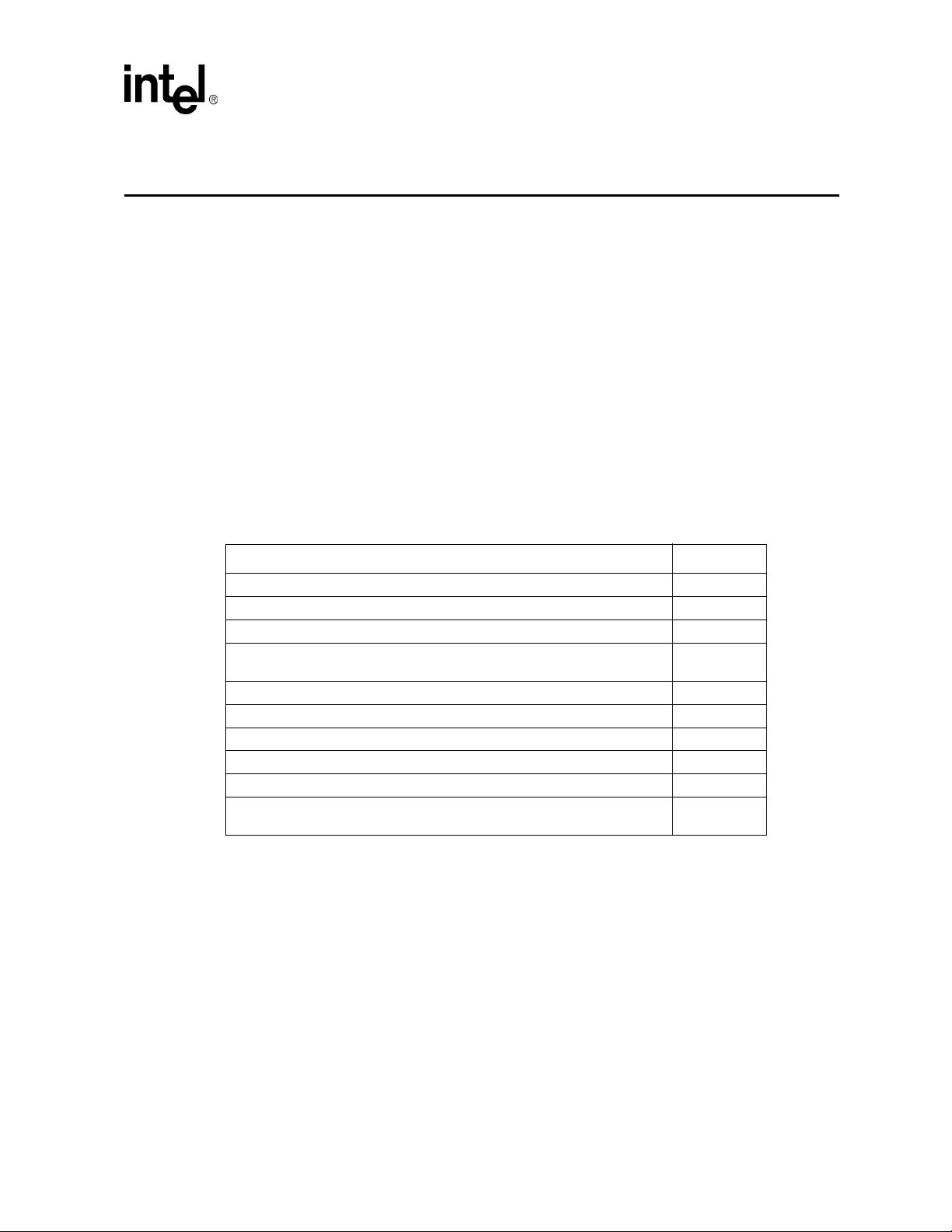

Tables

1-1 Related Documentation .............................................................................................................1-1

2-1 Pipelines and Pipe Stages.........................................................................................................2-2

3-1 External SDRAM Access Latency and Throughput for Different Frequencies (Silicon

Measurement Pending) .............................................................................................................3-1

3-2 Internal SRAM Access Latency and Throughput for Different Frequencies (Silicon

Measurement Pending) .............................................................................................................3-2

3-3 Data Cache and Buffer Behavior when X = 0............................................................................3-3

3-4 Data Cache and Buffer Behavior when X = 1............................................................................3-3

3-5 Data Cache and Buffer operation comparison for Intel® SA-1110 and Intel XScale®

Microarchitecture, X=0...............................................................................................................3-4

3-6 Sample LCD Configurations with Latency and Peak Bandwidth Requirements......................3-13

3-7 Memory to Memory Performance Using DMA for Different Memories and Frequencies.........3-17

4-1 PXA27x processor Mapping to Intel® Wireless MMX™ Technology and SSE .......................4-27

Intel® PXA27x Processor Family Optimization Guide vii

Page 8

Contents

4-2 Latency Example .....................................................................................................................4-37

4-3 Branch Instruction Timings (Those Predicted By the BTB (Branch Target Buffer)) ................4-37

4-4 Branch Instruction Timings (Those Not Predicted By the BTB)...............................................4-37

4-5 Data Processing Instruction Timings .......................................................................................4-38

4-6 Multiply Instruction Timings .....................................................................................................4-39

4-7 Multiply Implicit Accumulate Instruction Timings .....................................................................4-40

4-8 Implicit Accumulator Access Instruction Timings.....................................................................4-40

4-9 Saturated Data Processing Instruction Timings ......................................................................4-40

4-10 Status Register Access Instruction Timings ............................................................................4-41

4-11 Load and Store Instruction Timings.........................................................................................4-41

4-12 Load and Store Multiple Instruction Timings ...........................................................................4-41

4-13 Semaphore Instruction Timings...............................................................................................4-42

4-14 CP15 Register Access Instruction Timings .............................................................................4-42

4-15 CP14 Register Access Instruction Timings .............................................................................4-42

4-16 Exception-Generating Instruction Timings...............................................................................4-42

4-17 Count Leading Zeros Instruction Timings................................................................................4-42

4-18 Issue Cycle and Result Latency of the PXA27x processor Instructions ..................................4-43

4-19 Resource Availability Delay for the Execution Pipeline ...........................................................4-46

4-20 Multiply pipe instruction classes ..............................................................................................4-48

4-21 Resource Availability Delay for the Multiplier Pipeline.............................................................4-48

4-22 Resource Availability Delay for the Memory Pipeline ..............................................................4-48

4-23 Resource Availability Delay for the Coprocessor Interface Pipeline........................................4-49

6-1 Power Modes and Typical Power Consumption Summary .......................................................6-3

viii Intel® PXA27x Processor Family Optimization Guide

Page 9

Revision History

Date Revision Description

April 2004 -001 Initial release

Contents

Intel® PXA27x Processor Family Optimization Guide ix

Page 10

Contents

x Intel® PXA27x Processor Family Optimization Guide

Page 11

Introduction 1

1.1 About This Document

This document is a guide to optimizing software, the operating system, and system configuration to

best use the Intel® PXA27x Processor Family (PXA27x processor) feature set. The Intel®

PXA27x Processor Family consists of:

• Intel® PXA270 Processor – discrete processor

• Intel® PXA271 Processor – 32 MBytes of Intel StrataFlash® Memory and 32 MBytes of Low

Power SDRAM

• Intel® PXA272 Processor – 64 MBytes of Intel StrataFlash® Memory

• Intel® PXA273 Processor – 32 MBytes of Intel StrataFlash® Memory

This document assumes users are familiar with the documentation shown in Tab le 1-1.

Table 1-1. Related Documentation

Document Title Order Number

Intel® PXA27x Processor Family Developer’s Manual 280000

Intel® PXA27x Processor Family Design Guide 280001

Intel® PXA270 Processor Electrical, Mechanical, and Thermal Specificiation 280002

Intel® PXA27x Processor Family Electrical, Mechanical, and Thermal

Specificiation

Intel XScale® Microarchitecture for the PXA27x processor 11465

Intel® PXA27x Processor Family User’s Manual 11466

Intel® PXA250 and PXA210 Application Processors Optimization Guide 278552

Intel XScale® Core Developer’s Manual 273473

The Complete Guide to Intel® Wireless MMX™ Technology 278626

Programming with Intel® Wireless MMX™ technology: A Developer's Guide to

Mobile Multimedia Applications

280003

ISBN:

0974364916

This guide is organized into these sections:

• Chapter 2, “Microarchitecture Overview” presents an overview of the Intel XScale®

Microarchitecture and Intel® Wireless MMX™ technology media co-processor.

• Chapter 3, “System Level Optimization” discusses configuration of the PXA27x processor to

achieve optimal performance at the system level.

• Chapter 4, “Intel XScale® Microarchitecture & Intel® Wireless MMX™ Technology

Optimization” discusses how to optimize software (mostly at the assembly programming

level) to take advantage of the Intel XScale® Microarchitecture and Intel® Wireless MMX™

technology media co-processor.

• Chapter 5, “High Level Language Optimization” is a set of guidelines for C and C++ code

developers to maximize the performance by making the best use of the system resources.

Intel® PXA27x Processor Family Optimization Guide 1-1

Page 12

Introduction

• Chapter 6, “Power Optimization” discusses the trade-offs between performance and power

using the PXA27x processor.

• Appendix A, “Performance Checklist” is a set of guidelines for system level optimizations

which allow for obtain greater performance when using the Intel XScale® Microarchitecture

and the PXA27x processor.

1.2 High-Level Overview

Mobile and wireless devices simplify our lives, keep us entertained, increase productivity and

maximize our responsiveness. Enterprise and individual consumers alike realize the potential and

are integrating these products at a rapid rate into their everyday life. Customer expectations exceed

what is being delivered today. The desire to communicate and compute wirelessly - to have access

to information anytime, anywhere, is the expectation. Manufacturers require technologies that

deliver high-performance, flexibility and robust functionality-all in the small-size, low-power

framework of mobile handheld, battery-powered devices. The Intel® Personal Internet Client

Architecture (Intel® PCA) processors with Intel XScale® Microarchitecture help drive wireless

handheld device functionality to new heights to meet customer demand. Combining low-power,

high-performance, compelling new features and second generation memory stacking, Intel PCA

processors help to redefine what a mobile device can do to meet many of the performance demands

of Enterprise-class wireless computing and feature-hungry technology consumers.

Targeted at wireless handhelds and handsets such as cell phones and PDAs with full featured

operating systems, the Intel PXA27x processor family is the next generation of ultra-low-power

applications with industry leading multimedia performance for wireless clients. The Intel PXA27x

processor is a highly integrated solution that includes Wireless Intel Speedstep® technology for

ultra-low-power, Intel® Wireless MMX™ technology and up to 624

multimedia capabilites, and Intel® Quick Capture Interface to give customers the ability to capture

high quality images and video.

The PXA27x processor incorporates a comprehensive set of system and peripheral functions that

make it useful in a variety of low-power applications. The block diagram in

the PXA27x processor system-on-a-chip, and shows a primary system bus with the Intel XScale®

Microarchitecture core (Intel XScale® core) attached along with an LCD controller, USB Host

controller and 256

controller to allow communication to a variety of external memory or companion-chip devices, and

it is also connected to a DMA/bridge to allow communication with the on-chip peripherals. The

key features of all the sub-blocks are described in this section, with more detail provided in

subsequent sections.

KBytes of internal memory. The system bus is connected to a memory

MHz for advanced

Figure 1-1 illustrates

1-2 Intel® PXA27x Processor Family Optimization Guide

Page 13

Figure 1-1. PXA27x Processor Block Diagram

RTC

OS Timers

4 x PWM

Interrupt C

3 x SSP

USIM

I2S

AC97

Full Function

Uart

Bluetooth

Uart

Fast IrDA

Slow IrDA

I2C

USB

Client

General Purpose I/O

Baseband

Interface

Keypad

Interface

SDcard/MMC

Interface

Mem Stick

Interface

Primary GPIO

DMA

Controller

And

Peripheral Bus

Bridge

Power Management

Clock Control

Internal

Intel® Wireless

MMX™ Technology

SRAM

System Bus

Intel

XScale®

Core

13

MHz

Osc

LCD

LCD

Controller

USB

HOST

32.768

KHz

Osc

Memory

Controller

Address

and

Data

Variabl e

Latency I/O

Control

PCMCIA & CF

Control

Dynamic

Memory

Control

Stati c

Memory

Control

Introduction

Address and Data

ASIC

XCVR

SDRAM/

SMROM

4 banks

ROM/

Flash/

SRAM

4 Banks

Socket 0

Socket 1

1.2.1 Intel XScale® Microarchitecture and Intel XScale® core

The Intel XScale® Microarchitecture is based on a core that is ARM* version 5TE compliant. The

microarchitecture surrounds the core with instruction and data memory management units;

instruction, data, and mini-data caches; write, fill, pend, and branch-target buffers; power

management, performance monitoring, debug, and JTAG units; coprocessor interface; 32K caches;

MMUs; BTB; MAC coprocessor; and core memory bus.

The Intel XScale® Microarchitecture can be combined with peripherals to provide applicationspecific standard products (ASSPs) targeted at selected market segments. For example, the RISC

core can be integrated with peripherals such as an LCD controller, multimedia controllers, and an

external memory interface to empower OEMs to develop smaller, more cost-effective handheld

devices with long battery life, with the performance to run rich multimedia applications. Or the

microarchitecture could be surrounded by high-bandwidth PCI interfaces, memory controllers, and

networking micro-engines to provide a highly integrated, low-power, I/O or network processor.

Intel® PXA27x Processor Family Optimization Guide 1-3

Page 14

Introduction

1.2.2 Intel XScale® Microarchitecture Features

• Superpipelined RISC technology achieves high speed and low power

• Wireless Intel Speedstep® technology allows on-the-fly voltage and frequency scaling to

enable applications to use the right blend of performance and power

• Media processing technology enables the MAC coprocessor perform two simultaneous 16-bit

SIMD multiplies with 64-bit accumulation for efficient media processing

• Power management unit provides power savings via multiple low-power modes

• 128-entry Branch Target buffer keeps pipeline filled with statistically correct branch choices

• 32-Kbyte instruction cache (I-cache) keeps local copy of important instructions to enable high

performance and low power

• 32-Kbyte data cache (D-cache) keeps local copy of important data to enable high performance

and low power

• 2-Kbyte mini-data cache avoids “thrashing” of the D-cache for frequently changing data

streams

• 32-entry instruction memory management unit enables logical-to-physical address translation,

access permissions, I-cache attributes

• 32-entry data memory management unit enables logical-to-physical address translation, access

permissions, D-cache attributes

• 4-entry Fill and Pend buffers promote core efficiency by allowing “hit-under-miss” operation

with data caches

• Performance monitoring unit furnishes two 32-bit event counters and one 32-bit cycle counter

for analysis of hit rates

• Debug unit uses hardware breakpoints and 256-entry Trace History buffer (for flow change

messages) to debug programs

• 32-bit coprocessor interface provides high performance interface between core and

coprocessors

• 8-entry Write buffer allows the core to continue execution while data is written to memory

See the Intel XScale® Microarchitecture Users Guide for additional information.

1.2.3 Intel® Wireless MMX™ technology

The Intel XScale® Microarchitecture has attached to it a coprocessor to accelerate multimedia

applications. This coprocessor, characterized by a 64-bit Single Instruction Multiple Data (SIMD)

architecture and compatibility with the integer functionality of the

technology

technology

and SSE instruction sets, is known by its Intel project name, Intel® Wireless MMX™

. The key features of this coprocessor are:

• 30 new media processing instructions

• 64-bit architecture up to eight-way SIMD

• 16 x 64-bit register file

• SIMD PSR flags with group conditional execution support

• Instruction support for SIMD, SAD, and MAC

• Instruction support for alignment and video

• Intel® Wireless MMX™ technology and SSE integer compatibility

1-4 Intel® PXA27x Processor Family Optimization Guide

Intel® Wireless MMX™

Page 15

• Superset of existing Intel XScale® Microarchitecture media processing instructions

See the Intel® Wireless MMX™ technology Coprocessor EAS for more details.

1.2.4 Memory Architecture

1.2.4.1 Caches

There are two caches:

• Data cache – The PXA27x processor supports 32 Kbytes of data cache.

• Instruction Cache – The PXA27x processor supports 32 Kbytes of instruction cache.

1.2.4.2 Internal Memories

The key features of the PXA27x processor internal memory are:

• 256 Kbytes of on-chip SRAM arranged as four banks of 64 Kbytes

• Bank-by-bank power management with automatic power management for reduced power

consumption

• Byte write support

Introduction

1.2.4.3 External Memory Controller

The PXA27x processor supports a memory controller for external memory which can access:

• SDRAM up to 100 MHz at 1.8 Volts.

• Flash memories

• Synchronous ROM

• SRAM

• Variable latency input/output (VLIO) memory

• PC card and compact flash expansion memory

1.2.5 Processor Internal Communications

The PXA27x processor supports a hierarchical bus architecture. A system bus supports high

bandwidth peripherals, and a slower peripheral bus supports peripherals with lower data

throughputs.

1.2.5.1 System Bus

• Interconnection between the major key components is through the system bus.

• 64-bit wide, address and data multiplexed bus.

• The system bus allows split transactions, increasing the maximum data-throughput in the

system.

• Different burst sizes are allowed; up to 4 data phases per transactions (that is, 32 bytes). The

burst size is set in silicon for each peripheral and is not configurable.

Intel® PXA27x Processor Family Optimization Guide 1-5

Page 16

Introduction

• The system bus can operate at different frequency ratios with respect to the Intel XScale® core

(up to 208 MHz). The frequency control of the system bus is pivotal to striking a balance

between the desired performance and power consumption.

1.2.5.2 Peripheral Bus

The peripheral bus is a single master bus. The bus master arbitrates between the Intel XScale® core

and the DMA controller with a pre-defined priority scheme between them. The peripheral bus is

used by the low-bandwidth peripherals; the peripheral bus runs at 26

1.2.5.3 Peripherals in the Processor

The PXA27x processor has a rich set of peripherals. The list of peripherals and key features are

described in the subsections below.

1.2.5.3.1 LCD Display Controller

The LCD controller supports single- or dual-panel LCD displays. Color panels without internal

frame buffers up to 262144 colors (18

up to 16777216 colors (24

(8

bits) are supported.

bits) are supported. Monochrome panels up to 256 gray-scale levels

bits) are supported. Color panels with internal frame buffers

MHz.

1.2.5.3.2 DMA Controller

The PXA27x processor has a high performance DMA controller supporting memory-to-memory

transfers, peripheral-to-memory and memory-to-peripheral device transfers. It has support for

32

channels and up to 63-peripheral devices. The controller can perform descriptor chaining. DMA

supports descriptor-fetch, no-descriptor-fetch and descriptor-chaining.

1.2.5.3.3 Other Peripherals

The PXA27x processor offers this peripheral support:

• USB Client Controller with 23 programmable endpoints (compliant with USB Revision 1.1).

• USB Host controller (USB Rev. 1.1 compatible), which supports both low-speed and full-

speed USB devices through a built-in DMA controller.

• Intel® Quick Capture Interface which provides a connection between the processor and a

camera image sensor.

• Infrared Communication Port (ICP) which supports 4 Mbps data rate compliant with Infrared

Data Association (IrDA) standard.

2

• I

C Serial Bus Port, which is compliant with I2C standard (also supports arbitration between

multiple-masters).

• AC97 CODEC Interface (compliant with AC97 2.0) supporting multiple independent channels

(different channels are used for stereo PCM In, stereo PCM Out, MODEM Out, MODEM-In

and mono Mic-in).

2

• I

S Audio CODEC Interface.

• Three flexible synchronous serial ports.

• Multimedia Card Controller supports the MMC, SD and SDIO protocols.

• The PXA27x processor supports three UARTs compatible with 16550 and 16750 standard.

1-6 Intel® PXA27x Processor Family Optimization Guide

Page 17

Introduction

• Memory Stick Host Controller - compliant with Memory Stick V1.3 standard.

• USIM card interface (compliant with ISO standard 7816-3 and 3G TS 31.101)

• MSL – the physical interface of communication subsystems for mobile or wireless platforms.

The operating system and application software uses this to communicate between each other.

• Keypad interface supports both direct key as well as matrix key.

• Real-time clock (RTC) controller which provides a general-purpose, real-time reference clock

for use by the system.

• The pulse width modulator (PWM) controller generates four independent PWM outputs.

• Interrupt controller identifiesand controls the interrupt sources available to the processor.

• The OS timers controller provides a set of timer channels that allow software to generate timed

interrupts or wake-up events.

• General-purpose I/O (GPIO) controller for use in generating and capturing application-

specific input and output signals. Each of the 121

an input (or as bidirectional for certain alternate functions).

1

GPIOs may be programmed as an output,

1.2.6 Wireless Intel Speedstep® technology

Wireless Intel Speedstep® technology advances the capabilities of Intel® Dynamic Voltage

Management - a function already built into the Intel XScale® Microarchitecture - by incorporating

three new low-power states: deep idle, standby and deep sleep. The technology is able to change

both voltage and frequency on-the-fly by intelligently switching the processor into the various low

power modes, saving additional power while still providing the necessary performance to run rich

applications.

The PXA27x processor integrated microprocessor provides a rich set of flexible powermanagement controls for a wide range of usage models, while enabling very low-power operation.

The key features include:

• Five reset sources:

—Power-on

— Hardware

— Watchdog

—GPIO

— Exit from sleep mode

• Three clock-speed controls to adjust frequency:

— Turbo mode

— Divisor mode

—Fast Bus mode

• Switchable clock source

• Functional clock gating

• Programmable frequency-change capability

1. 121 GPIOs are available on the PXA271 processor, PXA271 processor, and PXA271 processor. The PXA270 processor only has 119 GPIOs

bonded out.

Intel® PXA27x Processor Family Optimization Guide 1-7

Page 18

Introduction

• Six power modes to control power consumption:

—Normal

—Idle

— Deep idle

— Standby

— Sleep

— Deep sleep

• Programmable I

See the Intel® PXA27x Processor Family Developer’s Manual for more details.

2

C-based external regulator interface to support voltage changing.

1.3 Intel XScale® Microarchitecture Compatibility

The Intel XScale® Microarchitecture is ARM*Version 5 (V5TE) architecture compliant. The

PXA27x processor implements the integer instruction set architecture of ARM*V5TE.

Backward compatibility for user-mode applications is maintained with the earlier generations of

StrongARM* and Intel XScale® Microarchitecture processors. Operating systems may require

modifications to match the specific Intel XScale® Microarchitecture hardware features, and to take

advantage of the performance enhancements added to this core.

Memory map and register locations are backward-compatible with the previous Intel XScale®

Microarchitecture hand-held products.

The Intel® Wireless MMX™ technology instruction set is compatible with the standard ARM*

coprocessor instruction format (See

for more details).

The Complete Guide to Intel® Wireless MMX™ Technology

1.3.1 PXA27x Processor Performance Features

Performance features of the PXA27x processor are:

• 32-Kbyte instruction cache

• 32-Kbyte data cache

• Intel® Wireless MMX™ technology with sixteen 64-bit registers, optimized instructions for

video, and multi-media applications.

• The PXA27x processor has an internal SRAM of 256 KBytes.

• Capability of locking entries in the instruction or data caches

• 2-Kbyte mini-data cache, separate from the data cache

• L1 caches and the mini-data cache use virtual address indices (or tags)

• Separate instruction and data Translation Lookaside buffers (TLBs), each with 32 entries

• Capability of locking entries in the TLBs

• 16-channel DMA engine with transfer-size control and descriptor chaining

1-8 Intel® PXA27x Processor Family Optimization Guide

Page 19

Introduction

• PID register for fast virtual address remapping

• Vect o r r emap

• Interrupt controller offers faster interrupt latency with the help of programmable priority

sorting mechanism.

• Extensions to the exception model to include imprecise data and instruction preload aborts

• Access control to other coprocessors

• Enhanced set of supported cache-control options

• A branch target buffer for dynamic-branch prediction

• Performance monitoring unit

• Software-debug support, including instruction and data breakpoints, a serial debug link via the

JTAG interface and a 256-entry trace buffer

• Integrated memory controller with support for SDRAM, flash memory, synchronous ROM,

SRAM, variable latency I/O (VLIO) memory, PC card, and compact flash expansion memory.

• Six power-management modes

Intel® PXA27x Processor Family Optimization Guide 1-9

Page 20

Introduction

1-10 Intel® PXA27x Processor Family Optimization Guide

Page 21

Microarchitecture Overview 2

2.1 Introduction

This chapter contains an overview of Intel XScale® Microarchitecture and Intel® Wireless

MMX™ Technology

architecture with an enhanced memory pipeline. The Intel XScale® Microarchitecture instruction

set is based on ARM* V5TE architecture; however, the Intel XScale® Microarchitecture includes

new instructions. Code developed for the Intel® StrongARM* SA-110 (SA-110), Intel®

StrongARM* SA-1100 (SA-1100), and Intel® StrongARM* SA-1110 (SA-1110) microprocessors

is portable to Intel XScale® Microarchitecture based processors. However, to obtain the maximum

performance, the code should be optimized for the Intel XScale® Microarchitecture using the

techniques presented in this document.

2.2 Intel XScale® Microarchitecture Pipeline

This section provides a brief description of the structure and behavior of Intel XScale®

Microarchitecture pipeline.

2.2.1 General Pipeline Characteristics

. The Intel XScale® Microarchitecture includes a superpipelined RISC

The following sections discuss general pipeline characteristics.

2.2.1.1 Pipeline Organization

The Intel XScale® Microarchitecture has a 7-stage pipeline operating at a higher frequency than its

predecessors allowing for greater overall performance. The Intel XScale® Microarchitecture

single-issue superpipeline consists of a main execution pipeline, a multiply-accumulate {MAC}

pipeline, and a memory access pipeline.

execution pipeline shaded.

Figure 2-1. Intel XScale® Microarchitecture RISC Superpipeline

Main execution pipeline

F1 F2 ID RF X1 X2

Figure 2-1 shows the pipeline organization with the main

MAC pipeline

M1 M2 Mx

Memory pipeline

D1 D2

XWB

DWB

Intel® PXA27x Processor Family Optimization Guide 2-1

Page 22

Microarchitecture Overview

Tab le 2-1 gives a brief description of each pipe stage and a reference for further information.

Table 2-1. Pipelines and Pipe Stages

Pipe / Pipestage Description For More Information

Main Execution Pipeline

• IF1/IF2

•ID

•RF

•X1

•X2

•XWB

Memory Pipeline

•D1/D2

•DWB

MAC Pipeline

•M1-M5

• MWB (not shown)

Handles data processing instructions

Instruction Fetch

Instruction Decode

Register File / Operand Shifter

ALU Execute

State Execute

Write-back

Handles load/store instructions

Data cache access

Data cache writeback

Handles all multiply instructions

Multiplier stages

MAC write-back occurs during M2-M5

2.2.1.2 Out of Order Completion

While the pipeline is scalar and single-issue, instructions occupy all three pipelines at once. The

main execution pipeline, memory, and MAC pipelines have different execution times because they

are not lock-stepped. Sequential consistency of instruction execution relates to two aspects: first,

the order instructions are completed and second, the order memory is accessed due to load and

store instructions. The Intel XScale® Microarchitecture only preserves a weak processor

consistency because instructions complete out of order (assuming no data dependencies exist).

The Intel XScale® Microarchitecture can buffer up to four outstanding reads. If load operations

miss the data cache, subsequent instructions complete independently. This operation is called a

hit-under-miss operation.

Section 2.2.3

Section 2.2.3.1

Section 2.2.3.2

Section 2.2.3.3

Section 2.2.3.4

Section 2.2.3.5

Section 2.2.3.6

Section 2.2.4

Section 2.2.4.1

Section 2.2.5.1

Section 2.2.5

Section 2.2.5

Section 2.2.5

2.2.1.3 Use of Bypassing

The pipeline makes extensive use of bypassing to minimize data hazards. To eliminate the need to

stall the pipeline, bypassing allows results forwarding from multiple sources.

In certain situations, the pipeline must stall because of register dependencies between instructions.

A register dependency occurs when a previous MAC or load instruction is about to modify a

register value that has not returned to the register file. Core bypassing allows the current instruction

to execute when the previous instruction’s results are available without waiting for the register file

to update.

2.2.2 Instruction Flow Through the Pipeline

With the exception of the MAC unit, the pipeline issues one instruction per clock cycle. Instruction

execution begins at the F1 pipestage and completes at the WB pipestage.

Although a single instruction is issued per clock cycle, all three pipelines are processing

instructions simultaneously. If there are no data hazards, each instruction complete independently

of the others.

2-2 Intel® PXA27x Processor Family Optimization Guide

Page 23

2.2.2.1 ARM* V5TE Instruction Execution

Figure 2-1 uses arrows to show the possible flow of instructions in the pipeline. Instruction

execution flows from the F1 pipestage to the RF pipestage. The RF pipestage issues a single

instruction to either the X1 pipestage or the MAC unit (multiply instructions go to the MAC, while

all others continue to X1). This means that M1 or X1 are idle.

After calculating the effective addresses in XI, all load and store instructions route to the memory

pipeline.

The ARM* V5TE branch and exchange (BX) instruction (used to branch between ARM* and

THUMB* code) causes the entire pipeline to be flushed. If the processor is in THUMB* mode the

ID pipestage dynamically expands each THUMB* instruction into a normal ARM* V5TE RISC

instruction and normal execution resumes.

2.2.2.2 Pipeline Stalls

Pipeline stalls can seriously degrade performance. The primary reasons for stalls are register

dependencies, load dependencies, multiple-cycle instruction latency, and unpredictable branches.

To help maximize performance, it is important to understand some of the ways to avoid pipeline

stalls. The following sections provide more detail on the nature of the pipeline and ways of

preventing stalls.

Microarchitecture Overview

2.2.3 Main Execution Pipeline

2.2.3.1 F1 / F2 (Instruction Fetch) Pipestages

The job of the instruction fetch stages F1 and F2 is to present the next instruction to be executed to

the ID stage. Two important functional units residing within the F1 and F2 stages are the BTB and

IFU.

• Branch Target Buffer (BTB)

The BTB provides a 128-entry dynamic branch prediction buffer. An entry in the BTB is

created when a B or BL instruction branch is taken for the first time. On sequential executions

of the branch instruction at the same address, the next instruction loaded into the pipeline is

predicted by the BTB. Once the branch type instruction reaches the X1 pipestage, its target

address is known. Execution continues without stalling if the target address is the same as the

BTB predicted address. If the address is different from the address that the BTB predicted, the

pipeline is flushed, execution starts at the new target address, and the branch’s history is

updated in the BTB.

• Instruction Fetch Unit (IFU)

The IFU is responsible for delivering instructions to the instruction decode (ID) pipestage. It

delivers one instruction word each cycle (if possible) to the ID. The instruction could come

from one of two sources: instruction cache or fetch buffers.

Intel® PXA27x Processor Family Optimization Guide 2-3

Page 24

Microarchitecture Overview

2.2.3.2 Instruction Decode (ID) Pipestage

The ID pipestage accepts an instruction word from the IFU and sends register decode information

to the RF pipestage. The ID is able to accept a new instruction word from the IFU on every clock

cycle in which there is no stall. The ID pipestage is responsible for:

• General instruction decoding (extracting the opcode, operand addresses, destination addresses

and the offset).

• Detecting undefined instructions and generating an exception.

• Dynamic expansion of complex instructions into sequence of simple instructions. Complex

instructions are defined as ones that take more than one clock cycle to issue, such as LDM,

STM, and SWP.

2.2.3.3 Register File / Shifter (RF) Pipestage

The main function of the RF pipestage is to read and write to the register file unit (RFU). It

provides source data to:

• X1 for ALU operations

• MAC for multiply operations

• Data cache for memory writes

• Coprocessor interface

The ID unit decodes the instruction and specifies the registers accessed in the RFU. Based on this

information, the RFU determines if it needs to stall the pipeline due to a register dependency. A

register dependency occurs when a previous instruction is about to modify a register value that has

not been returned to the RFU and the current instruction needs to access that same register. If no

dependencies exist, the RFU selects the appropriate data from the register file and passes it to the

next pipestage. When a register dependency does exist, the RFU keeps track of the unavailable

register. The RFU stops stalling the pipe when the result is returned.

The ARM* architecture specifies one of the operands for data processing instructions as the shifter

operand. A 32-bit shift can be performed on a value before it is used as an input to the ALU. This

shifter is located in the second half of the RF pipestage.

2.2.3.4 Execute (X1) Pipestages

The X1 pipestage performs these functions:

• ALU calculations – the ALU performs arithmetic and logic operations, as required for data

processing instructions and load/store index calculations.

• Determine conditional instruction executions – the instruction’s condition is compared to the

CPSR prior to execution of each instruction. Any instruction with a false condition is

cancelled and does not cause any architectural state changes, including modifications of

registers, memory, and PSR.

• Branch target determinations – the X1 pipestage flushes all instructions in the previous

pipestages and sends the branch target address to the BTB if a branch is mispredicted by the

BTB. The flushing of these instructions restarts the pipeline.

2-4 Intel® PXA27x Processor Family Optimization Guide

Page 25

2.2.3.5 Execute 2 (X2) Pipestage

The X2 pipestage contains the program status registers (PSR). This pipestage selects the data to be

written to the RFU in the WB cycle including the following items.

The X2 pipestage contains the current program status register (CPSR). This pipestage selects what

is written to the RFU in the WB cycle including program status registers.

2.2.3.6 Write-Back (WB)

When an instruction reaches the write-back stage it is considered complete. Instruction results are

written to the RFU.

2.2.4 Memory Pipeline

The memory pipeline consists of two stages, D1 and D2. The data cache unit (DCU) consists of the

data cache array, mini-data cache, fill buffers, and write buffers. The memory pipeline handles load

and store instructions.

2.2.4.1 D1 and D2 Pipestage

Microarchitecture Overview

Operation begins in D1 after the X1 pipestage calculates the effective address for loads and stores.

The data cache and mini-data cache return the destination data in the D2 pipestage. Before data is

returned in the D2 pipestage, sign extension and byte alignment occurs for byte and half-word

loads.

2.2.4.1.1 Write Buffer Behavior

The Intel XScale® Microarchitecture has enhanced write performance by the use of write

coalescing. Coalescing is combining a new store operation with an existing store operation already

resident in the write buffer. The new store is placed in the same write buffer entry as an existing

store when the address of new store falls in the 4-word aligned address of the existing entry.

The core can coalesce any of the four entries in the write buffer. The Intel XScale®

Microarchitecture has a global coalesce disable bit located in the Control register (CP15, register 1,

opcode_2=1).

2.2.4.1.2 Read Buffer Behavior

The Intel XScale® Microarchitecture has four fill buffers that allow four outstanding loads to the

cache and external memory. Four outstanding loads increases the memory throughput and the bus

efficiency. This feature can also be used to hide latency. Page table attributes affect the load

behavior; for a section with C=0, B=0 there is only one outstanding load from the memory. Thus,

the load performance for a memory page with C=0, B=1 is significantly better compared to a

memory page with C=0, B=0.

2.2.5 Multiply/Multiply Accumulate (MAC) Pipeline

The multiply-accumulate (MAC) unit executes the multiply and multiply-accumulate instructions

supported by the Intel XScale® Microarchitecture. The MAC implements the 40-bit Intel XScale®

Microarchitecture accumulator register acc0 and handles the instructions which transfers its value

to and from general-purpose ARM* registers.

Intel® PXA27x Processor Family Optimization Guide 2-5

Page 26

Microarchitecture Overview

These are important characteristics about the MAC:

• The MAC is not a true pipeline. The processing of a single instruction requires use of the same

data-path resources for several cycles before a new instruction is accepted. The type of

instruction and source arguments determine the number of required cycles.

• No more than two instructions can concurrently occupy the MAC pipeline.

• When the MAC is processing an instruction, another instruction cannot enter M1 unless the

original instruction completes in the next cycle.

• The MAC unit can operate on 16-bit packed signed data. This reduces register pressure and

memory traffic size. Two 16-bit data items can be loaded into a register with one LDR.

• The MAC can achieve throughput of one multiply per cycle when performing a 16-by-32-bit

multiply.

• ACC registers in the Intel XScale® Microarchitecture can be up to 64 bits in future

implementations. Code should be written to depend on the 40-bit nature of the current

implementation.

2.2.5.1 Behavioral Description

The execution of the MAC unit starts at the beginning of the M1 pipestage. At this point, the MAC

unit receives two 32-bit source operands. Results are completed N cycles later (where N is

dependent on the operand size) and returned to the register file. For more information on MAC

instruction latencies, refer to

Microarchitecture”.

Section 4.8, “Instruction Latencies for Intel XScale®

An instruction occupying the M1 or M2 pipestages occupies the X1 and X2 pipestage, respectively.

Each cycle, a MAC operation progresses for M1 to M5. A MAC operation may complete anywhere

from M2-M5.

2.2.5.2 Perils of Superpipelining

The longer pipeline has several consequences worth considering:

• Larger branch misprediction penalty (four cycles in the Intel XScale® Microarchitecture

instead of one in StrongARM* Architecture).

• Larger load use delay (LUD) — LUDs arise from load-use dependencies. A load-use

dependency gives rise to a LUD if the result of the load instruction cannot be made available

by the pipeline in time for the subsequent instruction. To avoid these penalties, an optimizing

compiler should take advantage of the core’s multiple outstanding load capability (also called

hit-under-miss) as well as finding independent instructions to fill the slot following the load.

• Certain instructions incur a few extra cycles of delay with the Intel XScale® Microarchitecture

as compared to StrongARM* processors (LDM, STM).

• Decode and register file lookups are spread out over two cycles with the Intel XScale®

Microarchitecture, instead of one cycle in predecessors.

2-6 Intel® PXA27x Processor Family Optimization Guide

Page 27

Microarchitecture Overview

2.3 Intel® Wireless MMX™ Technology Pipeline

As the Intel® Wireless MMX™ Technology is tightly coupled with the Intel XScale®

Microarchitecture; the

structure as the Intel XScale® Microarchitecture.

Technology pipeline, which contains three independent pipeline threads:

• X pipeline - Execution pipe

• M pipeline - Multiply pipe

• D pipeline - Memory pipe

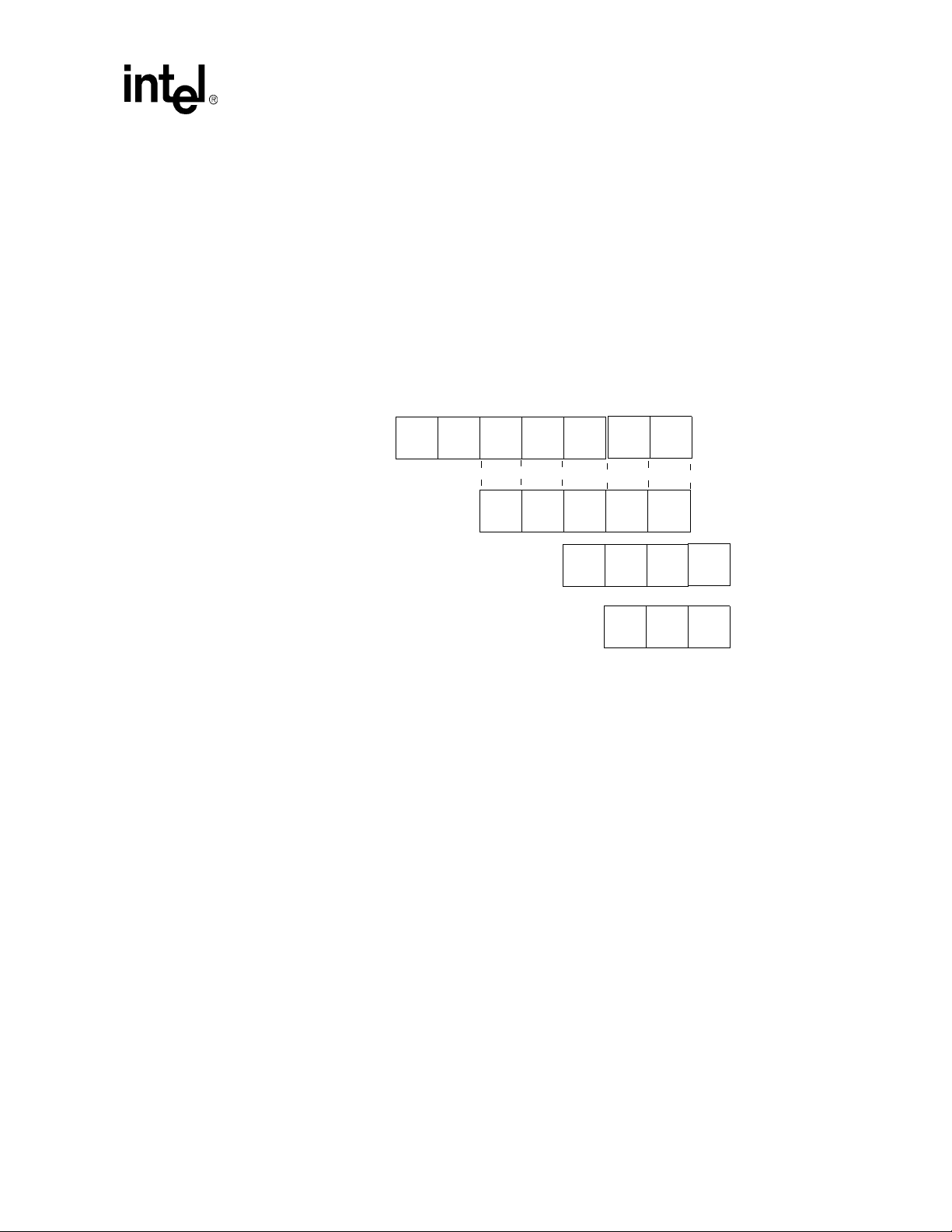

Figure 2-2. Intel® Wireless MMX™ Technology Pipeline Threads and relation with Intel

XScale® Microarchitecture Pipeline

Intel® Wireless MMX™ Technology pipeline follows the similar pipeline

Figure 2-2 shows the Intel® Wireless MMX™

Intel

XScale®

Pipeline

IF1 IF2

ID

RF

X1

X2 XWB

X pipeline

M pipeline

D pipeline

2.3.1 Execute Pipeline Thread

2.3.1.1 ID Stage

The ID pipe stage is where decoding of Intel® Wireless MMX™ Technology instructions

commences. Because of the significance of the transit time from Intel XScale® Microarchitecture

in the ID pipe stage, only group decoding is performed in the ID stage, with the remainder of the

decoding being completed in the RF stage. However, it is worth noting that the register address

decoding is fully completed in the ID stage because the register file needs to be accessed at the

beginning of the RF stage.

All instructions are issued in a single cycle, and they pass through the ID stage in one cycle if no

pipeline stall occurs.

2.3.1.2 RF Stage

ID

RF X1

M1

X2 XWB

M2

D1 D2 DWB

MWBM3

The RF stage controls the reading/writing of the register file, and determines if the pipeline has to

stall due to data or resource hazards. Instruction decoding also continues at the RF stage and

completes at the end of the RF stage. The register file is accessed for reads in the high phase of the

clock and accessed for writes in the low phase.If data or resource hazards are detected, the

Intel® PXA27x Processor Family Optimization Guide 2-7

Intel®

Page 28

Microarchitecture Overview

Wireless MMX™ Technology stalls Intel XScale® Microarchitecture. Note that control hazards

are detected in the Intel XScale® Microarchitecture, and a flush signal is sent from the core to the

Intel® Wireless MMX™ Technology.

2.3.1.3 X1 Stage

The X1 stage is also known as the execution stage, which is where most instructions begin being

executed. All instructions are conditionally executed and that determination occurs at the X1 stage

in the Intel XScale® Microarchitecture. A signal from the core is required to indicate whether the

instruction being executed is committed. In other words, an instruction being executed at the X1

stage may be canceled by a signal from the core. This signal is available to the

MMX™ Technology

2.3.1.4 X2 Stage

The Intel® Wireless MMX™ Technology supports saturated arithmetic operations. Saturation

detection is completed in the X2 pipe stage.

If the Intel XScale® Microarchitecture detects exceptions and flushes in the X2 pipe stage, Intel®

Wireless MMX™ Technology

Intel® Wireless

in the middle of the X1 pipe stage.

also flushes all the pipeline stages.

2.3.1.5 XWB Stage

The XWB stage is the last stage of the X pipeline, where a final result calculated in the X pipeline

is written back to the register file.

2.3.2 Multiply Pipeline Thread

2.3.2.1 M1 Stage

The M pipeline is separated from the X pipeline. The execution of multiply instructions starts at the

beginning of the M1 stage, which aligns with the X1 stage of the X pipeline. While the issue cycle

for multiply operations is one clock cycle, the result latency is at least three cycles. Certain

instructions such as TMIA, WMAC, WMUL, WMADD spend two M1 cycles since the

Wireless MMX™ Technology

level compression occur in the M1 pipe stage.

2.3.2.2 M2 Stage

Additional compression occurs in the M2 pipe stage, and the lower 32 bits of the result are

calculated with a 32 bit adder.

2.3.2.3 M3 Stage

The upper 32 bits of the result are calculated with a 32-bit adder.

has only two 16x16 multiplier arrays. Booth encoding and first-

Intel®

2.3.2.4 MWB Stage

The MWB stage is the last stage of the M pipeline, which is where a final result calculated in the M

pipeline is written back to the register file.

2-8 Intel® PXA27x Processor Family Optimization Guide

Page 29