Page 1

Intel®Ethernet Adapters and Devices User Guide

Page 2

Overview

Welcome to the User's Guide for Intel® Ethernet Adapters and devices. This guide covers hardware and

software installation, setup procedures, and troubleshooting tips for Intel network adapters, connections, and

other devices.

Installing the Network Adapter

If you are installing a network adapter, follow this procedure from step 1.

If you are upgrading the driver software, start with step 5 .

1. Make sure that you are installing the latest driver software for your adapter. Visit Intel's support web-

site to download the latest drivers.

2. Review system requirements.

3. Insert the adapter(s) in the computer.

4. Attach the copper or fiber network cable(s).

5. Install the driver.

6. For Windows systems, install the Intel® PROSet software.

If you have any problems with basic installation, see Troubleshooting.

You can now set up advanced features, if necessary. The available features and the configuration process

varies with the adapter and your operating system.

Supported Devices

For help identifying your network device and finding supported devices, click the link below:

http://www.intel.com/support

Compatibility Notes

In order for an adapter based on the XL710 controller to reach its full potential, you must install it in a PCIe

Gen3 x8 slot. Installing it in a shorter slot, or a Gen2 or Gen1 slot, will limit the throughput of the adapter.

Some older Intel(R) Ethernet Adapters do not have full software support for the most recent versions of

Microsoft Windows*. Many older Intel Ethernet Adapters have base drivers supplied by Microsoft Windows.

Lists of supported devices per OS are available at

http://www.intel.com/support/go/network/adapter/nicoscomp.htm

Some Intel® 10 Gigabit Network Adapters and Connections support SFP+ pluggable optical modules. Please

see SFP+ Devices with Pluggable Optics

System Requirements

Before installing the network adapter, check your system for the following minimum configuration

requirements.

Page 3

Hardware Compatibility

l The latest BIOS for your computer

l One of the following slot types, depending on your adapter:

l One open PCI bus master slot, 32-bit or 64-bit, operating at 33 or 66 MHz

l One open PCI-X slot operating at 66, 100 or 133 MHz(recommended)

l One open PCI-Express slot (v1.0a or newer), 4x, 8x, or 16x.

NOTES:

l The PRO/1000 MT Quad Port Server adapter requires a 3.3 volt only slot. It

cannot be installed in a 5.0 volt or universal slot.

l The Intel® 10 Gigabit AT Server Adapter will only fit into x8 or larger PCI

Express slots. Some systems have physical x8 PCI Express slots that actually support lower speeds. Please check your system manual to identify the

slot.

Supported 32-bit Operating Systems

NOTE: Intel 10GbE Ethernet Adapters do not support Microsoft* Windows* 32-bit operating sys-

tems. They support 32-bit versions of Linux* and FreeBSD*.

Basic software and drivers are supported on the following operating systems:

l DOS

l SunSoft* Solaris* (drivers and support are provided by the operating system vendor)

Advanced software and drivers are supported on the following operating systems:

l Microsoft* Windows* 7

l Microsoft Windows 8

l Microsoft Windows 8.1

l Microsoft Windows 10

l Linux*, v2.4 kernel or higher

l FreeBSD*

Supported Intel® 64 Architecture Operating Systems

l Microsoft Windows 7

l Microsoft Windows 8

l Microsoft Windows 8.1

l Microsoft Windows 10

l Microsoft Windows Server 2008 R2

l Microsoft Windows Server 2012

l Microsoft Windows Server 2012 R2

l Red Hat Linux

l SUSE Linux

l FreeBSD

Page 4

Supported Operating Systems for Itanium-based Systems

l Linux, v2.x kernel and higher, except v2.6

Some older Intel(R) Ethernet Adapters do not have full software support for the most recent versions of

Microsoft Windows*. Many older Intel Ethernet Adapters have base drivers supplied by Microsoft Windows.

Lists of supported devices per OS are available at

http://www.intel.com/support/go/network/adapter/nicoscomp.htm

Page 5

Hardware Installation

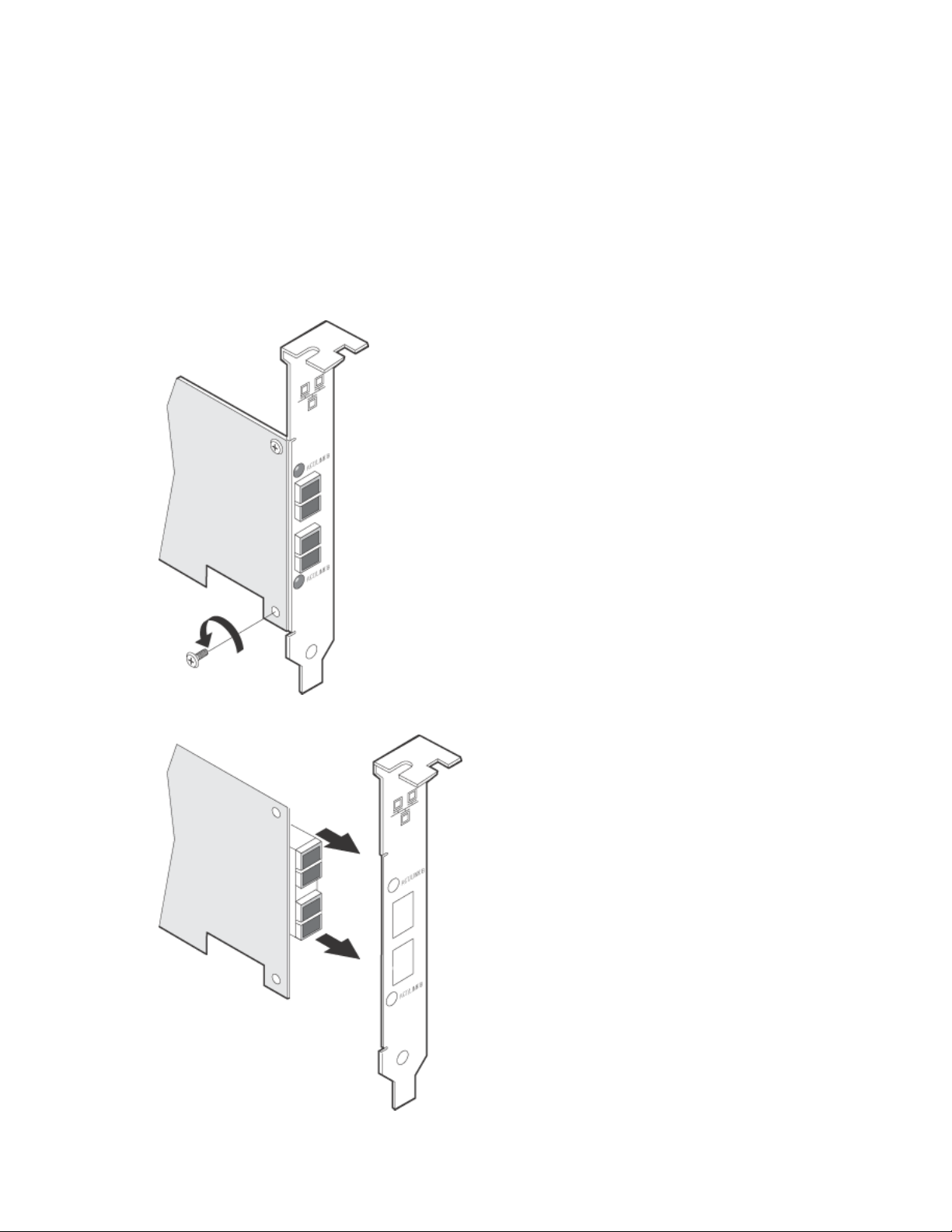

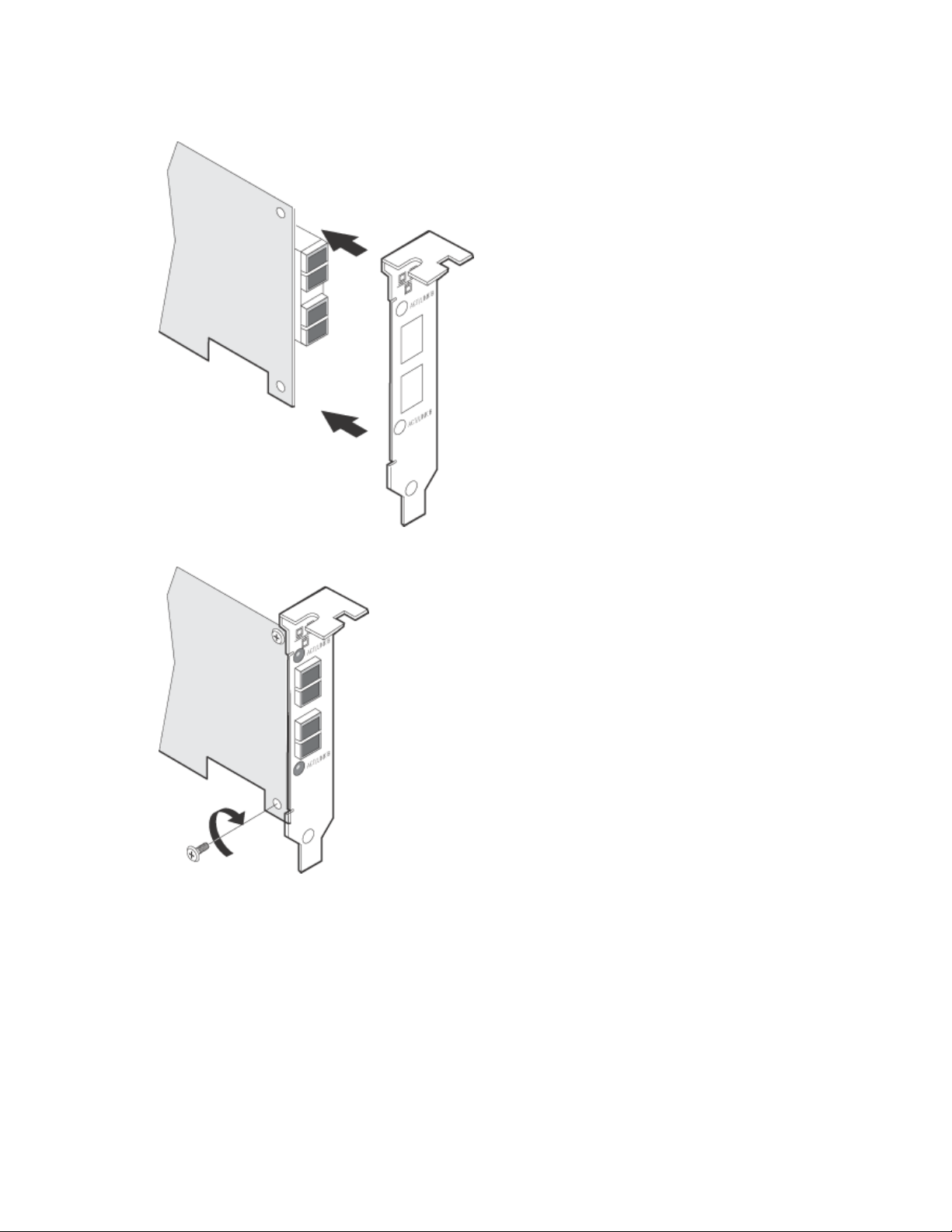

Attaching the Low Profile Bracket (Optional)

Some adapter models come with a small bracket in the product package, to be used in a low profile slot. If you

need to install the adapter in a low-profile slot, follow these instructions.

1. Before handling the adapter, ground yourself to dissipate any static charge.

2. Remove the two screws securing the standard bracket to the back side of the adapter.

3. Slide the bracket away from the adapter.

Page 6

4. Slide the low profile bracket onto the adapter.

5. Attach the low profile bracket to the adapter using the screws you removed in step 2.

6. Carefully tighten the screws until they are seated. Do not over tighten.

You may re-attach the standard sized bracket in the future if necessary.

Installing the Adapter

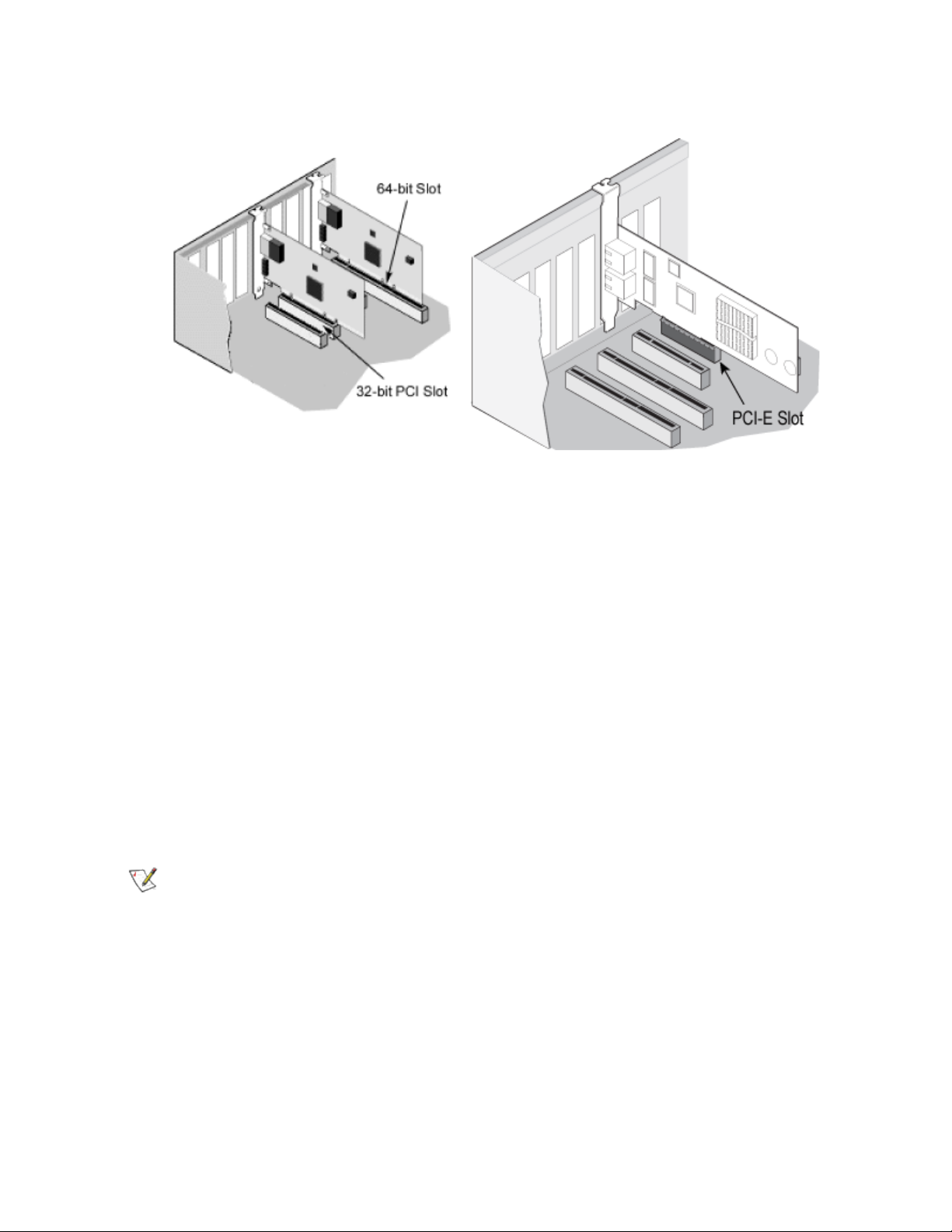

Selecting the Correct Slot

PCI-Express Adapters

l One open PCI-Express slot (v1.0a or newer), x4, x8, or x16.

Page 7

NOTE: The Intel® 10 Gigabit AT Server Adapter will only fit into x8 or larger PCI Express slots.

Some systems have physical x8 PCI Express slots that actually support lower speeds. Please

check your system manual to identify the slot.

PCI and PCI-X Adapters

One of the following slot types, depending on your adapter:

l One open PCI bus master slot, 32-bit or 64-bit, operating at 33 or 66 MHz

l One open PCI-X slot operating at 66, 100 or 133 MHz(recommended)

NOTE: The PRO/1000 MT Quad Port Server adapter requires a 3.3 volt only slot. It cannot be

installed in a 5.0 volt or universal slot.

If you have configuration problems, see your computer's documentation to determine if the PCI slots are bus

master-enabled. If you get a PCI or PCI-X configuration error, select Troubleshooting in the Table of Contents.

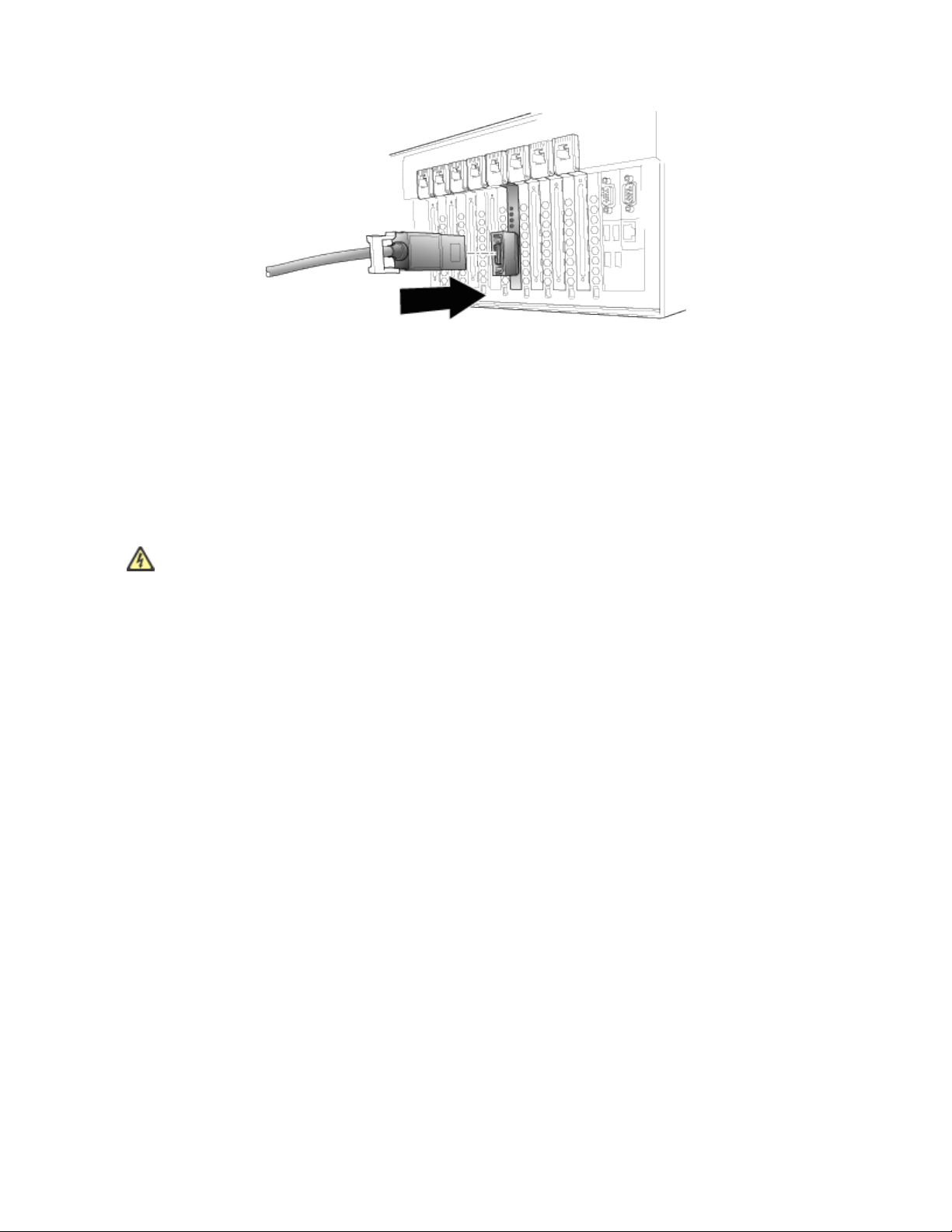

Insert the Adapter into the Computer

1. If your computer supports PCI Hot Plug, see your computer documentation for special installation

instructions.

2. Shut down the operating system (if it's running).

3. Turn off and unplug your computer. Then remove the cover.

CAUTION: Turn off and unplug the power before removing the computer's cover. Failure to

do so could endanger you and may damage the adapter or computer.

4. Remove the cover bracket from an appropriate slot.

5. Insert the adapter, pushing it into the slot until the adapter is firmly seated.

l If you install a 64-bit PCI adapter in a 32-bit slot, the adapter will still function, but the end of the

edge connector will be exposed and not connected. If this is the case, make sure the exposed

contacts do not touch anything that would cause an electrical problem.

l If you are installing a PCI-Express adapter, be aware that you can install a smaller PCI-Express

adapter in a larger PCI-Express slot.

Page 8

6. Secure the adapter bracket with a screw, if required.

7. Repeat steps 4 through 6 for each additional adapter to be installed.

8. Replace the computer cover and plug in the power cord.

9. Turn the power on and start your operating system.

Attaching Cables

Intel® Gigabit and 10 Gigabit Network Adapters using copper connections automatically accommodate either

MDI or MDI-X connections. The auto-MDI-X feature of Intel Gigabit Network adapters allows you to directly

connect two adapters without using a cross-over cable.

To ensure compliance with CISPR 24 and the EU's EN55024, Intel® 10 Gigabit Server Adapters and

Connections should be used only with CAT 6a shielded cables that are properly terminated according to the

recommendations in EN50174-2.

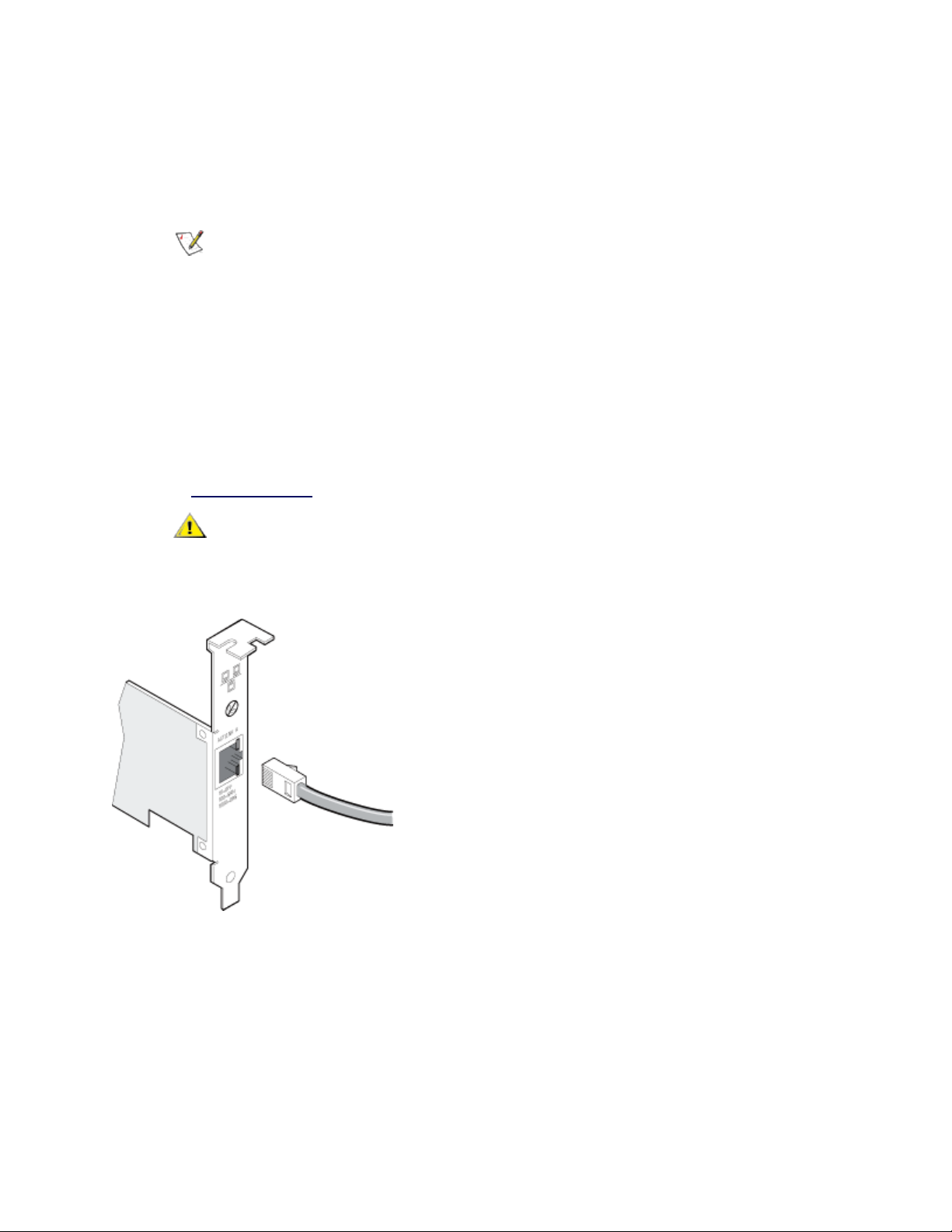

Attach an RJ45 Network Cable

Cable types

Use one of the following types of network cabling, depending on your adapter and the intended use:

NOTE: If you are using this adapter in a residential environment (at any speed), use Category 5 or

better wiring. If the cable runs between rooms or through walls and/or ceilings, it should be

plenum-rated for fire safety.

l Maximum lengths for Intel® 10 Gigabit Server Adapters and Connections that use 10GBASE-T on Cat-

egory 6, Category 6a, or Category 7 wiring, twisted 4-pair copper:

l Maximum length for Category 6 is 55 meters.

l Maximum length for Category 6a is 100 meters.

l Maximum length for Category 7 is 100 meters.

Page 9

l To ensure compliance with CISPR 24 and the EU's EN55024, Intel® 10 Gigabit Server

Adapters and Connections should be used only with CAT 6a shielded cables that are properly

terminated according to the recommendations in EN50174-2.

l For 1000 Mbps operation (1000BaseT), use Category 5 or better (must be 4-pair wiring). Make sure you

use Category 5 cable that complies with the TIA-568 wiring specification.

NOTE: To insure compliance with CISPR 24 and the EU’s EN55024, devices based on

the 82576 controller should be used only with CAT 5E shielded cables that are properly terminated according to the recommendations in EN50174-2.

l For 100 Mbps operation (100BaseTX), use Category 5 wiring or better.

l For 10 Mbps operation (10BaseT), use Category 3 wiring or better.

l In all cases:

l Segment length is limited to 100 meters (328 feet, 1 inch).

l The adapter must be connected to a compatible link partner, and may be set to auto-negotiate

speed and duplex.

l For more information on this specification, see the Telecommunications Industry Association's web-

site: www.tiaonline.org.

CAUTION: If using less than 4-pair cabling, you must manually configure the speed and

duplex setting of the adapter and the link partner. In addition, with 2- and 3-pair cabling the

adapter can only achieve speeds of up to 100Mbps.

Insert the twisted pair, RJ45 network cable as shown.

CX4

Physical cabling characteristics for the Intel® CX4 Dual Port Server Adapter must meet IEEE Std 802.3ak,

Clause 54 specifications. The required cable connector is an MDI latch-type receptacle, as defined by IEC

61076-3-113.

The IEEE standard defines a worst-case cable length of 15 meters, based on 100 O, twinaxial cable

characteristics. However, commercial cable manufacturers may offer enhanced products that will permit

successful operation over greater distances.

Page 10

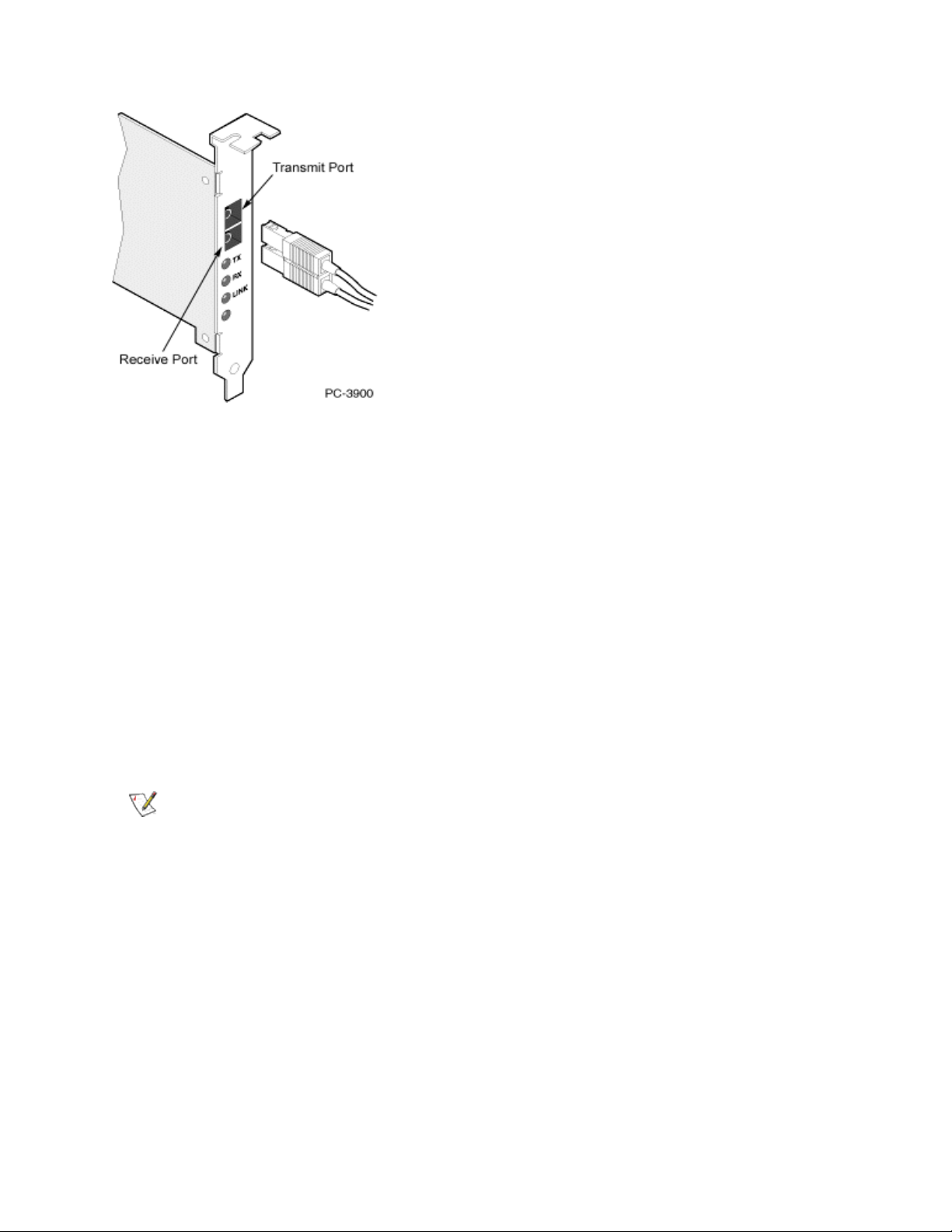

Fiber Optic Cabling

Remove and save the fiber optic connector cover. Insert a fiber optic cable into the ports on the network

adapter bracket as shown.

Most connectors and ports are keyed for proper orientation. If the cable you are using is not keyed, check to

be sure the connector is oriented properly (transmit port connected to receive port on the link partner, and vice

versa).

The adapter must be connected to a compatible link partner that is operating at the same laser wavelength as

the adapter.

CAUTION: The fiber optic ports contain a Class 1 laser device. When the ports are disconnected,

always cover them with the provided plug. If an abnormal fault occurs, skin or eye damage may result if in close proximity to the exposed ports.

Connecting Fiber Optic SC Cables

The connection requirements are as follows:

l Laser wavelength: 850 nanometer (not visible).

l SX Cable type:

l Multi-mode fiber with 50 micron core diameter; maximum length is 550 meters.

l Multi-mode fiber with 62.5 micron core diameter; maximum length is 275 meters.

l Connector type: SC.

Page 11

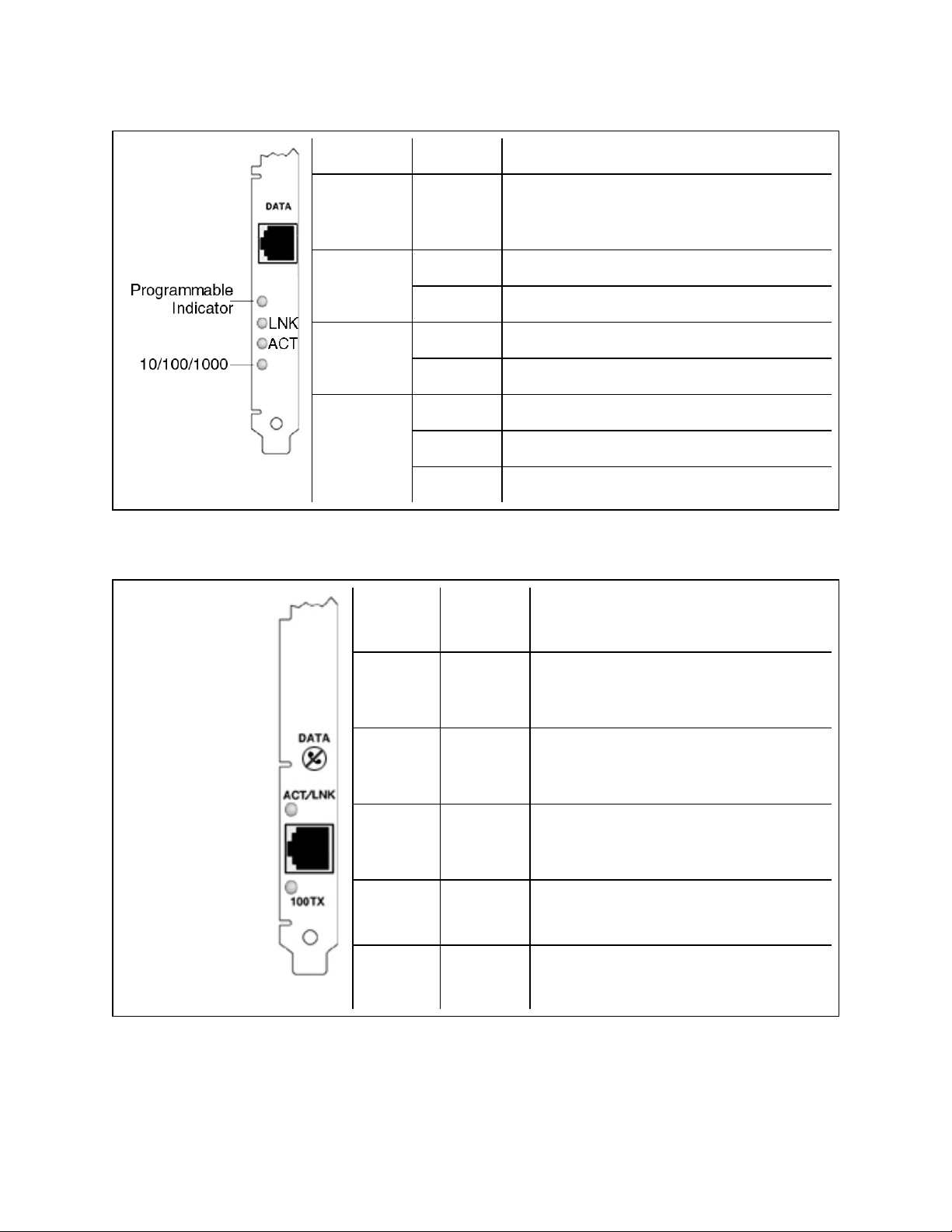

Single-port fiber adapter with SC connector

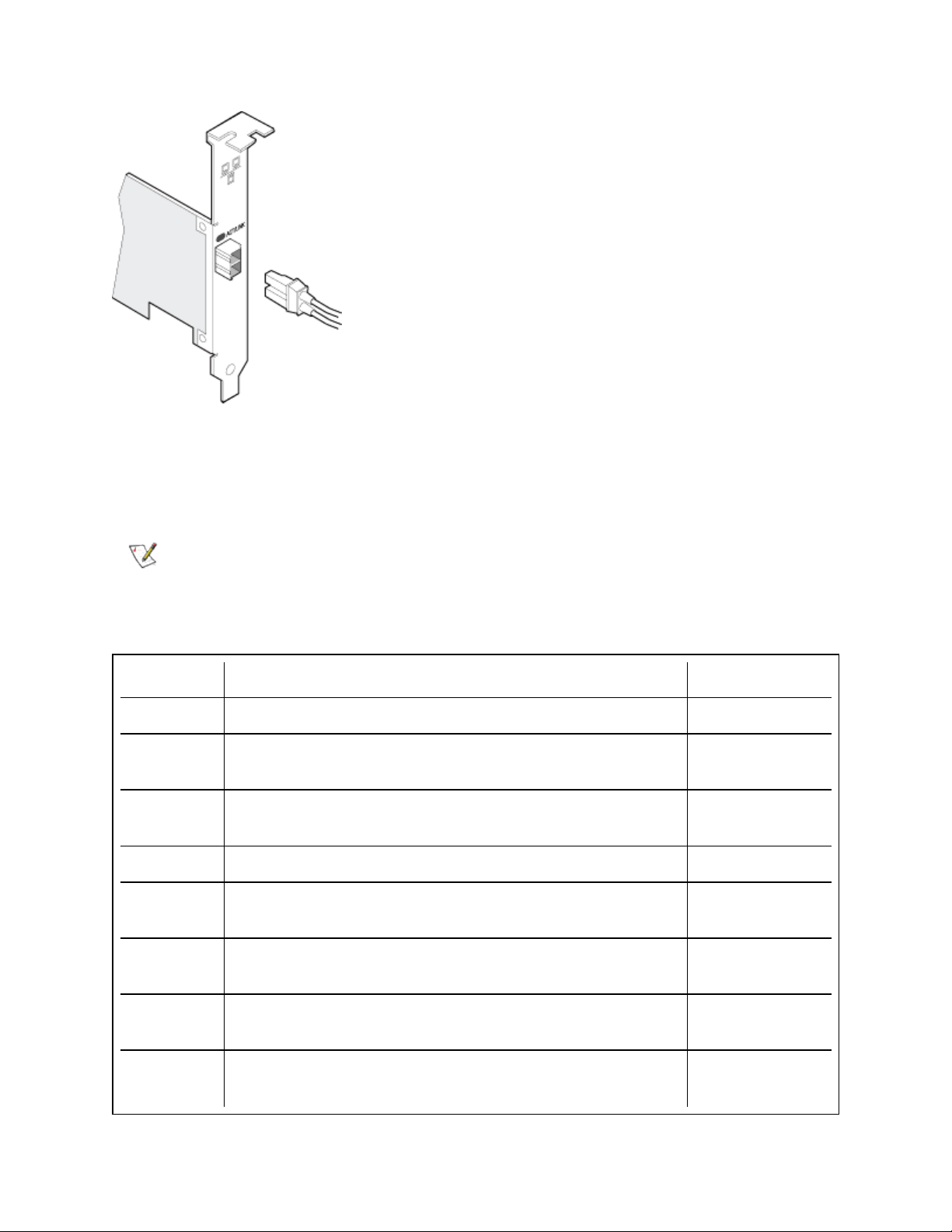

Connecting Fiber Optic LC Cables

l Laser type: 850 nanometer wavelength (not visible).

l SX Cabling:

l Multi-mode fiber with 50 micron core diameter. Maximum length is 550 meters.

l Multi-mode fiber with 62.5 micron core diameter. Maximum length is 275 meters

l Connector type: LC.

"LX" Adapters

Adapters with an "LX" marking on the bracket support the 1000Base-LX connectivity standard. They are

designed for long-haul operation, and have different connectivity requirements:

l Laser type: 1310 nanometer wavelength (not visible).

l LX Cabling: single-mode fiber with 9.0 micron core diameter.

l Maximum length: 10 kilometers.

l Connector type: LC.

NOTES:

l Although LX adapters are designed for use with long-haul single-mode fiber, they can also

be used with short-haul multi-mode fiber. Maximum cable length is 550 meters. Must be

connected to compatible LX ports.

l If needed, an LC-to-SC adapter cable may be used to mate with SC connectors.

Page 12

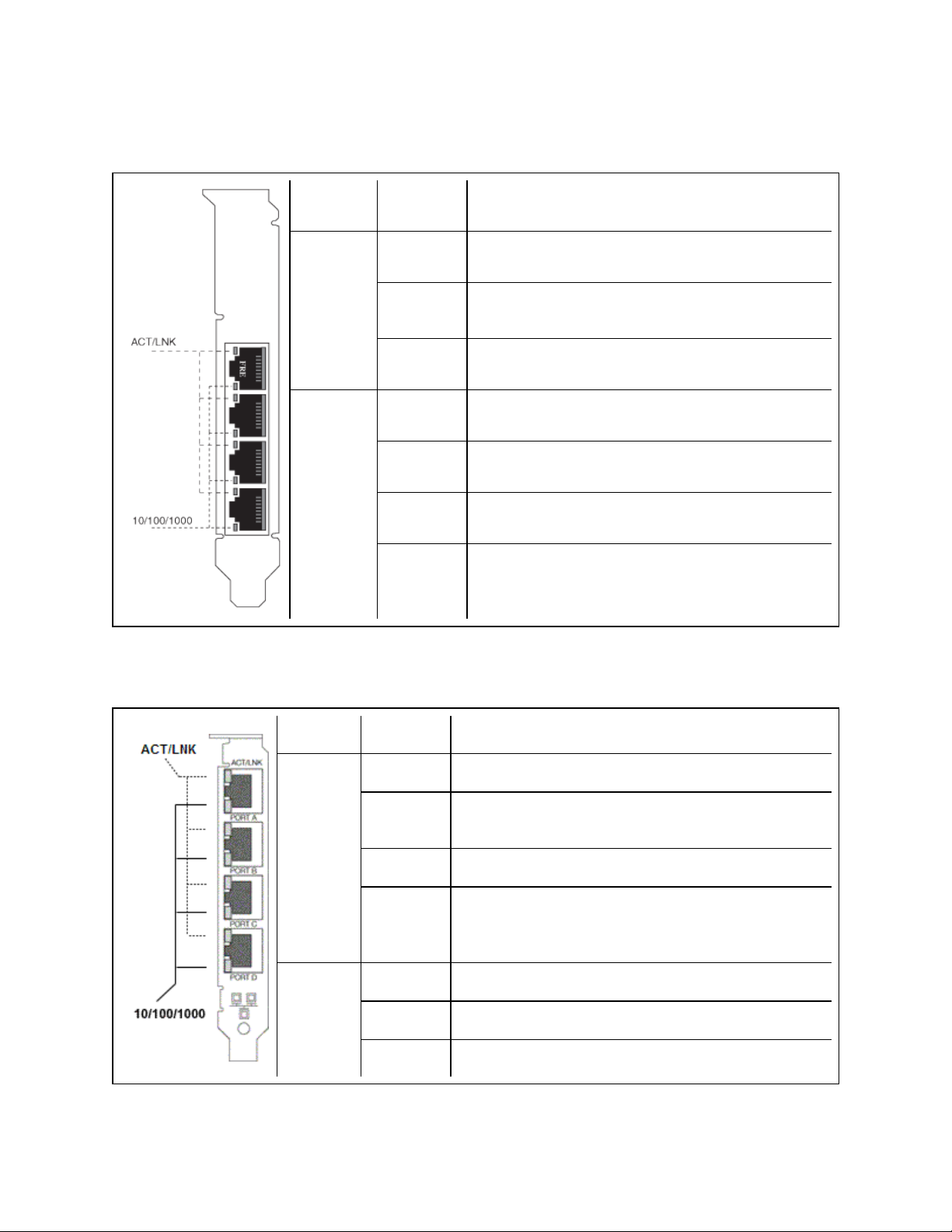

Single-port fiber adapter with LC connector

SFP+ Devices with Pluggable Optics

X710 and XL710-Based Adapters

NOTES:

l If your Intel(R) Network Adapter came with Intel SFP+ optics, or is an Intel(R) Ethernet

Converged Network Adapter X710 type of adapter, then it only supports Intel optics and/or

the direct attach cables listed below.

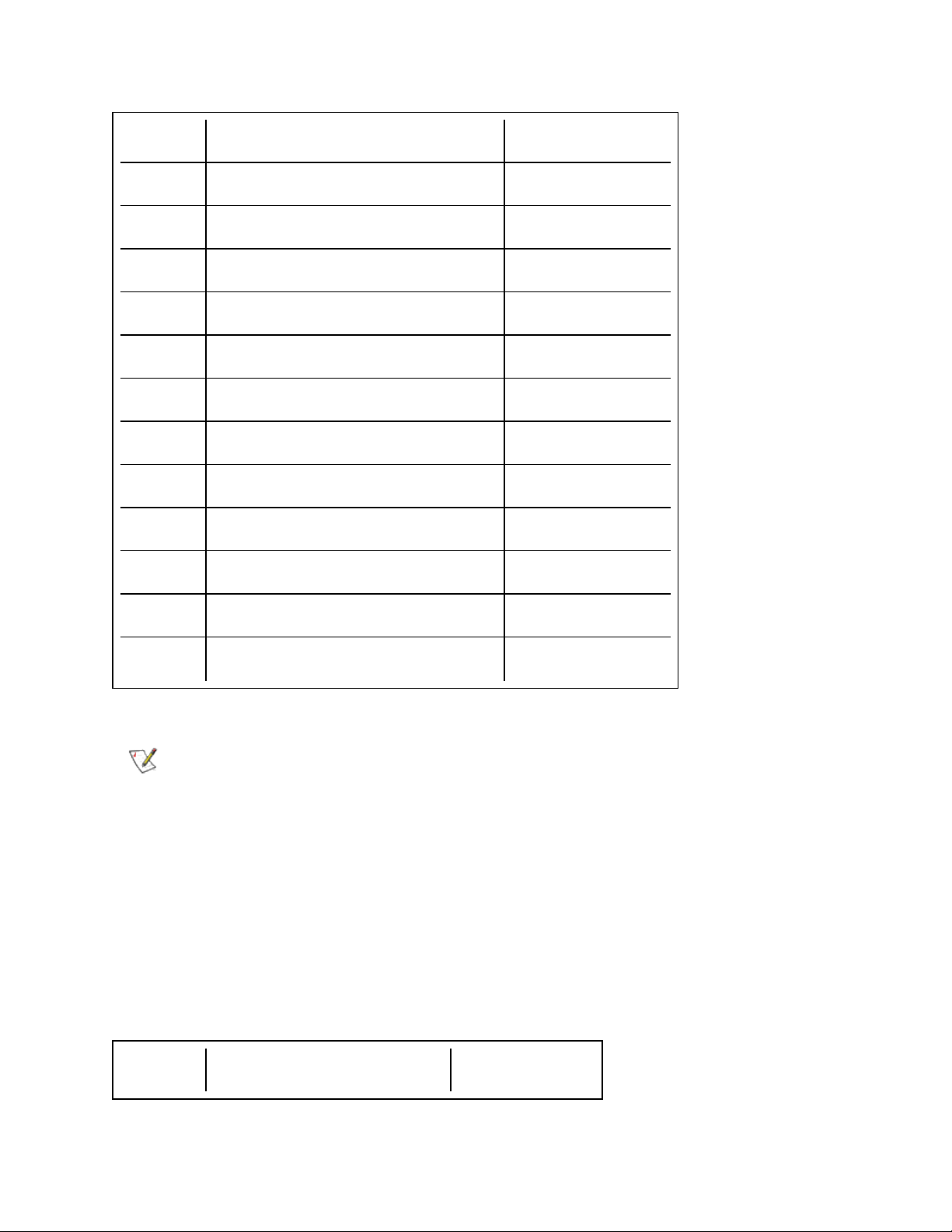

Supplier Type Part Numbers

SR Modules

Intel DUAL RATE 1G/10G SFP+ SR (bailed) FTLX8571D3BCV-

IT

Intel DUAL RATE 1G/10G SFP+ SR (bailed) AFBR-703SDDZ-

IN1

LR Modules

Intel DUAL RATE 1G/10G SFP+ LR (bailed) FTLX1471D3BCV-

IT

Intel DUAL RATE 1G/10G SFP+ LR (bailed) AFCT-701SDZ-

IN2

QSFP Modules

Intel TRIPLE RATE 1G/10G/40G QSFP+ SR (bailed) (1G not supported

E40GQSFPSR

on XL710)

Page 13

Intel TRIPLE RATE 1G/10G/40G QSFP+ LR (bailed) (1G not supported

E40GQSFPLR

on XL710)

82599-Based Adapters

NOTES:

l If your 82599-based Intel® Network Adapter came with Intel optics, or is an Intel® Eth-

ernet Server Adapter X520-2, then it only supports Intel optics and/or the direct attach

cables listed below.

l 82599-Based adapters support all passive and active limiting direct attach cables that com-

ply with SFF-8431 v4.1 and SFF-8472 v10.4 specifications.

Supplier Type Part Numbers

SR Modules

Intel DUAL RATE 1G/10G SFP+ SR (bailed) AFBR-703SDZ-

IN2

Intel DUAL RATE 1G/10G SFP+ SR (bailed) FTLX8571D3BCV-

IT

Intel DUAL RATE 1G/10G SFP+ SR (bailed) AFBR-703SDDZ-

IN1

LR Modules

Intel DUAL RATE 1G/10G SFP+ LR (bailed) FTLX1471D3BCV-

IT

Intel DUAL RATE 1G/10G SFP+ LR (bailed) AFCT-701SDZ-

IN2

Intel DUAL RATE 1G/10G SFP+ LR (bailed) AFCT-701SDDZ-

IN1

QSFP Modules

Intel TRIPLE RATE 1G/10G/40G QSFP+ SR (bailed) (40G not sup-

E40GQSFPSR

ported on 82599)

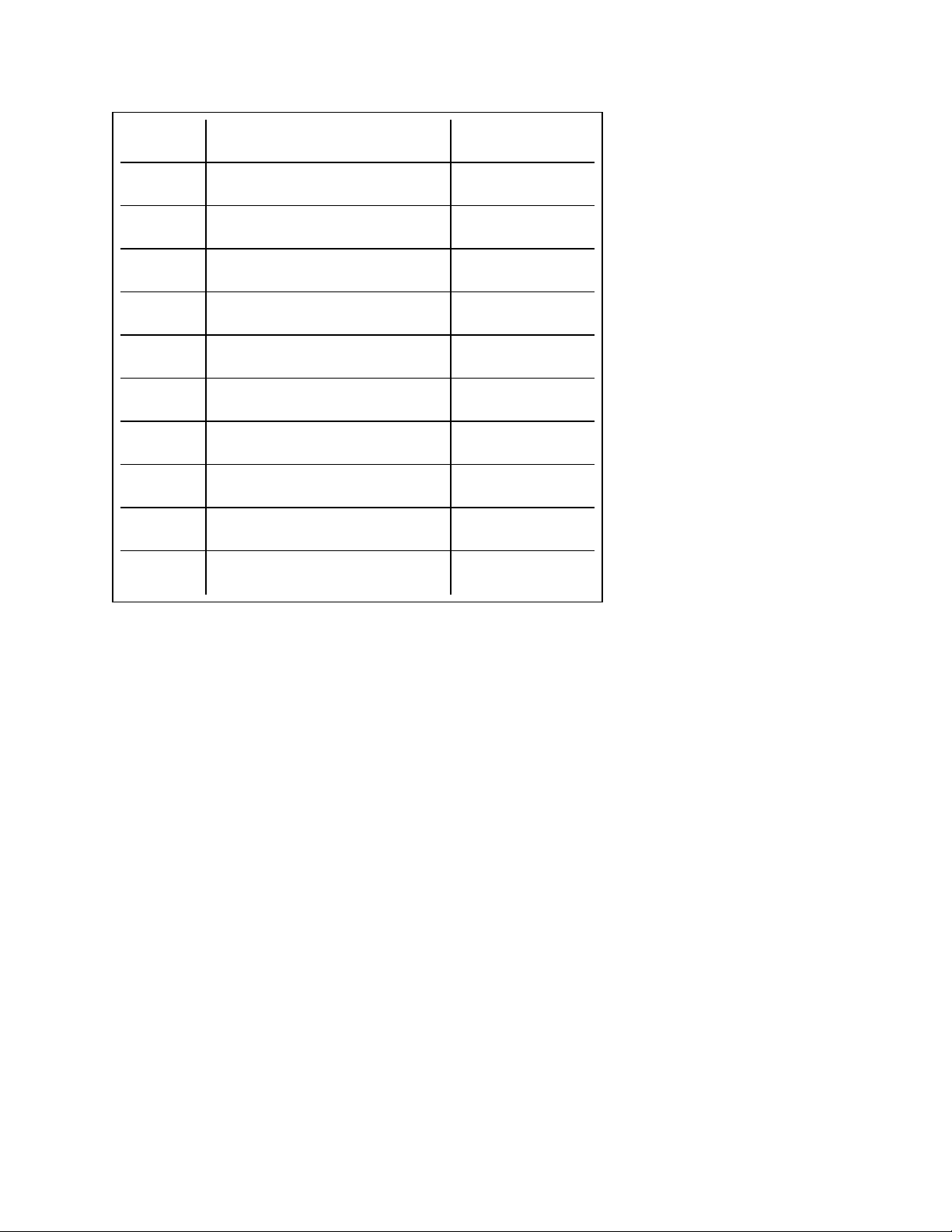

The following is a list of 3rd party SFP+ modules that have received some testing. Not all modules are

applicable to all devices.

Supplier Type Part Numbers

Page 14

Finisar SFP+ SR bailed, 10g single rate FTLX8571D3BCL

Avago SFP+ SR bailed, 10g single rate AFBR-700SDZ

Finisar SFP+ LR bailed, 10g single rate FTLX1471D3BCL

Finisar DUAL RATE 1G/10G SFP+ SR (No Bail) FTLX8571D3QCV-IT

Avago DUAL RATE 1G/10G SFP+ SR (No Bail) AFBR-703SDZ-IN1

Finisar DUAL RATE 1G/10G SFP+ LR (No Bail) FTLX1471D3QCV-IT

Avago DUAL RATE 1G/10G SFP+ LR (No Bail) AFCT-701SDZ-IN1

Finisar 1000BASE-T SFP FCLF8522P2BTL

Avago 1000BASE-T SFP ABCU-5710RZ

HP 1000BASE-SX SFP 453153-001

82598-Based Adapters

NOTES:

l Intel® Network Adapters that support removable optical modules only support their original

module type (i.e., the Intel® 10 Gigabit SR Dual Port Express Module only supports SR

optical modules). If you plug in a different type of module, the driver will not load.

l 82598-Based adapters support all passive direct attach cables that comply with SFF-8431

v4.1 and SFF-8472 v10.4 specifications. Active direct attach cables are not supported.

l Hot Swapping/hot plugging optical modules is not supported.

l Only single speed, 10 gigabit modules are supported.

l LAN on Motherboard (LOMs) may support DA, SR, or LR modules. Other module types are

not supported. Please see your system documentation for details.

The following is a list of SFP+ modules and direct attach cables that have received some testing. Not all

modules are applicable to all devices.

Supplier Type Part Numbers

Page 15

Finisar SFP+ SR bailed, 10g single rate FTLX8571D3BCL

Avago SFP+ SR bailed, 10g single rate AFBR-700SDZ

Finisar SFP+ LR bailed, 10g single rate FTLX1471D3BCL

Molex 1m - Twin-ax cable 74752-1101

Molex 3m - Twin-ax cable 74752-2301

Molex 5m - Twin-ax cable 74752-3501

Molex 10m - Twin-ax cable 74752-9004

Tyco 1m - Twin-ax cable 2032237-2

Tyco 3m - Twin-ax cable 2032237-4

Tyco 5m - Twin-ax cable 2032237-6

Tyco 10m - Twin-ax cable 1-2032237-1

THIRD PARTY OPTIC MODULES AND CABLES REFERRED TO ABOVE ARE LISTED ONLY FOR THE PURPOSE OF HIGHLIGHTI NG THIRD

PARTY SPECIFICATIONS AND POTENTIAL COMPATIBILITY, AND ARE NOT RECOMMENDATIONS OR ENDORSEMENT OR SPONSORSHIP OF

ANY THIRD PARTY'S PRODUCT BY INTEL. INTEL IS NOT ENDORSING OR PROMOTING PRODUCTS MADE BY ANY THIRD PARTY AND THE

THIRD PARTY REFERENCE I S PROVIDED ONLY TO SHARE INFORMATION REGARDING CERTAIN OPTIC MODULES AND CABLES WI TH THE

ABOVE SPECIFICATIONS. THERE MAY BE OTHER MANUFACTURERS OR SUPPLIERS, PRODUCING OR SUPPLYING OPTIC MODULES AND

CABLES WITH SIMILAR OR MATCHING DESCRIPTIONS. CUSTOMERS MUST USE THEIR OWN DISCRETI ON AND DILIGENCE TO PURCHASE

OPTIC MODULES AND CABLES FROM ANY THI RD PARTY OF THEIR CHOICE. CUSTOMERS ARE SOLELY RESPONSIBLE FOR ASSESSING

THE SUITABILIT Y OF THE PRODUCT AND/OR DEVICES AND FOR THE SELECTION OF THE VENDOR FOR PURCHASING ANY PRODUCT. THE

OPTIC MODULES AND CABLES REFERRED TO ABOVE ARE NOT WARRANTED OR SUPPORTED BY INTEL. INTEL ASSUMES NO LIABILITY

WHATSOEVER, AND I NTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF SUCH THI RD PARTY

PRODUCTS OR SELECTION OF VENDOR BY CUSTOMERS.

PCI Hot Plug Support

Intel® PRO/100 Server Adapters and most Intel® Gigabit Server Adapters are enabled for use in selected

servers equipped with Hot Plug support.Exceptions: Intel Gigabit Quad Port Server adapters do not support

Hot Plug operations.

If you replace an adapter in a Hot Plug slot, do not place the removed adapter back into the same network until

the server has rebooted (unless you return it to the same slot and same team as before). This prevents a

conflict in having two of the same Ethernet addresses on the same network.

The system will require a reboot if you

l Change the primary adapter designator.

l Add a new adapter to an existing team and make the new adapter the primary adapter.

l Remove the primary adapter from the system and replace it with a different type of adapter.

Page 16

NOTE: To replace an existing SLA-teamed adapter in a Hot Plug slot, first unplug the adapter

cable. When the adapter is replaced, reconnect the cable.

PCI Hot Plug Support for Microsoft* Windows* Operating Systems

Intel® network adapters are enabled for use in selected servers equipped with PCI Hot Plug support and

runningMicrosoft* Windows* operating systems. For more information on setting up and using PCI Hot Plug

support in your server, see your hardware and/or Hot Plug support documentation for details. PCI Hot Plug

only works when you hot plug an identical Intel network adapter.

NOTES:

l The MAC address and driver from the removed adapter will be used by the replacement

adapter unless you remove the adapter from the team and add it back in. If you do not

remove and restore the replacement adapter from the team, and the original adapter is used

elsewhere on your network, a MAC address conflict will occur.

l For SLA teams, ensure that the replacement NIC is a member of the team before con-

necting it to the switch.

Getting the Most From Your Intel® 10 Gigabit Server Adapter

NOTE:These adjustments should be performed by a highly skilled network administrator. They

are not guaranteed to improve performance. Not all settings shown here may be available through

your BIOS, operating system or network driver configuration.

1. Install the adapter in a PCIe x8 slot.

NOTE: Some PCIe x8 slots are actually configured as x4 slots. These slots have

insufficient bandwidth for full 10Gbe line rate with dual port 10GbE devices. The driver can

detect this situation and will write the following message in the system log: “PCI-Express

bandwidth available for this card is not sufficient for optimal performance. For optimal

performance a x8 PCI-Express slot is required.”

If this error occurs, moving your adapter to a true x8 slot will resolve the issue.

2. Use the proper cabling for the adapter you have. See Attach the Network Cable for details.

3. Enable Jumbo Frames, if your other network components can also be configured for it.

4. If your BIOS has an MMRBC (Maximum Memory Read Byte Count) adjustment, change it from its

default (usually 512) to 4096 (maximum).

NOTE: Some systems may set the adapter's MMRBC to 4096 by default.

5. Increase the number of TCP and Socket resources from the default value.

6. Increase the allocation size of Driver Resources (transmit/receive buffers). However, most TCP traffic

patterns work best with the transmit buffer set to its default value, and the receive buffer set to its

minimum value.

Page 17

Advanced Features

NOTE:The options available on the Advanced tab are adapter and system dependent. Not all

adapters will display all options.

Jumbo Frames

Jumbo Frames are Ethernet frames that are larger than 1518 bytes. You can use Jumbo Frames to reduce

server CPU utilization and increase throughput. However, additional latency may be introduced.

NOTES:

l Jumbo Frames are supported at 1000 Mbps and higher. Using Jumbo Frames at 10 or 100

Mbps is not supported and may result in poor performance or loss of link.

l End-to-end network hardware must support this capability; otherwise, packets will be

dropped.

Jumbo Frames can be implemented simultaneously with VLANs and teaming.

To configure Jumbo Frames at the switch, consult your network administrator or switch user's guide.

Jumbo Frame Restrictions:

l Jumbo Frames are not supported in multi-vendor team configurations.

l Supported protocols are limited to IP (TCP, UDP).

l Jumbo Frames require compatible switch connections that forward Jumbo Frames. Contact your

switch vendor for more information.

l The Jumbo Frame setting inside a virtual machine must be the same, or lower than, the setting on the

physical port.

l When standard sized Ethernet frames (64 to 1518 bytes) are used, there is no benefit to configuring

Jumbo Frames.

l The Jumbo Frames setting on the switch must be set to at least 8 bytes larger than the adapter setting

for Microsoft* Windows* operating systems, and at least 22 bytes larger for all other operating systems.

l The Intel® PRO/1000 PL Network Connection supports Jumbo Frames in Microsoft* Windows* oper-

ating systems only when Intel® PROSet for Windows Device Manager is installed.

l The following devices do not support Jumbo Frames larger than 4096 bytes:

l Intel® 82577LM Gigabit Network Connection

l Intel® 82578DM Gigabit Network Connection.

l The following devices do not support Jumbo Frames:

l Intel® 82567V-4 Gigabit Network Connection

l Intel® 82578DC Gigabit Network Connection

l Intel® 82577LC Gigabit Network Connection

l Intel® 82567LF-3 Gigabit Network Connection

l Intel® 82567V-2 Gigabit Network Connection

Page 18

l Intel® 82567LF-2 Gigabit Network Connection

l Intel® 82567V Gigabit Network Connection

l Intel® 82567LF Gigabit Network Connection

l Intel® PRO/1000 Gigabit Server Adapter

l Intel® PRO/1000 PM Network Connection

l Intel® PRO/1000 PM Network Connection

l Intel® 82562V 10/100 Network Connection

l Intel® 82562GT 10/100 Network Connection

l Intel® 82566DM Gigabit Network Connection

l Intel® 82566DC Gigabit Network Connection

l Intel® 82566DC-2 Gigabit Network Connection

l Intel® 82562V-2 10/100 Gigabit Network Connection

l Intel® 82562G-2 10/100 Gigabit Network Connection

l Intel® 82562GT-2 10/100 Gigabit Network Connection

l Intel® 82552 10/100 Network Connection

Setting Up Jumbo Frames in Microsoft* Windows*

NOTE: Jumbo Frames are not supported in multi-vendor team configurations.

1. Open Microsoft* Windows* Device Manager.

2. Open Properties on your adapter.

3. Click the Advanced tab.

4. Select Jumbo Frames from the list of advanced features.

5. Set your desired packet size (based on network capability).

6. Click OK to apply the changes.

All equipment on the network must also support the larger frame size. When setting up Jumbo Frames on

other network devices, be aware that different network devices calculate Jumbo Frame size differently. Some

devices include the header information in the frame size while others do not. Intel adapters do not include

header information in the frame size. When configuring Jumbo Frames on a switch, set the frame size four

bytes higher for CRC, plus four bytes if you are using VLANs or QoS packet tagging.

Advanced Network Services Teaming

Advanced Network Services (ANS) Teaming, a feature of the Advanced Network Services component, lets

you take advantage of multiple adapters in a system by grouping them together. ANS teaming can use

features like fault tolerance and load balancing to increase throughput and reliability. Before you can set up

ANS teaming in Microsoft* Windows*, you must install Intel® PROSet software. For more information, select

Intel PROSet in the Table of Contents (left pane) of this window.

Page 19

NOTES:

l Be sure to use the latest available drivers on all adapters.

l NDIS 6.2 introduced new RSS data structures and interfaces. Because of this, you cannot

enable RSS on teams that contain a mix of adapters that support NDIS 6.2 RSS and

adapters that do not.

l If a team is bound to a Hyper-V virtual NIC, you cannot change the Primary or Secondary

adapter.

l Intel adapters that do not support Intel PROSet may still be included in a team. However,

they are restricted in the same way non-Intel adapters are. See Multi-Vendor Teaming for

more information.

l To assure a common feature set, some advanced features, including hardware offloading,

are automatically disabled when an adapter that does not support Intel PROSet is added to

a team.

l Hot Plug operations in a Multi-Vendor Team may cause system instability. We recom-

mended that you restart the system or reload the team after performing Hot Plug operations

with a Multi-Vendor Team.

l Spanning tree protocol (STP) should be disabled on switch ports connected to teamed

adapters in order to prevent data loss when the primary adapter is returned to service (failback). Alternatively, an activation delay may be configured on the adapters to prevent data

loss when spanning tree is used. Set the Activation Delay on the advanced tab of team

properties.

l ANS teaming of Virtual Function devices inside a Windows 2008 R2 guest running on an

open source hypervisor is supported.

l Fibre Channel over Ethernet/Data Center Bridging will be automatically disabled when an

adapter is added to a team with non-FCoE/DCB capable adapters.

Supported Adapters

Teaming options are supported on Intel® PRO/100, Intel® Gigabit, and Intel® 10GbE adapters. Selected

adapters from other manufacturers are also supported.

Conditions that may prevent you from teaming a device

During team creation or modification, the list of available team types or list of available devices may not

include all team types or devices. This may be caused by any of several conditions, including:

l The operating system does not support the desired team type.

l The device does not support the desired team type or does not support teaming at all.

l The devices you want to team together use different driver versions.

l You are trying to team an Intel PRO/100 device with an Intel 10GbE device.

l TOE (TCP Offload Engine) enabled devices cannot be added to an ANS team and will not appear in the

list of available adapters.

l You can add Intel® Active Management Technology (Intel® AMT) enabled devices to Adapter Fault

Tolerance (AFT), Switch Fault Tolerance (SFT), and Adaptive Load Balancing (ALB) teams. All other

team types are not supported. The Intel AMT enabled device must be designated as the primary

adapter for the team.

Page 20

l The device's MAC address is overridden by the Locally Administered Address advanced setting.

l Fibre Channel over Ethernet (FCoE) Boot has been enabled on the device.

l The device has “OS Controlled” selected on the Data Center tab.

l The device has a virtual NIC bound to it.

l The device is part of a Microsoft* Load Balancing and Failover (LBFO) team.

Microsoft* Load Balancing and Failover (LBFO) teams

Intel ANS teaming and VLANs are not compatible with Microsoft's LBFO teams. Intel® PROSet will block a

member of an LBFO team from being added to an Intel ANS team or VLAN. You should not add a port that is

already part of an Intel ANS team or VLAN to an LBFO team, as this may cause system instability. If you use

an ANS team member or VLAN in an LBFO team, perform the following procedure to restore your

configuration:

1. Reboot the machine

2. Remove LBFO team. Even though LBFO team creation failed, after a reboot Server Manager will report

that LBFO is Enabled, and the LBFO interface is present in the ‘NIC Teaming’ GUI.

3. Remove the ANS teams and VLANS involved in the LBFO team and recreate them. This is an optional

(all bindings are restored when the LBFO team is removed ), but strongly recommended step

NOTE: If you add an Intel AMT enabled port to an LBFO team, do not set the port to Standby in

the LBFO team. If you set the port to Standby you may lose AMT functionality.

ANS Teaming Types

l Adapter Fault Tolerance (AFT) - provides automatic redundancy for a server's network connection. If

the primary adapter fails, the secondary adapter takes over. Adapter Fault Tolerance supports two to

eight adapters per team. This teaming type works with any hub or switch. All team members must be

connected to the same subnet.

l Switch Fault Tolerance (SFT) - provides failover between two adapters connected to separate

switches. Switch Fault Tolerance supports two adapters per team. Spanning Tree Protocol (STP) must

be enabled on the switch when you create an SFT team. When SFT teams are created, the Activation

Delay is automatically set to 60 seconds. This teaming type works with any switch or hub. All team

members must be connected to the same subnet.

l Adaptive Load Balancing (ALB) - provides load balancing of transmit traffic and adapter fault tolerance.

In Microsoft* Windows* operating systems, you can also enable or disable receive load balancing

(RLB) in ALB teams (by default, RLB is enabled).

l Virtual Machine Load Balancing (VMLB) - provides transmit and receive traffic load balancing across

Virtual Machines bound to the team interface, as well as fault tolerance in the event of switch port,

cable, or adapter failure. This teaming type works with anyswitch.

l Static Link Aggregation (SLA) - provides increased transmission and reception throughput in a team of

two to eight adapters. This team type replaces the following team types from prior software releases:

Fast EtherChannel*/Link Aggregation (FEC) and Gigabit EtherChannel*/Link Aggregation (GEC). This

type also includes adapter fault tolerance and load balancing (only routed protocols). This teaming type

requires a switch with Intel Link Aggregation, Cisco* FEC or GEC, or IEEE 802.3ad Static Link Aggregation capability.

Page 21

All adapters in a Link Aggregation team running in static mode must run at the same speed and must be

connected to a Static Link Aggregation capable switch. If the speed capability of adapters in a Static

Link Aggregation team are different, the speed of the team is dependent on the lowest common

denominator.

l IEEE 802.3ad Dynamic Link Aggregation - creates one or more teams using Dynamic Link Aggregation

with mixed-speed adapters. Like the Static Link Aggregation teams, Dynamic 802.3ad teams increase

transmission and reception throughput and provide fault tolerance. This teaming type requires a switch

that fully supports the IEEE 802.3ad standard.

l Multi-Vendor Teaming (MVT) -adds the capability to include adapters from selected other vendors in a

team. If you are using a Windows-based computer, you can team adapters that appear in the Intel

PROSet teaming wizard.

IMPORTANT:

l Be sure to use the latest available drivers on all adapters.

l Before creating a team, adding or removing team members, or changing advanced settings

of a team member, make sure each team member has been configured similarly. Settings

to check include VLANs and QoS Packet Tagging, Jumbo Frames, and the various offloads. These settings are available in Intel PROSet's Advanced tab. Pay particular atten-

tion when using different adapter models or adapter versions, as adapter capabilities vary.

l If team members implement Advanced features differently, failover and team functionality

will be affected. To avoid team implementation issues:

l Create teams that use similar adapter types and models.

l Reload the team after adding an adapter or changing any Advanced features. One

way to reload the team is to select a new preferred primary adapter. Although there

will be a temporary loss of network connectivity as the team reconfigures, the team

will maintain its network addressing schema.

NOTES:

l Hot Plug operations for an adapter that is part of a team are only available in Windows

Server.

l For SLA teams, all team members must be connected to the same switch. For AFT, ALB,

and RLB teams, all team members must belong to the same subnet. The members of an

SFT team must be connected to a different switch.

l Teaming only one adapter port is possible, but provides no benefit.

Primary and Secondary Adapters

If the primary adapter fails, another adapter will take over its duties. If you are using more than two adapters,

and you want a specific adapter to take over if the primary fails, you must specify a secondary adapter. If an

Intel AMT enabled device is part of a team, it must be designated as the primary adapter for the team.

There are two types of primary and secondary adapters:

l Default primary adapter: If you do not specify a preferred primary adapter, the software will choose

an adapter of the highest capability (model and speed) to act as the default primary. If a failover occurs,

another adapter becomes the primary. The adapter will, however, rejoin the team as a non-primary.

Page 22

l Preferred Primary/Secondary adapters: You can specify a preferred adapter in Intel PROSet. Under

normal conditions, the Primary adapter handles all non-TCP/IP traffic. The Secondary adapter will

receive fallback traffic if the primary fails. If the Preferred Primary adapter fails, but is later restored to

an active status, control is automatically switched back to the Preferred Primary adapter.

To specify a preferred primary or secondary adapter

1. From Device Manager, open the properties of a team.

2. Click the Settings tab.

3. Click the Modify Team button.

4. Select the adapter you want to be the primary adapter and click the Set Primary button.

The adapter's preferred setting appears in the Priority column.

Failover and Failback

When a link fails, either because of port or cable failure, team types that provide fault tolerance will continue to

send and receive traffic. Failover is the initial transfer of traffic from the failed link to a good link. Failback

occurs when the original adapter regains link. You can use the Activation Delay setting (located on the

Advanced tab of the team's properties in Device Manager) to specify a how long the failover adapter waits

before becoming active. If you don't want your team to failback when the original adapter gets link back, you

can set the Allow Failback setting to disabled (located on the Advanced tab of the team's properties in Device

Manager).

Adapter Fault Tolerance

Adapter Fault Tolerance (AFT) provides the safety of an additional backup link between the server and switch.

In the case of switch port, cable, or adapter failure, network connectivity is maintained.

Adapter Fault Tolerance is implemented with a primary adapter and one or more backup, or secondary

adapters. During normal operation, the backup adapters are in standby. If the link to the primary adapter fails,

the link to the secondary adapter automatically takes over. For more information, see Primary and Secondary

Adapters.

To use Adapter Fault Tolerance all adapters must be connected to the same subnet.

Switch Fault Tolerance

Switch Fault Tolerance (SFT) teaming allows you to connect each of two teamed adapters to a separate

switch.

Switch Fault Tolerance can detect failures when they occur:

l on either teamed adapter

l on either cable connecting the teamed adapter to its switch

l on switch ports connected to the adapters, if link is lost

In SFT teams, one adapter is the primary adapter and one adapter is the secondary adapter. During normal

operation, the secondary adapter is in standby. In standby, the adapter is inactive and waiting for failover to

occur. It does not transmit or receive other network traffic. If the primary adapter loses connectivity, the

secondary adapter automatically takes over.

In SFT teams, each adapter in the team can operate at a different speed than the other.

Page 23

Configuration Monitoring

You can set up monitoring between an SFT team and up to five IP addresses. This allows you to detectlink

failure beyond the switch. You can ensure connection availability for several clients that you consider critical.

If the connection between the primary adapter and all of the monitored IP addresses is lost, the team will

failover to the secondary adapter.

Adaptive Load Balancing

Adaptive Load Balancing (ALB) uses software to balance routable traffic among a team of two to eight

adapters or LOMs (the team must include at least one server adapter) connected to the same subnet. The

software analyzes the send and transmit loading on each adapter and balances the rate across the adapters

based on destination address. Adapter teams configured for ALB also provide the benefits of fault tolerance.

NOTES:

l ALB does not load balance non-routed protocols such as NetBEUI and some IPX* traffic.

l You can create an ALB team with mixed speed adapters. The load is balanced according to

the lowest common denominator of adapter capabilities and the bandwidth of the channel.

l On Windows systems, Receive Load Balancing is enabled by default.

l Receive Load Balancing is not supported on Microsoft Hyper-V*.

Virtual Machine Load Balancing

Virtual Machine Load Balancing (VMLB) provides transmit and receive traffic load balancing across Virtual

Machines bound to the team interface, as well as fault tolerance in the event of switch port, cable, or adapter

failure.

The driver analyzes the transmit and receive load on each member adapter and balances the traffic across

member adapters. In a VMLB team, each Virtual Machine is associated with one team member for its TX and

RX traffic.

If only one virtual NIC is bound to the team, or if Hyper-V is removed, then the VMLB team will act like an ALB

team.

NOTES:

l VMLB does not load balance non-routed protocols such as NetBEUI and some IPX* traffic.

l VMLB supports from two to eight adapter ports per team.

l You can create an VMLB team with mixed speed adapters. The load is balanced according

to the lowest common denominator of adapter capabilities and the bandwidth of the channel.

l You cannot use an Intel AMT enabled adapter in a VMLB team.

Static Link Aggregation

Static Link Aggregation (SLA) is a performance technology developed by Cisco to increase throughput

between switches. This team type works with:

l Cisco EtherChannel-capable switches with channeling mode set to 'ON'

l Intel switches capable of Link Aggregation

l Other switches capable of static 802.3ad

Page 24

The transmission speed will never exceed the adapter base speed to any single address (per specification).

Teams can contain two to eight adapters, but must match the capability of the switch. Adapter teams

configured for Static Link Aggregation also provide the benefits of fault tolerance and load balancing.

NOTE: You cannot use an Intel AMT enabled adapter in an SLA team

IEEE 802.3ad Dynamic Link Aggregation

802.3ad is an adopted IEEE standard.Teams can contain two to eight adapters, and you can have a

maximum of two IEEE 802.3ad dynamic teams per server. You must use 802.3ad switches (in dynamic

mode, aggregation can go across switches). Adapter teams configured for IEEE 802.3ad also provide the

benefits of fault tolerance and load balancing. Under 802.3ad, all protocols can be load balanced.

Dynamic mode supports multiple aggregators. Aggregators are formed by port speed connected to a switch.

For example, a team can contain adapters running at 1 Gbps and 10 Gbps, but two aggregators will be formed,

one for each speed. Also, if a team contains 1 Gbps ports connected to one switch, and a combination of

1Gbps and 10Gbps ports connected to a second switch, three aggregators would be formed. One containing

all the ports connected to the first switch, one containing the 1Gbps ports connected to the second switch,

and the third containing the 10Gbps ports connected to the second switch.

NOTES:

l Once you choose an aggregator, it remains in force until all adapters in that aggregator lose

link.

l In some switches, copper and fiber adapters cannot belong to the same aggregator in an

IEEE 802.3ad configuration. If there are copper and fiber adapters installed in a system,

the switch might configure the copper adapters in one aggregator and the fiber-based

adapters in another. If you experience this behavior, for best performance you should use

either copper or fiber-based adapters in a system.

l If multiple switches are used, all team members connected to the same switch must oper-

ate at the same speed.

l You cannot use an Intel AMT enabled adapter in a DLA team.

Before you begin

l Verify that the switch fully supports the IEEE 802.3ad standard.

l Check your switch documentation for port dependencies. Some switches require pairing to start on a

primary port.

l Check your speed and duplex settings to ensure the adapter and switch are running at full duplex,

either forced or set to auto-negotiate. Both the adapter and the switch must have the same speed and

duplex configuration.The full duplex requirement is part of the IEEE 802.3ad specification: http://stand-

ards.ieee.org/

If needed, change your speed or duplex setting beforeyou link the adapter to the switch. Although you

can change speed and duplex settings after the team is created,Intel recommends you disconnect the

cables until settings are in effect. In some cases, switches or servers might not appropriately

recognize modified speed or duplex settings if settings are changed when there is an active link to the

network.

Page 25

l If you are configuring a VLAN, check your switch documentation for VLAN compatibility notes. Not all

switches support simultaneous dynamic 802.3ad teams and VLANs. If you choose to set up VLANs,

configure teaming and VLAN settings on the adapter before you link the adapter to the switch. Setting

up VLANs after the switch has created an active aggregator affects VLAN functionality.

Multi-Vendor Teaming

MVT allows teaming with a combination of Intel adapters that support Intel PROSet, Intel adapters that do not

support Intel PROSet, and non-Intel adapters. This feature is currently available under Windows Server.All

adapters that appear in the Intel PROSet teaming wizard can be included in a team.

MVT Design Considerations

l In order to activate MVT, you must have at least one Intel adapter or integrated connection that sup-

ports Intel PROSet in the team. That adapter or connection must be designated as the primary

adapter.

l A multi-vendor team can be created for any team type.

l All members in a MVT must operate on a common feature set (lowest common denominator).

l Manually verify that the frame setting for all adapters in the team is the same.

l Verify that the RSS settings for all adapters in the team are the same.

Virtual LANs

The term VLAN (Virtual Local Area Network) refers to a collection of devices that communicate as if they

were on the same physical LAN. Any set of ports (including all ports on the switch) can be considered a

VLAN. LAN segments are not restricted by the hardware that physically connects them.

VLANs offer the ability to group computers together

into logical workgroups. This can simplify network

administration when connecting clients to servers

that are geographically dispersed across the

building, campus, or enterprise network.

Typically, VLANs consist of co-workers within the

same department but in different locations, groups

of users running the same network protocol, or a

cross-functional team working on a joint project.

By using VLANs on your network, you can:

l Improve network performance

l Limit broadcast storms

l Improve LAN configuration updates (adds, moves, and changes)

l Minimize security problems

l Ease your management task

Other Considerations

l To set up IEEE VLAN membership (multiple VLANs), the adapter must be attached to a switch with

IEEE 802.1Q VLAN capability.

l A maximum of 64 VLANs per network port or team are supported by Intel software.

Page 26

l VLANs can co-exist with teaming (if the adapter supports both). If you do this, the team must be

defined first, then you can set up your VLAN.

l The Intel PRO/100 VE and VM Desktop Adapters and Network Connections can be used in a switch

based VLAN but do not support IEEE Tagging.

l You can set up only one untagged VLAN per adapter or team. You must have at least one tagged

VLAN before you can set up an untagged VLAN.

CAUTION: When using IEEE 802 VLANs, settings must match between the switch and those

adapters using the VLANs.

NOTE: Intel ANS VLANs are not compatible with Microsoft's Load Balancing and Failover (LBFO)

teams. Intel® PROSet will block a member of an LBFO team from being added to an Intel ANS

VLAN. You should not add a port that is already part of an Intel ANS VLAN to an LBFO team, as

this may cause system instability.

Configuring VLANs in Microsoft* Windows*

In Microsoft* Windows*, you must use Intel® PROSet to set up and configure VLANs. For more information,

select Intel PROSet in the Table of Contents (left pane) of this window.

NOTES:

l If you change a setting under the Advanced tab for one VLAN, it changes the settings for

all VLANS using that port.

l In most environments, a maximum of 64 VLANs per network port or team are supported by

Intel PROSet.

l ANS VLANs are not supported on adapters and teams that have VMQ enabled. However,

VLAN filtering with VMQ is supported via the Microsoft Hyper-V VLAN interface. For more

information see Microsoft Hyper-V virtual NICs on teams and VLANs.

l You can have different VLAN tags on a child partition and its parent. Those settings are

separate from one another, and can be different or the same. The only instance where the

VLAN tag on the parent and child MUST be the same is if you want the parent and child partitions to be able to communicate with each other through that VLAN. For more information

see Microsoft Hyper-V virtual NICs on teams and VLANs.

Power Management

The Intel® PROSet Power Management tab replaces the standard Microsoft* Windows* Power Management

tab in Device Manager. It includes the Power Saver and Wake on LAN* (WoL*) options that were previously

included on the Advanced tab. The standard Windows power management functionality is incorporated on the

Intel PROSet tab.

Page 27

NOTES:

l The options available on the Power Management tab are adapter and system dependent.

Not all adapters will display all options.

l The following adapters support WoL only on Port A:

l Intel® Ethernet Server Adapter I350-T2

l Intel® Ethernet Server Adapter I350-T4

l Intel® Ethernet Server Adapter I340-T2

l Intel® Ethernet Server Adapter I340-T4

l Intel® Ethernet Server Adapter I340-F4

l Intel® Gigabit ET2 Quad Port Server Adapter

l Intel® PRO/1000 PF Quad Port Server Adapter

l Intel® PRO/1000 PT Quad Port LP Server Adapter

l Intel® PRO/1000 PT Quad Port Server Adapter

l Intel® PRO/1000 PT Dual Port Network Connection

l Intel® PRO/1000 PT Dual Port Server Connection

l Intel® PRO/1000 PT Dual Port Server Adapter

l Intel® PRO/1000 PF Dual Port Server Adapter

l Intel® Gigabit PT Quad Port Server ExpressModule

l The following adapters do not support WoL:

l Intel® PRO/1000 MT Quad Port Server adapter

l Intel® Gigabit VT Quad Port Server Adapter

l Intel® Ethernet Server Adapter X520-2

l Intel® Ethernet Server Adapter X520-1

l Intel® Ethernet Server Adapter X540-T1

l Intel® Ethernet Converged Network Adapter X540-T2

l Intel® Ethernet Converged Network Adapter X540-T1

NOTE: If your system has a Manageability Engine, the Link LED may stay lit even if WoL is

disabled.

Power Options

The Intel PROSet Power Management tab includes several settings that control the adapter's power

consumption. For example, you can set the adapter to reduce its power consumption if the cable is

disconnected.

If Reduce speed during standby is enabled, then Wake on Magic Packet and/or Wake on directed

packet must be enabled. If both of these options are disabled, power is removed from the adapter during

standby. Wake on Magic Packet from power off state has no effect on this option.

Page 28

Energy Efficient Ethernet

The Energy Efficient Ethernet (EEE) feature allows a capable device to enter Low Power Idle between bursts

of network traffic. Both ends of a link must have EEE enabled for any power to be saved. Both ends of the link

will resume full power when data needs to be transmitted. This transition may introduce a small amount of

network latency.

NOTES:

l Both ends of the EEE link must automatically negotiate link

speed.

l EEE is not supported at 10Mbps.

Intel® Auto Connect Battery Saver

The Intel® Auto Connect Battery Saver (ACBS) feature turns off the adapter when link is down or the network

cable is disconnected. After a timeout period, the adapter will power off. When the network cable is

reconnected and link is restored, the NIC powers up and functionality is fully restored.

ACBS only functions when the system is on battery power. If the power cable is connected, ACBS will be

automatically disabled. If ACBS is active, the adapter will appear to be powered off. If you have Intel®

PROSet installed, on the Link Speed tab, the Link Status will indicate Speed: Not connected. Power off.

NOTE: ACBS will not function on an adapter if the adapter has forced speed or duplex settings.

ACBS will only function if the adapter is set to auto-detect or auto-negotiate.

Intel® System Idle Power Saver

The Intel® System Idle Power Saver feature sets the adapter to negotiate the lowest possible speed setting

when the system and network are idle. When the system activity is detected, the link will be negotiated to a

higher speed.

To support this feature, the adapter must be

l configured to Auto Detect speed and

l connected to a link partner that can auto-negotiate speed

Remote Wake-Up and Wake on LAN*

Microsoft Windows and Windows Server do not support wake from a power-off (S5) state, only from standby

(S3) or hibernate (S4). When shutting down the system, they shutdown ACPI devices, including Intel

adapters. This disarms the adapters remote wake up capability. However, in some ACPI-capable computers,

the BIOS may have a setting that allows you to override the operating system and wake from an S5 state

anyway. If there is no support for wake from S5 state in your BIOS settings, you are limited to Wake From

Standby when using these operating systems.

Wake on Intel® Ready Access

Intel® Ready Access keeps your network connection active when the rest of your system is in sleep or

standby mode, so that content on your system is readily accessible. Requests from other computers will

wake up your computer.

Page 29

Quality of Service

Quality of Service (QoS) allows the adapter to send and receive IEEE 802.3ac tagged frames. 802.3ac tagged

frames include 802.1p priority-tagged frames and 802.1Q VLAN-tagged frames. In order to implement QoS,

the adapter must be connected to a switch that supports and is configured for QoS. Priority-tagged frames

allow programs that deal with real-time events to make the most efficient use of network bandwidth. High

priority packets are processed before lower priority packets.

Tagging is enabled and disabled in Microsoft* Windows* Server* using the "QoS Packet Tagging" field in the

Advanced tab in Intel® PROSet. For other versions of the Windows operating system, tagging is enabled

using the "Priority/VLAN Tagging" setting on the Advanced tab in Intel® PROSet.

Once QoS is enabled in Intel PROSet, you can specify levels of priority based on IEEE 802.1p/802.1Q frame

tagging.

The supported operating systems, including Microsoft* Windows Server*, have a utility for 802.1p packet

prioritization. For more information, see the Windows system help and Microsoft's knowledge base.

NOTE: The first generation Intel® PRO/1000 Gigabit Server Adapter (PWLA 8490) does not

support QoS frame tagging.

Data Center Bridging

Data Center Bridging (DCB) is a collection of standards-based extensions to classical Ethernet. It provides a

lossless data center transport layer that enables the convergence of LANs and SANs onto a single unified

fabric.

Furthermore, DCB is a configuration Quality of Service implementation in hardware. It uses the VLAN priority

tag (802.1p) to filter traffic. That means that there are 8 different priorities that traffic can be filtered into. It also

enables priority flow control (802.1Qbb) which can limit or eliminate the number of dropped packets during

network stress. Bandwidth can be allocated to each of these priorities, which is enforced at the hardware level

(802.1Qaz).

Adapter firmware implements LLDP and DCBX protocol agents as per 802.1AB and 802.1Qaz respectively.

The firmware based DCBX agent runs in willing mode only and can accept settings from a DCBX capable

peer. Software configuration of DCBX parameters via dcbtool/lldptool are not supported.

NOTE: On Microsoft Windows, Data Center Bridging (DCB) is only supported on NVM version

4.52 and newer. Older NVM versions must be updated before the adapter is capable of DCB

support in Windows.

Microsoft* Hyper-V* Overview

Microsoft* Hyper-V* makes it possible for one or more operating systems to run simultaneously on the same

physical system as virtual machines. This allows you to consolidate several servers onto one system, even if

they are running different operating systems. Intel® Network Adapters work with, and within, Microsoft

Hyper-V virtual machines with their standard drivers and software.

Page 30

NOTES:

l Some virtualization options are not available on some adapter/operating system com-

binations.

l The jumbo frame setting inside a virtual machine must be the same, or lower than, the set-

ting on the physical port.

l See http://www.intel.com/technology/advanced_comm/virtualization.htm for more inform-

ation on using Intel Network Adapters in virtualized environments.

Using Intel®Network Adapters in a Hyper-V Environment

When a Hyper-V Virtual NIC (VNIC) interface is created in the parent partition, the VNIC takes on the MAC

address of the underlying physical NIC. The same is true when a VNIC is created on a team or VLAN. Since

the VNIC uses the MAC address of the underlying interface, any operation that changes the MAC address of

the interface (for example, setting LAA on the interface, changing the primary adapter on a team, etc.), will

cause the VNIC to lose connectivity. In order to prevent this loss of connectivity, Intel® PROSet will not allow

you to change settings that change the MAC address.

NOTES:

l If Fibre Channel over Ethernet (FCoE)/Data Center Bridging (DCB) is present on the port,

configuring the device in Virtual Machine Queue (VMQ) + DCB mode reduces the number

of VMQ VPorts available for guest OSes. This does not apply to Intel® Ethernet Controller

X710 based devices.

l When sent from inside a virtual machine, LLDP and LACP packets may be a security risk.

The Intel® Virtual Function driver blocks the transmission of such packets.

l The Virtualization setting on the Advanced tab of the adapter's Device Manager property

sheet is not available if the Hyper-V role is not installed.

l While Microsoft supports Hyper-V on the Windows* 8 client OS, Intel® Ethernet adapters

do not support virtualization settings (VMQ, SR-IOV) on Windows 8 client.

l ANS teaming of VF devices inside a Windows 2008 R2 guest running on an open source

hypervisor is supported.

The Virtual Machine Switch

The virtual machine switch is part of the network I/O data path. It sits between the physical NIC and the

virtual machine NICs and routes packets to the correct MAC address. Enabling Virtual Machine Queue (VMQ)

offloading in Intel(R) ProSet will automatically enable VMQ in the virtual machine switch. For driver-only

installations, you must manually enable VMQ in the virtual machine switch.

Using ANS VLANs

If you create ANS VLANs in the parent partition, and you then create a Hyper-V Virtual NIC interface on an

ANS VLAN, then the Virtual NIC interface *must* have the same VLAN ID as the ANS VLAN. Using a

different VLAN ID or not setting a VLAN ID on the Virtual NIC interface will result in loss of communication on

that interface.

Virtual Switches bound to an ANS VLAN will have the same MAC address as the VLAN, which will have the

same address as the underlying NIC or team. If you have several VLANs bound to a team and bind a virtual

switch to each VLAN, all of the virtual switches will have the same MAC address. Clustering the virtual

Page 31

switches together will cause a network error in Microsoft’s cluster validation tool. In some cases, ignoring this

error will not impact the performance of the cluster. However, such a cluster is not supported by Microsoft.

Using Device Manager to give each of the virtual switches a unique address will resolve the issue. See the

Microsoft Technet article Configure MAC Address Spoofing for Virtual Network Adapters for more

information.

Virtual Machine Queues (VMQ) and SR-IOV cannot be enabled on a Hyper-V Virtual NIC interface bound to a

VLAN configured using the VLANs tab in Windows Device Manager.

Using an ANS Team or VLAN as a Virtual NIC

If you want to use a team or VLAN as a virtual NIC you must follow these steps:

NOTES:

l This applies only to virtual NICs created on a team or VLAN. Virtual NICs created on a

physical adapter do not require these steps.

l Receive Load Balancing (RLB) is not supported in Hyper-V. Disable RLB when using

Hyper-V.

1. Use Intel® PROSet to create the team or VLAN.

2. Open the Network Control Panel.

3. Open the team or VLAN.

4. On the General Tab, uncheck all of the protocol bindings and click OK.

5. Create the virtual NIC. (If you check the "Allow management operating system to share the network

adapter." box you can do the following step in the parent partition.)

6. Open the Network Control Panel for the Virtual NIC.

7. On the General Tab, check the protocol bindings that you desire.

NOTE: This step is not required for the team. When the Virtual NIC is created, its protocols

are correctly bound.

Command Line for Microsoft Windows Server* Core

Microsoft Windows Server* Core does not have a GUI interface. If you want to use an ANS Team or VLAN as

a Virtual NIC, you must use the prosetcl.exe utility to set up the configuration. Use the prosetcl.exe utility to

create the team or VLAN. See the prosetcl.txt file for installation and usage details.

NOTE: Support for the Intel PROSet command line utilities (prosetcl.exe and crashdmp.exe) is

being removed. This functionality will be replaced by the Intel Netcmdlets for Microsoft* Windows

PowerShell*. Please transition all of your scripts and processes to use the Intel Netcmdlets for

Microsoft Windows PowerShell.

The following is an example of how to set up the configuration using prosetcl.exe.

1. Use prosetcl.exe to create a team.

prosetcl.exe Team_Create 1,2,3 TeamNew VMLB

(VMLB is a dedicated teaming mode for load balancing under Hyper-V.)

Page 32

2. Create the virtual NIC by running a remote Hyper-V manager on a different machine. Please see

Microsoft's documentation for instructions on how to do this.

The following is an example of how to set up the configuration using Microsoft* Windows PowerShell*.

1. Get all the adapters on the system and store them into a variable.

$a = Get-IntelNetAdapter

2. Create a team by referencing the indexes of the stored adapter array.

New-IntelNetTeam -TeamMembers $a[1],$a[2] -TeamMode

VirtualMachineLoadBalancing -TeamName “Team1”

Virtual Machine Queue Offloading

Enabling VMQ offloading increases receive and transmit performance, as the adapter hardware is able to

perform these tasks faster than the operating system. Offloading also frees up CPU resources. Filtering is

based on MAC and/or VLAN filters. For devices that support it, VMQ offloading is enabled in the host partition

on the adapter's Device Manager property sheet, under Virtualization on the Advanced Tab.

Each Intel® Ethernet Adapter has a pool of virtual ports that are split between the various features, such as

VMQ Offloading, SR-IOV, Data Center Bridging (DCB), and Fibre Channel over Ethernet (FCoE). Increasing

the number of virtual ports used for one feature decreases the number available for other features. On devices

that support it, enabling DCB reduces the total pool available for other features to 32. Enabling FCoE further

reduces the total pool to 24.

NOTE: This does not apply to devices based on the Intel® Ethernet X710 or XL710 controllers.

Intel PROSet displays the number of virtual ports available for virtual functions under Virtualization properties

on the device's Advanced Tab. It also allows you to set how the available virtual ports are distributed between

VMQ and SR-IOV.

Teaming Considerations

l If VMQ is not enabled for all adapters in a team, VMQ will be disabled for the team.

l If an adapter that does not support VMQ is added to a team, VMQ will be disabled for the team.

l Virtual NICs cannot be created on a team with Receive Load Balancing enabled. Receive Load Balan-

cing is automatically disabled if you create a virtual NIC on a team.

l If a team is bound to a Hyper-V virtual NIC, you cannot change the Primary or Secondary adapter.

SR-IOV (Single Root I/O Virtualization)

SR-IOV lets a single network port appear to be several virtual functions in a virtualized environment. If you

have an SR-IOV capable NIC, each port on that NIC can assign a virtual function to several guest partitions.

The virtual functions bypass the Virtual Machine Manager (VMM), allowing packet data to move directly to a

guest partition's memory, resulting in higher throughput and lower CPU utilization. SR-IOV also allows you to

move packet data directly to a guest partition's memory. SR-IOV support was added in Microsoft Windows

Server 2012. See your operating system documentation for system requirements.

For devices that support it, SR-IOV is enabled in the host partition on the adapter's Device Manager property

sheet, under Virtualization on the Advanced Tab. Some devices may need to have SR-IOV enabled in a

preboot environment.

Page 33

NOTES:

l You must enable VMQ for SR-IOV to function.

l SR-IOV is not supported with ANS teams.

Network Virtualization using Generic Routing Encapsulation (NVGRE)

Network Virtualization using Generic Routing Encapsulation (NVGRE) increases the efficient routing of

network traffic within a virtualized or cloud environment. Some Intel® Ethernet Network devices perform

Network Virtualization using Generic Routing Encapsulation (NVGRE) processing, offloading it from the

operating system. This reduces CPU utilization.

Intel®Active Management Technology

Intel® Active Management Technology (Intel® AMT) is a hardware-based solution that uses out-of-band

communication for management access to networked client computers. Even when the client computer is

powered down or has a non-operational OS, Intel AMT enables you to access and manage the system over

the network. You can easily get accurate platform information, and can perform remote updating, diagnostics,

debugging, and repair of a system, regardless of the state of the OS and the power state of the system. For

example, if an OS has failed, you can establish a remote-control session to the failed system and then

remotely reboot the failed system to a known good OS image on a remote drive. After the system reboots, you

can remotely manage the reinstallation of the OS or repair.

For more information on Intel AMT features, see the documentation that came with your system.

NOTE:Intel Active Management Technology drivers are installed as part of the base driver

installation on Intel AMT capable systems.

Locally Administered Address (LAA)

The Locally Administered Address feature allows you to override a device’s MAC Address and assign an

address of your choice. The address is a 12-digit hexadecimal number in the range: 0000 0000 0001 – FEFF

FFFF FFFF.

CAUTION: Make sure no other systems on the network use this address.

NOTES:

l Do not use a multicast address (least significant bit of the high byte = 1).

For example, in the address 0y123456789A, "y" cannot be an odd number. (y must be 0, 2,

4, 6, 8, A, C, or E.)

l To restore the default MAC address, click Use Default.

l You cannot change the address if the adapter is part of a team.

l Changing this setting may cause a momentary loss of connectivity.

The LAA setting is disabled and cannot be changed because one or more of the following is true:

l The device is configured to iSCSI boot primary or secondary.

l The device or team is bound to the Microsoft Virtual Switch Protocol.

Page 34

Low Latency Interrupts (LLI)

The LLI feature enables the network device to by-pass the configured interrupt moderation scheme based on

the type of data being received. It configures which arriving TCP packets trigger an immediate interrupt,

enabling the system to handle the packet more quickly. Reduced data latency enables some applications to

gain faster access to network data.

NOTE: When LLI is enabled, system CPU utilization may increase.

LLI Options:

You may evoke LLI for data packets containing a TCP PSH flag in the header or for specified TCP ports.

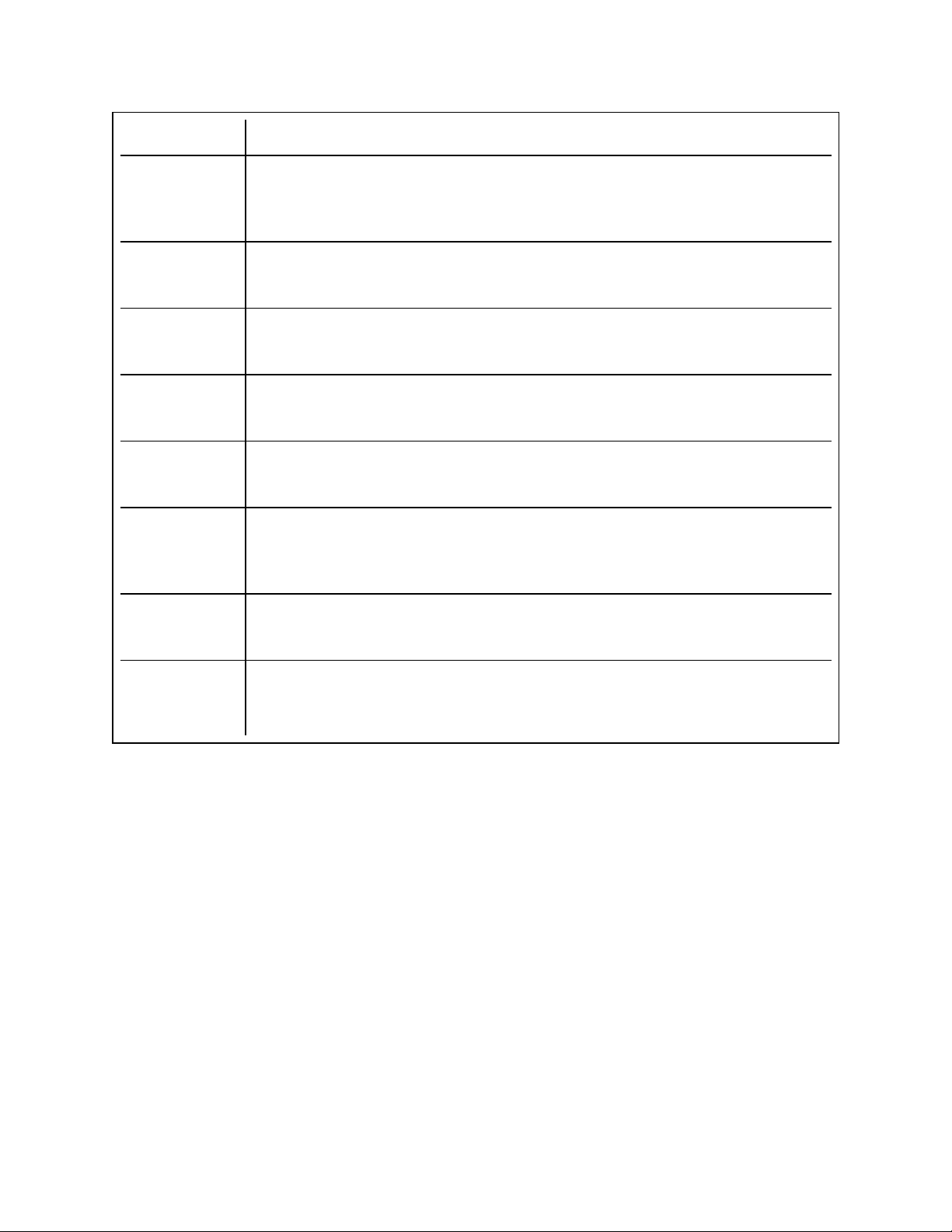

Use for packets

with TCP PSH flag:

Use for these TCP

ports:

Any incoming packet with the TCP PSH flag will trigger an immediate interrupt. The PSH flag is set by the sending device.

Every packet received on the specified ports will trigger an immediate interrupt. Up to 8 ports may be specified.

Direct Cache Access (DCA)

Direct Cache Access enables a capable I/O device such as a network controller to activate a pre-fetch engine

in the CPU that loads arriving packet data into the CPU cache for immediate access by the network stack

process. This reduces the number of memory access operations required to process each packet, reducing

CPU load and increasing throughput.

DCA takes advantage of high-speed cache and eliminates processor cycles required to read packet headers

and descriptors from system memory.

NOTE: DCA requires support from the I/O device, system chipset, and CPU.

Direct Memory Access (DMA) Coalescing

DMA (Direct Memory Access) allows the network device to move packet data directly to the system's

memory, reducing CPU utilization. However, the frequency and random intervals at which packets arrive do

not allow internal system components to enter energy-saving states. DMA Coalescing allows the NIC to

collect packets before it initiates a DMA event. This may increase network latency but also increases the

chances that the system will consume less energy.

Higher DMA Coalescing values result in more energy saved but may increase your system's network latency.

If you enable DMA Coalescing, you should also set the Interrupt Moderation Rate to 'Minimal'. This minimizes

the latency impact imposed by DMA Coalescing and results in better peak network throughput performance.

You must enable DMA Coalescing on all active ports in the system. You may not gain any energy savings if it

is enabled only on some of the ports in your system.

See Direct Memory Access (DMA) Coalescing for a list of supported devices, and information on BIOS,

platform, and application settings that will affect your potential energy savings.

Page 35

Receive Side Scaling

The phrase “Receive Side Scaling” (RSS) refers to the idea that all receive data processing is shared (scaled)

across multiple processors or processor cores. Without RSS, all receive data processing is performed by a

single processor, resulting in less efficient system cache utilization.

RSS can be enabled for a LAN or for FCoE. In the first case, it is called “LAN RSS”. In the second, it is called

“FCoE RSS”.

LAN RSS

LAN RSS applies to a particular TCP connection. Note the following:

l RSS has no effect if your system has only one processing unit.

l RSS must be enabled for Intel® I/O Acceleration Technology to function.

l RSS is not supported on some adapters configured to use Virtual Machine Queues (VMQ). On these

adapters VMQ will take precedence over RSS. RSS will appear disabled.

Configuration

LAN RSS is enabled on the Advanced tab of the adapter property sheet. If your adapter or operating system

does not support it, the RSS settings will not be displayed. If your adapter does support it, the following

settings will be displayed:

l Port NUMA Node: the NUMA node number of a device.

l Receive Side Scaling Queues: allocates queue space to buffer transactions between the network

adapter and CPU(s). Range:

1 queue is used when low CPU utilization is required.

2 queues are used when good throughput and low CPU utilization are required.

4 or more queues are used for applications that demand maximum throughput and

l Starting RSS CPU: allows you to set the preferred starting LAN RSS processor. Change this setting

if the current processor is dedicated to other processes. The setting range is from 0 to the number of

logical CPUs - 1. In Microsoft* Windows Server* 2008 R2, LAN RSS will only use CPUs in group 0

(CPUs 0 through 63).

l Preferred NUMA Node: allows you to choose the preferred NUMA (Non-Uniform Memory Access)

node to be used for memory allocations made by the network adapter. In addition the system will

attempt to use the CPUs from the preferred NUMA node first for the purposes of LAN RSS. On NUMA

platforms, memory access latency is dependent on the memory location. Allocation of memory from

the closest node helps improve performance. The Windows Task Manager shows the NUMA Node ID

for each processor. Note that this setting only affects NUMA systems; it will have no effect on nonNUMA systems.

Teaming

If RSS is not enabled for all adapters in a team, RSS will automatically be disabled for the team. If an adapter

that does not support RSS is added to a team, RSS will automatically be disabled for the team. If you create a

Multi-Vendor Team, you must manually verify that the RSS settings for all adapters in the team are the same.

FCoE RSS

If FCoE is installed, FCoE RSS is enabled and applies to FCoE receive processing that is shared across

processor cores.

Page 36

Configuration

If your adapter supports FCoE RSS, the following configuration settings can be viewed and changed on the

Performance Options of the Advanced tab of the Network Adapter device properties:

l FCoE NUMA Node Count: The number of consecutive NUMA Nodes where the allocated FCoE

queues will be evenly distributed.

l FCoE Starting NUMA Node: The NUMA Node representing the first node within the FCoE NUMA

Node Count.

l FCoE Starting Core Offset: The offset to first NUMA Node CPU core that will be assigned to FCoE

queue.

l FCoE Port NUMA Node: Indication from platform of optimal closest NUMA Node to physical port, if

available. NOTE: This item is read only.

Performance Tuning

The Intel Network Controller provides a set of advanced FCoE performance tuning options. These options

direct how FCoE transmit/receive queues are allocated in NUMA platforms. Specifically, they direct what

target set of NUMA node CPUs can be selected from to assign individual queue affinity. Selecting a specific

CPU has two main effects: it sets the desired interrupt location for processing queue packet indications, and

sets the relative locality of queue to available memory.

As indicated, these are intended as advanced tuning options for situations where you wish to maximize

system performance. They are generally expected to be used to maximize performance for multi-port platform

configurations. Because all ports share the same default installation directives ("Inf" file and so forth), the

FCoE queues for every port will be associated with the same set of NUMA CPUs, which may result in CPU

contention.

The SW exporting these tuning options defines a NUMA node to be equal or equivalent to an individual

processor (socket). Platform ACPI information presented by the BIOS to the OS helps to indicate the relation

of PCI devices to individual processors. This detail is not currently fully supportedin all platforms however, so

using the tuning options may produce unexpected results.

The performance tuning options are listed in the "Configuration" section above.

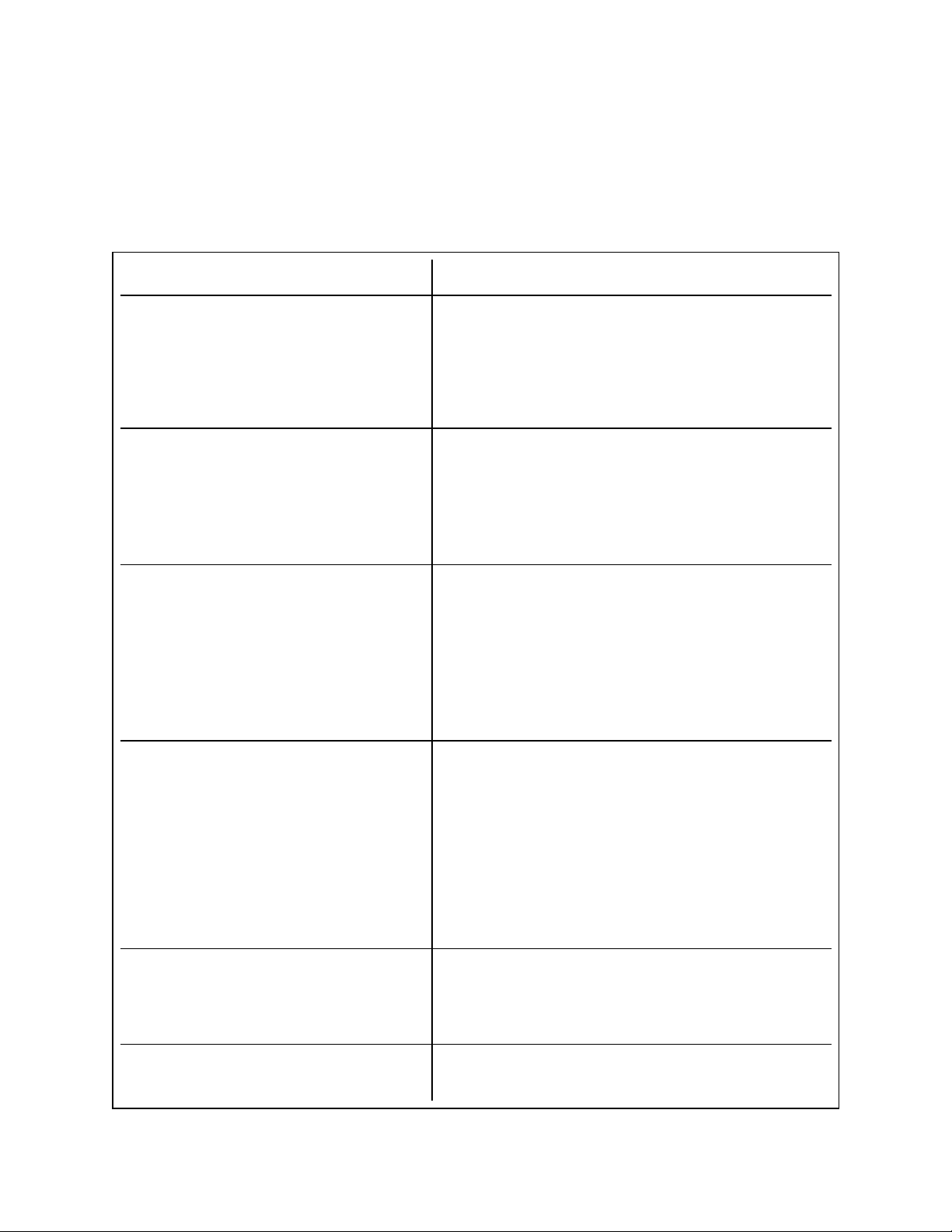

Example 1:

A Platform is known to have two physical sockets, each socket processor providing 8 core CPUs (16 when

hyper threading is enabled). You have also installed a dual port Intel NIC with FCoE enabled.

By default 8 FCoE queues will be allocated per NIC port. Also, by default the first (non-hyper thread) CPU

cores of the first processor will be assigned affinity to these queues, resulting in the allocation model pictured

below. You can see that both ports would then be competing for CPU cycles from the same set of CPUs on

socket 0.

Page 37

Socket Queue to CPU Allocation

Using the performance tuning options, you can direct association of the FCoE queues for the second port to a