Page 1

Intel® Ethernet Controller X540

Datasheet

PRODUCT FEATURES

Host Interface

General

Serial Flash interface

Configurable LED operation for software or customizing OEM

LED displays

Device disable capability

Package size - 25 mm x 25 mm

Networking

10 GbE/1 GbE/100 Mb/s copper PHYs integrated on-chip

Support for jumbo frames of up to 15.5 KB

Flow control support: send/receive pause frames and receive

FIFO thresholds

Statistics for management and RMON

802.1q VLAN support

TCP segmentation offload: up to 256 KB

IPv6 support for IP/TCP and IP/UDP receive checksum offload

Fragmented UDP checksum offload for packet reassembly

Message Signaled Interrupts (MSI)

Message Signaled Interrupts (MSI-X)

Interrupt throttling control to limit maximum interrupt rate

and improve CPU usage

Flow Director (16 x 8 and 32 x 4)

128 transmit queues

Receive packet split header

Receive header replication

Dynamic interrupt moderation

DCA support

TCP timer interrupts

No snoop

Relaxed ordering

Support for 64 virtual machines per port (64 VMs x 2 queues)

Support for Data Center Bridging (DCB);(802.1Qaz,

802.1Qbb, 802.1p)

PCIe base specification 2.1 (2.5GT/s or 5GT/s)

Bus width — x1, x2, x4, x8

64-bit address support for systems using more than 4 GB of

physical memory

UNCTIONS

MAC F

Descriptor ring management hardware for transmit and

receive

ACPI register set and power down functionality supporting

D0 and D3 states

A mechanism for delaying/reducing transmit interrupts

Software-controlled global reset bit (resets everything

except the configuration registers)

Four Software-Definable Pins (SDP) per port

Wake up

IPv6 wake-up filters

Configurable flexible filter (through NVM)

LAN function disable capability

Programmable memory transmit buffers (160 KB/port)

Default configuration by NVM for all LEDs for pre-driver

functionality

Manageability

SR-IOV support

Eight VLAN L2 filters

16 Flex L3 port filters

Four Flexible TCO filters

Four L3 address filters (IPv4)

Advanced pass through-compatible management packet

transmit/receive support

SMBus interface to an external Manageability Controller

(MC)

NC-SI interface to an external MC

Four L3 address filters (IPv6)

Four L2 address filters

Revision Number: 2.7

March 2014

Page 2

X540 — Revisions

INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR

OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS

OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING

TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE,

MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT.

A "Mission Critical Application" is any application in which failure of the Intel Product could result, directly or indirectly, in personal injury or death.

SHOULD YOU PURCHASE OR USE INTEL'S PRODUCTS FOR ANY SUCH MISSION CRITICAL APPLICATION, YOU SHALL INDEMNIFY AND HOLD INTEL AND

ITS SUBSIDIARIES, SUBCONTRACTORS AND AFFILIATES, AND THE DIRECTORS, OFFICERS, AND EMPLOYEES OF EACH, HARMLESS AGAINST ALL

CLAIMS COSTS, DAMAGES, AND EXPENSES AND REASONABLE ATTORNEYS' FEES ARISING OUT OF, DIRECTLY OR INDIRECTLY, ANY CLAIM OF

PRODUCT LIABILITY, PERSONAL INJURY, OR DEATH ARISING IN ANY WAY OUT OF SUCH MISSION CRITICAL APPLICATION, WHETHER OR NOT INTEL OR

ITS SUBCONTRACTOR WAS NEGLIGENT IN THE DESIGN, MANUFACTURE, OR WARNING OF THE INTEL PRODUCT OR ANY OF ITS PARTS.

Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or

characteristics of any features or instructions marked "reserved" or "undefined". Intel reserves these for future definition and shall have no

responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The information here is subject to change without notice.

Do not finalize a design with this information.

The products described in this document may contain design defects or errors known as errata which may cause the product to deviate from published

specifications. Current characterized errata are available on request.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1-800-548-

4725, or go to: http://www.intel.com/design/literature.htm.

Intel and Intel logo are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

*Other names and brands may be claimed as the property of others.

Copyright © 2014, Intel Corporation. All Rights Reserved.

2

Page 3

Revision History — X540

Revisions

Rev Date Notes

• Added MCTP footnote to table 1-7.

• Added a note to section 1.4.2 (MCTP Over SMBus).

• Revised section 3.6.3.2 (Auto-Negotiation and Link Setup).

• Revised section 4.6.3.2 (Global Reset and General Configuration)

2.7 March 2014

2.6 December 2013

2.5 September 2013

• Revised section 8.2.4.1.3 (Device Status Register; bit 7).

• Added a note to section 11.6.1.1 (NC-SI over MCTP)

• Revised section 11.6.3 (MCTP over SMBus; Message Type = 0x02 instead of 0x05).

• Removed all Simplified MCTP Mode references (not supported).

• Revised table 12-1 (Notes for Power On/Off Sequence Diagram; note 5).

• Added section 16 (Packets Format).

Revised sections/tables/figures:

• Table 1-2 (Support of non Auto-Negotiation Partner).

• 3.6.3.2 (Auto-Negotiation and Link Setup).

• 5.2.3 (removed).

• Notes below Figure 5.4 (first paragraph).

• 5.3.3.1 (last paragraph).

• Figure 5.3 and 5.4 (removed AN enabled block).

• 6.4.4.13 (bits 10:5).

• 7.2.3.2.2 (RS (bit 3 description).

• Table 11-1 (Select Package/Deselect Package commands).

• Tables 12-2 through 12-4 (Current Consumption).

• 12.4.2 (new section - Peak Current Consumption).

• 13.0 (added design considerations and guidelines for integrated magnetics).

Revised sections/tables/figures:

• 2.1.6 (changed NC-SI_CRS_DV pull up/pull down value).

• 2.1.10 (changed note 6 to note 5; last row of table).

• 4.4.1 (revised note concerning single-port NVM).

• 4.6.11.3.1 (updated 8 TCs mode and 4 TCs mode description).

• 4.6.11.4.3 (removed MTQC.DCB_Ena set to 1b sub-bullet).

• 7.2.3.1 (added Rate-Scheduler to second paragraph).

• 7.2.3.2.4 (removed “in IOV mode” under Check Context Bit description).

• 7.6 (revised LINK/ACTIVITY description).

• 8.1.1.2 (revised Memory-Mapped Accesses to Flash description).

• Table 8-4 (changed SECRXSAECC and SECRXAESECC offset values).

• 8.2.4.1.5 (changed NC-SI Configuration 1 word to NC-SI Configuration 2 word).

• 8.2.4.1.11 (changed bits 21:20, 22, and 23 initial values).

• 8.2.4.29.20 and 8.2.4.29.21 (removed).

• 8.2.4.29.55 and 8.2.4.29.56 (changed offset values).

• 12.1.4 (added note after tables 12-2, 12-3, and 12-4).

• Table 12-4 (added X540-BT2 Dual-Port Current Consumption using Single-port NVM power values).

• 12.3.9 (changed min/max values; threshold for 0.8 Vdc supply).

• Table 13-5 (added Integrated Magnetics vendor information).

3

Page 4

Changed single port SKU to single port configuration.

Revised sections:

• 3.2.5.1 (changed flags off to flags on).

• 3.4.8 (added 82575).

• 3.5 (removed old table 3.15, added new SDP settings table, and added new signal names).

• 4.6.9 (add new text; last bullet).

• 4.6.11.4.3 (changed INT[13:0] to DEC[13:0].

• 5.3.5 (new section).

• 6.1 (removed note).

• 6.4.4.8 (revised SDP_FUNC_OFF_EN bit description).

• 7.2.3.2.3 (added new EOF Codes in TSO table and new table references; also revised HEADLEN description).

• 7.7.2.4.1 (User Priority (UP) description).

• 7.9.3 (changed MAC reset to Master reset).

• 7.9.4 (revised table note).

2.4 April 2013

2.3 November 2012 • Added single-port SKU information.

2.2 July 2012 • Revised footnote to table 1.5 (LAN Performance Features).

• 7.13.1.1 (revised FC Frame description).

• 7.13.3.3.6 (SEQ_ID (8 bit) and SEQ_CNT (16 bit) descriptions).

• 8.2.4.1.5 (revised table note 2).

• 8,2.4.5.1 (revised FLOW_DIRECTOR bit description.

• 8.2.4.7.9 and 8.2.4.7.10 (revised notes at the end of register tables).

• 8.2.4.9.10 (revised WTHRESH bit description).

• 8.2.4.10.1 (changed bits 18:16 to reserved).

• 8.2.4.21.1 through 8.2.4.21.4 (new sections).

• 8.2.4.22.10 and 8.2.4.22.11 (revised bit descriptions)

• 8.2.4.29.44 through 8.2.4.29.51;8.2.4.29.73 and 8.2.4.29.73 (removed).

• 8.2.4.29.73 and 8.2.4.29.74 (removed register RXFECCSTATC and RXFECCSTATUC).

• 10.5.8 (changed 100BASE-TX Test Mode [1:0] bit setting 11b to reserved).

• 10.6.12 (revised F bit default setting).

• 12.4.1 (added new current consumption tables).

• 12.7.4 (added new mechanical package diagram).

• 16.0 (revised MDC and MDI descriptions).

X540 — Revision History

4

Page 5

Revision History — X540

• Added footnote to table 1.5 (LAN Performance Features).

• Revised section 4.6.7.2 (Replaced "ITR Interval bit" with "ITR_INTERVAL bit" and "RSC Delay field" with

"RSC_DELAY field".

• Revised sections 4.6.11.3.1, 4.6.11.3.3, and 4.6.11.3.4 (removed LLTC references).

• Section 5.3.2 (removed the statement directly above section 5.3.3).

• Revised table 5.4 (Start-up and Power-State Transition Timing Parameters; tppg, tfl, and tpgres values).

• Revised section 7.1.2.4 (replaced text “The receive packet is parsed and the OX_ID or RX_ID . . .” ).

• Revised section 8.2.4.22.20 (Flow Director Filters VLAN and FLEX bytes - FDIRVLAN (0x0000EE24) DBU-RX;

bits 15:0).

• Revised section 8.2.4.9.10 (Transmit Descriptor Control - TXDCTL[n] (0x00006028 + 0x40*n, n=0...127) DMATX; revised note from bit 25 description).

• Revised section 7.1.2.7.11 (Query Filter Flow table).

2.1 July 2012

2.0 March 2012

1.9 January 2012 Initial public release.

• Revised section 7.1.2.3 (ETQF flow)

• Revised section 7.3.2.1.1 (Replaced "RSC Delay field" with "RSC_DELAY field”).

• Revised figures 7.6 and 7.7.

• Revised section 10.6.14 (Global Reserved Provisioning 1: Address 1E.C470; updated bits E:D description).

• Revised section 10.4.21 (Auto-Negotiation Reserved Vendor Provisioning 1: Address 7.C410; updated bits F:E,

A:8, and bits 7:6).

• Revised section 10.6.19 (Global Cable Diagnostic Status 2: Address 1E.C801; bits 7:0).

• Revised section 10.6.33 (Global Reserved Status 1: Address 1E.C885; removed XENPAK references).

• Revised section 10.6.38 (Global Interrupt Mask 1: Address 1E.D400; changed bit E default to 1b).

• Added new section 10.2.35 (PMA Receive Reserved Vendor State 1: Address 1.E810).

• Added new section 10.2.36 (PMA Receive Reserved Vendor State 2: Address 1.E811).

• Revised section 12.3.9 (Power On Reset).

• Revised section 13.5.3.3 (Special Delay Requirements).

• Revised section 13.8.1 (LAN Disable).

• Revised section 2.1.10 (Miscellaneous; GPIO_7 description).

• Revised section 3.5 (2nd bullet after Table 3.15; lowest SDP pins (SDP0_0 or SDP1_0) description).

• Revised section 6.1 (added note about reserved fields).

• Revised section 6.3.7.1 (PXE Setup Options PCI Function 0 — Word Address 0x30; bits 12:10 description).

• Revised section 6.5.5.7 (NC-SI Configuration 1 - Offset 0x06; bits 4:0 description).

• Revised section 6.5.5.8 (NC-SI Configuration 2 - Offset 0x07; bit 15 description).

• Revised section 8.2.4.28.4 (Software Status Register; bit 8 description).

• Revised section 8.2.4.25.13 (Priority XON Transmitted Count; bits 15:0 description).

• Revised section 8.2.4.25.14 (Priority XON Received Count; bits 15:0 description).

• Revised section 8.2.4.25.15 (Priority XOFF Transmitted Count; bits 15:0 description).

• Revised section 8.2.4.25.16 (Priority XOFF Received Count; bits 15:0 description).

• Revised table note references in section 11.7.2.2.3 (Read Status Command).

• Revised section 6.2.1 (NVM Organization).

• Revised section 8.2.4.23.1 (Core Control 0 Register; bit 1 description).

• Revised section 8.2.4.4.14 (PCIe Control Extended Register; bit 30 description).

• Revised section 8.2.4.8.9 (PCIe Control Extended Register; bit 1 description).

• Revised section 8.2.4.23.10 (MAC Control Register; bits 7:5).

• Removed PSRTYPE from note 11 in section 4.2.3.

5

Page 6

NOTE: This page intentionally left blank.

X540 — Revision History

6

Page 7

Introduction—X540 10GBase-T Controller

1.0 Introduction

1.1 Scope

This document describes the external architecture (including device operation, pin

descriptions, register definitions, etc.) for the Intel

dual port 10GBASE-T Network Interface Controller.

This document is intended as a reference for logical design group, architecture validation,

firmware development, software device driver developers, board designers, test

engineers, or anyone else who might need specific technical or programming information

about the X540.

1.2 Product Overview

The X540 is a derivative of the 82599, the Intel 10 GbE Network Interface Controller

(NIC) targeted for blade servers. Many features of its predecessor remain intact;

however, some have been removed or modified as well as new features introduced.

The X540 includes two integrated 10GBASE-T copper Physical Layer Transceivers (PHYs).

A standard MDIO interface, accessible to software via MAC control registers, is used to

configure and monitor each PHY operation.

The X540 also supports a single port configuration.

®

Ethernet Controller X540, a single or

5

Page 8

1.2.1 System Configurations

The X540 is targeted for system configurations such as rack mounted or pedestal

servers, where it can be used as an add-on NIC or LAN on Motherboard (LOM). Another

system configuration is for high-end workstations.

X540 10GBase-T Controller—Introduction

Figure 1-1 Typical Rack / Pedestal System Configuration

6

Page 9

Introduction—X540 10GBase-T Controller

1.2.2 External Interfaces

Figure 1-2 X540 External Interfaces Diagram (Dual Port)

Figure 1-3 X540 External Interfaces Diagram (Single Port Configuration)

7

Page 10

1.2.3 PCIe* Interface

The X540 supports PCIe v2.1 (2.5GT/s or 5GT/s). See Section 2.1.2 for full pin

description and Section 12.4.7 for interface timing characteristics.

1.2.4 Network Interfaces

Two independent 10GBASE_T (10BASE-T_0 and 10GBASE-T_1) interfaces are used to

connect the two the X540 ports to external devices. Each 10GBASE-T interface can

operate at any of the following speeds:

• 10 Gb/s, 10GBASE-T mode

• 1 Gb/s, 1000BASE-T mode

• 100 Mb/s, 100BASE-TX mode

Refer to Section 2.1.3 for full-pin descriptions.For the timing characteristics of those

interfaces, refer to the relevant external specifications listed in Section 12.4.8.

X540 10GBase-T Controller—Introduction

1.2.5 Serial Flash Interface

The X540 provides an external SPI serial interface to a Flash device, also referred to as

Non-Volatile Memory (NVM). The X540 supports serial Flash devices with up to 16 Mb (2

MB) of memory.

1.2.6 SMBus Interface

SMBus is an optional interface for pass-through and/or configuration traffic between an

external Manageability Controller (MC) and the X540.

The X540's SMBus interface supports a standard SMBus, up to a frequency of 400 KHz.

Refer to Section 2.1.5 for full-pin descriptions and Section 12.4.6.3 for timing

characteristics of this interface.

1.2.7 NC-SI Interface

NC-SI is an optional interface for pass-through traffic to and from an MC. The X540

meets the NC-SI version 1.0.0 specification.

Refer to Section 2.1.6 for the pin descriptions, and Section 11.7.1 for NC-SI

programming.

8

Page 11

Introduction—X540 10GBase-T Controller

1.2.8 Software-Definable Pins (SDP) Interface (General-Purpose I/O)

The X540 has four SDP pins per port that can be used for miscellaneous hardware or

software-controllable purposes. These pins can each be individually configured to act as

either input or output pins. Via the SDP pins, the X540 can support IEEE1588 auxiliary

device connections, and other functionality. For more details on the SDPs see Section 3.5

and the ESDP register section.

1.2.9 LED Interface

The X540 implements four output drivers intended for driving external LED circuits per

port. Each of the four LED outputs can be individually configured to select the particular

event, state, or activity, which is indicated on that output. In addition, each LED can be

individually configured for output polarity as well as for blinking versus non-blinking

(steady-state) indications.

The configuration for LED outputs is specified via the LEDCTL register. In addition, the

hardware-default configuration for all LED outputs can be specified via an NVM field (see

Section 6.4.6.3), thereby supporting LED displays configured to a particular OEM

preference.

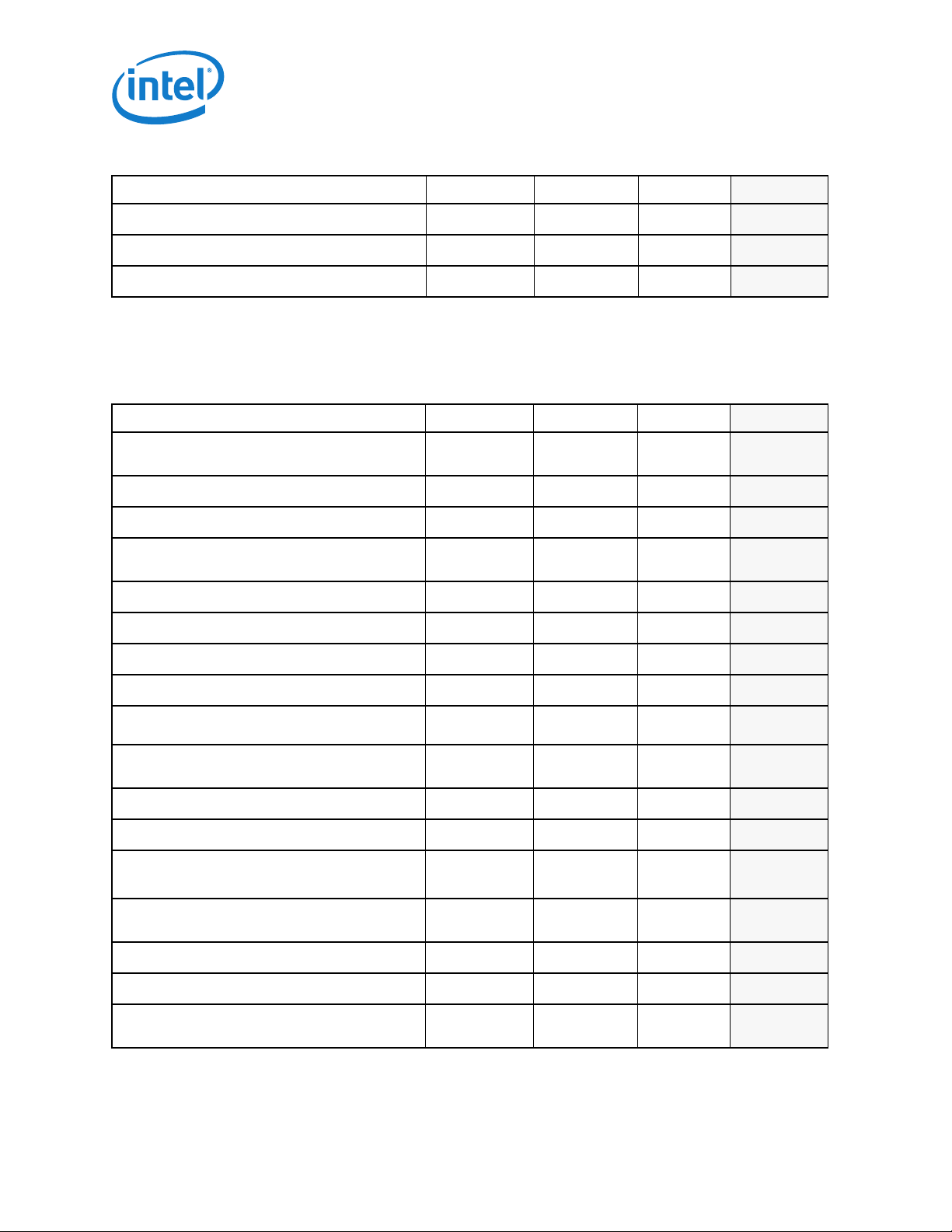

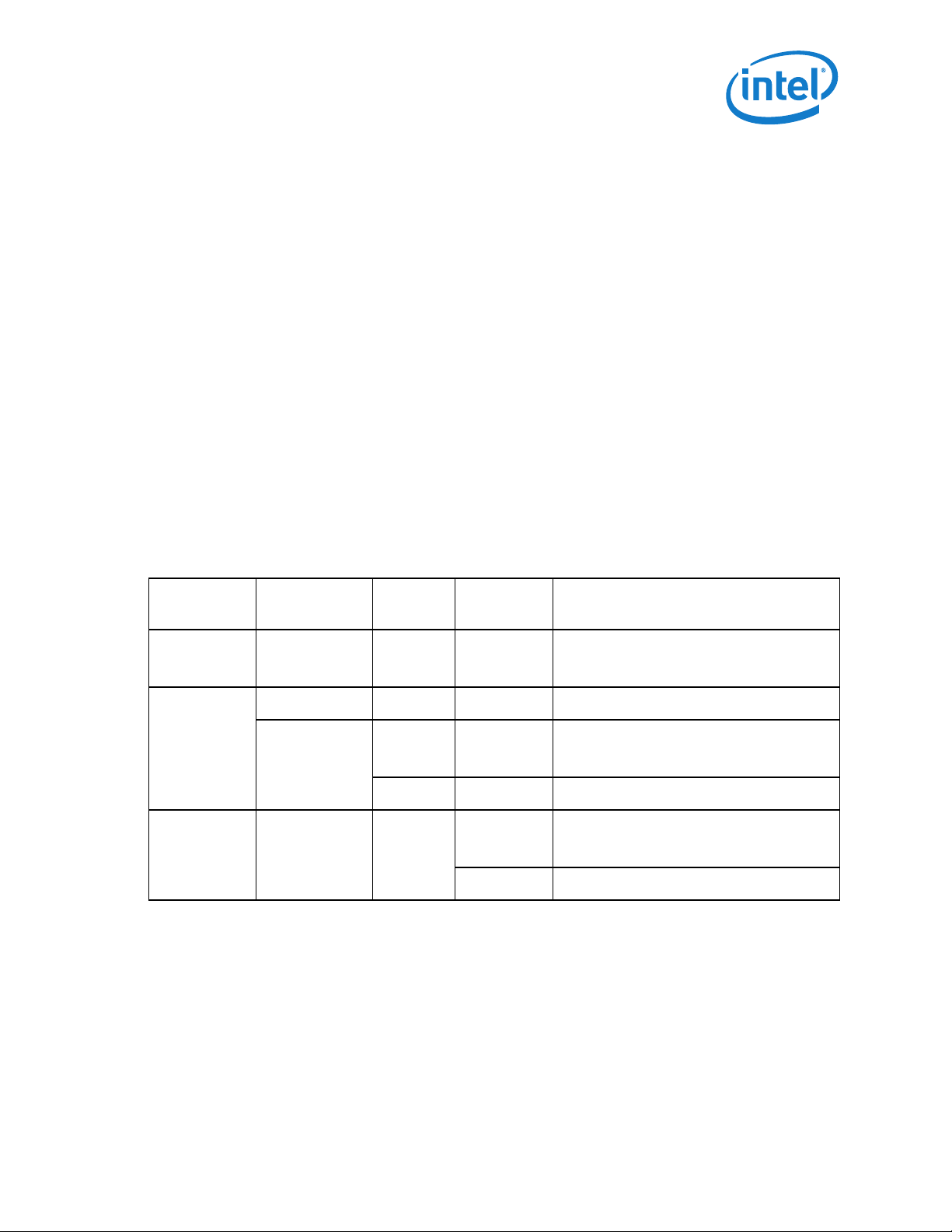

1.3 Features Summary

Table 1-1 to Table 1-7 list the X540's features in comparison to previous dual-port 10

GbE Ethernet controllers.

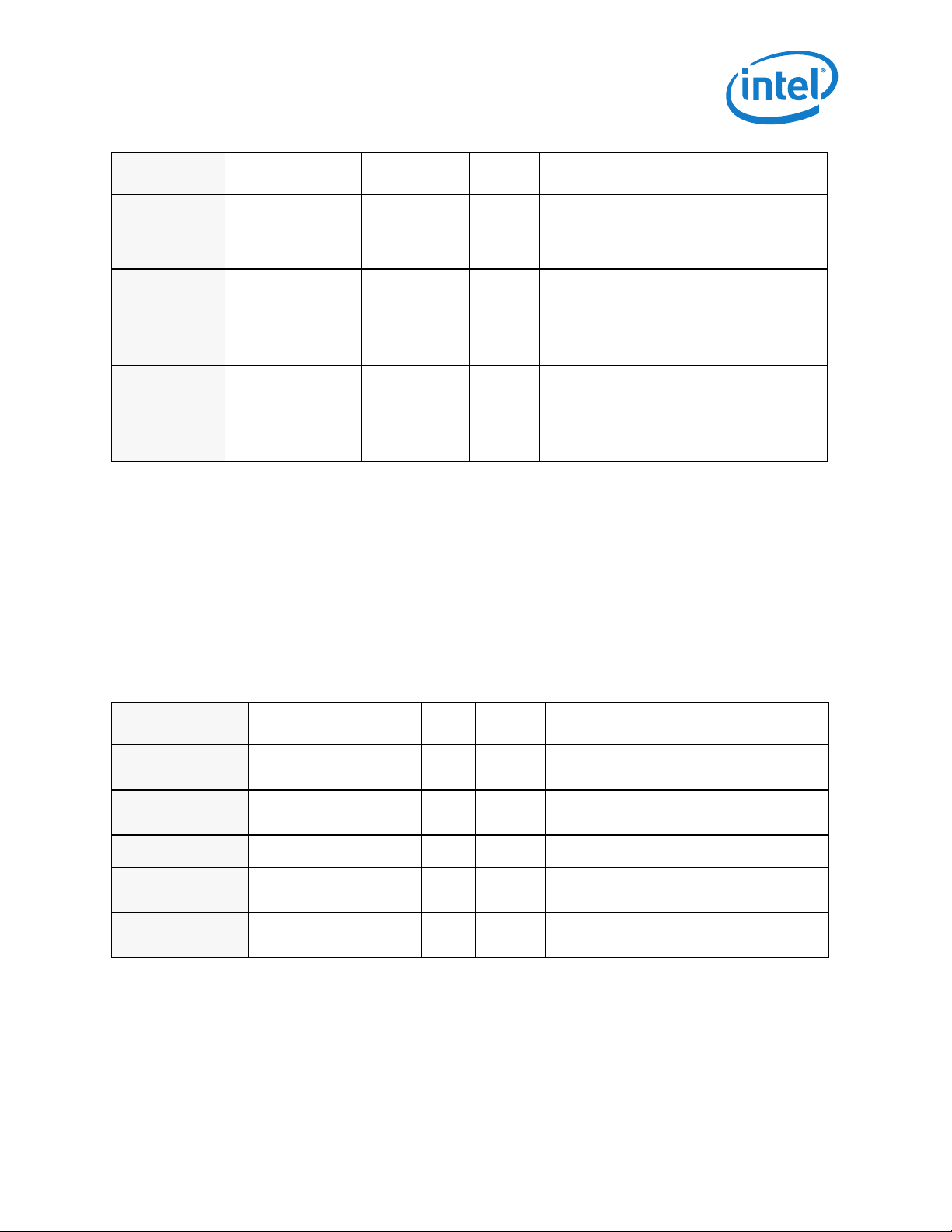

Table 1-1 General Features

Feature X540 82599 82598 Reserved

Serial Flash Interface Y Y Y

4-wire SPI EEPROM Interface N Y Y

Configurable LED Operation for Software or OEM

Customization of LED Displays

Protected EEPROM/NVM1 Space for Private

Configuration

Device Disable Capability Y Y Y

Package Size 25 mm x 25

YYY

YYY

25 mm x 25 mm31 x 31 mm

mm

9

Page 12

X540 10GBase-T Controller—Introduction

Table 1-1 General Features

Feature X540 82599 82598 Reserved

Embedded Thermal Diode Y N Y

Watchdog Timer Y Y N

Time Sync (IEEE 1588) Y

2

YN

1. X540 Only.

2. Time sync not supported at 100 Mb/s link speed.

Table 1-2 Network Features

Feature X540 82599 82598 Reserved

Compliant with the 10 GbE and 1 GbE Ethernet/

802.3ap (KX/KX4) Specification

Compliant with the 10 GbE 802.3ap (KR) specification N Y N

Support of 10GBASE-KR FEC N Y N

Compliant with the 10 GbE Ethernet/802.3ae (XAUI)

Specification

Compliant with XFI interface N Y N

Compliant with SFI interface N Y N

Support for EDC N N N

Compliant with the 1000BASE-BX Specification N Y Y

Auto Negotiation/Full-Duplex at 100 Mb/s Operation

NYY

NYY

Y

(100 Mb/s FDX)Y (100 Mb/s FDX)

NA

10000/1000/100 Mb/s Copper PHYs Integrated On-

YNN

Chip

Support Jumbo Frames of up to 15.5 KB Y

1

1

Y

Auto-Negotiation Clause 73 for Supported Modes N Y Y

MDIO Interface Clause 45 Y

YY

(internally)

Flow Control Support: Send/Receive Pause Frames

YYY

and Receive FIFO Thresholds

Statistics for Management and RMON Y Y Y

802.1q VLAN Support Y Y Y

SerDes Interface for External PHY Connection or

NYY

System Interconnect

10

Y

Page 13

Introduction—X540 10GBase-T Controller

Table 1-2 Network Features

Feature X540 82599 82598 Reserved

SGMII Interface

Support of non Auto-Negotiation Partner N Y Y

Double VLAN Y Y N

1. The X540 and 82599 support full-size 15.5 KB jumbo packets while in a basic mode of operation. When DCB mode is enabled,

or security engines enabled, or virtualization is enabled, or OS2BMC is enabled, then the X540 supports 9.5 KB jumbo packets.

Packets to/from MC longer than 2KB are filtered out.

N

Y

(100 Mb/s and 1

GbE only)

N

Table 1-3 Host Interface Features

Feature X540 82599 82598 Reserved

PCIe* version (speed)

Number of Lanes x1, x2, x4, x8 x1, x2, x4, x8 x1, x2, x4, x8

PCIe v2.1 (5GT/s)

PCIe v2.0 (2.5GTs

& 5GT/s)

PCIe Gen 1

v2.0 (2.5GT/s)

64-bit Address Support for Systems Using More

Than 4 GB of Physical Memory

Outstanding Requests for Tx Data Buffers 16 16 16

Outstanding Requests for Tx Descriptors 8 8 8

Outstanding Requests for Rx Descriptors 8 8 4

Credits for P-H/P-D/NP-H/NP-D (shared for the two

ports)

Max Payload Size Supported 512 Bytes 512 Bytes 256 Bytes

Max Request Size Supported 2 KB 2 KB 256 Bytes

Link Layer Retry Buffer Size (shared for the two

ports)

Vital Product Data (VPD) Y Y N

End to End CRC (ECRC) Y Y N

TLP Processing Hints (TPH) N N N

Latency Tolerance Reporting (LTR) N N N

ID-Based Ordering (IDO) N N N

Access Control Services (ACS) Y N N

YYY

16/16/4/4 16/16/4/4 8/16/4/4

3.4 KB 3.4 KB 2 KB

ASPM Optional Compliance Capability Y N N

PCIe Functions Off Via Pins, While LAN Ports Are

On

YNN

11

Page 14

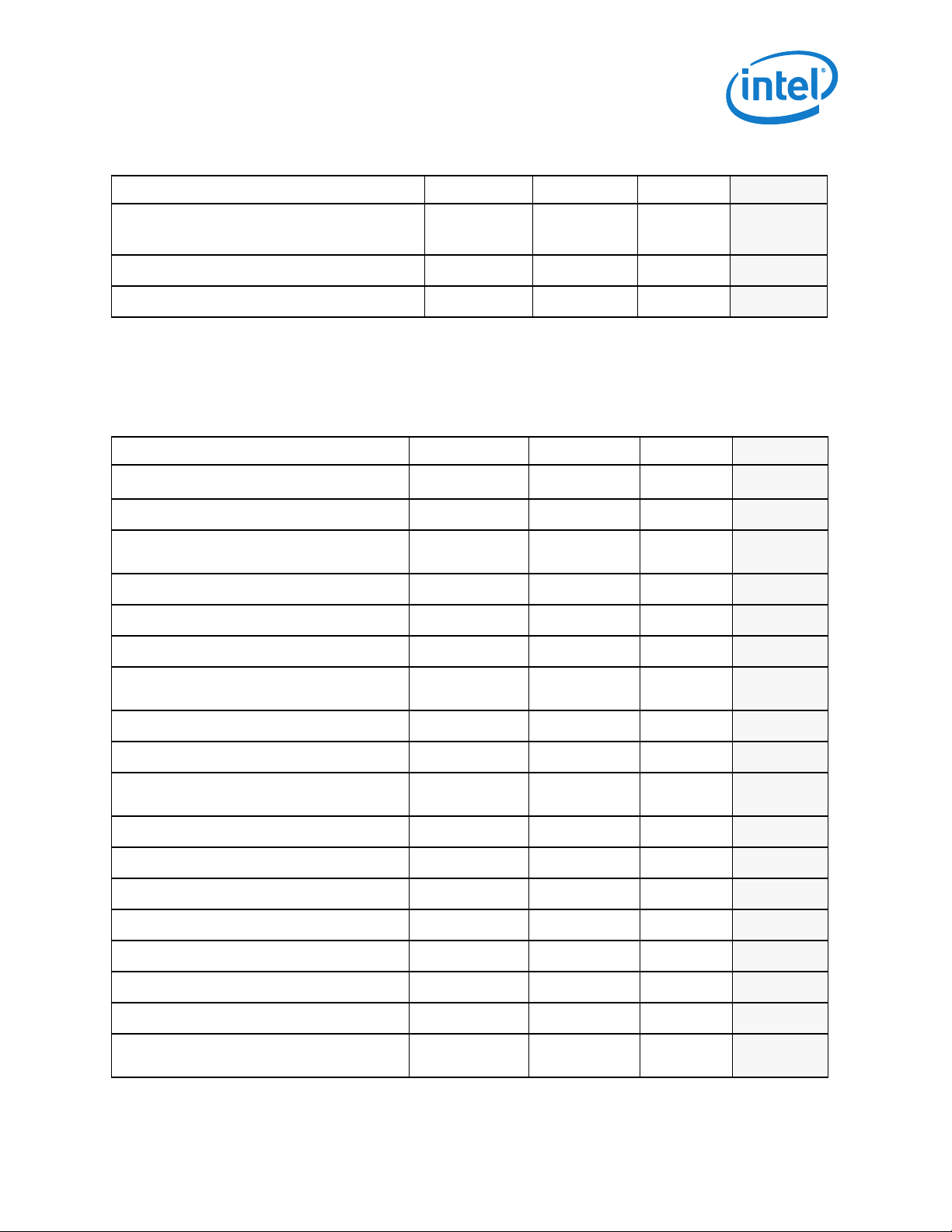

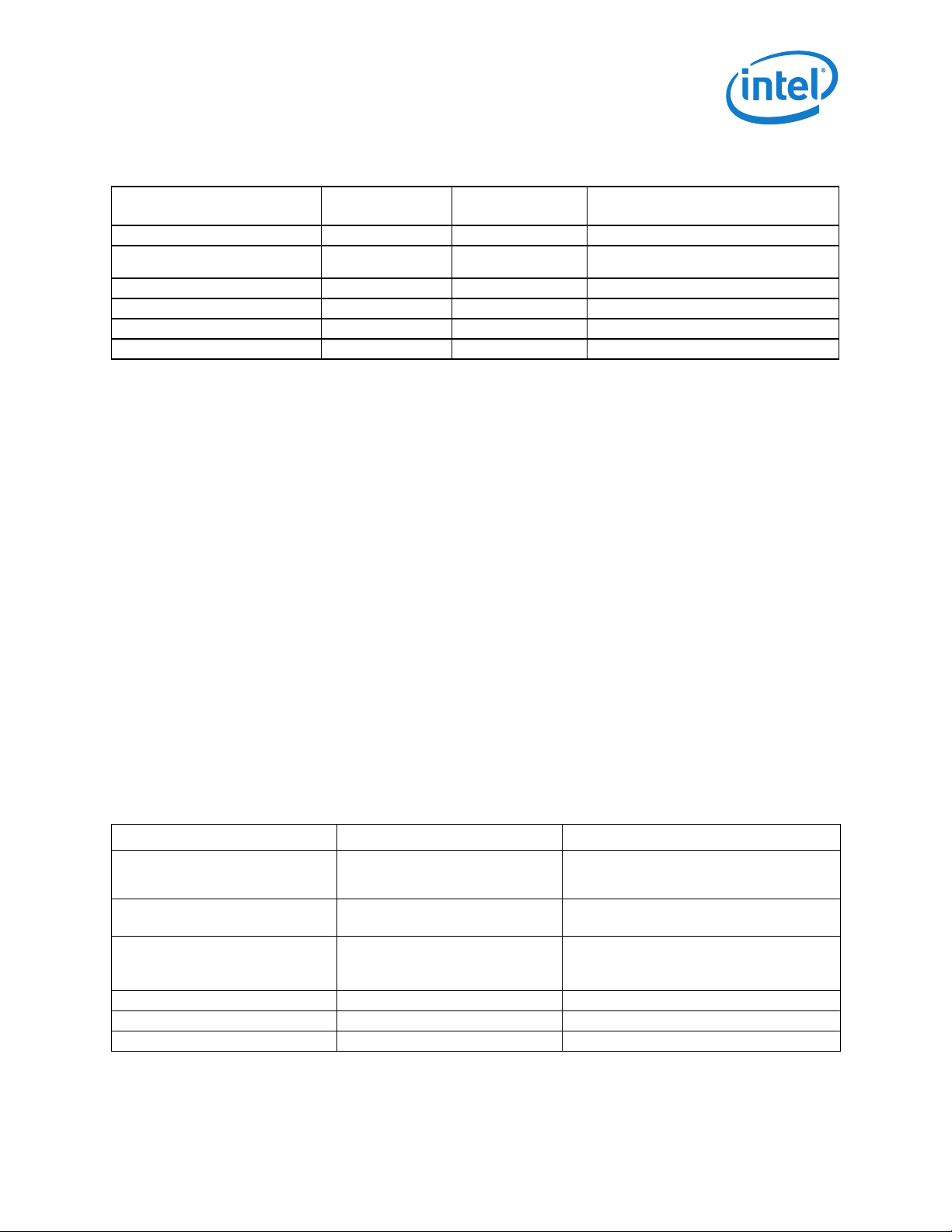

Table 1-4 LAN Functions Features

X540 10GBase-T Controller—Introduction

Feature X540 82599 82598

Programmable Host Memory Receive Buffers Y Y Y

Descriptor Ring Management Hardware for

Transmit and Receive

ACPI Register Set and Power Down Functionality

Supporting D0 & D3 States

Integrated MACsec, 801.2AE Security Engines:

AES-GCM 128-bit; Encryption + Authentication;

One SC x 2 SA Per Port. Replay Protection with

Zero Window

Integrated IPsec Security Engines: AES-GCM 128bit; AH or ESP encapsulation; IPv4 and IPv6 (no

option or extended headers)

Software-Controlled Global Reset Bit (Resets

Everything Except the Configuration Registers)

Software-Definable Pins (SDP) (per port) 4 8 8

Four SDP Pins can be Configured as General

Purpose Interrupts

Wake-on-LAN (WoL) Y Y Y

IPv6 Wake-up Filters Y Y Y

YYY

YYY

YY

1024 SA / port 1024 SA / port

YYY

YYY

Reserved

N

N

Configurable (through EEPROM/Flash1) Wake-up

Flexible Filters

Default Configuration by EEPROM/Flash1 for all

LEDs for Pre-Driver Functionality

LAN Function Disable Capability Y Y Y

Programmable Memory Transmit Buffers 160 KB / port 160 KB / port 320 KB / port

Programmable Memory Receive Buffers 384 KB / port 512 KB / port 512 KB / port

1. X540 Only.

Table 1-5 LAN Performance Features

Feature X540 82599 82598 Reserved

TCP/UDP Segmentation Offload 256 KB in all

TSO Interleaving for Reduced Latency Y Y N

TCP Receive Side Coalescing (RSC) 32 flows / port 32 flows / port N

12

YYY

YYY

1

modes

256 KB in all

modes

256 KB in

legacy mode,

32 KB in DCB

Page 15

Introduction—X540 10GBase-T Controller

Table 1-5 LAN Performance Features

Feature X540 82599 82598 Reserved

Data Center Bridging (DCB), IEEE Compliance to

Enhanced Transmission Selection (ETS) -

802.1Qaz

Priority-based Flow Control (PFC) - 802.1Qbb

Rate Limit VM Tx Traffic per TC (per TxQ) Y Y N

IPv6 Support for IP/TCP and IP/UDP Receive

Checksum Offload

Fragmented UDP Checksum Offload for Packet

Reassembly

FCoE Tx / Rx CRC Offload Y Y N

FCoE Transmit Segmentation 256 KB 256 KB N

FCoE Coalescing and Direct Data Placement 512 outstanding

Message Signaled Interrupts (MSI) Y Y Y

Message Signaled Interrupts (MSI-X) Y Y Y

Interrupt Throttling Control to Limit Maximum

Interrupt Rate and Improve CPU Use

1

Y (up to 8)

Y (up to 8)

YYY

YYY

Read — Write

requests / port

YYY

Y (up to 8)

Y (up to 8)

512 outstanding

Read — Write

requests / port

Y (up to 8)

Y (up to 8)

N

N

Rx Packet Split Header Y Y Y

Multiple Rx Queues (RSS) Y (multiple

Flow Director Filters: up to 32 KB -2 Flows by Hash

Filters or up to 8 KB -2 Perfect Match Filters

Number of Rx Queues (per port) 128 128 64

Number of Tx Queues (per port) 128 128 32

Low Latency Interrupts

DCA Support

TCP Timer Interrupts

No Snoop

Relax Ordering

Rate Control of Low Latency Interrupts Y Y N

1. The X540 performance features are focused on 10 GbE performance improvement whereas 1 GbE was optimized for power

saving.

modes)

YYN

Yes to all Yes to all Yes to all

Y (multiple

modes)

8x8

16x4

13

Page 16

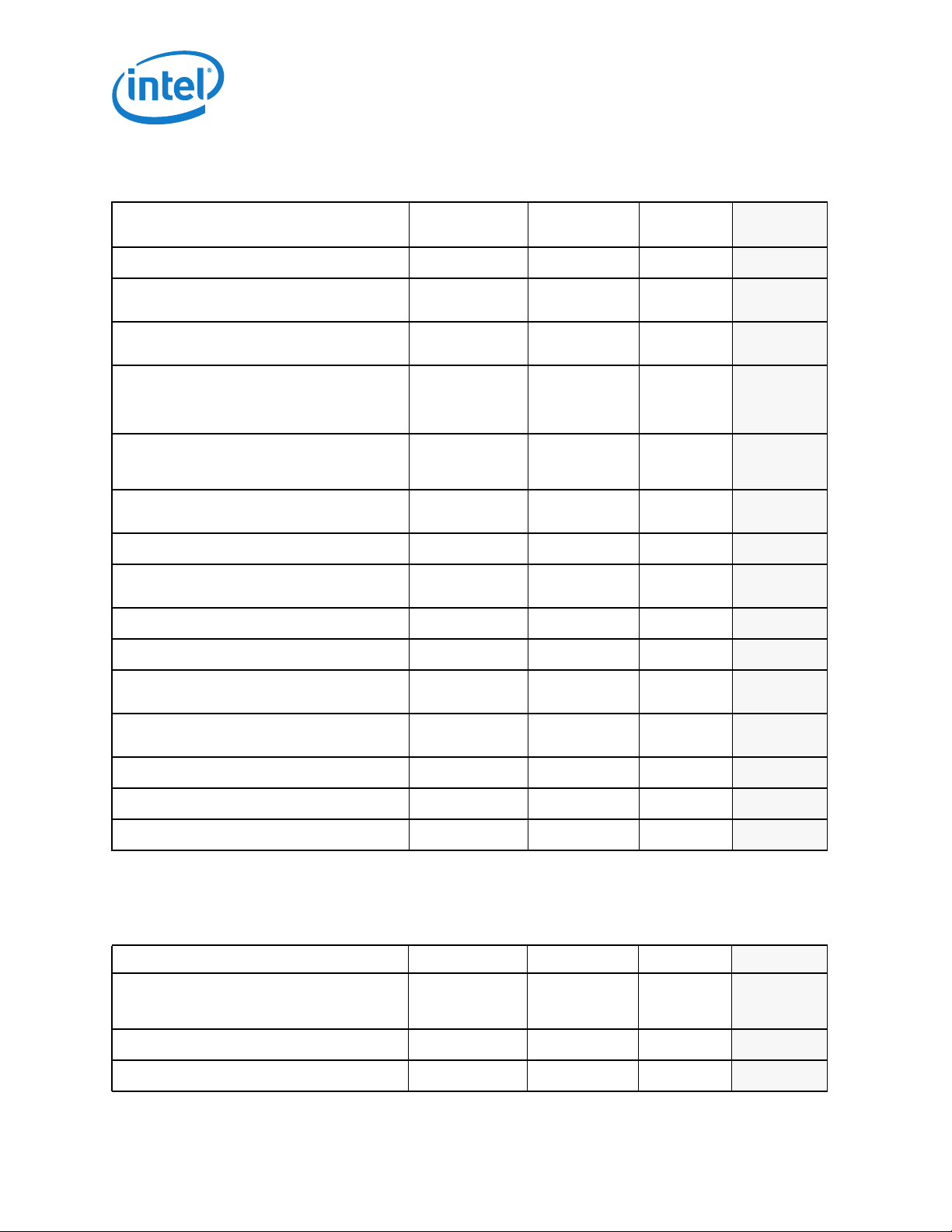

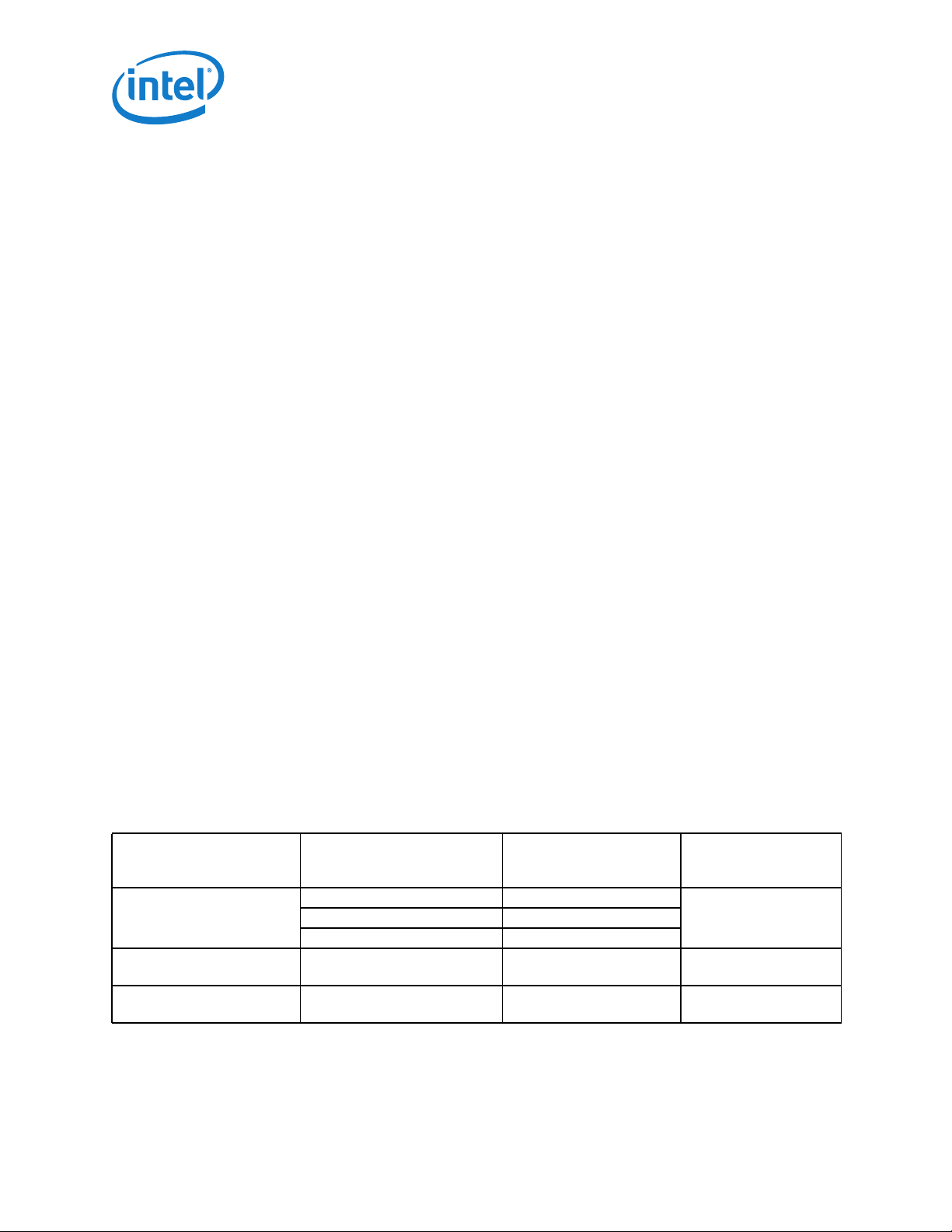

Table 1-6 Virtualization Features

Feature X540 82599 82598 Reserved

X540 10GBase-T Controller—Introduction

Support for Virtual Machine Device Queues

(VMDq1 and Next Generation VMDq)

L2 Ethernet MAC Address Filters (unicast and

multicast)

L2 VLAN filters 64 64 -

PCI-SIG SR IOV Y Y N

Multicast and Broadcast Packet Replication Y Y N

Packet Mirroring Y Y N

Packet Loopback Y Y N

Traffic Shaping Y Y N

64 64 16

128 128 16

Table 1-7 Manageability Features

Feature X540 82599 82598 Reserved

Advanced Pass Through-Compatible Management

Packet Transmit/Receive Support

SMBus Interface to an External MC Y Y Y

NC-SI Interface to an External MC Y Y Y

YYY

New Management Protocol Standards Support

(NC-SI)

L2 Address Filters 4 4 4

VLAN L2 Filters 8 8 8

Flex L3 Port Filters 16 16 16

Flexible TCO Filters 4 4 4

L3 Address Filters (IPv4) 4 4 4

L3 Address Filters (IPv6) 4 4 4

Host-Based Application-to-BMC Network

Communication Patch (OS2BMC)

Flexible MAC Address Y N N

MC Inventory of LOM Device Information Y N N

iSCSI Boot Configuration Parameters via MC Y N N

14

YYY

YNN

Page 17

Introduction—X540 10GBase-T Controller

Table 1-7 Manageability Features

Feature X540 82599 82598 Reserved

MC Monitoring Y N N

NC-SI to MC Y N N

NC-SI Arbitration Y N N

MCTP over SMBus

NC-SI Package ID Via SDP Pins Y N N

1. The X540's MCTP protocol implementation is based on an early draft of the DSP0261 Standard and it includes a Payload Type

field that was removed in the final release of the standard.

1

YNN

1.4 Overview of New Capabilities Beyond

82599

1.4.1 OS-to-BMC Management Traffic

Communication (OS2BMC)

OS2BMC is a filtering method that enables server management software to communicate

with a MC

interface. Functionality includes:

• A single PCI function (for multi-port devices, each LAN function enables

• One or more IP address(es) for the host along with a single (and separate) IP

1

via standard networking protocols such as TCP/IP instead of a chipset-specific

communication to the MC)

address for the MC

• One or more host MAC address(es) along with a single (and separate) MAC address

for the MC

• ARP/RARP/ICMP protocols supported in the MC

1.4.2 MCTP Over SMBus

Allow reporting and controlling of all the information exposed in a LOM device via NC-SI,

in NIC devices via MCTP over SMBus.

MCTP is a transport protocol that does not provide a way to control a device. In order to

allow a consistent interface for both LOM and NIC devices, it is planned to implement an

NC-SI over MCTP protocol.

1. Also referred to as Baseboard Management Controller (BMC).

15

Page 18

X540 10GBase-T Controller—Introduction

An Intel NIC can connect through MCTP to a MC. The MCTP interface will be used by the

MC to control the NIC and not for pass-through traffic.

Note: The X540's MCTP protocol implementation is based on an early draft of the

DSP0261 Standard and it includes a Payload Type field that was removed in

the final release of the standard.

1.4.3 PCIe v2.1 Features

1.4.3.1 Access Control Services (ACS)

the X540 supports ACS Extended Capability structures on all functions. the X540 reports

no support for the various ACS capabilities in the ACS Extended Capability structure.

Further information can be found in Section 9.4.5.

1.4.3.2 ASPM Optionality Compliance Capability

A new capability bit, ASPM (Active State Power Management) Optionality Compliance bit

has been added to the X540. Software is permitted to use the bit to help determine

whether to enable ASPM or whether to run ASPM compliance tests. New bit indicates that

the X540 can optionally support entry to L0s. Further information can be found in

Section 9.3.11.7.

1.5 Conventions

1.5.1 Terminology and Acronyms

See Section 17.0.

This section defines the organization of registers and memory transfers, as it relates to

information carried over the network:

• Any register defined in Big Endian notation can be transferred as is to/from Tx and Rx

buffers in the host memory. Big Endian notation is also referred to as being in

network order or ordering.

• Any register defined in Little Endian notation must be swapped before it is

transferred to/from Tx and Rx buffers in the host memory. Registers in Little Endian

order are referred to being in host order or ordering.

Tx and Rx buffers are defined as being in network ordering; they are transferred as is

over the network.

Note: Registers not transferred on the wire are defined in Little Endian notation.

Registers transferred on the wire are defined in Big Endian notation, unless

specified differently.

16

Page 19

Introduction—X540 10GBase-T Controller

1.6 References

The X540 implements features from the following specifications:

IEEE Specifications

• 10GBASE-T as per the IEEE 802.3an standard.

• 1000BASE-T and 100BASE-TX as per the IEEE standard 802.3-2005 (Ethernet).

Incorporates various IEEE Standards previously published separately. Institute of

Electrical and Electronic Engineers (IEEE).

• IEEE 1149.6 standard for Boundary Scan (MDI pins excluded)

• IEEE standard 802.3ap, draft D3.2.

• IEEE standard 1149.1, 2001 Edition (JTAG). Institute of Electrical and Electronics

Engineers (IEEE).

• IEEE standard 802.1Q for VLAN.

• IEEE 1588 International Standard, Precision clock synchronization protocol for

networked measurement and control systems, 2004-09.

• IEEE P802.1AE/D5.1, Media Access Control (MAC) Security, January 19, 2006.

PCI-SIG Specifications

• PCI Express® Base Specification Revision 2.1, March 4, 2009

• PCI Express 2.1 Card Electromechanical Specification

• PCI Express 2.0 Base specification, 12/20/2006.

• PCI Express™ 2.0 Card Electromechanical Specification, Revision 0.9, January 19,

2007.

• PCI Bus Power Management Interface Specification, Rev. 1.2, March 2004.

• PICMG3.1 Ethernet/Fibre Channel Over PICMG 3.0 Draft Specification January 14,

2003 Version D1.0.

• Single Root I/O Virtualization and Sharing Specification Revision 1.1, September 8,

2009.

IETF Specifications

• IPv4 specification (RFC 791)

• IPv6 specification (RFC 2460)

• TCP specification (RFC 793)

• UDP specification (RFC 768)

• ARP specification (RFC 826)

• RFC4106 — The Use of Galois/Counter Mode (GCM) in IPsec Encapsulating Security

Payload (ESP).

• RFC4302 — IP Authentication Header (AH)

• RFC4303 — IP Encapsulating Security Payload (ESP)

17

Page 20

X540 10GBase-T Controller—Introduction

• RFC4543 — The Use of Galois Message Authentication Code (GMAC) in IPsec ESP and

AH.

• IETF Internet Draft, Marker PDU Aligned Framing for TCP Specification.

• IETF Internet Draft, Direct Data Placement over Reliable Transports.

• IETF Internet Draft, RDMA Protocol Specification.

Other

• Advanced Configuration and Power Interface Specification, Rev 2.0b, October 2002

• RDMA Consortium, RDMA Protocol Verbs Specification

• Network Controller Sideband Interface (NC-SI) Specification, Version cPubs-0.1, 2/

18/2007.

• System Management Bus (SMBus) Specification, SBS Implementers Forum, Ver. 2.0,

August 2000.

• EUI-64 specification, http://standards.ieee.org/regauth/oui/tutorials/EUI64.html.

• Backward Congestion Notification Functional Specification, 11/28/2006.

• Definition for new PAUSE function, Rev. 1.2, 12/26/2006.

• GCM spec — McGrew, D. and J. Viega, “The Galois/Counter Mode of Operation

(GCM)”, Submission to NIST. http://csrc.nist.gov/CryptoToolkit/modes/

proposedmodes/gcm/gcm-spec.pdf, January 2004.

• FRAMING AND SIGNALING-2 (FC-FS-2) Rev 1.00

• Fibre Channel over Ethernet Draft Presented at the T11 on May 2007

• Per Priority Flow Control (by Cisco Systems) — Definition for new PAUSE function,

Rev 1.2, EDCS-472530

In addition, the following document provides application information:

• 82563EB/82564EB Gigabit Ethernet Physical Layer Device Design Guide, Intel

Corporation.

1.7 Architecture and Basic Operation

1.7.1 Transmit (Tx) Data Flow

Tx data flow provides a high-level description of all data/control transformation steps

needed for sending Ethernet packets over the wire.

18

Page 21

Introduction—X540 10GBase-T Controller

Table 1-8 Tx Data Flow

Step Description

1 The host creates a descriptor ring and configures one of the X540’s transmit queues with the address location,

2 The host is requested by the TCP/IP stack to transmit a packet, it gets the packet data within one or more data

3 The host initializes the descriptor(s) that point to the data buffer(s) and have additional control parameters

4 The host updates the appropriate Queue Tail Pointer (TDT).

5 The X540’s DMA senses a change of a specific TDT and as a result sends a PCIe request to fetch the

6 The descriptor(s) content is received in a PCIe read completion and is written to the appropriate location in the

7 The DMA fetches the next descriptor and processes its content. As a result, the DMA sends PCIe requests to

8 The packet data is being received from PCIe completions and passes through the transmit DMA that performs

9 While the packet is passing through the DMA, it is stored into the transmit FIFO.

10 The transmit switch arbitrates between host and management packets and eventually forwards the packet to

length, head, and tail pointers of the ring (one of 128 available Tx queues).

buffers.

that describes the needed hardware functionality. The host places that descriptor in the correct location at the

appropriate Tx ring.

descriptor(s) from host memory.

descriptor queue.

fetch the packet data from system memory.

all programmed data manipulations (various CPU offloading tasks as checksum offload, TSO offload, etc.) on

the packet data on the fly.

After the entire packet is stored in the transmit FIFO, it is then forwarded to transmit switch module.

the MAC.

11 The MAC appends the L2 CRC to the packet and delivers the packet to the integrated PHY.

12 The PHY performs the PCS encoding, scrambling, Loopback Dropped Packet Count (LDPC) encoding, and the

other manipulations required to deliver the packet over the copper wires at the selected speed.

13 When all the PCIe completions for a given packet are complete, the DMA updates the appropriate

descriptor(s).

14 The descriptors are written back to host memory using PCIe posted writes. The head pointer is updated in host

memory as well.

15 An interrupt is generated to notify the host driver that the specific packet has been read to the X540 and the

driver can then release the buffer(s).

19

Page 22

1.7.2 Receive (Rx) Data Flow

Rx data flow provides a high-level description of all data/control transformation steps

needed for receiving Ethernet packets.

Table 1-9 Rx Data Flow

Step Description

X540 10GBase-T Controller—Introduction

1 The host creates a descriptor ring and configures one of the X540’s receive queues with the address location,

2 The host initializes descriptor(s) that point to empty data buffer(s). The host places these descriptor(s) in the

3 The host updates the appropriate Queue Tail Pointer (RDT).

4 A packet enters the PHY through the copper wires.

5 The PHY performs the required manipulations on the incoming signal such as LDPC decoding, descrambling,

6 The PHY delivers the packet to the Rx MAC.

7 The MAC forwards the packet to the Rx filter.

8 If the packet matches the pre-programmed criteria of the Rx filtering, it is forwarded to an Rx FIFO.

9 The receive DMA fetches the next descriptor from the appropriate host memory ring to be used for the next

10 After the entire packet is placed into an Rx FIFO, the receive DMA posts the packet data to the location

11 When the packet is placed into host memory, the receive DMA updates all the descriptor(s) that were used by

12 The receive DMA writes back the descriptor content along with status bits that indicate the packet information

length, head, and tail pointers of the ring (one of 128 available Rx queues).

correct location at the appropriate Rx ring.

PCS decoding, etc.

received packet.

indicated by the descriptor through the PCIe interface.

If the packet size is greater than the buffer size, more descriptor(s) are fetched and their buffers are used for

the received packet.

the packet data.

including what offloads were done on that packet.

13 The X540 initiates an interrupt to the host to indicate that a new received packet is ready in host memory.

14 The host reads the packet data and sends it to the TCP/IP stack for further processing. The host releases the

20

associated buffer(s) and descriptor(s) once they are no longer in use.

Page 23

Pin Interface—X540 10GBase-T Controller

2.0 Pin Interface

2.1 Pin Assignments

2.1.1 Signal Type Definition

Signal Definition DC Specification

In Standard 2.5V I/O buffer, functions as input-only signal. 3.3V

Out (O) Standard 2.5V I/O buffer, functions as output-only signal. 3.3V

T/s Tri-state is a 2.5V bi-directional, tri-state input/output pin. 3.3V

O/d Open drain enables multiple devices to share as a wire-OR. Section 12.4.3

A-in Analog input signals. Section 12.4.6 and Section 12.4.7

A-out Analog output signals. Section 12.4.6 and Section 12.4.7

A-Inout Bi-directional analog signals.

B Input BIAS.

NCSI-in NC-SI 3.3V input signal. Section 12.4.4

NCSI-out NC-SI 3.3V output signal. Section 12.4.4

In-1p2 1.2V input-only signal. 3.3V tolerance.

In-Only Standard 2.5V buffer input-only signal. 3.3V tolerance.

Out-Only Standard 2.5V buffer output-only signal.

tolerance.

tolerance.

tolerance.

21

Page 24

X540 10GBase-T Controller—Pin Interface

Signal Definition DC Specification

LVDS-O Low voltage differential signal - output.

Pup Pull up.

Pdn Pull down.

2.1.2 PCIe

See AC/DC specifications in Section 12.4.6.

Reserved Pin Name Ball # Type

PET_0_p

PET_0_n

PET_1_p

PET_1_n

PET_2_p

PET_2_n

PET_3_p

PET_3_n

AC3

AD3

AC4

AD4

AC9

AD9

AC10

AD10

A-Out PCIe Serial Data Output. A serial

A-Out PCIe Serial Data Output. A serial

A-Out PCIe Serial Data Output. A serial

A-Out PCIe Serial Data Output. A serial

Internal

Pup/Pdn

External

Pup/Pdn

Name and Function

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

22

PET_4_p

PET_4_n

AC15

AD15

A-Out PCIe Serial Data Output. A serial

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

Page 25

Pin Interface—X540 10GBase-T Controller

Reserved Pin Name Ball # Type

PET_5_p

PET_5_n

PET_6_p

PET_6_n

PET_7_p

PET_7_n

PER_0_p

PER_0_n

AC16

AD16

AC21

AD21

AC22

AD22

AB2

AB1

A-Out PCIe Serial Data Output. A serial

A-Out PCIe Serial Data Output. A serial

A-Out PCIe Serial Data Output. A serial

A-In PCIe Serial Data Output. A serial

Internal

Pup/Pdn

External

Pup/Pdn

Name and Function

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

PER_1_p

PER_1_n

PER_2_p

PER_2_n

PER_3_p

PER_3_n

AD6

AC6

AD7

AC7

AD12

AC12

A-In PCIe Serial Data Output. A serial

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

A-In PCIe Serial Data Output. A serial

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

A-In PCIe Serial Data Output. A serial

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

23

Page 26

X540 10GBase-T Controller—Pin Interface

Reserved Pin Name Ball # Type

PER_4_p

PER_4_n

PER_5_p

PER _5_n

PER _6_p

PER _6_n

PER _7_p

PER _7_n

AD13

AC13

AD18

AC18

AD19

AC19

AB23

AB24

A-In PCIe Serial Data Output. A serial

A-In PCIe Serial Data Output. A serial

A-In PCIe Serial Data Output. A serial

A-In PCIe Serial Data Output. A serial

Internal

Pup/Pdn

External

Pup/Pdn

Name and Function

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

differential output pair running at

5 Gb/s or 2.5 Gb/s. This output

carries both data and an

embedded 5 GHz or 2.5 GHz clock

that is recovered along with data

at the receiving end.

PE_CLK_p

PE_CLK_n

PE_RBIAS0 V1 A-Inout Connection point for the band-gap

PE_RBIAS1 V2 A-Inout Connection point for the band-gap

PE_WAKE_N W1 O/d Pup

PE_RST_N W2 In Power and Clock Good Indication.

1. Pup value should be considered as 10 K.

Y2

Y1

A-In PCIe Differential Reference Clock

In (a 100 MHz differential clock

input).

This clock is used as the reference

clock for the PCIe Tx/Rx circuitry

and by the PCIe core PLL to

generate clocks for the PCIe core

logic.

reference resistor. This should be a

precision 1% 3.01 K resistor tied

to ground.

reference resistor. This should be a

precision 1% 3.01 K resistor tied

to ground.

1

Wake. Pulled low to indicate that a

Power Management Event (PME) is

pending and the PCIe link should

be restored. Defined in the PCIe

specifications.

Indicates that power and the PCIe

reference clock are within

specified values. Defined in the

PCIe specifications. Also called

PCIe Reset.

24

Page 27

Pin Interface—X540 10GBase-T Controller

2.1.3 MDI

See AC/DC specifications in Section 12.4.7.

Reserved Pin Name Ball # Type

MDI0_p_0 A3 A-

Inout

MDI0_n_0 B3 A-

Inout

MDI0_p_1 A5 A-

Inout

MDI0_n_1 B5 A-

Inout

MDI0_p_2 A7 A-

Inout

MDI0_n_2 B7 A-

Inout

Internal

Pup/Pdn

External

Pup/Pdn

Name and Function

Port 0 pair A+ of the line interface.

Connects to the Pair A+ input of the

transformer. On reset, set to high

impedance.

Port 0 pair A- of the line interface.

Connects to the Pair A- input of the

transformer. On reset, set to high

impedance.

Port 0 pair B+ of the line interface.

Connects to the Pair B+ input of the

transformer. On reset, set to high

impedance.

Port 0 pair B- of the line interface.

Connects to the Pair B- input of the

transformer. On reset, set to high

impedance.

Port 0 pair C+ of the line interface.

Connects to the Pair C+ input of the

transformer. On reset, set to high

impedance.

Port 0 pair C- of the line interface.

Connects to the Pair C- input of the

transformer. On reset, set to high

impedance.

MDI0_p_3 A9 A-

MDI0_n_3 B9 A-

MDI0_p_4 A11 A-

MDI0_n_4 B11 A-

MDI1_p_0

1

A22 A-

Inout

Inout

Inout

Inout

Inout

Port 0 pair D+ of the line interface.

Connects to the Pair D+ input of the

transformer. On reset, set to high

impedance.

Port 0 pair D- of the line interface.

Connects to the Pair D- input of the

transformer. On reset, set to high

impedance.

Port 0 Analog Test+. Connects to

the pair E+ input of the transformer.

Port 0 Analog Test-. Connects to the

pair E- input of the transformer.

Port 1 pair A+ of the line interface.

Connects to the Pair A+ input of the

transformer. On reset, set to high

impedance.

25

Page 28

X540 10GBase-T Controller—Pin Interface

Reserved Pin Name Ball # Type

MDI1_n_0

1

B22 A-

Inout

MDI1_p_1

1

A20 A-

Inout

MDI1_n_1

1

B20 A-

Inout

MDI1_p_2

1

A18 A-

Inout

MDI1_n_2

1

B18 A-

Inout

MDI1_p_3

1

A16 A-

Inout

Internal

Pup/Pdn

External

Pup/Pdn

Name and Function

Port 1 pair A- of the line interface.

Connects to the Pair A- input of the

transformer. On reset, set to high

impedance.

Port 1 pair B+ of the line interface.

Connects to the Pair B+ input of the

transformer. On reset, set to high

impedance.

Port 1 pair B- of the line interface.

Connects to the Pair B- input of the

transformer. On reset, set to high

impedance.

Port 1 pair C+ of the line interface.

Connects to the Pair C+ input of the

transformer. On reset, set to high

impedance.

Port 1 pair C- of the line interface.

Connects to the Pair C- input of the

transformer. On reset, set to high

impedance.

Port 1 pair D+ of the line interface.

Connects to the Pair D+ input of the

transformer. On reset, set to high

impedance.

MDI1_n_3

1

B16 A-

Inout

MDI1_p_4

1

A14 A-

Inout

MDI1_n_4

1

B14 A-

Inout

BG_REXT D12 A-

Inout

TM_REXT C12 A-

Inout

XTAL_I D23 A-In Positive 50.0 MHz crystal oscillator

XTAL_O D24 A-Out Positive 50.0 MHz crystal oscillator

1. These pins are a No Connect for the the X540 single port configuration.

Port 1 pair D- of the line interface.

Connect to the pair D- input of the

transformer. On reset, set to high

impedance.

Port 1 Analog Test+. Connects to

the pair E+ input of the transformer.

Port 1 Analog Test-. Connects to the

pair E- input of the transformer.

Connection point for the band-gap

reference resistor. Should be a

precision 1% 2 K resistor tied to

ground.

Connection point for the band-gap

reference resistor. Should be a

precision 1% 140 resistor tied to

2.5V.

input.

output.

26

Page 29

Pin Interface—X540 10GBase-T Controller

2.1.4 Serial Flash

See AC/DC specifications in Section 12.4.5.4.

Reserved Pin Name Ball # Type

FLSH_SI K2 Out Serial data output to the Flash.

FLSH_SO K1 In Pup Serial data input from the Flash.

FLSH_SCK J1 Out Flash serial clock. Operates at the

FLSH_CE_N J2 Out Pup

1. Pup value should be considered as 3.3 K.

2.1.5 SMBus

See the AC/DC specifications in Section 12.4.5.3.

Reserved Pin Name Ball # Type

SMBCLK L2 O/d Pup

SMBD L1 O/d Pup

Internal

Pup/Pdn

Internal

Pup/Pdn

External

Pup/Pdn

External

Pup/Pdn

1

1

Name and Function

maximum frequency of 25 MHz.

1

Flash chip select output.

Name and Function

SMBus Clock. One clock pulse is

generated for each data bit transferred.

SMBus Data. Stable during the high

period of the clock (unless it is a start or

stop condition).

SMBALRT_N M2 O/d Pup

1. Pup value should be considered as 10 K.

Note: If the SMBus is disconnected, use the external pull-up value listed.

1

SMBus Alert. Acts as an interrupt pin of a

slave device on the SMBus.

27

Page 30

2.1.6 NC-SI

See AC specifications in Section 12.4.5.5.

X540 10GBase-T Controller—Pin Interface

Reserved Pin Name Ball # Type

NCSI_CLK_

IN

NCSI_TX_EN G4 NCSI-In Pdn

NCSI_TXD0

NCSI_TXD1

NCSI_CRS_

DV

NSCI_RXD0

NCSI_RXD1

NCSI_ARB_

IN

NCSI_ARB_

OUT

1. Pdn value should be considered as 10 K.

2. Pup value should be considered as 10 K.

G2 NCSI-In Pdn

H2

G3

H1 NCSI-Out Pdn

H3

G1

F1 NCSI-In Pdn

F2 NCSI-Out NC-SI Arbitration Out.

Internal

Pup/Pdn

NCSI-In Pup

NCSI-Out Pup

External

Pup/Pdn

Name and Function

1

1

2

1

2

1

NC-SI Reference Clock Input.

Synchronous clock reference for receive,

transmit, and control interface. It is a 50

MHz clock ± 100 ppm.

MC Transmit Enable.

Indicates that received data from MC is

valid.

MC Transmit Data.

Data signals from the MC to the X540.

Carrier Sense/Receive Data Valid (CRS/

DV) to MC.

Indicates that the data transmitted from

the X540 to MC is valid.

MC Receive Data.

Data signals from the X540 to the MC.

NC-SI Arbitration In.

Note: If NC-SI is disconnected, use the external pull-up or pull-down values listed.

2.1.7 Software Defined Pins (SDPs)

See AC specifications in Section 12.4.5.1.

See Section 3.5 for more details on configurable SDPs.

28

Page 31

Pin Interface—X540 10GBase-T Controller

Reserved Pin Name Ball # Type

Internal

Pup/Pdn

External

Pup/

1

Pdn

Name and Function

SDP0_0

SDP0_1

SDP0_2

SDP0_3

R4

P3

T4

R3

T/s General Purpose SDPs. 2.5V I/Os for function

0. Can be used to support IEEE1588 auxiliary

devices.

Input for external interrupts, PCIe function

disablement, etc.

See Section 1.6 for possible usages of the pins.

SDP1_0

SDP1_1

SDP1_2

SDP1_3

2

T21

2

T22

2

U21

2

U22

T/s General Purpose SDPs. 2.5V I/Os for function

1. Can be used to support IEEE1588 auxiliary

devices.

Input for external interrupts, PCIe function

disablement, etc.

See Section 1.6 for possible usages of the pins.

1. SDP pins should have external Pup/Pdn or other board connectivity according to board implementation.

2. These pins are reserved and should be left as No Connect for the the X540 single port configuration.

29

Page 32

2.1.8 LEDs

See AC specifications in Section 12.4.5.1.

X540 10GBase-T Controller—Pin Interface

Reserved Pin Name Ball # Type

LED0_0 H4 Out Pdn Port 0 LED0. Programmable LED. By default,

LED0_1 J3 Out Pdn Port 0 LED1. Programmable LED. By default,

LED0_2 J4 Out Pdn Port 0 LED2. Programmable LED. By default,

LED0_3 K4 Out Pdn Port 0 LED3. Programmable LED. By default,

1

LED1_0

1

LED1_1

1

LED1_2

1

LED1_3

1. These pins are reserved and should be left as No Connect for the the X540 single port configuration.

J21 Out Pdn Port 1 LED0. Programmable LED. By default,

J22 Out Pdn Port 1 LED1. Programmable LED. By default,

K21 Out Pdn Port 1 LED2. Programmable LED. By default,

K22 Out Pdn Port 1 LED3. Programmable LED. By default,

Internal

Pup/Pdn

External

Pup/Pdn

Name and Function

indicates link up.

indicates 10 Gb/s link.

indicates link/activity.

indicates 1 Gb/s link.

indicates link up.

indicates 10 Gb/s link.

indicates link/activity.

indicates 1 Gb/s link.

30

Page 33

Pin Interface—X540 10GBase-T Controller

2.1.9 RSVD and No Connect Pins

Connecting RSVD pins based on naming convention:

• NC – pin is not connected in the package

• RSVD_NC – reserved pin. Should be left unconnected.

• RSVD_VSS – reserved pin. Should be connected to GND.

• RSVD_VCC – reserved pin. Should be connected to VCC3P3.

Reserved Pin Name Ball # Type Name and Function

RSVDH22_VSS H22 In-Only Reserved/VSS pins.

RSVDD14_NC D14 A-Inout Reserved/no connect pin.

RSVDG24_VSS G24 In Reserved/VSS pins.

RSVDF4_NC

RSVDF3_NC

RSVDD1_NC

RSVDE24_NC

RSVDE1_NC

RSVDE23_NC

RSVDC1_NC

RSVDF24_NC

RSVDV3_NC

RSVDV4_NC

RSVDL4_NC

RSVDL3_NC

RSVDL21_NC

RSVDM21_NC

RSVDL22_NC

RSVDN21_NC

RSVDM22_NC

RSVDP21_NC

RSVDN22_NC

RSVDR21_NC

RSVDP22_NC

RSVDM4_NC

RSVDM3_NC

RSVDN4_NC

RSVDN3_NC

RSVDP4_NC

F4

F3

D1

E24

E1

E23

C1

F24

V3

V4

L4

L3

L21

M21

L22

N21

M22

P21

N22

R21

P22

M4

M3

N4

N3

P4

A-Inout

A-Inout

A-Inout

A-Inout

A-Inout

A-Inout

A-Inout

A-Inout

A-Inout

A-Inout

Out

Out

Out

Out

Out

Out

Out

Out

Out

Out

Out

Out

Out

Out

Out

Out

Reserved/no connect pins.

Reserved/no connect pins.

Reserved/no connect pins.

Reserved/no connect pins.

Reserved/no connect pins.

Reserved/no connect pins.

RSVDR22_NC R22 Out Reserved/no connect pin.

31

Page 34

X540 10GBase-T Controller—Pin Interface

Reserved Pin Name Ball # Type Name and Function

RSVDAA6_NC

RSVDAA8_NC

RSVDAA10_NC

RSVDAA14_NC

RSVDAA16_NC

RSVDAA18_NC

RSVDU4_NC U4 PWR Reserved no connect pin.

RSVDG11_NC G11 PWR Reserved no connect pin.

RSVDU3_NC

RSVDN2_NC

RSVDU2_NC

RSVDV24_NC

RSVDU24_NC

RSVDU23_NC

RSVDR1_NC

RSVDP1_NC

RSVDU1_NC

RSVDT1_NC

RSVDT23_VSS T23 In-Only Reserved VSS pin.

AA6

AA8

AA10

AA14

AA16

AA18

U3

N2

U2

V24

U24

U23

R1

P1

U1

T1

PWR Reserved/no connect pins.

PWR Reserved/no connect pins.

T/s

T/s

T/s

T/s

T/s

T/s

T/s

T/s

T/s

T/s

Reserved no connect pins.

RSVDP23_VSS P23 In-Only Reserved VSS pin.

RSVDA24_VSS A24 In-Only Reserved VSS pin.

RSVDAD24_VSS AD24 In-Only Reserved VSS pins.

RSVDN23_VSS

RSVDN24_VSS

RSVDP24_VSS

RSVDY21_VSS Y21 In-Only Reserved VSS pin.

RSVDT24_VSS T24 In-Only Reserved VSS pin.

RSVDL23_NC L23 O/d Reserved No Connect pin.

RSVDJ23_VSS J23 In-Only Reserved VSS pin.

RSVDJ24_VSS J24 In Reserved VSS pin.

RSVDW4_VSS W4 In Reserved VSS pin.

RSVDH24_VSS H24 In Reserved VSS pin.

N23

N24

P24

In-Only

In-Only

In-Only

Reserved VSS pins.

32

Page 35

Pin Interface—X540 10GBase-T Controller

Reserved Pin Name Ball # Type Name and Function

RSVDG23_VSS G23 In-Only Reserved VSS pin.

RSVDY4_VSS Y4 In Reserved VSS pin.

RSVDAA24_VSS AA24 In-Only Reserved VSS pin.

RSVDAD1_VSS AD1 In Reserved VSS pin.

RSVDD13_NC

RSVDC13_NC

RSVDC11_NC

RSVDD11_NC

RSVDA1_NC

RSVDV21_VSS

RSVDW24_VSS

RSVDY20_NC

RSVDY5_NC

RSVDN13_NC

RSVDM12_NC

RSVDR24_NC

RSVDR23_NC

RSVDN1_NC

RSVDM24_VSS M24 In Reserved VSS pin.

Reserved Pin Name Ball # Type

RSVDT2_VCC2P5 T2 In Pdn Pup

RSVDM23_VCC2P5 M23 In Pup Pup

D13

C13

C11

D11

A1

V21

W24

Y20

Y5

N13

M12

R24

R23

N1

A-Inout

A-Inout

A-Out

A-Out

In-Only

In-Only

A-Inout

A-Inout

PWR-O

PWR-O

PWR-O

LVDS-O

LVDS-O

Out-Only

Internal

Pup/Pdn

Reserved no connect/VSS pins.

Reserved no connect pins.

External

Pup/Pdn

1

1

Name and Function

Reserved VCC2P5 pin.

Reserved VCC2P5 pin.

RSVDK3_VSS K3 In Pdn Pdn

1. Pup value should be considered as 3.3 K.

2.1.10 Miscellaneous

See AC/DC specifications in Section 12.4.5.1.

1

Reserved VSS pin.

33

Page 36

X540 10GBase-T Controller—Pin Interface

Reserved Pin Name Ball # Type

LAN_PWR_GOOD L24 In-

1p2

BYPASS_POR H23 In Pdn Pdn

AUX_PWR P2 In Note

MAIN_PWR_OK R2 In Note

LAN1_DIS_N K24 In Pup Pup

Internal

Pup/Pdn

External

Pup/Pdn

Pup Pup

Name and Function

1

LAN Power Good. A transition from

low to high initializes the X540 into

operation.

2

Reserved.

Must be connected to pull-down

resistor.

3

Auxiliary Power Available. When

set, indicates that auxiliary power

is available and the X540 should

support D3

enabled to do so. This pin is

power state if

COLD

latched at the rising edge of

LAN_PWR_GOOD.

4

Main Power Good. Indicates that

platform main power is up. Must be

connected externally.

1

This pin is a strapping pin latched

at the rising edge of

LAN_PWR_GOOD or PE_RST_N or

In-Band PCIe Reset. If this pin is

not connected or driven high

during initialization, LAN 1 is

enabled. If this pin is driven low

during initialization, LAN 1 port is

disabled.

LAN0_DIS_N K23 In Pup Pup

SEC_EN M1 In Pup Pup

1

This pin is a strapping option pin

latched at the rising edge of

LAN_PWR_GOOD or PE_RST_N or

In-Band PCIe Reset. If this pin is

not connected or driven high

during initialization, LAN 0 is

enabled. If this pin is driven low

during initialization, LAN 0 port is

disabled.

When LAN 0 port is disabled

manageability is not functional and

it must not be enabled in the NVM

Control Word 1.

1

Enable/Disable for the internal

MACsec/IPSec engines.

34

Page 37

Pin Interface—X540 10GBase-T Controller

Reserved Pin Name Ball # Type

THERM_D1_P

THERM_D1_N

PHY0_RVSL T3 T/s Pup Note

PHY1_RVSL V23 T/s Pup Note

1. Pup value should be considered as 10 K.

2. Pdn value should be considered as 10 K.

3. Connect AUX_PWR signal to Pup if AUX power is available. Connect Pdn if AUX power is not available. Pup/Pdn value should be

considered as 10 K.

4. Connect MAIN_PWR_OK signal to Main Power through Pup resistor. Pup value should be considered as 10 K.

5. For pin change order A, B, C, and D, connect PHY_RVSL signal to Pdn. For pin change order D, C, B, and A, connect PHY_RVSL

signal to Pup. Pup value should be considered as 10 K. Pdn value should be considered as 3.3 K.

G21

G22

AInout

AInout

Internal

Pup/Pdn

External

Pup/Pdn

5

5

Name and Function

Thermal Diode Reference. Can be

used to measure on-die

temperature.

Pin change order of MDI lanes port

0:

0b = Lane order A, B, C, D.

1b = Lane order D, C, B, A.

Pin change order of MDI lanes port

1:

0b = Lane order A, B, C, D.

1b = Lane order D, C, B, A.

2.1.11 JTAG

See AC specifications in Section 12.4.5.2.

Reserved Pin Name Ball # Type

TCK Y22 In-

TDI W22 In-

TDO V22 Out Pup

TMS W21 In-

TRST_N W23 In-

1. Pdn value should be considered as 470 .

2. Pup value should be considered as 10 K.

3. Pup value should be considered as 3.3 K

Note: If the JTAG is disconnected, use the external pull-up or pull-down values

listed.

Only

Only

Only

Only

Internal

Pup/Pdn

Pup Pdn

Pup Pup

Pup Pup

Pup Pdn

External

Pup/Pdn

Name and Function

1

2

3

2

1

JTAG Clock Input.

JTAG Data Input.

JTAG Data Output.

JTAG TMS Input.

JTAG Reset Input. Active low reset

for the JTAG port.

35

Page 38

X540 10GBase-T Controller—Pin Interface

2.1.12 Power Supplies

See AC specifications in Section 12.3.1.

Reserved Pin Name Ball # Type Name and Function

RSVDH21_

VSS

VSS B1, B10, B12, B13, B15, B17, B19,B2,

VSS AA1, AA11, AA12, AA13, AA15, AA17,

H21 PWR Reserved power pin.

B21, B23, B24, B4, B6, B8, C14, C16,

C18, C20, C22, C3, C5, C7, C9, D15,

D17, D19, D2, D21, D22, D3, D5, D7,

D9, E10, E12, E14, E16, E18, E2, E20,

E22, E4, E6, E8, F21, F22, F23

AA19, AA2, AA20, AA21, AA22, AA23,

AA3, AA4, AA5, AA7, AA9, AB10, AB12,

AB13, AB15, AB16, AB18, AB19, AB21,

AB4, AB6, AB7, AB9, AC1, AC11, AC14,

AC17, AC2, AC20, AC23, AC24, AC5,

AC8, AD11, AD14, AD17, AD2, AD20,

AD23, AD5, AD8, F10, F11, F12, F13,

F14, F15, F16, F17, F18, F19, F20, F5,

F6, F7, F8, F9, G10, G12, G14, G16,

G18, G20, G6, G8, H11, H13, H15, H17,

H19, H5, H7, H9, J10, J12, J14, J16, J18,

J20, J6, J8, K11, K13, K15, K17, K19,

K5, K7, K9, L10, L12, L14, L16, L18, L20,

L6, L8, M11, M13, M15, M17, M19, M5,

M7, M9, N10, N12, N14, N16, N18, N20,

N6, N8, P11, P13, P15, P17, P19, P5, P7,

P9, R10, R12, R14, R16, R18, R20, R6,

R8, T11, T13, T15, T17, T19, T5, T7,

T9,U10, U12, U14, U16, U18, U20, U6,

U8, V11, V13, V15, V17, V19, V5, V7,

V9, W10, W12, W14, W16, W18, W20,

W3, W6, W8, Y11, Y13, Y15, Y17, Y19,

Y23, Y24, Y3, Y7, Y9

PWR

_AL

G

PWR Ground

Ground

36

VCC0P67 G5, G7, G9, H10, H12, H6, H8, J11, J5,

J7, J9, K10, K12, K6, K8, L11, L7, L9,

M10, M8, N11, N7, N9, P10, P12, P8,

R11, R7, R9, T10, T12, T8, U11, U7, U9,

V10, V12, V8, W11, W7, W9

VCC0P8 G13, G15, G17, G19, H14, H16, H18,

H20, J13, J15, J17, J19, K14, K16, K18,

K20, L13, L15, L17, L19, M14, M16, M18,

N15, N17, P14, P16, P18, R13, R15, R17,

T14, T16, T18, U13, U15, U17, V14, V16,

V18, W13, W15, W17

VCC1P2 D10, D4, D8, E11, E7, E9, D6, E5 PWR

VCC1P2 E19, D20, D16,D18, E13,E15, E17 PWR

PWR 0.67V

PWR 0.8V

_AL

G

_AL

G

1.2V

1.2V

Page 39

Pin Interface—X540 10GBase-T Controller

Reserved Pin Name Ball # Type Name and Function

VCC2P5 A2, C2, A4, C4, A6, C6, A8, C8, A10,

C10, A12

VCC2P5 A13, A15, C15, A17, C17, A19, C19, A21,

C21, A23, C23

VCC1P2 E21 PWR

VCC1P2 E3 PWR

VCC3P3 L5, M6, N5 PWR 3.3V

VCC1P2 Y10, Y12, Y14, Y16, Y18, Y8, Y6 PWR 1.2V

VCC2P5 AB11, AB14, AB17, AB20, AB3 AB5

AB8,AB22

VCC2P5 C24 PWR

VCC2P5 M20, N19, V20, P20, P6, R19, R5, T20,

T6, U19, U5, V6, W19, W5

PWR

_AL

G

PWR

_AL

G

_AL

G

_AL

G

PWR 2.5V

_AL

G

PWR 2.5V

2.5V

2.5V

1.2V

1.2V

2.5V

37

Page 40

X540 10GBase-T Controller—Pin Interface

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

A

RSVDA1_NC VCC2P5 MDI0_p_0 VCC2P5 MDI0_p_1 VCC2P5 MDI0_p_2 VCC2P5 MDI0_p_3 VCC2P5 MDI0_p_4 VCC2P5 VCC2P5 MDI1_p_4 VCC2P5 MDI1_p_3 VCC2P5 MDI1_p_2 VCC2P5 MDI1_p_1 VCC2P5 MDI1_p_0 VCC2P5

RSVDA24_

VSS

A

B

VSS VSS MDI0_n_0 VSS MDI0_n_1 VSS MDI0_n_2 VSS MDI0_n_3 VSS MDI0_n_4 VSS VSS MDI1_n_4 VSS MDI1_n_3 VSS MDI1_n_2 VSS MDI1_n_1 VSS MDI1_n_0 VSS VSS

B

C

RSVDC1_NC VCC2P5 VSS VCC2P5 VSS VCC2P5 VSS VCC2P5 VSS VCC2P5

RSVDC11_

NC

TM_REXT

RSVDC13_

NC

VSS VCC2P5 VSS VCC2P5 VSS VCC2P5 VSS VCC2P5 VSS VCC2P5 VCC2P5

C

D

RSVDD1_NC VSS VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2

RSVDD11_

NC

BG_REXT

RSVDD13_NCRSVDD14_

NC

VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VSS X TAL_I XT AL_O

D

E

RSVDE1_NC VSS V CC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS

RSVDE23_NCRSVDE24_

NC

E

F

NCSI_ARB_

IN

NCSI_ARB_

OUT

RSVDF3_

NC

RSVDF4_

NC

VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS VSS

RSVDF24_

NC

F

G

NCSI_RXD1

NCSI_CLK_

IN

NCSI_TXD1

NCSI_TX_

EN

VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS

RSVDG11_

NC

VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS

Therm_

D1_P

Therm_

D1_N

RSVDG23_

VSS

RSVDG24_

VSS

G

H

NCSI_CRS_

DV

NCSI_TXD0 NCSI_RXD0 LED0_0 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8

RSVDH21_

VSS

RSVDH22_

VSS

RSVDH23

RSVDH24_

VSS

H

J

FLSH_SCK

FLSH_CE_

N

LED0_1 LED0_2 VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS LED1_0 LED1_1

RSVDAJ23_

VSS

RSVDJ24_

VSS

J

K

FLSH_SO FLSH_SI

RSVDK3_

VSS

LED0_3 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 LED1_2 LED1_3

LAN0_DIS_NLAN1_DIS_

N

K

L

SMBD SMBCLK

RSVDL3_

NC

RSVDL4_

NC

VCC3P3 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS

RSVDL21_

NC

RSVDL22_

NC

RSVDL23_

NC

LAN_PWR_

GOOD

L

M

SEC_EN

SMBALRT_NRSVDM3_

NC

RSVDM4_

NC

VSS VCC3P3 VSS VCC0P67 VSS VCC0P67 VSS

RSVDM12_

NC

VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC2P5

RSVDM21_NCRSVDM22_NCRSVDM23_

VCC2P5

RSVDM24_

VSS

M

N

RSVDN1_NC

RSVDN2_

NC

RSVDN3_

NC

RSVDN4_

NC

VCC3P3 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS

RSVDN13_

NC

VSS VCC0P8 VSS VCC0P8 VSS VCC2P5 VSS

RSVDN21_NCRSVDN22_NCRSVDN23_

VSS

RSVDN24_

VSS

N

P

RSVDP1_NC AUX_PWR SDP0_1

RSVDP4_

NC

VSS VCC2P5 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC2P5

RSVDP21_NCRSVDP22_NCRSVDP23_

VSS

RSVDP24_

VSS

P

R

RSVDR1_NC

MAIN_

PWR_

OK

SDP0_3 SDP0_0 VCC2P5 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC2P5 VSS

RSVDR21_NCRSVDR22_NCRSVDR23_NCRSVDR24_

NC

R

T

RSVDT1_NC

RSVDT2_

VCC2P5

PHY0_

RVSL

SDP0_2 VSS VCC2P5 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC2P5 SDP1_0 SDP1_1

RSVDT23_

VSS

RSVDT24_

VSS

T

U

RSVDU1_NC

RSVDU2_

NC

RSVDU3_

NC

RSVDU4_

NC

VCC2P5 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC2P5 VSS SDP1_2 SDP1_3

RSVDU23_NCRSVDU24_

NC

U

V

PE_RBIAS0 PE_RBIAS1

RSVDV3_

NC

RSVDV4_

NC

VSS VCC2P5 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC2P5

RSVDV21_

VSS

TDO

PHY1_

RVSL

RSVDV24_

NC

V

W

PE_WAKE_N PE_RST_N VSS

RSVDW4_

VSS

VCC2P5 VSS VCC0P67 VSS VCC0P67 VSS VCC0P67 VSS VCC0P8 VSS VCC0P8 VSS VCC0P8 VSS VCC2P5 VSS TMS TDI TRST_N

RSVDW24_

VSS

W

Y

PE_CLK_n PE_CLK_p VSS

RSVDY4_

VSS

RSVDY5_

NC

VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS VCC1P2 VSS

RSVDY20_

NC

RSVDY21_

VSS

TCK VSS VSS

Y

AA

VSS VSS VSS VSS VSS

RSVDAA6_

NC

VSS

RSVDAA8_

NC

VSS

RSVDAA10_

NC

VSS VSS

RSVDAA14_

NC

VSS

RSVDAA1 6_

NC

VSS

RSVDAA18_

NC

VSS VSS VSS VSS VSS

RSVDAA24_

VSS

AA

AB

PER_0_n PER_0_p VCC2P5 VSS VCC2P5 VSS VSS VCC2P5 VSS VSS VCC2P5 VSS VSS VCC2P5 VSS VSS VCC2P5 VSS VSS VCC2P5 VSS VCC2P5 PER_7_P PER_7_n

AB

AC

VSS VSS PET_0_p PET_1_p VSS PER_1_n PER_2_n VSS PET_2_p PET_3_p VSS PER_3_n PER_4_n VSS PET_4_p PET_5_p VSS PER_5_n PER_6_n VSS PET_6_p PET_7_P VSS VSS

AC

AD

RSVDAD1_

VSS

VSS PET_0_n PET_1_n VSS PER_1_p PER_2_p VSS PET_2_n PET_3_n VSS PER_3_p PER_4_p VSS PET_4_n PET_5_n VSS PER_5_p PER_6_p VSS PET_6_n PET_7_n VSS

RSVDAD24_

VSS

AD

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24

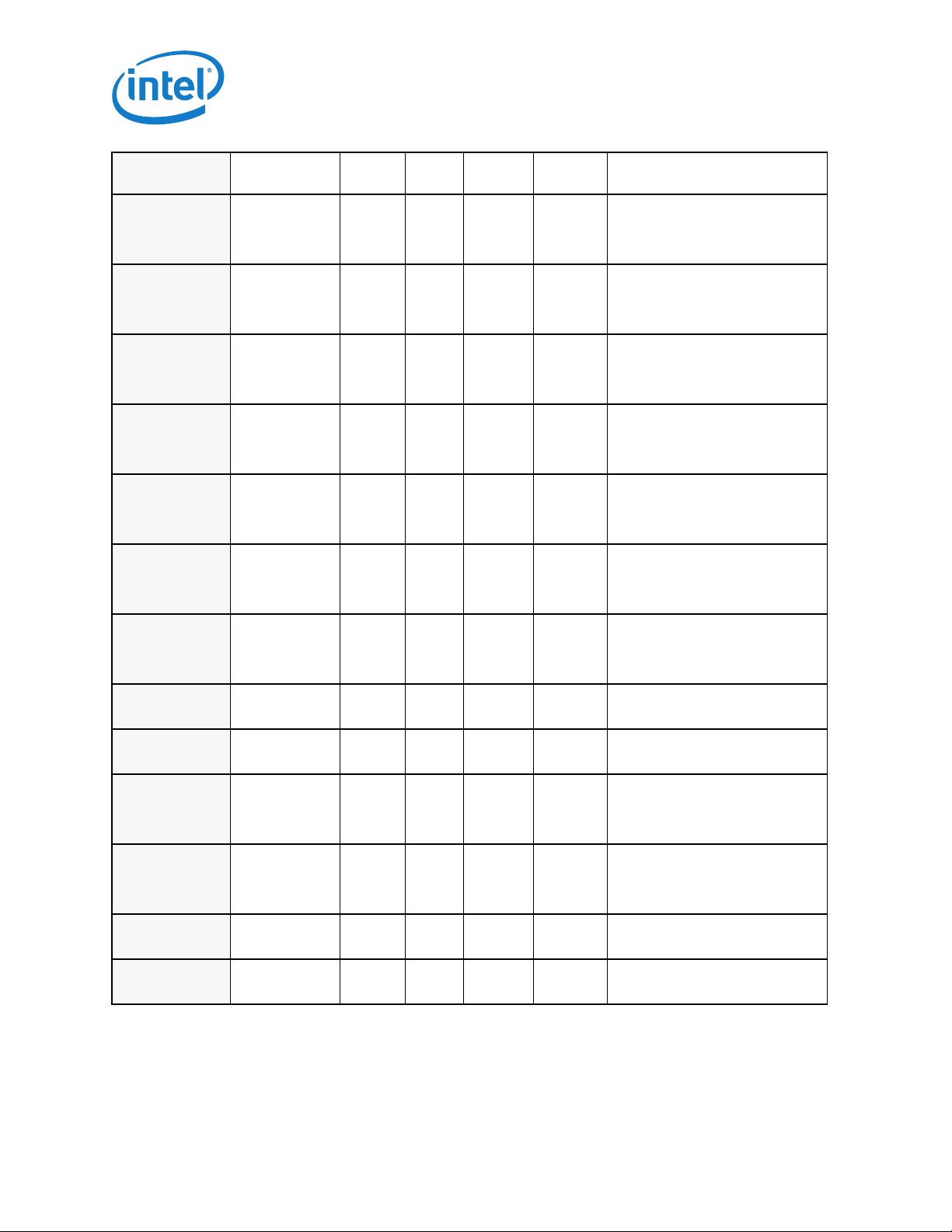

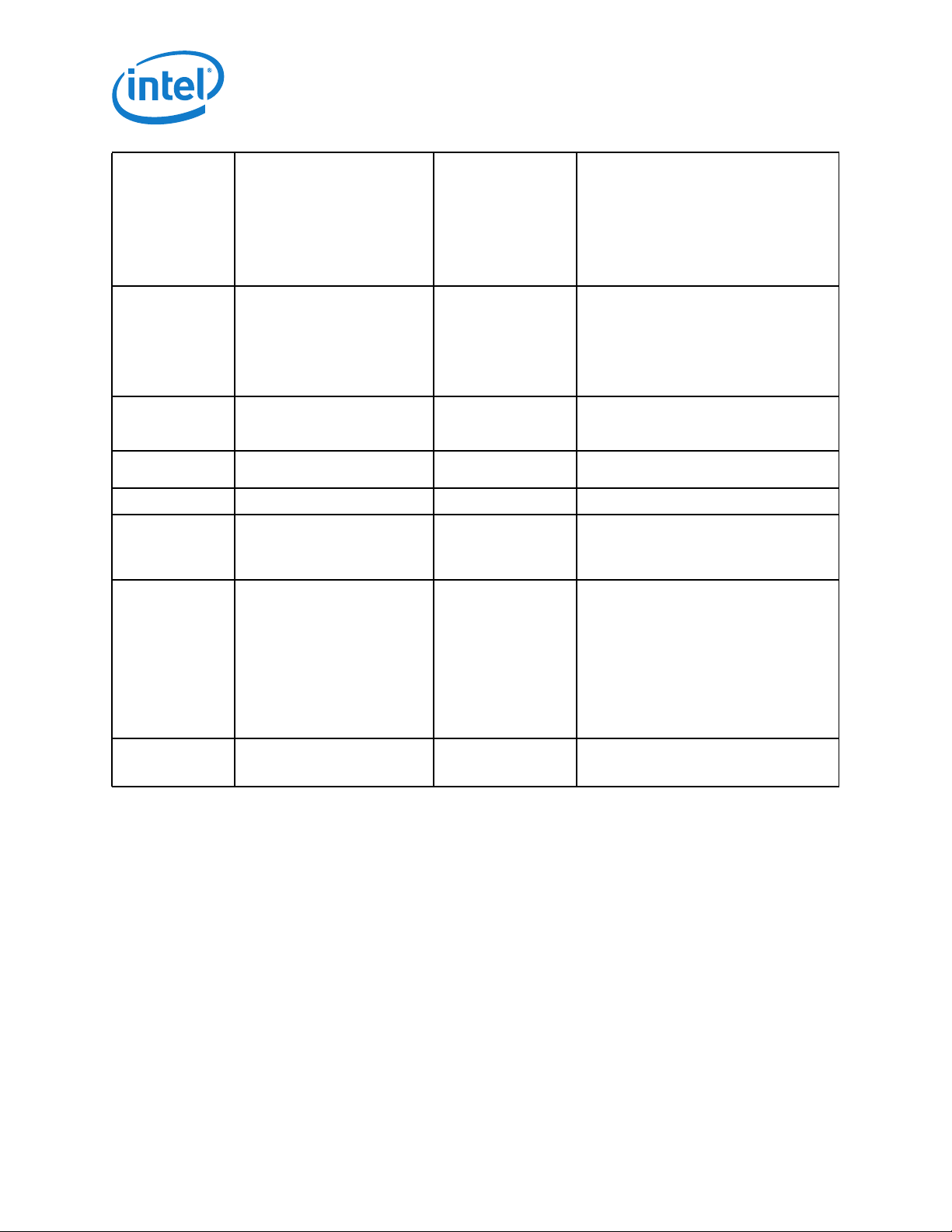

2.2 Ball Out — Top View Through Package

Figure 2-1 X540 Package Layout

VSS

38

Page 41

Interconnects—X540 10GBase-T Controller

3.0 Interconnects

3.1 PCI Express* (PCIe*)

3.1.1 Overview

PCIe is an I/O architecture that enables cost competitive solutions as well as provide

industry leading price/performance and feature richness. It is an industry-driven specification.

PCIe defines a basic set of requirements that addresses the majority of the targeted

application classes. Higher-end applications’ requirements (Enterprise class servers and

high-end communication platforms) are addressed by a set of advanced extensions that

compliment the baseline requirements.

To guarantee headroom for future applications, PCIe provides a software-managed

mechanism for introducing new, enhanced capabilities.

Figure 3-1 shows the PCIe architecture.

Figure 3-1 PCIe Stack Structure

39

Page 42

X540 10GBase-T Controller—Interconnects

The PCIe physical layer consists of a differential transmit pair and a differential receive

pair. Full-duplex data on these two point-to-point connections is self-clocked such that no

dedicated clock signals are required. The bandwidth of this interface increases in direct

proportion with frequency increases.

The packet is the fundamental unit of information exchange and the protocol includes a

message space to replace a variety of side-band signals found on previous interconnects.

This movement of hard-wired signals from the physical layer to messages within the

transaction layer enables easy and linear physical layer width expansion for increased

bandwidth.

The common base protocol uses split transactions along with several mechanisms to

eliminate wait states and to optimize the re-ordering of transactions to further improve

system performance.

3.1.1.1 Architecture, Transaction and Link Layer

Properties

• Split transaction, packet-based protocol

• Common flat address space for load/store access (for example, PCI addressing

model)

— 32-bit memory address space to enable a compact packet header (must be used

to access addresses below 4 GB)

— 64-bit memory address space using an extended packet header

• Transaction layer mechanisms:

— PCI-X style relaxed ordering

— Optimizations for no-snoop transactions

• Credit-based flow control

• Packet sizes/formats:

— Maximum packet size: 512 bytes

— Maximum read request size: 2 KB

• Reset/initialization:

— Frequency/width/profile negotiation performed by hardware

• Data integrity support

— Using CRC-32 for Transaction layer Packets (TLP)

• Link Layer Retry (LLR) for recovery following error detection

— Using CRC-16 for Link Layer (LL) messages

• No retry following error detection

— 8b/10b encoding with running disparity

40

Page 43

Interconnects—X540 10GBase-T Controller

• Software configuration mechanism:

— Uses PCI configuration and bus enumeration model

— PCIe-specific configuration registers mapped via PCI extended capability

mechanism

• Baseline messaging:

— In-band messaging of formerly side-band legacy signals (interrupts, etc.)

— System-level power management supported via messages

• Power management:

— Full support for PCIm

— Wake capability from D3cold state

— Compliant with ACPI, PCIm software model