h

R

®

Intel

Xeon™ Processor and

Intel® 860 Chipset Platform

Design Guide

Document Number: 298252-004

R

Information in this document is provided in connection with Intel® products. No license, express or implied, by estoppel or otherwise, to any intellectual

property rights is granted by this document. Except as provided in Intel’s Terms and Conditions of Sale for such products, Intel assumes no liability

whatsoever, and Intel disclaims any express or implied warranty, relating to sale and/or use of Intel products including liability or warranties relating to

fitness for a particular purpose, merchantability, or infringement of any patent, copyright or other intellectual property right. Intel products are not

intended for use in medical, life saving, or life sustaining applications.

Intel may make changes to specifications and product descriptions at any time, without notice.

Designers must not rely on the absence or characteristics of any features or instructions marked “reserved" or "undefined." Intel reserves these for

future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them.

®

The Intel

from published specifications. Current characterized errata are available on request.

Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order.

I

Implementations of the I

Corporation.

Alert on LAN is a result of the Intel-IBM Advanced Manageability Alliance and a trademark of IBM

Copies of documents which have an ordering number and are referenced in this document, or other Intel literature, may be obtained by calling

1-800-548-4725 or by visiting Intel’s website at http://www.intel.com .

Intel, Intel logo, Pentium, Intel NetBurst, and Intel Xeon are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United

States and other countries.

*Other names and brands may be claimed as the property of others.

Copyright

Xeon™ processor and Intel

2

C is a two-wire communications bus/protocol developed by Philips. SMBus is a subset of the I2C bus/protocol and was developed by Intel.

©

Intel Corporation 2001-2002

2

C bus/protocol may require licenses from various entities, including Philips Electronics N.V. and North American Philips

®

860 chipset may contain design defects or errors known as errata which may cause the product to deviate

®

2 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

R

Contents

1 Introduction ........................................................................................................................19

1.1 Related Documentation ........................................................................................20

1.2 Conventions and Terminology ..............................................................................22

1.3 System Overview ..................................................................................................25

1.3.1 Processor Overview ..............................................................................25

1.3.2 Intel® 860 Chipset..................................................................................26

1.3.2.1 Memory Controller Hub (MCH) ............................................26

1.3.2.2 I/O Controller Hub 2 (Intel® ICH2)........................................27

1.3.2.3 Memory Repeater Hub for Direct RDRAM* Device

1.3.2.4 PCI 64-Bit Hub (Intel® P64H) ...............................................28

1.3.3 Bandwidth Summary .............................................................................28

1.3.4 System Configurations ..........................................................................29

1.4 Platform Initiatives................................................................................................. 30

1.4.1 Memory Expansion Card (MEC) and Connector (MECC).....................30

1.4.2 Intel® 860 Chipset MCH.........................................................................30

1.4.2.1 Direct RDRAM* Device Interface .........................................30

1.4.2.2 Accelerated Graphics Port (AGP) ........................................30

1.4.3 Intel® ICH2.............................................................................................31

1.4.3.1 Integrated LAN Controller ....................................................31

1.4.3.2 Audio Codec ’97 (AC’97) 6-Channel Support ......................32

1.4.3.3 Low Pin Count (LPC) Interface ............................................33

1.4.3.4 Ultra ATA .............................................................................33

1.4.3.5 Universal Serial Bus (USB) ..................................................34

1.4.4 Manageability ........................................................................................34

1.5 Platform Compliance ............................................................................................35

1.5.1 PC 99/2001............................................................................................35

®

MRH-R) .....................................................................28

(Intel

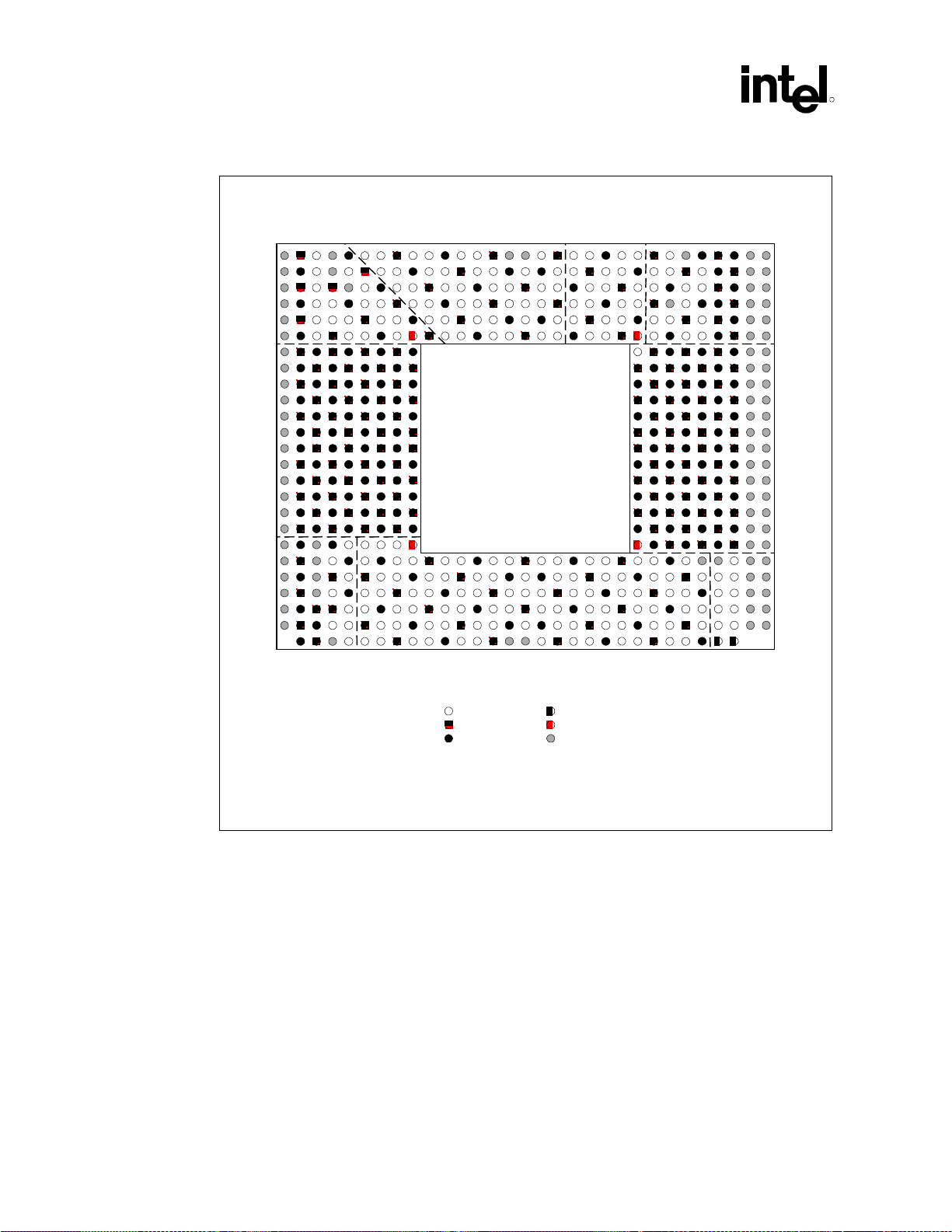

2 Component Layout ............................................................................................................37

2.1 Intel® Xeon™ Processor Component Quadrant Layout........................................37

2.2 Intel® 860 Chipset Component Quadrant Layout ..................................................40

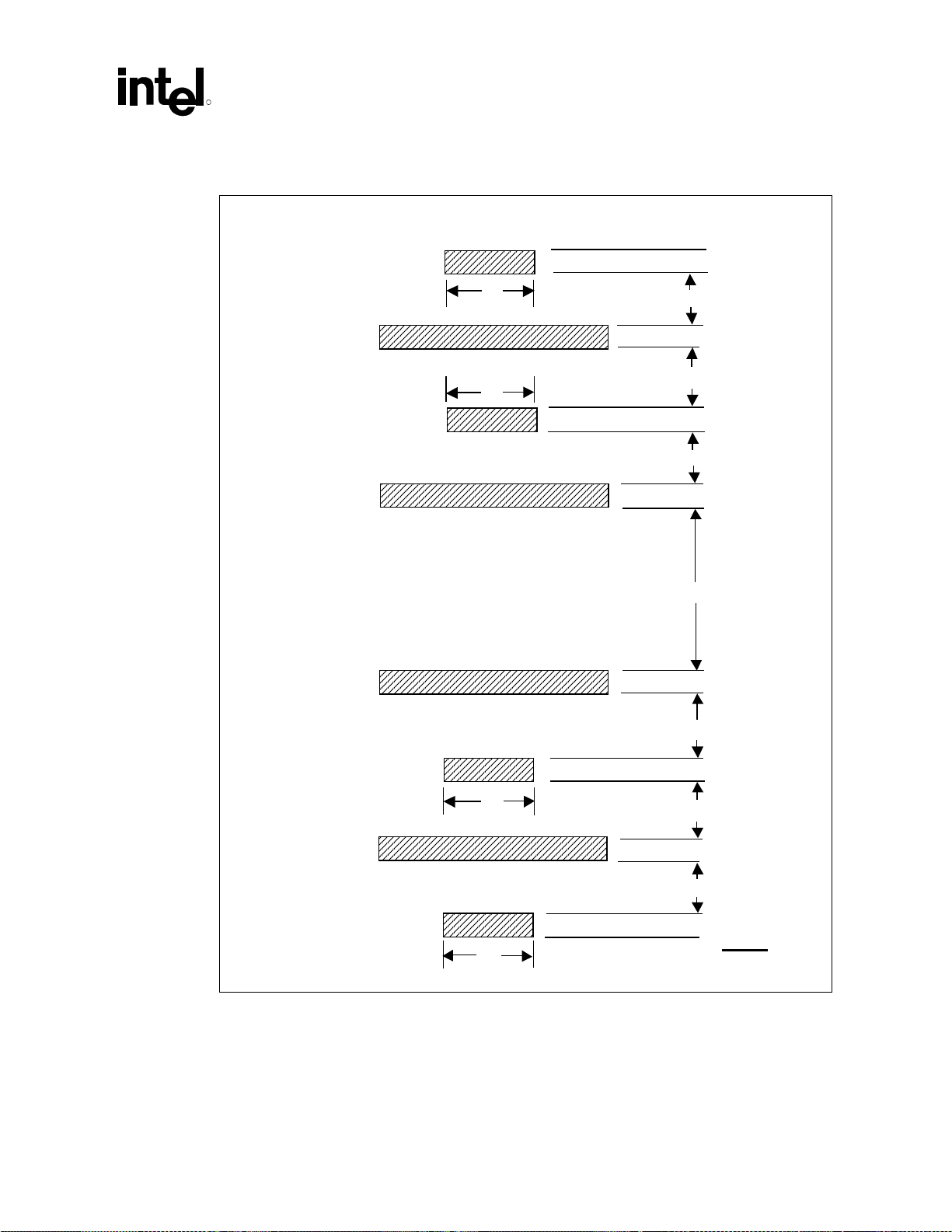

3 Platform Stack-Up and Placement Overview ....................................................................43

3.1 Platform Component Placement...........................................................................43

3.2 Two-Way System Stack-Up ..................................................................................45

3.2.1 Design Recommendations ....................................................................45

3.2.1.1 Stack-Up Option A ...............................................................45

3.2.1.2 Stack-Up Option B ...............................................................46

3.2.2 Design Considerations ..........................................................................46

3.2.2.1 Stack-Up Example A ............................................................47

3.2.2.2 Stack-Up Example B ............................................................48

4 Platform Clock Routing Guidelines....................................................................................51

4.1 Routing Guidelines for Host Clocks ......................................................................52

4.2 Routing Guidelines for Direct Rambus* Clock Generators (DRCG Devices) .......57

4.2.1 CKx_SKS to DRCG: Reference Clocks ................................................57

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 3

Intel

4.2.2

MCH to DRCG* Device: Phase Aligning Clocks ...................................58

4.2.3 DRCG* Device Signals to Rambus* Channels

(300 MHz/400 MHz Clocks) ..................................................................58

4.2.3.1 Trace Length Recommendations.........................................59

4.2.3.2 Topology Considerations .....................................................61

4.2.3.3 Clock Termination ................................................................61

4.2.4 DRCG* Device Impedance Matching Circuit.........................................62

4.2.5 DRCG* Device Layout Example............................................................64

4.3 Routing Guidelines for 66 MHz and 33 MHz Clocks.............................................65

4.3.1 66 MHz/33 MHz Clock Relationships ....................................................65

4.3.2 66 MHz Clock Routing Length Guidelines.............................................65

4.3.3 33 MHz Clock Routing Length Guidelines.............................................67

4.3.4 Intel® P64H PCI Clock Routing Guidelines............................................68

4.3.4.1 Intel® P64H PCI 33 MHz Clock Routing Guidelines.............68

4.3.4.2 Intel® P64H PCI 66 MHz Clock Routing Guidelines.............69

5 System Bus Routing ..........................................................................................................71

5.1 Return Path...........................................................................................................72

5.2 Serpentine Routing ...............................................................................................73

5.3 System Bus Decoupling Requirements ................................................................73

5.3.1 Processor I/O Decoupling Requirements..............................................74

5.4 Dual Processor Configuration ...............................................................................75

5.4.1 Routing Guidelines for Source Synchronous Signals............................77

5.4.1.1 4X Group (DSTBN[3:0]#, DSTBP[3:0]#, D[63:0]#,

DBI[3:0]#):............................................................................79

5.4.1.2 2X Group (ADSTB[1:0]#, A[35:3]#, REQ[4:0]#) ...................81

5.4.1.3 Common Clock ....................................................................81

5.4.1.4 Wired-OR Signals (BINIT#, BNR#, HIT#, HITM#,

MCERR#).............................................................................81

5.4.2 Routing Guidelines for Asynchronous GTL+ and Other System Bus

Signals...................................................................................................83

5.4.2.1 Topology 1: Asynchronous GTL+ Signals Driven by the

Processor.............................................................................84

5.4.2.2 Topology 2: Asynchronous GTL+ Signals Driven by the

Chipset.................................................................................87

5.4.2.3 Topology 3: VID[4:0] ............................................................87

5.4.2.4 Topology 4: SMBus Signals .................................................88

5.4.2.5 Topology 5: BR[3:0]# Signals...............................................90

5.4.2.6 Topology 6: COMP[1:0] Signals...........................................90

5.4.2.7 Topology 7: ODTEN Signal.................................................. 91

5.4.2.8 Topology 8: TESTHI[6:0] Signals.........................................91

5.4.2.9 Topology 9: SKTOCC# Signal .............................................92

5.5 MCH System Bus Interface...................................................................................93

5.5.1 MCH System Bus I/O Decoupling Recommendations ..........................94

R

6 Memory Interface Routing .................................................................................................97

6.1 Rambus* Channel Overview.................................................................................97

6.2 Memory Design with MEC/MECC (Inner Layer Routing)......................................98

®

4 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

R

6.3

Rambus* Channel Routing Guidelines .................................................................99

6.3.1 Rambus* Signaling Level (RSL) Signals ............................................. 100

6.3.2 Rambus Signaling Level (RSL) Channel Compensation.....................102

6.3.2.1 Package Trace Compensation

(RSL and Clocking Signals) ...............................................102

6.3.2.2 Via Compensation.............................................................. 104

6.3.2.3 Differential Clock Compensation .......................................105

6.3.2.4 Signal Layer Alteration for RIMM* Connector Pin

Compensation....................................................................105

6.3.2.5 RIMM* Connector Impedance Compensation ...................106

6.3.3 RSL Signal Termination ......................................................................110

6.3.4 Direct RDRAM* Device Reference Voltage.........................................111

6.3.5 High Speed CMOS Routing.................................................................112

6.3.6 SIO Routing.........................................................................................113

6.3.7 Suspend-to-RAM Shunt Transistor .....................................................114

6.4 1.8 V RAC Isolation Solution............................................................................... 116

7 AGP Interface Routing.....................................................................................................119

7.1 AGP Routing Guidelines .....................................................................................120

7.1.1 1X Timing Domain Signal Routing Guidelines ....................................120

7.1.2 2X/4X Timing Domain Signal Routing Guidelines ............................... 121

7.1.2.1 Trace Lengths Less Than 6 Inches ...................................121

7.1.2.2 Trace Lengths Greater Than 6 Inches and

Less Than 7.25 Inches.......................................................122

7.1.3 AGP Interfaces Trace Length Summary .............................................123

7.1.4 I/O Decoupling Guidelines...................................................................124

7.1.5 Signal Power/Ground Referencing Recommendations.......................125

7.1.6 VDDQ and TYPEDET# .......................................................................125

7.1.7 V

Generation...................................................................................125

REF

7.1.8 MCH AGP Interface Buffer Compensation..........................................126

7.1.9 AGP Pull-Ups/Pull-Downs on AGP Signals.........................................127

7.1.10 AGP Signal Voltage Tolerance List .....................................................129

7.1.11 AGP Connector ...................................................................................129

7.2 AGP Universal Retention Mechanism (RM)........................................................130

8 Hub Interface ...................................................................................................................133

8.1 PCI 33 MHz Guidelines.......................................................................................134

8.2 PCI 66 MHz Guidelines.......................................................................................135

8.3 8-Bit Hub Interface Routing Guidelines...............................................................136

8.3.1 8-Bit Hub Interface Data Signals .........................................................136

8.3.2 8-Bit Hub Interface Strobe Signals ......................................................136

8.3.3 8-Bit Hub Interface HIREF Generation/Distribution ............................. 137

8.3.4 8-Bit Hub Interface Compensation ......................................................139

8.3.5 8-Bit Hub Interface Decoupling Guidelines .........................................139

8.4 16-Bit Hub Interface Routing Guidelines.............................................................139

8.4.1 16-Bit Hub Interface Data Signals .......................................................139

8.4.2 16-Bit Hub Interface Strobe Signals ....................................................140

8.4.3 16-Bit Hub Interface HIREF Generation/Distribution ........................... 140

8.4.4 16-Bit Hub Interface Compensation Reference Voltage

Generation/Distribution........................................................................141

8.4.5 16-Bit Hub Interface Resistive Compensation.....................................142

8.4.6 16-Bit Hub Interface Decoupling Guidelines .......................................142

8.4.7 16-Bit Trace Length Compensation..................................................... 142

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 5

Intel

8.4.8

Unused 16-Bit Hub Interfaces .............................................................143

9 I/O Controller Hub 2 (Intel® ICH2)....................................................................................145

9.1 IDE Interface .......................................................................................................145

9.1.1 IDE Cable ............................................................................................145

9.1.2 Cable Detection for Ultra ATA/66 and Ultra ATA/100 .........................146

9.1.2.1 Host/Device-Side Detection ...............................................146

9.1.2.2 Device-Side Cable Detection .............................................148

9.1.3 Primary IDE Connector Requirements ................................................149

9.1.4 Secondary IDE Connector Requirements ...........................................150

9.2 Audio Codec ’97 (AC’97) ....................................................................................151

9.2.1 AC’97 Audio Codec Detect Circuit and Configuration Options............156

9.2.2 Valid Codec Configurations ................................................................. 160

9.3 USB Guidelines................................................................................................... 160

9.4 I/O APIC Design Recommendations ..................................................................162

9.5 SMBus/SMLink Interface ....................................................................................162

9.5.1 SMBus Architecture and Design Considerations ................................164

9.5.1.1 SMBus Design Considerations ..........................................164

9.6 PCI ......................................................................................................................167

9.7 RTC.....................................................................................................................168

9.7.1 RTC Crystal.........................................................................................168

9.7.2 External Capacitors .............................................................................169

9.7.3 RTC Layout Considerations ................................................................169

9.7.4 RTC External Battery Connection .......................................................169

9.7.5 RTC External RTCRST Circuit ............................................................171

9.7.6 RTC Routing Guidelines...................................................................... 172

9.7.7 VBIAS DC Voltage and Noise Measurements ....................................172

9.7.8 Power-Well Isolation Control ...............................................................173

9.8.9 Power Supply PS_ON Considerations ................................................174

9.8 LAN Layout Guidelines .......................................................................................175

9.8.1 Intel® ICH2—LAN Interconnect Guidelines .........................................176

9.8.1.1 Bus Topologies ..................................................................176

9.8.1.2 Point-to-Point Interconnect ................................................177

9.8.1.3 Signal Routing and Layout ................................................. 177

9.8.1.4 Crosstalk Consideration.....................................................178

9.8.1.5 Impedances .......................................................................178

9.8.1.6 Line Termination ................................................................178

9.8.2 General LAN Routing Guidelines and Considerations ........................179

9.8.2.1 General Trace Routing Considerations..............................179

9.8.2.2 Trace Geometry and Length ..............................................180

9.8.2.3 Power and Ground Connections........................................181

9.8.2.4 Common Physical Layout Issues .......................................182

9.8.3 Intel® 82562EH Home/PNA Guidelines ...............................................184

9.8.3.1 Power and Ground Connections........................................184

9.8.3.2 Guidelines for Intel® 82562EH Component Placement......184

9.8.3.3 Crystals and Oscillators .....................................................184

9.8.3.4 Phoneline HPNA Termination ............................................185

9.8.3.5 Critical Dimensions ............................................................186

9.8.4 Intel® 82562ET/EM Guidelines ............................................................187

9.8.4.1 Guidelines for Intel® 82562ET/EM Component

Placement ..........................................................................187

9.8.4.2 Crystals and Oscillators .....................................................187

R

®

6 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

R

9.8.4.3

Intel

®

82562ET/EM Termination Resistors ........................188

9.8.4.4 Critical Dimensions ............................................................188

9.8.4.5 Reducing Circuit Inductance ..............................................190

9.8.4.6 Terminating Unused Connections......................................190

9.8.5 Intel® 82562 ET/EM Disable Guidelines ..............................................191

9.8.6 Intel® 82562ET / Intel® 82562EH Dual Footprint Guidelines ...............192

9.9 FWH Guidelines..................................................................................................193

9.9.1 FWH Decoupling .................................................................................194

9.9.2 In Circuit FWH Programming ..............................................................194

9.9.3 FWH Vpp Design Guidelines .............................................................. 194

9.10 Intel® ICH2 Decoupling Recommendations ........................................................195

9.11 Glue Chip 3 (Intel® ICH2 Glue Chip)...................................................................196

9.12 SPKR Pin Consideration .....................................................................................197

9.13 1.8 V and 3.3 V Power Sequence Requirement .................................................198

9.14 ICH2 V5REF and VCC3_3 Sequencing Requirement ........................................199

9.15 PIRQ Routing......................................................................................................200

10 EMI Design Guidelines ....................................................................................................203

10.1 Terminology ........................................................................................................203

10.2 Basic EMI Theory................................................................................................203

10.3 EMI Regulations and Certifications .....................................................................204

10.4 EMI Design Considerations ................................................................................205

10.4.1 Spread Spectrum Clocking (SSC).......................................................205

10.4.2 Differential Clocking ............................................................................206

10.4.3 PCI Bus Clock Control.........................................................................207

10.4.4 Heat Sink Effects.................................................................................207

10.4.5 Faraday Cages....................................................................................208

10.4.6 EMI Test Capabilities ..........................................................................208

11 Mechanical and EMI Design Considerations ...................................................................211

11.1 Retention Mechanism Placement and Keepouts................................................211

11.2 Electromagnetic Interference Considerations.....................................................214

12 Intel® Xeon™ Processor Power Distribution Guidelines ..................................................217

12.1 Introduction .........................................................................................................217

12.2 Terminology ........................................................................................................217

12.3 Power Delivery Overview....................................................................................218

12.4 Processor Power Delivery Ingredients................................................................219

12.5 System Design....................................................................................................219

12.5.1 Multiple Voltages .................................................................................219

12.5.2 Voltage Sequencing ............................................................................220

12.5.3 Block Diagrams with Voltage Regulator Modules ...............................220

12.5.4 Block Diagram with Embedded Voltage Regulator .............................221

12.6 Processor Load...................................................................................................222

12.6.1 Processor Current Requirements........................................................222

12.6.2 Processor Voltage Tolerance ..............................................................225

12.6.3 Voltage Regulation Load Lines............................................................226

12.6.4 Voltage Regulation Load Line Equations ............................................226

12.7 Voltage Regulator ...............................................................................................234

12.8 Voltage Regulator Design ...................................................................................234

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 7

Intel

R

12.9

Voltage Regulator System Matching................................................................... 234

12.9.1 Voltage Regulator Output ....................................................................234

12.10 Voltage Regulator Input ......................................................................................234

12.11 Voltage Regulator Cooling ..................................................................................235

12.12 Voltage Regulator Remote Sense Connection ...................................................235

12.13 Voltage Regulator Module ISHARE Connection .................................................235

12.14 Voltage Regulator Module OUTEN Connection.................................................. 235

12.15 Power Planes......................................................................................................236

12.15.1 Layer Stack-Up....................................................................................236

12.15.2 Sheet Inductance/Resistance and Emission Effects of

Power Plane ........................................................................................237

12.16 Decoupling Capacitors........................................................................................239

12.16.1 Decoupling Technology and Transient Response...............................239

12.16.2 Location of High-Frequency Decoupling .............................................240

12.16.3 Location of Bulk Decoupling ................................................................ 240

12.16.4 Decoupling Recommendation .............................................................241

12.16.5 Component Placement and Modeling .................................................241

12.16.6 Component Models .............................................................................242

12.16.7 Processor Socket-Package Lump Model ............................................242

12.17 Validation Testing ...............................................................................................252

12.17.1 Generating and Distributing GTLREF[3:0] ..........................................253

12.17.2 GTLREF [3:0] ......................................................................................254

12.17.3 Filter Specifications for VCCA, VCCIOPLL, and VSSA.......................255

13 Chipset Power Distribution Guidelines ............................................................................261

13.1 Definitions ...........................................................................................................261

13.2 Power Management............................................................................................261

13.2.1 ACPI Hardware Model.........................................................................262

13.2.2 Thermal Design Power........................................................................263

13.3 Intel® 860 Chipset MCH Power Sequencing Requirement .................................264

13.4 Vterm/Vdd Power Sequencing Requirement ......................................................265

13.5 ICH2 5VREF and VCC3.3 Sequencing Requirement .........................................265

14 Methodology for Determining Topology and Routing Guidelines ....................................267

14.1 Timing Methodology............................................................................................268

14.1.1 Source Synchronous ...........................................................................269

14.1.1.1 Setup Time......................................................................... 269

14.1.1.2 Hold Time........................................................................... 270

14.1.2 Common clock ....................................................................................272

14.1.2.1 Setup Margin...................................................................... 272

14.1.2.2 Hold Margin........................................................................ 273

14.1.3 Timing Spreadsheet ............................................................................274

14.2 Simulation Methodology...................................................................................... 275

14.2.1 Design Optimization ............................................................................275

14.2.2 Signal Categories and Topology Options ............................................275

14.2.3 Sensitivity Analysis ..............................................................................275

14.2.4 Signal Quality Metrics..........................................................................276

14.2.4.1 Noise Margin......................................................................276

14.2.4.2 Ringback ............................................................................277

14.2.5 Timing Metrics .....................................................................................277

14.2.5.1 Setup Flight Time ...............................................................277

®

8 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

R

14.2.5.2

Calculating Flight Time for Signals with Corrupt Signal

Quality ................................................................................277

14.2.5.3 Incorporating Package Effects into the Flight Time ...........279

14.2.6 Parameter Sweeps and Monte Carlo Analysis .................................... 280

14.2.6.1 Parameter Sweeps ............................................................280

14.2.6.2 Final Solution Space ..........................................................282

15 System Theory.................................................................................................................283

15.1 AGTL+.................................................................................................................283

15.2 Inter-Symbol Interference ...................................................................................283

15.3 Crosstalk .............................................................................................................285

16 Debug Port Routing Guidelines .......................................................................................289

17 Schematic Checklist ........................................................................................................291

17.1 Intel® Xeon™ Processor and Intel Xeon Processor with 512 KB L2 Cache

Checklist .............................................................................................................291

17.2 CKx_SKS(DP)/Direct Rambus* Clock Generator Checklist ...............................298

17.3 Intel® 860 Chipset MCH Checklist ......................................................................301

17.4 AGP Checklist.....................................................................................................303

17.5 Intel® P64H Checklist .......................................................................................... 305

17.6 RIMM* Modules Down/ Intel® MRH-R Checklist .................................................307

17.7 I/O Controller Hub 2 (Intel® ICH2).......................................................................310

17.7.1 PCI Interface .......................................................................................310

17.7.2 Hub Interface.......................................................................................311

17.7.3 LAN Interface ......................................................................................311

17.7.4 EEPROM Interface..............................................................................312

17.7.5 FWH/LPC Interface .............................................................................312

17.7.6 Interrupt Interface ................................................................................313

17.7.7 GPIO ...................................................................................................315

17.7.8 USB .....................................................................................................316

17.7.9 Power Management ............................................................................316

17.7.10 Processor Signals ...............................................................................317

17.7.11 System Management ..........................................................................317

17.7.12 RTC ...........................................................................................318

17.7.13 AC’97 ...........................................................................................318

17.7.14 Miscellaneous Signals .........................................................................319

17.7.15 Power ...........................................................................................319

17.7.16 IDE Checklist .......................................................................................320

18 Layout Checklist ..............................................................................................................323

18.1 Processor and System Bus.................................................................................323

18.1.1 AGTL+ Signals .................................................................................... 323

18.1.2 Asynchronous GTL+ and Other Signals..............................................325

18.1.3 CK-SKS Clocking ................................................................................326

18.1.4 Processor Decoupling .........................................................................328

18.1.5 Chipset Decoupling .............................................................................328

18.1.6 AGTL+ (V

, HDVREF [3:0], HAVREF [1:0], and CCVREF) .............329

REF

18.2 Rambus* Routing Guidelines..............................................................................330

18.2.1 RSL Signals......................................................................................... 330

18.2.2 Ground Isolation: .................................................................................332

18.2.3 Vterm Layout .......................................................................................333

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 9

Intel

R

18.2.4

Direct Rambus* Clock Generation (DRCG* Device)

Recommendations ..............................................................................334

18.2.5 DRCG* Device Layout- Clean Power Supply......................................335

18.2.6 DRCG - CTM/CTM# Output Network Layout ......................................336

18.2.7 RAMREF Routing................................................................................336

18.3 AGP Guidelines ..................................................................................................337

18.3.1 All 1X Signals ......................................................................................337

18.3.2 2X/4X Signals ......................................................................................337

18.3.3 MCH AGP Decoupling......................................................................... 340

18.3.4 AGP Connector Decoupling ................................................................340

18.4 8-Bit Hub Interface ..............................................................................................341

18.4.1 Hub Decoupling...................................................................................341

18.5 16-Bit Hub Interface ............................................................................................342

18.5.1 Hub Decoupling...................................................................................342

18.6 IDE Interface .......................................................................................................343

18.7 AC’97 ..................................................................................................................343

18.8 USB.....................................................................................................................343

18.9 Intel® ICH2 Decoupling .......................................................................................344

18.10 RTC .............................................................................................................344

18.11 LAN Connect I/F .................................................................................................345

18.12 Miscellaneous Routing Guidelines ...................................................................... 346

Appendix A: Customer Reference Board Schematics ............................................................................347

®

10 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

R

Figures

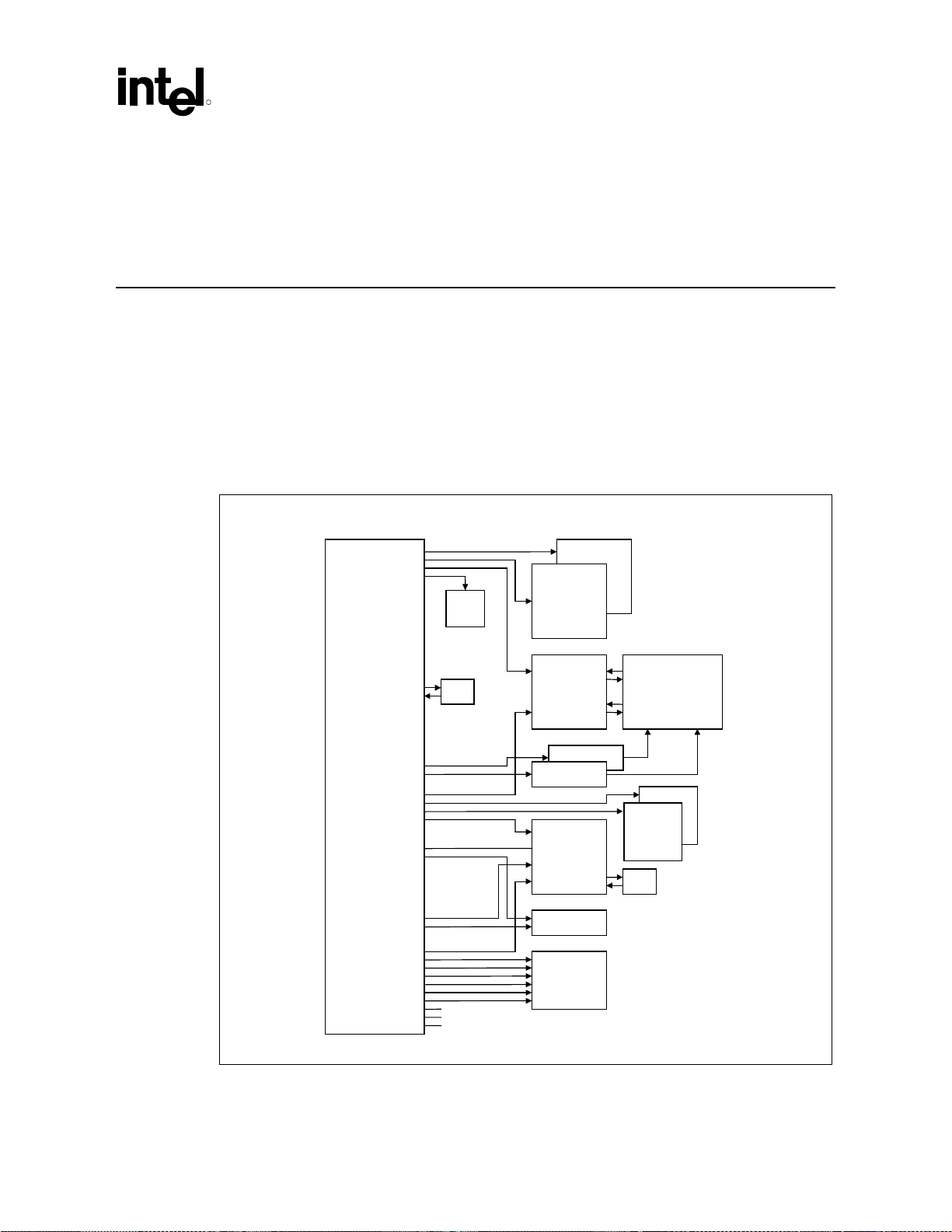

Figure 1. Workstation System Configuration Using the Intel® Xeon™ Processor/

Intel Xeon Processor with 512 KB L2 Cache and Intel

®

860 Chipset.................29

Figure 2. TOP VIEW—Intel® Xeon™ Processor Socket Quadrant Layout .......................38

Figure 3. TOP VIEW—Intel® Xeon™ Processor with 512 KB L2 Cache

Socket Quadrant Layout.....................................................................................39

Figure 4. TOP VIEW—MCH Quadrant Layout ..................................................................40

Figure 5. TOP VIEW—Intel® ICH2 Quadrant Layout.........................................................40

Figure 6. TOP VIEW—Intel® P64H Quadrant Layout........................................................41

Figure 7. TOP VIEW— Intel® MRH-R Quadrant Layout.................................................... 41

Figure 8. DP Workstation Component Placement Example in an Extended ATX

Form Factor........................................................................................................44

Figure 9. Eight Layer Stack-Up for DP Configurations—Option A ....................................45

Figure 10. Eight Layer Stack-Up for DP Configurations—Option B ..................................46

Figure 11. Dual Processor Stack-Up Example..................................................................47

Figure 12. Dual Processor Example Stack-Up B ..............................................................48

Figure 13. Stack-Up Example B Thickness Summary ......................................................49

Figure 14. Platform Clocking Architecture Using the CKx_SKS........................................ 51

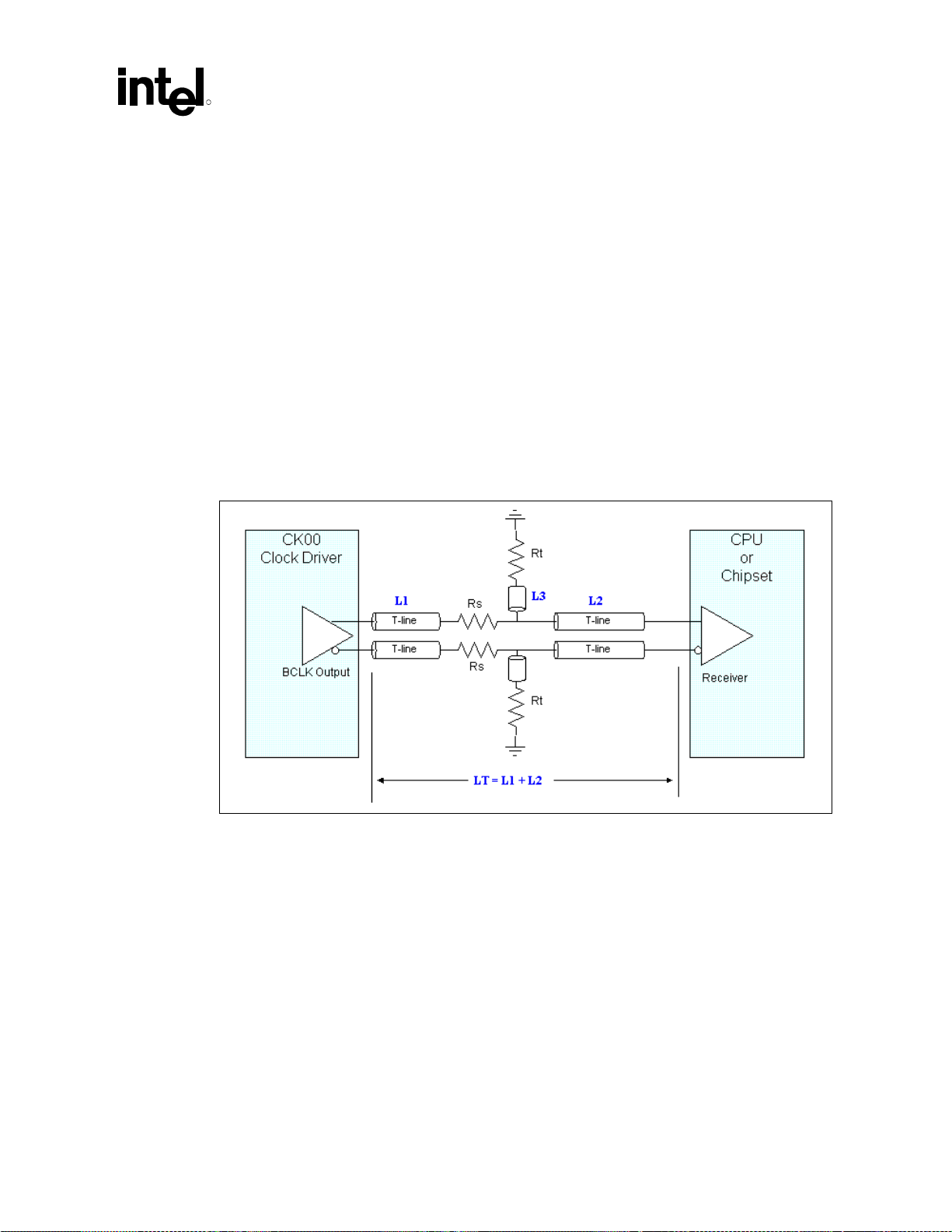

Figure 15. Dual Processor BCLK Topology.......................................................................52

Figure 16. Source Shunt Termination................................................................................53

Figure 17. Clock Skew as Measured from Agent-to-Agent ...............................................56

Figure 18. Trace Spacing ..................................................................................................56

Figure 19. VddIR and 3VMRef or 3VMRef# Routing ......................................................... 57

Figure 20. MCH to DRCG* Device Routing Diagram ........................................................58

Figure 21. Direct Rambus* Clock Generator Routing Dimension .....................................59

Figure 22. Differential Clock Routing Diagram ..................................................................60

Figure 23. Non-Differential Clock Routing Diagram ..........................................................60

Figure 24. CFM/CFM# Termination...................................................................................61

Figure 25. DRCG* Device Impedance Matching Network.................................................62

Figure 26. DRCG* Device Layout Example.......................................................................64

Figure 27. 66 MHz/33 MHz Clock Relationships ...............................................................65

Figure 28. AGP_66 Clock Routing Topology.....................................................................66

Figure 29. CLK_66 Clock Routing Topology .....................................................................66

Figure 30. PCI_33 Clock Routing Topology ......................................................................67

Figure 31. CLK_33 Clock Routing Topology .....................................................................67

Figure 32. Intel® P64H PCI 33 MHz Clock Routing ...........................................................68

Figure 33. Intel® P64H PCI 33 MHz Clock Routing ...........................................................68

Figure 34. Intel® P64H PCI 66 MHz Clock Routing ...........................................................69

Figure 35. 66 MHz PCI Clock Routing...............................................................................69

Figure 36. Serpentine Spacing – Diagram of Spacing to Reference Plane

Height Ratio...................................................................................................... 73

Figure 37. I/O Decoupling Guidelines for the Processor ...................................................74

Figure 38. Dual Processor System Bus Topology .............................................................77

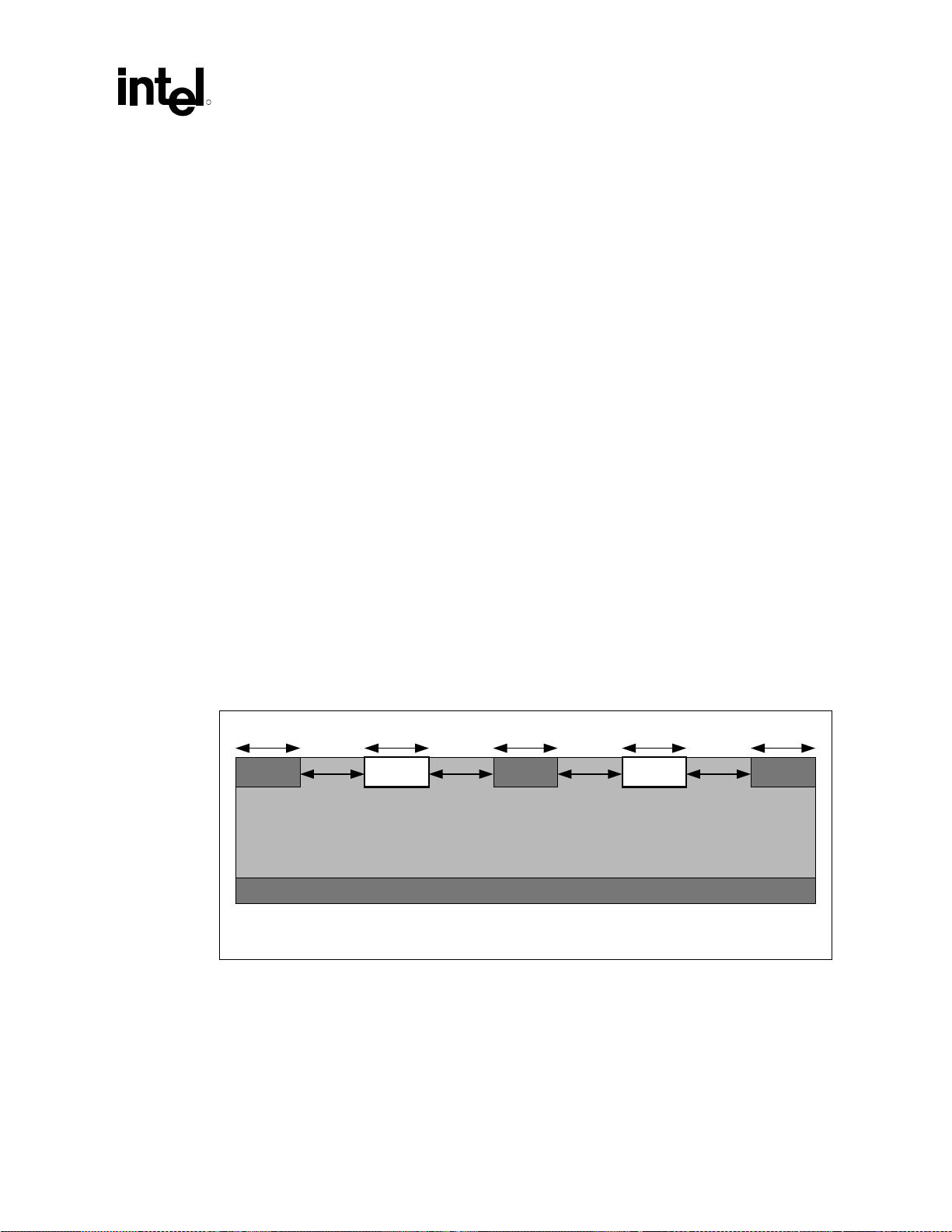

Figure 39. Cross Sectional View of 3:1 Ratio for Stripline (Edge-to-Edge

Trace Spacing vs. Trace to Reference Plane Height) ......................................78

Figure 40. Wired OR Topology for Dual Processor Based Systems .................................82

Figure 41. Via Dimensions and Routing Path....................................................................82

Figure 42. Topology 1 for DP Configuration ......................................................................85

Figure 43. Recommended THERMTRIP# Circuit.............................................................. 86

Figure 44. Topology 2 for DP Configuration ......................................................................87

Figure 45. BR[3:0]# Connection for DP Configuration.......................................................90

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 11

Intel

R

Figure 46. SKTOCC# Connection to Chipset BUSPARK Signal .......................................92

Figure 47. Voltage Divider Network for Reference Voltage Generation ............................93

Figure 48. Pull-Down Circuit .............................................................................................. 93

Figure 49. I/O Decoupling Guidelines for the MCH ...........................................................95

Figure 50. Rambus* Channel Signal Groups ....................................................................98

Figure 51. Intel® 860 Chipset MCH Rambus* Channel Routing Example.........................99

Figure 52. Example Rambus* Channel Routing..............................................................100

Figure 53. RSL Routing Diagram Showing Ground Isolation Traces with Via

Around Signals ...............................................................................................101

Figure 54. Rambus* Channel Trace Length Matching Example .....................................102

Figure 55. “Dummy” vs. “Real” Vias ................................................................................104

Figure 56. RSL and Clocking Signal Layer Alteration......................................................106

Figure 57. Top Layer CTAB with RSL Signal Routed on the Same Layer

(Ceff = 0.8 pF) ................................................................................................108

Figure 58. Bottom Layer CTAB with RSL Signal Routed on the Same Layer

(Ceff = 1.35 pF) ..............................................................................................109

Figure 59. Bottom Layer CTABs Split Across the Top and Bottom Layer to

Achieve Ceff ~1.35 pF...................................................................................109

Figure 60. Direct RDRAM* Device Termination Example ...............................................110

Figure 61. RAMREF Generation Example Circuit ...........................................................111

Figure 62. High Speed CMOS RC Termination...............................................................112

Figure 63. SIO Routing .................................................................................................... 113

Figure 64. Direct RDRAM* Device CMOS Shunt Transistor ...........................................115

Figure 65. Inductor-Capacitor Filter Circuit......................................................................116

Figure 66. Ferrite Bead Filter Circuit................................................................................116

Figure 67. RAC Isolation Filter Component Locations on Customer Reference Board ..118

Figure 68. AGP 2X/4X Routing Example for Interfaces < 6 Inches.................................122

Figure 69. AGP I/O Decoupling Example with a VSS Flood to Improve Power

Delivery to the MCH .......................................................................................124

Figure 70. AGP 2.0 V

Figure 71. AGP Left-Handed Retention Mechanism Drawing ......................................... 130

Figure 72. AGP Left-Handed Retention Mechanism Keepout Information......................131

Figure 73. 8-Bit Hub Interface Routing Example .............................................................133

Figure 74. 16-Bit Hub Interface Routing Example ...........................................................133

Figure 75. PCI 33 MHz Bus Layout Example ..................................................................134

Figure 76. PCI 66 MHz — 2 Slots Only ...........................................................................135

Figure 77. PCI 66 MHz—2 Slots with 1 Device Down .....................................................135

Figure 78. 8-Bit Hub Interface with a Single Reference Divider Circuit (Normal Mode) ..137

Figure 79. 8-Bit Hub Interface with Locally Generated Reference Divider Circuits

(Normal) ..........................................................................................................138

Figure 80. 8-Bit Hub Interface with Locally Generated Reference Divider

Circuits (Enhanced Mode) ...............................................................................138

Figure 81. 16-Bit Hub Interface with a Shared Reference Divider Circuit .......................140

Figure 82. 16-Bit Hub Interface with Locally Generated Reference Divider Circuits .......140

Figure 83. MCH 16-Bit Hub Interface HLSWNG Generated Reference Divider Circuit ..141

Figure 84. Trace Length Matching Example from MCH to Intel® P64H ..........................143

Figure 85. Host/Device-Side Detection Layout................................................................147

Figure 86. Device-Side Only Cable Detection .................................................................148

Figure 87. Connection Requirements for Primary IDE Connector...................................149

Figure 88. Connection Requirements for Secondary IDE Connector..............................150

Figure 89. Intel® ICH2 AC’97–Codec Connection ...........................................................151

Figure 90. Audio Codec ................................................................................................... 152

Figure 91. Modem Codec ................................................................................................152

Figure 92. Audio/Modem Codec ...................................................................................... 153

Generation and Distribution for 1.5 V Cards............................126

REF

®

12 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

R

Figure 93. Modem Codecs ..............................................................................................153

Figure 94. Audio and Modem Codecs .............................................................................154

Figure 95. Audio Codecs .................................................................................................154

Figure 96. Audio and Audio/Modem Codecs ...................................................................155

Figure 97. CDC_DN_ENAB# Support Circuitry for a Single Codec Motherboard...........157

Figure 98. CDC_DN_ENAB# Support Circuitry for Multi-Channel Audio Upgrade..........158

Figure 99. CDC_DN_ENAB# Support Circuitry for Two-Codecs on

Motherboard / One-Codec on CNR................................................................158

Figure 100. CDC_DN_ENAB# Support for Two-Codecs on Motherboard /

Two-Codecs on CNR ...................................................................................159

Figure 101. USB Data Line Schematic ............................................................................161

Figure 102. SMBUS/SMLink Interface.............................................................................163

Figure 103. Unified VCC_Suspend Architecture .............................................................165

Figure 104. Unified VCC_CPU Architecture ....................................................................165

Figure 105. Mixed VCC_Suspend/ VCC_CPU Architecture............................................166

Figure 106. PCI Bus Layout Example..............................................................................167

Figure 107. Intel® ICH2 Oscillator Circuitry......................................................................168

Figure 108. Diode Circuit to Connect RTC External Battery............................................170

Figure 109. RTCRST External Circuit for the Intel® ICH2 RTC ....................................... 171

Figure 110, RTC Power-Well Isolation Control................................................................173

Figure 111. Intel® ICH2 / LAN Connect Section ..............................................................175

Figure 112. Single Solution Interconnect.........................................................................177

Figure 113. LAN_CLK Routing Example .........................................................................178

Figure 114. Trace Routing ............................................................................................... 180

Figure 115. Ground Plane Separation .............................................................................181

Figure 116. Intel® 82562EH Termination .........................................................................185

Figure 117. Critical Dimensions for Component Placement............................................186

Figure 118. Intel® 82562ET/EM Termination ...................................................................188

Figure 119. Critical Dimensions for Component Placement............................................188

Figure 120. Termination Plane ........................................................................................191

Figure 121, Intel® 82562ET/EM Disable Circuit...............................................................191

Figure 122. Dual Footprint LAN Connect Interface .........................................................192

Figure 123. Dual Footprint Analog Interface....................................................................192

Figure 124, FWH VPP Isolation Circuitry ........................................................................194

Figure 125, SPKR Circuit.................................................................................................197

Figure 126, Example Power-On 3.3 V / 1.8 V Sequencing Circuit .................................. 198

Figure 127. Example 3.3V/V5REF Sequencing Circuitry ................................................199

Figure 128. Example PCI IRQ Routing............................................................................200

Figure 129. Spread Spectrum Modulation Profile............................................................205

Figure 130. Impact of Spread Spectrum Clocking on Radiated Emissions.....................206

Figure 131. Cancellation of H-fields Through Inverse Currents ......................................206

Figure 132. Intel® Xeon™ Processor Ground Frame ......................................................208

Figure 133. Retention Mechanism Keepout Outline ........................................................212

Figure 134. Retention Mechanism Placement and Keepout Overview ...........................213

Figure 135. EMI Ground Pad Size and Locations ...........................................................215

Figure 136. Retention Mechanism Ground Ring (Optional) ............................................216

Figure 137. Power Distribution Block Diagram for Dual Processor System

Motherboard with Voltage Regulator Modules ..............................................220

Figure 138. Power Distribution Block Diagram for Dual Processor System

Motherboard with Single Embedded Voltage Regulator...............................221

Figure 139. Current Load Step Shape at Socket.............................................................223

Figure 140. Piecewise Linear Tabulation of Load Step at Socket Composite.................224

Figure 141. Intel® Xeon Processor with 512 KB L2 Cache Piecewise Linear

Socket Current .............................................................................................225

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 13

Intel

R

Figure 142. Load Step Transient Voltage ........................................................................227

Figure 143. Intel® Xeon™ Processor Socket and Regulator Voltage Limits ...................230

Figure 144. Intel® Xeon™ Processor with 512 KB L2 Cache Socket and

Regulator Voltage Limits ..............................................................................232

Figure 145. Suggested Eight Layer Stack-Up for Dual Processor Systems....................236

Figure 146. Loop Inductance ........................................................................................... 237

Figure 147. 1206 Capacitor Pad and Via Layouts ...........................................................240

Figure 148. Connections to Via Patterns .........................................................................240

Figure 149. Processor Lump Model Schematic ..............................................................242

Figure 150. Processor Lump Model Drawing ..................................................................244

Figure 151. “L” Pattern with Embedded Voltage Regulator .............................................245

Figure 152. “L” Pattern with Embedded Voltage Regulator Schematic ...........................246

Figure 153. “Row” Pattern with Voltage Regulator Module .............................................248

Figure 154. “Row” Pattern with Voltage Regulator Module Schematic............................249

Figure 155. “Row” Pattern with Embedded Voltage Regulator........................................250

Figure 156. “Row” Pattern with Embedded Voltage Regulator Schematic ......................251

Figure 157. GTLREF Divider ...........................................................................................253

Figure 158. Suggested Processor GTLREF Design........................................................255

Figure 159. Processor PLL Filter Topology .....................................................................255

Figure 160. Processor PLL Filter Specification ...............................................................256

Figure 161. Filter Circuit with Discrete Resistors.............................................................258

Figure 162. Filter Circuit without Discrete Resistors........................................................258

Figure 163. Example of Decoupling for a Microstrip Baseboard Design .........................259

Figure 164. Global System Power States and Transition ................................................262

Figure 165. Desired Mode of Power Sequencing ............................................................264

Figure 166. Optional Mode of Power Sequencing ...........................................................264

Figure 167. 1.8 V and 2.5 V Power Sequence (Schottky Diode).....................................265

Figure 168. 5VREF Sequencing Circuit...........................................................................266

Figure 169. Simulation Methodology Flowchart...............................................................267

Figure 170. Source Synchronous Timing Diagram for Setup Time ................................. 270

Figure 171. Source Synchronous Timing Diagram for Hold Time ................................... 271

Figure 172. Circuit Used to Develop the Common Clock Timing Equations ................... 272

Figure 173. Timing Diagram Used to Determine the Common Clock Setup

Timing Equations.......................................................................................... 273

Figure 174. Timing Diagram Used to Determine the Common Clock Hold

Timing Equations.......................................................................................... 274

Figure 175. Calculating Rising Edge to Rising Edge Flight Time (Traditional Method) ... 278

Figure 176. Calculating Setup Flight Time (Receiver Edge Rate is Slower than

Specified Edge Rate) ...................................................................................278

Figure 177. Calculating Flight Time (Nonlinear Edge from VIL through VIH at the

Receiver) ......................................................................................................279

Figure 178. Calculating Flight Time (Ringback Violation from VIL through VIH

at the Receiver) ............................................................................................279

Figure 179. Example of Sweeps Used to Evaluate the Length Limits of Trace L2

and L3............................................................................................................281

Figure 180. Monte Carlo Analysis....................................................................................281

Figure 181. Targeted Monte Carlo (TMC) Analysis (Phase 2 Solution Space

for Variables L2 and L3) ...............................................................................282

Figure 182. Example of ISI Impact on Timing and Signal Integrity..................................284

Figure 183. Propagation on Aggressor Network .............................................................285

Figure 184. Aggressor and Victim Networks ...................................................................285

Figure 185. Transmission Line Geometry of Microstrip and Stripline..............................286

®

14 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

R

Tables

Table 1. Reference Documentation ...................................................................................20

Table 2. Platform Conventions and Terminology ..............................................................22

Table 3. Processor Feature Set Overview.........................................................................25

Table 4. Platform Bandwidth Summary .............................................................................28

Table 5. Intel® ICH2 Codec Options ..................................................................................32

Table 6. Placement Assumptions for Workstation Configurations ....................................43

Table 7. BCLK[1:0]# Routing Guidelines Summary ..........................................................55

Table 8. Direct Rambus* Clock Generator Routing Guidelines.........................................59

Table 9. DRCG* Device Impedance Matching Network Values........................................63

Table 10. 66 MHz Clock Routing Length Guidelines......................................................... 65

Table 11. 33 MHz Clock Routing Guidelines .....................................................................67

Table 12. System Bus Routing Summary..........................................................................71

Table 13. System Bus Signals...........................................................................................76

Table 14. Source Synchronous Signals and the Associated Strobes ...............................79

Table 15. Asynchronous GTL+ and Miscellaneous Signals ..............................................83

Table 16. Reference Voltage Network Values...................................................................94

Table 17. Rambus* Channel Signal Groups......................................................................99

Table 18. Rambus* Channel RSL Signal Lengths for RIMM* Connectors

on Motherboard ................................................................................................100

Table 19. RSL and Clocking Signal RIMM* Connector Capacitance Requirement.........106

Table 20. Copper Tab Area Calculation ..........................................................................108

Table 21. Single and Dual Package Sample Transistors ................................................ 114

Table 22. 82860 MCH 1.8 V RAC Pinout ........................................................................117

Table 23. AGP 2.0 Signal Groups ...................................................................................120

Table 24. AGP 2.0 Routing Summary .............................................................................123

Table 25. AGP Pull-Up/Pull-Down Resistors ...................................................................128

Table 26. 3.3 V and 5 V Tolerant Signals During 1.5 V Operation ..................................129

Table 27. List of Vendors for Retention Mechanism .......................................................131

Table 28. 8-Bit Hub Interface Buffer Configuration Setting .............................................136

Table 29. 8-Bit Hub Interface HIREF Generation Circuit Specifications..........................137

Table 30. 8-Bit Hub Interface RCOMP Resistor Values ..................................................139

Table 31. 16-Bit Hub Interface HUBREF Generation Circuit Specifications....................140

Table 32. 16-Bit Hub Interface HLSWNG Generation Circuit Specifications ..................141

Table 33. 16-Bit Hub Interface RCOMP Resistor Values ................................................142

Table 34. AC’97 SDIN Pull-Down Resistors .................................................................... 156

Table 35. Signal Descriptions ..........................................................................................159

Table 36. Codec Configurations ......................................................................................160

Table 37. Recommended USB Trace Characteristics.....................................................161

Table 38. SMBus/SMLink Requirements.........................................................................163

Table 39. Integrated LAN Options ...................................................................................175

Table 40. LAN Design Guide Section Reference ............................................................176

Table 41. Length Requirements for Single Solution Interconnect ................................... 177

Table 42. Critical Dimension Values................................................................................186

Table 43. Critical Dimension Values................................................................................189

Table 44. Decoupling Capacitor Recommendation ......................................................... 195

Table 45. IOAPIC Interrupt Inputs 16 Through 23 Usage ...............................................200

Table 46. Processor Current Step Parameters ...............................................................222

Table 47. Piecewise Linear Tabulation of Load Step at Socket by Percentage ..............223

Table 48. Piecewise Linear Tabulation of Load Step at Socket Composite ....................224

Table 49. Load Line Equation Parameters ......................................................................227

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 15

Intel

R

Table 50. Intel

®

Xeon™ Processor Dual Processor Load Step Transient

Requirements ..................................................................................................228

Table 51. Intel® Xeon™ Processor with 512 KB L2 Cache Dual Processor

Load Step Transient Requirements................................................................. 229

Table 52. Intel® Xeon™ Processor Socket and Regulator Voltage Limits.......................231

Table 53. Intel® Xeon™ Processor with 512 KB L2 Cache Socket and Regulat

or Voltage Limits..............................................................................................233

Table 54. Various Component Models Used at Intel (Not Vendor Specifications) ..........242

Table 55. Processor Lump Model Component Values .................................................... 243

Table 56. “L” Pattern with Embedded Voltage Regulator Schematic Values ..................247

Table 57. “Row” Pattern with Voltage Regulator Module Schematic Values...................249

Table 58. “Row” Pattern with Embedded Voltage Regulator Schematic Values .............252

Table 59. Component Recommendation — Inductor ......................................................257

Table 60. Component Recommendation — Capacitor.................................................... 257

Table 61. Intel® 860 Chipset MCH and Intel® ICH2 Thermal Design Power ...................263

Table 62. System Variables to Consider for Sensitivity Analysis..................................... 276

Table 63. Example Backward Crosstalk Coupling Factors with ε

V

= 1.5 V, and Z

OH_MAX

= 65 Ω......................................................................288

0

= 4.5,

r

Table 64. BR0# (I/O) and BR1# Signals Rotating Interconnect ......................................297

®

16 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

R

Revision History

Revision

Number

-001 Initial Release. May 2001

-002 2nd Release. Updated document references in Table 1 and throughout

document.

Added additional THERMTRIP# information to Section 5.4.2.1.

Updated BR[3:0] routing recommendations in Section 5.4.2.5.

Rotated and resized Figure 133, Figure 134, and Figure 135 to make

more readable.

Updated Table 50 and Table 51 with Intel Xeon processor at 2 GHz

information.

Updated TESTHI[6:0] and ODTEN recommendations in Sections

5.4.2.7 and 5.4.2.8, along with associated entries in processor

schematic checklist in Section 17.1.

Updated processor PLL filter, TESTHI[6:0], and ODTEN

implementations in Customer Reference Board Schematics in

Appendix A.

-003 3rd release. Updated document with Intel® Xeon™ processor with 512

KB L2 cache content.

Updated Section 12 with additional design details pertaining to

processor decoupling, loadlines, voltage regulators, and power

simulation/modeling.

Replaced Figure 107 in Section 9.7.1, RTC Crystal

Replaced Section 9.14, Power-Well Isolation Control Strap

Requirements, and moved to Section 9.7.8, Power-well Isolation

Control

Added Section 9.7.9, Power Supply PS_ON Consideration

Replaced Figure 114, Trace Routing in Section 9.8.2.1, General Trace

Routing Considerations

Added Section 9.8.5, Intel

Added Section 9.9, FWH Guidelines

Reordered the following sections: Glue Chip, SPKR Pin

Considerations, and 1.8V and 3.3V Power Sequence Requirement

Revised Section 13.5, ICH2 5VREF and VCC3.3 Sequencing

Requirement

Revised checklist item RSMRST# in Section 17.7.9, Power

Management

Revised checklist item RTCX1/RTCX2 in Section 17.7.12, RTC

Added checklist item SUSCLK to RTC checklist in Section 17.7.12

Description Revision

®

82562 ET/EM Disable Guidelines

Date

October 2001

January 2002

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 17

Intel

R

-004 Revised paragraph 3 in Section 9.14 ICH2 V5REF and VCC3_3

Sequencing Requirement

Revised APIC in Section 17.7.6 Interrupt Interface in the Schematics

Checklist

Revised V5REF_SUS in Section 17.7.15 Power in the Schematics

Checklist

March 2002

®

18 Intel

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide

Introduction

R

1 Introduction

This design guide documents Intel’s design recommendations for systems based on the

®

Intel

Xeon™ processor and/or Intel® Xeon™ processor with 512 KB L2 cache with the Intel®

860 chipset. In addition to providing motherboard design recommendations such as layout and

routing guidelines, this document also addresses possible system design issues such as EMI design

impacts and system bus decoupling. Thermal considerations are addressed by specific thermal

documentation for the processor and the Intel 860 chipset listed in Section 1.1. Unless otherwise

noted, references to “processor” throughout this document apply to both the Intel Xeon processor

and Intel Xeon processor with 512 KB L2 cache.

Carefully follow the design information, board schematics, debug recommendations, and system

checklist presented in this document. These design guidelines have been developed to ensure

maximum flexibility for board designers while reducing the risk of board related issues. The

design information provided in this document falls into one of the two categories:

• Design Recommendations are items that are based on Intel’s simulations and lab experience to

date, and are strongly recommended, if not necessary, to meet the timing and signal quality

specifications.

• Design Considerations are suggestions for platform design that provide one way to meet the

design recommendations. They are based on the reference platforms designed by Intel. They

should be used as examples, and may not apply to your particular design.

Note: The guidelines recommended in this document are based on experience, simulation, and validation

work completed at Intel during Intel Xeon processor and Intel Xeon processor with 512 KB L2

cache/Intel 860 chipset based systems development. This work is ongoing, and the

recommendations are subject to change.

The platform schematics in Appendix A can be used as a reference for board designers. While the

schematics may cover a specific design, the core schematics remain the same for most platforms.

The schematic sets provide a reference schematic for each platform component, and common

motherboard options. Additional flexibility is possible through permutations of these options and

components.

®

Xeon™ Processor and Intel® 860 Chipset Platform Design Guide 19

Intel

Introduction

1.1 Related Documentation

Table 1. Reference Documentation

R

Document Document

IA-32 Intel® Architecture Soft ware Developer’s Manual

• Volume 1: Basic Architecture

• Volume 2: Instruction Set Reference

• Volume 3: System Programming Guide

CK00 Clock Synthesi zer/Driver Des i gn Gui del i nes

VRM 9.0 DC-DC Converter Design Guidelines

Intel® 860 Chipset: 8260 Memory Controller Hub (MCH) Datasheet 290713

Intel® Xeon™ Processor Thermal Design Gui del i nes

603 Pin Socket Design Guidel i nes

Intel® Xeon™ Processor at 1.40 GHz, 1.50 GHz, 1.7 GHz and 2 GHz

Datasheet

Intel® Xeon™ Processor with 512 KB L2 Cache at 1. 8 GHz, 2 GHz and 2.2

GHz Datasheet

Intel® Xeon™ Processor with 512 KB L2 Cache Compatibility Guidelines for

Intel® Xeon™ Proces sor-Based Platforms

Intel® Xeon™ Processor Thermal Solut i on Functional Specification

Intel® Xeon™ Processor Si gnal Integrity Models (IB IS format) http://developer.intel.com

Intel® Xeon™ Processor w i th 512 KB L2 Cache Signal Integrity Models (IBIS

format)

Intel® Xeon™ Processor Overshoot Checker Tool http://developer.intel.com

Intel® Xeon™ Processor wi th 512 KB L2 Cache Overshoot Checker Tool http://developer.intel.com

Intel® Xeon™ Processor Enabl ed Components Models (ProE and IGES

format)

Intel® Xeon™ Proces sor with 512 KB L2 Cache Mec hani cal Models (ProE

and IGES format)

Intel® Xeon™ Processor Thermal Model (Flotherm and Icepak format) http://developer.intel.com

Intel® Xeon™ Processor with 512 KB L2 Cache Thermal Models (Fl ot herm

Number/Source

245470

245471

245472

http://developer.intel.com

/design/pentium4/guides

/249206.htm

http://developer.intel.com

/design/pentium4/guides

/249205.htm

http://developer.intel.com

/design/Xeon/guides

/298348.htm

http://developer.intel.com

/design/Xeon/guides

/249672.htm

http://developer.intel.com

/design/xeon/datashts

/249665.htm