Page 1

ibm.com/redbooks

IBM Eserver p5 590 and 595

System Handbook

Peter Domberg

Nia Kelley

TaiJung Kim

Ding Wei

Component-based description of the

hardware architecture

A guide for machine type 9119

models 590 and 595

Capacity on Demand

explained

Front cover

Page 2

Page 3

IBM Eserver p5 590 and 595 System Handbook

March 2005

International Technical Support Organization

SG24-9119-00

Page 4

© Copyright International Business Machines Corporation 2005. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP

Schedule Contract with IBM Corp.

First Edition (March 2005)

This edition applies to the IBM Sserver p5 9119 Models 590 and 595.

Note: Before using this information and the product it supports, read the information in

“Notices” on page xv.

Page 5

© Copyright IBM Corp. 2005. All rights reserved. iii

Contents

Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvi

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

The team that wrote this redbook. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvii

Become a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xix

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xx

Chapter 1. System overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 What’s new . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 General overview and characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3.1 Microprocessor technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3.2 Memory subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.3.3 I/O subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.3.4 Media bays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.3.5 Virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.4 Features summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.5 Operating systems support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.1 AIX 5L . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.2 Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Chapter 2. Hardware architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.1 Server overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.2 The POWER5 microprocessor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.2.1 Simultaneous multi-threading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.2.2 Dynamic power management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.2.3 The POWER chip evolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.2.4 CMOS, copper, and SOI technology. . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.5 Processor books . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.2.6 Processor clock rate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.3 Memory subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.3.1 Memory cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.3.2 Memory placement rules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.4 Central electronics complex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Page 6

iv IBM Eserver p5 590 and 595 System Handbook

2.4.1 CEC backplane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.5 System flash memory configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

2.6 Vital product data and system smart chips . . . . . . . . . . . . . . . . . . . . . . . . 36

2.7 I/O drawer. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

2.7.1 EEH adapters and partitioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.7.2 I/O drawer attachment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.7.3 Full-drawer cabling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.7.4 Half-drawer cabling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.7.5 blind-swap hot-plug cassette. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.7.6 Logical view of a RIO-2 drawer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.7.7 I/O drawer RAS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.7.8 Supported I/O adapters in p5-595 and p5-590 systems . . . . . . . . . . 47

2.7.9 Expansion units 5791, 5794, and 7040-61D . . . . . . . . . . . . . . . . . . . 50

2.7.10 Configuration of I/O drawer ID and serial number. . . . . . . . . . . . . . 54

Chapter 3. POWER5 virtualization capabilities. . . . . . . . . . . . . . . . . . . . . . 57

3.1 Virtualization features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

3.2 Micro-Partitioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

3.2.1 Shared processor partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

3.2.2 Types of shared processor partitions . . . . . . . . . . . . . . . . . . . . . . . . 62

3.2.3 Typical usage of Micro-Partitioning technology. . . . . . . . . . . . . . . . . 64

3.2.4 Limitations and considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

3.3 Virtual Ethernet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

3.3.1 Virtual LAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

3.3.2 Virtual Ethernet connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

3.3.3 Dynamic partitioning for virtual Ethernet devices . . . . . . . . . . . . . . . 72

3.3.4 Limitations and considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

3.4 Shared Ethernet Adapter. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

3.4.1 Connecting a virtual Ethernet to external networks. . . . . . . . . . . . . . 73

3.4.2 Using Link Aggregation (EtherChannel) to external networks . . . . . 77

3.4.3 Limitations and considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

3.5 Virtual I/O Server. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

3.6 Virtual SCSI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

3.6.1 Limitations and considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Chapter 4. Capacity on Demand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

4.1 Capacity on Demand overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

4.2 What’s new in Capacity on Demand? . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

4.3 Preparing for Capacity on Demand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

4.3.1 Step 1. Plan for future growth with inactive resources . . . . . . . . . . . 87

4.3.2 Step 2. Choose the amount and desired level of activation . . . . . . . 88

4.4 Types of Capacity on Demand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

4.5 Capacity BackUp. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Page 7

Contents v

4.6 Capacity on Demand activation procedure . . . . . . . . . . . . . . . . . . . . . . . . 91

4.7 Using Capacity on Demand. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

4.7.1 Using Capacity Upgrade on Demand . . . . . . . . . . . . . . . . . . . . . . . . 93

4.7.2 Using On/Off Capacity On Demand . . . . . . . . . . . . . . . . . . . . . . . . . 94

4.7.3 Using Reserve Capacity on Demand . . . . . . . . . . . . . . . . . . . . . . . . 98

4.7.4 Using Trial Capacity on Demand . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

4.8 HMC Capacity on Demand menus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

4.8.1 HMC command line functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

4.9 Capacity on Demand configuration rules . . . . . . . . . . . . . . . . . . . . . . . . 103

4.9.1 Processor Capacity Upgrade on Demand configuration rules . . . . 103

4.9.2 Memory Capacity Upgrade on Demand configuration rules . . . . . . 104

4.9.3 Trial Capacity on Demand configuration rules . . . . . . . . . . . . . . . . 104

4.9.4 Dynamic processor sparing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

4.10 Software licensing considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

4.10.1 License entitlements for permanent processor activations . . . . . . 106

4.10.2 License entitlements for temporary processor activations . . . . . . 107

4.11 Capacity on Demand feature codes . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Chapter 5. Configuration tools and rules . . . . . . . . . . . . . . . . . . . . . . . . . 111

5.1 Configuration tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

5.1.1 IBM Configurator for e-business . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

5.1.2 LPAR Validation Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

5.2 Configuration rules for p5-590 and p5-595 . . . . . . . . . . . . . . . . . . . . . . . 117

5.2.1 Minimum configuration for the p5-590 and p5-595 . . . . . . . . . . . . . 118

5.2.2 LPAR considerations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

5.2.3 Processor configuration rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

5.2.4 Memory configuration rules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

5.2.5 Advanced POWER Virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . 126

5.2.6 I/O sub-system configuration rules . . . . . . . . . . . . . . . . . . . . . . . . . 126

5.2.7 Disks, boot devices, and media devices . . . . . . . . . . . . . . . . . . . . . 128

5.2.8 PCI and PCI-X slots and adapters . . . . . . . . . . . . . . . . . . . . . . . . . 129

5.2.9 Keyboards and displays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

5.2.10 Frame, power, and battery backup configuration rules . . . . . . . . . 130

5.2.11 HMC configuration rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

5.2.12 Cluster 1600 considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

5.3 Capacity planning considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

5.3.1 p5-590 and p5-595 general considerations. . . . . . . . . . . . . . . . . . . 135

5.3.2 Further capacity planning considerations . . . . . . . . . . . . . . . . . . . . 137

Chapter 6. Reliability, availability, and serviceability. . . . . . . . . . . . . . . . 139

6.1 What’s new in RAS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

6.2 RAS overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

6.3 Predictive functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

Page 8

vi IBM Eserver p5 590 and 595 System Handbook

6.3.1 First Failure Data Capture (FFDC) . . . . . . . . . . . . . . . . . . . . . . . . . 143

6.3.2 Predictive failure analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

6.3.3 Component reliability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

6.3.4 Extended system testing and surveillance . . . . . . . . . . . . . . . . . . . 145

6.4 Redundancy in components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

6.4.1 Power and cooling redundancy. . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

6.4.2 Memory redundancy mechanisms . . . . . . . . . . . . . . . . . . . . . . . . . 146

6.4.3 Service processor and clocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 149

6.4.4 Multiple data paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

6.5 Fault recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

6.5.1 PCI bus error recovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

6.5.2 Dynamic CPU Deallocation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

6.5.3 CPU Guard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

6.5.4 Hot-plug components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

6.5.5 Hot-swappable boot disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

6.5.6 Blind-swap, hot-plug PCI adapters . . . . . . . . . . . . . . . . . . . . . . . . . 156

6.6 Serviceability features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

6.6.1 Converged service architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

6.6.2 Hardware Management Console . . . . . . . . . . . . . . . . . . . . . . . . . . 158

6.6.3 Error analyzing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

6.6.4 Service processors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

6.6.5 Service Agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

6.6.6 Service Focal Point . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

6.7 AIX 5L RAS features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

6.8 Linux RAS features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

Chapter 7. Service processor. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

7.1 Service processor functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

7.1.1 Firmware binary image . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

7.1.2 Platform initial program load . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

7.1.3 Error handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

7.2 Service processor cabling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

7.3 Advanced System Management Interface . . . . . . . . . . . . . . . . . . . . . . . 175

7.3.1 Accessing ASMI using HMC Service Focal Point utility . . . . . . . . . 175

7.3.2 Accessing ASMI using a Web browser . . . . . . . . . . . . . . . . . . . . . . 177

7.3.3 ASMI login window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

7.3.4 ASMI user accounts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

7.3.5 ASMI menu functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

7.3.6 Power On/Off tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

7.3.7 System Service Aids tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

7.3.8 System Configuration ASMI menu . . . . . . . . . . . . . . . . . . . . . . . . . 183

7.3.9 Network Services ASMI menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

7.3.10 Performance Setup ASMI menu . . . . . . . . . . . . . . . . . . . . . . . . . . 185

Page 9

Contents vii

7.4 Firmware updates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

7.5 System Management Services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

Chapter 8. Hardware Management Console overview . . . . . . . . . . . . . . . 195

8.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

8.1.1 Desktop HMC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

8.1.2 Rack mounted HMC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

8.1.3 HMC characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

8.2 HMC setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

8.2.1 The HMC logical communications. . . . . . . . . . . . . . . . . . . . . . . . . . 199

8.3 HMC network interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

8.3.1 Private and open networks in the HMC environment . . . . . . . . . . . 201

8.3.2 Using the HMC as a DHCP server . . . . . . . . . . . . . . . . . . . . . . . . . 202

8.3.3 HMC connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

8.3.4 Predefined HMC user accounts . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

8.4 HMC login . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

8.4.1 Required setup information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

8.5 HMC Guided Setup Wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

8.6 HMC security and user management . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

8.6.1 Server security . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

8.6.2 Object manager security . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

8.6.3 HMC user management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

8.7 Inventory Scout services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

8.8 Service Agent and Service Focal Point . . . . . . . . . . . . . . . . . . . . . . . . . . 237

8.8.1 Service Agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

8.8.2 Service Focal Point . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

8.9 HMC service utilities and tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

8.9.1 HMC boot up fails with fsck required. . . . . . . . . . . . . . . . . . . . . . . . 243

8.9.2 Determining HMC serial number. . . . . . . . . . . . . . . . . . . . . . . . . . . 244

Appendix A. Facts and features reference . . . . . . . . . . . . . . . . . . . . . . . . 245

Appendix B. PCI adapter placement guide. . . . . . . . . . . . . . . . . . . . . . . . 253

Expansion unit back view PCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

PCI-X slot description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

Recommended system unit slot placement and maximums . . . . . . . . . . . 255

Appendix C. Installation planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 259

Doors and covers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

Enhanced acoustical cover option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

Slimline cover option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 260

Raised-floor requirements and preparation . . . . . . . . . . . . . . . . . . . . . . . . . . 260

Securing the frame . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

Considerations for multiple system installations . . . . . . . . . . . . . . . . . . . . 261

Page 10

viii IBM Eserver p5 590 and 595 System Handbook

Moving the system to the installation site . . . . . . . . . . . . . . . . . . . . . . . . . 262

Dual power installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

Planning and installation documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

Appendix D. System documentation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

IBM Sserver Hardware Information Center . . . . . . . . . . . . . . . . . . . . . . . . . 266

What is the Hardware Information Center?. . . . . . . . . . . . . . . . . . . . . . . . 266

How do I get it? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266

How do I get updates? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 267

How do I use the application? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

Abbreviations and acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 278

How to get IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 281

Page 11

© Copyright IBM Corp. 2005. All rights reserved. ix

Figures

1-1 Primary system frame organization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1-2 Powered and bolt on frames . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1-3 POWER4 and POWER5 architecture comparison . . . . . . . . . . . . . . . . . 7

1-4 POWER4 and POWER5 memory structure comparison . . . . . . . . . . . . . 9

1-5 p5-590 and p5-595 I/O drawer organization . . . . . . . . . . . . . . . . . . . . . 10

2-1 POWER4 and POWER5 system structures. . . . . . . . . . . . . . . . . . . . . . 19

2-2 The POWER chip evolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2-3 p5-590 and p5-595 16-way processor book diagram . . . . . . . . . . . . . . 25

2-4 Memory flow diagram for MCM0 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2-5 Memory card with four DIMM slots . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2-6 Memory placement for the p5-590 and p5-595 . . . . . . . . . . . . . . . . . . . 29

2-7 p5-595 and p5-590 CEC logic diagram . . . . . . . . . . . . . . . . . . . . . . . . . 32

2-8 p5-595 CEC (top view). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2-9 p5-590 CEC (top view). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2-10 CEC backplane (front side view) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

2-11 Single loop 7040-61D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2-12 Dual loop 7040-61D . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2-13 I/O drawer RIO-2 ports. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2-14 blind-swap hot-plug cassette . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2-15 I/O drawer top view - logical layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

2-16 Hardware Information Center search for PCI placement . . . . . . . . . . . . 48

2-17 Select Model 590 or 595 placement . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

2-18 PCI-X slots of the I/O drawer (rear view) . . . . . . . . . . . . . . . . . . . . . . . . 50

2-19 PCI placement guide on IBM Sserver Information Center . . . . . . . . . 51

2-20 Minimum to maximum I/O configuration . . . . . . . . . . . . . . . . . . . . . . . . 53

2-21 I/O frame configuration example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

3-1 POWER5 partitioning concept . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

3-2 Capped shared processor partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

3-3 Uncapped shared processor partitions . . . . . . . . . . . . . . . . . . . . . . . . . 63

3-4 Example of a VLAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

3-5 VLAN configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

3-6 Logical view of an inter-partition VLAN . . . . . . . . . . . . . . . . . . . . . . . . . 71

3-7 Connection to external network using AIX routing . . . . . . . . . . . . . . . . . 74

3-8 Shared Ethernet Adapter configuration . . . . . . . . . . . . . . . . . . . . . . . . . 75

3-9 Multiple Shared Ethernet Adapter configuration . . . . . . . . . . . . . . . . . . 76

3-10 Link Aggregation (EtherChannel) pseudo device . . . . . . . . . . . . . . . . . 78

3-11 IBM p5-590 and p5-595 Virtualization Technologies . . . . . . . . . . . . . . . 80

3-12 AIX 5L Version 5.3 Virtual I/O Server and client partitions . . . . . . . . . . 82

Page 12

x IBM Eserver p5 590 and 595 System Handbook

4-1 HMC Capacity on Demand Order Selection panel . . . . . . . . . . . . . . . . 92

4-2 Enter CoD Enablement Code (HMC window) . . . . . . . . . . . . . . . . . . . . 92

4-3 HMC Billing Selection Wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

4-4 Manage On/Off CoD Processors HMC Activation window . . . . . . . . . . 97

4-5 Manage On/Off CoD HMC Confirmation Panel and Legal Statement . . 98

4-6 HMC Reserve CoD Processor Activation window . . . . . . . . . . . . . . . . . 99

4-7 CoD Processor Capacity Settings Overview HMC window . . . . . . . . . 101

4-8 CoD Processor Capacity Settings On/Off CoD HMC window . . . . . . . 102

4-9 CoD Processor Capacity Settings Reserve CoD HMC window . . . . . . 102

4-10 CoD Processor Capacity Settings “Trial CoD” HMC window . . . . . . . . 103

5-1 LPAR Validation Tool - creating a new partition . . . . . . . . . . . . . . . . . 114

5-2 LPAR Validation Tool - System Selection dialog . . . . . . . . . . . . . . . . . 114

5-3 LPAR Validation Tool - System Selection processor feature selection 115

5-4 LPAR Validation Tool - Partition Specifications dialog. . . . . . . . . . . . . 115

5-5 LPAR Validation Tool - Memory Specifications dialog. . . . . . . . . . . . . 116

5-6 LPAR Validation Tool - slot assignments . . . . . . . . . . . . . . . . . . . . . . . 117

6-1 IBMs RAS philosophy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

6-2 FFDC error checkers and fault isolation registers . . . . . . . . . . . . . . . . 143

6-3 Memory error recovery mechanisms . . . . . . . . . . . . . . . . . . . . . . . . . . 147

6-4 EEH on POWER5 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

6-5 blind-swap hot-plug cassette . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

6-6 Error reporting structure of POWER5 . . . . . . . . . . . . . . . . . . . . . . . . . 161

6-7 Service focal point overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

7-1 Service processor (front view) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

7-2 Bulk power controller connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

7-3 Oscillator and service processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

7-4 Select service processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

7-5 Select ASMI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

7-6 OK to launch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

7-7 ASMI login . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

7-8 ASMI menu: Welcome (as admin) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

7-9 ASMI menu: Error /Event Logs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

7-10 ASMI menu: Detailed Error Log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

7-11 ASMI menu: Factory Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

7-12 ASMI Menu: Firmware Update Policy . . . . . . . . . . . . . . . . . . . . . . . . . 184

7-13 ASMI menu: Network Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . 185

7-14 ASMI menu: Logical Memory Block Size . . . . . . . . . . . . . . . . . . . . . . . 186

7-15 Potential system components that require fixes . . . . . . . . . . . . . . . . . 187

7-16 Getting fixes from the IBM Sserver Hardware Information Center . . 188

7-17 Partition profile power-on properties . . . . . . . . . . . . . . . . . . . . . . . . . . 189

7-18 System Management Services (SMS) main menu . . . . . . . . . . . . . . . 190

7-19 Select Boot Options menu options. . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

7-20 Configure Boot Device Order menu. . . . . . . . . . . . . . . . . . . . . . . . . . . 192

Page 13

Figures xi

7-21 Current boot sequence menu (default boot list). . . . . . . . . . . . . . . . . . 193

8-1 Private direct network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

8-2 HMC with hub/switch attachment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

8-3 HMC attached to both private and public network . . . . . . . . . . . . . . . . 205

8-4 Primary and secondary HMC to BPC connections . . . . . . . . . . . . . . . 206

8-5 First screen after login as hscroot user . . . . . . . . . . . . . . . . . . . . . . . . 208

8-6 Guided Setup Wizard. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

8-7 Date and Time settings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

8-8 The hscroot password . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

8-9 The root password . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

8-10 First part of Guided Setup Wizard is done . . . . . . . . . . . . . . . . . . . . . . 213

8-11 Select LAN adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

8-12 Speed selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

8-13 Network type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

8-14 Configure eth0 DHCP range . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

8-15 Second LAN adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 218

8-16 Host name and domain name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

8-17 Default gateway IP address . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

8-18 DNS IP address . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220

8-19 End of network configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220

8-20 Client contact information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

8-21 Client contact information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

8-22 Remote support information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

8-23 Callhome connection type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

8-24 License agreement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

8-25 Modem configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

8-26 Country or region . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

8-27 Select phone number for modem. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

8-28 Dial-up configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

8-29 Authorized user for ESA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

8-30 The e-mail notification dialog . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

8-31 Communication interruptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

8-32 Summary screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

8-33 Status screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

8-34 Inventory Scout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

8-35 Select server to get VPD data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

8-36 Store data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

8-37 PPP or VPN connection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

8-38 Open serviceable events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

8-39 Manage service events . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

8-40 Detail view of a service event . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

8-41 Exchange parts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

B-1 7040-61D expansion unit back view with numbered slots . . . . . . . . . . 254

Page 14

xii IBM Eserver p5 590 and 595 System Handbook

C-1 Search for planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

C-2 Select 9119-590 and 9119-595 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

C-3 Planning information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

D-1 Information Center . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

D-2 Search field . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 269

D-3 Navigation bar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

D-4 Toolbar with start off call . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271

D-5 Previous pSeries documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 272

Page 15

© Copyright IBM Corp. 2005. All rights reserved. xiii

Tables

1-1 p5-590 and p5-595 features summary. . . . . . . . . . . . . . . . . . . . . . . . . . 12

1-2 p5-590 and p5-595 operating systems compatibility . . . . . . . . . . . . . . . 13

2-1 Memory configuration table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2-2 Types of available memory cards for p5-590 and p5-595 . . . . . . . . . . . 29

2-3 Number of possible I/O loop connections . . . . . . . . . . . . . . . . . . . . . . . 39

3-1 Micro-Partitioning overview on p5 systems . . . . . . . . . . . . . . . . . . . . . . 60

3-2 Interpartition VLAN communication . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

3-3 VLAN communication to external network . . . . . . . . . . . . . . . . . . . . . . . 70

3-4 EtherChannel and Link Aggregation . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

4-1 CoD feature comparisons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

4-2 Types of Capacity on Demand (functional categories) . . . . . . . . . . . . . 90

4-3 Permanently activated processors by MCM . . . . . . . . . . . . . . . . . . . . 104

4-4 License entitlement example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

4-5 p5-590 and p5-595 CoD Feature Codes . . . . . . . . . . . . . . . . . . . . . . . 109

5-1 p5-590 minimum system configuration . . . . . . . . . . . . . . . . . . . . . . . . 118

5-2 p5-595 minimum system configuration . . . . . . . . . . . . . . . . . . . . . . . . 119

5-3 Configurable memory-to-default memory block size . . . . . . . . . . . . . . 125

5-4 p5-590 I/O drawers quantity with different loop mode . . . . . . . . . . . . . 128

5-5 p5-595 I/O drawers quantity with different loop mode . . . . . . . . . . . . . 128

5-6 Hardware Management Console usage . . . . . . . . . . . . . . . . . . . . . . . 134

6-1 Hot-swappable FRUs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

7-1 Table of service processor card location codes. . . . . . . . . . . . . . . . . . 173

7-2 Summary of BPC Ethernet hub port connectors . . . . . . . . . . . . . . . . . 173

7-3 ASMI user accounts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

7-4 ASMI user-level access (menu options) . . . . . . . . . . . . . . . . . . . . . . . 180

8-1 HMC user passwords. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

A-1 Facts and Features for p5-590 and p5-595 . . . . . . . . . . . . . . . . . . . . . 246

A-2 System unit details . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 248

A-3 Server I/O attachment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

A-4 Peak bandwidth . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

A-5 Standard warranty in United States, other countries may vary . . . . . . 250

A-6 Physical planning characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

A-7 Racks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

A-8 I/O device options list . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 252

B-1 Model 61D expansion unit slot location description (PHB 1 and 2) . . . 254

B-2 Model 61D expansion unit slot location description (PHB 3) . . . . . . . . 254

B-3 p5-590 and p5-595 PCI adapter placement table . . . . . . . . . . . . . . . . 255

Page 16

xiv IBM Eserver p5 590 and 595 System Handbook

Page 17

© Copyright IBM Corp. 2005. All rights reserved. xv

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area.

Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM

product, program, or service may be used. Any functionally equivalent product, program, or service that

does not infringe any IBM intellectual property right may be used instead. However, it is the user's

responsibility to evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document.

The furnishing of this document does not give you any license to these patents. You can send license

inquiries, in writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such provisions

are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES

THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED,

INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer

of express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may

make improvements and/or changes in the product(s) and/or the program(s) described in this publication at

any time without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any

manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the

materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without

incurring any obligation to you.

Any performance data contained herein was determined in a controlled environment. Therefore, the results

obtained in other operating environments may vary significantly. Some measurements may have been made

on development-level systems and there is no guarantee that these measurements will be the same on

generally available systems. Furthermore, some measurement may have been estimated through

extrapolation. Actual results may vary. Users of this document should verify the applicable data for their

specific environment.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm

the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on

the capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrates programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

Page 18

xvi IBM Eserver p5 590 and 595 System Handbook

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the

sample programs are written. These examples have not been thoroughly tested under all conditions. IBM,

therefore, cannot guarantee or imply reliability, serviceability, or function of these programs. You may copy,

modify, and distribute these sample programs in any form without payment to IBM for the purposes of

developing, using, marketing, or distributing application programs conforming to IBM's application

programming interfaces.

Trademarks

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Eserver®

Eserver®

eServer™

ibm.com®

iSeries™

i5/OS™

pSeries®

xSeries®

zSeries®

AIX 5L™

AIX®

AS/400®

BladeCenter™

Chipkill™

Electronic Service Agent™

Enterprise Storage Server®

Extreme Blue™

ESCON®

Hypervisor™

HACMP™

IBM®

Micro Channel®

Micro-Partitioning™

OpenPower™

OS/400®

Power Architecture™

PowerPC®

POWER™

POWER2™

POWER4™

POWER4+™

POWER5™

PS/2®

PTX®

Redbooks™

Redbooks (logo)™

RS/6000®

S/390®

SP

TotalStorage®

Versatile Storage Server™

Virtualization Engine™

The following terms are trademarks of other companies:

Java and all Java-based trademarks and logos are trademarks or registered trademarks of Sun

Microsystems, Inc. in the United States, other countries, or both.

Microsoft, Windows, Windows NT, and the Windows logo are trademarks of Microsoft Corporation in the

United States, other countries, or both.

Intel, Intel Inside (logos), MMX, and Pentium are trademarks of Intel Corporation in the United States, other

countries, or both.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Other company, product, and service names may be trademarks or service marks of others.

Page 19

© Copyright IBM Corp. 2005. All rights reserved. xvii

Preface

This IBM® Redbook explores the IBM Sserver® p5 models 590 and 595

(9119-590, 9119-595), a new level of UNIX® servers providing world-class

performance, availability, and flexibility. Ideal for on demand computing

environments, datacenter implementation, application service providers, and

high performance computing, this new class of high-end servers include

mainframe-inspired self-management and security designed to meet your most

demanding needs. The IBM Sserver p5 590 and 595 provide an expandable,

high-end enterprise solution for managing the computing requirements

necessary to become an on demand business.

This publication includes the following topics:

p5-590 and p5-595 overview

p5-590 and p5-595 hardware architecture

Virtualization overview

Capacity on Demand overview

Reliability, availability, and serviceability (RAS) overview

Hardware Management Console (HMC) features and functions

This publication is an ideal desk-side reference for IBM professionals, IBM

Business Partners, and technical specialists who support the p5-590 and p5-595

systems, and for those who want to learn more about this radically new server in

a clear, single-source handbook.

The team that wrote this redbook

This redbook was produced by a team of specialists from around the world

working at the International Technical Support Organization, Austin Center.

Peter Domberg (Domi) is a Technical Support Specialist in Germany. He has

27 years of experience in the ITS hardware service. His areas of expertise

include pSeries®, RS/6000®, networking, and SSA storage. He is also an AIX 5L

Certified Specialist and Hardware Support Specialist for the North and East

regions in Germany.

Nia Kelley is a Staff Software Engineer based in IBM Austin with over four years

of experience in the pSeries firmware development field. She holds a bachelor’s

Page 20

xviii IBM Eserver p5 590 and 595 System Handbook

degree in Electrical Engineering from the University of Maryland at College Park.

Her areas of expertise include system bringup and firmware development, in

which she has led several project teams. Also she has held various architectural

positions for existing and future pSeries products. Ms. Kelley is an alumni of the

IBM Extreme Blue™ program and has filed numerous patents for the IBM

corporation.

TaiJung Kim is a pSeries Systems Product Engineer at the pSeries post-sales

Technical Support Team in IBM Korea. He has three years of experience working

on RS/6000 and pSeries products. He is an IBM Certified Specialist in pSeries

systems and AIX 5L. He provides clients with technical support on pSeries

systems, AIX 5L, and system management.

Ding Wei is an Advisory IT Specialist working for IBM China ATS. He has eight

years of experience in the Information Technology field. His areas of expertise

include pSeries® and storage products and solutions. He has been working for

IBM for six years.

Thanks to the following people for their contributions to this project:

International Technical Support Organization, Austin Center

Scott Vetter

IBM Austin

Anis Abdul, George Ahrens, Doug Bossen, Pat Buckland, Mark Dewalt,

Bob Foster, Iggy Haider, Dan Henderson, Richard (Jamie) Knight,

Andy McLaughlin, Jim Mitchell, Cathy Nunez, Jayesh Patel, Craig Shempert,

Guillermo Silva, Joel Tendler

IBM Endicott

Brian Tolan

IBM Raleigh

Andre Metelo

IBM Rochester

Salim Agha, Diane Knipfer, Dave Lewis, Matthew Spinler, Stephanie Swanson

IBM Poughkeepsie

Doug Baska

IBM Boca Raton

Arthur J. Prchlik

IBM Somers

Bill Mihaltse, Jim McGaughan

Page 21

Preface xix

IBM UK

Derrick Daines, Dave Williams

IBM France

Jacques Noury

IBM Germany

Hans Mozes, Wolfgang Seiwald

IBM Australia

Cameron Ferstat

IBM Italy

Carlo Costantini

IBM Redbook “Partitioning Implementations for IBM Sserver p5 and pSeries

Servers” Team

Nic Irving (CSC Corporation - Australia), Matthew Jenner (IBM Australia),

Arsi Kortesnemi (IBM Finland)

Become a published author

Join us for a two- to six-week residency program! Help write an IBM Redbook

dealing with specific products or solutions, while getting hands-on experience

with leading-edge technologies. You'll team with IBM technical professionals,

Business Partners and/or clients.

Your efforts will help increase product acceptance and client satisfaction. As a

bonus, you'll develop a network of contacts in IBM development labs, and

increase your productivity and marketability.

Find out more about the residency program, browse the residency index, and

apply online at:

ibm.com/redbooks/residencies.html

Page 22

xx IBM Eserver p5 590 and 595 System Handbook

Comments welcome

Your comments are important to us!

We want our Redbooks™ to be as helpful as possible. Send us your comments

about this or other Redbooks in one of the following ways:

Use the online Contact us review redbook form found at:

ibm.com/redbooks

Send your comments in an email to:

redbook@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. JN9B Building 905

11501 Burnet Road

Austin, Texas 78758-3493

Page 23

© Copyright IBM Corp. 2005. All rights reserved. 1

Chapter 1. System overview

In this chapter we provide a basic overview of the p5-590 and p5-595 servers,

highlighting the new features, marketing position, main features, and operating

systems.

Section 1.1, “Introduction” on page 2

Section 1.2, “What’s new” on page 2

Section 1.3, “General overview and characteristics” on page 4

Section 1.4, “Features summary” on page 12

Section 1.5, “Operating systems support” on page 13

1

Page 24

2 IBM Eserver p5 590 and 595 System Handbook

1.1 Introduction

The IBM Sserver p5 590 and IBM Sserver p5 595 are the servers redefining

the IT economics of enterprise UNIX and Linux® computing. The up to 64-way

p5-595 server is the new flagship of the product line with nearly three times the

commercial performance (based on rperf estimates) and twice the capacity of its

predecessor, the IBM Sserver pSeries 690. Accompanying the p5-595 is the up

to 32-way p5-590 that offers enterprise-class function and more performance

than the pSeries 690 at a significantly lower price for comparable configurations.

Both systems are powered by IBMs most advanced 64-bit Power Architecture™

microprocessor, the IBM POWER5™ microprocessor, with simultaneous

multi-threading that makes each processor function as two to the operating

system, thus increasing commercial performance and system utilization over

servers without this capability. The p5-595 features a choice of IBMs fastest

POWER5 processors running at 1.90 GHz or 1.65 GHz, while the p5-590 offers

1.65 GHz processors.

These servers come standard with mainframe-inspired reliability, availability,

serviceability (RAS) capabilities and IBM Virtualization Engine™ systems

technology with breakthrough innovations such as Micro-Partitioning™.

Micro-Partitioning allows as many as ten logical partitions (LPARs) per processor

to be defined. Both systems can be configured with up to 254 virtual servers with

a choice of AIX 5L™, Linux, and i5/OS™ operating systems in a single server,

opening the door to vast cost-saving consolidation opportunities.

1.2 What’s new

The p5-590 and p5-595 bring the following features:

POWER5 microprocessor

Designed to provide excellent application performance and high reliability.

Includes simultaneous multi-threading to help increase commercial system

performance and processor utilization. See 1.3.1, “Microprocessor

technology” on page 6 and 2.2, “The POWER5 microprocessor” on page 18

for more information.

High memory / I/O bandwidth

Fast processors wait less for data to be moved through the system. Delivers

data faster for the needs of high performance computing and other

memory-intensive applications. See 2.3, “Memory subsystem” on page 26 for

more information.

Page 25

Chapter 1. System overview 3

Flexibility in packaging

High-density 24-inch system frame for maximum growth. See 1.3, “General

overview and characteristics” on page 4 for more information.

Shared processor pool

Provides the ability to transparently share processing power between

partitions. Helps balance processing power and ensures the high priority

partitions receive the processor cycles they need. See 3.2.1, “Shared

processor partitions” on page 59 for more information.

Micro-Partitioning

Allows each processor in the shared processor pool to be split into as many

as ten partitions. Fine-tuned processing power to match workloads. See 3.2,

“Micro-Partitioning” on page 58 for more information.

Virtual I/O

Shares expensive resources to help reduce costs. See 3.6, “Virtual SCSI” on

page 81 for more information.

Virtual LAN

Provides the capability for TCP/IP communication between partitions without

the need for additional network adapters. See 3.3, “Virtual Ethernet” on

page 65 for more information.

Dynamic logical partitioning

Allows reallocation of system resources without rebooting affected partitions.

Offers greater flexibility in using available capacity and more rapidly matching

resources to changing business requirements.

Mainframe-inspired RAS

Delivers exceptional system availability using features usually found on much

more expensive systems including service processor, Chipkill™ memory, First

Failure Data Capture, dynamic deallocation of selected system resources,

dual system clocks, and more. See Chapter 6, “Reliability, availability, and

serviceability” on page 139 for more information.

Broad range of Capacity on Demand (CoD) offerings

Provides temporary access to processors and memory to meet predictable

business spikes. Prepaid access to processors to meet intermittent or

seasonal demands. Offers a one-time 30 day trial to test increased processor

or memory capacity before permanent activation. Allow processors and

memory to be permanently added to meet long term workload increases. See

Chapter 4, “Capacity on Demand” on page 85 for more information.

Page 26

4 IBM Eserver p5 590 and 595 System Handbook

Grid Computing support

Allows sharing of a wide range of computing and data resources across

heterogeneous, geographically dispersed environments.

Scaling through Cluster Systems Management (CSM) support

Allows for more granular growth so end-user demands can be readily

satisfied. Provides centralized management of multiple interconnected

systems. Provides ability to handle unexpected workload peaks by sharing

resources.

Multiple operating system support

Allows clients the flexibility to select the right operating system and the right

application to meet their needs. Provides the ability to expand applications

choices to include many open source applications. See 1.5, “Operating

systems support” on page 13 for more information.

1.3 General overview and characteristics

The p5-590 and p5-595 servers are designed with a basic server configuration

that starts with a single

frame (Figure 1-1), and is featured with optional and

required components.

Figure 1-1 Primary system frame organization

IBM Hardware

Management

Console

required

(HMC)

Bulk Power Assembly

(second fully redundant Bulk

Power Assembly on rear)

Central Electronics Complex (CEC)

Up to four 16-way books

Each book contains:

- two Multichip Modules (MCM)

- up to 512GB memory

- six RIO2 I/O hub adapters

24-inch System Frame, 42U

8U

18U

4U

4U

4U

4U

Two hot-plug redundant blowers with

two more on the rear of the CEC

First I/O Drawer (required)

Optional I/O Drawer

Optional I/O Drawer or

Internal batteries

Optional I/O Drawer or

Internal batteries

First I/O Drawer (required)

Optional I/O Drawer

Optional I/O Drawer or

Internal batteries

Optional I/O Drawer or

Internal batteries

Page 27

Chapter 1. System overview 5

Both systems are powered by IBMs most advanced 64-bit Power Architecture

microprocessor, the POWER5 microprocessor, with simultaneous multi-threading

that makes each processor logically appear as two to the operating system, thus

increasing commercial throughput and system utilization over servers without

this capability. The p5-595 features a choice of IBMs fastest POWER5

microprocessors running at 1.9 GHz or 1.65 GHz, while the p5-590 offers

1.65 GHz processors.

For additional capacity, either a powered or non-powered frame can be

configured for a p5-595, as shown in Figure 1-2.

Figure 1-2 Powered and bolt on frames

The p5-590 can be expanded by an optional bolt-on frame.

Every p5-590 and p5-595 server comes standard with Advanced POWER™

Virtualization, providing Micro-Partitioning, Virtual I/O Server, and Partition Load

Manager (PLM) for AIX 5L.

Micro-Partitioning enables system configurations with more partitions than

processors. Processing resources can be allocated in units as small as 1/10th of

a processor and be fine-tuned in increments of 1/100th of a processor. So a

p5-590 or p5-595 system can define up to ten

virtual servers per processor

(maximum of 254 per system), controlled in a shared processor pool for

automatic, nondisruptive resource balancing. Virtualization features of the

p5-595

Powered

Bolt-on

Required for 48- or 64-way server

with more than 4 I/O drawers

Consider this frame if anticipating

future rapid I/O growth, where CEC

will not handle power requirements

A bolt-on frame may be added later

for more capacity

May be used for 16- or 32-way server

with more than 4 I/O drawers, using

power from the primary CEC frame

A powered frame may be added later

Page 28

6 IBM Eserver p5 590 and 595 System Handbook

p5-590 and the p5-595 are introduced in Chapter 3, “POWER5 virtualization

capabilities” on page 57.

The ability to communicate between partitions using virtual Ethernet is part of

Advanced POWER Virtualization and it is extended with the Virtual I/O Server to

include shared Ethernet adapters. Also part of the Virtual I/O Server is virtual

SCSI for sharing SCSI adapters and the attached disk drives.

The Virtual I/O Server requires APAR IY62262 and is supported by AIX 5L

Version 5.3 with APAR IY60349, as well as by SLES 9 and RHEL AS 3. Also

included in Advanced POWER Virtualization is PLM, a powerful policy based tool

for automatically managing resources among LPARs running AIX 5L Version 5.3

or AIX 5L Version 5.2 with the 5200-04 Recommended Maintenance package.

IBM Sserver p5 590 and 595 servers also offer optional Capacity on Demand

(CoD) capability for processors and memory. CoD functionality is outlined in

Chapter 4, “Capacity on Demand” on page 85.

IBM Sserver p5 590 and 595 servers provide significant extensions to the

mainframe-inspired reliability, availability, and serviceability (RAS) capabilities

found in IBM Sserver p5 and pSeries systems. They come equipped with

multiple resources to identify and help resolve system problems rapidly. During

ongoing operation, error checking and correction (ECC) checks data for errors

and can correct them in real time. First Failure Data Capture (FFDC) capabilities

log both the source and root cause of problems to help prevent the recurrence of

intermittent failures that diagnostics cannot reproduce. Meanwhile, Dynamic

Processor Deallocation and dynamic deallocation of PCI bus slots help to

reallocate resources when an impending failure is detected so applications can

continue to run unimpeded. RAS function is discussed in Chapter 6, “Reliability,

availability, and serviceability” on page 139.

Power options for these systems are described in 5.2.10, “Frame, power, and

battery backup configuration rules” on page 130.

A description of RAS features, such redundant power and cooling, can be found

in 6.4, “Redundancy in components” on page 146.

The following sections detail some of the technologies behind the p5-590 and

p5-595.

1.3.1 Microprocessor technology

The IBM POWER4™ microprocessor, which was introduced in 2001, was a

result of advanced research technologies developed by IBM to create a

high-performance, high-scalability chip design to power future IBM Sserver

systems. The POWER4 design integrates two processor cores on a single chip, a

Page 29

Chapter 1. System overview 7

shared second-level (L2) cache, a directory for an off-chip third-level (L3) cache,

and the necessary circuitry to connect it to other POWER4 chips to form a

system. The dual-processor chip provides natural thread-level parallelism at the

chip level.

The POWER5 microprocessor is IBMs second generation dual core

microprocessor and extends the POWER4 design by introducing enhanced

performance and support for a more granular approach to computing. The

POWER5 chip features single- and multi-threaded execution and higher

performance in the single-threaded mode than the POWER4 chip at equivalent

frequencies.

The primary design objectives of the POWER5 microprocessor are:

Maintain binary and structural compatibility with existing POWER4 systems

Enhance and extend symmetric multiprocessing (SMP) scalability

Continue to provide superior performance

Deliver a power efficient design

Enhance reliability, availability, and serviceability

POWER4 to POWER5 comparison

There are several major differences between POWER4 and POWER5 chip

designs, and they include the following areas shown in Figure 1-3, and as

discussed in the following sections:

Figure 1-3 POWER4 and POWER5 architecture comparison

Better performance12072

Floating-point rename

registers

389mm

2

Enhanced dist. switch

Processor speed

½ proc. speed

1/10thof processor

Yes

36MB

12-way associative

Reduced latency

10-way associative

1.9MB

4-way associative

POWER5 design

Better usage of processor

resources

1 processor

Partitioning support

50% more transistors in

the same space

412mm

2

Size

Better systems throughput

Better performance

Distributed switch

½ proc. speed

½ proc. speed

Chip interconnect:

Type

Intra MCM data bus

Inter MCM data bus

Better processor utilization

30%* system improvement

No

Simultaneous

multi-threading

Better cache performance

32MB

8-way associative

118 clock cycles

L3 cache

Fewer L2 cache misses

Better performance

8-way associative

1.5MB

L2 cache

Improved L1 cache

performance

2-way associative

L1 cache

Benefit

POWER4+ design

POWER4+ to POWER5 comparison

* Based on IBM rPerf projections

Page 30

8 IBM Eserver p5 590 and 595 System Handbook

Introduction to simultaneous multi-threading

Simultaneous multi-threading is a hardware design enhancement in POWER5

architecture that allows two separate instruction streams (threads) to execute

simultaneously on the processor. It combines the capabilities of superscaler

processors with the latency hiding abilities of hardware multi-threading.

Using multiple on-chip thread contexts, the simultaneous multi-threading

processor executes instructions from multiple threads each cycle. By duplicating

portions of logic in the instruction pipeline and increasing the capacity of the

register rename pool, the POWER5 processor can execute several elements of

two instruction streams, or threads, concurrently. Through hardware and

software thread prioritization, greater utilization of the hardware resources can

be realized without an impact to application performance.

The benefit of simultaneous multi-threading is realized more in commercial

environments over numeric intensive environments, since the number of

transactions performed outweighs the actual speed of the transaction. For

example, the simultaneous multi-threading environment would be much better

suited for a Web server or database server than it would be for a Fortran weather

prediction application. In the rare case that applications are tuned for optimal use

of processor resources there may be a decrease in performance due to

increased contention to cache and memory. For this reason simultaneous

multi-threading may be disabled.

Although it is the operating system that determines whether simultaneous

multi-threading is used, simultaneous multi-threading is otherwise completely

transparent to the applications and operating system, and implemented entirely

in hardware (simultaneous multi-threading is not supported on AIX 5L Version

5.2).

1.3.2 Memory subsystem

With the enhanced architecture of larger 7.6 MB L2 and 144 MB L3 caches, each

mutichip module (MCM) can stage information more effectively from processor

memory to applications. These caches allow the p5-590 and p5-595 to run

workloads significantly faster than predecessor servers.

The difference of memory hierarchy between POWER4 and POWER5 systems is

represented in Figure 1-4 as follows:

Page 31

Chapter 1. System overview 9

Figure 1-4 POWER4 and POWER5 memory structure comparison

There are two types of memory technologies offered, namely DDR1 and DDR2.

Equipped with 8 GB of memory in its minimum configuration, the p5-590 can be

scaled to 1 TB using DDR1 266 MHz memory. From 8 GB to 128 GB of DDR2

533 MHz memory, useful for high-performance applications, is available. The

p5-595 can be scaled from 8 GB to 2 TB of DDR1 266 MHz memory; From 8 GB

to 256 GB of DDR2 533 MHz memory.

Additional information about memory can be found in 2.3, “Memory subsystem”

on page 26 and 5.2.4, “Memory configuration rules” on page 123.

1.3.3 I/O subsystem

Using the RIO-2 ports in the processor books, up to twelve I/O drawers can be

attached to a p5-595 and up to eight I/O drawers to the p5-590, providing up to

9.3 TB and 14 TB of 15 K rpm disk storage, respectively. Each 4U (4 EIA unit)

drawer provides 20 hot-plug, blind-swap PCI-X I/O adapter slots, 16 or 8

front-accessible, hot-swappable disk drive bays and four or two integrated Ultra3

SCSI controllers. I/O drawers can be installed in the primary 24-inch frame or in

an optional expansion frame. Attachment to a wide range of IBM TotalStorage®

storage system offerings – including disk storage subsystems, storage area

network (SAN) components, tape libraries, and external media drives – is also

supported.

Page 32

10 IBM Eserver p5 590 and 595 System Handbook

A minimum of one I/O drawer (FC 5791 or FC 5794) is required per system. I/O

drawer FC 5791 contains 20 PCI-X slots and 16 disk bays, and FC 5794 contains

20 PCI-X slots and 8 disk bays. Existing 7040-61D I/O drawers may also be

attached to a p5-595 or p5-590 servers as additional I/O drawers (when correctly

featured). For more information about the I/O system, refer to 2.7, “I/O drawer” on

page 37. The I/O features are shown in Figure 1-5.

Figure 1-5 p5-590 and p5-595 I/O drawer organization

1.3.4 Media bays

You can configure your IBM Sserver p5 595 (9119-595) and p5 590 (9119-590)

systems to include a storage device media drawer. This media drawer can be

mounted in the CEC rack with three available media bays, two in the front and

one in the rear. New storage devices for the media bays include:

16X/48X IDE DVD-ROM drive

4.7 GB, SCSI DVD-RAM drive

36/72 GB, 4 mm internal tape drive

This offering is an alternative to the 7212-102 Storage Device Enclosure, which

cannot be mounted in the 590 and 595 CEC rack.

FRONT

REAR

ƒ Contains up to 16 hot-swappable disks

structured into four 4-packs of 36.4GB or

73.4GB 15K rpm disk drives

(1.1 terabytes maximum per I/O drawer)

ƒ Feature options for two or four backplanes

ƒ Existing IBM 7040-61D I/O drawers may be

moved to these servers

ƒ Twenty hot-plug PCI-X slots per drawer

ƒ Maximum of 160 hot-plug slots per system

ƒ Hot-plug slots permit PCI-X adapters to

be added or replaced without extending

the I/O drawer while system remains

available via blind-swap cassettes

ƒ Any slot can be assigned to any partition

Page 33

Chapter 1. System overview 11

1.3.5 Virtualization

The IBM Virtualization Engine can help simplify IT infrastructure by reducing

management complexity and providing integrated Virtualization technologies and

systems services for a single IBM Sserver p5 server or across multiple server

platforms. The Virtualization Engine systems technologies added or enhanced

the systems using the POWER5 architecture are as follows. A detailed

description of these features can be found in Chapter 3, “POWER5 virtualization

capabilities” on page 57.

POWER™ Hypervisor™

Is responsible for time slicing and dispatching the logical partition workload

across the physical processors, and enforces partition security, and can

provide Virtual LAN channels between partitions, reducing the need for

physical Ethernet adapters using I/O adapter slots.

Simultaneous multi-threading

Allows two separate instruction streams (threads) to run concurrently on the

same physical processor, improving overall throughput and improving overall

hardware resource utilization.

LPAR

Allows processors, memory, and I/O adapters, and attached devices to be

grouped logically into separate systems within the same server.

Micro-Partitioning

Allows processor resources to be allocated to partitions in units as small as

1/10th of a processor, with increments in units of 1/100th of a processor.

Virtual I/O

Includes virtual SCSI for sharing SCSI attached disks and virtual networking

to enable sharing of Ethernet adapters.

Virtual LAN (VLAN)

Enables high-speed, secure, partition-to-partition communications using the

TCP/IP protocol to help improve performance.

Capacity on Demand

Allows system resources such as processors and memory to be made

available on an as-needed basis.

Multiple operating system support

The POWER5 processor-based Sserver p5 products supports IBM AIX 5L

Version 5.2, IBM AIX 5L Version 5.3, SUSE Linux Enterprise Server 9

(SLES 9), and Red Hat Enterprise AS Linux 3 (RHEL AS 3). IBM i5/OS V5R3

is also available on Sserver p5 models 570, 590, and 595.

Page 34

12 IBM Eserver p5 590 and 595 System Handbook

1.4 Features summary

Table 1-1 summarizes the major features of the p5-590 and p5-595 servers. For

more information, see Appendix A, “Facts and features reference” on page 245.

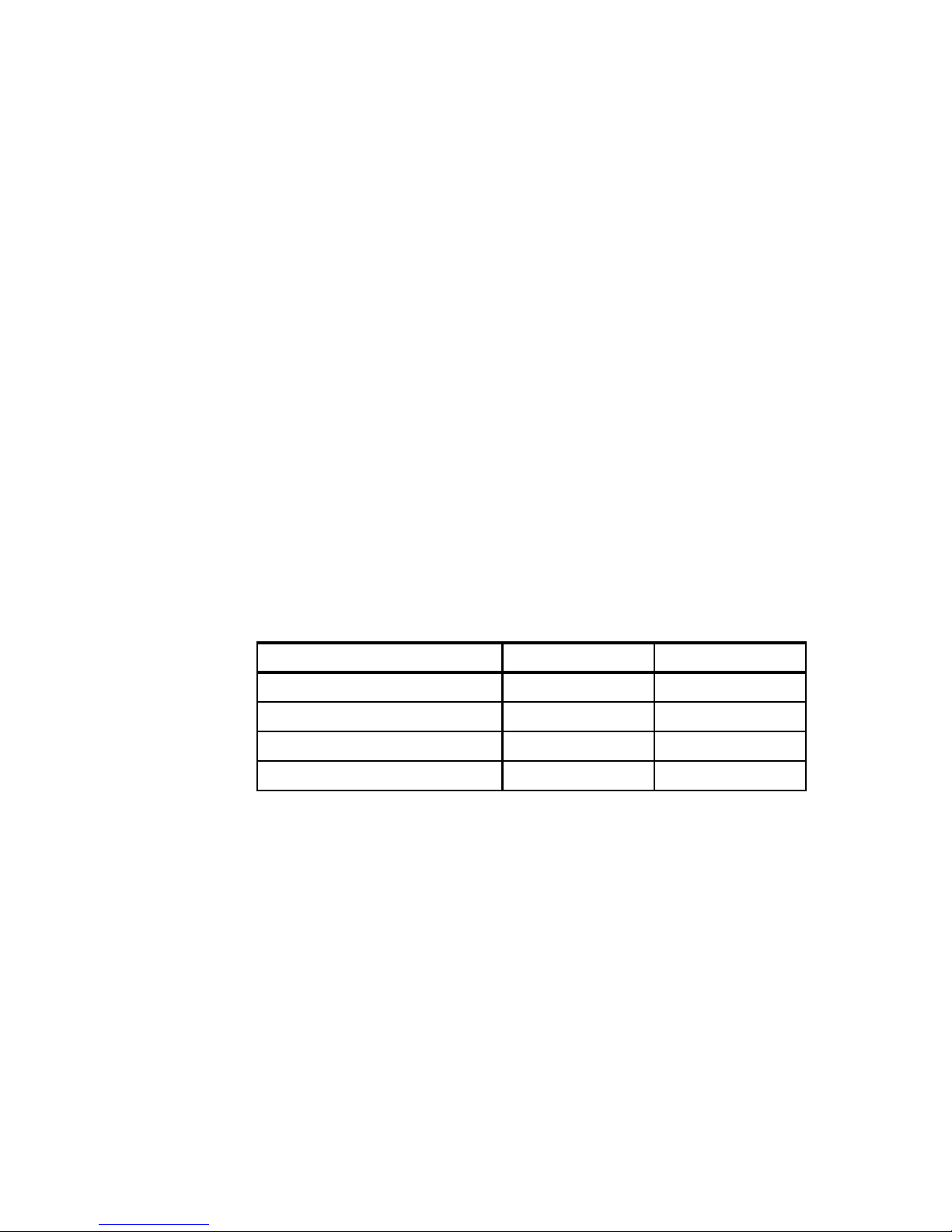

Table 1-1 p5-590 and p5-595 features summary

* 32 GB memory cards to enable maximum memory are planned for availability

April 8, 2005. Until that time, maximum memory is half as much (512 GB on

p5-590 and 1024 GB on p5-595).

IBM Sserver p5 system p5-590 p5-595

Machine type - Model 9119-590 9119-595

Packaging 24-inch system frame

Number of Expansion Racks 11 or 2

Number of processors per

system

8 to 32 16 to 64

POWER5 processor speed 1.65 GHz 1.65 or 1.90 GHz

Number of 16-way processor

books (2 MCMs per book)

1 or 2 1, 2, 3, or 4

Memory 8 GB - 1024 GB* 8 GB - 2048 GB*

CoD features (All memory CoD

features apply to DDR1

memory only)

Processor/Memory CUoD

Reserve CoD

On/Off Processor/Memory CoD

Trial Processor/Memory CoD

Capacity BackUp

Maximum micro-partitions 10 times the number of processors (254 maximum)

PCI-X slots 20 per I/O drawer

Media bays Optional

Disk bays 8 or 16 per I/O drawer

Optional I/O drawers Up to 8 Up to 12

Maximum PCI-X slots with

maximum I/O drawers

160 240

Maximum disk bays with

maximum I/O drawers

128 192

Maximum disk storage

maximum with I/O drawers

9.3 TB 14.0 TB

Page 35

Chapter 1. System overview 13

1.5 Operating systems support

All new POWER5 processor-based servers are capable of running IBM AIX 5L

Version 5.3 or AIX 5L Version 5.2 for POWER and support appropriate versions

of Linux. Both of the aforementioned supported versions of AIX 5L have been

specifically developed and enhanced to exploit and support the extensive RAS

features on IBM Sserver pSeries systems. Table 1-2 lists operating systems

compatibility.

Table 1-2 p5-590 and p5-595 operating systems compatibility

1

Many of the features are operating system dependent and may not be available

on Linux. For more information, check:

http://www.ibm.com/servers/eserver/pseries/linux/whitepapers/linux_pser

ies.html

1.5.1 AIX 5L

The p5-590 and p5-595 requires AIX 5L Version 5.3 or AIX 5L Version 5.2

Maintenance Package 5200-04 (IY56722) or later.

The system requires the following media:

Operating system

1

p5-590 p5-595

AIX 5L V5.1 No No

AIX 5L V5.2 (5765-E62) Yes Yes

AIX 5L V5.3 (5765-G03) Yes Yes