Page 1

SUSE Linux Enterprise Server 10 SP1 EAL4

High-Level Design

Version 1.2.1

Page 2

Version Author Date Comments

1.0 EJR 3/15/07 First draft based on RHEL5 HLD

1.1 EJR 4/19/07 Updates based on comments from Stephan Mueller and Klaus Weidner

1.2 GCW 4/26/07 Incorporated Stephan's comment to remove racoon

1.2.1 GCW 10/27/08 Added legal matter missing from final draft.

Novell, the Novell logo, the N logo, and SUSE are registered trademarks of Novell, Inc. in the United States and other

countries.

IBM, IBM logo, BladeCenter, eServer, iSeries, i5/OS, OS/400, PowerPC, POWER3, POWER4, POWER4+,

POWER5+, pSeries, S390, System p, System z, xSeries, zSeries, zArchitecture, and z/VM are trademarks or registered

trademarks of International Business Machines Corporation in the United States, other countries, or both.

Linux is a registered trademark of Linus Torvalds.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Intel and Pentium are trademarks of Intel Corporation in the United States, other countries, or both. Other

company, product, and service names may be trademarks or service marks of others.

This document is provided “AS IS” with no express or implied warranties. Use the information in this document at your

own risk.

This document may be reproduced or distributed in any form without prior permission provided the copyright notice is

retained on all copies. Modified versions of this document may be freely distributed provided that they are clearly

identified as such, and this copyright is included intact.

Copyright © 2003, 2007 IBM Corporation or its wholly owned subsidiaries.

2

Page 3

Table of Contents

1 Introduction....................................................................................................................................................1

1.1 Purpose of this document.......................................................................................................................1

1.2 Document overview ..............................................................................................................................1

1.3 Conventions used in this document........................................................................................................1

1.4 Terminology...........................................................................................................................................2

2 System Overview...........................................................................................................................................3

2.1 Product history.......................................................................................................................................4

2.1.1 SUSE Linux Enterprise Server.......................................................................................................4

2.1.2 eServer systems..............................................................................................................................4

2.2 High-level product overview..................................................................................................................5

2.2.1 eServer host computer structure.....................................................................................................5

2.2.2 eServer system structure.................................................................................................................7

2.2.3 TOE services..................................................................................................................................7

2.2.4 Security policy...............................................................................................................................8

2.2.5 Operation and administration.......................................................................................................10

2.2.6 TSF interfaces..............................................................................................................................10

2.3 Approach to TSF identification............................................................................................................11

3 Hardware architecture..................................................................................................................................14

3.1 System x...............................................................................................................................................14

3.1.1 System x hardware overview........................................................................................................14

3.1.2 System x hardware architecture....................................................................................................14

3.2 System p...............................................................................................................................................16

3.2.1 System p hardware overview........................................................................................................16

3.2.2 System p hardware architecture....................................................................................................17

3.3 System z...............................................................................................................................................17

3.3.1 System z hardware overview........................................................................................................17

3.3.2 System z hardware architecture....................................................................................................17

3.4 eServer 326..........................................................................................................................................18

3.4.1 eServer 326 hardware overview...................................................................................................19

3.4.2 eServer 326 hardware architecture...............................................................................................19

4 Software architecture....................................................................................................................................22

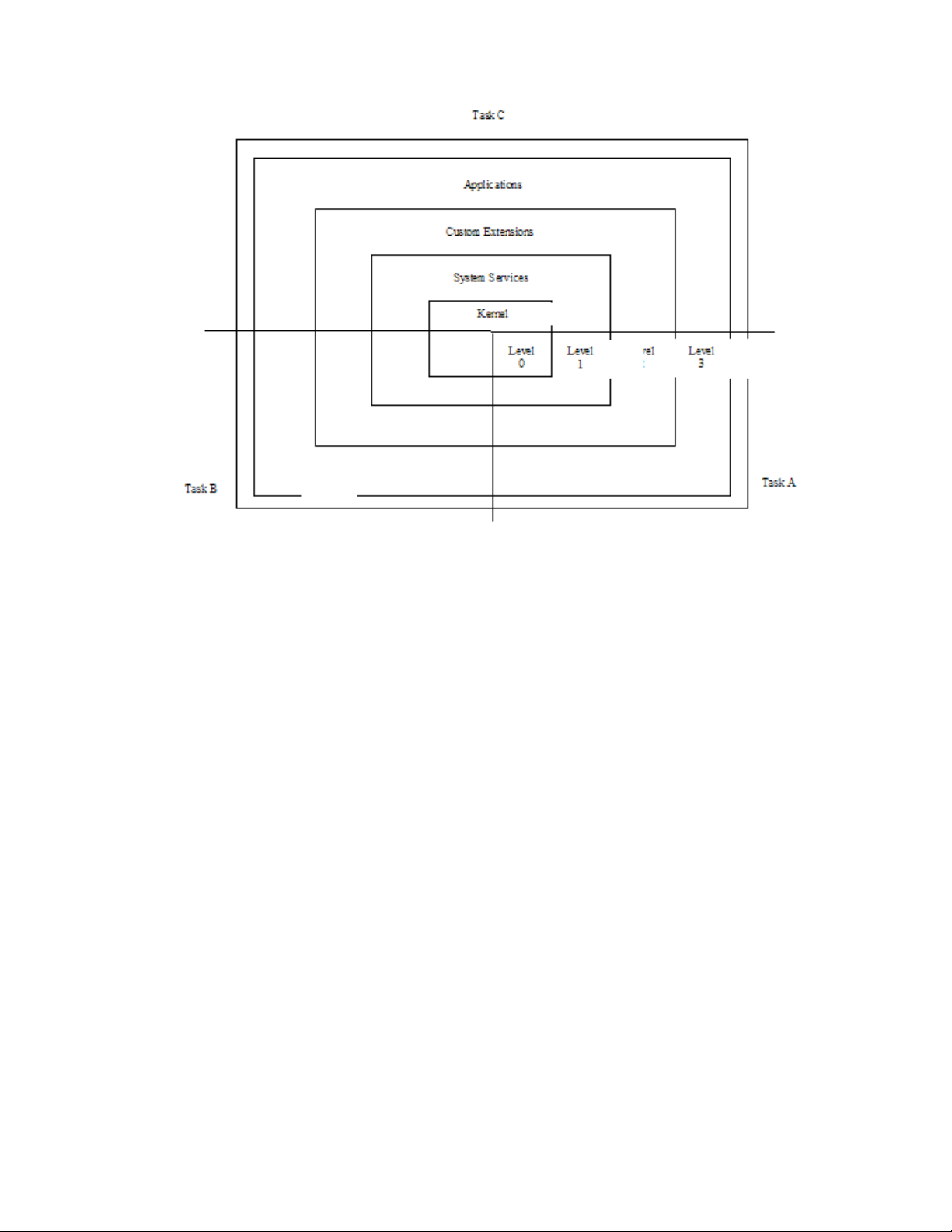

4.1 Hardware and software privilege..........................................................................................................22

4.1.1 Hardware privilege.......................................................................................................................22

4.1.1.1 Privilege level......................................................................................................................22

4.1.2 Software privilege........................................................................................................................24

3

Page 4

4.1.2.1 DAC....................................................................................................................................25

4.1.2.2 AppArmor............................................................................................................................26

4.1.2.3 Programs with software privilege.........................................................................................26

4.2 TOE Security Functions software structure.........................................................................................27

4.2.1 Kernel TSF software....................................................................................................................28

4.2.1.1 Logical components.............................................................................................................29

4.2.1.2 Execution components.........................................................................................................30

4.2.2 Non-kernel TSF software.............................................................................................................31

4.3 TSF databases......................................................................................................................................34

4.4 Definition of subsystems for the CC evaluation...................................................................................34

4.4.1 Hardware......................................................................................................................................35

4.4.2 Firmware......................................................................................................................................35

4.4.3 Kernel subsystems........................................................................................................................35

4.4.4 Trusted process subsystems..........................................................................................................35

4.4.5 User-level audit subsystem...........................................................................................................36

5 Functional descriptions................................................................................................................................38

5.1 File and I/O management.....................................................................................................................38

5.1.1 Virtual File System......................................................................................................................39

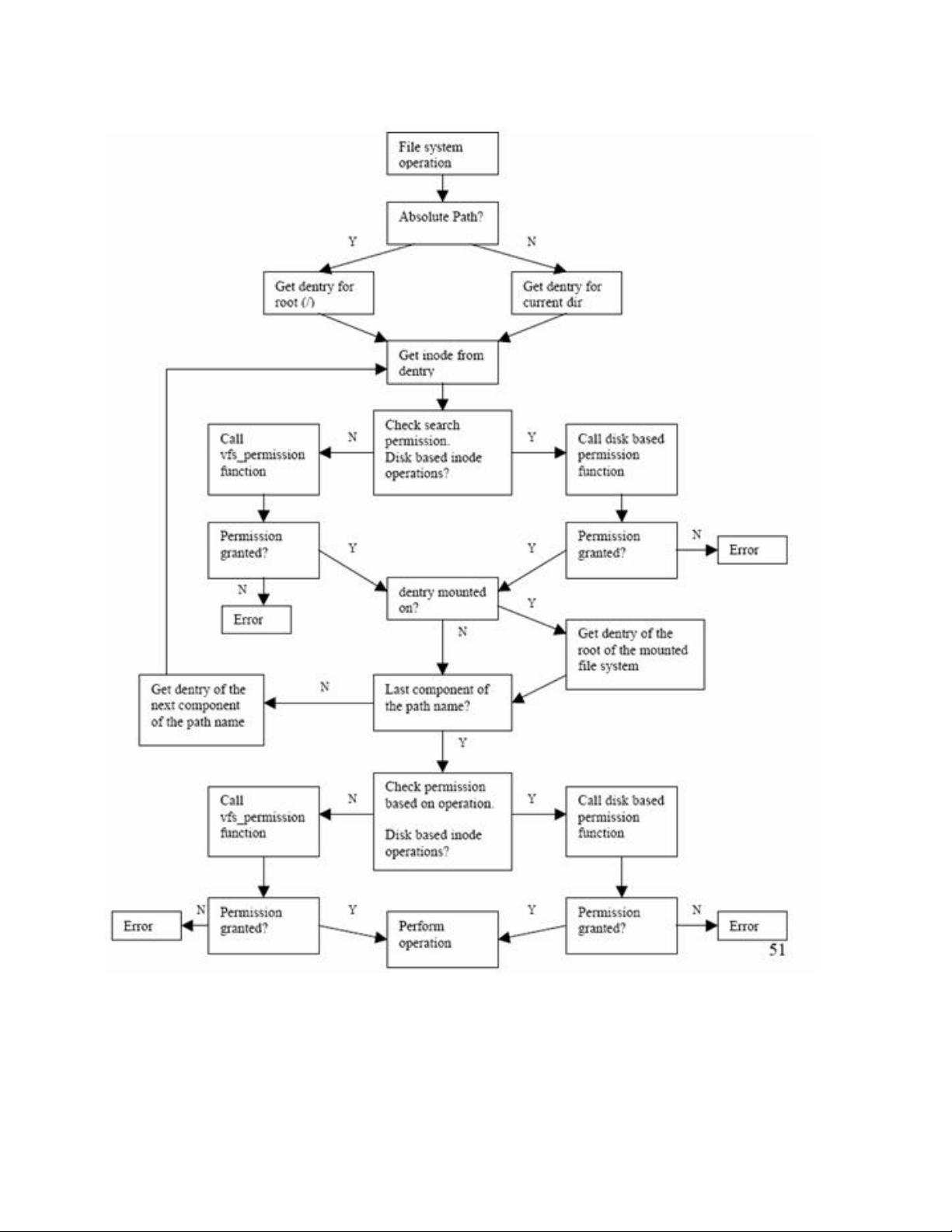

5.1.1.1 Pathname translation............................................................................................................41

5.1.1.2 open()...................................................................................................................................44

5.1.1.3 write()...................................................................................................................................45

5.1.1.4 mount().................................................................................................................................45

5.1.1.5 Shared subtrees....................................................................................................................46

5.1.2 Disk-based file systems................................................................................................................46

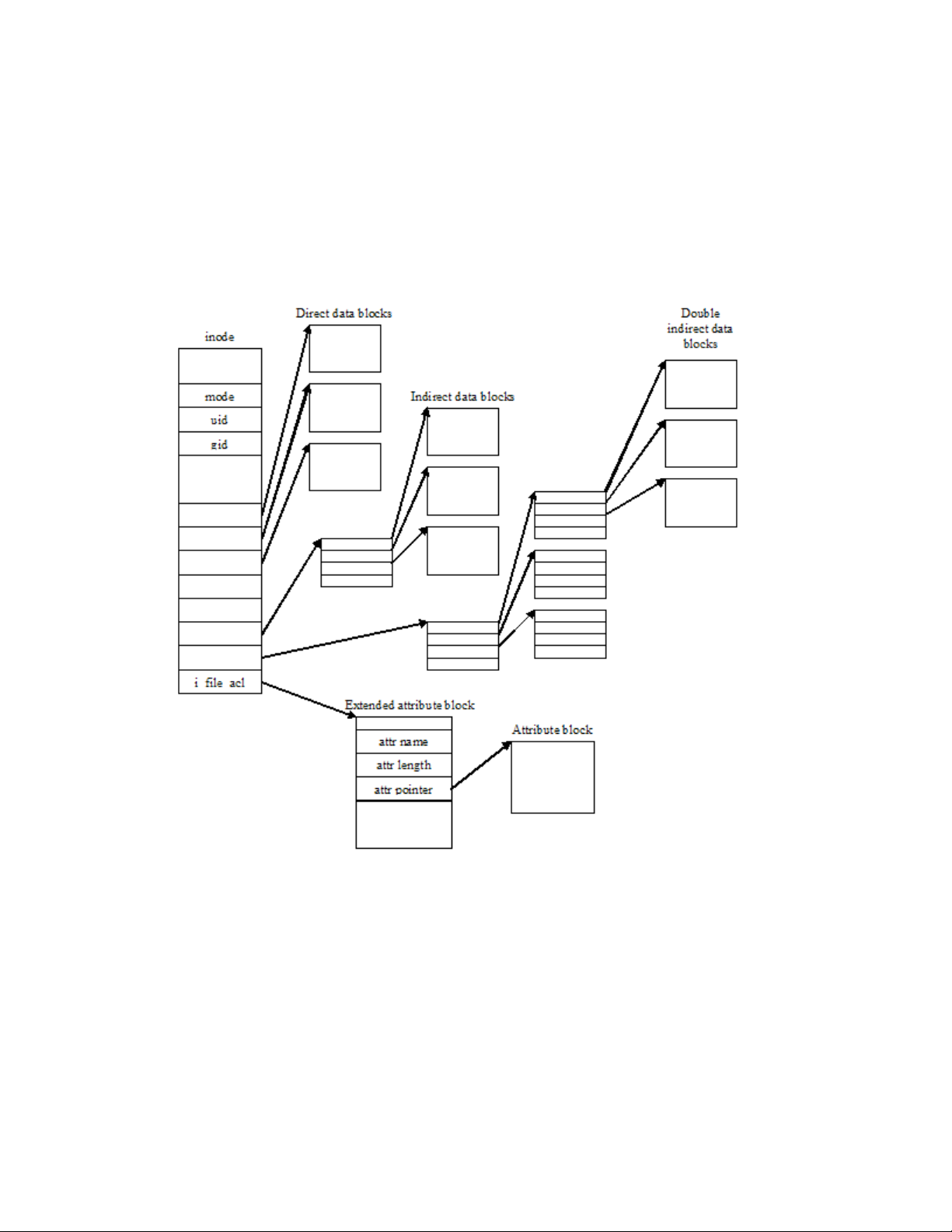

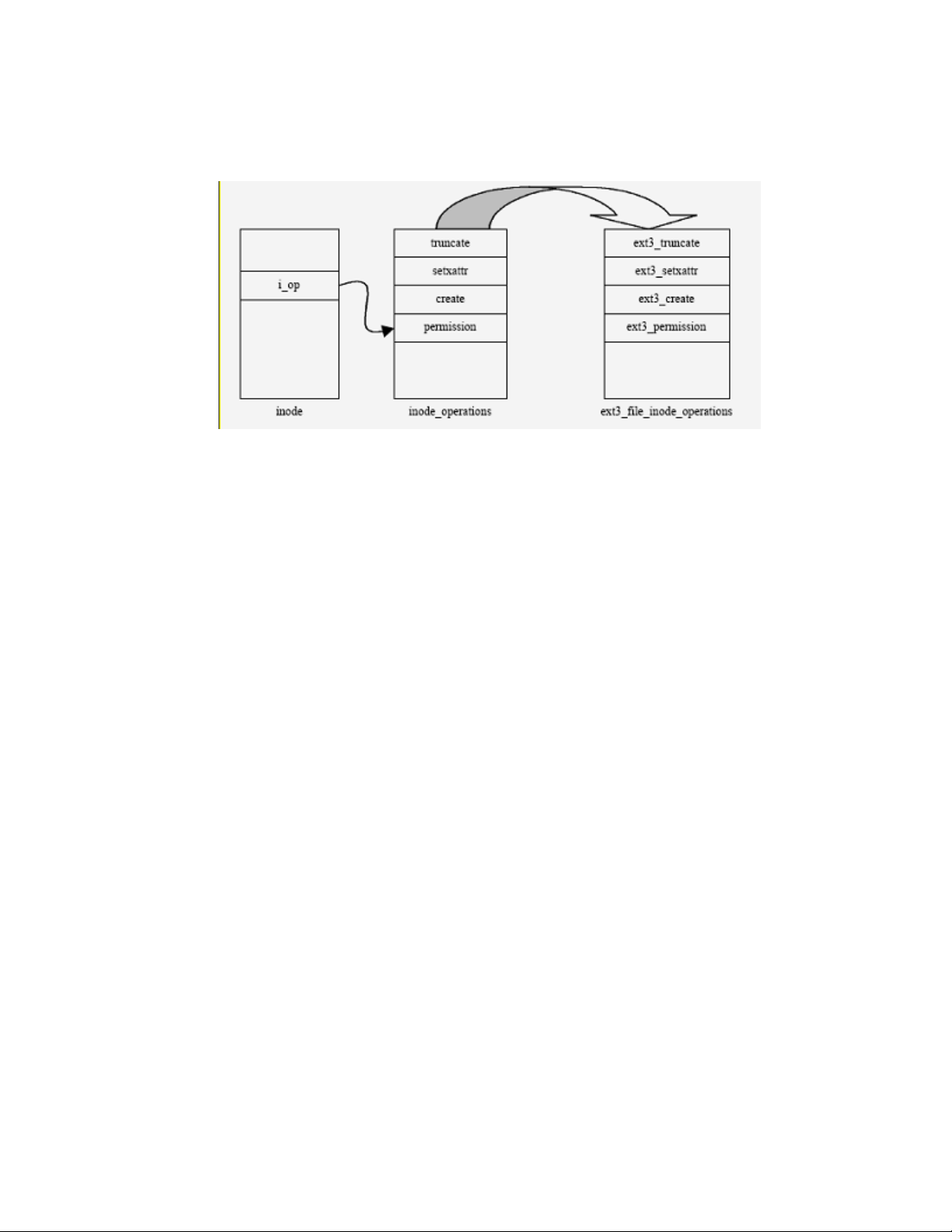

5.1.2.1 Ext3 file system....................................................................................................................47

5.1.2.2 ISO 9660 file system for CD-ROM......................................................................................51

5.1.3 Pseudo file systems......................................................................................................................52

5.1.3.1 procfs...................................................................................................................................52

5.1.3.2 tmpfs....................................................................................................................................53

5.1.3.3 sysfs.....................................................................................................................................53

5.1.3.4 devpts...................................................................................................................................53

5.1.3.5 rootfs....................................................................................................................................54

5.1.3.6 binfmt_misc.........................................................................................................................54

5.1.3.7 securityfs..............................................................................................................................54

5.1.3.8 configfs................................................................................................................................55

5.1.4 inotify...........................................................................................................................................55

4

Page 5

5.1.5 Discretionary Access Control (DAC)..........................................................................................55

5.1.5.1 Permission bits.....................................................................................................................56

5.1.5.2 Access Control Lists ............................................................................................................57

5.1.6 Asynchronous I/O .......................................................................................................................60

5.1.7 I/O scheduler................................................................................................................................61

5.1.7.1 Deadline I/O scheduler.........................................................................................................61

5.1.7.2 Anticipatory I/O scheduler...................................................................................................62

5.1.7.3 Completely Fair Queuing scheduler.....................................................................................62

5.1.7.4 Noop I/O scheduler..............................................................................................................62

5.1.8 I/O interrupts................................................................................................................................63

5.1.8.1 Top halves............................................................................................................................63

5.1.8.2 Bottom halves......................................................................................................................63

5.1.8.3 Softirqs.................................................................................................................................63

5.1.8.4 Tasklets................................................................................................................................63

5.1.8.5 Work queue..........................................................................................................................64

5.1.9 Processor interrupts......................................................................................................................64

5.1.10 Machine check...........................................................................................................................64

5.2 Process control and management.........................................................................................................65

5.2.1 Data structures..............................................................................................................................66

5.2.2 Process creation and destruction...................................................................................................67

5.2.2.1 Control of child processes....................................................................................................68

5.2.2.2 DAC controls.......................................................................................................................68

5.2.2.3 execve()................................................................................................................................68

5.2.2.4 do_exit()...............................................................................................................................69

5.2.3 Process switch..............................................................................................................................69

5.2.4 Kernel threads..............................................................................................................................69

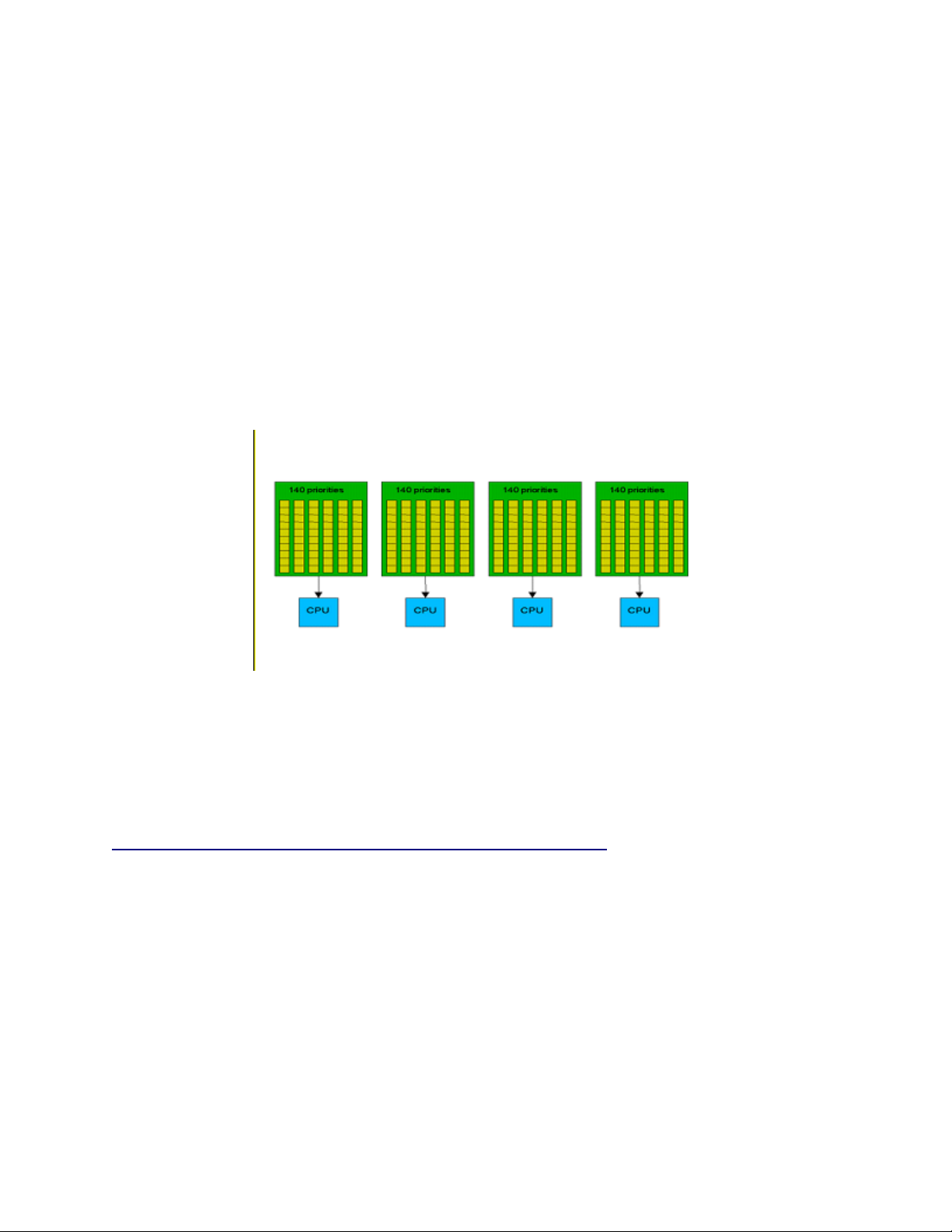

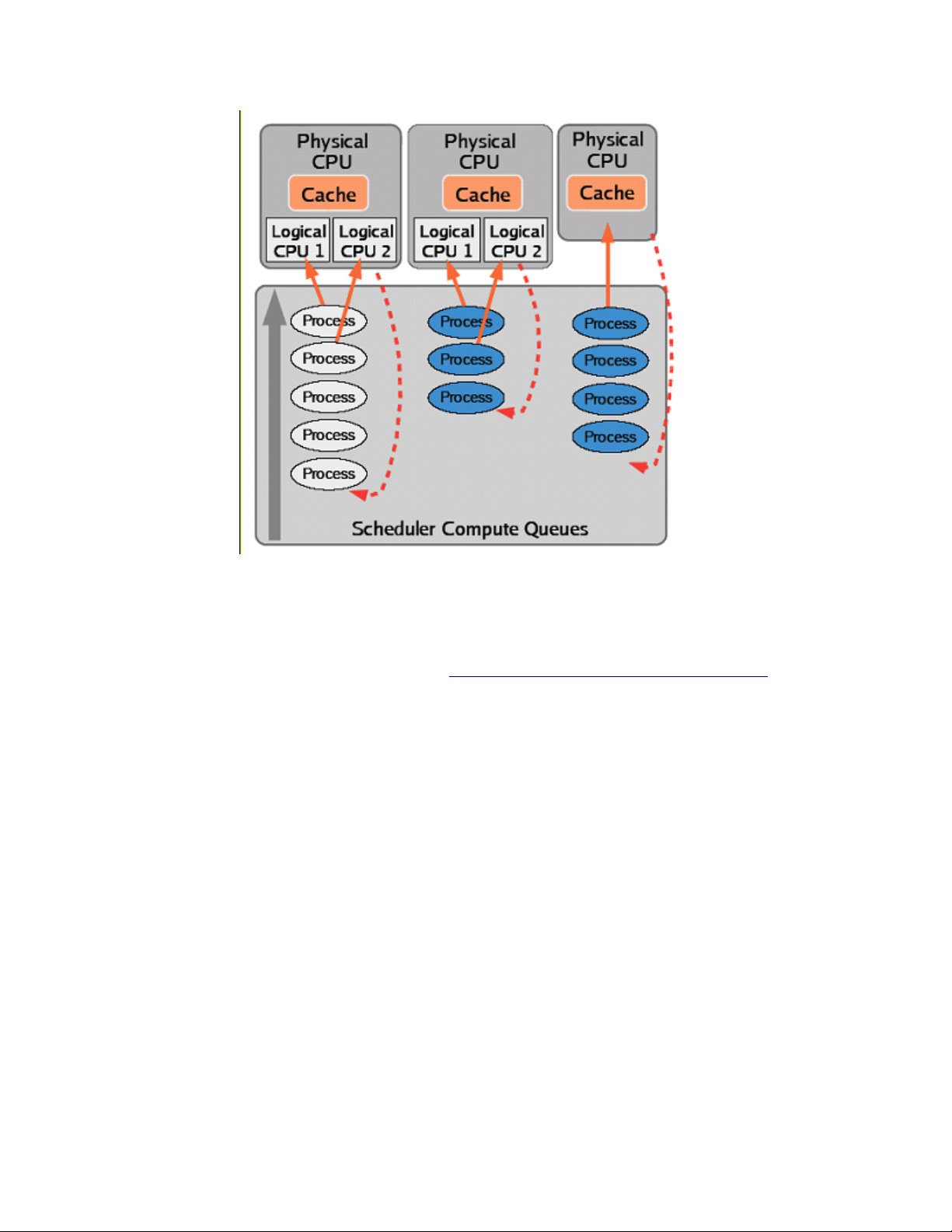

5.2.5 Scheduling....................................................................................................................................69

5.2.6 Kernel preemption........................................................................................................................71

5.3 Inter-process communication ..............................................................................................................72

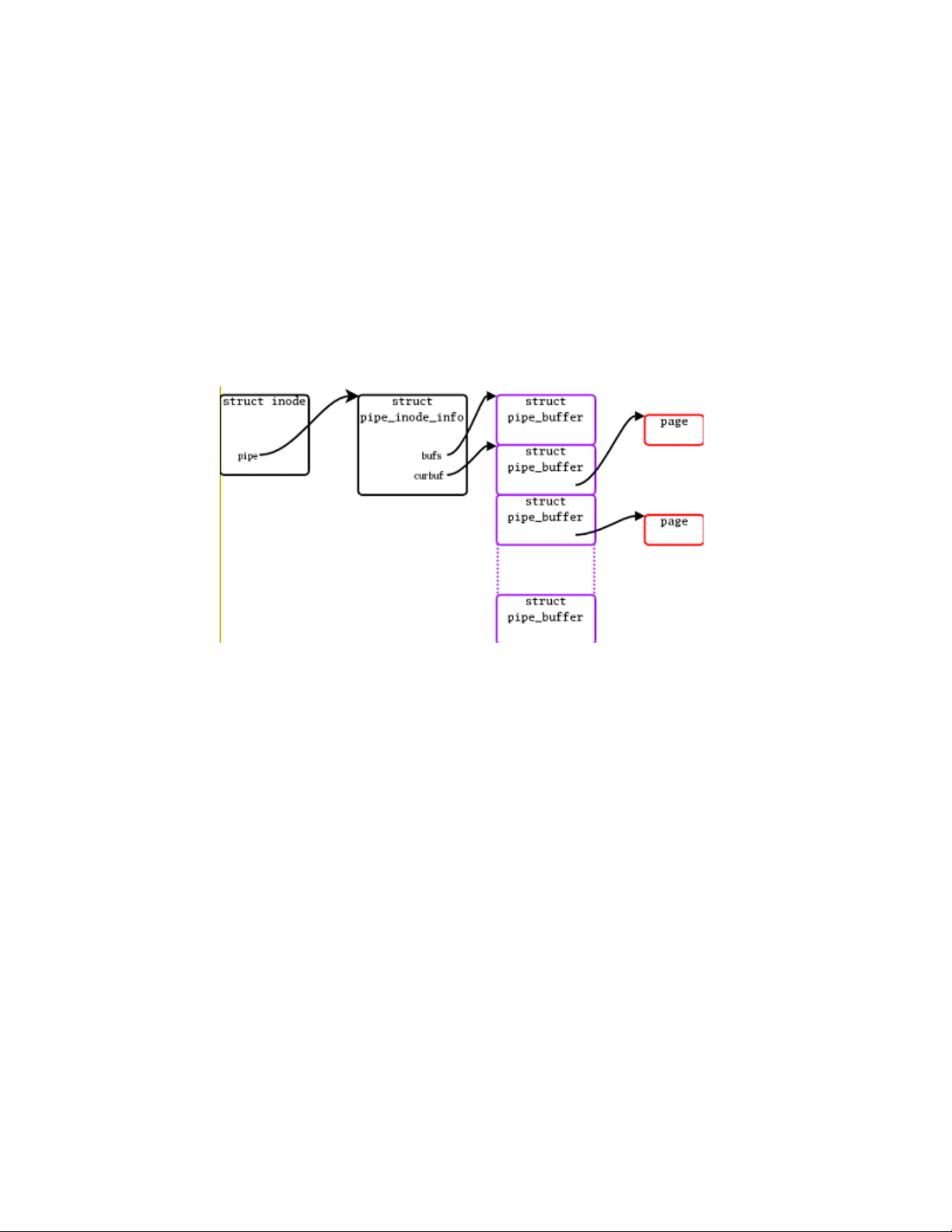

5.3.1 Pipes.............................................................................................................................................73

5.3.1.1 Data structures and algorithms.............................................................................................74

5.3.2 First-In First-Out Named pipes....................................................................................................74

5.3.2.1 FIFO creation.......................................................................................................................75

5.3.2.2 FIFO open............................................................................................................................75

5.3.3 System V IPC...............................................................................................................................75

5.3.3.1 Common data structures.......................................................................................................76

5

Page 6

5.3.3.2 Common functions...............................................................................................................76

5.3.3.3 Message queues....................................................................................................................77

5.3.3.4 Semaphores..........................................................................................................................78

5.3.3.5 Shared memory regions........................................................................................................79

5.3.4 Signals..........................................................................................................................................80

5.3.4.1 Data structures......................................................................................................................80

5.3.4.2 Algorithms...........................................................................................................................80

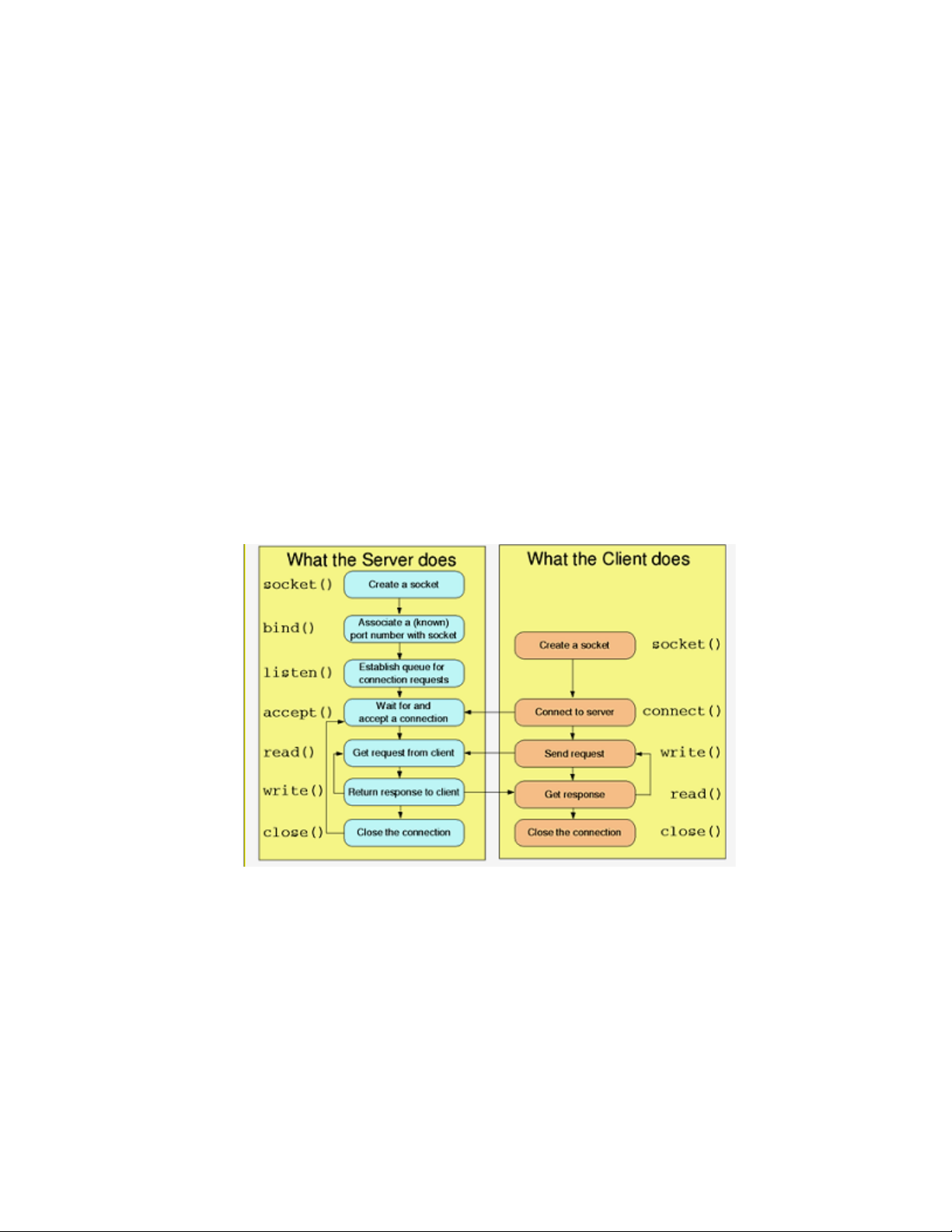

5.3.5 Sockets.........................................................................................................................................81

5.4 Network subsystem..............................................................................................................................82

5.4.1 Overview of the network protocol stack.......................................................................................83

5.4.2 Transport layer protocols..............................................................................................................85

5.4.2.1 TCP......................................................................................................................................85

5.4.2.2 UDP.....................................................................................................................................85

5.4.3 Network layer protocols...............................................................................................................85

5.4.3.1 Internet Protocol Version 4 (IPv4).......................................................................................86

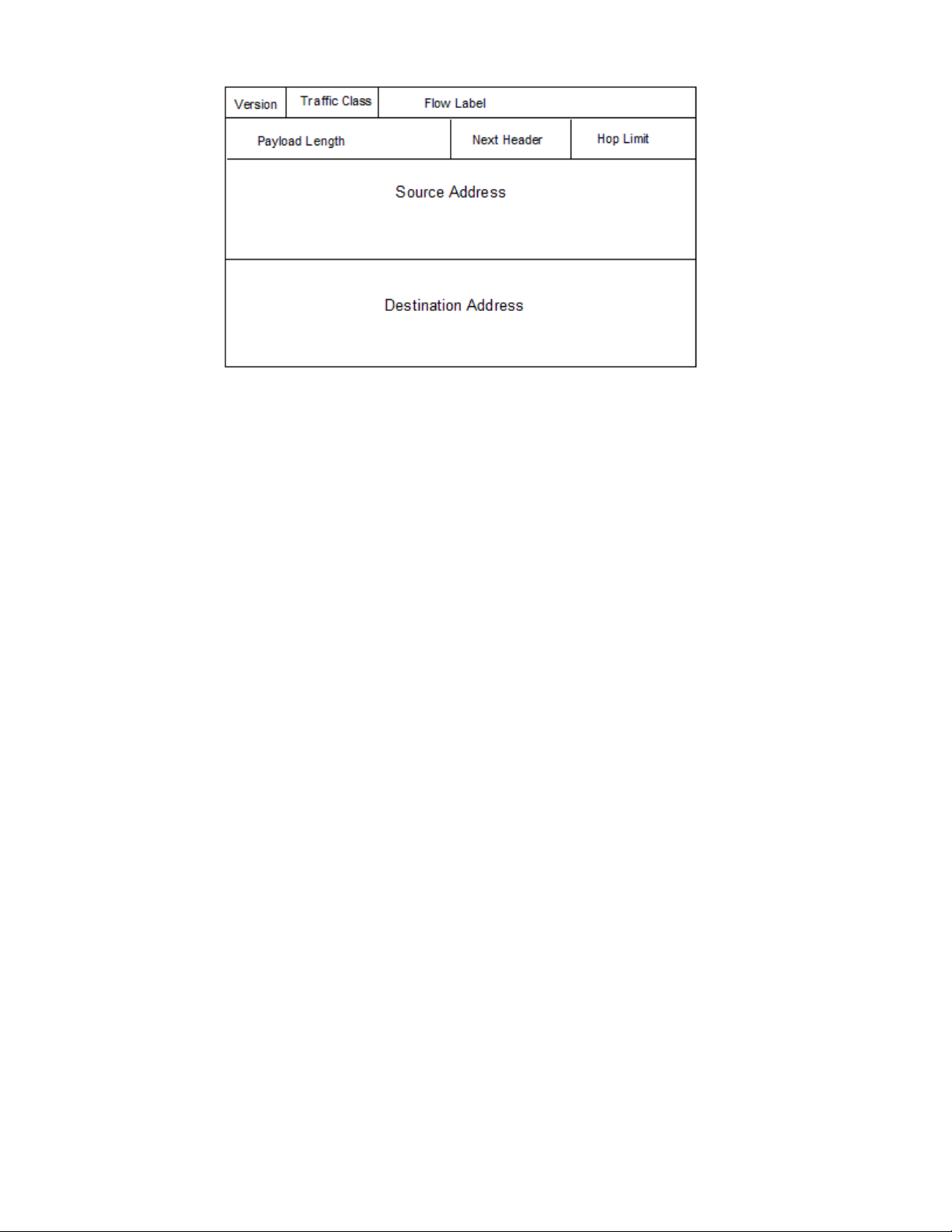

5.4.3.2 Internet Protocol Version 6 (IPv6).......................................................................................86

5.4.3.3 Transition between IPv4 and IPv6........................................................................................88

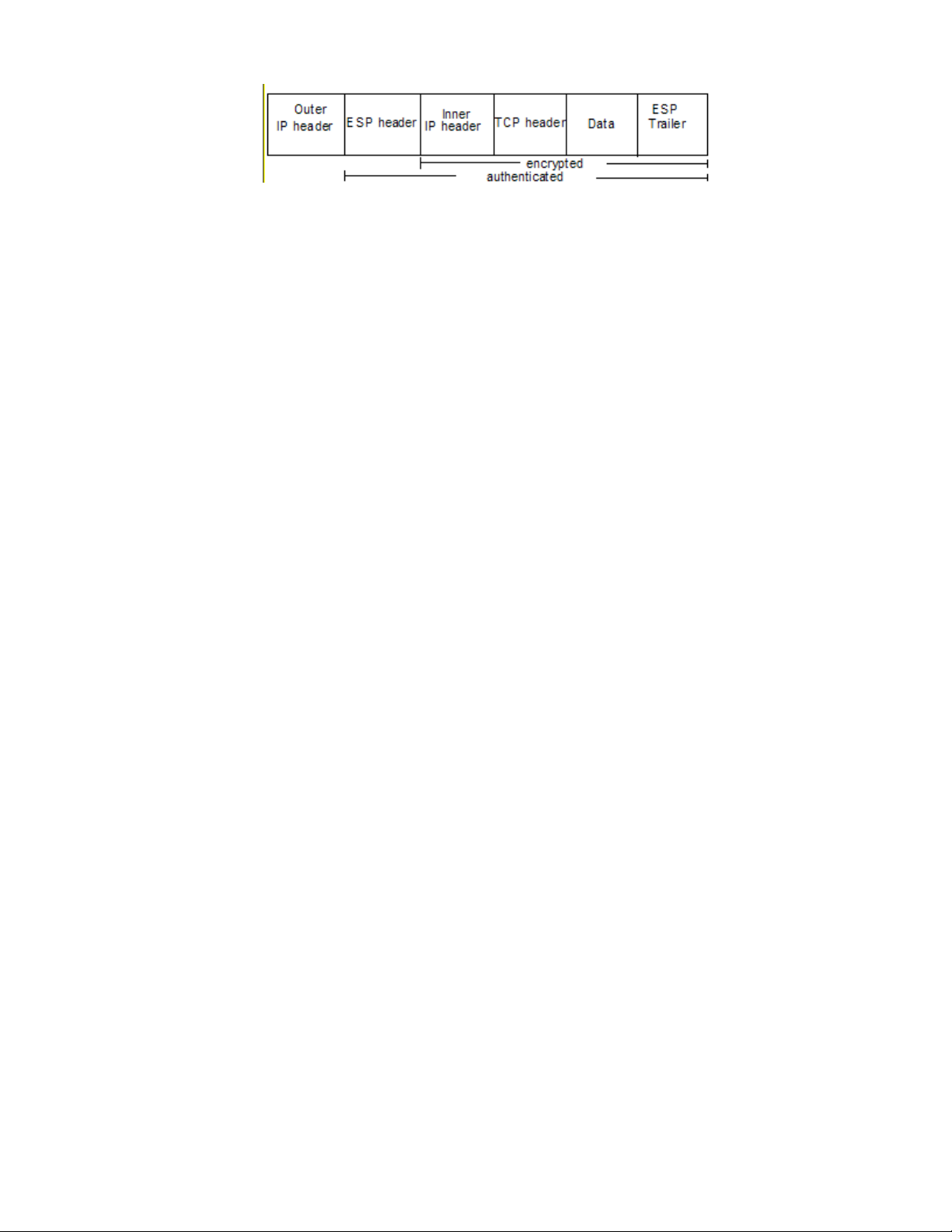

5.4.3.4 IP Security (IPsec)................................................................................................................88

5.4.4 Internet Control Message Protocol (ICMP)..................................................................................93

5.4.4.1 Link layer protocols.............................................................................................................93

5.4.5 Network services interface...........................................................................................................93

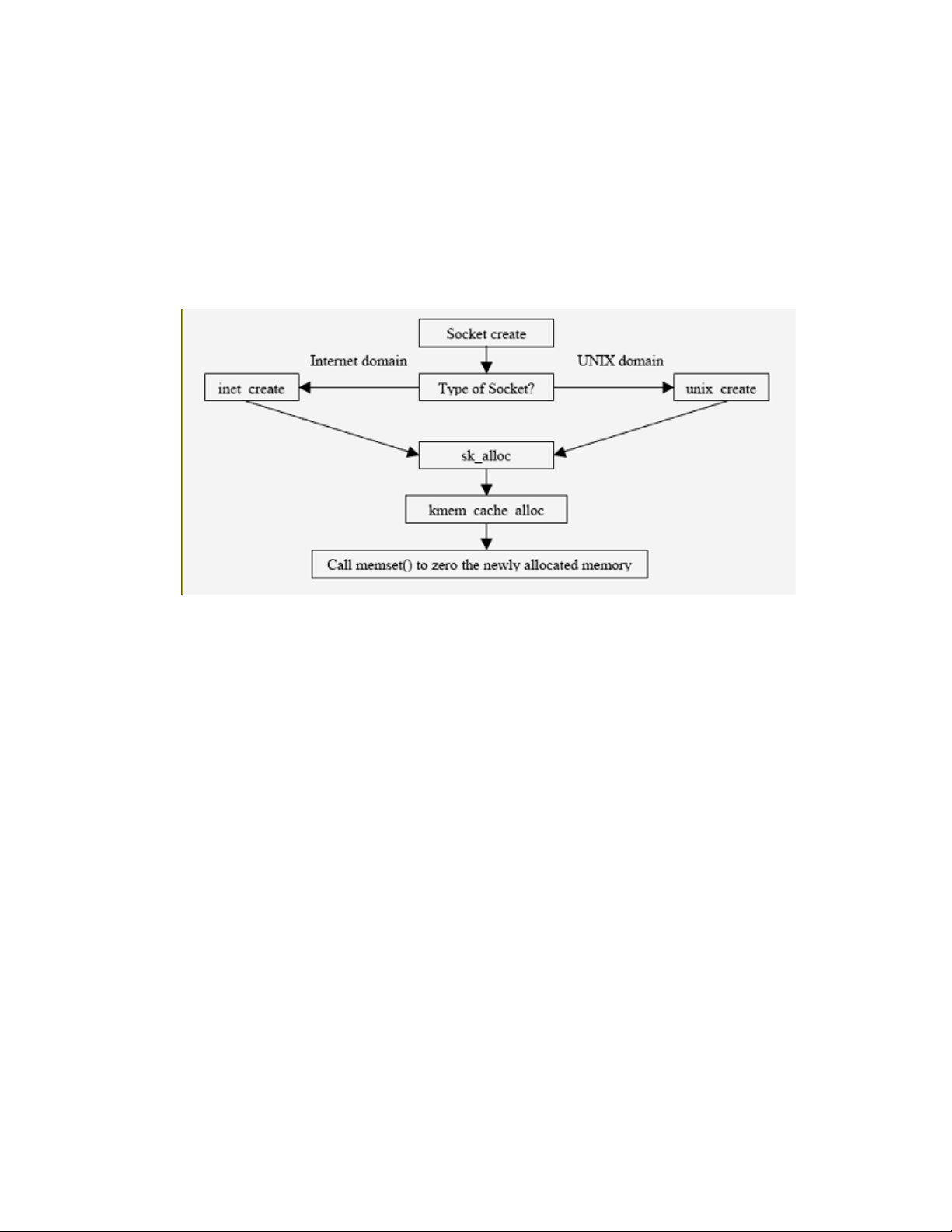

5.4.5.1 socket().................................................................................................................................94

5.4.5.2 bind()....................................................................................................................................94

5.4.5.3 listen()..................................................................................................................................96

5.4.5.4 accept().................................................................................................................................96

5.4.5.5 connect()..............................................................................................................................96

5.4.5.6 Generic calls.........................................................................................................................96

5.4.5.7 Access control......................................................................................................................96

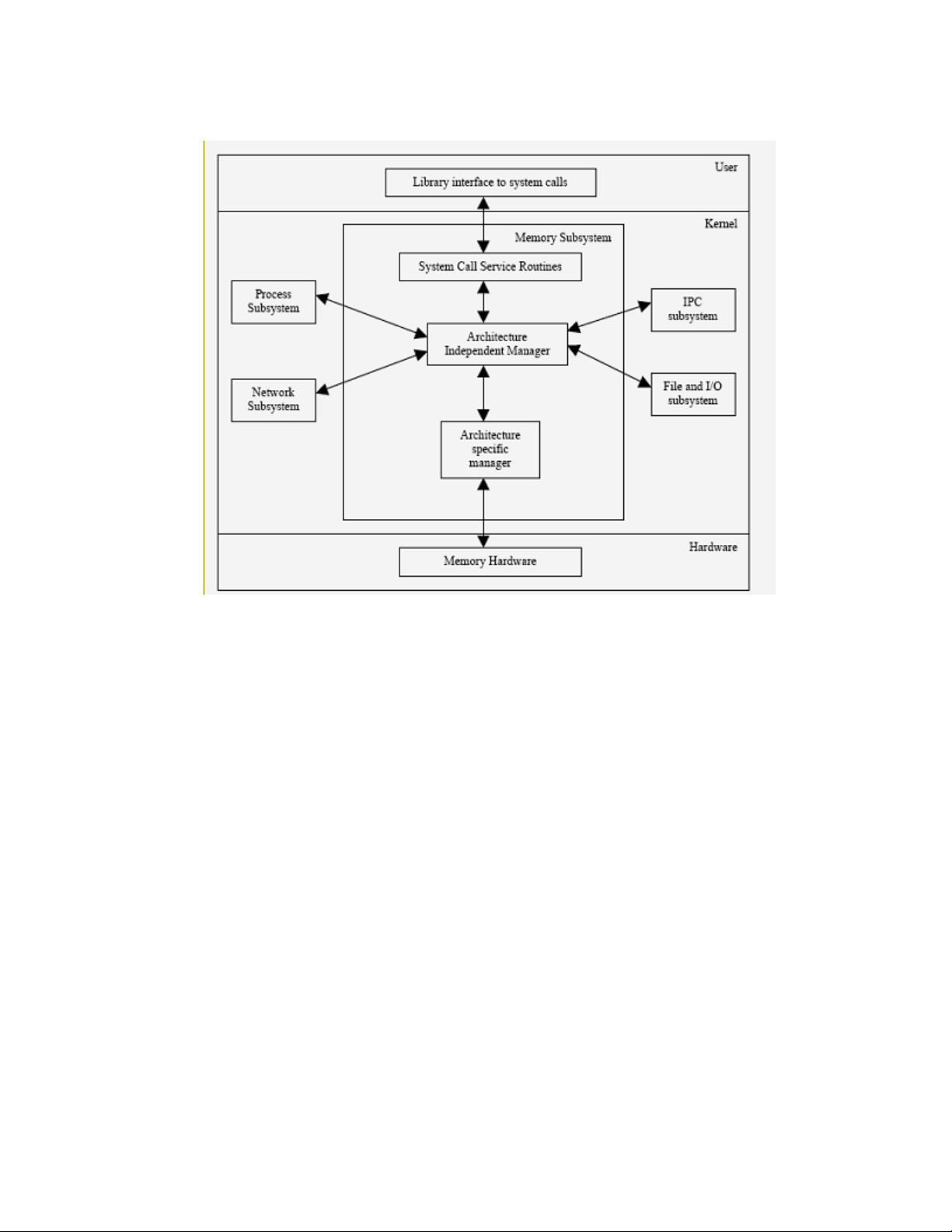

5.5 Memory management...........................................................................................................................97

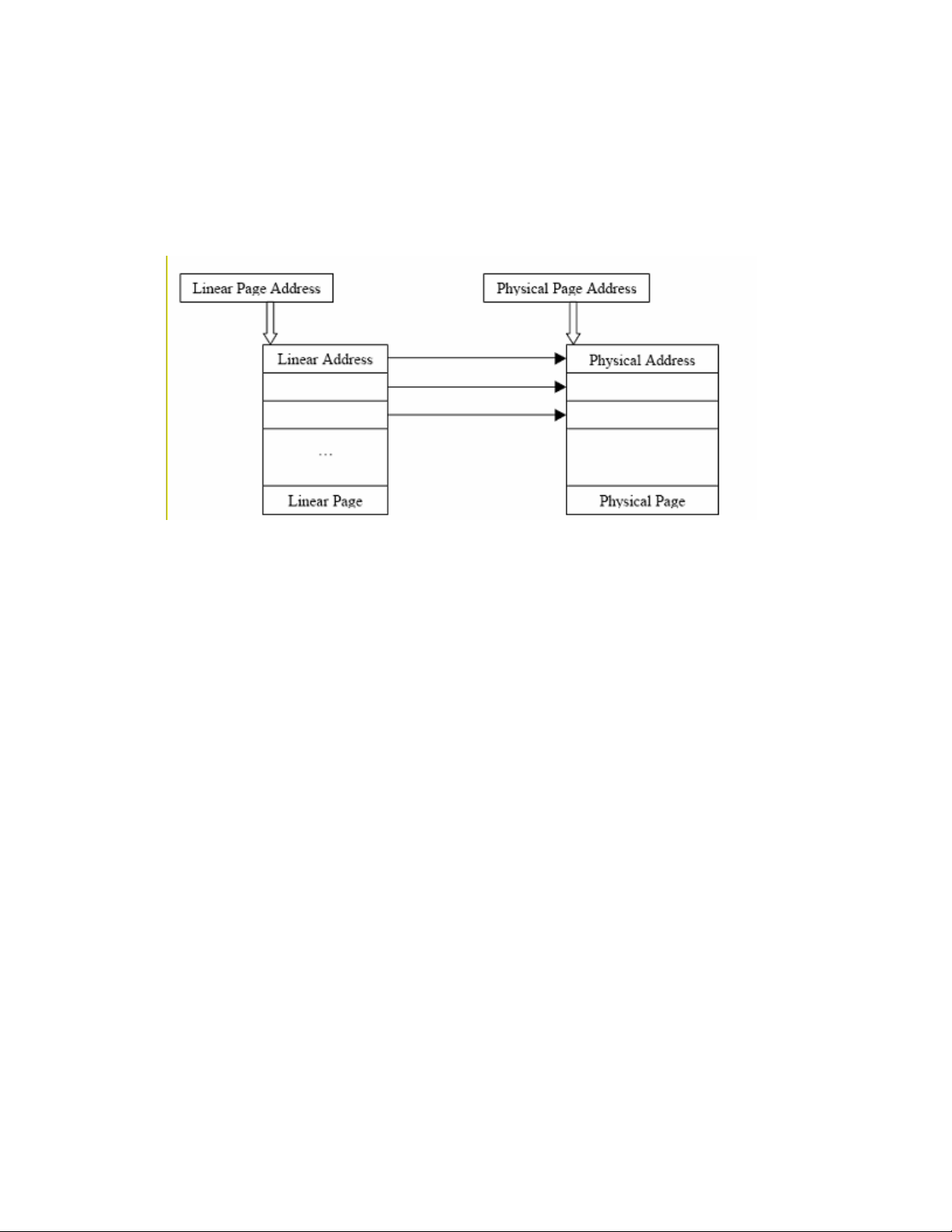

5.5.1 Four-Level Page Tables...............................................................................................................99

5.5.2 Memory addressing....................................................................................................................100

5.5.2.1 System x.............................................................................................................................101

5.5.2.2 System p.............................................................................................................................108

5.5.2.3 System p native mode........................................................................................................115

5.5.2.4 System z ............................................................................................................................123

5.5.2.5 eServer 326........................................................................................................................134

6

Page 7

5.5.3 Kernel memory management....................................................................................................142

5.5.3.1 Support for NUMA servers................................................................................................142

5.5.3.2 Reverse map Virtual Memory............................................................................................143

5.5.3.3 Huge Translation Lookaside Buffers..................................................................................144

5.5.3.4 Remap_file_pages..............................................................................................................146

5.5.3.5 Page frame management....................................................................................................147

5.5.3.6 Memory area management.................................................................................................147

5.5.3.7 Noncontiguous memory area management.........................................................................148

5.5.4 Process address space.................................................................................................................148

5.5.5 Symmetric multiprocessing and synchronization.......................................................................150

5.5.5.1 Atomic operations.............................................................................................................150

5.5.5.2 Memory barriers.................................................................................................................150

5.5.5.3 Spin locks...........................................................................................................................151

5.5.5.4 Kernel semaphores.............................................................................................................151

5.6 Audit subsystem.................................................................................................................................151

5.6.1 Audit components.....................................................................................................................152

5.6.1.1 Audit kernel components....................................................................................................153

5.6.1.2 File system audit components............................................................................................156

5.6.1.3 User space audit components.............................................................................................157

5.6.2 Audit operation and configuration options.................................................................................158

5.6.2.1 Configuration.....................................................................................................................158

5.6.2.2 Operation............................................................................................................................160

5.6.3 Audit records ............................................................................................................................161

5.6.3.1 Audit record generation......................................................................................................161

5.6.3.2 Audit record format............................................................................................................166

5.6.4 Audit tools..................................................................................................................................168

5.6.4.1 auditctl...............................................................................................................................168

5.6.4.2 ausearch..............................................................................................................................168

5.6.5 Login uid association.................................................................................................................169

5.7 Kernel modules.................................................................................................................................169

5.7.1 Linux Security Module framework............................................................................................170

5.7.2 LSM capabilities module ...........................................................................................................172

5.7.3 LSM AppArmor module............................................................................................................172

5.8 AppArmor..........................................................................................................................................172

5.8.1 AppArmor administrative utilities..............................................................................................172

5.8.2 AppArmor access control functions...........................................................................................174

7

Page 8

5.8.3 securityfs....................................................................................................................................174

5.9 Device drivers....................................................................................................................................174

5.9.1 I/O virtualization on System z....................................................................................................175

5.9.1.1 Interpretive-execution facility............................................................................................175

5.9.1.2 State description.................................................................................................................176

5.9.1.3 Hardware virtualization and simulation..............................................................................177

5.9.2 Character device driver..............................................................................................................178

5.9.3 Block device driver....................................................................................................................179

5.10 System initialization........................................................................................................................179

5.10.1 init............................................................................................................................................180

5.10.2 System x...................................................................................................................................181

5.10.2.1 Boot methods...................................................................................................................181

5.10.2.2 Boot loader.......................................................................................................................181

5.10.2.3 Boot process.....................................................................................................................182

5.10.3 System p...................................................................................................................................185

5.10.3.1 Boot methods...................................................................................................................185

5.10.3.2 Boot loader.......................................................................................................................185

5.10.3.3 Boot process.....................................................................................................................185

5.10.4 System p in LPAR....................................................................................................................187

5.10.4.1 Boot process.....................................................................................................................188

5.10.5 System z...................................................................................................................................191

5.10.5.1 Boot methods...................................................................................................................191

5.10.5.2 Control program...............................................................................................................191

5.10.5.3 Boot process.....................................................................................................................191

5.10.6 eServer 326..............................................................................................................................193

5.10.6.1 Boot methods...................................................................................................................194

5.10.6.2 Boot loader.......................................................................................................................194

5.10.6.3 Boot process.....................................................................................................................194

5.11 Identification and authentication......................................................................................................197

5.11.1 Pluggable Authentication Module............................................................................................197

5.11.1.1 Overview..........................................................................................................................197

5.11.1.2 Configuration terminology...............................................................................................198

5.11.1.3 Modules............................................................................................................................199

5.11.2 Protected databases..................................................................................................................200

5.11.2.1 Access control rules........................................................................................................202

5.11.3 Trusted commands and trusted processes.................................................................................202

8

Page 9

5.11.3.1 agetty................................................................................................................................203

5.11.3.2 gpasswd............................................................................................................................203

5.11.3.3 login.................................................................................................................................203

5.11.3.4 mingetty...........................................................................................................................204

5.11.3.5 newgrp..............................................................................................................................205

5.11.3.6 passwd..............................................................................................................................206

5.11.3.7 su......................................................................................................................................206

5.11.4 Interaction with audit...............................................................................................................207

5.12 Network applications........................................................................................................................207

5.12.1 OpenSSL Secure socket-layer interface...................................................................................207

5.12.1.1 Concepts...........................................................................................................................209

5.12.1.2 SSL architecture...............................................................................................................213

5.12.1.3 OpenSSL algorithms........................................................................................................217

5.12.1.4 Symmetric ciphers............................................................................................................217

5.12.2 Secure Shell .............................................................................................................................218

5.12.2.1 SSH client........................................................................................................................220

5.12.2.2 SSH server daemon.........................................................................................................220

5.12.3 Very Secure File Transfer Protocol daemon.............................................................................220

5.12.4 CUPS.......................................................................................................................................221

5.12.4.1 cupsd................................................................................................................................222

5.12.4.2 ping..................................................................................................................................224

5.12.4.3 ping6................................................................................................................................224

5.12.4.4 openssl..............................................................................................................................224

5.12.4.5 stunnel..............................................................................................................................224

5.12.4.6 xinetd...............................................................................................................................225

5.13 System management.........................................................................................................................226

5.13.1 Account Management..............................................................................................................226

5.13.1.1 chage................................................................................................................................226

5.13.1.2 chfn..................................................................................................................................226

5.13.1.3 chsh..................................................................................................................................227

5.13.2 User management.....................................................................................................................228

5.13.2.1 useradd.............................................................................................................................228

5.13.2.2 usermod............................................................................................................................228

5.13.2.3 userdel..............................................................................................................................229

5.13.3 Group management..................................................................................................................231

5.13.3.1 groupadd..........................................................................................................................231

9

Page 10

5.13.3.2 groupmod.........................................................................................................................232

5.13.3.3 groupdel...........................................................................................................................232

5.13.4 System Time management.......................................................................................................234

5.13.4.1 date...................................................................................................................................234

5.13.4.2 hwclock............................................................................................................................234

5.13.5 Other System Management......................................................................................................235

5.13.5.1 AMTU..............................................................................................................................235

5.13.5.2 star....................................................................................................................................238

5.13.6 I&A support.............................................................................................................................240

5.13.6.1 pam_tally..........................................................................................................................240

5.13.6.2 unix_chkpwd....................................................................................................................240

5.14 Batch processing..............................................................................................................................240

5.14.1 Batch processing user commands.............................................................................................241

5.14.1.1 crontab..............................................................................................................................241

5.14.1.2 at......................................................................................................................................241

5.14.2 Batch processing daemons.......................................................................................................242

5.14.2.1 cron..................................................................................................................................242

5.14.2.2 atd.....................................................................................................................................243

5.15 User-level audit subsystem...............................................................................................................243

5.15.1 Audit daemon...........................................................................................................................243

5.15.2 Audit utilities ...........................................................................................................................244

5.15.2.1 aureport ...........................................................................................................................244

5.15.2.2 ausearch............................................................................................................................245

5.15.2.3 autrace..............................................................................................................................245

5.15.3 Audit configuration files..........................................................................................................245

5.15.4 Audit logs.................................................................................................................................245

5.16 Supporting functions........................................................................................................................245

5.16.1 TSF libraries.............................................................................................................................246

5.16.2 Library linking mechanism.......................................................................................................248

5.16.3 System call linking mechanism................................................................................................249

5.16.3.1 System x...........................................................................................................................249

5.16.3.2 System p...........................................................................................................................249

5.16.3.3 System z..........................................................................................................................250

5.16.3.4 eServer 326.....................................................................................................................250

5.16.4 System call argument verification............................................................................................250

6 Mapping the TOE summary specification to the High-Level Design.........................................................251

10

Page 11

6.1 Identification and authentication.......................................................................................................251

6.1.1 User identification and authentication data management (IA.1).................................................251

6.1.2 Common authentication mechanism (IA.2)................................................................................251

6.1.3 Interactive login and related mechanisms (IA.3)........................................................................251

6.1.4 User identity changing (IA.4).....................................................................................................251

6.1.5 Login processing (IA.5).............................................................................................................251

6.2 Audit..................................................................................................................................................251

6.2.1 Audit configuration (AU.1)........................................................................................................252

6.2.2 Audit processing (AU.2)............................................................................................................252

6.2.3 Audit record format (AU.3) .......................................................................................................252

6.2.4 Audit post-processing (AU.4)....................................................................................................252

6.3 Discretionary Access Control............................................................................................................252

6.3.1 General DAC policy (DA.1).......................................................................................................252

6.3.2 Permission bits (DA.2)...............................................................................................................252

6.3.3 ACLs (DA.3)..............................................................................................................................252

6.3.4 DAC: IPC objects (DA.4)..........................................................................................................252

6.4 Object reuse........................................................................................................................................253

6.4.1 Object reuse: file system objects (OR.1)....................................................................................253

6.4.2 Object reuse: IPC objects (OR.2)...............................................................................................253

6.4.3 Object reuse: memory objects (OR.3)........................................................................................253

6.5 Security management.........................................................................................................................253

6.5.1 Roles (SM.1)..............................................................................................................................253

6.5.2 Access control configuration and management (SM.2)..............................................................253

6.5.3 Management of user, group and authentication data (SM.3)......................................................253

6.5.4 Management of audit configuration (SM.4)...............................................................................253

6.5.5 Reliable time stamps (SM.5)......................................................................................................254

6.6 Secure communications......................................................................................................................254

6.6.1 Secure protocols (SC.1)..............................................................................................................254

6.7 TSF protection....................................................................................................................................254

6.7.1 TSF invocation guarantees (TP.1)..............................................................................................254

6.7.2 Kernel (TP.2).............................................................................................................................254

6.7.3 Kernel modules (TP.3)...............................................................................................................254

6.7.4 Trusted processes (TP.4)............................................................................................................254

6.7.5 TSF Databases (TP.5)................................................................................................................254

6.7.6 Internal TOE protection mechanisms (TP.6)..............................................................................255

6.7.7 Testing the TOE protection mechanisms (TP.7).........................................................................255

11

Page 12

6.8 Security enforcing interfaces between subsystems.............................................................................255

6.8.1 Summary of kernel subsystem interfaces ..................................................................................256

6.8.1.1 Kernel subsystem file and I/O............................................................................................257

6.8.1.2 Kernel subsystem process control and management...........................................................259

6.8.1.3 Kernel subsystem inter-process communication.................................................................260

6.8.1.4 Kernel subsystem networking............................................................................................263

6.8.1.5 Kernel subsystem memory management............................................................................264

6.8.1.6 Kernel subsystem audit......................................................................................................264

6.8.1.7 Kernel subsystem device drivers........................................................................................266

6.8.1.8 Kernel subsystems kernel modules.....................................................................................268

6.8.2 Summary of trusted processes interfaces....................................................................................268

7 References..................................................................................................................................................269

12

Page 13

1 Introduction

This document describes the High Level Design (HLD) for the SUSE® Linux® Enterprise Server 10 Service

Pack 1 operating system. For ease of reading, this document uses the phrase SUSE Linux Enterprise Server and

the abbreviation SLES as a synonym for SUSE Linux Enterprise Server 10 SP1.

This document summarizes the design and Target of Evaluation Security Functions (TSF) of the SUSE Linux

Enterprise Server (SLES) operating system. Used within the Common Criteria evaluation of SUSE Linux

Enterprise Server at Evaluation Assurance Level (EAL) 4, it describes the security functions defined in the

Common Criteria Security Target document.

1.1 Purpose of this document

The SLES distribution is designed to provide a secure and reliable operating system for a variety of purposes.

This document describes the high-level design of the product and provides references to other, more detailed

design documentation that describe the structure and functions of the system. This document is consistent with

additional high-level design documents, as well as with the supporting detailed design documents for the system.

There are pointers to those documents in this document.

The SLES HLD is intended as a source of information about the architecture of the system for any evaluation

team.

1.2 Document overview

This HLD contains the following chapters:

Chapter 2 presents an overview of the IBM® eServer™ systems, including product history, system architecture,

and TSF identification.

Chapter 3 summarizes the eServer hardware subsystems, characterizes the subsystems with respect to security

relevance, and provides pointers to detailed hardware design documentation.

Chapter 4 expands on the design of the TSF software subsystems, particularly the kernel, which is identified in

Chapter 2.

Chapter 5 addresses functional topics and describes the functionality of individual subsystems, such as memory

management and process management.

Chapter 6 maps the Target of Evaluation (TOE) summary specification from the SUSE Linux Enterprise Server

Security Target to specific sections in this document.

1.3 Conventions used in this document

The following font conventions are used in this document:

Constant Width (Monospace) shows code or output from commands, and indicates source-code keywords

that appear in the code as well as file and directory names, program and command names, command-line

options.

Italic indicates URLs, book titles, and introduces new terms.

1.4 Terminology

For definitions of technical terms and phrases that have specific meaning for Common Criteria evaluation, please

refer to the Security Target.

Page 14

2 System Overview

The Target of Evaluation (TOE) is SUSE Linux Enterprise Server (SLES) running on an IBM eServer host

computer. The SLES product is available on a wide range of hardware platforms. This evaluation covers the

SLES product on the IBM eServer System x™, System p™, and System z™, and eServer 326 (Opteron).

(Throughout this document, SLES refers only to the specific evaluation platforms).

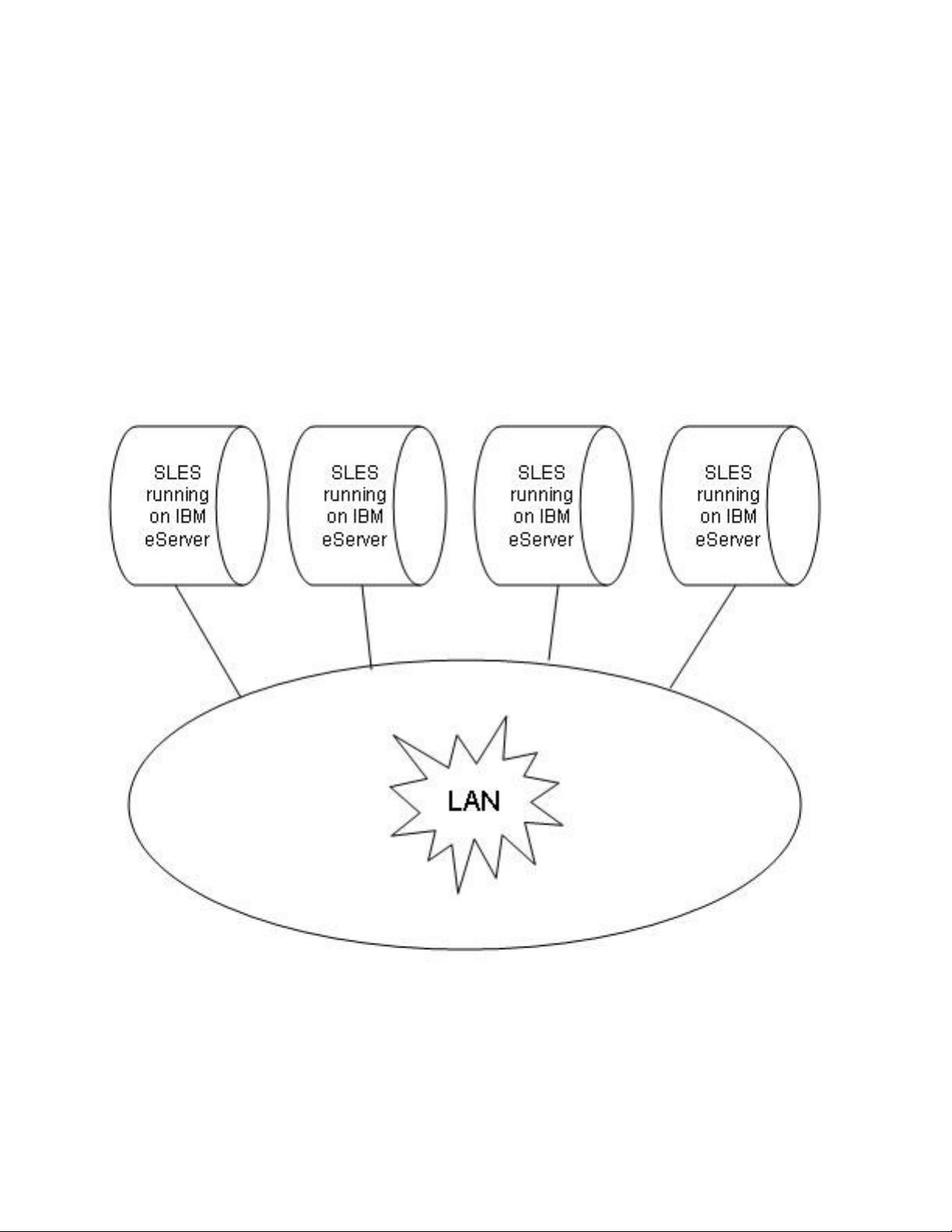

Multiple TOE systems can be connected via a physically-protected Local Area Network (LAN). The IBM

eServer line consists of Intel processor-based System x systems, POWER5™ and POWER5+™ processorbased System p systems, IBM mainframe System z systems, and AMD Opteron processor-based systems that

are intended for use as networked workstations and servers.

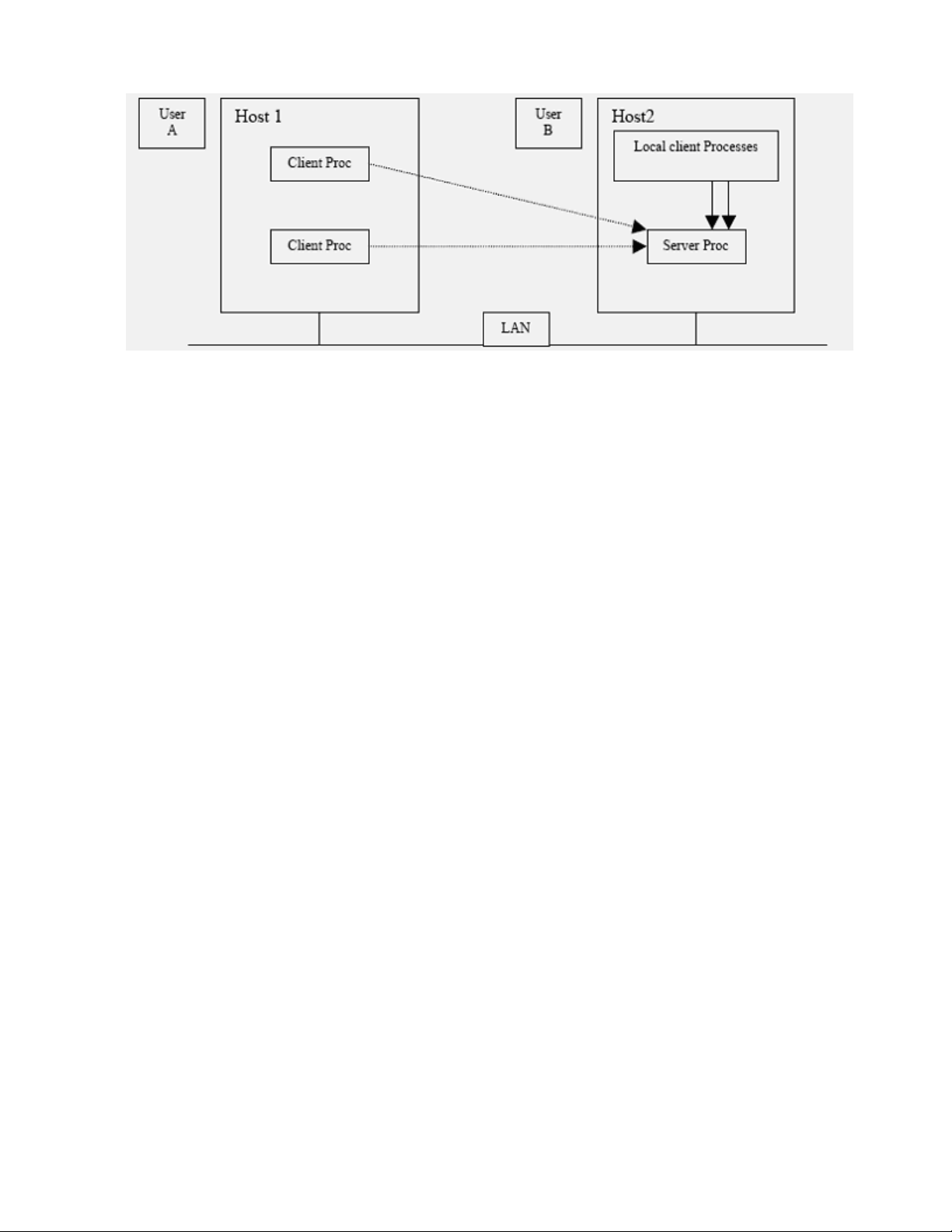

Figure 2-1 shows a series of interconnected TOE systems. Each TOE system is running the SLES operating

system on an eServer computer. Each computer provides the same set of local services, such as file, memory,

and process management. Each computer also provides network services, such as remote secure shells and

file transfers, to users on other computers. A user logs in to a host computer and requests services from the

local host and also from other computers within the LAN.

Figure 2-1: Series of TOE systems connected by a physically protected LAN

User programs issue network requests by sending Transmission Control Protocol (TCP) or User Datagram

Protocol (UDP) messages to another computer. Some network protocols, such as Secure Shell (ssh), can start

a shell process for the user on another computer, while others are handled by trusted server daemon processes.

2

Page 15

The TOE system provides user Identification and Authentication (I&A) mechanism by requiring each user to

log in with proper password at the local workstation, and also at any remote computer where the user can

enter commands to a shell program (for example, remote ssh sessions). Each computer enforces a coherent

Discretionary Access Control (DAC) policy, based on UNIX®-style mode bits and an optional Access

Control List (ACL) for the named objects under its control.

This chapter documents the SUSE Linux Enterprise Server and IBM eServer product histories, provides an

overview of the TOE system, and identifies the portion of the system that constitutes the TOE Security

Functions (TSF).

2.1 Product history

This section gives a brief history of the SLES and the IBM eServer series systems.

2.1.1 SUSE Linux Enterprise Server

SUSE Linux Enterprise Server is based on version 2.6 of the Linux kernel. Linux is a UNIX-like open-source

operating system originally created in 1991 by Linus Torvalds of Helsinki, Finland. SUSE was founded in

1992 by four German software engineers, and is the oldest major Linux solutions provider.

2.1.2 eServer systems

IBM eServer systems were introduced in 2000. The IBM eServer product line brings technological

innovation, application flexibility, and autonomic capabilities for managing the heterogeneous mix of servers

required to support dynamic on-demand business. It enables customers to meet their business needs by

providing unlimited scalability, support for open standards, and mission-critical qualities of service.

Following are systems in the IBM eServer product line that are included in the TOE:

• System z: Mainframe-class servers running mission-critical applications.

• System p: UNIX servers, technologically advanced POWER5 and POWER5+ processor-based

servers for commercial and technical computing applications.

• System x: Intel-based servers with high performance and outstanding availability.

• eServer 326: AMD Opteron-based servers with outstanding value in high performance computing in

both 32-bit and 64-bit environments.

• BladeCenter

®

: Intel Xeon, AMD Opteron, PowerPC, POWER5, and POWER5+ processor based

servers.

Since introducing eServers in 2000, new models with more powerful processors have been added to the

System x, System p, and System z lines. The AMD Opteron processor-based eServer 325 was added to the

eServer series in 2003; the eServer 326 is the next iteration of that model with updated components. The

AMD Opteron eServer 326 is designed for powerful scientific and technical computing. The Opteron

processor supports both 32-bit and 64-bit architectures, thus allowing easy migration to 64-bit computing.

2.2 High-level product overview

The TOE consists of SLES running on an eServer computer. The TOE system can be connected to other

systems by a protected LAN. SLES provides a multi-user, multi-processing environment, where users

interact with the operating system by issuing commands to a command interpreter, by running system

utilities, or by the users developing their own software to run in their own protected environments.

3

Page 16

The Common Criteria for Information Technology Security Evaluation [CC] and the Common Methodology

for Information Technology Security Evaluation [CEM] demand breaking the TOE into logical subsystems

that can be either (a) products, or (b) logical functions performed by the system.

The approach in this section is to break the system into structural hardware and software subsystems that

include, for example, pieces of hardware such as planars and adapters, or collections of one or more software

processes such as the base kernel and kernel modules. Chapter 4 explains the structure of the system in terms

of these architectural subsystems. Although the hardware is also described in this document, the reader should

be aware that while the hardware itself is part of the TOE environment, it is not part of the TOE.

The following subsections present a structural overview of the hardware and software that make up an

individual eServer host computer. This single-computer architecture is one of the configurations permitted

under this evaluation.

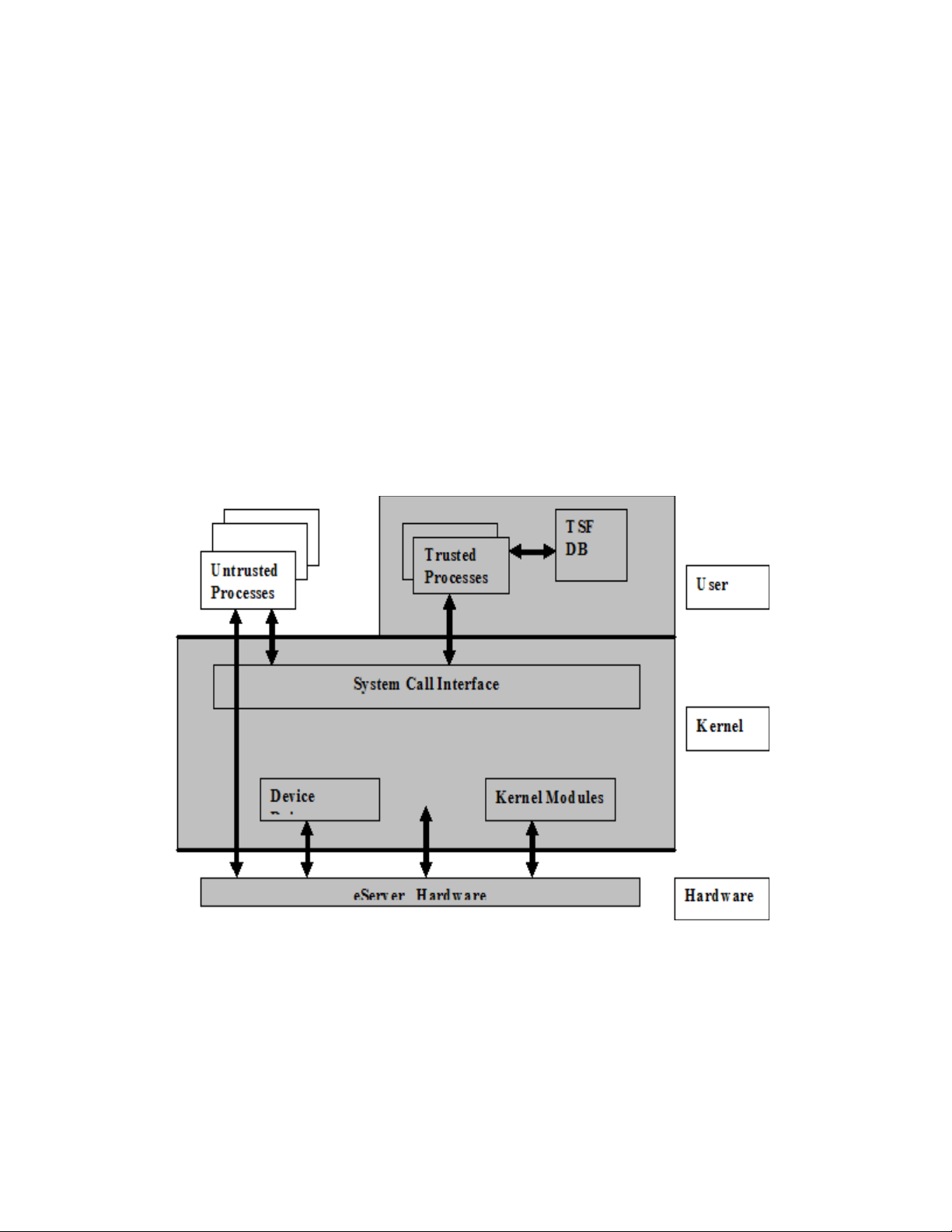

2.2.1 eServer host computer structure

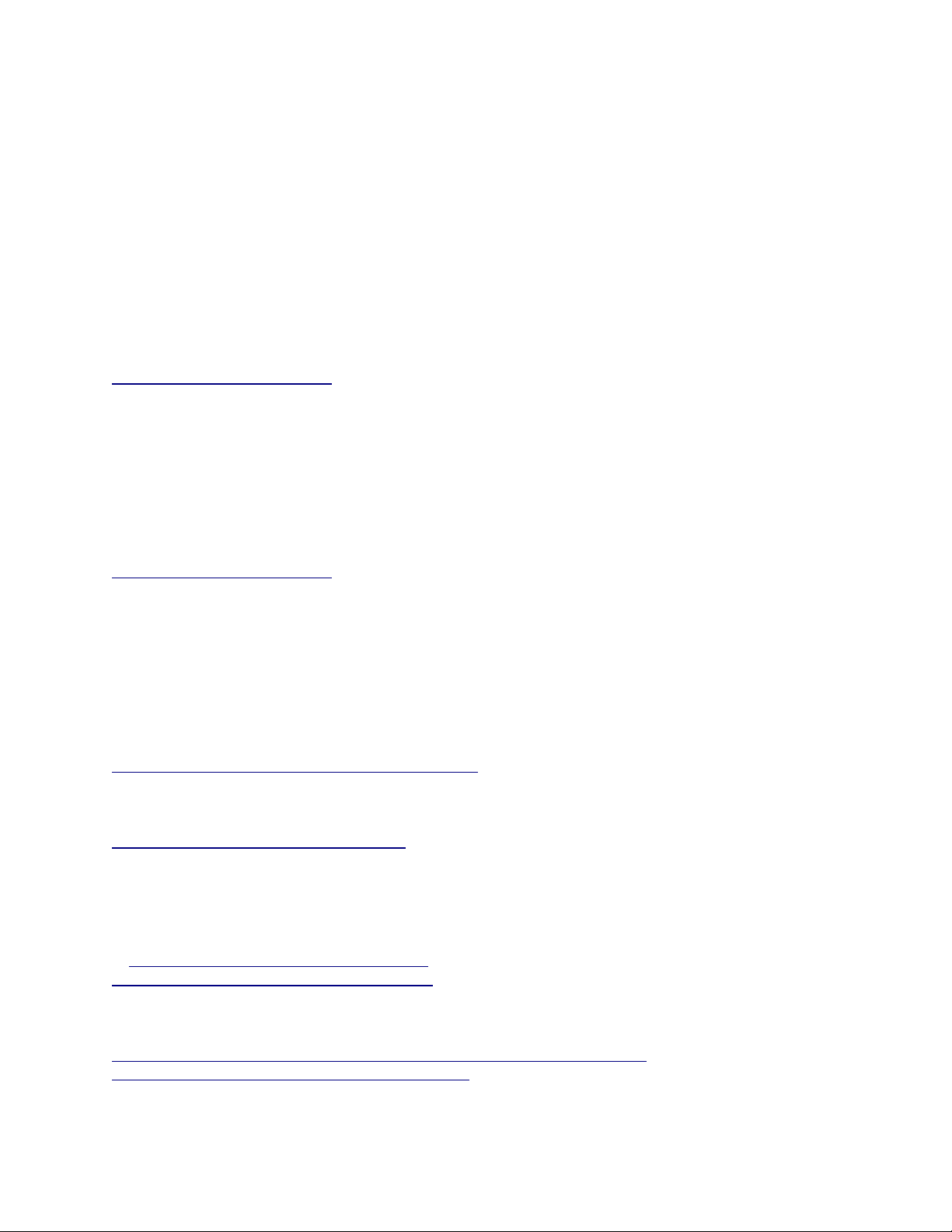

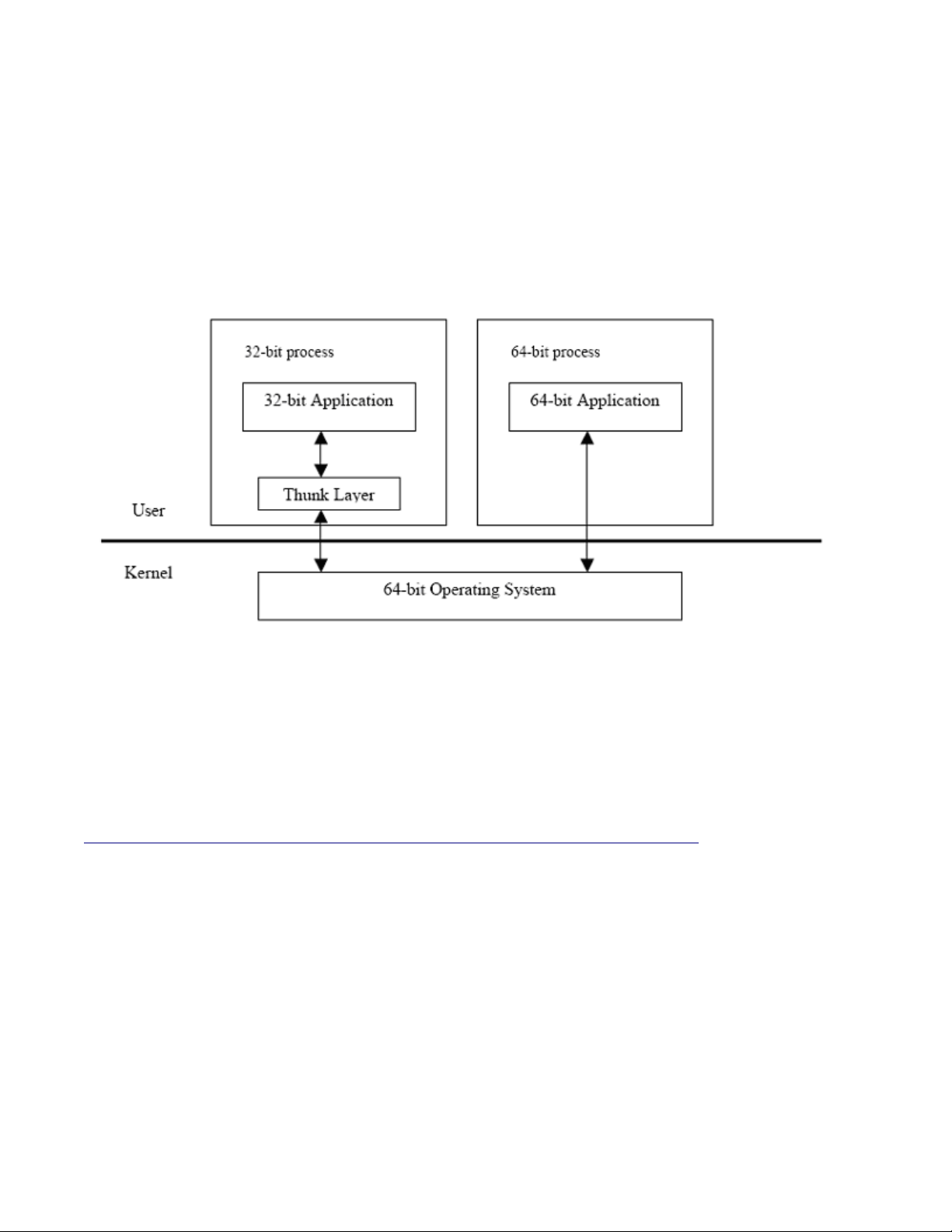

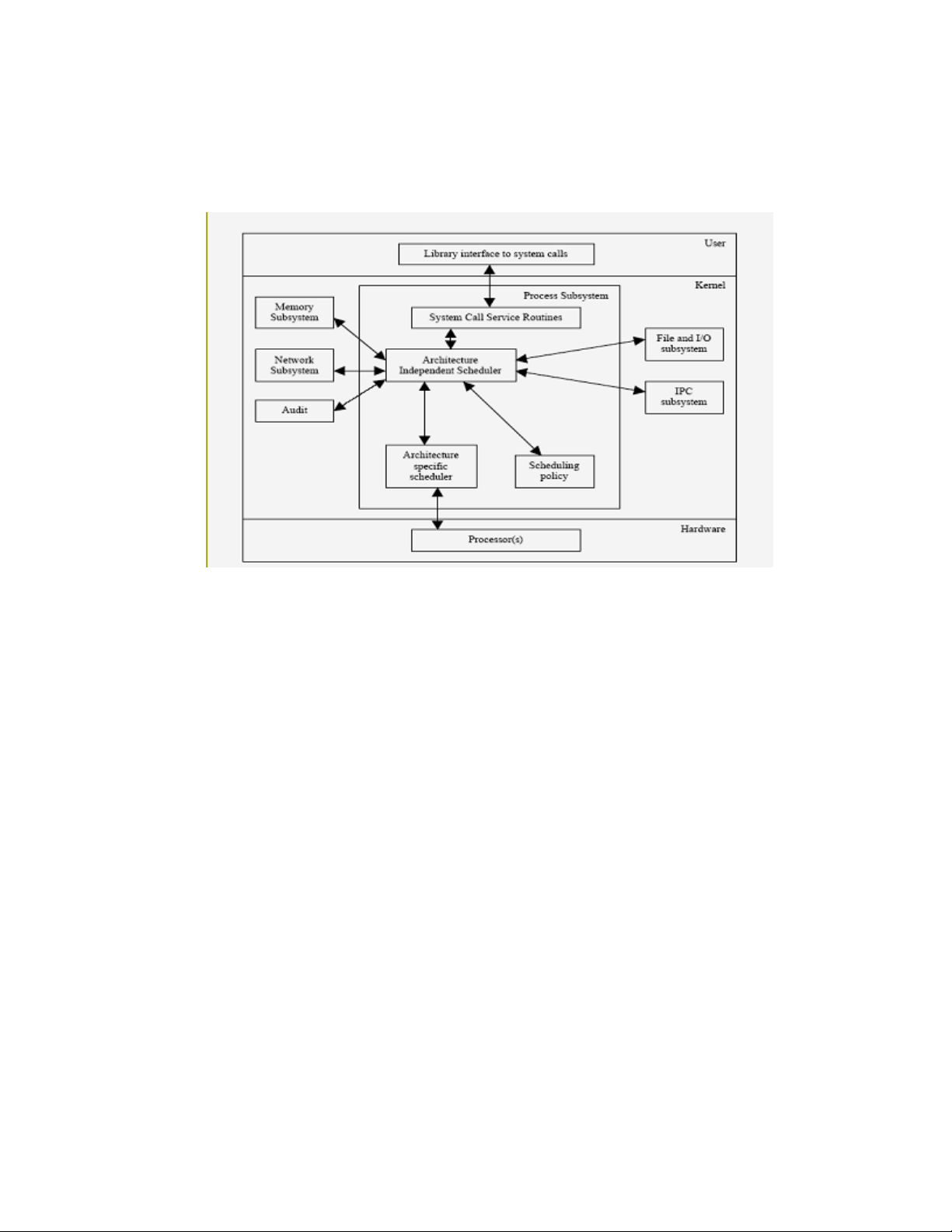

This section describes the structure of SLES for an individual eServer host computer. As shown in Figure 2-2,

the system consists of eServer hardware, the SLES kernel, trusted non-kernel processes, TSF databases, and

untrusted processes. In this figure, the TOE itself consists of Kernel Mode software, User Mode software,

and hardware. The TOE Security Functions (TSF) are shaded in gray. Details such as interactions within the

kernel, inter-process communications, and direct user access to the hardware are omitted.

Figure 2-2: Overall structure of the TOE

The planar components, including CPUs, memory, buses, on board adapters, and support circuitry; additional

adapters, including LAN and video; and, other peripherals, including storage devices, monitors, keyboards,

and front-panel hardware, constitute the hardware.

4

Page 17

The SLES kernel includes the base kernel and separately-loadable kernel modules and device drivers. (Note

that a device driver can also be a kernel module.) The kernel consists of the bootable kernel image and its

loadable modules. The kernel implements the system call interface, which provides system calls for file

management, memory management, process management, networking, and other TSF (logical subsystems)

functions addressed in the Functional Descriptions chapter of this document. The structure of the SLES kernel

is described further in the Software Architecture chapter of this paper.

Non-kernel TSF software includes programs that run with the administrative privilege, such as the sshd,

cron, atd, and vsftpd daemons. The TSF also includes the configuration files that define authorized

users, groups of users, services provided by the system, and other configuration data. Not included as TSF

are shells used by administrators, and standard utilities invoked by administrators.

The SLES system, which includes hardware, kernel-mode software, non-kernel programs, and databases,

provides a protected environment in which users and administrators run the programs, or sequences of CPU

instructions. Programs execute as processes with the identity of the users that started them (except for some

exceptions defined in this paper), and with privileges as dictated by the system security policy. Programs are

subject to the access control and accountability processes of the system.

5

Page 18

2.2.2 eServer system structure

The system is an eServer computer, which permits one user at a time to log in to the computer console.

Several virtual consoles can be mapped to a single physical console. Different users can login through

different virtual consoles simultaneously. The system can be connected to other computers via physically and

logically protected LANs. The eServer hardware and the physical LAN connecting the different systems

running SLES are not included within the evaluation boundary of this paper. External routers, bridges, and

repeaters are also not included in the evaluation boundary of this paper.

A standalone host configuration operates as a CC-evaluated system, which can be used by multiple users at a

time. Users can operate by logging in at the virtual consoles or serial terminals of a system, or by setting-up

background execution jobs. Users can request local services, such as file, memory, and process management,

by making system calls to the kernel. Even though interconnection of different systems running SLES is not

included in the evaluation boundary, the networking software is loaded. This aids in a user’s request for

network services (for example, FTP) from server processes on the same host.

Another configuration provides a useful network configuration, in which a user can log in to the console of

any of the eServer host computers, request local services at that computer, and also request network services

from any of the other computers. For example, a user can use ssh to log into one host from another, or scp

to transfer files from one host to another. The configuration extends the single LAN architecture to show that

SLES provides Internet Protocol (IP) routing from one LAN segment to another. For example, a user can log

in at the console of a host in one network segment and establish an ssh connection to a host in another

network segment. Packets on the connection travel across a LAN segment, and they are routed by a host in

that segment to a host on another LAN segment. The packets are eventually routed by the host in the second

LAN segment to a host on a third LAN segment, and from there are routed to the target host. The number of

hops from the client to the server are irrelevant to the security provided by the system, and are transparent to

the user.

The hosts that perform routing functions have statically-configured routing tables. When the hosts use other

components for routing (for example, a commercial router or switches), those components are assumed to

perform the routing functions correctly, and do not alter the data part of the packets.

If other systems are to be connected to the network, with multiple TOE systems connected via a physically

protected LAN, then they need to be configured and managed by the same authority using an appropriate

security policy not conflicting with the security policy of the TOE.

2.2.3 TOE services

Each host computer in the system is capable of providing the following types of services:

• Local services to the users who are currently logged in to the system using a local computer console,

virtual consoles, or terminal devices connected through physically protected serial lines.

• Local services to the previous users via deferred jobs; an example is the cron daemon.

• Local services to users who have accessed the local host via the network using a protocol such as

ssh, which starts a user shell on the local host.

• Network services to potentially multiple users on either the local host or on remote hosts.

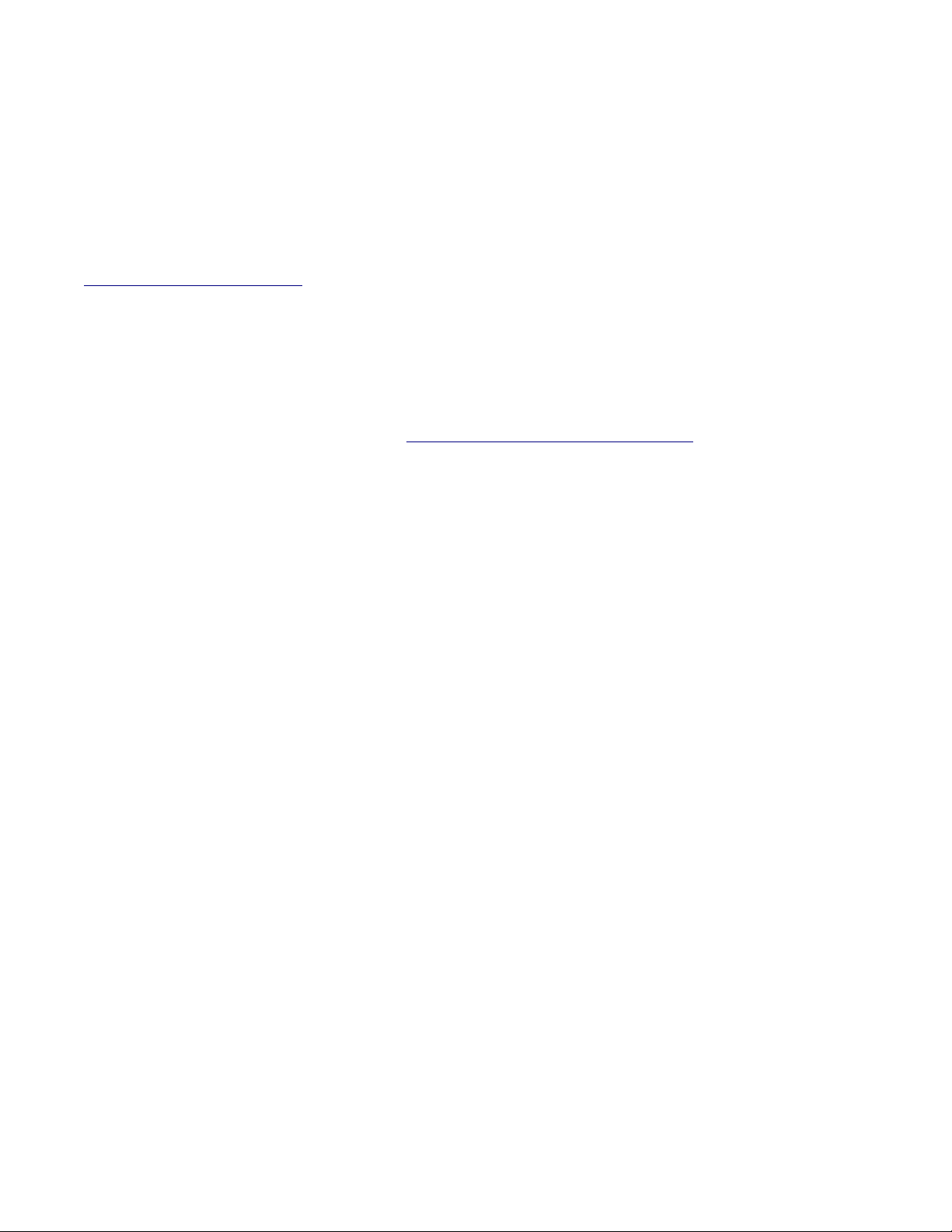

Figure 2-3 illustrates the difference between local services that take place on each local host computer, versus

network services that involve client-server architecture and a network service layer protocol. For example, a

user can log in to the local host computer and make file system requests or memory management requests for

services via system calls to the kernel of the local host. All such local services take place solely on the local

host computer and are mediated solely by trusted software on that host.

6

Page 19

Figure 2-3: Local and network services provided by SLES

Network services, such as ssh or ftp, involve client-server architecture and a network service-layer

protocol. The client-server model splits the software that provides a service into a client portion that makes

the request, and a server portion that carries out the request, usually on a different computer. The service

protocol is the interface between the client and server. For example, User A can log in at Host 1, and then use

ssh to log in to Host 2. On Host 2, User A is logged in from a remote host.

On Host 1, when User A uses ssh to log in to Host 2, the ssh client on Host 1 makes protocol requests to an

ssh server process on Host 2. The server process mediates the request on behalf of User A, carries out the

requested service, if possible, and returns the results to the requesting client process.

Also, note that the network client and server can be on the same host system. For example, when User B uses

ssh to log in to Host 2, the user's client process opens an ssh connection to the ssh server process on Host 2.

Although this process takes place on the local host computer, it is distinguished from local services because it

involves networking protocols.

2.2.4 Security policy

A user is an authorized individual with an account. Users can use the system in one of three ways:

1. By interacting directly with the system thorough a session at a computer console (in which case the

user can use the graphical display provided as the console), or

2. By interacting directly with system through a session at a serial terminal, or

3. Through deferred execution of jobs using the cron and atd utilities.

A user must log in at the local system in order to access the protected resources of the system. Once a user is

authenticated, the user can access files or execute programs on the local computer, or make network requests

to other computers in the system.

The only subjects in the system are processes. A process consists of an address space with an execution

context. The process is confined to a computer; there is no mechanism for dispatching a process to run

remotely (across TCP/IP) on another host. Every process has a process ID (PID) that is unique on its local

host computer, but PIDs are not unique throughout the system. As an example, each host in the system has an

init process with PID 1. Section 5.2 of this document explains how a parent process creates a child by making

a clone(), fork() or a vfork() system call; the child can then call execve() to load a new program.

7

Page 20

Objects are passive repositories of data. The TOE defines three types of objects: named objects, storage

objects, and public objects. Named objects are resources, such as files and IPC objects, which can be

manipulated by multiple users using a naming convention defined at the TSF interface. A storage object is an

object that supports both read and write access by multiple non-trusted subjects. Consistent with these

definitions, all named objects are also categorized as storage objects, but not all storage objects are named

objects. A public object is an object that can be publicly read by non-trusted subjects and can be written only

by trusted subjects.

SLES enforces a DAC policy for all named objects under its control, and an object reuse policy for all storage

objects under its control. Additional access control checks are possible, if an optional kernel module is

loaded, such as AppArmor. If AppArmor is loaded, DAC policy is enforced first, and the additional access

control checks are made only if DAC would allow the access. The additional checks are non-authoritative;

that is, a DAC policy denial cannot be overridden by the additional access control checks in the kernel

module.

While the DAC policy that is enforced varies among different object classes, in all cases it is based on user

identity and on group membership associated with the user identity. To allow for enforcement of the DAC

policy, all users must be identified, and their identities must be authenticated. The TOE uses both hardware

and software protection mechanisms.

The hardware mechanisms used by SLES to provide a protected domain for its own execution include a

multistate processor, memory segment protection, and memory page protection. The TOE software relies on

these hardware mechanisms to implement TSF isolation, non-circumventability, and process address-space

separation.

A user can log in at the console, at other directly attached terminals, or through a network connection.

Authentication is based on a password entered by the user and authentication data stored in a protected file.

Users must log in to a host before they can access any named objects on that host. Some services, such as

ssh to obtain a shell prompt on another host, or ftp to transfer files between hosts in the distributed system,

require the user to re-enter authentication data to the remote host. SLES permits the user to change passwords

(subject to TOE enforced password guidelines), change identity, submit batch jobs for deferred execution, and

log out of the system. The Strength of Function Analysis [VA] shows that the probability of guessing a

password is sufficiently low given the minimum password length and maximum password lifetime.

The system architecture provides TSF self-protection and process isolation mechanisms.

2.2.5 Operation and administration

The eServer networks can be composed of one, several, or many different host computers, each of which can

be in various states of operation, such as being shut down, initializing, being in single-user mode, or online in

a secure state. Thus, administration involves the configuration of multiple computers and the interactions of

those computers, as well as the administration of users, groups, files, printers, and other resources for each

eServer system.

The TOE provides the useradd, usermod, and userdel commands to add, modify, and delete a user

account. It provides the groupadd, groupmod, and groupdel commands to add, modify, and delete a

group from the system. These commands accept options to set up or modify various parameters for accounts

and groups. The commands modify the appropriate TSF databases and provide a safer way than manual

editing to update authentication databases. Refer to the appropriate command man pages for detailed

information about how to set up and maintain users and groups.

2.2.6 TSF interfaces

The TSF interfaces include local interfaces provided by each host computer, and the network client-server

interfaces provided by pairs of host computers.

8

Page 21

The local TSF interfaces provided by an individual host computer include:

• Files that are part of the TSF database that define the configuration parameters used by the security

functions.

• System calls made by trusted and untrusted programs to the privileged kernel-mode software. As

described separately in this document, system calls are exported by the base SLES kernel and by

kernel modules.

• Interfaces to trusted processes and trusted programs

• Interfaces to the SLES kernel through the /proc and the /sys pseudo file systems

External TSF interfaces provided by pairs of host computer include SSH v2 and SSL v3.

For more detailed information about these interfaces, refer to:

• SSH v2 Proposed Standard RFC 4819 Secure Shell Public Key Subsystem

http://www.ietf.org/rfc/rfc4819.txt

• SSLv3 Draft http://wp.netscape.com/eng/ssl3/draft302.txt

• RFC 3268 Advanced Encryption Standard (AES) Ciphersuites for Transport Layer Security (TLS)

http://www.ietf.org/rfc/rfc3268.txt

The following are interfaces that are not viewed as TSF interfaces:

• Interfaces between non-TSF processes and the underlying hardware. Typically, user processes do not

interface directly with the hardware; exceptions are processor and graphics hardware. User processes

interact with the processor by executing CPU instructions, reading and modifying CPU registers, and

modifying the contents of physical memory assigned to the process. User processes interact with

graphics hardware by modifying the contents of registers and memory on the graphics adapter.

Unprivileged processor instructions are externally visible interfaces. However, the unprivileged

processor instructions do not implement any security functionality, and the processor restricts these

instructions to the bounds defined by the processor. Therefore, this interface is not considered as part

of the TSF.

• Interfaces between different parts of the TSF that are invisible to normal users (for example, between

subroutines within the kernel) are not considered to be TSF interfaces. This is because the interface is

internal to the trusted part of the TOE and cannot be invoked outside of those parts. Those interfaces

are therefore not part of the functional specification, but are explained in this HLD.

• The firmware (PR/SM

TM

, z/VMTM, P5-LPAR), while part of the TOE, are not considered as providing

TSF interfaces because they do not allow direct unprivileged operations to them.

• System z processor exceptions reflected to the firmware, including z/VM, PR/SM, and LPAR, are not

considered to be TSF interfaces. They are not relevant to security because they provide access to the

z/VM kernel, which does not implement any security functionality.

• The System z z/VM DIAGNOSE code interface is not considered a TSF interface because it is not

accessible by unprivileged processes in the problem state, and does not provide any security

functionality.

TSF interfaces include any interface that is possible between untrusted software and the TSF.

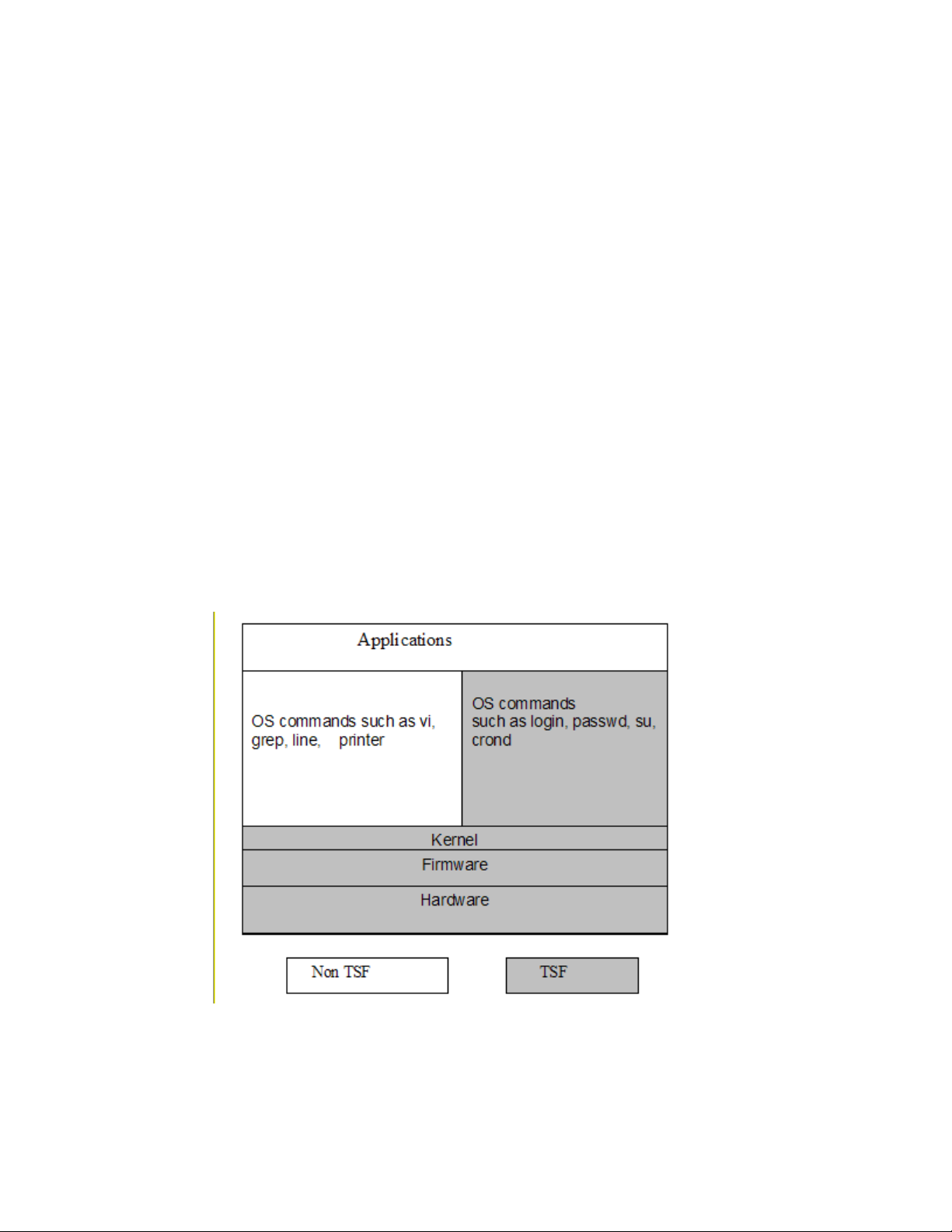

2.3 Approach to TSF identification

This section summarizes the approach to identification of the TSF.

As stated in Section 2.2.6, while the hardware and firmware (z/VM, PR/SM, LPAR) are part of the TOE, they

are not considered as providing TSF interfaces. The SLES operating system, on the other hand, does provide

TSF interfaces.

9

Page 22

The SLES operating system is distributed as a collection of packages. A package can include programs,

configuration data, and documentation for the package. Analysis is performed at the file level, except where a

particular package can be treated collectively. A file is included in the TSF for one or more of the following

reasons:

• It contains code, such as the kernel, kernel module, and device drivers, that runs in a privileged

hardware state.

• It enforces the security policy of the system.

• It allows setuid or setgid to a privileged user (for example, root) or group.

• It started as a privileged daemon; an example is one started by /etc/init.d.

• It is software that must function correctly to support the system security mechanisms.

• It is required for system administration.

• It consists of TSF data or configuration files.

• It consists of libraries linked to TSF programs.

There is a distinction between non-TSF user-mode software that can be loaded and run on the system, and

software that must be excluded from the system. The following methods are used to ensure that excluded

software cannot be used to violate the security policies of the system:

• The installation software will not install any device drivers except those required for the installed

hardware. Consequently, excluded device drivers will not be installed even if they are on the

installation media.