IBM NeXtScale System, NeXtScale n1200, NeXtScale nx360 M4 Planning And Implementation Manual

Page 1

ibm.com/redbooks

IBM NeXtScale System

Planning and

Implementation Guide

David Watts

Jordi Caubet

Duncan Furniss

David Latino

Introduces the new high density x86

solution for scale-out environments

Covers the n1200 Enclosure and

nx360 M4 Compute Node

Addresses power, cooling,

racking, and management

Front cover

Page 2

Page 3

IBM NeXtScale System Planning and

Implementation Guide

July 2014

International Technical Support Organization

SG24-8152-01

Page 4

© Copyright International Business Machines Corporation 2013, 2014. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP

Schedule Contract with IBM Corp.

Second Edition (July 2014)

This edition applies to:

IBM NeXtScale n1200 Enclosure, machine type 5456

IBM NeXtScale nx360 M4, machine type 5455

Note: Before using this information and the product it supports, read the information in

“Notices” on page vii.

Page 5

© Copyright IBM Corp. 2013, 2014. All rights reserved. iii

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Authors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Now you can become a published author, too! . . . . . . . . . . . . . . . . . . . . . . . . xii

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xii

Stay connected to IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

Summary of changes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

July 2014 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

April 2014, Second Edition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

Chapter 1. Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Evolution of data centers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 Density . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.2 Scale out applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 Executive Summary of IBM NeXtScale System . . . . . . . . . . . . . . . . . . . . . 3

1.2.1 IBM NeXtScale n1200 Enclosure . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2.2 IBM NeXtScale nx360 M4 compute node . . . . . . . . . . . . . . . . . . . . . . 4

1.3 Design points of the system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.4 This book. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Chapter 2. Positioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.1 Market positioning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.1.1 Three key messages with NeXtScale . . . . . . . . . . . . . . . . . . . . . . . . 11

2.1.2 Optimized for workloads . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.2 IBM System x overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.3 NeXtScale System versus iDataPlex . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.4 NeXtScale System versus Flex System . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.5 NeXtScale System versus rack-mounted servers . . . . . . . . . . . . . . . . . . . 20

2.6 Ordering and fulfillment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Chapter 3. IBM NeXtScale n1200 Enclosure . . . . . . . . . . . . . . . . . . . . . . . . 23

3.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.1.1 Front components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

3.1.2 Rear components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.1.3 Fault tolerance features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

3.2 Standard chassis models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Page 6

iv IBM NeXtScale System Planning and Implementation Guide

3.3 Supported compute nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.4 Power supplies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

3.5 Fan modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

3.6 Midplane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.7 Fan and Power Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.7.1 Ports and connectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3.7.2 Internal USB memory key . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

3.7.3 Overview of functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.7.4 Web GUI interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

3.8 Power management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3.8.1 Power Restore policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3.8.2 Power capping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

3.8.3 Power supply redundancy modes . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

3.8.4 Power supply oversubscription . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

3.8.5 Acoustic mode. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.9 Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

3.9.1 Physical specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

3.9.2 Supported environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Chapter 4. IBM NeXtScale nx360 M4 compute node . . . . . . . . . . . . . . . . . 59

4.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.1.1 Physical design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

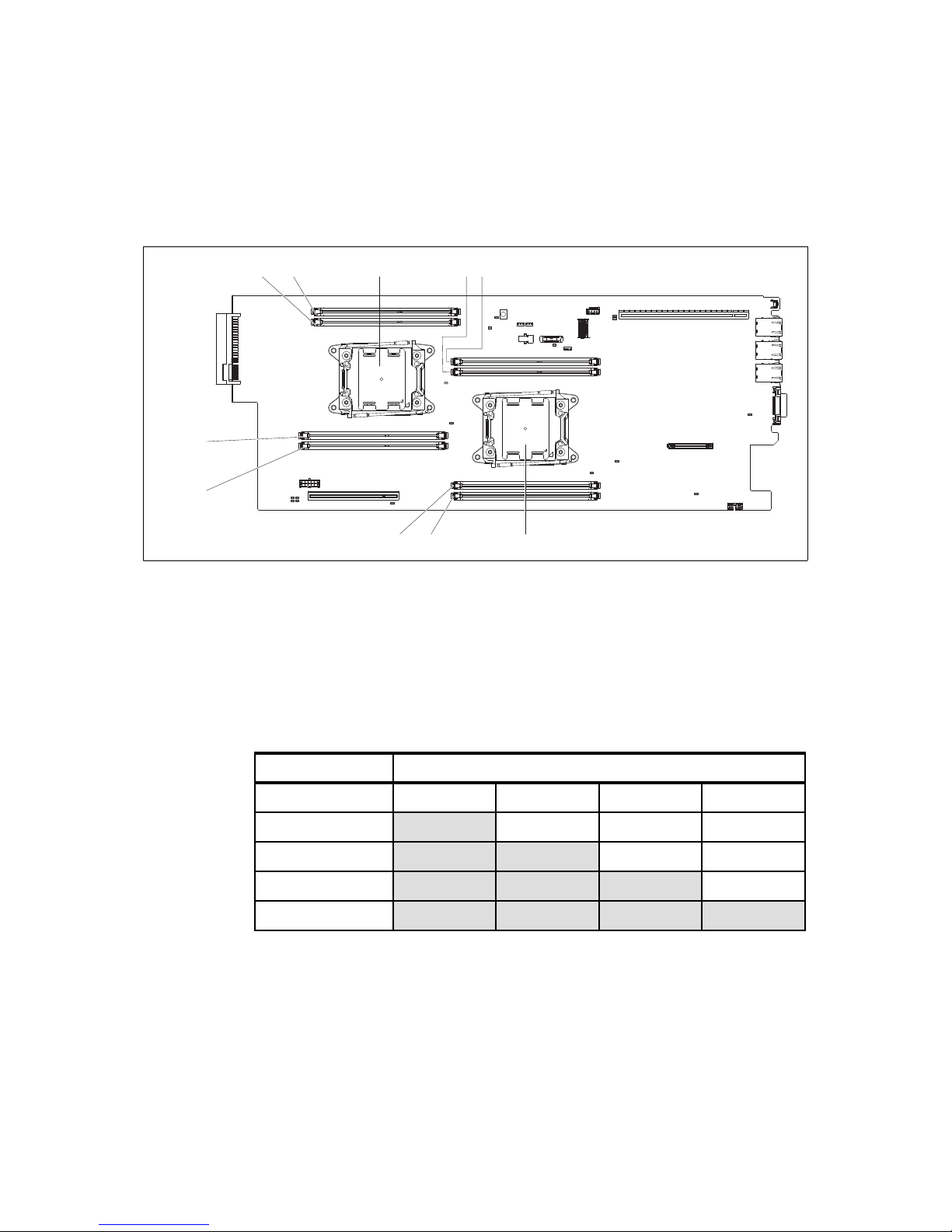

4.2 System architecture. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

4.3 Specificiations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.4 Standard models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.5 Processor options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

4.6 Memory options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

4.6.1 DIMM installation order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.7 Internal disk storage options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

4.7.1 Controllers for internal storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

4.7.2 Using the ServeRAID C100 with 1.8-inch SSDs . . . . . . . . . . . . . . . . 84

4.7.3 HDDs and SDDs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

4.8 IBM NeXtScale Storage Native Expansion Tray . . . . . . . . . . . . . . . . . . . . 86

4.9 IBM NeXtScale PCIe Native Expansion Tray . . . . . . . . . . . . . . . . . . . . . . 90

4.10 GPU and coprocessor adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

4.11 Embedded 1 Gb Ethernet controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

4.12 PCI Express I/O adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

4.12.1 Mezzanine adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

4.12.2 Single-slot riser card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

4.12.3 Network adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

4.12.4 Storage host bus adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

4.13 Integrated virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

4.14 Local server management. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Page 7

Contents v

4.15 Remote server management. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

4.16 External disk storage expansion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

4.17 Physical specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

4.18 Operating systems support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Chapter 5. Rack planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

5.1 Power planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

5.1.1 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

5.1.2 PDUs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

5.1.3 UPS units . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

5.2 Cooling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

5.3 Density . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

5.4 Racks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

5.4.1 The IBM 42U 1100mm Enterprise V2 Dynamic Rack . . . . . . . . . . . 125

5.4.2 Installing NeXtScale System in other racks . . . . . . . . . . . . . . . . . . 131

5.4.3 Rack options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

5.5 Rear Door Heat Exchanger. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

5.6 Top of rack switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

5.6.1 Ethernet switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

5.6.2 InfiniBand switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

5.6.3 Fibre Channel switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

5.7 Rack-level networking: Sample configurations . . . . . . . . . . . . . . . . . . . . 146

5.7.1 Non-blocking InfiniBand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

5.7.2 50% blocking InfiniBand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

5.7.3 10 Gb Ethernet, one port per node . . . . . . . . . . . . . . . . . . . . . . . . . 149

5.7.4 10 Gb Ethernet, two ports per node . . . . . . . . . . . . . . . . . . . . . . . . 150

5.7.5 Management network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Chapter 6. Factory integration and testing . . . . . . . . . . . . . . . . . . . . . . . . 153

6.1 IBM standards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

6.2 Testing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

6.3 Documentation that is provided. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

6.3.1 HPLinpack testing results: Supplied on request . . . . . . . . . . . . . . . 157

Chapter 7. Hardware management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

7.1 Managing compute nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

7.1.1 Integrated Management Module II . . . . . . . . . . . . . . . . . . . . . . . . . 160

7.1.2 Unified Extendible Firmware Interface . . . . . . . . . . . . . . . . . . . . . . 164

7.1.3 ASU. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

7.1.4 Firmware upgrade . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

7.2 Managing the chassis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

7.2.1 FPC web browser interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

7.2.2 FPC IPMI interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

7.3 ServeRAID C100 drivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Page 8

vi IBM NeXtScale System Planning and Implementation Guide

7.4 VMware vSphere Hypervisor (ESXi) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

Chapter 8. Software stack. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

8.1 eXtreme Cloud Administration Toolkit (xCAT). . . . . . . . . . . . . . . . . . . . . 214

8.2 IBM Platform Cluster Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

8.3 IBM General Parallel File System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220

8.3.1 IBM GPFS FPO. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

8.3.2 IBM System x GPFS Storage Server . . . . . . . . . . . . . . . . . . . . . . . 224

8.4 IBM Platform LSF family . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 227

8.5 IBM Platform HPC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

8.6 IBM Platform Symphony family . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

8.7 IBM Parallel Environment for x86 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

8.7.1 IBM Parallel Environment Runtime for x86 . . . . . . . . . . . . . . . . . . . 234

8.7.2 IBM Parallel Environment Developer Edition for x86 . . . . . . . . . . . 236

Abbreviations and acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

Page 9

© Copyright IBM Corp. 2013, 2014. All rights reserved. vii

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult your

local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not infringe

any IBM intellectual property right may be used instead. However, it is the user's responsibility to evaluate and

verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not grant you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made to the

information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM websites are provided for convenience only and do not in any

manner serve as an endorsement of those websites. The materials at those websites are not part of the materials

for this IBM product and use of those websites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring any

obligation to you.

Any performance data contained herein was determined in a controlled environment. Therefore, the results

obtained in other operating environments may vary significantly. Some measurements may have been made on

development-level systems and there is no guarantee that these measurements will be the same on generally

available systems. Furthermore, some measurements may have been estimated through extrapolation. Actual

results may vary. Users of this document should verify the applicable data for their specific environment.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them as

completely as possible, the examples include the names of individuals, companies, brands, and products. All of

these names are fictitious and any similarity to the names and addresses used by an actual business enterprise is

entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in any

form without payment to IBM, for the purposes of developing, using, marketing or distributing application programs

conforming to the application programming interface for the operating platform for which the sample programs are

written. These examples have not been thoroughly tested under all conditions. IBM, therefore, cannot guarantee or

imply reliability, serviceability, or function of these programs. You may copy, modify, and distribute these sample

programs in any form without payment to IBM for the purposes of developing, using, marketing, or distributing

application programs conforming to IBM's application programming interfaces.

Page 10

viii IBM NeXtScale System Planning and Implementation Guide

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business

Machines Corporation in the United States, other countries, or both. These and other IBM trademarked

terms are marked on their first occurrence in this information with the appropriate symbol (® or ™),

indicating US registered or common law trademarks owned by IBM at the time this information was

published. Such trademarks may also be registered or common law trademarks in other countries. A current

list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

AIX®

BladeCenter®

Blue Gene®

GPFS™

IBM®

IBM Flex System™

iDataPlex®

Intelligent Cluster™

LoadLeveler®

LSF®

POWER®

Power Systems™

PowerPC®

PureFlex™

RackSwitch™

Redbooks®

Redpaper™

Redbooks (logo) ®

ServerProven®

Symphony®

System Storage®

System x®

The following terms are trademarks of other companies:

Evolution, and Kenexa device are trademarks or registered trademarks of Kenexa, an IBM Company.

Intel, Intel Xeon, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered trademarks

of Intel Corporation or its subsidiaries in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Microsoft, Windows, and the Windows logo are trademarks of Microsoft Corporation in the United States,

other countries, or both.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Other company, product, or service names may be trademarks or service marks of others.

Page 11

© Copyright IBM Corp. 2013, 2014. All rights reserved. ix

Preface

IBM® NeXtScale System is a new, dense offering from IBM. It based on our

experience with IBM iDataPlex® and IBM BladeCenter® with a tight focus on

emerging and future client requirements. The IBM NeXtScale n1200 Enclosure

and IBM NeXtScale nx360 M4 Compute Node are designed to optimize density

and performance within typical data center infrastructure limits.

The 6U NeXtScale n1200 Enclosure fits in a standard 19-inch rack and up to 12

compute nodes can be installed into the enclosure. With more computing power

per watt and the latest Intel Xeon processors, you can reduce costs while

maintaining speed and availability.

This IBM Redbooks® publication is for customers who want to understand and

implement an IBM NeXtScale System solution. It introduces the offering and the

innovations in its design, outlines its benefits, and positions it with other IBM x86

servers. The book provides details about NeXtScale System components and

the supported options. It also provides rack and power planning considerations

and describes the ways that you can manage the system.

Authors

This book was produced by a team of specialists from around the world working

at the IBM Taiwan Systems & Technology Lab (TSTL) in Taipei, Taiwan.

David Watts is a Consulting IT Specialist at the IBM ITSO

Center in Raleigh. He manages residencies and produces

IBM Redbooks® publications about hardware and

software topics that are related to IBM Flex System™, IBM

System x®, and BladeCenter servers and associated

client platforms. He authored over 250 books, papers, and

Product Guides. He holds a Bachelor of Engineering

degree from the University of Queensland (Australia), and

worked for IBM in the United States and Australia since

1989. David is an IBM Certified IT Specialist, and a

member of the IT Specialist Certification Review Board.

Page 12

x IBM NeXtScale System Planning and Implementation Guide

Jordi Caubet is an IT Specialist with IBM in Spain. He has

seven years of experience with IBM and several years of

experience in high-performance computing (HPC), ranging

from systems design and development to systems

support. He holds a degree in Computer Science from the

Technical University of Catalonia (UPC). His areas of

expertise include Linux, cluster management, parallel

programming, storage, and hardware solutions, such as,

iDataPlex, BladeCenter, and System x products.

Duncan Furniss is a Consulting IT Specialist for IBM in

Canada. He currently provides technical sales support for

PureFlex™, iDataPlex, BladeCenter, and System x

products, and co-authored several IBM Redbooks

publications. Duncan designed and provided oversight for

the implementation of many large-scale solutions for HPC,

distributed databases, and rendering of computer

generated images. He is an IBM Certified IT Specialist and

member of the IT Specialist Certification Review Board.

David Latino is a Senior HPC Solutions Architect for IBM

Middle East & Africa. He has 10 years of experience in the

HPC field. He led a wide spectrum of consulting projects,

working with HPC users in academic research and

industry sectors. His work covered many aspects of the

HPC arena and he was technical leader for the design and

implementation of multiple large HPC systems that

appeared in the top500 list. He worked extensively on HPC

application development, optimization, scaling, and

performance benchmark evaluation, which resulted in

several highly optimized application software packages.

He also spent several years based at customer sites to

train system administrators, users, and developers to

manage and efficiently use IBM Blue Gene® systems.

Page 13

Preface xi

Thanks to the following people for their contributions to this project:

From IBM Marketing:

Mathieu Bordier

Jill Caugherty

Gaurav Chaudhry

Kelly Chiu

Jimmy Chou

Chuck Fang

Andrew Huang

Camille Lee

Brendan Paget

Scott Tease

Swarna Tsai

Matt Ziegler

From IBM Development:

David Brenchley

Vincent Chao

Kelly Chen

Jason Cheng

Marty Crippen

Chris Hsieh

Christina Hsu

Jim Huang

Cathy Lin

Bruce Smith

Brad Taylor

Giant Tu

Harold Wynkoop

From IBM Redbooks:

Tam ikia B ar row

Rich Conway

From IBMers around the world:

Bill Champion

Rick Koopman

A special thank you to Mathieu Bordier for hosting the team during our stay in

Ta ip ei .

Page 14

xii IBM NeXtScale System Planning and Implementation Guide

Now you can become a published author, too!

Here is an opportunity to spotlight your skills, grow your career, and become a

published author—all at the same time! Join an ITSO residency project and help

write a book in your area of expertise, while honing your experience using

leading-edge technologies. Your efforts will help to increase product acceptance

and customer satisfaction, as you expand your network of technical contacts and

relationships. Residencies run from two to six weeks in length, and you can

participate either in person or as a remote resident working from your home

base.

Find out more about the residency program, browse the residency index, and

apply online at this website:

http://www.ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our books to be as helpful as possible. Send us your comments about

this book or other IBM Redbooks publications in one of the following ways:

Use the online Contact us review Redbooks form found at this website:

http://www.ibm.com/redbooks

Send your comments in an email to:

redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

Page 15

Preface xiii

Stay connected to IBM Redbooks

Find us on Facebook:

http://www.facebook.com/IBMRedbooks

Follow us on Twitter:

http://twitter.com/ibmredbooks

Look for us on LinkedIn:

http://www.linkedin.com/groups?home=&gid=2130806

Explore new Redbooks publications, residencies, and workshops with the

IBM Redbooks weekly newsletter:

https://www.redbooks.ibm.com/Redbooks.nsf/subscribe?OpenForm

Stay current on recent Redbooks publications with RSS Feeds:

http://www.redbooks.ibm.com/rss.html

Page 16

xiv IBM NeXtScale System Planning and Implementation Guide

Page 17

© Copyright IBM Corp. 2013, 2014. All rights reserved. xv

Summary of changes

This section describes the technical changes made in this edition of the book and

in previous editions. This edition might also include minor corrections and

editorial changes that are not identified.

Summary of Changes

for SG24-8152-01

for IBM NeXtScale System Planning and Implementation Guide

as created or updated on July 7, 2014.

July 2014

This revision reflects the addition, deletion, or modification of new and changed

information described below.

New information

Added 1300W power supply efficiency values, Table 3-10 on page 40

Added tables showing quantities of compute nodes supported based on

processor selection,, power supply selection, and input voltage, 3.3,

“Supported compute nodes” on page 29

Additional information on GPUs, 4.10, “GPU and coprocessor adapters” on

page 92

April 2014, Second Edition

This revision reflects the addition, deletion, or modification of new and changed

information described below.

New information

New PCIe Native Expansion Tray supporting GPUs and coprocessors

New Intel Xeon Phi coprocessor and NVIDIA GPU adapter options

New Intel Xeon E5-2600 v2 processor options

New 1300W power supply option

New models of the NeXtScale n1200 chassis with 1300W power supplies

Page 18

xvi IBM NeXtScale System Planning and Implementation Guide

New RDIMM memory options

Support for 2.5-inch SSD options and other drive options

Support for ServeRAID M5120 RAID controller for external SAS storage

expansion

Page 19

© Copyright IBM Corp. 2013, 2014. All rights reserved. 1

Chapter 1. Introduction

IBM is introducing our next generation of scale out x86 servers, called NeXtScale

System. In this chapter, we describe the client requirements that led us to its

design, the computing environment it is meant to work in, and how this

architecture was created to meet current and future business and technical

challenges.

This chapter includes the following topics:

1.1, “Evolution of data centers” on page 2

1.2, “Executive Summary of IBM NeXtScale System” on page 3

1.3, “Design points of the system” on page 6

1

Page 20

2 IBM NeXtScale System Planning and Implementation Guide

1.1 Evolution of data centers

There is an increasing number of computational workloads that can be run on

groups of servers; often referred to by such names as clusters, farms, or pools.

This type of computing can be described as scale-out, although, as a convention,

we refer to these groups as clusters. As the computing community’s proficiency

with implementing and managing clusters improved, there is a trend to create

large clusters, which are becoming known as hyper-scale environments.

In the past, when the number of servers in a computing environment was lower,

to reduce application downtime, considerable hardware engineering effort and

server cost was expended to create servers that were highly reliable. With

clusters of servers, we strive to create a balance between the high availability

technologies that are built in to every server, and reduce the cost and complexity

of the servers, which allows more of them to be provisioned.

1.1.1 Density

As the number of servers in clusters grows and as data center real estate cost

increases, the number of servers in a unit of space (also known as the compute

density) becomes an increasingly important consideration. IBM NeXtScale

System is designed to optimize density while addressing other objectives, such

as, providing the best performing processors, minimizing the amount of energy

that is used to cool the servers, and providing a broad range of configuration

options.

1.1.2 Scale out applications

The following applications are among those that lend themselves to clusters of

servers:

High performance computing (HPC)

HPC is a general category of applications that are computationally complex,

can deal with large data sets, or consist of vast numbers of programs that

need to be run. Examples of computationally complex workloads include

weather modeling or simulating chemical reactions. Comparing gene

sequences is an example of a workload that involves large data sets. Image

rendering for animated movies and Monte Carlo analysis for particle physics

are examples of workloads where there are vast numbers of programs that

need to be run. The use of several HPC clusters in a Grid architecture is an

approach that gained popularity.

Page 21

Chapter 1. Introduction 3

Cloud services

Cloud services that are privately owned and those that are publicly available

from managed service providers provide standardized computing resources

from pools of homogeneous servers. If a consumer requires more or less

server capacity, the servers are provisioned from or returned to the pools.

This paradigm typically also includes consumer self service, and usage

metering with some form of show back, charge back, or billing.

Analytics

Distributed databases and extensive use of data mining, or analytics, is

another use case that is increasing in prevalence and is being applied to a

greater range of business and technical challenges.

1.2 Executive Summary of IBM NeXtScale System

NeXtScale System is IBM’s next generation dense, scalable computing system.

The major components are the NeXtScale n1200 Enclosure and NeXtScale

nx360 M4 compute node.

1.2.1 IBM NeXtScale n1200 Enclosure

The IBM NeXtScale System is based on a six-rack unit (6U) high chassis with 12

half-width bays, as shown in Figure 1-1.

Figure 1-1 Front of NeXtScale N1200 chassis, with 12 half-wide servers

Page 22

4 IBM NeXtScale System Planning and Implementation Guide

Chassis power and cooling

The IBM NeXtScale n1200 Enclosure includes 10 hot swappable fans and six hot

swappable power supplies. These are installed in the rear of the chassis, as

shown in Figure 1-2.

Figure 1-2 Rear of IBM NeXtScale n1200 Enclosure

Six single-phase power supplies were chosen to enable power feeds from one or

two sources of three-phase power.

Also, in the rear of the chassis is the Fan and Power Controller, which controls

power and cooling aspects of the chassis.

1.2.2 IBM NeXtScale nx360 M4 compute node

The first server that is available for NeXtScale System is the nx360 M4 compute

node. It fits in a half-width bay in the n1200 Enclosure, as shown in Figure 1-3 on

page 5. On the front is the power button, status LEDs, and connectors. There is a

full-height, half-length PCI Express card slot, and a PCI Express mezzanine card

slot that uses the same mezzanine card type as our rack mount servers.

Page 23

Chapter 1. Introduction 5

Figure 1-3 IBM NeXtScale nx360 M4

Inside, the nx360 M4 supports two Intel Xeon E5-2600 v2 series processors,

eight DDR3 DIMMs, and a hard drive carrier. Hard disk drive carrier options

include one 3.5-inch drive, two 2.5-inch drives, or four 1.8-inch solid-state drives.

The server is shown in Figure 1-4.

Figure 1-4 IBM NeXtScale nx360 M4 with one 3.5-inch hard disk drive

Page 24

6 IBM NeXtScale System Planning and Implementation Guide

1.3 Design points of the system

This section introduces some of the following design points that went into IBM

NeXtScale System:

The system is designed for flexibility.

The power supplies and fans, in the back of the chassis, are modular and hot

swappable. The servers slide in and out of the front and have their cable

connections at the front.

The chassis is engineered to support devices that span multiple bays.

Compute node designs are not limited to a single 1U-high half-wide server. As

with iDataPlex, servers can be augmented with trays that enable more

features, such as, adapters or drives. Further systems that extend the design

are in development.

Fits in a standard rack

It is possible to install the IBM NeXtScale n1200 Enclosure into many

standard 19-inch racks (which might require more cable routing brackets) and

the rack can have a mixture of NeXtScale and other components. It is

possible to start with a few servers and grow incrementally. Alternatively, you

can have IBM install them into racks with switches and power distribution

units and connect all the cables.

IBM factory integration

Further configuration and testing are done when the systems are factory

integrated. For more information about IBM factory integration, see Chapter 6,

“Factory integration and testing” on page 153.

The IBM NeXtScale System is focused on computational density.

Compared to an iDataPlex system with 84 servers in each iDataplex rack,

with six NextScale chassis in each 42U standard rack (which leaves 6U per

rack for more components), in the same floor tile configuration we can fit 28%

more servers. With standard racks, clients should be able to design compact

data center floor layouts for all their equipment.

The system is also designed for simplicity.

Cable access to the server is from the front. The servers are directly

accessed for their local console, management, and data networks, which

eliminates contention.

Another design objective was to use standard components.

The servers support standard PCI Express (Generation 3) adapters, and

have RJ-45 copper Ethernet interfaces on board. The chipsets that are used

in the server were selected with broad industry acceptance in mind.

Page 25

Chapter 1. Introduction 7

1.4 This book

In this book, we compare NeXtScale to other systems, raising points to help you

select the right systems for your applications. We then take an in-depth look at

the chassis, servers, and fan and power controller (FPC). Next, we take a

broader view, covering implementations at scale, reviewing racks and cooling.

We then describe IBM’s process for assembling and testing complete systems in

to Intelligent Cluster™ solutions. Next, we provide information about managing

the NeXtScale chassis and nodes. We finish by covering some of the software

that is available from IBM that is commonly used in a solution with NeXtScale

servers.

Page 26

8 IBM NeXtScale System Planning and Implementation Guide

Page 27

© Copyright IBM Corp. 2013, 2014. All rights reserved. 9

Chapter 2. Positioning

NeXtScale is ideal for fastest-growing workloads, such as, social media,

analytics, technical computing, and cloud delivery, which are putting increased

demands on data centers.

This chapter describes how IBM NeXtScale System is positioned in the

marketplace compared with other systems that are equipped with Intel

processors. The information helps you to understand the NeXtScale target

audience and the types of workloads for which it is intended.

This chapter includes the following topics:

2.1, “Market positioning” on page 10

2.2, “IBM System x overview” on page 16

2.3, “NeXtScale System versus iDataPlex” on page 17

2.4, “NeXtScale System versus Flex System” on page 19

2.5, “NeXtScale System versus rack-mounted servers” on page 20

2.6, “Ordering and fulfillment” on page 21

2

Page 28

10 IBM NeXtScale System Planning and Implementation Guide

2.1 Market positioning

The IBM NeXtScale System is a new x86 offering that introduces a new category

of dense computing into the marketplace. IBM NeXtScale System includes the

following key characteristics:

Strategically, this is the next generation dense system from System x:

– A building block design that is based on a low function/low cost chassis.

– Flexible compute node configurations that are based around a 1U

half-wide compute node supports various application workloads.

– A standard rack platform.

Built for workloads that require density

Not a replacement for, but complementary to, iDataPlex

NeXtScale performs well in scale-out applications, such as, cloud, HPC, grid,

and analytics

Is central in OpenStack initiatives for public clouds

Available in standard System x configurator tools, including SSCT, Blue

Horizon, and x-config

IBM NeXtScale System includes the following key features:

Supports up to seven chassis

1

in a 42U rack, which means up to a total of 84

systems and 2,016 processor cores in a standard 19-inch rack.

High-value software stack for powerful scheduling, management, optimization

tools.

Industry-standard components for flexibility, ease of maintenance, and

adoption.

Approved for 40°C data centers, which lowers cooling costs.

Available as single node, an empty or configured chassis, or in full racks.

Can be configured as part of the IBM Intelligent Cluster processor for

complete pre-testing, configuration, and arrival ready to plug in.

Compute nodes offer the fastest Intel Xeon processors (top-bin 130 W) with

new 1866 MHz memory.

Supports 100 - 127 V and 200 - 240 V power.

Standard form factor and components make it ideal for Business Partners.

1

Six chassis per rack are recommended because this leaves rack space for switches and cable

routing. The use of seven chassis in a rack might require removal of the rack doors.

Page 29

Chapter 2. Positioning 11

The customer who benefits the most from NeXtScale is an enterprise looking for

a low-cost, high-performance computing system to start or optimize cloud, big

data, Internet, and technical computing applications, which include the following

uses:

Large datacenters that require efficiency, density, scale, and scalability.

Public, private, and hybrid cloud infrastructures.

Data analytics applications, such as, customer relationship management,

operational optimization, risk and financial management, and enabling new

business models.

Internet media applications, such as, online gaming and video streaming.

High-resolution imaging for applications ranging from medicine to oil and gas

exploration.

“Departmental” uses in which a small solution can increase the speed of

outcome prediction, engineering analysis, and design and modeling.

2.1.1 Three key messages with NeXtScale

The three key messages about IBM NeXtScale System is that it is flexible,

simple, and scalable, as shown in Figure 2-1.

Figure 2-1 IBM NeXtScale System key messages

Evenasmallclustercan

changetheoutcome

Architectedfor

performance,scale,and

scalability

Maximumimpact/$

OptimizedSoftwareStack

withPlatformComputing

thepowerbeyondthe

hardware

FLEXIBLE

Deliveredreadytorun

Channelandboxship

capable

Optimizedforyourdata

centertodayandreadyfor

ittomorrow

BuiltonOpenStandards

seamlessadoption

Onepartnumberunlocks

IBMsserviceandsupport

withIntelligentCluster

2

SIMPLE

1

SCALE

3

SingleArchitecture

featuringNativeExpansion

Optimizedshared

infrastructurewithout

compromisingperformance

Thebackisnowthefront

SimplySilver thepolishis

itsessentialsonlydesign

Page 30

12 IBM NeXtScale System Planning and Implementation Guide

IBM NeXtScale System is flexible in the following ways:

Ordering and delivery

The question of how you want your NeXtScale System configuration to be

ordered and delivered complex because there are many choices. Some

clients want everything in parts so that they can mix and match to build what

they want. Others want systems that they tested and approved to show that it

is configured to their liking. Still others want complete solutions of racks to

arrive ready to plug in. With NeXtScale, the choice is yours.

A hardware design that allows for a mix of compute nodes.

The NeXtScale nx360 M4 compute node is a 1U half-wide server but is

designed to be extended with the addition of various trays (what we call

Native Expansion) with which you can select the systems you need that is

based on the needs of the applications that you run.

Fit it into your datacenter seamlessly in an IBM rack or most 19-inch standard

racks.

The IBM NeXtScale n1200 Enclosure is designed to be installed in the IBM

42U 1100mm Enterprise V2 Dynamic Rack because it provides the best

cabling features. However, the chassis can also be installed in many

third-party, four-post, 19-inch racks. This ensures maximum flexibility when it

comes to deploying NeXtScale System into your data center.

IBM NeXtScale System is backed by leading IBM service and support no

matter how you buy it, where you use it, or what task you have it running.

Support for open standards.

A client needs more than hardware to use IT. We designed NeXtScale to

support an open stack of industry standard tools to allow clients that have

existing protocols and tools to migrate easily to by using NeXtScale System.

The nx360 M4 compute node offers the Integrated Management Module II

service processor and the n1200 Enclosure has the Fan and Power

Controller. Both support the IPMI protocol for flexible and standards-based

systems managements.

IBM NeXtScale System is simple in the following ways:

A design that is based on the half-wide compute node.

The architecture of NeXtScale System revolves around a low-function

chassis that hosts compute nodes. The design supports Native Expansion

that allows seamless upgrades to add common functionality, such as, storage,

graphics acceleration, or co-processing at the time of shipment or in the

future.

Page 31

Chapter 2. Positioning 13

A chassis that includes shared fans and cooling.

The n1200 Enclosure supplies the cooling and power to maximize energy

efficiency, but leaves management and connectivity to the compute nodes,

which minimizes cost.

Cables, connectors, and controls at the front.

With the exception of the power cords, all cabling is at the front of the chassis.

All controls and indicators also are at the front. This configuration makes

access, server swap-outs, and overall systems management easier.

Each compute node has a front connector for a local console for use if you

need crash-cart access. Also, each compute node has a pull-out tab at the

front for system and customer labeling needs, as shown in Figure 2-2.

Figure 2-2 Front of the IBM NeXtScale nx360 M4

Because the cables do not clog up the back of the rack, air flow is improved

and thus energy efficiency also is improved. The harder the fans work to

move air through server, the more power they use.

Your support staff who work in the data center can tell you the front of the rack

is a much more enjoyable environment to spend time in because it might

easily be 30°F (16°C) cooler at the front than at the back. People cannot stay

in the rear of the rack that long before its no longer comfortable. Also, the front

of rack is less noisy than the rear of the rack because of fan noise.

KVM port

Pull out label tab

Page 32

14 IBM NeXtScale System Planning and Implementation Guide

Its also difficult to locate a single dense server in a row of dense racks and

then go round the back to service the cabling. Having all of the cabling on the

front simplifies and reduces the chances of mis-cabling or pulling the wrong

server.

Installation in a three-phase power data center.

The design of six power supplies per chassis allows seamless installation into

data centers with three-phase power. With six supplies and two, three-phase

feeds, we perfectly optimize and balance power delivery; no waste, no

inefficiency.

The compute nodes are unpainted metal.

Unlike every other x86 server IBM offers, these do not have a black front to

them, which indicates simplicity and efficiency.

IBM NeXtScale System is scalable in the following ways:

Scaling is for everyone

As we describe scale, do not assume its only for massive deployments; even

a small one-chassis solution can change what users believe can be done.

No matter whether you start small and grow or start huge and grow

enormously, NeXtScale is designed to be run and managed at scale as a

single solution.

NeXtScale System is built on what we learned about the financial aspects of

scale-out.

Every decision that we made about the product was aimed at improving our

clients impact per dollar, whether that meant removing components that are

not required or by selecting more energy efficient parts to reduce power

usage and, therefore, power costs.

The hardware can be used on its own or paired with a leadership stack that is

optimized for the work load.

Whether you are running a high-performance computing (HPC) application

that is optimized with Platform Computing or run on top of an

industry-standard, open-source tool, NeXtScale System makes it easy. Same

for Cloud (OpenStack and other industry standard, open stacks, or IBM Smart

Cloud); both are viable and bring value.

Scalable to the container level.

IBM NeXtScale System can meet the needs of clients who want to add IT at

the rack level or even at the container level. Racks can be fully configured,

cabled, labeled, and programmed before they are shipped. IBM also can take

configured racks and assemble them into complete, containerized solutions

with power, cooling, and infrastructure delivered ready to go.

Page 33

Chapter 2. Positioning 15

2.1.2 Optimized for workloads

IBM NeXtScale System is best-suited for the following primary workloads:

Public and private cloud

HPC and technical computing

Although these areas do share much in common, they have unique needs that

are served with NeXtScale System, as shown in Figure 2-2.

Figure 2-3 IBM NeXtScale System: Optimized for cloud computing and HPC solutions

For cloud, the important factors are that we support the entire processor stack;

so, no matter what our client goal is, we can support it with the right processor

performance and cost point. The same is true of memory; we have cost and

power-optimized choices and performance-optimized alternatives. The nodes

contain the right onboard networking on board with 1 Gb NICs embedded, with

options for up to four other high-speed fabric ports. With these features, the

entire solution is designed to scale to any size.

HPC and technical computing have many of the same attributes as cloud; a key

factor is the need for the top-bin 130 W processors. NeXtScale System can

support top bin 130 W processors, which means more cores and higher

frequencies than others.

Workload Fine Tuned Server Characteristics

• Processor and Memory performance and choice Full

Intel stack support with memory for performance and/or

cost optimization

• Standard Rack optimized Fits into client data centers

seamlessly

• Right sized IO Choice of networking options – 1Gb,

10Gb, or InfiniBand, all SDN ready

• Infinitely Scalable from small to enormous grid

deployments all built on open standards

• High energy efficiency means more impact/watt

• Top bin Intel Xeon processors, large memory

bandwidth, and high IOPS for rapid transaction

processing and analytics

• Workload optimized software stack with Platform

Computing and IBM xCAT

• Architected for low latency with choice of high speed

fabric support

• Supported as one part number no matter the size of

the solution and content with Intelligent Cluster

High Performance

Computing

Private Cloud Public Cloud

Page 34

16 IBM NeXtScale System Planning and Implementation Guide

In addition to rock-solid hardware, we have a powerful software stack that is

based on open standards and IBM added value offerings, such as, xCAT and

Platform Computing. IBM provides a software stack to run on top of NeXtScale,

including IBM General Parallel File System, GPFS™ Storage Server, xCAT, and

Platform Computing, which provides scheduling, management, and optimization

tools.

2.2 IBM System x overview

The world is evolving, and the way that our clients do business is evolving with it.

That is why IBM has the broadest x86 portfolio in our history and is expanding

even further to meet the needs of our clients, whatever those needs might be.

As shown in Figure 2-4 on page 17, the x86 server market is segmented on the

following key product areas:

High-end systems

IBM dominates this space with enterprise-class eX5 four-socket and up

systems that offer unprecedented x86 performance, resiliency, and security.

Blades and integrated systems

Integrated systems is a fast growing market where IBM adds value by

packaging IBM software and hardware assets in a way that helps our clients

optimize value from IBM systems.

Dense systems

Dense systems, as with NeXtScale or iDataPlex, is a fast growing segment

that is pushed by datacenter limitations and new workloads that require scale

out architecture. These systems transformed how clients optimize

space-constrained data centers with extreme performance and energy

efficiency.

High volume systems

The volume space is over half the total x86 server market and IBM has a

broad portfolio of rack and tower servers to meet a wide range of client needs,

from infrastructure to technical computing.

IBM solutions are aligned in a way to capture the value clients need from cloud,

analytics, and technical computing. Supporting all of this are the IBM technology

assets, such as, software and service and the deep integration that is necessary

to support the entire portfolio.

Page 35

Chapter 2. Positioning 17

Figure 2-4 System x Strategy delivers leadership products and solutions

2.3 NeXtScale System versus iDataPlex

Although iDataPlex and NeXtScale look different, many of the ideas and

innovations we pioneered with iDataPlex remain in the new NeXtScale System.

When IBM introduced iDataPlex in 2008, we introduced a chassis that was

dedicated to power and cool independent nodes. With NeXtScale system, we

reuse the same principle, but we are extending it to bring more flexibility to the

users.

The IBM NeXtScale n1200 Enclosure can now support up to 12 1U half-wide

compute nodes, while the iDataPlex chassis can house only two 1U half-deep

compute nodes. This allows IBM NeXtScale System to provide more flexibility to

the user and mix in the chassis different types of nodes with different form

factors.

The IBM NeXtScale n1200 Enclosure is designed to fit in the IBM 42U 1100mm

Enterprise V2 Dynamic Rack, but it also fits in many standard 19-inch racks.

Although iDataPlex can also be installed in a standard 19-inch rack, the use of

iDataPlex racks and different floor layouts was required to make use of its

high-density capability. IBM NeXtScale System brings more flexibility by allowing

users to use standard 19-inch racks and does not require a special datacenter

layout, which does not affect customer best practices and policies.

High-end systems

Broad portfolio to meet a

wide range of client needs

from infrastructure to

technical computing

4 socket+ enterprise-class x86

performance,

resiliency and security

Integration across IBM assets in

systems and SW for maximum client

optimization and value

Optimize space-constrained data

centers with extreme

performance and energy

efficiency

IBM PureSystems

IBM eX5 Systems

IBM NeXtScaleIBM System x Rack & Tower

IBM Flex System

IBM BladeCenter

Blades/Integrated systems

Volume systems Dense systems

IBM iDataPlex

Page 36

18 IBM NeXtScale System Planning and Implementation Guide

As with iDataPlex servers, NeXtScale servers support S3 mode. S3 allows

systems to come back into full production from low-power state much quicker

than a traditional power-on. In fact, cold boot normally takes about 270 second;

with S3, it takes only 45 seconds. When you know that a system will not be used

because of time of day or state of job flow, you can send it into a low-power state

to save power and, when needed, bring it back online quickly.

In Table 2-1, the features of IBM NeXtScale System are compared to those

features of IBM iDataPlex.

Table 2-1 Comparing IBM NeXtScale System to IBM iDataPlex

Feature iDataPlex NeXtScale Comments

Form factor Unique rack

1200 mm x 600 mm

Standard rack

600 mm x 1100 mm

NeXtScale System allows for

lower-cost racks and, perhaps, racks

customers already have.

Density in a

standard 42U rack

Up to 42 servers Up to 84 servers (72 with

space for switches)

NeXtScale System can provide up to

twice the server density when both

types of servers are installed in a

standard 42U rack.

Density in two

consecutive floor

tiles

a

a. Here we compare the density of servers that can be fitted in a single row of racks while using top-of-rack

switches. We use a single iDataPlex rack for iDataPlex servers that is 1200 mm wide, and we compare it with

two standard racks for NeXtScale servers that also are 1200 mm wide.

84 servers

8 ToR switches

(iDataPlex rack)

144 servers

12 ToR switches

(two standard racks next to

each other)

NeXtScale can provide up to 71%

more servers per row when using

top-of-rack (ToR) switches.

Density/400 sq. ft.

(with Rear Door

Heat Exchanger)

1,680 servers

84 servers/iDataPlex

rack; four rows of five

racks

2,160 servers

72 servers/standard rack;

three rows of 10 racks

10x10 floor tiles

28% density increase because of

standard rack layout.

Power/tile (front) 22 kW maximum

15 kW typical

42 servers + switches

37 kW maximum

25 kW typical

72 servers + switches

Similar power/server

GPU support Two GPUs per server in 2UTwo GPUs per server in 1U

effective space (GPU

support planned)

GPU tray + base node = 1U.

NeXtScale System has twice the

density of iDataPlex.

Direct attached

storage

None

b

b. None available with Intel E5-2600 v2 series processor.

Other storage-rich

offerings that are planned

include eight drives in 1U

effective space

More flexibility with NeXtScale

storage plan.

Direct water cooling Available Now NeXtScale System design

supports water cooling

Opportunity to optimize cost with

NeXtScale.

Page 37

Chapter 2. Positioning 19

2.4 NeXtScale System versus Flex System

Although NeXtScale System and Flex System are both “blade” architectures,

they are different in their approach, as shown in Figure 2-5 and Table 2-2 on

page 20. The key here is to understand the client philosophy. Features and

approaches in Flex System and NeXtScale System appeal to different kinds of

users.

Flex System has a wide ecosystem of compatible servers (x86 and POWER®),

switches, and storage offerings. It is aimed at clients that are looking for an

optimized and integrated solution that delivers better performance than

competitive offerings.

NeXtScale System is an x86-only architecture and its aim is to be the perfect

server for clients that require a scale-out infrastructure. NeXtScale System uses

industry-standard components, including I/O cards and top-of-rack networking

switches, for flexibility of choice and ease of adoption.

Figure 2-5 Comparing Flex System with NeXtScale System

Systems with integrated expertise to

deliver lower management costs and a

simplified user experience

Client needs: A pre-integrated, pre-

optimized, complete hardware and

software platform with built-in stack

management to develop and deliver new

business applications quickly and cost

effectively.

Designed for scale-out applications

such as Technical Computing,

Cloud, Grid and Analytics

Client needs: For clients looking to

build a customized solution that is

delivered pre-integrated leveraging

their existing management and

networking stacks.

IBM PureFlex and Flex System

IBM NeXtScale System

Page 38

20 IBM NeXtScale System Planning and Implementation Guide

Table 2-2 IBM Flex System and NeXtScale System Differentiations

2.5 NeXtScale System versus rack-mounted servers

Although the NeXtScale System compute nodes are included in a chassis, this

chassis is only there to provide shared power and cooling.

As a consequence, the approach to design, buy, or upgrade a solution that is

based on IBM NeXtScale System or regular 1U/2U rack-mounted servers is

similar. In both cases, the full solution relies on separate networking components

and can integrate seamlessly in an existing infrastructure.

The choice between IBM NeXtScale System and rack-mountable servers is first

driven by application requirements because each form factor brings various

advantages and limitations for particular workloads.

IBM Flex System differentiation IBM NeXtScale System differentiation

Integrated design offers flexibility (Flex

System) and factory-integration (PureFlex

System).

Flexibility of customization and integration

of hardware.

10U chassis holding 14 nodes with shared

power and cooling that is designed for

multiple generations of technology.

6U chassis holding 12 servers with shared

power and cooling designed for multiple

generations of technology.

Heterogeneous node support: x86 and

POWER.

x86 node support.

Full hardware redundancy; no single point

of failure.

Designed for software redundancy;

clustered approach.

Integrated switching, storage, and

management in the chassis. All network

connection are made via midplane.

Support for 1 Gb/10 Gb Ethernet, FCoE,

8 Gb/16 Gb FC, and QDR/FDR

InfiniBand. Custom form factor switches

and adapters.

No Integrated switching in the chassis.

Cables are routed to top of rack. Multiple

brands of rack-based switches are

supported: 1 GB/10 GB/40 Gb Ethernet,

InfiniBand QDR/FDR, and 8 GB/16 GB

Fibre Channel all installed external to the

chassis. Standard PCI adapters and ToR

switches.

Integrated shared storage optional with

the Flex System V7000 Storage Node.

Integrated direct attached storage optional

with the native expansion tray (planned).

Unified management via the Flex System

Manager for all physical and virtual

resources in chassis.

No unified management tools;

management of node/ storage/switching

handled via independent open standard,

vendor-independent toolkits.

Page 39

Chapter 2. Positioning 21

The 1U/2U rack-mounted servers and the IBM NeXtScale System feature the

following main capabilities differences:

Quantity of memory per node

Quantity of PCIe slots per node

Quantity of drives per node

Support for high energy power consumption adapters, such as, GPU and

co-processors

For small installations or for servers requiring a large amount of memory, a large

number of PCIe adapters the rack-mounted servers is the system of choice.

For medium to large installation of nodes that require up to 128 GB, IBM

NeXtScale System should be the system of choice. It allows the users to reduce

their initial cost of acquisition and their operating cost through higher density (up

to 4X compared to 2U servers) and higher energy efficiency (because of the

shared power and cooling infrastructure).

2.6 Ordering and fulfillment

We put careful attention on the NeXtScale System’s channel enablement. This

allows not only IBM sellers to be talking about NeXtScale but also our valued

partners. With iDataPlex, this was not something we saw; the product was not

optimized for the partner’s use, sale, pricing, and so on. This is all different from

NeXtScale because the product is set up the same as an HS23 or x3650, fully

channel enabled, part of the Customer Transition Plan (CTP), xREF, and all the

normal bid grids.

The IBM NeXtScale System can be fulfilled through regular channels or as a fully

integrated solution with Intelligent cluster. For more information, see Chapter 6,

“Factory integration and testing” on page 153.

Page 40

22 IBM NeXtScale System Planning and Implementation Guide

IBM NeXtScale System is as easy to configure, order, and price as standard

System x servers such as the x3650 M4 server, as shown in Table 2-3.

Table 2-3 Comparing configuration, ordering, and pricing tools

Tool System x iDataPlex NeXtScale

Presence in SSCT, Blue Horizon, x-config Ye s Ye s Ye s

Special bid in leads

Ye s Ye s Ye s

Available in IBM Intelligent Cluster

Ye s Ye s Ye s

Business Partners and distributors (Channel

stocked)

Ye s N o Ye s

Grid level pricing

Ye s N o Ye s

Available to buy at ibm.com

Ye s N o Ye s

Reference Configurations - starter kits

Ye s N o Ye s

Platform-specific sales plays

Ye s N o Ye s

Page 41

© Copyright IBM Corp. 2013, 2014. All rights reserved. 23

Chapter 3. IBM NeXtScale n1200

Enclosure

The foundation on which IBM NeXtScale System is built is the IBM NeXtScale

n1200 Enclosure.

Designed to provide shared, high-efficiency power and cooling for up to 12

compute nodes, this chassis scales with your business needs. Adding compute,

storage, or acceleration capability is as simple as adding nodes to the chassis.

There is no built-in networking or switching capabilities, which requires no

chassis-level management beyond power and cooling.

This chapter includes the following topics:

3.1, “Overview” on page 24

3.2, “Standard chassis models” on page 29

3.3, “Supported compute nodes” on page 29

3.4, “Power supplies” on page 38

3.5, “Fan modules” on page 42

3.6, “Midplane” on page 45

3.7, “Fan and Power Controller” on page 47

3.9, “Specifications” on page 57

3

Page 42

24 IBM NeXtScale System Planning and Implementation Guide

3.1 Overview

The IBM NeXtScale n1200 Enclosure is a 6U next-generation dense server

platform with integrated Fan and Power Controller. The n1200 is designed to

efficiently power and cool up to 12 1U half-wide compute nodes, with which

clients can install in a standard 42U 19-inch rack that is twice the number of

servers per rack-U space that is compared to traditional 1U rack servers.

Figure 3-1 IBM NeXtScale n1200 Enclosure with 12 compute nodes

The founding principle behind IBM NeXtScale System is to allow clients to adopt

this new hardware with minimal or no changes to their existing data center

infrastructure, management tools, protocols, and best practices.

The enclosure looks similar to an IBM BladeCenter or IBM Flex System chassis

but it is different as there is no consolidated management interface or integrated

switching. An exploded view of the components of the chassis is shown in

Figure 3-2 on page 25.

Page 43

Chapter 3. IBM NeXtScale n1200 Enclosure 25

Figure 3-2 IBM NeXtScale n1200 Enclosure components

The IBM NeXtScale n1200 Enclosure includes the following components:

Up to 12 compute nodes

Six power supplies each separately powered

A total of 10 fan modules in two cooling zones

One Fan and Power Controller

Shipping

bracket kit

Midplane

Top cover

Fan modules

Fan and

Power

Controller

Power

supplies

Rail kit

Node filler

Lift handles

Page 44

26 IBM NeXtScale System Planning and Implementation Guide

3.1.1 Front components

The IBM NeXtScale n1200 Enclosure supports up to 12 1U half-wide compute

nodes, as shown in Figure 3-3.

All compute nodes are front accessible with front cabling as shown in Figure 3-3.

From this angle, the chassis looks to be simple because it was designed to be

simple, low cost, and efficient.

Figure 3-3 IBM NeXtScale n1200 Enclosure Front View with 12 compute nodes

This new enclosure not only supports dense, high-performance compute nodes,

but also expanded compute nodes with more I/O slots for adapters, GPUs, and

coprocessors or other drive bays.

By using this capability, clients can access some powerful IT inside a simple and

cost effective base compute node that can be expanded to create rich and dense

storage or acceleration solutions without the need for any exotic components,

midplanes, or high-cost connectors.

Bay 1

Bay 3

Bay 5

Bay 7

Bay 9

Bay 11

Bay 2

Bay 4

Bay 6

Bay 8

Bay 10

Bay 12

Page 45

Chapter 3. IBM NeXtScale n1200 Enclosure 27

3.1.2 Rear components

The n1200 provides shared high-efficiency power supplies and fan modules. As

with IBM BladeCenter and IBM Flex System, the NeXtScale System compute

nodes connect to a midplane, but this connection is for power and control only;

the midplane does not provide any I/O connectivity.

Figure 3-4 shows the major components that are accessible from the rear of the

chassis.

Figure 3-4 IBM NeXtScale n1200 Enclosure rear view

At the rear of the chassis, the following types of components are accessible:

Power supplies

The IBM NeXtScale n1200 Enclosure has a six-power supply design, all in

one power domain. This configuration allows clients with 100 V - 240 V utility

power (in single or three-phase) to power up the chassis by using the

infrastructure they already have. For three-phase power, the phases are split

in the PDU for single phase input to the chassis power supplies.

For more information about the power supplies, see 3.4, “Power supplies” on

page 38.

6x Power supplies Fan and Power Controller 10x Fan Modules

Page 46

28 IBM NeXtScale System Planning and Implementation Guide

Fan modules

Also shared in the chassis are 10 80 mm fan modules, five in each of the two

cooling zones.

The fan modules and PSUs in the chassis provide shared power and cooling

for all the installed nodes by using fewer components and with less power

than traditional systems.

For more information about the fan modules, see 3.5, “Fan modules” on

page 42.

Fan and Power Controller

The Fan and Power Controller (FPC) module is the management device for

the chassis and, as its name implies, controls the power and cooling features

of the enclosure.

For more information about the FPC, see 3.7, “Fan and Power Controller” on

page 47.

You might notice that the enclosure does not contain space for network switches.

All I/O is routed directly out of the servers to top-of-rack switches. This

configuration provides choice and flexibility and keeps the IBM NeXtScale n1200

Enclosure flexible and low cost.

3.1.3 Fault tolerance features

The chassis implements a fault tolerant design. The following components in the

chassis enable continued operation if one of the components fails:

Power supplies

The power supplies support a single power domain that provides DC power to

all of the chassis components. If a power supply fails, the other power

supplies can continue to provide power.

Fan modules

The fan modules provide cooling to all of the chassis components. The power

supplies have their own fans to provide the cooling. Each fan module has a

dual rotor (blade) dual motor fan. One of the motors within the fan module can

fail and the remaining continue to operate. If a fan fails, the chassis continues

operating and the remaining fans increase in speed to compensate.

Power policies: The power management policy that you implemented for

the chassis determines the affect on chassis operation if there is a power

supply failure. Power policies can be N+N, N+1, or no redundancy. Power

policies are managed by the FPC.

Page 47

Chapter 3. IBM NeXtScale n1200 Enclosure 29

FPC

The FPC enables the Integrated Management Module to monitor the fans and

control fan speed. If the FPC fails, the enclosure fans ramp up to maximum,

but all systems continue to operate by using the existing power management

policy.

3.2 Standard chassis models

The standard chassis model is listed in Table 3-1. The chassis is also available

via the configure-to-order (CTO) processor.

Table 3-1 Standard enclosure models

The IBM NeXtScale n1200 Enclosure ships with the following items:

Rail kit

One Console breakout cable, also known as a KVM dongle (00Y8366)

A Torx-8 (T8) screwdriver for use with components, such as, the drive cage,

which is mounted on the rear of the chassis

One AC power cord for each power supply installed, 1.5m 10A, IEC320 C14

to C13 (part number 39Y7937)

3.3 Supported compute nodes

The IBM NeXtScale nx360 M4 is the only compute node that is supported in the

n1200 enclosure. However, the number of compute nodes that can be powered

on depends on the following factors:

The power policy that is selected (N+N, N+1, or no redundancy)

The AC input voltage

The components that are installed in each compute node (such as, processor,

memory, drives, and PCIe adapters)

Model Description Fan Modules

(standard/max)

Power Supplies

(standard/max)

5456-A2x IBM NeXtScale n1200 Enclosure 10x 80mm / 10 6x 900 W / 6

5456-A3x IBM NeXtScale n1200 Enclosure 10x 80mm / 10 2x 1300 W / 6

5456-A4x IBM NeXtScale n1200 Enclosure 10x 80mm / 10 6x 1300 W / 6

Page 48

30 IBM NeXtScale System Planning and Implementation Guide

UEFI settings

To size for a specific configuration, you can use the IBM Power Configurator that

is available at this website:

http://ibm.com/systems/bladecenter/resources/powerconfig.html

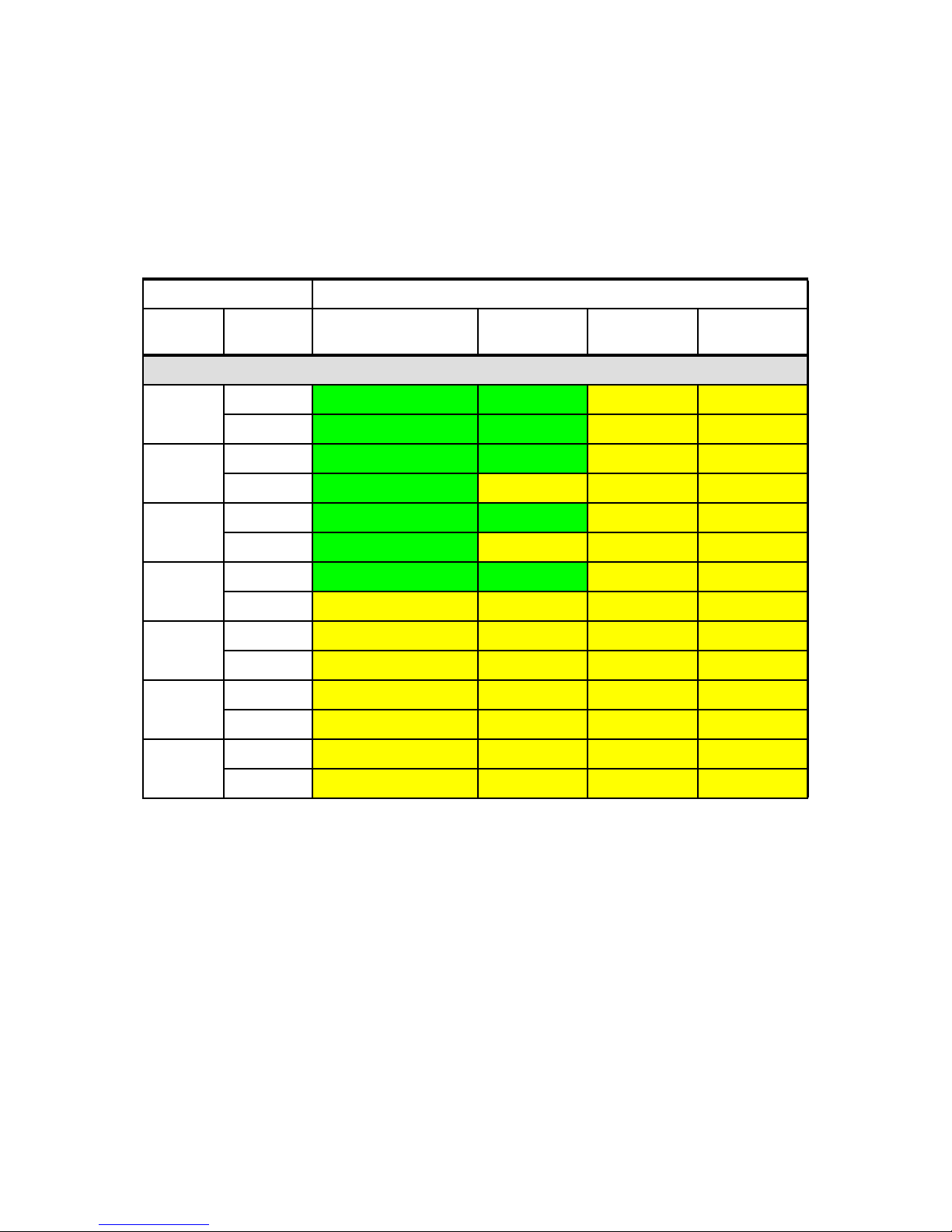

The following show the number of nodes that can be operated with no

performance compromise within the chassis depending on the power policy

required. The tables are as follows:

1300 W power supplies:

– 200-240V AC input, no GPU Trays: Table 3-2

– 200-240V AC input, GPU Trays with 130 W GPUs: Table 3-3 on page 32