Ibm OPEN STORAGE READ ME FIRST 7-9-2010, I VIRTUALIZATION READ ME FIRST 7-9-2010 User Manual

Page 1

IBM i Virtualization and Open Storage Read-me First

Vess Natchev

Cloud | Virtualization | Power Systems

IBM Rochester, MN

vess@us.ibm.com

July 9th, 2010

1

Page 2

This “read-me first” document provides detailed instructions on using IBM i 6.1 virtualization and

connecting open storage to IBM i. It covers prerequisites, supported hardware and software,

planning considerations, install and post-install tasks such as backups. The document also

contains links to many additional information sources.

Table of Contents

1. IBM i virtualization solutions

1.1. IBM i logical partition (LPAR) hosting another IBM i partition

1.2. IBM i using open storage as a client of the Virtual I/O Server (VIOS)

1.3. IBM i on a Power blade

2. IBM i hosting IBM i supported configurations

2.1. Hardware

2.2. Software and firmware

3. IBM i hosting IBM i concepts

3.1. Virtual SCSI and Ethernet adapters

3.2. Storage virtualization

3.3. Optical virtualization

3.4. Network virtualization

4. Prerequisites for implementing IBM i hosted LPARs

4.1. Storage planning

4.2. Performance

4.3. Dual hosting

5. Implementing IBM i client LPARs with an IBM i host

6. Post-install tasks and considerations

6.1. Configure IBM i networking

6.2. How to perform IBM i operator panel functions

6.3. How to display the IBM i partition System Reference Code (SRC) history

6.4. Client IBM i LPARs considerations and limitations

6.5. Configuring Electronic Customer Support (ECS) over LAN

6.6. Copying storage spaces

6.7. Backups

7. IBM i using open storage supported configurations

7.1. Hardware

7.2. Software and firmware

8. IBM i using open storage through VIOS concepts

8.1. Virtual SCSI and Ethernet adapters

8.2. Storage virtualization

8.3. Optical virtualization

8.4. Network virtualization

9. Prerequisites for attaching open storage to IBM i through VIOS

9.1. Storage planning

9.2. Performance

9.3. Dual hosting and multi-path I/O (MPIO)

9.3.1. Dual VIOS LPARs with IBM i mirroring

9.3.2. Path redundancy to a single set of LUNs

2

Page 3

9.3.2.1. Path redundancy with a single VIOS

9.3.2.2. Redundant VIOS LPARs with client-side MPIO

9.3.3 Subsystem Device Driver – Path Control Module (SDDPCM)

10. Attaching open storage to IBM i through VIOS

10.1. Open storage configuration

10.2. VIOS installation and configuration

10.3. IBM i installation and configuration

10.4. HMC provisioning of open storage in VIOS

10.5. End-to-end LUN device mapping

11. Post-install tasks and considerations

11.1. Configure IBM i networking

11.2. How to perform IBM i operator panel functions

11.3. How to display the IBM i partition System Reference Code (SRC) history

11.4. Client IBM i LPARs considerations and limitations

11.5. Configuring Electronic Customer Support (ECS) over LAN

11.6. Backups

12. DS4000 and DS5000 Copy Services and IBM i

12.1. FlashCopy and VolumeCopy

12.2. Enhanced Remote Mirroring (ERM)

13. IBM i using SAN Volume Controller (SVC) storage through VIOS

13.1. IBM i and SVC concepts

13.2. Attaching SVC storage to IBM i

14. SVC Copy Services and IBM i

14.1. FlashCopy

14.1.1. Test scenario

14.1.2. FlashCopy statements

14.2. Metro and Global Mirror

14.2.1. Test scenario

14.2.2. Metro and Global Mirror support statements

15. XIV Copy Services and IBM i

16. DS5000 direct attachment to IBM i

16.1. Overview

16.2. Supported hardware and software

16.3. Best practices, limitations and performance

16.4. Sizing and configuration

16.5. Copy Services support

17. N_Port ID Virtualization (NPIV) for IBM i

17.1. Overview

17.2. Supported hardware and software

17.3. Configuration

17.4. Copy Services support

18. Additional resources

19. Trademarks and disclaimers

3

Page 4

1. IBM i virtualization solutions

IBM i 6.1 introduces three significant virtualization capabilities that allow faster deployment of IBM

i workloads within a larger heterogeneous IT environment. This section will introduce and

differentiate these new technologies.

1.1. IBM i logical partition (LPAR) hosting another IBM i partition

An IBM i 6.1 LPAR can host one or more additional IBM i LPARs, known as virtual client LPARs.

Virtual client partitions can have no physical I/O hardware assigned and instead leverage virtual

I/O resources from the host IBM i partition. The types of hardware resources that can be

virtualized by the host LPAR are disk, optical and networking. The capability of IBM i to provide

virtual I/O resources has been used successfully for several years to integrate AIX®, Linux® and

Windows® workloads on the same platform. The same virtualization technology, which is part of

the IBM i operating system, can now be used to host IBM i LPARs. IBM i hosting IBM i is the

focus of the first half of this document.

1.2. IBM i using open storage as a client of the Virtual I/O Server (VIOS)

IBM i virtual client partitions can also be hosted by VIOS. VIOS is virtualization software that runs

in a separate partition whose purpose is to provide virtual storage, optical, tape and networking

resources to one or more client partitions. The most immediate benefit VIOS brings to an IBM i

client partition is the ability to expand its storage portfolio to use 512-byte/sector open storage.

Open storage volumes (or logical units, LUNs) are physically attached to VIOS via a Fibre

Channel or Serial-attached SCSI (SAS) connection and then made available to IBM i. While IBM

i does not directly attach to the SAN in this case, once open storage LUNs become available

through VIOS, they are managed the same way as integrated disks or LUNs from a directly

attached storage system. IBM i using open storage through VIOS is the focus of the second half

of this read-me first guide.

1.3. IBM i on a Power blade

The third major virtualization enhancement with IBM i 6.1 is the ability to run an IBM i LPAR and

its applications on a Power blade server, such as IBM BladeCenter JS12 or JS22. Running IBM i

on a Power blade is beyond the scope of this document. See the IBM i on a Power Blade Read-

me First for a complete technical overview and implementation instructions:

http://www.ibm.com/systems/power/hardware/blades/ibmi.html

2. IBM i hosting IBM i supported configurations

2.1. Hardware

One of the most significant benefits of this solution is the broad hardware support. Any storage,

network and optical adapters and devices supported by the host IBM i partition on a POWER6

processor-based server can be virtualized to the client IBM i partition. Virtualization of tape

devices from an IBM i host to an IBM i client is not supported. The following table lists the

supported hardware:

.

4

Page 5

Hardware type Supported for

IBM i hosting

IBM i

IBM Power servers Yes Includes IBM Power 520 Express, IBM

Power 550 Express, IBM Power 560

Express, IBM Power 570 and IBM Power 595

Does not include IBM POWER6 processorbased blade servers, such as IBM

BladeCenter JS12 and JS22

IBM Power 575 No

POWER5-based systems or

earlier

Storage adapters (Fibre

Channel, SAS, SCSI)

Storage devices and

subsystems

Network adapters Yes Must be supported by IBM i 6.1 or later and

Optical devices Yes Must be supported by IBM i 6.1 or later and

Tape devices No

To determine the storage, network and optical devices supported on each IBM Power server

model, refer to the Sales Manual for each model: http://www.ibm.com/common/ssi/index.wss

To determine the storage, network and optical devices supported only by IBM i 6.1, refer to the

upgrade planning Web site: https://www-

304.ibm.com/systems/support/i/planning/upgrade/futurehdwr.html.

2.2. Software and firmware

Software or firmware type Supported for

IBM i 6.1 or later Yes Required on both host and client IBM i

IBM i 5.4 or earlier No Not supported on host or client partition

IBM Power server system

firmware 320_040_031 or later

HMC firmware HMC V7 R3.2.0

or later

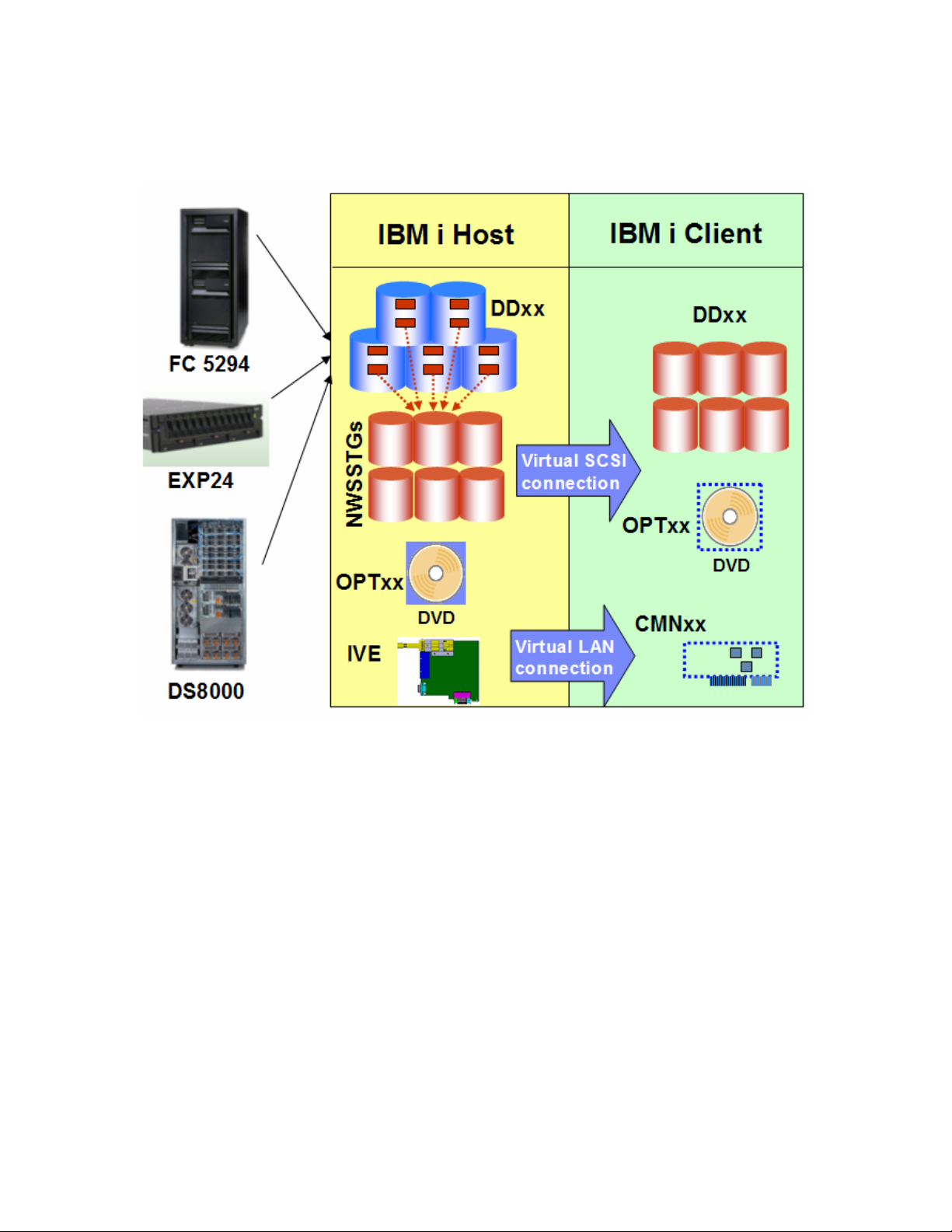

3. IBM i hosting IBM i concepts

The capability of an IBM i partition to host another IBM i partition involves hardware and

virtualization components. The hardware components are the storage, optical and network

adapters and devices physically assigned to the host IBM i LPAR. The virtualization components

No

Yes Must be supported by IBM i 6.1 or later and

supported on POWER6-based IBM Power

server

Yes Must be supported by IBM i 6.1 or later and

supported on POWER6-based IBM Power

server

supported on POWER6-based IBM Power

server

supported on POWER6-based IBM Power

server

IBM i hosting

IBM i

partition

Yes This is the minimum system firmware level

required

Yes This is the minimum HMC firmware level

required

Notes

.

Notes

5

Page 6

are the system firmware and IBM i operating system objects necessary to virtualize the physical

I/O resources to client partitions. The following diagram shows the full solution and its

components:

3.1. Virtual SCSI and Ethernet adapters

IBM i hosting IBM i uses an existing function of the system firmware, or Power Hypervisor: the

capability to create virtual SCSI and Ethernet adapters in a partition. Virtual adapters are created

for each LPAR in the Hardware Management Console (HMC). Virtual SCSI adapters are used for

storage and optical virtualization; virtual Ethernet adapters are used for network virtualization.

Note that using virtual I/O resources from a host partition does not preclude an IBM i client

partition from owning physical hardware. A mix of virtual and physical hardware in the same

partition is supported for IBM i in this environment, by assigning both types of adapters to the

partition in the HMC.

3.2. Storage virtualization

To virtualize integrated disk (SCSI, SAS or SSD) or LUNs from a SAN system to an IBM i client

partition, both HMC and IBM i objects must be created. In the HMC, the minimum required

configuration is:

• One virtual SCSI server adapter in the host partition

6

Page 7

• One virtual SCSI client adapter in the client partition

This virtual SCSI adapter pair allows the client partition to send read and write I/O operations to

the host partition. More than one virtual SCSI pair can exist for the same client partition in this

environment. To minimize performance overhead on the host partition, the virtual SCSI

connection is used to send I/O requests, but not for the actual transfer of data. Using the

capability of the Power Hypervisor for Logical Remote Direct Memory Access (LRDMA), data are

transferred directly from the physical adapter assigned to the host partition to a buffer in memory

of the client partition.

There is no additional configuration required in IBM i in the virtual client partition. In the host

partition, the minimum required IBM i setup consists of the following:

• One Network Server Description (NWSD) object

• One Network Server Storage Space (NWSSTG) object

The NWSD object associates a virtual SCSI server adapter in IBM i (which in turn is connected to

a virtual SCSI client adapter in the HMC) with one or more NWSSTG objects. At least one

NWSD object must be created in the host for each client, though more are supported.

The NWSSTG objects are the virtual disks provided to the client IBM i partition. They are created

from available physical storage in the host partition. In the client, they are recognized and

managed as standard DDxx disk devices (with a different type and model). The following

screenshot shows several storage spaces for a client partition in an IBM i 6.1 host partition:

The next screenshot shows several storage spaces in an IBM i 6.1 client partition:

7

Page 8

Storage spaces for an IBM i client partition do not have to match physical disk sizes; they can be

created from 160 MB to 1 TB in size, as long as there is available storage in the host. The 160

MB minimum size is a requirement from the storage management Licensed Internal Code (LIC)

on the client partition. For an IBM i client partition, up to 16 NWSSTGs can be linked to a single

NWSD, and therefore, to a single virtual SCSI connection. Up to 32 outstanding I/O operations

from the client to each storage space are supported for IBM i clients. Storage spaces can be

created in any existing Auxiliary Storage Pool (ASP) on the host, including Independent ASPs.

Through the use of NWSSTGs, any physical storage supported in the IBM i host partition on a

POWER6-based system can be virtualized to a client partition.

3.3. Optical virtualization

Any optical drive supported in the host IBM i LPAR can be virtualized to an IBM i client LPAR. An

existing virtual SCSI connection can be used, or a new connection can be created explicitly for

optical I/O traffic. By default, if a virtual SCSI connection exists between host and client, all

physical OPTxx optical drives in the host will be available to the client, where they will also be

recognized as OPTxx devices. The NWSD parameter Restricted device resources can be

used to specify which optical devices in the host a client partition cannot access.

A virtualized optical drive in the host partition can be used for a D-mode Initial Program Load

(IPL) and install of the client partition, as well as for installing Program Temporary Fixes (PTFs) or

applications later. If the optical drive is writeable, the client partition will be able to write to the

physical media in the drive.

3.4. Network virtualization

Virtualizing a network adapter and using a virtual LAN (VLAN) for partition-to-partition

communication within a system are existing IBM i capabilities. In order for a client to use a host’s

physical network adapter, a virtual Ethernet adapter must be created in the HMC in both partitions.

To be on the same VLAN, the two virtual Ethernet adapters must have the same Port Virtual LAN

ID (PVID). This type of adapter is recognized by IBM i as a communications port (CMNxx) with a

different type. In the host partition, the virtual Ethernet adapter is then associated with the

physical network adapter via a routing configuration – either Proxy ARP or Network Address

Translation (NAT). This allows the client partition to send network packets via the VLAN and

through the physical adapter to the outside LAN. The physical adapter can be any network

adapter supported by IBM i 6.1, including Integrated Virtual Ethernet (IVE) ports, also known as

Host Ethernet Adapter (HEA) ports.

4. Prerequisites for implementing IBM i hosted LPARs

4.1. Storage planning

Because virtual disks for the IBM i client LPAR are NWSSTG objects in the host LPAR, the main

prerequisite to installing a new client LPAR is having sufficient capacity in the host to create those

objects. Note that the host partition is not capable of detecting what percent of the virtual storage

is used in the client. For example, if a 500-GB storage space is created, it will occupy that

amount of physical storage in the host IBM i LPAR, even if the disk capacity is only 50% utilized

in the client LPAR.

8

Page 9

It is recommended to closely match the total size of the storage spaces for each client partition to

its initial disk requirements. As the storage needs of the client partition grow, additional storage

spaces can be dynamically created and linked to it on the host partition. On the client, the new

virtual disk will automatically be recognized as a non-configured drive and can be added to any

existing ASP. The only restriction to consider in this case is the maximum number of storage

spaces allowed per virtual SCSI connection for an IBM i client partition, which is 16. If more than

16 NWSSTGs are needed for a client LPAR, additional virtual SCSI connections can be created

dynamically in the HMC.

4.2. Performance

As described in section 2.2, disk I/O operations in an IBM i virtual client partition result in I/O

requests to the physical disk adapter(s) and drives assigned to the host partition. Therefore, the

best way to ensure good disk performance in the client LPAR is to create a well-performing disk

configuration in the host LPAR. Because the host partition is a standard IBM i partition, all the

recommendations in the Performance Capabilities Reference manual

(http://www.ibm.com/systems/i/solutions/perfmgmt/resource.html

manual’s suggestions for maximizing IBM i disk performance for the type of physical storage used

in the host, whether it is integrated disk or SAN.

Note that if only the System ASP exists on the host partition, NWSSTG objects are created on the

same physical disk units as all other objects. If the host partition is running production

applications in addition to providing virtual storage to client partitions, there will be disk I/O

contention as both client partitions and IBM i workloads in the host send I/O requests to those

disk units. To minimize disk I/O contention, create storage space objects in a separate ASP on

the host (Independent ASPs are supported). Performance on the client(s) would then depend on

the disk adapter and disk configuration used for that ASP. If the host partition is providing virtual

storage to more than one client partition, consider using separate ASPs for the storag e space

objects for each client. This recommendation should be weighed against the concern of ending

up with too few physical disk arms in each ASP to provide good performance.

Disk contention from IBM i workloads in the host LPAR and virtual client LPARs can be

eliminated if a separate IBM i LPAR is used just for hosting client LPARs. An additional benefit of

this configuration is the fact that an application or OS problem stemming from a different workload

on the host cannot negatively affect client partitions. These benefits should be weighed against:

• The license cost associated with a separate IBM i partition

• The maintenance time required for another partition, such as applying Program

Temporary Fixes (PTFs)

• The ability to create well-performing physical disk configurations in both partitions that

meet the requirements of their workloads

If the host partition runs a heavy-I/O workload and the client partitions also have high disk

response requirements, it is strongly recommended to consider using a separate hosting partition,

unless separate ASPs on the host are used for storage space objects. If the host partition’s

workload is light to moderate with respect to disk requirements and the client partitions are used

mostly for development, test or quality assurance (QA), it is acceptable to use one IBM i partition

for both tasks.

4.3. Dual hosting

An IBM i client partition has a dependency on its host: if the host partition fails, IBM i on the client

will lose contact with its disk units. The virtual disks would also become unavailable if the host

partition is brought down to restricted state or shut down for scheduled maintenance or to apply

) will apply to it. Use the

9

Page 10

PTFs. To remove this dependency, two host partitions can be used to simultaneously provide

virtual storage to one or more client partitions.

The configuration for two hosts for the same client partition uses the same conc epts as that for a

single host described in section 2.2. In addition, a second virtual SCSI client adapter exists in the

client LPAR, connected to a virtual SCSI server adapter in the second host LPAR. The IBM i

configuration of the second host mimics that of the first host, with the same number of NWSD and

NWSSTG objects, and NWSSG objects of the same size. As a result, the client partition

recognizes a second set of virtual disks of the same number and size. To achie v e redundancy,

adapter-level mirroring is used between the two sets of storage spaces from the two hosts. Thus,

if a host partition fails or is taken down for maintenance, mirroring will be suspended, but the

client partition will continue to operate. When the inactive host is either recovered or restarted,

mirroring can be resumed.

5. Implementing IBM i client LPARs with an IBM i host

Installing IBM i in a client LPAR with an IBM i host consists of two main phases:

• Creating the virtual SCSI configuration in the HMC

• Creating the NWSSTG and NWSD objects in the IBM i host partition, and activating the

new client partition

The implementation steps are described in detail in the topic Creating an IBM i logical partition

that uses IBM i virtual I/O resources using the HMC in the Power Systems Logical Partitioning

Guide: http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

sufficient available capacity is required in the IBM i host partition to create the storage space

objects. When following the detailed implementation instructions, keep in mind the performance

recommendations in section 4.2 of this document.

6. Post-install tasks and considerations

6.1. Configure IBM i networking

Once the IBM i client partition is installed and running, the first system management step is to

configure networking. There are three types of network adapters that can be assigned to an IBM

i client partition:

• A standard physical network adapter in a PCI slot

• A logical port on a Host Ethernet Adapter (HEA)

• A virtual Ethernet adapter

Note that both physical and virtual I/O resources can be assigned to an IBM i virtual client

partition. If a physical network adapter was not assigned to the IBM i client partition when it was

first created, see the topic Managing physical I/O devices and slots dynamically using the

HMC in the Power Systems Logical Partitioning Guide

(http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

available adapter.

An IBM i client partition can also use the new HEA capability of POWER6 processor-based

servers. To assign a logical port (LHEA) on an HEA to an IBM i client partition, see the topic

Creating a Logical Host Ethernet Adapter for a running logical partition using the HMC in

. Note that

) to assign an

10

Page 11

the Power Systems Logical Partitioning Guide:

http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf.

Lastly, a virtual Ethernet adapter can also provide network connectivity to an IBM i client partition.

To create one, consult the topic Configuring a virtual Ethernet adapter using the HMC in the

Power Systems Logical Partitioning Guide:

http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

In all three cases, the assigned network adapter will be recognized as a communication s port

(CMNxx) in IBM i. The type of communications port will depend on the network adapter: for

example, 5706 for a Gigabit Ethernet adapter, 5623 for an LHEA and 268C for a virtual Ethernet

adapter. In the case of a standard PCI network adapter or an LHEA, networking can be

configured following the process described in the IBM i networking topic in the Information

Center: http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/topic/rzajy/rzajyoverview.htm

If the IBM i client partition is using a virtual Ethernet adapter for networking, additional

configuration on the IBM i host is required. The virtual Ethernet adapter allows the client partition

to communicate only with other partitions whose virtual Ethernet adapters have the same Port

Virtual LAN ID (PVID); in other words, partitions on the same virtual LAN within the system. A

routing configuration can be created in the IBM i host partition to allow forwarding of network

packets from the outside LAN to the client partition on the virtual LAN. That type of virtual

network configuration has been used successfully for several years to provide networking to

Linux client partitions with an IBM i host. The two methods for routing traffic from the physical

LAN to a client partition on a virtual LAN are Proxy ARP and Network Address Translation (NAT).

To configure Proxy ARP or NAT in the IBM i host partition, follow the instructions in section 5.2 of

the Redbook Implementing POWER Linux on IBM System i Platform

(http://www.redbooks.ibm.com/redbooks/pdfs/sg246388.pdf

6.2. How to perform IBM i operator panel functions

Operator panel functions in an IBM i client partitions are performed in the HMC:

• Sign onto the HMC with a profile with sufficient authority to manage the IBM i client

partition

• Select the partition

• Use the open-in-context arrow to select Serviceability Æ Control Panel Functions, then

the desired function.

6.3. How to display the IBM i partition System Reference Code (SRC) history

• Sign onto the HMC with a profile with sufficient authority to manage the IBM i client

partition

• Select the partition

• Use the open-in-context arrow to select Serviceability Æ Reference Code History

• To display words 2 through 9 of a reference code, click the radio button for that code.

6.4. Client IBM i LPARs considerations and limitations

Consult the topic Considerations and limitations for i5/OS client partitions on systems

managed by the Integrated Virtualization Manager (IVM) in the Information Center:

http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/topic/rzahc/rzahcbladei5limits.htm

).

.

.

.

11

Page 12

While in this case the IBM i client partition is not being managed by IVM, it does virtual I/O

resources and the limitations outlined in the topic above apply to it.

6.5. Configuring Electronic Customer Support (ECS) over LAN

A supported WAN adapter can be assigned to the IBM i client partition for ECS. Alternatively,

ECS over LAN can be configured. Consult the topic Setting up a connection to IBM in the

Information Center:

http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/topic/rzaji/rzaji_setup.htm

6.6. Copying storage spaces

Because an IBM i client partition is installed into one or more storage space objects in the IBM i

host partition, new client partitions can be deployed rapidly by copying the storage space(s).

Note that each IBM i partition, client or host, must have valid OS and Licensed Product Program

licenses for the number of processors it uses.

To copy one or more storage spaces that contain an installed IBM i client partition, first shut down

the partition during an available period of downtime. Next, log into the host IBM i partition with a

security officer-level profile and perform the following steps:

• Enter WRKNWSSTG

• Enter 3 next to the storage space you are going to copy, then press Enter

• Enter a name of up to 10 characters for the new storage space

• The size of the original storage space will be entered automatically. The new storage

space can be as large or larger (up to 1 TB), but not smaller

• Enter the correct ASP ID. The ASP where the original storage space exists is the default

• Optionally, enter a text description

• Press Enter

To deploy the new client partition, follow the instructions in section 5 to create the necessary

virtual SCSI configuration in the HMC and the NWSD object in the host IBM i partition.

6.7. Backups

As mentioned above, an IBM i client partition with an IBM i host can use a mix of virtual and

physical I/O resources. Therefore, the simplest backup and restore approach is to assign an

available tape adapter on the system to it and treat it as a standard IBM i partition. The tape

adapter can be any adapter supported by IBM i on IBM Power servers and can be shared with

other partitions. To assign an available tape adapter to the IBM i client partition, consult the topic

Managing physical I/O devices and slots dynamically using the HMC in the Logical

Partitioning Guide

(http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

Once a tape adapter connected to a tape drive or library is available to the client partition, use the

Backup and Recovery topic in the Information Center to manage backups:

http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/index.jsp?topic=/rzahg/rzahgbackup.

htm&tocNode=int_215989.

The IBM i host partition can also be used for system-level backups of the client partition. See

the topic Saving IBM i server objects in IBM i in the Logical Partitioning Guide

(http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

.

).

).

12

Page 13

13

Page 14

7. IBM i using open storage supported configurations

There are three general methods by which IBM i connects to open storage:

• Directly through the SAN fabric without VIOS

• As a client of VIOS, with VIOS connecting to a storage subsystem through the SAN fabric

• As a client of VIOS, with VIOS using storage from a SAN Volume Controller (SVC). SVC

in turn connects to one or more storage subsystems through the SAN fabric

The first set of support tables in the hardware and software sections below applies to the first

connection method above (IBM i direct attachment), while the second set of tables in both

sections applies to the second method (IBM i Æ VIOS Æ storage subsystem). Furthermore, the

support statements in the second set of tables below apply to the end-to-end solution of

IBM i using open storage as a client of VIOS. This document will not attempt to list the full

device support of VIOS, nor of any other clients of VIOS, such as AIX and Linux. For the general

VIOS support statements, including other clients, see the VIOS Datasheet at:

http://www14.software.ibm.com/webapp/set2/sas/f/vios/documentation/datasheet.html

When the second connection method to open storage is used (IBM i Æ VIOS Æ SVC Æ storage

subsystem), the support statement for IBM i follows that of SVC. For a list of environments

supported by SVC, see the data sheet on the SVC overview Web site:

http://www.ibm.com/systems/storage/software/virtualization/svc

directly to SVC; it must do so as a client of VIOS.

IBM i as a client of VIOS is also supported on POWER6 or later processor-based blade servers.

IBM i cannot attach directly to open storage when running on Power blades. For the full

supported configurations statement for the IBM i on Power blade solution, see the

Supported Environments document at:

http://www.ibm.com/systems/power/hardware/blades/ibmi.html

7.1. Hardware

Hardware type Supported by

IBM i for direct

attachment

IBM Power servers Yes Includes IBM Power 520 Express, IBM

Power 550 Express, IBM Power 560

Express, IBM Power 570 and IBM Power 595

IBM Power 575 No

POWER5-based systems or

earlier

DS5100 using Fibre Channel

or SATA drives

DS5300 using Fibre Channel

or SATA drives

EXP810 expansion unit with

Fibre Channel or SATA drives

EXP5000 expansion unit with

Fibre Channel or SATA drives

No

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

. Note that IBM i cannot connect

.

Notes

.

14

Page 15

EXP5060 expansion unit with

Fibre Channel or SATA drives

DS6800 Yes

DS8100 using Fibre Channel

or FATA drives

DS8300 using Fibre Channel

or FATA drives

DS8700 using Fibre Channel

or FATA drives

Hardware type Supported by

IBM Power servers Yes Includes IBM Power 520 Express, IBM

IBM Power 575 No

POWER5-based systems or

earlier

DS3950 using Fibre Channel

or SATA drives

DS4800 using Fibre Channel

drives

DS4800 using SATA drives Yes It is strongly recommended that SATA

DS4700 using Fibre Channel

drives

DS4700 using SATA drives Yes It is strongly recommended that SATA

DS3400 using SAS or SATA

drives

DS4200 and earlier DS4000

and FAStT models

EXP810 expansion unit

attached to DS4800 or

DS47000

EXP710 expansion unit

attached to DS4800 or

DS4700

SAN Volume Controller (SVC) Yes SVC is not supported for direct connection to

SVC Entry Edition (SVC EE) Yes SVC EE is not supported for direct

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that FATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that FATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that FATA

drives are used only for test or archival IBM i

applications for performance reasons

Notes

IBM i as a

client of VIOS

Power 550 Express, IBM Power 560

Express, IBM Power 570 and IBM Power 595

No

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes Supported by IBM i as a client of VIOS on

both IBM Power servers and IBM Power

blade servers

drives are used only for test or archival IBM i

applications for performance reasons

Yes Supported by IBM i as a client of VIOS on

both IBM Power servers and IBM Power

blade servers

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

No

Yes See supported drives comments above

Yes See supported drives comments above

IBM i

connection to IBM i

15

Page 16

Storage subsystems

connected to SVC

DS5020 using Fibre Channel

or SATA drives

DS5100 using Fibre Channel

or SATA drives

DS5300 using Fibre Channel

or SATA drives

DS8100 using Fibre Channel

or FATA drives

DS8300 using Fibre Channel

or FATA drives

DS8700 using Fibre Channel

or FATA drives

XIV Storage System Yes

Fibre Channel adapters Yes Must be supported by VIOS*

*For a list of Fibre Channel adapters supported by VIOS, see the VIOS Datasheet at:

http://www14.software.ibm.com/webapp/set2/sas/f/vios/documentation/datasheet.html

7.2. Software and firmware

Software or firmware type Supported by

IBM i 6.1 with LIC 6.1.1 or later Yes

IBM i 6.1 without LIC 6.1.1 No

IBM i 5.4 or earlier No

DS5000 Copy Services Yes See Section 16.5 of this document for details

IBM i host kit for DS5000 Yes This license is required in order to attach

Controller firmware

07.60.26.00 or later

DS Storage Manager

10.60.x5.16 or later

Software or firmware type Supported by

IBM i 6.1 or later Yes

IBM i 5.4 or earlier No

PowerVM Standard Edition or

higher

VIOS 1.5 with Fix Pack 10.1 or Yes This is the minimum level of VIOS required

Yes The supported list for IBM i as a client of

VIOS follows that for SVC

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that SATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that FATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that FATA

drives are used only for test or archival IBM i

applications for performance reasons

Yes It is strongly recommended that FATA

drives are used only for test or archival IBM i

applications for performance reasons

.

Notes

IBM i for direct

attachment

DS5000 LUNs directly to IBM i

Yes

Yes

Notes

IBM i as a

client of VIOS

Yes Required for VIOS media and activation code

16

Page 17

later

IBM Power server system

firmware 320_040_031 or later

HMC firmware HMC V7 R3.2.0

or later

DS4000 controller firmware

6.60.08 or later

DS4000 Storage Manager 9.x

or later

DS4000 Copy Services Yes See Section 12 of this document for details

DS5000 Copy Services Yes See Section 12 of this document for details

AIX/VIOS host kit license for

DS4000

SVC code level 4.3.1 Yes This is the minimum SVC code level required

SVC Copy Services Yes See Section 14 of this document for details

XIV firmware 10.0.1b Yes This is the minimum XIV firmware level

XIV Copy Services Yes See Section 15 of this document for details

8. IBM i using open storage through VIOS concepts

The capability to use open storage through VIOS extends the IBM i storage portfolio to include

512-byte-per-sector storage subsystems. The existing IBM i storage portfolio includes integrated

SCSI, SAS or Solid State disk, as well as Fibre Channel-attached storage subsystems that

support 520 bytes per sector, such as the IBM DS8000 product line. IBM i cannot currently

attach directly to 512-B open storage, because its Fibre Channel adapter code requires 520 bytes

per sector. Therefore, logical units (LUNs) created on open storage are physically connected

through the Fibre Channel fabric to VIOS. Once recognized by VIOS, the LUNs are virtualized to

one or more IBM i virtual client partitions. The following diagram illustrates both the physical and

virtualization components of the solution:

Yes This is the minimum system firmware level

required

Yes This is the minimum HMC firmware level

required

Yes This is the minimum DS4000 controller

firmware level required. Controller

firmware 7.10.23 or later is supported.

Yes Storage Manager 10.10.x or later is

supported

Yes This license is required in order to attach

DS4000 LUNs to VIOS, and therefore

virtualize them to IBM i

required

17

Page 18

VIOS has been used successfully for several years to virtualize storage, optical and networking

resources to AIX and Linux client partitions. Now IBM i joins this virtualization environment,

gaining the ability to use open storage. From a VIOS perspective, IBM i is another client partition;

the host-side configuration steps are the same as for AIX and Linux clients. While code changes

were made in VIOS to accommodate IBM i client partitions, there is no special version of VIOS in

use for IBM i. If you have existing skills in attaching open storage to VIOS and virtualizing I/O

resources to client partitions, they will continue to prove useful when creating a configuration for

an IBM i client partition.

The hardware and virtualization components for attaching open storage to IBM i illustrated in the

diagram above also apply to using DS5000, XIV and other subsystems supported for this solution,

as listed in section 7.

Two management interfaces are available for virtualizing I/O resources through VIOS to IBM i:

the Hardware Management Console (HMC) and the Integrated Virtualization Manager (IVM).

Both provide logical partitioning and virtualization management functions, however, their support

on Power servers and Power blades is different:

• The HMC is supported with all Power servers, from Power 520 Express to Power 595,

but not supported with Power blades, such as IBM BladeCenter JS12 or JS23

• IVM is supported with all Power blades, from JS12 to JS43, and only with Power 520 and

Power 550

There is another significant difference between HMC and IVM when managing I/O virtualization

for IBM i: when the Power server is IVM-managed, IBM i partitions must be purely virtual. They

18

Page 19

are not allowed to own any physical I/O adapters. Therefore, when managing Power servers, the

HMC is used most of the time, with IVM being used occasionally only on Power 520 and 550.

When managing Power blades, IVM must always be used.

8.1. Virtual SCSI and Ethernet adapters

VIOS providing storage to an IBM i client LPAR uses an existing function of the system firmware,

or Power Hypervisor: the capability to create virtual SCSI and Ethernet adapters in a partition.

Virtual adapters are created for each LPAR in the HMC. Virtual SCSI adapters are used for

storage and optical virtualization; virtual Ethernet adapters are used for network virtualization.

Note that using virtual I/O resources from VIOS does not preclude an IBM i client partition from

owning physical hardware when the server is HMC-managed. A mix of virtual and physical

hardware in the same partition is supported for IBM i in this environment, by assigning both types

of adapters to the partition in the HMC.

As mentioned above, using IVM for LPAR and virtualization management mandates using only

virtual resources for IBM i partitions. However, IVM provides the benefit of automatically creating

Virtual SCSI server (in VIOS) and client adapters (in IBM i) as virtual disk, optical and tape

resources are assigned to IBM i. For more information on LPAR management with IVM, consult

the IVM topic in the PowerVM Editions Guide, available at:

http://publib.boulder.ibm.com/infocenter/systems/scope/hw/index.jsp?topic=/arecu/arecukickoff.ht

m.

Note that IVM will automatically create only the first pair of Virtual SCSI server and client adapters

when disk and optical resources are virtualized to IBM i. Additional adapter pairs must be created

by using the IVM command line, available via a Telnet or SSH session to VIOS. Consult the

VIOS topic in the PowerVM Editions Guide for more information on using the IVM/VIOS

command line. IVM will automatically create a new Virtual SCSI adapter pair for each tape

resource assigned to IBM i.

8.2. Storage virtualization

8.2.1. HMC and IVM configuration

For VIOS to virtualize LUNs created on open storage to an IBM i client partition, configuration

takes place both in the HMC (or IVM) and in VIOS. As mentioned in the previous section, no

explicit Virtual SCSI configuration is necessary in IVM the majority of the time.

In the HMC, the minimum required configuration is:

• One virtual SCSI server adapter in the host partition

• One virtual SCSI client adapter in the client partition

This virtual SCSI adapter pair allows the client partition to send read and write I/O operations to

the host partition. More than one virtual SCSI pair can exist for the same client partition in this

environment. To minimize performance overhead in VIOS, the virtual SCSI connection is used to

send I/O requests, but not for the actual transfer of data. Using the capability of the Power

Hypervisor for Logical Remote Direct Memory Access (LRDMA), data are transferred directly

from the Fibre Channel adapter in VIOS to a buffer in memory of the IBM i client partition.

In an IBM i client partition, a virtual SCSI client adapter is recognized as a type 290A DCxx

storage controller device. The screenshot below depicts the virtual SCSI client adapter, as well

as several open storage LUNs and an optical drive virtualized by VIOS:

19

Page 20

In VIOS, a virtual SCSI server adapter is recognized as a vhostX device:

8.2.2. VIOS configuration and HMC enhancements

No additional configuration in the IBM i client partition is necessary. In VIOS, however, a new

object must be created for each open storage LUN that will be virtualized to IBM i: a virtual

target SCSI device, or vtscsiX. A vtscsiX device makes a storage object in VIOS available to I B M

i as a standard DDxxx disk unit. There are three types of VIOS storage objects that can be

virtualized to IBM i:

• Physical disk units or volumes (hdiskX), which are open storage LUNs in this case

• Logical volumes (hdX and other)

• Files in a directory

For both simplicity and performance reasons, it is recommended to virtualize open storage

LUNs to IBM i directly as physical devices (hdiskX), and not through the use of logical

volumes or files. (See section 9.2 for a detailed performance discussion.) A vtscsiX device lin ks

a LUN available in VIOS (hdiskX) to a specific virtual SCSI adapter (vhostX). In turn, the virtual

SCSI adapter in VIOS is already connected to a client SCSI adapter in the IBM i client partition.

Thus, the hdiskX LUN is made available to IBM i through a vtscsiX device. What IBM i storage

management recognizes as a DDxxx disk unit is not the open storage LUN itself, but the

corresponding vtscsiX device. The vtscsiX device correctly reports the parameters of the LUN,

such as size, to the virtual storage code in IBM i, which in turn passes them on to storage

management.

Multiple vtscsiX devices, corresponding to multiple open storage LUNs, can be linked to a single

vhostX virtual SCSI server adapter and made available to IBM i. Up to 16 LUNs can be

virtualized to IBM i through a single virtual SCSI connection. Each LUN typically uses multiple

physical disk arms in the open storage subsystem. If more than 16 LUNs are required in an IBM i

20

Page 21

client partition, an additional pair of virtual SCSI server (VIOS) and client (IBM i) adapters must be

created in the HMC or on the IVM/VIOS command line. Additional LUNs available in VIOS can

then be linked to the new vhostX device through vtscsiX devices, making them available to IBM i.

Prior to May 2009, creating vtscsiX devices and thus virtualizing open storage LUNs to IBM i was

necessarily a task performed only on the IVM/VIOS command line when the server was HMCmanaged. When the server is IVM-managed, assignment of virtual resources is performed using

the IVM browser-based interface. However, the HMC interface has been significantly

enhanced, making the use of the IVM/VIOS command line rare. Once available in VIOS,

open storage LUNs and optical devices can be assigned to IBM i using the following steps in th e

HMC:

• Select the correct managed server

• In the menu below, expand Configuration, then Virtual Resources

• Click Virtual Storage Management

• Select the correct VIOS in the drop-down menu and click Query VIOS

• Click the Physical Volumes (or Optical Devices for optical resources)

• Select the correct LUN(s) or optical device(s) and click Modify assignment… to assign

to IBM i

If the QAUTOCFG system value in IBM i is set to “1” (which is the default), the new virtual

resources will become available in IBM i immediately. No steps are required on the IVM/VIOS

command line.

8.2.3. Adding devices to VIOS

VIOS does not autoscan for new devices added after the initial boot is complete. When a new

device – such as a LUN on a storage subsystem – is added to VIOS, prompt VIOS to scan for

new devices by connecting with Telnet or SSH and using the following command:

• cfgdev

Once it has detected the device, VIOS will autoconfigure it and make it available for use. It can

then be virtualized to the IBM i client partition using the HMC, as described in the previous

section. Note that if the SAN configuration is performed before the VIOS partition boots, this step

is not necessary, as VIOS will recognize all available devices at boot time.

8.3. Optical virtualization

8.3.1. Physical optical devices

CD and DVD drives supported by VIOS can be virtualized to IBM i directly. Optical drives are

recognized in VIOS as cdX devices. A cdX device is linked to a vhostX virtual SCSI server

adapter and made available to IBM i through a virtual target optical device, vtoptX. The same

optical drive can be made virtualized to multiple IBM i client partitions by creating separate vtoptX

devices linked to different vhostX adapters for the same cdX device. Only one IBM i client

partition can use the physical optical drive at a time. If the physical optical drive is writeable, IBM

i will be able to write to it. Similar to LUNs, optical devices can be virtualized to IBM i using the

enhanced functions of the HMC; it is not necessary to use the IVM/VIOS command line to create

the vtoptX devices.

21

Page 22

8.3.2. VIOS media repository

VIOS provides a capability similar to that of an image catalog (IMGCLG) in IBM i: a repository of

media images on disk. Unlike IMGCLGs in IBM i, a single media repository may exist per VIOS.

The media repository allows file-backed virtual optical volumes to be made available to the IBM i

client partition through a separate vtoptX device. One immediate benefit to IBM i is the ability to

import the IBM i install media into VIOS as ISO images, then install the client partition from the

ISO images instead of switching real media in a physical DVD drive.

8.4. Network virtualization

Virtualizing a network adapter and using a virtual LAN (VLAN) for partition-to-partition

communication within a system are existing Power server capabilities. In order for an IBM i client

to use a physical network adapter in VIOS, a virtual Ethernet adapter must be created in both

partitions in the HMC. To be on the same VLAN, the two virtual Ethernet adapters must have the

same Port Virtual LAN ID (PVID).

A virtual Ethernet adapter is recognized by IBM i as a communications port (CMNxx) of type

268C:

In VIOS, the same Ethernet type of device, entX, is used for logical Host Ethernet ports, physical

and virtual Ethernet adapters:

VIOS provides virtual networking to client partitions, including IBM i, by bridging a physical

Ethernet adapter and one or more virtual Ethernet adapters. The virtualization object that

provides this Ethernet bridge is called a Shared Ethernet Adapter (SEA). The SEA forwards

network packets from any client partitions on a VLAN to the physical LAN through the physical

Ethernet adapter. Because the SEA creates a Layer-2 bridge, the original MAC address of the

virtual Ethernet adapter in IBM i is used on the physical LAN. The CMNxx communications port

that represents the virtual Ethernet adapter in IBM i is configured with an externally routable IP

address and a standard network configuration is used. The physical adapter bridge by the SEA

can be any network adapter supported by VIOS, including Integrated Virtual Ethernet (IVE) ports,

also known as Host Ethernet Adapter (HEA) ports.

The enhanced virtualization management functions of the HMC also allow for net work

virtualization without using the IVM/VIOS command line. Follow these steps in the HMC:

22

Page 23

• Select the correct managed server

• In the menu below, expand Configuration, then Virtual Resources

• Click Virtual Network Management

• Use the drop-down menu to create or modify the settings of a VLAN (referred to as a

VSwitch), including which SEA it uses to access the external network

9. Prerequisites for attaching open storage to IBM i through VIOS

9.1. Storage planning

The first storage planning consideration is having enough available capacity in the open storage

subsystem to create the AIX/VIOS LUNs that will be virtualized to IBM i by VIOS. As mentioned

in the supported hardware section (7.1), it is strongly recommended that only Fibre Channel

or SAS physical drives are used to create LUNs for IBM i as a client of VIOS. The reason is

the performance and reliability requirements of IBM i production workloads. For non-I/O-intensive

workloads or nearline storage, SATA or FATA drives may also be used. This recommendation is

not meant to preclude the use of SATA or FATA drives for other clients of VIOS or other host

servers; it applies only to production IBM i workloads.

If the storage subsystem requires a host kit for AIX/VIOS to be installed before attaching LUNs to

these hosts (as is the case with DS5000 and DS4000), that host kit is also required when

virtualizing LUNs to IBM i. Because the LUNs are virtualized by VIOS, they do not have to match

IBM i integrated disk sizes. The technical minimum for any disk unit in IBM i is 160 MB and the

maximum is 2 TB, as measured in VIOS. Actual LUN size will be based on the capacity and

performance requirements of each IBM i virtual client partition.

9.2. Performance

When creating an open storage LUN configuration for IBM i as a client of VIOS, it is crucial to

plan for both capacity and performance. It may seem that because LUNs are virtualized for IBM i

by VIOS instead of being directly connected, the virtualization layer will necessarily add a

significant performance overhead. However, internal IBM performance tests clearly show that the

VIOS layer adds a negligible amount of overhead to each I/O operation. Instead, our tests

demonstrate that when IBM i uses open storage LUNs virtualized by VIOS, performance is

almost entirely determined by the physical and logical configuration of the storage

subsystem.

The IBM Rochester, MN, performance team has run a significant number of tests with IBM i as a

client of VIOS using open storage. The resulting recommendations on configuring both the open

storage and VIOS are available in the latest Performance Capabilities Reference manual (PCRM)

at http://www.ibm.com/systems/i/solutions/perfmgmt/resource.html

virtualized storage for IBM i. In most cases, an existing IBM i partition using physical storage will

be migrated to open storage LUNs virtualized by VIOS. The recommended approach here is to

start with the partition’s original physical disk configuration; then create a similar setup with the

physical drives in the open storage subsystem on which LUNs are created, while following the

suggestions in the PCRM sections above.

The commonly used SAN disk sizing tool Disk Magic can also be used to model the projecte d

IBM i performance of different physical and logical drive configurations on supported subsystems.

Work with IBM Techline or your IBM Business Partner for a Disk Magic analysis. The latest

. Chapter 14.6 focuses on

23

Page 24

version of Disk Magic includes support for multiple open storage subsystems and IBM i as a

virtual client of VIOS.

9.3. Dual hosting and multi-path I/O (MPIO)

An IBM i client partition in this environment has a dependency on VIOS: if the VIOS partition fails,

IBM i on the client will lose contact with the virtualized open storage LUNs. The LUNs would also

become unavailable if VIOS is brought down for scheduled maintenance or a release upgrade. To

remove this dependency, two or more VIOS partitions can be used to simultaneously provide

virtual storage to one or more IBM i client partitions.

9.3.1. Dual VIOS LPARs with IBM i mirroring

Prior to the availability of redundant VIOS LPARs with clients-side MPIO for IBM i, the only

method to achieve VIOS redundancy was to use mirroring within IBM i. This configuration uses

the same concepts as that for a single VIOS described in section 8.2. In addition, at least one

additional virtual SCSI client adapter exists in the client LPAR, connected to a virtual SCSI server

adapter in the second VIOS on the same Power server. A second set of LUNs of the same

number and size is created on the same or a different open storage subsystem, a nd connected to

the second VIOS. The host-side configuration of the second VIOS mimics that of the first host,

with the same number of LUNs (hdisks), vtscsiX and vhostX devices. As a result, the client

partition recognizes a second set of virtual disks of the same number and size. To achieve

redundancy, adapter-level mirroring in IBM i is used between the two sets of virtualized LUNs

from the two hosts. Thus, if a VIOS partition fails or is taken down for maintenance, mirroring will

be suspended, but the IBM i client will continue to operate. When the inactive VIOS is either

recovered or restarted, mirroring can be resumed in IBM i.

9.3.2. Path redundancy to a single set of LUNs

Note that the dual-VIOS solution above provides a level of redundancy by attaching two separate

sets of open storage LUNs to the same IBM i client through separate VIOS partitions. It is not an

MPIO solution that provides redundant paths to a single set of LUNs. There are two MPIO

scenarios possible with VIOS that remove the requirement for two sets of LUNs:

• A single VIOS partition using two Fibre Channel adapters to connect to the same set of

LUNs

• Two VIOS partitions providing redundant paths to the same set of LUNs on a single open

storage subsystem

9.3.2.1. Path redundancy with a single VIOS

If a VIOS LPAR has two or more Fibre Channel adapters assigned and the correct host

configuration is created in the open storage subsystem, VIOS will have redundant paths to the

LUNs connected to the Fibre Channel adapters. VIOS includes a basic MPIO driver, which has

been the default instead of the RDAC (Redundant Disk Array Controller) driver since November

2008. The MPIO driver can be used with any storage subsystem which VIOS supports and is

included in a default install. In this case, configuration is required only on the storage subsystem

in order to connect a single set of LUNs to both ports on a Fibre Channel adapter owned by VIOS.

This multi-path method can be configured in two ways: round-robin, or failover. For storage

systems like XIV and DS8000 that support active-active multi-path connections, either method is

allowed. Path failover will use one path and leave the other idle until the first path fails, while

round-robin will always attempt to use both paths if available to maximize throughput. For

24

Page 25

systems that only support active-passive connections, such as IBM’s DS3000, DS4000, and

DS5000 subsystems, failover is the only method allowed.

The access method can be configured on a per-LUN basis from the VIOS command line via a

Telnet or SSH session. To show the current multi-path algorithm:

• lsdev –dev hdiskX –attr algorithm

To change the multi-path algorithm:

• chdev –dev hdiskX –attr algorithm=round_robin, or

• chdev –dev hdiskX –attr algorithm=fail_over

These commands must be repeated for each hdisk.

9.3.2.2. Redundant VIOS LPARs with client-side MPIO

Beginning with IBM i 6.1 with Licensed Internal Code (LIC) 6.1.1, the IBM i Virtual SCSI (VSCSI)

client driver supports MPIO through two or more VIOS partitions to a single set of LUNs (up to a

maximum of 8 VIOS partitions). This multi-path configuration allows a VIOS partition to fail or be

brought down for service without IBM i losing access to the disk volumes since the other VIOS

partition(s) remain active. The following diagram illustrates the new capability for IBM i:

25

Page 26

Note that as with dual VIOS LPARs and IBM i mirroring, a VSCSI connection from IBM i to both

VIOS partitions is required. On the storage subsystem, it is recommended to create a host for

each VIOS containing the World-wide Port Names (WWPNs) of all Fibre Channel adapters in that

LPAR, and then create a host group comprising those VIOS hosts. A single set of LUNs for IBM i

can then be created and mapped to the host group, giving both VIOS LPARs access to the LUNs.

Configuring redundant VIOS LPARs with client-side MPIO does not preclude using MPIO within a

VIOS for a higher level of resiliency, as described in the previous section.

For storage systems such as DS8000 and XIV, these connections are typically shared via the

round-robin access as described above, so it is good practice to have two or more connections to

each VIOS partition. For DS3000, DS4000 and DS5000 with dual controllers, a connection must

be made to both controllers to allow an active and a failover path. When the volumes are created

on these systems, the host OS type should be set to DEFAULT or AIX (not AIX ADT/AVT, or

failover/failback oscillations could occur). For all storage systems, it is recommende d that the

fabric configuration uses separate dedicated zones and FC cables for each connection.

In addition to the VSCSI and storage subsystem configuration, there are several VIOS settings

that must be changed. These must be set via a Telnet or SSH session on both VIOS partitions.

The first group of settings is applied to each of the fscsiX devices being used. To show the

current fscsiX devices:

• lspath

Set the fast fail and dynamic tracking attributes on these devices:

• chdev –dev fscsiX –attr fc_err_recov=fast_fail –perm

• chdev –dev fscsiX –attr dyntrk=yes –perm

Next, restart VIOS. The pair of chdev commands must be repeated for each fscsiX device but

only one restart is required after all fscsiX attributes have been set.

To verify the settings have been changed successfully:

• lsdev –dev fscsiX –attr fc_err_recov

• lsdev –dev fscsiX –attr dyntrk

Importantly, the SCSI reserve policy for each LUN (or hdisk) on both VIOS LPARs must be set to

no_reserve to allow disk sharing. Some storage subsystems such as XIV default to no_reserv e

and do not require a change, while others such as DS4000 and DS5000 default to single_path.

The change must be made prior to mapping the LUNs to IBM i and it does not require a restart of

VIOS.

To show the current reserve policy settings:

• lsdev –dev hdiskX –attr reserve_policy

To set the no_reserve attribute if necessary:

• chdev –dev hdiskX –attr reserve_policy=no_reserve

Once the VSCSI client driver in IBM i detects a second path to the same set of LUNs through a

different VIOS LPAR, the disk names change from DDxxx to DMPxxx. This is identical to multipath I/O to a directly attached DS8000 subsystem. If a path is lost, the disk names do not change

back to DDxxx, but a path failure message is sent to the QSYSOPR message queue in IBM i.

26

Page 27

Once the path is restored, an informational message is sent to the same queue. To monitor the

status of the paths in IBM i:

• Start Services Tools and sign on

• Work with disk units

• Display disk configuration

• Display disk path status

Note that while the HMC allows a single VSCSI client adapter to be tagged as an IPL device, it is

not required to change the tagged resource if that path is lost. As long as the alternate path

through the second VIOS is active, the IBM i LPAR will be able to IPL. from the same load source

LUN. The alternate path must exist prior to the loss of the original IPL path and prior to powering

the IBM i LPAR off.

9.3.3 Subsystem Device Driver – Path Control Module (SDDPCM)

VIOS also supports the Subsystem Device Driver – Path Control Module (SDDPCM) for certain

storage subsystems. Examples of supported subsystems include the SAN Volume Controller

(SVC) and IBM DS8000. To find out whether a particular storage system supports SDD-PCM for

VIOS, consult its interoperability matrix on the SDDPCM Web site:

http://www.ibm.com/support/docview.wss?uid=ssg1S4000201. Note that there are separate

support statements for AIX and VIOS. If SDDPCM is supported on your storage subsystem for

VIOS, download and install the driver following the instructions in the Multi-path Subsystem

Device Driver User's Guide at the same location.

10. Attaching open storage to IBM i through VIOS

As described in section 8, IBM i joins the VIOS virtualization environment, allowing it to use open

storage. The setup process involves three main steps:

• Open storage configuration

• VIOS installation and configuration

• IBM i installation and configuration

The VIOS and open storage configuration steps are the same as for existing clients of VIOS,

such as AIX and Linux. These steps are well documented and any existing skills in those areas

would apply to IBM i as a client partition, as well. The only significant difference with IBM i is the

specific open storage configuration requirements in order to achieve good performance, as

referenced in section 9.2.

10.1. Open storage configuration

All open storage subsystems follow similar general configuration steps:

• Perform physical disk and RAID configuration

• Create volumes (LUNs)

• Attach those volumes to a host (VIOS in this case)

Naturally, the detailed steps and graphical interfaces used vary by subsystem. Locate the correct

documentation for your supported subsystem at http://www.redbooks.ibm.com

follow the instructions for creating and attaching LUNs to an AIX host.

. Importantly,

27

Page 28

10.2. VIOS installation and configuration

Consult the VIOS planning, installation and configuration topics in the PowerVM Editions Guide,

available at:

http://publib.boulder.ibm.com/infocenter/systems/scope/hw/index.jsp?topic=/arecu/arecukickoff.ht

m.

As described in section 8.2.2, once VIOS is installed and physical I/O resources are assigned to it,

those resources can be virtualized to IBM i using the HMC. It is not necessary to use the

IVM/VIOS command line to assign virtual disk, optical and network resources to IBM i.

10.3. IBM i installation and configuration

The IBM i client partition configuration as a client of VIOS is the same as that for a client of an

IBM i 6.1 host partition. Consult the topic Creating an IBM i logical partition that uses IBM i

virtual I/O resources using the HMC in the Logical Partitioning Guide:

http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

10.4. HMC provisioning of open storage in VIOS

In May 2009, IBM enhanced the HMC’s capabilities to allow mapping of storage available in VIOS

to client LPARs, such as IBM i. This significant improvement enables graphical provisioning of

open storage for IBM i through VIOS and removes the requirement to use the VIOS command

line. If the storage configuration is performed while VIOS is down or not yet installed, new

storage will be discovered upon boot and the HMC can then be used to assign the correct LUNs

to IBM i. If storage changes are made while VIOS and IBM i are running, it will still be necessary

to Telnet to VIOS and run the cfgdev command to discover newly added storage prior to using

the HMC for assigning it to IBM i.

For HMC provisioning of VIOS storage to work, the VIOS LPAR must have an active RMC

(Resource Monitoring and Control) connection to the HMC. The same connection is required for

performing Dynamic LPAR (DLPAR) changes to the VIOS LPAR and it generally involves

successful TCP/IP communication between the LPAR and the HMC. If the HMC displays an

RMC error while attempting VIOS storage provisioning, consult the DLPAR Checklist at:

http://www.ibm.com/developerworks/systems/articles/DLPARchecklist.html

To provision open storage in VIOS to IBM i in the HMC, use the following steps:

• Sign onto the HMC as hscroot or another superadministrator-level userid

• Expand Systems Management

• Click Servers

• Select the correct managed system (server) by using the checkbox

• Use the launch-in-context arrow and select Configuration Æ Virtual Resources Æ

Virtual Storage Management

• Select the correct VIOS in the drop-down menu and click Query VIOS

• Click Physical Volumes

• Select the first correct LUN to be assigned to IBM i and click Modify assignment…

• Select the correct IBM i LPAR in the drop-down menu and click OK

• Repeat for all LUNs to be assigned to IBM i

Note that this sequence assumes the existence of the correct VSCSI server and client adapters in

VIOS and IBM i, respectively. Once the assignment is performed, the new LUNs are immediately

available in IBM i as non-configured drives.

.

.

28

Page 29

10.5. End-to-end LUN device mapping

In October 2009, IBM enhanced both the HMC and VIOS to allow end-to-end device mapping for

LUNs assigned to client LPARs, such as IBM i. The new function enables administrators to

quickly identify which LUN reporting in VIOS (or, hdisk) is which DDxxx disk device in IBM i. This

in turn makes it easier to troubleshoot disk-related problems and safer to change a virtualized

disk configuration. In order to correctly perform the mapping, the HMC requires an active RMC

connection to VIOS. Such a connection is not required for the IBM i client LPAR, because IBM i

does not use RMC for DLPAR operations. However, if the client is an AIX LPAR, an active RMC

connection to AIX is also required for a complete end-to-end mapping.

To perform end-to-end LUN device mapping, use the following steps:

• Sign onto the HMC as hscroot or another superadministrator-level userid

• Expand Systems Management

• Expand Servers

• Click the correct managed system (server)

• Select the correct VIOS by using the checkbox

• Use the launch-in-context arrow and select Hardware Information Æ Virtual I/O

Adapters Æ SCSI

11. Post-install tasks and considerations

11.1. Configure IBM i networking

Once the IBM i client partition is installed and running, the first system management step is to

configure networking. There are three types of network adapters that can be assigned to an IBM

i client partition:

• A standard physical network adapter in a PCI slot

• A logical port on a Host Ethernet Adapter (HEA)

• A virtual Ethernet adapter

Note that both physical and virtual I/O resources can be assigned to an IBM i virtual client

partition. If a physical network adapter was not assigned to the IBM i client partition when it was

first created, see the topic Managing physical I/O devices and slots dynamically using the

HMC in the Logical Partitioning Guide

(http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

available adapter.

An IBM i client partition can also use the new HEA capability of POWER6 processor-based

servers. To assign a logical port (LHEA) on an HEA to an IBM i client partition, see the topic

Creating a Logical Host Ethernet Adapter for a running logical partition using the HMC in

the Logical Partitioning Guide:

http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

Lastly, a virtual Ethernet adapter can also provide network connectivity to an IBM i client partition.

To create one, consult the topic Configuring a virtual Ethernet adapter using the HMC in the

Logical Partitioning Guide:

http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

) to assign an

.

.

29

Page 30

In all three cases, the assigned network adapter will be recognized as a communication s port

(CMNxx) in IBM i. The type of communications port will depend on the network adapter: for

example, 5706 for a Gigabit Ethernet adapter, 5623 for an LHEA and 268C for a virtual Ethernet

adapter. In the case of a standard PCI network adapter or an LHEA, networking can be

configured following the process described in the IBM i networking topic in the Information

Center: http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/topic/rzajy/rzajyoverview.htm

If the IBM i client partition is using a virtual Ethernet adapter for networking, an SEA must be

created in VIOS to bridge the internal virtual LAN (VLAN) to the external LAN. Use the HMC and

the instructions in section 8.4 to perform the SEA configuration.

11.2. How to perform IBM i operator panel functions

If the system is HMC-managed, follow these steps:

• Sign onto the HMC with a profile with sufficient authority to manage the IBM i client

partition

• Select the partition

• Use the open-in-context arrow to select Serviceability Æ Control Panel Functions, then

the desired function.

If the system is IVM-managed, follow these steps:

• In IVM, click View/Modify Partitions

• Select the IBM i partition

• Use the More Tasks drop-down menu and select Operator panel service functions

• Select the function you wish to perform and click OK

11.3. How to display the IBM i partition System Reference Code (SRC) history

If the system is HMC-managed, follow these steps:

• Sign onto the HMC with a profile with sufficient authority to manage the IBM i client

partition

• Select the partition

• Use the open-in-context arrow to select Serviceability Æ Reference Code History

• To display words 2 through 9 of a reference code, click the radio button for that code.

If the system is IVM-managed, follow these steps:

• In IVM, click View/Modify Partitions

• Select the IBM i partition

• Use the More Tasks drop-down menu and select Reference Codes

• Click an SRC to display all words.

11.4. Client IBM i LPARs considerations and limitations

Consult the topic Considerations and limitations for i5/OS client partitions on systems

managed by the Integrated Virtualization Manager (IVM) in the Information Center:

http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/topic/rzahc/rzahcbladei5limits.htm

.

.

30

Page 31

11.5. Configuring Electronic Customer Support (ECS) over LAN

A supported WAN adapter can be assigned to the IBM i client partition for ECS. Alternatively,

ECS over LAN can be configured. Consult the topic Setting up a connection to IBM in the

Information Center:

http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/topic/rzaji/rzaji_setup.htm

11.6. Backups

As mentioned above, IBM i as a client of VIOS can use a mix of virtual and physical I/O resources.

Therefore, the simplest backup and restore approach is to assign an available tape adapter o n

the system to it and treat it as a standard IBM i partition. The tape adapter can be any adapter

supported by IBM i on IBM Power servers and can be shared with other partitions. To assign an

available tape adapter to the IBM i client partition, consult the topic Managing physical I/O

devices and slots dynamically using the HMC in the Logical Partitioning Gui de:

(http://publib.boulder.ibm.com/infocenter/systems/scope/hw/topic/iphat/iphat.pdf

Once a tape adapter connected to a tape drive or library is available to the client partition, use the

Backup and Recovery topic in the Information Center to manage backups:

http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/index.jsp?topic=/rzahg/rzahgbackup.

htm&tocNode=int_215989.

12. DS4000 and DS5000 Copy Services and IBM i

IBM has conducted some basic functional testing of DS4000 and DS5000 and Copy Services

with IBM i as client of VIOS. Below, you will find information on the scenarios tested and the

resulting statements of support for using DS4000 and DS5000 Copy Services with IBM i.

12.1. FlashCopy and VolumeCopy