Page 1

IBM i™ on a Power Blade Read-me First

Vess Natchev

Cloud | Virtualization | Power Systems

IBM Rochester, MN

vess@us.ibm.com

July 14th, 2010

1

Page 2

This “read-me first” document provides detailed instructions on using IBM i on a Power blade. It

covers prerequisites, supported configurations, preparation for install, hardware and softwa re

install, firmware updates and post-install tasks such as backups. The document also contains

links to many additional information sources.

Table of Contents

1. Prerequisites

1.1.1. IBM BladeCenter PS701 Express

1.1.2. IBM BladeCenter PS700 Express

1.1.3. IBM BladeCenter PS702 Express

1.1.4. IBM BladeCenter JS23 Express

1.1.5. IBM BladeCenter JS43 Express

1.1.6. IBM BladeCenter JS22

1.1.7. IBM BladeCenter JS12

1.1.8. Logical Partitioning (LPAR)

1.1.9. Overview of I/O concepts for IBM i on blade

1.2. Review terminology

1.3. Supported environments

1.4. Plan for necessary IP addresses

1.5. Install System i Access for Windows

1.6. Install PuTTY

2. Prepare for the hardware installation

2.1. Verify or obtain BladeCenter hardware

2.2. Verify or obtain I/O switch modules

3. Hardware installation and BladeCenter firmware updates

3.1. Assemble the BladeCenter and blade server hardware

3.2. Configure the Advanced Management Module (AMM)

3.2.1. Initial AMM configuration

3.2.2. AMM user profiles

3.2.3. AMM LAN configuration

3.3. Download BladeCenter firmware

3.4. Download BladeCenter management module firmware

3.5. Download the BladeCenter Fibre Channel I/O module firmware

3.6. Download the BladeCenter Ethernet I/O module firmware

3.7. Download the BladeCenter SAS I/O module firmware

3.8. Download the BladeCenter S DSM firmware

3.9. Update the BladeCenter management module firmware

3.10. Update Firmware on the BladeCenter I/O modules

3.10.1. Assigning an IP address to I/O modules

3.11. Update the firmware on the BladeCenter S DSM(s)

3.12. Installing and configuring an Intelligent Copper Pass-through Module (ICPM)

4. Storage management

4.1. Storage concepts for IBM i on Power blade in BladeCenter H

4.1.1. Storage concepts for JS12 and JS22 in BladeCenter H

4.1.2. Storage concepts for JS23, JS43, PS700, PS701 and PS702 in BladeCenter H

4.2. Best practices for BladeCenter H and Fibre Channel storage

4.3. Create LUNs for the IBM i partition(s) in BladeCenter H

4.4. Multi-path I/O (MPIO) for IBM i in BladeCenter H

4.4.1. MPIO drivers

4.5. Storage concepts for IBM i on Power blade in BladeCenter S

2

Page 3

4.5.1. Storage concepts for JS12 and JS22 in BladeCenter S

4.5.2. Storage concepts for JS23, JS43, PS700, PS701 and PS702 in BladeCenter S

4.6. Best practices for BladeCenter S and SAS storage

4.6.1. Best practices when using the SAS Connectivity Module

4.6.2. Best practices when using the SAS RAID Controller Module

4.7. Configuring storage in BladeCenter S using the SAS Connectivity Module

4.7.1. SAS I/O modules configurations

4.7.2. Activate a pre-defined SAS I/O module configuration

4.7.3. Create a custom SAS I/O module configuration

4.8. Configuring storage in BladeCenter S using the SAS RAID Controller Module

4.8.1. SAS zoning for RSSM

4.8.1. Configuring RSSM with SCM

4.9. Using the DVD drive in the BladeCenter with VIOS and IBM i

4.9.1. USB 2.0 access

4.9.2. Writing to DVD-RAM media in BladeCenter H

4.10. Creating multiple Virtual SCSI adapters per IBM i partition

4.10.1. Mapping storage to new Virtual SCSI adapters

4.10.2. Removing Virtual SCSI adapters

4.10.4. Multiple Virtual SCSI adapters and virtual tape

4.11. Fibre Channel over Converged Enhanced Ethernet (FCoCEE)

4.11.1. Requirements for FCoCEE

4.11.2. Implementing FCoCEE

5. Software installation

5.1. Obtain the VIOS and IBM i installation media and fixes

5.2. Install VIOS

5.2.1. Prerequisites in the AMM

5.2.2. Opening a console for VIOS

5.2.3. Powering on the blade

5.2.4. Accessing the SMS menus

5.2.5. Installing VIOS from DVD

5.2.6. Installing VIOS from NIM

5.2.7. Completing the install

5.2.8. Mirroring of VIOS

5.3. Configure networking in VIOS (if necessary)

5.4. Update the system firmware on the SP of the Power blade (if necessary)

5.5. Update VIOS (if necessary)

5.6. Update the microcode on the I/O expansion cards on the blade (if necessary)

5.6.1. Displaying the current microcode level of the expansion adapters on the blade

5.6.2. Downloading the latest available level of expansion adapter microcode

5.6.3. Updating the adapter microcode

5.7. Verify disks for IBM i are reporting in VIOS

5.8. Configure the Virtual Ethernet bridge for IBM i LAN Console

5.9. Memory recommendations for VIOS and the Hypervisor

5.9.1. CPU recommendations for VIOS

5.10. Create the IBM i partition(s) using IVM

5.11. Create the LAN Console connection on the console PC

5.12. Install IBM i

5.13. Configure mirroring in IBM i (if necessary)

5.13.1. Disk protection for IBM in BladeCenter H

5.13.2. IBM i mirroring in BladeCenter S

5.13.3. Identifying SAS disk units in different DSMs

5.13.4. Configuring mirroring

5.14. Install IBM i PTFs (if necessary)

3

Page 4

6. Post-install tasks and considerations

6.1. Configure IBM i networking

6.2. Configure Electronic Customer Support (ECS) over LAN

6.3. How to perform IBM i operator panel functions

6.4. How to display the IBM i partition System Reference Code (SRC) history

6.5. IBM i on Power blade considerations and limitations

6.6. Moving the BladeCenter DVD drive to another Power blade

7. Backup and restore

7.1. Overview of backup and restore for IBM i on a Power blade

7.2. Save and restore with a single LTO4 SAS tape drive

7.2.1. Technical overview

7.2.2. Support statements and requirements for a single LTO4 SAS tape drive

7.2.3. Making the tape drive available to VIOS

7.2.4. Sharing a tape drive among multiple blades

7.2.5. Assigning the tape drive to IBM i

7.2.6. Error logs for tape virtualization

7.2.7. Performing an IBM i save

7.2.8. Performing an IBM i restore

7.2.9. Performing a D-mode IPL from virtual tape

7.3. Save and restore with a Fibre Channel-attached tape library

7.3.1. Technical overview

7.3.2. Support statements and requirements for FC tape libraries

7.3.3. Creating the VFC configuration

7.3.4. Making the tape library available to IBM i

7.3.5. Performing an IBM i save

7.3.6. Performing an IBM i restore

7.3.7. Performing a D-mode IPL from a FC tape library

7.4. Backup and restore of VIOS and IVM

7.4.1. Backing up VIOS to tape

7.4.2. Restoring VIOS from tape

8. i Edition Express for BladeCenter S

8.1. Overview

9. IBM i preinstall on BladeCenter S

9.1. IBM i preinstall overview

9.1.1. IBM i preinstall with the SAS Connectivity Module

9.1.2. IBM i preinstall with the SAS RAID Controller Module

9.2. Requirements

9.2.1. Requirements for NSSM

9.2.2. Requirements for RSSM

9.3. Installation steps that must be performed by the implementing party

10. DS4000 or DS5000 Copy Services and IBM i

10.1. FlashCopy and VolumeCopy

10.1.1. Test scenario

10.1.2. FlashCopy and VolumeCopy support statements

10.2 Enhanced Remote Mirroring (ERM)

10.2.1. Test scenario

10.2.2 ERM support statements

11. Additional resources

12. Trademarks and disclaimers

4

Page 5

1. Prerequisites

1.1. Review IBM i on Power blade concepts

1.1.1. IBM BladeCenter PS701 Express

The IBM BladeCenter PS701 Express is a single-wide blade server based on the POWER7™

processor. The PS701 contains 1 socket and 8 POWER7™ cores, which use IBM’s 45-nm

lithography and operate at 3 GHz. The PS701 also includes 4 MB of on-chip eDRAM (enhanced

Dynamic RAM) L3 cache per core (total of 32 MB per socket) and 256 KB of L2 cache per core.

Up to 16 DDR3 memory DIMMs are supported for a maximum of 128 GB. The blade server

includes 2 embedded 1Gb Ethernet ports, onboard SAS and USB controllers, EnergyScale™

power management and an FSP-1 service processor. A maximum of one onboard Serialattached SCSI (SAS) drive is supported. The PS701 supports 2 types of I/O expansion adapters:

Combination Form Factor – Horizontal (CFFh) and Combination I/O Form Factor – Vertical

(CIOv). For IBM i, the PS701 is supported in IBM BladeCenter H and BladeCenter S. The

following picture shows the PS701, identifying the major components:

1.1.2. IBM BladeCenter PS700 Express

The IBM BladeCenter PS700 Express is a single-wide blade server based on the POWER7™

processor. The PS700 is similar to the PS701, with only the following differences:

• The POWER7™ socket contains only 4 active processor cores. The remaining 4 cores

cannot be activated via Capacity on Demand

• Two memory interface modules are present instead of 4

• Up to 8 DDR3 memory DIMMs are supported for a maximum of 64 GB

• Two SAS drives are supported

• The blade SMP connector is not present

5

Page 6

The I/O options and chassis support for IBM i for the PS700 are the same as those for the

PS701.

1.1.3. IBM BladeCenter PS702 Express

The IBM BladeCenter PS702 Express is a double-wide blade server based on the POWER7™

processor. The PS702 consists of the PS701 main blade unit and a Symmetric Multiprocessing

(SMP) expansion unit, which occupies an adjacent blade bay in an IBM BladeCenter. The PS702

has the following combined hardware characteristics:

• 2 sockets and 16 POWER7™ cores operating at 3 GHz

• 4 MB of L3 cache per core (total of 32 MB per socket) and 256KB of L2 cache per

core

• Up to 32 DDR3 memory DIMMs for a maximum of 256 GB

• 4 embedded 1Gb Ethernet ports

• Onboard SAS and USB controllers, EnergyScale™ power management and FSP-1

service processor on the main blade unit

• Up to 2 SAS onboard drives

• Up to 2 CFFh and 2 CIOv I/O expansion adapters

For IBM i, the PS702 is supported in BladeCenter H and BladeCenter S.

Note that the I/O adapter options for IBM i on PS700, PS701 and PS702 are the same as

those on JS23 and JS43.

1.1.4. IBM BladeCenter JS23 Express

The IBM BladeCenter JS23 Express is a single-wide blade server based on the POWER6™

processor. The JS23 contains 2 sockets and 4 POWER6™ cores, which use IBM’s enhan ced

65-nm lithography and operate at 4.2 GHz. The JS23 also includes 32 MB of shared L3 cache

per socket and 4 MB of dedicated L2 cache per core. Up to 8 DDR2 memory DIMMs are

supported for a maximum of 64 GB. The blade server includes 2 embedded 1Gb Ethernet ports,

onboard SAS and USB controllers, EnergyScale™ power management and an FSP-1 se rvice

processor. A maximum of one onboard Serial-attached SCSI (SAS) or Solid State Disk (SSD)

drive is supported. The JS23 supports 2 types of I/O expansion adapters: Combination Form

Factor – Horizontal (CFFh) and the new Combination I/O Form Factor – Vertical (CIOv). For IBM

i, the JS23 is supported in IBM BladeCenter H and BladeCenter S. The following picture shows

the JS23, identifying the major components:

6

Page 7

1.1.5. IBM BladeCenter JS43 Express

The IBM BladeCenter JS43 Express is a double-wide blade server based on the POWER6™

processor. The JS43 consists of the JS23 main blade unit and a Symmetric Multiproce ssing

(SMP) expansion unit, which occupies an adjacent blade bay in an IBM BladeCenter. The JS43

has the following hardware characteristics:

• 4 sockets and 8 POWER6™ cores operating at 4.2 GHz

• 32 MB of L3 cache per socket and 4 MB of L2 cache per core

• Up to 16 DDR2 memory DIMMs for a maximum of 128 GB

• 4 embedded 1Gb Ethernet ports

• Onboard SAS and USB controllers, EnergyScale™ power management and FSP-1

service processor on the main blade unit

• Up to 2 SAS or SSD onboard drives

• Up to 2 CFFh and 2 CIOv I/O expansion adapters

For IBM i, the JS43 is supported in BladeCenter H and BladeCenter S. The picture below shows

only the SMP expansion unit of the JS43, identifying the major components:

7

Page 8

1.1.6. IBM BladeCenter JS22

The JS22 Power blade is a 4-core blade server based on the POWER6™ processor. The JS22

fits in a standard IBM BladeCenter chassis and has an integrated Service Processor (SP), two

Gigabit Ethernet ports, SAS and USB controllers and a SAS disk drive. The embedded Ethernet

ports are Integrated Virtual Ethernet (IVE) ports, also present on other POWER6-based servers.

Additional I/O is provided by CFFh and CFFv expansion cards, which allow connections to

external storage and tape via switches in the BladeCenter chassis. IBM i on the JS22 is

supported in BladeCenter H and BladeCenter S. The following picture shows the JS22,

identifying the major components:

8

Page 9

1.1.7. IBM BladeCenter JS12

The JS12 Power blade is a 2-core blade server based on the POWER6™ processor. Its

hardware is very similar to that of the JS22, with several important differences:

• There is a single POWER6 socket with 2 processor cores

• The processor cores operate at 3.8 GHz, instead of the JS22’s 4 GHz

• Two integrated SAS drives are supported on the blade

• Four additional memory DIMM slots are supported, for a total of eight slots

The JS12 includes the same SP, embedded Gigabit Ethernet (IVE) ports, CFFv and CFFh I/O

expansion slots, and embedded SAS and USB controllers. The JS12 is capable of supporting

twice the number of memory DIMMs because of the new, shorter DIMM design, which allows the

DIMMs to be plugged in vertically. IBM i on the JS12 is supported in BladeCenter H and

BladeCenter S. The following picture shows the JS12, identifying the major components:

9

Page 10

Note that all implementation instructions in the rest of the document apply to JS12, JS22,

JS23, JS43, PS700, PS701 and PS702 unless explicitly stated otherwise.

1.1.8. Logical Partitioning (LPAR)

Like other Power systems, Power blades can be partitioned into separate environments, or logical

partitions (LPARs). Power blades support IBM i, AIX and Linux partitions. Any physical hardware

the blade has access to is owned by a Virtual I/O Server (VIOS) LPAR, which virtualizes storage,

optical and network resources to the other LPARs. An IBM i LPAR on the blade does not have

direct access to any physical hardware on the blade or outside the BladeCenter chassis. IBM i is

a client to VIOS, using a Virtual SCSI (VSCSI) connection in the Hypervisor firmware residing on

the SP.

VIOS is always the first LPAR installed on a partitioned Power blade. Once VIOS is installed,

other LPARs can be created using the Integrated Virtualization Manager (IVM). IVM is part of

VIOS and provides a browser interface to the blade for managing LPARs and I/O virtualization.

The Power blade does not support a Hardware Management Console (HMC).

1.1.9. Overview of I/O concepts for IBM i on blade

IBM i LPARs on a Power blade can use Fibre Channel or SAS storage. The type of storage

used is determined by the I/O modules available in the BladeCenter chassis and the I/O

expansion adapters present on the blade, and not by the blade machine type and model.

All POWER6™ and POWER7™ processor-based blades are capable of conne cting to both Fibre

Channel or SAS storage. The JS23, JS43, PS700, PS701 and PS702 would use one of several

CIOv adapters and/or the QLogic CFFh Fibre Channel/Ethernet adapter to connect to Fibre

Channel storage; they would use the CIOv SAS passthrough adapter to connect to SAS storage.

The JS12 and JS22 would use the QLogic CFFh Fibre Channel/Ethernet adapter to connect to

10

Page 11

Fibre Channel storage and the CFFv SAS adapter to connect to SAS storage. IBM i on a blade

supports Fibre Channel and/or SAS storage (IBM DS3200) with BladeCenter H, and BladeCenter

S internal SAS storage and/or DS3200 with BladeCenter S. IBM i supports the SAS RAID

Controller I/O Module in BladeCenter S. Fibre Channel storage is not supported with

BladeCenter S.

The storage is physically connected to VIOS using a supported expansion adapter for that blade.

Once the Fibre Channel or SAS LUNs, or SAS drives in the BladeCenter S are recognized by

VIOS, they are directly virtualized to IBM i, so that each LUN or SAS drive appears as one drive

within IBM i. IBM i is installed using the DVD drive in the chassis (virtualized by VIOS) or a media

image file in VIOS.

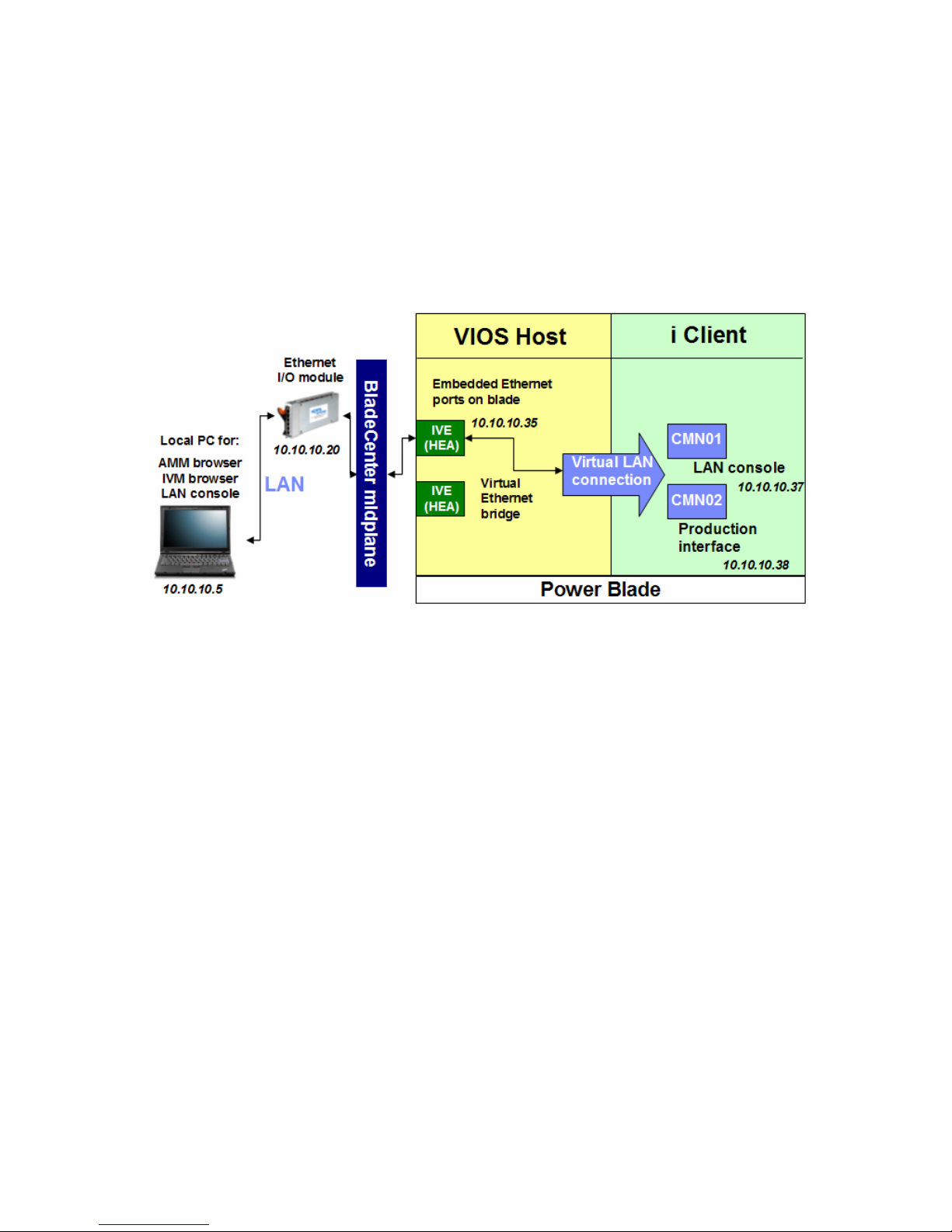

IBM i LPARs have two different 1Gb Ethernet connectivity options, both using VIOS virtualization.

The first option is the embedded Host Ethernet Adapter (HEA) ports on the blade. HEA ports are

not directly assigned to IBM i; instead they are physical assigned to VIOS, which provides a

Virtual Ethernet Bridge for client partitions. The second option is the Ethernet ports on the

QLogic CFFh Fibre Channel/Ethernet adapter. Once again, the ports are physically attached to

VIOS, which bridges them to IBM i. In order to take advantage of the Virtual Ethernet bridge, IBM

i must have at least one Virtual Ethernet adapter. One such adapter is created for each new IBM

i partition by default.

Operations Console (LAN) is the only console option. HMC console, Operations Console – Direct

Attached, thin clients or twinaxial connections are not supported. IBM i partitions use the same

network virtualization framework explained above for both LAN console and production TCP/IP

traffic. It is recommended that two separate Virtual Ethernet adapters be created for those

functions in each IBM i partition. The two adapters can then reside on the same Virtual LAN

(VLAN), and therefore connect to the outside network using the same HEA or CFFh port.

Alternatively, each Virtual Ethernet Adapter can be on a separate VLAN and using a different

HEA or CFFh port to reach the external network.

See section 7 of this document for an explanation of the save and restore options for IBM i on

blade, as well as the procedures for saving and recovering VIOS.

The following diagram shows an example Power blade environment with 2 IBM i, 1 AIX and 1

Linux LPAR as clients of VIOS:

11

Page 12

1.2. Review terminology

BladeCenter: The chassis containing the blade servers, I/O modules, AMM, DVD-ROM drive,

Power and fan modules.

Advanced Management Module (AMM): A control module residing in a speci al I/O bay in the

BladeCenter. The AMM provides browser and command-line interfaces into the BladeCenter and

can also provide KVM (keyboard, video, mouse) functions to blade servers. The KVM functions

are not used for Power blades.

I/O bay: A slot for an I/O module (switch) inside the BladeCenter. A BladeCenter can have a mix

of standard and high-speed switch bays.

I/O module (switch): A switch residing in the BladeCenter which provides connectivity between

the blade servers and external I/O device, using wiring in the BladeCenter midplane.

Multi-switch Interconnect Module (MSIM): A module which occupies both high-speed I/O bays

7 and 8 or 9 and 10. By placing a standard “vertical” module (normally residing in I/O bays 1-4) in

an MSIM, the module can use the BladeCenter’s high-speed fabric. This allows a “horizontal”

high-speed expansion card (CFFh) to connect through a “vertical” module.

Blade server: A standalone server residing in a blade slot in the BladeCenter.

12

Page 13

Service Processor (SP): The SP on the Power blade is similar to the SP (sometimes called

“FSP”) on other POWER6-based systems. It contains firmware to manage the hardwa re on the

blade; the Power Hypervisor; and Partition Firmware (PFW).

System firmware: As with other POWER6-based systems, this is the firmware on the SP (see

above).

I/O expansion card: Sometimes called “daughter card.” An I/O adapter that fits into a PCIe or

PCI-X slot on the blade and allows connectivity to external I/O devices through the BladeCenter

midplane and I/O modules.

Combination Form Factor – Horizontal (CFFh) I/O expansion adapter: A PCIe I/O adapter

that allows access to external resources through I/O bays 7 – 10 in BladeCenter H, and bay 2 in

BladeCenter S.

Combination I/O Form Factor – Vertical (CIOv) I/O expansion adapter: A PCIe I/O adapter

that allows access to external resources through I/O bays 3 and 4 in both BladeCenter H and

BladeCenter S.

Combination Form Factor – Vertical (CFFv) I/O expansion adapter: A PCI-X I/O adapter that

allows access to external resources through I/O bays 3 and 4 in both BladeCenter H and

BladeCenter S.

Adapter firmware: The firmware on the I/O expansion cards on the blade.

Integrated Virtual Ethernet (IVE) ports: Like other POWER6-based systems, the POWER6

blade includes 2 embedded Gigabit Ethernet ports on the system I/O bridge chip.

Host Ethernet Adapter (HEA) ports: Another name for the IVE ports, more commonly used in

technical documentation.

Virtual I/O Server (VIOS): The Virtual I/O Server is software that is located in a logical partition.

This software facilitates the sharing of physical I/O resources between client logical partitions

within the system.

Integrated Virtualization Manager (IVM): A browser interface installed with VIOS. It provides

LPAR and virtualization management functions.

Virtual Ethernet adapter: A virtual network adapter created in the Power Hypervisor that is part

of an LPAR’s hardware resources. On Power blade, IBM i cannot be assigned physical network

adapters.

Virtual SCSI adapter: A virtual storage adapter created in the Power Hypervisor that is part of

an LPAR’s hardware resources. On Power blade, a Virtual SCSI (VSCSI) client adapter is

created in IBM i and a VSCSI server adapter in VIOS for storage virtualization.

Virtual Ethernet bridge: A VIOS function that allows Layer-2 bridging of a Virtual LAN to an

outside physical LAN. It is a required on Power blade in order to provide both LAN console and

standard networking to IBM i.

Logical Unit (LUN): A volume created on a SAN system that appears as a single disk device to

a server.

Disk Drive Module (DDM): A physical disk unit in a SAN system.

SDDPCM (Subsystem Device Driver – Path Control Module): A Multi-path I/O (MPIO) driv er

for certain storage subsystems installed on top of VIOS.

RDAC (Redundant Disk Array Controller): A Multi-path I/O (MPIO) driver for DS4000 or

DS5000, which is included with VIOS.

SAS (Serial-attached SCSI): A storage access protocol, which is the next generation of the

[parallel] SCSI protocol.

DSM (Disk Storage Module): A disk bay in the BladeCenter S, currently capable of supporting

six SAS or SATA drives. Two DSMs are supported in the BladeCenter S.

1.3. Supported environments

For a complete list of supported hardware, firmware and software for the IBM i on Power blade

environment, see http://www.ibm.com/systems/i/advantages/v6r1/blades/index.html.

13

Page 14

1.4. Plan for necessary IP addresses

You should plan to assign IP addresses to the following components for a minimum IBM i on

blade configuration. All the addresses below are typically configured on the same subnet.

• AMM (this IP address is already assigned on an existing BladeCenter)

o The AMM IP address is a physical LAN IP address. It will be used to remotely

manage the BladeCenter and blade servers

• Ethernet I/O module (this IP address is already assigned on an existing BladeCenter)

o This IP address will be used to connect the Ethernet I/O module to the physical

LAN, allowing any blades in the BladeCenter access to the LAN

• VIOS/IVM

o An IP address on the external LAN that will be used to connect to both IVM and

the VIOS command line

• IBM i LAN console

o An IP address on the external LAN that will be used to provide 5250 console

access to IBM i via a PC with the System i Access for Windows software. The

address will be assigned to the IBM i partition when the LAN console connection

is first established. Note that this IP address is different from the VIOS IP

address and the IP address later used for IBM i production TCP/IP traffic.

• IBM i production interface

o An IP address on the external LAN that will be used for IBM i production network

traffic. This address will be configured after IBM i is installed using LAN console.

It is recommended that the IBM i LAN console and production network interface

use two separate Virtual Ethernet adapters in the IBM i partition

• PC for LAN console and IVM browser access

o When the IBM i LAN console connection is first established, the console PC must

be on the same subnet as the IBM i partition. Once the console is established,

this restriction is removed

• SAS I/O module 1

o An IP address on the external LAN that will be used to connect to the SAS I/O

module. This IP address is required in order to manage the SAS module

configuration and assign SAS drives in the chassis to blades.

• SAS I/O module 2

o An IP address on the external LAN that will be used to connect to the SAS I/O

module. This IP address is required in order to manage the SAS module

configuration and assign SAS drives in the chassis to blades. A second SAS I/O

module is optional in the BladeCenter S.

• SAS RAID controller module 1

o This applies only to BladeCenter S. An IP address on the external LAN that

will be used to communicate specifically with the RAID subsystem in the module.

When a pair of these I/O modules is used, an IP address is also required to be

assigned to the RAID subsystem in addition to assigning an IP address to the

SAS switch component of the module.

• SAS RAID controller module 2

o This applies only to BladeCenter S. An IP address on the external LAN that

will be used to communicate specifically with the RAID subsystem in the module.

Two such modules must always be used; therefore, this IP address is required if

the RAID SAS modules are installed.

• Fibre Channel switch modules

o This applies only to BladeCenter H. An IP address on the external LAN that

will be used to communicate with a Fibre Channel switch module through the

AMM. This IP address is required for switch configuration and firmware updates.

14

Page 15

From a network perspective, one significant difference between the BladeCenter H and

BladeCenter S is that in the BladeCenter H, each embedded HEA port on the Power blade

connects to the outside LAN through a separate Ethernet module in the chassis. The first HEA

port connects through I/O module bay 1 and the second one through I/O module bay 2. In the

BladeCenter S, both HEA ports on the blade connect through I/O module bay 1. If the network

ports on the QLogic CFFh Fibre Channel/Ethernet adapter are used in a BladeCenter H, the first

one connects through I/O module bay 7 and the second through I/O module bay 9. If that

expansion adapter is used in BladeCenter S, both ports connect through I/O module bay 2. The

following diagram shows a sample network configuration for a basic IBM i on blade installation:

1.5. Install System i Access for Windows

As mentioned above, IBM i on Power blade uses LAN console for a system console. LAN

console necessitates having a PC (initially on the same subnet as VIOS and IBM i) with the

System i Access for Windows software at version 6.1 or later. The same PC can be used for

the browser connection to IVM and Telnet sessions to the AMM or VIOS. Obtain the System i

Access software version 6.1 or later by visiting this Web site:

http://www.ibm.com/systems/i/software/access/caorder.html.

Complete the PC preparations for LAN console and install the software as described in this

section of the IBM i Information Center:

http://publib.boulder.ibm.com/infocenter/systems/scope/i5os/topic/rzajr/rzajrlcnetworksu.htm.

Make sure to install the Operations Console component.

1.6. Install PuTTY

As mentioned above, IVM is used for both LPAR and virtual resource management in this

environment. IVM requires networking to be configured in VIOS, which is discussed in section

5.3. To install and configure VIOS, and later to save or restore it, a Telnet session to the AMM or

to VIOS is required. Use the PuTTY application any time a Telnet session is mentioned in this

document. PuTTY provides better terminal functionality than the Telnet client included with

Windows and can be downloaded at no cost from

http://www.chiark.greenend.org.uk/~sgtatham/putty/download.html. Install PuTTY on the same

PC used for LAN console and the browser connection to IVM.

15

Page 16

2. Prepare for the hardware installation

2.1. Verify or obtain BladeCenter hardware

If you have already purchased a BladeCenter chassis, verify it is supported for IBM i on a Power

blade by consulting the supported environments document:

http://www.ibm.com/systems/i/advantages/v6r1/blades/index.html.

If you have not already obtained a BladeCenter chassis, work with your local sales channel or

use the IBM BladeCenter chassis Web site at

http://www.ibm.com/systems/bladecenter/hardware/chassis/.

2.2. Verify or obtain I/O switch modules

If you have already obtained the BladeCenter switch modules, verify that:

• They are supported for IBM i on Power blade

• They meet the requirements of the minimum configuration for IBM i on Power blade

Consult the supported environments document at

http://www.ibm.com/systems/i/advantages/v6r1/blades/index.html.

NOTE: If the Power blade(s) is/are going to co-exist with another type of blade server, such as

Intel-based blades, verify that the I/O switch module configuration of the chassis meets the I/O

requirements of all blades.

3. Hardware installation and BladeCenter firmware updates

3.1. Assemble the BladeCenter and blade server hardware

The first step in preparing the BladeCenter is to install the BladeCenter and blade server

hardware. This may include installing any management modules, power modules, and I/O

modules in the BladeCenter. The BladeCenter might have these components already installed if

an additional blade server is being added to an already functioning BladeCenter. Before installing

the blade servers in the BladeCenter, any blade server options must be installed. This may

include additional processors (if x86 blades), additional memory and I/O expansion cards. For

Power blade servers, any required CFFh, CIOv and/or CFFv expansion adapters are installed at

this time, depending on the chassis and storage used. Refer to the blade server and expansion

card documentation that came with the option for details on installing each one. Once the blade

server options have been installed, the blade server can be installed in the BladeCenter. Refer to

BladeCenter and blade server documentation for details on how to install the BladeCenter and

blade server components. After installing all the blade server options, installing the blade servers

into the BladeCenter, and installing the BladeCenter modules, the BladeCenter can be connected

to the Power outlets.

16

Page 17

3.2. Configure the Advanced Management Module (AMM)

3.2.1. Initial AMM configuration

At this time, the AMM must have an Ethernet cable plugged into its Ethernet port. Plug the other

end of this cable into the Ethernet connector of a computer where you will open a browser

session to the AMM. In some cases, a switc h or hub may also be necessary to connect. The

following steps are performed on the computer connected to the AMM and not on the

BladeCenter console.

Set the IP address to one in the same subnet as the AMM’s default IP address of

192.168.70.125 such as 192.168.70.101 and set the subnet mask to 255.255.255.0.

Ensure the BladeCenter’s AC Power cords are plugged into an appropriate Po wer source to

provide Power for the management module. Allow about 30 seconds after performing

this step for the management module to boot

Open a Web browser on the computer connected to the AMM. In the address or URL field,

type the IP address (192.168.70.125 is the default) of the AMM to which you want to

connect

The Enter Password window will open. Type the user name and password on the Enter

Password window. The management module has a default user name of USERID and

password of PASSW0RD (where 0 is a zero, not the letter O). It is recommended to

change the password during this initial configuration

Select a timeout value on the next screen and click continue

3.2.2. AMM user profiles

To create additional user profiles on the AMM, click Login Profiles under MM Control

Click a login ID currently marked as “not used”

Enter the user profile and password (twice), select the user profile’s desired role and click

Save

3.2.3. AMM LAN configuration

To configure the AMM so that it is accessible on the local network, click Network Interfaces

under MM Control

Enter a hostname for the AMM. If the AMM is going to use DHCP, choose the option

Enabled – Obtain IP Config from DHCP server in the DHCP drop-down menu

If the AMM is going to use a static IP address, choose the option Disable – Use static IP

configuration, then enter the IP address, subnet mask and gateway IP address

Click Save.

3.3. Download BladeCenter firmware

The procedures below are performed on a computer using a common we b browser, while

accessing the Web page: http://www.ibm.com/systems/i/advantages/v6r1/blades/index.html (the

IBM i on Power Blade Supported Environments document).

Start by locating the BladeCenter chassis model

Click on the Download firmware link

The Support for BladeCenter - Downloads page will then be displayed.

Alternatively, access the Support for BladeCenter - Downloads site at this URL: http://www-

947.ibm.com/systems/support/supportsite.wss/brandmain?brandind=5000020.

17

Page 18

3.4. Download BladeCenter management module firmware

• From the Support for BladeCenter - Downloads page, in the Refine results field,

select Advanced Management Module and click the Go button

• Note that both functional and maintenance releases of the AMM firmware are

available for download. A functional release enables new functions in the

BladeCenter; whereas a maintenance release fixes known problems since the last

functional release

• Download both the latest functional and maintenance firmware releases. Verify that

the Power blade(s) and x86 blade(s) you are installing are in the Supported

systems list

• On the firmware update page, click on the link for the README text file and print a

copy for use as a reference when actually performing the update

• Click on the browser’s Back button to return to the previous page

• Click on the link for the .zip file containing the firmware updates to download the file.

This file will be used to later update the firmware

3.5. Download BladeCenter Fibre Channel I/O module firmware

• From the Support for BladeCenter - Downloads page, in the Refine results field, select

Networking and click the Go button

• Find and select the appropriate link for the Fibre Channel I/O module installed in the

BladeCenter chassis (most often Brocade or QLogic)

• On the firmware update page, click on the link for the README text file and print a copy

for use as a reference when performing the update

• Click on the browser’s Back button to return to the previous page

• Next, click on the link of the firmware update to download the file. This file will be used

later to update the firmware. This link may lead to the I/O module vendor’s Web site:

o For Brocade, fill in the export compliance form and accept the user’s license.

Then download the file marked similar to Fabric OS v5.3.0a for PC

o For Cisco, click on the latest available release, named similar to Cisco MDS

9000 SAN-OS Software Release 3.2, then click the Download Software link. A

registration with Cisco is required to download the update file

o For QLogic, find the table named Fibre Channel Switch Module Firmware and

download the latest version of the firmware marked similar to QLogic 4Gb 6-

Port Fibre Channel Switch Module for IBM eServer BladeCenter Firmware

3.6. Download BladeCenter Ethernet I/O module firmware

• From the Support for BladeCenter - Downloads page, in the Refine results field,

select Networking

• Find and select the appropriate link for the Ethernet I/O module installed in the

BladeCenter chassis

• On the firmware update page, click on the link for the README text file and print a

copy for use as a reference when performing the update

• Click on the browser’s Back button to return to the previous page

• Next, click on the link of the firmware update to download the file. This file will be

used later to update the firmware

3.7. Download BladeCenter SAS I/O module firmware

• Start at http://www.ibm.com/support

and press the Go button

18

Page 19

• Choose BladeCenter under Choose support type and click the right arrow

• Select BladeCenter S under Product family and click Go. This applies even if you are

using one or both SAS I/O modules in a BladeCenter H

• If using the standard SAS I/O module, find the link to the most recent SAS Connectivity

Module firmware and click it

o Download the .ZIP file containing the firmware update and the corresponding

README file

• If using the SAS RAID I/O module, find the link to the most recent SAS RAID Controller

Module Matrix and click it

o Click the Storage Configuration Manager (SCM) Firmware Update Package

link

o Download the .ZIP and README files

o Go back to the SAS RAID Controller Module Matrix page and also download

the latest version of the Storage Configuration Manager software; you will

employ it later to configure RAID arrays and volumes

3.8. Download the BladeCenter S DSM firmware

• Start at http://www.ibm.com/support

• Choose BladeCenter under Choose support type and click the right arrow

• Select BladeCenter S under Product family and click Go

• Find the link to the most recent Disk Storage Module (DSM) Firmware and click it

• Download the .ZIP file containing the firmware update and the corresponding

README file

3.9. Update the BladeCenter management module firmware

• You can begin this procedure from any AMM Web browser window

• Click Firmware Update under MM Control on the navigation pane on the left

• On the Update MM Firmware window, select Browse and navigate to the location

(usually on a local PC) where you downloaded the management module firmware update.

The file or files will have an extension of .PKT

• Begin with the update file for the functional AMM firmware release. Highlight the file

and click the Open button

• The README text may specify a particular order to select these files. If so, follow the

README file instructions

• The full path of the selected file is displayed in the Browse field

• To start the update process, click Update

• A progress indicator opens as the file is transferred to temporary storage on the AMM. A

confirmation window will be displayed when the file transfer is complete

• Verify that the file shown on the Confirm Firmware Update window is the one you want

to update. If not, click Cancel

• To complete the update process, click Continue. A progress indicator opens as the

firmware on the AMM is flashed. A confirmation window will be displayed when the

update has successfully completed

• The README file text might direct you to restart the AMM after completing the .PKT file

update. If so, click Restart MM on the navigation pane on the left side of the window

• Click OK to confirm the reset. The Web browser window will then close. A new Web

browser window will have to be started and signed onto to continue

• Repeat this process using the update file for the latest maintenance firmware release

19

Page 20

3.10. Update the firmware on the BladeCenter I/O modules

Each of the I/O module’s software needs to be updated at this time. The procedure varies

depending on the manufacturer of the I/O module. Refer to the README file downloaded earlier

along with the I/O module to complete this task. Make sure you are using the instructions for

VIOS or AIX in each README; avoid instructions that refer to SANsurfer on Linux or Windows.

3.10.1. Assigning an IP address to I/O modules

Instructions in the README files assume that the I/O module you are updating is accessible on

the network. To assign an IP address to an I/O module:

• Log into the AMM browser UI with an administrator ID

• Click I/O Module Tasks

• Click Configuration

• Scroll down to the correct I/O module, change the network settings and click Save

• If using the SAS RAID module, make sure to change the network settings for both the

SAS switch component and the RAID subsystems

3.11. Update the firmware on the BladeCenter S DSM(s)

Use the instructions in the README file you downloaded in section 3.8. If the BladeCenter S

contains two DSMs, make sure to update the firmware on both. Note that to update the DSM

firmware, you log into one of the SAS I/O modules. The default userid for a SAS I/O module is

USERID and the default password is PASSW0RD (note the number zero). To log into a SAS I/O

module, start a browser session to the IP address assigned to it. If an IP address has not been

assigned to a SAS I/O module yet, follow these instructions to do so:

• Start a browser session to the IP address of the AMM

• Log in with an administrator userid

• Under I/O module Tasks, click Configuration

• Locate the SAS I/O module and assign an IP address, subnet mask and gateway. Click

Save

• Repeat for the second SAS module, if it is installed

• Continue by following the DSM firmware update instructions in the README file you

downloaded in section 3.8

3.12. Installing and configuring an Intelligent Copper Pass-through Module (ICPM)

Use the instructions in the ICPM Installation Guide

(http://publib.boulder.ibm.com/infocenter/bladectr/documentation/topic/com.ibm.bladecenter.io_44

W4483.doc/44r5248.pdf).

4. Storage management

As mentioned previously, the type of storage IBM i can use on a Power blade depends on the

BladeCenter in which the blade is installed, the I/O modules available in the chassis and the

expansion adapters present on the blade. IBM i on all Power blades supports Fibre Channel and

SAS storage (DS3200) connected to BladeCenter H and SAS storage only in BladeCenter S

20

Page 21

(internal drives and/or DS3200). IBM i on all Power blades supports the SAS RAID Controller

module in BladeCenter S; that module is not supported in BladeCenter H. Fibre Channel storage

in BladeCenter S is not supported for IBM i. This section will examine storage concepts for

BladeCenter H first, followed by those for BladeCenter S.

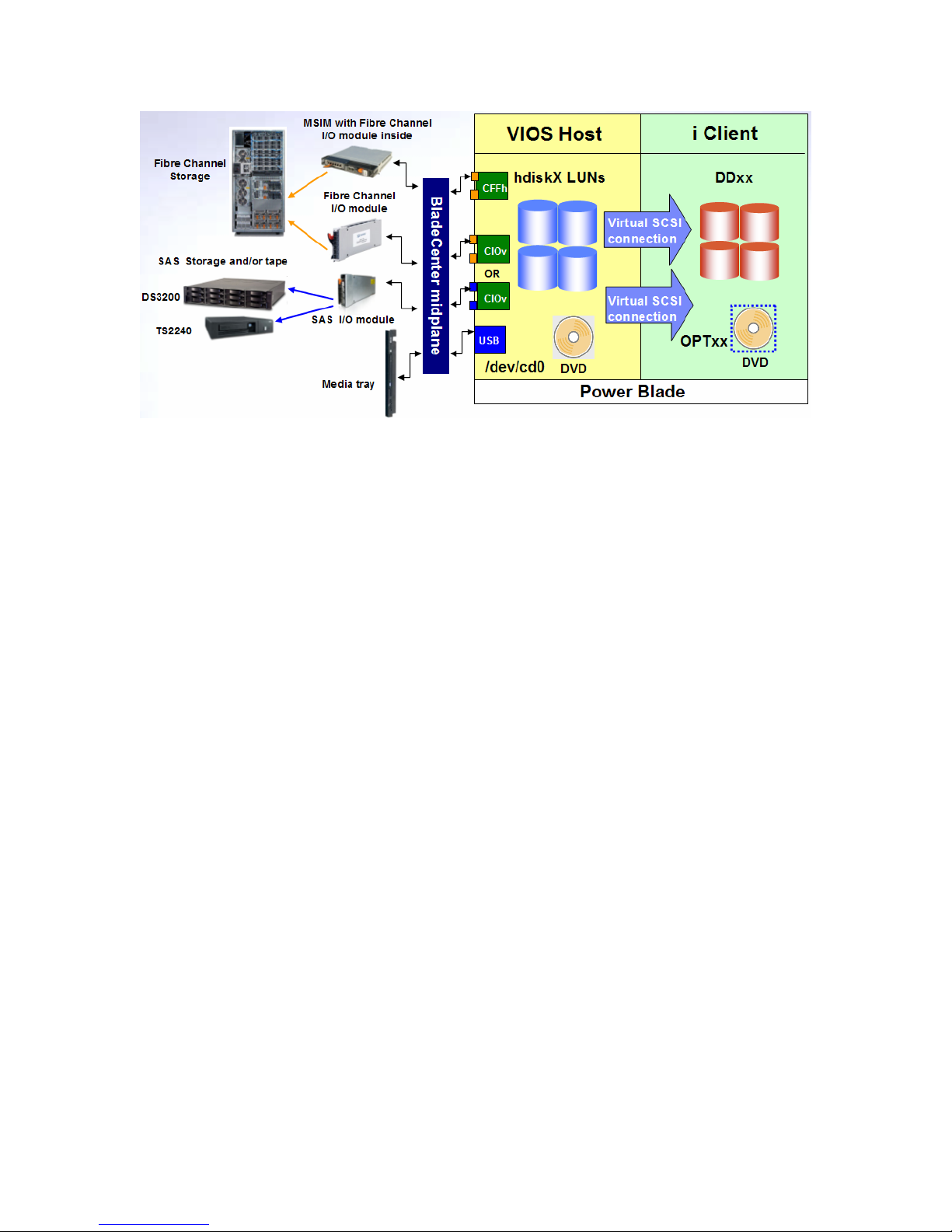

4.1. Storage concepts for IBM i on Power blade in BladeCenter H

In the Power blade environment, IBM i partitions do not have direct access to any physical I/O

hardware on the blade, in the chassis or outside the BladeCenter. In BladeCenter H, disk storage

is provided by attaching LUNs on a Fibre Channel or SAS storage subsystem to VIOS, then

directly virtualizing them to IBM i using the Integrated Virtualization Manager (IVM). DVD access

for IBM i installation is provided by assigning the DVD-ROM drive in the BladeCenter to a blade,

which makes the optical drive available to VIOS. The drive is then directly virtualized to IBM i by

assigning it in IVM. Direct virtualization of the TS2240 LTO4 SAS-attached tape drive is also

supported: the drive is attached to a SAS I/O module in the chassis. Once available in VIOS, the

tape drive is assigned to an IBM i partition in IVM.

4.1.1. Storage concepts for JS12 and JS22 in BladeCenter H

The following diagram presents an overview of storage, optical and tape virtualization for IBM i on

JS12 and JS22 in BladeCenter H:

VIOS accesses Fibre Channel storage via the CFFh expansion card on the blade. Presently, the

QLogic CFFh card has 2 x 4Gb Fibre Channel ports and 2 x 1Gb Ethernet ports. Before reach ing

the external SAN, disk I/O operations first travel through the BladeCenter midplane; then through

a Multi-switch Interconnect Module (MSIM) and a SAN I/O module inside the MSIM. The MSIM

resides in slots 7 and 8, or 9 and 10 in the BladeCenter H chassis. The MSIM allows the

standard, or “vertical,” SAN module inside it to connect to a high-speed, or “horizontal,” CFFh

card on the blade. With the 2-port CFFh card, 2 MSIMs with 1 SAN module in each are

supported in the BladeCenter H for redundancy in the SAN connection.

When configuring Fibre Channel LUNs for IBM i (via VIOS) in this environment, the host

connection on the SAN system must include the World-wide Port Name (WWPN) of one or both

ports on the CFFh card. If the Power blade is inserted in the chassis, the WWPNs can be

observed in the AMM using the following steps:

21

Page 22

• Log into the AMM browser UI with an administrator ID

• Click Hardware VPD

• Locate the blade with which you are working

• The CFFh card will appear as a High Speed Expansion Card with a description of

CFFH_EF HSEC. Click it

• Click the Ports tab

• Under High Speed Expansion Card Unique IDs, ID 2 is the WWPN of the first FC port

and ID 4 is the WWPN of the second FC port

When configuring LUNs for IBM i (virtualized by VIOS), they should be created as 512-byte,

“AIX” LUNs, not as 520-byte “IBM i” LUNs. VIOS accesses the 512-byte LUNs as described

above and then virtualizes them to IBM i via a Virtual SCSI connection between the 2 partitions.

The Virtual SCSI server adapter in VIOS and Virtual SCSI client adapter in IBM i are created

automatically when the LUNs are assigned to IBM i in IVM. The Virtual SCSI client adapter driver

allows IBM i to access 512-byte virtual disks. For each 4-kilobyte memory page, nine 512-byte

sectors are used, instead of eight; the ninth sector is used to store the 8-byte headers from the

preceding eight sectors.

VIOS accesses SAS storage (DS3200) using the CFFv SAS expansion adapter. The CFFv card

requires at least one SAS I/O module in bay 3, but can use two for redundancy, in bays 3 and 4.

Similar to Fibre Channel, the host connection on the SAS storage subsystem must include the

SAS IDs of the two ports on the expansion adapter. To find out those IDs in the AMM, follow

these steps:

• Log into the AMM browser UI with an administrator ID

• Click Hardware VPD

• Locate the blade with which you are working

• The CFFv SAS card will appear as an Expansion Card with a description of SAS

Expansion Option. Click it

• Click the Ports tab

• Under Expansion Card Unique IDs, ID 1 is the SAS ID of the first port and ID 2 is the

SAS ID of the second port

There is at least one Virtual SCSI connection between VIOS and each IBM i partition, which is

also used for IBM i access to the USB DVD-ROM drive in the chassis. The IVM Web interface

creates a single Virtual SCSI client adapter for each IBM i partition. The Virtual SCSI connection

allows a maximum of 16 disk and 16 optical devices in IBM i. This means that by default, a

maximum of 16 LUNs can be virtualized by VIOS per IBM i partition using only the IVM Web

interface. Additional Virtual SCSI client adapters can be created in an IBM i partition using the

VIOS command line. Note that even if only 16 LUNs are assigned to an IBM i partition, each LUN

does not represent a single physical disk arm. IBM i (via VIOS) takes advantage of the SAN

system’s ability to create a LUN using a RAID rank with multiple physical drives (DDMs).

4.1.2. Storage concepts for JS23, JS43, PS700, PS701 and PS702 in BladeCenter H

The following diagram presents an overview of storage, optical and tape virtualization for IBM i on

JS23, JS43, PS700, PS701 and PS702 in BladeCenter H:

22

Page 23

Both Fibre Channel and SAS storage concepts for JS23, JS43, PS700, PS701 and PS702 in

BladeCenter H are similar to those for JS12 and JS22, with the following differences:

• To connect to Fibre Channel storage, there are several CIOv and one CFFh expansion

adapter options. CIOv and CFFh Fibre Channel cards can be used together on the same

blade to provide adapter redundancy. CIOv adapters connect to I/O modules in bays 3

and 4

• CIOv Fibre Channel cards appear as Expansion Card with a description of Fibre

Channel EC under Hardware VPD in the AMM

• CIOv Fibre Channel adapters’ WWPNs are identified as IDs 1 and 2 on the Ports tab of

the Hardware VPD page for the adapter

• To connect to SAS storage, JS23, JS43, PS700, PS701 and PS702 use the CIOv SAS

passthrough adapter. As with Fibre Channel CIOv cards, it connects through I/O

modules in bays 3 and 4

• The CIOv SAS passthrough card appears as Expansion Card with a description of SAS

Conn Card under Hardware VPD in the AMM

4.2. Best practices for BladeCenter H and Fibre Channel storage

When configuring LUNs for IBM i (virtualized by VIOS), follow the best practices outlined in

chapter 14.5 of the latest Performance Capabilities Reference manual, available here:

http://www.ibm.com/systems/i/solutions/perfmgmt/resource.html. Note that some of its

recommendations apply only to IBM i using virtual storage outside of the blade environment.

In addition to the guidelines in the Performance Capabilities Reference manual, follow these

additional recommendations:

• Use Fibre Channel (FC) or SAS drives (and not SATA or FATA) to create the RAID ranks

for production IBM i workloads

• Use 15K RPM drives for medium and heavy I/O IBM i workloads, and 10K RPM drives for

low I/O workloads

• When creating a host connection to the WWPN of the Fibre Channel card on the blade,

specify at most 2 specific host ports. Do not create the connection so that the Fibre

Channel adapter can connect to all host port on the storage subsystem, which is the

default for some subsystems.

23

Page 24

• Once the LUNs that will be virtualized to IBM i have reported in VIOS, change their queue

depth to improve performance. Start a Telnet session to VIOS and log as padmin. Use

the following command for each LUN (hdisk1 in this example):

o chdev -dev hdisk1 -perm -attr queue_depth=32

• Another parameter that can improve performance is the number of I/O commands to

send to the QLogic CFFh Fibre Channel adapter. The recommended value is 512. Be

sure to change the value for both ports of the CFFh adapter:

o chdev –dev fcs0 –attr num_cmd_elems=512 –perm

o chdev –dev fcs1 –attr num_cmd_elems=512 –perm

• To improve reliability, enable dynamic tracking for the LUNs virtualized to IBM i. Do so

for both ports of the CFFh adapter:

o chdev –dev fscsi0 –attr dyntrk=yes –perm

o chdev –dev fscsi1 –attr dyntrk=yes –perm

Using the –perm option in the above commands means that the value will be updated only in the

VIOS device configuration database (ODM). To make the change effective, reboot VIOS when

there is downtime available for all client partitions.

4.3. Create LUNs for the IBM i partition(s) in BladeCenter H

To create LUNs for IBM i (virtualized by VIOS) on DS3400, follow the instructions in chapter 8 of

the Redbook IBM System Storage DS3000: Introduction and Implementation Guide (SG2 47065),

available here: http://www.redbooks.ibm.com/redpieces/abstracts/sg247065.html?Open.

To create LUNs for IBM i (virtualized by VIOS) on DS3950, DS4700, DS4800, DS5020, DS5100

or DS5300, follow the instructions in chapter 3 of the Redbook IBM Midrange System Storage

Implementation and Best Practices Guide (SG246363), available here:

http://www.redbooks.ibm.com/redbooks/pdfs/sg246363.pdf

To create LUNs for IBM i (virtualized by VIOS) on DS8000, follow the instructions in section 3 of

the Redbook IBM System Storage DS8000 Series: Architecture and Implem entation (SG246786),

available here: http://www.redbooks.ibm.com/redpieces/abstracts/sg246786.html?Open.

To create LUNs for IBM i (virtualized by VIOS) on the SAN Volume Controller (SVC), follow the

instructions in section 10 of the Redbook Implementing the IBM System Storage SAN Volume

Controller V4.3 (SG246423), available here:

http://www.redbooks.ibm.com/abstracts/sg246423.html?Open.

To create LUNs for IBM i (virtualized by VIOS) on the XIV storage subsystem, follow the

instructions in Chapter 4 of the Redbook IBM XIV Storage System: Architecture, Implementation,

and Usage (SG247659), available here:

http://www.redbooks.ibm.com/abstracts/sg247659.html?Open.

4.4. Multi-path I/O (MPIO) for IBM i in BladeCenter H

MPIO refers to the capability of an operating system to use two separate I/O paths to access

storage, typically Fibre Channel or SAS. For IBM i on blade, that capability resides with VIOS,

because IBM i is not directly accessing the SAN. Additionally, IBM i does not currently support

having two paths to the virtual disk units (LUNs) in VIOS from a virtual client/server perspective.

.

24

Page 25

JS12 and JS22 can achieve a level of redundancy with Fibre Channel storage by using both ports

on the CFFh adapter and a pair of MSIMs and Fibre Channel switch modules. The I/O

redundancy from the two ports on the adapter can be extended by connecting to separate

external Fibre Channel switches or directly to separate ports on the storage subsystem. Similarly,

both ports on the CFFv SAS adapter and two SAS modules can be used to achieve a level of

redundancy with SAS storage.

JS23, JS43, PS700, PS701 and PS702 support this level of MPIO by using both ports on a CFFh

or CIOv adapter. These blade servers can also achieve adapter-level redundancy with Fibre

Channel storage by employing both a CFFh and a CIOv card simultaneously. To complete such

a configuration, two MSIMs and two Fibre Channel modules in I/O bays 8 and 10 would be

required, as well as two Fibre Channel modules in bays 3 and 4.

4.4.1. MPIO drivers

VIOS includes a basic MPIO driver, which has been the default instead of the RDAC driver since

November 2008. The MPIO driver can be used with any storage subsystem which VIOS

supports and is included in a default install. In this case, configuration is required only on the

storage subsystem in order to connect a single set of LUNs to both ports on a Fibre Channel

adapter owned by VIOS.

VIOS also supports the Subsystem Device Driver – Path Control Module (SDDPCM) for certain

storage subsystems. Examples of supported subsystems include the SAN Volume Controller

(SVC) and IBM DS8000. To find out whether a particular storage system supports SDD-PCM for

VIOS, consult its interoperability matrix on the SDDPCM Web site:

http://www.ibm.com/support/docview.wss?uid=ssg1S4000201. Note that there are separate

support statements for AIX and VIOS. If SDDPCM is supported on your storage subsystem for

VIOS, download and install the driver following the instructions in the Multipath Subsystem

Device Driver User's Guide at the same location.

4.5. Storage concepts for IBM i on Power blade in BladeCenter S

As with the BladeCenter H, IBM i does not have physical access to storage when running in the

BladeCenter S. In this case, storage is provided in the chassis itself in the form of one or two

Disk Storage Modules (DSMs), each containing up to 6 SAS drives and/or SAS-attached external

storage in the IBM DS3200. While SATA drives can also be placed in the DSMs and are

supported for IBM i, they are not recommended because of their performance and reliability

characteristics. The minimum SAS configuration in the BladeCenter S is one DSM with one SAS

drive or one SAS Connectivity Module and DS3200.

IBM i supports both types of SAS I/O modules that can be placed in BladeCenter S: the SAS

Connectivity Module (switch functionality only) and the SAS RAID Controller Module (switch and

RAID functionality). However, the two types of SAS modules allow for different storage support:

• The SAS Connectivity Module does not support hardware RAID for the drives in the

chassis and supports attachment to DS3200, which provides its own RAID functions

• The SAS RAID Controller Module supports hardware RAID for the drives in the chassis,

but does not support attachment to DS3200

Note that whether RAID functionality is provided by DS3200 through the non-RAID SAS module

or by the RAID SAS module, it is independent of the SAS expansion adapter on the blade.

Neither the CFFv SAS expansion card nor the CIOv SAS expansion card supports RAID itself.

Neither card has any read or write cache.

25

Page 26

4.5.1. Storage concepts for JS12 and JS22 in BladeCenter S

The following diagram presents an overview of storage, optical and tape virtualization for IBM i on

JS12 and JS22 in BladeCenter S:

JS12 and JS22 use the CFFv SAS expansion adapter to access the following storage resources:

• SAS drives in BCS and/or LUNs from DS3200 if there is at least one non-RAID SAS

module present in the chassis

• LUNs from one or more RAID arrays internal to the chassis require both RAID SAS

modules to be present

Once SAS drives or LUNs have been assigned to the Power blade, they become available in

VIOS as hdiskX devices. VIOS then virtualizes each hdiskX disk directly to the IBM i client

partition(s), exactly as with LUNs in the BladeCenter H. Each virtualized SAS drive or LUN is

recognized and used in IBM i as a DDxx physical drive. If both internal drives are ordered on the

JS12, they will be recognized by VIOS as hdisk0 and hdisk1. The internal drives on the blade are

used for VIOS and are not virtualized to IBM i client LPARs. Access to the DVD-ROM drive in the

BladeCenter S is also provided by VIOS, as with the BladeCenter H.

4.5.2. Storage concepts for JS23, JS43, PS700, PS701 and PS702 in BladeCenter S

JS23, JS43, PS700, PS701 and PS702 follow the same storage concepts as the JS12 and JS22

in BladeCenter S, with the exception of the CFFv expansion adapter; instead, they use the CIOv

expansion card. The following diagram presents an overview of storage, optical and tape

virtualization for IBM i on JS23, JS43, PS700, PS701 and PS702 in BladeCenter S:

26

Page 27

4.6. Best practices for BladeCenter S and SAS storage

For performance information on IBM i in BladeCenter S, see section 14.5 of the latest

Performance Capabilities Reference manual, available here:

http://www.ibm.com/systems/i/solutions/perfmgmt/resource.html.

4.6.1. Best practices when using the SAS Connectivity Module

Because the SAS Connectivity Module does not support RAID, there are two possibilities for disk

protection once SAS drives in the chassis have been assigned to the Power blade and are

available in VIOS:

• Use Logical Volume Manager (LVM) mirroring in VIOS, create a volume group from the

available SAS drives, then create logical volumes and present those to IBM i

• Directly virtualize each SAS drive to IBM i and use mirroring in IBM i

The recommendation is to use mirroring in IBM i. Using logical volumes extends the path of

each I/O request in VIOS by involving the LVM layer. Mirroring in IBM i allows the use of existing

IBM i disk management skills.

For a higher level of redundancy, it is strongly recommended to assign the same number of SAS

drives in each DSM and mirror between them in IBM i. SAS drives in the BladeCenter S are

assigned to blades by changing the configuration of the SAS I/O module.

In the case of using LUNs from DS3200, they are already benefiting from RAID protection in the

external storage subsystem. Each LUN should once again be virtualized directly to IBM i in VIOS.

4.6.2. Best practices when using the SAS RAID Controller Module

The recommendation to directly virtualize LUNs to IBM i instead of using storage pools in VIOS

applies in this case, as well. Furthermore, it is strongly recommended that LUNs for IBM i on

a Power blade are created on a separate RAID array in the chassis. The goal is to avoid disk

arm contention between IBM i and other production workloads on the same or other blade s in the

BladeCenter S. While this approach lessens the flexibility of RAID array and LUN creation using

the RAID SAS module, it helps ensure the necessary response time and throughput demanded

27

Page 28

by IBM i production workloads. As with other storage subsystems, the number of physical drives

in the RAID array also plays a significant role, with a higher number of drives improving

performance.

4.7. Configuring storage in BladeCenter S using the SAS Connectivity Module

4.7.1. SAS I/O modules configurations

The configuration of the SAS I/O module(s) in the BladeCenter S determines which SAS drives in

the DSM(s) are assigned to which blades. This configuration is kept on non-volatile storage in

the module(s). If two modules are present, it is necessary to change the SAS drive assignment in

only one of them; the changes will be replicated to the second one.

8 pre-defined configurations and 4 user-defined configurations are stored on a SAS I/O module.

To use a pre-defined configuration, it has to be activated by interacting with the module. Userdefined configurations are blank until explicitly created. The following tables summarize the predefined configurations.

Pre-defined configurations for a single SAS I/O module:

Pre-defined configurations for two SAS I/O modules:

Notice that the same four configurations of drives and blades are used for both a single SAS I/O

module and two modules. Any of the four configurations can be used for IBM i in BladeCenter S.

However, keep in mind the further mirroring required in IBM i. Therefore, pre-defined

configuration #02/#03 will likely not be applicable to the majority of IBM i in BladeCenter S

implementations, because it provides for only one mirrored pair of drives in IBM i.

28

Page 29

The choice among the remaining configurations depends on the number of blades (Power and

x86) in the chassis. For example, configuration #04/#05 provides the greatest number of arms for

a single IBM i partition on the blade (12); however, it does not leave any drives in the chassis for

other blades. One approach to addressing the disk requirement for x86 blades in this case is to

use the two supported embedded drives on the x86 blade, provided they allow for sufficient disk

performance for the x86 application. If ordered, the two embedded SAS drive on the Power blade

are used to install and mirror VIOS.

Tape virtualization does not impact the choice of a pre-defined zone configuration: all pre-defined

configurations assign all external ports to all blades. Therefore, an administrator can connect a

TS2240 tape drive to any external port on a SAS I/O module and VIOS will recognize and

virtualize the drive to IBM i; provided that the blade has a CFFv or CIOv SAS expansion adapter.

4.7.2. Activate a pre-defined SAS I/O module configuration

If one of the pre-defined drive configurations fits the storage requirements of the IBM i in

BladeCenter S implementation, the only required action to assign drives to the blade is to activate

that configuration. The simplest method is to use an already familiar interface – a browser

session to the AMM:

• Start a browser session to the AMM of the BladeCenter S and sign on with USERID or

another administrator ID

• Click Configuration under Storage Tasks

• Click the SAS I/O module you want to configure. If two modules are present, it is

necessary to activate the selected configuration on only one

• Click the radio button next to the pre-defined configuration you wish to activate

• Click the Activate Selected Configuration button at the bottom of the right screen pane

The selected number of SAS drives is now available to VIOS on the Power blade to virtualize to

IBM i. There are three other methods to activate a pre-defined configuration:

• A Telnet command-line interface to the SAS I/O module

• A browser session directly to the module

• The Storage Configuration Manager GUI from a PC

However, using the AMM is the most straightforward way to just activate a pre-defined

configuration. The AMM does not have the capability to create a custom user configuration for

the SAS drives in the chassis.

4.7.3. Create a custom SAS I/O module configuration

If none of the pre-defined configurations meets the storage requirements of the IBM i in

BladeCenter S implementation, a custom drive configuration must be created by changing one of

the four available user-defined configuration templates on the SAS I/O module(s). Two interfaces

are available for creating a custom configuration:

• A Telnet command-line interface to the SAS I/O module

• The Storage Configuration Manager GUI from a PC

It is recommended to use the Storage Configuration Manager (SCM), unless you are already

familiar with the SAS I/O module command line. SCM can be downloaded here: https://www-

304.ibm.com/systems/support/supportsite.wss/docdisplay?lndocid=MIGR5502070&brandind=5000008.

29

Page 30

To install SCM and create a custom SAS I/O module configuration, follow the instructions in

chapter 4.4 of the Redpiece Implementing the IBM BladeCenter S Chassis (REDP-4357),

available here: http://www.redbooks.ibm.com/abstracts/redp4357.html?Open. When installing

SCM, choose to install only the SAS Module in BCS option.

Because custom SAS zone configurations are created from a blank slate, special care must be

taken if VIOS is going to virtualize tape to IBM i. All blades that require access to the tape drive

must specifically be configured to access the external port on the I/O module to which the tape

drive is connected.

Note that if the SAS I/O module configuration is changed after VIOS has been installed on a

Power blade, the cfgdev command must be run on the VIOS command line to detect any

changes in the drive configuration.

4.8. Configuring storage in BladeCenter S using the SAS RAID Controller Module

The SAS RAID Controller Module, also known as the RAID SAS Switch Module (RSSM),

provides RAID support for IBM i when using only the drives in a BladeCenter S. Before

continuing with the configuration steps, review the best practices for this environment in section

4.6.2. There are two general steps involved in assigning RAID storage in the chassis to a Power

blade; once available to the blade and VIOS, the LUNs are virtualized to IBM i as described in

section 5.10. The two high-level steps are:

• Use the correct SAS switch zoning configuration so that the Power blade is allowed

access to the RAID subsystem in the RSSM

• Create the appropriate configuration in the RAID subsystem and assign the new LUNs to

the Power blade

4.8.1. SAS zoning for RSSM

With a new BladeCenter S, the correct SAS zone to allow all blades access to the RAID

subsystem in the RSSM is already in place: the default pre-defined zone configuration gives all 6

blade slots access to the RAID controller and to all external SAS ports. As with the non-RAID

SAS switch modules (NSSMs), the SAS zone configuration is replicated between the two RSSMs.

If a change is required, it need be made only to one of the two RSSMs. To check whether the

default zone configuration is active on the RSSMs, use the AMM browser interface:

• Log into the AMM with an administrator ID

• Click Storage Tasks and then Configuration

• Pre-defined Config 10 should be active on both RSSMs

A user-defined configuration can also be used to limit which blades can access th e RAID

subsystem or a certain external SAS port. As with NSSM, the Storage Configuration Manager

(SCM) is used to manage zoning in the RSSM. Additionally, SCM is the interface used to create

the RAID configuration. See the next section on using SCM with RSSM.

4.8.1. Configuring RSSM with SCM

Download the latest version of SCM from this URL: https://www-

304.ibm.com/systems/support/supportsite.wss/docdisplay?lndocid=MIGR5502070&brandind=5000008. Install the interface using the instructions in chapter 4.4 of the

Redpiece Implementing the IBM BladeCenter S Chassis (REDP-4357), available here:

http://www.redbooks.ibm.com/abstracts/redp4357.html?Open. Make sure to perform a full

installation with all the management options.

30

Page 31

Once SCM is started, follow these steps to add your RSSMs to the interface:

• Sign on with a local Windows ID and password. You are not signing onto the RSSMs yet

• Expand BC-S SAS RAID Module and then Health

• Click All Resources

• Click Add SAS RAID Modules

• Enter the IP address of the SAS switch component of the RSSM in I/O bay 3

o Both the SAS switch and the RAID subsystem in both RSSMs must have

external IP addresses assigned. See section 3.10.1 for instructions

• Enter an ID and password for both the SAS switch and the RAID subsystem of the RSSM

in I/O bay 3

o The defaults for the SAS switch are USERID and PASSW0RD

o The defaults for the RAID subsystem are USERID1 and PASSW0RD

• Optionally, enter a nickname for these RSSMs

• Click OK

In most cases, it is not necessary to create a custom SAS zone configuration. If you do need to

create one, use the steps immediately below (otherwise, skip to the next set of bullets):

• Use the radio button to select the SAS switch in bay 3

• In the drop-down menu above, select Configuration, then SAS Zoning…

• Follow the instructions in chapter 4.4 of the Redpiece Implementing the IBM BladeCenter

S Chassis (REDP-4357), available here:

http://www.redbooks.ibm.com/abstracts/redp4357.html?Open

To create the RAID array and LUN configuration, follow these steps:

• On the All Resources screen, select the RAID subsystem

• In the drop-down menu above, select Configuration, then Storage…

• Click the Storage Pools tab. Storage pools are the RAID arrays you can create in the

chassis using the RSSM

• Click Create Storage Pool…

• Select Manually choose drives (advanced) and click Next

• Select the correct SAS drives (preferably in both DSMs) and RAID level for this storage

pool, then click Next

• Create the correct number of LUNs (volumes) with the correct capacity and add them to

the list of new volumes. Then click Next

• All blades that contain a CFFv or CIOv SAS expansion card and are allowed to access

the RAID subsystem will be identified in the Select Hosts area

o If your blade is not identified, click Discover Hosts

o If the blade still does not appear, check the SAS zoning configuration and

whether the SAS adapter on the blade is installed and operational

• Click your blade in the Select Hosts area

• Click on all LUNs that should be attached to that blade in the Select Volumes area

• Click Map Volumes. This action will map the selected LUNs to both ports on the SAS

expansion card. The maximum number of LUNs per blade is 8

• Click Next and then Finish

If VIOS is already running on the blade, use the cfgdev command on the VIOS command line to

recognize the new LUNs.

31

Page 32

4.9. Using the DVD drive in the BladeCenter with VIOS and IBM i

As mentioned above, VIOS virtualizes the DVD drive in the media tray (MT) of the BladeCenter to

IBM i. When the MT is assigned to the blade using the steps described in section 5.2.1, the DVD

drive becomes physically available only to VIOS. It is then assigned to an IBM partition using the

IVM GUI as described in section 7.3.2 (cd0 is the name of the physical DVD drive).

When the DVD drive must be reassigned to another IBM i LPAR on the same blade, the OPTxx

device in IBM should first be varied off. Then the cd0 device would be assigned to the second

LPAR using the same method described in section 7.3.2.

When the DVD drive must be reassigned to another IBM i LPAR on a different blade, or after it

has been used by a different blade and must be assigned back to the same LPAR, use the

following process:

• Vary off the OPTxx device in IBM i (if it was assigned to a Power blade and an IBM i

LPAR)

• Use the AMM browser interface to assign the MT to the correct blade, as described in

section 5.2.1

• Telnet to VIOS on the correct blade and sign in with padmin

• Enter cfgdev and press Enter

• Assign the DVD drive in the MT (cd0) to the correct IBM i partition on the blade, as

described in section 7.3.2.

• Vary on the OPTxx device in IBM i

Note that you must run the cfgdev command every time the DVD drive is assigned to a Power

blade, in order for VIOS to recognize that the device is present again.

4.9.1. USB 2.0 access

In October 2009, IBM enhanced the capabilities of Power blades when using the DVD drive in the

Media Tray (MT) in BladeCenter S and BladeCenter H. VIOS now uses a USB 2.0 driver for

physical access to the DVD drive. As a result, all client LPARs using the DVD drive as a virtual

optical device from VIOS can perform faster reads and writes. One benefit of this enhancement

is shorter install times for the IBM i Licensed Internal Code (LIC) and operating environment, as

well as Program Temporary Fixes (PTFs).

4.9.2. Writing to DVD-RAM media in BladeCenter H

Also in October 2009, VIOS gained the capability to write to DVD-RAM media when using

Feature code 4154, UltraSlim Enhanced SATA DVD-RAM Drive, in BladeCenter H or

BladeCenter S. For IBM i as client of VIOS all DVD-RAM operations that are supported by IBM i

are available when using this drive. This improvement allows IBM i to perform small saves and

restores (up to 4.7 GB) using this DVD drive.

4.10. Creating multiple Virtual SCSI adapters per IBM i partition

With the availability of VIOS 2.1 in November 2008, it is possible to create multiple Virtual SCSI

client adapters per IBM i partition on a Power blade. Power blades are always IVM-managed.

This allows for increased flexibility in configuring storage and optical devices for IBM i in the blade

environment:

• More than 16 disk and 16 optical devices can be virtualized by VIOS per IBM i partition

• Disk and optical devices can be configured on separate Virtual SCSI adapters

32

Page 33

As mentioned above, the IVM Web browser interface creates a single Virtual SCSI client adapter

per client partition and a corresponding Virtual SCSI server adapter in VIOS. The exception is for

tape virtualization, as described in section 4.1.4. To create additional Virtual SCSI client adapters,

you must use the VIOS command line:

• Log into VIOS with padmin or another administrator userid

• If the IBM i partition is not activated, follow the example below, which adds a new Virtual

SCSI client adapter in slot 5 of IBM i partition “test,” connecting to a server adapter in

the next available slot (chosen automatically by IVM) in partition “VIOS:”

o chsyscfg -r prof -i "name=test,virtual_scsi_adapters+=5/client/1/VIOS//1"