Page 1

Front cover

IBM OpenPower 720

Technical Overview

and Introduction

Deskside and rack-mount server for

managing e-business

Outstanding performance based on

POWER5 processor technology

From Web servers to

integrated cluster solutions

Giuliano Anselmi

Gregor Linzmeier

Wolfgang Seiwald

Philippe Vandamme

Scott Vetter

ibm.com/redbooks

Redpaper

Page 2

Page 3

International Technical Support Organization

IBM Sserver OpenPower 720 Technical Overview and

Introduction

October 2004

Page 4

Note: Before using this information and the product it supports, read the information in “Notices” on page v.

Second Edition (October 2004)

This edition applies to IBM Sserver OpenPower 720.

© Copyright International Business Machines Corporation 2004. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .v

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vi

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

The team that wrote this Redpaper . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Become a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Chapter 1. General description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 System specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 Physical package . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2.1 IBM eServer OpenPower 720 deskside . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2.2 IBM eServer OpenPower 720 rack-mounted . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3 Minimum and optional features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3.1 Processor card features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3.2 Memory features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3.3 Disk and media features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.3.4 USB diskette drive . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.3.5 I/O drawers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.3.6 Hardware Management Console models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.4 Express Product Offerings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.5 System racks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.5.1 IBM RS/6000 7014 Model T00 Enterprise Rack . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.5.2 IBM RS/6000 7014 Model T42 Enterprise Rack . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.3 AC Power Distribution Unit and rack content . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.5.4 Rack-mounting rules for OpenPower 720 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.5.5 Additional options for rack. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.5.6 OEM rack . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Chapter 2. Architecture and technical overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.1 The POWER5 chip . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.1.1 Simultaneous multi-threading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.1.2 Dynamic power management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.1.3 POWER chip evolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.1.4 CMOS, copper, and SOI technology. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.2 Processor cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.2.1 Available processor speeds . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3 Memory subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3.1 Memory placement rules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3.2 Memory throughput . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.3.3 Memory restrictions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.4 System buses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.4.1 RIO buses and GX card . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.5 Internal I/O subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.5.1 PCI-X slots and adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.5.2 LAN adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.5.3 Graphic accelerators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.5.4 SCSI adapters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.6 Internal service processor communications ports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

© Copyright IBM Corp. 2004. All rights reserved. iii

Page 6

2.7 Internal storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.7.1 Internal media devices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

2.7.2 Internal SCSI disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.7.3 RAID options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.8 External I/O subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.8.1 I/O drawers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.8.2 7311 Model D20 I/O drawer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

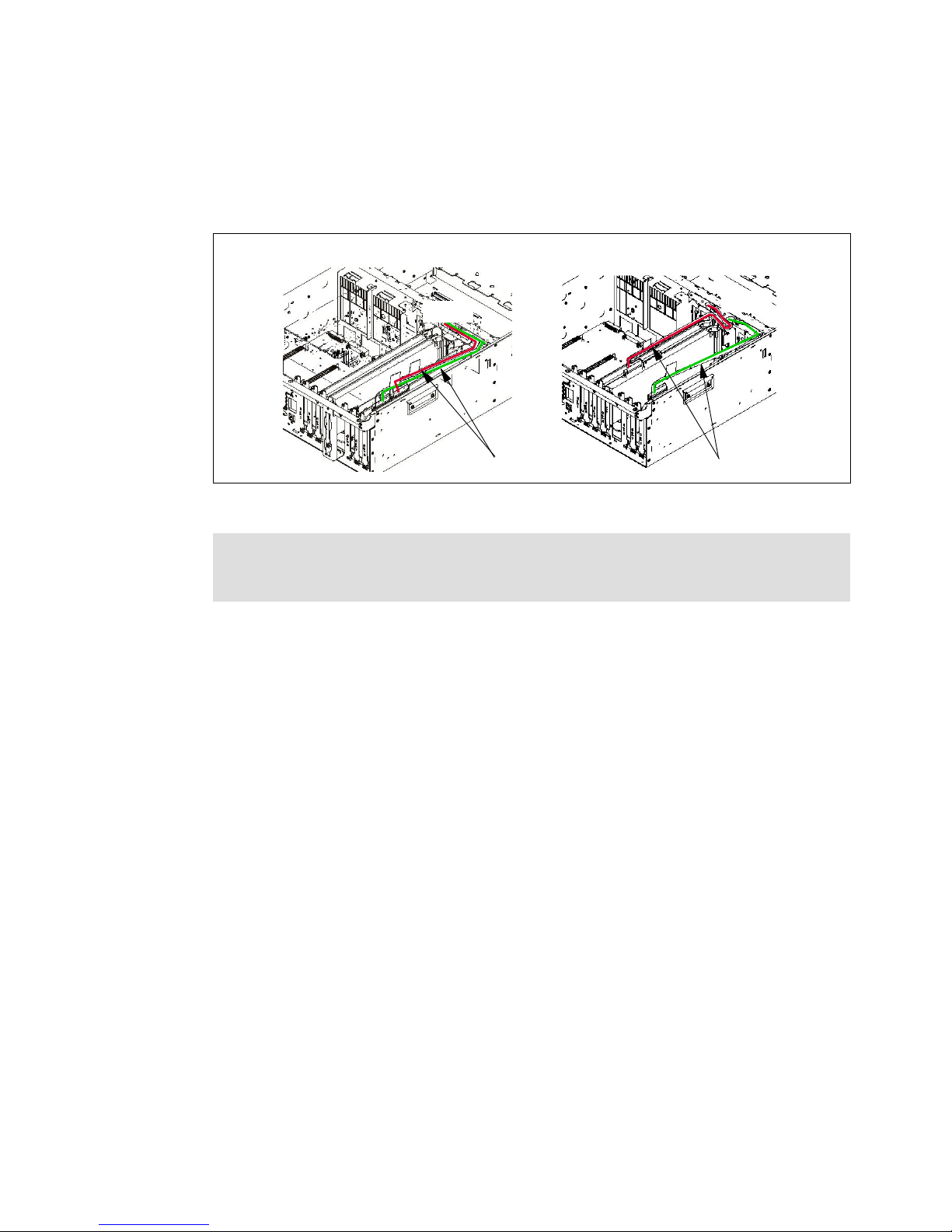

2.8.3 7311 I/O drawer’s RIO-2 cabling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.8.4 7311 Model D20 I/O drawer SPCN cabling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.8.5 External disk subsystems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.9 Advanced OpenPower Virtualization. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

2.9.1 Logical partitioning and dynamic logical partitioning . . . . . . . . . . . . . . . . . . . . . . . 34

2.9.2 Virtualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.10 Service processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

2.10.1 Service processor base . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.10.2 Service processor extender . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.11 Boot process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.11.1 IPL flow without an HMC attached to the system . . . . . . . . . . . . . . . . . . . . . . . . 38

2.11.2 Hardware Management Console . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.11.3 IPL flow with an HMC attached to the system. . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.11.4 Definitions of partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.11.5 Hardware requirements for partitioning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.11.6 Specific partition definitions used for Micro-Partitioning technology . . . . . . . . . . 42

2.11.7 System Management Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.11.8 Boot options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.11.9 Additional boot options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.11.10 Security . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

2.12 Operating system requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

2.12.1 Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Chapter 3. RAS and manageability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.1 Reliability, availability, and serviceability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3.1.1 Fault avoidance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3.1.2 First Failure Data Capture. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3.1.3 Permanent monitoring. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

3.1.4 Self-healing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.1.5 N+1 redundancy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.1.6 Fault masking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

3.1.7 Resource deallocation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

3.1.8 Serviceability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3.2 Manageability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

3.2.1 Service processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

3.2.2 Service Agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

3.2.3 OpenPower Customer-Managed Microcode. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.3 Cluster 1600 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

How to get IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

iv OpenPower 720 Technical Overview and Introduction

Page 7

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area.

Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM

product, program, or service may be used. Any functionally equivalent product, program, or service that does

not infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not give you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such provisions

are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES THIS

PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED,

INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any

manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the

materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without

incurring any obligation to you.

Any performance data contained herein was determined in a controlled environment. Therefore, the results

obtained in other operating environments may vary significantly. Some measurements may have been made

on development-level systems and there is no guarantee that these measurements will be the same on

generally available systems. Furthermore, some measurement may have been estimated through

extrapolation. Actual results may vary. Users of this document should verify the applicable data for their

specific environment.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrates programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs. You may copy, modify, and

distribute these sample programs in any form without payment to IBM for the purposes of developing, using,

marketing, or distributing application programs conforming to IBM's application programming interfaces.

© Copyright IBM Corp. 2004. All rights reserved. v

Page 8

Trademarks

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Eserver®

Eserver®

ibm.com®

iSeries™

pSeries®

xSeries®

AIX 5L™

AIX/L®

AIX®

Chipkill™

The following terms are trademarks of other companies:

UNIX is a registered trademark of The Open Group in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Other company, product, and service names may be trademarks or service marks of others.

DB2 Universal Database™

DB2®

Enterprise Storage Server®

Hypervisor™

IBM®

Micro-Partitioning™

OpenPower™

PowerPC®

POWER™

POWER4™

POWER4+™

POWER5™

PS/2®

Redbooks™

RS/6000®

Service Director™

TotalStorage®

UltraNav®

vi OpenPower 720 Technical Overview and Introduction

Page 9

Preface

This document is a comprehensive guide covering the IBM Sserver® OpenPower 720 Linux

servers. We introduce major hardware offerings and discuss their prominent functions.

Professionals wishing to acquire a better understanding of IBM Sserver OpenPower

products should consider reading this document. The intended audience includes:

Customers

Sales and marketing professionals

Technical support professionals

IBM Business Partners

Independent software vendors

This document expands the current set of IBM Sserver documentation by providing a

desktop reference that offers a detailed technical description of the OpenPower 720 system.

This publication does not replace the latest IBM Sserver marketing materials and tools. It is

intended as an additional source of information that, together with existing sources, can be

used to enhance your knowledge of IBM server solutions.

The team that wrote this Redpaper

This Redpaper was produced by a team of specialists from around the world working at the

International Technical Support Organization, Austin Center.

Giuliano Anselmi is a certified pSeries Presales Technical Support Specialist working in the

Field Technical Sales Support group based in Rome, Italy. For seven years, he was an IBM

Sserver pSeries Systems Product Engineer, supporting Web Server Sales Organization in

EMEA, IBM Sales, IBM Business Partners, Technical Support Organizations, and IBM Dublin

eServer Manufacturing. Giuliano has worked for IBM for 12 years, devoting himself to

RS/6000® and pSeries systems with his in-depth knowledge of the related hardware and

solutions.

Gregor Linzmeier is an IBM Advisory IT Specialist for RS/6000 and pSeries workstation and

entry servers as part of the Systems and Technology Group in Mainz, Germany supporting

IBM sales, Business Partners, and customers with pre-sales consultation and implementation

of client/server environments. He has worked for more than 13 years as an infrastructure

specialist for RT, RS/6000, and AIX® in large CATIA client/server projects.

Wolfgang Seiwald is an IBM Presales Technical Support Specialist working for the System

Sales Organization in Salzburg, Austria. He holds a Diplomingenieur degree in Telematik

from the Technical University of Graz. The main focus of his work for IBM in the past five

years has been in the areas of the IBM Sserver pSeries systems and the IBM AIX operating

system.

Philippe Vandamme is an IT Specialist working in pSeries Field Technical Support in Paris,

France, EMEA West region. With 15 years of experience in semi-conductor fabrication and

manufacturing and associated technologies, he is now in charge of pSeries Pre-Sales

Support. In his daily role, he supports and delivers training to the IBM and Business Partner

Sales force.

© Copyright IBM Corp. 2004. All rights reserved. vii

Page 10

The project that produced this document was managed by:

Scott Vetter

IBM U.S.

Thanks to the following people for their contributions to this project:

Ron Arroyo, Barb Hewitt, Thoi Nguyen, Jan Palmer, Charlie Reeves, Craig Shempert, Scott

Smylie, Joel Tendler, Ed Toutant, Jane Arbeitman, Tenley Jackson, Andy McLaughlin, Kathy

Bennett, Raymond Harney, David Boutcher.

IBM U.S.

Volker Haug

IBM Germany

Become a published author

Join us for a two- to six-week residency program! Help write an IBM Redbook dealing with

specific products or solutions, while getting hands-on experience with leading-edge

technologies. You'll team with IBM technical professionals, Business Partners and/or

customers.

Your efforts will help increase product acceptance and customer satisfaction. As a bonus,

you'll develop a network of contacts in IBM development labs, and increase your productivity

and marketability.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our papers to be as helpful as possible. Send us your comments about this

Redpaper or other Redbooks in one of the following ways:

Use the online Contact us review redbook form found at:

ibm.com/redbooks

Send your comments in an Internet note to:

redbook@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. JN9B Building 905 Internal Zip 9053D004

11501 Burnet Road

Austin, Texas 78758-3493

viii OpenPower 720 Technical Overview and Introduction

Page 11

Chapter 1. General description

The IBM Sserver OpenPower 720 deskside and rack-mount servers are designed for

greater application flexibility, with innovative technology, designed to help you become an on

demand business. With POWER5 microprocessor technology, the OpenPower 720 is the a

high-performance entry Linux server that, as an orderable option, includes the next

development of the IBM partitioning concept, Micro-Partitioning.

Through the optional POWER Hypervisor feature (FC 1965), LPAR on a 4-way OpenPower

720 (9124-720) allows up to four dedicated partitions. In addition, the POWER Hypervisor

feature enables the support up to 40 Micro-Partitions on a 4-way system. Micro-Partitioning

technology enables multiple partitions to share a physical processor. The POWER Hypervisor

controls dispatching the physical processors to each of the partitions. In addition to

Micro-Partitioning and Virtual Ethernet provided by the POWER Hypervisor, the optional

Virtual I/O Server feature allows sharing of physical network adapters and enables the

virtualization of SCSI storage. Together, these two features are known as Advanced

OpenPower Virtualization.

1

To dynamically manage the resources on a system, and to configure and use Virtual

Ethernet, Shared Ethernet adapters, and Virtual SCSI, an Hardware Management Console is

required.

Simultaneous multi-threading (SMT) is a standard feature of POWER5 technology that allows

two threads to be executed at the same time on a single processor. SMT is selectable with

dedicated or processors from a shared pool using Micro-Partitioning technology.

The symmetric multiprocessor (SMP) OpenPower 720 system features base 1-way or 2-way

(1.5 GHz), 2-way or 4-way (1.65 GHz), 64-bit, copper-based POWER5 microprocessors with

36 MB off-chip Level 3 cache configurations standard on 2-way and 4-way models. Main

memory starting at 512 MB can be expanded up to 64 GB, based on the available DIMMs, for

higher performance and exploitation of 64-bit addressing to meet the demands of enterprise

computing, such as large database applications.

Included with the OpenPower 720 are five hot-plug PCI-X slots with Enhanced Error Handling

(EEH), one embedded Ultra320 SCSI dual-channel controller, one 10/100/1000 Mbps

integrated dual-port Ethernet controller, two service processor communications ports, two

© Copyright IBM Corp. 2004. All rights reserved. 1

Page 12

USB 2.0 capable ports, two HMC ports, two RIO-2 ports, and two System Power Control

Network (SPCN) ports.

The OpenPower 720 includes four front-accessible, hot-swap-capable disk bays in a minimum

configuration with an additional four hot-swap-capable disk bays orderable as an optional

feature. The eight disk bays can accommodate up to 2.4 TB of disk storage using the 300 GB

Ultra320 SCSI disk drives. Three non hot-swapable media bays are used to accommodate

additional devices. Two media bays only accept slim line media devices, such as DVD-ROM

or DVD-RAM, and one half-height bay is used for a tape drive. The OpenPower 720 also has

I/O expansion capability using the RIO-2 bus, which allows attachment of the 7311 Model

D20 I/O drawers.

Additional reliability and availability features include redundant hot-plug cooling fans and

redundant power supplies. Along with these hot-plug components, the OpenPower 720 is

designed to provide an extensive set of reliability, availability, and serviceability (RAS)

features that include improved fault isolation, recovery from errors without stopping the

system, avoidance of recurring failures, and predictive failure analysis.

2 OpenPower 720 Technical Overview and Introduction

Page 13

1.1 System specifications

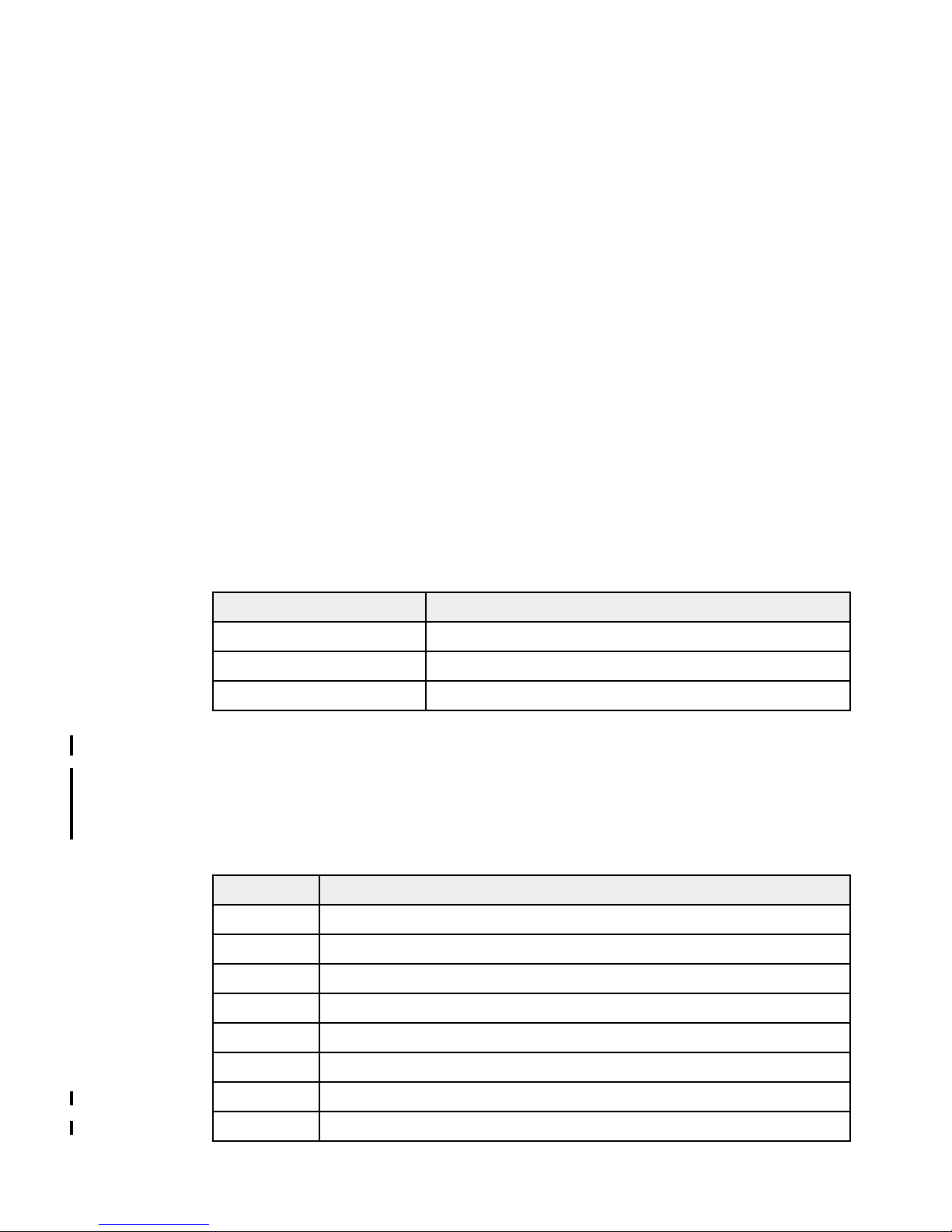

Table 1-1 lists the general system specifications of the OpenPower 720 system.

Table 1-1 OpenPower 720 specifications

Description Range

Operating temperature 5 to 35 degrees Celsius (41 to 95 F)

Relative humidity 8% to 80%

Operating voltage 1-2 way: 100 to 127 or 200 to 240 V AC (auto-ranging)

Operating frequency 47/63 Hz

Maximum power consumption 1100 watts maximum

MAximum thermal output 3754 Btu

British Thermal Unit

a.

1.2 Physical package

The following sections discuss the major physical attributes found on an OpenPower 720 in

rack-mounted and deskside versions (Figure 1-1 on page 4), as shown in Table 1-2. The

OpenPower 720 is a 4U, 19-inch rack-mounted system or deskside system depending on the

feature code selected.

4-way: 200 to 240 V AC

a

/hour (maximum)

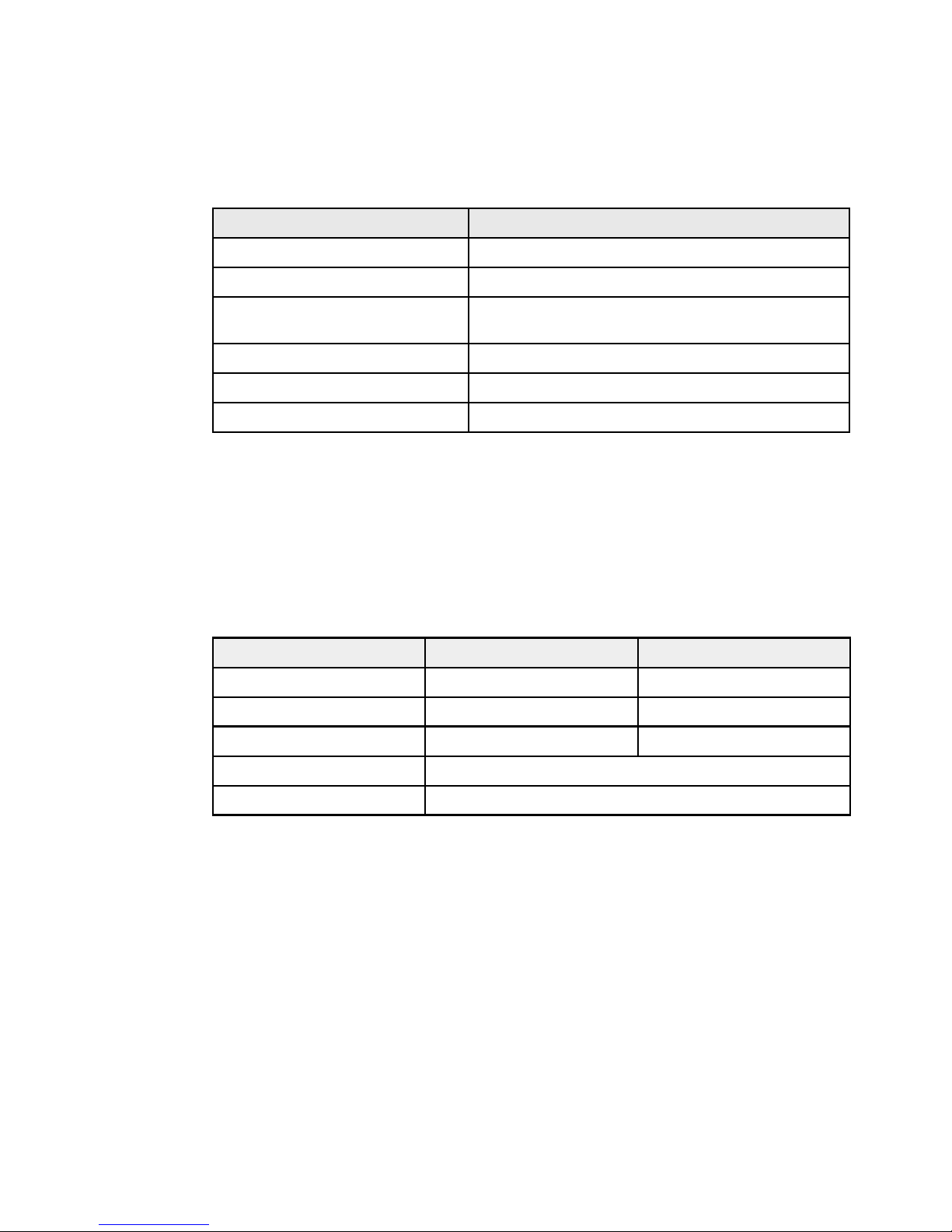

Table 1-2 Physical packaging of the OpenPower 720

Dimension Rack (FC 7914) Deskside (FC 7912)

Height 178 mm (7.0 inches) 533 mm (21.1 inches)

Width 437 mm (17.2 inches) 201 mm (7.9 inches)

Depth 731 mm (28.8 inches) 779 mm (30.7 inches)

Minimum configuration 41.4 kg (91 pounds)

Maximum configuration 57.0 kg (125 pounds)

Chapter 1. General description 3

Page 14

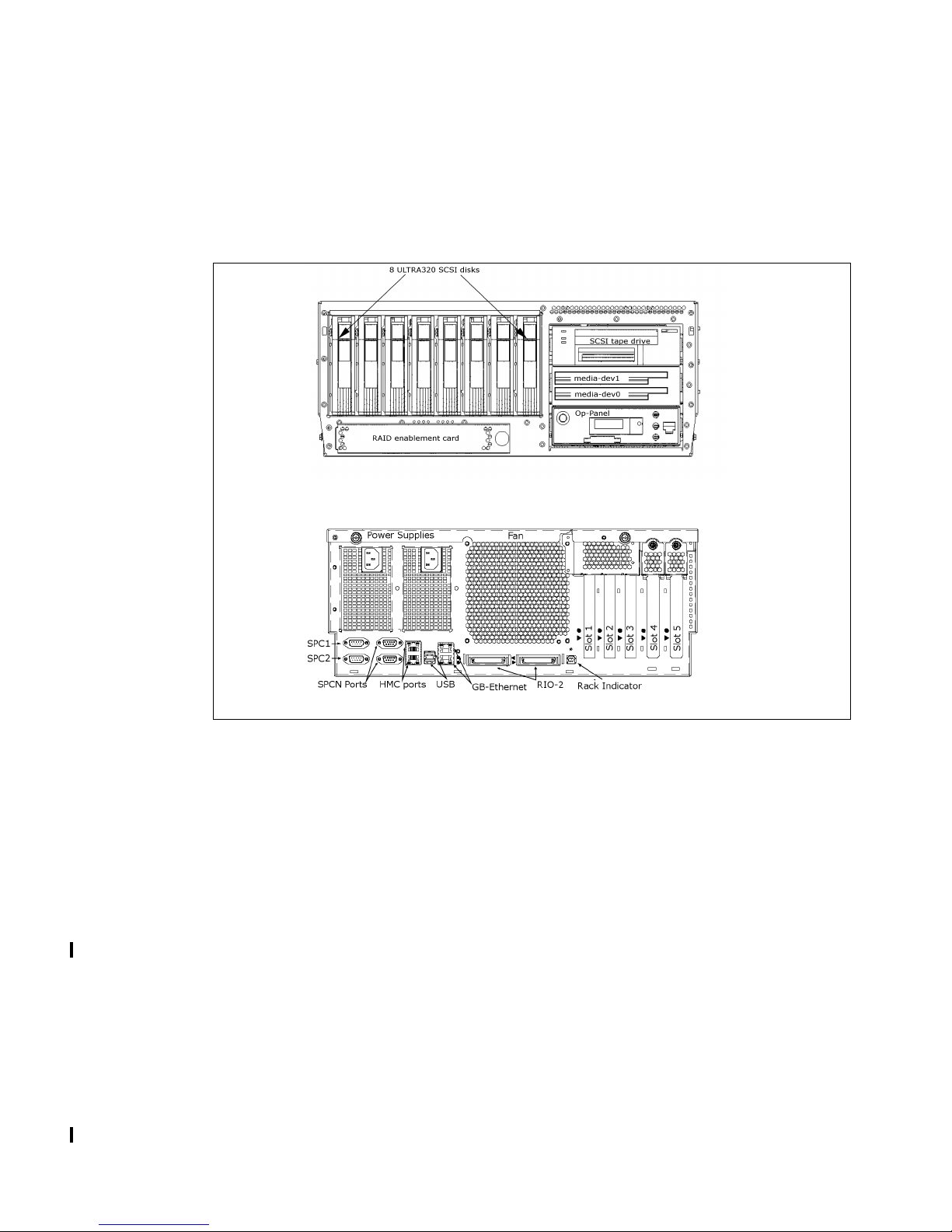

Figure 1-1 Rack-mount and deskside versions of the OpenPower 720

1.2.1 IBM eServer OpenPower 720 deskside

The OpenPower 720 is available as a deskside server that is ideal for environments requiring

the user to have local access to the hardware. A typical example of this would be applications

requiring a native graphics display.

To order an OpenPower 720 system as a deskside version, FC 7912 is required. The system

is designed to be set up by the customer and, in most cases, will not require the use of any

tools. Full set-up instructions are included with the system.

The GXT135P 2D graphics accelerator with analog and digital interfaces (FC 2849) is

available and is supported for SMS, firmware menus, and other low-level functions, as well as

when AIX or Linux starts the X11-based graphical user interface. Graphical AIX system tools

are usable for configuration management if the adapter is connected to the primary console,

such as the IBM L200p Flat-Panel Monitor (FC 3636), the IBM T541H 15-inch TFT Color

Monitor (FC 3637), or others.

1.2.2 IBM eServer OpenPower 720 rack-mounted

The OpenPower 720 is available as a 4U rack-mounted server and is intended to be installed

in a 19-inch rack, thereby enabling efficient use of computer room floor space. If the IBM

7014-T42 rack is used to mount the OpenPower 720, it is possible to place up to 10 systems

in an area of 644 mm (25.5 inches) x 1147 mm (45.2 inches).

To order an OpenPower 720 system as a rack-mounted version, FC 7914 is required.The

OpenPower 720 can be installed in IBM or OEM racks. Therefore you are required to select

one of the following features:

IBM Rack-mount Drawer Rail Kit (FC 7162)

OEM Rack-mount Drawer Rail Kit (FC 7163)

Included with the OpenPower 720 rack-mounted server packaging are all of the components

and instructions necessary to enable installation in a 19-inch rack using suitable tools.

4 OpenPower 720 Technical Overview and Introduction

Page 15

The GXT135P 2D graphics accelerator with analog and digital interfaces (FC 2849) is

available and is supported for SMS, firmware menus, and other low-level functions, as well as

when Linux starts the X11-based graphical user interface. Graphical system tools are usable

for configuration management if the adapter is connected to a common maintenance console,

such as the 7316-TF3 Rack-Mounted Flat-Panel display.

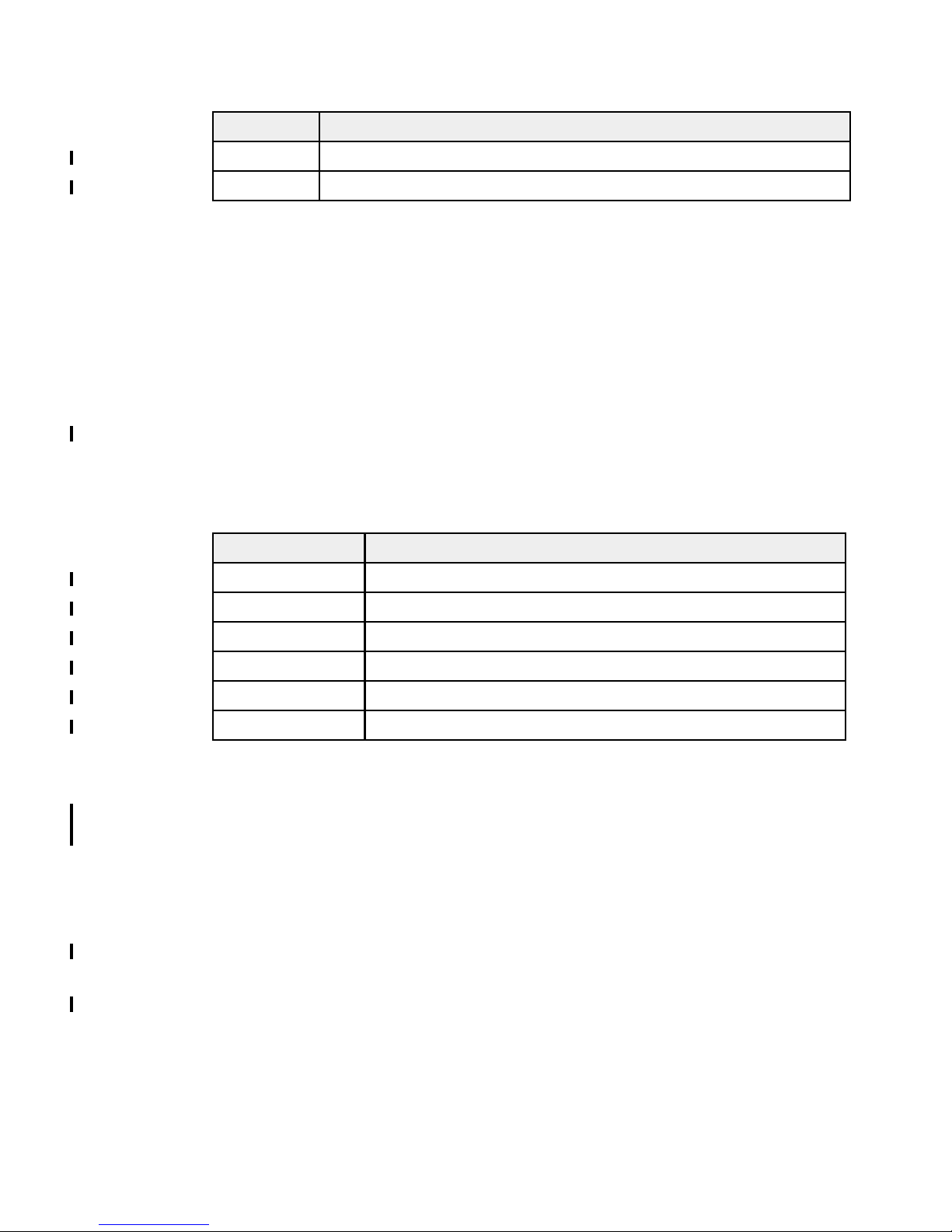

Figure 1-2 shows the basic ports available on the OpenPower 720.

Figure 1-2 Detailed views of the OpenPower 720 rack-mount system

1.3 Minimum and optional features

The OpenPower 720 system is based on a flexible, modular design, and it features:

Up to two processor books using the POWER5 chip, for a 1-way, 2-way, or 4-way

configurations

From 512 MB to 64 GB of total system memory capacity using DDR1 DIMM technology

Four SCSI disk drives in a minimum configuration, eight SCSI disk drives with an optional

second 4-pack enclosure for an internal storage capacity of 2.4 TB using 300 GB drives

Four PCI-X slots and 1 GX+ slot or five PCI-X slots

Two slim-line media bays for optional storage devices

One half-high bay for an optional tape device

The OpenPower 720, including the service processor (SP) described in 2.10.1, “Service

processor base” on page 38, supports the following native ports:

Two 10/100/1000 Ethernet ports

Two service processor communications ports

Chapter 1. General description 5

Page 16

Two USB 2.0 ports

Two HMC ports

Two remote I/O (RIO-2) ports

Two SPCN ports

In addition, the OpenPower 720 features one internal Ultra320 SCSI dual channel controller,

redundant hot-swap power supply (optional), cooling fans, and up to two processor power

regulators.

The system supports 32-bit and 64-bit applications.

1.3.1 Processor card features

The OpenPower 720 accommodates 1 and 2-way processor cards with state-of-the-art,

64-bit, copper-based, POWER5 microprocessors running at 1.5 GHz on 1-way, 1.5 GHz

2-way, and 1.65 GHz 2-way cards that share 1.9 MB of L2 on chip cache and eight slots for

memory DIMMS using DDR1 technology. 36 MB of L3 cache is available on the 2-way cards.

Capacity on Demand (CoD) is not available on the OpenPower 720. For a list of available

cards; see Table 1-3.

An initial order must have at least one processor card. Only a single 1-way card may be

present on any system, and cards with mixed clock rates cannot be installed on the same

system.

Table 1-3 Processor card and feature codes

Processor card FC Description

1960 1-way 1.5 GHz, no L3 cache, eight DDR1 DIMM sockets

1961 2-way 1.5 GHz, 36 MB L3 cache, eight DDR1 DIMM sockets

5262 2-way 1.65 GHz, 36 MB L3 cache, eight DDR1 DIMM sockets

1.3.2 Memory features

The processor cards used in the OpenPower 720 system have eight sockets for memory

DIMMs. Memory can be installed in pairs (feature numbers 1936, 1949, 1950, 1951, and

1952) or quads (feature numbers 1937, 1938, 1939, 1940, 1942, and 1945). Table 1-4 lists

the available memory features.

Table 1-4 Memory feature codes

Feature code Description

1936 0.5 GB (2x 256 MB) DIMMs, 250 MHz, DDR1 SDRAM

1937 1 GB (4x 256 MB) DIMMs, 250 MHz, DDR1 SDRAM

1938 2 GB (4x 512 MB), DIMMs, 250 MHz, DDR1 SDRAM

1940 4 GB (4x 1024 MB) DIMMs, 250 MHz, DDR1 SDRAM

1942 8 GB (4x 2048 MB) DIMMs, 250 MHz, DDR1 SDRAM

1945 16 GB (4x 4096 MB) DIMMs, 250 MHz, DDR1 SDRAM

1949 1GB (2x 512 MB) DIMMs, 266 MHz, DDR1 SDRAM

1950 2GB (2x 1024 MB) DIMMs, 266 MHz, DDR1 SDRAM

6 OpenPower 720 Technical Overview and Introduction

Page 17

Feature code Description

1951 4GB (2x 2048 MB) DIMMs, 266 MHz, DDR1 SDRAM

1952 8GB (2x 4096 MB) DIMMs, 266 MHz, DDR1 SDRAM

It is recommended that each processor card (if more than a single card is present) have an

equal amount of memory installed. Balancing memory across the installed processor cards

allows distributed memory accesses that provide optimal performance. The memory

controller will detect a variety of memory configurations of mixed memory sized DIMMs and

DIMMs installed in pairs.

1.3.3 Disk and media features

The minimum OpenPower 720 configuration includes a 4-pack disk drive enclosure. A second

4-pack disk drive enclosure can be installed by ordering FC 6592 (integrated controller

driven) or FC 6593 (additional adapter driven); therefore, the maximum internal storage

capacity is 2.4 TB (using the disk drive features available at the time of writing). The

OpenPower 720 also features two slim-line media device bays and one half-height media bay.

The minimum configuration requires at least one disk drive. Table 1-5 shows the disk drive

feature codes that each bay can contain.

Table 1-5 Disk drive feature code description

Feature code Description

1970 36.4 GB 15 K RPM Ultra3 SCSI disk drive assembly

1968 73.4 GB 10 K RPM Ultra3 SCSI disk drive assembly

1971 73.4 GB 15 K RPM Ultra3 SCSI disk drive assembly

1969 146.8 GB 10 K RPM Ultra3 SCSI disk drive assembly

1972 146.8 GB 15 K RPM Ultra3 SCSI disk drive assembly

1973 300 GB 10 K RPM Ultra3 SCSI disk drive assembly

Any combination of DVD-ROM and DVD-RAM drives of the following devices can be installed

in the two slim-line bays:

DVD-RAM drive, FC 1993

DVD-ROM drive, FC 1994

A logical partition running a supported release of the Linux operating system requires a

DVD-ROM drive or DVD-RAM drive to provide a way to boot hardware diagnostics from CD.

Supplementary devices can be installed in the half-height media bay, such as:

IBM 80/160 GB Internal Tape Drive with VXA Technology, FC 1992

60/150 GB 16-bit 8 mm Internal Tape Drive, FC 6134

36/72 GB 4 mm Internal Tape Drive, FC 1991

1.3.4 USB diskette drive

For today’s administration tasks, an internal diskette drive is not state-of-the-art. In some

situations, the external USB 1.44 MB diskette drive for OpenPower 720 systems (FC 2591) is

helpful. This super-slim-line and lightweight USB V2 attached diskette drive takes its power

requirements from the USB port. A USB cable is provided. The drive can be attached to the

Chapter 1. General description 7

Page 18

integrated USB ports, or to a USB adapter (FC 2738). A maximum of one USB diskette drive

is supported per integrated controller/adapter. The same controller can share a USB mouse

and keyboard.

1.3.5 I/O drawers

The OpenPower 720 has five internal PCI-X slots, where four of them are long slots and one

is a short slot. If more PCI-X slots are needed, especially well-suited to extend the number of

LPARs and Micro-Partitions, up to eight Model 7311 Model D20 I/O drawers can be attached

to the rack-mount OpenPower system. Up to four Model D20 drawers can be connected to the

two RIO-2 ports on the rear of the system that are provided in a minimum configuration. An

additional four Model D20s can be connected by ordering the Remote I/O expansion card (FC

1806). It provides two RIO-2 ports located on a interposer card that occupies the short PCI-X

slot.

Note: 7311-D20 is the only supported I/O drawer for the OpenPower 720.

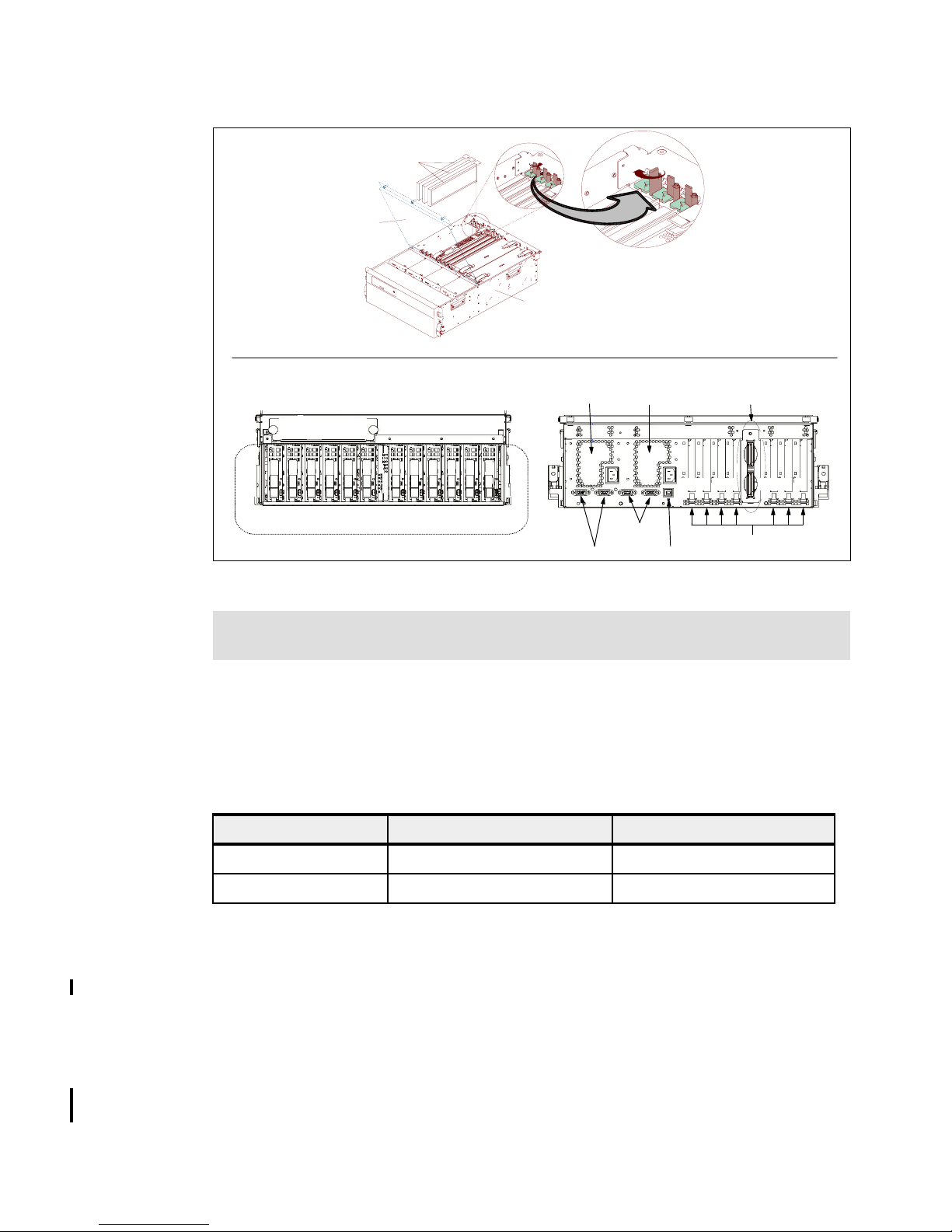

7311 Model D20 I/O drawer

The 7311 Model D20 I/O drawer is a 4U full-size drawer, which must be mounted in a rack. It

features seven hot-pluggable PCI-X slots and optionally up to 12 hot-swappable disks

arranged in two 6-packs. Redundant, concurrently maintainable power and cooling is an

optional feature (FC 6268). The 7311 Model D20 I/O drawer offers a modular growth path for

the OpenPower 720 system with increasing I/O requirements. When a OpenPower 720 is fully

configured with eight attached 7311 Model D20 drawers, the combined system supports up to

61 PCI-X adapters (in a full configuration, Remote I/O expansion cards are required) and

104 hot-swappable SCSI disks.

PCI-X and PCI cards are inserted from the top of the I/O drawer down into the slot. The

installed adapters are protected by plastic separators, designed to prevent grounding and

damage when adding or removing adapters.

The drawer has the following attributes:

4U rack-mount enclosure assembly

Seven PCI-X slots 3.3 volt, keyed, 133 MHz hot-pluggable

Two 6-pack hot-swappable SCSI devices

Optional redundant hot-plug power

Two RIO-2 ports and two SPCN ports

Note: The 7311 Model D20 I/O drawer initial order, or an existing 7311 Model D20 I/O

drawer that is migrated from another system, must have the RIO-2 ports available

(FC 6417).

7311 Model D20 I/O drawer physical package

The I/O drawer has the following physical characteristics:

Width: 482 mm (19.0 inches)

Depth: 610 mm (24.0 inches)

Height: 178 mm (7.0 inches)

Weight: 45.9 kg (101 pounds)

Figure 1-3 on page 9 shows the different views of the 7311-D20 I/O drawer.

8 OpenPower 720 Technical Overview and Introduction

Page 19

Operator panel

Adapters

Service

Access

I/O

Drawer

Front Rear

Power supply 2

Power supply 1

RIO ports

1 2 3 4 5 6 7

8 9 A B C D 8 9 A B C D

SCSI disk locations and IDs

Figure 1-3 7311-D20 I/O drawer

Note: The 7311 Model D20 I/O drawer is designed to be installed by an IBM service

representative.

I/O drawers and usable PCI slots

Only the 7311 Model D20 I/O drawer is supported on an OpenPower 720 system. Depending

on the system configuration, the maximum number of I/O drawers supported is different.

Table 1-6 summarizes the maximum number of I/O drawers supported and the total number

of PCI-X slots available.

Table 1-6 Maximum number of I/O drawers supported and total number of PCI slots

OpenPower 720 Max number of I/O drawers Total number of PCI-X slots

Minimum configuration 4 28 + 5

Additional FC 1806 8 56 + 4 (excluding used slot)

1.3.6 Hardware Management Console models

Reserved ports

SPCN ports

Rack indicator

PCI-X slots

The Hardware Management Console (HMC) provides a set of functions that is necessary to

manage the OpenPower system when dynamic LPAR, Micro-Partitioning, Virtual Ethernet,

Virtual SCSI, inventory and remote microcode management, and remote power control

functions are needed. These functions include the handling of the partition profiles that define

the processor, memory, and I/O resources allocated to an individual partition. The HMC is

required when the Virtual I/O Server or the POWER Hypervisor features are ordered.

The 7310 Model CR3 or the 7310 Model C04 HMCs are specifically for POWER5

processor-based systems. However, an existing 7315 POWER4 processor-based systems

Chapter 1. General description 9

Page 20

HMCs can be converted for POWER5 processor-based systems use when it is loaded with

the HMC software required for POWER5 processor-based systems (FC 0961).

To upgrade an existing POWER4 HMC:

Order FC 0961 for your existing HMC. Please contact your IBM Sales Representative for

help.

Call you local IBM Service Center and order APAR MB00691.

Order the CD online selecting Version 4.4 machine code updates. Go to the Hardware

Management Console Support for IBM Sserver i5, p5, pSeries, iSeries, and OpenPower

Web page at:

https://techsupport.services.ibm.com/server/hmc/power5

Note: You must have an IBM ID to use this freely available service. Registration

information and online registration form can be found at the Web page mentioned above.

POWER5 processor-based system HMCs require Ethernet connectivity. Ensure that sufficient

Ethernet adapters are available to enable public and private networks if you need both. The

7310 Model C04 is a desktop model with only one native 10/100/1000 Ethernet port, but three

additional PCI slots. The 7310 Model CR3 is a 1U, 19-inch rack mountable drawer that has

two native Ethernet ports and two additional PCI slots.

When an HMC is connected to the OpenPower 720, the integrated service processor

communications ports are disabled. If you need serial connections, you need to provide an

async adapter.

Note: It is not possible to connect POWER4 and POWER5 processor-based systems

simultaneously to the same HMC.

1.4 Express Product Offerings

New specially priced Express Product Offerings are now available for the OpenPower 720

servers. These Express Product Offerings feature popular, easy-to-order preconfigured

servers with attractive financial incentives. Express Product Offerings are available only as an

initial order.

OpenPower Express servers are complemented by pre-tested solutions that provide

recommended system configurations with installation and sizing aids, for a range of business

requirements. Built on the solid base of OpenPower servers, the Linux operating system, and

popular application software packages, these offerings are designed to help smaller and

mid-sized companies solve a variety of business problems - application consolidation, e-mail

security, and infrastructure for Enterprise Resource Planning (ERP).

Available solutions include:

IBM Eserver OpenPower Network E-Mail Security Express Solution

IBM Eserver OpenPower and IBM DB2 Universal Database™ for SAP Solution

IBM Eserver OpenPower Consolidation Express Solution

Express Product Offerings consist of the following processor requirements, either a two-way

POWER5 1.5 GHz processor (FC 1943) or two-way POWER5 1.65 GHz processor (FC 1944)

or two two-way POWER5 1.65 GHz processor (2 x FC 1944)z, and a defined minimum

configuration. You can make changes to the standard features as needed and still qualify;

10 OpenPower 720 Technical Overview and Introduction

Page 21

however, selection of features smaller than those defined as the minimums disqualify the

order as an Express Product Offering. If any of the features in an Express Product Offering is

changed, the Express Product Offering identification feature (FC 935X) will be removed from

the order.

Table 1-7 lists the available Express Product Offerings configurations and minimum

requirements.

Table 1-7 Express Product Offerings configurations

Express

Offering

Identifier

Entry

Deskside 9351

Entry Rack

9352

Value Rack

9353

Performance

Rack 9254

Consolidation

b

Rack

9355

a. SKU Identifier = Stock Keeping Unit Identifier

b. The Consolidation Offering includes 4 x FC 1965 (POWER Hypervisor and Virtual I/O Server)

as a minimum requirement

Processors Memory (MB) Disk Configuratio

2-way, FC 1943, 1.5 GHz 1 x 2048

(FC 1950)

2-way, FC 1943, 1.5 GHz 2 x 2048

(FC 1950)

2-way, FC 1944, 1.65 GHz 2 x 2048

(FC 1950)

2 x 2-way, FC 1943,

1.5 GHz

2 x 2-way, FC 1944,

1.65 GHz

4 x 2048

(FC 1950)

2 x 4096

(FC 1951)

2 x 73.4 GB disk

drive, FC 1968

2 x 73.4 GB disk

drive, FC 1968

2 x 73.4 GB disk

drive, FC 1968

4 x 73.4 GB disk

drive, FC 1968

4 x 73.4 GB disk

drive, FC 1971

The Express Product Offerings also includes:

DVD-ROM (FC1994)

IBM Rack-mount drawer bezel and hardware (FC 7998)

Rack-mount drawer rail kit (FC 7166)

n Number

(SKU)

91241D1

91241D2

91241D3

91241D4

91241D5

a

Power supply, 700 watt (FC 7989)

DVD-ROM (FC 1994)

Language group specify (FC 9300 or 97xx)

Power cord

Note: Keyboard, mouse, operating system (OS) license, and OS media are not included in

these configurations.

Note: If a build-to-order (BTO) configuration meets all the requirements of an OpenPower

Express configuration, Express configuration pricing will be applied.

When an OpenPower Express configuration is ordered, the nine-digit reference number

called a SKU Identifier will be printed on the packing list and on a label (readable and

barcode) on the outside of the box. Also it will appear on invoices and billing statements. The

SKU Identifier helps improve IBM Distributors’ inventory management. The SKU Identifier

numbers for OpenPower Express configurations are listed in Table 1-7 above.

Chapter 1. General description 11

Page 22

Note: Only Express Product Offerings configurations will have SKU Identifiers. No BTO

configuration even if it meets the definition of an Express Product Offering configuration will

have SKU Identifier. Any modifications to an Express hardware configuration will suppress

the SKU Identifier.

1.5 System racks

The Enterprise Rack Models T00 and T42 are 19-inch wide racks for general use with

IBM Sserver OpenPower, p5, pSeries, and RS/6000 rack-based or rack drawer-based

systems. The racks provide increased capacity, greater flexibility, and improved floor space

utilization.

The OpenPower 720 uses a 4U rack-mounted server drawer.

If an OpenPower 720 system is to be installed in a non-IBM rack or cabinet, you should

ensure that the rack conforms to the EIA

1

standard EIA-310-D (see 1.5.6, “OEM rack” on

page 16).

Note: It is the customer’s responsibility to ensure that the installation of the drawer in the

preferred rack or cabinet results in a configuration that is stable, serviceable, safe, and

compatible with the drawer requirements for power, cooling, cable management, weight,

and rail security.

1.5.1 IBM RS/6000 7014 Model T00 Enterprise Rack

The 1.8-meter (71 inch) Model T00 is compatible with past and present OpenPower, p5,

pSeries, and RS/6000 racks and is designed for use in all situations that have previously used

the older rack models R00 and S00. The T00 rack has the following features:

36 EIA units (36U) of usable space.

Optional removable side panels.

Optional highly perforated front door.

Optional side-to-side mounting hardware for joining multiple racks.

Standard black or optional white color in OEM format.

Increased power distribution and weight capacity.

An optional reinforced (ruggedized) rack feature (FC 6080) provides added earthquake

protection with modular rear brace, concrete floor bolt-down hardware, and bolt-in steel

front filler panels.

Support for both AC and DC configurations.

DC rack height is increased to 1926 mm (75.8 inches) if a power distribution panel is fixed

to the top of the rack.

Up to four Power Distribution Units (PDUs) can be mounted in the proper bays, but others

can fit inside the rack. See 1.5.3, “AC Power Distribution Unit and rack content” on

page 13.

Optional rack status beacon (FC 4690). This beacon is designed to be placed on top of a

rack and cabled to servers, such as a OpenPower 720, and other components, such as a

1

Electronic Industries Alliance (EIA). Accredited by American National Standards Institute (ANSI), EIA provides a

forum for industry to develop standards and publications throughout the electronics and high-tech industries.

12 OpenPower 720 Technical Overview and Introduction

Page 23

7311 I/O drawer, inside the rack. Servers can be programmed to illuminate the beacon in

response to a detected problem or changes in system status.

A rack status beacon junction box (FC 4693) should be used to connect multiple servers

and I/O drawers to the beacon. This feature provides six input connectors and one output

connector for the rack. To connect the servers or other components to the junction box or

the junction box to the rack, status beacon cables (FC 4691) are necessary. Multiple

junction boxes can be linked together in a series using daisy chain cables (FC 4692).

Weight:

T00 base empty rack: 244 kg (535 pounds)

T00 full rack: 816 kg (1795 pounds)

1.5.2 IBM RS/6000 7014 Model T42 Enterprise Rack

The 2.0-meter (79.3-inch) Model T42 is the rack that will address the special requirements of

customers who want a tall enclosure to house the maximum amount of equipment in the

smallest possible floor space. The features that differ in the Model T42 rack from the Model

T00 include the following:

42 EIA units (42U) of usable space

AC power support only

Weight:

– T42 base empty rack: 261 kg (575 pounds)

– T42 full rack: 930 kg (2045 pounds)

1.5.3 AC Power Distribution Unit and rack content

For rack models T00 and T42, 12-outlet PDUs (FC 9188 and FC 7188) are available.

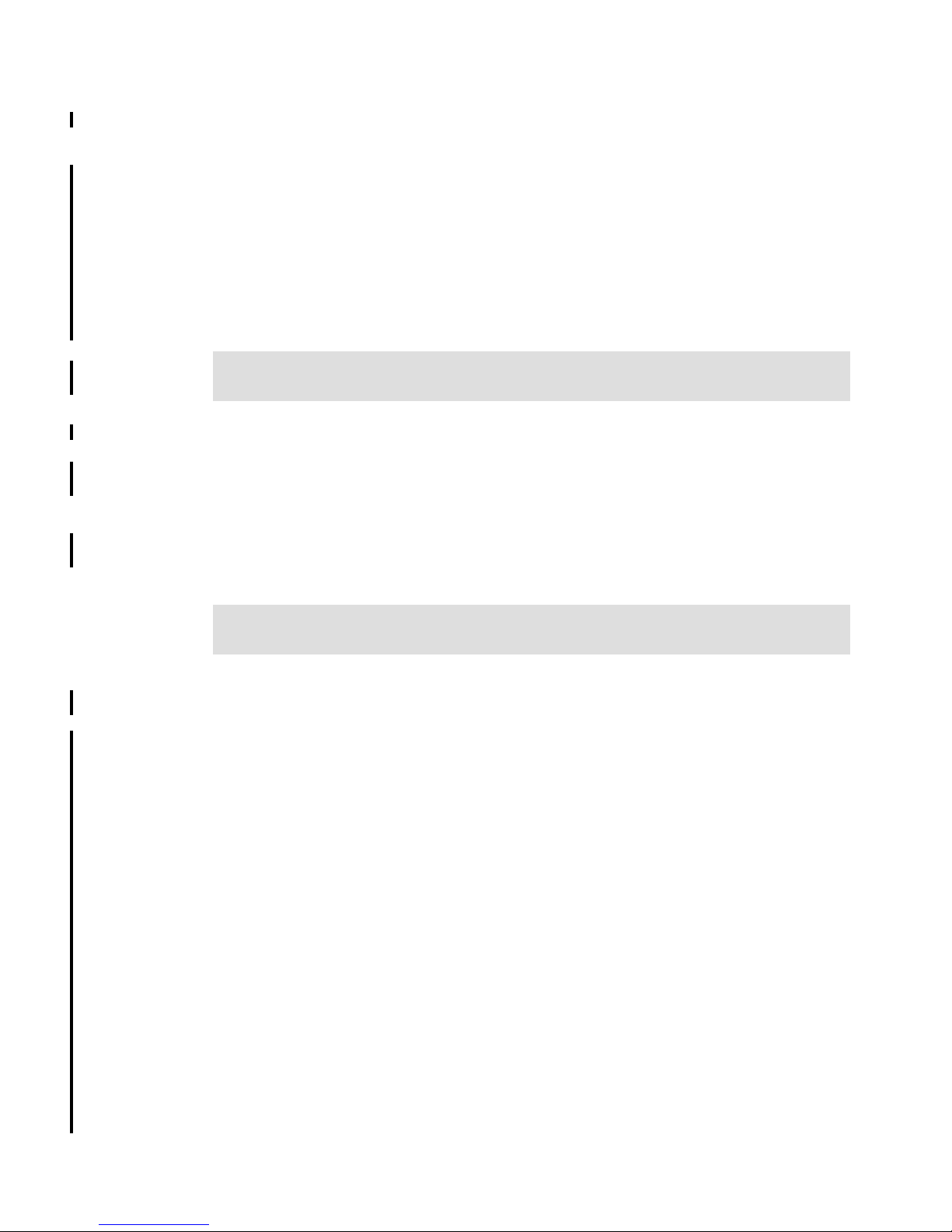

Four PDUs can be mounted vertically in the 7014 racks. See Figure 1-4 for placement of the

four vertically mounted PDUs. In the rear of the rack, two additional PDUs can be installed

horizontally in the T00 and three in the T42 rack. The four vertical mounting locations will be

filled first. Mounting PDUs horizontally consumes 1U per PDU and reduces the space

available for other racked components. When mounting PDUs horizontally, it is recommended

that fillers be used in the EIA units occupied by these PDUs to facilitate proper air-flow and

ventilation in the rack.

For detailed power cords requirements and power cord feature codes, see the publication

IBM Eserver Planning Information, SA38-0508. An online copy can be found at Maps of

pSeries books to the information center → Planning → Printable PDFs → Planning:

http://publib16.boulder.ibm.com/infocenter/eserver/v1r2s/en_US/index.htm

Note: Ensure the appropriate power cord feature is configured to support the power being

supplied.

The Base/Side Mount Universal PDU (FC 9188) and the optional, additional, Universal PDU

(FC 7188), support a wide range of country requirements and electrical power specifications.

It receives power through a UTG0247 connector. Each PDU requires one PDU to wall power

cord. Nine power cord features are available for different countries and applications by

varying the PDU to Wall Power Cord, which must be ordered separately. Each power cord

provides the unique design characteristics for the different power requirements. To match new

Chapter 1. General description 13

Page 24

power requirements and save previous investments, these power cords can be requested

with an initial order of the rack, or with a later upgrade of the rack features.

The PDU has twelve customer-usable IEC 320-C13 outlets. There are six groups of two

outlets fed by six circuit breakers. Each outlet is rated up to 10 amps, but each group of two

outlets is fed from one 15 amps circuit breaker.

Figure 1-4 PDU placement and PDU view

1.5.4 Rack-mounting rules for OpenPower 720

The primary rules that should be followed when mounting the OpenPower 720 into a rack are:

The system is designed to be placed at any location in the rack. For rack stability, it is

advisable to start filling a rack from the bottom.

Any remaining space in the rack can be used to install other systems or peripherals,

provided that the maximum permissible weight of the rack is not exceeded and the

installation rules for these devices are followed.

Before placing an OpenPower 720 into the service position, it is essential that the rack

manufacturer’s safety instructions have been followed regarding rack stability.

Depending on the current implementation and future enhancements of additional 7311 Model

D20 drawers connected to the OpenPower 720 system, Table 1-8 shows examples of the

minimum and maximum configurations for different combinations of servers and attached

7311 Model D20 I/O drawers.

Table 1-8 Minimum and maximum configurations for 720s and 7311-D20s

Only 720s One 720,

one 7311-D20

7014-T00 rack 9411

One 720,

four 7311-D20s

One 720,

eight 7311-D20s

7014-T42 rack 10521

14 OpenPower 720 Technical Overview and Introduction

Page 25

1.5.5 Additional options for rack

The intention of this section is to highlight some solutions available to provide a single point of

management for environments composed of multiple OpenPower 720 servers or other IBM

Sserver OpenPower, p5, pSeries, and RS/6000 systems.

IBM 7212 Model 102 IBM TotalStorage Storage device enclosure

The IBM 7212 Model 102 is designed to provide efficient and convenient storage expansion

capabilities for select IBM Sserver p5, pSeries, and RS/6000 servers. The IBM 7212 Model

102 is a 1U rack-mountable option to be installed in a standard 19-inch rack using an optional

rack-mount hardware feature kit. The 7212 Model 102 has two bays that can accommodate

any of the following storage drive features:

Digital Data Storage (DDS) Gen 5 DAT72 Tape Drive provides physical storage capacity of

36 GB (72 GB with 2:1 compression) per data cartridge

VXA-2 Tape Drive provides a media capacity of up to 80 GB (160 GB with 2:1

compression) physical data storage capacity per cartridge

Digital Data Storage (DDS-4) tape drive with 20 GB native data capacity per tape cartridge

and a native physical data transfer rate of up to 3 MB/sec that uses a 2:1 compression so

that a single tape cartridge can store up to 40 GB of data

DVD-ROM drive is a 5 1/4-inch, half-high device. It can read 640 MB CD-ROM and 4.7 GB

DVD-RAM media. It can be used for Alternate IPL

2

(IBM-distributed CD-ROM media only)

and program distribution

DVD-RAM drive with up to 2.7 MB/sec throughput. Using 3:1 compression, a single disk

can store up to 28 GB of data. Supported DVD disk native capacities on a single

DVD-RAM disk are as follows: up to 2.6 GB, 4.7 GB, 5.2 GB, and 9.4 GB

Flat panel display options

The IBM 7316-TF3 Flat Panel Console Kit can be installed in the system rack. This 1U

console uses a 15-inch thin film transistor (TFT) LCD with a viewable area of

304.1 mm x 228.1 mm and a 1024 x 768 pels

Kit has the following attributes:

Flat panel color monitor.

Rack tray for keyboard, monitor, and optional VGA switch with mounting brackets.

IBM Travel Keyboard® mounts in the rack keyboard tray (Integrated Trackpoint® and

UltraNav®).

IBM PS/2 Travel Keyboards are supported on the 7316-TF3 for use in configurations where

only PS/2 keyboard ports are available.

The IBM 7316-TF3 Flat Panel Console Kit provides an option for the IBM USB Travel

Keyboards with UltraNav. The USB keyboard allow the 7316-TF3 to be connected to systems

that do not have PS/2 keyboard ports. The IBM USB Travel Keyboard may be direct attached

to an available integrated USB port or a supported USB adapter (2738), on IBM Eserver

OpenPower servers, or IBM 7310-CR3 and 7315-CR3 Hardware Management Consoles.

3

resolution. The 7316-TF3 Flat Panel Console

The Netbay® LCM (Keyboard/Video/Mouse) Switch (FC 4202) allows users single-point

access and control of up to 64 servers from a single console. The Netbay LCM Switch has a

maximum video resolution of 1600 x 1280 and mounts in a 1U drawer behind the 7316-TF3

monitor. A minimum of one LCM cable (FC 4268) or USB cable (FC 4269) is required with a

2

Initial Program Load

3

Picture elements

Chapter 1. General description 15

Page 26

Netbay LCM Switch (FC 4202). When connecting to a OpenPower 720, FC 4269 provides

connection to the POWER5 USB ports. Only the PS/2 keyboard is supported when attaching

the 7316-TF3 to the LCM Switch.

The following should be considered when selecting the LCM Switch:

The KCO cable (FC 4268) is used with systems with PS/2 style keyboard, display, and

mouse ports

The USB cable (FC 4269) is used with systems with USB keyboard or mouse ports.

The switch offers four ports for server connections. Each port in the switch can connect a

maximum of 16 systems

– One KCO cable (FC 4268) or USB cable (FC 4269) is required for every four systems

supported on the switch

– A maximum of 16 KCO cables or USB cables per port may be used with the Netbay

LCM Switch (FC 4202) to connect up to 64 servers

Note: A server microcode update may be required on installed systems for boot-time SMS

menu support of the USB keyboards. The update may also be required for the LCM switch

on the 7316-TF3 console (FC 4202). Microcode updates are located at the URL below.

http://techsupport.services.ibm.com/server/mdownload

We recommend that you have the 7316-TF3 installed between EIA 20 to 25 of the rack for

ease of use. The 7316-TF3 or any other graphics monitor requires a POWER GXT135P

graphics accelerator (FC 2849) to be installed in the server, or other graphic accelerator, if

supported.

Hardware Management Console 7310 Model CR3

The 7310 Model CR3 Hardware Management Console (HMC) is a 1U, 19-inch

rack-mountable drawer supported in the 7014 Model T00 and T42 racks. The 7310 Model

CR3 provides one serial port, two integrated Ethernet ports, and two additional PCI slots. The

HMC 7310 Model CR2 has USB ports to connect USB keyboard and mouse devices.

Note: The HMC serial ports can be used for external modem attachment if the Service

Agent call-home function is implemented, and the Ethernet ports are used to communicate

to the service processor in OpenPower 720 systems. An Ethernet cable (FC 7801 or 7802)

is required to attach the HMC to the OpenPower 720 system it controls.

1.5.6 OEM rack

The OpenPower 720 can be installed in a suitable OEM rack, provided that the rack conforms

to the EIA-310-D standard. This standard is published by the Electrical Industries Alliance,

and a summary of this standard is available in the publication Site and Hardware Planning

Information, SA38-0508.

The key points mentioned in this standard are as follows:

Any rack used must be capable of supporting 15.9 kg (35 pounds) per EIA unit

(44.5 mm [1.75 inches] of rack height).

To ensure proper rail alignment, the rack must have mounting flanges that are at least

494 mm (19.45 inches) across the width of the rack and 719 mm (28.3 inches) between

the front and rear rack flanges.

It might be necessary to supply additional hardware, such as fasteners, for use in some

manufacturer’s racks.

16 OpenPower 720 Technical Overview and Introduction

Page 27

Figure 1-5 shows the drawing specifications for OEM racks.

Figure 1-5 Reference drawing for OEM rack specifications

Chapter 1. General description 17

Page 28

18 OpenPower 720 Technical Overview and Introduction

Page 29

Chapter 2. Architecture and technical

overview

This chapter discusses the overall system architecture represented by Figure 2-1. The major

components of this diagram are described in the following sections. The bandwidths provided

throughout this section are theoretical maximums provided for reference. It is always

recommended to obtain real-world performance measurements using production workloads.

2

ports

RIO-2 expansion

Enterprise

RIO HUB

Two SP communi cation

ports

interface card

card

2 (Proc Clk):1

8 Bytes each dir

Elastic Intfc

DCM

Two SPCN

Two HMC

Eth ports

RIO-2 bus

2 B (Diff'l) each dir

1 GB/sec

RIO-2 bus

2 B (Diff'l) each dir

1 GB/sec

GX+ bus

3 (Proc Clk):1

4 Bytes each dir

Elastic Intfc

core core

shared L2 cache

POWER5 POWER5

memory

distributed switch

D

I

M

M

controller

SMI-II SMI-II

*DDR1 memory *DDR1 memory

D

D

D

I

I

I

M

M

M

M

M

M

L3

cache

D

D

D

D

I

I

I

I

M

M

M

M

M

M

M

M

CUoD key card

operator panel

from optional

media backplane

100 MHz

SP

Enterprise

RIO HUB

GX+ bus

3 (Proc Clk):1

4 Bytes each dir

Elastic Intfc

core core

shared L2 cache

memory

distributed switch

D

I

M

M

controller

SMI-II SMI-II

D

D

D

I

I

I

M

M

M

M

M

M

2 (Proc Clk):1

8 Bytes each dir

Elastic Intfc

2 B (Diff'l) each dir

D

D

D

I

I

I

M

M

M

M

M

M

Two USB

ports

PCI-X slot #5 #4 #3 #2 #1

GX+ slot

USB

133 MHz

33 MHz

RIO-2 bus

1 GB/sec

DCM

Vertical Fabric

bus

4-pack disk drive backplane

cache

L3

D

I

M

M

PCI-X to PCI-X

Tape

bridge 2

PCI-X Host

bridge

to operator panel

slim-line media device

slim-line media device

optional media backplane

Two

Ethernet

ports

133 MHz

Dual 1 GB

Ethernet

133 MHz

133 MHz

66 MHz

133 MHz

IDE

controller

RAID

enablement

card

4-pack disk drive backplane

133 MHz

PCI-X to PCI-X

bridge 0

Dual SCSI

Ultra320

bus0 1

Figure 2-1 OpenPower 720 logic data flow

© Copyright IBM Corp. 2004. All rights reserved. 19

Page 30

2.1 The POWER5 chip

The POWER5 chip features single-threaded and multi-threaded execution, providing higher

performance in the single-threaded mode than its POWER4 predecessor at equivalent

frequencies provides. POWER5 maintains both binary and architectural compatibility with

existing POWER4 systems to ensure that binaries continue executing properly and all

application optimizations carry forward to newer systems. The POWER5 provides additional

enhancements such as virtualization, reliability, availability, and serviceability at both chip and

system levels, and it has been designed to support speeds up to 3 GHz.

Figure 2-2 shows the high-level structures of POWER4 and POWER5 processor-based

systems. The POWER4 scales up to a 32-way symmetric multiprocessor. Going beyond 32

processors increases interprocessor communication, resulting in high traffic on the

interconnection fabric bus. This contention negatively affects system scalability.

POWER4POWER5

Pr ocessor Process or

Fabri c bus

L2

cache

Fabri c

controller

L3

cache

Memory

controller

Memory

Pr ocessor Processor

Fabri c bus

L2

cache

Fabri c

cont r ol l er

L3

cache

Memory

cont r ol l er

Memory

Fabri c bus

L3

cache

Processor Pr ocessor

L2

cache

Fabri c

cont r ol l er

Memory

cont r ol l er

Memory

Process or Processor

L2

cache

Fabr i c

controller

Memory

controller

Memory

cache

L3

Figure 2-2 POWER4 and POWER5 system structures

Moving the L3 cache (2-way processor cards only) provides significantly more cache on the

processor side than previously available, thus reducing traffic on the fabric bus and allowing

POWER5 processor-based systems to scale to higher levels of symmetric multiprocessing.

The POWER5 supports a 1.9 MB on-chip L2 cache, implemented as three identical slices

with separate controllers for each. Either processor core can independently access each L2

controller. The L3 cache, with a capacity of 36 MB, operates as a backdoor with separate

buses for reads and writes that operate at half processor speed.

Because of the higher transistor density of the POWER5 130 nm technology, it was possible

to move the memory controller on chip and eliminate a chip previously needed for the

memory controller function. These changes in the POWER5 also have the significant side

benefits of reducing latency to the L3 cache and main memory and reducing the number of

chips necessary to build a system.

The POWER5 processor supports the 64-bit PowerPC architecture. A single die contains two

identical processor cores, each supporting two logical threads. Only a single core is active on

a 1-way processor card. Activation of the second core is not possible.

This architecture makes the chip appear as a 4-way symmetric multiprocessor to the

operating system. The POWER5 processor core has been designed to support both

enhanced simultaneous multi-threading (SMT) and single threaded (ST) operation modes.

20 OpenPower 720 Technical Overview and Introduction

Page 31

2.1.1 Simultaneous multi-threading

As a permanent requirement for performance improvements at the application level,

simultaneous multi-threading (SMT) functionality is embedded in the POWER5 chip

technology. Developers are familiar with process-level parallelism (multi-tasking) and

thread-level parallelism (multi-threads). SMT is the next stage of processor saturation for

throughput-oriented applications to introduce the method of instruction-level parallelism to

support multiple pipelines to the processor.

By default, SMT is activated. On a 2-way POWER5 processor-based system, the operating

system views the available processors as a 4-way system. To achieve a higher performance

level, SMT is also applicable in Micro-Partitioning, capped or uncapped, and dedicated

partition environments.

Simultaneous multi-threading is supported on POWER5 systems running the Linux operating

system-based at a required 2.6 kernel. For Linux, an additional boot option must be set to

activate SMT after a reboot.

The SMT mode maximizes the usage of the execution units. In the POWER5 chip, more

rename registers have been introduced (for floating-point operation, rename registers

increased to 120) that are essential for out of order execution and then vital for the SMT.

Enhanced SMT features

To improve SMT performance for various workload mixes and provide robust quality of

service, POWER5 provides two features:

Dynamic resource balancing

– The objective of dynamic resource balancing is to ensure that the two threads

executing on the same processor flow smoothly through the system.

– Depending on the situation, the POWER5 processor resource balancing logic has

different thread throttling mechanisms.

Adjustable thread priority

– Adjustable thread priority lets software determine when one thread should have a

greater (or lesser) share of execution resources.

– POWER5 supports eight software-controlled priority levels for each thread.

ST operation

Not all applications benefit from SMT. Having threads executing on the same processor will

not increase the performance of applications with execution unit limited performance or

applications that consume all the chip’s memory bandwidth. For this reason, the POWER5

supports the ST execution mode. In this mode, the POWER5 processor gives all the physical

resources to the active thread, allowing it to achieve higher performance than a POWER4

processor-based system at equivalent frequencies. Highly optimized scientific codes are one

example where ST operation is ideal.

2.1.2 Dynamic power management

In current Complimentary Metal Oxide Semiconductor (CMOS) technologies, chip power is

one of the most important design parameters. With the introduction of SMT, more instructions

execute per cycle per processor core, thus increasing the core’s and the chip’s total switching

power. To reduce switching power, POWER5 chips use a fine-grained, dynamic clock gating

mechanism extensively. This mechanism gates off clocks to a local clock buffer if dynamic

power management logic knows the set of latches driven by the buffer will not be used in the

Chapter 2. Architecture and technical overview 21

Page 32

next cycle. This allows substantial power saving with no performance impact. In every cycle,

g

g

y

y

the dynamic power management logic determines whether a local clock buffer that drives a

set of latches can be clock gated in the next cycle.

In addition to the switching power, leakage power has become a performance limiter. To

reduce leakage power, the POWER5 chip uses transistors with low threshold voltage only in

critical paths. The POWER5 chip also has a low-power mode, enabled when the system

software instructs the hardware to execute both threads at the lowest available priority. In low

power mode, instructions dispatch once every 32 cycles at most, further reducing switching

power. The POWER5 chip uses this mode only when there is no ready task to run on either

thread.

2.1.3 POWER chip evolution

The OpenPower system complies with the RS/6000 platform architecture, which is an

evolution of the PowerPC Common Hardware Reference Platform (CHRP) specifications.

Figure 2-3 shows the POWER evolution.

64bit

32bit

604e

332 /

375

F50

SP Nodes

Power3

RS64

125

S70

POWER4™

POWER4™

0.18 microns

0.18 microns

1.0 to

1.0 to

1.3 GHz

1.3 GHz

Core

Core

Mode ls 27 0, B80, and

POWER3 SP Nodes

Power3-I I

333 / 375 /

200+

RS64-I I

262. 5

S7A

1.0 to

1.0 to

1.3 GHz

1.3 GHz

Core

Core

450

F80, H80, M80, S80

RS64-I I

340

H70

0.13 microns

RS64-I I I

Copper =

450

pSeri es p620, p660,

POWER4+

1.2 to

1.9 GHz

Core

1.2 to

1.9 GHz

Core

RS64-I V

600 / 750

and p680

POWER4

1.0 t o 1.3

GHz

p630, p650, p655,

p670, and p690

SOI

+ SOI =

POW ER5

POW ER5

0.13 m icrons

0.13 m icrons

1.5 to

1.5 to

1.9 G Hz

1.9 G Hz

Core

Core

POWER4+

1. 2 to 1. 9 GHz

p615, p630,

p650, p655, p670

and p690

Note : Not all

processor speeds

avai labl e on all

mode l s

TM

TM

1.5 to

1.5 to

1.9 G Hz

1.9 G Hz

Core

Core

Figure 2-3 POWER chip evolution

2.1.4 CMOS, copper, and SOI technology

The POWER5 processor design is a result of a close collaboration between IBM Systems and

Technology Group

Sserver OpenPower systems to give customers improved performance, reduced power

22 OpenPower 720 Technical Overview and Introduction

Shared L2

Shared L2Shared L2

Distributed Switch

Distributed Switch

2001

2001

–Distributed Switch

–Distributed Switch

–Shared L2

–Shared L2

–LPAR

–LPAR

–Autonomic computing

–Autonomic computing

–Chip multiprocessin

–Chip multiprocessin

Shared L2Shared L2

Distributed Switch

2002-3

–Larger L2

–More LPARs

–High-speed Switch

Shared L2

Shared L2Shared L2

D istrib uted Sw itch

D istrib uted Sw itch

2004

2004

– Larger L2 and L3 caches

– Larger L2 and L3 caches

– M icro-partitioning

– M icro-partitioning

– Enhanced D istributed Sw itch

– Enhanced D istributed Sw itch

– E n h a n c e d c o r e p a ra lle lis m

– E n h a n c e d c o r e p a ra lle lis m

– Im proved floating-point

– Im proved floating-point

– perform ance

– perform ance

– Faster m em o r

– Faster m em o r

and IBM Microelectronics Technologies that enables IBM

Mem Ctl

Mem Ctl

environ m ent

environ m ent

Page 33

consumption, and decreased IT footprint size through logical partitioning. The POWER5

processor chip takes advantage of IBM leadership technology. It is made using IBM

0.13-

µm-lithography CMOS technology. The POWER5 processor also uses copper and

silicon-on-insulator (SOI) technology to allow a higher operating frequency for improved

performance yet with reduced power consumption and improved reliability compared to

processors not using this technology.

2.2 Processor cards

In the OpenPower system, the POWER5 chip has been packaged with the L3 cache chip (on

2-way cards) into a cost-effective Dual Chip Module (DCM) package. The storage structure

for the POWER5 chip is a distributed memory architecture, which provides high memory

bandwidth. Each processor can address all memory and sees a single shared memory

resource. As such, a single DCM and its associated L3 cache and memory are packaged on

a single processor card. Access to memory behind another processor is accomplished

through the fabric buses. The OpenPower 720 supports up to two processor cards (each card

is a 2-way) or a single 1-way card. Each 2-way processor card has a single DCM containing a

POWER5 processor chip and a 36 MB L3 module. On all cards I/O connects to the Central

Electronic Complex (CEC) subsystem through the GX+ bus. Each DCM provides a single

GX+ bus for a total system capability of two GX+ buses. The GX+ bus provides an interface to

a single device such as the RIO-2 buses, as shown in Figure 2-4.

D I

D I

L3 Cache

Data Unit

core

1.9 MB L2

GX+ Controller

Figure 2-4 OpenPower 720 DCM diagram

Each processor card contains a single DCM and the local memory storage subsystem for that

DCM. The processor card also contains LEDs for each FRU

CPU card itself. See Figure 2-5 on page 24 for a processor card layout view.

core

y

r

o

m

e

M

36 MB

r

e

l

l