Page 1

Front cover

IBM BladeCenter PS703

and PS704 Technical

Overview and Introduction

Features the POWER7 processor providing

advanced multi-core technology

Details the follow-on to the BladeCenter

PS700, PS701 and PS702

Describes management using

the new Systems Director

Management Console

David Watts

Kerry Anders

David Harlow

Joe Shipman II

ibm.com/redbooks

Redpaper

Page 2

Page 3

International Technical Support Organization

IBM BladeCenter PS703 and PS704 Technical Overview

and Introduction

May 2011

REDP-4744-00

Page 4

Note: Before using this information and the product it supports, read the information in “Notices” on

page vii.

First Edition (May 2011)

This edition applies to:

IBM BladeCenter PS703, 7891-73X

IBM BladeCenter PS704, 7891-74X

© Copyright International Business Machines Corporation 2011. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

The team who wrote this paper . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Now you can become a published author, too! . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Stay connected to IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

Chapter 1. Introduction and general description. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Overview of PS703 and PS704 blade servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 Comparison between the PS70x blade servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.3 IBM BladeCenter chassis support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3.1 Supported BladeCenter chassis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3.2 Number of PS703 and PS704 blades in a chassis . . . . . . . . . . . . . . . . . . . . . . . . 12

1.4 Operating environment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.5 Physical package . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.6 System features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.6.1 PS703 system features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.6.2 PS704 system features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

1.6.3 Minimum features for the POWER7 processor-based blade servers . . . . . . . . . . 18

1.6.4 Power supply features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.6.5 Processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.6 Memory features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

1.6.7 I/O features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

1.6.8 Disk features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.6.9 Standard onboard features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.7 Supported BladeCenter I/O modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

1.7.1 Ethernet switch and intelligent pass-through modules . . . . . . . . . . . . . . . . . . . . . 28

1.7.2 SAS I/O modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

1.7.3 Fibre Channel switch and pass-through modules . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.7.4 Converged networking I/O modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

1.7.5 InfiniBand switch module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

1.7.6 Multi-switch Interconnect Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

1.7.7 Multi-switch Interconnect Module for BladeCenter HT . . . . . . . . . . . . . . . . . . . . . 34

1.8 Building to order . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

1.9 Model upgrades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Chapter 2. Architecture and technical overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

2.1 Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

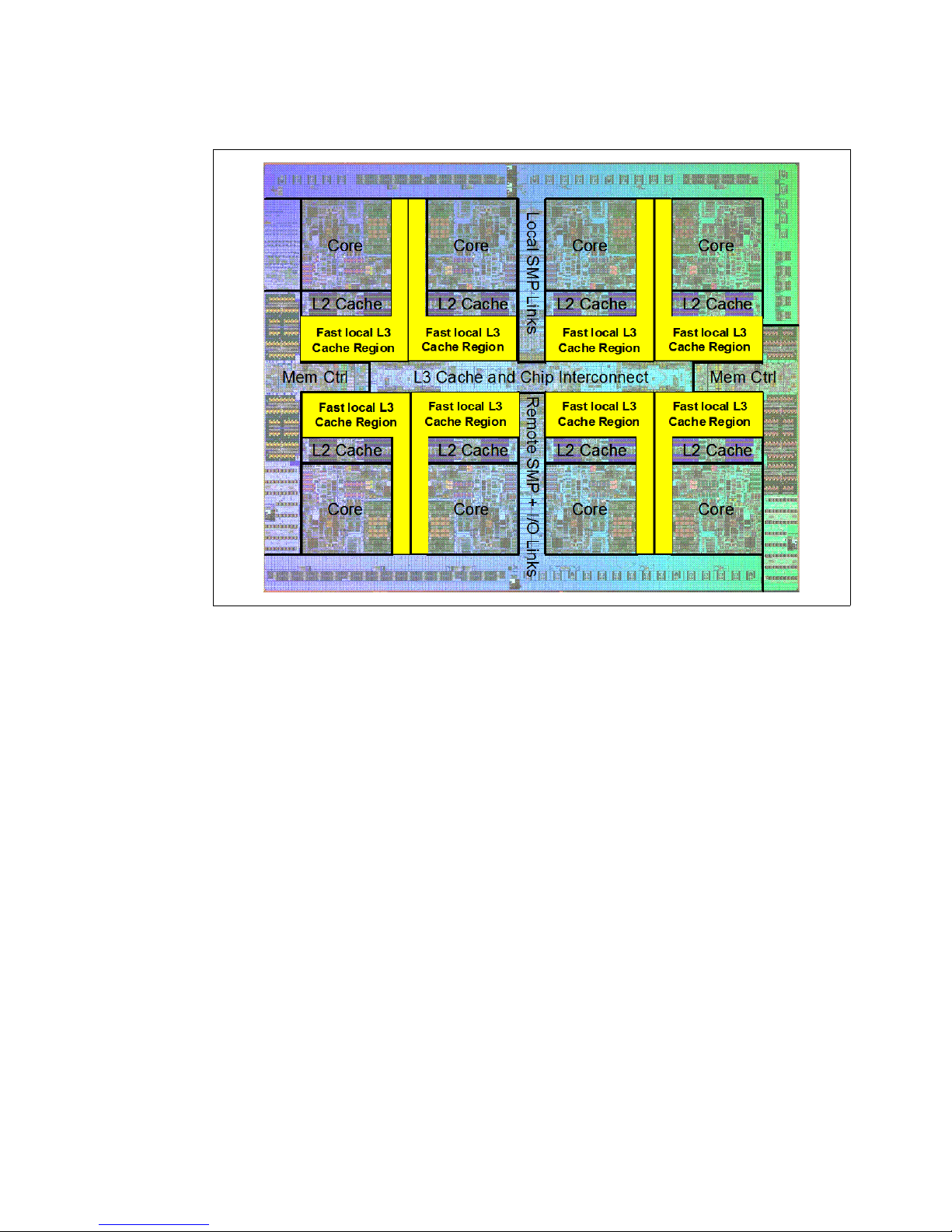

2.2 The IBM POWER7 processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

2.2.1 POWER7 processor overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.2.2 POWER7 processor core . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.2.3 Simultaneous multithreading. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

2.2.4 Memory access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.2.5 Flexible POWER7 processor packaging and offerings . . . . . . . . . . . . . . . . . . . . . 43

2.2.6 On-chip L3 intelligent cache . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.2.7 POWER7 processor and intelligent energy. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.2.8 Comparison of the POWER7 and POWER6 processors . . . . . . . . . . . . . . . . . . . 46

© Copyright IBM Corp. 2011. All rights reserved. iii

Page 6

2.3 POWER7 processor-based blades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

2.4 Memory subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

2.4.1 Memory placement rules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

2.5 Active Memory Expansion. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

2.6 Technical comparison . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

2.7 Internal I/O subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

2.7.1 PCI Express bus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

2.7.2 PCIe slots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

2.7.3 I/O expansion cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

2.7.4 Embedded SAS Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

2.7.5 Embedded Ethernet Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

2.7.6 Embedded USB controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

2.8 Service processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

2.8.1 Server console access by SOL. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

2.8.2 Anchor card. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

2.9 Internal storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

2.9.1 Hardware RAID function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

2.9.2 External SAS connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

2.10 External disk subsystems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

2.10.1 IBM BladeCenter S integrated storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

2.10.2 IBM System Storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

2.11 IVM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

2.12 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

2.13 IBM EnergyScale . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

2.13.1 IBM EnergyScale technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

2.13.2 EnergyScale device . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Chapter 3. Virtualization. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

3.1 PowerVM Version 2.2 enhancements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

3.2 POWER Hypervisor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

3.3 POWER processor modes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

3.4 PowerVM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

3.4.1 PowerVM editions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

3.4.2 Logical partitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

3.4.3 Multiple Shared-Processor Pools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

3.4.4 VIOS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

3.4.5 PowerVM Lx86 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

3.4.6 PowerVM Live Partition Mobility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

3.4.7 Active Memory Sharing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

3.4.8 Suspend/Resume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

3.4.9 N_Port ID Virtualization (NPIV). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

3.4.10 Supported PowerVM features by operating system . . . . . . . . . . . . . . . . . . . . . 117

Chapter 4. Continuous availability and manageability . . . . . . . . . . . . . . . . . . . . . . . . 119

4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

4.2 Reliability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

4.2.1 Designed for reliability. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

4.2.2 Placement of components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

4.2.3 Redundant components and concurrent repair. . . . . . . . . . . . . . . . . . . . . . . . . . 121

4.3 Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

4.3.1 Partition availability priority . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

4.3.2 General detection and deallocation of failing components . . . . . . . . . . . . . . . . . 122

4.3.3 Memory protection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

iv IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 7

4.3.4 Cache protection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

4.3.5 Special uncorrectable error handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

4.3.6 PCI extended error handling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

4.4 Serviceability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

4.4.1 Detecting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

4.4.2 Diagnosing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

4.4.3 Reporting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

4.4.4 Notifying the client. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

4.4.5 Locating and servicing parts requiring service . . . . . . . . . . . . . . . . . . . . . . . . . . 137

4.5 Manageability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

4.5.1 Service user interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

4.5.2 IBM Power Systems firmware maintenance . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

4.5.3 Electronic Service Agent and Service and Support Manager . . . . . . . . . . . . . . . 147

4.5.4 BladeCenter Service Advisor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

Chapter 5. Systems Director Management Console . . . . . . . . . . . . . . . . . . . . . . . . . . 151

5.1 SDMC Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

5.1.1 Hardware appliance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

5.1.2 Software appliance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

5.1.3 IVM, HMC, and SDMC support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

5.1.4 Terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

5.2 Using the web interface. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

5.2.1 Resources tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

5.2.2 Settings tab . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

5.3 POWER-based blades management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

5.3.1 IVM characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

5.3.2 SDMC added capability for POWER-based blades . . . . . . . . . . . . . . . . . . . . . . 158

5.4 IVM to SDMC transition. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

5.4.1 IVM to SDMC transition process. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

5.4.2 Steps to SDMC management of POWER-based blades . . . . . . . . . . . . . . . . . . 160

5.4.3 FSP access configuration from BladeCenter AMM. . . . . . . . . . . . . . . . . . . . . . . 160

5.4.4 Discovery . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

5.4.5 Request Access to Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

5.4.6 Updating ASM passwords. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

5.4.7 Systems Director inventory collection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

5.5 SDMC basic management of POWER-based blades . . . . . . . . . . . . . . . . . . . . . . . . . 165

5.5.1 Virtual server creation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

5.5.2 Multiple profiles for POWER-based blades. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

5.5.3 Dual VIOS on POWER-based blades. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

5.5.4 Virtual server Suspend and Resume . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

5.5.5 Active Memory Expansion (AME) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

5.5.6 Virtual consoles from the SDMC. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

Abbreviations and acronyms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

IBM Redbooks documents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

How to get Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

Help from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

Contents v

Page 8

vi IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 9

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not

infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not give you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any

manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the

materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring

any obligation to you.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs.

© Copyright IBM Corp. 2011. All rights reserved. vii

Page 10

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines

Corporation in the United States, other countries, or both. These and other IBM trademarked terms are

marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US

registered or common law trademarks owned by IBM at the time this information was published. Such

trademarks may also be registered or common law trademarks in other countries. A current list of IBM

trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

Active Memory™

AIX 5L™

AIX®

AS/400®

BladeCenter®

DS4000®

DS8000®

Electronic Service Agent™

EnergyScale™

FlashCopy®

Focal Point™

IBM Systems Director Active Energy

Manager™

IBM®

iSeries®

Micro-Partitioning™

POWER Hypervisor™

Power Systems™

Power Systems Software™

POWER4™

POWER5™

POWER6+™

POWER6®

POWER7™

PowerVM™

POWER®

pSeries®

Redbooks®

Redpaper™

Redbooks (logo) ®

ServerProven®

Solid®

System i®

System p5®

System Storage®

System x®

System z®

Tivoli®

Workload Partitions Manager™

XIV®

The following terms are trademarks of other companies:

BNT, and Server Mobility are trademarks or registered trademarks of Blade Network Technologies, Inc., an

IBM Company.

SnapManager, and the NetApp logo are trademarks or registered trademarks of NetApp, Inc. in the U.S. and

other countries.

Microsoft, Windows, and the Windows logo are trademarks of Microsoft Corporation in the United States,

other countries, or both.

Intel, Intel logo, Intel Inside logo, and Intel Centrino logo are trademarks or registered trademarks of Intel

Corporation or its subsidiaries in the United States and other countries.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Other company, product, or service names may be trademarks or service marks of others.

viii IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 11

Preface

The IBM® BladeCenter® PS703 and PS704 are premier blades for 64-bit applications. They

are designed to minimize complexity, improve efficiency, automate processes, reduce energy

consumption, and scale easily. These blade servers are based on the IBM POWER7™

processor and support AIX®, IBM i, and Linux® operating systems. Their ability to coexist in

the same chassis with other IBM BladeCenter blade servers enhances the ability to deliver

the rapid return on investment demanded by clients and businesses.

This IBM Redpaper™ doocument is a comprehensive guide covering the IBM BladeCenter

PS703 and PS704 servers. The goal of this paper is to introduce the offerings and their

prominent features and functions.

The team who wrote this paper

This paper was produced by a team of specialists from around the world working at the

International Technical Support Organization, Raleigh Center.

David Watts is a Consulting IT Specialist at the IBM ITSO Center in Raleigh. He manages

residencies and produces IBM Redbooks® publications for hardware and software topics that

are related to IBM System x® and IBM BladeCenter servers, and associated client platforms.

He has authored over 80 books, papers, and web documents. He holds a Bachelor of

Engineering degree from the University of Queensland (Australia) and has worked for IBM

both in the U.S. and Australia since 1989. David is an IBM Certified IT Specialist and a

member of the IT Specialist Certification Review Board.

Kerry Anders is a Consultant for POWER® systems and PowerVM™ in Lab Services for the

IBM Systems and Technology Group, based in Austin, Texas. He supports clients in

implementing IBM Power Systems™ blades using Virtual I/O Server, Integrated Virtualization

Manager, and AIX. Kerry’s prior IBM Redbooks publication projects include IBM BladeCenter

JS12 and JS22 Implementation Guide, SG24-7655, IBM BladeCenter JS23 and JS43

Implementation Guide, SG24-7740, and IBM BladeCenter PS700, PS701, and PS702

Technical Overview and Introduction, REDP-4655. Previously, he was the Systems

Integration Test Team Lead for the IBM BladeCenter JS21blade with IBM SAN storage using

AIX and Linux. His prior work includes test experience with the JS20 blade, also using AIX

and Linux in SAN environments. Kerry began his career with IBM in the Federal Systems

Division supporting NASA at the Johnson Space Center as a Systems Engineer. He

transferred to Austin in 1993.

David Harlow is a Senior Systems Engineer with business partner Mainline Information

Systems, Inc. located in Tallahassee, Florida and he is based in Raleigh, North Carolina. His

area of expertise includes Power Systems and Power Blade Servers using the IBM i

operating system. He has 19 years of experience with the AS/400®, iSeries®, System i®,

IBM i architecture, and IBM i operating systems. He has worked with the Power blade servers

with VIOS hosting IBM i partitions since the POWER6® JS12 and JS22 entered marketing.

He currently has several IBM certifications including the IBM Certified Technical Sales Expert

- Power Systems with POWER7 and the IBM Certified Sales Expert - Power Systems with

POWER7.

Joe Shipman II is a BladeCenter and System x Subject Matter Expert for the IBM Technical

Support Center in Atlanta, Georgia. He has 7 years of experience working with servers and

© Copyright IBM Corp. 2011. All rights reserved. ix

Page 12

has worked at IBM for 5 years. His areas of expertise include IBM BladeCenter, System x,

BladeCenter Fibre Channel fabrics, BladeCenter Networking, and Power Blade Servers.

Previously he worked as an Electrical and Environmental Systems Specialist for the US Air

Force for 10 years.

The team (l-r): Joe, David Harlow, Kerry, and David Watts

Thanks to the following people for their contributions to this project:

From IBM Power Systems development:

Chris Austen

Larry Cook

John DeHart

Kaena Freitas

Bob Galbraith

Jim Gallagher

Seth Lewis

Hoa Nguyen

Amartey Pearson

From IBM Power Systems marketing:

John Biebelhausen

From IBM Linux Technology Center:

Jeff Scheel

This paper is based in part on IBM BladeCenter PS700, PS701, and PS702 Technical

Overview and Introduction, REDP-4655. Thanks to the authors of that document:

David Watts

Kerry Anders

Berjis Patel

Portions of this paper are from the book Systems Director Management Console Introduction

and Overview, SG24-7860. Thanks to the authors of that document:

Thomas Libor

Allen Oh

Lakshmikanthan Selvarajan

Peter Wuestefeld

x IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 13

Now you can become a published author, too!

Here's an opportunity to spotlight your skills, grow your career, and become a published

author—all at the same time! Join an ITSO residency project and help write a book in your

area of expertise, while honing your experience using leading-edge technologies. Your efforts

will help to increase product acceptance and customer satisfaction, as you expand your

network of technical contacts and relationships. Residencies run from two to six weeks in

length, and you can participate either in person or as a remote resident working from your

home base.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our papers to be as helpful as possible. Send us your comments about this paper or

other IBM Redbooks publications in one of the following ways:

Use the online Contact us review Redbooks form found at:

ibm.com/redbooks

Send your comments in an email to:

redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

Stay connected to IBM Redbooks

Find us on Facebook:

http://www.facebook.com/IBMRedbooks

Follow us on Twitter:

http://twitter.com/ibmredbooks

Look for us on LinkedIn:

http://www.linkedin.com/groups?home=&gid=2130806

Explore new Redbooks publications, residencies, and workshops with the IBM Redbooks

weekly newsletter:

https://www.redbooks.ibm.com/Redbooks.nsf/subscribe?OpenForm

Stay current on recent Redbooks publications with RSS Feeds:

http://www.redbooks.ibm.com/rss.html

Preface xi

Page 14

xii IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 15

Chapter 1. Introduction and general

1

description

This chapter introduces and provides a general description of the new IBM BladeCenter

POWER7 processor-based blade servers. These new blades offer processor scalability from

16 cores to 32 cores:

IBM BladeCenter PS703: single-wide blade with two 8-core processors

IBM BladeCenter PS704: double-wide blade with four 8-core processors

The new PS703 and PS704 blades are premier blades for 64-bit applications. They are

designed to minimize complexity, improve efficiency, automate processes, reduce energy

consumption, and scale easily.

The POWER7 processor-based PS703 and PS704 blades support AIX, IBM i, and Linux

operating systems. Their ability to coexist in the same chassis with other IBM BladeCenter

blade servers enhances the ability to deliver the rapid return on investment demanded by

clients and businesses.

This chapter covers the following topics:

1.1, “Overview of PS703 and PS704 blade servers” on page 2

1.2, “Comparison between the PS70x blade servers” on page 3

1.3, “IBM BladeCenter chassis support” on page 4

1.4, “Operating environment” on page 12

1.5, “Physical package” on page 13

1.6, “System features” on page 14

1.7, “Supported BladeCenter I/O modules” on page 28

1.8, “Building to order” on page 34

1.9, “Model upgrades” on page 35

© Copyright IBM Corp. 2011. All rights reserved. 1

Page 16

1.1 Overview of PS703 and PS704 blade servers

Figure 1-1 shows the IBM BladeCenter PS703 and PS704 blade servers.

Figure 1-1 The IBM BladeCenter PS703 (right) and BladeCenter PS704 (left)

The PS703 blade server

The IBM BladeCenter PS703 (7891-73X) is a single-wide blade server with two eight-core

POWER7 processors with a total of 16 cores. The processors are 64-bit 8-core 2.4 GHz

processors with 256 KB L2 cache per core and 4 MB L3 cache per core.

The PS703 blade server has 16 DDR3 memory DIMM slots. The industry standard VLP

DDR3 memory DIMMs are either 4 GB or 8 GB or 16 GB running at 1066 MHz. The minimum

memory required for a PS703 blade server is 16 GB. The maximum memory that can be

supported is 256 GB (16 x 16 GB DIMMs).

The PS703 blade server supports optional Active Memory™ Expansion, which is a POWER7

technology that allows the effective maximum memory capacity to be much larger than the

true physical memory. Innovative compression/decompression of memory content using

processor cycles can allow memory expansion up to 100%. This can allow an AIX 6.1 or later

partition to do significantly more work with the same physical amount of memory, or a server

to run more partitions and do more work with the same physical amount of memory.

The PS703 blade server has two onboard 1 Gb integrated Ethernet ports that are connected

to the BladeCenter chassis fabric (midplane). The PS703 also has an integrated SAS

controller that supports local (on-board) storage, integrated USB controller and Serial over

LAN console access through the service processor, and the BladeCenter Advance

Management Module.

The PS703 has one on-board disk drive bay. The on-board storage can be one 2.5-inch SAS

HDD or two 1.8-inch SATA SSD drives (with the addition of an SSD interposer tray). The

2 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 17

PS703 also supports one PCIe CIOv expansion card slot and one PCIe CFFh expansion card

slot. See 1.6.7, “I/O features” on page 21 for supported I/O expansion cards.

The PS704 blade server

The IBM BladeCenter PS704 (7891-74X) is a double-wide blade server with four eight-core

POWER7 processors with a total of 32 cores. The processors are 64-bit 8-core 2.4 GHz

processors with 256 KB L2 cache per core and 4 MB L3 cache per core.

The PS704 is a double-wide blade, meaning that it occupies two adjacent slots in the IBM

BladeCenter chassis.

The PS704 blade server has 32 DDR3 memory DIMM slots. The industry standard VLP

DDR3 memory DIMMs are either 4 GB or 8 GB running at 1066 MHz. The minimum memory

required for PS704 blade server is 32 GB. The maximum memory that can be supported is

256 GB (32x 8 GB DIMMs).

The PS704 blade server supports optional Active Memory Expansion, which is a POWER7

technology that allows the effective maximum memory capacity to be much larger than the

true physical memory. Innovative compression/decompression of memory content using

processor cycles can allow memory expansion up to 100%. This can allow an AIX 6.1 or later

partition to do significantly more work with the same physical amount of memory, or a server

to run more partitions and do more work with the same physical amount of memory.

The PS704 blade server has four onboard 1 Gb integrated Ethernet ports that are connected

to the BladeCenter chassis fabric (midplane). The PS704 also has an integrated SAS

controller that supports local (on-board) storage, integrated USB controller and Serial over

LAN console access through the service processor, and the BladeCenter Advance

Management Module.

The PS704 blade server has two disk drive bays, one on the base blade and one on the

expansion unit. The on-board storage can be one or two 2.5-inch SAS HDD or up to four

1.8-inch SSD drives. The integrated SAS controller supports RAID 0, 10, 5, or 6 depending

on the numbers of HDDs or SSDs installed.

The PS704 supports two PCIe CIOv expansion card slots and two PCIe CFFh expansion

card slots. See 1.6.7, “I/O features” on page 21 for supported I/O expansion cards.

Note: For the PS704 blade server, the service processor (FSP or just SP) in the expansion

blade is set to IO mode, which provides control busses from IOs, but does not provide

redundancy and backup operational support to the SP in the base blade.

1.2 Comparison between the PS70x blade servers

This section describes the difference between the five POWER7 blade servers:

The PS700 is a single-wide blade with one 4-core 64-bit POWER7 3.0 GHz processor.

The PS701 is a single-wide blade with one 8-core 64-bit POWER7 3.0 GHz processor.

The PS702 is a double-wide blade with two 8-core 64-bit POWER7 3.0 GHz processors.

The PS703 is a single-wide blade with two 8-core 64-bit POWER7 2.4 GHz processors.

The PS704 is a double-wide blade with four 8-core 64-bit POWER7 2.4 GHz processors.

The POWER7 processor has 4 MB L3 cache per core and 256 KB L2 cache per core.

Chapter 1. Introduction and general description 3

Page 18

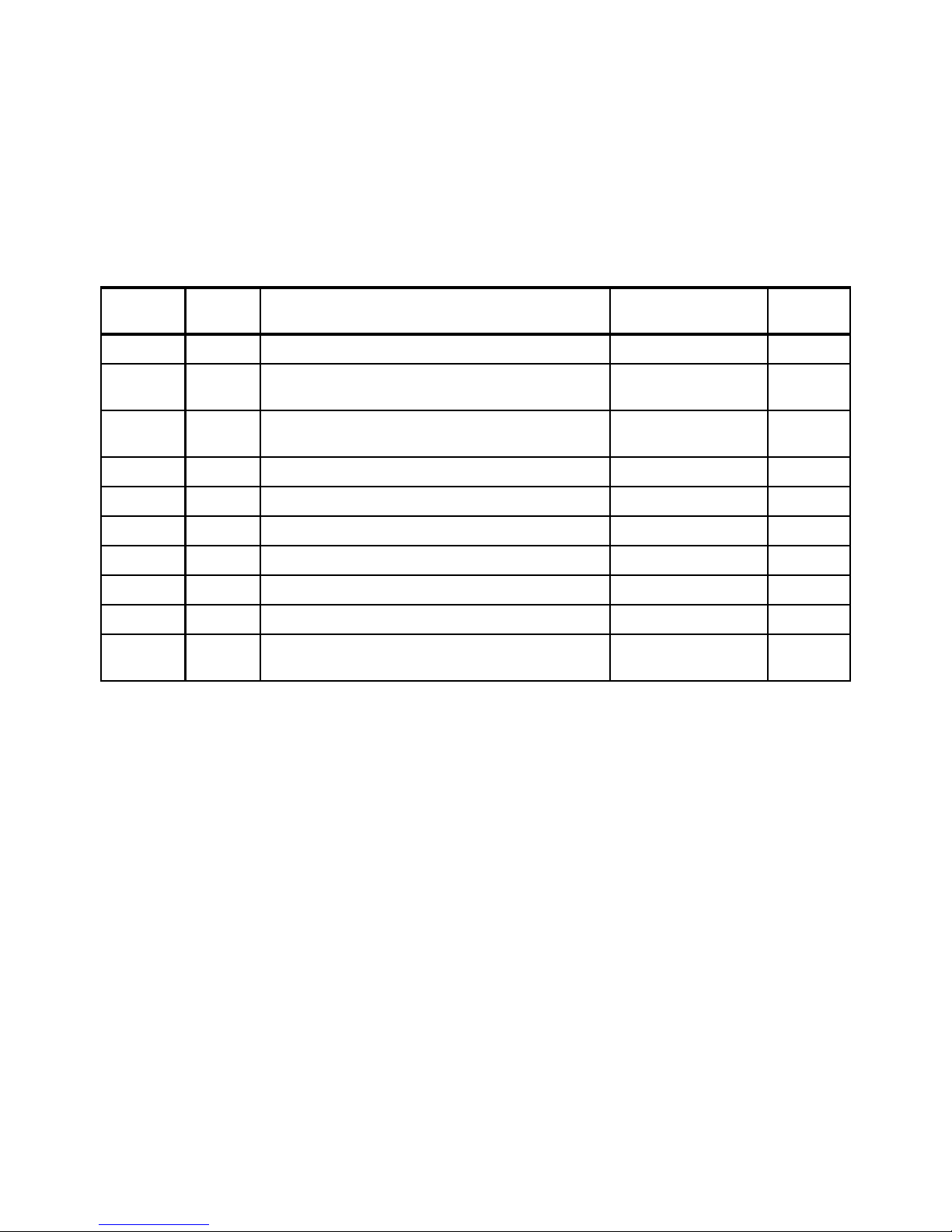

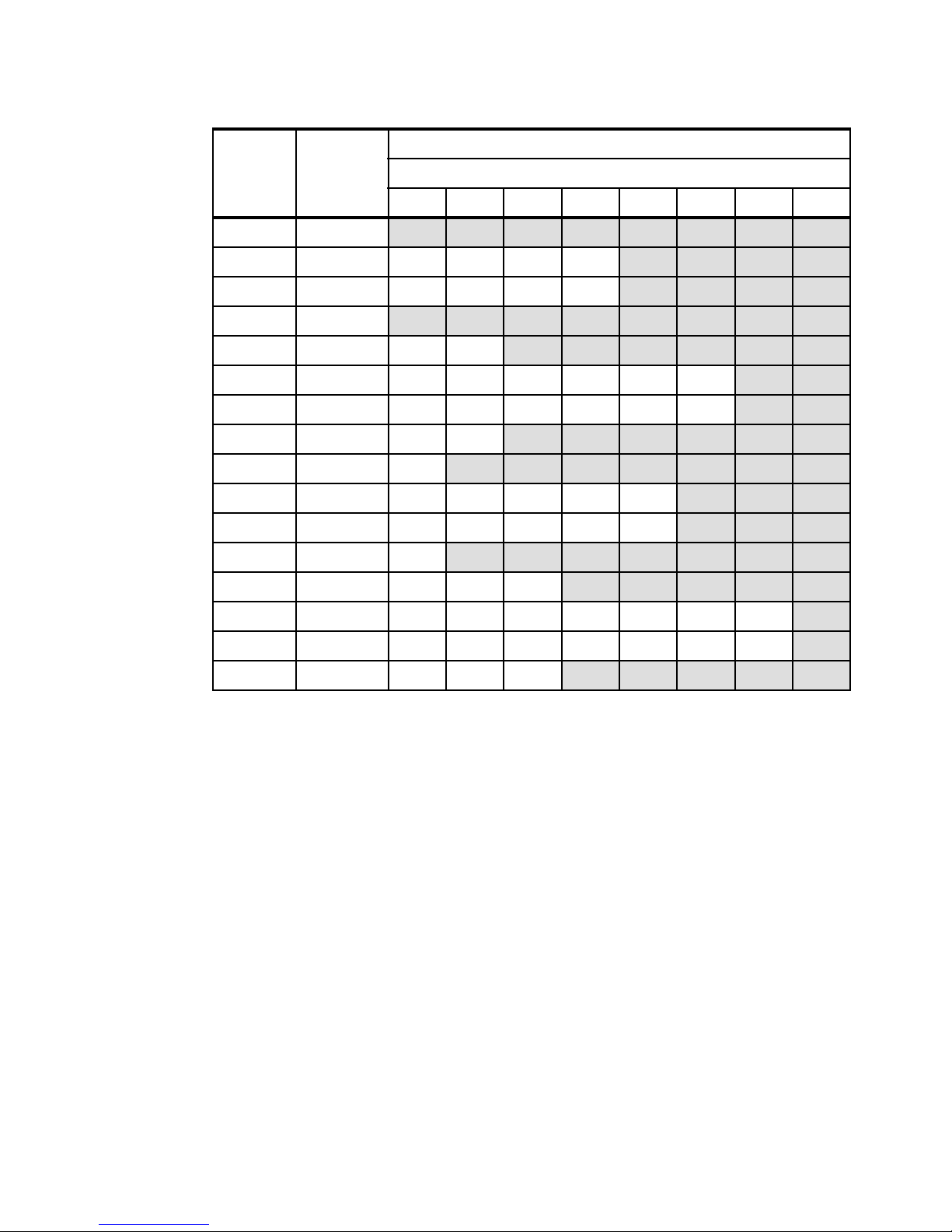

Table 1-1 compares the processor core options and frequencies, and L3 cache sizes of the

POWER7 blade servers.

Table 1-1 Comparison of POWER7 blade servers

System Number of

processors

PS700 blade 1 4 3.0 GHz 16 MB 4 GB / 64 GB Single-wide

PS701 blade 1 8 3.0 GHz 32 MB 16 GB / 128 GB Single-wide

PS702 blade 2 8 3.0 GHz 32 MB 32 GB / 256 GB Double-wide

PS703 blade 2 8 2.4 GHz 32 MB 16 GB / 128 GB Single-wide

PS704 blade 4 8 2.4 GHz 32 MB 32 GB / 256 GB Double-wide

Cores per

processor

Core

frequency

L3 cache

per processor

Minimum /

Maximum

memory

Form factor

For a detailed comparison, see 2.6, “Technical comparison” on page 54.

Full details about the PS700, PS701, and PS702 can be found in the IBM Redpaper, IBM

BladeCenter PS700, PS701, and PS702 Technical Overview and Introduction, REDP-4655

available from:

http://www.redbooks.ibm.com/abstracts/redp4655.html

1.3 IBM BladeCenter chassis support

Blade servers are thin servers that insert into a single rack-mounted chassis that supplies

shared power, cooling, and networking infrastructure. Each server is an independent server

with its own processors, memory, storage, network controllers, operating system, and

applications. The IBM BladeCenter chassis is the container for the blade servers and shared

infrastructure devices.

The IBM BladeCenter chassis can contain a mix of POWER, Intel®, Cell, and AMD

processor-based blades. Depending on the IBM BladeCenter chassis selected, combinations

of Ethernet, SAS, Fibre Channel, and FCoE I/O fabrics can also be shared within the same

chassis.

All chassis can offer full redundancy for all shared infrastructure, network, and I/O fabrics.

Having multiple power supplies, network switches, and I/O switches contained within a

BladeCenter chassis eliminates single points of failure in these areas.

The following sections describe the BladeCenter chassis that support the PS703 and PS704

blades. For a comprehensive look at all aspects of BladeCenter products see the IBM

Redbooks publication, IBM BladeCenter Products and Technology, SG24-7523, available

from the following web page:

http://www.redbooks.ibm.com/abstracts/sg247523.html

Refer to the BladeCenter Interoperability Guide for complete coverage of the compatibility

information. The latest version can be downloaded from the following address:

http://ibm.com/support/entry/portal/docdisplay?lndocid=MIGR-5073016

4 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 19

1.3.1 Supported BladeCenter chassis

The PS703 and PS704 blades are supported in the IBM BladeCenter chassis as listed in

Ta bl e 1 - 2 .

Table 1-2 The blade servers supported in each BladeCenter chassis

Blade Machine

type-model

Blade

width

BC S

8886

BC E

8677

BC T

8720

BC T

8730

BC H

8852

BC HT

8740

BC HT

8750

PS703 7891-73X 1 slot

PS704 7891-74X 2 slot

IBM BladeCenter H delivers high performance, extreme reliability, and ultimate flexibility for

the most demanding IT environments. See “BladeCenter H” on this page.

IBM BladeCenter HT models are designed for high-performance flexible telecommunications

environments by supporting high-speed networking technologies (such as 10G Ethernet).

They provide a robust platform for NGNs. See “BladeCenter HT” on page 7.

IBM BladeCenter S combines the power of blade servers with integrated storage, all in an

easy-to-use package designed specifically for the office and distributed enterprise

environments. See “BladeCenter S” on page 10.

Note: The number of blade servers that can be installed into chassis is dependent on the

power supply configuration, power supply input (110V/208V BladeCenter S only) and

power domain configuration options. See 1.3.2, “Number of PS703 and PS704 blades in a

chassis” on page 12 for more information.

BladeCenter H

IBM BladeCenter H delivers high performance, extreme reliability, and ultimate flexibility to

even the most demanding IT environments. In 9 U of rack space, the BladeCenter H chassis

can contain up to 14 blade servers, 10 switch modules, and four power supplies to provide the

necessary I/O network switching, power, cooling, and control panel information to support the

individual servers.

Ye s No No No Ye s Ye s Ye s

Ye s No No No Ye s Ye s Ye s

The chassis supports up to four traditional fabrics using networking switches, storage

switches, or pass-through devices. The chassis also supports up to four high-speed fabrics

for support of protocols such as 4X InfiniBand or 10 Gigabit Ethernet. The built-in media tray

includes light path diagnostics, two front USB 2.0 inputs, and an optical drive.

Chapter 1. Introduction and general description 5

Page 20

Figure 1-2 displays the front view of an IBM BladeCenter H and Figure 1-3 displays the rear

view.

Figure 1-2 BladeCenter H front view

Figure 1-3 BladeCenter H rear view

6 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 21

The key features of the IBM BladeCenter H chassis are as follows:

A rack-optimized, 9 U modular design enclosure for up to 14 hot-swap blades.

A high-availability mid-plane that supports hot-swap of individual blades.

Two 2,900 watt or 2,980 watt hot-swap power modules and support for two optional 2,900

watt or 2,980 watt power modules, offering redundancy and power for robust

configurations (cannot mix power module types).

Power supply requirements: BladeCenter H model 8852-4TX has 2,980 watt power

supplies. Other models have 2,900 W powers supplies and the 2,980 W supplies are

optional.

The PS703 and PS704 do not require the 2,980 watt power supply. They are designed

to fully function with both the 2,900 watt and 2,980 watt power supplies.

Two hot-swap redundant blowers. Two additional hot-swap fan modules are included with

additional power module option.

Blower requirements: BladeCenter H model 8852-4TX has enhanced blowers

compared with standard blowers in model 8852-4SX and earlier models. The enhanced

blowers are optional in the model 8852-4SX and earlier models.

The PS700, PS701, PS702, PS703, and PS704 do not require the enhanced blowers.

They are designed to fully function with both the standard and the enhanced blowers.

An Advanced Management Module that provides chassis-level solutions, simplifying

deployment and management of your installation.

Support for up to four network or storage switches or pass-through modules.

Support for up to four bridge modules.

A light path diagnostic panel, and two USB 2.0 ports.

Serial port breakout connector.

Support for UltraSlim Enhanced SATA DVD-ROM and multi-burner drives.

IBM Systems Director and Tivoli® Provisioning Manager for OS Deployments for easy

installation and management.

Energy-efficient design and innovative features to maximize productivity and reduce

power usage.

Density and integration to ease data center space constraints.

Help in protecting your IT investment through IBM BladeCenter family longevity,

compatibility, and innovation leadership in blades.

Support for the latest generation of IBM BladeCenter blades, helping provide investment

protection.

BladeCenter HT

The IBM BladeCenter HT is a 12-server blade chassis designed for high-density server

installations, typically for telecommunications use. It offers high performance with the support

of 10 Gb Ethernet installations. This 12 U high chassis with DC or AC power supplies

provides a cost-effective, high performance, high availability solution for telecommunication

networks and other rugged non-telecommunications environments. The IBM BladeCenter HT

Chapter 1. Introduction and general description 7

Page 22

chassis is positioned for expansion, capacity, redundancy, and carrier-grade NEBS level

3/ETSI compliance in DC models.

BladeCenter HT provides a solid foundation for next-generation networks (NGN), enabling

service providers to become on demand providers. IBM's technological expertise in the

enterprise data center , coupled with the industry know-how of key business p artner s, delivers

added value within service provider networ ks.

Figure 1-4 shows the front view of the BladeCenter HT.

Figure 1-4 BladeCenter HT front view

8 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 23

Figure 1-5 shows the rear view of the BladeCenter HT.

Figure 1-5 BladeCenter HT rear view

BladeCenter HT delivers rich telecommunications features and functionality, including

integrated servers, storage and networking, fault-tolerant features, optional hot-swappable

redundant DC or AC power supplies and cooling, and built-in system management resources.

The result is a Network Equipment Building Systems (NEBS-3) and ETSI-compliant server

platform optimized for next-generation networks.

The following BladeCenter HT applications are well suited for these servers:

Network management and security

– Network management engine

– Internet cache engine

– RSA encryption

–Gateways

– Intrusion detection

Network infrastructure

– Softswitch

– Unified messaging

– Gateway/Gatekeeper/SS7 solutions

– VOIP services and processing

– Voice portals

– IP translation database

Chapter 1. Introduction and general description 9

Page 24

The key features of the BladeCenter HT are as follows:

Support for up to 12 blade servers, compatible with the other chassis in the BladeCenter

family

Four standard and four high-speed I/O module bays, compatible with the other chassis in

the BladeCenter family

A media tray at the front with light path diagnostics, two USB 2.0 ports, and optional

compact flash memory module support

Two hot-swap management-module bays (one management module standard)

Four hot-swap power-module bays (two power modules standard)

New serial port for direct serial connection to installed blades

Compliance with the NEBS 3 and ETSI core network specifications

BladeCenter S

The BladeCenter S chassis can hold up to six blade servers, and up to 12 hot-swap 3.5-inch

SAS or SATA disk drives in just 7 U of rack space. It can also include up to four C14

950-watt/1450-watt power supplies. The BladeCenter S offers the necessary I/O network

switching, power, cooling, and control panel information to support the individual servers.

The IBM BladeCenter S is one of five chassis in the BladeCenter family. The BladeCenter S

provides an easy IT solution to the small and medium office and to the distributed enterprise.

Figure 1-6 shows the front view of the IBM BladeCenter S.

Figure 1-6 The front of the BladeCenter S chassis

10 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 25

Figure 1-7 shows the rear view of the chassis.

Figure 1-7 The rear of the BladeCenter S chassis

The key features of IBM BladeCenter S chassis are as follows:

A rack-optimized, 7 U modular design enclosure for up to six hot-swap blades

Two optional Disk Storage Modules for HDDs, six 3.5-inch SAS/SATA drives each

High-availability mid-plane that supports hot-swap of individual blades

Two 950-watt/1450-watt, hot-swap power modules and support for two optional

950/1450-watt power modules, offering redundancy and power for robust configurations

Four hot-swap redundant blowers, plus one fan in each power supply

An Advanced Management Module that provides chassis-level solutions, simplifying

deployment and management of your installation

Support for up to four network or storage switches or pass-through modules

A light path diagnostic panel, and two USB 2.0 ports

Support for optional UltraSlim Enhanced SATA DVD-ROM and Multi-Burner Drives

Support for SAS RAID Controller Module to make it easy for clients to buy the all-in-one

BladeCenter S solution

IBM Systems Director, Storage Configuration Manager (SCM), Start Now Advisor, and

Tivoli Provisioning Manager for OS Deployments support for easy installation and

management

Energy-efficient design and innovative features to maximize productivity and reduce

power usage

Help in protecting your IT investment through IBM BladeCenter family longevity,

compatibility, and innovation leadership in blades

Support for the latest generation of IBM BladeCenter blades, helping provide investment

protection

Chapter 1. Introduction and general description 11

Page 26

1.3.2 Number of PS703 and PS704 blades in a chassis

The number of POWER7 processor-based blades that can be installed in a BladeCenter

chassis depends on several factors:

BladeCenter chassis type

Number of power supplies installed

Power supply voltage option (BladeCenter S only)

BladeCenter power domain configuration

Table 1-3 shows the maximum number of PS703 and PS704 blades running in a maximum

configuration (memory, disk, expansion cards) for each supported BladeCenter chassis that

can be installed with fully redundant power and without performance reduction. IBM blades

that are based on processor types other than POWER7 might reduce these numbers.

Tip: As shown in Table 1-3, there is no restriction to the number of POWER7 blade servers

that you can install in a BladeCenter chassis other than the number of power supplies

installed in the chassis.

Table 1-3 PS703 and PS704 blades per chassis type

BladeCenter H BladeCenter HT BladeCenter S

14 Slots Total 12 Slots Total 6 Slots Total

110VAC 208VAC

Server

PS703 714612262 6

PS704 37361313

When mixing blades of different processor types in the same BladeCenter, the BladeCenter

Power Configurator tool helps determine whether the combination desired is valid. It is

expected that this tool will be updated to include the PS703 and PS704 blade configurations.

For more information about this update, see the following web page:

http://www.ibm.com/systems/bladecenter/powerconfig

2 PS 4 PS 2 PS 4 PS 2 PS 4 PS 2 PS 4 PS

1.4 Operating environment

In this section, we list the operating environment specifications for the PS703 and PS704

blade servers and BladeCenter H and S.

PS703 and PS704

Operating temperature

– 10°C - 35°C (50°F - 95°F) at 0 - 914 meters altitude (0 - 3000 feet)

– 10°C - 32°C (50°F - 90°F) at 914 - 2133 meters altitude (3000 - 7000 feet)

Relative Humidity 8% - 80%

Maximum Altitude 2133 meters (7000 ft.)

12 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 27

IBM BladeCenter H

Operating temperature

– 10.0°C - 35 °C (50°F - 95 °F) at 0 - 914 m (0 - 3000 ft.)

– 10.0°C - 32 °C (50°F - 90 °F) at 914 - 2133 m (3000 - 7000 ft.)

Relative humidity 8% - 80%

Maximum altitude: 2133 meters (7000 ft.)

IBM BladeCenter S

– Operating Temperature:

– 10°C - 35°C (50°F - 95°F) at 0 - 914 m (0 - 3000 ft.)

– 10°C - 32°C (50°F - 90°F) at 914 - 2133 m (3000 - 7000 ft.)

Relative humidity: 8% - 80%

Maximum altitude: 2133 meters (7000 ft.)

BladeCenter HT

Operating temperature

– 5°C - 40°C (41°F - 104 °F) at -60 - 1800 m (-197 - 6000 ft.)

– 5°C - 30°C (41°F - 86 °F) at 1800 - 4000 m (6000 - 13000 ft.)

Relative humidity 5% - 85%

Maximum altitude: 4000 meters (13000 ft.)

1.5 Physical package

The PS703 and PS704 blade servers are supported in BladeCenter H, HT, and S.

This section describes the physical dimensions of the POWER7 blade servers and the

supported BladeCenter chassis only. Table 1-4 shows the physical dimensions of the PS703

and PS704 blade servers.

Table 1-4 Physical dimensions of PS703 and PS704 blade servers

Dimension PS703 blade server PS704 blade server

Height 9.65 inch (245 mm) 9.65 inch (245 mm)

Width 1.14 inch (29 mm)

Single-wide blade

Depth 17.55 inch (445 mm) 17.55 inch (445 mm)

Weight 9.6 lbs (4.35 kg) 19.2 lbs (8.7 kg)

Table 1-5 shows the physical dimension of the BladeCenter chassis that supports the

POWER7 processor-based blade servers.

Table 1-5 Physical dimension of Supported BladeCenter chassis

Dimension BladeCenter H BladeCenter S BladeCenter HT

2.32 inch (59 mm)

Double-wide blade

Height 15.75 inch (400 mm) 12 inch (305 mm) 21 inch (528 mm)

Width 17.4 inch (442 mm) 17.5 inch (445 mm) 17.4 inch (442 mm)

Depth 28 inch (711 mm) 28.9 inch (734 mm) 27.8 inch (706 mm)

Chapter 1. Introduction and general description 13

Page 28

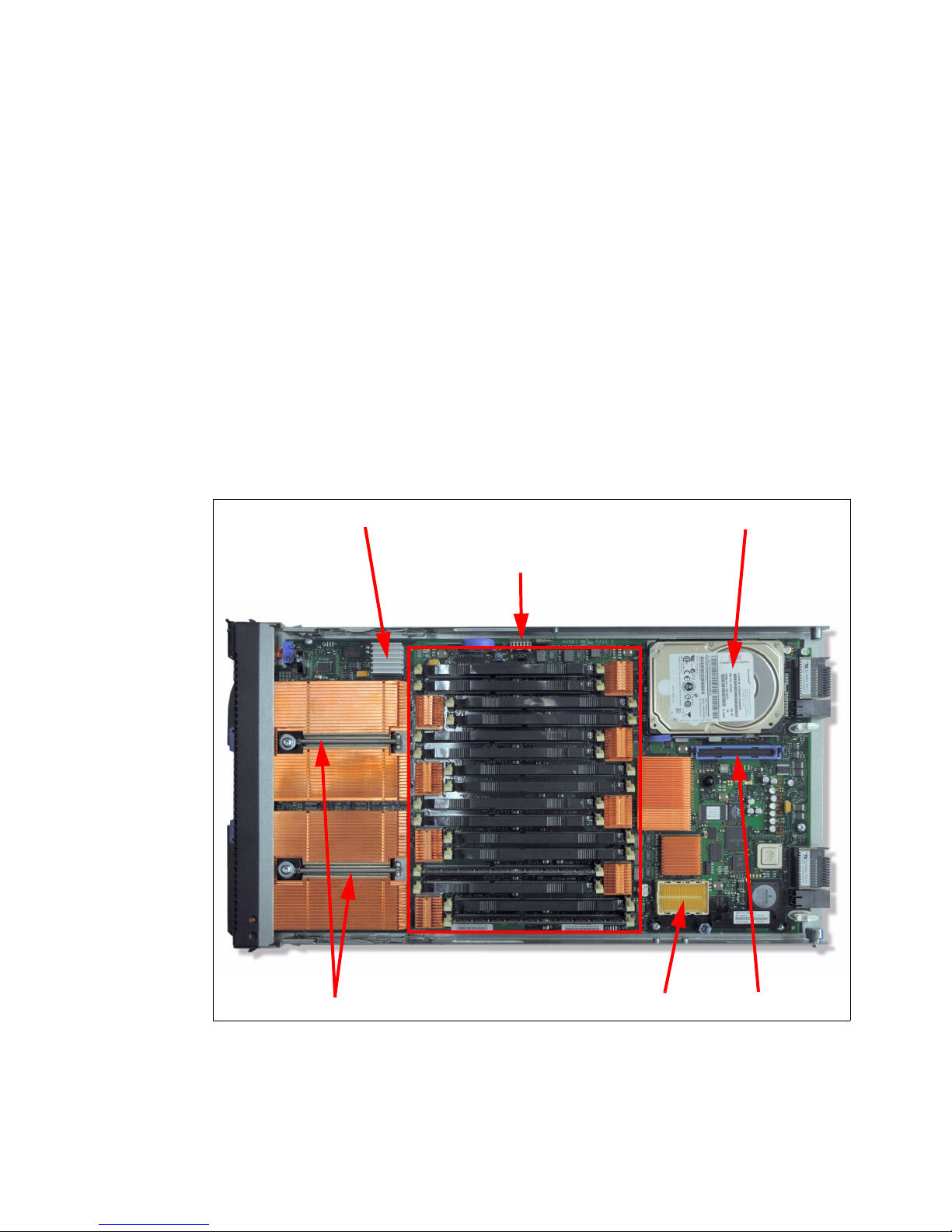

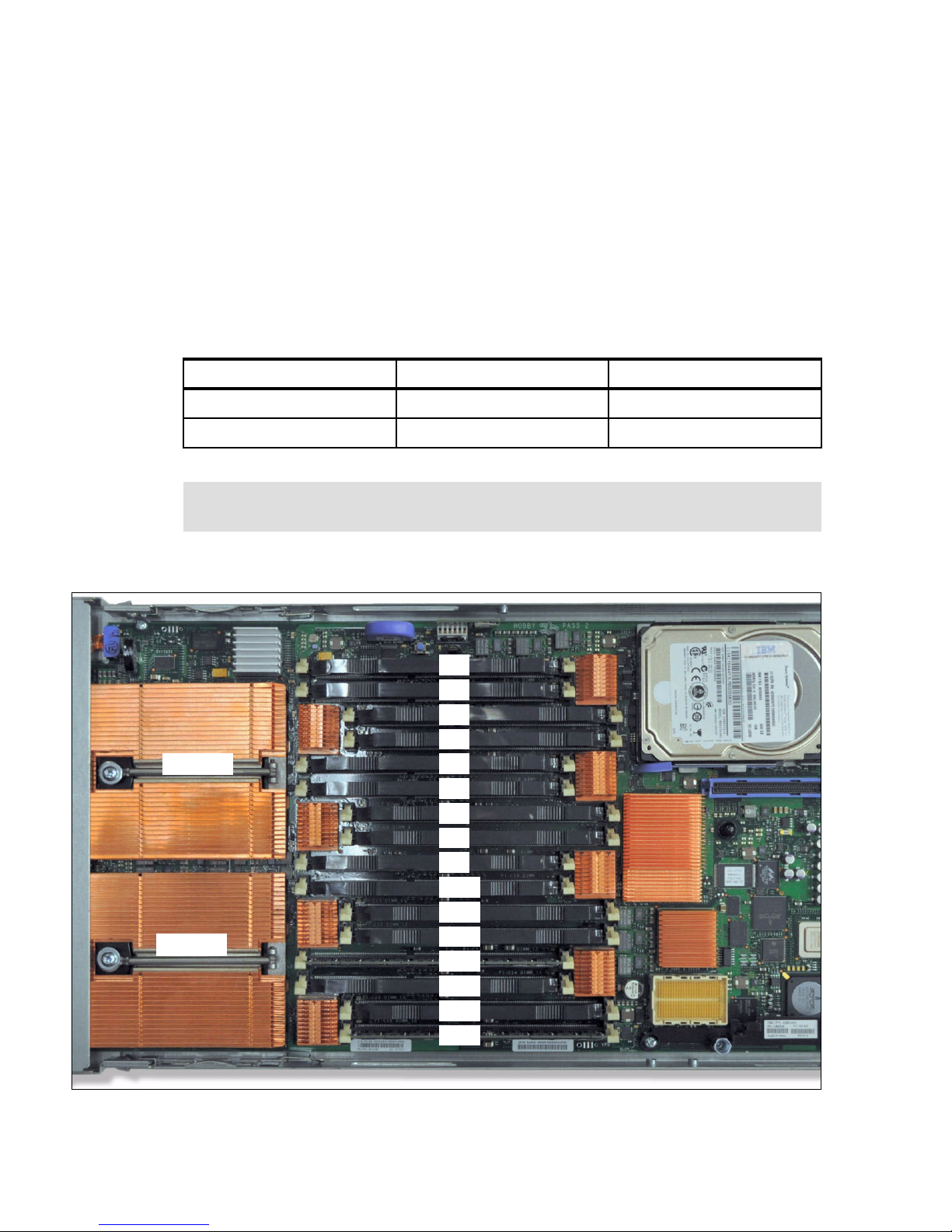

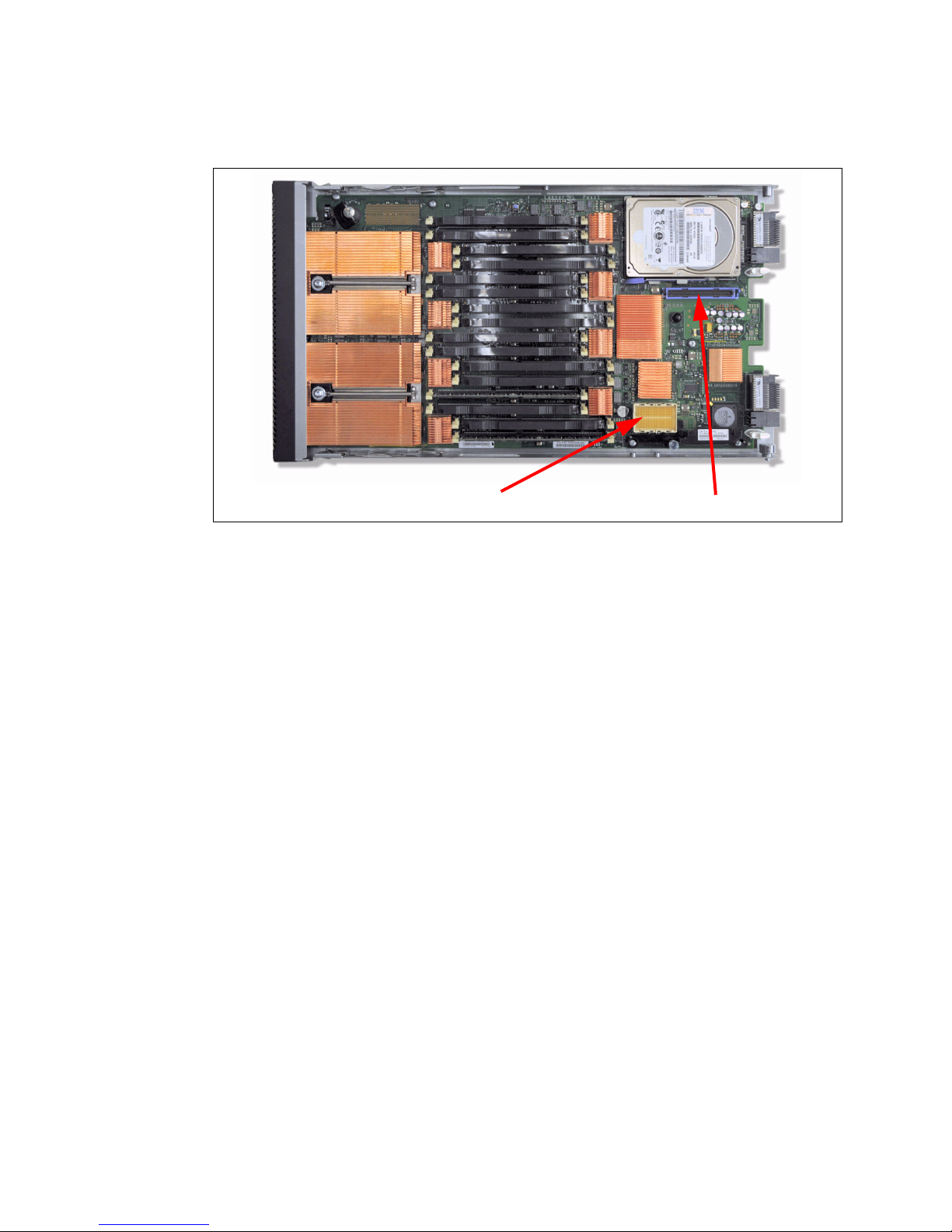

1.6 System features

Two 8-core processors

Disk drive bay

CIOv connectorCFFh connector

SAS disk controller

16 memory DIMM sockets

The PS703 and PS704 blade servers are 16-core and 32-core POWER7 processor-based

blade servers.This section describes the features on each of the POWER7 blade servers.

The following topics are covered:

1.6.1, “PS703 system features” on page 14

1.6.2, “PS704 system features” on page 16

1.6.3, “Minimum features for the POWER7 processor-based blade servers” on page 18

1.6.4, “Power supply features” on page 19

1.6.5, “Processor” on page 20

1.6.6, “Memory features” on page 20

1.6.7, “I/O features” on page 21

1.6.8, “Disk features” on page 26

1.6.9, “Standard onboard features” on page 26

1.6.1 PS703 system features

The BladeCenter PS703 is shown in Figure 1-8.

Figure 1-8 Top view of the PS703 blade server

The features of the server are as follows:

Machine type and model number

7891-73X

14 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 29

Form factor

Single-wide (30 mm) blade

Processors:

– Two eight-core 64-bit POWER7 processors operating at a 2.4 GHz clock speed for a

total of 16 cores in the blade server

– Based on CMOS 12S 45 nm SOI (silicon-on-insulator) technology

– Power consumption is 110 W per socket

– Single-wide (SW) Blade package

Memory

– 16 DIMM slots

– Minimum 16 GB, maximum capacity 256 GB (using 16 GB DIMMs)

– Industry standard VLP DDR3 DIMMs

– Optional Active Memory Expansion

Disk

– 3 Gb SAS disk storage controller

– One disk drive bay which supports one 2.5-inch SAS HDD (hard disk drive) or two

1.8-inch SATA SSD (solid state drive)

– Hardware mirroring:

• One HDD: RAID 0

• One SSD: RAID 0

• Two SSDs: RAID 0 or RAID 10

On-board integrated features:

– Service processor (SP)

– Two 1 Gb Ethernet ports

– One SAS Controller

– USB Controller which routes to the USB 2.0 port on the media tray

– 1 Serial over LAN (SOL) Console through SP

Expansion Card I/O Options:

– One CIOv expansion card slot (PCIe)

– One CFFh expansion card slot (PCIe)

Chapter 1. Introduction and general description 15

Page 30

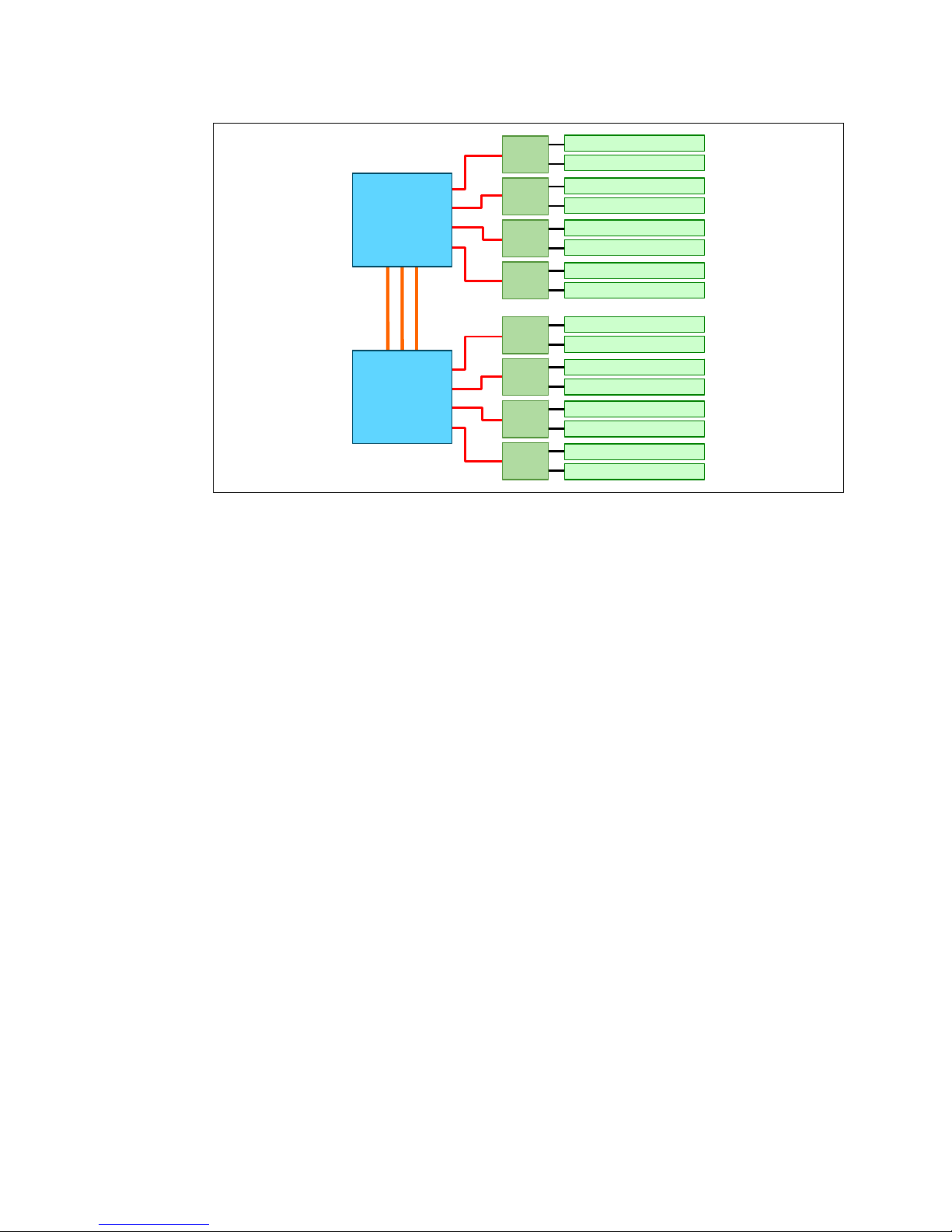

1.6.2 PS704 system features

Two 8-core processors

Drive bay

CIOv connectorCFFh connector

16 DIMM sockets

Thumb-screw

sockets

SMP connector to join the

PS704 base blade and SMP

blade together

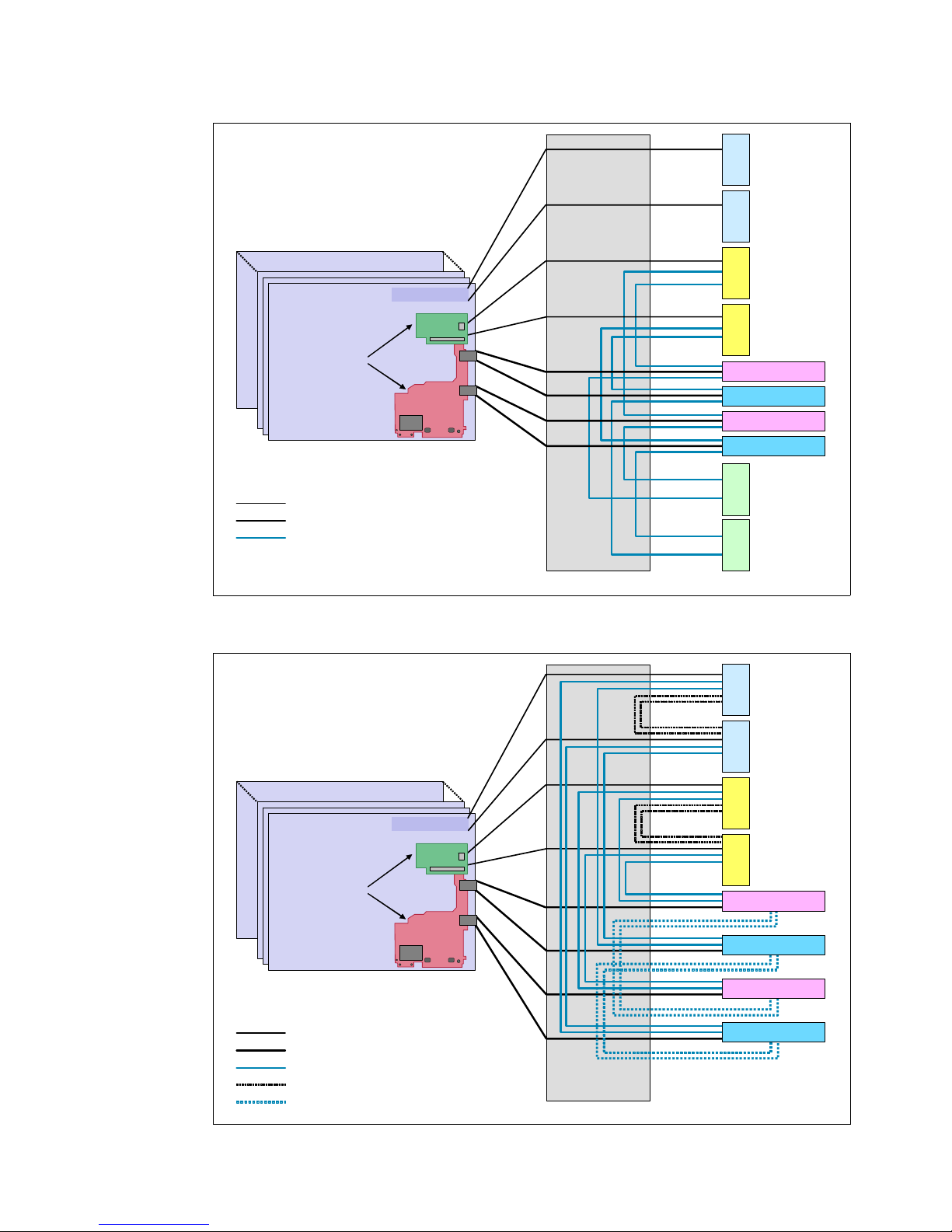

The PS704 is a double-wide server. The two halves of the BladeCenter PS704 are shown in

Figure 1-9 on this page and Figure 1-10 on page 17.

Figure 1-9 Top view of PS704 blade server base unit

16 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 31

Figure 1-10 Top view of PS704 blade server SMP unit

Two 8-core processors

Disk drive bay

CIOv connectorCFFh connector

SAS disk controller

16 DIMM sockets

Thumb screw to

attach to PS704 base

blade

SMP connector (on

the underside)

The features of the server are as follows:

Machine type and model number

7891-74X

Form factor

Double-wide (60 mm) blade

Processors:

– Four eight-core 64-bit POWER7 processors operating at a 2.4 GHz clock speed for a

total of 32 cores in the blade server

– Based on CMOS 12S 45 nm SOI (silicon-on-insulator) technology

– Power consumption is 110W per socket

Memory

– 32 DIMM slots

– Minimum 32 GB, maximum capacity 512 GB (using 16 GB DIMMs)

– Industry standard VLP DDR3 DIMMs

– Optional Active Memory Expansion

Disk

– 3 Gb SAS disk storage controller which is located in the SMP unit

Chapter 1. Introduction and general description 17

Page 32

– Two disk drive bays supporting up to two 2.5-inch SAS HDD (hard disk drive) or up to

four 1.8-inch SAS SSD (solid state drive)

– Hardware mirroring:

• One HDD: RAID 0

• One SSD: RAID 0

• Two HDDs: RAID 0 or RAID 10

• One HDD and one SSD: RAID 0 on each disk; combining HDD and SSD in one

RAID configuration is not allowed.

• Two SSDs: RAID 0 or RAID 10

• Three SSDs: RAID 0, RAID 5, or RAID 10 (RAID 10 with only two disks)

• Four SSDs: RAID 0, RAID 5, RAID 6, or RAID 10

On-board integrated features:

– Service processor (one on each blade

1

)

– Four 1 Gb Ethernet ports

– One SAS Controller

– USB Controller which routes to the USB 2.0 port on the media tray

– 1 Serial over LAN (SOL) Console through FSP

Expansion Card I/O Options:

– Two CIOv expansion card slots (PCIe)

– Two CFFh expansion card slots (PCIe)

1.6.3 Minimum features for the POWER7 processor-based blade servers

At the minimum a PS703 requires a BladeCenter chassis, two eight-core 2.4 GHz

processors, minimum memory of 16 GB, zero or one disks, and a Language Group Specify

(mandatory to order voltage nomenclature/language).

At the minimum a PS704 requires a BladeCenter chassis, four eight-core 2.4 GHz

processors, minimum memory of 32GB, zero or one disks, and a Language Group Specify

(mandatory to order voltage nomenclature/language).

Each system has a minimum feature set to be valid. The minimum system configuration for

PS703 and PS704 blade servers is shown in Table 1-6 on page 19.

1

The service processor (or flexible service processor) on the expansion unit provides control but does not offer

redundancy with the SP on the base unit.

18 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 33

Table 1-6 Minimum features for PS703 and PS704 blade server

Category Minimum features required

BladeCenter chassis Supported BladeCenter chassis

Refer to 1.3.1, “Supported BladeCenter chassis” on page 5

Processor Two 8-core 2.4 GHz Processors in a PS703 Blade (7891-73X)

Four 8-core 2.4 GHz Processors in a PS704 Blade(7891-74X)

Memory DDR3 Memory DIMM

For PS703:

16GB - two 8 GB (2 x 4 GB DIMMs) DDR3 1066 MHz (#8196) or one 16 GB (2

x 8 GB DIMMs) DDR3 1066 MHz (#8199

For PS704:

32 GB - four 8 GB (2 x 4 GB DIMMs) DDR3 1066 MHz (#8196) or two 16 GB (2

x 8 GB DIMMs) DDR3 1066 MHz (#8199)

Storage AIX/Linux/Virtual I/O Server/IBM i (Required VIOS partition):

300 GB SAS 2.5-inch HDD (#8274) or

600 GB SAS 2.5 inch HDD (#8276) or

177 GB SATA SSD (#8207)

If Boot from SAN 8 GB Fibre Channel HBA is selected with FC #8240, #8242 or

#8271 or Fibre Channel over Ethernet Adapter FC #8275 must be ordered.

)

FC #8207 requires FC #4539 - Interposer for 1.8-inch Solid® State Drives

1x Language Group Country specific (selected by the customer)

Operating system 1x primary operating system (one of the following)

AIX (#2146)

Linux (#2147)

IBM i (#2145) plus IBM i 6.1.1 (#0566)

IBM i (#2145) plus IBM i 7.1 (#0567)

1.6.4 Power supply features

The peak power consumption is 428 W for the PS703 and 848 W for the PS704 blade server;

power is provided by the BladeCenter power supply modules. The maximum measured value

is the worst case power consumption expected from a fully populated server under intensive

workload. The maximum measured value also takes into account component tolerance and

non-ideal operating conditions. Power consumption and heat load vary greatly by server

configuration and use.

Use the IBM Systems Energy Estimator to obtain a heat output estimate based on a specific

configuration. The Estimator is available from the following web page:

http://www-912.ibm.com/see/EnergyEstimator

For information about power supply requirements for each of the BladeCenter chassis

supported by POWER7 blade servers and the number of POWER7 blades supported, see

1.3.2, “Number of PS703 and PS704 blades in a chassis” on page 12.

Chapter 1. Introduction and general description 19

Page 34

1.6.5 Processor

The processors used in the PS703 and PS704 are 64-bit POWER7 processors operating at

2.4 GHz. They are optimized to achieve maximum performance for both the system and its

virtual machines. Couple that performance with PowerVM and you are now enabled for

massive workload consolidation to drive maximum system use, predictable performance, and

cost efficiency.

POWER7 Intelligent Threads Technology enables workload optimization by selecting the

most suitable threading mode (Single thread (per core) or Simultaneous Multi-thread 2 or 4

modes, also called 2-SMT and 4-SMT). The Intelligent Threads Technology can provide

improved application performance. In addition, POWER7 processors can maximize cache

access to cores, improving performance, using Intelligent Cache technology.

EnergyScale™ Technology offers Intelligent Energy management features, which can

dramatically and dynamically conserve power and further improve energy efficiency. These

Intelligent Energy features enable the POWER7 processor to operate at a higher frequency if

environmental conditions permit, for increased performance per watt. Alternatively, if user

settings permit, these features allow the processor to operate at a reduced frequency for

significant energy savings.

The PS703 and PS704 come with a standard processor configuration. There are no optional

processor configurations for the PS703 and PS704. The PS703 and PS704 processor

configurations are as follows:

The PS703 blade server is a single-wide blade that contains two eight-core, 64-bit

POWER7 2.4 GHz processors with 256 KB per processor core L2 cache and 4 MB per

processor core L3 cache. No processor options are available.

The PS704 blade server is a double-wide blade that supports four eight-core, 64-bit

POWER7 2.4 GHz processor with 256 KB per processor core L2 cache and 4 MB per

processor core L3 cache. No processor options are available.

1.6.6 Memory features

The PS703 and PS704 blade servers uses industry standard VLP DDR3 memory DIMMs.

Memory DIMMs must be installed in matched pairs with the same size and speed. For details

about the memory subsystem and layout, see 2.4, “Memory subsystem” on page 47.

The PS703 and PS704 blade serves have 16 and 32 DIMM slots, respectively. Memory is

available in 4 GB, 8 GB, or 16 GB DIMMs, all operating at a memory speed of 1066 MHz. The

memory sizes can be mixed within a system.

The POWER7 DDR3 memory uses a new memory architecture to provide greater bandwidth

and capacity. This enables operating at a higher data rate for larger memory configurations.

For details, see 2.4, “Memory subsystem” on page 47. Table 1-7 shows the DIMM features.

Table 1-7 Memory DIMM options

Feature code Total memory size Package includes Speed

8196 8 GB Two 4 GB DIMMs 1066 MHz

8199 16 GB Two 8 GB DIMMs 1066 MHz

EM34 32 GB Two 16 GB DIMMs 1066 MHz

20 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 35

Notes:

The DDR2 DIMMs used in JS23 and JS43 blade servers are not supported in the

POWER7 blade servers.

The DDR3 DIMMs used in PS700, PS701, and PS702 blade servers are not supported

in the PS703 and PS704 blade servers.

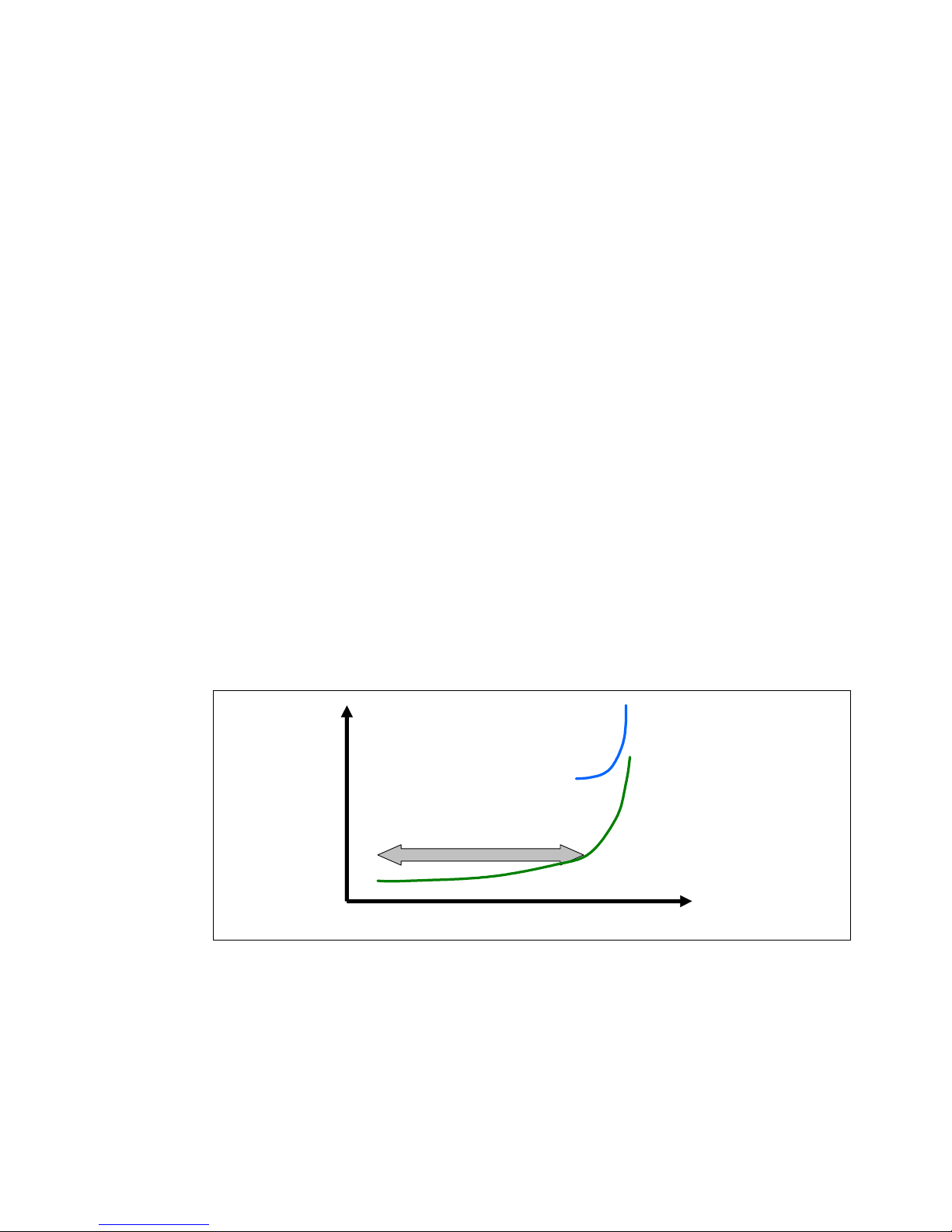

The optional Active Memory Expansion is a POWER7 technology that allows the effective

maximum memory capacity to be much larger than the true physical memory. Compression

and decompression of memory content using processor cycles can allow memory expansion

up to 100%. This can allow an AIX 6.1 (or later) partition to do significantly more work with the

same physical amount of memory or a server to run more partitions and do more work with

the same physical amount of memory. For more information, see 2.5, “Active Memory

Expansion” on page 52.

1.6.7 I/O features

The PS703 has one CIOv PCIe expansion card slot and one CFFh PCIe high-speed

expansion card slot. The PS704 blade server has two CIOv expansion card slots and two

CFFh expansion card slots.

Table 1-8 shows the CIOv and CFFh expansion cards supported in the PS703 and PS704

servers.

Table 1-8 I/O expansion cards supported in the PS703 and PS704

Card Description Feature Code

CIOv

QLogic 8 Gb Fibre Channel Expansion Card (CIOv) 8242

QLogic 4 Gb Fibre Channel Expansion Card (CIOv) 8241

Emulex 8 Gb Fibre Channel Expansion Card (CIOv) 8240

3 Gb SAS Passthrough Expansion Card (CIOv) 8246

Broadcom 2-Port Gb Ethernet Expansion Card (CIOv) 8243

CFFh

QLogic 1Gb Ethernet and 8 Gb Fibre Channel Expansion Card (CFFh) 8271

QLogic 1 Gb Ethernet and 4 Gb Fibre Channel Expansion Card (CFFh) 8252

QLogic 2-port 10 Gb Converged Network Adapter (CFFh) 8275

2-Port QDR 40 GB/s InfiniBand Expansion Card (CFFh) 8272

Broadcom 2/4-Port Ethernet Expansion Card (CFFh) 8291

QLogic 8 Gb Fibre Channel Expansion Card (CIOv)

The QLogic 8 Gb Fibre Channel Expansion Card (CIOv) for IBM BladeCenter, feature #8242,

enables high-speed access for IBM blade servers to connect to a Fibre Channel storage area

network (SAN). When compared to the previous-generation 4 Gb adapters, the new adapter

doubles the throughput speeds for Fibre Channel traffic. As a result, you can manage

increased amounts of data and possibly benefit from a reduced hardware cost.

Chapter 1. Introduction and general description 21

Page 36

The card has the following features:

CIOv form factor

QLogic 2532 8 Gb ASIC

PCI Express 2.0 host interface

Support for two full-duplex Fibre Channel ports at 8 Gbps maximum per channel

Support for Fibre Channel Protocol Small Computer System Interface (FCP-SCSI) and

Fibre Channel Internet Protocol (FC-IP)

Support for Fibre Channel service (class 3)

Support for switched fabric, point-to-point, and Fibre Channel Arbitrated Loop (FC-AL)

connections

Support for NPIV

For more information, see the IBM Redbooks at-a-glance guide at the following web page:

http://www.redbooks.ibm.com/abstracts/tips0692.html?Open

QLogic 4 Gb Fibre Channel Expansion Card (CIOv)

The QLogic 4 Gb Fibre Channel Expansion Card (CIOv) for BladeCenter, feature #8241,

enables you to connect the BladeCenter servers with CIOv expansion slots to a Fibre

Channel SAN. Pick any Fibre Channel storage solution from the IBM System Storage®

DS3000, DS4000®, DS5000, and DS8000® series, and begin accessing data over a

high-speed interconnect. This card is installed into the PCI Express CIOv slot of a supported

blade server. It provides connections to Fibre Channel-compatible modules located in bays 3

and 4 of a supported BladeCenter chassis. A maximum of one QLogic 4 Gb Fibre Channel

Expansion Card (CIOv) is supported per single-wide (30 mm) blade server.

The card has the following features:

CIOv form factor

PCI Express 2.0 host interface

Support for two full-duplex Fibre Channel ports at 4 Gbps maximum per channel

Support for Fibre Channel Protocol SCSI (FCP-SCSI) and Fibre Channel Internet Protocol

(FC-IP)

Support for Fibre Channel service (class 3)

Support for switched fabric, point-to-point, and Fibre Channel Arbitrated Loop (FC-AL)

connections

For more information, see the IBM Redbooks at-a-glance guide at the following web page:

http://www.redbooks.ibm.com/abstracts/tips0695.html?Open

Emulex 8 Gb Fibre Channel Expansion Card (CIOv)

The Emulex 8 Gb Fibre Channel Expansion Card (CIOv) for IBM BladeCenter, feature #8240,

enables high-performance connection to a SAN. The innovative design of the IBM

BladeCenter midplane enables this Fibre Channel adapter to operate without the need for an

optical transceiver module. This saves significant hardware costs. Each adapter provides

dual paths to the SAN switches to ensure full redundancy. The exclusive firmware-based

architecture allows firmware and features to be upgraded without taking the server offline or

rebooting, and without the need to upgrade the driver.

The card has the following features:

Support of the 8 Gbps Fibre Channel standard

Use of the Emulex “Saturn” 8 Gb Fibre Channel I/O Controller (IOC) chip

Enablement of high-speed and dual-port connection to a Fibre Channel SAN

Can be combined with a CFFh card on the same blade server

22 IBM BladeCenter PS703 and PS704 Technical Overview and Introduction

Page 37

Comprehensive virtualization capabilities with support for N_Port ID Virtualization (NPIV)

and Virtual Fabric

Simplified installation and configuration using common HBA drivers

Efficient administration by using HBAnyware for HBAs anywhere in the SAN

Common driver model that eases management and enables upgrades independent of

HBA firmware

Support of BladeCenter Open Fabric Manager

Support for NPIV when installed in the PS703 and PS704 blade servers

For more information, see the IBM Redbooks at-a-glance guide at the following web page:

http://www.redbooks.ibm.com/abstracts/tips0703.html?Open

3 Gb SAS Passthrough Expansion Card (CIOv)

This card, feature #8246, is an expansion card that offers the ideal way to connect the

supported BladeCenter servers to a wide variety of SAS storage devices. The SAS

connectivity card can connect to the Disk Storage Modules in the BladeCenter S. The card

routes the pair of SAS channels from the blade’s onboard SAS controller to the SAS switches

installed in the BladeCenter chassis.

Tip: This card is also known as the SAS Connectivity Card (CIOv) for IBM BladeCenter.

This card is installed into the CIOv slot of the supported blade server. It provides connections

to SAS modules located in bays 3 and 4 of a supported BladeCenter chassis.

The card has the following features:

CIOv form factor

Provides external connections for the two SAS ports of the blade server's onboard SAS

controller

Support for two full-duplex SAS ports at 3 Gbps maximum per channel

Support for SAS, SSP, and SMP protocols

Connectivity to SAS storage devices

For more information, see the IBM Redbooks at-a-glance guide at the following web page:

http://www.redbooks.ibm.com/abstracts/tips0701.html?Open

Broadcom 2-Port Gb Ethernet Expansion Card (CIOv)

The Broadcom 2-port Gb Ethernet Expansion Card (CIOv) is an Ethernet expansion card with

two 1 Gb Ethernet ports designed for BladeCenter servers with CIOv expansion slots.

The card has the following features:

PCI Express host interface

Broadcom BCM5709S communication module

BladeCenter Open Fabric Manager (BOFM) support

Connection to 1000BASE-X environments using BladeCenter Ethernet switches

Full-duplex (FDX) capability, enabling simultaneous transmission and reception of data on

the Ethernet local area network (LAN)

TCPIP checksum offload

TCP segmentation offload

For more detail see the IBM Redbooks publication IBM BladeCenter Products and

Technology, SG24-7523, available at the following web page:

http://www.redbooks.ibm.com/abstracts/sg247523.html?Open

Chapter 1. Introduction and general description 23

Page 38

QLogic 1 Gb Ethernet and 8 Gb Fibre Channel Expansion Card (CFFh)

The QLogic 1Gb Ethernet and 8Gb Fibre Channel Expansion Card, feature #8271, is a CFFh

high speed blade server expansion card with two 8Gb Fibre Channel ports and two 1 Gb

Ethernet ports. It provides QLogic 2532 PCI-Express ASIC for 8 Gb 2-port Fibre Channel and

Broadcom 5709S ASIC for 1 Gb 2-port Ethernet. This card is used in conjunction with the

Multi-Switch Interconnect Module and is installed in the left position of the MSIM and a Fibre

Channel capable I/O module is installed in the right position of the MSIM. Both switches do

not need to be present at the same time because the Fibre Channel and Ethernet networks

are separate and distinct. It can be combined with a CIOv I/O card on the same high-speed

blade server.

The card has the following features:

Broadcom 5709S ASIC with two 1Gb Ethernet ports

PCI Express host interface

BladeCenter Open Fabric Manager (BOFM) support

TCPIP checksum offload

TCP segmentation offload

Full-duplex (FDX) capability

QLogic 2532 ASIC with two 8Gb Fibre Channel ports

Support for FCP-SCSI and FCP-IP

Support for point-to-point fabric connection (F-port fabric login)

Support for Fibre Channel service (classes 2 and 3)

Support for NPIV when installed in PS703 and PS704 blade servers

Support for remote startup (boot) operations

Support for BladeCenter Open Fabric Manager

Support for Fibre Device Management Interface (FDMI) standard (VESA standard)

Fibre Channel 8 Gbps, 4 Gbps, or 2 Gbps auto-negotiation