Page 1

IBM Power Systems

Performance Capabilities Reference

IBM i operating system Version 6.1

January/April/October 2008

This

document is intended for use by qualified performance related programmers or analysts from

IBM, IBM Business Partners and IBM customers using the IBM Power

running IBM i operating system. Information in this document may be readily shared with

IBM i customers to understand the performance and tuning factors in IBM i operating system

6.1 and earlier where applicable. For the latest updates and for the latest on IBM i

performance information, please refer to the Performance Management Website:

http://www.ibm.com/systems/i/advantages/perfmgmt/index.html

Requests for use of performance information by the technical trade press or consultants should

be directed to Systems Performance Department V3T, IBM Rochester Lab, in Rochester, MN.

55901 USA.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 1

TM

Systems platform

Page 2

Note!

Before using this information, be sure to read the general information under “Special Notices.”

Twenty Fifth Edition (January/April/October 2008) SC41-0607-13

This edition applies to IBM i operating System V6.1 running on IBM Power Systems.

You can request a copy of this document by download from IBM i Center via the System i Internet site at:

http://www.ibm.com/systems/i/

available on the IBM iSeries Internet site in the "On Line Library", at:

http://publib.boulder.ibm.com/pubs/html/as400/online/chgfrm.htm.

Documents are viewable/downloadable in Adobe Acrobat (.pdf) format. Approximately 1 to 2 MB download. Adobe Acrobat

reader plug-in is available at: http://www.adobe.com

To request the CISC version (V3R2 and earlier), enter the following command on VM:

REQUEST V3R2 FROM FIELDSIT AT RCHVMW2 (your name)

To request the IBM iSeries Advanced 36 version, enter the following command on VM:

TOOLCAT MKTTOOLS GET AS4ADV36 PACKAGE

© Copyright International Business Machines Corporation 2008. All rights reserved.

Note to U.S. Government Users -- Documentation related to restricted rights -- Use, duplication, or disclosure is subject to

restrictions set forth in GSA ADP Schedule Contract with IBM Corp.

. The Version 5 Release 1 and Version 4 Release 5 Performance Capabilities Guides are also

.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 2

Page 3

Table of Contents

2.1 Overview

2.1.1 Interactive Indicators and Metrics

2.1.2 Disclaimer and Remaining Sections

2.1.3 V5R3

2.1.4 V5R2 and V5R1

2.2 Server Model Behavior

2.2.1 In V4R5 - V5R2

2.2.2 Choosing Between Similarly Rated Systems

2.2.3 Existing Older Models

2.3 Server Model Differences

2.4 Performance Highlights of Model 7xx Servers

2.5 Performance Highlights of Model 170 Servers

2.6 Performance Highlights of Custom Server Models

2.7 Additional Server Considerations

2.8 Interactive Utilization

2.9 Server Dynamic Tuning (SDT)

2.10 Managing Interactive Capacity

2.11 Migration from Traditional Models

2.12 Upgrade Considerations for Interactive Capacity

2.13 iSeries for Domino and Dedicated Server for Domino Performance Behavior

2.13.1 V5R2 iSeries for Domino & DSD Performance Behavior updates

2.13.2 V5R1 DSD Performance Behavior

3.1 Effect of CPU Speed on Batch

3.2 Effect of DASD Type on Batch

3.3 Tuning Parameters for Batch

4.1 New for i5/OS V6R1

i5/OS V6R1 SQE Query Coverage

4.2 DB2 i5/OS V5R4 Highlights

i5/OS V5R4 SQE Query Coverage

4.3 i5/OS V5R3 Highlights

i5/OS V5R3 SQE Query Coverage

Partitioned Table Support

4.4 V5R2 Highlights - Introduction of the SQL Query Engine

4.5 Indexing

4.6 DB2 Symmetric Multiprocessing feature

4.7 DB2 for i5/OS Memory Sharing Considerations

4.8 Journaling and Commitment Control

4.9 DB2 Multisystem for i5/OS

4.10 Referential Integrity

4.11 Triggers

4.12 Variable Length Fields

4.13 Reuse Deleted Record Space

4.14 Performance References for DB2

.....................................................................

.............................................

............................................

....................................................................

...........................................................

..........................................................

............................................................

......................................

.......................................................

........................................................

........................................

........................................

.....................................

..................................................

...........................................................

....................................................

...................................................

................................................

.....................................

....................

............................................

.....................................................

....................................................

......................................................

.............................................................

..................................................

......................................................

..................................................

...........................................................

..................................................

........................................................

...............................

......................................................................

.............................................

.......................................

................................................

.......................................................

............................................................

.....................................................................

..........................................................

.....................................................

.................................................

.............

10Special Notices ....................................................................

12Purpose of this Document ...........................................................

13Chapter 1. Introduction ............................................................

14Chapter 2. iSeries and AS/400 RISC Server Model Performance Behavior .................

14

14

15

15

16

16

16

17

17

19

21

22

23

23

24

25

28

31

33

34

34

34

38Chapter 3. Batch Performance ......................................................

38

38

39

41Chapter 4. DB2 for i5/OS Performance ...............................................

41

41

44

44

45

45

47

49

51

52

53

53

56

57

58

59

61

62

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 3

Page 4

5.2 Communication Performance Test Environment

5.5 TCP/IP Secure Performance

5.6 Performance Observations and Tips

5.7 APPC, ICF, CPI-C, and Anynet

.......................................................

.................................................

....................................................

5.8 HPR and Enterprise extender considerations

5.9 Additional Information

6.1 HTTP Server (powered by Apache)

6.2 PHP - Zend Core for i

6.3 WebSphere Application Server

6.4 IBM WebFacing

...........................................................

.................................................

............................................................

....................................................

..............................................................

.......................................

..........................................

6.5 WebSphere Host Access Transformation Services (HATS)

6.6 System Application Server Instance

6.7 WebSphere Portal

6.8 WebSphere Commerce

..............................................................

..........................................................

6.9 WebSphere Commerce Payments

6.10 Connect for iSeries

7.1 Introduction

...................................................................

7.2 What’s new in V6R1

...........................................................

............................................................

7.3 IBM Technology for Java (32-bit and 64-bit)

Native Code

Garbage Collection

7.4 Classic VM (64-bit)

JIT Compiler

Garbage Collection

Bytecode Verification

..................................................................

............................................................

............................................................

..................................................................

............................................................

...........................................................

7.5 Determining Which JVM to Use

7.6 Capacity Planning

General Guidelines

.............................................................

.............................................................

7.7 Java Performance – Tips and Techniques

Introduction

..................................................................

i5/OS Specific Java Tips and Techniques

Classic VM-specific Tips

........................................................

Java Language Performance Tips

Java i5/OS Database Access Tips

Resources

.......................................................................

8.1 System i Cryptographic Solutions

8.2 Cryptography Performance Test Environment

8.3 Software Cryptographic API Performance

8.4 Hardware Cryptographic API Performance

...............................................

.................................................

.........................................

..................................................

...........................................

............................................

.................................................

.................................................

..................................................

........................................

..........................................

..........................................

8.5 Cryptography Observations, Tips and Recommendations

8.6 Additional Information

9.1 iSeries NetServer File Serving Performance

10.1 DB2 for i5/OS access with JDBC

JDBC Performance Tuning Tips

References for JDBC

.........................................................

.........................................

.................................................

..................................................

..........................................................

..............................

...............................

63Chapter 5. Communications Performance .............................................

65

68

71

73

75

77

78Chapter 6. Web Server and WebSphere Performance ..................................

79

88

93

107

117

119

121

121

122

122

126Chapter 7. Java Performance ......................................................

126

126

127

128

128

129

129

131

132

133

135

135

136

136

137

137

138

141

142

143Chapter 8. Cryptography Performance ..............................................

143

144

145

146

148

149

150Chapter 9. iSeries NetServer File Serving Performance ................................

150

153Chapter 10. DB2 for i5/OS JDBC and ODBC Performance .............................

153

153

154

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 4

Page 5

10.2 DB2 for i5/OS access with ODBC

References for ODBC

...........................................................

11.1 Domino Workload Descriptions

11.2 Domino 8

11.3 Domino 7

11.4 Domino 6

...................................................................

...................................................................

...................................................................

Notes client improvements with Domino 6

Domino Web Access client improvements with Domino 6

11.5 Response Time and Megahertz relationship

11.6 Collaboration Edition and Domino Edition offerings

11.7 Performance Tips / Techniques

11.8 Domino Web Access

..........................................................

11.9 Domino Subsystem Tuning

11.10 Performance Monitoring Statistics

11.11 Main Storage Options

........................................................

11.12 Sizing Domino on System i

11.13 LPAR and Partial Processor Considerations

11.14 System i NotesBench Audits and Benchmarks

12.1 Introduction

.................................................................

................................................

.................................................

...........................................

...............................

.......................................

.................................

..................................................

.....................................................

...............................................

...................................................

.......................................

......................................

12.2 Performance Improvements for WebSphere MQ V5.3 CSD6

12.3 Test Description and Results

12.4 Conclusions, Recommendations and Tips

13.1 Summary

Key Ideas

....................................................................

....................................................................

13.2 Basic Requirements -- Where Linux Runs

13.3 Linux on iSeries Technical Overview

Linux on iSeries Architecture

Linux on iSeries Run-time Support

13.4 Basic Configuration and Performance Questions

13.5 General Performance Information and Results

Computational Performance -- C-based code

Computational Performance -- Java

Web Serving Performance

Network Operations

............................................................

Gcc and High Optimization (gcc compiler option -O3)

The Gcc Compiler, Version 3

13.6 Value of Virtual LAN and Virtual Disk

Virtual LAN

Virtual Disk

..................................................................

..................................................................

13.7 DB2 UDB for Linux on iSeries

13.8 Linux on iSeries and IBM eServer Workload Estimator

13.9 Top Tips for Linux on iSeries Performance

14.1 Internal (Native) Attachment.

14.1.0 Direct Attach (Native)

14.1.1 Hardware Characteristics

14.1.2 iV5R2 Direct Attach DASD

14.1.3 571B

.................................................................

....................................................

..........................................

..........................................

..............................................

.....................................................

.................................................

.....................................

.......................................

........................................

...............................................

.......................................................

.................................

.....................................................

............................................

...................................................

...............................

.........................................

...................................................

........................................................

...............................................

...................................................

...........................

155

157

158Chapter 11. Domino on i ..........................................................

159

160

160

161

161

162

163

164

164

167

168

168

169

172

173

174

175Chapter 12. WebSphere MQ for iSeries .............................................

175

175

176

176

178Chapter 13. Linux on iSeries Performance ...........................................

178

178

178

179

179

180

181

182

182

183

183

184

184

185

185

185

185

186

187

187

191Chapter 14. DASD Performance ...................................................

191

192

192

193

195

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 5

Page 6

14.1.3.1 571B RAID5 vs RAID6 - 10 15K 35GB DASD

14.1.3.2 571B IOP vs IOPLESS - 10 15K 35GB DASD

14.1.4 571B, 5709, 573D, 5703, 2780 IOA Comparison Chart

................................

.................................

..........................

14.1.5 Comparing Current 2780/574F with the new 571E/574F and 571F/575B

NOTE: iV5R3 has support for the features in this section but all of our

performance measurements were done on iV5R4 systems. For information on the

supported features see the IBM Product Announcement Letters. ..........................

14.1.6 Comparing 571E/574F and 571F/575B IOP and IOPLess

14.1.7 Comparing 571E/574F and 571F/575B RAID5 and RAID6 and Mirroring

14.1.8 Performance Limits on the 571F/575B

.......................................

14.1.9 Investigating 571E/574F and 571F/575B IOA, Bus and HSL limitations.

14.1.10 Direct Attach 571E/574F and 571F/575B Observations

14.2 New in iV5R4M5

14.2.1 9406-MMA CEC vs 9406-570 CEC DASD

14.2.2 RAID Hot Spare

14.2.3 12X Loop Testing

14.3 New in iV6R1M0

14.3.1 Encrypted ASP

14.3.2 57B8/57B7 IOA

14.3.3 572A IOA

14.4 SAN - Storage Area Network (External)

14.5.1 General VIOS Considerations

14.5.1.1 Generic Concepts

14.5.1.2 Generic Configuration Concepts

.............................................................

....................................

..........................................................

.........................................................

.............................................................

...........................................................

..........................................................

...............................................................

...........................................

..................................................

.......................................................

..............................................

.........................

...........

............

.........................

14.5.1.3 Specific VIOS Configuration Recommendations -- Traditional (non-blade)

Machines ........................................................................

14.5.1.3 VIOS and JS12 Express and JS22 Express Considerations

14.5.1.3.1 BladeCenter H JS22 Express running IBM i operating system/VIOS

14.5.1.3.2 BladeCenter S and JS12 Express

.........................................

14.5.1.3.3 JS12 Express and JS22 Express Configuration Considerations

14.5.1.3.4 DS3000/DS4000 Storage Subsystem Performance Tips

14.6 IBM i operating system 5.4 Virtual SCSI Performance

14.6.1 Introduction

14.6.2 Virtual SCSI Performance Examples

14.6.2.1 Native vs. Virtual Performance

................................................................

.........................................

...........................................

14.6.2.2 Virtual SCSI Bandwidth-Multiple Network Storage Spaces

14.6.2.3 Virtual SCSI Bandwidth-Network Storage Description (NWSD) Scaling

14.6.2.4 Virtual SCSI Bandwidth-Disk Scaling

14.6.3 Sizing

....................................................................

14.6.3.1 Sizing when using Dedicated Processors

14.6.3.2 Sizing when using Micro-Partitioning

14.6.3.3 Sizing memory

.........................................................

14.6.4 AIX Virtual IO Client Performance Guide

14.6.5 Performance Observations and Tips

14.6.6 Summary

.................................................................

15.1 Supported Backup Device Rates

.............................................

.................................................

15.2 Save Command Parameters that Affect Performance

Use Optimum Block Size (USEOPTBLK)

Data Compression (DTACPR)

Data Compaction (COMPACT)

....................................................

...................................................

.......................................

....................................

......................................

........................................

.................................

............................................

..........................

..........

.................

.......................

.............................

......................

............

195

195

196

198

199

200

202

203

205

206

206

207

208

209

209

211

213

214

216

216

217

220

222

222

227

228

229

231

233

234

235

235

236

237

238

238

240

241

242

242

242

243Chapter 15. Save/Restore Performance ..............................................

243

244

244

244

244

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 6

Page 7

15.3 Workloads

15.4 Comparing Performance Data

15.5 Lower Performing Backup Devices

15.6 Medium & High Performing Backup Devices

15.7 Ultra High Performing Backup Devices

15.8 The Use of Multiple Backup Devices

15.9 Parallel and Concurrent Library Measurements

15.9.1 Hardware (2757 IOAs, 2844 IOPs, 15K RPM DASD)

15.9.2 Large File Concurrent

15.9.3 Large File Parallel

15.9.4 User Mix Concurrent

15.10 Number of Processors Affect Performance

15.11 DASD and Backup Devices Sharing a Tower

15.12 Virtual Tape

15.13 Parallel Virtual Tapes

15.14 Concurrent Virtual Tapes

15.15 Save and Restore Scaling using a Virtual Tape Drive.

..................................................................

...................................................

...............................................

......................................

...........................................

.............................................

.....................................

...........................

....................................................

.......................................................

.....................................................

........................................

......................................

................................................................

.........................................................

......................................................

................................

15.16 Save and Restore Scaling using 571E IOAs and U320 15K DASD units to a

3580 Ultrium 3 Tape Drive. .........................................................

15.17 High-End Tape Placement on System i

..........................................

15.18 BRMS-Based Save/Restore Software Encryption and DASD-Based ASP

Encryption ......................................................................

15.19 5XX Tape Device Rates

.......................................................

15.20 5XX Tape Device Rates with 571E & 571F Storage IOAs and 4327 (U320)

Disk Units .......................................................................

15.21 5XX DVD RAM and Optical Library

15.23 9406-MMA DVD RAM

.....................................................

15.24 9406-MMA 576B IOPLess IOA

16.1 IPL Performance Considerations

16.2 IPL Test Description

...........................................................

16.3 9406-MMA System Hardware Information

16.3.1 Small system Hardware Configuration

16.3.2 Large system Hardware Configurations

16.4 9406-MMA IPL Performance Measurements (Normal)

16.5 9406-MMA IPL Performance Measurements (Abnormal)

16.6 NOTES on MSD

..............................................................

16.6.1 MSD Affects on IPL Performance Measurements

16.7 5XX System Hardware Information

16.7.1 5XX Small system Hardware Configuration

16.7.2 5XX Large system Hardware Configuration

16.8 5XX IPL Performance Measurements (Normal)

16.9 5XX IPL Performance Measurements (Abnormal)

16.10 5XX IOP vs IOPLess effects on IPL Performance (Normal)

16.11 IPL Tips

17.1 Introduction

...................................................................

..................................................................

17.2 Effects of Windows and Linux loads on the host system

17.2.1 IXS/IXA Disk I/O Operations:

17.2.2 iSCSI Disk I/O Operations:

17.2.3 iSCSI virtual I/O private memory pool

............................................

..............................................

.................................................

.........................................

.......................................

......................................

...............................

..............................

................................

...............................................

....................................

....................................

......................................

....................................

...........................

...............................

...............................................

.................................................

........................................

245

246

247

247

247

248

249

249

250

251

252

253

254

255

257

258

259

260

262

263

265

267

268

270

271

273Chapter 16 IPL Performance ......................................................

273

273

274

274

274

275

275

276

276

277

277

277

278

278

279

279

280Chapter 17. Integrated BladeCenter and System x Performance .........................

280

281

281

282

283

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 7

Page 8

17.2.4 Virtual Ethernet Connections:

17.2.5 IXS/IXA IOP Resource:

17.3 System i memory rules of thumb for IXS/IXA and iSCSI attached servers.

17.3.1 IXS and IXA attached servers:

17.3.2 iSCSI attached servers:

17.4 Disk I/O CPU Cost

............................................................

....................................................

17.4.1 Further notes about IXS/IXA Disk Operations

17.5 Disk I/O Throughput

...........................................................

17.6 Virtual Ethernet CPU Cost and Capacities

17.6.1 VE Capacity Comparisons

17.6.2 VE CPW Cost

17.6.3 Windows CPU Cost

...........................................................

.......................................................

17.7 File Level Backup Performance

17.8 Summary

....................................................................

17.9 Additional Sources of Information

18.1 Introduction

V5R3 Information

V5R2 Additions

General Tips

V5R1 Additions

18.2 Considerations

..................................................................

..............................................................

................................................................

..................................................................

................................................................

................................................................

18.3 Performance on a 12-way system

18.4 LPAR Measurements

18.5 Summary

....................................................................

..........................................................

..............................................

...................................................

................

...............................................

..................................

..........................................

.................................................

..................................................

................................................

.................................................

19.1 Public Benchmarks (TPC-C, SAP, NotesBench, SPECjbb2000, VolanoMark)

19.2 Dynamic Priority Scheduling

19.3 Main Storage Sizing Guidelines

19.4 Memory Tuning Using the QPFRADJ System Value

19.5 Additional Memory Tuning Techniques

19.6 User Pool Faulting Guidelines

19.7 AS/400 NetFinity Capacity Planning

20.1 Adjusting Your Performance Tuning for Threads

20.2 General Performance Guidelines -- Effects of Compilation

....................................................

.................................................

.................................

...........................................

...................................................

..............................................

....................................

.............................

20.3 How to Design for Minimum Main Storage Use (especially with Java, C, C++)

Theory -- and Practice

System Level Considerations

Typical Storage Costs

A Brief Example

Which is more important?

A Short but Important Tip about Data Base

A Final Thought About Memory and Competitiveness

20.4 Hardware Multi-threading (HMT)

HMT Described

HMT and SMT Compared and Contrasted

Models With/Without HMT

20.5 POWER6 520 Memory Considerations

20.6 Aligning Floating Point Data on Power6

..........................................................

.....................................................

...........................................................

...............................................................

.......................................................

..........................................

..................................

................................................

...............................................................

...........................................

.......................................................

............................................

...........................................

.............

.............

284

285

285

285

285

286

287

288

289

290

291

291

292

293

293

295Chapter 18. Logical Partitioning (LPAR) ............................................

295

295

295

295

296

296

297

300

301

302Chapter 19. Miscellaneous Performance Information ..................................

302

304

307

307

308

310

311

314Chapter 20. General Performance Tips and Techniques ...............................

314

316

317

317

318

318

319

320

321

321

322

322

323

323

324

325

327Chapter 21. High Availability Performance ...........................................

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 8

Page 9

21.1 Switchable IASP’s

21.2 Geographic Mirroring

22.1 Overview

....................................................................

22.2 Merging PM for System i data into the Estimator

22.3 Estimator Access

22.4 What the Estimator is Not

A.1 Commercial Processing Workload - CPW

A.2 Compute Intensive Workload - CIW

B.1 Performance Data Collection Services

B.2 Batch Modeling Tool (BCHMDL).

C.1 V6R1 Additions (October 2008)

C.2 V6R1 Additions (August 2008)

C.3 V6R1 Additions (April 2008)

C.4 V6R1 Additions (January 2008)

C.5 V5R4 Additions (July 2007)

............................................................

..........................................................

....................................

..............................................................

.......................................................

..........................................

..............................................

.............................................

.............................................

.................................................

..................................................

....................................................

..................................................

....................................................

C.6 V5R4 Additions (January/May/August 2006 and January/April 2007)

C.7 V5R3 Additions (May, July, August, October 2004, July 2005)

C.7.1 IBM

~®

C.8 V5R2 Additions (February, May, July 2003)

C.8.1 iSeries Model 8xx Servers

i5 Servers

..................................................

........................................

..................................................

C.8.2 Model 810 and 825 iSeries for Domino (February 2003)

C.9 V5R2 Additions

C.9.1 Base Models 8xx Servers

C.9.2 Standard Models 8xx Servers

C.10 V5R1 Additions

C.10.1 Model 8xx Servers

C.10.2 Model 2xx Servers

C.10.3 V5R1 Dedicated Server for Domino

C.10.4 Capacity Upgrade on-demand Models

...............................................................

..................................................

................................................

..............................................................

.......................................................

.......................................................

.........................................

.......................................

C.10.4.1 CPW Values and Interactive Features for CUoD Models

C.11 V4R5 Additions

C.11.1 AS/400e Model 8xx Servers

C.11.2 Model 2xx Servers

C.11.3 Dedicated Server for Domino

C.11.4 SB Models

C.12 V4R4 Additions

C.12.1 AS/400e Model 7xx Servers

C.12.2 Model 170 Servers

C.13 AS/400e Model Sxx Servers

C.14 AS/400e Custom Servers

C.15 AS/400 Advanced Servers

C.16 AS/400e Custom Application Server Model SB1

C.17 AS/400 Models 4xx, 5xx and 6xx Systems

C.18 AS/400 CISC Model Capacities

..............................................................

................................................

.......................................................

..............................................

.............................................................

..............................................................

................................................

......................................................

...................................................

.......................................................

.....................................................

....................................

.........................................

.................................................

.........................

..............................

.......................

....................

327

329

334Chapter 22. IBM Systems Workload Estimator ......................................

334

335

335

335

337Appendix A. CPW and CIW Descriptions ............................................

337

339

341Appendix B. System i Sizing and Performance Data Collection Tools ....................

342

343

345Appendix C. CPW and MCU Relative Performance Values for System i ..................

346

347

347

348

349

349

351

351

353

353

354

354

354

354

355

356

357

357

357

358

360

360

361

361

362

362

362

363

365

365

365

366

367

368

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 9

Page 10

Special Notices

DISCLAIMER NOTICE

Performance is based on measurements and projections using standard IBM benchmarks in a controlled

environment. This information is presented along with general recommendations to assist the reader to

have a better understanding of IBM(*) products. The actual throughput or performance that any user will

experience will vary depending upon considerations such as the amount of multiprogramming in the

user's job stream, the I/O configuration, the storage configuration, and the workload processed.

Therefore, no assurance can be given that an individual user will achieve throughput or performance

improvements equivalent to the ratios stated here.

All performance data contained in this publication was obtained in the specific operating environment and

under the conditions described within the document and is presented as an illustration. Performance

obtained in other operating environments may vary and customers should conduct their own testing.

Information is provided "AS IS" without warranty of any kind.

The use of this information or the implementation of any of these techniques is a customer responsibility

and depends on the customer's ability to evaluate and integrate them into the customer's operational

environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there

is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt

these techniques to their own environments do so at their own risk.

All statements regarding IBM future direction and intent are subject to change or withdrawal without

notice, and represent goals and objectives only. Contact your local IBM office or IBM authorized reseller

for the full text of the specific Statement of Direction.

Some information addresses anticipated future capabilities. Such information is not intended as a

definitive statement of a commitment to specific levels of performance, function or delivery schedules

with respect to any future products. Such commitments are only made in IBM product announcements.

The information is presented here to communicate IBM's current investment and development activities

as a good faith effort to help with our customers' future planning.

IBM may have patents or pending patent applications covering subject matter in this document. The

furnishing of this document does not give you any license to these patents. You can send license

inquiries, in writing, to the IBM Director of Commercial Relations, IBM Corporation, Purchase, NY

10577.

Information concerning non-IBM products was obtained from a supplier of these products, published

announcement material, or other publicly available sources and does not constitute an endorsement of

such products by IBM. Sources for non-IBM list prices and performance numbers are taken from

publicly available information, including vendor announcements and vendor worldwide homepages. IBM

has not tested these products and cannot confirm the accuracy of performance, capability, or any other

claims related to non-IBM products. Questions on the capability of non-IBM products should be

addressed to the supplier of those products.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 10

Page 11

The following terms, which may or may not be denoted by an asterisk (*) in this publication, are trademarks of the

IBM Corporation.

Operating System/400System/370iSeries or AS/400

i5/OS IPDSC/400

Application System/400COBOL/400OS/400

OfficeVisionRPG/400System i5

Facsimile Support/400CallPathSystem i

Distributed Relational Database ArchitectureDRDAPS/2

Advanced Function PrintingSQL/400OS/2

Operational AssistantImagePlusDB2

Client SeriesVTAMAFP

Workstation Remote IPL/400APPNIBM

Advanced Peer-to-Peer NetworkingSystemViewSQL/DS

OfficeVision/400ValuePoint400

iSeries Advanced Application ArchitectureDB2/400CICS

ADSTAR Distributed Storage Manager/400ADSM/400S/370

IBM Network StationAnyNet/400RPG IV

Lotus, Lotus Notes, Lotus Word Pro, Lotus 1-2-3AIX

POWER4+POWER4Micro-partitioning

TM

Systems

POWER5+POWER5POWER

POWER6+POWER6Power

PowerTM Systems SoftwarePowerTM Systems SoftwarePowerPC

The following terms, which may or may not be denoted by a double asterisk (**) in this publication, are trademarks

or registered trademarks of other companies as follows:

Transaction Processing Performance CouncilTPC Benchmark

Transaction Processing Performance CouncilTPC-A, TPC-B

Transaction Processing Performance CouncilTPC-C, TPC-D

Microsoft CorporationODBC, Windows NT Server, Access

Microsoft CorporationVisual Basic, Visual C++

Adobe Systems IncorporatedAdobe PageMaker

Borland International IncorporatedBorland Paradox

Corel CorporationCorelDRAW!

Borland InternationalParadox

Satelite Software InternationalWordPerfect

BGS Systems, Inc.BEST/1

NovellNetWare

Compaq Computer CorporationCompaq

Compaq Computer CorporationProliant

Business Application Performance CorporationBAPCo

Gaphics Software Publishing CorporationHarvard

Hewlett Packard CorporationHP-UX

Hewlett Packard CorporationHP 9000

Intersolve, Inc.INTERSOLV

Intersolve, Inc.Q+E

Novell, Inc.Netware

Systems Performance Evaluation CooperativeSPEC

UNIX Systems LaboratoriesUNIX

WordPerfect CorporationWordPerfect

Powersoft CorporationPowerbuilder

Gupta CorporationSQLWindows

Ziff-Davis Publishing CompanyNetBench

Digital Equipment CorporationDEC Alpha

Microsoft, Windows, Windows 95, Windows NT, Internet Explorer, Word, Excel, and Powerpoint, and the Windows logo are

trademarks of Microsoft Corporation in the United States, other countries, or both.

Intel, Intel Inside (logos), MMX and Pentium are trademarks of Intel Corporation in the United States, other countries, or both.

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Java and all Java-based trademarks are trademarks of Sun Microsystems, Inc. in the United States, other countries, or both.

Other company, product or service names may be trademarks or service marks of others

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 11

.

Page 12

Purpose of this Document

The intent of this document is to help provide guidance in terms of IBM i operating system

performance, capacity planning information, and tips to obtain optimal performance on IBM i

operating system. This document is typically updated with each new release or more often if needed.

This October 2008 edition of the IBM i V6.1 Performance Capabilities Reference Guide is an update to

the April 2008 edition to reflect new product functions announced on October 7, 2008.

This edition includes performance information on newly announced IBM Power Systems including

Power 520 and Power 550, utilizing POWER6 processor technology. This document further includes

information on IBM System i 570 using POWER6 processor technology, IBM i5/OS running on IBM

BladeCenter JS22 using POWER6 processor technology, recent System i5 servers (model 515, 525, and

595) featuring new user-based licensing for the 515 and 525 models and a new 2.3GHz model 595, DB2

UDB for iSeries SQL Query Engine Support, Websphere Application Server including WAS V6.1 both

with the Classic VM and the IBM Technology for Java (32-bit) VM, WebSphere Host Access

Transformation Services (HATS) including the IBM WebFacing Deployment Tool with HATS

Technology (WDHT), PHP - Zend Core for i, Java including Classic JVM (64-bit), IBM Technology for

Java (32-bit), IBM Technology for Java (64-bit) and bytecode verification, Cryptography, Domino 7,

Workplace Collaboration Services (WCS), RAID6 versus RAID5 disk comparisons, new internal storage

adapters, Virtual Tape, and IPL Performance.

The wide variety of applications available makes it extremely difficult to describe a "typical" workload.

The data in this document is the result of measuring or modeling certain application programs in very

specific and unique configurations, and should not be used to predict specific performance for other

applications. The performance of other applications can be predicted using a system sizing tool such as

IBM Systems Workload Estimator (refer to Chapter 22 for more details on Workload Estimator).

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 IBM i Performance Capabilities 12

Page 13

Chapter 1. Introduction

IBM System i and IBM System p platforms unified the value of their servers into a single,

powerful lineup of servers based on industry leading POWER6 processor technology with

support for IBM i operating system (formerly known as i5/OS), IBM AIX and Linux for Power.

Following along with this exciting unification are a number of naming changes to the formerly

named i5/OS, now officially called IBM i operating system. Specifically, recent versions of the

operating system are referred to by IBM i operating system 6.1 and IBM i operating system 5.4,

formerly i5/OS V6R1 and i5/OS V5R4 respectively. Shortened forms of the new operating

system name are IBM i 6.1, i 6.1, i V6.1 iV6R1, and sometimes simply ‘i’. As always,

references to legacy hardware and software will commonly use the naming conventions of the

time.

The Power 520 Express Edition is the entry member of the Power Systems portfolio, supporting

both IBM i 5.4 and IBM i 6.1. The System i 570 is enhanced to enable medium and large

enterprises to grow and extend their IBM i business applications more affordably and with more

granularity, while offering effective and scalable options for deploying Linux and AIX

applications on the same secure, reliable system.

The IBM Power 570 running IBM i offers IBM's fastest POWER6 processors in 2 to 16-way

configurations, plus an array of other technology advances. It is designed to deliver outstanding

price/performance, mainframe-inspired reliability and availability features, flexible capacity

upgrades, and innovative virtualization technologies. New 5.0GHz and 4.4GHz POWER6

processors use the very latest 64-bit IBM POWER processor technology. Each 2-way 570

processor card contains one two-core chip (two processors) and comes with 32 MB of L3 cache

and 8 MB of L2 cache.

The CPW ratings for systems with POWER6 processors are approximately 70% higher than

equivalent POWER5 systems and approximately 30% higher than equivalent POWER5+

systems. For some compute-intensive applications, the new System i 570 can deliver up to twice

the performance of the original 570 with 1.65 GHz POWER5 processors.

The 515 and 525 models introduced in April 2007, introduce user-based licensing for IBM i. For

assistance in determining the required number of user licenses, see

ttp://www.ibm.com/systems/i/hardware/515 (model 515) or

h

http://www.ibm.com/systems/i/hardware/525 (model 525). User-based licensing is not a

replacement for system sizing; instead, user-based licensing enables appropriate user

connectivity to the system. Application environments require different amounts of system

resources per user. See Chapter 22 (IBM Systems Workload Estimator) for assistance in system

sizing.

Customers who wish to remain with their existing hardware but want to move to IBM i 6.1 may

find functional and performance improvements. IBM i 6.1 continues to help protect the

customer's investment while providing more function and better price/performance over previous

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 1- Introduction 13

Page 14

versions. The primary public performance information web site is found at:

http://www.ibm.com/systems/i/advantages/perfmgmt/index.html

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 1- Introduction 14

Page 15

Chapter 2. iSeries and AS/400 RISC Server Model Performance Behavior

2.1 Overview

iSeries and AS/400 servers are intended for use primarily in client/server or other non-interactive work

environments such as batch, business intelligence, network computing etc. 5250-based interactive work

can be run on these servers, but with limitations. With iSeries and AS/400 servers, interactive capacity

can be increased with the purchase of additional interactive features. Interactive work is defined as any

job doing 5250 display device I/O. This includes:

All 5250 sessions

Any green screen interface

Telnet or 5250 DSPT workstations

5250/HTML workstation gateway

PC's using 5250 emulation

Interactive program debugging

RUMBA/400

Screen scrapers

Interactive subsystem monitors

Twinax printer jobs

BSC 3270 emulation

5250 emulation

PC Support/400 work station function

Note that printer work that passes through twinax media is treated as interactive, even though there is no

“user interface”. This is true regardless of whether the printer is working in dedicated mode or is printing

spool files from an out queue. Printer activity that is routed over a LAN through a PC print controller are

not considered to be interactive.

This explanation is different than that found in previous versions of this document. Previous versions

indicated that spooled work would not be considered to be interactive and were in error.

As of January 2003, 5250 On-line Transaction Processing (OLTP) replaces the term “interactive” when

referencing interactive CPW or interactive capacity. Also new in 2003, when ordering a iSeries server, the

customer must choose between a Standard Package and an Enterprise Package in most cases. The

Standard Packages comes with zero 5250 CPW and 5250 OLTP workloads are not supported. However,

the Standard Package does support a limited 5250 CPW for a system administrator to manage various

aspects of the server. Multiple administrative jobs will quickly exceed this capability. The Enterprise

Package does not have any limits relative to 5250 OLTP workloads. In other words, 100% of the server

capacity is available for 5250 OLTP applications whenever you need it.

5250 OLTP applications can be run after running the WebFacing Tool of IBM WebSphere Development

Studio for iSeries and will require no 5250 CPW if on V5R2 and using model 800, 810, 825, 870, or 890

hardware.

2.1.1 Interactive Indicators and Metrics

Prior to V4R5, there were no system metrics that would allow a customer to determine the overall

interactive feature capacity utilization. It was difficult for the customer to determine how much of the

total interactive capacity he was using and which jobs were consuming interactive capacity. This got

much easier with the system enhancements made in V4R5 and V5R1.

Starting with V4R5, two new metrics were added to the data generated by Collection Services to report

the system's interactive CPU utilization (ref file QAPMSYSCPU). The first metric (SCIFUS) is the

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 15

Page 16

interactive utilization - an average for the interval. Since average utilization does not indicate potential

problems associated with peak activity, a second metric (SCIFTE) reports the amount of interactive

utilization that occurred above threshold. Also, interactive feature utilization was reported when printing

a System Report generated from Collection Services data. In addition, Management Central now

monitors interactive CPU relative to the system/partition capacity.

Also in V4R5, a new operator message, CPI1479, was introduced for when the system has consistently

exceeded the purchased interactive capacity on the system. The message is not issued every time the

capacity is reached, but it will be issued on an hourly basis if the system is consistently at or above the

limit. In V5R2, this message may appear slightly more frequently for 8xx systems, even if there is no

change in the workload. This is because the message event was changed from a point that was beyond the

purchased capacity to the actual capacity for these systems in V5R2.

In V5R1, Collection Services was enhanced to mark all tasks that are counted against interactive capacity

(ref file QAPMJOBMI, field JBSVIF set to ‘1’). It is possible to query this file to understand what tasks

have contributed to the system’s interactive utilization and the CPU utilized by all interactive tasks. Note:

the system’s interactive capacity utilization may not be equal to the utilization of all interactive tasks.

Reasons for this are discussed in Section 2.10, Managing Interactive Capacity.

With the above enhancements, a customer can easily monitor the usage of interactive feature and decide

when he is approaching the need for an interactive feature upgrade.

2.1.2 Disclaimer and Remaining Sections

The performance information and equations in this chapter represent ideal environments. This

information is presented along with general recommendations to assist the reader to have a better

understanding of the iSeries server models. Actual results may vary significantly.

This chapter is organized into the following sections:

y Server Model Behavior

y Server Model Differences

y Performance Highlights of New Model 7xx Servers

y Performance Highlights of Current Model 170 Servers

y Performance Highlights of Custom Server Models

y Additional Server Considerations

y Interactive Utilization

y Server Dynamic Tuning (SDT)

y Managing Interactive Capacity

y Migration from Traditional Models

y Migration from Server Models

y Dedicated Server for Domino (DSD) Performance Behavior

2.1.3 V5R3

Beginning with V5R3, the processing limitations associated with the Dedicated Server for Domino (DSD)

models have been removed. Refer to section 2.13, “Dedicated Server for Domino Performance

Behavior”, for additional information.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 16

Page 17

2.1.4 V5R2 and V5R1

There were several new iSeries 8xx and 270 server model additions in V5R1 and the i890 in V5R2.

However, with the exception of the DSD models, the underlying server behavior did not change from

V4R5. All 27x and 8xx models, including the new i890 utilize the same server behavior algorithm that

was announced with the first 8xx models supported by V4R5. For more details on these new models,

please refer to Appendix C, “CPW, CIW and MCU Values for iSeries”.

Five new iSeries DSD models were introduced with V5R1. In addition, V5R1 expanded the capability of

the DSD models with enhanced support of Domino-complementary workloads such as Java Servlets and

WebSphere Application Server. Please refer to Section 2.13, Dedicated Server for Domino Performance

Behavior, for additional information.

2.2 Server Model Behavior

2.2.1 In V4R5 - V5R2

Beginning with V4R5, all 2xx, 8xx and SBx model servers utilize an enhanced server algorithm that

manages the interactive CPU utilization. This enhanced server algorithm may provide significant user

benefit. On prior models, when interactive users exceed the interactive CPW capacity of a system,

additional CPU usage visible in one or more CFINT tasks, reduces system capacity for all users including

client/server. New in V4R5, the system attempts to hold interactive CPU utilization below the threshold

where CFINT CPU usage begins to increase. Only in cases where interactive demand exceeds the

limitations of the interactive capacity for an extended time (for example: from long-running,

CPU-intensive transactions), will overhead be visable via the CFINT tasks. Highlights of this new

algorithm include the following:

y As interactive users exceed the installed interactive CPW capacity, the response times of those

applications may significantly lengthen and the system will attempt to manage these interactive

excesses below a level where CFINT CPU usage begins to increase. Generally, increased CFINT

may still occur but only for transient periods of time. Therefore, there should be remaining system

capacity available for non-interactive users of the system even though the interactive capacity has

been exceeded. It is still a good practice to keep interactive system use below the system interactive

CPW threshold to avoid long interactive response times.

y Client/server users should be able to utilize most of the remaining system capacity even though the

interactive users have temporarily exceeded the maximum interactive CPW capacity.

y The iSeries Dedicated Server for Domino models behave similarly when the Non Domino CPW

capacity has been exceeded (i.e. the system attempts to hold Non Domino CPW capacity below the

threshold where CFINT overhead is normally activated). Thus, Domino users should be able to run in

the remaining system capacity available.

y With the advent of the new server algorithm, there is not a concept known as the interactive knee or

interactive cap. The system just attempts to manage the interactive CPU utilization to the level of the

interactive CPW capacity.

y Dynamic priority adjustment (system value QDYNPTYADJ) will not have any effect managing the

interactive workloads as they exceed the system interactive CPW capacity. On the other hand, it

won’t hurt to have it activated.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 17

Page 18

y The new server algorithm only applies to the new hardware available in V4R5 (2xx, 8xx and SBx

models). The behavior of all other hardware, such as the 7xx models is unchanged (see section 2.2.3

Existing Model section for 7xx algorithm).

2.2.2 Choosing Between Similarly Rated Systems

Sometimes it is necessary to choose between two systems that have similar CPW values but different

processor megahertz (MHz) values or L2 cache sizes. If your applications tend to be compute intensive

such as Java, WebSphere, EJBs, and Domino, you may want to go with the faster MHz processors

because you will generally get faster response times. However, if your response times are already

sub-second, it is not likely that you will notice the response time improvements. If your applications tend

to be L2 cache friendly such as many traditional commercial applications are, you may want to choose the

system that has the larger L2 cache. In either case, you can use the IBM eServer Workload Estimator to

help you select the correct system (see URL: http://

www.ibm.com/iseries/support/estimator ) .

2.2.3 Existing Older Models

Server model behavior applies to:

y AS/400 Advanced Servers

y AS/400e servers

y AS/400e custom servers

y AS/400e model 150

y iSeries model 170

y iSeries model 7xx

Relative performance measurements are derived from commercial processing workload (CPW) on iSeries

and AS/400. CPW is representative of commercial applications, particularly those that do significant

database processing in conjunction with journaling and commitment control.

Traditional (non-server) AS/400 system models had a single CPW value which represented the maximum

workload that can be applied to that model. This CPW value was applicable to either an interactive

workload, a client/server workload, or a combination of the two.

Now there are two CPW values. The larger value represents the maximum workload the model could

support if the workload were entirely client/server (i.e. no interactive components). This CPW value is for

the processor feature of the system. The smaller CPW value represents the maximum workload the model

could support if the workload were entirely interactive. For 7xx models this is the CPW value for the

interactive feature of the system.

The two CPW values are NOT additive - interactive processing will reduce the system's

client/server processing capability. When 100% of client/server CPW is being used, there is no CPU

available for interactive workloads. When 100% of interactive capacity is being used, there is no CPU

available for client/server workloads.

For model 170s announced in 9/98 and all subsequent systems, the published interactive CPW represents

the point (the "knee of the curve") where the interactive utilization may cause increased overhead on the

system. (As will be discussed later, this threshold point (or knee) is at a different value for previously

announced server models). Up to the knee the server/batch capacity is equal to the processor capacity

(CPW) minus the interactive workload. As interactive requirements grow beyond the knee, overhead

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 18

Page 19

grows at a rate which can eventually eliminate server/batch capacity and limit additional interactive

growth. It is best for interactive workloads to execute below (less than) the knee of the curve.

(However, for those models having the knee at 1/3 of the total interactive capacity, satisfactory

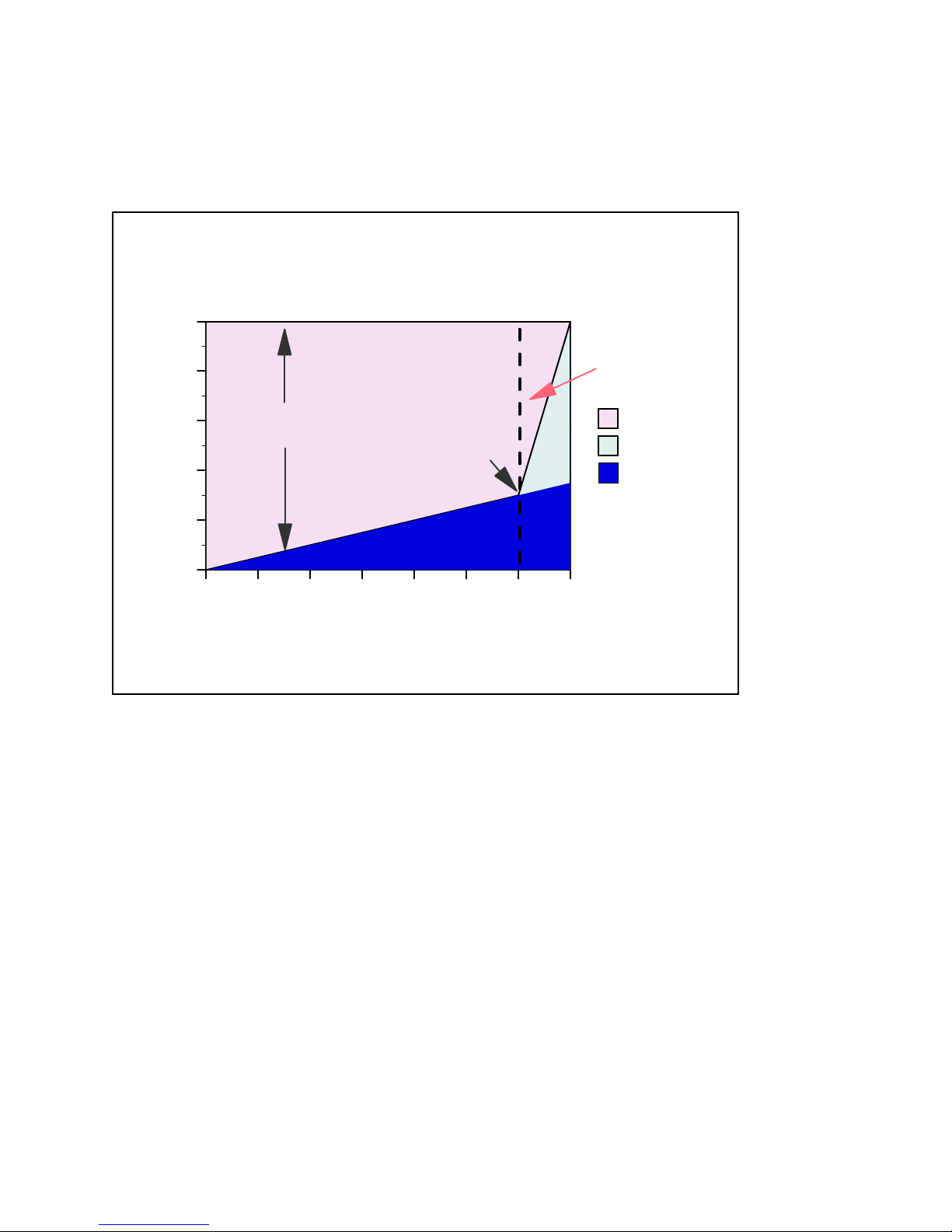

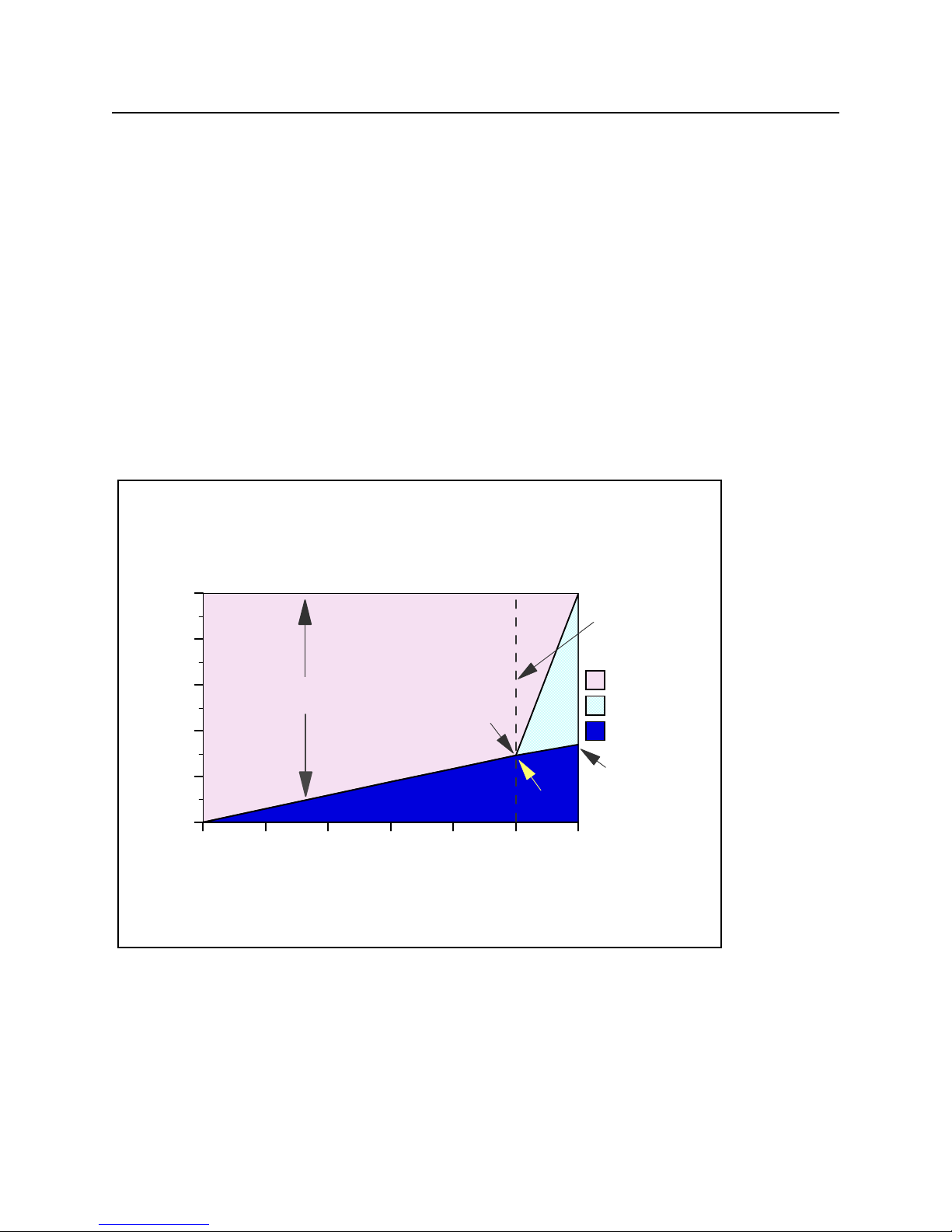

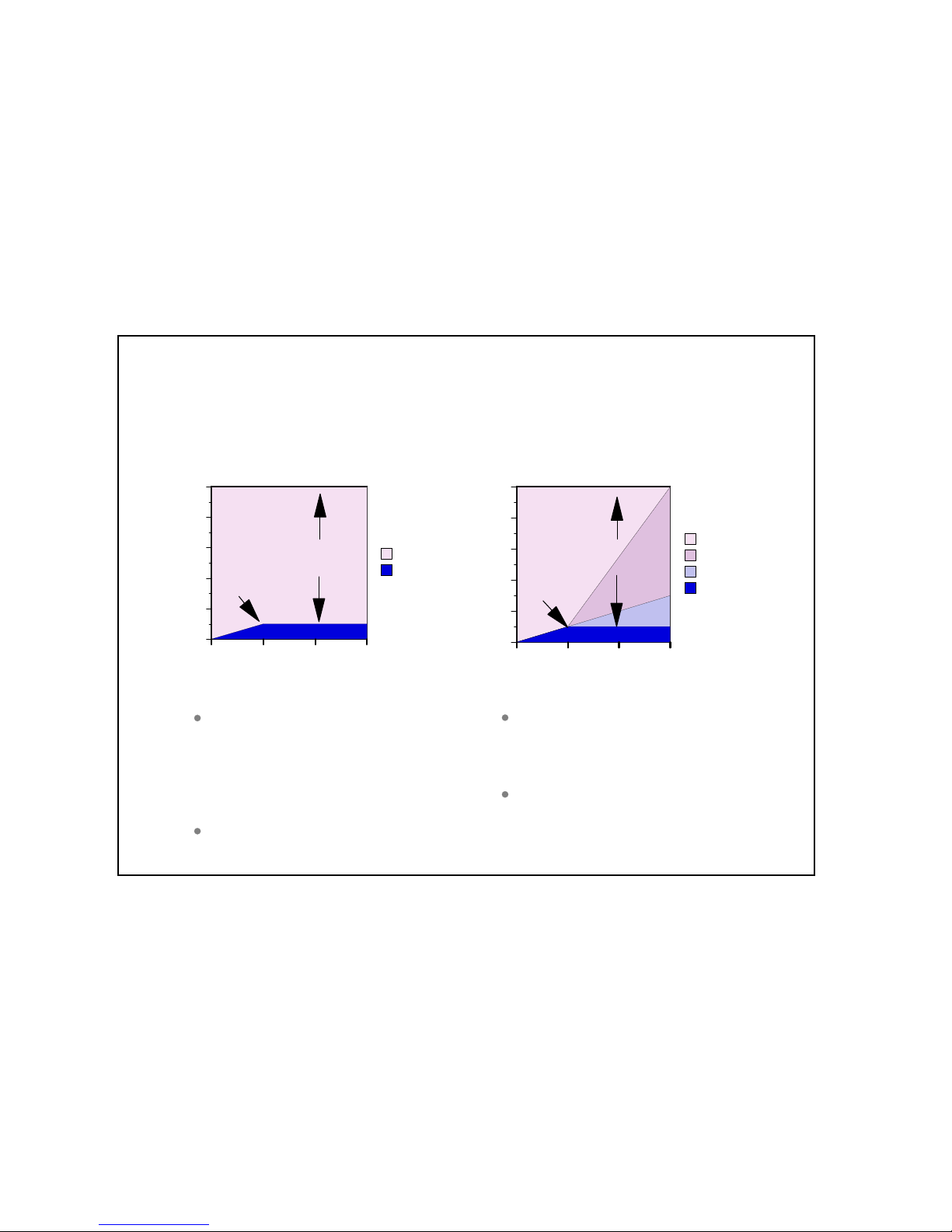

performance can be achieved.) The following graph illustrates these points.

Model 7xx and 9/98 Model 170 CPU

CPU Distribution vs. Interactive Utilization

100

Announced

Capacities

Stop Here!

80

60

Available for

Client/Server

40

Available CPU %

20

Knee

available

overhead

interactive

0

0 Full7/6

Fraction of Interactive CPW

Applies to: Model 170 anno un ce d in 9/98 and ALL systems announce d o n or after 2/99

Figure 2.1. Server Model behavior

The figure above shows a straight line for the effective interactive utilization. Real/customer

environments will produce a curved line since most environments will be dynamic, due to job initiation,

interrupts, etc.

In general, a single interactive job will not cause a significant impact to client/server performance

Microcode task CFINTn, for all iSeries models, handles interrupts, task switching, and other similar

system overhead functions. For the server models, when interactive processing exceeds a threshold

amount, the additional overhead required will be manifest in the CFINTn task. Note that a single

interactive job will not incur this overhead.

There is one CFINTn task for each processor. For example, on a single processor system only CFINT1

will appear. On an 8-way processor, system tasks CFINT1 through CFINT8 will appear. It is possible to

see significant CFINT activity even when server/interactive overhead does not exist. For example if there

are lots of synchronous or communication I/O or many jobs with many task switches.

The effective interactive utilization (EIU) for a server system can be defined as the useable interactive

utilization plus the total of CFINT utilization.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 19

Page 20

2.3 Server Model Differences

Server models were designed for a client/server workload and to accommodate an interactive workload.

When the interactive workload exceeds an interactive CPW threshold (the “knee of the curve”) the

client/server processing performance of the system becomes increasingly impacted at an accelerating rate

beyond the knee as interactive workload continues to build. Once the interactive workload reaches the

maximum interactive CPW value, all the CPU cycles are being used and there is no capacity available for

handling client/server tasks.

Custom server models interact with batch and interactive workloads similar to the server models but the

degree of interaction and priority of workloads follows a different algorithm and hence the knee of the

curve for workload interaction is at a different point which offers a much higher interactive workload

capability compared to the standard server models.

For the server models the knee of the curve is approximately:

y 100% of interactive CPW for:

y iSeries model 170s announced on or after 9/98

y 7xx models

y 6/7 (86%) of interactive CPW for:

y AS/400e custom servers

y 1/3 of interactive CPW for:

y AS/400 Advanced Servers

y AS/400e servers

y AS/400e model 150

y iSeries model 170s announced in 2/98

For the 7xx models the interactive capacity is a feature that can be sized and purchased like any other

feature of the system (i.e. disk, memory, communication lines, etc.).

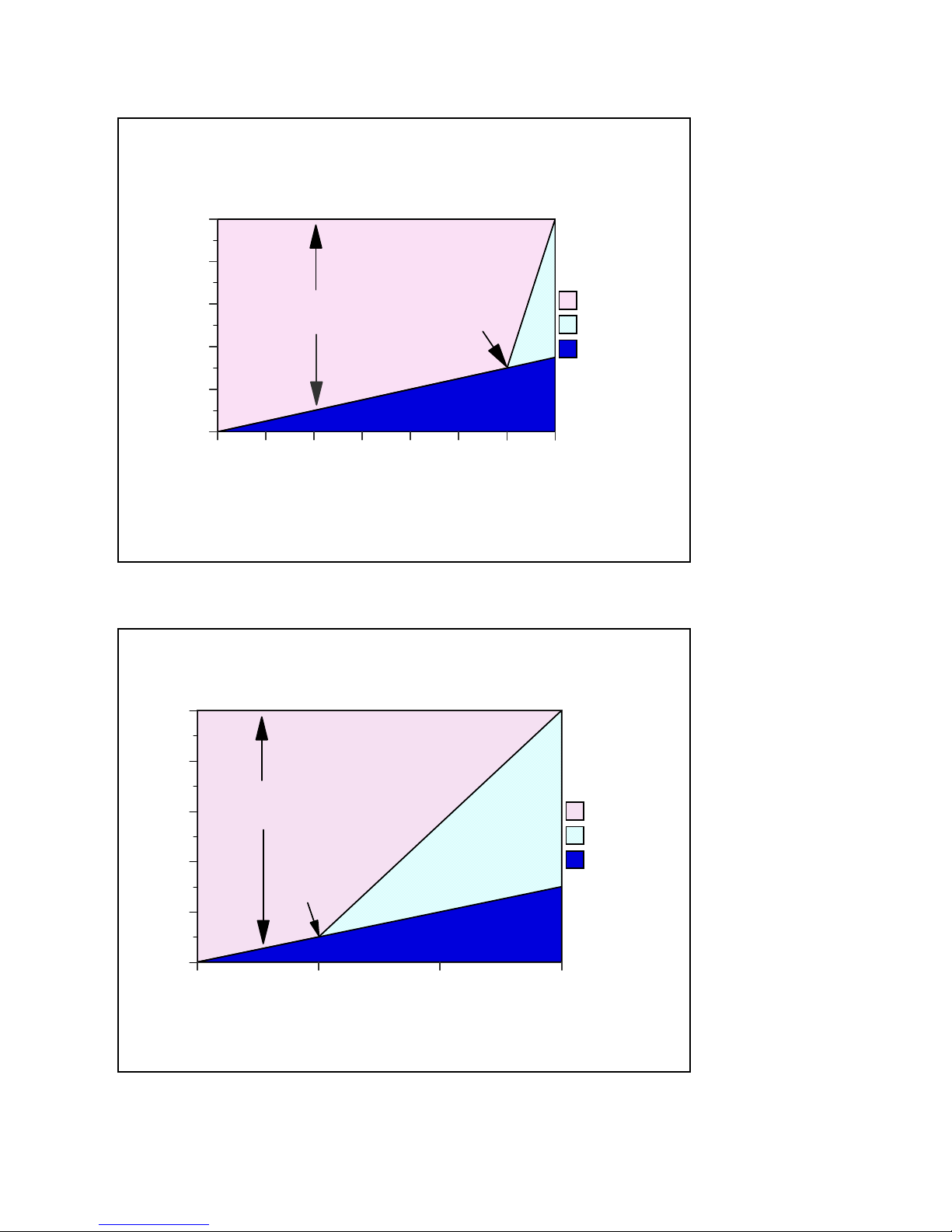

The following charts show the CPU distribution vs. interactive utilization for Custom Server and pre-2/99

Server models.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 20

Page 21

Custom Server Model

CPU Distribution vs. Interactive Utilization

100

80

60

Available for

Client/Server

Knee

40

20

Available CPU

0

0 6/7 Full

Fraction of Interactive CPW

Applies to: AS/400e Custom Servers, AS/400e Mixed Mode Servers

Figure 2.2. Custom Server Model behavior

available

CFINT

interactive

Server Model

CPU Distribution vs. Interactive Utilization

100

80

Available for

60

40

Available CPU

20

Figure 2.3. Server Model behavior

Client/Server

0

0 1/3 Int-CPW Full Int-CPW

Fraction of Interactive CPW

Applies to: AS/400 Advanced Servers, AS/400e servers,

Model 150, Model 170s announced in 2/98

Knee

available

CFINT

interactive

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 21

Page 22

2.4 Performance Highlights of Model 7xx Servers

7xx models were designed to accommodate a mixture of traditional “green screen” applications and more

intensive “server” environments. Interactive features may be upgraded if additional interactive capacity is

required. This is similar to disk, memory, or other features.

Each system is rated with a processor CPW which represents the relative performance (maximum

capacity) of a processor feature running a commercial processing workload (CPW) in a client/server

environment. Processor CPW is achievable when the commercial workload is not constrained by main

storage or DASD.

Each system may have one of several interactive features. Each interactive feature has an interactive

CPW associated with it. Interactive CPW represents the relative performance available to perform

host-centric (5250) workloads. The amount of interactive capacity consumed will reduce the available

processor capacity by the same amount. The following example will illustrate this performance capacity

interplay:

Model 7xx and 9/98 Model 170

CPU Distribution vs. Interactive Utilization

Model 7xx Processor FC 206B (240 / 70 CPW)

100

80

60

Available for

Client/Server

40

Available CPU %

20

Knee

29.2%

Announced

Capacities

Stop Here!

available

CFINT

interactive

34%

0

0 20 40 60 80 100 117

% of Published Interactive CPU

Applies to: Mo del 170 announced in 9/98 and ALL systems announced on or after 2/99

Figure 2.4. Model 7xx Utilization Example

(7/6)

At 110% of percent of the published interactive CPU, or 32.1% of total CPU, CFINT will use an

additional 39.8% (approximate) of the total CPU, yielding an effective interactive CPU utilization of

approximately 71.9%. This leaves approximately 28.1% of the total CPU available for client/server

work. Note that the CPU is completely utilized once the interactive workload reaches about 34%.

(CFINT would use approximately 66% CPU). At this saturation point, there is no CPU available for

client/server.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 22

Page 23

2.5 Performance Highlights of Model 170 Servers

iSeries Dedicated Server for Domino models will be generally available on September 24, 1999. Please

refer to Section 2.13, iSeries Dedicated Server for Domino Performance Behavior, for additional

information.

Model 170 servers (features 2289, 2290, 2291, 2292, 2385, 2386 and 2388) are significantly more

powerful than the previous Model 170s announced in Feb. '98. They have a faster processor (262MHz vs.

125MHz) and more main memory (up to 3.5GB vs. 1.0GB). In addition, the interactive workload

balancing algorithm has been improved to provide a linear relationship between the client/server (batch)

and published interactive workloads as measured by CPW.

The CPW rating for the maximum client/server workload now reflects the relative processor capacity

rather than the "system capacity" and therefore there is no need to state a "constrained performance"

CPW. This is because some workloads will be able to run at processor capacity if they are not DASD,

memory, or otherwise limited.

Just like the model 7xx, the current model 170s have a processor capacity (CPW) value and an

interactive capacity (CPW) value. These values behave in the same manner as described in the

Performance highlights of new model 7xx servers section.

As interactive workload is added to the current model 170 servers, the remaining available client/server

(batch) capacity available is calculated as: CPW (C/S batch) = CPW(processor) - CPW(interactive)

This is valid up to the published interactive CPW rating. As long as the interactive CPW workload does

not exceed the published interactive value, then interactive performance and client/server (batch)

workloads will be both be optimized for best performance. Bottom line, customers can use the entire

interactive capacity with no impacts to client/server (batch) workload response times.

On the current model 170s, if the published interactive capacity is exceeded, system overhead grows

very quickly, and the client/server (batch) capacity is quickly reduced and becomes zero once the

interactive workload reaches 7/6 of the published interactive CPW for that model.

The absolute limit for dedicated interactive capacity on the current models can be computed by

multiplying the published interactive CPW rating by a factor of 7/6. The absolute limit for dedicated

client/server (batch) is the published processor capacity value. This assumes that sufficient disk and

memory as well as other system resources are available to fit the needs of the customer's programs, etc.

Customer workloads that would require more than 10 disk arms for optimum performance should not be

expected to give optimum performance on the model 170, as 10 disk access arms is the maximum

configuration.

When the model 170 servers are running less than the published interactive workload, no Server Dynamic

Tuning (SDT) is necessary to achieve balanced performance between interactive and client/server (batch)

workloads. However, as with previous server models, a system value (QDYNPTYADJ - Server Dynamic

Tuning ) is available to determine how the server will react to work requests when interactive workload

exceeds the "knee". If the QDYNPTYADJ value is turned on, client/server work is favored over

additional interactive work. If it is turned off, additional interactive work is allowed at the expense of

low-priority client/server work. QDYNPTYADJ only affects the server when interactive requirements

exceed the published interactive capacity rating. The shipped default value is for QDYNPTYADJ to be

turned on.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

© Copyright IBM Corp. 2008 Chapter 2 - Server Performance Behavior 23

Page 24

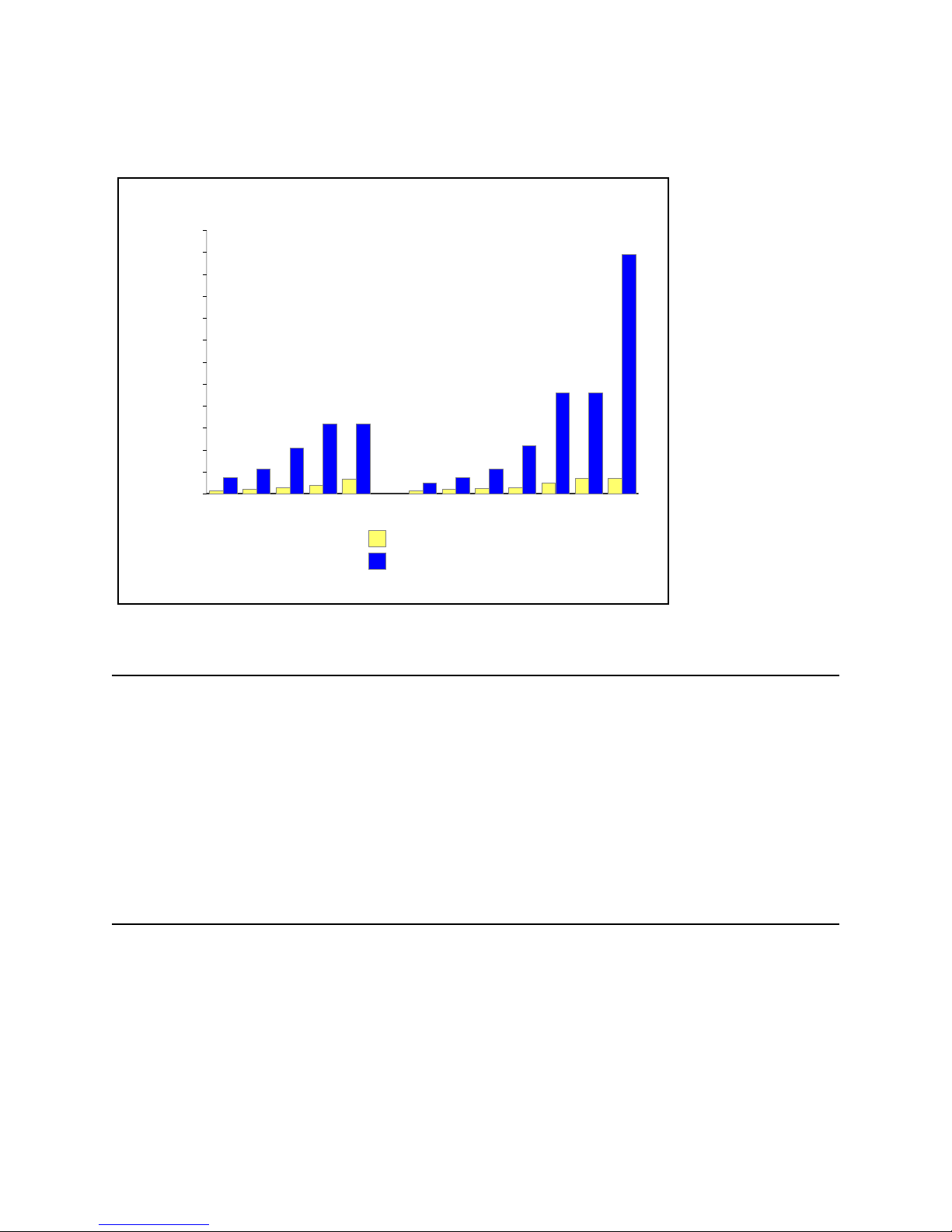

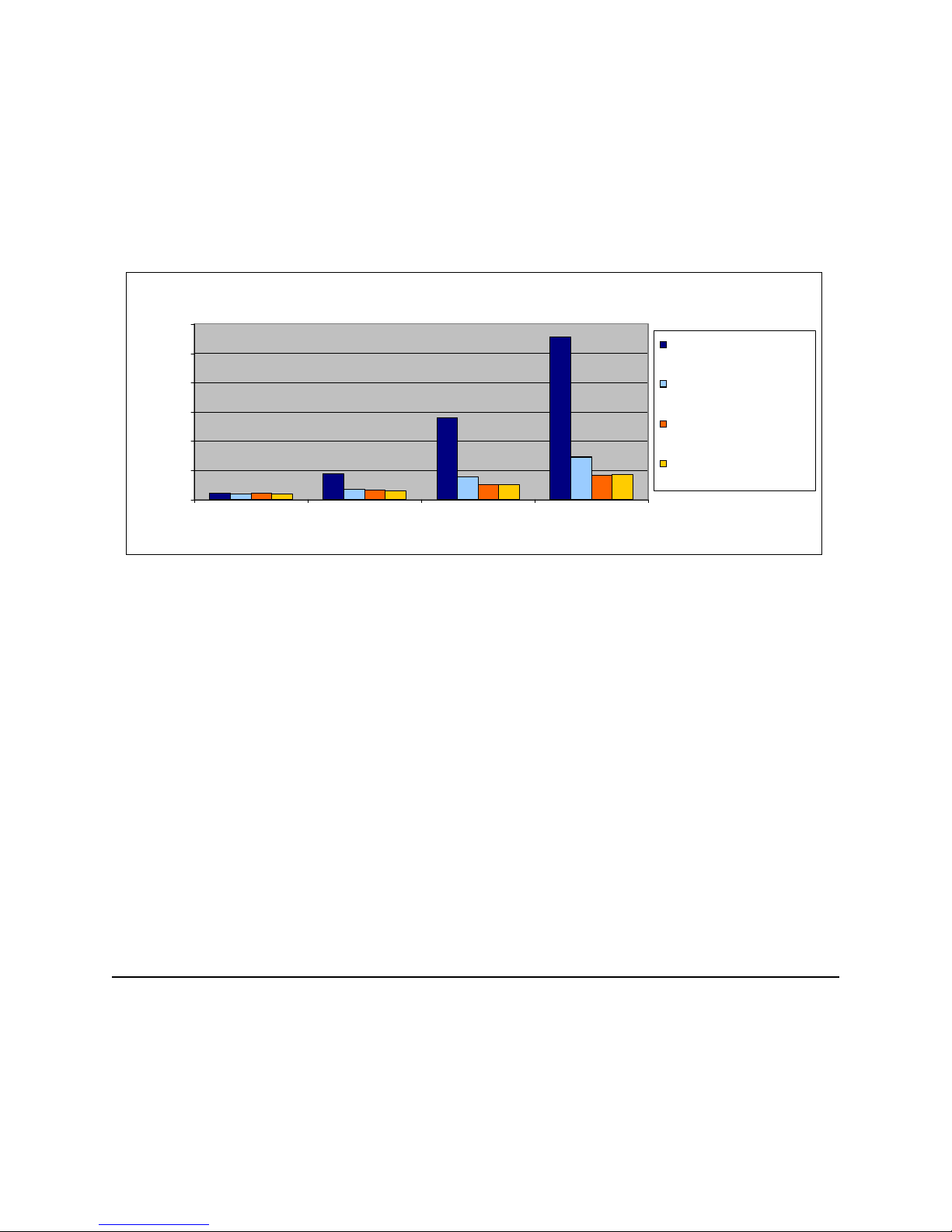

The next chart shows the performance capacity of the current and previous Model 170 servers.

Previous vs. Current AS/400e server 170 Performance

1200

1090

1000

800

600

400

CPW Values

200

0

114

73

23

16