Page 1

AS/400e

IBM

OS/400 Network File System Support

Ve r s i o n 4

SC41-5714-01

Page 2

Page 3

AS/400e

IBM

OS/400 Network File System Support

Ve r s i o n 4

SC41-5714-01

Page 4

Note

Before using this information and the product it supports, be sure to read the information in “Notices” on page 99.

Second Edition (May 1999)

This edition replaces SC41-5714-00.

© Copyright International Business Machines Corporation 1997, 1999. All rights reserved.

Note to U.S. Government Users — Documentation related to restricted rights — Use, duplication or disclosure is

subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp.

Page 5

Contents

Figures ........................... vii

Tables ........................... ix

About OS/400 Network File System Support (SC41-5714) ....... xi

Who should read this book .................... xi

AS/400 Operations Navigator ................... xi

Installing Operations Navigator.................. xii

Prerequisite and related information ................. xii

How to send your comments ...................xiii

Summary of Changes .....................xv

Chapter 1. What is the Network File System? ............ 1

Introduction.......................... 1

A Brief History ......................... 3

The Network File System as a File System .............. 3

Stateless Network Protocol .................... 4

Overview of the TULAB Scenario.................. 4

Chapter 2. The Network File System Client/Server Model........ 7

Network File System Client/Server Communication Design ........ 7

Network File System Process Layout ............... 8

Network File System Stack Description .............. 8

AS/400 as a Network File System Server ............... 9

Network File System Server-Side Daemons ............. 9

AS/400 as a Network File System Client ...............11

Network File System Client-Side Daemons .............12

NFS Client-Side Caches ....................12

Chapter 3. NFS and the User-Defined File System (UDFS) .......15

User File System Management ..................15

Create a User-Defined File System ................15

Display a User-Defined File System ................17

Delete a User-Defined File System ................18

Mount a User-Defined File System ................19

Unmount a User-Defined File System ...............20

Saving and Restoring a User-Defined File System ..........21

Graphical User Interface ....................21

User-Defined File System Functions in the Network File System ......22

Using User-Defined File Systems with Auxiliary Storage Pools ......23

Chapter 4. Server Exporting of File Systems ............25

What is Exporting? .......................25

Why Should I Export? ......................26

TULAB Scenario .......................26

What File Systems Can I Export?..................27

How Do I Export File Systems? ..................28

Rules for Exporting File Systems .................28

CHGNFSEXP (Change Network File System Export) Command .....30

Exporting from Operations Navigator ...............33

Finding out what is exported ..................36

Exporting Considerations ....................38

© Copyright IBM Corp. 1997, 1999 iii

Page 6

Chapter 5. Client Mounting of File Systems .............39

What Is Mounting? .......................39

Why Should I Mount File Systems? .................41

What File Systems Can I Mount?..................42

Where Can I Mount File Systems? .................42

Mount Points ........................45

How Do I Mount File Systems? ..................45

ADDMFS (Add Mounted File System) Command ...........45

RMVMFS (Remove Mounted File System) Command .........48

DSPMFSINF (Display Mounted File System Information) Command ....50

Chapter 6. Using the Network File System with AS/400 File Systems ...55

″Root″ File System (/) ......................55

Network File System Differences .................56

Open Systems File System (QOpenSys) ...............56

Network File System Differences .................56

Library File System (QSYS.LIB) ..................57

Network File System Differences .................57

Document Library Services File System (QDLS) ............60

Network File System Differences .................60

Optical File System (QOPT)....................61

Network File System Differences .................61

User-Defined File System (UDFS) .................62

Network File System Differences .................63

Administrators of UNIX Clients ...................63

Network File System Differences .................63

Chapter 7. NFS Startup, Shutdown, and Recovery ..........65

Configuring TCP/IP .......................65

Implications of Improper Startup and Shutdown ............66

Proper Startup Scenario .....................66

STRNFSSVR (Start Network File System Server) Command.......67

Proper Shutdown Scenario ....................70

Shutdown Consideration ....................70

ENDNFSSVR (End Network File System Server) Command .......70

Starting or stopping NFS from Operations Navigator...........72

Locks and Recovery ......................74

Why Should I Lock a File? ...................74

How Do I Lock A File?.....................74

Stateless System Versus Stateful Operation .............74

RLSIFSLCK (Release Integrated File System Locks) Command .....75

Chapter 8. Integrated File System APIs and the Network File System ...77

Error Conditions ........................77

ESTALE Error Condition ....................77

EACCES Error Condition ....................77

API Considerations .......................77

User Datagram Protocol (UDP) Considerations............77

Client Timeout Solution ....................78

Network File System Differences ..................78

open(), create(), and mkdir() APIs ................79

fcntl() API .........................79

Unchanged APIs ........................79

Chapter 9. Network File System Security Considerations........81

The Trusted Community .....................81

iv OS/400 Network File System Support V4R4

Page 7

Network Data Encryption ....................82

User Authorities ........................83

User Identifications (UIDs) ...................83

Group Identifications (GIDs)...................83

Mapping User Identifications ..................84

Proper UID Mapping .....................86

Securely Exporting File Systems ..................87

Export Options .......................88

Appendix A. Summary of Common Commands ...........91

Appendix B. Understanding the /etc Files..............93

Editing files within the /etc directory .................93

Editing stream files by using the Edit File (EDTF) command .......93

Editing stream files by using a PC based editor ...........94

Editing stream files by using a UNIX editor via NFS ..........94

/etc/exports File ........................94

Formatting Entries in the /etc/exports File..............94

Examples of Formatting /etc/exports with HOSTOPT Parameter .....96

/etc/netgroup File........................96

/etc/rpcbtab File ........................97

/etc/statd File .........................97

Notices ...........................99

Programming Interface Information .................101

Trademarks..........................101

Bibliography .........................103

Index ............................105

Readers’ Comments — We’d Like to Hear from You..........113

Contents v

Page 8

vi OS/400 Network File System Support V4R4

Page 9

Figures

1. AS/400 Operations Navigator Display ..............xii

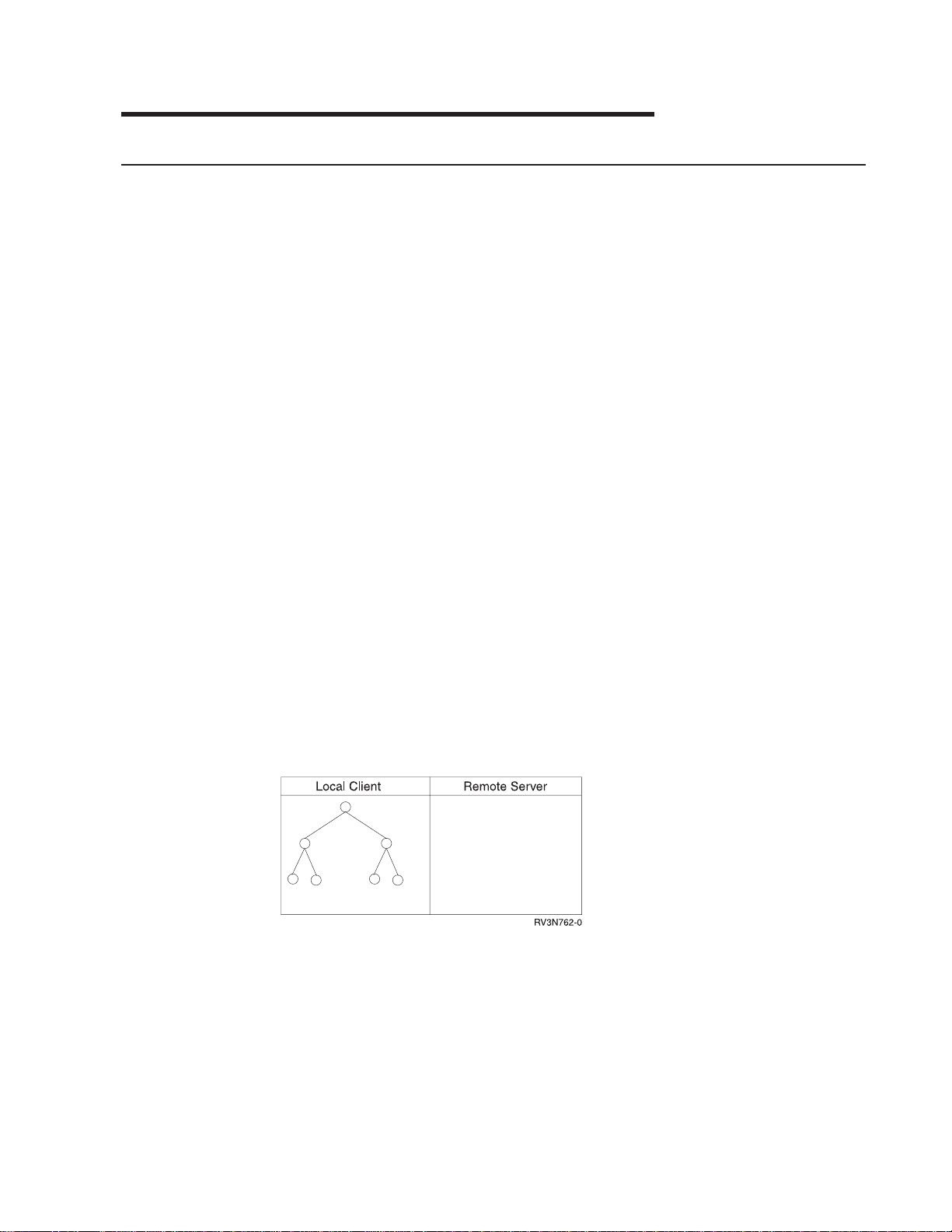

2. The local client and its view of the remote server before exporting data . . 1

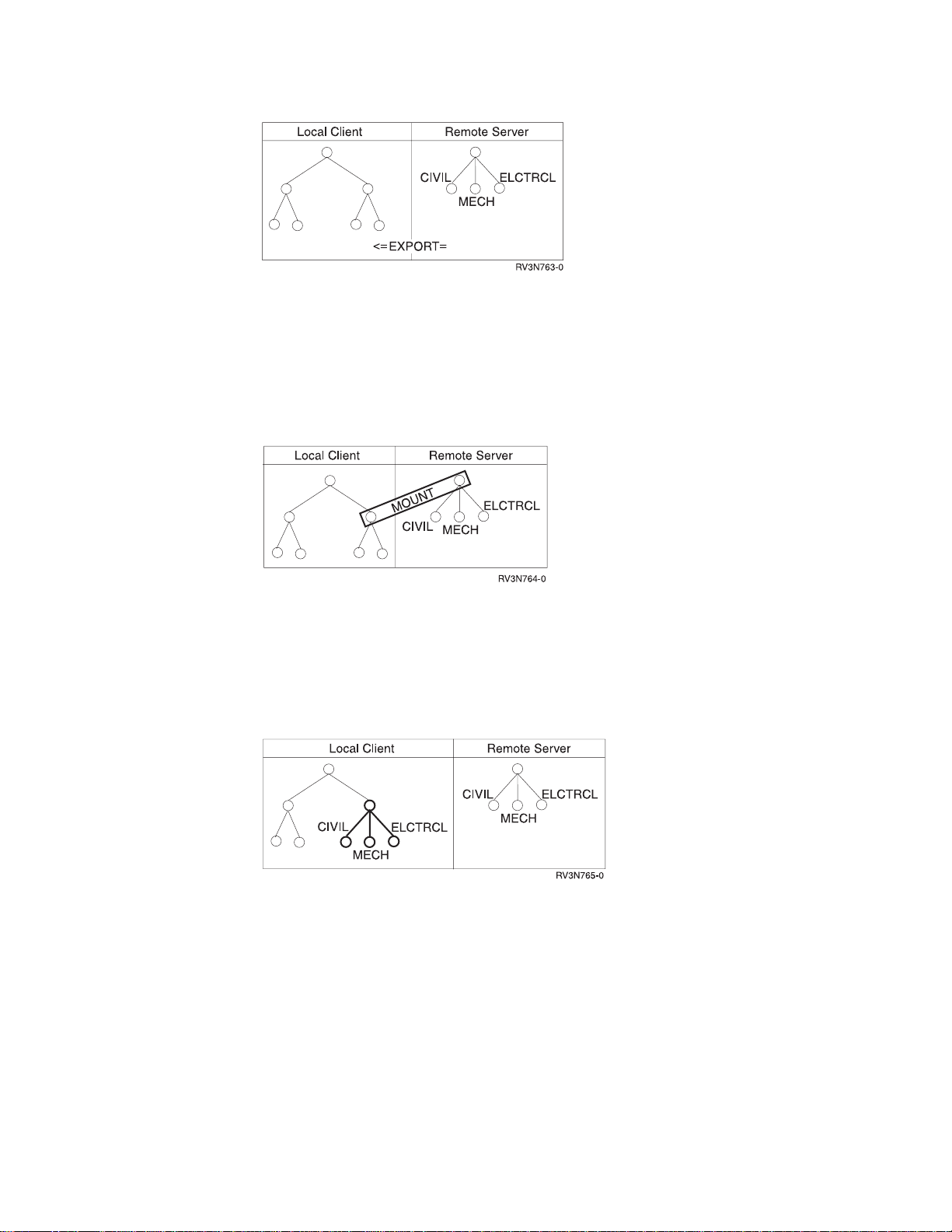

3. The local client and its view of the remote server after exporting data . . 2

4. The local client mounts data from a remote server ......... 2

5. Remote file systems function on the client............. 2

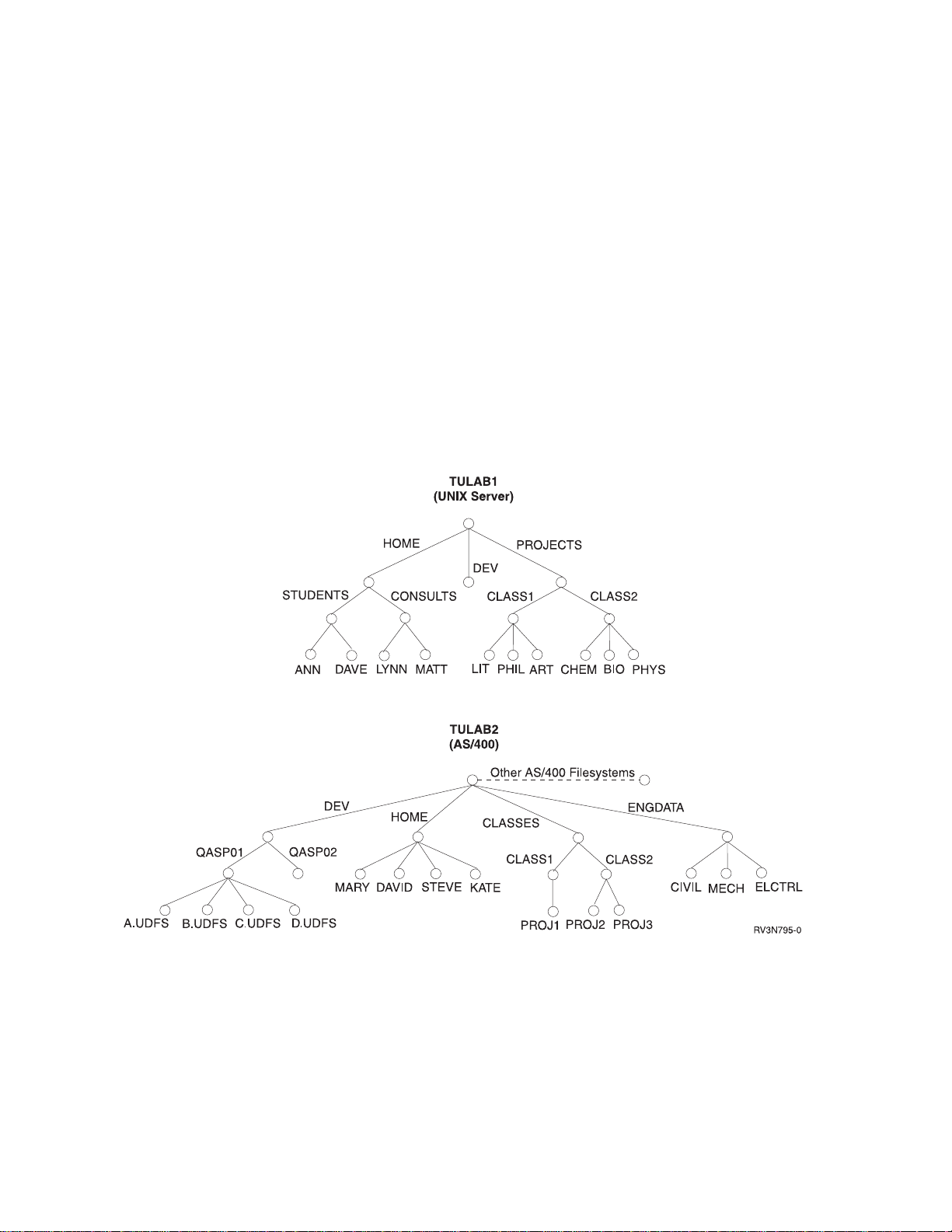

6. The TULAB network namespace ................ 5

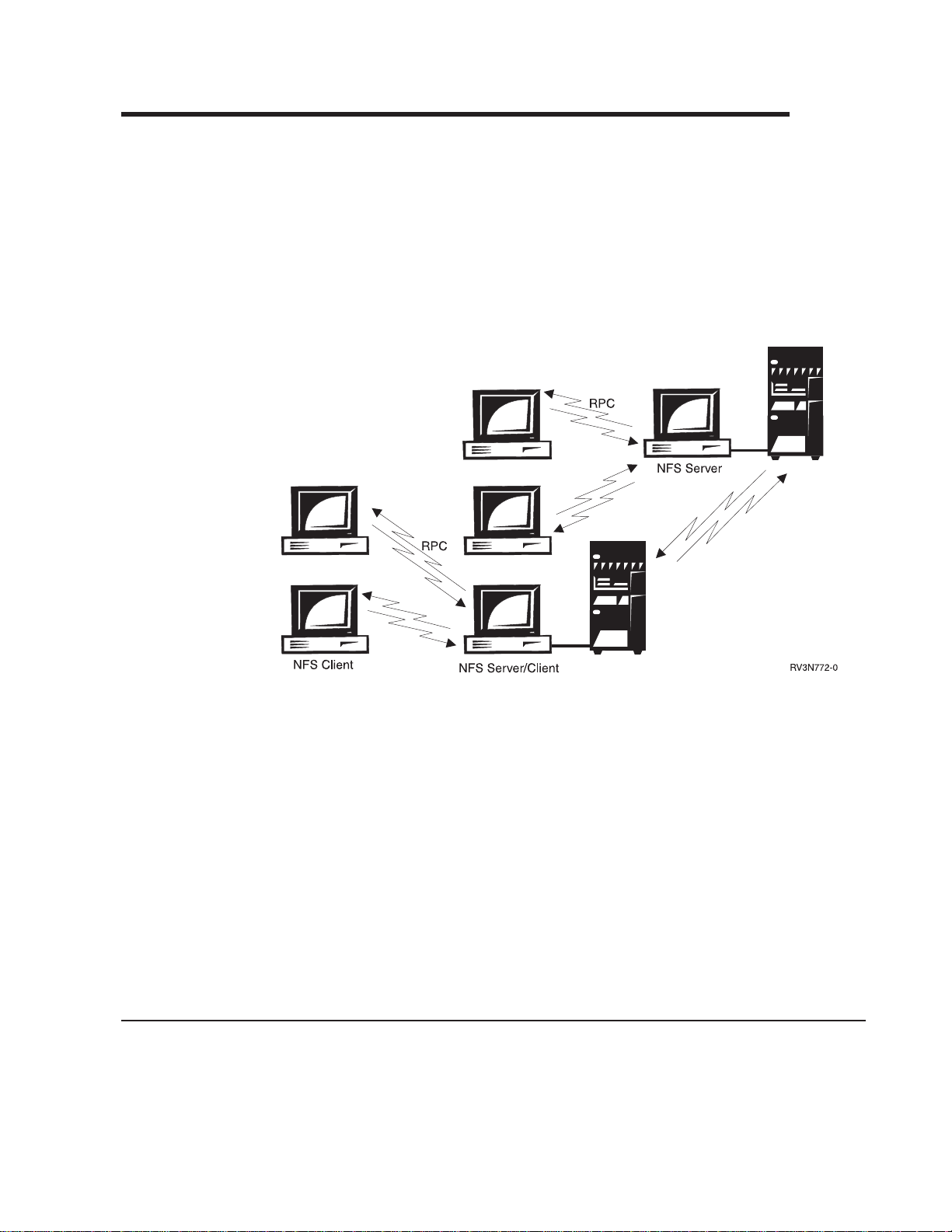

7. The NFS Client/Server Model ................. 7

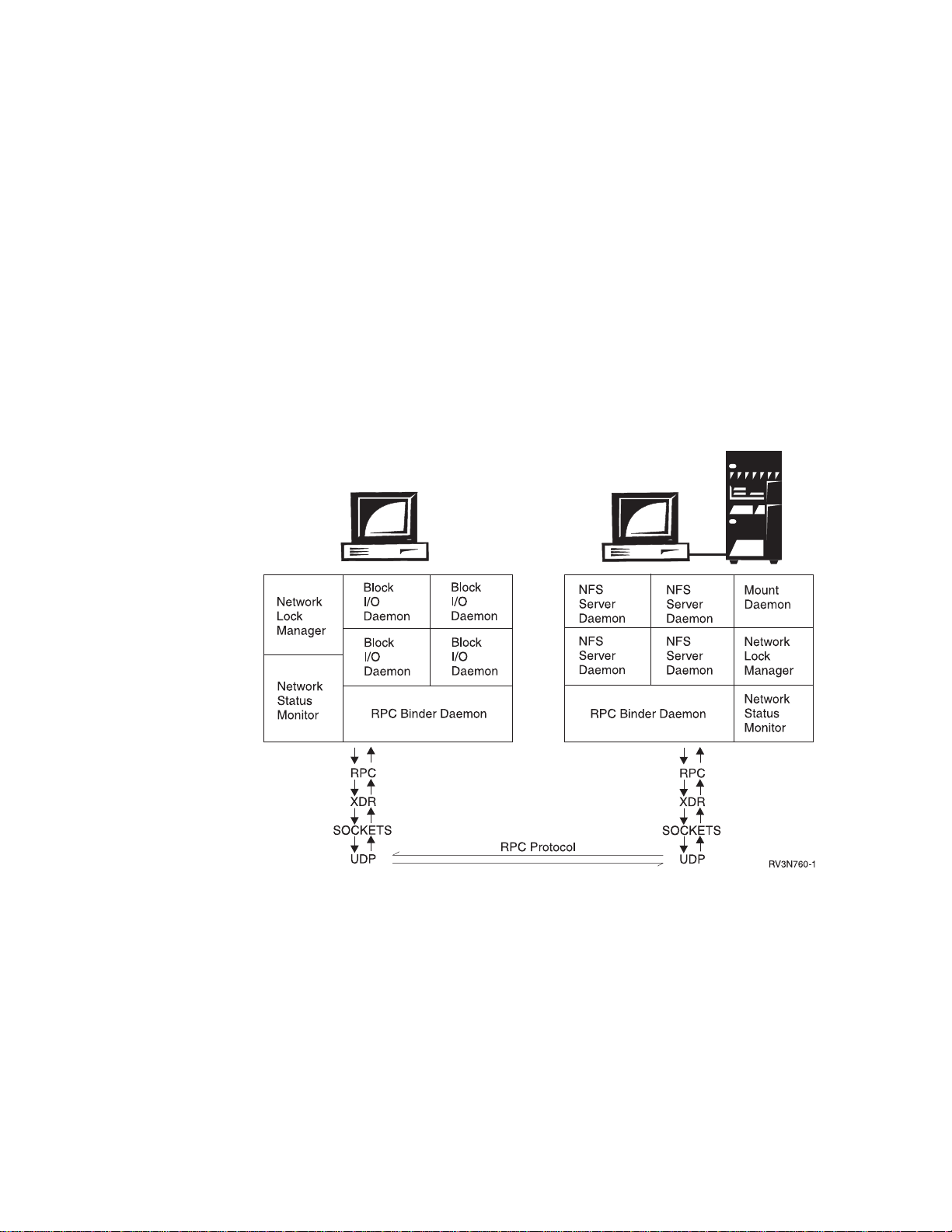

8. A breakdown of the NFS client/server protocol ........... 8

9. The NFS Server ......................10

10. The NFS Client ......................12

11. Using the Create User-Defined FS (CRTUDFS) display........16

12. Display User-Defined FS (DSPUDFS) output (1/2)..........17

13. Display User-Defined FS (DSPUDFS) output (2/2)..........18

14. Using the Delete User-Defined FS (DLTUDFS) display ........19

15. A Windows 95 view of using the CRTUDFS (Create UDFS) command . . 21

16. A Windows 95 view of using the DSPUDFS (Display UDFS) command . . 22

17. Exporting file systems with the /etc/exports file ...........25

18. Dynamically exporting file systems with the ″-I″ option ........26

19. Before the server has exported information ............27

20. After the server has exported /classes/class2 ...........28

21. A directory tree before exporting on TULAB2............29

22. The exported directory branch /classes on TULAB2 .........29

23. The exported directory branch /classes/class1 on TULAB2 ......29

24. Using the Change NFS Export (CHGNFSEXP) display ........31

25. The Operations Navigator interface. ...............34

26. The NFS Export dialog box. ..................34

27. The Add Host/Netgroup dialog box. ...............35

28. The Customize NFS Clients Access dialog box............36

29. The NFS Exports dialog box. .................37

30. A local client and remote server with exported file systems ......39

31. A local client mounting file systems from a remote server .......40

32. The mounted file systems cover local client directories ........40

33. The local client mounts over a high-level directory..........41

34. The local client mounts over the /2 directory ............41

35. Views of the local client and remote server ............43

36. The client mounts /classes/class1 from TULAB2 ..........43

37. The /classes/class1 directory covers /user/work...........43

38. The remote server exports /engdata ...............44

39. The local client mounts /engdata over a mount point .........44

40. The /engdata directory covers /user/work .............44

41. Using the Add Mounted FS (ADDMFS) display ...........46

42. A Windows 95 view of Mounting a user-defined file system ......47

43. Using the Remove Mounted FS (RMVMFS) display .........49

44. Using the Display Mounted FS Information (DSPMFSINF) display ....51

45. Display Mounted FS Information (DSPMFSINF) output (1/2) ......52

46. Display Mounted FS Information (DSPMFSINF) output (2/2) ......52

47. The ″Root″ (/) file system accessed through the NFS Server ......55

48. The QOpenSys file system accessed through the NFS Server .....56

49. The QSYS.LIB file system accessed through the NFS Server .....57

50. The QDLS file system accessed through the NFS Server .......60

51. The QOPT file system accessed through the NFS Server .......61

52. The UDFS file system accessed through the NFS Server .......62

53. Using the Start NFS Server (STRNFSSVR) display .........69

© Copyright IBM Corp. 1997, 1999 vii

Page 10

54. Using the End NFS Server (ENDNFSSVR) display .........71

55. Starting or stopping NFS server daemons. ............73

56. NFS Properties dialog box. ..................73

57. Using the Release File System Locks (RLSIFSLCK) display ......76

58. Client outside the trusted community causing a security breaches ....82

viii OS/400 Network File System Support V4R4

Page 11

Tables

1. CL Commands Used in Network File System Applications .......91

© Copyright IBM Corp. 1997, 1999 ix

Page 12

x OS/400 Network File System Support V4R4

Page 13

About OS/400 Network File System Support (SC41-5714)

The purpose of this book is to explain what the Network File System is, what it

does, and how it works on AS/400. The book shows real-world examples of how

you can use NFS to create a secure, useful integrated file system network. The

intended audiences for this book are:

v System administrators developing a distributed network using the Network File

System.

v Users or programmers working with the Network File System

Chapters one and two introduce NFS by giving background and conceptual

information on its protocol, components, and architecture. This is background

information for users who understand how AS/400 works, but do not understand

NFS.

The rest of the book (chapters three through nine) shows detailed examples of what

NFS can do and how you can best use it. The overall discussion topic of this book

is how to construct a secure, user-friendly distributed namespace. Included are

in-depth examples and information regarding mounting, exporting, and the following

topics:

v How NFS functions in the client/server relationship

v NFS exceptions for AS/400 file systems

v NFS startup, shutdown, and recovery

v File locking

v New integrated file system error conditions and how NFS affects them

v Troubleshooting procedures for NFS security considerations

It is assumed that the reader has experience with AS/400 client/server model,

though not necessarily with the Network File System.

Who should read this book

This book is for AS/400 users, programmers, and administrators who want to know

about the Network File System on AS/400. This book contains:

v Background theory and concepts regarding NFS and how it functions

v Examples of commands, AS/400 displays, and other operations you can use with

NFS

v Techniques on how to construct a secure, efficient namespace with NFS

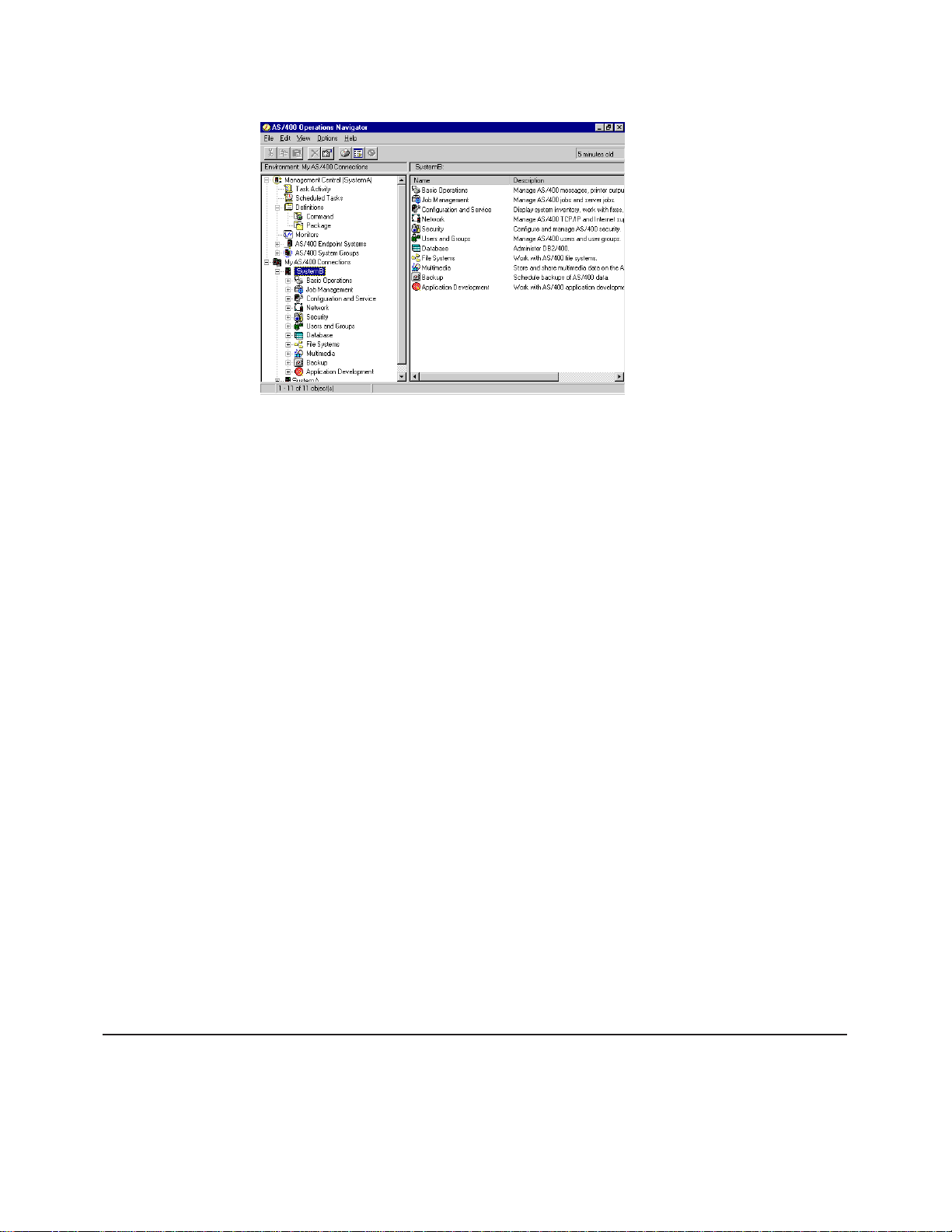

AS/400 Operations Navigator

AS/400 Operations Navigator is a powerful graphical interface for Windows clients.

With AS/400 Operations Navigator, you can manage and administer your AS/400

systems from your Windows desktop.

You can use Operations Navigator to manage communications, printing, database,

security, and other system operations. Operations Navigator includes Management

Central for managing multiple AS/400 systems centrally.

Figure 1 on page xii shows an example of the Operations Navigator display:

© Copyright IBM Corp. 1997, 1999 xi

Page 14

Figure 1. AS/400 Operations Navigator Display

This new interface has been designed to make you more productive and is the only

user interface to new, advanced features of OS/400. Therefore, IBM recommends

that you use AS/400 Operations Navigator, which has online help to guide you.

While this interface is being developed, you may still need to use a traditional

emulator such as PC5250 to do some of your tasks.

Installing Operations Navigator

To use AS/400 Operations Navigator, you must have Client Access installed on your

Windows PC. For help in connecting your Windows PC to your AS/400 system,

consult

AS/400 Operations Navigator is a separately installable component of Client Access

that contains many subcomponents. If you are installing for the first time and you

use the Typical installation option, the following options are installed by default:

v Operations Navigator base support

v Basic operations (messages, printer output, and printers)

To select the subcomponents that you want to install, select the Custom installation

option. (After Operations Navigator has been installed, you can add subcomponents

by using Client Access Selective Setup.)

1. Display the list of currently installed subcomponents in the Component

2. Select AS/400 Operations Navigator.

3. Select any additional subcomponents that you want to install and continue with

Client Access Express for Windows - Setup

Selection window of Custom installation or Selective Setup.

Custom installation or Selective Setup.

, SC41-5507-00.

After you install Client Access, double-click the AS400 Operations Navigator icon

on your desktop to access Operations Navigator and create an AS/400 connection.

Prerequisite and related information

Use the AS/400 Information Center as your starting point for looking up AS/400

technical information. You can access the Information Center from the AS/400e

Information Center CD-ROM (English version:

Web sites:

xii OS/400 Network File System Support V4R4

SK3T-2027

) or from one of these

Page 15

http://www.as400.ibm.com/infocenter

http://publib.boulder.ibm.com/pubs/html/as400/infocenter.htm

The AS/400 Information Center contains important topics such as logical

partitioning, clustering, Java, TCP/IP, Web serving, and secured networks. It also

contains Internet links to Web sites such as the AS/400 Online Library and the

AS/400 Technical Studio. Included in the Information Center is a link that describes

at a high level the differences in information between the Information Center and

the Online Library.

For a list of related publications, see the “Bibliography” on page 103.

How to send your comments

Your feedback is important in helping to provide the most accurate and high-quality

information. If you have any comments about this book or any other AS/400

documentation, fill out the readers’ comment form at the back of this book.

v If you prefer to send comments by mail, use the readers’ comment form with the

address that is printed on the back. If you are mailing a readers’ comment form

from a country other than the United States, you can give the form to the local

IBM branch office or IBM representative for postage-paid mailing.

v If you prefer to send comments by FAX, use either of the following numbers:

– United States and Canada: 1-800-937-3430

– Other countries: 1-507-253-5192

v If you prefer to send comments electronically, use one of these e-mail addresses:

– Comments on books:

RCHCLERK@us.ibm.com

IBMMAIL, to IBMMAIL(USIB56RZ)

– Comments on the AS/400 Information Center:

RCHINFOC@us.ibm.com

Be sure to include the following:

v The name of the book.

v The publication number of the book.

v The page number or topic to which your comment applies.

About OS/400 Network File System Support (SC41-5714) xiii

Page 16

xiv OS/400 Network File System Support V4R4

Page 17

Summary of Changes

This manual includes changes made since Version 4 Release 1 of the OS/400

licensed program on the AS/400 system. This edition includes information that has

been added to the system to support Version 4 Release 4.

Changes made to this book include the following items:

v Updated graphic files.

v Updated examples.

v Updated NFS to FSS/400 comparisons.

v Added information about short and long names.

v Added a new section about editing files within the /etc directory.

© Copyright IBM Corp. 1997, 1999 xv

Page 18

xvi OS/400 Network File System Support V4R4

Page 19

Chapter 1. What is the Network File System?

Introduction

OS/400 Network File System Support

aids users and administrators who work with network applications and file systems.

You can use the Network File System (NFS**) to construct a distributed network

system where all users can access the data they need. Furthermore, the Network

File System provides a method of transmitting data in a client/server relationship.

The Network File System makes remote objects stored in file systems appear to be

local, as if they reside in the local host. With NFS, all the systems in a network can

share a single set of files. This eliminates the need for duplicate file copies on every

network system. Using NFS aids in the overall administration and management of

users, systems, and data.

NFS gives users and administrators the ability to distribute data across a network

by:

v Exporting local file systems from a local server for access by remote clients.

This allows centralized administration of file system information. Instead of

duplicating common directories on every system, NFS shares a single copy of a

directory with all the proper clients from a single server.

v Mounting remote server file systems over local client directories. This allows

AS/400 client systems to work with file systems that have been exported from a

remote server. The mounted file systems will act and perform as if they exist on

the local system.

The following figures show the process of a remote NFS server exporting

directories to a local client. Once the client is aware of the exported directories, the

client then mounts the directories over local directories. The remote server

directories will now function locally on the client.

introduces a system function for AS/400 that

Figure 2. The local client and its view of the remote server before exporting data

Before the server exports information, the client does not know about the existence

of file systems on the server. Furthermore, the client does not know about any of

the file systems or objects on the server.

© Copyright IBM Corp. 1997, 1999 1

Page 20

Figure 3. The local client and its view of the remote server after exporting data

After the server exports information, the proper client (the client with the proper

authorities) can be aware of the existence of file systems on the server.

Furthermore, the client can mount the exported file systems or directories or

objects from the server.

Figure 4. The local client mounts data from a remote server

The mount command makes a certain file system, directory, or object

accessible

the client. Mounting does not copy or move objects from the server to the client.

Rather, it makes

Figure 5. Remote file systems function on the client

remote

When remote objects are mounted locally, they

are placed over. Mounted objects also cover any objects that are

objects available for use

locally

cover up

.

any local objects that they

downstream

of the

mount point, the place on the client where the mount to the server begins. The

mounted objects will function locally on the client just as they do remotely on the

server.

For more information on these aspects of NFS, see the following sections:

v “Chapter 4. Server Exporting of File Systems” on page 25

on

v “Chapter 5. Client Mounting of File Systems” on page 39

2 OS/400 Network File System Support V4R4

Page 21

|

|

|

|

|

|

|

A Brief History

OS/400 Network File System Support is the replacement for the TCP/IP File Server

Support/400 (FSS/400) system application. Users who are accustomed to working

with FSS/400 will notice many similarities between FSS/400 and NFS. It is

important to note, however, that FSS/400 and NFS are

other. The FSS/400 system application can exist on the same AS/400 with OS/400

Network File System Support, but they cannot operate together. On any given

system, do not start or use FSS/400 and NFS at the same time.

Sun Microsystems, Inc.** released NFS in 1984. Sun introduced NFS Version 2 in

1985. In 1989, the Request For Comments (RFC) standard 1094, describing NFS

Version 2, was published. X/Open published a compatible version that is a standard

for NFS in 1992. Sun published the NFS Version 3 protocol in 1993.

Sun developed NFS in a UNIX** environment, and therefore many UNIX concepts

(for example, the UNIX authentication) were integrated into the final protocol. Yet

the NFS protocol remains platform independent. Today, almost all UNIX platforms

use NFS, as do many PCs, mainframes, and workstations.

not

compatible with each

|

|

|

Most implementations of NFS are Version 2, although a number of vendors are

offering products that combine Version 2 and Version 3. The AS/400 implementation

of the Network File System supports both Version 2 and Version 3 of the protocol.

The Network File System as a File System

AS/400 file systems provide the support that allows users and applications to

access specific segments of storage. These logical units of′ storage are the

following:

v libraries

v directories

v folders

The logical storage units can contain different types of data:

v objects

v files

v documents

Each file system has a set of logical structures and rules for interacting with

information in storage. These structures and rules may be different from one file

system to another, depending on the type of file system. The OS/400 support for

accessing database files and various other object types through libraries can be

thought of as a file system. Similarly, the OS/400 support for accessing documents

through folders can be thought of as a separate file system. For more information

on AS/400 file systems, please see the

SC41-4711.

Integrated File System Introduction,

The Network File System provides seemingly “transparent” access to remote files.

This means that local client files and files that are accessed from a remote server

operate and function similarly and are indistinguishable. This takes away many

complex steps from users, who need a set of files and directories that act in a

consistent manner across many network clients. A long-term goal of system

administrators is to design such a transparent network that solidifies the belief of

Chapter 1. What is the Network File System? 3

Page 22

users that all data exists and is processed on their local workstations. An efficient

NFS network also gives the right people access to the right amount of data at the

right times.

Files and directories can be made available to clients by exporting from the server

and mounting on clients through a pervasive NFS client/server relationship. An

NFS client can also, at the same time, function as an NFS server, just as an NFS

server can function as a client.

Stateless Network Protocol

NFS incorporates the Remote Procedure Call (RPC) for client/server

communication. RPC is a high-end network protocol that encompasses many

simpler protocols, such as Transmission Control Protocol (TCP) and User Datagram

Protocol (UDP).

NFS is a stateless protocol, maintaining absolutely no saved or archived

information from client/server communications. State is the information regarding a

request that describes exactly what the request does. A stateful protocol saves

information about users and requests for use with many procedures. Statelessness

is a condition where no information is retained about users and their requests. This

condition demands that the information surrounding a request be sent with every

single request. Due to NFS statelessness, each RPC request contains all the

required information for the client and server to process user requests.

By using NFS, AS/400 users can bypass the details of the network interface. NFS

isolates applications from the physical and logical elements of data communications

and allows applications to use a variety of different transports.

In short, the NFS protocol is useful for applications that need to transfer information

over a client/server network. For more information about RPC and NFS, see

“Network File System Stack Description” on page 8.

Overview of the TULAB Scenario

This book uses the fictional namespace TULAB to describe detailed applications of

NFS concepts. A namespace is a distributed network space where one or more

servers look up, manage, and share ordered, deliberate object names.

TULAB exists only in a hypothetical computer-networked environment at a fictitious

Technological University. It is run by a network administrator, a person who

defines the network configuration and other network-related information. This

person controls how an enterprise or system uses its network resources. The

TULAB administrator, Chris Admin, is trying to construct an efficient, transparent,

and otherwise seamless distributed namespace for the diverse people who use the

TULAB:

v Engineering undergraduate students

v Humanities undergraduate students

v Engineering graduate students

v TULAB consultants

4 OS/400 Network File System Support V4R4

Page 23

Each group of users works on sets of clients that need different file systems from

the TULAB server. Each group of users has different permissions and authorities

and will pose a challenge to establishing a safe, secure NFS namespace.

Chris Admin will encounter common problems that administrators of NFS

namespaces face every day. Chris Admin will also work through some uncommon

and unique NFS situations. As this book describes each command and parameter,

Chris Admin will give a corresponding example from TULAB. As this book explains

applications of NFS, Chris Admin will show exactly how he configures NFS for

TULAB.

The network namespace of TULAB is complex and involves two NFS server

systems:

1. TULAB1 — A UNIX server system

2. TULAB2 — An AS/400 server system

The following figure describes the layout of the TULAB namespace.

Figure 6. The TULAB network namespace

Chapter 1. What is the Network File System? 5

Page 24

6 OS/400 Network File System Support V4R4

Page 25

Chapter 2. The Network File System Client/Server Model

To understand how the Network File System works on AS/400, you must first

understand the communication relationship between a server and various clients.

The client/server model involves a local host (the client) that makes a procedure

call that is usually processed on a different, remote network system (the server). To

the client, the procedure appears to be a local one, even though another system

processes the request. In some cases, however, a single computer can act as both

an NFS client

and

an NFS server.

Figure 7. The NFS Client/Server Model

There are various resources on the server which are not available on the client,

hence the need for such a communication relationship. The host owning the needed

resource acts as a server that communicates to the host which initiates the original

call for the resource, the client. In the case of NFS, this resource is usually a

shared file system, a directory, or an object.

RPC is the mechanism for establishing such a client/server relationship within NFS.

RPC bundles up the arguments intended for a procedure call into a packet of data

called a network datagram. The NFS client creates an RPC session with an NFS

server by connecting to the proper server for the job and transmitting the datagram

to that server. The arguments are then unpacked and decoded on the server. The

operation is processed by the server and a return message (should one exist) is

sent back to the client. On the client, this reply is transformed into a return value for

NFS. The user’s application is re-entered as if the process had taken place on a

local level.

Network File System Client/Server Communication Design

The logical layout of the Network File System on the client and server involves

numerous daemons, caches, and the NFS protocol breakdown. An overview of

each type of process follows.

© Copyright IBM Corp. 1997, 1999 7

Page 26

A daemon is a process that performs continuous or system-wide functions, such as

network control. NFS uses many different types of daemons to complete user

requests.

A cache is a type of high-speed buffer storage that contains frequently accessed

instructions and data. Caches are used to reduce the access time for this

information. Caching is the act of writing data to a cache.

For information about NFS server daemons, see “Network File System Server-Side

Daemons” on page 9. For information about NFS client daemons, see “Network File

System Client-Side Daemons” on page 12. For information about client-side caches,

see “NFS Client-Side Caches” on page 12 Detailed information about the NFS

protocol can be found in “Network File System Stack Description”.

Network File System Process Layout

Figure 8. A breakdown of the NFS client/server protocol

Local processes that are known as daemons are required on both the client and

the server. These daemons process both local and remote requests and handle

client/server communication. Both the NFS client and server have a set of daemons

that carry out user tasks. In addition, the NFS client also has data caches that store

specific types of data locally on the on the client. For more information about the

NFS client data caches, see “NFS Client-Side Caches” on page 12.

Network File System Stack Description

Simple low-end protocols make up a high-end complex protocol like NFS. For an

NFS client command to connect with the server, it must first use the Remote

Procedure Call (RPC) protocol. The request is encoded into External Data

8 OS/400 Network File System Support V4R4

Page 27

Representation (XDR) and then sent to the server using a socket. The simple User

Datagram Packet (UDP) protocol actually communicates between client and server.

Some aspects of NFS use the Transmission Control Protocol (TCP) as the base

communication protocol.

The operation of NFS can be seen as a logical client-to-server communications

system that specifically supports network applications. The typical NFS flow

includes the following steps:

1. The server waits for requests from one or more clients.

2. The client sends a request to the server and blocks (waits for a response).

3. When a request arrives, the server calls a dispatch routine.

4. The dispatch routine performs the requested service and returns with the results

of the request. The dispatch routine can also call a sub-routine to handle the

specific request. Sometimes the sub-routine will return results to the client by

itself, and other times it will report back to the dispatch routine.

5. The server sends those results back to the client.

6. The client then de-blocks.

The overhead of running more than one request at the same time is too heavy for

an NFS server, so it is designed to be

server can only process one request per session. The requests from the multiple

clients that use the NFS server are put into a queue and processed in the order in

which they were received. To improve throughput, multiple NFS servers can

process requests from the same queue.

single-threaded

. This means that an NFS

AS/400 as a Network File System Server

The NFS server is composed of many separate entities that work together to

process remote calls and local requests. These are:

v NFS server daemons. These daemons handle access requests for local files

from remote clients. Multiple instances of particular daemons can operate

simultaneously.

v Export command. This command allows a user to make local directories

accessible to remote clients.

v /etc/exports file. This file contains the local directory names that the NFS server

exports automatically when starting up. The administrator creates and maintains

this file, which is read by the export command. For more discussion about this

file, see “/etc/exports File” on page 94 and “Chapter 4. Server Exporting of File

Systems” on page 25.

v Export table. This table contains all the file systems that are currently exported

from the server. The export command builds the /etc/exports file into the export

table. Users can dynamically update the export table with the export command.

For discussion regarding the CHGNFSEXP (Change Network File System

Export) and EXPORTFS (Export File System) commands and how they work with

both the /etc/exports file, see “Chapter 4. Server Exporting of File Systems” on

page 25.

Network File System Server-Side Daemons

Chapter 2. The Network File System Client/Server Model 9

Page 28

Figure 9. The NFS Server

NFS is similar to other RPC-based services in its use of server-side daemons to

process incoming requests. NFS may also use multiple copies of some daemons to

improve overall performance and efficiency.

RPC Binder Daemon (RPCD)

|

|

|

|

|

This daemon is analogous to the port mapper daemon, which many

implementations of NFS use in UNIX. Clients determine the port of a specified RPC

service by using the RPC Binder Daemon. Local services register themselves with

the local RPC binder daemon (port mapper) when initializing. On AS/400, you can

register your own RPC programs with the RPC binder daemon.

NFS Server Daemons (NFSD)

The most pressing need for NFS server daemons centers around the need for

multi-threading NFS RPC requests. Running daemons in user-level processes

allows the server to have multiple, independent threads of processes. In this way,

the server can handle several NFS requests at once. As a daemon completes the

processing of a request, the daemon returns to the end of a line of daemons that

wait for new requests. Using this schedule design, a server always has the ability to

accept new requests if at least one server daemon is waiting in the queue. Multiple

instances of this daemon can perform tasks simultaneously.

Mount Daemon (MNTD)

Each NFS server system runs a mount daemon which listens to requests from

client systems. This daemon acts on mount and unmount requests from clients. If

the mount daemon receives a client mount request, then the daemon checks the

export table. The mount daemon compares it with the mount request to see if the

client is allowed to perform the mount. If the mount is allowed, the mount daemon

will send to the requesting client an opaque data structure, the file handle. This

structure uniquely describes the mounting point that is requested by the client. This

will enable the client to represent the root of the mounted file system when making

future requests.

10 OS/400 Network File System Support V4R4

Page 29

Network Status Monitor Daemon (NSMD)

The Network Status Monitor (NSM) is a stateful NFS service that provides

applications with information about the status of network hosts. The Network Lock

Manager (NLM) daemon heavily uses the NSM to track hosts that have established

locks as well as hosts that maintain such locks.

There is a single NSM server per host. It keeps track of the state of clients and

notifies any interested party when this state changes (usually after recovery from a

crash).

The NSM daemon keeps a

informed after a state change. After a local change of state, the NSM notifies each

host in the notify list of the new state of the local NSM. When the NSM receives a

state change notification from another host, it will notify the local network lock

manager daemon of the state change.

notify list

Network Lock Manager Daemon (NLMD)

The Network Lock Manager (NLM) daemon is a stateful service that provides

advisory byte-range locking for NFS files. The NLM maintains state across

requests, and makes use of the Network Status Monitor daemon (NSM) which

maintains state across crashes (using stable storage).

The NLM supports two types of byte-range locks:

1. Monitored locks. These are reliable and helpful in the event of system failure.

When an NLM server crashes and recovers, all the locks it had maintained will

be reinstated without client intervention. Likewise, NLM servers will release all

old locks when a client crashes and recovers. A Network Status Manager (NSM)

must be functioning on both the client and the server to create monitored locks.

2. Unmonitored locks. These locks require explicit action to be released after a

crash and re-established after startup. This is an alternative to monitoring locks,

which requires the NSM on both the client and the server systems.

AS/400 as a Network File System Client

that contains information on hosts to be

Several entities work together to communicate with the server and local jobs on the

NFS client. These processes are the following:

|

|

|

|

|

|

|

v RPC Binder Daemon. This daemon communicates with the local and remote

daemons by using the RPC protocol. Clients look for NFS services through this

daemon.

v Network Status Monitor and Network Lock Manager. These two daemons are

not mandatory on the client. Many client applications, however, establish

byte-range locks on parts of remote files on behalf of the client without notifying

the user. For this reason, it is recommended that the NSM and NLM daemons

exist on both the NFS client and server.

v Block I/O daemon. This daemon manages the data caches and is therefore

stateful in operation. It performs caching, and assists in routing client-side NFS

requests to the remote NFS server. Multiple instances of this daemon can

perform tasks simultaneously.

v Data and attribute caches. These two caches enhance NFS performance by

storing information on the client-side to prevent a client/server interaction. The

attribute cache stores file and directory attribute information locally on the client,

while the data cache stores frequently used data on the client.

Chapter 2. The Network File System Client/Server Model 11

Page 30

v Mount and Unmount commands. Users can mount and unmount a file system

in the client namespace with these commands. These are general tools, used not

only in NFS, but also to dynamically mount and unmount other local file systems.

For more information about the ADDMFS (Add Mounted File System) and

RMVMFS (Remove Mounted File System) commands, see “Chapter 5. Client

Mounting of File Systems” on page 39.

Network File System Client-Side Daemons

Figure 10. The NFS Client

Besides the RPC Daemon, the NFS client has only one daemon to process

requests and to transfer data from and to the remote server, the block I/O daemon.

NFS differs from typical client/server models in that processes on NFS clients make

some RPC calls themselves, independently of the client block I/O daemon. An NFS

client can optionally use both a Network Lock Manager (NLM) and a Network

Status Monitor (NSM) locally, but these daemons are not required for standard

operation. It is recommended that you use both the NLM and NSM on your client

because user applications often establish byte-range locks without the knowledge of

the user.

Block I/O Daemon (BIOD)

|

|

|

|

|

|

|

|

The block I/O daemon handles requests from the client for remote files or

operations on the server. The block I/O daemon may handle data requests from the

client to remote files on the server. Running only on NFS clients or servers that are

also clients, this daemon manages the data caches for the user. The block I/O

daemon is stateful and routes client application requests either to the caches or on

to the NFS server. The user can specify the regular intervals for updating all data

that is cached by the block I/O daemon. Users can start multiple daemons to

perform different operations simultaneously.

NFS Client-Side Caches

Caching file data or attributes gives administrators a way of tuning NFS

performance. The caching of information allows you to delay writes or to read

ahead.

12 OS/400 Network File System Support V4R4

Page 31

Client-side caching in NFS reduces the number of RPC requests sent to the server.

The NFS client can cache data, which can be read out of local memory instead of

from a remote disk. The caching scheme available for use depends on the file

system being accessed. Some caching schemes are prohibited because they

cannot guarantee the integrity and consistency of data that multiple clients

simultaneously change and update. The standard NFS cache policies ensure that

performance is acceptable while also preventing the introduction of state into the

client/server communication relationship.

There are two types of client caches: the directory and file attribute cache and

the data cache.

Directory and File Attribute Cache

Not all file system operations use the data in files and directories. Many operations

get or set the attributes of the file or directory, such as its length, owner, and

modification time. Because these attribute-only operations are frequent and do not

affect the data in a file or directory, they are prime candidates for using cached

information.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

The client-side file and directory cache will store file attributes. The system does

this so that every operation that gets or sets attributes does not have to go through

the connection to the NFS server. When the system reads a file’s attributes, they

remain valid on the client for some minimum period of time, typically 30 seconds.

You can set this time period by using the acregmin option on the mount command.

If the client changes the file, he updates its attributes. This makes changes to the

local copy of the attributes and extends the cache validity period another minimum

time period. The attributes of a file remain static for some maximum period, typically

sixty seconds. Additionally, the system deletes file attributes from the cache, and

writes changed file attributes back to the server. You can set this time period with

the acregmax option on the mount command. To force a refresh of remote attributes

when opening a file, use the nocto option on the mount command. Specifying the

noac option suppresses all the local caching of attributes, negating the acregmin,

acregmax, acdirmin, and the acdirmax options on the mount command.

The same mechanism is used for directory attributes, although they are given a

longer minimum life-span. The minimum and maximum time period for directory

attribute flushing from the cache is set by the acdirmin and acdirmax options on the

mount command.

Attribute caching allows a client to make multiple changes to a file or directory

without having to constantly get and set attributes on the server. Intermediate

attributes are cached, and the sum total of all updates is later written to the server

when the maximum attribute cache period expires. Frequently accessed files and

directories have their attributes cached locally on the client so that some NFS

requests can be performed without having to make an RPC call. By preventing this

type of client/server interaction, caching attributes improves the performance of

NFS.

For more information on the ADDMFS and MOUNT commands, see “Chapter 5.

Client Mounting of File Systems” on page 39. For more information on the options

to the ADDMFS and MOUNT commands, see

Chapter 2. The Network File System Client/Server Model 13

CL Reference,

SC41-4722.

Page 32

Data Cache

The data cache is very similar to the directory and file attribute cache in that it

stores frequently used information locally on the client. The data cache, however,

stores data that is frequently or likely to be used instead of file or directory

attributes. The data cache provides data in cases where the client would have to

access the server to retrieve information that has already been read. This operation

improves the performance of NFS.

Whenever a user makes a request on a remote object, a request is sent to the

server. If the request is to read a small amount of data, for example, 1 byte (B),

then the server returns 4 kilobytes (KB) of data. This “extra” data is stored in the

client caches because, presumably, it will soon be read by the client.

When users access the same data frequently over a given period of time, the client

can cache this information to prevent a client/server interaction. This caching also

applies to users who use data in one “area” of a file frequently. This is called

and involves not only the primary data that is retrieved from the server, but also a

larger block of data around it. When a user requests data frequently from one area,

the entire block of data is retrieved and then cached. There is a high probability that

the user will soon want to access this surrounding data. Because this information is

already cached locally on the client, the performance of NFS is improved.

locality

Client Timeout

If the client does not have a cache loaded, then all requests will go to the server.

This takes extra time to process each client operation. With the mount command,

users can specify a timeout value for re-sending the command. The mount

command can not distinguish between a slow server and a server that does not

exist, so it will retry the command.

|

|

|

|

The default retry value is 2 seconds. If the server does not respond in this time,

then the client will continue to retry the command. In a network environment, this

can overload the server with duplicate AS/400 client requests. The solution to this

difficulty is to increase the timeout value on the mount command to 5-10 seconds.

14 OS/400 Network File System Support V4R4

Page 33

Chapter 3. NFS and the User-Defined File System (UDFS)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

A user-defined file system (UDFS) is a type of file system that you directly manage

through the end user interface. This contrasts with a system-defined file system

(SDFS), which AS/400 system code creates. QDLS, QSYS.LIB, and QOPT are all

examples of SDFSs.

The UDFS introduces a concept on AS/400 that allows you to create and manage

your own file systems on a particular user Auxiliary Storage Pool (ASP). An ASP is

a storage unit that is defined from the disk units or disk unit sub-systems that make

up auxiliary storage. ASPs provide a means for placing certain objects on specific

disk units to prevent the loss of data due to disk media failures on other disk units.

The concept of Block Special Files (*BLKSF objects) allows a user to view a UDFS

as a single entity whose contents become visible only after mounting the UDFS in

the local namespace. An unmounted UDFS appears as a single, opaque entity to

the user. Access to individual objects within a UDFS from the integrated file system

interface is permissible only when the UDFS is mounted.

UDFS support enables you to choose which ASP will contain the file system, as

well as manage file system attributes like case-sensitivity. You can export a

mounted UDFS to NFS clients so that these clients can also share the data that is

stored on your ASP. This chapter explains how to create and work with a UDFS so

that it can be used through NFS.

User File System Management

The UDFS provides new file management strategies to the user and includes

several new and changed CL commands specific to UDFSs.

For more information about the various UDFS CL commands and their associated

parameters and options, see

Create a User-Defined File System

The Create User-Defined File System (CRTUDFS) command creates a file system

whose contents can be made visible to the rest of the integrated file system

namespace via the ADDMFS (Add Mounted File System) or MOUNT command. A

UDFS is represented by the object type *BLKSF, or block special file. Users can

create a UDFS in an ASP of their own choice and have the ability to specify

case-sensitivity.

Restrictions:

1. You must have *IOSYSCFG special authority to use this command.

CL Reference,

SC41-4722.

© Copyright IBM Corp. 1997, 1999 15

Page 34

CRTUDFS Display

Create User-Defined FS (CRTUDFS)

Type choices, press Enter.

User-defined file system....>'/DEV/QASP02/kate.udfs'

Public authority for data . . . *INDIR Name, *INDIR, *RWX, *RW...

Public authority for object . . *INDIR *INDIR, *NONE, *ALL...

Auditing value for objects... *SYSVAL *SYSVAL, *NONE,

*USRPRF...

Case sensitivity........ *MIXED *MIXED, *MONO

Text 'description'....... *BLANK

+ for more values

Additional Parameters

F3=Exit F4=Prompt F5=Refresh F12=Cancel F13=How to use this display

F24=More keys

Figure 11. Using the Create User-Defined FS (CRTUDFS) display

Bottom

When you use the CRTUDFS command, you can specify many parameters and

options:

v The required UDFS parameter determines the name of the new UDFS. This

entry must be of the form /DEV/QASPXX/name.udfs, where the XX is one of the

valid Auxiliary Storage Pool (ASP) numbers on the system, and name is the name

of the user-defined file system. All other parts of the path name must appear as

in the example above. The name part of the path must be unique within the

specified QASPXX directory.

v The DTAAUT parameter on the CRTUDFS command specifies the public data

authority given to the user for the new UDFS.

v The OBJAUT parameter on the CRTUDFS command specifies the public object

authority given to users for the new UDFS.

v The CRTOBJAUD parameter on the CRTUDFS command specifies the auditing

value of objects created in the new UDFS.

v The CASE parameter on the CRTUDFS command specifies the case-sensitivity

of the new UDFS. You can specify either the *MONO value or the *MIXED value.

Using the *MONO value creates a case-insensitive UDFS. Using the *MIXED

value creates a case-sensitive UDFS.

v The TEXT parameter on the CRTUDFS command specifies the text description

for the new UDFS

Examples

Example 1: Create UDFS in System ASP on TULAB2

CRTUDFS UDFS('/DEV/QASP01/A.udfs) CASE(*MONO)

This command creates a case-insensitive user-defined file system (UDFS) named

A.udfs in the system Auxiliary Storage Pool (ASP), qasp01.

Example 2: Create UDFS in user ASP on TULAB2

CRTUDFS UDFS('/DEV/QASP02/kate.udfs') CASE(*MIXED)

16 OS/400 Network File System Support V4R4

Page 35

This command creates a case sensitive user-defined file system (UDFS) named

kate.udfs in the user Auxiliary Storage Pool (ASP), qasp02.

Display a User-Defined File System

The Display User-Defined File System (DSPUDFS) command presents the

attributes of an existing UDFS, whether mounted or unmounted.

DSPUDFS Display

Display User-Defined FS (DSPUDFS)

Type choices, press Enter.

User-defined file system.... /DEV/QASP02/kate.udfs

Output............. * *, *PRINT

F3=Exit F4=Prompt F5=Refresh F12=Cancel F13=How to use this display

F24=More keys

Figure 12. Display User-Defined FS (DSPUDFS) output (1/2)

Bottom

When you use the DSPUDFS command, you only have to specify one parameter:

v The UDFS parameter determines the name of the UDFS to display. This entry

must be (or resolve to a pathname) of the form /DEV/QASP02/name.udfs, where

the XX is one of the valid user Auxiliary Storage Pool (ASP) numbers on the

system, and name is the name of the UDFS. All other parts of the path name

must appear as in the example above.

When you use the DSPUDFS command successfully, a screen will appear with

information about your UDFS on it:

Chapter 3. NFS and the User-Defined File System (UDFS) 17

Page 36

Display User-Defined FS

User-defined file system...: /DEV/QASP02/kate.udfs

Owner ............: PATRICK

Code page ..........: 37

Case sensitivity.......: *MIXED

Creation date/time......: 02/26/96 08:00:00

Change date/time.......: 08/30/96 12:30:42

Path where mounted......: Notmounted

Description .........:

Press Enter to continue.

F3=Exit F12=Cancel

(C) COPYRIGHT IBM CORP. 1980, 1996.

Figure 13. Display User-Defined FS (DSPUDFS) output (2/2)

Example

Display UDFS in user ASP on TULAB2

DSPUDFS UDFS('/DEV/QASP02/kate.udfs')

This command displays the attributes of a user-defined file system (UDFS) named

kate.udfs in the user Auxiliary Storage Pool (ASP), qasp02.

Delete a User-Defined File System

The Delete User-Defined File System command (DLTUDFS) deletes an existing,

unmounted UDFS and all the objects within it. The command will fail if the UDFS is

mounted. Deletion of a UDFS will cause the deletion of all objects in the UDFS. If

the user does not have the necessary authority to delete any of the objects within a

UDFS, none of the objects in the UDFS will be deleted.

Bottom

Restrictions:

1. The UDFS being deleted must not be mounted.

2. Only a user with *IOSYSCFG special authority can use this command.

18 OS/400 Network File System Support V4R4

Page 37

DLTUDFS Display

Delete User-Defined FS (DLTUDFS)

Type choices, press Enter.

User-defined file system....>'/DEV/QASP02/kate.udfs'

F3=Exit F4=Prompt F5=Refresh F12=Cancel F13=How to use this display

F24=More keys

Figure 14. Using the Delete User-Defined FS (DLTUDFS) display

Bottom

When you use the DLTUDFS command, you only have to specify one parameter:

v The UDFS parameter determines the name of the unmounted UDFS to delete.

This entry must be of the form /DEV/QASPXX/name.udfs, where the XX is one of the

valid Auxiliary Storage Pool (ASP) numbers on the system, and name is the name

of the UDFS. All other parts of the path name must appear as in the example

above. Wildcard characters such as ’*’ and ’?’ are not allowed in this parameter.

The command will fail if the UDFS specified is currently mounted.

Example

Unmount and Delete a UDFS in the user ASP on TULAB2

UNMOUNT TYPE(*UDFS) MFS('DEV/QASP02/kate.udfs')

This command will unmount the user-defined file system (UDFS) named kate.udfs

from the integrated file system namespace. A user must unmount a UDFS before

deleting it. After unmounting a UDFS, a user can now proceed to delete the UDFS

and all objects within it using the DLTUDFS command:

DLTUDFS UDFS('/DEV/QASP02/kate.udfs')

This command deletes the user-defined file system (UDFS) named kate.udfs from

the user Auxiliary Storage Pool (ASP) qasp02.

Mount a User-Defined File System

The Add Mounted File System (ADDMFS) and MOUNT commands make the

objects in a file system accessible to the integrated file system namespace. To

mount a UDFS, you need to specify TYPE (*UDFS) for the ADDMFS command.

|

|

|

|

The ADDMFS command (or its alias, MOUNT) allows you to dynamically mount a

file system, whether that file system is UDFS, NFS, or NetWare. Use the following

steps to allow a successful export of a UDFS to NFS clients:

1. Mount the block special file locally (Type *UDFS)

Chapter 3. NFS and the User-Defined File System (UDFS) 19

Page 38

|

|

2. Export the path to the UDFS mount point (the directory you mounted over in

Step 1)

|

|

|

|

The previous steps will ensure that the remote view of the namespace is the same

as the local view. Afterwards, the exported UDFS file system can be mounted (Type

*NFS) by remote NFS clients. However, you must have previously mounted it on

the local namespace.

ADDMFS/MOUNT Display

For a display of the ADDMFS (Add Mounted File System) and MOUNT commands,

please see “RMVMFS/UNMOUNT Display” on page 49.

Example

|

|

|

|

|

|

|

|

Mount and Export a UDFS on TULAB2

MOUNT TYPE(*UDFS) MFS('/DEV/QASP02/kate.udfs') MNTOVRDIR('/usr')

This command mounts the user-defined file system (UDFS) that is named

kate.udfs on the integrated file system namespace of TULAB2 over directory /usr.

CHGNFSEXP OPTIONS('-I -O ACCESS=Prof:1.234.5.6')

DIR('/usr')

This command exports the user-defined file system (UDFS) that is named

kate.udfs and makes it available to appropriate clients Prof and 1.234.5.6.

For more information about the MOUNT and ADDMFS commands, see “Chapter 5.

Client Mounting of File Systems” on page 39. For more information about the

EXPORTFS and CHGNFSEXP commands, see “Chapter 4. Server Exporting of File

Systems” on page 25.

Unmount a User-Defined File System

The Remove Mounted File System (RMVMFS) or UNMOUNT commands will make

a mounted file system inaccessible to the integrated file system namespace. If any

of the objects in the file system are in use (for example, a file is opened) at the time

of using the unmount command, an error message will be returned to the user. If

the user has mounted over the file system itself, then this file system cannot be

unmounted until it is uncovered.

Note: Unmounting an exported UDFS which has been mounted by a client will

cause the remote client to receive the ESTALE return code for a failed

operation upon the next client attempt at an operation that reaches the

server.

RMVMFS/UNMOUNT Display

For a display of the RMVMFS (Remove Mounted File System) and UNMOUNT

commands, please see “RMVMFS (Remove Mounted File System) Command” on

page 48.

For more information about the UNMOUNT and RMVMFS commands, see

“Chapter 5. Client Mounting of File Systems” on page 39.

20 OS/400 Network File System Support V4R4

Page 39

Saving and Restoring a User-Defined File System

The user has the ability to save and restore all UDFS objects, as well as their

associated authorities. The Save command (SAV) allows a user to save objects in a

UDFS while the Restore command (RST) allows a user to restore UDFS objects.

Both commands will function whether the UDFS is mounted or unmounted.

Graphical User Interface

A Graphical User Interface (GUI) provides easy and convenient access to UDFSs.

This GUI enables a user to create, delete, mount, and unmount a UDFS from a

Windows 95** client. Following are some examples of what you will see if you are

connected to AS/400 through AS/400 Client Access.

Figure 15. A Windows 95 view of using the CRTUDFS (Create UDFS) command

This window allows you to specify the name of the UDFS, its auditing value,

case-sensitivity, and other options. For more information about the CRTUDFS

command, see “Create a User-Defined File System” on page 15.

Chapter 3. NFS and the User-Defined File System (UDFS) 21

Page 40

Figure 16. A Windows 95 view of using the DSPUDFS (Display UDFS) command

This window displays the properties of a user-defined file system. For more

information about the DSPUDFS command, see “Display a User-Defined File

System” on page 17.

User-Defined File System Functions in the Network File System

|

|

|

|

|

|

|

|

To export the contents of a UDFS, you must first mount it on the local namespace.

Once the Block Special File (*BLKFS) mounts, it behaves like the ″root″ or

QOpenSys file systems. The UDFS contents become visible to remote clients when

the server exports them.

It is possible to export an unmounted UDFS (*BLKSF object) or the ASP in which it

resides. However, the use of such objects is limited from remote NFS clients. You

will have minumal use of mounting and viewing from most UNIX clients. You cannot

mount a *BLKSF object on AS/400 clients or work with them in NFS mounted

22 OS/400 Network File System Support V4R4

Page 41

|

|

|

directories. For this reason, exporting /DEV or objects within it can cause

administrative difficulties. The next sections describe how you can work around one

such scenario.

Using User-Defined File Systems with Auxiliary Storage Pools

This scenario involves an eager user, a non-communicative system administrator,

and a solution to an ASP problem through the Network File System.

A user, Jeff, accesses and works with the TULAB2 namespace each time he logs

into his account on a remote NFS client. In this namespace exist a number of

user-defined file systems (A.udfs, B.udfs, C.udfs, and D.udfs)inanASP

connected to the namespace as /DEV/QASP02/. Jeff is used to working with these

directories in their familiar form every day.

One day, the system administrator deletes the UDFSs and physically removes the

ASP02 from the server. The next time Jeff logs in, he can’t find his UDFSs. So,

being a helpful user, Jeff creates a /DEV/QASP02/ directory using the CRTDIR or

MKDIR command and fills the sub-directories with replicas of what he had before.

Jeff replaces A.udfs, B.udfs, C.udfs, and D.udfs with 1.udfs, 2.udfs, 3.udfs, and

4.udfs.

This is a problem for the network because it presents a false impression to the user

and a liability on the server. Because the real ASP directories (/DEV/QASPXX) are

only created during IPL by the system, Jeff’s new directories do not substitute for

actual ASPs. Also, because they are not real ASP directories (/DEV/QASPXX), all of

Jeff’s new entries take up disk space and other resources on the system ASP, not

the QASP02, as Jeff believes.

Furthermore, Jeff’s objects are not UDFSs and may have different properties than

he expects. For example, he cannot use the CRTUDFS command in his false

/DEV/QASP02 directory.

The system administrator then spontaneously decides to add a true ASP without

shutting the system down for IPL. At the next IPL, the new ASP will be mounted

over Jeff’s false /dev/qasp02 directory. Jeff and many other users will panic

because they suddenly cannot access their directories, which are “covered up” by

the system-performed mount. This new ASP cannot be unmounted using either the

RMVMFS or UNMOUNT commands. For Jeff and other users at the server, there is

no way to access their directories and objects in the false ASP directory (such as

1.udfs, 2.udfs, 3.udfs, and 4.udfs)

Recovery with the Network File System

The NFS protocol does not cross mount points. This concept is key to

understanding the solution to the problem described above. While the users at the

server cannot see the false ASP and false UDFSs covered by the

system-performed mount, these objects still exist and can be accessed by remote

clients using NFS. The recovery process involves action taken at both the client and

the server:

1. The administrator can export a directory above the false ASP (and everything

“downstream” of it) with the EXPORTFS command. Exporting /DEV exports the

underlying false ASP directory, but not the true ASP directory that is mounted

over the false ASP directory. Because NFS does not cross the mount point,

NFS recognizes only the underlying directories and objects.

Chapter 3. NFS and the User-Defined File System (UDFS) 23

Page 42

EXPORTFS OPTIONS('-I -O ROOT=TUclient52X') DIR('/DEV')

2. Now the client can mount the exported directory and place it over a convenient

directory on the client, like /tmp.

MOUNT TYPE(*NFS) MFS('TULAB2:/DEV') MNTOVRDIR('/tmp')

3. If the client uses the WRKLNK command on the mounted file system, then the

client can now access the false ASP directory and connecting directories will be

maintained.

WRKLNK '/tmp/*'

4. The server then needs to export a convenient directory, like /safe, which will

serve as the permanent location of the false ASP directory and its contents.

EXPORTFS OPTIONS ('-I -O ROOT=TUclient52X') DIR('/safe')

5. The client can mount the directory /safe from the server to provide a final

storage location for the false ASP directory and its contents.

MOUNT TYPE(*NFS) DIR('/safe') MNTOVRDIR('/user')

6. Finally, the client can copy the false ASP directory and the false objects 1.udfs,

2.udfs, 3.udfs, and 4.udfs on the server by copying them to the /safe directory

that has been mounted on the client.

COPY OBJ('/temp/*') TODIR('/user')

The false QASP02 directory and the false objects that were created with it are now

accessible to users at the server. The objects are now, however, located in /safe

on the server.

24 OS/400 Network File System Support V4R4

Page 43

Chapter 4. Server Exporting of File Systems

A key feature of the Network File System is its ability to make various local file

systems, directories, and objects available to remote clients through the export

command. Exporting is the first major step in setting up a “transparent” relationship

between client and server.

Before exporting from the server, remote clients cannot “see” or access a given file

system on the local server. Furthermore, remote clients are completely unaware of

the existence file systems on the server. Clients cannot mount or work with server

file systems in any way. After exporting, the clients authorized by the server will be

able to mount and then work with server file systems. Exported and mounted file

systems will perform as if they were located on the local workstation. Exporting

allows the NFS server administrator a great range of control as to exactly what file

systems are accessible and which clients can access them.

What is Exporting?

Exporting is the process by which users make local server file systems accessible

to remote clients. Assuming that remote clients have the proper authorities and

access identifications, they can see as well as access exported server file systems.

Using either the CHGNFSEXP or EXPORTFS command, you can add directory

names from the /etc/exports file to the export table for export to remote clients.

You can also use these commands to dynamically export from the NFS server,

bypassing the /etc/exports file.

A host system becomes an NFS server if it has file systems to export across the

network. A server does not advertise these file systems to all network systems.

Rather, it keeps a list of options for exported file systems and associated access

authorities and restrictions in a file, /etc/exports. The /etc/exports file is built into

the export table by the export command. The command reads the export options

and applies them to the file systems to be exported at the time the command is

used. Another way of exporting file systems is to do so individually with the “-I”

option of the export command. This command will not process any information

stored in the /etc/exports file.

Figure 17. Exporting file systems with the /etc/exports file

© Copyright IBM Corp. 1997, 1999 25

Page 44

Figure 18. Dynamically exporting file systems with the″-I″option

The mount daemon checks the export table each time a client makes a request to

mount an exported file system. Users with the proper authority can update the

/etc/exports file to export file systems at will by adding, deleting, or changing

entries. Then the user can use the export command to update the export table.

Most system administrators configure their NFS server so that, as it starts up, it

checks for the existence of /etc/exports, which it immediately processes.

Administrators can accomplish this by specifying *ALL on the STRNFSSVR (Start

Network File System Server) command. Once the server finds /etc/exports in this

way, it uses the export command to create the export table. This makes the file

systems immediately available for client use.

Why Should I Export?

Exporting gives a system administrator the opportunity to easily make any file

system accessible to clients. The administrator can perform an export at will to fulfill

the needs of any particular user or group of users, specifying to whom the file

system is available and how.

With an efficient system of exporting, a group of client systems needs only one set

of configuration and startup files, one set of archives, and one set of applications.

The export command can make all of these types of data accessible to the clients

at any time.

Although there are many insecure ways to export file systems, using the options on

the export command allow administrators to export file systems safely. Exported file

systems can be limited to a group of systems in a trusted community of a network

namespace.

Using the ability to export allows for a simpler and more effective administration of a

namespace, from setting up clients to determining what authority is needed to

access a sensitive data set. A properly used /etc/exports file can make your

namespace safe and secure while providing for the needs of all your users.

TULAB Scenario

In TULAB, a group of engineering undergraduate students are working with a group

of engineering graduate students. Both sets of students have access to their remote

home directories and applications on the server through NFS. Their research

involves the controversial history of local bridge architecture. The students will be

working in different rooms of the same campus building. Chris Admin needs a way

to make data available to both groups of computers and students without making all

the data available to everyone.

26 OS/400 Network File System Support V4R4

Page 45

Chris Admin can export a directory containing only the database files with statistics

of the bridge construction safety records. This operation can be performed without

fear of unknown users accessing the sensitive data. Chris Admin can use the export

command to allow only selected client systems to have access to the files. This

way, both groups of students will be able to mount and access the same data and

work with it on their separate, different workstations.

What File Systems Can I Export?

You can export any of the following file systems:

v “Root” (/)

v QOpenSys

v QSYS.LIB

v QDLS

v QOPT

v UDFS

You cannot export other file systems that have been mounted from remote servers.

This includes entire directory trees as well as single files. AS/400 follows the

general rules for exporting as detailed in “Rules for Exporting File Systems” on

page 28. This set of rules also includes the general NFS “downstream” rule for

exporting file systems. Remember that whenever you export a file system, you also

export all the directories and objects that are located hierarchically beneath

(“downstream” from) that file system.

The CHGNFSEXP or EXPORTFS command is the key for making selected portions

of the local user view part of the remote client view.

Exported file systems will be listed in the export table built from the /etc/exports

file. These file systems will be accessible to client mounting, assuming the client

has proper access authorities. Users will be able to mount and access exported

data as if it were local to their client systems. The administrator of the server can

also change the way file systems are exported dynamically to present remote

clients with a different view of file systems.

Before exporting, a remote client cannot view any local server file systems.

Figure 19. Before the server has exported information

Chapter 4. Server Exporting of File Systems 27

Page 46

Figure 20. After the server has exported /classes/class2

After exporting, a remote client can view the exported file systems PROJ2 and PROJ3.