Page 1

Front cover

IBM zSeries 990

Technical Guide

Structure and design - A scalable server for

an on demand world

Processor Unit, memory, and multiple

Logical Channel Subsystems

Capacity upgrade options

ibm.com/redbooks

Bill White

Mario Almeida

Dick Jorna

Page 2

Page 3

International Technical Support Organization

IBM ^ zSeries 990 Technical Guide

May 2004

SG24-6947-01

Page 4

Note: Before using this information and the product it supports, read the information in “Notices” on

page vii.

Second Edition (May 2004)

This edition applies to the IBM ^zSeries 990 server at hardware Driver Level 55.

© Copyright International Business Machines Corporation 2003, 2004. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule

Contract with IBM Corp.

Page 5

Contents

Notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . viii

Preface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

The team that wrote this redbook. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ix

Become a published author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Comments welcome. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .x

Chapter 1. zSeries 990 overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 z990 models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3 System functions and features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3.1 Processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.3.2 Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3.3 Self-Timed Interconnect (STI). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3.4 Channel Subsystem (CSS) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3.5 Physical Channel IDs (PCHIDs) and CHPID Mapping Tool . . . . . . . . . . . . . . . . . . 7

1.3.6 Spanned channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.3.7 I/O connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.3.8 Cryptographic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.3.9 Parallel Sysplex support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.3.10 Intelligent Resource Director (IRD) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.3.11 Hardware consoles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.3.12 Concurrent upgrades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

1.3.13 Performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.3.14 Reliability, Availability, and Serviceability (RAS). . . . . . . . . . . . . . . . . . . . . . . . . 17

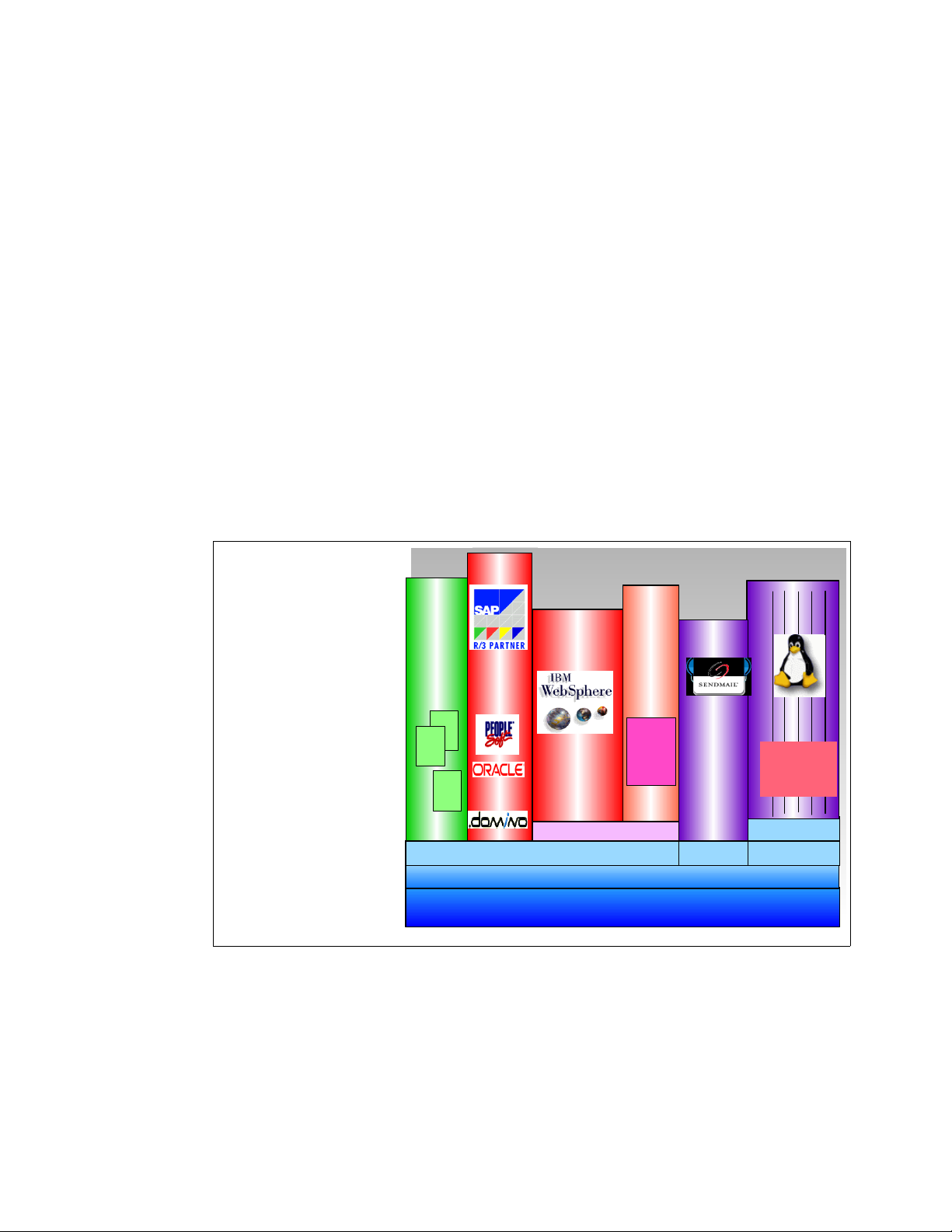

1.3.15 Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

1.3.16 Software support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.3.17 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Chapter 2. System structure and design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.1 System structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.1.1 Book concept . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.1.2 Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.1.3 Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

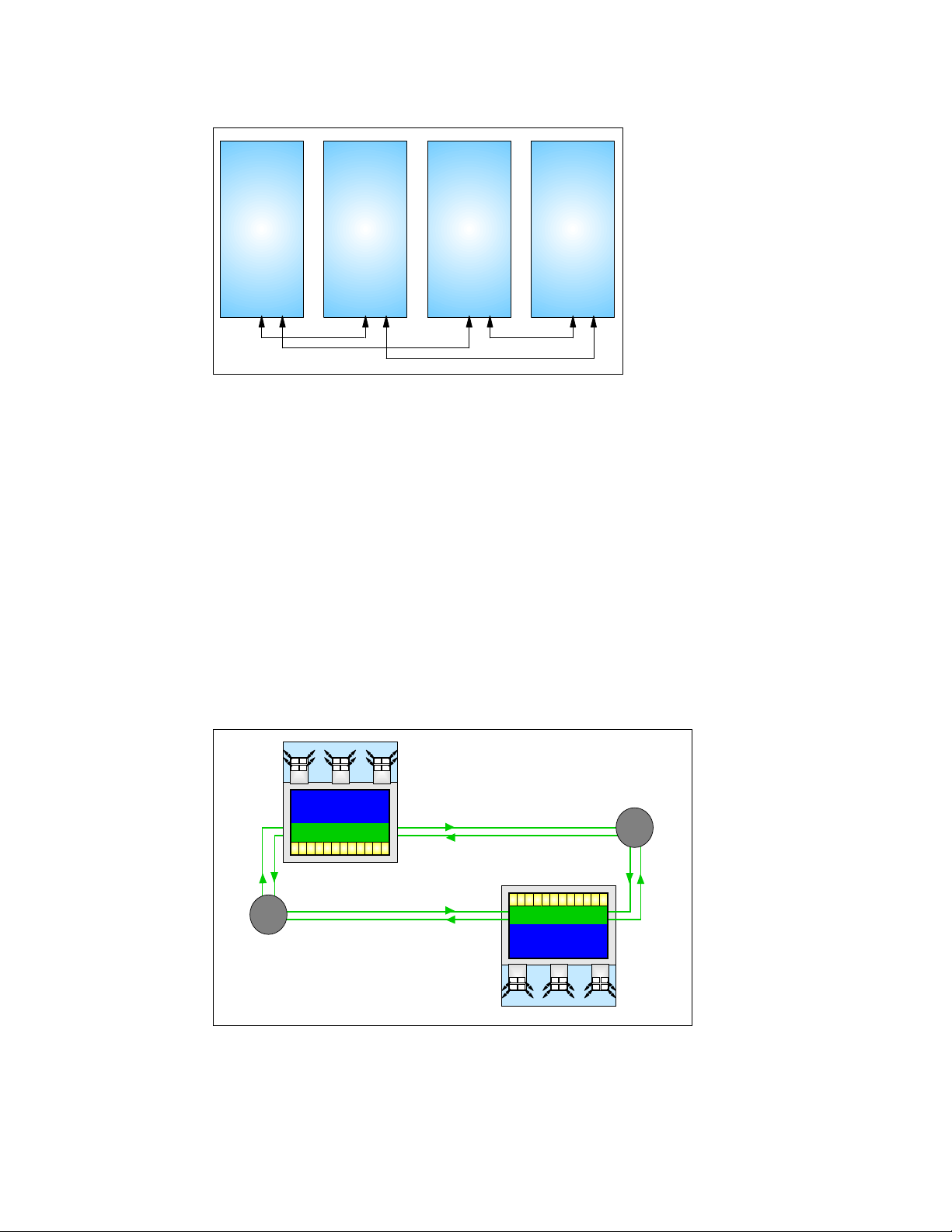

2.1.4 Ring topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.1.5 Connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.1.6 Frames and cages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.1.7 The MCM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.1.8 The PU, SC, and SD chips . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

2.1.9 Summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

2.2 System design. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.2.1 Design highlights. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.2.2 Book design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

2.2.3 Processor Unit design. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.2.4 Processor Unit functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.2.5 Memory design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

2.2.6 Modes of operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

2.2.7 Model configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

© Copyright IBM Corp. 2003, 2004. All rights reserved. iii

Page 6

2.2.8 Storage operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

2.2.9 Reserved storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2.2.10 LPAR storage granularity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2.2.11 LPAR Dynamic Storage Reconfiguration (DSR). . . . . . . . . . . . . . . . . . . . . . . . . 71

2.2.12 I/O subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

2.2.13 Channel Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Chapter 3. I/O system structure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

3.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

3.2 I/O cages. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

3.2.1 Self-Timed Interconnect (STI). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

3.2.2 STIs and I/O cage connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

3.2.3 Balancing I/O connections . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

3.3 I/O and cryptographic feature cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.3.1 I/O feature cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

3.3.2 Cryptographic feature cards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

3.3.3 Physical Channel IDs (PCHIDs) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

3.4 Connectivity. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

3.4.1 I/O and cryptographic features support and configuration rules . . . . . . . . . . . . . . 89

3.4.2 ESCON channel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

3.4.3 FICON channel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

3.4.4 OSA-Express adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

3.4.5 Coupling Facility links . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

3.4.6 External Time Reference (ETR) feature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

3.4.7 Cryptographic features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Chapter 4. Channel Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

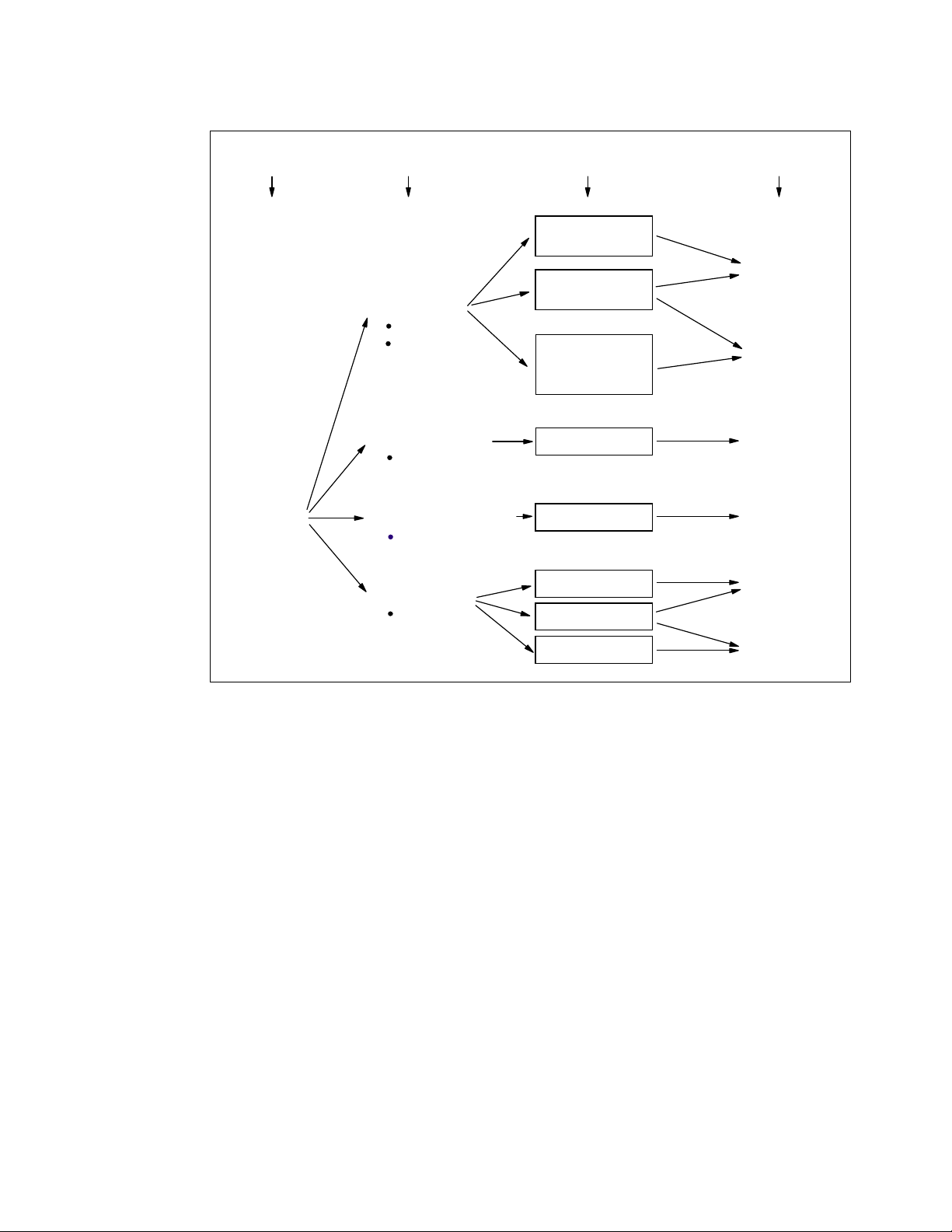

4.1 Multiple Logical Channel Subsystem (LCSS) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

4.1.1 Logical Channel Subsystem structure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

4.1.2 Physical Channel ID (PCHID) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

4.1.3 Channel spanning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

4.2 LCSS configuration management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

4.2.1 z990 configuration management. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

4.3 LCSS-related numbers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Chapter 5. Cryptography . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

5.1 Cryptographic function support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

5.1.1 Cryptographic Synchronous functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

5.1.2 Cryptographic Asynchronous functions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

5.2 z990 Cryptographic processors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

5.2.1 CP Assist for Cryptographic Function (CPACF) . . . . . . . . . . . . . . . . . . . . . . . . . 122

5.2.2 PCIX Cryptographic Coprocessor (PCIXCC) . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

5.2.3 PCI Cryptographic Accelerator (PCICA) feature. . . . . . . . . . . . . . . . . . . . . . . . . 124

5.3 Cryptographic hardware features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

5.3.1 PCIX Cryptographic Coprocessor feature. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

5.3.2 The PCICA feature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

5.3.3 Configuration rules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

5.3.4 z990 cryptographic feature codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

5.3.5 TKE workstation feature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

5.4 Cryptographic features comparison . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

5.5 Software requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Chapter 6. Software support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

6.1 Operating system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

iv IBM ^ zSeries 990 Technical Guide

Page 7

6.2 z/OS software support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

6.2.1 Compatibility Support for z/OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

6.2.2 Exploitation Support for z/OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

6.2.3 HCD support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

6.2.4 Automation changes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

6.2.5 SMF support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

6.2.6 RMF support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

6.2.7 ICKDSF requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

6.2.8 ICSF support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

6.2.9 Additional Exploitation Support considerations . . . . . . . . . . . . . . . . . . . . . . . . . . 142

6.3 z/VM software support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

6.4 z/VSE and VSE/ESA software support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

6.5 TPF software support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

6.6 Linux software support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

6.7 Summary of software requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 147

6.7.1 Summary of z/OS and OS/390 software requirements. . . . . . . . . . . . . . . . . . . . 147

6.7.2 Summary of z/VM, z/VSE, VSE/ESA, TPF, and Linux software requirements . . 148

6.8 Workload License Charges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

6.9 Concurrent upgrades considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Chapter 7. Sysplex functions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

7.1 Parallel Sysplex. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

7.1.1 Parallel Sysplex described . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

7.1.2 Parallel Sysplex summary. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

7.2 Sysplex and Coupling Facility considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

7.2.1 Sysplex configurations and Sysplex Timer considerations . . . . . . . . . . . . . . . . . 159

7.2.2 Coupling Facility and CFCC considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

7.2.3 CFCC enhanced patch apply . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

7.2.4 Coupling Facility link connectivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

7.2.5 Coupling Facility Resource Manager (CFRM) policy considerations . . . . . . . . . 166

7.2.6 ICF processor assignments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

7.2.7 Dynamic CF dispatching and dynamic ICF expansion . . . . . . . . . . . . . . . . . . . . 168

7.3 System-managed CF structure duplexing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

7.3.1 Benefits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

7.3.2 CF structure duplexing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

7.3.3 Configuration planning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

7.4 Geographically Dispersed Parallel Sysplex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

7.4.1 GDPS/PPRC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

7.4.2 GDPS/XRC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

7.4.3 GDPS and Capacity Backup (CBU) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

7.5 Intelligent Resource Director . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

7.5.1 LPAR CPU management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

7.5.2 Dynamic Channel Path Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

7.5.3 Channel Subsystem Priority Queueing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

7.5.4 WLM and Channel Subsystem priority . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

7.5.5 Special considerations and restrictions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

7.5.6 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

Chapter 8. Capacity upgrades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

8.1 Concurrent upgrades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

8.2 Capacity Upgrade on Demand (CUoD). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

8.3 Customer Initiated Upgrade (CIU). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

8.4 On/Off Capacity on Demand (On/Off CoD) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Contents v

Page 8

8.5 Capacity BackUp (CBU) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

8.6 Nondisruptive upgrades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

8.6.1 Upgrade scenarios . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

8.6.2 Planning for nondisruptive upgrades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

8.7 Capacity planning considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

8.7.1 Balanced system design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

8.7.2 Superscalar processors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

8.7.3 Integrated hardware and system assists. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

8.8 Capacity measurements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

8.8.1 Large Systems Performance Reference (LSPR) . . . . . . . . . . . . . . . . . . . . . . . . 224

Chapter 9. Environmental requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

9.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

9.1.1 Power and cooling requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

9.1.2 Power consumption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

9.1.3 Internal Battery Feature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

9.1.4 Emergency power-off . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

9.1.5 Cooling requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

9.2 Weights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

9.3 Dimensions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

Appendix A. Hardware Management Console (HMC) . . . . . . . . . . . . . . . . . . . . . . . . . 235

z990 Hardware Management Console. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

Token ring only wiring scenario. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

Ethernet only - one-path wiring scenario. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

Ethernet only - two-path wiring scenario . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

Token ring and Ethernet wiring scenario. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

Remote operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 244

Support Element . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

z990 HMC enhancements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

Appendix B. Fiber optic cabling services. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

Fiber optic cabling services from IBM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250

Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 253

Related publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

Other publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

Online resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262

How to get IBM Redbooks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

vi IBM ^ zSeries 990 Technical Guide

Page 9

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult

your local IBM representative for information on the products and services currently available in your area. Any

reference to an IBM product, program, or service is not intended to state or imply that only that IBM product,

program, or service may be used. Any functionally equivalent product, program, or service that does not

infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to

evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The

furnishing of this document does not give you any license to these patents. You can send license inquiries, in

writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such provisions are

inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES THIS

PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED,

INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of

express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made

to the information herein; these changes will be incorporated in new editions of the publication. IBM may make

improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time

without notice.

Any references in this information to non-IBM Web sites are provided for convenience only and do not in any

manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the

materials for this IBM product and use of those Web sites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring

any obligation to you.

Information concerning non-IBM products was obtained from the suppliers of those products, their published

announcements or other publicly available sources. IBM has not tested those products and cannot confirm the

accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the

capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them

as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business

enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrates programming

techniques on various operating platforms. You may copy, modify, and distribute these sample programs in

any form without payment to IBM, for the purposes of developing, using, marketing or distributing application

programs conforming to the application programming interface for the operating platform for which the sample

programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore,

cannot guarantee or imply reliability, serviceability, or function of these programs. You may copy, modify, and

distribute these sample programs in any form without payment to IBM for the purposes of developing, using,

marketing, or distributing application programs conforming to IBM's application programming interfaces.

© Copyright IBM Corp. 2003, 2004. All rights reserved. vii

Page 10

Trademarks

The following terms are trademarks of the International Business Machines Corporation in the United States,

other countries, or both:

CICS®

DB2®

developerWorks®

DRDA®

e-business on demand™

Enterprise Storage Server®

Enterprise Systems

Architecture/390®

ECKD™

ES/9000®

ESCON®

Eserver®

eServer™

FlashCopy®

FICON®

Geographically Dispersed Parallel

Sysplex™

GDDM®

GDPS®

HiperSockets™

The following terms are trademarks of other companies:

HyperSwap™

IBM®

IMS™

Multiprise®

MQSeries®

MVS™

NetView®

OS/2®

OS/390®

Parallel Sysplex®

Processor Resource/Systems

Manager™

PR/SM™

pSeries®

Redbooks™

Redbooks (logo) ™

Resource Link™

RACF®

RETAIN®

RMF™

S/360™

S/370™

S/390®

Sysplex Timer®

System/360™

System/370™

ThinkPad®

Tivoli®

TotalStorage®

VisualAge®

VM/ESA®

VSE/ESA™

VTAM®

Wave®

WebSphere®

z/Architecture™

z/OS®

z/VM®

zSeries®

Java and all Java-based trademarks and logos are trademarks or registered trademarks of Sun

Microsystems, Inc. in the United States, other countries, or both.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Other company, product, and service names may be trademarks or service marks of others.

viii IBM ^ zSeries 990 Technical Guide

Page 11

Preface

The IBM Eserver® zSeries® 990 scalable server provides major extensions to the existing

zSeries architecture and capabilities. The concept of Logical Channel Subsystems is added,

and the maximum number of Processor Units and logical partitions is increased. These

extensions provide the base for much larger zSeries servers.

This IBM® Redbook is intended for IBM systems engineers, consultants, and customers who

need to understand the zSeries 990 features, functions, availability, and services.

This publication is part of a series. For a complete understanding of the z990 scalable server

capabilities, also refer to our companion Redbooks™:

IBM Eserver zSeries 990 Technical Introduction, SG24-6863

IBM Eserver zSeries Connectivity Handbook, SG24-5444

Note that the information in this book includes features and functions announced on

April 7, 2004, and that certain functionality is not available until hardware Driver Level 55 is

installed on the z990 server.

The team that wrote this redbook

This redbook was produced by a team of specialists from around the world working at the

International Technical Support Organization, Poughkeepsie Center.

Bill White is a Project Leader and Senior Networking Specialist at the International

Technical Support Organization, Poughkeepsie Center.

Mario Almeida is a Certified Consulting IT Specialist in Brazil. He has 29 years of experience

in IBM Large Systems. His areas of expertise include zSeries and S/390® servers technical

support, large systems design, data center and backup site design and configuration, and

FICON® channels.

Dick Jorna is a Certified Senior Consulting IT Specialist in the Netherlands. He has 35 years

of experience in IBM Large Systems. During this time, he has worked in various roles within

IBM, and currently provides pre-sales technical support for the IBM ^ zSeries product

portfolio. In addition, he is a zSeries product manager, and is responsible for all zSeries

activities in his country.

Thanks to the following people for their contributions to this project:

Franck Injey

International Technical Support Organization, Poughkeepsie Center

Mike Scoba

zSeries Hardware Product Planning, IBM Poughkeepsie

First Edition authors

Franck Injey, Mario Almeida, Parwez Hamid, Brian Hatfield, Dick Jorna

© Copyright IBM Corp. 2003, 2004. All rights reserved. ix

Page 12

Become a published author

Join us for a two- to six-week residency program! Help write an IBM Redbook dealing with

specific products or solutions, while getting hands-on experience with leading-edge

technologies. You'll team with IBM technical professionals, Business Partners and/or

customers.

Your efforts will help increase product acceptance and customer satisfaction. As a bonus,

you'll develop a network of contacts in IBM development labs, and increase your productivity

and marketability.

Find out more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Comments welcome

Your comments are important to us!

We want our Redbooks to be as helpful as possible. Send us your comments about this or

other Redbooks in one of the following ways:

Use the online Contact us review redbook form found at:

ibm.com/redbooks

Send your comments in an Internet note to:

redbook@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYJ Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400

x IBM ^ zSeries 990 Technical Guide

Page 13

Chapter 1. zSeries 990 overview

This chapter gives a high-level view of the IBM Eserver zSeries 990. All the topics

mentioned in this chapter are discussed in greater detail later in this book.

The legacy of zSeries goes back more than 40 years. Actually, on April 7th, 2004, it was 40

years ago that IBM introduced its S/360™. Since then, mainframes have followed a path of

innovation with a focus on evolution to help protect investments made through the years.

The proliferation of servers in the last decade or so has increased complexity in IT

management and operations and decreased the overall efficiency of resource use. On top of

this came the need for business solutions to support business pressures on demand, which

requires an on demand operating environment capable of being supportive, adaptive, and

responsive to on demand business objectives and offering infrastructure simplification with

the values of the mainframe technology as set forward with the zSeries 990.

1

The zSeries 990 is designed for any enterprise that needs the qualities of service required to

sustain and expand their on demand computing environment. Customers requiring the ability

to meet mission-critical requirements that include unexpected demands, high numbers of

transactions, a heterogeneous application environment, and the ability to consolidate a

number of servers will find the z990 an attractive solution since it leverages the current

application portfolio with Linux and z/OS®, and simplifies the operation and management of

business applications by consolidating both Linux and mainframe applications onto the same

platform.

Customers with 9672s and z900s should consider using this server to consolidate servers

and workloads, add capacity, or expand their Linux workloads in a more cost-effective

manner. The increased capacity, bandwidth, number of channels, and logical partitions

provide customers with the ability to reduce costs, while positioning them for future

expansion.

The z990 is based on the proven IBM z/Architecture™, which was first introduced with the

z900 family of servers. It is the continuation of the zSeries z/Architecture evolution and

extends key platform characteristics with enhanced dynamic and flexible resource

management, scalability, and partitioning of predictable and unpredictable workload

environments. Additionally, the z990 availability, clustering, and Qualities of Service are built

on the superior foundation of the current zSeries technologies.

© Copyright IBM Corp. 2003, 2004. All rights reserved. 1

Page 14

The z990 servers can be configured in numerous ways to offer outstanding flexibility in the

deployment of e-business on demand™ solutions. Each z990 server can operate

independently, or as part of a Parallel Sysplex® cluster of servers. In addition to z/OS, the

z990 can host tens to hundreds of Linux images running identical or different applications in

parallel, based on z/VM® virtualization technology.

The z990 supports a high scalable standard of performance and integration by expanding on

the balanced system approach of the IBM z/Architecture. It is designed to eliminate

bottlenecks through its virtually unlimited 64-bit addressing capability, providing plenty of

“headroom” for unpredictable growth in enterprise applications.

The z990 provides a significant increase in system scalability and opportunity for server

consolidation by providing a “multi-book” system structure that supports configurations of one

to four books. Each book consists of 12 Processor Units (PUs) and associated memory, for a

maximum of 48 processors in a four-book system. All books are interconnected with a very

high-speed internal communications links via the L2 cache, which allows the system to be

operated and controlled by the PR/SM™ facility as a symmetrical, memory-coherent

multiprocessor. The logical partitioning facility provides the ability to configure and operate as

many as 30 logical partitions, which have processors, memory, and I/O resources assigned

from any of the installed books.

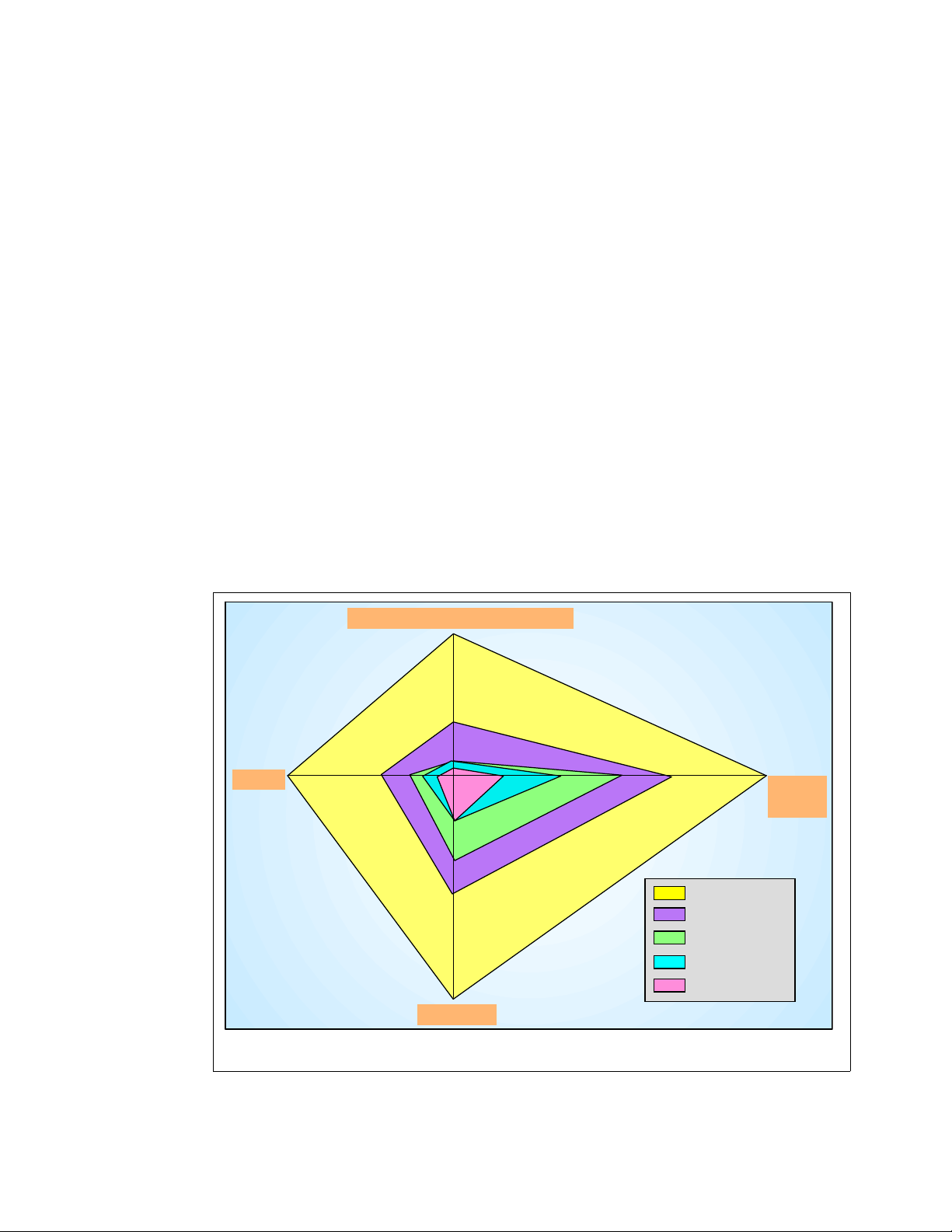

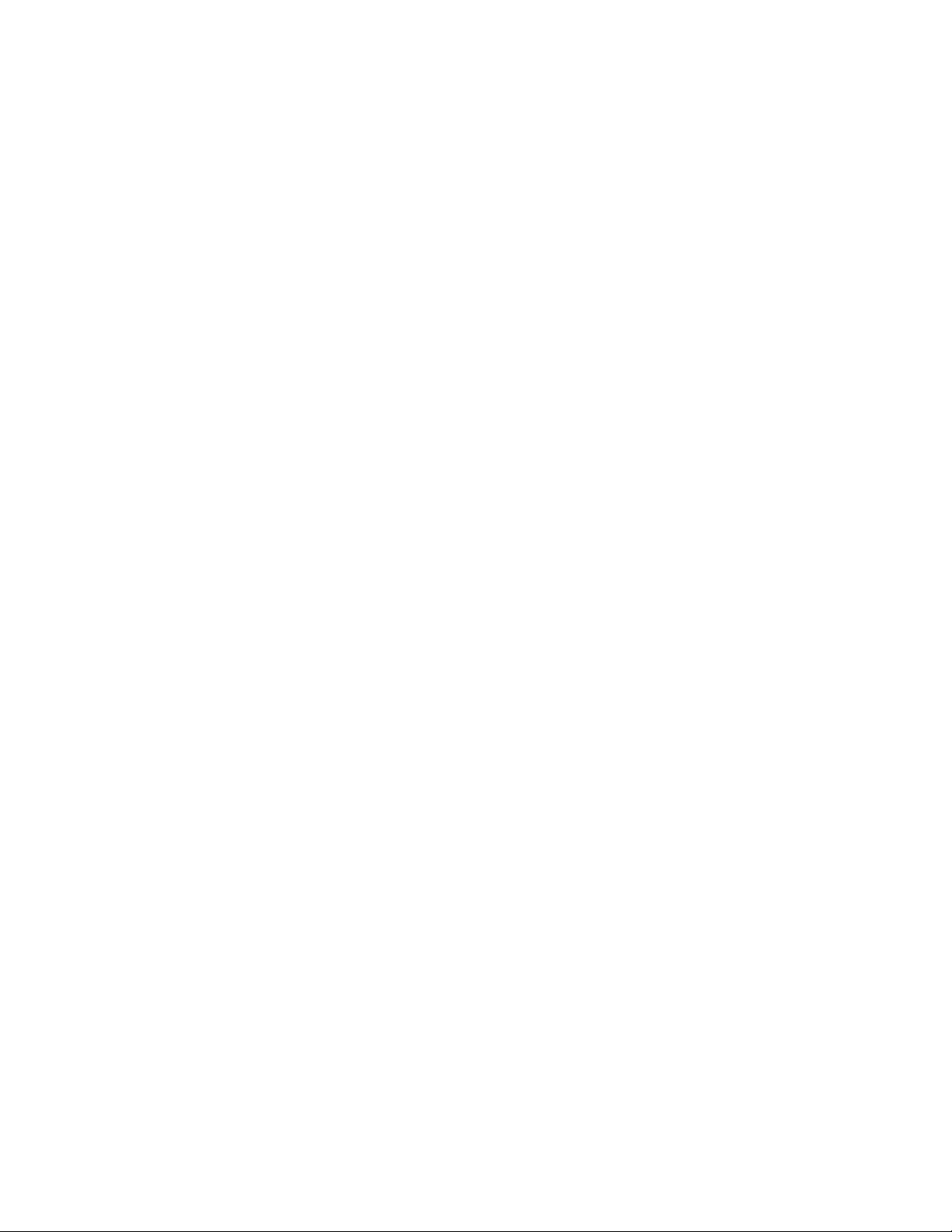

The chart in Figure 1-1 shows growth improvements along all axes. While some of the

previous generation of servers have grown more along one axis for a given family, later

families focus on the other axes. Now, with the z990, the balanced design achieves

improvement equally along all four axes.

System I/O Bandwidth

96 GBps

24 GBps

256 GB

Memory

External I/O or STI bandwidth only (Internal Coupling Channels and HiperSockets not included).

zSeries MCM internal bandwidth is 500 GB/sec. Memory bandwidth not included (not a system constraint) .

64 GB

16-way

32-way

CPUs

1.3 ns

zSeries z9XX

zSeries z900

Generation 6

Generation 5

Generation 4

0.83 ns

Cycle

Time

Figure 1-1 Balanced system design

2 IBM ^ zSeries 990 Technical Guide

Page 15

1.1 Introduction

The z990 further extends and integrates key platform characteristics: dynamic and flexible

partitioning, resource management in mixed and unpredictable workload environments,

availability, scalability, clustering, and systems management with emerging e-business on

demand application technologies (for example, WebSphere®, Java™, and Linux).

The zSeries 990 family provides a significant increase in performance over the previous

zSeries servers. The z990 introduces a different design from its predecessor, the zSeries

900. One noteworthy change is to the CEC cage, which is capable of housing up to four

books. This multi-book design provides enough Processor Units to improve total system

capacity by nearly three times over that provided by z900.

Figure 1-2 Introducing the z990 - internal and external view

The z990 introduced the superscalar microprocessor architecture. This design, and the

exploitation of the CMOS 9SG-SOI technology, improves the uniprocessor performance by

54% to 61%, compared to z900 Model 2C1. However, the true capacity increase of the

system is driven by the increased number of Processor Units per system: from 20 in the z900

to 48 Processor Units in the z990. The 48 Processor Units are packaged in four MCMs with

12 Processor Units each, plus up to 64 GB of memory and 12 STI links per book. All books

are connected via a super-fast redundant ring structure and can be individually upgraded.

The I/O infrastructure has been redesigned to handle the large increase in system

performance. The multiple Logical Channel Subsystems (LCSS) architecture on the z990

allows up to four LCSS, each with 256 channels. Channel types supported on the z990 are:

FICON Express

Coupling Links

OSA-Express

ESCON®

Chapter 1. zSeries 990 overview 3

Page 16

The following channel types, or channel cards, are not supported on the z990:

Parallel channels

4-port ESCON cards

OSA-2 cards

OSA-Express ATM cards

Pre-FICON Express cards

PCICC cards

The logical partitioning facility, PR/SM, provides the ability to configure and operate as many

as 30 logical partitions. PR/SM manages all the installed and enabled resources (processors

and memory) of the installed books as a single large SMP. Each logical partition has access

to physical resources (processors, memory, and I/O) in the whole system across multiple

books.

1.2 z990 models

The z990 has a machine type of 2084 and has four models: A08, B16, C24, and D32. The

model naming is representative of the book design of the z990, as it indicates the number of

books and the number of Processor Units available in the configuration. PUs are delivered in

single increments, orderable by feature code. A Processor Unit (PU) can be characterized as

a Central Processor (CP), Integrated Facility for Linux (IFL), Internal Coupling Facility (ICF),

zSeries Application Assist Processor (zAAP), or System Assist Processor (SAP).

The development of a multi-book system provides an opportunity for customers to increase

the capacity and/or requirements of the system in three areas:

You can add capacity by activating more CPs, IFLs, ICFs, or zAAPs on an existing book

concurrently.

You can add a new book concurrently and activate more CPs, IFLs, ICFs, or zAAPs.

You can add a new book to provide additional memory and/or STIs to support increasing

storage and/or I/O requirements. The ability to LICCC-enable more memory concurrently

to existing books is dependent on enough physical memory being present. Upgrades

requiring more memory than physically available are disruptive, requiring a planned

outage.

General rules

All models utilize a 12 PU MCM, of which eight are available for PU characterization. The

remaining four are reserved as two standard SAPs and two standard spares.

Model upgrades, from A08 to B16, from B16 to C24, or from C24 to D32, are achieved by

single book adds.

The model number designates the

use. Using feature codes, customers can order CPs, IFLs, ICFs, zAAPs, and optional SAPs,

unassigned CPs, and/or unassigned IFLs up to the maximum number of PUs for that model.

Therefore, an installation may order a model B16 with 13 CP features and three IFL features,

or a model B16 with only one CP feature.

maximum number of PUs available for an installation to

Unlike prior processor model names, which indicate the number of purchased CPs, z990

model names indicate the maximum number of Processor Units

not the actual number that have been ordered as CPs, IFLs, ICFs, zAAPs, or additional SAPs.

A software model notation is also used to indicate how many CPs are purchased and

software should be charged for. See “Software models” on page 63 for more information.

4 IBM ^ zSeries 990 Technical Guide

potentially orderable, and

Page 17

Model upgrade paths

With the exception of the z900 Model 100, any z900 model may be upgraded to a z990

model. With the advancement of Linux for S/390 and Linux on zSeries, customers may

choose to change the PU characterization of the server they are upgrading. In addition,

customers who are consolidating may not be increasing total capacity, and/or they may wish

to take advantage of the multiple Logical Channel Subsystems offered. z990-to-z990 model

upgrades and feature adds may be completed concurrently.

Model downgrades

There are no model downgrades offered. Customers may purchase unassigned CPs or IFLs

for future use. This avoids the placement of RPQ orders and subsequent sequential MES

activity, and paying software charges for capacity that is not in use.

Concurrent Processor Unit (PU) conversions

z990 servers support concurrent conversion between different PU types, providing flexibility

to meet changing business environments. Assigned CPs, unassigned CPs, assigned IFLs,

unassigned IFLs, and ICFs may be converted to assigned CPs, assigned IFLs or ICFs, or to

unassigned CPs or unassigned IFLs.

1.3 System functions and features

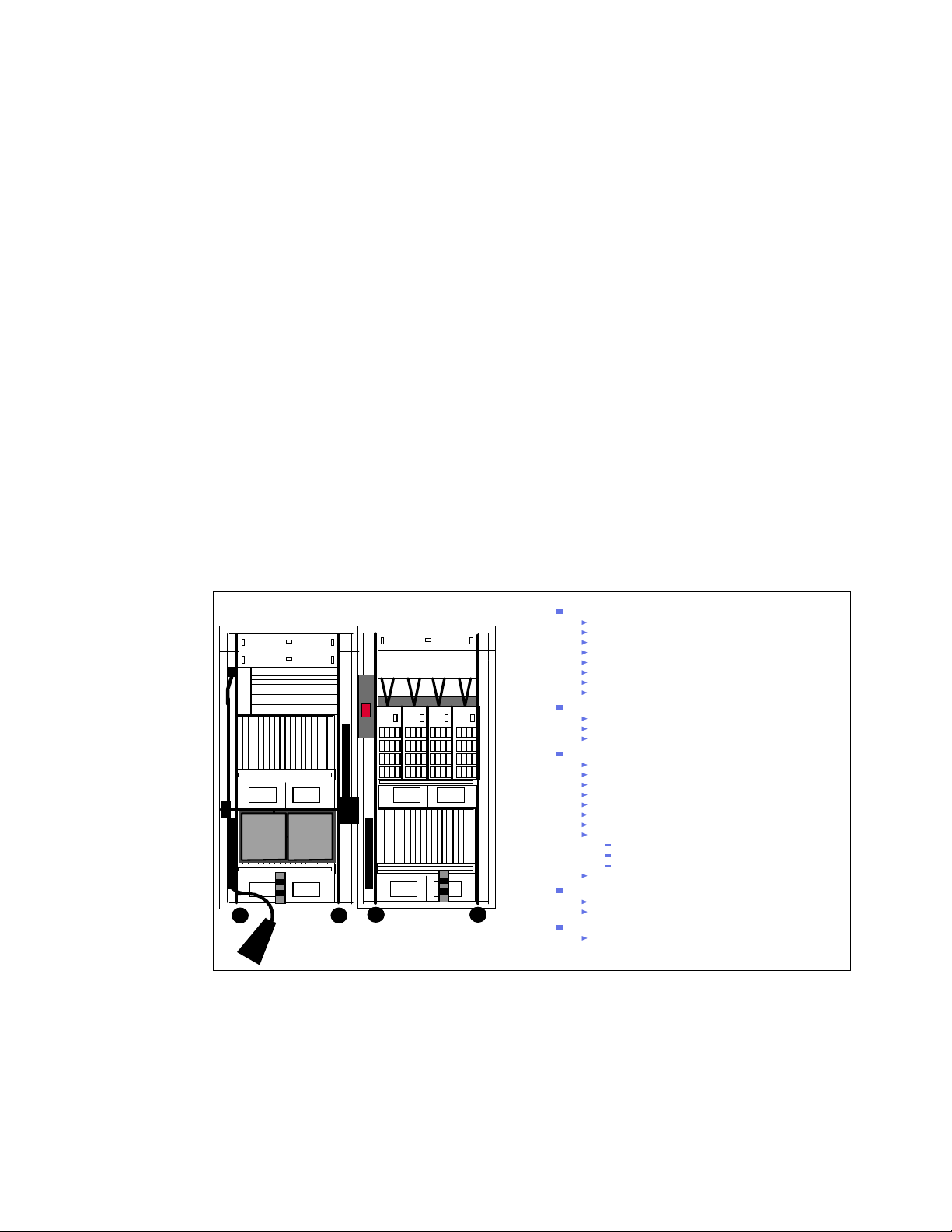

The 990 system offers the following functions and features, as shown in Figure 1-3.

Z frame

Figure 1-3 System overview

1.3.1 Processor

IBF

IBF

BPD

MDA MDA

Dual SEs

Cargo

Cargo

MDA MDA

A frame

IBF

MRU M RU

MDA MDAMDA MDA

MDA

MDA

Processor

4 flexible Models (A08, B16, C24 & D32)

64-bit Architecture

32 characterizable PUs, CMOS9G -SOI Technology

SuperScalar

Capacity Upgrade on Dem and including Mem ory and I/O

Hybrid Cooling (Air/Liquid)

Up to 30 Logical Partitions

Optional ETR feature

Memory

64 GB per Model, max system memory 256 GB

Card sizes 8, 16, 32 GB (2 cards per Book)

Bi-directional redundant Ring Structure

I/O

64-bit Architecture (42/48-bit I/O addressing in hardware)

Up to 48 x 2 GB/s Self-Timed Interconnects (STIs)

I/O cage with enhanced power

Up to 4 Logical Channel Subsystems (LCSS)

Up to 120 FICON Express™Channels

FCP SCSI over Fibre Channel

Up to 48 OSA-Express network connectors

Crypto

SSL

PCIXCC

No CHPID number required

Up to 16 HiperSockets

Parallel Sysplex technology

ICB-4 (2 GB /s), ICB-3, ICB-2, ISC- 3, IC

CF Duplexing

HMC

Alternate Service Element

IBM introduced the Processor Resource/Systems Manager™ (PR/SM) feature in February

1988, supporting a maximum of four logical partitions. In June 1992, IBM introduced support

for a maximum of 10 logical partitions and announced the Multiple Image Facility (MIF, also

known as EMIF), which allowed sharing of ESCON channels across logical partitions, and

since that time, has allowed sharing of more channels across logical partitions (such as

Chapter 1. zSeries 990 overview 5

Page 18

Coupling Links, FICON, and OSA). In June 1997, IBM announced increased support - up to

15 logical partitions on Generation 3 and Generation 4 servers.

The evolution continues and IBM is announcing support for 30 logical partitions. This support

is exclusive to z990 and z890 models.

MCM technology

The z990 12-PU MCM is smaller and more capable than the z900’s 20-PU MCM. It has

16 chips, compared to 35 for the z900. The total number of transistors is over 3 billion,

compared with approximately 2.5 billion for the z900. With this amazing technology

integration comes improvements in chip-to-substrate and substrate-to-board connections.

The z990 module uses a connection technology, Land Grid Arrays (LGA), pioneered by the

pSeries® in the p690 and the i890. LGA technology enables the z990 substrate, with only

53% of the surface area of the z900 20 PU MCM substrate, to have 23% more I/Os from the

logic package.

Both the z900 and z990 have 101 layers in the glass ceramic substrate. The z990's substrate

is thinner, shortening the paths that signals must travel to reach their destination (another chip

or exiting the MCM). Inside the low dielectric glass ceramic substrate is 0.4 km of internal

wiring that interconnects the 16 chips that are mounted on the top layer of the MCM. The

internal wiring provides power and signal paths into and out of the MCM.

The MCM on the z990 offers flexibility in enabling spare PUs via the Licensed Internal Code

Configuration Control (LIC-CC) to be used for a number of different functions. These are:

A Central Processor (CP)

A System Assist Processor (SAP)

An Internal Coupling Facility (ICF)

An Integrated Facility for Linux (IFL)

A zSeries Application Assist Processor (zAAP)

The number of CPs and SAPs assigned for particular general purpose models depends on

the configuration. The number of spare PUs is dependent on how many CPs, SAPs, ICFs,

zAAPs, and IFLs are present in a configuration.

1.3.2 Memory

The minimum system memory on any model is 16 GB. Memory size can be increased in 8 GB

increments to a maximum of 64 GB per book or 256 GB for the entire CPC. Each book has

two memory cards, which come in three physical size cards: 8 GB, 16 GB, and 32 GB.

The z990 continues to employ storage size selection by Licensed Internal Code introduced on

the G5 processors. Memory cards installed may have more usable memory than required to

fulfill the machine order. LICCC will determine how much memory is used from each card.

1.3.3 Self-Timed Interconnect (STI)

An STI is an interface to the Memory Bus Adaptor (MBA), used to gather and send data. 12

STIs per z990 physical book is supported. Each of these STIs has a bidirectional bandwidth

of 2 GBps. The maximum instantaneous bandwidth per book is 24 GBps.

1.3.4 Channel Subsystem (CSS)

A new Channel Subsystem (CSS) structure was introduced with z990 to “break the barrier” of

256 channels. With the introduction of the new system structure and all of its scalability

6 IBM ^ zSeries 990 Technical Guide

Page 19

benefits, it was essential that the Channel Subsystem also be scalable and allow “horizontal”

growth. This is facilitated by multiple Logical Channel Subsystems (LCSSs) on a single

zSeries server. The CSS has increased connectivity and is structured to provide the

following:

Four Logical Channel Subsystems (LCSS).

Each LCSS may have from one to 256 channels.

Each LCSS can be configured with 1 to 15 logical partitions.

Each LCSS supports 63K I/O devices.

Note: There is no change to the operating system maximums. One operating system

image continues to support a maximum of 256 channels, and has a maximum of 63K

subchannels available to it.

The I/O subsystem continues to be viewed as a single Input/Output Configuration Data Set

(IOCDS) across the entire system with multiple LCSS. Only one Hardware System Area

(HSA) is used for the multiple LCSSs.

A three-digit Physical Channel Identifier (PCHID) is being introduced to accommodate the

mapping of 1024 channels to four LCSSs, with 256 CHPIDs each. CHPIDs continue to exist

and will be associated with PCHIDs. An updated CHPID Mapping Tool (CMT) is being

introduced and the CHPID report from e-config is replaced by a PCHID report. The CHPID

Mapping Tool is available from Resource Link™ as a stand-alone PC-based program.

1.3.5 Physical Channel IDs (PCHIDs) and CHPID Mapping Tool

A z990 can have up to 1024 physical channels, or PCHIDs. In order for an operating system

to make use of that PCHID, it must be mapped to a CHPID within the IOCDs. Each CHPID is

uniquely defined with an LCSS and mapped to an installed PCHID. A PCHID is eligible for

mapping to any CHPID in any LCSS.

The z990 CHPID Mapping Tool (CMT) provides a method of customizing the CHPID

assignments for a z990 system to avoid attaching critical channel paths to single points of

failure. It should be used after the machine order is placed and before the system is delivered

for installation. The tool can also be used to remap CHPIDs after hardware upgrades that

increase the number of channels.

The tool maps the CHPIDs from an IOCP file to Physical Channel Identifiers (PCHIDs) that

are assigned to the I/O ports. The PCHID assignments are fixed and cannot be changed.

A list of PCHID assignments for each hardware configuration is provided in the PCHID Report

available when the z990 hardware is ordered. Unlike previous zSeries systems, there are no

default CHPID assignments. CHPIDs are assigned when the IOCP file is built. When

upgrading an existing zSeries configuration to z990, CHPIDs can be mapped by importing the

IOCP file into the z990 CHPID Mapping Tool.

1.3.6 Spanned channels

As part of the z990 LCSS, the Channel Subsystem is extended to provide the high-speed,

transparent sharing of some channel types in a manner that extends the MIF shared channel

function. Internal Channel types such as HiperSocket (IQD) and Internal Coupling Channels

(ICP) can be configured as “spanned” channels. External channels such as FICON channels,

OSA features, and External Coupling Links can be defined as spanned channels. Spanned

channels will allow the channel to be configured to multiple LCSSs, thus enabling them to be

shared by any/all of the configured logical partitions, regardless of the LCSS in which the

partition is configured.

Chapter 1. zSeries 990 overview 7

Page 20

Note: Spanned channels are not supported for ESCON channels, FICON conversion

channels (FCV), and Coupling Receiver links (CBR, CFR).

1.3.7 I/O connectivity

Here we discuss I/O connectivity.

I/O cage

Each book provides 12 STI links (48 STI maximum with four books) for I/O and coupling

connectivity, and for cryptographic feature cards. Each of these links can either be configured

for ICBs, or be connected to STI distribution cards in the I/O cage(s). The data rate for the STI

is 2 GBps.

Note: The z900 compatibility I/O cage is not supported on the z990.

The z990 I/O cage contains seven STI domains. Each domain has the capability of four I/O

slots. A subset of previous zSeries 900 I/O and cryptographic cards is supported by the I/O

cages in the z990.

Note: Parallel channels, OSA-2, OSA-Express ATM, pre-FICON Express channels, and

PCICC feature cards are not supported in the z990.

The installation of an I/O cage remains a disruptive MES, so the Plan Ahead feature remains

an important consideration when ordering a z990 system.

The z990 is a two-frame server. The z990 has a minimum of one CEC cage and one I/O cage

in the A frame. The Z frame can accommodate an additional two I/O cages, making a total of

three for the whole system. Figure 1-4 shows the layout of the frames and I/O cages.

ESCON

z-Frame

A-Fr ame

FICON/FCP

FICON™Express

Networking

OSA-Express

Gigabit Ethernet

1000BASE-T Ethernet

Token Ring

HiperSockets

rd I/O

3

Cage

CEC

cage

Coupling Links

ISC-3

ICB-2, ICB-3, ICB-4, IC

Crypto

1

PCICA

PCIXCC

Not Supported

Parallel

OSA-E ATM and OSA-2

FICON (pre-FICON Express)

PCICC

2nd I/O

Cage

st I/O

Cage

Figure 1-4 I/O cage layout and supported cards and coupling links

8 IBM ^ zSeries 990 Technical Guide

Front View

Page 21

Up to 1024 ESCON channels

The high density ESCON feature (FC 2323) has 16 ports, of which 15 can be activated for

customer use. One port is always reserved as a spare, in the event of a failure of one of the

other ports.

This is not an orderable feature. The configuration tool will select the quantity of features

based upon the order quantity of ESCON FC2324 ports, distributing the ports across features

for high availability. After the first pair, ESCON FC2323 are installed in increments of one.

ESCON channels are available on a port basis in increments of four. The port quantity is

selected and LIC CC is shipped to activate the desired quantity of ports on the 16-port

ESCON FC2323. Each port utilizes a light emitting diode (LED) as the optical transceiver,

and supports use of a 62.5/125-micrometer multimode fiber optic cable terminated with a

small form factor, industry standard MT-RJ connector.

Up to 120 FICON Express channels

An increased number of FICON Express features per z990 leads the way in distinguishing

this server family, further setting it apart as enterprise class in terms of the number of

simultaneous I/O connections available for FICON Express features. z990 supports 60

FICON Express features to be plugged, providing a total of 120 available channels. This is a

25% growth over what was available on z900. These channels are available in long wave (LX)

and short wave (SX).

The FICON Express LX and SX channel cards have two ports. LX and SX ports are ordered

in increments of two. The maximum number of FICON Express cards is 60, installed in the

three I/O cages.

The same FICON Express channel card used for FICON channels is also used for FCP

channels. FCP channels are enabled on these cards as a microcode load with an FCP mode

of operation and CHPID type definition. As with FICON, FCP is available in long wavelength

(LX) and short wavelength (SX) operation, though the LX and SX cannot be intermixed on a

single card.

zSeries supports FCP channels, switches and FCP/SCSI devices with full fabric connectivity

under Linux on zSeries. Support for FCP devices means that z990 servers will be capable of

attaching to select FCP/SCSI devices, and may access these devices from Linux on zSeries.

This expanded attach ability means that customers have more choices for storage solutions,

or may have the ability to use existing storage devices, thus leveraging existing investments

and lowering total cost of ownership for their Linux implementation.

The 2 Gb capability on the FICON Express channel cards means that 2 Gb link speeds are

available for FCP channels as well.

The Fibre Channel Protocol (FCP) capability, supporting attachment to SCSI devices in Linux

environments, was made generally available in conjunction with IBM TotalStorage®

Enterprise Tape System 3590, IBM TotalStorage Enterprise Tape Drive 3592, and IBM

TotalStorage Enterprise Tape Library 3494. For VM guest mode, z/VM Version 4 Release 3 is

required to support Linux/FCP. When configured as a CHPID type FCP, FICON allows

concurrent patching of Licensed Internal Code without have to configure the channel off and

on.

Chapter 1. zSeries 990 overview 9

Page 22

The required Linux level for this function is SLES 8 from SUSE. This support allows a z990

system to access industry standard devices for Linux, using SCSI control block-based

Input/Output (I/O) devices. These industry standard devices utilize Fixed Block rather than

Extended Count Key Data (ECKD™) format. For more information, consult the IBM I/O

Connectivity Web page:

http://www.ibm.com/servers/eserver/zseries/connectivity/#fcp

FICON CTC function

Native FICON channels support CTC on the z990, z890, z900, and z800. G5 and G6 servers

can connect to a zSeries FICON CTC, as well. This FICON CTC connectivity will increase

bandwidth between G5, G6, z990, z890, z900, and z800 systems.

Because the FICON CTC function is included as part of the native FICON (FC) mode of

operation on zSeries, a FICON channel used for FICON CTC is not limited to intersystem

connectivity but will also support multiple device definitions. For example, ESCON channels

that are dedicated as CTC cannot communicate with any other device, whereas native FICON

(FC) channels are not dedicated to CTC only. Native mode can support both device and CTC

mode definition concurrently, allowing for greater connectivity flexibility.

FICON Cascaded Directors

Some time ago, IBM made the FICON Cascaded Director function generally available. This

means that a native FICON (FC) channel or a FICON CTC can connect a server to a device

or other server via two (same vendor) FICON Directors in between.

This type of cascaded support is important for disaster recovery and business continuity

solutions because it can provide high availability and extended distance connectivity, and

(particularly with the implementation of 2 Gb Inter Switch Links) has the potential for fiber

infrastructure cost savings by reducing the number of channels for interconnecting the two

sites.

The following directors and switches are supported:

CNT (INRANGE) FC/9000 64-port and 128-port models (IBM 2042)

McDATA Intrepid 6064 (IBM 2032)

McDATA Intrepid 6140 (IBM 2032)

McDATA Sphereon 4500 Fabric Switch (IBM 2031-224)

IBM TotalStorage SAN Switches 2109-F16, S16, and S08

IBM TotalStorage Director 2109-M12

FICON Cascaded Directors have the added value of ensuring high integrity connectivity.

Transmission data checking, link incidence reporting, and error checking are integral to the

FICON architecture, thus providing a true enterprise fabric.

For more information on Cascaded Directors, consult the I/O Connectivity Web page:

http://www.ibm.com/servers/eserver/zseries/connectivity/ficon_cascaded.html

OSA-Express

With the introduction of z990 and its increased processing capacity, and the availability of

multiple LCSSs, the Open Systems Adapter family of local area network (LAN) adapters is

also expanding by offering a maximum of 24 features per system (versus the maximum of 12

features per system on prior generations). The z990 can have 48 ports of LAN connectivity.

10 IBM ^ zSeries 990 Technical Guide

Page 23

You can choose any combination of OSA features: the OSA-Express Gigabit Ethernet LX

(FC1364), the OSA-Express Gigabit Ethernet SX (FC1365), the OSA-Express 1000BASE-T

Ethernet (FC1366), or OSA-Express Token Ring (FC2367). You can also carry forward your

current z900 OSA-Express features to z990, OSA-Express Gigabit Ethernet LX (FC 2364),

OSA-Express Gigabit Ethernet SX (FC 2365), OSA-Express Fast Ethernet (FC 2366), and

OSA-Express Token Ring (FC 2367).

Gigabit Ethernet

The OSA-Express GbE features (FC1364 and FC1365) have an LC Duplex connector type,

replacing the current SC Duplex connector. This conforms to the fiber optic connectors

currently in use for ISC-3 and the FICON Express features shipped after October 30, 2001.

1000BASE-T Ethernet

The z990 supports a copper Ethernet feature: 1000BASE-T Ethernet. This feature is offered

on new builds and replaces the current OSA-Express Fast Ethernet (FC 2366), which can be

brought forward to z990 on an upgrade from z900.

1000BASE-T Ethernet is capable of operating at 10, 100, or 1000 Mbps (1 Gbps) using the

same Category-5 copper cabling infrastructure that is utilized for Fast Ethernet. The Gigabit

over copper adapter allows a migration to gigabit speeds wherever there is a copper cabling

infrastructure instead of a fiber optic cabling infrastructure.

OSA-Express Integrated Console Controller (OSA-ICC)

An additional function of the OSA-Express 1000BASE-T Ethernet feature is its support as an

OSA-Express 100BASE-T Ethernet Integrated Console Controller. This function supports

TN3270E and non-SNA DFT 3270 emulation and means that 3270 emulation for console

session connections are integrated in the z990 via a port of the 1000BASE-T Ethernet

feature.

Checksum Offload for Linux and z/OS when in QDIO mode

A function introduced for the Linux on zSeries and z/OS environments, called checksum

offload, provides the capability of calculating the Transmission Control Protocol/User

Datagram Protocol (TCP/UDP) and Internet Protocol (IP) header checksums.

Checksum verifies the correctness of files. By moving the checksum calculations to a Gigabit

or 1000BASE-T Ethernet feature, host CPU cycles are reduced.

Improved performance can be realized by taking advantage of the checksum offload function

of the OSA-Express Gigabit Ethernet, and OSA-Express GbE or the 1000BASE-T Ethernet

(when operating at 1000 Mbps (1 Gbps)) features by offloading checksum processing to

OSA-Express (in QDIO mode, CHPID type OSD)) for most IPv4 packets. This support is

available with z/OS V1R5 and later as well as Linux on zSeries.

Token Ring

The OSA-Express Token Ring feature has two independent ports, each supporting

attachment to either a 4 Mbps, 16 Mbps, or 100 Mbps Token Ring Local Area Network (LAN).

The OSA-Express Token Ring feature supports autosensing as well as any of the following

settings: 4 Mbps half- or full-duplex, 16 Mbps half- or full-duplex, or 100 Mbps full-duplex.

Note: The demand for Token Ring on mainframe continues to decline. Migration from

Token Ring to an Ethernet infrastructure is recommended as part of long term planning for

Local Area Network support.

Chapter 1. zSeries 990 overview 11

Page 24

OSA-Express ATM

The OSA-Express Asynchronous Transfer Mode (ATM) features are not supported on z990.

They are not offered as a new build option and are not offered on an upgrade from z900. This

satisfies the Statement of General Direction in the hardware announcement dated April 30,

2002.

If ATM connectivity is still desired, a multiprotocol switch or router with the appropriate

network interface (for example, 1000BASE-T Ethernet, Gigabit Ethernet) can be used to

provide connectivity between the z990 and an ATM network.

OSA-2 FDDI

The OSA-2 Fiber Distributed Data Interface (FDDI) feature is not supported on z990. It is not

offered as a new build option and is not offered on an upgrade from z900. This satisfies the

Statement of General Direction in the hardware announcement dated October 4, 2001.

If FDDI connectivity is still desired, a multiprotocol switch or router with the appropriate

network interface (for example, 1000BASE-T Ethernet, Gigabit Ethernet) can be used to

provide connectivity between the z990 and a FDDI LAN.

Parallel channels and converters

Parallel channels are not supported on z990. Customers who wish to use parallel-attached

devices with z990 must obtain a parallel channel converter box such as the IBM 9034, which

may be available through IBM Global Services (IGS), or obtain a third-party parallel channel

converter box such as the Optica 34600 FXBT. In both cases, these are connected to an

ESCON channel.

For more information about Optica offerings, contact Optica directly:

http://www.opticatech.com/

1.3.8 Cryptographic

Here we discuss cryptographic functions and features.

CP Assist for cryptographic function

The zSeries cryptography is further advanced with the introduction of the Cryptographic

Assist Architecture implemented on every z990 PU. The z990 processor provides a set of

symmetric cryptographic functions, synchronously executed, which enormously enhance the

performance of the encrypt/decrypt function of SSL, Virtual Private Network (VPN), and data

storing applications that do not require FIPS 140-2 level 4 security. The on-processor crypto

functions run at z990 processor speed.

These cryptographic functions are implemented in every PU; the affinity problem of pre-z990

systems is eliminated. The Crypto Assist Architecture includes DES and T-DES data

en/decryption, MAC message authentication, and SHA-1 secure hashing. These functions

are directly available to application programs (zSeries Architecture instructions). SHA-1 is

always enabled, but other cryptographic functions are available only when the Crypto

enablement feature (FC 3863) is installed.

PCI Cryptographic Accelerator feature (PCICA)

The Peripheral Component Interconnect Cryptographic Accelerator (PCICA) feature has two

accelerator cards per feature and is an optional addition, along with the Peripheral

Component Interconnect X Cryptographic Coprocessor (PCIXCC) FC0868. The PCICA is a

very fast cryptographic processor designed to provide leading-edge performance of the

12 IBM ^ zSeries 990 Technical Guide

Page 25

complex RSA cryptographic operations used with the Secure Sockets layer (SSL) protocol

supporting e-business. The PCICA feature is designed specifically for maximum speed SSL

acceleration.

Each zSeries PCI Cryptographic Accelerator feature (PCICA) contains two accelerator cards

and can support up to 2100 SSL handshakes per second.

Note: To enable the function of the PCICA feature, the CP Assist feature (feature code

3863) must be installed.

PCI X-Cryptographic Coprocessor (PCIXCC) feature

The Peripheral Component Interconnect X Cryptographic Coprocessor (PCIXCC) feature has

one coprocessor and is an optional addition, containing support to satisfy high-end server

security requirements by providing full checking and fully programmable functions and User

Defined Extension (UDX) support.

The PCIXCC adapter is intended for applications demanding high security. The PCIXCC

feature is designed for the FIPS 140-2 Level 4 compliance rating for secure cryptographic

hardware.

Note: To enable the function of the PCIXCC feature, the CP Assist feature (feature code

3863) must be installed.

1.3.9 Parallel Sysplex support

Here we discuss Parallel Sysplex support.

ISC-3

A 4-port ISC-3 card structure is provided on the z900 family of processors. It consists of a

Mother Card with two Daughter Cards that have two ports each. Each Daughter Card is

capable of operating at 1 Gbps in compatibility mode (HiPerLink) or 2 gigabits/sec in peer

mode and up to 10 km. The mode is selected for each port via the CHPID type in the IOCDS.

InterSystem Coupling Facility-3 (ISC-3) channels provide the connectivity required for data

sharing between the Coupling Facility and the CPCs directly attached to it. ISC-3 channels

are point-to-point connections that require a unique channel definition at each end of the

channel. ISC-3 channels operating in peer mode provide connections between z990, z890,

and z900 general purpose models and z900-based Coupling Facility images. ISC-3 channels

operating in compatibility mode provide connections between z990 models and ISC HiperLink

channels on 9672 G5/G6 models.

ICB-2 (Integrated Cluster Bus 2)

The Integrated Cluster Bus-2 (ICB-2) link is a member of the family of Coupling Link options

available on z990. Like the ISC-3 link, it is used by coupled systems to pass information back

and forth over high speed links in a Parallel Sysplex environment. ICB-2 or ISC-3 links are

used to connect 9672 G5/G6 to z990 servers.

An STI-2 resides in the I/O cage and provides two output ports to support the ICB-2

connections. The STI-2 card converts the 2 GBps input into two 333 MBps ICBs. The ICB-2 is

defined in compatibility mode and the link speed is 333 MBps.

One feature is required for each end of the link. Ports are ordered in increments of one.

Chapter 1. zSeries 990 overview 13

Page 26

ICB-3 (Integrated Cluster Bus 3)

The Integrated Cluster Bus-3 (ICB-3) link is a member of the family of Coupling Link options

available on z990. Like the ISC-3 link, it is used by coupled systems to pass information back

and forth over high speed links in a Parallel Sysplex environment. ICB-3 or ISC-3 links are

used to connect z900, z800, or z890 servers (2064, 2066, or 2086) to z990 servers.

An STI-3 card resides in the I/O cage and provides two output ports to support the ICB-3

connections. The STI-3 card converts the 2 GBps input into two 1 GBps ICBs. The ICB-3 is

defined in peer mode and the link speed is 1 GBps.

One feature is required for each end of the link. Ports are ordered in increments of one.

ICB-4 (Integrated Cluster Bus 4)

The Integrated Cluster Bus-4 (ICB-4) link is a member of the family of Coupling Link options

available on z990. ICB-4 is a “native” connection used between z990 and or z890 processors.

An ICB-4 connection consists of one link that attaches directly to an STI port in the system,

does not require connectivity to a card in the I/O cage, and operates at 2 GBps. The ICB-4

works in peer mode and the link speed is 2 GBps.

One feature is required for each end of the link. Ports are ordered in increments of one.

Internal Coupling (IC)

The Internal Coupling-3 (IC) channel emulates the Coupling Facility functions in LIC between

images within a single system. No hardware is required; however, a minimum of two CHPID

numbers must be defined in the IOCDS for each connection.

System-Managed CF Structure Duplexing

System-Managed Coupling Facility (CF) Structure Duplexing provides a general purpose,

hardware-assisted, easy-to-exploit mechanism for duplexing CF structure data. This provides

a robust recovery mechanism for failures (such as loss of a single structure or CF or loss of

connectivity to a single CF) through rapid failover to the other structure instance of the duplex

pair.

The following three structure types can be duplexed using this architecture:

Cache structures

List structures

Locking structures

Support for these extensions is included in Coupling Facility Control Code (CFCC) Levels 11

12, and 13 and in z/OS V1.2, V1.3, V1.4, and V1.5 and later.

For those CF structures that support the use of System-Managed CF Structure Duplexing,

customers have the ability to dynamically enable or disable, selectively by structure, the use

of System-Managed CF Structure Duplexing.

Customers interested in deploying System-Managed CF Structure Duplexing in their test,

development, or production Parallel Sysplex will need to read the technical paper

System-Managed CF Structure Duplexing, GM13-0100 and analyze their Parallel Sysplex

environment to understand the performance and other considerations of using this function.

System-Managed CF Structure Duplexing, GM13-0100 is available at these Web sites:

http://www.ibm.com/server/eserver/zSeries/pso

http://www.ibm.com/servers/eserver/zSeries/library/techpapers/gm130103.html

14 IBM ^ zSeries 990 Technical Guide

Page 27

1.3.10 Intelligent Resource Director (IRD)

Exclusive to the IBM z/Architecture is Intelligent Resource Director (IRD), a function that

optimizes processor and channel resource utilization across logical partitions based on

workload priorities. IRD combines the strengths of the PR/SM, Parallel Sysplex clustering,

and z/OS Workload Manager.

Intelligent Resource Director uses the concept of an “LPAR cluster”, the subset of z/OS

systems in a Parallel Sysplex cluster that are running as logical partitions on the same z900

server. In a Parallel Sysplex environment, Workload Manager directs work to the appropriate

resources, based on business policy. With IRD, resources are directed to the priority work.

Together, Parallel Sysplex technology and IRD provide flexibility and responsiveness to

e-business workloads that are unrivaled in the industry.

IRD has three major functions: LPAR CPU Management, Dynamic Channel Path

Management, and Channel Subsystem Priority Queuing, which are explained in the following

sections.

Channel Subsystem Priority Queuing

Channel Subsystem Priority Queuing on the z900 allows priority queueing of I/O requests

within the Channel Subsystem, and the specification of relative priority among logical

partitions. WLM in goal mode sets priorities for a logical partition, and coordinates this activity

among clustered logical partitions.

Dynamic Channel Path Management

This feature enables customers to have channel paths that dynamically and automatically

move to those ESCON I/O devices that have a need for additional bandwidth due to high I/O

activity. The benefits are enhanced by the use of goal mode and clustered logical partitions.

LPAR CPU Management

Workload Manager (WLM) dynamically adjusts the number of logical processors within a

logical partition and the processor weight, based on the WLM policy. The ability to move the

CPU weights across an LPAR cluster provides processing power to where it is most needed,

based on WLM goal mode policy.

1.3.11 Hardware consoles

Here we discuss the Hardware Management Console and Support Element interface.

Hardware Management Console and Support Element interface

On z990 servers, the Hardware Management Console (HMC) provides the platform and user

interface that can control and monitor the status of the system via the two redundant Support

Elements installed in each z990.

The z990 server implements two fully redundant interfaces, known as the Power Service

Control Network (PSCN), between the two Support Elements and the CPC. Error detection

and automatic switchover between the two redundant Support Elements provides enhanced

reliability and availability.

1.3.12 Concurrent upgrades

The z990 servers have concurrent upgrade capability via the Capacity Upgrade on Demand

(CUoD) function. This function is also used by Customer Initiated Upgrades (CIUs) and by the