Page 1

i

IBM SPSS Modeler 15 User’s Guide

Page 2

Note: Before using this information and the product it supports, read the general information

under Notices on p. 249.

This edition applies to IBM SPSS Modeler 15 and to all subsequent releases and modifications

until otherwise indicated in new editions.

Adobe product screenshot(s) reprinted with permission from Adobe Systems Incorporated.

Microsoft product screenshot(s) reprinted with permission from Microsoft Corporation.

Licensed Mate

© Copyright IBM Corporation 1994, 2012.

rials - Property of IBM

U.S. Government Users Restricted Rights - Use, duplication or disclosure restricted by GSA ADP

Schedule Contract with IBM Corp .

Page 3

Preface

IBM® SPSS® Modeler is the IBM Co r p. enterprise-strength data mining workbench. SPSS

Modeler helps organizations to improve customer and citizen relat ionships th r ough an in-depth

understandi

profitable customers, identify cross-selling opportunities, attract new customers, detect f raud,

reduce risk, and improve government service delivery.

SPSS Modeler

leads to more powerful predictive models and shortens time-to-solution . SPSS Modeler offers

many modeling techniques, such as prediction, c lassification, segmentation, and association

detection a

enables t heir deliver y enterprise-wide to decision makers or to a database.

ng of data. Organizations use the insight gained from SPSS M odeler to retain

’s visual interface invites users to apply t heir specific business expertise, which

lgorithms. Once models are created, IBM® SPSS® Modeler Solution Publisher

About IBM Bu

IBM Business Analytics software delivers complete, consistent and accurate information that

decision-makers trust to improve business performance. A comprehensive portfolio of business

intelligence, predictive analytics, financial performance and strategy m anagement, and analytic

applications provides clear, immediate and actionable in sights into current performance and the

ability to predict future outcomes. Combined with rich industry solution s, proven practices and

professional services, organizations of every size can drive the highest productivity, confidently

automate decisions and deliver better results.

As part of

future events and proactively act upon that insight to drive bet ter business outcomes. Commercial,

government and academic customers worldwide rely on IBM SPSS technology as a competitive

advantag

risk. By incorporating IBM SPSS software into their daily operations, organizations become

predictive enterprises – able to direct and automate decisions to meet busin ess goals and achieve

measura

http://www.ibm.com/spss.

Techni

cal support

Technical support is available to maintenance custome r s. Customers may contact Technical

Support for assistance in using IBM Corp. products or for installation help for one of the

supported hardware environments. To reach Technical Support, see the IBM Corp. web site

at http://www.ibm.com/support. Be prepared to identify yourself, your organization, and your

support agreement when requesting assistance.

siness Analytics

this portfolio, IBM SPSS Predictive Analytics software helps organizations predict

e in attracting, retaining and growing customers, while reducing fraud and mitigating

ble competitive advantage. For further information or to reach a representative visit

© Copyright IBM Corporation 1994, 2012.

iii

Page 4

Contents

1 About IBM SPSS Modeler 1

IBM SPSS Modeler Products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

IBM SPSS Modeler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

IBM SPSS Modeler Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

IBM SPSS Modeler Administration Console . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

IBM SPSS Modeler Batch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

IBM SPSS Modeler Solution Publisher. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

IBM SPSS Modeler Server Adapters for IBM SPSS Collaboration and Deployment Services . 2

IBM SPSS Modeler Editions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

IBM SPSS Modeler Documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

SPSS Modeler Professional Documentation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

SPSS Modeler Premium Documentation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

Application Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

Demos Folder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2 New Features 7

New and Changed Features in IBM SPSS Modeler 15 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

New features in IBM SPSS Modeler Professional . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

New features in IBM SPSS Modeler Premium . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

New Nodes in This Release . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

3 IBM SPSS Modeler Overview 12

Getting Started . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Starting IBM SPSS Modeler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Launching from the Command Line . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Connecting to IBM SPSS Modeler Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

Changing the Temp Directory. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Starting Multiple IBM SPSS Modeler Sessions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

IBM SPSS Modeler Interface at a Glance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

IBM SPSS Modeler Stream Canvas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Nodes Palette . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

IBM SPSS Modeler Managers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

IBM SPSS Modeler Projects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

IBM SPSS Modeler Toolbar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Customizing the Toolbar. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Customizing the IBM SPSS Modeler Window. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

© Copyright IBM Corporation 1994, 2012.

iv

Page 5

Changing the icon size for a stream . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Using the Mous

Using Shortcut Keys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Printing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Automating IB

e in IBM SPSS Modeler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

M SPSS Modeler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4 Understandin

Data Mining Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Assessing the Data. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

A Strategy for Data Mining . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

The CRISP-DM Process Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Types of Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Data Mining Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

g Data Mining 29

5 Building Streams 41

Stream-Building Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Building Data Streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Working with Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Working with Streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Stream Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Running Streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Working with Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Adding Comments and Annotations to Nodes and Streams . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Saving Data Streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Loading Files . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Mapping Data Streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Tips and Shortcuts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

6 Handling Missing Values 99

Overview of Missing Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Handling Missing Values. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Handling Records with Missing Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Handling Fields with Missing Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Imputing or Filling Missing Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

CLEM Functions for Missing Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

v

Page 6

7 Building CLEM Expressions 105

About CLEM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

CLEM Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Values and Data Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Expressions and Conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

Stream, Session, and SuperNode Parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Working with Strings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Handling Blanks and Missing Values. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

Working with Numbers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Working with Times and Dates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

Summarizing Multiple Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Working with Multiple-Response Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

The Expression Builder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Accessing the Expression Builder . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Creating Expressions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Selecting Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Selecting Fields, Parameters, and Global Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Viewing or Selecting Values. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

Checking CLEM Expressions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Find and Replace . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

8 CLEM Language Reference 127

CLEM Reference Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

CLEM Datatypes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

Integers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

Reals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

Characters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

Strings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Lists. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Fields. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Dates. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

CLEM Operators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

Functions Reference. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Conventions in Function Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Information Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Conversion Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

Comparison Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

vi

Page 7

Logical Functions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

Numeric Funct

Trigonometric Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Probability Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

Bitwise Integ

Random Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

String Functions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

SoundEx Functi

Date and Time Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

Sequence Functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

Global Functio

Functions Handling Blanks and Null Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

Special Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

ions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

er Operations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

ons . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

ns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

9 Using IBM SPSS Modeler with a Repository 158

About the IBM SPSS Collaboration and Deployment Services Repository . . . . . . . . . . . . . . . . . . 158

Storing and Deploying Repository Objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

Connecting to the Repository . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

Entering Credentials for the Repository . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

Browsing the Repository Contents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

Storing Objects in the Repository . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

Setting Object Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

Storing Streams. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

Storing Projects. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

Storing Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Storing Output Objects. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Storing Models and Model Palettes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Retrieving Objects from the Repository . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Choosing an Object to Retrieve . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

Selecting an Object Version . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

Searching for Objects in the Repository . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

Modifying Repository Objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

Creating, Renaming, and Deleting Folders . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

Locking and Unlocking Repository Objects. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

Deleting Repository Objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

Managing Properties of Repository Objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Viewing Folder Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Viewing and Editing Object Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

Managing Object Version Labels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

vii

Page 8

Deploying Streams . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

Stream Deployment Options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

The Scoring Br

anch. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

10 Exporting to E

About Exporting to External Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Opening a Stream in IBM SPSS Modeler Advantage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Importing and Exporting Models as PMML . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

Model Types Supporting PMML . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 198

xternal Applications 195

11 Projects and Reports 200

Introduction to Projects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

CRISP-DM View. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

Classes View . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Building a Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Creating a New Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Adding to a Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

Transferring Projects to the IBM SPSS Collaboration and Deployment Services Repository . 204

Setting Project Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

Annotating a Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

Object Properties. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

Closing a Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Generating a Report . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

Saving and Exporting Generated Reports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

12 Customizing IBM SPSS Modeler 215

Customizing IBM SPSS Modeler Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

Setting IBM SPSS Modeler Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

System Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

Setting Default Directories. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Setting User Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Setting User Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

viii

Page 9

Customizing the Nodes Palette . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

Customizing the Palette Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

Changing a Pal

CEMI Node Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

ette Tab View . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

13 Performance Considerations for Streams and Nodes 230

Order of Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

Node Caches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

Performance: Process Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Performance: Modeling Nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

Performance: CLEM Expressions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

Appendices

A Accessibility in IBM SPSS Modeler 236

Overview of Accessibility in IBM SPSS Modeler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Types of Accessibility Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Accessibility for the Visually Impaired . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Accessibility for Blind Users . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

Keyboard Accessibility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

Using a Screen Reader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Tips for Use . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

Interference with Other Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

JAWS and Java. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

Using Graphs in IBM SPSS Modeler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

B Unicode Support 248

Unicode Support in IBM SPSS Modeler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 248

ix

Page 10

C Notices 249

Index 252

x

Page 11

About IBM SPSS Modeler

IBM® SPSS® Modeler is a set of data mining tools that enable you to quickly deve lop predictive

models using business expertise and deploy them into business o pe r ations to improve decision

making. Desi

entire data mining process, from data to better business results.

SPSS Modeler offers a va r iety of modeling methods taken from machine learning, art ificial

intelligence, and statistics. The methods available on the Modeling palette allow you to derive

new information from your data and to develop predictive models. Each method has certain

strengths and is best suited for particular types of problems.

SPSS Modeler can be purchased as a standalone p r oduct, or used as a client in

combination with SPSS Modeler Server. A number of additional options are also

available, as summarized in the following sections. For more information, see

http://www.ibm.com/software/analytics/spss/products/modeler/.

gned aroun d the industry-standard CRISP-DM model, SPSS Modeler supports t he

Chapter

1

1

11

IBM SPSS Modeler Products

The IBM® SPSS® Modeler family of products and associated software comprises the following.

IBM SPSS Modeler

IBM SPSS Modeler Server

IBM SPSS Modeler Administration Console

IBM SPSS Modeler Batch

IBM SPSS Modeler Solution Publisher

IBM SPSS Modeler Server adapters for I BM SPSS Collaboration and Deployment Services

IBM SPSS Modeler

SPSS Modeler is a functionally complete version of the produc t that you install and run on your

personal computer. You can run SPSS Modeler in local mode as a standalone product, or use it

in distributed mode along with IBM® SPSS® Modeler Server for im proved performance on

large data sets.

With SPSS Modeler, you can build a ccurate p r edictive models quickly and intuitively, withou t

programming. Using the un ique visual interface, you can easily visualize the data mining process.

With the support of the advanced analytics embedded in the product, you can discover previously

hidden patterns and trends in your data. You can mode l outcomes and understand the factors that

influence them, enabling you to take advantage of business opportunities and mitigate risks.

SPSS Modeler is available in two editions: SPSS Modeler Professional and SPSS Modeler

Premium. For more information, see the topic IBM SPSS Modeler Editions on p. 3.

© Copyright IBM Corporation 1994, 2012.

1

Page 12

2

Chapter 1

IBM SPSS Modeler Server

SPSS Modeler uses a client/server architecture to distribute requests for resource-intensive

operations to powerful server software, resulting in faster performance on larger data sets.

SPSS Modeler Server is a separately-licensed product that runs continually in distributed analysis

mode on a server host in conjunction with one or more IBM® SPSS® Modeler installations.

In this way, SPSS Modeler Server provides superior performance on large data sets because

memory-intensive operations can be done on the server without downloading data to the client

computer. IBM® SPSS® Modeler S erver also provides support for SQL optimization and

in-database modeling capabilities, delivering further benefits in performance and automation.

IBM SPSS Modeler Administration Console

The Modeler Administration Console is a graphical application for managing many of the SPSS

Modeler Server configuration options, which are also configurable by means of an options file.

The appl ication provides a console user interface to monitor and configure your SPSS Modeler

Server installations, and is available free-of-charge to current SPSS Modeler Server customers.

The applicatio n can be installed only o n Windows computers; however, it can administer a server

installed on any supported platform.

IBM SPSS Modeler Batch

While data mining is usually an interactive process, it is also possible to run SPSS Modeler

from a command line, with out the need for the graphical user interf ace. For example, you might

have long-running or repetitive tasks that you want to perform with no user intervention. SPSS

Modeler Batch is a special ver sion of the product that provides support f or the complete analytical

capabilities of SPSS Modeler without access to t he regular user interface. An SPSS Modeler

Server license is required to use SPSS Modeler Batch.

IBM SPSS Modeler Solution Publisher

SPSS Modeler Solution Pub lisher is a tool that enables you to create a packaged version of an

SPSS Modeler stream that can be run by an external runtime engine or embedded in an external

application. In this way, you can publish and deploy complete SPSS Modeler streams for use in

environments that do not have SPSS Modeler installed. SPSS Modeler Solution Publisher is

distributed as part of the IBM SPSS Collaborat ion and Deployment Services - Scoring service,

for which a separate license is required. With this lice nse, you receive SPSS Modeler Solution

Publisher Runtime, which enables you to execute the published streams.

IBM SPSS Modeler Server Adapters for IBM SPSS Collaboration and Deployment Services

A number of adapters for IBM® SPSS® Collaboration and Deployment Services are available that

enable SPSS Modeler and SPSS Modeler Server to interact with an IBM SPSS Collaboration and

Deployment Services repo sitory. In this way, an SPSS Modeler stream deployed to the repository

Page 13

can be shared by multiple users, or accessed from the thin-cl ient application IBM SPSS Modeler

Advantage. Yo

u install the adapter on the system that hosts the repository.

IBM SPSS Modeler Editions

SPSS Modeler is available in the following editions.

SPSS Modeler Professional

SPSS Modeler Professional provides all the tools you need to work with most types of structured

data, such as behaviors and interactions tracked in C RM systems, demographics, purchasing

behavior and sales data.

SPSS Modeler Premium

3

About IBM SPSS Modeler

SPSS Modeler Premium is a separately-licensed product that extends SPSS Modeler Pro f essional

to work with specialized data such as that us ed for entity analytics or social networkin g, and with

unstructured text data. SPSS Modeler Premium comprises the following components.

IBM® SPSS® Modeler Entity Analytics adds a completely new dimension to IBM® SPSS®

Modeler predictive analytics. Whereas predictive analytics attempts to predict future behavior

from pas t data, entity analytics focuses on improving the cohere nce and consistency of current

data by resolving identity conflicts within the records themselves. An identity can be that of an

individual, an organization, an object, or any other entity for whic h ambiguity might exist. Identity

resolution can be vital in a number of fields, including customer relationship management, fraud

detection, anti-money laundering, and national and international security.

IBM SPSS Modeler Social Network Analysis transforms information about relationships into

fields that characterize the social behavior of individuals and groups. Using data describing

the relati onships underlying social networ ks, IBM® SPSS® Modeler Social Network Analysis

identifies social leaders who influence the behavior of others in the network. In addition, you can

determine which people are most affected by other network participants. By combining these

results with othe r measure s, you can create comprehensive profiles of individuals on which to

base your predictive models. Models that include this social information will perform better than

models that do not.

IBM® SPSS® Modeler Text Analytics uses advanced ling uistic technologies and Natural

Language Processing (NLP) to rapidly process a large variety of unstructured text data, extract

and organize the key concepts, and group th

categories can be combined with existing structured da ta, such as demographics, and applied to

modeling using the full suite of SPSS Modeler data mining tools to yield better and more focu sed

decisions.

ese concepts into categories. Extracted concepts and

Page 14

4

Chapter 1

IBM SPSS Modeler Documentation

Documentation in online help form at is available from the Help menu of SPSS Modeler. This

includes documentation for S PSS Modeler, SPSS Modeler Server, and SPSS Modeler Solution

Publisher, as well as the Applications Guide and other supporting materials.

Complete do cumentation for each product (including installation instructions) is available in PDF

format under the \Documentation folder on each product DVD. Installation documents can also be

downloaded from the web at http://www-01.ibm.com/support/docview.wss?uid=swg27023172.

Documentation in both formats is also available from the SPSS Modeler Information Center at

http://publib.boulder.ibm.com/infocenter/spssmodl/v15r0m0/.

SPSS Modeler Professional Documentation

The SPSS Modeler Professional documentation suite (excluding installation instruc tions) is

as follows.

IBM SPSS Modeler User’s Guide.

to build data streams, handle missing values, build CLEM expressions, work w ith projects and

reports, and package streams for deployment to IBM SPSS Collaboration and Deployment

Services, Predictive Applications, or IBM SPSS Modeler Advantage.

IBM SPSS Modeler Source, Process, and Output Nodes.

read, process, and o utput data in different formats. Effective ly this means all nodes other

than modeling nodes.

IBM SPSS Modeler Modeling Nodes.

models. IBM® SPSS® Modeler offers a v ariety of modeling methods taken from machine

learning, artificial intelligence, and sta tistics.

IBM SPSS Modeler Algorithms Guide.

modeling methods used in SPSS Modeler. This guide is available in P DF format only.

IBM SPSS Modeler Applications Guide.

introductions to specific modeling methods and techniques. An on line version of this guide

is also available from the Help menu. For more information, see the topic Application

Examples on p. 5.

IBM SPSS Modeler Scripting and Automation.

scripting, including the properties that can be used to manipulate nodes and streams.

IBM SPSS Modeler Deployment Guide.

scenarios as steps in processing jobs under IBM® SPSS® Collaboration and Deployment

Services Deployment Manager.

IBM SPSS Modeler CLEF Developer’s Guide.

programs such as data processing routines or modeling algorithms as nodes in SPSS M odeler.

IBM SPSS Modeler In-Database Mining Guide.

database to improve performance and extend the range of analytical capabilities through

third-party algorithms.

IBM SPSS Modeler Server Administration and Performance Guide.

configure and administer IBM® SPSS® Modeler Server.

General introduction to using SPSS Modeler, including how

Descriptions of all the nodes used to create data mining

Descriptions of a ll the nodes used to

Descriptions of the mathematical foundations of the

The examples in this guide provide brief, targeted

Information on automating th e system through

Information on running SPSS Modeler streams and

CLEF provides the ability to integrate third-party

Information on how to use the power of your

Information on how to

Page 15

IBM SPSS Modeler Administration Console User Guide.

console user interface for monitoring and configuring SPSS Modeler Server. The console is

implemented a

IBM SPSS Modeler Solution Publisher Guide.

s a plug-in to the Deployment Manager application.

component that enables organizations to publish streams for use outside of the standard

SPSS Modeler environment.

IBM SPSS Modeler CRISP-DM Guide.

for data mining with SPSS Modeler.

IBM SPSS Mod

eler Batch User’s Guide.

mode, including de tails of batch mode execution and command-line arguments. This guide

is available in PDF format only.

SPSS Modeler Premium Documentation

The SPSS Modeler Premium documentation suite (excluding installation instr uctions) is as

follows.

IBM SPSS Modeler Ent

SPSS Modeler, covering repos itory insta llation and co nfiguration, entity analytics nodes,

and administrative tasks.

IBM SPSS Modeler Social Network Analysis User Guide.

analysis with SPSS Mo

SPSS Modeler Text Analytics User’s Guide.

Modeler, covering the text mining nodes, interactive workbench, templates, and other

resources.

IBM SPSS Modeler Text Analytics Administration Console User Guide.

and using the console us er interface for monitoring and configuring IBM® SPSS® Modeler

Server for use with SPSS Modeler Text Analytics . The cons ole is implemented as a plug-in

to the Deployment Manager application.

ity Analytics User Guide.

deler, including group an alysis and diffusion analysis.

5

About IBM SPSS Modeler

Information on installing and using the

SPSS Modeler Solution Publis her is an add-on

Step-by-step guide to using the CRISP-DM methodology

Complete guide to using IBM SPSS Modeler in batch

Information on using entity analytics with

A guide to perform ing social network

Information on using text analytics with SPSS

Information on installing

Application Examples

While the d ata mining tools in SPS S Modeler can help solve a wide variety of business and

organizational pro blems, t

modeling methods and techniques. The data sets used here are much smaller than the enormous

data stores managed by some data miners, but the concepts and methods involved should be

scalable to real-world app

You can access the examples by clicking

Modeler. The data files and sam ple streams are installed in the Demos folder under the product

installation directory.

Database modeling examples.

Guide.

Scripting examples.

See the examples in the IBM SPSS Modeler Scripting and Automation Guide.

he application examples provide brief, targeted introductions to specific

lications.

Application Examples

on the Help menu in SPSS

For more information, see the topic Demos Folder on p. 6.

See the examples in the IBM SPSS Modeler In-Database Mining

Page 16

6

Chapter 1

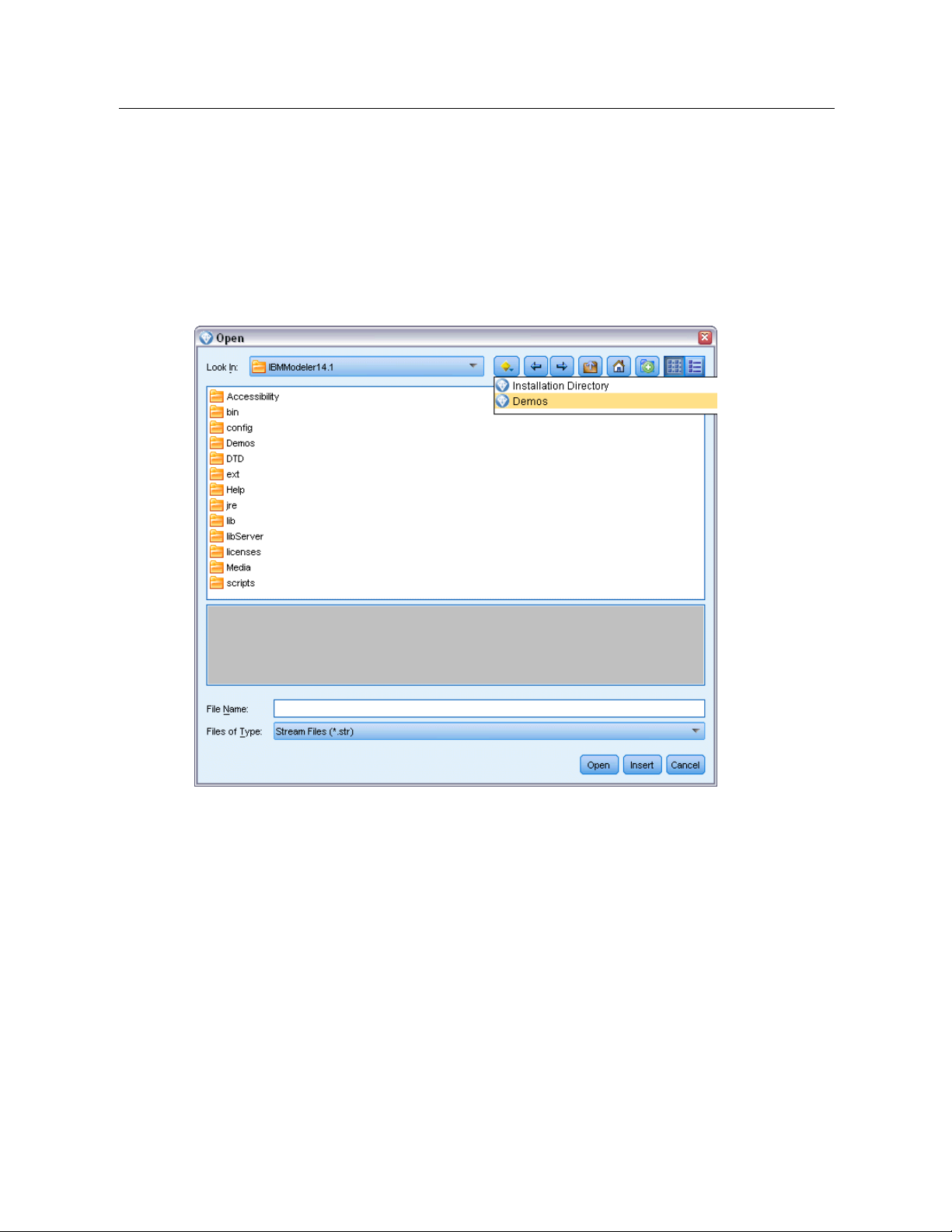

Demos Folder

The data files and sample streams used with the application examples are installed in the Demos

folder under the product installation directory. This folder can also be accessed from the

SPSS Modeler 15

recent directories in the File Open dialog box.

Figure 1-1

Selecting the Demos folder from the list of recently-used directories

IBM

program gr oup on the Windows Start menu, or by clicking Demos on the list of

Page 17

New Features

New and Changed Features in IBM SPSS Modeler 15

From this release onwards, IBM® SPSS® Modeler has the following editions.

IBM® SPSS® Modeler Professional

IBM® SPSS® Modeler Premium

features to those supplied by SPSS Modeler Professional.

The new features for these edit ions are described in the following sections.

New features in IBM SPSS Modeler Professional

The IBM® SPSS® Modeler Professional edition adds the following features in this release.

is the new name for the existing SPSS Modeler product.

is a sepa r ately-licensed product that provides additio nal

Chapter

2

2

22

GLMM modeling node.

that: the target is linearly related to the factors and covariates via a specified link function; the

target can have a non-normal d is tribution; and the observations can be correlated. Generalized

linear mixed models cover a wide variety of models, from simple linear regression to co mplex

multilevel models for non-normal longitudinal data. For more information, see the topic New

Nodes in This Release on p. 10.

Support for maps in the Graphboard node.

number of map types. These include choropleths (wher e regions can be given different colors

or patterns to indicate different values) and point overlay maps (where geospatial points are

overlaid on the map).

IBM® SPSS® Modeler ships with several map file s, but you can use the Map Conversion Utility

to convert your existing map shapefiles for use with the Graphboard Template Chooser.

Netezza Time Series and Generalized Linear nodes.

Netezza® Analytics in - database mining: Time Series and Generalized Linear. For more

information, see the topic New Nodes in This Release on p. 10.

Netezza nodes enabled through Helper Applications.

nodes are now enabled in the same way as the other database modeling nodes.

Generalized linear mixed models (GLMMs) extend the linear model so

The Graphboard node now includes support for a large

Two new nodes are available for IBM®

The Netezza Analytics database modeling

Zooming in and out on the stream view.

from the standard size. This feature is particularly useful for gaining an overall view of a complex

stream, or for minimizing the number of pages needed to pri nt a stream. For more information,

see the topic Changing the icon size for a stream in Chapter 3 on p. 24.

© Copyright IBM Corporation 1994, 2012.

It is now possible to scale the entire stream view up or down

7

Page 18

8

Chapter 2

Default settings for database connections.

and Ora cle dat

abase connections, as we ll as those already supported for IBM DB2 InfoSphere

You can now specify default settings for SQL Server

Warehouse.

Stream properties and optimization redesign.

The Options tab on the Stream Properties dialog box

has been redesigned to group the options into categories. The Optimizatio n options have also

moved from User Options to Stream Properties. For more information, see the topic Setting

Options for Streams in Chapter 5 on p. 54.

Node execution timing.

You can now set an option to display individ ual execution times for

the nodes in a stream. For more information, see the topic Viewing Node Execution Times in

Chapter 5 on p. 67.

You can also set an option (time_ecode_execution_log) in the server configuration file to record

these execution times in the message log.

Stream parameters in SQL queries from Database source node.

You can now include SPSS Modeler

stream parameters in SQL queries that you enter in the Database source node.

Expression Builder supports in-database functions.

If a stream connects to a database through a

Database source node and you use the Expression Builder with a downstream node, you can

include in-database functions from the connected database directly in the expression you are

building. For more in f ormation, see the t opic Selecting Functions in Cha pter 7 on p. 120.

IBM Cognos BI node enhancements.

The Cognos BI source node now supports importing C ognos

list reports as we ll as data, and additionally supports the use of parameters and filters.

For the Cognos BI source a nd export nodes, SPSS Modeler now automatically detects the version

of IBM Cognos BI in use.

Enhancements to Aggregate node.

The Aggregate node n ow supports several new aggregation

modes for aggregate fields: median, count, variance, and first and third quartiles.

Merge node supports conditional merge.

You can now perform input record merges that depend on

satisfying a condition. You can specify the condition directly in the n ode, or build the conditio n

using the Expression Builder.

Enhancements to in-database mining nodes for IBM DB2 InfoSphere Warehouse.

For in-database

mining with IBM DB2 InfoSphere Warehouse, the ISW Clustering node now supports the

Enhanced BIRCH algorithm in addition to demographic and Koho nen clustering. In addition, the

ISW Associ ation node provides a choice of layout for non-transactional (tabular) data.

Table compression for database export.

When exporting to a database, you can now specify table

compression options for SQL Server and Oracle database connections, as well as those already

supported for IBM DB2 InfoSphere Warehouse.

Bulk loading for database export.

Additional help information is available for database bulk loading

using an external loader program.

Page 19

New Features

9

SQL generation enhancements.

timestamp, an

d string data types, in additi on to integer and real. With IBM Netezza databases, the

The Aggregate node now supports SQL generation for date, time,

Sample node supports SQL generation for simple and complex sampling, and the Binn ing node

supports SQL generation for all binning methods except Tiles.

In-database model scoring.

For I B M DB2 for z/OS, IBM Netezza and Teradata da tabases, it is

possible to enable SQL pushback of many of the model nuggets to carry out model scoring (as

opposed to in-database mining) within the database. To do this, you can install a scoring adapter

into the database. When you publish a model for the scoring adapter, the model is enabled to use

the user-defined function (UDF) capabilities of the database to perform the scoring.

A new configuration option, db_udf_enabled in options.cfg, causes the SQL generation option

to generate UDF SQL by default.

New format for database connection in batch mode.

The format for specifying a database connection

in batch mode has changed to a single argument, to be consistent with the way it is specified in

scripting.

Enhancements to SPSS Statistics integration.

are available on the Syntax tab th r ough the

On the Statistics Output node, additional procedures

Select a dialog

button. The Regres sion submenu

now supports Partial Least Squares regression, and there is a new Forecasting submenu with the

following op tions: Spectral Analysis, Sequence Charts, Autocorrelations, and Cross-correlations.

For mor e information, see the SPSS Statistics documentation.

The Syntax tab of the Statistics Output node also has a new option to generate a Statistics File

source node for importing the data that results fr om running a stream containing the node. This is

useful where a procedure writes fields such as scores to the active datas et in addition to displaying

output, as these fields would otherwise not be visible.

Non-root user on UNIX servers.

If you have SPS S Modeler Server installed on a UNIX server, you

can now install, configure, and start and stop SPSS Modeler Server as a non-root user without the

need for a pr ivate password database.

Deployed streams can now access IBM SPSS Collaboration and Deployment Services model

management features.

When a stream is deployed to IBM SPSS Collaboration and Deployment

Services as a str eam, it can now use the same model management features as it could if deployed

as a scenario. These features include evaluation, refresh, score, and champion/challenger.

Improved method of changing ODBC connection for SPSS Modeler stream and scenario job steps.

For

stream and scenario job steps in IBM SPSS Collaboration and Deployment Services, changes to

an ODBC connection and related logon credentials apply to all rela ted job steps. This means that

you no longer have to change the job steps one by one.

Choice of execution branch in deployed streams.

For stream job steps in IBM SPSS Collaboration

and Deployment Services, if the stream contains branches you can now choose one or more

stream branches to execute.

Page 20

10

Chapter 2

New features in IBM SPSS Modeler Premium

IBM® SPSS® Modeler Premium is a separately-licensed product that provides additional features

to those supplied by IBM® SPSS® Modeler Professional. Previously, SPSS Mode ler Premi um

included only IBM® SPSS® Modeler Text Analytics . The full set of SPS S Modeler Premium

features is now as follows.

SPSS Modeler Text Analytics

IBM® SPSS® Modeler Entity Analytics

IBM® SPSS® Modeler Social Network Analysis

SPSS Modeler Text Analytics uses advanc ed linguistic technologies and Natural Language

Processing (NLP) to rapidly process a large variety of unstructured text data, extract and organize

the key concepts, and group these concepts into categories. Extracted concepts and categories

can be combined with existing structured data, such as demographics, and applied to modeling

using the full suite of IBM® SPSS® Modeler data mining tools to yield better and more focused

decisions.

IBM SPSS Modeler Entity Analytics adds a complet ely ne w dimension to SPSS Modeler

predictive analytics. Whereas predictive analytics attempts to predict future behavior from past

data, entity analytics focuses on improving the coherence and consistency of current data by

resolving identity conflicts within the records themselves. An identity can be that of an individual,

an organization, an object, or any other entity for which ambiguity might exist. Identity resolution

can be vital in a number of fields, including customer relationship management, fraud detection,

anti-money laundering, and national and international security.

IBM SPSS Modeler Social Network Analysis transforms information about relationships into

fields that characterize the social behavior of individuals and groups. Using data describing the

relationships underlying social networks, IBM SPSS Modeler Social Network Analysis identifies

social leaders who influence the behavior of others in the network. In addition, you can determine

which people are most affected by other network participants. By combining these results with

other measures, you can create comprehensive profiles of individuals on which to base your

predictive models. Models that inclu de this social information will perform better than models

that do not.

Note: SPSS Modeler Professional must be installed before installing any of the SPSS Modeler

Premium features.

New Nodes in This Release

IBM SPSS Modeler Professional

A generalized linear mixed model (GLMM) extends th e linear model so that the target

can have a non-normal distribution, is linearly related to the factors and covariates via

a specified link function, and so that the observations can be correlated. Generalized

linear mixed models cover a wide variety of models, from simple linear regression to

complex multilevel models for non-normal longitudinal data.

Page 21

The Netezza Time Series node analyzes time series data and can predict future

behavior from past events.

The Netezza Generalized Linear model expands the linear regression model so that

the dependent variable is related to the predictor vari ables by means of a specified

link function. Moreover, the model all ows for the dependent variable to have a

non-normal distribution.

IBM SPSS Modeler Premium

The EA Export node is a terminal node that reads entity data from a data source and

exports the data to a repository for the purpose of entity resolution.

11

New Features

The Entity Analytics(EA) source node reads the resolved entities from th e repository

and passes th

is data to the stream for further processing, such as formatting into

a report.

The Streaming EA node compares new cases against the entity data in the repository.

The SNA Group Analysis node builds a model of a social net w ork based on input

data about the social groupings within the network. This technique identifies links

between the group members, and analyzes the interactions within the groups to

produce key performance indicators (KPIs). The KPIs can be used for purposes such

as churn prediction, anomaly detection, or group leader identification.

The SNA Diffusion Analysis node models the flow of information from a group

member to t heir social environment. A group member is assigned an initial weighting,

which is propagated across the network as a gradually reducing figure. This process

continues until each member of the network has been assigned a weighting relative to

the original group member, according to the amount of information that has reached

them. The individual member scores are then derived directly from these weightings.

In this way, for examp l e, a service provider could identify customers that are at a

higher risk of churn according to their relationship with a recent churner.

Page 22

IBM SPSS Modeler Overview

Getting Started

As a data mining application, IBM® SPSS® Modeler offers a strategic approa ch to finding useful

relationships in large data sets. I n contrast to more traditional statistical methods, you do not

necessarily need to know what you are looking for when you start. You can explore y our data,

fitting different models and investigating different relationships, until you find useful information.

Starting IBM SPSS Modeler

To start the application, cli ck:

Start > [All] Programs > IBM SPSS Modeler15 > IBM SPSS Modeler15

Chapter

3

3

33

The main window is displayed after a few seconds.

Figure 3-1

IBM SPSS Modeler main application window

© Copyright IBM Corporation 1994, 2012.

12

Page 23

Launching from the Command Line

You can use the command li ne of your operating system to launch IBM® SPSS® Modeler

as follows:

E

On a computer where IBM® SPSS® Modeler is installed, open a DOS, or command-prompt,

window.

E

To launch the SPSS Modeler interface in interactive mode, type the modelerclient command

followed by the re quired arguments; for example:

modelerclient -stream report.str -execute

The ava ilable arguments (flags) allow you to connect to a se r ver, load streams, run scripts, or

specify other parameters as needed.

Connecting to IBM SPSS Modeler Server

IBM® SPSS® Modeler can be run as a standalone application, or as a client connected to IBM®

SPSS® Modeler Server directly or to an SPSS Modeler Server or server cluster through the

Coordinator of Processes plug- in from IBM® SPSS® Collaboration and Deployment Services.

The current connection status is displayed at the bottom left of the SPSS Modeler window.

Whenever you want to connect to a server, you can ma nually enter the server name to which

you want to connect or select a name that you have previously defined. How ever, if you have I B M

SPSS Collaboration an d Deployme nt Services, you can search through a list of servers or server

clusters fro m the Server Login dialog box . The ability to browse through the Statistics services

running o n a network is made available through the Coordinator of Processes.

13

IBM SPSS Modeler Overview

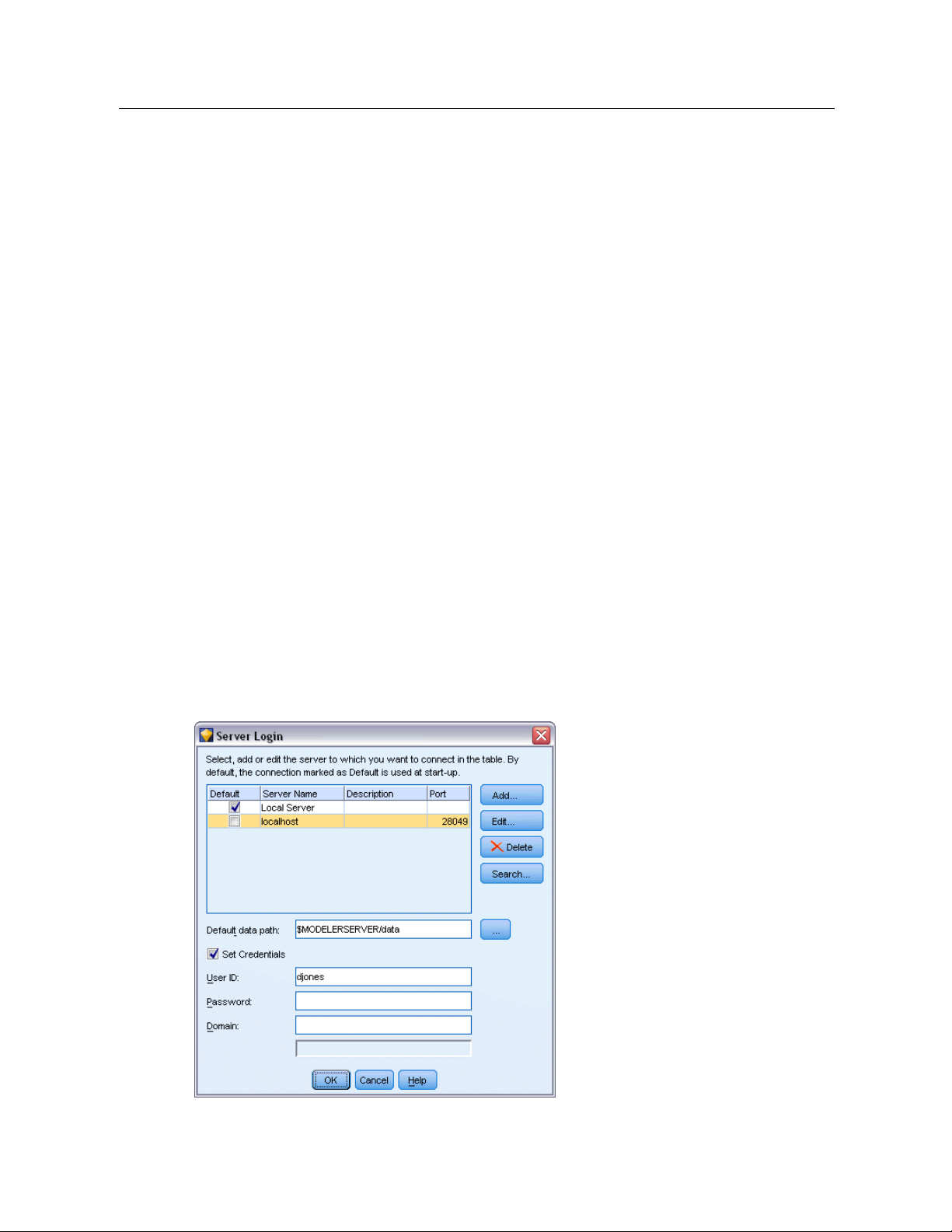

Figure 3-2

Server Login dialog box

Page 24

14

Chapter 3

To Connect to a Server

E

On the Tools menu, click

Server Login

. The Server Login dialog box opens. Alternatively,

double-click the connection status area of the SPSS Modeler window.

E

Using the dialog box, specify options to connect to the local server computer or select a connection

from the table.

Click

AddorEdit

to add or edit a connection . For mor e information, see the topic Adding and

Editing the IBM SPSS Modeler Server Connection on p. 14.

Click

Search

to acces s a server or server cluster in the Coordinator of Processes. For more

information, s ee the topic Searching for Servers in IBM SPSS Collaboration and Deployment

Services on p. 16.

Server table.

This table c ontains the set of defined server connections. The table displays the

default connection, server name, description, and port number. You can manually add a new

connection, as well as select or search for an existing connection. To set a particular server as the

default conne ction, select the check box in the Default column in the table for the connection.

Default data path.

Specify a path used for data on the server com puter. C lick the ellipsis button

to browse to the required location.

Set Credentials.

Leave this box unchecked to enable the single sign-on feature, which attempts

to log you in to the server using your local computer username and password details. If single

sign-on is not possible, or if you check this box to disable single sign-on (for example, to log in to

an administrator account), the following fields are enabled for you to enter your credentials.

User ID.

Password.

Domain.

Enter the user name with which to log on to the server.

Enter the passwo r d associa ted with the specified user name.

Specify the domain used to log on to the server. A domain name is required only when

the server computer is in a different Windows domain th an the client computer.

E

ClickOKto complete the connection.

To Disconnect from a Server

E

On the Tools menu, click

double-click the con

Server Login

. The Server Login dialog box opens. Alternatively,

nection status area of the SPSS Modeler window.

(...)

E

In the dialog box, select the Local Server and clickOK.

Adding and Editing the IBM SPSS Modeler Server Connection

You can manually edit or add a server connection in the Server Login dialog box. By clicking

Add, you can access an empty Add/Edit Server dialog box in which you can enter server

connection details. By selecting an existing connection and clicking Edit in the Server Login

dialog box, the Add/Edit Server dialog box opens with the details for that connection so that

you can make any changes.

Page 25

IBM SPSS Modeler Overview

Note: You cannot edit a server connection that was added f r om IBM® SPSS® Collaboration

and Deploymen

t Services, since the name, port, and other deta ils are defined in IBM SPSS

Collaboration and Deployment Services.

15

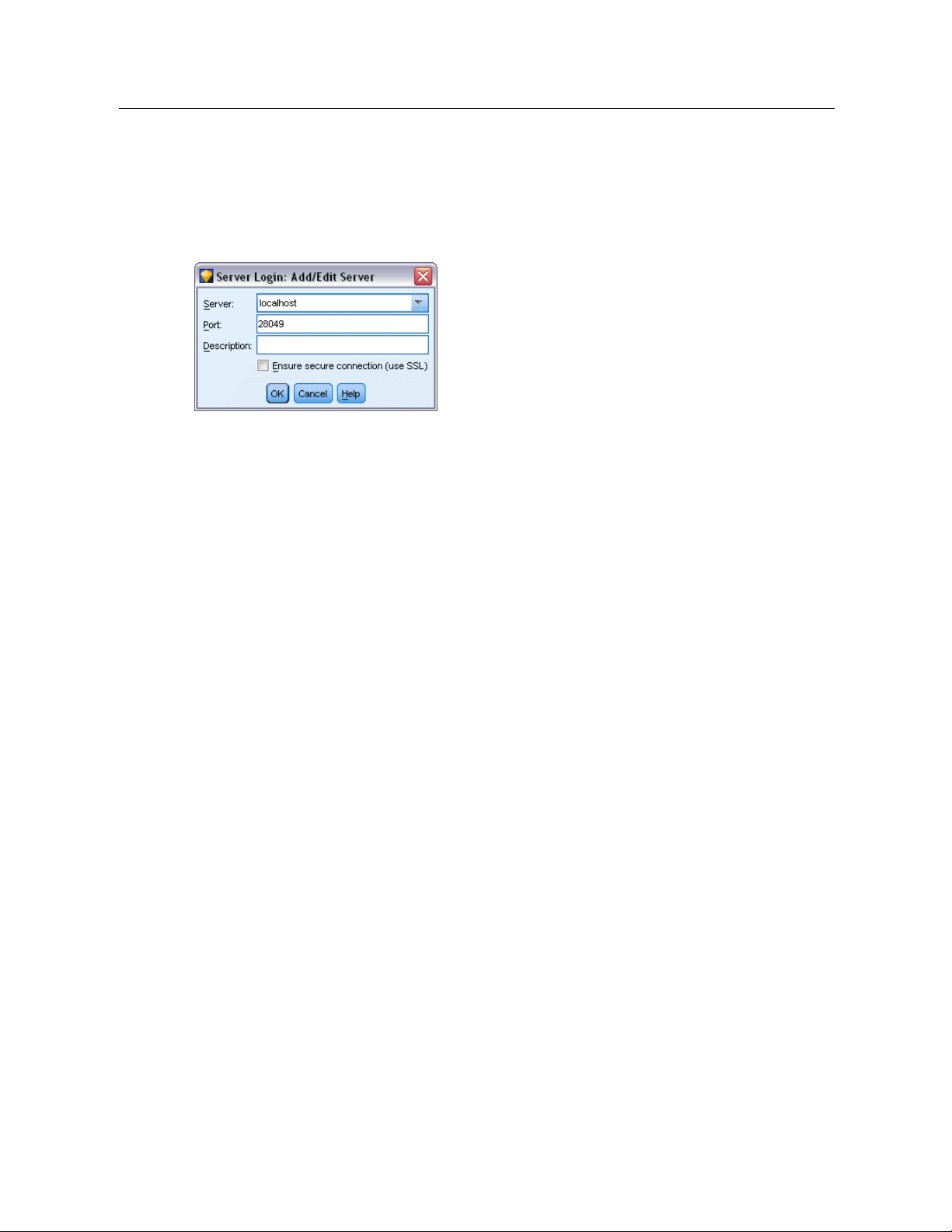

Figure 3-3

Server Login

Add/Edit Server dialog box

To Add Server Connections

E

On the Tools menu, click

E

In this dialog box, click

E

Enter the server connection details and clickOKto save the connection and retu r n to the Server

Server Login

Add

. The Server Login Add/Ed it Server dialog box opens .

. The Server Login dialog box opens.

Login dialog box.

Server.

Specify an ava ilable server or select one from the list. The server computer can be

identified by an alphanumeric name (for example, myserver) or an IP address assigned to the

server computer (for example, 202.123.456.78).

Port.

Give the port number on which the server is listening. If the d efault does not work, ask

your system administrator for the corre ct port number.

Description.

Ensure secure connection (use SSL).

Enter an optional description for this server con ne ction.

Specifies whether an SSL (Secure Sockets Layer)

connection should be used. SSL is a commonly used protocol for securing data sent over a

network. To use this feature, SSL must be enabled on the server hosting IBM® SPSS®

Modeler Server. If necessary, contact your local administrator for details.

To Edit Server Connections

E

On the Tools menu, click

E

In this dialog box, select the connec tion you want to ed it and then click

Server Login

. The Server Login dialog box opens.

Edit

. The Server Login

Add/Edit Server dialog box opens.

E

Change the server connection details and clickOKto save the changes and return to the Server

Login dialog box.

Page 26

16

Chapter 3

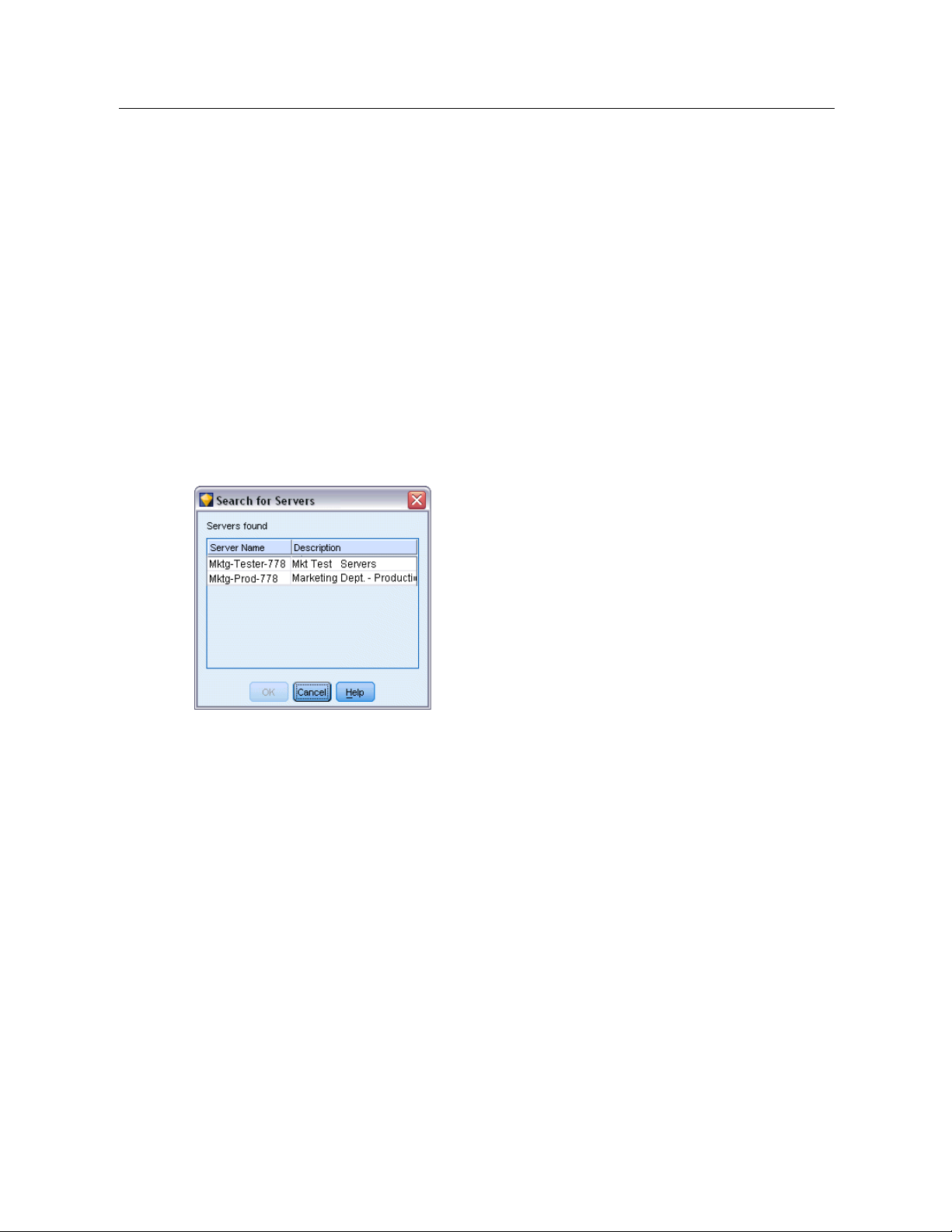

Searching for Servers in IBM SPSS Collaboration and Deployment Services

Instead of entering a server connection manually, you can select a server or server cluster available

on the network through the Coor dinator of Processes, available in IBM® SP SS® Collab oration

and Deployment Services. A server cluster is a group of servers from which the Coordinator of

Processes determines the server best suited to respond to a processing request.

Although you can ma nually add servers in the Ser ver Login dialog box, searching for available

servers lets you connect to servers without requiring that you know the correct server name and

port number. This information is autom atically provided. However, you still need the correct

logon information, such as username, domain, and pass word.

Note: If you do not have access to the Coordinator of Proc esses capability, you can still manually

enter the server name to which you want to connect or select a name that you have previously

defined. For more information, see the topic Adding and Editing the IBM SPSS Modeler Server

Connection on p. 14.

Figure 3-4

Search for Servers dialog box

To search for servers and clusters

E

On the Tools menu, click

E

In this dialog box, click

logged on to IBM SPSS Collaboration and Deployment Services when you attempt to browse

the Coordinator of Processes, you will be prompted to do so. For more information, see th e

topic Connecting to the Repository in Chapter 9 on p. 161.

E

Select the server or server cluster from th e list.

E

ClickOKto close the dialog box and add this connection to the table in the Server Login dialog box.

Changing the Temp Directory

Some operations perfor med by IBM® SPSS® Modeler Server may require temporary files to be

created. By default, IBM® SPSS® Modeler uses the system temporary directory to create temp

files. You can alter the location of the temporary directory using the following steps.

E

Create a new directory called spss and subdirectory called servertemp.

Server Login

Search

to open the Search for Ser ve r s dialog box. If you are not

. The Server Login dialog box opens.

Page 27

E

Edit options.cfg, located in the /config directory of y our SPSS Modeler ins tallation directory. Edit

the temp_dire

E

After doing this, you must restart the SPSS Modeler Server service. You can do this by clicking

the

Services

ctory parameter in this file to read:

tab on your Windows Control Panel. Just stop the service and then start it to activate

the changes you made. Restarting the m achine will also restart the service.

All temp files will now be written to this new directory.

Note: The most common error when you are attempting to do this is to use the wrong type of

slashes. Because of SPSS Modeler’s UNIX history, forward slashes are used.

Starting Multiple IBM SPSS Modeler Sessions

If you need to launch more than one IBM® SPSS® Modeler s ession at a time, you must make

some changes to your IBM® SPSS® Modeler and Windows settings. For example, you may

need to do th is if you have two separate se r ver license s and want to run two streams against two

different servers from the same client machine.

To enable multiple SPSS Modeler sessions:

IBM SPSS Modeler Overview

temp_directory, "C:/spss/servertemp"

17

.

E

Click:

Start > [All] Programs > IBM SPSS Modeler15

E

On the IBM SPSS Modeler15 shortcut (the one with the icon), right-click and select

E

In the

Target

text box , add

E

In Windows Explorer, select:

Tools > Folder Options...

E

On the File Types tab, select the SPSS Modeler Stream option and click

E

In the Edit File Type dialog box, select

E

In the

Application used to perform action

-noshare

to the end of the string.

Open with SPSS Modeler

text box, add

IBM SPSS Modeler Interface at a Glance

At each point in the data mining proce ss, IBM® SPSS® Modeler’s easy-to-use interface invites

your specific business e xpertise. Modeling algorithms, such as prediction, classification,

segmentation, and association detection, ensure powerful and accurate models. Model results

can easily be deployed a nd read into databases, IBM® SPSS® Statistics, and a wide variety

of other applications.

-noshare

and click

before the

Advanced

Edit

.

-stream

Properties

.

argument.

.

Working with SPSS Modeler is a three-step process of working with data.

First, you read data into SPSS Modeler.

Next, you run the data through a series of manipulations.

Finally, you send the data to a destination.

Page 28

18

Chapter 3

This sequ ence of operations is known as a data stream because the data flows record by record

from the sourc

e through each manipulation and, finally, to the destination—either a model or

type of data output.

Figure 3-5

A simple stream

IBM SPSS Modeler Stream Canvas

The stream canvas is the largest area of the IBM® SPSS® Modeler window and is where you will

build and manipulate data streams.

Streams are created by drawing diagrams of data operations relevant t

main canvas in the interface. Each operation is represented by an icon or node, and the nodes are

linked together in a stream representing the flow of data through each operation.

You can work with multiple streams at one time in SPSS Modeler, either i

canvas or by opening a new stream canvas. During a sessi on, streams are stored in the Streams

manager, at the upper right of the SPSS Modeler window.

o your business on the

n the same stream

Nodes Palette

Most of the data and modeling tools in IBM® SPSS® Modeler reside in the Nodes Palette, acr oss

the b ottom o f the window below the stream canvas.

For example, the Record Ops palette tab contains nodes that you can use to perform operations

on the data records, such as selecting, merging, and appending.

To add nodes to the canvas, double-click icons from the Nodes Palette or drag and drop them

onto the canvas. You then connect them to create a stream, representing the flow of data.

Figure 3-6

Record Ops tab on the nodes palette

Each palette tab contains a collection of related nodes used for different p hases of stream

operations, such as :

Sources.

Record Ops.

appending.

Nodes bring data into SPSS Modeler.

Nodes perform operations on data records, such as selecting, merging, an d

Page 29

IBM SPSS Modeler Overview

Field Ops.

Nodes perform operations on data fields, such as filtering, deriving new fields, and

determining the measurement level for given fields.

Graphs.

Nodes graphically display data before and after modeling. Graphs include plots,

histograms, web nodes, and evaluation charts.

Modeling.

des use the modeling algorithms available in SPSS Modeler, such as neural nets ,

No

decision trees, clustering algorithms, and data sequencing.

Database Modeling.

Nodes use the modeling algorithms available in Microsoft SQL Server,

IBM DB2, and Oracle database s.

Output.

Nodes produce a variety of output for d ata, charts, and model results that can be

viewed in SPSS Modeler.

Export.

es prod uce a variety of output that can be viewed in external applications, such

Nod

as IBM® S PSS® Data Collection or Excel.

SPSS Statistics.

Nodes import data from, or export data to, IBM® SPSS® Stat is tics, as well as

running SPSS Statistics procedures.

As you become more familiar with SPSS Modeler, you can customize the palette contents for

your own use. For more information, see the topic Customizing the Nodes Palette in Chapter 12

on p. 223.

Located below the Nodes Palette, a report pane provides feedback on the progress of various

operations, such as when data is being read into t he data stream. Also located below the Nodes

Palette, a status pane provides info r mation on what the application is currently doing, as well as

indications of when user feedback is required.

19

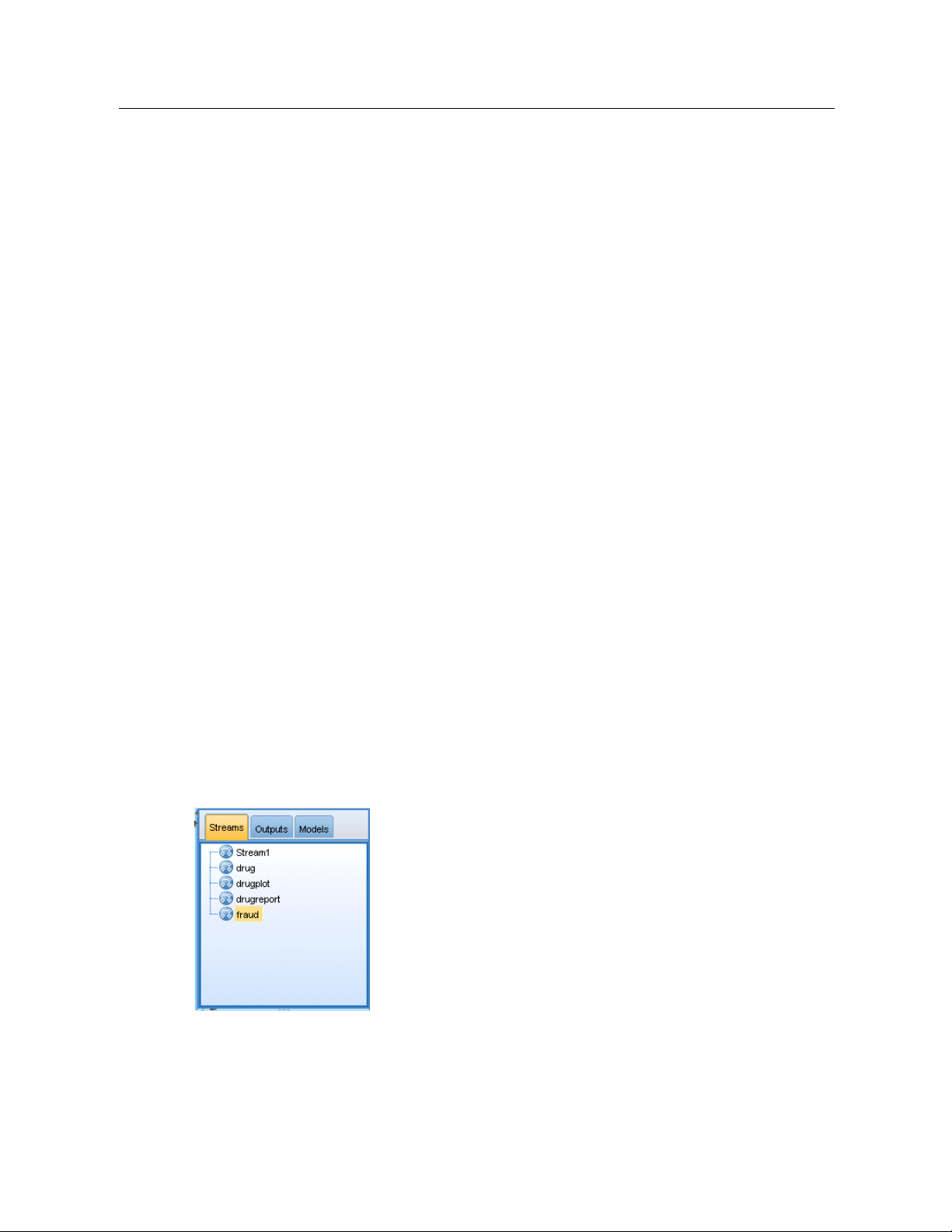

IBM SPSS Modeler Managers

At the top right of the window is the managers pane . This has three tabs, which are used to

manage streams, output and models.

You can use the Streams tab to open, rename, save, and dele te the streams created in a session.

Figure 3-7

Streams tab

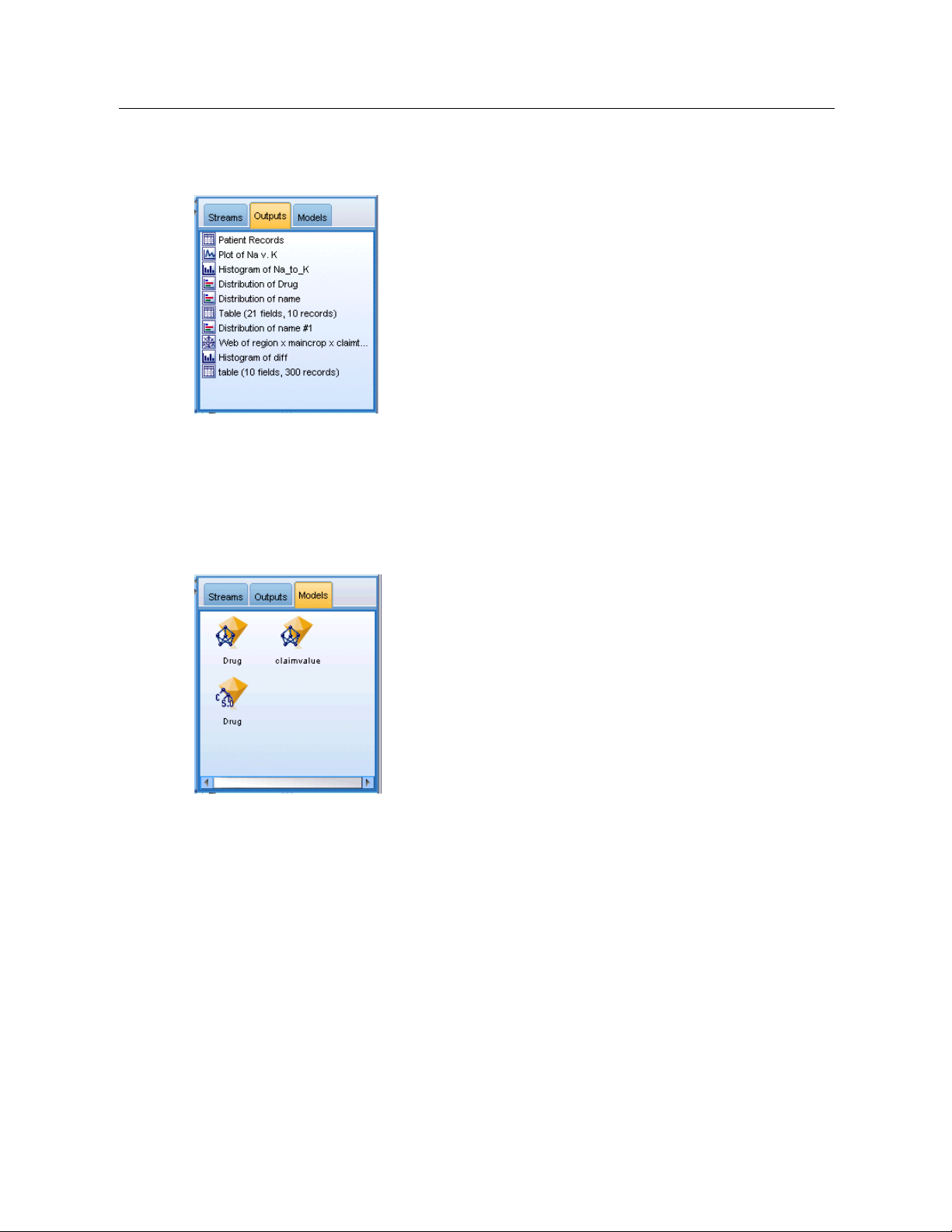

The Outputs tab contains a variety of files , such as graphs and tables, produced by stream

operations in IBM® SPSS® Modeler. You can display, save, rename, and close the tables, graphs,

and reports listed on this tab.

Page 30

20

Chapter 3

Figure 3-8

Outputs tab

The Models tab is the most powerful of the manager tabs. This tab contains all model nuggets,

which contain the models generated in SPSS Modeler, for the current session. These models can

be browsed directly from the Models tab or added to the stream in the c anvas.

Figure 3-9

Models tab containing model nuggets

IBM SPSS Modeler Projects

On the lower right side of the window is the project pane, used to create and manage data mining

projects (groups of files related to a data mining task). There are two ways to view projects you

in IBM® SPSS® Modeler—in the Classes view and the CRISP-DM view.

create

The CRISP-DM tab provides a way to organize projects according to the Cross-Industry

Standard Process for Data Mining, an industry-proven, nonproprietary methodology. For both

enced and first-time data miners, using the CRISP-DM tool will help you to better o rganize

experi

and communicate your efforts.

Page 31

IBM SPSS Modeler Overview

Figure 3-10

CRISP-DM view

The Classes tab provides a way to organize your work in SPSS Modeler categorically—by the

types of objects you create. This view is useful when taking inventory of data, streams, and

models.

Figure 3-11

Classes view

21

IBM SPSS Modeler Toolbar

At the top of the IBM® SPSS® Modeler window, you will find a toolbar of icons that provides a

number of useful functions. Following a r e the toolbar buttons and their functions.

Create new stream Open stream

Save stream

Print current stream

Page 32

22

Chapter 3

Cut & move to clipboard Copy to clipboard

Paste selection Undo last action

Redo Search for nodes

Edit stream properties Preview SQL generation

Run current stream

Stop stream (Active only while

stream is running)

Zoom in (SuperNodes only) Zoom out (SuperNodes only)

No markup in stream

Hide stream markup (if any) Show hidden stream markup

Open stream in IBM® SPSS®

Modeler Advantage

Run stream selection

Add SuperNode

Insert comment

Stream markup consists of stream comments, model links, and scoring branch indications.

For mor e information on stream comments , see Adding Comments and Annotations to Nodes

and Streams on p. 78.

For more i

nformation on scoring branch indications, see The Scoring Branch on p. 188.

Model links are described in the IBM SPSS Modeling Nodes guide.

Page 33

Customizing the Toolbar

You can change various aspects of the toolbar, such as:

Whether it is displayed

Whether the icons have tooltips available

Whether it uses large or small icons

To turn the toolbar display on and off:

E

On the main menu, click:

View > Toolbar > Display

To change the tooltip or icon size settings:

E

On the main menu, click:

View > Toolbar > Customize

23

IBM SPSS Modeler Overview

Click

Show Too

lTipsorLarge Buttons

as required.

Customizing the IBM SPSS Modeler Window

Using the dividers between vario us portions of the IBM® SPSS® Modeler interface, you can

resize or close tools to meet your preferen ces. For example, if you are working with a large

stream, you can use the small arrows located on each divider to close the nodes palette, managers

pane, and project pane. This maximizes the stream canvas, providing enough work space for

large or multiple streams.

Alternativ

ely, on the View menu, click

these items on or off.

Nodes Palette,Managers

, or

Project

to turn the dis play of

Page 34

24

Chapter 3

Figure 3-12

Maximized stream canvas

As an alternative to closing the nodes palette, and the managers and project panes, you can use the