Page 1

Hitachi Dynamic Link Manager Software

User's Guide (for Linux(R))

3000-3-F04-60(E)

Page 2

Relevant program products

Hitachi Dynamic Link Manager version 6.6.2

For details about applicable OSs, see the Release Notes.

Trademarks

AIX is a trademark of International Business Machines Corporation in the United States, other countries, or both.

AMD, AMD Opteron, and combinations thereof, are trademarks of Advanced Micro Devices, Inc.

Brocade is a trademark or a registered trademark of Brocade Communications Systems, Inc. in the United States and/or in other

countries.

Emulex is a registered trademark of Emulex Corporation.

HP-UX is a product name of Hewlett-Packard Company.

HP StorageWorks is a trademark of Hewlett-Packard Company.

Intel Xeon is a trademark of Intel Corporation in the United States and other countries.

Itanium is a trademark of Intel Corporation in the United States and other countries.

Java is a registered trademark of Oracle and/or its affiliates.

JDK is either a registered trademark or a trademark of Oracle and/or its affiliates.

Linux(R) is the registered trademark of Linus Torvalds in the U.S. and other countries.

Microsoft is either a registered trademark or a trademark of Microsoft Corporation in the United States and/or other countries.

Oracle and Oracle9i are either registered trademarks or trademarks of Oracle and/or its affiliates.

Oracle and Oracle Database 10g are either registered trademarks or trademarks of Oracle and/or its affiliates.

Oracle and Oracle Database 11g are either registered trademarks or trademarks of Oracle and/or its affiliates.

Pentium is a trademark of Intel Corporation in the United States and other countries.

QLogic is a registered trademark of QLogic Corporation.

Red Hat is a trademark or a registered trademark of Red Hat Inc. in the United States and other countries.

Solaris is either a registered trademark or a trademark of Oracle and/or its affiliates.

SteelEye Technology, SteelEye and LifeKeeper are registered trademarks of SteelEye Technology, Inc.

Sun Microsystems is either a registered trademark or a trademark of Oracle and/or its affiliates.

SUSE is a registered trademark of Novell, Inc. in the United States and other countries.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Veritas is a trademark or registered trademark of Symantec Corporation in the U.S. and other countries.

Windows is either a registered trademark or a trademark of Microsoft Corporation in the United States and/or other countries.

Throughout this document Hitachi has attempted to distinguish trademarks from descriptive terms by writing the name with the

capitalization used by the manufacturer, or by writing the name with initial capital letters. Hitachi cannot attest to the accuracy of

this information. Use of a trademark in this document should not be regarded as affecting the validity of the trademark.

Restrictions

Information in this document is subject to change without notice and does not represent a commitment on the part of Hitachi. The

software described in this manual is furnished according to a license agreement with Hitachi. The license agreement contains all of

the terms and conditions governing your use of the software and documentation, including all warranty rights, limitations of liability,

and disclaimers of warranty.

Material contained in this document may describe Hitachi products not available or features not available in your country.

No part of this material may be reproduced in any form or by any means without permission in writing from the publisher.

Edition history

3000-3-F04-60(E): August 2011

Copyright

All Rights Reserved. Copyright (C) 2008, 2011, Hitachi, Ltd.

Page 3

Summary of Amendments

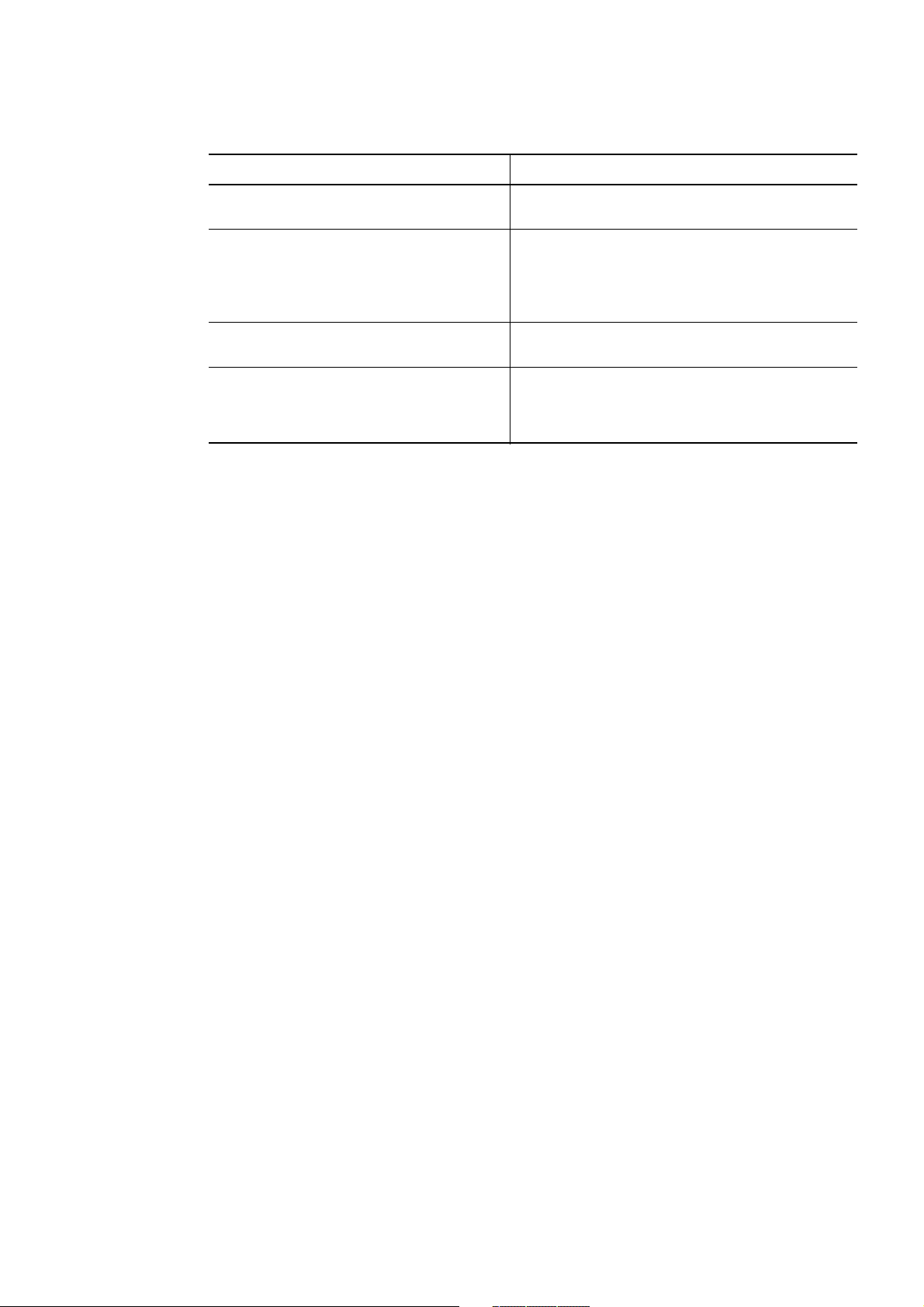

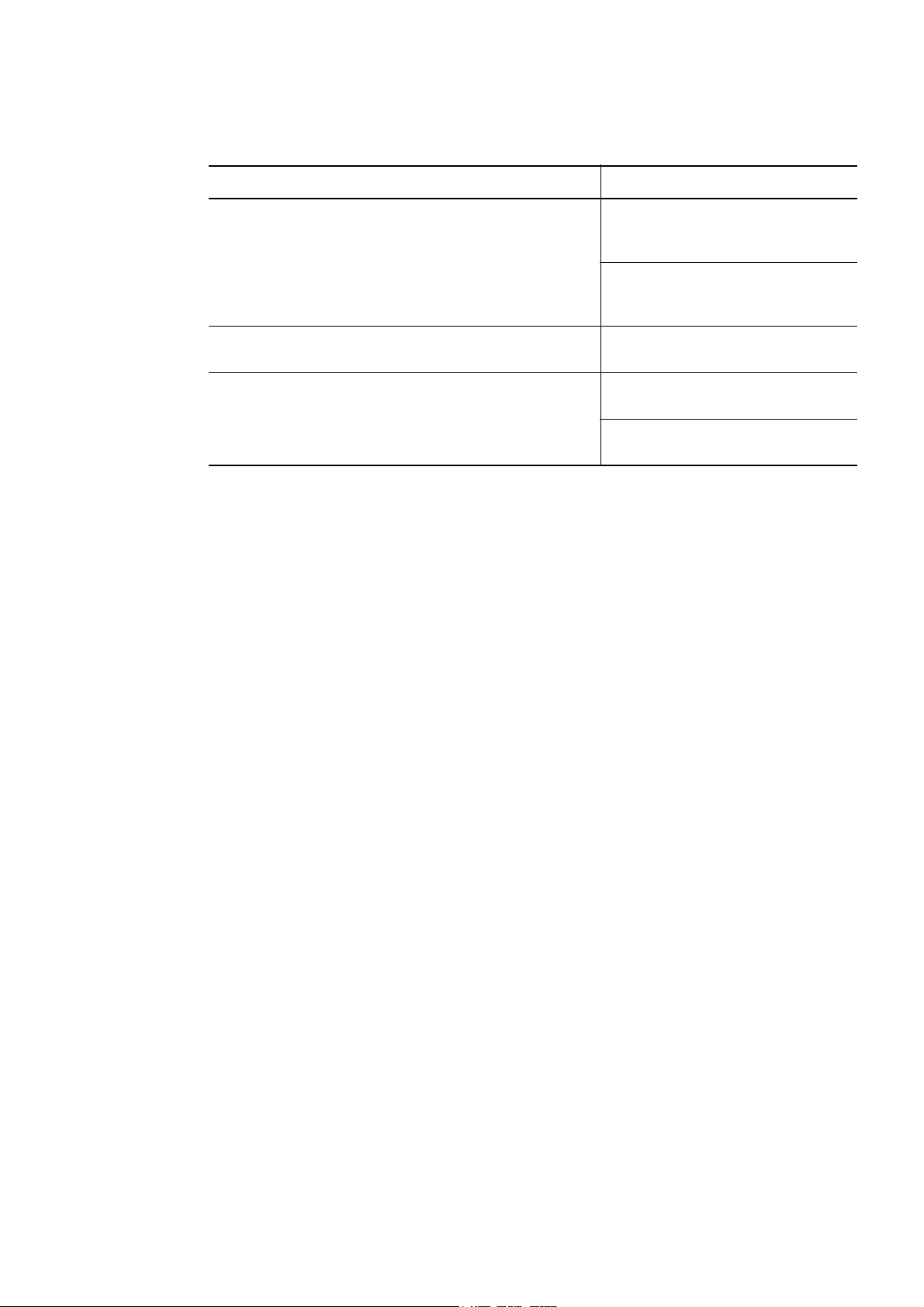

The following table lists changes in this manual (3000-3-F04-60(E)) and product

changes related to this manual.

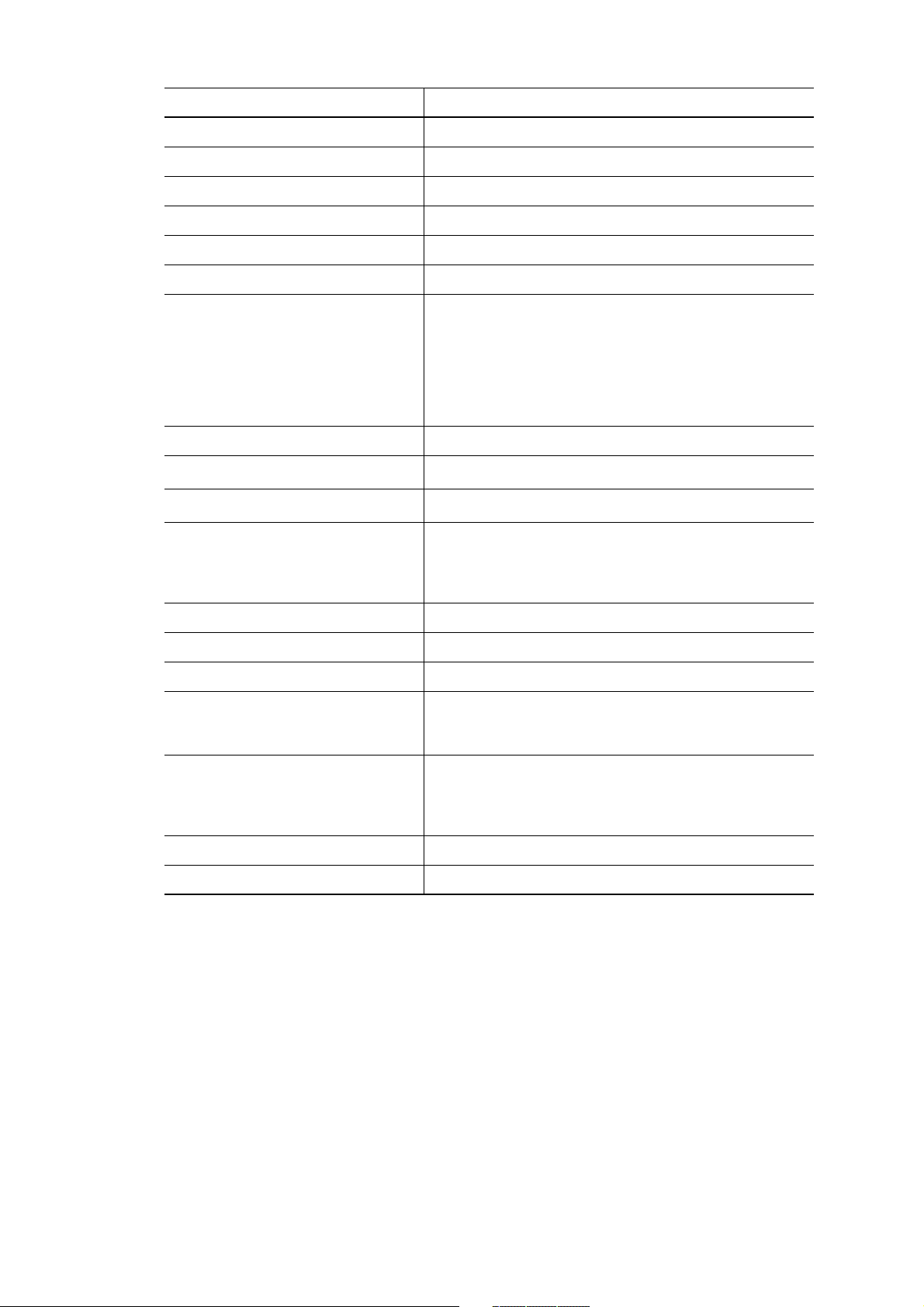

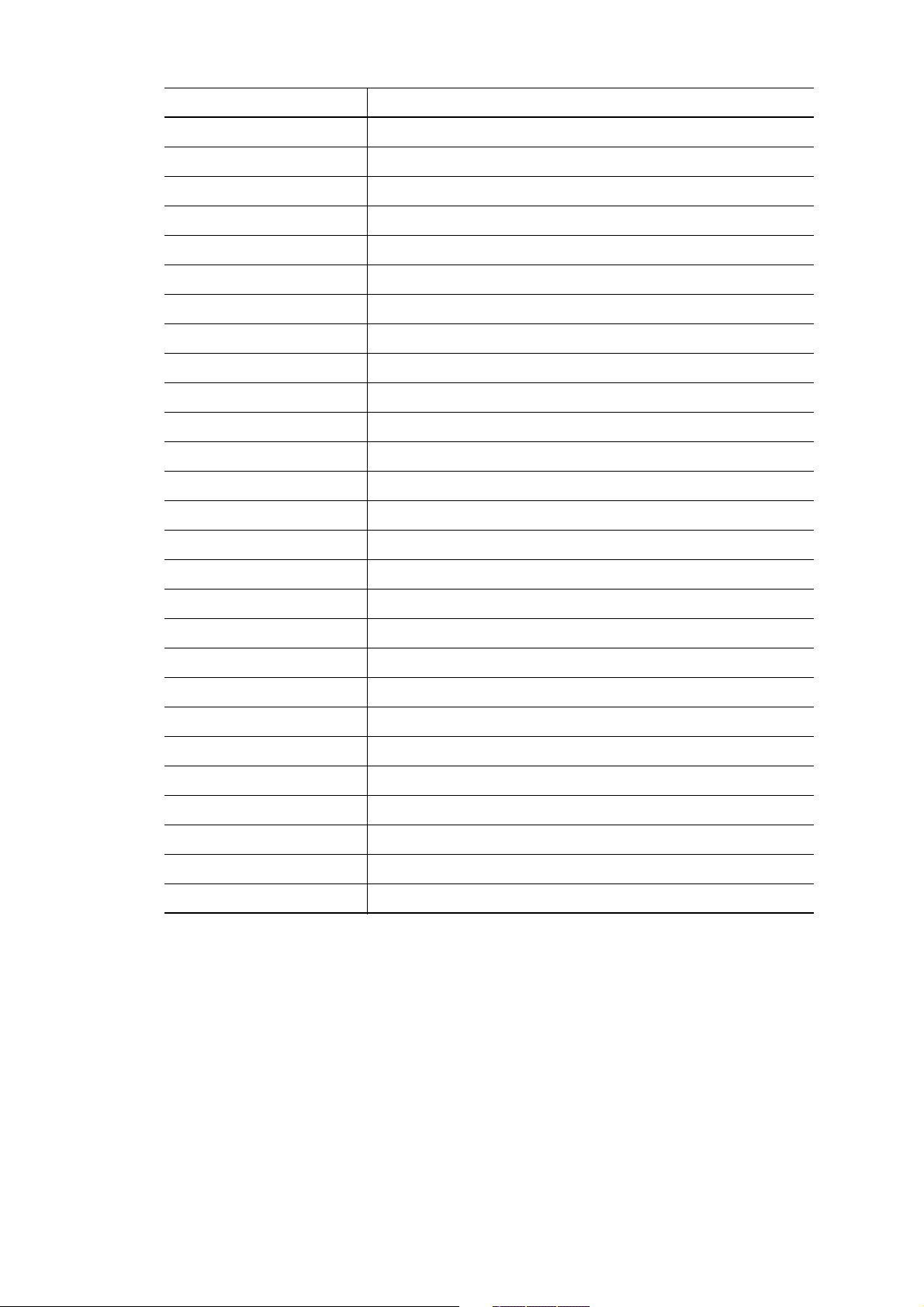

Changes Location in this manual

Systems that use an IP-SAN are now supported. 2.2, 2.2.2, 3.1.1, 3.1.2, 3.1.4, 3.1.5, 3.6.3, 3.6.6, 3.22.1,

The following messages have been added:

KAPL04053-W, KAPL05712-I,

KAPL10948-W, KAPL10949-E,

KAPL15001-I, KAPL15002-E,

KAPL15064-I, KAPL15065-E

Red Hat Enterprise Linux AS 4.9 and Red Hat

Enterprise Linux ES 4.9 are now supported.

It is now possible to specify the number of times

the same path can be used for I/O operations when

the Round Robin (

Blocks (

lbk) algorithm is used for load balancing.

rr), Least I/Os (lio), or Least

3.22.2, 4.6.4, 6.7.2, 7.2.3, 7.10.3, Appendix D

2.12.1, 8.4, 8.5, 8.10, 8.14

3.1.1, 3.1.3

4.3.7, 6.6.1, 6.6.2, 6.7.1, 6.7.2, 7.2.3, 7.10.3

In addition to the above changes, minor editorial corrections have been made.

Page 4

Page 5

Preface

This manual describes the functions and use of the following program products:

• Hitachi Dynamic Link Manager

Intended Readers

This manual is intended for system administrators who use Hitachi Dynamic Link

Manager (HDLM) to operate and manage storage systems. The readers of this manual

must have a basic knowledge of the following areas:

• Linux and its management functionality

• Storage system management functionality

• Cluster software functionality

• Volume management software functionality

Organization of This Manual

This manual is organized as follows:

1. Overview of HDLM

Chapter 1 gives an overview of HDLM, and describes its features.

2. HDLM Functions

Chapter 2 describes management targets and the system configuration of HDLM,

and the basic terms and functions for HDLM.

3. Creating an HDLM Environment

Chapter 3 describes the procedures for building an HDLM environment

(including installing and setting up HDLM), and describes for canceling the

settings.

4. HDLM Operation

Chapter 4 describes how to use HDLM by using both the HDLM commands, and

how to manually start and stop the HDLM manager. This chapter also describes

how to configure an environment to properly operate HDLM, such as changing

the HDLM management-target devices that connect paths or replacing the

hardware that makes up a path.

5. Troubleshooting

Chapter 5 explains how to troubleshoot a path error, HDLM failure, or any other

i

Page 6

problems that you might encounter.

6. Command Reference

Chapter 6 describes all the HDLM commands.

7. Utility Reference

Chapter 7 describes the HDLM utilities.

8. Messages

Chapter 8 provides information for all the possible messages that could be output

by HDLM. It also lists and explains the HDLM messages and shows the actions

to be taken in response to each message.

A. Notes on Linux Commands and Files

Appendix A gives notes on Linux commands and files.

B. Troubleshooting Products That Use the Weak-Modules Script

Appendix B explains how to deal with errors that are caused by installing or

uninstalling products that use the weak-modules script.

C. Functional Differences Between Versions of HDLM

Appendix C explains the differences in functionality between HDLM versions.

D. Glossary

This glossary explains terms used in this manual.

Related Publications

Manuals related to this manual are listed below. See these manuals when necessary:

• Hitachi Global Link Manager Software Installation and Configuration Guide

• Hitachi Global Link Manager Software Messages

• Hitachi Adaptable Modular Storage Series User's Guide

• Hitachi Simple Modular Storage Series User's Guide

• Hitachi USP Series User's Guide

• Hitachi Workgroup Modular Storage Series User's Guide

• Thunder9580V Series Disk Array Subsystem User's Guide

• Universal Storage Platform V Series User's Guide

• Universal Storage Platform VM Series User's Guide

• Virtual Storage Platform Series User's Guide

ii

Page 7

• HITACHI Gigabit Fibre Channel Board User's Guide

• ServerConductor/DeploymentManager User's Guide

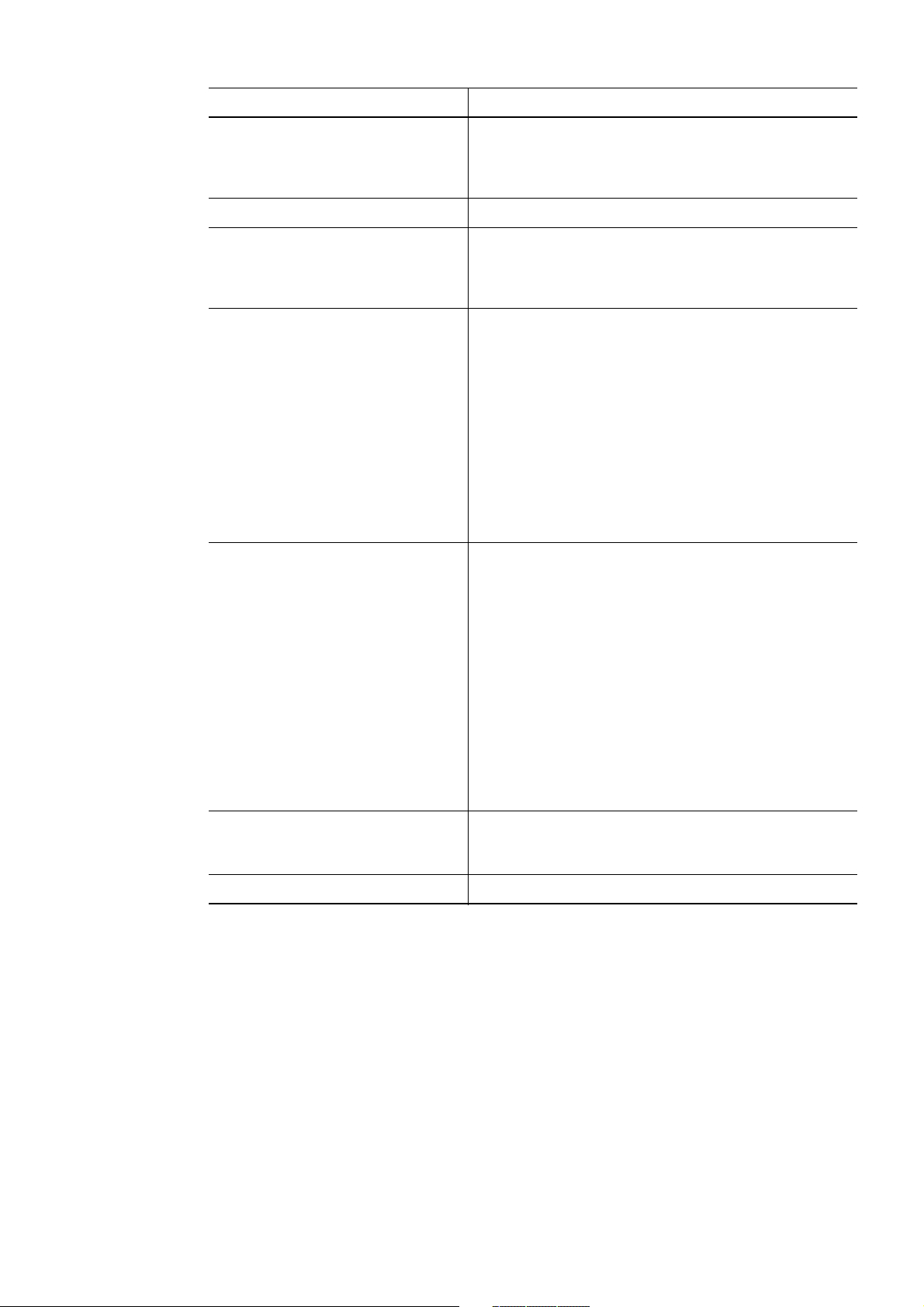

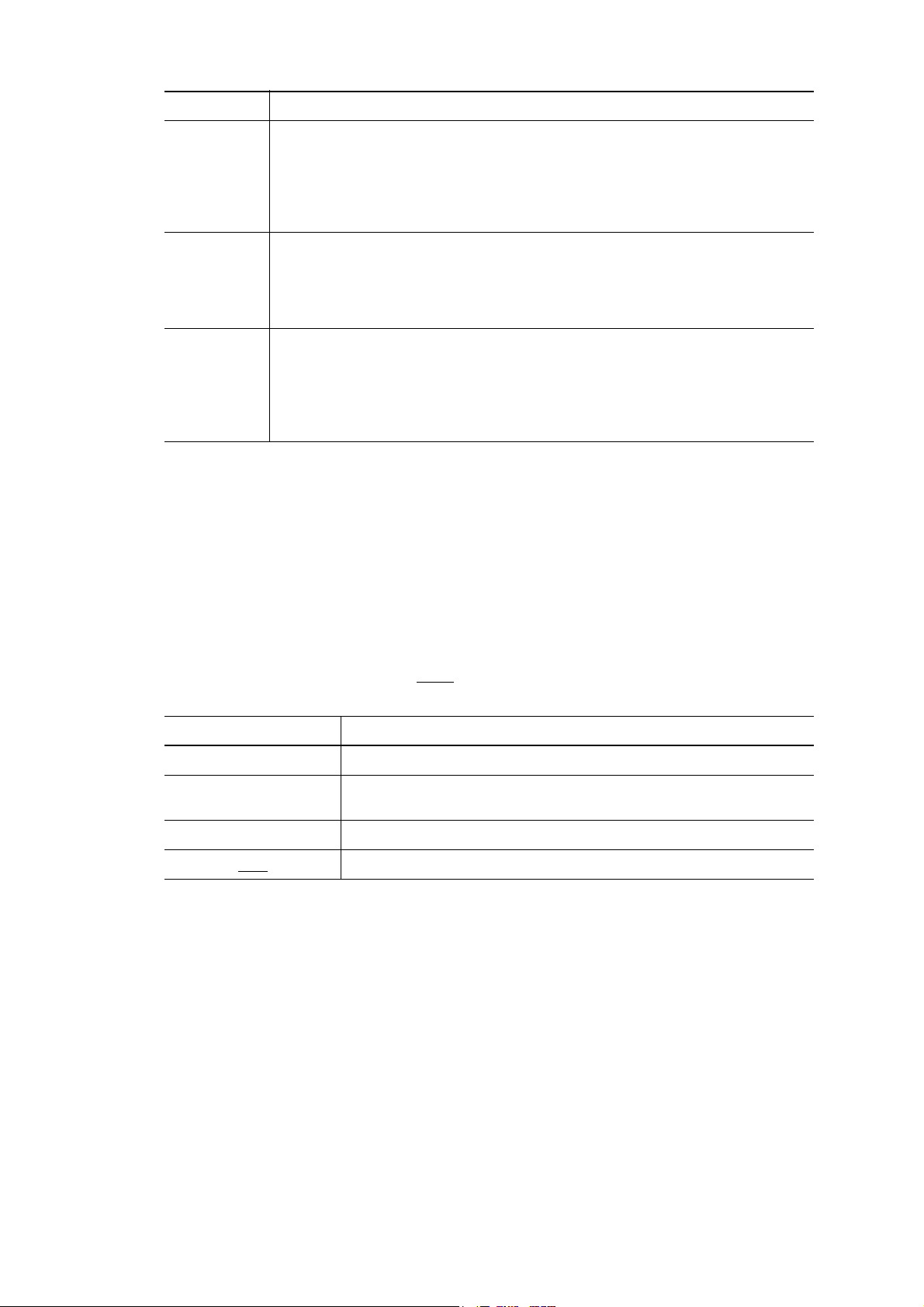

Conventions: Abbreviations

This manual uses the following abbreviations for product names.

Abbreviation Full name or meaning

Device Manager Agent Device Manager Agent included in Hitachi Device Manager

Hitachi Global Link Manager Global Link Manager

HDLM Hitachi Dynamic Link Manager

Hitachi AMS A generic term for:

• Hitachi Adaptable Modular Storage 1000

• Hitachi Adaptable Modular Storage 500

• Hitachi Adaptable Modular Storage 200

Hitachi AMS/WMS series A generic term for:

• Hitachi Adaptable Modular Storage 1000

• Hitachi Adaptable Modular Storage 500

• Hitachi Adaptable Modular Storage 200

• Hitachi Workgroup Modular Storage series

Hitachi AMS2000/AMS/WMS/SMS series A generic term for:

• Hitachi Adaptable Modular Storage 2000 series

• Hitachi Adaptable Modular Storage 1000

• Hitachi Adaptable Modular Storage 500

• Hitachi Adaptable Modular Storage 200

• Hitachi Workgroup Modular Storage series

• Hitachi Simple Modular Storage series

Hitachi AMS2000 series Hitachi Adaptable Modular Storage 2000 series

Hitachi NSC55 Hitachi Network Storage Controller NSC55

Hitachi Simple Modular Storage series Hitachi SMS

Hitachi USP A generic term for:

• Hitachi NSC55

• Hitachi Universal Storage Platform 100

• Hitachi Universal Storage Platform 600

• Hitachi Universal Storage Platform 1100

• HP XP10000

• HP XP12000

• SVS

Hitachi WMS Hitachi Workgroup Modular Storage series

iii

Page 8

Abbreviation Full name or meaning

HP XP128 HP StorageWorks XP128 Disk Array

HP XP1024 HP StorageWorks XP1024 Disk Array

HP XP10000 HP StorageWorks XP10000 Disk Array

HP XP12000 HP StorageWorks XP12000 Disk Array

HP XP20000 HP StorageWorks XP20000 Disk Array

HP XP24000 HP StorageWorks XP24000 Disk Array

HP XP series A generic term for:

• HP XP128

• HP XP1024

• HP XP10000

• HP XP12000

• HP XP20000

• HP XP24000

HVM Hitachi Virtualization Manager

JDK

JRE

TM

JavaTM 2 SDK, Standard Edition

TM

2 Runtime Environment, Standard Edition

Java

Lightning 9900V series A generic term for:

• Lightning 9900V series

• HP XP128

• HP XP1024

Linux Linux(R)

LUKS Linux Unified Key Setup

Oracle9i RAC Oracle9i Real Application Clusters

Oracle Enterprise Linux 4 A generic term for:

• Oracle Enterprise Linux 4 Update 5

• Oracle Enterprise Linux 4 Update 6

Oracle Enterprise Linux 5 A generic term for:

• Oracle Enterprise Linux 5 Update 1

• Oracle Enterprise Linux 5 Update 4

• Oracle Enterprise Linux 5 Update 5

Oracle RAC 10g Oracle Real Application Clusters 10g

Oracle RAC 11g Oracle Real Application Clusters 11g

iv

Page 9

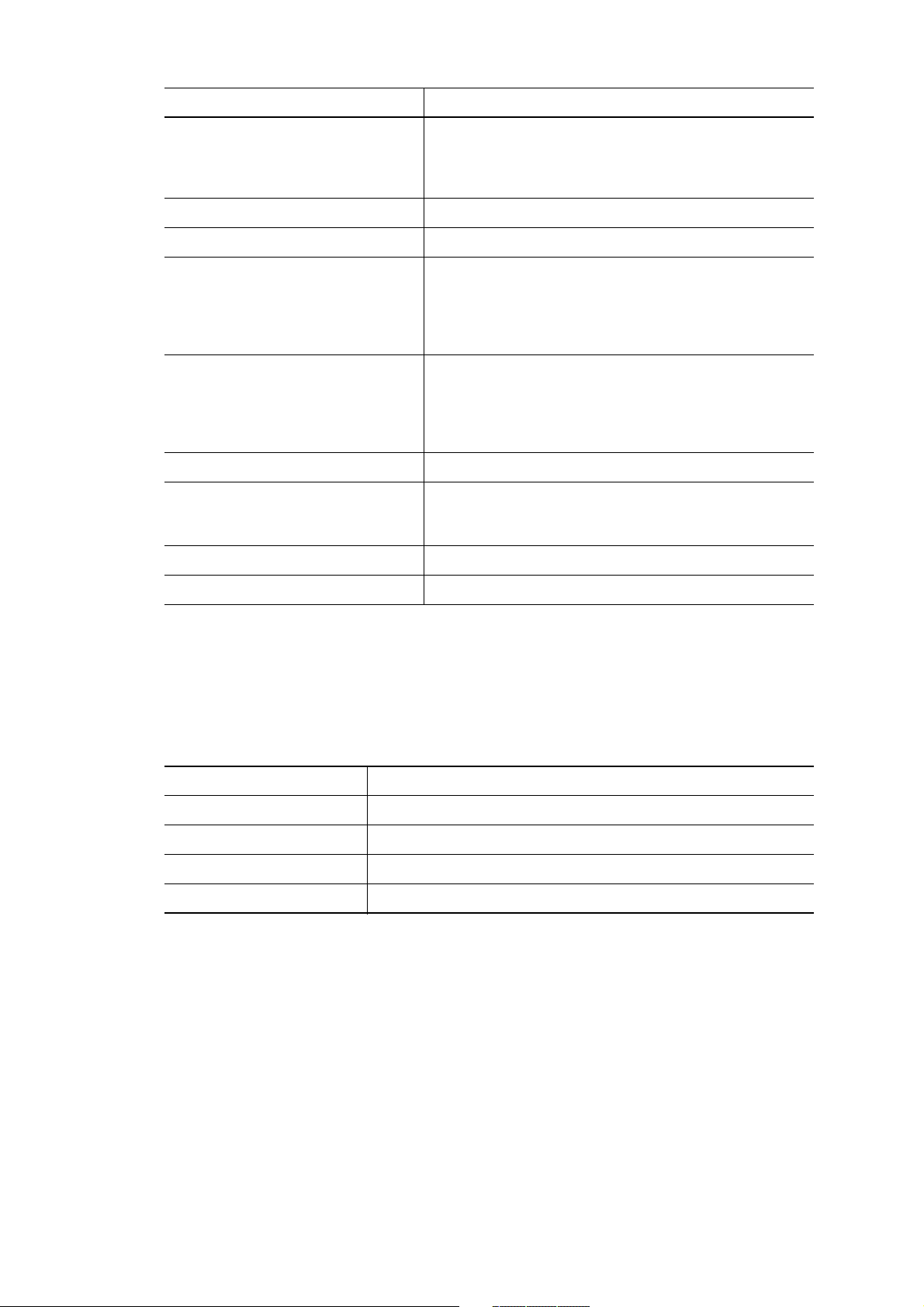

Abbreviation Full name or meaning

Oracle RAC A generic term for:

• Oracle9i Real Application Clusters

• Oracle Real Application Clusters 10g

• Oracle Real Application Clusters 11g

P9500 HP StorageWorks P9500 Disk Array

Red Hat Enterprise Linux A generic term for:

• Red Hat Enterprise Linux(R) AS4/ES4

• Red Hat Enterprise Linux(R) 5

• Red Hat Enterprise Linux(R) 6

Red Hat Enterprise Linux AS4/ES4 A generic term for:

• Red Hat Enterprise Linux(R) AS 4

• Red Hat Enterprise Linux(R) AS 4.5

• Red Hat Enterprise Linux(R) AS 4.6

• Red Hat Enterprise Linux(R) AS 4.7

• Red Hat Enterprise Linux(R) AS 4.8

• Red Hat Enterprise Linux(R) AS 4.9

• Red Hat Enterprise Linux(R) ES 4

• Red Hat Enterprise Linux(R) ES 4.5

• Red Hat Enterprise Linux(R) ES 4.6

• Red Hat Enterprise Linux(R) ES 4.7

• Red Hat Enterprise Linux(R) ES 4.8

• Red Hat Enterprise Linux(R) ES 4.9

Red Hat Enterprise Linux 5 A generic term for:

• Red Hat Enterprise Linux(R) 5

• Red Hat Enterprise Linux(R) 5 Advanced Platform

• Red Hat Enterprise Linux(R) 5.1

• Red Hat Enterprise Linux(R) 5.1 Advanced Platform

• Red Hat Enterprise Linux(R) 5.2

• Red Hat Enterprise Linux(R) 5.2 Advanced Platform

• Red Hat Enterprise Linux(R) 5.3

• Red Hat Enterprise Linux(R) 5.3 Advanced Platform

• Red Hat Enterprise Linux(R) 5.4

• Red Hat Enterprise Linux(R) 5.4 Advanced Platform

• Red Hat Enterprise Linux(R) 5.5

• Red Hat Enterprise Linux(R) 5.5 Advanced Platform

• Red Hat Enterprise Linux(R) 5.6

• Red Hat Enterprise Linux(R) 5.6 Advanced Platform

Red Hat Enterprise Linux 6 A generic term for:

• Red Hat Enterprise Linux(R) 6

• Red Hat Enterprise Linux(R) 6 Advanced Platform

RHCM Red Hat(R) Cluster Manager

v

Page 10

Abbreviation Full name or meaning

SUSE LINUX Enterprise Server A generic term for:

• SUSE LINUX(R) Enterprise Server 9

• SUSE LINUX(R) Enterprise Server 10

• SUSE LINUX(R) Enterprise Server 11

SVS HP StorageWorks 200 Storage Virtualization System

Thunder 9200 Hitachi Freedom Storage Thunder 9200

Universal Storage Platform V/VM A generic term for:

• Hitachi Universal Storage Platform V

• Hitachi Universal Storage Platform VM

• HP XP20000

• HP XP24000

UNIX A generic term for:

• AIX

• Solaris

• Linux

• HP-UX

VCS Veritas Cluster Server

Virtual Storage Platform A generic term for:

• Hitachi Virtual Storage Platform

• HP StorageWorks P9500 Disk Array

VxFS Veritas File System

VxVM Veritas Volume Manager

Note that if descriptions include the term Red Hat Enterprise Linux or Red Hat

Enterprise Linux AS4/ES4, and there is no specific explanation about Oracle

Enterprise Linux 4, read them as Oracle Enterprise Linux 4 when necessary. Similarly,

note that if descriptions include the term Red Hat Enterprise Linux or Red Hat

Enterprise Linux 5, and there is no specific explanation about Oracle Enterprise Linux

5, read them as Oracle Enterprise Linux 5 when necessary.

This manual also uses the following abbreviations.

Abbreviation Full name or meaning

API Application Programming Interface

BIOS Basic Input / Output System

CFQ Complete Fair Queuing

CHA Channel Adapter

vi

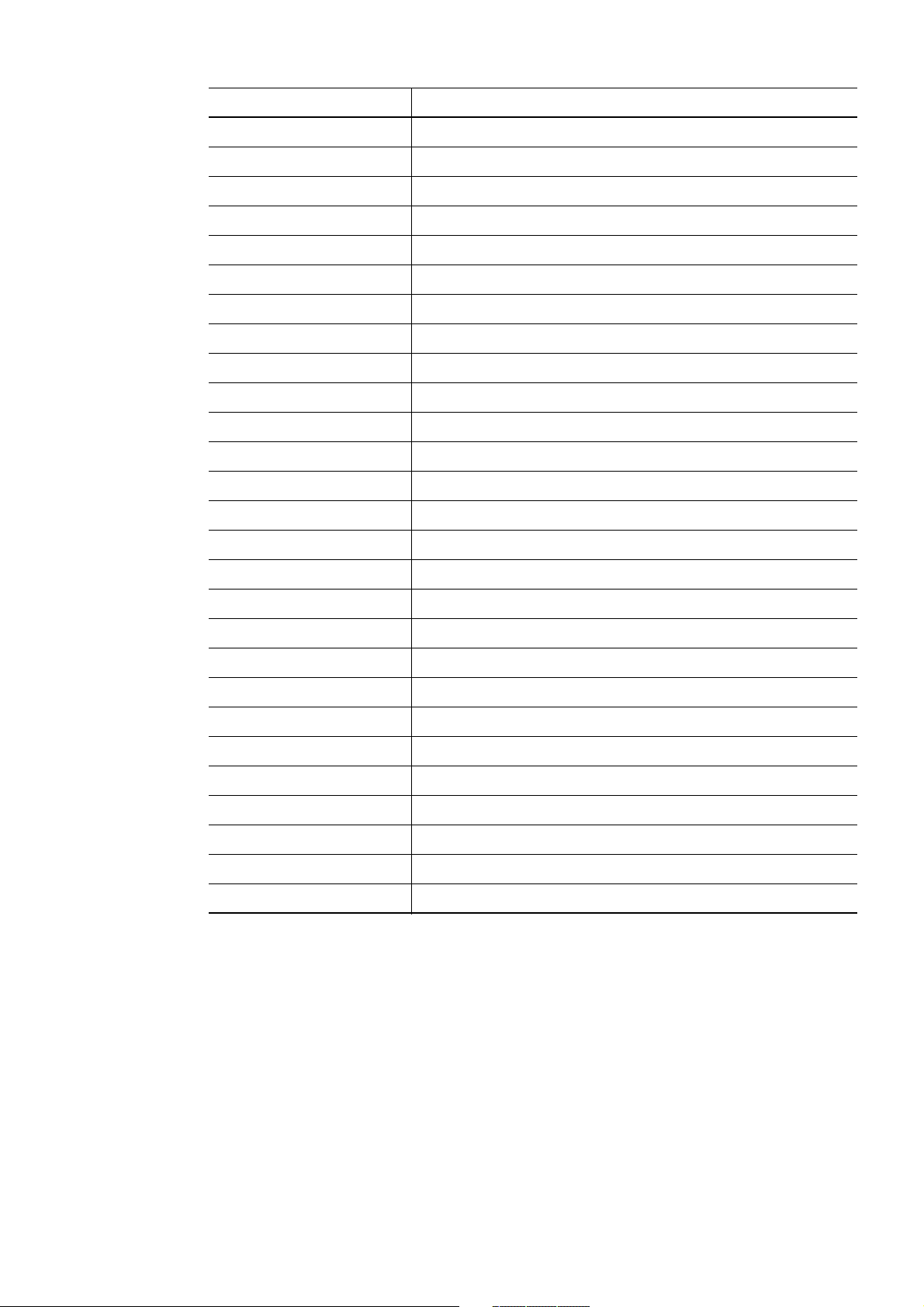

Page 11

Abbreviation Full name or meaning

CLPR Cache Logical Partition

CPU Central Processing Unit

CU Control Unit

DBMS Database Management System

Dev Device

DMI Desktop Management Interface

DNS Domain Name Server

DRBD Distributed Replicated Block Device

ELILO Extensible Firmware Interface Linux Loader

EM64T Extended Memory 64 Technology

EVMS Enterprise Volume Management System

ext Extended File System

FC Fibre Channel

FC-SP Fibre Channel Security Protocol

FO Failover

GMT Greenwich Mean Time

GRUB GRand Unified Bootloader

GUI Graphical User Interface

HBA Host Bus Adapter

HDev Host Device

HLU Host Logical Unit

HTTP Hypertext Transfer Protocol

I/O Input/Output

IA32 Intel Architecture 32

IDE Integrated Drive Electronics

IP Internet Protocol

IPC Inter Process Communication

vii

Page 12

Abbreviation Full name or meaning

IPF Itanium(R) Processor Family

IRQ Interrupt ReQuest

iSCSI Internet Small Computer System Interface

KVM Kernel-based Virtual Machine

LAN Local Area Network

LDAP Lightweight Directory Access Protocol

LDEV Logical Device

LILO Linux Loader

LU Logical Unit

LUN Logical Unit Number

LVM Logical Volume Manager

md Multiple Devices

NAS Network Attached Storage

NIC Network Interface Card

NTP Network Time Protocol

OS Operating System

PPort

PCI Peripheral Component Interconnect

RADIUS Remote Authentication Dial in User Service

SAN Storage Area Network

SCSI Small Computer System Interface

SLPR Storage Logical Partition

SMTP Simple Mail Transfer Protocol

SNMP Simple Network Management Protocol

SP Service Pack

SSL Secure Sockets Layer

SVP Service Processor

viii

Page 13

Abbreviation Full name or meaning

UUID Universally Unique Identifier

VG Volume Group

WWN World Wide Name

Conventions: Diagrams

This manual uses the following conventions in diagrams:

Conventions: Fonts and Symbols

Font and symbol conventions are classified as:

• General font conventions

• Conventions in syntax explanations

These conventions are described below.

General Font Conventions

The following table lists the general font conventions:

ix

Page 14

Font Convention

Bold Bold type indicates text on a window, other than the window title. Such text includes menus,

Italics Italics are used to indicate a placeholder for some actual text provided by the user or system.

Code font A code font indicates text that the user enters without change, or text (such as messages) output

menu options, buttons, radio box options, or explanatory labels. For example, bold is used in

sentences such as the following:

• From the File menu, choose Open.

• Click the Cancel button.

• In the Enter name entry box, type your name.

Italics are also used for emphasis. For example:

• Write the command as follows:

copy source-file target-file

• Do not delete the configuration file.

by the system. For example:

• At the prompt, enter

• Use the

• The following message is displayed:

send command to send mail.

The password is incorrect.

dir.

Code examples and messages appear as follows (though there may be some

exceptions, such as when the code is part of a diagram):

MakeDatabase

...

StoreDatabase temp DB32

In examples of coding, an ellipsis (...) indicates that one or more lines of coding are not

shown for purposes of brevity.

Conventions in Syntax Explanations

Syntax definitions appear as follows:

StoreDatabase [temp|perm] (database-name ...)

The following table lists the conventions used in syntax explanations:

Example font or symbol Convention

StoreDatabase Code-font characters must be entered exactly as shown.

database-name This font style marks a placeholder that indicates where appropriate characters are

SD Bold code-font characters indicate the abbreviation for a command.

perm Underlined characters indicate the default value.

x

to be entered in an actual command.

Page 15

Example font or symbol Convention

[ ] Square brackets enclose an item or set of items whose specification is optional. An

item that is underlined is specified when all items are omitted.

{ } One of the options enclosed in { } must be specified.

| Only one of the options separated by a vertical bar can be specified at the same

time.

... An ellipsis (...) indicates that the item or items enclosed in ( ) or [ ] immediately

() Parentheses indicate the range of items to which the vertical bar (|) or ellipsis (...)

# A prompt on a command-execution window when the OS is UNIX

preceding the ellipsis may be specified as many times as necessary.

is applicable.

Conventions: KB, MB, GB, and TB

This manual uses the following conventions:

• 1 KB (kilobyte) is 1,024 bytes.

• 1 MB (megabyte) is 1,024

• 1 GB (gigabyte) is 1,024

• 1 TB (terabyte) is 1,024

2

bytes.

3

bytes.

4

bytes.

xi

Page 16

Page 17

Contents

Preface i

Intended Readers........................................................................................................i

Organization of This Manual .....................................................................................i

Related Publications..................................................................................................ii

Conventions: Abbreviations.................................................................................... iii

Conventions: Diagrams............................................................................................ix

Conventions: Fonts and Symbols.............................................................................ix

Conventions: KB, MB, GB, and TB ........................................................................xi

1. Overview of HDLM 1

1.1 What is HDLM? .........................................................................................................2

1.2 HDLM Features..........................................................................................................4

2. HDLM Functions 5

2.1 Devices Managed by HDLM......................................................................................6

2.2 System Configuration.................................................................................................7

2.2.1 System Configuration Using an FC-SAN .......................................................7

2.2.2 System Configuration Using an IP-SAN ........................................................9

2.3 LU Configuration .....................................................................................................12

2.4 Program Configuration.............................................................................................14

2.5 Position of the HDLM Driver and HDLM Device...................................................16

2.6 Logical Device Files for HDLM Devices.................................................................17

2.7 Distributing a Load Using Load Balancing..............................................................19

2.7.1 Paths To Which Load Balancing Is Applied .................................................21

2.7.2 Load Balancing Algorithms ..........................................................................23

2.8 Performing Failovers and Failbacks Using Path Switching.....................................25

2.8.1 Automatic Path Switching.............................................................................25

2.8.2 Manual Path Switching .................................................................................28

2.8.3 Path Status Transition....................................................................................29

2.9 Monitoring Intermittent Errors (Functionality When Automatic Failback Is Used)32

2.9.1 Checking Intermittent Errors.........................................................................32

2.9.2 Setting Up Intermittent Error Monitoring .....................................................32

2.9.3 Intermittent Error Monitoring Actions..........................................................33

2.9.4 When a User Changes the Intermittent Error Information...........................35

2.10 Detecting Errors by Using Path Health Checking..................................................38

2.11 Error Management ..................................................................................................39

2.11.1 Types of Collected Logs..............................................................................41

2.11.2 Filtering of Error Information .....................................................................42

xiii

Page 18

2.11.3 Collecting Error Information Using the Utility for Collecting HDLM Error

Information (DLMgetras)............................................................................. 43

2.11.4 Utility for Collecting HDLM Installation Error Information

(installgetras)................................................................................................ 43

2.12 Collecting Audit Log Data..................................................................................... 44

2.12.1 Categories and Audit Events that HDLM Can Output to the Audit Log ... 45

2.12.2 Requirements for Outputting Audit Log Data............................................ 49

2.12.3 Destination and Filtering of Audit Log Data.............................................. 50

2.12.4 Audit Log Data Formats............................................................................. 51

2.13 Integrated HDLM management using Global Link Manager................................ 54

2.14 Cluster Support ...................................................................................................... 56

3. Creating an HDLM Environment 57

3.1 HDLM System Requirements.................................................................................. 58

3.1.1 Hosts and OSs Supported by HDLM ........................................................... 58

3.1.2 Storage Systems Supported by HDLM......................................................... 71

3.1.3 Related Products When Using Red Hat Enterprise Linux AS4/ES4............ 72

3.1.4 Related Products When Using Red Hat Enterprise Linux 5......................... 96

3.1.5 Related Products When Using Red Hat Enterprise Linux 6....................... 109

3.1.6 Related Products When Using SUSE LINUX Enterprise Server 9.............110

3.1.7 Related Products When Using SUSE LINUX Enterprise Server 10...........111

3.1.8 Related Products When Using SUSE LINUX Enterprise Server 11 ...........118

3.1.9 Related Products When Using Oracle Enterprise Linux 4 ......................... 120

3.1.10 Related Products When Using Oracle Enterprise Linux 5 ....................... 121

3.1.11 Memory and Disk Capacity Requirements............................................... 124

3.1.12 Number of Paths Supported in HDLM..................................................... 127

3.1.13 Virtual Environments Supported by HDLM ............................................ 127

3.2 Flow for Creating an HDLM Environment ........................................................... 129

3.3 HDLM Installation Types ...................................................................................... 131

3.4 Knowledge Required Before You Install HDLM .................................................. 132

3.5 Notes on Creating an HDLM Environment........................................................... 133

3.5.1 Notes on Hardware Settings ....................................................................... 133

3.5.2 Notes on Linux ........................................................................................... 134

3.5.3 Notes on Installing HDLM......................................................................... 135

3.5.4 Notes on an Upgrade Installation or Re-installation of HDLM ................. 137

3.5.5 Notes on a Device Manager Agent............................................................. 137

3.6 Installing HDLM................................................................................................... 139

3.6.1 Preparations for a New Installation of HDLM ........................................... 139

3.6.2 Performing a New Installation of HDLM................................................... 144

3.6.3 Preparations for an Upgrade Installation or Re-installation of HDLM...... 151

3.6.4 Performing a Re-installation of HDLM...................................................... 152

3.6.5 Performing an Upgrade Installation of HDLM .......................................... 159

3.6.6 Unattended Installation of HDLM.............................................................. 165

3.7 Installing HDLM for Managing Boot Disks.......................................................... 172

xiv

Page 19

3.7.1 Notes on Installing HDLM in a Boot Disk Environment............................172

3.7.2 Overview of the Procedure for Installing HDLM in a Boot Disk

Environment................................................................................................175

3.7.3 Settings for Using an HDLM Device as a Boot Disk .................................177

3.7.4 Upgrade Installation in an Environment Where an HDLM Device Is Used as

a Boot Disk .................................................................................................188

3.7.5 Settings for Using a Logical Volume (LVM2) on an HDLM Device as a Boot

Disk .............................................................................................................197

3.7.6 Upgrade Installation in an Environment Where a Logical Volume (LVM2) on

an HDLM Device Is Used as a Boot Disk..................................................209

3.7.7 Countermeasures for Unsuccessful Startup of the OS from an HDLM

Device .........................................................................................................226

3.8 Settings for LUKS ..................................................................................................229

3.8.1 Notes on Using LUKS.................................................................................229

3.8.2 Using HDLM in an LUKS Environment ....................................................229

3.9 Settings for md Devices..........................................................................................232

3.9.1 Notes on Setting Up md Devices ................................................................232

3.9.2 Creating an md Device................................................................................233

3.9.3 Using HDLM in an md Device Environment .............................................237

3.10 Settings for LVM2 ................................................................................................246

3.10.1 Notes on Using LVM2 ..............................................................................246

3.10.2 When Using an HDLM Device to Create a New Logical Volume ...........246

3.10.3 When Moving a Logical Volume Created on a SCSI Device in a Single-Path

Environment to an HDLM Device..............................................................253

3.11 Settings for Xen....................................................................................................258

3.12 Settings for KVM .................................................................................................260

3.13 Settings for Heartbeat...........................................................................................262

3.14 Settings for Oracle RAC.......................................................................................263

3.15 Settings for the RHCM.........................................................................................266

3.15.1 Notes on Using RHCM .............................................................................266

3.15.2 When Using Red Hat Enterprise Linux AS4/ES4.....................................266

3.15.3 When Using Red Hat Enterprise Linux 5, or Red Hat Enterprise Linux

6...................................................................................................................267

3.16 Settings for VCS...................................................................................................268

3.17 Checking the Path Configuration .........................................................................269

3.18 Setting Up HDLM ................................................................................................271

3.18.1 Checking the Current Settings...................................................................271

3.18.2 Setting Up the HDLM Functions ..............................................................271

3.18.3 Checking the Updated Settings .................................................................280

3.19 The Process-specific-trace Information File.........................................................281

3.19.1 Notes on Using the Hitachi Network Objectplaza Trace Library .............281

3.20 Creating a Character-Type Device File for an HDLM Device.............................282

3.20.1 When Using Red Hat Enterprise Linux 5 or Red Hat Enterprise Linux 6 282

xv

Page 20

3.20.2 When Using Red Hat Enterprise Linux AS4/ES4 or SUSE LINUX Enterprise

Server ......................................................................................................... 282

3.21 Creating File Systems for HDLM (When Volume Management Software Is Not

Used)..................................................................................................................... 284

3.21.1 Mounting a File System............................................................................ 284

3.21.2 Creating a File System.............................................................................. 284

3.22 Settings for Automatic Mounting ........................................................................ 286

3.22.1 Setting the HDLM Device for the First Time........................................... 286

3.22.2 Migrating from an Environment Where a SCSI Device Is Already Set... 287

3.23 Canceling the Settings for HDLM....................................................................... 290

3.23.1 Operations on HDLM-Managed Devices................................................. 290

3.23.2 Canceling the Heartbeat Settings.............................................................. 292

3.23.3 Canceling the Settings for Oracle RAC.................................................... 292

3.23.4 Canceling the Settings for RHCM............................................................ 293

3.23.5 Canceling the Settings for VCS................................................................ 295

3.23.6 Canceling the Xen Settings....................................................................... 295

3.23.7 Canceling the KVM Settings.................................................................... 297

3.23.8 Canceling the Settings for LVM2 ............................................................. 298

3.23.9 Canceling the md Device Settings............................................................ 302

3.23.10 Canceling the LUKS Settings................................................................. 310

3.23.11 Uninstalling HDLM.................................................................................311

3.23.12 Uninstalling Hitachi Network Objectplaza Trace Library (HNTRLib2) 326

4. HDLM Operation 329

4.1 Notes on Using HDLM.......................................................................................... 330

4.1.1 Notes Common to OSs ............................................................................... 330

4.1.2 Notes When Using Red Hat Enterprise Linux AS4/ES4, or Oracle Enterprise

Linux 4 ....................................................................................................... 332

4.1.3 Notes When Using Red Hat Enterprise Linux 5, Red Hat Enterprise Linux 6,

or Oracle Enterprise Linux 5...................................................................... 333

4.1.4 Notes When Using SUSE LINUX Enterprise Server 9.............................. 334

4.1.5 Notes When Using SUSE LINUX Enterprise Server 10............................ 335

4.1.6 Notes When Using SUSE LINUX Enterprise Server 11............................ 336

4.2 Updating Kernel Packages and Applying OS Update Packages ........................... 339

4.2.1 Environment Where an HDLM Device Is not Used as the Boot Disk....... 339

4.2.2 Environment Where an HDLM Device Is Used as the Boot Disk ............. 339

4.2.3 Environment Where a Logical Volume (LVM2) on an HDLM Device Is Used

as the Boot Disk ......................................................................................... 340

4.3 HDLM Operations Using Commands ................................................................... 341

4.3.1 Notes on Using Commands ........................................................................ 341

4.3.2 Viewing Path Information .......................................................................... 341

4.3.3 Changing the Status of Paths ...................................................................... 341

4.3.4 Viewing LU Information ............................................................................ 343

xvi

Page 21

4.3.5 Displaying Corresponding Information About an HDLM Device, SCSI

Device, and LDEV......................................................................................344

4.3.6 Initializing Statistical Information for Paths ...............................................345

4.3.7 Viewing and Setting Up the Operating Environment..................................346

4.3.8 Viewing License Information......................................................................348

4.3.9 Updating the License...................................................................................348

4.3.10 Viewing HDLM Version Information .......................................................349

4.3.11 Viewing HDLM Component Information .................................................350

4.4 Starting and Stopping the HDLM Manager............................................................352

4.4.1 Starting the HDLM Manager ......................................................................352

4.4.2 Stopping the HDLM Manager.....................................................................353

4.5 HDLM Resident Processes.....................................................................................355

4.6 Reconfiguring the HDLM Operating Environment................................................356

4.6.1 Replacing HBAs..........................................................................................356

4.6.2 Replacing a Fiber Cable..............................................................................364

4.6.3 Replacing the Fibre Channel Switch...........................................................367

4.6.4 Changing the HDLM Device Configuration...............................................369

4.6.5 About Creating a New HDLM Device........................................................383

4.6.6 Note on adding a BladeSymphony I/O drawer ...........................................385

5. Troubleshooting 387

5.1 Information Collected by the DLMgetras Utility for Collecting HDLM Error

Information............................................................................................................388

5.2 Checking Error Information in Messages...............................................................389

5.3 What To Do for a Path Error ..................................................................................391

5.3.1 Examining the Messages.............................................................................392

5.3.2 Obtain Path Information..............................................................................392

5.3.3 Identifying the Error Path............................................................................393

5.3.4 Narrowing Down the Hardware That Might Have Caused the Error .........393

5.3.5 Identifying the Error Location and Correcting any Hardware Error...........393

5.3.6 Placing the Path Online...............................................................................393

5.4 What To Do for a Program Error............................................................................394

5.4.1 Examining the Messages.............................................................................394

5.4.2 Obtaining Program Information..................................................................394

5.4.3 What To Do for the Program Error .............................................................395

5.4.4 Contacting your HDLM Vendor or Maintenance Company .......................395

5.5 What To Do for Other Errors..................................................................................396

6. Command Reference 397

6.1 Overview of the HDLM Command dlnkmgr .........................................................398

6.2 clear (Returns the Path Statistics to the Initial Value) ............................................400

6.2.1 Format .........................................................................................................400

6.2.2 Parameters ...................................................................................................400

6.3 help (Displays the Operation Format) ....................................................................402

xvii

Page 22

6.3.1 Format......................................................................................................... 402

6.3.2 Parameter.................................................................................................... 402

6.4 offline (Places Paths Offline)................................................................................. 404

6.4.1 Format......................................................................................................... 404

6.4.2 Parameters .................................................................................................. 404

6.5 online (Places Paths Online).................................................................................. 409

6.5.1 Format......................................................................................................... 409

6.5.2 Parameters .................................................................................................. 409

6.6 set (Sets Up the Operating Environment) .............................................................. 414

6.6.1 Format......................................................................................................... 414

6.6.2 Parameters .................................................................................................. 414

6.7 view (Displays Information).................................................................................. 431

6.7.1 Format......................................................................................................... 431

6.7.2 Parameters (To display program information)............................................ 432

7. Utility Reference 463

7.1 Overview of the Utilities........................................................................................ 464

7.2 DLMgetras Utility for Collecting HDLM Error Information................................ 466

7.2.1 Format......................................................................................................... 466

7.2.2 Parameters .................................................................................................. 466

7.2.3 List of Collected Error Information............................................................ 468

7.3 dlmcfgmgr Utility for Managing the HDLM Configuration ................................. 489

7.3.1 Format......................................................................................................... 489

7.3.2 Parameters .................................................................................................. 489

7.4 dlmmkinitrd Utility for Supporting a Boot Disk ................................................... 500

7.4.1 Format......................................................................................................... 500

7.4.2 Parameters .................................................................................................. 500

7.5 dlmpr Utility for Clearing HDLM Persistent Reservation..................................... 503

7.5.1 Format......................................................................................................... 503

7.5.2 Parameters .................................................................................................. 503

7.6 dlmsetopt Utility for Setting HDLM Driver Options ............................................ 506

7.6.1 Format......................................................................................................... 506

7.6.2 Parameters .................................................................................................. 506

7.7 dlmstart Utility for Starting HDLM....................................................................... 510

7.7.1 Format......................................................................................................... 510

7.8 dlmupdatesysinit Utility for Updating System Scripts ...........................................511

7.8.1 Format..........................................................................................................511

7.9 installgetras Utility for Collecting HDLM Installation Error Information............ 512

7.9.1 Format......................................................................................................... 512

7.9.2 Parameters .................................................................................................. 512

7.9.3 Error Information To Be Collected............................................................. 512

7.10 installhdlm Utility for Installing HDLM ............................................................. 514

7.10.1 Format....................................................................................................... 514

7.10.2 Parameters ................................................................................................ 514

xviii

Page 23

7.10.3 Editing an Installation-Information Settings File......................................514

7.11 installux.sh Utility for HDLM Common Installer................................................521

7.11.1 Format .......................................................................................................521

7.11.2 Parameters .................................................................................................521

7.11.3 Log file ......................................................................................................521

8. Messages 523

8.1 Before Viewing the List of Messages.....................................................................524

8.1.1 Format and Meaning of Message IDs .........................................................524

8.1.2 Terms Used in Messages and Message Explanations .................................524

8.1.3 Components That Output Messages to syslog ............................................524

8.2 KAPL01001 to KAPL02000 ..................................................................................526

8.3 KAPL03001 to KAPL04000 ..................................................................................548

8.4 KAPL04001 to KAPL05000 ..................................................................................551

8.5 KAPL05001 to KAPL06000 ..................................................................................560

8.6 KAPL06001 to KAPL07000 ..................................................................................567

8.7 KAPL07001 to KAPL08000 ..................................................................................571

8.8 KAPL08001 to KAPL09000 ..................................................................................572

8.9 KAPL09001 to KAPL10000 ..................................................................................575

8.10 KAPL10001 to KAPL11000 ................................................................................602

8.11 KAPL11001 to KAPL12000 ................................................................................640

8.12 KAPL12001 to KAPL13000 ................................................................................644

8.13 KAPL13001 to KAPL14000 ................................................................................662

8.14 KAPL15001 to KAPL16000 ................................................................................677

8.15 Return Codes for Hitachi Command Suite Common Agent Component.............684

Appendixes 691

A. Notes on Linux Commands and Files......................................................................692

A.1 Notes on the /proc/partitions File..................................................................692

A.2 Notes on Linux Commands...........................................................................692

A.3 Notes on the iostat Command .......................................................................693

A.4 Notes on the mkfs Command ........................................................................694

A.5 Notes on the fdisk Command ........................................................................694

A.6 Notes on the sar Command ...........................................................................695

A.7 Notes on the fdisk and parted Commands.....................................................695

A.8 Notes on the parted Command ......................................................................695

A.9 Notes on the vgrename and lvrename Commands ........................................696

B. Troubleshooting Products That Use the Weak-Modules Script...............................697

B.1 Installing Products That Use the Weak-Modules Script................................697

B.2 Uninstalling Products That Use the Weak-Modules Script ...........................702

C. Functional Differences Between Versions of HDLM..............................................703

C.1 Functional Differences Between Version 6.2.1 and Versions Earlier Than

6.2.1...............................................................................................................703

C.2 Functional Differences Between Version 6.1 and Versions Earlier Than 6.1703

xix

Page 24

C.3 Functional Differences Between Version 6.0 or Later and Versions Earlier Than

6.0................................................................................................................. 703

C.4 Functional Differences Between Version 5.9.4 or Later and Versions Earlier

Than 5.9.4..................................................................................................... 703

C.5 Functional Differences Between Version 5.9.1 or Later and Versions Earlier

Than 5.9.1..................................................................................................... 704

C.6 Functional Differences Between Version 5.9 or Later and Versions Earlier Than

5.9................................................................................................................. 704

C.7 Functional Differences Between Version 5.8 or Later and Versions Earlier Than

5.8................................................................................................................. 704

C.8 Functional Differences Between Version 5.7.1 or Later and Versions Earlier

Than 5.7.1..................................................................................................... 705

C.9 Functional Differences Between Version 5.7.0-01 or Later and Versions Earlier

Than 5.7.0-01 ............................................................................................... 705

C.10 Functional Differences Between Version 5.7 or Later and Versions Earlier

Than 5.7........................................................................................................ 705

C.11 Functional Differences Between Version 5.6.3 or Later and Versions Earlier

Than 5.6.3..................................................................................................... 706

C.12 Functional Differences Between Version 5.4 or Later and Versions Earlier

Than 5.4........................................................................................................ 706

D. Glossary .................................................................................................................. 707

Index 715

xx

Page 25

Chapter

1. Overview of HDLM

HDLM is a software package that manages paths between a host and a storage system.

HDLM is designed to distribute loads across multiple paths and will switch a given

load to another path if there is a failure in the path that is currently being used, thus

improving system reliability.

This chapter gives an overview of HDLM and describes its features.

1.1 What is HDLM?

1.2 HDLM Features

1

Page 26

1. Overview of HDLM

1.1 What is HDLM?

With the widespread use of data warehousing and increasing use of multimedia data,

the need for high-speed processing of large volumes of data on networks has rapidly

grown. To satisfy this need, networks dedicated to the transfer of data, such as SANs,

are now being used to provide access to storage systems.

HDLM manages the access paths to these storage systems. HDLM provides the ability

to distribute loads across multiple paths and switch to another path if there is a failure

in the path that is currently being used, thus improving system availability and

reliability.

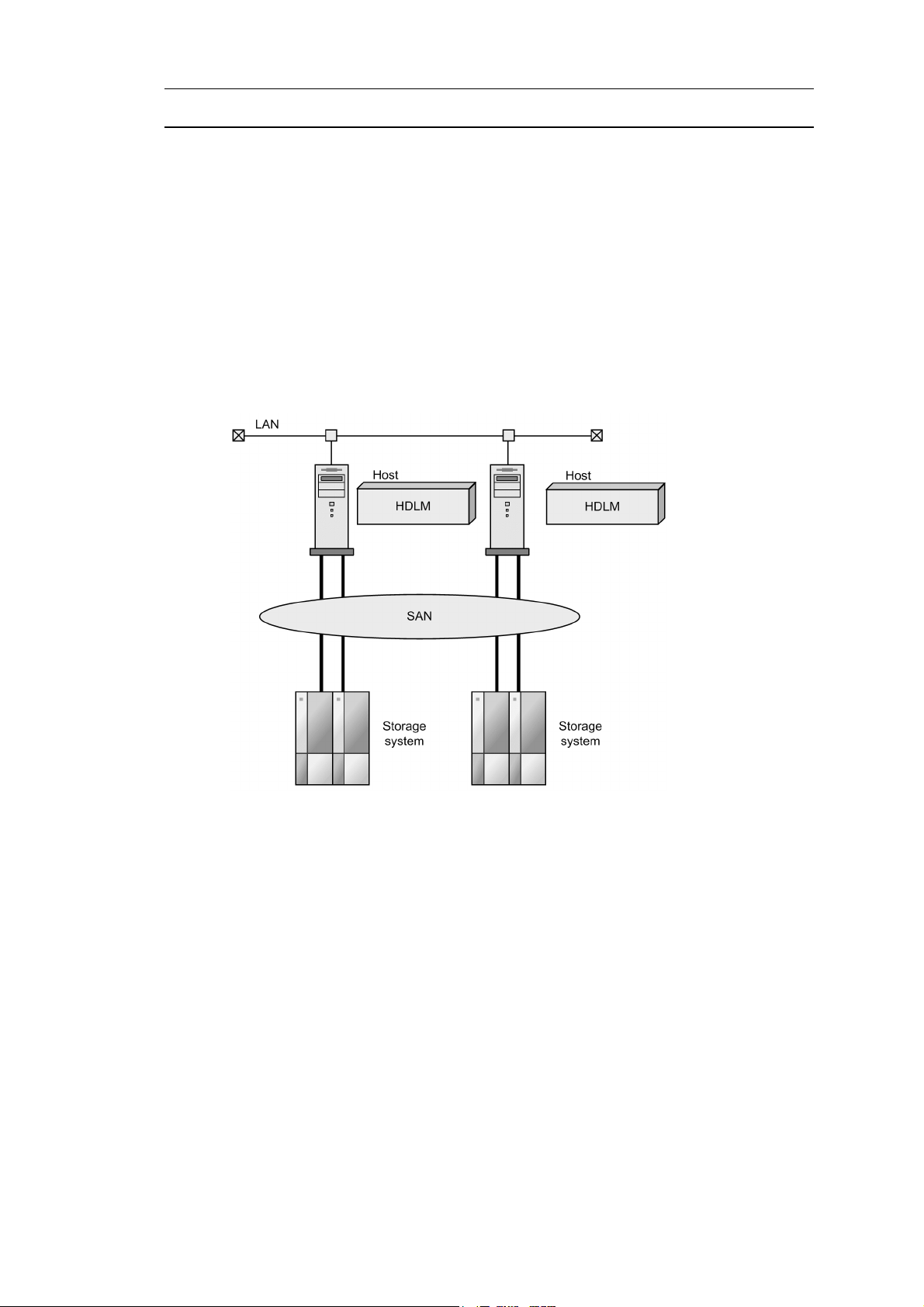

Figure 1-1: Between Hosts and Storage Systems illustrates the connections between

various hosts and storage systems. A server on which HDLM is installed is called a

host.

Figure 1-1: Between Hosts and Storage Systems

HDLM supports the following storage systems:

• Hitachi AMS2000/AMS/WMS/SMS series

• Hitachi USP

• Lightning 9900 series

2

Page 27

• Lightning 9900V series

• Thunder 9500V series

• Universal Storage Platform V/VM

• Virtual Storage Platform

1. Overview of HDLM

3

Page 28

1. Overview of HDLM

1.2 HDLM Features

HDLM features include the following:

The ability to distribute a load across multiple paths. This is also known as load

balancing.

When a host is connected to a storage system via multiple paths, HDLM can

distribute the load across all the paths. This prevents one, loaded down path from

affecting the processing speed of the entire system.

For details on load balancing, see 2.7 Distributing a Load Using Load Balancing.

The ability to continue running operations between a host and storage system, even if

there is a failure. This is also known as performing a failover.

When a host is connected to a storage system via multiple paths, HDLM can

automatically switch to another path if there is some sort of failure in the path that

is currently being used. This allows operations to continue between a host and a

storage system.

For details on performing failovers, see 2.8 Performing Failovers and Failbacks

Using Path Switching.

The ability to bring a path that has recovered from an error back online. This is also

known as performing a failback.

If a path is recovered from an error, HDLM can bring that path back online. This

enables the maximum possible number of paths to always be available and online,

which in turn enables HDLM to better distribute the load across multiple paths.

Failbacks can be performed manually or automatically. In an automatic failback,

HDLM will automatically restore the path to an active state after the user has

corrected the problem that exists on the physical path.

For details on performing failbacks, see 2.8 Performing Failovers and Failbacks

Using Path Switching.

The ability to automatically check the status of any given path at regular intervals. This

is also known as path health checking.

HDLM can easily detect errors by checking the statuses of paths at user-defined

time intervals. This allows you to check for any existing path errors and to resolve

them promptly and efficiently.

For details on setting up and performing path health checking, see 2.10 Detecting

Errors by Using Path Health Checking.

4

Page 29

Chapter

2. HDLM Functions

This chapter describes the various functions that are built into HDLM. Before the

function specifications are explained though, this chapter will go into detail about the

HDLM management targets, system configuration, and basic terms that are necessary

to know to effectively operate HDLM. After that, the rest of the chapter focus on

describing all the HDLM functions, including the main ones: load distribution across

paths and path switching.

2.1 Devices Managed by HDLM

2.2 System Configuration

2.3 LU Configuration

2.4 Program Configuration

2.5 Position of the HDLM Driver and HDLM Device

2.6 Logical Device Files for HDLM Devices

2.7 Distributing a Load Using Load Balancing

2.8 Performing Failovers and Failbacks Using Path Switching

2.9 Monitoring Intermittent Errors (Functionality When Automatic Failback Is

Used)

2.10 Detecting Errors by Using Path Health Checking

2.11 Error Management

2.12 Collecting Audit Log Data

2.13 Integrated HDLM management using Global Link Manager

2.14 Cluster Support

5

Page 30

2. HDLM Functions

2.1 Devices Managed by HDLM

Below is a list of devices that can or cannot be managed by HDLM. The devices that

can be managed by HDLM are called HDLM management-target devices.

HDLM management-target devices:

The following devices of the storage systems listed in Section 1.1 What is

HDLM?:

• SCSI devices

• Boot disks

Non-HDLM management-target devices:

• SCSI devices other than those of the storage systems listed in Section

1.1 What is HDLM?

• Devices other than disks (such as tape devices)

• Command devices of the storage systems listed in Section 1.1 What is

HDLM? (For example, Hitachi RAID Manager command devices.)

6

Page 31

2.2 System Configuration

HDLM manages routes between a host and a storage system by using the SCSI driver.

A host and a storage system are connected via an FC-SAN or an IP-SAN.

2.2.1 System Configuration Using an FC-SAN

In an FC-SAN, fiber cables connect hosts to storage systems. The cable port on the

host is a host bus adapter (HBA). The cable port on the storage system is a port (P) on

a channel adapter (CHA).

A logical unit (LU) contained in a storage system is the target of input to, or output

from, the host. You can divide an LU into multiple areas. Each area after the division

is called a Dev. The Dev is equivalent to a partition. A route that connects a host and

an LU is called a physical path, and a route that connects a host and a Dev is called a

path. When an LU has been divided into multiple Devs, the number of paths set to the

LU is equal to the number that is found by multiplying the number of physical paths

by the number of Devs in the LU.

HDLM assigns an ID to a physical path and manages the paths on a physical-path

basis. When you use HDLM, there is no need to consider the difference between a

physical path and a path. Thus, hereafter both physical paths and paths might be called

paths, without a distinction being made between the two. The ID that HDLM assigns

for each physical path is called an AutoPATH_ID. Also, a path might be called a

management target.

2. HDLM Functions

Figure 2-1: Configuration of an HDLM System When Using an FC-SAN shows the

configuration of an HDLM system using an FC-SAN.

7

Page 32

2. HDLM Functions

Figure 2-1: Configuration of an HDLM System When Using an FC-SAN

Table 2-1: HDLM System Components When Using an FC-SAN lists the HDLM

system components when using an FC-SAN.

Tabl e 2 -1 : HDLM System Components When Using an FC-SAN

Components Description

HBA A host bus adapter. This serves as a cable port on the host.

FC-SAN A dedicated network that is used for data transfer between the host and storage systems.

CHA A channel adapter.

P A port on a CHA. This serves as a cable port on a storage system.

LU A logical unit (a logical volume defined on the storage system). This serves as the target of input

or output operations from the host.

Dev An area (partition) of a divided LU.

Physical path A route that connects a host and an LU.

8

Page 33

Components Description

Path A route that connects a host and a Dev.

2.2.2 System Configuration Using an IP-SAN

In an IP-SAN, LAN cables are used to connect hosts to storage systems. The cable port

on the host is called a network interface card (NIC). In order to use an NIC, the iSCSI

software must be installed ahead of time on the host. The cable port on the storage

system is called a port (P) on a channel adapter (CHA) used for iSCSI connections.

A logical unit (LU) contained in a storage system is the target of input to, or output

from, the host. You can divide an LU into multiple areas. Each area after the division

is called a Dev. The Dev is equivalent to a partition. A route that connects a host and

an LU is called a physical path, and a route that connects a host and a Dev is called a

path. When an LU has been divided into multiple Devs, the number of paths set to the

LU is equal to the number that is found by multiplying the number of physical paths

by the number of Devs in the LU.

HDLM assigns an ID to a physical path and manages the paths on a physical-path

basis. When you use HDLM, there is no need to consider the difference between a

physical path and a path. Thus, hereafter both physical paths and paths might be called

paths, without a distinction being made between the two. The ID that HDLM assigns

for each physical path is called an AutoPATH_ID. Also, a path might be called a

management target.

2. HDLM Functions

Figure 2-2: Configuration of an HDLM System When Using an IP-SAN shows the

configuration of an HDLM system using an IP-SAN.

9

Page 34

2. HDLM Functions

Figure 2-2: Configuration of an HDLM System When Using an IP-SAN

Table 2-2: HDLM System Components When Using an IP-SAN lists the HDLM

system components when using an IP-SAN.

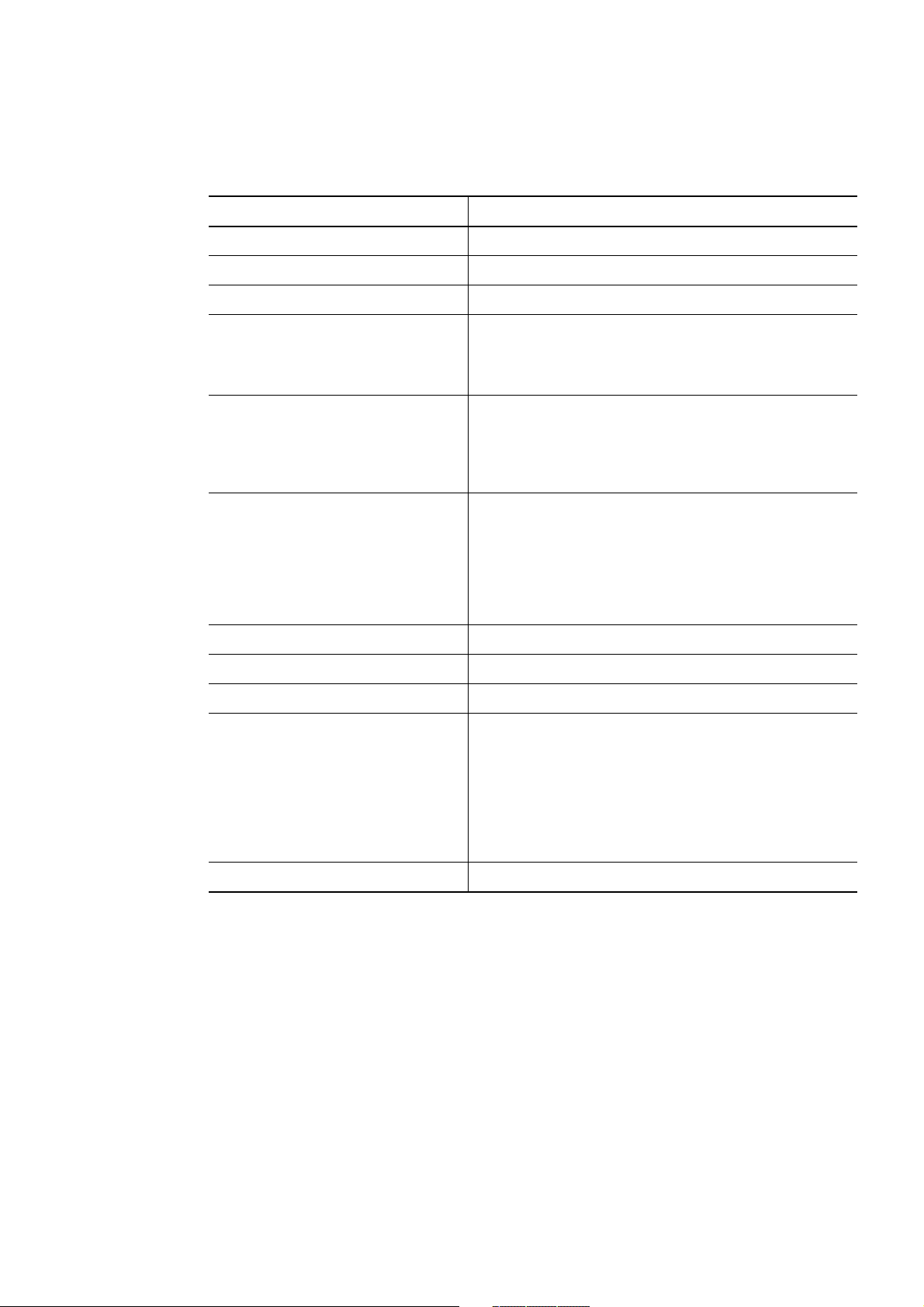

Tabl e 2 -2 : HDLM System Components When Using an IP-SAN

Components Description

iSCSI software The driver software that contains the iSCSI initiator function

NIC A network interface card that serves as a cable port on a host. The NIC is referred to as the

HBA in HDLM commands. Sometimes, it is also just simply called an HBA in this manual.

IP-SAN A data transfer network that connects hosts and storage systems by using the iSCSI standard.

CHA A channel adapter.

P A port on a CHA. This serves as a cable port on a storage system.

LU A logical unit (a logical volume defined on the storage system). This serves as the target of

Dev An area (partition) of a divided LU.

10

input or output operations from the host.

Page 35

Components Description

Physical path A route that connects a host and an LU.

Path A route that connects a host and a Dev.

IP-SAN environments supported by HDLM

HDLM supports system configurations that use an IP-SAN in the following

environments:

• OS

• Red Hat Enterprise Linux 5.6

• Red Hat Enterprise Linux 5.6 Advanced Platform

• Red Hat Enterprise Linux 6

• iSCSI software

2. HDLM Functions

HDLM supports the iSCSI initiator (

iscsi-initiator-utils) supplied with

the OS.

• NICs

For details on the applicable NICs, see HDLM Release Notes.

• Storage system

The storage system applicable for an IP-SAN is a Hitachi AMS2000 series

storage system.

Restrictions on using HDLM in an IP-SAN environment

The following restrictions apply when using HDLM in an IP-SAN environment:

• Use of HDLM in cluster configurations or boot disk environments is not

supported.

• The kdump function cannot be used.

11

Page 36

2. HDLM Functions

2.3 LU Configuration

After you have properly installed HDLM, the LU configuration will change as follows:

Before the installation of HDLM:

The host recognizes that a SCSI device is connected to each path.

Thus, a single LU in the storage system is recognized as though there are as many

LUs as there are paths.

After the installation of HDLM:

An HDLM device corresponding one-to-one with an LU in the storage system is

created at a level higher than the SCSI device.

Thus, from the host, LUs in the storage system are also recognized as a single LU

regardless of the number of paths.

#

In addition to the one that indicates the entire LU, a logical device file for the

HDLM device is created for each partition.

An LU recognized by a host after HDLM installation, is called a host LU (HLU). The

areas in a host LU that correspond to the Dev (partition) in a storage system LU are

called host devices (HDev).

#

12

On a system using HDLM, the logical device file for the HDLM device is used to

access the target LU instead of the logical device file for the SCSI device.

Figure 2-3: LU Configuration Recognized by the Host After HDLM Installation

shows the LU configuration recognized by the host after HDLM installation.

Page 37

2. HDLM Functions

Figure 2-3: LU Configuration Recognized by the Host After HDLM Installation

Table 2-3: LU Components lists the components recognized by the host.

Tabl e 2 -3 : LU Components

Components Description

HDev A Dev (partition) in an LU that the host recognizes via

the HDLM driver. It is called a host device. One host

device is recognized for one Dev in the storage system.

HLU An LU that the host recognizes via the HDLM driver. It

is called a host LU. Regardless of how many paths exist,

only one host LU is recognized for each LU in the

storage system.

13

Page 38

2. HDLM Functions

2.4 Program Configuration

HDLM is actually a combination of several programs. Because each program

corresponds to a specific HDLM operation, it is important to understand the name and

purpose of each program, along with how they are all interrelated.

Figure 2-4: Configuration of the HDLM Programs shows the configuration of the

HDLM programs.

Figure 2-4: Configuration of the HDLM Programs

14

Table 2-4: Functionality of HDLM Programs lists and describes the functions of

these programs.

Page 39

Tabl e 2 -4 : Functionality of HDLM Programs

Program name Functions

2. HDLM Functions

HDLM command Provides the

dlnkmgr command, which enables you to:

• Manage paths

• Display error information

• Set up the HDLM operating environment

HDLM utility Provides the HDLM utility, which enables you to:

• Collect error information

• Define HDLM device configuration information

• Make an HDLM device available as a boot disk

• Clear HDLM persistent reservation

• Specify settings for the HDLM filter driver

• Perform tasks that are required after the installation of HDLM

• Re-register HDLM information

• Collect information about errors that occurred during the installation

of HDLM

• Install HDLM

HDLM manager Provides the HDLM manager, which enables you to:

• Configure the HDLM operating environment

• Request path health checks and automatic failbacks to be performed

• Collect error log data

HDLM alert driver Reports the log information collected by the HDLM driver to the HDLM

manager. The driver name is

sddlmadrv.

HDLM driver Controls all the HDLM functions, manages paths, and detects errors. The

HDLM driver consists of the following:

• Core logic component

Controls the basic functionality of HDLM.

• Filter component

Sends and receives I/O data. The driver name is

sddlmfdrv.

15

Page 40

2. HDLM Functions

2.5 Position of the HDLM Driver and HDLM Device

The HDLM driver is positioned above the SCSI driver. Each application on the host

uses the HDLM device (logical device file) created by HDLM, to access LUs in the

storage system.

Figure 2-5: Position of the HDLM Driver and HDLM Devices shows the position of

the HDLM driver and HDLM device.

Figure 2-5: Position of the HDLM Driver and HDLM Devices

16

Page 41

2. HDLM Functions

2.6 Logical Device Files for HDLM Devices

The logical device file name of an HDLM device is different from the logical device

file name of a SCSI device. When you configure the logical device file of an HDLM

device for applications such as volume management software, these applications can

access the LUs that HDLM manages.

The following shows an example of the logical device file name that the application

uses to access the LU (for accesses before and after HDLM installation).

Table 2-5: Example of Using the Logical Device File Name of the Device Used When

the Application Accesses the LU illustrates the logical device file name of the device

that the application uses, for before and after HDLM installation.

Tabl e 2 -5 : Example of Using the Logical Device File Name of the Device Used

When the Application Accesses the LU

Host status Device file name that the application uses

Before installing HDLM The application uses the logical device file name for the SCSI device.

Example:

sda

sdb

After installing HDLM The application uses the logical device file name for the HDLM device.

Example:

sddlmaa

The logical device file name of an HDLM device has the following format:

/dev/sddlm[aa-pap][1-15]

About alphabetic letters used in the logical device file name:

• For the first 256 LUs, two alphabetic letters are assigned. The specifiable

values for the first two characters are in the range from

a to p.

• For the 257th and subsequent LUs, three alphabetic letters are assigned. The

specifiable values for the first and third characters are in the range from

p. The value of the second character is always a.

• A major number is required for each of the first characters.

Figure 2-6: About Alphabetic Letters Used in the Logical Device File Name shows

information about alphabetic letters used in the logical device file name.

a to

17

Page 42

2. HDLM Functions

Figure 2-6: About Alphabetic Letters Used in the Logical Device File Name

18

About numeric values used in a logical device file name:

[1-15] indicates a partition number in the applicable LU. For example, if the

logical device file name of an HDLM device is

1 on

sddlmaa. To specify the entire LU, simply use sddlmaa. Note that HDLM

sddlmaa1, it indicates partition

creates block device files. The system dynamically selects the major number of

the block device that this file uses.

Page 43

2.7 Distributing a Load Using Load Balancing

When the system contains multiple paths to a single LU, HDLM can distribute the load

across the paths by using multiple paths to transfer the I/O data. This function is called

load balancing, and it prevents a single, heavily loaded path from affecting the

performance of the entire system.

Note that some I/O operations managed by HDLM can be distributed to each path,

while others cannot. Therefore, even though load balancing function is used, I/O

operations might not be equally allocated to each path.

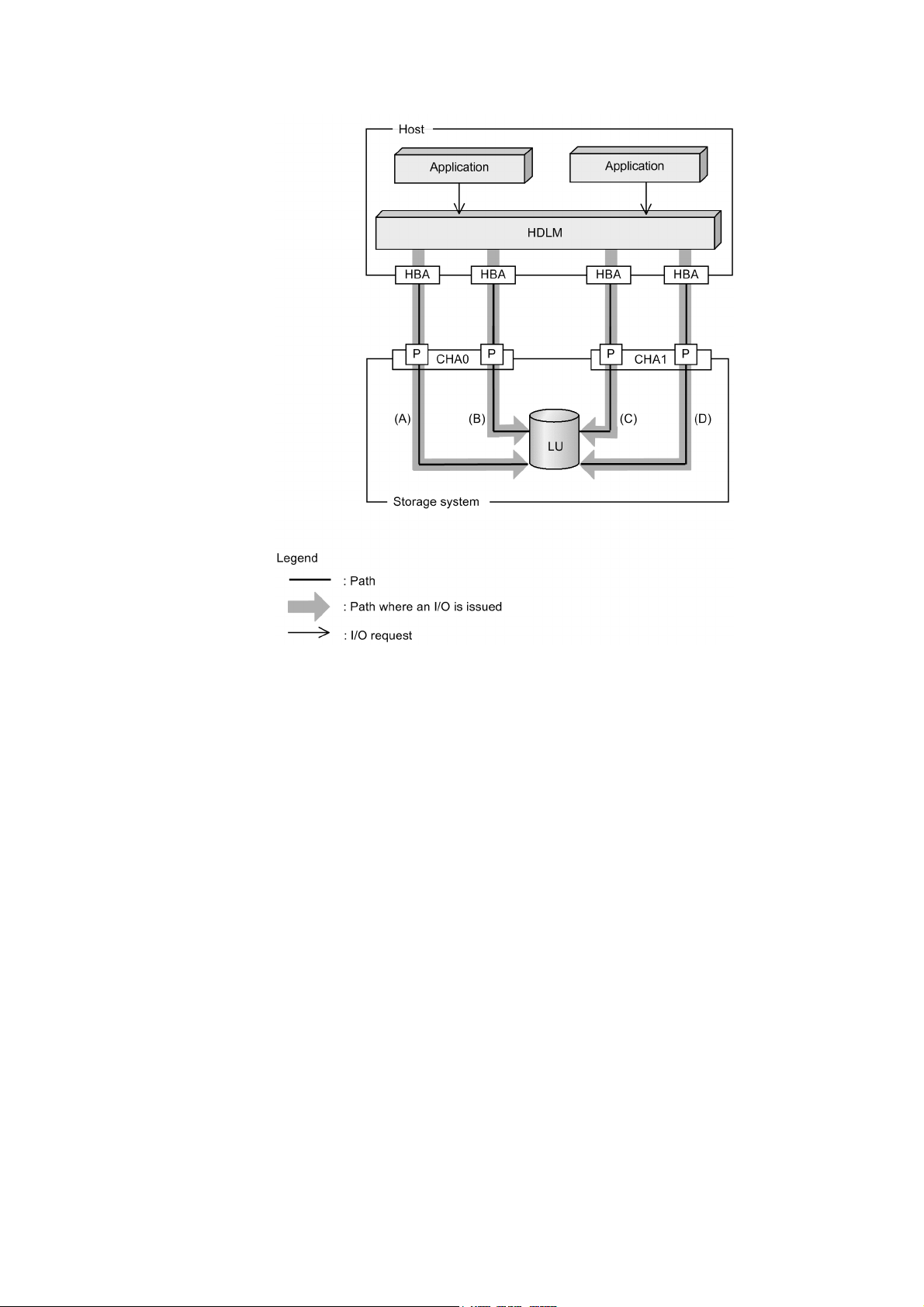

Figure 2-7: Flow of I/O Data When the Load Balancing Function Is Not Used shows

the flow of I/O data when the load balancing function is not used. Figure 2-8: Flow

of I/O Data When the Load Balancing Function Is Used shows the flow of I/O data

when the load balancing function is used. Both figures show examples of I/O

operations being issued for the same LU by multiple applications.

2. HDLM Functions

19

Page 44

2. HDLM Functions

Figure 2-7: Flow of I/O Data When the Load Balancing Function Is Not Used

20

When the load balancing function is not used, I/O operations converge onto a single

path (A). The load on that one path (A) will cause a bottleneck, which might cause

problems with system performance.

Page 45

2. HDLM Functions

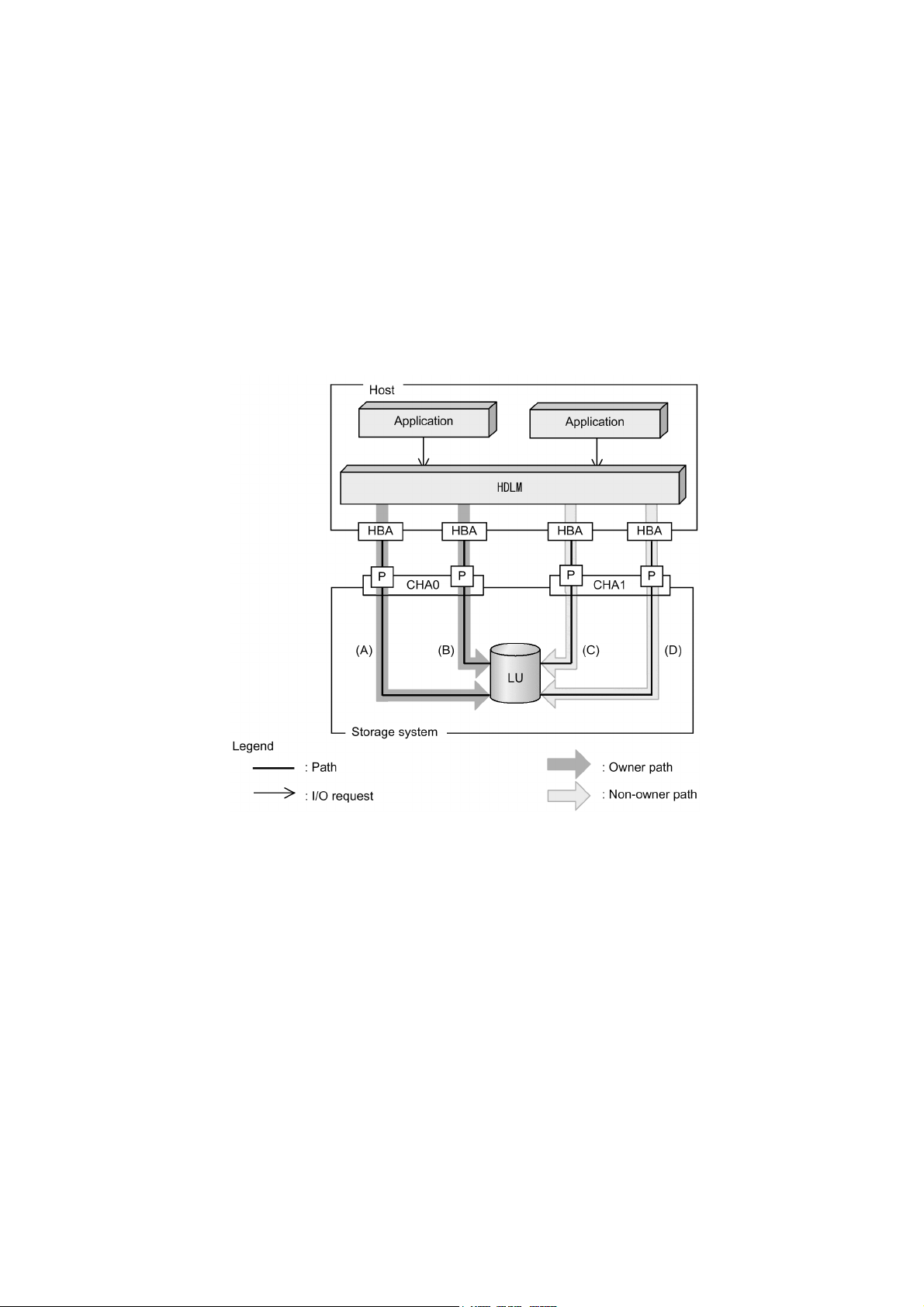

Figure 2-8: Flow of I/O Data When the Load Balancing Function Is Used

When the load balancing function is used, I/O operations are distributed via multiple

paths (A, B, C, and D). This helps to prevent problems with system performance and

helps prevent bottlenecks from occurring.

2.7.1 Paths To Which Load Balancing Is Applied

This subsection describes, for each type of storage system, the paths to which the load

balancing function is applied.

(1) When Using the Thunder 9500V Series, or Hitachi AMS/WMS series

HDLM performs load balancing between owner paths or between non-owner paths.

An owner path is a path that passes through the CHA. This path is set on the owner

controller of the storage system LU. Since the owner controller varies depending on

the LU, the owner path also varies depending on the LU. A non-owner path is a path

21

Page 46

2. HDLM Functions

that uses a CHA other than the owner controller (a non-owner controller). Paths used

for load balancing are selected from owner paths first, then non-owner paths. To

prevent performance in the entire system from deteriorating, HDLM does not perform

load balancing between owner paths and non-owner paths. When some owner paths

cannot be used due to a problem such as a failure, load balancing is performed among

the remaining usable owner paths. When all owner paths cannot be used, load

balancing is performed among the non-owner paths.

For the example in Figure 2-9: Overview of Load Balancing, suppose that in the

owner controller of LU0 is CHA0. When the LU is accessed, the load is balanced

between the two paths A and B, which are both owner paths. When one of the paths

(A) cannot be used, then the LU is accessed from the only other owner path (B). When

both of the owner paths (A and B) cannot be used, the load is then balanced between

two other, non-owner paths (C and D).

Figure 2-9: Overview of Load Balancing

(2) When Using the Lightning 9900 Series, Lightning 9900V Series, Hitachi USP,

Universal Storage Platform V/VM, Virtual Storage Platform, Hitachi AMS2000

Series, or Hitachi SMS

All online paths are owner paths. Thus, for the example in Figure 2-8: Flow of I/O

22

Page 47

Data When the Load Balancing Function Is Used, the load is balanced among the four

paths A, B, C, and D. If one of the paths were to become unusable, the load would be

balanced among the three, remaining paths.

2.7.2 Load Balancing Algorithms

HDLM has the following six load balancing algorithms:

• The Round Robin algorithm

• The Extended Round Robin algorithm

• The Least I/Os algorithm

• The Extended Least I/Os algorithm

• The Least Blocks algorithm

• The Extended Least Blocks algorithm

The above algorithms are divided into two categories, which differ in their processing

method. The following describes both of these processing methods:

The Round Robin, Least I/Os, and Least Blocks algorithms

These algorithms select the path to use each time a certain number of I/Os are

issued. The path that is used is determined by the following:

2. HDLM Functions

• Round Robin

The paths are simply selected in order from among all the connected paths.

• Least I/Os

The path that has the least number of I/Os being processed is selected from

among all the connected paths.

• Least Blocks

The path that has the least number of I/O blocks being processed is selected

from among all the connected paths.

The Extended Round Robin, Extended Least I/Os, and Extended Least Blocks

algorithms

These algorithms determine which path to allocate based on whether the I/O to be

issued is sequential with the immediately preceding I/O.

If the I/O is sequential with the previous I/O, the path to which the previous I/O

was distributed will be used. However, if a specified number of I/Os has been

issued to a path, processing switches to the next path.