Page 1

HP SureStore E Disk Array FC60

Advanced User’s Guide

This manual was downloaded from http://www.hp.com/support/fc60/

hpHH

Edition E1200

Printed in U.S.A.

Page 2

Notice

© Hewlett-Packar d C om pan y, 1999, 2000. All rights

reserved.

Hewlett-Packard Company makes no warranty of

any kind with regard to this docume nt, including, but

not limited to, the implied warranties of merchantability and fitness for a particular purpose. HewlettPackard shall not be liable for errors contained

herein or for incidental or consequential damages in

connection with the furnishing, performance, or use

of this material.

This document contains proprietary information,

which is protected by copyright. No part of this

document may be photocopied, reproduced, or

translated into another language without the prior

written consent of Hewlett-Packard. The information contained in this document is subject to change

without notice.

Trademark In formation

Microsoft, Windo ws , Windows NT , and Windows

2000 are register ed trademarks of the Microsoft

Corporation.

Safety Notices

Warning

Weight Exceeds 100 lbs. (45 kg.)

Do NOT lift unassisted. Use

a lift device or two people.

To protect against personal injury and product

damage, do not attempt to lift the product without

the assistance of another person or lift device.

Components bearing this symbol may

be hot to touch.

Components bearing this symbol are

fragile. Handle wit h care.

Components bearing this symbol are

susceptible to damage by static

electricity. ESD pr ec autions are

required.

Service

Any servicing, adjustment, maintenance, or repair

must be performed only by authorized servicetrained personnel.

2

Page 3

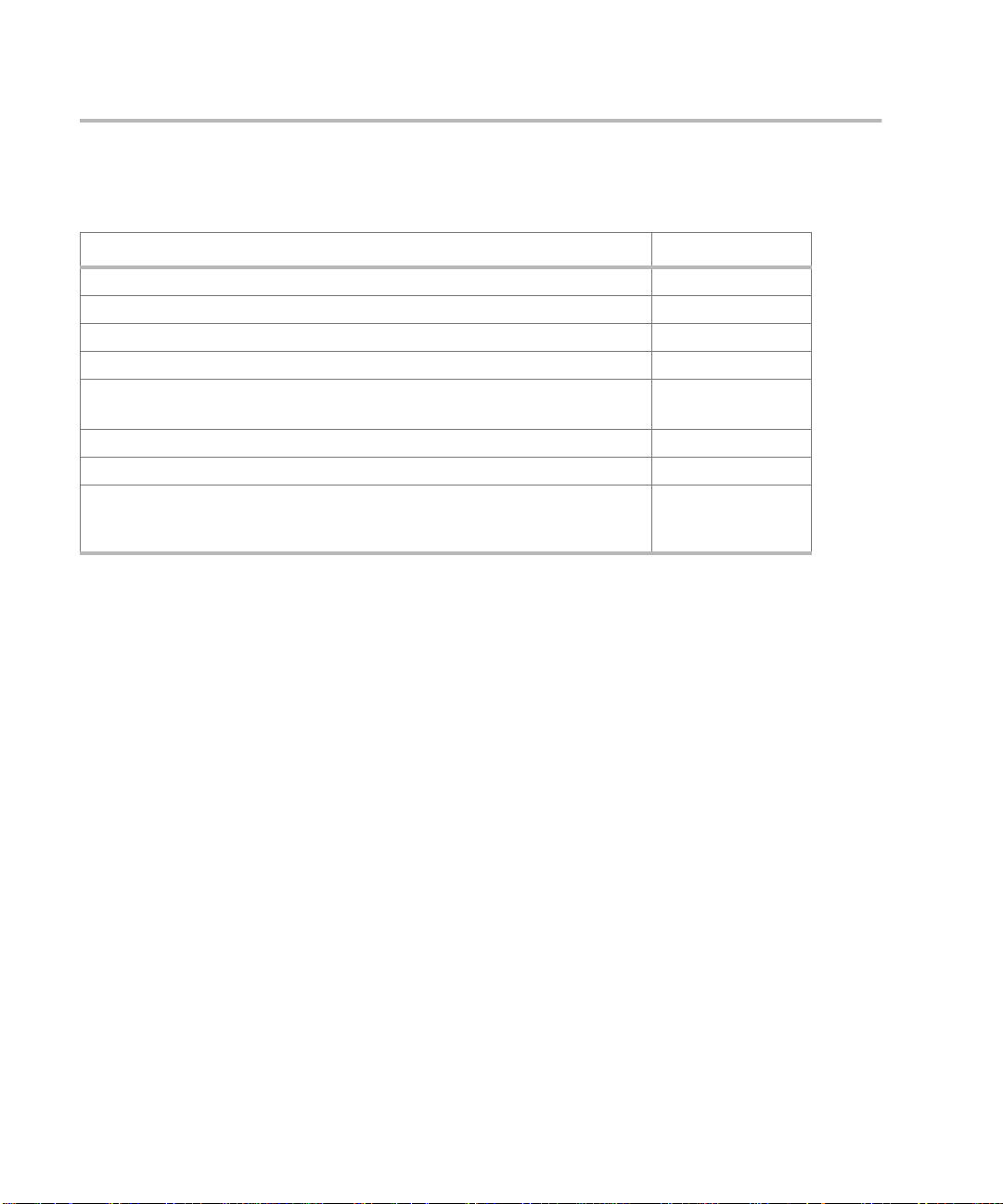

Format Conventions

Denotes

WARNING

Caution A hazard that can cause hardware or software damage

Note Significant concepts or operating instructions

this font

this font Text displayed on the screen

A hazard that can cause personal injury

Text to be typed verbatim: all commands, path names, file names, and directory names

Printing History

1st Edition - September 1999

2nd Edition - October 1999

3rd Edition - February 2000

4th Edition - July 2000

5th Edition - September 2000

6th Edition - October 2000

7th Edition - Decemb er 2000

3

Page 4

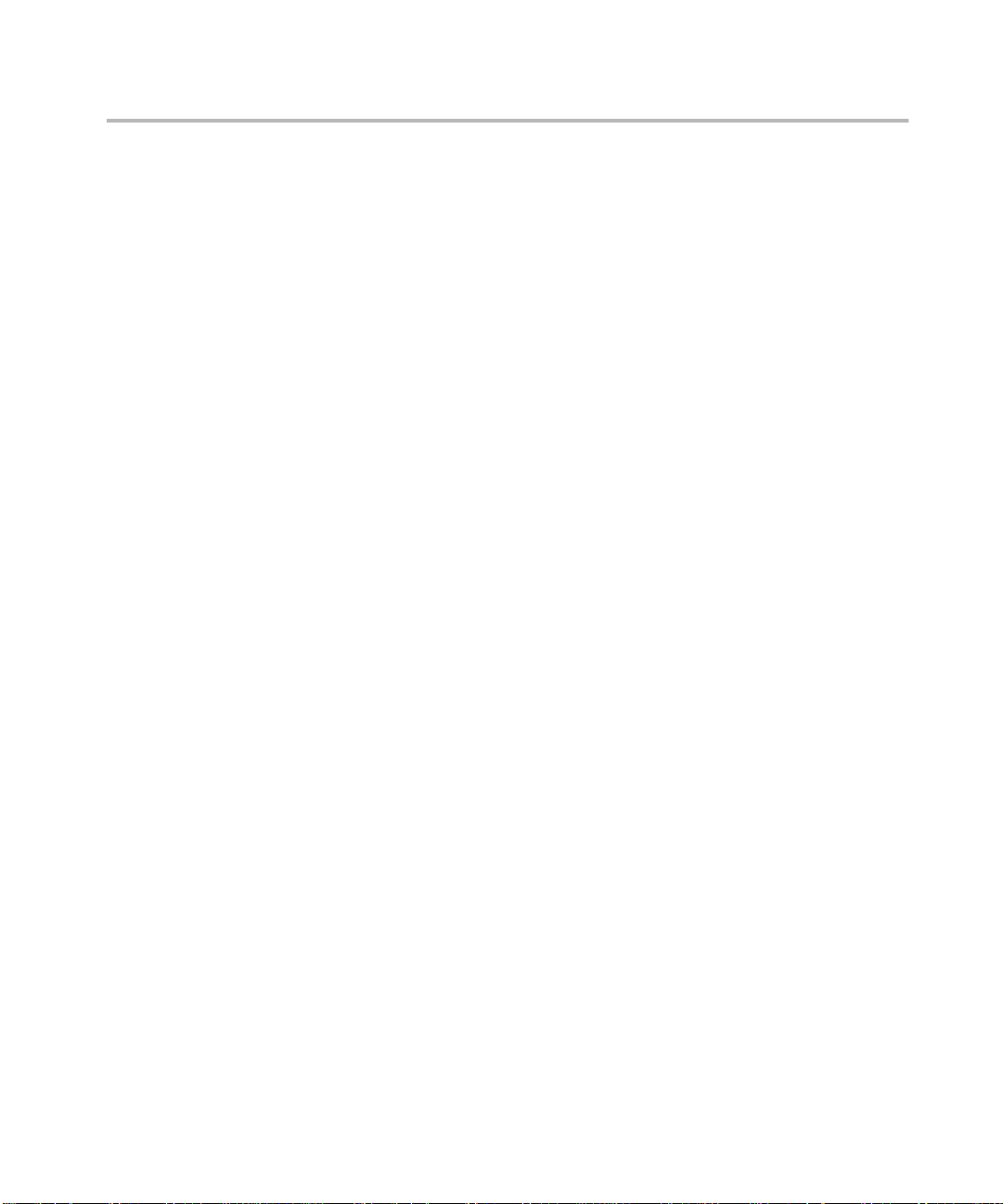

Manual Revision History

December 2000

Change Page

Added Figure 87 to clarify operation of the write cache flush thresholds. 253

Added note regarding t he impact of LUN binding on performance. 250

Added information on Managing the Universal Transport Mechanism (UTM). 298

Added information on major event logging available with firmware HP08. 307

Added Allocating Space for Disk Array Logs section describing use of

environment variable

Added information on Purging Controller Logs. 311

Added information for RAID 0 support on HP-UX. 47

Changed the required m inimu m numb er of disk module s per encl osure fro m

2 to 4 based on power supply requirements for the disk enclosure.

AM60_MAX_LOG_SIZE_MB.

308

73

4

Page 5

About This Book

This guide is intended for use by syst em administrators and others involved in operating and

managing the HP SureStore E Disk Array FC60. It is organized into the following chapters and

section.

Chapter 1, Product Description

Chapter 2, Topology and Array Planning

Chapter 3, Installation

Chapter 4, Managing the Disk Array on

HP-UX

Chapter 5, HP-UX Diagnostic Tools

Chapter 6, Troubleshooting

Chapter 7, Removal and Replacement

Chapter 8, Reference / Legal /

Regulatory

GLOSSARY

Index

Describes the feat ures, controls , and operati on of the

disk array.

Guidelines for designing the disk array configura tion

that best meets your needs.

Instruction for moving the disk array.

Complete instructions for managing your disk array

using the available management software.

Information on using STM to gather in formation abou t

disk array s tatus.

Instructions for isolating and solving common

problems that may occur during disk array operation.

Instructions for removing and replacing all customer-

replaceable components.

Regulatory, environmental, and other reference

information.

5

Page 6

Related Documents and Information

The following items co ntain information related t o the installation and use of the HP SureStore E

Disk Array and its management software.

HP SureStore E Disk Array FC60 Advanced User’s Guide -

•

book you are reading. Topics that a re discu ssed in m ore d etail i n the Ad vanc ed User’ s Gui de are

clearly identified throughout this book.

Download: www.hp.com/support/fc60

"

!

HP Storage Manager 60 User’s Guide

•

disk array management software for Windows NT and Windows 2000. It is included with the

A5628A software kit.

Download: www.hp.com/support/fc60

"

!

HP Storage Manager 60 Introd uction Guide

•

software for Windows NT and Windows 2000. It is included in electronic format on the

Storage Manager 60 CD

Download: www.hp.com/support/fc60

"

!

Fibre Channel Mass Storage Adapters Service and User Manual

•

Fibre Channel Mass Storage/9000. It describes installation of the Fibre Channel I/O adaptors into

K-, D-, T-, and V-class systems.

Download: www.hp.com/essd/efc/A3636A_documentation.html

"

!

Using EMS HA Monitors

•

used for hardware monitoring.

Download: http://www.docs.hp.com/hpux/ha/

"

!

.

(B5735-90001) - contains information about the EMS environment

- this guide describes the features and operation of the

- this guide introd uces the disk arr ay managem ent

this is the expanded version of the

(A3636-90002) - describes

HP

EMS Hardware Monitors User’s Guide

•

protect your system fro m undetected failures.

Download: http://www.docs.hp.com/hpux/systems/

"

!

Diagnostic/IPR Media User’s Guide

•

enabling the E M S Hardware Event Monitors.

Download: http://www.docs.hp.com/hpux/systems/

"

!

Managing MC/ServiceGuard

•

dependencies for hardwa re resources.

Download: http://www.docs.hp.com/hpux/ha/

"

!

6

(B3939-90024) - provides information on creating package

- describes how to use the EMS Hardware Monitors to

(B6191-90015) - provides information on using STM, and

Page 7

1 Product Description

Product Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .18

Operating System Support. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .20

Management Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .20

Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .20

High Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .21

Scalable Storage Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .21

LED Status Monitoring. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .22

EMS Hardware Event Monitoring (HP-UX Only) . . . . . . . . . . . . . . . . . . . . . . . . .22

Disk Enclosure Components. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .23

Operation Features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .25

Disk Enclosure SC10 Modules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .27

Array Controller Enclosure Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .34

Front Cover . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .37

Controller Modules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .38

Controller Fan Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .40

Power Supply Modules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .42

Power Supply Fan Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .43

Battery Backup Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .45

Disk Array High Availability Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .47

RAID Technology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .47

Disk Mirroring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .47

Data Parity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .48

Data Striping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .49

RAID Levels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .50

RAID Level Comparisons. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .57

Global Hot Spare Disks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Primary and Alternate I/O Paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .64

Capacity Management Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .65

LUNs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .65

Contents

7

Page 8

Disk Groups. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .65

Disk Array Caching. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .66

Dynamic Capacity Expansion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .67

2 Topology and Array Planning

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .70

Array Design Considerations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .71

Array Hardware Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .71

RAID, LUNs, and Global Hot Spares . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .72

High Availability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .72

Performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .73

Storage Capacity. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .75

Expanding Storage Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .75

Recommended Disk Array Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .77

Configuration Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .77

One Disk Enclosure Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .78

Two Disk Enclosure Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .80

Three Disk Enclosure Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .84

Four Disk Enclosure Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .88

Five Disk Enclosure Configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .92

Six Disk Enclosure Configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .96

Total Disk Array Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .100

For high-availability, one disk per SCSI channel is used as a global hot spare.. . 101

Topologies for HP-UX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .102

Basic Topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .103

Single-System Distance Topology. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .110

High Availability Topology. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .115

High Availability, Distance, and Capacity Topology . . . . . . . . . . . . . . . . . . . . .120

Campus Topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .125

Performance Topology with Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .129

Topologies for Windows NT and Windows 2000 . . . . . . . . . . . . . . . . . . . . . . . . . . .131

8

Page 9

Non-High Availability Topologies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .133

High Availability Topologies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .137

3Installation

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .144

Host System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .145

HP-UX. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1 45

Windows NT and Windows 2000. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .146

Site Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .147

Environmental Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .147

Electrical Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .147

Power Distribution Units (PDU/PDRU). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .150

Installing PDUs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .152

Recommended UPS Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .152

Installing the Disk Array FC60 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .155

Installing the Disk Enclosures. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 60

Step 1: Collect Required Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .160

Step 2: Unpack the Product . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .160

Step 3: Install Mounting Rails . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .162

Step 4: Install the Disk Enclosure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .162

Step 5: Install Disks and Fillers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .1 66

Moving a Disk Enclosure from One Disk Array to Another . . . . . . . . . . . . . . .168

Installing the Controller. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .170

Step 1: Gather Required Tools. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .170

Step 2: Unpack the Product . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .170

Step 3: Install Mounting Rails . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .173

Step 4: Install the Controller Enclosure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .173

Configuration Switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .176

Disk Enclosure (Tray) ID Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .176

Disk Enclosure DIP Switch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .176

Fibre Channel Host ID Address Setting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .179

Contents

9

Page 10

Attaching Power Cords . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .183

Attaching SCSI Cables and

Configuring the Disk Enclosure Switches. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .187

Full-Bus Cabling and Switch Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . .188

Split-Bus Switch and Cabling Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . .191

Connecting the Fibre Channel Cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .196

Applying Power to the Disk Array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .198

Verifying Disk Array Connection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .206

On Windows NT and Windows 2000. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .206

On HP-UX. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .206

Interpreting Hardware Paths. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .208

Installing the Disk Array FC60 Software (HP-UX Only) . . . . . . . . . . . . . . . . . . . . .213

System Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .213

Verifying the Operating System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .214

Installing the Disk Array FC60 Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .214

Downgrading the Disk Array Firmware for HP-UX 11.11 Hosts. . . . . . . . . . . .215

Configuring the Disk Array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .216

HP-UX. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .216

Windows NT and Windows 2000. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .220

Using the Disk Array FC60 as a Boot Device (HP-UX Only). . . . . . . . . . . . . . . . . .222

Solving Common Installation Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .223

Adding Disk Enclosures to Increase Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . .224

General Rules for Adding Disk Enclosures to the Disk Array . . . . . . . . . . . . .224

Step 1. Plan the Expanded Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .225

Step 2. Backup All Disk Array Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .226

Step 3. Prepare the Disk Array for Shut Down . . . . . . . . . . . . . . . . . . . . . . . . . .226

Step 4. Add the New Disk Enclosures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .227

Step 5. Completing the Expansion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .230

Capacity Expansion Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .232

10

Page 11

4 Managing the Disk Array on HP-UX

Tools for Managing the Disk Array FC60. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .238

System Administration Manager (SAM). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .238

Array Manager 60 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .238

STM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .238

Installing the Array Manager 60 Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .240

AM60Srvr Daemon . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .241

Running Array Manager 60. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .241

Managing Disk Array Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2 42

Configuring LUNs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .242

Selecting Disks for a LUN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .243

Assigning LUN Ownership . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .247

Selecting a RAID Level . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .247

Global Hot Spares . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .248

Setting Stripe Segment Size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2 49

Evaluating Performance Impact . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .250

Adding Capacity to the Disk Array. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .254

Adding More Disk Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2 54

Adding Additional Disk Enclosures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .256

Replacing Disk Modules with Higher Capacity Modules. . . . . . . . . . . . . . . . . .256

Upgrading Controller Cache to 512 Mbytes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .258

Managing the Disk Array Using SAM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .2 60

Checking Disk Array Status. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .261

Assigning an Alias to the Disk Array. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .264

Locating Disk Modules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .265

Binding a LUN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .267

Unbinding a LUN. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .271

Replacing a LUN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .271

Adding a Global Hot Spare. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .273

Removing a Global Hot Spare . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .275

Contents

11

Page 12

Managing the Disk Array Using Array Manager 60. . . . . . . . . . . . . . . . . . . . . . . . . .276

Command Syntax Conventions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .279

Array Manager 60 man pages. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .279

Quick Help . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .279

Selecting a Disk Array and Its Components . . . . . . . . . . . . . . . . . . . . . . . . . . . .280

Preparing to Manage the Disk Array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .281

Checking Disk Array Status. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .282

Managing LUNs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .289

Managing Global Hot Spares. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .295

Managing Disk Array Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .297

Managing the Universal Transport Mechanism (UTM) . . . . . . . . . . . . . . . . . . .298

Managing Cache Parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .299

Performing Disk Array Maintenance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .304

Managing Disk Array Logs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .307

Upgrading Disk Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .313

Managing the Disk Array Using STM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .314

Checking Disk Array Status Information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .314

Binding a LUN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .314

Unbinding a LUN. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .315

Adding a Global Hot Spare. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .315

Removing a Global Hot Spare . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .3 15

Locating Disk Modules. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .316

Status Conditions and Sense Code Information. . . . . . . . . . . . . . . . . . . . . . . . . . . .317

LUN Status Conditions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .317

Disk Status Conditions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .319

Component Status Conditions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .321

FRU Codes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .322

SCSI Sense Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .327

5 HP-UX Diagnostic Tools

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .346

12

Page 13

Support Tools Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .347

STM User Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .347

STM Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .351

Using the STM Information Tool. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .352

Interpreting the Information Tool Information Log . . . . . . . . . . . . . . . . . . . . . .354

Interpreting the Information Tool Activity Log. . . . . . . . . . . . . . . . . . . . . . . . . .354

Using the STM Expert Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .355

6 Troubleshooting

Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .360

About Field Replaceable Units (FRUs) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .361

HP-UX Troubleshooting Tools. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .362

Windows NT and Windows 2000 Troubleshooting Tools . . . . . . . . . . . . . . . . .362

EMS Hardware Event Monitoring (HP-UX Only) . . . . . . . . . . . . . . . . . . . . . . . .362

Disk Array Installation/Troubleshooting Checklist . . . . . . . . . . . . . . . . . . . . . . . . . 365

Power-Up Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .366

Controller Enclosure Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .367

Introduction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .367

Controller Enclosure LEDs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .368

Master Troubleshooting Table. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .370

SureStore E Disk System SC10 Troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . .376

Disk Enclosure LEDs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .376

Losing LUN 0 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .376

Interpreting Component Status Values (HP-UX Only). . . . . . . . . . . . . . . . . . . .379

Isolating Causes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .380

Contents

7 Removal and Replacement

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .384

Disk Enclosure Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .386

Disk Module or Filler Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .386

13

Page 14

Disk Enclosure Fan Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .392

Disk Enclosure Power Supply Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .394

Controller Enclosure Modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .396

Front Cover Removal/Replacement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .397

Controller Fan Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .398

Battery Backup Unit (BBU) Removal/Replacement. . . . . . . . . . . . . . . . . . . . . .400

Power Supply Fan Module Removal/Replacement. . . . . . . . . . . . . . . . . . . . . . .403

Power Supply Module Removal/Replacement . . . . . . . . . . . . . . . . . . . . . . . . . .405

SCSI Cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .407

8 Reference / Legal / Regulatory

System Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .410

Host Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .410

Supported Operating Systems. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .410

Fibre Channel Host Adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .411

Models and Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .412

A5277A/AZ Controller Enclosure Models and Options . . . . . . . . . . . . . . . . . . .412

A5294A/AZ Disk Enclosure SC10 Models and Options . . . . . . . . . . . . . . . . . . .414

Disk Array FC60 Upgrade and Add-On Products . . . . . . . . . . . . . . . . . . . . . . . .416

PDU/PDRU Products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .417

Replaceable Parts. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .418

A5277A/AZ Controller Enclosure Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . .420

Dimensions: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .420

Weight: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .420

AC Power: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .421

Heat Output: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .421

Environmental Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .422

Acoustics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .423

A5294A/AZ Disk Enclosure Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .424

Dimensions: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .424

Weight: . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .424

14

Page 15

AC Power:. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .425

DC Power Output:. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .425

Heat Output:. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4 25

Environmental Specifications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .426

Acoustics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .427

Warranty and License Information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .428

Hewlett-Packard Hardware Limited Warranty . . . . . . . . . . . . . . . . . . . . . . . . . .428

Software Product Limited Warranty. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .429

Limitation of Warranty . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .429

Hewlett-Packard Software License Terms. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .431

Regulatory Compliance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .434

Safety Certifications:. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .434

EMC Compliance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .434

FCC Statements (USA Only) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .435

IEC Statement (Worldwide). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .435

CSA Statement (For Canada Only). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .435

VCCI Statement (Japan). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .436

Harmonics Conformance (Japan). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .436

Class A Warning Statement (Taiwan). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .436

Spécification ATI Classe A (France Seulement). . . . . . . . . . . . . . . . . . . . . . . . .437

Product Noise Declaration (For Germany Only) . . . . . . . . . . . . . . . . . . . . . . . .437

Geräuschemission (For Germany Only) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .438

Declaration of Conformity. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .439

Contents

15

Page 16

16

Page 17

1 PRODUCT DESCRIPTION

Product Description. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Disk Enclosure Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Array Controller Enclosure Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Disk Array High Availability Features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Capacity Management Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Product Description

17

Page 18

Product Description

The HP SureStore E Disk Array FC60 (Disk Array FC60) is a disk storage system that

features high data availability, high performance, and storage scalability. To provide high

availability, the Disk Array FC60 uses redundant, hot swappable modules, which can be

replaced without disrupting disk array operation should they fail.

The Disk Array FC60 consists of two primary compone nts: an FC60 controller enclosure,

and from one to six HP SureStore E Disk System SC10 enclosures (referred to throughout

this document as simply di sk enclosures). The controller enclosure is respo nsible for

providing overall control of the Disk Array FC60 by managing the communication between

the host and the disk enclosures. Host communication is done through dual Fibre Channel

arbitrated loops (whe n dual controller modules are installed). By using Fibre Ch annel, the

Disk Array FC60 achieves a high data rate throughput. High data throughput is maintained

to the disks by using u p to six Ultr a2 SCSI c hann els t o the disk en clos ures ( one ch anne l for

each disk enclosure).

In addition to increase d performance, the use of multiple disk enclosures provides

scalability — simplifying the process of adding storage capacity as needed. Up to six disk

enclosures can be added incrementally as storage demands increase. Each disk enclosure

holds up to ten disk modules in capacities of 9.1 Gbyte, 8.2 Gbyte, 36.4 Gbyte, or 73.4

Gbyte. A fully loaded syst em comprising six disk enclosures, each populated with ten 73.4Gbyte disk modules, achieves a capacity of over 3 Tbytes.

The Disk Array FC60 enclosures are designed for installation in HP’s original 19-inch

cabinets, which include the C2785A (1.1m), C2786A (1.6m), C2787A (2m), A1896A (1.1 m),

and A1897A (1.6 m) and the HP Rack System/E Racks which includes the A490xA and

A150xA Rack System/E cabinets. The Disk Array FC60 is also supported in the Rittal 9000

Series racks.

18 Product Description

Page 19

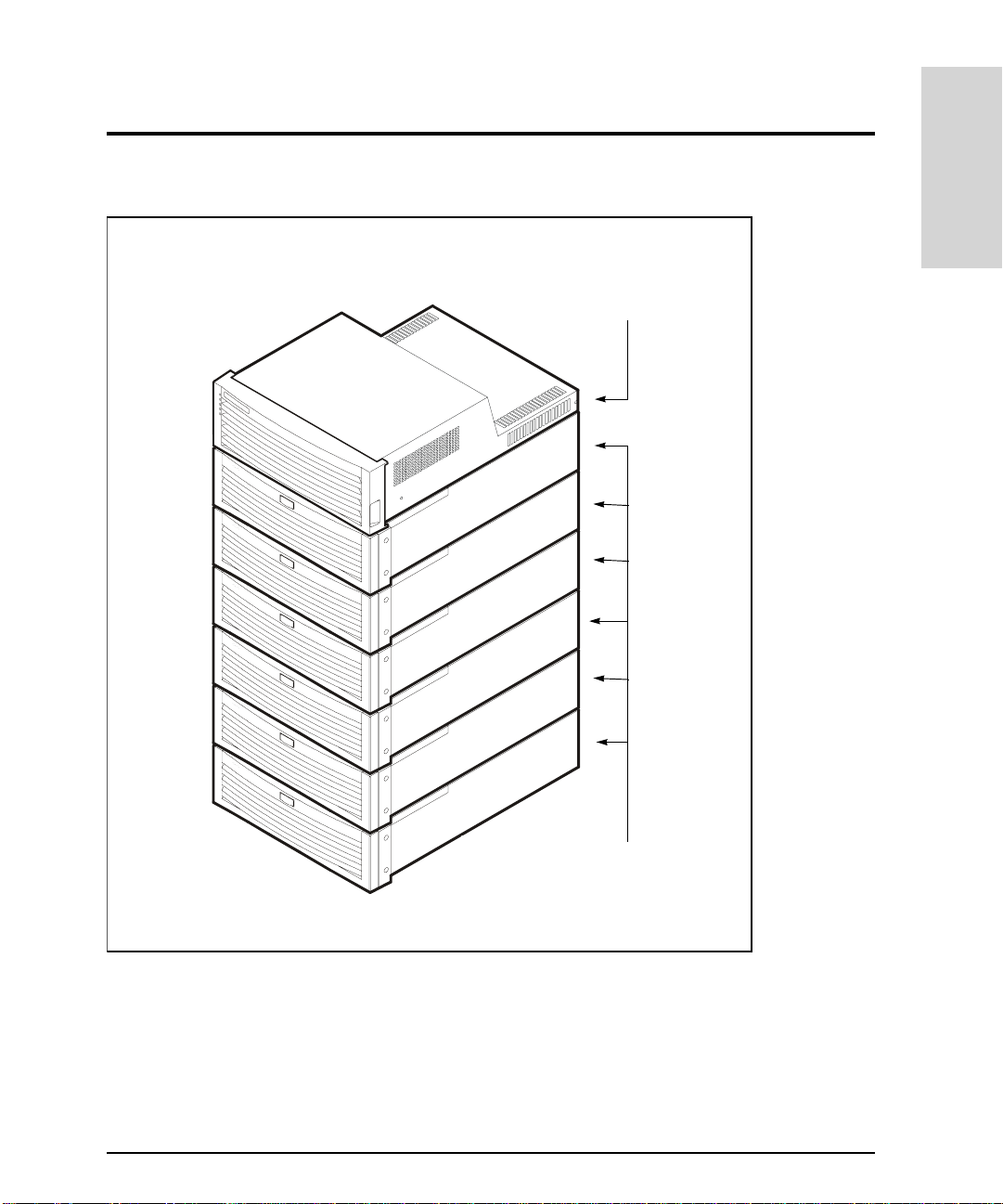

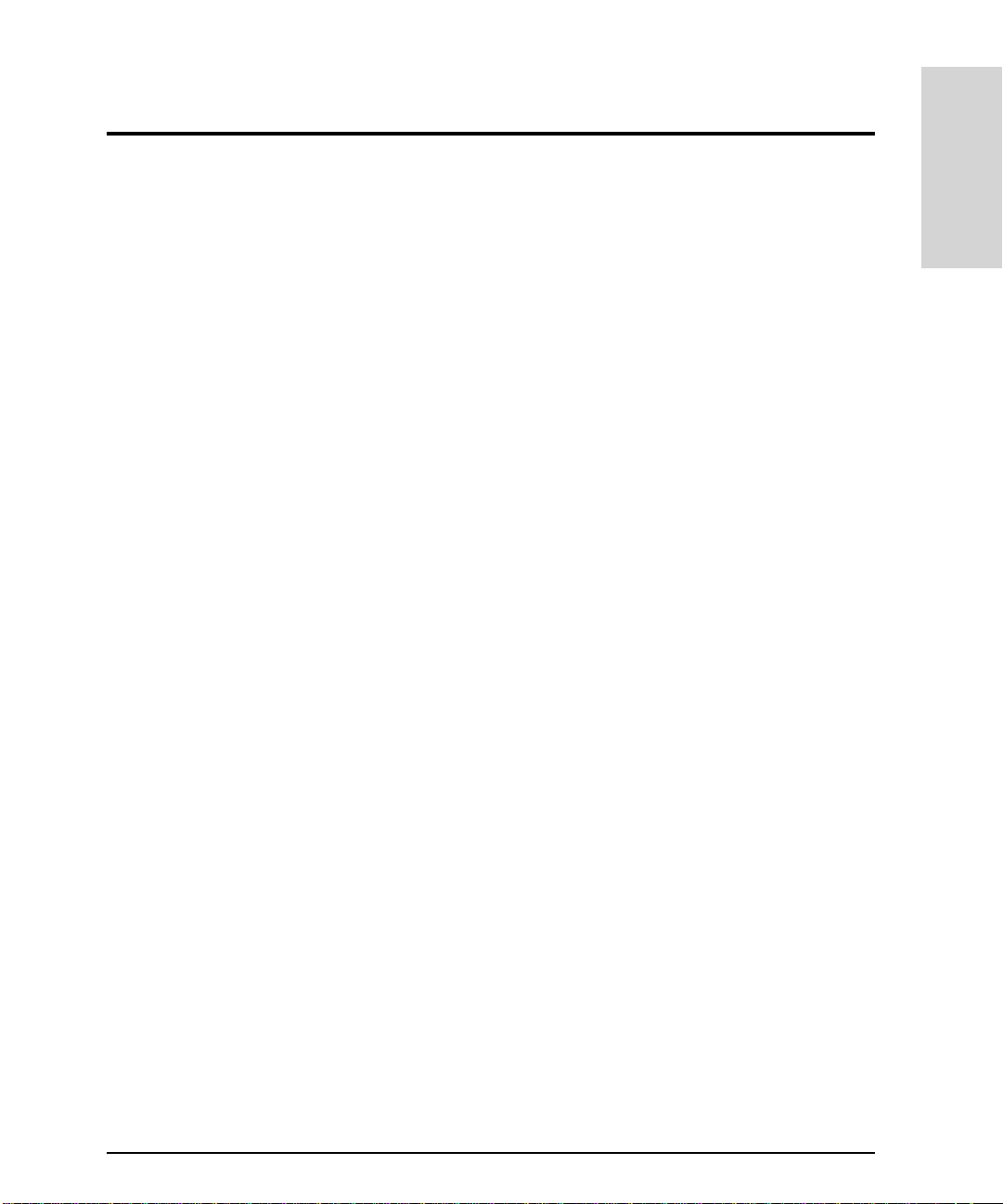

Array Controller FC60

Product Description

Figure 1

SureStore E

Disk System SC10

HP SureStore E Disk Arra y FC60 (Controller with Six Disk Enclosures)

Product Description 19

Page 20

Operating System Support

The Disk Array FC60 is currently supported on the followi ng operating systems:

HP-UX 11.0, 11.11, and 10.20

•

Windows NT 4.0

•

Windows 2000

•

Note

Some disk array featu res ar e specif ic to eac h op eratin g system. Th ese featu res

are clearly identifi ed throughout this book.

Management Tools

HP-UX Tools

The following tools are available for managing the disk array on HP-UX. These tools are

included with the disk array.

Array Manager 60 command line utilities

•

SAM

•

Support Tools Manager (STM)

•

Windows NT and Windows 2000

The following tools are used to manage the Disk Array FC60 on Windows NT and Windows

2000. This tool is not included with the disk array, but must be ordered separately as

product A5628A.

HP Storage Manager 60

•

(A5628A)

Features

The Disk Array FC60 offers the following fe atures

High availability

•

Scalable storage capacity

•

LED status monitoring

•

20 Product Description

Page 21

RAID levels 0, 1, 0/1, 3, and 5 ( RAID level 3 supported on Windows NT and Windows

•

2000 only)

EMS hardware monitoring (HP-UX only)

•

High Availability

High availability is a general term that describes hardware and software systems that are

designed to minimize system downtime — planned or unplanned. The Disk Array FC60

qualifies as high-availability hardware , achieving 99.99% availability.

The following features enable high availab ility:

Hot-swappable, high-capacity, high-speed disk modules

•

Dual Fibre Channel arbitrated loops (FC-AL) connection to the host

•

Redundant, hot-swappable, fans and power supplies

•

Support for RAID 1, 0/1, and 5

•

Remote monito ring and diagnosti c s

•

EMS Hardware event monitoring and real-time error reporting (HP-UX only)

•

Product Description

Note

The Disk Array FC60 is designed to operate with either one or two cont roller

modules; however, for data integrity and high availability it is highly

recommended th at dual controller modules be installed.

Scalable Storage Capacity

The Disk Array FC60 is designed to provide maxi mum scalability, simplifying the process

of adding storage capacity as required. Storage capacity can be added in three ways:

– By adding additional disk modules to a disk enclosure

– By adding additional disk enclosures to the array

– By replacing existing disk modules with higher capacity modules

The controller enclosure supports up t o six disk enclosures. Each disk enclos ure holds up

to ten disk modules in capacities of 9.1 Gbyte, 18.2 Gbyte, 36.4 Gbyte, or 73.4 Gbyte. The

minimum configuration for the array is one disk enclosure with two 9.1-Gbyte disk

modules. The maximum co nfiguration is six disk enclosures w ith ten 73.4-Gbyte disk

Product Description 21

Page 22

modules. This provi des a storage cap acity range from 36 Gbytes to over 3 Tbytes of usab le

storage.

LED Status Monitoring

Both the controller enclosure and disk enclosure monitor the status of their internal

components and operations. At least one LED is provided for each swappable module. If an

error is detected in any module, the error is displayed on the appropriate module’s LED.

This allows failed modules to be quickly ide nt if ied and replaced.

EMS Hardware Event Monitoring (HP-UX Only)

The Disk Array FC60 is fully supported by Hewlett-Packard's EMS Hardware Monitors,

which allow you to monitor all aspects of product operation and be alerted imme diately if

any failure or other unusua l event occurs. Hardware monitoring is available at no cost to

users running HP-UX 11.0 or 10.20.

Hardware monitoring provides a high level of protection against system hardware f ailure.

It provides an important tool for implementing a high-availability strategy for your system.

By using EMS Hardware Mo nitors, you can virtually eliminate undetected hardware

failures that could interrupt syst em operation or cause data loss.

The EMS Hardware Monito r software with the Disk Array FC60 monitor is distributed on

the

HP-UX Support Plus CD-ROM

installing and using hardware event monitoring is contained in the EMS Hardware

Monitors User's Guide (B6191-90011). A copy of this book can be accessed from the

Systems Hardware, Diagnostics, & Monitoring pa ge of Hewlett-Packard's on-line

documentation web site at http :/ /www.docs.hp.com/hpux/systems/

release 9912 and later. Complete inf ormation on

The minimum supported version of the Disk Array FC60 hardware monitor (fc60mon) is

A.01.04. To verify the version of the monitor installed, type:

what /usr/sbin/stm/uut/bin/tools/monitor/fc60mon

22 Product Description

Page 23

Disk Enclosure Components

The SureStore E Disk System SC10, or disk enclosure, is a high availability Ultra2 SCSI

storage product. It provides an LVD SCSI connection to the controller enclosur e and ten

slots on a single-ended backpla ne for high-speed, high-capacity LVD SCSI disks. Six disk

enclosures fully populated with 9.1 Gbtye disks provide 0.54 Tbytes of storage in a 2-meter

System/E rack. When fully populated with 73.4 Gbyte disks, the array provides over 3

Tbytes of storage. These values represent maximum storage; usable storage space will vary

depending on the RAID leve l used.

The disk enclosure consists of modular, redundant components that are e asy to upgrade

and maintain. See Figure 2. Disks, fans, power supplies, and Bus Control Cards (BCCs) are

replaceable parts that plug into individual slots in the front and back of the disk enclosure.

Redundant fans, power supplies, an d disk modules can be removed and replaced without

interrupting storage operations. In addition, a single disk within a LUN can be replaced

while the system is on.

Product Description

Disk Enclosure Components 23

Page 24

BCC Modules

Power Supply Modules

Fan Modules

Disk Modules

Chassis (and Backplane)

Figure 2

24 Disk Enclosure Components

Disk Enclosure Components, Exploded View

(Front Door Not Shown)

Page 25

Operation Features

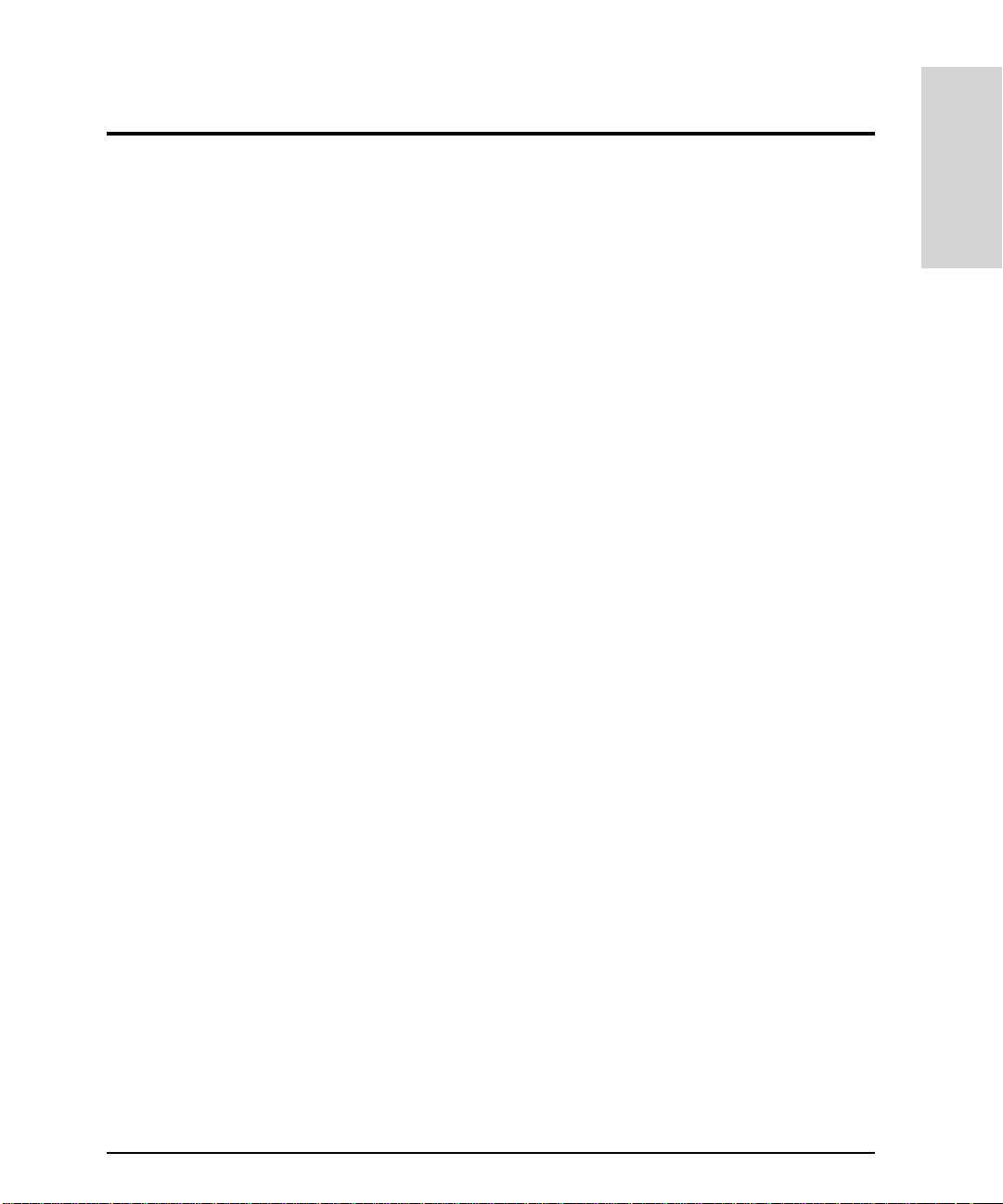

The disk enclosure is designed to be installed in a standard 19-inch rack and occupies 3.5

EIA units (high). Disk drives mount in t he front of the enclosure. Also located in t he f ront

of the enclosure are a p ower switch and status LED s. A lockable fr ont door shields RFI and

restricts access to the disk drives and power button (Figure 3 on page 26).

BCCs are installed in the back of the enclosure along with redundant power supp lies and

fans.

Status Indicators

LEDs on the front and b ack of the di sk enclo sure enab le you to quickly id entify and repla ce

failed components, thereby pre venting or minimizing downtime. "Troubleshooting" on

page 359 provides more detailed information abo ut the operation of these LEDs.

Two system LEDs on the front, top right corner of the disk enclosure (A in Figure 3)

indicate the status of the disk enclosure. The left LED indicates when pow er is on or off

and the right LED identifies if a fault has occurred. Additional pairs of LEDs above each

disk slot (D in Figure 3), indicate disk activity and a fault condition. The l eft LED (green )

indicates disk I/O activity and the right LED goes on if the disk module has experienced a

fault. The disk fault LE Ds are al so use d by t he ma nagement tools to identify a specific disk

module by flashing its fault LED.

Product Description

On the back of the disk encl osure, the fo llowin g LEDs (

components and Fibre Channel link:

– Power supply status and fault LEDs

– Fan status and fault LEDs

Bus Controller Card LEDs:

– BCC Fault LED

– Term Power LED (monitors power on the SCSI bus)

– Full Bus Mode LED

– LVD Mode LED

– Bus Free Status LED

For detailed information on LED operation, refer to "Troubleshooting" on page 359

) indicate the statu s of replac eable

K

Disk Enclosure Components 25

Page 26

A system LEDs

B power button

C disk module

D disk module LEDs

Edoor lock

FESD plug

G mounting ea r

H power supply

IBCCs

Jfans

K component LEDs

Figure 3

Disk Enclosure Front and Back View

Power Switch

The power switch (B in Figure 3) interrupts power from the power supplies to t he disk

enclosure component s. Power to the power supplies is cont rolled by the power cords and

the AC source.

26 Disk Enclosure Components

Page 27

Disk Enclosure SC10 Modules

The disk enclosure hot-sw appable modules include th e following:

Disks and fillers

•

Fans

•

Power supplies

•

Disks and Fillers

Hot-swappable disk modules make it easy to add or replace disks. Fillers are required in all

unused slots to maintain proper airflow within the enclosure.

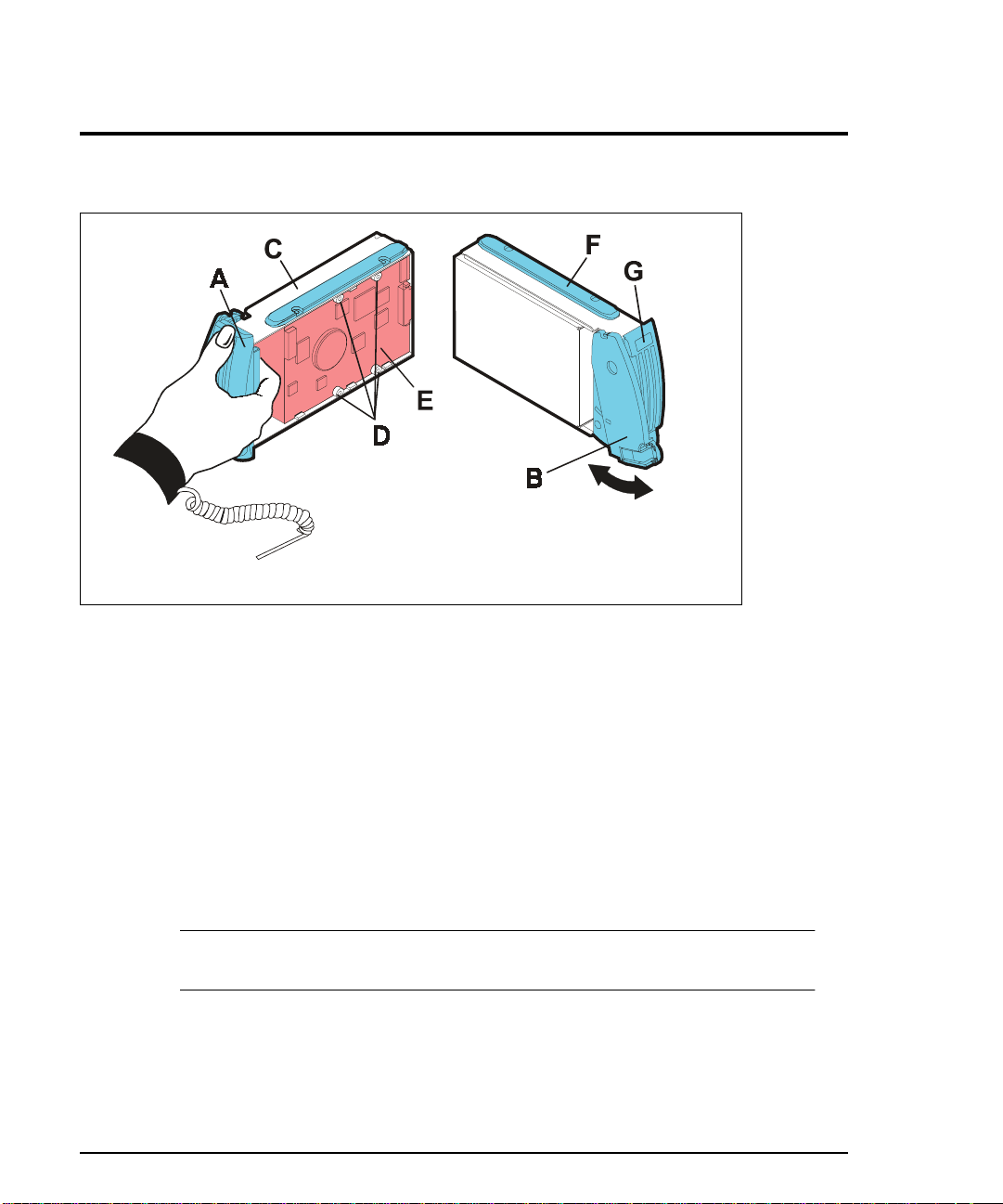

Figure 4 illustrates the 3.5-inch disks in a metal carrier. The open carrier design allows ten

half height (1.6 inch) disks to fit the 19-inch width of a standard rack and meet cooling

needs.

WARNING Touching exposed circuits on the disk module can damage the disk

drive inside. T o avoid damage, always handle disks carefully and use

ESD precautions.

Product Description

The following plastic parts of the disk are safe to touch:

Bezel-handle (A in Figure 4)

•

Cam latch (B)

•

Insertion guide (F)

•

Metal standoffs (D) protect exposed circuits against damage when the disk is laid

circuit-side down on a flat surface.

Disk Enclosure Components 27

Page 28

A bezel handle

B cam latch E circuit board

C car rier frame F insertion guide

D standoffs G capacity label

Figure 4

Disk Module

Disks fit snugly in their slots. The cam latch (B in Figure 4) is used to seat and unseat the

connectors on the backplane.

A label (G) on the disk provides the following information:

Disk mechanism height: 1.6 inch (h alf height) or 1 inch (low profile)

•

Rotational speed: 10K RPM and 15K RPM (18 Gbyte only)

•

Capacity: 9.1 Gbyte, 18.2 Gbyte, 36.4 Gbyte, or 73.4 Gbyte

•

A large zero on the capacity label distinguishes a filler from a disk. Fillers are required in all

unused slots to maintain proper airflow within the enclosure.

C

AUTION

Fillers must be installed in unused slots to maintain proper cooling within

the disk enclosure.

28 Disk Enclosure Components

Page 29

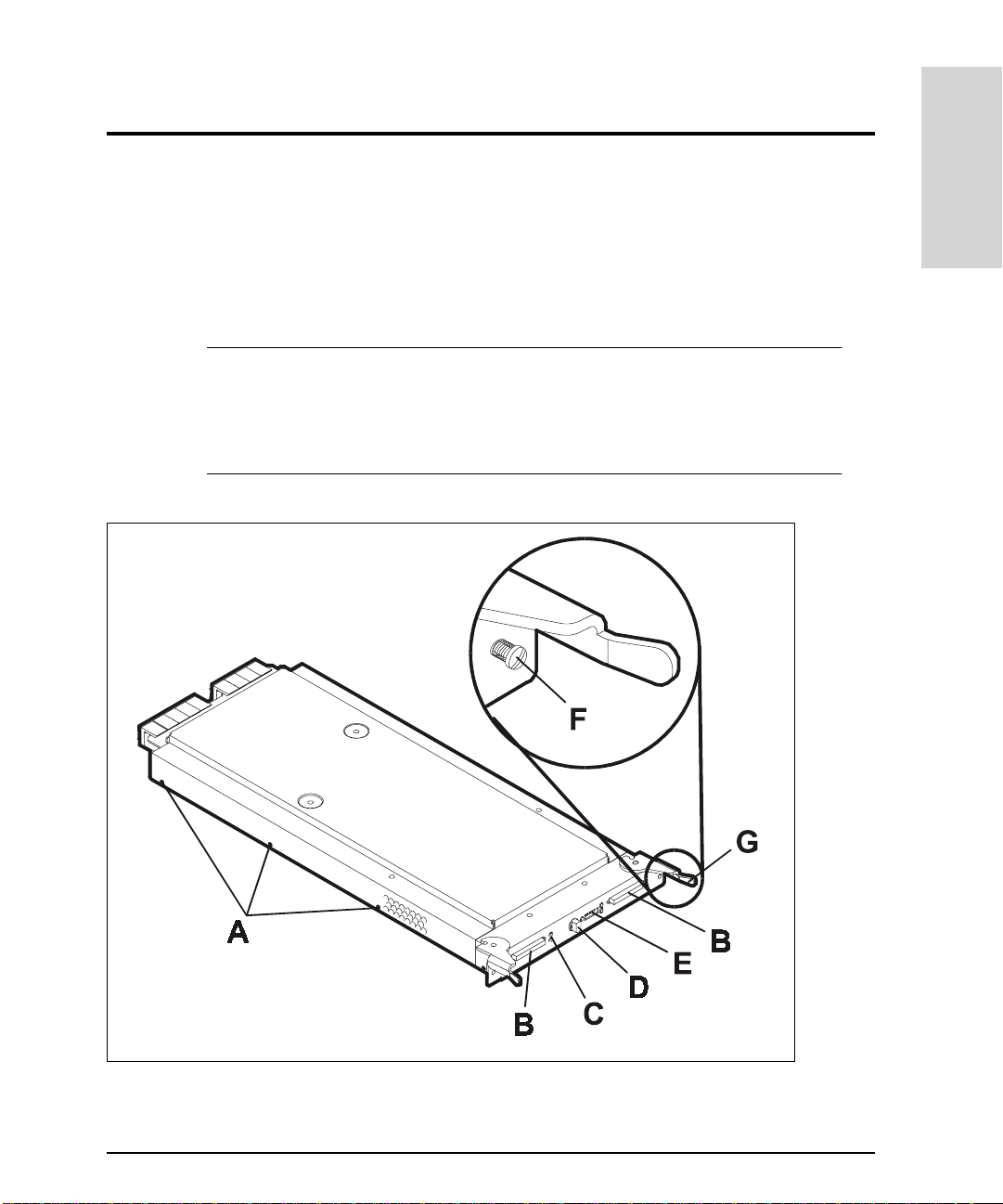

BCCs

T wo Backplane Controller Cards, BCCs, control the disks on one or two buses according to

the setting of the Full Bus switch. When the Full Bus switch is set to on, BCC A, in the top

slot, accesses the disks in all ten slots. When the Full Bus switch is off, BCC A accesses

disks in the even-numbered slot s and BCC B accesses disks in the odd-numbered slots.

Product Description

Note

In full bus mode, all ten disks can be accessed through either BCC. Howeve r,

internally each BCC still manages five disks. This means that if the BCC that is

not connected to the SCSI channel fails, access to its five disks will be lost.

Failure of the BCC that is connected to the SCSI channel will render all ten

disks inaccessible.

A alignment guides

B SCSI Ports E DIP switches

CLEDs Flocking screw

D rotary switch G cam lever

Figure 5

BCC

Disk Enclosure Components 29

Page 30

Each BCC provides two LVD SCSI ports (B in Figure 5) for connection to the controller

enclosure.

The EEPROM on each BCC store s 2

data, including the manufacturer serial number, World Wide Name, and product number.

The following are additional features of the BCC:

LEDs (C in Figure 5) show the status of the BCC and the bus.

•

A rotary switch (D) used to set the enclosure (tray) ID which is used by internal

•

controller operations, and also by the management tools to identify each enclosure.

DIP switches set disk enclosure option s. The only option used by the Disk Array FC60

•

is the full-bus/split-bus mode.

Screws (F) prevent the card from being unintentionally disconnected .

•

Cam levers (G) assist in installing a nd removing the BCC from the enclos ure, ensuring

•

a tight connection with the backplane.

BCC functions include dr ive addressing, fault detection, and environmental services.

bytes of configuration information and user-defined

K

30 Disk Enclosure Components

Page 31

Fans

Redundant, hot-swappable fans pr ovide cooling for all enclosure components. Each fan has

two internal high-speed blowers (A in Figure 6), an LED (B), a pull tab (C), and two locking

screws (D).

Product Description

A internal blowers

BLED

Cpull tab

D locking scre w s

Figure 6

Fan

Internal circuitry senses blower motion and triggers a fault when the speed of either blower

falls below a critical level. If a fan failure occurs, the amber f au lt L ED will go on. An alert

should also be generated by EMS Hardware Monitoring when a fan failure occurs.

Disk Enclosure Components 31

Page 32

Power Supplies

Redundant, hot-swapp ab le 450-watt power supplies conver t wide- r an gi n g AC vo ltage from

an external main to stable DC output and deliver it to the backplane. Each power suppl y

has two internal blowers, an AC receptacle (A in Figure 7), a cam handle (B) with locking

screw, and an LED (C). Internal control prevents the rear DC connector from becoming

energized when the power supply is removed from the disk enclosure.

Figure 7

Note

AAC receptacle

B cam handle

CLED

Power Supply

NOTE:

LED position varies.

Although it is possible to operate the disk enclosure on one power supply, it is

not recommended. Using only one supply creates a single point of failure. If the

power supply fails, the entire enclosure is inaccessible. To maintain high

availability, both power supplies should be used at all times, and a failed supply

should be replaced as soon as possible.

32 Disk Enclosure Components

Page 33

Power supplies share the load reciprocally; that is, each supply automatically increases its

output to compensate fo r reduced output from the other. If one power supply fails, the

other delivers the entire load.

Internal circuitry triggers a fault when a power supply fan or other power supply part fails.

If a power supply failure occurs, the amber f ault LED will go on. An alert should also be

generated by EMS Hardware Monitoring when a power supply failure occurs.

Product Description

Disk Enclosure Components 33

Page 34

Array Controller Enclosure Components

The array controller e nclosure, like the disk enclosure, consists of several modules that

can be easily replaced, plus several additional internal assemb lies. See Figure 8. Together,

these removable modules and internal assemblies make up the field replaceable units

(FRUs). Many modules can be remov ed and replaced without disrupting disk array

operation.

The following module s are contained in the controller enclosure:

Controller modules

•

Controller fan module

•

Power supply modules

•

Power supply fan module

•

Battery backup unit

•

34 Array Controller Enclosure Components

Page 35

Controller Fan

Product Description

Power Supply Fan

Module

Power Supply Modules

Controller Chassis

dule A

dule B

(Front Cover Not Shown)

Figure 8

Controller Enclosure Exploded View

Controller Mo

Controller Mo

BBU

During operation, controller enclosure status is indicated by five LEDs on the front left of

the controll er enclo sure. F aults de tected by the contro ller mo dule cause the correspo nding

controller enclos ure fault LED to go on. Additional LEDs o n the individual components

identify the failed component. See "T roubleshooting" on page 359 for detailed information

on LED operation.

Array Controller Enclosure Components 35

Page 36

Figure 9

Controller Enclos ur e Fr ont V iew

36 Array Controller Enclosure Components

Page 37

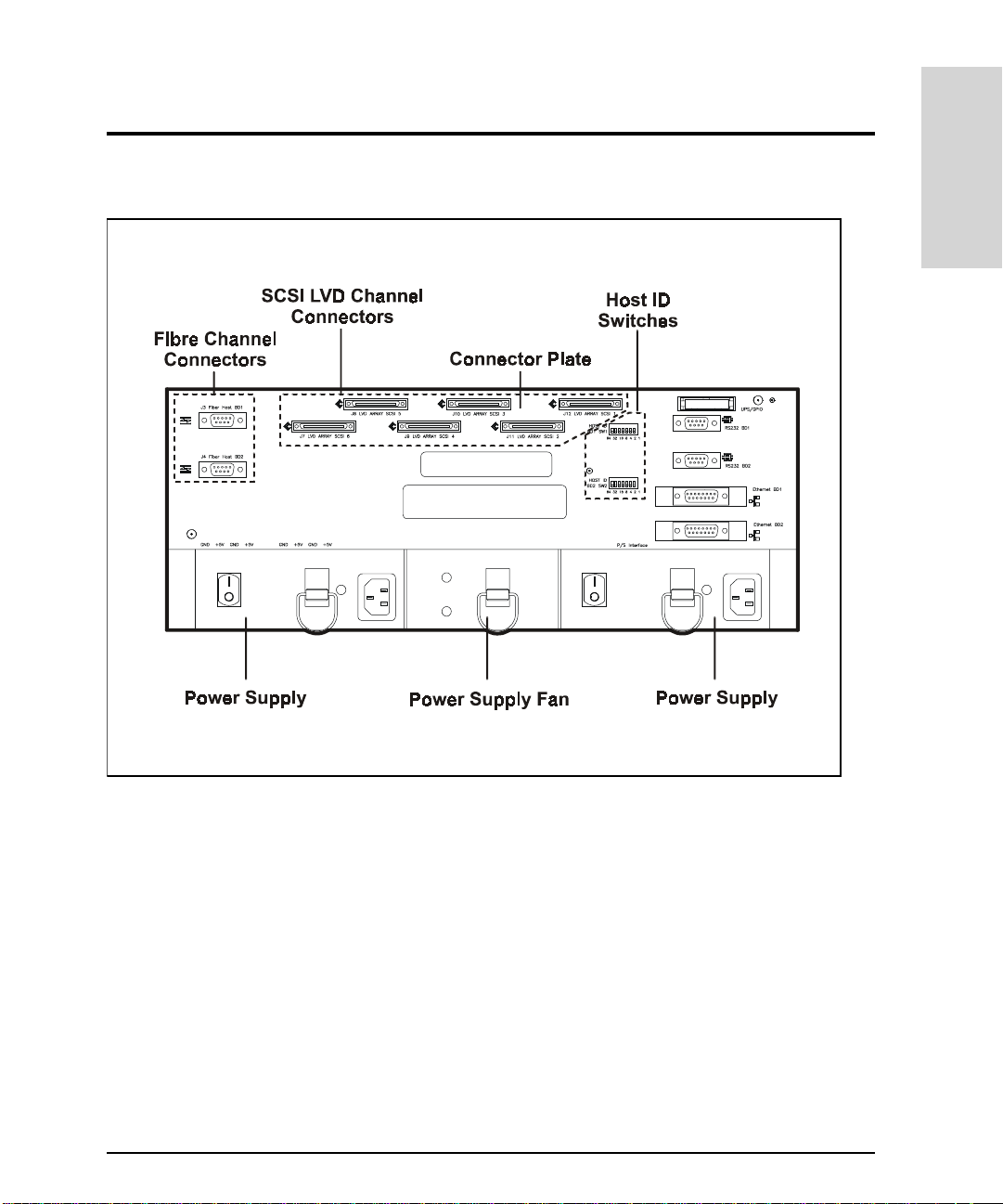

Product Description

Figure 10

Controller Enclosure Rear View

Front Cover

The controller enclosure has a removable front cover which contains slots for viewing the

main operating LEDs. The cover also contains grills that aid air circulation. The cont roller

modules, controller fan, and battery backup unit are located behind this cover. This cover

must be removed to gain ac cess to these modu les, and also, to observe th e control ler status

and BBU LEDs.

Array Controller Enclosure Components 37

Page 38

Controller Modules

The controller enclosure contains one or two controller modules. See Figure 11. These

modules provide the main data and status processing for the Disk Array FC60. The

controller modules slide into two controller slots (A and B) and plug directly into the

backplane. Two handles lock the modules in place. Each controller slot has a controller

letter that identifies the physical location of the controller in the chassis: controller slot A

or controller slot B (also known as BD1 and BD2, respectively, as referenced on the back of

the controller enclosure).

Figure 11

38 Array Controller Enclosure Components

Controller Modules

Page 39

Each controller module has ten LEDs. See Figure 12. One LED identifies the controller

module’s power status. A second LE D indicates when a fault is detected. The remaining

eight LEDs provi de detaile d fault co ndition status. The mo st signifi cant LED , the heartb eat,

flashes approximately every two seconds beginni ng 15 seconds after power-on.

"Troubleshooting" on page 359 contains additional information on controller LED

operation.

The controller module connects to the host via Fibre Channel, and to the disk enclosures

via LVD SCSI. Each controller must have a unique host f i bre ID number assigned using the

ID switches on the back of the controller modules. See "Installation" on page 1 43 for more

information on setting host IDs.

Product Description

Figure 12

Controller Module LEDs

Array Controller Enclosure Components 39

Page 40

Controller Memory Modules

Each controller module contains SIMM and DIMM memory modules. Two 16-Mbyte SIMMs

(32 Mbytes total) store controller program and other data required for operation. The

standard controller module includes 256-Mbytes of cache DIMM, which is upgradeable to

512 Mbytes. The cache may be configured as either two 128-Mbyte DIMMs, or a single 256Mbyte DIMM. Cache memory serves as temporary data storage during read and write

operations, improving I/ O performance. When cache mem ory contains write data, the Fa st

Write Cache LED, on the front of the controller enclosure is on. See Figure 13.

Controller Fan Modules

The controller fan module is a single removable unit containing dual cooling fans and

temperature monitoring logic. See Figure 13. It includes five LEDs that indicate overall

system status and controller fan status. The fans provide cooling by pulling air in through

ventilation holes, moving it across the controller cards, and exhausting it out the

ventilation holes in the fan assembly. The dual fans provide a redundant cooling system to

both controller modules. I f one fan fails, the other continues to operate and provide

sufficient air cir cul ation to pr even t the cont roll ers fr om o verh eating unti l the fan m odul e is

replaced. The fan module plugs into a slot on the front of the controller enclosure, and has

a handle and captive screw for easy service.

40 Array Controller Enclosure Components

Page 41

Product Description

Figure 13

Controller Fan Module

Array Controller Enclosure Components 41

Page 42

Power Supply Modules

Two separate power supplies provide electrical power to the internal components by

converting incoming AC voltage to DC voltage. Both power supplies are housed in

removable power supply modules that slide into two slots in the back of the controller and

plug directly into the power interface board. See Figure 14.

Figure 14

Power Supply Modules

Each power supply uses a separate power cord. These two power cords are special ferrite

bead cords (part no. 5064-2482) required for FCC compliance. Both power cords can plug

into a common power source or each cord can plug into a sep ar at e circuit (to provide

power source redundancy).

42 Array Controller Enclosure Components

Page 43

Each power supply is equipped wi th a power switch to disconnect powe r to the supply.

Turning off both switches turns off power to the controller. This should not be performed

unless I/O activity to the di sk array has be en sto pped, and the writ e cache h as been f lushed

as indicated by the Fast Write Cache LED being off.

C

AUTION

The controller power switches should not be turned off unless all I/O activity

to the disk array has been suspended from the host. Also, power should not be

turned off if the Fast Write Cache LED is on, indicating that there is data in

the write cache. Wait until t he Fast Write Cache LED goes off before shutting

off power to the disk array.

Each power supply is equipped with a power-on LED indicator. If the LED is on (green) the

supply is providing dc power to the controller. If the LED is off, there is a malfunction or

the power has been interrupted. The system Power Fault LED on the front of the controller

enclosure works in conjunction with the Power Supply LEDs. If both power supplies are

on, the system Power Fault LED will be off . If eit her power supply is off or in a fault state,

the system Power Fault LED go es on. When both power supplies are off or not providing

power to the enclosure, the system power LED on the front of the controller enclosure will

be off.

Product Description

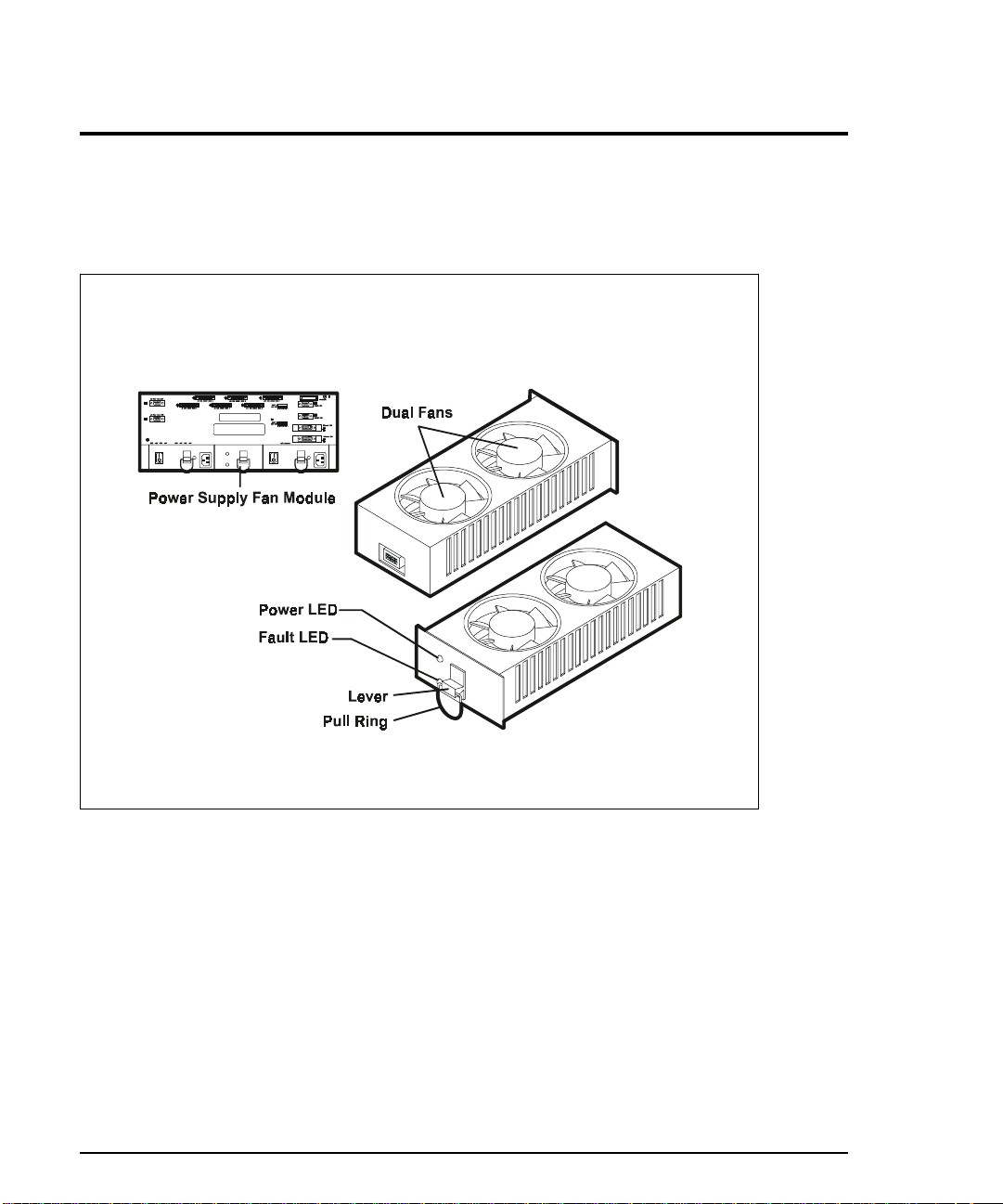

Power Supply Fan Module

Like the controller fan, the power supply fan module (Figure 15) is a single remo vable unit

that contains dual cooling fans. Dual fans provide a redundant cooling system to both

power supply modules. If one fan fails, the other will continue to operate. A single fan will

provide sufficient air circulation to prevent the power supplies from overheating until the

entire power supply fa n module can be replaced. Th e power supply fan module plugs

directly into a slot on the ba ck of the controller enclosure, between the power supplies. It

has a locking lever that allow s it to be unlatched and removed.

The power supply fan can b e hot swapped, provided the exchang e is pe rformed within 15

minutes. This time limit applies only to the total time during whic h t he fan is out of the

enclosure, beginning when you remove the failed unit and ending when you re-seat the new

one. This does not include the time it takes you to perform this entire procedure (including

checking LEDs).

Array Controller Enclosure Components 43

Page 44

Figure 15

Power Supply Fan Module

44 Array Controller Enclosure Components

Page 45

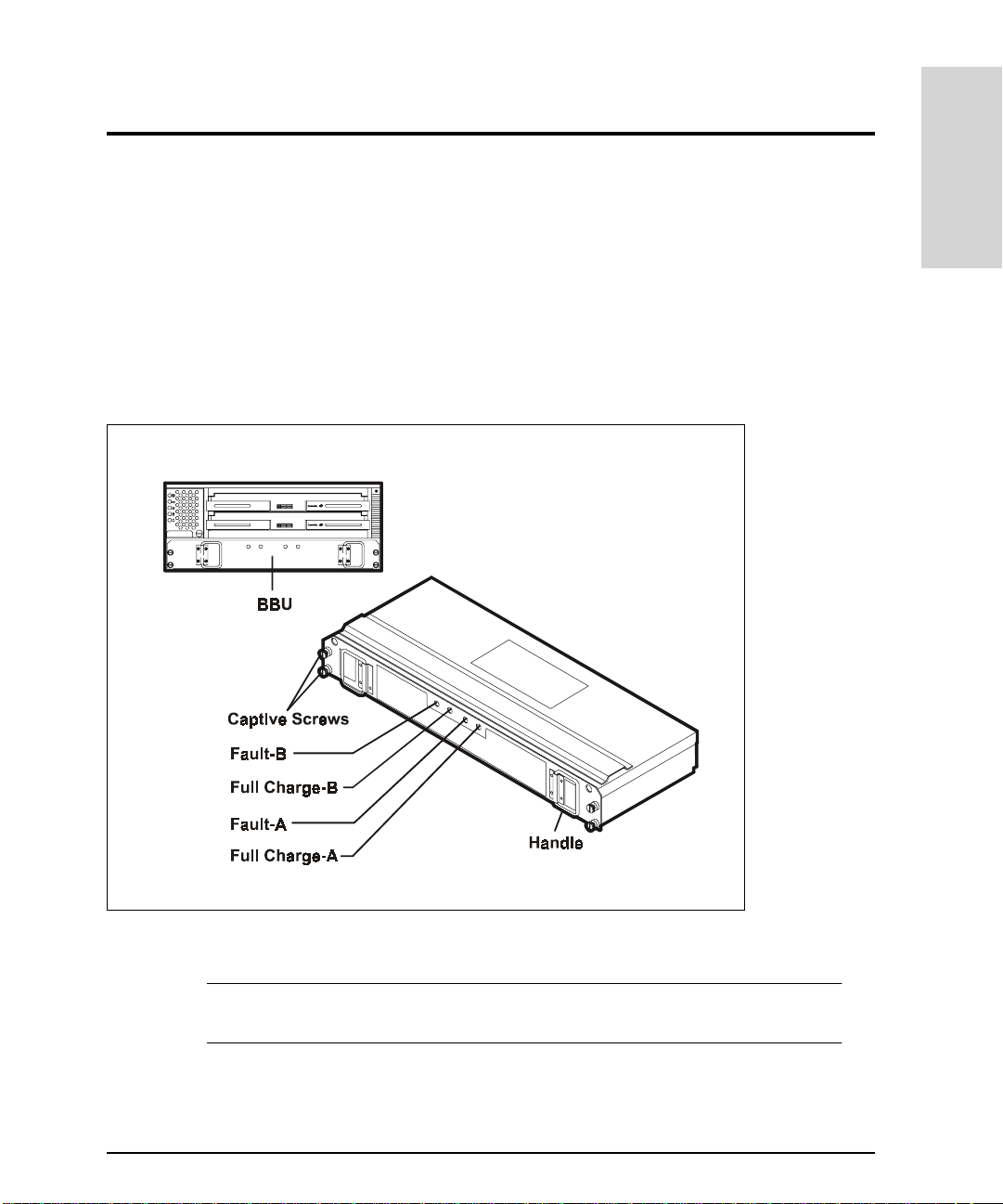

Battery Backup Unit

The controller enclosure contains one removable battery backup unit (BBU) that houses

two rechargeable internal batteries (A and B) and a battery charger board. The BBU plugs

into the front of the controller enclosure where it provides backup power to the

controller’s cache memory during a power outage. The BBU will supply pow er to the

controllers for up to five days (120 hrs). All data stored in memory will be preserved as long

as the BBU supplies power. When power to the disk array is restored, the cache data will

be written to disk.

Product Description

Figure 16

C

AUTION

Battery Backup Unit

During a power outage, do not remove the controller or the BBU. Removing

either of these modules can compromise data integrity.

Array Controller Enclosure Components 45

Page 46

The BBU contains four LEDs that identify the condition of the battery. Internally, the BBU

consists of two batteries or banks, ide nt ified as bank “A” and bank “B.” During normal

operation both of the Full Charge LEDs (Full Charge-A and Full Charge-B) are on and the

two amber Fault LEDs are off. If one or both of the Fault LEDs are on, refer to

"Troubleshooting" on page 359 for information on solving the problem. The Full Charge

LEDs flash while the BBU is charging. It can take up to seven hours to fully charge a new

BBU.

Battery Operation and Replacement

Replace the BBU every two years or whenever it fails to hold a charge, as indicated by the

BBU Fault LEDs. The service label on the BBU provides a line for recording the date on

which the BBU was serviced. Check this label to de termine when to replace the BBU.

When a BBU is replaced, it may require up to seven hours to fully charge. The Full Charge

LEDs flash while the BBU is charging, and remain on when the BBU is fully charged.

If you replace the BBU and still experience battery-related problems (such as a loss of

battery power to the controllers or batteries not charging properly), the controller

enclosure may have some other internal component failure. In this case contact your HP

service en gineer.

Battery Operation for No Data Loss

The BBU protects the write cac he (dat a which has no t bee n writt en to d isk) fo r at l east 12 0

hours (five days) in case of a power failure. When power to the disk array is restored, data

in the cache is written to the disks and no data loss occu rs. How ever, if the system is to be

powered off for more than 120 hours, it is imperative that a proper shutdown procedure be

executed or data may be lost. The following are rec ommendations:

Battery status must always be checked and replaced when a failure is indicated.

•

Never remove the BB U wit hout first performing a proper shutdown proced ure.

•

For a planned shutdown, make sure t hat all data has been written to disks before

•

removing power. This is indicated by the Fast Write Cache LED which will be off when

there is no longer any data in write cache. See Figure 13.

If the BBU is removed, do not shut off power to the array unless the Fast Write Cache

•

LED is off. Data in write cache will be posted to disk 10 seconds after the BBU is

removed.

46 Array Controller Enclosure Components

Page 47

Disk Array High Availability Features

High availability systems are designed to provide uninterrupted operation should a

hardware failure occur. Disk arrays contribute to high availability by ensuring that user

data remains accessible even when a disk or other component within the Disk Array FC60

fails. Selecting the proper Fibre Channel topology and system configuration can p rot ect

against the fail ure of any hard ware comp onent in the I/O path to the di sk array by provi ding

an alternate p ath to all user data.

The Disk Array FC60 provides high ava ilability in the following ways:

• Supported RAID levels 1, 0/1, 3, and 5 all use data redundancy to protect data when a disk

failure oc curs. RAID 0 is support ed but it do es not offe r data re dundanc y and shou ld not

be used in high-availability environm ents.

• Global hot spare disks serve as automatic replacements for failed disks.

Product Description

• Alternate hardware paths to user data protects against I/O path failures.

• Redundant, hot-swappable hardware components can be replaced without interrupting

disk array operati o n.

RAID Technology

RAID technology contributes to high availability through the use of data redundancy,

which ensures that da ta on the di sk array rem ains avail able e ven if a disk or channe l failur e

occurs. RAID technolog y uses two techniques to achieve data redundancy: mirroring and

parity. A third characteristic of RAID technology, data striping, enhances I/O performance.

Disk Mirroring

Disk mirroring achieves data redundancy by maintaining a duplicate copy of all data. Disks

are organized into pairs: one disk serves as the data disk, the other as the mirror which

contains an exact image of its data. If either disk in the pair fails or becomes inaccessible,

the remaining disk provides uninterrupted access to the data.

Disk Array High Availability Features 47

Page 48

The disk array uses hardware mirroring, in which the disk array automatically

synchronizes the two disk images, without user or operating system involvement. T h is is

unlike the software mirroring, in which the host oper at ing system software (for example,

LVM) synchronizes the disk images.

Disk mirroring is used by RAID 1 and RAID 0/1 LUNs. A RAID 1 LUN consists of exa ctly

two disks: a primary disk and a mirror disk. A RAID 0/1 LUN consists of an even number of

disks, half of which are primar y disks and the other half are mirror disks. I f a disk f ails or

becomes inaccessible, the remaining disk of the mirrored pair provides uninterrupted data

access. After a failed disk is replaced, the disk array automatically rebuilds a copy of the

data from its companion disk. To protect mirrored data from a channel or internal bus

failure, each disk in the LUN should be in a different enclosure.

Data Parity

Data parity is a second techniqu e used to achieve data redundanc y. If a disk fails or

becomes inaccessible, the parity data can be combined with data on the remaining disks in

the LUN to reconstruct the data on the failed disk. Data parity is used for RAID 3 and RAID

5 LUNs.

To ensure high availability, each disk in the LUN should be in a separate enclosure. Parity

cannot be used to recon s tr uct data if more than one disk in the LUN is unavailable.

Parity is calculated on each write I/O by doing a serial binary exclusive OR (XOR) of the

data segments in the stripe written to the data disks in the LUN. The exclusive OR

algorithm requires an even number of binary 1s to create a result of 0.

Figure 17 illustrates the pr ocess for calculating parity on a five-disk LUN. The data written

on the first disk is “XOR’d” with the data written on the second disk. The result is “XOR’d”

with the data on the third disk, which is “XOR’d” with the data on the fourth disk. The

result, which is the parity, is written to the fifth disk. If any bit changes state, the parity also

changes to maintain a resul t of 0.

48 Disk Array High Availability F eatures

Page 49

Product Description

Data Data Data Data Parity

0

=

0

0

0

0

If this bit is

now written

as 1...

Figure 17

0

1

1

0

0

Calculating Data Parity

1

++ +

0

1

0

1

1

0

1

1

0

1

+

1

0

0

1

This bit will also

be changed to a 1

so the total still

equals 0.

1

0

1

1

0

Data Striping

Data striping, which is used on RAID 0, 0/1, 3 a nd 5 LUNs, is the performance-enhancing

technique of reading and writing data to uniformly s i zed segments on all disks in a LUN

simultaneously. Collectively, the segments comprise a stripe of data on the LUN. Data

striping enhances performance by allowing multiple sets of read/write heads to execute the

same I/O transaction simultaneously.

The amount of information simultaneously read from or wr itten to each disk is the stripe

segment size. The stripe segment size is configurable to provide optimum performance

under varying sizes of I/O transactions. Stripe segment size is specified in 512-byte blocks

of data.

Stripe segment si ze can affect disk ar ra y p er fo r ma nc e. The smaller the stri pe segment size,

the more efficient the distribution of data read or written across the stripes in the LUN.

However, if the stripe segment is too small for a single I/O operation, the operation requires

access to two stripes. Called a stripe boundary crossing, this action may negatively impact

performance.

The optimum stripe segment size is the smallest size that will rarely force I/Os to a second

stripe. For example, assume your application uses a typical I/O size of 6 4

Disk Array High Availability Features 49

B. If you are

K

Page 50

using a 5-disk RAID 5 LUN, a stri pe segm ent size of 32 bl ocks (1 6 KB) would ensure that an

entire I/O would fit on a single str ipe (16

The total stripe size is the number of disks in a LUN multiplied by the stripe segment size.

For example, if the stripe segment size is 32 blocks and the LUN comprises five disks, the

stripe size is 32 X 5, or 160 blocks (81,920 bytes).

B on each of the four data disks).

K

RAID Levels

RAID technology uses a number of different techniques for storing data and maintaining

data redundancy. These industry-standard RAID levels define the method used for

distributing data on the disks in a LUN. LUNs that use different RAID levels can be created

on the same disk array.

The Disk Array FC60 supp orts the following RAID levels:

RAID 0

•

RAID 1

•

RAID 0/1

•

RAID 3 (Windows NT and Windows 2000 only)

•

RAID 5

•

RAID 0

C

AUTION

RAID 0 uses disk striping to achieve high performance. Data is striped across all disk in the

LUN. The ability to access all disks in the LUN simultaneously provides a high I/O rate. A

RAID 0 group configura tion for a logical disk unit offe rs fast access, but without the hi gh

availability offered by the ot her RAID levels.

Unlike other RAID levels, RAID 0 does not provi de data redundancy, er ror recovery, or

other high availability features. Consequently it should not be used in environments where

high-availability is critical. All data on a RAID 0 LUN is lost if a single disk within the LUN

50 Disk Array High Availability F eatures

RAID 0 does not provide data redundancy. It should only be used in

situations where high performance is more important than data protection.

The failure of any disk within a RAID 0 LUN will cause the loss of all data on

the LUN.. RAID 0 should only be used for non-critical data that could be lost

in the event of a hardware failure.

Page 51

fails. RAID-0 provides enhanced pe rformance through simultan eous I/Os to multiple disk

modules. Software mirroring the RA ID-0 group provides high availability.

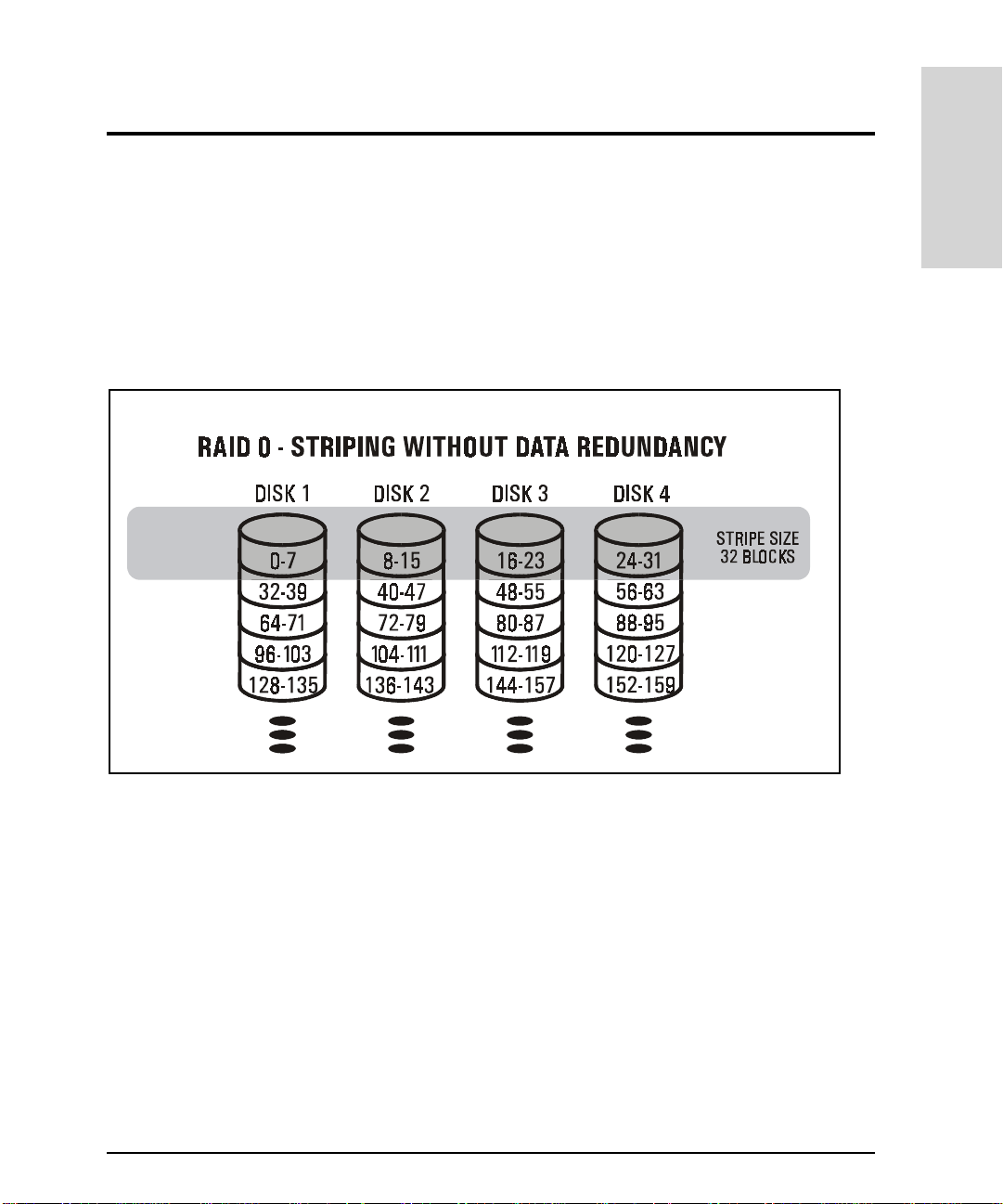

Figure 18 illustrates the distribution of user and parity data in a four-disk RAID 0 LUN. The

the stripe segment size is 8 blocks, and the stripe size is 32 blocks (8 blocks times 4 disks).

The disk block addresses in the stripe proceed sequentially from the first disk to the

second, third, and fourth, then back to the first, and so on.

Product Description

Figure 18

RAID 0

LUN

RAID 1

RAID 1 uses mirroring to achieve data redundancy. RAID 1 provides high availability and

good performance, but at the cost of storage efficiency. Because all data is mirrored, a

RAID 1 LUN has a storage efficiency of 50%.

A RAID 1 LUN co nsists of exactl y two disks configured as a mirrored pair. One disk is the

data disk and the other is the disk mirror. The disks in a RAID 1 LUN are mirrored by the

disk array hardware, which au tomatically writes data to both t he data disk and the disk

mirror. Once bound into a RAID 1 mirrored pair, the two disks cannot be accessed as

Disk Array High Availability Features 51

Page 52

individual disks. For highest data availability, each disk in the mirrored pair must be

located in a different enclosure.

When a data disk or disk mirror in a RAID 1 LUN fails, the disk array automatically uses the

remaining disk for data access. Until the failed disk is replaced (or a rebuild on a global hot

spare is completed ), the LUN operates in degraded mo de. While in degraded mode the LUN

is susceptible to the failure of the second disk. If both disks fail or become ina cc essible

simultaneously, the data on the LUN becomes inaccessible.

Figure 19 shows the dist ribution of data on a RAID 1 LUN. Note that all data on the data

disk is replicated on the disk mirror.

Figure 19

RAID 1

LUN

RAID 0/1

RAID 0/1 uses mirroring to achieve data redundancy and disk strip ing to enhance

performance. It combines the speed advantage of block striping with the redundancy

advantage of mirroring. Because all data is mirrored, a RAID 0/1 LUN has a storage

efficiency of 50%.

A RAID 0/1 LUN contains an even number of from four to 30 disks. One half of the disks are

primary disks and th e other ha lf are disk mirror s. The disks in a RAID 0/ 1 LUN are mi rrored

by the disk array hardw ar e, w hich automatically writes da ta to both disks in the mirrored