Page 1

HP P63x0/P65x0 Enterprise Virtual Array User Guide

Abstract

This document describes the hardware and general operation of the P63x0/P65x0 EVA.

HP Part Number: 5697-2486

Published: September 2013

Edition: 5

Page 2

© Copyright 2011, 2013 Hewlett-Packard Development Company, L.P.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express

warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall

not be liable for technical or editorial errors or omissions contained herein.

Warranty

To obtain a copy of the warranty for this product, see the warranty information website:

http://www.hp.com/go/storagewarranty

Acknowledgments

Microsoft® and Windows® are U.S. registered trademarks of Microsoft Corporation.

Java® and Oracle® are registered U.S. trademark of Oracle Corporation or its affiliates.

Intel® and Itanium® are registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

Page 3

Contents

1 P63x0/P65x0 EVA hardware....................................................................13

SAS disk enclosures................................................................................................................13

Small Form Factor disk enclosure chassis...............................................................................13

Front view....................................................................................................................13

Rear view.....................................................................................................................14

Drive bay numbering.....................................................................................................14

Large Form Factor disk enclosure chassis...............................................................................14

Front view....................................................................................................................14

Rear view.....................................................................................................................15

Drive bay numbering.....................................................................................................15

Disk drives........................................................................................................................15

Disk drive LEDs.............................................................................................................15

Disk drive blanks...........................................................................................................16

Front status and UID module................................................................................................16

Front UID module LEDs...................................................................................................16

Unit identification (UID) button........................................................................................17

Power supply module..........................................................................................................17

Power supply LED..........................................................................................................17

Fan module.......................................................................................................................17

Fan module LED............................................................................................................18

I/O module......................................................................................................................18

I/O module LEDs..........................................................................................................19

Rear power and UID module...............................................................................................19

Rear power and UID module LEDs...................................................................................20

Unit identification (UID) button........................................................................................21

Power on/standby button...............................................................................................21

SAS cables.......................................................................................................................21

Controller enclosure................................................................................................................21

Controller status indicators..................................................................................................24

Controller status LEDs.....................................................................................................25

Power supply module..........................................................................................................26

Battery module..................................................................................................................27

Fan module.......................................................................................................................27

Management module.........................................................................................................28

iSCSI and iSCSI/FCoE recessed maintenance button..............................................................28

Reset the iSCSI or iSCSI/FCoE module and boot the primary image....................................29

Reset iSCSI or iSCSI/FCoE MGMT port IP address.............................................................29

Enable iSCSI or iSCSI/FCoE MGMT port DHCP address....................................................29

Reset the iSCSI or iSCSI/FCoE module to factory defaults...................................................29

HSV controller cabling............................................................................................................29

Storage system racks ..............................................................................................................30

Rack configurations............................................................................................................30

Power distribution units............................................................................................................31

PDU 1..............................................................................................................................31

PDU 2..............................................................................................................................31

PDMs...............................................................................................................................32

Rack AC power distribution.................................................................................................33

Moving and stabilizing a rack..................................................................................................33

2 P63x0/P65x0 EVA operation....................................................................36

Best practices.........................................................................................................................36

Operating tips and information................................................................................................36

Contents 3

Page 4

Reserving adequate free space............................................................................................36

Using SAS-midline disk drives..............................................................................................36

Failback preference setting for HSV controllers.......................................................................36

Changing virtual disk failover/failback setting..................................................................38

Implicit LUN transition.........................................................................................................38

Recovery CD.....................................................................................................................39

Adding disk drives to the storage system...............................................................................39

Handling fiber optic cables.................................................................................................39

Storage system shutdown and startup........................................................................................40

Powering on disk enclosures................................................................................................40

Powering off disk enclosures................................................................................................41

Shutting down the storage system from HP P6000 Command View...........................................41

Shutting down the storage system from the array controller......................................................41

Starting the storage system..................................................................................................41

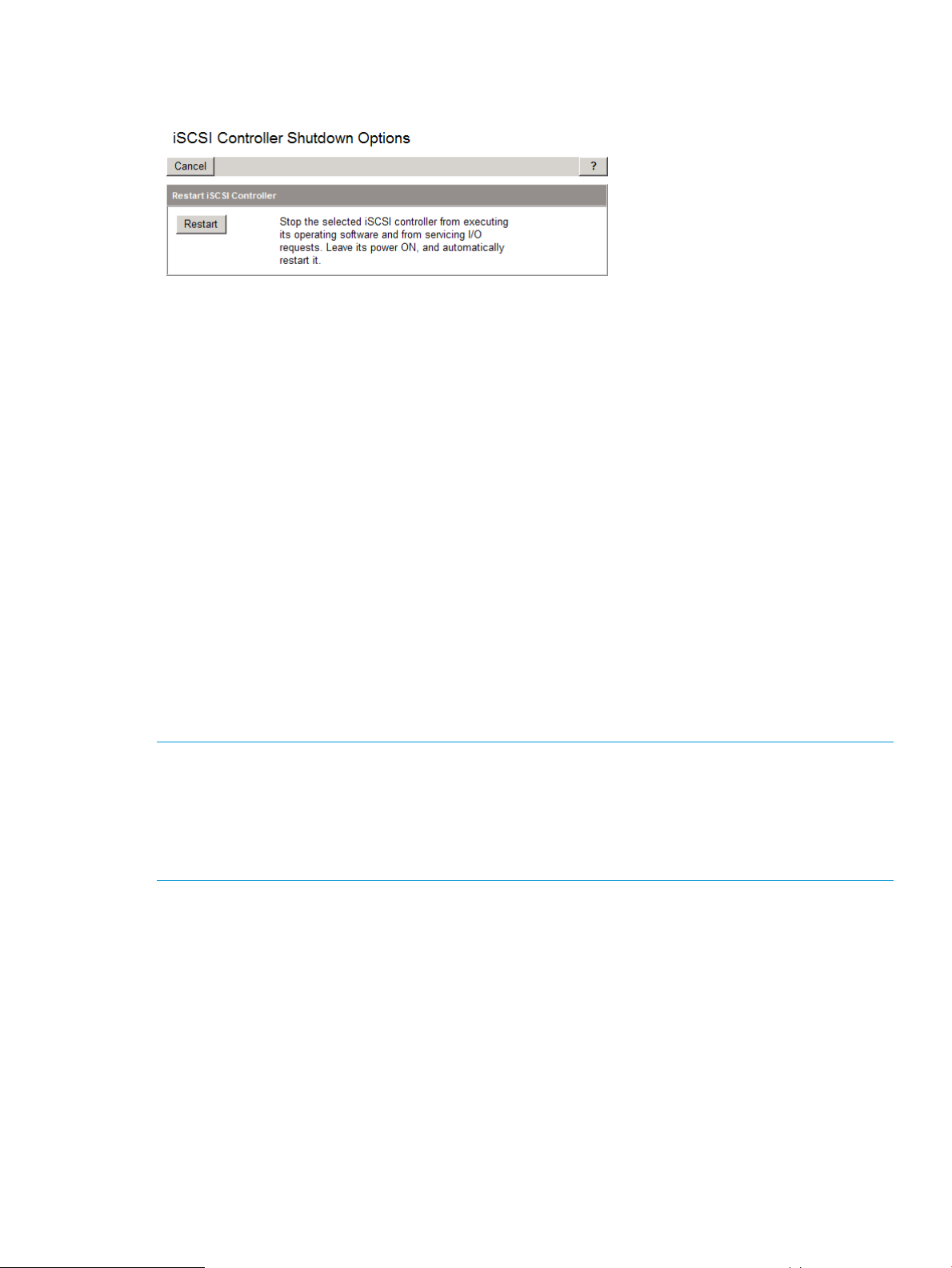

Restarting the iSCSI or iSCSI/FCoE module ..........................................................................42

Using the management module................................................................................................43

Connecting to the management module................................................................................43

Connecting through a public network...............................................................................44

Connecting through a private network..............................................................................45

Accessing HP P6000 Command View on the management module..........................................45

Changing the host port default operating mode.....................................................................45

Saving storage system configuration data...................................................................................46

Saving or restoring the iSCSI or iSCSI/FCoE module configuration...........................................48

3 Configuring application servers..................................................................50

Overview..............................................................................................................................50

Clustering..............................................................................................................................50

Multipathing..........................................................................................................................50

Installing Fibre Channel adapters..............................................................................................50

Testing connections to the array................................................................................................51

Adding hosts..........................................................................................................................51

Creating and presenting virtual disks.........................................................................................52

Verifying virtual disk access from the host...................................................................................52

Configuring virtual disks from the host.......................................................................................52

HP-UX...................................................................................................................................52

Scanning the bus...............................................................................................................52

Creating volume groups on a virtual disk using vgcreate.........................................................53

IBM AIX................................................................................................................................54

Accessing IBM AIX utilities..................................................................................................54

Adding hosts.....................................................................................................................54

Creating and presenting virtual disks....................................................................................54

Verifying virtual disks from the host.......................................................................................54

Linux.....................................................................................................................................55

Driver failover mode...........................................................................................................55

Installing a QLogic driver....................................................................................................55

Upgrading Linux components..............................................................................................56

Upgrading qla2x00 RPMs..............................................................................................56

Detecting third-party storage...........................................................................................56

Compiling the driver for multiple kernels...........................................................................57

Uninstalling the Linux components........................................................................................57

Using the source RPM.........................................................................................................57

HBA drivers.......................................................................................................................58

Verifying virtual disks from the host.......................................................................................58

OpenVMS.............................................................................................................................58

4 Contents

Page 5

Updating the AlphaServer console code, Integrity Server console code, and Fibre Channel FCA

firmware...........................................................................................................................58

Verifying the Fibre Channel adapter software installation........................................................58

Console LUN ID and OS unit ID...........................................................................................59

Adding OpenVMS hosts.....................................................................................................59

Scanning the bus...............................................................................................................60

Configuring virtual disks from the OpenVMS host...................................................................61

Setting preferred paths.......................................................................................................61

Oracle Solaris........................................................................................................................61

Loading the operating system and software...........................................................................62

Configuring FCAs with the Oracle SAN driver stack...............................................................62

Configuring Emulex FCAs with the lpfc driver....................................................................62

Configuring QLogic FCAs with the qla2300 driver.............................................................64

Fabric setup and zoning.....................................................................................................65

Oracle StorEdge Traffic Manager (MPxIO)/Oracle Storage Multipathing..................................65

Configuring with Veritas Volume Manager............................................................................66

Configuring virtual disks from the host...................................................................................67

Verifying virtual disks from the host..................................................................................68

Labeling and partitioning the devices...............................................................................69

VMware................................................................................................................................70

Configuring the EVA with VMware host servers......................................................................70

Configuring an ESX server ..................................................................................................70

Setting the multipathing policy........................................................................................71

Verifying virtual disks from the host.......................................................................................73

HP P6000 EVA Software Plug-in for VMware VAAI.................................................................73

System prerequisites......................................................................................................73

Enabling vSphere Storage API for Array Integration (VAAI).................................................73

Installing the VAAI Plug-in...............................................................................................74

Installation overview.................................................................................................74

Installing the HP EVA VAAI Plug-in using ESX host console utilities...................................75

Installing the HP VAAI Plug-in using vCLI/vMA.............................................................76

Installing the VAAI Plug-in using VUM.........................................................................78

Uninstalling the VAAI Plug-in...........................................................................................80

Uninstalling VAAI Plug-in using the automated script (hpeva.pl).......................................80

Uninstalling VAAI Plug-in using vCLI/vMA (vihostupdate)...............................................80

Uninstalling VAAI Plug-in using VMware native tools (esxupdate)....................................81

4 Replacing array components......................................................................82

Customer self repair (CSR).......................................................................................................82

Parts-only warranty service..................................................................................................82

Best practices for replacing hardware components......................................................................82

Component replacement videos...........................................................................................82

Verifying component failure.................................................................................................82

Identifying the spare part....................................................................................................82

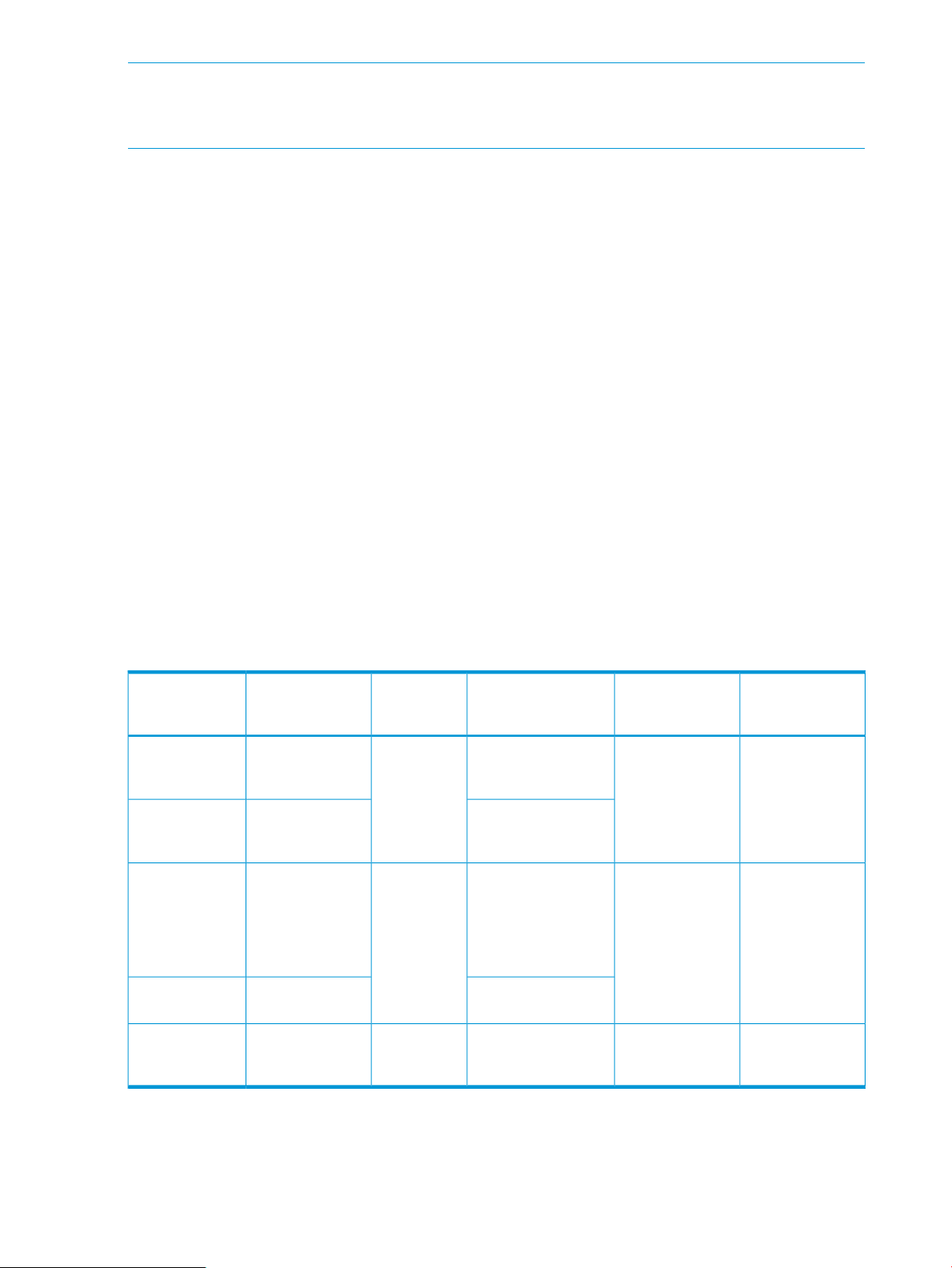

Replaceable parts...................................................................................................................83

Replacing the failed component................................................................................................85

Replacement instructions..........................................................................................................85

5 iSCSI or iSCSI/FCoE configuration rules and guidelines................................87

iSCSI or iSCSI/FCoE module rules and supported maximums ......................................................87

HP P6000 Command View and iSCSI or iSCSI/FCoE module management rules and guidelines......87

HP P63x0/P65x0 EVA storage system software..........................................................................87

Fibre Channel over Ethernet switch and fabric support.................................................................87

Operating system and multipath software support.......................................................................90

iSCSI initiator rules, guidelines, and support ..............................................................................91

General iSCSI initiator rules and guidelines ..........................................................................91

Contents 5

Page 6

Apple Mac OS X iSCSI initiator rules and guidelines..............................................................91

Microsoft Windows iSCSI Initiator rules and guidelines...........................................................91

Linux iSCSI Initiator rules and guidelines ..............................................................................92

Solaris iSCSI Initiator rules and guidelines.............................................................................92

VMware iSCSI Initiator rules and guidelines..........................................................................93

Supported IP network adapters ................................................................................................93

IP network requirements ..........................................................................................................93

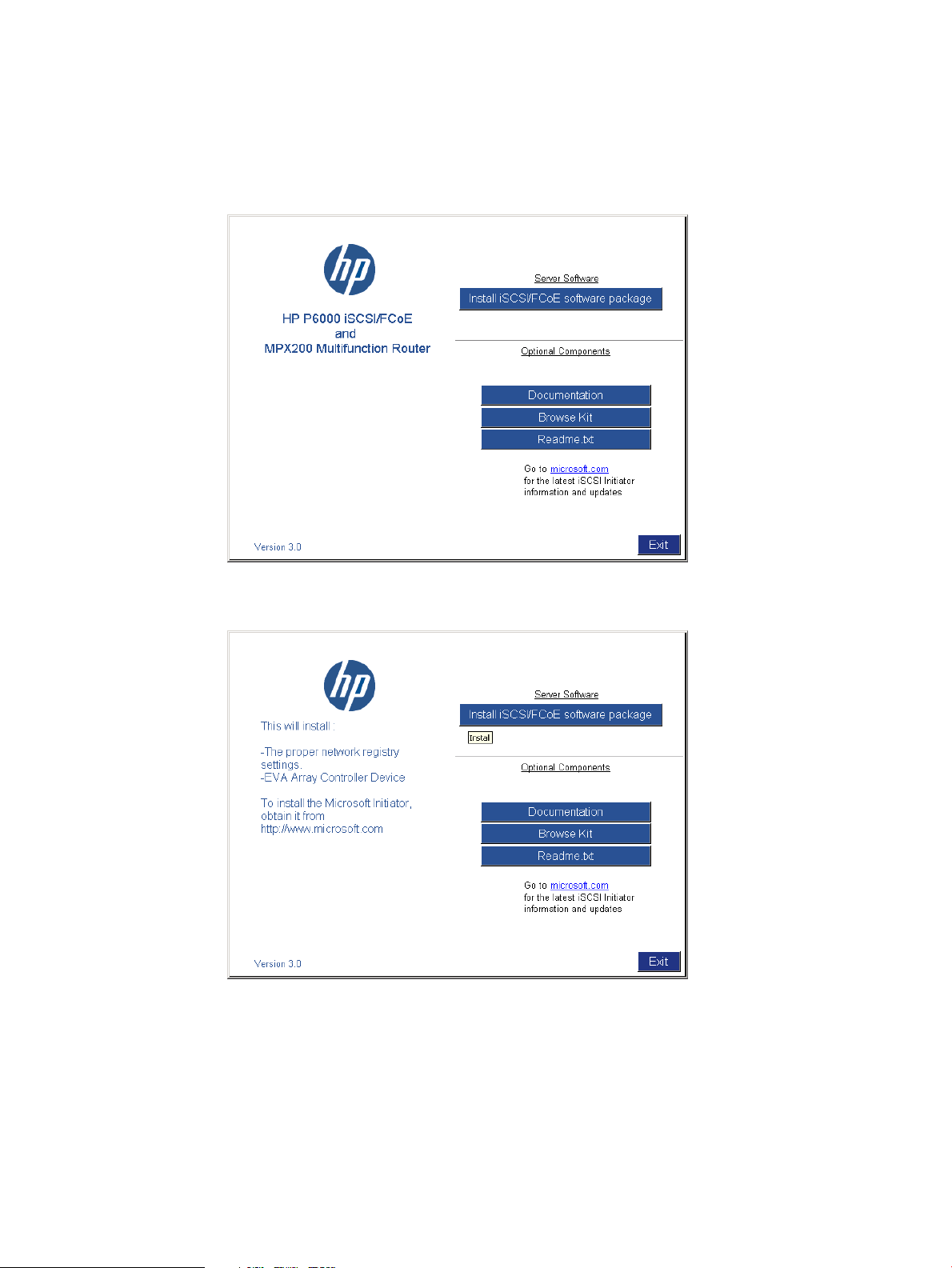

Set up the iSCSI Initiator..........................................................................................................94

Windows..........................................................................................................................94

Multipathing.....................................................................................................................99

Installing the MPIO feature for Windows Server 2012...........................................................100

Installing the MPIO feature for Windows Server 2008..........................................................103

Installing the MPIO feature for Windows Server 2003..........................................................104

About Microsoft Windows Server 2003 scalable networking pack.........................................105

SNP setup with HP NC 3xxx GbE multifunction adapter...................................................105

iSCSI Initiator version 3.10 setup for Apple Mac OS X (single-path)........................................105

Set up the iSCSI Initiator for Apple Mac OS X.................................................................106

Storage setup for Apple Mac OS X................................................................................109

iSCSI Initiator setup for Linux.............................................................................................109

Installing and configuring the SUSE Linux Enterprise 10 iSCSI driver...................................109

Installing and configuring for Red Hat 5....................................................................111

Installing and configuring for Red Hat 4 and SUSE 9..................................................112

Installing the initiator for Red Hat 3 and SUSE 8.........................................................112

Assigning device names...............................................................................................112

Target bindings...........................................................................................................113

Mounting file systems...................................................................................................114

Unmounting file systems...............................................................................................114

Presenting EVA storage for Linux....................................................................................115

Setting up the iSCSI Initiator for VMware............................................................................115

Configuring multipath with the Solaris 10 iSCSI Initiator........................................................117

MPxIO overview.........................................................................................................118

Preparing the host system........................................................................................118

Enabling MPxIO for HP P63x0/P65x0 EVA...............................................................118

Enable iSCSI target discovery...................................................................................120

Modify target parameter MaxRecvDataSegLen...........................................................121

Monitor Multipath devices.......................................................................................122

Managing and Troubleshooting Solaris iSCSI Multipath devices...................................123

Configuring Microsoft MPIO iSCSI devices..........................................................................123

Load balancing features of Microsoft MPIO for iSCSI............................................................124

Microsoft MPIO with QLogic iSCSI HBA..............................................................................125

Installing the QLogic iSCSI HBA....................................................................................125

Installing the Microsoft iSCSI Initiator services and MPIO..................................................125

Configuring the QLogic iSCSI HBA................................................................................125

Adding targets to QLogic iSCSI Initiator.........................................................................126

Presenting LUNs to the QLogic iSCSI Initiator..................................................................127

Installing the HP MPIO Full Featured DSM for EVA...........................................................128

Microsoft Windows Cluster support....................................................................................129

Microsoft Cluster Server for Windows 2003...................................................................129

Requirements..............................................................................................................129

Setting the Persistent Reservation registry key...................................................................129

Microsoft Cluster Server for Windows 2008...................................................................130

Requirements.........................................................................................................130

Setting up authentication ..................................................................................................131

CHAP restrictions ............................................................................................................131

Microsoft Initiator CHAP secret restrictions ..........................................................................131

6 Contents

Page 7

Linux version...................................................................................................................132

ATTO Macintosh Chap restrictions .....................................................................................132

Recommended CHAP policies ...........................................................................................132

iSCSI session types ..........................................................................................................132

The iSCSI or iSCSI/FCoE controller CHAP modes ................................................................132

Enabling single–direction CHAP during discovery and normal session....................................132

Enabling CHAP for the iSCSI or iSCSI/FCoE module-discovered iSCSI initiator entry ................134

Enable CHAP for the Microsoft iSCSI Initiator.......................................................................135

Enable CHAP for the open-iscsi iSCSI Initiator .....................................................................135

Enabling single–direction CHAP during discovery and bi-directional CHAP during normal session

.....................................................................................................................................136

Enabling bi-directional CHAP during discovery and single–direction CHAP during normal

session...........................................................................................................................138

Enabling bi-directional CHAP during discovery and bi-directional CHAP during normal session...140

Enable CHAP for the open-iscsi iSCSI Initiator......................................................................142

iSCSI and FCoE thin provision handling..............................................................................144

6 Single path implementation.....................................................................149

Installation requirements........................................................................................................149

Recommended mitigations.....................................................................................................149

Supported configurations.......................................................................................................150

General configuration components.....................................................................................150

Connecting a single path HBA server to a switch in a fabric zone..........................................150

HP-UX configuration..............................................................................................................152

Requirements...................................................................................................................152

HBA configuration............................................................................................................152

Risks..............................................................................................................................152

Limitations.......................................................................................................................152

Windows Server 2003 (32-bit) ,Windows Server 2008 (32–bit) , and Windows Server 2012 (32–bit)

configurations......................................................................................................................153

Requirements...................................................................................................................153

HBA configuration............................................................................................................153

Risks..............................................................................................................................153

Limitations.......................................................................................................................154

Windows Server 2003 (64-bit) and Windows Server 2008 (64–bit) configurations.......................154

Requirements...................................................................................................................154

HBA configuration............................................................................................................154

Risks..............................................................................................................................155

Limitations.......................................................................................................................155

Oracle Solaris configuration...................................................................................................155

Requirements...................................................................................................................155

HBA configuration............................................................................................................156

Risks..............................................................................................................................156

Limitations.......................................................................................................................156

OpenVMS configuration........................................................................................................157

Requirements...................................................................................................................157

HBA configuration............................................................................................................157

Risks..............................................................................................................................157

Limitations.......................................................................................................................158

Xen configuration.................................................................................................................158

Requirements...................................................................................................................158

HBA configuration............................................................................................................158

Risks..............................................................................................................................159

Limitations.......................................................................................................................159

Linux (32-bit) configuration.....................................................................................................159

Contents 7

Page 8

Requirements...................................................................................................................159

HBA configuration............................................................................................................160

Risks..............................................................................................................................160

Limitations.......................................................................................................................160

Linux (Itanium) configuration...................................................................................................160

Requirements...................................................................................................................160

HBA configuration............................................................................................................161

Risks..............................................................................................................................161

Limitations.......................................................................................................................161

IBM AIX configuration...........................................................................................................162

Requirements...................................................................................................................162

HBA configuration............................................................................................................162

Risks..............................................................................................................................162

Limitations.......................................................................................................................162

VMware configuration...........................................................................................................163

Requirements...................................................................................................................163

HBA configuration............................................................................................................163

Risks..............................................................................................................................163

Limitations.......................................................................................................................164

Mac OS configuration...........................................................................................................164

Failure scenarios...................................................................................................................164

HP-UX.............................................................................................................................164

Windows Servers.............................................................................................................165

Oracle Solaris.................................................................................................................165

OpenVMS......................................................................................................................165

Linux..............................................................................................................................166

IBM AIX..........................................................................................................................167

VMware.........................................................................................................................167

Mac OS.........................................................................................................................168

7 Troubleshooting......................................................................................169

If the disk enclosure does not initialize.....................................................................................169

Diagnostic steps...................................................................................................................169

Is the enclosure front fault LED amber?................................................................................169

Is the enclosure rear fault LED amber?.................................................................................169

Is the power on/standby button LED amber?.......................................................................170

Is the power supply LED amber?........................................................................................170

Is the I/O module fault LED amber?....................................................................................170

Is the fan LED amber?.......................................................................................................171

Effects of a disk drive failure...................................................................................................171

Compromised fault tolerance.............................................................................................171

Factors to consider before replacing disk drives........................................................................171

Automatic data recovery (rebuild)...........................................................................................172

Time required for a rebuild................................................................................................172

Failure of another drive during rebuild................................................................................173

Handling disk drive failures...............................................................................................173

iSCSI module diagnostics and troubleshooting..........................................................................173

iSCSI and iSCSI/FCoE diagnostics.....................................................................................173

Locate the iSCSI or iSCSI/FCoE module.........................................................................174

iSCSI or iSCSI/FCoE module's log data.........................................................................175

iSCSI or iSCSI/FCoE module statistics............................................................................175

Troubleshoot using HP P6000 Command View................................................................175

Issues and solutions..........................................................................................................175

Issue: HP P6000 Command View does not discover the iSCSI or iSCSI/FCoE modules.........175

Issue: Initiator cannot login to iSCSI or iSCSI/FCoE module target.....................................176

8 Contents

Page 9

Issue: Initiator logs in to iSCSI or iSCSI/FCoE controller target but EVA assigned LUNs are not

appearing on the initiator............................................................................................176

Issue: EVA presented virtual disk is not seen by the initiator...............................................176

Issue: Windows initiators may display Reconnecting if NIC MTU changes after connection has

logged in...................................................................................................................177

Issue: When communication between HP P6000 Command View and iSCSI or iSCSI/FCoE

module is down, use following options:..........................................................................177

HP P6000 Command View issues and solutions...................................................................178

8 Error messages.......................................................................................180

9 Support and other resources....................................................................197

Contacting HP......................................................................................................................197

HP technical support........................................................................................................197

Subscription service..........................................................................................................197

Documentation feedback..................................................................................................197

Related documentation..........................................................................................................197

Documents......................................................................................................................197

Websites........................................................................................................................197

Typographic conventions.......................................................................................................198

Customer self repair..............................................................................................................198

Rack stability........................................................................................................................199

A Regulatory compliance notices.................................................................200

Regulatory compliance identification numbers..........................................................................200

Federal Communications Commission notice............................................................................200

FCC rating label..............................................................................................................200

Class A equipment......................................................................................................200

Class B equipment......................................................................................................200

Declaration of Conformity for products marked with the FCC logo, United States only...............201

Modification...................................................................................................................201

Cables...........................................................................................................................201

Canadian notice (Avis Canadien)...........................................................................................201

Class A equipment...........................................................................................................201

Class B equipment...........................................................................................................201

European Union notice..........................................................................................................201

Japanese notices..................................................................................................................202

Japanese VCCI-A notice....................................................................................................202

Japanese VCCI-B notice....................................................................................................202

Japanese VCCI marking...................................................................................................202

Japanese power cord statement.........................................................................................202

Korean notices.....................................................................................................................202

Class A equipment...........................................................................................................202

Class B equipment...........................................................................................................203

Taiwanese notices.................................................................................................................203

BSMI Class A notice.........................................................................................................203

Taiwan battery recycle statement........................................................................................203

Turkish recycling notice..........................................................................................................203

Vietnamese Information Technology and Communications compliance marking.............................203

Laser compliance notices.......................................................................................................204

English laser notice..........................................................................................................204

Dutch laser notice............................................................................................................204

French laser notice...........................................................................................................204

German laser notice.........................................................................................................205

Italian laser notice............................................................................................................205

Japanese laser notice.......................................................................................................205

Contents 9

Page 10

Spanish laser notice.........................................................................................................206

Recycling notices..................................................................................................................206

English recycling notice....................................................................................................206

Bulgarian recycling notice.................................................................................................206

Czech recycling notice......................................................................................................206

Danish recycling notice.....................................................................................................206

Dutch recycling notice.......................................................................................................207

Estonian recycling notice...................................................................................................207

Finnish recycling notice.....................................................................................................207

French recycling notice.....................................................................................................207

German recycling notice...................................................................................................207

Greek recycling notice......................................................................................................207

Hungarian recycling notice...............................................................................................208

Italian recycling notice......................................................................................................208

Latvian recycling notice.....................................................................................................208

Lithuanian recycling notice................................................................................................208

Polish recycling notice.......................................................................................................208

Portuguese recycling notice...............................................................................................209

Romanian recycling notice................................................................................................209

Slovak recycling notice.....................................................................................................209

Spanish recycling notice...................................................................................................209

Swedish recycling notice...................................................................................................209

Battery replacement notices...................................................................................................210

Dutch battery notice.........................................................................................................210

French battery notice........................................................................................................210

German battery notice......................................................................................................211

Italian battery notice........................................................................................................211

Japanese battery notice....................................................................................................212

Spanish battery notice......................................................................................................212

B Non-standard rack specifications..............................................................213

Internal component envelope..................................................................................................213

EIA310-D standards..............................................................................................................213

EVA cabinet measures and tolerances.....................................................................................213

Weights, dimensions and component CG measurements...........................................................214

Airflow and Recirculation.......................................................................................................214

Component Airflow Requirements.......................................................................................214

Rack Airflow Requirements................................................................................................214

Configuration Standards........................................................................................................214

UPS Selection.......................................................................................................................214

Shock and vibration specifications..........................................................................................215

C Command reference...............................................................................217

Command syntax..................................................................................................................217

Command line completion................................................................................................217

Authority requirements......................................................................................................217

Commands..........................................................................................................................217

Admin............................................................................................................................218

Beacon...........................................................................................................................218

Clear.............................................................................................................................218

Date..............................................................................................................................219

Exit................................................................................................................................219

FRU................................................................................................................................220

Help..............................................................................................................................220

History...........................................................................................................................222

Image............................................................................................................................222

10 Contents

Page 11

Initiator...........................................................................................................................223

Logout............................................................................................................................225

Lunmask.........................................................................................................................225

Passwd...........................................................................................................................228

Ping...............................................................................................................................229

Quit...............................................................................................................................230

Reboot...........................................................................................................................230

Reset..............................................................................................................................230

Save..............................................................................................................................231

Set.................................................................................................................................231

Set alias.........................................................................................................................232

Set CHAP.......................................................................................................................233

Set FC............................................................................................................................233

Set features.....................................................................................................................234

Set iSCSI........................................................................................................................235

Set iSNS.........................................................................................................................236

Set Mgmt........................................................................................................................236

Set NTP..........................................................................................................................237

Set properties..................................................................................................................237

Set SNMP.......................................................................................................................238

Set system.......................................................................................................................239

Set VPGroups..................................................................................................................239

Show.............................................................................................................................240

Show CHAP....................................................................................................................242

Show FC........................................................................................................................242

Show features..................................................................................................................244

Show initiators.................................................................................................................244

Show initiators LUN mask.................................................................................................246

Show iSCSI.....................................................................................................................247

Show iSNS.....................................................................................................................249

Show logs.......................................................................................................................249

Show LUNinfo.................................................................................................................250

Show LUNs.....................................................................................................................251

Show lunmask.................................................................................................................252

Show memory.................................................................................................................252

Show mgmt.....................................................................................................................253

Show NTP......................................................................................................................253

Show perf.......................................................................................................................254

Show presented targets.....................................................................................................255

Show properties..............................................................................................................258

Show SNMP...................................................................................................................259

Show stats......................................................................................................................259

Show system...................................................................................................................261

Show targets...................................................................................................................262

Show VPGroups...............................................................................................................262

Shutdown.......................................................................................................................263

Target............................................................................................................................263

Traceroute.......................................................................................................................264

D Using the iSCSI CLI.................................................................................265

Logging on to an iSCSI or iSCSI/FCoE module.........................................................................265

Understanding the guest account............................................................................................265

Working with iSCSI or iSCSI/FCoE module configurations.........................................................266

Modifying a configuration.................................................................................................267

Saving and restoring iSCSI or iSCSI/FCoE controller configurations........................................267

Contents 11

Page 12

Restoring iSCSI or iSCSI/FCoE module configuration and persistent data................................267

E Simple Network Management Protocol......................................................269

SNMP parameters................................................................................................................269

SNMP trap configuration parameters.......................................................................................269

Management Information Base ..............................................................................................270

Network port table...........................................................................................................270

FC port table...................................................................................................................272

Initiator object table.........................................................................................................273

LUN table.......................................................................................................................275

VP group table................................................................................................................277

Sensor table....................................................................................................................278

Notifications........................................................................................................................279

System information objects................................................................................................280

Notification objects..........................................................................................................280

Agent startup notification..................................................................................................281

Agent shutdown notification..............................................................................................281

Network port down notification..........................................................................................281

FC port down notification..................................................................................................281

Target device discovery....................................................................................................282

Target presentation (mapping)...........................................................................................282

VP group notification........................................................................................................282

Sensor notification...........................................................................................................283

Generic notification..........................................................................................................283

F iSCSI and iSCSI/FCoE module log messages.............................................284

Glossary..................................................................................................298

Index.......................................................................................................311

12 Contents

Page 13

1 P63x0/P65x0 EVA hardware

The P63x0/P65x0 EVA contains the following components:

• EVA controller enclosure — Contains HSV controllers, power supplies, cache batteries, and

fans. Available in FC and iSCSI options

NOTE: Compared to older models, the HP P6350 and P6550 employ newer batteries and

a performance enhanced management module. They require XCS Version 11000000 or later

on the P6350 and P6550 and HP P6000 Command View Version 10.1 or later on the

management module. The P6300 and P6350 use the HSV340 controller while the P6500

and P6550 use the HSV360 controller.

• SAS disk enclosure — Contains disk drives, power supplies, fans, midplane, and I/O modules.

• Y-cables — Provides dual-port connectivity to the EVA controller.

• Rack — Several free standing racks are available.

SAS disk enclosures

6 Gb SAS disk enclosures are available in two models:

• Small Form Factor (SFF): Supports 25 SFF (2.5 inch) disk drives

• Large Form Factor (LFF): Supports 12 LFF (3.5 inch) disk drives

• The SFF model is M6625; the LFF model is M6612.

Small Form Factor disk enclosure chassis

Front view

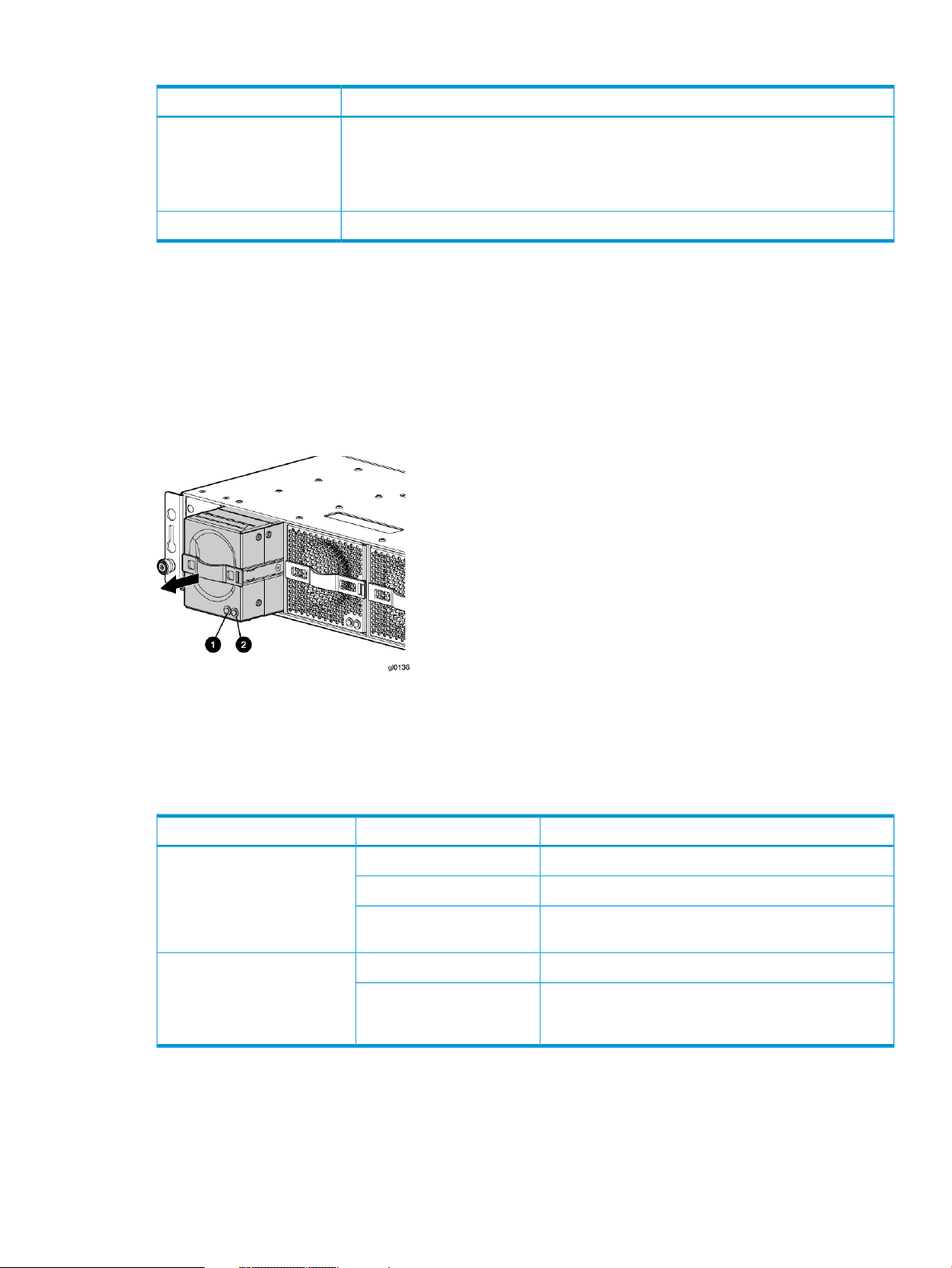

3. UID push button and LED1. Rack-mounting thumbscrew

4. Enclosure status LEDs2. Disk drive in bay 9

SAS disk enclosures 13

Page 14

Rear view

Drive bay numbering

Disk drives mount in bays on the front of the enclosure. Bays are numbered sequentially from top

to bottom and left to right. Bay numbers are indicated on the left side of each drive bay.

7. UID push button and LED4. I/O module A1. Power supply 1

8. Enclosure status LEDs5. I/O module B2. Power supply 2

9. Power push button and LED6. Fan 23. Fan 1

Large Form Factor disk enclosure chassis

Front view

3. UID push button and LED1. Rack-mounting thumbscrew

4. Enclosure status LEDs2. Disk drive in bay 6

14 P63x0/P65x0 EVA hardware

Page 15

Rear view

Drive bay numbering

Disk drives mount in bays on the front of the enclosure. Bays are numbered sequentially from top

to bottom and left to right. A drive-bay legend is included on the left bezel.

7. UID push button and LED4. I/O module A1. Power supply 1

8. Enclosure status LEDs5. I/O module B2. Power supply 2

9. Power push button and LED6. Fan 23. Fan 1

Disk drives

Disk drives are hot-pluggable. A variety of disk drive models are supported for use.

Disk drive LEDs

Two LEDs indicate drive status.

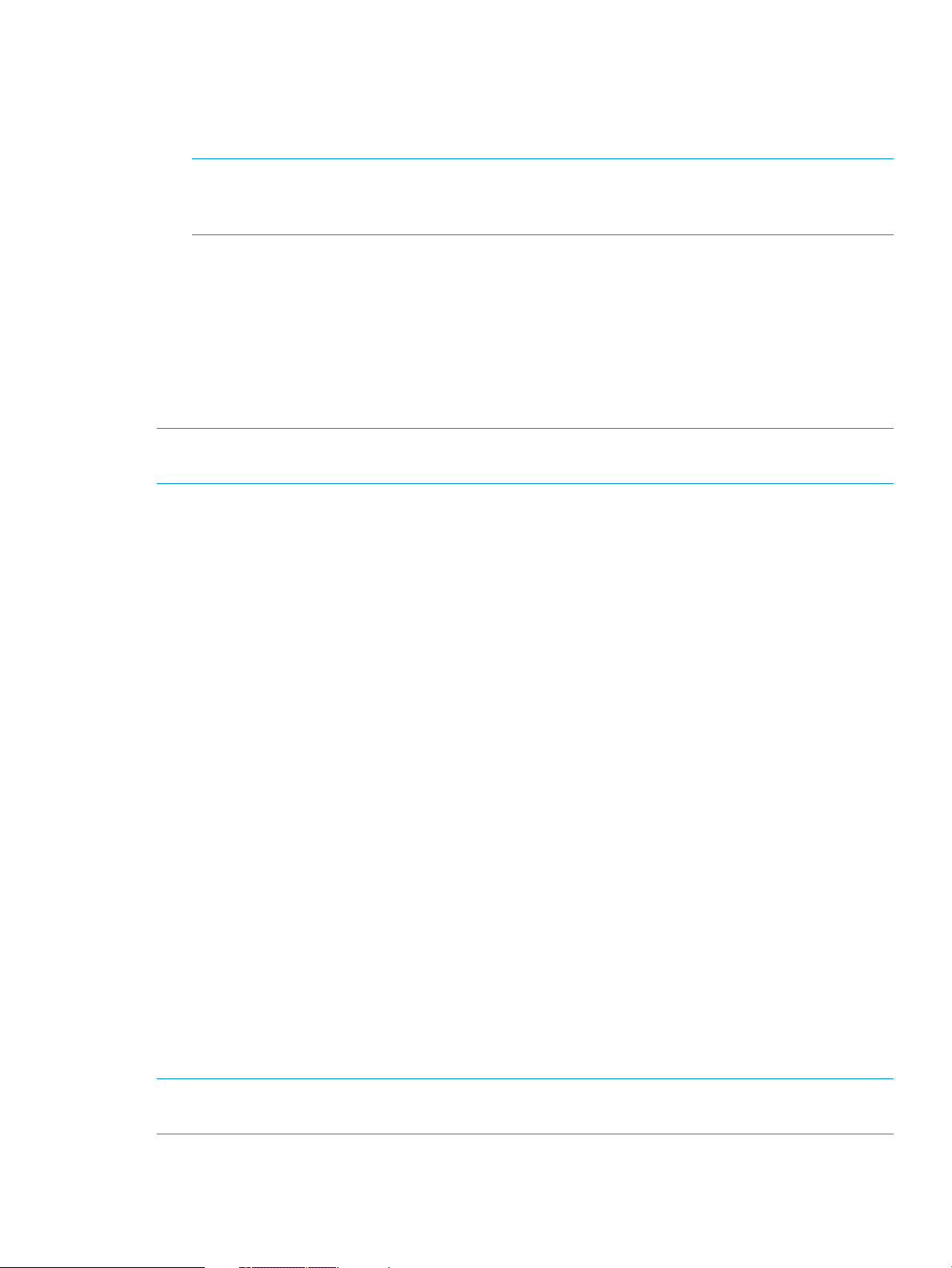

NOTE: The following image shows a Small Form Factor (SFF) disk drive. LED patterns are the

same for SFF and LFF disk drives.

SAS disk enclosures 15

Page 16

DescriptionLED statusLED colorLED

Locate driveSlow blinking (0.5 Hz)Blue1. Locate/Fault

Drive faultSolidAmber

Disk drive blanks

To maintain the proper enclosure air flow, a disk drive or a disk drive blank must be installed in

each drive bay. The disk drive blank maintains proper airflow within the disk enclosure.

Front status and UID module

The front status and UID module includes status LEDs and a unit identification (UID) button.

Front UID module LEDs

Blinking (1 Hz)Green2. Status

Drive is spinning up or down

and is not ready

Drive activityFast blinking (4 Hz)

Ready for activitySolid

16 P63x0/P65x0 EVA hardware

Blinking

Blinking

Solid

Blinking

Solid

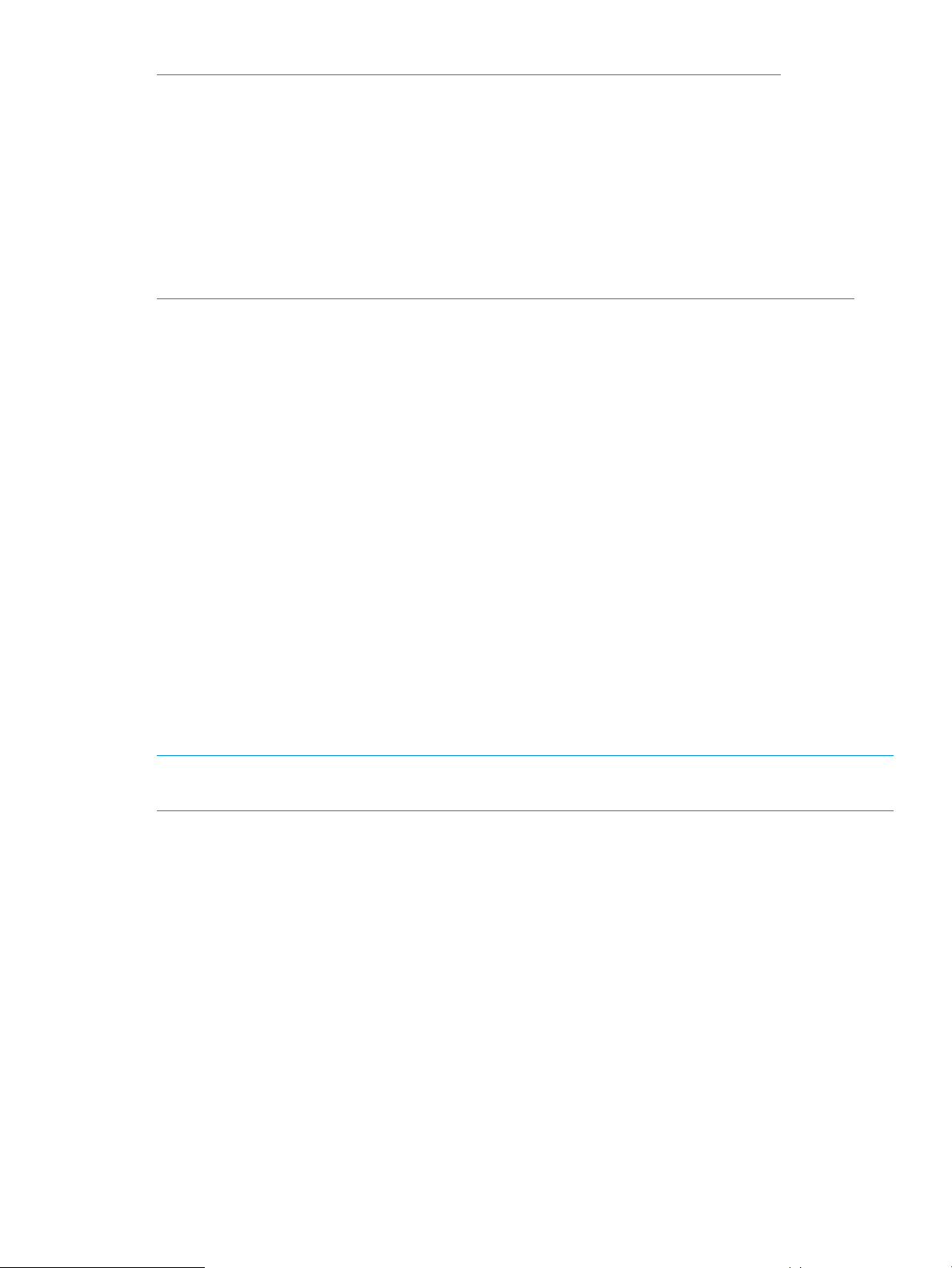

DescriptionLED statusLED colorLED iconLED

No powerOffGreen1. Health

Enclosure is starting up and not ready,

performing POST

Normal, power is onSolid

Normal, no fault conditionsOffAmber2. Fault

A fault of lesser importance was detected in the

enclosure chassis or modules

A fault of greater importance was detected in

the enclosure chassis or modules

Not being identified or power is offOffBlue3. UID

Unit is being identified from the management

utility

Unit is being identified from the UID button

being pushed

Page 17

Unit identification (UID) button

The unit identification (UID) button helps locate an enclosure and its components. When the UID

button is activated, the UID on the front and rear of the enclosure are illuminated.

NOTE: A remote session from the management utility can also illuminate the UID.

• To turn on the UID light, press the UID button. The UID light on the front and the rear of the

enclosure will illuminate solid blue. (The UID on cascaded storage enclosures are not

illuminated.)

• To turn off an illuminated UID light, press the UID button. The UID light on the front and the

rear of the enclosure will turn off.

Power supply module

Two power supplies provide the necessary operating voltages to all controller enclosure components.

If one power supply fails, the remaining power supply is capable of operating the enclosure.

(Replace any failed component as soon as possible.)

NOTE: If one of the two power supply modules fails, it can be hot-replaced.

Power supply LED

One LED provides module status information.

Fan module

Fan modules provide cooling necessary to maintain proper operating temperature within the disk

enclosure. If one fan fails, the remaining fan is capable of cooling the enclosure. (Replace any

failed component as soon as possible.)

NOTE: If one of the two fan modules fail, it can be hot-replaced.

DescriptionLED status

No powerOff

Normal, no fault conditionsOn

SAS disk enclosures 17

Page 18

Fan module LED

One bi-color LED provides module status information.

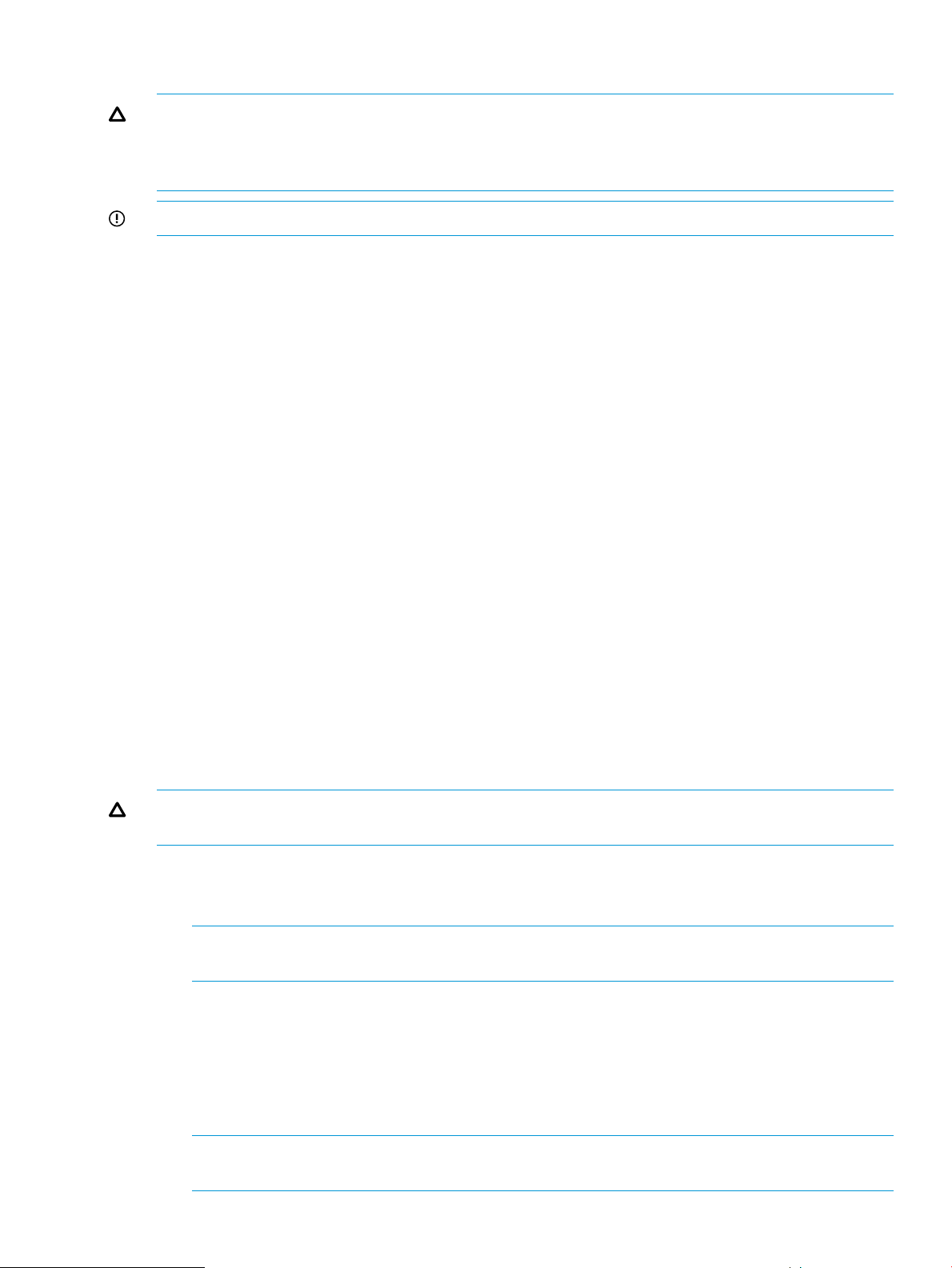

DescriptionLED statusLED color

No powerOffOff

The module is being identifiedBlinkingGreen

I/O module

The I/O module provides the interface between the disk enclosure and the host.

Each I/O module has two ports that can transmit and receive data for bidirectional operation.

Normal, no fault conditionsSolid

Fault conditions detectedBlinkingAmber

Problems detecting the moduleSolid

3. SAS Port 2

18 P63x0/P65x0 EVA hardware

4. Double 7–segment display1. Manufacturing diagnostic port

5. I/O module LEDs2. SAS Port 1

Page 19

I/O module LEDs

LEDs on the I/O module provide status information about each I/O port and the entire module.

NOTE: The following image illustrates LEDs on the Small Form Factor I/O module.

DescriptionLED statusLED colorLED iconLED

display

OffGreenn/a1. SAS Port Link

Blinking

Solid

Offn/an/a3. 7–segment

Solid

Blinking

No cable, no power, or port not

connected

The port is being identified by an

application client

Healthy, active linkSolid

Normal, no errors detectedOffAmbern/a2. SAS Port Error

Error detected by application clientBlinking

Error, fault conditions detected on

the port by the I/O module

No cable, no power, enclosure not

detected

The enclosure box numberNumber

Not being identified or no powerOffBlue4. UID

Module is being identified, from

the management utility

No power or firmware malfunctionOffGreen5. Health

Enclosure is starting up and not

ready, performing POST

Normal, power is onSolid

Rear power and UID module

The rear power and UID module includes status LEDs, a unit identification (UID) button, and the

power on/standby button.

Solid

Normal, no fault conditionsOffAmber6. Fault

A fault of lesser importanceBlinking

A fault of greater importance, I/O

failed to start

SAS disk enclosures 19

Page 20

Rear power and UID module LEDs

DescriptionStatusLED colorLED iconLED

OffBlue1. UID

On

Blinking

Not being identified or no

power

Unit is being identified, either

from the UID button being

pushed or from the

management utility

No powerOffGreen2. Health

Enclosure is starting up and

not ready, performing POST

Normal, power is onSolid

Normal, no fault conditionsOffAmber3. Fault

A fault of lesser importanceBlinking

A fault of greater importanceSolid

Power is onSolidGreen4. On/Standby

Standby powerSolidAmber

20 P63x0/P65x0 EVA hardware

Page 21

Unit identification (UID) button

The unit identification (UID) button helps locate an enclosure and its components. When the UID

button is activated, the UID on the front and rear of the enclosure are illuminated.

NOTE: A remote session from the management utility can also illuminate the UID.

• To turn on the UID light, press the UID button. The UID light on the front and the rear of the

enclosure will illuminate solid blue. (The UID on cascaded storage enclosures are not

illuminated.)

• To turn off an illuminated UID light, press the UID button. The UID light on the front and the

rear of the enclosure will turn off.

Power on/standby button

The power on/standby button applies either full or partial power to the enclosure chassis.

• To initially power on the enclosure, press and hold the on/standby button for a few seconds,

until the LEDs begin to illuminate.

• To place an enclosure in standby, press and hold the on standby button for a few seconds,

until the on/standby LED changes to amber.

NOTE: System power to the disk enclosure does not completely shut off with the power on/standby

button. The standby position removes power from most of the electronics and components, but

portions of the power supply and some internal circuitry remain active. To completely remove

power from the system, disconnect all power cords from the device.

SAS cables

These disk enclosures use cables with mini-SAS connectors for connections to the controller and

cascaded disk enclosures.

Controller enclosure

For both the P63x0 EVA and P65x0 EVA, a single enclosure contains a management module and

two controllers. Two interconnected controllers ensure that the failure of a controller component

does not disable the system. One controller can fully support an entire system until the defective

controller, or controller component, is repaired. The controllers have an 8 Gb host port capability.

The P63x0 and P65x0 EVA controllers are available in FC, FC-iSCSI, and iSCSI/FCoE versions.

The controller models are HSV340 (for the P63x0) and HSV360 (for the P65x0).

Figure 1 (page 22) shows the bezel of the controller enclosure. Figure 2 (page 22) shows the front

of the controller enclosure with the bezel removed.

Controller enclosure 21

Page 22

Figure 1 Controller enclosure (front bezel)

2. Front UID push button1. Enclosure status LEDs

Figure 2 Controller enclosure (front view with bezel removed)

8. Fan 1 normal operation LED1. Rack-mounting thumbscrew

9. Fan 1 fault LED2. Enclosure product number (PN) and serial number

10. Fan 23. World Wide Number (WWN)

11. Battery 24. Battery 1

12. Enclosure status LEDs5. Battery normal operation LED

13. Front UID push button6. Battery fault LED

7. Fan 1

Each P63x0 controller contains two SAS data ports. Each P65x0 controller contains four SAS data

ports (made possible using Y-cables—one cable with two outputs). For both the P63x0 and P65x0

EVA, the FC controller adds four 8 Gb FC ports (Figure 3 (page 23)); the FC-iSCSI controller adds

two 8 Gb FC ports and four 1 GbE iSCSI ports (Figure 4 (page 23)); and the iSCSI/FCoE controller

adds two 8 Gb FC ports and two10 GbE iSCSI/FCoE ports (Figure 5 (page 24)).

22 P63x0/P65x0 EVA hardware

Page 23

Figure 3 P6000 EVA FC controller enclosure (rear view)

9. Enclosure power push button1. Power supply 1

10. Power supply 22. Controller 1