Page 1

HPE FlexNetwork MSR Router Series

Comware 7 MPLS Configuration Guide

Part number: 5998-8833

Software version: CMW710-R0305

Document version: 6PW106-20160308

Page 2

© Copyright 2016 Hewlett Packard Enterprise Development LP

The info

rmation contained herein is subject to change without notice. The only warranties for Hewlett Packard

Enterprise products and services are set forth in the express warranty statements acco mpanying such

products and services. Nothing herein should be construe d as constituting an additional warranty. Hewlett

Packard Enterprise shall not be liable for technical or editorial errors or omissions co ntained herein.

Confidential computer software. V alid license from Hewlett Packard Enterprise required for possession, use, or

copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software

Documentation, and T e chnical Data for Commercial Items are licensed to the U.S. Government under vendor’s

standard commercial license.

Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard

Enterprise has no control over and is not responsible for information outside the Hewlett Packard Enterprise

website.

Acknowledgments

Intel®, Itanium®, Pentium®, Intel Inside®, and the Intel Inside logo are trademarks of Intel Corporation in the

United States and other countries.

Microsoft® and Windows® are trademarks of the Microsoft group of companies.

Adobe® and Acrobat® are trademarks of Adobe Systems In corporated.

Java and Oracle are registered trademarks of Oracle and/or its affiliates.

UNIX® is a registered trademark of The Open Group.

i

Page 3

Contents

Configuring basic MPLS ················································································· 1

Overview ···························································································································································· 1

Basic concepts ··········································································································································· 1

MPLS network architecture ························································································································ 2

LSP establishment ····································································································································· 3

MPLS forwarding ········································································································································ 4

PHP ···························································································································································· 4

Protocols and standards ···························································································································· 5

Compatibility information ···································································································································· 5

MPLS configuration task list ······························································································································· 5

Enabling MPLS ·················································································································································· 6

Setting MPLS MTU ············································································································································ 6

Specifying the label type advertised by the egress ···························································································· 7

Configuring TTL propagation ····························································································································· 8

Enabling sending of MPLS TTL-expired messages ··························································································· 9

Enabling MPLS forwarding statistics ·················································································································· 9

Enabling FTN forwarding statistics ············································································································· 9

Enabling MPLS label forwarding statistics for LSPs ················································································ 10

Enabling MPLS label forwarding statistics for a VPN instance ································································ 10

Enabling split horizon for MPLS forwarding ····································································································· 10

Enabling SNMP notifications for MPLS ············································································································ 11

Displaying and maintaining MPLS ··················································································································· 11

Configuring a static LSP ··············································································· 13

Overview ·························································································································································· 13

Configuration prerequisites ······························································································································ 13

Configuration procedure ·································································································································· 13

Displaying static LSPs ····································································································································· 14

Static LSP configuration example ···················································································································· 14

Configuring LDP ···························································································· 17

Overview ·························································································································································· 17

Terminology ············································································································································· 17

LDP messages ········································································································································· 17

LDP operation ·········································································································································· 18

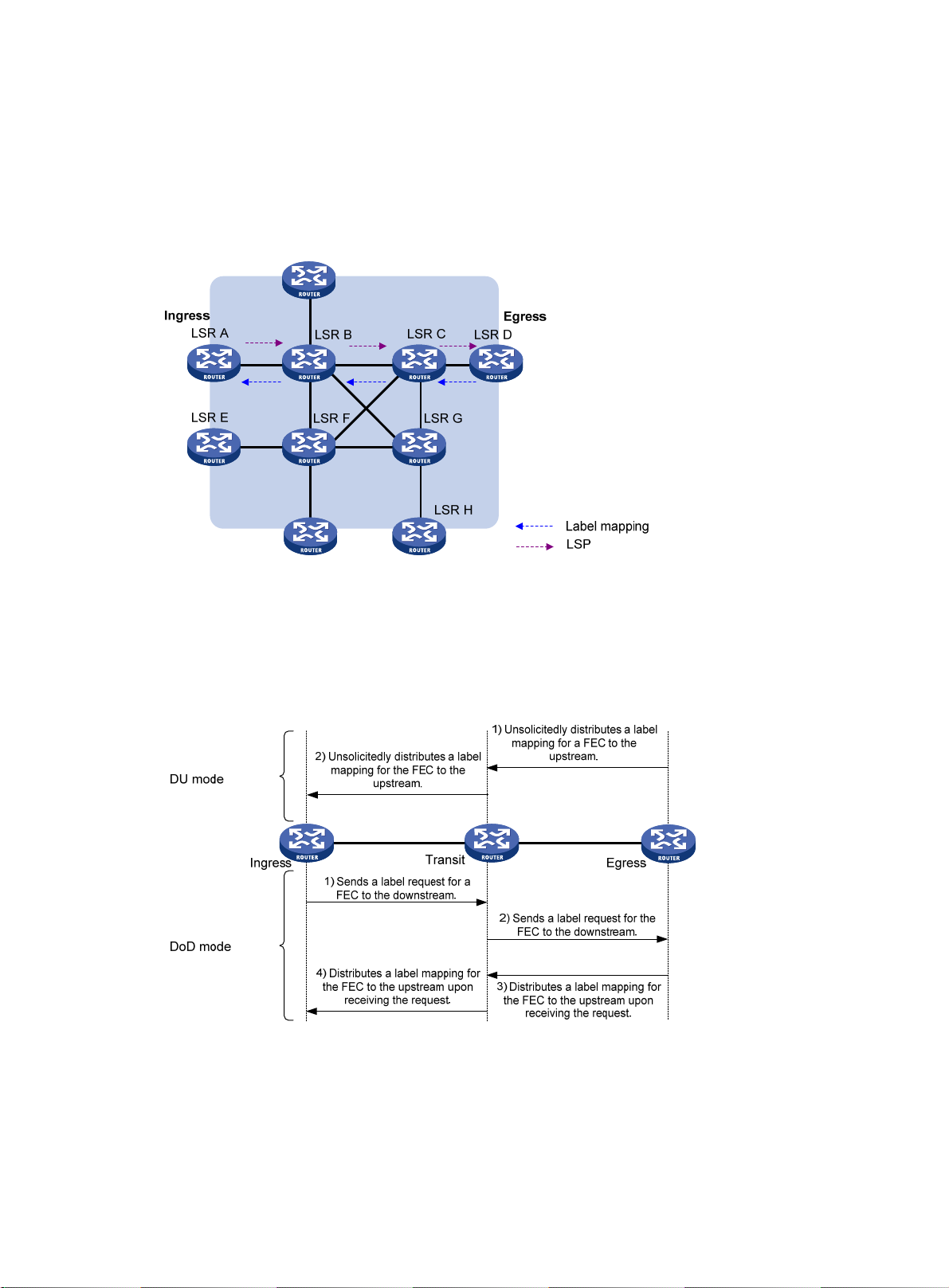

Label distribution and control ··················································································································· 19

LDP GR ···················································································································································· 21

LDP NSR ·················································································································································· 22

LDP-IGP synchronization ························································································································· 23

LDP FRR ·················································································································································· 23

LDP over MPLS TE ·································································································································· 24

Protocols ·················································································································································· 25

Compatibility information ·································································································································· 25

LDP configuration task list ······························································································································· 25

Enabling LDP ··················································································································································· 26

Enabling LDP globally ······························································································································ 26

Enabling LDP on an interface ·················································································································· 26

Configuring Hello parameters ·························································································································· 26

Configuring LDP session parameters ·············································································································· 27

Configuring LDP backoff ·································································································································· 29

Configuring LDP MD5 authentication ··············································································································· 29

Configuring LDP to redistribute BGP unicast routes ························································································ 30

Configuring an LSP generation policy ·············································································································· 30

Configuring the LDP label distribution control mode ························································································ 31

Configuring a label advertisement policy ········································································································· 31

Configuring a label acceptance policy ············································································································· 32

Configuring LDP loop detection ······················································································································· 33

i

Page 4

Configuring LDP session protection ················································································································· 34

Configuring LDP GR ········································································································································ 35

Configuring LDP NSR ······································································································································ 35

Configuring LDP-IGP synchronization ············································································································· 35

Configuring LDP-OSPF synchronization ·································································································· 36

Configuring LDP-ISIS synchronization ····································································································· 37

Configuring LDP FRR ······································································································································ 37

Setting a DSCP value for outgoing LDP packets ····························································································· 37

Resetting LDP sessions ··································································································································· 38

Enabling SNMP notifications for LDP ·············································································································· 38

Displaying and maintaining LDP ······················································································································ 38

IPv4 LDP configuration examples ···················································································································· 39

LDP LSP configuration example ·············································································································· 39

Label acceptance control configuration example ····················································································· 43

Label advertisement control configuration example ················································································· 47

LDP FRR configuration example ·············································································································· 52

IPv6 LDP configuration examples ···················································································································· 55

IPv6 LDP LSP configuration example ······································································································ 55

IPv6 label acceptance control configuration example ·············································································· 61

IPv6 label advertisement control configuration example ·········································································· 65

Configuring MPLS TE ··················································································· 71

Overview ·························································································································································· 71

TE and MPLS TE ····································································································································· 71

MPLS TE basic concepts ························································································································· 71

Static CRLSP establishment ···················································································································· 71

Dynamic CRLSP establishment ··············································································································· 71

CRLSP establishment using PCE path calculation ·················································································· 73

Traffic forwarding ····································································································································· 74

Make-before-break ··································································································································· 75

Route pinning ··········································································································································· 76

Tunnel reoptimization ······························································································································· 76

Automatic bandwidth adjustment ············································································································· 76

CRLSP backup ········································································································································· 77

FRR ·························································································································································· 77

DiffServ-aware TE ···································································································································· 78

Bidirectional MPLS TE tunnel ·················································································································· 80

Protocols and standards ·························································································································· 80

MPLS TE configuration task list ······················································································································· 81

Enabling MPLS TE ··········································································································································· 82

Configuring a tunnel interface ·························································································································· 83

Configuring DS-TE ··········································································································································· 83

Configuring an MPLS TE tunnel to use a static CRLSP ·················································································· 84

Configuring an MPLS TE tunnel to use a dynamic CRLSP ············································································· 85

Configuration task list ······························································································································· 85

Configuring MPLS TE attributes for a link ································································································ 85

Advertising link TE attributes by using IGP TE extension ········································································ 86

Configuring MPLS TE tunnel constraints ································································································· 87

Establishing an MPLS TE tunnel by using RSVP-TE ··············································································· 89

Controlling CRLSP path selection ············································································································ 89

Controlling MPLS TE tunnel setup ··········································································································· 91

Configuring an MPLS TE tunnel to use a CRLSP calculated by PCEs ··························································· 93

Configuring a PCE ··································································································································· 93

Discovering PCEs ···································································································································· 94

Establishing a CRLSP by using the path calculated by PCEs ································································· 94

Establishing a backup CRLSP by using the path calculated by PCEs ····················································· 94

Configuring PCEP session parameters ···································································································· 95

Configuring load sharing for an MPLS TE tunnel ····························································································· 95

Configuring traffic forwarding ··························································································································· 96

Configuring static routing to direct traffic to an MPLS TE tunnel or tunnel bundle ··································· 96

Configuring PBR to direct traffic to an MPLS TE tunnel or tunnel bundle ················································ 97

Configuring automatic route advertisement to direct traffic to an MPLS TE tunnel or tunnel bundle ······· 97

ii

Page 5

Configuring a bidirectional MPLS TE tunnel ···································································································· 98

Configuring CRLSP backup ····························································································································· 99

Configuring MPLS TE FRR ···························································································································· 100

Enabling FRR ········································································································································· 100

Configuring a bypass tunnel on the PLR ································································································ 100

Configuring node fault detection ············································································································ 104

Configuring the optimal bypass tunnel selection interval ······································································· 105

Enabling SNMP notifications for MPLS TE ···································································································· 105

Displaying and maintaining MPLS TE ············································································································ 105

MPLS TE configuration examples ·················································································································· 106

Establishing an MPLS TE tunnel over a static CRLSP ·········································································· 106

Establishing an MPLS TE tunnel with RSVP-TE ···················································································· 111

Establishing an inter-AS MPLS TE tunnel with RSVP-TE ······································································ 117

Establishing an inter-area MPLS TE tunnel over a CRLSP calculated by PCEs ··································· 124

Bidirectional MPLS TE tunnel configuration example ············································································ 128

CRLSP backup configuration example ·································································································· 134

Manual bypass tunnel for FRR configuration example ·········································································· 138

Auto FRR configuration example ··········································································································· 144

IETF DS-TE configuration example ······································································································· 150

Troubleshooting MPLS TE ····························································································································· 157

No TE LSA generated ···························································································································· 157

Configuring a static CRLSP ········································································ 158

Overview ························································································································································ 158

Configuration procedure ································································································································ 158

Displaying static CRLSPs ······························································································································ 159

Static CRLSP configuration example ············································································································· 159

Configuring RSVP ······················································································· 165

Overview ························································································································································ 165

RSVP messages ···································································································································· 165

CRLSP setup procedure ························································································································ 166

RSVP refresh mechanism ······················································································································ 166

RSVP authentication ······························································································································ 167

RSVP GR ··············································································································································· 167

Protocols and standards ························································································································ 168

RSVP configuration task list ··························································································································· 168

Enabling RSVP ·············································································································································· 168

Configuring RSVP refresh ······························································································································ 168

Configuring RSVP Srefresh and reliable RSVP message delivery ································································ 169

Configuring RSVP hello extension ················································································································· 169

Configuring RSVP authentication ·················································································································· 170

Setting a DSCP value for outgoing RSVP packets ························································································ 171

Configuring RSVP GR ··································································································································· 172

Enabling BFD for RSVP ································································································································· 172

Displaying and maintaining RSVP ················································································································· 172

RSVP configuration examples ······················································································································· 173

Establishing an MPLS TE tunnel with RSVP-TE ···················································································· 173

RSVP GR configuration example ··········································································································· 179

Configuring tunnel policies ·········································································· 182

Overview ························································································································································ 182

Configuring a tunnel policy ····························································································································· 182

Configuration guidelines ························································································································· 182

Configuration procedure ························································································································· 183

Displaying tunnel information ························································································································· 184

Tunnel policy configuration examples ············································································································ 184

Preferred tunnel configuration example ································································································· 184

Exclusive tunnel configuration example ································································································· 184

Tunnel selection order configuration example ······················································································· 185

Preferred tunnel and tunnel selection order configuration example ······················································· 186

iii

Page 6

Configuring MPLS L3VPN ·········································································· 188

Overview ························································································································································ 188

Basic MPLS L3VPN architecture ··········································································································· 188

MPLS L3VPN concepts ·························································································································· 188

MPLS L3VPN route advertisement ········································································································ 190

MPLS L3VPN packet forwarding ············································································································ 191

MPLS L3VPN networking schemes ······································································································· 192

Inter-AS VPN ·········································································································································· 194

Carrier's carrier ······································································································································ 198

Nested VPN ··········································································································································· 200

Multirole host ·········································································································································· 201

HoVPN ··················································································································································· 202

OSPF VPN extension ····························································································································· 204

BGP AS number substitution and SoO attribute ···················································································· 206

MPLS L3VPN FRR ································································································································· 207

Multi-VPN instance CE ··························································································································· 209

Protocols and standards ························································································································ 210

MPLS L3VPN configuration task list ·············································································································· 211

Configuring basic MPLS L3VPN ···················································································································· 211

Configuration prerequisites ···················································································································· 211

Configuring VPN instances ···················································································································· 211

Configuring routing between a PE and a CE ························································································· 213

Configuring routing between PEs ··········································································································· 218

Configuring BGP VPNv4 route control ··································································································· 219

Configuring inter-AS VPN ······························································································································ 220

Configuring inter-AS option A ················································································································· 220

Configuring inter-AS option B ················································································································· 221

Configuring inter-AS option C ················································································································ 222

Configuring nested VPN ································································································································ 224

Configuring multirole host ······························································································································ 225

Configuring and applying PBR ··············································································································· 226

Configuring a static route ······················································································································· 226

Configuring HoVPN ········································································································································ 226

Configuring an OSPF sham link ····················································································································· 227

Configuring a loopback interface ············································································································ 228

Redistributing the loopback interface address ······················································································· 228

Creating a sham link ······························································································································ 228

Configuring routing on an MCE ······················································································································ 229

Configuring routing between an MCE and a VPN site ··········································································· 229

Configuring routing between an MCE and a PE ···················································································· 234

Specifying the VPN label processing mode on the egress PE ······································································ 237

Configuring BGP AS number substitution and SoO attribute ········································································· 238

Configuring MPLS L3VPN FRR ····················································································································· 238

Enabling SNMP notifications for MPLS L3VPN ····························································································· 240

Displaying and maintaining MPLS L3VPN ····································································································· 240

MPLS L3VPN configuration examples ··········································································································· 242

Configuring basic MPLS L3VPN ············································································································ 242

Configuring MPLS L3VPN over a GRE tunnel ······················································································· 247

Configuring a hub-spoke network ·········································································································· 251

Configuring MPLS L3VPN inter-AS option A ························································································· 258

Configuring MPLS L3VPN inter-AS option B ························································································· 262

Configuring MPLS L3VPN inter-AS option C ························································································· 267

Configuring MPLS L3VPN carrier's carrier in the same AS ··································································· 274

Configuring MPLS L3VPN carrier's carrier in different ASs ··································································· 282

Configuring nested VPN ························································································································· 289

Configuring multirole host ······················································································································ 298

Configuring HoVPN ································································································································ 300

Configuring an OSPF sham link ············································································································· 307

Configuring MCE ···································································································································· 311

Configuring BGP AS number substitution ······························································································ 316

Configuring BGP AS number substitution and SoO attribute ································································· 320

iv

Page 7

Configuring MPLS L3VPN FRR through VPNv4 route backup for a VPNv4 route ································ 323

Configuring MPLS L3VPN FRR through VPNv4 route backup for an IPv4 route ·································· 325

Configuring MPLS L3VPN FRR through IPv4 route backup for a VPNv4 route ···································· 327

Configuring IPv6 MPLS L3VPN ·································································· 330

Overview ························································································································································ 330

IPv6 MPLS L3VPN packet forwarding ··································································································· 330

IPv6 MPLS L3VPN routing information advertisement ·········································································· 331

IPv6 MPLS L3VPN network schemes and functions ············································································· 331

Protocols and standards ························································································································ 332

IPv6 MPLS L3VPN configuration task list ······································································································ 332

Configuring basic IPv6 MPLS L3VPN ············································································································ 332

Configuring VPN instances ···················································································································· 332

Configuring routing between a PE and a CE ························································································· 335

Configuring routing between PEs ··········································································································· 340

Configuring BGP VPNv6 route control ··································································································· 341

Configuring inter-AS IPv6 VPN ······················································································································ 342

Configuring inter-AS IPv6 VPN option A ································································································ 343

Configuring inter-AS IPv6 VPN option C ································································································ 343

Configuring multirole host ······························································································································ 344

Configuring and applying IPv6 PBR ······································································································· 344

Configuring an IPv6 static route ············································································································· 345

Configuring an OSPFv3 sham link ················································································································· 345

Configuring a loopback interface ············································································································ 345

Redistributing the loopback interface address ······················································································· 345

Creating a sham link ······························································································································ 346

Configuring routing on an MCE ······················································································································ 346

Configuring routing between an MCE and a VPN site ··········································································· 346

Configuring routing between an MCE and a PE ···················································································· 351

Configuring BGP AS number substitution and SoO attribute ········································································· 355

Displaying and maintaining IPv6 MPLS L3VPN ····························································································· 355

IPv6 MPLS L3VPN configuration examples ··································································································· 356

Configuring IPv6 MPLS L3VPNs ············································································································ 356

Configuring an IPv6 MPLS L3VPN over a GRE tunnel ·········································································· 363

Configuring IPv6 MPLS L3VPN inter-AS option A ················································································· 366

Configuring IPv6 MPLS L3VPN inter-AS option C ················································································· 371

Configuring IPv6 MPLS L3VPN carrier's carrier in the same AS ··························································· 377

Configuring multirole host ······················································································································ 385

Configuring an OSPFv3 sham link ········································································································· 387

Configuring IPv6 MCE ···························································································································· 391

Configuring BGP AS number substitution ······························································································ 396

Configuring BGP AS number substitution and SoO attribute ································································· 401

Configuring MPLS L2VPN ·········································································· 404

Overview ························································································································································ 404

Basic concepts of MPLS L2VPN ············································································································ 404

MPLS L2VPN network models ··············································································································· 405

Remote connection establishment ········································································································· 406

Local connection establishment ············································································································· 407

PW types ················································································································································ 407

Control word ··········································································································································· 409

MPLS L2VPN interworking ····················································································································· 409

PW redundancy ······································································································································ 410

Multi-segment PW ·································································································································· 410

VCCV ····················································································································································· 412

Compatibility information ································································································································ 412

MPLS L2VPN configuration task list ·············································································································· 412

Enabling L2VPN ············································································································································· 413

Configuring an AC ·········································································································································· 413

Configuring the interface with Ethernet or VLAN encapsulation ···························································· 414

Configuring the interface with PPP encapsulation ················································································· 414

Configuring the interface with HDLC encapsulation ··············································································· 414

v

Page 8

Configuring a cross-connect ·························································································································· 415

Configuring a PW ··········································································································································· 415

Configuring a PW class ·························································································································· 415

Configuring a static PW ·························································································································· 415

Configuring an LDP PW ························································································································· 416

Configuring a BGP PW ·························································································································· 416

Configuring a remote CCC connection ·································································································· 418

Binding an AC to a cross-connect ·················································································································· 419

Configuring PW redundancy ·························································································································· 419

Configuring static PW redundancy ········································································································· 420

Configuring LDP PW redundancy ·········································································································· 420

Configuring interworking for a cross-connect ································································································· 421

Enabling SNMP notifications for L2VPN PW ································································································· 422

Displaying and maintaining MPLS L2VPN ····································································································· 422

MPLS L2VPN configuration examples ··········································································································· 423

Configuring local MPLS L2VPN connections ························································································· 423

Configuring IP interworking over local MPLS L2VPN connections ························································ 424

Configuring a static PW ·························································································································· 426

Configuring an LDP PW ························································································································· 429

Configuring IP interworking over an LDP PW ························································································ 433

Configuring a BGP PW ·························································································································· 436

Configuring a remote CCC connection ·································································································· 440

Configuring an intra-domain multi-segment PW ···················································································· 444

Configuring an inter-domain multi-segment PW ···················································································· 447

Configuring VPLS ······················································································· 453

Overview ························································································································································ 453

Basic VPLS architecture ························································································································ 453

VPLS implementation ····························································································································· 454

H-VPLS ·················································································································································· 456

Hub-spoke networking ··························································································································· 458

Compatibility information ································································································································ 458

VPLS configuration task list ··························································································································· 459

Enabling L2VPN ············································································································································· 459

Configuring an AC ·········································································································································· 460

Configuring a VSI ··········································································································································· 460

Configuring a PW ··········································································································································· 461

Configuring a PW class ·························································································································· 461

Configuring a static PW ·························································································································· 461

Configuring an LDP PW ························································································································· 462

Configuring a BGP PW ·························································································································· 462

Configuring a BGP auto-discovery LDP PW ·························································································· 464

Binding an AC to a VSI ·································································································································· 466

Configuring UPE dual homing ························································································································ 466

Configuring static PW redundancy ········································································································· 466

Configuring LDP PW redundancy ·········································································································· 467

Configuring MAC address learning ················································································································ 468

Enabling SNMP notifications for L2VPN PW ································································································· 468

Displaying and maintaining VPLS ·················································································································· 468

VPLS configuration examples ························································································································ 470

Static PW configuration example ··········································································································· 470

LDP PW configuration example ············································································································· 474

BGP PW configuration example ············································································································· 476

BGP auto-discovery LDP PW configuration example ············································································ 480

H-VPLS using MPLS access configuration example ············································································· 485

Hub-spoke VPLS configuration example ······························································································· 489

H-VPLS UPE dual homing configuration example ················································································· 492

Configuring L2VPN access to L3VPN or IP backbone ································ 498

Overview ························································································································································ 498

Conventional L2VPN access to L3VPN or IP backbone ········································································ 498

Improved L2VPN access to L3VPN or IP backbone ·············································································· 499

vi

Page 9

Configuring conventional L2VPN access to L3VPN or IP backbone ····························································· 500

Configuring improved L2VPN access to L3VPN or IP backbone ··································································· 500

Configuring an L2VE interface ··············································································································· 501

Configuring an L3VE interface ··············································································································· 501

Displaying and maintaining L2VPN access to L3VPN or IP backbone ·························································· 502

Improved L2VPN access to L3VPN or IP backbone configuration examples ················································ 502

Access to MPLS L3VPN through an LDP MPLS L2VPN ······································································· 502

Access to IP backbone through an LDP VPLS ······················································································ 508

Configuring MPLS OAM ·············································································· 513

Overview ························································································································································ 513

MPLS ping ·············································································································································· 513

MPLS tracert ·········································································································································· 513

BFD for MPLS ········································································································································ 513

Periodic MPLS tracert ···························································································································· 514

Protocols and standards ································································································································ 514

Configuring MPLS OAM for LSP tunnels ······································································································· 514

Configuring MPLS ping for LSPs ··········································································································· 514

Configuring MPLS tracert for LSPs ········································································································ 515

Configuring BFD for LSPs ······················································································································ 515

Configuring periodic MPLS tracert for LSPs ·························································································· 516

Configuring MPLS OAM for MPLS TE tunnels ······························································································ 516

Configuring MPLS ping for MPLS TE tunnels ························································································ 516

Configuring MPLS tracert for MPLS TE tunnels ····················································································· 516

Configuring BFD for MPLS TE tunnels ·································································································· 517

Configuring MPLS OAM for a PW ·················································································································· 517

Configuring MPLS ping for a PW ··········································································································· 518

Configuring BFD for a PW ······················································································································ 518

Displaying MPLS OAM ·································································································································· 521

BFD for LSP configuration example ··············································································································· 522

Configuring MPLS protection switching ······················································ 525

Overview ························································································································································ 525

Protection switching triggering modes ··································································································· 525

Protection switching modes ··················································································································· 525

Path switching modes ···························································································································· 526

Protocols and standards ································································································································ 526

Compatibility information ································································································································ 526

MPLS protection switching configuration task list ·························································································· 527

Enabling MPLS protection switching ·············································································································· 527

Creating a protection group ··························································································································· 527

Configuring PS attributes for the protection group ························································································· 529

Configuring command switching for the protection group ·············································································· 530

Configuring the PSC message sending interval ···························································································· 530

Displaying and maintaining MPLS protection switching ················································································· 530

MPLS protection switching configuration example························································································· 531

Network requirements ···························································································································· 531

Configuration procedure ························································································································· 532

Verifying the configuration ······················································································································ 534

Document conventions and icons ······························································· 535

Conventions ··················································································································································· 535

Network topology icons ·································································································································· 536

Support and other resources ······································································ 537

Accessing Hewlett Packard Enterprise Support ···························································································· 537

Accessing updates ········································································································································· 537

Websites ················································································································································ 538

Customer self repair ······························································································································· 538

Remote support ······································································································································ 538

Documentation feedback ······················································································································· 538

vii

Page 10

Index ··········································································································· 540

viii

Page 11

Configuring basic MPLS

Multiprotocol Label Switching (MPLS) provides connection-oriented label switching over

connectionless IP backbone networks. It integrates both the flexibility of IP routing and the simplicity

of Layer 2 switching.

Overview

MPLS has the following features:

• High speed and efficiency—MPLS uses short- and fixed-length labels to forward packets,

avoiding complicated routing table lookups.

• Multiprotocol support—MPLS resides between the link layer and the network layer. It can

work over various link layer protocols (for example, PPP, ATM, frame relay, and Ethernet) to

provide connection-oriented services for various network layer protocols (for example, IPv4,

IPv6, and IPX).

• Good scalability—The connection-oriented switching and multilayer label stack features

enable MPLS to deliver various extended services, such as VPN, traffic engineering, and QoS.

Basic concepts

FEC

MPLS groups packets with the same characteristics (such as packets with the same destination or

service class) into a forwarding equivalence class (FEC). Packets of the same FEC are handled in

the same way on an MPLS network.

Label

A label uniquely identifies an FEC and has local significance.

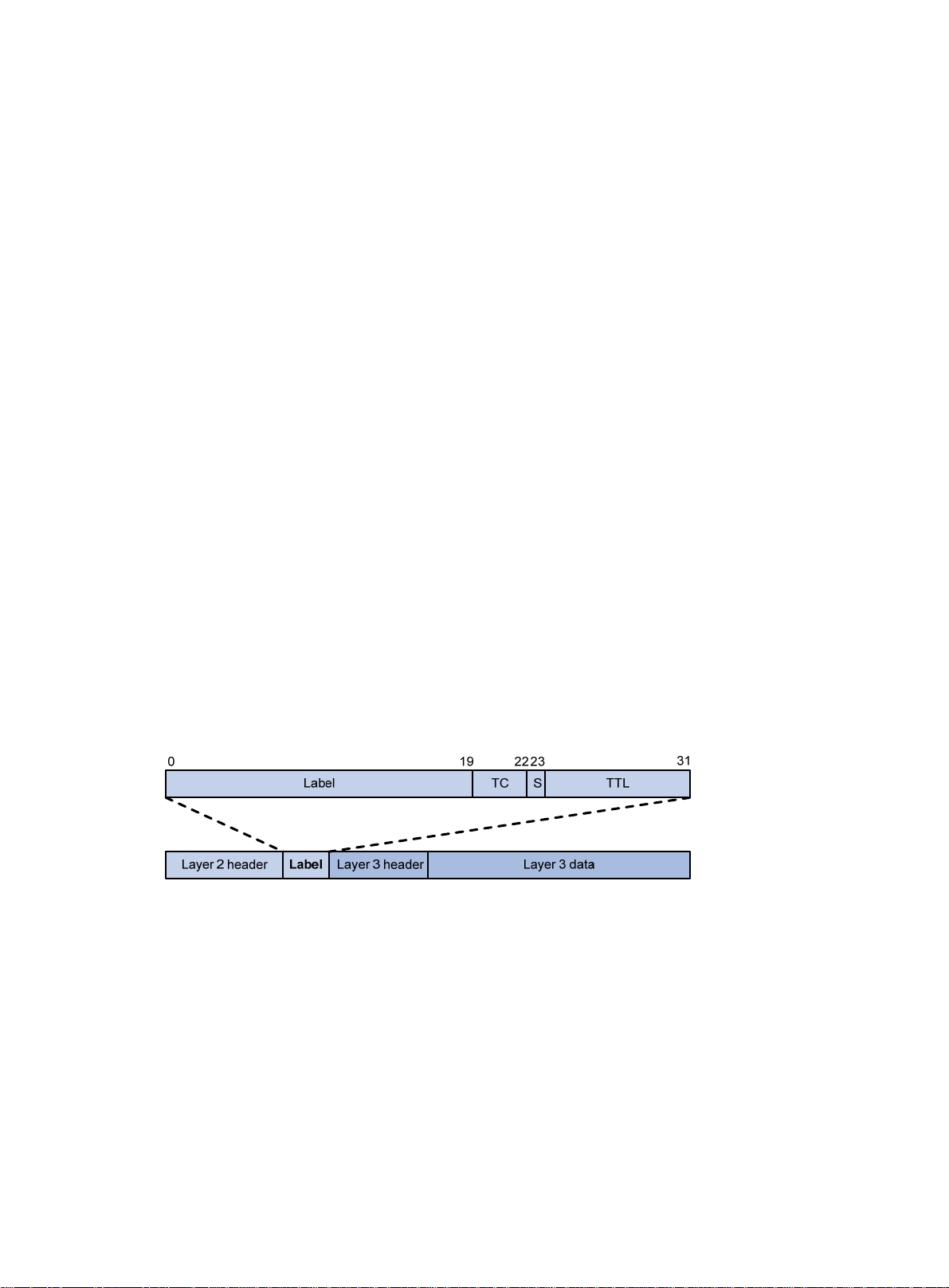

Figure 1 Format of a label

A label is encapsulated betwee n the Layer 2 heade r and Layer 3 header of a packet. It is four bytes

long and consists of the following fields:

• Label—20-bit label value.

• TC—3-bit traffic class, used for QoS. It is also called Exp.

• S—1-bit bottom of stack flag. A label stack can contain multiple labels. The label nearest to the

Layer 2 header is called the top label, and the label nearest to the Layer 3 header is called the

bottom label. The S field is set to 1 if the label is the bottom label and set to 0 if not.

• TTL—8-bit time to live field used for MPLS loop prevention.

LSR

A router that performs MPLS forwarding is a label switching router (LSR).

1

Page 12

LSP

LFIB

A label switched path (LSP) is the path along which packets of an FEC travel through an MPLS

network.

An LSP is a unidirectional packet forwarding path. Two neighboring LSRs are called the upstream

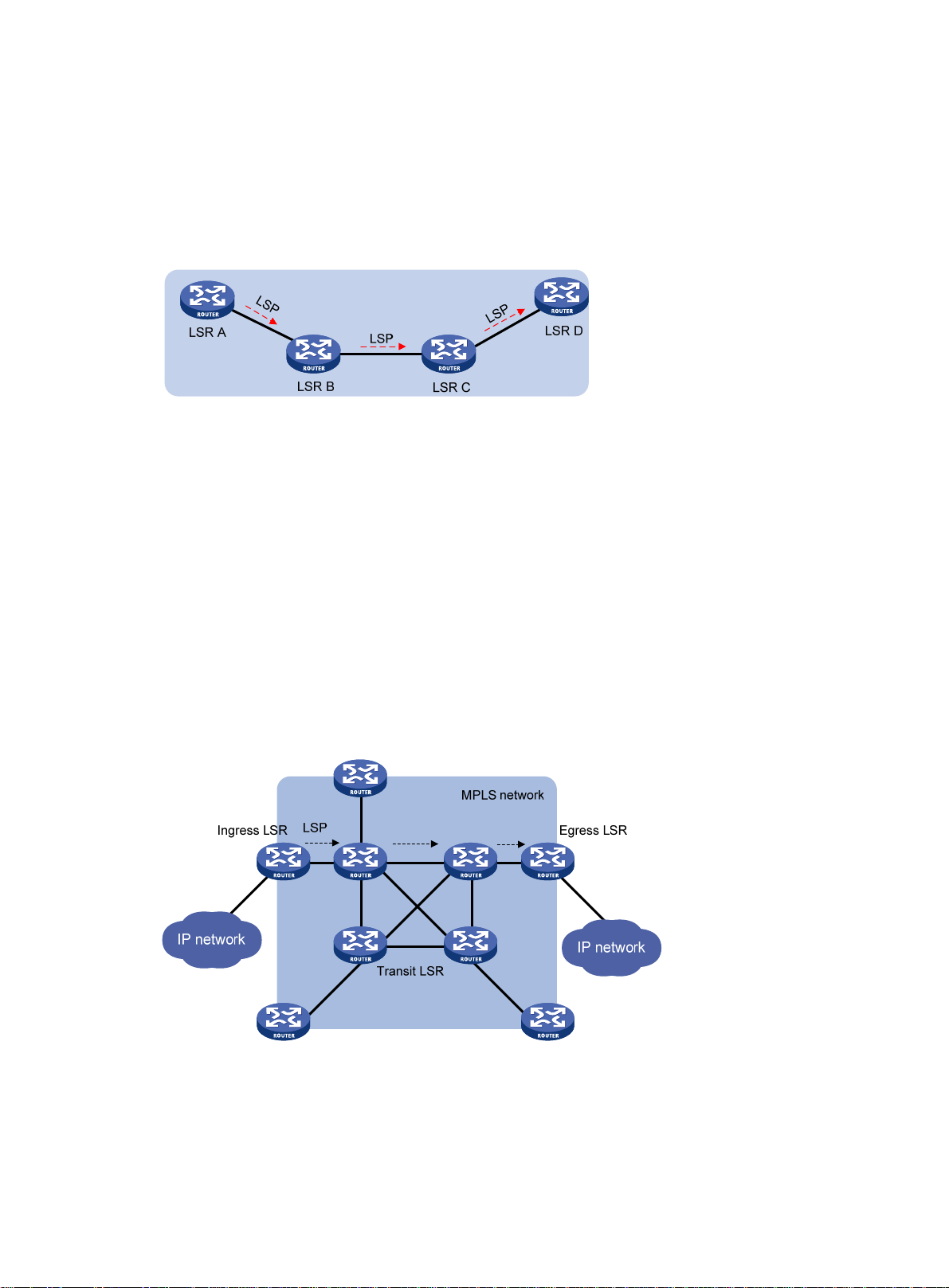

LSR and downstream LSR along the direction of an LSP. As shown in Figure 2, LSR B

downstream LSR of LSR A, and LSR A is the upstream LSR of LSR B.

Figure 2 Label switched path

The Label Forwarding Information Base (LFIB) on an MPLS network functions like the Forwarding

Information Base (FIB) on an IP network. When an LSR receives a labeled packet, it searches the

LFIB to obtain information for forwarding the packet. The information includes the label operation

type, the outgoing label value, and the next hop.

is the

Control plane and forwarding plane

An MPLS node consists of a control plane and a forwarding plane.

• Control plane—Assigns labels, distributes FEC-label mappings to neighbor LSRs, creates the

LFIB, and establishes and removes LSPs.

• Forwarding plane—Forwards packets according to the LFIB.

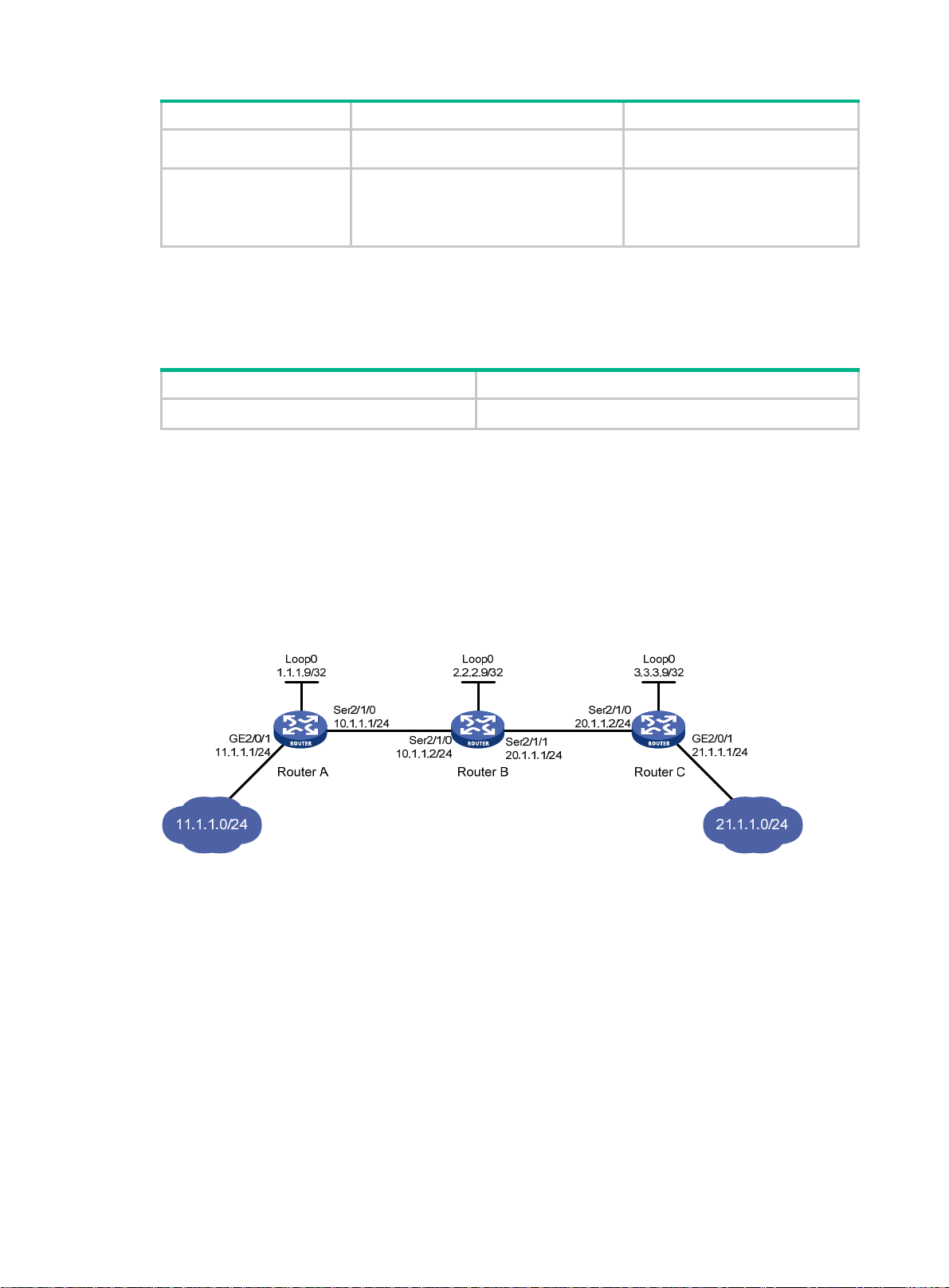

MPLS network architecture

Figure 3 MPLS network architecture

An MPLS network has the following types of LSRs:

• Ingress LSR—Ingress LSR of packets. It labels packets entering into the MPLS network.

• Transit LSR—Intermediate LSRs in the MPLS network. The transit LSRs on an LSP forward

packets to the egress LSR according to labels.

2

Page 13

• Egress LSR—Egress LSR of packets. It removes labels from packets and forwards the

packets to their destination networks.

LSP establishment

LSPs include static and dynamic LSPs.

• Static LSP—To establish a static LSP, you must configure an LFIB entry on each LSR along the

LSP. Establishing static LSPs consumes fewer resources than establishing dynamic LSPs, but

static LSPs cannot automatically adapt to network topology changes. Therefore, static LSPs

are suitable for small-scale networks with simple, stable topologies.

• Dynamic LSP—Established by a label distribution protocol (also called an MPLS signaling

protocol). A label distribution protocol classifies FECs, distributes FEC-label mappings, and

establishes and maintains LSPs. Label distribution protocols include protocols designed

specifically for label distribution, such as the Label Distribution Protocol (LDP), and protocols

extended to support label distribution, such as MP-BGP and RSVP-TE.

In this document, the term "label distribution protocols" refers to all protocols for label distribution.

The term "LDP" refers to the RFC 5036 LDP.

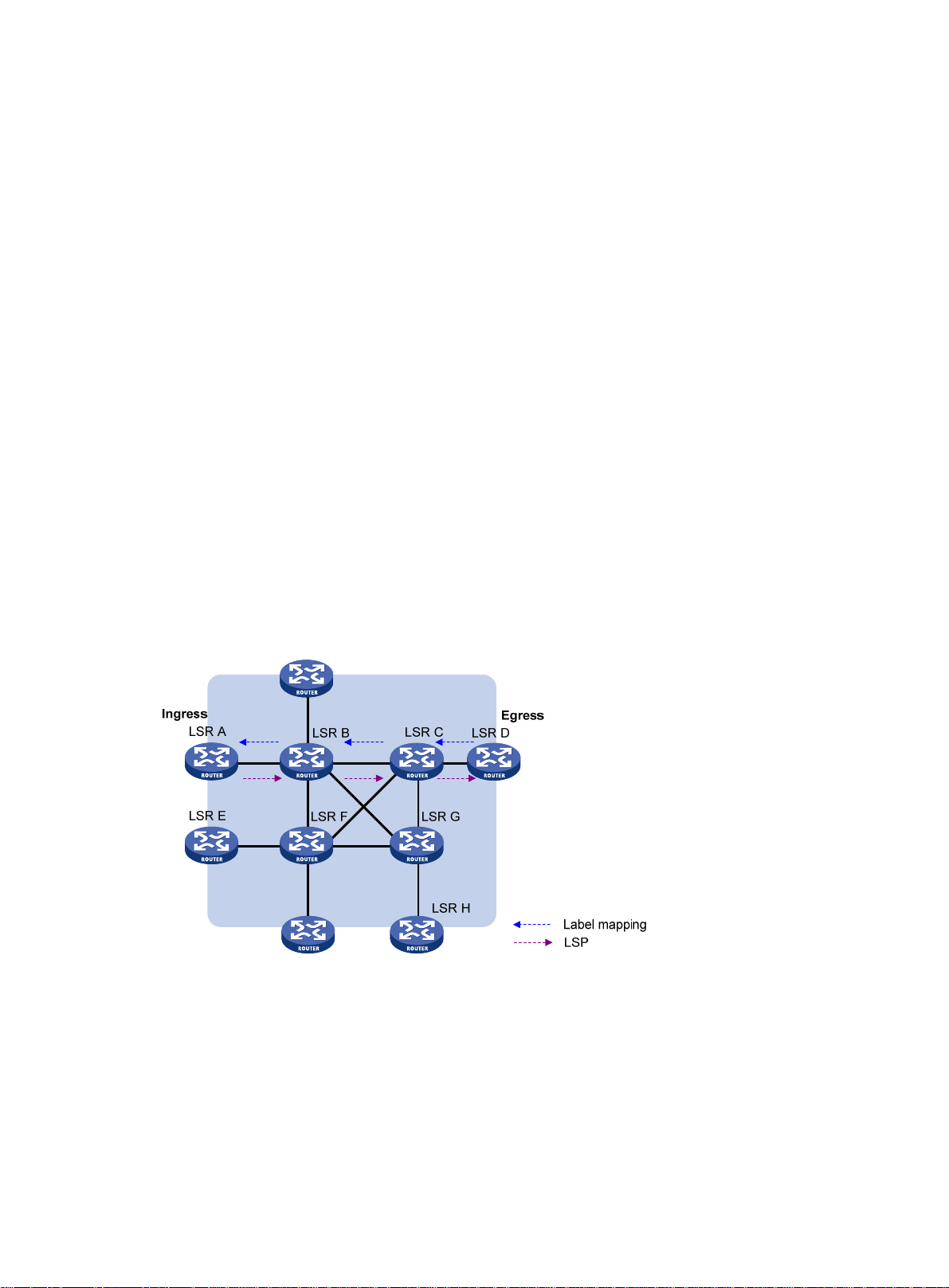

A dynamic LSP is established in the following steps:

1. A downstream LSR classifies FECs according to destination addresses.

2. The downstream LSR assigns a label for each FEC, and distributes the FEC-label binding to its

upstream LSR.

3. The upstream LSR establishes an LFIB entry for the FEC according to the binding inform ation.

After all LSRs along the LSP establish an LFIB entry for the FEC, a dynamic LSP is established for

the packets of this FEC.

Figure 4 Dynamic LSP establishment

3

Page 14

MPLS forwarding

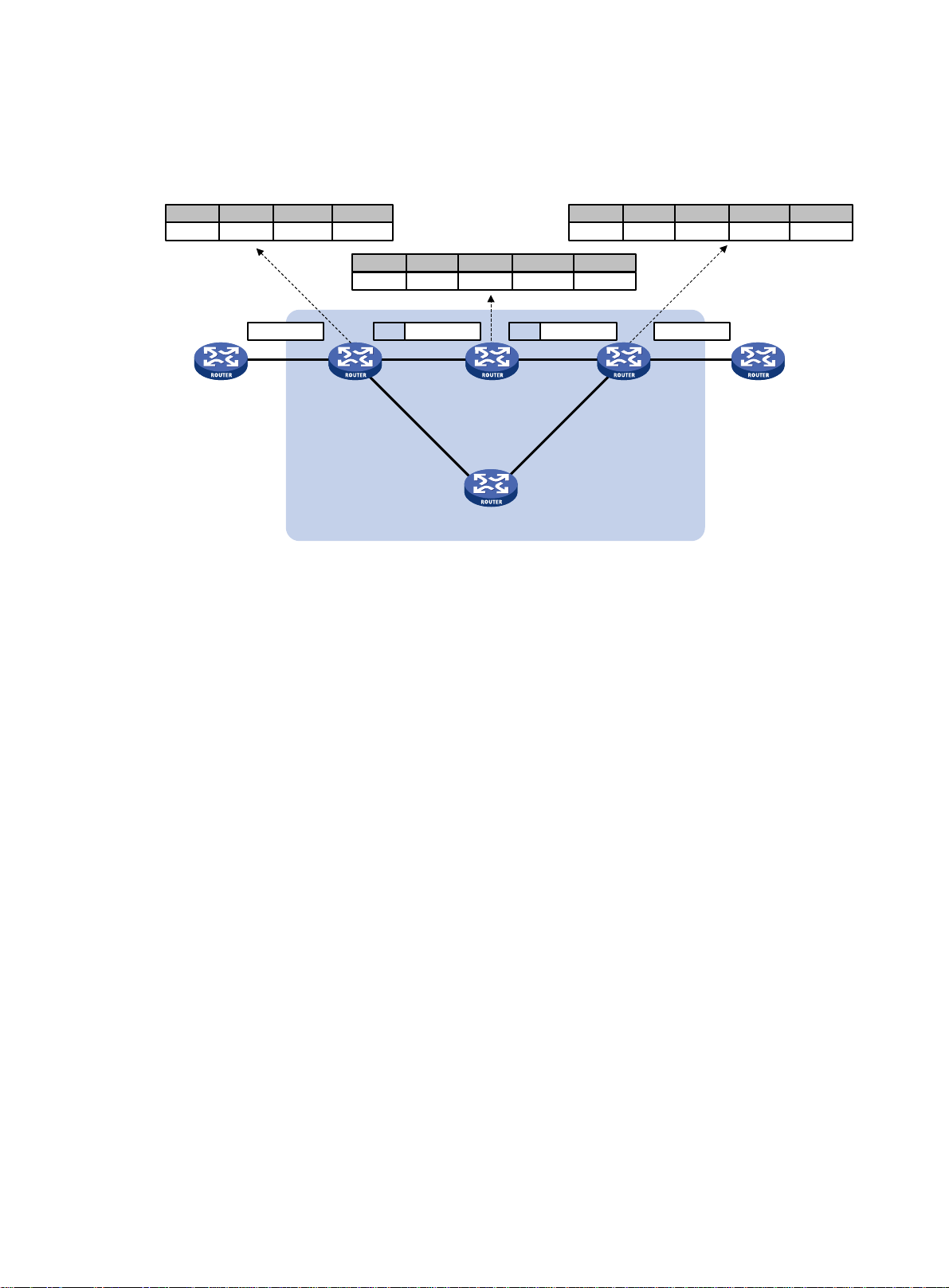

Figure 5 MPLS forwarding

10.1.0.0

Router A

FIB table

NexthopDest Out intOut label

Router C

IP:10.1.1.1

GE2/0/1 GE2/0/2

GE2/0/240

In label

Router B

Ingress

40

Oper

Swap

GE2/0/1

LFIB table

Out label

50

Router C

Router F

Nexthop

Router D

GE2/0/2

In label

Out int

GE2/0/2

GE2/0/1

Oper

50

Router D

Egress

MPLS network

Pop

Out label

IP:10.1.1.140 IP:10.1.1.1 50 IP:10.1.1.1

GE2/0/2

LFIB table

Nexthop

--

Router E

Router E

Out int

GE2/0/2

As shown in Figure 5, a packet is forwarded over the MPLS network as follows:

1. Router B (the ingress LSR) receives a packet with no label. Then, it performs the following

operations:

a. Identifies the FIB entry that matches the destination address of the packet.

b. Adds the outgoing la bel (40, in this example) to the packet.

c. Forwards the labeled packet out of the interface GigabitEthernet 2/0/2 to the next hop LSR

Router C.

2. When receiving the labeled packet, Router C processes the packet as follows:

a. Identifies the LFIB entry that has an incoming label of 40.

b. Uses the outgoing label 5 0 of the entry to replace label 40 in the packet.

c. Forwards the labeled packet out of the outgoing interface GigabitEthernet 2/0/2 to the next

hop LSR Router D.

3. When receiving the labeled packet, Router D (the egress LSR) processes the packet as follows:

a. Identifies the LFIB entry that has an incoming label of 50.

b. Removes the label from th e packet.

c. Forwards the packet out of the outgoing interface GigabitEthernet 2/0/2 to the next hop LSR

Router E.

If the LFIB entry records no outgoing interface or next hop information, Router D performs the

following operations:

a. Identifies the FIB entry by the IP header.

b. Forwards the packet according to the FIB entry.

PHP

An egress node must perform two forwarding table lookups to forward a packet:

• Two LFIB lookup s (if the packet has more than one label).

4

Page 15

• One LFIB lookup and one FIB lookup (if the packet has only one label).

The penultimate hop popping (PHP) feature can pop the label at the penultimate node, so the egress

node only performs one table lookup.

A PHP-capable egress node sends the penultimate node an implicit null label of 3. This label never

appears in the label stack of packets. If an incoming packet matches an LFIB entry containing the

implicit null label, the penultimate node pops the top label and forwards the packet to the egress

node. The egress node directly forwards the packet.

Sometimes, the egress node must use the TC field in the label to perform QoS. To keep the TC

information, you can configure the egress node to send the penultimate node an explicit null label of

0. If an incoming packet matches an LFIB entry containing the explicit null label, the penultimate hop

replaces the top label value with value 0, and forwards the packet to the egress node. The egress

node gets the TC information, pops the label of the packet, and forwards the packet.

Protocols and standards

• RFC 3031, Multiprotocol Label Switching Architecture

• RFC 3032, MPLS Label Stack Encoding

• RFC 5462, Multiprotocol Label Switching (MPLS) Label Stack Entry: "EXP" Field Renamed to

"T raffic Class" Field

Compatibility information

Commands and descriptions for centralized devices apply to the followin g routers:

• MSR1002-4/1003-8S.

• MSR2003.

• MSR2004-24/2004-48.

• MSR3012/3024/3044/3064.

Commands and descriptions for distributed devices apply to MSR4060 and MSR4080 routers.

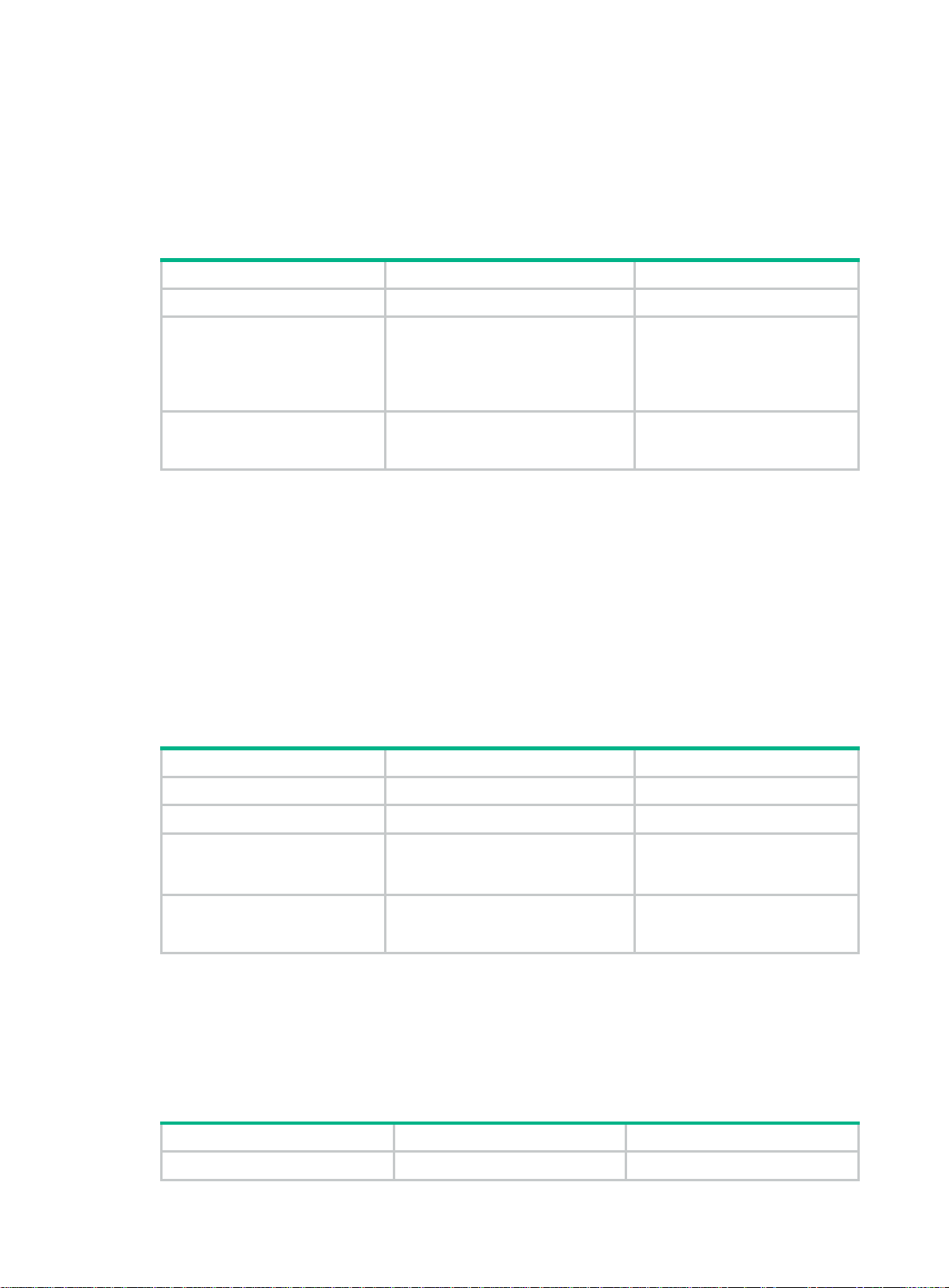

MPLS configuration task list

Tasks at a glance

(Required.) Enabling MPLS

(Optional.) Setting MPLS MTU

(Optional.) Specifying the label type advertised by the egress

(Optional.) Configuring TTL propagation

(Optional.) Enabling sending of MPLS TTL-expired messages

(Optional.) Enabling MPLS forwarding statistics

(Optional.) Enabling split horizon for MPLS forwarding

(Optional.) Enabling SNMP notifications for MPLS

5

Page 16

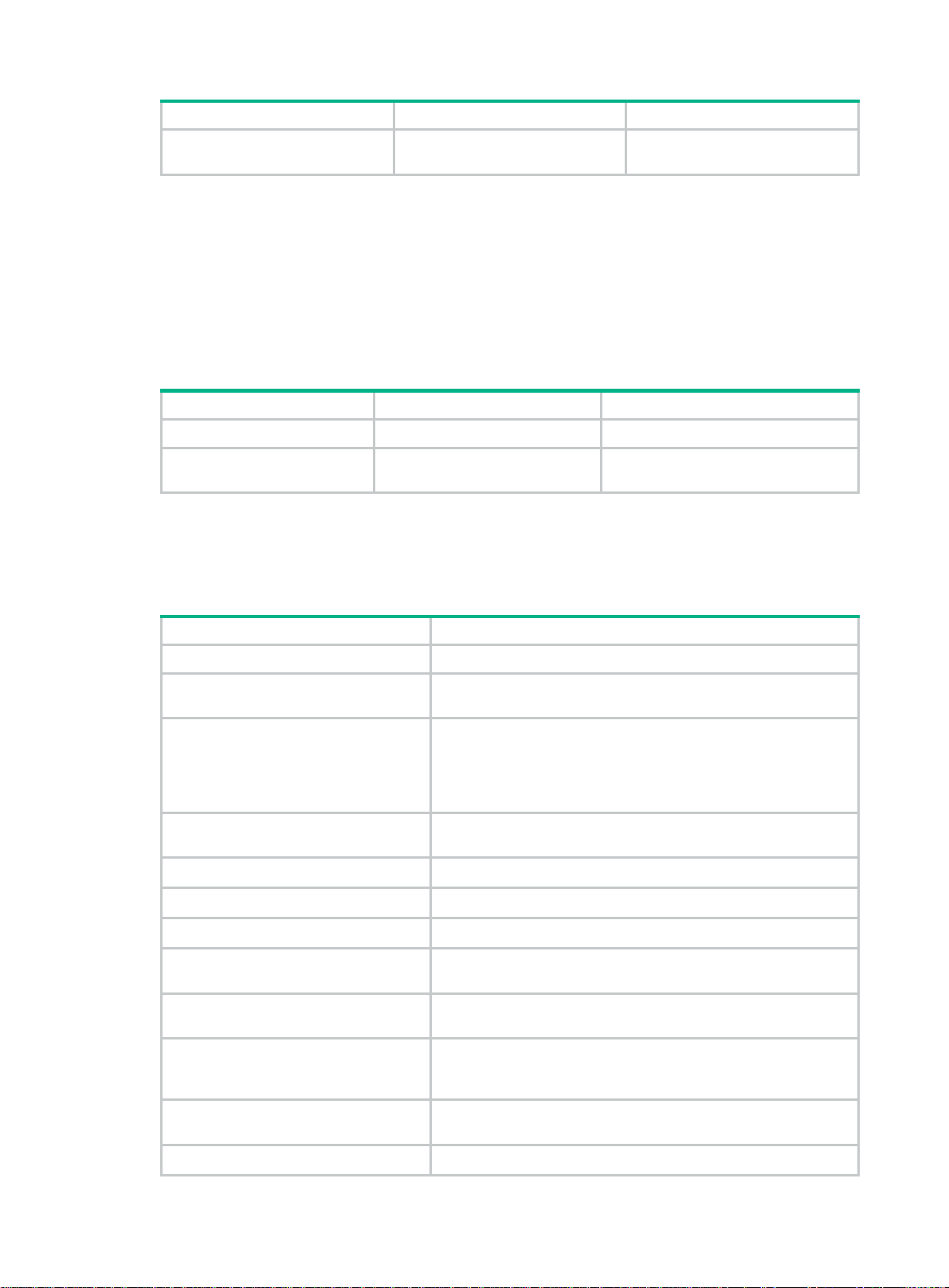

Enabling MPLS

Before you enable MPLS, perform the following tasks:

• Configure link layer protocols to ensure connectivity at the link layer.

• Configure IP addresses for interfaces to ensure IP conne ctivity between neighboring nodes.

• Configure static routes or an IGP protocol to ensure IP connectivity among LSRs.

To enable MPLS:

Step Command Remarks

1. Enter system view.

2. Configure an LSR ID for the

local node.

3. Enter the view of the interface

that needs to perform MPLS

forwarding.

system-view

mpls lsr-id

interface

interface-number

lsr-id

interface-type

N/A

By default, no LSR ID is configured.

An LSR ID must be unique in an

MPLS network and in IP address

format. As a best practice, use the

IP address of a loopback interface

as an LSR ID.

N/A

4. Enable MPLS on the interface.

Setting MPLS MTU

MPLS adds the label stack between the link layer header and network layer header of each packet.

T o ma ke sure the size of MPLS labeled packets i s smaller than the MTU of an interface, configure a n

MPLS MTU on the interface.

MPLS compares each MPLS packet against the interface MPLS MTU. When the packet exceeds the

MPLS MTU:

• If fragmentation is allowed, MPLS performs the following operations:

a. Removes the label stack from the packet.

b. Fragments the IP packet. The length of a fragment is the MPLS MTU minus the length of the

label stack.

c. Adds the label stack to each fragment, and forwards the fragments.

• If fragmentation is not allowed, the LSR drops the packet.

To set an MPLS MTU for an interface:

Step Command Remarks

1. Enter system view.

mpls enable

system-view

By default, MPLS is disabled on the

interface.

N/A

2. Enter interface view.

3. Set an MPLS MTU for the

interface.

interface

interface-number

mpls mtu

interface-type

value

The following applies when an interface handles MPLS packets:

6

N/A

By default, no MPLS MTU is set

on an interface.

Page 17

• MPLS packets carrying L2VPN or IPv6 packets are always forwarded by an interface, even if

the length of the MPLS packets exceeds the MPLS MTU of the interface. Whether the

forwarding can succeed depends on the actual forwarding capacity of the interface.

• If the MPLS MTU of an interface is greater than the MTU of the interface, data forwarding might

fail on the interface.

• If you do not configure the MPLS MTU of an interface, fragmentation of MPLS packets is based

on the MTU of the interface without considering MPLS labels. An MPLS fragment might be

larger than the interface MTU and be dropped.

Specifying the label type advertised by the egress

In an MPLS network, an egress can advertise the following types of labels:

• Implicit null label with a value of 3.

• Explicit null label with a value of 0.

• Non-null label.

For LSPs established by a label distribution protocol, the label advertised by the egress determines

how the penultimate hop processes a labeled packet.

• If the egress advertises an implicit null label, the penultimate hop directly pops the top label of a

matching packet.

• If the egress advertises an explicit null label, the penultimate hop swaps the top label value of a

matching packet with the explicit null label.

• If the egress advertises a non-null label, the penultimate hop swaps the top label of a matching

packet with the label assigned by the egress.

Configuration guidelines

As a best practice, configure the egress to advertise an implicit null label to the penultimate hop if the

penultimate hop supports PHP. If you want to simplify packet forwarding on the egress but keep

labels to determine QoS policies, configure the egress to advertise an explicit null label to the

penultimate hop. Use non-null labels only in particular scenarios. For example, when OAM is

configured on the egress, the egress can get the OAM function entity status only through non-null

labels.

As a penultimate hop, the device accepts the implicit null label, explicit null label, or normal label

advertised by the egress device.

For LDP LSPs, the mpls label advertise command triggers LDP to delete the LSPs established

before the command is executed and re-establishes new LSPs.

For BGP LSPs, the mpls label advertise command takes effect only on the BGP LSPs estab lished

after the command is executed. To apply the new setting to BGP LSPs established before the

command is executed, delete the routes corresponding to the BGP LSPs, and then redistribute the

routes.

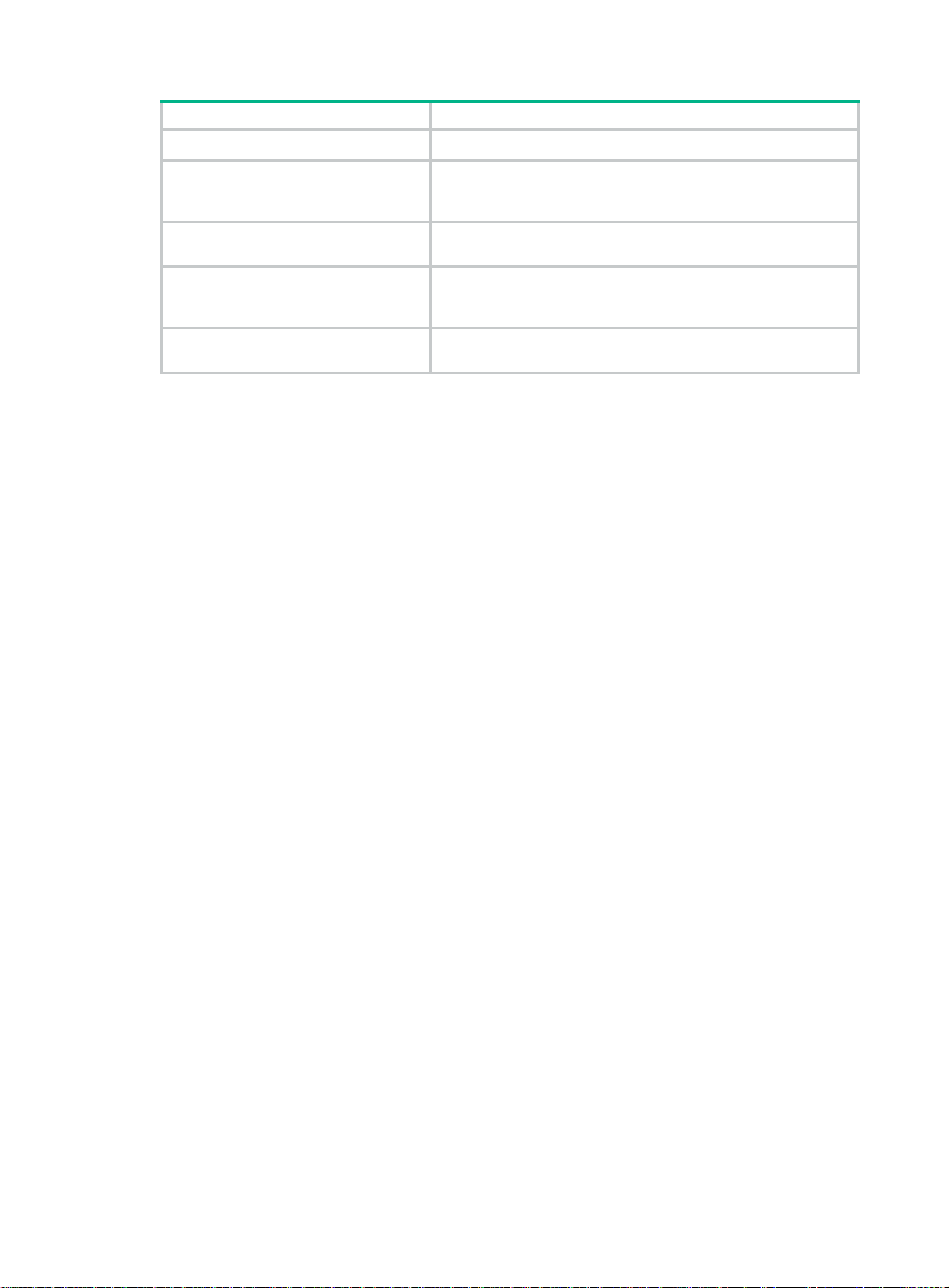

Configuration procedure

To specify the type of label that the egress node will advertise to the penultimate hop:

Step Command Remarks

1. Enter system view.

2. Specify the label type

advertised by the egress to

the penultimate hop.

system-view

mpls label advertise

explicit-null

{

non-null

}

implicit-null

|

N/A

|

By default, an egress advertises

an implicit null label to the

penultimate hop.

7

Page 18

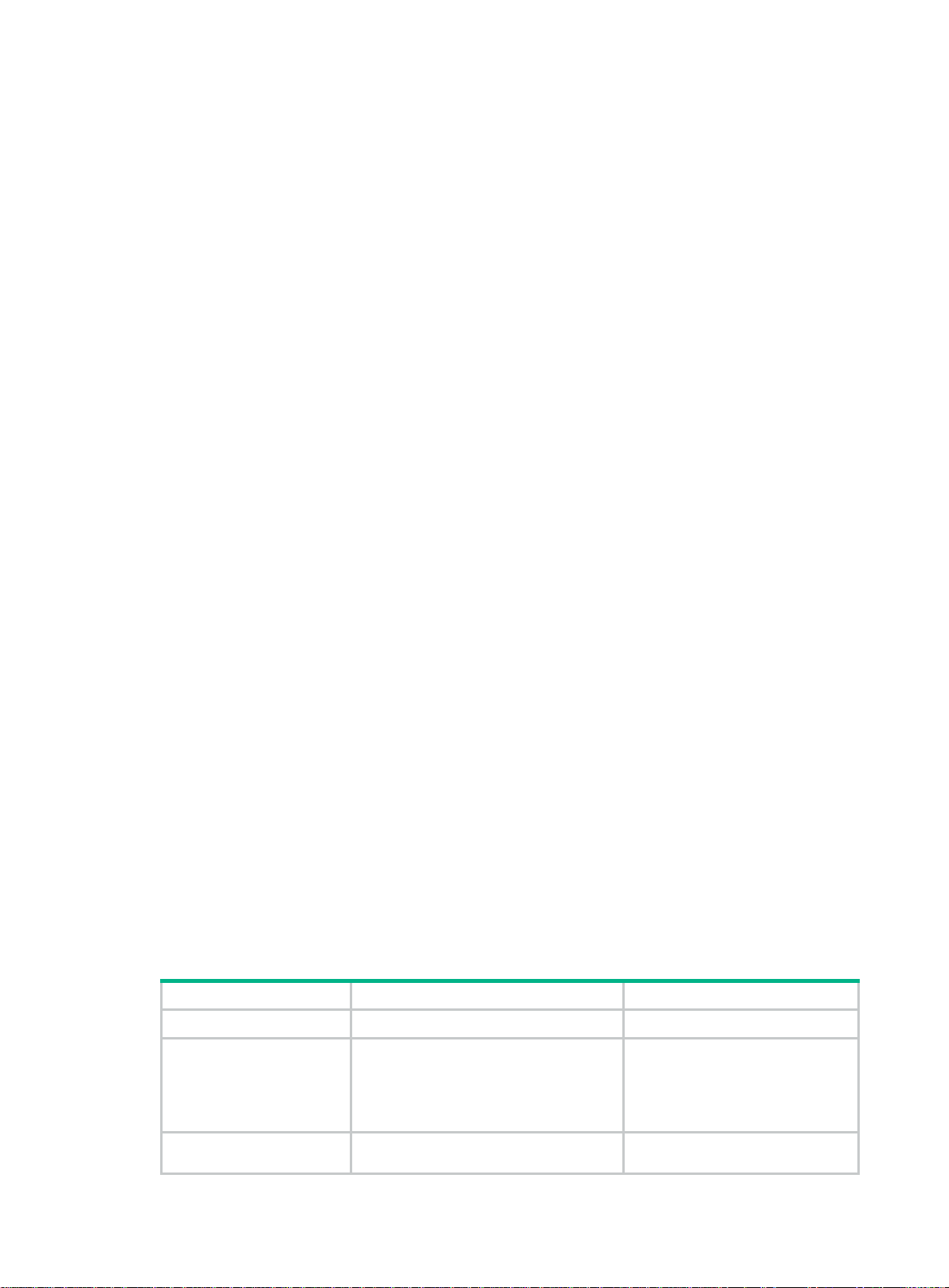

Configuring TTL propagation

When TTL propagation is enabl ed, the ingress node copi es the TTL value of an IP packet to the TTL

field of the label. Each LSR on the LSP decreases the label TTL value by 1. The LSR that pops the

label copies the remaining label TTL value back to the IP TTL of the packet. The IP TTL value can