Page 1

Dynamic Root Disk A.3.10.* Administrator's Guide

HP-UX 11i v2, HP-UX 11i v3

HP Part Number: 5900-2100

Published: January 2012

Edition: 1.0

Page 2

© Copyright 2012 Hewlett-Packard Development Company, L.P.

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial

Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under

vendor's standard commercial license. The information contained herein is subject to change without notice. The only warranties for HP products

and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as

constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein. UNIX is a registered

trademark of The Open Group.

Revision History

Publication DateSupported Operating SystemsDRD VersionDocument Part Number

A.3.10.*5900-2100

January 2012HP-UX 11i v2

HP-UX 11i v3

Page 3

Table of Contents

1 About Dynamic Root Disk............................................................................7

1.1 Conceptual overview............................................................................................................7

1.2 Terminology.......................................................................................................................7

1.3 Commands overview...........................................................................................................7

1.4 Downloading and installing Dynamic Root Disk ......................................................................8

2 Cloning the active system image...................................................................9

2.1 The active system image.......................................................................................................9

2.2 Locating disks....................................................................................................................9

2.2.1 Locating disks on HP-UX 11i v2 systems...........................................................................9

2.2.2 Locating disks on HP-UX 11i v3 Integrity systems............................................................10

2.3 Choosing a target disk......................................................................................................12

2.4 Using other utilities to determine disk availability..................................................................12

2.5 Using DRD for limited disk availability checks.......................................................................12

2.6 Using drd clone to analyze disk size...............................................................................13

2.7 Creating the clone............................................................................................................13

2.8 Adding or removing a disk................................................................................................16

3 Updating and maintaining software on the clone..........................................17

3.1 DRD-Safe commands and Packages.....................................................................................17

3.2 Updating and managing patches with drd runcmd............................................................18

3.2.1 DRD-Safe patches and the drd_unsafe_patch_list file............................................18

3.2.2 Patches with special installation instructions...................................................................19

3.3 Updating and managing products with drd runcmd ..........................................................19

3.4 Special considerations for firmware patches.........................................................................19

3.5 Restrictions on update-ux and sw* commands invoked by drd runcmd..............................19

3.6 Viewing logs....................................................................................................................20

4 Accessing the inactive system image...........................................................21

4.1 Mounting the inactive system image.....................................................................................21

4.2 Performing administrative tasks on the inactive system image..................................................22

4.3 Unmounting the inactive system image.................................................................................24

5 Synchronizing the inactive clone image with the booted system......................27

5.1 Overview.........................................................................................................................27

5.2 Quick start—basic synchronization......................................................................................27

5.3 The drd sync command......................................................................................................28

5.3.1 Determining the list of files in the booted volume group...................................................28

5.3.2 Trimming the list of files to be synchronized...................................................................28

5.3.3 Copying the files to the inactive clone image................................................................30

5.4 Using the drd sync preview to determine divergence of the clone from the booted system........30

5.5 drd sync system shutdown script......................................................................................31

6 Activating the inactive system image...........................................................33

6.1 Preparing the inactive system image to activate later..............................................................33

6.2 Undoing activation of the inactive system image...................................................................35

Table of Contents 3

Page 4

7 Rehosting and unrehosting systems..............................................................39

7.1 Rehosting overview............................................................................................................39

7.2 Rehosting examples...........................................................................................................39

7.3 Rehosting a mirrored image................................................................................................40

7.4 Unrehosting overview........................................................................................................41

8 Troubleshooting DRD................................................................................43

9 Support and other resources......................................................................45

9.1 Contacting HP...................................................................................................................45

9.2 New and changed information in this edition.......................................................................46

9.3 Locating this guide............................................................................................................46

9.4 Related information...........................................................................................................46

9.5 Typographic conventions....................................................................................................46

A DRD commands.......................................................................................49

A.1 DRD command syntax.......................................................................................................49

A.1.1 The drd activate command...................................................................................49

A.1.2 The drd clone command.........................................................................................51

A.1.3 The drd deactivate command...............................................................................54

A.1.4 The drd mount command.........................................................................................55

A.1.5 The drd rehost command.......................................................................................57

A.1.6 The drd runcmd command.......................................................................................60

A.1.7 The drd status command.......................................................................................62

A.1.8 The drd sync command..........................................................................................64

A.1.9 The drd umount command.......................................................................................65

A.1.10 The drd unrehost command..................................................................................67

Glossary....................................................................................................71

Index.........................................................................................................73

4 Table of Contents

Page 5

List of Figures

2-1 Preparing to Clone the Active System Image........................................................................13

2-2 Cloning the Active System Image.......................................................................................14

2-3 Disk Configurations After Cloning......................................................................................15

6-1 Disk Configurations After Activating the Inactive System Image...............................................33

5

Page 6

List of Examples

2-1 The ioscan -fnkC disk command output on an HP-UX 11i v2 PA-RISC system...................10

2-2 The ioscan -fnkC disk command output on an HP-UX 11i v2 Integrity system....................10

2-3 The ioscan -fNnkC disk command output on an HP-UX 11i v3 Integrity system..................11

2-4 The ioscan -m dsf command output on an HP-UX 11i v3 Integrity system...........................11

2-5 drd clone preview example on HP-UX 11i v2 or 11i v3......................................................13

2-6 drd clone preview example on HP-UX 11i v3 (using agile DSF)...........................................13

2-7 The drd clone command output....................................................................................15

2-8 The drd clone command output for SAN disk..................................................................16

2-9 The drd status command output for SAN disk.................................................................16

4-1 The drd mount command output.....................................................................................22

4-2 The bdf command output.................................................................................................22

4-3 Checking a warning message............................................................................................23

4-4 Creating a patch install file...............................................................................................23

4-5 Editing symlinked files.......................................................................................................24

4-6 The drd umount command output...................................................................................24

5-1 drd sync scenario.........................................................................................................27

6-1 Booting the primary boot disk with an alternate boot disk (HP-UX 11i v2)................................35

6-2 Using drd deactivate after activating — legacy DSF.............................................................36

6-3 Using drd deactivate after activating — agile DSF................................................................37

7-1 Provisioning a new system.................................................................................................40

7-2 Updating a system to an existing system's maintenance level.................................................40

A-1 File system mount points....................................................................................................56

6 List of Examples

Page 7

1 About Dynamic Root Disk

1.1 Conceptual overview

This document describes the Dynamic Root Disk (DRD) toolset, which you can use to perform

software maintenance and recovery on an HP-UX operating system with minimum system downtime.

DRD enables you to easily and safely clone a system image from a root disk to another disk on

the same system and modify the image without shutting down the system. DRD significantly reduces

system downtime and allows you to do software maintenance during normal business hours. This

document is primarily for HP-UX system administrators who apply software maintenance on HP-UX

systems, such as installing new software product revisions, as well as updating from an older HP-UX

operating environment (OE) to a newer one. Some understanding of HP-UX system administration

is assumed.

Hewlett-Packard developed DRD to minimize the usual maintenance window during which you

shut down the system to apply software maintenance. With DRD, the system keeps running while

you clone the system image and apply software maintenance to the cloned image. DRD tools can

manage the two system images simultaneously. DRD also provides a fail-safe mechanism for

returning the system to its original state, if necessary.

Using DRD commands, you can perform software maintenance or make other modifications on

the cloned system image without affecting the active system image. When ready, you can boot

the cloned image on either the original system or a different system. The only downtime required

for this process is while the system reboots.

Other uses of DRD include using the clone for quick software recovery or using the clone to boot

another system, which is referred to as rehosting. For details of rehosting, see Rehosting and

unrehosting systems.

1.2 Terminology

In this guide, “root group” refers to the LVM volume group or VxVM disk group that contains the

root (“/”) file system. The term “logical volume” refers to an LVM logical volume or a VxVM volume.

IMPORTANT: DRD supports the following LVM root volume group versions:

• DRD A.3.5.* and earlier:

— HP-UX 11i v2: LVM 1.0

— HP-UX 11i v3: LVM 1.0

• DRD A.3.6.* and later:

— HP-UX 11i v2: LVM 1.0

— HP-UX 11i v3: LVM 1.0 and LVM 2.2

1.3 Commands overview

The drd command provides a command line interface to DRD tools. The drd command has nine

major modes of operation:

• activate After using the DRD commands to create and optionally modify a clone, using drd

activate invokes setboot(1M) and sets the primary boot path to the clone. After the clone

is booted, using drd activate invokes setboot(1M) to set the primary boot path to the

original system image. The drd activate command always sets the primary boot path to

the inactive (not booted) system image.

• clone Clones a booted system to an inactive system image. The drd clone mode copies

the LVM volume group or VxVM disk group, containing the volume on which the root file

system (“/”) is mounted, to the target disk specified in the command.

1.1 Conceptual overview 7

Page 8

• deactivate If the drd activate command (which invokes setboot(1M)) has previously

been utilized and set the clone as the primary boot path, but the system has not yet been

rebooted, the drd deactivate command can be used to “undo” the drd activate

command. That is, the drd deactivate command will set the original system image to be

the primary boot path. The drd deactivate command always sets the primary boot path

to the active (currently booted) system image.

• mount Mounts all file systems in an inactive system image. The mount point of the root file

system is either /var/opt/drd/mnts/sysimage_000 or /var/opt/drd/mnts/

sysimage_001. If the inactive system image was created by the most recent drd clone

command, the mount point of the root file system is /var/opt/drd/mnts/sysimage_001.

If the inactive system image was the booted system when the most recent drd clone command

was run, the mount point of the root file system is /var/opt/drd/mnts/sysimage_000.

• rehost Copies the specified system information file—containing hostname, IP address, and

other system-specific information—to EFI/HPUX/SYSINFO.TXT on the disk to be rehosted.

• runcmd Runs a command on an inactive system image. Only a select group of commands

may be run by the runcmd mode. These are commands that have been verified to have no

effect on the booted system when executed by drd runcmd. Such commands are referred

to as DRD-safe. The commands kctune, swinstall, swjob, swlist, swmodify,

swremove, swverify, update-ux, and view are currently certified DRD-safe. An attempt

to execute any other command will result in a runcmd error. In addition, not every software

package may safely be processed by the sw* and update-ux commands. The DRD-safe

update-ux and SW-DIST commands are aware of running in a DRD session and will reject

any unsafe packages. For more information about DRD-safe packages, see drd-runcmd(1M).

NOTE: The drd runcmd command suppresses all reboots. The option -x autoreboot

is ignored when an swinstall, swremove, or update-ux command is executed by drd

runcmd.

• status Displays (system-specific) status information about the original disk and the clone

disk, including which disk is currently booted and which disk is activated (that is, the disk that

will be booted when the system is restarted).

• sync Propagates file system changes from the booted original system to the inactive clone

image.

• umount Unmounts all file systems in the inactive system image previously mounted by a drd

mount command.

• unrehost Removes the system information file, EFI/HPUX/SYSINFO.TXT, from a disk that

was rehosted, optionally preserving a copy in a file system on the booted system.

For details of DRD commands syntax, including all options and extended options, see DRD

commands.

1.4 Downloading and installing Dynamic Root Disk

For up-to-date detailed instructions about downloading and installing the DRD product, see https://

h20392.www2.hp.com/portal/swdepot/displayInstallInfo.do?productNumber=DynRootDisk.

NOTE: Due to system calls DRD depends on, DRD expects legacy Device Special Files (DSFs) to

be present and the legacy naming model to be enabled on HP-UX 11i v3 (11.31) servers. HP

recommends only partial migration to persistent DSFs be performed. For details of migration to

persistent DSFs, see theHP-UX 11i v3 Persistent DSF Migration Guide.

NOTE: On VxVM configurations, DRD expects OS Native Naming (osn) to be enabled. Enclosure

Based Naming (ebn) must be turned off.

8 About Dynamic Root Disk

Page 9

2 Cloning the active system image

This chapter describes how to use the drd clone command to clone the active system image. It

also describes where the cloned image is saved.

NOTE: You must be logged in as root to use any DRD command.

2.1 The active system image

The drd clone command creates a bootable disk that is a copy of the volume group containing

the root file system (/). The source of the drd clone command is the LVM volume group or VxVM

disk group containing the root (/) and boot (/stand) file systems. For a system with an LVM root,

the source does not need to reside on a single physical disk. For a system with a VxVM root, all

volumes in the root disk group must reside on every physical disk in the root group. Thus, each

disk must be a mirror of every other disk. The target must be a single physical disk large enough

to hold all volumes in the root group. In addition, a mirror for the target may be specified. For

more details, see the Dynamic Root Disk and MirrorDisk/UX white paper, available at http://

www.hp.com/go/drd-docs.

Because the drd clone operation clones a single group, systems with file systems to be patched

must not reside in multiple volume groups. (For example, if /stand resides in vg00 and /var

resides in vg01, the system is not appropriate for DRD.)

For additional information about source and target disks, see the drd-clone (1M) manpage (man

drd-clone) and the Dynamic Root Disk: Quick Start & Best Practices white paper, available at

http://www.hp.com/go/drd-docs.

NOTE:

After creating a DRD clone, your system has two system images—the original and the cloned

image. Throughout this document, the system image that is currently in use is called the active

system image. The image that is not in use is called the inactive system image.

2.2 Locating disks

The target of a drd clone operation must be a single disk or SAN LUN that is write-accessible

to the system and not currently in use. Depending on your HP-UX operating system, refer to one

of the following sections:

• Locating Disks on HP-UX 11i v2

• Locating Disks on HP-UX 11i v3

2.2.1 Locating disks on HP-UX 11i v2 systems

To help find and select the target disk on an HP-UX 11i v2 system, you can find out what disks are

on the system with the ioscan command:

# /usr/sbin/ioscan -fnkC disk

The ioscan command displays a list of system disks with identifying information, location, and

size. On a PA-RISC system, the output looks similar to Example 2-1.

2.1 The active system image 9

Page 10

Example 2-1 The ioscan -fnkC disk command output on an HP-UX 11i v2 PA-RISC system

# /usr/sbin/ioscan -fnkC disk

Class I H/W Path Driver S/W State H/W Type Description

=======================================================================

disk 0 10/0/14/0.0.0 sdisk CLAIMED DEVICE TEAC CD-532E-B

/dev/dsk/c0t0d0 /dev/rdsk/c0t0d0

disk 1 10/0/15/1.5.0 sdisk CLAIMED DEVICE HP 18.2GMAN3184MC

/dev/dsk/c1t2d0 /dev/rdsk/c1t2d0

disk 2 10/0/15/1.6.0 sdisk CLAIMED DEVICE HP 18.2GMAN3184MC

/dev/dsk/c2t3d0 /dev/rdsk/c2t3d0

On an Integrity system, the output looks similar to Example 2-2.

Example 2-2 The ioscan -fnkC disk command output on an HP-UX 11i v2 Integrity system

# /usr/sbin/ioscan -fnkC disk

Class I H/W Path Driver S/W State H/W Type Description

============================================================================

disk 0 0/0/2/0.0.0.0 sdisk CLAIMED DEVICE TEAC DV-28E-N

/dev/dsk/c0t0d0 /dev/rdsk/c0t0d0

disk 1 0/1/1/0.0.0 sdisk CLAIMED DEVICE HP 36.4GST336754LC

/dev/dsk/c2t0d0 /dev/dsk/c2t0d0s3 /dev/rdsk/c2t0d0s2

/dev/dsk/c2t0d0s1 /dev/rdsk/c2t0d0 /dev/rdsk/c2t0d0s3

/dev/dsk/c2t0d0s2 /dev/rdsk/c2t0d0s1

disk 2 0/1/1/0.1.0 sdisk CLAIMED DEVICE HP 36.4GST336754LC

/dev/dsk/c2t1d0 /dev/dsk/c2t1d0s3 /dev/rdsk/c2t1d0s2

/dev/dsk/c2t1d0s1 /dev/rdsk/c2t1d0 /dev/rdsk/c2t1d0s3

/dev/dsk/c2t1d0s2 /dev/rdsk/c2t1d0s1

IMPORTANT:

The above output includes block device special files ending with s1, s2, or s3. These endings

indicate an idisk partition on the disk. Do NOT use a partition as a clone target!

The first disk in the above list is a DVD drive, indicated by the DV in the description field. Do NOT

use a DVD as a clone target!

Some device files are identified as /dev/rdsk/. . . following the block device special file

designation. This identifies them as raw files. Do NOT use a raw file as a clone target!

If you have recently added a disk to your system you may need to run ioscan without the -k

option to display the new disk. See the ioscan(1M) manpage for more information about ioscan

options.

2.2.2 Locating disks on HP-UX 11i v3 Integrity systems

To help find and select the target disk on an Integrity system running HP-UX 11i v3 (11.31), you

can find out what disks are on the system with the ioscan command:

# /usr/sbin/ioscan -fNnkC disk

The ioscan command displays a list of system disks with identifying information, location, and

size. On an Integrity system running HP-UX 11i v3, the output looks similar to Example 2-3.

10 Cloning the active system image

Page 11

Example 2-3 The ioscan -fNnkC disk command output on an HP-UX 11i v3 Integrity system

# /usr/sbin/ioscan -fNnkC disk

Class I H/W Path Driver S/W State H/W Type Description

===================================================================

disk 4 64000/0xfa00/0x0 esdisk CLAIMED DEVICE HP 36.4GMAN3367MC

/dev/disk/disk4 /dev/rdisk/disk4

/dev/disk/disk4_p1 /dev/rdisk/disk4_p1

/dev/disk/disk4_p2 /dev/rdisk/disk4_p2

/dev/disk/disk4_p3 /dev/rdisk/disk4_p3

disk 5 64000/0xfa00/0x1 esdisk CLAIMED DEVICE HP 36.4GMAN3367MC

/dev/disk/disk5 /dev/rdisk/disk5

/dev/disk/disk5_p1 /dev/rdisk/disk5_p1

/dev/disk/disk5_p2 /dev/rdisk/disk5_p2

/dev/disk/disk5_p3 /dev/rdisk/disk5_p3

disk 6 64000/0xfa00/0x2 esdisk CLAIMED DEVICE HP 36.4GMAN3367MC

/dev/disk/disk6 /dev/rdisk/disk6

/dev/disk/disk6_p1 /dev/rdisk/disk6_p1

/dev/disk/disk6_p2 /dev/rdisk/disk6_p2

/dev/disk/disk6_p3 /dev/rdisk/disk6_p3

disk 7 64000/0xfa00/0x3 esdisk CLAIMED DEVICE TEAC DW-28E

/dev/disk/disk7 /dev/rdisk/disk7

IMPORTANT:

The above output includes block device special files ending with _p1, _p2, or _p3. These endings

indicate an idisk partition on the disk. Do NOT use a partition as a clone target!

The last disk in the above list is a DVD drive, indicated by the DW in the description field. Do NOT

use a DVD as a clone target!

Some device files are identified as /dev/rdisk following the block device special file designation.

This identifies them as raw files. Do NOT use a raw file as a clone target!

Additionally, on HP-UX 11i v3 Integrity systems, you may find it useful to use the following ioscan

command to identify persistent and legacy DSFs:

Example 2-4 The ioscan -m dsf command output on an HP-UX 11i v3 Integrity system

# /usr/sbin/ioscan -m dsf

Persistent DSF Legacy DSF(s)

==========================================

/dev/rdisk/disk4 /dev/rdsk/c2t0d0

/dev/rdisk/disk4_p1 /dev/rdsk/c2t0d0s1

/dev/rdisk/disk4_p2 /dev/rdsk/c2t0d0s2

/dev/rdisk/disk4_p3 /dev/rdsk/c2t0d0s3

/dev/rdisk/disk5 /dev/rdsk/c2t1d0

/dev/rdisk/disk5_p1 /dev/rdsk/c2t1d0s1

/dev/rdisk/disk5_p2 /dev/rdsk/c2t1d0s2

/dev/rdisk/disk5_p3 /dev/rdsk/c2t1d0s3

/dev/rdisk/disk6 /dev/rdsk/c3t2d0

/dev/rdisk/disk6_p1 /dev/rdsk/c3t2d0s1

/dev/rdisk/disk6_p2 /dev/rdsk/c3t2d0s2

/dev/rdisk/disk6_p3 /dev/rdsk/c3t2d0s3

/dev/rdisk/disk7 /dev/rdsk/c0t0d0

For additional information about LVM volume group configurations from legacy to the agile naming

model, see the LVM Migration from Legacy to Agile Naming Model HP-UX 11i v3 white paper.

2.2 Locating disks 11

Page 12

2.3 Choosing a target disk

CAUTION: It is the system administrator's responsibility to identify a target disk that is not currently

in use! Cloning a disk removes all current data on the target disk.

In Example 2-1, the disk with the active system image is /dev/dsk/c2t3d0. You need to choose

a free disk to be the target of the drd clone command. Your system may have many more disks

than Example 2-1 shows.

The target disk must:

• Be a block device special file.

• Be writeable by the system.

• Not currently be in use by other applications.

• Be large enough to hold a copy of each logical volume in the root group.

The target's physical disk need not be as large as the disk allocated for the root group, as long

as there is enough space for a copy of each logical volume in the root group. However, the disk

needs to be larger than the used space in each logical volume because each logical volume will

be created with the number of physical extents currently allocated to the corresponding root group

logical volume.

Example 2-1 shows three system disks: /dev/dsk/c0t0d0, /dev/dsk/c1t2d0, and /dev/

dsk/c2t3d0. You need to determine which disks are available and large enough.

2.4 Using other utilities to determine disk availability

You can determine which disks are in use with the lvm(7M) (Logical Volume Manager) and VxVM

(Veritas Volume Manager) commands. For example, to see which disks are in use by lvm, enter

this command:

# /usr/sbin/vgdisplay -v | /usr/bin/more

and look in the output for PV Name, which describes physical volumes.

This information is under the Physical Volumes heading. It looks similar to this:

--- Physical Volumes ---

PV Name /dev/dsk/c2t3d0

PV Status available

Total PE 4340

Free PE 428

Autoswitch On

You can use the vxdisk -o alldgs list command to display information about all disks

managed by VxVM. Do not specify any disk in use by VxVM as a clone target.

The swapinfo command can be used to display information about disks currently used for swap.

The HP System Management Homepage, hpsmh(1M), or System Administration Manager, sam(1M),

can be used to investigate the disks on the system and their current usage.

2.5 Using DRD for limited disk availability checks

You can use drd clone with the -p option to get minimal availability information about a disk.

(See the following section for an example.)

The drd clone command performs the following checks:

12 Cloning the active system image

Page 13

• If the disk is currently in use by the LVM Manager, it is rejected by the drd clone operation.

• If the disk is currently in use by the VxVM Manager, it will be accepted only if the following

two conditions are met:

— The disk is an inactive image managed by DRD

— The extended option -x overwrite=true is specified on the drd clone command

• If the disk is not currently in use by LVM or VxVM, but contains LVM, VxVM, or boot records,

it is only accepted as a drd clone target if -x overwrite=true is specified on the drd

clone command.

NOTE: A selected target disk will not be overwritten if it is part of the root volume. However, the

drd clone command will overwrite swap or raw data disks because it does not detect this type

of usage. For example, any raw disks in use by databases would be overwritten if given as the

target clone disk.

2.6 Using drd clone to analyze disk size

A simple way to determine if a disk is large enough for a DRD clone is to run drd clone in

preview mode:

Example 2-5 drd clone preview example on HP-UX 11i v2 or 11i v3

# /opt/drd/bin/drd clone –p –v –t /dev/dsk/cxtxdx

Example 2-6 drd clone preview example on HP-UX 11i v3 (using agile DSF)

# /opt/drd/bin/drd clone –p –v –t /dev/disk/diskn

The preview operation includes disk space analysis that shows whether a target disk is large

enough. If you prefer to investigate disk sizes before previewing the clone, you can use the

diskinfo command.

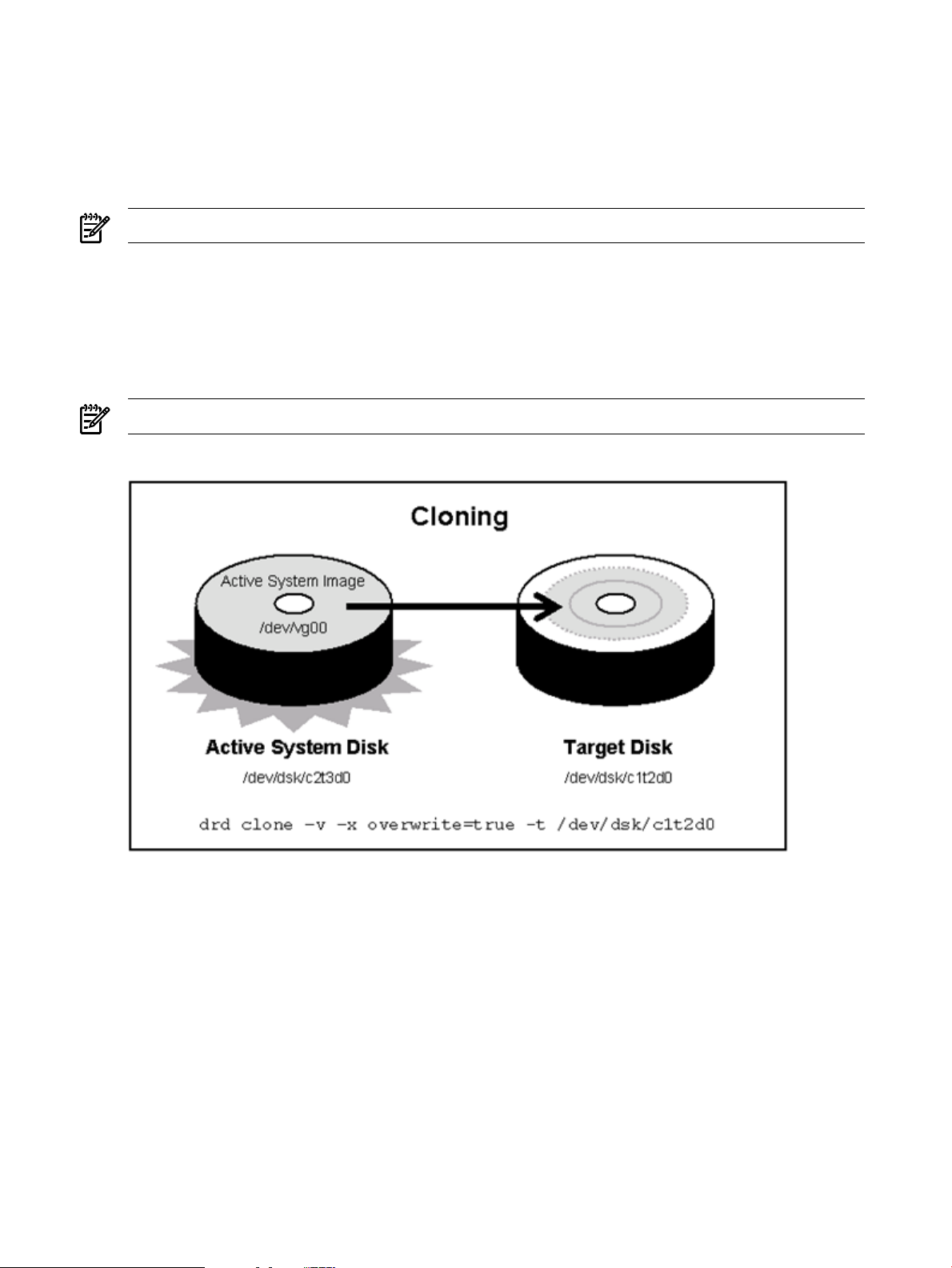

2.7 Creating the clone

After determining that sufficient disk space exists and that the target disk contains no data you

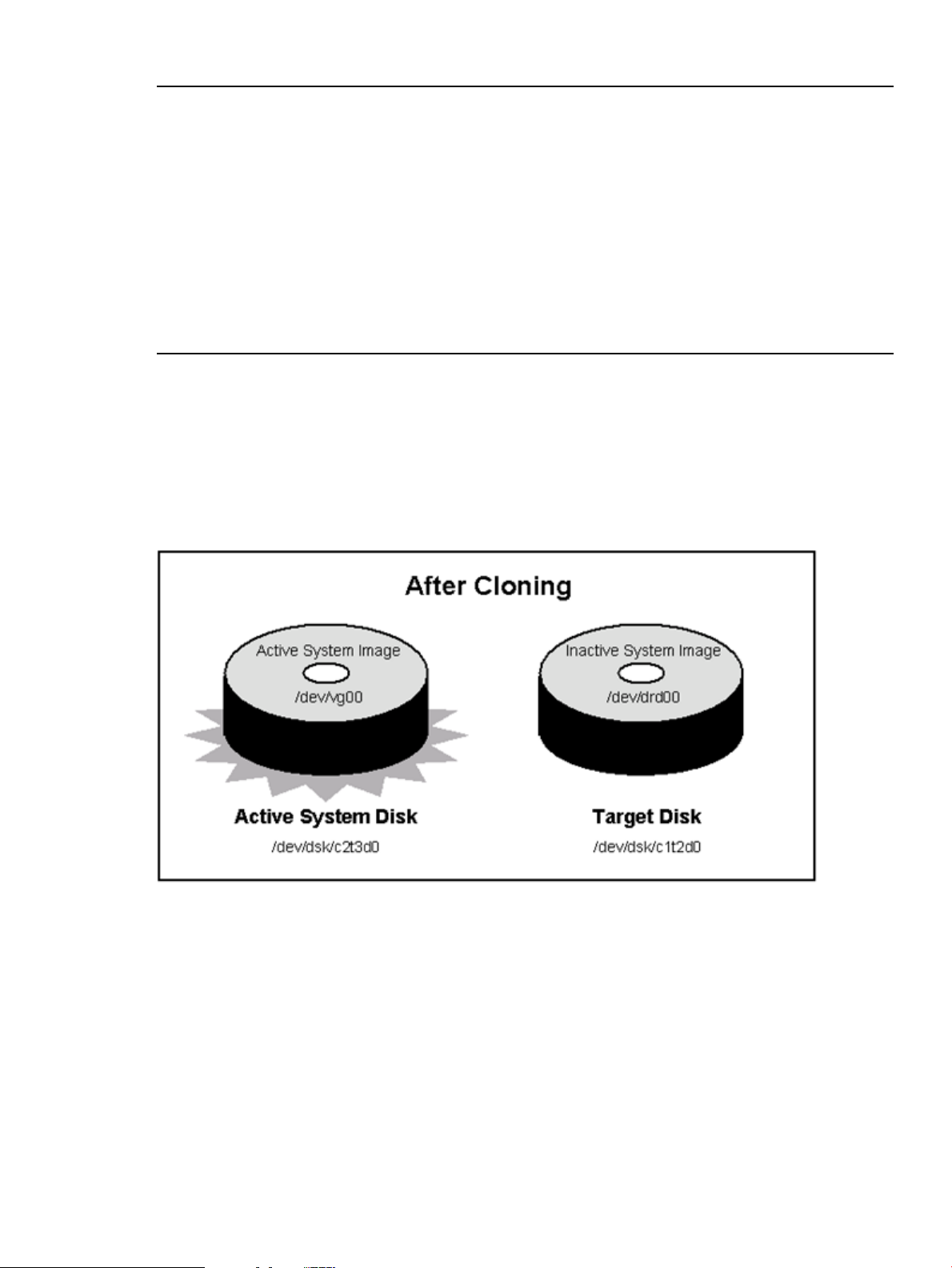

want to keep, you are ready to run the drd clone command. Figure 2-1 illustrates the content

of the active system disk and the clone target disk before cloning happens.

Figure 2-1 Preparing to Clone the Active System Image

2.6 Using drd clone to analyze disk size 13

Page 14

Use the following command to clone the system image, substituting your target disk identifier for

the one shown in the command:

# /opt/drd/bin/drd clone -v -x overwrite=true -t /dev/dsk/c1t2d0

On HP-UX 11i v3 systems, you can also use the agile device file to the target disk, substituting

your target disk identifier for the one shown in the following command:

# /opt/drd/bin/drd clone -v -x overwrite=true -t /dev/disk/disk10

NOTE: For descriptions of the drd clone command, see The drd clone command.

The -x option lets you choose whether to overwrite data on the target disk. The -x

overwrite=true option tells the command to overwrite any data on the disk. The -x

overwrite=false option tells the command not to write the cloned image if the disk appears

to contain LVM, VxVM, or boot records. The default value is false.

Cloning creates an inactive system image on the target disk at /dev/dsk/c1t2d0. Figure 2-2

shows the active system image being cloned to the target disk.

NOTE: The drd clone command does not write over a disk that is part of the root volume.

Figure 2-2 Cloning the Active System Image

The output you see as this command runs is similar to Example 2-7.

When you see the message, Copying File Systems to New System Image, the active

system image is being cloned. This operation can take quite a while, and you see no more messages

until the file systems have been copied.

14 Cloning the active system image

Page 15

Example 2-7 The drd clone command output

======= 12/01/06 11:07:28 MST BEGIN Clone System Image (user=root)

(jobid=drdtest2)

* Reading Current System Information

* Selecting System Image To Clone

* Selecting Target Disk

* Selecting Volume Manager For New System Image

* Analyzing For System Image Cloning

* Creating New File Systems

* Copying File Systems To New System Image

* Making New System Image Bootable

* Unmounting New System Image Clone

* System image: "sysimage_001" on disk "/dev/dsk/c1t2d0"

======= 12/01/06 11:38:19 MST END Clone System Image succeeded. (user=root)

(jobid=drdtest2)

Figure 2-3 shows the two disks after cloning. Both disks contain the system image. The image on

the target disk is the inactive system image.

The DRD clone operation will have some impact on the booted system's I/O resources, particularly

if the source disk is on the same SCSI chain as the target disk. DRD's performance is similar to

system performance when using Ignite to create recovery images, which many system administrators

find acceptable.

Figure 2-3 Disk Configurations After Cloning

After running drd clone, you have identical system images on the system disk and the target

disk. The image on the system disk is the active system image. The image on the target disk is the

inactive system image.

The drd clone command returns the following values:

0 Success

1 Error

2 Warning

For more details, you can examine messages written to the log file at /var/opt/drd/drd.log.

Here is an example of creating a clone from a HP-UX 11i v3 system to a storage area network

(SAN) disk. First, Example 2-8 displays the output of the following drd clone command:

# /opt/drd/bin/drd clone -t /dev/disk/disk14 -x overwrite=true

2.7 Creating the clone 15

Page 16

Example 2-8 The drd clone command output for SAN disk

======= 06/24/08 11:55:58 MDT BEGIN Clone System Image (user=root) (jobid=drdtest14)

* Reading Current System Information

* Selecting System Image To Clone

* Selecting Target Disk

* The disk "/dev/disk/disk14" contains data which will be overwritten.

* Selecting Volume Manager For New System Image

* Analyzing For System Image Cloning

* Creating New File Systems

* Copying File Systems To New System Image

* Making New System Image Bootable

* Unmounting New System Image Clone

======= 06/24/08 12:06:00 MDT END Clone System Image succeeded. (user=root) (jobid=drdtest14)

Next, the drd status command is executed to verify the clone disk and the original disk. Example

2-9 displays the output of the following drd status command:

# /opt/drd/bin/drd status

Example 2-9 The drd status command output for SAN disk

======= 06/24/08 12:09:46 MDT BEGIN Displaying DRD Clone Image Information (user=root) (jobid=drdtest14)

* Clone Disk: /dev/disk/disk14

* Clone EFI Partition: AUTO file present, Boot loader present, SYSINFO.TXT not present

* Clone Creation Date: 06/24/08 11:56:18 MDT

* Clone Mirror Disk: None

* Mirror EFI Partition: None

* Original Disk: /dev/disk/disk15

* Original EFI Partition: AUTO file present, Boot loader present, SYSINFO.TXT not present

* Booted Disk: Original Disk (/dev/disk/disk15)

* Activated Disk: Original Disk (/dev/disk/disk15)

======= 06/24/08 12:10:01 MDT END Displaying DRD Clone Image Information succeeded. (user=root)

(jobid=drdtest14)

NOTE: The elapsed time of the clone creation will vary, depending on the size of the root disk,

independent of whether it's going to a SAN or an internal disk.

2.8 Adding or removing a disk

After creating a clone, you can remove it or the original system image from the system. For example,

if may need to boot the image on different hardware (See Rehosting and unrehosting systems) or

need disk storage for use on another system.

After you remove an image from the system, DRD operations other than clone will fail. In addition,

if a VxVM image is removed from the system without updating the VxVM metadata, the drd clone

command will also fail. The inactive image disk group is still present which prevents it from being

recreated, but the disk residing in it is missing. This prevents DRD from recognizing it as a disk

group in use by DRD.

To remove the disk group and enable future drd clonecommands, determine the VxVM disk

group of the inactivate image as follows:

• If the booted group does NOT begin with the “drd_” prefix the booted group name with

“drd_” to determine the inactive image disk group.

OR

• If the booted group DOES begin with “drd_” remove the “drd_” prefix to determine the

inactive image disk group. Issue the command “vxdg deport <inactive image disk

group>”.

16 Cloning the active system image

Page 17

3 Updating and maintaining software on the clone

After cloning the active system image, you can use drd runcmd to run a limited set of commands

and to apply patches to the inactive system image. This chapter describes this process.

For details of the drd runcmd command, including available options and extended options, see

The drd runcmd command.

NOTE: You must be logged in as root to run any DRD command.

3.1 DRD-Safe commands and Packages

The drd runcmd operation runs a command on the inactive system image that does not make

any changes to the booted system, the running kernel, or the process space. This enables a system

administrator to make changes to the inactive system image without incurring any disruption to the

currently booted system.

NOTE: The drd runcmd command suppresses all reboots. The option -x autoreboot is

ignored when a swinstall, swremove, or update-ux command is executed by drd runcmd.

Not all commands can safely be executed by the drd runcmd operation. For example, commands

that start or stop daemons or change dynamic kernel tuneables are disruptive to current processes

and must be prevented by the drd runcmd operation.

Restrictions on commands executed by drd runcmd are:

• When calling swinstall and update-ux, drd runcmd supports installation from directory

depots on the booted system and on remote servers. Note that installing from serial depots or

from depots on the inactive system image is not supported.

• drd runcmd can also be used to swlist, swverify, swremove, and swmodify software

that is installed on the inactive image. It cannot be used to list or modify any depot's contents.

Using these commands outside of drd runcmd allows for depot listing and management.

Commands that are not disruptive to the booted system and perform appropriate actions on the

inactive system are known as DRD-safe. For this release of DRD, a short list of commands is

recognized by the drd runcmd operation to be DRD-safe. An attempt to use DRD to run commands

that are not DRD-safe will terminate with an ERROR return code without executing the command.

A number of Software Distributor commands have been made safe at a sufficient maintenance

level of SW-DIST. The DRD product has a package co-requisite on a minimum release of SW-DIST.

(For details of the DRD product dependencies, see the following webpage: https://

h20392.www2.hp.com/portal/swdepot/

displayInstallInfo.do?productNumber=DynRootDisk#download.)

Similarly, drd runcmd update-ux includes a run-time check for the revision of SWManager

(SWM), which provides DRD-safe tools used during the update. This functionality supports updates

on the clone from an older version of HP-UX 11i v3 to HP-UX 11i v3 update 4 or later. (For details

of the DRD product dependencies, see the following webpage: https://h20392.www2.hp.com/

portal/swdepot/displayInstallInfo.do?productNumber=DynRootDisk#download.)

The DRD-safe commands are the following:

• swinstall

• swremove

• swlist

• swmodify

• swverify

• swjob

3.1 DRD-Safe commands and Packages 17

Page 18

• kctune

• update-ux

• view

• kcmodule

• kconfig

• mk_kernel

• swm job

See the Software Distributor Administrator's Guide, located at http://www.hp.com/go/sd-docs,

as well as swinstall(1M), swremove(1M), swlist(1M), swmodify(1M), swverify(1M), swjob(1M),

kctune(1M), update-ux(1M), view(1M), kcmodule(1M), kconfig(1M), mk_kernel(1M), and swm(1M)

for additional information about these commands.

The DRD-safe commands may be specified by their base names, such as swinstall or swremove,

or their full paths, such as /usr/sbin/swinstall or /usr/sbin/swremove. However, paths

that are symlinks to the DRD-safe commands are not supported.

If the inactive system image has not been mounted, the drd runcmd operation mounts it, executes

the DRD-safe command, and then unmounts it. If the inactive system image is already mounted,

the drd runcmd operation leaves it mounted.

When swinstall, swremove, and update-ux are used to manage software packages, SD

control scripts included in the packages—such as pre-install, post-remove, and update-prep

scripts—are executed. For management of such packages to be DRD-safe, the control scripts must

not take any action that will affect the booted system. (The configure scripts are not executed.) A

package satisfying this restriction is also known as DRD-safe. The swlist, swmodify, and

swverify commands—without -F or -x fix=true options—may be invoked by drd runcmd

for arbitrary packages. The swlist and swmodify commands do not call control scripts, and

verify scripts do not change the system.

When executing the drd runcmd kctune command, the kctune command outputs the prompt:

==> Update the automatic 'backup' configuration first?

If the user types y, the following error is displayed:

WARNING: The backup behavior 'yes' is not supported in alternate

root environments. The behavior 'once' will be used instead.

This message can be ignored.

3.2 Updating and managing patches with drd runcmd

3.2.1 DRD-Safe patches and the drd_unsafe_patch_list file

An HP program was initiated in November 2004 to make all patches DRD-safe. Although most

patches delivered after November 2004 are DRD-safe, a few are not. Patches that are not DRD-safe

are listed in the file /etc/opt/drd/drd_unsafe_patch_list, which is delivered as a volatile

file with the DRD product. The copy of this file on the inactive system image is used to filter patches

that are selected for installation with drd runcmd.

IMPORTANT: When invoked by the drd runcmd operation, the swinstall, swremove, and

update-ux commands reject any attempt to install or remove a patch included in the

drd_unsafe_patch_list file on the inactive system image.

In the rare event that a new patch is determined not to be DRD-safe, a new version of the

drd_unsafe_patch_list file is made available on HP’s IT Resource Center website.

To determine if you need to update the drd_unsafe_patch_list files, see the Update the

drd_unsafe_patch_list File procedure in the DRD Downloads and Patches page at https://

18 Updating and maintaining software on the clone

Page 19

h20392.www2.hp.com/portal/swdepot/

displayInstallInfo.do?productNumber=DynRootDisk#download.

It is helpful during maintenance planning for system administrators to be able to determine which,

if any, patches are not DRD-safe, and to make plans regarding these patches. See the DRD-Safe

Concepts for HP-UX 11i v2 and Later white paper, located at http://www.hp.com/go/drd-docs,

for information about identifying such patches and alternatives on how to manage them without

using the drd runcmd operation.

3.2.2 Patches with special installation instructions

Patches may include Special Installation Instructions, or SIIs, which contain specific tasks for the

user to perform when they install certain patches. If you install patches with SIIs on an inactive DRD

system image, ensure the following:

• You must not stop/kill or restart any processes or daemons. Because the patch is being installed

on an inactive DRD system image, these actions are not needed, and in fact could leave the

running system in an undesirable state. When the inactive system image is booted, all processes

are stopped and restarted.

• Only make kernel changes by executing: drd runcmd kctune

3.3 Updating and managing products with drd runcmd

For non-patch products, a new fileset-level packaging attribute, is_drd_safe, has been introduced.

The value of the attribute defaults to false, so any package created before the attribute was

introduced will be rejected by swinstall, swremove, and update-ux commands invoked by

the drd runcmd operation. Because the DRD product was not available at the initial release of

11i v2, relatively few non-patch products have the is_drd_safe attribute set to true. For HP-UX

11i v3, however, most products will have the is_drd_safe attribute set to true.

To determine if a non-patch product can be installed or removed using drd runcmd, execute the

command:

# /usr/sbin/swlist –l fileset –a is_drd_safe _product_name_

and check that all filesets have is_drd_safe set to true.

3.4 Special considerations for firmware patches

A firmware patch changes the firmware the next time the patched image is booted. Because the

firmware is shared by both the active and inactive system images, there is no ability to have an

unchanged copy of the firmware as a fail-safe mechanism. As a result, the benefit of DRD as a Hot

Recovery mechanism cannot be provided with firmware patches. Firmware patches set the fileset

attribute is_drd_safe to false to supply a checkinstall script that prevents installation in

a DRD session.

IMPORTANT: System administrators need to be aware that any firmware change cannot be

reversed by booting a different system image.

3.5 Restrictions on update-ux and sw* commands invoked by drd

runcmd

Options on the Software Distributor commands that can be used with drd runcmd are limited by

the need to ensure that operations are DRD-safe. The restrictions include the following:

• The -F and -x fix=true options are not supported for drd runcmd swverify operations.

Use of these options could result in changes to the booted system.

• The use of double quotation marks and wild card symbols (*, ?) in the command line must

be escaped with a backslash character (\), as in the following example:

drd runcmd swinstall –s depot_server:/var/opt/patches \*

3.3 Updating and managing products with drd runcmd 19

Page 20

• Files referenced in the command line must both:

— Reside in the inactive system image

— Be referenced in the DRD-safe command by the path relative to the mount point of the

inactive system image

This applies to files referenced as arguments for the -C, -f, -S, -X, and -x logfile options

for an sw command run by drd runcmd and for the update-ux command -f option.

• To use update-ux with a media source or local depot, SWM version A.3.5.1 or later must

be present on the clone.

See drd-runcmd(1M) for further information about restrictions on Software Distributor commands

invoked by drd runcmd.

3.6 Viewing logs

When a drd runcmd operation executes a DRD-safe command, the DRD command runs on the

booted system, and the DRD log, /var/opt/drd/drd.log, is created on the booted system.

However, the DRD-safe command runs on the inactive system image, and its logs (if they exist),

reside on the inactive system image.

Logs can be viewed on the inactive system image by using the drd runcmd view command.

For example, to view the swagent log on the inactive image, execute the following command:

# /opt/drd/bin/drd runcmd view /var/adm/sw/swagent.log

For more information on viewing log files and on maintaining the integrity of system logs, see the

Dynamic Root Disk: Quick Start & Best Practices white paper, located at http://www.hp.com/go/

drd-docs.

20 Updating and maintaining software on the clone

Page 21

4 Accessing the inactive system image

This chapter describes how to mount and unmount the inactive system image.

IMPORTANT: If you choose to mount the inactive DRD system image, exercise caution to ensure

that any actions taken do not impact the running system. You must:

• Not stop/kill or restart any processes or daemons.

• Only make kernel changes by executing: drd runcmd kctune.

For example, to change the value of the maxfiles_lim kernel tunable on the inactive system image

to 8192, execute the command:

# /opt/drd/bin/drd runcmd kctune maxfiles_lim=8192

Using drd runcmd to change the value of maxfiles_lim on the inactive system image ensures that

its value on the booted system is unchanged.

NOTE: Accessing the inactive system image is not always required; however, you may need to

access the inactive system image prior to activating it.

You can mount DRD-cloned file systems to access them and:

• Check the logs of commands executed by drd runcmd.

• Create files on the inactive system image. In particular, you can create files that will be

referenced by swinstall commands, executed by the drd runcmd command. (For an

example of this type of file creation, see Example 4-4.)

• Verify the integrity of certain files on the inactive system image. If a file is known to have

changed during the drd clone operation, you might want to compute a checksum on the

copy of the file on the booted system and the copy on the target system to validate the clone

copy.

NOTE: You must be logged in as root to run any DRD command.

4.1 Mounting the inactive system image

For details of the drd mount command, including available options and extended options, see

The drd mount command.

To mount the inactive system image, execute the drd mount command:

# /opt/drd/bin/drd mount

The command locates the inactive system image and mounts it.

The output of this command is similar to Example 4-1.

4.1 Mounting the inactive system image 21

Page 22

Example 4-1 The drd mount command output

# /opt/drd/bin/drd mount

======= 12/08/06 22:19:31 MST BEGIN Mount Inactive System Image (user=root)

(jobid=dlkma1)

* Reading Current System Information

* Locating Inactive System Image

* Mounting Inactive System Image

======= 12/08/06 22:19:52 MST END Mount Inactive System Image succeeded.

(user=root) (jobid=dlkma1)

The drd mount command automatically chooses the mount point for the inactive system image.

If the image was created by the drd clone command, the mount point is /var/opt/drd/mnts/

sysimage_001. If the clone has been booted, drd mount mounts the original system at the

mount point /var/opt/drd/mnts/sysimage_000. To see all mounted file systems, including

those in the active and inactive system images, execute the following command:

# /usr/bin/bdf

The output of this command should look similar to Example 4-2, if the drd mount command has

been executed:

Example 4-2 The bdf command output

# /usr/bin/bdf

file system kbytes used avail %used Mounted on

/dev/vg00/lvol3 1048576 320456 722432 31% /

/dev/vg00/lvol1 505392 43560 411288 10% /stand

/dev/vg00/lvol8 3395584 797064 2580088 24% /var

/dev/vg00/lvol7 4636672 1990752 2625264 43% /usr

/dev/vg00/lvol4 204800 8656 194680 4% /tmp

/dev/vg00/lvol6 3067904 1961048 1098264 64% /opt

/dev/vg00/lvol5 262144 9320 250912 4% /home

/dev/drd00/lvol3 1048576 320504 722392 31% /var/opt/drd/mnts/sysimage_001

/dev/drd00/lvol1 505392 43560 411288 10% /var/opt/drd/mnts/sysimage_001/stand

/dev/drd00/lvol4 204800 8592 194680 4% /var/opt/drd/mnts/sysimage_001/tmp

/dev/drd00/lvol5 262144 9320 250912 4% /var/opt/drd/mnts/sysimage_001/home

/dev/drd00/lvol6 3067904 1962912 1096416 64% /var/opt/drd/mnts/sysimage_001/opt

/dev/drd00/lvol7 4636672 1991336 2624680 43% /var/opt/drd/mnts/sysimage_001/usr

/dev/drd00/lvol8 3395584 788256 2586968 23% /var/opt/drd/mnts/sysimage_001/var

In this output, file systems identified as dev/vg00/* are the active system image file systems.

Those identified as /dev/drd00/* are the inactive system image file systems.

4.2 Performing administrative tasks on the inactive system image

The following examples show some tasks you can perform on the inactive system image.

22 Accessing the inactive system image

Page 23

TIP: HP recommends that a clone be deployed shortly after creating and (optionally) modifying

it.

Example 4-3 Checking a warning message

You verify software on the inactive system image with drd runcmd swverify and see a warning

message.

Task: Find additional information about the message.

To see detailed information about the warning message supplied by the swagent log, execute

the following command:

# /opt/drd/bin/drd runcmd view /var/adm/sw/swagent.log

Example 4-4 Creating a patch install file

Task: Create a file containing a list of patches to be applied to the inactive system image. You

want to use the file as the argument of a -f option in a swinstall command run by drd

runcmd. Follow this procedure:

1. Mount the inactive system image:

# /opt/drd/bin/drd mount

2. Enter the patches into a file such as

/var/opt/drd/mnts/sysimage_001/var/opt/drd/my_patch_list

with the following commands:

a. # /usr/bin/echo “PHCO_02201” > \

/var/opt/drd/mnts/sysimage_001/var/opt/drd/my_patch_list

b. # /usr/bin/echo “PHCO_12134” >> \

/var/opt/drd/mnts/sysimage_001/var/opt/drd/my_patch_list

c. # /usr/bin/echo “PHCO_56178” >> \

/var/opt/drd/mnts/sysimage_001/var/opt/drd/my_patch_list

NOTE: If the inactive system image is the original system image, and not the clone, the

root file system mount point is /var/opt/drd/mnts/sysimage_000.

3. Apply the patches using drd runcmd, identifying the file by its path relative to the mount

point of the inactive system image root file system:

# /opt/drd/bin/drd runcmd swinstall -s patch_server:/var/opt/patch_depot \

-f /var/opt/drd/my_patch_list

NOTE: Because the inactive system image was mounted when drd runcmd was executed,

it is still mounted after drd runcmd completes. You can unmount it with the following

command:

# /opt/drd/bin/drd umount

4.2 Performing administrative tasks on the inactive system image 23

Page 24

Example 4-5 Editing symlinked files

Task: You changed the value of NUM_BK in /opt/VRTS/bin/vxconfigbackup from 5 to

10 by editing the file. You want the change applied to the clone as well.

Execute the following commands:

1. Mount the inactive system image:

# /opt/drd/bin/drd mount

2. Compare vxconfigbackup with the clone copy:

# /usr/bin/diff /opt/VRTS/bin/vxconfigbackup \

/var/opt/drd/mnts/sysimage_000/opt/VRTS/bin/vxconfigbackup

Surprisingly, the files are equal! What happened?

A long listing shows that the files are symlinks:

# /usr/bin/ll /opt/VRTS/bin/vxconfigbackup \

/var/opt/drd/mnts/sysimage_000/opt/VRTS/bin/vxconfigbackup

The listing shows:

lrwxr-xr-x l bin bin 32 Apr 3 17:34

/opt/VRTS/bin/vxconfigbackup -> /usr/lib/vxvm/bin/vxconfigbackup

lrwxr-xr-x 1 bin bin 32 Nov 16 12:45

/var/opt/drd/mnts/sysimage_000/opt/VRTS.bin/vxconfigbackup

-> /usr/lib/vxvm/bin/vxconfigbackup

When the clone is booted, the target of the symlink on the clone resides on the clone. However,

when the clone is mounted under the booted system, the target of the symlink resides on the booted

system.

To change the data on the clone, edit the file that will be the target of the symlink when the clone

is booted:

# /usr/bin/vi /var/opt/drd/mnts/sysimage_001/usr/lib/vxvm/vxconfigbackup

and change the value of NUM_BK to 10.

CAUTION: Attempting to edit a path on the clone that is an absolute symlink results in changes

on the booted system!

4.3 Unmounting the inactive system image

For details of the drd umount command, including available options and extended options, see

The drd umount command.

To unmount the inactive system image, the command is:

# /opt/drd/bin/drd umount

The output is similar to Example 4-7.

Example 4-6 The drd umount command output

======= 12/08/06 22:09:22 MST BEGIN Unmount Inactive System Image (user=root)

(jobid=dlkma1)

* Reading Current System Information

* Locating Inactive System Image

* Unmounting Inactive System Image

======= 12/08/06 22:09:48 MST END Unmount Inactive System Image succeeded.

(user=root) (jobid=dlkma1)

The drd umount command:

24 Accessing the inactive system image

Page 25

• Unmounts the file systems in the inactive system image.

• Inactivates the inactive system image's volume group.

• For an LVM-based system, exports the volume group.

If you run the bdf command after the drd umount command, you no longer see the inactive

system image in the output.

4.3 Unmounting the inactive system image 25

Page 26

26

Page 27

5 Synchronizing the inactive clone image with the booted

system

5.1 Overview

The drd sync command is introduced in release B.11.xx.A.3.5 of Dynamic Root Disk (DRD) to

propagate root volume group file system changes from the booted original system to the inactive

clone image.

Example 5-1 drd sync scenario

Here is a sample scenario that can be improved by using the drd sync command:

1. A system administrator creates a DRD clone on a Thursday.

2. The administrator applies a collection of software changes to the clone on Friday using the

drd runcmd command.

3. On Friday, several log files are updated on the booted system.

4. On Saturday, the clone is booted.

Prior to DRD release B.11.xx.A.3.5, the system administrator needed to take manual actions to

ensure that changes made to log files on the booted system (on Friday) were copied to the clone

before or after it was booted. This was particularly important for logs that were audited for security

purposes.

With the introduction of the drd sync command, the administrator can run drd sync before

booting the clone (preferably as part of a shutdown script) to ensure that changes made to the

booted system are propagated to the clone.

The drd sync command does not propagate changes to software installed on the clone. In most

cases, software installed on the clone is intentionally different from software installed on the booted

system. For example, patches might have been applied to the clone, new revisions of software

products installed, or an entire new release of HP-UX installed using drd runcmd. More information

on how drd sync handles software changes is provided in “The drd sync command” (page 28).

IMPORTANT: HP recommends that drd sync not be used for clones that have diverged greatly

from the booted system over a period of time. In some cases, it is more appropriate to re-create

the clone and apply the software changes, or install newer software changes. For ways to determine

how much a clone differs from the original booted system, see “Using the drd sync preview to

determine divergence of the clone from the booted system” (page 30).

5.2 Quick start—basic synchronization

To propagate the maximum number of file changes from the booted system to the inactive clone

image, you need to create a shutdown script on the booted system that does the following:

• Mount the clone:

# /opt/drd/bin/drd mount

• Execute the drd sync command:

# /opt/drd/bin/drd sync

• Remove the copy of the script on the clone:

# /usr/bin/rm /var/opt/drd/mnts/sysimage_001/<rc_location>

• Unmount the clone:

# /opt/drd/bin/drd umount

5.1 Overview 27

Page 28

For a sample shutdown script, see “drd sync system shutdown script” (page 31).

Additional information is provided below in the comparison between the booted system and the

clone. This information is provided by the preview option on the drd sync command, the

preparatory actions that can be used to precisely control what files are synchronized, and the

considerations advised when multiple iterations of the drd sync command are run.

5.3 The drd sync command

The drd sync command

• Determines the list of files in the booted volume group that are not excluded by the –x

exclude_list option

• Trims the list based on criteria described below

• Copies the files to the clone, preserving ownership and permissions

When the drd sync preview (-p) option is used, the file-copy step is suppressed. In any case,

the list of files to be copied is produced.

5.3.1 Determining the list of files in the booted volume group

The drd sync command finds each file in the root LVM volume group (or VxVM disk group) on

the booted system that is not excluded by the x exclude_list option to provide the initial list

of files to be synchronized.

Note that files that have been erased from the booted root volume group since the clone was

created are not identified by this process.

5.3.2 Trimming the list of files to be synchronized

The list of files produced above is reduced based on the following criteria:

1. Locations that are not synchronized

• /var/adm/sw/*: Because the appropriate mechanism for managing software changes

on the clone is drd runcmd, the directory tree rooted at /var/adm/sw (which contains

the Software Distributor Installed Products Database and associated log files) is not copied

by drd sync. Instead, files in this location are created and modified by execution of

Software Distributor commands (such as swinstall and swremove) during execution

of the drd runcmd command.

• /tmp/*, /var/tmp/*, /var/opt/drd/tmp/*: These locations contain transient files,

so changes to files in these locations are not propagated to the clone.

• /stand/*: Changes to the HP-UX kernel should be applied by using drd runcmd with

either a Software Distributor command or the kctune, kcmodule, konfig, or

mk_kernelcommands, so changes in this location are not propagated by drd sync.

• /etc/lvmconf/*, /etc/lvmtab*, /etc/lvmpvg*, /etc/vx/*, and /etc/

vxvmconf/*: These locations contain information specific to the root LVM volume group

(or VxVM disk group) on the booted system and should not be propagated to the clone's

system image.

• /etc/fstab*: This location contains information specific to the VxVM disk group mount

point for the clone's system image.

• /dev/<clone_group>/*: To prevent errors in the drd mount command, the

/dev/<clone_group> directory is not copied to the clone.

The collection of files that are not synchronized—because they reside in locations that are not

synchronized—is written to the /var/opt/drd/sync/

28 Synchronizing the inactive clone image with the booted system

Page 29

filtered_out_by_non_synced_location_filter_sync_phase file, which is refreshed

each time drd sync is run, even if the command is run with the -p preview option.

2. Files that have changed on the clone

A file residing on the clone might have been changed by a drd runcmd operation, and it

may have been updated on the booted system as well. This can occur even if the file is not

listed in the Software Distributor Installed Products Database (that is, in the output of swlist

–l file). For example, the installation of a product can add a new user to /etc/passwd.

In the case that a file has been updated on the booted system and updated on the clone by

a mechanism other than a previous drd sync, drd sync does not copy the file. This avoids

overwriting any changes made by installation (or removal) of software on the clone.

Because administrators might be interested in identifying changed files on the clone that will

not be synchronized, the list of such files is written to the /var/opt/drd/

filtered_out_by_target_changed_filter_sync_phase file, even if the drd sync

command is run with the -p preview option. For any file listed in /var/opt/drd/

filtered_out_by_target_changed_filter_sync_phase, the administrator can use

a command such as diff to compare the versions of the file on the booted disk and the clone.

If the administrator determines that the file should be copied to the clone, the copy on the

clone can be erased and the drd sync command executed again.

3. Nonvolatile files in the Software Distributor Installed Products Database (IPD)

Most files delivered in software packages should not be changed by a system administrator.

To emphasize this fact, the files have the attribute is_volatile set to false. Any change to such

a file results in an error if the swverify command is run (on the booted system if the file is

changed there, or through drd runcmd if the file is changed on the clone.)

To keep files delivered by Software Distributor in accordance with the information recorded

about them in the Software Distributor Installed Products Database (IPD), changes to nonvolatile

files in the IPD are not propagated by drd sync. (Note that nonvolatile files are those

displayed as output from the command /usr/sbin/swlist -l file -a is_volatile

| grep false).

The list of files on the booted system that are not propagated by drd sync because they are

nonvolatile files in the Installed Products Database is written to /var/opt/drd/

filtered_out_by_nonvolatile_filter_sync_phase, even if the drd sync command

is run with the -p preview option.

4. Volatile files in the Software Distributor Installed Products Database. (IPD)

Files delivered by Software Distributor with the file attribute is_volatile set to true may be

changed by the system administrator. In fact, in many cases, they must be changed by the

system administrator. For example, the /etc/rc.config.d/netconf file must be customized

for each system to include its network configuration. Typically, such a customization applies

to both the booted system and the clone image. Thus, such changes are ordinarily propagated

to the clone by the drd sync command.

However, if the clone has been updated to a new release of the operating system (or a release

of a particular software package that changes the format of the file), propagation of the

changes may be inappropriate. DRD uses the configuration template (delivered to a location

containing a directory named newconfig) for the volatile file to determine if the changes

should be propagated to the clone. If the templates are the same, the change is propagated,

otherwise, they are not.

Volatile files that cannot be copied to the clone due to differing templates, are listed in the

/var/opt/drd/filtered_out_by_volatile_filter_sync_phase file, even if the

drd sync command is run with the -p preview option.

After criteria 1 through 4 (above) are applied, the list of files to be copied to the inactive clone

during a drd sync operation is written to /var/opt/drd/

5.3 The drd sync command 29

Page 30

files_to_be_copied_by_drd_sync, even if the drd sync command is run with the

-p preview option.

5.3.3 Copying the files to the inactive clone image

The drd sync command uses the /usr/bin/pax command to propagate changes from the

booted original system to the inactive clone.

IMPORTANT: The original system must be booted when drd sync is run, and changes are

always propagated from the original system to the clone.

The current limitations apply to actual propagation of changes:

• If a new hard link is created to a file that existed when the clone was created, the drd sync

command does not recognize that the file should be created as a hard link on the inactive

clone image, and creates a new copy of the file with the new (hard-linked) name. In rare

cases, this could result in an “insufficient space” error on the clone during synchronization.

• If a permission or ownership of a file on the original booted system is changed, the modification

time of the file is not updated, so this change by itself does not cause the file to be copied to

the clone. However, new permission or ownership of the file is propagated to the clone when

it is copied.

The copy of a file to the inactive clone image does not include modification of any file system

access control lists (ACLs) on the clone.

5.4 Using the drd sync preview to determine divergence of the clone from the booted system

If many changes were made to the booted system after the clone was created, it can be preferable

to run drd clone rather than attempt to use drd sync to update the inactive clone. To determine

the extent of the changes to the booted system, run drd sync with the -p preview option:

# /opt/drd/bin/drd sync -p

Next, examine the /var/opt/drd/files_to_be_copied_by_drd_sync file. If the file is

large, consider running drd clone to re-create the clone.

Additional information can be obtained by examining other files in /var/opt/drd/sync, which

are described in “The drd sync command” (page 28).

30 Synchronizing the inactive clone image with the booted system

Page 31

5.5 drd sync system shutdown script

To run the following script during system shutdown, create the script in the /sbin/init.d directory

and create a symlink to it from /sbin/rc[n].d/K[mmm]. The system administrator can choose

values for n and mmm to ensure that file updates made during shutdown occur before the script is

run. The script must be run before file systems are unmounted, which is generally done by /sbin/

rc0.d/K900localmount. See rc(1) for further information on rc script numbering.

#!/sbin/sh

#

# synchronize source root group with cloned root group

# drd-sync(1M).

#

PATH=/sbin:/usr/sbin:/usr/bin:/opt/drd/bin

export PATH

rval=0

DRD_MOUNT_PT=/var/opt/drd/mnts/sysimage_001

set_return() {

if [ $1 -ne 0 ]; then

echo $2

rval=1

fi

}

case $1 in

stop_msg)

echo "Running drd sync to synchronize cloned root file systems"

;;

stop)

# Synchronize the source disk with the cloned system

drd mount >/dev/null 2>&1 # Ignore errors, may already be mounted.

drd sync

sync_ret=$?

set_return $sync_ret "ERROR: Return code from drd sync is $sync_ret"

rm -f $0 ${DRD_MOUNT_PT}$0 > /dev/null 2>&1

[[ -x $0 ]] && \

set_return 1 "ERROR: The $0 script (on image being shut down) could not be removed."

[[ -x ${DRD_MOUNT_PT}$0 ]] && \

set_return 1 "ERROR: The ${DRD_MOUNT_PT}$0 script ($0 on clone) could not be removed."

drd umount >/dev/null 2>&1 # Ignore errors.

;;

*)

echo "usage: $0 { stop_msg | stop }"

rval=1

;;

esac

exit $rval

5.5 drd sync system shutdown script 31

Page 32

32

Page 33

6 Activating the inactive system image

This chapter describes how to set the inactive system image so it will become the active system

image the next time the system boots.

For details of the drd activate command, including available options and extended options,

see The drd activate command.

NOTE: You must be logged in as root to run any DRD command.

To make the inactive system image the active system image, run the following command:

# /opt/drd/bin/drd activate -x reboot=true

This command:

1. Modifies stable storage to indicate that the inactive system image should become the active

system image when the system boots.

2. It then reboots the system.

3. After the reboot, the formerly inactive system image is the active system image, and the formerly

active system image is the inactive system image.

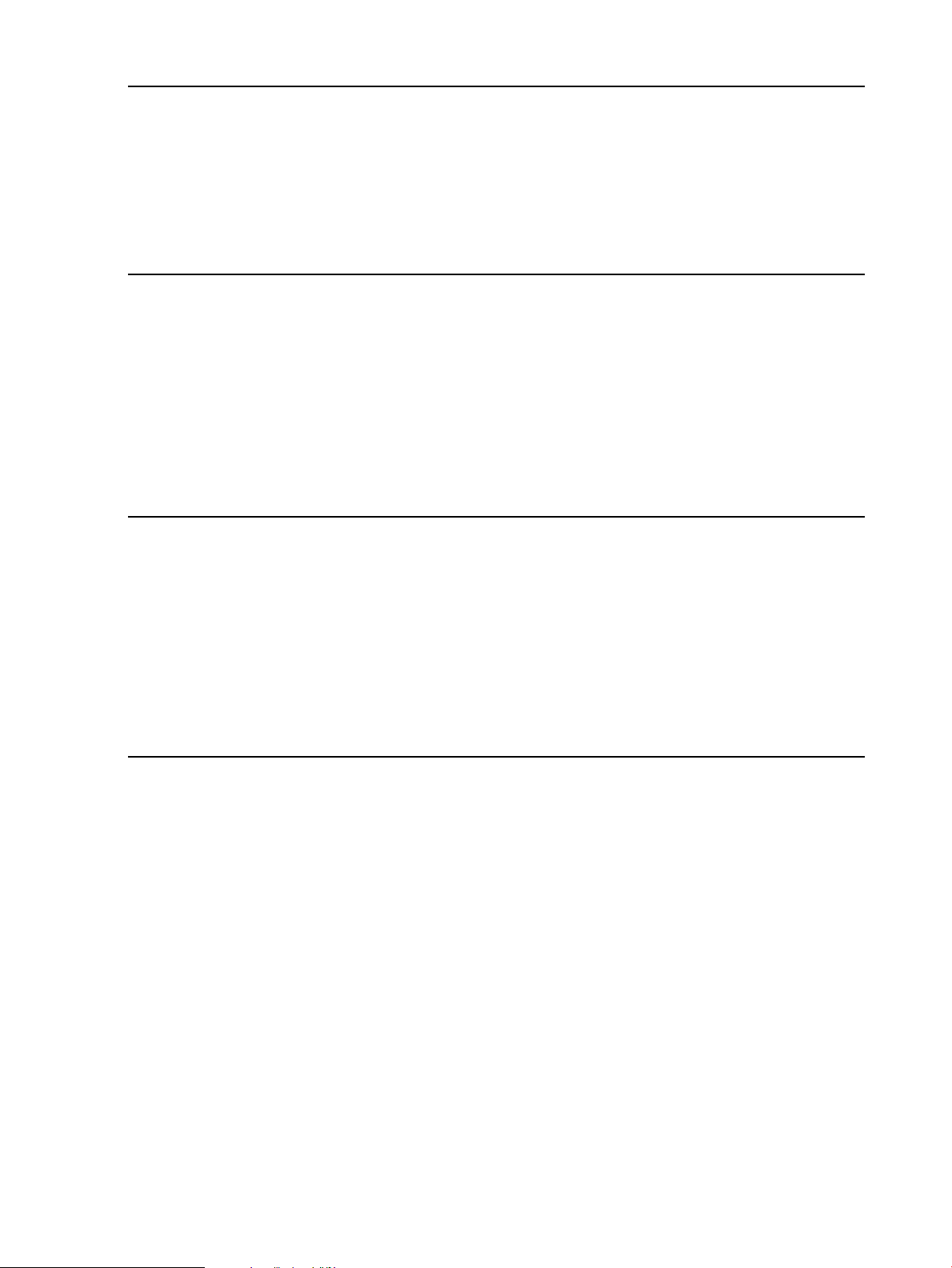

Figure 5-1 shows an example of using the drd activate command with the option -x

reboot=true. Initially, /dev/dsk/c2t3d0 was the active system disk and drd clone was

used to create an inactive system disk on /dev/dsk/c1t2d0. By using the drd activate

command noted above, /dev/dsk/c2t3d0 has become the inactive system disk and /dev/

dsk/c1t2d0 has become the active system disk.

Figure 6-1 Disk Configurations After Activating the Inactive System Image

NOTE: The alternate boot path and the High Availability (HA) alternate boot path are not affected

by the drd activate command. In addition, the value of the autoboot flag (set by setboot

–b ) is not affected by the drd activate command.

6.1 Preparing the inactive system image to activate later

If you do not want to make the inactive system image the active system image right away, you can

configure the drd activate command so that it does not reboot the system. Because -x

reboot=false is the default, the command is simply:

6.1 Preparing the inactive system image to activate later 33

Page 34

# /opt/drd/bin/drd activate

If you are not certain which system image is set to become the active system image when the system

boots, execute the following command:

# /usr/sbin/setboot -v

For additional information, see the setboot(1M) manpage.

In the following example on an HP-UX 11i v2 system, a system administrator uses /stand/

bootconf, setboot, and ioscan to determine that, currently, the primary boot disk (the one

that will be booted on the next reboot) is the same as the currently booted disk. The system

administrator is ready to boot to the clone, but wants to set the alternate boot disk to the current

boot disk. (In the event of any problem booting the clone, the system will then fall back to booting

the alternate, current disk.)

The system administrator issues the drd activate command shown below, then uses setboot

to verify the settings.

34 Activating the inactive system image

Page 35

NOTE: The following example does not correspond to any of the figures in this guide.

Example 6-1 Booting the primary boot disk with an alternate boot disk (HP-UX 11i v2)

# /usr/bin/more /stand/bootconf

l /dev/dsk/c2t0d0s2

#

# /usr/sbin/setboot

Primary bootpath : 0/1/1/0.0.0

HA Alternate bootpath : 0/1/1/1.2.0

Alternate bootpath : 0/1/1/1.2.0

Autoboot is ON (enabled)

#

# /usr/sbin/ioscan -fnkC disk

Class I H/W Path Driver S/W State H/W Type Description

======================================================================================

disk 0 0/0/2/0.0.0.0 sdisk CLAIMED DEVICE TEAC DV-28E-N

/dev/dsk/c0t0d0 /dev/rdsk/c0t0d0

disk 1 0/1/1/0.0.0 sdisk CLAIMED DEVICE HP 36.4GST336754LC

/dev/dsk/c2t0d0 /dev/dsk/c2t0d0s3 /dev/rdsk/c2t0d0s2

/dev/dsk/c2t0d0s1 /dev/rdsk/c2t0d0 /dev/rdsk/c2t0d0s3

/dev/dsk/c2t0d0s2 /dev/rdsk/c2t0d0s1

disk 2 0/1/1/0.1.0 sdisk CLAIMED DEVICE HP 36.4GST336754LC

/dev/dsk/c2t1d0 /dev/dsk/c2t1d0s3 /dev/rdsk/c2t1d0s2

/dev/dsk/c2t1d0s1 /dev/rdsk/c2t1d0 /dev/rdsk/c2t1d0s3

/dev/dsk/c2t1d0s2 /dev/rdsk/c2t1d0s1

disk 3 0/1/1/1.2.0 sdisk CLAIMED DEVICE HP 36.4GST336706LC