Page 1

HP Distributed Cloud Networking 3.0.R2

Installation Guide

HP Part Number: 5998‐6919a

Published: November 2014

Edition: 2

Page 2

© Copyright 2014 Hewlett-Packard Development Company, L.P.

The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in

the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an

additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

Warranty

WARRANTY STATEMENT: See the warranty information sheet provided in the product box and available online.

Page 3

Table of Contents

About This Guide...................................................................................................................................... 6

Audience................................................................................................................................................. 6

1 HP DCN: Overview and Infrastructure............................................................ 7

HP DCN Overview.................................................................................................................................... 7

HP DCN Infrastructure Requirements and Recommendations ........................................................................... 9

Data Center IP Network........................................................................................................................ 9

NTP Infrastructure ................................................................................................................................ 9

Domain Name System.......................................................................................................................... 9

Certificate Authority ............................................................................................................................. 9

HP DCN Installation Overview.................................................................................................................... 9

2 HP DCN Software Installation .....................................................................11

HP VSD Hardware and Software Requirements ............................................................................................ 11

HP VSD Installation Overview.................................................................................................................... 11

Installation Types ................................................................................................................................ 11

High Availability ........................................................................................................................... 11

Installation Methods............................................................................................................................12

Notes on Reinstallation: MySQL Root Password.......................................................................................12

HP VSD Installation Using QCow2 Image ...................................................................................................13

Set Up Appliance VMs ........................................................................................................................13

Connect to Appliance VMs .............................................................................................................14

Connect Via VNC ....................................................................................................................15

Connect Via virsh Console.........................................................................................................15

Configure Networking....................................................................................................................15

Configure DNS Server....................................................................................................................15

Configure NTP Server ....................................................................................................................17

Install HP VSD using qcow2............................................................................................................17

HP VSD Installation Using ISO Disc Image .............................................................................................18

Set Up VM for ISO ........................................................................................................................18

Extract and Mount ISO Image.........................................................................................................19

Configure Networking, DNS, and NTP.............................................................................................19

Install HP VSD Using ISO................................................................................................................19

Import Certificates on the Servers.............................................................................................................. 20

LDAP Store.........................................................................................................................................21

Example of Load Balancer Configuration ...............................................................................................21

3 HP VSC Software Installation ......................................................................22

HP VSC Installation Notes........................................................................................................................ 22

HP VSC Software Installation Procedure on KVM......................................................................................... 22

Emulated Disks Notes......................................................................................................................... 24

Emulated Ethernet NIC Notes.............................................................................................................. 25

HP VSC Software Installation Procedure on VMware.................................................................................... 25

Installing HP VSC on ESXI Using OVA .................................................................................................. 26

HP VSC Basic Configuration......................................................................................................................31

HP VSC Boot Options File Configuration................................................................................................31

HP VSC System and Protocol Configuration........................................................................................... 35

System-level HP VSC Configuration ................................................................................................. 35

System Name ......................................................................................................................... 35

NTP Servers and Time Zone...................................................................................................... 36

XMPP and OpenFlow...............................................................................................................36

In-band and Loopback IP Interfaces ........................................................................................... 37

Network Protocols (OSPF and BGP) ........................................................................................... 37

Table of Contents 3

Page 4

Post-install Security Tasks .......................................................................................................................... 39

4 HP VRS and VRS-G Software Installation.......................................................42

VRS and VRS-G Installation Overview........................................................................................................ 42

Preparing the Hypervisor ......................................................................................................................... 42

Installing the VRS or VRS-G Software......................................................................................................... 43

VRS on RHEL..................................................................................................................................... 43

VRS on Ubuntu 12.04 LTS with Ubuntu 12.04 Cloud Packages ................................................................. 45

VRS-G on RHEL or Ubuntu 12.04 ......................................................................................................... 46

Installing the VRS Kernel Module for MPLS over GRE ..............................................................................46

Installing VRS Kernel Module On RHEL............................................................................................47

Installing VRS Kernel Module On Ubuntu 12.04................................................................................ 48

Configuring and Running VRS or VRS-G..................................................................................................... 48

5 VMware VRS VM Deployment.....................................................................49

Introduction............................................................................................................................................ 49

Prerequisites...................................................................................................................................... 49

Creating the dVSwitch ............................................................................................................................. 49

Verifying the Creation of the dVSwitch .................................................................................................. 50

vSphere vSwitch Configurations ........................................................................................................... 50

vSwitch0 ..................................................................................................................................... 50

vSwitch1 ..................................................................................................................................... 50

dVswitch ......................................................................................................................................51

Deployment of dVRS.................................................................................................................................51

Information Needed............................................................................................................................51

Deployment of dVRS on ESXI with OpenStack or CloudStack...............................................................51

Verifying Deployment ..........................................................................................................................51

DRS Enablement............................................................................................................................51

dVRS Files Downloaded .................................................................................................................51

Deployment of dVRS ..................................................................................................................... 52

Additional Verification................................................................................................................... 52

6 VRS Installation on Citrix XenServer 6.2 .......................................................53

Clean Install on XenServer ....................................................................................................................... 53

Introduction....................................................................................................................................... 54

Block 1 ............................................................................................................................................ 54

Installation.............................................................................................................................. 54

Verification............................................................................................................................. 54

Block 2 ............................................................................................................................................ 55

Installation................................................................................................................................... 55

Verification .................................................................................................................................. 55

Upgrade Existing dVRS Installation on XenServer ........................................................................................ 56

Block 1 ............................................................................................................................................ 57

Installation................................................................................................................................... 57

Verification .................................................................................................................................. 57

Block 2 ............................................................................................................................................ 57

Installation................................................................................................................................... 57

Verification .................................................................................................................................. 57

Running and Configuring VRS .................................................................................................................. 59

Specifying the Active and Standby HP VSCs.......................................................................................... 59

7 Support and Other Resources...................................................................... 61

Gather information before contacting an authorized support..........................................................................61

How to contact HP ...................................................................................................................................61

Software technical support and software updates.........................................................................................61

Care Packs ....................................................................................................................................... 62

Obtaining software updates................................................................................................................ 62

Warranty.......................................................................................................................................... 62

4Table of Contents

Page 5

Related information................................................................................................................................. 62

Documentation .................................................................................................................................. 62

Product websites................................................................................................................................ 62

8 Documentation feedback ............................................................................65

9 Appendix: Emulated Ethernet NIC Notes ...................................................... 66

Table of Contents 5

Page 6

About This Guide

The scope of this manual is to describe the installation process for HP Distributed Cloud

Networking (DCN).

Audience

This manual is intended for system administrators who are responsible for installing and

configuring the HP DCN software.

6

Page 7

1 HP DCN: Overview and Infrastructure

This chapter provides an overview of HP Distributed Cloud Networking (DCN) 3.0.R2 and of

the infrastructure required to implement the DCN solution. It also gives a brief overview of the

installation process itself.

Topics in this chapter include:

• HP DCN Overview

• HP DCN Infrastructure Requirements and Recommendations

• Data Center IP Network

• NTP Infrastructure

• Domain Name System

• Certificate Authority

• HP DCN Installation Overview

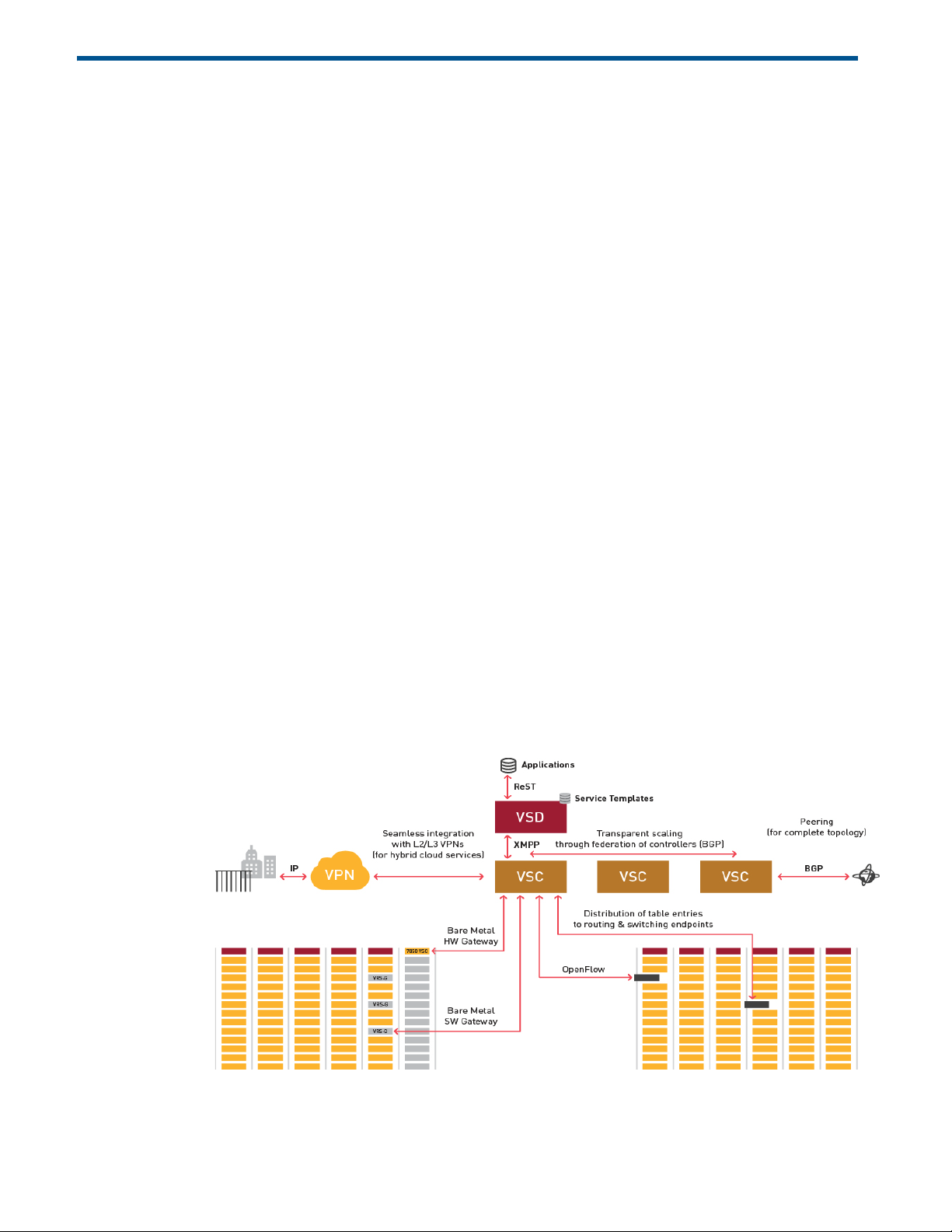

HP DCN Overview

HP DCN is a Software-Defined Networking (SDN) solution that enhances data center (DC)

network virtualization by automatically establishing connectivity between compute resources

upon their creation. Leveraging programmable business logic and a powerful policy engine,

HP DCN provides an open and highly responsive solution that scales to meet the stringent

needs of massive multi-tenant DCs. HP DCN is a software solution that can be deployed over

an existing DC IP network fabric. Figure 1 illustrates the logical architecture of the HP DCN

solution.

Figure1:HPDCNArchitectureandComponents

HP DCN Overview 7

Page 8

There are three main components in the HP DCN solution: HP Virtualized Services Directory

(HP VSD), HP Virtualized Services Controller (HP VSC) and HP Virtual Routing and Switching

(HP VRS).

HP Virtualized Services Directory

HP VSD is a programmable policy and analytics engine that provides a flexible and

hierarchical network policy framework that enables IT administrators to define and enforce

resource policies.

HP VSD contains a multi-tenant service directory which supports role-based administration of

users, computers, and network resources. It also manages network resource assignments such

as IP and MAC addresses.

HP VSD enables the definition of sophisticated statistics rules such as:

• collection frequencies

• rolling averages and samples

• threshold crossing alerts (TCAs).

When a TCA occurs it will trigger an event that can be exported to external systems

through a generic messaging bus.

Statistics are aggregated over hours, days and months and stored in a Hadoop® analytics

cluster to facilitate data mining and performance reporting.

HP VSD is composed of many components and modules, but all required components can run

on a single Linux server or in a single Linux virtual machine. Redundancy requires multiple

servers or VMs.

To get a license key to activate your HP VSD, contact your HP Sales Representative.

HP Virtualized Services Controller

HP VSC functions as the robust network control plane for DCs, maintaining a full view of pertenant network and service topologies. Through the HP VSC, virtual routing and switching

constructs are established to program the network forwarding plane, HP VRS, using the

OpenFlow™ protocol.

The HP VSC communicates with the VSD policy engine using Extensible Messaging and

Presence Protocol (XMPP). An ejabberd XMPP server/cluster is used to distribute messages

between the HP VSD and HP VSC entities.

Multiple HP VSC instances can be federated within and across DCs by leveraging MP-BGP.

The HP VSC is based on HP DCN Operating System (DCNOS) and runs in a virtual machine

environment.

HP Virtual Routing and Switching

HP VRS is an enhanced Open vSwitch (OVS) implementation that constitutes the network

forwarding plane. It encapsulates and de-encapsulates user traffic, enforcing L2-L4 traffic

policies as defined by the HP VSD. The HP VRS tracks VM creation, migration and deletion

events in order to dynamically adjust network connectivity.

8 HP DCN: Overview and Infrastructure

Page 9

HP VRS-G

For low volume deployments the software based HP VRS Gateway (VRS-G) module

incorporates bare metal as virtualized extensions to the datacenter.

HP DCN Infrastructure Requirements and Recommendations

In order to make use of the HP DCN, the data center environment must meet some key

requirements as described in the following sections.

Data Center IP Network

HP VSP can be used in any data center with an IP network. HP VSC actively participates in the

IP routing infrastructure. HP VSCs can run OSPF or IS-IS for the IGP in addition to BGP, but

integration with the IGP is not mandatory.

BGP is used to form a federation of HP VSCs and synchronize the HP VSP network information.

In addition, BGP is also used to exchange routing information with the data center provider

edge router.

NTP Infrastructure

Because HP VSP is a distributed system, it is important that the different elements have a

reliable reference clock to ensure the messages exchanged between the elements have

meaningful timestamps. HP VSP relies on each of the elements having clocks synchronized with

NTP.

The HP VSD and HP VRS applications rely on the NTP facilities provided by the host operating

system. The HP VSC, which is based on HP DCN OS, has an NTP client.

HP recommends having at least three NTP reference clocks configured for each system.

Domain Name System

In scaled HP VSP deployments, the HP VSD functional elements can be distributed across

machines into clusters of machines where the failover and load sharing mechanisms for the

clusters rely on being referenced as a single DNS entity.

Certificate Authority

The northbound ReST API on HP VSD is accessed within an SSL session. The HP VSD is able to

use a self-signed certificate, but having a certificate from a certificate authority will enable

client applications to avoid processing security warnings about unrecognized certificate

authorities.

HP DCN Installation Overview

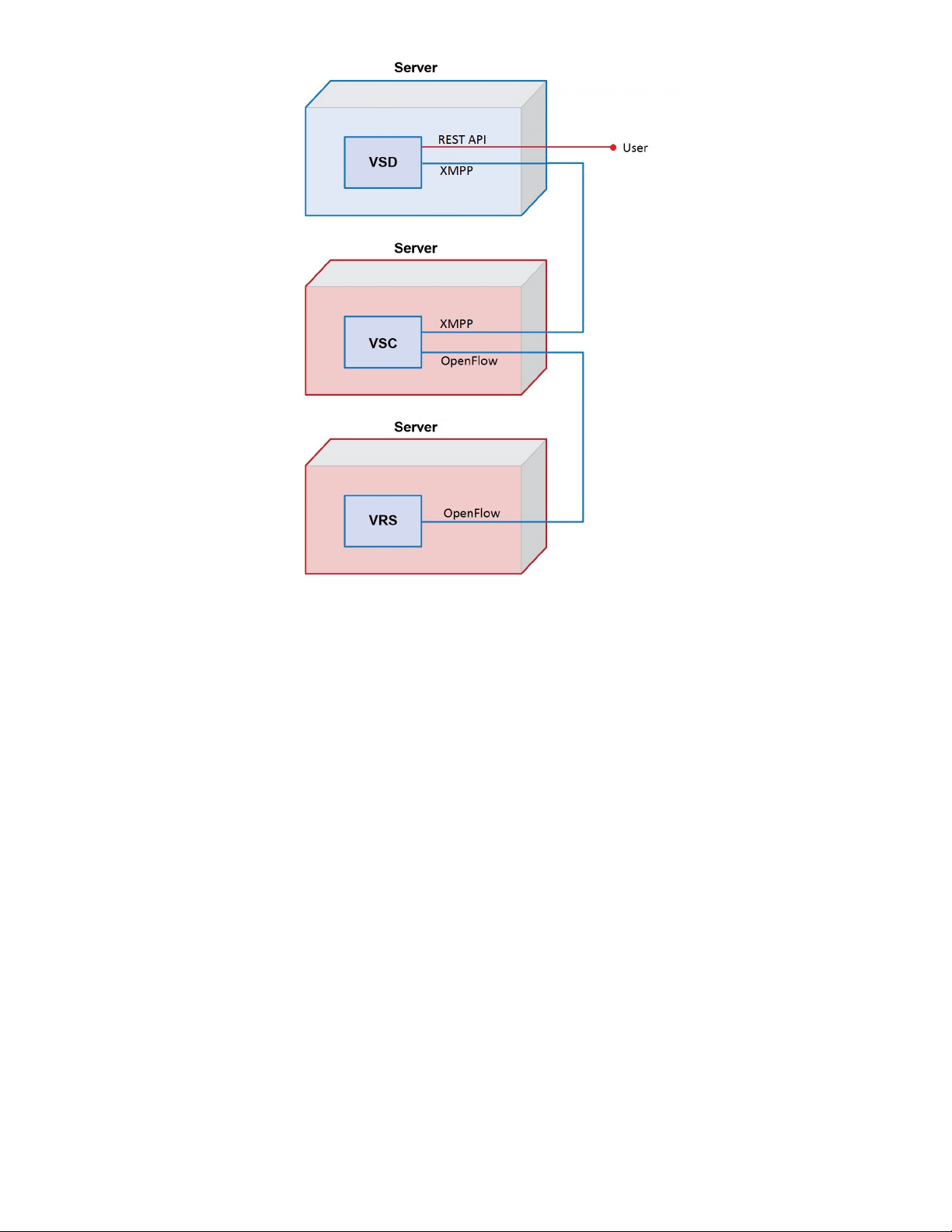

Installing HP DCN consists of installing the three software components (HP VSD, HP VSC, and

HP VRS) and configuring their interfaces to establish connectivity between them.

HP DCN Infrastructure Requirements and Recommendations 9

Page 10

Figure2:InstallationSetup

Figure 2 diagrams the installation of the HP VSP components and shows how they

communicate with each other. The labeled interfaces are referenced in the installation

instructions. The diagram could be used to map out the topology you plan to use for your own

installation.

The recommended order for installing the software is the order presented in this guide because

each newly installed software item component provides the infrastructure to communicate with

the next component on the list.

After installing HP DCN, configure policies in the HP VSD to derive full benefit from the system.

10 HP DCN: Overview and Infrastructure

Page 11

2 HP DCN Software Installation

Topics in this chapter include:

• HP VSD Hardware and Software Requirements

• HP VSD Installation Overview

• HP VSD Installation Using QCow2 Image

• HP VSD Installation Using ISO Disc Image

• Import Certificates on the Servers

• Example of Load Balancer Configuration

HP VSD Hardware and Software Requirements

Installing HP VSD software requires:

• A hypervisor of the specifications set out in the Release Notes

• A mechanism to access the graphical console of the HP VSD appliance (e.g. VNC)

• IP address for the HP VSD appliance(s) and host name(s) defined in DNS and accessible to

all VSP components.

For a license key to activate HP VSD once installed, contact your HP Sales Representative.

HP VSD Installation Overview

The procedures set out here assume installation on a hypervisor running KVM.

Installation Types

There are two types of installation, standalone and high availability.

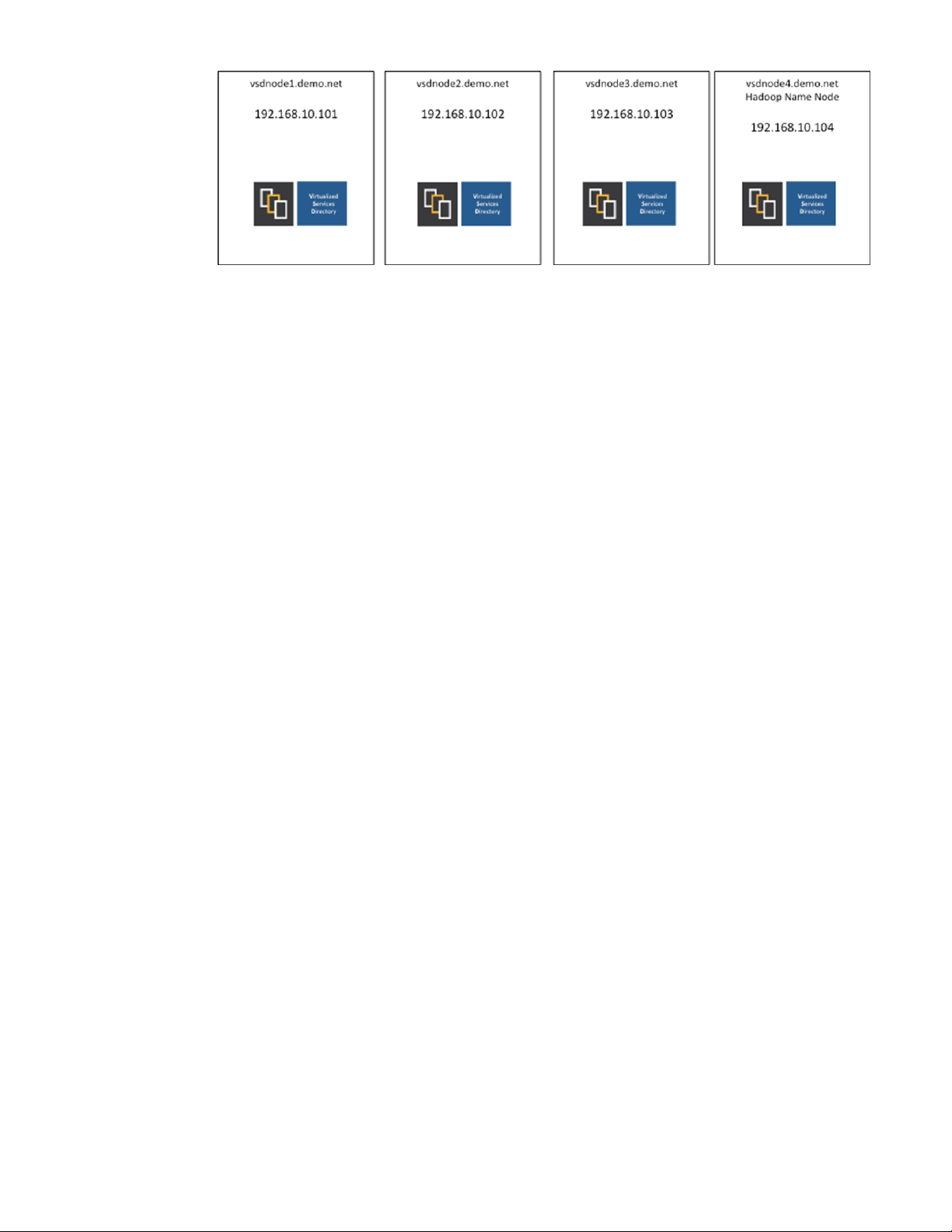

High Availability

HP VSD High Availability is intended to guard against single-failure scenarios. High

availability for HP VSD is implemented as a 3 + 1 node cluster as shown in Figure 3.

For high availability of the HP VSD nodes, it is necessary to ensure each VSD node has

redundant network and power, so that no single failure can cause loss of connectivity to more

than one HP VSD node. Therefore, each HP VSD node should be installed on a different

hypervisor.

EachHPVSDinstanceandNameNoderequiresanindividualnetworkinterface.Allnodesmust

beIP‐reachable.

HP VSD Hardware and Software Requirements 11

Page 12

Figure3:HPVSD3+1HACluster

The cluster consists of three HP VSD nodes and one statistics master node (Name node). In

addition, a Load Balancer (not supplied) is optional to load balance across the HP VSD nodes

for the REST API.

Installation Methods

The standard method of installation of HP VSD uses the pre-installed appliance. This appliance

is distributed in four formats.

• a ready-to-use QCow2 VM image for KVM hypervisor deployment (see HP VSD Installation

Using QCow2 Image

)

• a ready-to-use image for VMWare hypervisor deployment

• a ready-to-use image for OVA hypervisor deployment

• an ISO disc image (see HP VSD Installation Using ISO Disc Image)

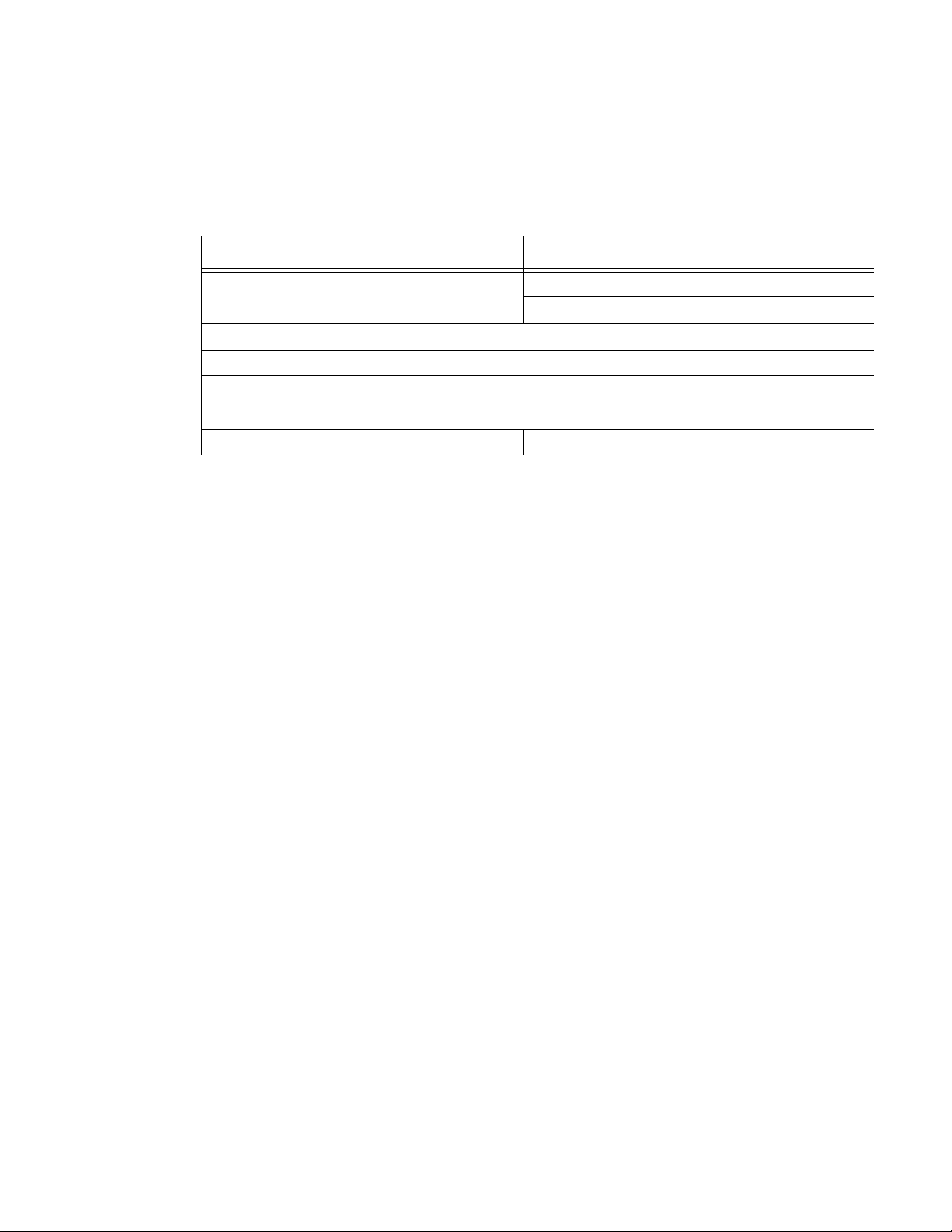

Table 1 provides an overview of the installation tasks with links to each.

Notes on Reinstallation: MySQL Root Password

The password for the MySQL root user is not set after installation, because the HP VSD

installation scripts require that the root user not have a MySQL password.

Reinstalling HP VSD

To reinstall HP VSD, before uninstalling:

1. Set the root password to ‘no password.’ On each node, run:

mysql -uroot -p<current password> -e “update mysql.user set

password=PASSWORD(‘’) where user=’root’; flush privileges;”

2. Uninstall all HP VSD nodes.

3. Install all HP VSD nodes following the procedure specified for your HP VSD version and

installation type.

4. Verify that installation was successful.

5. Set the root password:

To set the root password for the first time, on each node, run:

12 HP DCN Software Installation

Page 13

mysql -e “update mysql.user set password=PASSWORD <NEW PASSWORD>

WHERE USER=’ROOT’; FLUSH PRIVILEGES;”

To change the root password, on each node, run:

mysql -uroot -p<current password> -e “update mysql.user set

password=PASSWORD <new password> where user =’root’; flush privileges;”

Table1:HPVSDInstallationOverview

qcow2 ISO

Set Up Appliance VMs Set Up VM for ISO

Extract and Mount ISO Image

Connect to Appliance VMs

Configure Networking

Configure DNS Server

Configure NTP Server

Install HP VSD using qcow2 Install HP VSD Using ISO

HP VSD Installation Using QCow2 Image

The following instructions are for a High Availability installation. For a standalone installation,

use the same instructions to install one HP VSD on a single node.

1.

Set Up Appliance VMs

2. Connect to Appliance VMs

3. Configure Networking

4. Configure DNS Server

5. Configure NTP Server

Install HP VSD using qcow2

6.

Set Up Appliance VMs

1. Unzip all the HP VSD tar files to a temporary location.

2. If you do not already have virt-install on your hypervisor(s), run this command to put it in:

yum install virt-install

3. Copy the HP VSD qcow2 image to the KVM hypervisor image location <TTY>/var/lib/

libvirt/images/ on each hypervisor.

4. Create appliance VMs.

In the example below, a VM is created for each of four HP VSD nodes. If you are doing a

standalone installation, create only

myh1.

Note: “listen=0.0.0.0” results in KVM responding to VNC connection requests on all IP

interfaces. Depending on your network configuration, this may be a security issue.

HP VSD Installation Using QCow2 Image 13

Page 14

Consider removing “listen=0.0.0.0” and using an alternative method (for

example,

hypervisor1server# vsd_name=myh1

hypervisor1server# vsd_disk=/var/lib/libvirt/images/myh1.qcow2

hypervisor1server# virt-install --connect qemu:///system -n

$vsd_name -r 24576 --os-type=linux --os-variant=rhel6 --disk

path=$vsd_disk,device=disk,bus=virtio,format=qcow2 --vcpus=6 -graphics vnc,listen=0.0.0.0 --noautoconsole --import

hypervisor2server# vsd_name=myh2

hypervisor2server# vsd_disk=/var/lib/libvirt/images/myh2.qcow2

hypervisor2server# virt-install --connect qemu:///system -n

$vsd_name -r 24576 --os-type=linux --os-variant=rhel6 --disk

path=$vsd_disk,device=disk,bus=virtio,format=qcow2 --vcpus=6 -graphics vnc,listen=0.0.0.0 --noautoconsole -import

hypervisor3server# vsd_name=myh3

hypervisor3server# vsd_disk=/var/lib/libvirt/images/myh3.qcow2

hypervisor3server# virt-install --connect qemu:///system -n

$vsd_name -r 24576 --os-type=linux --os-variant=rhel6 --disk

path=$vsd_disk,device=disk,bus=virtio,format=qcow2 --vcpus=6 -graphics vnc,listen=0.0.0.0 --noautoconsole -import

virt-manager or SSH tunnel) to obtain console access.

hypervisor4server# vsd_name=myname

hypervisor4server# vsd_disk=/var/lib/libvirt/images/myname.qcow2

hypervisor4server# virt-install --connect qemu:///system -n

$vsd_name -r 24576 --os-type=linux --os-variant=rhel6 --disk

path=$vsd_disk,device=disk,bus=virtio,format=qcow2 --vcpus=6 -graphics vnc,listen=0.0.0.0 --noautoconsole -import

5. Verify the appliance VMs are running:

hypervisor1server# virsh list --all

Id Name State

--------------------------------- 9 myh1 running

hypervisor2# virsh list --all

Id Name State

--------------------------------- 10 myh2 running

hypervisor3# virsh list --all

Id Name State

--------------------------------- 11 myh3 running

hypervisor4# virsh list --all

Id Name State

--------------------------------- 12 myname running

Connect to Appliance VMs

The HP VSD appliance VM requires console access for initial configuration. Either:

14 HP DCN Software Installation

Page 15

• Connect Via VNC)

• Connect Via virsh Console).

Connect Via VNC

Using a VNC client (e.g. RealVNC, TightVNC) or other console access mechanism, connect to

the HP VSD appliance consoles and log in using the default username and password:

login: root

password: default password

Connect Via virsh Console

Using a virsh console domain command, connect to the HP VSD appliance consoles and

log in using the default username and password.

[root@kvm ~]# virsh list

ID dName State

---------------------------------------------------454 vsd running

[root@kvm ~]# virsh console vsd

Connected to domain vsd

Escape character is ^]

[root@vsd ~]#

Configure Networking

1. Do not use DHCP. Use static IP instead. To do this, modify the file

/etc/sysconfig/network-scripts/ifcfg-eth0 to use your static IP and gateway,

replacing BOOTPROTO value “

BOOTPROTO="static"

IPADDR=192.168.10.101

GATEWAY=192.168.100.1

NETMASK=255.255.255.0

2. Restart networking on the guest:

3. Ping the gateway (in this example, 192.168.100.1).

/etc/init.d/network restart

4. Ping the gateway (in this example, 192.168.100.1).

ping 192.168.100.1

Configure DNS Server

dhcp” with “static”.

Set up the fully qualified names for all the nodes in the cluster (unless you are doing a

standalone installation, in which case one FQDN is obviously sufficient). Reverse DNS lookup

for the HP VSD nodes should also be set up.

Note: If the Service Records (SRV) for the XMPP cluster are not in the Domain Name

Server (DNS), the script will generate them. An administrator must then load them

into the DNS server. The XMPP cluster name is typically xmpp host in the domain,

HP VSD Installation Using QCow2 Image 15

Page 16

for example, xmpp.example.com. To use a different host name run the install.sh

with the -x option.

TheDNSserverinthisexampleis10.10.10.100.

Test DNSandreverseDNSfromeachVSDnode(VM).

1. Set up the fully qualified names for the nodes in the DNS server forward named file as per

the following example:

myh1.myd.example.com. 604800 IN A 192.168.10.101

myh2.myd.example.com. 604800 IN A 192.168.10.102

myh3.myd.example.com. 604800 IN A 192.168.10.103

myname.myd.example.com. 604800 IN A 192.168.10.104

The installation script verifies the DNS forward named file records.

2. From the HP VSD node, verify the SRV record as follows:

server# dig +noall +an @10.10.10.100 SRV _xmpp-client._tcp.xmpp.example.com

_xmpp-client._tcp.xmpp.example.com. 604800 IN SRV 10 0 5222 myh1.myd.example.com.

_xmpp-client._tcp.xmpp.example.com. 604800 IN SRV 10 0 5222 myh2.myd.example.com.

_xmpp-client._tcp.xmpp.example.com. 604800 IN SRV 10 0 5222 myh3.myd.example.com.

_xmpp-client._tcp.xmpp.example.com. 604800 IN SRV 10 0 5222 myname.myd.example.com.

3. Set up the fully qualified names for the nodes in the DNS server reverse named file as per

the following example:

vsd# dig +noall +an @10.10.10.100 -x 192.168.10.101

vsd# dig +noall +an @10.10.10.100 -x 192.168.10.102

vsd# dig +noall +an @10.10.10.100 -x 192.168.10.103

vsd# dig +noall +an @10.10.10.100 -x 192.168.10.104

4. Verify the DNS reverse named file records as follows:

101.10.168.192.in-addr.arpa. 604800 IN PTR myh1.myd.example.com.

102.10.168.192.in-addr.arpa. 604800 IN PTR myh2.myd.example.com.

103.10.168.192.in-addr.arpa. 604800 IN PTR myh3.myd.example.com.

104.10.168.192.in-addr.arpa. 604800 IN PTR myname.myd.example.com.

5. Set up forward DNS records as follows:

; hosts

myh1 A 192.168.10.101

myh2 A 192.168.10.102

myh3 A 192.168.10.103

myname A 192.168.10.104

; xmpp nodes

xmpp A 192.168.10.101

xmpp A 192.168.10.102

xmpp A 192.168.10.103

; SRV records for xmpp.example.com

_xmpp-client._tcp.xmpp.example.com. IN SRV 10 0 5222 myh1.myd.example.com.

_xmpp-client._tcp.xmpp.example.com. IN SRV 10 0 5222 myh2.myd.example.com.

_xmpp-client._tcp.xmpp.example.com. IN SRV 10 0 5222 myh3.myd.example.com.

16 HP DCN Software Installation

Page 17

Configure NTP Server

Include one or more NTP servers in the /etc/ntp.conf file. For example, edit the NTP file and

add servers as follows, restarting the NTPD service to put these parameters into effect:

server 10.10.0.10

server 192.16.10.10

server 192.16.20.10

Install HP VSD using qcow2

The install script is interactive. Node 1 is the master node, and it serves as a template for the

other nodes.

Note: HP VSD consists of several components and providing high availability for each of

these components can be quite complex. It is imperative that the installation and

powering-on of each node be done in the order specified here.

1. Install HP VSD on Node 1.

The install script checks for the XMPP proxy entry in your DNS. Run

install.sh -x xmpp.myd.example.com

[root@myh1 ~]# <TTY>/opt/vsd/install.sh -x xmpp.myd.example.com

----------------------------------------------------| V I R T U A L S E R V I C E S D I R E C T O R Y |

| (c) 2014 HP Networks |

----------------------------------------------------VSD supports two configurations:

1) HA, consisting of 2 redundant installs of VSD with an optional statistics

server.

2) Standalone, where all services are installed on a single machine.

Is this a redundant (r) or standalone (s) installation [r|s]? (default=s):

Is this install the first (1), second (2), third (3) or cluster name node (t)

[1|2|3|t]: 1

Please enter the fully qualified domain name (fqdn) for this node:

, substituting your own XMPP server name.

<TTY>/opt/vsd/

r

myh1.myd.example.com

Install VSD on the 1st HA node myh1.myd.example.com ...

What is the fully qualified domain name for the 2nd node of VSD:

myh2.myd.example.com

What is the fully qualified domain name for the 3rd node of VSD:

myh3.myd.example.com

What is the fully qualified domain name for the cluster name node of VSD:

myname.myd.example.com

What is the fully qualified domain name for the load balancer (if any)

(default=none):

Node 1: myh1.myd.example.com

Node 2: myh2.myd.example.com

Node 3: myh3.myd.example.com

Name Node: myname.myd.example.com

XMPP: xmpp.myd.example.com

Continue [y|n]? (default=y):

Starting VSD installation. This may take as long as 20 minutes in some

situations ...

A self-signed certificate has been generated to get you started using VSD.

You may import one from a certificate authority later.

VSD installed on this host and the services have started.

Please install VSD on myh2.myd.example.com to complete the installation.

y

HP VSD Installation Using QCow2 Image 17

Page 18

2. Install VSD on Node 2:

[root@myh2 ~]# <TTY>/opt/vsd/install.sh

----------------------------------------------------| V I R T U A L S E R V I C E S D I R E C T O R Y |

| (c) 2014 HP Networks |

----------------------------------------------------VSD supports two configurations:

1) HA, consisting of 3 redundant installs of VSD with a cluster name node

server.

2) Standalone, where all services are installed on a single machine.

Is this a redundant (r) or standalone (s) installation [r|s]? (default=s):

Is this install the first (1), second (2), third (3) or cluster name node (t)

[1|2|3|t]:

Please enter the fully qualified domain name for the 1st node of VSD:

myh1.myd.example.com

Install VSD on the 2nd HA node myh2.myd.example.com ...

Node 2: myh2.myd.example.com

Continue [y|n]? (default=y):

Starting VSD installation. This may take as long as 20 minutes in some

situations ...

A self-signed certificate has been generated to get you started using VSD.

You may import one from a certificate authority later.

VSD installed on this host and the services have started.

2

r

3. Follow the interactive script to install HP VSD on Node 3.

4. Follow the interactive script to install HP VSD on the Name Node.

5. Verify that your HP VSD(s) are up and running by using the following command:

service vsd status

6. See Import Certificates on the Servers.

HP VSD Installation Using ISO Disc Image

Note: Consult the Release Notes for the ISO installation requirements.

The following instructions are for a High Availability installation. For a standalone installation,

use the same instructions to install one HP VSD on a single node.

1.

Set Up VM for ISO

2. Extract and Mount ISO Image

3. Configure Networking

4. Configure DNS Server

5. Configure NTP Server

6. Install HP VSD Using ISO

Set Up VM for ISO

Note: “listen=0.0.0.0” results in KVM responding to VNC connection requests on

all IP interfaces. Depending on your network configuration, this may be a security

issue. Consider removing “listen=0.0.0.0” and using an alternative method

(for example,

18 HP DCN Software Installation

virt-manager or SSH tunnel) to obtain console access.

Page 19

1. Bring up a VM named myh1 using 24 GB RAM and 6 logical cores with the following

commands:

# vsd_name=myh1

# vsd_disk=<TTY>/var/lib/libvirt/images/[xxx].qcow2

# virt-install --connect qemu:///system -n $vsd_name -r 24576 --os-type=linux \

--os-variant=rhel6 \

--disk path=$vsd_disk,device=disk,bus=virtio,format=qcow2 \

--vcpus=6 --graphics vnc,listen=0.0.0.0 --noautoconsole --import

2. Repeat this step for each additional hypervisor, naming the additional vsd instances myh2,

myh3, and myname.

Extract and Mount ISO Image

1. Extract the ISO disc image from the tar file to a temporary location.

2. Mount the ISO disc image from the temporary location to <TTY>/media/CDROM/ on each

node.

Note: Ensure that the ISO is mounted to the same location on each node.

Configure Networking, DNS, and NTP

1. See Configure Networking.

2. See Configure DNS Server.

3. See Configure NTP Server.

Install HP VSD Using ISO

1. Install VSD on Node 1.

The install script checks for the XMPP proxy entry in your DNS. Run /media/CDROM/install.sh

-x xmpp.myd.example.com

[root@myh1 ~]# /media/CDROM/install.sh -x xmpp.myd.example.com

----------------------------------------------------| V I R T U A L S E R V I C E S D I R E C T O R Y |

| (c) 2014 HP Networks |

----------------------------------------------------VSD supports two configurations:

1) HA, consisting of 2 redundant installs of VSD with an optional statistics

server.

2) Standalone, where all services are installed on a single machine.

Is this a redundant (r) or standalone (s) installation [r|s]? (default=s):

Is this install the first (1), second (2), third (3) or cluster name node (t)

[1|2|3|t]:

Please enter the fully qualified domain name (fqdn) for this node:

myh1.myd.example.com

Install VSD on the 1st HA node myh1.myd.example.com ...

What is the fully qualified domain name for the 2nd node of VSD:

myh2.myd.example.com

What is the fully qualified domain name for the 3rd node of VSD:

myh3.myd.example.com

What is the fully qualified domain name for the cluster name node of VSD:

myname.myd.example.com

What is the fully qualified domain name for the load balancer (if any)

1

, substituting your own XMPP server name.

r

HP VSD Installation Using QCow2 Image 19

Page 20

(default=none):

Node 1: myh1.myd.example.com

Node 2: myh2.myd.example.com

Node 3: myh3.myd.example.com

Name Node: myname.myd.example.com

XMPP: xmpp.myd.example.com

Continue [y|n]? (default=y):

Starting VSD installation. This may take as long as 20 minutes in some

situations ...

A self-signed certificate has been generated to get you started using VSD.

You may import one from a certificate authority later.

VSD installed on this host and the services have started.

Please install VSD on myh2.myd.example.com to complete the installation.

y

2. Install HP VSD on Node 2:

[root@myh2 ~]# /media/CDROM/install.sh

----------------------------------------------------| V I R T U A L S E R V I C E S D I R E C T O R Y |

| (c) 2014 HP Networks |

----------------------------------------------------VSD supports two configurations:

1) HA, consisting of 3 redundant installs of VSD with a cluster name node

server.

2) Standalone, where all services are installed on a single machine.

Is this a redundant (r) or standalone (s) installation [r|s]? (default=s):

Is this install the first (1), second (2), third (3) or cluster name node (t)

[1|2|3|t]:

Please enter the fully qualified domain name for the 1st node of VSD:

2

r

myh1.myd.example.com

Install VSD on the 2nd HA node myh2.myd.example.com ...

Node 2: myh2.myd.example.com

Continue [y|n]? (default=y):

Starting VSD installation. This may take as long as 20 minutes in some

situations ...

A self-signed certificate has been generated to get you started using VSD.

You may import one from a certificate authority later.

VSD installed on this host and the services have started.

3. Follow the interactive script to install VSD on Node 3.

4. Follow the interactive script to install VSD on the Name Node.

5. Verify that your VSD(s) are up and running by using the following command:

service vsd status

Import Certificates on the Servers

On each HP VSD host, installation generates a self-signed certificate. If you want to import an

official certificate signed by a certificate authority, use the

• Import a certificate generated by a Certificate Authority:

# ./set-cert.sh -r -i certificateFilename

• Generate and use a self-signed certificate if you do not run a proxy:

# ./set-cert.sh -r

20 HP DCN Software Installation

set-cert.sh script:

Page 21

• Generate and use a self-signed certificate if you run a proxy:

# ./set-cert.sh -r -p proxyHostname

Select an option and generate or import the certificate to Node 1. If you are running HA VSD,

import it to Nodes 2 and 3 as well.

LDAP Store

If you are using an LDAP store, see Using an LDAP Store.

Example of Load Balancer Configuration

frontend vsdha *:443

default_backend vsdhaapp

backend vsdhaapp

mode tcp

balance source

server c1 myh1.myd.example.com:8443 check

server c2 myh2.myd.example.com:8443 check

server c3 myh3.myd.example.com:8443 check

frontend main1 *:401

default_backend app1

backend app1

mode tcp

balance source

server c1 myh1.myd.example.com:8443 check

frontend main2 *:402

default_backend app2

backend app2

mode tcp

balance source

server c2 myh2.myd.example.com:8443 check

frontend main2 *:403

default_backend app3

backend app3

mode tcp

balance source

server c3 myh3.myd.example.com:8443 check

Import Certificates on the Servers 21

Page 22

3 HP VSC Software Installation

This chapter provides installation instructions and the basic configuration for the HP VSC.

Topics in this chapter include:

• HP VSC Installation Notes

• HP VSC Software Installation Procedure on KVM

• Emulated Disks Notes

• Emulated Ethernet NIC Notes

• HP VSC Software Installation Procedure on VMware

• Installing HP VSC on ESXI Using OVA

• HP VSC Basic Configuration

• HP VSC Boot Options File Configuration

• HP VSC System and Protocol Configuration

• System-level HP VSC Configuration

• In-band and Loopback IP Interfaces

• Post -install Security Task s

HP VSC Installation Notes

Part of the XML definition of the HP VSC virtual machine is to “pin” the virtual CPUs (vCPUs) to

separate CPU cores on the hypervisor. These settings are required for stable operation of the

HP VSC to ensure internal timers do not experience unacceptable levels of jitter.

Hyperthreading must be disabled to achieve the best use of the physical cores.

For the HP VSC hardware and software requirements, consult the current HP Distributed Cloud

Networking Release Notes.

HP VSC Software Installation Procedure on KVM

This section describes the process of loading the HP VSC software onto the dedicated server. At

the end of the procedure, the HP VSC image will be running on the server, and HP VSC

prompts you to log in.

There are two types of deployment, with a single qcow2 disk or (legacy) with two qcow2 disks

(see

Emulated Disks Notes).

This installation procedure assumes:

• The Linux server is a clean installation with a minimum of configuration and applications.

22 HP VSC Software Installation

Page 23

• An IP address is already assigned for the management network.

• The user has root access to the console of the Linux server.

• Either one or three NTP servers have been configured and NTP has synchronized with

them.

• The user has a means of copying the HP VSC software files to the server.

• Two independent network interfaces for management and data traffic, connected to two

Linux Bridge interfaces.

Once these requirements have been met, install the required dependencies (the following lines

refer to RHEL; substitute the appropriate Ubuntu references):

yum install kvm libvirt bridge-utils

When you set up a server, you must set up an NTP server for all the components. When you

define a VM, it gets a timestamp which cannot deviate more than 10 seconds.

Note: Intel Extended Page Tables (EPT) must be disabled in the KVM kernel module.

If EPT is enabled, it can be disabled by updating modprobe.d and reloading the kernel module

with:

echo "options kvm_intel ept=0" > /etc/modprobe.d/HP_kvm_intel.conf

rmmod kvm_intel

rmmod kvm

modprobe kvm

modprobe kvm_intel

These instructions assume bridges br0 for management and br1 for data have been created

and attached.

1. S t a r t libvirtd and ensure it is set to start automatically.

Prerequisite: Make sure that libvirt and the bridge packages are installed.

For example, with Ubuntu:

service libvirtd start

chkconfig libvirtd on

install kvm libvirt -bin bridge-utils

2. Copy HP VSC disks for libvirt access:

tar xzvf HP-VSC-*.tar.gz

For single disk deployment use:

cd VSC/QCOW_IMAGE/singledisk

For legacy two disk deployment use:

cd VSC/QCOW_IMAGE/twodisks

HP VSC Software Installation Procedure on KVM 23

Page 24

3. Enter:

cp vsc*disk.qcow2 /var/lib/libvirt/images/

chown qemu:qemu /var/lib/libvirt/images/*.qcow2

For Ubuntu:

chown libvirt-qemu:kvm /var/lib/libvirt/images/*.qcow2

4. (Optional) Modify the HP VSC XML configuration to rename the VM or the disk files.

5. Define VM:

virsh define vsc.xml

6. Configure VM to autostart:

virsh autostart vsc

7. Start the VM:

virsh start vsc

8. Connect to the HP VSC console using libvirt:

virsh console vsc

HP VSC should boot to a login prompt on the console.

9. From the console, log in and configure the HP VSC. Default login:

login: admin

password: admin

Emulated Disks Notes

There are two types of HP VSC deployment:

• Single disk configuration requires one QEMU emulated disk in the qcow2 format

(vsc_singledisk.qcow2) configured as IDE 0/1 (bus 0, master). This emulated disk is

accessible within the HP VSC as device “CF1:”

• Two disk configuration requires two QEMU emulated disks in the qcow2 format:

• IDE 0/1 (bus 0, master) must be configured as the “user” disk. The HP VSC

configuration, logs and other user data reside on this disk. This emulated disk is

accessible within the HP VSC as device “CF1:”. A minimum of 1GB is recommended

for this disk (a reference user disk is provided).

• IDE 0/2 (bus 0, slave) must be configured as the “image” disk. This disk contains HP

VSC binaries and a default boot options file. This emulated disk is accessible within the

HP VSC as device “CF2:”. The user should treat this disk as “read only” and essentially

dedicated to use by the image file. After the user customizes the boot options file, the

modified file should be stored on the user disk CF1:.

• It is possible to interchangeably boot different HP VSC versions by using the

corresponding image disk qcow2 file via the libvirt XML.

It is highly recommended to host the “user” disk locally (on CompactFlash, SSD or hard drive

storage as available). Likewise, to achieve the best boot times, it is recommended the “image”

disk be hosted locally on the hypervisor as well.

24 HP VSC Software Installation

Page 25

Emulated Ethernet NIC Notes

Two emulated e1000 Ethernet NICs are required. The HP VSC expects the first NIC to be

connected to the management network and the second NIC to be connected to the data

network.

The recommended configuration is to set up two independent bridges (br## devices in Linux)

and attach the emulated NICs and the corresponding physical NICs to each of these bridges.

See

Appendix: Emulated Ethernet NIC Notes.

HP VSC Software Installation Procedure on VMware

Starting with VSP 3.0, the HP ESXi implementation will provide a new mode of operation that

enables leveraging the underlying ESXi standard Vswitch or distributed Vswitch. As a result,

multiple VMs on the same ESXi host will be able to communicate directly without bridging over

the HP VRS-VM. This brings a tradeoff between performance, use of the underlying Vswitch

(VMware standard vSwitch or dvS) and flow controls inside the same port-group.

The HP implementation is based on VMware's networking paradigm. That is, when multiple

virtual NICs (VNICs) are put together on the same port-group they are able to communicate

with each other (in much the same way that multiple ports on the same VLAN are able to

exchange frames with each other).

When starting a VM, you choose the port-group in which to place the VNICs. Typically, VMs

are placed in the same port-group when they belong to the same subnet. However, there are

other reasons why VNICS might be put together on the same port-group. In any case,

communication is allowed in the same port-group.

The general user workflow for the standard Vswitch mode is the following:

1. Hypervisor installation

a. A Vswitch is defined with at least one port group.

b. The VRS-VM is installed on the hypervisor and the access VNIC is placed on the

standard Vswitch, on a special port-group configured in trunk mode (VLAN 4095). The

VRS-VM is configured at installation time in standard Vswitch mode.

2. Hypervisor usage

a. A new VM A is defined with one VNIC. The VNIC is put into one of the port-groups of

the standard Vswitch (your choice).

b. The VRS-VM receives an event and knows on which VLAN to receive that VM traffic on

its trunk port.

c. The whole instantiation process continues and the VRS-VM hands on the IP on that

specific VLAN.

d. The VNIC is able to communicate through the VRS-VM in a standard HP fashion AND

is also able to communicate with any other VNIC on the same port-group.

HP VSC Software Installation Procedure on VMware 25

Page 26

Installing HP VSC on ESXI Using OVA

Note: It is presumed that vCenter and ESXi are correctly installed.

1. Enable SSH on the ESX hypervisor. You can do this over the ESX screen or from vCenter.

2. Disable firewall on the ESXi. Run the following CLI on the ESXi host that will run the HP

VSC:

esxcli network firewall set --enabled false

3. Select the host:

4. Select Edit > Deploy OVF template:

5. In the Deploy OVF Template window that appears, click Browse and select the source

location of the OVF file, and

thenclickNext.

26 HP VSC Software Installation

Page 27

6. Specify a name and location for the deployed template, and then clickNext.:

7. Select a resource pool within which to deploy the template, and

HP VSC Software Installation Procedure on VMware 27

thenclickNext.

Page 28

8. Select the format in which to store the virtual disks, and thenclickNext.

9. Map the networks used in this OVF template to networks in your inventory (select the port

groups), and

thenclickNext.

10. Enter the HP VSC configuration information.

28 HP VSC Software Installation

Page 29

Note: Note that you must enter the control IP addresses of the HP VSC peers in the BGP

peer fields.

HP VSC Software Installation Procedure on VMware 29

Page 30

Then click Next. A summary is displayed.

11. To close the summary, click Finish.

12. Before powering on the VM, add a serial port. Connect via Network, Network Backing to

Server, Port URI to telnet://:2500 (this can be any port number).

13. Connect to the serial console of the TIMOS VM using a terminal application, such as PuTTY.

14. (Optional) Select one of the three boot options:

• HP VSC

• Update HP VSC configuration and reboot

• Update HP VSC configuration

If you do not make a choice within 20 seconds, the first option—HP VSC— is automatically

selected and the VM boots from the vApp properties that you gave initially.

To boot up the VSC VM implementing the new information, use the second option—Update

HP VSC configuration and reboot.

To make changes inside the VM before booting SROS, use the third option—Update HP

VSC configuration. Instructions for making such changes are beyond the scope of this

document. Do not make such changes unless you know what you are doing.

30 HP VSC Software Installation

Page 31

HP VSC Basic Configuration

This section describes the intial configuration steps necessary to get the HP VSC up and

running and able to communicate with other elements in the VSP.

The procedures described include:

• HP VSC Boot Options File Configuration

• HP VSC System and Protocol Configuration

HP VSC Boot Options File Configuration

The HP VSC uses a Boot Options File (BOF) named bof.cfg that is read on system boot and is

used for some basic, low-level system configuration needed to successfully boot the HP VSC.

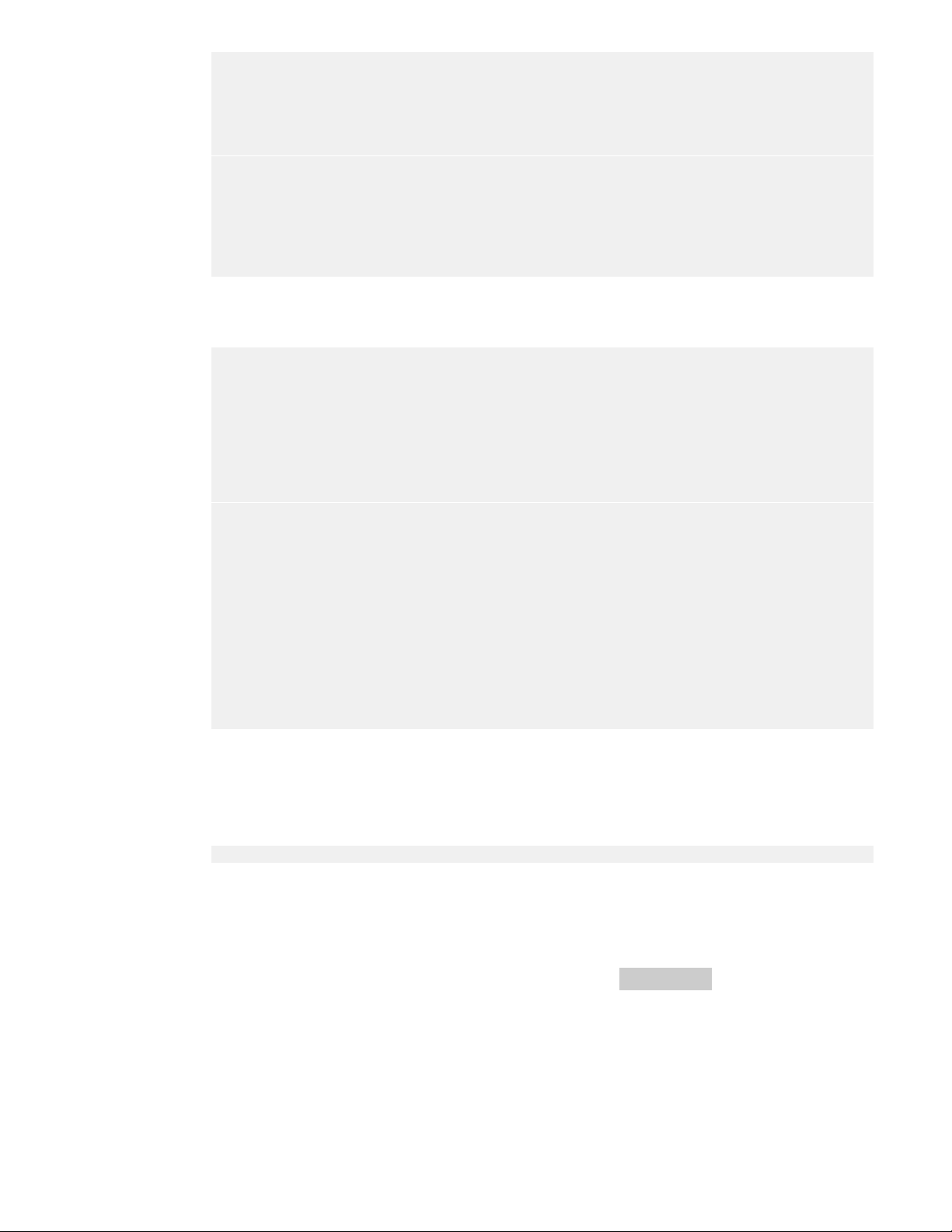

Table 5 lists the configuration paramaters that are set in the BOF that are needed for proper

operation of the HP VSC.

Table5:BOFParameters,DefaultsandDescriptions

Parameter DefaultValue DescriptionandNotes

primary-image cf2:/timos/cpm.tim

primary-config cf1:/config.cfg

address

primary-dns

secondary-dns

tertiary-dns

dns-domain

static-route

wait

nodefault TheIPaddressoftheManagementIPinterface

nodefault TheIPaddressesoftheprimary,secondaryand

nodefault TheDNSdomainoftheHPVSC.

nodefault Configuresastaticrouteforsubnetsreachable

3seconds Configuresapauseinsecondsatthestartof

Theimagefilefromwhichthesystemwi ll

attempttoboot.

Theprimary(first)configurationfilethesystem

willattempttoloadonboot.Thereareaddi‐

tionalparametersforsecondaryandtertiary

configurationfilesshouldthesystembeunable

tolocatethespecifiedconfiguration.

(alsocalledthe“out‐of‐band”interfaceinthe

HPVSCasthisisnormallyonthedatacenter’s

managementnetwork).

tertiaryDNSserversthattheHPVSCwillrefer‐

enceforDNSnameresolution.

throughtheManagementIPinterface.

thebootprocesswhichallowssysteminitializa‐

tiontobeinterruptedattheconsole.

Whensysteminitializationisinterrupted,the

operatorisallowedtomanuallyoverridethe

parametersdefinedintheBOF.

HP VSC Basic Configuration 31

Page 32

Table5:BOFParameters,DefaultsandDescriptions(Continued)

Parameter DefaultValue DescriptionandNotes

persist off

Specifieswhetherthesystemwillcreateaper‐

sistencyfile(.ndx)whichwillpreservesystem

indexes(forexample,theIPinterfaceMIB

objectindex)acrossasystemreboot.This

parameteristypicallyturnedonwhentheHP

VSCismanagedwithSNMP.

ip-address-dhcp

nodefault Thisoptionalparametershouldbeconfigured

intheHPVSCbof.cfgtotriggerDHCPresolution

atbootup.Whenthiscommandispresent,if

anaddressispresentitwillbeignoredon

reboot,andtheHPVSCwillobtainitsmanage‐

mentIPviaaDHCPexchange(assumingthere

operlyconfiguredDHCPserveronthe

isapr

network).

The following procedure updates the BOF and save the updated bof.cfg file on the “user” disk

CF1:.

Note: The “image” disk CF2: has a default bof.cfg file, but any user modified bof.cfg

should be stored on the “user” disk CF1.

This installation procedure assumes:

1. The HP VSC software has been successfully installed.

2. The user is at the HP VSC console and waiting to log in for the first time.

The information that is configured in the BOF is the following:

• The IP address of the Management IP interface (192.168.1.254 in the example below).

• As appropriate, the IP addresses of the primary, secondary and tertiary DNS servers

(10.0.0.1, 10.0.0.2 and 10.0.0.3 respectively in the example below).

• The DNS domain of the HP VSC (example.com in the example below).

• The IP next hop of any static routes that are to be reached via the Management IP interface

(one static route to subnet 192.168.100.0/24 via next hop 192.168.1.1 in the example

below).

• [Optional] Index persistence file for SNMP managed HP VSCs

1. Log in to the HP VSC console as administrator

At the “login as:” prompt, use the default administrator username (“admin”) and password

(“admin”) to log into the system and be at the root CLI context:

*A:NSC-vPE-1#

2. Assign the Management IP address

To navigate to the Boot Options File context, enter “bof<Enter>” and the prompt will

indicate a change to the bof context:

32 HP VSC Software Installation

Page 33

*A:VSC-1>bof#

The management IP address is configured using the address command which has a syntax

of:

[no]addressip‐prefix/ip‐prefix‐length[active|standby]

where keywords are in bold, parameters are in italics and optional elements are enclosed

in square brackets. “[ ]”. Typically, the no form of the command will remove the configured

parameter or return it to its default value.

In the input below, the management IP is set to 192.168.1.254/24:

*A:VSC-1>bof# address 192.168.1.254/24

3. Configure DNS servers

The HP VSC allows for up to three DNS servers to be defined that will be contacted in

order: primary, secondary and tertiary. If one DNS is not reachable, the next DNS is

contacted.

The DNS servers are configured with the following command syntax:

primary‐dnsip‐address

noprimary‐dns

secondary‐dnsip‐address

nosecondary‐dns

tertiary‐dnsip‐address

notertiary‐dns

The primary, secondary and tertiary DNS servers are configured to 10.0.0.1, 10.0.0.2 and

10.0.0.3, respectively, with the following commands:

*A:VSC-1>bof# primary-dns 10.0.0.1

*A:VSC-1>bof# secondary-dns 10.0.0.2

*A:VSC-1>bof# tertiary-dns 10.0.0.3

4. Configure the DNS domain

The HP VSC DNS domain is set with the dns-domain command which has the following

syntax:

dns‐domaindns‐name

nodns‐domain

The DNS domain is set to example.com with the command below:

*A:VSC-1>bof# dns-domain example.com

5. Configure static routes for the management IP network

A static route is configured for the management IP interface with the static-route command

which has the following syntax:

HP VSC Basic Configuration 33

Page 34

[no]static‐routeip‐prefix/ip‐prefix‐lengthnext‐hopip‐address

Multiple static-route commands can be issued for the Management IP interface.

A static route is added for 192.168.100.0/24 with a next hop of 192.168.1.1 with the

command below:

*A:VSC-1>bof# static-route 192.168.100.0/24 next-hop 192.168.1.1

To check connectivity:

ping router “management” <Gateway IP>

6. [Optional] Enable index persistence for SNMP managed HP VSCs

If the HP VSC is going to be managed using SNMP, it is recommended that index

persistence be enabled using the persist command to ensure that MIB objects, like IP

interfaces, retain their index values across a reboot. The .ndx file that saves all of the

indexes in use is saved on the same device as the configuration file whenever a save

command is issued to save the HP VSC configuration.

The persist command has the following syntax:

persist{on|off}

To enable index persistence, the command is:

*A:VSC-1>bof# persist on

7. Save the configuration to cf1:

The BOF file is normally saved in the same directory as the image file for DCNOS , but for

the HP VSC, it is recommended that the bof.cfg file be saved to the cf1: “user” emulated

disk.

Note: The “image” disk CF2: has a default bof.cfg file, but any user modified bof.cfg

should be stored on the “user” disk CF1:.

The command to save the BOF to cf1: is:

*A:VSC-1>bof# save cf1:

8. Reboot the HP VSC to load the saved boot options

After saving the BOF, the system needs to rebooted because the bof.cfg is only read on

system initialization.

To reboot the HP VSC, issue the following commands:

*A:VSC-1>bof# exit

*A:NSC-vPE-1# admin reboot

WARNING: Configuration and/or Boot options may have changed since the last

save.

Are you sure you want to reboot (y/n)? y

The exit command returns the CLI to the root context so that the admin reboot command

can be issued to reboot the system. Answer in the affirmative to reboot.

34 HP VSC Software Installation

Page 35

After rebooting, the IP management interface for the HP VSC is configured along with

DNS.

HP VSC System and Protocol Configuration

In addition to the (“out-of-band”) Management IP interface, the HP VSC has an (“in-band”)

network interface for the data center’s data network.

In order to utilize the in-band network interface and provide connectivity with the other VSP

elements, the HP VSC requires some additional system-level configuration as well as in-band

data network configuration.

The system-level configuration required includes:

• Assigning a system name.

• Defining NTP servers to be used by the system.

• Configuring the system time zone.

• Configuring the XMPP client and OpenFlow in the HP VSC.

Configuring the IP interfaces and network protocols:

• Creating the in-band IP interface and assigning an IP address (interface name control and

IP address 10.9.0.7/24 in the example configuration below).

• Creating a system loopback IP interface for use by network protocols (interface name

system and IP address 10.0.0.7/32 in the example configuration below).

• Configure network protocols, for example, OSPF, IS-IS and BGP.

The sections below describe the configuration required by highlighting the relevant commands

of a HP VSC configuration file. The HP VSC configuration file contains the CLI commands

where the commands are formatted for enhanced readability.

After configuration, use the following command to save the configuration:

vsc# admin save

System-level HP VSC Configuration

Information on the XMPP server and OpenFlow commands on the VRS can be found in the

current HP Distributed Cloud Networking User Guide.

System Name

The config>system>name command is used to configure the system name. In the excerpt below,

the system name is set to NSC-vPE-1.

#--------------------------------------------------

echo "System Configuration"

#--------------------------------------------------

exit all

configure

system

name "NSC-vPE-1"

HP VSC Basic Configuration 35

Page 36

snmp

shutdown

exit

exit all

NTP Servers and Time Zone

Having the different VSP elements time synchronized with NTP is essential to ensure that the

messages passed between the VSD, HP VSC and VRS elements are appropriately timestamped

to ensure proper processing.

Specify one or more (and preferrably three) NTP servers should be defined like in the example

below (10.0.0.123, 10.10.10.18 and 10.200.223.10).

The time zone is set with the zone command (PST) with the daylight savings time zone set with

the dst-zone command (PDT). The dst-zone will automatically complete the start and end dates

and times, but can be edited if needed.

exit all

configure

system

time

ntp

server 10.0.0.123

server 10.10.10.18

server 10.200.223.10

no shutdown

exit

sntp

shutdown

exit

dst-zone PDT

start second sunday march 02:00

end first sunday november 02:00

exit

zone PST

exit

exit all

XMPP and OpenFlow

Specify the xmpp server (xmpp.example.com) and username (NSC-vPE-1) and password

(password). The ejabberd server is configured to auto-create the user on the server with the

supplied username and password.

For OpenFlow, optional subnets can be specified with the auto-peer command which restricts

inbound OpenFlow connections from that subnet. If no auto-peer stanza is configured,

OpenFlow will sessions will be accepted on all interfaces, both in-band and out-of-band.

36 HP VSC Software Installation

Page 37

#--------------------------------------------------

echo "Virtual Switch Controller Configuration"

#--------------------------------------------------

exit all

configure

vswitch-controller

xmpp-server "NSC-vPE1:password@xmpp.example.com"

open-flow

auto-peer 10.9.0.0/24

exit

exit

xmpp

exit

exit

In-band and Loopback IP Interfaces

The excerpt below shows how to configure the in-band interface IP (name control with IP

address 10.9.0.7) as well as the loopback (name system with IP address 10.0.0.7) IP

interfaces. The loopback IP is needed if any IGP or BGP routing protocols will be configured. If

using BGP, an autonomous system needs to be configured (65000). Optionally, static routes

can be configured as well (for the in-band) routing table.

#--------------------------------------------------

echo "Router (Network Side) Configuration"

#--------------------------------------------------

exit all

configure

router

interface "control"

address 10.9.0.7/24

no shutdown

exit

interface "system"

address 10.0.0.7/32

no shutdown

exit

autonomous-system 65000

#--------------------------------------------------

echo "Static Route Configuration"

#--------------------------------------------------

static-route 1.2.3.4/32 next-hop 10.9.0.100

exit all

Network Protocols (OSPF and BGP)

The following sections show the commands to configure OSPF for area 0.0.0.0.

HP VSC Basic Configuration 37

Page 38

#--------------------------------------------------

echo "OSPFv2 Configuration"

#--------------------------------------------------

exit all

configure

router

ospf

area 0.0.0.0

interface "system"

no shutdown

exit

interface "control"

no shutdown

exit

exit

exit

exit

exit all

BGP needs to be configured if there are multiple HP VSCs that will be operating as a

federation. The following is just a sample configuration and should be adapted according to

the existing BGP infrastructure (for example, the use of Route Reflectors, the bgp group

neighbor IP addresses and family types should be specified, etc.).

38 HP VSC Software Installation

Page 39

#--------------------------------------------------

echo "BGP Configuration"

#--------------------------------------------------

exit all

configure

router

bgp

connect-retry 2

min-route-advertisement 1

outbound-route-filtering

extended-community

send-orf

exit

exit

group "internal"

type internal

neighbor <ip-address>

family vpn-ipv4

exit

neighbor <ip-address>

family evpn

exit

exit

no shutdown

exit

exit

exit all

Post-install Security Tasks

After installing the HP VSC software, there are a number of tasks that should be performed to

secure the system. Most of these tasks are obvious, but worth mentioning as a reminder.

• Change HP VSC “admin” password

By default, the HP VSC administrator username and password are “admin”. Finding the

default credentials for most systems and software is not difficult and is an easy security

exploit.

• Centralized HP VSC authentication and authorization

The HP VSC software is based on DCNOS and inherits many of the platform and security

features supported in DCNOS. Rather than rely on users defined locally on each VRS,

RADIUS and TACACS+ can be used to centralize the authentication and authorization for

VRS administrative users.

Post-install Security Tasks 39

Page 40

• Secure Unused TCP/UDP Ports

After installing and configuring the HP VSC, the user should take all steps necessary to

ensure the network security of the HP VSC system through the use of ACLs and/or firewalls

and by disabling any unneeded network services on the node.

Table 6 lists the required and optional UDP/TCP ports for particular services for inbound

connections to the HP VSC.

Table 7 lists required and optional UDP/TCP ports for particular services for outbound

connections from the HP VSC.

Optional ports are only required if the network service is in use on the HP VSC.

Table6:HPVSCUDP/TCPInbound/OpenedPorts

Port UDP/TCP Required/

Optional

ProtocolNotes

21/22 TCP Optional FTP

22 TCP Optional SSH

23 TCP Optional Telnet

123 UDP Required NTP

161/162 UDP Optional SNMP‐requiredforSNMPmanagement

179 TCP Required BGP‐requiredforfederatedHPVSCs

6633 TCP Required OpenFlow

49152‐

65535

UDP Optional RADIUSforconsoleuserauthentication

dynamicallyreservesportsinthisrange

uponinitializationoftheHPVSCforout‐

goingconnectionsandtheresulting

response.Theportsusedinthisrange

canbeviewedwith“showsystemcon‐

nections”.

IfRADIUSnotused,noincomingpackets

willbeforwardedorprocessed.

Port UDP/TCP Required/

21/22 TCP Optional FTP

22 TCP Optional SSH

23 TCP Optional Telnet

49 TCP Optional TACACS+

53 UDP/TCP Required DNS

40 HP VSC Software Installation

Table7:HPVSCUDP/TCPOutbound/RemotePorts

ProtocolNotes

Optional

Page 41

Table7:HPVSCUDP/TCPOutbound/RemotePorts(Continued)

Port UDP/TCP Required/

Optional

ProtocolNotes

69 UDP Optional TFTP

123 UDP Required NTP

161/162 UDP Optional SNMP‐requiredforSNMPmanagement

179 TCP Required BGP‐requiredforfederatedHPVSCs

514 UDP Optional Syslog

6633 TCP Required OpenFlow

Post-install Security Tasks 41

Page 42

4 HP VRS and VRS-G Software Installation

This chapter provides installation instructions and the basic configuration for HP Virtual Routing

and Switching (VRS) and HP Virtual Routing and Switching Gateway (VRS-G).

Topics in this chapter include:

• VRS and VRS-G Installation Overview

• Preparing the Hypervisor

• Installing the VRS or VRS-G Software

• Configuring and Running VRS or VRS-G

VRS and VRS-G Installation Overview

VRS—The VRS component is a module that serves as a virtual endpoint for network services.

Through VRS, changes in the compute environment are immediately detected, triggering

instantaneous policy-based responses in network connectivity to ensure that application needs

are met.

VRS is an enhanced Open vSwitch (OVS) implementation that constitutes the network

forwarding plane. It encapsulates and de-encapsulates user traffic, enforcing L2-L4 traffic

policies as defined by the VSD. The VRS includes a Virtual Agent (VA) that tracks VM creation,

migration and deletion events in order to dynamically adjust network connectivity.

VRS‐G—The VRS-G component is a software gateway between the HP DCN networks and

legacy VLAN-based networks. It can be installed either on a bare metal server or within a VM.

For optimum performance, bare metal is recommended.

OperatingSystemandHardwareRequirements—See the Release Notes.

InstallationProcedure—Installation is essentially a three (or four) phase operation:

1.

Preparing the Hypervisor.

2.

Installing the VRS or VRS-G Software: The procedures are slightly different for the two

components and for each supported operating system, therefore each procedure is given

separately.

3. If you need MPLS over GRE:

4. Configuring and Running VRS or VRS-G.

Installing the VRS Kernel Module for MPLS over GRE.

Preparing the Hypervisor

Before installation of VRS/VRS-G, the following requirements must be met for all operating

systems:

42 HP VRS and VRS-G Software Installation

Page 43

• The Linux server must be a clean installation with a minimum of configuration and

applications.

• An IP address must already have been assigned to the server.

• DNS must have already been configured and must be operational.

• At least two NTP servers must have been configured and NTP must have been synchronized

with them.

• There must be root access to the console of the Linux server.

• You must have the ability to download and install software from remote archives, or have a

local repository mirror for the required repositories.