Page 1

User Service Guide

HP Integrity Superdo me/sx2000 Server

Second Editio n

Manufacturing Part Number : A9834-9001B

September 2006

Page 2

Legal Notices

Copyright 2006 Hewlett-Packard Development Com pany, L.P.

The information contained herein is subject to change without notice.

The only warranties for HP products and services are set forth in the express warranty statements

accompanying such products and services. Nothing herein should be construed as constituting an additional

warranty. HP shall not be liable for technic al or editor ial erro r s or omis sio ns contained herein.

Intel and Itanium are tradem arks or regis tered tradem ar ks of Intel Corporat io n or its subsid iar ies in the

United States and other countries. Linux is a U.S. registered trademark of Linus Torvalds. Microsoft and

Windows are U.S. registered trademarks of Microsoft Corporation. UNIX is a registered trademark of The

Open Group.

2

Page 3

1. Overview

Server History and Specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Server Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Power System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

AC Power. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

DC Power . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

System Power On Sequence. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Enabling 48 Volts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

Cooling System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Utilities Subsyste m . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Platform Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

IPF Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

UGUY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

CLU Functionality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

PM3 Functionality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

System Clocks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Managemant Processor (SBCH and SBC). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

Compact Flash . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

HUCB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Backplane (Fabric). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Crossbar Chip - XBC. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Switch Fabrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

Backplane Monitor and Control. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

I2C Bus Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Clock Subsystem. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

Cabinet ID. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Cell ID . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Backplane Power Requirements and Power Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

CPUs and Memories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Cell Controller. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Processor Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Processors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

Cell Memory System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4 2

PDC Functional Changes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

Platform Dependant Hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Reset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Cell OL*. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

I/O Subsystem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

PCI-X Backplane Functionality. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

New Server Cabling. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

M-Link Cable . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

E-Link Cable. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Firmware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Server Configurations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

Server Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Protection Domain Access Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Contents

3

Page 4

Contents

Hardware Corrected Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Global Shared Memory Errrors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Hardware Uncorrectable Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Fatal Errors. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Blocking Timeout Fatal Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Deadlock Recovery Reset Errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Error Logging . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

2. System Specifications

Dimensions and Weights. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Component Dimensions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Component Weights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 1

Shipping Dimensions and Weights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Electrical Specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Grounding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Circuit Breaker. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Power Options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

System Power Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Component Power Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

I/O Expansion Cabinet Power Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

I/O Expansion Cabinet Power Cords . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Environmental Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Temperature and Humidity Specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Power Dissipation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Acoustic Noise Specification. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Airflow. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

3. Installing the System

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Communications Interference . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Electrostatic Discharge . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Public Telecommunications Network Connection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

Unpacking and Inspecting the System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Verifying Site Preparation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Checking the Inventory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

Inspecting the Shipping Containers for Damage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Unpacking and Inspecting Hardware Components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Unpacking the PDCA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Returning Equipment. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Setting Up the System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Moving the System and Related Equipment to the Installation Site . . . . . . . . . . . . . . . . . . . . . . . . . 91

Unpacking an d Ins tallin g the Blo we r Housings and Blowers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Attaching the Side Skins and Blower Side Bezels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

Attaching the Leveling Feet and Leveling the Cabinet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Installing the Front Do or Be ze ls and the Fron t and R ear Blo we r Bezels . . . . . . . . . . . . . . . . . . . . . 97

Wiring Check. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

4

Page 5

Contents

Installing and Verifying the PDCA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Voltage Check . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

Removing the EMI Panels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Connecting the Cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

Routing the I/O Cables. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Installing the Sup po rt Managemen t Statio n . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Configuring the Event Information Tools. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

Turning On Housekeeping Power. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

Connecting the MP to the Customer LAN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Connecting the MP to the Network. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Setting the Customer IP Address . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Booting and Verifying the System. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Connecting to the Management Processor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Powering On the System 48 V Supply. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

Booting the HP Integrity Sup erdom e /sx2000 to a EFI Shell . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

Verifying the System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Running JET Software . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 32

Running JUST . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

Power Cycling After Using JET. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

Offline Diagnostic Environment (ODE) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Attaching the Rear Kick Plates. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Performing a Visual Inspection and Completing the Installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

Conducting a Post Installation Check. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138

4. Booting and Shutting Down the Operating System

Operating Systems Supported on Cell-based HP Servers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

System Boot Configuration Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

HP Integrity Boot Configuration Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

Booting and Shutting Down HP-UX. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

HP-UX Support for Cell Local Memory. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Adding HP-UX to the Boot Options List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Booting HP-UX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

Shutting Down HP-UX. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Booting and Shutting Down HP OpenVMS I64. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

HP OpenVMS I64 Support for Cell Local Memory. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

Adding HP OpenVMS to the Boot Options List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

Booting HP OpenVMS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

Shutting Down HP OpenVMS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

Booting and Shutting Down Microsoft Windows. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

Microsoft Windows Support for Cell Local Memory. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

Adding Microsoft Windows to the Boot Options List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

Booting Microsoft Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

Shutting Down Microsoft Windows. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

Booting and Shutting Down Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

Linux Support for Cell Local Memory. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

Adding Linux to the Boot Options List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

5

Page 6

Contents

Booting Red Hat Enterprise Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

Booting SuSE Linux Enterprise Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

Shutting Down Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 67

A. sx2000 LEDs

B. Management Processor Commands

MP Command: BO. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176

MP Command: CA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

MP Command: CC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

MP Command: CP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 79

MP Command: DATE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

MP Command: DC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

MP Command: DF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

MP Command: DI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

MP Command: DL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 184

MP Command: EL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 85

MP Command: HE. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

MP Command: ID. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

MP Command: IO. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

MP Command: IT. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

MP Command: LC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

MP Command: LS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 92

MP Command: MA. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

MP Command: ND. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

MP Command: PD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 95

MP Command: PE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

MP Command: PS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 98

MP Command: RE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

MP Command: RL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2 01

MP Command: RR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

MP Command: RS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

MP Command: SA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

MP Command: SO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 205

MP Command: SYSREV . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 206

MP Command: TC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

MP Command: TE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

MP Command: VM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

MP Command: WHO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 210

MP Command: XD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2 11

C. Powering the System On and Off

Shutting Down the System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

Checking System Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

Shutting Down the Operating System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Preparing the Partitions for Shutdown. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 218

6

Page 7

Contents

Powering Off the System Using the pe Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

Turning On Housekeeping Power. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 222

Powering On the System Using the pe Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

D. Tem pl a t es

Templates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 228

Equipment Footprint Templates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

Computer Room Layout Plan. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .245

7

Page 8

Contents

8

Page 9

Tables

Table 1-1. HSO LED Status Indicator Meaning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Table 1-2. Supported Processors and Minimum Firmware Version Required . . . . . . . . . . . . . . . . . 42

Table 2-1. Server Component Dimensions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Table 2-2. I/O Expansion Cabinet Component Dimensions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

Table 2-3. System Component Weights. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Table 2-4. I/O Expansion Cabinet Weights. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 1

Table 2-5. Miscellaneous Dimensions and Weights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Table 2-6. Available Power Options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 3

Table 2-7. Option 6 and 7 Specifics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

Table 2-8. Power Requirements (Without Support Management Station). . . . . . . . . . . . . . . . . . . . 65

Table 2-9. Component Power Requirements (Without Support Management Station). . . . . . . . . . 66

Table 2-10. I/O Expansion Cabinet Power Requirements (Without Support Management Station)67

Table 2-11. I/O Expansion Cabinet Component Power Requirements . . . . . . . . . . . . . . . . . . . . . . . 67

Table 2-12. I/O Expansion Cabinet ac Power Cords. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Table 2-13. Operational Physical Environment Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Table 2-14. Nonoperational Physical Environment Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . 68

Table 2-15. Typical HP Integrity Superdome/sx2000 for Dual-core CPU Configurations. . . . . . . . 69

Table 2-16. Typical HP Integrity Superdome/sx2000 for Single-core CPU Configurations. . . . . . . 70

Table 2-17. Physical Environmental Specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

Table 3-1. Available Power Options. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 9

Table 3-2. Power Cord Option 6 and 7 Specifics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

Table 3-3. 4- and 5-Wire Voltage Ranges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

Table A-1. Front Panel LEDs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

Table A-2. Power and OL* LEDs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Table A-3. OL* LED States . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Table A-4. PDH Status and Power Good LED States . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 73

9

Page 10

Tables

10

Page 11

Figures

Figure 1-1. Superdome Cabinet. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Figure 1-2. UGUY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

Figure 1-3. Management Processor. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Figure 1-4. HUCB . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

Figure 1-5. Locations of HSO and RCS. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

Figure 1-6. Backplane Power Supply Module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Figure 1-7. Backplane (Rear View). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

Figure 1-8. Cell Board . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

Figure 1-9. Cell Memory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Figure 1-10. I/O Rope Mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Figure 1-11. Backplane Cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Figure 2-1. PDCA Locations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Figure 2-2. Airflow Diagram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

Figure 3-1. Normal Tilt Indicator. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Figure 3-2. Abnormal Tilt Indicator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Figure 3-3. Front of Cabinet Container. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Figure 3-4. Cutting Polystrap Bands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Figure 3-5. Removing the Ramps from the Pallet. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Figure 3-6. Location of Power Supply Mounting Screws . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Figure 3-7. I/O Chassis Mounting Screws . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Figure 3-8. Shipping Strap Location . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Figure 3-9. Removing the Mounting Brackets. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Figure 3-10. Positioning the Ramps. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Figure 3-11. Rolling the Cabinet Down the Ramp . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Figure 3-12. Blower Housing Frame . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

Figure 3-13. Removing Protective Cardboard from the Housing. . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Figure 3-14. Installing the Rear Blower Housing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Figure 3-15. Installing the Front Blower Housing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Figure 3-16. Installing the Blowers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Figure 3-17. Attaching the Rear Side Skin . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

Figure 3-18. Attaching the Front Side Skins. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Figure 3-19. Attaching the Side Bezels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

Figure 3-20. Attaching the Leveling Feet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Figure 3-21. Installing the Lower Front Door Assembly . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

Figure 3-22. Installing the Upper Front Door Assembly . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

Figure 3-23. Installing the Rear Blower Bezel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

Figure 3-24. Installing the Front Blower Bezel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Figure 3-25. PDCA Assembly for Options 6 and 7 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

Figure 3-26. A 4-Wire Connector. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

Figure 3-27. A 5-Wire Connector. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

Figure 3-28. Installing the PDCA. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

Figure 3-29. Checking PDCA Test Points (5-Wire). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 07

Figure 3-30. Wall Receptacle Pinouts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

11

Page 12

Figures

Figure 3-31. Power Supply Indicator LED Detail. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Figure 3-32. Rem ov ing Front EMI Panel Scr ew . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Figure 3-33. Rem ov ing the Back EMI Panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 11

Figure 3-34. Cable Labeling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Figure 3-35. Routing I/O Cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

Figure 3-36. Front Panel with Housekeeping (HKP) Power On and Present LEDs . . . . . . . . . . . 1 18

Figure 3-37. BPS LEDs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Figure 3-38. MP LAN Connection Location. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Figure 3-39. LAN Configuration Screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

Figure 3-40. The ls Command Screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

Figure 3-41. Connec ting to Host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Figure 3-42. Main MP Menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

Figure 3-43. MP Command Option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 24

Figure 3-44. MP Virtual Front Panel. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

Figure 3-45. Example of Partition State—Cabinet Not Powered Up. . . . . . . . . . . . . . . . . . . . . . . 125

Figure 3-46. MP Console Option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

Figure 3-47. HP Integrity Superdome/sx2000 EFI Boot Manager . . . . . . . . . . . . . . . . . . . . . . . . . 127

Figure 3-48. EFI Shell Prompt . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

Figure 3-49. HP Integrity Superdome/sx2000 Partitions at System Firmware Console. . . . . . . . 129

Figure 3-50. Power Status First Window. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Figure 3-51. Power Status Window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

Figure 3-52. Power Status Showing State of UGUY LEDs (and Other Status) . . . . . . . . . . . . . . 130

Figure 3-53. Atta ching Rear Kick Plates. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 34

Figure 3-54. Cel l Board Ejectors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

Figure 3-55. Front EMI Panel Flange and Cabinet Holes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

Figure 3-56. Rei nstalling the Back EMI Panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

Figure A-1. Utilities LEDs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Figure A-2. PDH Status. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

Figure C-1. Connec ting to Host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

Figure C-2. Main MP Menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

Figure C-3. Checking for Other Users . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

Figure C-4. Checking Current System Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Figure C-5. MP Virtual Front Panel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Figure C-6. Example of Partition State . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Figure C-7. Partition Consoles Menu . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 217

Figure C-8. Issuing an rr Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21 8

Figure C-9. Using the de -s Command. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

Figure C-10. Power Entity Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220

Figure C-11. Power Status First Window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 220

Figure C-12. Power Status Second Window. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

Figure C-13. Front Panel Display with Housekeeping (HKP) Power On, and Present LEDs . . . 223

Figure C-14. BPS LEDs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 224

Figure C-15. Power Entity Command . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 225

12

Page 13

Figures

Figure C-16. Power Status First Window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

Figure C-17. Power Status Window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22 6

Figure D-1. Cable Cutouts and Caster Locations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

Figure D-2. SD16 and SD32 Space Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

Figure D-3. SD64 Space Requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

Figure D-4. Computer Floor Tem plate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Figure D-5. Computer Floor Tem plate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

Figure D-6. Computer Floor Tem plate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 235

Figure D-7. Computer Floor Tem plate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

Figure D-8. Computer Floor Tem plate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

Figure D-9. SD32 and SD64, and I/O Expansion Cabinet Templates. . . . . . . . . . . . . . . . . . . . . . . 2 38

Figure D-10. SD32 and SD64, and I/O Expansion Cabinet Templates. . . . . . . . . . . . . . . . . . . . . . 239

Figure D-11. SD32 and SD64, and I/O Expansion Cabinet Templates. . . . . . . . . . . . . . . . . . . . . . 240

Figure D-12. SD32 and SD64, and I/O Expansion Cabinet Templates. . . . . . . . . . . . . . . . . . . . . . 241

Figure D-13. SD32 and SD64, and I/O Expansion Cabinet Templates. . . . . . . . . . . . . . . . . . . . . . 242

Figure D-14. SD32 and SD64, and I/O Expansion Cabinet Templates. . . . . . . . . . . . . . . . . . . . . . 243

13

Page 14

Figures

14

Page 15

About This Document

This document contains a system overview, system specific parameters, how to install the system, and

operating system specifics for the system.

15

Page 16

Intended Audience

This document is intended for HP trained Customer Support Consultants.

Docum e n t Or ganiz ation

This document is organized as follow s:

Chapter 1 This chapter presents an hist orical view of the Superdome server family, descri b es the

various server c omponents, and describes how the server components function together.

Chapter 2 This chapter contains the dimensions and weights for the serv er and various components.

Electrical specif ic at ions, environment a l requirements, and templates are als o included.

Chapter 3 This chapter involves unpacking and inspecting the system, setting up the system,

connecting the MP to the customer LAN, and steps to complete the installation.

Chapter 4 This chapter has information for booting and shutting down the server operating system

(OS) for each OS supported.

Appendix A This appendix contains tables that describe the various LED states for the front panel,

power and OL* states, and OL* states for I/O chassis cards.

Appendix B This appendix provides a summary for each management processor (MP) command. Screen

output is provi ded for each command so you can see the results of the command.

Appendix C This appendix provides procedures to power off and power on the syst em when the remova l

and replacement of a component requires it.

Appendix D This append ix cont ains templat es for: cable cutout s a nd cast er locatio n s, SD16, SD32,

SD64, and I/O expansion cabinets, and the computer room floor.

16

Page 17

Typographic Conventions

The following ty pog raphic conventions are used in this publication.

WARNING A warning lists requirements that you must me et to avoid personal injury.

CAUTION A caution provides information req uir ed to avoid losin g d ata o r avoid losing system

functionality.

IMPORTANT Provides essen tial inf ormation to explain a concept or to complete a task .

NOTE A note highlights useful inform ation such as re strictio ns, recommen dations, o r important

details about HP pro duct featur es.

• Commands and options are repr esent e d using this font.

• Text that you type exactly as shown is represented using this font.

• Text to be replaced with text that you supply is represented using this font.

Example:

“Enter the ls -l filename command” means you must replace filename with your ow n text.

Keyboar d keys and graphical interface items (such as buttons, tabs, a nd m enu items) are represented using this

•

.

font

Examples:

Control key, the OK button, the General tab, the Options menu.

The

Menu —> Submenu represe nts a menu selecti o n you can per f o rm.

•

Example:

“Select the

the

Partition menu.

Partition —> Create Part ition action” m e ans you must se le c t the Create Partition men u item from

• Example screen output is represented using this font.

17

Page 18

Related Inform ation

You ca n fin d other information on HP se rv er ha r d ware management, Microso ft® Windows ®, and di a gn os t ic

support tools at the following Web sites.

Web Site for HP Technical Documentation: http://docs.hp.com

This is the main Web site for HP technical doc um e n tatio n. This site o ffer s com p reh en siv e in form atio n abou t

HP products available for free.

Server Hardware Information: http://docs.hp.com/hpux/hw/

This Web site is the systems hardware portion of the docs.h p.com site. It provides HP nPartition server

hardware manag ement details, including site preparation, installation, and more.

Diagnost ics and Event Mo ni tori ng: Hardware Support Tools: http://docs.hp.com/hpux/diag

This site contains complete information about HP hardware support tools, including online and offline

diagnostics and eve nt m o nitoring tools. This site has man uals, tutorials, FAQs, and other reference material.

Web Site for HP Technical Support: http://us-support2.external.hp.com

The HP IT resou rce center Web site provides comprehensive support information for IT pr ofessionals on a

wide variety of to p ics, inc lud in g so f tware, hard ware, and network ing.

18

Page 19

Publishing History

The publishing histo r y of th is doc um ent includes the following editions. Upda te s are m ad e to this docu m e nt

on an unschedu led as needed basis. The updates consist of a complete replacement manual and pertinent

Web-based or CD documentation.

First Edition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . M a rch 2006

Second Editi on . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . September 2006

19

Page 20

HP Encourages Your Comments

HP welcomes your feedba ck on this publication. Address your comments to edit@presskit.rsn.hp.com and note that you will not receive an immediate reply. All comments are appreciated.

20

Page 21

1 Overview

The HP superscalable sx2000 processor chipset is the new chipset for the Superdome high-end platform. It

supports up to 128 PA-RISC or Intel

for the Superdome line of syste m s. The sx2000 provid es the final major hardware upgrad e to the Supe r dome

platform. Modifications include changes to the following components:

- A new chipset

Itanium 2 processors and provides an enterprise se rver upgrade path

Chapter 1

21

Page 22

Overview

- A new cell board

- A new system backplane and it’s power board

- A new I/O backplanes and it’s power board

- New I/O - backplane cables

- And the addition of a redundant, hot swappable clock source.

22

Chapter 1

Page 23

Overview

Server History and Specifications

Server Histor y and Specifications

Superdome was introduced as the ne w platfo r m archite cture for HP high -end se rvers in 2000-2004.

Superdome represented the first collaborative hardware design effort between traditional HP and Convex

technologies . S uperdome w as designed t o replace T and V Class ser vers and to prepare for the trans ition from

PA-RISC to Intel

operating sys tem s on the sam e serve r. The design also included sev eral ne w high availability featu re s.

Initially, Superdome was released with the legacy core electronics complex (CEC) which included a 552Mhz

PA-8600 processor. The legacy CEC supported two additional cpu speeds; a 750 Mhz PA-8700, followed by an

875 Mhz PA-8700 processor.

The HP Integrity server project was acually four projects based around the sx1000 CEC chipset and the

Integrity cell boards. The initial rele ase was the sx1000 chipset, Integr ity cell boards, IA firmware and a

1.2Mhz Intel

compatible with th e legac y Superdome IOX .

A second release was still based upon the sx1000 CEC and included Integrity cell boards, but also added PA

firmware and a dual-core PA processor. The release also incl uded a 2GB DIM M and a new HP-U X ver sio n.

The processors , processor power pods, memory, firmware, and operati ng system a ll c han ged for this relea s e.

Itanium 2 processors (IA). The new design was to enable the ability running different

processor. This initial release included PCI-X and PCI I/O mix es. The Integr ity systems were

A third release, still based upon the sx1000 chipset, included the Integrity cell boards, IA firmware and a 1.5

Mhz IA CPU. The CPU module is composed of a dua l-core pr ocessor wit h a new c ache contro ller. The firmw are

now allowed for mixing cells within a syste m. All thre e DIMM sizes we re supported. Act ual firmwar e an d

operating system chan ges were minor c han ges from t he earli er ver sion s.

Today, the HP super scalable sx2000 processor chipset is the forth and final Superdome release, based upon a

new CEC that supports up to 128 PA-RISC or IA processors. It is targeted to be the last generation of

Superdome servers to support the PARISC family of processors. Modifications include the new chipset and

board changes including cell board, sys tem and I/O backplan es and their asso ciated po wer boar ds,

interconnect, and the addition of a redundant, hot swappable clock source.

Chapter 1

23

Page 24

Overview

Server Components

Server Components

A Superdome system consists of the fo llowing types of cabinet assemblies:

At least one Superdome left cabinet . The Superdome cabinets contain all of the processo rs, memory, and core

devices of the system. They al so house most (usua l l y all) of t he syst em's PCI cards. Systems can include both

left and right cabinet assemblies containing a left or right backplan e (SD 64) resp ectiv e ly.

One or more HP Rack System/E cabine ts. These rack cabinets are used to hold the system per i phera l devices

such as disk drives.

Optionally , one or more I/O expansion cabinets (Rack System/E). An I/O expansion cabinet is required when a

custom e r re q uires mo re PCI cards than ca n b e ac co m m o d a ted in the S up e rdome ca binets.

The width of the cabinet assemblies accommodat es moving them through standard-sized doorways. The

intake air to the main (cell) ca rd cag e is filte r ed. Th is f ilter is rem ovable for cleaning and repl acem e nt while

the system is fully operational.

A status display is located on the outside of the front and rear doors of each cabinet. You can therefore

determine basic stat us of each cabin et without opening any cabine t door s.

The Superdome is a cell-based sys te m. Cells commu nic ate wit h other via the crossbar on the backplane .

Every cell has its own I/O interface, which can be connected to one 1 2-slot I/O-card cage via two system bus

adapter (SBA) link cables. Not all SBA links are con nected by default due to a physic al lim itat io n of fo ur

I/O-card cages per cabinet or node. In addition to these components each node c onsists of a power subsystem

and a utility subsystem. Three types o f Superd o me are available: an SD16, an SD32, and an SD 64

two-cabinet system ( wit h sing le -CPU cel l boar d soc kets). The SD## represents the maximum number of

available CPU sockets.

An SD16 contains the followin g co m p o nents:

- Up to four cell boards

- Four I/O card cages

- Five I/O fans,

- Four system cooling fans,

- Four bulk power supplies (BPS)

- Two Power Distribution Control Assemblies (PDCA)

Two backplane N+1 power supplies provide power for the SD16. The four cell boards are connected to one pair

of crossbar chips (XBC). The backplane of an SD16 is the same as a backplane of an SD32, but the SD16 has

one set of XBCs and the EEPROM is different. On the HUCB utility pcb is a switch that should be set to

TYPE= 1.

An SD32 has up to eight cell boards. All eight cell boards are connected to two pairs of crossbar chips (XBCs).

The SD32 backplane is designed to allow for a system upgrade to an SD64. On an SD32, four of the eight

connectors should use U-Turn cables. The U-Turn cables double the number of links and the bandwidth

between the XBCs and are recommended to achieve best performance.

An SD64 has up to 16 cell boards and requ ires tw o cabinets. All 16 cell boards are connected to four pairs of

XBCs. The SD64 consists of a left backplane and a right backplane cabinets whi ch are connect ed us ing 12

M-Link cables.

24

Chapter 1

Page 25

Overview

P

Server Components

When the PA dual -c ore or the IA dual-core processors are used, the CPU counts are doubled by the use of the

dual-die processors, as supported on the Itanium

cell boards. Up to 128 processors can be supported.

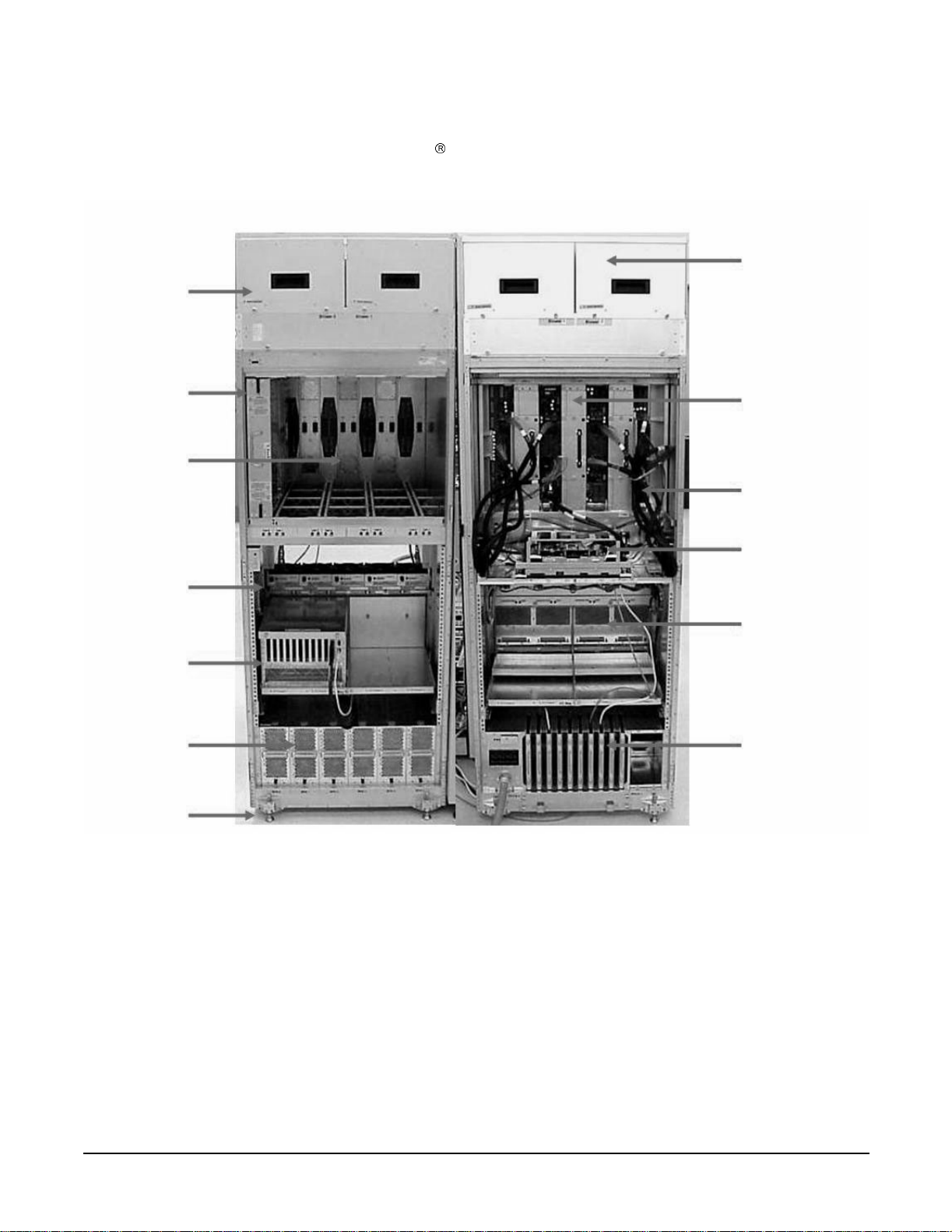

Figure 1-1 Superdome Cabinet

Blowers

Blowers

Cell

Backplane

I/O Fans

I/O Chassis

ower Supplies

Leveling Feet

Backplane

Power

Cables

Utilities

I/O Chassis

PDCA

Cable Groomer

Chapter 1

25

Page 26

Overview

Power System

Power System

The power subsystem consist s of the follo win g componen t s:

- 1 or 2 Power Distribution Component As sembly (PDCA)

- 1 Front End Power Supply (FEPS)

- Up to 6 Bulk Power Supplies (BPS)

- 1 power board per cell

- An HIOB power system

- Backplane power bricks

- Power monitor (PM) on the Universal Glob of Utilities (UGUY)

- And local power monitors (LPM) on the cell, the HIOB and the backplanes.

AC P ow er

The AC power system includes one or two PDCAs and one FEPS .

The FEPS is a modular, 2n+2 shelf assembly power sys t em t h at can consume up t o 17 KVA of pow er from A C

sources. The purpose of the FEPS chassis is to provide interconnect, signal and voltage busing between the

PDCAs and BPSs, between the BPSs and utility subsystem, and between the BPS and the system power

architectu re. The FEPS subsystem comprises of three dist inct modu lar assemblies: s ix BPS, two PDCAs, and

one FEPS chassis.

At least one 3 -phase PDCA per Superdome cabinet is required. For redundancy a second PDCA per cabinet

may be provided. The purpose of the PDCA is to receive a single 3-phase input and output three 1-phase

outputs with a voltage range of 200 to 240 volts regardless of the AC source type. The PDCA also provides a

convenience disconnect switch/circuit breaker for service, test points and voltage present LED indicators. The

PDCA is offered as a 4-wire or a 5-wire PDCAdevice . Separate PDCA ’s (PDCA-0 and PDCA-1) may be

connected to 4-wire and 5-wire input source simultaneously as long as the PDCA internal wiring matches the

wiring configuration of th e AC so urce

The 4-wire PDCA is used in a phase to phase voltag e rang e of 200 to 240 volts at 50/60 Hz. This PDCA is

rated for a maximum in put cu rrent of 44 Amps per phase. The AC input power line to the PDCA is con ne ct ed

with power plugs or is hardwired. When using power plugs, use a power cord [OLFLEX 190 (PN 6008044)

four conductor 6-AWG (16mm), 600 V, 60 Amp, 90°C, UL and CSA approved, conforms to CE directives

GN/YW ground wire].

Following recommend plugs for the 4-wire PDCA are:

- In-line connector: Mennekes ME 460C9, 3-phase, 4-wire, 60 Amp, 250 V, UL approved, color blue, IEC309-1

grounded at 9:00 o'clock.

- Panel-mount receptacle: Mennekes ME 460R9, 3-phase, 4-wire, 60 Amp, 250 V, UL approved, color blue,

IEC309-1 grounded at 9:00 o'clock.

The 5 wire PDCA is used in a phase-to-neutral voltage ran ge of 200 to 240 Vac 50/60Hz. This PDCA is rated

for a maximum inpu t cu rr ent of 24 Am p s per ph ase. Th e AC input power line to the PDCA is connected with

power p lugs or is ha rd wired. When usin g p ower plugs, a power cord [ f ive co nductors, 10-AWG (6 mm),

450/475 V, 32 Amps, <HAR< European wire cordage, GN/YW ground wire]. An alternative is for the customer

to provide t he power plug including the power cord an d the receptacle. Recommended plugs:

26

Chapter 1

Page 27

Overview

Power System

- Inline connector: Mennekes ME532C6-16, 3-phase, 5-wire, 32 Amps, 450/475 V, VDE certified, color

red,IEC309-1, IEC309-2, grounded at 6:00 o'clock.

- Panel-mount receptacle: Mennekes ME532R6-1276, 3-phase, 5-wire, 32 Amp, 450/475 V , VDE certified, color

red, IEC309-1, IEC309-2, grounded at 6:00 o'clock.

- FUSE per phase: 25 Amp (valid for Germany).

DC Power

Each power supply output provides 48 V dc up to 60 A (2.88kVA) and 5.3 V dc housekeeping. Normally an

SD32 Superdome cabinet contains six BPS independent from the installed amount of cells and I/O. An SD16

normally has four B PS instal led.

System Power On Sequence

The general power up sequence order is as follows:

1. AC power cord is pulled in and front end power supply (FEPS) breakers closed.

2. Housek eepin g ( HK P ) powe r is applie d. Util itie s init ialization and the comple x conf ig uration is checked.

3. Power switch on and the cabinet 48V power is enable d.

4. SPU cabinet main back plan e power ed on and rese t. The main system backplane comes up first and

supplies clocks to cells and I/O backplanes. Backplane XBCs must be read y by the time cell cont rolle rs

initialize.

5. I/O backplanes are powered on.

6. Cell boards are powered on.

7. SUB queries cells for valid complex profiles. Cells must be powered up with 48 V in addition to HKP. When

one valid cell is located, a timer star ts and cell bo ards not ready, after the time r counts down, will not be

initialized.

8. Early CPU _IN IT and cell m onarch selections begin.

9. Cell board nitialization begin.

10. Partitions seek rendezvous and perform core-cell selections.

11. Partition domains are Iinitialize d .

12. IPL is launched.

Enabling 48 Volts

The PM is responsible for enabli ng 48 V, but it must have permission from the MP. To enable 48 V, the

transition cabi ne t pow e r swit ch m us t be mo ve d from OFF to O N. Or you can use the MP com m and pe if the

power switch is already on. (If switch is on the cabinet wakes up from power on reset).

If the PM has permission, it sends a PS_CTL_L signal to the FEPS. Then the BPS enables 48 V converters

which send 48 V to the backplane, I/O Chassis, HUCB, cells, fans, and blowers. Once the 48 V is enabled, it is

cabled to the backplane, cells, and I/O chassis(s).

Chapter 1

27

Page 28

Overview

Cooling System

Cooling System

The Superdome has fou r blower s and five I/O fans per c abinet . These co mponents a re all h ot-sw ap devices . All

have LEDs indicating the current status. Thes e LEDs are self-enplanation. Temperature monitoring occurs

for the following:

- Inlet air for temperature increases above normal

- BPS for temperature increases abov e normal

- The I/O power board over temperature signal is monitored.

The inlet air sensor is on the main cabinet, located near the bottom of cell 1 front. The inlet air sensor and the

BPS sensors are monitor ed by the PM3 (on the UGUY), an d the I/O power board sensor s are monit ored by the

cabinet level utilities (CLU) (on the UG U Y) .

The PM controls and monitors the speed of groups of N+1 redundant fans. In a CPU cabinet, fan Group 0

consists of the four main blowers and fan Group 1 consists of the five I/O fans. In an I/O Expansion (IOX)

cabinet, fan Gro ups 0 thru 3 consist of four I/O fans and fan Group 4 consists of 2 manage m e nt subsyste m

fans. All fans are expected to be populated at all times (with the exception of the OLR of a failed fan).

The main blowers feature a variable spe ed contr ol . The blow ers operat e at full spe ed there is cir cu i t ry

available to REDUCE the normal operating speed. All of the I/O fans and managed fans run only at 1 speed.

The PM controls fans through the use of the following resources:

- fanReference D/A (for main fans only)

- tachSelect register

- 930 Port 3.5 (T1) and 930 Timer 1

- FAN_SPEED_HIGH and FAN_SPEED_NORMAL message (for main fans only)

- 16 blower/fan present signals

- 2 manageability fan present signals

- 16 blower/fan fail signals

- 2 management fan fail signals

When the PM queries the entities for their maximum power c onsumption, the cells also send a v alue

describing the desired NORMAL m ain f an sp ee d . Cells o f the sam e ar chit ectu re se nd id entical values. If the

PM receives differing values, it uses the largest value.

One minute after setting the main blower fanReference to the desired speed or powering on the cabinet, the

PM uses the tach select register to cycle through each fan and measure its speed. When a fan is selected,

Timer 1 i s used in counter mode to count the pulses on port T1 over a period of 1 second. If th e frequency does

not equal the expected frequency plus some ma rgin of error, the fan is considered to hav e failed and is

subtracted from the working fan count.

28

Chapter 1

Page 29

Overview

Cooling System

If the failure causes a transition to N- I/O or main fans in a CPU cabinet, the cabinet is immediately powered

off. If the failure causes a transition to N- I/O fans in an IOX cabinet, the I/O backplanes contained in the I/O

Chassis Enclosure (ICE) containing that fan group are immediat el y powered off.

Only inlet tempe rat ur e inc reases will be monitored by HP U X, all othe r hig h te mp e ratu re inc rea s e chassis

codes will not activa te the envd daemo n to act as con figured in the /etc/en vd .co nf. The PM mo nit ors am bie nt

(inlet) temperature. The PM polls an analog-to-digital converter to read t he current ambient temperature.

The temperature fall s into one of four ranges: Normal, OverTempLow , OverTempMid, or OverTempHigh. The

following state c odes machine describes the actions taken based on the various temperature state transi tion s:

OTL_THRESHOLD = 32C -----> send error code PDC_IPR_OLT

OTM_THRESHOLD = 38C ----> send error code PDC_INT_OTM

OTH_THRESHOLD = 40C -----> shut down 48 V

NOTE In an I/O expansion (IOX) cabinet, the thresholds are set 2 degrees higher to compensate for

the fact that the cab ine t se nsor is mounted in a ho t spo t.

Chapter 1

29

Page 30

Overview

Utilities Subsystem

Utilities Subsystem

The Superdome utilities subsystem is comprised of a number of hardware and firmware components located

througho ut th e Su p erd o m e syste m.

Platform Management

The sx2000 platform management subsystem consists of a number of hardware and firmware components

located throughout the sx2000 sy ste m. The sx2 000 use s th e sx1000 p latform m anagement components, with

firmware changes to su ppor t ne w functionality.

The following list descr ibes the major hardwa re components of the platform management subsystem and the

changes required for the sx20 00:

The PDH microcontroller i s locat ed on Cell 's PDH Daughter-card assembly. It provides communication

betw e en th e M anag e men t fi rmwa re, the P DH spa ce, an d t he USB bus. The m i croco n t roll e r repr esents a

change from the prior implementation, Intel

(ARM). This microc o ntr olle r change enable s th e PDH da ughter-card design to be compat ible a cros s all thr ee

new CEC platforms. It also enables the extra processing power to be used to move the console UARTs into

PDH memory space located on the Cell elimin atin g the sx1 000 Co re I/O (CIO) card.

80C251 processes, to a more powerful 16-bit microcontroller

The Universal Glob of Util iti es (UGUY) on Super dome contains the power monitor (PM), the cabinet le vel

utilities (CLU), and the syst e m clock sour ce cir cu itry. No change s are pl ann ed f or the sx2000 but the entire

clock section on the UGUY assembly is made obsolete by new redundant clock source circuitry.

The CLU circuitry on the UGUY assembly that provides cabinet -evel cable interconnect for backplane and

I/O card cage utility signal communication and scan support.

The PM circuitry on the UGUY assembly monitors and controls the 48 V dc, the cabinet environment

(ambient temperature and fans), and controls power to the entities (cells, I/O bays).

The Management Pr ocessor (MP) is a single board computer (SBC) that controls the console (local and

remote), the fron t pan e l disp lay and its red ir ec tion o n the con so le, main tains logs for the Event ID s,

coordinates messages between dev ices, and performs other service processor functions.

The SBCH board provides USB hubs into the cabinet from an upstream HUB or the MP. No changes are

planned for the sx2000.

IPF Firmware

- The firmware supports four different operating systems (HP-UX, Linux, Windows, OpenVMS)

- The firmware is complia nt wit h I PF ind u str y standards (SAL, PAL, ACPI, EFI)

- Provides an IPMI (intelligent platform management interface)

- Supports architecture that extend s acros s p rod uc t line and ne xt ge n er atio n syste m s

- Supports a new interface for user (mfg./diag/), etc.

- Supports PCI hot-plug

- Supports cell hot-plug (online add and delet e)

- Supports I/O chassis hot-plug (online add and delete)

- Suppo rt s C e l l- L ocal mem ory

30

Chapter 1

Page 31

Overview

Utilities Subsystem

- Suppo rts U S B f o r ke yboard a nd mouse at b o ot

- Supports VGA during boot

- Enables global shared memory (GSM)

- Supports PCI 2.3, PCI-X 1.0, and PCI-X 2.0

UGUY

Every cabinet co ntains one UGUY. Refer to Figure 1-2. The U G UY plugs into the HUCB. It is not hot

swappable. Its MP microproc essor controls power monitor functions, executing the Power Monitor 3 (PM3)

firmware and the cabinet-level utility (CLU) firmware.

The UGUY consists of two main components:

- CLU

- PM3

Figure 1-2 UGUY

CLU Functionality

The CLU is respons ible for colle cti ng and repo r ting the configurat io n info rmation for itself, main backplan e,

I/O backplanes , and the SUB/HUB. Each of these boards is furnished with a configuration EEPROM

containing FRU IDs, revision information, and, for the main backplane and I/O backplanes, maximum power

requirement s for that e ntit y in its fully co nfigured, fully loaded st ate. The pow e r requ irem ent information is

sent to the PM3 autom atically when HKP is ap plie d or whe n a new e ntity is plugged in. The configu rat io n

information is se nt to the SU B in resp onse to a get_config command.

The CLU gathers the following information over its five I2C buses:

- Board revision information is contained in the board's configuration EEPROM for the UGUY board (UGUY),

the SBCH board (SBCH), the main backplane, the main backplane power boards (HB PB), the I/O backplane

(HIOB), and the I/O backplane power boards (IOPB).

- Power requiremen t s from the configur ation EEPROM for the main backplane (HLSB or HRSB), the I/O

backplanes (HIOB). This information is sent to the PM3 processor (via USB) so that it can calculate cabinet

power re q uireme nts.

- Power control and status interface. Another function of the UGUY is to use the power_ good s i gna l s to drive

power on

- Reset control wh i ch includes a re set for ea ch I /O b a ck p l ane, a main backp l ane ca binet res e t, TR ST - JTAG

reset fo r a ll JTA G scan chai ns in entire cabinet, a sy s te m clock con trol mar gin contr o l

Chapter 1

31

Page 32

Overview

Utilities Subsystem

- Status LEDs for the SBA cable OL*, the cell OL*, and the I/O backplane OL*

PM3 Functional ity

The PM3 performs the following function s :

1) FEPS control and monitoring. For each of the BPSs in the FEPS.

Superdome has six B PS and the UG U Y se nds 5 V to the BP S for use b y the fa ult collection circuitr y.

2) FAN control and m o nito r ing.

In addition to the blowers , there are five I/O system fans (above and between I/O bays). Th ese fans run at full

speed all the time (there is no fan speed s ignal).

3) Cabinet mode and cabinet number fan out.

There is a surface mount DIP switch on the HUCB (UGUY backplane) can be used to configure a Superdome

cabinet for normal use or as an SD16 cabinet. Use the 16-position thumb switch on the UGUY to set the

cabinet number. Numbers 0-7 are for CPU oriented cabinets and numbers 8-15 are for I/O-only cabinets.

4) Local Power Monitor (LPM) interfaces.

Each big board (cell board, I/ O backplane, and main backplane) contains logic that controls conversion of 48

V to lower voltages. The PM3 interfaces to the LPM with the board-present input signal to the PM3 and the

power-enable output signal from the PM3.

5) Front and rear panel board controls.

System Clocks

The sx2000 clocks are supplied from the backplane and to the backplane crossbar ASICs and the cell boards.

There is no distribution of the system clocks to the I/O backplanes. Instead, independent local clock

distribution is provided on the I/O backplane.

Managemant Processor (SBCH and SBC)

The management processor (MP) is comprised of two PCBs, the SBC (single-board computer) and the single

board computer hub (SBCH), forms one functional unit. The MP is a hot-swappable unit powe red by +5 V

HKP that holds the MP configuration param ete rs in compac t flash and the error and activity logs and the

complex identification informat io n (complex prof ile) in battery backed NVRAM. It also provides the USB

network controller (MP bus). Each complex has one MP per complex. It cannot be setup for redundancy.

However, it is not a single point of failure for the complex because it can be hot-swapped. If the MP fails, the

complex can still bo ot and function. Howev e r, the following utility function ality is lost until the MP can be

replaced:

32

Chapter 1

Page 33

-The ability to process and store log entries (chassis codes)

- Console functions to every partition

- OL* functions

- Virtual front panel and system alert notification

- The ability to connect to the MP for maintenance, either locally or remotely

- The ability to run diagnostics (ODE and scan)

Figure 1-3 Management Processor

SBC

Overview

Utilities Subsystem

SBCH

UGUY

The SBCH provides the physical and electrical interface to the SBC, the fanning out of the universal serial

bus (USB) to internal and external su bsys tem s, and a LAN 10/100BT eth ernet con ne ctio n. It plugs into the

HUCB and is hot swappable. Every CPU cabinet contains one SBCH board, but only one SBCH contai ns an

SBC board used as the MP for the complex. The remaining SBCH boar ds act as USB hub s.

The SBC board is an embedded pc running system utility board (SUB) firmware. It is the core of the MP. It

plugs into the SBCH board through a PC104 interface. The SBC provides three external interfaces to the

utility subsys te m :

- LAN (10/100BT ethernet) for customer console access

- RS232 port, for remote access from the response center th roug h a modem

- RS232 port, for local console access for manufacturing and field support personnel

The modem func tio n is not included on the SBC and must be exte rn al to the cabin et.

Compact Flash

The compact flash is a PCMCIA-style mem ory card that plug s into the SBC bo ard. It stores the MP MP

firmware and the customer's MP configuration parameters. The parameters stored in the compact flash are:

- The network configuratio ns for both the publ ic and priv ate LANs

- User name and passwo r d com binations for logging in to the M P

- Baud ra t e s f or the seri al port s

- Paging parameters for a specified alert level

Chapter 1

33

Page 34

Overview

Utilities Subsystem

HUCB

The HUCB, shown in Figure 1-4, is the backplane of the utility subsystem. It provides cable distribution for

all the utility signals except the clocks. It also provides the customer LAN interface and serial ports. The SMS

connects to the HUCB. The system type switch is located on the HUCB. This board has no active circuits. It is

not hot-swappab le.

Figure 1-4 HUCB

34

Chapter 1

Page 35

Overview

Backplane (Fabric)

Backplane (Fabric)

The system backplane assembly provides the following functionality in an sx2000 system:

- Interfaces the CLU subsystem to the sys te m back pl ane and cell m o d ules

- Houses the system cro ssbar switc h f abrics and cell m o dule s

- Provides switch fabric interconnect between multiple cabinets

- Generates system clock so urces

- Pe rf orms redundant system clock source sw itching

- Distributes the system clock to crossbar chips and cell modules

- Distributes housekeepi ng power to cell modules

- Terminates I/O cables to cell modules

The backplane supports up to eight cells, interconnected via the crossbar links. A sustained total bandwidth

of 25.5 GBs is provided to each cell. Each cell connects to three individual XBC ASICs. Th is connection