Fortinet FortiGate-7000, FortiGate-7040E, FortiGate-7904E, FortiGate-7901E, FortiGate-7030E Handbook

...Page 1

FortiOS™ Handbook - FortiGate-7000

7000

VERSION 5.4.5

Page 2

FORTINET DOCUMENTLIBRARY

http://docs.fortinet.com

FORTINETVIDEOGUIDE

http://video.fortinet.com

FORTINETBLOG

https://blog.fortinet.com

CUSTOMERSERVICE&SUPPORT

https://support.fortinet.com

http://cookbook.fortinet.com/how-to-work-with-fortinet-support/

FORTIGATECOOKBOOK

http://cookbook.fortinet.com

FORTINETTRAININGSERVICES

http://www.fortinet.com/training

FORTIGUARDCENTER

http://www.fortiguard.com

FORTICAST

http://forticast.fortinet.com

ENDUSER LICENSE AGREEMENT

http://www.fortinet.com/doc/legal/EULA.pdf

FORTINET PRIVACY POLICY

https://www.fortinet.com/corporate/about-us/privacy.html

FEEDBACK

Email: techdocs@fortinet.com

December 20, 2017

FortiOS™ Handbook - FortiGate-7000

01-545-3966550-20171220

Page 3

TABLEOFCONTENTS

Change Log 7

Introduction 8

What's new in for FortiGate-7000 v5.4.5 8

M1 and M2 interfaces can use different VLANs for heartbeat traffic (408386) 8

GTP load balancing 8

FSSO user authentication is synchronized 8

HA Link failure threshold changes (422264 ) 9

FortiGate-7000s running FortiOS v5.4.5 can be configured as dialup IPsec VPN servers 9

FortiGate-7000 overview 11

Licenses, Device Registration, and Support 11

FortiGate-7060E 12

FortiGate-7060E front panel 12

FortiGate-7060E schematic 13

FortiGate-7040E 14

FortiGate-7040E front panel 14

FortiGate-7040E schematic 15

FortiGate-7030E 15

FortiGate-7030E front panel 16

FortiGate-7030E schematic 16

FIM-7901E interface module 18

FIM-7901E schematic 19

FIM-7904E interface module 20

Splitting the FIM-7904E B1 to B8 interfaces 21

FIM-7904E hardware schematic 21

FIM-7910E interface module 22

Splitting the FIM-7910E C1 to C4 interfaces 23

FIM-7910E hardware schematic 24

FIM-7920E interface module 24

Changing the interface type and splitting the FIM-7920E C1 to C4 interfaces 25

Splitting the C1 to C4 interfaces 26

FIM-7920E hardware schematic 26

FPM-7620E processing module 27

NP6 network processors - offloading load balancing and network traffic 28

Page 4

Accelerated IPS, SSL VPN, and IPsec VPN (CP9 content processors) 30

Getting started with FortiGate-7000 31

Managing individual modules 32

Managing individual modules from the CLI 33

Connecting to module CLIs using the management module 33

Connecting to the FortiOS CLI of the FIM module in slot 1 34

Default VDOM configuration 35

Default management VDOM 35

Firmware upgrades 35

Restarting the FortiGate-7000 35

Load balancing 36

Traffic that cannot be load balanced 36

Recommended configuration for traffic that cannot be load balanced 37

Configuration synchronization 39

Failover in a standalone FortiGate-7000 39

Replacing a failed FPMor FIMmodule 39

Replacing a failed module in a standalone FortiGate-7000 chassis 39

Replacing a failed module in a FortiGate-7000 chassis inan HAcluster 40

Installing firmware on an FIM or FPM module from the BIOS using a TFTP server 41

Uploading firmware from a TFTP server to an FIMmodule 41

Uploading firmware from a TFTP server to an FPMmodule 43

Operating a FortiGate-7000 45

Failover in a standalone FortiGate-7000 45

Replacing a failed FPMor FIMmodule 45

Replacing a failed module in a standalone FortiGate-7000 chassis 45

Replacing a failed module in a FortiGate-7000 chassis inan HAcluster 46

Installing firmware on an FIM or FPM module from the BIOS using a TFTP server 46

Uploading firmware from a TFTP server to an FIMmodule 47

Uploading firmware from a TFTP server to an FPMmodule 48

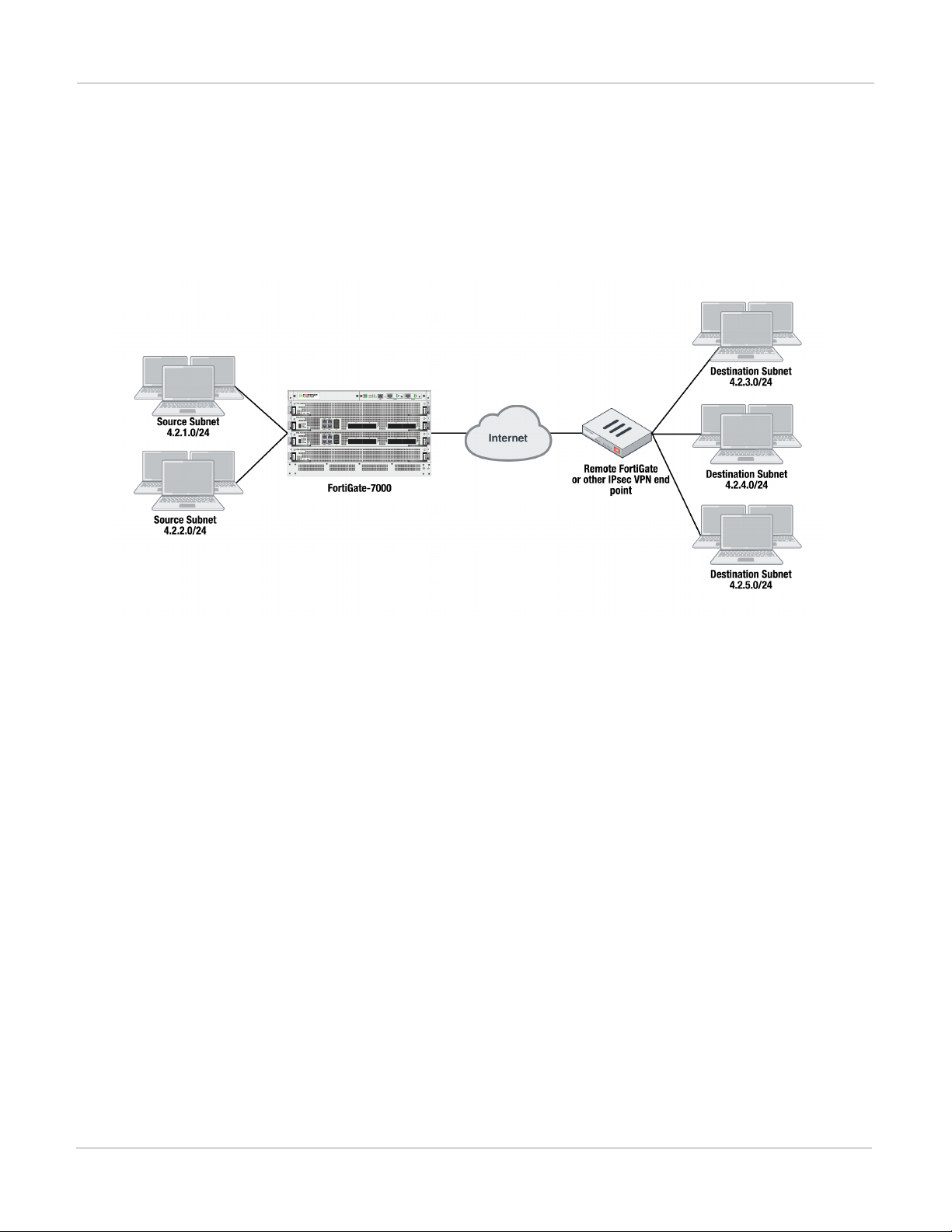

IPsec VPN 51

Adding source and destination subnets to IPsec VPN phase 2 configurations 51

Example basic IPsec VPN Phase 2 configuration 51

Example multiple subnet IPsec VPN Phase 2 configuration 52

Configuring the FortiGate-7000 as a dialup IPsec VPN server 53

Example dialup IPsec VPN configuration 53

Troubleshooting 54

High Availability 57

Before you begin configuring HA 57

Connect the M1 and M2 interfaces for HA heartbeat communication 58

HA configuration 60

Setting up HA on the FIM interface modules in the first FortiGate-7000 (chassis 1) 60

HA management configuration 62

Page 5

Managing individual modules in HAmode 63

Firmware upgrade 64

Session failover (session-pickup) 64

Enabling session pickup for TCP and UDP 65

If session pickup is disabled 65

Primary unit selection and failover criteria 66

Verifying primary chassis selection 68

How link and module failures affect primary chassis selection 68

FIM module failures 70

Management link failures 70

Link failure threshold and board failover tolerance 70

Link failure threshold 70

Board failover tolerance 70

Priority and primary chassis selection 71

Override and primary chassis selection 71

FortiGate-7000 v5.4.5 special features and limitations 72

Managing the FortiGate-7000 72

Default management VDOM 72

Firewall 72

IP Multicast 72

HighAvailability 73

Shelf Manager Module 73

FortiOS features that are not supported by FortiGate-7000 v5.4.5 74

IPsec VPN tunnels terminated by the FortiGate-7000 74

SSL VPN 75

Traffic shaping and DDoS policies 75

Sniffer mode (one-arm sniffer) 75

FortiGuard Web Filtering 75

Log messages include a slot field 75

FortiOS Carrier 75

Special notice for new deployment connectivity testing 75

FortiGate-7000 v5.4.3 special features and limitations 77

Managing the FortiGate-7000 77

Default management VDOM 77

Firewall 77

Link monitoring and health checking 77

IP Multicast 78

HighAvailability 78

Shelf Manager Module 79

FortiOS features that are not supported by FortiGate-7000 v5.4.3 79

IPsec VPN tunnels terminated by the FortiGate-7000 79

More about IPsec VPN routing limitations 80

Page 6

SSL VPN 80

Authentication 80

Traffic shaping and DDoS policies 81

Sniffer mode (one-arm sniffer) 81

FortiGuard Web Filtering 81

Log messages include a slot field 81

FortiOS Carrier 81

Special notice for new deployment connectivity testing 81

FortiGate-7000 Load balancing commands 82

config load-balance flow-rule 82

Syntax 82

status {disable | enable} 83

src-interface <interface-name> [interface-name>...} 83

vlan <vlan-id> 83

ether-type {any | arp | ip | ipv4 | ipv6} 83

{src-addr-ipv4 | dst-addr-ipv4 | src-addr-ipv6 | dst-addr-ipv6} <ip-address> <netmask> 83

protocol {any | icmp | tcp | udp | igmp | sctp | gre | esp | ah | ospf | pim | vrrp} 83

{src-l4port | dst-l4port} <start>[-<end>] 83

action {forward | mirror-ingress | mirror-egress | stats | drop} 83

set mirror-interface <interface-name> 84

forward-slot {master | all | load-balance | FPM3 | FPM4 | FPM5 | FPM6} 84

priority <number> 84

comment <text> 84

config load-balance setting 84

gtp-load-balance {disable | enable} 84

max-miss-heartbeats <heartbeats> 85

max-miss-mgmt-heartbeats <heartbeats> 85

weighted-load-balance {disable | enable} 85

dp-load-distribution-method {round-robin | src-ip | dst-ip | src-dst-ip | src-ip-sport | dst-

ip-dport | src-dst-ip-sport-dport} 85

config workers 85

Page 7

Change Log

Date Change Description

December 20, 2017 Updated for FortiGate-7000 v5.4.5. New sections include What's new in for

FortiGate-7000 v5.4.5 on page 8, FortiGate-7000 v5.4.5 special features and

limitations on page 72, IPsec VPN on page 51, gtp-load-balance {disable | enable} on

page 84, and Operating a FortiGate-7000 on page 45. Also, changes to High

Availability on page 57. New section Recommended configuration for traffic that

cannot be load balanced on page 37.Additional changes and fixes throughout the

document.

Changes to Installing firmware on an FIM or FPM module from the BIOS using a

November 7, 2017

November 2, 2017 Updated with new information throughout the document including a new HA chapter.

TFTP server on page 41. Also added a note about the MGMT interface being a static

aggregate and not an LACP aggregate.

Change Log

August 30, 2017 Updated with new information throughout the document.

December 1, 2016 Initial Release

7 FortiGate-7000

Fortinet Technologies Inc.

Page 8

Introduction What's new in for FortiGate-7000 v5.4.5

Introduction

This document describes what you need to know to get started using a FortiGate-7000 product. Also included are

details about CLI commands that are specific to FortiGate-7000 products.

This FortiOS Handbook chapter contains the following sections:

FortiGate-7000 overview provides a quick overview of FortiGate-7000 components.

Getting started with FortiGate-7000 describes how to get started with managing and configuring your FortiGate-

7000 product.

FortiGate-7000 Load balancing commands describes FortiGate-7000 load balancing CLI commands.

What's new in for FortiGate-7000 v5.4.5

The following new features have been added to FortiGate-7000 v5.4.5.

M1 and M2 interfaces can use different VLANs for heartbeat traffic (408386)

The M1 and M2 interfaces can be configured to use different VLANs for HA heartbeat traffic.

The following command now configures the VLAN used by the M1 interface (default 999):

config system ha

set hbdev-vlan-id 999

end

The following new command configures the VLAN used by the M2 interface (default 1999):

config system ha

set hbdev-second-vlan-id 1999

end

GTP load balancing

GTP load balancing is supported for FortiGate-7000 configurations licensed for FortiOSCarrier. You can use the

following command to enable GTP load balancing. This command is only available after you have licensed the

FortiGate-7000 for FortiOSCarrier.

config load-balance setting

set gtp-load-balance enable

end

FSSO user authentication is synchronized

FSSO user authentication is synchronized to all FIM and FPMmodules. FSSO users are no longer required to reauthenticate when sessions are processed by a different FIM or FPM module.

FortiGate-7000

Fortinet Technologies Inc.

8

Page 9

What's new in for FortiGate-7000 v5.4.5 Introduction

HA Link failure threshold changes (422264 )

The link failure threshold is now determined based on the all FIM modules in a chassis. This means that the

chassis with the fewest active links will become the backup chassis.

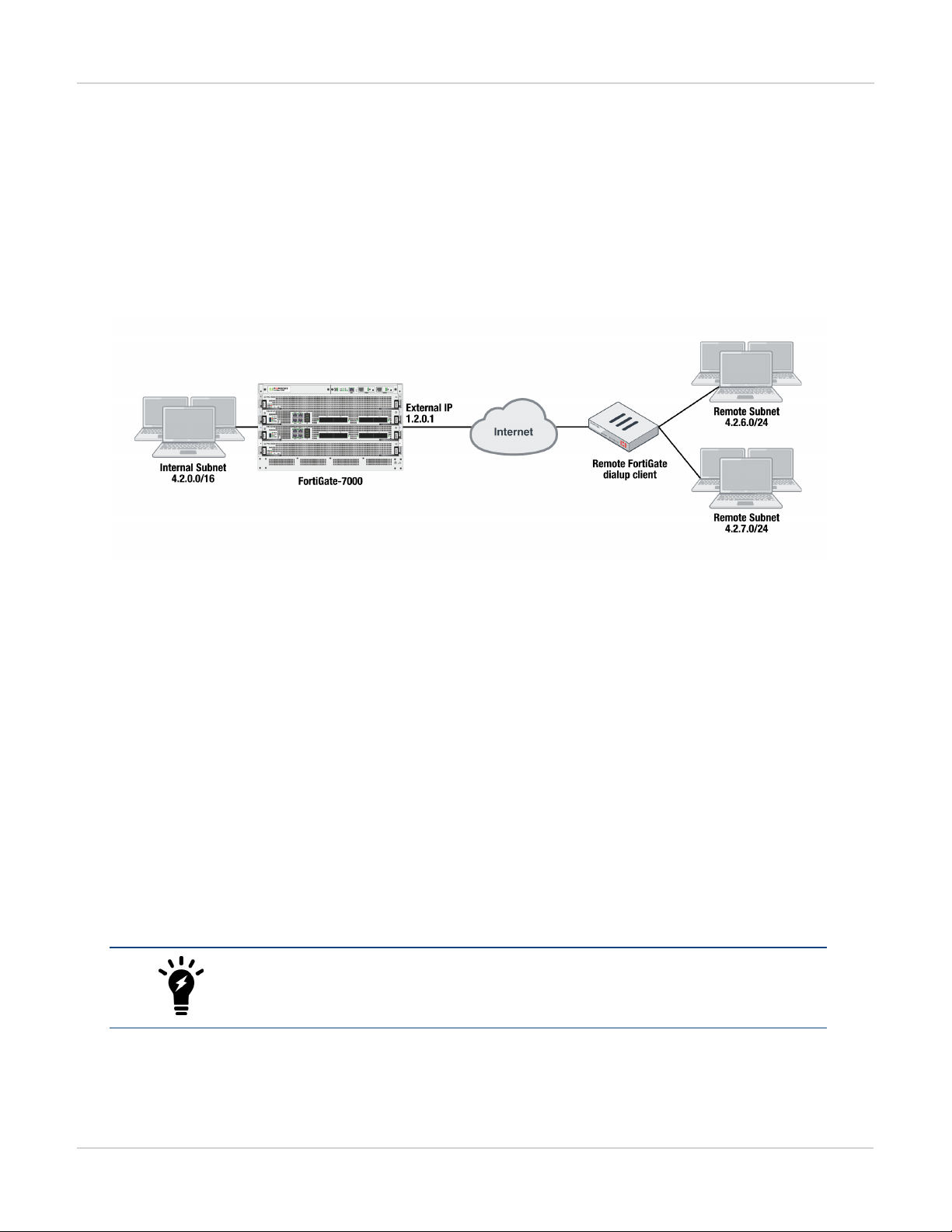

FortiGate-7000s running FortiOS v5.4.5 can be configured as dialup IPsec VPN servers

The following shows how to setup a dialup IPsec VPN configuration where the FortiGate-7000 running v5.4.5 acts

as a dialup IPsec VPN server.

Configure the phase1, set type to dynamic.

config vpn ipsec phase1-interface

edit dialup-server

set type dynamic

set interface "v0020"

set peertype any

set psksecret <password>

end

Configure the phase 2, to support dialup IPsec VPN, set the destination subnet to 0.0.0.0 0.0.0.0.

config vpn ipsec phase2-interface

edit dialup-server

set phase1name dialup-server

set src-subnet 4.2.0.0 255.255.0.0

set dst-subnet 0.0.0.0 0.0.0.0

end

To configure the remote FortiGate as a dialup IPsec VPN client

The dialup IPsec VPN client should advertise its local subnet(s) using the phase 2 src-subnet option.

If there are multiple local subnets create a phase 2 for each one. Each phase 2 only

advertises one local subnet to the dialup IPsec VPN server. If more than one local

subnet is added to the phase 2, only the first one is advertised to the server.

Dialup client configuration:

config vpn ipsec phase1-interface

9 FortiGate-7000

Fortinet Technologies Inc.

Page 10

Introduction What's new in for FortiGate-7000 v5.4.5

edit "to-fgt7k"

set interface "v0020"

set peertype any

set remote-gw 1.2.0.1

set psksecret <password>

end

config vpn ipsec phase2-interface

edit "to-fgt7k"

set phase1name "to-fgt7k"

set src-subnet 4.2.6.0 255.255.255.0

set dst-subnet 4.2.0.0 255.255.0.0

next

edit "to-fgt7k-2"

set phase1name "to-fgt7k"

set src-subnet 4.2.7.0 255.255.255.0

set dst-subnet 4.2.0.0 255.255.0.0

end

FortiGate-7000

Fortinet Technologies Inc.

10

Page 11

Licenses, Device Registration, and Support FortiGate-7000 overview

FortiGate-7000 overview

A FortiGate-7000 product consists of a FortiGate-7000 series chassis (for example, the FortiGate-7040E) with

FortiGate-7000 modules installed in the chassis slots. A FortiGate-7040E chassis comes with two interface

modules (FIM) to be installed in slots 1 and 2 to provide network connections and session-aware load balancing

to two processor modules (FPM) to be installed in slots 3 and 4.

FortiGate-7000 products are sold and licensed as packages that include the chassis as well as the modules to be

included in the chassis. When you receive your FortiGate-7000 series product the chassis has to be installed in a

rack and the modules installed in the chassis. Interface modules always go in slots 1 and 2 and processor

modules in slots 3 and up.

If your FortiGate-7000 product includes two different interfaces modules, for optimal configuration you should

install the module with the lower model number in slot 1 and the module with the higher model number in slot 2.

For example, if your chassis includes a FIM-7901E and a FIM-7904E, install the FIM-7901E in chassis slot 1 and

the FIM-7904E in chassis slot 2. This applies to any combination of two different interface modules.

As an administrator, when you browse to the FortiGate-7000 management IP address you log into the interface

module in slot 1 (the primary or master interface module or FIM) to view the status of the FortiGate-7000 and

make configuration changes. The FortiOS firmware running on each module has the same configuration and

when you make configuration changes to the primary interface module, the configuration changes are

synchronized to all modules.

The same FortiOS firmware build runs on each module in the chassis. You can upgrade FortiGate-7000 firmware

by logging into the primary interface module and performing a firmware upgrade as you would for any FortiGate.

During the upgrade process the firmware of all of the modules in the chassis upgrades in one step. Firmware

upgrades should be done during a quiet time because traffic will briefly be interrupted during the upgrade

process.

Licenses, Device Registration, and Support

A FortiGate-7000 product is made up of a FortiGate-7000 series chassis, one or two FIM interface modules and

two to four FPM processor modules. The entire package is licensed and configured as a single product under the

FortiGate-7000 chassis serial number. When you receive a new FortiGate-7000 product you register it on

https://support.fortinet.com using the chassis serial number. Use the chassis serial number when requesting

support from Fortinet for the product.

All Fortinet licensing, including FortiCare Support, IPS, AntiVirus, Web Filtering, Mobile Malware, FortiClient,

FortiCloud, and additional virtual domains (VDOM) is for the entire FortiGate-7000 product and not for individual

components.

If an individual component, such as a single interface or processor fails you can RMA and replace just that

component.

11 FortiGate-7000

Fortinet Technologies Inc.

Page 12

FortiGate-7060E FortiGate-7060E front panel

FortiGate-7060E

The FortiGate-7060E is a 8U 19-inch rackmount 6-slot chassis with a 80Gbps fabric and 1Gbps base backplane

designed by Fortinet. The fabric backplane provides network data communication and the base backplane

provides management and synch communication among the chassis slots.

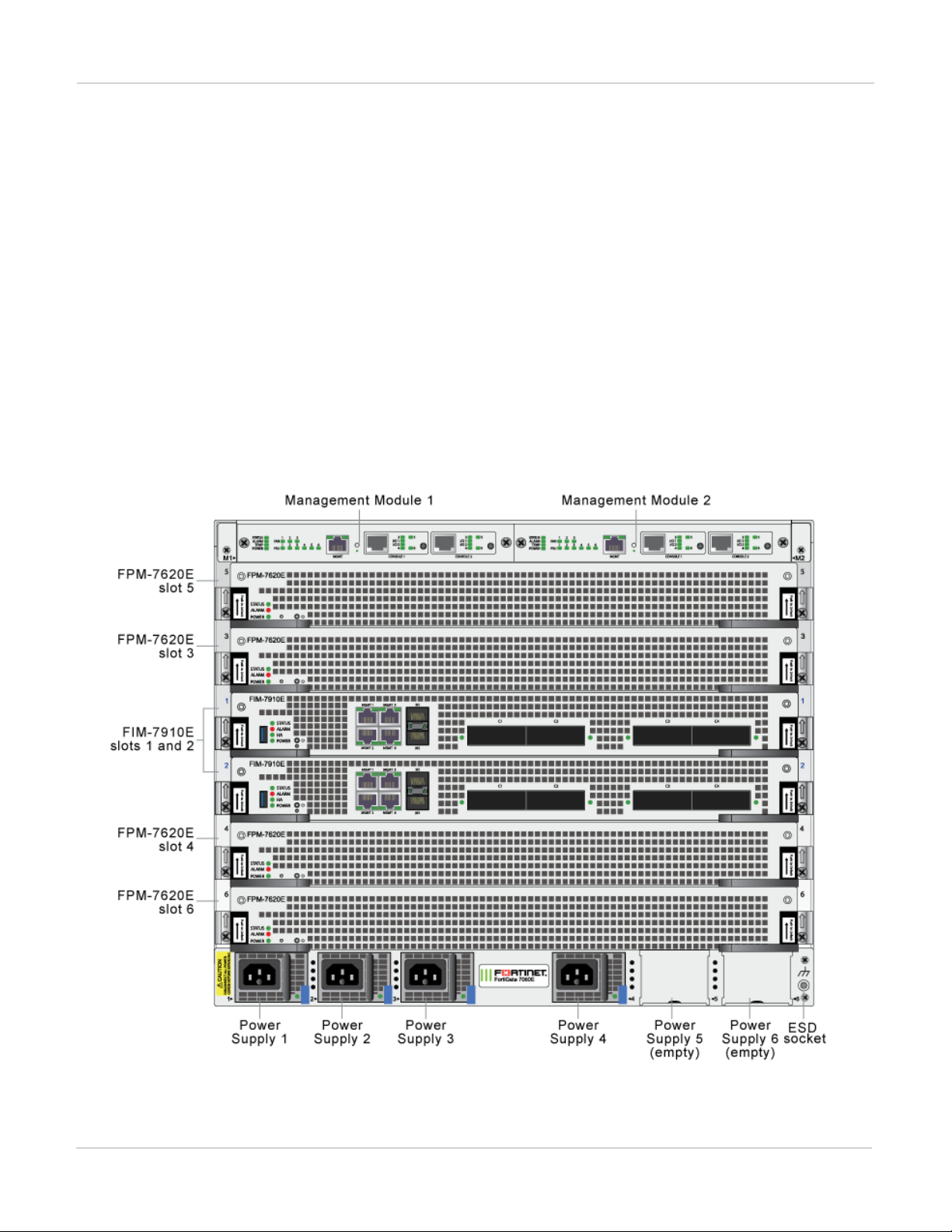

FortiGate-7060E front panel

The chassis is managed by two redundant management modules. Each module includes an Ethernet connection

as well as two switchable console ports that provide console connections to the modules in the chassis slots. The

active management module controls chassis cooling and power management and provides an interface for

managing the modules installed in the chassis.

FortiGate-7060E front panel, (example module configuration)

FortiGate-7000

Fortinet Technologies Inc.

12

Page 13

FortiGate-7060E schematic FortiGate-7060E

Power is provided to the chassis using four hot swappable 3+1 redundant 100-240 VAC, 50-60 Hz power supply

units (PSUs). You can also optionally add up to six PSUs to provide 3+3 redundancy. The FortiGate-7060E can

also be equipped with DC PSUs allowing you to connect the chassis to -48V DC power

The standard configuration of the FortiGate-7060E includes two FIM (interface) modules in chassis slots 1 and 2

and up to four FPM (processing) modules in chassis slots 3 to 6.

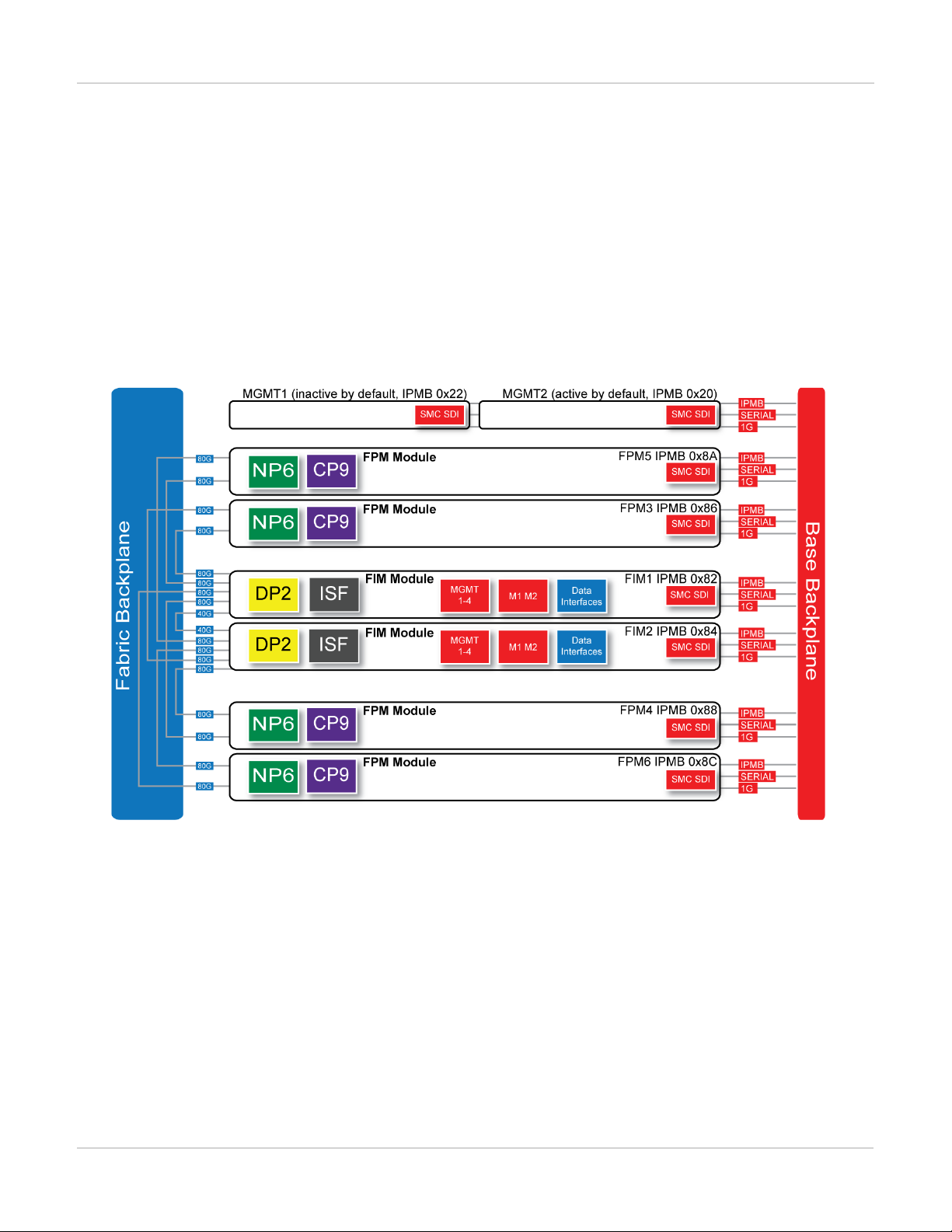

FortiGate-7060E schematic

The FortiGate-7060E chassis schematic below shows the communication channels between chassis components

including the management modules (MGMT), the FIM modules (called FIM1 and FIM2) and the FPM modules

(FPM3, FPM4, FPM5, and FPM6).

By default MGMT2 is the active management module and MGMT1 is inactive. The active management module

always has the IPMB address 0x20 and the inactive management module always has the IPMB address 0x22.

The active management module communicates with all modules in the chassis over the base backplane. Each

module, including the management modules has a Shelf Management Controller (SMC). These SMCs support

Intelligent Platform Management Bus (IPMB) communication between the active management module and the

FIM and FPM modules for storing and sharing sensor data that the management module uses to control chassis

cooling and power distribution. The base backplane also supports serial communications to allow console access

from the management module to all modules, and 1Gbps Ethernet communication for management and

heartbeat communication betweenmodules.

FIM1 and FIM2 (IPMB addresses 0x82 and 0x84) are the FIM modules in slots 1 and 2. The interfaces of these

modules connect the chassis to data networks and can be used for Ethernet management access to chassis

components. The FIM modules include DP2 processors that distribute sessions over the Integrated Switch Fabric

13 FortiGate-7000

Fortinet Technologies Inc.

Page 14

FortiGate-7060E FortiGate-7040E

(ISF) to the NP6 processors in the FPMmodules. Data sessions are communicated to the FPM modules over the

80Gbps chassis fabric backplane.

FPM03, FPM04, FPM05, and FPM06 (IPMB addresses 0x86, 0x88, 0x8A, and 0x8C) are the FPM processor

modules in slots 3 to 6. These worker modules process sessions distributed to them by the FIMmodules.

FPMmodules include NP6 processors to offload sessions from the FPM CPU and CP9 processors that accelerate

content processing.

FortiGate-7040E

The FortiGate-7040E is a 6U 19-inch rackmount 4-slot chassis with a 80Gbps fabric and 1Gbps base backplane

designed by Fortinet. The fabric backplane provides network data communication and the base backplane

provides management and synch communication among the chassis slots.

FortiGate-7040E front panel

The FortiGate-7040E chassis is managed by a single management module that includes an Ethernet connection

as well as two switchable console ports that provide console connections to the modules in the chassis slots. The

management module controls chassis cooling and power management and provides an interface for managing

the modules installed in the chassis. The standard configuration of the FortiGate-7040E includes two FIM

(interface) modules in chassis slots 1 and 2 and two FPM (processing) modules in chassis slots 3 and 4.

FortiGate-7040E front panel

FortiGate-7000

Fortinet Technologies Inc.

14

Page 15

FortiGate-7030E FortiGate-7060E

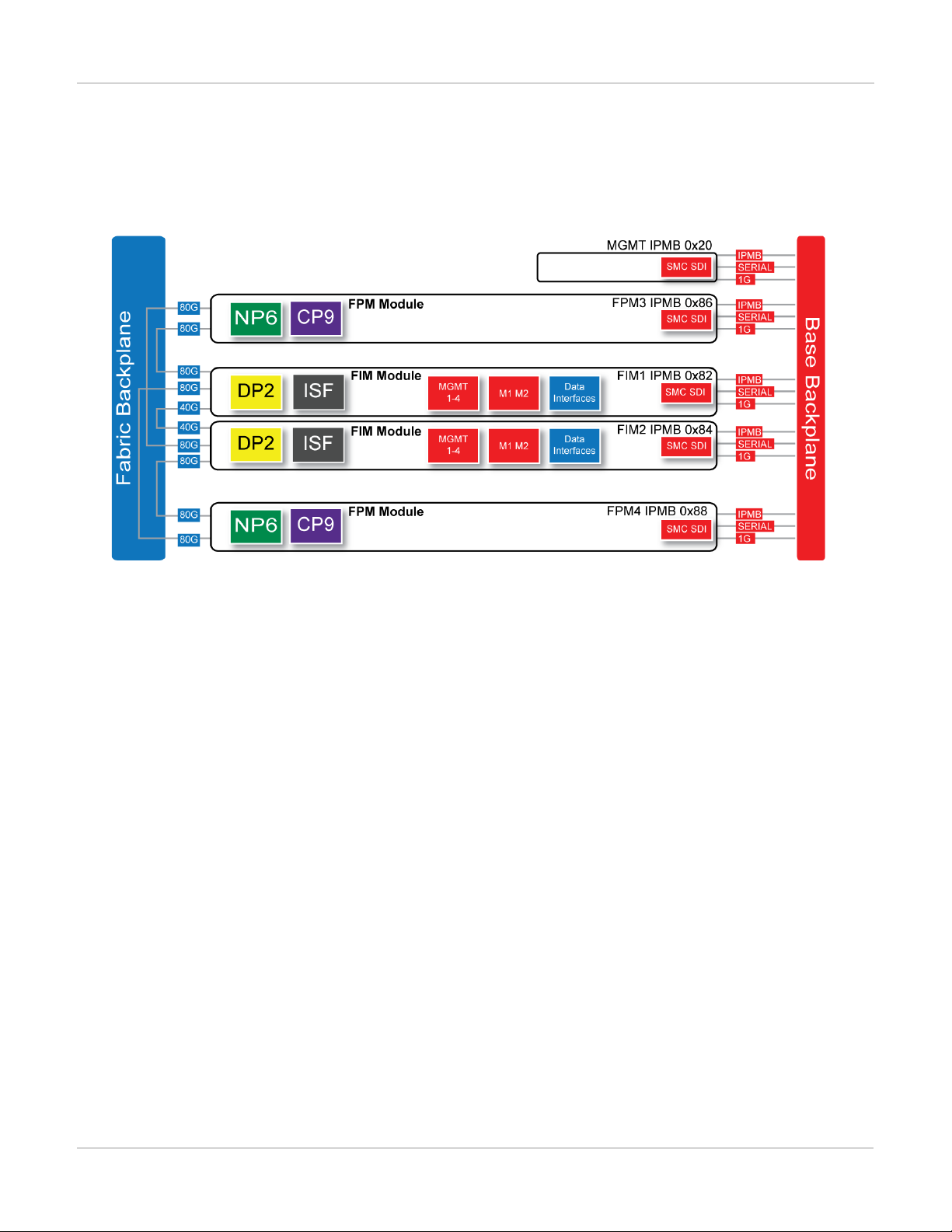

FortiGate-7040E schematic

The FortiGate-7040E chassis schematic below shows the communication channels between chassis components

including the management module (MGMT), the FIM modules (called FIM1 and FIM2) and the FPM modules

(FPM3 and FPM4).

The management module (MGMT, with IPMB address 0x20) communicates with all modules in the chassis over

the base backplane. Each module, including the management module includes a Shelf Management Controller

(SMC). These SMCs support Intelligent Platform Management Bus (IPMB) communication between the

management module and the FIM and FPM modules for storing and sharing sensor data that the management

module uses to control chassis cooling and power distribution. The base backplane also supports serial

communications to allow console access from the management module to all modules, and 1Gbps Ethernet

communication for management and heartbeat communication betweenmodules.

FIM1 and FIM2 (IPMB addresses 0x82 and 0x84) are the FIM modules in slots 1 and 2. The interfaces of these

modules connect the chassis to data networks and can be used for Ethernet management access to chassis

components. The FIM modules include DP2 processors that distribute sessions over the Integrated Switch Fabric

(ISF) to the NP6 processors in the FPMmodules. Data sessions are communicated to the FPM modules over the

80Gbps chassis fabric backplane.

FPM3 and FPM4 (IPMB addresses 0x86 and 0x88) are the FPM processor modules in slots 3 and 4. These

worker modules process sessions distributed to them by the FIMmodules. FPMmodules include NP6 processors

to offload sessions from the FPM CPU and CP9 processors that accelerate content processing.

FortiGate-7030E

The FortiGate-7030E is a 6U 19-inch rackmount 3-slot chassis with a 80Gbps fabric and 1Gbps base backplane

designed by Fortinet. The fabric backplane provides network data communication and the base backplane

provides management and synch communication among the chassis slots.

15 FortiGate-7000

Fortinet Technologies Inc.

Page 16

FortiGate-7060E FortiGate-7030E

FortiGate-7030E front panel

The FortiGate-7030E chassis is managed by a single management module that includes an Ethernet connection

as well as two switchable console ports that provide console connections to the modules in the chassis slots. The

management module controls chassis cooling and power management and provides an interface for managing

the modules installed in the chassis. The standard configuration of the FortiGate-7030E includes one FIM

(interface) module in chassis slot 1 and two FPM (processing) modules in chassis slots 3 and 4. The front panel

also includes a sealed blank panel. Breaking the seal or removing the panel voids your FortiGate-7030E warranty.

FortiGate-7030E front panel (example module configuration)

(missing or bad snippet)

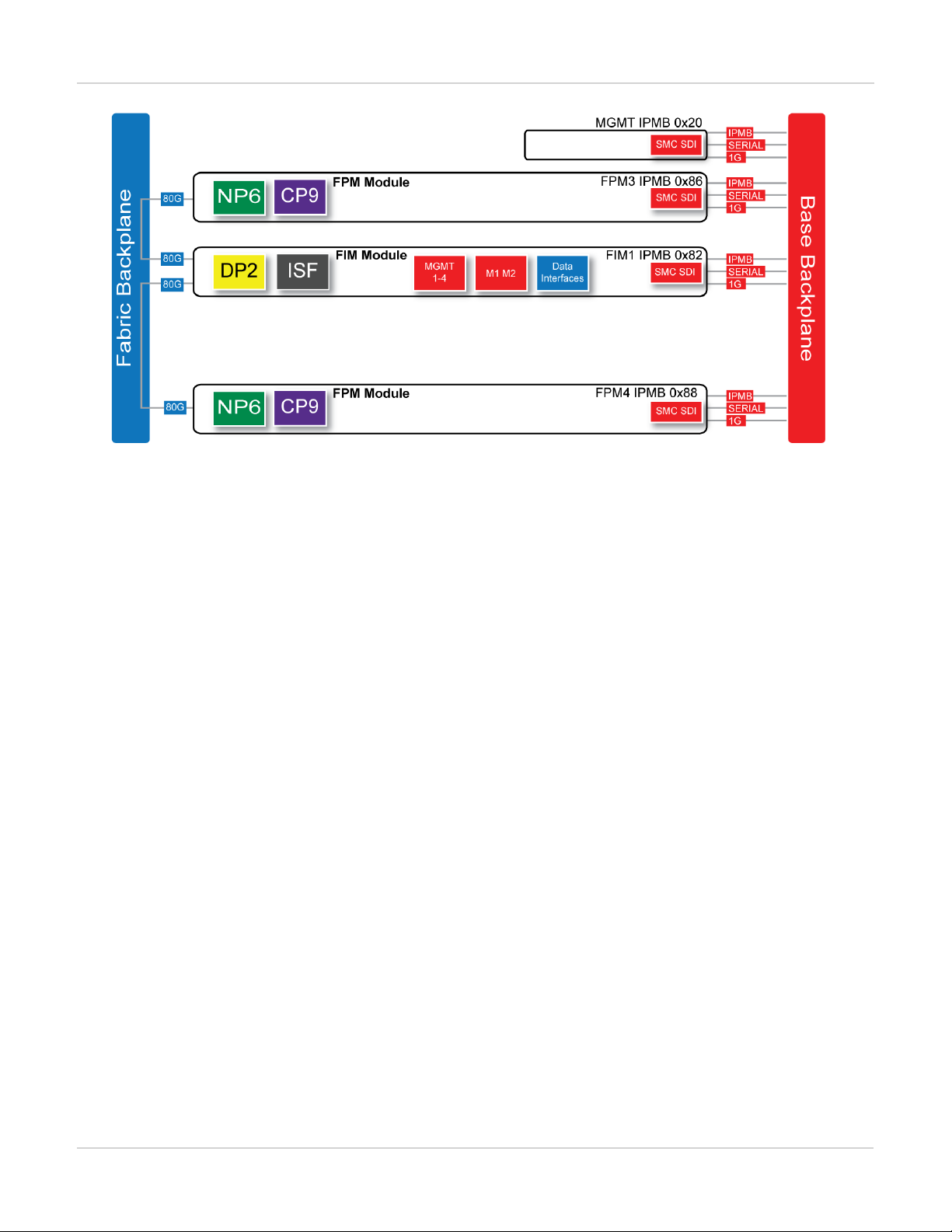

FortiGate-7030E schematic

The FortiGate-7030E chassis schematic below shows the communication channels between chassis components

including the management module (MGMT), the FIM module (called FIM1) and the FPM modules (FPM3 and

FPM4).

FortiGate-7000

Fortinet Technologies Inc.

16

Page 17

FortiGate-7030E FortiGate-7060E

The management module (MGMT, with IPMB address 0x20) communicates with all modules in the chassis over

the base backplane. Each module, including the management module includes a Shelf Management Controller

(SMC). These SMCs support Intelligent Platform Management Bus (IPMB) communication between the

management module and the FIM and FPM modules for storing and sharing sensor data that the management

module uses to control chassis cooling and power distribution. The base backplane also supports serial

communications to allow console access from the management module to all modules, and 1Gbps Ethernet

communication for management and heartbeat communication betweenmodules.

FIM1 (IPMB address 0x82) is the FIM module in slot 1. The interfaces of this module connect the chassis to data

networks and can be used for Ethernet management access to chassis components. The FIM module include

DP2 processors that distribute sessions over the Integrated Switch Fabric (ISF) to the NP6 processors in the

FPMmodules. Data sessions are communicated to the FPM modules over the 80Gbps chassis fabric backplane.

FPM3 and FPM4 (IPMB addresses 0x86 and 0x88) are the FPM processor modules in slots 3 and 4. These

worker modules process sessions distributed to them by the FIMmodule. FPMmodules include NP6 processors

to offload sessions from the FPM CPU and CP9 processors that accelerate content processing.

17 FortiGate-7000

Fortinet Technologies Inc.

Page 18

FIM-7901E interface module FortiGate-7030E

FIM-7901E interface module

The FIM-7901E interface module is a hot swappable module that provides data, management and session

sync/heartbeat interfaces, base backplane switching and fabric backplane session-aware load balancing for a

FortiGate-7000 chassis. The FIM-7901E includes an integrated switch fabric and DP2 processors to load balance

millions of data sessions over the chassis fabric backplane to FPM processor modules.

The FIM-7901E can be installed in any FortiGate-7000 series chassis in hub/switch slots 1 and 2. The FIM-7901E

provides thirty-two 10GigE small form-factor pluggable plus (SPF+) interfaces for a FortiGate-7000 chassis.

You can also install FIM-7901Es in a second chassis and operate the chassis in HA mode with another set of

processor modules to provide chassis failover protection.

FIM-7901E front panel

The FIM-7901E includes the following hardware features:

l Thirty-two front panel 10GigE SFP+ fabric channel interfaces (A1 to A32). These interfaces are connected to

10Gbps networks to distribute sessions to the FPM processor modules installed in chassis slots 3 and up. These

interfaces can also be configured to operate as Gigabit Ethernet interfaces using SFP transceivers. These

interfaces also support creating link aggregation groups (LAGs) that can include interfaces from both FIM-7901Es.

l Two front panel 10GigE SFP+ interfaces (M1 and M2) that connect to the base backplane channel. These

interfaces are used for heartbeat, session sync, and management communication between FIM-7901Es in different

chassis. These interfaces can also be configured to operate as Gigabit Ethernet interfaces using SFP transceivers,

but should not normally be changed. If you use switches to connect these interfaces, the switch ports should be

able to accept packets with a maximum frame size of at least 1526. The M1 and M2 interfaces need to be on

different broadcast domains. If M1 and M2 are connected to the same switch, Q-in-Q must be enabled on the

switch.

l Four 10/100/1000BASE-T out of band management Ethernet interfaces (MGMT1 to MGMT4).

l One 80Gbps fabric backplane channel for traffic distribution with each FPM module installed in the same chassis as

the FIM-7901E.

l One 1Gbps base backplane channel for base backplane with each FPM module installed in the same chassis as the

FIM-7901E.

l One 40Gbps fabric backplane channel for fabric backplane communication with the other FIM-7901E in the chassis.

FortiGate-7000

Fortinet Technologies Inc.

18

Page 19

FortiGate-7030E FIM-7901E interface module

l One 1Gbps base backplane channel for base backplane communication with the other FIM-7901E in the chassis.

l On-board DP2 processors and an integrated switch fabric to provide high-capacity session-aware load balancing.

l One front panel USB port.

l Power button.

l NMIswitch (for troubleshooting as recommended by Fortinet Support).

l Mounting hardware.

l LED status indicators.

FIM-7901E schematic

The FIM-7901E includes an integrated switch fabric (ISF) that connects the front panel interfaces to the DP2

session-aware load balancers and to the chassis backplanes. The ISFalso allows the DP2 processors to

distribute sessions amoung all NP6 processors on the FPMmodules in the same chassis.

FIM-7901E schematic

19 FortiGate-7000

Fortinet Technologies Inc.

Page 20

FIM-7904E interface module FortiGate-7030E

FIM-7904E interface module

The FIM-7904E interface module is a hot swappable module that provides data, management and session

sync/heartbeat interfaces, base backplane switching and fabric backplane session-aware load balancing for a

FortiGate-7000 series chassis. The FIM-7904E includes an integrated switch fabric and DP2 processors to load

balance millions of data sessions over the chassis fabric backplane to FPM processor modules.

The FIM-7904E can be installed in any FortiGate-7000 series chassis in hub/switch slots 1 and 2. The FIM-7904E

provides four Quad Small Form-factor Pluggable plus (QSFP+) interfaces for a FortiGate-7000 chassis. Using a

40GBASE-SR10 multimode QSFP+ transceiver, each QSFP+ interface can also be split into four 10GBASE-SR

interfaces.

You can also install FIM-7904Es in a second chassis and operate the chassis in HA mode with another set of

processor modules to provide chassis failover protection.

FIM-7904E front panel

The FIM-7904E includes the following hardware features:

l Eight front panel 40GigE QSFP+ fabric channel interfaces (B1 to B8). These interfaces are connected to 40Gbps

networks to distribute sessions to the FPM processor modules installed in chassis slots 3 and up. Using 40GBASESR10 multimode QSFP+ transceivers, each QSFP+ interface can also be split into four 10GBASE-SR interfaces.

These interfaces also support creating link aggregation groups (LAGs) that can include interfaces from both FIM7904Es.

l Two front panel 10GigE SFP+ interfaces (M1 and M2) that connect to the base backplane channel. These

interfaces are used for heartbeat, session sync, and management communication between FIM-7904Es in different

chassis. These interfaces can also be configured to operate as Gigabit Ethernet interfaces using SFP transceivers,

but should not normally be changed. If you use switches to connect these interfaces, the switch ports should be

able to accept packets with a maximum frame size of at least 1526. The M1 and M2 interfaces need to be on

different broadcast domains. If M1 and M2 are connected to the same switch, Q-in-Q must be enabled on the

switch.

l Four 10/100/10000BASE-T out of band management Ethernet interfaces (MGMT1 to MGMT4).

l One 80Gbps fabric backplane channel for traffic distribution with each FPM module installed in the same chassis as

the FIM-7904E.

FortiGate-7000

Fortinet Technologies Inc.

20

Page 21

FortiGate-7030E FIM-7904E interface module

l One 1Gbps base backplane channel for base backplane with each FPM module installed in the same chassis as the

FIM-7904E.

l One 40Gbps fabric backplane channel for fabric backplane communication with the other FIM-7904E in the chassis.

l One 1Gbps base backplane channel for base backplane communication with the other FIM-7904E in the chassis.

l On-board DP2 processors and an integrated switch fabric to provide high-capacity session-aware load balancing.

l One front panel USB port.

l Power button.

l NMIswitch (for troubleshooting as recommended by Fortinet Support).

l Mounting hardware.

l LED status indicators.

Splitting the FIM-7904E B1 to B8 interfaces

Each 40GE interface (B1 to B8) on the FIM-7904Es in slot 1 and slot 2 of a FortiGate-7000 system can be split

into 4x10GBE interfaces. You split these interfaces after the FIM-7904Es are installed in your FortiGate-7000

system and the system us up and running. You can split the interfaces of the FIM-7904Es in slot 1 and slot 2 at

the same time by entering a single CLI command. Splitting the interfaces requires a system reboot so Fortinet

recommends that you split multiple interfaces at the same time according to your requirements to avoid traffic

disruption.

For example, to split the B1 interface of the FIM-7904E in slot 1 (this interface is named 1-B1) and the B1 and B4

interfaces of the FIM-7904E in slot 2 (these interfaces are named 2-B1 and 2-B4) connect to the CLI of your

FortiGate-7000 system using the management IP and enter the following command:

config system global

set split-port 1-B1 2-B1 2-B4

end

After you enter the command, the FortiGate-7000 reboots and when it comes up:

l The 1-B1 interface will no longer be available. Instead the 1-B1/1, 1-B1/2, 1-B1/3, and 1-B1/4 interfaces will be

available.

l The 2-B1 interface will no longer be available. Instead the 2-B1/1, 2-B1/2, 2-B1/3, and 2-B1/4 interfaces will be

available.

l The 2-B4 interface will no longer be available. Instead the 2-B4/1, 2-B4/2, 2-B4/3, and 2-B4/4 interfaces will be

available.

You can now connect breakout cables to these interfaces and configure traffic between them just like any other

FortiGate interface.

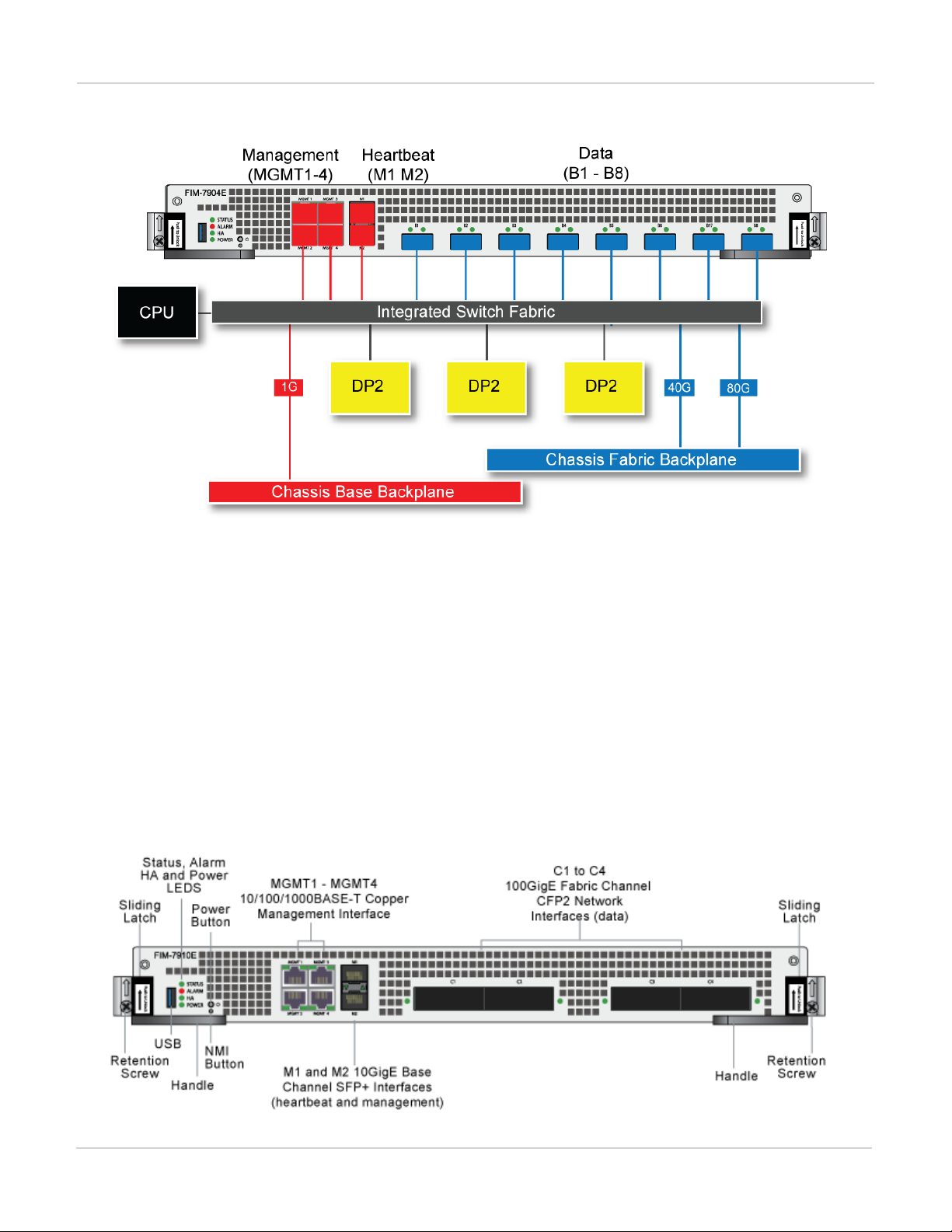

FIM-7904E hardware schematic

The FIM-7904E includes an integrated switch fabric (ISF) that connects the front panel interfaces to the DP2

session-aware load balancers and to the chassis backplanes. The ISFalso allows the DP2 processors to

distribute sessions amoung all NP6 processors on the FPMmodules in the same chassis.

21 FortiGate-7000

Fortinet Technologies Inc.

Page 22

FIM-7904E interface module FIM-7910E interface module

FIM-7904E hardware architecture

FIM-7910E interface module

The FIM-7910E interface module is a hot swappable module that provides data, management and session

sync/heartbeat interfaces, base backplane switching and fabric backplane session-aware load balancing for a

FortiGate-7000 series chassis. The FIM-7910E includes an integrated switch fabric and DP2 processors to load

balance millions of data sessions over the chassis fabric backplane to FPM processor modules.

The FIM-7910E can be installed in any FortiGate-7000 series chassis in hub/switch slots 1 and 2. The FIM-7910E

provides four C form-factor pluggable 2 (CFP2) interfaces for a FortiGate-7000 chassis. Using a 100GBASESR10 multimode CFP2 transceiver, each CFP2 interface can also be split into ten 10GBASE-SR interfaces.

FIM-7910E front panel

FortiGate-7000

Fortinet Technologies Inc.

22

Page 23

FIM-7910E interface module FIM-7904E interface module

The FIM-7910E includes the following hardware features:

l Four front panel 100GigE CFP2 fabric channel interfaces (C1 to C4). These interfaces are connected to 100Gbps

networks to distribute sessions to the FPM processor modules installed in chassis slots 3 and up. Using 100GBASESR10 multimode CFP2 transceivers, each CFP2 interface can also be split into ten 10GBASE-SR interfaces. These

interfaces also support creating link aggregation groups (LAGs) that can include interfaces from both FIM-7910Es.

l Two front panel 10GigE SFP+ interfaces (M1 and M2) that connect to the base backplane channel. These

interfaces are used for heartbeat, session sync, and management communication between FIM-7910Es in different

chassis. These interfaces can also be configured to operate as Gigabit Ethernet interfaces using SFP transceivers,

but should not normally be changed. If you use switches to connect these interfaces, the switch ports should be

able to accept packets with a maximum frame size of at least 1526. The M1 and M2 interfaces need to be on

different broadcast domains. If M1 and M2 are connected to the same switch, Q-in-Q must be enabled on the

switch.

l Four 10/100/1000BASE-T out of band management Ethernet interfaces (MGMT1 to MGMT4).

l One 80Gbps fabric backplane channel for traffic distribution with each FPM module installed in the same chassis as

the FIM-7910E.

l One 1Gbps base backplane channel for base backplane with each FPM module installed in the same chassis as the

FIM-7910E.

l One 40Gbps fabric backplane channel for fabric backplane communication with the other FIM-7910E in the chassis.

l One 1Gbps base backplane channel for base backplane communication with the other FIM-7910E in the chassis.

l On-board DP2 processors and an integrated switch fabric to provide high-capacity session-aware load balancing.

l One front panel USB port.

l Power button.

l NMIswitch (for troubleshooting as recommended by Fortinet Support).

l Mounting hardware.

l LED status indicators.

Splitting the FIM-7910E C1 to C4 interfaces

Each 100GE interface (C1 to C4) on the FIM-7910Es in slot 1 and slot 2 of a FortiGate-7000 system can be split

into 10 x 10GBE interfaces. You split these interfaces after the FIM-7910Es are installed in your FortiGate-7000

system and the system us up and running. You can split the interfaces of the FIM-7910Es in slot 1 and slot 2 at

the same time by entering a single CLI command. Splitting the interfaces requires a system reboot so Fortinet

recommends that you split multiple interfaces at the same time according to your requirements to avoid traffic

disruption.

For example, to split the C1 interface of the FIM-7910E in slot 1 (this interface is named 1-C1) and the C1 and C4

interfaces of the FIM-7910E in slot 2 (these interfaces are named 2-C1 and 2-C4) connect to the CLI of your

FortiGate-7000 system using the management IP and enter the following command:

config system global

set split-port 1-C1 2-C1 2-C4

end

After you enter the command, the FortiGate-7000 reboots and when it comes up:

l The 1-C1 interface will no longer be available. Instead the 1-C1/1, 1-C1/2, ..., and 1-C1/10 interfaces will be

available.

l The 2-C1 interface will no longer be available. Instead the 2-C1/1, 2-C1/2, ..., and 2-C1/10 interfaces will be

available.

23 FortiGate-7000

Fortinet Technologies Inc.

Page 24

FIM-7904E interface module FIM-7920E interface module

l The 2-C4 interface will no longer be available. Instead the 2-C4/1, 2-C4/2, ..., and 2-C4/10 interfaces will be

available.

You can now connect breakout cables to these interfaces and configure traffic between them just like any other

FortiGate interface.

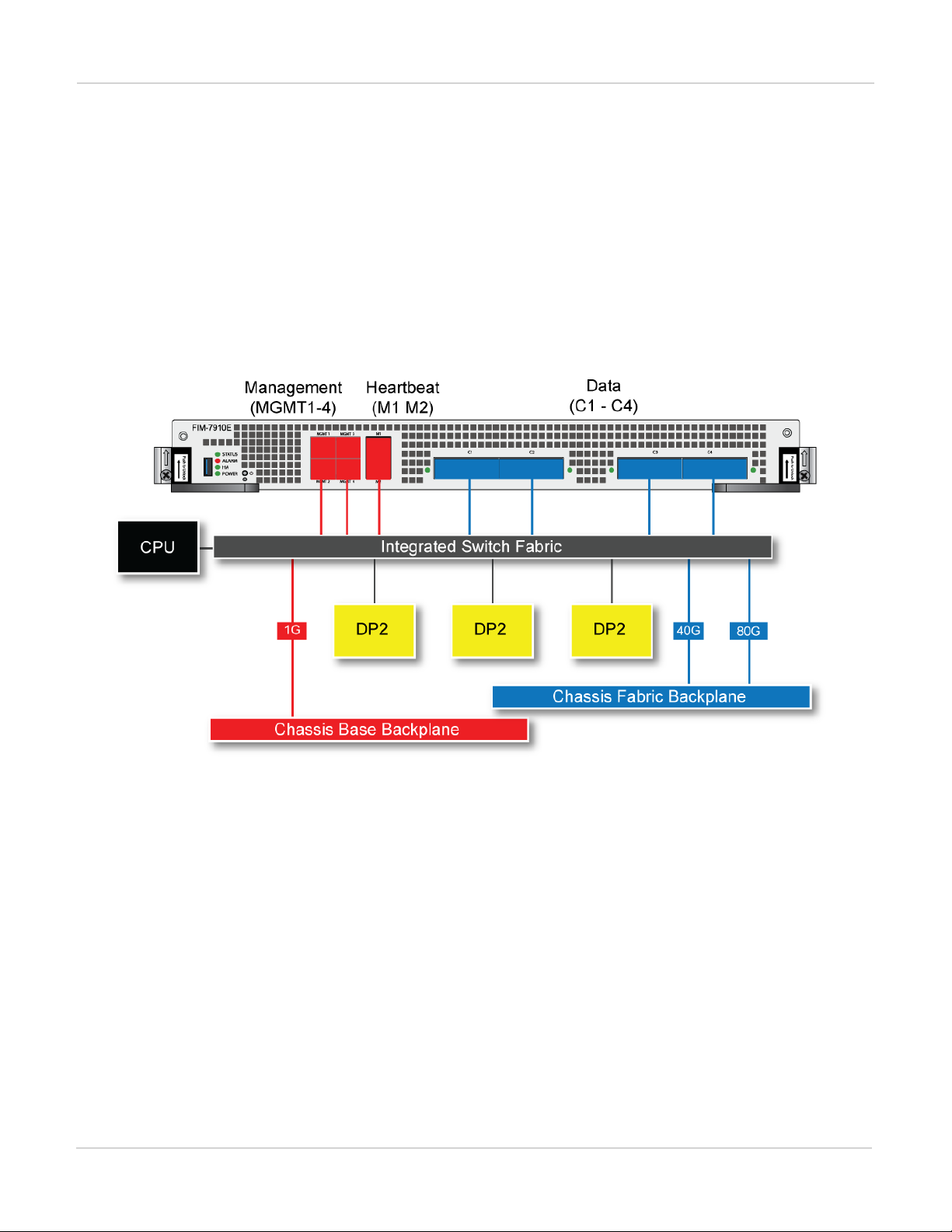

FIM-7910E hardware schematic

The FIM-7910E includes an integrated switch fabric (ISF) that connects the front panel interfaces to the DP2

session-aware load balancers and to the chassis backplanes. The ISFalso allows the DP2 processors to

distribute sessions amoung all NP6 processors on the FPMmodules in the same chassis.

FIM-7910E hardware schematic

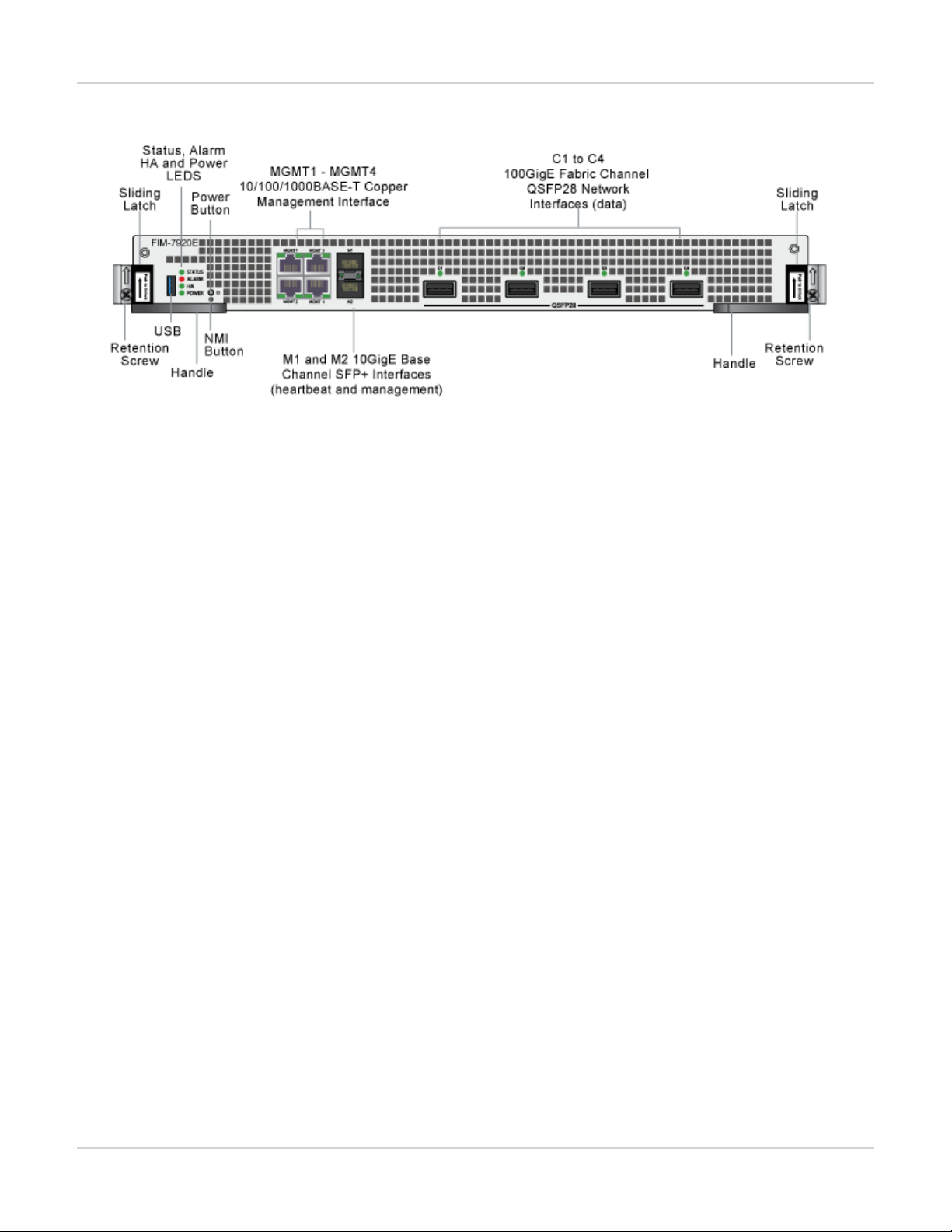

FIM-7920E interface module

The FIM-7920E interface module is a hot swappable module that provides data, management and session

sync/heartbeat interfaces, base backplane switching and fabric backplane session-aware load balancing for a

FortiGate-7000 series chassis. The FIM-7920E includes an integrated switch fabric and DP2 processors to load

balance millions of data sessions over the chassis fabric backplane to FPM processor modules.

The FIM-7920E can be installed in any FortiGate-7000 series chassis in hub/switch slots 1 or 2. The FIM-7920E

provides four Quad Small Form-factor Pluggable 28 (QSFP28) 100GigE interfaces for a FortiGate-7000 chassis.

Using a 100GBASE-SR4 QSFP28 or 40GBASE-SR4 QSFP+ transceiver, each QSFP28 interface can also be split

into four 10GBASE-SR interfaces.

You can also install FIM-7920Es in a second chassis and operate the chassis in HA mode with another set of

processor modules to provide chassis failover protection.

FortiGate-7000

Fortinet Technologies Inc.

24

Page 25

FIM-7920E interface module FIM-7904E interface module

FIM-7920E front panel

The FIM-7920E includes the following hardware features:

l Four front panel 100GigE QSFP28 fabric channel interfaces (C1 to C4). These interfaces are connected to

100Gbps networks to distribute sessions to the FPM processor modules installed in chassis slots 3 and up. Using a

100GBASE-SR4 QSFP28 or 40GBASE-SR4 QSFP+ transceiver, each QSFP28 interface can also be split into four

10GBASE-SR interfaces. These interfaces also support creating link aggregation groups (LAGs) that can include

interfaces from both FIM-7920Es.

l Two front panel 10GigE SFP+ interfaces (M1 and M2) that connect to the base backplane channel. These

interfaces are used for heartbeat, session sync, and management communication between FIM-7920Es in different

chassis. These interfaces can also be configured to operate as Gigabit Ethernet interfaces using SFP transceivers,

but should not normally be changed. If you use switches to connect these interfaces, the switch ports should be

able to accept packets with a maximum frame size of at least 1526. The M1 and M2 interfaces need to be on

different broadcast domains. If M1 and M2 are connected to the same switch, Q-in-Q must be enabled on the

switch.

l Four 10/100/1000BASE-T out of band management Ethernet interfaces (MGMT1 to MGMT4).

l One 80Gbps fabric backplane channel for traffic distribution with each FPM module installed in the same chassis as

the FIM-7920E.

l One 1Gbps base backplane channel for base backplane with each FPM module installed in the same chassis as the

FIM-7920E.

l One 40Gbps fabric backplane channel for fabric backplane communication with the other FIM-7920E in the chassis.

l One 1Gbps base backplane channel for base backplane communication with the other FIM-7920E in the chassis.

l On-board DP2 processors and an integrated switch fabric to provide high-capacity session-aware load balancing.

l One front panel USB port.

l Power button.

l NMIswitch (for troubleshooting as recommended by Fortinet Support).

l Mounting hardware.

l LED status indicators.

Changing the interface type and splitting the FIM-7920E C1 to C4 interfaces

By default, the FIM-7920E C1 to C4 interfaces are configured as 100GE QSFP28 interfaces. You can use the

following command to convert them to 40GE QSFP+ interfaces. Once converted, you can use the other

command below to split them into four 10GBASE-SR interfaces.

25 FortiGate-7000

Fortinet Technologies Inc.

Page 26

FIM-7904E interface module FIM-7920E hardware schematic

Changing the interface type

For example, to change the interface type of the C1 interface of the FIM-7920E in slot 1 to 40GE QSFP+ connect

to the CLI of your FortiGate-7000 system using the management IP and enter the following command:

config system global

set qsfp28-40g-port 1-C1

end

The FortiGate-7000 system reboots and when it starts up interface C1 of the FIM-7920E in slot 1 is operating as

a 40GE QSFP+ interface .

To change the interface type of the C3 and C4 ports of the FIM-7920E in slot 2 to 40GE QSFP+ enter the

following command:

config system global

set qsfp28-40g-port 2-C3 2-C4

end

The FortiGate-7000 system reboots and when it starts up interfaces C3 and C4 of the FIM-7920E in slot 2 are

operating as a 40GE QSFP+ interfaces.

Splitting the C1 to C4 interfaces

Each 40GE interface (C1 to C4) on the FIM-7920Es in slot 1 and slot 2 of a FortiGate-7000 system can be split

into 4 x 10GBE interfaces. You split these interfaces after the FIM-7920Es are installed in your FortiGate-7000

system and the system us up and running. You can split the interfaces of the FIM-7920Es in slot 1 and slot 2 at

the same time by entering a single CLI command. Splitting the interfaces requires a system reboot so Fortinet

recommends that you split multiple interfaces at the same time according to your requirements to avoid traffic

disruption.

For example, to split the C1 interface of the FIM-7920E in slot 1 (this interface is named 1-C1) and the C1 and C4

interfaces of the FIM-7920E in slot 2 (these interfaces are named 2-C1 and 2-C4) connect to the CLI of your

FortiGate-7000 system using the management IP and enter the following command:

config system global

set split-port 1-C1 2-C1 2-C4

end

After you enter the command, the FortiGate-7000 reboots and when it comes up:

l The 1-C1 interface will no longer be available. Instead the 1-C1/1, 1-C1/2, 1-C1/3, and 1-C1/4 interfaces will be

available.

l The 2-C1 interface will no longer be available. Instead the 2-C1/1, 2-C1/2, 2-C1/3, and 2-C1/4 interfaces will be

available.

l The 2-C4 interface will no longer be available. Instead the 2-C4/1, 2-C4/2, 2-C4/3, and 2-C4/4 interfaces will be

available.

You can now connect breakout cables to these interfaces and configure traffic between them just like any other

FortiGate interface.

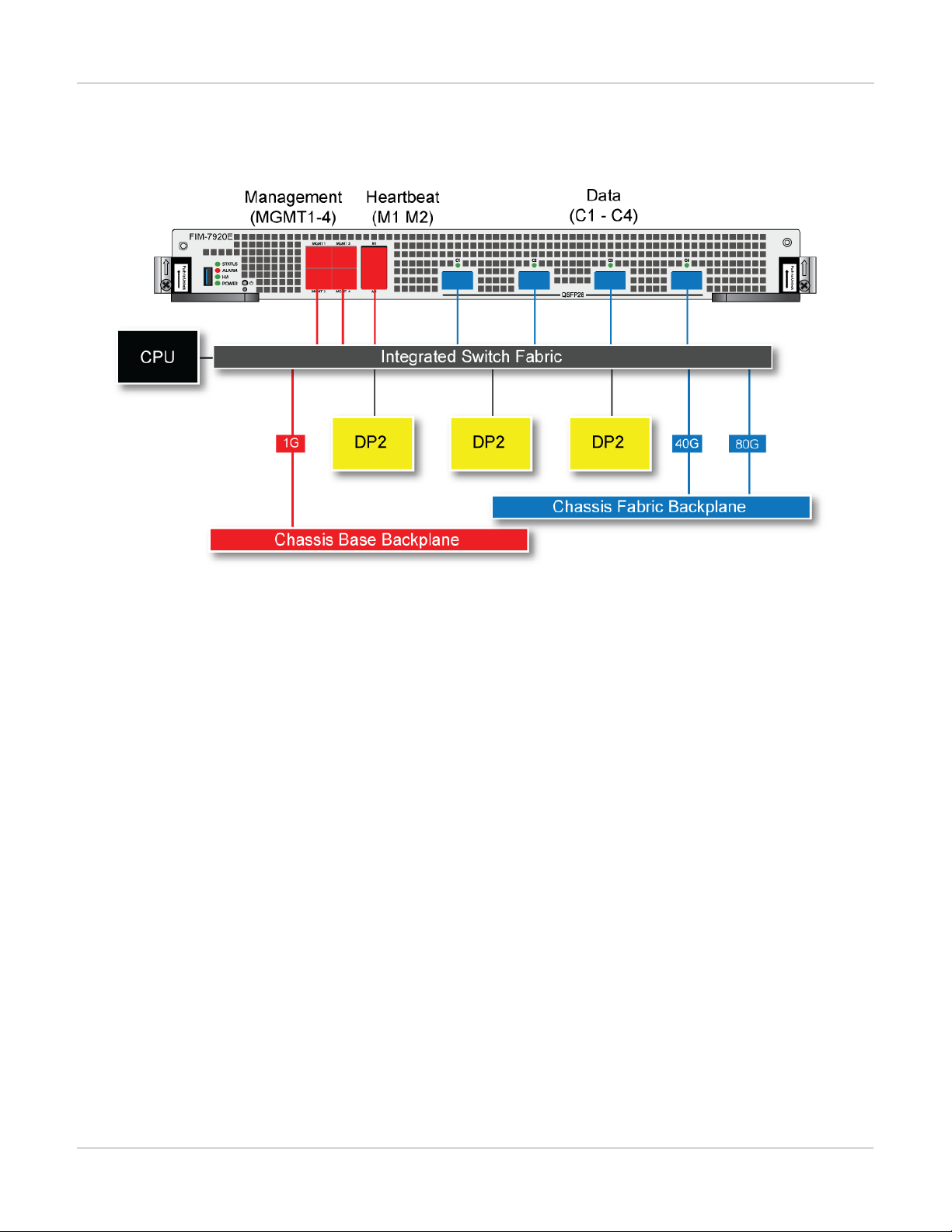

FIM-7920E hardware schematic

The FIM-7920E includes an integrated switch fabric (ISF) that connects the front panel interfaces to the DP2

session-aware load balancers and to the chassis backplanes. The ISFalso allows the DP2 processors to

FortiGate-7000

Fortinet Technologies Inc.

26

Page 27

FPM-7620E processing module FIM-7904E interface module

distribute sessions among all NP6 processors on the FPMmodules in the same chassis.

FIM-7920E hardware schematic

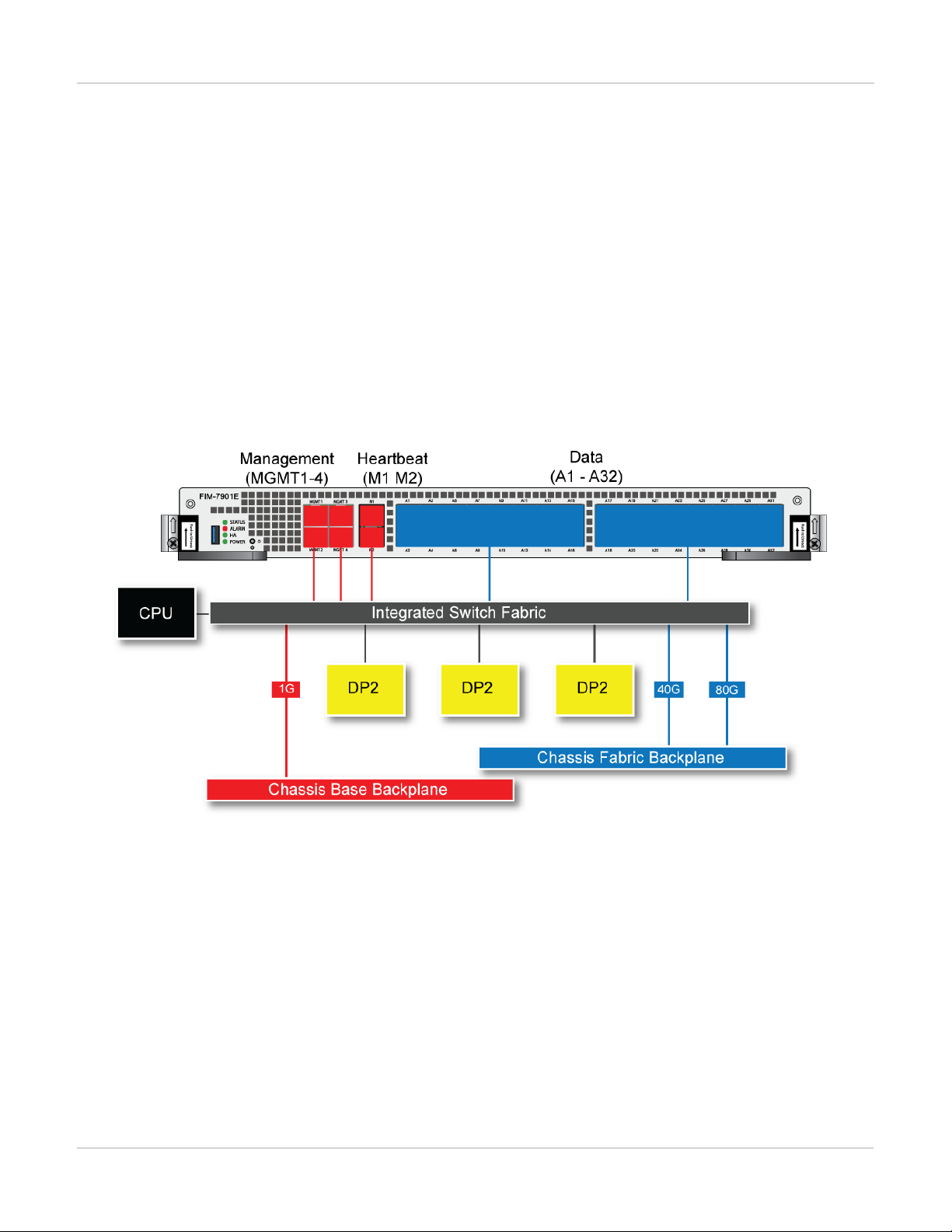

FPM-7620E processing module

The FPM-7620E processing module is a high-performance worker module that processes sessions load balanced

to it by FortiGate-7000 series interface (FIM) modules over the chassis fabric backplane. The FPM-7620E can be

installed in any FortiGate-7000 series chassis in slots 3 and up.

The FPM-7620E includes two 80Gbps connections to the chassis fabric backplane and two 1Gbps connections to

the base backplane. The FPM-7620E processes sessions using a dual CPU configuration, accelerates network

traffic processing with 4 NP6 processors and accelerates content processing with 8 CP9 processors. The NP6

network processors are connected by the FIM switch fabric so all supported traffic types can be fast path

accelerated by the NP6 processors.

The FPM-7620E includes the following hardware features:

l Two 80Gbps fabric backplane channels for load balanced sessions from the FIM modules installed in the chassis.

l Two 1Gbps base backplane channels for management, heartbeat and session sync communication.

l Dual CPUs for high performance operation.

l Four NP6 processors to offload network processing from the CPUs.

l Eight CP9 processors to offload content processing and SSL and IPsec encryption from the CPUs.

27 FortiGate-7000

Fortinet Technologies Inc.

Page 28

FIM-7904E interface module FPM-7620E processing module

FPM-7620E front panel

l Power button.

l NMI switch (for troubleshooting as recommended by Fortinet Support).

l Mounting hardware.

l LED status indicators.

NP6 network processors - offloading load balancing and network traffic

The four FPM-7620E NP6 network processors combined with theFIM module integrated switch fabric (ISF)

provide hardware acceleration by offloading load balancing from the FPM-7620E CPUs. The result is enhanced

network performance provided by the NP6 processors plus the network processing load is removed from the CPU.

The NP6 processor can also handle some CPU intensive tasks, like IPsec VPN encryption/decryption. Because of

the integrated switch fabric, all sessions are fast-pathed and accelerated.

FortiGate-7000

Fortinet Technologies Inc.

28

Page 29

FPM-7620E processing module FIM-7904E interface module

FPM-7620E hardware architecture

29 FortiGate-7000

Fortinet Technologies Inc.

Page 30

FIM-7904E interface module Accelerated IPS, SSL VPN, and IPsec VPN (CP9 content processors)

Accelerated IPS, SSL VPN, and IPsec VPN (CP9 content processors)

The FPM-7620E includes eight CP9 processors that provide the following performance enhancements:

l Flow-based inspection (IPS, application control etc.) pattern matching acceleration with over 10Gbps throughput

l IPS pre-scan

l IPS signature correlation

l Full match processors

l High performance VPN bulk data engine

l IPsec and SSL/TLS protocol processor

l DES/3DES/AES128/192/256 in accordance with FIPS46-3/FIPS81/FIPS197

l MD5/SHA-1/SHA256/384/512-96/128/192/256 with RFC1321 and FIPS180

l HMAC in accordance with RFC2104/2403/2404 and FIPS198

l ESN mode

l GCM support for NSA "Suite B" (RFC6379/RFC6460) including GCM-128/256; GMAC-128/256

l Key Exchange Processor that supports high performance IKE and RSA computation

l Public key exponentiation engine with hardware CRT support

l Primary checking for RSA key generation

l Handshake accelerator with automatic key material generation

l True Random Number generator

l Elliptic Curve support for NSA "Suite B"

l Sub public key engine (PKCE) to support up to 4096 bit operation directly (4k for DH and 8k for RSA with CRT)

l DLP fingerprint support

l TTTD (Two-Thresholds-Two-Divisors) content chunking

l Two thresholds and two divisors are configurable

FortiGate-7000

Fortinet Technologies Inc.

30

Page 31

Accelerated IPS, SSL VPN, and IPsec VPN (CP9 content processors) Getting started with FortiGate-7000

Getting started with FortiGate-7000

Once you have installed your FortiGate-7000 chassis in a rack and installed FIM interface modules and FPM

processing modules in it you can power on the chassis and all modules in the chassis will power up.

Whenever a chassis is first powered on, it takes about 5 minutes for all modules to start up and become

completely initialized and synchronized. During this time the chassis will not allow traffic to pass through and you

may not be able to log into the GUI, or if you manage to log in the session could time out as the FortiGate-7000

continues negotiating.

Review the chassis and module front panel LEDs to verify that everything is operating normally. Wait until the

chassis has complete started up and synchronized before making configuration changes. You can use the

diagnose system ha status command to confirm that the FortiGate-7000 is completely initialized. If the

output from entering this command hasn't changed after checking for a few minutes you can assume that the

system has initialized. You don't normally have to confirm that the system has initialized, but this diagnose

command is available if needed.

You can configure and manage the FortiGate-7000 by connecting an Ethernet cable to one of the MGMT1 to

MGMT4 interfaces of one of the FIM interface modules in the chassis. By default the MGMT1 to MGMT4

interfaces of both interface modules have been added to a static 802.3 aggregate interface called mgmt with a

default IPaddress of 192.168.1.99.

LACPis not supported for the mgmt aggregate interface. The MGMT1 to MGMT4

interfaces are in a static aggregate interface.

You can connect to any of the MGMT1 to MGMT4 interfaces to create a management connection to the

FortiGate-7000. You can also set up a switch with a static 802.3 aggregate interface and connect the switch ports

in the aggregate interface to multiple MGMT1 to MGMT4 interfaces to set up redundant management

connections to the FortiGate-7000.

Connect to the GUI by browsing to https://192.168.1.99. Log into the GUI using the admin account with no

password. Connect to the CLI by using SSH to connect to 192.168.1.99. You may have to enable SSH

administrative access for the mgmt interface before you can connect to the CLI.

For security reasons you should add a password to the admin account before

connecting the chassis to your network.

Once you have logged into the GUI or CLI you can view and change the configuration of your FortiGate-7000 just

like any FortiGate. For example, all of the interfaces from both interface modules are visible and you can

configure firewall policies between any two interfaces, even if they are physically in different interface modules.

You can also configure aggregate interfaces that include physical interfaces from both interface modules.

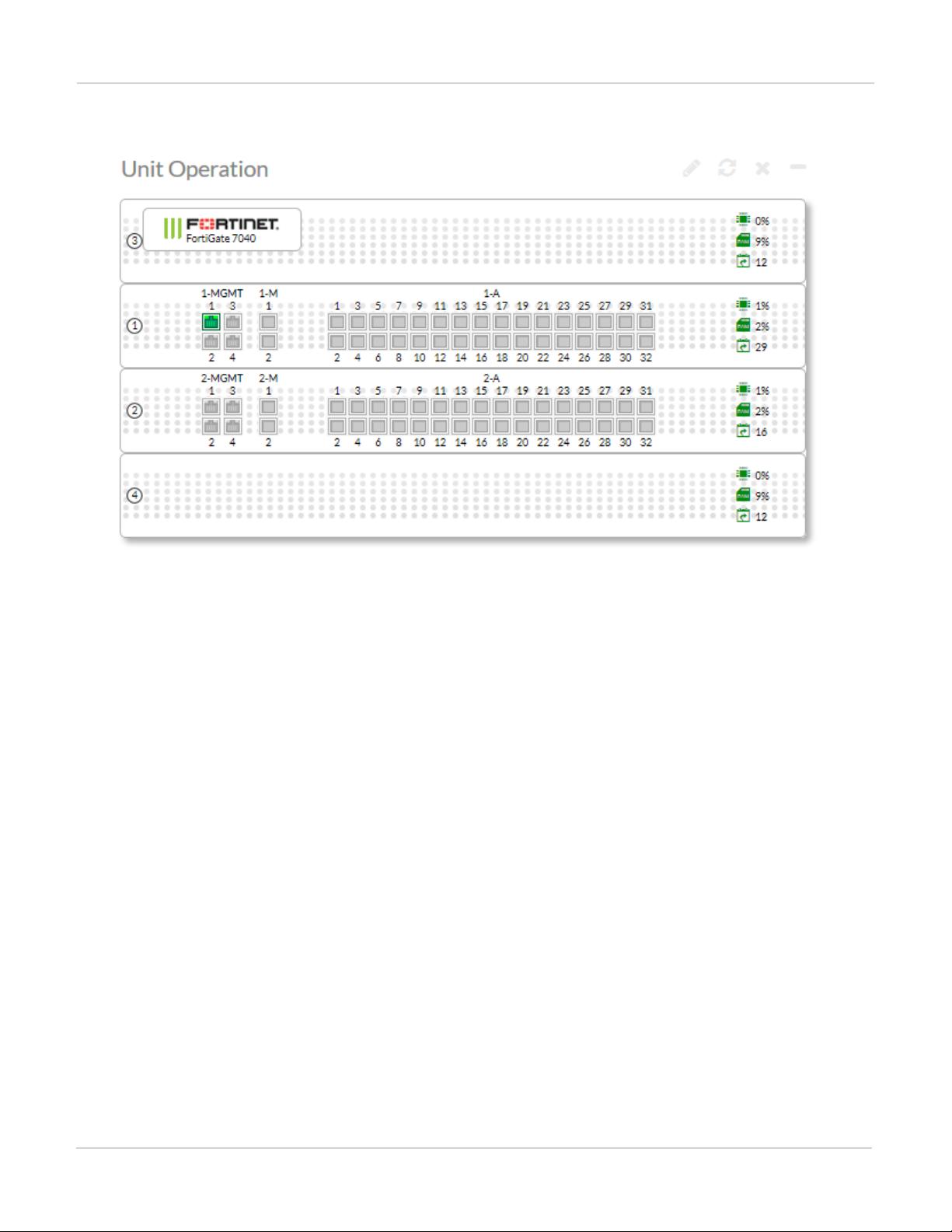

The following example Unit Operation dashboard widget shows a FortiGate-7040E with FIM-7901E modules in

slots 1 and 2 and FPM modules in slots 3 and 4.

31 FortiGate-7000

Fortinet Technologies Inc.

Page 32

Getting started with FortiGate-7000 Managing individual modules

Example FortiGate-7040 unit operation widget view

Managing individual modules

When you log into the GUI or CLI using the mgmt interface IP address you are actually connected to the primary

(or master) interface module in slot 1 (the address of slot 1 is FIM01). To verify which module you have logged

into, the GUI header banner or CLI prompt shows the hostname of the module you are logged into plus the slot

address in the format <hostname> (<slot address>).

In some cases you may want to connect to individual modules. For example, you may want to view the traffic

being processed by a specific processor module. You can connect to the GUI or CLIof individual modules in the

chassis using the system management IP address with a special port number.

For example, if the system management IP address is 192.168.1.99 you can connect to the GUIof the interface

module in slot 1 using the system management IP address (for example, by browsing to https://192.168.1.99).

You can also use the system management IP address followed by the special port number, for example

https://192.168.1.99:44301.

The special port number (in this case 44301)is a combination of the service port (for HTTPS the service port is

443) and the chassis slot number (in this example, 01). The following table lists the special ports to use to

connect to each chassis slot using common admin protocols:

FortiGate-7000

Fortinet Technologies Inc.

32

Page 33

Connecting to module CLIs using the management module Getting started with FortiGate-7000

FortiGate-7000 special administration port numbers

Slot Number Slot Address

5 Processor module FPM05 8005 44305 2305 2205 16105

3 Processor module FPM03 8003 44303 2303 2203 16103

1 Primary Interface module FIM01 8001 44301 2301 2201 16101

2 Interface module FIM02 8002 44302 2302 2202 16102

4 Processor module FPM04 8004 44304 2304 2204 16104

6 Processor module FPM06 8006 44306 2306 2206 16106

For example:

l To connect to the GUI of the interface module in slot 3 using HTTPS you would browse to

https://192.168.1.99:44303.

l To send an SNMP query to the processor module in slot 6 use the port number 16106.

The FortiGate-7000 configuration is the same no matter which modem you log into. Logging into different

modules allows you to use FortiView or Monitor GUI pages to view the activity on that module. Even though you

can log into different modules, you should only make configuration changes from the primary interface module;

which is the FIMmodule in slot 1.

HTTP

(80)

HTTPS (443)

Telnet

(23)

SSH (22) SNMP (161)

Managing individual modules from the CLI

From the CLI you can use the following command to switch between chassis slots and perform different

operations on the modules in each slot:

execute load-balance slot {manage | power-off | power-on | reboot} <slot-number>

Use manage to connect to the CLI of a different module, use power-off, power-on, and reboot to turn off

or turn on the power or reboot the module in <slot-number>.

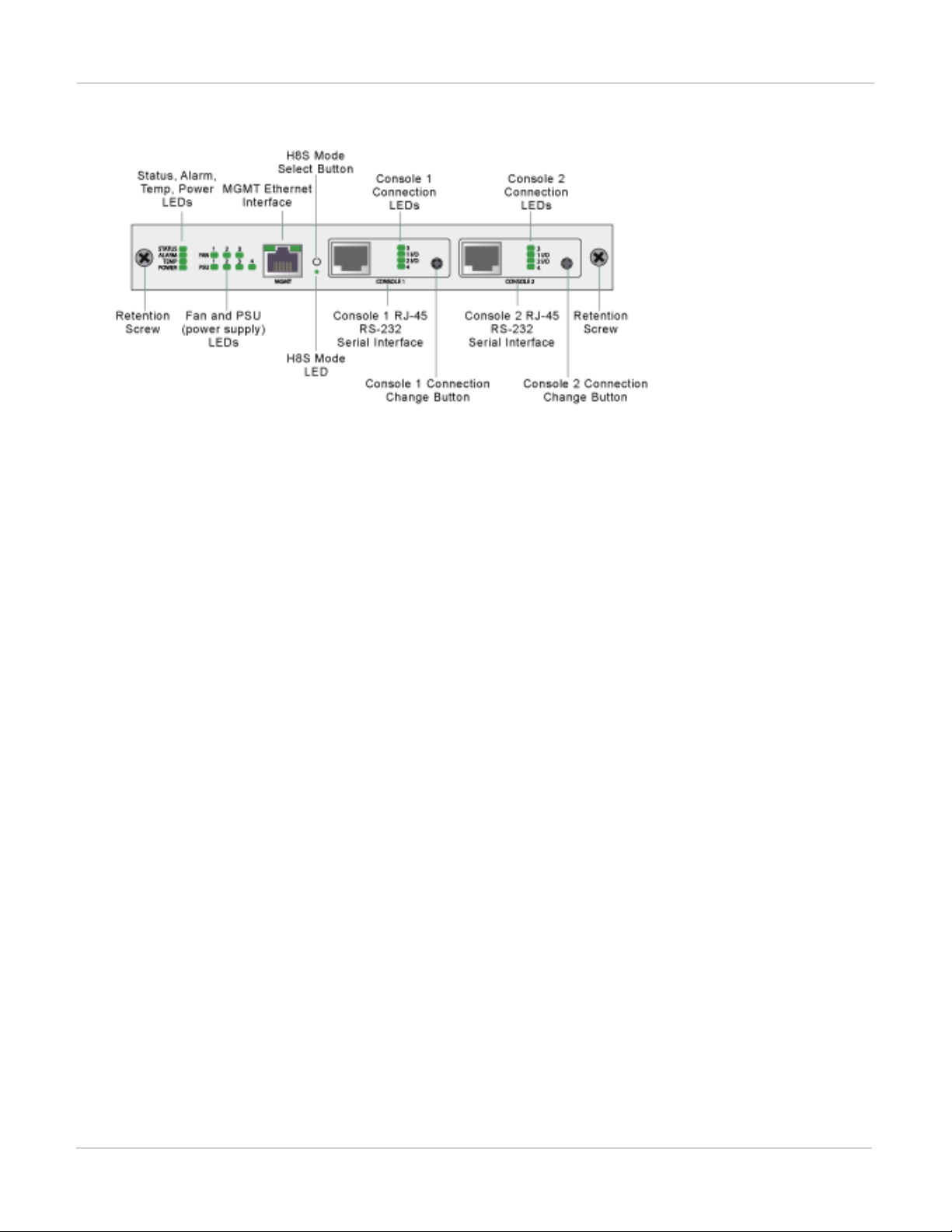

Connecting to module CLIs using the management module

All FortiGate-7000 chassis includes a front panel management module (also called a shelf manager) on the

chassis front panel. See the system guide for your chassis for details about the management module.

33 FortiGate-7000

Fortinet Technologies Inc.

Page 34

Getting started with FortiGate-7000 Connecting to module CLIs using the management module

ForiGate-7040E management module front panel

The management module includes two console ports named Console 1 and Console 2 that can be used to

connect to the CLI of the FIM and FPM modules in the chassis. As described in the system guide, the console

ports are also used to connect to SMC CLIs of the management module and the FIM and FPMmodules

By default when the chassis first starts up Console 1 is connected to the FortiOS CLI of the FIM module in slot 1

and Console 2 is disconnected. The default settings for connecting to each console port are:

Baud Rate (bps) 9600, Data bits 8, Parity None, Stop bits 1, and Flow Control None.

You can use the console connection change buttons to select the CLI that each console port is connected to.

Press the button to cycle through the FIM and FPM module FortiOS CLIs and disconnect this console. The

console's LEDs indicate what it is connected to. If no LED is lit the console is either connected to the

management module SMC SDI console or disconnected. Both console ports cannot be connected to the same

CLI at the same time. If a console button press would cause a conflict that module is skipped. If one of the

console ports is disconnected then the other console port can connect to any CLI.

If you connect a PC to one of the management module console ports with a serial cable and open a terminal

session you begin by pressing Ctrl-T to enable console switching mode. Press Ctrl-T multiple times to cycle

through the FIM and FPM module FortiOS CLIs (the new destination is displayed in the terminal window). If you

press Ctrl-T after connecting to the FPM module in the highest slot number, the console is disconnected. Press

Ctrl-T again to start over again at slot 1.

Once the console port is connected to the CLI that you want to use, press Enter to enable the CLI and login. The

default administrator account for accessing the FortiOS CLIs is admin with no password.

When your session is complete you can press Ctrl-T until the prompt shows you have disconnected from the

console.

Connecting to the FortiOS CLI of the FIM module in slot 1

Use the following steps to connect to the FortiOS CLI of the FIM module in slot 1:

1.

Connect the console cable supplied with your chassis to Console 1 and to your PC or other device RS-232 console

port.

2.

Start a terminal emulation program on the management computer. Use these settings:

Baud Rate (bps) 9600, Data bits 8, Parity None, Stop bits 1, and Flow Control None.

FortiGate-7000

Fortinet Technologies Inc.

34

Page 35

Default VDOM configuration Getting started with FortiGate-7000

3.

Press Ctrl-T to enter console switch mode.

4.

Repeat pressing Ctrl-T until you have connected to slot 1. Example prompt:

<Switching to Console: FIM01 (9600)>

5.

Login with an administrator name and password.

The default is admin with no password. For security reasons, it is strongly recommended that you change the

password.

6.

When your session is complete, enter the exit command to log out.

Default VDOM configuration

By default when the FortiGate-7000 first starts up it is operating in multiple VDOM mode. The system has a

management VDOM (named dmgmt-vdom) and the root VDOM. All management interfaces are in dmgmt-vdom

and all other interfaces are in the root VDOM. You can add more VDOMs and add interfaces to them or just use

the root VDOM.

Default management VDOM

By default the FortiGate-7000 configuration includes a management VDOMnamed dmgmt-vdom. For the

FortiGate-7000 system to operate normally you should not change the configuration of this VDOM and this

VDOM should always be the management VDOM. You should also not add or remove interfaces from this

VDOM.

You have full control over the configurations of other FortiGate-7000 VDOMs.

Firmware upgrades

All of the modules in your FortiGate-7000 run the same firmware image. You upgrade the firmware from the

primary interface module GUIor CLI just as you would any FortiGate product. During the upgrade process the

firmware of all of the modules in the chassis upgrades in one step. Firmware upgrades should be done during a

quiet time because traffic will briefly be interrupted during the upgrade process.

If you are operating two FortiGate-7000 chassis in HA mode with uninterruptable-upgrade and

session-pickup enabled, firmware upgrades should only cause a minimal traffic interruption. Use the

following command to enable these settings. These settings are synchronized to all modules in the cluster.

config system ha

set uninterruptable-upgrade enable

set session-pickup enable

end

Restarting the FortiGate-7000

To restart all of the modules in a FortiGate-7000 chassis, connect to the primary FIMmodule CLI and enter the

command execute reboot. When you enter this command all of the modules in the chassis reboot.

35 FortiGate-7000

Fortinet Technologies Inc.

Page 36

Getting started with FortiGate-7000 Load balancing

You can restart individual modules by logging into that module's CLI and entering the execute reboot

command.

Load balancing

FortiGate-7000E session-aware load balancing (SLBC) distributes TCP, UDP, and SCTP traffic from the interface

modules to the processor modules. Traffic is load balanced based on the algorithm set by the following

command:

config load-balance setting

set dp-load-distribution-method {round-robin | src-ip | dst-ip | src-dst-ip | src-ip-

sport | dst-ip-dport | src-dst-ip-sport-dport}

end

Where:

round-robin Directs new requests to the next slot regardless of response time or number of connections.

src-ip traffic load is distributed across all slots according to source IP address.

dst-ip traffic load is statically distributed across all slots according to destination IP address.

src-dst-ip traffic load is distributed across all slots according to the source and destination IP addresses.

src-ip-sport traffic load is distributed across all slots according to the source IP address and source port.

dst-ip-dport traffic load is distributed across all slots according to the destination IP address and destination

port.

src-dst-ipsport-dport traffic load is distributed across all slots according to the source and destination IP

address, source port, and destination port. This is the default load balance distribution method and represents

true session-aware load balancing.

Traffic that cannot be load balanced

Some traffic types cannot be load balanced. Traffic that cannot be load balanced is all processed by the primary

FPM module, which is usually the FPM module in slot 3. Internal to the system this FPM module is designated as

the ELBC master. If the FPM module in slot 3 fails or is rebooted, the next FPMmodule will become the primary

FPM module.

You can configure the FortiGate-7000 to send any type of traffic to the primary FPM or to other specific FPM

modules using the config loadbalance flow-rule command. By default, traffic that is only sent to the

primary FPM module includes, IPsec, IKE, GRE, session helper, Kerberos, BGP, RIP, IPv4 and IPv6 DHCP,

PPTP, BFD, IPv4 multicast and IPv6 multicast. You can view the default configuration of the config

loadbalance flow-rule command to see how this is all configured. For example, the following

configuration sends all IKE traffic to the primary FPM:

config load-balance flow-rule

edit 1

set status enable

set vlan 0

set ether-type ip

set protocol udp

set src-l4port 500-500

set dst-l4port 500-500

set action forward

FortiGate-7000

Fortinet Technologies Inc.

36

Page 37

Recommended configuration for traffic that cannot be load balanced Getting started with FortiGate-7000

set forward-slot master

set priority 5

set comment "ike"

next

edit 2

set status disable

set vlan 0

set ether-type ip

set protocol udp

set src-l4port 4500-4500

set dst-l4port 0-0

set action forward

set forward-slot master

set priority 5

set comment "ike-natt src"

next

edit 3

set status disable

set vlan 0

set ether-type ip

set protocol udp

set src-l4port 0-0

set dst-l4port 4500-4500

set action forward

set forward-slot master

set priority 5

set comment "ike-natt dst"

next

Recommended configuration for traffic that cannot be load balanced

The following flow rules are recommended to handle common forms of traffic that cannot be load balanced.

These flow rules send GPRS (port 2123), SSL VPN, IPv4 and IPv6 IPsec VPN, ICMP and ICMPv6 traffic to the

primary (or master) FPM.

The CLI syntax below just shows the configuration changes. All other options are set to their defaults. For

example, the flow rule option that controls the FPM slot that sessions are sent to is forward-slot and in all

cases below forward-slot is set to its default setting of master. This setting sends matching sessions to the

primary (or master) FPM.

config load-balance flow-rule

edit 20

set status enable

set ether-type ipv4

set protocol udp

set dst-l4port 2123-2123

next

edit 21

set status enable

set ether-type ip

set protocol tcp

set dst-l4port 10443-10443

set comment "ssl vpn to the primary FPM"

next

edit 22

37 FortiGate-7000

Fortinet Technologies Inc.

Page 38

Getting started with FortiGate-7000 Recommended configuration for traffic that cannot be load balanced

set status enable

set ether-type ipv4

set protocol udp

set src-l4port 500-500

set dst-l4port 500-500

set comment "ipv4 ike"

next

edit 23

set status enable

set ether-type ipv4

set protocol udp

set src-l4port 4500-4500

set comment "ipv4 ike-natt src"

next

edit 24

set status enable

set ether-type ipv4

set protocol udp

set dst-l4port 4500-4500

set comment "ipv4 ike-natt dst"

next

edit 25

set status enable

set ether-type ipv4

set protocol esp

set comment "ipv4 esp"

next

edit 26

set status enable

set ether-type ipv6

set protocol udp

set src-l4port 500-500

set dst-l4port 500-500

set comment "ipv6 ike"

next

edit 27

set status enable

set ether-type ipv6

set protocol udp

set src-l4port 4500-4500

set comment "ipv6 ike-natt src"

next

edit 28

set status enable

set ether-type ipv6

set protocol udp

set dst-l4port 4500-4500

set comment "ipv6 ike-natt dst"

next

edit 29

set status enable

set ether-type ipv6

set protocol esp

set comment "ipv6 esp"

next

edit 30

set ether-type ipv4

FortiGate-7000

Fortinet Technologies Inc.

38

Page 39

Configuration synchronization Getting started with FortiGate-7000

set protocol icmp

set comment "icmp"

next

edit 31

set status enable

set ether-type ipv6

set protocol icmpv6

set comment "icmpv6"

next

edit 32

set ether-type ipv6

set protocol 41

end

Configuration synchronization

The FortiGate-7000 synchronizes the configuration to all modules in the chassis. To support this feature, the

interface module in slot 1 becomes the config-sync master and this module makes sure the configurations of all

modules are synchronized. Every time you make a configuration change you must be logged into the chassis

using the management address, which logs you into the config-sync master. All configuration changes made to

the config-sync master are synchronized to all of the modules in the chassis.

If the FIM module in slot 1 fails or reboots, the FIM module in slot 2 becomes the config-sync master.

Failover in a standalone FortiGate-7000

A FortiGate-7000 will continue to operate even if one of the FIM or FPM modules fails or is removed. If an FPM

module fails, sessions being processed by that module fail. All sessions are then load balanced to the remaining

FPMmodules. Sessions that were being processed by the failed module are restarted and load balanced to the

remaining FPM modules.

If an FIM module fails, the other FIM module will continue to operate and will become the config-sync master.

However, traffic received by the failed FIM module will be lost.

You can use LACP or redundant interfaces to connect interfaces of both FIMs to the same network. In this way, if

one of the FIMs fails the traffic will continue to be received by the other FIM module.

Replacing a failed FPMor FIMmodule

This section describes how to remove a failed FPM or FIM module and replace it with a new one. The procedure

is slightly different depending on if you are operating in HA mode with two chassis or just operating a standalone

chassis.

Replacing a failed module in a standalone FortiGate-7000 chassis

1.

Power down the failed module by pressing the front panel power button.

2.

Remove the module from the chassis.

3.

Insert the replacement module. It should power up when inserted into the chassis if the chassis has power.

39 FortiGate-7000

Fortinet Technologies Inc.

Page 40

Getting started with FortiGate-7000 Replacing a failed FPMor FIMmodule

4.

The module's configuration is synchronized and its firmware is upgraded to match the firmware version on the

primary module. The new module reboots.

5.

If the module will be running FortiOS Carrier, apply the FortiOS Carrier license to the module. The module

reboots.

6.

Confirm that the new module is running the correct firmware version either from the GUI or by using the config

system status command.

Manually update the module to the correct version if required. You can do this by logging into the module and

performing a firmware upgrade.

7.

Verify that the configuration has been synchronized.

The following command output shows the sync status of the FIM modules in a FortiGate-7000 chassis. The field

in_sync=1 indicates that the configurations of the modules are synchronized.

diagnose sys confsync

status | grep in_sy

FIM04E3E16000080, Slave, uptime=177426.45, priority=2,

slot_id=1:2, idx=0, flag=0x0, in_sync=1

FIM10E3E16000063, Master, uptime=177415.38, priority=1,

slot_id=1:1, idx=1, flag=0x0, in_sync=1

If in_sync is not equal to 1 or if a module is missing in the command output you can try restarting the

modules in the chassis by entering execute reboot from any module CLI. If this does not solve the problem,

contact Fortinet support.

Replacing a failed module in a FortiGate-7000 chassis inan HAcluster

1.

Power down the failed module by pressing the front panel power button.

2.

Remove the module from the chassis.

3.

Insert the replacement module. It should power up when inserted into the chassis if the chassis has power.

4.

The module's configuration is synchronized and its firmware is upgraded to match the configuration and firmware

version on the primary module. The new module reboots.

5.

If the module will be running FortiOS Carrier, apply the FortiOS Carrier license to the module. The module

reboots.

6.

Confirm that the module is running the correct firmware version.

Manually update the module to the correct version if required. You can do this by logging into the module and

performing a firmware upgrade.

7.

Configure the new module for HA operation. For example:

config system ha

set mode a-p

set chassis-id 1

set hbdev m1 m2

set hbdev-vlan-id 999

set hbdev-second-vlan-id 990

end

8.

Optionally configure the hostname:

config system global

set hostname <name>

end

The HA configuration and the hostname must be set manually because HA settings and the hostname is not

synchronized.

9.

Verify that the configuration has been synchronized.

The following command output shows the sync status of the FIM modules in a FortiGate-7000 chassis. The field

FortiGate-7000

Fortinet Technologies Inc.

40

Page 41

Installing firmware on an FIM or FPM module from the BIOS using a TFTP server Getting started with FortiGate-7000

in_sync=1 indicates that the configurations of the modules are synchronized.

diagnose sys confsync

status | grep in_sy

FIM04E3E16000080, Slave, uptime=177426.45, priority=2,

slot_id=1:2, idx=0, flag=0x0, in_sync=1

FIM10E3E16000063, Master, uptime=177415.38, priority=1,

slot_id=1:1, idx=1, flag=0x0, in_sync=1

If in_sync is not equal to 1 or if a module is missing in the command output you can try restarting the

modules in the chassis by entering execute reboot from any module CLI. If this does not solve the problem,

contact Fortinet support.

Installing firmware on an FIM or FPM module from the BIOS using a TFTP server

Use the procedures in this section to install firmware on a FIM or FPM module from a TFTP server after

interrupting the boot up sequence from the BIOS.

You might want to use this procedure if you need to reset the configuration of a module to factory defaults by

installing firmware from a reboot. You can also use this procedure if you have formatted one or more FIMor

FPMmodules from the BIOS by interrupting the boot process.

This procedure involves creating a connection between a TFTP server and one of the MGMT interfaces of one of

the FIMmodules, using a chassis console port to connect to the CLI of the module that you are upgrading the

firmware for, rebooting this module, interrupting the boot from the console session, and installing the firmware.

This section includes two procedures, one for upgrading FIM modules and one for upgrading FPM modules. The

two procedures are very similar but a few details, most notably the local VLAN ID setting are different. If you need

to update both FIM and FPM modules, you should update the FIM modules first as the FPM modules can only

communicate with the TFTP server through FIMmodule interfaces.

Uploading firmware from a TFTP server to an FIMmodule

Use the following steps to upload firmware from a TFTP server to an FIM module. This procedure requires

Ethernet connectivity between the TFTP server and one of the FIM module's MGMT interfaces.

During this procedure, the FIM module will not be able to process traffic so, if possible, perform this procedure

when the network is not processing any traffic.

If you are operating an HA configuration, you should remove the chassis from the HA configuration before

performing this procedure.

1.

Set up a TFTP server and copy the firmware file to be installed into the TFTP server default folder.

2.

Set up your network to allow traffic between the TFTP server and one of the MGMT interfaces of the FIM module

to be updated.

If the MGMT interface you are using is one of the MGMT interfaces connected as a LAG to a switch you must

shutdown or disconnect all of the other connections in the LAG from the switch. This includes the MGMT

interfaces in the other FIM module.

3.

Connect the console cable supplied with your chassis to the Console 1 port on your chassis front panel and to your

management computer's RS-232 console port.

4.

Start a terminal emulation program on the management computer. Use these settings:

Baud Rate (bps) 9600, Data bits 8, Parity None, Stop bits 1, and Flow Control None.

41 FortiGate-7000

Fortinet Technologies Inc.

Page 42