Page 1

Dell PowerVault MD3200i

and MD3220i Storage Arrays

Owner’s Manual

Regulatory Model: E03J Series and E04J Series

Regulatory Type: E03J001 and E04J001

Page 2

Notes, Cautions, and Warnings

NOTE: A NOTE indicates important information that helps you make better use of

your computer.

CAUTION: A CAUTION indicates potential damage to hardware or loss of data if

instructions are not followed.

WARNING: A WARNING indicates a potential for property damage, personal

injury, or death.

____________________

© 2013 Dell Inc.

Trademarks used in this text: Dell™, the DELL logo, PowerEdge™, PowerVault™, and

OpenManage™ are trademarks of Dell Inc. Intel

the U.S. and other countries. Microsoft

®

Explorer

and/or other countries. Red Hat

Hat, Inc. in the United States and other countries. SUSE

the United States and other countries.

Regulatory Model: E03J Series and E04J Series

Regulatory Type: E03J001 and E04J001

2013 - 06 Rev. A02

are either trademarks or registered trademarks of Microsoft Corporation in the United States

®

®

and Red Hat Enterprise Linux® are registered trademarks of Red

®

, Windows®, Windows Server®, MS-DOS®, and Internet

is a registered trademarks of Intel Corporation in

®

is a registered trademark of Novell, Inc. in

Page 3

Contents

1 Introduction. . . . . . . . . . . . . . . . . . . . . . . 19

About This Document . . . . . . . . . . . . . . . . . . 19

Inside the box of the Dell PowerVault

MD3200i Series Storage Array

. . . . . . . . . . . . . 19

MD3200i

Dell PowerVault Modular Disk

Storage Manager. . . . . . . . . . . . . . . . . . 20

Dell PowerVault Modular Disk

Configuration Utility

Series Storage Array . . . . . . . . . . . 20

. . . . . . . . . . . . . . . . . 20

Other Information You May Need

. . . . . . . . . . . . 20

2 Planning: About Your Storage Array . . . . 23

Overview. . . . . . . . . . . . . . . . . . . . . . . . . 23

Hardware Features

Front-Panel Features and Indicators

Back Panel Features and Indicators

Hard-Drive Indicator Patterns

Power Supply and Cooling Fan Features . . . . . . . . 29

Power Indicator Codes and Features

. . . . . . . . . . . . . . . . . . . 24

. . . . . . . 24

. . . . . . . . 27

. . . . . . . . . . . . . . 28

. . . . . . . . . . 30

Contents 3

Page 4

3 Planning: RAID Controller Modules . . . . 31

RAID Controller Modules . . . . . . . . . . . . . . . . 31

RAID Controller Module Connectors

and Features

. . . . . . . . . . . . . . . . . . . . . . . 32

RAID Controller Module—Additional Features

Battery Backup Unit

. . . . . . . . . . . . . . . . 34

Storage Array Thermal Shutdown

System Password Reset

Cache Functions and Features

Cache Mirroring

Write-Back Cache

. . . . . . . . . . . . . . 35

. . . . . . . . . . . . . . 35

. . . . . . . . . . . . . . . . . . . 35

. . . . . . . . . . . . . . . . . 35

. . . . . 34

. . . . . . . . . 34

Write-Through Cache . . . . . . . . . . . . . . . . 36

4 Planning: MD3200i Series Storage

Array Terms and Concepts . . . . . . . . . . . 37

Physical Disks, Virtual Disks, and Disk Groups. . . . . 37

Physical Disks

Physical Disk States

Self-Monitoring Analysis and

Reporting Technology

Virtual Disks and Disk Groups

Virtual Disk States . . . . . . . . . . . . . . . . . 40

RAID Levels. . . . . . . . . . . . . . . . . . . . . . . . 40

RAID Level Usage

. . . . . . . . . . . . . . . . . . . . 37

. . . . . . . . . . . . . . . . 38

. . . . . . . . . . . . . . . . 39

. . . . . . . . . . . 39

. . . . . . . . . . . . . . . . . . 41

4 Contents

Segment Size

. . . . . . . . . . . . . . . . . . . . . . . 42

Virtual Disk Operations

Virtual Disk Initialization

Background Initialization

. . . . . . . . . . . . . . . . . 43

. . . . . . . . . . . . . . 43

. . . . . . . . . . . . . . 43

Page 5

Foreground Initialization . . . . . . . . . . . . . . 43

Consistency Check . . . . . . . . . . . . . . . . . 44

Media Verification

Cycle Time

. . . . . . . . . . . . . . . . . 44

. . . . . . . . . . . . . . . . . . . . . 44

Virtual Disk Operations Limit . . . . . . . . . . . . 45

Disk Group Operations. . . . . . . . . . . . . . . . . . 45

RAID Level Migration

. . . . . . . . . . . . . . . . 45

Segment Size Migration . . . . . . . . . . . . . . 45

Virtual Disk Capacity Expansion

Disk Group Expansion

. . . . . . . . . . . . . . . 46

. . . . . . . . . . 46

Disk Group Defragmentation . . . . . . . . . . . . 46

Disk Group Operations Limit

. . . . . . . . . . . . 47

RAID Background Operations Priority

. . . . . . . . . 47

Virtual Disk Migration and Disk Roaming. . . . . . . . 48

Disk Migration

. . . . . . . . . . . . . . . . . . . 48

Disk Roaming . . . . . . . . . . . . . . . . . . . . 50

Advanced Features

Host Server-to-Virtual Disk Mapping

Host Types

Snapshot Virtual Disks

. . . . . . . . . . . . . . . . . . . 50

. . . . . . . . 51

. . . . . . . . . . . . . . . . . . . . . 51

. . . . . . . . . . . . . . . 51

Snapshot Repository Virtual Disk. . . . . . . . . . 52

Virtual Disk Copy

Virtual Disk Recovery

. . . . . . . . . . . . . . . . . . 53

. . . . . . . . . . . . . . . . 54

Using Snapshot and Disk Copy Together. . . . . . 54

Multi-Path Software. . . . . . . . . . . . . . . . . . . 54

Preferred and Alternate

Controllers and Paths

Virtual Disk Ownership

Load Balancing

. . . . . . . . . . . . . . . . . . . . . 56

. . . . . . . . . . . . . . . . 55

. . . . . . . . . . . . . . . 55

Contents 5

Page 6

Monitoring MD3200i Series System

Performance

. . . . . . . . . . . . . . . . . . . . . . . 57

5 Configuration: Overview . . . . . . . . . . . . 61

User Interface . . . . . . . . . . . . . . . . . . . . . . 61

Enterprise Management Window

. . . . . . . . . 62

Array Management Window . . . . . . . . . . . . 63

6 Configuration: About Your

Storage Array . . . . . . . . . . . . . . . . . . . . . 65

Out-of-Band and In-Band Management . . . . . . . . . 65

Storage Arrays . . . . . . . . . . . . . . . . . . . . . . 66

Adding Storage Arrays

Setting Up Your Storage Array . . . . . . . . . . . 68

Locating Storage Arrays

Naming or Renaming Storage Arrays. . . . . . . . 70

Setting a Password

Viewing Storage Array Connections

Adding/Editing a Comment to an Existing

Storage Array. . . . . . . . . . . . . . . . . . . . 73

Removing Storage Arrays

Enabling Premium Features. . . . . . . . . . . . . 74

Failover Alert Display

Changing the Cache Settings on the

Storage Array. . . . . . . . . . . . . . . . . . . . 74

Changing Expansion Enclosure

ID Numbers

. . . . . . . . . . . . . . . . . . . . . 75

Changing the Enclosure Order in the

Physical Pane

. . . . . . . . . . . . . . . 66

. . . . . . . . . . . . . . 69

. . . . . . . . . . . . . . . . . 71

. . . . . . . . 72

. . . . . . . . . . . . . . 73

. . . . . . . . . . . . . . . . 74

. . . . . . . . . . . . . . . . . . . . 75

6 Contents

Configuring Alert Notifications

. . . . . . . . . . . . . 76

Page 7

Configuring E-mail Alerts. . . . . . . . . . . . . . 76

Configuring SNMP Alerts. . . . . . . . . . . . . . 79

Battery Settings . . . . . . . . . . . . . . . . . . . . . 80

Setting the Storage Array RAID Controller

Module Clocks

. . . . . . . . . . . . . . . . . . . 81

7 Configuration: Using iSCSI . . . . . . . . . . . 83

Changing the iSCSI Target Authentication . . . . . . . 83

Entering Mutual Authentication Permissions

Creating CHAP Secrets

Initiator CHAP Secret

Target CHAP Secret

. . . . . . . . . . . . . . . . . 84

. . . . . . . . . . . . . . . . 85

. . . . . . . . . . . . . . . . 85

Valid Characters for CHAP Secrets

Changing the iSCSI Target Identification

Changing the iSCSI Target Discovery Settings

Configuring the iSCSI Host Ports

. . . . . . . . . . . . 87

. . . . . . 84

. . . . . . . . 85

. . . . . . . . 86

. . . . . 86

Advanced iSCSI Host Ports Settings . . . . . . . . . . 89

Viewing or Ending an iSCSI Session

. . . . . . . . . . 90

Viewing iSCSI Statistics and Setting

Baseline Statistics

Edit, Remove, or Rename Host Topology

. . . . . . . . . . . . . . . . . . . . 91

. . . . . . . . 92

8 Configuration: Event Monitor . . . . . . . . . 93

Enabling or Disabling the Event Monitor . . . . . . . . 93

Windows

. . . . . . . . . . . . . . . . . . . . . . 94

Contents 7

Page 8

Linux. . . . . . . . . . . . . . . . . . . . . . . . . 94

9 Configuration: About Your Host. . . . . . . 95

Configuring Host Access. . . . . . . . . . . . . . . . . 95

Using the Mappings Tab

Defining a Host

. . . . . . . . . . . . . . . . . 96

. . . . . . . . . . . . . . . . . . . 96

Removing Host Access. . . . . . . . . . . . . . . . . . 98

Managing Host Groups

Creating a Host Group

. . . . . . . . . . . . . . . . . . 98

. . . . . . . . . . . . . . . 98

Moving a Host to a Different Host Group

Removing a Host Group. . . . . . . . . . . . . . 100

Host Topology

. . . . . . . . . . . . . . . . . . . 100

Starting or Stopping the Host

Context Agent. . . . . . . . . . . . . . . . . . . 101

I/O Data Path Protection . . . . . . . . . . . . . . . . 102

Managing Host Port Identifiers

. . . . . . . . . . . . 103

10 Configuration: Disk Groups

and Virtual Disks

Creating Disk Groups and Virtual Disks. . . . . . . . 105

Creating Disk Groups

Locating a Disk Group . . . . . . . . . . . . . . 108

Creating Virtual Disks

Changing the Virtual Disk

Modification Priority . . . . . . . . . . . . . . . 110

Changing the Virtual Disk Cache Settings

Changing the Segment Size of

a Virtual Disk . . . . . . . . . . . . . . . . . . . 113

. . . . . . . . . . . . . . . . . . 105

. . . . . . . . . . . . . . . 106

. . . . . . . . . . . . . . . 108

. . . . . . 99

. . . . 111

8 Contents

Page 9

Changing the I/O Type . . . . . . . . . . . . . . . 114

Choosing an Appropriate Physical Disk Type

. . . . . . 115

Physical Disk Security with Self

Encrypting Disk

Creating a Security Key

Changing a Security Key

. . . . . . . . . . . . . . . . . . . . . 115

. . . . . . . . . . . . . . . 118

. . . . . . . . . . . . . . 119

Saving a Security Key . . . . . . . . . . . . . . . 121

Validate Security Key

Unlocking Secure Phys ical Disks

. . . . . . . . . . . . . . . . 122

. . . . . . . . . . 122

Erasing Secure Physical Disks . . . . . . . . . . . 122

Configuring Hot Spare Phys ical Disks . . . . . . . . . 123

Hot Spares and Rebuild

. . . . . . . . . . . . . . . 125

Global Hot Spares . . . . . . . . . . . . . . . . . 125

Hot Spare Operation

Hot Spare Drive Protection

Enclosure Loss Protection

Host-to-Virtual Disk Mapping

Creating Host-to-Virtual Disk Mappings

. . . . . . . . . . . . . . . . 125

. . . . . . . . . . . . . 126

. . . . . . . . . . . . . . . . 126

. . . . . . . . . . . . . . 128

. . . . . . 129

Modifying and Removing

Host-to-Virtual Disk Mapping. . . . . . . . . . . . 130

Changing Controller Ownership

of the Virtual Disk

. . . . . . . . . . . . . . . . . . 131

Removing Host-to-Virtual Disk Mapping . . . . . . 132

Changing the RAID Controller Module

Ownership of a Disk Group

. . . . . . . . . . . . . 132

Changing the RAID Level of a Disk Group . . . . . 133

Removing a Host-to-Virtual Disk Mapping

Using Linux DMMP

. . . . . . . . . . . . . . . . . 134

Restricted Mappings

. . . . . . . . . . . . . . . . . . . 136

Changing the RAID Controller Module

Ownership of a Virtual Disk or a Disk Group

. . . . 137

Contents 9

Page 10

Changing the RAID Level of a Disk Group. . . . . . . 139

Storage Partitioning

Disk Group and Virtual Disk Expansion

Disk Group Expansion

Virtual Disk Expansion

Using Free Capacity

. . . . . . . . . . . . . . . . . . 140

. . . . . . . . 141

. . . . . . . . . . . . . . . 141

. . . . . . . . . . . . . . 142

. . . . . . . . . . . . . . . . 142

Using Unconfigured Capacity. . . . . . . . . . . 142

Disk Group Migration . . . . . . . . . . . . . . . . . 143

Export Disk Group

. . . . . . . . . . . . . . . . . 143

Exporting a Disk Group . . . . . . . . . . . . . . 143

Import Disk Group . . . . . . . . . . . . . . . . . . . 144

Importing a Disk Group

Storage Array Media Scan

Changing Media Scan Settings

Suspending the Media Scan

. . . . . . . . . . . . . . 144

. . . . . . . . . . . . . . . 145

. . . . . . . . . . 146

. . . . . . . . . . . 147

11 Configuration: Premium

Feature—Snapshot Virtual Disks . . . . . 149

10 Contents

Scheduling a Snapshot Virtual Disk. . . . . . . . . . 150

Common Reasons for Scheduling

a Snapshot Virtual Disk

. . . . . . . . . . . . . . 150

Guidelines for Creating Snapshot

Schedules. . . . . . . . . . . . . . . . . . . . . 151

Enabling and Disabling Snapshot

Schedules

. . . . . . . . . . . . . . . . . . . . . 152

Creating a Snapshot Virtual Disk Using the

Simple Path

. . . . . . . . . . . . . . . . . . . . . . . 152

About the Simple Path

. . . . . . . . . . . . . . 153

Page 11

Preparing Host Servers to Create the

Snapshot Using the Simple Path

. . . . . . . . . . 153

Creating a Snapshot Virtual Disk Using

the Advanced Path

About the Advanced Path

. . . . . . . . . . . . . . . . . . . . 156

. . . . . . . . . . . . . 156

Preparing Host Servers to Create the

Snapshot Using the Advanced Path

. . . . . . . . 157

Creating the Snapshot Using the

Advanced Path . . . . . . . . . . . . . . . . . . . 159

Specifying Snapshot Virtual Disk Names

Snapshot Repository Capacity. . . . . . . . . . . . . . 162

Disabling a Snapshot Virtual Disk

Preparing Host Servers to Re-create

a Snapshot Virtual Disk

Re-creating Snapshot Virtual Disks

Snapshot Rollback

. . . . . . . . . . . . . . . . . . . . 167

Rules and Guidelines for Performing a

Snapshot Rollback

. . . . . . . . . . . . . . . . . 168

Protecting Against a Failed

Snapshot Rollback . . . . . . . . . . . . . . . . . 169

Previous Versions of the

MD Storage Manager

. . . . . . . . . . . . . . . 169

Starting a Snapshot Rollback

Resuming a Snapshot Rollback

Canceling a Snapshot Rollback . . . . . . . . . . 171

12 Configuration: Premium

Feature—Virtual Disk Copy

. . . . . . . . 161

. . . . . . . . . . . . 165

. . . . . . . . . . . . . . . 166

. . . . . . . . . . . 167

. . . . . . . . . . . . 169

. . . . . . . . . . 170

. . . . . . . . . 173

Types of Virtual Disk Copies. . . . . . . . . . . . . . . 174

Offline Copy

. . . . . . . . . . . . . . . . . . . . . 174

Contents 11

Page 12

Online Copy . . . . . . . . . . . . . . . . . . . . 175

Creating a Virtual Disk Copy for an

MSCS Shared Disk

. . . . . . . . . . . . . . . . . . . 176

Virtual Disk Read/Write Permissions

Virtual Disk Copy Restrictions

. . . . . . . . . 176

. . . . . . . . . . . . . 177

Creating a Virtual Disk Copy. . . . . . . . . . . . . . 178

Before you Begin

. . . . . . . . . . . . . . . . . 178

Virtual Disk Copy and

Modification Operations . . . . . . . . . . . . . 179

Create Copy Wizard

. . . . . . . . . . . . . . . . 179

Failed Virtual Disk Copy. . . . . . . . . . . . . . 179

Preferred RAID Controller Module Ownership . . . . 180

Failed RAID Controller Module

Copy Manager

. . . . . . . . . . . . . . . . . . . . . 180

Copying the Virtual Disk

. . . . . . . . . . . . 180

. . . . . . . . . . . . . . . . 180

Storage Array Performance During

Virtual Disk Copy

. . . . . . . . . . . . . . . . . . . . 182

Setting Copy Priority . . . . . . . . . . . . . . . . . . 182

Stopping a Virtual Disk Copy

. . . . . . . . . . . . . 183

12 Contents

Recopying a Virtual Disk

. . . . . . . . . . . . . . . . 183

Preparing Host Servers to Recopy

a Virtual Disk

. . . . . . . . . . . . . . . . . . . 184

Re-Copying a Virtual Disk

Removing Copy Pairs

. . . . . . . . . . . . . . . . . . 186

. . . . . . . . . . . . . 185

Page 13

13 Configuration: Premium Feature

—Upgrading to

High-Performance-Tier . . . . . . . . . . . . . 187

14 Configuration: Device Mapper

Multipath for Linux . . . . . . . . . . . . . . . . 189

Overview. . . . . . . . . . . . . . . . . . . . . . . . . 189

Using DM Multipathing Devices

Prerequisite Steps

. . . . . . . . . . . . . . . . . 190

Device Mapper Configuration Steps

Linux Host Server Reboot Best Practices

. . . . . . . . . . . . 190

. . . . . . . . 191

. . . . . 195

Important Information About

Special Partitions. . . . . . . . . . . . . . . . . . 196

Limitations and Known Issues

. . . . . . . . . . . . . . 197

Troubleshooting . . . . . . . . . . . . . . . . . . . . . 198

15 Management: Firmware Downloads . . . 201

Downloading RAID Controller and

NVSRAM Packages

Downloading Both RAID Controller and

NVSRAM Firmware

Downloading Only NVSRAM Firmware. . . . . . . . . 204

Downloading Physical Disk Firmware

Downloading MD1200 Series Expansion

Module EMM Firmware

. . . . . . . . . . . . . . . . . . . 201

. . . . . . . . . . . . . . . . . . . 202

. . . . . . . . . 206

. . . . . . . . . . . . . . . . . 208

Self-Monitoring Analysis and Reporting

Technology (SMART)

. . . . . . . . . . . . . . . . . . 210

Contents 13

Page 14

Media Errors and Unreadable Sectors . . . . . . . . 210

16 Management: Installing Array

Components . . . . . . . . . . . . . . . . . . . . . . 211

Recommended Tools . . . . . . . . . . . . . . . . . . 211

Front Bezel (Optional)

Removing the Front Bezel

Installing the Front Bezel

Hard Drives

. . . . . . . . . . . . . . . . . . . . . . . 213

Removing a Hard-Drive Blank

Installing a Hard-Drive Blank

. . . . . . . . . . . . . . . . . 212

. . . . . . . . . . . . . 212

. . . . . . . . . . . . . 212

. . . . . . . . . . 213

. . . . . . . . . . . 214

Removing a Hard Drive . . . . . . . . . . . . . . 214

Installing a Hard Drive

. . . . . . . . . . . . . . 216

Removing a Hard Drive From a

Hard-Drive Carrier . . . . . . . . . . . . . . . . 216

Installing a Hard Drive Into a

Hard-Drive Carrier . . . . . . . . . . . . . . . . 219

RAID Controller Module . . . . . . . . . . . . . . . . 219

Removing a RAID Controller

Module Blank

. . . . . . . . . . . . . . . . . . . 219

Installing a RAID Controller

Module Blank

. . . . . . . . . . . . . . . . . . . 220

Removing a RAID Controller Module. . . . . . . 221

Installing a RAID Controller Module

Opening the RAID Controller Module

. . . . . . . 222

. . . . . . . 222

Closing the RAID Controller Module . . . . . . . 223

14 Contents

RAID Controller Module Backup Battery Unit

Removing the RAID Controller Module

Backup Battery Unit

. . . . . . . . . . . . . . . 224

Installing the RAID Controller Module

Backup Battery Unit

. . . . . . . . . . . . . . . 225

. . . . . 224

Page 15

Power Supply/Cooling Fan Module . . . . . . . . . . . 225

Removing a Power Supply/Cooling

Fan Module

. . . . . . . . . . . . . . . . . . . . . 225

Installing a Power Supply/Cooling

Fan Module

. . . . . . . . . . . . . . . . . . . . . 228

Control Panel

Removing the Control Panel

Installing the Control Panel

Backplane

Removing the Backplane

Installing the Backplane

. . . . . . . . . . . . . . . . . . . . . . . 229

. . . . . . . . . . . . 229

. . . . . . . . . . . . . 230

. . . . . . . . . . . . . . . . . . . . . . . . 231

. . . . . . . . . . . . . . 231

. . . . . . . . . . . . . . 234

17 Management: Firmware Inventory . . . . 235

Viewing the Firmware Inventory . . . . . . . . . . . . 235

18 Management: System Interfaces . . . . . 237

Microsoft Services. . . . . . . . . . . . . . . . . . . . 237

Virtual Disk Service

Volume Shadow-Copy Service

. . . . . . . . . . . . . . . . . 237

. . . . . . . . . . . 237

19 Troubleshooting: Your Storage

Array Software . . . . . . . . . . . . . . . . . . . 239

Start-Up Routine . . . . . . . . . . . . . . . . . . . . . 239

Device Health Conditions

Storage Array Support Data

Automatically Collect the Support Bundle Data

. . . . . . . . . . . . . . . . 239

. . . . . . . . . . . . . . . 242

. . . . 243

Contents 15

Page 16

Collecting the Physical Disk Data . . . . . . . . . . . 244

Event Log

Recovery Guru

. . . . . . . . . . . . . . . . . . . . . . . . 244

. . . . . . . . . . . . . . . . . . . . . 245

Storage Array Profile. . . . . . . . . . . . . . . . . . 246

Viewing the Logical Associations

Viewing the Physical Associations

. . . . . . . . . . . 247

. . . . . . . . . . 248

Finding Nodes . . . . . . . . . . . . . . . . . . . . . 248

Using Go To

. . . . . . . . . . . . . . . . . . . . . . . 250

Recovering From an Unresponsive

Storage Array Condition

Locating a Physical Disk

. . . . . . . . . . . . . . . . 251

. . . . . . . . . . . . . . . . 253

Locating an Expansion Enclosure . . . . . . . . . . . 255

Capturing the State Information

SMrepassist Utility

Unidentified Devices

. . . . . . . . . . . . . . . . . . . 257

. . . . . . . . . . . . . . . . . . 258

. . . . . . . . . . . . 256

20 Troubleshooting: Your Array . . . . . . . . . 263

16 Contents

Recovering From an Unidentified

Storage Array

. . . . . . . . . . . . . . . . . . . . . . 258

Starting or Restarting the Host Context

Agent Software

. . . . . . . . . . . . . . . . . . . . . 260

Safety First—For you and Your Array . . . . . . . . . 263

Troubleshooting Storage Array

Startup Failure

. . . . . . . . . . . . . . . . . . . . . 263

Page 17

Troubleshooting Loss of Communication . . . . . . . . 263

Troubleshooting External Connections

. . . . . . . . . 263

Troubleshooting Power Supply/Cooling

Fan Module

Troubleshooting Array Cooling Problems

. . . . . . . . . . . . . . . . . . . . . . . . 264

. . . . . . . . 265

Troubleshooting Expansion Enclosure

Management Modules

. . . . . . . . . . . . . . . . . . 265

Troubleshooting RAID Controller Modules . . . . . . . 267

Troubleshooting Hard Drives

. . . . . . . . . . . . . . 268

Troubleshooting Array and Expansion

Enclosure Connections

Troubleshooting a Wet Storage Array

. . . . . . . . . . . . . . . . . 269

. . . . . . . . . . 269

Troubleshooting a Damaged Array . . . . . . . . . . . 270

Troubleshooting RAID Controller Modules

Conditions

. . . . . . . . . . . . . . . . . . . . . . 271

. . . . . . . 271

Invalid Storage Array. . . . . . . . . . . . . . . . 271

ECC Errors

PCI Errors

Critical Conditions

Noncritical Conditions

. . . . . . . . . . . . . . . . . . . . . 271

. . . . . . . . . . . . . . . . . . . . . . 272

. . . . . . . . . . . . . . . . . 272

. . . . . . . . . . . . . . . 272

21 Getting Help . . . . . . . . . . . . . . . . . . . . . . 273

Locating Your System Service Tag . . . . . . . . . . . 273

Contacting Dell

Documentation Feedback

. . . . . . . . . . . . . . . . . . . . . 273

. . . . . . . . . . . . . . . . 274

Contents 17

Page 18

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 275

18 Contents

Page 19

1

Introduction

NOTE: Unless specified, MD3200i Series represents Dell PowerVault MD3200i and

Dell PowerVault MD3220i storage arrays.

WARNING: See the Safety, Environmental, and Regulatory Information document

for important safety information before following any procedures listed in this

document.

About This Document

This guide familiarizes you with the functions of the Dell PowerVault

MD3200i Series storage array. The guide is organized according to the tasks

that you must complete after receiving your MD3200i Series storage array.

The tasks are:

Planning—Information about the storage array and its features.

Configuration—Tasks that must be completed to ensure that your storage

array performs optimally.

Management—Tasks that may be performed to ensure that the storage array

components are up to date and performing properly, including removal and

installation of storage array components.

Troubleshooting—Tasks that you must complete to resolve problems that

may occur with the storage array.

Additional information on these and other topics can be found in the Dell

PowerVault MD3200i and MD3220i Storage Array Deployment Guide at

dell.com/support/manuals.

Inside the Box of the Dell PowerVault MD3200i Series Storage Array

Your MD3200i Series product package includes:

• Dell PowerVault MD3200i

• Power cables

• Front bezel (optional)

Series

storage array

Introduction 19

Page 20

• Mounting rails (2) (optional)

• MD3200i

•

Rack Installation Instructions

•

Getting Started With Your System

features, setting up your enclosure, and technical specifications).

Series

resource media

.

(provides an overview of enclosure

MD3200i Series Storage Array

The Dell PowerVault MD3200i Series is a 2U rack-mounted external

redundant array of independent disks (RAID) storage array capable of

accommodating up to twelve 3.5" or twenty four 2.5" 6.0-Gbps SerialAttached SCSI (SAS) disks. The MD3200i Series storage arrays can be daisychained with MD1200 Series expansion enclosures, providing access to a

maximum of 120 disks (or 192 disks with Premium Feature activation) in the

entire storage system. Connectivity between the storage array and the host

server is provided by a standard Ethernet connection.

Dell PowerVault Modular Disk Stor ag e Man ager

Dell PowerVault Modular Disk Storage Manager (MDSM) is a graphical user

interface (GUI) application, used to configure and manage one or more

MD3200i Series Storage Arrays. The MDSM software is located on the

MD3200i Series resource media.

Dell PowerVault Modular Disk Configuration Utility

Dell PowerVault Modular Disk Configuration Utility (MDCU) is an iSCSI

configuration wizard that can be used in conjunction with MDSM to simplify

the configuration of iSCSI connections. The MDCU software is located on

the MD3200i Series resource media.

Other Information You May Need

WARNING: See the safety and regulatory information that shipped with your

system. Warranty information may be included within this document or as a

separate document.

•The

20 Introduction

Getting Started Guide

your storage array.

provides an overview of setting up and cabling

Page 21

•The

Deployment Guide

provides installation and configuration instructions

for both software and hardware.

•The

Storage Manager CLI Guide

provides information about using the

command line interface (CLI).

• The Resource media contains all system management tools.

•The

Systems Support Matrix

provides information on supported software

and hardware for MD systems. The document is available at

dell.com/support/manuals

•The

Dell P owerEdge C luster D ocum entation

dell.com/support/manuals

•

Release notes

or readme files are included to provide last-minute updates

.

is available at

.

to the enclosure or documentation or advanced technical reference

material intended for experienced users or technicians.

• This document as well as

Guide

is available at

Dell P o werVault MD 1200 Series Installation

dell.com/support/manuals

for users who incorporate

MD1200 expansion enclosures.

•The

Rack Installation Instructions

included with your rack solution

describes how to install your enclosure into a rack.

NOTE: Always check for updates on dell.com/support/manuals and read the

updates first because they often supersede information in other documents.

Introduction 21

Page 22

22 Introduction

Page 23

2

Planning: About Your Storage Array

Overview

The MD3200i Series storage array is designed for high availability, offering

redundant access to data storage. It features support for both single and dual

RAID controller configuration.

The Dell PowerVault MD3200i storage array provides 1.0-Gbps 1000 BaseT

connectivity to the host server and enables access to 32 physical hosts.

The MD3200i Series storage array includes:

• RAID controller module(s)

•PSU/Fan modules

• Disk drives (also called physical disk drives in this document)

• A front bezel (optional)

• A system enclosure, into which, the other components are plugged

Planning: About Your Storage Array 23

Page 24

Hardware Features

1

2

3

5

4

6

1

2

3

4

6

5

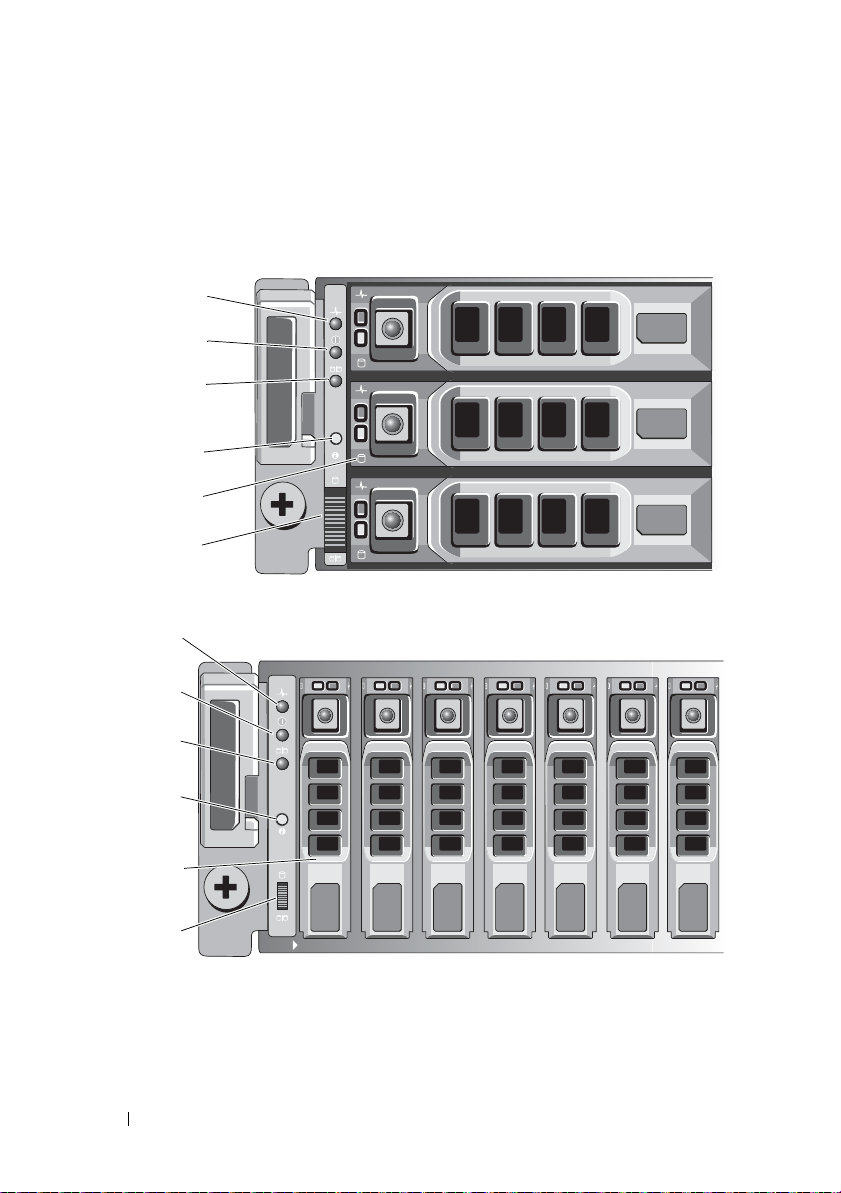

Front-Panel Features and Indicators

Figure 2-1. Front-Panel Features and Indicators—Dell PowerVault MD3200i

Figure 2-2. Front-Panel Features and Indicators—Dell PowerVault MD3220i

24 Planning: About Your Storage Array

Page 25

Figure 2-3. Front-Bezel Features and Indicators

1

2

3

Item Indicator, Button, or

Connector

1 Enclosure status LED The enclosure status LED lights when the

2 Power LED The power LED lights green when at least one

Icon Description

enclosure power is on.

Lights blue during normal operation.

Blinks blue when a host server is identifying the

enclosure or when the system identification

button is pressed.

Lights amber as enclosure boots or is reset.

Blinks amber when the enclosure is either in a

fault state or the hosts are not using the preferr ed

path to a virtual disk.

power supply is supplying power to

the enclosure.

Planning: About Your Storage Array 25

Page 26

Item Indicator, Button, or

Connector

3 Split mode LED This LED must be unlit as the split mode

4 System identification

button

5 Hard drives MD3200i—Up to twelve 3.5" SAS hot-swappable

6Enclosure mode

switch

Icon Description

function is not supported by the MD3200i Series

Storage Arrays.

The system identification button on the front

control panel can be used to locate a particular

enclosure within a rack. When the button is

pushed, the system status indicators on the

control panel and the RAID controller module(s)

blink blue until the button is pushed again.

hard drives.

MD3220i—Up to twenty four 2.5" SAS hot-

swappable hard drives.

The function of this switch is not applicable to

your storage array. However, if MD1200 Series

expansion enclosures are daisy chained to the

storage array, the enclosure mode switches of the

MD1200 Series expansion enclosures must be set

to the Unified-Mode position.

NOTE: This switch must be set before turning on

the MD1200 series expansion enclosure. Changing

the switch setting after the expansion enclosure is

turned on has no effect on the enclosure

configuration until the expansion enclosure goes

through a complete power cycle.

26 Planning: About Your Storage Array

Page 27

Back Panel Features and Indicators

1

23

4

Figure 2-4. Back-Panel Features and Indicators—Dell PowerVault MD3200i Series

1 600 W power supply/cooling fan

module

3 RAID Controller Module 1 4 600 W power supply/cooling fan

2 RAID Controller Module 0

module

Planning: About Your Storage Array 27

Page 28

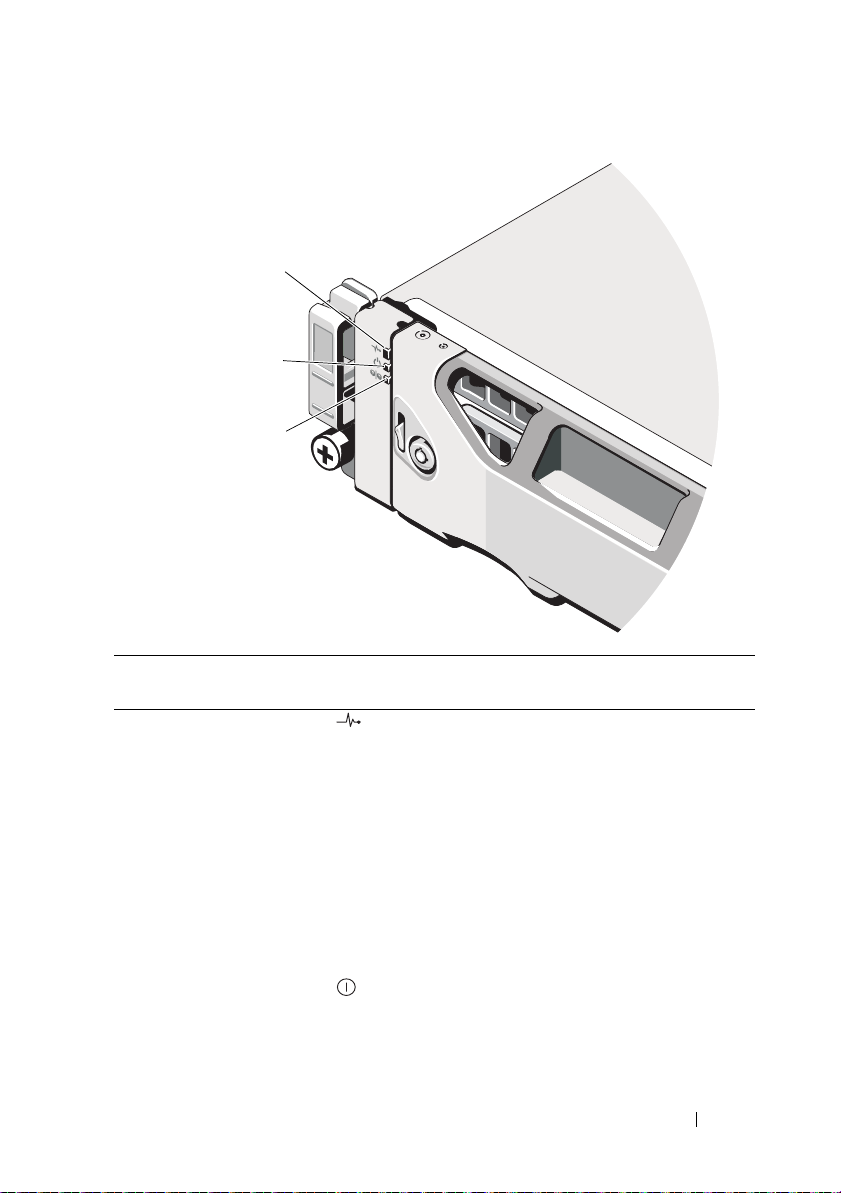

Hard-Drive Indicator Patterns

1

2

Figure 2-5. Hard Drive Indicators

1 hard-drive activity indicator (green) 2 hard-drive status indicator (green

28 Planning: About Your Storage Array

and amber)

Page 29

Drive-Status Indicator Pattern Condition

Off The physical disk is:

• not yet discovered by the host server

•is spun down for removal

• not supported for the RAID con trolle r

module or is not in the physical disk slot

NOTE: The drive status indicator remains

off until all hard drives are initialized after

system power is turned on. Drives are not

ready for insertion or removal during this

time.

Steady green Physical disk is online

Green flashing (On 250 ms, Off 250 ms) Physical disk is being identified

Green flashing (On 400 ms, Off 100 ms) Physical disk rebuilding

Amber flashing (On 150 ms, Off 150 ms) Physical disk failed

Flashing green, amber, and off (green On

500 ms, amber On 500 ms, Off 1000 ms)

Flashing green, amber, and Off (green 3

seconds, amber 3 seconds, and Off 3

seconds)

Physical disk failure predicted (SMART)

Physical disk rebuild aborted

Power Supply and Cooling Fan Features

The MD3200i Series storage array includes two integrated, hot-swappable

power supply/cooling fan modules. Both modules must be installed to ensure

proper cooling. The system requires at least one of the cooling fans to

function to avoid overheating.

A power supply/cooling fan module can be replaced without powering down

the system. For information on removing and installing the modules, see

"Power Supply/Cooling Fan Module" on page 225.

CAUTION: A power supply/cooling fan module can be removed from a powered-

on system for a maximum period of 5 minutes. Beyond tha t time, the system may

automatically shut down to prevent damage.

Planning: About Your Storage Array 29

Page 30

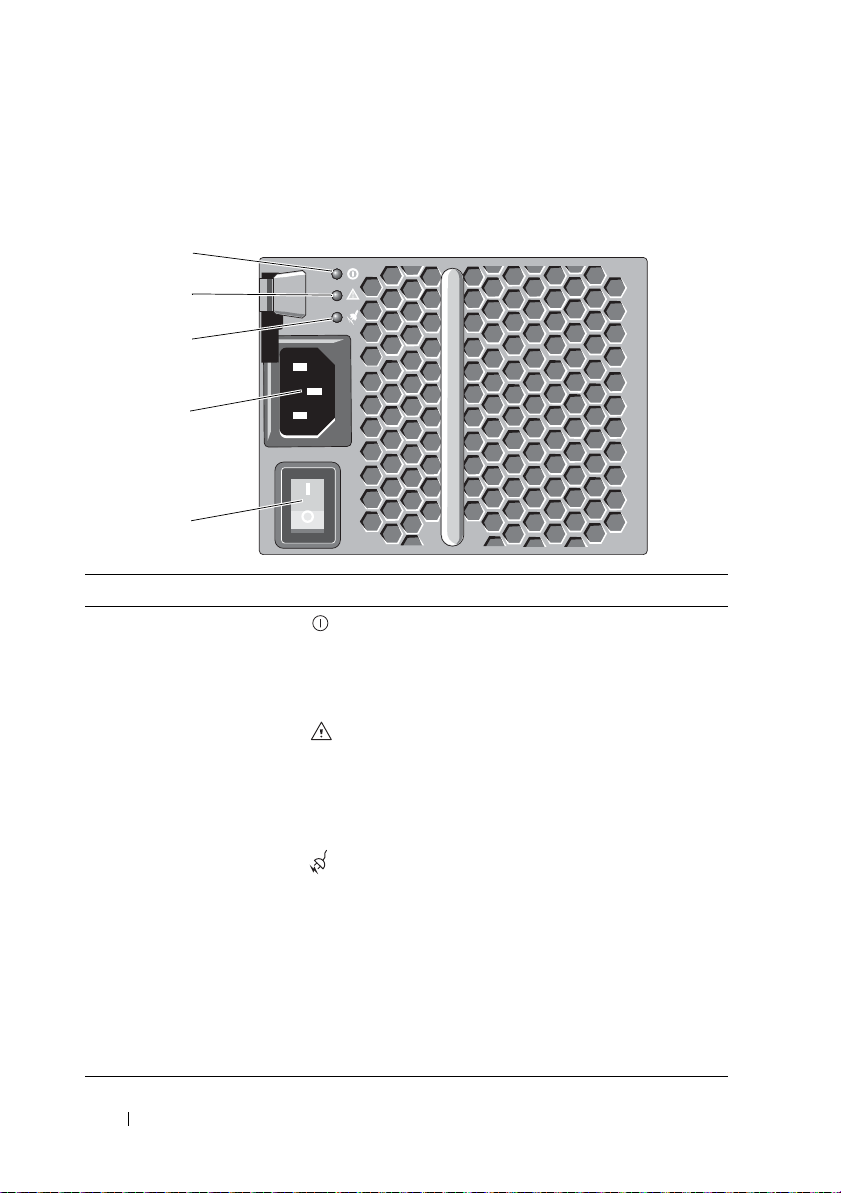

Power Indicator Codes and Features

1

2

3

5

4

Figure 2-6. Power Indicator Codes and Features

Item LED Type Icon Description

1 DC power The LED lights green when the DC output

voltage is within the limit.

If this LED is off, it indicates that the DC output

voltage are not within the limit.

2 Power supply/cooling

fan fault

3 AC power The LED lights green when the AC input voltage

4 Power connector Connect the external power supply to this

5 Power switches (2) The power switch controls the power supply

The LED lights amber when the DC output

voltage is not within the limit or a fault with the

fan is detected.

If this LED is off, it indicates that no fault

condition is present.

is within the limit.

If this LED is off, it indicates either there is no

power or the AC input voltage is not within the

limit.

connector.

output to the enclosure.

30 Planning: About Your Storage Array

Page 31

3

Planning: RAID Controller Modules

RAID Controller Modules

The RAID controller modules provide high-performance, advanced virtual

disk configuration, and fault-tolerant disk subsystem management. Each

RAID controller module contains 2 GB or 4 GB of mirrored cache for high

availability and is protected by a battery powered cache offload mechanism.

NOTE: The 4 GB mirrored cache is an optional feature.

RAID controller modules provide the following data path and enclosure

management functions:

• Monitoring and controlling enclosur e environment elements

(temperature, fans, power supplies, and enclosure LEDs)

• Contro lling access to the physical disks

• Communicating enclosure attributes and states to the host server and

management station

Each RAID controller module has multiple iSCSI IN-ports for host access.

The ports provide redundant host connections and support a high availability

storage environment. Vari ous configurations can be utilized, in both single

controller (simplex) and dual controller (duplex) modes, to connect the

storage enclosure to hosts depending on specific redundancy needs.

For information on cabling, see the MD3200i and MD3220i Series storage

array’s Deployment Guide at dell.com/support/manuals.

Planning: RAID Controller Modules 31

Page 32

RAID Controller Module Connectors and Features

12 567

81112141516

9

10

13 18 1917

3

4

Figure 3-1. MD3200i Series iSCSI RAID Controller Module

Item Component Function

1 SAS OUT port Provides SAS connection for cabling to an expansion

enclosure.

2 iSCSI IN port 0 Provides host-to-controller iSCSI 1Gbps Ethernet

connection.

3 iSCSI IN port 1 Provides host-to-controller iSCSI 1Gbps Ethernet

connection.

4 iSCSI IN port 2 Provides host-to-controller iSCSI 1Gbps Ethernet

connection.

5 iSCSI IN port 3 Provides host-to-controller iSCSI 1Gbps Ethernet

connection.

6 Management port

ethernet connector

7 Debug port Dell support only.

Provides a 100/1000 Mbps Ethernet connection for

out-of-band management of the enclosure.

32 Planning: RAID Controller Modules

Page 33

Item Component Function

8SAS OUT port

link/fault LED

9iSCSI IN port link

LED

10 Controller power LED Lights green when controller is turned on.

11 iSCSI IN port activity

LED

12 Controller fault LED Lights amber when controller fault detected.

13 System identification

LED

14 Cache active or cache

offload LED

15 Battery fault Lights amber when battery backup unit or battery has

16 Password reset switch Activating this switch deletes the password.

17 MAC address label Provides MAC addresses of iSCSI host ports and the

Lights green when all four links are connected.

Lights amber when one to 3 links are disconnected.

Off when all links in the port are disconnected or

cable is disconnected.

Lights green when ethernet connection at 1Gbps

established.

Off when there is no link.

Off when controller is not turned on.

Lights green when there is no activity on 1Gbps

connection.

Blinks green when there is Activity on 1Gbps

connection.

Off when 1Gbps link is down.

Off when controller operating normally.

Blinks blue when system identification switch push-

button on enclosure front panel is pressed.

Lights green when On-board controller memory

contains data.

If AC power fails, this LED changes to indica te Cache

Offload status. If the password reset function has

successfully changed the password, this LED flashes

on and off briefly.

failed.

Off when battery backup unit is operating normally.

management port.

Planning: RAID Controller Modules 33

Page 34

Item Component Function

18 Management port

speed LED

19 Management port

activity LED

Lights green when ethernet connection is operatin g at

1000 Mbps.

Lights amber when ethernet connection is operating

at 100 Mbps.

Off when ethernet connection is operating at 10 Mbps

or is not active.

Lights green when ethernet connection is active.

Off when ethernet connection is not active.

RAID Controller Module—Additional Features

Battery Backup Unit

Each RAID controller contains a two-cell Lithium ion nanopolymer battery

backup unit (BBU). It provides power to the RAID controller module in the

event of a power outage. For information on removing and installing the BBU,

see "RAID Controller Module Backup Battery Unit" on page 224.

NOTE: For virtual disks, the RAID controller firmware changes the data cache

setting based on the state of the battery. If the battery is missing or does not have

sufficient charge, the controller flushes the cache and sets the write cache

attribute to Write Through for all virtual disks. When the battery is replaced, Write

Back is re-enabled.

Storage Array Thermal Shutdown

The system automatically shuts down when system temperature exceeds the

safe threshold. The battery backup unit protects against data los s by

providing power to offload to non-volatile memory in the event of power loss.

It is not necessary to shut down any MD1200 Series expansion enclosures

attached to the storage array when thermal shutdown occurs.

Temperature threshold values determine the temperature at which shutdown

occurs. These thresholds cannot be changed.

34 Planning: RAID Controller Modules

Page 35

Table 3-1. Shutdown Threshold Type

Threshold Temperature Exceeding Event Description

Nominal failure threshold A critical event is set

Maximum failure threshold Shutdown of the system power supplies occurs

within 3 minutes

Shutdown threshold Shutdown of the system power supplies occurs

within 5 seconds

System Password Reset

The storage array system password can be reset if it is forgotten. To reset the

password, push and hold down the password reset switch for at least 5

seconds. The password is deleted. See Figure3-1 to locate the password reset

switch.

The RAID controller module allows you to change the password. For more

information about setting your password, see "Setting a Password" on page 71.

NOTE: The reset switch can be accessed by using a small object such as the tip of

a pen.

Cache Functions and Features

Cache Mirroring

Cache mirroring function copies accepted host-write data from the primary

controller to the partner controller. This action ensures that host-write data is

safely mirrored to the partner controller befor e successful completion status is

returned to the host. If a controller fails, the surviving controller safely retains

all mirrored data. Cache mirroring is enabled by default.

Write-Back Cache

In Write-back Cache, write operations result in a completion signal being

sent to the host operating system as soon as the cache receives the data to be

written. The target physical disk receives the data at a more appropriate time

in order to increase controller performance. In dual-active controller

configurations with Write-back Caching enabled, the write data is always

Planning: RAID Controller Modules 35

Page 36

mirrored to the cache of the second controller before completion status is

issued to the host initiator. Write-Back Cache is enabled by default unless

cache mirroring is disabled.

Write-Through Cache

In write-through cache, data is written to the physical disk before completion

status is returned to the host operating system. Write-through cache is

considered more robust than write-back cache, since a power failure is less

likely to cause loss of data. The RAID controller automatically switches to

write-through if cache mirroring is disabled or if the battery is missing or has a

fault condition.

36 Planning: RAID Controller Modules

Page 37

4

Planning: MD3200i Series Storage Array Terms and Concepts

This chapter explains terms and concepts used for configuration and

operation of MD3200i Series storage arrays.

Physical Disks, Virtual Disks, and Disk Groups

Physical disks in your storage array provide the physical storage capacity for

your data. Before you can begin writing data to the storage array, you must

configure the physical storage capacity into logical components, called disk

groups and virtual disks.

A disk group is a set of physical disks upon which multiple virtual disks are

created. The maximum number of physical disks supported in a disk group is

120 disks (or 192 disks with Premium Feature activation) for RAID 0, RAID 1,

and RAID 10, and 30 drives for RAID 5 and RAID 6. You can create disk

groups from unconfigured capacity on your storage array.

A virtual disk is a partition in a disk group that is made up of contiguous data

segments of the physical disks in the disk group. A virtual disk consists of data

segments from all physical disks in the disk group. Virtual disks and disk

groups are set up according to how you plan to organize your data. For

example, you may have one virtual disk for inventory, a second virtual disk for

financial and tax information, and so on.

All virtual disks in a disk group support the same RAID level. The storage

array supports up to 255 virtual disks (minimum size of 10 MB each) that can

be assigned to host servers. Each virtual disk is assigned a Logical Unit

Number (LUN) that is recognized by the host operating system.

Physical Disks

Only Dell supported 6.0-Gbps SAS physical disks are supported in the storage

array. If the storage array detects unsupported physical disks, it marks the disk

as unsupported and the physical disk becomes unavailable for all operations.

Planning: MD3200i Series Storage Array Terms and Concepts 37

Page 38

NOTE: The MD3200i Series storage enclosure must contain at least two physical

disks for proper operation. This is necessary because the physical disks are used to

store configuration information.

Physical Disk States

Table 4-1 describes the various states of the physical disk, which are

recognized by the storage array and reported in the MDSM application.

Table 4-1. RAID Controller Physical Disk States

Status Mode Description Physical Disk

Status LED

Optimal Assigned The physical disk in the indicated slot

is configured as part of a disk group.

Optimal Unassigned The physical disk in the indicated slot

is unused and available to be

configured.

Optimal Hot Spare

standby

Optimal Hot Spare in

use

Failed Assigned,

Unassigned,

Hot Spare in

use, or Hot

Spare standby

Replaced Assigned Th e physical disk in th e indicated slot

Pending

Failure

Assigned,

Unassigned,

Hot spare in

use, or Hot

Spare standby

The physical disk in the indicated slot

is configured as a hot spare.

The physical disk in the indicated slot

is in use as a hot spare within a disk

group.

The physical disk in the indicated slot

has failed because of an unrecoverable

error, an incorrect drive type or drive

size, or by its operational state being

set to failed.

is replaced and is ready to be, or is

actively being configured into a disk

group.

A Self-Monitoring Analysis and

Reporting T echnology (SMART) error

is detected on the physical disk in the

indicated slot.

Steady Green

Steady Green

Steady Green

Steady Green

Amber flashing

(150 ms)

Green flashing

(On 400 ms, Off

100 ms)

Green flashing

(500 ms), Amber

(500 ms), and Off

(1000 ms)

38 Planning: MD3200i Series Storage Array Terms and Concepts

Page 39

Table 4-1. RAID Controller Physical Disk States

Status Mode Description Physical Disk

Offline Not applicable The physical disk has either been spun

down or had a rebuild aborted by user

request.

Identify Assigned,

Unassigned,

Hot Spare in

use, or Hot

Spare standby

N/A N/A The indicated slot is empty, or the

The physical disk is being identified. Green flashing

array cannot detect the physical disk.

(continued)

Status LED

Green flashing

(3000 ms), Amber

(3000 ms), and

Off (3000 ms)

(250 ms)

If a disk drive rebuild fails because of a source drive failure or because the

drive is too small, the MDSM reports a failure of the physical disk eve n

though the LED state on the drive indicates the rebuild was aborted (green

for 3 seconds, amber for 3 seconds, then off for 3 seconds).

Self-Monitoring Analysis and Reporting Techno logy

SMART monitors the internal performance of all physical disk components to

detect faults indicating the potential for physical disk failure. SMART uses

this information to report whether failure is imminent so that a physical disk

can be replaced before failure occurs. The storage array monitors all attached

drives and notifies you when a predicted failure is reported by a physical disk.

Virtual Disks and Disk Groups

When configuring a storage array, you must:

1

Organize the physical disks into disk groups.

2

Create virtual disks within these disk groups.

3

Provide host server access.

4

Create mappings to associate the virtual disks with the host servers.

NOTE: Host server access must be created before mapping virtual disks.

Planning: MD3200i Series Storage Array Terms and Concepts 39

Page 40

Disk groups are always created in the unconfigured capacity of a storage array .

Unconfigured capacity is the available physical disk space not already

assigned in the storage array.

Virtual disks are cr eated within the free ca pacity of a disk group. F r ee capacity

is the space in a disk group that has not been assigned to a virtual disk.

Virtual Disk States

The storage array recognizes the following virtual disk states.

Table 4-2. RAID Controller Virtual Disk States

State Description

Optimal The virtual disk contains physical disks that are all online.

Degraded The virtual disk with a redundant RAID leve l conta ins an in accessible

physical disk. The system can still work properly, but performance may

be affected and additional disk failures may result in data loss.

Offline A virtual disk with one or more member disks is in an inaccessible

(failed, missing, or offline) state. Data on the virtual disk is no longer

accessible.

Force online The storage array forces a virtual disk that is in an Offline state to an

Optimal state. If all the member physical disks are not available, the

storage array forces the virtual disk to a Degraded state. The storage

array can force a virtual disk to an Online state only when a sufficient

number of physical disks are available to support the virtual disk.

RAID Levels

RAID levels determine the way in which data is written to physical disks.

Different RAID levels provide different l evels of accessibility, redundancy, and

capacity.

Using multiple physical disks has the following advantages over using a single

physical disk:

• Placing data on multiple physical disks (striping) allows input/output (I/O)

operations to occur simultaneously and improve performance.

• Storing r edundant data on multiple physical disks using mirroring or parity

supports reconstruction of lost data if an error occurs, even if that error is

the failure of a physical disk.

40 Planning: MD3200i Series Storage Array Terms and Concepts

Page 41

Each RAID level provides different performance and protection. You must

select a RAID level based on the type of application, access, fault tolerance,

and data you are storing.

The storage array supports RAID levels 0, 1, 5, 6, and 10. The maximum

number of physical disks that can be used in a disk group depends on the

RAID level:

• 192 for RAID

•30 for RAID

levels

levels

0, 1, and 10

5 and 6.

RAID Level Usage

To ensure best performance, you must select an optimal RAID level when you

create a system physical disk. The optimal RAID level for your disk array

depends on:

• Number of physical disks in the disk array

• Capacity of the physical disks in the disk array

• Need for redundant access to the data (fault tolerance)

• Disk performance requirements

RAID 0

RAID 0 uses disk striping to provide high data throughput, especially for large

files in an environment that requires no data redundancy. RAID 0 breaks the

data down into segments and writes each segment to a separate physical disk.

I/O performance is greatly improved by spreading the I/O load across many

physical disks. Although it offers the best performance of any RAID level,

RAID 0 lacks data redundancy. Select this option only for non-critical data,

because failure of one physical disk results in the loss of all data. Examples of

RAID 0 applications include video editing, image editing, prepress

applications, or any application requiring high bandwidth.

RAID 1

RAID 1 uses disk mirroring so that data written to one physical disk is

simultaneously written to another physical disk. This RAID level offers fast

performance, the best data availability, and the highest disk overhead. RAID 1

Planning: MD3200i Series Storage Array Terms and Concepts 41

Page 42

is recommended for small databases or other applications that do not require

large capacity. RAID 1 provides full data redundancy. F or example accounting,

payroll, or financial applications.

RAID 5

RAID 5 uses parity and striping data across all physical disks (distributed

parity) to provide high data throughput and data redundancy, especially for

small random access. This is a versatile RAID level and is suited for multi-user

environments where typical I/O size is small a n d the re is a high proportion of

read activity such as file, application, database, web, e-mail, news, and

intranet servers.

RAID 6

RAID 6 is similar to RAID 5 but provides an additional parity disk for better

redundancy. This is the most versatile RAID level and is suited for multi-user

environments where typical I/O size is small a n d the re is a high proportion of

read activity. RAID 6 is recommended when large size physical disks are used

or large number of physical disks are used in a disk group.

RAID 10

RAID 10, a combination of RAID 1 and RAID 0, uses disk striping across

mirrored disks. It provides high data throughput and complete data

redundancy. Utilizing an even number of physical disks (four or more) creates

a RAID level 10 disk group and/or virtual disk. Because RAID levels 1 and 10

use disk mirroring, half of the capacity of the physical disks is utilized for

mirroring. This leaves the remaining half of the physical disk capacity for

actual storage. RAID 10 is automatically used when a RAID level of 1 is

chosen with four or more physical disks. RAID 10 works well for mediumsized databases or any environment that requires high performance and fault

tolerance and moderate-to-medium capacity.

Segment Size

Disk striping enables data to be written across multiple physical disks. Disk

striping enhances performance because striped disks are accessed

simultaneously.

42 Planning: MD3200i Series Storage Array Terms and Concepts

Page 43

The segment size or stripe element size specifies the size of data in a stripe

written to a single disk. The storage array supports stripe element sizes of 8

KB, 16 KB, 32 KB, 64 KB, 128 KB, 256 KB, and 512 KB. The default stripe

element size is 128 KB.

Stripe width, or depth, refers to the number of disks involved in an array

where striping is implemented. For example, a four-disk group with disk

striping has a stripe width of four.

NOTE: Although disk striping delivers excellent performance, striping alone does

not provide data redundancy.

Virtual Disk Operations

Virtual Disk Initialization

Every virtual disk must be initialized. Initialization can be done in the

foreground or the background. A maximum of four virtual disks can be

initialized concurrently on each RAID controller module.

Background Initialization

The storage array executes a background initialization when the virtual disk is

created to establish parity, while allowing full host server access to the virtual

disks. Background initialization does not run on RAID 0 virtual disks. The

background initialization rate is controlled by MDSM. To change the rate of

background initialization, you must stop any existing background

initialization. The rate change is implemented when the background

initialization restarts automatically.

Foreground Initialization

The storage array supports foreground initialization for virtual disks. All

access to the virtual disk is blocked during foreground initialization. During

foreground initialization, zeros (0x00) are written to every sector of the virt ual

disk. The virtual disk is available after foreground initialization is completed.

Planning: MD3200i Series Storage Array Terms and Concepts 43

Page 44

Consistency Check

A consistency check verifies the correctness of data in a redundant array

(RAID levels 1, 5, 6, and 10). For example, in a system with parity, checking

consistency involves computing the data on one physical disk and comparing

the results to the contents of the parity physical disk.

A consistency check is similar to a background initialization. The difference is

that background initialization cannot be started or stopped manually, while

consistency check can.

NOTE: Dell recommends that you run data consistency checks on a redundant

array at least once a month. This allows detection and automatic replacement of

unreadable sectors. Finding an unreadable sector during a rebuild of a failed

physical disk is a serious problem, beca use the system does not have the

redundancy to recover the data.

Media Verification

Another background task performed by the storage array is media verification

of all configured physical disks in a disk group. The storage array uses the

Read operation to perform verification on the space configured in virtual

disks and the space reserved for the metadata.

Cycle Time

The media verification operation runs only on selected disk groups,

independent of other disk groups. Cycle time is the time taken to complete

verification of the metadata region of the disk group and all virtual disks in

the disk group for which media verification is configured. The next cycle for a

disk group starts automatically when the current cycle completes. You can set

the cycle time for a media verification operation between 1 and 30 days. The

storage controller throttles the media verification I/O accesses to disks based

on the cycle time.

The storage array tracks the cycle for each disk group independent of other

disk groups on the controller and creates a checkpoint. If the media

verification operation on a disk group is pr eempted or blocked by another

operation on the disk group, the storage array resumes after the current cycle.

If the media verification process on a disk group is stopped due to a RAID

controller module restart, the storage array resumes the process from the last

checkpoint.

44 Planning: MD3200i Series Storage Array Terms and Concepts

Page 45

Virtual Disk Operations Limit

The maximum number of active, concurrent virtual disk processes per RAID

controller module installed in the storage array is four. This limit is applied to

the following virtual disk processes:

• Background initialization

• Foreground initialization

• Consistency check

•Rebuild

•Copy back

If a redundant RAID controller module fails with existing virtual disk

processes, the processes on the failed controller are transferred to the peer

controller . A transferr ed process is placed in a suspended state if there ar e four

active processes on the peer controller. The suspended processes are resumed

on the peer controller when the number of active processes falls below four.

Disk Group Operations

RAID Level Migration

You can migrate from one RAID level to another depending on your

requirements. For example, fault-tolerant characteristics can be added to a

stripe set (RAID 0) by converting it to a RAID 5 set. MDSM provides

information about RAID attributes to assist you in selecting the appropriate

RAID level. You can perform a RAID level mig ration while the system is still

running and without rebooting, which maintains data availability.

Segment Size Migration

Segment size refers to the amount of data (in KB) that the storage array

writes on a single physical disk in a virtual disk before writing data on the next

physical disk. Valid values for the segment size are 8 KB, 16 KB, 32 KB, 64 KB,

128 KB, 256 KB, and 512 KB.

Dynamic segment size migration enables the segment size of a given virtual

disk to be changed. A default segment size is set when the virtual disk is

created, based on such factors as the RAID level and expected usage. You can

change the default value if segment size usage does not match your needs.

Planning: MD3200i Series Storage Array Terms and Concepts 45

Page 46

When considering a segment-size change, two scenarios illustrate different

approaches to the limitations:

• If I/O activity stretches beyond the segment size, you can increase it to

reduce the number of disks required for a single I/O . Using a single physical

disk for a single request frees disks to service other requests, especially

when you have multiple users accessing a database or storage environment.

• If you use the virtual disk in a single-user, large I/O environment (such as

for multimedia application storage), performance can be optimized when

a single I/O request is serviced with a single data stripe (the segment size

multiplied by the number of physical disks in the disk group used for data

storage). In this case, multiple disks are used for the same request, but

each disk is only accessed once.

Virtual Disk Capacity Expansion

When you configure a virtual disk, you select a capacity based on the amount

of data you expect to store. However, you may need to increase the virtual disk

capacity for a standard virtual disk by adding free capacity to the disk group.

This creates more unused space for new virtual disks or to expand existing

virtual disks.

Disk Group Expansion

Because the storage array supports hot pluggable physical disks, you can add

two physical disks at a time for each disk group while the storage array

remains online. Data remains accessible on virtual disk groups, virtual disks,

and physical disks throughout the operation. The data and increased unused

free space are dynamically redistributed across the disk group. RAID

characteristics are also reapplied to the disk group as a whole.

Disk Group Defragmentation

Defragmenting consolidates the free capacity in the disk group into one

contiguous area. Defragmentation does not change the way in which the data

is stored on the virtual disks.

46 Planning: MD3200i Series Storage Array Terms and Concepts

Page 47

Disk Group Operations Limit

The maximum number of active, concurrent disk group processes per

installed RAID controller module is one. This limit is applied to the following

disk group processes:

• Virtual disk RAID level migration

• Segment size migration

• Virtual disk capacity expansion

• Disk group expansion

• Disk group defragmentation

If a redundant RAID controller module fails with an existing disk group

process, the process on the failed controller is transferred to the peer

controller. A transferred process is placed in a suspended state if there is an

active disk group process on the peer controller. The suspended processes are

resumed when the active process on the peer controller completes or is

stopped.

NOTE: If you try to start a disk group process on a controller that does not have an

existing active process, the start attempt fails if the first virtual disk in the disk group

is owned by the other controller and there is an active process on the other

controller.

RAID Background Operations Priority

The storage array supports a common configurable priority for the following

RAID operations:

• Background initialization

•Rebuild

•Copy back

• Virtual disk capacity expansion

• Raid level migration

• Segment size migration

• Disk group expansion

• Disk group defragmentation

Planning: MD3200i Series Storage Array Terms and Concepts 47

Page 48

The priority of each of these operations can be changed to address

performance requirements of the environment in which the operations are to

be executed.

NOTE: Setting a high priority level impacts storage array performance. It is not

advisable to set priority levels at the maximum level. Priority must also be assessed

in terms of impact to host server access and time to complete an operation. For

example, the longer a rebuild of a degraded virtual disk takes, the greater the risk

for secondary disk failure.

Virtual Disk Migration and Disk Roaming

Virtual disk migration is moving a virtua l disk or a hot spare from one array to

another by detaching the physical disks and re-attaching them to the new

array. Disk roaming is moving a physical disk from one slot to another on the

same array.

Disk Migration

You can move virtual disks from one array to another without taking the

target array offline. However, the disk group being migrated must be offline

before your perform disk migration. If the disk group is not offline prior to

migration, the source array holding the physical and virtual disks within the

disk group marks them as missing. However, the disk groups themselves

migrate to the target array.

An array can import a virtual disk only if it is in an optimal state. You can

move virtual disks that are part of a disk group only if all members of the disk

group are being migrated. The virtual disks automatically become available

after the target array has finished importing all the disks in the disk group.

When you migrate a physical disk or a disk group from one MD3200i array to

another, the MD 3200 i array you migrate to, recognizes any data structures

and/or metadata you had in place on the migrating MD3200i array. However,

if you are migrating from any device other than a MD3200i Series storage

array, the MD3200i array does not recognize the migrating metadata and that

data is lost. In this case, MD3200i initializes the physical disks and mark

them as unconfigured capacity.

48 Planning: MD3200i Series Storage Array Terms and Concepts

Page 49

NOTE: Only disk groups and associated virtual disks with all member physical disks

present can be migrated from one storage array to another. It is recommended that

you only migrate disk groups that have all their associated member virtual disks in

an optimal state.

NOTE: The number of physical disks and virtual disks that a storage array supports

limits the scope of the migration.

Use either of the following methods to move disk groups and virtual disks:

• Hot virtual disk migration—Disk migration with the destination storage

array power turned on.

• Cold virtual disk migration—Disk migration with the destination storage

array power turned off.

NOTE: To ensure that the migrating disk groups and virtual disks are correctly

recognized when the target storage array has an existing physical disk, use hot

virtual disk migration.

When attempting virtual disk migration, follow these recommendations:

• Moving physical disks to the destination array for migration—When

inserting drives into the destination storage array during hot virtual disk

migration, wait for the inserted physical disk to be displayed in MDSM

before inserting the next physical disk.

WARNING: Without the delay between drive insertions, the storage array can

become unstable and manageability is temporarily lost.

• Migrating virtual disks from multiple storage arrays into a single storage

array—When migrating virtual disks from multiple or different storage

arrays into a single destination storage array, move all of the physical disks

from the same storage array as a set into the new destination storage array.

Ensure that all of the physical disks from a storage array are migrated to

the destination storage array before starting migration from the next

storage array.

NOTE: If the drive modules are not moved as a set to the destination storage

array, the newly relocated disk groups may not be accessible.

• Migrating virtual disks to a storage array with no existing physical disks—

Turn off the destination storage array , when migrating disk groups or a

complete set of physical disks from a storage array to another storage array

Planning: MD3200i Series Storage Array Terms and Concepts 49

Page 50

that has no existing physical disks. After the destination storage array is

turned on and has successfully recognized the newly migrated physical

disks, migration operations can continue.

NOTE: Disk groups from multiple storage arrays must not be migrated at the

same time to a storage array that has no existing physical disks. Use cold

virtual disk migration for the disk groups from one storage array.

• Enabling premium features before migration—Before migrating disk

groups and virtual disks, enable the required premium features on the

destination storage array. If a disk group is migrated from a MD3200i

storage array that has a premium feature enabled and the destination array

does not have this feature enabled, an

Out of Compliance

error message

may be generated.

Disk Roaming

You can move physical disks within an array. The RAID controller module

automatically recognizes the relocated physical disks and logically places

them in the proper virtual disks that are part of the disk group. Disk roaming

is permitted when the RAID controller module is either online or powered

off.

NOTE: The disk group must be exported before moving the physical disks.

Advanced Features

The RAID enclosure supports several advanced features:

• Virtual Disk Snapshots

•Virtual Disk Copy

• High Performance Tier

NOTE: Virtual Disk Snapshot, Virtual Disk Copy, and High Performance Tier are

premium features that must be activated separately. If you have purchased these

features, an activation card is supplied that contains instructions for enabling this

functionality.

50 Planning: MD3200i Series Storage Array Terms and Concepts

Page 51

Host Server-to-Virtual Disk Mapping

The host server attached to a storage array accesses various virtual disks on

the storage array through its host ports. Specific virtual disk-to-LUN

mappings to an individual host server can be defined. In addition, the host

server can be part of a host group that shares access to one or more virtual

disks.

You can manually configure a host server-to-virtual disk mapping. When you

configure host server-to-virtual disk mapping, consider these guidelines:

• You can define one host server-to-virtual disk mapping for each virtual disk

in the storage array.

• Host server-to-virtual disk mappings are shared between RAID controller

modules in the storage array.

• A unique LUN must be used by a host group or host server to access a

virtual disk.

• Not every operating system has the same number of LUNs available for

use.

Host Types

A host server is a server that accesses a storage array. Host servers are mapped

to the virtual disks and use one or more iSCSI initiator ports. Host servers

have the following attributes:

• Host name—A name that uniquely identifies the host server.

• Host group (used in Cluster solutions only)—Two or more host servers

associated together to share access to the same virtual disks.

This host group is a logical entity you can create in MDSM. All host servers

in a host group must be running the same operating system.

• Host type—The operating system running on the host server.

Snapshot Virtual Disks

A snapshot is a point-in-time image of a virtual disk. The snapshot provides

an image of the virtual disk at the time the snapshot was created. You create a

snapshot so that an application (for example, a backup application) can

access the snapshot and read the data while the source virtual disk remains

Planning: MD3200i Series Storage Array Terms and Concepts 51

Page 52

online and user-accessible. When the backup is completed, the snapshot

virtual disk is no longer needed. You can create up to four snapshots per

virtual disk.

Snapshots are used to recover previous versions of files that have changed

since the snapshot was taken. Snapshots are implemented using a copy-onwrite algorithm, which makes a backup copy of data the instant an error

occurs. Data on a virtual disk is copied to the snapshot repository before it is

modified. Snapshots can be created instantaneously or can be scheduled and

take up less overhead than a full physical copy process.

Snapshot Repository Virtual Disk

When you create a snapshot virtual disk, it automatically creates a snapshot

repository virtual disk. A snapshot repository is a virtual disk created in the

storage array as a resource for a snapshot virtual disk. A snapshot repository

virtual disk contains snapshot virtual disk metadata and copy-on-write data

for a particular snapshot virtual disk. The repository supports one snapshot

only.

You cannot select a snapshot repository virtual disk as a source virtual disk or

as a target virtual disk in a virtual disk copy. If you select a Snapshot source

virtual disk as the target virtual disk of a virtual disk copy, you must disable all

snapshot virtual disks associated with the source virtual disk.

CAUTION: Before using the Snapshot Virtual Disks Premium Feature in a

Windows Clustered configuration, you must map the snapshot virtual disk to the

cluster node that owns the source virtual disk. This ensures that the cluster nodes

correctly recognize the snapshot virtual disk.

CAUTION: Mapping the snapshot virtual disk to the node that does not own the

source virtual disk before the snapshot enabling process is completed can result

in the operating system misidentifying the snapshot virtual disk. This can result in

data loss or an inaccessible snapshot.

For more information on mapping the snapshot virtual disk to the secondary