Page 1

Dell™ PowerVault™ NAS Systems SCSI Cluster

Installation and Troubleshooting Guide

Getting Started

Preparing Your Systems for Clustering

Cabling Your Cluster Hardware

Maintaining Your Cluster

Using MSCS

Troubleshooting

Cluster Data Sheet

Abbreviations and Acronyms

Notes, Notices, and Cautions

NOTE: A NOTE indicates important information that helps you make better use of your computer.

NOTICE: A NOTICE indicates either potential damage to hardware or loss of data and tells you how to avoid the

problem.

CAUTION: A CAUTION indicates a potential for property damage, personal injury, or death.

Abbreviations and Acronyms

For a complete list of abbreviations and acronyms, see "Abbreviations and Acronyms."

Information in this document is subject to change without notice.

© 2003 Dell Inc. All rights reserved.

Reproduction in any manner whatsoever without the written permission of Dell Inc. is strictly forbidden.

Trademarks used in this text: Dell, the DELL logo, PowerEdge, PowerVault, and Dell OpenManage are trademarks of Dell Inc.; Microsoft, Windows,

Windows NT, and MS-DOS are registered trademarks of Microsoft Corporation; Intel and Pentium are registered trademarks of Intel Corporation;

Novell and NetWare are registered trademarks of Novell Corporation.

Other trademarks and trade names may be used in this document to refer to either the entities claiming the marks and names or their products.

Dell Inc. disclaims any proprietary interest in trademarks and trade names other than its own.

Initial release: 26 Aug 2003

Page 2

Back to Contents Page

Getting Started

Dell™ PowerVault™ NAS Systems SCSI Cluster Installation and Troubleshooting Guide

Intended Audience

Obtaining Technical Assistance

Overview of NAS Clusters

NAS Cluster Features

NAS Cluster Components

Minimum System Requirements

Other Documents You May Need

This guide provides information for installing, configuring, and troubleshooting a Dell™ PowerVault™ network attached storage

(NAS) system's hardware and software components in a cluster configuration and provides information about the

configuration listed in Table 1-1

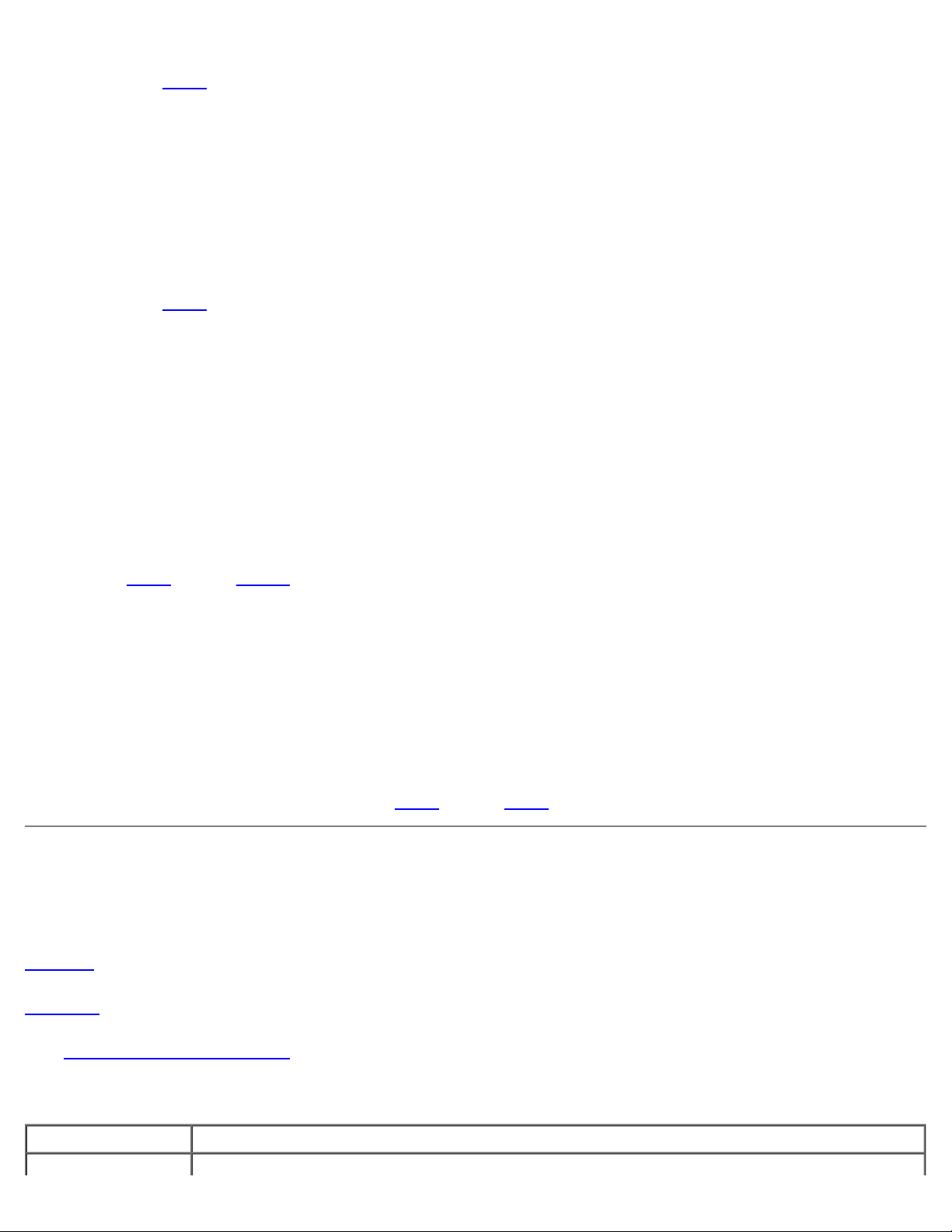

Table 1-1. PowerVault NAS System SCSI Cluster Configuration

.

Systems RAID

Controllers

Two PowerVault NAS

systems

The information in this guide includes:

Basic SCSI cluster installation procedures, which include:

Preparing NAS and storage systems for clustering

Cabling the cluster configuration

Installation procedures for installing the Microsoft® Windows® Storage Server 2003, Enterprise Edition operating

systems in your cluster configuration

Configuring the cluster peripherals, including PERC cards and network adapters

Installation procedures for installing a SCSI cluster configuration in your corporate network

Cluster upgrading and maintenance procedures

Information about the Microsoft Cluster Service (MSCS), the clustering software built into the operating systems

NOTE: Hereafter, Microsoft Cluster Service is also known as MSCS.

PERC 3/DC

PERC 4/DC

Storage Systems Operating System

Up to four PowerVault 21xS or 22xS

storage systems

Microsoft® Windows® Storage Server 2003,

Enterprise Edition

Troubleshooting procedures

Data sheets for recording critical cluster configuration information

See the Dell PowerVault NAS Systems SCSI Cluster Platform Guide for information about supported configurations.

NOTE: Dell and Microsoft support only the specific configurations described in the Platform Guide.

Page 3

Intended Audience

This guide addresses two audience levels:

Users and system installers who will perform general setup, cabling, and configuration of the PowerVault NAS Cluster

components

Trained service technicians who will perform more extensive installations, such as firmware upgrades and installation of

required expansion cards

Obtaining More Information

See "Obtaining Technical Assistance" and "Overview of NAS Clusters" for a general description of PowerVault NAS SCSI

clusters and clustering technology.

See "Using MSCS

operating system.

" for an overview of the clustering software built into the Windows Storage Server 2003, Enterprise Edition

Obtaining Technical Assistance

Dell Enterprise Training and Certification is available; see www.dell.com/training for more information. This service may

not be offered in all locations.

Overview of NAS Clusters

The PowerVault NAS SCSI cluster implements clustering technology on PowerVault NAS systems based on the Windows

Storage Server 2003, Enterprise Edition operating system. PowerVault NAS clusters provide the following benefits in meeting

the needs of mission-critical network application programs:

High availability — Clustering technology built into Microsoft Cluster Service (MSCS) ensures that system services

and resources are available to network clients if a cluster node fails for any reason.

Redundant storage — Application data can be stored on a maximum of four PowerVault storage systems.

Cluster share failure recovery — Cluster shares run on virtual servers, which can be failed over to another cluster

node if a node fails for any reason.

Zero impact on network resources — Cluster nodes can be repaired, serviced, upgraded, or replaced without taking

the entire cluster offline.

PowerVault NAS systems provide an easy-to-install solution for ensuring high-availability of your network storage resources

for Windows and UNIX® clients. Novell® NetWare® and Apple resources are also supported. However, if a system running

NetWare or Apple resources fails for any reason, you must manually restart their dependent resources. This procedure does

not corrupt the share data.

A NAS cluster provides a failover solution for the NAS systems, thereby ensuring a higher availability of network resources

than a nonclustered NAS system. The NAS cluster consists of the following components:

PowerVault NAS systems — Two homogeneous (identical) PowerVault NAS systems with the Windows Storage

Server 2003, Enterprise Edition operating system installed on each system

Cluster interconnect cable — An Ethernet crossover cable (cluster interconnect) connected to a network adapter in

Page 4

both systems

Storage systems — One to four PowerVault 21xS or 22xS storage systems

Each cluster node is configured with software and network resources that enable it to interact with the other node to provide

a mutual redundancy of operation and application program processing. Because the systems interact in this way, they appear

as a single system to the network clients.

As an integrated system, the PowerVault NAS Cluster is designed to dynamically handle most hardware failures and prevent

downtime. In the event that one of the cluster nodes fails for any reason, the processing workload of the failed node switches

over (or fails over) to the remaining node in the cluster. This failover capability enables the cluster system to keep network

resources and application programs up and running on the network while the failed node is taken offline, repaired, and

brought back online. The failover process is transparent and network clients experience only a momentary delay in accessing

their resources. After the failed node is repaired, the network resources can be transferred back to the original node, if

desired.

NOTE: When a cluster node running the Windows Storage Server 2003, Enterprise Edition operating system fails, the

NFS files shares running on the failed node are moved to the remaining node in the cluster and restarted. When a

cluster node with Novell NetWare shares or Apple shares fails, the file shares running on the failed node are converted

to file directories and moved to the remaining node in the cluster. To access the data in the failed-over directories, you

must manually reconfigure the file directories to file shares.

The availability of network services is critical to applications in a client/server environment. Clustering reduces the amount of

downtime caused by unexpected failures, providing maximum uptime of mission critical applications—also known as high

availability—that surpasses the capabilities of a stand-alone system. Using MSCS, clustering ensures that applications on a

failed cluster node continue on the remaining node(s) by migrating and managing the required resource to another node in

the cluster. Clusters that reduce the amount of system downtime are known as high availability clusters.

Configuring Active and Passive Cluster Nodes

Cluster configurations may include both active and passive cluster nodes. Active nodes are nodes that support the cluster

workload by processing application requests and providing client services. Passive nodes are backup nodes that support the

active nodes in the event of a hardware or software failure, thereby ensuring that client applications and services are highly

available.

NOTE: Passive nodes must be configured with the appropriate processing power and storage capacity to support the

resources that are running on the active nodes.

NAS SCSI cluster solutions running Windows are limited to active/active and active/passive configurations because this

solution supports two nodes.

An active/active configuration is a cluster with virtual servers running separate applications or services on each node. When

an application is running on node 1, the remaining cluster node does not have to wait for node 1 to fail. The remaining cluster

node can run its own cluster-aware applications (or another instance of the same application) while providing failover

capabilities for the resources on node 1.

An active/passive configuration is a cluster where the active cluster node is processing requests for a clustered application

while the passive cluster node simply waits for the active node to fail.

Active/passive configurations are more costly in terms of price and performance because one cluster node remains idle all of

the time. This configuration is appropriate for business-critical systems since the application can use all the resources of a

standby cluster node in case one active cluster node fails.

Cluster Node Limitations

The Windows Powered operating system installed on your cluster nodes is dedicated to file server operations. Because your

PowerVault NAS Cluster is a dedicated file server, the cluster nodes cannot be used in the following capacities:

Page 5

Primary Domain Controller (PDC)

NOTE: If another domain controller is not available on the network, you can configure a NAS cluster node as a domain

controller for the NAS cluster. However, client systems outside of the NAS cluster cannot be included as members of

the NAS cluster domain.

Windows Internet Naming Service (WINS) server

Dynamic Host Configuration Protocol (DHCP) server

Domain Name System (DNS) server

Microsoft Exchange Server

Microsoft Structured Query Language (SQL) server

Network Information Service (NIS) server

NAS Cluster Features

The PowerVault NAS cluster solution provides a high level of availability that is not available in nonclustered PowerVault NAS

systems. Because of the differences between clustered and nonclustered systems, compare the features in the clustered

PowerVault NAS systems to ensure that they meet your specific needs.

Table 1-2

provides a comparison of the features in both clustered and nonclustered PowerVault NAS systems.

Table 1-2. NAS Cluster Features

Features Clustered PowerVault

NAS Systems

Failover capability Yes No

Server Message Block (SMB) Yes Yes

SMB share failover Yes No

Dell OpenManage™ Array Manager

management

Monitor and keyboard required Yes No

Failover SCSI storage Yes No

Snapshot functionality Yes Yes

Optional Directory Quotas Yes Yes

Network File System (NFS) shares failover Yes No

Failover internal SCSI storage No No

Novell NetWare share failover No No

Yes Yes

Nonclustered PowerVault

NAS Systems

Apple shares failover No No

Simplified disk and volume management No Yes

Online volume expansion Yes Yes

NAS Cluster Components

Page 6

The following subsections describe the components that are common to the PowerVault NAS cluster, as well as the

components that are specific to each cluster system.

Table 1-3

lists the common components that are used in a PowerVault NAS cluster.

Table 1-3. Cluster Components

Component Description

NAS

systems

Shared

storage

system

Network

adapters

Two identical PowerVault 770N or 775N NAS systems in a homogeneous pair with the Windows Storage

Server 2003, Enterprise Edition operating system installed in each system.

NOTE: Dell or Microsoft can support only the specific configurations described in the Dell PowerVault NAS

SCSI Cluster Platform Guide.

Up to four PowerVault 21xS storage systems with dual SCSI expander management modules (SEMMs) or up

to four PowerVault 22xS with dual enclosure management modules (EMMs).

Supported network adapters for the public LAN.

PowerVault NAS-Specific Network Components

Table 1-4 describes the required components for each PowerVault NAS system.

Table 1-4. PowerVault NAS-Specific Network Components

Hardware

Component

Hot-spare

drive

support

RAID

controller(s)

RAID

support

Shared

storage

system(s)

Network

adapters

Description

Support for 1-inch SCSI hot-pluggable spare drives.

One of the following PERC RAID controller(s) installed in each PowerVault NAS system for the cluster's shared

storage:

PERC 3/DC

PERC 4/DC

Support for RAID 1, 5, and 1+0 levels.

RAID 1+0 is supported in a single enclosure or spanning two enclosures with hot-spare drives. RAID 0 and

independent drive configurations can be installed in a PowerVault NAS cluster. Because they do not offer data

redundancy if a disk fails, they are not recommended for a high-availability system.

NOTE: Dell and Microsoft support only the specific configuration described in the Dell PowerVault NAS SCSI

Cluster Platform Guide.

Up to four PowerVault 21xS storage systems with dual SEMMs or up to four PowerVault 22xS with dual

EMMs.

Two or more network adapters installed in each PowerVault NAS system for the node-to-node cluster

interconnect.

If two network adapters are not installed in the PowerVault 770N NAS system, you must install an additional

network adapter for the private network. PowerVault 775N NAS systems are preconfigured with two onboard

network adapters, which meets the minimum requirements.

NOTE: The network adapters must be identical on both systems.

NOTE: Dell and Microsoft support only the specific configuration described in the Dell PowerVault NAS SCSI

Page 7

Cluster Platform Guide.

Crossover

cable

Keyboard

and monitor

One Ethernet crossover cable for the node-to-node cluster interconnect (private network).

A keyboard and monitor are required for troubleshooting the cluster nodes.

RAID Controllers

Table 1-5 lists the Dell PowerEdge™ Expandable RAID controllers (PERC) that are used to connect the PowerVault 770N and

775N systems to external PowerVault storage systems. See the PERC documentation included with your system for a

complete list of features.

NOTE: Table 1-5 lists the RAID controllers that are connected to the external storage system(s). Your NAS system

also contains an internal RAID controller that is used to manage the system's internal hard drives.

Table 1-5. RAID Controller Features

Feature PERC 3/DC PERC 4/DC

SCSI channels 2 2

SCSI data transfer rate Up to 160 MB/s per channel Up to 320 MB/s per channel

Maximum number of drives per

channel

RAID levels RAID 0, 1, 1+0, 5, and 5+0 RAID 0, 1, 1+0, 5, and 5+0

Number of supported logical drives

and arrays

Cache 128 MB 128 MB

NOTE: RAID 0 and independent drives are possible but are not recommended for a high-availability system because they do

not offer data redundancy if a disk failure occurs.

14 14

Up to 14 logical drives and 32 arrays per

controller

Up to 14 logical drives and 32 arrays per

controller

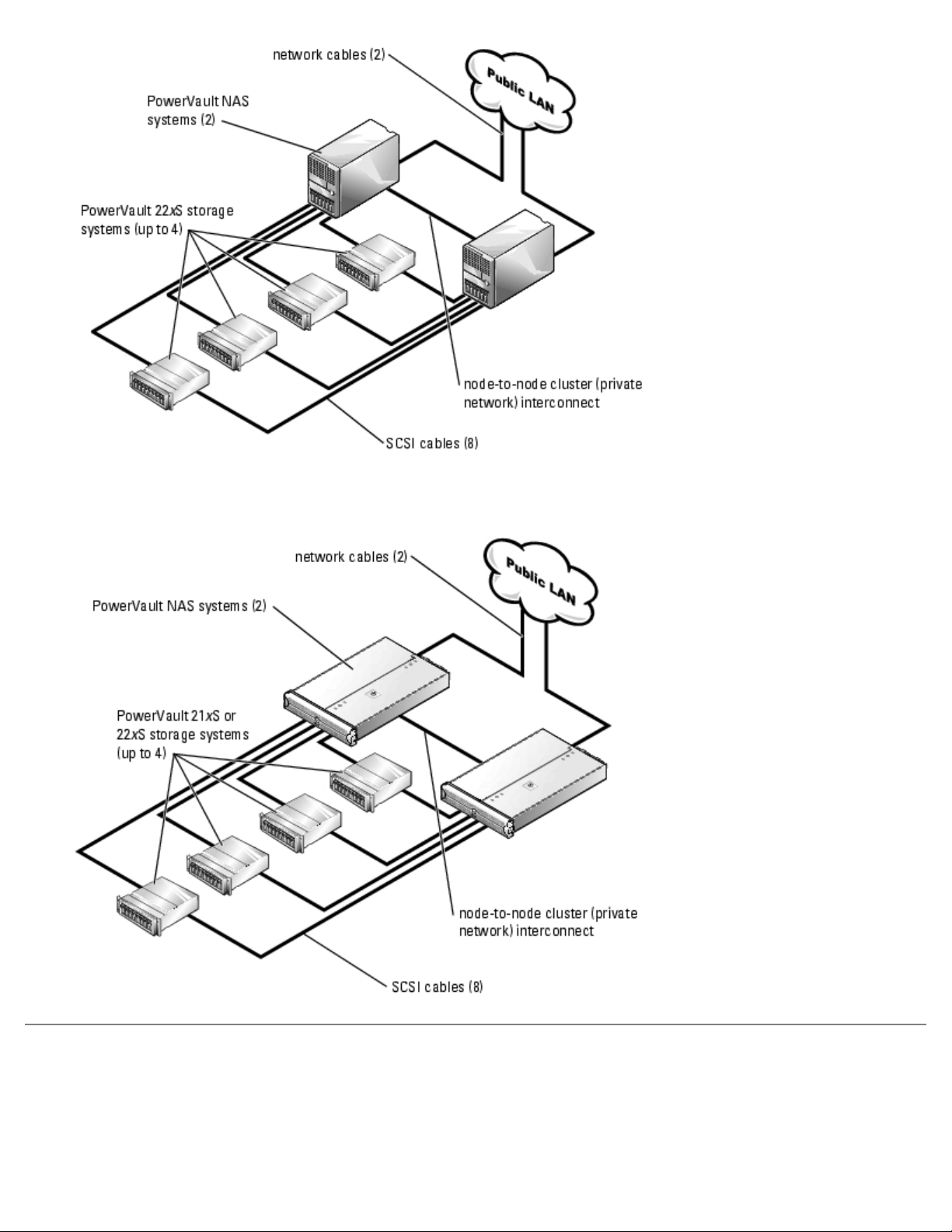

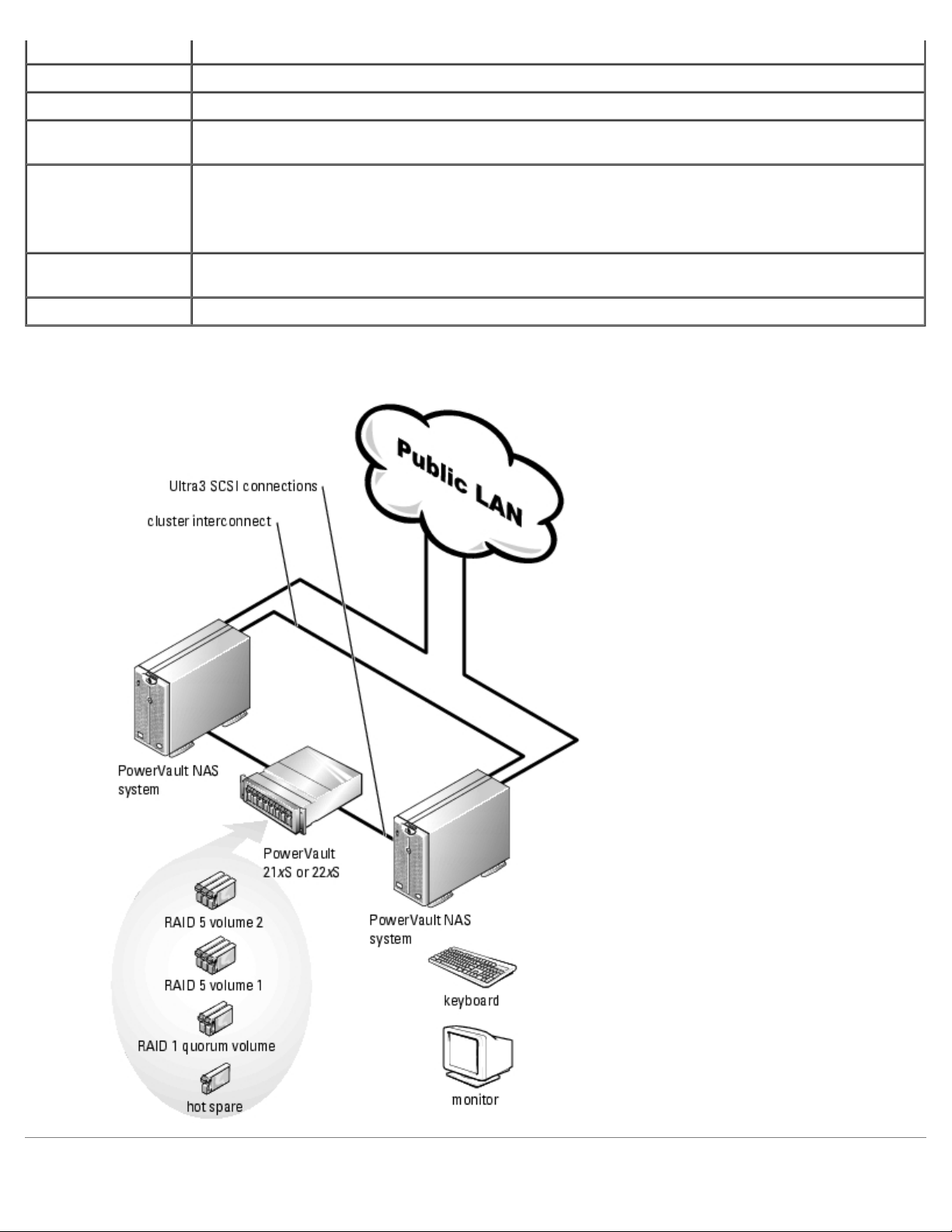

PowerVault NAS System Specific Network Components

Figure 1-1 shows a sample configuration of the PowerVault 770N SCSI cluster components and cabling. Figure 1-2 shows a

similar sample configuration for the PowerVault 775N SCSI cluster.

See the Platform Guide for system-specific configuration information.

Figure 1-1. PowerVault 770N Cluster Solution

Page 8

Figure 1-2. PowerVault 775N Cluster Solution

Minimum System Requirements

If you are installing a new PowerVault NAS SCSI cluster or upgrading an existing system to a PowerVault NAS SCSI cluster,

review the previous subsections to ensure that your hardware components meet the minimum system requirements listed in

the following section.

Page 9

PowerVault NAS Cluster Minimum System Requirements

PowerVault NAS SCSI cluster configurations require the following hardware and software components:

Cluster nodes

Cluster storage

Cluster interconnects (private network)

Client network connections (public network)

Operating system and storage management software

Cluster Nodes

Table 1-6 lists the hardware requirements for the cluster nodes.

Table 1-6. Cluster Node Requirements

Component Minimum Requirement

Cluster

nodes

Processors One or two processors on both cluster nodes.

RAM At least 512 MB of RAM installed on each cluster node.

RAID

controllers

Network

adapters

Two homogeneous (identical) PowerVault 770N or 775N NAS systems that support clusters in homogeneous

pairs.

NOTE: Both cluster nodes must be configured with the same number of processors.

One of the following PERC RAID controllers installed in each system for the cluster's shared storage:

PERC 3/DC

PERC 4/DC

Up to two PERCs per cluster node may be used for the cluster's shared storage.

Two disk drives are required for mirroring (RAID 1) and at least three disk drives are required for disk

striping with parity (RAID 5).

Two or more network adapters installed in each PowerVault NAS system for the node-to-node cluster

interconnect.

If two network adapters are not installed in the PowerVault 770N NAS system, you must install an additional

network adapter for the private network. PowerVault 775N NAS systems are preconfigured with two onboard

network adapters, which meets the minimum requirements.

NOTE: The network adapters must be identical on both systems.

NOTE: Dell and Microsoft support only the specific configuration described in the Dell PowerVault NAS SCSI

Cluster Platform Guide.

Private

network

cables

If you are using Fast Ethernet network adapters for the private network, connect a crossover Ethernet cable

between the network adapters in both cluster nodes.

If you are using Gigabit Ethernet network adapters for the private network, connect a standard Ethernet cable

between the network adapters in both cluster nodes.

Cluster Storage

Page 10

Table 1-7 provides the minimum requirements for the shared storage system(s).

Table 1-7. Cluster Shared Storage System Requirements

Hardware

Component

Shared storage

system(s)

Minimum Requirement

Up to four PowerVault 21xS or 22xS enclosures (for the shared disk resource) with the following

configuration:

Two SEMMs for each PowerVault 21xS

Two EMMs for each PowerVault 22xS

Redundant power supplies connected to separate power sources

At least two SCSI hard drives in each PowerVault 21xS or 22xS enclosure to support hardwarebased RAID functionality

Currently, MSCS supports only the Windows NT File System (NTFS) format for the shared storage

system.

Two volumes are the minimum requirement for an active/active cluster configuration (where the

active nodes process requests and provide failover for each other)

See "Configuring Active and Passive Cluster Nodes

active/passive cluster configurations.

Two 1-, 4-, 8-, or 20-m SCSI cables for each PowerVault 21xS or 22xS storage system in the

cluster

Cluster Interconnects (Private Network)

" for more information on active/active and

Table 1-8 provides the minimum requirements for the cluster interconnects (private network).

Table 1-8. Cluster Interconnects (Private Network) Requirements

Hardware

Component

Network

adapters

Ethernet

switch

(optional)

Ethernet

cables

Ethernet

switch

cabling

(optional)

Minimum Requirement

Any network adapter supported by the system for each cluster node. The network adapters for the private

network must be identical and supported by the system.

NOTE: Dual-port Fast Ethernet network adapters are not recommended for simultaneous cluster connections

to the public and private networks. When you configure the network adapter in MSCS Setup to All

Communications, the public network can provide redundancy for node-to- node traffic in the case of a

failure in the private network segment.

One Ethernet switch for the private network (cluster interconnect).

One standard or crossover Ethernet cable.

Standard Ethernet cable (not included with the Dell Cluster kit) connects two copper Gigabit Ethernet

(1000 BASE-T) network adapters.

Crossover Ethernet cable connects two fast 100 Mb/s Ethernet network adapters.

Additional Ethernet cables (not included) may be used to attached to an Ethernet switch for the public

network (client connections) and private network (cluster interconnect).

Client Network Connections (Public Network)

Page 11

The cluster connections to the public network (for client access of cluster resources) require one or more identical network

adapters supported by the system for each cluster node. Configure this network in a mixed mode (All Communications) to

communicate the cluster heartbeat to the cluster nodes if the private network fails for any reason.

Other Documents You May Need

The System Information Guide provides important safety and regulatory information. Warranty information may be

included within this document or as a separate document.

The Platform Guide provides information about the platforms that support the NAS SCSI cluster configuration.

The Rack Installation Guide and Rack Installation Instructions document that was included with your rack solution

describes how to install your system into a rack.

The Getting Started Guide provides an overview of initially setting up your system.

The User's Guide for your PowerVault system describes system features and technical specifications, SCSI drivers, the

System Setup program (if applicable), software support, and the system configuration utility.

The Installation and Troubleshooting Guide for your PowerVault system describes how to troubleshoot the system and

install or replace system components.

The Dell PowerVault 77xN NAS Systems Administrator's Guide provides system configuration, operation, and

management information.

The Dell PowerVault 200S, 201S, 210S, and 211S Storage Systems Installation and Service Guide describes how to

install and troubleshoot the PowerVault 200S, 201S, 210S, and 211S storage systems and install or replace system

components.

The Dell PowerVault 220S and 221S System Installation and Troubleshooting Guide describes how to install and

troubleshoot the PowerVault 220S and 221S storage systems and install or replace system components.

The PERC documentation includes information on the SCSI RAID controller.

The Dell OpenManage™ Array Manager documentation provides instructions for using the array management software

to configure RAID systems.

Documentation for any components you purchased separately provides information to configure and install these

options.

Updates are sometimes included with the system to describe changes to the system, software, and/or documentation.

NOTE: Always read the updates first because they often supersede information in other documents.

Release notes or readme files may be included to provide last-minute updates to the system documentation or

advanced technical reference material intended for experienced users or technicians.

Back to Contents Page

Page 12

Back to Contents Page

Preparing Your Systems for Clustering

Dell™ PowerVault™ NAS Systems SCSI Cluster Installation and Troubleshooting Guide

Before You Begin Configuring the Shared Disks

Installation Overview

Selecting a Domain Model Installing and Configuring MSCS

Configuring Windows Networking

Assigning Static IP Addresses to Your Cluster Resources

and Components

Installing a PERC RAID Controller Using Shadow Copies of Shared Folders

Installing and Configuring the Shared Storage System Installing the Cluster Management Software

Installing a PowerVault 770N NAS Cluster Minimum

Configuration

Installing a PowerVault 775N NAS Cluster Minimum

Configuration

Before You Begin

Configuring Cluster Networks Running Windows Storage

Server 2003, Enterprise Edition

Configuring and Managing the Cluster Using Cluster

Administrator

Managing Directory Quotas (Optional)

Creating a System State Backup

1. Ensure that your site can handle the power requirements of the cluster equipment.

Contact your sales representative for information about your region's power requirements.

CAUTION: Only trained service technicians are authorized to remove and access any of the components

inside the system. See your System Information Guide for complete information about safety precautions,

working inside the computer, and protecting against electrostatic discharge.

2. Ensure that the following components are installed in each PowerVault NAS system in the cluster:

Network adapters

PERC cards

SCSI hard drives

Any additional peripheral components

HBA drivers

You can download the latest drivers from the Dell Support website at support.dell.com.

NOTE: Both NAS systems and the hardware components in each system must be identical.

3. Ensure that the following components are installed in each Dell™ PowerVault™ 21xS or 22xS system in the cluster:

Two SEMMs (PowerVault 21xS only) or two EMMs (PowerVault 22xS only)

A split-bus module

SCSI hard drives

Page 13

See "Installing and Configuring the Shared Storage System" for more information.

4. Cable the system hardware for clustering.

See "Cabling Your Cluster Hardware

5. Configure the storage system(s) as described in your storage system documentation.

6. Configure the PERC cards as described in your PERC card documentation.

7. Configure RAID for the internal SCSI hard drives, configure the hard drives using the controller's BIOS utility or Dell

OpenManage™ Array Manager.

" for more information.

Installation Overview

This section provides installation overview procedures for configuring your cluster running the Microsoft® Windows® Storage

Server 2003 operating system.

1. Ensure that your cluster meets the requirements as described in "Before You Begin

2. Select a domain model that is appropriate for your corporate network and operating system.

See "Selecting a Domain Model

3. Reserve static IP addresses for your cluster resources and components.

" for more information.

."

The resources and components include:

Public network

Private network

Cluster virtual servers

See "Assigning Static IP Addresses to Your Cluster Resources and Components

4. Install or update the PERC drivers.

The PERC drivers allow your cluster nodes to communicate with the shared storage systems.

See "Updating the PERC Card Driver

5. Configure the hard drives on the shared storage system(s).

See "Configuring and Managing Virtual Disks" for more information.

6. Configure the MSCS software.

" for more information.

" for more information.

The MSCS software is the clustering component of the Windows operating system that provides the failover capabilities

for the cluster.

See "Installing and Configuring MSCS

" for more information.

Page 14

7. Verify cluster functionality. Ensure that:

Your cluster components are communicating properly with each other.

MSCS is started.

See "Verifying Cluster Functionality

8. Verify cluster resource availability.

Use Cluster Administrator to check the running state of each resource group.

See "Verifying Cluster Resource Availability

The following sections provide detailed information for each step in the "Installation Overview

Windows operating system.

NOTE: Dell strongly recommends that you use the "PowerVault SCSI Cluster Solution Data Sheet" during the

installation of your cluster to ensure that all installation steps are completed. The data sheets are located in "Cluster

Data Sheet."

" for more information.

" for more information.

" that is specific to your

Selecting a Domain Model

On a cluster running the Windows Storage Server 2003, Enterprise Edition operating system, both cluster nodes must belong

to a common domain or directory model. The following membership configurations are supported:

Both cluster nodes are member systems in a Windows 2000 Active Directory domain.

Both cluster nodes are member systems in a Windows Storage Server 2003 Active Directory domain.

One node is a domain controller and the other node is a member of the domain, without other member systems or

clients in the domain.

If a cluster node cannot contact a domain controller, the node will not be able to authenticate client requests.

Configuring Windows Networking

You must configure the public and private networks in each node before you install MSCS. The following sections introduce

you to some principles and procedures necessary to the networking prerequisites.

Assigning Static IP Addresses to Your Cluster Resources and

Components

A static IP address is an Internet address that a network administrator assigns exclusively to a system or a resource. The

address assignment remains in effect until it is changed by the network administrator.

The IP address assignments for the public LAN segments will depend on the configuration of your environment. If the IP

assignments are set up correctly, all of the network adapter resources will respond to ping commands and appear online

Page 15

before and after you install MSCS. If the IP assignments are not set up correctly, the cluster nodes may not be able to

communicate with the domain. See "Troubleshooting" for more information.

appear online after you install MSCS. If the IP address resources are not set up correctly, the cluster nodes may not be able

PowerVault NAS SCSI cluster configurations running the Windows operating system require static IP addresses assigned to

hardware and software applications in your cluster, as listed in Table 2-1

.

Table 2-1. Applications and Hardware Requiring IP Address Assignments

Application/Hardware Description

Cluster IP address The cluster IP address is used for cluster management and must correspond to the cluster name.

Because each server has at least two network adapters, the minimum number of static IP

addresses required for a cluster configuration is five (one for each network adapter and one for

the cluster). Additional static IP addresses are required when MSCS is configured with application

programs that require IP addresses.

Cluster-aware

applications running on

the cluster

Cluster node network

adapters

For example, these applications may include a network file system (NFS) share, server message

block (SMB) file share, or a general purpose file share.

The network adapters are used to connect to the public and private networks.

For cluster operation, two network adapters are required: one network adapter for the public

network (LAN/WAN) and another network adapter for the private network (sharing heartbeat

information between the cluster nodes).

See "Cabling Your Cluster Hardware

NOTE: To ensure cluster operations during a DHCP server failure, Dell recommends using static

IP addresses for your cluster.

" for more information about cluster interconnect options.

Configuring IP Addresses for the Private Network (Cluster

Interconnect)

Having two network adapters connected to separate networks on the cluster provides a contingency solution for cluster

communication failure. If the private network (cluster interconnect) fails, MSCS can default cluster node communications

through the public network, thereby ensuring that failover capabilities are possible in the event of a cluster node failure.

The network adapters installed in each cluster node on the private network (cluster interconnect) must reside on different IP

subnets. Having a separate IP subnet or a different network ID than the LAN subnet(s) used for client connectivity ensures

that both the public and private network communications do not interfere with each other.

If you are connecting the cluster node network adapters together using an Ethernet cable, Dell recommends using the static

IP address assignments in Table 2-2

for the network adapters that are connected to the private network.

Table 2-2. Sample Static IP Address Assignments for the Private Network

Cluster Node IP Address Subnet

Node 1 10.0.0.1 255.255.255.0

Node 2 10.0.0.2 255.255.255.0

If you are connecting multiple network adapters together for the private network using a network switch, ensure that each

network adapter connected to a private network is assigned a unique IP address. For example, you can continue the IP

address scheme in Table 2-2

private network that are connected to the same switch.

NOTE: The IP address assignments for the public LAN segment(s) depend on the configuration of your environment.

If the IP assignments are set up correctly, all of the network adapter resources will respond to ping commands and will

by using 10.0.0.3 and 10.0.0.4 for additional cluster nodes and the network adapters for the

Page 16

to communicate with the domain and the Cluster Configuration Wizard may not allow you to configure all of your networks.

See "Troubleshooting" for more information on troubleshooting problems.

NOTE: Additional fault tolerance for the LAN segments can be achieved by using network adapters that support

adapter teaming or by having multiple LAN segments. Do not use fault tolerant network adapters for the cluster

interconnect, as these network adapters require a dedicated link between the cluster nodes.

Creating Separate Subnets for the Public and Private Networks

The network adapters for the public and private networks that are installed in the same cluster node must reside on separate

IP subnetworks. Therefore, the private network used to exchange heartbeat information between the cluster nodes must have

a separate IP subnet or a different network ID than the public network, which is used for client connections.

Setting the Network Interface Binding Order

1. Click the Start button, select Control Panel, and double-click Network Connections.

2. Click the Advanced menu, and then click Advanced Settings.

The Advanced Settings window appears.

3. In the Adapters and Bindings tab, ensure that the Private and Public connections are at the top of the list.

To change the connection order:

a. Click Public or Private.

b. Click the up-arrow or down-arrow to move the connection to the top or bottom of the Connections box.

c. Click OK.

d. Close the Network Connections window.

Using Dual-Port Network Adapters for the Private Network

Using a dual-port network adapter, you can configure your cluster to use the public network as a failover for private network

communications. However, to ensure high-availability and redundancy in your NAS cluster, configure the public and private

networks on two separate network adapters. For example, you can configure an internal network adapter port for the private

network and a PCI network adapter port for the public network.

NOTE: Configuring the public and private network on a dual-port network adapter is not supported.

Verifying Cluster Network Communications

To ensure proper cluster operations, the cluster nodes must be able to communicate with each other through the private

network (cluster interconnect). This communication involves the exchange of heartbeat messages, whereby the two cluster

nodes inquire about each other's status, or "health," and acknowledge each inquiry.

To verify network communications between the cluster nodes:

1. Open a command prompt on each cluster node.

Page 17

2. At the prompt, type:

ipconfig /all

3. Press <Enter>.

All known IP addresses for each local server appear on the screen.

4. Issue the ping command from each remote system.

Ensure that each local server responds to the ping command.

Installing a PERC RAID Controller

You can install a PERC controller in your PowerVault NAS systems to manage your external storage systems. When you install

a RAID controller in your system, install the controller in the correct PCI slot. Some PCI slots on your system are connected to

different PCI buses with varying I/O configurations (for example, 32-bit, 32-MHz vs. 64-bit, 32-MHz) that might affect the

data transfer rate from your RAID controller to your shared storage system. Install the RAID controller in the recommended

PCI slot.

See the Platform Guide for more information about your system's PCI bus configuration.

See "RAID Controllers

" for a list of supported RAID controllers.

Updating the PERC Card Driver

See the Dell Support website at support.dell.com to download the latest Windows driver for the PERC card.

To update the default driver to the latest PERC driver:

1. Click the Start button, select Programs, select Administrative Tools, and click Computer Management.

2. Select System Tools, select Device Manager, and click the plus (+) sign to expand SCSI and RAID controllers.

One or more PERC cards are listed.

3. Right-click the PERC card, select Properties, select the Driver tab, and then click Update Driver to start the

Windows Device Driver wizard.

4. Click Next to proceed to the Install Hardware Device Drivers dialog box.

5. Select Display a list of known drivers for this device... and then click Next.

6. Click Have Disk, insert the diskette or the Dell OpenManage Server Assistant CD that contains Dell's updated driver,

specify the location of the driver (A:> or D:>), and then click OK.

7. Select the appropriate RAID controller (PERC card) and click Next.

8. Click Next to begin the installation.

9. When the installation is complete, click Finish to exit the wizard.

10. Click Close to exit the Properties window.

11. Click Yes to restart the system.

Page 18

12. Repeat this procedure for cluster node 2.

Installing and Configuring the Shared Storage System

Clustering PowerVault Storage Systems

If you are upgrading an existing PowerVault 21xS or 22xS storage system to meet the cluster requirements for the shared

storage system, you may need to install additional hard drives and/or one of the following management modules in the

shared storage system:

SCSI SEMM (PowerVault 21xS only)

EMM (PowerVault 22xS only)

The size and number of drives you add depends on the RAID level you want to use, the number of hard drives installed in

your system, and the number of application programs you want to run in your cluster environment.

See the Dell PowerVault 200S, 201S, 210S, and 211S Storage Systems Installation and Service Guide or the Dell PowerVault

220S and 221S System Installation and Troubleshooting Guide for information about installing the hard drives in the

PowerVault 22xS storage system.

NOTE: In cluster mode, the last slot (SCSI ID 15) in the PowerVault 22xS is not used; SCSI ID 15 is used for the

primary EMM.

Configuring the PowerVault 21xS Storage System for Cluster Mode

To ensure that both NAS systems recognize all the drives in the storage system, you must enable forced-joined mode on the

SEMMs installed in each storage system that you will share between the two storage systems for clustering. This mode

prevents the storage system from operating in a dual-bus split backplane configuration (2 x 4 or 2 x 6) when two cables are

attached.

The SEMMs are identified by a label adjacent to the SCSI connector. Two identical SEMMs installed in each storage system are

required for cluster operation. You cannot use one SEMM.

See the Dell PowerVault 200S, 201S, 210S, and 211S Storage Systems Installation and Service Guide for more information

on installing and configuring the SEMMs.

To configure the SEMMs for forced join mode:

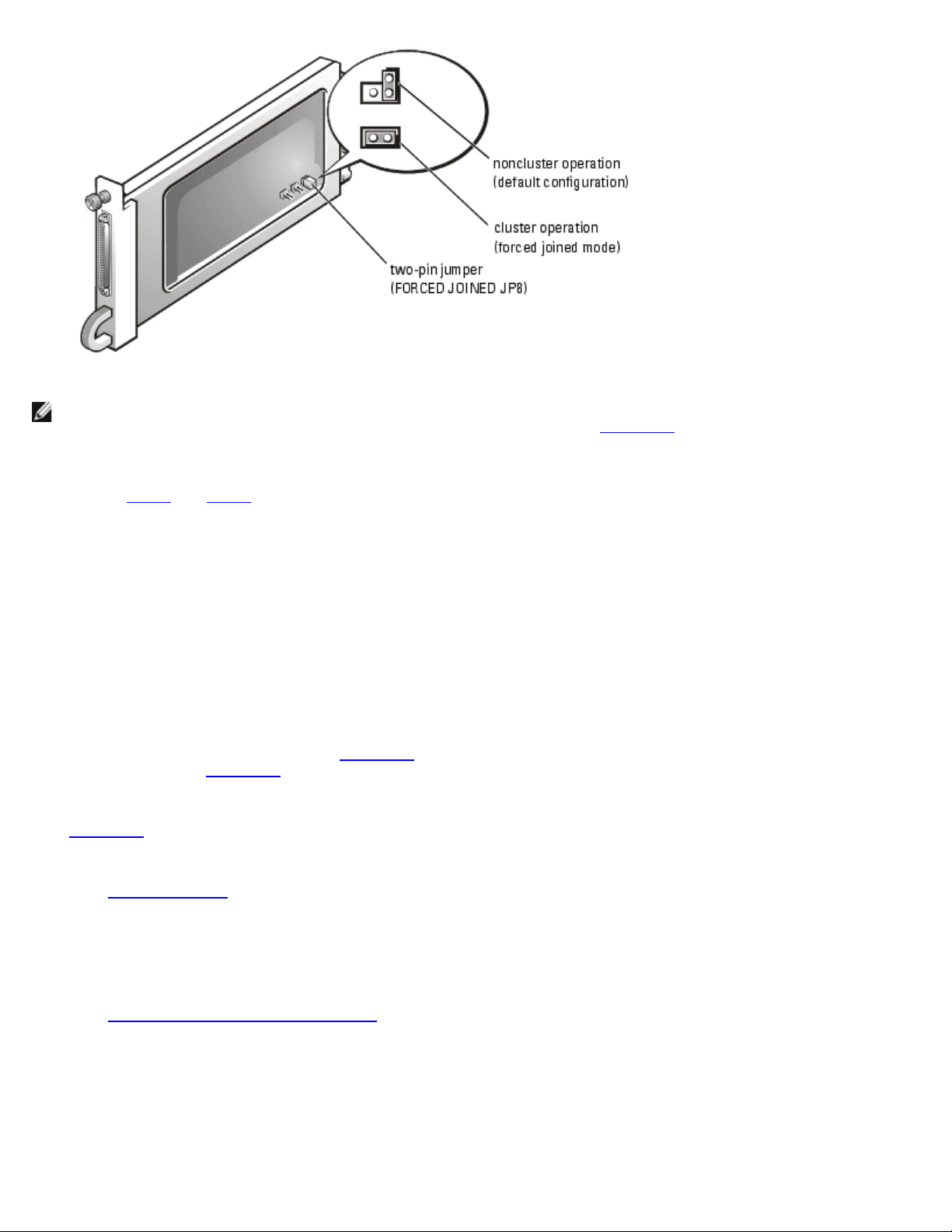

1. Locate the two-pin jumper labeled "FORCED JOINED JP8" on the SEMM, as shown in Figure 2-1

The SEMM is shipped with a jumper plug that is connected to only one jumper pin.

.

Figure 2-1. SEMM Configuration

Page 19

NOTE: Only the FORCED JOINED JP8 jumper contains a jumper plug. The Dell-installed default for jumpers JP1, JP2,

JP6, and JP7 is a noncluster operation (default configuration), as shown in Figure 2-1

2. Move the jumper plug to connect the two pins of the FORCED JOINED JP8 jumper.

3. Repeat step 1 and step 2 for the second SEMM.

4. Install the two SEMMs in the PowerVault 21xS storage system.

.

Configuring the PowerVault 22xS Storage System for Cluster Mode

To ensure that both systems recognize all the drives in the storage system, you must set the split-bus configuration switch to

cluster mode on the PowerVault 22xS storage system before turning on the storage system.

To configure the storage system in cluster mode:

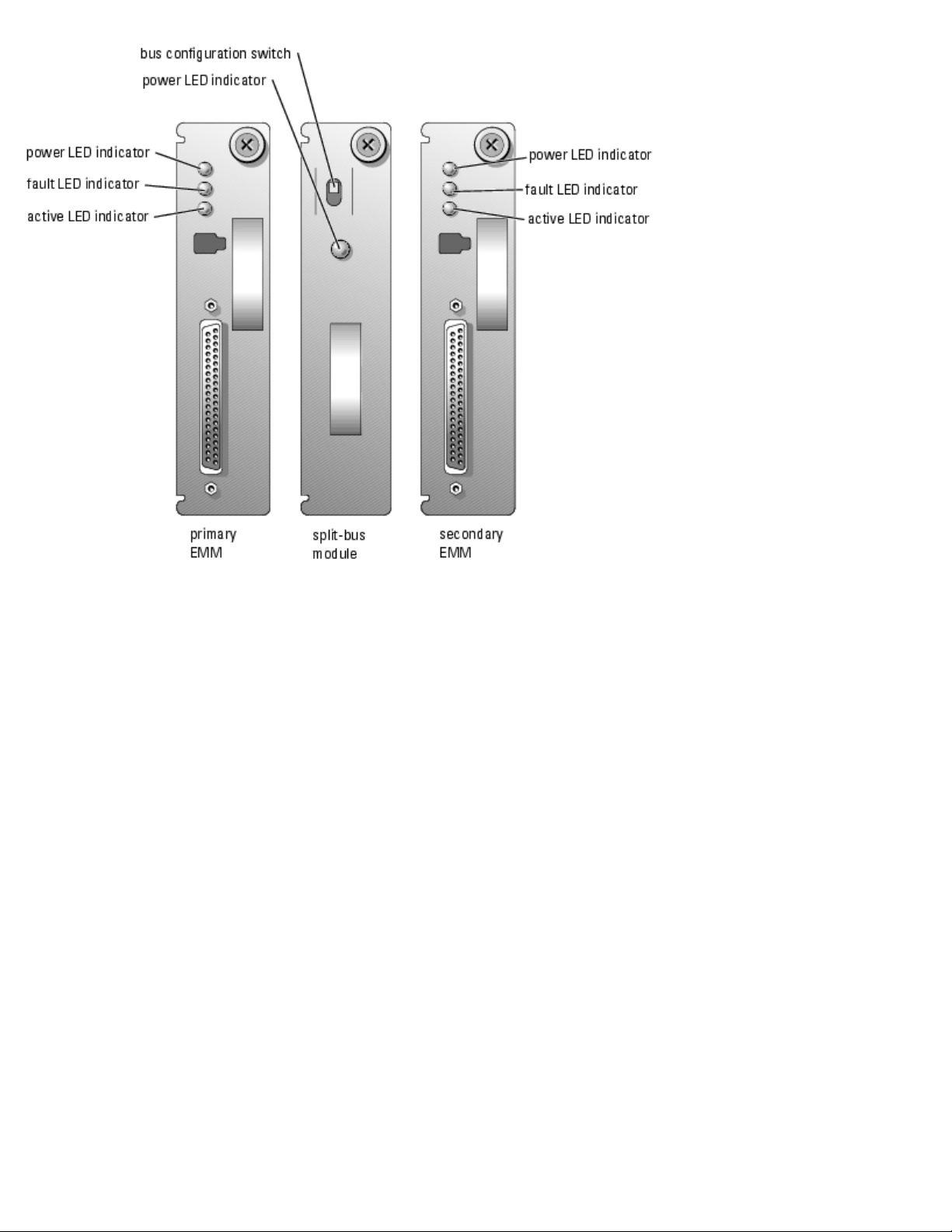

1. Set the bus configuration switch (see Figure 2-2

LED indicator (see Figure 2-3

Figure 2-3

221S System's User's Guide for more information.

See "Split-Bus Module

2. Install the split-bus module in the PowerVault 22xS storage system.

3. Install the two EMMs in the PowerVault 22xS storage system.

See "Enclosure Management Module (EMM)

Installation and Troubleshooting Guide for information about installing EMMs.

illustrates the front panel indicators on the storage system's front panel. See the Dell PowerVault 220S and

" for more information about the split-bus module.

) indicates that the storage system is in cluster mode.

) on the split-bus module to cluster mode (down position). The cluster

" for basic information about EMMs; see the Dell PowerVault 220S and 221S

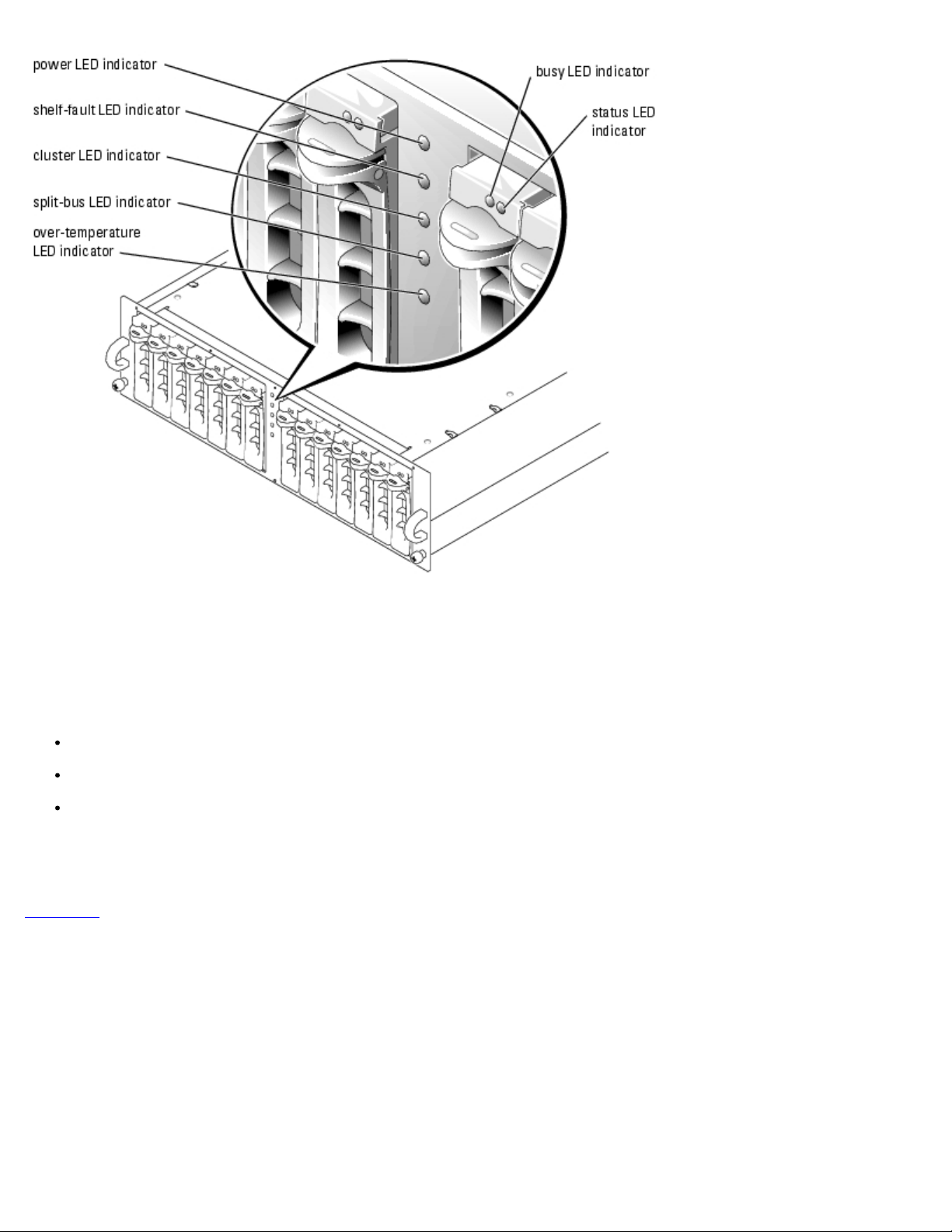

Figure 2-2. Back-Panel Module Features and Indicators

Page 20

Figure 2-3. Front Panel Features and Indicators

Page 21

Split-Bus Module

Your system supports three SCSI bus modes controlled by the split-bus module:

Joined-bus mode

Split-bus mode

Cluster mode

These modes are controlled by the position of the bus configuration switch when the system is turned on.

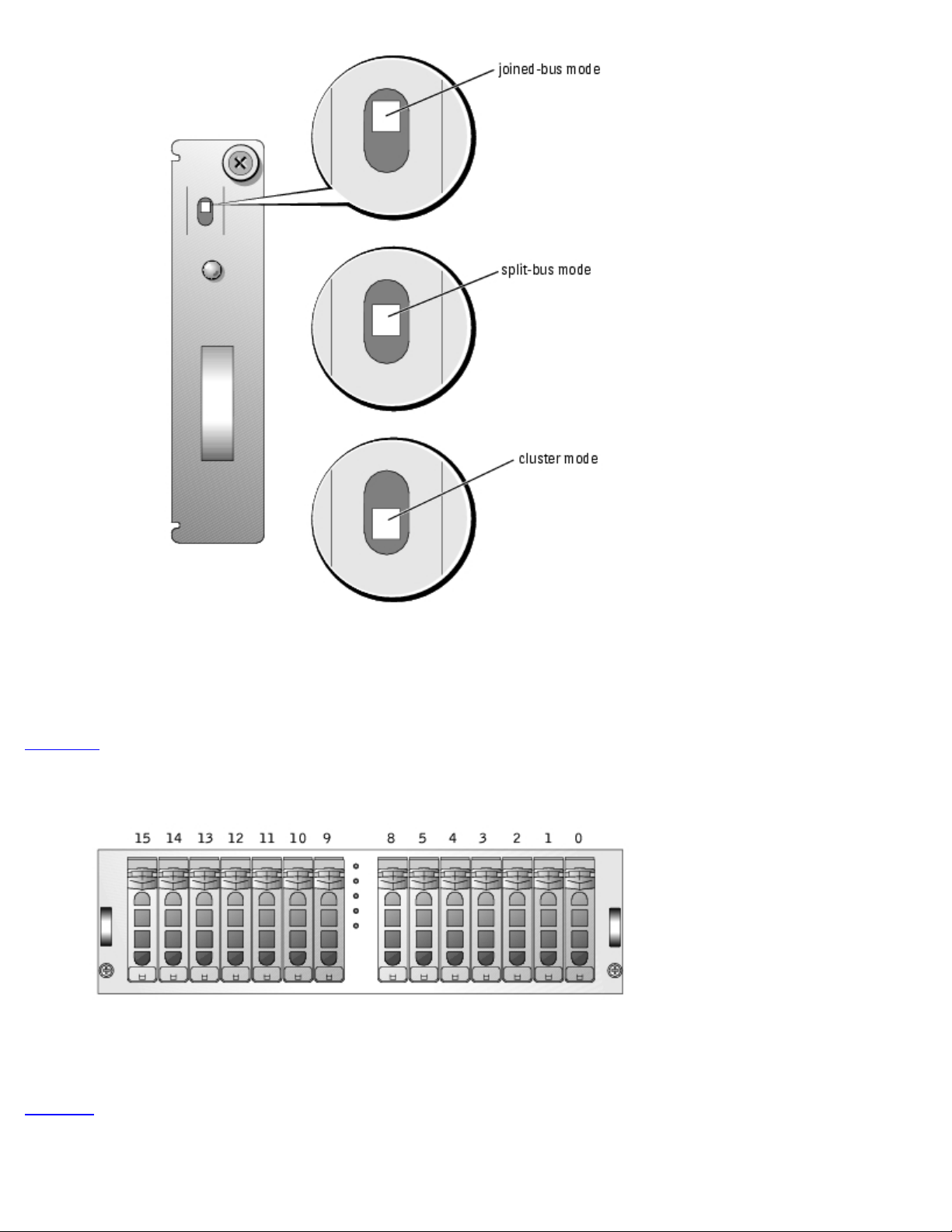

Figure 2-4 illustrates the switch position for each mode.

Figure 2-4. Bus Configuration Switch Modes

Page 22

The only difference between cluster mode and joined-bus mode is the SCSI ID occupied by the enclosure services processor.

When cluster mode is detected, the processor SCSI ID changes from 6 to 15, allowing a second initiator to occupy SCSI ID 6.

As a result, SCSI ID 15 is disabled, leaving 13 available hard drives in cluster mode. As a result, you must remove the SCSI

ID 15 hard drive from the enclosure when using the enclosure in cluster mode.

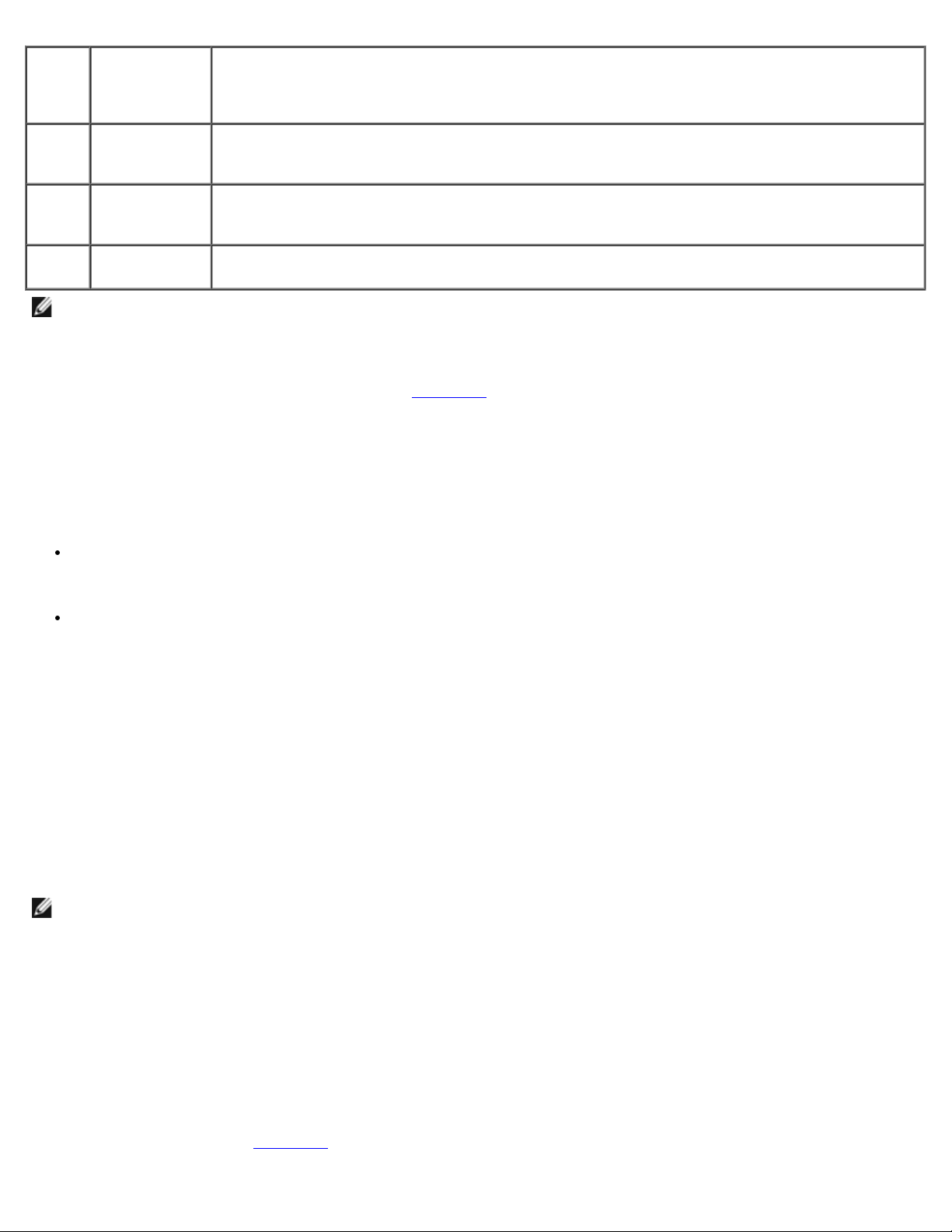

Figure 2-5

illustrates the SCSI IDs and their associated hard drives for the PowerVault 22xS storage system.

Figure 2-5. PowerVault 22xS SCSI ID Numbers and Associated Drives

See your Dell PowerVault 220S and 221S Systems Installation and Troubleshooting Guide for more information about SCSI ID

assignments and cluster mode operation.

Table 2-3

provides a description of the split-bus module modes and functions.

Table 2-3. Split-bus Module Modes and Functions

Page 23

Mode Position of

Bus

Configuration

Switch

Function

Joinedbus

mode

Splitbus

mode

Cluster

mode

The split-bus module has only one LED indicator (see Figure 2-2

receiving power.

Up LVD termination on the split-bus module is disabled, electrically joining the two SCSI buses to

form one contiguous bus. In this mode, neither the split-bus nor the cluster LED indicators on the

front of the enclosure are illuminated.

Center LVD termination on the split-bus module is enabled and the two buses are electrically isolated,

resulting in two seven-drive SCSI buses. The split-bus LED indicator on the front of the enclosure

is illuminated while the system is in split-bus mode.

Down LVD termination is disabled and the buses are electrically joined. The cluster LED on the front of

the enclosure is illuminated while the system is in cluster mode.

NOTE: To change the SCSI bus mode, you must change the position of the bus configuration switch before turning on

the storage system. Using the bus configuration switch while the system is on does not affect system operation. If you

change the bus configuration switch while the system is running, the change will not take effect until you perform the

following sequence: shut down the nodes, reboot the storage system, and then power up the nodes.

for location), which is illuminated when the module is

Enclosure Management Module (EMM)

The EMM serves two primary functions in your storage system:

SCSI bus expansion — Acts as a buffer for the SCSI bus, electrically dividing the bus into two independent segments

while logically allowing all SCSI bus traffic to pass through it transparently. The buffer improves the quality of the SCSI

signals and allows longer cable length connections.

Management functions — Includes SES and SAF-TE reporting to the host initiator, control of all enclosure LED

indicators, and monitoring of all enclosure environmental elements such as temperature sensors, cooling modules, and

power supplies.

A system with redundant enclosure management features two EMMs that are designated as primary and secondary and can

be configured in either a cluster, joined-bus, or split-bus mode. A nonredundant configuration consists of one EMM and one

SCSI terminator card, and can be configured in a joined-bus mode only. In a redundant system, only one EMM per SCSI bus

is active at one time, so only one EMM per SCSI bus can respond to SCSI commands from an initiator.

If a secondary EMM receives a message that the primary EMM has failed in joined-bus and cluster modes, the fault LED

indicator on the primary EMM is illuminated and the condition is reported back to the host initiator. The secondary EMM then

becomes active and holds the failed primary in a state of reset until it is replaced. If the primary EMM detects that the

secondary has failed, the secondary's fault LED indicator is illuminated and the failed status is reported back to the host

initiator.

NOTE: In split-bus mode, each EMM controls half of the enclosure. If one EMM fails in split-bus mode, the second EMM

reports the failure, but does not assume control of the entire SCSI bus.

The primary EMM is always plugged into the slot on the left (viewed from the back of the system). In a redundant joined-bus

configuration, the primary EMM assumes control of all the enclosure functionality. In addition, the primary EMM is the only

module that reports the status of the system to the host initiator through SES and SAF-TE protocols. Because the secondary

EMM must assume the responsibilities of the primary in the event that the primary fails, both the primary and secondary

EMMs are continuously monitoring the status of the system's components.

Preparing the PERC Card for Clustering

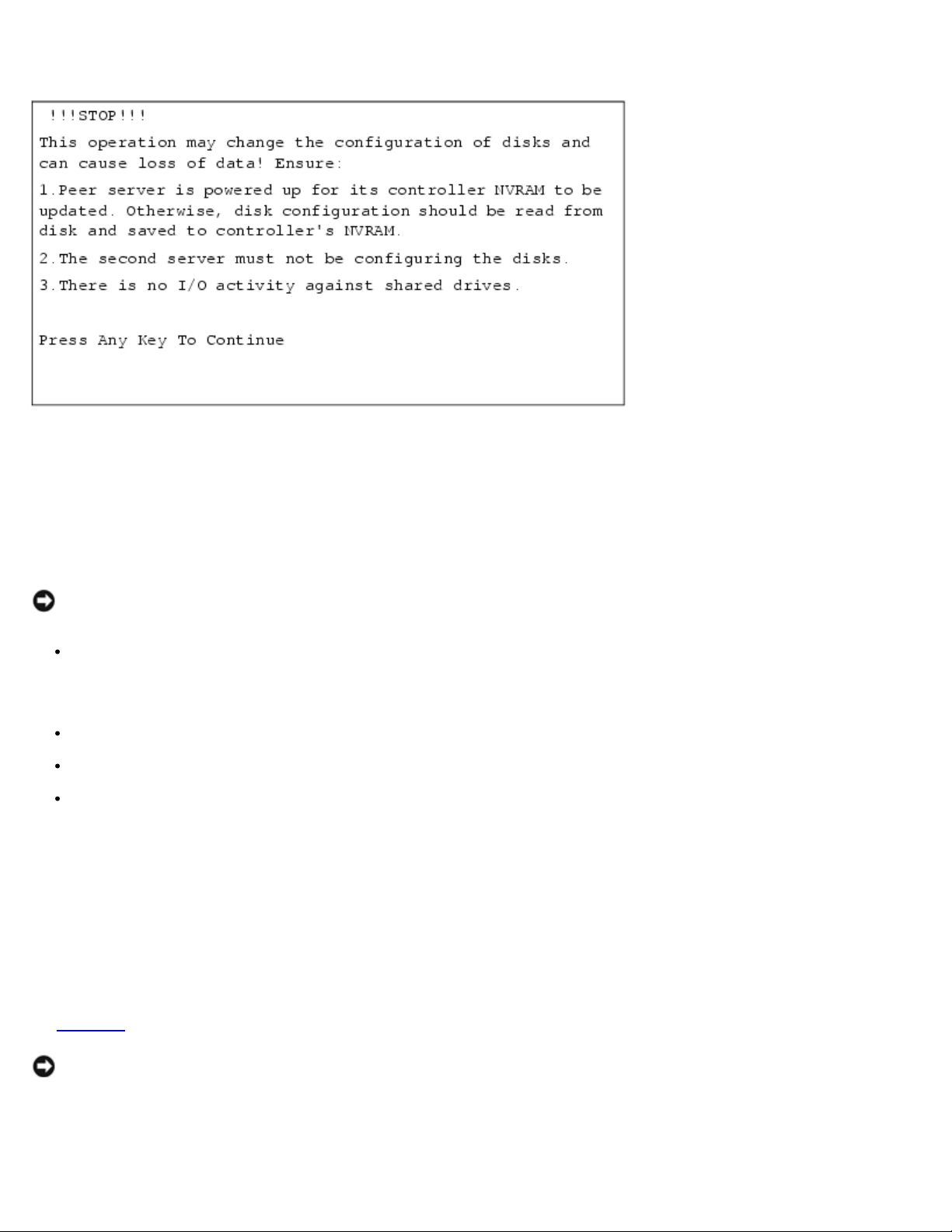

The warning message shown in Figure 2-6 appears on your screen when you attempt to modify the configuration of the

shared storage system on your cluster by using the PERC BIOS configuration utility.

Page 24

Figure 2-6. Important System Warning

The warning message appears on the screen immediately after activating the PERC BIOS configuration utility by pressing

<Ctrl><m> during the system's POST and when you attempt to perform a data-destructive operation in the Dell™

PowerEdge™ RAID Console utility. Examples of data-destructive operations include clearing the configuration of the logical

drives or changing the RAID level of your shared hard drives.

This warning message alerts you to the possibility of data loss if certain precautions are not taken to protect the integrity of

the data on your cluster.

NOTICE: To prevent data loss, your cluster must meet the conditions in the following bulleted list before you attempt

any data-destructive operation on your shared hard drives.

Ensure that the peer system is turned on during the operation so that the PERC card's NVRAM can be updated with the

new configuration information. Alternately, if the peer system is down, you must save the disk configuration to the

shared storage system. When you restart the system later, update the peer system's NVRAM from the disk

configuration saved to the shared storage system.

Ensure that the peer cluster node is not currently configuring the shared storage system.

Ensure that I/O activity does not occur on the shared storage system during the operation.

Ensure that your PERC firmware is the latest version. See your PERC documentation for information on downloading the

latest firmware.

Enabling the Cluster Mode Using the PERC Card

Each PERC card that is used to connect to a shared storage enclosure must have cluster mode enabled using the PERC card's

BIOS configuration utility. Enabling cluster mode implements the additional functionality required for the controller to operate

in a cluster environment.

See Table 2-3

NOTICE: If you replace your PERC card, ensure that you enable the cluster mode on the replacement PERC card and

set the SCSI ID to the appropriate value (6 or 7) before you connect the SCSI cables to the shared storage.

See the appropriate PERC card documentation for more information about enabling cluster mode and the SCSI host adapter.

for more information on split-bus module modes.

Page 25

Setting the SCSI Host Adapter IDs

After you enable cluster mode on the PERC card, you have the option to change the SCSI ID for both of the adapter's

channels. For each shared SCSI bus (a connection from a channel on one system's PERC card to the shared storage enclosure

to a channel on the second system's PERC card), you must have unique SCSI IDs for each controller. The default SCSI ID for

the PERC is ID 7. Thus, the SCSI ID for one of the system's PERC cards must be configured to ID 6.

For cluster configurations with two PERC cards in each node connected to shared storage enclosures, set both controllers in

one system to SCSI ID 6; that is, one node's pair of PERC cards utilizes SCSI ID 7 (default) and the other node's pair of PERC

cards is changed to utilize SCSI ID 6.

See the PERC documentation for more information about setting the SCSI host adapter ID number.

NOTICE: If you replace a PERC card, you must set the appropriate SCSI ID before you connect the SCSI cables to the

shared storage.

Configuring and Managing Virtual Disks

The hard drives in the shared storage system must be configured for clustering. Before you configure the virtual disks,

configure the RAID levels that you will be using in your cluster. See the PERC documentation and the Array Manager

documentation for instructions about setting up a RAID array.

All virtual disks, especially if they are used for the quorum resource, should incorporate the appropriate RAID level to ensure

high availability. See "Creating the Quorum Resource

" for more information on the quorum resource.

NOTE: Dell recommends that you use a RAID level other than RAID 0 (which is commonly called striping). RAID 0

configurations provide very high performance, but do not provide the necessary redundancy that is required for the

quorum resource. See the documentation for your storage system for more information about setting up RAID levels

for the system.

In a cluster configuration, if multiple NTFS partitions are created on a single virtual disk, these partitions will fail over

together. If you plan to run cluster-aware applications on each cluster node, you must create at least two separate virtual

disks to ensure that the applications can fail over independently.

Obtaining More Information

See "Naming and Formatting Drives on the Shared Storage System" for information on how to assign drives letters to the

shared hard drives in a cluster installation.

See the appropriate operating system documentation and the PERC documentation for instructions on partitioning and

formatting the shared storage system's hard drives.

Windows Storage Server 2003, Enterprise Edition Dynamic Disks and

Volumes

The Windows operating system does not support dynamic disks or volumes as shared cluster storage. If the shared cluster

storage is configured as a dynamic disk, the Cluster Configuration wizard will not be able to discover the disks, which

prevents the cluster and network clients from accessing the disks.

Naming and Formatting Drives on the Shared Storage System

Page 26

After the virtual disks are created, write the disk signature, assign drive letters to the virtual disks, and then format the

drives as NTFS drives. Format the drives and assign drive letters from only one cluster node.

NOTICE: Accessing the hard drives from multiple cluster nodes may corrupt the file system.

Assigning Drive Letters

NOTICE: If the disk letters are manually assigned from the second node, the shared disks are simultaneously

accessible from both nodes. To ensure file system integrity and prevent possible data loss before you install the MSCS

software, prevent any I/O activity to the shared drives by performing the following procedure on one node at a time,

and ensuring that the other node is shut down.

Before installing MSCS, ensure that both nodes have the same view of the shared storage systems. Because each node has

access to hard drives that are in a common storage array, each node must have identical drive letters assigned to each hard

drive. Up to 22 logical drive letters (E through Z) can be used for the shared storage systems.

NOTE: Drive letters A through D are reserved for the local system.

The number of drive letters required by individual servers in a cluster may vary. Dell recommends that the shared drives be

named in reverse alphabetical order beginning with the letter z.

To assign drive letters and format drives on the shared storage system:

1. With node 2 shut down, open Disk Management on node 1.

2. Allow Windows to enter a signature on all new physical or logical drives.

NOTE: Do not create dynamic disks on your hard drives.

3. Locate the icon for the first unnamed, unformatted drive on the shared storage system.

4. Right-click the icon and select Create from the submenu.

If the unformatted drives are not visible, verify the following:

The latest version of the PERC driver is installed.

The storage system is properly cabled to the servers.

The split-bus module on the PowerVault 22xS is set to cluster mode.

5. In the dialog box, create a partition the size of the entire drive (the default) and then click OK.

NOTE: The MSCS software allows only one node to access a logical drive at a time. If a logical drive is

partitioned into multiple disks, only one node is able to access all the partitions for that logical drive. If each

node must access a separate disk, two or more logical drives must be present in the storage system.

6. Click Yes to confirm the partition.

7. With the mouse pointer on the same icon, right-click and select Change Drive Letter and Path from the submenu.

8. Assign a drive letter to an NTFS volume or create a mount point.

To assign a drive letter to an NTFS volume:

a. Click Edit and select the letter you want to assign to the drive (for example, z).

Page 27

b. Click OK.

c. Go to step 9.

PowerVault 770N Two homogeneous (identical) PowerVault 770N NAS systems running the Windows Storage Server

To create a mount point:

a. Click Add.

b. Click Mount in the following empty NTFS folder.

c. Type the path to an empty folder on an NTFS volume, or click Browse to locate it.

d. Click OK.

e. Go to step 9

9. Click Yes to confirm the changes.

10. Right-click the drive icon again and select Format from the submenu.

11. Under Volume Label, enter a descriptive name for the new volume; for example, Disk_Z or Email_Data.

12. In the dialog box, change the file system to NTFS, select Quick Format, and click the Start button.

13. Click OK at the warning.

14. Click OK to acknowledge that the format is complete.

15. Click Close to close the dialog box.

16. Repeat step 3

17. Close Disk Management.

18. Shut down node 1.

19. Turn on node 2.

20. On node 2, open Disk Management.

21. Ensure that the drive letters for node 2 are correct.

.

through step 15 for each remaining drive.

To modify the drive letters on node 2, repeat step 7

through step 9.

Installing a PowerVault 770N NAS Cluster Minimum

Configuration

Table 2-4 provides the hardware requirements for a PowerVault 770N NAS cluster minimum configuration.

Figure 2-7

See "Minimum System Requirements

Table 2-4. PowerVault 770N NAS Cluster Minimum Configuration Hardware Requirements

Component Hardware Requirement

shows a minimum system configuration for a PowerVault 770N NAS Cluster.

" for more information.

Page 28

NAS systems 2003, Enterprise Edition operating system

Operating system Windows Storage Server 2003, Enterprise Edition

RAID controller One supported PERC installed in both systems

Shared storage

systems

Private network

cabling

Public network

cabling

Network adapter An additional network adapter installed in each NAS system for the private network

One PowerVault 21xS or 22xS storage system with at least nine hard drives reserved for the cluster

One crossover cable (not included) attached to a Fast Ethernet network adapter in both systems

OR

One standard cable (not included) attached to a Gigabit Ethernet network adapter in both systems

One standard cable attached to a network adapter in both systems for the public network

Figure 2-7. Minimum System Configuration of a PowerVault 770N NAS Cluster

Page 29

Installing a PowerVault 775N NAS Cluster Minimum

Configuration

The following cluster components are required for a minimum system cluster configuration using the PowerVault 775N NAS

Cluster:

Table 2-5

Figure 2-8

See "Minimum System Requirements

provides the hardware requirements for a PowerVault 775N NAS cluster minimum configuration.

shows a minimum system configuration for a PowerVault 775N NAS Cluster.

" for more information.

Table 2-5. PowerVault 775N NAS Cluster Minimum Configuration Hardware Requirements

Cluster Component Hardware Requirement

PowerVault 775N NAS

systems

Operating system Windows Storage Server 2003, Enterprise Edition

RAID controllers One supported PERC installed in both systems for the external storage system(s)

Shared storage systems One PowerVault 21xS or 22xS storage system with at least nine hard drives reserved for the

Private network cabling One Ethernet cable attached to a network adapter in both systems for the private network

Public network cabling One Ethernet cable attached to a network adapter in both systems for the public network

Two homogeneous (identical) PowerVault 775N NAS systems that support clusters

cluster

Figure 2-8. Minimum System Configuration of a PowerVault 775N NAS Cluster

Page 30

Configuring the Shared Disks

This section provides the steps for performing the following procedures:

Creating the quorum resource

Configuring the shared disk for the quorum disk

Configuring the shared disks for the data disks

Configuring the hot spare

Creating the Quorum Resource

When you install Windows Storage Server 2003, Enterprise Edition in your cluster, the software installation wizard

automatically selects the quorum resource (or quorum disk), which you can modify later using Cluster Administrator.

Additionally, you can assign a specific hard drive for the quorum resource. To prevent quorum resource corruption, Dell and

Microsoft recommend that you do not place data on the resource.

Page 31

The quorum resource is typically a hard drive in the shared storage system that serves the following purposes in a PowerVault

NAS Cluster configuration:

Acts as an arbiter between the cluster nodes to ensure that the specific data necessary for system recovery is

maintained consistently across the cluster nodes

Logs the recovery data sent by the cluster node

Only one cluster node can control the quorum resource at one time. This node continues to run if the two nodes are unable to

communicate with each other. If the two nodes are unable to communicate through the private network, MSCS automatically

shuts down the node that does not contain the quorum resource.

When one of the cluster nodes fails for any reason, changes to the cluster configuration database are logged to the quorum

resource, ensuring that the healthy node gaining control of the quorum resource has access to an up-to-date version of the

cluster configuration database.

Creating a Partition for the Quorum Resource

Dell recommends creating a separate partition—approximately 1 GB in size—for the quorum resource.

When you create the partition for the quorum resource:

Format the partition with NTFS.

Use the partition exclusively for your quorum logs.

Do not store any application data or user data on the quorum resource partition.

To properly identify the quorum resource, Dell recommends that you assign the drive letter "Q" to the quorum resource

partition.

Dell does not recommend using the remainder of the virtual disk for other cluster resources. If you do use the space for

cluster resources, be aware that when you create two volumes (partitions) on a single virtual disk, they will both fail over

together if a server fails.

Preventing Quorum Resource Failure

Because the quorum resource plays a crucial role in cluster operation, losing a quorum resource causes the entire cluster to

fail. To prevent cluster failure, configure the quorum resource on a RAID volume in the shared storage system.

NOTICE: Dell recommends that you use a RAID level other than RAID 0, which is commonly called striping. RAID 0

configurations provide very high performance, but they do not provide the level of redundancy that is required for the

quorum resource.

Configuring the Shared Disk for the Quorum Resource

1. Open Dell OpenManage Array Manager.

2. Locate two hard drives of the same size in the external storage system(s).

3. Create a RAID 1 virtual disk.

See your Array Manager documentation for information on installing a virtual disk.

Page 32

NOTE: After you create the virtual disk and the virtual disk is initialized by the PERC 3 controller, you must

reboot the system.

4. Write a signature on the new disk.

5. Using the new disk, create a volume, assign a drive letter, and format the disk in NTFS.

See your Array Manager documentation for information about configuring the shared disk.

Configuring the Shared Disks for the Data Disk(s)

1. Open Array Manager.

2. Locate three or more hard drives of the same size in the external storage system(s).

3. Create a RAID 5 virtual disk using at least three hard drives.

See your Array Manager documentation for information on installing a virtual disk.

NOTE: After you create the virtual disk and the virtual disk is initialized by the PERC 3 controller, you must

reboot the system.

4. Write a signature on the new disk.

5. Using the new disk, create a volume, assign a drive letter, and format the disk in NTFS.

To configure the shared disks for the data disks on data volume 2, repeat the steps for configuring the shared disks for other

data volumes.

Configuring the Hot Spare

The hot spare is a failover hard drive for any of the internal hard drives in the external storage system. If one of the hard

drives in the storage system fails, the responsibilities of the failed disk will automatically fail over to the hot spare.

1. Open Dell OpenManage Array Manager.

2. Assign a global hot spare disk.

See your Array Manager documentation for more information.

Configuring Cluster Networks Running Windows Storage Server

2003, Enterprise Edition

When you install and configure a cluster running Windows Storage Server 2003, Enterprise Edition, the software installation

wizard automatically assigns and configures the public and private networks for your cluster. You can rename a network,

allow or disallow the cluster to use a particular network, or modify the network role using Cluster Administrator. Dell

recommends that you configure at least one network for the cluster interconnect (private network) and one network for all

communications. Additionally, Dell recommends that you use a Gigabit Ethernet network adapter for the private network.

Page 33

Installing and Configuring MSCS

MSCS is an integrated service in the Windows Storage Server 2003, Enterprise Edition operating system. MSCS performs the

basic cluster functionality, which includes membership, communication, and failover management. When MSCS is installed

properly, the service starts on each node and responds automatically if one of the nodes fails or goes offline. To provide

application failover for the cluster, the MSCS software must be installed on both cluster nodes.

See "Using MSCS

NOTE: For systems with split backplane modules installed, the cluster installation tries to use the logical drives on the

secondary backplane as cluster disks. Because these drives are not accessible to all nodes in the cluster, ensure that

they are removed from the cluster after the installation is complete.

NOTE: In Windows Storage Server 2003, Enterprise Edition, mapping a network drive to the same drive letter as a

cluster disk resource renders the cluster disk inaccessible from Windows Explorer on the host. Ensure that mapped

network drives and cluster disks are never assigned the same drive letter.

" for more information.

Verifying Cluster Readiness

To ensure that your server and storage systems are ready for MSCS installation, ensure that these systems are functioning

correctly and verify the following:

All cluster servers are able to log on to the same domain.

The shared disks are partitioned and formatted, and the same drive letters that reference logical drives on the shared

storage system are used on each node.

For each attached PowerVault 22xS storage system, the split-bus module is set to cluster mode before power-up.

Cluster mode is enabled on all PERC cards connected to shared storage.

The controller's SCSI IDs (6 or 7) on each node are different.

All peer PERC cards are connected to the same PowerVault system through the same channel number.

All IP addresses and network names for each system node are communicating with each other and the rest of the

network. The private IP addresses should not be accessible from the LAN.

Configuring Microsoft Windows Storage Server 2003, Enterprise Edition

Cluster Service (MSCS)

The cluster setup files are automatically installed on the system disk.

To create a new cluster:

1. From either node, click the Start button, select Programs→ Administrative Tools, and then double-click Cluster

Administrator.

2. From the File menu, select Open Connection.

3. In the Action box of the Open Connection to Cluster, select Create new cluster.

The New Server Cluster Wizard appears.

4. Click Next to continue.

Page 34

5. Follow the procedures in the wizard, and then click Finish.

6. Add the second node to the cluster.

a. Turn on the remaining node.

b. Click the Start button, select Programs→ Administrative Tools, and double-click Cluster Administrator.

c. From the File menu, select Open Connection.

d. In the Action box of the Open Connection to Cluster, select Add nodes to cluster.

e. In the Cluster or server name box, type the name of the cluster or click Browse to select an available cluster

from the list, and then click OK.

The Add Nodes Wizard window appears.

If the Add Nodes Wizard does not generate a cluster feasibility error, go to step f

If the Add Nodes Wizard generates a cluster feasibility error, go to "Adding Cluster Nodes Using the Advanced

Configuration Option."

f. Click Next to continue.

g. Follow the procedures in the wizard, and then click Finish.

.

Adding Cluster Nodes Using the Advanced Configuration Option

If you are adding additional nodes to the cluster using the Add Nodes Wizard and the nodes are not configured with identical

internal storage devices, the wizard may generate one or more errors while checking cluster feasibility in the Analyzing

Configuration menu. If this situation occurs, select Advanced Configuration Option in the Add Nodes Wizard to add the

nodes to the cluster.

To add the nodes using the Advanced Configuration Option:

1. From the File menu in Cluster Administrator, select Open Connection.

2. In the Action box of the Open Connection to Cluster, select Add nodes to cluster, and click OK.

The Add Nodes Wizard window appears.

3. Click Next.

4. In the Select Computers menu, click Browse.

5. In the Enter the object names to select (examples), type the names of one to seven systems to add to the

cluster, with each system name separated by a semicolon.

6. Click Check Names.

The Add Nodes Wizard verifies and underlines each valid system name.

7. Click OK.

8. In the Select Computers menu, click Add.

9. In the Advanced Configuration Options window, click Advanced (minimum) configuration, and then click OK.

Page 35

10. In the Add Nodes window, click Next.

11. In the Analyzing Configuration menu, Cluster Administrator analyzes the cluster configuration.

If Cluster Administrator discovers a problem with the cluster configuration, a warning icon appears in Checking cluster

feasibility. Click the plus (+) sign to review any warnings, if needed.

12. Click Next to continue.

13. In the Password field of the Cluster Service Account menu, type the password for the account used to run MSCS,

and click Next.

The Proposed Cluster Configuration menu appears with a summary with the configuration settings for your cluster.

14. Click Next to continue.

The new systems (hosts) are added to the cluster. When completed, Tasks completed appears in the Adding Nodes

to the Cluster menu.

NOTE: This process may take several minutes to complete.

15. Click Next to continue.

16. In the Completing the Add Nodes Wizard, click Finish.

Verifying Cluster Functionality

To verify cluster functionality, monitor the cluster network communications to ensure that your cluster components are

communicating properly with each other. Also, verify that MSCS is running on the cluster nodes.

Verifying MSCS Operation

After you install MSCS, verify that the service is operating properly.

1. Click the Start button and select Programs→ Administrative Tools, and then select Services.

2. In the Services window, verify the following:

In the Name column, Cluster Service appears.

In the Status column, Cluster Service is set to Started.

In the Startup Type column, Cluster Service is set to Automatic.

Verifying Cluster Resource Availability

In the context of clustering, a resource is a basic unit of failover management. Application programs are made up of

resources that are grouped together for recovery purposes. All recovery groups, and therefore the resources that comprise

the recovery groups, must be online (or in a ready state) for the cluster to function properly.

To verify that the cluster resources are online:

Page 36

1. Start Cluster Administrator on the monitoring node.

2. Click the Start button and select Programs→ Administrative Tools (Common)→ Cluster Administrator.

3. Open a connection to the cluster and observe the running state of each resource group. If a group has failed, one or

more of its resources might be offline.

Configuring and Managing the Cluster Using Cluster

Administrator

Cluster Administrator is Microsoft's tool for configuring and managing a cluster. The following procedures describe how to run

Cluster Administrator locally on a cluster node and how to install the tool on a remote console.

Launching Cluster Administrator on a Cluster Node

1. Click the Start button and select Programs.

2. Select Administrative Tools.

3. Select Cluster Administrator.

Troubleshooting Failed Resources

Troubleshooting the failed resources is beyond the scope of this document, but examining the properties of each resource and

ensuring that the specified parameters are correct are the first two steps in this process. In general, if a resource is offline, it

can be brought online by right-clicking the resource and selecting Bring Online from the drop-down menu.

Obtaining More Information

See the Windows Storage Server 2003, Enterprise Edition documentation and online help for information about

troubleshooting resource failures.

See Microsoft's online help for configuring MSCS.

See "Using MSCS

" for more information about MSCS.

Managing Directory Quotas (Optional)

Directory Quota is an optional tool in the PowerVault NAS Manager that allows you to manage and control disk space

allocation on the server appliance. Using Directory Quota, you can add, delete, monitor and change space limits for specific

directories on your cluster nodes. The Administrator can configure the settings for Directory Quota in the PowerVault NAS

Manager, which are available and enforced in a failover scenario.

NOTE: Directory Quota monitors disk space for specific directories and does not monitor disk space for each individual

user. To enable quotas for each user, you must use Disk Quota.

In a cluster configuration, each cluster node can manage and configure Directory Quota for the volume(s) owned by the node.

Page 37

For example, if a cluster has two volumes and each node owns one of the volumes, a typical scenario in an active/active

configuration (where virtual servers are running on each node) would be:

Node 1 owns Volume G.

Node 2 owns Volume H.

In this configuration, the administrator must use the PowerVault NAS Manager connect to node 1 to configure the Directory