Cray Urika-GX Administration Manual

Urika®-GX System Administration Guide

(2.2.UP00)

S-3016

Contents

Contents

1 About the Urika®-GX System Administration Guide............................................................................................... 7

2 The Urika-GX System.............................................................................................................................................9

2.1 Administrative Components of Urika-GX...................................................................................................9

2.2 Network Components.............................................................................................................................. 10

2.3 File Systems............................................................................................................................................ 11

2.4 System Nodes......................................................................................................................................... 12

2.5 Restrictions on Use................................................................................................................................. 12

3 System Management............................................................................................................................................15

3.1 Check the Current Service Mode............................................................................................................ 15

3.2 Urika-GX Component Naming Conventions............................................................................................15

3.3 System Management Workstation (SMW)...............................................................................................17

3.3.1 Power On the System Management Workstation (SMW).......................................................... 17

3.3.2 About the Integrated Dell Remote Access Controller (iDRAC).................................................. 17

3.3.3 Control System Management Workstation (SMW) Power with the iDRAC8 Web Console........17

3.3.4 Synchronize the System Management Workstation (SMW) to the Site NTP Server..................20

3.3.5 Synchronize Time of Day on System Nodes..............................................................................21

3.3.6 Reboot a Stopped System Management Workstation (SMW)................................................... 22

3.4 Hardware Supervisory System (HSS)..................................................................................................... 22

3.4.1 Hardware Supervisory System (HSS) Architecture Overview....................................................24

3.4.2 The xtdiscover Command.......................................................................................................... 25

3.4.3 Hardware Supervisory System (HSS) Component Location Discovery..................................... 25

3.4.4 Hardware Supervisory System (HSS) Daemons........................................................................26

3.4.5 Hardware Supervisory System (HSS) Administration and Diagnostic Commands

Supported on Urika-GX.............................................................................................................27

3.4.6 Hardware Supervisory System (HSS) Environments................................................................. 30

3.4.7 High Speed Network (HSN) Management................................................................................. 32

3.4.8 Create Direct Connection between the System Management Workstation (SMW) and a

Compute Node Console............................................................................................................32

3.4.9 Disable Hardware Components................................................................................................. 33

3.4.10 Enable Hardware Components................................................................................................ 33

3.4.11 Set Hardware Components to EMPTY...................................................................................... 34

3.4.12 Stop Components Using the Hardware Supervisory System (HSS)........................................ 34

3.4.13 Unlock Hardware Components................................................................................................ 35

3.4.14 Capture and Analyze System-level and Node-level Dumps.....................................................35

3.4.15 Collect Debug Information From Hung Nodes Using the xtnmi Command.............................. 36

S3016 2

Contents

3.4.16 Find Node Information..............................................................................................................36

3.4.17 Request and Display System Routing......................................................................................37

3.4.18 Initiate a Network Discovery Process.......................................................................................38

3.4.19 Power Up a Rack or Dual Aries Network Card (dANC)............................................................38

3.4.20 Check the Status of System Components................................................................................38

3.4.21 Check Compute Node High Speed Network (HSN) Connection..............................................39

3.4.22 Monitor the Health of PCIe Channels.......................................................................................39

3.4.23 Poll a Response from an HSS Daemon, Manager, or the Event Router..................................39

3.4.24 View Component Alert, Warning, and Location History............................................................40

3.4.25 Display Alerts and Warnings.................................................................................................... 40

3.4.26 Display Error Codes................................................................................................................. 40

3.4.27 Display Component State Information......................................................................................41

3.4.28 Clear Component Flags........................................................................................................... 41

3.4.29 Flash Management on Urika-GX..............................................................................................41

3.4.30 Create and Modify the authorized_keys File Using the xtcc-ssh-keys Command....... 42

3.4.31 Change the Passwords of RC, dANCCs and iSCB using the xtccpasswd Command.......... 42

3.4.32 Gather Troubleshooting Information Using the xtdumpsys Command.....................................43

3.5 Dual Aries Network Card (dANC) Management...................................................................................... 43

3.6 Analyze Node Memory Dump Using the kdump and crash Utilities on a Node.....................................44

3.7 Cray Lightweight Log Management (LLM) System................................................................................. 45

3.8 Urika-GX Node Power Management....................................................................................................... 45

3.9 Power Up the Urika-GX System.............................................................................................................. 46

3.10 Power Down the Urika-GX System....................................................................................................... 49

3.11 Urika-GX CLI Commands for Managing Services................................................................................. 51

3.12 Remote HDFS Remote Access and Multihoming on Urika-GX.............................................................54

3.13 Update the InfluxDB Data Retention Policy...........................................................................................54

3.14 Service to Node Mapping...................................................................................................................... 55

3.15 Image Management with Docker and Kubernetes................................................................................ 59

3.15.1 Execute Spark Jobs on Kubernetes......................................................................................... 60

3.15.2 Multi-tenant Spark Thrift Server on Urika-GX...........................................................................62

4 System Monitoring................................................................................................................................................ 65

4.1 System Monitoring Tools......................................................................................................................... 65

4.2 Monitor Resource Utilization and Node Status Using Nagios................................................................. 66

4.2.1 Configure SSL/TLS for Nagios Core.......................................................................................... 67

4.2.2 Configure the Nagios Server to Send Email Notifications.......................................................... 70

4.2.3 Change the Default Log File Path and Rotation Interval............................................................ 73

4.2.4 Configure Email Alerts................................................................................................................74

4.2.5 Modify Nagios Plug-in Threshold............................................................................................... 75

S3016 3

Contents

4.3 Get Started with Using Grafana...............................................................................................................77

4.4 Default Grafana Dashboards...................................................................................................................79

4.5 Update InfluxDB Security Settings.......................................................................................................... 90

4.6 Update the InfluxDB Data Retention Policy.............................................................................................91

4.7 Configuration Settings of Grafana........................................................................................................... 93

4.8 Change the Default Timezone Displayed on Grafana............................................................................. 93

4.9 Create a New Grafana Dashboard.......................................................................................................... 95

4.10 Add a New Graph to the Grafana Dashboard....................................................................................... 97

4.11 Start InfluxDB Before Hadoop Services.............................................................................................. 100

4.12 Monitor Subrack Attributes.................................................................................................................. 101

4.13 Analyze Node Memory Dump Using the kdump and crash Utilities on a Node.................................102

4.14 Retrieve System Status Information Using the urika-check-platform Command.................................103

4.15 iSCB Description................................................................................................................................. 104

4.15.1 Log on to the iSCB................................................................................................................. 104

4.15.2 iSCB Command Reference.................................................................................................... 105

5 Resource Management...................................................................................................................................... 124

5.1 Manage Resources on Urika-GX...........................................................................................................124

5.2 Use Apache Mesos on Urika-GX ..........................................................................................................126

5.2.1 Access the Apache Mesos Web UI.......................................................................................... 128

5.3 Use mrun to Retrieve Information About Marathon and Mesos Frameworks........................................129

5.4 Launch an HPC Job Using mrun........................................................................................................... 133

5.5 Manage Long Running Services Using Marathon................................................................................. 133

5.6 Manage the Spark Thrift Server as a Non-Admin User......................................................................... 136

5.7 Manage Jobs Using the Cray Application Management UI................................................................... 137

5.7.1 Overview of the Cray Application Management UI...................................................................138

6 Cray DVS............................................................................................................................................................140

6.1 Introduction to DVS............................................................................................................................... 140

6.1.1 Use Cray DVS on Urika-GX..................................................................................................... 141

6.1.2 DVS ioctl Interfaces..................................................................................................................141

6.1.3 DVS Client Mount Point Options.............................................................................................. 143

6.1.4 DVS Environment Variables..................................................................................................... 149

6.1.5 Modes.......................................................................................................................................150

6.1.6 Resiliency and Diagnostics...................................................................................................... 154

6.1.7 Caveats.................................................................................................................................... 157

6.1.8 Administrative Tasks.................................................................................................................158

7 Security...............................................................................................................................................................175

7.1 Authentication and Authorization...........................................................................................................175

7.2 Urika-GX Service Modes....................................................................................................................... 177

S3016 4

Contents

7.2.1 Modify the Service Mode..........................................................................................................180

7.2.2 User Interface Access in the Secure Service Mode................................................................. 181

7.3 Security Architecture Overview............................................................................................................. 181

7.4 Set up Passwordless SSH.....................................................................................................................182

7.5 Tenancy................................................................................................................................................. 183

7.5.1 Configure a Bridge Port............................................................................................................185

7.5.2 Tenant Management.................................................................................................................189

7.5.3 Tenant Virtual Machine States..................................................................................................195

7.5.4 Tenant Management CLI Commands.......................................................................................196

7.5.5 Execution of Lustre Sub-Commands Inside Tenant VMs......................................................... 198

7.5.6 Get Started with Tenant Management......................................................................................199

7.5.7 Multi-Tenancy........................................................................................................................... 202

7.5.8 Multi-tenant HDFS....................................................................................................................203

7.6 Authorized User Management...............................................................................................................205

7.7 Guidance on LDAP Forwarding.............................................................................................................208

7.8 Authentication Mechanisms...................................................................................................................216

7.9 Change Default Passwords................................................................................................................... 217

7.9.1 Default Urika-GX System Accounts......................................................................................... 219

7.9.2 Change the Default Nagios Password..................................................................................... 220

7.9.3 Change the Default iDRAC8 Password....................................................................................221

7.9.4 Change the Default System Management Workstation (SMW) Passwords.............................223

7.9.5 Change LDAP Password on Urika-GX.....................................................................................224

7.9.6 Reset a Forgotten Password for the Cray Application Management UI...................................224

7.9.7 Reset an Administrator LDAP Password on Systems Using Urika-GX 1.2UP01 and Earlier

Releases................................................................................................................................. 225

7.9.8 Reset an Administrator LDAP Password when the OLC Schema Password is Unknown....... 226

7.9.9 Reset an Administrator LDAP Password when the OLC Scheme Password is Known........... 228

7.10 Tableau Authorization and Authentication Mechanisms...................................................................... 229

7.11 Enable SSL..........................................................................................................................................229

7.12 Enable SSL for Spark Thrift Server of a Tenant.................................................................................. 234

7.13 Install a Trusted SSL Certificate on Urika-GX..................................................................................... 235

7.14 Enable LDAP Authentication on Urika-GX ......................................................................................... 236

7.14.1 Enable LDAP for Connecting Tableau to HiveServer2........................................................... 238

7.15 Enable SQL Standard based Authorization for HiveServer2...............................................................239

7.16 File System Permissions..................................................................................................................... 240

7.17 Urika-GX Security Quick Reference Information................................................................................. 240

7.18 Port Assignments................................................................................................................................ 241

8 Troubleshooting.................................................................................................................................................. 245

S3016 5

Contents

8.1 System Management Log File Locations.............................................................................................. 245

8.2 Default Log Settings.............................................................................................................................. 246

8.3 Analytic Applications Log File Locations............................................................................................... 248

8.4 Security Related Troubleshooting Information.......................................................................................250

8.4.1 Save and Restore Tenant Information......................................................................................254

8.4.2 LDAP Server Start-up Issues................................................................................................... 256

8.5 Modify the Secret of a Mesos Framework............................................................................................. 256

8.6 Clean Up Log Data................................................................................................................................ 257

8.7 Diagnose and Troubleshoot Orphaned Mesos Tasks............................................................................258

8.8 Troubleshoot Common Analytic and System Management Issues ...................................................... 259

8.9 Troubleshoot mrun Issues.....................................................................................................................268

8.10 Troubleshoot: Application Hangs as a Result of NFS File Locking..................................................... 270

8.11 Troubleshoot: DVS does not Start after Data Store Move...................................................................270

8.12 Troubleshoot: DVS Ignores User Environment Variables....................................................................271

8.13 Clear Leftover hugetlbf Files................................................................................................................271

8.14 Remove Temporary Spark Files from SSDs........................................................................................271

S3016 6

About the Urika

®

-GX System Administration Guide

1

This publication contains administrative information about using the Cray® Urika®-GX system.

About the Urika®-GX System Administration Guide

Typographic Conventions

Monospace

Monospaced Bold

Oblique or Italics Indicates user-supplied values in commands or syntax definitions.

Proportional Bold Indicates a graphical user interface window or element.

\ (backslash) At the end of a command line, indicates the Linux® shell line continuation character

Indicates program code, reserved words, library functions, command-line prompts,

screen output, file/path names, key strokes (e.g., Enter and Alt-Ctrl-F), and

other software constructs.

Indicates commands that must be entered on a command line or in response to an

interactive prompt.

(lines joined by a backslash are parsed as a single line). Do not type anything after

the backslash or the continuation feature will not work correctly.

Scope and Audience

The audience of this publication is system administrators of the Urika®-GX system. This publication is not

intended to provide detailed information about open source products used in the system. References to online

documentation are included where applicable.

Record of Revision

Date Addressed Release

September, 2018

May, 2018

December, 2017

April, 2017

December, 2016

August, 2016

March, 2016

2.2UP00

2.1UP00

2.0UP00

1.2UP00

1.1UP00

1.0UP00

0.5UP00

Record of Revision

This revision includes updates to Tableau related topics.

S3016 7

About the Urika®-GX System Administration Guide

Trademarks

The following are trademarks of Cray Inc. and are registered in the United States and other countries: CRAY and

design, SONEXION, Urika-GX, Urika-XA, Urika-GD, and YARCDATA. The following are trademarks of Cray Inc.:

APPRENTICE2, CHAPEL, CLUSTER CONNECT, CRAYDOC, CRAYPAT, CRAYPORT, DATAWARP, ECOPHLEX,

LIBSCI, NODEKARE. The following system family marks, and associated model number marks, are trademarks

of Cray Inc.: CS, CX, XC, XE, XK, XMT, and XT. The registered trademark LINUX is used pursuant to a

sublicense from LMI, the exclusive licensee of Linus Torvalds, owner of the mark on a worldwide basis. Other

trademarks used in this document are the property of their respective owners.

S3016 8

The Urika-GX System

2

The Urika-GX system is a big data analytics platform optimized for analytic workflows. It combines a highly

advanced hardware platform with a comprehensive analytic software stack to help derive optimal business value

from data. The Urika-GX platform provides the tools required for capturing and organizing a wide variety of data

types from different sources and enables analyzing big data and discovering hidden relationships.

The Urika-GX system also features a number of workload management tools as well as an optimized system

administration tool for performing monitoring and management tasks.

For a list of features of the Urika-GX system, see S-3017, "Urika®-GX System Overview".

2.1

Urika-GX platforms have been developed by tightly integrating commodity hardware components, open-source

software, and Cray proprietary hardware, to provide users a high performance, scalable and open compute

platform.

Major administrative components of Urika-GX include:

● System Management Workstation (SMW) - The SMW is a server that acts as a single-point interface to a

The Urika-GX System

Administrative Components of Urika-GX

system administrator's environment. It provides an interface for performing administrative and monitoring

capabilities.

○ Hardware Supervisory System (HSS) - HSS is an integrated system of hardware and software

components that are used for managing and monitoring the system.

○ Cobbler - Cobbler is used on Urika-GX for provisioning and deployment.

● Rack Controller (RC) - The RC monitors the environmental sensors within the rack and manages

communication between the SMW and other physical system components, including the rack, sub-rack and

dANC (Dual Aries Network Card).

● Intelligent Subrack Control Board (iSCB) - The iSCB status command can be used to monitor the physical

attributes of the sub-rack, such as the power supply, amperage, fan status, and temperature.

● Aries Network Card Controller (ANCC) - Each sub-rack chassis of the Urika-GX system contains two

dANCs (dual Aries Network Cards). Each dANC contains 2 Aries chips, an Advanced RISC Machines (ARM)

processor, and a number of environmental sensors to help monitor the system.

● Integrated Dell Remote Access Controller (iDRAC) - The iDRAC is a hardware that provides advanced

agentless system management functionality for the SMW. It operates independently of the SMW's CPU and

operating system. The version of iDRAC used on the Urika-GX system is iDRAC8.

● System Monitoring and Performance Analysis Tools - Urika-GX ships with Grafana and Nagios. These

tools enable monitoring system resources and viewing performance statistics of various system components.

For more information, see S-3015, "Urika®-GX Analytic Applications Guide".

S3016 9

The Urika-GX System

● Data Analytic Components - Urika-GX features a number of data analytic tools that help perform analytic

tasks, including managing and monitoring clusters, executing Hadoop and SPARK jobs, performing graph

analytics, etc. For more information, see S-3015, "Urika®-GX Analytic Applications Guide" and S-3010,

"Cray™ Graph Engine User Guide".

● Security and Tenant Management Tools - Secret files used on the system are managed by the Urika-GX

Secret Manager. Tenancy is implemented through the use of a tenant VM that runs on physical nodes and

provides controlled access to services on the physical nodes through a command proxy mechanism. For

more information, refer to Urika-GX Service Modes on page 177 and Tenancy on page 183.

NOTE: Only Spark and HDFS commands can be executed within a tenant VM in this release. All the

commands for flexing the cluster, mrun and Cray Graph Engine (CGE) CLI commands cannot be

executed within a tenant VM.

In addition, Urika-GX features a number of CLI scripts that facilitate system management and monitoring the

system.

2.2 Network Components

There are 3 networks deployed on the Urika®-GX platform:

● Aries High Speed Network (HSN) - The Aries HSN provides high speed application and data network

connectivity between nodes. This network provides node interconnect via high bandwidth, low latency DMA

access. The hardware to support this network consists of an Aries Interface Board (AIB) connected to an

available PCIe slot on each Urika-GX node and integrated into the node chassis assembly. The AIB is

connected to the dANC integrated in the Urika-GX sub-rack. Copper cables provide an all-to-all connection of

all dANCs in the system.

● Operational Ethernet network- The operational Ethernet network is used for ingesting user data. This

network is comprised of a single unit 48-port GigE switch that provides dual 1GigE and/or dual 10GigE

interfaces to the site network. Urika-GX's login nodes do not route through this switch and need to be directly

connected to the site network. The operational network allows node connectivity externally from Urika-GX to

the site network. The Urika-GX compute and I/O nodes are connected to a single managed Brocade ICX

6450-48, 48 port switch with a single power supply. Connectivity of this network to the site network is made

possible by two available Gigabit Ethernet ports and/or two 10 Gigabit Ethernet ports on the ICX 6450-48

switch.

The operational network can also be used to access data streaming applications and services directly from

compute nodes.

● Management Ethernet network - The management Ethernet network is primarily used for system

management, and not for user data. The management Ethernet network is comprised of two stacked 1U 48port switches, which are located at the top of the Urika-GX rack, and can optionally contain redundant switch

power supplies. These switches provide GigE management Ethernet connectivity to every node, System

Management Workstation (SMW), Rack Controller (RC), Intelligent Subrack Control Board (iSCB), Power

Distribution Units (PDUs), Dual Aries Network Cards (dANCs) and to the operational network that connects to

the nodes.

The Urika-GX system also contains the following subnets:

○ SMW subnet, which provides connectivity to the SMW and the RC.

○ Rack subnet, which provides connectivity to the dANCs and iSCB module.

This network is supported by two managed Brocade ICX 6450-48, 48 port switches stacked together with two

10gigE optical interconnects. Each switch contains a single power supply, and can optionally contain

S3016 10

The Urika-GX System

redundant switch power supplies. The following VLANs are defined for this network to support management

network traffic:

○ VLAN 102 - Uses ports 1-5 on each ICX 6450-48 switch. This is a dual-mode (tagged dual-mode for

VLAN 102 and tagged for VLAN 103) VLAN. Untagged traffic on these ports belongs to VLAN 102. Traffic

can be tagged for VLAN 103. The SMW HSS interface, the RC for a given rack, and the PDUs for a given

rack are connected to these ports.

○ VLAN 103 Ports 6-12 on each ICX 6450-48 switch. Untagged traffic on these ports belongs to VLAN 103.

The iSCBs and dANC cards are connected to these ports.

○ VLAN 104 Ports 13-48 on each ICX 6450-48 switch.

NOTE: Traffic on this VLAN may be reduced if VLAN 105 is needed for storage as long as each

compute node is connected to VLAN 104

Untagged traffic on these ports belongs to VLAN 104. The compute nodes and the SMW node-side

network are connected to these ports.

○ VLAN 105 Some number of Ports 13-48 on each ICX 6450-48 switch, as needed for storage

management. Untagged traffic on these ports belongs to VLAN 105. The Storage Management Ports are

connected to these ports.

○ VLAN 1 (default) is unused.

Traffic from the SMW to the subcomponents in the rack subnet, and vice versa, is routed through the

corresponding RC.

For additional information, see the Urika®-GX Hardware Guide.

2.3 File Systems

Supported file system types on Urika-GX include:

● Internal file systems

○ Hadoop Distributed File System (HDFS) - Hadoop uses HDFS for storing data. HDFS is highly fault-

tolerant, provides high throughput access to application data, and is suitable for applications that have

large data sets. Urika-GX also features tiered HDFS storage. HDFS data is transferred over the Aries

network.

○ Network File System (NFS) - The Urika-GX SMW hosts NFS, which is made available to every node via

the management network.

○ /mnt/lustre - This is a directory that hosts Lustre file system data if DAL/Sonexion is used.

CAUTION: Avoid using NFS for high data transfers and/or large writes as this will cause the network

to operate much slower or timeout. NFS, as configured for Urika-GX home directories, is not capable

of handling large parallel writes from multiple nodes without data loss. Though It is possible to

configure NFS to handle parallel writes, it would require a hard mount, which would have undesired

consequences.

File Locations

● Home directories are mounted on (internal) NFS, with limited space

● Distributed file system (Lustre), if provisioned, is mounted at /mnt/lustre and is suitable for larger files.

Lustre mounts are isolated, with individual tenants having their own mount point.

S3016 11

The Urika-GX System

2.4 System Nodes

Each Urika-GX node is a logical grouping of a processor, memory, and a data routing resource. Nodes can be

categorized as compute, I/O, service and login nodes.

Table 1. Node Types and Descriptions

Node Type Description

Compute nodes Compute nodes run application programs.

I/O nodes I/O nodes facilitate connecting to the supported external storage system.

Login nodes Users log in to the Urika-GX system via login nodes and virtual machines

(VMs). Login nodes store users' local files and facilitate launching jobs from the

command line. They also offer the environment for users to build, compile, and

monitor analytics applications.

Service nodes Service nodes handle support functions such as user login and I/O.

All Urika-GX nodes run the CentOS operating system (version 7.3) as well as portions of the Cray Linux

Environment (CLE).

2.5 Restrictions on Use

Hardware Considerations

The following items should be kept under consideration when using Urika-GX hardware:

● High speed network/management network switches must not be modified as this network is internal to UrikaGX.

● Moving the system from the rack Cray supplies to customer provided racks is not supported.

● Sub-rack and SMW hardware configuration must not be changed.

● PCIe devices should not be modified.

● Hardware and drivers installed on the SMW and nodes should not be modified.

● PDUs installed on the system should not be replaced.

Contact Cray Support if it is required to swap nodes between slots. The following options are supported:

● Connecting to the internal PDU power switches.

● Changing the hosts names of login nodes and the SMW.

● The single top of rack switch used for the operational network may be modified to meet site-specific needs.

This switch is expected to be used to enable a direct connection from the site network to the compute and I/O

nodes to support data ingestion and streaming analytics. This network may be modified to reflect site-specific

IP addresses and node names that would be directly exposed to the site network. For information on how to

configure the operational network, contact Cray support.

S3016 12

The Urika-GX System

● The available space in the rack can be used for additional hardware, however proper power and cooling for

that gear needs to be ensured.

Contact Cray Support for information related to:

● Optionally switching to higher bandwidth NICs on the login nodes or SMW connections to the site network.

● Changing the internal range of Cray's IP addresses in case there is a conflict.

Software Considerations

The following items should be kept under consideration when using Urika-GX software:

● Spark Shells using Kubernetes (i.e., those launched under the secure service mode) will be limited to 16

cores and 60 GiB memory and this cannot be overridden at the command line. This is due to a limitation of

the lack of native Spark Shell support in the Spark on Kubernetes project that Cray has provided a

workaround for in this release.

● Modifying the iSCB firmware is not supported.

● Modifying switch firmware (both Ethernet/InfiniBand) is not supported.

● Modifying node BIOS settings is not supported.

● Modifying the kernel and/or kernel modules is not supported.

● Deleting any factory installed software is not supported.

● Changing the default configurations of Mesos, Marathon, mrun, and Grafana is not supported.

● Launching of Docker containers through Docker commands is not supported. Users must use the Marathon

interface for launching containers. For more information, refer to S-3015, "Urika®-GX Analytic Applications

Guide".

● Building and managing new Docker images is currently not supported on Urika-GX. For more information,

contact Cray Support.

Before installing any additional software on the Urika-GX system, a ticket should be opened with Cray Support to

verify that the software will have no impact on the system. The following options are supported:

● Adding CentOS 7 packages that do not cause dependency issues with the Cray installed software. Only Crayprovided Linux updates and YUM repositories should be used.

● Installing additional HDP 2.6.1.0-129 compliant packages and modifying these packages for integrating into

the existing software stack. This applies in the default service mode for HDP related items, except Spark. For

more information, refer to Urika-GX Service Modes on page 177

● Tuning Hadoop and Spark configuration parameters listed in section "Tunable Hadoop and Spark

Configuration Parameters" of S-3015, "Urika®-GX Analytic Applications Guide".

NOTE: Contact Cray Support if it is required to modify additional software configurations.

Security Considerations

If the Urika GX system is running in the secure mode in production, Cray does not recommend toggling back to

the default mode while in production because, in the default mode, the security assurances provided by secure

mode are not in place, and the security of data that was protected by secure mode may be compromised while

running in the default mode. Cray cannot extend the secure mode security assurances to any system that has run

in a production state in the default mode until that system has been fully re-deployed.

The following actions are not supported:

S3016 13

The Urika-GX System

● It is recommended not to make any changes to the default set of Kubernetes and Kerberos configurations

without consulting Cray Support, as doing so can adversely affect the functionality of the system in the secure

service mode.

● Enabling of the PermitUserEnvironment option in sshd_config(5) or the passing of environment

variables beyond those listed on the ssh(1) manual page.

● Changing any settings listed in /etc/environment into login sessions on Urika-GX physical nodes outside

of the system through the login mechanism.

● Modifying the list of whitelist commands. For a list of commands that are part of the whitelist, see Tenancy on

page 183

● Tenant NameNode configuration is managed automatically by the Urika-GX tenant management scripts.

Manually altering the configurations of the tenant NameNode is not supported.

● Tenant names may only contain:

○ the letters a-z

○ the numbers 0-9

○ the characters '-' and '.'

NOTE: The name 'default' is reserved for the sample tenant configuration and cannot be used as a

tenant name.

● The following items need to be kept under consideration while using the ux-tenant-alter-vm command:

○ Number of CPUs: At least 2 CPUs need to remain available when the number of CPUs is changed by this

script. That is, if there are N number of CPUs, a maximum of N-2 CPUs can be assigned to a VM.

○ Amount of memory: At least 50% of the memory must remain available after a VM has been assigned

memory using this command.

S3016 14

System Management

3

System Management

3.1 Check the Current Service Mode

Prerequisites

This procedure requires root privileges on the SMW.

About this task

Urika-GX supports two service modes, which dictate the list of services available. These modes include:

● Default

● Secure

Use the following instructions to determine the service mode the system is currently running in.

Procedure

1. Log on to the SMW as root.

# ssh root@hostname-smw

2. Display the current service mode by using one of the following options:

● Execute the urika-state command. This displays the current service mode as well as the status of all

the services that are supported in that mode.

● Execute the urika-service-mode command.

# urika-service-mode

Current mode is: default

For more information, refer to the urika-service-mode and urika-state man pages.

3.2

The following table contains the component naming format for Urika®-GX systems:

Urika-GX Component Naming Conventions

S3016 15

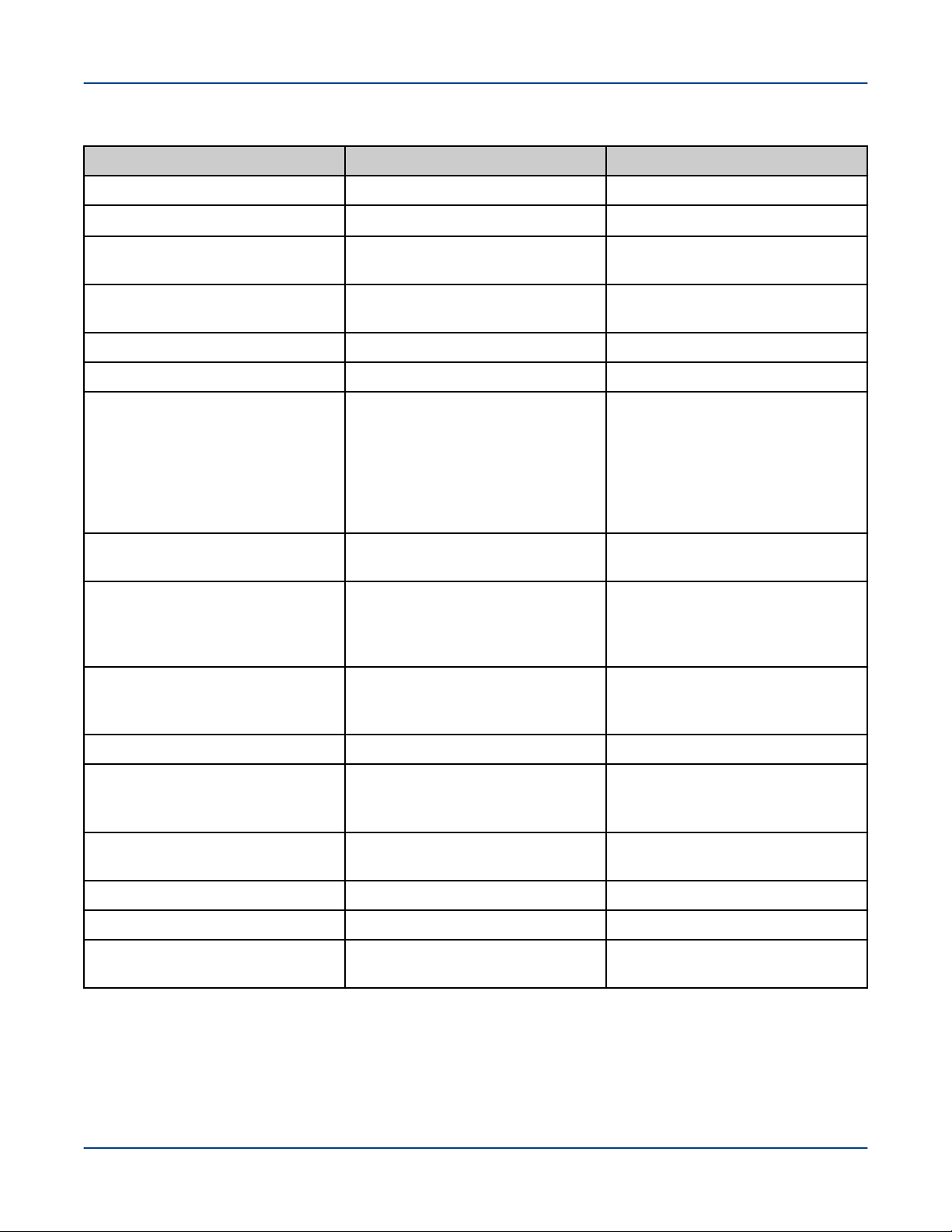

Table 2. Urika-GX Component Naming Conventions

Component/Subject Naming Pattern Range

System Management

SMW

Wild card, similar to s0.

Wild card, which refers to all the

compute nodes

Wild card, which refers to all the

service nodes

Machine partition

Rack

Sub-rack. There are up to 4 subracks per rack. Each sub-rack

contains up to 2 dual Aries Network

Card (dANC) cards, up to 16

compute nodes, and up to 2

Intelligent Subrack Control Boards

(iSCBs)

Intelligent Subrack Control Board

(iSCB)

Dual Aries Network Card (dANC).

There are up to 2 dANCs per subrack, accommodating up to 16

nodes

s0

all

all_comp

all_serv

pP p0

rR R:0 to 161

rRsS S:0 to 3

rRsSiI I:0 to 1

rRsScC C:0 to 1

N/A

N/A

N/A

N/A

High Speed Network (HSN) cable.

The "j" name is visible on the cable

connector face plate

Aries ASIC within a dANC card

Aries link control block within an

Aries ASIC.

Network Interface Controller (NIC)

within an Aries ASIC

Node within a dANC card

Accelerator within a node

Board Management Control (BMC)

within a node

Tenant Naming Conventions

Tenant names may only contain:

● the letters a-z

rRsScCjJ J:0-15

rRsScCaA A:0 to 1

rRsScCaAlRC R:0 to 5

C:0 to 7

rRsScCaAnN N:0 to 3

rRsScCnN N:0 to 7

rRsScCnNaA A:0 to 7

rRsScCnNbB B:0

S3016 16

System Management

● the numbers 0-9

● the characters '-' and '.

NOTE: The name default is reserved for the sample tenant configuration and cannot be used as a

tenant name.

3.3 System Management Workstation (SMW)

The System Management Workstation (SMW) is the system administrator's console for managing a Cray system.

The SMW is a server that runs the CentOS (version 7.3) operating system, Cray developed software, and thirdparty software. The SMW is also a point of control for the Hardware Supervisory System (HSS). The HSS data is

stored on an internal hard drive of the SMW.

The SMW provides shell and web access to authorized users to perform administrative and monitoring tasks. The

Nagios Core service also runs on the SMW.

Most system logs are collected and stored on the SMW. The SMW plays no role in computation after the system

is booted. From the SMW an administrator can initiate the boot process, access the database that keeps track of

system hardware, analyze log messages, and perform standard administrative tasks.

CAUTION: The SMW is a critical system component, which facilitates the operation of other hardware

and software components. Therefore, it is important that all instructions in this publication be followed

before making any changes/reconfigurations to the SWM, as well as before restarting the SMW.

3.3.1 Power On the System Management Workstation (SMW)

The SMW can be turned on by:

● Physically turning the SMW on via the power button.

● Using the iDRAC.

CAUTION:

The SMW is a critical system component, which facilitates the operation of other hardware and software

components. Therefore, it is important that all instructions in this publication be followed before making

any changes/reconfigurations to the SWM, as well as before restarting the SMW.

3.3.2

The iDRAC is a systems management hardware and software solution that provides remote management

capabilities, crashed system recovery, and power control functions for the System Management Workstation

(SMW). The iDRAC alerts administrators to server issues, helps them perform remote server management, and

reduces the need for physical access to the server. The iDRAC also facilitates inventory management and

monitoring, deployment and troubleshooting. To help diagnose the probable cause of a system crash, the iDRAC

can log event data and capture an image of the screen when it detects that the system has crashed.

About the Integrated Dell Remote Access Controller (iDRAC)

For more information about the iDRAC, refer to online documentation at http://www.dell.com.

S3016 17

System Management

3.3.3 Control System Management Workstation (SMW) Power with the iDRAC8 Web

Console

Prerequisites

Ensure that the SMW is up and running.

About this task

Use the iDRAC's web console to start up and shut down the System Management Workstation (SMW).

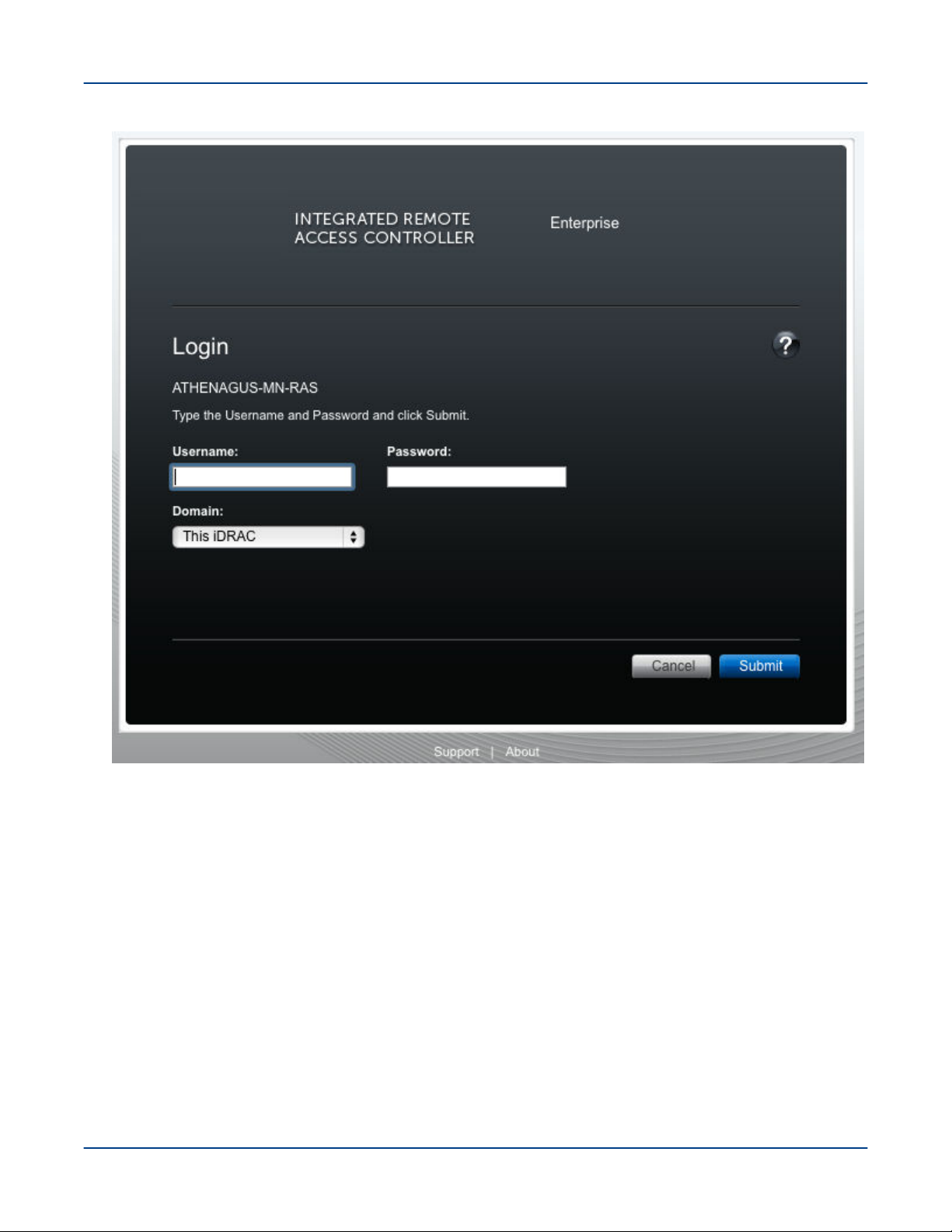

Procedure

1. Point a browser to the site-specific iDRAC IP address, such as https://system-smw-ras

The iDRAC console's login screen appears.

2. Enter root and initial0 in the Username and Password fields respectively. These are the default

credentials that should only be used if the default credentials have not been changed.

S3016 18

Figure 1. iDRAC Login Screen

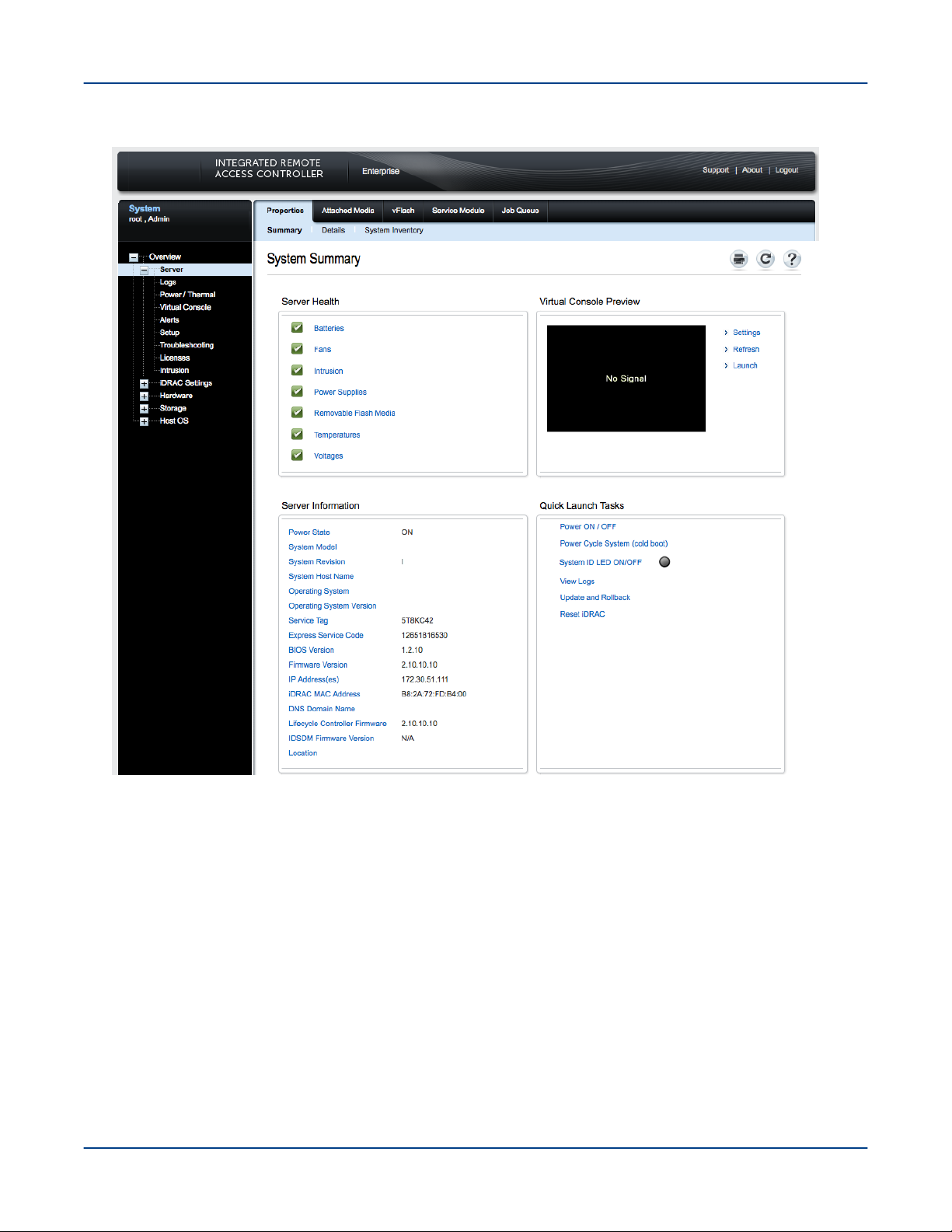

System Management

3. On the Quick Launch Tasks section of the iDRAC UI, click on Power ON/ OFF link to control the SMW's

power.

S3016 19

Figure 2. iDRAC Console

System Management

For more information about the iDRAC, visit http://www.dell.com

3.3.4 Synchronize the System Management Workstation (SMW) to the Site NTP Server

Prerequisites

This procedure requires root privileges.

About this task

The components of the Cray system synchronize time with the System Management Workstation (SMW) through

Network Time Protocol (NTP). By default, the NTP configuration of the SMW is configured to stand alone;

however, the SMW can optionally be configured to synchronize with a site NTP server. Follow this procedure to

configure the SMW to synchronize to a site NTP server.

S3016 20

System Management

Procedure

1. Log on to the SMW as root.

2. Stop the NTP server by issuing the systemctl stop ntpd command.

smw:~ # systemctl stop ntpd

3. Edit the /etc/ntp.conf file on the SMW to point to the new server.

4. Update the clocks

smw:~ # ntpdate timeserver

5. Restart the NTP server by issuing the systemctl start ntpd command:

smw:~ # systemctl start ntpd

The SMW can continue to update the rest of the system by proxy. By default, the SMW qualifies as a stratum

3 (local) NTP server. For more information about NTP, refer to the Linux documentation.

6. Sync the hardware clock

smw:~ # hwclock --systohc

7. Verify that the SMW has jitter from the NTP server

smw:~ # ntpq -p

3.3.5 Synchronize Time of Day on System Nodes

Prerequisites

This procedure needs to be carried out as root.

About this task

Follow this procedure to configure Urika-GX compute nodes to synchronize to a site NTP server. This procedure

is specific to a 48 node system.

Procedure

1. Stop the NTP server by issuing the systemctl stop ntpd command.

# pdsh -w nid000[00-47] " systemctl stop ntpd"

2. Edit the /etc/ntp.conf file on the SMW to point to the new server.

3. Update the clocks

# pdsh -w nid000[00-47] " ntpdate -s smw"

S3016 21

4. Sync the hardware clock

# pdsh -w nid000[00-47] "hwclock --systohc "

5. Restart the NTP server by issuing the systemctl start ntpd command:

# pdsh -w nid000[00-47] " systemctl start ntpd"

System Management

3.3.6

Reboot a Stopped System Management Workstation (SMW)

About this task

The SMW is an integral player in monitoring and maintaining optimal High Speed Network (HSN) traffic. If the

SMW is down or being rebooted (i.e., not fully working), the Aries Network Card Controllers (ANCCs) will

automatically throttle the high-speed network because the ANCCs are no longer hearing SMW heartbeats. This is

done in order to prevent possible network congestion, which normally requires the SMW to be up in order to

respond to such congestion. Once the SMW is up again, the ANCCs will unthrottle the network. (No attempt is

made to prevent loss of data or to carry out operations that occur when the SMW is offline). The consequences of

throttling are that the network will perform much more slowly than normal.

When the SMW comes up, it restarts, establishes communications with all external interfaces, restores the proper

state in the state manager, and continues normal operation without user intervention.

For a scheduled or unscheduled shutdown and reboot of the SMW, it is necessary to have a backup of

configuration files so that if one or more of the files in use becomes corrupted, a clean set of files is available with

which to reboot.

Procedure

1. Ensure that there are no resiliency actions taking place (by executing tail -f /var/opt/cray/log/p0-

default/nlrd-YYYYMMDD) at the time of a graceful SMW shutdown, otherwise wait until the action is

finished.

# tail -f /var/opt/cray/log/p0-default/nlrd-YYYYMMDD

2. Boot the SMW.

3.4

HSS is an integrated system of hardware and software that monitors the hardware components of the system and

proactively manages the health of the system. HSS communicates with nodes and management processors over

an internal (private) Ethernet network that operates independently of the Cray Aries High Speed Network (HSN).

HSS includes the following components:

● HSS network

● HSS Command Line Interface (CLI)

● Aries Network Card Controllers (ANCCs)

Hardware Supervisory System (HSS)

S3016 22

System Management

● Rack controllers

● HSS daemons

● HSS database

● Various logs

HSS performs a number of administrative tasks, such as:

● Monitoring certain hardware system components

● Managing hardware and software failures

● Starting up and shutting down nodes

● Managing the High Speed Network (HSN)

● Maintaining system component states

● Managing the hardware inventory

HSS Command Line Interface

HSS has a command-line interface to manage and view the system from the SMW. For complete usage

information, see the xtcli(8) man page.

Dual Aries Network Card (dANC) Controllers and Rack Controllers

A dANC control processor is hierarchically the lowest component of the monitoring system. The dANC Cray

network card contains two Aries ASICs and an ANCC. There are 2 dANC cards per sub-rack, and hence 4 Aries

ASICs, which support 16 nodes. The dANC monitors the general health of components, including items such as

voltages, temperature, and various failure indicators. A version of Linux optimized for embedded controllers runs

on each dANC controller.

Each rack has a rack control processor (rack controller) that monitors and controls the rack power and

communicates with all dANC controllers in the rack. It sends a periodic heartbeat to the SMW to indicate rack

health.

The rack controller connects to the dANC controllers via the Ethernet switch on each blade by an Ethernet cable

and routes HSS data to and from the SMW. RC runs the same version of embedded Linux as the dANCs. The

SMW, rack controllers, iSCBs, and ANCCs are all interconnected via Ethernet

The monitoring system uses periodic heartbeats. Processes send heartbeats within a time interval. If the interval

is exceeded, the system monitor generates a fault event that is sent to the state manager. The fault is recorded in

the event log, and the state manager sets an alert flag for the component (dANC controller or rack controller) that

spawned it.

The rack and dANC controllers use NTP to keep accurate time with the SMW.

HSS Daemons

HSS daemons on the SMW and the controllers act to monitor and control the state of the system, and to respond

to incidents such as hardware failures. The data path between the HSS CLI and the various daemons is via HSS

events.

Cray System Network Routing Utility

The rtr command performs a variety of routing-related tasks for the High Speed Network (HSN). Tasks include:

S3016 23

System Management

● Generating and applying HSN routes

● Verifying that routes can be generated for the current configuration

● Verifying that generated routes are free of cyclic dependencies

● Dumping out a variety of routing-related and link-related information

HSS Database

The HSS database is a MariaDB relational database that contains the state of all the physical system

components, including the System Management Workstation (SMW), Rack Controller (RC), Intelligent Subrack

Control Board (iSCB), nodes, and the Aries Network Card Controller (ANCC). The state manager reads and

writes the system state to the HSS database. The state manager keeps the database up-to-date with the current

state of components and retrieves component information from the database when needed.

Log Files

Event Logs The event router records events to the event log in the /var/opt/cray/log/event-yyyymmdd

file.

Log rotation takes place at specific time intervals. By default, one file is generated per day.

Dump Logs

SMW Logs SMW logs are stored in /var/opt/cray/log/p0-default on the SMW, and include logs for

The xtdumpsys writes logs into the/var/opt/cray/dump directory by default.

xtconsole, xtconsumer, xtnlrd, etc.

3.4.1 Hardware Supervisory System (HSS) Architecture Overview

HSS hardware on the Urika-GX system consists of a System Management Workstation (SMW), which is a rackmounted Intel-based server running CentOS along with an Ethernet network that connects the SMW to a rack

controller (RC) via a switch. The RC connects to one Aries Network Card Controller (ANCC) on each dual Aries

Network Card (dANC) and consists of a mini PC running Linux. The ANCC has a 32-bit processor. Each hardware

component in the HSS system runs a version of Linux with the relevant HSS software installed. RC is used to

route data downstream from the SMW to the ANCCs and Intelligent Subrack Control Boards (iSCBs), and

upstream from the ANCCs and iSCBs to the SMW.

HSS control and monitoring is performed by the SMW over the HSS Ethernet via a stacked managed switch,

which uses VLANs to connect the SMW to the ANCCs, RC, and iSCBs.

The Urika-GX system can consist of 1, 2 or 3 sub-racks per rack, and 2 dANCs per sub-rack, resulting in a

maximum of 6 dANCs per rack. Each dANC has 2 Aries ASICs, each of which has 4 NICs to support a single

node per NIC connected by PCIe Gen 3.

HSS infrastructure software stack executes on the RC, SMW, and the ANCC to control and monitor the Aries

ASIC.

Resiliency Communication Agent (RCA)

RCA is a messaging service that connects compute nodes to the HSS event and messaging system, and allows

compute nodes to subscribe to and inject HSS events and messages.

S3016 24

System Management

Inventory Management

HSS keeps track of hardware inventory to identify which hardware components are up and running. It uses the

xthwinv command to retrieve hardware component information.

Hardware Discovery

HSS plays an integral role in system hardware discovery. HSS components that play a role in this process include

the HSS database and the xtdiscover command. For more information, see the xtdiscover(8) man page.

Hardware Supervisory System (HSS) Ethernet/Management Network

The HSS network provides interconnectivity between the System Management Workstation (SMW), Rack

Controllers (RCs), and dual Aries Network Cards (dANCs) in a hierarchical fashion.

3.4.2

The xtdiscover command automatically discovers the hardware components on a Cray system and creates

entries in the system database to reflect the current hardware configuration. The xtdiscover command

identifies missing or non-responsive cabinets and empty or non-functioning Dual Aries Network Cards (dANCs).

The xtdiscover command and the state manager ensure that the system status represents the real state of the

hardware. When xtdiscover has finished, a system administrator can use the xtcli command to display the

current configuration. No previous configuration of the system is required; the hardware is discovered and made

available. Modifications can be made to components after xtdiscover has finished creating entries in the

system database.

The xtdiscover interface steps a system administrator through the discovery process.

Prior to performing component discovery, the xtdiscover command will need to make sure that the Hardware

Supervisory System (HSS) networking is set up properly, using a user-provided block of IP address space. This

information is used to create the /etc/hosts file and DHCP entries for the HSS network. This setup typically

only needs to be done once unless the address block is moved, or a new rack is added.

If there are changes to the system hardware, such as populating a previously empty dANC, or adding an

additional rack, then xtdiscover must be executed again, and it will perform an incremental discovery of the

hardware changes. A full-system xtdiscover is not intended to be run while the High Speed Network (HSN) is

actively routing traffic. When new blades are added during system operation with xtwarmswap, however, a minixtdiscover is automatically run to make the required updates to the database.

The xtdiscover Command

TIP: Simply adding an additional rack within an existing address block will not affect the address

assignments for the existing racks. If it is intended to add additional racks in the future, it is better to

configure networking for all of them all at once. The xtdiscover command will automatically detect

whether each rack is presently in the system and will set the system state accordingly.

For more information, see the xtdiscover(8) man page.

3.4.3

Each Urika®-GX system rack is numbered starting at 0. Each sub-rack within a rack has a dip switch that can set

the rack and sub rack number. The iSCB conveys the rack and sub-rack numbers to the Aries Network Cards

(ANCs) via an I2C bus. The Dual Aries Network Card (dANC) blade has a slot-sense bit which tells it which dual

dANC number it is within the sub-rack (0 or 1). The dANC uses the rack, sub-rack, and dANC number to

construct its hostname. The Rack Controller (RC) determines its rack number from the location of the Intelligent

Subrack Control Board (iSCB), encoded in a DHCP request sent by the iSCB and seen by RC.

Hardware Supervisory System (HSS) Component Location Discovery

S3016 25

System Management

3.4.4 Hardware Supervisory System (HSS) Daemons

HSS daemons and applications exchange information with the event router. They are located

at: /opt/cray/hss/default/bin and are started when the System Management Workstation (SMW) boots.

They can be managed via systemd and can be stopped and started via systemctl stop hss and

systemctl start hss respectively. HSS daemons are configured dynamically by executing the

xtdaemonconfig command.

Key HSS daemons include:

● State manager daemon (state_manager) - Performs HSS system hardware state management.

● Event router daemon (erd) and (erdh) - Performs HSS message routing.

● Node ID manager daemon (nid_mgr) - Manages node IDs and NIC addresses for every node in the system.

State Manager

HSS maintains the state of all components that it manages. The state manager, state_manager, runs on the

SMW and uses a relational database (also referred to as the HSS database) to maintain/store the system state.

The state manager keeps the database up-to-date with the current state of components and retrieves component

information from the database when needed. Thus, the dynamic system state persists between SMW boots. The

state manager uses the Lightweight Log Manager (LLM). The log data from state manager is written

to: /var/opt/cray/log/sm-yyyymmdd. The default setting for state manager is to enable LLM logging. The

state manager performs the following functions:

● Updates and maintains component state information

● Monitors events to update component states

● Detects and handles state notification upon failure

● Provides state and configuration information to HSS applications.

The state manager performs the aforementioned tasks on behalf of:

● System nodes

● Aries chips

● Aries HSN Links

● dual Aries Network Card (dANC)

● Rack controller (RC)

● Intelligent Subrack Control Board (iSCB)

In summary, the state manager subscribes to and listens for HSS events, records changes of states, and shares

those states with other daemons.

The Event Router (erd)

HSS functions are event-driven. The event router daemon, erd runs on the SMW, rack controllers, and dANC

controllers. HSS commands and daemons subscribe to events and inject events into the HSS system by using

the services of the erd. The event router starts as each of the devices (SMW, rack controller, dANC controller)

are started.

S3016 26

System Management

When the event router on the SMW receives an event from either a connected agent or from another event router

in the hierarchy, the event is logged and then processed. HSS CLI commands use events to query and control

HSS daemons.

Node ID (NID) Manager

The nid_mgr generates a list of mapping between node logical IDs and physical Network Interface Controller

(NIC) IDs and distributes this information to the blade controllers. Along with the ability to assign NIDs

automatically, the nid_mgr supports a mechanism that allows an administrator to control the NID assignment;

this is useful for handling unique configurations. Administrator-controlled NID assignment is accomplished through

the NID assignment file, nids.ini.

CAUTION: The nids.ini file can have a major impact on the functionality of a Cray system and should

only be used or modified at the recommendation of Cray support personnel. Setting up this file incorrectly

can make the Cray system unroutable.

Typically after a NID mapping is defined for a system, this mapping is used until some major event occurs, such

as a hardware configuration change. This may require the NID mapping to change, depending on the nature of

the configuration change. Adding additional racks does not typically result in a new mapping.

Since the operating system always uses NIDs, HSS converts these to NIC IDs when sending them on to the HSS

network and converts them to NIDs when forwarding events from HSS network to a node.

3.4.5 Hardware Supervisory System (HSS) Administration and Diagnostic Commands

Supported on Urika-GX

The following HSS commands are supported on Urika®-GX and need to be invoked from the System

Management Workstation (SMW) to control HSS operations. Usage information for all of these commands can be

viewed using the -h option, man pages are available where noted.

Table 3. HSS Administration Commands

Command Description

capmc

hss_make_default_initrd.athena.rc

hss_make_default_initrd.athena.danc

hssbootlink

Cray advanced power monitoring and control utility. See

the capmc(8) man page for more information.

Creates the Rack Controller (RC) image.

Creates the dual Aries Network Card (dANC) image.

Links a Linux kernel bzImage file, an initramfs file,

and a parameters file so that they can be booted on a

Controller by using PXE boot on an SMW.

hssclone

hssds_init

hsspackage

make_node_inventory

Clones the master image directory.

Creates the Hardware Supervisory System (HSS) data

store; ensures the proper HSS data store user

credentials are created and that the data store is ready

for operation.

Facilitates creation of controller boot images.

Generates an inventory of Urika-GX nodes.

S3016 27

Command Description

System Management

nid2nic

rtr

xtagent

xtalive

xtbounce

xtcablecheck

xtccpasswd

xtcc-ssh-keys

Prints all nid-to-nic_address mappings. See the

nid2nic(8) man page for more information.

Performs system routing. See the rtr(8) man page

for more information.

Generic agent for accessing the event router.

Checks for life of HSS daemons. See the xtalive(8)

man page for more information.

Initializes Aries and HSN links. Powers down nodes as

needed. See the xtbounce(8) man page for more

information.

Compares the link endpoint pairs as known to the

routing software with the link ID values set in MMRs in

each LCB in the Aries ASIC to insure the HSN is cabled

correctly.

Changes the password for all Rack Controllers (RCs),

Dual Aries Network Card Controllers (dANCCs), and

Intelligent Subrack Control Boards (iSCBs) on UrikaGX. It must be run as root.

Creates and modifies the authorized_keys file for

Rack Controllers (RCs) and dANCCs.

xtchecklink

xtclass

xtclear

xtcli

xtcon

xtconsole

xtconsumer

xtdaemonconfig

xtdiscover

xtdumpsys

Checks HSN and PCIe link health.

Displays the network topology class for this system.

Clears component flags in the State Manager. For more

information, see the xtclear(8) man page.

Controls dANC and node power, displays status and

sets administrative component status. See the

xtcli(8) man page for more information.

Provides a two-way connection to the console of a

node. For more information, see the xtcon(8) man

page.

Displays console text from one or more nodes.

Displays HSS events.

Configures HSS daemons dynamically.

Discovers and configures the Cray system hardware.

This command is also used to populate the HSS

database and set up HSS IP networking. For more

information, see the xtdiscover(8) man page.

Gathers information when a system node stops

responding or fails.

xterrorcode

xtfileio

Displays event error codes.

Performs file transfer to/from HSS controllers.

S3016 28

Command Description

System Management

xtfile2mem/xtmem2file

xtgenid

xtgenevent

xthwerrlog

xthwerrlogd

xtlogfilter

xtmemio

xtnetwatch

xtnid2str

xtnlrd

xtnmi

Reads CPU or Aries memory and saves it in a file.

Performs binary file-to-MMR/node memory and vice

versa.

Generates HSS physical IDs.

Injects arbitrary HSS events into the event stream.

Displays hardware errors retrieved from xthwerrlogd

in user-friendly format.

Monitors HW error log messages sent by the ANCC and

by nodes.

Filters information from event router log files.

Performs reads or writes to Aries MMRs or node

memory.

Watches the Cray system interconnection network for

link control block (LCB) and router errors.

Converts node identification numbers to physical

names. See the xtnid2str(8) man page for more

information.

Responds to fatal Aries and HSN errors by rerouting the

system.

Sends a non-maskable interrupt to target nodes. See

the xtnmi(8) man page for more information.

xtpcimon

rackfw

xtshow

xtsignal

xtwarmswap

xthwinv

xtls

xtpe

There are a number of HSS diagnostics commands supported on Urika-GX. These commands need to be run

from a compute node.

WARNING: All HSS diagnostics commands are intended for use by Cray Service Personnel only.

Improper use of these restricted commands can cause serious damage to the system.

Monitors health of PCIe channels for Urika-GX systems.

Flashes all devices in the Urika-GX system via out-ofband (OOB). See the rackfw(8) man page for more

information.

Shows components with selected characteristics. See

the xtshow(8) man page for more information.

Sends a signal number or software interrupt to a remote

process.

Allows Cray dANC cards or high-speed network cables

to be warm swapped. See the xtwarmswap(8) man

page for more information.

Retrieves hardware component information for selected

modules.

Generates a summary of HSN link errors.

Generates a summary of PCIe link errors.

S3016 29

Table 4. HSS Diagnostic Commands

Command Description

System Management

xtbte_ata

xtbte_ato

xtfma_ata

xtfma_ato

xtfma_amo

xtfbc

xtbte_perf

Perform system stress test to ensure that all logical endpoints go to

all other end points using BTE put and/or get transactions.

Runs a number of applications individually or collectively as a suite

to ensure that a system is ready for executing jobs after hardware

or software upgrades or after power cycles.

Ensures that all logical endpoints go to all other end points using

FMA put and/or get transactions.

Ensures that all logical endpoints target one end point using FMA

Put and/or Get transactions. A round robin approach is used to step

through each end point in the configuration.

Checks all AMO operations using an All-to-All algorithm. It tests

AMOs with PUT (non-fetching) and GET (fetching) attributes.

Ensures that both the FMA and BTE logic blocks are tested. The

test relies on the Generic Network Interface (GNI) API to directly

communicate with the Cray network application-specific integrated

circuit (ASIC).

Determines the one hop connections between nodes. It then

performs BTE transfers over these one hop connections and

determines the time taken to do so. The time duration is checked

against an expected value for the link type. The actual data

transferred is also verified.

3.4.6 Hardware Supervisory System (HSS) Environments

The HSS infrastructure environment is composed of a number of daemons, processes, and commands that help

control and monitor physical system components.

HSS daemons supported on Urika-GX are listed in the following tables:

Table 5. ANCC HSS Daemons

Daemon Description

Aries Network Card Controller (ANCC) System Daemon

(anccsysd)

ANCC Router Daemon (anccrtrd) Handles requests from rtr on the System

ANCC Bandwidth Throttle Daemon (anccbwtd) Monitors High Speed Network (HSN) traffic and

Controls power and state of the dual Aries

Network Card (dANC) components, including

Aries initialization and health monitoring.

Management Workstation (SMW) to stage or

install Aries Routes.

reports congestion indicators, assists in

controlling congestion.

S3016 30

Loading...

Loading...