Page 1

Hitachi Freedom Storage™

Lightning 9900™

User and Reference Guide

Page 2

Page 3

© 2003 Hitachi Data Systems Corporation, ALL RIGHTS RESERVED

Notice: No part of this publication may be reproduced or transmitted in any form or by any

electronic or mechanical means, including photocopying and recording, or stored in a

database or retrieval system for any purpose, without the express written permission of

Hitachi Data Systems Corporation.

Hitachi Data Systems reserves the right to make changes to this document at any time

without notice and assumes no responsibility for its use. Hitachi Data Systems products and

services can only be ordered under the terms and conditions of Hitachi Data Systems’

applicable agreements, including license agreements. All of the features described in this

document may not be currently available. Refer to the most recent product announcement

or contact your local Hitachi Data Systems sales office for information on feature and

product availability.

This document contains the most current information available at the time of publication.

When new and/or revised information becomes available, this entire document will be

updated and distributed to all registered users.

Trademarks

Hitachi Data Systems is a registered trademark and service mark of Hitachi, Ltd. The Hitachi

Data Systems design mark is a trademark and service mark of Hitachi, Ltd.

Hi-Track is a registered trademark of Hitachi Data Systems Corporation.

Extended Serial Adapter, ExSA, Hitachi Freedom Storage, Hitachi Graph-Track, and Lightning

9900 are trademarks of Hitachi Data Systems Corporation.

APC and Symmetra are trademarks or registered trademarks of American Power Conversion

Corporation.

HARBOR is a registered trademark of BETA Systems Software AG.

AIX, DYNIX/ptx, ESCON, FICON, IBM, MVS, MVS/ESA, VM/ESA, and S/390 are registered

trademarks or trademarks of International Business Machines Corporation.

Microsoft, Windows, and Windows NT are registered trademarks of Microsoft Corporation.

Tantia is a trademark of Tantia Technologies Inc. Tantia Technologies is a wholly owned

subsidiary of BETA Systems Software AG of Berlin.

All other brand or product names are or may be registered trademarks, trademarks or

service marks of and are used to identify products or services of their respective owners.

Notice of Export Controls

Export of technical data contained in this document may require an export license from the

United States government and/or the government of Japan. Contact the Hitachi Data

Systems Legal Department for any export compliance questions.

Hitachi Lightning 9900™ User and Reference Guide iii

Page 4

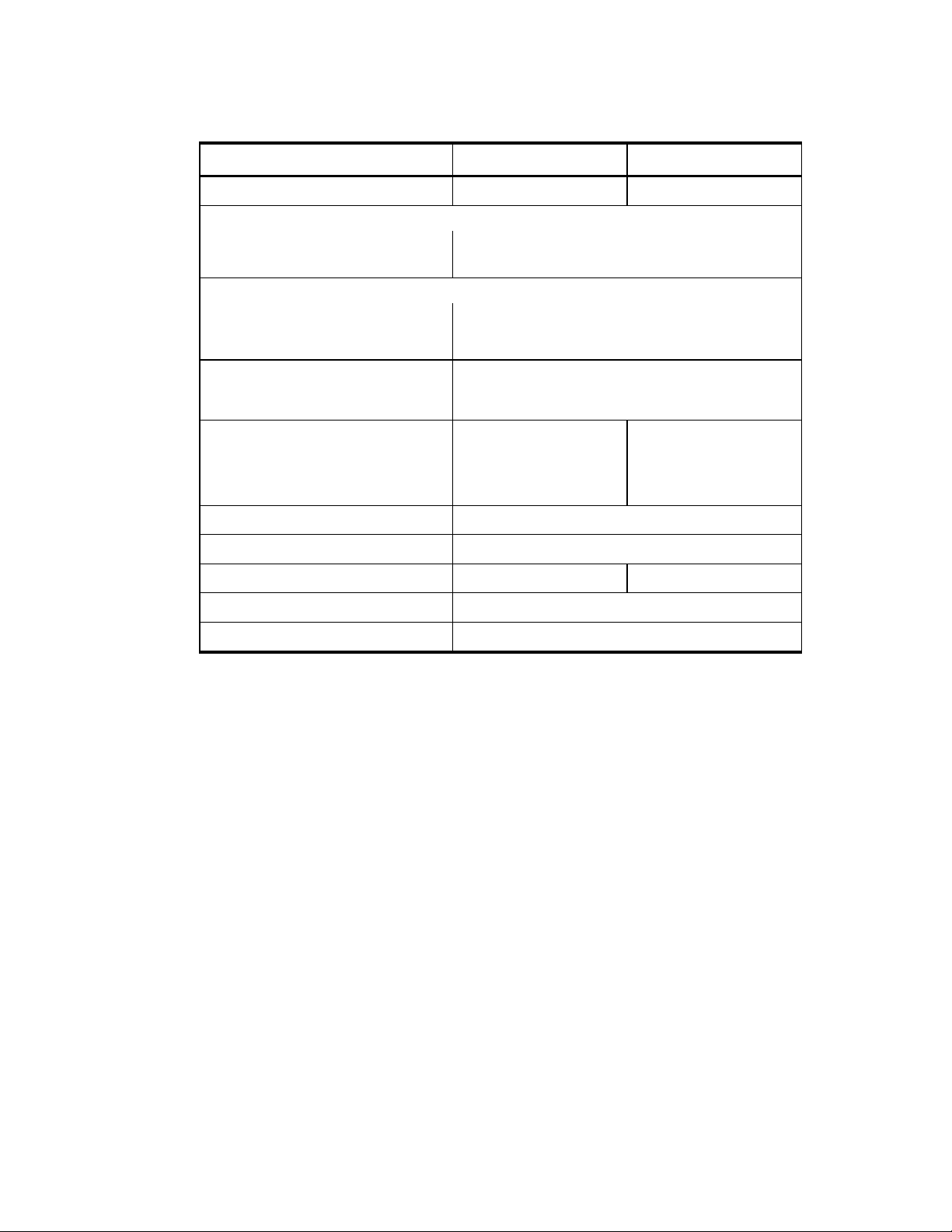

Document Revision Level

Revision Date Description

MK-90RD008-0 July 2000 Initial Release

MK-90RD008-1 November 2000 Revision 1, supersedes and replaces MK-90RD008-0

MK-90RD008-2 March 2001 Revision 2, supersedes and replaces MK-90RD008-1

MK-90RD008-3 June 2001 Revision 3, supersedes and replaces MK-90RD008-2

MK-90RD008-4 January 2002 Revision 4, supersedes and replaces MK-90RD008-3

MK-90RD008-5 February 2002 Revision 5, supersedes and replaces MK-90RD008-4

MK-90RD008-6 May 2002 Revision 6, supersedes and replaces MK-90RD008-5

MK-90RD008-7 October 2003 Revision 7, supersedes and replaces MK-90RD008-6

Source Documents for this Revision

This document revision applies to 9900 microcode versions 01-12-xx.

This document revision applies to 9900 microcode versions 01-13-xx.

This document revision applies to 9900 microcode versions 01-16-xx.

This document revision applies to 9900 microcode versions 01-17-xx.

This document revision applies to 9900 microcode versions 01-17-yy.

This revision applies to 9900 microcode versions 01-18-67 and higher.

DKC410I/405I Disk Subsystem Maintenance Manual, revision 12.1 (August 2003).

RAID400_RAID450_Public_Mode_Rev.2a (April 29, 2003).

Changes in this Revision

Updated description of disk drive and cache upgrades to remove the statement that all

upgrades can be made with minimal impact (section 1.1.7).

Added information on the 146-GB hard disk drive (sections 2.3, 2.4.2, 2.4.3; Table 2.2,

Table 2.3, Table 5.8, Table 5.9, Table 5.18).

Added information on the public system option modes (new section 3.5, new Table 3.2-

Table 3.8).

iv Preface

Page 5

Preface

This document describes the physical, functional, and operational characteristics of the

Hitachi Lightning 9900™ subsystem, provides general instructions for operating the 9900

subsystem, and provides the installation and configuration planning information for the 9900

subsystem.

This document assumes that:

The user has a background in data processing and understands direct-access storage

The user is familiar with the S/390

The user is familiar with the equipment used to connect RAID disk array subsystems to

For further information on Hitachi Data Systems products and services, please contact your

Hitachi Data Systems account team, or visit Hitachi Data Systems worldwide web site at

http://www.hds.com

the Lightning 9900™ subsystem, please refer to the 9900 user documentation for the

platform, or contact the Hitachi Data Systems Support Center.

device (DASD) subsystems and their basic functions,

®

(mainframe) operating systems and/or open-system

platforms supported by the 9900 subsystem, and

the supported host systems.

. For specific information on supported host systems and platforms for

Note: Unless otherwise noted, the term “9900” refers to the entire Hitachi Lightning 9900™

subsystem family, including all models (e.g., 9960, 9910) and all configurations (e.g., allmainframe, all-open, multiplatform).

Note: The use of Hitachi Data Systems products is governed by the terms of your license

agreement(s) with Hitachi Data Systems.

Microcode Level

This document revision applies to 9900 microcode versions 01-18-67 and higher.

Please send us your comments on this document: doc.comments@hds.com.

Make sure to include the document title, number, and revision.

Please refer to specific page(s) and paragraph(s) whenever possible.

(All comments become the property of Hitachi Data Systems Corporation.)

COMMENTS

Thank you!

Hitachi Lightning 9900™ User and Reference Guide v

Page 6

vi Preface

Page 7

Contents

Chapter 1 Overview of the Lightning 9900™ Subsystem

1.1 Key Features of the Lightning 9900™ Subsystem............................ 1

1.1.1 Continuous Data Availability..................................... 2

1.1.2 Connectivity.................................................. 2

1.1.3 S/390® Compatibility and Functionality............................. 3

1.1.4 Open-Systems Compatibility and Functionality....................... 3

1.1.5 Hitachi Freedom NAS™ and Hitachi Freedom SAN™.................... 4

1.1.6 Program Products and Service Offerings............................ 6

1.1.7 Subsystem Scalability........................................... 8

1.2 Reliability, Availability, and Serviceability................................ 9

Chapter 2 Subsystem Architecture and Components

2.1 Overview ..........................................................11

2.2 Components of the Controller Frame ....................................14

2.2.1 Storage Clusters...............................................14

2.2.2 Nonvolatile Shared Memory......................................15

2.2.3 Nonvolatile Duplex Cache .......................................15

2.2.4 Multiple Data and Control Paths ..................................16

2.2.5 Redundant Power Supplies.......................................16

2.2.6 Client-Host Interface Processors (CHIPs) and Channels.................16

2.2.7 Channels ....................................................18

2.2.8 Array Control Processors (ACPs) ..................................19

2.3 Array Frame........................................................21

2.3.1 Disk Array Groups..............................................23

2.3.2 Sequential Data Striping ........................................24

2.4 Intermix Configurations...............................................25

2.4.1 RAID-1 & RAID-5 Intermix........................................25

2.4.2 Hard Disk Drive Intermix ........................................25

2.4.3 Device Emulation Intermix.......................................26

2.5 Service Processor (SVP)...............................................27

2.6 Remote Console PC ..................................................27

Chapter 3 Functional and Operational Characteristics

3.1 New 9900 Features and Capabilities .....................................29

3.2 I/O Operations......................................................29

3.3 Cache Management ..................................................30

3.3.1 Algorithms for Cache Control.....................................30

3.3.2 Write Pending Rate ............................................30

3.4 Control Unit (CU) Images, LVIs, and LUs..................................31

3.4.1 CU Images ...................................................31

3.4.2 Logical Volume Image (LVIs) .....................................31

3.4.3 Logical Unit (LU) Type..........................................31

3.5 System Option Modes.................................................32

Hitachi Lightning 9900™ User and Reference Guide vii

Page 8

3.6

Open Systems Features and Functions................................... 37

3.6.1 Failover and SNMP Support...................................... 37

3.6.2 Share-Everything Architecture................................... 37

3.6.3 SCSI Extended Copy Command Support ............................ 37

3.7 Data Management Functions .......................................... 38

3.7.1 Hitachi TrueCopy (TC) ......................................... 40

3.7.2 Hitachi TrueCopy – S/390® (TC390) ............................... 40

3.7.3 Hitachi ShadowImage (SI)....................................... 41

3.7.4 Hitachi ShadowImage – S/390® (SI390)............................. 41

3.7.5 Command Control Interface (CCI) ................................ 42

3.7.6 Extended Copy Manager (ECM)................................... 42

3.7.7 Hitachi Extended Remote Copy (HXRC)............................ 43

3.7.8 Hitachi NanoCopy™............................................ 44

3.7.9 Data Migration ............................................... 44

3.7.10 Hitachi RapidXchange (HRX)..................................... 45

3.7.11 Hitachi Multiplatform Backup/Restore (HMBR) ...................... 45

3.7.12 HARBOR® File-Level Backup/Restore.............................. 46

3.7.13 HARBOR® File Transfer......................................... 46

3.7.14 HiCommand™ ................................................ 46

3.7.15 LUN Manager ................................................ 47

3.7.16 LU Size Expansion (LUSE)....................................... 47

3.7.17 Virtual LVI/LUN .............................................. 47

3.7.18 FlashAccess.................................................. 48

3.7.19 Cache Manager............................................... 48

3.7.20 Hitachi SANtinel.............................................. 49

3.7.21 Hitachi SANtinel – S/390®....................................... 49

3.7.22 Prioritized Port and WWN Control (PPC) ........................... 50

3.7.23 Hitachi Parallel Access Volume (HPAV) ............................ 50

3.7.24 Dynamic Link Manager™ (DLM)................................... 50

3.7.25 LDEV Guard.................................................. 50

3.7.26 Hitachi CruiseControl.......................................... 51

3.7.27 Hitachi Graph-Track™.......................................... 52

Chapter 4 Configuring and Using the 9900 Subsystem

4.1 S/390® Configuration ................................................ 53

4.1.1 Subsystem IDs (SSIDs) .......................................... 53

4.2 S/390® Hardware Definition........................................... 54

4.2.1 Hardware Definition Using IOCP (MVS, VM, or VSE)................... 54

4.2.2 Hardware Definition Using HCD (MVS/ESA) ......................... 59

4.2.3 Defining the 9900 to VM/ESA® Systems ............................ 70

4.2.4 Defining the 9900 to TPF....................................... 70

4.3 S/390® Operations .................................................. 71

4.3.1 Initializing the LVIs............................................ 71

4.3.2 Device Operations: ICKDSF...................................... 71

4.3.3 MVS Cache Operations......................................... 73

4.3.4 VM/ESA® Cache Operations ..................................... 75

4.3.5 VSE/ESA Cache Operations...................................... 75

viii Contents

Page 9

4.4

Open-Systems Configuration ...........................................76

4.4.1 Configuring the Fibre-Channel Ports...............................77

4.4.2 Virtual LVI/LUN Devices.........................................77

4.4.3 LU Size Expansion (LUSE) Devices .................................77

4.5 Open Systems Operations .............................................78

4.5.1 Command Tag Queuing .........................................78

4.5.2 Host/Application Failover Support.................................78

4.5.3 Path Failover Support ..........................................79

4.5.4 Remote SIM (R-SIM) Reporting....................................80

4.5.5 SNMP Remote Subsystem Management .............................80

4.5.6 NAS and SAN Operations ........................................80

Chapter 5 Planning for Installation and Operation

5.1 User Responsibilities .................................................81

5.2 Electrical Specifications and Requirements for Three-Phase Subsystems.........82

5.2.1 Internal Cable Diagram .........................................82

5.2.2 Power Plugs..................................................83

5.2.3 Features.....................................................84

5.2.4 Current Rating, Power Plug, Receptacle, and Connector for

Three-Phase (60 Hz only)........................................84

5.2.5 Input Voltage Tolerances........................................85

5.3 Electrical Specifications and Requirements for Single-Phase Subsystems.........86

5.3.1 Internal Cable Diagram .........................................86

5.3.2 Power Plugs..................................................88

5.3.3 Features.....................................................91

5.3.4 Current Rating, Power Plug, Receptacle, and Connector for

Single-Phase (60 Hz only)........................................91

5.3.5 Input Voltage Tolerances........................................92

5.4 Dimensions and Weight ...............................................93

5.5 Floor Loading and Cable Routing Requirements ............................96

5.5.1 Service Clearance Requirements..................................96

5.5.2 Minimum Subsystem Disk Configuration............................101

5.5.3 Floor Load Rating.............................................105

5.5.4 Cable Requirements...........................................109

5.6 Channel Specifications and Requirements................................110

5.7 Environmental Specifications and Requirements...........................111

5.7.1 Temperature and Humidity Requirements..........................111

5.7.2 Power Consumption and Heat Output Specifications..................111

5.7.3 Loudness ...................................................113

5.7.4 Air Flow Requirements.........................................113

5.7.5 Vibration and Shock Tolerances..................................114

Hitachi Lightning 9900™ User and Reference Guide ix

Page 10

Chapter 6 Troubleshooting

6.1 Troubleshooting................................................... 115

6.2 Service Information Messages (SIMs) ................................... 116

6.3 Calling the Hitachi Data Systems Support Center ......................... 117

Appendix A Unit Conversions

...................................................... 119

Acronyms and Abbreviations....................................................... 121

x Contents

Page 11

List of Figures

Figure 2.1 Lightning 9900™ HiStar Network (HSN) Architecture .....................11

Figure 2.2 9960 Subsystem Frames...........................................13

Figure 2.3 9910 Subsystem Frame ...........................................13

Figure 2.4 Conceptual ACP Array Domain......................................19

Figure 2.5 Sample RAID-1 Layout ............................................23

Figure 2.6 Sample RAID-5 Layout (Data Plus Parity Stripe) ........................24

Figure 2.7 Sample Hard Disk Drive Intermix....................................25

Figure 2.8 Sample Device Emulation Intermix ..................................26

Figure 3.1 Fibre-Channel Device Addressing....................................31

Figure 4.1 IOCP Definition for FICON™ Channels (direct connect and via

Figure 4.2 IOCP Definition for 1024 LVIs (9900 connected to host CPU(s) via ESCD) .....56

Figure 4.3 IOCP Definition for 1024 LVIs (9900 directly connected to CPU)............57

Figure 4.4 Master MENU (Step 1) ............................................61

Figure 4.5 Basic HCD Panel (Step 2)..........................................62

Figure 4.6 Define, Modify, or View Configuration Data (Step 3) ....................62

Figure 4.7 Control Unit List Panel (Step 4).....................................63

Figure 4.8 Add Control Unit Panel (Step 5) ....................................63

Figure 4.9 Selecting the Operating System (Step 6)..............................64

Figure 4.10 Control Unit Chpid, CUADD, and Device Address Range Addressing (Step 7) . . 64

Figure 4.11 Select Processor / Control Unit Panel (Step 8).........................65

Figure 4.12 Control Unit List (Step 9)..........................................65

Figure 4.13 I/O Device List Panel (Step 10).....................................66

Figure 4.14 Add Device Panel (Step 11)........................................66

Figure 4.15 Device / Processor Definition Panel – Selecting the Processor ID (Step 12) ...67

Figure 4.16 Define Device / Processor Panel (Step 13) ............................67

Figure 4.17 Device / Processor Definition Panel (Step 14) .........................68

Figure 4.18 Define Device to Operating System Configuration (Step 15)...............68

Figure 4.19 Define Device Parameters / Features Panel (Step 16) ...................69

Figure 4.20 Update Serial Number, Description and VOLSER Panel (Step 18) ...........69

Figure 4.21 LVI Initialization for MVS: ICKDSF JCL................................71

Figure 4.22 Displaying Cache Statistics Using MVS DFSMS ..........................73

Figure 4.23 IDCAMS LISTDATA COUNTS (JCL example).............................73

Figure 4.24 Fibre Port-to-LUN Addressing ......................................77

Figure 4.25 Alternate Pathing ...............................................79

FICON™ switch) .................................................55

Figure 5.1 Diagram of Power Plugs for Three-Phase 9960 Disk Array Unit (Europe)......82

Figure 5.2 Diagram of Power Plugs for Three-Phase 9960 Disk Array Unit (USA) ........83

Figure 5.3 Diagram of Power Plugs for Three-Phase 9960 Disk Array Unit (Europe)......83

Figure 5.4 Internal Cable Diagram of a Single-Phase 9960 Subsystem ................86

Figure 5.5 Internal Cable Diagram of a Single-Phase 9910 Subsystem ................87

Figure 5.6 Power Plugs for Single-Phase 9960 Controller (USA).....................88

Figure 5.7 Power Plugs for Single-Phase 9910 Subsystem (USA).....................88

Figure 5.8 Power Plugs for a Single-Phase 9960 Controller (Europe).................89

Figure 5.9 Power Plugs for a Single-Phase 9910 Subsystem (Europe).................89

Figure 5.10 Power Plugs for Single-Phase 9960 Disk Array Unit (USA) .................90

Hitachi Lightning 9900™ User and Reference Guide xi

Page 12

Figure 5.11

Figure 5.15 9960 DKC and DKU Physical Dimensions.............................. 93

Figure 5.16 9910 DKC and DKU Physical Dimensions.............................. 93

Figure 5.17 9960 Controller Frame Service Clearance and Cutouts (millimeters) ....... 97

Figure 5.18 9960 Controller Frame Service Clearance and Cutouts (inches) ........... 98

Figure 5.19 9960 Disk Array Unit Service Clearance and Cutout (millimeters).......... 99

Figure 5.20 9960 Disk Array Unit Service Clearance and Cutout (inches)............. 100

Figure 5.21 9960 Disk Subsystem Minimum Configuration (millimeters).............. 101

Figure 5.22 9960 Disk Subsystem Minimum Configuration (inches).................. 102

Figure 5.23 9910 Disk Subsystem All Configurations (millimeters).................. 103

Figure 5.24 9910 Disk Subsystem All Configurations (inches)...................... 104

Figure 5.25 9960 Disk Subsystem with Controller and 4 Disk Arrays................. 106

Figure 5.26 9960 Disk Subsystem with Maximum Configuration .................... 107

Figure 6.1 Typical 9900 SIM Showing Reference Code and SIM Type................ 116

List of Tables

Table 1.1 Program Products and Service Offerings .............................6-7

Table 2.1 CHIP and Channel Specifications ................................... 17

Table 2.2 ACP Specifications .............................................. 20

Table 2.3 Disk Drive Specifications ......................................... 22

Power Plugs for Single-Phase 9960 Disk Array Unit (Europe).............. 90

Table 3.1 Capacities of Standard LU Types ................................... 31

Table 3.2 Common System Option Modes .................................... 32

Table 3.3 System Option Modes for Mainframe Connectivity...................... 32

Table 3.4 System Option Modes for Open-System Connectivity.................... 33

Table 3.5 System Option Modes for ShadowImage - S/390® and ShadowImage........ 34

Table 3.6 System Option Modes for TrueCopy - S/390® Sync & Async............. 34-35

Table 3.7 System Option Modes for HXRC .................................... 36

Table 3.8 System Option Modes for Concurrent Copy (CC) ....................... 36

Table 3.9 Data Management Functions for Open-System Users.................... 38

Table 3.10 Data Management Functions for S/390® Users......................... 39

Table 4.1 SSID Requirements.............................................. 53

Table 4.2 Correspondence between Physical Paths and Channel Interface IDs (Cl 1) . . . 58

Table 4.3 Correspondence between Physical Paths and Channel Interface IDs (Cl 2) . . . 58

Table 4.4 HCD Definition for 64 LVIs ........................................ 59

Table 4.5 HCD Definition for 256 LVIs ....................................... 59

Table 4.6 ICKDSF Commands for 9900 Contrasted to RAMAC...................... 72

Table 4.7 9900 Open-System Platforms and Configuration Guides ................. 76

Table 5.1 9960 Three-Phase Features ....................................... 84

Table 5.2 Current Rating, Power Plug, Receptacle, and Connector for 3-Phase 9960. . . 84

Table 5.3 Input Voltage Specifications for Three-Phase Power.................... 85

Table 5.4 9900 Single-Phase Features ....................................... 91

Table 5.5 Current Rating, Power Plug, Receptacle, and Connector for 1-Phase 9900. . . 91

xii Contents

Page 13

Table 5.6

Table 5.7 9900 Physical Specifications .......................................94

Table 5.8 9960 Frame and Component Weights ................................95

Table 5.9 9960 & 9910 Subsystem Weights ....................................95

Table 5.10 Floor Load Rating for 9910 Subsystem...............................105

Table 5.11 Floor Load Rating for 9960 Controller with 1 Disk Array.................105

Table 5.12 Floor Load Rating for 9960 Controller with 4 Disk Arrays ................107

Table 5.13 Floor Load Rating for 9960 Controller with Maximum Configuration........108

Table 5.14 Cable Requirements.............................................109

Table 5.15 ESCON® and FICON™ Port Information...............................110

Table 5.16 Fibre-Channel Port Information....................................110

Table 5.17 Temperature and Humidity Requirements ...........................111

Table 5.18 9910/9960 Component Power and Heat Output Specifications............112

Table 5.19 9900 Subsystem Power and Heat Output Specifications .................112

Table 5.20 Internal Air Flow ...............................................113

Table 5.21 Vibration and Shock Tolerances....................................114

Table 6.1 Troubleshooting................................................115

Table A.1 Unit Conversions for Standard (U.S.) and Metric Measures ...............119

Input Voltage Specifications for Single-Phase Power.....................92

Hitachi Lightning 9900™ User and Reference Guide xiii

Page 14

xiv Contents

Page 15

Chapter 1 Overview of the Lightning 9900™ Subsystem

1.1 Key Features of the Lightning 9900™ Subsystem

The Hitachi Lightning 9900™ subsystem provides high-speed response, continuous data

availability, scalable connectivity, and expandable capacity for both S/390

®

and open-

systems environments. The 9900 subsystem is designed for use in 7×24 data centers that

demand high-performance, non-stop operation. The 9900 subsystem is compatible with

industry-standard software and supports concurrent attachment to multiple host systems and

platforms. The 9900 subsystem employs and improves upon the key characteristics of

generations of successful Hitachi disk storage subsystems to achieve high performance and

reliability. The advanced components, functions, and features of the Lightning 9900™

subsystem represent an integrated approach to data retrieval and storage management.

The Lightning 9900™ subsystem provides many new benefits and advantages for the user.

The 9900 subsystem can operate with multihost applications and host clusters, and is

designed to handle very large databases as well as data warehousing and data mining

applications that store and retrieve terabytes of data. The Lightning 9900™ provides up to 32

host interface ports and can be configured for all-mainframe, all-open, or multiplatform

operations.

Instant access to data around the clock:

– 100 percent data availability guarantee.

– No single point of failure.

– Highly resilient multi-path fibre architecture.

– Fully redundant, hot-swappable components.

– Global dynamic hot sparing.

– Duplexed write cache with battery backup.

– Hi-Track

®

“call-home” maintenance system.

– Non-disruptive microcode updates.

– RAID-1 and/or RAID-5 array groups within the same subsystem.

Unmatched performance and capacity:

– Industry’s only internal switched fabric architecture.

– Multiple point-to-point data and control paths.

– Up to 6.4-GB/sec internal system bandwidth.

– Fully addressable 32-GB data cache; separate control cache.

– Extremely fast and intelligent cache algorithms.

– Non-disruptive expansion to over 88 TB raw capacity.

– Simultaneous transfers from up to 32 separate hosts.

– High-throughput 10K RPM fibre-channel, dual-active disk drives.

Hitachi Lightning 9900™ User and Reference Guide 1

Page 16

Extensive connectivity and resource sharing:

– Concurrent operation of UNIX

NetWare

®

, and S/390® host systems.

– Fibre-channel, Fiber Connection (FICON™), and Extended Serial Adapter™ (ESCON

server connections.

– Optimized for storage-area networks (SANs), fibre-channel switched, fibre-channel

arbitrated loop, and point-to-point configurations.

1.1.1 Continuous Data Availability

The Hitachi Lightning 9900™ is designed for nonstop operation and continuous access to all

user data. To achieve nonstop customer operation, the 9900 subsystem accommodates

online feature upgrades and online software and hardware maintenance. See section 1.2 for

further information on the reliability and availability features of the Lightning 9900™

subsystem.

1.1.2 Connectivity

®

-based, Windows NT®, Windows® 2000, Linux®,

®

)

The Hitachi Lightning 9900™ RAID subsystem supports concurrent attachment to S/390®

mainframe hosts and open-system (UNIX

®

-based and/or PC-server) platforms. The 9900

subsystem can be configured with FICON™ ports, Extended Serial Adapter™ (ExSA™) ports

(compatible with ESCON

®

protocol), and/or fibre-channel ports to support all-mainframe,

all-open, and multiplatform configurations.

When FICON™ channel interfaces are used, the 9900 subsystem can provide up to 16 logical

control unit (CU) images and 4096 logical device (LDEV) addresses. Each physical FICON™

channel interface supports up to 512 logical paths providing a maximum of 8192 logical paths

per subsystem. FICON™ connection provides transfer rates of up to 100 MB/sec (1Gbps).

When ExSA™ channel interfaces are used, the 9900 subsystem can provide up to 16 logical

control unit (CU) images and 4,096 logical device (LDEV) addresses. Each physical ExSA™

channel interface supports up to 256 logical paths providing a maximum of 8,192 logical

paths per subsystem. ExSA™ connection provides transfer rates of up to 17 MB/sec.

When fibre-channel interfaces are used, the 9900 subsystem can provide up to 32 ports for

attachment to UNIX

®

-based and/or PC-server platforms. The type of host platform

determines the number of logical units (LUs) that may be connected to each port. Fibrechannel connection provides data transfer rates of up to 200 MB/sec (2 Gbps). The 9900

subsystem supports fibre-channel arbitrated loop (FC-AL) and fabric fibre-channel topologies

as well as high-availability (HA) fibre-channel configurations using hubs and switches.

2 Chapter 1 Overview of the Lightning 9900™ Subsystem

Page 17

1.1.3 S/390® Compatibility and Functionality

The 9900 subsystem supports 3990 and 2105 controller emulations and can be configured

with multiple concurrent logical volume image (LVI) formats, including 3390-1, -2, -3, -3R, -9

and 3380-E, -J, -K. In addition to full System-Managed Storage (SMS) compatibility, the 9900

subsystem also provides the following functionality in the S/390

Sequential data striping,

Cache fast write (CFW) and DASD fast write (DFW),

Enhanced dynamic cache management,

Multiple Allegiance support,

Concurrent Copy (CC) support,

Enhanced CCW support,

Priority I/O queuing,

Parallel Access Volume (PAV) support, and

Transaction Processing Facility (TPF)/Multi-Path Locking Facility (MPLF) support.

1.1.4 Open-Systems Compatibility and Functionality

®

environment:

The Lightning 9900™ subsystem supports multiple concurrent attachment to a variety of host

operating systems (OS). The 9900 supports the following platforms at this time. The type of

host platform determines the number of logical units (LUs) that may be connected to each

port. Please contact Hitachi Data Systems for the latest information on platform and OS

version support. The 9900 is compatible with most fibre-channel host bus adapters (HBAs).

IBM

Sun™ Solaris™ OS

HP-UX

Compaq

Sequent

SGI™ IRIX

®

AIX® OS

®

OS

®

Tru64™ UNIX® OS

®

DYNIX/ptx® OS

®

OS

Microsoft

Microsoft

Novell

Red Hat

Compaq

®

Windows NT® OS

®

Windows® 2000 OS

®

NetWare® OS

®

Linux® OS

®

OpenVMS® OS

The 9900 subsystem provides enhanced dynamic cache management and supports command

tag queuing and multi-initiator I/O. Command tag queuing (see section 4.5.1) enables hosts

to issue multiple disk commands to the fibre-channel adapter without having to serialize the

operations. The 9900 subsystem operates with industry-standard middleware products

providing application/host failover capability, I/O path failover support, and logical volume

management. The 9900 subsystem also supports the industry-standard simple network

management protocol (SNMP) for remote management from the open-system host.

The 9900 subsystem can be configured with multiple concurrent logical unit (LU) formats

(e.g., OPEN-3, -8, -9, -K, -E, -L, -M). The user can also configure custom-size volumes using

the Virtual LVI/LUN and LU Size Expansion (LUSE) features of the 9900 subsystem, which are

described in the next section.

Hitachi Lightning 9900™ User and Reference Guide 3

Page 18

1.1.5 Hitachi Freedom NAS™ and Hitachi Freedom SAN™

Hitachi Freedom Data Networks™ (FDN) provide an open architecture that offers

organizations freedom of choice in deploying data access, protection, and sharing

capabilities across the enterprise. Using multiple technologies and solutions such as storagearea networks (SANs) and network-attached storage (NAS), FDN builds, leverages, and

augments storage infrastructures, providing access to any data from any computer, anytime

and anywhere.

Hitachi Freedom NAS™ and Hitachi Freedom SAN™ solutions are the core offerings behind the

FDN approach. They complement the Hitachi Freedom Storage™ subsystems by allowing

more flexibility than ever in heterogeneous environments. While SAN architectures respond

to high bandwidth needs, NAS addresses the need for rapid file access, especially critical for

e-business applications. Hitachi Data Systems offers the best of both.

FDN encompasses storage, switches and hubs, servers/clients, management software,

protocols, services, and networks developed by Hitachi, our alliance partners, and third

party providers. FDN facilitates consolidation of server and storage resources, data sharing

among heterogeneous hosts, centralized resource and data management, superior data

security, and increased connectivity.

Hitachi Freedom SAN™. Hitachi Data Systems’ SAN solutions give you the freedom to locate

storage wherever needed and protect your investment in currently installed components.

Made possible by the advent and proliferation of high-speed fibre-channel technology, SANs

break the traditional server/storage bond and enable total connectivity. As a result, you can

add, remove, or reassign any resource without interfering with ongoing business operations.

The Lightning 9900™ subsystem features unparalleled reliability, a SAN-ready architecture,

and support for S/390

®

, UNIX®, and Windows NT® platforms. Hitachi adds software and

services to SAN components to provide functionality such as LAN-free backup, remote copy,

and multiplatform data exchange from our Freedom Storage™ software suites.

4 Chapter 1 Overview of the Lightning 9900™ Subsystem

Page 19

Hitachi Freedom NAS™. Freedom NAS answers the need for speed with faster file access.

Numerous clients can instantly share data with information available on your NAS file server.

Freedom NAS is an excellent solution for file/web serving, document/record imaging,

streaming media, video design, telco call centers, and manufacturing.

Hitachi Freedom Storage™ subsystems are combined with Network Storage Solutions’ NAS file

servers to provide Freedom NAS solutions. The modular architecture of Hitachi Freedom

Storage™ subsystems provides quick and easy storage expansion. For further information,

please refer to the Hitachi Freedom NAS™ NSS Configuration Guide, MK-91RD053.

Freedom NAS provides the following benefits for the user:

Accelerates response times

Supports rapid deployment of new

applications

Satisfies increasing customer demand

Enables expanding operations

Leverages existing storage infrastructure

Improves service levels

Reduces I/O bottlenecks

Minimizes overhead through

consolidation and reduced

complexity

Increases availability and

reliability

Eliminates storage islands

Installs quickly and easily

Hitachi Lightning 9900™ User and Reference Guide 5

Page 20

1.1.6 Program Products and Service Offerings

The Lightning 9900™ subsystem provides many advanced features and functions that increase

data accessibility, enable continuous user data access, and deliver enterprise-wide coverage

of on-line data copy/relocation, data access/protection, and storage resource management.

Hitachi Data Systems’ software solutions provide a full complement of industry-leading copy,

availability, resource management, and exchange software to support business continuity,

database backup/restore, application testing, and data mining.

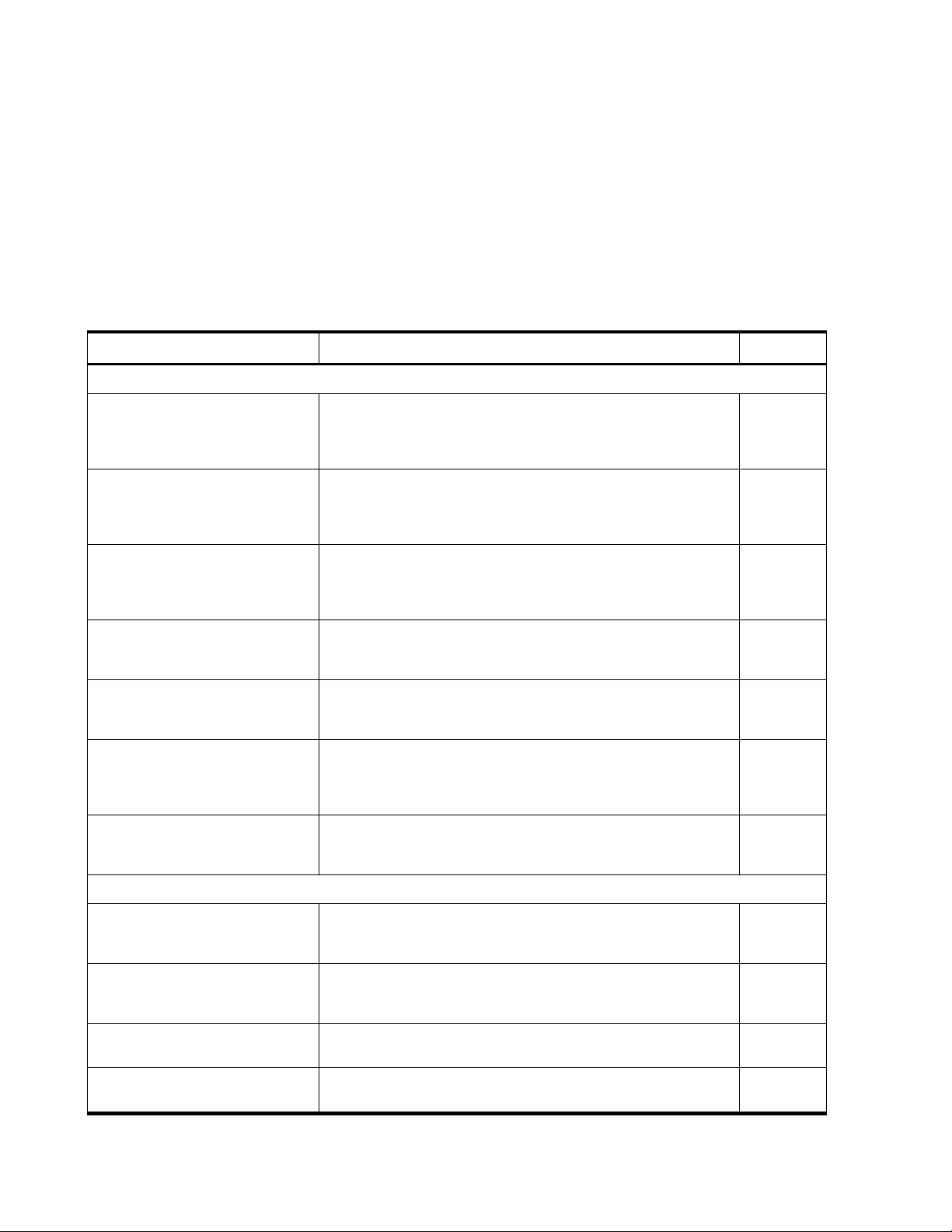

Table 1.1 Program Products and Service Offerings (continues on the next page)

Function Description See Section:

Data Replication:

Hitachi TrueCopy (TC)

Hitachi TrueCopy – S/390

Hitachi ShadowImage (SI)

Hitachi ShadowImage – S/390

Command Control Interface (CCI) Enables open-system users to perform TrueCopy and ShadowImage operations

Extended Copy Manager (ECM) Provides server-free backup solutions between the 9900 and backup devices

Hitachi Extended Remote Copy

(HXRC)

Hitachi NanoCopy™ Enables S/390® users to make Point-in-Time (PiT) copies of production data,

Data migration

(service offering only)

®

(TC390)

®

(SI390)

Enables the user to perform remote copy operations between 9900 subsystems

(and 7700E and 7700) in different locations. Hitachi TrueCopy provides

synchronous and asynchronous copy modes for both S/390

data. (The 7700 subsystem supports only synchronous remote copy operations.)

Allows the user to create internal copies of volumes for a wide variety of

purposes including application testing and offline backup. Can be used in

conjunction with TrueCopy to maintain multiple copies of critical data at both the

primary and secondary sites.

by issuing commands from the host to the 9900 subsystem. The CCI software

supports scripting and provides failover and mutual hot standby functionality in

cooperation with host failover products.

(e.g., tape, disk) in SAN environments. Supports the SCSI Extended Copy

command issued from the host server to the 9900 subsystem.

Provides compatibility with the IBM® Extended Remote Copy (XRC) S/390® host

software function, which performs server-based asynchronous remote copy

operations for mainframe LVIs.

without quiescing the application or causing any disruption to end-user

operations, for such uses as application testing, business intelligence, and

disaster recovery for business continuance.

Enables the rapid transfer of data from other disk subsystems onto the 9900

subsystem. Data migration operations can be performed while applications are

online using the data which is being transferred.

®

and open-system

3.7.1

3.7.2

3.7.3

3.7.4

3.7.5

3.7.6

3.7.7

3.7.8

3.7.9

Backup/Restore and Data Sharing:

Hitachi RapidXchange (HRX) Enables users to transfer data between S/390® and open-system platforms

Hitachi Multiplatform Backup/Restore

(HMBR)

HARBOR® File-Level Backup/Restore Enables users to perform mainframe-based file-level backup/restore operations

HARBOR® File Transfer Enables users to transfer large data files at ultra-high channel speeds in either

using the ExSA™ and/or FICON™ channels, which provides high-speed data

transfer without requiring network communication links or tape.

Allows users to perform mainframe-based volume-level backup and restore

operations on the open-system data stored on the multiplatform 9900

subsystem.

on the open-system data stored on the multiplatform 9900 subsystem.

direction between open systems and mainframe servers.

6 Chapter 1 Overview of the Lightning 9900™ Subsystem

3.7.10

3.7.11

3.7.12

3.7.13

Page 21

Table 1.1 Program Products and Service Offerings (continued)

Function Description See Section:

Resource Management:

HiCommand™ Enables users to manage the 9900 subsystem and perform functions (e.g., LUN

Manager, SANtinel) from virtually any location via the HiCommand™ Web

Client, command line interface (CLI), and/or third-party application.

LUN Manager Enables users to configure the 9900 fibre-channel ports for operational

environments (e.g., arbitrated-loop (FC-AL) and fabric topologies, host failover

support).

LU Size Expansion (LUSE) Enables open-system users to create expanded LUs which can be up to 36

times larger than standard fixed-size LUs.

Virtual LVI (VLVI)

Virtual LUN (VLUN)

Enables users to configure custom-size LVIs and LUs which are smaller than

standard-size devices.

FlashAccess (Flsh) Enables users to store specific high-usage data directly in cache memory to

provide virtually immediate data availability.

Cache Manager Enables users to perform FlashAccess operations from the S/390® host system.

FlashAccess allows you to place specific data in cache memory to enable

virtually immediate access to this data

Hitachi SANtinel

Hitachi SANtinel – S/390

®

Allows users to restrict host access to data on the Lightning 9900™ subsystem.

Open-system users can restrict host access to LUs based on the host’s World

Wide Name (WWN). S/390

®

mainframe users can restrict host access to LVIs

based on node IDs and logical partition (LPAR) numbers.

Prioritized Port Control (PPC) Allows open-system users to designate prioritized ports (e.g., for production

servers) and non-prioritized ports (e.g., for development servers) and set

thresholds and upper limits for the I/O activity of these ports.

Hitachi Parallel Access Volume

(HPAV)

Enables the S/390® host system to issue multiple I/O requests in parallel to

single LDEVs in the Lightning 9900™ subsystem. HPAV provides compatibility

with the IBM® Workload Manager (WLM) host software function and supports

both static and dynamic PAV functionality.

3.7.14

3.7.15

3.7.16

3.7.17

3.7.18

3.7.19

3.7.20

3.7.21

3.7.22

3.7.23

Dynamic Link Manager™ Provides automatic load balancing, path failover, and recovery capabilities in the

3.7.24

event of a path failure.

LDEV Guard Enables the assigning of access permissions (Read/Write, Read-Only, and

MK-92RD072

Protect) to logical volumes in a disk subsystem. 3390-3A, 3390-3B and 3390-3C

volumes can be used by both mainframe hosts and open-system hosts.

Note: Please check with your Hitachi Data Systems representative for the latest

feature availability.

Storage Utilities:

Hitachi CruiseControl Monitors subsystem and volume activity and performs automatic relocation of

3.7.26

volumes to optimize performance.

Hitachi Graph-Track™ (GT) Provides detailed information on the I/O activity and hardware performance of

3.7.27

the 9900 subsystem. Hitachi Graph-Track™ displays real-time and historical

data in graphical format, including I/O statistics, cache statistics, and front-end

and back-end microprocessor usage.

Hitachi Lightning 9900™ User and Reference Guide 7

Page 22

1.1.7 Subsystem Scalability

The architecture of the 9900 subsystem accommodates scalability to meet a wide range of

capacity and performance requirements. The 9960 storage capacity can be increased from a

minimum of 54 GB to a maximum of 88 TB of user data. The 9960 nonvolatile cache can be

configured from 1 GB to 32 GB. All disk drive and cache upgrades can be performed without

interrupting user access to data.

The 9900 subsystem can be configured with the desired number and type of front-end clienthost interface processors (CHIPs). The CHIPs are installed in pairs, and each CHIP pair offers

up to eight host connections. The 9960 can be configured with four CHIP pairs to provide up

to 32 paths to attached host processors. The 9910 supports up to three CHIP pairs and 24

paths.

The ACPs are the back-end processors which transfer data between the disk drives and

cache. Each ACP pair is equipped with eight device paths. The 9960 subsystem can be

configured with up to four pairs of array control processors (ACPs), providing up to thirtytwo concurrent data transfers to and from the disk drives. The 9910 is configured with one

ACP pair.

8 Chapter 1 Overview of the Lightning 9900™ Subsystem

Page 23

1.2 Reliability, Availability, and Serviceability

The Lightning 9900™ subsystem is not expected to fail in any way that would interrupt user

access to data. The 9900 can sustain multiple component failures and still continue to

provide full access to all stored user data. Note: While access to user data is never

compromised, the failure of a key component can degrade performance.

The reliability, availability, and serviceability features of the 9900 subsystem include:

Full fault-tolerance. The 9900 subsystem provides full fault-tolerance capability for all

critical components. The disk drives are protected against error and failure by enhanced

RAID technologies and dynamic scrubbing and sparing. The 9900 uses component and

function redundancy to provide full fault-tolerance for all other subsystem components

(microprocessors, control storage, power supplies, etc.). The 9900 has no active single

point of component failure and is designed to provide continuous access to all user data.

Separate power supply systems. Each storage cluster is powered by a separate set of

power supplies. Each set can provide power for the entire subsystem in the unlikely

event of power supply failure. The power supplies of each set can be connected across

power boundaries, so that each set can continue to provide power if a power outage

occurs. The 9900 can sustain the loss of multiple power supplies and still continue

operation.

Dynamic scrubbing and sparing for disk drives. The 9900 uses special diagnostic

techniques and dynamic scrubbing to detect and correct disk errors. Dynamic sparing is

invoked automatically if needed. The 9960 can be configured with up to sixteen spare

disk drives, and any spare disk can back up any other disk of the same capacity, even if

the failed disk and spare disk are in different array domains (attached to different ACP

pairs).

Dynamic duplex cache. The 9900 cache is divided into two equal segments on separate

power boundaries. The 9900 places all write data in both cache segments with one

internal write operation, so the data is always duplicated (duplexed) across power

boundaries. If one copy of write data is defective or lost, the other copy is immediately

destaged to disk. This duplex design ensures full data integrity in the event of a cache or

power failure.

Remote copy features. The Hitachi TrueCopy and Hitachi Extended Remote Copy

(HXRC) data movement features enable the user to set up and maintain duplicate copies

of S/390

®

and open-system data over extended distances. In the event of a system

failure or site disaster, the secondary copy of data can be invoked rapidly, allowing

applications to be recovered with guaranteed data integrity.

Hitachi Lightning 9900™ User and Reference Guide 9

Page 24

Hi-Track

®

. The Hi-Track® maintenance support tool monitors the operation of the 9900

subsystem at all times, collects hardware status and error data, and transmits this data

via modem to the Hitachi Data Systems Support Center. The Hitachi Data Systems

Support Center analyzes the data and implements corrective action when necessary. In

the unlikely event of a component failure, Hi-Track

®

calls the Hitachi Data Systems

Support Center immediately to report the failure without requiring any action on the

part of the user. Hi-Track

®

enables most problems to be identified and fixed prior to

actual failure, and the advanced redundancy features enable the subsystem to remain

operational even if one or more components fail. Note: Hi-Track

to any user data stored on the 9900 subsystem. The Hi-Track

®

does not have access

®

tool requires a dedicated

RJ-11 analog phone line.

Nondisruptive service and upgrades. All hardware upgrades can be performed

nondisruptively during normal subsystem operation. All hardware subassemblies can be

removed, serviced, repaired, and/or replaced nondisruptively during normal subsystem

operation. All microcode upgrades can be performed during normal subsystem

operations using the SVP or the alternate path facilities of the host.

Error Reporting. The Lightning 9900™ subsystem reports service information messages

(SIMs) to notify users of errors and service requirements. SIMs can also report normal

operational changes, such as remote copy pair status change. The SIMs are logged on the

9900 service processor (SVP) and on the Remote Console PC, reported directly to the

mainframe and open-system hosts, and reported to Hitachi Data Systems via Hi-Track

®

.

10 Chapter 1 Overview of the Lightning 9900™ Subsystem

Page 25

Chapter 2 Subsystem Architecture and Components

2.1 Overview

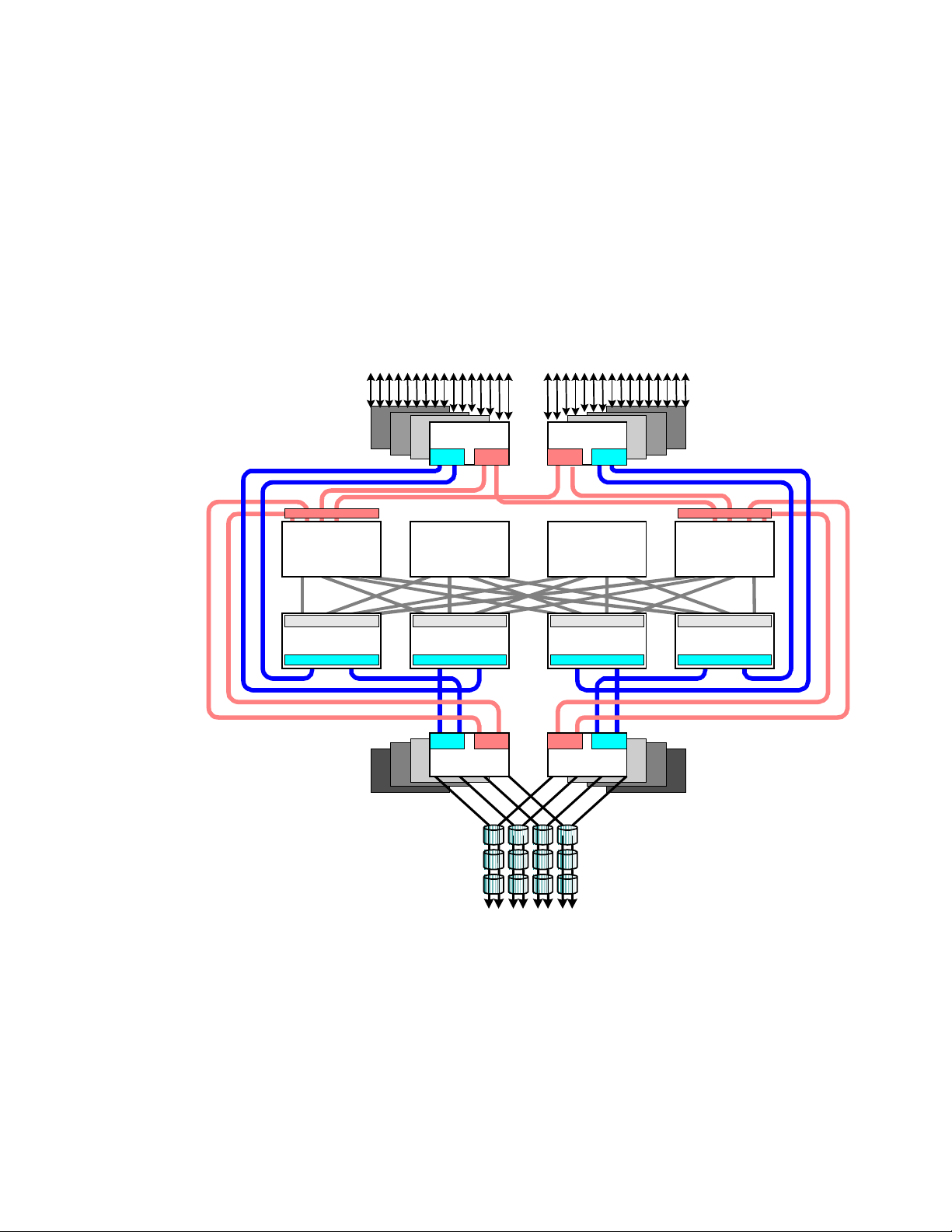

Figure 2.1 shows the Hierarchical Star Network (HiStar or HSN) architecture of the Lightning

9900™ RAID subsystem. The “front end” of the 9900 subsystem includes the hardware and

software that transfers the host data to and from cache memory, and the “back end”

includes the hardware and software that transfers data between cache memory and the disk

drives.

control cache

Data Cache

Cache/Data Side

Cache Switch

Processor Side

CHT

DTA MPA

CHT

DTAMPA

Data Cache Data Cache

Cache/Data Side

Cache Switch

Processor Side

DTA MPA

ACP

Cache/Data Side

Cache Switch

Processor Side

DTAMPA

ACP

control cache

Data Cache

Cache/Data Si de

Cache Switch

Processor Side

Figure 2.1 Lightning 9900™ HiStar Network (HSN) Architecture

Hitachi Lightning 9900™ User and Reference Guide 11

Page 26

Front End: The 9900 front end is entirely resident in the 9900 controller frame and includes

the client-host interface processors (CHIPs) that reside on the channel adapter (CHA or CHT)

boards. The CHIPs control the transfer of data to and from the host processors via the fibrechannel, ExSA™, and/or FICON™ channel interfaces and to and from cache memory via

independent high-speed paths through the cache switches (CSWs).

Each channel adapter board (CHA or CHT) can contain two or four CHIPs. The 9960

subsystem supports up to eight CHAs for a maximum of 32 host interfaces, and the 9910

subsystem supports up to six CHAs to provide a maximum of 24 host interfaces.

The 9960 controller contains four cache switch (CSW) cards, and the 9910 controller

contains two CSW cards.

Cache memory in the 9960 resides on two or four cards depending on features, and each

cache card is backed up by a separate battery. The 9910 supports two cache cards.

Shared memory resides on the first two cache cards and is provided with its own power

sources and backup batteries. Shared memory also has independent address and data

paths from the channel adapter and disk adapter boards.

Back End: The 9900 back end is controlled by the array control processors (ACPs) that reside

on the disk adapter boards in the 9900 controller frame. The ACPs control the transfer of

data to and from the disk arrays via high-speed fibre (100 MB/sec or 1 Gbps) and then to and

from cache memory via independent high-speed paths through the CSWs.

The disk adapter board (DKA) contains four ACPs. The 9960 subsystem supports up to

eight DKAs for a maximum of 32 ACPs. The 9910 subsystem supports two DKAs for a

maximum of eight ACPs.

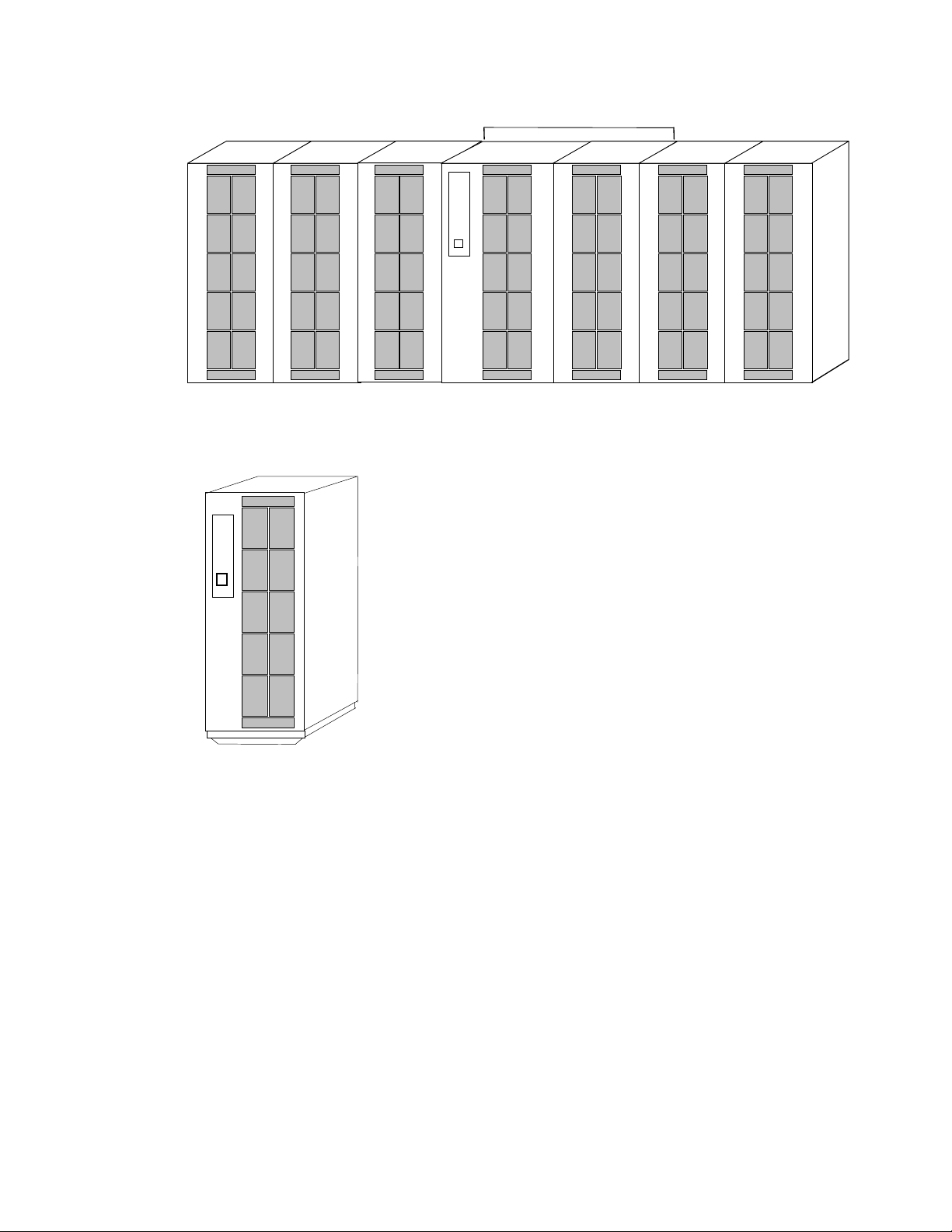

The 9960 subsystem (see Figure 2.2) includes the following major components:

One controller frame containing the control and operational components of the

subsystem.

Up to six disk array frames containing the storage components (disk drive arrays) of the

subsystem.

The service processor (SVP) (see section 2.5). The 9900 SVP is located in the controller

frame and can only be used by authorized Hitachi Data Systems personnel.

The Remote Console PC (see section 2.6). The 9900 Remote Console PC can be attached

to multiple 9960 and/or 9910 subsystems via the 9900-internal local-area network (LAN).

The 9910 subsystem (see Figure 2.3) includes the following major components:

One frame containing the controller and disk components of the subsystem.

The service processor (SVP) (see section 2.5). The 9900 SVP is located in the controller

frame and can only be used by authorized Hitachi Data Systems personnel.

The Remote Console PC (see section 2.6). The 9900 Remote Console PC can be attached

to multiple 9960 and/or 9910 subsystems.

12 Chapter 2 Subsystem Architecture and Components

Page 27

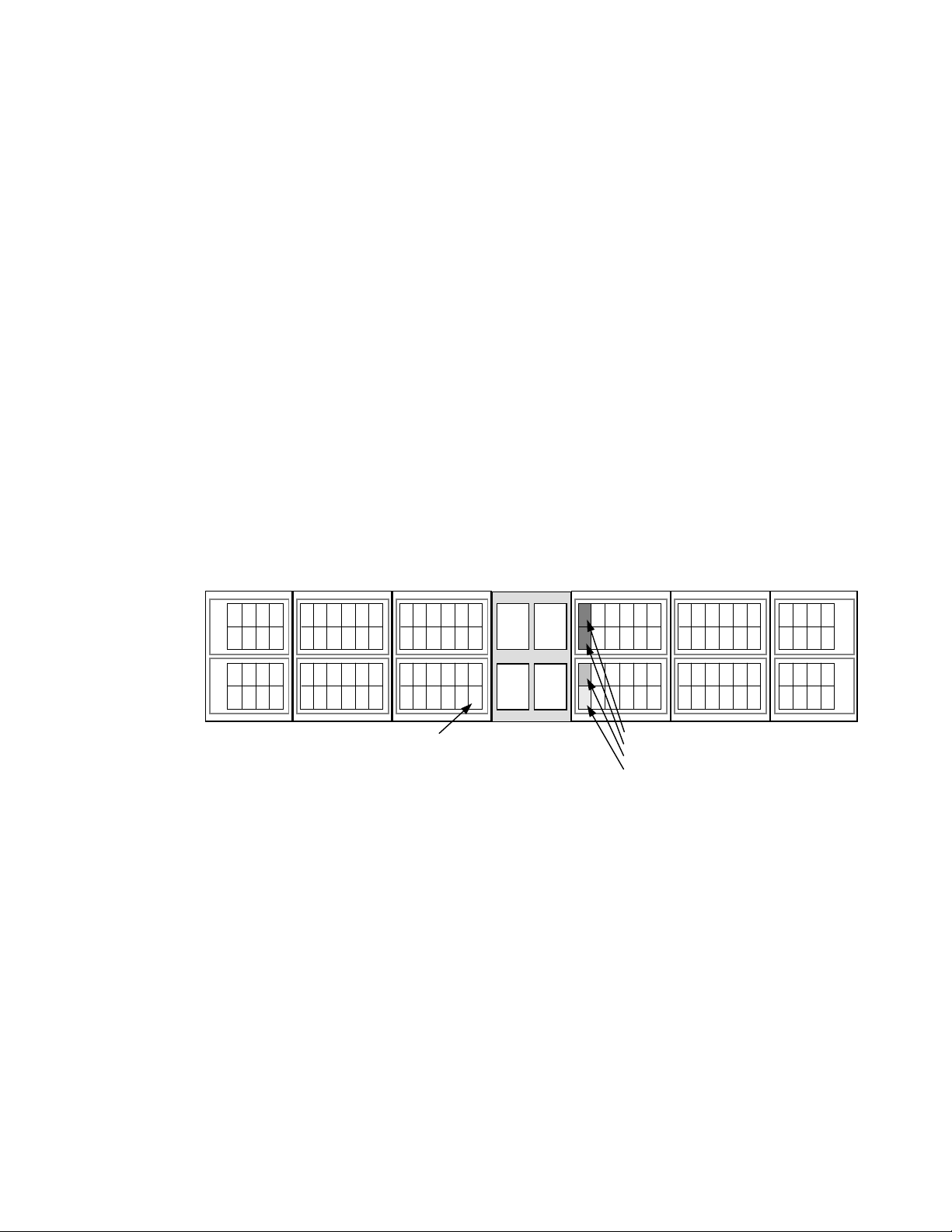

Minimum configuration of 9960 subsystem

Disk Array Unit Disk Array Unit Disk Array Unit 9900 Controller Disk Array Unit Disk Array Unit Disk Arr ay Unit

Figure 2.2 9960 Subsystem Frames

Figure 2.3 9910 Subsystem Frame

Hitachi Lightning 9900™ User and Reference Guide 13

Page 28

2.2 Components of the Controller Frame

The 9900 controller frame contains the control and operational components of the

subsystem. For the 9910 subsystem, the controller frame also contains the disk array

components. The 9900 controller is fully redundant and has no active single point of failure.

All controller frame components can be repaired or replaced without interrupting access to

user data. The key features and components of the controller frame are:

Storage clusters (see section 2.2.1),

Nonvolatile duplex shared memory (see section 2.2.2),

Nonvolatile duplex cache memory (see section 2.2.3),

Multiple data and control paths (see section 2.2.4),

Redundant power supplies (see section 2.2.5),

CHIPs and channels (FICON™, ExSA™, and/or fibre-channel) (see section 2.2.6),

ACPs (see section 2.2.8).

2.2.1 Storage Clusters

Each controller frame consists of two redundant controller halves called storage clusters.

Each storage cluster contains all physical and logical elements (e.g., power supplies, CHAs,

CHIPs, ACPs, cache, control storage) needed to sustain processing within the subsystem.

Both storage clusters should be connected to each host using an alternate path scheme, so

that if one storage cluster fails, the other storage cluster can continue processing for the

entire subsystem.

Each pair of channel adapters is split between clusters to provide full backup for both frontend and back-end microprocessors. Each storage cluster also contains a separate, duplicate

copy of cache and shared memory contents. In addition to the high-level redundancy that

this type of storage clustering provides, many of the individual components within each

storage cluster contain redundant circuits, paths, and/or processors to allow the storage

cluster to remain operational even with multiple component failures. Each storage cluster is

powered by its own set of power supplies, which can provide power for the entire subsystem

in the unlikely event of power supply failure. Because of this redundancy, the Lightning

9900™ subsystem can sustain the loss of multiple power supplies and still continue operation.

Note: The redundancy and backup features of the Lightning 9900™ subsystem eliminate all

active single points of failure, no matter how unlikely, to provide an additional level of

reliability and data availability.

14 Chapter 2 Subsystem Architecture and Components

Page 29

2.2.2 Nonvolatile Shared Memory

The nonvolatile shared memory contains the cache directory and configuration information

for the 9900 subsystem. The path group arrays (e.g., for dynamic path selection) also reside

in the shared memory. The shared memory is duplexed, and each side of the duplex resides

on the first two cache cards, which are in clusters 1 and 2. Even though the shared memory

resides on the cache cards, the shared memory has separate power supplies and separate

battery backup. The basic size of the shared memory is 512 MB, and the maximum size is 1.5

GB (for 9960). The size of the shared memory storage is determined by the total cache size

and the number of logical devices (LDEVs). Any required increase beyond the base size is

automatically shipped and configured during the upgrade process. The shared memory is

protected by battery backup.

2.2.3 Nonvolatile Duplex Cache

The 9960 subsystem can be configured with up to 32 GB of cache, and the 9910 can be

configured with up to 16 GB of cache. All cache memory in the 9900 is nonvolatile, and each

cache card is protected by its own 48-hour battery backup. The cache in the 9900 is divided

into two equal areas (called cache A and cache B) on separate cards. Cache A is in cluster 1,

and cache B is in cluster 2. The 9900 places all read and write data in cache. Write data is

normally written to both cache A and B with one CHIP write operation, so that the data is

always duplicated (duplexed) across logic and power boundaries. If one copy of write data is

defective or lost, the other copy is immediately destaged to disk. This “duplex cache”

design ensures full data integrity in the unlikely event of a cache memory or power-related

failure.

Note: Mainframe hosts can specify special attributes (e.g., cache fast write (CFW)

command) to write data (typically a sort command) without write duplexing. This data is not

duplexed and is usually given a discard command at the end of the sort, so that the data will

not be destaged to the disk drives. See section 4.3.3 for further information on S/390

operations.

®

cache

Hitachi Lightning 9900™ User and Reference Guide 15

Page 30

2.2.4 Multiple Data and Control Paths

The 9900 subsystem uses a state-of-the-art architecture called the Hierarchical Star (HiStar)

Network (HSN) which utilizes multiple point-to-point data and command paths in order to

provide redundancy and improve performance. Each data and command path is independent.

The individual paths between the channel or disk adapters and cache are steered by highspeed cache switch cards. The 9900 does not have any common buses, thus eliminating the

performance degradation and contention that can occur in a bus architecture. All data

stored on the 9900 subsystem is moved into and out of cache via the redundant high-speed

paths.

2.2.5 Redundant Power Supplies

Each storage cluster is powered by its own set of redundant power supplies, and each power

supply is able to provide power for the entire subsystem, if necessary. Because of this

redundancy, the 9900 subsystem can sustain the loss of multiple power supplies and still

continue operation. To make use of this capability, the 9900 should be connected either to

dual power sources or to different power panels, so if there is a failure on one of the power

sources, the 9900 can continue full operations using power from the alternate source.

2.2.6 Client-Host Interface Processors (CHIPs) and Channels

The CHIPs contain the front-end microprocessors which process the channel commands from

the host(s) and manage host access to cache. In the S/390

CKD-to-FBA and FBA-to-CKD conversion for the data in cache. The CHIPs are available in

pairs. Depending on the configuration, each CHIP in a pair contains either two or four

microprocessors and four buffers which allow data to be transferred between the CHIP and

cache. Each CHIP pair is composed of the same type of channel interface (FICON™, ExSA™, or

fibre-channel). Each ExSA™ or fibre-channel CHIP pair supports either four or eight

simultaneous data transfers to and from cache and four or eight physical connections to the

host. Each FICON™ CHIP pair supports four physical connections to the host. The 9900 can be

configured with multiple CHIP pairs to support various interface configurations. Table 2.1

lists the CHIP specifications and configurations and the number of channel connections for

each configuration.

Note: The Hitachi CruiseControl and Graph-Track products (see section 3.7) allow users to

collect and view usage statistics for the CHIPs in the 9900 subsystem.

®

environment, the CHIPs perform

16 Chapter 2 Subsystem Architecture and Components

Page 31

Table 2.1 CHIP and Channel Specifications

Parameter Specification for 9960 Specification for 9910

Number of CHIP pairs 1, 2, 3, or 4 1, 2, or 3

Simultaneous data transfers per CHIP pair:

S/390® 4 or 8 ExSA™ (serial/ESCON®)

Open Systems 4 or 8 (fibre-channel)

Maximum transfer rate:

FICON™ 100 MB/sec (1 Gbps)

ExSA™ (serial/ ESCON®) 10 or 17 MB/sec

Fibre 100 or 200 MB/sec (1 or 2 Gbps)

Physical interfaces per CHIP pair 4 or 8 ExSA™

4 FICON™

4 or 8 fibre-channel

Maximum physical interfaces per subsystem: 32 24

FICON™ 0, 4, 8, 12, or 16 0, 4, 8, or 12

ExSA™ (serial/ESCON®) 0, 4, 8, 12, 16, 20, 24, 28 or 32 0, 4, 8, 12, 16, 20, or 24

Fibre-channel 0, 4, 8, 12, 16, 20, 24, 28 or 32 0, 4, 8, 12, 16, 20, or 24

Logical paths per FICON™ port 512

Logical paths per ExSA™ (ESCON®) port 256

Maximum logical paths per subsystem 8,192 6144

Maximum LUs per fibre-channel port 256

Maximum LVI/LUs per subsystem 4,096

Hitachi Lightning 9900™ User and Reference Guide 17

Page 32

2.2.7 Channels

The Lightning 9900™ subsystem supports all-mainframe, multiplatform, and all-open system

operations and offers the following two types of host channel connections:

Fiber Connection (FICON™). The 9960 subsystem supports up to 16 FICON™-channel

ports, and the 9910 supports up to 12 FICON™ ports. The FICON™ ports are capable of

data transfer speeds of 100 MB/sec (1 Gbps). The 9900 FICON™-channel cards are

available with four ports per CHIP pair. The 9900 supports shortwave and longwave nonOFC (non-open FICON™ control) optical interface and multimode optical cables as well as

high-availability (HA) FICON™-channel configurations using hubs and switches. When

configured with shortwave FICON™ cards, the 9900 subsystem can be located up to 500

meters (2750 feet) from the host(s). When configured with longwave FICON™ cards, the

9900 subsystem can be located up to ten kilometers from the host(s).

Extended Serial Adapter™ (ExSA™). The 9960 subsystem supports a maximum of 32

ExSA™ serial channel interfaces (compatible with ESCON

®

protocol), and the 9910

supports a maximum of 24 ExSA™ interfaces. The 9900 ExSA™ channel interface cards

provide data transfer speeds of up to 17 MB/sec and are available in four or eight ports

per CHIP pair. Each ExSA™ channel can be connected to a single processor or logical

partition (LPAR) or to serial channel directors. Shared serial channels can be used for

dynamic path switching. The 9900 subsystem also supports the ExSA™ Extended Distance

Feature (XDF).

Fibre-Channel. The 9960 subsystem supports up to 32 fibre-channel ports, and the 9910

supports up to 24 fibre ports. The fibre ports are capable of data transfer speeds of 100

or 200 MB/sec (1 or 2 Gbps). The 9900 fibre-channel cards are available in either four or

eight ports per CHIP pair. The 9900 supports shortwave and longwave non-OFC (non-open

fibre control) optical interface and multimode optical cables as well as high-availability

(HA) fibre-channel configurations using hubs and switches. When configured with

shortwave fibre cards, the 9900 subsystem can be located up to 500 meters (2750 feet)

from the open-system host(s). When configured with longwave fibre cards, the 9900

subsystem can be located up to ten kilometers from the open-system host(s).

18 Chapter 2 Subsystem Architecture and Components

Page 33

2.2.8 Array Control Processors (ACPs)

A

The ACPs, which control the transfer of data between the disk drives and cache, are

installed in pairs for redundancy and performance. Figure 2.4 illustrates a conceptual ACP

pair domain. The 9960 can be configured with up to four ACP pairs, and the 9910 has one

ACP pair. All functions, paths, and disk drives controlled by one ACP pair are called an

“array domain.” An array domain can contain a variety of LVI and/or LU configurations.

The disk drives are connected to the ACP pairs by fibre cables using an arbitrated-loop

(FC-AL) topology. Each ACP has four microprocessors and four independent fibre backend

paths. Each 9960 fibre backend path can access up to 32 disk drives (32 drives × 4 paths =

128 disk drives per ACP). Each 9910 fibre backend path can access up to 12 disk drives (12

drives × 4 paths = 48 disk drives per ACP). Each disk drive is dual-ported for performance and

redundancy in case of a backend path failure.

Table 2.2 lists the ACP specifications. Each 9960 ACP pair can support a maximum of 128

physical disk drives (in three array frames), including dynamic spare disk drives. Each ACP

pair contains eight buffers (one per fibre path), that support data transfer to and from

cache. Each disk drive has a dual-port feature and can transfer data via either port. Each of

the two paths shared by the disk drive is connected to a separate ACP in the pair to provide

alternate path capability. Each ACP pair is capable of eight simultaneous data transfers to or

from the disk drives.

Note: The Hitachi CruiseControl and Graph-Track products (see section 3.7) allow users to

collect and view usage statistics for the ACPs in the 9900 subsystem.

ACP

Pair 4

MMaaxxiimmuumm FFoouurr AACCPP PPaaiirrs

ALL llooooppss == 33..22GGBB//ss

-

3322 FFCC-

ACP

Pair 3

ACP

Pair 1

s

ACP

Pair 2

Figure 2.4 Conceptual ACP Array Domain

Hitachi Lightning 9900™ User and Reference Guide 19

Page 34

Table 2.2 ACP Specifications

Description Specification for 9960 Specification for 9910

Number of ACP pairs 1, 2, 3 or 4 1

Backend paths per ACP pair 8

Backend paths per subsystem 8, 16, 24 or 32 8

Array group (or parity group) type per ACP pair RAID-1 and/or RAID-5

Hard disk drive type per ACP pair

Logical device emulation type within ACP pair 3380-x, 3390-x, and OPEN-x

[1]

18 GB, 47 GB, 73 GB, 146 GB, 180 GB

[3]

Backend array interface type Fibre-channel arbitrated loop (FC-AL)

Backend interface transfer rate (burst rate) 100 MB/sec (1 Gbps)

Maximum concurrent backend operations per ACP pair 8

Maximum concurrent backend operations per subsystem 32 8

HiStar Network architecture internal bandwidth 1.6 or 3.2 GB/sec 1.6 GB/sec

Notes:

1. All hard disk drives (HDDs) in an array group (also called parity group) must be the same type. Please contact your Hitachi

Data Systems representative for the latest information on available HDD types.

2. The 180-GB HDDs should not be intermixed with other HDD types in the same array domain (behind the same ACP pair).

See the notes under Table 2.3 for important information on 9900 subsystems which contain 180-GB HDDs.

3. 3390-3 and 3390-3R LVIs cannot be intermixed in the same 9900 subsystem.

[2]

20 Chapter 2 Subsystem Architecture and Components

Page 35

2.3 Array Frame

The 9960 array frames contain the physical disk drives, including the disk array groups and

the dynamic spare disk drives. Each array frame has dual AC power plugs, which should be

attached to two different power sources or power panels. The 9960 can be configured with

up to six array frames to provide a storage capacity of up to 88 TB. The 9910 subsystem

combines the controller and disk array components in one physical frame.

The 9900 subsystem uses three-inch disk drives with fixed-block-architecture (FBA) format.

The currently available disk drives have capacities of 18 GB, 47 GB, 73 GB, 146 GB, and 180

GB. All drives in an array group must have the same capacity. The 18-GB, 47-GB, 73-GB, and

146-GB HDDs can be attached to the same ACP pair. The 180-GB HDDs should not be

intermixed with other HDD types behind the same ACP pair. Table 2.3 provides the disk drive

specifications.

Each disk drive can be replaced nondisruptively on site. The 9900 utilizes diagnostic

techniques and background dynamic scrubbing that detect and correct disk errors. Dynamic

sparing is invoked automatically if needed. For both RAID-5 and RAID-1 array groups, any

spare disk drive can back up any other disk drive of the same capacity anywhere in the

subsystem, even if the failed disk and the spare disk are in different array domains

(attached to different ACP pairs). The 9960 can be configured with a minimum of one and a

maximum of sixteen spare disk drives. The 9910 can be configured with a minimum of one

and a maximum of four spare disk drives. The standard configuration provides one spare

drive for type of drive installed in the subsystem. The Hi-Track

tool detects disk drive failures and notifies the Hitachi Data Systems Support Center

automatically, and a service representative is sent to replace the disk drive.

®

monitoring and reporting

Note: The spare disk drives are used only as replacements and are not included in the

storage capacity ratings of the subsystem.

Hitachi Lightning 9900™ User and Reference Guide 21

Page 36

Table 2.3 Disk Drive Specifications

Disk Drive Type

Parameter

Formatted capacity (GB) 72.91 47.19 18.46 143.7 172.55

Platter diameter 3 inches 3 inches 3 inches 3 inches 3 inches

Physical cylinders (user area) 15,154 12,027 12,027 n/a 24,247

73 GB 47 GB 18 GB 146 GB 180 GB*

Physical tracks per physical cylinder

(number of heads)

Physical disk platters (user area)

(numbers of disks)

Sector length (byte) 520(512) 520(512) 520(512) 520 (512) 520(512)

Seek time (ms) MIN. 0.5/0.7 0.5/0.7 0.5/0.7 0.5/0.7 0.8 / 1.1

(Read/Write)

AVE. 5.7/6.5 5.7/6.5 5.2/6.0 4.9/5.4 7.4 / 8.2

Revolution speed (rpm) 10,025 10,025 10,025 10,025 7,200

Average latency time (ms) 2.99 2.99 2.99 2.99 4.17

Internal data transfer rate (MB/sec) 33.6 to 56.6 30.2 to 45.6 30.2 to 45.6 57.3 to 99.9 35.3 to 63.5

Max. interface data transfer rate

(MB/sec)

MAX. 12.0/13.0 12.0/13.0 12.0/13.0 10.0/11.0 16.0 / 17.0

24 (DKR1C)

10 (DKR2D)

12 (DKR1C)

5 (DKR2D)

100 100 100 100 100

23 (DKR1B)

16 (DKR1C)

12 (DKR1B)

8 (DKR1C)

9 (DKR2B)

6 (DKR2C)

5 (DKR2B)

3 (DKR2C)

10 24

5 12

*Caution and notes for the 180-GB HDDs:

Caution: The 180-GB HDDs should not be intermixed with other HDD types in the same

array domain (behind the same ACP pair). If 180-GB HDDs are used, all HDDs in that

array domain should also be 180-GB HDDs.

Note: Subsystems which contain the 180-GB HDDs do not have the same availability or

serviceability as those subsystems which do not contain 180-GB HDDs. Some offline

maintenance may be required.

Note: The 180-GB HDDs are intended for archival use and do not have characteristics

that make them suitable as performance devices.

22 Chapter 2 Subsystem Architecture and Components

Page 37

2.3.1 Disk Array Groups

The disk array group is the basic unit of storage capacity for the 9900. Each array group is

attached to both ACPs of an ACP pair via eight fibre paths, which enables all disk drives in

the array group to be accessed simultaneously by the ACP pair. All disk drives in an array

group must have the same logical capacity. Each array frame has two canister mounts, and

each canister mount can have up to 48 physical disk drives.

The 9900 supports both RAID-1 and RAID-5 array groups. Figure 2.5 illustrates a sample RAID1 layout. A RAID-1 array group consists of two pair of disk drives in a mirrored configuration,

regardless of disk drive capacity. Data is striped to two drives and mirrored to the other two

drives. The stripe consists of two data chunks. The primary and secondary stripes are

toggled back and forth across the physical disk drives for high performance. Each data chunk

consists of either eight logical tracks (S/390

in a drive causes the corresponding mirrored drive to take over for the failed drive. Although

the RAID-5 implementation is appropriate for many applications, the RAID-1 option on the

all-open 9900 subsystem is ideal for workloads with low cache-hit ratios.

RAID-1 using 2D + 2D and S/390® LDEVs

®

) or 768 logical blocks (open systems). A failure

Track 0

to

Track 7

Track 16

to

Track 23

Track 32

to

Track 39

Track 48

to

Track 55

Track 0

to

Track 7

Track 16

to

Track 23

Track 32

to

Track 39

Track 48

to

Track 55

Track 8

to

Track 15

Track 24

to

Track 31

Track 40

to

Track 47

Track 56

to

Track 63

Track 8

to

Track 15

Track 24

to

Track 31

Track 40

to

Track 47

Track 56

to

Track 63

Figure 2.5 Sample RAID-1 Layout

A RAID-5 array group consists of four disk drives. The data is written across the four hard

drives in a stripe that has three data chunks and one parity chunk. Each chunk contains

®

either eight logical tracks (S/390

) or 768 logical blocks (open systems). The enhanced RAID5+ implementation in the 9900 subsystem minimizes the write penalty incurred by standard

RAID-5 implementations by keeping write data in cache until an entire stripe can be built

and then writing the entire data stripe to the disk drives.

Hitachi Lightning 9900™ User and Reference Guide 23

Page 38

Figure 2.6 illustrates RAID-5 data stripes mapped over four physical drives. Data and parity

are striped across each of the disk drives in the array group (hence the term “parity group”).

The logical devices (LDEVs) are evenly dispersed in the array group, so that the performance

of each LDEV within the array group is the same. Figure 2.6 also shows the parity chunks

that are the “Exclusive OR” (EOR) of the data chunks. The parity and data chunks rotate

after each stripe. The total data in each stripe is either 24 logical tracks (eight tracks per

chunk) for S/390

®

data, or 2304 blocks (768 blocks per chunk) for open-systems data. Each of

these array groups can be configured as either 3380-x, 3390-x, or OPEN-x logical devices. All

LDEVs in the array group must be the same format (3380-x, 3390-x, or OPEN-x). For open