Page 1

Cisco UCS Servers RAID Guide

Updated: February 06, 2018

Americas Headquarters

Cisco Systems, Inc.

170 West Tasman Drive

San Jose, CA 95134-1706

USA

http://www.cisco.com

Tel: 408 526-4000

800 553-NETS (6387)

Fax: 408 527-0883

Text Part Number: OL-26591-01

Page 2

THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL

STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT

WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS.

THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT

SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE

OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY.

The following information is for FCC compliance of Class A devices: This equipment has been tested and found to comply with the limits for a Class A digital device, pursuant

to part 15 of the FCC rules. These limits are designed to provide reasonable protection against harmful interference when the equipment is operated in a commercial

environment. This equipment generates, uses, and can radiate radio-frequency energy and, if not installed and used in accordance with the instruction manual, may cause

harmful interference to radio communications. Operation of this equipment in a residential area is likely to cause harmful interference, in which case users will be required

to correct the interference at their own expense.

The following information is for FCC compliance of Class B devices: This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant

to part 15 of the FCC rules. These limits are designed to provide reasonable protection against harmful interference in a residential installation. This equipment generates,

uses and can radiate radio frequency energy and, if not installed and used in accordance with the instructions, may cause harmful interference to radio communications.

However, there is no guarantee that interference will not occur in a particular installation. If the equipment causes interference to radio or television reception, which can be

determined by turning the equipment off and on, users are encouraged to try to correct the interference by using one or more of the following measures:

• Reorient or relocate the receiving antenna.

• Increase the separation between the equipment and receiver.

• Connect the equipment into an outlet on a circuit different from that to which the receiver is connected.

• Consult the dealer or an experienced radio/TV technician for help.

Modifications to this product not authorized by Cisco could void the FCC approval and negate your authority to operate the product.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public

domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California.

NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH

ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT

LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF

DEALING, USAGE, OR TRADE PRACTICE.

IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING,

WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO

OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

Cisco and the Cisco logo are trademarks or registered trademarks of Cisco and/or its affiliates in the U.S. and other countries. To view a list of Cisco trademarks, go to this

URL: www.cisco.com/go/trademarks. Third-party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership

relationship between Cisco and any other company. (1110R)

Any Internet Protocol (IP) addresses and phone numbers used in this document are not intended to be actual addresses and phone numbers. Any examples, command display

output, network topology diagrams, and other figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses or phone numbers in

illustrative content is unintentional and coincidental.

Cisco UCS Servers RAID Guide

© 2015 Cisco Systems, Inc. All rights reserved.

Page 3

CONTENTS

CHAPTER

1 RAID Overview 1-1

Information About RAID 1-1

Drive Group 1-1

Virtual Drive 1-1

Disk Striping 1-2

Disk Mirroring (RAID 1 and RAID 10) 1-2

Parity 1-3

Disk Spanning 1-3

Hot Spares 1-4

Global Hot Spare 1-5

Dedicated Hot Spare 1-5

Disk Rebuilds 1-6

Hot Swap 1-6

Drive States 1-7

Virtual Drive States 1-7

RAID Levels 1-8

RAID Levels Summary 1-8

RAID 0 1-9

RAID 1 1-10

RAID 5 1-10

RAID 6 1-12

RAID 00 1-13

RAID 10 1-14

RAID 50 1-15

RAID 60 1-16

Fault Tolerance 1-17

CHAPTER

OL-26591-01

Generic Drive Replacement Procedure 1-18

Removing a Drive from a Server 1-18

Installing a Drive in a Server 1-19

Platform-Specific RAID and Drive Procedures 1-19

2 Using Cisco Integrated Management Controller and Cisco UCS Server Configuration Utility for

RAID Monitoring and Configuring

2-1

Cisco Integrated Management Controller—Viewing Storage Properties 2-1

Cisco UCS Server Configuration Utility—RAID Configuration 2-2

Cisco UCS Servers RAID Guide

iii

Page 4

Contents

CHAPTER

3 Using Cisco UCS Manager for RAID Configuring and Monitoring 3-1

Cisco UCS Manager Configuration 3-1

Local Disk Configuration Policy 3-1

Guidelines for all Local Disk Configuration Policies 3-2

Guidelines for Local Disk Configuration Policies Configured for RAID 3-3

Creating a Local Disk Configuration Policy 3-4

Changing a Local Disk Configuration Policy 3-6

Deleting a Local Disk Configuration Policy 3-7

Server Disk Drive Monitoring 3-7

Support for Disk Drive Monitoring 3-7

Viewing the Status of a Disk Drive 3-8

Interpreting the Status of a Monitored Disk Drive 3-9

RAID Controllers in UCS Servers 3-10

Determining Which Controller is in Your Server 3-11

RAID Controllers 3-12

Disabling Quiet Boot 3-12

Accessing ROM-Based Controller Utilities 3-13

Documentation About RAID Controllers and LSI Utilities 3-13

Moving a RAID Cluster Using UCS Software Version 1.4(1) 3-13

Moving a RAID Cluster Using UCS Software Version 1.4(2) and Later Releases 3-14

Moving a RAID Cluster Between B200 M3 Servers 3-15

Replacing a Failed Drive in a RAID Cluster 3-16

CHAPTER

iv

4 Configuring the LSI SAS2 Integrated RAID Controller 4-1

Information about LSI Integrated RAID 4-1

Mirrored Volumes 4-3

Operation of Mirrored Volumes 4-3

Mirrored Volume Features 4-6

Mirroring and Mirroring Enhanced Features 4-7

Integrated Striping 4-8

Integrated Striping Features 4-9

Creating Mirrored Volumes 4-10

Launching the LSI SAS2 BIOS Configuration Utility 4-10

Creating Mirrored Volumes 4-11

Creating an Integrated Mirroring Volume 4-11

Creating an Integrated Mirroring Enhanced or Integrated Mirroring and Striping Volume 4-13

Expanding an Integrated Mirroring Volume with OCE 4-14

Managing Hot Spare Disks 4-15

Creating Hot Spare Disks 4-15

Cisco UCS Servers RAID Guide

OL-26591-01

Page 5

Deleting Hot Spare Disks 4-15

Other Configuration Tasks 4-16

Viewing Volume Properties 4-16

Running a Consistency Check 4-16

Activating an Array 4-17

Deleting an Array 4-17

Locating Disk Drives in a Volume 4-18

Choosing a Boot Disk 4-18

Creating Integrated Striping Volumes 4-19

Other Configuration Tasks 4-21

Viewing Volume Properties 4-21

Activating an Array 4-21

Deleting an Array 4-21

Locating Disk Drives in a Volume 4-22

Choosing a Boot Disk 4-23

Contents

CHAPTER

Determining Which Controller is in Your Server 4-23

Disabling Quiet Boot for CIMC Firmware Earlier than Release 1.2(1) 4-24

Launching Option ROM-Based Controller Utilities 4-24

Restoring RAID Configuration After Replacing a RAID Controller 4-25

5 LSI MegaRAID SAS Controller Tasks 5-1

LSI MegaRAID Controller Management Utilities 5-1

LSI WebBIOS Configuration Utility 5-1

MegaRAID Command Tool 5-2

MegaRAID Storage Manager 5-2

LSI WebBIOS CU 5-2

Starting the WebBIOS CU 5-2

WebBIOS CU Main Menu Window Options 5-3

Toolbar 5-4

Menu Options 5-4

Configuring RAID Drive Groups and Virtual Drives 5-5

Choosing the Configuration with the Configuration Wizard 5-5

Using Automatic Configuration 5-5

Using Manual Configuration 5-6

Viewing and Changing Device Properties 5-11

Viewing Controller Properties 5-11

Viewing Virtual Drive Properties, Policies, and Operations 5-13

Viewing Physical Drive Properties and Operations 5-14

Viewing and Changing Battery Backup Unit Information 5-15

OL-26591-01

Cisco UCS Servers RAID Guide

v

Page 6

Contents

Managing RAID 5-16

Expanding a Virtual Drive 5-16

Monitoring Array Health 5-16

Recovery 5-17

Deleting a Virtual Drive 5-18

Migrating an Array to a New Server 5-18

Foreign Configurations in Cable Pull and Drive Removal Scenarios 5-19

Importing Foreign Configurations from Integrated RAID to MegaRAID 5-20

Troubleshooting Information 5-20

Migrating the RAID Level of a Virtual Drive 5-20

Determining Which Controller is in Your Server 5-22

Disabling Quiet Boot for CIMC Firmware Earlier than Release 1.2(1) 5-22

Launching an Option ROM-Based Controller Utility 5-22

LSI MegaRAID Card Beep Codes 5-23

Restoring the RAID Configuration After Replacing a RAID Controller 5-23

CHAPTER

Limitation on Importing Foreign Configuration To a Virtual Disk That is Under Construction 5-25

Limitation 5-25

Design 5-25

Prerequisites For Reconstruction to Start 5-26

6 Configuring the Embedded ICH10R SATA Controller 6-1

Enabling the Integrated Intel ICH10R RAID Controller in the BIOS 6-1

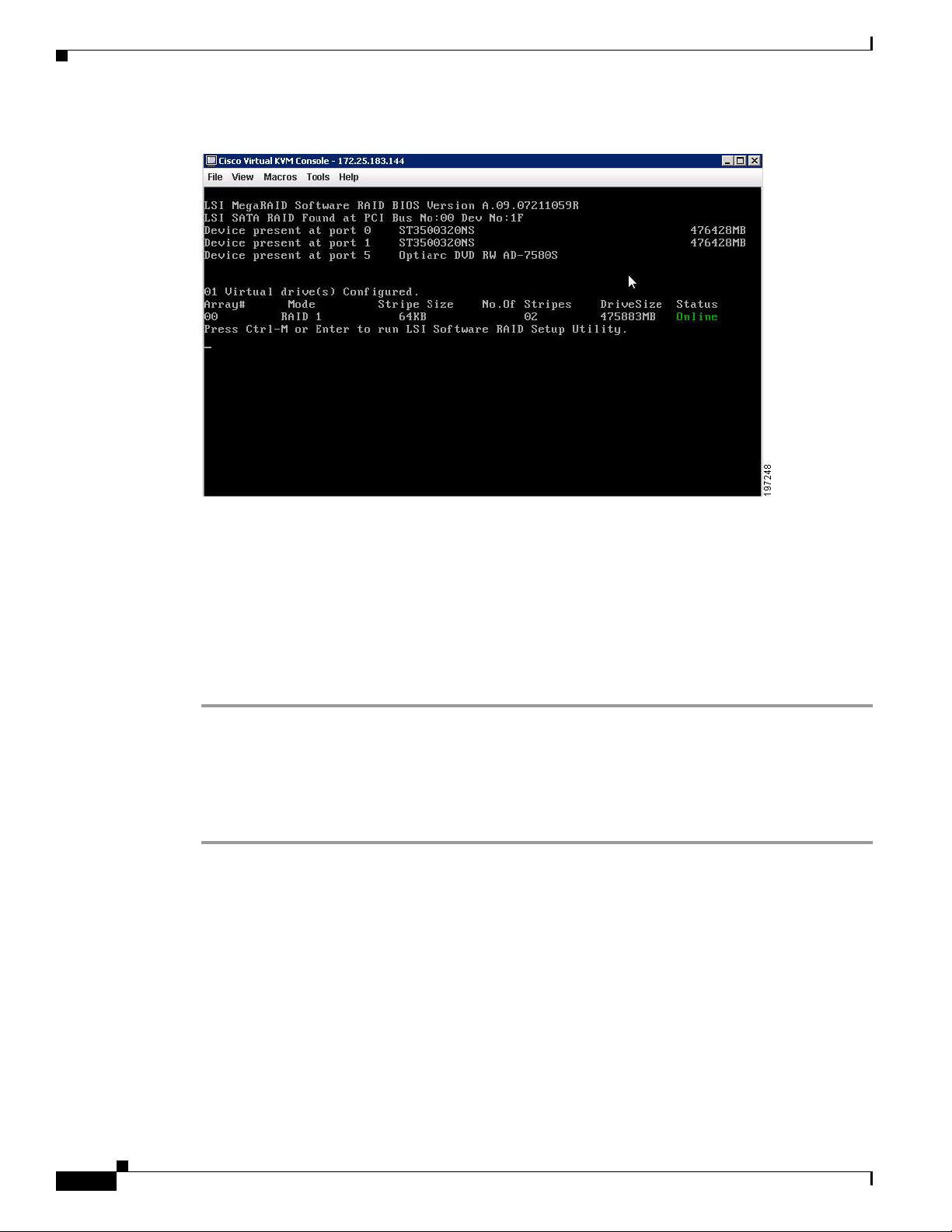

Launching the LSI Software RAID Setup Utility 6-1

Configuring the Onboard Intel ICH10R RAID Controller 6-2

Creating a New RAID Configuration 6-3

Viewing or Changing a RAID Configuration 6-4

vi

Cisco UCS Servers RAID Guide

OL-26591-01

Page 7

RAID Overview

This chapter describes RAID (Redundant Array of Independent Disks), RAID functions and benefits,

RAID components, RAID levels, and configuration strategies.

This chapter contains the following sections:

• Information About RAID, page 1-1

• RAID Levels, page 1-8

• Generic Drive Replacement Procedure, page 1-18

• Platform-Specific RAID and Drive Procedures, page 1-19

Information About RAID

RAID is an array, or group, of multiple independent physical drives that provide high performance and

fault tolerance. A RAID drive group improves input/output (I/O) performance and reliability. The RAID

drive group appears to the host computer as a single storage unit or as multiple virtual units. I/O is

expedited because several drives can be accessed simultaneously.

RAID drive groups improve data storage reliability and fault tolerance compared to single-drive storage

systems. Data loss resulting from a drive failure can be prevented by reconstructing missing data from

the remaining drives. RAID improves I/O performance and increases storage subsystem reliability.

RAID levels describe a system for ensuring the availability and redundancy of data stored on large disk

subsystems. See RAID Levels, page 1-8 for detailed information about RAID levels. The RAID

drive-group components and RAID levels are described in the following sections.

CHA P T ER

1

Drive Group

Virtual Drive

OL-26591-01

A drive group is a group of physical drives. These drives are managed in partitions known as virtual

drives.

A virtual drive is a partition in a drive group that is made up of contiguous data segments on the drives.

A virtual drive can consist of an entire drive group, more than one entire drive group, a part of a drive

group, parts of more than one drive group, or a combination of any two of these conditions.

Cisco UCS Servers RAID Guide

1-1

Page 8

Information About RAID

Segment 1

Segment 5

Segment 9

Segment 2

Segment 6

Segment 10

Segment 3

Segment 7

Segment 11

Segment 4

Segment 8

Segment 12

332084

Disk Striping

Chapter 1 RAID Overview

Disk striping (used in RAID level 0) allows you to write data across multiple drives instead of only one

drive. Disk striping involves partitioning each drive storage space into stripes that can vary in size from

8 KB to 1024 KB. These stripes are interleaved in a repeated sequential manner. The combined storage

space is composed of stripes from each drive. We recommend that you keep stripe sizes the same across

RAID drive groups.

For example, in a four-disk system using only disk striping, segment 1 is written to disk 1, segment 2 is

written to disk 2, and so on (see Figure 1-1). Disk striping enhances performance because multiple drives

are accessed simultaneously, but disk striping does not provide data redundancy

Figure 1-1 Example of Disk Striping (RAID 0)

Stripe width is the number of drives involved in a drive group where striping is implemented. For

example, a four-disk drive group with disk striping has a stripe width of four.

The stripe size is the length of the interleaved data segments that the RAID controller writes across

multiple drives, not including parity drives. For example, consider a stripe that contains 64 KB of disk

space and has 16 KB of data residing on each disk in the stripe. In this case, the stripe size is 64 KB and

the strip size is 16 KB.

The strip size is the portion of a stripe that resides on a single drive.

Disk Mirroring (RAID 1 and RAID 10)

With disk mirroring (used in RAID 1 and RAID 10), data written to one drive is simultaneously written

to another drive. The primary advantage of disk mirroring is that it provides 100 percent data

redundancy. Because the contents of the disk are completely written to a second disk, data is not lost if

one disk fails. In addition, both drives contain the same data at all times, so either disk can act as the

operational disk. If one disk fails, the contents of the other disk can be used to run the system and

reconstruct the failed disk.

Disk mirroring provides 100 percent redundancy but is expensive because each drive in the system must

be duplicated (see Figure 1-2).

1-2

Cisco UCS Servers RAID Guide

OL-26591-01

Page 9

Chapter 1 RAID Overview

Segment 1

Segment 2

Segment 3

Segment 1 Duplicated

Segment 2 Duplicated

Segment 3 Duplicated

Segment 4 Segment 4 Duplicated

Parity

Information About RAID

Figure 1-2 Example of Disk Mirroring (RAID 1)

Parity generates a set of redundancy data from two or more parent data sets. The redundancy data can

be used to reconstruct one of the parent data sets in the event of a drive failure. Parity data does not fully

duplicate the parent data sets, but parity generation can slow the write process. In RAID, this method is

applied to entire drives or stripes across all of the drives in a drive group. There are two types of parity:

• Dedicated parity—The parity data on two or more drives is stored on an additional disk.

Note Parity is distributed across all drives in the drive group.

Disk Spanning

• Distributed parity—The parity data is distributed across more than one drive in the system.

RAID 5 combines distributed parity with disk striping (see Figure 1-3). If a single drive fails, it can be

rebuilt from the parity and the data on the remaining drives. RAID 5 uses parity to provide redundancy

for one drive failure without duplicating the contents of entire drives. RAID 6 uses distributed parity and

disk striping also but adds a second set of parity data so that it can survive up to two drive failures.

Figure 1-3 Example of Distributed Parity (RAID 5)

Segment 1

Segment 7

Segment 13

Segment 19

Segment 25

Parity (26–30)

Segment 2

Segment 8

Segment 14

Segment 20

Parity (21–25)

Segment 26

Segment 3

Segment 9

Segment 15

Parity (16–20)

Segment 21

Segment 4

Segment 10

Parity (11–15)

Segment 16

Segment 22

Segment 28

Segment 5

Parity (6–10)

Segment 11

Segment 17

Segment 23

92 tnemgeS72 tnemgeS

Parity (1–5)

Segment 6

Segment 12

Segment 18

Segment 24

Segment 30

332086

Disk spanning allows multiple drives to function like one big drive. Spanning overcomes lack of disk

space and simplifies storage management by combining existing resources or adding relatively

inexpensive resources. For example, four 20-GB drives can be combined to appear to the operating

system as a single 80-GB drive.

OL-26591-01

Cisco UCS Servers RAID Guide

1-3

Page 10

Information About RAID

60 GB 60 GB

Can be accessed as

one 120-GB drive

60 GB 60 GB

Can be accessed as

one 120-GB drive

332087

Note Make sure that the spans are in different backplanes, so that if one span fails, you do not lose the whole

Chapter 1 RAID Overview

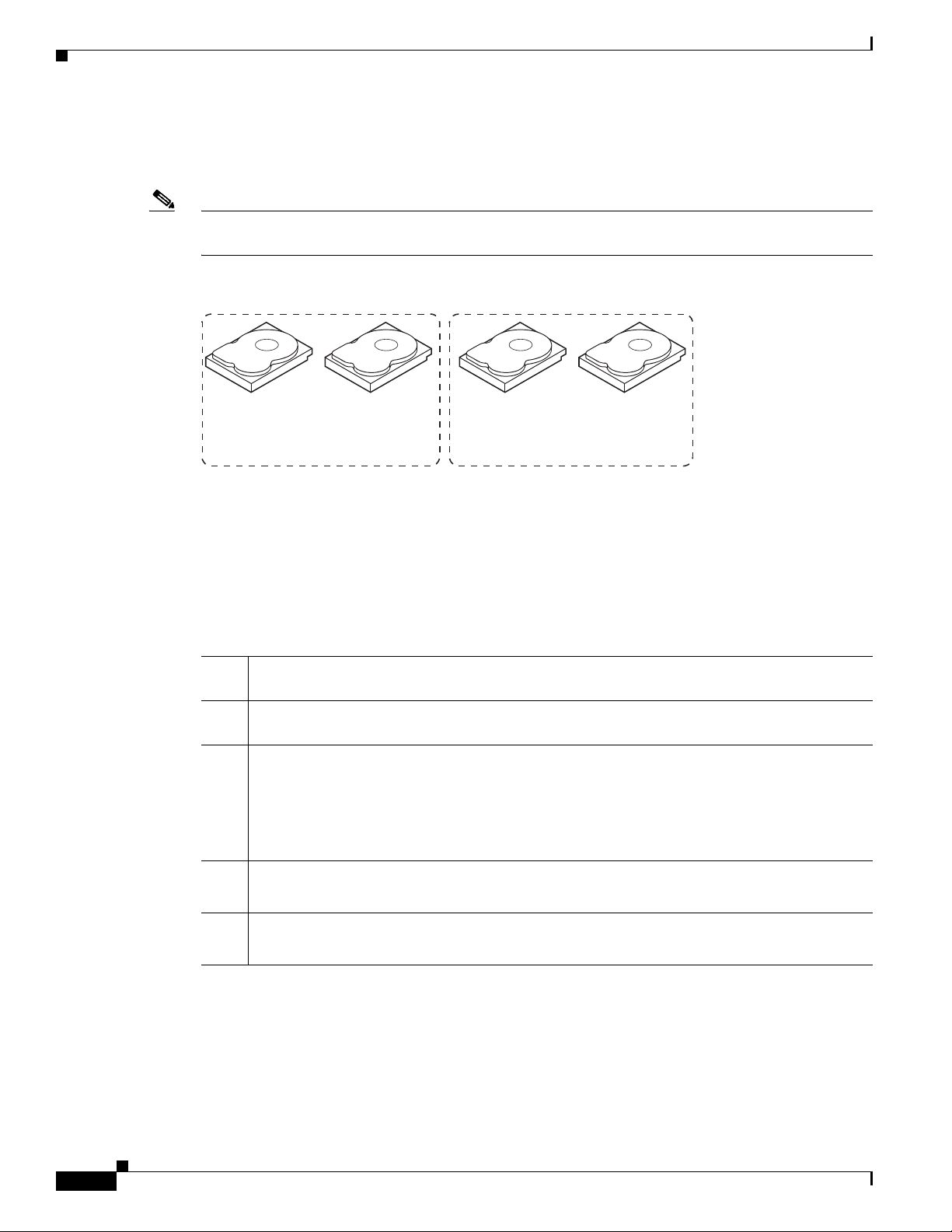

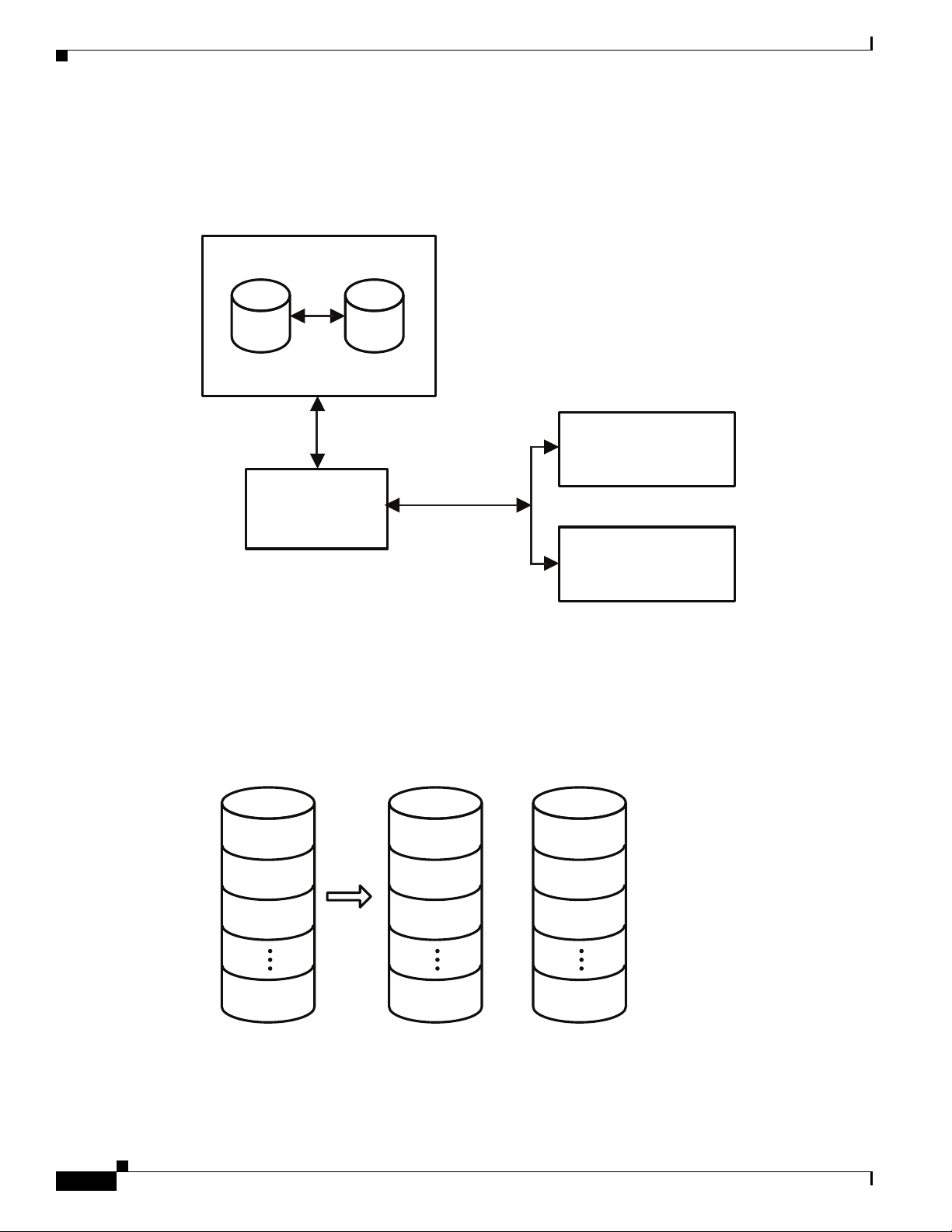

Spanning alone does not provide reliability or performance enhancements. Spanned virtual drives must

have the same stripe size and must be contiguous. In Figure 1-4, RAID 1 drive groups are turned into a

RAID 10 drive group.

drive group.

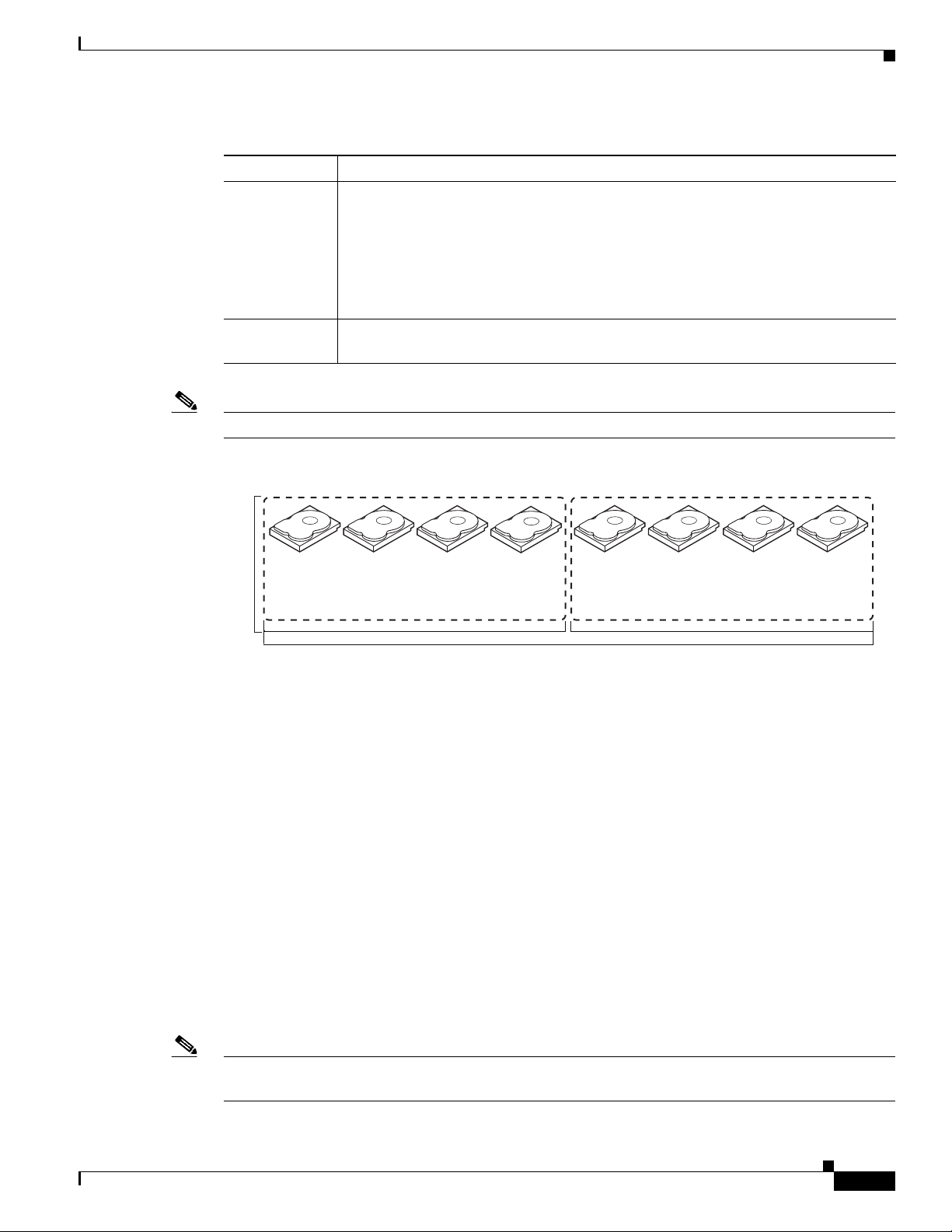

Figure 1-4 Example of Disk Spanning

Spanning two contiguous RAID 0 virtual drives does not produce a new RAID level or add fault

tolerance. It does increase the capacity of the virtual drive and improves performance by doubling the

number of physical disks.

Table 1 -1 describes how to configure RAID 00, RAID 10, RAID 50, and RAID 60 by spanning. The

virtual drives must have the same stripe size and the maximum number of spans is eight. The full drive

capacity is used when you span virtual drives; you cannot specify a smaller drive capacity.

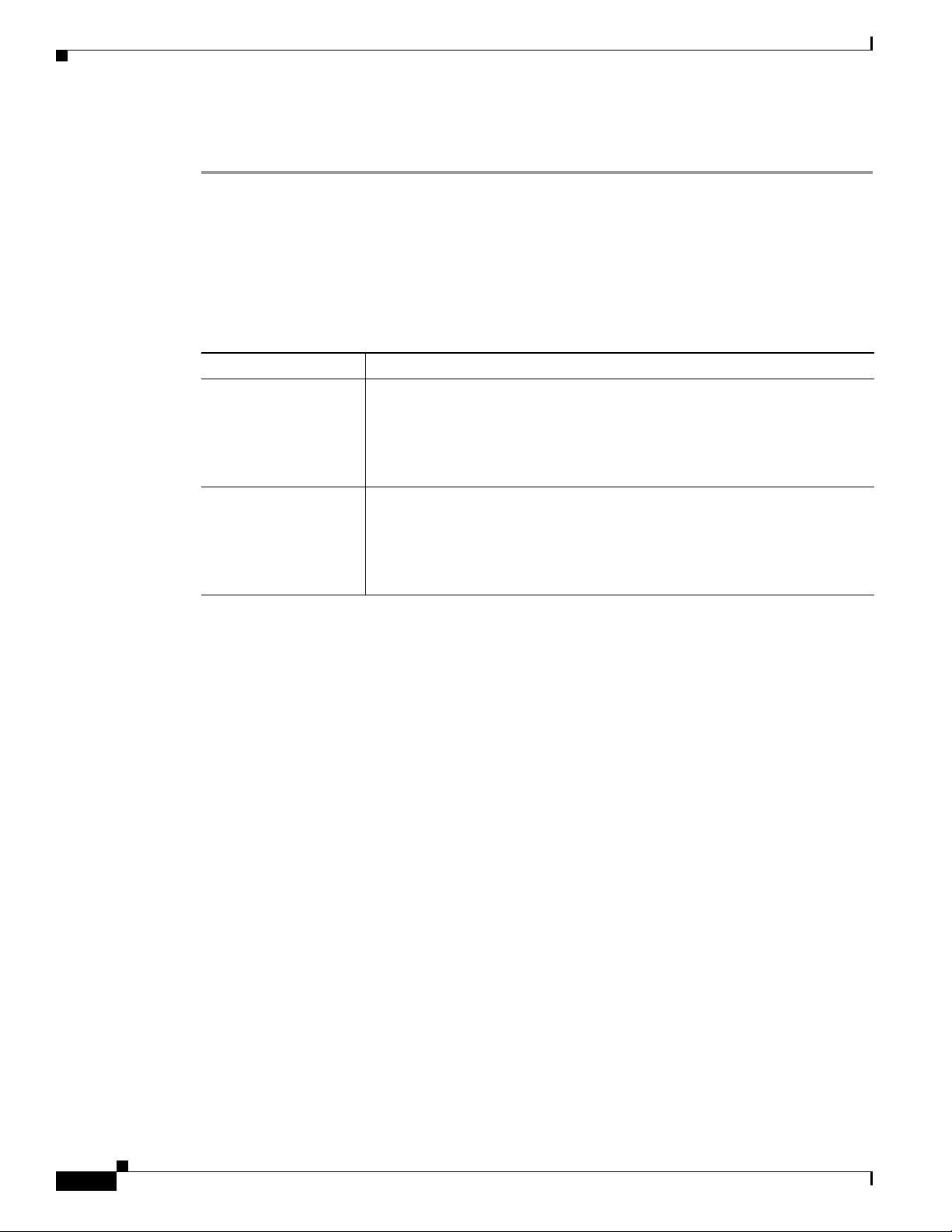

Table 1-1 Spanning for RAID 00, RAID 10, RAID 50, and RAID 60

RAID

Level Description

00 Configure RAID 00 by spanning two contiguous RAID 0 virtual drives, up to the maximum

number of supported devices for the controller.

10 Configure RAID 10 by spanning two contiguous RAID 1 virtual drives, up to the maximum

number of supported devices for the controller.

RAID 10 supports a maximum of eight spans. You must use an even number of drives in each

RAID virtual drive in the span.

The RAID 1 virtual drives must have the same stripe size.

50 Configure RAID 50 by spanning two contiguous RAID 5 virtual drives.

The RAID 5 virtual drives must have the same stripe size.

60 Configure RAID 60 by spanning two contiguous RAID 6 virtual drives.

The RAID 6 virtual drives must have the same stripe size.

Hot Spares

1-4

Cisco UCS Servers RAID Guide

A hot spare is an extra, unused drive that is part of the disk subsystem. It is usually in standby mode,

ready for service if a drive fails. If a drive used in a RAID virtual drive fails, a hot spare automatically

takes its place and the data on the failed drive is rebuilt on the hot spare. Hot spares can be used for RAID

levels 1, 5, 6, 10, 50, and 60.

OL-26591-01

Page 11

Chapter 1 RAID Overview

Note When running RAID 0 and RAID 5 virtual drives on the same set of drives (a sliced configuration), a

Information About RAID

Hot spares permit you to replace failed drives without system shutdown or user intervention. MegaRAID

SAS RAID controllers can implement automatic and transparent rebuilds of failed drives using hot spare

drives, providing a high degree of fault tolerance and zero downtime.

rebuild to a hot spare cannot occur after a drive failure until the RAID 0 virtual drive is deleted.

The LSI RAID management software allows you to specify drives as hot spares. When a hot spare is

needed, the RAID controller assigns the hot spare that has a capacity closest to and at least as great as

that of the failed drive to take the place of the failed drive. The failed drive is removed from the virtual

drive and marked ready awaiting removal once the rebuild to a hot spare begins. You can make hot spares

of the drives that are not in a RAID virtual drive.

You can use the RAID management software to designate the hot spare to have enclosure affinity, which

means that if drive failures are present on a split backplane configuration, the hot spare is used first on

the backplane side that it resides in.

If the hot spare is designated as having enclosure affinity, it attempts to rebuild any failed drives on the

backplane that it resides in before rebuilding any other drives on other backplanes.

Note If a rebuild to a hot spare fails for any reason, the hot spare drive is marked as failed. If the source drive

fails, both the source drive and the hot spare drive is marked as failed.

There are two types of hot spares:

• Global hot spare

• Dedicated hot spare

Global Hot Spare

A global hot spare drive can be used to replace any failed drive in a redundant drive group as long as its

capacity is equal to or larger than the capacity of the failed drive. A global hot spare defined on any

channel should be available to replace a failed drive on both channels.

Dedicated Hot Spare

A dedicated hot spare can be used to replace a failed drive only in a chosen drive group. One or more

drives can be designated as a member of a spare drive pool. The most suitable drive from the pool is

chosen for failover. A dedicated hot spare is used before one from the global hot spare pool.

Hot spare drives can be located on any RAID channel. Standby hot spares (not being used in RAID drive

group) are polled every 60 seconds at a minimum, and their status is made available in the drive group

management software. RAID controllers offer the ability to rebuild with a disk that is in a system, but

not initially set to be a hot spare.

When using hot spares, observe the following guidelines:

OL-26591-01

• Hot spares are used only in drive groups with redundancy, which includes RAID levels 1, 5, 6, 10,

50, and 60.

• A hot spare connected to a specific RAID controller can be used to rebuild a drive that is connected

to the same controller only.

Cisco UCS Servers RAID Guide

1-5

Page 12

Information About RAID

Disk Rebuilds

Chapter 1 RAID Overview

• You must assign the hot spare to one or more drives through the controller BIOS or use drive group

management software to place it in the hot spare pool.

• A hot spare must have free space equal to or greater than the drive it replaces. For example, to

replace an 18-GB drive, the hot spare must be 18 GB or larger.

When a drive in a RAID drive group fails, you can rebuild the drive by recreating the data that was stored

on the drive before it failed. The RAID controller recreates the data using the data stored on the other

drives in the drive group. Rebuilding can be done only in drive groups with data redundancy, which

includes RAID 1, 5, 6, 10, 50, and 60 drive groups.

The RAID controller uses hot spares to rebuild failed drives automatically and transparently, at

user-defined rebuild rates. If a hot spare is available, the rebuild can start automatically when a drive

fails. If a hot spare is not available, the failed drive must be replaced with a new drive so that the data on

the failed drive can be rebuilt.

The failed drive is removed from the virtual drive and marked ready awaiting removal when the rebuild

to a hot spare begins. If the system goes down during a rebuild, the RAID controller automatically

restarts the rebuild after the system reboots.

Hot Swap

Note When the rebuild to a hot spare begins, the failed drive is often removed from the virtual drive before

management applications detect the failed drive. When this situation occurs, the events logs show the

drive rebuilding to the hot spare without showing the failed drive. The formerly failed drive is marked

as ready after a rebuild begins to a hot spare.

Note If a source drive fails during a rebuild to a hot spare, the rebuild fails, and the failed source drive is

marked as offline. In addition, the rebuilding hot spare drive is changed back to a hot spare. After a

rebuild fails because of a source drive failure, the dedicated hot spare is still dedicated and assigned to

the correct drive group, and the global hot spare is still global.

An automatic drive rebuild does not start if you replace a drive during a RAID-level migration. The

rebuild must be started manually after the expansion or migration procedure is complete. (RAID-level

migration changes a virtual drive from one RAID level to another.)

A hot swap is the manual replacement of a defective drive unit while the computer is still running

(performing its normal functions). When a new drive is installed, a rebuild occurs automatically if one

of the following happens:

• The newly inserted drive is the same capacity as or larger than the failed drive.

• It is placed in the same drive bay as the failed drive it is replacing.

The RAID controller can be configured to detect the new drives and rebuild the contents of the drive

automatically. The backplane and enclosure must support hot swap for the functionality to work.

1-6

Cisco UCS Servers RAID Guide

OL-26591-01

Page 13

Chapter 1 RAID Overview

Drive States

Information About RAID

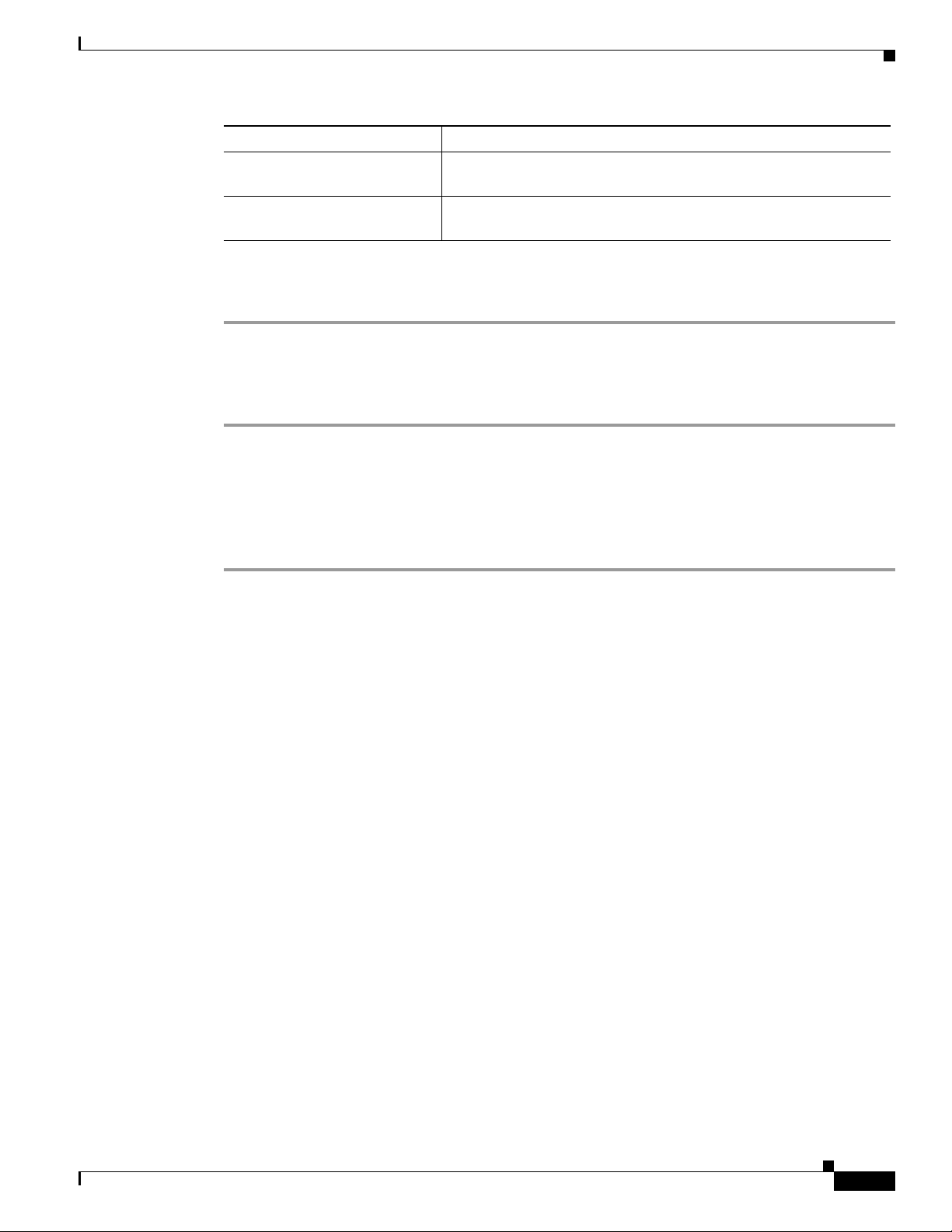

A drive state is a property that indicates the status of the drive. Table 1-2 describes the drive states.

Table 1-2 Drive States

State Description

Online A drive that can be accessed by the RAID controller and is part of the virtual drive.

Unconfigured

Good

Hot Spare A drive that is powered up and ready for use as a spare in case an online drive fails.

Failed A drive that was originally configured as Online or Hot Spare but on which the

Rebuild A drive to which data is being written to restore full redundancy for a virtual drive.

Unconfigured

Bad

Missing A drive that was Online but which has been removed from its location.

Offline A drive that is part of a virtual drive but which has invalid data as far as the RAID

A drive that is functioning normally but is not configured as a part of a virtual drive

or as a hot spare.

firmware detects an unrecoverable error.

A drive on which the firmware detects an unrecoverable error; the drive was

Unconfigured Good or the drive could not be initialized.

configuration is concerned.

When a virtual drive with cached data goes offline, the cache for the virtual drive is

discarded. Because the virtual drive is offline, the cache cannot be saved.

Virtual Drive States

A virtual drive state is a property indicating the status of the virtual drive. Tabl e 1-3 describes the virtual

drive states.

Table 1-3 Virtual Drive States

State Description

Optimal The virtual drive operating condition is good. All configured drives are online.

Degraded The virtual drive operating condition is not optimal. One of the configured drives has

Partial

Degraded

Failed The virtual drive has failed.

Offline The virtual drive is not available to the RAID controller.

failed or is offline.

The operating condition in a RAID 6 virtual drive is not optimal. One of the

configured drives has failed or is offline. RAID 6 can tolerate up to two drive failures.

OL-26591-01

Cisco UCS Servers RAID Guide

1-7

Page 14

RAID Levels

RAID Levels

The MegaRAID controller supports RAID levels 0, 00, 1, 5, 6, 10, 50, and 60. It also supports

independent drives (configured as RAID 0 and RAID 00.) The supported RAID levels are summarized

in the following section.

RAID Levels Summary

• RAID 0 uses striping to provide high data throughput, especially for large files in an environment

that does not require fault tolerance.

• RAID 1 uses mirroring so that data written to one drive is simultaneously written to another drive

which is good for small databases or other applications that require small capacity, but complete data

redundancy.

• RAID 5 uses disk striping and parity data across all drives (distributed parity) to provide high data

throughput, especially for small random access.

• RAID 6 uses distributed parity, with two independent parity blocks per stripe, and disk striping. A

RAID 6 virtual drive can survive the loss of two drives without losing data. A RAID 6 drive group,

which requires a minimum of three drives, is similar to a RAID 5 drive group. Blocks of data and

parity information are written across all drives. The parity information is used to recover the data if

one or two drives fail in the drive group.

• A RAID 00 drive group is a spanned drive group that creates a striped set from a series of RAID 0

drive groups.

• RAID 10, a combination of RAID 0 and RAID 1, consists of striped data across mirrored spans. A

RAID 10 drive group is a spanned drive group that creates a striped set from a series of mirrored

drives. RAID 10 allows a maximum of eight spans. You must use an even number of drives in each

RAID virtual drive in the span. The RAID 1 virtual drives must have the same stripe size. RAID 10

provides high data throughput and complete data redundancy but uses a larger number of spans.

Chapter 1 RAID Overview

1-8

• RAID 50, a combination of RAID 0 and RAID 5, uses distributed parity and disk striping. A

RAID 50 drive group is a spanned drive group in which data is striped across multiple RAID 5 drive

groups. RAID 50 works best with data that requires high reliability, high request rates, high data

transfers, and medium-to-large capacity.

Note You cannot have virtual drives of different RAID levels, such as RAID 0 and RAID 5, in the same drive

group. For example, if an existing RAID 5 virtual drive is created out of partial space in an array, the

next virtual drive in the array has to be RAID 5 only.

• RAID 60, a combination of RAID 0 and RAID 6, uses distributed parity, with two independent

parity blocks per stripe in each RAID set, and disk striping. A RAID 60 virtual drive can survive the

loss of two drives in each of the RAID 6 sets without losing data. It works best with data that

requires high reliability, high request rates, high data transfers, and medium-to-large capacity.

Cisco UCS Servers RAID Guide

OL-26591-01

Page 15

Chapter 1 RAID Overview

RAID 0

Note RAID level 0 is not fault tolerant. If a drive in a RAID 0 drive group fails, the whole virtual drive (all

RAID Levels

RAID 0 provides disk striping across all drives in the RAID drive group. RAID 0 does not provide any

data redundancy but does offer the best performance of any RAID level. RAID 0 breaks up data into

smaller segments and stripes the data segments across each drive in the drive group. The size of each

data segment is determined by the stripe size. RAID 0 offers high bandwidth.

drives associated with the virtual drive) will fail.

By breaking up a large file into smaller segments, the RAID controller can use both SAS drives and

SATA drives to read or write the file faster. RAID 0 involves no parity calculations to complicate the

write operation, which makes RAID 0 ideal for applications that require high bandwidth, but do not

require fault tolerance. Tab le 1-4 provides an overview of RAID 0. Figure 1-5 shows an example of a

RAID 0 drive group advantage.

Table 1-4 RAID 0 Overview

Feature Description

Uses Provides high data throughput, especially for large files. Any environment that does

not require fault tolerance.

Benefits Provides increased data throughput for large files.

No capacity loss penalty for parity.

Limitations Does not provide fault tolerance or high bandwidth.

All data is lost if any drive fails.

Drives 1 to 32.

Figure 1-5 RAID 0 Drive Group Example

Segment 1

Segment 3

Segment 5

Segment 7 Segment 8

Segment 2

Segment 4

Segment 6

332088

OL-26591-01

Cisco UCS Servers RAID Guide

1-9

Page 16

RAID Levels

RAID 1

Chapter 1 RAID Overview

In RAID 1, the RAID controller duplicates all data from one drive to a second drive in the drive group.

RAID 1 supports an even number of drives from 2 to 32 in a single span. RAID 1 provides complete data

redundancy but at the cost of doubling the required data storage capacity. Tab le 1- 5 provides an overview

of RAID 1. Figure 1-6 shows an example of a RAID 1 drive group.

Table 1-5 RAID 1 Overview

Feature Description

Uses Use RAID 1 for small databases or any other environment that requires fault

tolerance, but small capacity.

Benefits Provides complete data redundancy. RAID 1 is ideal for any application that requires

fault tolerance and minimal capacity.

Limitations Requires twice as many drives. Performance is impaired during drive rebuilds.

Drives 2 to 32 (must be an even number of drives).

RAID 5

Figure 1-6 RAID 1 Drive Group Example

Segment 1

Segment 5

...

RAID1

Segment 1

Duplicate

Segment 5

Duplicate

Segment 2

Segment 6

...

RAID1

Segment 2

Duplicate

Segment 6

Duplicate

Segment 3

Segment 7

...

RAID1

Segment 3

Duplicate

Segment 7

Duplicate

Segment 4

Segment 8

...

Segment 4

Duplicate

Segment 8

Duplicate

RAID1

332089

RAID 5 includes disk striping at the block level and parity. Parity is the property of the data of being odd

or even, and parity checking is used to detect errors in the data. In RAID 5, the parity information is

written to all drives. RAID 5 is best suited for networks that perform a lot of small input/output (I/O)

transactions simultaneously. RAID 5 provides data redundancy, high read rates, and good performance

in most environments. It also provides redundancy with the lowest loss of capacity.

In addition, RAID 5 is good for any application that has high read request rates but has low write request

rates.

RAID 5 addresses the congestion issue for random I/O operations. Because each drive contains both data

and parity, numerous writes can take place concurrently.

Table 1 -6 provides an overview of RAID 5. Figure 1-7 shows an example of a RAID 5 drive group.

1-10

Cisco UCS Servers RAID Guide

OL-26591-01

Page 17

Chapter 1 RAID Overview

Segment 1

Segment 7

Segment 2

Segment 8

Segment 3

Segment 9

Segment 4

Segment 10

Segment 5

Parity (6–10)

Parity (11–15)

Parity (1–5)

Segment 6

Segment 12

Segment 15

Segment 11

Segment 14

Segment 13

Segment 19

Segment 25

Segment 20

Segment 23

Segment 18

Segment 21

Segment 16

Segment 22

Segment 17

Parity (21–25)

Parity (26–30)

Parity (16–20)

Segment 24

Segment 30

92 tnemgeS72 tnemgeS

Segment 26

Segment 28

332090

RAID Levels

Table 1-6 RAID 5 Overview

Features Description

Uses Provides high data throughput, especially for large files. Use RAID 5 for transaction

processing applications because each drive can read and write independently. If a

drive fails, the RAID controller uses the parity drive to recreate all missing

information. Use also for office automation and online customer service that requires

fault tolerance. Use for any application that has high read request rates but low write

request rates.

Benefits Provides data redundancy, high read rates, and good performance in most

environments. RAID 5 provides redundancy with the lowest loss of capacity.

Limitations Not well-suited to tasks that require a large number of writes. RAID 5 has problems

if no cache is used (clustering). The drive’s performance is reduced if a drive is being

rebuilt. Environments with few processes do not perform as well because the RAID

overhead is not offset by the performance gains in handling simultaneous processes.

Drives 3 to 32.

Note Parity is distributed across all drives in the drive group.

Figure 1-7 RAID 5 Drive Group Example

OL-26591-01

Cisco UCS Servers RAID Guide

1-11

Page 18

RAID Levels

RAID 6

Chapter 1 RAID Overview

RAID 6 is similar to RAID 5 (disk striping and distributed parity), except that instead of one parity block

per stripe, there are two. With two independent parity blocks, RAID 6 can survive the loss of two drives

in a virtual drive without losing data. RAID 6 provides a high level of data protection through the use of

a second parity block in each stripe. Use RAID 6 for data that requires a very high level of protection

from loss.

RAID 6 is best suited for networks that perform a lot of small input/output (I/O) transactions

simultaneously. It provides data redundancy, high read rates, and good performance in most

environments.

In the case of a failure of one drive or two drives in a virtual drive, the RAID controller uses the parity

blocks to recreate all of the missing information. If two drives in a RAID 6 virtual drive fail, two drive

rebuilds are required, one for each drive. These rebuilds do not occur at the same time. The controller

rebuilds one failed drive and then the other failed drive.

Table 1 -7 provides an overview of a RAID 6 drive group. Figure 1-8 shows a RAID 6 data layout. The

second set of parity drives are denoted by Q. The P drives follow the RAID 5 parity scheme.

Table 1-7 RAID 6 Overview

Features Description

Uses Use for office automation and online customer service that requires fault tolerance.

Use for any application that has high read request rates but low write request rates.

Benefits Provides data redundancy, high read rates, and good performance in most

environments, can survive the loss of two drives or the loss of a drive while another

drive is being rebuilt, and provides the highest level of protection against drive

failures of all of the RAID levels. The read performance is similar to that of RAID 5.

Limitations Not well-suited to tasks that require a large number of writes. A RAID 6 virtual drive

has to generate two sets of parity data for each write operation, which results in a

significant decrease in performance during writes. The drive performance is reduced

during a drive rebuild. Environments with few processes do not perform as well,

because the RAID overhead is not offset by the performance gains in handling

simultaneous processes. RAID 6 costs more because of the extra capacity required

by using two parity blocks per stripe.

Drives 3 to 32.

Note Parity is distributed across all drives in the drive group.

1-12

Cisco UCS Servers RAID Guide

OL-26591-01

Page 19

Chapter 1 RAID Overview

Segment 1

Segment 6

Segment 2

Segment 7

Segment 3

Segment 8

Segment 4

Parity (P5–P8)

Parity (P1–P4)

Parity (Q5–Q8)

Parity (Q9–Q12)

Parity (Q1–Q4)

Segment 5

Segment 10

Parity (P9–P12)

Segment 9

Segment 12

Segment 11

Segment 16

Parity (P17–P20)

Parity (P13–P16)

Segment 19

Segment 15

Segment 17

Segment 13

Segment 18

Segment 14

Parity (Q17–Q20)

Parity (Q13–Q16)

Segment 20

332094

RAID 00

RAID Levels

Figure 1-8 RAID 6 Drive Group Example

A RAID 00 drive group is a spanned drive group that creates a striped set from a series of RAID 0 drive

groups. RAID 00 does not provide any data redundancy, but along with RAID 0, RAID 00 offers the best

performance of any RAID level. RAID 00 breaks up data into smaller segments and stripes the data

segments across each drive in the drive groups. The size of each data segment is determined by the stripe

size. RAID 00 offers high bandwidth.

Note RAID level 00 is not fault tolerant. If a drive in a RAID 0 drive group fails, the whole virtual drive (all

drives associated with the virtual drive) fails.

By breaking up a large file into smaller segments, the RAID controller can use both SAS drives and

SATA drives to read or write the file faster. RAID 00 involves no parity calculations to complicate the

write operation, which makes RAID 00 ideal for applications that require high bandwidth but do not

require fault tolerance. Tab le 1-8 provides an overview of RAID 00. Figure 1-9 shows an example of a

RAID 00 drive group.

Table 1-8 RAID 00 Overview

Features Description

Uses Provides high data throughput, especially for large files. Use RAID 00 in any

environment that does not require fault tolerance.

Benefits Provides increased data throughput for large files. RAID 00 has no capacity loss

penalty for parity.

Limitations Does not provide fault tolerance or high bandwidth. All data is lost if any drive fails.

Drives Two to the maximum number of drives that are supported by the controller.

OL-26591-01

Cisco UCS Servers RAID Guide

1-13

Page 20

RAID Levels

Segment 1

Segment 2Segment

3

Segment 6

Segment

8

Segment

5

Segment 7

Segment

9

Segment

11

Segment

13

Segment 15

Segment

10

Segment

12

Segment

14

Segment

16

Segment

4

...

...

...

...

RAID

0

RAID

0

RAID

0

RAID

0

RAID

00

RAID 0

Segment

17

Segment

18

Segment

19

Segment 20Segment

21

Segment

22

Segment

23

Segment

24

RAID 10

Chapter 1 RAID Overview

Figure 1-9 RAID 00 Drive Group Example Using Two Drives

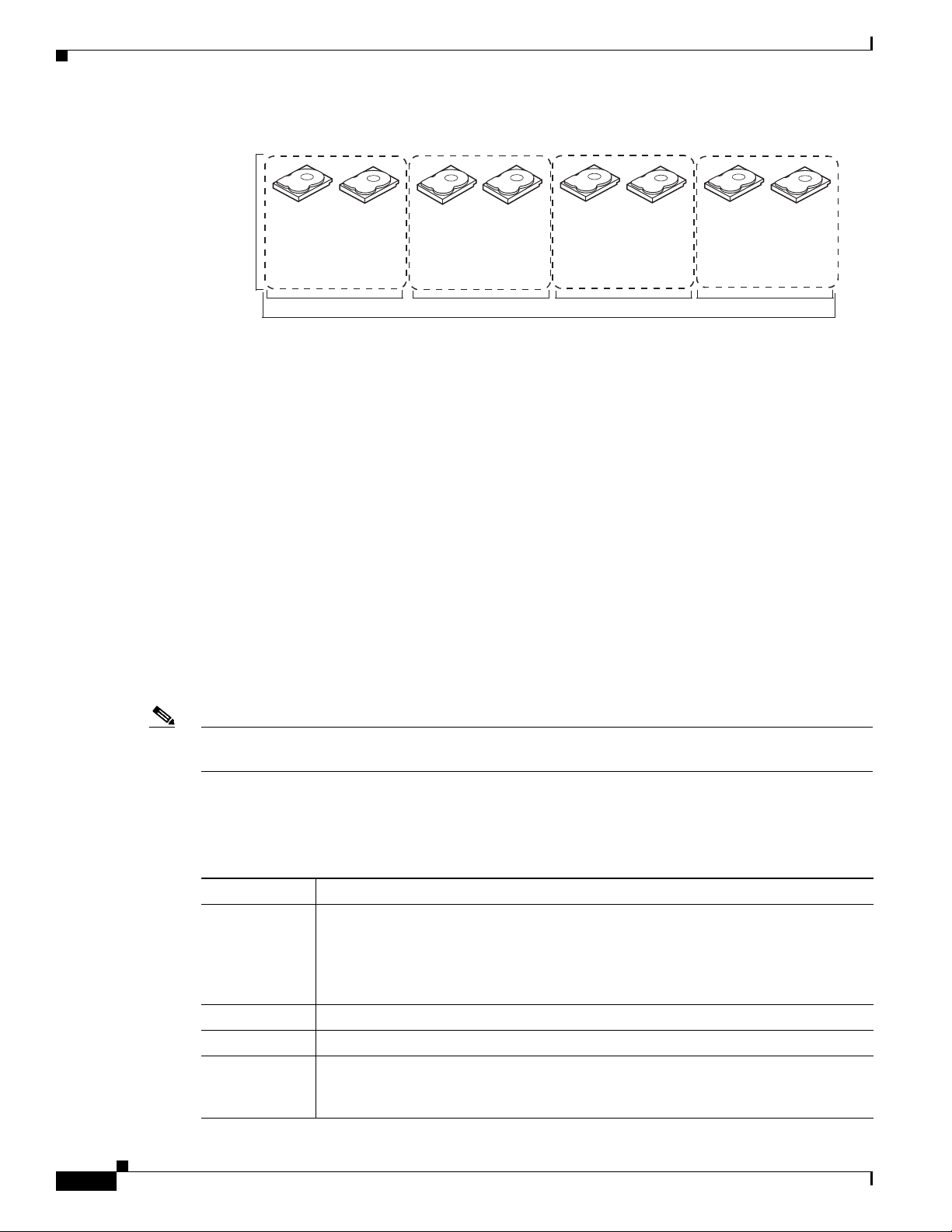

RAID 10 is a combination of RAID 0 and RAID 1 and consists of stripes across mirrored drives.

RAID 10 breaks up data into smaller blocks and mirrors the blocks of data to each RAID 1 drive group.

The first RAID 1 drive in each drive group then duplicates its data to the second drive. The size of each

block is determined by the stripe size parameter, which is set during the creation of the RAID set. The

RAID 1 virtual drives must have the same stripe size.

1-14

Spanning is used because one virtual drive is defined across more than one drive group. Virtual drives

defined across multiple RAID 1 level drive groups are referred to as RAID level 10, (1+0). Data is striped

across drive groups to increase performance by enabling access to multiple drive groups simultaneously.

Each spanned RAID 10 virtual drive can tolerate multiple drive failures, as long as each failure is in a

separate drive group. If there are drive failures, less than the total drive capacity is available.

Configure RAID 10 by spanning two contiguous RAID 1 virtual drives, up to the maximum number of

supported devices for the controller. RAID 10 supports a maximum of eight spans with a maximum of

32 drives per span. You must use an even number of drives in each RAID 10 virtual drive in the span.

Note Other factors, such as the type of controller, can restrict the number of drives supported by RAID 10

virtual drives.

Table 1 -9 provides an overview of RAID 10. In Figure 1-10, virtual drive 0 is created by distributing

data across four RAID 1 drive groups (drive groups 0 through 3).

Table 1-9 RAID 10 Overview

Benefits Description

Uses Appropriate when used with data storage that needs 100 percent redundancy of

mirrored drive groups and that also needs the enhanced I/O performance of RAID 0

(striped drive groups.) RAID 10 works well for medium-sized databases or any

environment that requires a higher degree of fault tolerance and moderate to medium

capacity.

Benefits Provides both high data transfer rates and complete data redundancy.

Limitations Requires twice as many drives as all other RAID levels except RAID 1.

Drives Two to 8 equal spans of RAID 1 drive groups containing 2 to 32 drives each (limited

by the maximum number of devices supported by the controller). You must use an

Cisco UCS Servers RAID Guide

even number of drive spans.

OL-26591-01

Page 21

Chapter 1 RAID Overview

RAID Levels

Figure 1-10 RAID 10 Virtual Drive Example

RAID 50

RAID 10

Segment 1

Segment 5

...

RAID1

Segment 1

Duplicate

Segment 5

Duplicate

Segment 2

Segment 6

...

RAID1

Segment 2

Duplicate

Segment 6

Duplicate

RAID 0

Segment 3

Segment 7

...

RAID1

Segment 3

Duplicate

Segment 7

Duplicate

Segment 4

Segment 8

...

Segment 4

Duplicate

Segment 8

Duplicate

RAID1

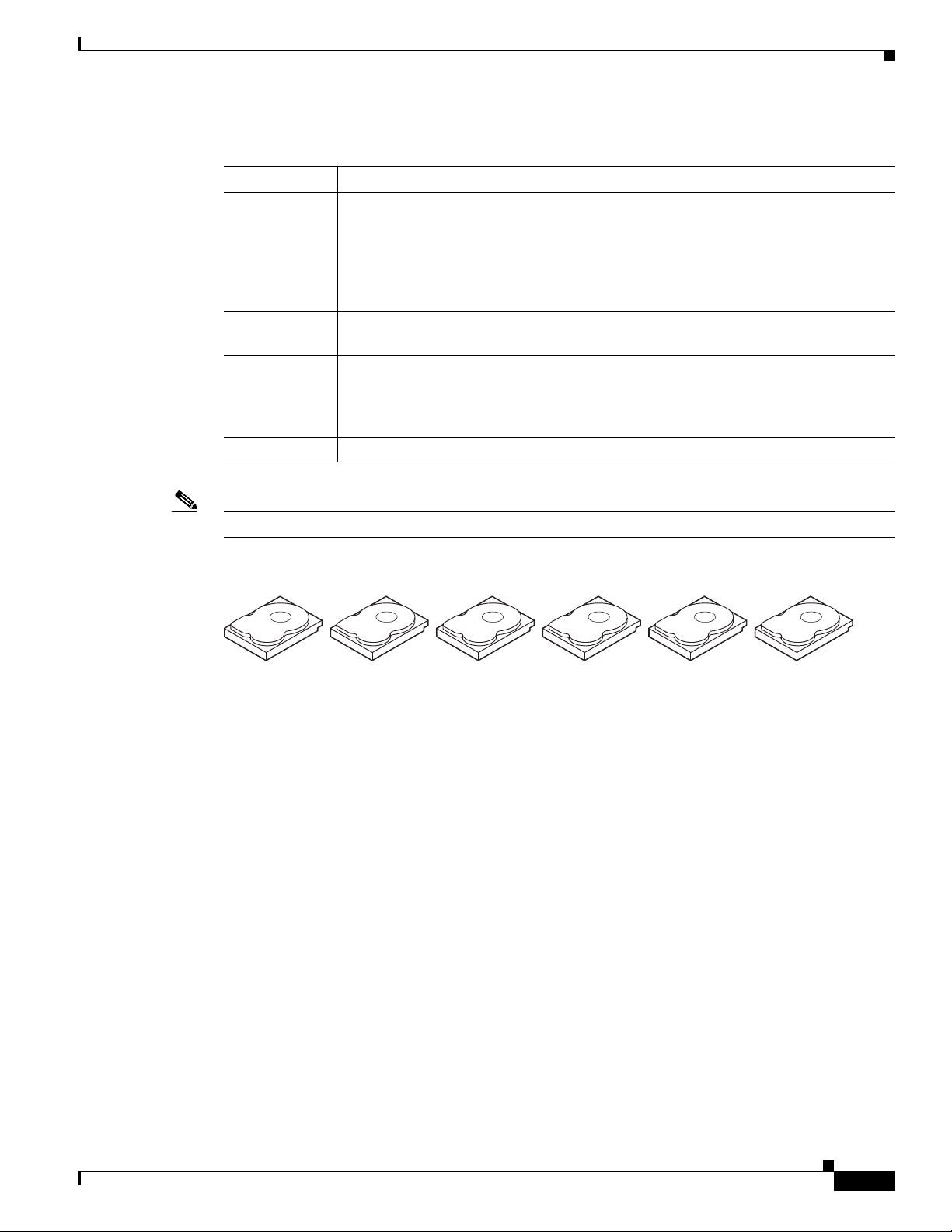

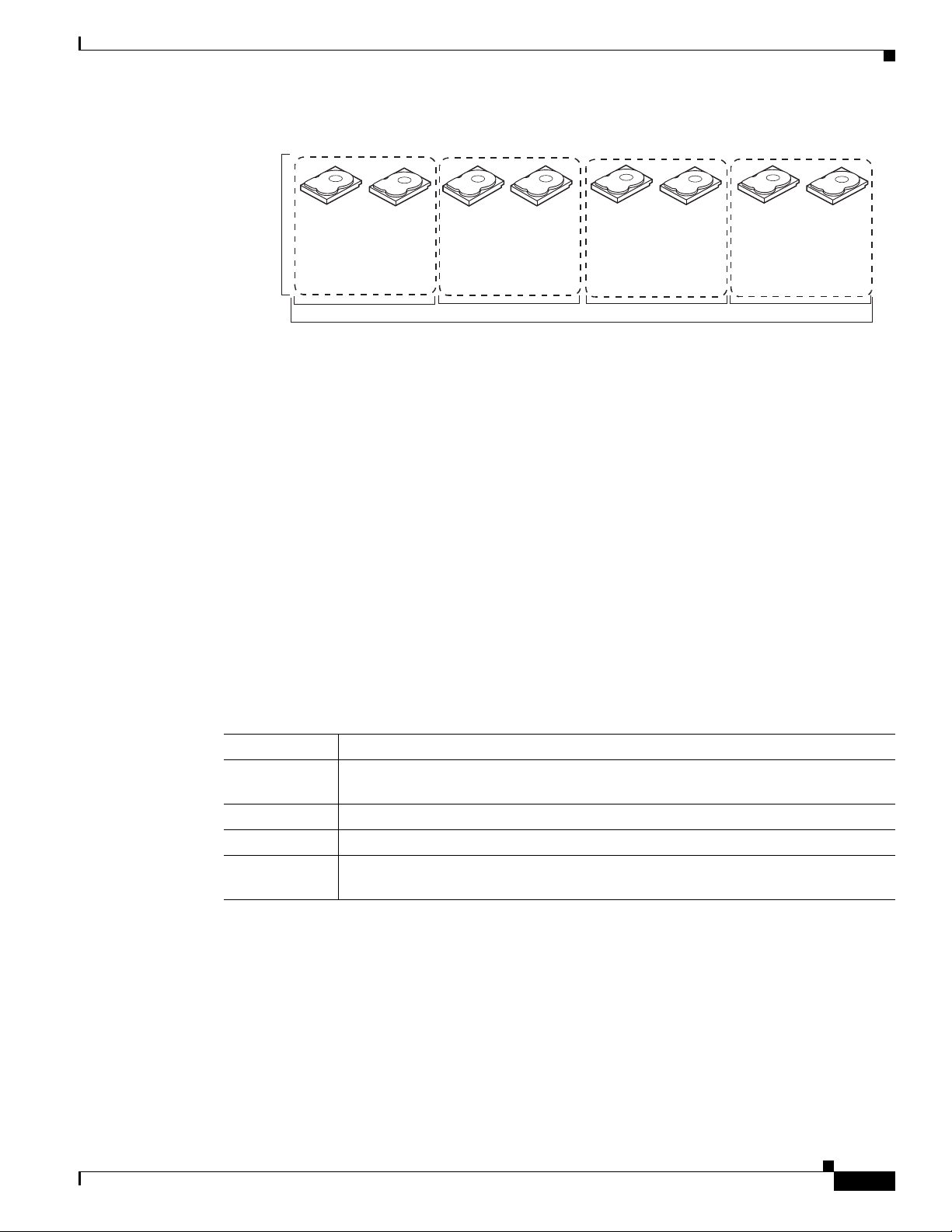

RAID 50 provides the features of both RAID 0 and RAID 5. RAID 50 includes both parity and disk

striping across multiple drive groups. RAID 50 is best implemented on two RAID 5 drive groups with

data striped across both drive groups.

RAID 50 breaks up data into smaller blocks and stripes the blocks of data to each RAID 5 disk set.

RAID 5 breaks up data into smaller blocks, calculates parity, and writes the blocks of data and parity to

each drive in the drive group. The size of each block is determined by the stripe size parameter, which

is set during the creation of the RAID set.

RAID level 50 can support up to eight spans and tolerate up to eight drive failures though less than total

drive capacity is available. Though multiple drive failures can be tolerated, only one drive failure can be

tolerated in each RAID 5 level drive group.

Table 1 -10 provides an overview of RAID 50. In Figure 1-11, virtual drive 0 is created by distributing

data across two RAID 5 drive groups.

332143

Table 1-10 RAID 50 Overview

Features Description

Uses Appropriate when used with data that requires high reliability, high request rates,

high data transfer, and medium to large capacity.

Benefits Provides high data throughput, data redundancy, and very good performance.

Limitations Requires 2 to 8 times as many parity drives as RAID 5.

Drives Two to 8 equal spans of RAID 5 drive groups containing 3 to 32 drives each (limited

by the maximum number of devices supported by the controller.)

OL-26591-01

Cisco UCS Servers RAID Guide

1-15

Page 22

RAID Levels

Segment 1

Segment 2

Segment 5

Segment 6

RAID 0

RAID 50

(Segment 1,2)

Segment 3

Segment 4

Segment 8

Segment 7

Segment 9

Segment 10

Segment 11

Segment 12

(Segment 5,6)

(Segment 9,10)

(Segment 11,12)

(Segment 7,8)

(Segment 3,4)

RAID 5 RAID 5

332097

RAID 60

Chapter 1 RAID Overview

Figure 1-11 RAID 50 Virtual Drive Example

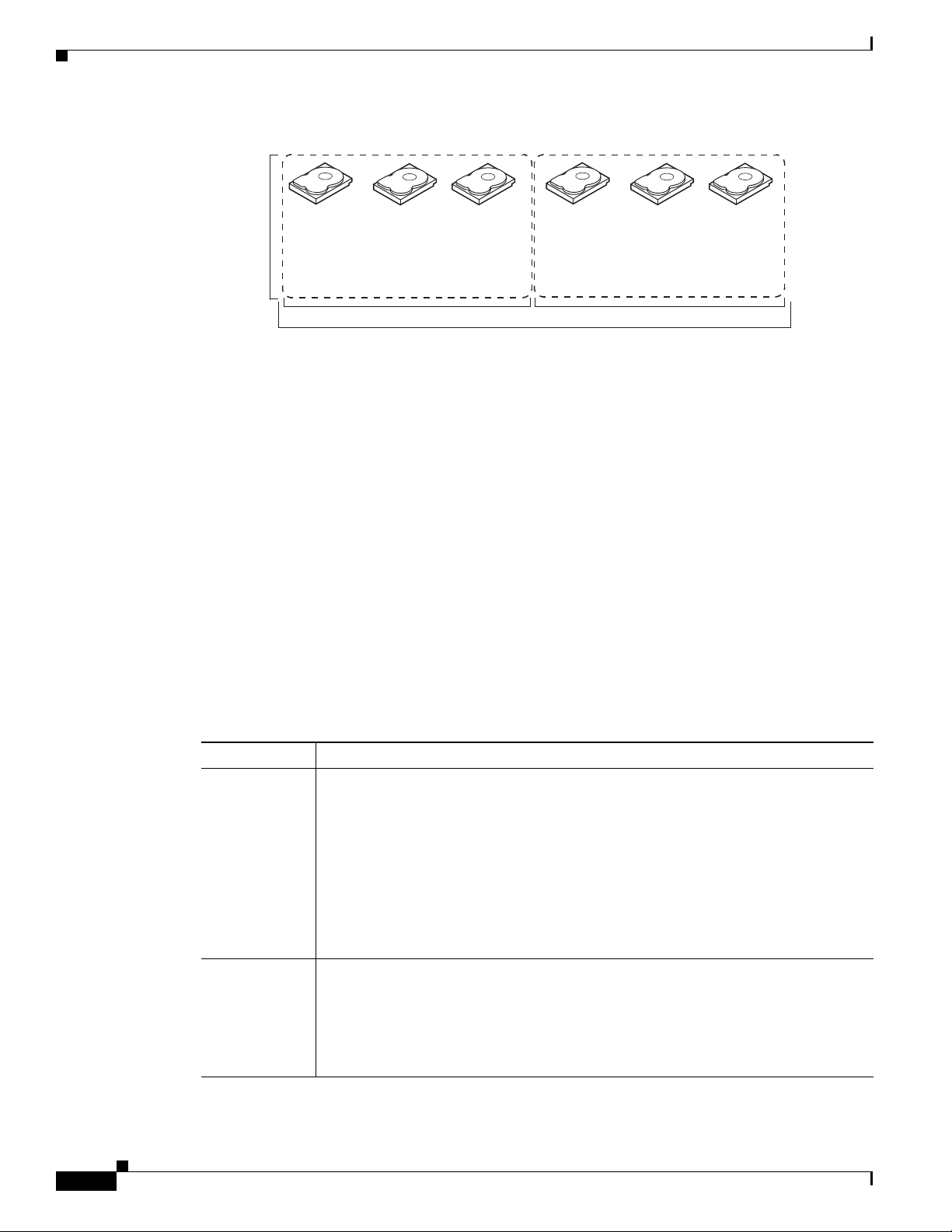

RAID 60 provides the features of both RAID 0 and RAID 6 and includes both parity and disk striping

across multiple drive groups. RAID 6 supports two independent parity blocks per stripe. A RAID 60

virtual drive can survive the loss of two drives in each of the RAID 6 sets without losing data. RAID 60

is best implemented on two RAID 6 drive groups with data striped across both drive groups.

RAID 60 breaks up data into smaller blocks and stripes the blocks of data to each RAID 6 disk set.

RAID 6 breaks up data into smaller blocks, calculates parity, and writes the blocks of data and parity to

each drive in the drive group. The size of each block is determined by the stripe size parameter, which

is set during the creation of the RAID set.

RAID 60 can support up to 8 spans and tolerate up to 16 drive failures though less than total drive

capacity is available. Two drive failures can be tolerated in each RAID 6 level drive group.

Table 1 -11 provides an overview of RAID 60. Figure 1-12 shows a RAID 6 data layout. The second set

of parity drives are denoted by Q. The P drives follow the RAID 5 parity scheme.

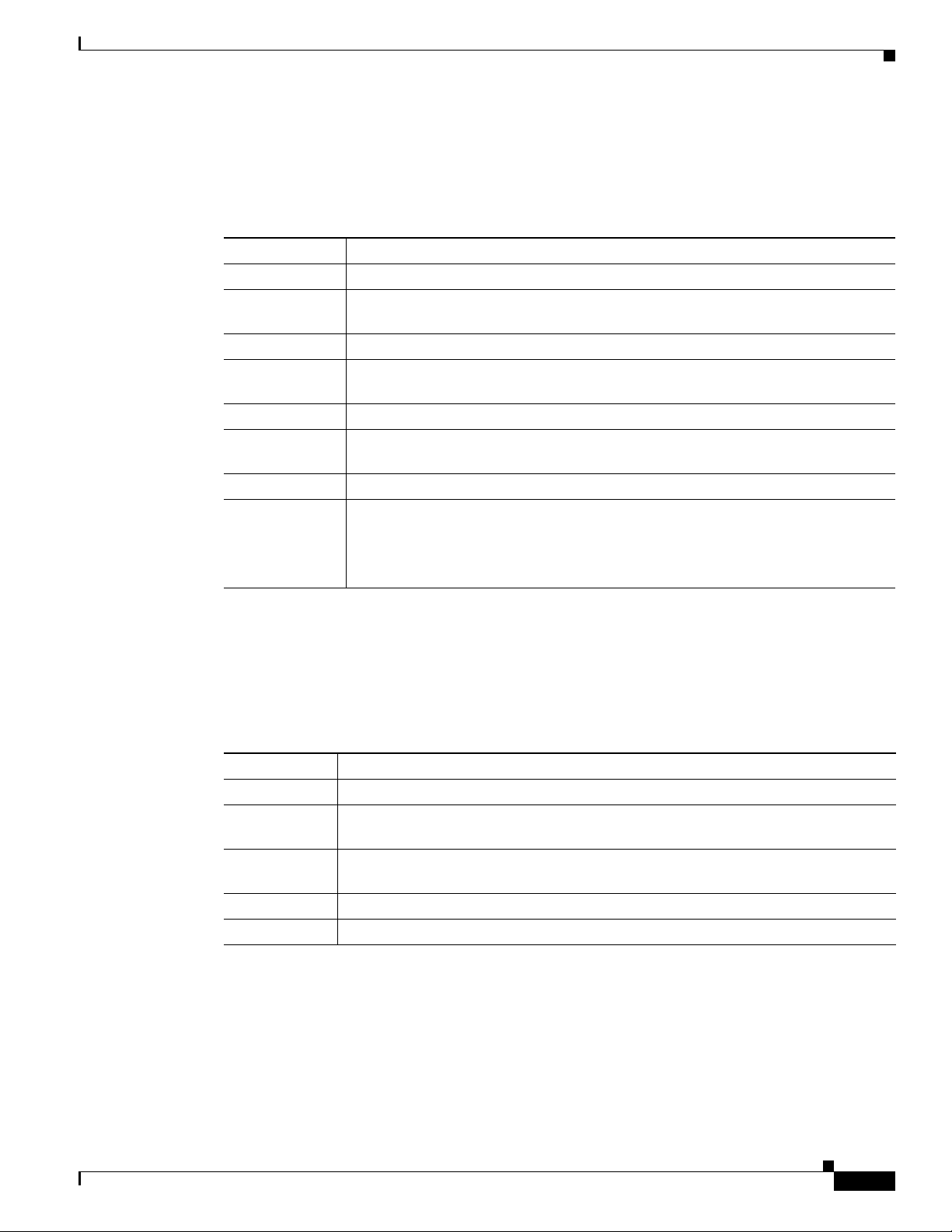

Table 1-11 RAID 60 Overview

Features Description

Uses Provides a high level of data protection through the use of a second parity block in

each stripe. Use RAID 60 for data that requires a very high level of protection from

loss.

In the case of a failure of one drive or two drives in a RAID set in a virtual drive, the

RAID controller uses the parity blocks to recreate all of the missing information. If

two drives in a RAID 6 set in a RAID 60 virtual drive fail, two drive rebuilds are

required, one for each drive. These rebuilds can occur at the same time.

Use for office automation and online customer service that requires fault tolerance.

Use for any application that has high read request rates but low write request rates.

Benefits Provides data redundancy, high read rates, and good performance in most

environments. Each RAID 6 set can survive the loss of two drives or the loss of a

drive while another drive is being rebuilt. RAID 60 provides the highest level of

protection against drive failures of all of the RAID levels. The read performance is

similar to that of RAID 50, though random reads in RAID 60 might be slightly faster

because data is spread across at least one more disk in each RAID 6 set.

1-16

Cisco UCS Servers RAID Guide

OL-26591-01

Page 23

Chapter 1 RAID Overview

Segment 1

Segment 8

Segment 2

Segment 7

Segment 10

Segment 5

Parity (P1–P2)

Parity (Q11–Q12)

Parity (Q1–Q2)

Segment 11

Segment 12

Parity (P15–P16)

Segment 15

Segment 16

Parity (Q15–Q16)

Segment 3

Segment 6

Segment 4

Parity (P9–P10)

Parity (Q9–Q10)Parity (P11–P12)

Segment 9

Parity (P13–P14)

Segment 14

Segment 13

Parity (Q13–Q14)

RAID 6

RAID 6

RAID 0

Parity (Q3–Q4)

Parity (P3–P4)

Parity (Q5–Q6)

Parity (P5–P6)

Parity (P3–P4)

Parity (Q3–Q4)

RAID

60

Note Parity is distributed across all drives in the drive group.

RAID Levels

Table 1-11 RAID 60 Overview (continued)

Features Description

Limitations Not well suited to tasks using many writes. A RAID 60 virtual drive has to generate

two sets of parity data for each write operation, which results in a significant decrease

in performance during writes. Drive performance is reduced during a drive rebuild.

Environments with few processes do not perform as well because the RAID overhead

is not offset by the performance gains in handling simultaneous processes. RAID 6

costs more because of the extra capacity required by using two parity blocks per

stripe.

Drives Two to 8 equal spans of RAID 6 drive groups containing 3 to 32 drives each (limited

by the maximum number of devices supported by the controller.)

Figure 1-12 RAID 60 Virtual Drive Example

Fault Tolerance

OL-26591-01

Fault tolerance is the capability of the subsystem to undergo a drive failure or failures without

compromising data integrity and processing capability. The RAID controller provides this support

through redundant drive groups in RAID levels 1, 5, 6, 10, 50, and 60. The system can operate properly

even with a drive failure in a drive group, although performance might be degraded to some extent.

• A RAID 1 drive group has two drives and can tolerate one drive failure.

• A RAID 5 drive group can tolerate one drive failure in each RAID 5 drive group.

• A RAID 6 drive group can tolerate up to two drive failures.

• Each spanned RAID 10 virtual drive can tolerate multiple drive failures as long as each failure is in

a separate drive group.

• A RAID 50 virtual drive can tolerate two drive failures as long as each failure is in a separate drive

group.

• RAID 60 drive groups can tolerate up to two drive failures in each drive group.

Note RAID level 0 is not fault tolerant. If a drive in a RAID 0 drive group fails, the whole virtual drive (all

drives associated with the virtual drive) fails.

Cisco UCS Servers RAID Guide

1-17

Page 24

Generic Drive Replacement Procedure

Fault tolerance is often associated with system availability because it allows the system to be available

during the failures. However, it is also important for the system to be available during the repair of the

problem.

Hot spares are important in fault tolerance; see Hot Spares, page 1-4 for more information.

Auto-rebuild allows a failed drive to be replaced and the data automatically rebuilt by hot swapping the

drive in the same drive bay. See Hot Swap, page 1-6 for more information. The RAID drive group

continues to handle requests while the rebuild occurs.

Generic Drive Replacement Procedure

Note B-series blade servers are shown but the mechanical features (release button, eject lever) are the same

for most B-series and C-series servers.

Removing a Drive from a Server

Chapter 1 RAID Overview

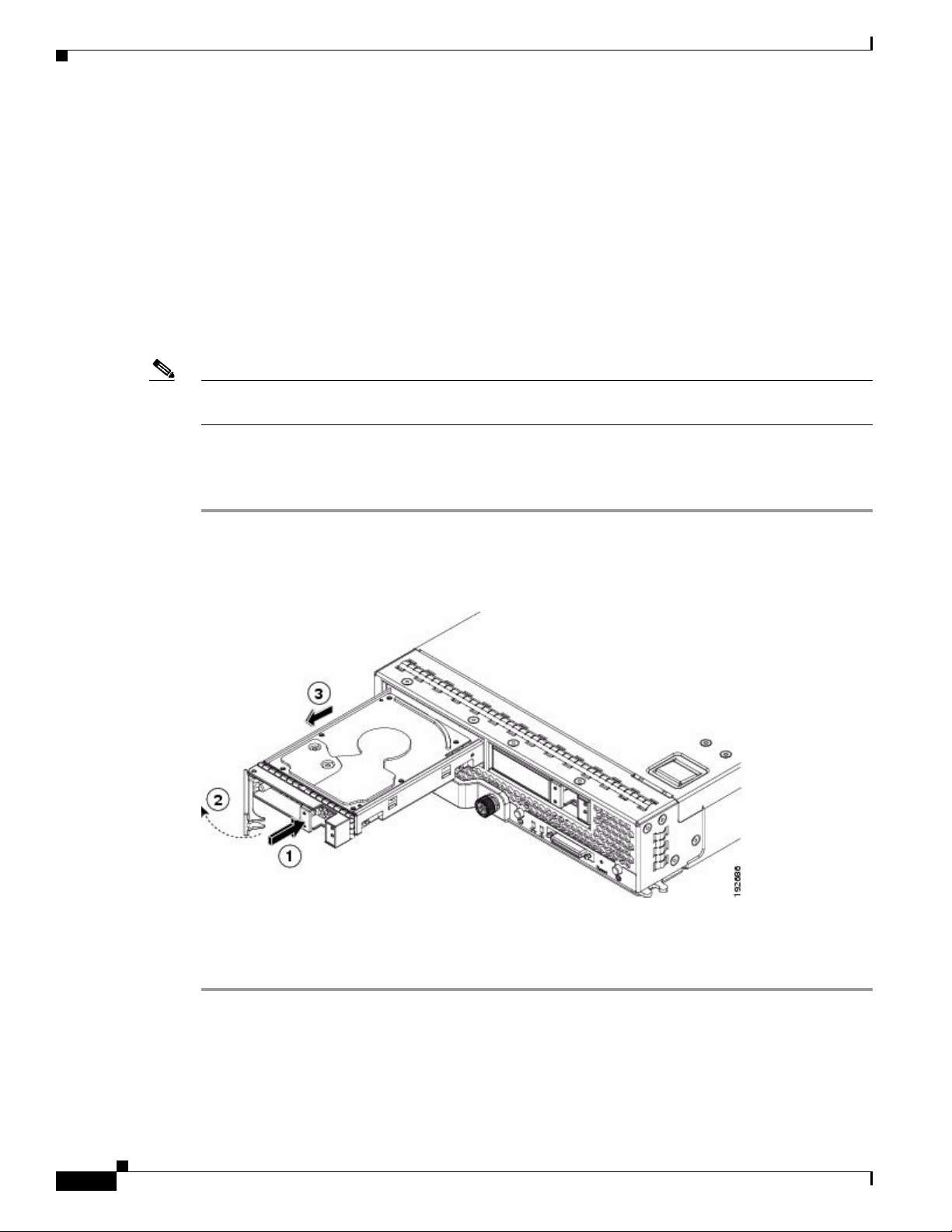

Step 1 Push the button to release the ejector, fully extend the ejection lever and then pull the hard drive from its

slot. See Figure 1-13.

Figure 1-13 Removing the Drive

Step 2

Step 3 Install a blank faceplate (N20-BBLKD) to keep dust out of the server if the slot will remain empty.

Place the hard drive on an antistatic mat or antistatic foam if you are not immediately reinstalling it in

another blade server.

1-18

Cisco UCS Servers RAID Guide

OL-26591-01

Page 25

Chapter 1 RAID Overview

Installing a Drive in a Server

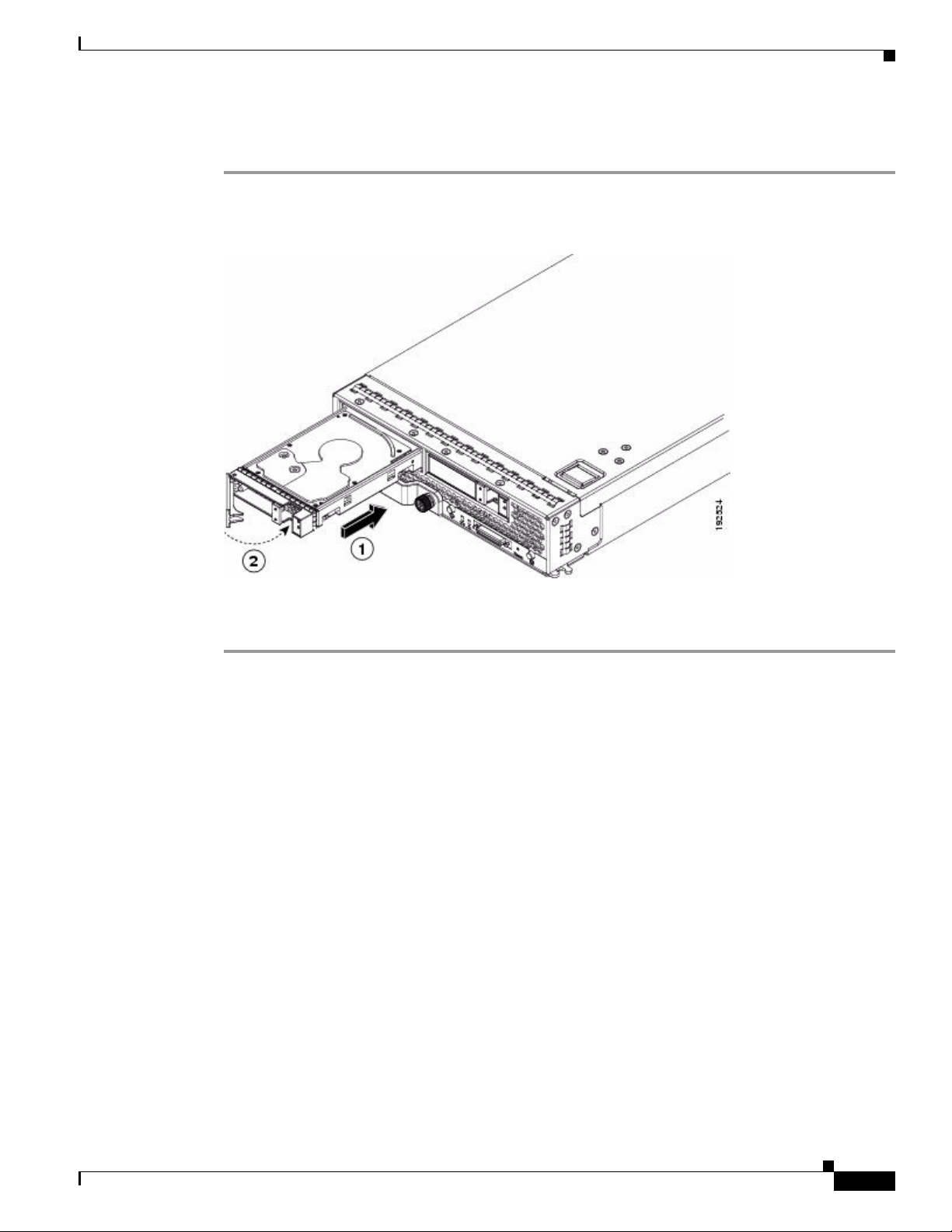

Step 1 Place the hard drive lever into the open position by pushing the release button (see Figure 1-14).

Figure 1-14 Installing a Hard Drive in a Blade Server

Platform-Specific RAID and Drive Procedures

Step 2

Step 3 Push the hard drive lever into the closed position.

Gently slide the hard drive into the opening in the blade server until it seats into place.

If you need to move a RAID cluster, see the Moving a RAID Cluster section of the “Troubleshooting

Server Hardware” chapter of the Cisco UCS Troubleshooting Guide.

Platform-Specific RAID and Drive Procedures

B-series RAID and supported drive information that was previously in the software configuration,

hardware installation and service, and troubleshooting guides is repeated in this guide. B series servers

all have onboard RAID controllers that cannot be removed or upgraded. Only software configuration and

drive operations appropriate for that server’s controller are possible.

Supported RAID controllers for all models are listed in RAID Controllers in UCS Servers, page 3-10.

The C-Series hardware installation guides each have a “RAID Considerations” appendix that provides

information about supported RAID controllers and cables, plus cabling instructions specific to each

server model. See that documentation as needed at:

http://www.cisco.com/en/US/products/ps10493/prod_installation_guides_list.html

OL-26591-01

Cisco UCS Servers RAID Guide

1-19

Page 26

Platform-Specific RAID and Drive Procedures

Chapter 1 RAID Overview

1-20

Cisco UCS Servers RAID Guide

OL-26591-01

Page 27

CHA P T ER

2

Using Cisco Integrated Management Controller and Cisco UCS Server Configuration Utility for RAID Monitoring and Configuring

This chapter provides information about monitoring and configuring your RAID controller in your Cisco

Integrated Management Controller (CIMC) and Cisco UCS Server Configuration Utility. The Cisco

C-Series servers have built-in monitoring and configuration tools for storage, including RAID.

This chapter contains the following sections:

• Cisco Integrated Management Controller—Viewing Storage Properties, page 2-1

• Cisco UCS Server Configuration Utility—RAID Configuration, page 2-2

Note The tools and software referred to in this chapter are used only in C-series rack-mounted servers that are

not integrated with Cisco UCS Manager.

Cisco Integrated Management Controller—Viewing Storage Properties

CIMC is the management service for the C-Series servers and runs within the server.

You can use a web-based GUI or Secure Shell-based CLI to access, configure, administer, and monitor

the server. Almost all tasks can be performed in either interface, and the results of tasks performed in

one interface are displayed in another.

The configuration information for CIMC is located in the Cisco UCS C-Series Rack-Mount Servers

Configuration Guide and the Cisco UCS C-Series Rack-Mount Servers CLI Configuration Guide. For

details, see the guide that applies to the release that you are using.

A complete list of GUI and CLI configuration guides can be found here: Cisco UCS C-Series

Configuration Guides.

The following information is included:

• Storage adapters—Including all MegaRAID and Cisco Flexible Flash controllers.

• Controller information—that include the following:

–

PCI information

–

Manufacturing information

Cisco UCS Servers RAID Guide

OL-26591-01

2-1

Page 28

Chapter 2 Using Cisco Integrated Management Controller and Cisco UCS Server Configuration Utility for RAID

Cisco Integrated Management Controller—Viewing Storage Properties

–

Running and startup firmware image information

–

Virtual and physical drive counts

–

General settings

–

Capabilities

–

Hardware configuration

–

Error counters

• Physical drive information—Including general drive information, identification information, and

drive status.

• Virtual drive information—Including general drive information, RAID information, and physical

drive information.

• Battery backup unit information (does not apply to Cisco Flexible Flash).

Cisco UCS Server Configuration Utility—RAID Configuration

You can use the RAID Configuration section in the Cisco UCS Server Configuration Utility document

to configure your system RAID controllers.

RAID levels supported by SCU are RAID 0, 1, 5, and 6.

The latest documentation can be found here: Cisco UCS Server Configuration Utility, Release 3.0 User

Guide.

If your system has multiple RAID controllers, Cisco UCS Server Configuration Utility displays a list of

all available RAID devices. This feature is described in the Server Configuration section.

Three types of RAID configurations can be set up using Cisco UCS Server Configuration Utility. This

feature is documented in the RAID configuration section.

• Automatic setup with redundancy

• Automatic setup without redundancy

• Create custom or multiple RAID arrays

2-2

Cisco UCS Servers RAID Guide

OL-26591-01

Page 29

CHA P T ER

3

Using Cisco UCS Manager for RAID Configuring and Monitoring

This chapter describes monitoring and configuring your RAID controller using Cisco UCS Manager. The

Cisco B-Series servers have built-in monitoring and configuration tools for storage, including RAID.

This chapter contains the following sections:

• Cisco UCS Manager Configuration, page 3-1

• Server Disk Drive Monitoring, page 3-7

• RAID Controllers in UCS Servers, page 3-10

Note Cisco UCS Manager is used both with B-series blade servers and C-series rack servers that have been

integrated.

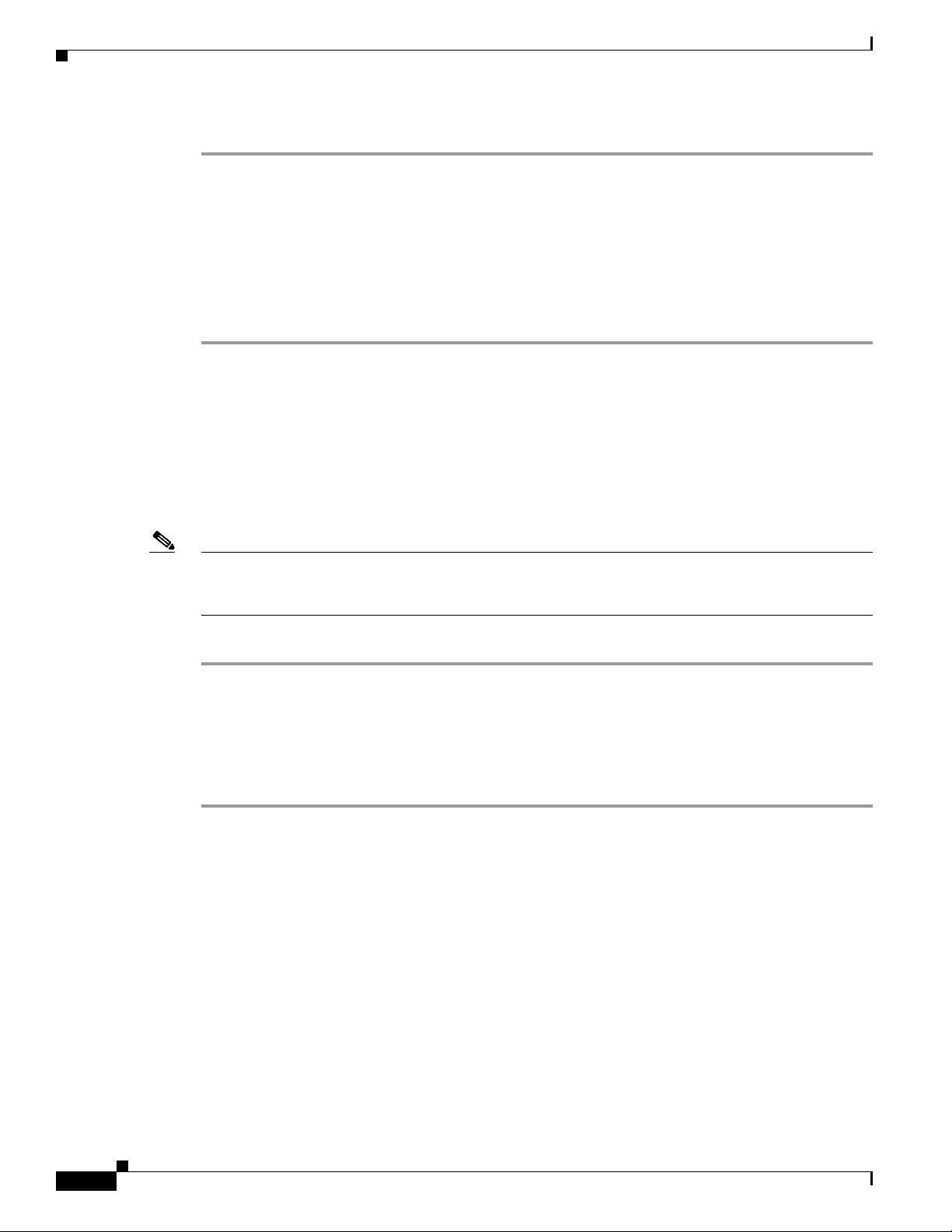

Cisco UCS Manager Configuration

Cisco UCS Manager interfaces with the LSI controllers and software and creates RAID configurations

as part of creating local disk configuration policies, which allow the same configuration steps to be

applied to many servers at once.

Local Disk Configuration Policy

This policy configures any optional SAS local drives that have been installed on a server through the

onboard RAID controller of the local drive. This policy enables you to set a local disk mode for all

servers that are associated with a service profile that includes the local disk configuration policy.

The local disk modes include the following:

• No Local Storage—For a diskless server or a SAN-only configuration. If you select this option, you

cannot associate any service profile that uses this policy with a server that has a local disk.

• RAID 0 Striped—Data is striped across all disks in the array, providing fast throughput. There is no

data redundancy, and all data is lost if any disk fails.

• RAID 1 Mirrored—Data is written to two disks, which provides complete data redundancy if one

disk fails. The maximum array size is equal to the available space on the smaller of the two drives.

• Any Configuration—For a server configuration that carries forward the local disk configuration

without any changes.

OL-26591-01

Cisco UCS Servers RAID Guide

3-1

Page 30

Cisco UCS Manager Configuration

• No RAID—For a server configuration that removes the RAID and leaves the disk MBR and payload

unaltered.

• RAID 5 Striped Parity—Data is striped across all disks in the array. Part of the capacity of each disk

stores parity information that can be used to reconstruct data if a disk fails. RAID 5 provides good

data throughput for applications with high read request rates.

• RAID 6 Striped Dual Parity—Data is striped across all disks in the array, and two parity disks are

used to provide protection against the failure of up to two physical disks. In each row of data blocks,

two sets of parity data are stored.

• RAID10 Mirrored and Striped— RAID 10 uses mirrored pairs of disks to provide complete data

redundancy and high throughput rates.

You must include this policy in a service profile, and that service profile must be associated with a server

for the policy to take effect.

Guidelines for all Local Disk Configuration Policies

Before you create a local disk configuration policy, consider the following guidelines:

• No Mixed HDDs and SSDs

Mixing HDD and SSDs in a single server or RAID configuration is not supported.

Chapter 3 Using Cisco UCS Manager for RAID Configuring and Monitoring

• Do Not Assign a Service Profile with the Default Local Disk Configuration Policy from a B200 M1

or M2 to a B200 M3

Due to the differences in the RAID/JBOD support provided by the storage controllers of B200 M1

and M2 servers and those of the B200 M3 server, you cannot assign or reassign a service profile that

includes the default local disk configuration policy from a B200M1 or M2 server to a B200 M3

server. The default local disk configuration policy includes the Any Configuration or JBOD modes.

• Impact of Upgrade to Release 1.3(1i) or Higher

An upgrade from an earlier Cisco UCS firmware release to release 1.3(1i) or higher has the

following impact on the Protect Configuration property of the local disk configuration policy the

first time servers are associated with service profiles after the upgrade:

• Unassociated Servers

After you upgrade the Cisco UCS domain, the initial server association proceeds without

configuration errors whether or not the local disk configuration policy matches the server hardware.

Even if you enable the Protect Configuration property, Cisco UCS does not protect the user data on

the server if there are configuration mismatches between the local disk configuration policy on the

previous service profile and the policy in the new service profile.

Note If you enable the Protect Configuration property and the local disk configuration policy

encounters mismatches between the previous service profile and the new service profile, all

subsequent service profile associations with the server are blocked.

• Associated Servers

3-2

Any servers that are already associated with service profiles do not reboot after the upgrade. Cisco

UCS Manager does not report any configuration errors if there is a mismatch between the local disk

configuration policy and the server hardware.

Cisco UCS Servers RAID Guide

OL-26591-01

Page 31

Chapter 3 Using Cisco UCS Manager for RAID Configuring and Monitoring

When a service profile is disassociated from a server and a new service profile associated, the setting

for the Protect Configuration property in the new service profile takes precedence and overwrites

the setting in the previous service profile.

Guidelines for Local Disk Configuration Policies Configured for RAID

• No Mixed HDDs and SSDs

Do not include HDDs and SSDs in a single RAID configuration.

• Server May Not Boot After RAID 1 Cluster Migration if Any Configuration Mode Specified in

Service Profile

After RAID 1 clusters are migrated, you must associate a service profile with the server. If the local

disk configuration policy in the service profile is configured with Any Configuration mode rather

than RAID 1, the RAID LUN remains in an “inactive” state during and after association. As a result,

the server cannot boot.

To avoid this issue, ensure that the service profile you associate with the server contains the identical

local disk configuration policy as the original service profile before the migration and does not

include the Any Configuration mode.

• Configure RAID Settings in Local Disk Configuration Policy for Servers with MegaRAID Storage

Controllers

If a blade server or integrated rack-mount server has a MegaRAID controller, you must configure

RAID settings for the drives in the Local Disk Configuration policy included in the service profile

for that server.

Cisco UCS Manager Configuration

If you do not configure your RAID LUNs before installing the OS, disk discovery failures might

occur during the installation and you might see error messages such as “No Device Found.”

• Do Not Use JBOD Mode on Servers with MegaRAID Storage Controllers

Do not configure or use JBOD mode or JBOD operations on any blade server or integrated

rack-mount server with MegaRAID storage controllers. JBOD mode and operations are not

supported on these servers.

• Maximum of One RAID Volume Using RAID 0 or RAID 1 Disk Policy

A rack-mount server that has been integrated with Cisco UCS Manager can have a maximum of one

RAID 1 or RAID 0 volume using the Local Disk Policy irrespective of how many hard drives are

present on the server. If you require multiple volumes you must use the “Any Configuration” local

drive policy and configure the volumes using the LSI tools outside of UCSM.

• Number of Disks Selected in Mirrored RAID Should Not Exceed Two

If the number of disks selected in the Mirrored RAID exceed two, RAID 1 is created as a RAID 10

LUN. This issue can occur with the Cisco UCS B440 M1 and B440 M2 servers.

OL-26591-01

Cisco UCS Servers RAID Guide

3-3

Page 32

Chapter 3 Using Cisco UCS Manager for RAID Configuring and Monitoring

Cisco UCS Manager Configuration

Creating a Local Disk Configuration Policy

Step 1 In the Navigation pane, click the Servers tab.

Step 2 On the Servers tab, expand Servers > Policies.

Step 3 Expand the node for the organization where you want to create the policy.

If the system does not include multi-tenancy, expand the root node.

Step 4 Right-click Local Disk Config Policies and choose Create Local Disk Configuration Policy.

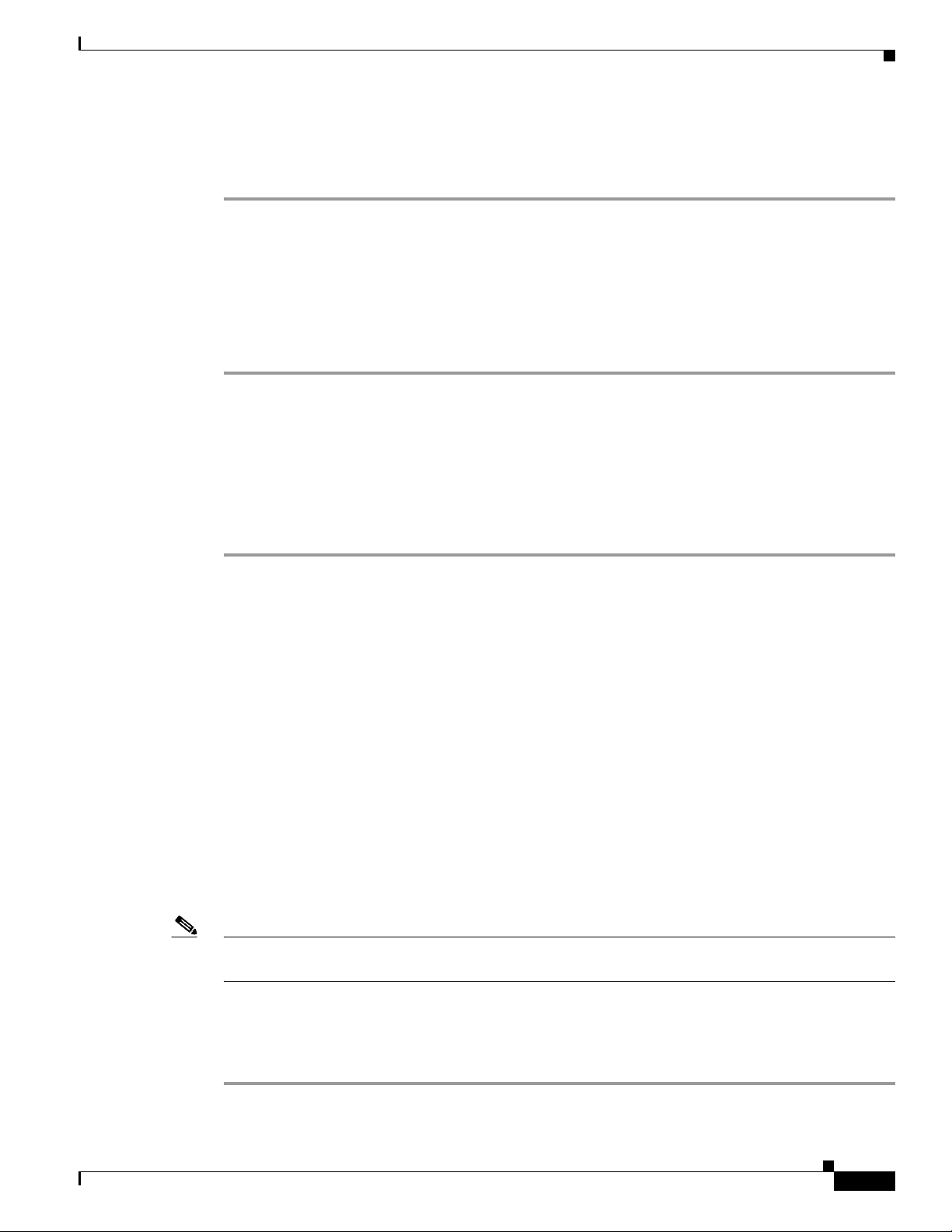

Step 5 In the Create Local Disk Configuration Policy dialog box, complete the following fields:

Name Description

Name field The name of the policy.

This name can be between 1 and 16 alphanumeric characters. You cannot use

spaces or any special characters other than - (hyphen), _ (underscore),

: (colon), and . (period), and you cannot change this name after the object has

been saved.

Description field A description of the policy. We recommend that you include information

about where and when the policy should be used.

Enter up to 256 characters. You can use any characters or spaces except ^

(carat), \ (back slash), > (greater than), < (less than), ' (single quote), " (double

quote), ` (accent mark), or = (equal sign).

3-4

Cisco UCS Servers RAID Guide

OL-26591-01

Page 33

Chapter 3 Using Cisco UCS Manager for RAID Configuring and Monitoring

Name Description

Mode drop-down list This can be one of the following local disk policy modes:

• No Local Storage—For a diskless server or a SAN-only configuration. If

you select this option, you cannot associate any service profile which

uses this policy with a server that has a local disk.

• RAID 0 Striped—Data is striped across all disks in the array, providing

fast throughput. There is no data redundancy, and all data is lost if any

disk fails.

• RAID 1 Mirrored—Data is written to two disks, which provides complete

data redundancy if one disk fails. The maximum array size is equal to the

available space on the smaller of the two drives.

• Any Configuration—For a server configuration that carries forward the

local disk configuration without any changes.

• No RAID—For a server configuration that removes the RAID and leaves

the disk MBR and payload unaltered.

• RAID 5 Striped Parity—Data is striped across all disks in the array. Part

of the capacity of each disk stores parity information that can be used to

reconstruct data if a disk fails. RAID 5 provides good data throughput for

applications with high read request rates.

• RAID 6 Striped Dual Parity—Data is striped across all disks in the array

and two parity disks are used to provide protection against the failure of

up to two physical disks. In each row of data blocks, two sets of parity

data are stored.

• RAID10 Mirrored and Striped— RAID 10 uses mirrored pairs of disks to

provide complete data redundancy and high throughput rates.

Cisco UCS Manager Configuration

Note If you choose No RAID and you apply this policy to a server that

already has an operating system with RAID storage configured, the

system does not remove the disk contents. Therefore, there may be no

visible differences after you apply the No RAID mode.

To make sure that any previous RAID configuration information is removed

from a disk, apply a scrub policy that removes all disk information after you

apply the No RAID configuration mode.

OL-26591-01

Cisco UCS Servers RAID Guide

3-5

Page 34

Cisco UCS Manager Configuration

Name Description

Protect Configuration

check box

Chapter 3 Using Cisco UCS Manager for RAID Configuring and Monitoring

If checked, the server retains the configuration in the local disk configuration

policy even if the server is disassociated from the service profile.

Caution Protect Configuration becomes non functional if one or more disks

in the server are defective or faulty.

This property is checked by default.

When a service profile is disassociated from a server and a new service profile

associated, the setting for the Protect Configuration property in the new

service profile takes precedence and overwrites the setting in the previous

service profile.

Note If you disassociate the server from a service profile with this option

enabled and then associate it with a new service profile that includes

a local disk configuration policy with different properties, the server

returns a configuration mismatch error and the association fails.

Step 6 Click OK.

Changing a Local Disk Configuration Policy

This procedure describes how to change a local disk configuration policy from an associated service

profile. You can also change a local disk configuration policy from the Policies node of the Servers tab.

Step 1 In the Navigation pane, click the Servers tab.

Step 2 On the Servers tab, expand Servers > Service Profiles.

Step 3 Expand the organization that includes the service profile with the local disk configuration policy you

want to change.

If the system does not include multi-tenancy, expand the root node.

Step 4 Click the service profile that contains the local disk configuration policy you want to change.

Step 5 In the Work pane, click the Policies tab.

Step 6 In the Actions area, click Change Local Disk Configuration Policy.

Step 7 In the Change Local Disk Configuration Policy dialog box, choose one of the following options from

the Select the Local Disk Configuration Policy drop-down list.

3-6

Option Description

Use a Disk Policy Select an existing local disk configuration policy from the list below

this option. Cisco UCS Manager assigns this policy to the service

profile.

Cisco UCS Servers RAID Guide

OL-26591-01

Page 35

Chapter 3 Using Cisco UCS Manager for RAID Configuring and Monitoring

Option Description

Create a Local Disk Policy Enables you to create a local disk configuration policy that can only

be accessed by the selected service profile.

No Disk Policy Does not use a local disk configuration policy for the selected

service profile.

Step 8 Click OK.

Step 9 (Optional) Expand the Local Disk Configuration Policy area to confirm that the change has been made.

Deleting a Local Disk Configuration Policy

Step 1 In the Navigation pane, click the Servers tab.

Step 2 On the Servers tab, expand Servers > Policies > Organization_Name.

Server Disk Drive Monitoring

Step 3 Expand the Local Disk Config Policies node.

Step 4 Right-click the policy you want to delete and choose Delete.

Step 5 If the Cisco UCS Manager GUI displays a confirmation dialog box, click Yes .

Server Disk Drive Monitoring

The disk drive monitoring for Cisco UCS provides Cisco UCS Manager with blade-resident disk drive

status for supported blade servers in a Cisco UCS domain. Disk drive monitoring provides a

unidirectional fault signal from the LSI firmware to Cisco UCS Manager to provide status information.

The following server and firmware components gather, send, and aggregate information about the disk

drive status in a server:

• Physical presence sensor—Determines whether the disk drive is inserted in the server drive bay.

• Physical fault sensor—Determines the operability status reported by the LSI storage controller

firmware for the disk drive.

• IPMI disk drive fault and presence sensors—Sends the sensor results to Cisco UCS Manager.

• Disk drive fault LED control and associated IPMI sensors—Controls disk drive fault LED states