Page 1

Cisco UCS Manager GUI Configuration Guide, Release 2.0

First Published: September 06, 2011

Last Modified: September 04, 2012

Americas Headquarters

Cisco Systems, Inc.

170 West Tasman Drive

San Jose, CA 95134-1706

USA

http://www.cisco.com

Tel: 408 526-4000

800 553-NETS (6387)

Fax: 408 527-0883

Text Part Number: OL-25712-04

Page 2

THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS,

INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND,

EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS.

THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH

THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY,

CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version

of the UNIX operating system. All rights reserved. Copyright©1981, Regents of the University of California.

NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS" WITH ALL FAULTS.

CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE.

IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT

LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS

HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

Cisco and the Cisco logo are trademarks or registered trademarks of Cisco and/or its affiliates in the U.S. and other countries. To view a list of Cisco trademarks, go to this URL: http://

www.cisco.com/go/trademarks. Third-party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership

relationship between Cisco and any other company. (1110R)

Any Internet Protocol (IP) addresses used in this document are not intended to be actual addresses. Any examples, command display output, and figures included in the document are shown

for illustrative purposes only. Any use of actual IP addresses in illustrative content is unintentional and coincidental.

©

2011-2012 Cisco Systems, Inc. All rights reserved.

Page 3

CONTENTS

Preface

PART I

CHAPTER 1

CHAPTER 2

Preface xxxiii

Audience xxxiii

Conventions xxxiii

Related Cisco UCS Documentation xxxv

Documentation Feedback xxxv

Obtaining Documentation and Submitting a Service Request xxxv

Introduction 1

New and Changed Information 3

New and Changed Information for this Release 3

Overview of Cisco Unified Computing System 9

About Cisco Unified Computing System 9

Unified Fabric 10

Fibre Channel over Ethernet 11

Link-Level Flow Control 11

Priority Flow Control 11

Server Architecture and Connectivity 12

Overview of Service Profiles 12

Network Connectivity through Service Profiles 12

Configuration through Service Profiles 12

Service Profiles that Override Server Identity 13

Service Profiles that Inherit Server Identity 14

Service Profile Templates 15

Policies 15

Configuration Policies 15

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 iii

Page 4

Contents

Boot Policy 15

Chassis Discovery Policy 16

Dynamic vNIC Connection Policy 19

Ethernet and Fibre Channel Adapter Policies 19

Global Cap Policy 20

Host Firmware Package 21

IPMI Access Profile 21

Local Disk Configuration Policy 22

Management Firmware Package 22

Management Interfaces Monitoring Policy 23

Network Control Policy 23

Power Control Policy 24

Power Policy 24

Quality of Service Policy 25

Rack Server Discovery Policy 25

Server Autoconfiguration Policy 25

Server Discovery Policy 25

Server Inheritance Policy 26

Server Pool Policy 26

Server Pool Policy Qualifications 26

vHBA Template 27

VM Lifecycle Policy 27

vNIC Template 27

vNIC/vHBA Placement Policies 28

Operational Policies 28

Fault Collection Policy 28

Flow Control Policy 29

Maintenance Policy 29

Scrub Policy 29

Serial over LAN Policy 30

Statistics Collection Policy 30

Statistics Threshold Policy 30

Pools 31

Server Pools 31

MAC Pools 31

Cisco UCS Manager GUI Configuration Guide, Release 2.0

iv OL-25712-04

Page 5

Contents

UUID Suffix Pools 32

WWN Pools 32

Management IP Pool 33

Traffic Management 33

Oversubscription 33

Oversubscription Considerations 33

Guidelines for Estimating Oversubscription 34

Pinning 35

Pinning Server Traffic to Server Ports 35

Guidelines for Pinning 36

Quality of Service 37

System Classes 37

CHAPTER 3

Quality of Service Policy 38

Flow Control Policy 38

Opt-In Features 38

Stateless Computing 38

Multi-Tenancy 39

Virtualization in Cisco UCS 40

Overview of Virtualization 40

Overview of Cisco Virtual Machine Fabric Extender 41

Virtualization with Network Interface Cards and Converged Network Adapters 41

Virtualization with a Virtual Interface Card Adapter 41

Overview of Cisco UCS Manager 43

About Cisco UCS Manager 43

Tasks You Can Perform in Cisco UCS Manager 44

Tasks You Cannot Perform in Cisco UCS Manager 46

Cisco UCS Manager in a High Availability Environment 46

CHAPTER 4

Overview of Cisco UCS Manager GUI 47

Overview of Cisco UCS Manager GUI 47

Fault Summary Area 48

Navigation Pane 48

Toolbar 50

Work Pane 50

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 v

Page 6

Contents

Status Bar 50

Table Customization 51

LAN Uplinks Manager 52

Internal Fabric Manager 52

Hybrid Display 53

Logging in to Cisco UCS Manager GUI through HTTPS 53

Logging in to Cisco UCS Manager GUI through HTTP 54

Logging Off Cisco UCS Manager GUI 54

Web Session Limits 55

Setting the Web Session Limit for Cisco UCS Manager 55

Pre-Login Banner 56

Creating the Pre-Login Banner 56

Modifying the Pre-Login Banner 56

PART II

CHAPTER 5

Deleting the Pre-Login Banner 57

Cisco UCS Manager GUI Properties 57

Configuring the Cisco UCS Manager GUI Session and Log Properties 57

Configuring Properties for Confirmation Messages 58

Configuring Properties for External Applications 59

Customizing the Appearance of Cisco UCS Manager GUI 59

Determining the Acceptable Range of Values for a Field 60

Determining Where a Policy Is Used 60

Determining Where a Pool Is Used 61

Copying the XML 61

System Configuration 63

Configuring the Fabric Interconnects 65

Initial System Setup 65

Setup Mode 66

System Configuration Type 66

Management Port IP Address 66

Performing an Initial System Setup for a Standalone Configuration 67

Initial System Setup for a Cluster Configuration 69

Performing an Initial System Setup on the First Fabric Interconnect 69

Performing an Initial System Setup on the Second Fabric Interconnect 71

Cisco UCS Manager GUI Configuration Guide, Release 2.0

vi OL-25712-04

Page 7

Contents

Enabling a Standalone Fabric Interconnect for Cluster Configuration 72

Ethernet Switching Mode 72

Configuring Ethernet Switching Mode 73

Fibre Channel Switching Mode 74

Configuring Fibre Channel Switching Mode 74

Changing the Properties of the Fabric Interconnects 75

Determining the Leadership Role of a Fabric Interconnect 76

CHAPTER 6

Configuring Ports and Port Channels 77

Server and Uplink Ports on the 6100 Series Fabric Interconnect 77

Unified Ports on the 6200 Series Fabric Interconnect 78

Port Modes 78

Port Types 79

Beacon LEDs for Unified Ports 80

Guidelines for Configuring Unified Ports 80

Effect of Port Mode Changes on Data Traffic 81

Configuring Port Modes for a 6248 Fabric Interconnect 82

Configuring Port Modes for a 6296 Fabric Interconnect 83

Configuring the Beacon LEDs for Unified Ports 84

Server Ports 85

Configuring Server Ports 85

Uplink Ethernet Ports 85

Configuring Uplink Ethernet Ports 85

Changing the Properties of an Uplink Ethernet Port 86

Reconfiguring a Port on a Fabric Interconnect 86

Enabling a Port on a Fabric Interconnect 87

Disabling a Port on a Fabric Interconnect 88

Unconfiguring a Port on a Fabric Interconnect 89

Appliance Ports 89

Configuring an Appliance Port 89

Modifying the Properties of an Appliance Port 92

FCoE and Fibre Channel Storage Ports 94

Configuring an FCoE Storage Port 94

Configuring a Fibre Channel Storage Port 94

Restoring an Uplink Fibre Channel Port 95

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 vii

Page 8

Contents

Default Zoning 95

Enabling Default Zoning 96

Disabling Default Zoning 97

Uplink Ethernet Port Channels 97

Creating an Uplink Ethernet Port Channel 98

Enabling an Uplink Ethernet Port Channel 99

Disabling an Uplink Ethernet Port Channel 99

Adding Ports to and Removing Ports from an Uplink Ethernet Port Channel 99

Deleting an Uplink Ethernet Port Channel 100

Appliance Port Channels 100

Creating an Appliance Port Channel 100

Enabling an Appliance Port Channel 103

Disabling an Appliance Port Channel 103

Adding Ports to and Removing Ports from an Appliance Port Channel 103

Deleting an Appliance Port Channel 104

Fibre Channel Port Channels 104

Creating a Fibre Channel Port Channel 104

Enabling a Fibre Channel Port Channel 105

Disabling a Fibre Channel Port Channel 106

Adding Ports to and Removing Ports from a Fibre Channel Port Channel 106

Modifying the Properties of a Fibre Channel Port Channel 106

Deleting a Fibre Channel Port Channel 107

Adapter Port Channels 108

Viewing Adapter Port Channels 108

Fabric Port Channels 108

Cabling Considerations for Fabric Port Channels 109

Configuring a Fabric Port Channel 109

Viewing Fabric Port Channels 110

Enabling or Disabling a Fabric Port Channel Member Port 110

Configuring Server Ports with the Internal Fabric Manager 111

Internal Fabric Manager 111

Launching the Internal Fabric Manager 111

Configuring a Server Port with the Internal Fabric Manager 111

Unconfiguring a Server Port with the Internal Fabric Manager 112

Enabling a Server Port with the Internal Fabric Manager 112

Cisco UCS Manager GUI Configuration Guide, Release 2.0

viii OL-25712-04

Page 9

Contents

Disabling a Server Port with the Internal Fabric Manager 112

CHAPTER 7

Configuring Communication Services 113

Communication Services 113

Configuring CIM-XML 114

Configuring HTTP 115

Configuring HTTPS 115

Certificates, Key Rings, and Trusted Points 115

Creating a Key Ring 116

Creating a Certificate Request for a Key Ring 117

Creating a Trusted Point 118

Importing a Certificate into a Key Ring 119

Configuring HTTPS 119

Deleting a Key Ring 121

Deleting a Trusted Point 121

Configuring SNMP 121

Information about SNMP 121

SNMP Functional Overview 121

CHAPTER 8

SNMP Notifications 122

SNMP Security Levels and Privileges 122

Supported Combinations of SNMP Security Models and Levels 123

SNMPv3 Security Features 124

SNMP Support in Cisco UCS 124

Enabling SNMP and Configuring SNMP Properties 125

Creating an SNMP Trap 126

Deleting an SNMP Trap 127

Creating an SNMPv3 user 128

Deleting an SNMPv3 User 129

Enabling Telnet 129

Disabling Communication Services 129

Configuring Authentication 131

Authentication Services 131

Guidelines and Recommendations for Remote Authentication Providers 131

User Attributes in Remote Authentication Providers 132

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 ix

Page 10

Contents

LDAP Group Rule 134

Configuring LDAP Providers 134

Configuring Properties for LDAP Providers 134

Creating an LDAP Provider 135

Changing the LDAP Group Rule for an LDAP Provider 139

Deleting an LDAP Provider 140

LDAP Group Mapping 140

Creating an LDAP Group Map 141

Deleting an LDAP Group Map 141

Configuring RADIUS Providers 142

Configuring Properties for RADIUS Providers 142

Creating a RADIUS Provider 142

Deleting a RADIUS Provider 144

Configuring TACACS+ Providers 144

Configuring Properties for TACACS+ Providers 144

Creating a TACACS+ Provider 145

Deleting a TACACS+ Provider 146

Configuring Multiple Authentication Systems 146

Multiple Authentication Systems 146

Provider Groups 147

Creating an LDAP Provider Group 147

Deleting an LDAP Provider Group 147

Creating a RADIUS Provider Group 148

Deleting a RADIUS Provider Group 148

Creating a TACACS+ Provider Group 149

Deleting a TACACS+ Provider Group 149

Authentication Domains 150

Creating an Authentication Domain 150

Selecting a Primary Authentication Service 151

Selecting the Console Authentication Service 151

Selecting the Default Authentication Service 152

Role Policy for Remote Users 153

Configuring the Role Policy for Remote Users 154

CHAPTER 9

Cisco UCS Manager GUI Configuration Guide, Release 2.0

x OL-25712-04

Configuring Organizations 155

Page 11

Contents

Organizations in a Multi-Tenancy Environment 155

Hierarchical Name Resolution in a Multi-Tenancy Environment 156

Creating an Organization under the Root Organization 157

Creating an Organization under a Sub-Organization 158

Deleting an Organization 158

CHAPTER 10

Configuring Role-Based Access Control 159

Role-Based Access Control 159

User Accounts for Cisco UCS Manager 159

Guidelines for Cisco UCS Manager Usernames 160

Reserved Words: Locally Authenticated User Accounts 161

Guidelines for Cisco UCS Manager Passwords 162

Web Session Limits for User Accounts 162

User Roles 162

Default User Roles 163

Reserved Words: User Roles 164

Privileges 164

User Locales 166

Configuring User Roles 167

Creating a User Role 167

Adding Privileges to a User Role 168

Removing Privileges from a User Role 168

Deleting a User Role 168

Configuring Locales 169

Creating a Locale 169

Assigning an Organization to a Locale 170

Deleting an Organization from a Locale 170

Deleting a Locale 171

Configuring Locally Authenticated User Accounts 171

Creating a User Account 171

Enabling the Password Strength Check for Locally Authenticated Users 174

Setting the Web Session Limits for Cisco UCS Manager GUI Users 174

Changing the Locales Assigned to a Locally Authenticated User Account 175

Changing the Roles Assigned to a Locally Authenticated User Account 175

Enabling a User Account 176

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xi

Page 12

Contents

Disabling a User Account 176

Clearing the Password History for a Locally Authenticated User 177

Deleting a Locally Authenticated User Account 177

Password Profile for Locally Authenticated Users 177

Configuring the Maximum Number of Password Changes for a Change Interval 179

Configuring a No Change Interval for Passwords 179

Configuring the Password History Count 180

Monitoring User Sessions 180

CHAPTER 11

Managing Firmware 183

Overview of Firmware 183

Firmware Image Management 184

Firmware Image Headers 185

Firmware Image Catalog 185

Firmware Versions 186

Firmware Upgrades 187

Cautions, Guidelines, and Best Practices for Firmware Upgrades 187

Configuration Changes and Settings that Can Impact Upgrades 188

Hardware-Related Guidelines and Best Practices for Firmware Upgrades 189

Firmware- and Software-Related Best Practices for Upgrades 190

Required Order of Components for Firmware Activation 192

Required Order for Adding Support for Previously Unsupported Servers 193

Direct Firmware Upgrade at Endpoints 194

Stages of a Direct Firmware Upgrade 195

Outage Impacts of Direct Firmware Upgrades 196

Firmware Upgrades through Service Profiles 197

Host Firmware Package 197

Management Firmware Package 198

Stages of a Firmware Upgrade through Service Profiles 198

Firmware Downgrades 199

Completing the Prerequisites for Upgrading the Firmware 199

Prerequisites for Upgrading and Downgrading Firmware 199

Creating an All Configuration Backup File 200

Verifying the Overall Status of the Fabric Interconnects 202

Verifying the High Availability Status and Roles of a Cluster Configuration 202

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xii OL-25712-04

Page 13

Contents

Verifying the Status of I/O Modules 203

Verifying the Status of Servers 203

Verifying the Status of Adapters on Servers in a Chassis 204

Downloading and Managing Firmware Packages 204

Obtaining Software Bundles from Cisco 204

Downloading Firmware Images to the Fabric Interconnect from a Remote Location 206

Downloading Firmware Images to the Fabric Interconnect from the Local File System 207

Canceling an Image Download 208

Determining the Contents of a Firmware Package 209

Checking the Available Space on a Fabric Interconnect 209

Deleting Firmware Packages from a Fabric Interconnect 209

Deleting Firmware Images from a Fabric Interconnect 210

Directly Upgrading Firmware at Endpoints 210

Updating the Firmware on Multiple Endpoints 210

Updating the Firmware on an Adapter 212

Activating the Firmware on an Adapter 213

Updating the BIOS Firmware on a Server 213

Activating the BIOS Firmware on a Server 214

Updating the CIMC Firmware on a Server 215

Activating the CIMC Firmware on a Server 215

Updating the Firmware on an IOM 216

Activating the Firmware on an IOM 217

Activating the Board Controller Firmware on a Server 218

Activating the Cisco UCS Manager Software 219

Activating the Firmware on a Subordinate Fabric Interconnect 219

Activating the Firmware on a Primary Fabric Interconnect 220

Activating the Firmware on a Standalone Fabric Interconnect 221

Upgrading Firmware through Service Profiles 222

Host Firmware Package 222

Management Firmware Package 223

Effect of Updates to Host Firmware Packages and Management Firmware Packages 223

Creating a Host Firmware Package 226

Updating a Host Firmware Package 227

Creating a Management Firmware Package 228

Updating a Management Firmware Package 228

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xiii

Page 14

Contents

Adding Firmware Packages to an Existing Service Profile 229

Verifying Firmware Versions on Components 230

Managing the Capability Catalog 230

Capability Catalog 230

Contents of the Capability Catalog 230

Updates to the Capability Catalog 231

Activating a Capability Catalog Update 232

Verifying that the Capability Catalog Is Current 232

Viewing a Capability Catalog Provider 233

Downloading Individual Capability Catalog Updates 233

Obtaining Capability Catalog Updates from Cisco 233

Updating the Capability Catalog from a Remote Location 234

Updating the Capability Catalog from the Local File System 235

CHAPTER 12

CHAPTER 13

Updating Management Extensions 235

Management Extensions 235

Activating a Management Extension 236

Configuring DNS Servers 237

DNS Servers in Cisco UCS 237

Adding a DNS Server 237

Deleting a DNS Server 238

Configuring System-Related Policies 239

Configuring the Chassis Discovery Policy 239

Chassis Discovery Policy 239

Configuring the Chassis Discovery Policy 242

Configuring the Chassis Connectivity Policy 243

Chassis Connectivity Policy 243

Configuring a Chassis Connectivity Policy 243

Configuring the Rack Server Discovery Policy 244

Rack Server Discovery Policy 244

Configuring the Rack Server Discovery Policy 244

Configuring the Aging Time for the MAC Address Table 245

Aging Time for the MAC Address Table 245

Configuring the Aging Time for the MAC Address Table 245

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xiv OL-25712-04

Page 15

Contents

CHAPTER 14

CHAPTER 15

Managing Licenses 247

Licenses 247

Obtaining the Host ID for a Fabric Interconnect 248

Obtaining a License 249

Downloading Licenses to the Fabric Interconnect from the Local File System 250

Downloading Licenses to the Fabric Interconnect from a Remote Location 251

Installing a License 252

Viewing the Licenses Installed on a Fabric Interconnect 253

Determining the Grace Period Available for a Port or Feature 255

Determining the Expiry Date of a License 256

Uninstalling a License 256

Managing Virtual Interfaces 259

Virtual Interfaces 259

Virtual Interface Subscription Management and Error Handling 259

PART III

CHAPTER 16

Network Configuration 261

Using the LAN Uplinks Manager 263

LAN Uplinks Manager 263

Launching the LAN Uplinks Manager 264

Changing the Ethernet Switching Mode with the LAN Uplinks Manager 264

Configuring a Port with the LAN Uplinks Manager 264

Configuring Server Ports 265

Enabling a Server Port with the LAN Uplinks Manager 265

Disabling a Server Port with the LAN Uplinks Manager 266

Unconfiguring a Server Port with the LAN Uplinks Manager 266

Configuring Uplink Ethernet Ports 266

Enabling an Uplink Ethernet Port with the LAN Uplinks Manager 266

Disabling an Uplink Ethernet Port with the LAN Uplinks Manager 267

Unconfiguring an Uplink Ethernet Port with the LAN Uplinks Manager 267

Configuring Uplink Ethernet Port Channels 267

Creating a Port Channel with the LAN Uplinks Manager 267

Enabling a Port Channel with the LAN Uplinks Manager 268

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xv

Page 16

Contents

Disabling a Port Channel with the LAN Uplinks Manager 269

Adding Ports to a Port Channel with the LAN Uplinks Manager 269

Removing Ports from a Port Channel with the LAN Uplinks Manager 270

Deleting a Port Channel with the LAN Uplinks Manager 270

Configuring LAN Pin Groups 270

Creating a Pin Group with the LAN Uplinks Manager 270

Deleting a Pin Group with the LAN Uplinks Manager 271

Configuring Named VLANs 271

Creating a Named VLAN with the LAN Uplinks Manager 271

Deleting a Named VLAN with the LAN Uplinks Manager 274

Configuring QoS System Classes with the LAN Uplinks Manager 274

CHAPTER 17

CHAPTER 18

Configuring VLANs 277

Named VLANs 277

Private VLANs 278

VLAN Port Limitations 279

Configuring Named VLANs 280

Creating a Named VLAN 280

Deleting a Named VLAN 284

Configuring Private VLANs 285

Creating a Primary VLAN for a Private VLAN 285

Creating a Secondary VLAN for a Private VLAN 288

Viewing the VLAN Port Count 291

Configuring LAN Pin Groups 293

LAN Pin Groups 293

Creating a LAN Pin Group 293

Deleting a LAN Pin Group 294

CHAPTER 19

Configuring MAC Pools 295

MAC Pools 295

Creating a MAC Pool 295

Deleting a MAC Pool 296

CHAPTER 20

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xvi OL-25712-04

Configuring Quality of Service 297

Page 17

Contents

Quality of Service 297

Configuring System Classes 297

System Classes 297

Configuring QoS System Classes 298

Enabling a QoS System Class 300

Disabling a QoS System Class 300

Configuring Quality of Service Policies 301

Quality of Service Policy 301

Creating a QoS Policy 301

Deleting a QoS Policy 303

Configuring Flow Control Policies 304

Flow Control Policy 304

CHAPTER 21

Creating a Flow Control Policy 304

Deleting a Flow Control Policy 305

Configuring Network-Related Policies 307

Configuring vNIC Templates 307

vNIC Template 307

Creating a vNIC Template 307

Deleting a vNIC Template 311

Binding a vNIC to a vNIC Template 311

Unbinding a vNIC from a vNIC Template 312

Configuring Ethernet Adapter Policies 312

Ethernet and Fibre Channel Adapter Policies 312

Creating an Ethernet Adapter Policy 313

Deleting an Ethernet Adapter Policy 317

Configuring Network Control Policies 317

Network Control Policy 317

Creating a Network Control Policy 318

Deleting a Network Control Policy 320

CHAPTER 22

Configuring Upstream Disjoint Layer-2 Networks 321

Upstream Disjoint Layer-2 Networks 321

Guidelines for Configuring Upstream Disjoint L2 Networks 322

Pinning Considerations for Upstream Disjoint L2 Networks 323

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xvii

Page 18

Contents

Configuring Cisco UCS for Upstream Disjoint L2 Networks 324

Creating a VLAN for an Upstream Disjoint L2 Network 325

Assigning Ports and Port Channels to VLANs 327

Removing Ports and Port Channels from VLANs 328

Viewing Ports and Port Channels Assigned to VLANs 329

PART IV

CHAPTER 23

CHAPTER 24

Storage Configuration 331

Configuring Named VSANs 333

Named VSANs 333

Fibre Channel Uplink Trunking for Named VSANs 334

Guidelines and Recommendations for VSANs 334

Creating a Named VSAN 335

Creating a Storage VSAN 337

Deleting a VSAN 339

Changing the VLAN ID for the FCoE VLAN for a Storage VSAN 340

Enabling Fibre Channel Uplink Trunking 341

Disabling Fibre Channel Uplink Trunking 341

Configuring SAN Pin Groups 343

SAN Pin Groups 343

Creating a SAN Pin Group 343

Deleting a SAN Pin Group 344

CHAPTER 25

Configuring WWN Pools 345

WWN Pools 345

Configuring WWNN Pools 346

Creating a WWNN Pool 346

Adding a WWN Block to a WWNN Pool 347

Deleting a WWN Block from a WWNN Pool 347

Adding a WWNN Initiator to a WWNN Pool 348

Deleting a WWNN Initiator from a WWNN Pool 349

Deleting a WWNN Pool 349

Configuring WWPN Pools 350

Creating a WWPN Pool 350

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xviii OL-25712-04

Page 19

Contents

Adding a WWN Block to a WWPN Pool 351

Deleting a WWN Block from a WWPN Pool 351

Adding a WWPN Initiator to a WWPN Pool 352

Deleting a WWPN Initiator from a WWPN Pool 353

Deleting a WWPN Pool 353

CHAPTER 26

PART V

CHAPTER 27

Configuring Storage-Related Policies 355

Configuring vHBA Templates 355

vHBA Template 355

Creating a vHBA Template 355

Deleting a vHBA Template 357

Binding a vHBA to a vHBA Template 357

Unbinding a vHBA from a vHBA Template 358

Configuring Fibre Channel Adapter Policies 358

Ethernet and Fibre Channel Adapter Policies 358

Creating a Fibre Channel Adapter Policy 359

Deleting a Fibre Channel Adapter Policy 364

Server Configuration 365

Configuring Server-Related Pools 367

Configuring Server Pools 367

Server Pools 367

Creating a Server Pool 367

Deleting a Server Pool 368

Adding Servers to a Server Pool 369

Removing Servers from a Server Pool 369

Configuring UUID Suffix Pools 369

UUID Suffix Pools 369

Creating a UUID Suffix Pool 370

Deleting a UUID Suffix Pool 371

CHAPTER 28

Setting the Management IP Address 373

Management IP Address 373

Configuring the Management IP Address on a Blade Server 374

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xix

Page 20

Contents

Configuring a Blade Server to Use a Static IP Address 374

Configuring a Blade Server to Use the Management IP Pool 374

Configuring the Management IP Address on a Rack Server 375

Configuring a Rack Server to Use a Static IP Address 375

Configuring a Rack Server to Use the Management IP Pool 376

Setting the Management IP Address on a Service Profile 376

Setting the Management IP Address on a Service Profile Template 377

Configuring the Management IP Pool 377

Management IP Pool 377

Creating an IP Address Block in the Management IP Pool 378

Deleting an IP Address Block from the Management IP Pool 379

CHAPTER 29

Configuring Server-Related Policies 381

Configuring BIOS Settings 381

Server BIOS Settings 381

Main BIOS Settings 382

Processor BIOS Settings 384

Intel Directed I/O BIOS Settings 390

RAS Memory BIOS Settings 392

Serial Port BIOS Settings 394

USB BIOS Settings 394

PCI Configuration BIOS Settings 395

Boot Options BIOS Settings 396

Server Management BIOS Settings 397

BIOS Policy 402

Default BIOS Settings 402

Creating a BIOS Policy 403

Modifying the BIOS Defaults 404

Viewing the Actual BIOS Settings for a Server 404

Configuring IPMI Access Profiles 405

IPMI Access Profile 405

Creating an IPMI Access Profile 405

Deleting an IPMI Access Profile 406

Configuring Local Disk Configuration Policies 407

Local Disk Configuration Policy 407

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xx OL-25712-04

Page 21

Contents

Guidelines for all Local Disk Configuration Policies 407

Guidelines for Local Disk Configuration Policies Configured for RAID 408

Creating a Local Disk Configuration Policy 410

Changing a Local Disk Configuration Policy 412

Deleting a Local Disk Configuration Policy 413

Configuring Scrub Policies 413

Scrub Policy 413

Creating a Scrub Policy 414

Deleting a Scrub Policy 415

Configuring Serial over LAN Policies 415

Serial over LAN Policy 415

Creating a Serial over LAN Policy 415

Deleting a Serial over LAN Policy 416

Configuring Server Autoconfiguration Policies 417

Server Autoconfiguration Policy 417

Creating an Autoconfiguration Policy 417

Deleting an Autoconfiguration Policy 418

Configuring Server Discovery Policies 419

Server Discovery Policy 419

Creating a Server Discovery Policy 419

Deleting a Server Discovery Policy 420

Configuring Server Inheritance Policies 420

Server Inheritance Policy 420

Creating a Server Inheritance Policy 420

Deleting a Server Inheritance Policy 421

Configuring Server Pool Policies 422

Server Pool Policy 422

Creating a Server Pool Policy 422

Deleting a Server Pool Policy 423

Configuring Server Pool Policy Qualifications 423

Server Pool Policy Qualifications 423

Creating Server Pool Policy Qualifications 424

Deleting Server Pool Policy Qualifications 428

Deleting Qualifications from Server Pool Policy Qualifications 428

Configuring vNIC/vHBA Placement Policies 429

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xxi

Page 22

Contents

vNIC/vHBA Placement Policies 429

vCon to Adapter Placement 430

vNIC/vHBA to vCon Assignment 430

Creating a vNIC/vHBA Placement Policy 433

Deleting a vNIC/vHBA Placement Policy 434

Explicitly Assigning a vNIC to a vCon 434

Explicitly Assigning a vHBA to a vCon 435

CHAPTER 30

Configuring Server Boot 439

Boot Policy 439

Creating a Boot Policy 440

SAN Boot 441

Configuring a SAN Boot for a Boot Policy 441

iSCSI Boot 443

iSCSI Boot Process 444

iSCSI Boot Guidelines and Prerequisites 444

Enabling MPIO on Windows 446

Configuring iSCSI Boot 446

Creating an iSCSI Adapter Policy 447

Deleting an iSCSI Adapter Policy 449

Creating an Authentication Profile 449

Deleting an Authentication Profile 450

Creating an iSCSI Initiator IP Pool 450

Deleting an iSCSI Initiator IP Pool 451

Creating an iSCSI Boot Policy 451

Creating an iSCSI vNIC for a Service Profile 452

Deleting an iSCSI vNIC from a Service Profile 454

Setting iSCSI Boot Parameters 454

Modifying iSCSI Boot Parameters 458

IQN Pools 461

Creating an IQN Pool 461

Adding a Block to an IQN Pool 463

Deleting a Block from an IQN Pool 463

Deleting an IQN Pool 464

LAN Boot 465

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xxii OL-25712-04

Page 23

Contents

Configuring a LAN Boot for a Boot Policy 465

Local Disk Boot 465

Configuring a Local Disk Boot for a Boot Policy 466

Virtual Media Boot 466

Configuring a Virtual Media Boot for a Boot Policy 466

Deleting a Boot Policy 467

CHAPTER 31

Deferring Deployment of Service Profile Updates 469

Deferred Deployment of Service Profiles 469

Deferred Deployment Schedules 470

Maintenance Policy 470

Pending Activities 471

Guidelines and Limitations for Deferred Deployment 471

Configuring Schedules 472

Creating a Schedule 472

Creating a One Time Occurrence for a Schedule 477

Creating a Recurring Occurrence for a Schedule 479

Deleting a One Time Occurrence from a Schedule 481

Deleting a Recurring Occurrence from a Schedule 481

Deleting a Schedule 482

Configuring Maintenance Policies 482

Creating a Maintenance Policy 482

Deleting a Maintenance Policy 484

Managing Pending Activities 484

Viewing Pending Activities 484

Deploying a Service Profile Change Waiting for User Acknowledgement 484

Deploying All Service Profile Changes Waiting for User Acknowledgement 485

Deploying a Scheduled Service Profile Change Immediately 485

Deploying All Scheduled Service Profile Changes Immediately 486

CHAPTER 32

Configuring Service Profiles 487

Service Profiles that Override Server Identity 487

Service Profiles that Inherit Server Identity 488

Service Profile Templates 488

Guidelines and Recommendations for Service Profiles 489

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xxiii

Page 24

Contents

Creating Service Profiles 489

Creating a Service Profile with the Expert Wizard 489

Page 1: Identifying the Service Profile 490

Page 2: Configuring the Storage Options 491

Page 3: Configuring the Networking Options 496

Page 4: Setting the vNIC/vHBA Placement 502

Page 5: Setting the Server Boot Order 504

Page 6: Adding the Maintenance Policy 507

Page 7: Specifying the Server Assignment 509

Page 8: Adding Operational Policies 511

Creating a Service Profile that Inherits Server Identity 513

Creating a Hardware Based Service Profile for a Blade Server 517

Creating a Hardware Based Service Profile for a Rack-Mount Server 517

Working with Service Profile Templates 518

Creating a Service Profile Template 518

Page 1: Identifying the Service Profile Template 519

Page 2: Specifying the Storage Options 520

Page 3: Specifying the Networking Options 524

Page 4: Setting the vNIC/vHBA Placement 530

Page 5: Setting the Server Boot Order 532

Page 6: Adding the Maintenance Policy 535

Page 7: Specifying the Server Assignment Options 537

Page 8: Adding Operational Policies 539

Creating One or More Service Profiles from a Service Profile Template 541

Creating a Template Based Service Profile for a Blade Server 541

Creating a Template Based Service Profile for a Rack-Mount Server 542

Creating a Service Profile Template from a Service Profile 543

Managing Service Profiles 544

Cloning a Service Profile 544

Associating a Service Profile with a Server or Server Pool 544

Disassociating a Service Profile from a Server or Server Pool 545

Associating a Service Profile Template with a Server Pool 546

Disassociating a Service Profile Template from its Server Pool 547

Changing the UUID in a Service Profile 547

Changing the UUID in a Service Profile Template 548

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xxiv OL-25712-04

Page 25

Contents

Resetting the UUID Assigned to a Service Profile from a Pool in a Service Profile

Template 549

Modifying the Boot Order in a Service Profile 550

Creating a vNIC for a Service Profile 553

Resetting the MAC Address Assigned to a vNIC from a Pool in a Service Profile

Template 555

Deleting a vNIC from a Service Profile 556

Creating a vHBA for a Service Profile 556

Changing the WWPN for a vHBA 559

Resetting the WWPN Assigned to a vHBA from a Pool in a Service Profile Template 560

Clearing Persistent Binding for a vHBA 560

Deleting a vHBA from a Service Profile 561

CHAPTER 33

Binding a Service Profile to a Service Profile Template 561

Unbinding a Service Profile from a Service Profile Template 562

Deleting a Service Profile 562

Managing Power in Cisco UCS 563

Power Management in Cisco UCS 563

Rack Server Power Management 563

Power Management Precautions 563

Configuring the Power Policy 564

Power Policy 564

Configuring the Power Policy 564

Configuring the Global Cap Policy 564

Global Cap Policy 564

Configuring the Global Cap Policy 565

Configuring Policy-Driven Chassis Group Power Capping 565

Policy-Driven Chassis Group Power Capping 565

Configuring Power Groups 566

Power Groups 566

Creating a Power Group 566

Adding a Chassis to a Power Group 568

Removing a Chassis from a Power Group 568

Deleting a Power Group 568

Configuring Power Control Policies 569

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xxv

Page 26

Contents

Power Control Policy 569

Creating a Power Control Policy 569

Deleting a Power Control Policy 570

Configuring Manual Blade-Level Power Capping 570

Manual Blade-Level Power Capping 570

Setting the Blade-Level Power Cap for a Server 571

Viewing the Blade-Level Power Cap 572

PART VI

CHAPTER 34

CHAPTER 35

System Management 573

Managing Time Zones 575

Time Zones 575

Setting the Time Zone 575

Adding an NTP Server 576

Deleting an NTP Server 576

Managing the Chassis 577

Chassis Management in Cisco UCS Manager GUI 577

Guidelines for Removing and Decommissioning Chassis 577

Acknowledging a Chassis 578

Decommissioning a Chassis 579

Removing a Chassis 579

Recommissioning a Single Chassis 579

Recommissioning Multiple Chassis 580

Renumbering a Chassis 581

Toggling the Locator LED 582

Turning on the Locator LED for a Chassis 582

Turning off the Locator LED for a Chassis 582

Viewing the POST Results for a Chassis 582

CHAPTER 36

Managing Blade Servers 585

Blade Server Management 585

Guidelines for Removing and Decommissioning Blade Servers 586

Booting Blade Servers 586

Booting a Blade Server 586

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xxvi OL-25712-04

Page 27

Contents

Booting a Server from the Service Profile 587

Determining the Boot Order of a Blade Server 587

Shutting Down Blade Servers 588

Shutting Down a Blade Server 588

Shutting Down a Server from the Service Profile 588

Resetting a Blade Server 589

Avoiding Unexpected Server Power Changes 590

Reacknowledging a Blade Server 591

Removing a Server from a Chassis 591

Decommissioning a Blade Server 592

Recommissioning a Blade Server 593

Reacknowledging a Server Slot in a Chassis 593

CHAPTER 37

Removing a Non-Existent Blade Server from the Configuration Database 594

Turning the Locator LED for a Blade Server On and Off 594

Resetting the CMOS for a Blade Server 594

Resetting the CIMC for a Blade Server 595

Recovering the Corrupt BIOS on a Blade Server 595

Viewing the POST Results for a Blade Server 596

Issuing an NMI from a Blade Server 597

Managing Rack-Mount Servers 599

Rack-Mount Server Management 599

Guidelines for Removing and Decommissioning Rack-Mount Servers 600

Booting Rack-Mount Servers 600

Booting a Rack-Mount Server 600

Booting a Server from the Service Profile 601

Determining the Boot Order of a Rack-Mount Server 601

Shutting Down Rack-Mount Servers 602

Shutting Down a Rack-Mount Server 602

Shutting Down a Server from the Service Profile 602

Resetting a Rack-Mount Server 603

Avoiding Unexpected Server Power Changes 604

Reacknowledging a Rack-Mount Server 605

Decommissioning a Rack-Mount Server 605

Recommissioning a Rack-Mount Server 606

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xxvii

Page 28

Contents

Renumbering a Rack-Mount Server 606

Removing a Non-Existent Rack-Mount Server from the Configuration Database 607

Turning the Locator LED for a Rack-Mount Server On and Off 607

Resetting the CMOS for a Rack-Mount Server 608

Resetting the CIMC for a Rack-Mount Server 608

Recovering the Corrupt BIOS on a Rack-Mount Server 609

Viewing the POST Results for a Rack-Mount Server 610

Issuing an NMI from a Rack-Mount Server 610

CHAPTER 38

CHAPTER 39

CHAPTER 40

Starting the KVM Console 611

KVM Console 611

Virtual KVM Console 612

Starting the KVM Console from a Server 615

Starting the KVM Console from a Service Profile 615

Starting the KVM Console from the KVM Launch Manager 615

Managing the I/O Modules 617

I/O Module Management in Cisco UCS Manager GUI 617

Resetting an I/O Module 617

Viewing the POST Results for an I/O Module 617

Backing Up and Restoring the Configuration 619

Backup and Export Configuration 619

Backup Types 619

Considerations and Recommendations for Backup Operations 620

Import Configuration 621

Import Methods 621

System Restore 621

Required User Role for Backup and Import Operations 621

Backup Operations 622

Creating a Backup Operation 622

Running a Backup Operation 625

Modifying a Backup Operation 625

Deleting One or More Backup Operations 626

Import Operations 626

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xxviii OL-25712-04

Page 29

Contents

Creating an Import Operation 626

Running an Import Operation 629

Modifying an Import Operation 630

Deleting One or More Import Operations 630

Restoring the Configuration for a Fabric Interconnect 631

CHAPTER 41

PART VII

CHAPTER 42

Recovering a Lost Password 633

Recovering a Lost Password 633

Password Recovery for the Admin Account 633

Determining the Leadership Role of a Fabric Interconnect 634

Verifying the Firmware Versions on a Fabric Interconnect 634

Recovering the Admin Account Password in a Standalone Configuration 634

Recovering the Admin Account Password in a Cluster Configuration 636

System Monitoring 639

Monitoring Traffic 641

Traffic Monitoring 641

Guidelines and Recommendations for Traffic Monitoring 642

Creating an Ethernet Traffic Monitoring Session 643

Creating a Fibre Channel Traffic Monitoring Session 644

Adding Traffic Sources to a Monitoring Session 645

Activating a Traffic Monitoring Session 646

Deleting a Traffic Monitoring Session 646

CHAPTER 43

Monitoring Hardware 647

Monitoring a Fabric Interconnect 647

Monitoring a Chassis 648

Monitoring a Blade Server 650

Monitoring a Rack-Mount Server 652

Monitoring an I/O Module 654

Monitoring Management Interfaces 655

Management Interfaces Monitoring Policy 655

Configuring the Management Interfaces Monitoring Policy 656

Server Disk Drive Monitoring 658

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xxix

Page 30

Contents

Support for Disk Drive Monitoring 658

Prerequisites for Disk Drive Monitoring 659

Viewing the Status of a Disk Drive 659

Interpreting the Status of a Monitored Disk Drive 660

CHAPTER 44

CHAPTER 45

Configuring Statistics-Related Policies 663

Configuring Statistics Collection Policies 663

Statistics Collection Policy 663

Modifying a Statistics Collection Policy 664

Configuring Statistics Threshold Policies 666

Statistics Threshold Policy 666

Creating a Server and Server Component Threshold Policy 666

Adding a Threshold Class to an Existing Server and Server Component Threshold

Policy 668

Deleting a Server and Server Component Threshold Policy 669

Adding a Threshold Class to the Uplink Ethernet Port Threshold Policy 670

Adding a Threshold Class to the Ethernet Server Port, Chassis, and Fabric Interconnect

Threshold Policy 671

Adding a Threshold Class to the Fibre Channel Port Threshold Policy 672

Configuring Call Home 675

Call Home 675

Call Home Considerations and Guidelines 677

Cisco UCS Faults and Call Home Severity Levels 678

Cisco Smart Call Home 679

Configuring Call Home 680

Disabling Call Home 683

Enabling Call Home 683

Configuring System Inventory Messages 684

Configuring System Inventory Messages 684

Sending a System Inventory Message 684

Configuring Call Home Profiles 685

Call Home Profiles 685

Creating a Call Home Profile 686

Deleting a Call Home Profile 688

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xxx OL-25712-04

Page 31

Contents

Configuring Call Home Policies 688

Call Home Policies 688

Configuring a Call Home Policy 688

Disabling a Call Home Policy 689

Enabling a Call Home Policy 690

Deleting a Call Home Policy 690

Example: Configuring Call Home for Smart Call Home 690

Configuring Smart Call Home 690

Configuring the Default Cisco TAC-1 Profile 692

Configuring System Inventory Messages for Smart Call Home 693

Registering Smart Call Home 694

CHAPTER 46

CHAPTER 47

Managing the System Event Log 695

System Event Log 695

Viewing the System Event Log for an Individual Server 696

Viewing the System Event Log for the Servers in a Chassis 696

Configuring the SEL Policy 696

Managing the System Event Log for a Server 698

Copying One or More Entries in the System Event Log 698

Printing the System Event Log 699

Refreshing the System Event Log 699

Manually Backing Up the System Event Log 699

Manually Clearing the System Event Log 699

Configuring Settings for Faults, Events, and Logs 701

Configuring Settings for the Fault Collection Policy 701

Fault Collection Policy 701

Configuring the Fault Collection Policy 702

Configuring Settings for the Core File Exporter 703

Core File Exporter 703

Configuring the Core File Exporter 703

Disabling the Core File Exporter 704

Configuring the Syslog 704

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xxxi

Page 32

Contents

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xxxii OL-25712-04

Page 33

Audience

Preface

This preface includes the following sections:

• Audience, page xxxiii

• Conventions, page xxxiii

• Related Cisco UCS Documentation, page xxxv

• Documentation Feedback, page xxxv

• Obtaining Documentation and Submitting a Service Request, page xxxv

This guide is intended primarily for data center administrators with responsibilities and expertise in one or

more of the following:

• Server administration

• Storage administration

• Network administration

• Network security

Conventions

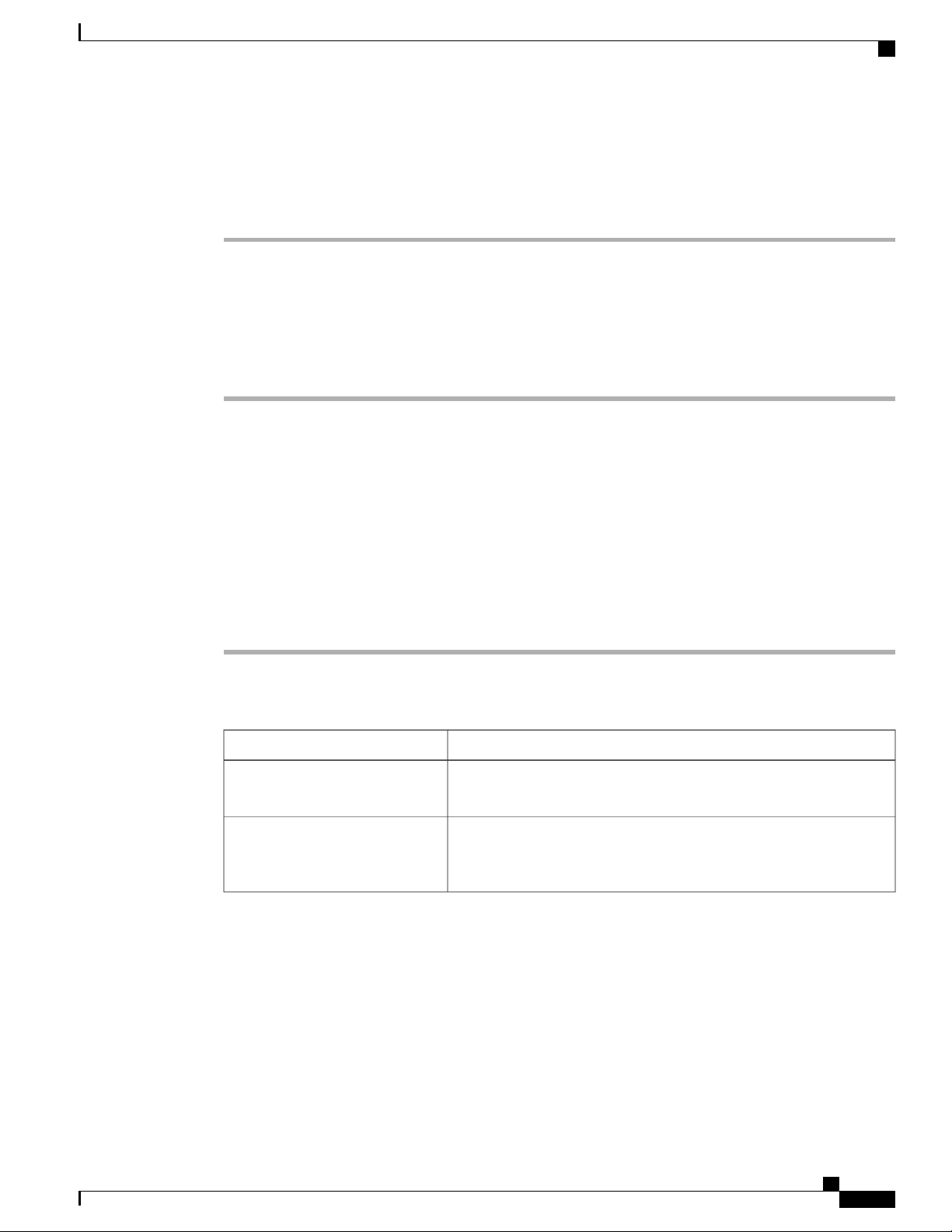

This document uses the following conventions:

IndicationConvention

bold font

italic font

OL-25712-04 xxxiii

Commands, keywords, GUI elements, and user-entered text

appear in bold font.

Document titles, new or emphasized terms, and arguments for

which you supply values are in italic font.

Cisco UCS Manager GUI Configuration Guide, Release 2.0

Page 34

Conventions

Preface

IndicationConvention

courierfont

{x | y | z}

[x | y | z]

string

!, #

Terminal sessions and information that the system displays

appear in courier font.

Elements in square brackets are optional.[ ]

Required alternative keywords are grouped in braces and

separated by vertical bars.

Optional alternative keywords are grouped in brackets and

separated by vertical bars.

A nonquoted set of characters. Do not use quotation marks

around the string or the string will include the quotation marks.

Nonprinting characters such as passwords are in angle brackets.< >

Default responses to system prompts are in square brackets.[ ]

An exclamation point (!) or a pound sign (#) at the beginning of

a line of code indicates a comment line.

Note

Tip

Caution

Timesaver

Warning

Means reader take note.

Means the following information will help you solve a problem.

Means reader be careful. In this situation, you might perform an action that could result in equipment

damage or loss of data.

Means the described action saves time. You can save time by performing the action described in the

paragraph.

Means reader be warned. In this situation, you might perform an action that could result in bodily injury.

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xxxiv OL-25712-04

Page 35

Preface

Related Cisco UCS Documentation

Documentation Roadmaps

For a complete list of all B-Series documentation, see the Cisco UCS B-Series Servers Documentation Roadmap

available at the following URL: http://www.cisco.com/go/unifiedcomputing/b-series-doc.

For a complete list of all C-Series documentation, see the Cisco UCS C-Series Servers Documentation Roadmap

available at the following URL: http://www.cisco.com/go/unifiedcomputing/c-series-doc .

Other Documentation Resources

An ISO file containing all B and C-Series documents is available at the following URL: http://www.cisco.com/

cisco/software/type.html?mdfid=283853163&flowid=25821. From this page, click Unified Computing

System (UCS) Documentation Roadmap Bundle.

The ISO file is updated after every major documentation release.

Follow Cisco UCS Docs on Twitter to receive document update notifications.

Related Cisco UCS Documentation

Documentation Feedback

To provide technical feedback on this document, or to report an error or omission, please send your comments

to ucs-docfeedback@external.cisco.com. We appreciate your feedback.

Obtaining Documentation and Submitting a Service Request

For information on obtaining documentation, submitting a service request, and gathering additional information,

see the monthly What's New in Cisco Product Documentation, which also lists all new and revised Cisco

technical documentation.

Subscribe to the What's New in Cisco Product Documentation as a Really Simple Syndication (RSS) feed

and set content to be delivered directly to your desktop using a reader application. The RSS feeds are a free

service and Cisco currently supports RSS version 2.0.

Follow Cisco UCS Docs on Twitter to receive document update notifications.

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 xxxv

Page 36

Obtaining Documentation and Submitting a Service Request

Preface

Cisco UCS Manager GUI Configuration Guide, Release 2.0

xxxvi OL-25712-04

Page 37

PART I

Introduction

• New and Changed Information, page 3

• Overview of Cisco Unified Computing System, page 9

• Overview of Cisco UCS Manager, page 43

• Overview of Cisco UCS Manager GUI, page 47

Page 38

Page 39

CHAPTER 1

New and Changed Information

This chapter includes the following sections:

• New and Changed Information for this Release, page 3

New and Changed Information for this Release

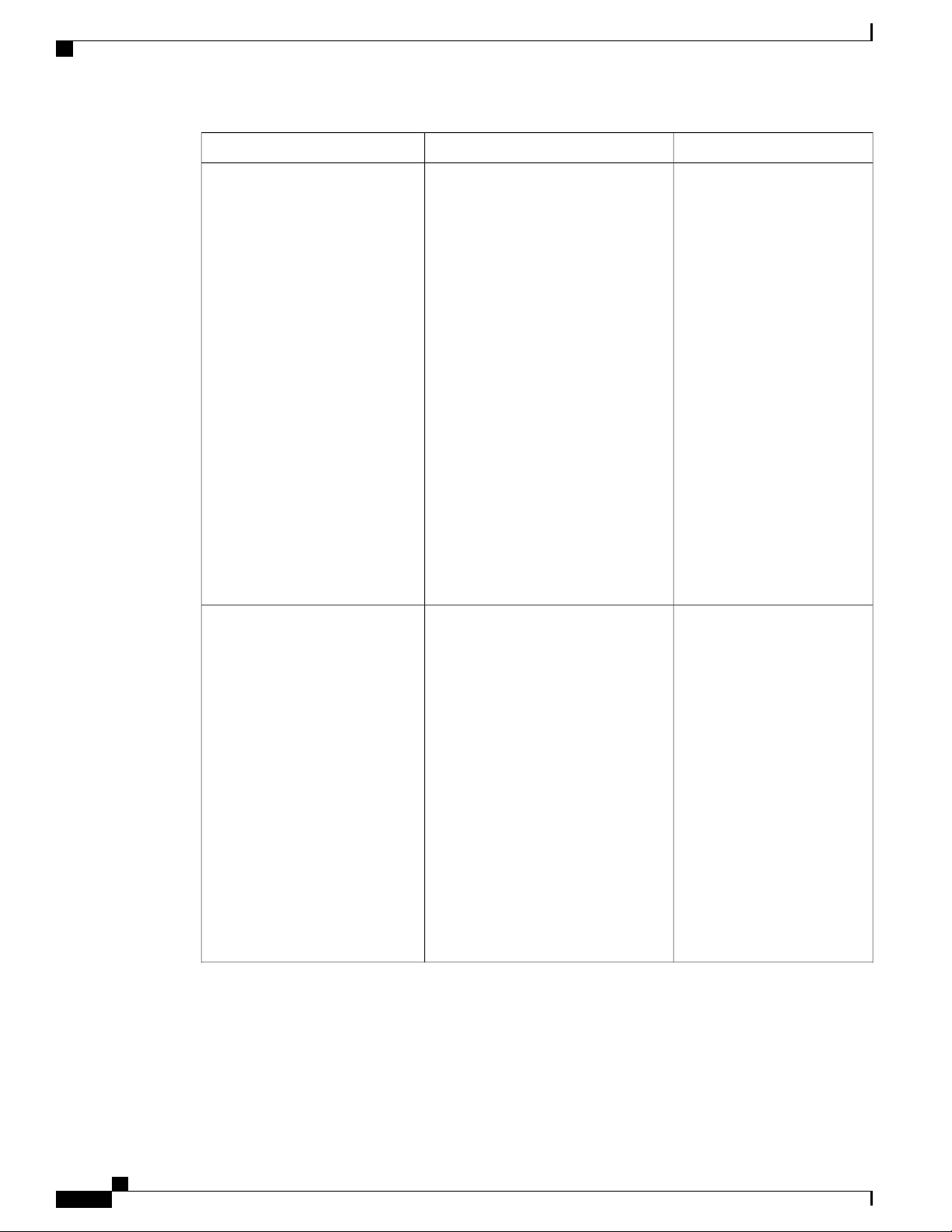

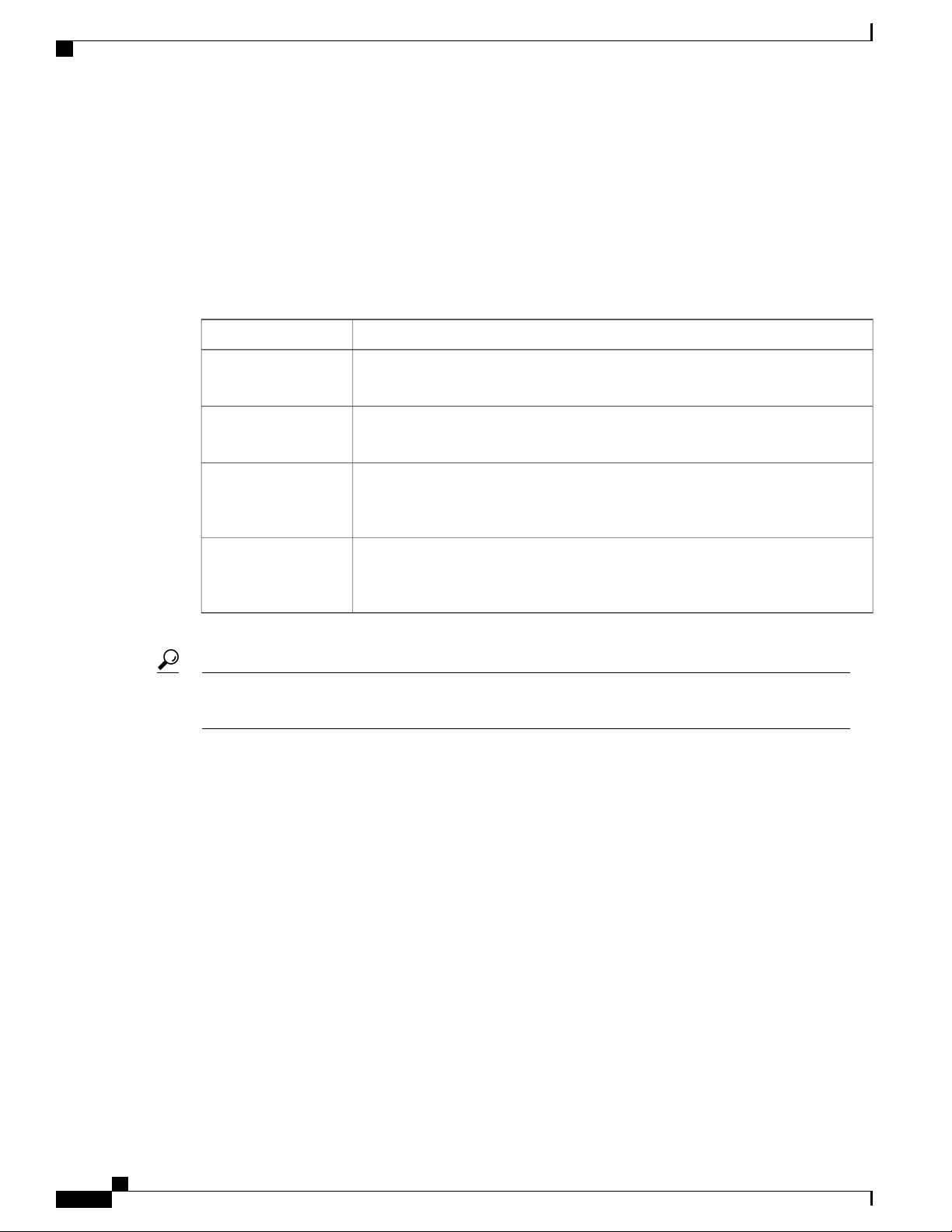

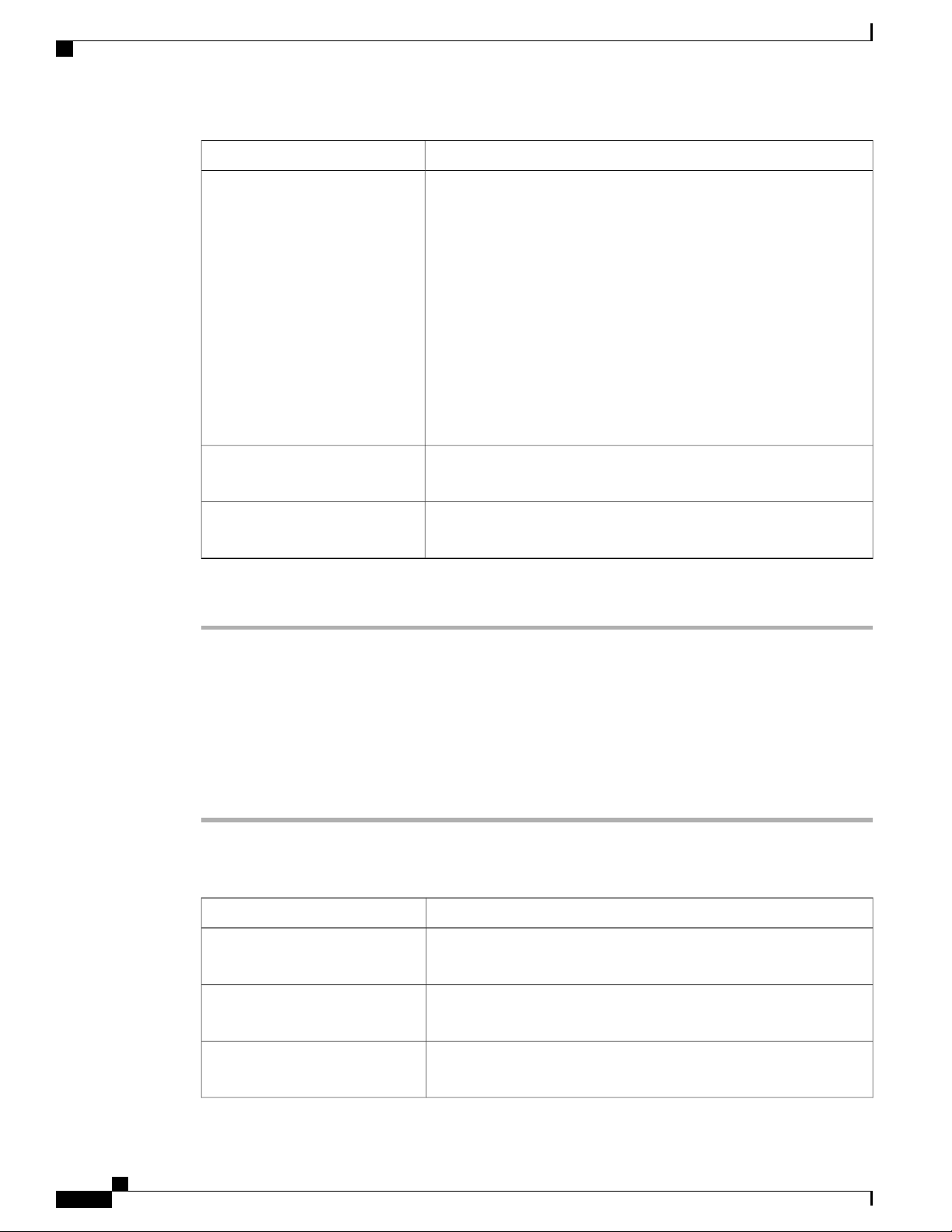

The following table provides an overview of the significant changes to this guide for this current release. The

table does not provide an exhaustive list of all changes made to the configuration guides or of the new features

in this release. For information about new supported hardware in this release, see the Cisco UCS B-Series

Servers Documentation Roadmap available at the following URL: http://www.cisco.com/go/unifiedcomputing/

b-series-doc.

Table 1: New Features and Significant Behavioral Changes in Cisco UCS, Release 2.0(3)

Where DocumentedDescriptionFeature

Cipher Suite

Web Session Refresh

BIOS Settings

Overview of enabling MPIO

OL-25712-04 3

Adds support for Cipher Suite in

HTTPS configuration.

Enables you to configure the web

session refresh period and timeout for

authentication domains.

Adds support for new BIOS settings

that can be included in BIOS policies

and configured from Cisco UCS

Manager.

High level information added for how

to enable MPIO with iSCSI boot.

Cisco UCS Manager GUI Configuration Guide, Release 2.0

Configuring Communication

Services, on page 113

Configuring Authentication,

on page 131

Configuring Server-Related

Policies, on page 381

Enabling MPIO on Windows,

on page 446

Page 40

New and Changed Information for this Release

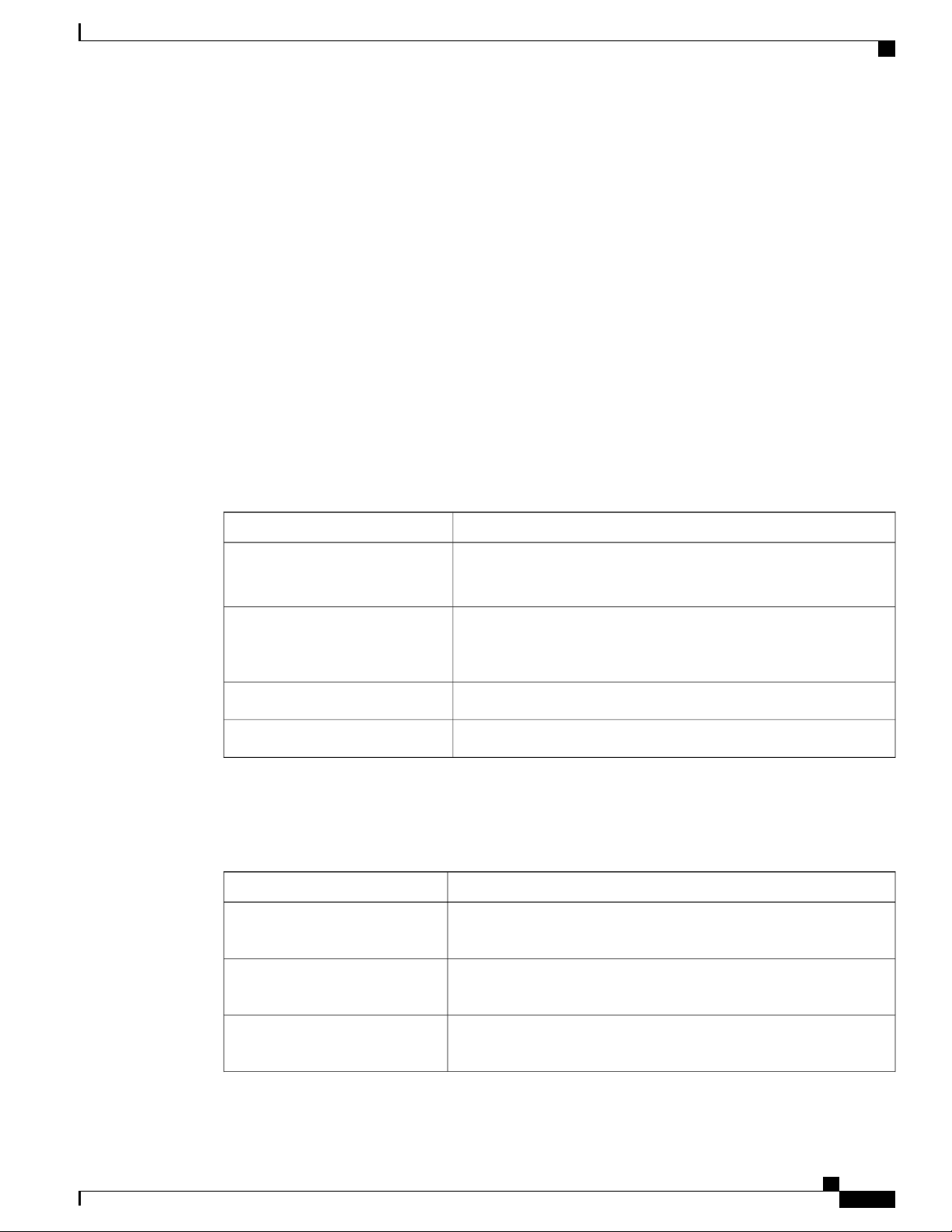

Table 2: New Features and Significant Behavioral Changes in Cisco UCS, Release 2.0(2)

Where DocumentedDescriptionFeature

IQN Pools

Adapter Port Channels

Unified Port Support for 6296

Fabric Interconnect

Renumbering for Rack-Mount

Servers

Changes to Behavior for Power

State Synchronization

BIOS Settings

UCS domains configured for iSCSI

boot.

Enables you to group all the physical

links from a Cisco UCS Virtual

Interface Card (VIC) to an I/O Module

into one logical link. (Requires

supported hardware.)

Enables you to use the Configure

Unified Ports wizard to configure ports

on a 6296 fabric interconnect.

Enables you to renumber an integrated

rack-mount server.

Adds information and a caution about

power state synchronization, including

use of the physical power button or the

reset feature on a blade server or an

integrated rack-mount server.

Adds support for new BIOS settings

that can be included in BIOS policies

and configured from Cisco UCS

Manager.

iSCSI Boot, on page 443Adds support for IQN pools in Cisco

Configuring Ports and Port

Channels, on page 77

Unified Ports on the 6200

Series Fabric Interconnect, on

page 78

Managing Rack-Mount

Servers, on page 599

Managing Blade Servers, on

page 585

Managing Rack-Mount

Servers, on page 599

Configuring Server-Related

Policies, on page 381

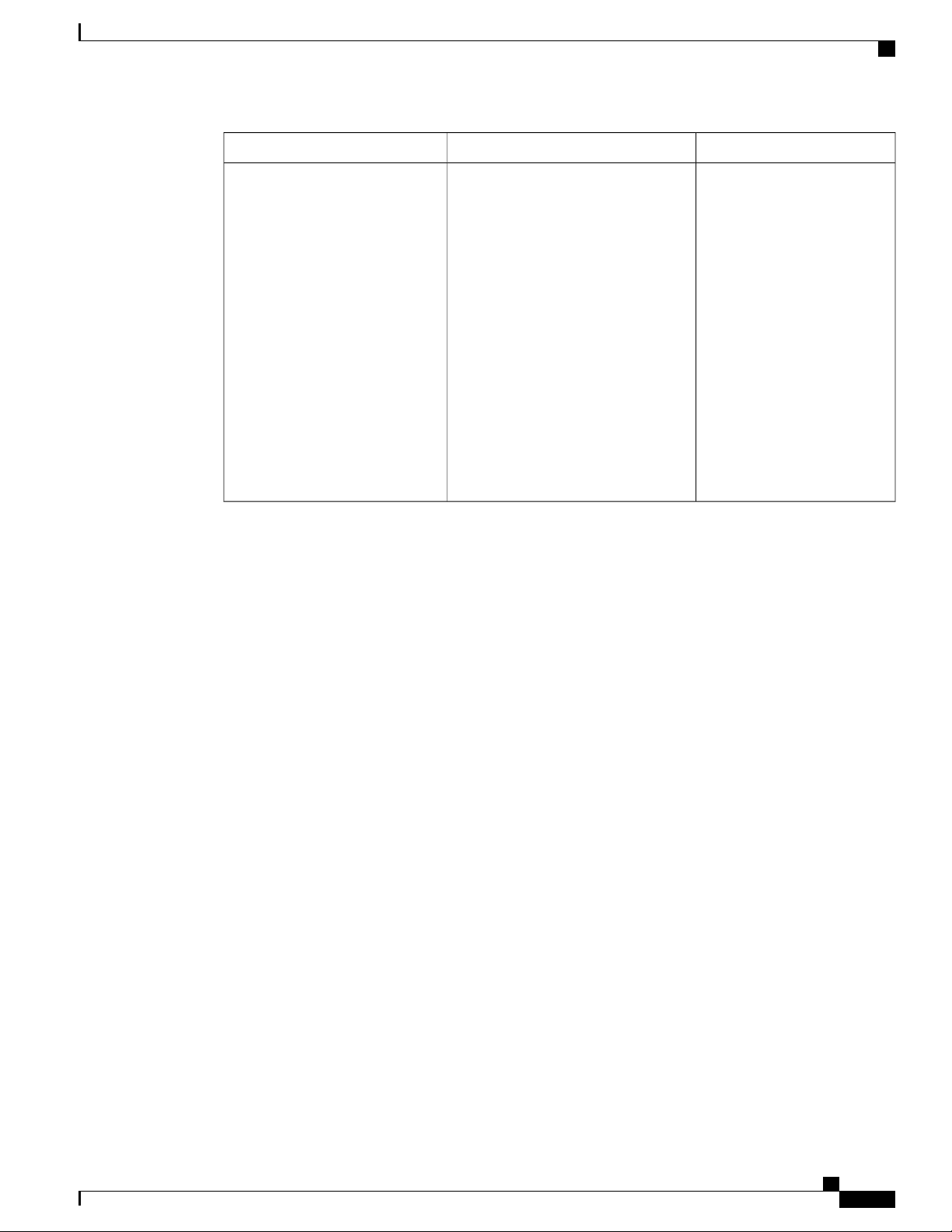

Table 3: New Features in Cisco UCS, Release 2.0(1)

Where DocumentedDescriptionFeature

Disk Drive Monitoring Support

Support for disk drive monitoring on

certain blade servers and a specific LSI

Monitoring Hardware, on page

647

storage controller firmware level.

Fabric Port Channels

Enables you to group several of the

physical links from a IOM to a fabric

Configuring Ports and Port

Channels, on page 77

interconnect into one logical link for

redundancy and bandwidth sharing.

(Requires supported hardware.)

Firmware Bundle Option

Enables you to select a bundle instead

of a version when updating firmware

Managing Firmware, on page

183

using the Cisco UCS Manager GUI.

Cisco UCS Manager GUI Configuration Guide, Release 2.0

4 OL-25712-04

Page 41

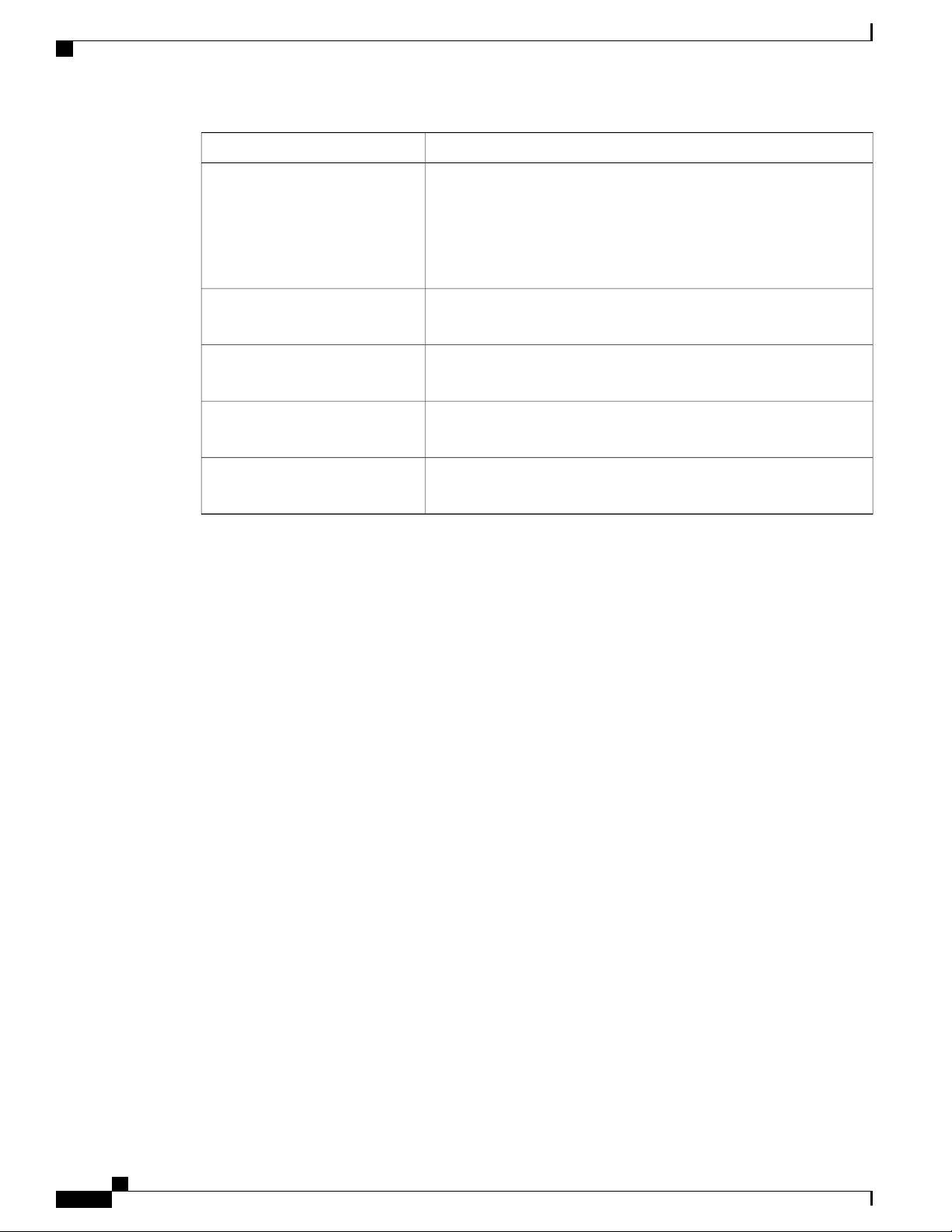

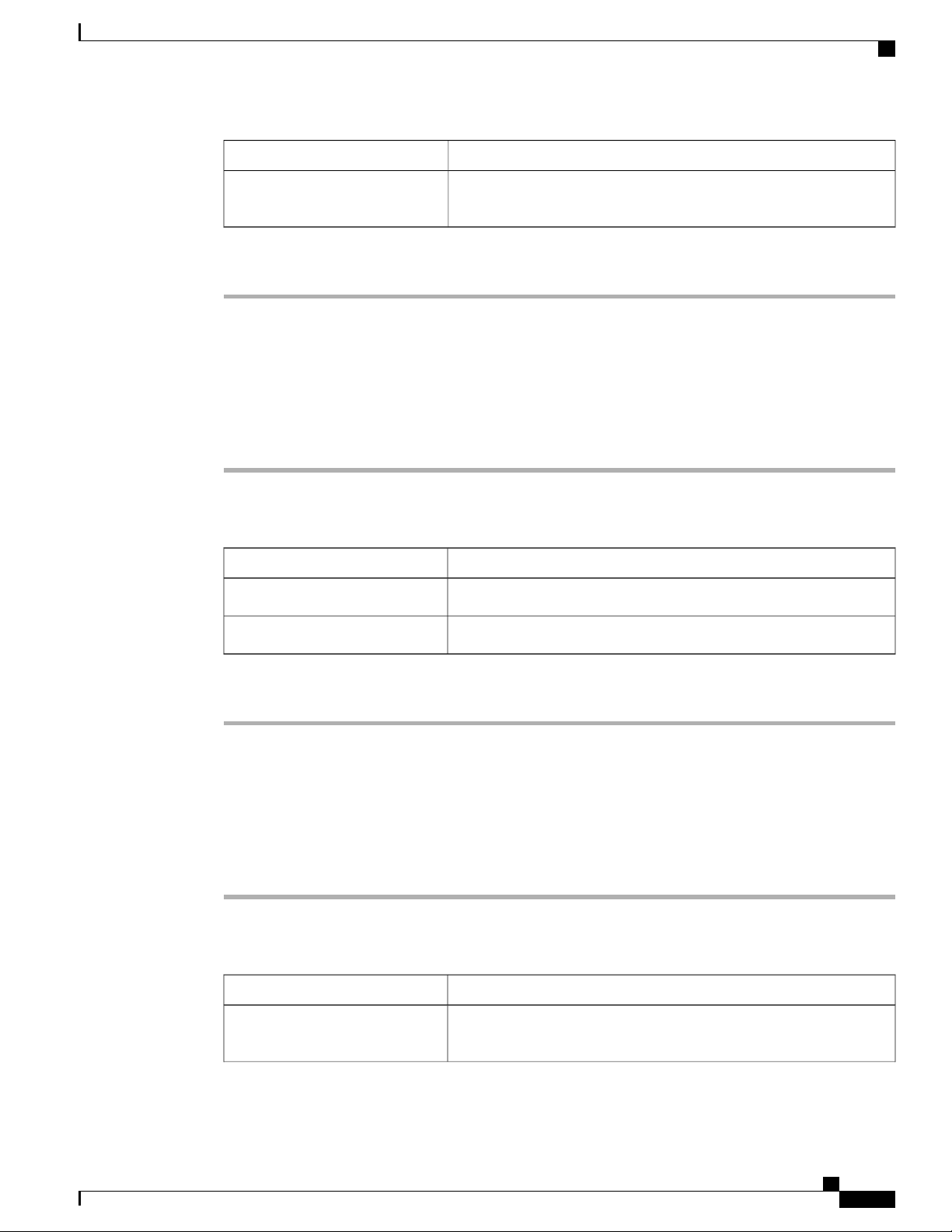

New and Changed Information for this Release

Where DocumentedDescriptionFeature

iSCSI Boot

Licensing

Pre-login Banner

Unified Ports

Upstream Disjoint Layer-2

Networks

Virtual Interfaces

operating system from an iSCSI target

machine located remotely over a

network.

hardware.

to login when a user logs into Cisco

UCS Manager using the GUI or CLI.

Unified ports are ports on the 6200

series fabric interconnect that can be

configured to carry either Ethernet or

Fibre Channel traffic.

Enables you to configure Cisco UCS

to communicate with upstream disjoint

layer-2 networks.

The number of vNICs and vHBAs

configurable for a service profile is

determined by adapter capability and

the amount of virtual interface (VIF)

namespace available on the adapter.

iSCSI Boot, on page 443iSCSI boot enables a server to boot its

Licenses, on page 247Updated information for new UCS

Pre-Login Banner, on page 56Displays user-defined banner text prior

Unified Ports on the 6200

Series Fabric Interconnect, on

page 78

Configuring Upstream Disjoint

Layer-2 Networks, on page 321

Managing Virtual Interfaces,

on page 259

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 5

Page 42

New and Changed Information for this Release

Where DocumentedDescriptionFeature

Virtual Interface Card Drivers

VM-FEX Integration for VMware

Cisco UCS Virtual Interface Card

(VIC) drivers facilitate communication

between supported operating systems

and Cisco UCS Virtual Interface Cards

(VICs).

Cisco Virtual Machine Fabric Extender

(VM-FEX) for VMware provides

management integration and network

communication between Cisco UCS

Manager and VMware vCenter.

In previous releases, this functionality

was known as VN-Link in Hardware.

This feature is now

documented in the following

installation guides:

•

Cisco UCS Manager

Interface Card Drivers

for ESX Installation

Guide

•

Cisco UCS Manager

Interface Card Drivers

for Linux Installation

Guide

•

Cisco UCS Manager

Interface Card Drivers

for Windows Installation

Guide

The VIC driver installation

guides can be found here: http:/

/www.cisco.com/en/US/

products/ps10281/prod_

installation_guides_list.html

This feature is now

documented in the following

configuration guides:

•

Cisco UCS Manager

VM-FEX for VMware

GUI Configuration

Guide

•

Cisco UCS Manager

VM-FEX for VMware

CLI Configuration Guide

The VM-FEX configuration

guides can be found here: http:/

/www.cisco.com/en/US/

products/ps10281/products_

installation_and_

configuration_guides_list.html

Cisco UCS Manager GUI Configuration Guide, Release 2.0

6 OL-25712-04

Page 43

New and Changed Information for this Release

Where DocumentedDescriptionFeature

VM-FEX Integration for KVM

(Red Hat Linux)

Cisco Virtual Machine Fabric Extender

(VM-FEX) for VMware provides

external switching for virtual machines

running on a KVM Linux-based

hypervisor in a Cisco UCS domain.

This feature is documented in

the following configuration

guides:

•

Cisco UCS Manager

VM-FEX for KVM GUI

Configuration Guide

•

Cisco UCS Manager

VM-FEX for KVM CLI

Configuration Guide

The VM-FEX configuration

guides can be found here: http:/

/www.cisco.com/en/US/

products/ps10281/products_

installation_and_

configuration_guides_list.html

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 7

Page 44

New and Changed Information for this Release

Cisco UCS Manager GUI Configuration Guide, Release 2.0

8 OL-25712-04

Page 45

Overview of Cisco Unified Computing System

This chapter includes the following sections:

• About Cisco Unified Computing System , page 9

• Unified Fabric, page 10

• Server Architecture and Connectivity, page 12

• Traffic Management, page 33

• Opt-In Features, page 38

• Virtualization in Cisco UCS , page 40

About Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) fuses access layer networking and servers. This

high-performance, next-generation server system provides a data center with a high degree of workload agility

and scalability.

The hardware and software components support Cisco's unified fabric, which runs multiple types of data

center traffic over a single converged network adapter.

CHAPTER 2

Architectural Simplification

The simplified architecture of Cisco UCS reduces the number of required devices and centralizes switching

resources. By eliminating switching inside a chassis, network access-layer fragmentation is significantly

reduced.

Cisco UCS implements Cisco unified fabric within racks and groups of racks, supporting Ethernet and Fibre

Channel protocols over 10 Gigabit Cisco Data Center Ethernet and Fibre Channel over Ethernet (FCoE) links.

This radical simplification reduces the number of switches, cables, adapters, and management points by up

to two-thirds. All devices in a Cisco UCS domain remain under a single management domain, which remains

highly available through the use of redundant components.

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 9

Page 46

Unified Fabric

High Availability

The management and data plane of Cisco UCS is designed for high availability and redundant access layer

fabric interconnects. In addition, Cisco UCS supports existing high availability and disaster recovery solutions

for the data center, such as data replication and application-level clustering technologies.

Scalability

A single Cisco UCS domain supports multiple chassis and their servers, all of which are administered through

one Cisco UCS Manager. For more detailed information about the scalability, speak to your Cisco representative.

Flexibility

A Cisco UCS domain allows you to quickly align computing resources in the data center with rapidly changing

business requirements. This built-in flexibility is determined by whether you choose to fully implement the

stateless computing feature.

Pools of servers and other system resources can be applied as necessary to respond to workload fluctuations,

support new applications, scale existing software and business services, and accommodate both scheduled

and unscheduled downtime. Server identity can be abstracted into a mobile service profile that can be moved

from server to server with minimal downtime and no need for additional network configuration.

With this level of flexibility, you can quickly and easily scale server capacity without having to change the

server identity or reconfigure the server, LAN, or SAN. During a maintenance window, you can quickly do

the following:

Unified Fabric

• Deploy new servers to meet unexpected workload demand and rebalance resources and traffic.

• Shut down an application, such as a database management system, on one server and then boot it up

again on another server with increased I/O capacity and memory resources.

Optimized for Server Virtualization

Cisco UCS has been optimized to implement VM-FEX technology. This technology provides improved

support for server virtualization, including better policy-based configuration and security, conformance with

a company's operational model, and accommodation for VMware's VMotion.

With unified fabric, multiple types of data center traffic can run over a single Data Center Ethernet (DCE)

network. Instead of having a series of different host bus adapters (HBAs) and network interface cards (NICs)

present in a server, unified fabric uses a single converged network adapter. This type of adapter can carry

LAN and SAN traffic on the same cable.

Cisco UCS uses Fibre Channel over Ethernet (FCoE) to carry Fibre Channel and Ethernet traffic on the same

physical Ethernet connection between the fabric interconnect and the server. This connection terminates at a

converged network adapter on the server, and the unified fabric terminates on the uplink ports of the fabric

interconnect. On the core network, the LAN and SAN traffic remains separated. Cisco UCS does not require

that you implement unified fabric across the data center.

The converged network adapter presents an Ethernet interface and Fibre Channel interface to the operating

system. At the server, the operating system is not aware of the FCoE encapsulation because it sees a standard

Fibre Channel HBA.

Cisco UCS Manager GUI Configuration Guide, Release 2.0

10 OL-25712-04

Page 47

At the fabric interconnect, the server-facing Ethernet port receives the Ethernet and Fibre Channel traffic. The

fabric interconnect (using Ethertype to differentiate the frames) separates the two traffic types. Ethernet frames

and Fibre Channel frames are switched to their respective uplink interfaces.

Fibre Channel over Ethernet

Cisco UCS leverages Fibre Channel over Ethernet (FCoE) standard protocol to deliver Fibre Channel. The

upper Fibre Channel layers are unchanged, so the Fibre Channel operational model is maintained. FCoE

network management and configuration is similar to a native Fibre Channel network.

FCoE encapsulates Fibre Channel traffic over a physical Ethernet link. FCoE is encapsulated over Ethernet

with the use of a dedicated Ethertype, 0x8906, so that FCoE traffic and standard Ethernet traffic can be carried

on the same link. FCoE has been standardized by the ANSI T11 Standards Committee.

Fibre Channel traffic requires a lossless transport layer. Instead of the buffer-to-buffer credit system used by

native Fibre Channel, FCoE depends upon the Ethernet link to implement lossless service.

Ethernet links on the fabric interconnect provide two mechanisms to ensure lossless transport for FCoE traffic:

• Link-level flow control

• Priority flow control

Unified Fabric

Link-Level Flow Control

IEEE 802.3x link-level flow control allows a congested receiver to signal the endpoint to pause data transmission

for a short time. This link-level flow control pauses all traffic on the link.

The transmit and receive directions are separately configurable. By default, link-level flow control is disabled

for both directions.

On each Ethernet interface, the fabric interconnect can enable either priority flow control or link-level flow

control (but not both).

Priority Flow Control

The priority flow control (PFC) feature applies pause functionality to specific classes of traffic on the Ethernet

link. For example, PFC can provide lossless service for the FCoE traffic, and best-effort service for the standard

Ethernet traffic. PFC can provide different levels of service to specific classes of Ethernet traffic (using IEEE

802.1p traffic classes).

PFC decides whether to apply pause based on the IEEE 802.1p CoS value. When the fabric interconnect

enables PFC, it configures the connected adapter to apply the pause functionality to packets with specific CoS

values.

By default, the fabric interconnect negotiates to enable the PFC capability. If the negotiation succeeds, PFC

is enabled and link-level flow control remains disabled (regardless of its configuration settings). If the PFC

negotiation fails, you can either force PFC to be enabled on the interface or you can enable IEEE 802.x

link-level flow control.

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 11

Page 48

Server Architecture and Connectivity

Server Architecture and Connectivity

Overview of Service Profiles

Service profiles are the central concept of Cisco UCS. Each service profile serves a specific purpose: ensuring

that the associated server hardware has the configuration required to support the applications it will host.

The service profile maintains configuration information about the server hardware, interfaces, fabric

connectivity, and server and network identity. This information is stored in a format that you can manage

through Cisco UCS Manager. All service profiles are centrally managed and stored in a database on the fabric

interconnect.

Every server must be associated with a service profile.

Important

At any given time, each server can be associated with only one service profile. Similarly, each service

profile can be associated with only one server at a time.

After you associate a service profile with a server, the server is ready to have an operating system and

applications installed, and you can use the service profile to review the configuration of the server. If the

server associated with a service profile fails, the service profile does not automatically fail over to another

server.

When a service profile is disassociated from a server, the identity and connectivity information for the server

is reset to factory defaults.

Network Connectivity through Service Profiles

Each service profile specifies the LAN and SAN network connections for the server through the Cisco UCS

infrastructure and out to the external network. You do not need to manually configure the network connections

for Cisco UCS servers and other components. All network configuration is performed through the service

profile.

When you associate a service profile with a server, the Cisco UCS internal fabric is configured with the

information in the service profile. If the profile was previously associated with a different server, the network

infrastructure reconfigures to support identical network connectivity to the new server.

Configuration through Service Profiles

A service profile can take advantage of resource pools and policies to handle server and connectivity

configuration.

Hardware Components Configured by Service Profiles

When a service profile is associated with a server, the following components are configured according to the

data in the profile:

• Server, including BIOS and CIMC

• Adapters

• Fabric interconnects

Cisco UCS Manager GUI Configuration Guide, Release 2.0

12 OL-25712-04

Page 49

Server Architecture and Connectivity

You do not need to configure these hardware components directly.

Server Identity Management through Service Profiles

You can use the network and device identities burned into the server hardware at manufacture or you can use

identities that you specify in the associated service profile either directly or through identity pools, such as

MAC, WWN, and UUID.

The following are examples of configuration information that you can include in a service profile:

• Profile name and description

• Unique server identity (UUID)

• LAN connectivity attributes, such as the MAC address

• SAN connectivity attributes, such as the WWN

Operational Aspects configured by Service Profiles

You can configure some of the operational functions for a server in a service profile, such as the following:

• Firmware packages and versions

• Operating system boot order and configuration

• IPMI and KVM access

vNIC Configuration by Service Profiles

A vNIC is a virtualized network interface that is configured on a physical network adapter and appears to be

a physical NIC to the operating system of the server. The type of adapter in the system determines how many

vNICs you can create. For example, a converged network adapter has two NICs, which means you can create

a maximum of two vNICs for each adapter.

A vNIC communicates over Ethernet and handles LAN traffic. At a minimum, each vNIC must be configured

with a name and with fabric and network connectivity.

vHBA Configuration by Service Profiles

A vHBA is a virtualized host bus adapter that is configured on a physical network adapter and appears to be

a physical HBA to the operating system of the server. The type of adapter in the system determines how many

vHBAs you can create. For example, a converged network adapter has two HBAs, which means you can

create a maximum of two vHBAs for each of those adapters. In contrast, a network interface card does not

have any HBAs, which means you cannot create any vHBAs for those adapters.

A vHBA communicates over FCoE and handles SAN traffic. At a minimum, each vHBA must be configured

with a name and fabric connectivity.

Service Profiles that Override Server Identity

This type of service profile provides the maximum amount of flexibility and control. This profile allows you

to override the identity values that are on the server at the time of association and use the resource pools and

policies set up in Cisco UCS Manager to automate some administration tasks.

You can disassociate this service profile from one server and then associate it with another server. This

re-association can be done either manually or through an automated server pool policy. The burned-in settings,

Cisco UCS Manager GUI Configuration Guide, Release 2.0

OL-25712-04 13

Page 50

Server Architecture and Connectivity

such as UUID and MAC address, on the new server are overwritten with the configuration in the service

profile. As a result, the change in server is transparent to your network. You do not need to reconfigure any

component or application on your network to begin using the new server.

This profile allows you to take advantage of and manage system resources through resource pools and policies,

such as the following:

• Virtualized identity information, including pools of MAC addresses, WWN addresses, and UUIDs

• Ethernet and Fibre Channel adapter profile policies

• Firmware package policies

• Operating system boot order policies

Unless the service profile contains power management policies, a server pool qualification policy, or another

policy that requires a specific hardware configuration, the profile can be used for any type of server in the

Cisco UCS domain.

You can associate these service profiles with either a rack-mount server or a blade server. The ability to

migrate the service profile depends upon whether you choose to restrict migration of the service profile.

Note

If you choose not to restrict migration, Cisco UCS Manager does not perform any compatibility checks

on the new server before migrating the existing service profile. If the hardware of both servers are not

similar, the association might fail.

Service Profiles that Inherit Server Identity

This hardware-based service profile is the simplest to use and create. This profile uses the default values in