Page 1

NetXtreme®-C/NetXtreme-E

User Guide

NetXtreme-UG100

August 23, 2018

Page 2

Broadcom, the pulse logo, Connecting everything, NetXtre me, Avago Technologies, Avago, and the A logo are am ong the

trademarks of Broadcom and/or its affiliates in the United States, certain other countries, and/or the EU.

Copyright © 2018 Broadcom. All Rights Reserved.

The term “Broadcom” refers to Broa dcom Inc. and/or its subsidiaries. For more information, please visit www.broadcom.com.

Broadcom reserves the right to make changes without further notice to any products or data herein to improve reliability,

function, or design. Information furnished by Broadcom is believed to be accurate and reliable. However, Broadcom does

not assume any liability arising out of the application or use of this information, nor the application or use of any product or

circuit described herein, neither does it convey any license under its patent rights nor the rights of others.

Page 3

NetXtreme-C/NetXtreme-E User Guide

Table of Contents

1 Regulatory and Safety Approvals...............................................................................................................................7

1.1 Regulatory............................................................................................................................................................7

1.2 Safety...................................................................................................................................................................7

1.3 Electromagnetic Compatibility (EMC) ..................................................................................................................7

1.4 Electrostatic Discharge (ESD) Compliance .........................................................................................................8

1.5 FCC Statement....................................................................................................................................................8

2 Functional Description ................................................................................................................................................8

3 Network Link and Activity Indication .........................................................................................................................8

4 Features ........................................................................................................................................................................9

4.1 Software and Hardware Features........................................................................................................................9

4.2 Virtualization Features .......................................................................................................................................10

4.3 VXLAN ...............................................................................................................................................................11

4.4 NVGRE/GRE/IP-in-IP/Geneve...........................................................................................................................11

4.5 Stateless Offloads..............................................................................................................................................11

4.5.1 RSS ........... ... ... .... ... ... ... .... ...................................... .... ... ... ... .... ... ... ..........................................................11

4.5.2 TPA...................... ... ... ... .... ... ... ... ....................................... ... .... ... ... ... ... ....................................................11

4.5.3 Header-Payload Split........................ ... ... ... .... ... ... ... .... .......................................... ... ... .............................11

4.6 UDP Fragmentation Offload...............................................................................................................................11

4.7 Stateless Transport Tunnel Offload ...................................................................................................................12

4.8 Multiqueue Support for OS ................................................................................................................................12

4.8.1 NDIS VMQ.............................. ... .... ... ... ... ... ....................................... ... .... ... ... ... .... ... ................................12

4.8.2 VMw are NetQ ueue . ... ... .... ... ... ... ....................................... ... .... ... ... ... ... .... ... ... ... .......................................12

4.8.3 KVM/Xen Multiqueue ..................................... ... ... ... .... ... ... ... .... ... ... ... .......................................................12

4.9 SR-IOV Configuration Support Matrix................................................................................................................12

4.10 SR-IOV.............................................................................................................................................................12

4.11 Network Partitioning (NPAR) ...........................................................................................................................13

4.12 RDMA over Converge Ethernet – RoCE..........................................................................................................13

4.13 Supported Combinations .................................................................................................................................13

4.13.1 NPAR, SR-IOV, and RoCE....................................................................................................................13

4.13.2 NPAR, SR-IOV, and DPDK ...................................................................................................................14

4.13.3 Unsupported Combinations...................................................................................................................14

5 Installing the Hardware..............................................................................................................................................15

5.1 Safety Precautions.............................................................................................................................................15

5.2 System Requirements........................................................................................................................................15

5.2.1 Hardware Requirements..........................................................................................................................15

5.2.2 Preins tallation Checklist.................... ... ... ... .......................................... .... ... ... ... .... ...................................15

5.3 Installing the Adapter .........................................................................................................................................16

5.4 Connecting the Network Cables ........................................................................................................................16

NetXtreme-UG100

3

Page 4

NetXtreme-C/NetXtreme-E User Guide

5.4.1 Supported Cables and Modules ..............................................................................................................16

5.4.1.1 Copper.............................................................................................................................................................16

5.4.1.2 SFP+ ...............................................................................................................................................................16

5.4.1.3 SFP28 .............................................................................................................................................................16

5.4.1.4 QSFP...............................................................................................................................................................16

6 Software Packages and Installation.........................................................................................................................17

6.1 Supported Operating Systems...........................................................................................................................17

6.2 Installing the Linux Driver...................................................................................................................................17

6.2.1 Linux Ethtool Commands.........................................................................................................................17

6.3 Installing the VMware Driver..............................................................................................................................18

6.4 Installing the Windows Driver.............................................................................................................................19

6.4.1 Driver Advanced Properties.....................................................................................................................19

6.4.2 Event Log Messages ...............................................................................................................................20

7 Updating the Firmware ..............................................................................................................................................21

7.1 Linux ..................................................................................................................................................................21

7.2 Windows/ESX ....................................................................................................................................................22

8 Teaming......................................................................................................................................................................22

8.1 Windows ............................................................................................................................................................22

8.2 Linux ..................................................................................................................................................................22

9 System-Level Configuration .....................................................................................................................................23

9.1 UEFI HII Menu ...................................................................................................................................................23

9.1.1 Main Configuration Page .........................................................................................................................23

9.1.2 Firmware Image Properties .....................................................................................................................23

9.1.3 Dev ice-Level Configuration............... ... ... ... .......................................... .... ... ... ... .... ...................................23

9.1.4 NIC Conf iguration ... ... ... .... ... ... ... .... ... ... ... ... .... ... ... .......................................... ... .......................................23

9.1.5 iSCSI Configuration ... ... .... ... ... ... .... ... ... ... ... .... ... ... ... .... .......................................... ... ................................23

9.2 Comprehensive Configuration Management .....................................................................................................24

9.2.1 Device Hardware Configuration...............................................................................................................24

9.2.2 MBA Configuration Menu.........................................................................................................................24

9.2.3 iSCSI Boot Main Menu .............. .... ... ... ... ... .... ... ... ... .... ... ... ... .... ... .............................................................24

9.3 Auto-Negotiation Configuration..........................................................................................................................24

9.3.1 Operational Link Speed ....... ... ... .... ... ... ... ... .... ... ... ... .... .......................................... ... ... ... ..........................27

9.3.2 Firmware Link Speed.............................. ... .... ... ... ... .......................................... .... ... ... ... ..........................27

9.3.3 Aut o-negotiation Protocol .............. ... ... ... ... .... ... ... ... .... ... ... ... .... ... ... ... ... .... ... ... ..........................................27

9.3.4 Windows Driv er Settings...................... ... ... .......................................... .... ... ... ... .... ...................................27

9.3.5 Linux Driver Settings................................................................................................................................28

9.3.6 ESXi Driver Settings ................................................................................................................................28

10 iSCSI Boot.................................................................................................................................................................28

10.1 Supported Operating Systems for iSCSI Boot.................................................................................................28

10.2 Setting up iSCSI Boot ......................................................................................................................................29

10.2.1 Configuring the iSCSI Target.................................................................................................................29

NetXtreme-UG100

4

Page 5

NetXtreme-C/NetXtreme-E User Guide

10.2.2 Configuring iSCSI Boot Parameters......................................................................................................29

10.2.3 MBA Boot Protocol Configuration..........................................................................................................30

10.2.4 iSCSI Boot Configuration.......................................................................................................................30

10.2.4.1 Static iSCSI Boot Configuration ....................................................................................................................30

10.2.4.2 Dynamic iSCSI Boot Configuration ...............................................................................................................31

10.2.5 Enabling CHAP Authentication ....................................... ... .... ... ... ..........................................................32

10.3 Configuring the DHCP Server to Support iSCSI Boot......................................................................................33

10.3.1 DHCP iSCSI Boot Configurations for IPv4 ............................................................................................33

10.3.1.1 DHCP Option 17, Root Path..........................................................................................................................33

10.3.1.2 DHCP Option 43, Vendor-Specific Information .............................................................................................34

10.3.1.3 Configuring the DHCP Server .......................................................................................................................34

10.3.2 DHCP iSCSI Boot Configuration for IPv6 ..............................................................................................34

10.3.2.1 DHCPv6 Option 16, Vendor Class Option.....................................................................................................34

10.3.2.2 DHCPv6 Option 17, Vendor-Specific Information .........................................................................................34

10.3.2.3 Configuring the DHCP Server .......................................................................................................................35

11 VXLAN: Configuration and Use Case Examples...................................................................................................35

12 SR-IOV: Configuration and Use Case Examples...................................................................................................36

12.1 Linux Use Case Example.................................................................................................................................36

12.2 Windows Use Case Example...........................................................................................................................37

12.3 VMware SRIOV Use Case Example................................................................................................................38

13 NPAR – Configuration and Use Case Example .....................................................................................................39

13.1 Features and Requirements ........ ...... .... ... .......................................................................................................39

13.2 Limitations........................................................................................................................................................40

13.3 Configuration....................................................................................................................................................40

13.4 Notes on Reducing NIC Memory Consumption...............................................................................................42

14 RoCE – Configuration and Use Case Examples ...................................................................................................43

14.1 Linux Configuration and Use Case Examples .................................................................................................43

14.1.1 Requirements ........................................................................................................................................43

14.1.2 BNXT_RE Driver Dependencies............................................................................................................43

14.1.3 Installation..............................................................................................................................................44

14.1.4 Limitations..............................................................................................................................................45

14.1.5 Known Issues ........................................................................................................................................45

14.2 Windows and Use Case Examples..................................................................................................................45

14.2.1 Kernel Mode ..........................................................................................................................................45

14.2.2 Verifying RDMA .....................................................................................................................................45

14.2.3 User Mode.............................................................................................................................................46

14.3 VMware ESX Configuration and Use Case Examples.....................................................................................47

14.3.1 Limitations..............................................................................................................................................47

14.3.2 BNXT RoCE Driver Requirements.........................................................................................................47

14.3.3 Installation..............................................................................................................................................47

14.3.4 Configuring Paravirtualized RDMA Network Adapters ..........................................................................47

14.3.4.1 Configuring a Virtual Center for PVRDMA ....................................................................................................47

NetXtreme-UG100

5

Page 6

NetXtreme-C/NetXtreme-E User Guide

14.3.4.2 Tagging vmknic for PVRDMA on ESX Hosts ................................................................................................48

14.3.4.3 Setting the Firewall Rule for PVRDMA..........................................................................................................48

14.3.4.4 Adding a PVRDMA Device to the VM ...........................................................................................................48

14.3.4.5 Configuring the VM on Linux Guest OS ........................................................................................................48

15 DCBX – Data Center Bridging .................................................................................................................................50

15.1 QoS Profile – Default QoS Queue Profile........................................................................................................50

15.2 DCBX Mode – Enable (IEEE only)...................................................................................................................51

15.3 DCBX Willing Bit ..............................................................................................................................................51

16 DPDK – Configuration and Use Case Examples ...................................................................................................54

16.1 Compiling the Application ............ ... ... .... ..........................................................................................................54

16.2 Running the Application...................................................................................................................................54

16.3 Testpmd Runtime Functions............................................................................................................................55

16.4 Control Functions.............................................................................................................................................55

16.5 Display Functions.............................................................................................................................................55

16.6 Configuration Functions...................................................................................................................................56

17 Frequently Asked Questions ..................................................................................................................................56

Revision History............................................................................................................................................................ 57

NetXtreme-UG100

6

Page 7

NetXtreme-C/NetXtreme-E User Guide

1 Regulatory and Safety Approvals

The following sections detail the Regulatory, Safety, Electromagnetic Compatibility (EMC), and Electrostatic Discharge

®

(ESD) standard compliance for the NetXtreme

-C/NetXtreme-E Network Interface Card.

1.1 Regulatory

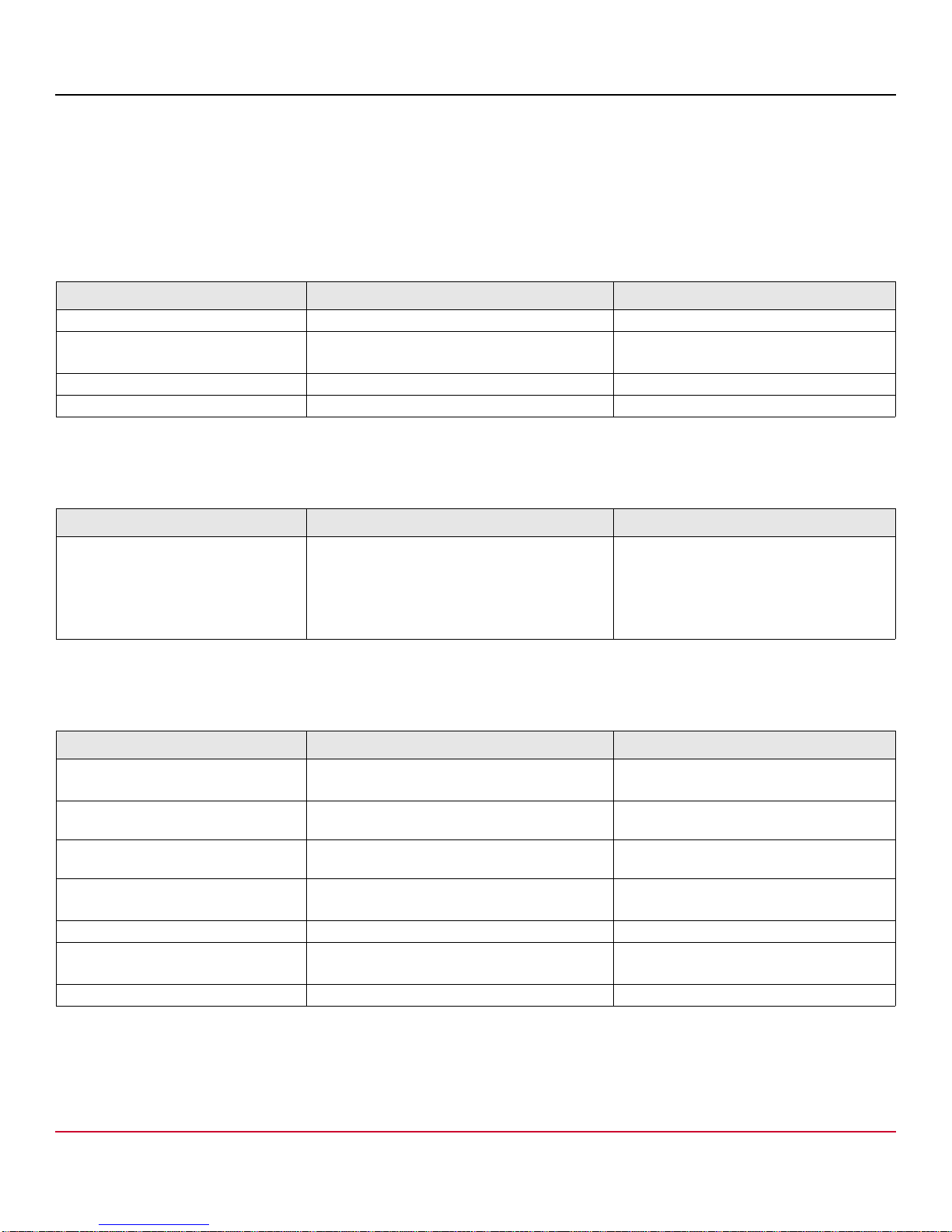

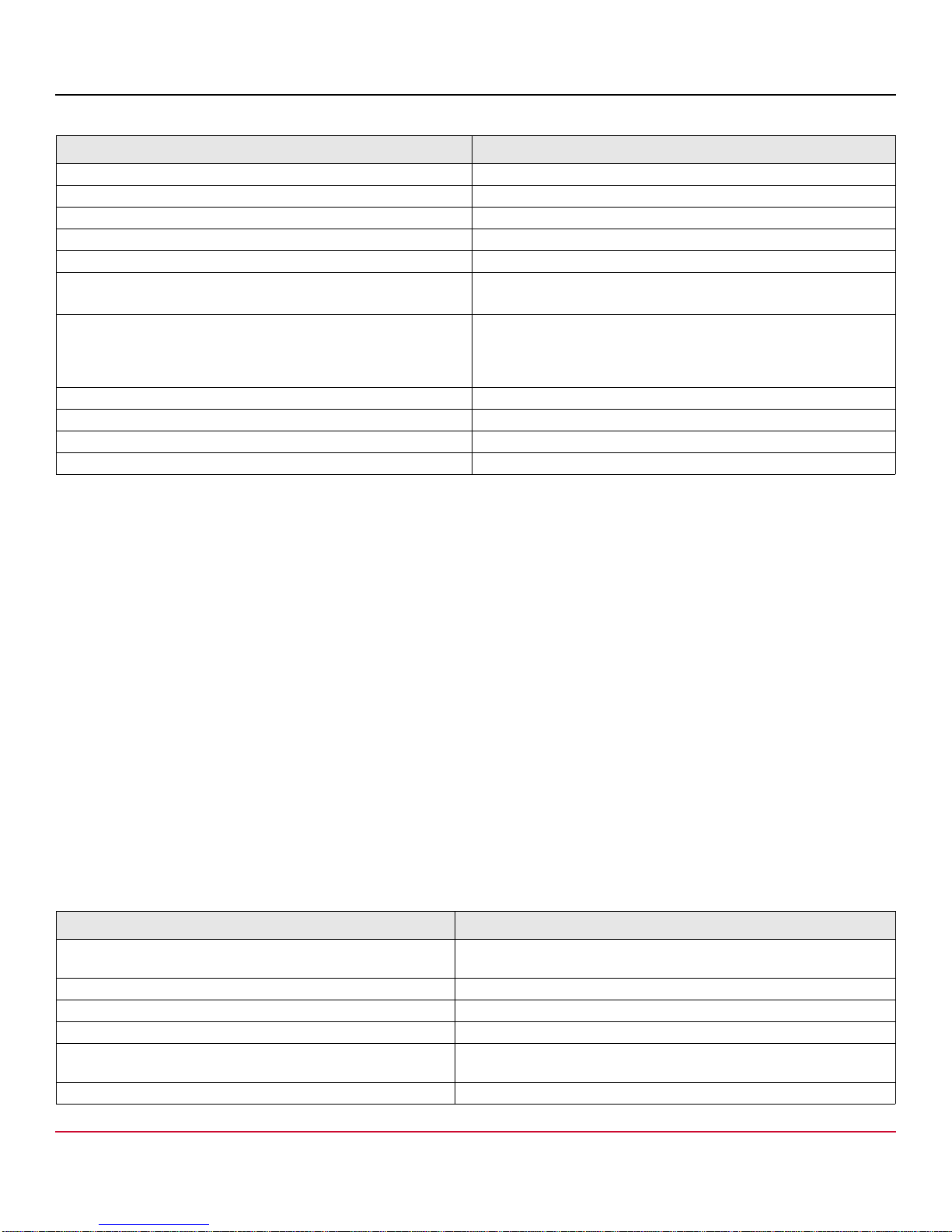

Table 1: Regulatory Approvals

Item Applicable Standard Approval/Certificate

CE/European Union EN 60950-1 CB report and certificate

UL/USA UL 60950-1

CTUVus UL

CSA/Canada CSA 22.2 No. 950 CSA report and certificate.

Taiwan CNS14336 Class B –

UL report and certificate.

1.2 Safety

Table 2: Safety Approvals

Country Certification Type/Standard Compliance

International CB Scheme

ICES 003 - Digital Device

UL 1977 (connector safety)

UL 796 (PCB wiring safety)

UL 94 (flammability of parts)

Yes

1.3 Electromagnetic Compatibility (EMC)

Table 3: Electromagnetic Compatibility

Standard/Country Certification Type Compliance

CE/EU EN 55022:2010 + *AC:2011 Class B

EN 55024 Class B

FCC/USA CFR47, Part 15 Class B FCC/IC DoC and EMC report referencing

IC/Canada ICES-003 Class B FCC/IC DoC and report referencing FCC and

ACA/Australia, New Zealand EN 5022:2010 + *AC:2011 ACA certificate

BSMI/Taiwan CNS13438 Class B BSMI certificate

MIC/S. Korea RRL KN22 Class B

KN24 (ESD)

VCCI /Japan V-3/2014/04 Copy of VCCI on-line certificate

CE report and CE DoC

FCC and IC standards

IC standards

RCM Mark

Korea certificate

MSIP Mark

NetXtreme-UG100

7

Page 8

NetXtreme-C/NetXtreme-E User Guide

1.4 Electrostatic Discharge (ESD) Compliance

Table 4: ESD Compliance Summary

Standard Certification Type Compliance

EN55024:2010 Air/Direct discharge Yes

1.5 FCC Statement

This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the

FCC Rules. These limits are designed to provide reasonable protection against harmful interference in a residential

installation. This equipment generates uses and can radiate radio frequency energy and, if not installed and used in

accordance with the instructions, may cause harmful interference to radio communications. However, there is no guarantee

that interference will not occur in a particular installation. If this equipment does cause harmful interference to radio television

reception, which can be determined by turning the equipment off and on, the user is encouraged to try to correct the

interference by one or more of the following measures:

Reorient or relocate the receiving antenna.

Increase the separation between the equipment and receiver.

Consult the dealer or an experienced radio/TV technician for help.

NOTE: Changes or modifications not expressly approved by the manufacture responsible for compliance could void the

user’s authority to operate the equipment.

2 Functional Description

The Broadcom NetXtreme-C (BCM573XX) and NetXtreme-E (BCM574XX) family of Ethernet Controllers are highlyintegrated, full-featured Ethernet LAN controllers optimized for data center and cloud infrastructures. Adapters support

100G/50G/40G/25G/10G/1G in both single and dual-port configurations. On the host side, these devices support sixteen

lanes of a PCIe Generation 3 interface.

An extensive set of stateless offloads and virtualization offloads to enhance packet processing efficiency are included to

enable low-overhead, high-speed network communications.

3 Network Link and Activity Indication

Ethernet connections, the state of the network link, and activity is indicated by the LEDs on the rear connector as shown in

Table 5.

Refer to the individual board data sheets for specific media design.

Table 5: Network Link and Activity Indicated by Port LEDs

Port LED LED Appearance Network State

Link LED Off No link (cable disconnected)

Continuously illuminated Link

Activity LED Off No network activity

Blinking Network activity

NetXtreme-UG100

8

Page 9

NetXtreme-C/NetXtreme-E User Guide

4 Features

Refer to the following sections for device features.

4.1 Software and Hardware Features

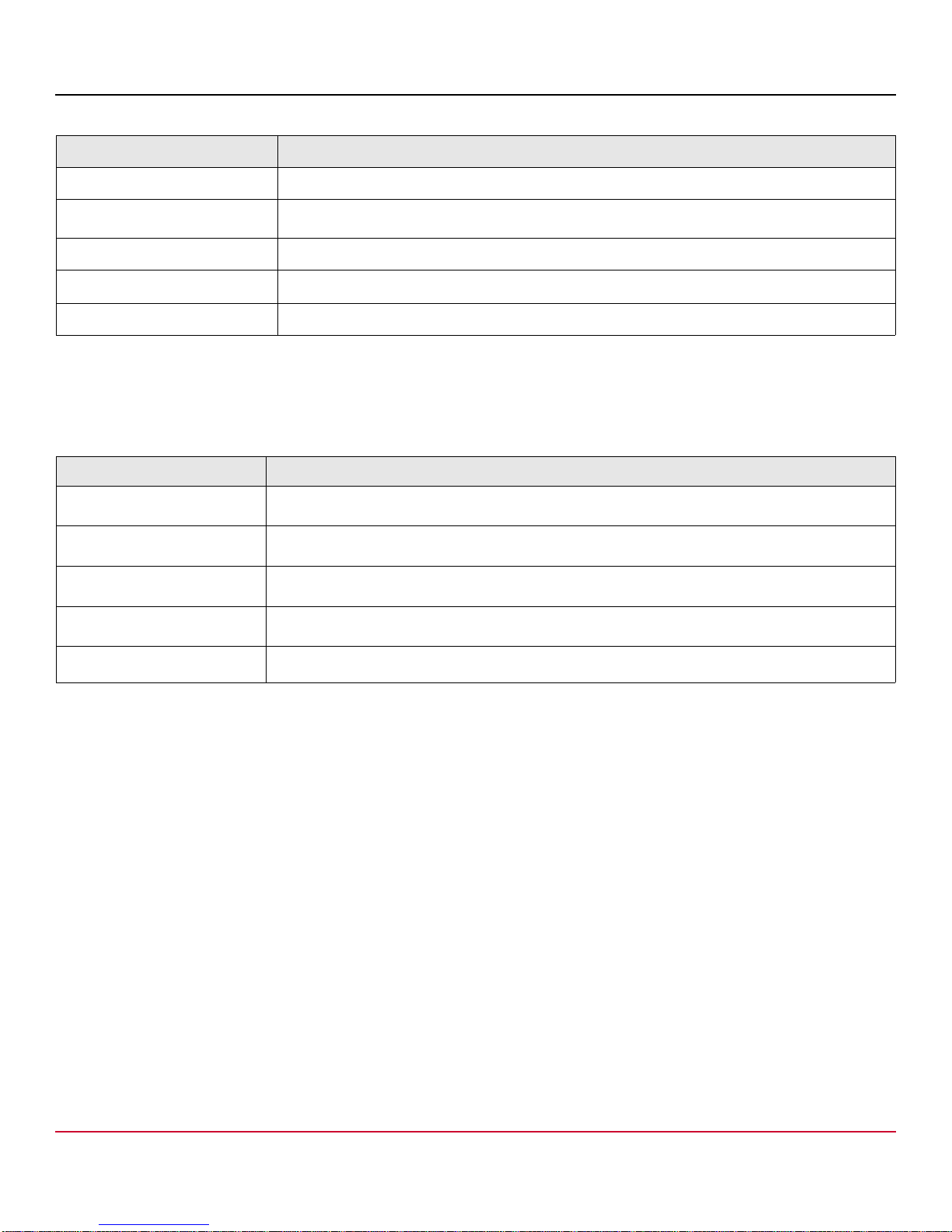

Table 6 provides a list of host interface features.

Table 6: Host Interface Features

Feature Details

Host Interface PCIe 3.0 (Gen 3: 8 GT/s; Gen 2: 5 GT/s; Gen 1: 2.5 GT/s).

Number of PCIe lanes PCIe Edge connector: x16.

Vital Product Data (VPD) Supported.

Alternate Routing ID (ARI) Supported.

Function Level Reset (FLR) Supported.

Advanced Error Reporting Supported.

PCIe ECNs Support for TLP Processing Hints (TPH), Latency Tolerance

Reporting (LTR), and Optimized Buffer Flush/Fill (OBFF).

MSI-X Interrupt vector per queue 1 per RSS queue, 1 per NetQueue, 1 per Virtual Machine Queue

(VMQ).

IP Checksum Offload Support for transmit and receive side.

TCP Checksum Offload Support for transmit and receive side.

UDP Checksum Offload Support for transmit and receive side.

NDIS TCP Large Send Offload Support for LSOV1 and LSOV2.

NDIS Receive Segment Coalescing (RSC) Support for Windows environments.

TCP Segmentation Offload (TSO) Support for Linux and VMware environments.

Large Receive Offload (LRO) Support for Linux and VMware environments.

Generic Receive Offload (GRO) Support for Linux and VMware environments.

Receive Side Scaling (RSS) Support for Windows, Linux, and VMware environments. Up to

8 queues/port supported for RSS.

Header-Payload Split Enables the software TCP/IP stack to receive TCP/IP packets

with header and payload data split into separate buffers.

Supports Windows, Linux, and VMware environments.

Jumbo Frames Supported.

iSCSI boot Supported.

NIC Partitioning (NPAR) Supports up to eight Physical Functions (PFs) per port, or up to

16 PFs per silicon. This option is configurable in NVRAM.

NetXtreme-UG100

9

Page 10

NetXtreme-C/NetXtreme-E User Guide

Table 6: Host Interface Features (Continued)

Feature Details

RDMA over Converge Ethernet (RoCE) The BCM5741X supports RoCE v1/v2 for Windows, Linux, and

VMware.

Data Center Bridging (DCB) The BCM5741X supports DCBX (IEEE and CEE specification),

PFC, and AVB.

NCSI (Network Controller Sideband Interface) Supported.

Wake on LAN (WOL) Supported on designs with 10GBASE-T, SFP+, and SFP28

interfaces.

PXE boot Supported.

UEFI boot Supported.

Flow Control (Pause) Supported.

Auto negotiation Supported.

IEEE 802.1q VLAN Supported.

Interrupt Moderation Supported.

MAC/VLAN filters Supported.

4.2 Virtualization Features

Table 7 lists the virt ua lizatio n fe at ur es of the NetXt re me-C /Ne tXt re m e- E.

Table 7: Virtualization Features

Feature Details

Linux KVM Multiqueue Supported.

VMware NetQueue Supported.

NDIS Virtual Machine Queue (VMQ) Supported.

Virtual eXtensible LAN (VXLAN) – Aware stateless offloads (IP/

Supported.

UDP/TCP checksum offloads

Generic Routing Encapsulation (GRE) – Aware stateless offloads

Supported.

(IP/UDP/TCP checksum offloads

Network Virtualization using Generic Routing Encapsulation

Supported.

(NVGRE) – Aware stateless offloads

IP-in-IP aware stateless offloads (IP/UDP/TCP checksum offloads Supp orted

SR-IOV v1.0 128 Virtual Functions (VFs) for Guest Operating Systems (GOS)

NetXtreme-UG100

per device. MSI-X vector per VF is set to 16.

10

Page 11

NetXtreme-C/NetXtreme-E User Guide

Table 7: Virtualization Features (Continued)

Feature Details

MSI-X vector port 74 per port default value (two port configuration). 16 per VF and is

configurable in HII and CCM.

4.3 VXLAN

A Virtual eXtensible Local Area Network (VXLAN), defined in IETF RFC 7348, is used to address the need for overlay

networks within virtualized data centers accommodating multiple tenants. VXLAN is a Laye r 2 overlay or tunneling schem e

over a Layer 3 network. Only VMs within the same VXLAN segment can communicate with each other.

4.4 NVGRE/GRE/IP-in-IP/Geneve

Network Virtualization using GRE (NVGRE), defined in IETF RFC 7637, is similar to a VXLAN.

4.5 Stateless Offloads

4.5.1 RSS

Receive Side Scaling (RSS) uses a Toeplitz algorithm which uses 4 tuple match on the received frames and forwards it to

a deterministic CPU for frame processing. This allows streamlined frame processing and balances CPU utilization. An

indirection table is used to map the stream to a CPU.

Symmetric RSS allows the mapping of packets of a given TCP or UDP flow to the same receive queue.

4.5.2 TPA

Transparent Packet Aggregation (TPA) is a technique where received frames of the same 4 tuple matched frames are

aggregated together and then indicated to the network stack. Each entry in the TPA context is identified by the 4 tuple:

Source IP, destination IP, source TCP port, and destination TCP port. TPA improves system performance by reducing

interrupts for network traffic and lessening CPU overhead.

4.5.3 Header-Payload Split

Header-payload split is a feature that enables the software TCP/IP stack to receive TCP/IP packets with header and payload

data split into separate buffers. The support for this feature is available in both Windows and Linux environments. The

following are potential benefits of header-payload split:

The header-payload split enables compact and efficient caching of packet headers into host CPU caches. This can

result in a receive side TCP/IP performance improvement.

Header-payload splitting enables page flipping and zero copy operations by the host TCP/IP stack. This can further

improve the performance of the receive path.

4.6 UDP Fragmentation Offload

UDP Fragmentation Offload (UFO) is a feature that enables the software stack to offload fragmentation of UDP/IP datagrams

into UDP/IP packets. The support for this feature is only available in the Linux environment. The following is a potential

benefit of UFO:

NetXtreme-UG100

11

Page 12

NetXtreme-C/NetXtreme-E User Guide

The UFO enables the NIC to handle fragmentation of a UDP datagram into UDP/IP packets. This can result in the

reduction of CPU overhead for transmit side UDP/IP processing.

4.7 Stateless Transport Tunnel Offload

Stateless Transport Tunnel Offload (STT) is a tunnel encapsulation tha t enables overlay networks in virtualized data centers.

STT uses IP-based encapsulation with a TCP-like header. There is no TCP connection state associated with the tunnel and

that is why STT is stateless. Open Virtual Switch (OVS) uses STT.

An STT frame contains the STT frame header and payload. The payload of the STT frame is an untagged Ethernet frame.

The STT frame header and encapsulated payload are treated as the TCP payload and TCP-like header. The IP header (IPv4

or IPv6) and Ethernet header are created for each STT segment that is transmitted.

4.8 Multiqueue Support for OS

4.8.1 NDIS VMQ

The NDIS Virtual Machine Queue (VMQ) is a feature that is supported by Microsoft to improve Hyper-V network

performance. The VMQ feature supports packet classification based on the destination MAC address to return received

packets on different completion queues. This packet classification combined with the ability to DMA packets directly into a

virtual machine’s memory allows the scaling of virtual machines across multiple processors.

See Driver Advanced Properties for information on VMQ.

4.8.2 VMware NetQueue

The VMware NetQueue is a feature that is similar to Microsoft’s NDIS VMQ feature. The NetQueue feature supports packet

classification based on the destination MAC address and VLAN to return received packets on different NetQueues. This

packet classification combined with the ability to DMA packets directly into a virtual machine’s memory allows the scaling of

virtual machines across multiple processors.

4.8.3 KVM/Xen Multiqueue

KVM/Multiqueue returns the frames to different queues of the host stack by classifying the incoming frame by processing

the received packet’s destination MAC address and or IEEE 802.1Q VLAN tag. The classification combined with the ability

to DMA the frames directly into a virtual machine’s memory allows scaling of virtual machines across multiple processors.

4.9 SR-IOV Configuration Support Matrix

Windows VF over Windows hypervisor

Windows VF and Linux VF over VMware hypervisor

Linux VF over Linux KVM

4.10 SR-IOV

The PCI-SIG defines optional support fo r Single-Root IO Virtua lization (SR-IOV). SR-IOV is designed to allow access of the

VM directly to the device using Virtual Functions (VFs). The NIC Physical Function (PF) is divided into multiple virtual

functions and each VF is presented as a PF to VMs.

SR-IOV uses IOMMU functionality to translate PCIe virtual addresses to physical addresses by using a translation table.

NetXtreme-UG100

12

Page 13

NetXtreme-C/NetXtreme-E User Guide

The number of Physical Functions (PFs) and Virtual Functions (VFs) are managed through the UEFI HII menu, the CCM,

and through NVRAM configurations. SRIOV can be supported in combination with NPAR mode.

4.11 Network Partitioning (NPAR)

The Network Partitioning (NPAR) feature allows a single physical network interface port to appear to the system as multiple

network device functions. When NPAR mode is enabled, the NetXtreme-E device is enumerated as multiple PCIe physical

functions (PF). Each PF or “partition” is assigned a separate PC Ie function ID on initial power on. The original PCIe definition

allowed for eight PFs per device. For Alternative Routing-ID (ARI) capable systems, Broadcom NetXtreme-E adapters

support up to 16 PFs per device. Each partition is assigned its own configuration space, BAR address, and MAC address

allowing it to operate independently. Partitions support direct assignment to VMs, VLANs, and so on, just as any other

physical interface.

4.12 RDMA over Converge Ethernet – RoCE

Remote Direct Memory Access (RDMA) over Converge Ethernet (RoCE) is a complete hardware offload feature in the

BCM5741X that allows RDMA functionality over an Ethernet network. RoCE functionality is available in user mode and

kernel mode application. RoCE Physical Functions (PF) and SRIOV Virtual Functions (VF) are available in single function

mode and in mutli-function mode (NIC Partitioning mode). Broadcom supports RoCE in Windows, Linux, and VMware.

Refer to the following links for RDMA support for each operating system:

Windows

https://technet.microsoft.com/en-us/library/jj134210(v=ws.11).aspx

Redhat Linux

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/networking_guide/partinfiniband_and_rdma_networking

VMware

https://docs.vmware.com/en/VMware-vSphere/6.7/com.vmware.vsphere.networking.doc/GUID-E4ECDD76-75D6-4974A225-04D5D117A9CF.html

4.13 Supported Combinations

The following sections describe the supported feature combinations for this device.

4.13.1 NPAR, SR-IOV, and RoCE

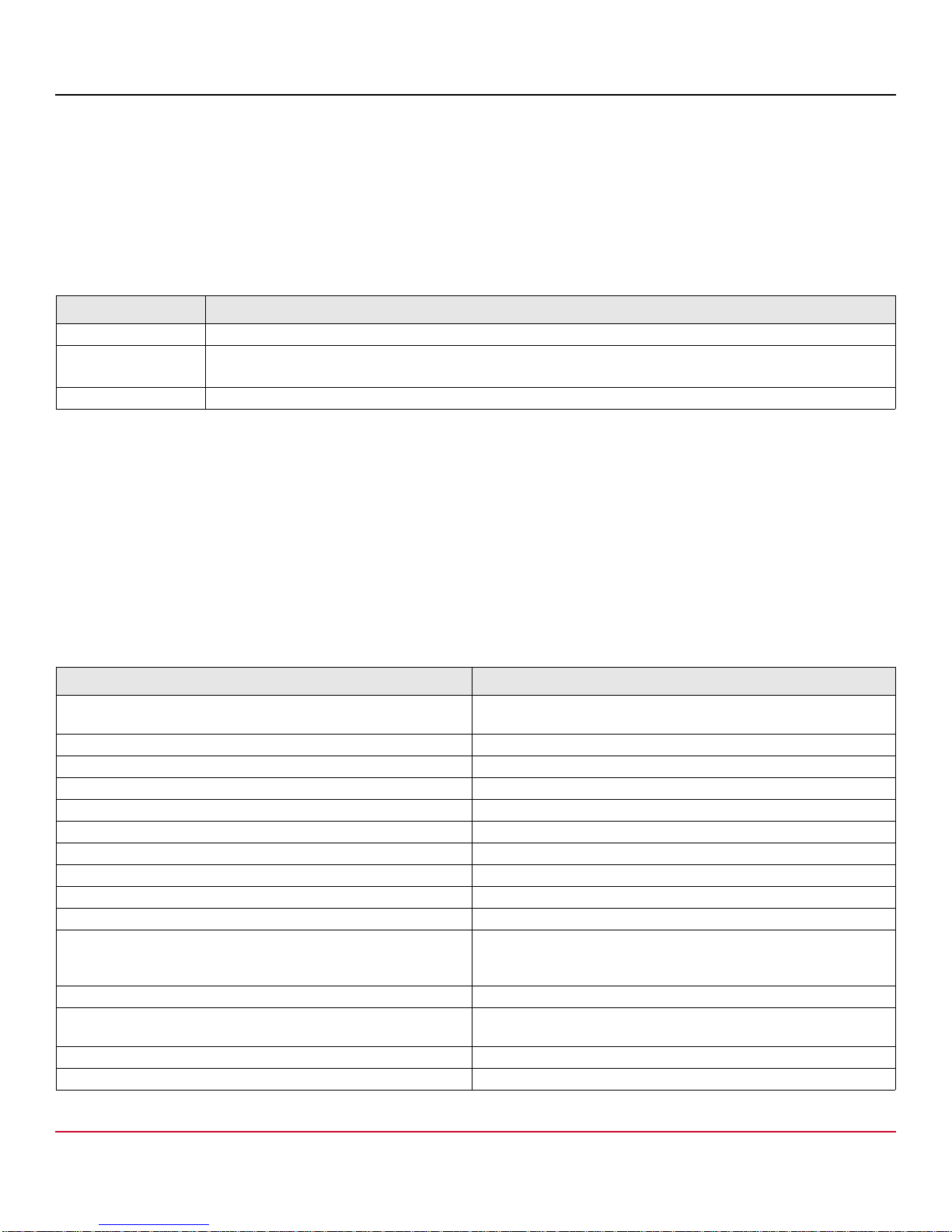

Table 8 provides the supported feature combinations of NPAR, SR-IOV, and RoCE.

Table 8: NPAR, SR-IOV, and RoCE

SW Feature Notes

NPAR Up to 8 PFs or 16 PFs

SR-IOV Up to 128 VFs (total per chip)

NetXtreme-UG100

13

Page 14

NetXtreme-C/NetXtreme-E User Guide

Table 8: NPAR, SR-IOV, and RoCE (Continued)

SW Feature Notes

RoCE on PFs Up to 4 PFs

RoCE on VFs Valid for VFs attached to RoCE-enabled PFs

Host OS Linux, Windows, ESXi (no vRDMA support)

Guest OS Linux and Windows

DCB Up to two COS per port with non-shared reserved memory

4.13.2 NPAR, SR-IOV, and DPDK

Table 9 provides the supported feature combinations of NPAR, SR-IOV, and DPDK.

Table 9: NPAR, SR-IOV, and DPDK

SW Feature Notes

NPAR Up to 8 PFs or 16 PFs

SR-IOV Up to 128 VFs (total per chip)

DPDK Supported only as a VF

Host OS Linux

Guest OS DPDK (Linux)

4.13.3 Unsupported Combinations

The combination of NPAR, SR-IOV, RoCE, and DPDK is not supported.

NetXtreme-UG100

14

Page 15

NetXtreme-C/NetXtreme-E User Guide

5 Installing the Hardware

5.1 Safety Precautions

CAUTION! The adapter is being installed in a system that operates with voltages that can be lethal. Before removing the

cover of the system, observe the following precautions to protect yourself and to prevent damage to the system

components:

Remove any metallic objects or jewelry from your hands and wrists.

Make sure to use only insulated or nonconducting tools.

Verify that the system is powered OFF and unplugged before you touch internal components.

Install or remove adapters in a static-free environment. The use of a properly grounded wrist strap or other

personal antistatic devices and an antistatic mat is strongly recommended.

5.2 System Requirements

Before installing the Broadcom NetXtreme-E Ethernet adapter, verify that the system meets the requirements listed for the

operating system.

5.2.1 Hardware Requirements

Refer to the following list of hardware requirements:

One open PCIe Gen 3 x8 or x 16 slot.

4 GB memory or more (32 GB or more is recommended for virtualization applications and nominal network throughput

performance).

5.2.2 Preinstallation Checklist

Refer to the following list before installing the NetXtreme-C/NetXtreme-E device.

1. Verify that the server meets the hardware and software requirements listed in “System Requirements”.

2. Verify that the server is using the latest BIOS.

3. If the system is active, shut it down.

4. When the system shutdown is complete, turn off the power and unplug the power cord.

5. Holding the adapter card by the edges, remove it from its shipping package and place it on an antistatic surface.

6. Check the adapter for visible signs of damage, p articularly on the card edge connector. Never attempt to install a

damaged adapter.

NetXtreme-UG100

15

Page 16

NetXtreme-C/NetXtreme-E User Guide

5.3 Installing the Adapter

The following instructions apply to installing the Broadcom NetXtreme-E Ethernet adapter (add-in NIC) into most servers.

Refer to the manuals that are supplied with the server for details about performing these tasks on this particular server.

1. Review the “Safety Precautions” on page 15 and “Preinstallation Checklist” before installing the adapter. Ensure that the

system power is OFF and unplugged from the power outlet, and that pr oper electrical grounding pr ocedures have b een

followed.

2. Open the system case and select any empty PCI Express Gen 3 x8 or x16 slot.

3. Remove the blank cover-plate from the slot.

4. Align the adapter connector edge with the connector slot in the system.

5. Secure the adapter with the adapter clip or screw.

6. Close the system case and disconnect any personal antistatic devices.

5.4 Connecting the Network Cables

Broadcom Ethernet switches are productized with SFP+/SFP28 /QSFP28 ports that su ppor t up to 100 Gb/s. The se 10 0 Gb/

s ports can be divided into 4 x 25 Gb/s SFP28 ports. QSFP ports can be connected to SFP28 ports using 4 x 25G SFP28

breakout cables.

5.4.1 Supported Cables and Modules

5.4.1.1 Copper

The BCM957406AXXXX, BCM957416AXXXX, and BCM957416XXXX adapters have two RJ-45 connectors used for

attaching the system to a CAT 6E Ethernet copper-wire segment.

5.4.1.2 SFP+

The BCM957302/402AXXXX, BCM957412AXXXX, and BCM957412MXXXX adapters have two SFP+ connectors used for

attaching the system to a 10 Gb/s Ethernet switch.

5.4.1.3 SFP28

The BCM957404AXXXX, BCM957414XXXX, and BCM957414AXXXX adapters have two SFP28 connectors used for

attaching the system to a 100 Gb/s Ethernet switch.

5.4.1.4 QSFP

The BCM957454XXXXXX, BCM957414AXXXX, and BCM957304XXXXX adapters have single QSFP connectors used for

attaching the system to a 100 Gb/s Ethernet switch.

NetXtreme-UG100

16

Page 17

NetXtreme-C/NetXtreme-E User Guide

6 Software Packages and Installation

Refer to the following sections for information on software packages and installation.

6.1 Supported Operating Systems

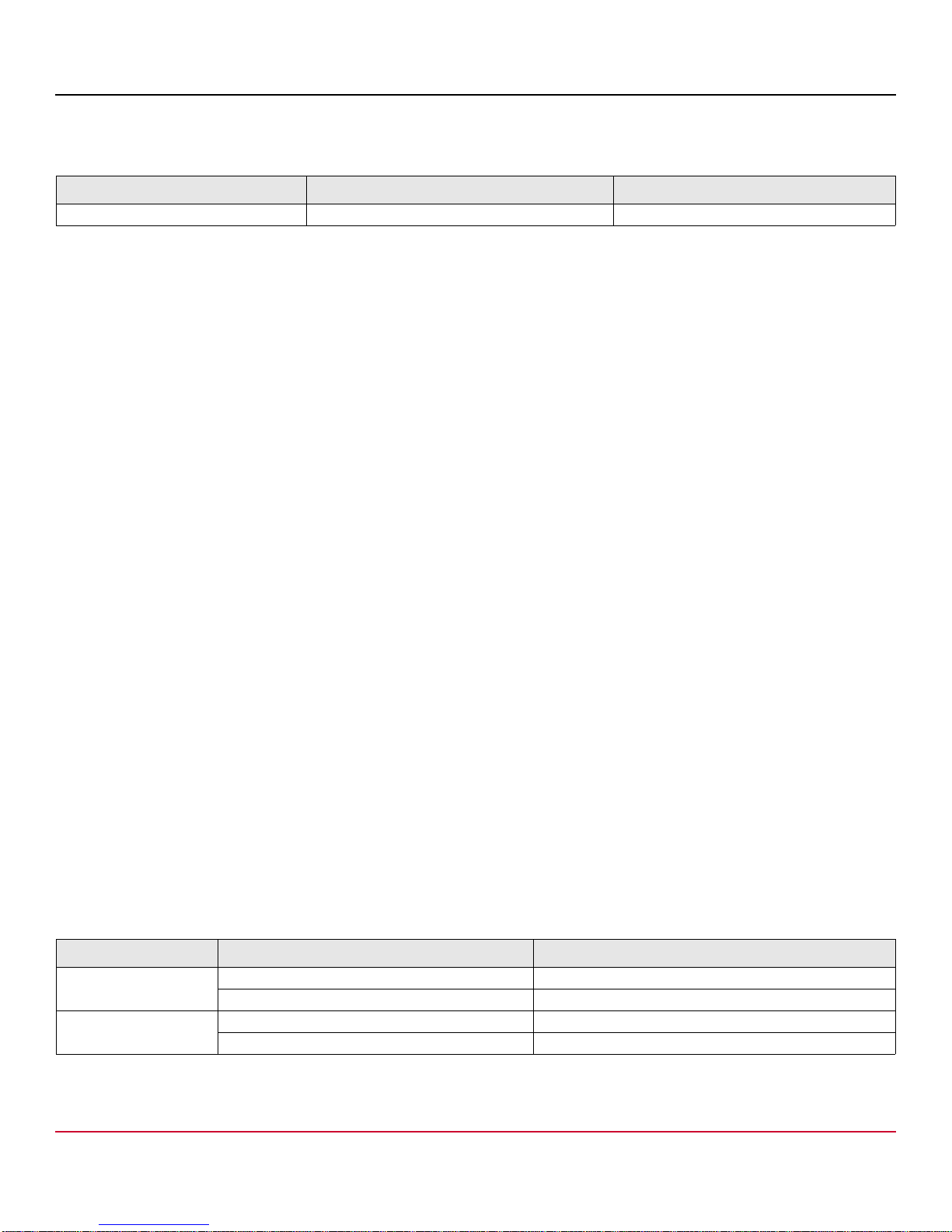

Table 10 provides a list of supported operating systems.

Table 10: Supported Operating System List

OS Flavor Distribution

Windows Windows 2012 R2 or above

Linux Redhat 6.9, Redhat 7.1 or above

SLES 11 SP 4, SLES 12 SP 2 or above

VMware ESXi 6.0 U3 or above

6.2 Installing the Linux Driver

Linux drivers can be downloaded from the Broadcom pub lic website: https://www.broadcom.com/support/download-search/

?pg=Ethernet+Connectivity,+Switching,+and+PHYs&pf=Ethernet+Network+Adapters+-+NetXtreme&pa=Driver.

See the package readme.txt files for specific instructions and optional parameters.

6.2.1 Linux Ethtool Commands

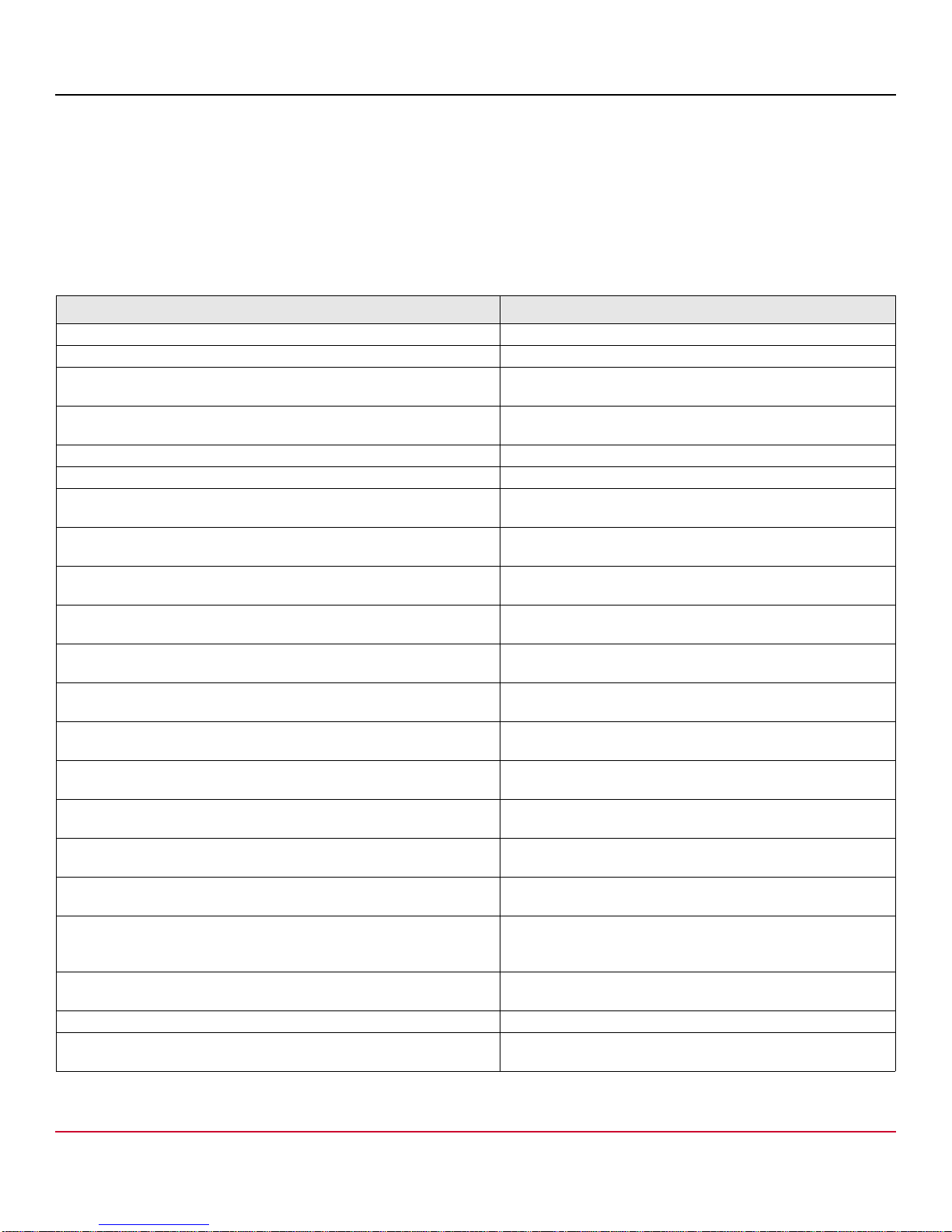

NOTE: In Table 11, ethX should be replaced with the actual interface name.

Table 11: Linux Ethtool Commands

Command Description

ethtool -s ethX speed 25000 autoneg off Set the speed. If the link is up on one port, the driver does not allow

the other port to be set to an incompatible speed.

ethtool -i ethX Output includes driver, firmware and package version.

ethtool -k ethX Show offload features.

ethtool -K ethX tso off Turn off TSO.

ethtool -K ethX gro off lro off Turn off GRO/LRO.

ethtool -g ethX Show ring sizes.

ethtool -G ethX rx N Set Ring sizes.

ethtool -S ethX Get statistics.

ethtool -l ethX Show number of rings.

ethtool -L ethX rx 0 tx 0 combined M Set number of rings.

ethtool -C ethX rx-frames N Set interrupt coalescing. Other parameters supported are: rx-usecs,

rx-frames, rx-usecs-irq, rx-frames-irq, tx-usecs, tx-frames, tx-usecs-

irq, tx-frames-irq.

ethtool -x ethX Show RSS flow hash indirection table and RSS key.

ethtool -s ethX autoneg on speed 10000 duplex full Enable Autoneg (see “Auto-Negotiation Configuration” on page 24

for more details)

ethtool --show-eee ethX Show EEE state.

ethtool --set-eee ethX eee off Disable EEE.

NetXtreme-UG100

17

Page 18

NetXtreme-C/NetXtreme-E User Guide

Table 11: Linux Ethtool Commands (Continued)

Command Description

ethtool --set-eee ethX eee on tx-lpi off Enable EEE, but disable LPI.

ethtool -L ethX combined 1 rx 0 tx 0 Disable RSS. Set the combined channels to 1.

ethtool -K ethX ntuple off Disable Accelerated RFS by disabling ntuple filters.

ethtool -K ethX ntuple on Enable Accelerated RFS.

Ethtool -t ethX Performs various diagnostic self-tests.

echo 32768 > /proc/sys/net/core/rps_sock_flow_entries

echo 2048 > /sys/class/net/ethX/queues/rx-X/rps_flow_cnt

sysctl -w net.core.busy_read=50 This sets the time to busy read the device's receive ring to 50 usecs.

echo 4 > /sys/bus/pci/devices/0000:82:00.0/sriov_numvfs Enable SR-IOV with four VFs on bus 82, Device 0 and Function 0.

ip link set ethX vf 0 mac 00:12:34:56:78:9a Set VF MAC address.

ip link set ethX vf 0 state enable Set VF link state for VF 0.

ip link set ethX vf 0 vlan 100 Set VF 0 with VLAN ID 100.

Enable RFS for Ring X.

For socket applications waiting for data to arrive, using this method

can decrease latency by 2 or 3 usecs typically at the expense of

higher CPU utilization.

6.3 Installing the VMware Driver

The ESX drivers are provided in VMware standard VIB format and can be downloaded from VMware: https://

www.vmware.com/resources/compatibility/

search.php?deviceCategory=io&details=1&partner=12&deviceTypes=6&page=1&display_interval=10&sortColumn=Partn

er&sortOrder=Asc

1. To install the Ethernet and RDMA driver, issue the following commands:

$ esxcli software vib install -v <bnxtnet>-<driver version>.vib

$ esxcli software vib install -v <bnxtroce>-<driver version>.vib

2. A system reboot is required for the new driver to take effect.

Other useful VMware commands shown in Table 12.

NOTE: In Table 12, vmnicX should be replaced with the actual interface name.

NOTE:

$ kill -HUP $(cat /var/run/vmware/vmkdevmgr.pid) This command is required after vmkl oad_mod bnxtnet

for successful module bring up.

Table 12: VMware Commands

Command Description

esxcli software vib list |grep bnx List the VIBs installed to see whether the bnxt driver installed

successfully.

esxcfg-module –I bnxtnet Print module info on to screen.

esxcli network get –n vmnicX Get vmnicX properties.

esxcfg-module –g bnxtnet Print module parameters.

esxcfg-module –s ‘multi_rx_filters=2 disable_tap=0

max_vfs=0,0 RSS=0’

vmkload_mod –u bnxtnet Unload bnxtnet module.

Set the module parameters.

NetXtreme-UG100

18

Page 19

NetXtreme-C/NetXtreme-E User Guide

Table 12: VMware Commands (Continued)

Command Description

vmkload_mod bnxtnet Load bnxtnet module.

esxcli network nic set –n vmnicX –D full –S 25000 Set the speed and duplex of vmnicX.

esxcli network nic down –n vmnicX Disable vmnicX.

esxcli network nic up –n vmnic6 Enable vmnicX.

bnxtnetcli –s –n vmnic6 –S “25000” Set the link speed. Bnxtnetcli is needed for older ESX versions to

support the 25G speed setting.

6.4 Installing the Windows Driver

To install the Windows drivers:

1. Download the Windows driver installation package can be downloaded from: https://www.broadcom.com/support/

download-search/?pg=Ethernet+Connectivity,+Switching,+and+PHYs&pf=Ethernet+Network+Adapters++NetXtreme&pa=Driver.

2. Unzip the Wiin20xx_2xx.xx.x.zip file.

3. Launch the Device Manager.

4. Right-click on the Broadcom devices under Network Adapters.

5. Select Update Driver.

6. Select Browse My Computer For Driver Software and navigate to the folder where the driver files located. The driver

l updates automatically.

7. Reboot the system to ensure that the driver is running.

6.4.1 Driver Advanced Properties

The Windows driver advanced properties are shown in Table 13.

.

Table 13: Windows Driver Advanced Properties

Driver Key Parameters Description

Encapsulated Task offload Enable or Disable Used for configuring NVGRE

encapsulated task offload.

Energy Efficient Ethernet Enable or Disable EEE enabled for Copper ports and

Disabled for SFP+ or SFP28 ports. This

feature is only enabled for the

BCM957406A4060 adapter.

Flow control TX or RX or TX/RX enable Configure flow control on RX or TX or both

sides.

Interrupt Moderation Enable or Disable Default Enabled. Allows frames to be

batch processed by saving CPU time.

Jumbo packet 1514, 4088, or 9014 Jumbo packet size.

Large Send offload V2 (IPv4) Enable or Disable LSO for IPv4.

Large Send offload V2 (IPv6) Enable or Disable LSO for IPv6.

Locally Administered Address User entered MAC address. Override default hardware MAC address

after OS boot.

NetXtreme-UG100

19

Page 20

NetXtreme-C/NetXtreme-E User Guide

Table 13: Windows Driver Advanced Properties (Continued)

Driver Key Parameters Description

Max Number of RSS Queues 2, 4, or 8. Default is 8. Allows user to configure

Receive Side Scaling queues.

Priority and VLAN Priority and VLAN Disable, Priority

enabled, VLAN enabled, Priority and

Default Enabled. Used for configuring

IEEE 802.1Q and IEEE 802.1P.

VLAN enabled.

Receive Buffer (0=Auto) Increments of 500. Default is Auto.

Receive Side Scaling Enable or Disable. Default Enabled

Receive Segment Coalescing (IPv4) Enable or Disable. Default Enabled

Receive Segment Coalescing (IPv6) Enable or Disable. Default Enabled

RSS load balancing profile NUMA scaling static, Closest processor,

Default NUMA scaling static.

Closest processor static, conservative

scaling, NUMA scaling.

Speed and Duplex 1 Gb/s, or 10 Gb/s, or 25 Gb/s, or Auto

Negotiation.

10 Gb/s Copper ports can Auto negotiate

speeds, whereas 25 Gb/s ports are set to

forced speeds.

SR-IOV Enable or Disable. Default Enabled. This parameter works in

conjunction with HW configured SR-IOV

and BIOS configured SR-IOV setting.

TCP/UDP checksum offload IPv4 TX/RX enabled, TX enabled or RX

Default RX and TX enabled.

Enabled or offload disabled.

TCP/UDP checksum offload IPv6 TX/RX enabled, TX enabled or RX

Default RX and TX enabled.

Enabled or offload disabled.

Transmit Buffers (0=Auto) Increment of 50. Default Auto.

Virtual Machine Queue Enable or Disable. Default Enabled.

VLAN ID User configurable number. Default 0.

6.4.2 Event Log Messages

Table 14 provides the Event Log messages logged by the Windows NDIS driver to the event logs.

Table 14: Windows Event Log Messages

Message ID Comment

0x0001 Failed Memory allocation.

0x0002 Link Down Detected.

0x0003 Link up detected.

0x0009 Link 1000 Full.

0x000A Link 2500 Full.

0x000b Initialization successful.

0x000c Miniport Reset.

0x000d Failed Initialization.

0x000E Link 10 Gbe successful.

0x000F Failed Driver Layer Binding.

0x0011 Failed to set Attributes.

0x0012 Failed scatter gather DMA.

0x0013 Failed default Queue initialization.

NetXtreme-UG100

20

Page 21

NetXtreme-C/NetXtreme-E User Guide

Table 14: Windows Event Log Messages (Continued)

Message ID Comment

0x0014 Incompatible firmware version.

0x0015 Single interrupt.

Table 15: Event Log Messages

0x0016 Firmware failed to respond within allocated time.

0x0017 Firmware returned failure status.

0x0018 Firmware is in unknown state.

0x0019 Optics Module is not supported.

0x001A Incompatible speed selection between Port 1 and Port 2. Reported

link speeds are correct and might not match Speed and Duplex

setting.

0x001B Incompatible speed selection between Port 1 and Port 2. Link

configuration became illegal.

0x001C Network controller configured for 25 Gb full-duplex link.

0x001D Network controller configured for 40 Gb full-duplex link.

0x001E Network controller configured for 50 Gb full-duplex link.

0x001F Network controller configured for 100 Gb full-duplex link.

0x0020 RDMA support initialization failed.

0x0021 Device's RDMA firmware is incompatible with this driver.

0x0022 Doorbell BAR size is too small for RDMA.

0x0023 RDMA restart upon device reset failed.

0x0024 RDMA restart upon system power up failed

0x0025 RDMA startup failed. Not enough resources.

0x0026 RDMA not enabled in firmware.

0x0027 Start failed, a MAC address is not set.

0x0028 Transmit stall detected. TX flow control is disabled from now on.

7 Updating the Firmware

7.1 Linux

To update the Linux firmware:

1. Download the firmware upgrade package from: https://www.broadcom.com/support/download-search/

?pg=Ethernet+Connectivity,+Switching,+and+PHYs&pf=Ethernet+Network+Adapters++NetXtreme&pn=All&pa=Firmware&po=&dk= .

2. Execute the following commands:

tar zxvf nxe_fw_upgrade.tgz

3. Execute the following command:

./fw_install.sh

4. Follow the install script steps.

NetXtreme-UG100

21

Page 22

NetXtreme-C/NetXtreme-E User Guide

5. Reboot the system for new firmware to take affect.

7.2 Windows/ESX

The NIC firmware can be upgraded using the NVRAM packages prov ided in the same link in the Linux session. Refer to the

readme.txt for specific instructions for your adapter.

8 Teaming

8.1 Windows

The Broadcom NetXtreme-C/NetXtreme-E devices can participate in NIC teaming functionality using the Microsoft teaming

solution. Refer to Microsoft public documentation described in the following link:

https://docs.microsoft.com/en-us/windows-server/networking/technologies/nic-teaming/create-a-new-nic-team

Microsoft LBFO is a native teaming driver that can be used in the Windows OS. The teaming driver also provides VLAN

tagging capabilities.

8.2 Linux

Linux bonding is used for teaming under Linux. The concept is loading the bondin g driver and adding team members to the

bond which would load-balance the traffic.

Use the following steps to setup Linux bonding:

1. Execute the following command:

modprobe bonding mode=”balance-alb”. This will create a bond interface.

2. Add bond clients to the bond interface. An example is shown below:

ifenslave bond0 ethX; ifenslave bond0 ethY

3. Assign an IPv4 address to bond the interface using ifconfig bond0 IPV4Address netmask NetMask up. The

IPV4Address and NetMask are an IPv4 address and the associated network mask.

NOTE: The IPv4 address should be replaced with the actual network IPv4 address.

actual IPv4 network mask.

4. Assign an IPv6 address to bond the interface using

IPV6Address and NetMask are an IPv6 address and the associated network mask.

NOTE: The IPv6 address should be replaced with the actual network IPv6 address.

actual IPv6 network mask.

Refer to the Linux Bonding documentation for advanced configurations.

ifconfig bond0 IPV6Address netmask NetMask up. The

NetMask should be replaced by the

NetMask should be replaced by the

NetXtreme-UG100

22

Page 23

NetXtreme-C/NetXtreme-E User Guide

9 System-Level Configuration

The following sections provide information on system-level NIC configuration.

9.1 UEFI HII Menu

Broadcom NetXtreme-E series controllers can be configured for pre-boot, iSCSI and advanced configuration such as SRIOV using HII (Human Interface) menu.

To configure the settings, during system boot, enter BIOS setup and navigate to the network interface control me n us .

9.1.1 Main Configuration Page

This page displays the current network link status, PCIe Bus:Device:Function, MAC address of the adapter and the Ethernet

device.

The 10GBASE-T card allows the user to enable or disable Energy Efficient Ethernet (EEE).

9.1.2 Firmware Image Properties

Main configuration page – The firmware Image properties displays the Family ver sion, which consists of version numbers of

the controller BIOS, Multi Boot Agent (MBA), UEFI, iSCSI, and Comprehensive Configuration Manageme nt (CCM) version

numbers.

9.1.3 Device-Level Configuration

Main configuration page – The device level configuration allows the user to ena ble SR-IOV mode, number of virtual functions

per physical function, MSI-X vectors per Virtual function, and the Max number of physical function MSI-X vectors.

9.1.4 NIC Configuration

NIC configuration – Legacy boot protocol is used to select and con figure PXE, iSCSI, or disable legacy boot mode. The boot

strap type can be Auto, int18h (interrupt 18h), int19h (interrupt 19h), or BBS.

MBA and iSCSI can also be configured using CCM. Legacy BIOS mode uses CCM for configuration. The hide setup pr ompt

can be used for disabling or enabling the banner display.

VLAN for PXE can be enabled or disabled and the VLAN ID can be configured by the user. See the Auto-Negotiation

Configuration for details on link speed setting options.

9.1.5 iSCSI Configuration

iSCSI boot configuration can be set through the Main configuration page -> iSCSI configuration. Parameters such as IPv4

or IPv6, iSCSI initiator, or the iSCSI target are set through this page.

Refer to iSCSI Boot for detailed configuration information.

NetXtreme-UG100

23

Page 24

NetXtreme-C/NetXtreme-E User Guide

9.2 Comprehensive Configuration Management

For adapters that include CCM firmware (legacy boot only), preboot configuration can be configured using the

Comprehensive Configuration Management (CCM) menu option. During the system BIOS POST, the Broadcom banner

message is displayed with an option to change the par ame te rs thr ough the Contr ol-S menu . Wh en Co ntro l-S is pr essed, a

device list is populated with all the Broadcom network adapters found in the system. Select the desir ed NIC for configuration.

NOTE: Some adapters may not have CCM firmware in the NVRAM image and must use the HII menu to configure legacy

parameters.

9.2.1 Device Hardware Configuration

Parameters that can be configured using this section are the same as the HII menu Device level configuration.

9.2.2 MBA Configuration Menu

Parameters that can be configured using this section are the same as the HII menu NIC configuration.

9.2.3 iSCSI Boot Main Menu

Parameters that can be configured using this section are the same as the HII menu iSCSI configuration.

9.3 Auto-Negotiation Configuration

NOTE: In NPAR (NIC partitioning) devices where one port is shared by multiple PCI functions, the port speed is

preconfigured and cannot be changed by the driver.

The Broadcom NetXtreme-E controller supports the following auto-negotiation features:

Link speed auto-negotiation

Pause/Flow Control auto-negotiation

NOTE: Regarding link speed AN, when using SFP+, SFP28 connectors, use DAC or Multimode Optical transceivers

capable of supporting AN. Ensure that the link partn er port has been set to the matchin g auto-negotiation protocol.

For example, if the local Broadcom port is set to IEEE 802.3by AN protocol, the link partner must support AN and

must be set to IEEE 802.3by AN protocol.

NOTE: For dual ports NetXtreme-E network controllers, 10 Gb/s and 25 Gb/s are not a supported combination of link

speed.

NetXtreme-UG100

24

Page 25

NetXtreme-UG100

25

NetXtreme-C/NetXtreme-E User Guide

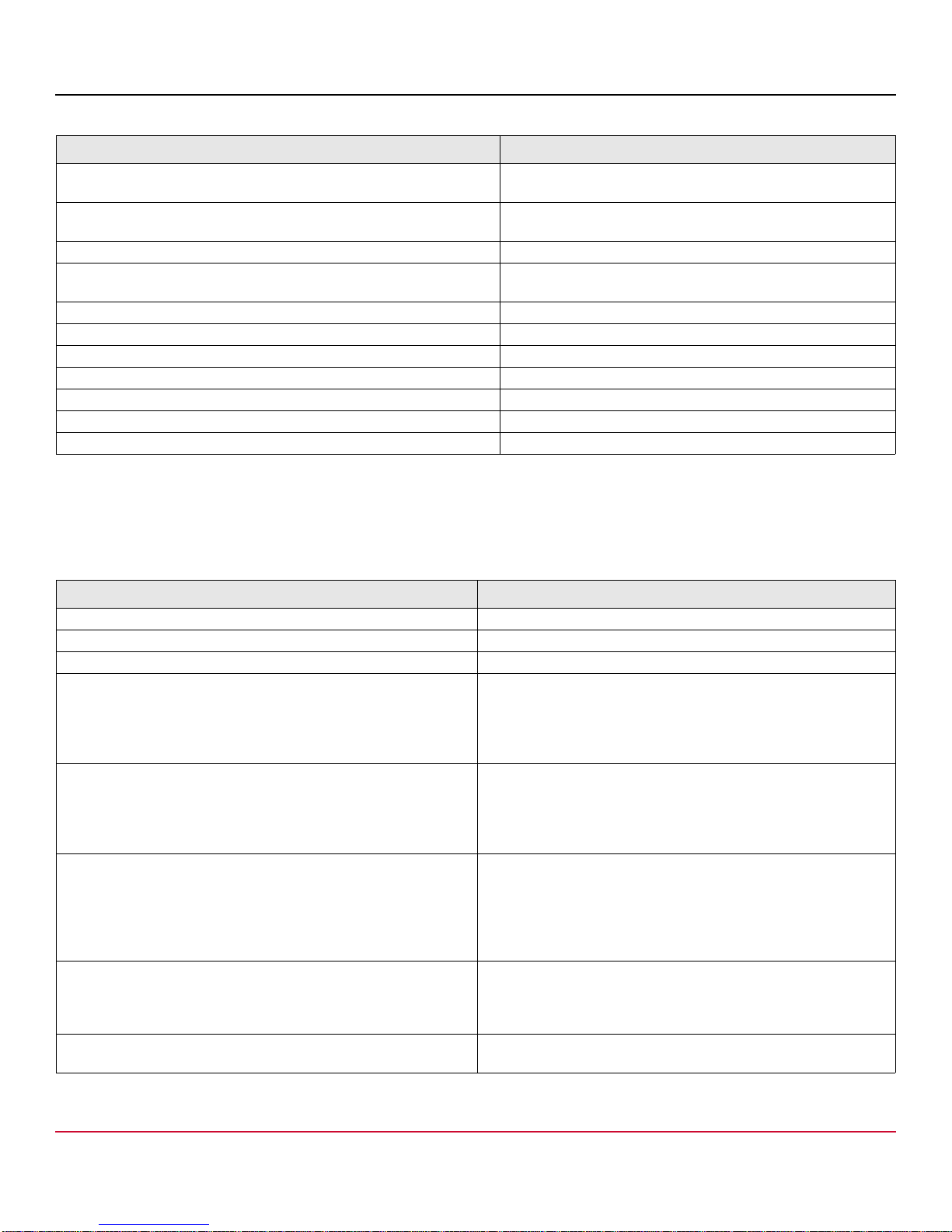

The supported combination of link speed settings for two ports NetXtreme-E network controller are shown in Table 16.

NOTE: 1 Gb/s link speed for SFP+/SFP28 is currently not support in this release.

P1 – port 1 setting

P2 – port 2 setting

AN – auto-negotiation

No AN – forced speed

{link speed} – expected link speed

AN {link speeds} – advertised supported auto-negotiation link speeds.

Table 16: Supported Combination of Link Speed Settings

Port1 Link Speed

Setting

Port 2 Link Setting

Forced 1G Forced 10G Forced 25G AN Enabled {1G}

AN Enabled

{10G}

AN Enabled

{25G}

AN Enabled {1/

10G}

AN Enabled {1/

25G}

AN Enabled {10/

25G}

AN Enabled {1/10/

25G}

Forced 1G P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN

P2: no AN P2: no AN P2: no AN P2: {1G} P2: AN {10G} P2: AN {25G} P2: AN {1/10G} P2: AN {1/25G} P2: AN {10/25G} P2: AN {1/10/25G}

Forced 10G P1: no AN P1: no AN Not supported P1: no AN P1: no AN Not supported P1: no AN P1: no AN P1: no AN P1: no AN

P2: no AN P2: no AN P2: {1G} P2: {10G} P2: AN {1/10G} P2: AN {1G} P2: AN {10G} P2: AN {1/10G}

Forced 25G P1: no AN Not supported P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN P1: no AN

P2: no AN P2: no AN P2: no AN P2: no AN P2: no AN P2: AN {1G} P2: AN {1/25G} P2: AN {25G} P2: AN {1/25G}

AN Enabled

{1G}

P1: {1G} P1: {1G} P1: {1G} P1: AN {1G} P1: AN {1G} P1: AN {1G} P1: AN {1G} P1: AN {1G} P1: AN {1G} P1: AN {1G}

P2: no AN P2: no AN P2: no AN P2: AN {1G} P2: AN {10G} P2: AN {25G} P2: AN {1/10G} P2: AN {1/25G} P2: AN {10/25G} P2: AN {1/10/25G}

AN Enabled

{10G}

P1: AN {10G} P1: AN {25G} Not supported P1: AN {10G} P1: AN {10G} Not supported P1: AN {25G} P1: AN {10G} P1: AN {10G} P1: AN {10G}

P2: no AN P2: no AN P2: AN {1G} P2: AN {10G} P2: AN {1G} P2: AN {1G} P2: AN {10G} P2: AN {1/10G}

AN Enabled

{25G}

P1: AN {25G} Not supported P1: AN {25G} P1: AN {25G} Not supported P1: AN {25G} P1: AN {1/10G} P1: AN {25G} P1: AN {25G} P1: AN {25G}

P2: no AN P2: no AN P2: AN {1G} P2: AN {25G} P2: AN {1/10G} P2: AN {1/25G} P2: AN {25G} P2: AN {1/25G}

AN Enabled

{1/10G}

P1: AN {1/10G} P1: AN {1/10G} P1: AN {1G} P1: AN {1/10G} P1: AN {1/10G} P1: AN {1/10G} P1: AN {1/25G} P1: AN {1G} P1: AN {1/10G} P1: AN {1/10G}

P2: no AN P2: no AN P2: no AN P2: AN {1G} P2: AN {10G} P2: AN {25G} P2: AN {1/10G} P2: AN {1G} P2: AN {10G} P2: AN {1/10G}

AN Enabled

{1/25G}

P1: AN {1/25G} P1: {1G} P1: AN {1/25G} P1: AN {1/25G} P1: AN {1G} P1: AN {1/25G} P1: AN {10/25G} P1: AN {1/25G} P1: AN {1/25G} P1: AN {1/25G}

P2: no AN P2: no AN P2: no AN P2: AN {1G} P2: AN {10G} P2: AN {25G} P2: AN {1/10G} P2: AN {1/25G} P2: AN {25G} P2: AN {1/25G}

AN Enabled

{10/25G}

P1: AN {10/

25G}

P1: {10G} P1: AN {25G} P1: AN {10/25G} P1: AN {10G} P1: AN {25G} P1: AN {1/10/25G} P1: AN {25G} P1: AN {10/25G} P1: AN {10/25G}

P2: no AN P2: no AN P2: no AN P2: AN {1G} P2: AN {10G} P2: AN {25G} P2: AN {1/10G} P2: AN {25G} P2: AN {10/25G} P2: AN {1/10/25G}

AN Enabled

{1/10/25G}

P1: AN {1/10/

25G}

P1: {1/10G} P1: AN {1/25G} P1: AN {1/10/

25G}

P1: AN {1/10G} P1: AN {1/25G} P1: AN {1/10/25G} P1: AN {1/25G} P1: AN {1/10/25G} P1: AN {1/10/25G}

P2: no AN P2: no AN P2: no AN P2: AN {1G} P2: AN {10G} P2: AN {25G} P2: AN {1/10G} P2: AN {1/25G} P2: AN {10/25G} P2: AN {1/10/25G}

Page 26

NetXtreme-UG100

26

NetXtreme-C/NetXtreme-E User Guide

The expected link speeds based on the local and link partner settings are shown in Table 17.

To enable link speed auto-negotiation, the following options can be enabled in system BIOS HII menu or in CCM:

System BIOS->NetXtreme-E NIC->Device Level Configuration

Table 17: Expected Link Speeds

Local Speed

Settings

Link Partner Speed Settings

Forced 1G

Forced

10G

Forced

25G

AN Enabled

{1G}

AN

Enabled

{10G}

AN

Enabled

{25G}

AN Enabled

{1/10G}

AN

Enabled {1/

25G}

AN Enabled

{10/25G}

AN Enabled

{1/10/25G}

Forced 1G 1G No link No link No link No link No link No link No link No link No link

Forced 10G No link 10G No link No link No link No link No link No link No link No link

Forced 25G No link No link 25G No link No link No link No link No link No link No link

AN {1G} No link No link No link 1G No link No link 1G 1G No link 1G

AN {10G} No link No link No link No link 10G No link 10G No link 10G 10G

AN {25G} No link No link No link No link No link 25G No link 25G 25G 25G

AN {1/10G} No link No link No link 1G 10G No link 10G 1G 10G 10G

AN {1/25G} No link No link No link 1G No link 25G 1G 25G 25G 25G

AN {10/25G} No link No link No link No link 10G 25G 10G 25G 25G 25G

AN {1/10/25G} No link No link No link 1G 10G 25G 10G 25G 25G 25G

Page 27

NetXtreme-C/NetXtreme-E User Guide

9.3.1 Operational Link Speed

This option configures the link speed used by the OS driver and firmware. This setting is overridden by the driver setting in

the OS present state.

9.3.2 Firmware Link Speed

This option configures the link speed used by the firmware when the device is in D3.

9.3.3 Auto-negotiation Protocol

This is the supported auto-negotiation prot ocol used to negotiate the link speed with the link partner. This option must match

the AN protocol setting in the link partner port. The Broadcom NetXtreme-E NIC supports the following auto-negotiation

protocols: IEEE 802.3by, 25G/50G consortiums and 25G/50G BAM. By default, this option is set to IEEE 802.3by.

Link speed and Flow Control/Pause must be configured in the driver in the host OS.

9.3.4 Windows Driver Settings

To access the Windows driver settings:

Open Windows Manager -> Broadcom NetXtreme E Series adapter -> Advanced Properties -> Advanced tab

Flow Control = Auto-Negotiation

This enables Flow Control/Pause frame AN.

Speed and Duplex = Auto-Negotiation

This enables link speed AN.

NetXtreme-UG100

27

Page 28

NetXtreme-C/NetXtreme-E User Guide

9.3.5 Linux Driver Settings

NOTE: For 10GBASE-T NetXtreme-E network adapters, auto-negotiation must be enabled.

NOTE: 25G and 50G advertisements are newer standards first defined in the 4.7 kernel's ethtool interface. To fully support

these new advertisement speeds for auto-negotiation, a 4.7 (or newer) kernel and a newer ethtool utility (version

4.8) are required.

ethtool -s eth0 speed 25000 autoneg off – This command turns off auto-negotiation and forces the link speed to

25 Gb/s.

ethtool -s eth0 autoneg on advertise 0x 0 – Th is com m a nd enables auto-negotiation and advertises that the device

supports all speeds: 1G, 10G, 25G (and 40G, 50G if applicable).

The following are supported advertised speeds.

– 0x020 – 1000BASE-T Full

– 0x1000 – 1000BASE-T Full

– 0x80000000 – 25000BASE-CR Full

ethtool -A eth0 autoneg on|off – Use this command to enable/disable pause frame auto-negotiation.

ethtool -a eth0 – Use this command to display the current flow control auto-negotiation setting.

9.3.6 ESXi Driver Settings

NOTE: For 10GBASE-T NetXtreme-E network adapters, auto-negotiation must be enabled. Using forced speed on a

10GBASE-T adapter results in esxcli command failure.

NOTE: VMware does not support 25G/50G speeds in ESX6.0. In this case, use the second utility (BNXTNETCLI) to set

25G/50G speed. For ESX6.0U2, the 25G/50G speed is supported.

$ esxcli network nic get -n <iface> – This command shows the current speed, duplex, driver version, firmware version

and link status.

$ esxcli network nic set -S 10000 -D full -n <iface> – This command sets the forced speed to 10 Gb/s.

$ esxcli network nic set -a -n <iface> – This enables linkspeed auto-negotiation on interface <iface>.

$ esxcli network nic pauseParams list – Use this command to get pause Parameters list.

$ esxcli network nic pauseParams set --auto <1/0> --rx <1/0> --tx <1/0> -n <iface> – Use this command to set pause

parameters.

NOTE: Flow control/pause auto-negotiation can be set only when the interface is conf igured in link speed auto-nego tiation

mode.

10 iSCSI Boot

Broadcom NetXtreme-E Ethernet adapters support iSCSI boot to enable the network boot of operating systems to diskless

systems. iSCSI boot allows a Windows, Linux, or VMware operating system to boot from an iSCSI target machine located

remotely over a standard IP network.

10.1 Supported Operating Systems for iSCSI Boot

The Broadcom NetXtreme-E Gigabit Ethernet adapters support iSCSI boot on the following operating systems:

Windows Server 2012 and later 64-bit

Linux RHEL 7.1 and later, SLES11 SP4 or later

VMware 6.0 U2

NetXtreme-UG100

28

Page 29

NetXtreme-C/NetXtreme-E User Guide

10.2 Setting up iSCSI Boot

Refer to the following sections for information on setting up iSCSI boot.

10.2.1 Configuring the iSCSI Target

Configuring the iSCSI target varies per the target vendor. For information on configuring the iSCSI target, refer to the

documentation provided by the vendor. The general steps include:

1. Create an iSCSI target.

2. Create a virtual disk.

3. Map the virtual disk to the iSCSI target created in Step 1 on page 29.

4. Associate an iSCSI initiator with the iSCSI target.

5. Record the iSCSI target name, TCP port number, iSCSI Logical Unit Number (LUN), initiator Internet Qualified Name

(IQN), and CHAP authentication details.

6. After configuring the iSCSI target, obtain the following:

– Target IQN

– Target IP address

– Target TCP port number

– Target LUN

– Initiator IQN

– CHAP ID and secret

10.2.2 Configuring iSCSI Boot Parameters

Configure the Broadcom iSCSI boot software for either static or dynamic configuration. Refer to Table 18 for configuration

options available from the General Parameters menu. Table18 lists parameters for both IPv4 and IPv6. Parameters specific

to either IPv4 or IPv6 are noted.

Table 18: Configuration Options

Option Description

TCP/IP parameters via DHCP This option is specific to IPv4. Controls whether the iSCSI boot host software acquires

the IP address information using DHCP (Enabled) or use a static IP configuration

(Disabled).

IP Autoconfiguration This option is specific to IPv6. Controls whether the iSCSI boot host software configures

a stateless link-local address and/or stateful address if DHCPv6 is present and used

(Enabled). Router Solicit packets are sent out up to three times with 4-second intervals

in between each retry. Or use a static IP configuration (Disabled).

iSCSI parameters via DHCP Controls whether the iSCSI boot host software acquires its iSCSI target parameters

using DHCP (Enabled) or through a static configuration (Disabled). The static

information is entered through the iSCSI Initiator Parameters Configuration screen.

CHAP Authentication Controls whether the iSCSI boot host software uses CHAP authentication when

connecting to the iSCSI target. If CHAP Authentication is enabled, the CHAP ID and

CHAP Secret are entered through the iSCSI Initiator Parameters Configuration screen.

DHCP Vendor ID Controls how the iSCSI boot host software interprets the Vendor Class ID field used

during DHCP. If the Vendor Class ID field in the DHCP Offer packet matches the value

in the field, the iSCSI boot host software looks into the DHCP Option 43 fields for the

required iSCSI boot extensions. If DHCP is disabled, this value does not need to be set.

NetXtreme-UG100

29

Page 30

NetXtreme-C/NetXtreme-E User Guide

Table 18: Configuration Options (Continued)

Option Description

Link Up Delay Time Controls how long the iSCSI boot host software waits, in seconds, after an Ethernet link

is established before sending any data over the network. The valid values are 0 to 255.

As an example, a user may need to set a value for this option if a network protocol, such

as Spanning Tree, is enabled on the switch interface to the client system.

Use TCP Timestamp Controls if the TCP Timestamp option is enabled or disabled.

Target as First HDD Allows specifying that the iSCSI target drive appears as the first hard drive in the system.

LUN Busy Retry Count Controls the number of connection retries the iSCSI Boot initiator attempts if the iSCSI

target LUN is busy.

IP Version This option specific to IPv6. Toggles between the IPv4 or IPv6 protocol. All IP settings

are lost when switching from one protocol version to another.

10.2.3 MBA Boot Protocol Configuration

To configure the boot protocol:

1. Restart the system.

2. From the PXE banner, select CTRL+S. The MBA Configuration Menu displays.

3. From the MBA Configuration Menu, use the up arrow or down arrow to move to the Boot Protocol option. Use the

left arrow or right arrow to change the Boot Protocol option for iSCSI.

4.

Select iSCSI Boot Configuration from Main Menu.

10.2.4 iSCSI Boot Configuration

There are two ways to configure iSCSI boot:

Static iSCSI Boot Configuration.

Dynamic iSCSI Boot Configuration.

10.2.4.1 Static iSCSI Boot Configuration

In a static configuration, you must enter data for the system's IP address, the system's initiator IQN, and the target

parameters obtained in “Configuring the iSCSI Target” on page 29. For information on configuration options, see Table 18

on page 29.

To configure the iSCSI boot parameters using static configuration:

1. From the General Parameters menu, set the following:

– TCP/IP parameters via DHCP – Disabled. (For IPv4).

– IP Autoconfiguration – Disabled. (For IPv6, non-offload).

– iSCSI parameters via DHCP – Disabled.

– CHAP Authentication – Disabled.

– DHCP Vendor ID – BRCM ISAN.

– Link Up Delay Time – 0.

– Use TCP Timestamp – Enabled (for some targets, it is necessary to enable Use TCP Timestamp).

– Target as First HDD – Disabled.

– LUN Busy Retry Count – 0.

– IP Version – IPv6. (For IPv6, non -o ff loa d) .

NetXtreme-UG100

30

Page 31

NetXtreme-C/NetXtreme-E User Guide

2. Select ESC to return to the Main menu.

3. From the Main menu, select Initiator Parameters.

4. From the Initiator Parameters screen, enter values for the following:

– IP Address (unspecified IPv4 and IPv6 addresses should be "0.0.0.0" and "::", respectively)

– Subnet Mask Prefix

– Default Gateway

– Primary DNS

– Secondary DNS

– iSCSI Name (corresponds to the iSCSI initiator name to be used by the client system)

NOTE: Enter the IP address. There is no error-checking performed against the IP address to check for duplicates or

incorrect segment/network assignment.

5. Select

Esc to return to the Main menu.

6. From the Main menu, select 1st Target Parameters.

NOTE: For the initial setup, configuring a second target is not supported.

7.

From the 1st Target Parameters screen, enable Connect to connect to the iSCSI target. Type values for the fol lowing