Page 1

Integration Guide

AudioCodes Intuitive Human Communications for Chatbot Services

Voice.AI Gateway

Version 1.8

Page 2

Notice

Voice.AI Gateway | Integration Guide

Notice

Information contained in this document is believed to be accurate and reliable at the time

of printing. However, due to ongoing product improvements and revisions, AudioCodes

cannot guarantee accuracy of printed material after the Date Published nor can it accept

responsibility for errors or omissions. Updates to this document can be downloaded from

https://www.audiocodes.com/library/technical-documents.

This document is subject to change without notice.

Date Published: August-20-2020

WEEE EU Directive

Pursuant to the WEEE EU Directive, electronic and electrical waste must not be disposed of

with unsorted waste. Please contact your local recycling authority for disposal of this product.

Customer Support

Customer technical support and services are provided by AudioCodes or by an authorized

AudioCodes Service Partner. For more information on how to buy technical support for

AudioCodes products and for contact information, please visit our website at

https://www.audiocodes.com/services-support/maintenance-and-support.

Documentation Feedback

AudioCodes continually strives to produce high quality documentation. If you have any

comments (suggestions or errors) regarding this document, please fill out the Documentation

Feedback form on our website at https://online.audiocodes.com/documentation-feedback.

Stay in the Loop with AudioCodes

- ii -

Page 3

Notice

Voice.AI Gateway | Integration Guide

Notes and Warnings

OPEN SOURCE SOFTWARE. Portions of the software may be open source software

and may be governed by and distributed under open source licenses, such as the terms

of the GNU General Public License (GPL), the terms of the Lesser General Public

License (LGPL), BSD and LDAP, which terms are located at

https://www.audiocodes.com/services-support/open-source/ and all are incorporated

herein by reference. If any open source software is provided in object code, and its

accompanying license requires that it be provided in source code as well, Buyer may

receive such source code by contacting AudioCodes, by following the instructions

available on AudioCodes website.

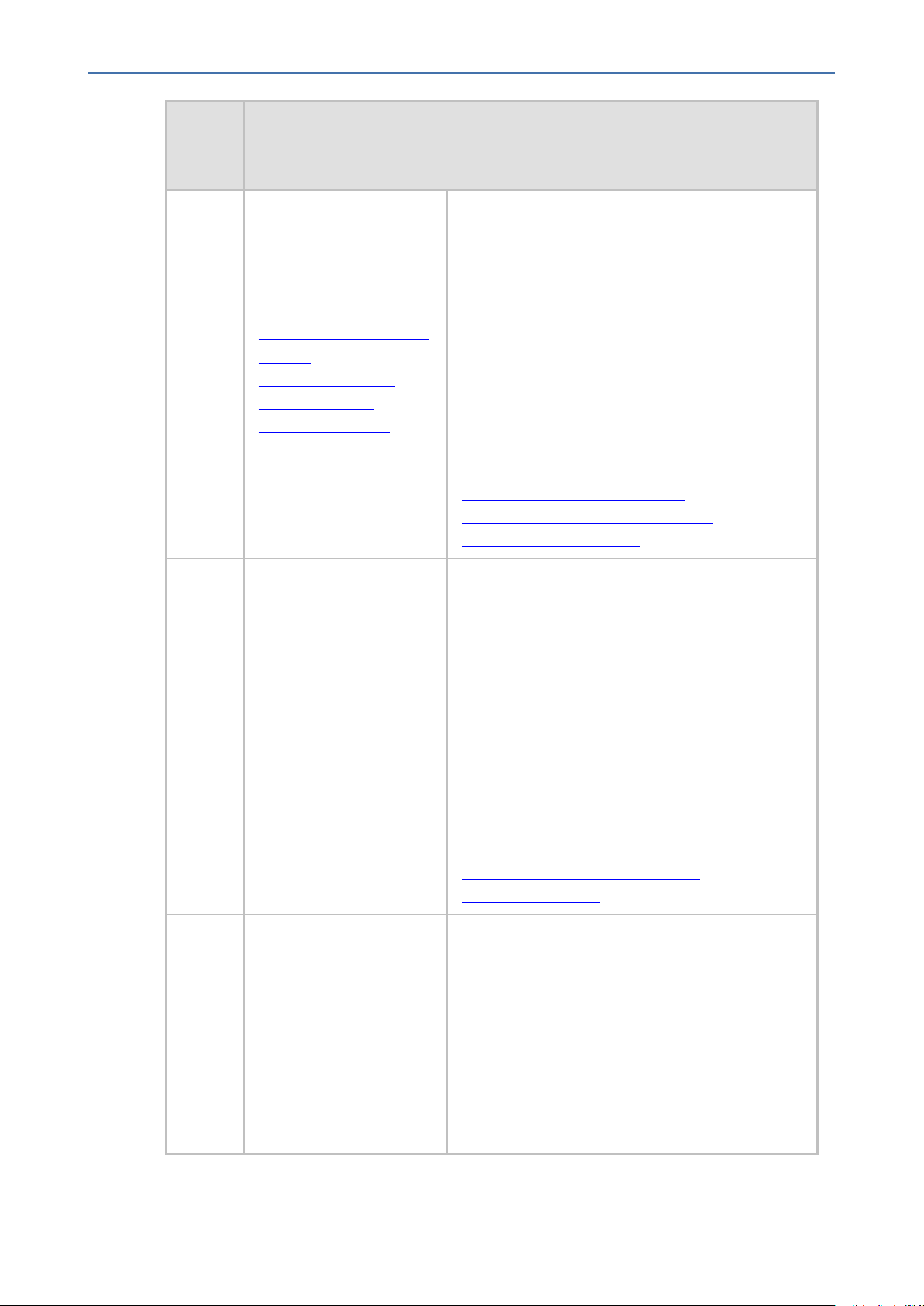

Related Documentation

Document Name

Voice.AI Gateway API Reference Guide

Voice.AI Gateway Product Description

Voice.AI Gateway with One-Click Dialogflow Integration Guide

AudioCodes Phone Number Connector

Document Revision Record

LTRT Description

30920 Initial document release.

30921 Parameters added- sttContextId, , sttContextPhrases, sttContextBoost.

30922 "Amazon Lex" and "Google Dialogflow" terms added; AudioCodes API syntax

example for initial sent message; hangupReason updated (CDR); handover

changed to transfer; event type (e.g., string) added; sttEndpointID (description

updated)' miscellaneous.

30923 Updated to Ver. 1.4. VOICE_AI_WELCOME event replaced by WELCOME.

30924 Dialogflow text length limitation; typo (Product Notice replaced by Product

Description).

30926 Updated to Ver. 1.6; Nuance add for STT/TTS; transferSipHeaders (typo);

transferReferredByURL (added); sttContextId (updated)

30927 Bot-controlled parameters added.

- iii -

Page 4

Notice

Voice.AI Gateway | Integration Guide

LTRT Description

30928 Updated to Ver. 1.8. startRecognition and stopRecognition (added to activities);

participant (added to initial message); participant, from, and participantUriUser

(added to text message); sendMetaData (added to activities)

30929 No User Input Event section added; DTMF Event corrected re "value"

- iv -

Page 5

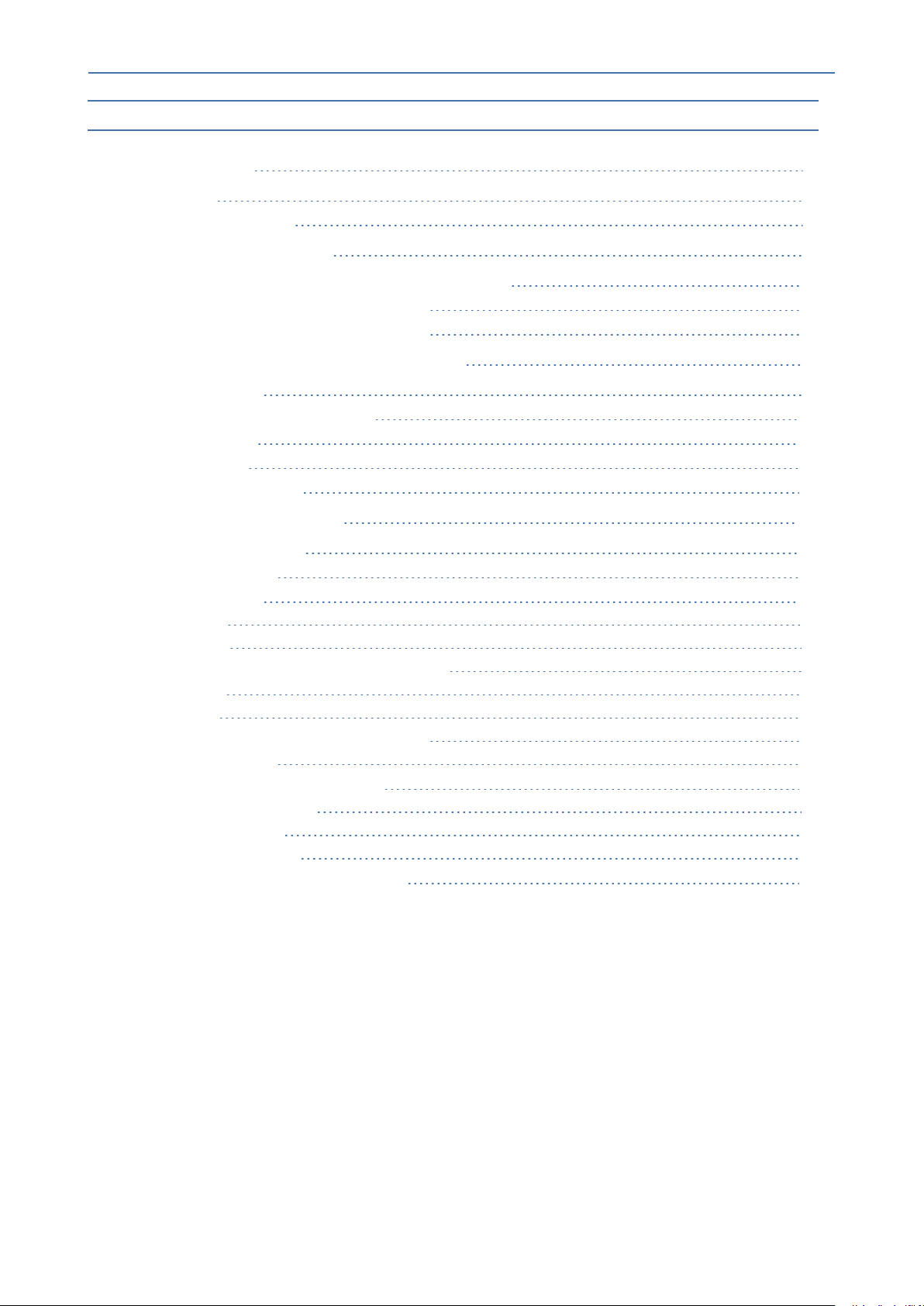

Content

Voice.AI Gateway | Integration Guide

Table of Contents

1 Introduction 1

Purpose 1

Targeted Audience 1

2 Required Information 2

Required Information of Bot Framework Provider 2

Required Information of STT Provider 3

Required Information of TTS Provider 4

3 Messages Sent by Voice.AI Gateway 7

Initial Message 7

End of Conversation Message 10

Text Message 10

DTMF Event 12

No User Input Event 13

4 Messages Sent by Bot 15

Basic Activity Syntax 15

message Activity 15

event Activities 16

hangup 17

transfer 17

Adding SIP Headers on Call Transfer 18

playUrl 19

config 20

startRecognition and stopRecognition 20

sendMetaData 21

Bot Framework Specific Details 21

AudioCodes Bot API 21

Microsoft Azure 21

Google Dialogflow 22

Parameters Controlled Also by Bot 24

- v -

Page 6

CHAPTER1 Introduction

1 Introduction

AudioCodes Voice.AI Gateway enhances chatbot functionality by allowing human

communication with chatbots through voice (voicebot), offering an audio- centric user

experience. Integrating the Voice.AI Gateway into your chatbot environment provides you

with a single-vendor solution, assisting you in migrating your text-based chatbot experience

into a voice-based chatbot.

● Prior to reading this document, it is recommended that you read the Voice.AI

Gateway Product Description to familiarize yourself with AudioCodes Voice.AI

Gateway architecture and solution.

● Most of the information provided in this document is relevant to all bot frameworks.

Where a specific bot framework uses different syntax, a note will indicate this.

Purpose

Voice.AI Gateway | Integration Guide

This guide provides the following:

■ Information that you need to supply AudioCodes for connecting the Voice.AI Gateway to

the third-party cognitive services used in your chatbot environment - bot framework(s),

speech-to-text (STT) engine(s), and text-to-speech (TTS) engine(s).

■ Description of the messages sent by the Voice.AI Gateway to the bot, and messages sent

by the bot to the Voice.AI Gateway to achieve the desired functionality. These descriptions

allow the bot developer to adapt the bot's behavior to the voice and telephony

engagement channels.

Targeted Audience

This guide is intended for IT Administrators and Bot Developers who want to integrate

AudioCodes Voice.AI Gateway into their bot solution.

- 1 -

Page 7

CHAPTER2 Required Information

2 Required Information

This section lists the information that you need to supply AudioCodes for integrating and

connecting the Voice.AI Gateway to the cognitive services of your chatbot environment. This

includes information of the bot framework, Speech-to-Text (STT) provider, and Text-to-Speech

(TTS) provider used in your environment.

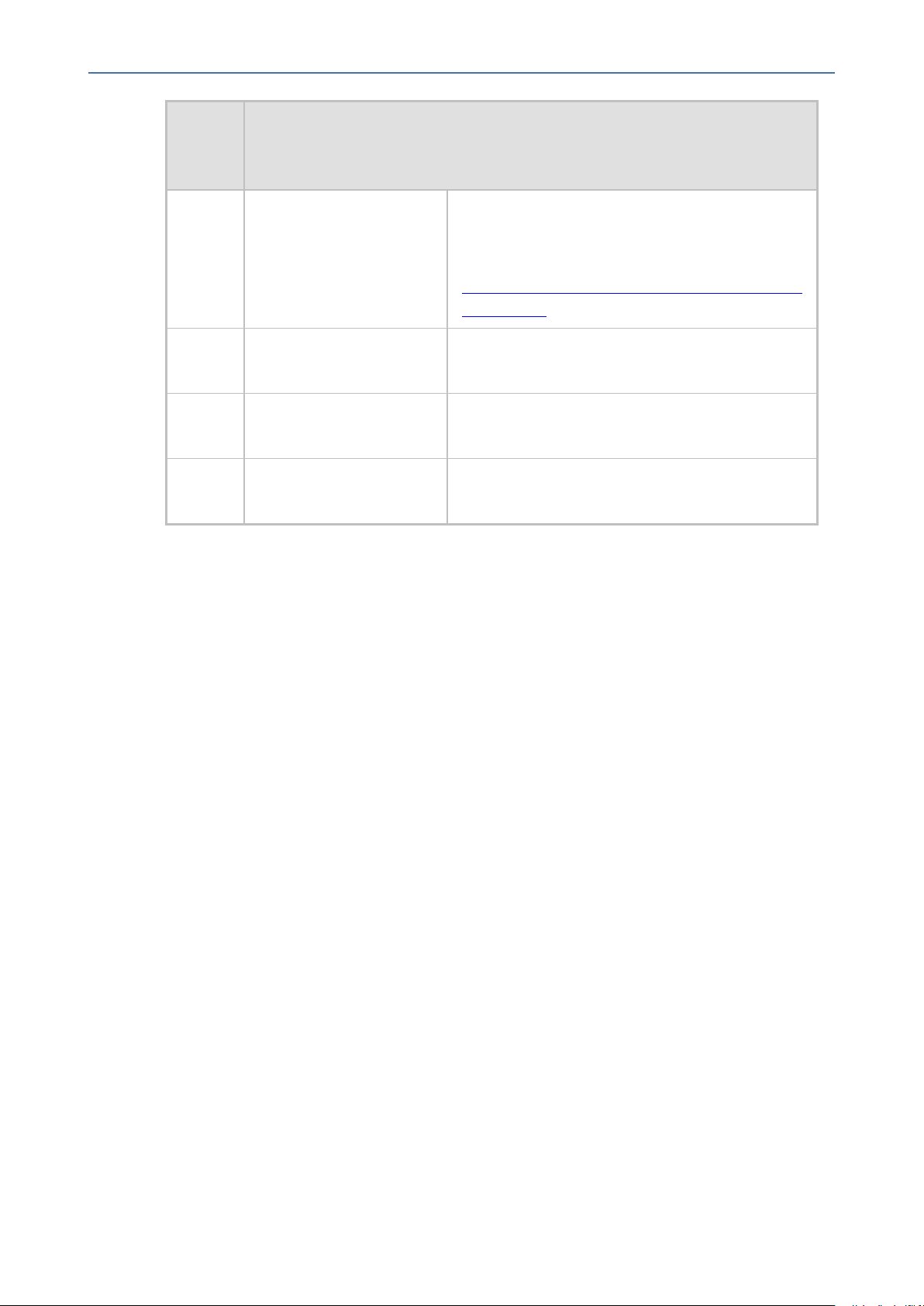

Required Information of Bot Framework Provider

To connect the Voice.AI Gateway to bot frameworks, you need to provide AudioCodes with

the bot framework provider's details, as listed in the following table.

Table 2-1: Required Information per Bot Framework

Voice.AI Gateway | Integration Guide

Bot

Framework

Microsoft

Azure

AWS

Required Information

To connect to Microsoft Azure Bot Framework, you need to provide

AudioCodes with the bot's secret key. To obtain this key, refer to Azure's

documentation at https://docs.microsoft.com/en-us/azure/bot-

service/bot-service-channel-connect-directline.

Note: Microsoft Azure Bot Framework Direct Line Version 3.0 must be

used.

To connect to Amazon Lex, you need to provide AudioCodes with the

following:

■ AWS account keys:

✔ Access key

✔ Secret access key

To obtain these keys, refer to the AWS documentation at

https://docs.aws.amazon.com/general/latest/gr/managing-awsaccess-keys.html.

Note: The same keys are used for all Amazon services (STT, TTS and

bot framework).

Google

■ Name of the specific bot

■ AWS Region (e.g., "us-west-2")

To connect to Google Dialogflow, you need to provide AudioCodes with

the following:

■ Private key of the Google service account. For information on how to

create the account key, refer to Google's documentation at

https://cloud.google.com/iam/docs/creating-managing-service-

- 2 -

Page 8

CHAPTER2 Required Information

Voice.AI Gateway | Integration Guide

Bot

Framework

Required Information

account-keys. From the JSON object representing the key, you need to

extract the private key (including the "-----BEGIN PRIVATE KEY-----"

prefix) and the service account email.

■ Client email

■ Project ID (of the bot)

AudioCodes

Bot API

To create the channel between the Voice.AI Gateway's Cognitive Service

component and the bot provider, refer to the document Voice.AI Gateway

API Reference Guide.

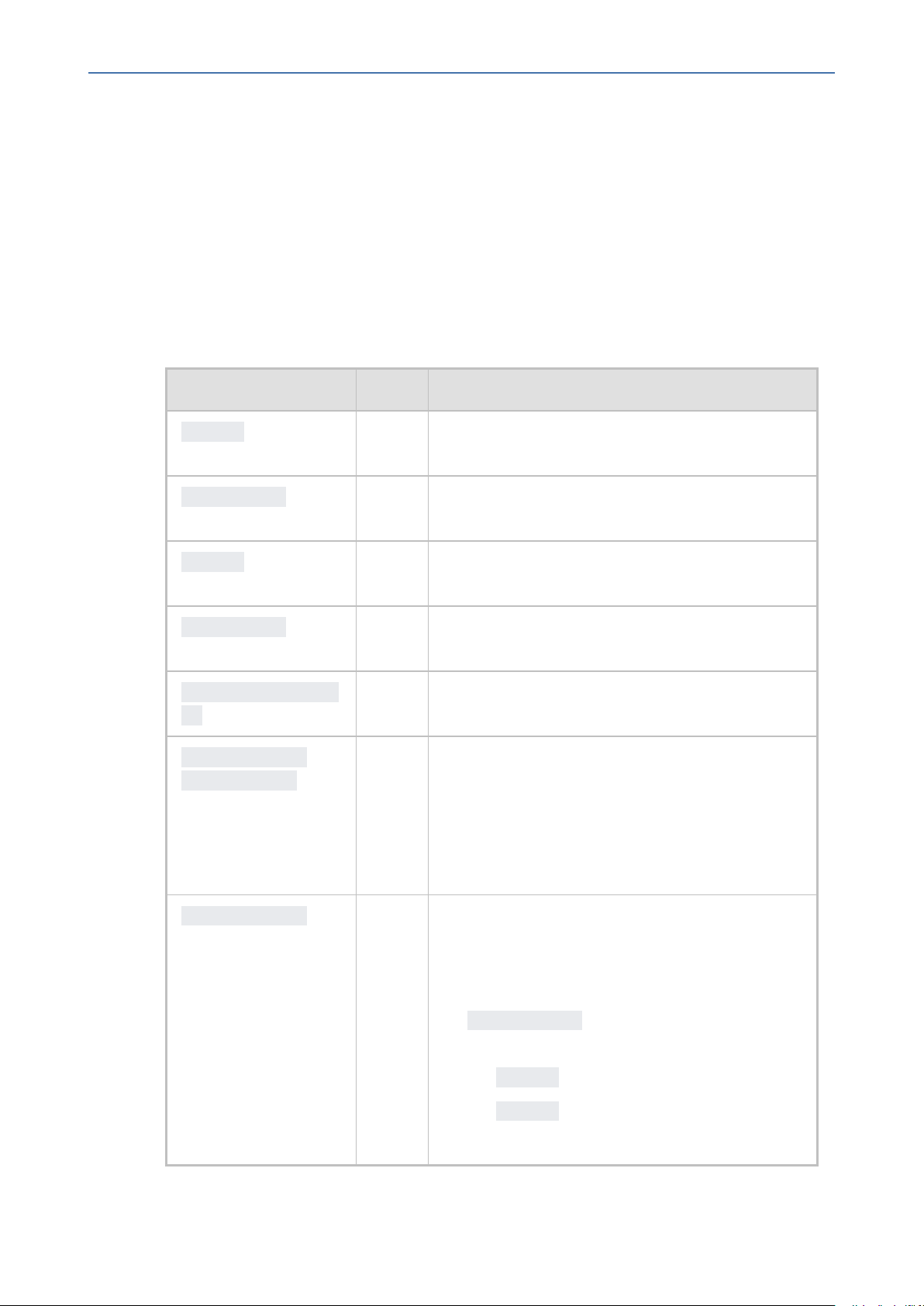

Required Information of STT Provider

To connect the Voice.AI Gateway to third-party, speech-to-text (STT) engines, you need to

provide AudioCodes with the STT provider's details, as listed in the following table.

Table 2-2: Required Information per Supported STT Provider

STT

Provider

Microsoft

Azure

Speech

Services

Required Information from STT Provider

Connectivity Language Definition

To connect to Azure's Speech

Service, you need to provide

AudioCodes with your

subscription key for the service.

To obtain the key, see Azure's

documentation at

https://docs.microsoft.com/enus/azure/cognitiveservices/speech-service/getstarted.

Note: The key is only valid for a

specific region.

To connect to Azure Speech Services,

you need to provide AudioCodes with

the following:

■ Relevant value in the 'Locale' column

in Azure's Text-to-Speech table (see

below).

For example, for Italian (Italy), the

'Locale' column value is "it-IT".

For languages supported by Azure's

Speech Services, see the Speech-to-text

table in Azure's documentation at

https://docs.microsoft.com/enus/azure/cognitive-services/speechservice/language-support.

- 3 -

The Voice.AI Gateway can also use

Azure's Custom Speech service. For

more information, see Azure's

documentation at

https://docs.microsoft.com/en-

Page 9

CHAPTER2 Required Information

Voice.AI Gateway | Integration Guide

STT

Provider

Google

Cloud

Speechto-Text

Required Information from STT Provider

To connect to Google Cloud

Speech-to-Text service, see

Required Information of Bot

Framework Provider on page2

for required information.

us/azure/cognitive-services/speechservice/how-to-custom-speech-deploymodel . If you do use this service, you

need to provide AudioCodes with the

custom endpoint details.

To connect to Google Cloud Speech-toText, you need to provide AudioCodes

with the following:

■ Relevant value in the 'languageCode'

column in Google's Cloud Speech-toText table (see below).

For example, for English (South Africa),

the 'Language code' column value is "enZA".

For languages supported by Google

Cloud Speech-to-Text, see Google's

documentation at

https://cloud.google.com/speech-totext/docs/languages.

Yandex

Nuance

Contact AudioCodes for more

information.

Contact AudioCodes for more

information.

Contact AudioCodes for more

information.

Contact AudioCodes for more

information.

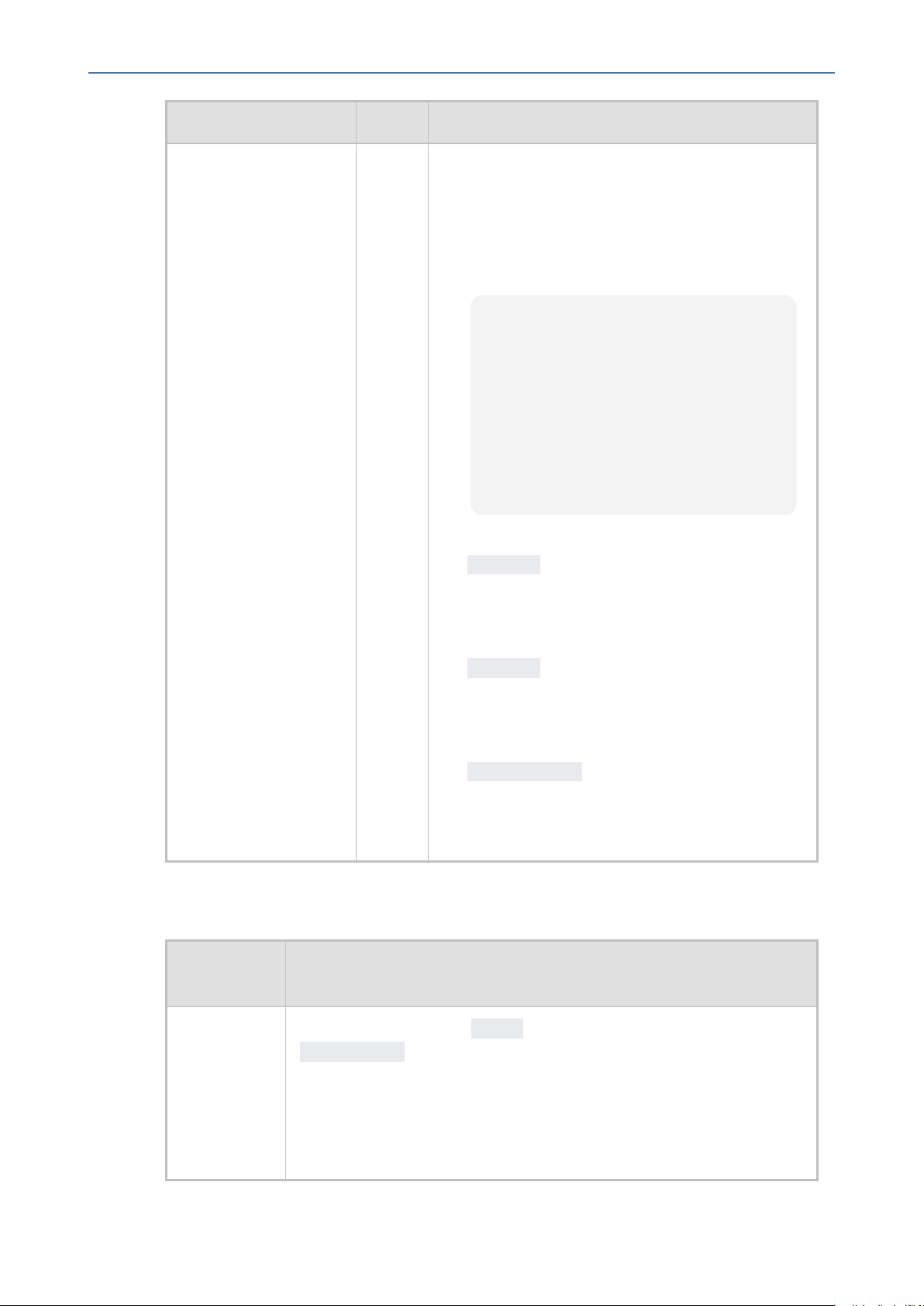

Required Information of TTS Provider

To connect the Voice.AI Gateway to third-party, text-to-speech (TTS) engines, you need to

provide AudioCodes with the TTS provider's details, as listed in the following table.

Table 2-3: Required Information per Supported TTS Provider

TTS

Provide

r

Micros

oft

Connectivity Language Definition

To connect to Azure's

Speech Service, you need

Required Information from TTS Provider

To connect to Azure Speech Services, you need to

provide AudioCodes with the following:

- 4 -

Page 10

CHAPTER2 Required Information

TTS

Provide

r

Voice.AI Gateway | Integration Guide

Required Information from TTS Provider

Azure

Speech

Service

s

Google

Cloud

TexttoSpeech

to provide AudioCodes

with your subscription

key for the service.

To obtain the key, see

Azure's documentation at

https://docs.microsoft.c

om/enus/azure/cognitiveservices/speechservice/get-started.

Note: The key is valid

only for a specific region.

To connect to Google

Cloud Text-to- Speech

service, see Required

Information of Bot

Framework Provider on

page2 for required

information.

■ Relevant value in the 'Locale' column in

Azure's Text-to-Speech table (see below link).

■ Relevant value in the 'Short voice name'

column in Azure's Text-to-Speech table (see

below link).

For example, for Italian (Italy), the 'Locale' column

value is "it-IT" and the 'Short voice name' column

value is "it-IT-ElsaNeural".

For languages supported by Azure's Speech

Services, see the Text-to-Speech table in Azure's

documentation at

https://docs.microsoft.com/enus/azure/cognitive-services/speechservice/language-support.

To connect to Google Cloud Text-to-Speech, you

need to provide AudioCodes with the following:

■ Relevant value in the 'Language code' column

in Google's table (see below link).

■ Relevant value in the 'Voice name' column in

Google's table (see below link).

AWS

Amazo

n Polly

To connect to Amazon

Polly Text-to-Speech

service, see Required

Information of Bot

Framework Provider on

page2 for required

information.

For example, for English (US), the 'Language code'

column value is "en-US" and the 'Voice name'

column value is "en-US-Wavenet-A".

For languages supported by Google Cloud Textto-Speech, see Google's documentation at

https://cloud.google.com/text-tospeech/docs/voices.

To connect to Amazon Polly TTS service, you need

to provide AudioCodes with the following:

■ Relevant value in the 'Language' column in

Amazon Polly TTS table (see below link).

■ Relevant value in the 'Name/ID' column in

Amazon Polly TTS table (see below link).

For example, for English (US), the 'Language'

column value is "English, US (en-US)" and the

- 5 -

Page 11

CHAPTER2 Required Information

TTS

Provide

r

Voice.AI Gateway | Integration Guide

Required Information from TTS Provider

'Name/ID' column is "Matthew".

For languages supported by Amazon Polly TTS

service, see the table in

https://docs.aws.amazon.com/polly/latest/dg/v

oicelist.html.

Yandex

Almag

u

Nuanc

e

Contact AudioCodes for

more information.

Contact AudioCodes for

more information.

Contact AudioCodes for

more information.

Contact AudioCodes for more information.

Contact AudioCodes for more information.

Contact AudioCodes for more information.

- 6 -

Page 12

CHAPTER3 Messages Sent by Voice.AI Gateway

3 Messages Sent by Voice.AI Gateway

This section describes the messages that are sent by the Voice.AI Gateway.

Initial Message

When the conversation starts, a message is sent with the details of the call. These details

include (when available) the following:

Table 3-1: Description of Initial Message Sent by Voice.AI Gateway

Property Type Description

Voice.AI Gateway | Integration Guide

callee

calleeHost

caller

callerHost

callerDisplayNa

me

<Additional

attributes>

String Dialed phone number. This is typically obtained

from the SIP To header.

String Host part of the destination of the call. This is

typically obtained from the SIP To header.

String Caller's phone number. This is typically obtained

from the SIP From header.

String Host part of the source of the call. This is typically

obtained from the SIP From header.

String Caller's display name. This is typically obtained from

the SIP From header.

- Defines additional attributes such as values from

various SIP headers. These can be added by

customization. The Voice.AI Gateway can be

configured to extract values from the SIP INVITE

message and then send them as additional

attributes in the initial message to the bot.

participants

Array

of

Object

s

Participants of the conversation when the Voice.AI

Gateway is used with the SBC's SIPRec feature (e.g.,

for the Agent Assist solution). This parameter

includes the following sub-parameters:

■ participant: (String) Role of the participant,

which can be one of the following values:

✔ caller

✔ callee

✔ user defined

- 7 -

Page 13

CHAPTER3 Messages Sent by Voice.AI Gateway

Property Type Description

Voice.AI Gateway | Integration Guide

The value is obtained from the 'ac:role' element

in the SIPRec XML body. The values should be set

in the SIPRec XML using the SBC's Message

Manipulation functionality, under the <participant> element, as shown in the following

example:

<participant id="+123456789"

session="0000-0000-0000-0000b44497aaf9597f7f">

<nameID

aor="+123456789@example.com"></

nameID>

<ac:role>caller</ac:role>

</participant>

The values must be unique.

■ uriUser: (String) User-part of the URI of the

participant. The value is obtained from the userpart of the 'aor' property of the 'nameID'

element in the SIPRec XML body.

■ uriHost: (String) Host-part of the URI of the

participant. The value is obtained from the hostpart of the 'aor' property of the 'nameID'

element in the SIPRec XML body.

■ displayName: (String) Display name of the

participant. The value is obtained from the

'name' sub-element of the 'nameID' element in

the SIPRec XML body.

The syntax of the initial message depends on the specific bot framework:

Table 3-2: Syntax of Initial Message Sent by Voice.AI Gateway

Bot

Framework

Message Syntax

AudioCodes

Bot API

The message is sent as a start event, with the details inside the

parameters property.

Example:

{

"type": "event",

"name": "start",

- 8 -

Page 14

CHAPTER3 Messages Sent by Voice.AI Gateway

Voice.AI Gateway | Integration Guide

Bot

Framework

Microsoft

Azure

Message Syntax

"parameters": {

"callee": "12345678",

"calleeHost": "10.20.30.40",

"caller": "12345678",

"callerHost": "10.20.30.40"

}

}

The message is sent as a channel event, with the details inside the

channelData property.

Example:

{

"type": "event",

"name": "channel",

"value": "telephony",

Google

Dialogflow

"channelData": {

"callee": "12345678",

"calleeHost": "10.20.30.40",

"caller": "12345678",

"callerHost": "10.20.30.40"

},

"from": {

"id": "12345678"

},

"locale": "en-US"

}

The message is sent as a WELCOME event, with the details as event

parameters.

Example:

{

"queryInput": {

"event": {

"languageCode": "en-US",

"name": "WELCOME",

"parameters": {

"callee": "12345678",

"calleeHost": "10.20.30.40",

- 9 -

Page 15

CHAPTER3 Messages Sent by Voice.AI Gateway

Voice.AI Gateway | Integration Guide

Bot

Framework

"caller": "12345678",

"callerHost": "10.20.30.40"

}

}

}

}

Note: These parameters can be used when generating the response text,

by using a syntax such as this:

"#WELCOME.caller"

Message Syntax

End of Conversation Message

The syntax of the end-of-conversation message depends on the specific bot framework:

Table 3-3: Syntax of End-of-Conversation Message Sent by Voice.AI Gateway

Bot

Framework

AudioCodes

Bot API

Microsoft

Azure

Google

Dialogflow

The conversation is terminated according to the AC Bot API

documentation.

The conversation is terminated by sending an endOfConversation

activity, with an optional text property with a textual reason.

Example:

{

}

Currently, no indication is sent for the end of conversation.

Text Message

Message Syntax

"type": "endOfConversation",

"text": "Client Side"

When the speech-to-text engine detects user utterance, it is sent as a message to the bot. The

message may contain details gathered by the speech-to-text engine. These details include:

- 10 -

Page 16

CHAPTER3 Messages Sent by Voice.AI Gateway

Table 3-4: Description of Text Message Sent by Voice.AI Gateway

Property Type Description

Voice.AI Gateway | Integration Guide

confidence

recognitionOutput

recognitions

participant

Number Numeric value representing the confidence level

of the recognition.

Object Raw recognition output of the speech-to-text

engine (vendor specific).

Array of

Objects

String Indicates the participant (“role”) on which the

If Continuous ASR mode is enabled, this array

contains the separate recognition outputs.

speech recognition occurred.

Note: The parameter is applicable only to Agent

Assist calls.

participantUriUser

String URI of the participant.

Note: The parameter is applicable only to Agent

Assist calls.

The syntax of the text message depends on the specific bot framework:

Table 3-5: Syntax of Text Message Sent by Voice.AI Gateway

Bot

Framework

AudioCodes

Bot API

Microsoft

Azure

Message Syntax

The message is sent as a message activity. Additional details are sent in

the parameters property.

Example:

{

"type": "message",

"text": "Hi.",

"parameters": {

"confidence":0.6599681,

}

}

The message is sent as a message activity. Additional details are sent in

the channelData property.

Example:

{

"type": "message",

"text": "Hi.",

- 11 -

Page 17

CHAPTER3 Messages Sent by Voice.AI Gateway

Voice.AI Gateway | Integration Guide

Bot

Framework

Google

Dialogflow

Message Syntax

"channelData": {

"confidence":0.6599681,

}

}

The message is sent as text input. Currently, additional details are not

sent.

Example:

{

"queryInput": {

"text": {

"languageCode": "en-US",

"text": "Hi."

}

}

}

Note: Dialogflow supports a maximum text input length of 256 characters.

Therefore, if the input received from the speech-to-text engine is longer

than 256 characters, the Voice.AI Gateway truncates the message before

sending it to Dialogflow.

DTMF Event

The syntax for DTMF tone signals (i.e., keys pressed on phone keypad by user) depends on the

specific bot framework.

Table 3-6: Syntax of DTMF Sent by Voice.AI Gateway

Bot Framework Message Syntax

AudioCodes Bot API /

Microsoft Azure

This message is sent as a DTMF event with the digits as the

value of the event.

Example:

{

"type": "event",

Google Dialogflow

"name": "DTMF",

"value": "3

}

This message is sent as a DTMF event with the digits as the

- 12 -

Page 18

CHAPTER3 Messages Sent by Voice.AI Gateway

Bot Framework Message Syntax

Voice.AI Gateway | Integration Guide

event parameters.

Example:

{

"queryInput": {

"event": {

"languageCode": "en-US",

"name": "DTMF",

"parameters": {

"value": "3"

}

}

}

}

Note: The digits can be used when generating the response

text, by using a syntax such as this:

"#DTMF.digits"

No User Input Event

The Voice.AI Connector can send an event message to the bot if there is no user input (for the

duration configured by the userNoInputTimeoutMS parameter), indicating how many

times the timeout expired ('value' field). The message is sent only if the

userNoInputSendEvent is configured to true.

Table 3-7: Syntax of No User Input Event Sent by Voice.AI Gateway

Bot Framework Message Syntax

AudioCodes Bot

API / Microsoft

Azure

This message is sent as a noUserInput event with the number

of times that the timeout expired as the value of the event.

Example:

{

"type": "event",

Google Dialogflow

"name": "noUserInput",

"value": "1

}

This message is sent as a noUserInput event with the number

of times that the timeout expired as the value of the event.

Example:

{

- 13 -

Page 19

CHAPTER3 Messages Sent by Voice.AI Gateway

Bot Framework Message Syntax

}

Voice.AI Gateway | Integration Guide

"queryInput": {

"event": {

"languageCode": "en-US",

"name": "noUserInput",

"parameters": {

"value": "1"

}

}

}

- 14 -

Page 20

CHAPTER4 Messages Sent by Bot

4 Messages Sent by Bot

When the Voice.AI Gateway handles messages from the bot, it treats them as activities.

The syntax for sending the activities in the different bot frameworks is described in Section Bot

Framework Specific Details on page21.

Activities sent by the bot contain actions to be performed and parameters. The parameters

can affect the current action or change the behavior of the whole conversation. A list of the

configurable parameters are described in Section Parameters Controlled by Bot.

The Voice.AI Gateway handles activities synchronously and therefore, an activity is not

executed before the previous one has finished. For example, when the Voice.AI Gateway

receives two activities—to play text to the user and to hang up the call—the hangup activity

is only executed after it has finished playing the text.

Basic Activity Syntax

Voice.AI Gateway | Integration Guide

Each activity is a JSON object that has the following properties:

Table 4-1: Properties of JSON Object Activities

Property Type Description

type

name

text

activityParams

sessionParams

The Params object is comprised of key-value pairs, were the key is the parameter name and

the value is the desired value for the parameter. For a list of the supported parameters, see

Parameters Controlled by Bot.

String Either message or event.

String Name of event for the event activity. For supported

events, see event Activities on the next page.

String Text to be played for the message activity.

Params

object

Params

object

Set of parameters that affect the current activity.

Set of parameters that affect the remaining duration of

the conversation.

message Activity

The most common activity is the message activity, which indicates to the Voice.AI Gateway

to play the given text to the user.

Example:

- 15 -

Page 21

CHAPTER4 Messages Sent by Bot

{

"type": "message",

"text": "Hi, how may I assist you?"

}

A message activity can also contain parameters that affect its handling. For example, to

disable caching of the text-to-speech generated voice for the current activity, the following

activity can be sent:

{

"type": "message",

"text": "I have something sensitive to tell you.",

"activityParams": {

"disableTtsCache": true

}

}

Voice.AI Gateway | Integration Guide

The text field can contain Speech Synthesis Markup Language (SSML). The SSML can be one

of the following:

■ A full SSML document, for example:

<speak>

This is <say-as interpret-as="characters">SSML</say-as>.

</speak>

■ Text with SSML tags, for example:

This is <say-as interpret-as="characters">SSML</say-as>.

● The SSML is parsed by the text-to-speech engine. Refer to their documentation for

a list of supported features.

● When using SSML, all invalid XML characters, for example, the ampersand (&),

must be properly escaped.

event Activities

This section lists the supported events. Each event is shown with a list of associated

parameters. These parameters can be set either in the configuration of the bot or by sending

them as part of the activityParams (to be used once) or as part of the

sessionParams (to be used for the remaining duration of the conversation).

- 16 -

Page 22

CHAPTER4 Messages Sent by Bot

The list only includes parameters that are specific to that event, but other parameters can also

be updated by the event. For example, the language parameter can be updated by

playUrl, by adding it to the activityParams or sessionParams properties.

hangup

The hangup event disconnects the conversation.

The following table lists the parameters associated with this event.

Parameter Type Description

Voice.AI Gateway | Integration Guide

Table 4-2: Parameters for hangup Event

hangupReason

Example:

String Conveys a textual reason for hanging up.

This reason appears in the CDR of the call.

{

"type": "event",

"name": "hangup",

"activityParams": {

"hangupReason": "conversationCompleted"

}

}

transfer

The transfer event transfers the call to a human agent or to another bot. The handover

event is a synonym for the transfer event.

The following table lists the parameters associated with this event.

Table 4-3: Parameters for transfer Event

Parameter Type Description

transferTarget

handoverReason

transferSipHeaders

String URI to where the call must be transferred call

to. Typically, the URI is a "tel" or "sip" URI.

String Conveys a textual reason for the transfer.

Array

of

Objects

Array of objects listing SIP headers that

should be sent to the transferee. Each object

comprises a name and a value attribute.

For more information, see Adding SIP

Headers on Call Transfer on the next page.

- 17 -

Page 23

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

transferReferredByURL

String Defines the party (URL) who initiated the call

Example:

{

"type": "event",

"name": "transfer",

"activityParams": {

"handoverReason": "userRequest",

"transferTarget": "tel:123456789"

"transferReferredByURL": "sip:456@ac.com",

}

}

referral. If this parameter exists, the SBC

adds a SIP Referred-By header to the

outgoing INVITE or REFER message

(according to the 'Remote REFER Mode'

parameter). If the SBC handles locally

(termination), the SBC adds it to a new

outgoing INVITE. If not handled locally

(regular), the SBC adds it to the forwarded

REFER message.

Adding SIP Headers on Call Transfer

When the bot performs a call transfer using the transfer event, it can add data to be sent

as SIP headers in the generated SIP message (REFER or INVITE). This is done by the

transferSipHeaders parameter. This parameter contains an array of JSON objects with

the following attributes:

Table 4-4: Attributes of transferSipHeaders Parameter

Attribute Type Description

name

value

For example, the following transfer event can be used to add the header "X-My-Header"

with the value "my_value":

{

"type": "event",

String Name of the SIP header.

String Value of the SIP header.

- 18 -

Page 24

CHAPTER4 Messages Sent by Bot

"name": "transfer",

"activityParams": {

"transferTarget": "sip:john@host.com",

"transferSipHeaders": [

{

"name": "X-My-Header",

"value": "my_value"

}

]

}

}

If the Voice.AI Gateway is configured to handle transfer by sending a SIP INVITE message, it will

contain the header, for example:

X-My-Header: my_value

Voice.AI Gateway | Integration Guide

If the Voice.AI Gateway is configured to handle transfer by sending a SIP REFER message, it will

contain the value in the URI of the Refer-To header, for example:

Refer-To: <sip:john@host.com?X-My-Header=my_value>

playUrl

The playURL event plays audio to the user from a given URL.

The format of the file must match the format specified by the playUrlMediaFormat

parameter; otherwise, the audio will be played corruptly.

The following table lists the parameters associated with this event.

Table 4-5: Parameters for playURL Event

Parameter Type Description

playUrlUrl

String URL of where the audio file is located.

playUrlCaching

playUrlMediaFormat

Boolean Enables caching of the audio:

■ true: Enables caching

■ false: (Default) Disables caching

String Defines the format of the audio:

■ wav/lpcm16 (default)

- 19 -

Page 25

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

■ raw/lpcm16

playUrlAltText

Example:

String Defines the text to display in the transcript page

of the user interface while the audio is played.

{

"type": "event",

"name": "playUrl",

"activityParams": {

"playUrlUrl": "https://example.com/my-file.wav",

"playUrlMediaFormat": "wav/lpcm16"

}

}

config

The config event updates the session parameters, regardless of specific activity.

There are no parameters that are associated with this event.

The following is an example of the config event, enabling the Barge-In feature:

{

"type": "event",

"name": "config",

"sessionParams": {

"bargeIn": true

}

}

startRecognition and stopRecognition

The startRecognition and stopRecognition activities are used for Agent Assist

calls. The STT engine only starts when a startRecognition activity is received from the

bot and stops when a stopRecognition activity is received from the bot.

The following table lists the parameter associated with this event.

Table 4-6: Parameter for startRecognition and stopRecognition Events

Parameter Type Description

targetParticipant

String Defines the participant for which to start or stop

- 20 -

Page 26

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Example:

{

"type": "event",

"name": "startRecognition",

"activityParams": {

"targetParticipant": "caller"

}

}

sendMetaData

The sendMetaData event can be used for sending data (using SIP INFO messages) to the

peer of the conversation. For example, for Agent Assist calls, the bot can send suggestions to

the human agent. The bot passes the data in the “value” parameter, which can contain any

valid JSON object. When handling the activity, the Voice.AI Gateway sends a SIP INFO request

with a body containing the data as JSON.

Voice.AI Gateway | Integration Guide

speech recognition.

Example:

{

"type": "event",

"name": "sendMetaData",

"value": {

"myParamName": "myParamValue"

}

}

Bot Framework Specific Details

This section provides details specific to bot frameworks.

AudioCodes Bot API

For AudioCodes Bot API, the activities can be sent as is, with the addition of the attributes id

and timestamp, as defined in the AudioCodes API Reference Guide.

Microsoft Azure

For Azure bots, the sessionParams and activityParams properties should be placed

inside the channelData property.

- 21 -

Page 27

CHAPTER4 Messages Sent by Bot

Example:

{

"type": "event",

"name": "transfer",

"channelData": {

"activityParams": {

"handoverReason": "userRequest",

"transferTarget": "tel:123456789"

}

}

}

Google Dialogflow

For Google Dialogflow, the activities are derived from intent’s response (the "Default"

response, which is the response to PLATFORM_UNSPECIFIED platform).

Voice.AI Gateway | Integration Guide

The response’s text is used to construct a message activity for playing the text to the user.

To send additional parameters or activities, Custom Payload must be added to the response

(see https://cloud.google.com/dialogflow/docs/intents-rich-messages).

The Custom Payload can contain a JSON object with the following properties:

Table 4-7: Google Dialogflow Custom Payload Properties

Property Description

activityParams

This is applied when playing the text of the response (i.e., of the

message activity).

sessionParams

This is applied when playing the text of the response (i.e., of the

message activity).

activities

For example, if the text response is "I’m going to transfer you to a human agent" and the

Custom Payload contains the following JSON object:

Array of activities to be executed after playing the text of the

response.

{

"activityParams": {

"disableTtsCache": true

},

"activities": [

{

"type": "event",

- 22 -

Page 28

CHAPTER4 Messages Sent by Bot

"name": "transfer",

"activityParams": {

"transferTarget": "tel:123456789"

}

}

]

}

Then the audio of the text "I’m going to transfer you to a human agent." is played without

caching (due to the disableTtsCache parameter). After it has finished playing, the

transfer activity is executed.

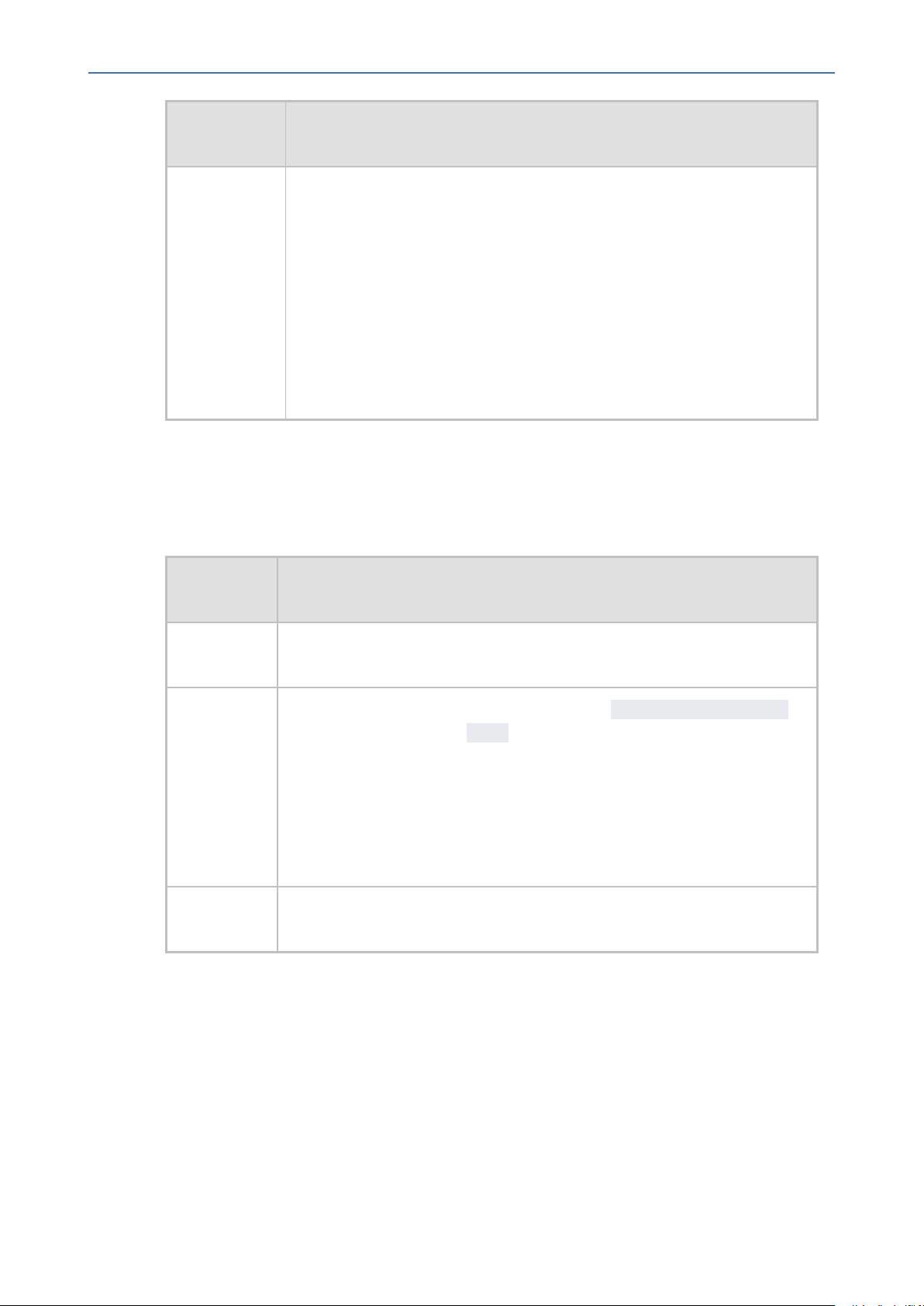

The above example can be configured through the Dialogflow user interface, as follows:

Table 4-8: Custom Payload Configuration Example through Dialogflow User Interface

Voice.AI Gateway | Integration Guide

- 23 -

Page 29

CHAPTER4 Messages Sent by Bot

Parameters Controlled Also by Bot

These parameters can be configured on the Voice.AI Connector, but they can also be

determined and updated by the bot dynamically. The bot takes precedence (i.e., overrides

Voice.AI Connector configuration). Parameters that are specific to a single event type are

documented in Section event Activities on page16. As explained in Section Basic Activity

Syntax on page15, these parameters can be included in the activityParams or the

sessionParams of any activity sent by the bot.

Table 4-9: Bots Section Parameter Descriptions (Also Controlled by Bot)

Parameter Type Description

Voice.AI Gateway | Integration Guide

azureSpeechRecogni

tionMode

bargeIn

StringDefines the Azure STT recognition mode.

■ conversation (default)

■ dictation

■ interactive

Note: The parameter is applicable only to the

Microsoft Azure STT service.

Bool

ean

Enables the Barge-In feature.

■ true: Enabled, When the bot is playing a

response to the user (playback of bot message),

the user can "barge-in" (interrupt) and start

speaking. This terminates the bot response,

allowing the bot to listen to the new speech

input from the user (i.e., Voice.AI Gateway

sends detected utterance to the bot).

■ false: (Default) Disabled. The Voice.AI

Gateway doesn't expect speech input from the

user until the bot has finished playing its

response to the user. In other words, the user

can't "barge-in" until the bot message response

has finished playing.

bargeInOnDTMF

Bool

ean

Enables the Barge-In on DTMF feature.

■ true: (Default) Enabled. When the bot is

playing a response to the user (playback of bot

message), the user can "barge-in" (interrupt)

with a DTMF digit. This terminates the bot

response, allowing the bot to listen to and

process the digits sent from the user.

■ false: Disabled. The Voice.AI Connector

- 24 -

Page 30

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

doesn't expect DTMF input from the user until

the bot has finished playing its response to the

user. In other words, the user can't "barge-in"

until the bot message response has finished

playing.

Note: When the parameter is enabled, you also

need to enable sendDTMF.

bargeInMinWordCoun

t

botFailOnErrors

botNoInputGiveUpTi

meoutMS

Inte

ger

Bool

ean

Inte

ger

Defines the minimum number of words that the

user must say for the Voice.AI Gateway to consider

it a barge-in. For example, if configured to 4 and the

user only says 3 words during the bot's playback

response, no barge-in occurs.

The valid range is 1 to 5. The default is 1.

Defines what happens when the Azure bot error

"retry" occurs.

■ true: The error is printed to the log and the

call is disconnected.

■ false: (Default) The error is printed to the

log, but the call is not disconnected.

Defines the maximum time that the Voice.AI

Connector waits for a response from the bot. If no

response is received when the timeout expires, the

Voice.AI Connector disconnects the call with the

SBC.

botNoInputTimeoutM

S

Inte

ger

The default is 0 (i.e., feature disabled).

If the call is disconnected, the SIP BYE message sent

by the SBC to the user indicates this failure, by

prefixing the value in the Reason header with "Bot

Err:".

Note: In this scenario (disconnects), you can also

configure the Voice.AI Connector to perform

specific activities, for example, playing a prompt to

the user or transferring the call (see the

generalFailoverActivities parameter).

Defines the maximum time (in milliseconds) that

the Voice.AI Connector waits for input from the bot

framework.

- 25 -

Page 31

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

If no input is received from the bot when this

timeout expires, you can configure the Voice.AI

Connector to play a textual (see the

botNoInputSpeech parameter) or an audio

(see the botNoInputUrl parameter) prompt to the

user.

The default is 0 (i.e., feature disabled).

botNoInputRetries

botNoInputSpeech

Inte

ger

Defines the maximum number of allowed timeouts

(configured by the botNoInputTimeoutMS

parameter) for no bot input. If you have configured

a prompt to play (see the botNoInputSpeech

or botNoInputUrl parameter), the prompt is

played to the user each time the timeout expires.

The default is 0 (i.e., only one timeout – no retries).

For more information on the no bot input feature,

see the botNoInputTimeoutMS parameter.

Note: If you have configured a prompt to play

upon timeout expiry, the timer is triggered only

after playing the prompt to the user.

StringDefines the textual prompt to play to the user

when no input has been received from the bot

framework when the timeout expires (configured

by botNoInputTimeoutMS).

By default, the parameter is not configured.

For example:

{

"name": "LondonTube",

"provider": "my_azure",

"displayName": "London Tube",

"botNoInputTimeoutMS": 5000,

"botNoInputSpeech": "Please wait for

bot input"

}

For more information on the no bot input feature,

see the botNoInputTimeoutMS parameter.

Note: If you have also configured to play an audio

prompt (see the botNoInputUrl parameter),

the botNoInputSpeech takes precedence.

- 26 -

Page 32

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

botNoInputUrl

userNoInputTimeout

MS

StringDefines the URL from where the audio prompt is

played to the user when no input has been

received from the bot when the timeout expires

(configured by botNoInputTimeoutMS).

By default, the parameter is not configured.

For more information on the no bot input feature,

see the botNoInputTimeoutMS.

Note: If you have also configured to play a textual

prompt (see the botNoInputSpeech

parameter), the botNoInputSpeech takes

precedence.

Inte

ger

Defines the maximum time (in milliseconds) that

the Voice.AI Connector waits for input from the

user.

If no input is received when this timeout expires,

you can configure the Voice.AI Connector to play a

textual (see the userNoInputSpeech

parameter) or an audio (see the userNoInputUrl

parameter) prompt to ask the user to say

something. If there is still no input from the user,

you can configure the Voice.AI Connector to

prompt the user again. The number of times to

prompt is configured by the

userNoInputRetries parameter.

userNoInputRetries

Inte

ger

If the userNoInputSendEvent parameter is

configured to true and the timeout expires, the

Voice.AI Connector sends an event to the bot,

indicating how many times the timer has expired.

The default is 0 (i.e., feature disabled).

Note:

■ DTMF (any input) is considered as user input (in

addition to user speech) if the sendDTMF

parameter is configured to true.

■ If you have configured a prompt to play when

the timeout expires, the timer is triggered only

after playing the prompt to the user.

Defines the maximum number of allowed timeouts

(configured by the userNoInputTimeoutMS

parameter) for no user input. If you have

- 27 -

Page 33

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

configured a prompt to play (see the

userNoInputSpeech or userNoInputUrl

parameter), the prompt is payed each time the

timeout expires.

The default is 0 (i.e., only one timeout).

For more information on the no user input feature,

see the userNoInputTimeoutMS parameter.

Note: If you have configured a prompt to play

upon timeout expiry, the timer is triggered only

after playing the prompt to the user.

userNoInputSendEve

nt

userNoInputSpeech

Bool

ean

Enables the Voice.AI Connector to send an event

message to the bot if there is no user input for the

duration configured by the

userNoInputTimeoutMS parameter,

indicating how many times the timer has expired

('value' field):

{

"type": "event",

"name": "noUserInput",

"value": 1

}

■ true: Enabled.

■ false: (Default) Disabled.

Note: The feature is applicable only to Azure,

Google, and AudioCodes API (ac-api).

StringDefines the textual prompt to play to the user

when no input has been received from the user

when the timeout expires (configured by

userNoInputTimeoutMS).

By default, the parameter is not configured.

For example:

{

"name": "LondonTube",

"provider": "my_azure",

"displayName": "London Tube",

"userNoInputTimeoutMS": 5000,

- 28 -

Page 34

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

"userNoInputSpeech": "Hi there. Please

say something"

}

For more information on the no user input feature,

see the userNoInputTimeoutMS.

Note: If you have also configured to play an audio

prompt (see the userNoInputUrl parameter),

the userNoInputSpeech takes precedence.

userNoInputUrl

continuousASR

StringDefines the URL from where the audio prompt is

played to the user when no input has been

received from the user when the timeout expires

(configured by userNoInputTimeoutMS).

By default, the parameter is not configured.

For more information on the no user input feature,

see the userNoInputTimeoutMS.

Note: If you have also configured to play a textual

prompt (see the userNoInputSpeech

parameter), the userNoInputSpeech takes

precedence.

Bool

ean

Enables the Continuous ASR feature. Continuous

ASR enables the Voice.AI Gateway to concatenate

multiple STT recognitions of the user and then send

them as a single textual message to the bot.

■ true: Enabled

■ false: (Default) Disabled

continuousASRDigit

s

For an overview of the Continuous ASR feature,

refer to the Voice.AI Gateway Product Description.

StringThis parameter is applicable when the Continuous

ASR feature is enabled.

Defines a special DTMF key, which if pressed,

causes the Voice.AI Gateway to immediately send

the accumulated recognitions of the user to the

bot. For example, if configured to "#" and the user

presses the pound key (#) on the phone's keypad,

the device concatenates the accumulated

recognitions and then sends them as one single

textual message to the bot.

- 29 -

Page 35

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

The default is "#".

Note: Using this feature incurs an additional delay

from the user’s perspective because the speech is

not sent immediately to the bot after it has been

recognized. To overcome this delay, configure the

parameter to a value that is appropriate to your

environment.

continuousASRTimeo

utInMS

disableTtsCache

Inte

ger

Bool

ean

This parameter is applicable when the Continuous

ASR feature is enabled.

Defines the automatic speech recognition (ASR)

timeout (in milliseconds). When the device detects

silence from the user for a duration configured by

this parameter, it concatenates all the accumulated

STT recognitions and sends them as one single

textual message to the bot.

The valid value is 2,500 (i.e., 2.5 seconds) to 60,000

(i.e., 1 minute). The default is 3,000.

Enables caching of TTS (audio) results from the bot.

Therefore, if the Voice.AI Connector needs to send

a request for TTS to a TTS provider and this text has

been requested before, it retrieves the result from

its cache instead of requesting it again from the TTS

provider.

■ true: Enabled

■ false: (Default) Disabled

googleInteractionT

ype

handoverReason

hangupReason

StringDefines the Google STT interaction type. For more

information, see

https://cloud.google.com/speech-totext/docs/reference/rest/v1p1beta1/RecognitionC

onfig#InteractionType.

StringDefines the textual reason when the call is

transferred to another party (e.g., another bot or a

human agent).

By default, the parameter is not defined.

StringConveys a textual reason for hanging up

(disconnecting call). This reason appears in the CDR

of the call.

- 30 -

Page 36

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

Example message:

{

"type": "event",

"name": "hangup",

"activityParams": {

"hangupReason":

"conversationCompleted"

}

}

language

StringDefines the language (e.g., "en-ZA" for South

African English) of the bot conversation and is used

for TTS and STT functionality. The value is obtained

from the service provider.

■ STT:

✔ Azure: The parameter is configured with the

value from the 'Locale' column in Azure's

Speech-Text table (e.g., "en-GB").

✔ Google: The parameter is configured with

the value from the 'languageCode' (BCP-47)

column in Google's Cloud Speech-to-Text

table (e.g., "nl-NL").

For more information, refer to section Required

Information of STT Provider on page3.

■ TTS:

✔ Azure: The parameter is configured with

the value from the 'Locale' column in

Azure's Text-to-Speech table (e.g., "it-IT").

✔ Google: The parameter is configured with

the value from the 'Language code' column

in Google's Cloud Text-to-Speech table (e.g.,

"en-US").

✔ AWS: The parameter is configured with the

value from the 'Language' column in

Amazon's Polly TTS table (e.g., "de-DE").

For more information, refer to section Required

Information of TTS Provider on page4.

- 31 -

Page 37

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

Note: This string is obtained from the TTS or STT

service provider by the Customer and must be

provided to AudioCodes. For more information,

see the Voice.AI Gateway Integration Guide.

playUrlAltText

playUrlCaching

playUrlMediaFormat

playUrlUrl

resumeRecognitionT

imeoutMS

StringDefines the text to display in the transcript page of

the user interface while the audio is played.

Bool

ean

Enables caching of the audio in the TTS cache:

■ true: Enables caching

■ false: (Default) Disables caching

StringDefines the format of the audio:

■ wav/lpcm16 (default)

■ raw/lpcm16

StringDefines the HTTP-based server by URL where the

audio file to be played is located. This allows the

play of pre-recorded prompts (audio file) to the

user from a remote third-party server.

Inte

ger

When Barge-In is disabled, speech input is not

expected before the bot's response has finished

playback. If no reply from the bot arrives within this

configured timeout (in milliseconds), the Voice.AI

Gateway expects speech input from the user and

STT recognition is re-activated.

sendDTMF

sttContextBoost

Bool

ean

Num

ber

The valid value is 0 (i.e., no automatic resumption

of recognition) to 600,000 (i.e., 10 minutes). The

default is 10,000.

Enables the sending of DTMF events to the bot.

■ true: Enabled

■ false: (Default) Disabled

Defines the boost number for context recognition

of the speech context phrase configured by

sttContextPhrases. Speech-adaptation

boost allows you to increase the recognition model

bias by assigning more weight to some phrases

than others. For example, when users say

- 32 -

Page 38

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

"weather" or "whether", you may want the STT to

recognize the word as weather.

For more information, see

https://cloud.google.com/speech-totext/docs/context-strength.

Note:

■ The parameter can be used by all bot providers

when the STT engine is Google.

■ When using other STT engines, the parameter

has no affect.

sttContextId

sttContextPhrases

StringDefines the STT context. This is used for the DNN

server, and as custom context for Azure's STT

service.

Arra

y of

Strin

gs

When using Google's Cloud STT engine, this

parameter controls Speech Context phrases.

The parameter can list phrases or words that is

passed to the STT engine as "hints" for improving

the accuracy of speech recognitions.

For more information on speech context (speech

adaptation) as well details regarding tokens (class

tokens) that can be used in phrases, go to

https://cloud.google.com/speech-totext/docs/speech-adaptation.

For example, whenever a speaker says "weather"

frequently, you want the STT engine to transcribe it

as "weather" and not "whether". To do this, the

parameter can be used to create a context for this

word (and other similar phrases associated with

weather):

sttDisablePunctuat

"sttContextPhrases": ["weather"]

Note:

■ The parameter can be used by all bot providers

when the STT engine is Google.

■ When using other STT engines, the parameter

has no affect.

Bool Prevents the STT response from the bot to include

- 33 -

Page 39

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

ion

sttEndpointID

targetParticipant

transferReferredBy

URL

ean punctuation marks.

■ true: Enabled. Punctuation is excluded.

■ false: (Default) Disabled. Punctuation is

included.

Note: This requires support from the STT engine.

StringA synonym for the sttContextId parameter.

StringDefines the participant on which to apply the

events startRecognition and

stopRecognition for starting and stopping

(respectively) speech recognition by the STT engine.

Note: The parameter is applicable only to Agent

Assist calls.

StringDefines the party (URL) who initiated the referral. If

this parameter exists, the SBC adds a SIP ReferredBy header to the outgoing INVITE/REFER message

(according to the 'Remote REFER Mode'

parameter). If the SBC handles locally (termination),

the SBC adds it to a new outgoing INVITE. If not

handled locally (regular), the SBC adds it to the

forwarded REFER message.

transferSipHeaders

transferTarget

voiceName

Arra

y of

Obje

Array of objects listing SIP headers that should be

sent to the transferee. Each object comprises a

name and a value attribute.

cts

StringDefines the URI to where the call must be

transferred. Typically, the URI is a "tel" or "sip" URI.

StringDefines the voice name for the TTS service.

■ Azure: The parameter is configured with the

value from the 'Short voice name' column in

Azure's Text-to-Speech table (e.g., "it-IT-

ElsaNeural").

■ Google: The parameter is configured with the

value from the 'Voice name' column in Google's

Cloud Text-to-Speech table (e.g., "en-US-

Wavenet-A").

- 34 -

Page 40

CHAPTER4 Messages Sent by Bot

Parameter Type Description

Voice.AI Gateway | Integration Guide

■ AWS: The parameter is configured with the

value from the 'Name/ID' column in Amazon's

Polly TTS table (e.g., "Hans").

■ Almagu: The parameter is configured with the

value from the 'Voice' column in Almagu's TTS

table (e.g., "Osnat").

Note: This string is obtained from the TTS service

provider by the Customer and must be provided to

AudioCodes. For more information, refer to Section

Required Information of TTS Provider on page4.

- 35 -

Page 41

CHAPTER4 Messages Sent by Bot

Voice.AI Gateway | Integration Guide

This page is intentionally left blank.

- 36 -

Page 42

International Headquarters

1 Hayarden Street,

Airport City

Lod 7019900, Israel

Tel: +972-3-976-4000

Fax: +972-3-976-4040

AudioCodes Inc.

200 Cottontail Lane

Suite A101E

Somerset NJ 08873

Tel: +1-732-469-0880

Fax: +1-732-469-2298

Contact us: https://www.audiocodes.com/corporate/offices-worldwide

Website: https://www.audiocodes.com/

Documentation Feedback: https://online.audiocodes.com/documentation-

feedback

©2020 AudioCodes Ltd. All rights reserved. AudioCodes, AC, HD VoIP, HD VoIP Sounds Better, IPmedia, Mediant, MediaPack, What’s Inside Matters, OSN, SmartTAP, User Management Pack, VMAS,

VoIPerfect, VoIPerfectHD, Your Gateway T o VoIP, 3GX, VocaNom, AudioCodes One Voice, AudioCodes

Meeting Insights, AudioCodes Room Experience and CloudBond are trademarks or registered trademarks of AudioCodes Limited. All other products or trademarks are property of their respective owners. Product speci fications are subject to change without notice.

Document #: LTRT-30929

Loading...

Loading...