Areca ARC-1110, ARC-1120, ARC-1130, ARC-1231ML, ARC-1261ML User Manual

...

SATA RAID Cards

ARC-1110/1120/1130/1160/1170

( 4/8/12/16/24-port PCI-X SATA RAID Controllers )

ARC-1110ML/1120ML/1130ML/1160ML

( 4/8-port Innband connector and 12/16-port Multi-lane

connector PCI-X SATA RAID Controllers )

ARC-1210/1220/1230/1260/1280

( 4/8/12/16/24-port PCI-Express SATA RAID Controllers )

ARC-1231ML/1261ML/1280ML

(12/16/24-port PCI-Express SATA RAID Controllers)

USER Manual

Version: 3.3

Issue Date: November, 2006

Microsoft WHQL Windows Hardware Compatibility

Test

ARECA is committed to submitting products to the Microsoft Windows

Hardware Quality Labs (WHQL), which is required for participation in the

Windows Logo Program. Successful passage of the WHQL tests results

in both the “Designed for Windows” logo for qualifying ARECA PCI-X and

PCI-Express SATA RAID controllers and a listing on the Microsoft Hardware Compatibility List (HCL).

Copyright and Trademarks

The information of the products in this manual is subject to change

without prior notice and does not represent a commitment on the part

of the vendor, who assumes no liability or responsibility for any errors

that may appear in this manual. All brands and trademarks are the

properties of their respective owners. This manual contains materials

protected under International Copyright Conventions. All rights

reserved. No part of this manual may be reproduced in any form or by

any means, electronic or mechanical, including photocopying, without

the written permission of the manufacturer and the author. All inquiries

should be addressed to ARECA Technology Corp.

FCC STATEMENT

This equipment has been tested and found to comply with the limits for

a Class B digital device, pursuant to part 15 of the FCC Rules. These

limits are designed to provide reasonable protection against interference in a residential installation. This equipment generates, uses, and

can radiate radio frequency energy and, if not installed and used in accordance with the instructions, may cause harmful interference to radio

communications. However, there is no guarantee that interference will

not occur in a particular installation.

Contents

1. Introduction .............................................................. 10

1.1 Overview ....................................................................... 10

1.2 Features ........................................................................12

1.3 RAID Concept ................................................................. 15

1.3.1 RAID Set ................................................................... 15

1.3.2 Volume Set ................................................................ 15

1.3.3 Ease of Use Features ................................................. 16

1.3.3.1 Foreground Availability/Background Initialization ....... 16

1.3.3.2 Array Roaming ..................................................... 16

1.3.3.3 Online Capacity Expansion ..................................... 17

1.3.3.4 Online RAID Level and Stripe Size Migration ............. 19

1.3.3.5 Online Volume Expansion ........................................ 19

1.4 High availability .............................................................. 20

1.4.1 Global Hot Spares ...................................................... 20

1.4.2 Hot-Swap Disk Drive Support ....................................... 21

1.4.3 Auto Declare Hot-Spare .............................................. 21

1.4.4 Auto Rebuilding ......................................................... 21

1.4.5 Adjustable Rebuild Priority ........................................... 22

1.5.1 Hard Drive Failure Prediction ........................................ 23

1.5.2 Auto Reassign Sector .................................................. 23

1.5.3 Consistency Check ...................................................... 24

1.6 Data Protection ............................................................... 24

1.6.1 BATTERY BACKUP ...................................................... 24

1.6.2 RECOVERY ROM ......................................................... 25

1.7 Understanding RAID ........................................................ 25

1.7.1 RAID 0 ...................................................................... 25

1.7.2 RAID 1 ...................................................................... 26

1.7.3 RAID 1E .................................................................... 27

1.7.4 RAID 3 ...................................................................... 27

1.7.5 RAID 5 ...................................................................... 28

1.7.6 RAID 6 ...................................................................... 29

2. Hardware Installation ............................................... 32

2.1 Before Your begin Installation ........................................... 32

2.2 Board Layout .................................................................. 33

2.3 Installation .....................................................................39

3. McBIOS RAID Manager .............................................. 56

3.1 Starting the McBIOS RAID Manager ................................... 56

3.2 McBIOS Conguration manager ......................................... 57

3.3 Conguring Raid Sets and Volume Sets .............................. 58

3.4 Designating Drives as Hot Spares ...................................... 58

3.5 Using Quick Volume /Raid Setup Conguration .................... 59

3.6 Using RAID Set/Volume Set Function Method ...................... 60

3.7 Main Menu .................................................................... 62

3.7.1 Quick Volume/RAID Setup ........................................... 63

3.7.2 Raid Set Function ....................................................... 66

3.7.2.1 Create Raid Set .................................................... 67

3.7.2.2 Delete Raid Set ..................................................... 68

3.7.2.3 Expand Raid Set .................................................... 68

• Migrating ...................................................................... 69

3.7.2.4 Activate Incomplete Raid Set ................................... 70

3.7.2.5 Create Hot Spare ................................................... 71

3.7.2.6 Delete Hot Spare ................................................... 71

3.7.2.7 Raid Set Information .............................................. 72

3.7.3 Volume Set Function ................................................... 72

3.7.3.1 Create Volume Set ................................................. 73

• Volume Name ................................................................ 75

• Raid Level ..................................................................... 75

• Capacity .......................................................................76

• Strip Size ...................................................................... 77

• SCSI Channel ................................................................78

• SCSI ID ........................................................................ 78

• SCSI LUN ...................................................................... 79

• Cache Mode .................................................................. 79

3.7.3.2 Delete Volume Set ................................................. 80

• Tag Queuing .................................................................. 80

3.7.3.3 Modify Volume Set ................................................. 81

• Volume Growth .............................................................. 82

• Volume Set Migration ...................................................... 83

3.7.3.4 Check Volume Set .................................................. 83

3.7.3.5 Stop Volume Set Check .......................................... 83

3.7.3.6 Display Volume Set Info. ........................................ 84

3.7.4 Physical Drives ........................................................... 85

3.7.4.1 View Drive Information .......................................... 85

3.7.4.2 Create Pass-Through Disk ....................................... 86

3.7.4.3 Modify a Pass-Through Disk ..................................... 86

3.7.4.4 Delete Pass-Through Disk ....................................... 87

3.7.4.5 Identify Selected Drive ........................................... 87

3.7.5 Raid System Function .................................................88

3.7.5.1 Mute The Alert Beeper ........................................... 88

3.7.5.2 Alert Beeper Setting ............................................... 89

3.7.5.3 Change Password .................................................. 89

3.7.5.4 JBOD/RAID Function .............................................. 90

3.7.5.5 Background Task Priority ........................................ 91

3.7.5.6 Maximum SATA Mode ............................................. 91

3.7.5.7 HDD Read Ahead Cache ......................................... 92

3.7.5.8 Stagger Power On .................................................. 92

3.7.5.9 Empty HDD slot HDD .............................................93

3.7.5.10 HDD SMART Status Polling .................................... 94

3.7.5.11 Controller Fan Detection ....................................... 94

3.7.5.12 Disk Write Cache Mode ......................................... 95

3.7.5.13 Capacity Truncation .............................................. 95

3.7.6 Ethernet Conguration (12/16/24-port) ......................... 96

3.7.6.1 DHCP Function ......................................................97

3.7.6.2 Local IP address .................................................... 98

3.7.6.3 Ethernet Address ................................................... 99

3.7.7 View System Events ...................................................99

3.7.8 Clear Events Buffer ................................................... 100

3.7.9 Hardware Monitor ..................................................... 100

3.7.10 System Information ................................................ 100

4. Driver Installation ................................................... 102

4.1 Creating the Driver Diskettes .......................................... 102

4.2 Driver Installation for Windows ....................................... 103

4.2.1 New Storage Device Drivers in Windows Server 2003 .... 103

4.2.2 Install Windows 2000/XP/2003 on a SATA RAID Volume 104

4.2.2.1 Installation procedures ......................................... 104

4.2.2.2 Making Volume Sets Available to Windows System ... 105

4.2.3 Installing controller into an existing Windows 2000/XP/2003

Installation ...................................................................... 106

4.2.3.1 Making Volume Sets Available to Windows System ... 107

4.2.4 Uninstall controller from Windows 2000/XP/2003 .......... 108

4.3 Driver Installation for Linux ............................................ 109

4.4 Driver Installation for FreeBSD ........................................ 109

4.5 Driver Installation for Solaris 10 ...................................... 110

4.6 Driver Installation for Mac 10.x ....................................... 110

4.7 Driver Installation for UnixWare 7.1.4 .............................. 111

4.8 Driver Installation for NetWare 6.5 .................................. 111

5. ArcHttp Proxy Server Installation ........................... 112

5.1 For Windows................................................................. 113

5.2 For Linux ..................................................................... 114

5.3 For FreeBSD ................................................................. 115

5.4 For Solaris 10 x86 ......................................................... 116

5.5 For Mac OS 10.x ........................................................... 116

5.6 ArcHttp Conguration .................................................... 117

6. Web Browser-based Conguration ......................... 121

6.1 Start-up McRAID Storage Manager ................................. 121

• Another method to start-up McRAID Storage Manager from

Windows Local Administration .......................................... 122

6.1.1 Through Ethernet port (Out-of-Band) ......................... 123

6.2 SATA RAID controller McRAID Storage Manager ................. 124

6.3 Main Menu .................................................................. 125

6.4 Quick Function .............................................................. 125

6.5 RaidSet Functions ......................................................... 126

6.5.1 Create Raid Set ....................................................... 126

6.5.2 Delete Raid Set ........................................................ 127

6.5.3 Expand Raid Set ....................................................... 128

6.5.4 Activate Incomplete Raid Set ..................................... 128

6.5.5 Create Hot Spare ..................................................... 129

6.5.6 Delete Hot Spare ...................................................... 129

6.5.7 Rescue Raid Set ....................................................... 129

6.6 Volume Set Functions .................................................... 130

6.6.1 Create Volume Set ................................................... 130

• Volume Name .............................................................. 131

• Raid Level .................................................................. 131

• Capacity ..................................................................... 131

• Greater Two TB Volume Support ..................................... 131

• Initialization Mode ........................................................ 132

• Strip Size .................................................................... 132

• Cache Mode ................................................................ 132

• SCSI Channel/SCSI ID/SCSI Lun .................................... 132

• Tag Queuing ................................................................ 132

6.6.2 Delete Volume Set .................................................... 133

6.6.3 Modify Volume Set .................................................... 133

6.6.3.1 Volume Set Migration ........................................... 134

6.6.4 Check Volume Set .................................................... 135

6.6.5 Stop VolumeSet Check .............................................. 135

6.7 Physical Drive .............................................................. 135

6.7.1 Create Pass-Through Disk .......................................... 136

6.7.2 Modify Pass-Through Disk .......................................... 136

6.7.3 Delete Pass-Through Disk .......................................... 137

6.8 System Controls ........................................................... 138

6.8.1 System Cong ......................................................... 138

• System Beeper Setting ................................................. 138

• Background Task Priority ............................................... 138

• JBOD/RAID Conguration .............................................. 138

• Maximun SATA Supported ............................................. 138

• HDD Read Ahead Cache ................................................ 138

• Stagger Power on ........................................................ 139

• Empty HDD Slot LED .................................................... 140

• HDD SMART Status Polling............................................. 140

• Disk Write Cache Mode ................................................. 141

• Disk Capacity Truncation Mode ....................................... 141

6.8.2 Ethernet Conguration (12/16/24-port) ....................... 142

6.8.3 Alert by Mail Conguration (12/16/24-port) ................ 143

6.8.4 SNMP Conguration (12/16/24-port) ........................... 144

• SNMP Trap Congurations ............................................. 145

• SNMP System Congurations ......................................... 145

• SNMP Trap Notication Congurations ............................. 145

6.8.5 NTP Conguration (12/16/24-port) ............................. 145

• NTP Sever Address ....................................................... 145

• Time Zone ................................................................... 146

• Automatic Daylight Saving............................................. 146

6.8.6 View Events/Mute Beeper .......................................... 146

6.8.7 Generate Test Event ................................................. 146

6.8.8 Clear Events Buffer ................................................... 147

6.8.9 Modify Password ...................................................... 147

6.8.10 Update Firmware ................................................... 148

6.9 Information .................................................................. 148

6.9.1 RaidSet Hierarchy ..................................................... 148

6.9.2 System Information .................................................. 148

Appendix A ................................................................. 151

Upgrading Flash ROM Update Process .................................... 151

Upgrading Firmware Through McRAID Storage Manager ........... 151

Upgrading Entire Flash ROM ImageThrough Arcash DOS Utility 153

Appendix B .................................................................. 156

Battery Backup Module (ARC-6120-BAT) ................................ 156

BBM Components ........................................................... 156

BBM Specications .......................................................... 156

Installation .................................................................... 157

Battery Backup Capacity .................................................. 157

Operation ...................................................................... 158

Changing the Battery Backup Module ................................ 158

Status of BBM ................................................................ 158

Appendix C .................................................................. 159

SNMP Operation & Denition ................................................ 159

Appendix D .................................................................. 166

Event Notication Congurations ........................................ 166

A. Device Event .............................................................. 166

B. Volume Event ............................................................. 167

C. RAID Set Event .......................................................... 167

D. Hardware Event .......................................................... 168

Appendix E .................................................................. 169

General Troubleshooting Tips ............................................... 169

Appendix F .................................................................. 173

Technical Support ............................................................... 173

Glossary ...................................................................... 174

2TB .............................................................................. 174

Array ............................................................................ 174

ATA .............................................................................. 174

Auto Reassign Sector ..................................................... 174

Battery Backup Module .................................................... 175

BIOS ............................................................................ 175

Cache ........................................................................... 175

Consistency Check .......................................................... 175

Driver ........................................................................... 175

Hot Spare ...................................................................... 176

Hardware RAID versus Software RAID .............................. 176

Hot Swap ...................................................................... 176

NVRAM .......................................................................... 176

Parity ............................................................................ 176

PCI Express .................................................................. 176

PCI-X ........................................................................... 177

RAID ............................................................................ 177

Rebuild ......................................................................... 177

SATA (Serial ATA) ........................................................... 177

SMART .......................................................................... 178

SNMP ............................................................................ 178

Volume Set .................................................................... 178

Write-back ..................................................................... 178

Write-through ................................................................ 178

XOR-Engine ................................................................... 179

INTRODUCTION

1. Introduction

This section presents a brief overview of the SATA RAID Series

controller, ARC-1110/1110ML/1120/1120ML/1130/1130ML/1160/

1160ML/1170 (4/8/12/16/24-port PCI-X SATA RAID Controllers) and

ARC-1210/1220/1230/1230/1231ML/1260/1261ML/1280/1280ML

(4/8/12/16/24-port PCI-Express SATA RAID Controllers).

1.1 Overview

The ARC-11xx and ARC-12xx Series of high-performance Serial ATA

RAID controllers support a maximum of 4, 8, 12, 16, or 24 SATA

II peripheral devices (depending on model) on a single controller.

The ARC-11xx series for the PCI-X bus and the ARC-12xx Series

for the PCI-Express bus. When properly congured, these SATA

controllers provide non-stop service with a high degree of fault

tolerance through the use of RAID technology and can also provide

advanced array management features.

The 4 and 8 port SATA RAID controllers are low-prole PCI cards,

ideal for 1U and 2U rack-mount systems. These controllers utilize

the same RAID kernel that has been eld-proven in Areca existing

external RAID controllers, allowing Areca to quickly bring stable

and reliable RAID controllers to the market.

Unparalleled Performance

The SATA RAID controllers provide reliable data protection for

desktops, workstations, and servers. These cards set the standard with enhancements that include a high-performance Intel I/O

Processor, a new memory architecture, and a high performance PCI

bus interconnection. The 8/12/16/24-port controllers with the RAID

6 engine built-in can offer extreme-availability RAID 6 functionality.

This engine can concurrently compute two parity blocks with performance very similar to RAID 5. The controllers by default support

256MB of ECC SDRAM memory. The 12/16/24 port controllers support one DDR333 SODIMM socket that allows for upgrading up to

1GB of memory. The 12/16/24 port controllers support one DDR2533 DIMM socket that allows for upgrading up to 2GB of memory.

The controllers use Marvell 4/8 channel SATA PCI-X controller

10

INTRODUCTION

chips, which can simultaneously communicate with the I/O processor and read or write data on multiple drives.

Unsurpassed Data Availability

As storage capacity requirements continue to rapidly increase, users require greater levels of disk drive fault tolerance, which can be

implemented without doubling the investment in disk drives. RAID

1 (mirroring) provides high fault tolerance. However, half of the

drive capacity of the array is lost to mirroring, making it too costly

for most users to implement on large volume sets due to dobuling

the number of drives required. Users want the protection of RAID 1

or better with an implementation cost comparable to RAID 5. RAID

6 can offer fault tolerance greater than RAID 1 or RAID 5 but only

consumes the capacity of 2 disk drives for distributed parity data.

The 8/12/16/24-port RAID controllers provide RAID 6 functionality

to meet these demanding requirements.

The SATA RAID controllers also provide RAID levels 0, 1, 1E, 3, 5

or JBOD congurations. Its high data availability and protection is

derived from the following capabilities: Online RAID Capacity Expansion, Array Roaming, Online RAID Level / Stripe Size Migration,

Dynamic Volume Set Expansion, Global Online Spare, Automatic

Drive Failure Detection, Automatic Failed Drive Rebuilding, Disk

Hot-Swap, Online Background Rebuilding and Instant Availability/Background Initialization. During the controller rmware ash

upgrade process, it is possible that an error results in corruption of

the controller rmware. This could result in the device becoming

non-functional. However, with our Redundant Flash image feature,

the controller will revert back to the last known version of rmware

and continue operating. This reduces the risk of system failure due

to rmware crashes.

Easy RAID Management

The SATA RAID controller utilizes built-in rmware with an embedded terminal emulation that can access via hot key at BIOS bootup screen. This pre-boot manager utility can be used to simplify

the setup and management of the RAID controller. The controller

rmware also contains a ArcHttp browser-based program that can

be accessed through the ArcHttp proxy server function in Windows,

11

INTRODUCTION

Linux, FreeBSD and more environments. This Web browser-based

RAID management utility allows both local and remote creation and

modication RAID sets, volume sets, and monitoring of RAID status

from standard web browsers.

1.2 Features

Adapter Architecture

• Intel IOP 331 I/O processor (ARC-11xx series)

• Intel IOP 332/IOP 333 I/O processor (ARC-12xx series)

• Intel IOP341 I/O processor (ARC-12x1ML/ARC-1280ML/1280)

• 64-bit/133MHz PCI-X Bus compatible

• PCI Express X8 compatible

• 256MB on-board DDR333 SDRAM with ECC protection (4/8-port)

• One SODIMM Socket with default 256 MB of DDR333 SDRAM

with ECC protection, upgrade to 1GB (12, 16 and 24-port cards

only)

• One DIMM Socket with default 256 MB of DDR2-533 SDRAM

with ECC protection, upgrade to 2GB(ARC-12xxML, ARC-1280)

• An ECC or non-ECC SDRAM module using X8 or X16 devices

• Support up to 4/8/12/16/24 SATA ll drives

• Write-through or write-back cache support

• Multi-adapter support for large storage requirements

• BIOS boot support for greater fault tolerance

• BIOS PnP (plug and play) and BBS (BIOS boot specication)

support

• Supports extreme performance Intel RAID 6 functionality

• NVRAM for RAID event & transaction log

• Battery backup module (BBM) ready (Depend on mother

board)

RAID Features

• RAID level 0, 1, 1E, 3, 5, 6 (R6 engine inside) and JBOD

• Multiple RAID selection

• Array roaming

• Online RAID level/stripe size migration

• Online capacity expansion & RAID level migration simultaneously

• Online volume set growth

• Instant availability and background initialization

• Automatic drive insertion / removal detection and rebuilding

• Greater than 2TB per volume set for 64-bit LBA

12

INTRODUCTION

• Redundant ash image for adapter availability

• Support S.M.A.R.T, NCQ and OOB staggered spin-up capable

drives

Monitors/Notication

• System status indication through LED/LCD connector, HDD

activity/fault connector, and alarm buzzer

• SMTP support for email notication

• SNMP agent supports for remote SNMP Manager

• I2C Enclosure Management Ready (IOP331/332/333)

• I2C & SGPIO Enclosure Management Ready (IOP341)

RAID Management

• Field-upgradeable rmware in ash ROM

• Ethernet port support on 12/16/24-port

In-Band Manager

• Hot key boot-up McBIOS RAID manager via BIOS

• Support controller’s API library, allowing customer to write its

own AP

• Support Command Line Interface (CLI)

• Browser-based management utility via ArcHttp proxy server

• Single Admin Portal (SAP) monitor utility

• Disk Stress Test (DST) utility for production in Windows

Out-of-Band Manager

• Firmware-embedded browser-based RAID manager, SMTP

manager, SNMP agent and Telnet function via Ethernet port

(for 12/16/24-port Adapter)

• Support controller’s API library for customer to write its own

AP (for 12/16/24-port Adapter)

• Push Button and LCD display panel (option)

Operating System

• Windows 2000/XP/Server 2003

• Red Hat Linux

• SuSE Linux

• FreeBSD

• Novell Netware 6.5

• Solaris 10 X86/X86_64

• SCO Unixware 7.1.4

• Mac OS 10.X (no_bootable)

(For latest supported OS listing visit http://www.areca.com.tw)

13

INTRODUCTION

Internal PCI-X RAID Card Comparison (ARC-11XX)

1110 1120 1130 1160 1170

RAID processor IOP331

Host Bus Type PCI-X 133MHz

RAID 6 support N/A YES YES YES YES

Cache Memory 256MB 256MB One SO-

DIMM

Drive Support 4 * SATA ll 8 * SATA ll 12 * SATA ll 16 * SATA ll 24 * SATA ll

Disk Connector SATA SATA SATA SATA SATA

PCI-X RAID Card Comparison (ARC-11XXML)

1110ML 1120ML 1130ML 1160ML

RAID processor IOP331

Host Bus Type PCI-X 133MHz

RAID 6 support N/A YES YES YES

Cache Memory 256MB 256MB One SODIMM One SODIMM

Drive Support 4 * SATA ll 8 * SATA ll 12 * SATA ll 16 * SATA ll

Disk Connector Innband Innband Multi-lane Multi-lane

One SO-

DIMM

One SO-

DIMM

Internal PCI-Express RAID Card Comparison (ARC-12XX)

1210 1220 1230 1260

RAID processor IOP333

Host Bus Type PCI-Express X8

RAID 6 support N/A YES YES YES

Cache Memory 256MB 256MB One SODIMM One SODIMM

Drive Support 4 * SATA ll 8 * SATA ll 12 * SATA ll 16 * SATA ll

Disk Connector SATA SATA SATA SATA

IOP332

14

INTRODUCTION

Internal PCI-Express RAID Card Comparison (ARC-12XX)

1231ML 1261ML 1280ML 1280

RAID processor IOP341

Host Bus Type PCI-Express X8

RAID 6 support YES YES YES YES

Cache Memory One DDR2 DIMM (Default 256MB, Upgrade to 2GB)

Drive Support 12 * SATA ll 16 * SATA ll 24 * SATA ll 24 * SATA ll

Disk Connector 3*Min SAS 4i 4*Min SAS 4i 6*Min SAS 4i 24*SATA

1.3 RAID Concept

1.3.1 RAID Set

A RAID set is a group of disks connected to a RAID controller. A

RAID set contains one or more volume sets. The RAID set itself

does not dene the RAID level (0, 1, 1E, 3, 5, 6, etc); the RAID

level is dened within each volume set. Therefore, volume sets are

contained within RAID sets and RAID Level is dened within the

volume set. If physical disks of different capacities are grouped

together in a RAID set, then the capacity of the smallest disk will

become the effective capacity of all the disks in the RAID set.

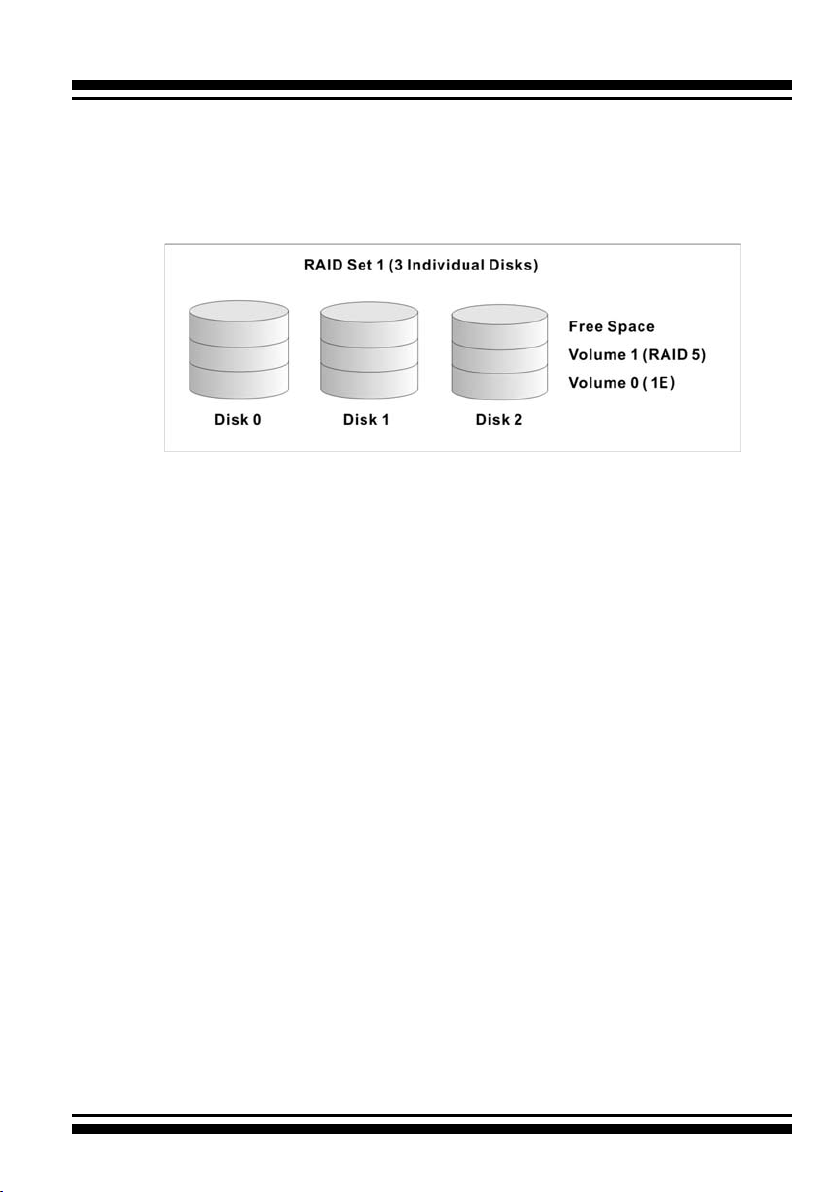

1.3.2 Volume Set

Each volume set is seen by the host system as a single logical device (in other words, a single large virtual hard disk). A volume set

will use a specic RAID level, which will require one or more physical disks (depending on the RAID level used). RAID level refers to

the level of performance and data protection of a volume set. The

capacity of a volume set can consume all or a portion of the available disk capacity in a RAID set. Multiple volume sets can exist in a

RAID set.

For the SATA RAID controller, a volume set must be created either

on an existing RAID set or on a group of available individual disks

(disks that are about to become part of a RAID set). If there are

pre-existing RAID sets with available capacity and enough disks for

the desired RAID level, then the volume set can be created in the

existing RAID set of the user’s choice.

15

INTRODUCTION

In the illustration, volume 1 can be assigned a RAID level 5 of

operation while volume 0 might be assigned a RAID level 1E of

operation. Alterantively, the free space can be used to create volume 2, which could then be set to use RAID level 5.

1.3.3 Ease of Use Features

1.3.3.1 Foreground Availability/Background Initialization

RAID 0 and RAID 1 volume sets can be used immediately after creation because they do not create parity data. However,

RAID 3, 5 and 6 volume sets must be initialized to generate

parity information. In Backgorund Initialization, the initialization proceeds as a background task, and the volume set is fully

accessible for system reads and writes. The operating system

can instantly access the newly created arrays without requiring a reboot and without waiting for initialization to complete.

Furthermore, the volume set is protected against disk failures

while initialing. If using Foreground Initialization, the initialization process must be completed before the volume set is ready

for system accesses.

16

1.3.3.2 Array Roaming

The SATA RAID controllers store RAID conguration information

on the disk drives. The controller therefore protect the conguration settings in the event of controller failure. Array roaming

allows the administrators the ability to move a completele RAID

set to another system without losing RAID conguration infor-

INTRODUCTION

mation or data on that RAID set. Therefore, if a server fails, the

RAID set disk drives can be moved to another server with an

Areca RAID controller and the disks can be inserted in any order.

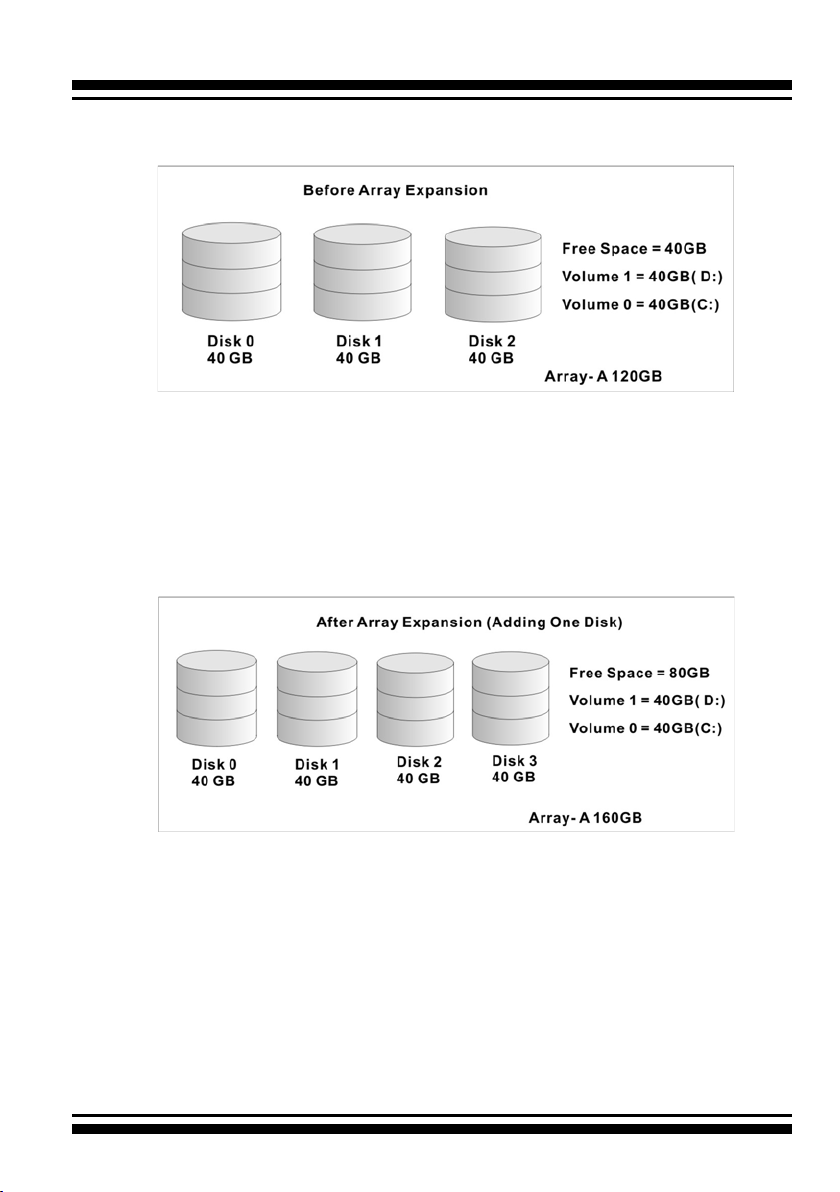

1.3.3.3 Online Capacity Expansion

Online Capacity Expansion makes it possible to add one or more

physical drives to a volume set without interrupting server operation, eliminating the need to backup and restore after reconguration of the RAID set. When disks are added to a RAID set,

unused capacity is added to the end of the RAID set. Then, data

on the existing volume sets (residing on the newly expanded

RAID set) is redistributed evenly across all the disks. A contiguous block of unused capacity is made available on the RAID set.

The unused capacity can be used to create additional volume

sets.

A disk, to be added to a RAID set, must be in normal mode (not

failed), free (not spare, in a RAID set, or passed through to

host) and must have at least the same capacity as the smallest

disk capacity already in the RAID set.

Capacity expansion is only permitted to proceed if all volumes

on the RAID set are in the normal status. During the expansion

process, the volume sets being expanded can be accessed by

the host system. In addition, the volume sets with RAID level 1,

1E, 3, 5 or 6 are protected against data loss in the event of disk

failure(s). In the case of disk failure, the volume set transitions

from “migrating” state to “migrating+degraded“ state. When the

expansion is completed, the volume set would then transition to

“degraded” mode. If a global hot spare is present, then it further

transitions to the “rebuilding” state.

17

INTRODUCTION

The expansion process is illustrated as following gure.

The SATA RAID controller redistributes the original volume set

over the original and newly added disks, using the same faulttolerance conguration. The unused capacity on the expand

RAID set can then be used to create an additional volume set,

with a different fault tolerance setting (if required by the user.)

18

The SATA RAID controller redistributes the original volume set

over the original and newly added disks, using the same faulttolerance conguration. The unused capacity on the expand raid

set can then be used to create an additional volume sets, with a

different fault tolerance setting if user need to change.

INTRODUCTION

1.3.3.4 Online RAID Level and Stripe Size Migration

For those who wish to later upgrade to any RAID capabilities, a

system with Areca online RAID level/stripe size migration allows

a simplied upgrade to any supported RAID level without having

to reinstall the operating system.

The SATA RAID controllers can migrate both the RAID level and

stripe size of an existing volume set, while the server is online and the volume set is in use. Online RAID level/stripe size

migration can prove helpful during performance tuning activities

as well as when additional physical disks are added to the SATA

RAID controller. For example, in a system using two drives in

RAID level 1, it is possible to add a single drive and add capacity and retain fault tolerance. (Normally, expanding a RAID level

1 array would require the addition of two disks). A third disk

can be added to the existing RAID logical drive and the volume

set can then be migrated from RAID level 1 to 5. The result

would be parity fault tolerance and double the available capacity

without taking the system down. A forth disk could be added to

migrate to RAID level 6. It is only possible to migrate to a higher

RAID level by adding a disk; disks in an existing array can’t be

recongured for a higher RAID level without adding a disk.

Online migration is only permitted to begin, It all volumes to be

migrated are in the normal mode. During the migration process,

the volume sets being migrated are accessed by the host system. In addition, the volume sets with RAID level 1, 1E, 3, 5 or

6 are protected against data loss in the event of disk failure(s).

In the case of disk failure, the volume set transitions from migrating state to (migrating+degraded) state. When the migration is completed, the volume set transitions to degraded mode.

If a global hot spare is present, then it further transitions to

rebuilding state.

1.3.3.5 Online Volume Expansion

Performing a volume expansion on the controller is the process

of growing only the size of the lastest volume. A more exible

option is for the array to concatenate an additional drive into the

RAID set and then expand the volumes on the y. This happens

19

INTRODUCTION

transparently while the volumes are online, but, at the end of

the process, the operating system will detect free space at after

the existing volume.

Windows, NetWare and other advanced operating systems support volume expansion, which enables you to incorporate the

additional free space within the volume into the operating system partition. The operating system partition is extended to

incorporate the free space so it can be used by the operating

system without creating a new operating system partition.

You can use the Diskpart.exe command line utility, included with

Windows Server 2003 or the Windows 2000 Resource Kit, to extend an existing partition into free space in the dynamic disk.

Third-party software vendors have created utilities that can be

used to repartition disks without data loss. Most of these utilities

work ofine. Partition Magic is one such utility.

1.4 High availability

1.4.1 Global Hot Spares

A Global Hot Spare is an unused online available drive, which is

ready for replacing the failure disk. The Global Hot Spare is one

of the most important features that SATA RAID controllers provide

to deliver a high degree of fault-tolerance. A Global Hot Spare

is a spare physical drive that has been marked as a global hot

spare and therefore is not a member of any RAID set. If a disk

drive used in a volume set fails, then the Global Hot Spare will

automatically take its place and he data previously located on the

failed drive is reconstructed on the Global Hot Spare.

For this feature to work properly, the global hot spare must have

at least the same capacity as the drive it replaces. Global Hot

Spares only work with RAID level 1, 1E, 3, 5, or 6 volume set.

You can congure up to three global hot spares with ARC-11xx/

12xx.

The Create Hot Spare option gives you the ability to dene a

20

INTRODUCTION

global hot spare disk drive. To effectively use the global hot

spare feature, you must always maintain at least one drive that

is marked as a global spare.

Important:

The hot spare must have at least the same capacity as the

drive it replaces.

1.4.2 Hot-Swap Disk Drive Support

The SATA controller chip includes a protection circuit that supports

the replacement of SATA hard disk drives without having to shut

down or reboot the system. A removable hard drive tray can deliver “hot swappable” fault-tolerant RAID solutions at prices much

less than the cost of conventional SCSI hard disk RAID controllers. This feature provides advanced fault tolerant RAID protection

and “online” drive replacement.

1.4.3 Auto Declare Hot-Spare

If a disk drive is brought online into a system operating in degraded mode, The SATA RAID controllers will automatically declare the new disk as a spare and begin rebuilding the degraded

volume. The Auto Declare Hot-Spare function requires that the

smallest drive contained within the volume set in which the failure

occurred.

In the normal status, the newly installed drive will be recongured

an online free disk. But, the newly-installed drive is automatically

assigned as a hot spare if any hot spare disk was used to rebuild

and without new installed drive replaced it. In this condition, the

Auto Declare Hot-Spare status will disappeared if the RAID subsystem has since powered off/on.

The Hot-Swap function can be used to rebuild disk drives in arrays

with data redundancy such as RAID level 1, 1E, 3, 5, and 6.

21

INTRODUCTION

1.4.4 Auto Rebuilding

If a hot spare is available, the rebuild starts automatically when

a drive fails. The SATA RAID controllers automatically and transparently rebuild failed drives in the background at user-denable

rebuild rates.

If a hot spare is not available, the failed disk drive must be replaced with a new disk drive so that the data on the failed drive

can be automatically rebuilt and so that fault tolerance can be

maintained.

The SATA RAID controllers will automatically restart the system

and the rebuild process if the system is shut down or powered off

abnormally during a reconstruction procedure condition.

When a disk is hot swapped, although the system is functionally

operational, the system may no longer be fault tolerant. Fault

tolerance will be lost until the removed drive is replaced and the

rebuild operation is completed.

During the automatic rebuild process, system activity will continue as normal, however, the system performance and fault tolerance will be affected.

1.4.5 Adjustable Rebuild Priority

Rebuilding a degraded volume incurs a load on the RAID subsystem. The SATA RAID controllers allow the user to select the

rebuild priority to balance volume access and rebuild tasks appropriately. The Background Task Priority is a relative indication of

how much time the controller devotes to a background operation,

such as rebuilding or migrating.

The SATA RAID controller allows user to choose the task priority

(Ultra Low (5%), Low (20%), Medium (50%), High (80%)) to balance volume set access and background tasks appropriately. For

high array performance, specify an Ultra Low value. Like volume

initialization, after a volume rebuilds, it does not require a system

reboot.

22

INTRODUCTION

1.5 High Reliability

1.5.1 Hard Drive Failure Prediction

In an effort to help users avoid data loss, disk manufacturers are

now incorporating logic into their drives that acts as an "early

warning system" for pending drive problems. This system is called

S.M.A.R.T. The disk integrated controller works with multiple

sensors to monitor various aspects of the drive's performance,

determines from this information if the drive is behaving normally

or not, and makes available status information to RAID controller

rmware that probes the drive and look at it.

The SMART can often predict a problem before failure occurs.

The controllers will recognize a SMART error code and notify the

administer of an impending hard drive failure.

1.5.2 Auto Reassign Sector

Under normal operation, even initially defect-free drive media can

develop defects. This is a common phenomenon. The bit density

and rotational speed of disks is increasing every year, and so is

the potential of problems. Usually a drive can internally remap

bad sectors without external help using cyclic redundancy check

(CRC) checksums stored at the end of each sector.

SATA drives perform automatic defect re-assignment for both

read and write errors. Writes are always completed - if a location

to be written is found to be defective, the drive will automatically

relocate that write command to a new location and map out the

defective location. If there is a recoverable read error, the correct data will be transferred to the host and that location will be

tested by the drive to be certain the location is not defective. If

it is found to have a defect, data will be automatically relocated,

and the defective location is mapped out to prevent future write

attempts.

In the event of an unrecoverable read error, the error will be

reported to the host and the location agged as potentially defective. A subsequent write to that location will initiate a sector test

and relocation should that location have a defect. Auto Reassign

Sector does not affect disk subsystem performance because it

23

INTRODUCTION

runs as a background task. Auto Reassign Sector discontinues

when the operating system makes a request.

1.5.3 Consistency Check

A consistency check is a process that veries the integrity of

redundant data. For example, performing a consistency check

of a mirrored drive assures that the data on both drives of the

mirrored pair is exactly the same. To verify RAID 3, 5 or 6 redundancy, a consistency check reads all associated data blocks, computes parity, reads parity, and veries that the computed parity

matches the read parity.

Consistency checks are very important because they detect and

correct parity errors or bad disk blocks in the drive. A consistency

check forces every block on a volume to be read, and any bad

blocks are marked; those blocks are not used again. This is critical and important because a bad disk block can prevent a disk

rebuild from completing. We strongly recommend that you run

consistency checks on a regular basis—at least once per week.

Note that consistency checks degrade performance, so you should

run them when the system load can tolerate it.

1.6 Data Protection

1.6.1 BATTERY BACKUP

The SATA RAID controllers are armed with a Battery Backup Module (BBM). While a Uninterruptible Power Supply (UPS) protects

most servers from power uctuations or failures, a BBM provides

an additional level of protection. In the event of a power failure, a

BBM supplies power to retain data in the RAID controller’s cache,

thereby permitting any potentially dirty data in the cache to be

ushed out to secondary storage when power is restored.

The batteries in the BBM are recharged continuously through a

trickle-charging process whenever the system power is on. The

batteries protect data in a failed server for up to three or four

days, depending on the size of the memory module. Under normal operating conditions, the batteries last for three years before

replacement is necessary.

24

INTRODUCTION

1.6.2 RECOVERY ROM

The SATA RAID controller rmware is stored on the ash ROM and

is executed by the I/O processor. The rmware can also be updated through the PCI-X/PCIe bus port or Ethernet port (if equipped)

without the need to replace any hardware chips. During the controller rmware upgrade ash process, it is possible for a problem

to occur resulting in corruption of the controller rmware. With

our Redundant Flash Image feature, the controller will revert back

to the last known version of rmware and continue operating.

This reduces the risk of system failure due to rmware crash.

1.7 Understanding RAID

RAID is an acronym for Redundant Array of Independent Disks. It

is an array of multiple independent hard disk drives that provides

high performance and fault tolerance. The SATA RAID controller implements several levels of the Berkeley RAID technology.

An appropriate RAID level is selected when the volume sets are

dened or created. This decision should be based on the desired

disk capacity, data availability (fault tolerance or redundancy),

and disk performance. The following section discusses the RAID

levels supported by the SATA RAID controller.

The SATA RAID controller makes the RAID implementation and

the disks’ physical conguration transparent to the host operating

system. This means that the host operating system drivers and

software utilities are not affected, regardless of the RAID level

selected. Correct installation of the disk array and the controller requires a proper understanding of RAID technology and the

concepts.

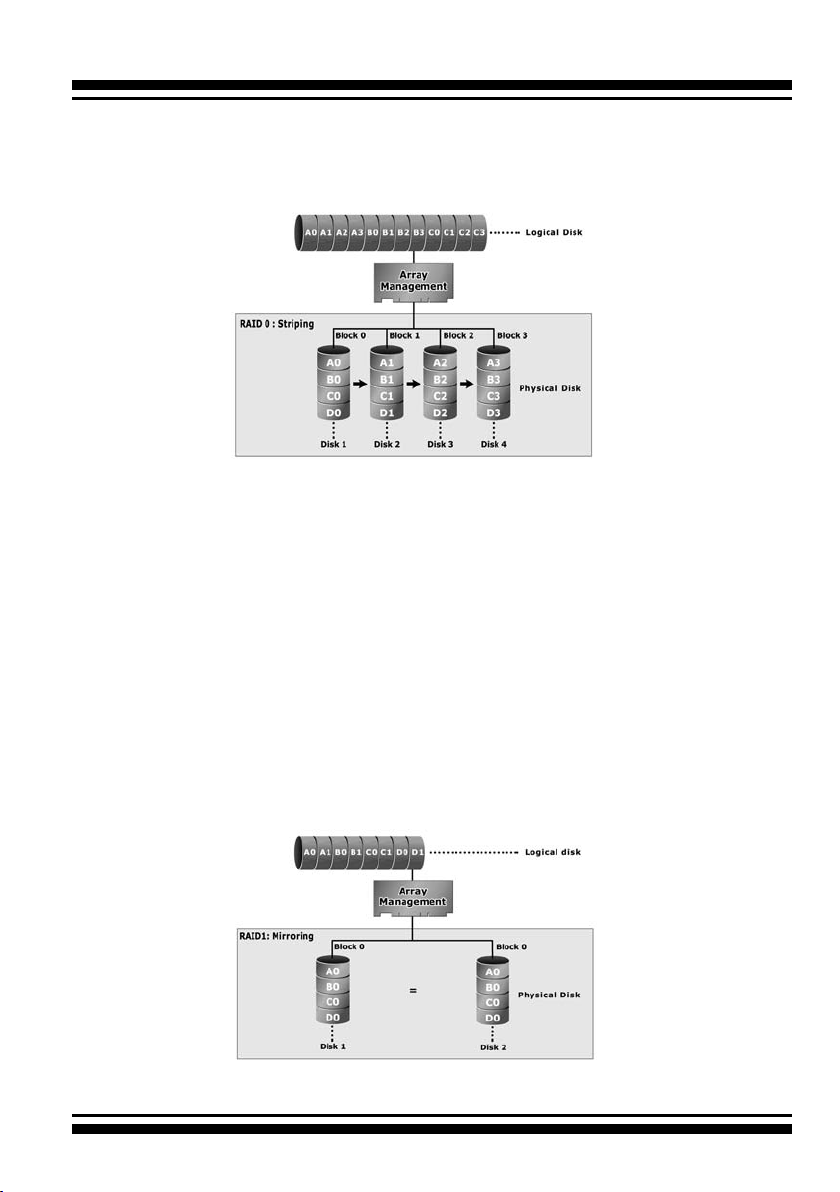

1.7.1 RAID 0

RAID 0, also referred to as striping, writes stripes of data across

multiple disk drives instead of just one disk drive. RAID 0 does

not provide any data redundancy, but does offer the best highspeed data throughput. RAID 0 breaks up data into smaller blocks

and then writes a block to each drive in the array. Disk striping enhances performance because multiple drives are accessed

25

INTRODUCTION

simultaneously; the reliability of RAID Level 0 is less because the

entire array will fail if any one disk drive fails, due to a lack of

redundancy.

1.7.2 RAID 1

RAID 1 is also known as “disk mirroring”; data written to one disk

drive is simultaneously written to another disk drive. Read performance may be enhanced if the array controller can, in parallel,

accesses both members of a mirrored pair. During writes, there

will be a minor performance penalty when compared to writing

to a single disk. If one drive fails, all data (and software applications) are preserved on the other drive. RAID 1 offers extremely

high data reliability, but at the cost of doubling the required data

storage capacity.

26

INTRODUCTION

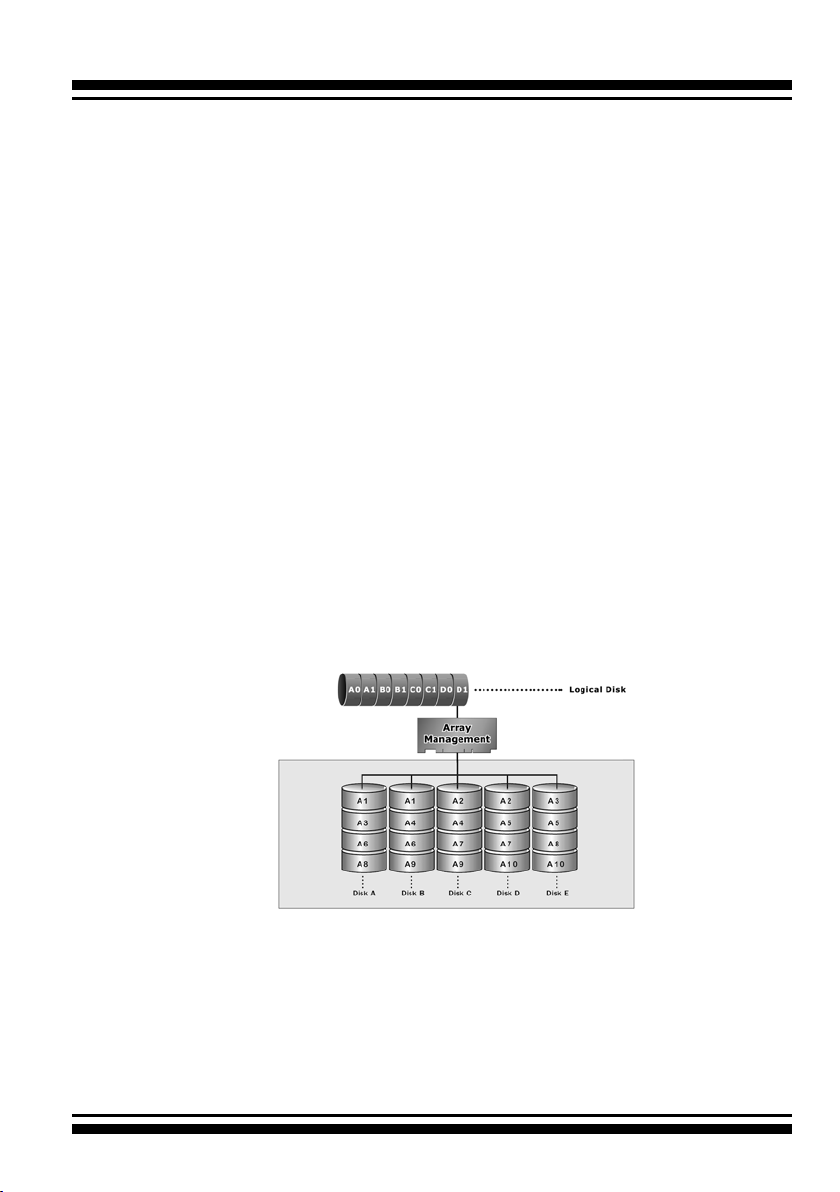

1.7.3 RAID 1E

RAID 1E is a combination of RAID 0 and RAID 1, combing stripping with disk mirroring. RAID Level 1E combines the fast performance of Level 0 with the data redundancy of Leve1 1. In

this conguration, data is distributed across several disk drives,

similar to Level 0, which are then duplicated to another set of

drive for data protection. RAID 1E has been traditionally implemented using an even number of disks, some hybrids can use an

odd number of disks as well. Illustration is an example of a hybrid RAID 1E array comprised of ve disks; A, B, C, D and E. In

this conguration, each strip is mirrored on an adjacent disk with

wrap-around. In fact this scheme - or a slightly modied version

of it - is often referred to as RAID 1E and was originally proposed

by IBM. When the number of disks comprising a RAID 1E is even,

the striping pattern is identical to that of a traditional RAID 1E,

with each disk being mirrored by exactly one other unique disk.

Therefore, all the characteristics for a traditional RAID 1E apply

to a RAID 1E when the latter has an even number of disks. Areca

RAID 1E offers a little more exibility in choosing the number of

disks that can be used to constitute an array. The number can be

even or odd.

1.7.4 RAID 3

RAID 3 provides disk striping and complete data redundancy

though a dedicated parity drive. RAID 3 breaks up data into

smaller blocks, calculates parity by performing an exclusive-or

on the blocks, and then writes the blocks to all but one drive in

27

INTRODUCTION

the array. The parity data created during the exclusive-or is then

written to the last drive in the array. If a single drive fails, data is

still available by computing the exclusive-or of the contents corresponding strips of the surviving member disk. RAID 3 is best

for applications that require very fast data- transfer rates or long

data blocks.

1.7.5 RAID 5

RAID 5 is sometimes called striping with parity at byte level. In

RAID 5, the parity information is written to all of the drives in the

controllers rather than being concentrated on a dedicated parity

disk. If one drive in the system fails, the parity information can

be used to reconstruct the data from that drive. All drives in the

array system can be used for seek operations at the same time,

greatly increasing the performance of the RAID system. This

relieves the write bottleneck that characterizes RAID 4, and is the

primary reason that RAID 5 is more often implemented in RAID

arrays.

28

INTRODUCTION

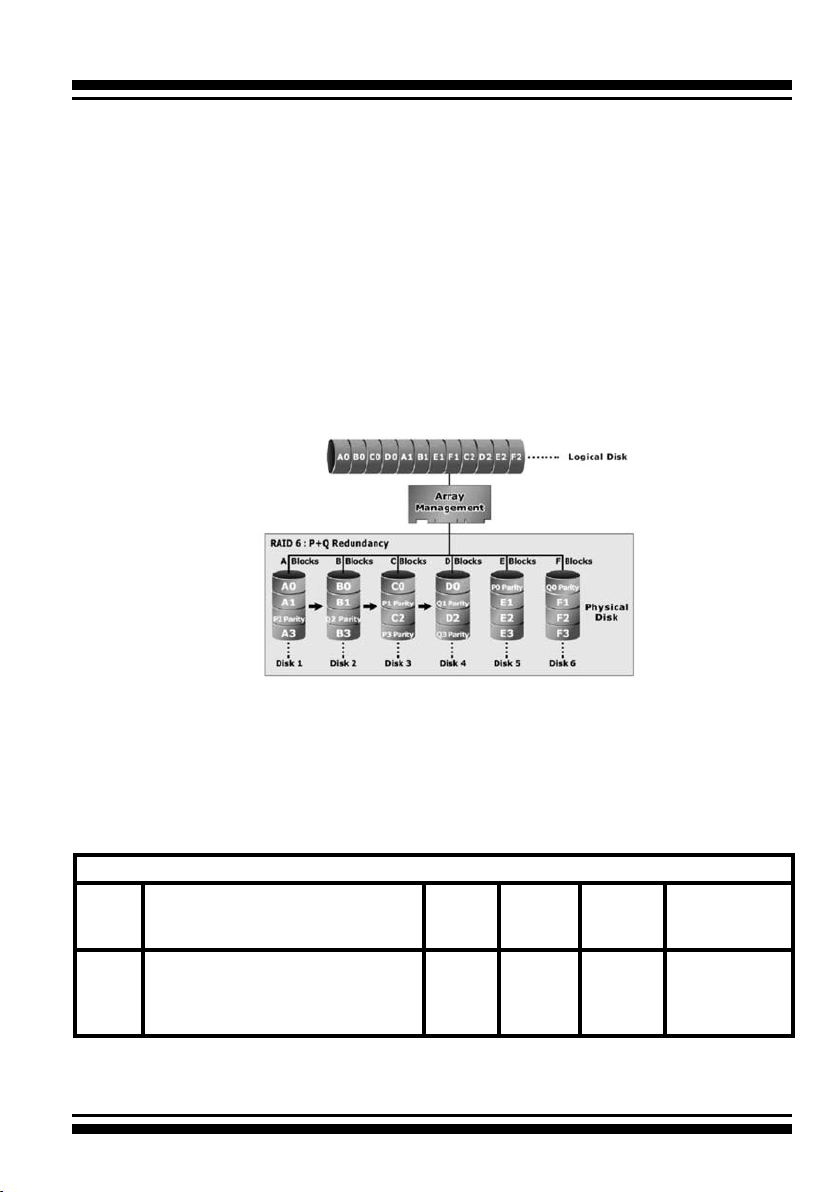

1.7.6 RAID 6

RAID 6 provides the highest reliability, but is not yet widely used.

It is similar to RAID 5, but it performs two different parity computations or the same computation on overlapping subsets of

the data. RAID 6 can offer fault tolerance greater than RAID 1 or

RAID 5 but only consumes the capacity of 2 disk drives for distributed parity data. RAID 6 is an extension of RAID 5 but uses a

second, independent distributed parity scheme. Data is striped on

a block level across a set of drives, and then a second set of parity is calculated and written across all of the drives.

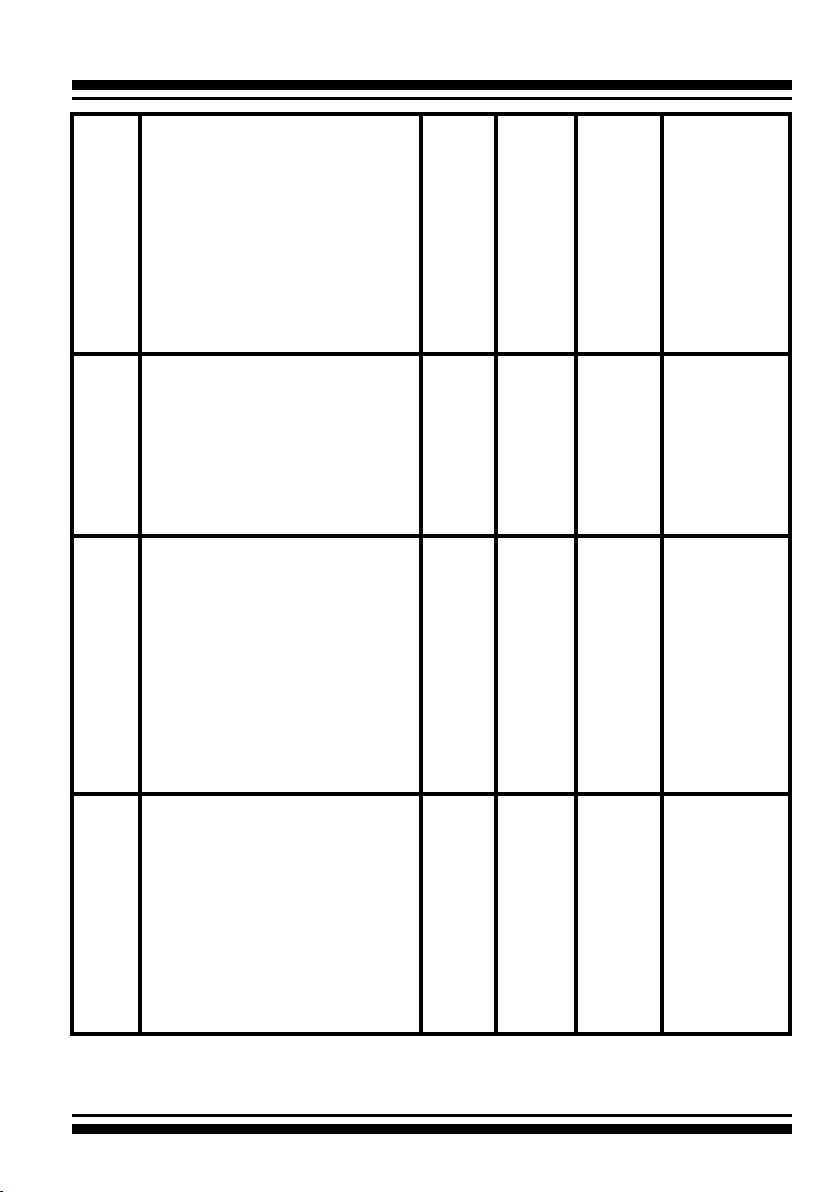

Summary of RAID Levels

The SATA RAID controller supports RAID Level 0, 1, 1E, 3, 5 and 6.

The table below provides a summary of RAID levels.

Features and Performance

RAID

Level

0 Also known as stripping

Description Min.

Drives

Data distributed across multiple

drives in the array. There is no

data protection.

Data

Reliability

1 No data

Protection

Data

Transfer

Rate

Very

High

I/O Request

Rates

Very High for

Both Reads

and Writes

29

INTRODUCTION

1 Also known as mirroring

All data replicated on N separated disks.

N is almost always 2.

This is a high availability solution, but due to the 100%

duplication, it is also a costly

solution. Half of drive capacity in

array devoted to mirroring.

1E Also known Block-Interleaved

Parity.

Data and parity information

is subdivided and distributed

across all disks. Parity must be

the equal to the smallest disk

capacity in the array. Parity

information normally stored on a

dedicated parity disk.

3 Also known Bit-Interleaved Par-

ity.

Data and parity information

is subdivided and distributed

across all disks. Parity data

consumes the capacity of 1

disk drive. Parity information

normally stored on a dedicated

parity disk.

5 Also known Block-Interleaved

Distributed Parity.

Data and parity information

is subdivided and distributed

across all disk. Parity data consumes the capacity of 2 disk

drive.

2 Lower

than

RAID 6;

Higher

than

RAID

3, 5

3 Lower

than

RAID 6;

Higher

than

RAID

3, 5

3 Lower

than

RAID 1,

1E, 6;

Higher

than a

single

drive

3 Lower

than

RAID 1,

1E, 6;

Higher

than a

single

drive

Reads

are

higher

than a

single

disk;

Writes

similar

to a

single

disk

Transfer

rates

more

similar

to RAID

1 than

RAID 0

Reads

are

similar

to

RAID

0;

Writes

are

slower

than a

single

disk

Reads

are

similar

to

RAID 0;

Writes

are

slower

than a

single

disk

Reads are

twice as fast

as a single

disk;

Write are

similar to a

single disk.

Reads are

twice as fast

as a single

disk;

Writes are

similar to a

single disk.

Reads are

close to being

twice as fast

as a single

disk;

Writes are

similar to a

single disk.

Reads are

similar to

RAID 0;

Writes are

slower than a

single disk.

30

Loading...

Loading...