Page 1

ADSP-21000 FamilyADSP-21000 Family

ADSP-21000 Family

ADSP-21000 FamilyADSP-21000 Family

Application Handbook VolumeApplication Handbook Volume

Application Handbook Volume

Application Handbook VolumeApplication Handbook Volume

11

1

11

a

Page 2

ADSP-21000 FamilyADSP-21000 Family

ADSP-21000 Family

ADSP-21000 FamilyADSP-21000 Family

Application Handbook Volume 1Application Handbook Volume 1

Application Handbook Volume 1

Application Handbook Volume 1Application Handbook Volume 1

1994 Analog Devices, Inc.

ALL RIGHTS RESERVED

PRODUCT AND DOCUMENTATION NOTICE: Analog Devices reserves the right to change this product

and its documentation without prior notice.

Information furnished by Analog Devices is believed to be accurate and reliable.

However, no responsibility is assumed by Analog Devices for its use, nor for any infringement of patents,

or other rights of third parties which may result from its use. No license is granted by implication or

otherwise under the patent rights of Analog Devices.

SHARC, EZ-ICE and EZ-LAB are trademarks of Analog Devices, Inc.

MS-DOS and Windows are trademarks of Microsoft, Inc.

PRINTED IN U.S.A.

Printing History

FIRST EDITION 5/94

Page 3

For marketing information or Applications Engineering assistance, contact your local

Analog Devices sales office or authorized distributor.

If you have suggestions for how the ADSP-2100 Family development tools or

documentation can better serve your needs, or you need Applications Engineering

assistance from Analog Devices, please contact:

Analog Devices, Inc.

DSP Applications Engineering

One Technology Way

Norwood, MA 02062-9106

Tel: (617) 461-3672

Fax: (617) 461-3010

e-mail:

dsp_applications@analog.com

The System IC Products Division runs a Bulletin Board Service that can be reached at

speeds up to 14,400 baud, no parity, 8 bits data, 1 stop bit, dialing (617) 461-4258.

This BBS supports: V.32bis, error correction (V.42 and MNP classes 2, 3, and 4), and

data compression (V.42bis and MNP class 5

)

The System IC Products Division Applications Group maintains an Internet FTP site. Login

as anonymous using your email address for your password. Type (from your UNIX

prompt):

ftp ftp.analog.com (or type: ftp 137.71.23.11)

For additional marketing information, call (617) 461-3881 in Norwood MA, USA.

Page 4

LiteratureLiterature

Literature

LiteratureLiterature

ADSP-21000 FAMILY MANUALSADSP-21000 FAMILY MANUALS

ADSP-21000 FAMILY MANUALS

ADSP-21000 FAMILY MANUALSADSP-21000 FAMILY MANUALS

ADSP-21020 User’s Manual

ADSP-21000 SHARC Preliminary Users Manual

Complete description of processor architectures and system interfaces.

ADSP-21000 Family Assembler Tools & Simulator Manual

ADSP-21000 Family C Tools Manual

ADSP-21000 Family C Runtime Library Manual

Programmer’s references.

ADSP-21020 EZ-ICE Manual

ADSP-21020 EZ-LAB Manual

User’s manuals for in-circuit emulators and demonstration boards.

SPECIFICATION INFORMATIONSPECIFICATION INFORMATION

SPECIFICATION INFORMATION

SPECIFICATION INFORMATIONSPECIFICATION INFORMATION

ADSP-21020 Data Sheet

ADSP-2106 SHARC Preliminary Data Sheet

ADSP-21000 Family Development Tools Data Sheet

Page 5

ContentsContents

Contents

ContentsContents

CHAPTER 1 INTRODUCTION

1.1 USAGE CONVENTIONS ................................................................... 1

1.2 DEVELOPMENT RESOURCES......................................................... 1

1.2.1 Software Development Tools..................................................... 1

1.2.2 Hardware Development Tools .................................................. 2

1.2.2.1 EZ-LAB.................................................................................. 2

1.2.2.2 EZ-ICE ................................................................................... 2

1.2.3 Third Party Support .................................................................... 2

1.2.4 DSPatch ......................................................................................... 3

1.2.5 Applications Engineering Support ........................................... 3

1.2.6 ADSP-21000 Family Classes ....................................................... 4

1.3 ADSP-21000 FAMILY: THE SIGNAL PROCESSING

SOLUTION ........................................................................................... 4

1.3.1 Why DSP? ..................................................................................... 4

1.3.2 Why Floating-Point?.................................................................... 4

1.3.2.1 Precision ................................................................................ 4

1.3.2.2 Dynamic Range .................................................................... 5

1.3.2.3 Signal-To-Noise Ratio ......................................................... 5

1.3.2.4 Ease-Of-Use .......................................................................... 5

1.3.3 Why ADSP-21000 Family? ......................................................... 5

1.3.3.1 Fast & Flexible Arithmetic .................................................. 6

1.3.3.2 Unconstrained Data Flow................................................... 6

1.3.3.3 Extended IEEE-Floating-Point Support............................ 6

1.3.3.4 Dual Address Generators ................................................... 6

1.3.3.5 Efficient Program Sequencing ........................................... 6

1.4 ADSP-21000 FAMILY ARCHITECTURE OVERVIEW .................. 7

1.4.1 ADSP-21000 Family Base Architecture..................................... 7

1.4.2 ADSP-21020 DSP.......................................................................... 8

1.4.3 ADSP-21060 SHARC ................................................................. 10

v

Page 6

ContentsContents

Contents

ContentsContents

CHAPTER 2 TRIGONOMETRIC, MATHEMATICAL &

TRANSCENDENTAL FUNCTIONS

2.1 SINE/COSINE APPROXIMATION ............................................... 15

2.1.1 Implementation.......................................................................... 16

2.1.2 Code Listings.............................................................................. 18

2.1.2.1 Sine/Cosine Approximation Subroutine ....................... 18

2.1.2.2 Example Calling Routine .................................................. 21

2.2 TANGENT APPROXIMATION ...................................................... 22

2.2.1 Implementation.......................................................................... 22

2.2.2 Code Listing-Tangent Subroutine ........................................... 24

2.3 ARCTANGENT APPROXIMATION ............................................. 27

2.3.1 Implementation.......................................................................... 27

2.3.2 Listing-Arctangent Subroutine ................................................ 29

2.4 SQUARE ROOT & INVERSE SQUARE ROOT

APPROXIMATIONS ......................................................................... 33

2.4.1 Implementation.......................................................................... 34

2.4.2 Code Listings.............................................................................. 35

2.4.2.1 SQRT Approximation Subroutine................................... 36

2.4.2.2 ISQRT Approximation Subroutine ................................. 38

2.4.2.3 SQRTSGL Approximation Subroutine ........................... 40

2.4.2.4 ISQRTSGL Approximation Subroutine .......................... 42

2.5 DIVISION............................................................................................ 44

2.5.1 Implementation.......................................................................... 44

2.5.2 Code Listing-Division Subroutine .......................................... 44

2.6 LOGARITHM APPROXIMATIONS ............................................... 46

2.6.1 Implementation.......................................................................... 47

2.6.2 Code Listing ............................................................................... 49

2.6.2.1 Logarithm Approximation Subroutine .......................... 49

2.7 EXPONENTIAL APPROXIMATION ............................................. 52

2.7.1 Implementation.......................................................................... 53

2.7.2 Code Listings-Exponential Subroutine................................... 55

2.8 POWER APPROXIMATION............................................................ 57

2.8.1 Implementation.......................................................................... 59

2.8.2 Code Listings.............................................................................. 62

2.8.2.1 Power Subroutine .............................................................. 62

2.8.2.2 Global Header File............................................................. 68

2.8.2.3 Header File.......................................................................... 69

2.9 REFERENCES..................................................................................... 69

vi

2 – 22 – 2

2 – 2

2 – 22 – 2

Page 7

ContentsContents

Contents

ContentsContents

CHAPTER 3 MATRIX FUNCTIONS

3.1 STORING A MATRIX ....................................................................... 72

3.2 MULTIPLICATION OF A M×N MATRIX

BY AN N×1 VECTOR........................................................................ 73

3.2.1 Implementation.......................................................................... 73

3.2.2 Code Listing—M×N By N×1 Multiplication.......................... 75

3.3 MULTIPLICATION OF A M×N MATRIX

BY A N×O MATRIX .......................................................................... 77

3.3.1 Implementation.......................................................................... 77

3.3.2 Code Listing—M×N By N×O Multiplication......................... 79

3.4 MATRIX INVERSION....................................................................... 81

3.4.1 Implementation.......................................................................... 82

3.4.2 Code Listing—Matrix Inversion.............................................. 84

3.5 REFERENCES..................................................................................... 88

CHAPTER 4 FIR & IIR FILTERS

4.1 FIR FILTERS ....................................................................................... 90

4.1.1 Implementation.......................................................................... 91

4.1.2 Code Listings.............................................................................. 96

4.1.2.1 Example Calling Routine .................................................. 96

4.1.2.2 Filter Code .......................................................................... 98

4.2 IIR FILTERS ...................................................................................... 100

4.2.1 Implementation........................................................................ 101

4.2.1.1 Implementation Overview ............................................. 101

4.2.1.2 Implementation Details .................................................. 102

4.2.2 Code Listings............................................................................ 106

4.2.2.1 iirmem.asm ....................................................................... 106

4.2.2.2 cascade.asm ...................................................................... 108

4.3 SUMMARY ....................................................................................... 111

4.4 REFERENCES................................................................................... 111

CHAPTER 5 MULTIRATE FILTERS

5.1 SINGLE-STAGE DECIMATION FILTER..................................... 114

5.1.1 Implementation........................................................................ 114

5.1.2 Code Listings—decimate.asm ............................................... 117

5.2 SINGLE-STAGE INTERPOLATION FILTER.............................. 122

5.2.1 Implementation........................................................................ 122

2 – 32 – 3

2 – 3

2 – 32 – 3

vii

Page 8

ContentsContents

Contents

ContentsContents

5.2.2 Code Listing—interpol.asm ................................................... 124

5.3 RATIONAL RATE CHANGER (TIMER-BASED) ...................... 129

5.3.1 Implementation........................................................................ 129

5.3.2 Code Listings—ratiobuf.asm ................................................. 133

5.4 RATIONAL RATE CHANGER

(EXTERNAL INTERRUPT-BASED).............................................. 138

5.4.1 Implementation........................................................................ 138

5.4.2 Code Listing—rat_2_int.asm.................................................. 139

5.5 TWO-STAGE DECIMATION FILTER.......................................... 143

5.5.1 Implementation........................................................................ 143

5.5.2 Code Listing—dec2stg.asm .................................................... 145

5.6 TWO-STAGE INTERPOLATION FILTER ................................... 150

5.6.1 Implementation........................................................................ 150

5.6.2 Code Listing—int2stg.asm ..................................................... 151

5.7 REFERENCES................................................................................... 156

CHAPTER 6 ADAPTIVE FILTERS

6.1 INTRODUCTION ............................................................................ 157

6.1.1 Applications Of Adaptive Filters .......................................... 157

6.1.1.1 System Identification....................................................... 158

6.1.1.2 Adaptive Equalization For Data Transmission ........... 159

6.1.1.3 Echo Cancellation For Speech-Band

Data Transmission ........................................................... 159

6.1.1.4 Linear Predictive Coding of Speech Signals ................ 160

6.1.1.5 Array Processing.............................................................. 160

6.1.2 FIR Filter Structures ................................................................ 160

6.1.2.1 Transversal Structure ...................................................... 161

6.1.2.2 Symmetric Transversal Structure .................................. 162

6.1.2.3 Lattice Structure ............................................................... 163

6.1.3 Adaptive Filter Algorithms .................................................... 164

6.1.3.1 The LMS Algorithm......................................................... 164

6.1.3.2 The RLS Algorithm.......................................................... 165

6.2 IMPLEMENTATIONS .................................................................... 167

6.2.1 Transversal Filter Implementation........................................ 168

6.2.2 LMS (Transversal FIR Filter Structure)................................. 168

6.2.2.1 Code Listing—lms.asm ................................................... 169

6.2.3 llms.asm—Leaky LMS Algorithm (Transversal) ................ 171

6.2.3.1 Code Listing ..................................................................... 171

6.2.4 Normalized LMS Algorithm (Transversal).......................... 173

6.2.4.1 Code Listing—nlms.asm................................................. 174

6.2.5 Sign-Error LMS (Transversal) ................................................ 176

viii

2 – 42 – 4

2 – 4

2 – 42 – 4

Page 9

ContentsContents

Contents

ContentsContents

6.2.5.1 Code Listing—selms.asm ............................................... 177

6.2.6 Sign-Data LMS (Transversal) ................................................. 179

6.2.6.1 Code Listing—sdlms.asm............................................... 180

6.2.7 Sign-Sign LMS (Transversal).................................................. 183

6.2.7.1 Code Listing—sslms.asm ............................................... 183

6.2.8 Symmetric Transversal Filter Implementation LMS .......... 185

6.2.8.1 Code Listing—sylms.asm ............................................... 186

6.2.9 Lattice Filter LMS With Joint Process Estimation ............... 189

6.2.9.1 Code Listing—latlms.asm .............................................. 191

6.2.10 RLS (Transversal Filter) .......................................................... 194

6.2.10.1 Code Listing—rls.asm ..................................................... 195

6.2.11 Testing Shell For Adaptive Filters......................................... 199

6.2.11.1 Code Listing—testafa.asm.............................................. 199

6.3 CONCLUSION................................................................................. 202

6.4 REFERENCES................................................................................... 203

CHAPTER 7 FOURIER TRANSFORMS

7.1 COMPUTATION OF THE DFT..................................................... 206

7.1.1 Derivation Of The Fast Fourier Transform .......................... 207

7.1.2 Butterfly Calculations ............................................................. 208

7.2 ARCHITECTURAL FEATURES FOR FFTS................................. 210

7.3 COMPLEX FFTS .............................................................................. 211

7.3.1 Architecture File Requirements ............................................. 211

7.3.2 The Radix-2 DIT FFT Program .............................................. 212

7.3.3 The Radix-4 DIF FFT Program............................................... 213

7.3.4 FFTs On The ADSP-21060 ...................................................... 214

7.3.5 FFT Twiddle Factor Generation............................................. 214

7.4 INVERSE COMPLEX FFTs............................................................. 215

7.5 BENCHMARKS ............................................................................... 216

7.6 CODE LISTINGS ............................................................................. 217

7.6.1 FFT.ACH—Architecture File ................................................. 217

7.6.2 FFTRAD2.ASM—Complex Radix2 FFT ............................... 218

7.6.3 FFTRAD4.ASM—Complex Radix-4 FFT.............................. 225

7.6.4 TWIDRAD2.C—Radix2 Coefficient Generator ................... 232

7.6.5 TWIDRAD4.C—Radix4 Coefficient Generator ................... 234

7.7 REFERENCES................................................................................... 236

2 – 52 – 5

2 – 5

2 – 52 – 5

ix

Page 10

ContentsContents

Contents

ContentsContents

CHAPTER 8 GRAPHICS

8.1 3-D GRAPHICS LINE ACCEPT/REJECT.................................... 237

8.1.1 Implementation........................................................................ 239

8.1.2 Code Listing ............................................................................. 240

8.2 CUBIC BEZIER POLYNOMIAL EVALUATION ....................... 244

8.2.1 Implementation........................................................................ 245

8.2.2 Code Listing ............................................................................. 246

8.3 CUBIC B-SPLINE POLYNOMIAL EVALUATION................... 248

8.3.1 Implementation........................................................................ 248

8.3.2 Code Listing ............................................................................. 250

8.4 BIT BLOCK TRANSFER ................................................................. 253

8.4.1 Implementation........................................................................ 253

8.4.2 Code Listing ............................................................................. 255

8.5 BRESENHAM LINE DRAWING .................................................. 257

8.5.1 Implementation........................................................................ 257

8.5.2 Code Listing ............................................................................. 259

8.6 3-D GRAPHICS TRANSLATION, ROTATION, & SCALING . 262

8.6.1 Implementation........................................................................ 262

8.6.2 Code Listing ............................................................................. 265

8.7 MULTIPLY 4×4 BY 4×1 MATRICES (3D GRAPHICS .....................

8.7.1 Implementation........................................................................ 267

8.7.2 Code Listing ............................................................................. 268

8.8 TABLE LOOKUP WITH INTERPOLATION .............................. 270

8.8.1 Implementation........................................................................ 270

8.8.2 Code Listing ............................................................................. 272

8.9 VECTOR CROSS PRODUCT ......................................................... 274

8.9.1 Implementation........................................................................ 274

8.9.2 Code Listing ............................................................................. 275

8.10 REFERENCES................................................................................... 277

TRANSFORMATION) .................................................................... 267

x

2 – 62 – 6

2 – 6

2 – 62 – 6

CHAPTER 9 IMAGE PROCESSING

9.1 TWO-DIMENSIONAL CONVOLUTION.................................... 279

9.1.1 Implementation........................................................................ 280

9.1.2 Code Listing ............................................................................. 283

9.2 MEDIAN FILTERING (3×3) ........................................................... 285

9.2.1 Implementation........................................................................ 285

9.2.2 Code Listing ............................................................................. 286

9.3 HISTOGRAM EQUALIZATION................................................... 288

Page 11

ContentsContents

Contents

ContentsContents

9.3.1 Implementation........................................................................ 289

9.3.2 Code Listing ............................................................................. 290

9.4 ONE-DIMENSIONAL MEDIAN FILTERING ............................ 292

9.4.1 Implementation........................................................................ 292

9.4.2 Code Listings............................................................................ 294

9.5 REFERENCES................................................................................... 298

CHAPTER 10 JTAG DOWNLOADER

10.1 HARDWARE.................................................................................... 300

10.1.1 Details ........................................................................................ 301

10.1.2 Test Access Port Operations................................................... 305

10.1.3 Timing Considerations ........................................................... 308

10.2 SOFTWARE ...................................................................................... 310

10.2.1 TMS & TDI Bit Generation ..................................................... 311

10.2.2 Software Example .................................................................... 313

10.2.3 Summary: How To Make The EPROM ................................ 316

10.3 DETAILED TMS & TDI BEHAVIOR ............................................ 316

10.3.1 Code Listings............................................................................ 320

10.3.2 pub21k.h.................................................................................... 320

10.3.3 pub21k.c .................................................................................... 322

10.3.4 s2c.c ............................................................................................ 324

10.3.5 c2b.c ........................................................................................... 326

10.3.6 b2b.c ........................................................................................... 330

10.3.7 stox.c .......................................................................................... 331

10.3.8 Loader Kernel ........................................................................... 332

10.4 REFERENCE..................................................................................... 336

INDEX ................................................................................................ 337

FIGURES

Figure 1.1 ADSP-21020 Block Diagram....................................................... 9

Figure 1.2 ADSP-21020 System Diagram.................................................... 9

Figure 1.3 ADSP-21060 Block Diagram..................................................... 12

Figure 1.4 ADSP-21060 System Diagram.................................................. 13

Figure 1.5 ADSP-21060 Multiprocessing System Diagram .................... 14

Figure 4.1 Delay Line ................................................................................... 94

2 – 72 – 7

2 – 7

2 – 72 – 7

xi

Page 12

ContentsContents

Contents

ContentsContents

Figure 6.1 System Identification Model .................................................. 158

Figure 6.2 Transversal FIR Filter Structure............................................. 161

Figure 6.3 Symmetric Transversal Filter Structure................................ 162

Figure 6.4 One Stage Of Lattice FIR......................................................... 163

Figure 6.5 Generic Adaptive Filter .......................................................... 167

Figure 7.1 Flow Graph Of Butterfly Calculation ................................... 208

Figure 7.2 32-Point Radix-2 DIT FFT ...................................................... 209

Figure 8.1 Cubic Bezier Polynomial ........................................................ 244

Figure 8.2 Register Assignments For Cubic Bezier Polynomial .......... 245

Figure 8.3 Cubic B-Spline Polynomial..................................................... 248

Figure 8.4 Register Assignments For Cubic B-Spline Polynomial ...... 249

Figure 8.5 BitBlt .......................................................................................... 253

Figure 8.6 Register Usage For BitBlt ........................................................ 253

Figure 9.1 3x3 Convolution Matrix .......................................................... 281

Figure 9.2 3x3 Convolution Operation.................................................... 281

Figure 9.3 Histogram Of Dark Picture .................................................... 288

Figure 9.4 Histogram Of Bright Picture .................................................. 289

Figure 9.5 Median Filter Algorithm......................................................... 293

Figure 10.1 System Diagram ....................................................................... 301

Figure 10.2 Block Diagram .......................................................................... 302

Figure 10.3 Prototype Schematic................................................................ 303

Figure 10.4 Prototype Board Layout ......................................................... 304

Figure 10.5 JTAG Test Access Port States ................................................. 305

Figure 10.6 Worst-Case Data Setup To Clock Time ................................ 309

Figure 10.7 TMS & TDI Timing From RESET Through Start

Of First Scan Of DR ................................................................. 317

Figure 10.8 TMS & TDI Timing From End Of First Scan To

Start Of Second Scan ............................................................... 318

Figure 10.9 Other TMS & TDI Timing....................................................... 319

TABLES

Table 1.1 ADSP-21060 Benchmarks (@ 40 MHz).................................... 11

Table 6.1 Transversal FIR LMS Performance & Memory

Benchmarks For Filters Of Order N ...................................... 202

Table 6.2 LMS Algorithm Benchmarks For

Different Filter Structures....................................................... 202

Table 6.3 LMS vs. RLS Benchmark Performance ................................. 203

xii

2 – 82 – 8

2 – 8

2 – 82 – 8

Page 13

ContentsContents

Contents

ContentsContents

Table 10.1 Parts List.................................................................................... 304

Table 10.2 JTAG States Used By The Downloader ................................ 306

Table 10.3 Downloader Operations ......................................................... 307

Table 10.4 Source Code Description & Usage ........................................ 310

Table 10.5 Bitstream/EPROM Byte Relationship .................................. 311

Table 10.6 TMS Values For State Transitions ......................................... 312

Table 10.7 TDI Values For IRSHIFT & DRSHIFT .................................. 312

Table 10.8 kernel.ach - Architecture File Used With kernel.asm......... 313

Table 10.9 kernel.stk - Stacked-format spl21k Output .......................... 314

Table 10.10 kernel.s0 - pub21k Output Used To Burn

Downloader EPROM .............................................................. 315

LISTINGS

Listing 2.1 sin.asm......................................................................................... 20

Listing 2.2 sintest.asm................................................................................... 21

Listing 2.3 tan.asm ........................................................................................ 26

Listing 2.4 atan.asm ...................................................................................... 32

Listing 2.5 sqrt.asm ....................................................................................... 37

Listing 2.6 isqrt.asm ...................................................................................... 39

Listing 2.7 sqrtsgl.asm .................................................................................. 41

Listing 2.8 isqrtsgl.asm ................................................................................. 43

Listing 2.9 Divide..asm ................................................................................. 45

Listing 2.10 logs.asm....................................................................................... 51

Listing 2.11 Exponential Subroutine ............................................................ 57

Listing 2.12 pow.asm ...................................................................................... 67

Listing 2.13 asm_glob.h .................................................................................. 68

Listing 2.14 pow.h ........................................................................................... 69

Listing 3.1 MxNxNx1.asm ........................................................................... 76

Listing 3.2 MxNxNxO.asm .......................................................................... 80

Listing 3.3 matinv.asm ................................................................................. 87

Listing 4.1 firtest.asm.................................................................................... 97

Listing 4.2 fir.asm .......................................................................................... 99

Listing 4.3 Filter Specifications From FDAS ........................................... 102

Listing 4.4 iirmem.asm ............................................................................... 108

Listing 4.5 cascade.asm .............................................................................. 110

Listing 5.1 decimate.asm ............................................................................ 121

Listing 5.2 interpol.asm .............................................................................. 128

Listing 5.3 ratiobuf.asm.............................................................................. 137

2 – 92 – 9

2 – 9

2 – 92 – 9

xiii

Page 14

ContentsContents

Contents

ContentsContents

Listing 5.4 rat2int.asm ................................................................................ 142

Listing 5.5 dec2stg.asm............................................................................... 149

Listing 5.6 int2stg.asm ................................................................................ 155

Listing 6.1 lms.asm...................................................................................... 170

Listing 6.2 llms.asm .................................................................................... 173

Listing 6.3 nlms.asm ................................................................................... 176

Listing 6.4 selms.asm .................................................................................. 179

Listing 6.5 sdlms.asm ................................................................................. 182

Listing 6.6 sslms.asm .................................................................................. 185

Listing 6.7 sylms.asm.................................................................................. 188

Listing 6.8 latlms.asm ................................................................................. 193

Listing 6.9 rls.asm........................................................................................ 198

Listing 6.10 testafa.asm ................................................................................ 201

Listing 7.1 FFT.ACH ................................................................................... 217

Listing 7.2 fftrad2.asm ................................................................................ 224

Listing 7.3 fftrad4.asm ................................................................................ 231

Listing 7.4 twidrad2.c ................................................................................. 233

Listing 7.5 twidrad4.c ................................................................................. 235

Listing 8.1 accej.asm.................................................................................... 243

Listing 8.2 bezier.asm ................................................................................. 247

Listing 8.3 B-spline.asm.............................................................................. 252

Listing 8.4 bitblt.asm................................................................................... 256

Listing 8.5 bresen.asm ................................................................................ 261

Listing 8.6 transf.asm.................................................................................. 266

Listing 8.7 mul44x41.asm........................................................................... 270

Listing 8.8 tblllkup.asm .............................................................................. 273

Listing 8.9 xprod.asm ................................................................................. 277

Listing 9.1 CONV3x3.ASM ........................................................................ 284

Listing 9.2 med3x3.asm .............................................................................. 287

Listing 9.3 histo.asm ................................................................................... 292

Listing 9.4 Fixed-Point 1-D Median Fillter .............................................. 296

Listing 9.5 Floating-Point 1-D Median Fillter ......................................... 298

Listing 10.1 pub21k.h.................................................................................... 322

Listing 10.2 pub21k.c .................................................................................... 323

Listing 10.3 s2c.c ............................................................................................ 325

Listing 10.4 c2b.c ........................................................................................... 329

Listing 10.5 b2b.c ........................................................................................... 331

Listing 10.6 stox.c .......................................................................................... 331

Listing 10.7 Loader Kernal........................................................................... 335

xiv

2 – 102 – 10

2 – 10

2 – 102 – 10

Page 15

IntroductionIntroduction

Introduction

IntroductionIntroduction

This applications handbook is intended to help you get a quick start in

developing DSP applications with ADSP-21000 Family digital signal

processors.

This chapter includes a summary of available resources and an

introduction to the ADSP-21000 Family architecture. (Complete

architecture and programming details are found in each processor’s data

sheet, the ADSP-21060 SHARC User’s Manual, and the ADSP-21020 User’s

Manual.) The next eight chapters describe commonly used DSP algorithms

and their implementations on ADSP-21000 family DSPs. The last chapter

shows you how to build a bootstrap program downloader using the

ADSP-21020 built-in JTAG port.

11

1

11

1.11.1

1.1

1.11.1

• Code listings, assembly language instructions and labels, commands

typed on an operating system shell command line, and file names are

printed in the Courier font.

• Underlined variables are vectors:

1.21.2

1.2

1.21.2

This section discusses resources available from Analog Devices to help

you develop applications using ADSP-21000 Family digital signal

processors.

1.2.11.2.1

1.2.1

1.2.11.2.1

A full set of software tools support ADSP-21000 family program

development, including an assembler, linker, simulator, PROM splitter,

and C Compiler. The development tools also include libraries of assembly

language modules and C functions. See the ADSP-21000 Family Assembler

Tools & Simulator Manual, the ADSP-21000 Family C Tools Manual, and the

ADSP-21000 Family C Runtime Library Manual for complete details on the

development tools.

USAGE CONVENTIONSUSAGE CONVENTIONS

USAGE CONVENTIONS

USAGE CONVENTIONSUSAGE CONVENTIONS

V

DEVELOPMENT RESOURCESDEVELOPMENT RESOURCES

DEVELOPMENT RESOURCES

DEVELOPMENT RESOURCESDEVELOPMENT RESOURCES

Software Development ToolsSoftware Development Tools

Software Development Tools

Software Development ToolsSoftware Development Tools

11

1

11

Page 16

11

1

11

IntroductionIntroduction

Introduction

IntroductionIntroduction

1.2.21.2.2

1.2.2

1.2.21.2.2

Analog Devices offers several systems that let you test your programs on

real hardware without spending time hardware prototyping, as well as

help you debug your target system hardware.

1.2.2.11.2.2.1

1.2.2.1

1.2.2.11.2.2.1

EZ-LAB® evaluation boards are complete ADSP-210xx systems that

include memory, an audio codec, an analog interface, and expansion

connectors on a single, small printed-circuit board. Several programs are

included that demonstrate signal processing algorithms. You can

download your own programs to the EZ-LAB from your IBM-PC

compatible computer.

EZ-LAB connects with EZ-ICE (described in the next section) and an IBMPC compatible to form a high-speed, interactive DSP workstation that lets

you debug and execute your software without prototype hardware.

EZ-LAB is also available bundled with the software development tools in

the EZ-KIT packages. Each member of the ADSP-21000 family is

supported by its own EZ-LAB.

1.2.2.21.2.2.2

1.2.2.2

1.2.2.21.2.2.2

EZ-ICE® in-circuit emulators give you an affordable alternative to large

dedicated emulators without sacrificing features. The EZ-ICE software

runs on an IBM-PC and gives you a debugging environment very similar

to the ADSP-210xx simulator. The EZ-ICE probe connects to the PC with

an ISA plug-in card and to the target system through a test connector on

the target. EZ-ICE communicates to the target processor through the

processor’s JTAG test access port. Your software runs on your hardware at

full speed in real time, which simplifies hardware and software

debugging.

Hardware Development Tools Hardware Development Tools

Hardware Development Tools

Hardware Development Tools Hardware Development Tools

EZ-LABEZ-LAB

EZ-LAB

EZ-LABEZ-LAB

EZ-ICEEZ-ICE

EZ-ICE

EZ-ICEEZ-ICE

22

2

22

1.2.31.2.3

1.2.3

1.2.31.2.3

Several third party companies also provide products that support ADSP21000 family development; contact Analog Devices for a complete list.

Here are a few of the products available as of this writing:

• Spectron SPOX Real-time Operating System

• Comdisco Signal Processing Worksystem

• Loughborough Sound Images/Spectrum Processing PC Plug-in Board

• Momentum Data Systems Filter Design Software (FDAS)

• Hyperceptions Hypersignal Workstation

Third Party Support Third Party Support

Third Party Support

Third Party Support Third Party Support

Page 17

IntroductionIntroduction

Introduction

IntroductionIntroduction

11

1

11

1.2.41.2.4

1.2.4

1.2.41.2.4

DSPatch is Analog Devices award-winning DSP product support

newsletter. Each quarterly issue includes

• applications feature articles

• stories about customers using ADI DSPs in consumer, industrial and

military products

• new product announcements

• product upgrade announcements

and features as regular columns

• Q & A—tricks and tips from the Application Engineering staff

• C Programming—a popular series of articles about programming DSPs

with the C language.

1.2.51.2.5

1.2.5

1.2.51.2.5

Analog Devices’ expert staff of Applications Engineers are available to

answer your technical questions.

• To speak to an Applications Engineer, Monday to Friday 9am to 5pm

EST, call (617) 461-3672.

DSPatchDSPatch

DSPatch

DSPatchDSPatch

Applications Engineering SupportApplications Engineering Support

Applications Engineering Support

Applications Engineering SupportApplications Engineering Support

• You can send email to dsp_applications@analog.com .

• Facsimiles may be sent to (617) 461-3010.

• You may log in to the DSP Bulletin Board System [8:1:N:1200/2400/

4800/9600/14,400] at (617) 461-4258, 24 hours a day.

• The files on the DSP BBS are also available by anonymous ftp, at

ftp.analog.com (132.71.32.11) , in the directory

• Postal mail may be sent to “DSP Applications Engineering, Three

Technology Way, PO Box 9106, Norwood, MA, 02062-2106.”

Technical support is also available for Analog Devices Authorized

Distributers and Field Applications Offices.

/pub/dsp .

33

3

33

Page 18

11

1

11

IntroductionIntroduction

Introduction

IntroductionIntroduction

1.2.61.2.6

1.2.6

1.2.61.2.6

Applications Engineering regularly offers a course in ADSP-21000 family

architecture and programming. Please contact Applications Engineering

for a schedule of upcoming courses.

1.31.3

1.3

1.31.3

1.3.11.3.1

1.3.1

1.3.11.3.1

Digital signal processors are a special class of microprocessors that are

optimized for computing the real-time calculations used in signal

processing. Although it is possible to use some fast general-purpose

microprocessors for signal processing, they are not optimized for that task.

The resulting design can be hard to implement and costly to manufacture.

In contrast, DSPs have an architecture that simplifies application designs

and makes low-cost signal processing a reality.

The kinds of algorithms used in signal processing can be optimized if they

are supported by a computer architecture specifically designed for them.

In order to handle digital signal processing tasks efficiently, a

microprocessor must have the following characteristics:

ADSP-21000 Family ClassesADSP-21000 Family Classes

ADSP-21000 Family Classes

ADSP-21000 Family ClassesADSP-21000 Family Classes

ADSP-21000 FAMILY: THE SIGNAL PROCESSING SOLUTIONADSP-21000 FAMILY: THE SIGNAL PROCESSING SOLUTION

ADSP-21000 FAMILY: THE SIGNAL PROCESSING SOLUTION

ADSP-21000 FAMILY: THE SIGNAL PROCESSING SOLUTIONADSP-21000 FAMILY: THE SIGNAL PROCESSING SOLUTION

Why DSP?Why DSP?

Why DSP?

Why DSP?Why DSP?

44

4

44

• fast, flexible computation units

• unconstrained data flow to and from the computation units

• extended precision and dynamic range in the computation units

• dual address generators

• efficient program sequencing and looping mechanisms

1.3.21.3.2

1.3.2

1.3.21.3.2

A processor’s data format determines its ability to handle signals of

differing precision, dynamic range, and signal-to-noise ratios. However,

ease-of-use and time-to-market considerations are often equally

important.

1.3.2.11.3.2.1

1.3.2.1

1.3.2.11.3.2.1

The precision of converters has been improving and will continue to

increase. In the past few years, average precision requirements have risen

by several bits and the trend is for both precision and sampling rates to

increase.

Why Floating-Point?Why Floating-Point?

Why Floating-Point?

Why Floating-Point?Why Floating-Point?

PrecisionPrecision

Precision

PrecisionPrecision

Page 19

IntroductionIntroduction

Introduction

IntroductionIntroduction

11

1

11

1.3.2.21.3.2.2

1.3.2.2

1.3.2.21.3.2.2

Traditionally, compression and decompression algorithms have operated

on signals of known bandwidth. These algorithms were developed to

behave regularly, to keep costs down and implementations easy.

Increasingly, the trend in algorithm development is to remove constraints

on the regularity and dynamic range of intermediate results. Adaptive

filtering and imaging are two applications requiring wide dynamic range.

1.3.2.31.3.2.3

1.3.2.3

1.3.2.31.3.2.3

Radar, sonar, and even commercial applications (like speech recognition)

require a wide dynamic range to discern selected signals from noisy

environments.

1.3.2.41.3.2.4

1.3.2.4

1.3.2.41.3.2.4

Ideally, floating-point digital signal processors should be easier to use and

allow a quicker time-to-market than DSPs that do not support floatingpoint formats. If the floating-point processor’s architecture is designed

properly, designers can spend time on algorithm development instead of

assembly coding, code paging, and error handling. The following features

are hallmarks of a good floating-point DSP architecture:

• consistency with IEEE workstation simulations

• elimination of scaling

• high-level language (C, ADA) programmability

• large address spaces

• wide dynamic range

Dynamic RangeDynamic Range

Dynamic Range

Dynamic RangeDynamic Range

Signal-To-Noise RatioSignal-To-Noise Ratio

Signal-To-Noise Ratio

Signal-To-Noise RatioSignal-To-Noise Ratio

Ease-Of-UseEase-Of-Use

Ease-Of-Use

Ease-Of-UseEase-Of-Use

1.3.31.3.3

1.3.3

1.3.31.3.3

The ADSP-21020 and ADSP-21060 are the first members of Analog

Devices’ ADSP-21000 family of floating-point digital signal processors

(DSPs). The ADSP-21000 family architecture meets the five central

requirements for DSPs:

• Fast, flexible arithmetic computation units

• Unconstrained data flow to and from the computation units

• Extended precision and dynamic range in the computation units

• Dual address generators

• Efficient program sequencing

Why ADSP-21000 Family?Why ADSP-21000 Family?

Why ADSP-21000 Family?

Why ADSP-21000 Family?Why ADSP-21000 Family?

55

5

55

Page 20

11

1

11

IntroductionIntroduction

Introduction

IntroductionIntroduction

1.3.3.11.3.3.1

1.3.3.1

1.3.3.11.3.3.1

The ADSP-210xx can execute all instructions in a single cycle. It provides

one of the fastest cycle times available and the most complete set of

arithmetic operations, including Seed 1/X, Seed

Shift and Rotate, in addition to the traditional multiplication, addition,

subtraction and combined addition/subtraction. It is IEEE floating-point

compatible and allows either interrupt on arithmetic exception or latched

status exception handling.

1.3.3.21.3.3.2

1.3.3.2

1.3.3.21.3.3.2

The ADSP-210xx has a Harvard architecture combined with a 10-port, 16

word data register file. In every cycle, all of these operations can be

executed:

• the register file can read or write two operands off-chip

• the ALU can receive two operands

• the multiplier can receive two operands

• the ALU and multiplier can produce two results (three, if the ALU

The processors’ 48-bit orthogonal instruction word supports parallel data

transfer and arithmetic operations in the same instruction.

Fast & Flexible ArithmeticFast & Flexible Arithmetic

Fast & Flexible Arithmetic

Fast & Flexible ArithmeticFast & Flexible Arithmetic

Unconstrained Data FlowUnconstrained Data Flow

Unconstrained Data Flow

Unconstrained Data FlowUnconstrained Data Flow

operation is a combined addition/subtraction)

1/R(x), Min, Max, Clip,

66

6

66

1.3.3.31.3.3.3

1.3.3.3

1.3.3.31.3.3.3

All members of the ADSP-21000 family handle 32-bit IEEE floating-point

format, 32-bit integer and fractional formats (twos-complement and

unsigned), and an extended-precision 40-bit IEEE floating-point format.

These processors carry extended precision throughout their computation

units, limiting intermediate data truncation errors. The fixed-point formats

have an 80-bit accumulator for true 32-bit fixed-point computations.

1.3.3.41.3.3.4

1.3.3.4

1.3.3.41.3.3.4

The ADSP-210xx has two data address generators (DAGs) that provide

immediate or indirect (pre- and post-modify) addressing. Modulus and

bit-reverse operations are supported, without constraints on buffer

placement.

1.3.3.51.3.3.5

1.3.3.5

1.3.3.51.3.3.5

In addition to zero-overhead loops, the ADSP-210xx supports single-cycle

setup and exit for loops. Loops are nestable (six levels in hardware) and

interruptable. The processor also supports delayed and non-delayed

branches.

Extended IEEE-Floating-Point SupportExtended IEEE-Floating-Point Support

Extended IEEE-Floating-Point Support

Extended IEEE-Floating-Point SupportExtended IEEE-Floating-Point Support

Dual Address GeneratorsDual Address Generators

Dual Address Generators

Dual Address GeneratorsDual Address Generators

Efficient Program SequencingEfficient Program Sequencing

Efficient Program Sequencing

Efficient Program SequencingEfficient Program Sequencing

Page 21

IntroductionIntroduction

Introduction

IntroductionIntroduction

11

1

11

1.41.4

1.4

1.41.4

The following sections summarize the basic features of the ADSP-21020

architecture. These features are also common to the ADSP-21060 SHARC

processor; SHARC-specific enhancements to the base architecture are

discussed in the next section.

1.4.11.4.1

1.4.1

1.4.11.4.1

All members of the ADSP-21000 Family have the same base architecture.

The ADSP-21060 has advanced features built on to this base, but retains

code compatibility with the ADSP-21020 processor. The key features of the

base architecture are:

• Independent, Parallel Computation Units

The arithmetic/logic unit (ALU), multiplier, and shifter perform

single-cycle instructions. The three units are arranged in parallel,

maximizing computational throughput. Single multifunction

instructions execute parallel ALU and multiplier operations. These

computation units support IEEE 32-bit single-precision floating-point,

extended precision 40-bit floating-point, and 32-bit fixed-point data

formats.

• Data Register File

A general-purpose data register file transfers data between the

computation units and the data buses, and for storing intermediate

results. This 10-port, 32-register (16 primary, 16 secondary) register

file, combined with the ADSP-21000 Harvard architecture, allows

unconstrained data flow between computation units and memory.

ADSP-21000 FAMILY ARCHITECTURE OVERVIEWADSP-21000 FAMILY ARCHITECTURE OVERVIEW

ADSP-21000 FAMILY ARCHITECTURE OVERVIEW

ADSP-21000 FAMILY ARCHITECTURE OVERVIEWADSP-21000 FAMILY ARCHITECTURE OVERVIEW

ADSP-21000 Family Base ArchitectureADSP-21000 Family Base Architecture

ADSP-21000 Family Base Architecture

ADSP-21000 Family Base ArchitectureADSP-21000 Family Base Architecture

• Single-Cycle Fetch of Instruction & Two Operands

The ADSP-210xx features an enhanced Harvard architecture in which

the data memory (DM) bus transfers data and the program memory

(PM) bus transfers both instructions and data (see Figure 1.1). With its

separate program and data memory buses and on-chip instruction

cache, the processor can simultaneously fetch two operands and an

instruction (from the cache) in a single cycle.

• Instruction Cache

The ADSP-210xx includes a high performance instruction cache that

enables three-bus operation for fetching an instruction and two data

values. The cache is selective—only the instructions whose fetches

conflict with PM bus data accesses are cached. This allows full-speed

execution of looped operations such as digital filter multiplyaccumulates and FFT butterfly processing.

77

7

77

Page 22

11

1

11

IntroductionIntroduction

Introduction

IntroductionIntroduction

• Data Address Generators with Hardware Circular Buffers

The ADSP-210xx’s two data address generators (DAGs) implement

circular data buffers in hardware. Circular buffers let delay lines (and

other data structures required in digital signal processing) be

implemented efficiently; circular buffers are commonly used in digital

filters and Fourier transforms. The ADSP-210xx’s two DAGs contain

sufficient registers for up to 32 circular buffers (16 primary register

sets, 16 secondary). The DAGs automatically handle address pointer

wraparound, reducing overhead, increasing performance, and

simplifying implementation. Circular buffers can start and end at any

memory location.

• Flexible Instruction Set

The ADSP-210xx’s 48-bit instruction word accommodates a variety of

parallel operations, for concise programming. For example, in a single

instruction, the ADSP-210xx can conditionally execute a multiply, an

add, a subtract and a branch.

• Serial Scan & Emulation Features

The ADSP-210xx supports the IEEE-standard P1149 Joint Test Action

Group (JTAG) standard for system test. This standard defines a

method for serially scanning the I/O status of each component in a

system. This serial port also gives access to the ADSP-210xx on-chip

emulation features.

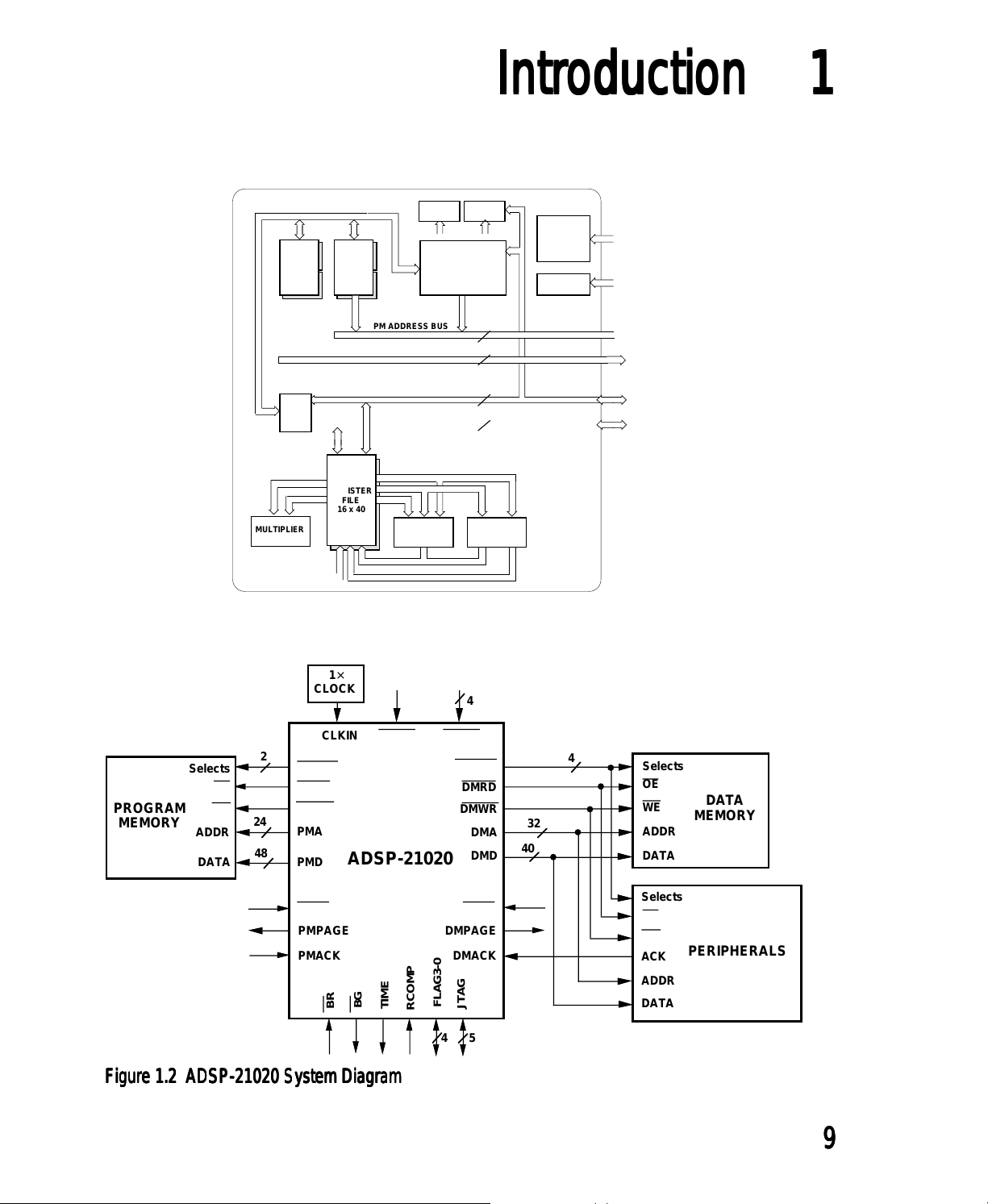

1.4.21.4.2

1.4.2

1.4.21.4.2

The ADSP-21020 is the first member of the ADSP-21000 family. It is a

complete implementation of the family base architecture. Figure 1.1 shows

the block diagram of the ADSP-21020 and Figure 1.2 shows a system

diagram.

ADSP-21020 DSPADSP-21020 DSP

ADSP-21020 DSP

ADSP-21020 DSPADSP-21020 DSP

88

8

88

Page 23

DAG 1

8 x 4 x 32

DAG 2

8 x 4 x 24

TIMER

PROGRAM

SEQUENCER

IntroductionIntroduction

Introduction

IntroductionIntroduction

CACHE

32 x 48

JTAG

TEST &

EMULATION

FLAGS

11

1

11

PM ADDRESS BUS

DM ADDRESS BUS

Bus

Connect

REGISTER

FILE

16 x 40

MULTIPLIER

Figure 1.1 ADSP-21020 Block DiagramFigure 1.1 ADSP-21020 Block Diagram

Figure 1.1 ADSP-21020 Block Diagram

Figure 1.1 ADSP-21020 Block DiagramFigure 1.1 ADSP-21020 Block Diagram

1×

CLOCK

CLKIN

2

PMS1-0

PMRD

PMWR

24

PMA

48

PMD

ADSP-21020

PROGRAM

MEMORY

Selects

OE

WE

ADDR

DATA

PM DATA BUS

DM DATA BUS

BARREL

SHIFTER

RESET IRQ3-0

DMS3-0

DMRD

DMWR

4

DMA

DMD

24

32

48

40

ALU

4

32

40

Selects

OE

WE

ADDR

DATA

DATA

MEMORY

PMTS

PMACK

BG

BR

Figure 1.2 ADSP-21020 System DiagramFigure 1.2 ADSP-21020 System Diagram

Figure 1.2 ADSP-21020 System Diagram

Figure 1.2 ADSP-21020 System DiagramFigure 1.2 ADSP-21020 System Diagram

TIMEXP

FLAG3-0

RCOMP

DMTS

DMPAGEPMPAGE

DMACK

JTAG

Selects

OE

WE

PERIPHERALS

ACK

ADDR

DATA

54

99

9

99

Page 24

11

1

11

IntroductionIntroduction

Introduction

IntroductionIntroduction

1.4.31.4.3

1.4.3

1.4.31.4.3

The ADSP-21060 SHARC (Super Harvard Architecture Computer) is a

single-chip 32-bit computer optimized for signal computing applications.

The ADSP-21060 SHARC has the following key features:

Four Megabit Configurable On-Chip SRAM

• Dual-Ported for Independent Access by Base Processor and DMA

• Configurable as Maximum 128K Words Data Memory (32-Bit),

Off-Chip Memory Interfacing

• 4 Gigawords Addressable (32-bit Address)

• Programmable Wait State Generation, Page-Mode DRAM Support

DMA Controller

ADSP-21060 SHARCADSP-21060 SHARC

ADSP-21060 SHARC

ADSP-21060 SHARCADSP-21060 SHARC

80K Words Program Memory (48-Bit), or Combinations of Both Up To

4 Mbits

1010

10

1010

Page 25

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

This chapter contains listings and descriptions of several useful

trigonometric, mathematical and transcendental functions. The functions

are

Trigonometric

• sine/cosine approximation

• tangent approximation

• arctangent approximation

Mathematical

• square root

• square root with single precision

• inverse square root

• inverse square root with single precision

• division

22

2

22

Transcendental

• logarithm

• exponential

• power

2.12.1

2.1

2.12.1

The sine and cosine functions are fundamental operations commonly used

in digital signal processing algorithms , such as simple tone generation

and calculation of sine tables for FFTs. This section describes how to

calculate the sine and cosine functions.

This ADSP-210xx implementation of sin(x) is based on a min-max

polynomial approximation algorithm in the [CODY]. Computation of the

function sin(x) is reduced to the evaluation of a sine approximation over a

small interval that is symmetrical about the axis.

SINE/COSINE APPROXIMATION SINE/COSINE APPROXIMATION

SINE/COSINE APPROXIMATION

SINE/COSINE APPROXIMATION SINE/COSINE APPROXIMATION

1515

15

1515

Page 26

22

2

22

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

Let

π

≤ π

+ f

/2.

|x| = N

where

|f|

Then

sin(x) = sign(x) * sin(f) * (-1)

Once the sign of the input, x, is determined, the value of N can be

determined. The next step is to calculate f. In order to maintain the

maximum precision, f is calculated as follows

f = (|x| – xNC

The constants C

equal to pi (π). C

four decimal places beyond the precision of the ADSP-210xx.

For devices that represent floating-point numbers in 32 bits, Cody and

Waite suggest a seven term min-max polynomial of the form R(g) = g·P(g).

When expanded, the sine approximation for f is represented as

sin(f) = ((((((r

With sin(f) calculated, sin(x) can be constructed. The cosine function is

calculated similarly, using the trigonometric identity

cos(x) = sin(x +

) – xNC

1

and C2 are determined such that C

1

is determined such that C1 + C2 represents pi to three or

2

·f + r6) * f + r5) * f +r4) * f + r3) * f + r2) * f + r1) · f

7

π

/2)

N

2

is approximately

1

1616

16

1616

2.1.12.1.1

2.1.1

2.1.12.1.1

The two listings illustrate the sine approximation and the calling of the

sine approximation. The first listing, sin.asm

the algorithm for calculation of sines and cosines. The second listing,

sinetest.asm , is an example of a program that calls the sine

approximation.

Implementation Implementation

Implementation

Implementation Implementation

, is an implementation of

Page 27

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

Implementation of the sine algorithm on ADSP-21000 family processors is

straightforward. In the first listing below,

defined. The first segment, defined with the .SEGMENT directive, contains

the assembly code for the sine/cosine approximation. The second segment

is a data segment that contains the constants necessary to perform this

approximation.

The code is structured as a called subroutine, where the parameter x is

passed into this routine using register F0. When the subroutine is finished

executing, the value sin(x) or cos(x) is returned in the same register, F0. The

variables, i_reg and l_reg

length register, in either data address generator on the ADSP-21000 family.

These registers are used in the program to point to elements of the data

table, sine_data . Elements of this table are accessed indirectly within

this program. Specifically, index registers I0 - I7 are used if the data table

containing all the constants is put in data memory and index registers I8 I15 are used if the data table is put in program memory. The variable mem

must be defined as program memory, PM , or data memory, DM .

, are specified as an index register and a

sin.asm , two segments are

22

2

22

The include file, asm_glob.h

and i_reg

The second listing, sinetest.asm

the cosine and sine routines.

There are two entry points in the subroutine, sin.asm . They are labeled

cosine and sine . Code execution begins at these labels. The calling

program uses these labels by executing the instruction

or

with the argument x in register F0. These calls are delayed branch calls

that efficiently use the instruction pipeline on the ADSP-21000 family. In a

delayed branch, the two instructions following the branch instruction are

executed prior to the branch. This prevents the need to flush an instruction

pipeline before taking a branch.

. You can alter these definitions to suit your needs.

call sine (db);

call cosine (db);

, contains definitions of mem, l_reg ,

, is an example of a routine that calls

1717

17

1717

Page 28

22

2

22

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

2.1.22.1.2

2.1.2

2.1.22.1.2

2.1.2.12.1.2.1

2.1.2.1

2.1.2.12.1.2.1

/***************************************************************************

File Name

Version

0.03 7/4/90

Purpose

Equations Implemented

Calling Parameters

Return Values

Registers Affected

Code ListingsCode Listings

Code Listings

Code ListingsCode Listings

Sine/Cosine Approximation SubroutineSine/Cosine Approximation Subroutine

Sine/Cosine Approximation Subroutine

Sine/Cosine Approximation SubroutineSine/Cosine Approximation Subroutine

SIN.ASM

Subroutine to compute the Sine or Cosine values of a floating point input.

Y=SIN(X) or

Y=COS(X)

F0 = Input Value X=[6E-20, 6E20]

l_reg=0

F0 = Sine (or Cosine) of input Y=[-1,1]

F0, F2, F4, F7, F8, F12

i_reg

Cycle Count

38 Cycles

# PM Locations

34 words

# DM Locations

11 Words

***************************************************************************/

1818

18

1818

Page 29

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

#include “asm_glob.h”

.SEGMENT/PM Assembly_Library_Code_Space;

.PRECISION=MACHINE_PRECISION;

#define half_PI 1.57079632679489661923

.GLOBAL cosine, sine;

/**** Cosine/Sine approximation program starts here. ****/

/**** Based on algorithm found in Cody and Waite. ****/

22

2

22

cosine:

i_reg=sine_data; /*Load pointer to data*/

F8=ABS F0; /*Use absolute value of input*/

F12=0.5; /*Used later after modulo*/

F2=1.57079632679489661923; /* and add PI/2*/

JUMP compute_modulo (DB); /*Follow sin code from here!*/

F4=F8+F2, F2=mem(i_reg,1);

F7=1.0; /*Sign flag is set to 1*/

sine:

i_reg=sine_data; /*Load pointer to data*/

F7=1.0; /*Assume a positive sign*/

F12=0.0; /*Used later after modulo*/

F8=ABS F0, F2=mem(i_reg,1);

F0=PASS F0, F4=F8;

IF LT F7=-F7; /*If input was negative, invert

sign*/

compute_modulo:

F4=F4*F2; /*Compute fp modulo value*/

R2=FIX F4; /*Round nearest fractional portion*/

BTST R2 BY 0; /*Test for odd number*/

IF NOT SZ F7=-F7; /*Invert sign if odd modulo*/

F4=FLOAT R2; /*Return to fp*/

F4=F4-F12, F2=mem(i_reg,1); /*Add cos adjust if necessary,

compute_f:

F12=F2*F4, F2=mem(i_reg,1); /*Compute XN*C1*/

F2=F2*F4, F12=F8-F12; /*Compute |X|-XN*C1, and

XN*C2*/

F8=F12-F2, F4=mem(i_reg,1); /*Compute f=(|X|-XN*C1)-

XN*C2*/

F12=ABS F8; /*Need magnitude for test*/

F4=F12-F4, F12=F8; /*Check for sin(x)=x*/

IF LT JUMP compute_sign; /*Return with result in F1*/

F4=XN*/

compute_R:

F12=F12*F12, F4=mem(i_reg,1);

(listing continues on next page)(listing continues on next page)

(listing continues on next page)

(listing continues on next page)(listing continues on next page)

1919

19

1919

Page 30

22

2

22

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

LCNTR=6, DO compute_poly UNTIL LCE;

F4=F12*F4, F2=mem(i_reg,1); /*Compute sum*g*/

compute_poly:

compute_sign:

path*/

.ENDSEG;

.SEGMENT/SPACE Assembly_Library_Data_Space;

.PRECISION=MEMORY_PRECISION;

.VAR sine_data[11] =

F4=F2+F4; /*Compute sum=sum+next r*/

F4=F12*F4; /*Final multiply by g*/

RTS (DB), F4=F4*F8; /*Compute f*R*/

F12=F4+F8; /*Compute Result=f+f*R*/

F0=F12*F7; /*Restore sign of result*/

RTS; /*This return only for sin(eps)=eps

0.31830988618379067154, /*1/PI*/

3.14160156250000000000, /*C1, almost PI*/

-8.908910206761537356617E-6, /*C2, PI=C1+C2*/

9.536743164E-7, /*eps, sin(eps)=eps*/

-0.737066277507114174E-12, /*R7*/

0.160478446323816900E-9, /*R6*/

-0.250518708834705760E-7, /*R5*/

0.275573164212926457E-5, /*R4*/

-0.198412698232225068E-3, /*R3*/

0.833333333327592139E-2, /*R2*/

-0.166666666666659653; /*R1*/

.ENDSEG;

2020

20

2020

Page 31

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

Listing 2.1 sin.asmListing 2.1 sin.asm

Listing 2.1 sin.asm

Listing 2.1 sin.asmListing 2.1 sin.asm

22

2

22

2.1.2.22.1.2.2

2.1.2.2

2.1.2.22.1.2.2

/

*********************************************************************************

File Name

SINTEST.ASM

Purpose

Example calling routine for the sine function.

*********************************************************************************/

#include “asm_glob.h”;

#include “def21020.h”;

#define N 4

#define PIE 3.141592654

.SEGMENT/DM dm_data; /* Declare variables in data memory

*/

.VAR input[N]= PIE/2, PIE/4, PIE*3/4, 12.12345678; /* test data */

.VAR output[N] /* results here */

.VAR correct[N]=1.0, .707106781, .707106781, -.0428573949; /* correct results

*/

.ENDSEG;

.SEGMENT/PM

Example Calling RoutineExample Calling Routine

Example Calling Routine

Example Calling RoutineExample Calling Routine

pm_rsti; /* The reset vector resides in this space */

DMWAIT=0x21; /* Set data memory waitstates to zero */

PMWAIT=0x21; /* Set program memory waitstates to zero */

JUMP start;

.ENDSEG;

.EXTERN sine;

.SEGMENT/PM pm_code;

start:

bit set mode2 0x10; nop; read cache 0; bit clr mode2 0x10;

M1=1;

B0=input;

L0=0;

I1=output;

L1=0;

lcntr=N, do calcit until lce;

CALL sine (db);

l_reg=0;

f0=dm(i0,m1);

calcit:

dm(i1,m1)=f0;

end:

2121

21

2121

Page 32

22

2

22

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

.ENDSEG;

Listing 2.2 sintest.asmListing 2.2 sintest.asm

Listing 2.2 sintest.asm

Listing 2.2 sintest.asmListing 2.2 sintest.asm

2.22.2

2.2

2.22.2

The tangent function is one of the fundamental trigonometric signal

processing operations. This section shows how to approximate the tangent

function in software. The algorithm used is taken from [CODY].

Tan(x) is calculated in three steps:

1. The argument x (which may be any real value) is reduced to a

related argument f with a magnitude less than π/4 (that is, t has a

range of ± π/2).

2. Tan(f) is computed using a min-max polynomial approximation.

3. The desired function is reconstructed.

2.2.12.2.1

2.2.1

2.2.12.2.1

The implementation of the tangent approximation algorithm uses 38

instruction cycles and consists of three logical steps.

IDLE;

TANGENT APPROXIMATIONTANGENT APPROXIMATION

TANGENT APPROXIMATION

TANGENT APPROXIMATIONTANGENT APPROXIMATION

Implementation Implementation

Implementation

Implementation Implementation

2222

22

2222

First, the argument x is reduced to the argument f. This argument

reduction is done in the sections labeled compute_modulo and

compute_f

The factor

any floating-point value) to f (which is a normalized value with a range of

± π/2). To get an accurate result, the constants C

C

+ C

1

2

precision. The value C

added to C

Notice that in the argument reduction, the assembly instructions are all

multifunction instructions. ADSP-21000 family processors can execute a

data move or a register move in parallel with a computation. Because

multifunction instructions execute in a single cycle, the overhead for the

memory move is eliminated.

.

π

/2 is required in the computation to reduce x (which may be

and C2 are chosen so that

1

approximates π/2 to three or four decimal places beyond machine

is chosen to be close to π/2 and C2 is a factor that is

1

that results in a very accurate representation of π/2.

1

Page 33

Trigonometric, Mathematical &Trigonometric, Mathematical &

Trigonometric, Mathematical &

Trigonometric, Mathematical &Trigonometric, Mathematical &

Transcendental FunctionsTranscendental Functions

Transcendental Functions

Transcendental FunctionsTranscendental Functions

A special case is if tan(x) = x. This occurs when the absolute value of f is

less than epsilon. This value is very close to 0. In this case, a jump is

executed and the tangent function is calculated using the values of f and 1

in the final divide.

The second step is to approximate the tangent function using a min-max

polynomial expansion. Two calculations are performed, the calculation of

P(g) and the calculation of Q(g), where g is just f * f. Four coefficients are

used in calculation of both P(g) and Q(g). The section labeled compute_P

makes this calculation:

P(g) = ((p3 * g + p2) * g + p1) * g *f

Where g = f*f and f is the reduced argument of x. The value f * P(g) is

stored in the register F8.

22

2

22

The section labeled compute_Q

Q(g) = ((q

The third step in the calculation of the tangent function is to divide P(g) by

Q(g). If the argument, f, is even, then the tangent function is

tan(f) = f * P(g)/Q(g)

If the argument, f, is odd then the tangent function is

tan(f) = –f * P(g)/Q(g)