Page 1

Page 2

DISTANT SPEECH

RECOGNITION

Distant Speech Recognition Matthias W¨olfel and John McDonough

© 2009 John Wiley & Sons, Ltd. ISBN: 978-0-470-51704-8

Page 3

DISTANT SPEECH

RECOGNITION

Matthias W¨olfel

Universit¨at Karlsruhe (TH), Germany

and

John McDonough

Universit¨at des Saarlandes, Germany

A John Wiley and Sons, Ltd., Publication

Page 4

This edition first published 2009

© 2009 John Wiley & Sons Ltd

Registered office

John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, United Kingdom

For details of our global editorial offices, for customer services and for information about how to apply for

permission to reuse the copyright material in this book please see our website at www.wiley.com.

The right of the author to be identified as the author of this work has been asserted in accordance with the

Copyright, Designs and Patents Act 1988.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in

any form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by

the UK Copyright, Designs and Patents Act 1988, without the prior permission of the publisher.

Wiley also publishes its books in a variety of electronic formats. Some content that appears in print may not be

available in electronic books.

Designations used by companies to distinguish their products are often claimed as trademarks. All brand names

and product names used in this book are trade names, service marks, trademarks or registered trademarks of their

respective owners. The publisher is not associated with any product or vendor mentioned in this book. This

publication is designed to provide accurate and authoritative information in regard to the subject matter covered.

It is sold on the understanding that the publisher is not engaged in rendering professional services. If professional

advice or other expert assistance is required, the services of a competent professional should be sought.

Wiley also publishes its books in a variety of electronic formats. Some content that appears

in print may not be available in electronic books.

Library of Congress Cataloging-in-Publication Data

W¨olfel, Matthias.

Distant speech recognition / Matthias W¨olfel, John McDonough.

p. cm.

Includes bibliographical references and index.

ISBN 978-0-470-51704-8 (cloth)

1. Automatic speech recognition. I. McDonough, John (John W.) II. Title.

TK7882.S65W64 2009

006.4

54–dc22

2008052791

A catalogue record for this book is available from the British Library

ISBN 978-0-470-51704-8 (H/B)

Typeset in 10/12 Times by Laserwords Private Limited, Chennai, India

Printed and bound in Great Britain by Antony Rowe Ltd, Chippenham, Wiltshire

Page 5

Contents

Foreword xiii

Preface xvii

1 Introduction 1

1.1 Research and Applications in Academia and Industry 1

1.1.1 Intelligent Home and Office Environments 2

1.1.2 Humanoid Robots 3

1.1.3 Automobiles 4

1.1.4 Speech-to-Speech Translation 6

1.2 Challenges in Distant Speech Recognition 7

1.3 System Evaluation 9

1.4 Fields of Speech Recognition 10

1.5 Robust Perception 12

1.5.1 A Priori Knowledge 12

1.5.2 Phonemic Restoration and Reliability 12

1.5.3 Binaural Masking Level Difference 14

1.5.4 Multi-Microphone Processing 14

1.5.5 Multiple Sources by Different Modalities 15

1.6 Organizations, Conferences and Journals 16

1.7 Useful Tools, Data Resources and Evaluation Campaigns 18

1.8 Organization of this Book 18

1.9 Principal Symbols used Throughout the Book 23

1.10 Units used Throughout the Book 25

2Acoustics 27

2.1 Physical Aspect of Sound 27

2.1.1 Propagation of Sound in Air 28

2.1.2 The Speed of Sound 29

2.1.3 Wave Equation and Velocity Potential 29

2.1.4 Sound Intensity and Acoustic Power 31

2.1.5 Reflections of Plane Waves 32

2.1.6 Reflections of Spherical Waves 33

Page 6

vi Contents

2.2 Speech Signals 34

2.2.1 Production of Speech Signals 34

2.2.2 Units of Speech Signals 36

2.2.3 Categories of Speech Signals 39

2.2.4 Statistics of Speech Signals 39

2.3 Human Perception of Sound 41

2.3.1 Phase Insensitivity 42

2.3.2 Frequency Range and Spectral Resolution 42

2.3.3 Hearing Level and Speech Intensity 42

2.3.4 Masking 44

2.3.5 Binaural Hearing 45

2.3.6 Weighting Curves 45

2.3.7 Virtual Pitch 46

2.4 The Acoustic Environment 47

2.4.1 Ambient Noise 47

2.4.2 Echo and Reverberation 48

2.4.3 Signal-to-Noise and Signal-to-Reverberation Ratio 51

2.4.4 An Illustrative Comparison between Close and Distant

Recordings 52

2.4.5 The Influence of the Acoustic Environment on Speech Production 53

2.4.6 Coloration 54

2.4.7 Head Orientation and Sound Radiation 55

2.4.8 Expected Distances between the Speaker and the Microphone 57

2.5 Recording Techniques and Sensor Configuration 58

2.5.1 Mechanical C lassification of Microphones 58

2.5.2 Electrical Classification of Microphones 59

2.5.3 Characteristics of Microphones 60

2.5.4 Microphone Placement 60

2.5.5 Microphone Amplification 62

2.6 Summary and Further Reading 62

2.7 Principal Symbols 63

3 Signal Processing and Filtering Techniques 65

3.1 Linear Time-Invariant Systems 65

3.1.1 Time Domain Analysis 66

3.1.2 Frequency Domain Analysis 69

3.1.3 z-Transform Analysis 72

3.1.4 Sampling Continuous-Time Signals 79

3.2 The Discrete Fourier Transform 82

3.2.1 Realizing LTI Systems with the DFT 85

3.2.2 Overlap-Add Method 86

3.2.3 Overlap-Save M ethod 87

3.3 Short-Time Fourier Transform 87

3.4 Summary and Further Reading 90

3.5 Principal Symbols 91

Page 7

Contents vii

4 Bayesian Filters 93

4.1 Sequential Bayesian Estimation 95

4.2 Wiener Filter 98

4.2.1 Time Domain Solution 98

4.2.2 Frequency Domain Solution 99

4.3 Kalman Filter and Variations 101

4.3.1 Kalman Filter 101

4.3.2 Extended Kalman Filter 106

4.3.3 Iterated Extended Kalman Filter 107

4.3.4 Numerical Stability 108

4.3.5 Probabilistic Data Association Filter 110

4.3.6 Joint Probabilistic Data Association Filter 115

4.4 Particle Filters 121

4.4.1 Approximation of Probabilistic Expectations 121

4.4.2 Sequential Monte Carlo Methods 125

4.5 Summary and Further Reading 132

4.6 Principal Symbols 133

5 Speech Feature Extraction 135

5.1 Short-Time Spectral Analysis 136

5.1.1 Speech Windowing and Segmentation 136

5.1.2 The Spectrogram 137

5.2 Perceptually Motivated Representation 138

5.2.1 Spectral Shaping 138

5.2.2 Bark and Mel Filter Banks 139

5.2.3 Warping by Bilinear Transform – Time vs Frequency Domain 142

5.3 Spectral Estimation and Analysis 145

5.3.1 Power Spectrum 145

5.3.2 Spectral Envelopes 146

5.3.3 LP Envelope 147

5.3.4 MVDR Envelope 150

5.3.5 Perceptual LP Envelope 153

5.3.6 Warped LP Envelope 153

5.3.7 Warped MVDR Envelope 156

5.3.8 Warped-Twice MVDR Envelope 157

5.3.9 Comparison of Spectral Estimates 159

5.3.10 Scaling of Envelopes 160

5.4 Cepstral Processing 163

5.4.1 Definition and Characteristics of Cepstral Sequences 163

5.4.2 Homomorphic Deconvolution 166

5.4.3 Calculating Cepstral Coefficients 167

5.5 Comparison between Mel Frequency, Perceptual LP and warped

MVDR Cepstral Coefficient Front-Ends 168

5.6 Feature Augmentation 169

5.6.1 Static and Dynamic Parameter Augmentation 169

Page 8

viii Contents

5.6.2 Feature Augmentation by Temporal Patterns 171

5.7 Feature Reduction 171

5.7.1 Class Separability Measures 172

5.7.2 Linear Discriminant Analysis 173

5.7.3 Heteroscedastic Linear Discriminant Analysis 176

5.8 Feature-Space Minimum Phone Error 178

5.9 Summary and Further Reading 178

5.10 Principal Symbols 179

6 Speech Feature Enhancement 181

6.1 Noise and Reverberation in Various Domains 183

6.1.1 Frequency Domain 183

6.1.2 Power Spectral Domain 185

6.1.3 Logarithmic Spectral Domain 186

6.1.4 Cepstral Domain 187

6.2 Two Principal Approaches 188

6.3 Direct Speech Feature Enhancement 189

6.3.1 Wiener Filter 189

6.3.2 Gaussian and Super-Gaussian MMSE Estimation 191

6.3.3 RASTA Processing 191

6.3.4 Stereo-Based Piecewise Linear Compensation for Environments 192

6.4 Schematics of Indirect Speech Feature Enhancement 193

6.5 Estimating Additive Distortion 194

6.5.1 Voice Activity Detection-Based Noise Estimation 194

6.5.2 Minimum Statistics Noise Estimation 195

6.5.3 Histogram- and Quantile-Based Methods 196

6.5.4 Estimation of the a Posteriori and a Priori Signal-to-Noise Ratio 197

6.6 Estimating Convolutional Distortion 198

6.6.1 Estimating Channel Effects 199

6.6.2 Measuring the Impulse Response 200

6.6.3 Harmful Effects of Room Acoustics 201

6.6.4 Problem in Speech Dereverberation 201

6.6.5 Estimating Late Reflections 202

6.7 Distortion Evolution 204

6.7.1 Random Walk 204

6.7.2 Semi-random Walk by Polyak Averaging and Feedback 205

6.7.3 Predicted Walk by Static Autoregressive Processes 206

6.7.4 Predicted Walk by Dynamic Autoregressive Processes 207

6.7.5 Predicted Walk by Extended Kalman Filters 209

6.7.6 Correlated Prediction Error Covariance Matrix 210

6.8 Distortion Evaluation 211

6.8.1 Likelihood Evaluation 212

6.8.2 Likelihood Evaluation by a Switching Model 213

6.8.3 Incorporating the Phase 214

6.9 Distortion Compensation 215

Page 9

Contents ix

6.9.1 Spectral Subtraction 215

6.9.2 Compensating for Channel Effects 217

6.9.3 Distortion Compensation for Distributions 218

6.10 Joint Estimation of Additive and Convolutional Distortions 222

6.11 Observation Uncertainty 227

6.12 Summary and Further Reading 228

6.13 Principal Symbols 229

7 Search: Finding the Best Word Hypothesis 231

7.1 Fundamentals of Search 233

7.1.1 Hidden Markov Model: Definition 233

7.1.2 Viterbi Algorithm 235

7.1.3 Word Lattice Generation 238

7.1.4 Word Trace Decoding 240

7.2 Weighted Finite-State Transducers 241

7.2.1 Definitions 241

7.2.2 Weighted Composition 244

7.2.3 Weighted Determinization 246

7.2.4 Weight Pushing 249

7.2.5 Weighted Minimization 251

7.2.6 Epsilon Removal 253

7.3 Knowledge Sources 255

7.3.1 Grammar 256

7.3.2 Pronunciation Lexicon 263

7.3.3 Hidden Markov Model 264

7.3.4 Context Dependency Decision Tree 264

7.3.5 Combination of Knowledge Sources 273

7.3.6 Reducing Search Graph Size 274

7.4 Fast On-the-Fly Composition 275

7.5 Word and Lattice Combination 278

7.6 Summary and Further Reading 279

7.7 Principal Symbols 281

8 Hidden Markov Model Parameter Estimation 283

8.1 Maximum Likelihood Parameter Estimation 284

8.1.1 Gaussian Mixture Model Parameter Estimation 286

8.1.2 Forward– Backward Estimation 290

8.1.3 Speaker-Adapted Training 296

8.1.4 Optimal Regression Class Estimation 300

8.1.5 Viterbi and Label Training 301

8.2 Discriminative Parameter Estimation 302

8.2.1 Conventional Maximum Mutual Information Estimation

Formulae 302

8.2.2 Maximum Mutual Information Training on Word Lattices 306

8.2.3 Minimum Word and Phone Error Training 308

Page 10

x Contents

8.2.4 Maximum Mutual Information Speaker-Adapted Training 310

8.3 Summary and Further Reading 313

8.4 Principal Symbols 315

9 Feature and Model Transformation 317

9.1 Feature Transformation Techniques 318

9.1.1 Vocal Tract Length Normalization 318

9.1.2 Constrained Maximum Likelihood Linear Regression 319

9.2 Model Transformation Techniques 320

9.2.1 Maximum Likelihood Linear Regression 321

9.2.2 All-Pass Transform Adaptation 322

9.3 Acoustic Model Combination 332

9.3.1 Combination of Gaussians in the Logarithmic Domain 333

9.4 Summary and Further Reading 334

9.5 Principal Symbols 336

10 Speaker Localization and Tracking 337

10.1 Conventional Techniques 338

10.1.1 Spherical Intersection Estimator 339

10.1.2 Spherical Interpolation Estimator 341

10.1.3 Linear Intersection Estimator 342

10.2 Speaker Tracking with the Kalman Filter 345

10.2.1 Implementation Based on the Cholesky Decomposition 348

10.3 Tracking Multiple Simultaneous Speakers 351

10.4 Audio-Visual Speaker Tracking 352

10.5 Speaker Tracking with the Particle Filter 354

10.5.1 Localization Based on Time Delays of Arrival 356

10.5.2 Localization Based on Steered Beamformer Response Power 356

10.6 Summary and Further Reading 357

10.7 Principal Symbols 358

11 Digital Filter Banks 359

11.1 Uniform Discrete Fourier Transform Filter Banks 360

11.2 Polyphase Implementation 364

11.3 Decimation and Expansion 365

11.4 Noble Identities 368

11.5 Nyquist(M) Filters 369

11.6 Filter Bank Design of De Haan et al. 371

11.6.1 Analysis Prototype Design 372

11.6.2 Synthesis Prototype Design 375

11.7 Filter Bank Design with the Nyquist(M ) Criterion 376

11.7.1 Analysis Prototype Design 376

11.7.2 Synthesis Prototype Design 377

11.7.3 Alternative Design 378

Page 11

Contents xi

11.8 Quality Assessment of Filter Bank Prototypes 379

11.9 Summary and Further Reading 384

11.10 Principal Symbols 384

12 Blind Source Separation 387

12.1 Channel Quality and Selection 388

12.2 Independent Component Analysis 390

12.2.1 Definition of ICA 390

12.2.2 Statistical Independence and its Implications 392

12.2.3 ICA Optimization Criteria 396

12.2.4 Parameter Update Strategies 403

12.3 BSS Algorithms based on Second-Order Statistics 404

12.4 Summary and Further Reading 407

12.5 Principal Symbols 408

13 Beamforming 409

13.1 Beamforming Fundamentals 411

13.1.1 Sound Propagation and Array Geometry 411

13.1.2 Beam Patterns 415

13.1.3 Delay-and-Sum Beamformer 416

13.1.4 Beam Steering 421

13.2 Beamforming Performance Measures 426

13.2.1 Directivity 426

13.2.2 Array Gain 428

13.3 Conventional Beamforming Algorithms 430

13.3.1 Minimum Variance Distortionless Response Beamformer 430

13.3.2 Array Gain of the MVDR Beamformer 433

13.3.3 MVDR Beamformer Performance with Plane Wave

Interference 433

13.3.4 Superdirective Beamformers 437

13.3.5 Minimum Mean Square Error Beamformer 439

13.3.6 Maximum Signal-to-Noise Ratio Beamformer 441

13.3.7 Generalized Sidelobe Canceler 441

13.3.8 Diagonal Loading 445

13.4 Recursive Algorithms 447

13.4.1 Gradient Descent Algorithms 448

13.4.2 Least Mean Square Error Estimation 450

13.4.3 Recursive Least Squares Estimation 455

13.4.4 Square-Root Implementation of the RLS Beamformer 461

13.5 Nonconventional Beamforming Algorithms 465

13.5.1 Maximum Likelihood Beamforming 466

13.5.2 Maximum Negentropy Beamforming 471

13.5.3 Hidden Markov Model Maximum Negentropy Beamforming 477

13.5.4 Minimum Mutual Information Beamforming 480

13.5.5 Geometric Source Separation 487

Page 12

xii Contents

13.6 Array Shape Calibration 488

13.7 Summary and Further Reading 489

13.8 Principal Symbols 491

14 Hands On 493

14.1 Example Room Configurations 494

14.2 Automatic Speech Recognition Engines 496

14.3 Word Error Rate 498

14.4 Single-Channel Feature Enhancement Experiments 499

14.5 Acoustic Speaker-Tracking Experiments 501

14.6 Audio-Video Speaker-Tracking Experiments 503

14.7 Speaker-Tracking Performance vs Word Error Rate 504

14.8 Single-Speaker Beamforming Experiments 505

14.9 Speech Separation Experiments 507

14.10 Filter Bank Experiments 508

14.11 Summary and Further Reading 509

Appendices 511

A List of Abbreviations 513

B Useful Background 517

B.1 Discrete Cosine Transform 517

B.2 Matrix Inversion Lemma 518

B.3 Cholesky Decomposition 519

B.4 Distance Measures 519

B.5 Super-Gaussian Probability Density Functions 521

B.5.1 Generalized Gaussian pdf 521

B.5.2 Super-Gaussian pdfs with the Meier G-function 523

B.6 Entropy 528

B.7 Relative Entropy 529

B.8 Transformation Law of Probabilities 529

B.9 Cascade of Warping Stages 530

B.10 Taylor Series 530

B.11 Correlation and Covariance 531

B.12 Bessel Functions 531

B.13 Proof of the Nyquist–Shannon Sampling Theorem 532

B.14 Proof of Equations (11.31–11.32) 532

B.15 Givens Rotations 534

B.16 Derivatives with Respect to Complex Vectors 537

B.17 Perpendicular Projection Operators 540

Bibliography 541

Index 561

Page 13

Foreword

As the authors of Distant Speech Recognition note, automatic speech recognition is the

key enabling technology that will permit natural interaction between humans and intelligent machines. Core speech recognition technology has developed over the past decade

in domains such as office dictation and interactive voice response systems to the point

that it is now commonplace for customers to encounter automated speech-based intelligent

agents that handle at least the initial part of a user query for airline flight information, technical support, ticketing services, etc. While these limited-domain applications have been

reasonably successful in reducing the costs associated with handling telephone inquiries,

their fragility with respect to acoustical variability is illustrated by the difficulties that

are experienced when users interact with the systems using speakerphone input. As time

goes by, we will come to expect the range of natural human-machine dialog to grow to

include seamless and productive interactions in contexts such as humanoid robotic butlers

in our living rooms, information kiosks in large and reverberant public spaces, as well

as intelligent agents in automobiles while traveling at highway speeds in the presence of

multiple sources of noise. Nevertheless, this vision cannot be fulfilled until we are able

to overcome the shortcomings of present speech recognition technology that are observed

when speech is recorded at a distance from the speaker.

While we have made great progress over the past two decades in core speech recognition

technologies, the failure to develop techniques that overcome the effects of acoustical

variability in homes, classrooms, and public spaces is the major reason why automated

speech technologies are not generally available for use in these venues. Consequently,

much of the current research in speech processing is directed toward improving robustness

to acoustical variability of all types. Two of the major forms of environmental degradation

are produced by additive noise of various forms and the effects of linear convolution.

Research directed toward compensating for these problems has been in progress for more

than three decades, beginning with the pioneering work in the late 1970s of Steven Boll

in noise cancellation and Thomas Stockham in homomorphic deconvolution.

Additive noise arises naturally from sound sources that are present in the environment

in addition to the desired speech source. As the speech-to-noise ratio (SNR) decreases, it is

to be expected that speech recognition will become more difficult. In addition, the impact

of noise on speech recognition accuracy depends as much on the type of noise source as on

the SNR. While a number of statistical techniques are known to be reasonably effective in

dealing with the effects of quasi-stationary broadband additive noise of arbitrary spectral

coloration, compensation becomes much more difficult when the noise is highly transient

Page 14

xiv Foreword

in nature, as is the case with many types of impulsive machine noise on factory floors and

gunshots in military environments. Interference by sources such as background music or

background speech is especially difficult to handle, as it is both highly transient in nature

and easily confused with the desired speech signal.

Reverberation is also a natural part of virtually all acoustical environments indoors, and

it is a factor in many outdoor settings with reflective surfaces as well. The presence of

even a relatively small amount of reverberation destroys the temporal structure of speech

waveforms. This has a very adverse impact on the recognition accuracy that is obtained

from speech systems that are deployed in public spaces, homes, and offices for virtually

any application in which the user does not use a head-mounted microphone. It is presently

more difficult to ameliorate the effects of common room reverberation than it has been

to render speech systems robust to the effects of additive noise, even at fairly low SNRs.

Researchers have begun to make progress on this problem only recently, and the results

of work from groups around the world have not yet congealed into a clear picture of how

to cope with the problem of reverberation effectively and efficiently.

Distant Speech Recognition by Matthias W¨olfel and John McDonough provides an

extraordinarily comprehensive exposition of the most up-to-date techniques that enable

robust distant speech recognition, along with very useful and detailed explanations of

the underlying science and technology upon which these techniques are based. The

book includes substantial discussions of the major sources of difficulties along with

approaches that are taken toward their resolution, summarizing scholarly work and practical experience around the world that has accumulated over decades. Considering both

single-microphone and multiple-microphone techniques, the authors address a broad array

of approaches at all levels of the system, including methods that enhance the waveforms

that are input to the system, methods that increase the effectiveness of features that are

input to speech recognition systems, as well as methods that render the internal models

that are used to characterize speech sounds more robust to environmental variability.

This book will be of great interest to several types of readers. First (and most obviously), readers who are unfamiliar with the field of distant speech recognition can learn in

this volume all of the technical background needed to construct and integrate a complete

distant speech recognition system. In addition, the discussions in this volume are presented

in self-contained chapters that enable technically literate readers in all fields to acquire a

deep level of knowledge about relevant disciplines that are complementary to their own

primary fields of expertise. Computer scientists can profit from the discussions on signal

processing that begin with elementary signal representation and transformation and lead

to advanced topics such as optimal Bayesian filtering, multirate digital signal processing,

blind source separation, and speaker tracking. Classically-trained engineers will benefit

from the detailed discussion of the theory and implementation of computer speech recognition systems including the extraction and enhancement of features representing speech

sounds, statistical modeling of speech and language, along with the optimal search for the

best available match between the incoming utterance and the internally-stored statistical

representations of speech. Both of these groups will benefit from the treatments of physical acoustics, speech production, and auditory perception that are too frequently omitted

from books of this type. Finally, the detailed contemporary exposition will serve to bring

experienced practitioners who have been in the field for some time up to date on the most

current approaches to robust recognition for language spoken from a distance.

Page 15

Foreword xv

Doctors W¨olfel and McDonough have provided a resource to scientists and engineers

that will serve as a valuable tutorial exposition and practical reference for all aspects

associated with robust speech recognition in practical environments as well as for speech

recognition in general. I am very pleased that this information is now available so easily

and conveniently in one location. I fully expect that the publication of Distant Speech

Recognition will serve as a significant accelerant to future work in the field, bringing

us closer to the day in which transparent speech-based human-machine interfaces will

become a practical reality in our daily lives everywhere.

Richard M. Stern

Pittsburgh, PA, USA

Page 16

Preface

Our primary purpose in writing this book has been to cover a broad body of techniques

and diverse disciplines required to enable reliable and natural verbal interaction between

humans and computers. In the early nineties, many claimed that automatic speech recognition (ASR) was a “solved problem” as the word error rate (WER) had dropped below the

5% level for professionally trained speakers such as in the Wall Street Journal (WSJ) corpus. This perception changed, however, when the Switchboard Corpus, the first corpus of

spontaneous speech recorded over a telephone channel, became available. In 1993, the first

reported error rates on Switchboard, obtained largely with ASR systems trained on WSJ

data, were over 60%, which represented a twelve-fold degradation in accuracy. Today the

ASR field stands at the threshold of another radical change. WERs on telephony speech

corpora such as the Switchboard Corpus have dropped below 10%, prompting many to

once more claim that ASR is a solved problem. But such a claim is credible only if one

ignores the fact that such WERs are obtained with close-talking microphones,suchas

those in telephones, and when only a single person is speaking. One of the primary hindrances to the widespread acceptance of ASR as the man-machine interface of first choice

is the necessity of wearing a head-mounted microphone. This necessity is dictated by the

fact that, under the current state of the art, WERs with microphones located a meter or

more away from the speaker’s mouth can catastrophically increase, making most applications impractical. The interest in developing techniques for overcoming such practical

limitations is growing rapidly within the research community. This change, like so many

others in the past, is being driven by the availability of new corpora, namely, speech

corpora recorded with far-field sensors. Examples of such include the meeting corpora

which have been recorded at various sites including the International Computer Science

Institute in Berkeley, California, Carnegie Mellon University in Pittsburgh, Pennsylvania

and the National Institute of Standards and Technologies (NIST) near Washington, D.C.,

USA. In 2005, conversational speech corpora that had been collected with microphone

arrays became available for the first time, after being released by the European Union

projects Computers in the Human Interaction Loop (CHIL) and Augmented Multiparty

Interaction (AMI). Data collected by both projects was subsequently shared with NIST

for use in the semi-annual Rich Transcription evaluations it sponsors. In 2006 Mike Lincoln at Edinburgh University in Scotland collected the first corpus of overlapping speech

captured with microphone arrays. This data collection effort involved real speakers who

read sentences from the 5,000 word WSJ task.

Page 17

xviii Preface

In the view of the current authors, ground breaking progress in the field of distant speech

recognition can only be achieved if the mainstream ASR community adopts methodologies and techniques that have heretofore been confined to the fringes. Such technologies

include speaker tracking for determining a speaker’s position in a room, beamforming for

combining the signals from an array of microphones so as to concentrate on a desired

speaker’s speech and suppress noise and reverberation, and source separation for effective

recognition of overlapping speech. Terms like filter bank, generalized sidelobe canceller,

and diffuse noise field must become household words within the ASR community. At

the same time researchers in the fields of acoustic array processing and source separation

must become more knowledgeable about the current state of the art in the ASR field.

This community must learn to speak the language of word lattices, semi-tied covariance

matrices, and weighted finite-state transducers. For too long, the two research communities have been content to effectively ignore one another. With a few noteable exceptions,

the ASR community has behaved as if a speech signal does not exist before it has been

converted to cepstral coefficients. The array processing community, on the other hand,

continues to publish experimental results obtained on artificial data, with ASR systems

that are nowhere near the state of the art, and on tasks that have long since ceased to

be of any research interest in the mainstream ASR world. It is only if each community

adopts the best practices of the other that they can together meet the challenge posed by

distant speech recognition. We hope with our book to make a step in this direction.

Acknowledgments

We wish to thank the many colleagues who have reviewed parts of this book and provided

very useful feedback for improving its quality and correctness. In particular we would

like to thank the following people: Elisa Barney Smith, Friedrich Faubel, Sadaoki Furui,

Reinhold H¨ab-Umbach, Kenichi Kumatani, Armin Sehr, Antske Fokkens, Richard Stern,

Piergiorgio Svaizer, Helmut W¨olfel, Najib Hadir, Hassan El-soumsoumani, and Barbara

Rauch. Furthermore we would like to thank Tiina Ruonamaa, Sarah Hinton, Anna Smart,

Sarah Tilley, and Brett Wells at Wiley who have supported us in writing this book and

provided useful insights into the process of producing a book, not to mention having

demonstrated the patience of saints through many delays and deadline extensions. We

would also like to thank the university library at Universit¨at Karlsruhe (TH) for providing

us with a great deal of scholarly material, either online or in books.

We would also like to thank the people who have supported us during our careers in

speech recognition. First of all thanks is due to our Ph.D. supervisors Alex Waibel, Bill

Byrne, and Frederick Jelinek who have fostered our interest in the field of automatic

speech recognition. Satoshi Nakamura, Mari Ostendorf, Dietrich Klakow, Mike Savic,

Gerasimos (Makis) Potamianos, and Richard Stern always proved more than willing to

listen to our ideas and scientific interests, for which we are grateful. We would furthermore

like to thank IEEE and ISCA for providing platforms for exchange, publications and for

hosting various conferences. We are indebted to Jim Flanagan and Harry Van Trees, who

were among the great pioneers in the array processing field. We are also much obliged to

the tireless employees at NIST, including Vince Stanford, Jon Fiscus and John Garofolo,

for providing us with our first real microphone array, the Mark III, and hosting the

annual evaluation campaigns which have provided a tremendous impetus for advancing

Page 18

Preface xix

the entire field. Thanks is due also to Cedrick Roch´et for having built the Mark III

while at NIST, and having improved it while at Universit¨at Karlsruhe (TH). In the latter

effort, Maurizio Omologo and his coworkers at ITC-irst in Trento, Italy were particularly

helpful. We would also like to thank Kristian Kroschel at Universit¨at Karlsruhe (TH) for

having fostered our initial interest in microphone arrays and agreeing to collaborate in

teaching a course on the subject. Thanks is due also to Mike Riley and Mehryar Mohri

for inspiring our interest in weighted finite-state transducers. Emilian Stoimenov was an

important contributor to many of the finite-state transducer techniques described here.

And of course, the list of those to whom we are indebted would not be complete if we

failed to mention the undergraduates and graduate students at Universit¨at Karlsruhe (TH)

who helped us to build an instrumented seminar room for the CHIL project, and thereafter

collect the audio and video data used for many of the experiments described in the final

chapter of this work. These include Tobias Gehrig, Uwe Mayer, Fabian Jakobs, Keni

Bernardin, Kai Nickel, Hazim Kemal Ekenel, Florian Kraft, and Sebastian St¨uker. We

are also naturally grateful to the funding agencies who made the research described in

this book possible: the European Commission, the American Defense Advanced Research

Projects Agency, and the Deutsche Forschungsgemeinschaft.

Most important of all, our thanks goes to our families. In particular, we would like

to thank Matthias’ wife Irina W¨olfel, without whose support during the many evenings,

holidays and weekends devoted to writing this book, we would have had to survive

only on cold pizza and Diet Coke. Thanks is also due to Helmut and Doris W¨olfel, John

McDonough, Sr. and Christopher McDonough, without whose support through life’s many

trials, this book would not have been possible. Finally, we fondly remember Kathleen

McDonough.

Matthias W¨olfel

Karlsruhe, Germany

John McDonough

Saarbr¨ucken, Germany

Page 19

1

Introduction

For humans, speech is the quickest and most natural form of communication. Beginning

in the late 19th century, verbal communication has been systematically extended through

technologies such as radio broadcast, telephony, TV, CD and MP3 players, mobile phones

and the Internet by voice over IP. In addition to these examples of one and two way verbal

human–human interaction, in the last decades, a great deal of research has been devoted to

extending our capacity of verbal communication with computers through automatic speech

recognition (ASR) and speech synthesis. The goal of this research effort has been and

remains to enable simple and natural human – computer interaction (HCI). Achieving this

goal is of paramount importance, as verbal communication is not only fast and convenient,

but also the only feasible means of HCI in a broad variety of circumstances. For example,

while driving, it is much safer to simply ask a car navigation system for directions, and

to receive them verbally, than to use a keyboard for tactile input and a screen for visual

feedback. Moreover, hands-free computing is also accessible for disabled users.

1.1 Research and Applications in Academia and Industry

Hands-free computing, much like hands-free speech processing, refers to computer interface configurations which allow an interaction between the human user and computer

without the use of the hands. Specifically, this implies that no close-talking microphone

is required. Hands-free computing is important because it is useful in a broad variety

of applications where the use of other common interface devices, such as a mouse or

keyboard, are impractical or impossible. Examples of some currently available hands-free

computing devices are camera-based head location and orientation-tracking systems, as

well as gesture-tracking systems. Of the various hands-free input modalities, however,

distant speech recognition (DSR) systems provide by far the most flexibility. When used

in combination with other hands-free modalities, they provide for a broad variety of HCI

possibilities. For example, in combination with a pointing gesture system it would become

possible to turn on a particular light in the room by pointing at it while saying, “Turn on

this light.”

The remainder of this section describes a variety of applications where speech recognition technology is currently under development or already available commercially. The

Distant Speech Recognition Matthias W¨olfel and John McDonough

© 2009 John Wiley & Sons, Ltd. ISBN: 978-0-470-51704-8

Page 20

2 Distant Speech Recognition

application areas include intelligent home and office environments, humanoid robots,

automobiles, and speech-to-speech translation.

1.1.1 Intelligent Home and Office Environments

A great deal of research effort is directed towards equipping household and office

devices – such as appliances, entertainment centers, personal digital assistants and

computers, phones or lights – with more user friendly interfaces. These devices should

be unobtrusive and should not require any special attention from the user. Ideally such

devices should know the mental state of the user and act accordingly, gradually relieving

household inhabitants and office workers from the chore of manual control of the

environment. This is possible only through the application of sophisticated algorithms

such as speech and speaker recognition applied to data captured with far-field sensors.

In addition to applications centered on HCI, computers are gradually gaining the capacity of acting as mediators for human – human interaction. The goal of the research in this

area is to build a computer that will serve human users in their interactions with other

human users; instead of requiring that users concentrate on their interactions with the

machine itself, the machine will provide ancillary services enabling users to attend exclusively to their interactions with other people. Based on a detailed understanding of human

perceptual context, intelligent rooms will be able to provide active assistance without any

explicit request from the users, thereby requiring a minimum of attention from and creating no interruptions for their human users. In addition to speech recognition, such services

need qualitative human analysis and human factors, natural scene analysis, multimodal

structure and content analysis, and HCI. All of these capabilities must also be integrated

into a single system.

Such interaction scenarios have been addressed by the recent projects Computers in

the Human Interaction Loop (CHIL), Augmented Multi-party Interaction (AMI), as well

as the successor of the latter Augmented Multi-party Interaction with Distance Access

(AMIDA), all of which were sponsored by the European Union. To provide such services

requires technology that models human users, their activities, and intentions. Automatically recognizing and understanding human speech plays a fundamental role in developing

such technology. Therefore, all of the projects mentioned above have sought to develop

technology for automatic transcription using speech data captured with distant microphones, determining who spoke when and where, and providing other useful services

such as the summarizations of verbal dialogues. Similarly, the Cognitive Assistant that

Learns and Organizes (CALO) project sponsored by the US Defense Advanced Research

Project Agency (DARPA), takes as its goal the extraction of information from audio data

captured during group interactions.

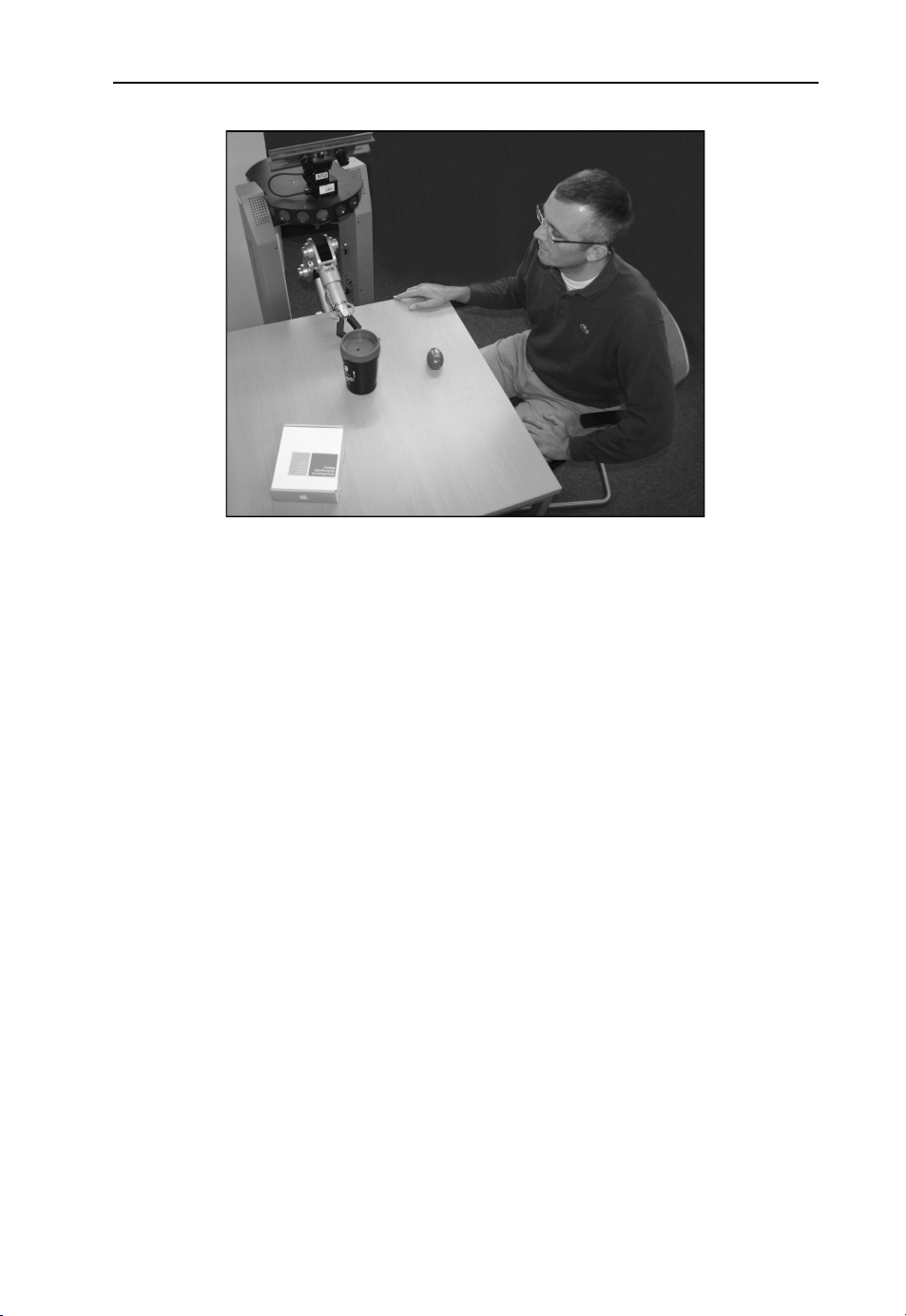

A typical meeting scenario as addressed by the AMIDA project is shown in Figure 1.1.

Note the three microphone arrays placed at various locations on the table, which are

intended to capture far-field speech for speaker tracking, beamforming, and DSR experiments. Although not shown in the photograph, the meeting participants typically also

wear close-talking microphones to provide the best possible sound capture as a reference

against which to judge the performance of the DSR system.

Page 21

Introduction 3

Figure 1.1 A typical AMIDA interaction. (© Photo reproduced by permission of the University

of Edinburgh)

1.1.2 Humanoid Robots

If humanoid robots are ever to be accepted as full ‘partners’ by their human users, they

must eventually develop perceptual capabilities similar to those possessed by humans, as

well as the capacity of performing a diverse collection of tasks, including learning, reasoning, communicating and forming goals through interaction with both users and instructors.

To provide for such capabilities, ASR is essential, because, as mentioned previously, spoken communication is the most common and flexible form of communication between

people. To provide a natural interaction between a human and a humanoid robot requires

not only the development of speech recognition systems capable of functioning reliably

on data captured with far-field sensors, but also natural language capabilities including a

sense of social interrelations and hierarchies.

In recent years, humanoid robots, albeit with very limited capabilities, have become

commonplace. They are, for example, deployed as entertainment or information systems.

Figure 1.2 shows an example of such a robot, namely, the humanoid tour guide robot

TPR-Robina

1

developed by Toyota. The robot is able to escort visitors around the Toyota Kaikan Exhibition Hall and to interact with them through a combination of verbal

communication and gestures.

While humanoid robots programmed for a limited range of tasks are already in

widespread use, such systems lack the capability of learning and adapting to new

environments. The development of such a capacity is essential for humanoid robots to

become helpful in everyday life. The Cognitive Systems for Cognitive Assistants (COSY)

project, financed by the European Union, has the objective to develops two kind of

robots providing such advanced capabilities. The first robot will find its way around a

1

ROBINA stands for ROBot as INtelligent Assistant.

Page 22

4 Distant Speech Recognition

Figure 1.2 Humanoid tour guide robot TPR-Robina by Toyota which escort visitors around Toyota

Kaikan Exhibition Hall in Toyota City, Aichi Prefecture, Japan. (© Photo reproduced by permission

of Toyota Motor Corporation)

complex building, showing others where to go and answering questions about routes

and locations. The second will be able to manipulate structured objects on a table top.

A photograph of the second COSY robot during an interaction session is shown in

Figure 1.3.

1.1.3 Automobiles

There is a growing trend in the automotive industry towards increasing both the number

and the complexity of the features available in high end models. Such features include

entertainment, navigation, and telematics systems, all of which compete for the driver’s

visual and auditory attention, and can increase his cognitive load. ASR in such automobile

environments would promote the “Eyes on the road, hands on the wheel” philosophy. This

would not only provide more convenience for the driver, but would in addition actually

Page 23

Introduction 5

Figure 1.3 Humanoid robot under development for the COSY project. (© Photo reproduced by

permission of DFKI GmbH)

enhance automotive safety. The enhanced safety is provided by hands-free operation of

everything but the car itself and thus would leave the driver free to concentrate on the

road and the traffic. Most luxury cars already have some sort of voice-control system

which are, for example, able to provide

• voice-activated, hands-free calling

Allows anyone in the contact list of the driver’s mobile phone to be called by voice

command.

• voice-activated music

Enables browsing through music using voice commands.

• audible information and text messages

Makes it possible to synthesize information and text messages, and have them read out

loud through speech synthesis.

This and other voice-controlled functionality will become available in the mass market

in the near future. An example of a voice-controlled car navigation system is shown in

Figure 1.4.

While high-end consumer automobiles have ever more features available, all of which

represent potential distractions from the task of driving the car, a police automobile has far

more devices that place demands on the driver’s attention. The goal of Project54 is to measure the cognitive load of New Hampshire state policeman – who are using speech-based

interfaces in their cars – during the course of their duties. Shown in Figure 1.5 is the

car simulator used by Project54 to measure the response times of police officers when

confronted with the task of driving a police cruiser as well as manipulating the several

devices contained therein through a speech interface.

Page 24

6 Distant Speech Recognition

Figure 1.4 Voice-controlled car navigation system by Becker. (© Photo reproduced by permission

of Herman/Becker Automotive Systems GmbH)

Figure 1.5 Automobile simulator at the University of New Hampshire. (© Photo reproduced by

permission of University of New Hampshire)

1.1.4 Speech-to-Speech Translation

Speech-to-speech translation systems provide a platform enabling communication with

others without the requirement of speaking or understanding a common language. Given

the nearly 6,000 different languages presently spoken somewhere on the Earth, and the

ever-increasing rate of globalization and frequency of travel, this is a capacity that will

in future be ever more in demand.

Even though speech-to-speech translation remains a very challenging task, commercial

products are already available that enable meaningful interactions in several scenarios. One

such system from National Telephone and Telegraph (NTT) DoCoMo of Japan works on a

common cell phone, as shown in Figure 1.6, providing voice-activated Japanese–English

and Japanese – Chinese translation. In a typical interaction, the user speaks short Japanese

phrases or sentences into the mobile phone. As the mobile phone does not provide

enough computational power for complete speech-to-text translation, the speech signal

is transformed into enhanced speech features which are transmitted to a server. The

server, operated by ATR-Trek, recognizes the speech and provides statistical translations,

which are then displayed on the screen of the cell-phone. The current system works

for both Japanese–English and Japanese– Chinese language pairs, offering translation in

Page 25

Introduction 7

Between Japanese and English

Between Japanese and Chinese

Figure 1.6 Cell phone, 905i Series by NTT DoCoMo, providing speech translation between

English and Japanese, and Chinese and Japanese developed by ATR and ATR-Trek. This service is

commercially available from NTT DoCoMo. (© Photos reproduced by permission of ATR-Trek)

both directions. For the future, however, preparation is underway to include support for

additional languages.

As the translations appear on the screen of the cell phone in the DoCoMo system, there

is a natural desire by users to hold the phone so that the screen is visible instead of next

to the ear. This would imply that the microphone is no longer only a few centimeters

from the mouth; i.e., we would have once more a distant speech recognition scenario.

Indeed, there is a similar trend in all hand-held devices supporting speech input.

Accurate translation of unrestricted speech is well beyond the capability of today’s

state-of-the-art research systems. Therefore, advances are needed to improve the

technologies for both speech recognition and speech translation. The development of

such technologies are the goals of the Technology and Corpora for Speech-to-Speech

Translation (TC-Star) project, financially supported by European Union, as well as the

Global Autonomous Language Exploitation (GALE) project sponsored by the DARPA.

These projects respectively aim to develop the capability for unconstrained conversational

speech-to-speech translation of English speeches given in the European Parliament, and

of broadcast news in Chinese or Arabic.

1.2 Challenges in Distant Speech Recognition

To guarantee high-quality sound capture, the microphones used in an ASR system should

be located at a fixed position, very close to the sound source, namely, the mouth of

the speaker. Thus body mounted microphones, such as head-sets or lapel microphones,

provide the highest sound quality. Such microphones are not practical in a broad variety

of situations, however, as they must be connected by a wire or radio link to a computer

and attached to the speaker’s body before the HCI can begin. As mentioned previously,

this makes HCI impractical in many situations where it would be most helpful; e.g., when

communicating with humanoid robots, or in intelligent room environments.

Although ASR is already used in several commercially available products, there are still

obstacles to be overcome in making DSR commercially viable. The two major sources

Page 26

8 Distant Speech Recognition

of degradation in DSR are distortions, such as additive noise and reverberation, and a

mismatch between training and test data , such as those introduced by speaking style

or accent. In DSR scenarios, the quality of the speech provided to the recognizer has a

decisive impact on system performance. This implies that speech enhancement techniques

are typically required to achieve the best possible signal quality.

In the last decades, many methods have been proposed to enable ASR systems to

compensate or adapt to mismatch due to interspeaker differences, articulation effects and

microphone characteristics. Today, those systems work well for different users on a broad

variety of applications, but only as long as the speech captured by the microphones is

free of other distortions. This explains the severe performance degradation encountered

in current ASR systems as soon as the microphone is moved away from the speaker’s

mouth. Such situations are known as distant, far-field or hands-free

2

speech recognition.

This dramatic drop in performance occurs mainly due to three different types of distortion:

3

• The first is noise, also known as background noise,

which is any sound other than the

desired speech, such as that from air conditioners, printers, machines in a factory, or

speech from other speakers.

• The second distortion is echo and reverberation, which are reflections of the sound

source arriving some time after the signal on the direct path.

• Other types of distortions are introduced by environmental factors such as room modes,

the orientation of the speaker’s head ,ortheLombard effect .

To limit the degradation in system performance introduced by these distortions, a great

deal of current research is devoted to exploiting several aspects of speech captured with

far-field sensors. In DSR applications, procedures already known from conventional ASR

can be adopted. For instance, confusion network combination is typically used with data

captured with a close-talking microphone to fuse word hypotheses obtained by using

various speech feature extraction schemes or even completely different ASR systems.

For DSR with multiple microphone conditions, confusion network combination can be

used to fuse word hypotheses from different microphones. Speech recognition with distant

sensors also introduces the possibility, however, of making use of techniques that were

either developed in other areas of signal processing, or that are entirely novel. It has

become common in the recent past, for example, to place a microphone array in the

speaker’s vicinity, enabling the speaker’s position to be determined and tracked with

time. Through beamforming techniques, a microphone array can also act as a spatial

filter to emphasize the speech of the desired speaker while suppressing ambient noise

or simultaneous speech from other speakers. Moreover, human speech has temporal,

spectral, and statistical characteristics that are very different from those possessed by

other signals for which conventional beamforming techniques have been used in the past.

Recent research has revealed that these characteristics can be exploited to perform more

effective beamforming for speech enhancement and recognition.

2

The latter term is misleading, inasmuch close-talking microphones are usually not held in the hand, but are

mounted to the head or body of the speaker.

3

This term is also misleading, in that the “background” could well be closer to the microphone than the “fore-

ground” signal of interest.

Page 27

Introduction 9

1.3 System Evaluation

Quantitative measures of the quality or performance of a system are essential for making

fundamental advances in the state-of-the-art. This fact is embodied in the often repeated

statement, “You improve what you measure.” In order to asses system performance, it is

essential to have error metrics or objective functions at hand which are well-suited to the

problem under investigation. Unfortunately, good objective functions do not exist for a

broad variety of problems, on the one hand, or else cannot be directly or automatically

evaluated, on the other.

Since the early 1980s, word error rate (WER) has emerged as the measure of first choice

for determining the quality of automatically-derived speech transcriptions. As typically

defined, an error in a speech transcription is of one of three types, all of which we will

now describe. A deletion occurs when the recognizer fails to hypothesize a word that

was spoken. An insertion occurs when the recognizer hypothesizes a word that was not

spoken. A substitution occurs when the recognizer misrecognizes a word. These three

errors are illustrated in the following partial hypothesis, where they are labeled with D,

I,andS, respectively:

Hyp: BUT ... WILL SELL THE CHAIN ... FOR EACH STORE SEPARATELY

Utt: ... IT WILL SELL THE CHAIN ... OR EACH STORE SEPARATELY

ID S

A more thorough discussion of word error rate is given in Section 14.1.

Even though widely accepted and used, word error rate is not without flaws. It has

been argued that the equal weighting of words should be replaced by a context sensitive

weighting, whereby, for example, information-bearing keywords should be assigned a

higher weight than functional words or articles. Additionally, it has been asserted that word

similarities should be considered. Such approaches, however, have never been widely

adopted as they are more difficult to evaluate and involve subjective judgment. Moreover,

these measures would raise new questions, such as how to measure the distance between

words or which words are important.

Naively it could be assumed that WER would be sufficient in ASR as an objective

measure. While this may be true for the user of an ASR system, it does not hold for the

engineer. In fact a broad variety of additional objective or cost functions are required.

These include:

• The Mahalanobis distance, which is used to evaluate the acoustic model.

• Perplexity, which is used to evaluate the language model as described in Section 7.3.1.

• Class separability , which is used to evaluate the feature extraction component or

front-end.

• Maximum mutual information or minimum phone error, which are used during discrim-

inate estimation of the parameters in a hidden Markov model.

• Maximum likelihood, which is the metric of first choice for the estimation of all system

parameters.

A DSR system requires additional objective functions to cope with problems not encoun-

tered in data captured with close-talking microphones. Among these are:

Page 28

10 Distant Speech Recognition

• Cross-correlation, which is used to estimate time delays of arrival between microphone

pairs as described in Section 10.1.

• Signal-to-noise ratio, which can be used for channel selection in a multiple-microphone

data capture scenario.

• Negentropy, which can be used for combining the signals captured by all sensors of a

microphone array.

Most of the objective functions mentioned above are useful because they show a significant correlation with WER. The performance of a system is optimized by minimizing or

maximizing a suitable objective function. The way in which this optimization is conducted

depends both on the objective function and the nature of the underlying model. In the best

case, a closed-form solution is available, such as in the optimization of the beamforming

weights as discussed in Section 13.3. In other cases, an iterative solution can be adopted,

such as when optimizing the parameters of a hidden Markov model (HMM) as discussed

in Chapter 8. In still other cases, numerical optimization algorithms must be used such

as when optimization the parameters of an all-pass transform for speaker adaptation as

discussedinSection9.2.2.

To chose the appropriate objective function a number of decisions must be made

(H¨ansler and Schmidt 2004, sect. 4):

• What kind of information is available?

• How should the available information be used?

• How should the error be weighted by the objective function?

• Should the objective function be deterministic or stochastic?

Throughout the balance of this text, we will strive to answer these questions whenever

introducing an objective function for a particular application or in a particular context.

When a given objective function is better suited than another for a particular purpose, we

will indicate why. As mentioned above, the reasoning typically centers around the fact

that the better suited objective function is more closely correlated with word error rate.

1.4 Fields of Speech Recognition

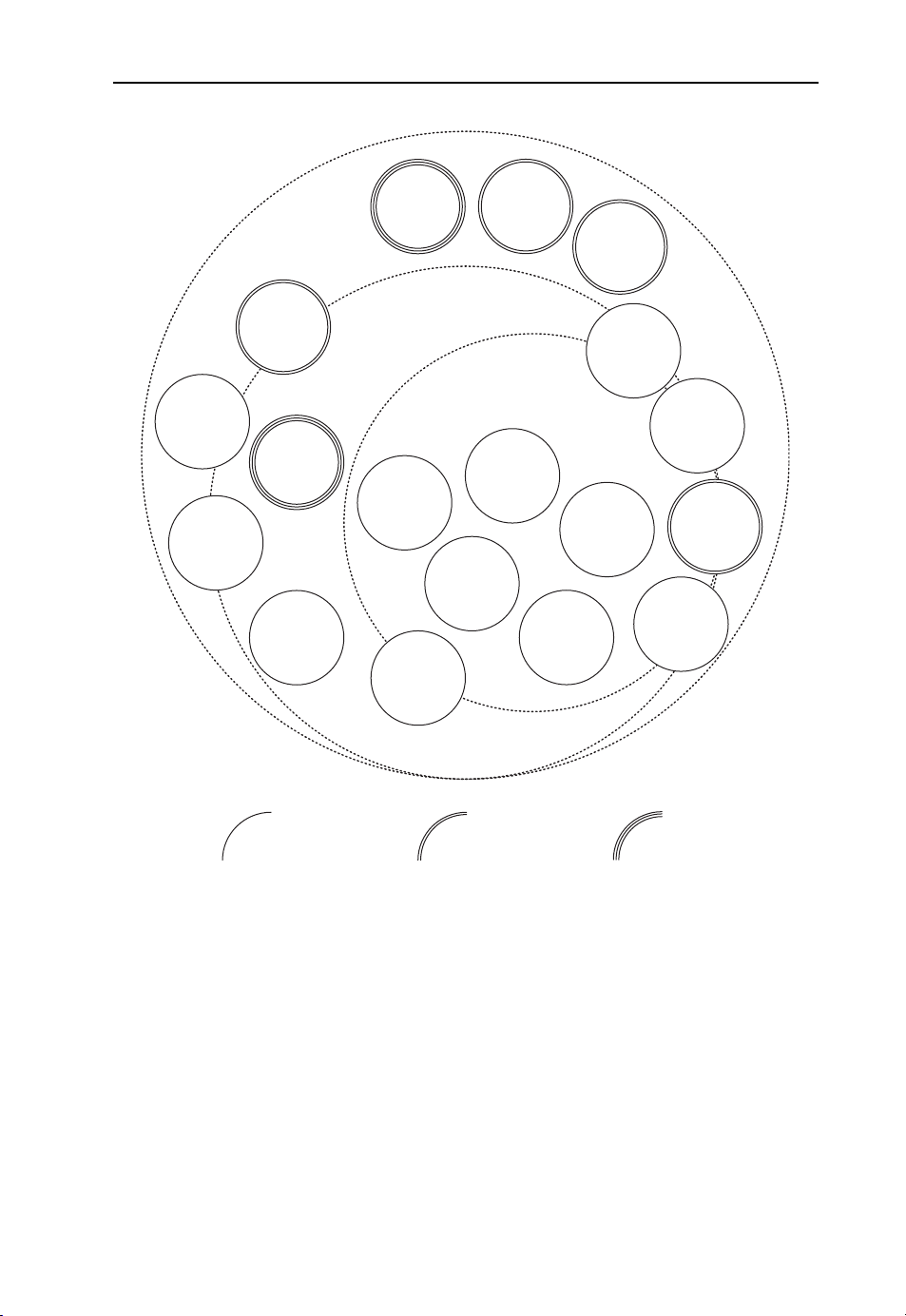

Figure 1.7 presents several subtopics of speech recognition in general which can be

associated with three different fields: automatic, robust and distant speech recognition.

While some topics such as multilingual speech recognition and language modeling can

be clearly assigned to one group (i.e., automatic) other topics such as feature extraction

or adaptation cannot be uniquely assigned to a single group. A second classification of

topics shown in Figure 1.7 depends on the number and type of sensors. Whereas one

microphone is traditionally used for recognition, in distant recognition the traditional

sensor configuration can be augmented by an entire array of microphones with known or

unknown geometry. For specific tasks such as lipreading or speaker localization, additional

sensor types such as video cameras can be used.

Undoubtedly, the construction of optimal DSR systems must draw on concepts from

several fields, including acoustics, signal processing, pattern recognition, speaker tracking

and beamforming. As has been shown in the past, all components can be optimized

Page 29

Introduction 11

dereverberation

feature

enhancement

distant speech

recognition

blind

source

separation

lip reading

missing

features

localization

and

tracking

robust speech

recognition

automatic speech

search

linguistics

adaptation

beamforming

recognition

multi

lingual

modeling

language

modeling

channel

selection

feature

extraction

acoustic

uncertainty

decoding

channel

combination

acoustics

single microphone multi microphone multi sensor

Figure 1.7 Illustration of the different fields of speech recognition: automatic, robust and distant

separately to construct a DSR system. Such an independent treatment, however, does

not allow for optimal performance. Moreover, new techniques have recently emerged

exploiting the complementary effects of the several components of a DSR system. These

include:

• More closely coupling the feature extraction and acoustic models; e.g., by propagating

the uncertainty of the feature extraction into the HMM.

• Feeding the word hypotheses produced by the DSR back to the component located

earlier in the processing chain; e.g. by feature enhancement with particle filters with

models for different phoneme classes.

Page 30

12 Distant Speech Recognition

• Replacing traditional objective functions such as signal-to-noise ratio by objective

functions taking into account the acoustic model of the speech recognition system,

as in maximum likelihood beamforming, or considering the particular characteristics of

human speech, as in maximum negentropy beamforming.

1.5 Robust Perception

In contrast to automatic pattern recognition, human perception is very robust in the

presence of distortions such as noise and reverberation. Therefore, knowledge of the

mechanisms of human perception, in particular with regard to robustness, may also be

useful in the development of automatic systems that must operate in difficult acoustic

environments. It is interesting to note that the cognitive load for humans increases while

listening in noisy environments, even when the speech remains intelligible (Kjellberg

et al. 2007). This section presents some illustrative examples of human perceptual

phenomena and robustness. We also present several technical solutions based on these

phenomena which are known to improve robustness in automatic recognition.

1.5.1 A Priori Knowledge

When confronted with an ambiguous stimulus requiring a single interpretation, the human

brain must rely on apriori knowledge and expectations. What is likely to be one of the

most amazing findings about the robustness and flexibility of human perception and the

use of apriori information is illustrated by the following sentence, which was circulated

in the Internet in September 2003:

Aoccdrnig to rscheearch at Cmabrigde uinervtisy, it deosn’t mttaer waht oredr the

ltteers in a wrod are, the olny ipromoetnt tihng is taht the frist and lsat ltteres are

at the rghit pclae. The rset can be a tatol mses and you can sitll raed it wouthit a

porbelm. Tihs is bcuseae we do not raed ervey lteter by itslef but the wrod as a

wlohe.

The text is easy to read for a human inasmuch as, through reordering, the brain maps

the erroneously presented characters into correct English words.

Apriori knowledge is also widely used in automatic speech processing. Obvious

examples are

• the statistics of speech,

• the limited number of possible phoneme combinations constrained by known words

which might be further constrained by the domain,

• the word sequences follow a particular structure which can be represented as a context

free grammar or the knowledge of successive words, represented as an N-gram .

1.5.2 Phonemic Restoration and Reliability

Most signals of interest, including human speech, are highly redundant. This redundancy

provides for correct recognition or classification even in the event that the signal is partially

Page 31

Introduction 13

Figure 1.8 Adding a mask to the occluded portions of the top image renders the word legible, as

is evident in the lower image

occluded or otherwise distorted, which implies that a significant amount of information is

missing. The sophisticated capabilities of the human brain underlying robust perception

were demonstrated by Fletcher (1953), who found that verbal communication between

humans is possible if either the frequencies below or above 1800 Hz are filtered out.

An illusory phenomenon, which clearly illustrates the robustness of the human auditory

system, is known as the phonemic restoration effect, whereby phonetic information that

is actually missing in a speech signal can be synthesized by the brain and clearly heard

(Miller and Licklider 1950; Warren 1970). Furthermore, the knowledge of which information is distorted or missing can significantly improve perception. For example, knowledge

about the occluded portion of an image can render a word readable, as is apparent upon

considering Figure 1.8. Similarly, the comprehensibility of speech can be improved by

adding noise (Warren et al. 1997).

Several problems in automatic data processing – such as occlusion – which were first

investigated in the context of visual pattern recognition, are now current research topics

in robust speech recognition. One can distinguish between two related approaches for

coping with this problem:

• missing feature theory

In missing feature theory, unreliable information is either ignored, set to some fixed

nominal value, such as the global mean, or interpolated from nearby reliable infor-

mation. In many cases, however, the restoration of missing features by spectral and/or

temporal interpolation is less effective than simply ignoring them. The reason for this is

that no processing can re-create information that has been lost as long as no additional

information, such as an estimate of the noise or its propagation, is available.

• uncertainty processing

In uncertainty processing, unreliable information is assumed to be unaltered, but the

unreliable portion of the data is assigned less weight than the reliable portion.

Page 32

14 Distant Speech Recognition

1.5.3 Binaural Masking Level Difference

Even though the most obvious benefit from binaural hearing lies in source localization,

other interesting effects exist: If the same signal and noise is presented to both ears with

a noise level so high as to mask the signal, the signal is inaudible. Paradoxically, if either

of the two ears is unable to hear the signal, it becomes once more audible. This effect is

known as the binaural masking level difference. The binaural improvements in observing

a signal in noise can be up to 20 dB (Durlach 1972). As discussed in Section 6.9.1, the

binaural masking level difference can be related to spectral subtraction, wherein two input

signals, one containing both the desired signal along with noise, and the second containing

only the noise, are present. A closely related effect is the so-called cocktail party effect

(Handel 1989), which describes the capacity of humans to suppress undesired sounds,

such as the babble during a cocktail party, and concentrate on the desired signal, such as

the voice of a conversation partner.

1.5.4 Multi-Microphone Processing

The use of multiple microphones is motivated by nature, in which two ears have been

shown to enhance speech understanding as well as acoustic source localization. This effect

is even further extended for a group of people, where one person could not understand

some words, a person next to the first might have and together they are able to understand

more than independent of each other.

Similarly, different tiers in a speech recognition system, which are derived either from

different channels (e.g., microphones at different locations or visual observations) or

from the variance in the recognition system itself, produce different recognition results.

An appropriate combination of the different tiers can improve recognition performance.

The degree of success depends on

• the variance of the information provided by the different tiers,

• the quality and reliability of the different tiers and

• the method used to combine the different tiers.

In automatic speech recognition, the different tiers can be combined at various stages of

the recognition system providing different advantages and disadvantages:

• signal combination

Signal-based algorithms, such as beamforming, exploit the spatial diversity resulting

from the fact that the desired and interfering signal sources are in practice located at

different points in space. These approaches assume that the time delays of the signals

between different microphone pairs are known or can be reliably estimated. The spatial

diversity can then be exploited by suppressing signals coming from directions other

than that of the desired source.

• feature combination

These algorithms concatenate features derived by different feature extraction methods

to form a new feature vector. In such an approach, it is a common practice to reduce

the number of features by principal component analysis or linear discriminant analysis.

Page 33

Introduction 15

While such algorithms are simple to implement, they suffer in performance if the

different streams are not perfectly synchronized.

• word and lattice combination

Those algorithms, such as recognizer output voting error reduction (ROVER) and confusion network combination, combine the information of the recognition output which

can be represented as a first best, N-best or lattice word sequence and might be augmented with a confidence score for each word.

In the following we present some examples where different tiers have been successfully combined: Stolcke et al. (2005) used two different front-ends, mel-frequency cepstral

coefficients and features derived from perceptual linear prediction, for cross-adaptation

and system combination via confusion networks. Both of these features are described in

Chapter 5. Yu et al. (2004) demonstrated, on a Chinese ASR system, that two different

kinds of models, one on phonemes, the other on semi-syllables, can be combined to good

effect. Lamel and Gauvain (2005) combined systems trained with different phoneme sets

using ROVER. Siohan et al. (2005) combined randomized decision trees. St¨uker et al.

(2006) showed that a combination of four systems – two different phoneme sets with two

feature extraction strategies – leads to additional improvements over the combination of

two different phoneme sets or two front-ends. St¨uker et al. also found that combining

two systems, where both the phoneme set and front-ends are altered, leads to improved

recognition accuracy compared to changing only the phoneme set or only the front-end.

This fact follows from the increased variance between the two different channels to be

combined. The previous systems have combined different tiers using only a single channel combination technique. W¨olfel et al. (2006) demonstrated that a hybrid approach

combining the different tiers, derived from different microphones, at different stages in a

distant speech recognition system leads to additional improvements over a single combination approach. In particular W¨olfel et al. achieved fewer recognition errors by using a

combination of beamforming and confusion network.

1.5.5 Multiple Sources by Different Modalities

Given that it often happens that no single modality is powerful enough to provide correct

classification, one of the key issues in robust human perception is the efficient merging

of different input modalities, such as audio and vision, to render a stimulus intelligible

(Ernst and B¨ulthoff 2004; Jacobs 2002). An illustrative example demonstrating the multimodality of speech perception is the McGurk effect

which is experienced when contrary audiovisual information is presented to human subjects. To wit, a video presenting a visual /ga/ combined with an audio /ba/ will be

perceived by 98% of adults as the syllable /da/. This effect exists not only for single

syllables, but can alter the perception of entire spoken utterances, as was confirmed by

a study about witness testimony (Wright and Wareham 2005). It is interesting to note

that awareness of the effect does not change the perception. This stands in stark contrast

to certain optical illusions, which are destroyed as soon as the subject is aware of the

deception.

4

This is often referred to as the McGurk – MacDonald effect.

4

(McGurk and MacDonald 1976),

Page 34

16 Distant Speech Recognition

Humans follow two different strategies to combine information:

• maximizing information (sensor combination)

If the different modalities are complementary, the various pieces of information about

an object are combined to maximize the knowledge about the particular observation.

For example, consider a three-dimensional object, the correct recognition of which

is dependent upon the orientation of the object to the observer. Without rotating the

object, vision provides only two-dimensional information about the object, while the

5

haptic

input provides the missing three-dimensional information (Newell 2001).

• reducing variance (sensor integration)

If different modalities overlap, the variance of the information is reduced. Under the

independence and Gaussian assumption of the noise, the estimate with the lowest variance is identical to the maximum likelihood estimate.

One example of the integration of audio and video information for localization supporting the reduction in variance theory is given by Alais and Burr (2004).

Two prominent technical implementations of sensor fusion are audio-visual speaker

tracking, which will be presented in Section 10.4, and audio-visual speech recognition. A

good overview paper of the latter is by Potamianos et al. (2004).

1.6 Organizations, Conferences and Journals

Like all other well-established scientific disciplines, the fields of speech processing and

recognition have founded and fostered an elaborate network of conferences and publications. Such networks are critical for promoting and disseminating scientific progress in

the field. The most important organizations that plan and hold such conferences on speech

processing and publish scholarly journals are listed in Table 1.1.

At conferences and in their associated proceedings the most recent advances in the

state-of-the-art are reported, discussed, and frequently lead to further advances. Several

major conferences take place every year or every other year. These conferences are listed

in Table 1.2. The principal advantage of conferences is that they provide a venue for

Table 1.1 Organizations promoting research in speech processing and recognition

Abbreviation Full Name

IEEE Institute of Electrical and Electronics Engineers

ISCA International Speech Communication Association former

European Speech Communication Association (ESCA)

EURASIP European Association for Signal Processing

ASA Acoustical Society of America

ASJ Acoustical Society of Japan

EAA European Acoustics Association

5

Haptic phenomena pertain to the sense of touch.

Page 35

Introduction 17

Table 1.2 Speech processing and recognition conferences

Abbreviation Full Name

ICASSP International Conference on Acoustics, Speech, and Signal Processing by IEEE

Interspeech ISCA conference; previous Eurospeech and International Conference on

Spoken Language Processing (ICSLP)

ASRU Automatic Speech Recognition and Understanding by IEEE

EUSIPCO European Signal Processing Conference by EURASIP

HSCMA Hands-free Speech Communication and Microphone Arrays

WASPAA Workshop on Applications of Signal Processing to Audio and Acoustics

IWAENC International Workshop on Acoustic Echo and Noise Control

ISCSLP International Symposium on Chinese Spoken Language Processing

ICMI International Conference on Multimodal Interfaces

MLMI Machine Learning for Multimodal Interaction

HLT Human Language Technology

the most recent advances to be reported. The disadvantage of conferences is that the

process of peer review by which the papers to be presented and published are chosen

is on an extremely tight time schedule. Each submission is either accepted or rejected,

with no time allowed for discussion with or clarification from the authors. In addition

to the scientific papers themselves, conferences offer a venue for presentations, expert