Page 1

Page 2

Page 3

CORE AND METRO

NETWORKS

Page 4

WILEY SERIES IN COMMUNICATIONS NETWORKING

& DISTRIBUTED SYSTEMS

Series Editors: David Hutchison, Lancaster University, Lancaster, UK

Serge Fdida, Universite´Pierre et Marie Curie, Paris, France

Joe Sventek, University of Glasgow, Glasgow, UK

The ‘Wiley Series in Communications Networking & Distributed Systems’ is a series of

expert-level, technically detailed books covering cutting-edge research, and brand new

developments as well as tutorial-style treatments in networking, middleware and software

technologies for communications and distributed systems. The books will provide timely and

reliable information about the state-of-the-art to researchers, advanced students and

development engineers in the Telecommunications and the Computing sectors.

Other titles in the series:

Wright: Voice over Pac ket Networks 0-471-49516-6 (February 2001)

Jepsen: Java for Telecommunications 0-471-49826-2 (July 2001)

Sutton: Secure Communications 0-471-49904-8 (December 2001)

Stajano: Security for Ubiquitous Computing 0-470-84493-0 (February 2002)

Martin-Flatin: Web-Based Management of IP Networks and Systems 0-471-48702-3

(September 2002)

Berman, Fox, Hey: Grid Computing. Making the Global Infrastructure a Reality

0-470-85319-0 (March 2003)

Turner, Magill, Marples: Service Provision. Technologies for Next Generation

Communications 0-470-85066-3 (April 2004)

Welzl: Network Congestion Control: Managing Internet Traffic 0-470-02528-X (July 2005)

Raz, Juhola, Serrat-Fernandez, Galis: Fast and Efficient Context-Aware Services

0-470-01668-X (April 2006)

Heckmann: The Competitive Internet Service Provider 0-470-01293-5 (April 2006)

Dressler: Self-Organization in Sensor and Actor Networks 0-470-02820-3 (November 2007)

Berndt: Towards 4G Technologies: Services with Initiative 0-470-0 1031-2 (Ma rch 2008)

Jacquenet, Bourdon, Boucadair: Service Automation and Dynamic Provisioning Techniques in

IP/MPLS Environments 0-470-01829-1 (March 2008)

Minei/Lucek: MPLS-Enabled Applications: Emerging Developments and New Technologies,

Second Edition 0-470-98644-1 (April 2008)

Gurtov: Host Identity Protocol (HIP): Towards the Secure Mobile Internet 0-470-99790-7

(June 2008)

Boucadair: Inter-Asterisk Exchange (IAX): Deployment Scenarios in SIP-enabled Networks

0-470-77072-4 (January 2009)

Fitzek: Mobile Peer to Peer (P2P): A Tutorial Guide 0-470-69992-2 (June 2009)

Shelby: 6LoWPAN: The Wireless Embedded Internet 0-470-74799-4 (November 2009)

Page 5

CORE AND METRO

NETWORKS

Editor

Alexandros Stavdas

University of Peloponnese, Greece

Page 6

This edition first published 2010

Ó 2010 John Wiley & Sons Ltd.,

Except for:

Chapter 1, ‘The Emerging Core and Metropolitan Networks’ Ó 2009 Angel Ferreiro and Telecom Italia S.p.A

Chapter 4, Section 4.5.1–4.5.5 and 4.5.7 Ó 2009 Telecom Italia S.p.A

Chapter 5, Section 5.2–5.6 Ó 2009 Telecom Italia S.p.A

Registered office

John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex, PO19 8SQ, United Kingdom

For details of our global editorial offices, for customer services and for information about how to apply for

permission to reuse the copyright material in this book please see our website at www.wiley.com.

The right of the author to be identified as the author of this work has been asserted in accordance with the

Copyright, Designs and Patents Act 1988.

All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in

any form or by any means, electronic, mechanical, photocopying, recording or otherwise, except as permitted by

the UK Copyright, Designs and Patents Act 1988, without the prior permission of the publisher.

Wiley also publishes its books in a variety of electronic formats. Some content that appears in print may not be

available in electronic books.

Designations used by companies to distinguish their products are often claimed as trademarks. All brand names

and product names used in this book are trade names, service marks, trademarks or registered trademarks of their

respective owners. The publisher is not associated with any product or vendor mentioned in this book. This

publication is designed to provide accurate and authoritative information in regard to the subject matter covered.

It is sold on the understanding that the publisher is not engaged in rendering professional services. If professional

advice or other expert assistance is required, the services of a competent professional should be sought.

Library of Congress Cataloging-in-Publication Data

Core and metro networks / edited by Alexandros Stavdas.

p. cm.

Includes bibliographical references and index.

ISBN 978-0-470-51274-6 (cloth)

1. Metropolitan area networks (Computer networks) I. Stavdas, Alexandros A.

TK5105.85.C678 2010

004.67–dc22

A catalogue record for this book is available from the British Library.

ISBN 9780470512746 (H/B)

Set in 10/12 pt Times Roman by Thomson Digital, Noida, India

Printed and Bound in Singapore by Markono Pte.

2009044665

Page 7

Contents

Preface ix

1 The Emerging Core and Metropolitan Networks 1

Andrea Di Giglio, Angel Ferreiro and Marco Schiano

1.1 Introduction 1

1.1.1 Chapter’s Scope and Objectives 1

1.2 General Characteristics of Transport Networks 1

1.2.1 Circuit- and Packet-Based Network Paradigms 2

1.2.2 Network Layering 3

1.2.3 Data Plane, Control Plane, Management Plane 4

1.2.4 Users’ Applications and Network Services 4

1.2.5 Resilience 5

1.2.6 Quality of Service 7

1.2.7 Traffic Engineering 8

1.2.8 Virtual Private Networks 10

1.2.9 Packet Transport Technologies 11

1.3 Future Networks Challenges 12

1.3.1 Network Evolution Drivers 12

1.3.2 Characteristics of Applications and Related Traffic 12

1.3.3 Network Architectural Requirements 17

1.3.4 Data Plane, Control Plane, and Management Plane Requirements 24

1.4 New Transport Networks Architectures 31

1.4.1 Metropolitan Area Network 33

1.4.2 Core Network 36

1.4.3 Metro and Core Network (Ultra-long-term Scenario) 38

1.5 Transport Networks Economics 39

1.5.1 Capital Expenditure Models 39

1.5.2 Operational Expenditure Models 42

1.5.3 New Business Opportunities 44

Acronyms 52

References 54

Page 8

vi Contents

2 The Advances in Control and Management for Transport Networks 55

Dominique Verchere and Bela Be rde

2.1 Drivers Towards More Uniform Management and Control Networks 55

2.2 Control Plane as Main Enabler to Autonomic Network Integration 58

2.2.1 Generalized Multi-Protocol Label Switching 59

2.2.2 Evolution in Integrated Architectures 71

2.3 Multilayer Interactions and Network Models 74

2.3.1 Introduction 74

2.3.2 Vertical Integration and Models 78

2.3.3 Horizontal Integration and Models 79

2.3.4 Conclusions on UNI Definitions from ITU-T, OIF, IETF,

and OIF UNI: GMPLS UNI Interoperability Issues 104

2.4 Evolution of Connection Services and Special Cases of Optical Networks 105

2.4.1 Evolution in Network Services 105

2.4.2 Virtual Private Networks 106

2.4.3 Layer 1 VPN 109

2.4.4 Layer 2 VPN 118

2.4.5 Layer 3 VPN 122

2.5 Conclusion 123

References 124

3 Elements from Telecommunications Engineering 127

Chris Matrakidis, John Mitchell and Benn Thomsen

3.1 Digital Optical Communication Systems 127

3.1.1 Description of Signals in the Time and Frequency Domains 127

3.1.2 Digital Signal Formats 132

3.2 Performance Estimation 135

3.2.1 Introduction 136

3.2.2 Modeling 141

3.2.3 Comparison of Techniques 146

3.2.4 Standard Experimental Measurement Procedures 149

References 158

4 Enabling Technologies 161

Stefano Santoni, Roberto Cigliutti, Massimo Giltrelli, Pasquale Donadio,

Chris Matrakidis, Andrea Paparella, Tanya Politi, Marcello Potenza,

Erwan Pincemin and Alexandros Stavdas

4.1 Introduction 161

4.2 Transmitters 161

4.2.1 Introduction 161

4.2.2 Overview of Light Sources for Optical Communications 167

4.2.3 Transmitters for High Data-Rate Wavel ength-Division

Multiplexing Systems 178

Page 9

Contents vii

4.3 Receiver 202

4.3.1 Overview of Common Receiver Compone nts 202

4.4 The Optical Fiber 212

4.4.1 Short Introduction to the Waveguide Principle 213

4.4.2 Description of Optical Single-Mode Fibers 216

4.4.3 Special Fiber Types 222

4.5 Optical Amplifiers 223

4.5.1 Introduction to Optical Amplifiers 225

4.5.2 Principle of Operation 229

4.5.3 Gain Saturation 231

4.5.4 Noise 234

4.5.5 Gain Dynamics 235

4.5.6 Optical Fiber and Semiconductor Optical Amplifiers 236

4.5.7 Raman Amplifiers 239

4.5.8 Lasers and Amplifiers 243

4.6 Optical Filters and Multiplexers 245

4.6.1 Introduction 245

4.6.2 Optical (De-)Multiplexing Devices 246

4.6.3 Overall Assessment of (De-)Multiplexing Techniques 256

4.6.4 Optical Filters 257

4.6.5 Tunable Filters 260

References 263

5 Assessing Physical Layer Degradations 267

Andrew Lord, Marcello Potenza, Marco Forzati and Erwan Pincemin

5.1 Introduction and Scope 267

5.2 Optical Power Budgets, Part I 268

5.2.1 Optical Signal-to-Noise Ratio and Q Factor 268

5.2.2 Noise 273

5.2.3 Performance Param eters. Light Path Evaluation Rules 290

5.2.4 Transmission Impairments and Enhancements: Simple

Power Budgets 295

5.3 System Bandwidth 334

5.3.1 System Bandwidth, Signal Distortion, Intersymbol Interference 334

5.3.2 Fiber-Optical Nonlinear Effects 346

5.3.3 Optical Transients 356

5.4 Comments on Budgets for Nonlinear Effects and Optical Transients 362

5.4.1 Compensators/Equalizers 363

5.4.2 CD Equalization 363

5.4.3 PMD Equalization 364

5.4.4 Simultaneous Presence of Distortions, Electronic Equalization,

and Cumulative Filtering 364

5.4.5 General Features of Different Modulation Formats 368

5.5 Semianalytical Models for Penalties 370

5.6 Translucent or Hybrid Networks 370

5.6.1 Design Rules for Hybrid Networks 371

Page 10

viii Contents

5.7 Appendix 372

5.7.1 Dispersion Managed Links 372

5.7.2 Intrachannel Nonlinear Effects 374

References 378

6 Combating Physical Layer Degradations 381

Herbert Haunstein, Harald Rohde, Marco Forzati, Erwan Pincemin,

Jonas Martensson, Anders Djupsj€obacka and Tanya Politi

6.1 Introduction 381

6.2 Dispersion-Compensating Components and Methods for CD and PMD 382

6.2.1 Introduction on Optical CD and PMD Compensator

Technology 382

6.2.2 Optical Compensation Schemes 383

6.2.3 Key Parameters of Optical Compensators 387

6.2.4 Compensators Suitable for Translucent Networks 389

6.2.5 Impact of Group-Delay Ripple in Fiber Gratings 391

6.3 Modulation Formats 396

6.3.1 On–Off Keying Modulation Formats 397

6.3.2 Comparison of Basic OOK Modulation Formats: NRZ,

RZ, and CSRZ for 40 Gbit/s Transmission 400

6.3.3 A Power-Tolerant Modulation Format: APRZ-OOK 408

6.3.4 DPSK Modulation Formats 412

6.3.5 Spectrally Efficient Modulation Formats 414

6.4 Electronic Equalization of Optical Transmission Impairments 416

6.4.1 Electronic Equalization Concepts 416

6.4.2 Static Performance Characterization 420

6.4.3 Dynamic Adaptation of FFE- and DFE-Structures 420

6.4.4 General Remarks 423

6.5 FEC in Lightwave Systems 424

6.5.1 Application of FEC in Lightwave Systems 424

6.5.2 Standards for FEC in Lightwave Systems 425

6.5.3 FEC Performance Characterization 426

6.5.4 FEC Application in System Design 429

6.6 Appendix: Experimental Configuration and Measurement Procedure

for Evaluation and Comparison for Different Modulation Formats

for 40 Gbit/s Transmission 431

6.6.1 Simulation Setup 434

Acknowledgments 435

References 435

Dictionary of Optical Networking 441

Didier Colle, Chris Matrakidis and Josep Sole-Pareta

Acronyms 465

Index 477

Page 11

Preface

It is commonly accepted today that optical fiber communications have revolutionized

telecommunications. Indeed, dramatic changes have been induced in the way we interact

with our relatives, friends, and colleagues: we retrieve information, we entertain and

educate ourselves, we buy and sell, we organize our activities, and so on, in a long list

of activities. Optical fiber systems initially allowed for a significant curb in the cost of

transmission and later on they sparked the process of a major rethinking regarding some,

generation-old, telecommunication concepts like the (OSI)-layer definition, the lack of

cross-layer dependency, the oversegmentation and overfragmentation of telecommunications networks, and so on.

Traditionally, telecommunications are classified based on the physical properties of the

channel; that is, fixed-line/wired-communications and wireless/radio communications.

Following this classification, it can be safely a rgued that today’s core networks and metropolitan area networks (metro networks for simplicity) are almost entirely based on optical

fiber systems. Moreover, the penetration of optical fiber communications in the access segment

is progressing at an astonishing rate, although, quite often, it is the competition between

providers, the quest for higher profits based on the established technological framework, and

the legislative gridlocks that prevent an even faster adoption of this technology. Thus, a fullscale deployment of optical fiber systems in the access networks, through fixed/wireless

convergence, could further reduce the role of wireless technology in transporting bandwidth

over a reasonably long distance. Evidently, optical-fiber-based networks are the dominant

technology, literally the backbone, of the future Internet. The fields of this technology are

diverse and its engineering requires knowledge that extends from layer 1 to layer 3.

Many excellent basic text and specialized books are available today aiming to educate and/or

inform scientists, engineers and technicians on the essentials in the field of optical technology.

However, there is a pressing need for books presenting both comprehensive guidelines for

designing fiber-optic systems and core/metro network architectures and, simultaneously,

illustrating the advances in the state of the art in the respective fields. IST-NOBEL (I and

II) was a large-scale research project funded from the Framework Programme 6 of the

European Commission, incorporating major operators, system vendors and leading European

universities. Employing a large number of experts in several fields, the project decided to

collectively produce such a book as part of the disseminating activities. Thus, a considerable

part of this book is based on the deliverables of IST-NOBEL with significant effort made to

provide the necessary introduction of concepts and notions. The objective was to make it

readable for a non-highly specialized audience, as well as to demystify the necessity behind the

introduction of this or that novelty by clearly stating the underlying “need.” It is left to the

readers to decide whether we have succeeded in our goals.

Page 12

x Preface

The contributors to this book would like to acknowledge the immense help and support of

their colleagues in the IST-NOBEL project that contributed to the preparation of the

respective d eliverables. A separate, specia l, acknowledgment is for the IST-NOBEL I and

II project leaders and colleagues from Telecom Italia, Antonio Manzalini, Marco Schiano,

and Giuseppe Ferraris. Also, the editor is extremely grateful to Andreas Drakos and Penny

Papageorgopoulou, PhD candidates in the University of Peloponnese, for their help in

preparing the final manuscript.

Alexandros Stavdas

Department of Telecommunications Science and Technology

University of Peloponnese, Greece

Page 13

1

The Emerging Core and Metropolitan Networks

Andrea Di Giglio, Angel Ferreiro and Marco Schiano

1.1 Introduction

1.1.1 Chapter’s Scope and Objectives

The study of transport networks is a vast and highly multidisciplinary field in the modern

telecommunication world. The beginner who starts studying this technical subject may remain

astonished by the variety and complexity of network architectures and technologies that have

proliferated in the last decade. Even an expert in the field may get disoriented in the huge variety

of networks’ functions and characteristics.

This introductory chapter is devoted to the definition of transport networks’ fundamentals

representing the very basic “toolbox” of any expert in the field. Furthermore, it investigates

transport network architectural evolution in terms of new network services supporting

emerging users’ applications.

The chapter is structured as follows. Section 1.2 contains the definitions of the basic network

concepts used throughout the book. Sections 1.3 and 1.4 describe the requirements and the

architectural evolution roadmap of transport networks based on emerging users’ applications.

Finally, Section 1.5 shows the economic models and analysis techniques that enable the design

and realization of economically sustainable transport services.

1.2 General Characteristics of Transport Networks

For more than a century, the traditional vision of telecommunication networks has been a smart

combinationof transmissionand switching technologies. Even if transmissionand switchingare

still the basic building blocks of any network, telecommunication networks fundamentals cover

a much broader scope nowadays. This new vision is primarily due to the introduction of digital

Chapter 1, ‘The Emerging Core and Metropolitan Networks’, Ó 2009 Angel Ferreiro and Telecom Italia S.p.A from

Core and Metro Networks, edited by A. Stavdas, 2009

Page 14

2 The Emerging Core and Metropolitan Networks

technologies paving the way to packet-based networks. In contrast to old analog networks,

packet-based digital networks can be either connectionless or connection oriented, can have a

control plane for the automation of some functions, can implement various resilience schemes,

can perform a number of network services supporting users’ applications, and so on.

The essential ideas are explained in this section as a background for the entire chapter.

1.2.1 Circuit- and Packet-Based Network Paradigms

Digital networks can transfer information between nodes by means of two fundamental

paradigms: circuit switching or packet switching.

.

In circuit-switched networks, data are organized in continuous, uninterrupted bit streams.

In this mode of operation, a dedicated physical link between a couple of nodes is established.

Before starting the data transfer on a specific connection, the connection itself must be

“provisioned”; that is, the network switching nodes must be configured to provide the

required physical link. This implies an exclusive allocation of network resources for

the whole duration of the connection. Such a task is usually performed by dedicated

elements belonging to the network control system; network resources are released when

the connection ends.

This is the way that the plain old telephony service (POTS) has been working so far. The

private reservation of network resources prevents other connections from using them while

the first one is working, and may lead to inefficient network use.

.

In packet-switched networks, data are organized in packets of finite length that are processed

one by one in network nodes and forwarded based on the packet header information. In this

network scenario, each packet exploits switching and transmission devices just for the time

of its duration, and these network resources are shared by all packets. This process of packet

forwarding and aggregation is called statistical multiplexing and represents the major benefit

of packet-switched networks with respect to the circuit-switched networks in terms of

network exploitation efficiency.

Typical examples of circuit-switching and packet-switching technologies are synchronous

digital hierarchy (SDH) and Ethernet resp ectively.

Packet-switched networks can, in turn, work in connectionless or connection-oriented

network modes.

.

In the connectionless networ k mode, packets are forwarded hop by hop from source node to

destination node according to packet header information only, and no transfer negotiation

is performed in advance between the network nodes involved in the connection; that is, the

source node, optionally the intermediate node(s) and the destination node.

.

In the connection-oriented network mode, packet transfer from source node to dest ination

node is performed through defined resource negotiation and reservation schemes between the

network nodes; that is, it is preceded by a connection set-up phase and a connection usage

phase, followed by a connection tear-down phase.

Typical examples of packet-switched connectionless and connection-oriented network

protocols are Internet protocol (IP) and asynchronous transfer mode (ATM) respectively.

Page 15

General Characteristics of Transport Networks 3

The main characteristic of the connectionless network mode is that packets are routed

throughout the network solely on the base of the forwarding algorithms working in each

node; hence, packet routes may vary due to the network status. For instance, cable faults

or traffic overloads are possible causes of traffic reroute: in the connectionless network mode,

the new route of a packet connection is not planned in advance and, in general, is

unpredictable.

On the contrary, in the connection-oriented network mode, the route of any connection is

planned in advance and, in the case of faults, traffic is rerouted on a new path that can be

determined in advance.

Since route and rerouting have strong impacts on the quality of a packet connection, the two

network modes are used for different network services depending on the required quality and

the related cost.

1.2.2 Network Layering

The functions of a telecommunication network have become increasingly complex. They

include information transfer, traffic integrity and survivability aspects, and network management and performance monitoring, just to mention the main ones. To keep this growing

complexity under control and to maintain a clear vision of the network structure, layered

network models have been developed. According to these models, network functions are

subdivided into a hierarchical structure of layers. Each layer encompasses a set of homogeneous network functions duly organized for providing defined services to the upper layer, while

using the services provided by the lower layer. For example, in an Ethernet network, the

physical layer provides data transmission services to the data link layer.

To define transport network architectures, it is essential to start from the description of the

lowest three layers [1]: network, data link, and physical layers:

.

Network layer. The main task of the network layer is to provide routing functions. It also

provides fragmentation and reassembly of data at the endpoints. The most common layer 3

technology is the IP. It manages the connectionless transfer of data across a router-based

network.

.

Data-link layer. This provides frames, synchronization, and flow control. The data link

layer also performs transfer of data coming from the network layer. Typical examples of datalink layers are point-to-point protocol and Ethernet MAC (medium/media access control)

(IEEE 802.1xx).

.

Physical layer. The physical layer defines the transmission media used to connect devices

operating at the upper layer (e.g., data link). Physical media can be, for example, copper-wire

pairs, coaxial cables or, more frequently, single-mode or multimode optical fibers. The

physical layer also defines modulation encoding (e.g., Manchester, 8B/10B) or topology

(e.g., ring, mesh) [2]. Most common technologies implementing layer 1 functionalities are

Ethernet (physical layer, IEEE 802.3xx), SDH and optical transport network (OTN).

It is commonly agreed that the Open System Interconnection (OSI) model is an excellent

place to begin the study of network architecture. Nevertheless, the network technologies

commercially available do not map exactly with the levels described in the OSI basic model.

Page 16

4 The Emerging Core and Metropolitan Networks

1.2.3 Data Plane, Control Plane, Management Plane

The layered network models encompass all network functions related to data transfer.

However, modern transport networks are often provided with additional functions devoted

to network management and automatic network control. Hence, the totality of network

functions can be classified into three groups named planes: the data plane, the management

plane and the control plane.

The functions that characterize each plane are summarized below.

.

Data plane. The data plane aims at framing and carrying out the physical transportation of

data blocks to the final destination. This operation includes all transmission and switching

functions.

.

Control plane. The control plane performs the basic functions of signaling, routing and

resource discovery. These are essential operations to introduce automation on high level

network functions such as: connection establishment (i.e., path computation, resource

availability verification and connection signaling set-up and tear-down), reconfiguration

of signaled connections and connection restoration in case of network faults.

.

Management plane. The management plane performs management functions like alarm

reporting, systems configuration and connection provisioning for data and control planes.

The complexity of the management plane depends strongly on the availability of a control

plane. For example, the management plane of traditional circuit-switched public switched

telephone networks is more cumbersome than transport networks with a control plane, since,

in the latter case, certain tasks (e.g., connection provisioning and restoration) are carried out

by the control plane itself.

1.2.4 Users’ Applications and Network Services

The current challenge of evolving telephony-dedicated transport networks towards enhanced

communication architectures is set by two fundamental trends.

First, services offered today to final users are much richer than simple telephony. User

services like video telephony, video on demand, and Web browsing require an advanced

terminal, typically a personal computer with dedicated software; for this reason, they will be

called “user applications” or simpl y “applications” from now on.

Second, to convey these end-user applications, transport networks are relying on “network

services,” which effectively refer to a number of transfer modes.

As an example, a point-to-point unprotected circuit connection at 2 Mbit/s represents a

specific transfer mode. Other examples of network services are connections based on packet

paradigms; for example, IP/multi-protocol label switching (MPLS), ATM or Ethernet. Today,

all modern applications make reference to packet-based network services.

The idea of a transport network able to provide many different services is one of the most

challenging of recent years and it will be analyzed in detail in the following chapt ers.

Network services and user applications can be provided by different actors. Network

operators that own and manage the networks are typical providers of network services. Service

providers sell and support user applications by means of network services supplied by network

operators.

Page 17

General Characteristics of Transport Networks 5

Important user application categories are:

.

multimedia triple play – voice, video and high-speed Internet;

.

data storage for disaster recovery and business continuity;

.

grid computing; that is, computing services delivered from distributed computer

networks.

The last two categories, storage and grid computing, are dedicated to business company

customers and research institutions. On the contrary, multimedia applications address residential customers and the small office, home office.

Examples of network services are:

.

time-division multiplexing (TDM) connections and wavelength connections (e.g., leased

lines);

.

Ethernet point-to-point, point-to-multipoint (p2mp) or rooted multipoint connections;

.

virtual private networks (Section 1.2.8).

Each user application is enabled by a network service characterized by specific attributes. A list

of the most important ones is shown below.

.

Protocols: Ethernet and IP are the most common.

.

Bandwidth: committed peak, committed average bit-rate, excess peak and excess bitrate [3].

.

Quality of service (QoS): regarding transport networks, this is defined by mea ns of the

maximum allowed packet loss rate (PLR), the packet latency (i.e., the packet transmission

delay), and jitter (latency variation); see Section 1.2.6.

.

Resilience: required connection availability (Section 1.2.5).

These service attributes are the main inputs for a network provider to design a multi-service

network, in support of a number of defined applications.

1.2.5 Resilience

One of the most important features of transport networks is their ability to preserve live traffic

even when faults occur. This feature is generally referred to as “resilience.”

In transport networks, resilience is usually achieved by duplication of network resources. For

example, a fiber-optic link between a couple of nodes can be duplicated to assure survivability

to cable breaks. Similarly, the switching matrix of an exchange node can be duplicated to

guarantee service continuity in the case of electronics faults.

The way these extra resources are used depends strongly on networ k topology (rings or

meshed network configurations), equipment technology (packet or circuit switching, network

mode, optical transmission), and traffic protection requirements. However, the following

general definitions help understanding the fundamental resilience schemes.

Page 18

6 The Emerging Core and Metropolitan Networks

1. If the connections for traffic protection are organized in advance, the resilience mechanism

is called “protection.”

a. 1 þ1 protection (also called dedicated protection). The whole traffic of a connection

is duplicated and transmitted through two disjoint paths: the working and the protection

path simultaneously. The receiving node switches between the two signals in the case

of failure. The trigger of 1 þ1 protection is the received signal quality; for example,

the received power level or the bit error rate (BER). Since no complex network protocols

are needed, 1 þ1 protectio n works very quickly, typically within 50 ms. The drawback of

this protection scheme is duplication of network resources.

b. 1: 1 protection (also called protection with extra traffic). The working connection is

protected with one backup connection using a disjoint path. The working traffic is sent

over only one of the connections at a time; this is in contrast to dedicated protection,

where traffic is always bridged onto two connections simultaneously. Under normal

conditions (no network failure) the protecting connection is either idle or is carrying

some extra traffic (typically best-effort traffic). Configuring 1: 1 protection depends

on the control plane’s ability to handle extra traffic, that is, whether it supports the

preemption of network resources for allocating them to the working traffic once it has

been affected by the failure. The ingress node then feeds the working traffic on the

protecting connection in the case of failure. The trigger of 1: 1 protection is the reception

of network failure notification messages. Protection with extra traffic has two main

drawbacks: the need to duplica te working traffic resources onto the protection path and,

in the case of resource contention, the possibility that extra traffic may be interrupted

without effective need.

c. M: N protection (also called shared protection). M working connections are protected

by N backup connections on a disjoint path (N M). The traffic is no longer duplicated

because backup connections can carry traffic initially transported by any one of the

working connections in the case of fault. Thus, switching to backup connections requires

first knowing their availability and then performing traffic switching. Signaling is needed

for failure notification and backup connection activation. Once failure has been repaired,

traffic is reassigned to the working connection and the resources of the backup

connection are available again for protection. In any case, this protection mechanism

allows resource savings with respect to 1 þ1 protection.

Both protection mechanisms, dedicated and shared, are used in rings and meshed network

configurations. The main advantage of protection is its quick operation, since the backup path is

predefined and network resources are pre-allocated.

2. Alternatively to protection, restoration is the resilience mechanism that sets up new backup

connections after failure eventsby discovering, routing, and setting up new links “on the fly”

among the network resources still available after the failure. This is achieved by the

extension of signaling, routing, and discovery para digms typical of IP networks. In fact,

to restore a connection, switching nodes need to discover the network topology not affected

by the failure, thus allowing one to compute a set of candidate routes, then to select a new

route, and to set up the backup connections. Discovery, routing algorithms, and signaling

functions embedded in commercial IP/MPLS routers can quite easily implement restoration. On the other hand, transport network equipment needs a dedicated control plane to

perform such functions.

Page 19

General Characteristics of Transport Networks 7

Table 1.1 Indicative figures for network availability

Availability (%) N-Nines Downtime time (minutes/year)

99 2-Nines 5000

99.9 3-Nines 500

99.99 4-Nines 50

99.999 5-Nines 5

99.9999 6-Nines 0.5

Usually, the resilience level of a network service (e.g., a leased line or an Ethernet

connection, as defined in Section 1.2.4) is made precise through a number of parameters; the

most important are:

.

Mean time to failure (MTTF): the reciprocal of the failure rate, for systems being replaced

after a failure.

.

Mean time to repair (MTTR): this depends on the repair time of a network fault.

.

Mean time between failures (MTBF): this is the sum of MTTF and MTTR and defines the

mean time interval between successive failures of a repairable system ; it is a measure of

network component reliability.

.

Maximum recovery time: this is the maximum delay between a failure injuring a network

service and the restoration of the service over another path; in other words, the maximum

time during which the network service is not available. It accounts for MTTR and all other

possible delays affecting complete system recovery (signaling, rerouting).

The same concept can be given a different flavor, insisting on network status instead of

duration:

.

Unavailability: the probability that the network service is not working at a given time and

under specified conditions; it is the ratio MTTR/MTBF. Some indicative numbers for

network availability are illustrated in Table 1.1.

1.2.6 Quality of Service

Network services are characterized by a set of parameters that define their quality (QoS).

.

BER: this is a physical-layer parameter, manifesting the fraction of erroneous bits over the

total number of transmitted bits. It is closely related to design rules applied to the physical

layer transport network. It is studied in detail in Chapter 3.

.

PLR: in packet-switched services, this is the fraction of data packets lost out of the total

number of transmitted packets. Packets can be dropped due to congestion, or due to

transmission errors or faults.

.

Latency: the time needed for carrying data from the source node to the destination node.

Latency is caused by the combination of signal propagation delay, data processing delays,

and queuing delays at the intermediate nodes on the connection [3].

.

Latency variation: the range of variation of the latency mainly due to variable queuing

delays in network nodes or due to data segmentation and routing of data blocks, via different

physical paths (a feature readily available in next-generation (NG)-synchronous optical

network (SONET)/SDH). Also, queuing delay variations may occur in the case of traffic

Page 20

8 The Emerging Core and Metropolitan Networks

overload in nodes or links. An excess of latency variation can cause quality degradation in

some real-time or interactive applications such as voice over IP (VoIP) and video over IP

(IP television (IPTV)).

.

Service unavailability: this has already been defined in Section 1.2.5.

For connection-oriented network services, the definition of QoS also includes:

.

Blocking probability: the ratio between blocking events (failure of a network to establish a

connection requested by the user, because of lack of resources) and the number of attempts.

.

Set-up time: delay between the user application request time and the network service actual

delivery time.

Current packet-based networks are designed to satisfy the appropriate level of QoS for

different network services. Table 1.2 shows suitable values of QoS parameters for the main

users’ applications. As an example, applications like voice or videoconference need tight

values of latency and latency variation. Video distribution is more tolerant to latency variation,

but it needs low packet loss, since lost packets are not retransmitted. File transfer (e.g., backup)

does not have strong requirements about any QoS parameters, since the only requirement is to

transfer a pre-established amount of data in a fixed time interval.

1.2.7 Traffic Engineering

In complex meshed networks, careful traffic engineering (TE) and resource optimization is a

mandatory requirement providing network management and operation functions at reasonable

capital expenditure (CAPEX) and operational expenditure (OPEX). Towards this end, the use

of conventional algorithms to set up the working and protection (backup) paths and for traffic

routing within the network is insufficient. Toaddress this problem, use is made of TE, which is a

network engineering mechanism allowing for network performance optimization by means of

leveraging traffic allocation in conjunction with the available network resources.

The purpose of TE is to optimize the use of network resources and facilitate reliable network

operations. The latter aspect is pursued with mechanisms enhancing network integrity and

by embracing policies supporting network survivability. The overall operation leads to the

minimizations of network vulnerability, service outages due to errors, and congestions and

failures occurring during daily network operations. TE makes it possible to transport traffic via

reliable network resources, minimizing the risk of losing any fraction of this traffic.

TE leverages on some instruments that are independent of the network layer and technology:

.

A set of policies, objectives, and requirements (which may be context dependent) for

network performance evaluation and performance optimization.

.

A collection of mechanisms and tools for measuring, characterizing, modeling, and

efficiently handling the traffic. These tools allow the allocation and control of network

resources where these are needed and/or the allocation of traffic chunks to the appropriate

resources.

.

A set of administrative control parameters, necessary to manage the connec tions for reactive

reconfigurations.

Page 21

General Characteristics of Transport Networks 9

Table 1.2 QoS characterization of users’ applications

User application QoS

Max.

latency

(ms)

Storage

Backup/restore N.A. N.A. 0.1 min 99.990

Storage on demand 10 1 0.1 s 99.999

Asyncrhonous mirroring 100 10 0.1 s 99.999

Synchronous mirroring 3 1 min 99.999

Grid computing

Compute grid 100 20 0.0 s 99.990

Data grid 500 100 0.1 s 99.990

Utility grid 200 50 0.0 s 99.999

Multimedia

Video on demand (enter-

tainment quality, similar to

DVD)

Video broadcast (IP-TV),

entertainment quality

similar to DVD

Video download 2–20 s 1000 1.0 s 99.990

Video chat (SIF quality, no

real-time coding penalty)

Narrowband voice, data

(VoIP, ...)

Telemedicine (diagnostic) 40–250 5-40 0.5 ms 99.999

Gaming 50–75 10 5.0 s 99.500

Digital distribution, digital

cinema

Video conference (PAL

broadcast quality 2.0

real-time coding penalty)

Note: latency is expressed in milliseconds with the exception of video on demand, video broadcast, and

video download, where seconds are the unit.

2–20 s 50 0.5 s 99.500%

2–20 s 50 0.5 s 99.500

400 10 5.0 s 99.500

100–400 10 0.5 ms 99.999

120 80 0.5 s 99.990

100 10 0.5 99.990

Max. latency

variation (ms)

Packet loss

(layer 3)

(%)

Max.

set-up

time

Min.

availability

(%)

The process of TE can be divided into four phases that may be applied both in core and in

metropolitan area networks, as described by the Internet Engineering Task Force (IETF) in

RFC 2702 [4]:

.

Definition of a relevant control policy that governs network operations (depe nding on many

factors like business model, network cost structure, operating constraints, etc.).

.

Monitoring mechanism, involving the acquisition of measurement data from the actual

network.

.

Evaluation and classification of network status and traffic load. The performance analysis

may be either proactive (i.e., based on estimates and predictions for the traffic load, scenarios

Page 22

10 The Emerging Core and Metropolitan Networks

for the scheduling of network resources in order to prevent network disruptions like

congestion) or reactive (a set of measures to be taken to handle unforese en circumstances;

e.g., in- progress congestion).

.

Performance optimization of the network. The performance optimization phase involves

a decision process, which selects and implements a set of actions from a set of

alternatives.

1.2.8 Virtual Private Networks

Avirtual private network (VPN) is a logical representation of the connections that makes use of

a physical telecommunication infrastructure shared with other VPNs or services, but maintaining privacy through the use of tunneling protocols (Section 1.2.9) and security procedures.

The idea of the VPN is to give a user the same services accessible in a totally independent

network, but at much lower cost, thanks to the use of a shar ed infrastructure, rather than a

dedicated one [5].

In fact, a common VPN application is to segregate the traffic from different user communities over the public Internet, or to separate the traffic of different service providers sharing the

same physical infrastructure of a unique network provider.

VPNs are a hot topic also in the discussion within standardization bodies: different views

exist on what a VPN truly is.

According to ITU-T recommendation Y.1311 [6] a VPN “provides connectivity amongst

a limited and specific subset of the total set of users served by the network provider. A VPN

has the appearance of a network that is dedicated specifically to the users within the subset.”

The restricted group of network users that can exploit the VPN services is called a closed user

group.

The other standardization approach, used by the IETF, is to define a VPN’s components and

related functions (RFC 4026, [7]):

.

Customer edge (CE) device: this is the node that provides access to the VPN service,

physically located at the customer’s premises.

.

Provider edge (PE) device: a device (or set of devices) at the edge of the provider network

that makes available the provider’s view of the customer site. PEs are usually aware of the

VPNs, and do maintain a VPN state.

.

Provider (P) device: a device inside the provider’s core network; it does not directly

interface to any customer endpoint, but it can be used to provide routing for many provideroperated tunnels belonging to different customers’ VPNs.

Standardization bodies specified VPNs for different network layers. For example, a transport

layer based on SDH can be used to provide a layer 1 VPN [8, 9]. Layer 2, (e.g., Ethernet) allows

the possibility to implement L2-VPN, also called virtual LAN (VLAN). Layer 3 VPNs are very

often based on IP, and this is the first and the most common VPN concept.

In some situations, adaptation funct ions between the bit-stream that is provided from the

“source” (of the applications) and the VPN are required. An example of an adaptation data

protocol function is the mapping of Ethernet frames in NG-SDH containers.

Page 23

General Characteristics of Transport Networks 11

1.2.9 Packet Transport Technologies

Packet technologies have been dominating the local area network (LAN) scenario for more

than 25 years, and nowadays they are widely used also in transport networks, where many

network services are based on packet paradigms. The main reason for this success is twofold:

first, the superior efficiency of packet networks in traffic grooming due to the statistical

aggregation of packet-based traffic; second, the inherent flexibility of packet networks that can

support an unlimited variety of users’ applications with a few fundamental network services,

as shown in Section 1.2.4.

However, until now, the transport of packe t traffic has been based on the underlying circuitswitch ed technology already available for telephony. A typical example is represented

by Ethernet transport over NG-SDH networks. This solution is justified by the widespread

availability of SDH equipment in already-installed transport networks, and by the

excellent operation, administration, and maintenance (OAM) features of such technology.

These features are fundamental for provisioning packet network services with the quality

required for most users’ applications, but they are not supported by the LAN packet

technologies.

This situation is changi ng rapidly, because a new generation of packet-based network

technologies is emerging. These new scenarios combine the efficiency and flexibility of packet

networks with the effective network control and management features of circuit-based

networks. These new technologies are referred to as packet transport technologies packe t

transport technology (PTTs).

There are proposals for introducing tunnels

engineering features rendering it into a connection-oriented platform. These developments are

currently under standardization at IEEE and ITU-T where is known as Provider Backbone

Bridge with Traffic Engineering (or simply PBB-TE).

An alternative approach under standardization at the ITU-T and IETF is to evolve the

IP/MPLS protocol suites to integrate OAM functions for carrier-grade packet transport

networks.

This PTT, known as MPLS-TP (MPLS transport profile) includes features traditionally

associated with transport networks, such as protection switching and operation and maintenance (OAM) functions, in order to provide a common operation, control and management

paradigm with other transport technologies (e.g., SDH, optical transport hierarchy (OTH),

wavelength-division multiplexing (WDM)).

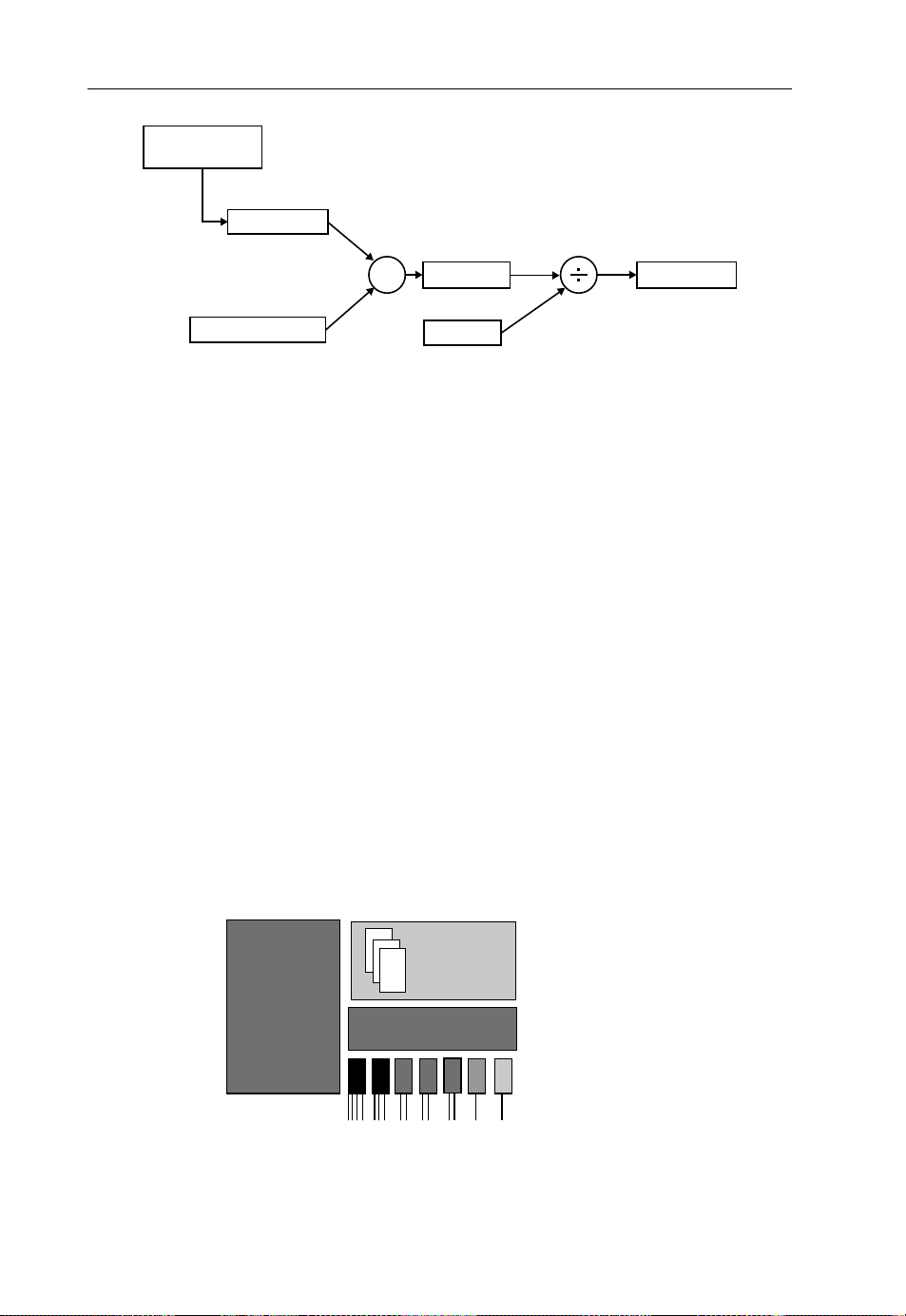

The trend imposed by the dramatic increase of packet traffic and the obvious advantages in

evolving existing circuit-switched networks into advanced packet-switched networks is going

to make PTTs a viable solution to building a unified transport infrastructure, as depicted in

Figure 1.1. Incumbent network operators that have already deployed a versatile NG-SDH

network for aggregated traffic may follow conservative migration guidelines for their core

networks and keep circuit solutions based on optical technologies. These plausible solutions

are discussed in Section 1.4.

1

facilitating to allow Ethernet attaining traffic

1

A tunnel is a method of communication between a couple of network nodes via a channel passing through intermediate

nodes with no changes in its information content.

Page 24

12 The Emerging Core and Metropolitan Networks

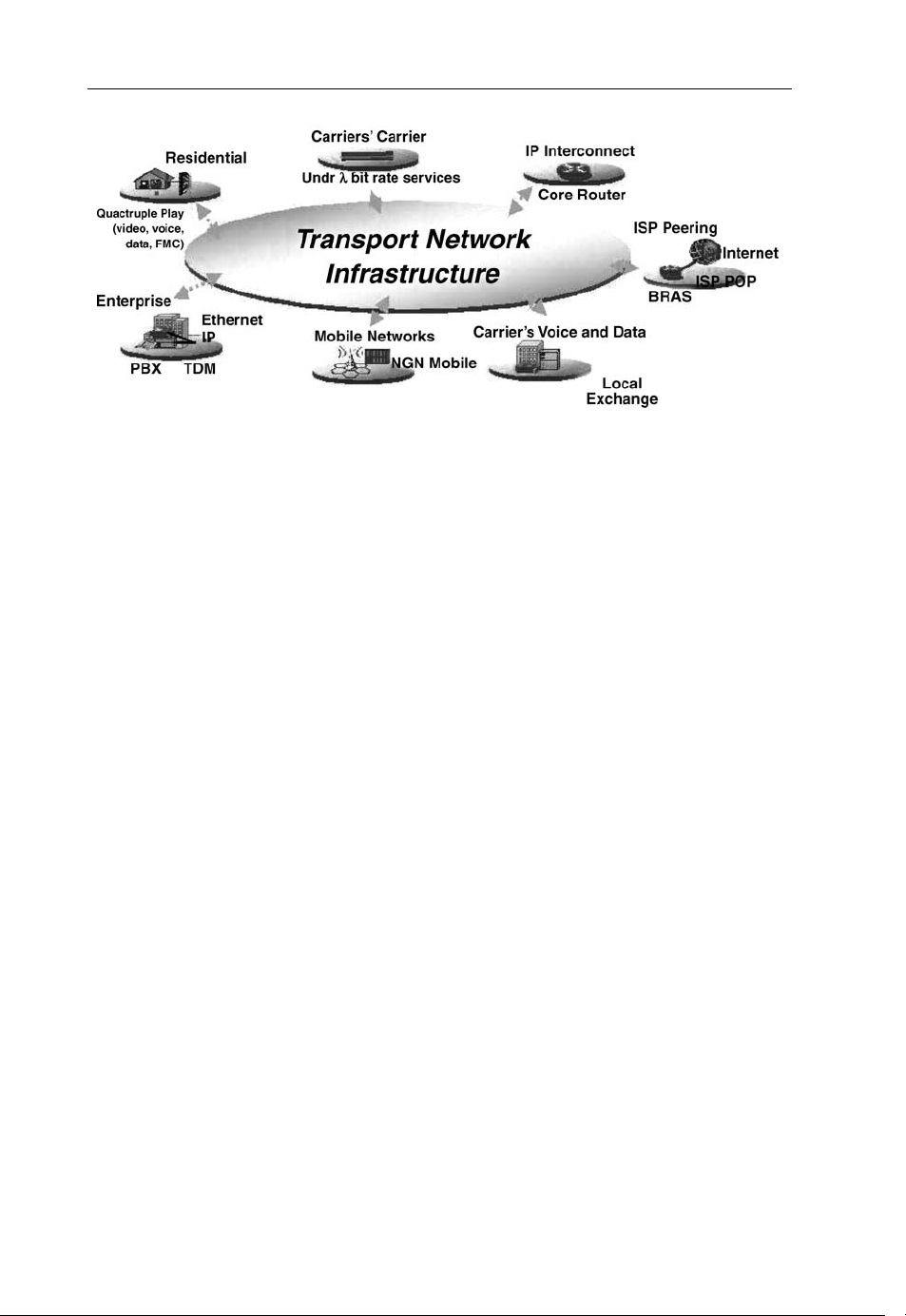

Figure 1.1 Unified transport network

1.3 Future Networks Challenges

1.3.1 Network Evolution Drivers

In the past decade, the proliferation of electronic and fiber-optic technologies has allowed

network services to evolve from the exclusive support of plain telephony to an abundance of

services which are transported based on the IP. These advances have had a major impact on the

drivers for network evolution.

Nowadays, network design and planning is the outcome of the interplay between different

technological, legal, and economic drivers:

.

Introduction of new services. A network operator or a service provider can decide to offer

new services based on customers’ requests or market trends.

.

Traffic growth. The growing penetration and the intensive use of new services increase the

network load.

.

Availability of new technologies. Electronic, optical, and software technologies keep on

offering new advances in transmission, switching, and control of information flows based on

circuits and packets.

.

Degree of standardization and interoperability of new network equipment. Modern

networks are very complex systems, requiring interaction of various kinds of equipment by

means of dedicated protocols. Standardization and interoperability are key requirements for

a proper integration of many different network elements.

.

Laws and regulati ons. National laws and government regulations may set limitations and

opportunities defining new business actors for network deployment and usage.

.

Market potential and amount of investments. The financial resource availability and the

potentialof the telecommunicationmarket are thekeyeconomic drivers for networkdevelopment.

1.3.2 Characteristics of Applications and Related Traffic

In this section, the association between applications and network services is presented. The

starting point of the analysis is the bandwidth requirement (traffic) of the various applications

Page 25

Future Networks Challenges 13

and the subsequent classification of this traffic into classes. Figure 1.2, illustrates a classification of user applications based on the following traffic characteristics:

.

elasticity

.

interactivity

.

degree of resilience (availability)

.

symmetry

.

bandwidth.

Elastic

Traffic Classes

Inelastic

non interactive

interactive

Non interactive

interactive

standard availability

high availability

standard availability

high availability

standard availability

high availability

standard availability

high availability

asymmetrical

symmetrical

asymmetrical

symmetrical

asymmetrical

symmetrical

asymmetrical

symmetrical

asymmetrical

symmetrical

asymmetrical

symmetrical

asymmetrical

symmetrical

downloding

remote backup

P2P file exchange

mail

tele-diagnostics

medical data storage

network supervision

web browsing

compute grid

telnet

data grid

utility grid

gambling

network control

low bandwidth

high bandwidth

low bandwidth

high bandwidth

low bandwidth

high bandwidth

low bandwidth

high bandwidth

radio broadcast

Live radio

video on demand

video broadcast

live TV

asynchronous mirroring

storage on demand

tele-vigilance

voice over IP

video chat

remote surgery

synchronous mirroring

real time compute grid

digital distribution

digital cinema distribution

telephony

IP telephony

gaming

video-conference

paramedic communications

emergency communications

Figure 1.2 Classification of traffic generated by reference applications

Page 26

14 The Emerging Core and Metropolitan Networks

Table 1.3 Qualitative classification of traffic types

Elastic Inelastic

Interactive Transactional Real time

Noninteractive Best effort Streaming

Elasticity refers to the level up to which the original traffic shape can be modified; the two main

categories are as follows:

.

Inelastic traffic (or stream traffic) is generated by applications whose temporal integrity

overwhelms data integrity because they try to emulate virtual presence.

.

Elastic traffic is generated by applications where data integrity overwhelms temporal

integrity, therefore being rather tolerant to delays and being able to adapt their data

generation rate to network conditions.

The term interactivity refers to a mode of operation characterized by constant feedback and

an interrelated traffic exchange between the two endpoints of the connection.

To map users’ applications traffic into the appropriate network services, it is essential to

define a few classes of traffic patterns that share the main characteristics. For this purpose,

Table 1.3 defines four kinds of traffic patterns in terms of QoS requirements.

Another important task is to assign QoS parameters quantitatively to the traffic classes.

Table 1.4 sets the values of QoS parameters used to define four basic classes as:

.

real-time traffic

.

streaming traffic

.

transactional traffic

.

best-effort traffic.

In connection with Table 1.4, the term dynamicity refers to the ability of a user to modify the

parameters of an existing connection. It is the only important parameter not described in

Section 1.2.6, since it is not addressed directly by the classic QoS definition, but it is anyway an

important quantity for application classification. The dynamicity refers to the time variation

of the following connection characteristics:

.

bandwidth (bit-rate);

.

QoS parameters (latency, availability, data integrity);

.

connectivity (the end-points of the connection).

The level of dynamicity is quantified on a three-state base:

– “none” (it is not possible to modify any parameters of an existing connection);

– “bit-rate and QoS” (when only these two parameters can be altered);

– “full” (bit-rate, QoS parameters, and connectivity modifications are allowed).

As seen in connectio n with Table 1.4, four traffic categories are defined based only on QoS

parameters. Table 1.5 shows examples of applications belonging to each one of the four classes

identified above, having different bandwidth requirements.

Page 27

Table 1.4 Quantitative classification of QoS for traffic classes

Blocking

probability (%)

Real time <0.1 >99.995 <1 <50 ms

Streaming <0.1 >99.99 <1 <1s

Transactional <1 >99.9 <3 <1s <200 Bit-rate and QoS <1 E-2

Best effort

Network

availability (%)

Set-up

time (s)

Max.

latency

Mean

latency

(ms)

Max.

latency

variation

Dynamicity Packet loss rate

Bit-rate, QoS and

<5 E-5

connectivity

None <1 E-3

Not applicable

Future Networks Challenges 15

Page 28

16 The Emerging Core and Metropolitan Networks

Table 1.5 Traffic characterization based on bandwidth (BW) and QoS parameters and map of users’

applications

QoS Low BW (tens of kbit/s) Medium BW (<2 Mbit/s) High BW (>2 Mbit/s)

Real time Legacy and IP telephony Gaming Video conference, grid

computing

Streaming UMTS Remote backup, network

supervision

Transactional E-commerce Telnet SAN

Best effort E-mail, domotic, VoIP p2p file exchange, data

acquisition

a

Video on demand.

b

Storage area network.

TV and video broadcast,

a

Vo D

b

p2p file exchange, data

acquisition

Table 1.5 is useful to map most common users’ applications into the four traffic classes (real-

time, streaming, transactional, best-effort), taking also the bandwidth use into account.

Similar to the classification of user applications, network services are classified into five

categories in association with the network services reported in Section 1.2.4. Thus, the network

service map looks as follows:

.

L1 VPN, which provides a physical-layer service between customer sites belonging to the

same closed user group. These VPN connections can be based on physical ports, optical

wavelengths, or TDM timeslots.

.

L2 VPN, which provides a service between customer terminals belonging to the VPN at the

data link layer. Data packet forwarding is based on the information contained in the packets’

data link layer headers (e.g., frame relay data link circuit identifier, ATM virtual circuit

identifier/virtual path identifier, or Ethernet MAC addresses).

.

L3 VPN, which provides a network layer service between customer devices belonging to the

same VPN. Packets are forwarded based on the information contained in the layer 3 headers

(e.g., IPv4 or IPv6 destination address).

.

Public IP, which is considered as the paradigm of best-effort network services. Namely, it is

a generalized L3 VPN without restrictions to the user group, but with a consequently poor

QoS.

.

Business IP, which is included as a higher priority class that, for instance, can efficiently

handle latency

2

in ti me-sensitive applications.

On top of this classification, further “orthogonal” categorizations are often introduced. VPN

services are further subdivided into:

– permanent VPNs, to be provided on a permanent basis by the network service

provider;

– on-demand VPNs, which could be controlled dynamically by the client user/network.

2

See latency and other QoS defining parameters later in this section.

Page 29

Future Networks Challenges 17

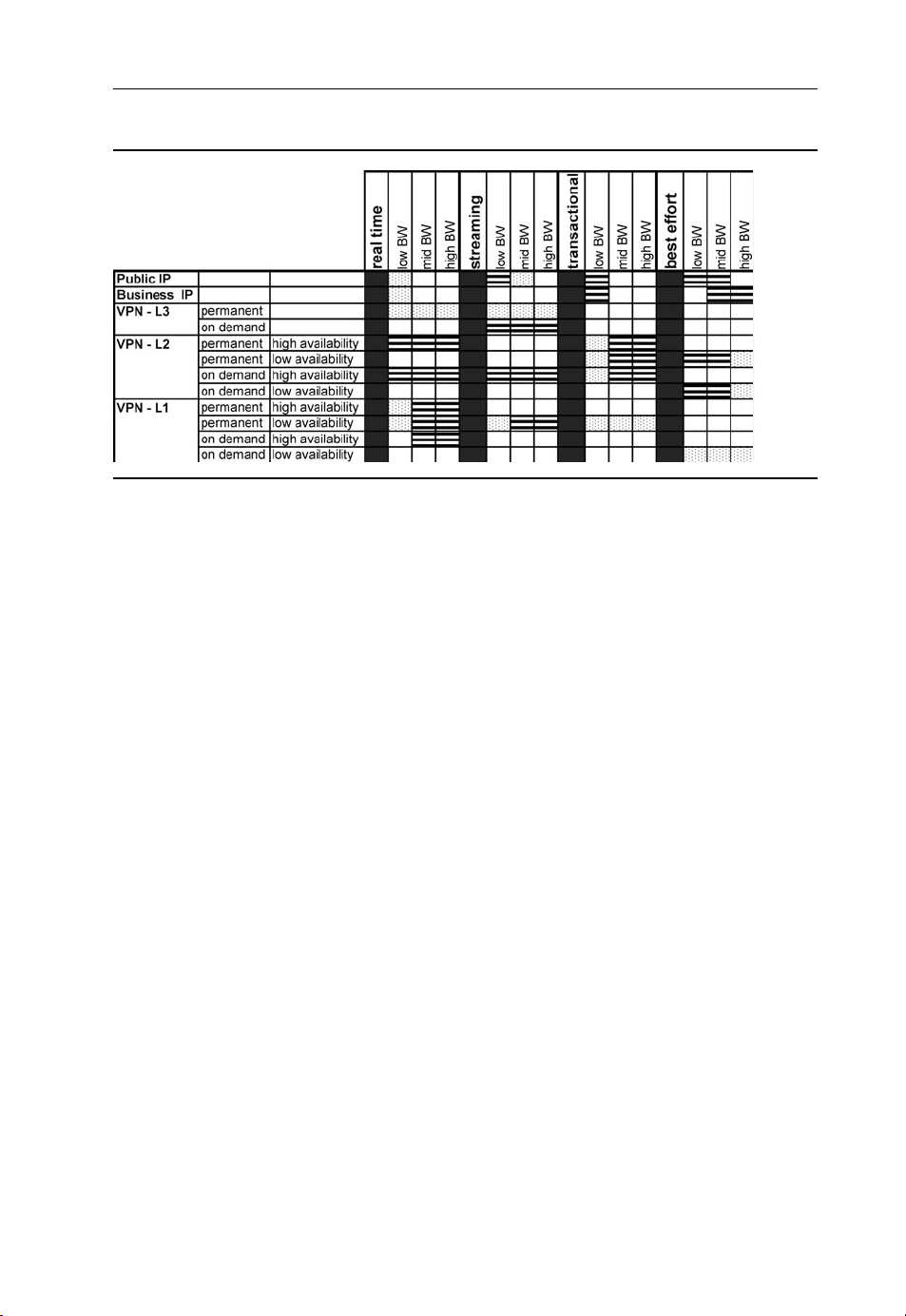

Table 1.6 Mapping network services groups to some applications (BW: bandwidth)

L1 and L2 VPN services are also classified into high- and low-availability services. Table 1.6

provides a mapping between “user applications” and “network services”: in this context,

a stippled box means that that particular application may run over on this network service, but

not very efficiently. The most efficient support to that application is designated with horizontal

rows, whereas a white box should be interpreted as no support at all from this service to that

application.

1.3.3 Network Architectural Requirements

This section gives an overview of the architectural requirements for transport networks

supporting the services described above.

1.3.3.1 Network Functional Requirements

From an architectural point of view, data services have been traditionally transported over a

wide set of protocols and technologies. For example, IP services are transported over the core

network usually relying on SDH, ATM, or Ethernet transmission networks. A widespread

alternative used in current convergent transport networks is to go towards a meshed network of

IP/MPLS routers, interconnected through direct fiber or lambda connections and without any

multilayer interaction.

This “IP-based for everything” approach was proved to be valid for the last decade, but with

current traffic trends it would lead to scalability problems. Currently, backbone nodes need

switching capacities of several terabits per second, and this need is predicted to double every

2 years. Routers are also very expensive and they are not optimized for high-bandwidth traffic

transportation, while transport technologies such as SONET/SDH are not efficient enough for

packet transport, due to a very coarse and not flexible bandwidth granularity.

Page 30

18 The Emerging Core and Metropolitan Networks

On the other hand, a number of emerging services (e.g., new multimedia applications served

over the Internet; i.e., real-time high-bandwidth video services) are imposing new requirements on the current “IP-based for everything” architecture in terms of bandwidth and QoS

(end-to-end delay and availability). Moreover, mobility of users and devices and new traffic

profiles (due to, for example, flash crowds and streaming services) require a network with an

unprecedented dynamicity that is able to support unpredictable traffic patterns.

1.3.3.2 Network Scalability

The term scalability is a feature of a network architecture designating the ability to

accommodate higher traffic load without requiring large-scale redesign and/or major deployment of resources. A typical (negative) example manifesting lack of scalability is an SDH

ring where additional resources and manual configurations are mandatory in order to increase

the capacity between two nodes. Thus, future transport networks should be scalable in order to

support existing or yet-unknown clients and traffic volumes.

The lack of scalability is demonstrated in two distinctive ways. First, by means of an

excessive deployment of network resources to accommodate higher traffic volumes. This

inefficiency is leading to higher CAPEX and OPEX that are mainly attributed to the enduring

very high cost of switching. Solving this issue requires the deployment of technologies able to

transport traffic with a lower cost per bit. Second, it is associated with architectural and/or

control plane scalability restrictions due to the excessive number of network elements to

control (e.g., the number of paths in the network). To address this issue requires the adoption

of layered architectures and aggregation hierarchies.

1.3.3.3 Network Reconfiguration Ability

Network reconfiguration ability refers to the ability of the network to change the status of some

or all of the established connections, to modify the parameters of these connections (e.g.,

modify the amount of allocated bandwidth) or to modify the way the services are provided (for

instance, changing the routing of a given connection to allow more efficient grooming on a

different route or improve spare capacity sharing).

The interest in having a reconfigurable network comes from the fact that traffic profiles

change very frequently, may be fostered by symmetrical traffic patterns, unexpected traffic

growth, possible mobile data/multimedia services, varied geographic connectivity (e.g., home,

work), andemerging services, suchas user-generated content. All these facts make it reasonable

to think in the future about a highly varying traffic profile in a network, thus meaning that

reconfigurability would be a highly advantageous characteristic in data architectures.

1.3.3.4 Cost Effectiveness

Taking into account the fierce competition and the pressure upon network operators in the

telecommunications market, as well as the descending cost per bit charged to the final user, the

only solution for service providers to keep competitive is to reduce traffic transport costs.

Therefore, cost effectiveness is the obvious requirement for any new technology. Basic

Page 31

Future Networks Challenges 19

approaches to achieve this cost reduction are to build networks upon cheap scale-economy

technologies, adapted to the applications’ bursty data traffic and specifically designed to keep

functional complexity to a minimum. To facilitate this cost per bit reduction even in presence

of unp redictable traffic growth, modular solutions are of paramount importance.

1.3.3.5 Standardized Solutions

Standardization of solutions is a key point, because it assures interoperability of equipment

from different manufacturers and, as a consequence, it allows a multi-vendor environment.

This leads to the achievement of economies of scale that lower costs, since a higher number

of suppliers use the same technology. Besides, standardization allows network operators to

deploy networks with components from different suppliers, therefore avoiding dependence on

a single manufacturer, both from a technological and an economical point of view.

1.3.3.6 Quality of Service Differentiation

As specified in Sections 1.2.6 and 1.3.2, a differentiating feature between the various

applications consists in their dissimilar transport network requirements (e.g., minimum/

maximum bandwidth, availability, security, delay, jitter, loss, error rate, priority, and buffering). For this reason, networks have to support QoS differentiation because their main goal is to

assure a proper multi-service delivery to different applications. The intention of QoS

specifications is to utilize network mechanisms for classifying and managing network traffic

or bandwidth reservation, in order to deliver predictable service levels such that service

requirements can be fulfilled.

1.3.3.7 Resilience Mechanisms

As reported in Section 1.2.5, an important aspect that characterizes services offered by

telecommunication networks is service availability. Resilience mechanisms must be present

in order to react to network failures, providing backup solutions to restore the connections

affected by the failure. Typical resilience mechanisms provide full protection against all single

failures; they distinguish in terms of how fast restoration is provided and on the amount of

backup capacity required for protection, to fully suppor t this single-failure event. Resilience

schemes can also be characterized depending on their ability to provide various level of

protection (e.g., full protection against single failures, best effort protection, no-protection, and

preemption in the case of failure) and on their capability to provide very high availability

services (e.g., full protection against multiple failures). For transport network clients, the

important aspect is the resulting service availability, measured in terms of average service

availability over a given period of time (e.g., 1 year) and of maximum service interruption time.

1.3.3.8 Operation and Maintenance

A fundamental requirement is to keep a proper control over the networking infrastructure: easy

monitoring, alarm management, and configuration tools are required. The current trend for

Page 32

20 The Emerging Core and Metropolitan Networks

OPEX reduction and maintenance simplification leads towards automated distributed cont rol

maintenance and operations.

Transport technologies or carrier-grade switching and transmission solutions differ from

other technologies in the OAM features: it is important not only in adm inistrating

and managing the network, but also to provide services and to deal with its customers.

Efficient operation tools and mechanisms must also be implemented within the transport

networks.

Finally, it is important to consider the interoperability between different network layers that

requires mutual layer independence; for this reason, the transport technology needs to be selfsufficient to provide its own OAM, independently of its client and server layers.

1.3.3.9 Traffic Multicast Support

A multicast transfer pattern allows transmission of data to multiple recipients in the network at

the same time over one transmission stream to the switches.

A network with multicast capability must guarantee the communication between a single

sender and multiple receivers on a network by delivering a single stream of information to

multiple recipients, duplicating data only when the multiple path follows different routes. The

network (not the customer devices) has to be able to duplicate data flows. There are only two

degrees for the ability to support multicast transfer: able or unable (multicast is an on/off

property).

Multicast distribution is considered a useful tool for transport technologies when dealing

with IPTV and similar applications. However, it is pointed out that layer 2 multicasting is not

the only solution to distribute IPTV.

1.3.3.10 Multiplicity of Client Signals

Previous sections highlighted that metro-core networks are supporting traffic from many

different applications, such as business data, Web browsing, peer to peer, e-Business, storage

networking, utility computing, and new applications such as video streaming, video conference, VoIP, and tele-medicine applications. The prevalence of multimedia services and the

expansion of triple-play has an important role in traffic load and distribution in metro and core

networks. A strong increase of broadband access penetration, based on a combination of

different fixed and mobile access technologies, is expected for the next years, favoring the

increase of terminal nomadism, which might introduce a more variable and unpredictable

traffic, especially in the metro area. On the other side, corporate VPN services ranging from

MPLS-based VPNs [10] to legacy services cope with the business telecom market.

From a technological standpoint, most services are migrating to packet-based Ethernet

framing. This trend makes it mandatory for Core/Metro networks to support Ethernet client

services. Nevertheless, many legacy networks are still based on other standards, such as SDH

and ATM, and they still need to suppor t these kinds of technology.

A transport infrastructure that can carry traffic generated by both mobile and fixed access is

an important challenge for future transport networks.

Fixed and mobile applications present similar QoS requirements, and can be classified

according to the four classes previously defined in Section 1.2.4. (i.e., best-effort, streaming,

Page 33

Future Networks Challenges 21

real-time, and transactional). However, current bandwidth requirements are lower for mobile

applications than for fixed applications due to limitations in wireless access bandwidth and

terminal screen size and resolution.

1.3.3.11 Transport Network Service Models and Client Interactions

Telecom networks have been upgraded with different network layer technologies, each

providing its own set of service functionality based on its own switching paradigm and

framing architecture. The GMPLS (Generalized Multi-Protocol Label Switching) protocol

architecture paves the way for a convergence between transport and client networks reducing,

thus, the overall control and management complexity. GMPLS can be configured to handle

networks with dissimilar switching paradigms (on data plane) and different network management platforms (on control and management plane). This is made feasible by means of LSPs

(Label Switched Paths) that are established between two end points. i.e. under the GMPLS

protocol architecture the resources of the optical transport network are reserved based on the

connectivity requests from a client packet-switched network.

The Overlay Model

The overlay model refers to a business model in which carriers or optical backbone

(bandwidth) providers lease their network facilities to Internet service providers (ISPs). This

model is based on a client–server relationship with well-defined network interfaces (or

automatic switched optical networ k (ASON) reference points) between the transport network

involved and client networks. The overlay model mandates a complete separation of the data

client network control (that could be IP/MPLS based) and the transport network control plane

(e.g., wavelength-switched optical networks/GMPLS). A controlled amount of signaling and

restricted amount of routing messages may be exchanged; as a consequence, the overlay model

is a very opaque paradigm. The IP/MPLS routing and signaling controllers are independent of

the routing and signaling controllers within the transport domain, enabling the different

networks to operate independently. The independent control planes interact through a userto-network interface (UNI), defining a client–server relationship between the IP/MPLS

data network and the wavelength-switched optical network (WSON)/GMPLS transport

network.

Overlay network service models support different business and administrative classes

(as developed in Section 1.5.3.) and preserve confidentiality between network operators. The

connection services are requested from client networks to the transport network across distinct

UNIs. When a connection is established in the transport network for a given client network,

this connection can be used as a nested LSP or a stitched LSP to support the requirements of the

client network.

The service interface in the overlay network model can be configured according to the level

of trust of the two interacting structures. The interface can be based on a mediation entity such

as an operation service support (OSS) or it can use the northbound interface of the network

management system. Further, the interface between client network (higher layer network) and

transport network (lower layer network) can operate a GMPLS signaling protocol, such

reservation protocol with TE (RSVP-TE).

Page 34

22 The Emerging Core and Metropolitan Networks

Peer Model

Compared with the overlay model, the peer model is built on a unified service representation,

not restricting any control information exchanged between the transport network and the

clients. This model is relevant and represents an optimal solution when a transport network

operator is both an optical bandwidth provider and an ISP. In this case, the operator can

optimally align the virtual topologies of its transport network with the network services

required by its data network. The IP/MPLS control plane acts as a peer of the GMPLS transport

network control plane, implying that a dual instance of the control plane is running over the data

network (say, an IP/MPLS network) and optical network (say, a WSON/GMPLS network).

The peer model entails the tightest coupling between IP/MPLS and WSON/GMPLS components. The differentnodes are distinguished by their switching capabilities; for example, packet

for IP routers interconnected to photonic cross-connects (PXCs).

Integrated Model

Compared with the peer model, the integrated model does not require different service

interfaces between the different networks. The integrated model proposes the full convergence

of data network control plane and transport network control plane. All nodes are label-switched