Page 1

Page 2

Page 3

Page 4

Coding Theory

Page 5

Page 6

Coding Theory

Algorithms, Architectures, and

Applications

Andr´e Neubauer

M¨unster University of Applied Sciences, Germany

J¨urgen Freudenberger

HTWG Konstanz, University of Applied Sciences, Germany

Volker K¨uhn

University of Rostock, Germany

Page 7

Copyright2007JohnWiley&SonsLtd,TheAtrium,SouthernGate,Chichester,

West Sussex PO19 8SQ, England

Telephone (+44) 1243 779777

Email (for orders and customer service enquiries): cs-books@wiley.co.uk

Visit our Home Page on www.wileyeurope.com or www.wiley.com

All Rights Reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted

in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except

under the terms of the Copyright, Designs and Patents Act 1988 or under the terms of a licence issued by the

Copyright Licensing Agency Ltd, 90 Tottenham Court Road, London W1T 4LP, UK, without the permission in

writing of the Publisher. Requests to the Publisher should be addressed to the Permissions Department, John

Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England, or emailed to

permreq@wiley.co.uk, or faxed to (+44) 1243 770620.

Designations used by companies to distinguish their products are often claimed as trademarks. All brand names

and product names used in this book are trade names, service marks, trademarks or registered trademarks of

their respective owners. The Publisher is not associated with any product or vendor mentioned in this book. All

trademarks referred to in the text of this publication are the property of their respective owners.

This publication is designed to provide accurate and authoritative information in regard to the subject matter

covered. It is sold on the understanding that the Publisher is not engaged in rendering professional services. If

professional advice or other expert assistance is required, the services of a competent professional should be

sought.

Other Wiley Editorial Offices

John Wiley & Sons Inc., 111 River Street, Hoboken, NJ 07030, USA

Jossey-Bass, 989 Market Street, San Francisco, CA 94103-1741, USA

Wiley-VCH Verlag GmbH, Boschstr. 12, D-69469 Weinheim, Germany

John Wiley & Sons Australia Ltd, 42 McDougall Street, Milton, Queensland 4064, Australia

John Wiley & Sons (Asia) Pte Ltd, 2 Clementi Loop #02-01, Jin Xing Distripark, Singapore 129809

John Wiley & Sons Canada Ltd, 6045 Freemont Blvd, Mississauga, Ontario, L5R 4J3, Canada

Wiley also publishes its books in a variety of electronic formats. Some content that appears

in print may not be available in electronic books.

Anniversary Logo Design: Richard J. Pacifico

Library of Congress Cataloging-in-Publication Data

Neubauer, Andre.

Coding theory : algorithms, architectures and applications / Andre

Neubauer, J¨urgen Freudenberger, Volker K¨uhn.

p. cm.

ISBN 978-0-470-02861-2 (cloth)

1. Coding theory. I Freudenberger, Jrgen. II. K¨uhn, Volker. III.

Title

QA268.N48 2007

003

.54–dc22

British Library Cataloguing in Publication Data

A catalogue record for this book is available from the British Library

ISBN 978-0-470-02861-2 (HB)

Typeset in 10/12pt Times by Laserwords Private Limited, Chennai, India

Printed and bound in Great Britain by Antony Rowe Ltd, Chippenham, Wiltshire

This book is printed on acid-free paper responsibly manufactured from sustainable forestry

in which at least two trees are planted for each one used for paper production.

Page 8

Contents

Preface ix

1 Introduction 1

1.1 Communication Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Information Theory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2.1 Entropy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2.2 Channel Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.2.3 Binary Symmetric Channel . . . . . . . . . . . . . . . . . . . . . . 5

1.2.4 AWGN Channel . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.3 A Simple Channel Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2 Algebraic Coding Theory 13

2.1 Fundamentals of Block Codes . . . . . . . . . . . . . . . . . . . . . . . . . 14

2.1.1 Code Parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.1.2 Maximum Likelihood Decoding . . . . . . . . . . . . . . . . . . . . 19

2.1.3 Binary Symmetric Channel . . . . . . . . . . . . . . . . . . . . . . 23

2.1.4 Error Detection and Error Correction . . . . . . . . . . . . . . . . . 25

2.2 Linear Block Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.2.1 Definition of Linear Block Codes . . . . . . . . . . . . . . . . . . . 27

2.2.2 Generator Matrix . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.2.3 Parity-Check Matrix . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.2.4 Syndrome and Cosets . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.2.5 Dual Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

2.2.6 Bounds for Linear Block Codes . . . . . . . . . . . . . . . . . . . . 37

2.2.7 Code Constructions . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.2.8 Examples of Linear Block Codes . . . . . . . . . . . . . . . . . . . 46

2.3 Cyclic Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

2.3.1 Definition of Cyclic Codes . . . . . . . . . . . . . . . . . . . . . . 62

2.3.2 Generator Polynomial . . . . . . . . . . . . . . . . . . . . . . . . . 63

2.3.3 Parity-Check Polynomial . . . . . . . . . . . . . . . . . . . . . . . . 67

2.3.4 Dual Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2.3.5 Linear Feedback Shift Registers . . . . . . . . . . . . . . . . . . . . 71

2.3.6 BCH Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

2.3.7 Reed–Solomon Codes . . . . . . . . . . . . . . . . . . . . . . . . . 81

Page 9

vi CONTENTS

2.3.8 Algebraic Decoding Algorithm . . . . . . . . . . . . . . . . . . . . 84

2.4 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

3 Convolutional Codes 97

3.1 Encoding of Convolutional Codes . . . . . . . . . . . . . . . . . . . . . . . 98

3.1.1 Convolutional Encoder . . . . . . . . . . . . . . . . . . . . . . . . . 98

3.1.2 Generator Matrix in the Time Domain . . . . . . . . . . . . . . . . 101

3.1.3 State Diagram of a Convolutional Encoder . . . . . . . . . . . . . . 103

3.1.4 Code Termination . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

3.1.5 Puncturing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

3.1.6 Generator Matrix in the D-Domain . . . . . . . . . . . . . . . . . . 108

3.1.7 Encoder Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

3.2 Trellis Diagram and the Viterbi Algorithm . . . . . . . . . . . . . . . . . . 112

3.2.1 Minimum Distance Decoding . . . . . . . . . . . . . . . . . . . . . 113

3.2.2 Trellises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

3.2.3 Viterbi Algorithm . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

3.3 Distance Properties and Error Bounds . . . . . . . . . . . . . . . . . . . . . 121

3.3.1 Free Distance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

3.3.2 Active Distances . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

3.3.3 Weight Enumerators for Terminated Codes . . . . . . . . . . . . . . 126

3.3.4 Path Enumerators . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

3.3.5 Pairwise Error Probability . . . . . . . . . . . . . . . . . . . . . . . 131

3.3.6 Viterbi Bound . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

3.4 Soft-input Decoding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

3.4.1 Euclidean Metric . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

3.4.2 Support of Punctured Codes . . . . . . . . . . . . . . . . . . . . . . 137

3.4.3 Implementation Issues . . . . . . . . . . . . . . . . . . . . . . . . . 138

3.5 Soft-output Decoding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

3.5.1 Derivation of APP Decoding . . . . . . . . . . . . . . . . . . . . . 141

3.5.2 APP Decoding in the Log Domain . . . . . . . . . . . . . . . . . . 145

3.6 Convolutional Coding in Mobile Communications . . . . . . . . . . . . . . 147

3.6.1 Coding of Speech Data . . . . . . . . . . . . . . . . . . . . . . . . 147

3.6.2 Hybrid ARQ . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 150

3.6.3 EGPRS Modulation and Coding . . . . . . . . . . . . . . . . . . . . 152

3.6.4 Retransmission Mechanism . . . . . . . . . . . . . . . . . . . . . . 155

3.6.5 Link Adaptation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

3.6.6 Incremental Redundancy . . . . . . . . . . . . . . . . . . . . . . . . 157

3.7 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

4 Turbo Codes 163

4.1 LDPC Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

4.1.1 Codes Based on Sparse Graphs . . . . . . . . . . . . . . . . . . . . 165

4.1.2 Decoding for the Binary Erasure Channel . . . . . . . . . . . . . . 168

4.1.3 Log-Likelihood Algebra . . . . . . . . . . . . . . . . . . . . . . . . 169

4.1.4 Belief Propagation . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

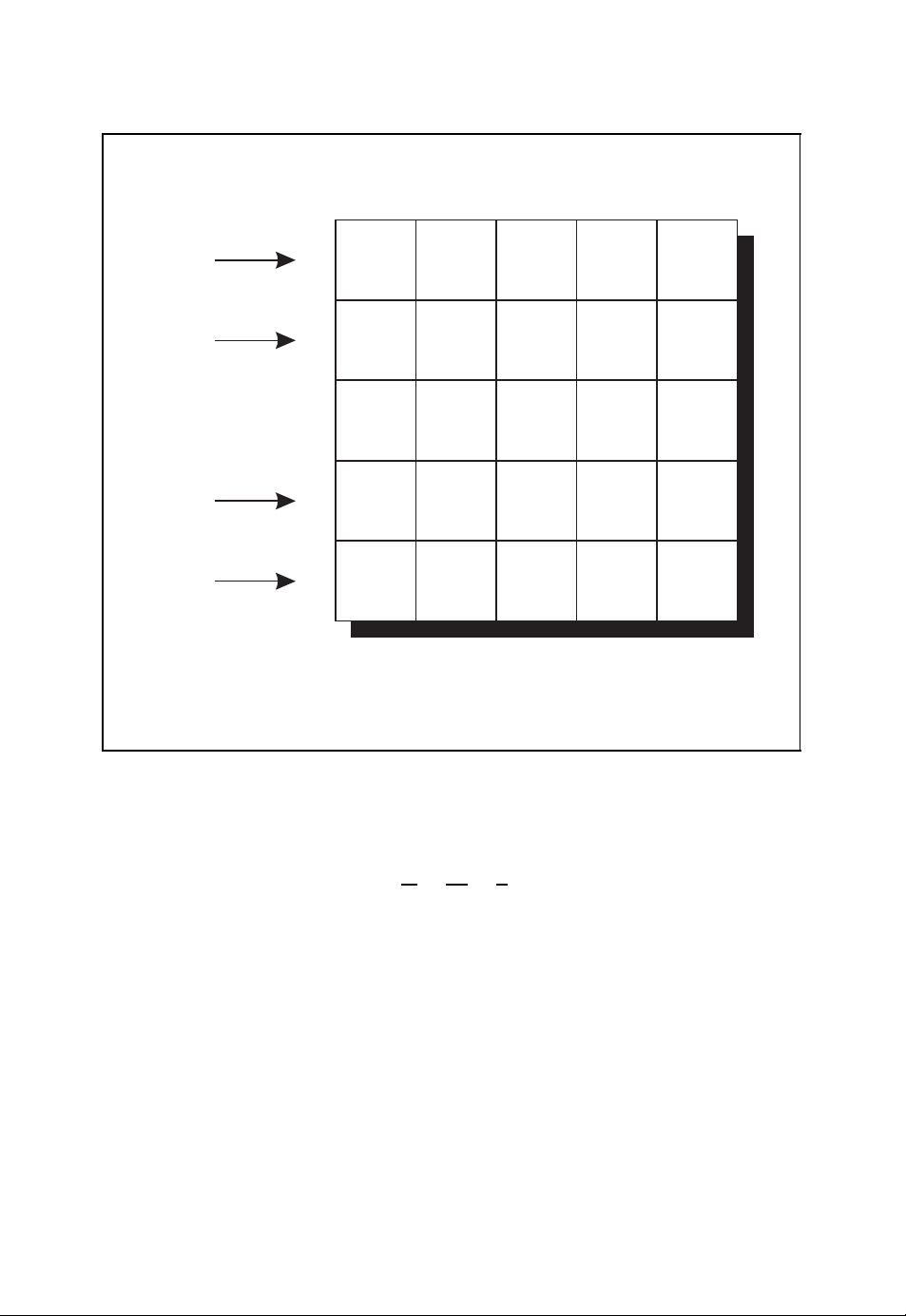

4.2 A First Encounter with Code Concatenation . . . . . . . . . . . . . . . . . 177

4.2.1 Product Codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

Page 10

CONTENTS vii

4.2.2 Iterative Decoding of Product Codes . . . . . . . . . . . . . . . . . 180

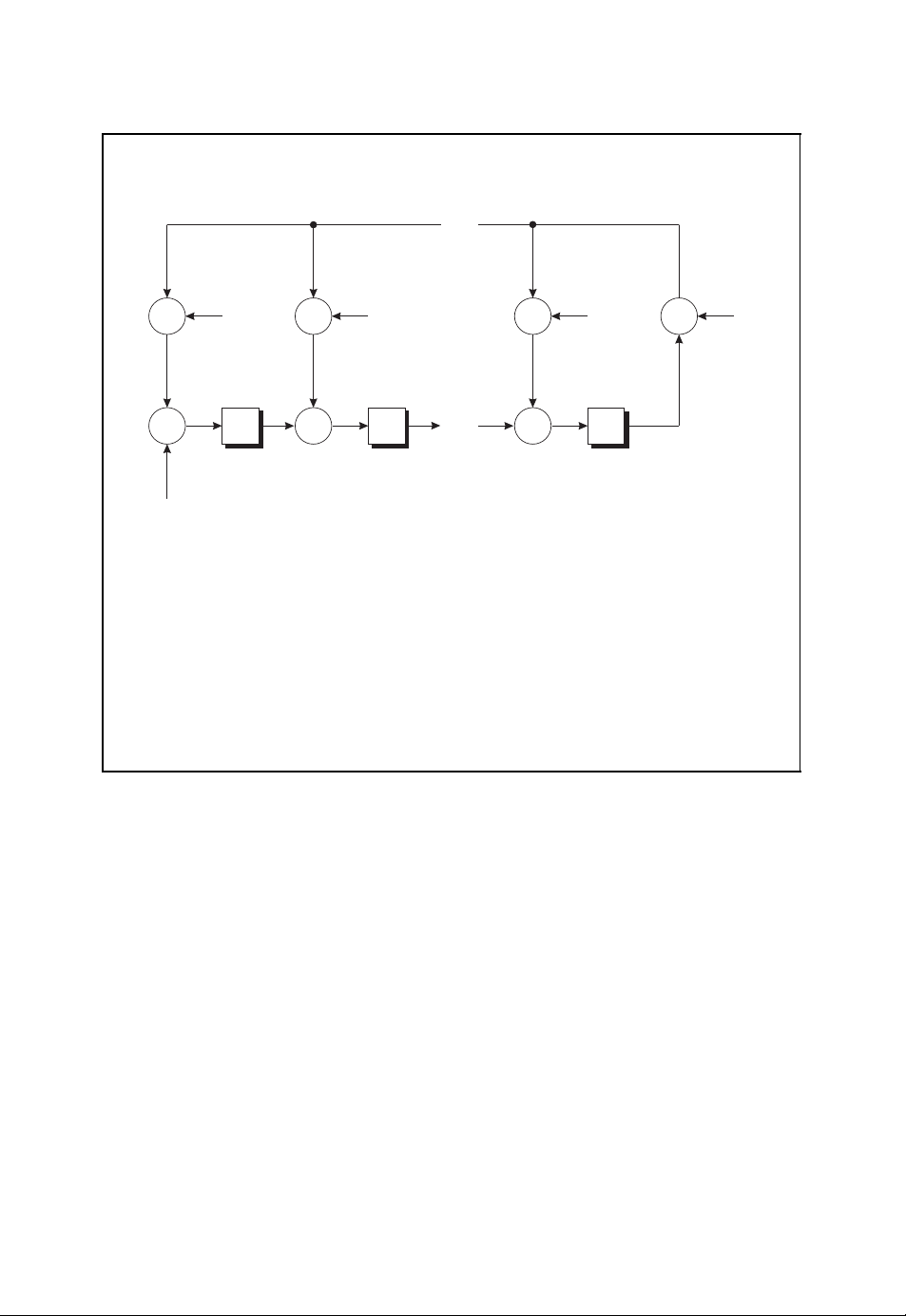

4.3 Concatenated Convolutional Codes . . . . . . . . . . . . . . . . . . . . . . 182

4.3.1 Parallel Concatenation . . . . . . . . . . . . . . . . . . . . . . . . . 182

4.3.2 The UMTS Turbo Code . . . . . . . . . . . . . . . . . . . . . . . . 183

4.3.3 Serial Concatenation . . . . . . . . . . . . . . . . . . . . . . . . . . 184

4.3.4 Partial Concatenation . . . . . . . . . . . . . . . . . . . . . . . . . . 185

4.3.5 Turbo Decoding . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

4.4 EXIT Charts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

4.4.1 Calculating an EXIT Chart . . . . . . . . . . . . . . . . . . . . . . 189

4.4.2 Interpretation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

4.5 Weight Distribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

4.5.1 Partial Weights . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 196

4.5.2 Expected Weight Distribution . . . . . . . . . . . . . . . . . . . . . 197

4.6 Woven Convolutional Codes . . . . . . . . . . . . . . . . . . . . . . . . . . 198

4.6.1 Encoding Schemes . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

4.6.2 Distance Properties of Woven Codes . . . . . . . . . . . . . . . . . 202

4.6.3 Woven Turbo Codes . . . . . . . . . . . . . . . . . . . . . . . . . . 205

4.6.4 Interleaver Design . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

4.7 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

5 Space–Time Codes 215

5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 215

5.1.1 Digital Modulation Schemes . . . . . . . . . . . . . . . . . . . . . . 216

5.1.2 Diversity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 223

5.2 Spatial Channels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

5.2.1 Basic Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

5.2.2 Spatial Channel Models . . . . . . . . . . . . . . . . . . . . . . . . 234

5.2.3 Channel Estimation . . . . . . . . . . . . . . . . . . . . . . . . . . 239

5.3 Performance Measures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

5.3.1 Channel Capacity . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

5.3.2 Outage Probability and Outage Capacity . . . . . . . . . . . . . . . 250

5.3.3 Ergodic Error Probability . . . . . . . . . . . . . . . . . . . . . . . 252

5.4 Orthogonal Space – Time Block Codes . . . . . . . . . . . . . . . . . . . . . 257

5.4.1 Alamouti’s Scheme . . . . . . . . . . . . . . . . . . . . . . . . . . . 257

5.4.2 Extension to More than Two Transmit Antennas . . . . . . . . . . . 260

5.4.3 Simulation Results . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

5.5 Spatial Multiplexing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

5.5.1 General Concept . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

5.5.2 Iterative APP Preprocessing and Per-layer Decoding . . . . . . . . . 267

5.5.3 Linear Multilayer Detection . . . . . . . . . . . . . . . . . . . . . . 272

5.5.4 Original BLAST Detection . . . . . . . . . . . . . . . . . . . . . . 275

5.5.5 QL Decomposition and Interference Cancellation . . . . . . . . . . 278

5.5.6 Performance of Multi-Layer Detection Schemes . . . . . . . . . . . 287

5.5.7 Unified Description by Linear Dispersion Codes . . . . . . . . . . . 291

5.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Page 11

viii CONTENTS

A Algebraic Structures 295

A.1 Groups, Rings and Finite Fields . . . . . . . . . . . . . . . . . . . . . . . . 295

A.1.1 Groups . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295

A.1.2 Rings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 296

A.1.3 Finite Fields . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

A.2 Vector Spaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 299

A.3 Polynomials and Extension Fields . . . . . . . . . . . . . . . . . . . . . . . 300

A.4 Discrete Fourier Transform . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

B Linear Algebra 311

C Acronyms 319

Bibliography 325

Index 335

Page 12

Preface

Modern information and communication systems are based on the reliable and efficient

transmission of information. Channels encountered in practical applications are usually

disturbed regardless of whether they correspond to information transmission over noisy

and time-variant mobile radio channels or to information transmission on optical discs that

might be damaged by scratches. Owing to these disturbances, appropriate channel coding

schemes have to be employed such that errors within the transmitted information can be

detected or even corrected. To this end, channel coding theory provides suitable coding

schemes for error detection and error correction. Besides good code characteristics with

respect to the number of errors that can be detected or corrected, the complexity of the

architectures used for implementing the encoding and decoding algorithms is important for

practical applications.

The present book provides a concise overview of channel coding theory and practice

as well as the accompanying algorithms, architectures and applications. The selection of

the topics presented in this book is oriented towards those subjects that are relevant for

information and communication systems in use today or in the near future. The focus

is on those aspects of coding theory that are important for the understanding of these

systems. This book places emphasis on the algorithms for encoding and decoding and their

architectures, as well as the applications of the corresponding coding schemes in a unified

framework.

The idea for this book originated from a two-day seminar on coding theory in the

industrial context. We have tried to keep this seminar style in the book by highlighting the

most important facts within the figures and by restricting the scope to the most important

topics with respect to the applications of coding theory, especially within communication

systems. This also means that many important and interesting topics could not be covered

in order to be as concise as possible.

The target audience for the book are students of communication and information engi-

neering as well as computer science at universities and also applied mathematicians who are

interested in a presentation that subsumes theory and practice of coding theory without sacrificing exactness or relevance with regard to real-world practical applications. Therefore,

this book is well suited for engineers in industry who want to know about the theoretical

basics of coding theory and their application in currently relevant communication systems.

The book is organised as follows. In Chapter 1 a brief overview of the principle

architecture of a communication system is given and the information theory fundamentals

underlying coding theory are summarised. The most important concepts of information theory, such as entropy and channel capacity as well as simple channel models, are described.

Page 13

x PREFACE

Chapter 2 presents the classical, i.e. algebraic, coding theory. The fundamentals of the

encoding and decoding of block codes are explained, and the maximum likelihood decoding

rule is derived as the optimum decoding strategy for minimising the word error probability

after decoding a received word. Linear block codes and their definition based on generator

and parity-check matrices are discussed. General performance measures and bounds relating

important code characteristics such as the minimum Hamming distance and the code rate

are presented, illustrating the compromises necessary between error detection and error

correction capabilities and transmission efficiency. It is explained how new codes can be

constructed from already known codes. Repetition codes, parity-check-codes, Hamming

codes, simplex codes and Reed – Muller codes are presented as examples. Since the task

of decoding linear block codes is difficult in general, the algebraic properties of cyclic

codes are exploited for efficient decoding algorithms. These cyclic codes, together with

their generator and parity-check polynomials, are discussed, as well as efficient encoding

and decoding architectures based on linear feedback shift registers. Important cyclic codes

such as BCH codes and Reed – Solomon codes are presented, and an efficient algebraic

decoding algorithm for the decoding of these cyclic codes is derived.

Chapter 3 deals with the fundamentals of convolutional coding. Convolutional codes

can be found in many applications, for instance in dial-up modems, satellite communications

and digital cellular systems. The major reason for this popularity is the existence of efficient

decoding algorithms that can utilise soft input values from the demodulator. This so-called

soft-input decoding leads to significant performance gains. Two famous examples for a

soft-input decoding algorithm are the Viterbi algorithm and the Bahl, Cocke, Jelinek, Raviv

(BCJR) algorithm which also provides a reliability output. Both algorithms are based on

the trellis representation of the convolutional code. This highly repetitive structure makes

trellis-based decoding very suitable for hardware implementations.

We start our discussion with the encoding of convolutional codes and some of their

basic properties. It follows a presentation of the Viterbi algorithm and an analysis of

the error correction performance with this maximum likelihood decoding procedure. The

concept of soft-output decoding and the BCJR algorithm are considered in Section 3.5. Softoutput decoding is a prerequisite for the iterative decoding of concatenated convolutional

codes as introduced in Chapter 4. Finally, we consider an application of convolutional

codes for mobile communication channels as defined in the Global System for Mobile

communications (GSM) standard. In particular, the considered hybrid ARQ protocols are

excellent examples of the adaptive coding systems that are required for strongly time-variant

mobile channels.

As mentioned above, Chapter 4 is dedicated to the construction of long powerful codes

based on the concatenation of simple convolutional component codes. These concatenated

convolutional codes, for example the famous turbo codes, are capable of achieving low

bit error rates at signal-to-noise ratios close to the theoretical Shannon limit. The term

turbo reflects a property of the employed iterative decoding algorithm, where the decoder

output of one iteration is used as the decoder input of the next iteration. This concept

of iterative decoding was first introduced for the class of low-density parity-check codes.

Therefore, we first introduce low-density parity-check codes in Section 4.1 and discuss

the relation between these codes and concatenated code constructions. Then, we introduce

some popular encoding schemes for concatenated convolutional codes and present three

methods to analyse the performance of the corresponding codes. The EXIT chart method

Page 14

PREFACE xi

in Section 4.4 makes it possible to predict the behaviour of the iterative decoder by looking

at the input/output relations of the individual constituent soft-output decoders. Next, we

present a common approach in coding theory. We estimate the code performance with

maximum likelihood decoding for an ensemble of concatenated codes. This method explains

why many concatenated code constructions lead to a low minimum Hamming distance and

therefore to a relatively poor performance for high signal-to-noise ratios. In Section 4.6 we

consider code designs that lead to a higher minimum Hamming distance owing to a special

encoder construction, called the woven encoder, or the application of designed interleavers.

The fifth chapter addresses space – time coding concepts, a still rather new topic in the

area of radio communications. Although these techniques do not represent error-correcting

codes in the classical sense, they can also be used to improve the reliability of a data

link. Since space –time coding became popular only a decade ago, only a few concepts

have found their way into current standards hitherto. However, many other approaches are

currently being discussed. As already mentioned before, we restrict this book to the most

important and promising concepts.

While classical encoders and decoders are separated from the physical channel by

modulators, equalisers, etc., and experience rather simple hyperchannels, this is not true

for space–time coding schemes. They directly work on the physical channel. Therefore,

Chapter 5 starts with a short survey of linear modulation schemes and explains the principle of diversity. Next, spatial channel models are described and different performance

measures for their quantitative evaluation are discussed. Sections 5.4 and 5.5 introduce

two space – time coding concepts with the highest practical relevance, namely orthogonal

space–time block codes increasing the diversity degree and spatial multiplexing techniques

boosting the achievable data rate. For the latter approach, sophisticated signal processing

algorithms are required at the receiver in order to separate superimposed data streams again.

In the appendices a brief summary of algebraic structures such as finite fields and poly-

nomial rings is given, which are needed for the treatment especially of classical algebraic

codes, and the basics of linear algebra are briefly reviewed.

Finally, we would like to thank the Wiley team, especially Sarah Hinton as the respon-

sible editor, for their support during the completion of the manuscript. We also thank

Dr. rer. nat. Jens Schlembach who was involved from the beginning of this book project

and who gave encouragement when needed. Last but not least, we would like to give special thanks to our families – Fabian, Heike, Jana and Erik, Claudia, Hannah and Jakob and

Christiane – for their emotional support during the writing of the manuscript.

Andr´e Neubauer

M¨unster University of Applied Sciences

J¨urgen Freudenberger

HTWG Konstanz University of Applied Sciences

Volker K¨uhn

University of Rostock

Page 15

Page 16

1

Introduction

The reliable transmission of information over noisy channels is one of the basic requirements of digital information and communication systems. Here, transmission is understood

both as transmission in space, e.g. over mobile radio channels, and as transmission in time

by storing information in appropriate storage media. Because of this requirement, modern

communication systems rely heavily on powerful channel coding methodologies. For practical applications these coding schemes do not only need to have good coding characteristics

with respect to the capability of detecting or correcting errors introduced on the channel.

They also have to be efficiently implementable, e.g. in digital hardware within integrated

circuits. Practical applications of channel codes include space and satellite communications, data transmission, digital audio and video broadcasting and mobile communications,

as well as storage systems such as computer memories or the compact disc (Costello et al.,

1998).

In this introductory chapter we will give a brief introduction into the field of channel

coding. To this end, we will describe the information theory fundamentals of channel

coding. Simple channel models will be presented that will be used throughout the text.

Furthermore, we will present the binary triple repetition code as an illustrative example of

a simple channel code.

1.1 Communication Systems

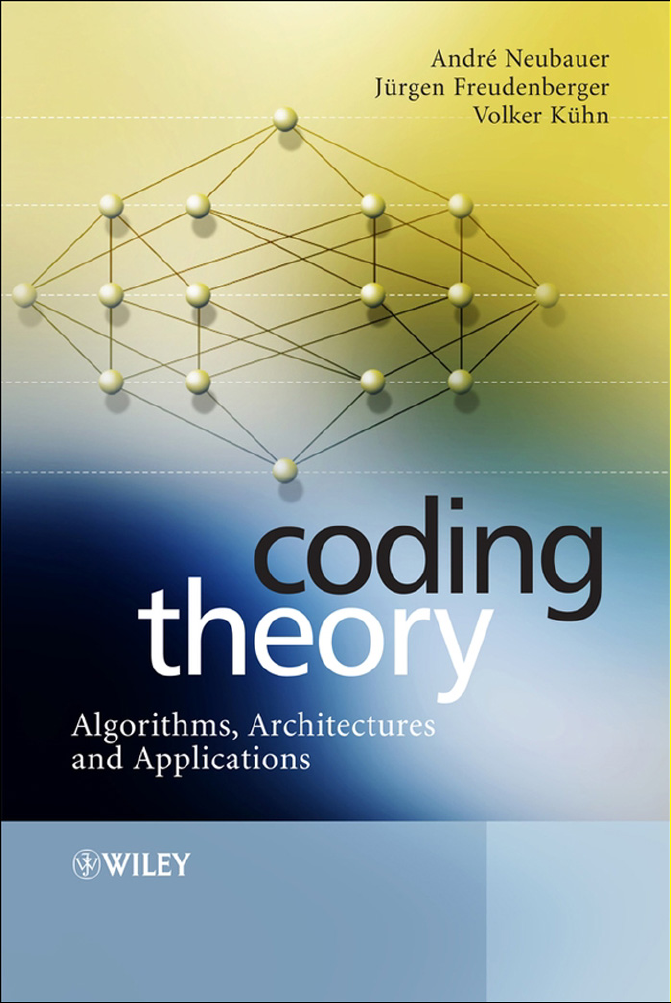

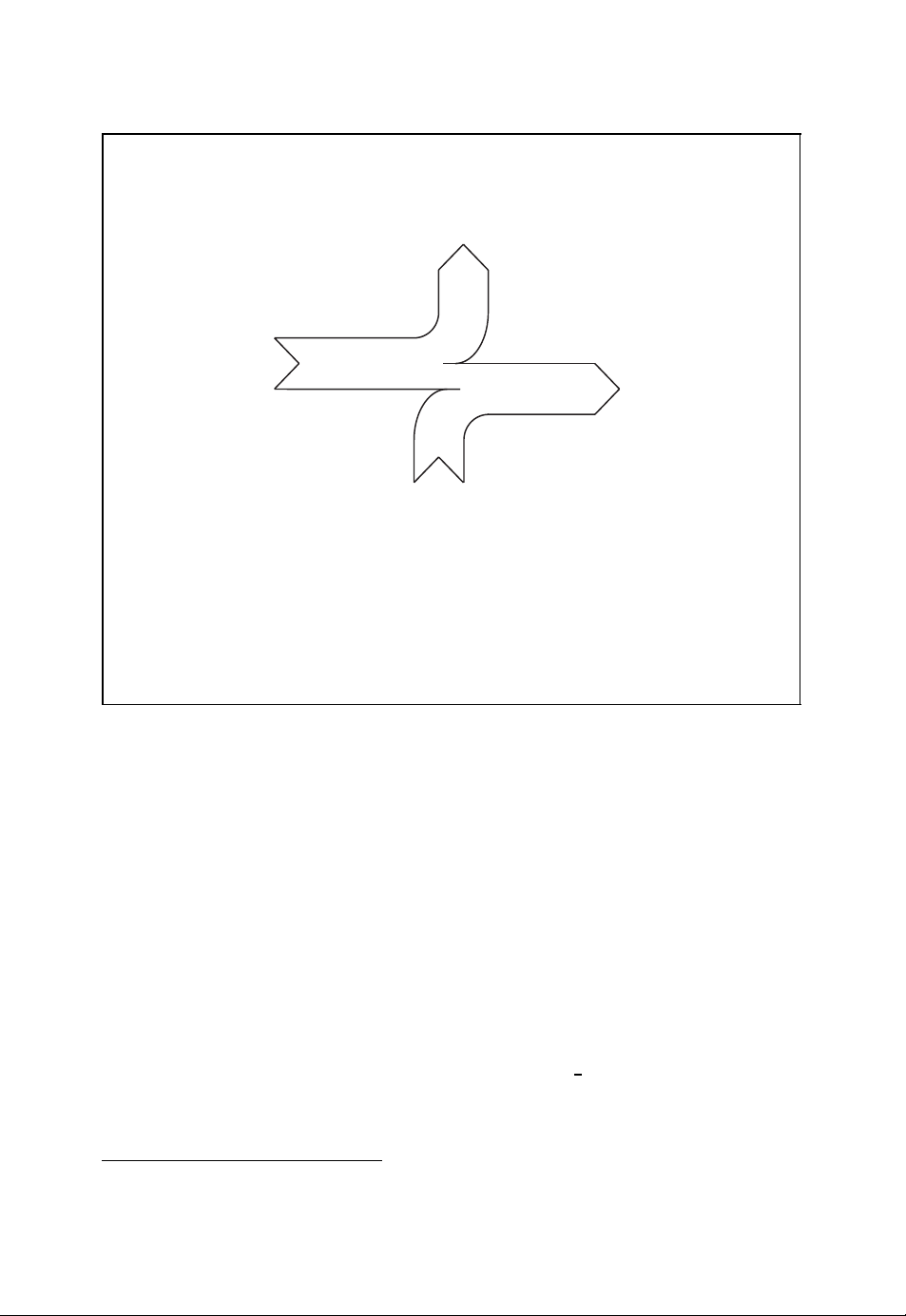

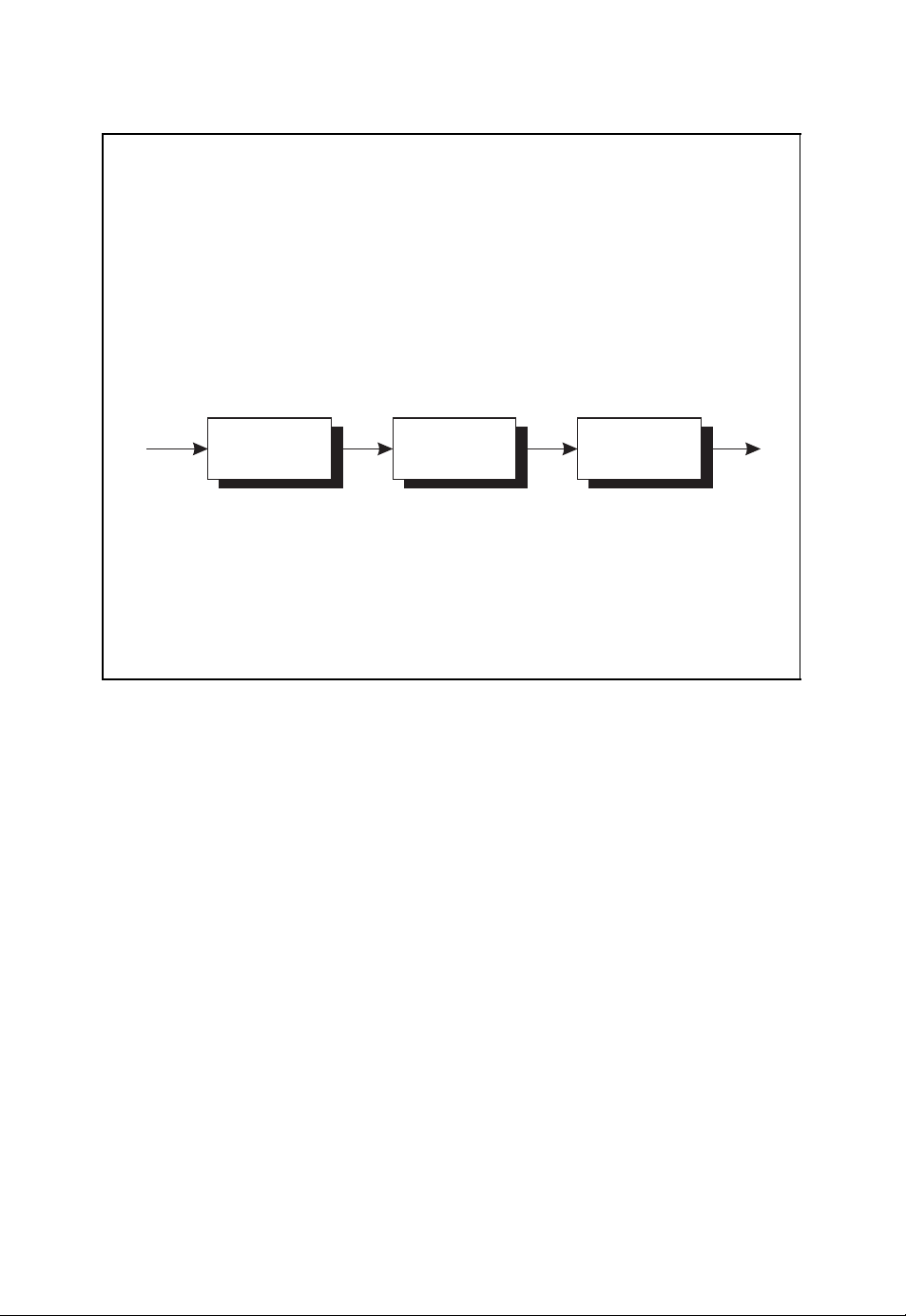

In Figure 1.1 the basic structure of a digital communication system is shown which represents the architecture of the communication systems in use today. Within the transmitter

of such a communication system the following tasks are carried out:

● source encoding,

● channel encoding,

● modulation.

Coding Theory – Algorithms, Architectures, and Applications Andr´e Neubauer, J¨urgen Freudenberger, Volker K¨uhn

2007 John Wiley & Sons, Ltd

Page 17

2 INTRODUCTION

Principal structure of digital communication systems

u

u

ˆ

channel

FEC

encoder

encoder

channel

FEC

decoder

encoder

source

source

FEC

encoder

encoder

encoder

source

FEC

decoder

encoder

■ The sequence of information symbols u is encoded into the sequence of

b

modu-

FEC

lator

encoder

FEC

channel

encoder

r

demo-

FEC

dulator

encoder

code symbols b which are transmitted across the channel after modulation.

■ The sequence of received symbols r is decoded into the sequence of

information symbolsˆu which are estimates of the originally transmitted

information symbols.

Figure 1.1: Basic structure of digital communication systems

In the receiver the corresponding inverse operations are implemented:

● demodulation,

● channel decoding,

● source decoding.

According to Figure 1.1 the modulator generates the signal that is used to transmit the

sequence of symbols b across the channel (Benedetto and Biglieri, 1999; Neubauer, 2007;

Proakis, 2001). Due to the noisy nature of the channel, the transmitted signal is disturbed.

The noisy received signal is demodulated by the demodulator in the receiver, leading to the

sequence of received symbols r. Since the received symbol sequence r usually differs from

the transmitted symbol sequence b,achannel code is used such that the receiver is able to

detect or even correct errors (Bossert, 1999; Lin and Costello, 2004; Neubauer, 2006b). To

this end, the channel encoder introduces redundancy into the information sequence u. This

redundancy can be exploited by the channel decoder for error detection or error correction

by estimating the transmitted symbol sequenceˆu.

In his fundamental work, Shannon showed that it is theoretically possible to realise an

information transmission system with as small an error probability as required (Shannon,

1948). The prerequisite for this is that the information rate of the information source

be smaller than the so-called channel capacity. In order to reduce the information rate,

source coding schemes are used which are implemented by the source encoder in the

transmitter and the source decoder in the receiver (McEliece, 2002; Neubauer, 2006a).

Page 18

INTRODUCTION 3

Further information about source coding can be found elsewhere (Gibson et al., 1998;

Sayood, 2000, 2003).

In order better to understand the theoretical basics of information transmission as well

as channel coding, we now give a brief overview of information theory as introduced by

Shannon in his seminal paper (Shannon, 1948). In this context we will also introduce the

simple channel models that will be used throughout the text.

1.2 Information Theory

An important result of information theory is the finding that error-free transmission across a

noisy channel is theoretically possible – as long as the information rate does not exceed the

so-called channel capacity. In order to quantify this result, we need to measure information.

Within Shannon’s information theory this is done by considering the statistics of symbols

emitted by information sources.

1.2.1 Entropy

Let us consider the discrete memoryless information source shown in Figure 1.2. At a given

time instant, this discrete information source emits the random discrete symbol X = x

which assumes one out of M possible symbol values x1, x2, ..., xM. The rate at which these

symbol values appear are given by the probabilities P

(xi) = Pr{X = xi}.

P

X

(x1), PX(x2), ..., PX(xM) with

X

i

Discrete information source

Information

X

source

■ The discrete information source emits the random discrete symbol X .

■ The symbol values x

..., P

■ Entropy

(xM).

X

Figure 1.2: Discrete information source emitting discrete symbols X

, x2, ..., xMappear with probabilities PX(x1), PX(x2),

1

M

I(X ) =−

PX(xi) · log2(PX(xi)) (1.1)

i=1

Page 19

4 INTRODUCTION

The average information associated with the random discrete symbol X is given by the

so-called entropy measured in the unit ‘bit’

M

I(X ) =−

PX(xi) · log

i=1

(xi)).

P

(

X

2

For a binary information source that emits the binary symbols X = 0 and X = 1 with

probabilities Pr{X = 0}=p

and Pr{X = 1}=1 −Pr{X = 0}=1 − p0, the entropy is

0

given by the so-called Shannon function or binary entropy function

I(X ) =−p

log2(p0) − (1 −p0) log2(1 −p0).

0

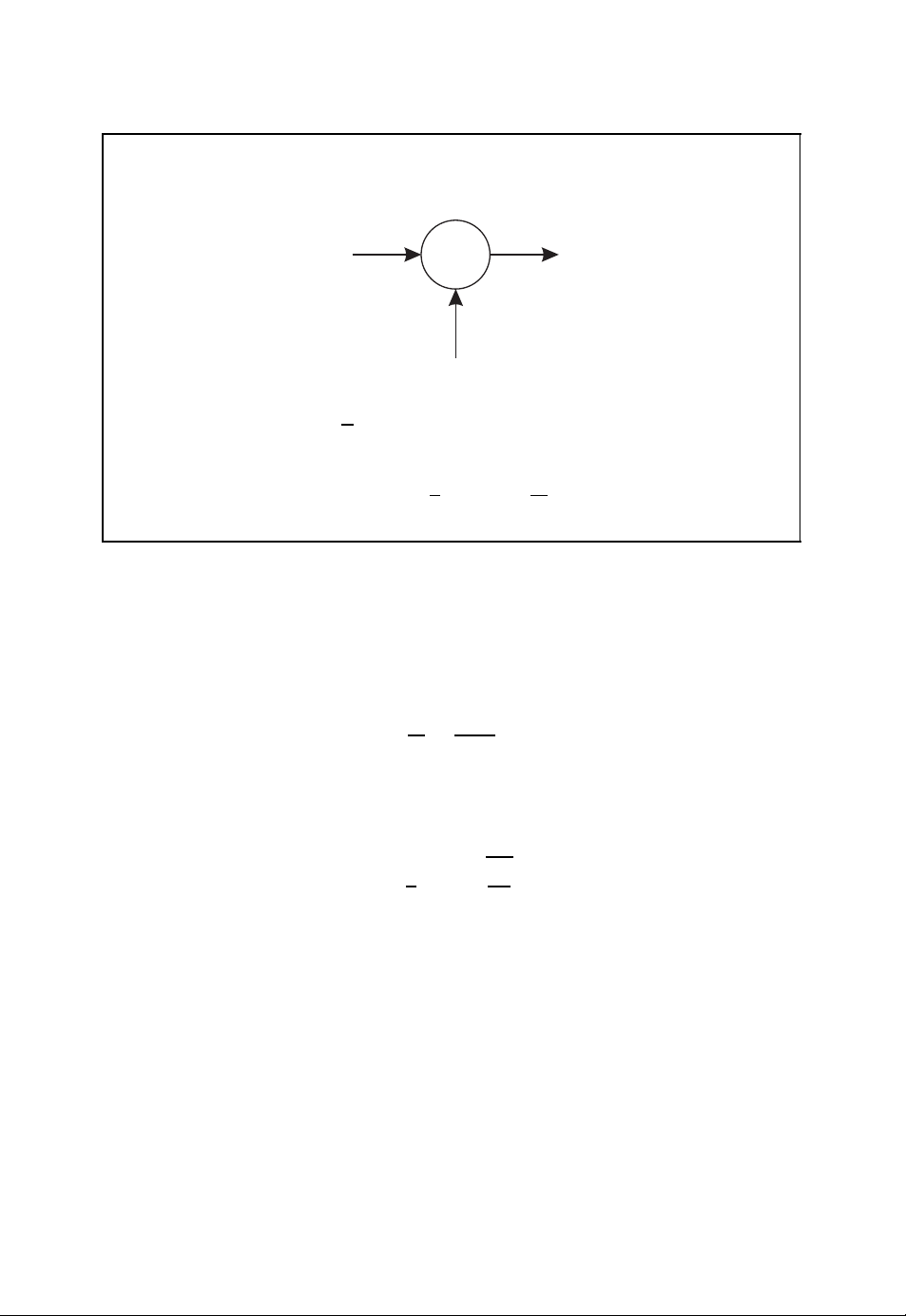

1.2.2 Channel Capacity

With the help of the entropy concept we can model a channel according to Berger’s channel

diagram shown in Figure 1.3 (Neubauer, 2006a). Here, X refers to the input symbol and

R denotes the output symbol or received symbol. We now assume that M input symbol

values x

help of the conditional probabilities

and

the conditional entropies are given by

and

With these conditional probabilities the mutual information

can be derived which measures the amount of information that is transmitted across the

channel from the input to the output for a given information source.

I(X ;R) with respect to the statistical properties of the input X , i.e. by appropriately

choosing the probabilities {P

If the input entropy I(X ) is smaller than the channel capacity C

, x2, ..., xMand N output symbol values r1, r2, ..., rNare possible. With the

1

(xi|rj) = Pr{X = xi|R = rj}

P

X |R

P

(rj|xi) = Pr{R = rj|X = xi}

R|X

M

N

I(X |R) =−

I(R|X ) =−

i=1

M

i=1

j=1

N

j=1

P

(xi,rj) · log

X ,R

P

(xi,rj) · log2(P

X ,R

P

X |R

2

R|X

(xi|rj)

(rj|xi)).

I(X ;R) = I(X ) − I(X |R) = I(R) − I(R|X )

The so-called channel capacity C is obtained by maximising the mutual information

(xi)}

X

C = max

. This leads to

1≤i≤M

{PX(xi)}

I(X )

1≤i≤M

!

<C,

I(X ;R).

then information can be transmitted across the noisy channel with arbitrarily small error

probability. Thus, the channel capacity C in fact quantifies the information transmission

capacity of the channel.

Page 20

INTRODUCTION 5

Berger’s channel diagram

I(X |R)

I(X )

I(X ;R)

I(R)

I(R|X )

■ Mutual information

I(X ;R) = I(X ) − I(X |R) = I(R) − I(R|X ) (1.2)

■ Channel capacity

C = max

{PX(xi)}

I(X ;R) (1.3)

1≤i≤M

Figure 1.3: Berger’s channel diagram

1.2.3 Binary Symmetric Channel

As an important example of a memoryless channel we turn to the binary symmetric channel

or BSC. Figure 1.4 shows the channel diagram of the binary symmetric channel with bit

error probability ε. This channel transmits the binary symbol X = 0orX = 1 correctly

with probability 1 −ε, whereas the incorrect binary symbol R = 1orR = 0 is emitted

with probability ε.

By maximising the mutual information I(X ;R), the channel capacity of a binary

symmetric channel is obtained according to

C = 1 + ε log

This channel capacity is equal to 1 if ε = 0orε = 1; for ε =

(ε) + (1 − ε) log2(1 −ε).

2

1

2

the channel capacity is 0. In

contrast to the binary symmetric channel, which has discrete input and output symbols taken

from binary alphabets, the so-called AWGN channel is defined on the basis of continuous

real-valued random variables.

1

In Chapter 5 we will also consider complex-valued random variables.

1

Page 21

6 INTRODUCTION

Binary symmetric channel

1 −ε

X = 0

ε

ε

X = 1

1 −ε

■ Bit error probability ε

■ Channel capacity

R = 0

R = 1

C = 1 + ε log

(ε) + (1 − ε) log2(1 −ε) (1.4)

2

Figure 1.4: Binary symmetric channel with bit error probability ε

1.2.4 AWGN Channel

Up to now we have exclusively considered discrete-valued symbols. The concept of entropy

can be transferred to continuous real-valued random variables by introducing the so-called

differential entropy. It turns out that a channel with real-valued input and output symbols can

again be characterised with the help of the mutual information I(X ;R) and its maximum,

the channel capacity C. In Figure 1.5 the so-called AWGN channel is illustrated which is

described by the additive white Gaussian noise term Z .

With the help of the signal power

S = EX

and the noise power

N = EZ

the channel capacity of the AWGN channel is given by

1

C =

log

2

2

2

2

1 +

S

.

N

The channel capacity exclusively depends on the signal-to-noise ratio S/N.

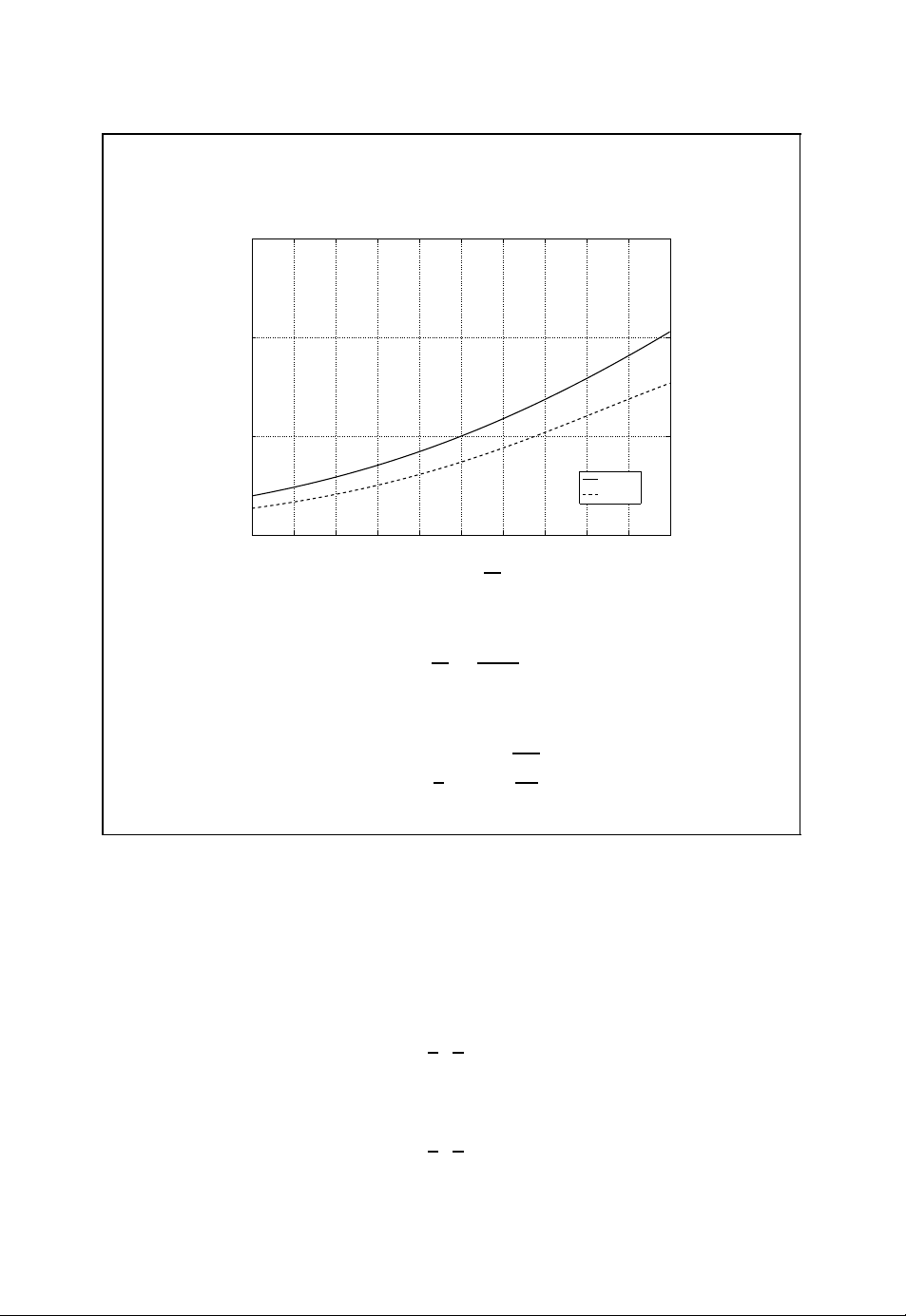

In order to compare the channel capacities of the binary symmetric channel and the

AWGN channel, we assume a digital transmission scheme using binary phase shift keying

(BPSK) and optimal reception with the help of a matched filter (Benedetto and Biglieri,

1999; Neubauer, 2007; Proakis, 2001). The signal-to-noise ratio of the real-valued output

Page 22

INTRODUCTION 7

AWGN channel

X

+

R

Z

■ Signal-to-noise ratio

■ Channel capacity

S

N

C =

1

log

2

2

1 +

S

N

(1.5)

Figure 1.5: AWGN channel with signal-to-noise ratio S/N

R of the matched filter is then given by

S

E

b

=

N

N0/2

with bit energy E

and noise power spectral density N0. If the output R of the matched

b

filter is compared with the threshold 0, we obtain the binary symmetric channel with bit

error probability

ε =

1

erfc

2

E

b

.

N

0

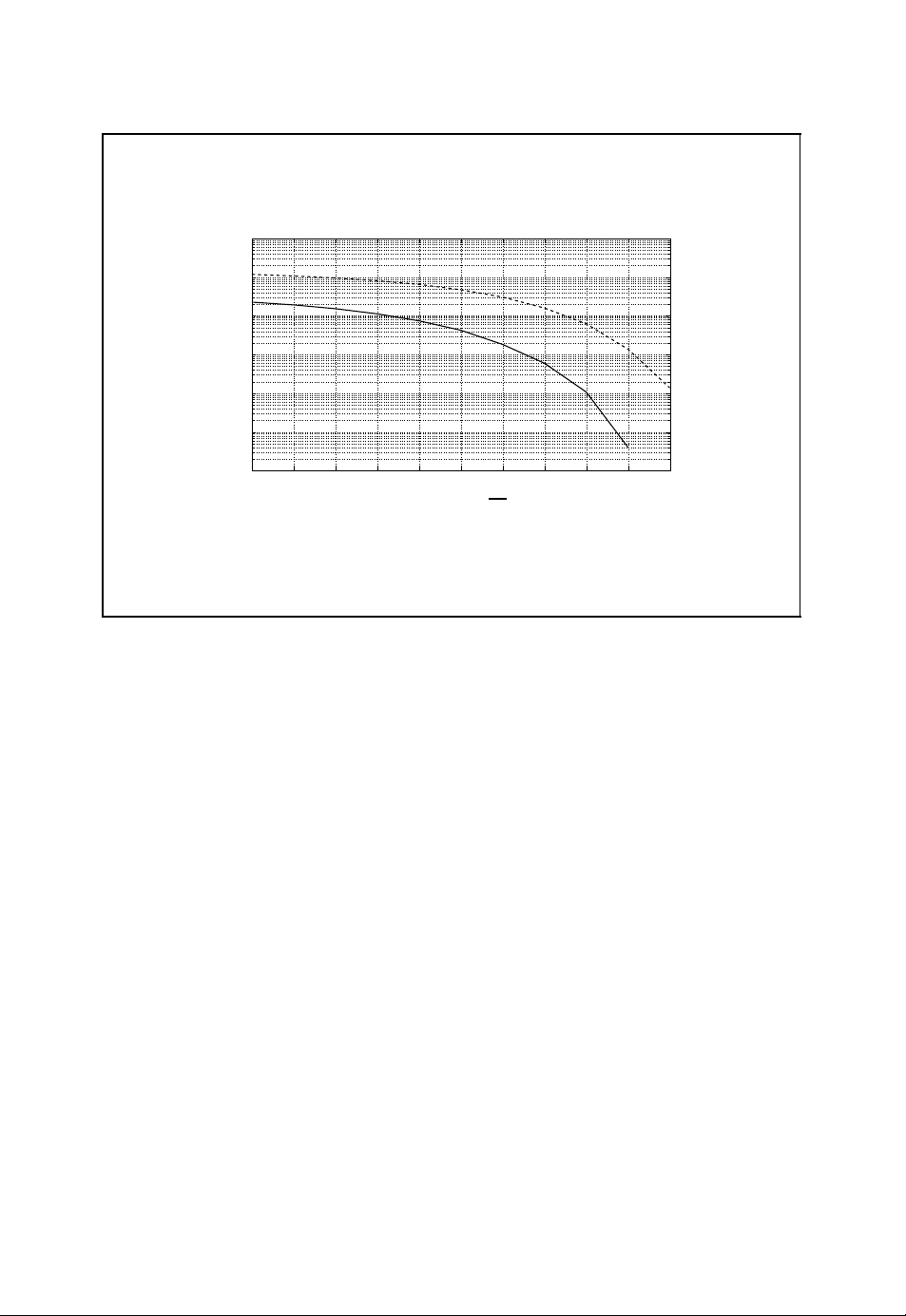

Here, erfc(·) denotes the complementary error function. In Figure 1.6 the channel capacities of the binary symmetric channel and the AWGN channel are compared as a function

of E

. The signal-to-noise ratio S/N or the ratio Eb/N0must be higher for the binary

b/N0

symmetric channel compared with the AWGN channel in order to achieve the same channel

capacity. This gain also translates to the coding gain achievable by soft-decision decoding

as opposed to hard-decision decoding of channel codes, as we will see later (e.g. in

Section 2.2.8).

Although information theory tells us that it is theoretically possible to find a channel

code that for a given channel leads to as small an error probability as required, the design

of good channel codes is generally difficult. Therefore, in the next chapters several classes

of channel codes will be described. Here, we start with a simple example.

Page 23

8 INTRODUCTION

Channel capacity of BSC vs AWGN channel

1.5

1

C

0.5

AWGN

BSC

0

Ŧ5 Ŧ4 Ŧ3 Ŧ2 Ŧ1 0 1 2 3 4 5

10 log

■ Signal-to-noise ratio of AWGN channel

E

b

10

N

0

E

S

N

=

b

N0/2

(1.6)

■ Bit error probability of binary symmetric channel

ε =

1

erfc

2

E

b

N

0

(1.7)

Figure 1.6: Channel capacity of the binary symmetric channel vs the channel capacity of

the AWGN channel

1.3 A Simple Channel Code

As an introductory example of a simple channel code we consider the transmission of the

binary information sequence

01110

001

over a binary symmetric channel with bit error probability ε = 0.25 (Neubauer, 2006b).

On average, every fourth binary symbol will be received incorrectly. In this example we

assume that the binary sequence

00110

000

is received at the output of the binary symmetric channel (see Figure 1.7).

Page 24

INTRODUCTION 9

Channel transmission

00101110

■ Binary symmetric channel with bit error probability ε = 0.25

■ Transmission w/o channel code

BSC

00000110

Figure 1.7: Channel transmission without channel code

Encoder

00101110

■ Binary information symbols 0 and 1

■ Binary code words 000 and 111

■ Binary triple repetition code {000, 111}

Encoder

000000111000111111111000

Figure 1.8: Encoder of a triple repetition code

In order to implement a simple error correction scheme we make use of the so-called

binary triple repetition code. This simple channel code is used for the encoding of binary

data. If the binary symbol 0 is to be transmitted, the encoder emits the code word 000.

Alternatively, the code word 111 is issued by the encoder when the binary symbol 1 is to

be transmitted. The encoder of a triple repetition code is illustrated in Figure 1.8.

For the binary information sequence given above we obtain the binary code sequence

000 000 111 000 111111 111 000

at the output of the encoder. If we again assume that on average every fourth binary

symbol is incorrectly transmitted by the binary symmetric channel, we may obtain the

received sequence

0 000 011 010 111010 111 010.

01

This is illustrated in Figure 1.9.

Page 25

10 INTRODUCTION

Channel transmission

000000111000111111111000

■ Binary symmetric channel with bit error probability ε = 0.25

■ Transmission with binary triple repetition code

BSC

010000011010111010111010

Figure 1.9: Channel transmission of a binary triple repetition code

Decoder

010000011010111010111010

■ Decoding of triple repetition code by majority decision

Decoder

000 → 000

001 → 000

010 → 000

00101010

011 → 111

.

.

.

110 → 111

111 → 111

Figure 1.10: Decoder of a triple repetition code

The decoder in Figure 1.10 tries to estimate the original information sequence with the

help of a majority decision. If the number of 0s within a received 3-bit word is larger than

the number of 1s, the decoder emits the binary symbol 0; otherwise a 1 is decoded. With

this decoding algorithm we obtain the decoded information sequence

001010

10.

Page 26

INTRODUCTION 11

As can be seen from this example, the binary triple repetition code is able to correct a single

error within a code word. More errors cannot be corrected. With the help of this simple

channel code we are able to reduce the number of errors. Compared with the unprotected

transmission without a channel code, the number of errors has been reduced from two to

one. However, this is achieved by a significant reduction in the transmission bandwidth

because, for a given symbol rate on the channel, it takes 3 times longer to transmit an

information symbol with the help of the triple repetition code. It is one of the main topics

of the following chapters to present more efficient coding schemes.

Page 27

Page 28

2

Algebraic Coding Theory

In this chapter we will introduce the basic concepts of algebraic coding theory. To this end,

the fundamental properties of block codes are first discussed. We will define important code

parameters and describe how these codes can be used for the purpose of error detection

and error correction. The optimal maximum likelihood decoding strategy will be derived

and applied to the binary symmetric channel.

With these fundamentals at hand we will then introduce linear block codes. These

channel codes can be generated with the help of so-called generator matrices owing to

their special algebraic properties. Based on the closely related parity-check matrix and the

syndrome, the decoding of linear block codes can be carried out. We will also introduce

dual codes and several techniques for the construction of new block codes based on known

ones, as well as bounds for the respective code parameters and the accompanying code

characteristics. As examples of linear block codes we will treat the repetition code, paritycheck code, Hamming code, simplex code and Reed –Muller code.

Although code generation can be carried out efficiently for linear block codes, the

decoding problem for general linear block codes is difficult to solve. By introducing further

algebraic structures, cyclic codes can be derived as a subclass of linear block codes for

which efficient algebraic decoding algorithms exist. Similar to general linear block codes,

which are defined using the generator matrix or the parity-check matrix, cyclic codes

are defined with the help of the so-called generator polynomial or parity-check polynomial.

Based on linear feedback shift registers, the respective encoding and decoding architectures

for cyclic codes can be efficiently implemented. As important examples of cyclic codes

we will discuss BCH codes and Reed – Solomon codes. Furthermore, an algebraic decoding

algorithm is presented that can be used for the decoding of BCH and Reed–Solomon

codes.

In this chapter the classical algebraic coding theory is presented. In particular, we

will follow work (Berlekamp, 1984; Bossert, 1999; Hamming, 1986; Jungnickel, 1995;

Lin and Costello, 2004; Ling and Xing, 2004; MacWilliams and Sloane, 1998; McEliece,

2002; Neubauer, 2006b; van Lint, 1999) that contains further details about algebraic coding

theory.

Coding Theory – Algorithms, Architectures, and Applications Andr´e Neubauer, J¨urgen Freudenberger, Volker K¨uhn

2007 John Wiley & Sons, Ltd

Page 29

14 ALGEBRAIC CODING THEORY

2.1 Fundamentals of Block Codes

In Section 1.3, the binary triple repetition code was given as an introductory example of a

simple channel code. This specific channel code consists of two code words 000 and 111 of

length n = 3, which represent k = 1 binary information symbol 0 or 1 respectively. Each

symbol of a binary information sequence is encoded separately. The respective code word

of length 3 is then transmitted across the channel. The potentially erroneously received word

is finally decoded into a valid code word 000 or 111 – or equivalently into the respective

information symbol 0 or 1. As we have seen, this simple code is merely able to correct

one error by transmitting three code symbols instead of just one information symbol across

the channel.

In order to generalise this simple channel coding scheme and to come up with more efficient and powerful channel codes, we now consider an information sequence u

u5u6u7... of discrete information symbols ui. This information sequence is grouped into

blocks of length k according to

u

···u

0u1

k−1

block

u

kuk+1

···u

block

u

2k−1

2ku2k+1

block

···u

3k−1

···.

In so-called q-nary (n, k) block codes the information words

u

0u1

u

kuk+1

u

2ku2k+1

.

.

.

···u

k−1

···u

···u

,

2k−1

3k−1

,

,

0u1u2u3u4

of length k with u

∈{0, 1,...,q−1} are encoded separately from each other into the

i

corresponding code words

b

of length n with b

···b

0b1

b

nbn+1

b

2nb2n+1

.

.

.

∈{0, 1,...,q−1} (see Figure 2.1).1These code words are trans-

i

n−1

···b

···b

,

2n−1

3n−1

,

,

mitted across the channel and the received words are appropriately decoded, as shown

in Figure 2.2. In the following, we will write the information word u

the code word b

0b1

···b

respectively. Accordingly, the received word is denoted by r = (r

1

For q = 2 we obtain the important class of binary channel codes.

as vectors u = (u0,u1,...,u

n−1

) and b = (b0,b1,...,b

k−1

,...,r

0,r1

0u1

···u

n−1

and

k−1

n−1

), whereas

)

Page 30

ALGEBRAIC CODING THEORY 15

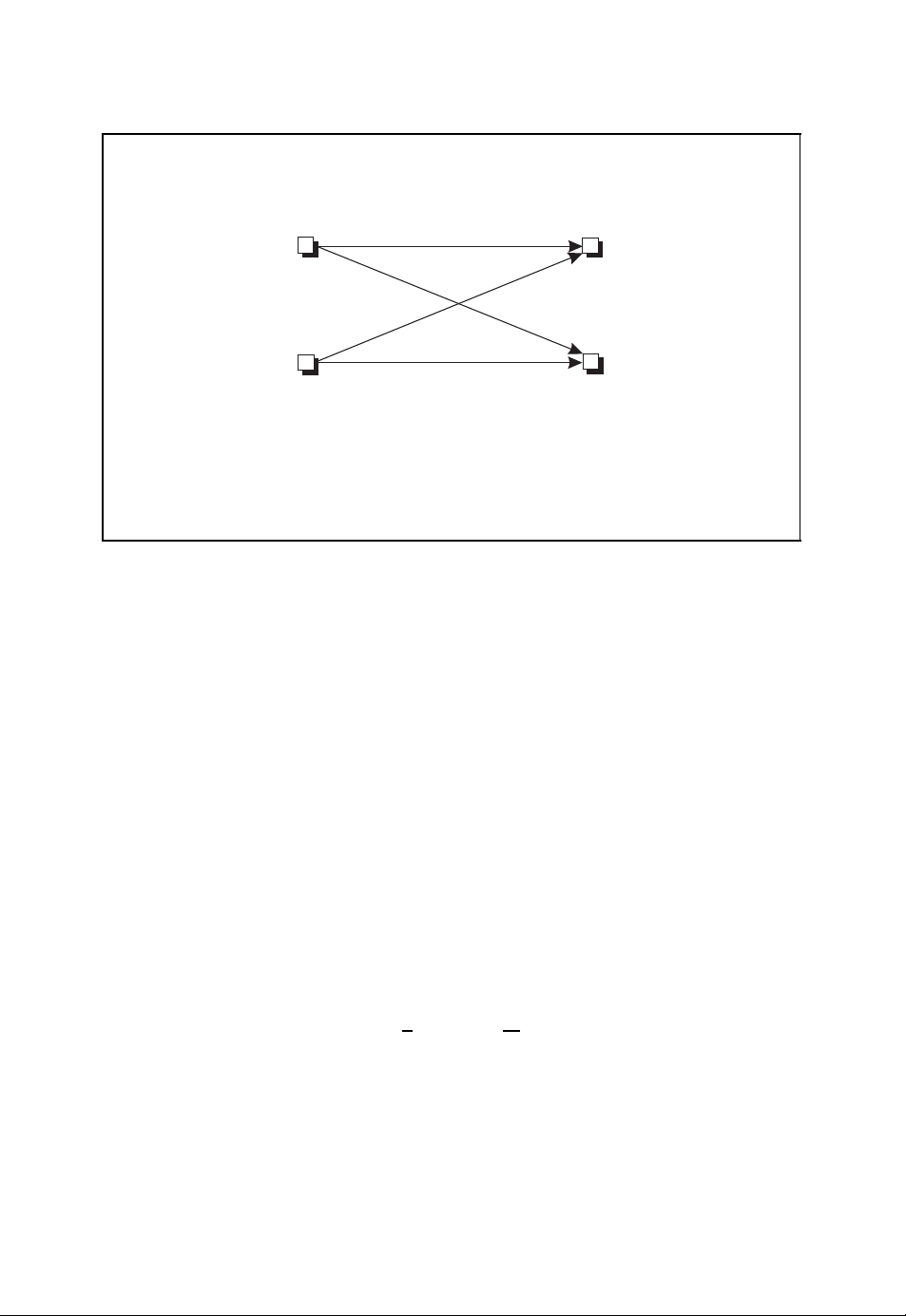

Encoding of an (n, k) block code

(u0,u1,...,u

■ The sequence of information symbols is grouped into words (or blocks) of

k−1

)

Encoder

(b

0,b1

,...,b

equal length k which are independently encoded.

■ Each information word (u

onto a code word (b

0,b1

0,u1

,...,b

,...,u

k−1

) of length n.

n−1

) of length k is uniquely mapped

Figure 2.1: Encoding of an (n, k) block code

Decoding of an (n, k) block code

(r0,r1,...,r

■ The received word (r

decoded into the code word (ˆb

wordˆu = ( ˆu

n−1

)

, ˆu1,..., ˆu

0

Decoder

,...,r

0,r1

) of length k respectively.

k−1

) of length n at the output of the channel is

n−1

,ˆb1,...,ˆb

0

,ˆb1,...,ˆb

(ˆb

0

) of length n or the information

n−1

n−1

n−1

)

)

Figure 2.2: Decoding of an (n, k) block code

the decoded code word and decoded information word are given byˆb = (ˆb

andˆu = ( ˆu

, ˆu1,..., ˆu

0

) respectively. In general, we obtain the transmission scheme for

k−1

,ˆb1,...,ˆb

0

n−1

an (n, k) block code as shown in Figure 2.3.

Without further algebraic properties of the (n, k) block code, the encoding can be carried

out by a table look-up procedure. The information word u to be encoded is used to address

a table that for each information word u contains the corresponding code word b at the

respective address. If each information symbol can assume one out of q possible values,

)

Page 31

16 ALGEBRAIC CODING THEORY

Encoding, transmission and decoding of an (n, k) block code

■ Information word u = (u

■ Code word b = (b

■ Received word r = (r

■ Decoded code word

■ Decoded information word

u

■ The information word u is encoded into the code word b.

■ The code word b is transmitted across the channel which emits the received

Encoder Channel Decoder

0,b1

0,r1

ˆ

b = (ˆb0,ˆb1,...,ˆb

,...,u

0,u1

,...,b

) of length n

n−1

,...,r

n−1

ˆ

u = ( ˆu0, ˆu1,..., ˆu

b

) of length k

k−1

) of length n

) of length n

n−1

k−1

) of length k

r

word r.

■ Based on the received word r,thecodeword

ˆ

b (or equivalently the

information wordˆu) is decoded.

Figure 2.3: Encoding, transmission and decoding of an (n, k) block code

ˆ

b

k

the number of code words is given by q

total size of the table is nq

k

. The size of the table grows exponentially with increasing

. Since each entry carries nq-nary symbols, the

information word length k. For codes with a large number of code words – corresponding to

a large information word length k – a coding scheme based on a table look-up procedure

is inefficient owing to the large memory size needed. For that reason, further algebraic

properties are introduced in order to allow for a more efficient encoder architecture of an

(n, k) block code. This is the idea lying behind the so-called linear block codes, which we

will encounter in Section 2.2.

2.1.1 Code Parameters

Channel codes are characterised by so-called code parameters. The most important code

parameters of a general (n, k) block code that are introduced in the following are the code

rate and the minimum Hamming distance (Bossert, 1999; Lin and Costello, 2004; Ling and

Xing, 2004). With the help of these code parameters, the efficiency of the encoding process

and the error detection and error correction capabilities can be evaluated for a given (n, k)

block code.

Page 32

ALGEBRAIC CODING THEORY 17

Code Rate

Under the assumption that each information symbol u

q values, the number of possible information words and code words is given by

of the (n, k) block code can assume

i

2

M = qk.

Since the code word length n is larger than the information word length k, the rate at which

information is transmitted across the channel is reduced by the so-called code rate

(M)

log

R =

For the simple binary triple repetition code with k = 1 and n = 3, the code rate is R =

k

1

=

≈ 0,3333.

n

3

q

n

k

=

.

n

Weight and Hamming Distance

Each code word b = (b

as the number of non-zero components b

0,b1

,...,b

) can be assigned the weight wt(b) which is defined

n−1

= 0 (Bossert, 1999), i.e.

i

3

wt(b) =|{i : bi= 0, 0 ≤ i<n}|.

Accordingly, the distance between two code words b = (b

b

1

The Hamming distance dist(b, b

b

consisting of M code words b

,...,b

) is given by the so-called Hamming distance (Bossert, 1999)

n−1

dist(b, b

i : b

) =

) provides the number of different components of b and

i

= b

, 0 ≤ i<n

i

and thus measures how close the code words b and bare to each other. For a code B

, b2, ..., bM, the minimum Hamming distance is given by

1

0,b1

,...,b

.

) and b= (b

n−1

,

0

d = min

We will denote the (n, k) block code B =

∀b=b

{

b

dist(b, b).

, b2,...,b

1

}

with M = q

M

k

q-nary code words

of length n and minimum Hamming distance d by B(n,k,d). The minimum weight of the

block code B is defined as min

wt(b). The code parameters of B(n,k,d) are summarised

∀b=0

in Figure 2.4.

Weight Distribution

The so-called weight distribution W(x) of an (n, k) block code B ={b

, b2,...,b

1

M

describes how many code words exist with a specific weight. Denoting the number of

code words with weight i by

w

=|{b ∈ B :wt(b) = i

i

2

In view of the linear block codes introduced in the following, we assume here that all possible information

words u = (u

3

|B| denotes the cardinality of the set B.

0,u1

,...,u

) are encoded.

k−1

}|

}

Page 33

18 ALGEBRAIC CODING THEORY

Code parameters of (n, k) block codes B(n, k, d)

■ Code rate

■ Minimum weight

■ Minimum Hamming distance

d = min

∀b=b

k

R =

n

wt(b) (2.2)

min

∀b=0

dist(b, b) (2.3)

(2.1)

Figure 2.4: Code parameters of (n, k) block codes B(n,k,d)

with 0 ≤ i ≤ n and 0 ≤ w

(Bossert, 1999)

≤ M , the weight distribution is defined by the polynomial

i

n

W(x)=

wixi.

i=0

The minimum weight of the block code B can then be obtained from the weight distribution

according to

wt(b) = min

min

∀b=0

i>0

wi.

Code Space

A q-nary block code B(n,k,d) with code word length n can be illustrated as a subset of

the so-called code space F

q-nary words or vectors.

n

. Such a code space is a graphical illustration of all possible

q

4

The total number of vectors of length n with weight w and

q-nary components is given by

n

w

with the binomial coefficients

The total number of vectors within F

|F

4

In Section 2.2 we will identify the code space with the finite vector space F

algebraic structures such as finite fields and vector spaces the reader is referred to Appendix A.

(q −1)

n

w

n

|=

q

w

=

=

n

is then obtained from

q

n

w=0

n!

w! (n −w)!

n!

w! (n −w)!

n

(q −1)

w

(q −1)

.

w

= qn.

w

n

. For a brief overview of

q

Page 34

ALGEBRAIC CODING THEORY 19

Four-dimensional binary code space F

0000

1111

4

0

0100

1001

1101

4

1

1000

4

2

1100

4

3

4

4

■ The four-dimensional binary code space F

1010

1110

vectors of length 4.

4

2

0010

0110

1011

4

2

0001

0101

0111

consists of 24= 16 binary

0011

wt = 0

wt = 1

wt = 2

wt = 3

wt = 4

Figure 2.5: Four-dimensional binary code space F

4

. Reproduced by permission of

2

J. Schlembach Fachverlag

The four-dimensional binary code space F

4

with q = 2 and n = 4 is illustrated in Figure 2.5

2

(Neubauer, 2006b).

2.1.2 Maximum Likelihood Decoding

Channel codes are used in order to decrease the probability of incorrectly received code

words or symbols. In this section we will derive a widely used decoding strategy. To this

end, we will consider a decoding strategy to be optimal if the corresponding word error

probability

= Pr{ˆu = u}=Pr{ˆb = b}

p

err

is minimal (Bossert, 1999). The word error probability has to be distinguished from the

symbol error probability

k−1

sym

1

=

k

i=0

Pr{ˆui= ui}

p

Page 35

20 ALGEBRAIC CODING THEORY

Optimal decoder

r

■ The received word r is decoded into the code word

Decoder

ˆ

b(r)

ˆ

b(r) such that the word

error probability

= Pr{ˆu = u}=Pr{ˆb = b} (2.4)

p

err

is minimal.

Figure 2.6: Optimal decoder with minimal word error probability

which denotes the probability of an incorrectly decoded information symbol u

. In general,

i

the symbol error probability is harder to derive analytically than the word error probability.

However, it can be bounded by the following inequality (Bossert, 1999)

1

p

≤ p

err

k

In the following, a q -nary channel code B ∈ F

n

the code space F

is considered. Let bjbe the transmitted code word. Owing to the noisy

q

channel, the received word r may differ from the transmitted code word b

≤ p

sym

.

err

n

with M code words b1, b2, ..., bMin

q

. The task of the

j

decoder in Figure 2.6 is to decode the transmitted code word based on the sole knowledge

of r with minimal word error probability p

err

.

This decoding step can be written according to the decoding rule r →ˆb =ˆb(r). For

hard-decision decoding the received word r is an element of the discrete code space F

To each code word b

so-called decision region. These non-overlapping decision regions create the whole code

space F

n

, i.e.

q

the received word r lies within the decision region D

the code word b

ˆ

b(r) = b

is equivalent to the event r ∈ Di. By properly choosing the decision regions Di,

i

we assign a corresponding subspace Djof the code space F

j

M

Dj= F

j=1

. That is, the decoding of the code word biaccording to the decision rule

i

n

and Di∩ Dj=∅for i = j as illustrated in Figure 2.7. If

q

, the decoder decides in favour of

i

n

, the

q

the decoder can be designed. For an optimal decoder the decision regions are chosen such

that the word error probability p

The probability of the event that the code word b = b

wordˆb(r) = b

is decoded is given by

i

is minimal.

err

is transmitted and the code

j

n

.

q

Pr{(ˆb(r) = b

) ∧ (b = bj)}=Pr{(r ∈ Di) ∧ (b = bj)}.

i

We obtain the word error probability p

the transmitted code word b = b

is decoded into a different code wordˆb(r) = biwith

j

by averaging over all possible events for which

err

Page 36

ALGEBRAIC CODING THEORY 21

Decision regions

n

F

q

D

1

D

i

■ Decision regions D

j

D

with

...

D

j

M

M

j=1

Dj= F

n

and Di∩ Dj=∅for i = j

q

Figure 2.7: Non-overlapping decision regions Djin the code space F

i = j . This leads to (Neubauer, 2006b)

= Pr{ˆb(r) = b}

p

err

M

=

i=1

=

i=1

Pr{(ˆb(r) = bi) ∧ (b = bj)}

j=i

M

Pr{(r ∈ Di) ∧ (b = bj)}

j=i

M

=

j=i

i=1

r∈D

i

With the help of Bayes’ rule Pr{r ∧ (b = b

order of summation, we obtain

M

r∈D

i

j=i

p

=

err

i=1

M

=

r∈D

j=i

i

i=1

n

q

Pr{r ∧ (b = bj)}.

)}=Pr{b = bj|r}Pr{r} and by changing the

j

Pr{r ∧ (b = bj)}

Pr{b = bj|r}Pr{r}

Page 37

22 ALGEBRAIC CODING THEORY

=

M

i=1r∈D

Pr{r}

i

Pr{b = bj|r}.

j=i

The inner sum can be simplified by observing the normalisation condition

M

Pr{b = bj|r}=Pr{b = bi|r}+

j=1

Pr{b = bj|r}=1.

j=i

This leads to the word error probability

M

p

=

err

i=1r∈D

In order to minimise the word error probability p

Pr{r}(1 −Pr{b = bi|r}).

i

, we define the decision regions Diby

err

assigning each possible received word r to one particular decision region. If r is assigned

to the particular decision region D

smallest, the word error probability p

for which the inner term Pr{r}(1 −Pr{b = bi|r})is

i

will be minimal. Therefore, the decision regions

err

are obtained from the following assignment

r ∈ D

⇔ Pr{r}1 −Pr{b = bj|r}= min

j

Pr{r}(1 −Pr{b = bi|r}).

1≤i≤M

Since the probability Pr{r} does not change with index i, this is equivalent to

r ∈ D

⇔ Pr{b = bj|r}= max

j

1≤i≤M

Pr{b = bi|r}.

Finally, we obtain the optimal decoding rule according to

ˆ

b(r) = b

The optimal decoder with minimal word error probability p

for which the a-posteriori probability Pr{b = b

⇔ Pr{b = bj|r}= max

j

Pr{b = bi|r}.

1≤i≤M

emits the code wordˆb =ˆb(r)

err

|r}=Pr{bi|r} is maximal. This decoding

i

strategy

ˆ

b(r) = argmax

Pr{b|r}

b∈B

is called MED (minimum error probability decoding) or MAP (maximum a-posteriori)

decoding (Bossert, 1999).

For this MAP decoding strategy the a-posteriori probabilities Pr{b|r} have to be determined for all code words b ∈ B and received words r. With the help of Bayes’ rule

Pr{b|r}=

Pr{r|b}Pr{b}

Pr{r}

and by omitting the term Pr{r} which does not depend on the specific code word b, the

decoding rule

ˆ

b(r) = argmax

Pr{r|b}Pr{b}

b∈B

Page 38

ALGEBRAIC CODING THEORY 23

Optimal decoding strategies

r

■ For Minimum Error Probability Decoding (MED) or Maximum A-Posteriori

Decoder

ˆ

b(r)

(MAP) decoding the decoder rule is

ˆ

b(r) = argmax

■ For Maximum Likelihood Decoding (MLD) the decoder rule is

ˆ

b(r) = argmax

■ MLD is identical to MED if all code words are equally likely, i.e. Pr{b}=

Pr{b|r} (2.5)

b∈B

Pr{r|b} (2.6)

b∈B

1

.

M

Figure 2.8: Optimal decoding strategies

follows. For MAP decoding, the conditional probabilities Pr{r|b} as well as the a-priori

probabilities Pr{b} have to be known. If all M code words b appear with equal probability Pr{b}=1/M , we obtain the so-called MLD (maximum likelihood decoding) strategy

(Bossert, 1999)

ˆ

b(r) = argmax

Pr{r|b}.

b∈B

These decoding strategies are summarised in Figure 2.8. In the following, we will assume

that all code words are equally likely, so that maximum likelihood decoding can be used

as the optimal decoding rule. In order to apply the maximum likelihood decoding rule, the

conditional probabilities Pr{r|b} must be available. We illustrate how this decoding rule

can be further simplified by considering the binary symmetric channel.

2.1.3 Binary Symmetric Channel

In Section 1.2.3 we defined the binary symmetric channel as a memoryless channel with

the conditional probabilities

Pr{r

}=

i|bi

ε, ri= b

with channel bit error probability ε. Since the binary symmetric channel is assumed to

be memoryless, the conditional probability Pr{r|b} can be calculated for code word b =

1 −ε, r

i

= b

i

i

Page 39

24 ALGEBRAIC CODING THEORY

(b

0,b1

,...,b

) and received word r = (r0,r1,...,r

n−1

n−1

Pr{r|b}=

i=0

Pr{ri|bi}.

) according to

n−1

If the words r and b differ in dist(r, b) symbols, this yields

Pr{r|b}=(1 −ε)

Taking into account 0 ≤ ε<

ˆ

b(r) = argmax

Pr{r|b}=argmax

b∈B

n−dist(r,b)

1

and therefore

2

b∈B

dist

ε

(1 −ε)

(r,b)

= (1 − ε)

ε

< 1, the MLD rule is given by

1−ε

n

n

(r,b)

dist

ε

1 −ε

ε

1 −ε

= argmin

dist

b∈B

(r,b)

.

dist(r, b),

i.e. for the binary symmetric channel the optimal maximum likelihood decoder (Bossert,

1999)

ˆ

b(r) = argmin

dist(r, b)

b∈B

emits that particular code word which differs in the smallest number of components from

the received word r, i.e. which has the smallest Hamming distance to the received word r

(see Figure 2.9). This decoding rule is called minimum distance decoding. This minimum

distance decoding rule is also optimal for a q -nary symmetric channel (Neubauer, 2006b).

We now turn to the error probabilities for the binary symmetric channel during transmission

before decoding. The probability of w errors at w given positions within the n-dimensional

binary received word r is given by ε

w

(1 −ε)

n−w

. Since there are

n

different possibilities

w

Minimum distance decoding for the binary symmetric channel

r

■ The optimal maximum likelihood decoding rule for the binary symmetric

Decoder

channel is given by the minimum distance decoding rule

ˆ

b(r) = argmin

dist(r, b) (2.7)

b∈B

Figure 2.9: Minimum distance decoding for the binary symmetric channel

ˆ

b(r)

Page 40

ALGEBRAIC CODING THEORY 25

of choosing w out of n positions, the probability of w errors at arbitrary positions within

an n-dimensional binary received word follows the binomial distribution

n

Pr{w errors}=

w

ε

w

(1 −ε)

n−w

with mean nε. Because of the condition ε<

1

, the probability Pr{w errors} decreases with

2

increasing number of errors w, i.e. few errors are more likely than many errors.

n

The probability of error-free transmission is Pr{0 errors}=(1 − ε)

, whereas the prob-

ability of a disturbed transmission with r = b is given by

n

n

Pr{r = b}=

w=1

w

ε

w

(1 −ε)

n−w

= 1 − (1 − ε)n.

2.1.4 Error Detection and Error Correction

Based on the minimum distance decoding rule and the code space concept, we can assess

the error detection and error correction capabilities of a given channel code. To this end, let

b and b

words shall be equal to the minimum Hamming distance, i.e. dist(b, b

detect errors as long as the erroneously received word r is not equal to a code word different

from the transmitted code word. This error detection capability is guaranteed as long as

the number of errors is smaller than the minimum Hamming distance d, because another

code word (e.g. b

least d components. For an (n, k) block code B(n,k,d) with minimum Hamming distance

d, the number of detectable errors is therefore given by (Bossert, 1999; Lin and Costello,

2004; Ling and Xing, 2004; van Lint, 1999)

For the analysis of the error correction capabilities of the (n, k) block code B(n,k,d) we

define for each code word b the corresponding correction ball of radius as the subset

of all words that are closer to the code word b than to any other code word b

block code B(n,k,d) (see Figure 2.10). As we have seen in the last section, for minimum

distance decoding, all received words within a particular correction ball are decoded into

the respective code word b. According to the radius of the correction balls, besides the

code word b, all words that differ in 1, 2,..., components from b are elements of the

corresponding correction ball. We can uniquely decode all elements of a correction ball

into the corresponding code word b as long as the correction balls do not intersect. This

condition is true if <

code B(n,k,d) with minimum Hamming distance d is given by (Bossert, 1999; Lin and

Costello, 2004; Ling and Xing, 2004; van Lint, 1999)

be two code words of an (n, k) block code B (n,k,d). The distance of these code

) can be reached from a given code word (e.g. b) merely by changing at

= d − 1.

e

det

d

holds. Therefore, the number of correctable errors of a block

2

5

d −1

2

.

e

=

cor

) = d. We are able to

of the

5

The term z denotes the largest integer number that is not larger than z.

Page 41

26 ALGEBRAIC CODING THEORY

Error detection and error correction

correction

balls

b

b

d

■ If the minimum Hamming distance between two arbitrary code words is d

thecodeisabletodetectupto

e

= d − 1 (2.8)

det

errors.

■ If the minimum Hamming distance between two arbitrary code words is d

thecodeisabletocorrectupto

=

e

cor

d −1

2

errors.

Figure 2.10: Error detection and error correction

For the binary symmetric channel the number of errors w within the n-dimensional

transmitted code word is binomially distributed according to Pr{w errors}=

n−w

ε)

. Since an e

-error detecting code is able to detect w ≤ e

det

= d − 1 errors, the

det

n

w

remaining detection error probability is bounded by

(2.9)

w

ε

(1 −

If an e

+1

n

w

ε

(1 −ε)

w

n

p

det

-error correcting code is used with e

cor

≤

w=e

det

n−w

= 1 −

=(d −1)/2, the word error probability

cor

e

det

w=0

for a binary symmetric channel can be similarly bounded by

+1

n

w

ε

(1 −ε)

w

n−w

= 1 −

n

err

≤

w=e

cor

p

e

cor

w=0

n

w

ε

w

n

w

ε

w

(1 −ε)

(1 −ε)

n−w

n−w

.

.

Page 42

ALGEBRAIC CODING THEORY 27

2.2 Linear Block Codes

In the foregoing discussion of the fundamentals of general block codes we exclusively

focused on the distance properties between code words and received words in the code

space F

taking into account the respective algebraic properties of vector spaces, we gain efficiency

especially with regard to the encoding scheme.

2.2.1 Definition of Linear Block Codes

n

. If we consider the code words to be vectors in the finite vector space F

q

6

n

,

q

The (n, k) block code B(n,k,d) with minimum Hamming distance d over the finite field

F

is called linear,ifB(n,k,d)is a subspace of the vector space F

q

n

of dimension k (Lin

q

and Costello, 2004; Ling and Xing, 2004). The number of code words is then given by

k

M = q

according to the code rate

k

.

R =

n

Because of the linearity property, an arbitrary superposition of code words again leads to

a valid code word of the linear block code B(n,k,d), i.e.

α

+ α2b2+···+αlbl∈ B(n,k,d)

2b1

with α

,...,αl∈ Fqand b1, b2,...,bl∈ B(n,k,d). Owing to the linearity, the n-

1,α2

dimensional zero row vector 0 = (0, 0,...,0) consisting of n zeros is always a valid

code word. It can be shown that the minimum Hamming distance of a linear block code

B(n,k,d) is equal to the minimum weight of all non-zero code words, i.e.

d = min

∀b=b

dist(b, b) = min

∀b=0

wt(b).

These properties are summarised in Figure 2.11. As a simple example of a linear block

code, the binary parity-check code is described in Figure 2.12 (Bossert, 1999).

For each linear block code an equivalent code can be found by rearranging the code

7

word symbols.

This equivalent code is characterised by the same code parameters as the

original code, i.e. the equivalent code has the same dimension k and the same minimum

Hamming distance d.

2.2.2 Generator Matrix

The linearity property of a linear block code B(n,k,d) can be exploited for efficiently

encoding a given information word u = (u

} of the subspace spanned by the linear block code is chosen, consisting of k linearly

g

k−1

0,u1

,...,u

independent n-dimensional vectors

= (g

g

i

6

Finite fields and vector spaces are briefly reviewed in Sections A.1 and A.2 in Appendix A.

7

In general, an equivalent code is obtained by suitable operations on the rows and columns of the generator

matrix G which is defined in Section 2.2.2.

i,0,gi,1

, ··· ,g

). To this end, a basis {g0, g1,...,

k−1

)

i,n−1

Page 43

28 ALGEBRAIC CODING THEORY

Linear block codes

■ A linear q-nary (n, k) block code B(n,k,d)is defined as a subspace of the

vector space F

■ The number of code words is given by M = q

■ The minimum Hamming distance d is given by the minimum weight

wt(b) = d.

min

∀b=0

n

.

q

k

.

Figure 2.11: Linear block codes B(n,k,d)

Binary parity-check code

■ The binary parity-check code is a linear block code over the finite field F

■ This code takes a k-dimensional information word u = (u

and generates the code word b = (b

0,b1

,...,b

) with bi= uifor

k−1,bk

0 ≤ i ≤ k −1 and

k−1

= u0+ u1+···+u

b

k

k−1

=

u

i

i=0

0,u1

,...,u

2

k−1

(2.10)

.

)

■ The bit b

is called the parity bit; it is chosen such that the resulting code

k

word is of even parity.

Figure 2.12: Binary parity-check code

with 0 ≤ i ≤ k − 1. The corresponding code word b = (b

b = u

+ u1g1+···+u

0g0

k−1gk−1

0,b1

,...,b

) is then given by

n−1

with the q-nary information symbols ui∈ Fq. If we define the k × n matrix

G =

g

0

g

1

.

.

.

g

k−1

=

g

g

g

k−1,0gk−1,1

0,0

1,0

.

.

.

g

··· g

0,1

g

··· g

1,1

.

.

.

.

.

.

··· g

k−1,n−1

0,n−1

1,n−1

.

.

.

Page 44

ALGEBRAIC CODING THEORY 29

Generator matrix

■ The generator matrix G of a linear block code is constructed by a suitable

set of k linearly independent basis vectors g

G =

■ The k-dimensional information word u is encoded into the n-dimensional

g

0

g

1

.

.

.

g

k−1

g

=

0,0

g

1,0

.

.

.

g

k−1,0gk−1,1

code word b by the encoding rule

b = uG (2.12)

Figure 2.13: Generator matrix G of a linear block code B (n,k,d)

according to

i

g

0,1

g

1,1

.

.

.

··· g

··· g

.

.

.

··· g

0,n−1

1,n−1

.

.

.

k−1,n−1

(2.11)

the information word u = (u

0,u1

,...,u

) is encoded according to the matrix–vector

k−1

multiplication

b = uG.

k

Since all M = q

code words b ∈ B(n,k,d) can be generated by this rule, the matrix G

is called the generator matrix of the linear block code B(n,k,d) (see Figure 2.13). Owing

to this property, the linear block code B(n,k,d) is completely defined with the help of the

generator matrix G (Bossert, 1999; Lin and Costello, 2004; Ling and Xing, 2004).