Page 1

A Guide to MPEG Fundamentals

and Protocol Analysis

(Including DVB and ATSC)

MPEG Tutorial

Page 2

Page 3

www.tektronix.com/video_audio

i

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Section 1 – Introduction to MPEG ..............................................................................1

1.1 Convergence ............................................................................................................................................1

1.2 Why Compression Is Needed ....................................................................................................................1

1.3 Principles of Compression..........................................................................................................................1

1.4 Compression in Television Applications ....................................................................................................2

1.5 Introduction to Digital Video Compression ................................................................................................3

1.6 Introduction to Audio Compression ..........................................................................................................5

1.7 MPEG Streams..........................................................................................................................................6

1.8 Need for Monitoring and Analysis..............................................................................................................7

1.9 Pitfalls of Compression..............................................................................................................................7

Section 2 – Compression in Video ............................................................................8

2.1 Spatial or Temporal Coding? ....................................................................................................................8

2.2 Spatial Coding ..........................................................................................................................................8

2.3 Weighting ................................................................................................................................................10

2.4 Scanning ..................................................................................................................................................11

2.5 Entropy Coding ........................................................................................................................................11

2.6 A Spatial Coder........................................................................................................................................12

2.7 Temporal Coding......................................................................................................................................13

2.8 Motion Compensation..............................................................................................................................14

2.9 Bidirectional Coding ................................................................................................................................16

2.10 I-, P- and B-pictures................................................................................................................................16

2.11 An MPEG Compressor ............................................................................................................................18

2.12 Preprocessing..........................................................................................................................................21

2.13 Wavelets ................................................................................................................................................22

Section 3 – Audio Compression ..............................................................................23

3.1 The Hearing Mechanism..........................................................................................................................23

3.2 Subband Coding ......................................................................................................................................24

3.3 MPEG Layer 1..........................................................................................................................................25

3.4 MPEG Layer 2 ........................................................................................................................................26

3.5 Transform Coding....................................................................................................................................26

3.6 MPEG Layer 3 ........................................................................................................................................27

3.7 MPEG-2 Audio ........................................................................................................................................27

3.8 MPEG-4 Audio ........................................................................................................................................27

3.9 AC-3 ......................................................................................................................................................28

Section 4 – The MPEG Standards ..........................................................................29

4.1 What is MPEG ........................................................................................................................................29

4.2 MPEG-1 ..................................................................................................................................................29

4.3 MPEG-2 ..................................................................................................................................................30

4.3.1 Profiles and Levels in MPEG-2 ................................................................................................................30

4.4 MPEG-4 ..................................................................................................................................................32

4.4.1 MPEG-4 Standards Documents ..............................................................................................................32

4.4.2 Object Coding ........................................................................................................................................32

4.4.3 Video and Audio Coding..........................................................................................................................34

4.4.4 Scalability ................................................................................................................................................35

4.4.5 Other Aspects of MPEG-4 ......................................................................................................................36

4.4.6 The Future of MPEG-4 ............................................................................................................................37

4.5 MPEG-7 ..................................................................................................................................................38

4.6 MPEG-21 ................................................................................................................................................39

Page 4

www.tektronix.com/video_audio

ii

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Section 5 – Elementary Streams ............................................................................40

5.1 Video Elementary Stream Syntax ............................................................................................................40

5.2 Audio Elementary Streams ......................................................................................................................41

Section 6 – Packetized Elementary Streams (PES) ..............................................42

6.1 PES Packets ............................................................................................................................................42

6.2 Time Stamps ..........................................................................................................................................42

6.3 PTS/DTS..................................................................................................................................................42

Section 7 – Program Streams ................................................................................44

7.1 Recording vs. Transmission ....................................................................................................................44

7.2 Introduction to Program Streams ............................................................................................................44

Section 8 – Transport Streams ..............................................................................45

8.1 The Job of a Transport Stream................................................................................................................45

8.2 Packets ..................................................................................................................................................46

8.3 Program Clock Reference (PCR) ............................................................................................................46

8.4 Packet Identification (PID)........................................................................................................................47

8.5 Program Specific Information (PSI) ..........................................................................................................48

Section 9 – Digital Modulation ................................................................................50

9.1 Principles of Modulation ..........................................................................................................................50

9.2 Analog Modulation ..................................................................................................................................50

9.3 Quadrature Modulation............................................................................................................................50

9.4 Simple Digital Modulation Systems ..........................................................................................................51

9.5 Phase Shift Keying ..................................................................................................................................51

9.6 Quadrature Amplitude Modulation - QAM................................................................................................52

9.7 Vestigial Sideband Modulation - VSB ......................................................................................................53

9.8 Coded Orthogonal Frequency Division Multiplex - COFDM ......................................................................53

9.9 Integrated Services Data Broadcasting (ISDB) ........................................................................................55

9.9.1 ISDB-S Satellite System ..........................................................................................................................55

9.9.2 ISDB-C Cable System ..............................................................................................................................55

9.9.3 ISDB-T Terrestrial Modulation..................................................................................................................55

9.9.4 ISDB in Summary ....................................................................................................................................55

Section 10 – Introduction to DVB & ATSC ..............................................................56

10.1 An Overall View ......................................................................................................................................56

10.2 Remultiplexing ........................................................................................................................................57

10.3 Service Information (SI)............................................................................................................................57

10.4 Error Correction ......................................................................................................................................58

10.5 Channel Coding ......................................................................................................................................59

10.6 Inner Coding............................................................................................................................................60

10.7 Transmitting Digits....................................................................................................................................61

Page 5

www.tektronix.com/video_audio

iii

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Section 11 – Data Broadcast ..................................................................................62

11.1 Applications ............................................................................................................................................62

11.1.1 Program Related Data ..................................................................................................... .......................62

11.1.2 Opportunistic Data ..................................................................................................................................62

11.1.3 Network Data..........................................................................................................................................63

11.1.4 Enhanced TV ..........................................................................................................................................63

11.1.5 Interactive TV..........................................................................................................................................63

11.2 Content Encapsulation ............................................................................................................................63

11.2.1 MPEG Data Encapsulation ......................................................................................................................63

11.2.1.1 Data Piping..............................................................................................................................................63

11.2.1.2 Data Streaming ......................................................................................................................................63

11.2.1.3 DSMCC – Digital Storage Medium Command and Control........................................................................64

11.2.1.4 MPE – Multi-protocol Encapsulation ........................................................................................................64

11.2.1.5 Carousels................................................................................................................................................64

11.2.1.6 Data Carousels........................................................................................................................................65

11.2.1.7 Object Carousels ....................................................................................................................................66

11.2.1.8 How Object Carousels Are Broadcast ....................................................................................................67

11.2.1.9 MPEG-2 Data Synchronization ................................................................................................................68

11.2.2 DVB Data Encapsulation ..........................................................................................................................68

11.2.3 ATSC A/90 Data Encapsulation................................................................................................................68

11.2.4 ARIB Data Encapsulation ........................................................................................................................69

11.3 Data Content Transmission......................................................................................................................69

11.3.1 DVB Announcement ................................................................................................................................70

11.3.2 ATSC Announcement ..............................................................................................................................70

11.4 Content Presentation ..............................................................................................................................70

11.4.1 Set Top Box Middleware ..........................................................................................................................70

11.4.2 The DVB Multimedia Home Platform (MHP) ............................................................................................71

11.4.3 ATVEF DASE ............................................................................................................................................72

11.4.4 DASE ......................................................................................................................................................72

Section 12 – MPEG Testing ......................................................................................73

12.1 Testing Requirements ..............................................................................................................................73

12.2 Analyzing a Transport Stream ..................................................................................................................73

12.3 Hierarchic View ......................................................................................................................................74

12.4 Interpreted View......................................................................................................................................76

12.5 Syntax and CRC Analysis ........................................................................................................................76

12.6 Filtering....................................................................................................................................................77

12.7 Timing Analysis ........................................................................................................................................77

12.8 Elementary Stream Testing ......................................................................................................................79

12.9 Sarnoff®Compliant Bit Streams................................................................................................................79

12.10 Elementary Stream Analysis ....................................................................................................................80

12.11 Creating a Transport Stream ....................................................................................................................81

12.12 PCR Inaccuracy Generation ....................................................................................................................81

Glossary ..................................................................................................................82

Page 6

www.tektronix.com/video_audio

iv

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Page 7

www.tektronix.com/video_audio

1

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Section 1 – Introduction to MPEG

MPEG is one of the most popular audio/video compression techniques

because it is not just a single standard. Instead, it is a range of standards

suitable for different applications but based on similar principles. MPEG is

an acronym for the Moving Picture Experts Group, part of the Joint Technical

Committee, JTC1, established by the ISO (International Standards

Organization) and IEC (International Electrotechnical Commission). JTC1

is responsible for Information Technology; within JTC1, Sub Group SG29 is

responsible for “Coding of Audio,Picture, and Multimedia and Hypermedia

Information.” There are a number of working groups within SG29, including

JPEG (Joint Photographic Experts Group), and Working Group 11 for compression of moving pictures. ISO/IEC JTC1/SG29/WG11 is MPEG.

MPEG can be described as the interaction of acronyms. As ETSI stated,

“The CAT is a pointer to enable the IRD to find the EMMs associated with

the CA system(s) that it uses.” If you can understand that sentence you

don’t need this book!

1.1 Convergence

Digital techniques have made rapid progress in audio and video for a

number of reasons. Digital information is more robust and can be coded

to substantially eliminate error. This means that generation-losses in

recording and losses in transmission may be eliminated. The compact

disc (CD) was the first consumer product to demonstrate this.

While the CD has an improved sound quality with respect to its vinyl

predecessor,comparison of quality alone misses the point. The real point

is that digital recording and transmission techniques allow content manipulation to a degree that is impossible with analog. Once audio or video is

digitized, the content is in the form of data. Such data can be handled in

the same way as any other kind of data; therefore, digital video and audio

become the province of computer technology.

The convergence of computers and audio/video is an inevitable consequence

of the key inventions of computing and pulse code modulation (PCM).

Digital media can store any type of information, so it is easy to use a

computer storage device for digital video. The nonlinear workstation was

the first example of an application of convergent technology that did not

have an analog forerunner.Another example, multimedia, combines the

storage of audio, video, g raphics, text and data on the same medium.

Multimedia has no equivalent in the analog domain.

1.2 Why Compression Is Needed

The initial success of digital video was in post-production applications,

where the high cost of digital video was offset by its limitless layering and

effects capability.However, production-standard digital video generates

over 200 megabits per second of data, and this bit rate requires extensive

capacity for storage and wide bandwidth for transmission. Digital video

could only be used in wider applications if the storage and bandwidth

requirements could be eased; this is the purpose of compression.

Compression is a way of expressing digital audio and video by using less

data. Compression has the following advantages:

A smaller amount of storage is needed for a given amount of source material.

When working in real time, compression reduces the bandwidth needed.

Additionally,compression allows faster-than-real-time transfer between media,

for example, between tape and disk.

A compressed recording format can use a lower recording density and this can

make the recorder less sensitive to environmental factors and maintenance.

1.3 Principles of Compression

There are two fundamentally different techniques that may be used to reduce

the quantity of data used to convey information content. In practical compression systems, these are usually combined, often in very complex ways.

The first technique is to improve coding efficiency.There are many ways

of coding any given information, and most simple data representations of

video and audio contain a substantial amount of redundancy.The concept

of entropy is discussed below.

Many coding tricks can be used to reduce or eliminate redundancy; examples include run-length coding and variable-length coding systems such

as Huffman codes. When properly used, these techniques are completely

reversible so that after decompression the data is identical to that at the

input of the system.This type of compression is known as lossless. Archiving

computer programs such as PKZip employ lossless compression.

Page 8

www.tektronix.com/video_audio

2

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Obviously, lossless compression is ideal, but unfortunately it does not

usually provide the degree of data reduction needed for video and audio

applications. However, because it is lossless, it can be applied at any point

in the system and is often used on the data output of lossy compressors.

If the elimination of redundancy does not reduce the data as much as

needed, some information will have to be discarded. Lossy compression

systems achieve data reduction by removing information that is irrelevant,

or of lesser relevance.These are not general techniques that can be applied

to any data stream; the assessment of relevance can only be made in

the context of the application, understanding what the data represents

and how it will be used. In the case of television, the application is the

presentation of images and sound to the human visual and hearing

systems, and the human factors must be well understood to design

an effective compression system.

Some information in video signals cannot be perceived by the human visual system and is, therefore, truly irrelevant in this context. A compression

system that discards only irrelevant image information is known as visually

lossless.

1.4 Compression in Television Applications

Television signals, analog or digital, have always represented a great deal

of information, and bandwidth reduction techniques have been used from

a very early stage. Probably the earliest example is interlace. For a given

number of lines, and a given rate of picture refresh, interlace offers a 2:1

reduction in bandwidth requirement.The process is lossy; interlace generates

artifacts caused by interference between vertical and temporal information,

and reduces the usable vertical resolution of the image. Nevertheless, most

of what is given up is largely irrelevant, so interlace represented a simple

and very valuable trade-off in its time. Unfortunately interlace and the

artifacts it generates are very disruptive to more sophisticated digital

compression schemes. Much of the complexity of MPEG-2 results from

the need to handle interlaced signals, and there is still a significant loss

in coding efficiency when compared to progressive signals.

The next major steps came with the advent of color.Color cameras produce

GBR signals, so nominally there is three times the information of a monochrome signal – but there was a requirement to transmit color signals in

the same channels used for monochrome.

The first part of the solution was to transform the signals from GBR to a

brightness signal (normally designatedY ) plus two color difference signals,

U and V, or I and Q. Generation of a brightness signal went a long way

towards solving the problem of compatibility with monochrome receivers,

but the important step for bandwidth minimization came from the color

difference signals.

It turns out that the human visual system uses sensors that are sensitive

to brightness, and that can “see” a very high-resolution image. Other

sensors capture color information, but at much lower resolution.The net

result is that, within certain limits, a sharp monochrome image representing

scene brightness overlaid with fuzzy (low-bandwidth) color information will

appear as a sharp color picture. It is not possible to take advantage of this

when dealing with GBR signals, as each signal contains both brightness

and color information. However, in YUV space, most of the brightness

information is carried in the Y signal, and ver y little in the color difference

signals. So, it is possible to filter the color difference signals and drastically

reduce the information to be transmitted.

This is an example of eliminating (mostly) irrelevant information. Under the

design viewing conditions, the human visual system does not respond significantly to the high frequency information in the color difference signals,

so it may be discarded. NTSC television transmissions carry only about

500 kHz in each color difference signal, but the pictures are adequately

sharp for many applications.

The final step in the bandwidth reduction process of NTSC and PAL was to

“hide” the color difference signals in unused parts of the spectrum of the

monochrome signal. Although the process is not strictly lossless, this can

be thought of as increasing the coding efficiency of the signal.

Some of the techniques in the digital world are quite different, but similar

principles apply.For example, MPEG transforms signals into a different

domain to permit the isolation of irrelevant information. The transform

to color-difference space is still employed, but digital techniques permit

filtering of the color difference signal to reduce vertical resolution for

further savings.

Page 9

www.tektronix.com/video_audio

3

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

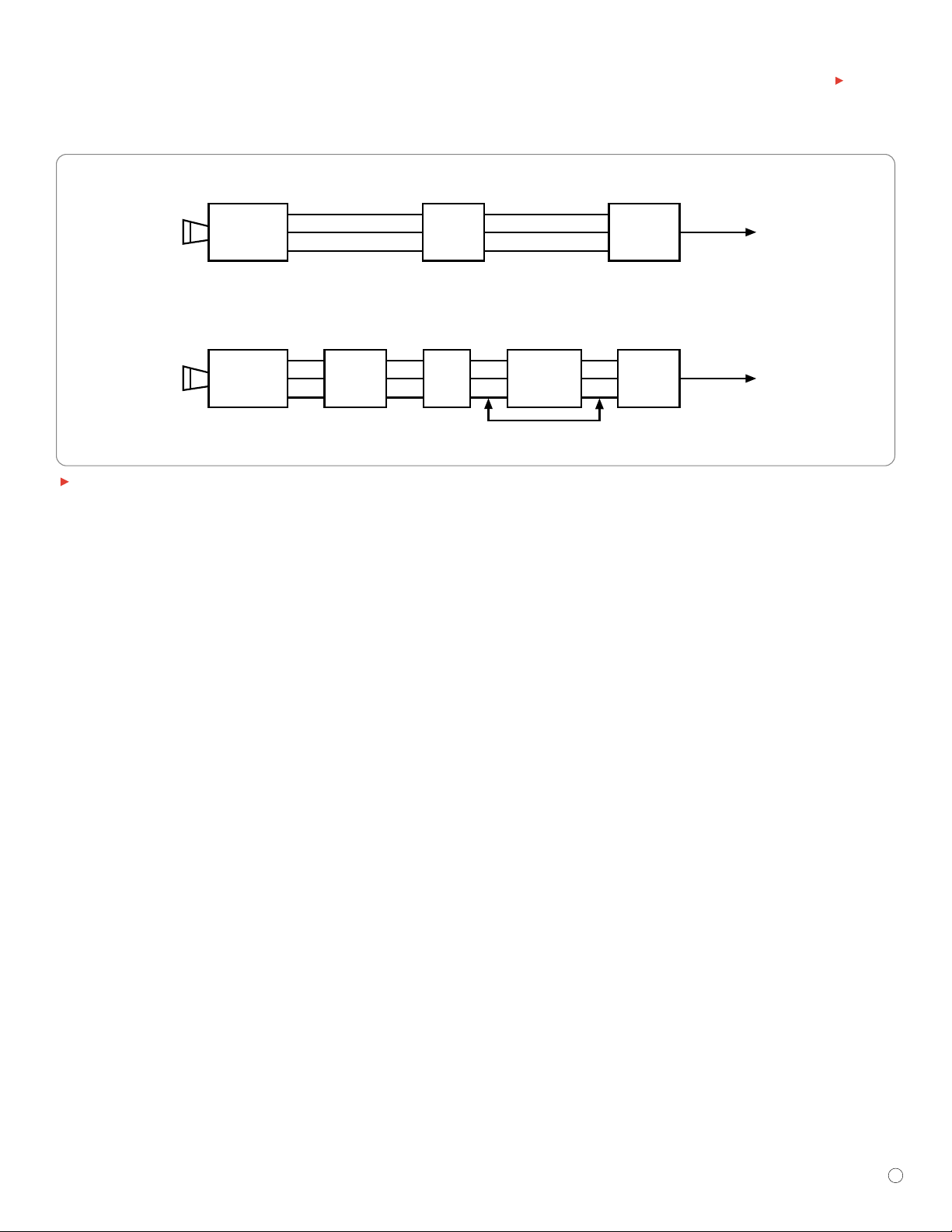

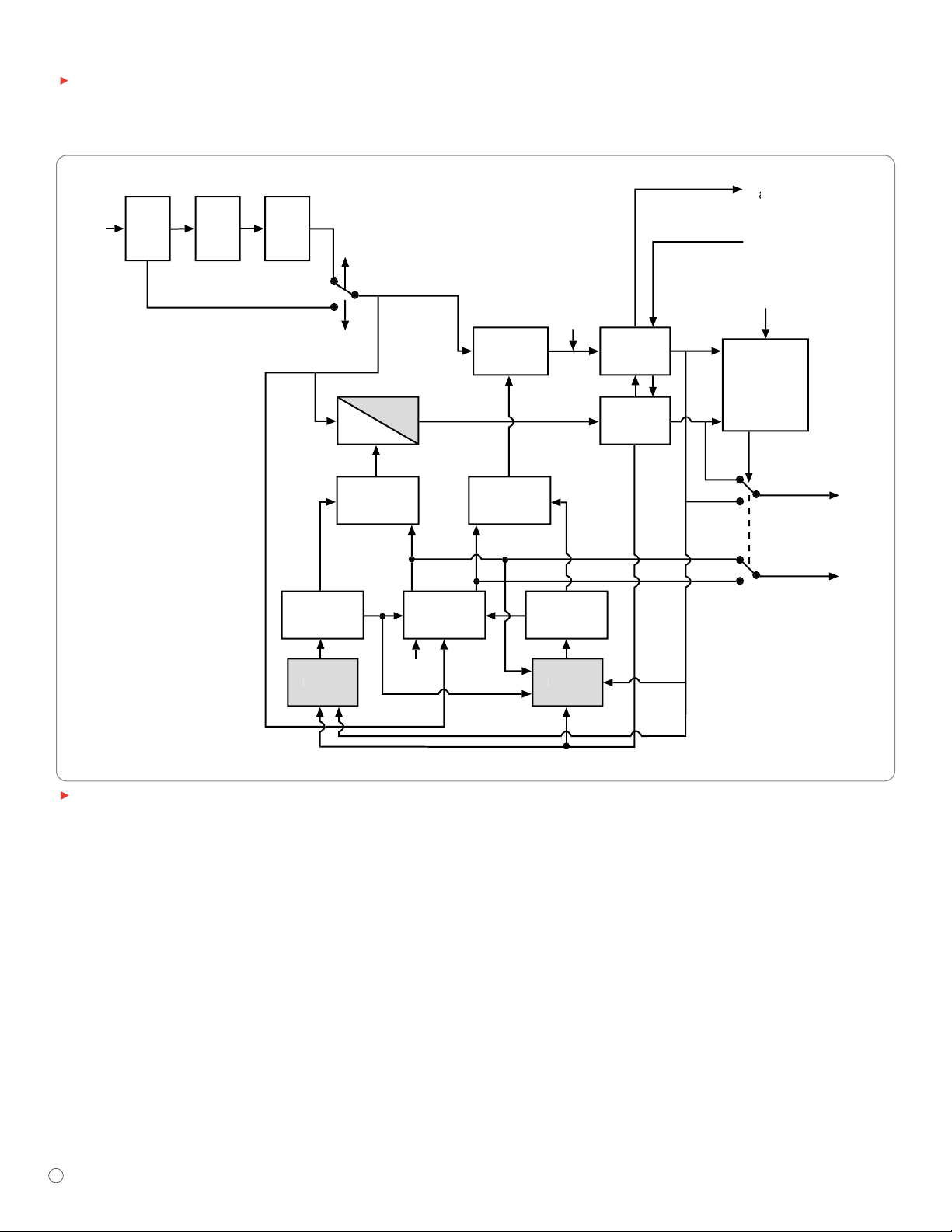

Figure 1-1a shows that in traditional television systems, the GBR camera

signal is converted to Y, P

b,Pr

components for production and encoded

into analog composite for transmission. Figure 1-1b shows the modern

equivalent.The Y, P

b,Pr

signals are digitized and carried as Y, Cb,Crsignals

in SDI form through the production process prior to being encoded with

MPEG for transmission. Clearly, MPEG can be considered by the broadcaster

as a more efficient replacement for composite video. In addition, MPEG

has greater flexibility because the bit rate required can be adjusted to suit

the application. At lower bit rates and resolutions, MPEG can be used for

video conferencing and video telephones.

Digital Video Broadcasting (DVB) and Advanced Television Systems Committee

(ATSC) (the European- and American-originated digital-television broadcasting

standards) would not be viable without compression because the bandwidth

required would be too great. Compression extends the playing time of DVD

(digital video/versatile disk) allowing full-length movies on a single disk.

Compression also reduces the cost of ENG and other contributions to

television production. DVB,ATSC and digital video disc (DVD) are all based

on MPEG-2 compression.

In tape recording, mild compression eases tolerances and adds reliability in

Digital Betacam and Digital-S, whereas in SX, DVC, DVCPRO and DVCAM,

the goal is miniaturization. In disk-based video ser vers, compression lowers

storage cost. Compression also lowers bandwidth, which allows more

users to access a given server.This characteristic is also important for

VOD (video on demand) applications.

1.5 Introduction to Digital Video Compression

In all real program material, there are two types of components of the

signal: those that are novel and unpredictable and those that can be anticipated.The novel component is called entropy and is the true information in

the signal. The remainder is called redundancy because it is not essential.

Redundancy may be spatial, as it is in large plain areas of picture where

adjacent pixels have almost the same value. Redundancy can also be temporal as it is where similarities between successive pictures are used. All

compression systems work by separating entropy from redundancy in the

encoder.Only the entropy is recorded or transmitted and the decoder computes the redundancy from the transmitted signal. Figure 1-2a (see next

page) shows this concept.

An ideal encoder would extract all the entropy and only this will be transmitted to the decoder.An ideal decoder would then reproduce the original

signal. In practice, this ideal cannot be reached. An ideal coder would be

complex and cause a very long delay in order to use temporal redundancy.

In certain applications, such as recording or broadcasting, some delay is

acceptable, but in videoconferencing it is not. In some cases, a very complex coder would be too expensive. It follows that there is no one ideal

compression system.

Analog

Composite

Out

(PAL, NTSC

or SECAM)

B

G

R

Y

P

r

P

b

Digital

Compressed

Out

Matrix

ADC

Production

Process

B

G

R

Y

YY

SDI

MPEG

Coder

a)

b)

Matrix

Camera

Camera

Composite

Encoder

P

r

P

b

C

r

C

b

C

r

C

b

Figure 1-1.

Page 10

www.tektronix.com/video_audio

4

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

In practice, a range of coders is needed which have a range of processing

delays and complexities. The power of MPEG is that it is not a single compression format, but a range of standardized coding tools that can be

combined flexibly to suit a range of applications. The way in which coding

has been performed is included in the compressed data so that the

decoder can automatically handle whatever the coder decided to do.

In MPEG-2 and MPEG-4 coding is divided into several profiles that have

different complexity,and each profile can be implemented at a different

level depending on the resolution of the input picture. Section 4 considers

profiles and levels in detail.

There are many different digital video formats and each has a different bit

rate. For example a high definition system might have six times the bit rate

of a standard definition system. Consequently, just knowing the bit rate out

of the coder is not very useful. What matters is the compression factor,

which is the ratio of the input bit rate to the compressed bit rate, for

example 2:1, 5:1 and so on.

Unfortunately,the number of variables involved makes it very difficult to

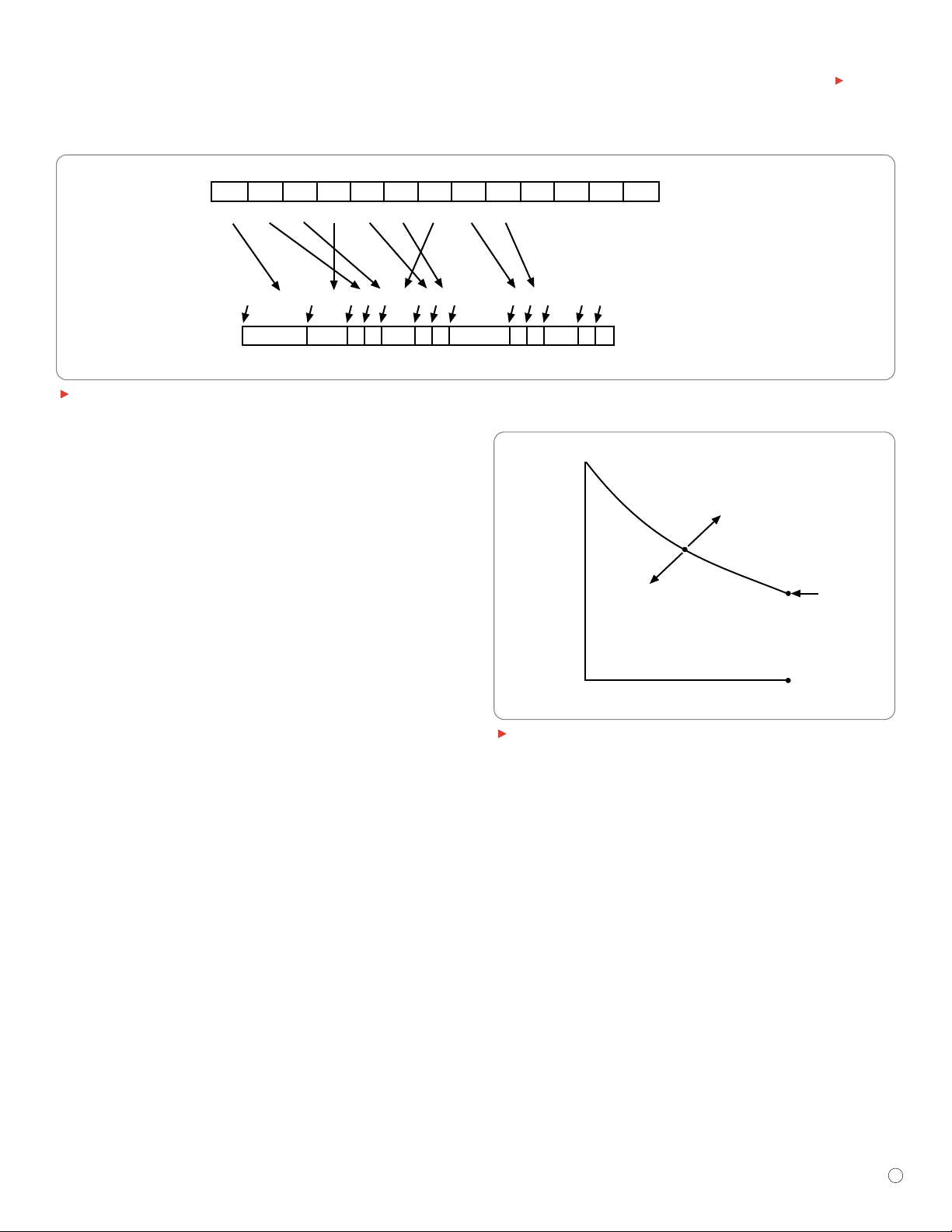

determine a suitable compression factor.Figure 1-2a shows that for an

ideal coder,if all of the entropy is sent, the quality is good. However, if

the compression factor is increased in order to reduce the bit rate, not all of

the entropy is sent and the quality falls. Note that in a compressed system

when the quality loss occurs, it is steep (Figure 1-2b). If the available bit

rate is inadequate, it is better to avoid this area by reducing the entropy

of the input picture. This can be done by filtering. The loss of resolution

caused by the filtering is subjectively more acceptable than the

compression artifacts.

To identify the entropy perfectly, an ideal compressor would have to be

extremely complex. A practical compressor may be less complex for

economic reasons and must send more data to be sure of carrying all of

the entropy.Figure 1-2b shows the relationship between coder complexity

and performance. The higher the compression factor required, the more

complex the encoder has to be.

S

y

S

e

S

e

r

y

y

o

y

er

y

y

p

p

y

er

y

y

)

)

)

y

deal

o

o

Figure 1-2.

M Vide

Entrop

a

ression Factor

om

b

orse

Qualit

Complexit

Ideal Code

Sends Onl

Entrop

Bett

Qualit

Non-i

Coder Has T

end Mor

ression Factor

om

c

orse

Qualit

Bett

Qualit

Latenc

hort Dela

Coder Has T

end Even Mor

Page 11

www.tektronix.com/video_audio

5

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

The entropy in video signals varies. A recording of an announcer delivering

the news has much redundancy and is easy to compress. In contrast, it is

more difficult to compress a recording with leaves blowing in the wind or

one of a football crowd that is constantly moving and therefore has less

redundancy (more information or entropy). In either case, if all the entropy

is not sent, there will be quality loss. Thus, we may choose between a

constant bit-rate channel with variable quality or a constant quality channel

with variable bit rate. Telecommunications network operators tend to prefer

a constant bit rate for practical purposes, but a buffer memory can be

used to average out entropy variations if the resulting increase in delay is

acceptable. In recording, a variable bit rate may be easier to handle and

DVD uses variable bit rate, using buffering so that the average bit rate

remains within the capabilities of the disk system.

Intra-coding (intra = within) is a technique that exploits spatial redundancy,

or redundancy within the picture; inter-coding (inter = between) is a

technique that exploits temporal redundancy.Intra-coding may be used alone,

as in the JPEG standard for still pictures, or combined with inter-coding as

in MPEG.

Intra-coding relies on two characteristics of typical images. First, not all

spatial frequencies are simultaneously present, and second, the higher the

spatial frequency, the lower the amplitude is likely to be. Intra-coding

requires analysis of the spatial frequencies in an image.This analysis is the

purpose of transforms such as wavelets and DCT (discrete cosine transform).

Transforms produce coefficients that describe the magnitude of each spatial

frequency.Typically,many coefficients will be zero, or nearly zero, and these

coefficients can be omitted, resulting in a reduction in bit r ate.

Inter-coding relies on finding similarities between successive pictures. If a

given picture is available at the decoder, the next picture can be created by

sending only the picture differences. The picture differences will be

increased when objects move, but this magnification can be offset by

using motion compensation, since a moving object does not generally

change its appearance very much from one picture to the next. If the

motion can be measured, a closer approximation to the current picture can

be created by shifting part of the previous picture to a new location. The

shifting process is controlled by a pair of horizontal and vertical displacement

values (collectively known as the motion vector) that is transmitted to the

decoder.The motion vector transmission requires less data than sending

the picture-difference data.

MPEG can handle both interlaced and non-interlaced images. An image at

some point on the time axis is called a “picture,” whether it is a field or a

frame. Interlace is not ideal as a source for digital compression because

it is in itself a compression technique. Temporal coding is made more

complex because pixels in one field are in a different position to those in

the next.

Motion compensation minimizes but does not eliminate the differences

between successive pictures. The picture difference is itself a spatial

image and can be compressed using transform-based intra-coding as

previously described. Motion compensation simply reduces the amount

of data in the difference image.

The efficiency of a temporal coder rises with the time span over which it

can act. Figure 1-2c shows that if a high compression factor is required,

a longer time span in the input must be considered and thus a longer

coding delay will be experienced. Clearly,temporally coded signals are

difficult to edit because the content of a given output picture may be

based on image data which was transmitted some time earlier.Production

systems will have to limit the degree of temporal coding to allow editing

and this limitation will in turn limit the available compression factor.

1.6 Introduction to Audio Compression

The bit rate of a PCM digital audio channel is only about 1.5 megabits

per second, which is about 0.5% of 4:2:2 digital video. With mild video

compression schemes, such as Digital Betacam, audio compression is

unnecessary. But, as the video compression factor is raised, it becomes

important to compress the audio as well.

Audio compression takes advantage of two facts. First, in typical audio

signals, not all frequencies are simultaneously present. Second, because

of the phenomenon of masking, human hearing cannot discern every detail

of an audio signal. Audio compression splits the audio spectrum into bands

by filtering or transforms, and includes less data when describing bands in

which the level is low.Where masking prevents or reduces audibility of a

particular band, even less data needs to be sent.

Page 12

www.tektronix.com/video_audio

6

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Audio compression is not as easy to achieve as video compression

because of the acuity of hearing. Masking only works properly when the

masking and the masked sounds coincide spatially.Spatial coincidence is

always the case in mono recordings but not in stereo recordings, where

low-level signals can still be heard if they are in a different part of the

sound stage. Consequently, in stereo and surround sound systems, a lower

compression factor is allowable for a given quality.Another factor complicating audio compression is that delayed resonances in poor loudspeakers

actually mask compression artifacts. Testing a compressor with poor

speakers gives a false result, and signals that are apparently satisfactory

may be disappointing when heard on good equipment.

1.7 MPEG Streams

The output of a single MPEG audio or video coder is called an elementary

stream. An elementary stream is an endless near real-time signal. For

convenience, the elementary stream may be broken into data blocks of

manageable size, forming a packetized elementary stream (PES). These

data blocks need header information to identify the start of the packets

and must include time stamps because packetizing disrupts the time axis.

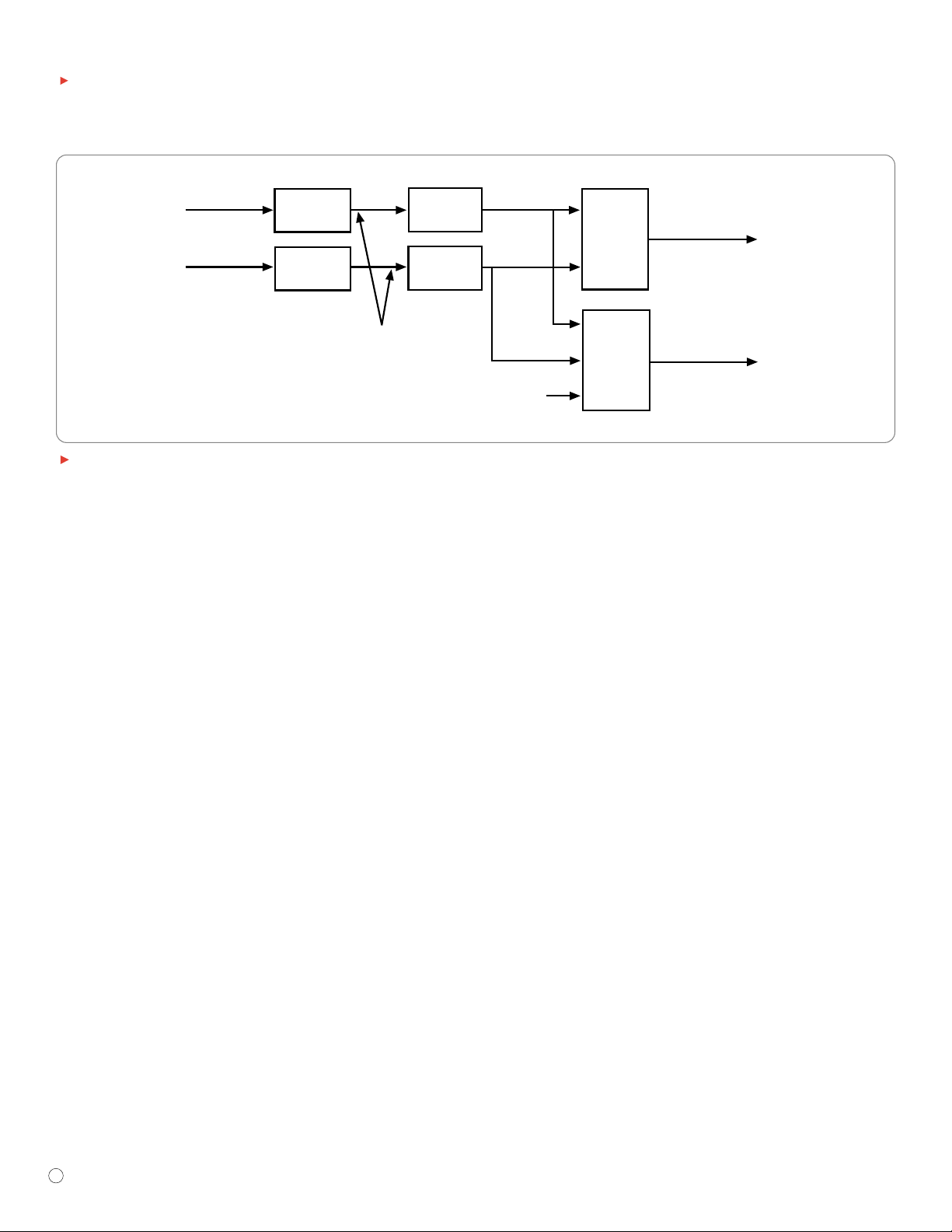

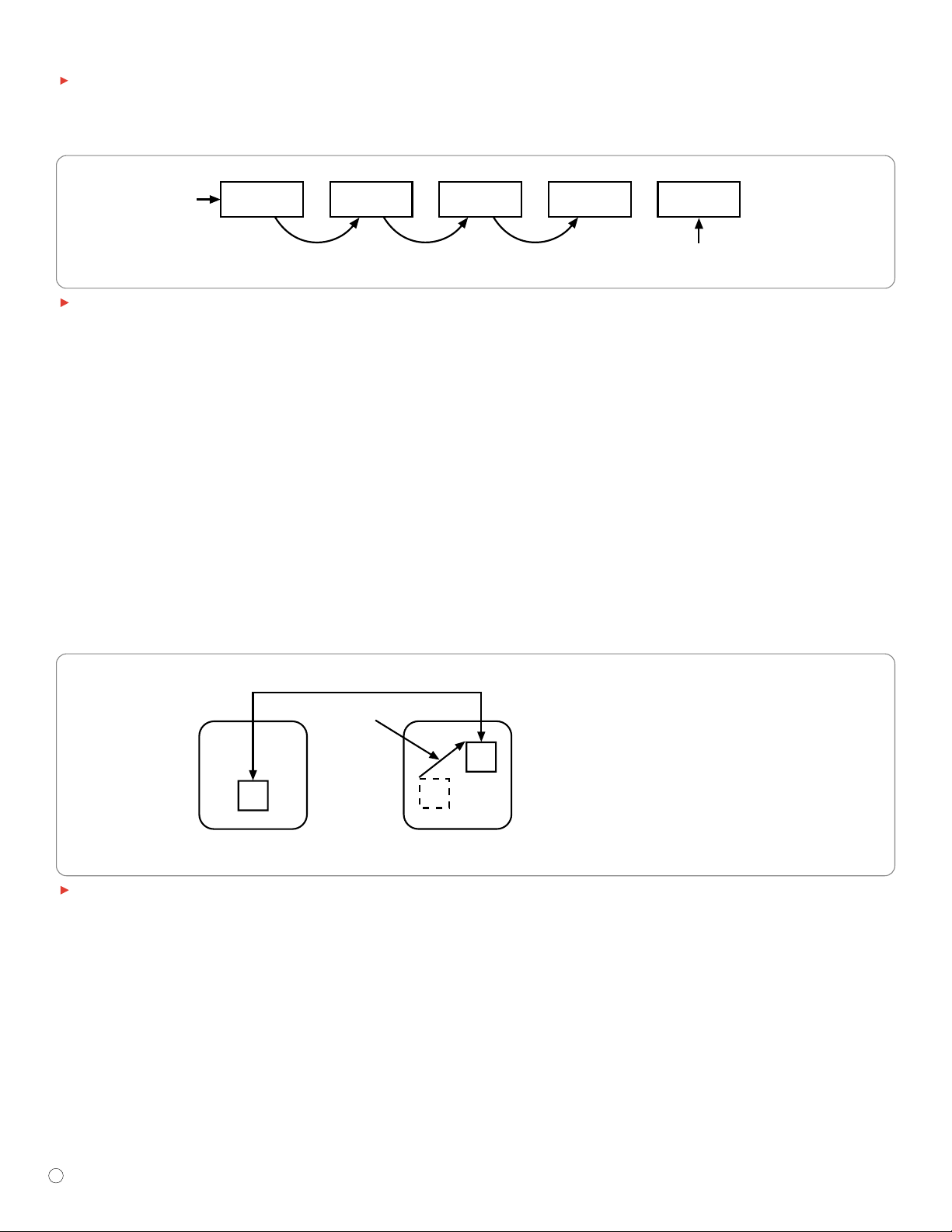

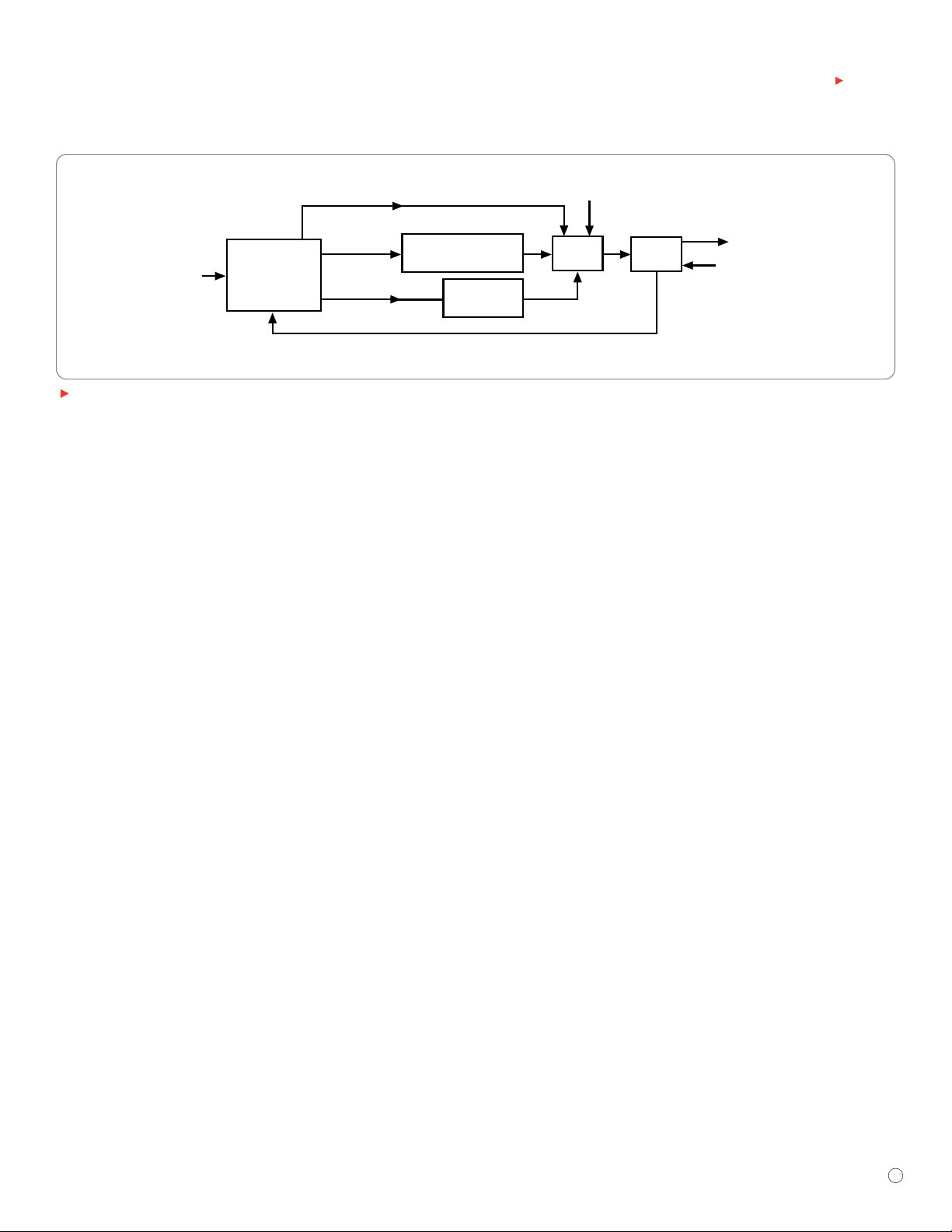

Figure 1-3 shows that one video PES and a number of audio PES can be

combined to form a program stream, provided that all of the coders are

locked to a common clock.Time stamps in each PES can be used to ensure

lip-sync between the video and audio. Program streams have variable-length

packets with headers. They find use in data transfers to and from optical

and hard disks, which are essentially error free, and in which files of

arbitrary sizes are expected. DVD uses program streams.

For transmission and digital broadcasting, several programs and their

associated PES can be multiplexed into a single transport stream. A

transport stream differs from a program stream in that the PES packets

are further subdivided into short fixed-size packets and in that multiple

programs encoded with different clocks can be carried. This is possible

because a transport stream has a program clock reference (PCR) mechanism

that allows transmission of multiple clocks, one of which is selected and

regenerated at the decoder.A single program transport stream (SPTS) is

also possible and this may be found between a coder and a multiplexer.

Since a transport stream can genlock the decoder clock to the encoder

clock, the SPTS is more common than the Program Stream.

A transport stream is more than just a multiplex of audio and video PES.

In addition to the compressed audio, video and data, a transport stream

includes metadata describing the bit stream. This includes the program

association table (PAT) that lists ever y program in the transport stream.

Each entry in the PAT points to a program map table (PMT) that lists the

elementary streams making up each program. Some programs will be open,

but some programs may be subject to conditional access (encryption) and

this information is also carried in the metadata.

The transport stream consists of fixed-size data packets, each containing

188 bytes. Each packet carries a program identifier code (PID). Packets in

the same elementary stream all have the same PID, so that the decoder

(or a demultiplexer) can select the elementary stream(s) it wants and reject

the remainder.Packet continuity counts ensure that every packet that is

needed to decode a stream is received.An effective synchronization system

is needed so that decoders can correctly identify the beginning of each

packet and deserialize the bit stream into words.

Video

Data

Audio

Data

Elementary

Stream

Video

PES

Audio

PES

Data

Program

Stream

(DVD)

Single

Program

Transport

Stream

Video

Encoder

Audio

Encoder

Packetizer

Packetizer

Program

Stream

MUX

Transport

Stream

MUX

Figure 1-3.

Page 13

www.tektronix.com/video_audio

7

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

1.8 Need for Monitoring and Analysis

The MPEG transport stream is an extremely complex structure using

interlinked tables and coded identifiers to separate the programs and the

elementary streams within the programs. Within each elementar y stream,

there is a complex structure, allowing a decoder to distinguish between,

for example, vectors, coefficients and quantization tables.

Failures can be divided into two broad categories. In the first category,the

transport system correctly delivers information from an encoder/multiplexer

to a decoder with no bit errors or added jitter,but the encoder/multiplexer

or the decoder has a fault. In the second category,the encoder/multiplexer

and decoder are fine, but the transport of data from one to the other is

defective. It is very important to know whether the fault lies in the

encoder/multiplexer,the transport or the decoder if a prompt solution is

to be found.

Synchronizing problems, such as loss or corruption of sync patterns, may

prevent reception of the entire transport stream. Transport stream protocol

defects may prevent the decoder from finding all of the data for a program,

perhaps delivering picture but not sound. Correct delivery of the data but

with excessive jitter can cause decoder timing problems.

If a system using an MPEG transport stream fails, the fault could be in the

encoder,the multiplexer or in the decoder.How can this fault be isolated?

First, verify that a transport stream is compliant with the MPEG-coding

standards. If the stream is not compliant, a decoder can hardly be

blamed for having difficulty.If the stream is compliant, the decoder may

need attention.

Traditional video testing tools, the signal generator, the waveform monitor

and vectorscope, are not appropriate in analyzing MPEG systems, except

to ensure that the video signals entering and leaving an MPEG system are

of suitable quality.Instead, a reliable source of valid MPEG test signals is

essential for testing receiving equipment and decoders. With a suitable

analyzer,the performance of encoders, transmission systems, multiplexers

and remultiplexers can be assessed with a high degree of confidence. As

a long standing supplier of high grade test equipment to the video industry,

Tektronix continues to provide test and measurement solutions as the

technology evolves, giving the MPEG user the confidence that complex

compressed systems are correctly functioning and allowing rapid diagnosis

when they are not.

1.9 Pitfalls of Compression

MPEG compression is lossy in that what is decoded is not identical to the

original. The entropy of the source varies, and when entropy is high, the

compression system may leave visible artifacts when decoded. In temporal

compression, redundancy between successive pictures is assumed.When

this is not the case, the system may fail.An example is video from a press

conference where flashguns are firing. Individual pictures containing the

flash are totally different from their neighbors, and coding artifacts may

become obvious.

Irregular motion or several independently moving objects on screen require

a lot of vector bandwidth and this requirement may only be met by reducing

the bandwidth available for picture-data. Again, visible artifacts may occur

whose level varies and depends on the motion. This problem often occurs

in sports-coverage video.

Coarse quantizing results in luminance contouring and posterized color.

These can be seen as blotchy shadows and blocking on large areas

of plain color. Subjectively, compression artifacts are more annoying than

the relatively constant impairments resulting from analog television

transmission systems.

The only solution to these problems is to reduce the compression factor.

Consequently,the compression user has to make a value judgment between

the economy of a high compression factor and the level of artifacts.

In addition to extending the encoding and decoding delay,temporal coding

also causes difficulty in editing. In fact, an MPEG bit stream cannot be

arbitrarily edited. This restriction occurs because, in temporal coding, the

decoding of one picture may require the contents of an earlier picture and

the contents may not be available following an edit. The fact that pictures

may be sent out of sequence also complicates editing.

If suitable coding has been used, edits can take place, but only at splice

points that are relatively widely spaced. If arbitrary editing is required, the

MPEG stream must undergo a decode-modify-recode process, which will

result in generation loss.

Page 14

www.tektronix.com/video_audio

8

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Section 2 – Compression in Video

This section shows how video compression is based on the perception of

the eye. Important enabling techniques, such as transforms and motion

compensation, are considered as an introduction to the structure of an

MPEG coder.

2.1 Spatial or Temporal Coding?

As was seen in Section 1, video compression can take advantage of both

spatial and temporal redundancy.In MPEG, temporal redundancy is reduced

first by using similarities between successive pictures.As much as possible

of the current picture is created or “predicted” by using information from

pictures already sent. When this technique is used, it is only necessary to

send a difference picture, which eliminates the differences between the

actual picture and the prediction. The difference picture is then subject

to spatial compression. As a practical matter it is easier to explain spatial

compression prior to explaining temporal compression.

Spatial compression relies on similarities between adjacent pixels in plain

areas of picture and on dominant spatial frequencies in areas of patterning.

The JPEG system uses spatial compression only,since it is designed to

transmit individual still pictures. However, JPEG may be used to code a

succession of individual pictures for video. In the so-called “Motion JPEG”

application, the compression factor will not be as good as if temporal coding

was used, but the bit stream will be freely editable on a picture-bypicture basis.

2.2 Spatial Coding

The first step in spatial coding is to perform an analysis of spatial frequencies

using a transform. A transform is simply a way of expressing a waveform

in a different domain, in this case, the frequency domain. The output of a

transform is a set of coefficients that describe how much of a given frequency

is present. An inverse transform reproduces the original waveform. If the

coefficients are handled with sufficient accuracy,the output of the inverse

transform is identical to the original waveform.

The most well known transform is the Fourier transform.This transform finds

each frequency in the input signal. It finds each frequency by multiplying the

input waveform by a sample of a target frequency,called a basis function,

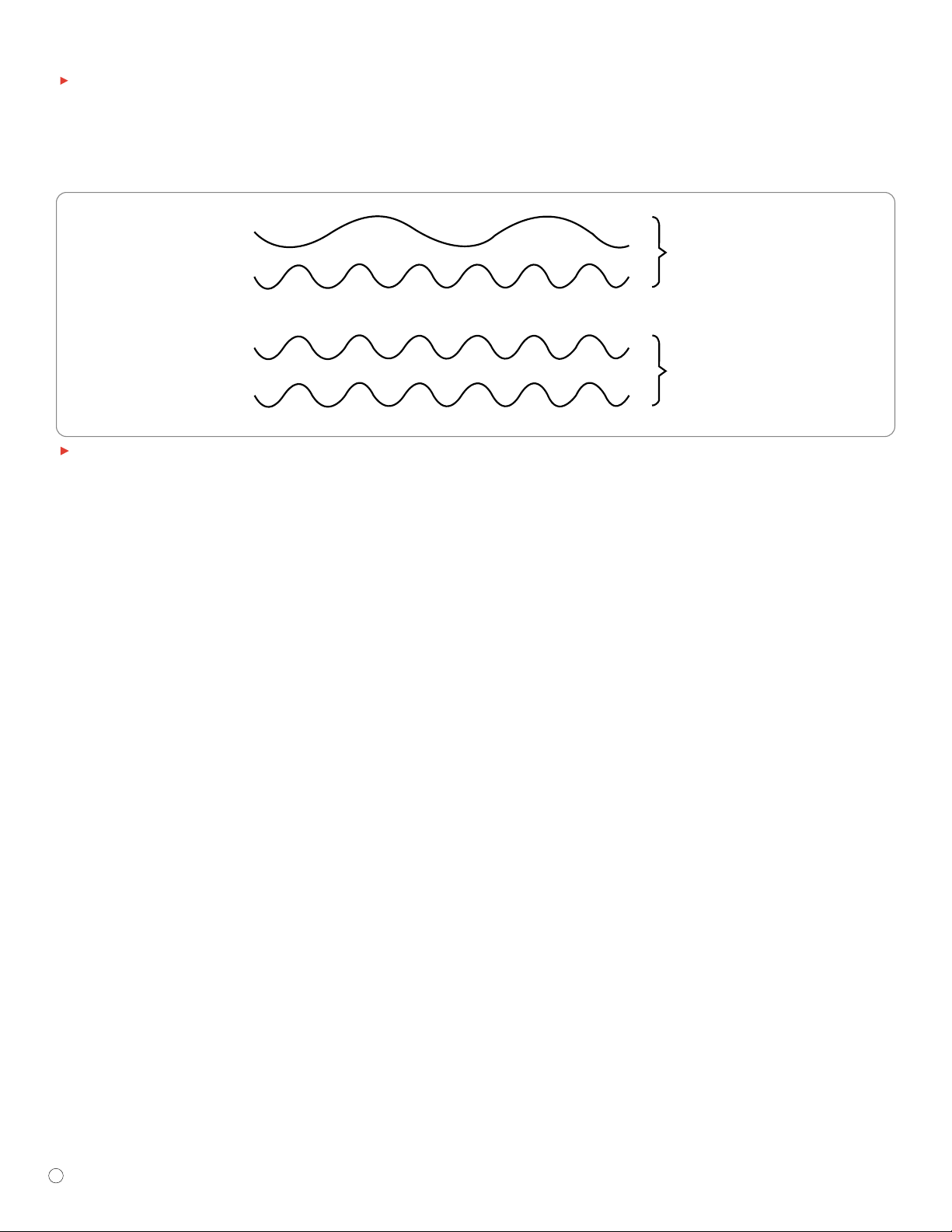

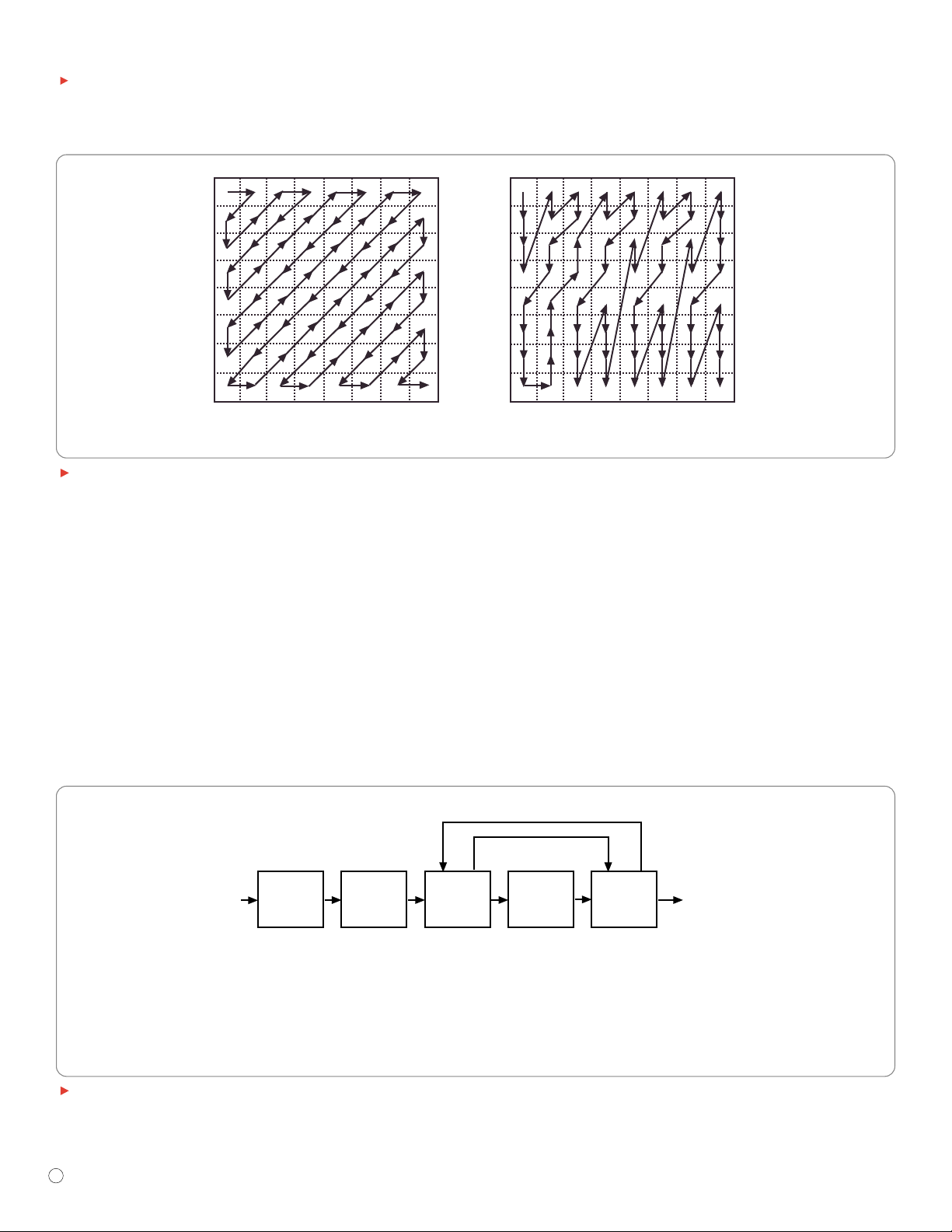

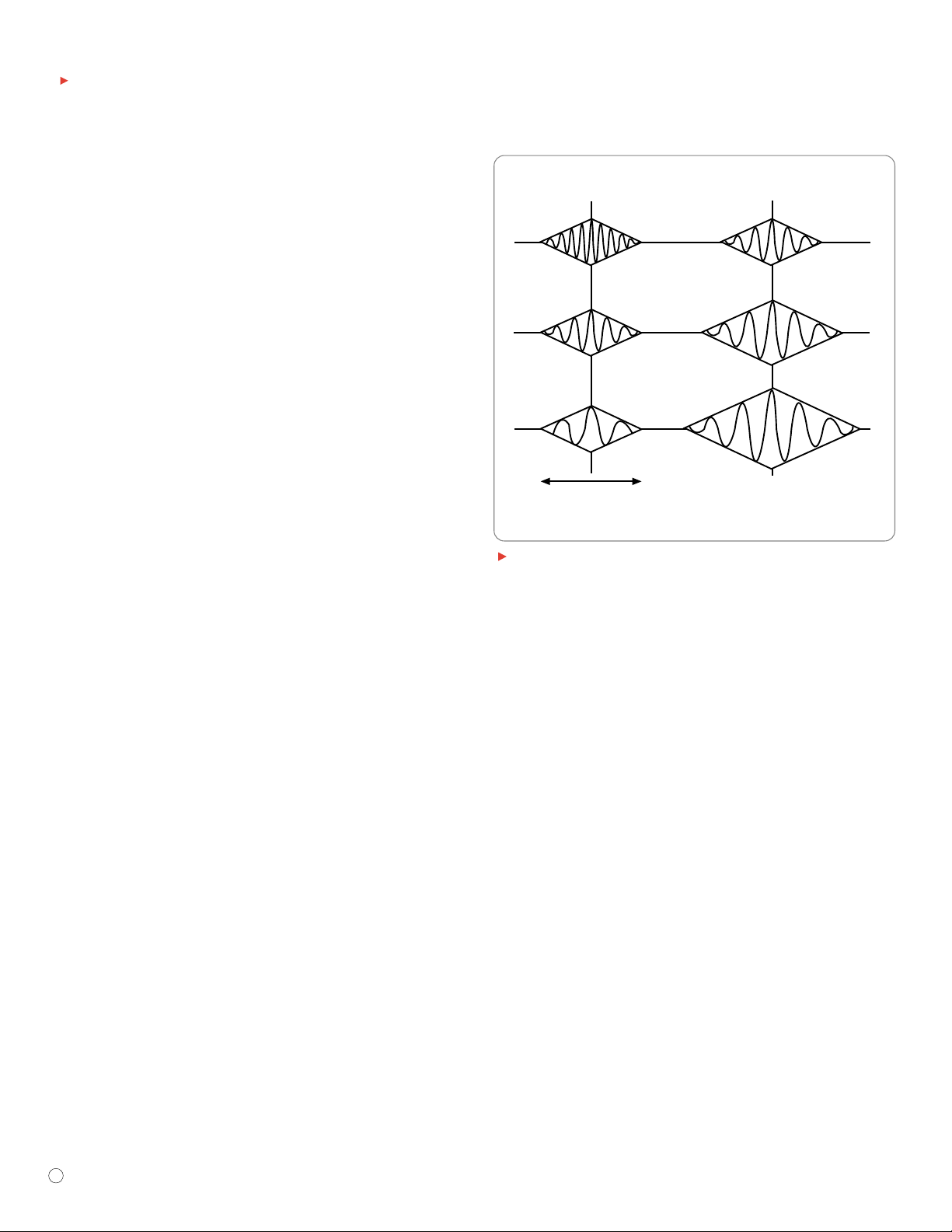

and integrating the product. Figure 2-1 shows that when the input waveform does not contain the target frequency,the integral will be zero, but

when it does, the integral will be a coefficient describing the amplitude of

that component frequency.

The results will be as described if the frequency component is in phase with

the basis function. However if the frequency component is in quadrature

with the basis function, the integral will still be zero.Therefore, it is necessary to perform two searches for each frequency, with the basis functions

in quadrature with one another so that every phase of the input will

be detected.

No Correlation

if Frequency

Different

High Correlation

if Frequency

the Same

Input

Basis

Function

Input

Basis

Function

Figure 2-1.

Page 15

www.tektronix.com/video_audio

9

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

The Fourier transform has the disadvantage of requiring coefficients for

both sine and cosine components of each frequency. In the cosine

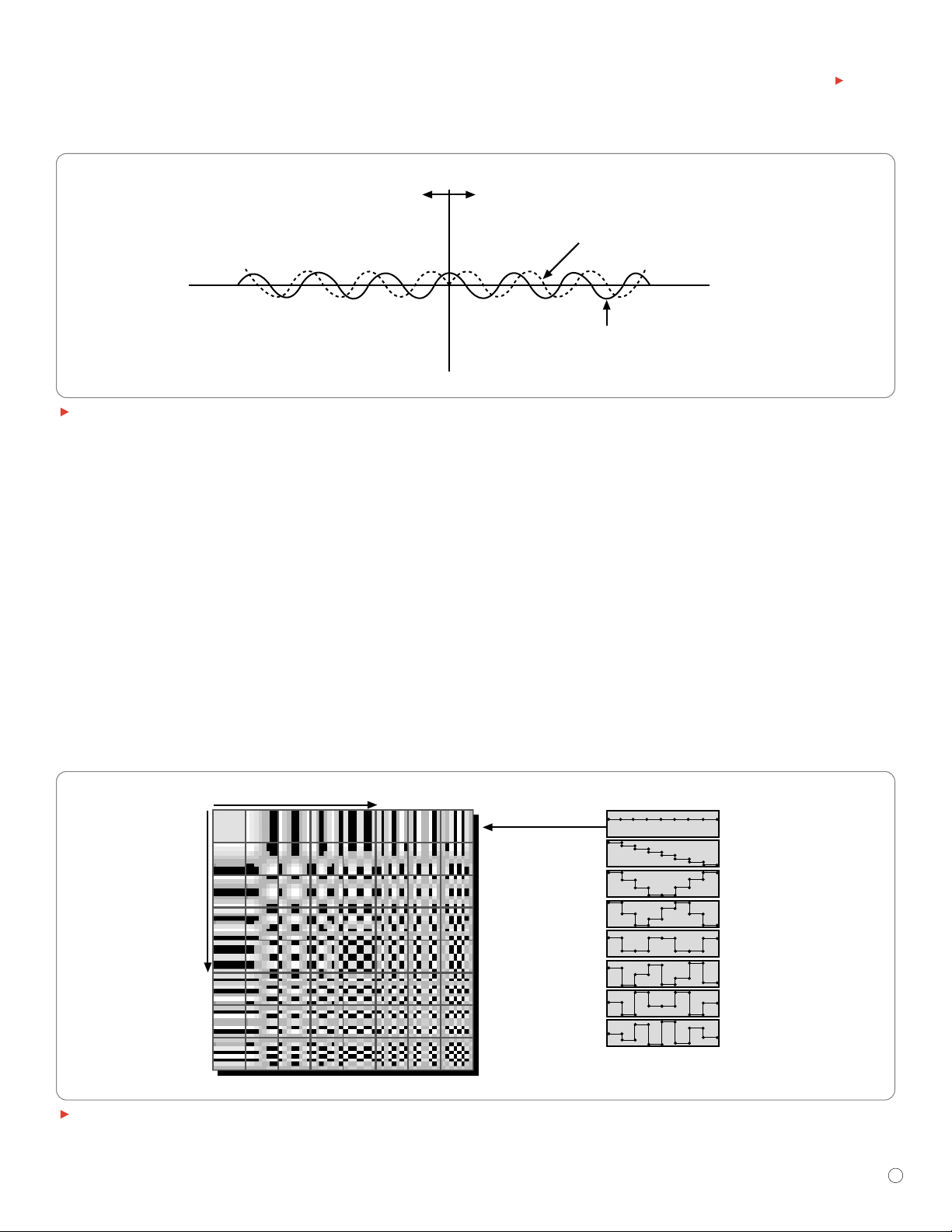

transform, the input waveform is time-mirrored with itself prior to multiplication by the basis functions. Figure 2-2 shows that this mirroring cancels

out all sine components and doubles all of the cosine components. The

sine basis function is unnecessary and only one coefficient is needed for

each frequency.

The discrete cosine transform (DCT) is the sampled version of the cosine

transform and is used extensively in two-dimensional form in MPEG. A

block of 8x8 pixels is transformed to become a block of 8x8 coefficients.

Since the transform requires multiplication by fractions, there is wordlength

extension, resulting in coefficients that have longer wordlength than the

pixel values. Typically an 8-bit pixel block results in an 11-bit coefficient

block. Thus, a DCT does not result in any compression; in fact it results in

the opposite. However, the DCT converts the source pixels into a form

where compression is easier.

Figure 2-3 shows the results of an inverse transform of each of the individual coefficients of an 8x8 DCT. In the case of the luminance signal,

the top-left coefficient is the average brightness or DC component of the

whole block. Moving across the top row, horizontal spatial frequency

increases. Moving down the left column, vertical spatial frequency increases.

In real pictures, different vertical and horizontal spatial frequencies may

occur simultaneously and a coefficient at some point within the block will

represent all possible horizontal and vertical combinations.

Figure 2-3 also shows 8 coefficients as one-dimensional horizontal

waveforms. Combining these waveforms with various amplitudes and

either polarity can reproduce any combination of 8 pixels. Thus combining

the 64 coefficients of the 2-D DCT will result in the original 8x8 pixel

Mirror

Cosine Component

Coherent Through Mirror

Sine Component

Inverts at Mirror – Cancels

Figure 2-2.

Frequency Waveforms

H

V

Horizontal Spatial

Figure 2-3.

Page 16

www.tektronix.com/video_audio

10

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

block. Clearly for color pictures, the color difference samples will also

need to be handled. Y, C

b

and Crdata are assembled into separate 8x8

arrays and are transformed individually.

In much real program material, many of the coefficients will have zero or

near-zero values and, therefore, will not be transmitted. This fact results in

significant compression that is virtually lossless. If a higher compression

factor is needed, then the wordlength of the non-zero coefficients must be

reduced. This reduction will reduce accuracy of these coefficients and will

introduce losses into the process. With care, the losses can be introduced

in a way that is least visible to the viewer.

2.3 Weighting

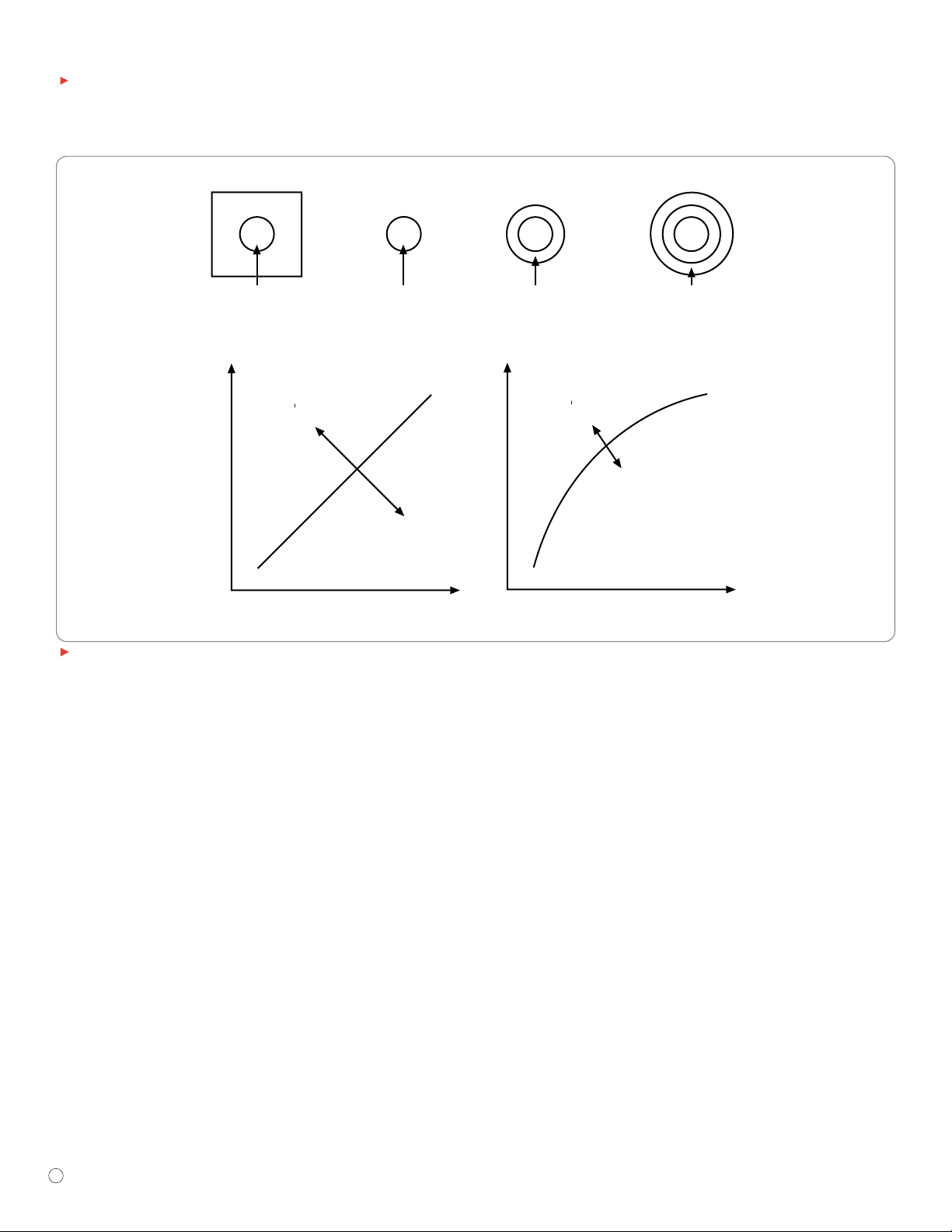

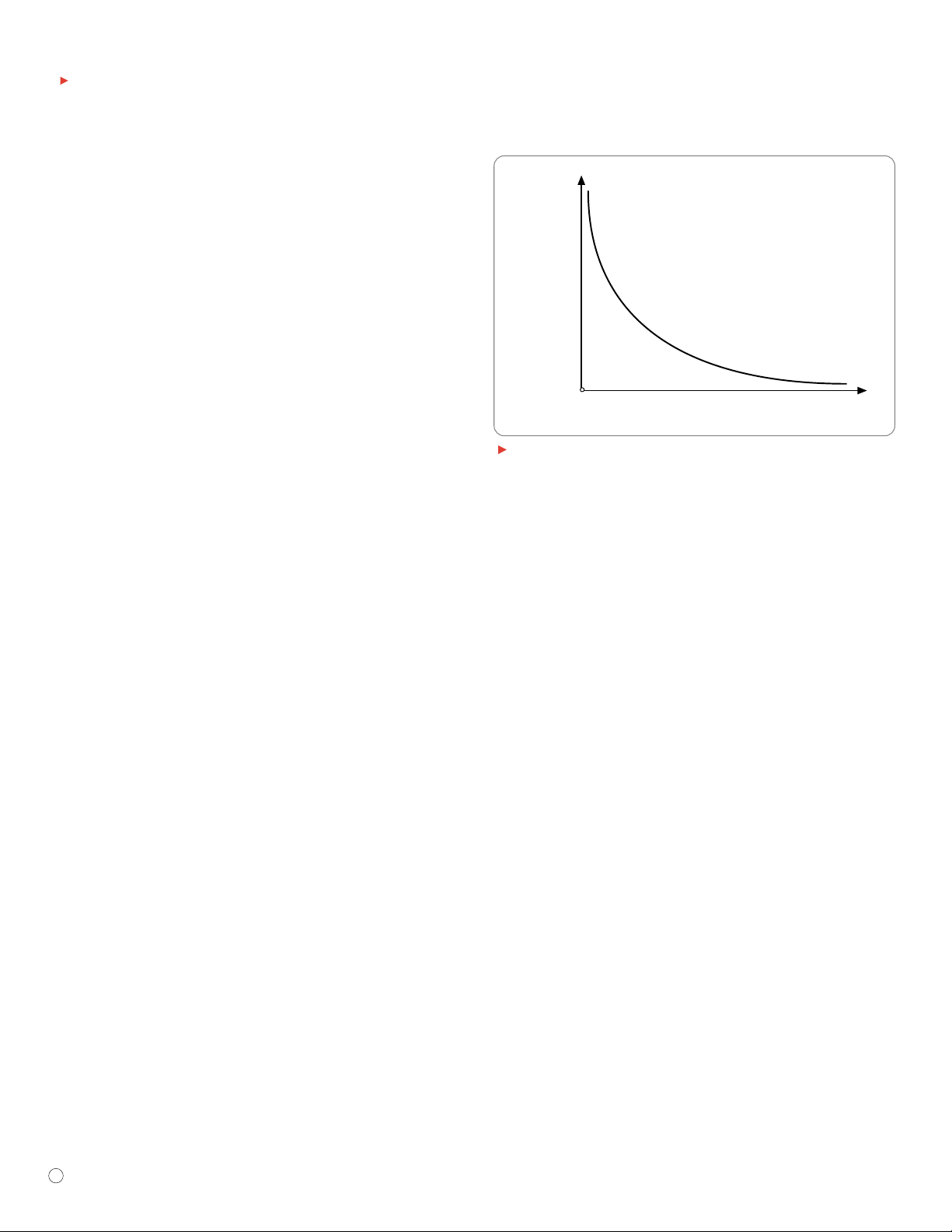

Figure 2-4 shows that the human perception of noise in pictures is not

uniform but is a function of the spatial frequency. More noise can be

tolerated at high spatial frequencies. Also, video noise is effectively

masked by fine detail in the picture, whereas in plain areas it is highly

visible. The reader will be aware that traditional noise measurements are

frequently weighted so that technical measurements relate more closely

to the subjective result.

Compression reduces the accuracy of coefficients and has a similar effect

to using shorter wordlength samples in PCM; that is, the noise level rises.

In PCM, the result of shortening the word-length is that the noise level

rises equally at all frequencies. As the DCT splits the signal into different

frequencies, it becomes possible to control the spectrum of the noise.

Effectively,low-frequency coefficients are rendered more accurately than

high-frequency coefficients by a process of weighting.

Figure 2-5 shows that, in the weighting process, the coefficients from the

DCT are divided by constants that are a function of two-dimensional frequency.Low-frequency coefficients will be divided by small numbers, and

high-frequency coefficients will be divided by large numbers. Following the

division, the result is truncated to the nearest integer. This truncation is

a form of requantizing. In the absence of weighting, this requantizing

would have the effect of uniformly increasing the size of the quantizing

step, but with weighting, it increases the step size according to the

division factor.

As a result, coefficients representing low spatial frequencies are requantized

with relatively small steps and suffer little increased noise. Coefficients

representing higher spatial frequencies are requantized with large steps

and suffer more noise. However, fewer steps means that fewer bits are

needed to identify the step and compression is obtained.

In the decoder,low-order zeros will be added to return the weighted

coefficients to their correct magnitude. They will then be multiplied by

inverse weighting factors. Clearly,at high frequencies the multiplication

factors will be larger,so the requantizing noise will be greater.Following

inverse weighting, the coefficients will have their original DCT output values,

plus requantizing error,which will be greater at high frequency than at

low frequency.

As an alternative to truncation, weighted coefficients may be nonlinearly

requantized so that the quantizing step size increases with the magnitude

of the coefficient. This technique allows higher compression factors but

worse levels of artifacts.

Clearly,the degree of compression obtained and, in turn, the output bit rate

obtained, is a function of the severity of the requantizing process. Different

bit rates will require different weighting tables. In MPEG, it is possible to use

various different weighting tables and the table in use can be transmitted to

the decoder,so that correct decoding is ensured.

Human

Noise

Sensitivity

Spatial Frequency

Figure 2-4.

Page 17

www.tektronix.com/video_audio

11

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

2.4 Scanning

In typical program material, the most significant DCT coefficients are

generally found in or near the top-left corner of the matrix. After weighting,

low-value coefficients might be truncated to zero. More efficient transmission

can be obtained if all of the non-zero coefficients are sent first, followed

by a code indicating that the remainder are all zero. Scanning is a technique

that increases the probability of achieving this result, because it sends

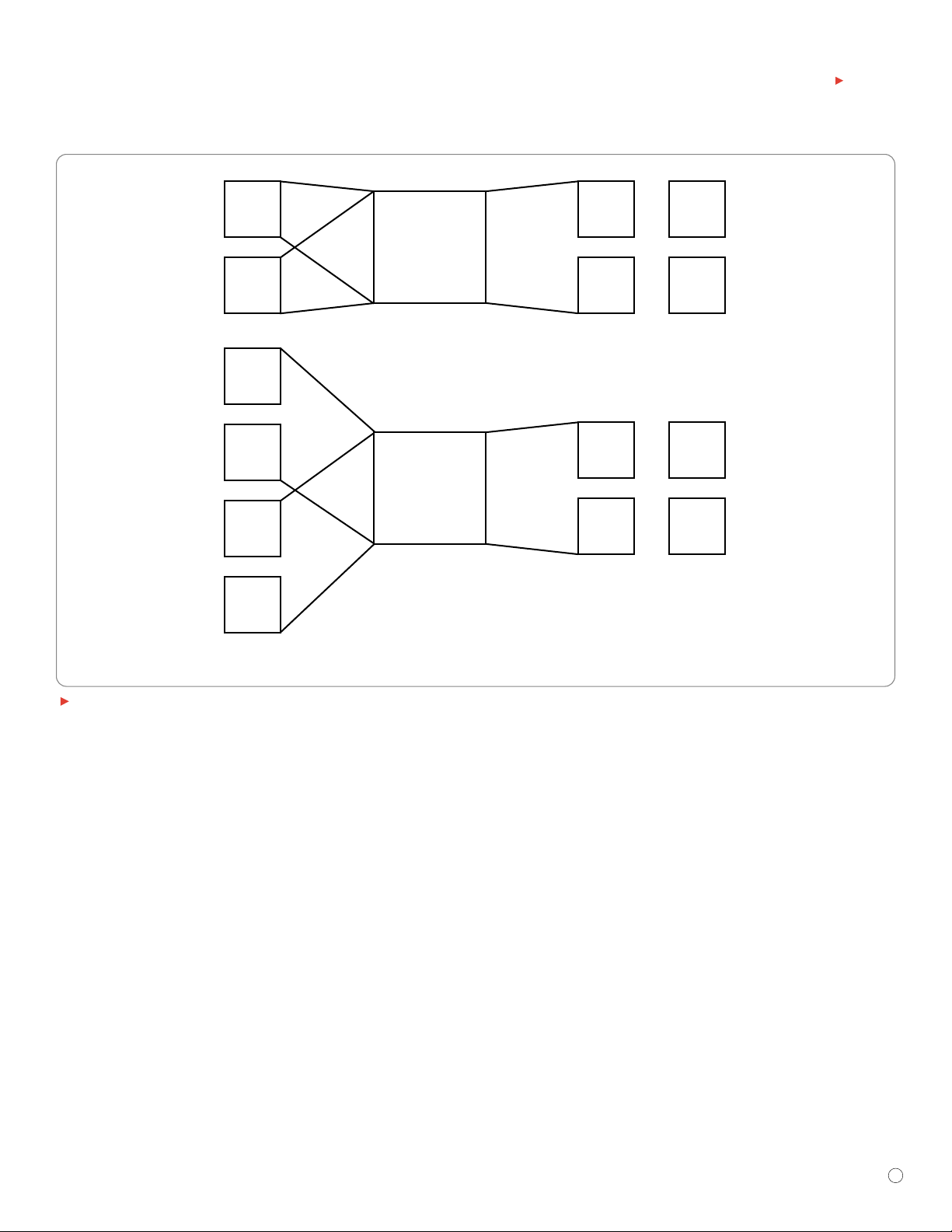

coefficients in descending order of magnitude probability.Figure 2-6a (see

next page) shows that in a non-interlaced system, the probability of a coefficient

having a high value is highest in the top-left corner and lowest in the bottomright corner.A 45 degree diagonal zigzag scan is the best sequence to use here.

In Figure 2-6b, an alternative scan pattern is shown that may be used for

interlaced sources. In an interlaced picture, an 8x8 DCT block from one

field extends over twice the vertical screen area, so that for a given picture

detail, vertical frequencies will appear to be twice as great as horizontal

frequencies. Thus, the ideal scan for an interlaced picture will be on a

diagonal that is twice as steep. Figure 2-6b shows that a given vertical

spatial frequency is scanned before scanning the same horizontal spatial

frequency.

2.5 Entropy Coding

In real video, not all spatial frequencies are present simultaneously; therefore, the DCT coefficient matrix will have zero terms in it. Requantization

will increase the number of zeros by eliminating small values. Despite the

Input DCT Coefficients

(A More Complex Block)

Output DCT Coefficients

Value for Display Only

Not Actual Results

Quant Matrix Values

Value Used Corresponds

to the Coefficient Location

Quant Scale Values

Not All Code Values Are Shown

One Value Used for Complete 8x8 Block

Divide by

Quant

Matrix

Divide by

Quant

Scale

980 12 23 16 13 4 1 0

12

7

5

2

2

1

0

98112100

13

6

3

4

4

0

830201

6

8

2

2

1

42100

1

1

1

0

0

00

000

1

000

00

00

00

7842 199 448 362 342 112 31 22

198

142

111

49

58

30

22

151 181 264 59 37 14 3

291

133

85

120

121

28

218 87 27 88 27 12

159

217

60

61

2

119 58 65 36 2

50

40

22

33

8 3 14 12

41 11 2 1

30 1 0 1

24 51 44 81

8 16192226272934

16

19

22

22

26

26

27

16 22 24 27 29 34 37

22

22

26

27

27

29

26 27 29 34 34 38

26

27

29

29

35

27 29 34 37 40

29

32

34

38

35

40 48

35 40 48

38 48 56 69

46 56 69 83

32

58

Code

Linear

Quant Scale

Non-Linear

Quant Scale

1

8

16

20

24

28

31

2

16

32

40

48

56

62

1

8

24

40

88

112

56

Figure 2-5.

Page 18

www.tektronix.com/video_audio

12

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

use of scanning, zero coefficients will still appear between the significant

values. Run length coding (RLC) allows these coefficients to be handled

more efficiently.Where repeating values, such as a string of zeros, are

present, RLC simply transmits the number of zeros rather than each

individual bit.

The probability of occurrence of particular coefficient values in real video

can be studied. In practice, some values occur very often; others occur less

often. This statistical information can be used to achieve further compression using variable length coding (VLC). Frequently occurring values are

converted to short code words, and infrequent values are converted to long

code words. To aid decoding, no code word can be the prefix of another.

2.6 A Spatial Coder

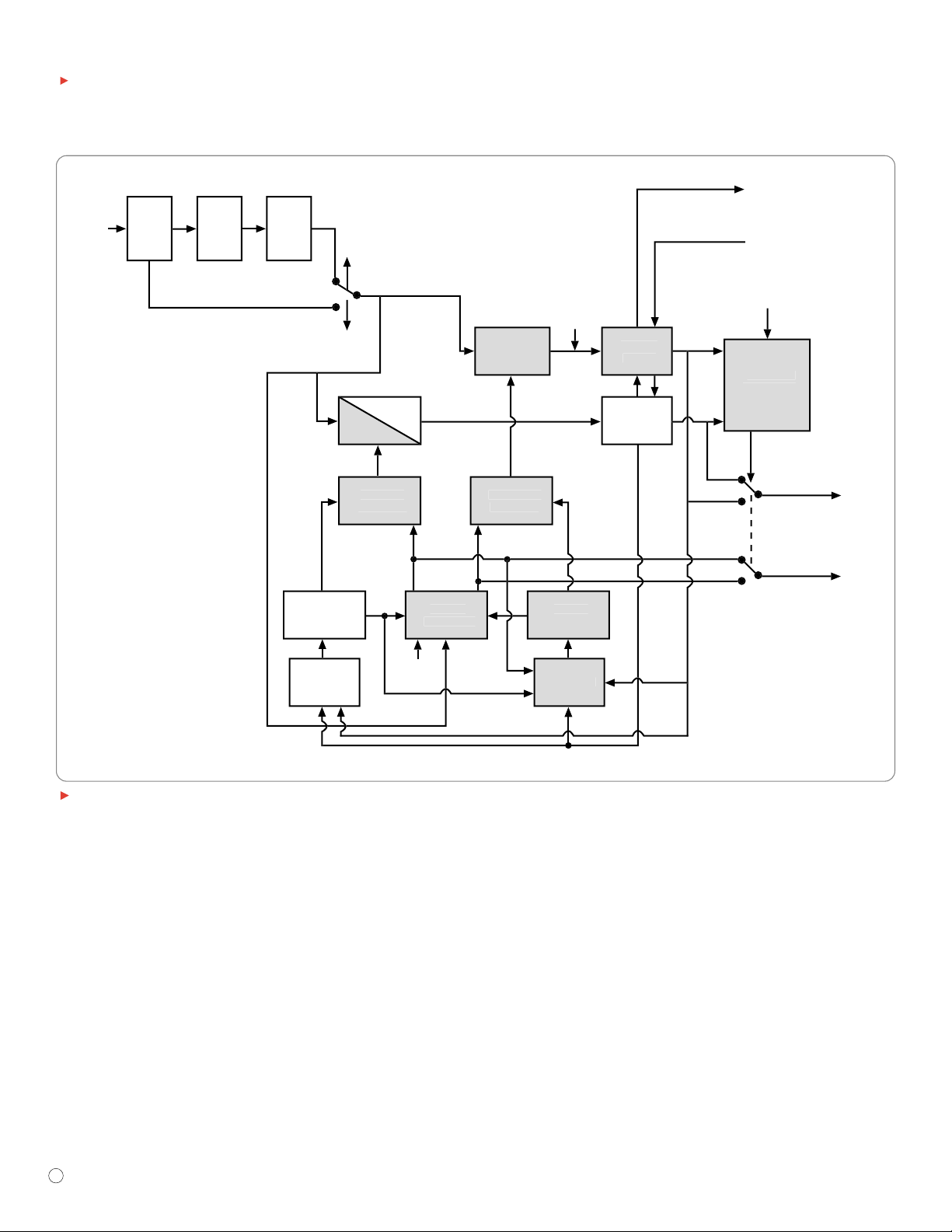

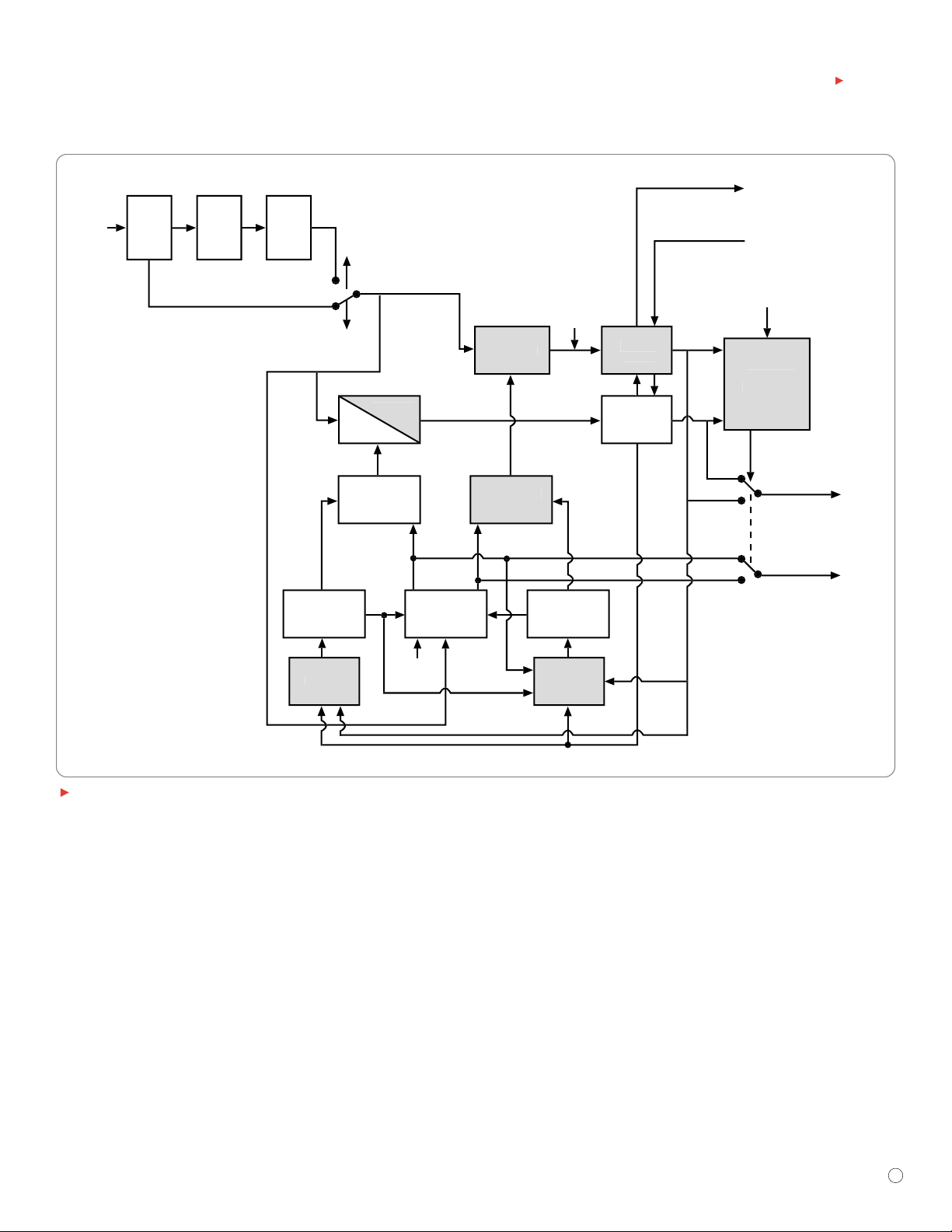

Figure 2-7 ties together all of the preceding spatial coding concepts. The

input signal is assumed to be 4:2:2 SDI (Serial Digital Interface), which may

have 8- or 10-bit wordlength. MPEG uses only 8-bit resolution; therefore, a

rounding stage will be needed when the SDI signal contains 10-bit words.

Most MPEG profiles operate with 4:2:0 sampling; therefore, a vertical low-pass

filter/interpolation stage will be needed. Rounding and color subsampling

introduces a small irreversible loss of information and a proportional

reduction in bit rate. The raster scanned input format will need to be

stored so that it can be converted to 8x8 pixel blocks.

Zigzag or Classic (Nominally for Frames)

a)

Alternate (Nominally for Fields)

b)

Figure 2-6.

Quantizing

Full

10-bit

Data

Rate Control

Quantizing Data

Compressed

Data

Data Reduced

(No Loss)

Data Reduced

(Information

Lost)

No Loss

No Data

Reduced

Information Lost

Data Reduced

Convert

4:2:2 to

8-bit 4:2:0

Quantize

Entropy

Coding

Buffer

DCT

Entropy Coding

Reduce the number of bits for each

coefficient. Give preference to certain

coefficients. Reduction can differ

for each coefficient

Variable Length Coding.

Use short words for

most frequent values

(like Morse Code)

Run Length Coding.

Send a unique code

word instead of

strings of zeros

Bit Rate

Figure 2-7.

Page 19

www.tektronix.com/video_audio

13

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

The DCT stage transforms the picture information to the frequency

domain. The DCT itself does not achieve any compression. Following DCT,

the coefficients are weighted and truncated, providing the first significant

compression. The coefficients are then zigzag scanned to increase the

probability that the significant coefficients occur early in the scan. After

the last non-zero coefficient, an EOB (end of block) code is generated.

Coefficient data are further compressed by run-length and variable-length

coding. In a variable bit-rate system, the quantizing may be fixed, but in a

fixed bit-rate system, a buffer memory is used to absorb variations in

coding difficulty.Highly detailed pictures will tend to fill the buffer,whereas

plain pictures will allow it to empty.If the buffer is in danger of overflowing,

the requantizing steps will have to be made larger, so that the compression

factor is raised.

In the decoder,the bit stream is deserialized and the entropy coding is

reversed to reproduce the weighted coefficients.The coefficients are placed

in the matrix according to the zigzag scan, and inverse weighting is applied

to recreate the block of DCT coefficients. Following an inverse transform,

the 8x8 pixel block is recreated.To obtain a raster-scanned output, the blocks

are stored in RAM, which is read a line at a time.To obtain a 4:2:2 output

from 4:2:0 data, a vertical interpolation process will be needed as shown

in Figure 2-8.

The chroma samples in 4:2:0 are positioned half way between luminance

samples in the vertical axis so that they are evenly spaced when an interlaced source is used.

2.7 Temporal Coding

Temporal redundancy can be exploited by inter-coding or transmitting only

the differences between pictures. Figure 2-9 shows that a one-picture

4:2:2 Rec 601

4:1:1

4:2:0

1 Luminance sample Y

2 Chrominance samples Cb, C

r

N

N+1

N+2

N+3

Figure 2-8.

This

Picture

Previous

Picture

Picture

Difference

Picture Delay

+

_

Figure 2-9.

Page 20

www.tektronix.com/video_audio

14

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

delay combined with a subtracter can compute the picture differences.

The picture difference is an image in its own right and can be further

compressed by the spatial coder as was previously described. The decoder

reverses the spatial coding and adds the difference picture to the previous

picture to obtain the next picture.

There are some disadvantages to this simple system. First, as only differences are sent, it is impossible to begin decoding after the start of the

transmission. This limitation makes it difficult for a decoder to provide pictures following a switch from one bit stream to another (as occurs when the

viewer changes channels). Second, if any part of the difference data is

incorrect, the error in the picture will propagate indefinitely.

The solution to these problems is to use a system that is not completely

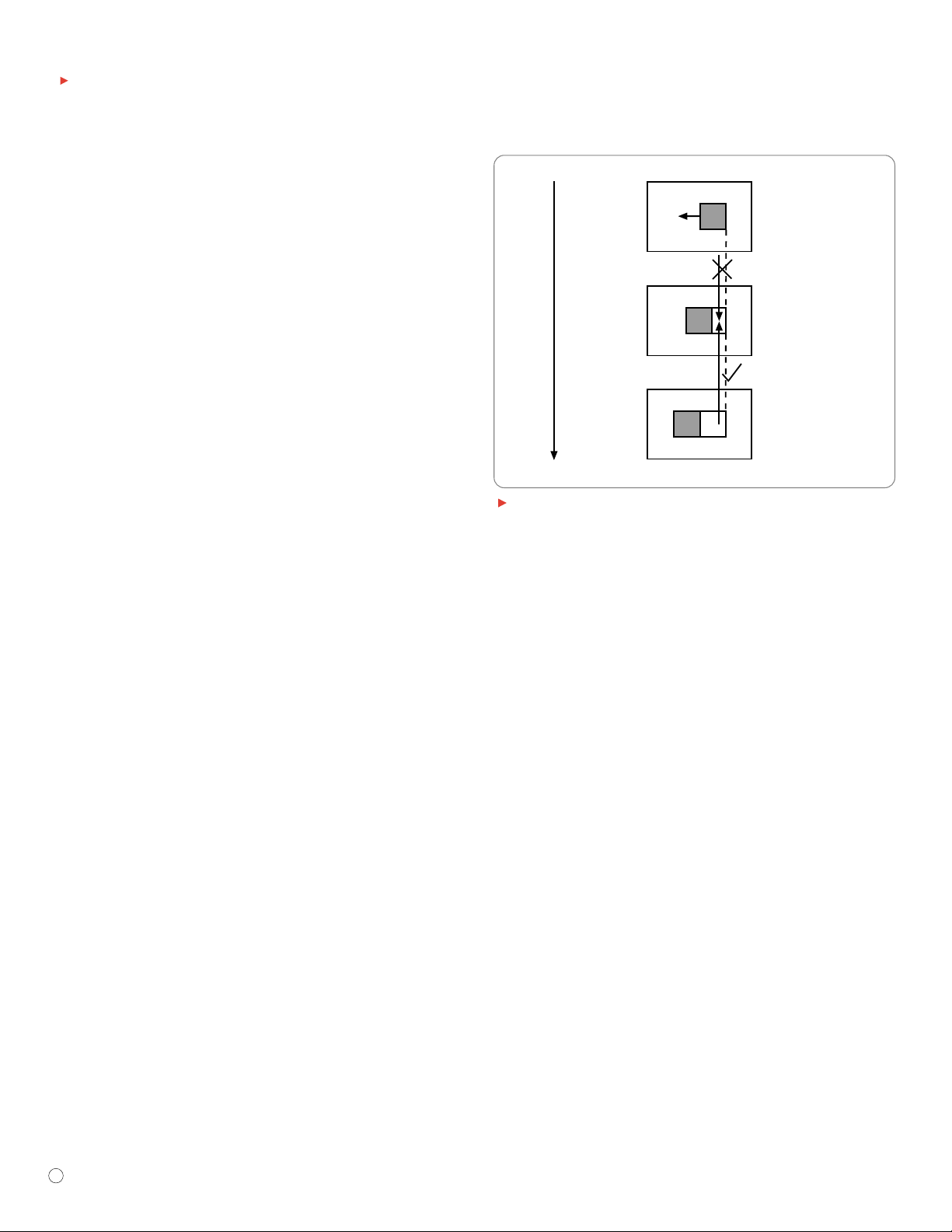

differential. Figure 2-10 shows that periodically complete pictures are

sent. These are called Intra-coded pictures (or I-pictures), and they are

obtained by spatial compression only. If an error or a channel switch

occurs, it will be possible to resume correct decoding at the next I-picture.

2.8 Motion Compensation

Motion reduces the similarities between pictures and increases the data

needed to create the difference picture. Motion compensation is used to

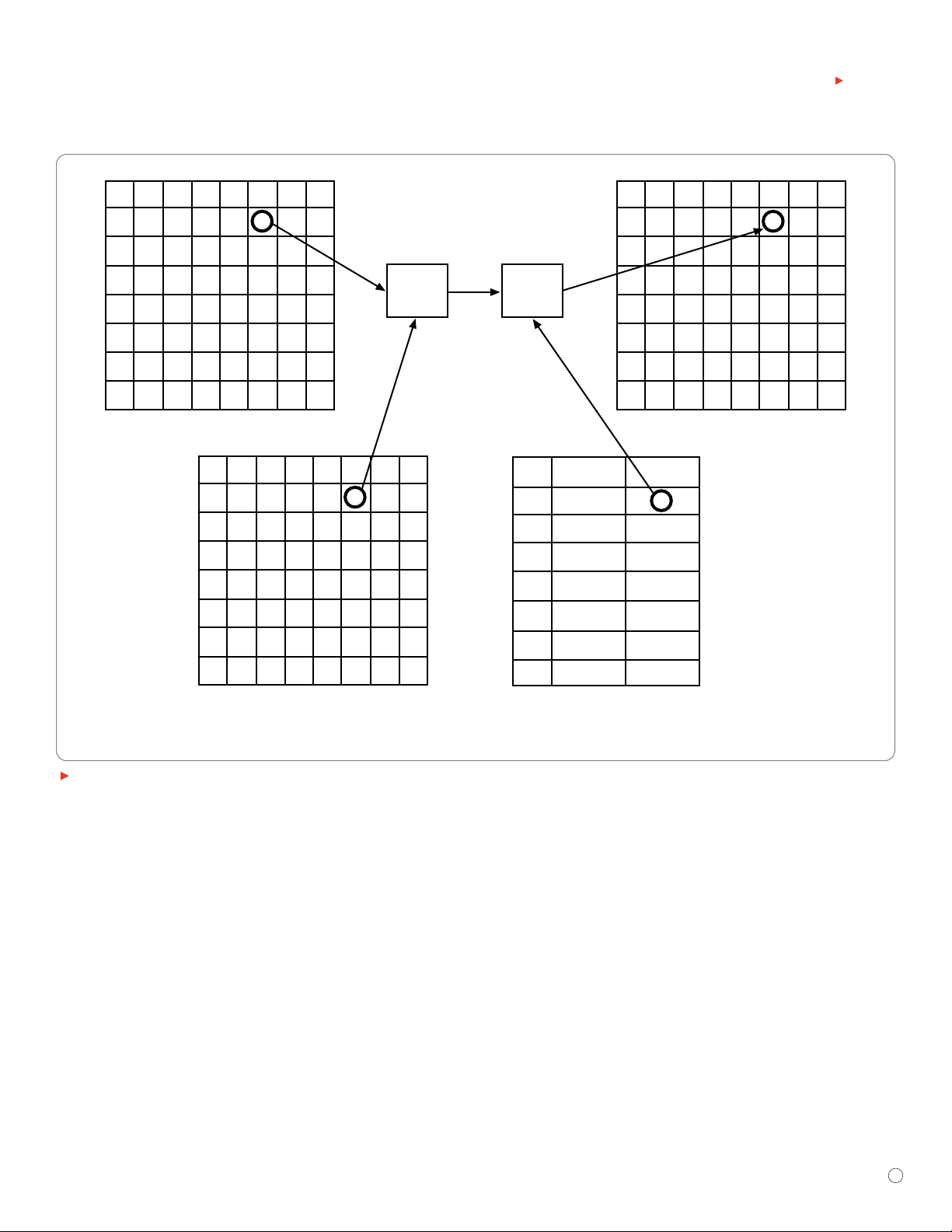

increase the similarity.Figure 2-11 shows the principle. When an object

moves across the TV screen, it may appear in a different place in each

picture, but it does not change in appearance very much. The picture difference can be reduced by measuring the motion at the encoder.This is

sent to the decoder as a vector.The decoder uses the vector to shift part

of the previous picture to a more appropriate place in the new picture.

Difference

Restart

Start

Intra

Intra

Temporal Temporal Temporal

DifferenceDifference

Figure 2-10.

Actions:

1. Compute Motion Vector

2. Shift Data from Picture N

Using Vector to Make

Predicted Picture N+1

3. Compare Actual Picture

with Predicted Picture

4. Send Vector and

Prediction Error

Picture N

Picture N+1

Motion

Vector

Part of Moving Object

Figure 2-11.

Page 21

www.tektronix.com/video_audio

15

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

One vector controls the shifting of an entire area of the picture that is

known as a macroblock. The size of the macroblock is determined by the

DCT coding and the color subsampling structure. Figure 2-12a shows that,

with a 4:2:0 system, the vertical and horizontal spacing of color samples

is exactly twice the spacing of luminance. A single 8x8 DCT block of color

samples extends over the same area as four 8x8 luminance blocks; therefore this is the minimum picture area that can be shifted by a vector. One

4:2:0 macroblock contains four luminance blocks: one C

b

block and one

C

r

block.

In the 4:2:2 profile, color is only subsampled in the horizontal axis. Figure

2-12b shows that in 4:2:2, a single 8x8 DCT block of color samples

extends over two luminance blocks. A 4:2:2 macroblock contains four

luminance blocks: two C

b

blocks and two Crblocks.

The motion estimator works by comparing the luminance data from two

successive pictures.A macroblock in the first picture is used as a reference.

The correlation between the reference and the next picture is measured at

all possible displacements with a resolution of half a pixel over the entire

search range. When the greatest correlation is found, this correlation is

assumed to represent the correct motion.

The motion vector has a vertical and horizontal component. In typical program

material, a moving object may extend over a number of macroblocks.

A greater compression factor is obtained if the vectors are transmitted differentially.When a large object moves, adjacent macroblocks have the same

vectors and the vector differential becomes zero.

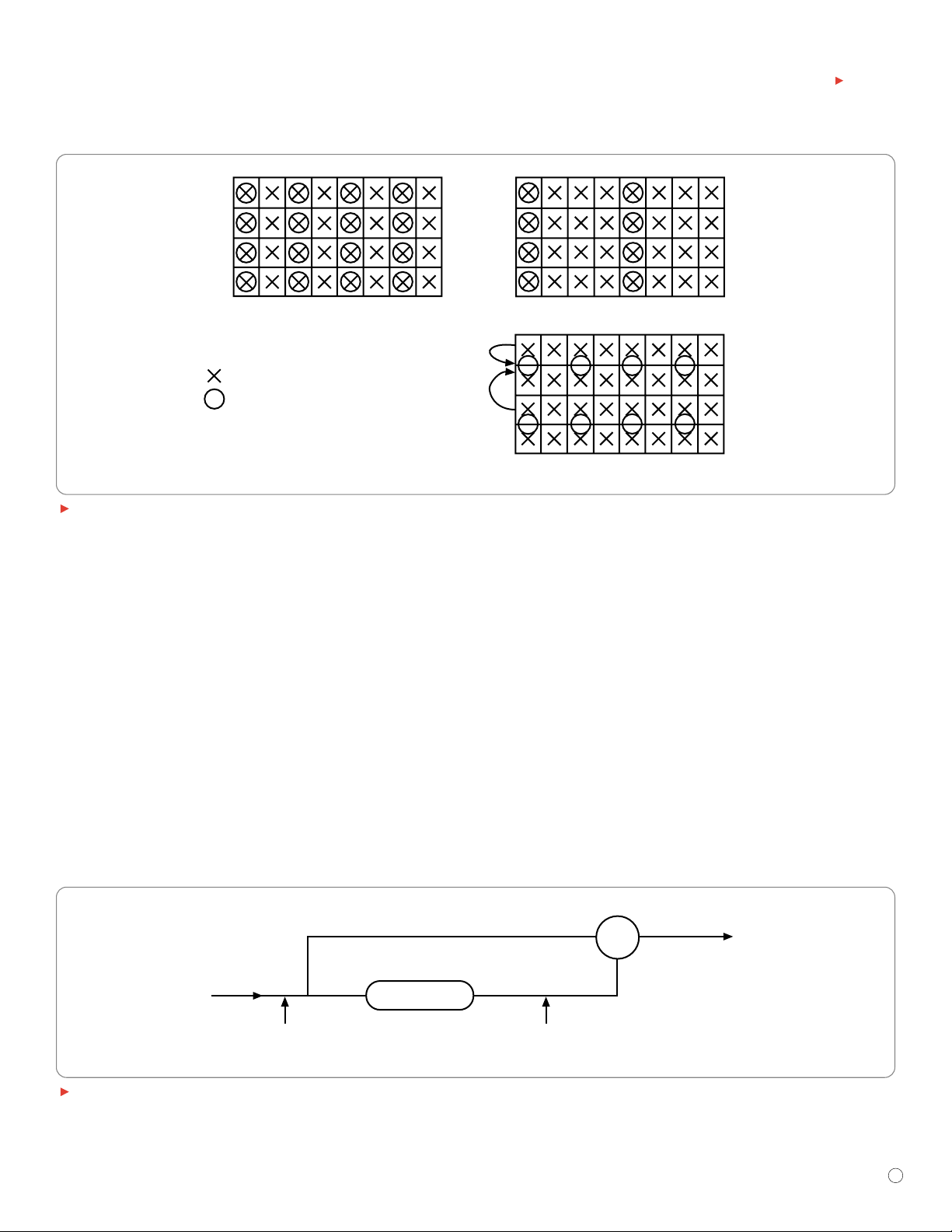

a) 4:2:0 has 1/4 as many chroma sampling points as Y

b) 4:2:2 has twice as much chroma data as 4:2:0

8

8

8

8

8

8

8

8

8

8

8

8

16

16

4 x Y

C

r

C

b

8

8

8

8

2 x C

r

2 x C

b

16

16

8

8

8

8

8

8

8

8

4 x Y

8

8

8

8

Figure 2-12.

Page 22

www.tektronix.com/video_audio

16

A Guide to MPEG Fundamentals and Protocol Analysis

Primer

Motion vectors are associated with macroblocks, not with real objects in

the image and there will be occasions where part of the macroblock

moves and part of it does not. In this case, it is impossible to compensate

properly.If the motion of the moving part is compensated by transmitting

a vector,the stationary part will be incorrectly shifted, and it will need difference data to be corrected. If no vector is sent, the stationar y part will

be correct, but difference data will be needed to correct the moving part.

A practical compressor might attempt both strategies and select the one

that required the least data.

2.9 Bidirectional Coding

When an object moves, it conceals the background at its leading edge

and reveals the background at its trailing edge. The revealed background

requires new data to be transmitted because the area of background was

previously concealed and no information can be obtained from a previous

picture. A similar problem occurs if the camera pans; new areas come into

view and nothing is known about them. MPEG helps to minimize this problem by using bidirectional coding, which allows information to be taken

from pictures before and after the current picture. If a background is being

revealed, it will be present in a later picture, and the information can be

moved backwards in time to create part of an earlier picture.

Figure 2-13 shows the concept of bidirectional coding. On an individual

macroblock basis, a bidirectionally-coded picture can obtain motioncompensated data from an earlier or later picture, or even use an average

of earlier and later data. Bidirectional coding significantly reduces the

amount of difference data needed by improving the degree of prediction

possible. MPEG does not specify how an encoder should be built, only

what constitutes a compliant bit stream. However, an intelligent compressor

could try all three coding strategies and select the one that results in the

least data to be transmitted.

2.10 I-, P- and B-pictures

In MPEG, three different types of pictures are needed to support differential

and bidirectional coding while minimizing error propagation:

I-pictures are intra-coded pictures that need no additional information for

decoding. They require a lot of data compared to other picture types, and

therefore they are not transmitted any more frequently than necessary.They

consist primarily of transform coefficients and have no vectors. I-pictures are

decoded without reference to any other pictures, so they allow the viewer to

switch channels, and they arrest error propagation.

P-pictures are forward predicted from an earlier picture, which could be

an I-picture or a P-picture. P-picture data consists of vectors describing

where, in the previous picture, each macro-block should be taken from,

and transform coefficients that describe the correction or difference data

that must be added to that macroblock. Where no suitable match for a

macroblock could be found by the motion compensation search, intra data