Page 1

Sun StorEdge™T3 and T3+ Array

Configuration Guide

Sun Microsystems, Inc.

901 San Antonio Road

Palo Alto, CA 94303-4900 U.S.A.

650-960-1300

Part No. 816-0777-10

August 2001, Revision A

Send comments about this document to: docfeedback@sun.com

Page 2

Copyright 2001Sun Microsystems,Inc., 901San AntonioRoad, PaloAlto, CA94303-4900 U.S.A.All rightsreserved.

This productor documentis distributedunder licensesrestricting its use, copying, distribution, and decompilation. No part of this product or

document maybe reproduced inany formby anymeans withoutprior writtenauthorization ofSun andits licensors,if any.Third-party

software, includingfont technology, iscopyrighted andlicensed fromSun suppliers.

Parts ofthe productmay bederived from BerkeleyBSD systems,licensed fromthe Universityof California.UNIX isa registered trademark in

the U.S.and othercountries, exclusivelylicensed throughX/Open Company, Ltd.

Sun, SunMicrosystems, theSun logo,AnswerBook2, SolsticeDiskSuite, docs.sun.com,OpenBoot, SunSolve,JumpStart, StorTools, Sun

Enterprise, SunStorEdge, SunUltra, SunFire, SunBlade, SolsticeBackup, Netra,NFS, andSolaris are trademarks,registered trademarks, or

service marksof SunMicrosystems, Inc.in theU.S. andother countries.All SPARC trademarks are usedunder licenseand aretrademarks or

registered trademarks of SPARCInternational, Inc.in theU.S. andother countries.Products bearingSPARC trademarks arebased uponan

architecture developed by Sun Microsystems, Inc.

The OPENLOOK andSun™ GraphicalUser Interfacewas developedby SunMicrosystems, Inc.for itsusers andlicensees. Sunacknowledges

the pioneeringefforts ofXerox in researchingand developingthe conceptof visualor graphicaluser interfacesfor thecomputer industry. Sun

holds anon-exclusive licensefrom Xerox tothe XeroxGraphical UserInterface, whichlicense alsocovers Sun’s licensees who implement OPEN

LOOK GUIsand otherwisecomply withSun’s writtenlicense agreements.

Federal Acquisitions:Commercial Software—Government UsersSubject toStandard LicenseTerms and Conditions.

DOCUMENTATION IS PROVIDED “AS IS” AND ALL EXPRESS OR IMPLIED CONDITIONS, REPRESENTATIONS AND WARRANTIES,

INCLUDING ANY IMPLIED WARRANTY OF MERCHANTABILITY, FITNESS FOR APARTICULAR PURPOSE OR NON-INFRINGEMENT,

ARE DISCLAIMED, EXCEPT TO THE EXTENT THAT SUCH DISCLAIMERS ARE HELD TO BE LEGALLY INVALID.

Copyright 2001Sun Microsystems,Inc., 901San AntonioRoad, PaloAlto, CA94303-4900 Etats-Unis.Tous droits réservés.

Ce produitou documentest distribuéavec deslicences quien restreignent l’utilisation,la copie,la distribution,et ladécompilation. Aucune

partie dece produitou documentne peutêtre reproduite sousaucune forme,par quelquemoyen quece soit,sans l’autorisationpréalable et

écrite deSun etde sesbailleurs delicence, s’ily ena. Lelogiciel détenupar destiers, etqui comprendla technologierelative aux polices de

caractères, estprotégé par un copyright et licencié par des fournisseurs de Sun.

Des partiesde ceproduit pourront êtredérivées dessystèmes BerkeleyBSD licenciéspar l’Universitéde Californie.UNIX estune marque

déposée auxEtats-Unis etdans d’autrespays etlicenciée exclusivementpar X/OpenCompany, Ltd.

Sun, SunMicrosystems, lelogo Sun,AnswerBook2, SolsticeDiskSuite, docs.sun.com,SunSolve, OpenBoot,JumpStart, StorTools, Sun

Enterprise, SunStorEdge, SunUltra, SunFire, SunBlade, SolsticeBackup, Netra,NFS, etSolaris sontdes marques defabrique oudes marques

déposées, oumarques deservice, deSun Microsystems, Inc.aux Etats-Uniset dansd’autres pays.Toutes les marquesSPARC sontutilisées sous

licence etsont desmarques defabrique oudes marques déposéesde SPARC International, Inc. aux Etats-Unis et dans d’autres pays. Les

produits portantles marques SPARC sont basés sur une architecturedéveloppée parSun Microsystems,Inc.

L’interfaced’utilisation graphiqueOPEN LOOKet Sun™a étédéveloppée parSun Microsystems, Inc. pour ses utilisateurs etlicenciés. Sun

reconnaît lesefforts de pionniers de Xerox pour la recherche et le développement du concept des interfaces d’utilisation visuelle ou graphique

pour l’industriede l’informatique.Sun détientune licencenon exclusivede Xeroxsur l’interfaced’utilisation graphiqueXerox, cette licence

couvrant égalementles licenciésde Sunqui mettenten placel’interface d’utilisationgraphique OPENLOOK etqui enoutre seconforment aux

licences écritesde Sun.

LA DOCUMENTATION EST FOURNIE “EN L’ETAT” ET TOUTES AUTRES CONDITIONS, DECLARATIONS ET GARANTIES EXPRESSES

OU TACITES SONT FORMELLEMENTEXCLUES, DANSLA MESUREAUTORISEE PARLA LOIAPPLICABLE, YCOMPRIS NOTAMMENT

TOUTE GARANTIE IMPLICITE RELATIVE A LA QUALITE MARCHANDE, A L’APTITUDE A UNE UTILISATION PARTICULIERE OU A

L’ABSENCE DE CONTREFAÇON.

Please

Recycle

Page 3

Contents

Preface ix

1. Array Configuration Overview 1

Product Description 1

Controller Card 2

Interconnect Cards 4

Array Configurations 6

Configuration Guidelines and Restrictions 8

Configuration Recommendations 9

Supported Platforms 9

Supported Software 10

Sun Cluster Support 10

2. Configuring Global Parameters 13

Cache 13

Configuring Cache for Performance and Redundancy 14

Configuring Data Block Size 15

Selecting a Data Block Size 15

Enabling Mirrored Cache 16

Configuring Cache Allocation 16

iii

Page 4

Logical Volumes 16

Guidelines for Configuring Logical Volumes 17

Determining How Many Logical Volumes You Need 17

Determining Which RAID Level You Need 18

Determining Whether You Need a Hot Spare 18

Creating and Labeling a Logical Volume 19

Setting the LUN Reconstruction Rate 19

Using RAID Levels to Configure Redundancy 20

RAID 0 21

RAID 1 21

RAID 5 21

Configuring RAID Levels 22

3. Configuring Partner Groups 23

Understanding Partner Groups 23

How Partner Groups Work 25

Creating Partner Groups 26

4. Configuration Examples 27

Direct Host Connection 27

Single Host With One Controller Unit 28

Single Host With Two Controller Units Configured as a Partner Group 29

Host Multipathing Management Software 30

Single Host With Four Controller Units Configured as Two Partner

Groups 31

Single Host With Eight Controller Units Configured as Four Partner

Groups 32

Hub Host Connection 34

Single Host With Two Hubs and Four Controller Units Configured as Two

Partner Groups 34

iv Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 5

Single Host With Two Hubs and Eight Controller Units Configured as Four

Partner Groups 36

Dual Hosts With Two Hubs and Four Controller Units 38

Dual Hosts With Two Hubs and Eight Controller Units 40

Dual Hosts With Two Hubs and Four Controller Units Configured as Two

Partner Groups 42

Dual Hosts With Two Hubs and Eight Controller Units Configured as Four

Partner Groups 44

Switch Host Connection 46

Dual Hosts With Two Switches and Two Controller Units 46

Dual Hosts With Two Switches and Eight Controller Units 48

5. Host Connections 51

Sun Enterprise SBus+ and Graphics+

I/O Boards 52

System Requirements 52

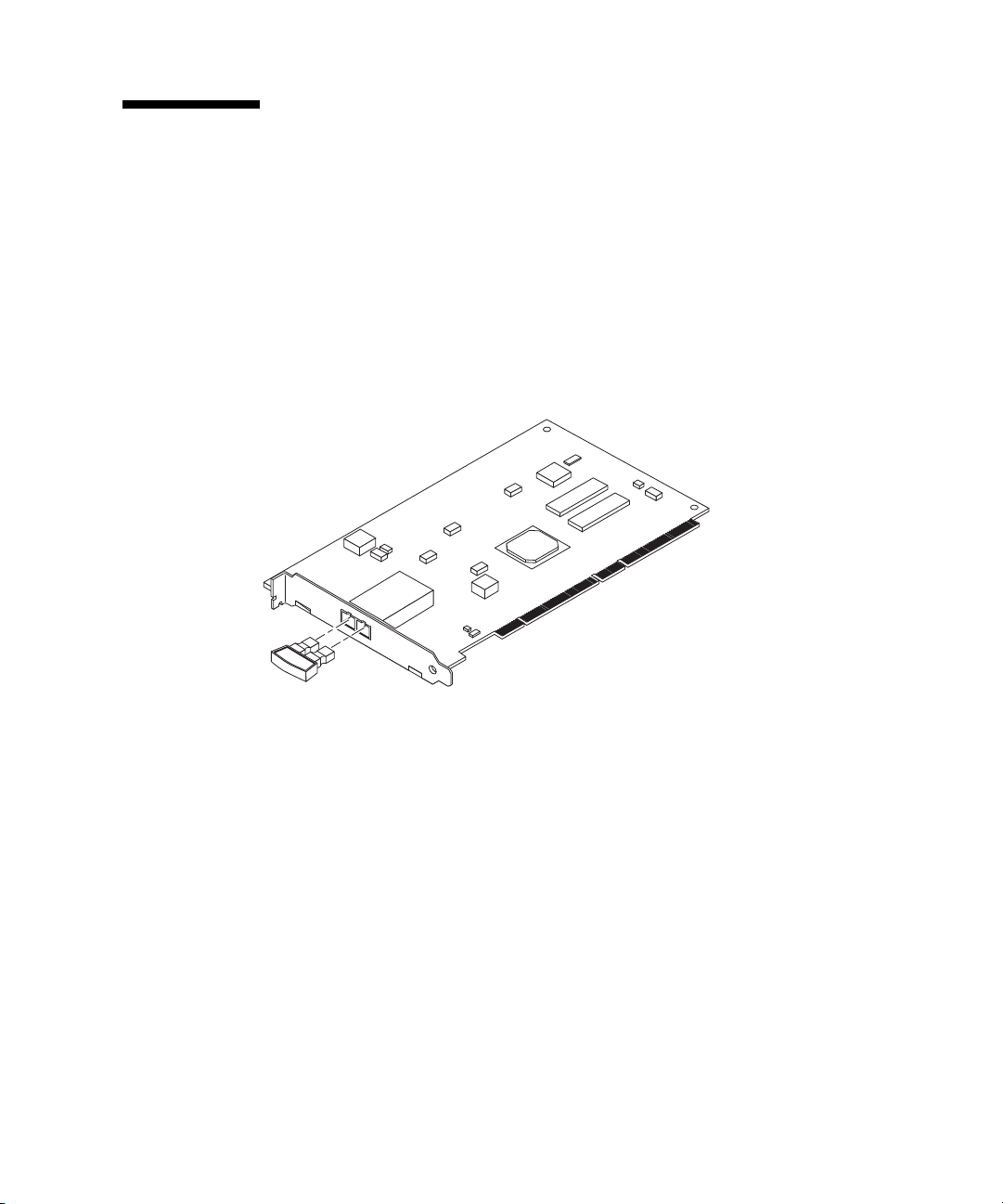

Sun StorEdge PCI FC-100 Host Bus Adapter 53

System Requirements 53

Sun StorEdge SBus FC-100 Host Bus Adapter 54

System Requirements 54

Sun StorEdge PCI Single Fibre Channel Network Adapter 55

System Requirements 55

Sun StorEdge PCI Dual Fibre Channel Network Adapter 56

System Requirements 56

Sun StorEdge CompactPCI Dual Fibre Channel Network Adapter 57

System Requirements 57

6. Array Cabling 59

Overview of Array Cabling 59

Data Path 59

Contents v

Page 6

Administration Path 60

Connecting Partner Groups 60

Workgroup Configurations 62

Enterprise Configurations 63

Glossary 65

vi Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 7

Figures

FIGURE 1-1 Sun StorEdge T3 Array Controller Card and Ports 3

FIGURE 1-2 Sun StorEdge T3+ Array Controller Card and Ports 4

FIGURE 1-3 Interconnect Card and Ports 5

FIGURE 1-4 Workgroup Configuration 6

FIGURE 1-5 Enterprise Configuration 7

FIGURE 3-1 Sun StorEdge T3 Array Partner Group 24

FIGURE 4-1 Single Host Connected to One Controller Unit 28

FIGURE 4-2 Single Host With Two Controller Units Configured as a Partner Group 29

FIGURE 4-3 Failover Configuration 30

FIGURE 4-4 Single Host With Four Controller Units Configured as Two Partner Groups 31

FIGURE 4-5 Single Host With Eight Controller Units Configured as Four Partner Groups 33

FIGURE 4-6 Single Host With Two Hubs and Four Controller Units Configured as Two Partner Groups 35

FIGURE 4-7 Single Host With Two Hubs Configured and Eight Controller Units as Four Partner

Groups 37

FIGURE 4-8 Dual Hosts With Two Hubs and Four Controller Units 39

FIGURE 4-9 Dual Hosts With Two Hubs and Eight Controller Units 41

FIGURE 4-10 Dual Hosts With Two Hubs and Four Controller Units Configured as Two Partner Groups 43

FIGURE 4-11 Dual Hosts With Two Hubs and Eight Controller Units Configured as Four Partner

Groups 45

FIGURE 4-12 Dual Hosts With Two Switches and Two Controller Units 47

FIGURE 4-13 Dual Hosts With Two Switches and Eight Controller Units 49

vii

Page 8

FIGURE 5-1 Sun Enterprise 6x00/5x00/4x00/3x00 SBus+ I/O Board 52

FIGURE 5-2 Sun StorEdge PCI FC-100 Host Bus Adapter 53

FIGURE 5-3 Sun StorEdge SBus FC-100 Host Bus Adapter 54

FIGURE 5-4 Sun StorEdge PCI Single Fibre Channel Network Adapter 55

FIGURE 5-5 Sun StorEdge PCI Dual Fibre Channel Network Adapter 56

FIGURE 5-6 Sun StorEdge CompactPCI Dual Fibre Channel Network Adapter 57

FIGURE 6-1 Sun StorEdge T3 Array Controller Card and Interconnect Cards 61

FIGURE 6-2 Sun StorEdge T3+ Array Controller Card and Interconnect Cards 61

FIGURE 6-3 Array Workgroup Configuration 62

FIGURE 6-4 Enterprise Configuration 63

viii Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 9

Preface

The Sun StorEdge T3 and T3+ Array Configuration Guide describes the recommended

configurations for Sun StorEdge T3 and T3+ arrays for high availability, maximum

performance, and maximum storage capability. This guide is intended for Sun™

field sales and technical support personnel.

Before You Read This Book

Read the Sun StorEdge T3 and T3+ Array Installation, Operation, and Service Manual for

product overview information.

How This Book Is Organized

Chapter 1 describes the connection ports and Fibre Channel loops for the Sun

StorEdge T3 and T3+ array. It also describes basic rules and recommendations for

configuring the array.

Chapter 2 describes how to configure the array’s global parameters.

Chapter 3 describes how to configure arrays into partner groups to form redundant

storage systems.

Chapter 4 provides reference configuration examples.

Chapter 5 describes host connections for the array.

Chapter 6 describes array cabling.

ix

Page 10

Using UNIX Commands

This document contains some information on basic UNIX®commands and

procedures such as booting the devices. For further information, see one or more of

the following:

■ AnswerBook2™ online documentation for the Solaris™ software environment

■ Other software documentation that you received with your system

Typographic Conventions

Typeface Meaning Examples

AaBbCc123 The names of commands, files,

and directories; on-screen

computer output

AaBbCc123

AaBbCc123 Book titles, new words or terms,

What you type, when

contrasted with on-screen

computer output

words to be emphasized

Command-line variable; replace

with a real name or value

Edit your.login file.

Use ls -a to list all files.

% You have mail.

% su

Password:

Read Chapter 6 in the User’s Guide.

These are called class options.

Yo u must be superuser to do this.

To delete a file, type rm filename.

x Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 11

Shell Prompts

Shell Prompt

C shell machine_name%

C shell superuser machine_name#

Bourne shell and Korn shell $

Bourne shell and Korn shell superuser #

Sun StorEdge T3 and T3+ array :/:

Related Documentation

Application Title Part Number

Latest array updates Sun StorEdge T3 and T3+ Array Release

Notes

Installation overview Sun StorEdge T3 and T3+ Array Start Here 816-0772

Safety procedures Sun StorEdge T3 and T3+ Array Regulatory

and Safety Compliance Manual

Site preparation Sun StorEdge T3 and T3+ Array Site

Preparation Guide

Installation and Service Sun StorEdge T3 and T3+ Array Installation,

Operation, and Service Manual

Administration Sun StorEdge T3 and T3+ Array

Administrator’s Guide

Cabinet installation Sun StorEdge T3 Array Cabinet Installation

Guide

Disk drive specifications 18 Gbyte, 1-inch, 10K rpm Disk Drive

Specifications

36 Gbyte, 10K rpm Disk Drive Specifications 806-6383

73 Gbyte, 10K rpm, 1.6 Inch Disk Drive

Specifications

816-1983

816-0774

816-0778

816-0773

816-0776

806-7979

806-1493

806-4800

Preface xi

Page 12

Application Title Part Number

Sun StorEdge Component

Manager installation

Using Sun StorEdge

Component Manager

software

Latest Sun StorEdge

Component Manager

Updates

Sun StorEdge Component Manager

Installation Guide - Solaris

Sun StorEdge Component Manager

Installation Guide - Windows NT

Sun StorEdge Component Manager User’s

Guide

Sun StorEdge Component Manager Release

Notes

806-6645

806-6646

806-6647

806-6648

Accessing Sun Documentation Online

You can find the Sun StorEdge T3 and T3+ array documentation and other select

product documentation for Network Storage Solutions at:

http://www.sun.com/products-n-solutions/hardware/docs/

Network_Storage_Solutions

Sun Welcomes Your Comments

Sun is interested in improving its documentation and welcomes your comments and

suggestions. You can email your comments to Sun at:

docfeedback@sun.com

Please include the part number (816-0777-10) of your document in the subject line of

your email.

xii Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 13

CHAPTER

1

Array Configuration Overview

This chapter describes the Sun StorEdge T3 and T3+ arrays, the connection ports,

and Fibre Channel connections. It also describes basic rules and recommendations

for configuring the array, and it lists supported hardware and software platforms.

Note – For installation and cabling information, refer to the Sun StorEdge T3 and T3+

Array Installation, Operation, and Service Manual. For software configuration

information, refer to the Sun StorEdge T3 and T3+ Array Administrator’s Guide.

This chapter is organized as follows:

■ “Product Description” on page 1

■ “Configuration Guidelines and Restrictions” on page 8

■ “Configuration Recommendations” on page 9

■ “Supported Platforms” on page 9

■ “Sun Cluster Support” on page 10.

Product Description

The Sun StorEdge T3 array is a high-performance, modular, scalable storage device

that contains an internal RAID controller and nine disk drives with Fibre Channel

connectivity to the data host. Extensive

features include redundant components, notification of failed components, and the

ability to replace components while the unit is online. The Sun StorEdge T3+ array

provides the same features as the Sun StorEdge T3 array, and includes an updated

controller card with direct fiber-optic connectivity and additional memory for data

cache. The controller cards of both array models are described in more detail later in

this chapter.

reliability, availability, and serviceability (RAS)

1

Page 14

The array can be used either as a standalone storage unit or as a building block,

interconnected with other arrays of the same type and configured in various ways to

provide a storage solution optimized to the host application. The array can be placed

on a table top or rackmounted in a server cabinet or expansion cabinet.

The array is sometimes called a controller unit, which refers to the internal RAID

controller on the controller card. Arrays without the controller card are called

expansion units. When connected to a controller unit, the expansion unit enables you

to increase your storage capacity without the cost of an additional controller. An

expansion unit must be connected to a controller unit to operate because it does not

have its own controller.

In this document, the Sun StorEdge T3 array and Sun StorEdge T3+ array are

referred to as the array, except when necessary to distinguish between models.

Note – The Sun StorEdge T3 and T3+ arrays are similar in appearance. In this

document, all illustrations labeled Sun StorEdge T3 array also apply to the Sun

StorEdge T3+ array, except when necessary to distinguish specific model features.

In these instances, the array model is specified.

Refer to the Sun StorEdge T3 and T3+ Array Installation, Operation, and Service Manual

for an illustrated breakdown of the array and its component parts.

Controller Card

There are two controller card versions that are specific to the array model. Both

controller cards provide the connection ports to cable the array to data and

management hosts, but the type of connectors vary between models.

The Sun StorEdge T3 array controller card contains:

■ One Fibre Channel-Arbitrated Loop (FC-AL) port, which provides data path

connectivity to the application host system. This connector on the Sun StorEdge

T3 array requires a media interface adapter (MIA) to connect a fiber-optic cable.

■ One 10BASE-T Ethernet host interface port (RJ-45). This port provides the

interface between the controller card and the management host system. An

unshielded twisted-pair Ethernet cable (category 3) connects the controller to the

site’s network hub. This interface enables the administration and management of

the array via the Sun StorEdge Component Manager software or the command-line

interface (CLI).

■ One RJ-11 serial port. This serial port is reserved for diagnostic procedures that

can only be performed by qualified service personnel.

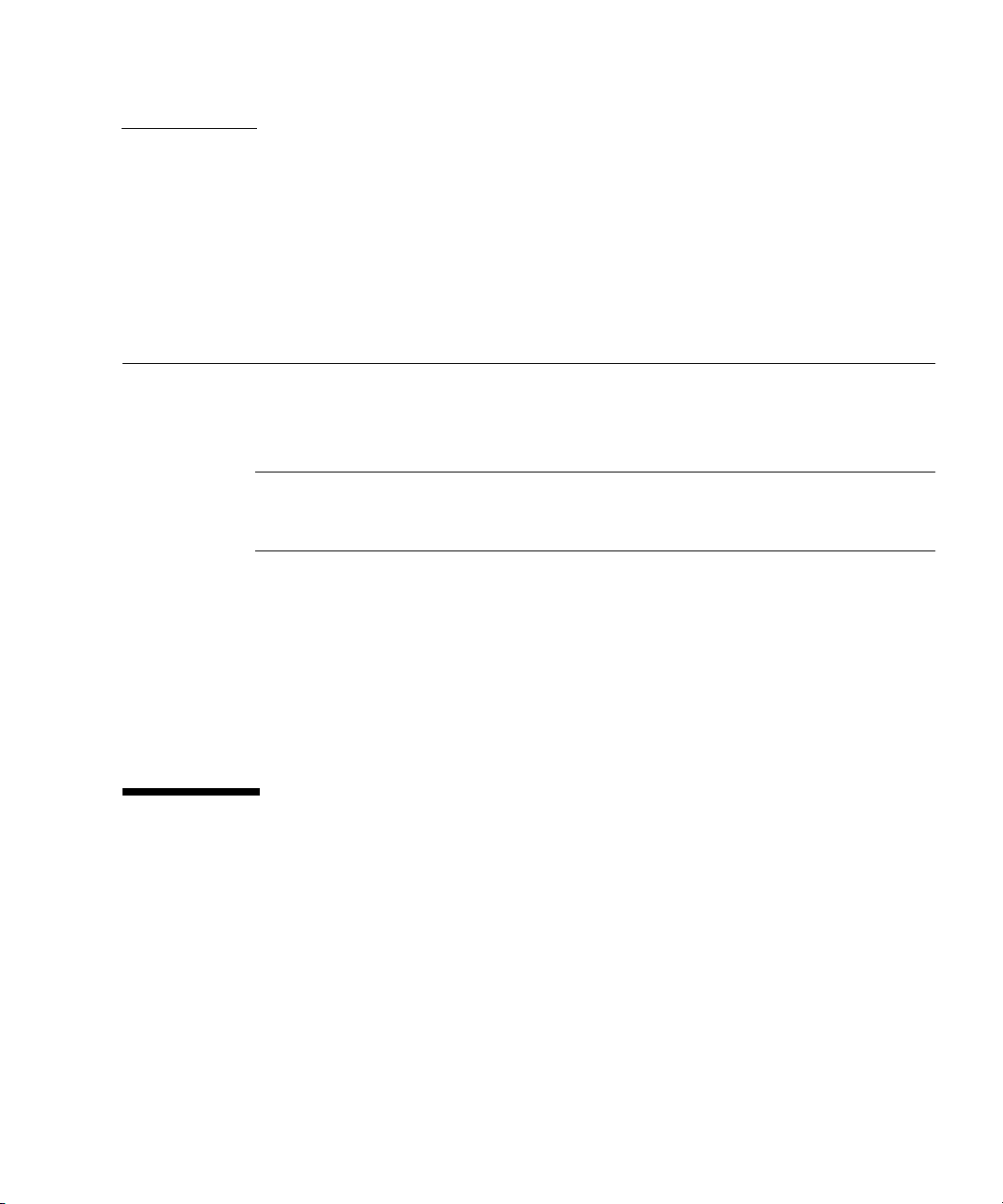

FIGURE 1-1 shows the location of the controller card and the connector ports on the

Sun StorEdge T3 array.

2 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 15

Serial port (RJ-11)

10BASE-T Ethernet port (RJ-45)

FC-AL data connection port

Note: FC-AL port requires an MIA for cable connection.

FIGURE 1-1 Sun StorEdge T3 Array Controller Card and Ports

The Sun StorEdge T3+ array controller card contains:

■ One Fibre Channel-Arbitrated Loop (FC-AL) port using an LC small-form factor

(SFF) connector. The fiber-optic cable that provides data channel connectivity to

the array has an LC-SFF connector that attaches directly to the port on the

controller card. The other end of the fiber-optic cable has a standard connector

(SC) that attaches a host bust adapter (HBA), hub, or switch.

■ One 10/100BASE-T Ethernet host interface port (RJ-45). This port provides the

interface between the controller card and the management host system. A

shielded Ethernet cable (category 5) connects the controller to the site’s network

hub. This interface enables the administration and management of the array via

the Sun StorEdge Component Manager software or the command-line interface

(CLI).

■ One RJ-45 serial port. This serial port is reserved for diagnostic procedures that

can only be performed by qualified service personnel.

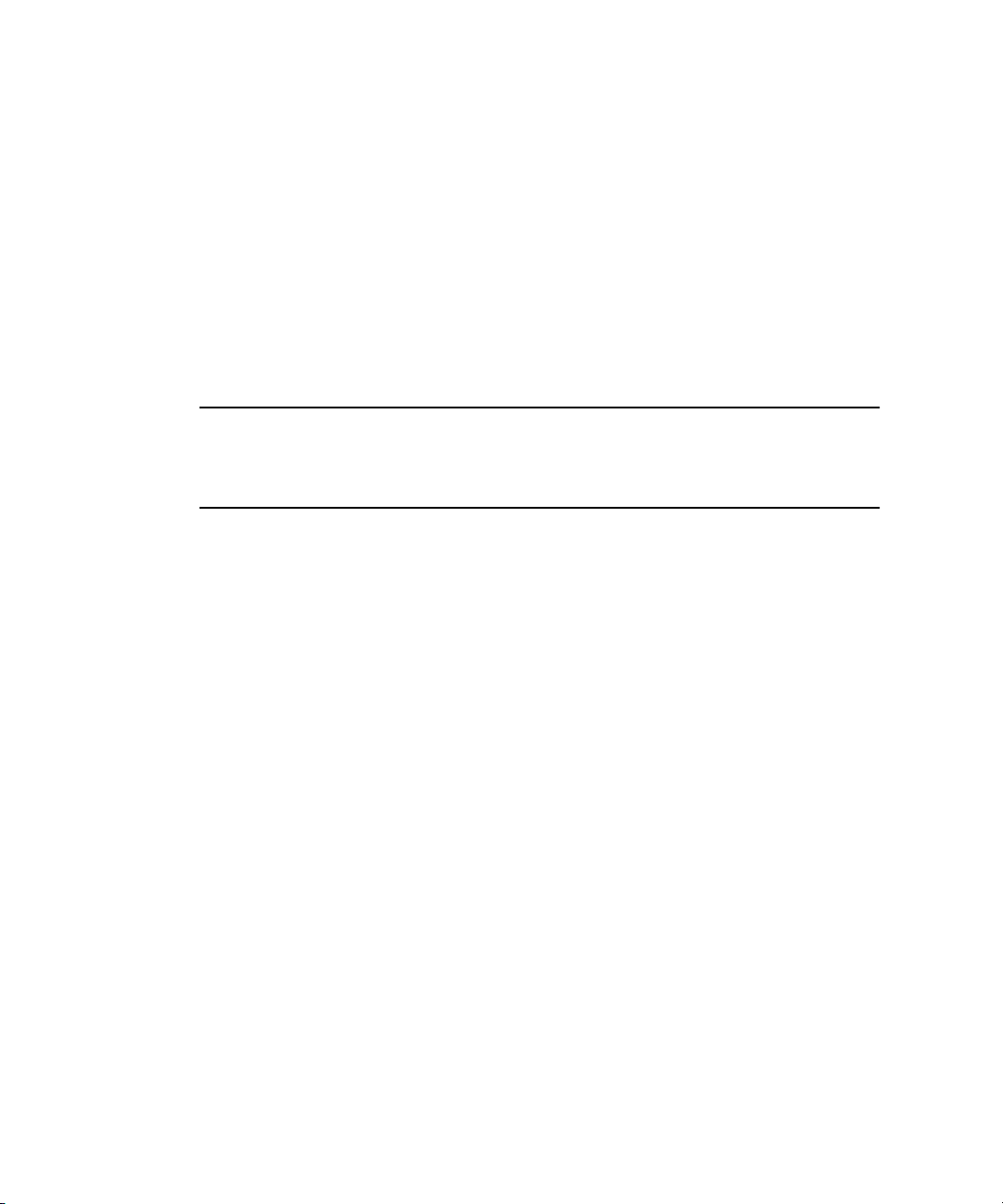

FIGURE 1-2 shows the Sun StorEdge T3+ array controller card and connector ports.

Chapter 1 Array Configuration Overview 3

Page 16

Serial port (RJ-45)

10/100BASE-T Ethernet port (RJ-45)

FC-AL data connection port

(LC-SFF)

FIGURE 1-2 Sun StorEdge T3+ Array Controller Card and Ports

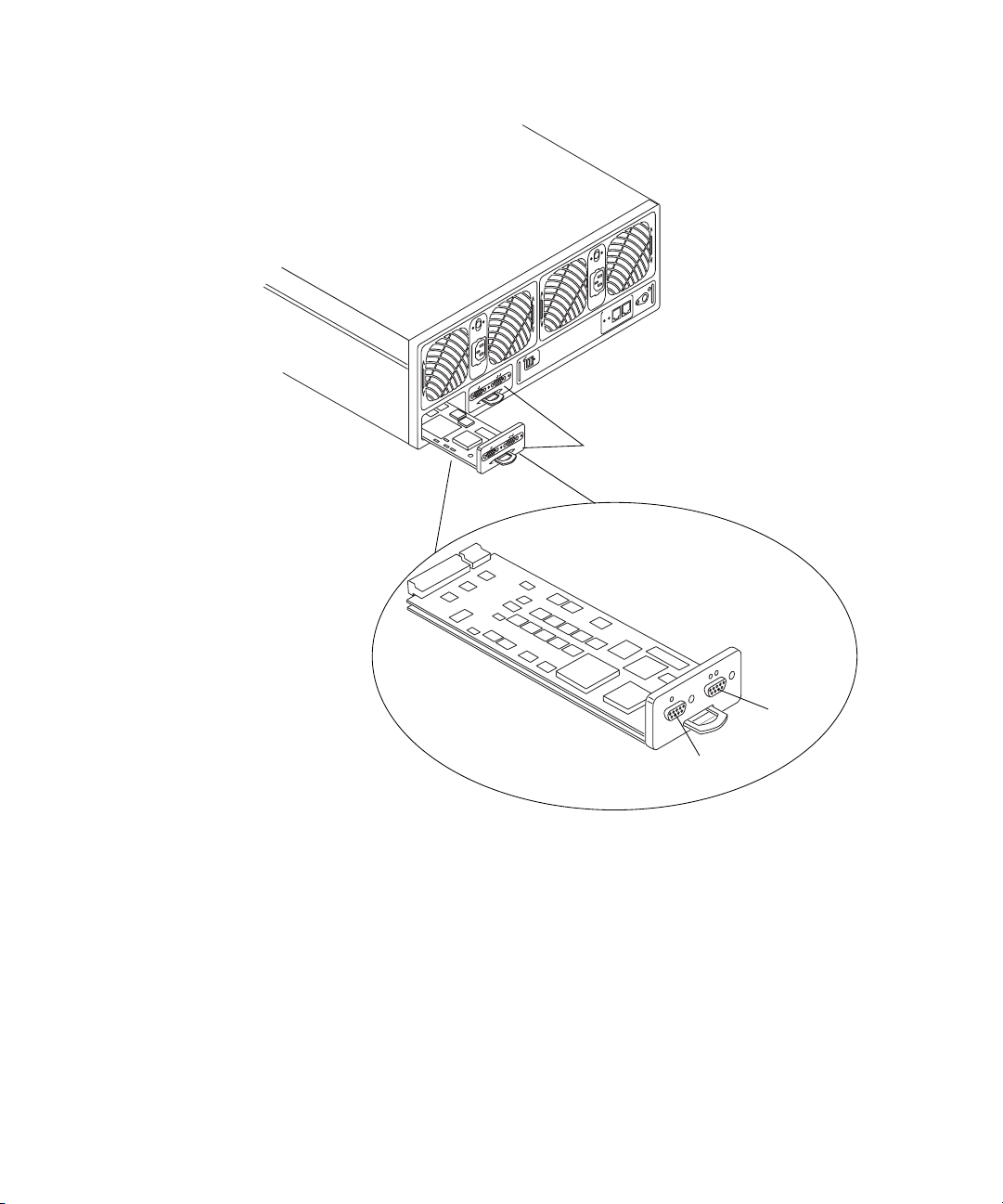

Interconnect Cards

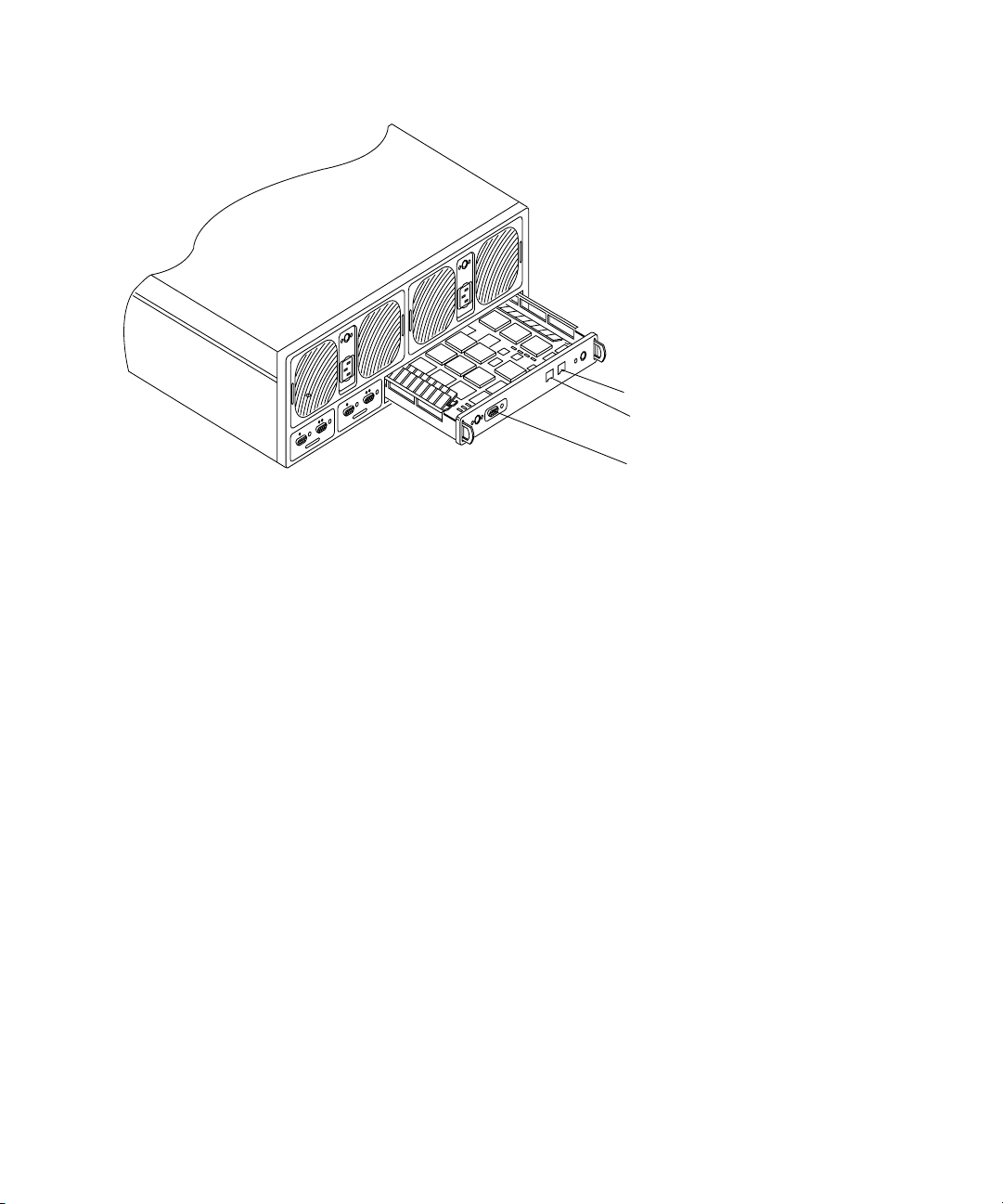

The interconnect cards are alike on both array models. There are two interconnect

ports on each card: one input and one output for interconnecting multiple arrays.

The interconnect card provides switch and failover capabilities, as well as an

environmental monitor for the array. Each array contains two interconnect cards for

redundancy (thus providing a total of four interconnect ports).

FIGURE 1-3 shows the interconnect cards in a Sun StorEdge T3+ array.

4 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 17

FIGURE 1-3 Interconnect Card and Ports

Interconnect cards

Output

Input

Chapter 1 Array Configuration Overview 5

Page 18

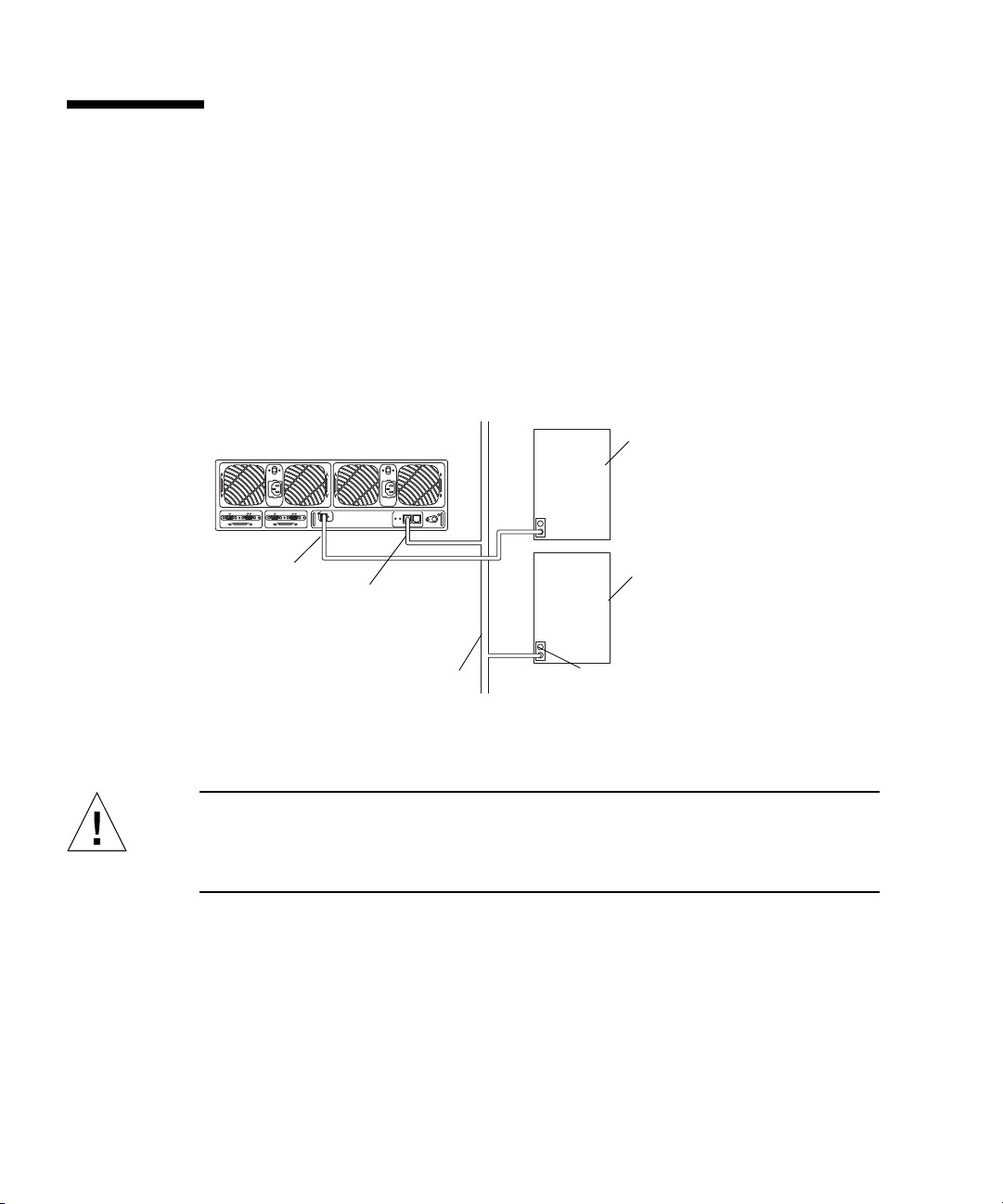

Array Configurations

Each array uses Fibre Channel-Arbitrated Loop (FC-AL) connections to connect to

the application host. An FC-AL connection is a 100-Mbyte/second serial channel

that enables multiple devices, such as disk drives and controllers, to be connected.

Two array configurations are supported:

■ Workgroup. This standalone array is a high-performance, high-RAS configuration

with a single hardware RAID cached controller. The unit is fully populated with

redundant hot-swap components and nine disk drives (

FIGURE 1-4).

Application host

FC-AL

connection

Ethernet

connection

LAN

FIGURE 1-4 Workgroup Configuration

Management host

Ethernet port

Caution – In a workgroup configuration, use a host-based mirroring solution to

protect data. This configuration does not offer the redundancy to provide cache

mirroring, and operating without a host-based mirroring solution could lead to data

loss in the event of a controller failure.

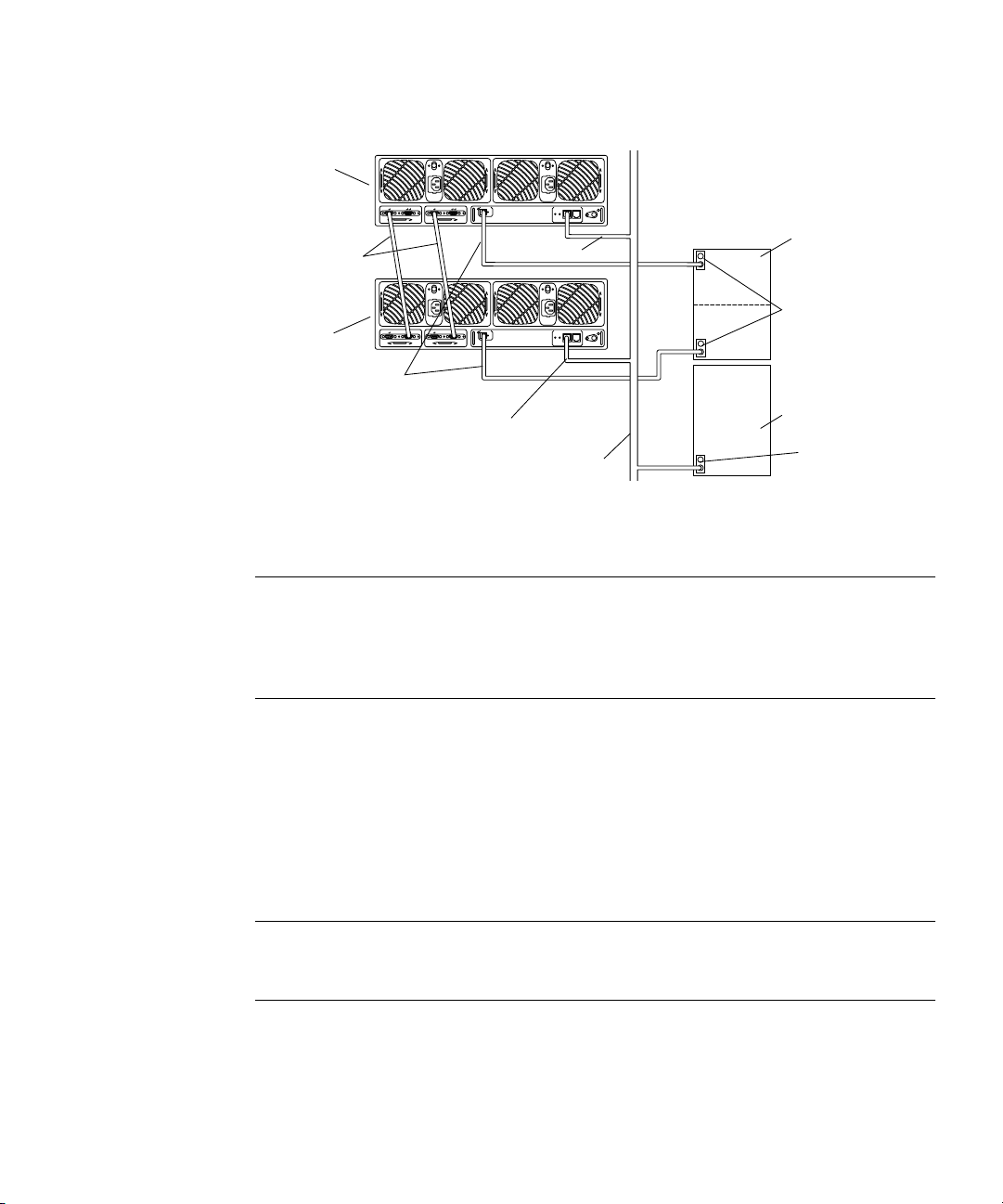

■ Enterprise. Also called a partner group, this is a configuration of two controller

units paired using interconnect cables for back-end data and administrative

connections. The enterprise configuration provides all the RAS of single controller

units, plus redundant hardware RAID controllers with mirrored caches, and

redundant host channels for continuous data availability for host applications.

In this document, the terms enterprise configuration and partner group are used

interchangeably, but apply to the same type of configuration shown in

FIGURE 1-5.

6 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 19

Alternate

master

controller

unit

Ethernet

Interconnect

cables

Master

controller

unit

FC-AL connection

FIGURE 1-5 Enterprise Configuration

connection

Ethernet

connection

LAN

Application host

Host-bus adapters

Management host

Ethernet port

Note – Sun StorEdge T3 array workgroup and enterprise configurations require a

media-interface adapter (MIA) connected to the Fibre Channel port to connect the

fiber-optic cable. Sun StorEdge T3+ array configurations support direct FC-AL

connections. Refer to the Sun StorEdge T3 and T3+ Array Installation, Operation, and

Service Manual for specific information on cabling the arrays.

In an enterprise configuration, there is a master controller unit and an alternate master

controller unit. In all default enterprise configurations, the master controller unit is

the array positioned at the bottom of an array stack in either a rackmounted or

tabletop installation. The alternate master controller unit is positioned on top of the

master controller unit. The positioning of the master and alternate master controller

units is important for cabling the units together correctly, understanding IP address

assignments, interpreting array command-line screen output, and determining

controller failover and failback conditions.

Note – In an enterprise configuration, you can only interconnect array models of the

same type. For example, you can connect a Sun StorEdge T3+ array to another Sun

StorEdge T3+ array, but you cannot connect it to a Sun StorEdge T3 array.

Chapter 1 Array Configuration Overview 7

Page 20

Configuration Guidelines and Restrictions

Workgroup Configurations:

■ The media access control (MAC) address is required to assign an IP address to the

controller unit. The MAC address uniquely identifies each node of a network. The

MAC address is available on the pull-out tab on the front left side of the array.

■ A host-based mirroring solution is necessary to protect data in cache.

■ Sun StorEdge T3 array workgroup configurations are supported in Sun Cluster

2.2 environments. Sun StorEdge T3 and T3+ array workgroup configurations are

supported in Sun Cluster 3.0 environments.

Enterprise Configurations

■ Partner groups can be connected to more than one host only if the following

conditions exist:

■ The partner group must be connected to the hosts through a hub.

■ The configuration must be using Sun StorEdge Traffic Manager software for

multipathing support.

■ The configuration must be a cluster configuration using Sun Cluster 3.0

software.

■ You cannot use a daisy-chain configuration to link more than two controller units

together.

■ You can only connect arrays of the same type model in a partner group.

■ In a cluster configuration, partner groups are supported using only Sun Cluster

3.0 software. They are not supported with Sun Cluster 2.2 software.

Caution – In an enterprise configuration, make sure you to use the MAC address of

the master controller unit.

8 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 21

Configuration Recommendations

■ Use enterprise configurations for controller redundancy.

■ Use host-based software such as VERITAS Volume Manager (VxVM), Sun

Enterprise™ Server Alternate Pathing (AP) software, or Sun StorEdge Traffic

Manager for multipathing support.

■ Connect redundant paths to separate host adapters, I/O cards, and system buses.

■ Configure active paths over separate system buses to maximize bandwidth.

Caution – The array and its global parameters must be tailored to match the I/O

workload for optimum performance. Within a partner group, both units will share

the same volume configuration, block size, and cache mode. That is, all cache

parameter settings are common to both units within a partner group.

Supported Platforms

Sun StorEdge T3 and T3+ arrays are supported on the following host platforms:

■ Sun Ultra™ 60 and Ultra 80 workstations

■ Sun Blade™ 1000 workstation

■ Sun Enterprise 10000, 6x00, 5x00, 4x00, and 3x00 servers

■ Sun Workgroup 450, 420R, 250, and 220R servers

■ Sun Fire™ F6x00, F4x10, F4x00, F3x00, and F280R servers

■ Netra™ t 1405 server

Tip – For the latest information on supported platforms, refer to the storage

solutions web site at http://www.sun.com/storage and look for details on the

Sun StorEdge T3 array product family.

Chapter 1 Array Configuration Overview 9

Page 22

Supported Software

The following software is supported on Sun StorEdge T3 and T3+ arrays:

■ Solaris 2.6, Solaris 7, and Solaris 8 operating environments

■ VERITAS Volume Manager 3.04 and later with DMP

■ Sun Enterprise Server Alternate Pathing (AP) 2.3.1

■ Sun StorEdge Component Manager 2.1 and later

■ StorTools™ 3.3 Diagnostics

■ Sun Cluster 2.2 and 3.0 software (see “Sun Cluster Support” on page 10)

■ Sun StorEdge Data Management Center 3.0

■ Sun StorEdge Instant Image 2.0

■ Sun StorEdge Network Data Replicator (SNDR) 2.0

■ Solstice Backup™ 5.5.1

■ Solstice DiskSuite™ 4.2 and 4.2.1

Tip – For the latest information on supported software, refer to the storage solutions

web site at http://www.sun.com/storage and look for details on the Sun

StorEdge T3 array product family.

Sun Cluster Support

Sun StorEdge T3 and T3+ arrays are supported in Sun Cluster configurations with

the following restrictions:

■ Array controller firmware version 1.17b or later is required on each Sun StorEdge

T3 array.

■ Array controller firmware version 2.0 or later is required on each Sun StorEdge

T3+ array.

■ Workgroup configurations are supported in Sun Cluster 2.2 for the Sun StorEdge

T3 array only. Sun Cluster 3.0 environments support both Sun StorEdge T3 and

T3+ array models.

■ Enterprise configurations are supported only in Sun Cluster 3.0 environments.

■ Partner groups in a Sun Cluster environment must use Sun StorEdge Traffic

Manager software for multipathing support.

10 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 23

■ Switches are not supported.

■ Hubs must be used.

■ The Sun StorEdge SBus FC-100 (SOC+) HBA and the onboard SOC+ interface in

Sun Fire™ systems are supported.

■ On Sun Enterprise 6x00/5x00/4x00/3x00 systems, a maximum of 64 arrays are

supported per cluster.

■ On Sun Enterprise 10000 systems, a maximum of 256 arrays are supported per

cluster.

■ To ensure full redundancy, host-based mirroring software such as Solstice

DiskSuite (SDS) 4.2 or SDS 4.2.1 must be used.

■ Solaris 2.6 and Solaris 8 are the only supported operating systems.

Note – Refer to the latest Sun Cluster documentation for more information on Sun

Cluster supported array configurations and restrictions.

Chapter 1 Array Configuration Overview 11

Page 24

12 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 25

CHAPTER

2

Configuring Global Parameters

When an array is shipped, the global parameters are set to default values. This

chapter describes how to reconfigure your array by changing these default values.

Caution – If you are planning an enterprise configuration using new factory units,

be sure to install and set up the units as a partner group before you power on, and

change any parameters or create/change any logical volumes. Refer to the Sun

StorEdge T3 and T3+ Array Installation, Operation, and Service Manual for more

information.

Note – For more information on changing array global parameters, refer to the Sun

StorEdge T3 and T3+ Array Administrator’s Guide.

The following parameters are described in this chapter:

■ “Cache” on page 13

■ “Logical Volumes” on page 16

■ “Using RAID Levels to Configure Redundancy” on page 20

Cache

Each Sun StorEdge T3 array controller unit has 256 Mbytes of data cache; each Sun

StorEdge T3+ array controller unit has 1 GByte of data cache. Writing to cache

improves write performance by staging data in cache, assembling the data into data

stripes, and then destaging the data from cache to disk, when appropriate. This

method frees the data host for other operations while cache data is being destaged,

and it eliminates the read-modify-write delays seen in non-cache systems. Read cache

improves performance by determining which data will be requested for the next

read operation and prestaging this data into cache. RAID 5 performance is also

improved by coalescing writes.

13

Page 26

Configuring Cache for Performance and Redundancy

Cache mode can be set to the following values:

■ Auto. The cache mode is determined as either write-behind or write-through,

based on the I/O profile. If the array has full redundancy available, then caching

operates in write-behind mode. If any array component is non-redundant, the

caching mode is set to write-through. Read caching is always performed. Auto

caching mode provides the best performance while retaining full redundancy

protection.

Auto is the default cache mode for Sun StorEdge T3 and T3+ arrays.

■ Write-behind. All read and write operations are written to cache. An algorithm

determines when the data is destaged or moved from cache to disk. Write-behind

cache improves performance, because a write to a high-speed cache is faster than

a write to a normal disk.

Use write-behind cache mode with a workgroup configuration when you want to

force write-behind caching to be used.

Caution – In a workgroup configuration, use a host-based mirroring solution to

protect data. This configuration does not offer the redundancy to provide cache

mirroring, and operating without a host-based mirroring solution could lead to data

loss in the event of a controller failure.

■ Write-through. This cache mode forces write-through caching to be used. In

write-through cache mode, data is written through cache in a serial manner and is

then written to the disk. Write-through caching does not improve write

performance. However, if a subsequent read operation needs the same data, the

read performance is improved, because the data is already in cache.

■ None. No reads or writes are cached.

Note – For full redundancy in an enterprise configuration, set the cache mode and

the mirror variable to Auto. This ensures that the cache is mirrored between

controllers and that write-behind cache mode is in effect. If a failure occurs, the data

is synchronized to disk, and then write-through mode takes effect. Once the problem

has been corrected and all internal components are again optimal, the system will

revert to operating in write-behind cache mode.

14 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 27

Configuring Data Block Size

The data block size is the amount of data written to each drive when striping data

across drives. (The block size is also known as the stripe unit size.) The block size

can be changed only when there are no volumes defined. The block size can be

configured as 16 Kbytes, 32 Kbytes, or 64 Kbytes. The default block size is 64 Kbytes.

A cache segment is the amount of data being read into cache. A cache segment is

1/8 of a data block. Therefore, cache segments can be 2 Kbytes, 4 Kbytes, or

8 Kbytes. Because the default block size is 64 Kbytes, the default cache segment size

is 8 Kbytes.

Note – The array data block size is independent of I/O block size. Alignment of the

two is not required.

Selecting a Data Block Size

If the I/O initiated from the host is 4 Kbytes, a data block size of 64 Kbytes would

force 8 Kbytes of internal disk I/O, wasting 4 Kbytes of the cache segment.

Therefore, it would be best to configure 32-Kbyte block sizes, causing 4-Kbyte

physical I/O from the disk. If sequential activity occurs, full block writes (32 Kbytes)

will take place. For 8-Kbyte I/O or greater from the host, use 64-Kbyte blocks.

Applications benefit from the following data block or stripe unit sizes:

■ 16-Kbyte data block size

■ Online Transaction Processing (OLTP)

■ Internet service provider (ISP)

■ Enterprise Resource Planning (ERP)

■ 32-Kbyte data block size

■ NFS™ file system, version 2

■ Attribute-intensive NFS file system, version 3

■ 64-Kbyte data block size

■ Data-intensive NFS file system, version 3

■ Decision Support Systems (DSS)

■ Data Warehouse (DW)

■ High Performance Computing (HPC)

Chapter 2 Configuring Global Parameters 15

Page 28

Note – The data block size must be configured before any logical volumes are

created on the units. Remember, this block size is used for every logical volume

created on the unit. Therefore it is important to have similar application data

configured per unit.

Data block size is universal throughout a partner group. Therefore, you cannot

change it after you have created a volume. To change the data block size, you must

first delete the volume(s), change the data block size, and then create new volume(s).

Caution – Unless you back up and restore the data on these volumes, it will be lost.

Enabling Mirrored Cache

By enabling mirrored cache, you can safeguard cached data if a controller fails.

Note – Mirrored cache is possible only in a redundant enterprise configuration.

Configuring Cache Allocation

Cache is allocated based on the read/write mix and it is dynamically adjusted by the

controller firmware, based on the I/O profile of the application. If the application

profile is configured for a 100% read environment, then 100% of the cache is used for

reads. If the application profile has a high number of writes, then the upper limit for

writes is set to 80%.

Logical Volumes

Also called a logical unit number (LUN), a logical volume is one or more disk drives

that are grouped together to form a single unit. Each logical volume is represented to

the host as a logical unit number. Using the

you can view the logical volumes presented by the array. You can use this disk space

as you would any physical disk, for example, to perform the following operations:

■ Install a file system

■ Use the device as a raw device (without any file system structure)

■ Partition the device

16 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

format utility on the application host,

Page 29

Note – Individual physical disk drives are not visible from the application host.

Refer to the Sun StorEdge T3 and T3+ Array Administrator’s Guide for more

information on creating logical volumes.

Guidelines for Configuring Logical Volumes

Use the following guidelines when configuring logical volumes:

■ The array’s native volume management can support a maximum of two volumes

per array unit.

■ The minimum number of drives is based on the RAID level, as follows:

■ RAID 0 and RAID 1 require a minimum of two drives.

■ RAID 5 requires a minimum of three drives.

■ Drive number 9 can be designated as a hot spare. If designated, drive number 9

will be the hot spare for all volumes in the array.

■ A partial drive configuration is not allowed.

■ Volumes cannot span array units.

Consider the following questions when configuring logical volumes:

■ How many logical volumes do you need (one or two)?

■ What RAID level do you require?

■ Do you need a hot spare?

Determining How Many Logical Volumes You Need

You can configure a volume into seven partitions (also known as slices) using the

format(1M) utility. Alternatively, you can configure virtually a large number of

partitions (also known as subdisks) using VERITAS Volume Manager. Therefore,

arrays are best configured as one large volume.

Applications benefit from the following logical volume or LUN configurations:

■ Two LUNs per array

■ OLTP

■ ISP

■ ERP

■ NFS, version 2

■ Attribute-intensive NFS, version 3

■ One LUN per array

Chapter 2 Configuring Global Parameters 17

Page 30

■ Data-intensive NFS, version 3

■ DSS

■ DW

■ HPC

Note – If you are creating new volumes or changing the volume configuration, you

must first manually rewrite the label of the previous volume using the autoconfigure

option of the

format(1M) UNIX host command. For more information on this

procedure, refer to the Sun StorEdge T3 and T3+ Array Administrator’s Guide.

Caution – Removing and reconfiguring the volume will destroy all data previously

stored there.

Determining Which RAID Level You Need

For a new array installation, the default configuration is 8+1 RAID 5, without a hot

spare.

In general, RAID 5 is efficiently managed by the RAID controller hardware. This

efficiency is apparent when compared to RAID 5 software solutions such as

VERITAS Volume Manager.

The following applications benefit most from the RAID controller hardware of the

array:

■ Data-intensive NFS file system, version 3

■ DSS

■ DW

■ HPC

Note – For more information about RAID levels, see “Using RAID Levels to

Configure Redundancy” later in this chapter.

Determining Whether You Need a Hot Spare

If you choose to include a hot-spare disk drive in your configuration, you must

specify it when you create the first volume in the array. If you want to add a hot

spare at a later date, you must remove the existing volume(s) and recreate the

configuration.

18 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 31

Note – Only one hot spare is allowed per array and it is only usable for the array in

which it is configured. The hot spare must be configured as drive 9.

Drive 9 will be the hot spare in the unit. So, for example, should a drive failure occur

on drive 7, drive 9 is synchronized automatically with the entire LUN to reflect the

data on drive 7. Once the failed drive (7) is replaced, the controller unit will

automatically copy the data from drive 9 to the new drive, and drive 9 will become

a hot spare again.

Tip – Although they are not required, hot spares are always recommended for

mission-critical configurations because they allow the controller unit to reconstruct

the data from the RAID group and only take a performance hit while the

reconstruction is taking place. If a hot spare is not used, the controller unit remains

in write-through cache mode until the failed drive is replaced and reconstruction is

complete (which could take an extended period of time). During this time, the array

is operating in degraded mode.

If there is no hot spare, the reconstruction of the data will begin when the failed

drive is replaced, provided RAID 1 or RAID 5 is used.

Creating and Labeling a Logical Volume

You must set the RAID level and the hot-spare disk when creating a logical volume.

For the Solaris operating system to recognize a volume, it must be labeled with the

format or fmthard command.

Caution – Removing and reconfiguring a logical volume will destroy all data

previously stored there.

Setting the LUN Reconstruction Rate

Note – When a failed drive is disabled, the volume is operating without further

redundancy protection, so the failed drive needs to be replaced as soon as possible.

Chapter 2 Configuring Global Parameters 19

Page 32

If the volume has a hot spare configured and that drive is available, the data on the

disabled drive is reconstructed on the hot-spare drive. When this operation is

complete, the volume is operating with full redundancy protection, so another drive

in the volume may fail without loss of data.

After a drive has been replaced, the original data is automatically reconstructed on

the new drive. If no hot spare was used, the data is regenerated using the RAID

redundancy data in the volume. If the failed drive data has been reconstructed onto

a hot spare, once the reconstruction has completed, a copy-back operation begins

where the hot spare data is copied to the newly replaced drive.

You can also configure the rate at which data is reconstructed, so as not to interfere

with application performance. Reconstruction rate values are low, medium, and high

as follows:

■ Low is the slowest and has the lowest impact on performance

■ Medium is the default

■ High is the fastest and has the highest impact on performance

Note – Reconstruction rates can be changed while a reconstruction operation is in

process. However, the changes don’t take effect until the current reconstruction has

completed.

Using RAID Levels to Configure Redundancy

The RAID level determines how the controller reads and writes data and parity on

the drives. The Sun StorEdge T3 and T3+ arrays can be configured with RAID level

0, RAID level 1 (1+0) or RAID level 5. The factory-configured LUN is a RAID 5 LUN.

Note – The default RAID level (5) can result in very large volumes; for example, 128

Gbytes in a configuration of single 7+1 RAID 5 LUN plus hot spare, with 18 Gbyte

drives. Some applications cannot use such large volumes effectively. The following

two solutions can be used separately or in combination:

■ First, use the partitioning utility available on the data host’s operating system. In

the Solaris environment, use the

distinct partitions per volume. Note that in the case of the configuration described

above, if each partition is equal in size, this will result in 18-Gbyte partitions,

which still may be too large to be used efficiently by legacy applications.

20 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

format utility, which can create up to seven

Page 33

■ Second, you can use third-party software on the host system to create as many

partitions as desired from a given volume. In the Solaris environment, you can

use VERITAS Volume Manager or Solaris Logical Volume Management (SLVM)

formerly known as Solstice DiskSuite (SDS) for this purpose.

Note – For information on using the format utility, refer to the format (1M) man

page. For more information on third-party software or VERITAS Volume Manager,

refer to the documentation for that product.

RAID 0

Data blocks in a RAID 0 volume are striped across all the drives in the volume in

order. There is no parity data, so RAID 0 uses the full capacity of the drives. There is,

however, no redundancy. If a single drive fails, all data on the volume is lost.

RAID 1

Each data block in a RAID 1 volume is mirrored on two drives. If one of the

mirrored pair fails, the data from the other drive is used. Because the data is

mirrored in a RAID 1 configuration, the volume has only half the capacity of the

assigned drives. For example, if you create a 4-drive RAID 1+0 volume with

18-Gbyte drives, the resulting data capacity is 4 x 18 / 2 = 36 Gbytes.

RAID 5

In a RAID 5 configuration, data is striped across the drives in the volumes in

segments, with parity information being striped across the drives, as well. Because

of this parity, if a single drive fails, data can be recovered from the remaining drives.

Two drive failures cause all data to be lost. A RAID 5 volume has the data capacity

of all the drives in the logical unit, less one. For example, a 5-drive RAID 5 volume

with 18-Gbyte drives has a capacity of (5 - 1) x 18 = 72 Gbytes.

Chapter 2 Configuring Global Parameters 21

Page 34

Configuring RAID Levels

The Sun StorEdge T3 and T3+ arrays are preconfigured at the factory with a single

LUN, RAID level 5 redundancy and no hot spare. Once a volume has been

configured, you cannot reconfigure it to change its size, RAID level, or hot spare

configuration. You must first delete the volume and create a new one with the

configuration values you want.

22 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 35

CHAPTER

3

Configuring Partner Groups

Sun StorEdge T3 and T3+ arrays can be interconnected in partner groups to form a

redundant and larger storage system.

Note – The terms partner group and enterprise configuration refer to the same type of

configuration and are used interchangeably in this document.

Note – Partner groups are not supported in Sun Cluster 2.2 configurations.

This chapter describes how to configure array partner groups, and it includes the

following sections:

■ “Understanding Partner Groups” on page 23

■ “How Partner Groups Work” on page 25

■ “Creating Partner Groups” on page 26

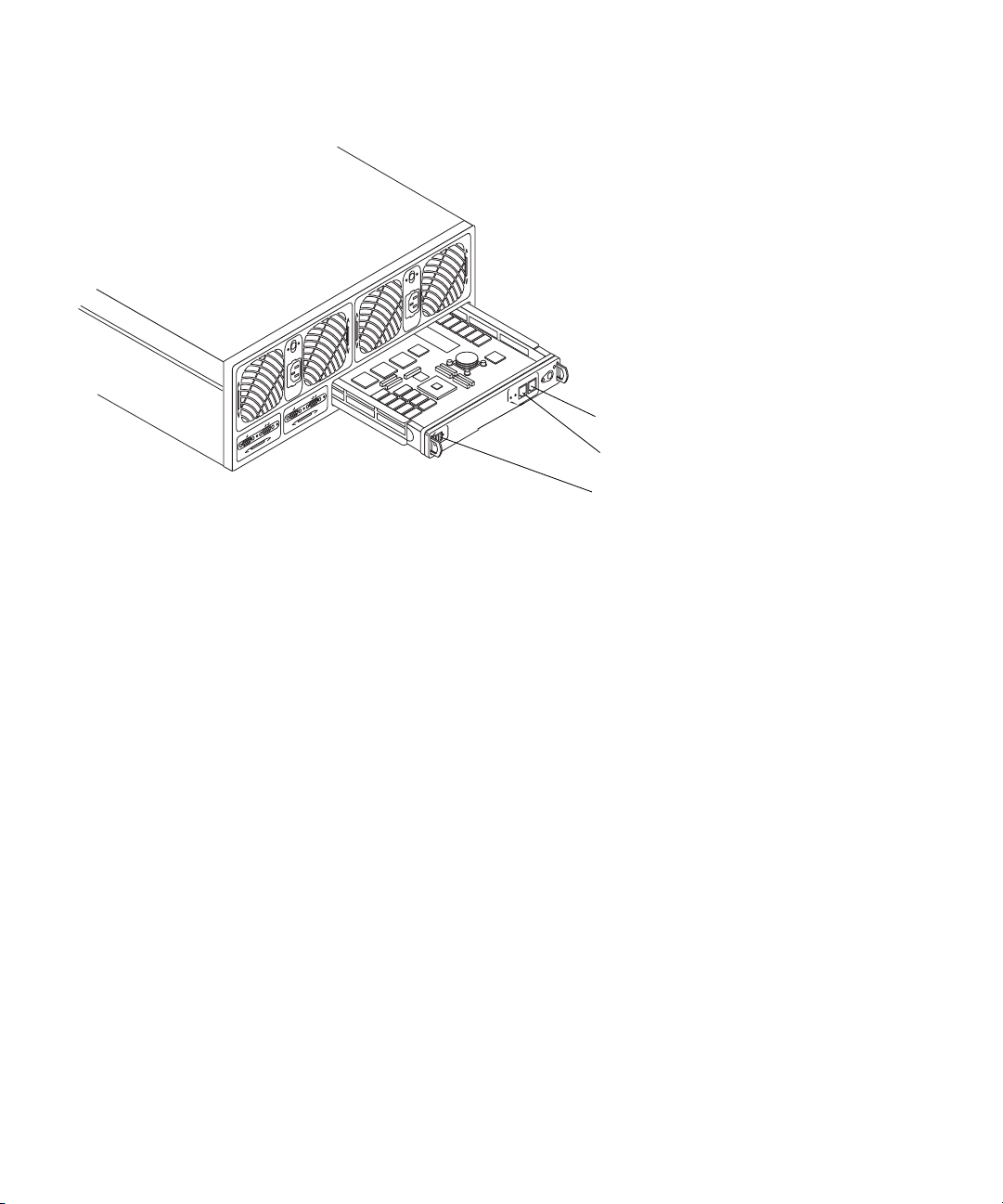

Understanding Partner Groups

In a partner group, there is a master controller unit and an alternate master controller

unit. The master controller unit is the array positioned at the bottom of an array

stack in either a rackmounted or tabletop installation. The alternate master controller

unit is positioned on top of the master controller unit. Array units are connected

using the interconnect cards and interconnect cables. A partner group is shown in

FIGURE 3-1.

23

Page 36

Alternate

master

controller

unit

Interconnect

cables

Master

controller

unit

FC-AL connection

FIGURE 3-1 Sun StorEdge T3 Array Partner Group

Note – Sun StorEdge T3 arrays require a media-interface adapter (MIA) connected

to the Fibre Channel port on the controller card to connect the fiber-optic cable. Sun

StorEdge T3+ array configurations support direct FC-AL connections.

Ethernet

connection

LAN

Ethernet

connection

Application host

Host-bus adapters

Management host

Ethernet port

When two units are connected together, they form a redundant partner group. This

group provides controller redundancy. Because the controller is a single point of

failure in a standalone configuration, this redundancy allows an application host to

access data even if a controller fails. This configuration offers multipath and LUN

failover features.

The partner group connection also allows for a single point of control. The bottom

unit will assume the role of the master, and from its Ethernet connections, it will be

used to monitor and administer the unit installed above it.

The master controller unit will set the global variables within this storage system,

including cache block size, cache mode, and cache mirroring.

Note – For information about setting or changing these parameters, refer to the Sun

StorEdge T3 and T3+ Array Administrator’s Guide.

24 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 37

Any controller unit will boot from the master controller unit’s drives. All

configuration data, including syslog information, is located on the master

controller unit’s drives.

How Partner Groups Work

If the master controller unit fails and the “heartbeat” between it and the alternate

master stops, this failure causes a controller failover, where the alternate master

assumes the role of the master controller unit. The new master (formerly the

alternate master) takes the IP address and the MAC address from the old master and

begins to function as the administrator of the storage system. It will also be able to

access the former master controller unit’s drives. The former master controller unit’s

drives will still be used to store syslog information, system configuration

information, and bootcode. Should it become necessary to reboot the storage system

while the master controller unit is inactive, the alternate master will use the former

master controller unit’s drives to boot.

Note – After the failed master controller is back online, it remains the alternate

master controller and, as a result, the original configuration has been modified from

its original state.

In a redundant partner group configuration, the units can be set to do a path failover

operation. Normally the volumes or LUNs that are controlled by one unit are not

accessible to the controller of the other. The units can be set so that if a failure in one

controller occurs, the remaining one will accept I/O for the devices that were

running on the failed controller. To enable this controller failover operation,

multipathing software, such as VERITAS Volume Manager, Sun StorEdge Traffic

Manager software, or Solaris Alternate Pathing (AP) software must be installed on

the data application host.

Note – In order for a feature such VERITAS DMP to access a LUN through both

controllers in a redundant partner group, the

rw to enable this feature. If you are using Sun StorEdge Traffic Manager, the

mp_support parameter must be set to mpxio. For information on setting the

mp_support parameter and options, refer to the Sun StorEdge T3 and T3+ Array

Administrator’s Guide.

mp_support parameter must be set to

Chapter 3 Configuring Partner Groups 25

Page 38

Creating Partner Groups

Partner groups can be created in two ways:

■ From new units

■ From existing standalone units

Instructions for installing new array units and connecting them to create partner

groups can be found in the Sun StorEdge T3 and T3+ Array Installation, Operation, and

Service Manual.

To configure existing standalone arrays with data into a partner group, you must go

through a qualified service provider. Contact your SunService representative for

more information.

Caution – The procedure to reconfigure the arrays into a partner group involves

deleting all data from array disks and restoring the data after the completing the

reconfiguration. There is the potential risk of data loss or data corruption if the

procedure is not performed properly.

26 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 39

CHAPTER

4

Configuration Examples

This chapter includes sample reference configurations for Sun StorEdge T3 and T3+

arrays. Although there are many supported configurations, these reference

configurations provide the best solution for many installations.:

■ “Direct Host Connection” on page 27

■ “Hub Host Connection” on page 34

■ “Switch Host Connection” on page 46

Direct Host Connection

This section contains examples of the following configurations:

■ “Single Host With One Controller Unit” on page 28

■ “Single Host With Two Controller Units Configured as a Partner Group” on

page 29

■ “Single Host With Four Controller Units Configured as Two Partner Groups” on

page 31

■ “Single Host With Eight Controller Units Configured as Four Partner Groups” on

page 32

27

Page 40

Single Host With One Controller Unit

FIGURE 4-1 shows one application host connected through an FC-AL cable to one

array controller unit. The Ethernet cable connects the controller to a management

host via a LAN on a public or separate network, and requires an IP address.

Note – This configuration is not recommended for RAS functionality because the

controller is a single point of failure. In this type of configuration, use a host-based

mirroring solution to protect data in cache.

Controller unit

Application host

HBA

FC-AL

connection

Ethernet

connection

Management host

LAN

FIGURE 4-1 Single Host Connected to One Controller Unit

Note – For the Sun StorEdge T3 array, you must insert a media interface adapter

(MIA) into the FC-AL connection port on the array controller card to connect the

fiber-optic cable. This is detailed in the Sun StorEdge T3 and T3+ Array Installation,

Operation, and Service Manual.

28 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Ethernet port

Page 41

Single Host With Two Controller Units Configured as a Partner Group

FIGURE 4-2 shows one application host connected through FC-AL cables to one array

partner group, which consists of two Sun StorEdge T3+ arrays. The Ethernet

connection from the master controller unit is on a public or separate network and

requires an IP address for the partner group. In the event of a failover, the alternate

master controller unit will use the master controller unit’s IP address and MAC

address.

Alternate

master

controller

unit

Interconnect

cables

Master

controller

unit

FC-AL connection

Ethernet

connection

LAN

FIGURE 4-2 Single Host With Two Controller Units Configured as a Partner Group

Ethernet

connection

Application host

HBAs

Management host

Ethernet port

This configuration is a recommended enterprise configuration for RAS functionality

because there is no single point of failure. This configuration supports Dynamic

Multi-Pathing (DMP) by VERITAS Volume Manager, the Alternate Pathing (AP)

software in the Solaris operating environment, or Sun StorEdge Traffic Manager

software for failover only.

The following three global parameters must be set on the master controller unit, as

follows:

■ mp_support = rw or mpxio

■ cache mode = auto

■ cache mirroring = auto

For information on setting these parameters, refer to the Sun StorEdge T3 and T3+

Array Administrator’s Guide.

Chapter 4 Configuration Examples 29

Page 42

Host Multipathing Management Software

While Sun StorEdge T3 and T3+ arrays are redundant devices that automatically

reconfigure whenever a failure occurs on any internal component, a host-based

solution is needed for a redundant data path. Supported multipathing solutions

include:

■ The DMP feature in VERITAS Volume Manager

■ Sun Enterprise Server Alternate Pathing software

■ Sun StorEdge Traffic Manager software

During normal operation, I/O moves on the host channel connected to the controller

that owns the LUNs. This path is a primary path. During failover operation, the

multipathing software directs all I/O to the alternate channel’s controller. This path

is the failover path.

When a controller in the master controller unit fails, the alternate master controller

unit becomes the master. When the failed controller is repaired, the new controller

immediately boots, goes online and becomes the alternate master controller unit. The

former alternate master controller unit remains the master controller unit.

Note – The multipathing software solution must be installed on the application host

to achieve a fully redundant configuration.

FIGURE 4-3 shows a failover configuration.

LUN 1

Alternate

master

controller

unit

Interconnect

cables

LUN 0

Master

controller

unit

FC-AL connection

Ethernet

connection

LAN

FIGURE 4-3 Failover Configuration

30 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Ethernet

connection

Application host

HBA

HBA

Management host

Primary LUN 1

Failover LUN 0

Primary LUN 0

Failover LUN 1

Ethernet port

Page 43

Single Host With Four Controller Units Configured as Two Partner Groups

FIGURE 4-4 shows one application host connected through FC-AL cables to four

arrays configured as two separate partner groups. This configuration can be used for

capacity and I/O throughput requirements. Host-based Alternate Pathing software

is required for this configuration.

Note – This configuration is a recommended enterprise configuration for RAS

functionality because the controller is not a single point of failure.

The following three parameters must be set on the master controller unit, as follows:

■ mp_support = rw or mpxio

■ cache mode = auto

■ cache mirroring = auto

For information on setting these parameters, refer to the Sun StorEdge T3 and T3+

Array Administrator’s Guide.

Alternate

master

controller

unit

Interconnect

cables

Master

controller

unit

FC-AL

FC-AL

Ethernet

Application host

HBA

HBA

HBA

HBA

Ethernet

port

LAN

FIGURE 4-4 Single Host With Four Controller Units Configured as Two Partner Groups

Chapter 4 Configuration Examples 31

Management host

Page 44

Single Host With Eight Controller Units Configured as Four Partner Groups

FIGURE 4-5 shows one application host connected through FC-AL cables to eight Sun

StorEdge T3+ arrays, forming four partner groups. This configuration is the

maximum allowed in a 72-inch cabinet. This configuration can be used for footprint

and I/O throughput.

Note – This configuration is a recommended enterprise configuration for RAS

functionality because the controller is not a single point of failure.

The following three parameters must be set on the master controller unit, as follows:

■ mp_support = rw or mpxio

■ cache mode = auto

■ cache mirroring = auto

Note – For information on setting these parameters, refer to the Sun StorEdge T3 and

T3+ Array Administrator’s Guide.

Host-based multipathing software is required for this configuration.

32 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 45

Alternate

master

controller

unit

Interconnect

cables

Master

controller

unit

FC-AL

FC-AL

Ethernet

Application host

HBA

HBA

HBA

HBA

HBA

HBA

HBA

HBA

Ethernet

port

LAN

FIGURE 4-5 Single Host With Eight Controller Units Configured as Four Partner Groups

Chapter 4 Configuration Examples 33

Management host

Page 46

Hub Host Connection

The following sample configurations are included in this section:

■ “Single Host With Two Hubs and Four Controller Units Configured as Two

Partner Groups” on page 34

■ “Single Host With Two Hubs and Eight Controller Units Configured as Four

Partner Groups” on page 36

■ “Dual Hosts With Two Hubs and Four Controller Units” on page 38

■ “Dual Hosts With Two Hubs and Eight Controller Units” on page 40

■ “Dual Hosts With Two Hubs and Four Controller Units Configured as Two

Partner Groups” on page 42

■ “Dual Hosts With Two Hubs and Eight Controller Units Configured as Four

Partner Groups” on page 44

Single Host With Two Hubs and Four Controller Units Configured as Two Partner Groups

FIGURE 4-6 shows one application host connected through FC-AL cables to two hubs

and two array partner groups. The Ethernet connection on the master controller unit

is on a public or separate network and requires an IP address for the partner group.

In the event of a failover, the alternate master controller unit will use the master

controller unit’s IP address and MAC address.

Note – This configuration is a recommended enterprise configuration for RAS

functionality because the controller is not a single point of failure.

Note – There are no hub port position dependencies when connecting arrays to a

hub. Arrays can be connected to any available port on the hub.

Each array needs to be assigned a unique target address using the port set

command. These target addresses can be any number between 1 and 125. At the

factory, the array target addresses are set starting with target address 1 for the

bottom array and continuing to the top array. Use the port list command to

verify that all arrays have a unique target address. Refer to Appendix A of the Sun

StorEdge T3 and T3+ Array Administrator’s Guide for further details.

34 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 47

The following three parameters must be set on the master controller unit, as follows:

■ mp_support = rw or mpxio

■ cache mode = auto

■ cache mirroring = auto

Note – For information on setting these parameters, refer to the Sun StorEdge T3 and

T3+ Array Administrator’s Guide

Host-based multipathing software is required for this configuration.

Hub

Application host

HBA

Alternate

master

controller

unit

Interconnect

cables

Master

controller

unit

Hub

HBA

Ethernet

FC-AL

FC-AL

Ethernet

port

Management host

LAN

FIGURE 4-6 Single Host With Two Hubs and Four Controller Units Configured as Two

Partner Groups

Chapter 4 Configuration Examples 35

Page 48

Single Host With Two Hubs and Eight Controller Units Configured as Four Partner Groups

FIGURE 4-7 shows one application host connected through FC-AL cables to two hubs

and to eight Sun StorEdge T3+ arrays, forming four partner groups. This

configuration is the maximum allowed in a 72-inch cabinet. This configuration can

be used for footprint and I/O throughput.

Note – This configuration is a recommended enterprise configuration for RAS

functionality because the controller is not a single point of failure.

Note – There are no hub port position dependencies when connecting arrays to a

hub. An array can be connected to any available port on the hub.

Each array needs to be assigned a unique target address using the port set

command. These target addresses can be any number between 1 and 125. At the

factory, the array target addresses are set starting with target address 1 for the

bottom array and continuing to the top array. Use the port list command to

verify that all arrays have a unique target address. Refer to Appendix A of the Sun

StorEdge T3 and T3+ Array Administrator’s Guide for further details.

The following three parameters must be set on the master controller unit, as follows:

■ mp_support = rw or mpxio

■ cache mode = auto

■ cache mirroring = auto

Note – For information on setting these parameters, refer to the Sun StorEdge T3 and

T3+ Array Administrator’s Guide

Host-based multipathing software is required for this configuration.

36 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 49

Alternate

master

controller

unit

Interconnect

cables

Hub

Application host

HBA

HBA

Hub

Ethernet

FC-AL

Master

controller

unit

FC-AL

LAN

Ethernet

port

Management host

FIGURE 4-7 Single Host With Two Hubs Configured and Eight Controller Units as Four

Partner Groups

Chapter 4 Configuration Examples 37

Page 50

Dual Hosts With Two Hubs and Four Controller Units

FIGURE 4-8 shows two application hosts connected through FC-AL cables to two hubs

and four Sun StorEdge T3+ arrays. This configuration, also known as a multi-initiator

configuration, can be used for footprint and I/O throughput. The following

limitations should be evaluated when proceeding with this configuration:

■ Avoid the risk caused by any array or data path single point of failure using host-

based mirroring software such as VERITAS Volume Manager or Solaris Volume

Manager.

■ When configuring more than a single array to share a single FC-AL loop, as with

a hub, array target addresses need to be set to unique values.

This configuration is not a recommended for RAS functionality because the

controller is a single point of failure.

Note – There are no hub port position dependencies when connecting arrays to a

hub. An array can be connected to any available port on the hub.

Each array needs to be assigned a unique target address using the port set

command. These target addresses can be any number between 1 and 125. At the

factory, the array target addresses are set starting with target address 1 for the

bottom array and continuing to the top array. Use the port list command to

verify that all arrays have a unique target address. Refer to Appendix A of the Sun

StorEdge T3 and T3+ Array Administrator’s Guide for further details.

The following two parameters must be set on the master controller unit, as follows:

■ cache mode = auto

■ cache mirroring = auto

Note – For information on setting these parameters, refer to the Sun StorEdge T3 and

T3+ Array Administrator’s Guide.

38 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 51

Hub

Application host 1

HBA

Controller

unit

Hub

HBA

Application host 2

HBA

HBA

FC-AL

Ethernet

Ethernet

port

LAN

FIGURE 4-8 Dual Hosts With Two Hubs and Four Controller Units

Management host

Chapter 4 Configuration Examples 39

Page 52

Dual Hosts With Two Hubs and Eight Controller Units

FIGURE 4-9 shows two application hosts connected through FC-AL cables to two hubs

and eight Sun StorEdge T3+ arrays. This configuration, also known as a multi-

initiator configuration, can be used for footprint and I/O throughput. The following

limitations should be evaluated when proceeding with this configuration

■ Avoid the risk caused by any array or data path single point of failure using host-

based mirroring software such as VERITAS Volume Manager or Solaris Volume

Manager.

Note – This configuration, running host-based mirroring features from VERITAS

Volume Manager or Solaris Logical Volume Manager, represents four arrays of data

mirrored to the other four trays using host-based mirroring.

■ When configuring more than a single array to share a single FC-AL loop, as with

a hub, array target addresses need to be set to unique values.

This configuration is not a recommended for RAS functionality because the

controller is a single point of failure.

Note – There are no hub port position dependencies when connecting arrays to a

hub. An array can be connected to any available port on the hub.

Each array needs to be assigned a unique target address using the port set

command. These target addresses can be any number between 1 and 125. At the

factory, the array target addresses are set starting with target address 1 for the

bottom array and continuing to the top array. Use the port list command to

verify that all arrays have a unique target address. Refer to Appendix A of the Sun

StorEdge T3 and T3+ Array Administrator’s Guide for further details.

The following two parameters must be set on the master controller unit, as follows:

■ cache mode = auto

■ cache mirroring = auto

Note – For information on setting these parameters, refer to the Sun StorEdge T3 and

T3+ Array Administrator’s Guide.

40 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 53

Hub

Application host 1

HBA

Controller

unit

Hub

FC-AL

HBA

Application host 2

HBA

HBA

Ethernet

Ethernet

port

LAN

FIGURE 4-9 Dual Hosts With Two Hubs and Eight Controller Units

Management Host

Chapter 4 Configuration Examples 41

Page 54

Dual Hosts With Two Hubs and Four Controller Units Configured as Two Partner Groups

FIGURE 4-8 shows two application hosts connected through FC-AL cables to two hubs

and four Sun StorEdge T3+ arrays forming two partner groups. This multi-initiator

configuration can be used for footprint and I/O throughput.

Note – This configuration is a recommended enterprise configuration for RAS

functionality because the controller is not a single point of failure.

Note – There are no hub port position dependencies when connecting arrays to a

hub. An array can be connected to any available port on the hub.

Each array needs to be assigned a unique target address using the port set

command. These target addresses can be any number between 1 and 125. At the

factory, the array target addresses are set starting with target address 1 for the

bottom array and continuing to the top array. Use the port list command to

verify that all arrays have a unique target address. Refer to Appendix A of the Sun

StorEdge T3 and T3+ Array Administrator’s Guide for further details.

The following three parameters must be set on the master controller unit, as follows:

■ mp_support = rw or mpxio

■ cache mode = auto

■ cache mirroring = auto

Note – For information on setting these parameters, refer to the Sun StorEdge T3 and

T3+ Array Administrator’s Guide

Host-based multipathing software is required for this configuration.

42 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 55

Hub

Application host 1

HBA

Alternate

master

controller

unit

Interconnect

cables

Master

controller

unit

Hub

HBA

Application host 2

HBA

HBA

FC-AL

Ethernet

FC-AL

Ethernet

port

LAN

FIGURE 4-10 Dual Hosts With Two Hubs and Four Controller Units Configured as Two

Management host

Partner Groups

Chapter 4 Configuration Examples 43

Page 56

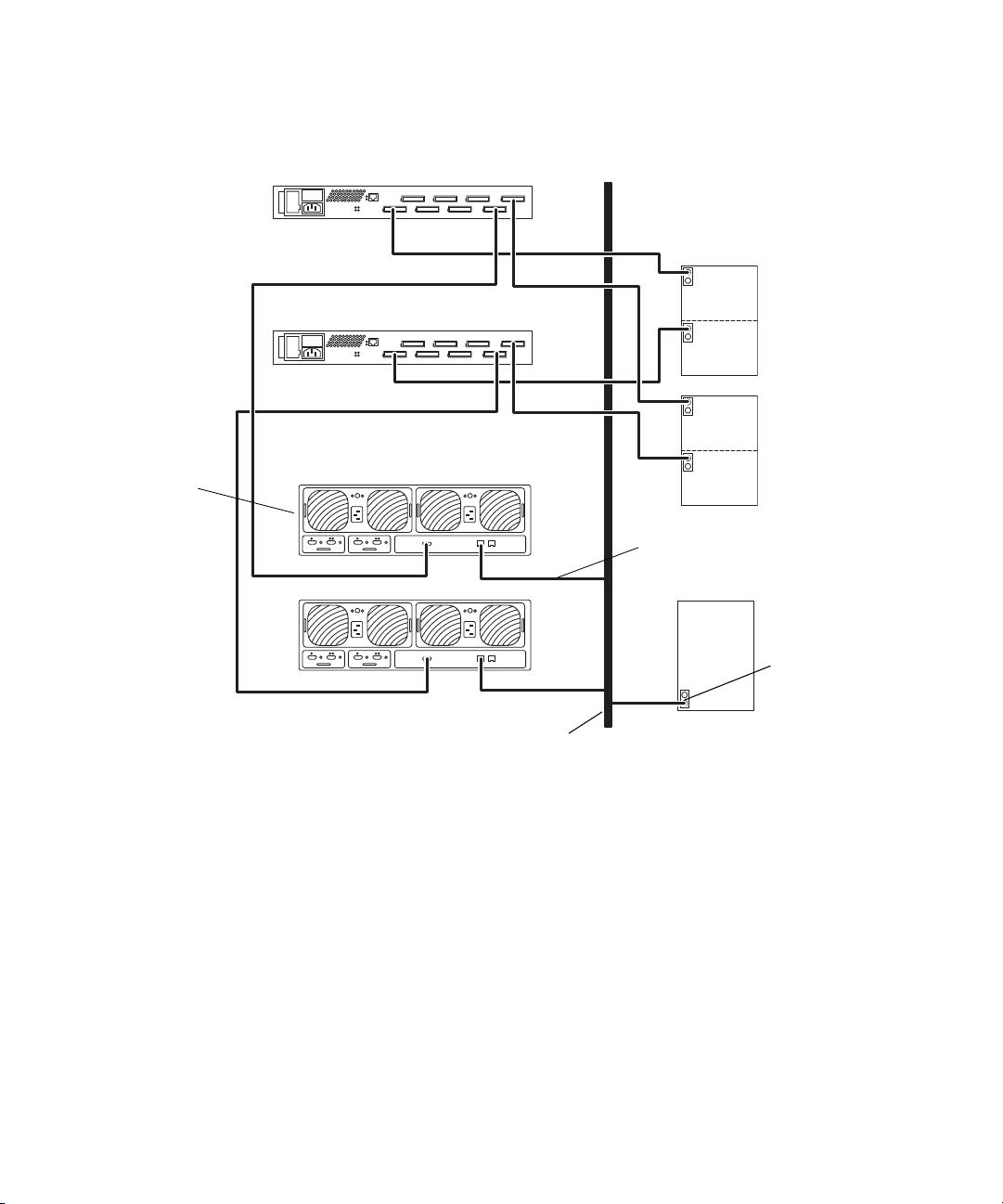

Dual Hosts With Two Hubs and Eight Controller Units Configured as Four Partner Groups

FIGURE 4-9 shows two application hosts connected through FC-AL cables to two hubs

and eight Sun StorEdge T3+ arrays forming four partner groups. This multi-initiator

configuration can be used for footprint and I/O throughput.

This configuration is a recommended enterprise configuration for RAS functionality

because the controller is not a single point of failure.

Note – There are no hub port position dependencies when connecting Sun StorEdge

T3 and T3+ arrays to a hub. An array can be connected to any available port on the

hub.

When configuring more than one partner group or a single array to share a single

FC-AL loop, as with a hub, array target addresses need to be set to unique values.

Assign the array target address using the port set command. These target

addresses can be any number between 1 and 125. At the factory, the array target

addresses are set starting with target address 1 for the bottom array and continuing

to the top array. Use the port list command to verify that all arrays have a

unique target address. Refer to Appendix A of the Sun StorEdge T3 and T3+ Array

Administrator’s Guide for further details.

The following two parameters must be set on the master controller unit, as follows:

■ mp_support = rw or mpxio

■ cache mode = auto

■ cache mirroring = auto

Note – For information on setting these parameters, refer to the Sun StorEdge T3 and

T3+ Array Administrator’s Guide

Host-based multipathing software is required for this configuration.

44 Sun StorEdge T3 and T3+ Array Configuration Guide • August 2001

Page 57

Hub

Application host 1

HBA

Alternate

master

controller

unit

Interconnect

cables

Master

controller

unit

Hub

HBA

Application host 2

HBA

HBA

FC-AL

Ethernet

FC-AL

LAN

Management host

Ethernet

port

FIGURE 4-11 Dual Hosts With Two Hubs and Eight Controller Units Configured as Four

Partner Groups

Chapter 4 Configuration Examples 45

Page 58

Switch Host Connection

This section contains the following example configurations:

■ “Dual Hosts With Two Switches and Two Controller Units” on page 46

■ “Dual Hosts With Two Switches and Eight Controller Units” on page 48

Dual Hosts With Two Switches and Two Controller Units

FIGURE 4-12 shows two application hosts connected through FC-AL cables to two

switches and two Sun StorEdge T3+ arrays. This multi-initiator configuration can be

used for footprint and I/O throughput.

Note – This configuration is not a recommended for RAS functionality because the

controller is a single point of failure.

Evaluate the following limitations before proceeding with this configuration:

■ Avoid the risk caused by any array or data path single point of failure using host-

based mirroring software such as VERITAS Volume Manager or Solaris Volume

Manager.

■ When configuring more than a single array to share a single FC-AL loop, as with

a hub, array target addresses need to be set to unique values.

Each array needs to be assigned a unique target address using the port set

command. These target addresses can be any number between 1 and 125. At the

factory, the array target addresses are set starting with target address 1 for the