Page 1

Sun GlassFish Enterprise Server

2.1 PerformanceTuning Guide

Sun Microsystems, Inc.

4150 Network Circle

Santa Clara, CA 95054

U.S.A.

Part No: 820–4343–10

January 2009

Page 2

Copyright 2009 SunMicrosystems, Inc. 4150 NetworkCircle, Santa Clara, CA 95054 U.S.A. All rights reserved.

Sun Microsystems, Inc. has intellectual property rights relating to technology embodied in the product that is described in this document. In particular, and without

limitation, these intellectual property rights may include one or more U.S. patents or pending patent applications in the U.S. and in other countries.

U.S. Government Rights – Commercial software. Government users are subject to the Sun Microsystems, Inc. standard license agreement and applicable provisions

of the FAR and its supplements.

This distribution may include materials developed by third parties.

Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other

countries, exclusively licensed through X/Open Company, Ltd.

Sun, Sun Microsystems, the Sun logo, the Solaris logo, the Java Coee Cup logo, docs.sun.com, OpenSolaris, Java, and Solaris are trademarks or registered

trademarks of Sun Microsystems, Inc. or its subsidiaries in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or

registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed

by Sun Microsystems, Inc.

The OPEN LOOK and Sun

of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to

the Xerox Graphical User Interface, which license also covers Sun's licensees who implement OPEN LOOK GUIs and otherwise comply with Sun's written license

agreements.

Products covered by and information contained in this publication are controlled by U.S. Export Control laws and may be subject to the export or import laws in

other countries. Nuclear, missile, chemical or biological weapons or nuclear maritime end uses or end users, whether direct or indirect, are strictly prohibited. Export

or reexport to countries subject to U.S. embargo or to entities identied on U.S. export exclusion lists, including, but not limited to, the denied persons and specially

designated nationals lists is strictly prohibited.

DOCUMENTATION IS PROVIDED “AS IS” AND ALL EXPRESS OR IMPLIED CONDITIONS, REPRESENTATIONS AND WARRANTIES, INCLUDINGANY

IMPLIED WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE OR NON-INFRINGEMENT, ARE DISCLAIMED, EXCEPT TO

THE EXTENT THATSUCH DISCLAIMERS ARE HELD TO BE LEGALLY INVALID.

TM

Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering eorts

Copyright 2009 Sun Microsystems, Inc. 4150 Network Circle, Santa Clara, CA 95054 U.S.A. Tous droits réservés.

Sun Microsystems, Inc. détient les droits de propriété intellectuelle relatifs à la technologie incorporée dans le produit qui est décrit dans ce document. En particulier,

et ce sans limitation, ces droits de propriété intellectuelle peuvent inclure un ou plusieurs brevets américains ou des applications de brevet en attente aux Etats-Unis

et dans d'autres pays.

Cette distribution peut comprendre des composants développés par des tierces personnes.

Certaines composants de ce produit peuvent être dérivées du logiciel Berkeley BSD,licenciés par l'Université de Californie. UNIX est une marque déposée aux

Etats-Unis et dans d'autres pays; elle est licenciée exclusivement par X/Open Company, Ltd.

Sun, Sun Microsystems, le logo Sun, le logo Solaris, le logo Java Coee Cup, docs.sun.com, OpenSolaris, Java et Solaris sont des marques de fabrique ou des marques

déposées de Sun Microsystems, Inc., ou ses liales, aux Etats-Unis et dans d'autres pays. Toutes les marques SPARC sont utilisées sous licence et sont des marques de

fabrique ou des marques déposées de SPARC International, Inc. aux Etats-Unis et dans d'autres pays. Les produits portant les marques SPARCsont basés sur une

architecture développée par Sun Microsystems, Inc.

L'interface d'utilisation graphique OPEN LOOK et Sun a été développée par Sun Microsystems, Inc. pour ses utilisateurs et licenciés. Sun reconnaît les eorts de

pionniers de Xerox pour la recherche et le développement du concept des interfaces d'utilisation visuelle ou graphique pour l'industrie de l'informatique. Sun détient

une licence non exclusive de Xerox sur l'interface d'utilisation graphique Xerox, cette licence couvrant également les licenciés de Sun qui mettent en place l'interface

d'utilisation graphique OPEN LOOK et qui, en outre, se conforment aux licences écrites de Sun.

Les produits qui font l'objet de cette publication et les informations qu'il contient sont régis par la legislation américaine en matière de contrôle des exportations et

peuvent être soumis au droit d'autres pays dans le domaine des exportations et importations. Les utilisations nales, ou utilisateurs naux, pour des armes nucléaires,

des missiles, des armes chimiques ou biologiques ou pour le nucléaire maritime, directement ou indirectement, sont strictement interdites. Les exportations ou

réexportations vers des pays sous embargo des Etats-Unis, ou vers des entités gurant sur les listes d'exclusion d'exportation américaines, y compris, mais de manière

non exclusive, la liste de personnes qui font objet d'un ordre de ne pas participer, d'une façon directe ou indirecte, aux exportations des produits ou des services qui

sont régis par la legislation américaine en matière de contrôle des exportations et la liste de ressortissants spéciquement designés, sont rigoureusement interdites.

LA DOCUMENTATIONEST FOURNIE "EN L'ETAT"ET TOUTES AUTRESCONDITIONS, DECLARATIONS ET GARANTIES EXPRESSES OU TACITES

SONT FORMELLEMENT EXCLUES, DANS LA MESURE AUTORISEE PAR LA LOI APPLICABLE, Y COMPRIS NOTAMMENT TOUTE GARANTIE

IMPLICITE RELATIVE A LA QUALITE MARCHANDE, A L'APTITUDE A UNE UTILISATIONPARTICULIERE OU A L'ABSENCE DE CONTREFACON.

090304@21990

Page 3

Contents

Preface ...................................................................................................................................................13

1 Overview of Enterprise Server PerformanceTuning ..................................................................... 17

Process Overview ................................................................................................................................ 17

▼ Performance Tuning Sequence .................................................................................................. 18

Understanding Operational Requirements ..................................................................................... 19

Application Architecture ............................................................................................................ 19

Security Requirements ................................................................................................................ 21

Hardware Resources .................................................................................................................... 22

Administration ............................................................................................................................. 23

General Tuning Concepts .................................................................................................................. 23

Capacity Planning ........................................................................................................................ 24

User Expectations ........................................................................................................................ 25

Further Information ............................................................................................................................ 26

2 TuningYour Application .....................................................................................................................27

Java Programming Guidelines ........................................................................................................... 27

AvoidSerialization and Deserialization .................................................................................... 27

Java Server Page and Servlet Tuning ................................................................................................. 29

Suggested Coding Practices ........................................................................................................30

EJB Performance Tuning .................................................................................................................... 32

Goals .............................................................................................................................................. 32

Monitoring EJB Components .................................................................................................... 32

General Guidelines ...................................................................................................................... 35

Using Local and Remote Interfaces ........................................................................................... 36

Improving Performance of EJB Transactions .......................................................................... 38

Using Special Techniques ........................................................................................................... 39

3

Page 4

Contents

Tuning Tips for Specic Types of EJB Components ............................................................... 42

JDBC and Database Access ......................................................................................................... 46

Tuning Message-Driven Beans .................................................................................................. 47

3 Tuningthe Enterprise Server ............................................................................................................. 49

Deployment Settings ........................................................................................................................... 49

Disable Auto-deployment ........................................................................................................... 50

Use Pre-compiled JavaServer Pages ........................................................................................... 50

Disable Dynamic Application Reloading .................................................................................. 50

Logger Settings ..................................................................................................................................... 50

General Settings ........................................................................................................................... 51

Log Levels ...................................................................................................................................... 51

Web Container Settings ...................................................................................................................... 51

Session Properties: Session Timeout ......................................................................................... 51

Manager Properties: Reap Interval ............................................................................................ 52

Disable Dynamic JSP Reloading ................................................................................................ 52

EJB Container Settings ........................................................................................................................ 53

Monitoring the EJB Container ................................................................................................... 53

Tuning the EJB Container ...........................................................................................................53

Java Message Service Settings ............................................................................................................. 58

Transaction Service Settings .............................................................................................................. 58

Monitoring the Transaction Service .......................................................................................... 58

Tuning the Transaction Service ................................................................................................. 59

HTTP Service Settings ........................................................................................................................ 60

Monitoring the HTTP Service .................................................................................................... 60

Connection Queue ....................................................................................................................... 64

Tuning the HTTP Service ........................................................................................................... 64

Tuning HTTP Listener Settings ................................................................................................. 69

ORB Settings ........................................................................................................................................ 70

Overview ....................................................................................................................................... 70

How a Client Connects to the ORB ............................................................................................ 70

Monitoring the ORB .................................................................................................................... 70

Tuning the ORB ........................................................................................................................... 71

Thread Pool Sizing ....................................................................................................................... 74

Examining IIOP Messages .......................................................................................................... 74

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 20094

Page 5

Contents

Improving ORB Performance with Java Serialization ............................................................. 75

Thread Pool Settings ........................................................................................................................... 76

Tuning Thread Pools (Unix /Linux only) ................................................................................. 76

Resources .............................................................................................................................................. 77

JDBC Connection Pool Settings ................................................................................................. 77

Connector Connection Pool Settings ........................................................................................ 80

4 Tuningthe Java Runtime System ......................................................................................................83

Java Virtual Machine Settings ............................................................................................................ 83

Managing Memory and Garbage Collection .................................................................................... 84

Tuning the Garbage Collector .................................................................................................... 84

Tracing Garbage Collection ........................................................................................................86

Other Garbage Collector Settings .............................................................................................. 86

Tuning the Java Heap .................................................................................................................. 87

Rebasing DLLs on Windows ...................................................................................................... 89

Further Information ............................................................................................................................ 91

5 Tuningthe Operating Systemand Platform ................................................................................... 93

Server Scaling ....................................................................................................................................... 93

Processors ..................................................................................................................................... 93

Memory ......................................................................................................................................... 94

Disk Space ..................................................................................................................................... 94

Networking ................................................................................................................................... 94

Solaris 10 Platform-Specic Tuning Information ........................................................................... 95

Tuning for the Solaris OS ................................................................................................................... 95

Tuning Parameters ...................................................................................................................... 95

File Descriptor Setting ................................................................................................................. 97

Linux Conguration ........................................................................................................................... 97

Tuning for Solaris on x86 ................................................................................................................... 98

File Descriptors ............................................................................................................................ 99

IP Stack Settings ........................................................................................................................... 99

Tuning for Linux platforms ............................................................................................................. 100

File Descriptors .......................................................................................................................... 100

Virtual Memory ......................................................................................................................... 101

Network Interface ...................................................................................................................... 102

5

Page 6

Contents

Disk I/O Settings ........................................................................................................................ 102

TCP/IP Settings .......................................................................................................................... 102

Tuning UltraSPARCT1–Based Systems ........................................................................................ 103

Tuning Operating System and TCP Settings .......................................................................... 103

Disk Conguration .................................................................................................................... 105

Network Conguration ............................................................................................................. 105

Start Options ............................................................................................................................... 105

6 Tuningfor High-Availability ............................................................................................................107

Tuning HADB .................................................................................................................................... 107

Disk Use ...................................................................................................................................... 107

Memory Allocation .................................................................................................................... 109

Performance ............................................................................................................................... 110

Operating System Conguration ............................................................................................. 116

Tuning the Enterprise Server for High-Availability ...................................................................... 116

Tuning Session Persistence Frequency .................................................................................... 117

Session Persistence Scope .......................................................................................................... 118

Session Size ................................................................................................................................. 118

Checkpointing Stateful Session Beans ..................................................................................... 119

Conguring the JDBC Connection Pool ................................................................................. 119

Conguring the Load Balancer ........................................................................................................ 120

Enabling the Health Checker .................................................................................................... 120

Index ................................................................................................................................................... 123

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 20096

Page 7

Figures

FIGURE 1–1 Java EE Application Model ....................................................................................... 20

7

Page 8

8

Page 9

Tables

TABLE 1–1 PerformanceTuning Roadmap ............................................................................... 17

TABLE 1–2 Factors That Aect Performance ............................................................................. 24

TABLE 3–1 Bean Type Pooling or Caching ................................................................................ 53

TABLE 3–2 EJB Cache and Pool Settings .................................................................................... 56

TABLE 3–3 Tunable ORB Settings ...............................................................................................71

TABLE 3–4 Connection PoolSizing ............................................................................................ 78

TABLE 4–1 Maximum Address Space Per Process .................................................................... 87

TABLE 5–1 Tuning Parameters for Solaris .................................................................................95

TABLE 5–2 Tuning 64–bit Systems for Performance Benchmarking ...................................104

9

Page 10

10

Page 11

Examples

EXAMPLE 4–1 Heap Conguration on Solaris ................................................................................89

EXAMPLE 4–2 Heap Conguration on Windows ........................................................................... 90

11

Page 12

12

Page 13

Preface

The Performance Tuning Guide describes how to get the best performance with Enterprise

Server.

This preface contains information about and conventions for the entire Sun GlassFish

TM

Enterprise Server documentation set.

Sun GlassFish Enterprise Server Documentation Set

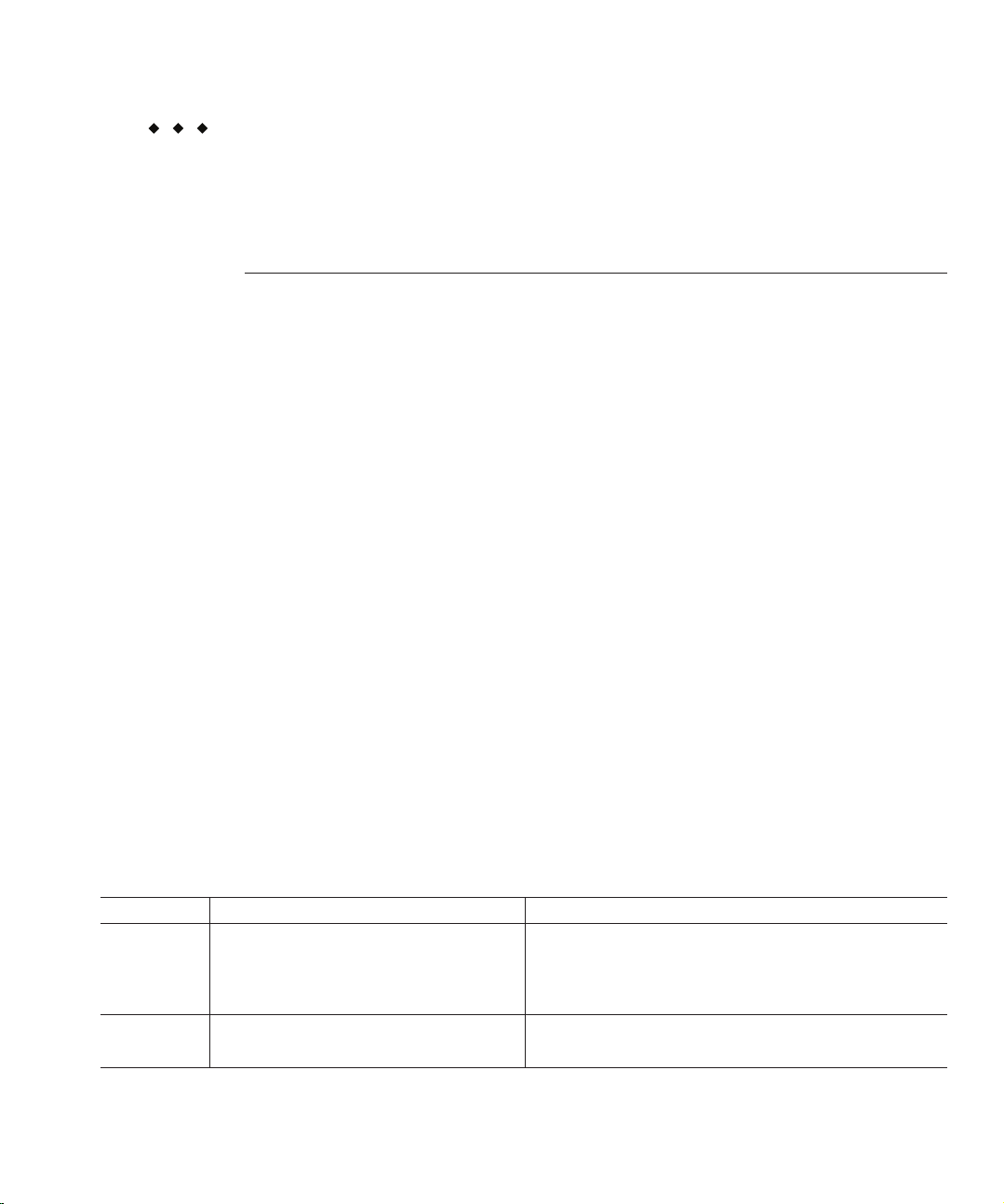

TABLE P–1 Books in the Enterprise Server Documentation Set

Book Title Description

Documentation Center Enterprise Server documentation topics organized by task and subject.

Release Notes Late-breaking information about the software and the documentation. Includes a

comprehensive, table-based summary of the supported hardware, operating system, Java

Development Kit (JDKTM), and database drivers.

Quick Start Guide How to get started with the Enterprise Server product.

Installation Guide Installing the software and its components.

Application Deployment Guide Deployment of applications and application components to the Enterprise Server. Includes

information about deployment descriptors.

Developer’s Guide Creating and implementing Java Platform, Enterprise Edition (Java EE platform) applications

intended to run on the Enterprise Server that follow the open Java standards model for Java

EE components and APIs. Includes information about developer tools, security, debugging,

and creating lifecycle modules.

TM

Java EE 5 Tutorial Using Java EE 5 platform technologies and APIs to develop Java EE applications.

Java WSIT Tutorial Developing web applications using the Web Service Interoperability Technologies (WSIT).

Describes how, when, and why to use the WSIT technologies and the features and options

that each technology supports.

Administration Guide System administration for the Enterprise Server, including conguration, monitoring,

security, resource management, and web services management.

13

Page 14

Preface

TABLE P–1 Books in the Enterprise Server Documentation Set (Continued)

Book Title Description

High Availability Administration

Guide

Administration Reference Editing the Enterprise Server conguration le, domain.xml.

Performance Tuning Guide Tuning the Enterprise Server to improve performance.

Reference Manual Utility commands available with the Enterprise Server; written in man page style. Includes

Setting up clusters, working with node agents, and using load balancers.

the asadmin command line interface.

Default Paths and File Names

The following table describes the default paths and le names that are used in this book.

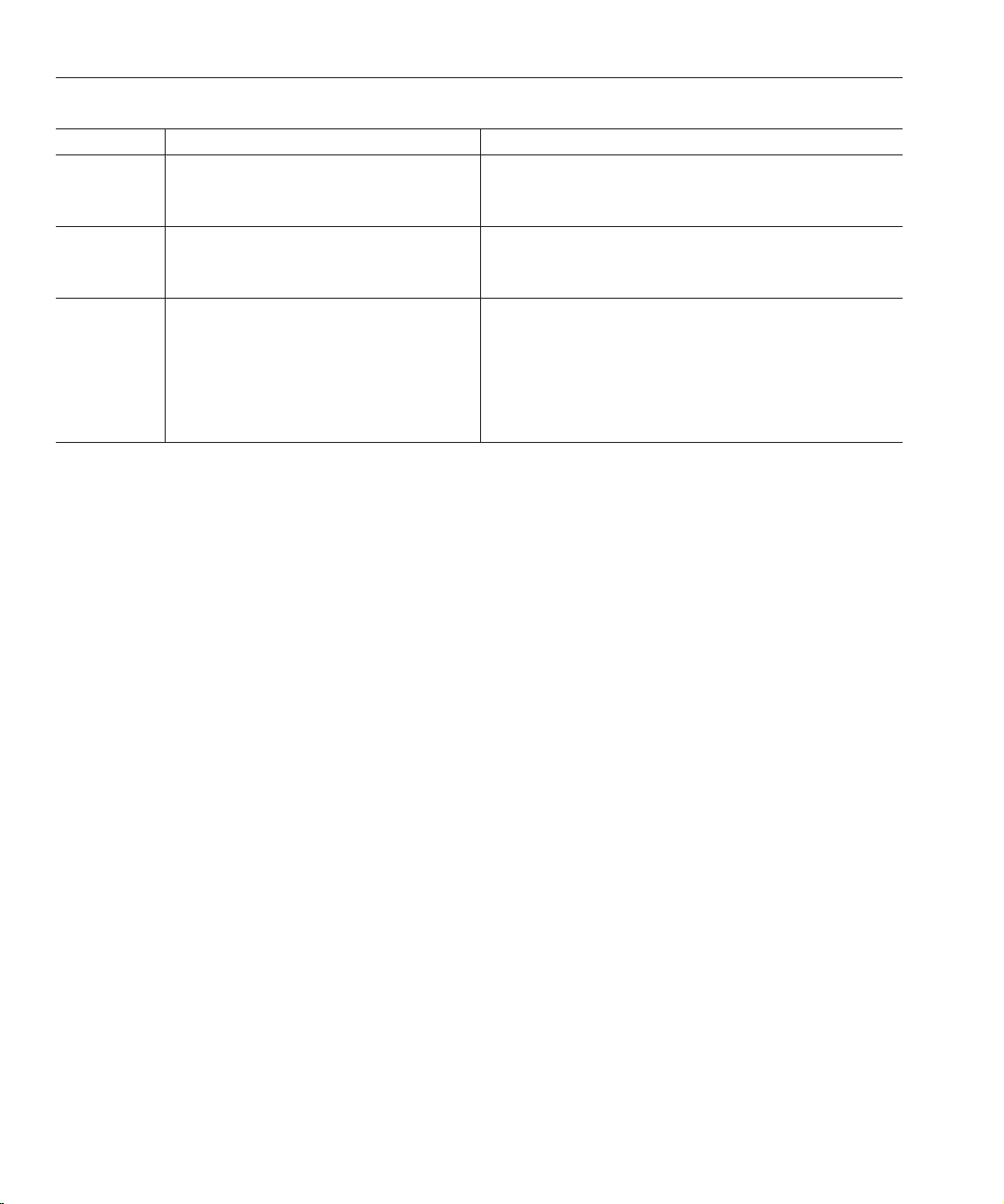

TABLE P–2 Default Pathsand File Names

Placeholder Description Default Value

as-install Represents the base installation directory for

Enterprise Server.

SolarisTMand Linux installations, non-root user:

user’s-home-directory/SUNWappserver

Solaris and Linux installations, root user:

/opt/SUNWappserver

Windows, all installations:

SystemDrive:\Sun\AppServer

domain-root-dir Represents the directory containing all

domains.

domain-dir Represents the directory for a domain.

In conguration les, you might see

domain-dir represented as follows:

${com.sun.aas.instanceRoot}

instance-dir Represents the directory for a server instance. domain-dir/instance-dir

samples-dir Represents the directory containing sample

applications.

docs-dir Represents the directory containing

documentation.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200914

All installations:

as-install/domains/

domain-root-dir/domain-dir

as-install/samples

as-install/docs

Page 15

Typographic Conventions

The following table describes the typographic changes that are used in this book.

TABLE P–3 TypographicConventions

Typeface Meaning Example

Preface

AaBbCc123 Thenames of commands, les, and

directories, and onscreen computer

output

AaBbCc123 Whatyou type, contrasted with onscreen

computer output

AaBbCc123 A placeholder to be replaced with a real

name or value

AaBbCc123 Book titles, new terms, and terms to be

emphasized (note that some emphasized

items appear bold online)

Symbol Conventions

The following table explains symbols that might be used in this book.

TABLE P–4 SymbolConventions

Symbol Description Example Meaning

[] Contains optional arguments

and command options.

Edit your .login le.

Use ls -a to list all les.

machine_name% you have mail.

machine_name% su

Password:

The command to remove a le is rm lename.

Read Chapter 6 in the User's Guide.

A cache is a copy that is stored locally.

Do not save the le.

ls [-l] The -l option is not required.

{|} Contains a set of choices for a

required command option.

${ } Indicates a variable

reference.

- Joins simultaneous multiple

keystrokes.

+ Joins consecutive multiple

keystrokes.

-d {y|n} The -d option requires that you use

either the y argument or the n

argument.

${com.sun.javaRoot} References the value of the

com.sun.javaRoot variable.

Control-A Press the Control key while you press

the A key.

Ctrl+A+N Press the Control key, release it, and

then press the subsequent keys.

15

Page 16

Preface

TABLE P–4 SymbolConventions (Continued)

Symbol Description Example Meaning

→ Indicates menu item

selection in a graphical user

interface.

File → New → Templates From the File menu, choose New.

Documentation, Support, andTraining

The Sun web site provides information about the following additional resources:

■

Documentation (http://www.sun.com/documentation/)

■

Support (http://www.sun.com/support/)

■

Training (http://www.sun.com/training/)

Third-PartyWeb Site References

Third-party URLs are referenced in this document and provide additional, related information.

Note – Sun is not responsible for the availability of third-party web sites mentioned in this

document. Sun does not endorse and is not responsible or liable for any content, advertising,

products, or other materials that are available on or through such sites or resources. Sun will not

be responsible or liable for any actual or alleged damage or loss caused or alleged to be caused by

or in connection with use of or reliance on any such content, goods, or services that are available

on or through such sites or resources.

From the New submenu, choose

Templates.

Sun WelcomesYour Comments

Sun is interested in improving its documentation and welcomes your comments and

suggestions.

To share your comments, go to

provide the document title and part number. The part number is a seven-digit or nine-digit

number that can be found on the title page of the book or at the top of the document.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200916

http://docs.sun.com and click Feedback. In the online form,

Page 17

CHAPTER 1

1

Overview of Enterprise Server Performance Tuning

You can signicantly improve performance of the Sun GlassFish Enterprise Server and of

applications deployed to it by adjusting a few deployment and server conguration settings.

However, it is important to understand the environment and performance goals. An optimal

conguration for a production environment might not be optimal for a development

environment.

This chapter discusses the following topics:

■

“Process Overview” on page 17

■

“Understanding Operational Requirements” on page 19

■

“General Tuning Concepts” on page 23

■

“Further Information” on page 26

Process Overview

The following table outlines the overall administration process, and shows where performance

tuning ts in the sequence.

TABLE 1–1 PerformanceTuning Roadmap

Step Description of Task Location of Instructions

1 Design: Decide on the high-availability topology

and set up the Application Server and, if you are

using HADBfor session persistence,

high-availability database (HADB)systems.

2 Capacity Planning: Make sure the systems have

sucient resources to perform well.

Deployment Planning Guide

Deployment Planning Guide

17

Page 18

Process Overview

TABLE 1–1 PerformanceTuning Roadmap (Continued)

Step Description of Task Location of Instructions

3 Installation: If you are using HADB for session

persistence, ensure that the HADB software is

installed.

4 Deployment: Install and run your applications.

Familiarize yourself with how to congure and

administer the Enterprise Server.

5 Tuning: Tune the following items:

■

Applications

■

Enterprise Server

■

Java Runtime System

■

Operating system and platform

■

High availability features

▼

PerformanceTuningSequence

Application developers should tune applications prior to production use. Tuning applications

often produces dramatic performance improvements. System administrators perform the

remaining steps in the following list after tuning the application, or when application tuning

has to wait and you want to improve performance as much as possible in the meantime.

Ideally, follow this sequence of steps when you are tuning performance:

1

Tuneyour application, described in

Installation Guide

Application Deployment Guide

Administration Guide

The following chapters:

■

Chapter 2, “Tuning Your Application”

■

Chapter 3, “Tuning the Enterprise Server”

■

Chapter 4, “Tuning the Java Runtime System”

■

Chapter 5, “Tuning the Operating System and Platform”

■

Chapter 6, “Tuning for High-Availability”

Chapter 2,“TuningYourApplication”

Tunethe server, described in Chapter 3,“Tuning the Enterprise Server”Chapter 3,“Tuning the

2

Enterprise Server”

Tunethe high availability database, described in Chapter 6,“Tuning for High-Availability”

3

Tunethe Java runtime system, described in Chapter 4,“Tuning the Java Runtime System”

4

Tunethe operating system, described in Chapter 5,“Tuningthe Operating System and Platform”

5

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200918

Page 19

Understanding Operational Requirements

Before you begin to deploy and tune your application on the Application Server, it is important

to clearly dene the operational environment. The operational environment is determined by

high-level constraints and requirements such as:

■

“Application Architecture” on page 19

■

“Security Requirements” on page 21

■

“Hardware Resources” on page 22

Application Architecture

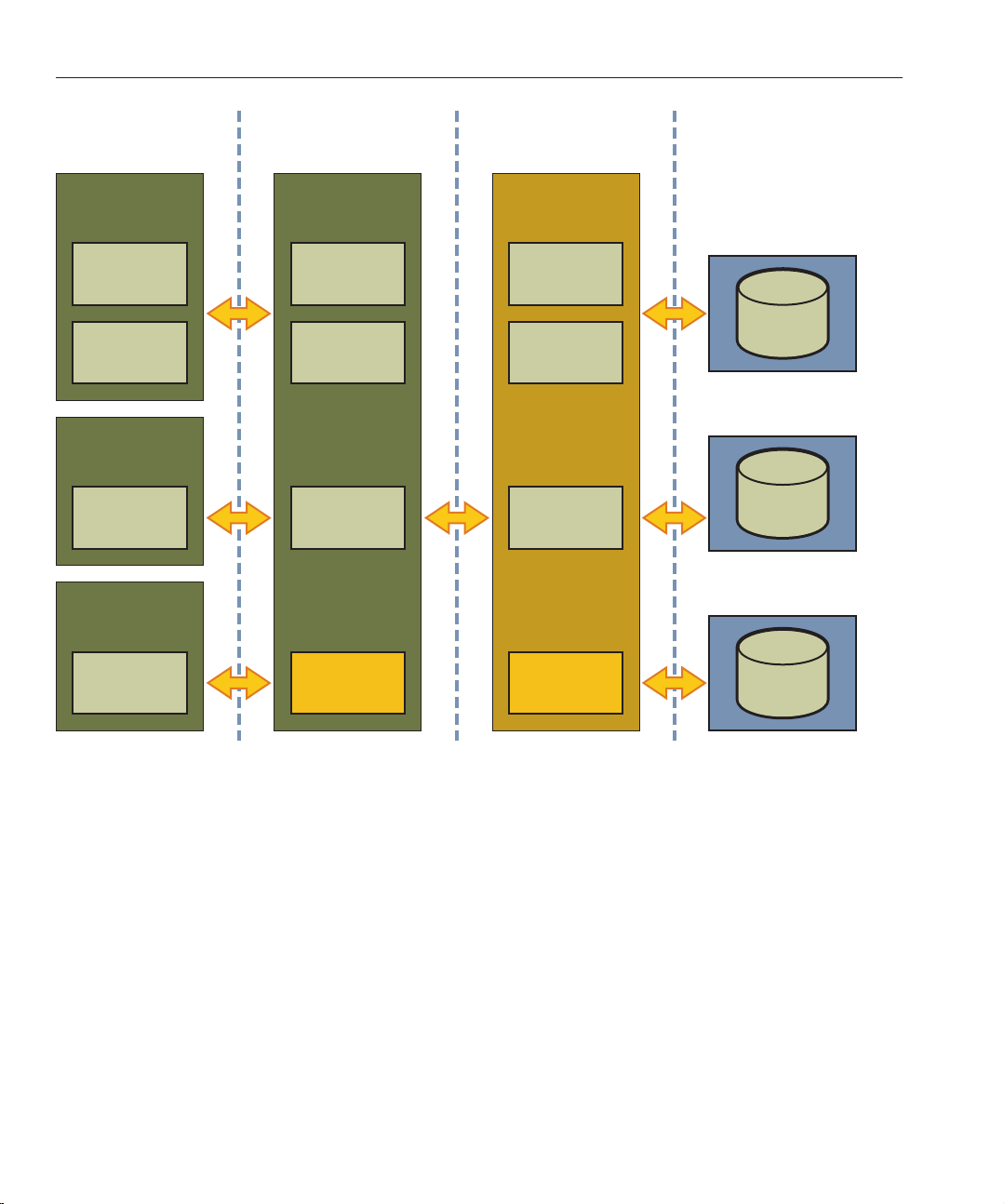

The Java EE Application model, as shown in the following gure, is very exible; allowing the

application architect to split application logic functionally into many tiers. The presentation

layer is typically implemented using servlets and JSP technology and executes in the web

container.

Understanding Operational Requirements

Chapter 1 • Overview of Enterprise Server Performance Tuning 19

Page 20

Understanding Operational Requirements

Client-Side

Presentation

Browser

Pure

HTML

Java

Applet

Desktop

Java

Application

Other

Device

Server-Side

Presentation

Web

Server

JSP

JSP

Java

Servlet

Server-Side

Business Logic

EJB

Container

EJB

EJB

EJB

Enterprise

Information

System

J2EE

Client

FIGURE 1–1 Java EE Application Model

Moderately complex enterprise applications can be developed entirely using servlets and JSP

technology. More complex business applications often use Enterprise JavaBeans (EJB)

components. The Application Server integrates the web and EJB containers in a single process.

Local access to EJB components from servlets is very ecient. However, some application

deployments may require EJB components to execute in a separate process; and be accessible

from standalone client applications as well as servlets. Based on the application architecture, the

server administrator can employ the Application Server in multiple tiers, or simply host both

the presentation and business logic on a single tier.

It is important to understand the application architecture before designing a new Application

Server deployment, and when deploying a new business application to an existing application

server deployment.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200920

J2EE

Platform

J2EE

Platform

Page 21

Understanding Operational Requirements

Security Requirements

Most business applications require security. This section discusses security considerations and

decisions.

User Authentication and Authorization

Application users must be authenticated. The Application Server provides three dierent

choices for user authentication: le-based, LDAP, and Solaris.

The default le based security realm is suitable for developer environments, where new

applications are developed and tested. At deployment time, the server administrator can choose

between the Lighweight Directory Access Protocol (LDAP) or Solaris security realms. Many

large enterprises use LDAP-based directory servers to maintain employee and customer

proles. Small to medium enterprises that do not already use a directory server may nd it

advantageous to leverage investment in Solaris security infrastructure.

For more information on security realms, see

GlassFish Enterprise Server 2.1 Administration Guide.

The type of authentication mechanism chosen may require additional hardware for the

deployment. Typically a directory server executes on a separate server, and may also require a

backup for replication and high availability. Refer to Sun Java System Directory Server

documentation for more information on deployment, sizing, and availability guidelines.

An authenticated user’saccess to application functions may also need authorization checks. If

the application uses the role-based Java EE authorization checks, the application server

performs some additional checking, which incurs additional overheads. When you perform

capacity planning, you must take this additional overhead into account.

Chapter 9, “Conguring Security,” in Sun

Encryption

For security reasons, sensitive user inputs and application output must be encrypted. Most

business-oriented web applications encrypt all or some of the communication ow between the

browser and Application Server. Online shopping applications encrypt trac when the user is

completing a purchase or supplying private data. Portal applications such as news and media

typically do not employ encryption. Secure Sockets Layer (SSL) is the most common security

framework, and is supported by many browsers and application servers.

The Application Server supports SSL 2.0 and 3.0 and contains software support for various

cipher suites. It also supports integration of hardware encryption cards for even higher

performance. Security considerations, particularly when using the integrated software

encryption, will impact hardware sizing and capacity planning.

Consider the following when assessing the encryption needs for a deployment:

Chapter 1 • Overview of Enterprise Server Performance Tuning 21

Page 22

Understanding Operational Requirements

■

What is the nature of the applications with respect to security? Do they encrypt all or only a

part of the application inputs and output? What percentage of the information needs to be

securely transmitted?

■

Are the applications going to be deployed on an application server that is directly connected

to the Internet? Will a web server exist in a demilitarized zone (DMZ) separate from the

application server tier and backend enterprise systems?

A DMZ-style deployment is recommended for high security. It is also useful when the

application has a signicant amount of static text and image content and some business

logic that executes on the Application Server, behind the most secure rewall. Application

Server provides secure reverse proxy plugins to enable integration with popular web servers.

The Application Server can also be deployed and used as a web server in DMZ.

■

Is encryption required between the web servers in the DMZ and application servers in the

next tier? The reverse proxy plugins supplied with Application Server support SSL

encryption between the web server and application server tier. If SSL is enabled, hardware

capacity planning must be take into account the encryption policy and mechanisms.

■

If software encryption is to be employed:

■

What is the expected performance overhead for every tier in the system, given the

security requirements?

■

What are the performance and throughput characteristics of various choices?

For information on how to encrypt the communication between web servers and Application

Server, please refer to

Administration Guide

Chapter 9, “Conguring Security,” in Sun GlassFish Enterprise Server 2.1

.

Hardware Resources

The type and quantity of hardware resources available greatly inuence performance tuning

and site planning.

The Application Server provides excellent vertical scalability. It can scale to eciently utilize

multiple high-performance CPUs, using just one application server process. A smaller number

of application server instances makes maintenance easier and administration less expensive.

Also, deploying several related applications on fewer application servers can improve

performance, due to better data locality, and reuse of cached data between co-located

applications. Such servers must also contain large amounts of memory, disk space, and network

capacity to cope with increased load.

The Application Server can also be deployed on large “farms” of relatively modest hardware

units. Business applications can be partitioned across various server instances. Using one or

more external load balancers can eciently spread user access across all the application server

instances. A horizontal scaling approach may improve availability, lower hardware costs and is

suitable for some types of applications. However, this approach requires administration of

more application server instances and hardware nodes.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200922

Page 23

General Tuning Concepts

Administration

A single Application Server installation on a server can encompass multiple instances. A group

of one or more instances that are administered by a single Administration Server is called a

domain. Grouping server instances into domains permits dierent people to independently

administer the groups.

You can use a single-instance domain to create a “sandbox” for a particular developer and

environment. In this scenario, each developer administers his or her own application server,

without interfering with other application server domains. A small development group may

choose to create multiple instances in a shared administrative domain for collaborative

development.

In a deployment environment, an administrator can create domains based on application and

business function. For example, internal Human Resources applications may be hosted on one

or more servers in one Administrative domain, while external customer applications are hosted

on several administrative domains in a server farm.

The Application Server supports virtual server capability for web applications. For example, a

web application hosting service provider can host dierent URL domains on a single

Application Server process for ecient administration.

For detailed information on administration, see

Administration Guide

.

GeneralTuning Concepts

Some key concepts that aect performance tuning are:

■

User load

■

Application scalability

■

Margins of safety

The following table describes these concepts, and how they are measured in practice. The left

most column describes the general concept, the second column gives the practical ramications

of the concept, the third column describes the measurements, and the right most column

describes the value sources.

Chapter 1 • Overview of Enterprise Server Performance Tuning 23

Sun GlassFish Enterprise Server 2.1

Page 24

General Tuning Concepts

TABLE 1–2 Factors That Aect Performance

Concept In practice Measurement Value sources

User Load Concurrent

sessions at

peak load

Application

Scalability

Vertical

scalability

Horizontal

scalability

Safety Margins High

Transaction

rate measured

on one CPU

Increase in

performance

from

additional

CPUs

Increase in

performance

from

additional

servers

availability

requirements

Transactions Per Minute (TPM)

Web Interactions Per Second

(WIPS)

TPM or WIPS Measured from workload benchmark. Perform at each tier.

Percentage gain per additional

CPU

Percentage gain per additional

server process and/or hardware

node.

If the system must cope with

failures, size the system to meet

performance requirements

assuming that one or more

application server instances are

non functional

(Max. number of concurrent users) * (expected response time) /

(time between clicks)

Example:

(100 users*2sec)/10sec=20

Based on curve tting from benchmark. Perform tests while

gradually increasing the number of CPUs. Identify the “knee” of

the curve, where additional CPUs are providing uneconomical

gains in performance. Requires tuning as described in this guide.

Perform at each tier and iterate if necessary. Stop here if this

meets performance requirements.

Use a well-tuned single application server instance, as in

previous step. Measure how much each additional server

instance and hardware node improves performance.

Dierent equations used if high availability is required.

Excess capacity

for unexpected

peaks

It is desirable to operate a server

at less than its benchmarked

peak, for some safety margin

80% system capacity utilization at peak loads may work for most

installations. Measure your deployment under real and

simulated peak loads.

Capacity Planning

The previous discussion guides you towards dening a deployment architecture. However, you

determine the actual size of the deployment by a process called capacity planning. Capacity

planning enables you to predict:

■

The performance capacity of a particular hardware conguration.

■

The hardware resources required to sustain specied application load and performance.

You can estimate these values through careful performance benchmarking, using an

application with realistic data sets and workloads.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200924

Page 25

General Tuning Concepts

▼

To Determine Capacity

Determine performance on a single CPU.

1

First determine the largest load that a single processor can sustain. You can obtain this gure by

measuring the performance of the application on a single-processor machine. Either leverage

the performance numbers of an existing application with similar processing characteristics or,

ideally, use the actual application and workload in a testing environment. Make sure that the

application and data resources are tiered exactly as they would be in the nal deployment.

2

Determine vertical scalability.

Determine how much additional performance you gain when you add processors. That is, you

are indirectly measuring the amount of shared resource contention that occurs on the server for

a specic workload. Either obtain this information based on additional load testing of the

application on a multiprocessor system, or leverage existing information from a similar

application that has already been load tested.

Running a series of performance tests on one to eight CPUs, in incremental steps, generally

provides a sense of the vertical scalability characteristics of the system. Be sure to properly tune

the application, Application Server, backend database resources, and operating system so that

they do not skew the results.

3

Determine horizontal scalability.

If suciently powerful hardware resources are available, a single hardware node may meet the

performance requirements. However for better availability, you can cluster two or more

systems. Employing external load balancers and workload simulation, determine the

performance benets of replicating one well-tuned application server node, as determined in

step (2).

User Expectations

Application end-users generally have some performance expectations. Often you can

numerically quantify them. To ensure that customer needs are met, you must understand these

expectations clearly, and use them in capacity planning.

Consider the following questions regarding performance expectations:

■

What do users expect the average response times to be for various interactions with the

application? What are the most frequent interactions? Are there any extremely time-critical

interactions? What is the length of each transaction, including think time? In many cases,

you may need to perform empirical user studies to get good estimates.

■

What are the anticipated steady-state and peak user loads? Are there are any particular times

of the day, week, or year when you observe or expect to observe load peaks? While there may

be several million registered customers for an online business, at any one time only a

Chapter 1 • Overview of Enterprise Server Performance Tuning 25

Page 26

Further Information

fraction of them are logged in and performing business transactions. A common mistake

during capacity planning is to use the total size of customer population as the basis and not

the average and peak numbers for concurrent users. The number of concurrent users also

may exhibit patterns over time.

■

What is the average and peak amount of data transferred per request? This value is also

application-specic. Good estimates for content size, combined with other usage patterns,

will help you anticipate network capacity needs.

■

What is the expected growth in user load over the next year? Planning ahead for the future

will help avoid crisis situations and system downtimes for upgrades.

Further Information

■

For more information on Java performance, see Java Performance Documentation and Java

Performance BluePrints

■

For details on optimizing EJB components, see Seven Rules for Optimizing Entity Beans

■

For details on proling, see “Proling Tools” in Sun GlassFish Enterprise Server 2.1

Developer’s Guide

■

For more details on the domain.xml le see Sun GlassFish Enterprise Server 2.1

Administration Reference.

.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200926

Page 27

CHAPTER 2

2

TuningYour Application

This chapter provides information on tuning applications for maximum performance. A

complete guide to writing high performance Java and Java EE applications is beyond the scope

of this document.

This chapter discusses the following topics:

■

“Java Programming Guidelines” on page 27

■

“Java Server Page and Servlet Tuning” on page 29

■

“EJB Performance Tuning” on page 32

Java Programming Guidelines

This section covers issues related to Java coding and performance. The guidelines outlined are

not specic to Enterprise Server, but are general rules that are useful in many situations. For a

complete discussion of Java coding best practices, see the

Avoid Serialization and Deserialization

Serialization and deserialization of objects is a CPU-intensive procedure and is likely to slow

down your application. Use the transient keyword to reduce the amount of data serialized.

Additionally, customized readObject() and writeObject() methods may be benecial in

some cases.

Use StringBuer to Concatenate Strings

To improve performance, instead of using string concatenation, use StringBuffer.append().

String objects are immutable—they never change after creation. For example, consider the

following code:

Java Blueprints.

27

Page 28

Java Programming Guidelines

String str = "testing";

str = str + "abc";

The compiler translates this code as:

String str = "testing";

StringBuffer tmp = new StringBuffer(str);

tmp.append("abc");

str = tmp.toString();

Therefore, copying is inherently expensive and overusing it can reduce performance

signicantly.

Assign null toVariablesThat Are No Longer Needed

Explicitly assigning a null value to variables that are no longer needed helps the garbage

collector to identify the parts of memory that can be safely reclaimed. Although Java provides

memory management, it does not prevent memory leaks or using excessive amounts of

memory.

An application may induce memory leaks by not releasing object references. Doing so prevents

the Java garbage collector from reclaiming those objects, and results in increasing amounts of

memory being used. Explicitly nullifying references to variables after their use allows the

garbage collector to reclaim memory.

One way to detect memory leaks is to employ proling tools and take memory snapshots after

each transaction. A leak-free application in steady state will show a steady active heap memory

after garbage collections.

Declare Methods as nal Only If Necessary

Modern optimizing dynamic compilers can perform inlining and other inter-procedural

optimizations, even if Java methods are not declared final. Use the keyword final as it was

originally intended: for program architecture reasons and maintainability.

Only if you are absolutely certain that a method must not be overridden, use the final

keyword.

Declare Constants as static nal

The dynamic compiler can perform some constant folding optimizations easily, when you

declare constants as static final variables.

AvoidFinalizers

Adding nalizers to code makes the garbage collector more expensive and unpredictable. The

virtual machine does not guarantee the time at which nalizers are run. Finalizers may not

always be executed, before the program exits. Releasing critical resources in finalize()

methods may lead to unpredictable application behavior.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200928

Page 29

Java Server Page and Servlet Tuning

Declare Method Arguments nal

Declare method arguments final if they are not modied in the method. In general, declare all

variables final if they are not modied after being initialized or set to some value.

Synchronize Only When Necessary

Do not synchronize code blocks or methods unless synchronization is required. Keep

synchronized blocks or methods as short as possible to avoid scalability bottlenecks. Use the

Java Collections Framework for unsynchronized data structures instead of more expensive

alternatives such asjava.util.HashTable.

Use DataHandlers for SOAP Attachments

Using a javax.activation.DataHandler for a SOAP attachment will improve performance.

JAX-RPC species:

■

A mapping of certain MIME types to Java types.

■

Any MIME type is mappable to a javax.activation.DataHandler .

As a result, send an attachment (.gif or XML document) as a SOAP attachment to an RPC style

web service by utilizing the Java type mappings. When passing in any of the mandated Java type

mappings (appropriate for the attachment’s MIME type) as an argument for the web service, the

JAX-RPC runtime handles these as SOAP attachments.

For example, to send out an image/gif attachment, use java.awt.Image, or create a

DataHandler wrapper over your image. The advantages of using the wrapper are:

■

Reduced coding: You can reuse generic attachment code to handle the attachments because

the DataHandler determines the content type of the contained data automatically. This

feature is especially useful when using a document style service. Since the content is known

at runtime, there is no need to make calls to attachment.setContent(stringContent,

"image/gif"), for example.

■

Improved Performance: Informal tests have shown that using DataHandler wrappers

doubles throughput for image/gif MIME types, and multiplies throughput by

approximately 1.5 for text/xml or java.awt.Image for image/* types.

Java Server Page and Servlet Tuning

Many applications running on the Enterprise Server use servlets or JavaServer Pages (JSP)

technology in the presentation tier. This section describes how to improve performance of such

applications, both through coding practices and through deployment and conguration

settings.

Chapter 2 • TuningYour Application 29

Page 30

Java Server Page and Servlet Tuning

Suggested Coding Practices

This section provides some tips on coding practices that improve servlet and JSP application

performance.

General Guidelines

Follow these general guidelines to increase performance of the presentation tier:

■

Minimize Java synchronization in servlets.

■

Don’t use the single thread model for servlets.

■

Use the servlet’sinit() method to perform expensive one-time initialization.

■

Avoidusing System.out.println() calls.

AvoidShared Modied Class Variables

In the servlet multithread model (the default), a single instance of a servlet is created for each

application server instance. All requests for a servlet on that application instance share the same

servlet instance. This can lead to thread contention if there are synchronization blocks in the

servlet code. So, avoid using shared modied class variables, since they create the need for

synchronization.

HTTP Session Handling

Follow these guidelines when using HTTP sessions:

■

Create sessions sparingly. Session creation is not free. If a session is not required, do not

create one.

■

Use javax.servlet.http.HttpSession.invalidate() to release sessions when they are

no longer needed.

■

Keep session size small, to reduce response times. If possible, keep session size below seven

KB.

■

Use the directive <%page session="false"%> in JSP les to prevent the Enterprise Server

from automatically creating sessions when they are not necessary.

■

Avoidlarge object graphs in an HttpSession . They force serialization and add

computational overhead. Generally, do not store large objects as HttpSession variables.

■

Don’t cache transaction data in HttpSession. Access to data in an HttpSession is not

transactional. Do not use it as a cache of transactional data, which is better kept in the

database and accessed using entity beans. Transactions will rollback upon failures to their

original state. However, stale and inaccurate data may remain in HttpSession objects. The

Enterprise Server provides “read-only” bean-managed persistence entity beans for cached

access to read-only data.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200930

Page 31

Java Server Page and Servlet Tuning

Conguration and Deployment Tips

Follow these conguration tips to improve performance. These tips are intended for production

environments, not development environments.

■

To improve class loading time, avoid having excessive directories in the server CLASSPATH.

Put application-related classes into JAR les.

■

HTTP response times are dependent on how the keep-alive subsystem and the HTTP server

is tuned in general. For more information, see

■

Cache servlet results when possible. For more information, see Chapter 8, “Developing Web

and SIP Applications,” in Sun GlassFish Enterprise Server 2.1 Developer’s Guide

■

If an application does not contain any EJB components, deploy the application as a WAR

le, not an EAR le.

Optimize SSL

“HTTP Service Settings” on page 60.

.

Optimize SSL by using routines in the appropriate operating system library for concurrent

access to heap space. The library to use depends on the version of the Solaris

TM

Operating

System (SolarisOS) that you are using. To ensure that you use the correct library, set the

LD_PRELOAD environment variable to specify the correct library le. For mor information, see

the following table.

Solaris OS Version Library Setting of LD_PRELOAD Environment Variable

10 libumem–3LIB /usr/lib/libumem.so

9 libmtmalloc-3LIB /usr/lib/libmtmalloc.so

To set the LD_PRELOAD environment variable, edit the entry for this environment variable in the

startserv script. The startserv script is located is located in the bin/startserv directory of

your domain.

The exact syntax to dene an environment variable depends on the shell that you are using.

Disable Security Manager

The security manager is expensive because calls to required resources must call the

doPrivileged() method and must also check the resource with the server.policy le. If you

are sure that no malicious code will be run on the server and you do not use authentication

within your application, then you can disable the security manager.

To disable use of the server.policy le, use the Admin Console. Under Congurations >

cong-name > JVM Settings (JVM Options) delete the option that contains the following text:

-Djava.security.manager

Chapter 2 • TuningYour Application 31

Page 32

EJB PerformanceTuning

EJB PerformanceTuning

The Enterprise Server’shigh-performance EJB container has numerous parameters that aect

performance. Individual EJB components also have parameters that aect performance. The

value of individual EJB component’s parameter overrides the value of the same parameter for

the EJB container. The default values are designed for a single-processor computer

system—change them to optimize for other system congurations.

This section covers the following topics:

■

“Goals” on page 32

■

“Monitoring EJB Components” on page 32

■

“General Guidelines” on page 35

■

“Using Local and Remote Interfaces” on page 36

■

“Improving Performance of EJB Transactions” on page 38

■

“Using Special Techniques” on page 39

■

“Tuning Tips for Specic Types of EJB Components” on page 42

■

“JDBC and Database Access” on page 46

■

“Tuning Message-Driven Beans” on page 47

Goals

The goals of EJB performance tuning are:

■

Increased speed - Cache as many beans in the EJB caches as possible to increase speed

(equivalently, decrease response time). Caching eliminates CPU-intensive operations.

However, since memory is nite, as the caches become larger, housekeeping for them

(including garbage collection) takes longer.

■

Decreased memory consumption - Beans in the pools or caches consume memory from

the Java virtual machine heap. Very large pools and caches degrade performance because

they require longer and more frequent garbage collection cycles.

■

Improved functional properties - Functional properties such as user time-out, commit

options, security, and transaction options, are mostly related to the functionality and

conguration of the application. Generally, they do not compromise functionality for

performance. In some cases, you might be forced to make a “trade-o” decision between

functionality and performance. This section oers suggestions in such cases.

Monitoring EJB Components

When the EJB container has monitoring enabled, you can examine statistics for individual

beans based on the bean pool and cache settings.

For example, the monitoring command below gives the Bean Cache statistics for a stateful

session bean.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200932

Page 33

EJB PerformanceTuning

asadmin get --user admin --host e4800-241-a --port 4848

-m specjcmp.application.SPECjAppServer.ejb-module.

supplier_jar.stateful-session-bean.BuyerSes.bean-cache.*

The following is a sample of the monitoring output:

resize-quantity = -1

cache-misses = 0

idle-timeout-in-seconds = 0

num-passivations = 0

cache-hits = 59

num-passivation-errors = 0

total-beans-in-cache = 59

num-expired-sessions-removed = 0

max-beans-in-cache = 4096

num-passivation-success = 0

The monitoring command below gives the bean pool statistics for an entity bean:

asadmin get --user admin --host e4800-241-a --port 4848

-m specjcmp.application.SPECjAppServer.ejb-module.

supplier_jar.stateful-entity-bean.ItemEnt.bean-pool.*

idle-timeout-in-seconds = 0

steady-pool-size = 0

total-beans-destroyed = 0

num-threads-waiting = 0

num-beans-in-pool = 54

max-pool-size = 2147483647

pool-resize-quantity = 0

total-beans-created = 255

The monitoring command below gives the bean pool statistics for a stateless bean.

asadmin get --user admin --host e4800-241-a --port 4848

-m test.application.testEjbMon.ejb-module.slsb.stateless-session-bean.slsb.bean-pool.*

idle-timeout-in-seconds = 200

steady-pool-size = 32

total-beans-destroyed = 12

num-threads-waiting = 0

num-beans-in-pool = 4

max-pool-size = 1024

pool-resize-quantity = 12

total-beans-created = 42

Tuning the bean involves charting the behavior of the cache and pool for the bean in question

over a period of time.

If too many passivations are happening and the JVM heap remains fairly small, then the

max-cache-size or the cache-idle-timeout-in-seconds can be increased. If garbage

Chapter 2 • TuningYour Application 33

Page 34

EJB PerformanceTuning

collection is happening too frequently, and the pool size is growing, but the cache hit rate is

small, then the pool-idle-timeout-in-seconds can be reduced to destroy the instances.

Note – Specifying a max-pool-size of zero (0) means that the pool is unbounded. The pooled

beans remain in memory unless they are removed by specifying a small interval for

pool-idle-timeout-in-seconds. For production systems, specifying the pool as unbounded is

NOT recommended.

Monitoring Individual EJB Components

To gather method invocation statistics for all methods in a bean, use this command:

asadmin get -m monitorableObject.*

where monitorableObject is a fully-qualied identier from the hierarchy of objects that can be

monitored, shown below.

serverInstance.application.applicationName.ejb-module.moduleName

where moduleName is x_jar for module x.jar.

■

.stateless-session-bean.beanName

.bean-pool

.bean-method.methodName

■

.stateful-session-bean.beanName

.bean-cache

.bean-method.methodName

■

.entity-bean.beanName

.bean-cache

.bean-pool

.bean-method.methodName

■

.message-driven-bean.beanName

.bean-pool

.bean-method.methodName (methodName = onMessage)

For standalone beans, use this pattern:

serverInstance.application.applicationName.standalone-ejb-module.moduleName

The possible identiers are the same as for ejb-module.

For example, to get statistics for a method in an entity bean, use this command:

asadmin get -m serverInstance.application.appName.ejb-module.moduleName

.entity-bean.beanName.bean-method.methodName.*

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200934

Page 35

EJB PerformanceTuning

To nd the possible objects (applications, modules, beans, and methods) and object attributes

that can be monitored, use the Admin Console. For more information, see Chapter 18,

“Monitoring Components and Services,” in Sun GlassFish Enterprise Server 2.1 Administration

. Alternatively, use the asadmin list command. For more information, see list(1).

Guide

For statistics on stateful session bean passivations, use this command:

asadmin get -m serverInstance.application.appName.ejb-module.moduleName

.stateful-session-bean.beanName.bean-cache.*

From the attribute values that are returned, use this command:

num-passivationsnum-passivation-errorsnum-passivation-success

General Guidelines

The following guidelines can improve performance of EJB components. Keep in mind that

decomposing an application into many EJB components creates overhead and can degrade

performance. EJB components are not simply Java objects. They are components with

semantics for remote call interfaces, security, and transactions, as well as properties and

methods.

Use High Performance Beans

Use high-performance beans as much as possible to improve the overall performance of your

application. For more information, see “Tuning Tips for Specic Types of EJB Components” on

page 42

The types of EJB components are listed below, from the highest performance to the lowest:

1. Stateless Session Beans and Message Driven Beans

2. Stateful Session Beans

3. Container Managed Persistence (CMP) entity beans congured as read-only

4. Bean Managed Persistence (BMP) entity beans congured as read-only

5. CMP beans

6. BMP beans

Use Caching

Caching can greatly improve performance when used wisely. For example:

■

Cache EJB references: To avoid a JNDI lookup for every request, cache EJB references in

servlets.

■

Cache home interfaces: Since repeated lookups to a home interface can be expensive, cache

references to EJBHomes in the init() methods of servlets.

Chapter 2 • TuningYour Application 35

Page 36

EJB PerformanceTuning

■

Cache EJB resources: Use setSessionContext() or ejbCreate() to cache bean resources.

This is again an example of using bean lifecycle methods to perform application actions only

once where possible. Remember to release acquired resources in the ejbRemove() method.

Use the Appropriate Stubs

The stub classes needed by EJB applications are generated dynamically at runtime when an EJB

client needs them. This means that it is not necessary to generate the stubs or retrieve the client

JAR le when deploying an application with remote EJB components. When deploying an

application, it is no longer necessary to specify the --retrieve option, which can speed up

deployment.

If you have a legacy rich-client application that directly uses the CosNaming service (not a

recommended conguration), then you must generate the stubs for your application explicitly

using RMIC. For more information, see

for more details.

Guide

Sun GlassFish Enterprise Server 2.1 Troubleshooting

Remove Unneeded Stateful Session Beans

Removing unneeded stateful session beans avoids passivating them, which requires disk

operations.

Cache and Pool TuningTips

Follow these tips when using the EJB cache and pools to improve performance:

■

Explicitly call remove(): Allow stateful session EJB components to be removed from the

container cache by explicitly calling of the remove() method in the client.

■

Tune the entity EJB component’s pool size: Entity Beans use both the EJB pool and cache

settings. Tune the entity EJB component’s pool size to minimize the creation and

destruction of beans. Populating the pool with a non-zero steady size before hand is useful

for getting better response for initial requests.

■

Cache bean-specic resources: Use the setEntityContext() method to cache bean specic

resources and release them using the unSetEntityContext() method.

■

Load related data eciently for container-managed relationships (CMRs). For more

information, see

■

Identify read-only beans: Congure read-only entity beans for read only operations. For

more information, see

“Pre-fetching Container Managed Relationship (CMR) Beans” on page 44

“Read-Only Entity Beans” on page 43

Using Local and Remote Interfaces

This section describes some considerations when EJB components are used by local and remote

clients.

Sun GlassFish Enterprise Server 2.1 PerformanceTuning Guide • January 200936

Page 37

EJB PerformanceTuning

Prefer Local Interfaces

An EJB component can have remote and local interfaces. Clients not located in the same

application server instance as the bean (remote clients) use the remote interface to access the

bean. Calls to the remote interface require marshalling arguments, transportation of the

marshalled data over the network, un-marshaling the arguments, and dispatch at the receiving

end. Thus, using the remote interface entails signicant overhead.

If an EJB component has a local interface, then local clients in the same application server

instance can use it instead of the remote interface. Using the local interface is more ecient,

since it does not require argument marshalling, transportation, and un-marshalling.

If a bean is to be used only by local clients then it makes sense to provide only the local interface.

If, on the other hand, the bean is to be location-independent, then you should provide both the

remote and local interfaces so that remote clients use the remote interface and local clients can

use the local interface for eciency.

Using Pass-By-Reference Semantics

By default, the Enterprise Server uses pass-by-value semantics for calling the remote interface of

a bean, even if it is co-located. This can be expensive, since clients using pass-by-value

semantics must copy arguments before passing them to the EJB component.

However, local clients can use pass-by-reference semantics and thus the local and remote

interfaces can share the passed objects. But this means that the argument objects must be

implemented properly, so that they are shareable. In general, it is more ecient to use

pass-by-reference semantics when possible.

Using the remote and local interfaces appropriately means that clients can access EJB

components eciently. That is, local clients use the local interface with pass-by-reference

semantics, while remote clients use the remote interface with pass-by-value semantics.

However, in some instances it might not be possible to use the local interface, for example

when:

■

The application predates the EJB 2.0 specication and was written without any local

interfaces.

■

There are bean-to-bean calls and the client beans are written without making any

co-location assumptions about the called beans.

For these cases, the Enterprise Server provides a pass-by-reference option that clients can use to

pass arguments by reference to the remote interface of a co-located EJB component.

You can specify the pass-by-reference option for an entire application or a single EJB

component. When specied at the application level, all beans in the application use

pass-by-reference semantics when passing arguments to their remote interfaces. When

specied at the bean level, all calls to the remote interface of the bean use pass-by-reference

Chapter 2 • TuningYour Application 37

Page 38

EJB PerformanceTuning

semantics. See “Value Added Features” in Sun GlassFish Enterprise Server 2.1 Developer’s Guide

for more details about the pass-by-reference ag.