Page 1

Sun StorEdge™3900 and 6900

Series Troubleshooting Guide

Sun Microsystems, Inc.

4150 Network Circle

Santa Clara, CA 95054 U.S.A.

650-960-1300

Part No. 816-4290-11

March 2002, Revision A

Send comments about this document to: docfeedback@sun.com

Page 2

Copyright 2002Sun Microsystems, Inc.,4150 NetworkCircle, SantaClara, CA95054 U.S.A.All rightsreserved.

This product ordocument isdistributed underlicenses restrictingits use,copying, distribution,and decompilation.No partof thisproduct or

document may be reproduced inany formby anymeans withoutprior writtenauthorization ofSun andits licensors,if any. Third-party

software,including fonttechnology,is copyrighted and licensed fromSun suppliers.

Parts of the product maybe derivedfrom BerkeleyBSD systems,licensed fromthe University of California. UNIX is a registered trademarkin

the U.S. and other countries, exclusively licensed through X/OpenCompany,Ltd.

Sun, Sun Microsystems,the Sunlogo, AnswerBook2,Sun StorEdge,StorTools,docs.sun.com, SunEnterprise, SunFire, SunOS, Netra, and

Solaris are trademarks,registered trademarks, or service marks of Sun Microsystems, Inc.in theU.S. andother countries.All SPARC

trademarks are usedunder licenseand aretrademarks orregisteredtrademarks ofSPARCInternational, Inc.in theU.S. and other countries.

Productsbearing SPARC trademarksare basedupon anarchitecturedeveloped bySun Microsystems,Inc.

The OPEN LOOK and Sun™ Graphical User Interface was developed bySun Microsystems,Inc. forits usersand licensees.Sun acknowledges

the pioneering effortsof Xeroxin researchingand developing the concept of visual orgraphical userinterfaces forthe computerindustry.Sun

holds a non-exclusive license fromXerox tothe XeroxGraphical User Interface, which license also covers Sun’s licensees who implement OPEN

LOOK GUIs and otherwise comply with Sun’s written license agreements.

Federal Acquisitions: CommercialSoftware—Government UsersSubject toStandard License TermsandConditions.

DOCUMENTATION IS PROVIDED “AS IS” AND ALL EXPRESS OR IMPLIED CONDITIONS, REPRESENTATIONS AND WARRANTIES,

INCLUDING ANY IMPLIED WARRANTY OFMERCHANTABILITY, FITNESS FOR APARTICULARPURPOSE OR NON-INFRINGEMENT,

ARE DISCLAIMED, EXCEPT TO THE EXTENT THAT SUCH DISCLAIMERS ARE HELD TO BE LEGALLY INVALID.

Copyright 2002 Sun Microsystems, Inc.,4150 NetworkCircle, SantaClara, CA95054 Etats-Unis.Tousdroitsréservés.

Ce produit oudocument estdistribué avecdes licencesqui enrestreignent l’utilisation, la copie, la distribution, et la décompilation. Aucune

partie de ce produit oudocument nepeut êtrereproduitesous aucuneforme, parquelque moyenque cesoit, sansl’autorisation préalableet

écrite de Sun et de ses bailleurs de licence, s’il y en a.Le logicieldétenu pardes tiers,et quicomprend latechnologie relativeaux policesde

caractères,est protégépar uncopyright etlicencié pardes fournisseursde Sun.

Des parties de ce produitpourront êtredérivées des systèmes Berkeley BSD licenciés par l’Université de Californie. UNIX est une marque

déposée aux Etats-Unis et dans d’autres payset licenciéeexclusivement parX/Open Company, Ltd.

Sun, Sun Microsystems,le logoSun, AnswerBook2,Sun StorEdge,StorTools,docs.sun.com, SunEnterprise, SunFire, SunOS, Netra, et Solaris

sont des marquesde fabriqueou desmarques déposées,ou marquesde service, de Sun Microsystems,Inc. auxEtats-Unis etdans d’autrespays.

Toutes lesmarques SPARC sontutilisées souslicence etsont desmarques de fabrique ou des marques déposées de SPARCInternational, Inc.

aux Etats-Unis et dans d’autres pays.Les produitsportant lesmarques SPARC sont basés sur unearchitecture développéepar Sun

Microsystems,Inc.

L’interfaced’utilisation graphique OPEN LOOK et Sun™ a été développéepar SunMicrosystems, Inc.pour sesutilisateurs etlicenciés. Sun

reconnaîtles effortsde pionniersde Xeroxpour la rechercheet ledéveloppement duconcept desinterfaces d’utilisationvisuelle ougraphique

pour l’industrie de l’informatique. Sun détient une licence non exclusive deXerox surl’interface d’utilisationgraphique Xerox,cette licence

couvrant également les licenciés de Sun qui mettent en place l’interfaced’utilisation graphiqueOPEN LOOKet quien outrese conformentaux

licences écrites de Sun.

LA DOCUMENTATION EST FOURNIE “EN L’ETAT” ET TOUTES AUTRES CONDITIONS, DECLARATIONS ET GARANTIES EXPRESSES

OU TACITES SONT FORMELLEMENTEXCLUES, DANSLA MESUREAUTORISEE PAR LALOI APPLICABLE,Y COMPRIS NOTAMMENT

TOUTE GARANTIE IMPLICITE RELATIVE A LA QUALITE MARCHANDE, A L’APTITUDE A UNE UTILISATION PARTICULIERE OU A

L’ABSENCE DE CONTREFAÇON.

Please

Recycle

Page 3

Contents

1. Introduction 1

Predictive Failure Analysis Capabilities 2

2. General Troubleshooting Procedures 3

Troubleshooting Overview Tasks 3

Multipathing Options in the Sun StorEdge 6900 Series 7

Alternatives to Sun StorEdge Traffic Manager 8

▼ To Quiesce the I/O 8

▼ To Unconfigure the c2 Path 8

▼ To Suspend the I/O 10

▼ To Return the Path to Production 10

▼ To View the VxDisk Properties 11

▼ To Quiesce the I/O on the A3/B3 Link 13

▼ To Suspend the I/O on the A3/B3 Link 13

▼ To Return the Path to Production 14

Fibre Channel Links 15

Fibre Channel Link Diagrams 16

Host Side Troubleshooting 18

Storage Service Processor Side Troubleshooting 18

For Internal Use Only

Contents iii

Page 4

Command Line Test Examples 19

qlctest(1M) 19

switchtest(1M) 20

Storage Automated Diagnostic Environment Event Grid 21

▼ To Customize an Event Report 21

3. Troubleshooting the Fibre Channel Links 23

A1/B1 Fibre Channel (FC) Link 23

▼ To Verify the Data Host 25

FRU Tests Available for A1/B1 FC Link Segment 26

▼ To Isolate the A1/B1 FC Link 28

A2/B2 Fibre Channel (FC) Link 29

▼ To Verify the Host Side 31

▼ To Verify the A2/B2 FC Link 33

▼ To Isolate the A2/B2 FC Link 33

A3/B3 Fibre Channel (FC) Link 35

▼ To Verify the Host Side 37

▼ To Verify the Storage Service Processor 38

FRU Tests Available for the A3/B3 FC Link Segment 38

▼ To Isolate the A3/B3 FC Link 39

A4/B4 Fibre Channel (FC) Link 40

▼ To Verify the Data Host 42

Sun StorEdge 3900 Series 42

Sun StorEdge 6900 Series 42

FRU tests available for the A4/B4 FC Link Segment 44

▼ To Isolate the A4/B4 FC Link 44

4. Configuration Settings 47

Verifying Configuration Settings 47

For Internal Use Only

Contents iv

Page 5

▼ To Verify Configuration Settings 47

▼ To Clear the Lock File 50

5. Troubleshooting Host Devices 53

Host Event Grid 53

▼ Using the Host Event Grid 53

Replacing the Master, Alternate Master, and Slave Monitoring Host 57

▼ To Replace the Master Host 57

▼ To Replace the Alternate Master or Slave Monitoring Host 58

Conclusion 59

6. Troubleshooting Sun StorEdge FC Switch-8 and Switch-16 Devices 61

Sun StorEdge Network FC Switch-8 and Switch-16 Switch Description 61

▼ To Diagnose and Troubleshoot Switch Hardware 62

Switch Event Grid 62

▼ Using the Switch Event Grid 62

Replacing the Master Midplane 68

▼ To Replace the Master Midplane 68

Conclusion 68

7. Troubleshooting Virtualization Engine Devices 69

Virtualization Engine Description 69

Virtualization Engine Diagnostics 70

Service Request Numbers 70

Service and Diagnostic Codes 70

▼ To Retrieve Service Information 70

CLI Interface 70

▼ To Display Log Files and Retrieve SRNs 71

▼ To Clear the Log 72

For Internal Use Only

Contents v

Page 6

Virtualization Engine LEDs 72

Power LED Codes 73

Interpreting LED Service and Diagnostic Codes 73

Back Panel Features 74

Ethernet Port LEDs 74

Fibre Channel Link Error Status Report 75

▼ To Check Fibre Channel Link Error Status Manually 76

Translating Host Device Names 78

▼ To Display the VLUN Serial Number 79

Devices That Are Not Sun StorEdge Traffic Manager-Enabled 79

Sun StorEdge Traffic Manager-Enabled Devices 80

▼ To View the Virtualization Engine Map 81

▼ To Failback the Virtualization Engine 83

▼ To Replace a Failed Virtualization Engine 84

▼ To Manually Clear the SAN Database 86

▼ To Reset the SAN Database on Both Virtualization Engines 86

▼ To Reset the SAN Database on a Single Virtualization Engine 86

Stopping and Restarting the SLIC Daemon 87

▼ To Restart the SLIC Daemon 87

Sun StorEdge 6900 Series Multipathing Example 89

One Sun StorEdge T3+ array partner pair with 1 500GB RAID 5 LUN per

brick (2 LUNs total) 89

Virtualization Engine Event Grid 95

▼ Using the Virtualization Engine Event Grid 95

8. Troubleshooting the Sun StorEdge T3+ Array Devices 99

Explorer Data Collection Utility 99

▼ To Install Explorer Data Collection Utility on the Storage Service

Processor 99

vi Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 7

Troubleshooting the T1/T2 Data Path 102

Notes 102

T1/T2 Notification Events 103

Sun StorEdge T3+ Array Storage Service Processor Verification 106

T1/T2 FRU Tests Available 107

Notes 108

T1/T2 Isolation Procedures 108

Sun StorEdge T3+ Array Event Grid 109

▼ Using the Sun StorEdge T3+ Array Event Grid 109

Replacing the Master Midplane 122

▼ To Replace the Master Midplane 122

Conclusion 122

9. Troubleshooting Ethernet Hubs 123

setupswitch Exit Values 141

For Internal Use Only

Contents vii

Page 8

viii Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 9

List of Figures

FIGURE 2-1 Sun StorEdge 3900 Series Fibre Channel Link Diagram 16

FIGURE 2-2 Sun StorEdge 6900 Series Fibre Channel Link Diagram 17

FIGURE 3-1 Data Host Notification of Intermittent Problems 23

FIGURE 3-2 Data Host Notification of Severe Link Error 24

FIGURE 3-3 Storage Service Processor Notification 24

FIGURE 3-4 A2/B2 FC Link Host Side Event 29

FIGURE 3-5 A2/B2 FC Link Storage Service Processor Side Event 30

FIGURE 3-6 A3/B3 FC Link Host-Side Event 35

FIGURE 3-7 A3/B3 FC Link Storage Service Processor-Side Event 36

FIGURE 3-8 A3/B3 FC Link Storage Service Processor-Side Event 36

FIGURE 3-9 A4/B4 FC Link Data Host Notification 40

FIGURE 3-10 Storage Service Processor Notification 41

FIGURE 5-1 Host Event Grid 54

FIGURE 6-1 Switch Event Grid 63

FIGURE 7-1 Virtualization Engine Front Panel LEDs 73

FIGURE 7-2 Sun StorEdge 6900 Series Logical View 90

FIGURE 7-3 Primary Data Paths to the Alternate Master 91

FIGURE 7-4 Primary Data Paths to the Master Sun StorEdge T3+ Array 92

FIGURE 7-5 Path Failure—Before the Second Tier of Switches 93

List of Figures ix

Page 10

FIGURE 7-6 Path Failure —I/O Routed through Both HBAs 94

FIGURE 7-7 Virtualization Engine Event Grid 95

FIGURE 8-1 Storage Service Processor Event 103

FIGURE 8-2 Virtualization Engine Alert 105

FIGURE 8-3 Manage Configuration Files Menu 106

FIGURE 8-4 Example Link Test Text Output from the Storage Automated Diagnostic Environment 107

FIGURE 8-5 Sun StorEdge T3+ array Event Grid 109

List of Figures x

Page 11

Preface

The Sun StorEdge 3900 and 6900 Series Troubleshooting Guide provides guidelines

for isolating problems in supported configurations of the Sun StorEdge

6900 series. For detailed configuration information, refer to the Sun StorEdge 3900

and 6900 Series Reference Manual.

The scope of this troubleshooting guide is limited to information pertaining to the

components of the Sun StorEdge 3900 and 6900 series, including the Storage Service

Processor and the virtualization engines in the Sun StorEdge 6900 series. This guide

is written for Sun personnel who have been fully trained on all the components in

the configuration.

TM

3900 and

How This Book Is Organized

This book contains the following topics:

Chapter 1 introduces the Sun StorEdge 3900 and 6900 series storage subsystems.

Chapter 2 offers general troubleshooting guidelines, such as quiescing the I/O, and

tools you can use to isolate and troubleshoot problems.

Chapter 3 provides Fibre Channel link troubleshooting procedures.

Chapter 4 presents information about configuration settings, specific to the Sun

StorEdge 3900 and 6900 series. It also provides a procedure for how to clear the lock

file.

Chapter 5 provides information on host device troubleshooting.

Chapter 6 provides information on Sun StorEdge network FC switch-8 and switch-

16 switch device troubleshooting.

xi

Page 12

Chapter 7 provides detailed information for troubleshooting the virtualization

engines.

Chapter 8 describes how to troubleshoot the Sun StorEdge T3+ array devices. Also

included in this chapter is information about the Explorer Data Collection Utility.

Chapter 9 discusses ethernet hub troubleshooting. Information associated with the

3COM Ethernet hubs is limited in this guide, however, as this is third-party

information.

Appendix A provides virtualization engine references, including SRN and SNMP

Reference, an SRN/SNMP single point of failure table, and port communication and

service code tables.

Appendix B provides a list of SUNWsecfg Error Messages and recommendations for

corrective action.

Using UNIX Commands

This document may not contain information on basic UNIX®commands and

procedures such as shutting down the system, booting the system, and configuring

devices.

See one or more of the following for this information:

■ Solaris Handbook for Sun Peripherals

■ AnswerBook2™ online documentation for the Solaris™ operating environment

■ Other software documentation that you received with your system

xii Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 13

Typographic Conventions

Typeface Meaning Examples

AaBbCc123 The names of commands, files,

and directories; on-screen

computer output

AaBbCc123

AaBbCc123 Book titles, new words or terms,

What you type, when

contrasted with on-screen

computer output

words to be emphasized

Command-line variable; replace

with a real name or value

Edit your.login file.

Use ls -a to list all files.

% You have mail.

%

su

Password:

Read Chapter 6 in the User’s Guide.

These are called class options.

You must be superuser to do this.

To delete a file, type rm filename.

Shell Prompts

Shell Prompt

C shell machine_name%

C shell superuser machine_name#

Bourne shell and Korn shell $

Bourne shell and Korn shell superuser #

Preface xiii

Page 14

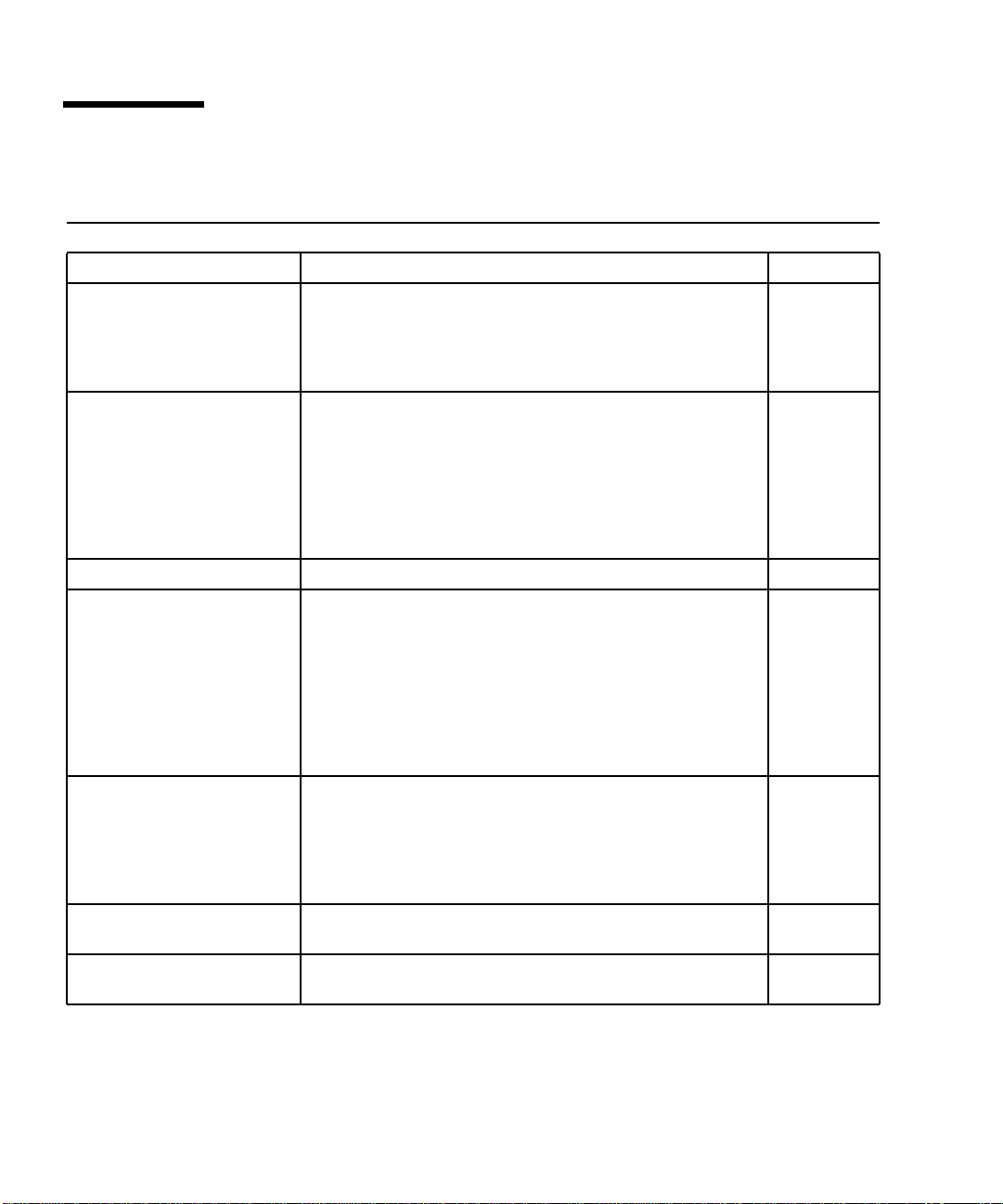

Related Documentation

Product Title Part Number

Late-breaking News • Sun StorEdge 3900 and 6900 Series Release Notes 816-3247

Sun StorEdge 3900 and 6900

series hardware information

Sun StorEdge T3 and T3+

array

Diagnostics • Storage Automated Diagnostics Environment User’s Guide 816-3142

Sun StorEdge network FC

switch-8 and switch-16

SANbox switch management

using SANsurfer

Expansion cabinet • Sun StorEdge Expansion Cabinet Installation and Service

Storage server processor • Netra X1 Server User’s Guide

• Sun StorEdge 3900 and 6900 Series Site Preparation Guide

• Sun StorEdge 3900 and 6900 Series Regulatory and Safety

Compliance Manual

• Sun StorEdge 3900 and 6900 Series Hardware Installation and

Service Manual

• Sun StorEdge T3 and T3+ Array Start Here

• Sun StorEdge T3 and T3+ Array Installation, Operation, and

Service Manual

• Sun StorEdge T3 and T3+ Array Administrator’s Guide

• Sun StorEdge T3 and T3+ Array Configuration Guide

• Sun StorEdge T3 and T3+ Array Site Preparation Guide

• Sun StorEdge T3 and T3+ Field Service Manual

• Sun StorEdge T3 and T3+ Array Release Notes

• Sun StorEdge Network FC Switch-8 and Switch-16 Release Notes

• Sun StorEdge Network FC Switch-8 and Switch-16 Installation

and Configuration Guide

• Sun StorEdge Network FC Switch-8 and Switch-16 Best

Practices Manual

• Sun StorEdge Network FC Switch-8 and Switch-16 Operations

Guide

• Sun StorEdge Network FC Switch-8 and Switch-16 Field

Troubleshooting Guide

• SANbox 8/16 Segmented Loop Switch Management User ’s

Manual

• SANbox-8 Segmented Loop Fibre Channel Switch Installer’s/

User’s Manual

• SANbox-16 Segmented Loop Fibre Channel Switch Installer’s/

User’s Manual

Manual

• Netra X1 Server Hard Disk Drive Installation Guide

816-3242

816-3243

816-3244

816-0772

816-0773

816-0776

816-0777

816-0778

816-0779

816-0781

816-0842

816-0830

816-2688

816-1986

816-1701

875-3060

875-1881

875-3059

805-3067

806-5980

806-7670

xiv Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 15

Accessing Sun Documentation Online

A broad selection of Sun system documentation is located at:

http://www.sun.com/products-n-solutions/hardware/docs

A complete set of Solaris documentation and many other titles are located at:

http://docs.sun.com

Sun Welcomes Your Comments

Sun is interested in improving its documentation and welcomes your comments and

suggestions. You can email your comments to Sun at:

docfeedback@sun.com

Please include the part number (816-4290-10) of your document in the subject line of

your email.

Preface xv

Page 16

xvi Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 17

CHAPTER

1

Introduction

The Sun StorEdge 3900 and 6900 series storage subsystems are complete

preconfigured storage solutions. The configurations for each of the storage

subsystems are shown in

TABLE1-1

Series System

Sun StorEdge

3900 series

Sun StorEdge

3910 system

TABLE 1-1.

Sun StorEdge

Fibre Channel

Switch Supported

Two 8-port

switches

Sun StorEdge T3+

Array Partner Groups

Supported

1to4

Additional Array

Partner Groups

Supported with

Optional Additional

Expansion Cabinet

Not applicable

Sun StorEdge

6900 series

Sun StorEdge

3960 system

Sun StorEdge

6910 system

Sun StorEdge

6960 system

Two 16-port

switches

Two 8-port

switches

Two 16-port

switches

1to4

1to3

1to3

1to5

1to4

1

Page 18

Predictive Failure Analysis Capabilities

The Storage Automated Diagnostic Environment software provides the health and

monitoring functions for the Sun StorEdge 3900 and 6900 series systems. This

software provides the following predictive failure analysis (PFA) capabilities.

■ FC links—Fibre Channel links are monitored at all end points using the link FC-

ELS link counters. When link errors surpass the threshold values, an alert is sent.

This enables Sun personnel to replace components that are experiencing high

transient fault levels before a hard fault occurs.

■ Enclosure status—Many devices, like the Sun StorEdge network FC switch-8 and

switch-16 switch and the Sun StorEdge T3+ array, will cause the Storage

Automated Diagnostic Environment alerts to be sent if the temperature

thresholds are exceeded. This enables Sun-trained personnel to address the

problem before the component and enclosure fails.

■ SPOF notification—Storage Automated Diagnostic Environment notification for

path failures and failovers (that is, Sun StorEdge Traffic Manager software

failover) can be considered PFA, since Sun-trained personnel are notified and can

repair the primary path. This eliminates the time of exposure to single points of

failure and helps to preserve customer availability during the repair process.

PFA is not always effective in detecting or isolating failures. The remainder of this

document provides guidelines that can be used to troubleshoot problems that occur

in supported components of the Sun StorEdge 3900 and 6900 series.

2 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 19

CHAPTER

2

General Troubleshooting Procedures

This chapter contains the following sections:

■ “Troubleshooting Overview Tasks” on page 3

■ “Multipathing Options in the Sun StorEdge 6900 Series” on page 7

■ “Fibre Channel Links” on page 15

■ “Storage Automated Diagnostic Environment Event Grid” on page 21

Troubleshooting Overview Tasks

This section lists the high-level steps to isolate and troubleshoot problems in the Sun

StorEdge 3900 and 6900 series. It offers a methodical approach and lists the tools and

resources available at each step.

Note – A single problem can cause various errors throughout the SAN. A good

practice is to begin by investigating the devices that have experienced “Loss of

Communication” events in the Storage Automated Diagnostic Environment. These

errors usually indicate more serious problems.

A “Loss of Communication” error on a switch, for example, could cause multiple

ports and HBAs to go offline. Concentrating on the switch and fixing that failure can

help bring the ports and HBAs back online.

3

Page 20

1. Discover the error by checking one or more of the following messages or files:

■ Storage Automated Diagnostic Environment alerts or email messages

■ /var/adm/messages

■ Sun StorEdge T3+ array syslog file

■ Storage Service Processor messages

■ /var/adm/messages.t3 messages

■ /var/adm/log/SEcfglog file

2. Determine the extent of the problem by using one or more of the following methods:

■ Storage Automated Diagnostic Environment Topology view

■ Storage Automated Diagnostic Environment Revision Checking (manual patch or

package, to check whether the package or patch is installed)

■ Verify the functionality using one of the following:

■ checkdefaultconfig(1M)

■ checkt3config(1M)

■ cfgadm -al output

■ luxadm(1M) output

■ Check the multipathing status using the Sun StorEdge Traffic Manager software

or VxDMP.

3. Check the status of a Sun StorEdge T3+ array by using one or more of the following methods:

■ Storage Automated Diagnostic Environment device monitoring reports

■ Run the SEcfg script, which displays and shows the Sun StorEdge T3+ array

configuration

■ Manually open a telnet session to the Sun StorEdge T3+ array

■ luxadm(1M) display output

■ LED status on the Sun StorEdge T3+ array

■ Explorer Data Collection Utility output (located on the Storage Service Processor)

4 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 21

4. Check the status of the Sun StorEdge FC network switch-8 and switch-16 switches

using the following tools:

■ Storage Automated Diagnostic Environment device monitoring reports

■ Run the SEcfg script, which displays and shows the Sun StorEdge T3+ array

configuration

■ LED Status (online/offline, POST error codes found in the Sun StorEdge network

FC switch-8 and switch-16 switch Installation and Configuration Guide)

■ Explorer Data Collection Utility output (located on the Storage Service Processor)

■ SANsurfer GUI

Note – To run the SANsurfer GUI from the Storage Service Processor, you must

export X-Display.)

5. Check the status of the virtualization engine using one or more of the following methods:

■ Storage Automated Diagnostic Environment device monitoring reports

■ Run the SEcfg script, which displays and shows the virtualization engine

■ Refer to the LED status blink codes in Chapter 7.

6. Quiesce the I/O along the path to be tested as follows:

■ For installations using VERITAS VxDMP, disable vxdmpadm

■ For installations using the Sun StorEdge Traffic Manager software, unconfigure

the Fabric device.

■ Refer to “To Quiesce the I/O” on page 8

■ Halt the application.

7. Test and isolate the FRUs using the following tools:

■ Storage Automated Diagnostic Environment diagnostic tests (this might require

the use of a loopback cable for isolation)

■ Sun StorEdge T3+ array tests, including t3test(1M), t3ofdg(1M), and

t3volverify(1M), which can be found in the Storage Automated Diagnostic

Environment User’s Guide.

Note – These tests isolate the problem to a FRU that must be replaced. Follow the

instructions in the Sun StorEdge 3900 and 6900 Series Reference Manual and the Sun

StorEdge 3900 and 6900 Installation and Service Manual for proper FRU replacement

procedures.

Chapter 2 General Troubleshooting Procedures 5

For Internal Use Only

Page 22

8. Verify the fix using the following tools:

■ Storage Automated Diagnostic Environment GUI Topology View and Diagnostic

Tests

■ /var/adm/messages on the data host

9. Return the path to service by using one of the following methods:

■ Multipathing software

■ Restarting the application

6 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 23

Multipathing Options in the Sun StorEdge 6900 Series

Using the virtualization engines presents several challenges in how multipathing is

handled in the Sun StorEdge 6900 series.

Unlike Sun StorEdge T3+ array and Sun StorEdge network FC switch-8 and switch16 switch installations, which present primary and secondary pathing options, the

virtualization engines present only primary pathing options to the data host. The

virtualization engines handle all failover and failback operations and mask those

operations from the multipathing software on the data host.

The following example illustrates a Sun StorEdge Traffic Manager problem on a Sun

StorEdge 6900 series system.

# luxadm display

/dev/rdsk/c6t29000060220041F96257354230303052d0s2

DEVICE PROPERTIES for disk: /dev/rdsk/

c6t29000060220041F96257354230303052d0s2

Status(Port A): O.K.

Status(Port B): O.K.

Vendor: SUN

Product ID: SESS01

WWN(Node): 2a000060220041f4

WWN(Port A): 2b000060220041f4

WWN(Port B): 2b000060220041f9

Revision: 080C

Serial Num: Unsupported

Unformatted capacity: 102400.000 MBytes

Write Cache: Enabled

Read Cache: Enabled

Minimum prefetch: 0x0

Maximum prefetch: 0x0

Device Type: Disk device

Path(s):

/dev/rdsk/c6t29000060220041F96257354230303052d0s2

/devices/scsi_vhci/ssd@g29000060220041f96257354230303052:c,raw

Controller /devices/pci@6,4000/SUNW,qlc@2/fp@0,0

Device Address 2b000060220041f4,0

Class primary

State ONLINE

Controller /devices/pci@6,4000/SUNW,qlc@3/fp@0,0

Device Address 2b000060220041f9,0

Class primary

State ONLINE

For Internal Use Only

Chapter 2 General Troubleshooting Procedures 7

Page 24

Note that in the Class and State fields, the virtualization engines are presented as

two primary/ONLINE devices. The current Sun StorEdge Traffic Manager design

does not enable you to manually halt the I/O (that is, you cannot perform a failover

to the secondary path) when only primary devices are present.

Alternatives to Sun StorEdge Traffic Manager

As an alternative to using Sun StorEdge Traffic Manager, you can manually halt the

I/O using one of two methods: quiesce I/O and unconfigure the c2 path. These

methods are explained below.

▼ To Quiesce the I/O

1. Determine the path you want to disable.

2. Type:

# cfgadm -c unconfigure device

▼ To Unconfigure the c2 Path

1. Type:

# cfgadm -al

Ap_Id Type Receptacle Occupant Condition

c0 scsi-bus connected configured unknown

c0::dsk/c0t0d0 disk connected configured unknown

c0::dsk/c0t1d0 disk connected configured unknown

c1 scsi-bus connected configured unknown

c1::dsk/c1t6d0 CD-ROM connected configured unknown

c2 fc-fabric connected configured unknown

c2::210100e08b23fa25 unknown connected unconfigured unknown

c2::2b000060220041f4 disk connected configured unknown

c3 fc-fabric connected configured unknown

c3::210100e08b230926 unknown connected unconfigured unknown

c3::2b000060220041f9 disk connected configured unknown

c4 fc-private connected unconfigured unknown

c5 fc connected unconfigured unknown

8 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 25

2. Using Storage Automated Diagnostic Environment Topology GUI, determine

which virtualization engine is in the path you need to disable.

3. Use the world wide name (WWN) of the virtualization engine that is in the

unconfigure command, as follows:

# cfgadm -c unconfigure c2::2b000060220041f4

# cfgadm -al

Ap_Id Type Receptacle Occupant Condition

c0 scsi-bus connected configured unknown

c0::dsk/c0t0d0 disk connected configured unknown

c0::dsk/c0t1d0 disk connected configured unknown

c1 scsi-bus connected configured unknown

c1::dsk/c1t6d0 CD-ROM connected configured unknown

c2 fc-fabric connected unconfigured unknown

c2::210100e08b23fa25 unknown connected unconfigured unknown

c2::2b000060220041f4 disk connected unconfigured unknown

c3 fc-fabric connected configured unknown

c3::210100e08b230926 unknown connected unconfigured unknown

c3::2b000060220041f9 disk connected configured unknown

c4 fc-private connected unconfigured unknown

c5 fc connected unconfigured unknown

4. Verify that I/O has halted.

This halts the I/O only up to the A3/B3 link (see

FIGURE 2-2). I/O continues to move

over the T1 and T2 paths, as well as the A4/B4 links to the Sun StorEdge T3+ array.

For Internal Use Only

Chapter 2 General Troubleshooting Procedures 9

Page 26

▼ To Suspend the I/O

Use one of the following methods to suspend the I/O while the failover occurs:

1. Stop all customer applications that are accessing the Sun StorEdge T3+ array.

2. Manually pull the link from the Sun StorEdge T3+ array to the switch and wait

for a Sun StorEdge T3+ array LUN failover.

■ After the failover occurs, replace the cable and proceed with testing and FRU

isolation.

■ After testing and any FRU replacement is finished, return the Controller state

back to the default by using virtualization engine failback. Refer to

“Virtualization Engine Failback” on page 81.

Note – To confirm that a failover is occurring, open a telnet session to the Sun

StorEdge T3+ array and check the output of port listmap.

Another, but slower, method is to run the runsecfg script and verify the

virtualization engine maps by polling them against a live system.

Caution – During the failover, SCSI errors will occur on the data host and a brief

suspension of I/O will occur.

▼ To Return the Path to Production

1. Type cfgadm -c configure device.

# cfgadm -c configure c2::2b000060220041f4

2. Verify that I/O has resumed on all paths.

10 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 27

▼

To View the VxDisk Properties

1. Type the following:

# vxdisk list Disk_1

Device: Disk_1

devicetag: Disk_1

type: sliced

hostid: diag.xxxxx.xxx.COM

disk: name=t3dg02 id=1010283311.1163.diag.xxxxx.xxx.com

group: name=t3dg id=1010283312.1166.diag.xxxxx.xxx.com

flags: online ready private autoconfig nohotuse autoimport imported

pubpaths: block=/dev/vx/dmp/Disk_1s4 char=/dev/vx/rdmp/Disk_1s4

privpaths: block=/dev/vx/dmp/Disk_1s3 char=/dev/vx/rdmp/Disk_1s3

version: 2.2

iosize: min=512 (bytes) max=2048 (blocks)

public: slice=4 offset=0 len=209698816

private: slice=3 offset=1 len=4095

update: time=1010434311 seqno=0.6

headers: 0 248

configs: count=1 len=3004

logs: count=1 len=455

Defined regions:

config priv 000017-000247[000231]: copy=01 offset=000000 enabled

config priv 000249-003021[002773]: copy=01 offset=000231 enabled

log priv 003022-003476[000455]: copy=01 offset=000000 enabled

Multipathing information:

numpaths: 2

c20t2B000060220041F4d0s2 state=enabled

c23t2B000060220041F9d0s2 state=enabled

# vxdmpadm listctlr all

CTLR-NAME ENCLR-TYPE STATE ENCLR-NAME

=====================================================

c0 OTHER_DISKS ENABLED OTHER_DISKS

c2 SENA ENABLED SENA0

c3 SENA ENABLED SENA0

c20 Disk ENABLED Disk

c23 Disk ENABLED Disk

From the VxDisk output, notice that there are two physical paths to the LUN:

■ c20t2B000060220041F4d0s2

■ c23t2B000060220041F9d0s2

Both of these paths are currently enabled with VxDMP.

Chapter 2 General Troubleshooting Procedures 11

For Internal Use Only

Page 28

2. Use the luxadm(1M) command to display further information about the underlying LUN.

# luxadm display /dev/rdsk/c20t2B000060220041F4d0s2

DEVICE PROPERTIES for disk: /dev/rdsk/c20t2B000060220041F4d0s2

Status(Port A): O.K.

Vendor: SUN

Product ID: SESS01

WWN(Node): 2a000060220041f4

WWN(Port A): 2b000060220041f4

Revision: 080C

Serial Num: Unsupported

Unformatted capacity: 102400.000 MBytes

Write Cache: Enabled

Read Cache: Enabled

Minimum prefetch: 0x0

Maximum prefetch: 0x0

Device Type: Disk device

Path(s):

/dev/rdsk/c20t2B000060220041F4d0s2

/devices/pci@a,2000/pci@2/SUNW,qlc@4/fp@0,0

ssd@w2b000060220041f4,0:c,raw

# luxadm display /dev/rdsk/c23t2B000060220041F9d0s2

DEVICE PROPERTIES for disk: /dev/rdsk/c23t2B000060220041F9d0s2

Status(Port A): O.K.

Vendor: SUN

Product ID: SESS01

WWN(Node): 2a000060220041f9

WWN(Port A): 2b000060220041f9

Revision: 080C

Serial Num: Unsupported

Unformatted capacity: 102400.000 MBytes

Write Cache: Enabled

Read Cache: Enabled

Minimum prefetch: 0x0

Maximum prefetch: 0x0

Device Type: Disk device

Path(s):

/dev/rdsk/c23t2B000060220041F9d0s2

/devices/pci@e,2000/pci@2/SUNW,qlc@4/fp@0,0/

ssd@w2b000060220041f9,0:c,raw

12 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 29

▼

To Quiesce the I/O on the A3/B3 Link

1. Determine the path you want to disable.

2. Disable the path by typing the following:

# vxdmpadm disable ctlr=<c#>

3. Verify that the path is disabled:

# vxdmpadm listctlr all

Steps 1 and 2 halt I/O only up to the A3/B3 link. I/O will continue to move over the

T1 & T2 paths, as well as the A4/B4 links to the Sun StorEdge T3+ array.

▼ To Suspend the I/O on the A3/B3 Link

Use one of the following methods to suspend I/O while the failover occurs:

1. Stop all customer applications that are accessing the Sun StorEdge T3+ array.

2. Manually pull the link from the Sun StorEdge T3+ array to the switch and wait

for a Sun StorEdge T3+ array LUN failover.

a. After the failover occurs, replace the cable and proceed with testing and FRU

isolation.

b. After testing is complete and any FRU replacement is finished, return the

controller state back to the default by using the virtualization engine failback

command.

Caution – This action will cause SCSI errors on the data host and a brief suspension

of I/O while the failover occurs.

Chapter 2 General Troubleshooting Procedures 13

For Internal Use Only

Page 30

▼ To Return the Path to Production

1. Type:

# vxdmpadm enable ctlr=<c#>

2. Verify that the path has been re-enabled by typing:

# vxdmpadm listctlr all

14 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 31

Fibre Channel Links

The following sections provide troubleshooting information for the basic

components and Fibre Channel links, listed in

TABLE2-1

Link Provides Fibre Channel Link Between these Components

A1 to B1 Datahost, sw1a, and sw1b

A2 sw1a and v1a*

B2 sw1b and v1b*

A3 v1a and sw2a*

B3 v1b and sw2b*

A4 Master Sun StorEdge T3+ array and the “A” path switch

B4 AltMaster Sun StorEdge T3+ array and the “B” path switch

T1 to T2 sw2a and sw2b*

* Sun StorEdge 6900 series only

Note – In an actual Sun StorEdge 3900 or 6900 series configuration, there could be

more Sun StorEdge T3+ arrays than are shown in FIGURE 2-1 and FIGURE 2-2.

TABLE 2-1.

By using the Storage Automated Diagnostic Environment, you should be able to

isolate the problem to one particular segment of the configuration.

The information found in this section is based on the assumption that the Storage

Automated Diagnostic Environment is running on the data host, and that it is

configured to monitor host errors. If the Storage Automated Diagnostic Environment

is not installed on the data host, there will be areas of limited monitoring, diagnosis

and isolation.

The following diagrams provide troubleshooting information for the basic

components and Fibre Channel links specific to the Sun StorEdge 3900 series, shown

FIGURE 2-1, and the Sun StorEdge 6900 series, shown in FIGURE 2-2.

in

Chapter 2 General Troubleshooting Procedures 15

For Internal Use Only

Page 32

Fibre Channel Link Diagrams

FIGURE 2-1 shows the basic components and the Fibre Channel links for a Sun

StorEdge 3900 series system:

■ A1 to B1—HBA to Sun StorEdge FC network switch-8 and switch-16 switch link

■ A4 to B4—Sun StorEdge FC network switch-8 and switch-16 switch to Sun

StorEdge T3+ array link

HOST

HBA-A

A1

Sw1a Sw1b

T3 Alt-Master

A4

T3 Master

HBA-B

B1

B4

FIGURE 2-1 Sun StorEdge 3900 Series Fibre Channel Link Diagram

16 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 33

FIGURE 2-2 shows the basic components and the Fibre Channel links for a Sun

StorEdge 6900 series system:

■ A1 to B1—HBA to Sun StorEdge network FC switch-8 and switch-16 switch link

■ A2 to B2—Sun StorEdge network FC switch-8 and switch-16 switch to

virtualization engine link on the host side

■ A3 to B3—Sun StorEdge network FC switch-8 and switch-16 switch to the

virtualization engine link on the device side

■ A4 to B4—Sun StorEdge network FC switch-8 and switch-16 switch to Sun

StorEdge T3+ array switch

■ T1 to T2—T Port switch-to-switch link

HOST

A2

A3

Sw2a

Sw1a

V1a

A4

A1

HBA-A

HBA-B

B1

Sw1b

B2

V1b

B3

T1

Sw2b

T2

B4

T3 Alt-Master

T3 Master

FIGURE 2-2 Sun StorEdge 6900 Series Fibre Channel Link Diagram

Chapter 2 General Troubleshooting Procedures 17

For Internal Use Only

Page 34

Host Side Troubleshooting

Host-side troubleshooting refers to the messages and errors the data host detects.

Usually, these messages appear in the /var/adm/messages file.

Storage Service Processor Side Troubleshooting

Storage Service Processor-side Troubleshooting refers to messages, alerts, and errors

that the Storage Automated Diagnostic Environment, running on the Storage Service

Processor, detects. You can find these messages by monitoring the following Sun

StorEdge 3900 series and the Sun StorEdge 6900 series components:

■ Sun StorEdge network FC switch-8 and switch-16 switches

■ Virtualization engine

■ Sun StorEdge T3+ array

Combining the host side messages and errors and the Storage Service Processor-side

messages, alerts, and errors into a meaningful context is essential for proper

troubleshooting.

18 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 35

Command Line Test Examples

To run a single Sun StorEdge diagnostic test from the command line rather than

through the Storage Automated Diagnostic Environment interface, you must log into

the appropriate Host or Slave for testing the components. The following two tests,

the qlctest(1M) and the switchtest(1M) are provided as examples.

qlctest(1M)

The qlctest(1M) comprises several subtests that test the functions of the Sun

StorEdge PCI dual Fibre Channel (FC) host adapter board. This board is an HBA that

has diagnostic support. This diagnostic test is not scalable.

CODE EXAMPLE 2-1 qlctest(1M)

# /opt/SUNWstade/Diags/bin/qlctest -v -o "dev=

/devices/pci@6,4000/SUNW,qlc@3/fp@0,0:devctl|run_connect

=Yes|mbox=Disable|ilb=Disable|ilb_10=Disable|elb=Enable"

"qlctest: called with options: dev=/devices/pci@6,4000/SUNW,qlc@3/

fp@0,0:devctl|run_connect=Yes|mbox=Disable|ilb=Disable|ilb_10=Disable|el

b=Enable"

"qlctest: Started."

"Program Version is 4.0.1"

"Testing qlc0 device at /devices/pci@6,4000/SUNW,qlc@3/fp@0,0:devctl."

"QLC Adapter Chip Revision = 1, Risc Revision = 3,

Frame Buffer Revision = 1029, Riscrom Revision = 4,

Driver Revision = 5.a-2-1.15 "

"Running ECHO command test with pattern 0x7e7e7e7e"

"Running ECHO command test with pattern 0x1e1e1e1e"

"Running ECHO command test with pattern 0xf1f1f1f1"

<snip>

"Running ECHO command test with pattern 0x4a4a4a4a"

"Running ECHO command test with pattern 0x78787878"

"Running ECHO command test with pattern 0x25252525"

"FCODE revision is ISP2200 FC-AL Host Adapter Driver: 1.12 01/01/16"

"Firmware revision is 2.1.7f"

"Running CHECKSUM check"

"Running diag selftest"

"qlctest: Stopped successfully."

Chapter 2 General Troubleshooting Procedures 19

For Internal Use Only

Page 36

switchtest(1M)

switchtest(1M) is used to diagnose the Sun StorEdge network FC switch-8 and

switch-16 switch devices. The switchtest process also provides command line

access to switch diagnostics. switchtest supports testing on local and remote

switches.

switchtest runs the port diagnostic on connected switch ports. While

switchtest is running, the port statistics are monitored for errors, and the chassis

status is checked.

CODE EXAMPLE 2-2 switchtest(1M)

# /opt/SUNWstade/Diags/bin/switchtest -v -o "dev=

2:192.168.0.30:0x0|xfersize=200"

"switchtest: called with options: dev=2:192.168.0.30:0x0|xfersize=200"

"switchtest: Started."

"Testing port: 2"

"Using ip_addr: 192.168.0.30, fcaddr: 0x0 to access this port."

"Chassis Status for Device: Switch Power: OK Temp: OK 23.0c Fan 1: OK Fan

2: OK"

"Testing Device: Switch Port: 2 Pattern: 0x7e7e7e7e"

"Testing Device: Switch Port: 2 Pattern: 0x1e1e1e1e"

"Testing Device: Switch Port: 2 Pattern: 0xf1f1f1f1"

"Testing Device: Switch Port: 2 Pattern: 0xb5b5b5b5"

"Testing Device: Switch Port: 2 Pattern: 0x4a4a4a4a"

"Testing Device: Switch Port: 2 Pattern: 0x78787878"

"Testing Device: Switch Port: 2 Pattern: 0xe7e7e7e7"

"Testing Device: Switch Port: 2 Pattern: 0xaa55aa55"

"Testing Device: Switch Port: 2 Pattern: 0x7f7f7f7f"

"Testing Device: Switch Port: 2 Pattern: 0x0f0f0f0f"

"Testing Device: Switch Port: 2 Pattern: 0x00ff00ff"

"Testing Device: Switch Port: 2 Pattern: 0x25252525"

"Port: 2 passed all tests on Switch"

"switchtest: Stopped successfully."

All Storage Automated Diagnostic Environment diagnostics tests are located in

/opt/SUNWstade/Diags/bin. Refer to the Storage Automated Diagnostic

Environment User’s Guide for a complete list of tests, subtests, options, and

restrictions.

20 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 37

Storage Automated Diagnostic Environment Event Grid

The Storage Automated Diagnostic Environment generates component-specific event

grids that describe the severity of an Event, whether action is required, a description

of the event, and recommended action. Refer to Chapters 5 through 9 of this

troubleshooting guide for component-specific event grids.

▼ To Customize an Event Report

1. Click the Event Grid link on the the Storage Automated Diagnostic Environment

Help menu.

2. Select the criteria from the Storage Automated Diagnostic Environment event

grid, like the one shown in in

TABLE2-2 Event Grid Sorting Criteria

Category Component Event Type Severity Action

• All (Default)

• Sun StorEdge A3500FC array

• Sun StorEdge A5000 array

• Agent

• Host

• Message

• Sun Switch

• Sun StorEdge T3+ array

• Tape

• Vvirtualization engine

• All

(Default)

• Backplane

• Controller

• Disk

• Interface

• LUN

• Port

• Power

TABLE 2-2.

• Agent Deinstall

• Agent Install

• Alarm

• Alternate Master +

• Alternate Master—

• Audit

• CommunicationEstablished

• CommunicationLost

• Discovery

• Heartbeat

• Insert Component

• Location Change

• Patch Info

• Quiesce End

• Quiesce Start

• Removal

• Remove Component

• State Change +(from offline

to online)

• State Change—(from online

to offline)

• Statistics

• Backup

Red—

Critical

(Error)

Yellow—

Alert

(Warning)

Down—

System

Down

Y—This

event is

actionable

and is sent

to RSS/

SRS

N—This

event is

non

actionable

For Internal Use Only

Chapter 2 General Troubleshooting Procedures 21

Page 38

22 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 39

CHAPTER

3

Troubleshooting the Fibre Channel

Links

A1/B1 Fibre Channel (FC) Link

If a problem occurs with the A1/B1 FC link:

■ In a Sun StorEdge 3900 series system, the Sun StorEdge T3+ array will fail over.

■ In a Sun StorEdge 6900 series system, no Sun StorEdge T3+ array will fail over,

but a severe problem can cause a path to go offline.

FIGURE 3-1, FIGURE 3-2, and FIGURE 3-3 are examples of A1/B1 Fibre Channel Link

Notification Events.

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : Message Key: message:diag.xxxxx.xxx.com

EventType: LogEvent.driver.LOOP_OFFLINE

EventTime: 01/08/2002 14:34:45

Found 1 ’driver.LOOP_OFFLINE’ error(s) in logfile: /var/adm/messages on

diag.xxxxx.xxx.com (id=80fee746):

info: Loop Offline

Jan 8 14:34:25 WWN: Received 2 ’Loop Offline’ message(s) [threshold is 1

in 5mins] Last-Message: ’diag.xxxxx.xxx.com qlc: [ID 686697 kern.info] NOTICE:

Qlogic qlc(0): Loop OFFLINE ’

FIGURE 3-1 Data Host Notification of Intermittent Problems

23

Page 40

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : Message Key: message:diag.xxxxx.xxx.com

EventType: LogEvent.driver.MPXIO_offline

EventTime: 01/08/2002 14:48:02

Found 2 ’driver.MPXIO_offline’ warning(s) in logfile: /var/adm/messages on

diag.xxxxx.xxx.com (id=80fee746):

Jan 8 14:47:07 WWN:2b000060220041f9 diag.xxxxx.xxx.com mpxio: [ID

779286 kern.info] /scsi_vhci/ssd@g29000060220041f96257354230303053

(ssd19) multipath status: degraded, path /pci@6,4000/SUNW,qlc@3/fp@0,0

(fp1) to target address: 2b000060220041f9,1 is offline

Jan 8 14:47:07 WWN:2b000060220041f9 diag.xxxxx.xxx.com mpxio: [ID

779286 kern.info] /scsi_vhci/ssd@g29000060220041f96257354230303052

(ssd18) multipath status: degraded, path /pci@6,4000/SUNW,qlc@3/fp@0,0

(fp1) to target address: 2b000060220041f9,0 is offline

FIGURE 3-2 Data Host Notification of Severe Link Error

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : Switch Key: switch:100000c0dd0057bd

EventType: StateChangeEvent.X.port.6

EventTime: 01/08/2002 14:54:20

’port.6’ in SWITCH diag-sw1a (ip=192.168.0.30) is now Unknown (statusstate changed from ’Online’ to ’Admin’):

FIGURE 3-3 Storage Service Processor Notification

Note – An A1/B1 FC link error can cause a port in sw1a or sw1b to change state.

24 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 41

▼

To Verify the Data Host

An error in the A1/B1 FC link can cause a path to go offline in the multipathing

software.

CODE EXAMPLE 3-1 luxadm(1M) Display

# luxadm display

/dev/rdsk/c6t29000060220041F96257354230303052d0s2

DEVICE PROPERTIES for disk: /dev/rdsk/

c6t29000060220041F96257354230303052d0s2

Status(Port A): O.K.

Status(Port B): O.K.

Vendor: SUN

Product ID: SESS01

WWN(Node): 2a000060220041f4

WWN(Port A): 2b000060220041f4

WWN(Port B): 2b000060220041f9

Revision: 080C

Serial Num: Unsupported

Unformatted capacity: 102400.000 MBytes

Write Cache: Enabled

Read Cache: Enabled

Minimum prefetch: 0x0

Maximum prefetch: 0x0

Device Type: Disk device

Path(s):

/dev/rdsk/c6t29000060220041F96257354230303052d0s2

/devices/scsi_vhci/ssd@g29000060220041f96257354230303052:c,raw

Controller /devices/pci@6,4000/SUNW,qlc@3/fp@0,0

Device Address 2b000060220041f9,0

Class primary

State OFFLINE

Controller /devices/pci@6,4000/SUNW,qlc@2/fp@0,0

Device Address 2b000060220041f4,0

Class primary

State ONLINE

...

For Internal Use Only

Chapter 3 Troubleshooting the Fibre Channel Links 25

Page 42

An error in the A1/B1 FC link can also cause a device to enter the “unusable” state

in cfgadm. In this case, the output for luxadm -e port will show that a device that

was “connected” changed to an “unconnected” state.

CODE EXAMPLE 3-2 cfgadm -al Display

...

# cfgadm -al

Ap_Id Type Receptacle Occupant Condition

c0 scsi-bus connected configured unknown

c0::dsk/c0t0d0 disk connected configured unknown

c0::dsk/c0t1d0 disk connected configured unknown

c1 scsi-bus connected configured unknown

c1::dsk/c1t6d0 CD-ROM connected configured unknown

c2 fc-fabric connected configured unknown

c2::210100e08b23fa25 unknown connected unconfigured unknown

c2::2b000060220041f4 disk connected configured unknown

c3 fc-fabric connected configured unknown

c3::2b000060220041f9 disk connected configured unusable

c4 fc-private connected unconfigured unknown

c5 fc connected unconfigured unknown

FRU Tests Available for A1/B1 FC Link Segment

■ HBA—qlctest(1M)

■ Available only if the Storage Automated Diagnostic Environment is installed

on a data host

■ Causes HBA to go “offline” and “online” during tests

■ Switch —switchtest(1M)

■ Can be run while the link is still cabled and online (connected to HBA)

■ You must specify a payload of 200 bytes or less when testing the A1/B1 FC

link, while the link is connected to the HBA (limitation in HBA ASIC).

■ Can be run only from the Storage Service Processor

■ The dev option to switchtest is in the following format:

Port:IP-Address:FCAddress

The FCAddress can be set to 0x0

26 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 43

CODE EXAMPLE 3-3 switchtest(1M) called with options

# ./switchtest -v -o "dev=2:192.168.0.30:0"

"switchtest: called with options: dev=2:192.168.0.30:0"

"switchtest: Started."

"Testing port: 2"

"Using ip_addr: 192.168.0.30, fcaddr: 0x0 to access this port."

"Chassis Status for Device: Switch Power: OK Temp: OK 23.0c Fan 1: OK

Fan 2: OK "

02/06/02 15:09:45 diag Storage Automated Diagnostic Environment MSGID 4001

switchtest.WARNING

switch0: "Maximum transfer size for a FABRIC port is 200. Changing

transfer size 2000 to 200"

"Testing Device: Switch Port: 2 Pattern: 0x7e7e7e7e"

"Testing Device: Switch Port: 2 Pattern: 0x1e1e1e1e"

Note – The Storage Automated Diagnostic Environment automatically resets the

transfer size if it notes that it is about to test a switch to HBA connection. This is

done both in the Storage Automated Diagnostic Environment GUI and from the

command-line interface (CLI).

For Internal Use Only

Chapter 3 Troubleshooting the Fibre Channel Links 27

Page 44

▼ To Isolate the A1/B1 FC Link

1. Quiesce the I/O on the A1/B1 FC link path.

2. Run switchtest or qlctest to test the entire link.

3. Break the connection by uncabling the link.

4. Insert a loopback connector into the switch port.

5. Rerun switchtest.

a. If switchtest fails, replace the GBIC and rerun switchtest.

b. If switchtest fails again, replace the switch.

6. Insert a loopback connector into the HBA.

7. Run qlctest.

■ If the test fails, replace the HBA.

■ If the test passes, replace the cable.

8. Recable the entire link.

9. Run switchtest or qlctest to validate the fix.

10. Return the path to production.

28 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 45

A2/B2 Fibre Channel (FC) Link

If a problem occurs with the A2/B2 FC link:

■ In a Sun StorEdge 3900 series system, the Sun StorEdge T3+ array will fail over.

■ In a Sun StorEdge 6900 series system, no Sun StorEdge T3+ array will fail over,

but a severe problem can cause a path to go offline.

FIGURE 3-4 and FIGURE 3-5 are examples of A2/B2 FC Link Notification Events.

From root Tue Jan 8 18:39:48 2002

Date: Tue, 8 Jan 2002 18:39:47 -0700 (MST)

Message-Id: <200201090139.g091dlg07015@diag.xxxxx.xxx.com>

From: Storage Automated Diagnostic Environment.Agent

Subject: Message from ’diag.xxxxx.xxx.com’ (2.0.B2.002)

Content-Length: 2742

You requested the following events be forwarded to you from

’diag.xxxxx.xxx.com’.

Site : FSDE LAB Broomfield CO

Source : diag226.xxxxx.xxx.com

Severity : Normal

Category : Message Key: message:diag.xxxxx.xxx.com

EventType: LogEvent.driver.Fabric_Warning

EventTime: 01/08/2002 17:34:47

Found 1 ’driver.Fabric_Warning’ warning(s) in logfile: /var/adm/messages

on diag.xxxxx.xxx.com (id=80fee746):

Info: Fabric warning

Jan 8 17:34:36 WWN:2b000060220041f4 diag.xxxxx.xxx.com fp: [ID 517869

kern.warning] WARNING: fp(0): N_x Port with D_ID=108000,

PWWN=2b000060220041f4 disappeared from fabric

<snip>

multipath status: degraded, path /pci@6,4000/SUNW,qlc@2/fp@0,0 (fp0) to

target address: 2b000060220041f4,1 is offline

Jan 8 17:34:55 WWN:2b000060220041f4 diag.xxxxx.xxx.com

mpxio: [ID 779286 kern.info] /scsi_vhci/

ssd@g29000060220041f96257354230303052 (ssd18)

multipath status: degraded, path /pci@6,4000/SUNW,qlc@2/fp@0,0 (fp0) to

target address: 2b000060220041f4,0 is offline

FIGURE 3-4 A2/B2 FC Link Host Side Event

Chapter 3 Troubleshooting the Fibre Channel Links 29

For Internal Use Only

Page 46

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : Switch Key: switch:100000c0dd0061bb

EventType: StateChangeEvent.X.port.1

EventTime: 01/08/2002 17:38:32

’port.1’ in SWITCH diag-sw1b (ip=192.168.0.31) is now Unknown (statusstate changed from ’Online’ to ’Admin’):

----------------------------------------------------------------

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : San Key: switch:100000c0dd0061bb:1

EventType: LinkEvent.ITW.switch|ve

EventTime: 01/08/2002 17:39:47

ITW-ERROR (765 in 11 mins): Origin: port 1 on ’switch ’sw1b/192.168.0.31’.

Destination: port 1 on ve ’diag-v1b/29000060220041f4’:

Info:

An invalid transmission word (ITW) was detected between two components.

This could indicate a potential problem.

Cause:

Likely Causes are: GBIC, FC Cable and device optical connections.

Action:

To isolate further please run the Storage Automated Diagnostic Environment

tests associated with this link segment.

FIGURE 3-5 A2/B2 FC Link Storage Service Processor Side Event

30 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 47

▼

To Verify the Host Side

An error in the A2/B2 FC link can result in a device being listed as in an “unusable”

state in cfgadm, but no HBAs are listed as in the “unconnected” state in luxadm

output. The multipathing software will note an OFFLINE path.

For Internal Use Only

Chapter 3 Troubleshooting the Fibre Channel Links 31

Page 48

CODE EXAMPLE 3-4 cfgadm -al

# cfgadm -al

Ap_Id Type Receptacle Occupant Condition

c0 scsi-bus connected configured unknown

<snip>

# luxadm -e port

Found path to 2 HBA ports

/devices/pci@6,4000/SUNW,qlc@2/fp@0,0:devctl CONNECTED

/devices/pci@6,4000/SUNW,qlc@3/fp@0,0:devctl CONNECTED

# luxadm display /dev/rdsk/c6t29000060220041F96257354230303052d0s2

DEVICE PROPERTIES for disk: /dev/rdsk/

c6t29000060220041F96257354230303052d0s2

Status(Port A): O.K.

Status(Port B): O.K.

Vendor: SUN

Product ID: SESS01

WWN(Node): 2a000060220041f9

WWN(Port A): 2b000060220041f9

WWN(Port B): 2b000060220041f4

Revision: 080C

Serial Num: Unsupported

Unformatted capacity: 102400.000 MBytes

Write Cache: Enabled

Read Cache: Enabled

Minimum prefetch: 0x0

Maximum prefetch: 0x0

Device Type: Disk device

Path(s):

/dev/rdsk/c6t29000060220041F96257354230303052d0s2

/devices/scsi_vhci/ssd@g29000060220041f96257354230303052:c,raw

Controller /devices/pci@6,4000/SUNW,qlc@3/fp@0,0

Device Address 2b000060220041f9,0

Class primary

State ONLINE

Controller /devices/pci@6,4000/SUNW,qlc@2/fp@0,0

Device Address 2b000060220041f4,0

Class primary

State OFFLINE

32 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 49

Note – You can find procedures for restoring virtualization engine settings in the

Sun StorEdge 3900 and 6900 Series Reference Manual .

▼ To Verify the A2/B2 FC Link

You can check the A2/B2 FC link using the Storage Automated Diagnostic

Environment, Diagnose—Test from Topology functionality. The Storage Automated

Diagnostic Environment’s implementation of diagnostic tests verifies the operation

of user-selected components. Using the Topology view, you can select specific tests,

subtests, and test options.

Refer to Chapter 5 of the Storage Automated Diagnostic Environment User’s Guide for

more information.

FRU Tests Available for A2/B2 FC Link Segment

■ The linktest is not available.

■ The switch and/or GBIC— switchtest test:

■ Can be used only in conjunction with the loopback connector.

■ Cannot be cabled to the virtualization engine while switchtest runs.

■ No virtualization engine tests are available at this time.

▼ To Isolate the A2/B2 FC Link

1. Quiesce the I/O on the A2/B2 FC link path.

2. Break the connection by uncabling the link.

3. Insert the loopback connector into the switch port.

4. Run switchtest:

a. If the test fails, replace the GBIC and rerun switchtest.

b. If the test fails again, replace the switch.

Chapter 3 Troubleshooting the Fibre Channel Links 33

For Internal Use Only

Page 50

5. If the switch or the GBIC show no errors, replace the remaining components in

the following order:

a. Replace the virtualization engine-side GBIC, recable the link, and monitor the

link for errors.

b. Replace the cable, recable the link, and monitor the link for errors.

c. Replace the virtualization engine, restore the virtualization engine settings,

recable the link, and monitor the link for errors

6. Return the path to production.

The procedures for restoring virtualization engine settings are in the Sun StorEdge

3900 and 6900 Series Reference Manual.

34 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 51

A3/B3 Fibre Channel (FC) Link

If a problem occurs with the A3/B3 FC link:

■ In a Sun StorEdge 3900 series system, the Sun StorEdge T3+ array will fail over.

■ In a Sun StorEdge 6900 series system, no Sun StorEdge T3+ array will fail over,

but a severe problem can cause a path to go offline.

FIGURE 3-6, FIGURE 3-7, and FIGURE 3-8 are examples of A3/B3 FC link Notification

Events.

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : Message Key: message:diag.xxxxx.xxx.com

EventType: LogEvent.driver.MPXIO_offline

EventTime: 01/08/2002 18:25:18

Found 2 ’driver.MPXIO_offline’ warning(s) in logfile: /var/adm/messages on

diag.xxxxx.xxx.com (id=80fee746):

Jan 8 18:24:24 WWN:2b000060220041f9 diag.xxxxx.xxx.com mpxio: [ID

779286 kern.info] /scsi_vhci/ssd@g29000060220041f96257354230303053

(ssd19) multipath status: degraded, path /pci@6,4000/SUNW,qlc@3/fp@0,0

(fp1) to target address: 2b000060220041f9,1 is offline

Jan 8 18:24:24 WWN:2b000060220041f9 diag.xxxxx.xxx.com mpxio: [ID

779286 kern.info] /scsi_vhci/ssd@g29000060220041f96257354230303052

(ssd18) multipath status: degraded, path /pci@6,4000/SUNW,qlc@3/fp@0,0

(fp1) to target address: 2b000060220041f9,0 is offline

---------------------------------------------------------------Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : Message Key: message:diag.xxxxx.xxx.com

EventType: LogEvent.driver.Fabric_Warning

EventTime: 01/08/2002 18:25:18

Found 1 ’driver.Fabric_Warning’ warning(s) in logfile: /var/adm/messages

on diag.xxxxx.xxx.com (id=80fee746):

Info:

Fabric warning

Jan 8 18:24:04 WWN:2b000060220041f9 diag.xxxxx.xxx.com fp: [ID 517869

kern.warning] WARNING: fp(1): N_x Port with D_ID=104000,

PWWN=2b000060220041f9 disappeared from fabric

FIGURE 3-6 A3/B3 FC Link Host-Side Event

Chapter 3 Troubleshooting the Fibre Channel Links 35

For Internal Use Only

Page 52

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : Switch Key: switch:100000c0dd0057bd

EventType: StateChangeEvent.M.port.1

EventTime: 01/08/2002 18:28:38

’port.1’ in SWITCH diag-sw1a (ip=192.168.0.30) is now Not-Available

(status-state changed from ’Online’ to ’Offline’):

Info:

A port on the switch has logged out of the fabric and gone offline

Action:

1. Verify cables, GBICs and connections along Fibre Channel path

2. Check Storage Automated Diagnostic Environment SAN Topology GUI to

identify failing segment of the data path

3. Verify correct FC switch configuration

FIGURE 3-7 A3/B3 FC Link Storage Service Processor-Side Event

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Normal

Category : Switch Key: switch:100000c0dd00cbfe

EventType: StateChangeEvent.M.port.1

EventTime: 01/08/2002 18:28:40

’port.1’ in SWITCH diag-sw2a (ip=192.168.0.32) is now Not-Available

(status-state changed from ’Online’ to ’Offline’):

Info:

A port on the switch has logged out of the fabric and gone offline

Action:

1. Verify cables, GBICs and connections along Fibre Channel path

2. Check Storage Automated Diagnostic Environment SAN Topology GUI to

identify failing segment of the data path

3. Verify correct FC switch configuration

FIGURE 3-8 A3/B3 FC Link Storage Service Processor-Side Event

36 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 53

▼

To Verify the Host Side

An error in the A3/B3 FC link results in a device being listed as in an “unusable”

state in cfgadm, but no HBAs are listed as in the “unconnected” state in luxadm

output. The multipathing software will note an “offline” path.

CODE EXAMPLE 3-5 Devices in the “connected” state

# cfgadm -al

Ap_Id Type Receptacle Occupant Condition

c0 scsi-bus connected configured unknown

c0::dsk/c0t0d0 disk connected configured unknown

c0::dsk/c0t1d0 disk connected configured unknown

c1 scsi-bus connected configured unknown

c1::dsk/c1t6d0 CD-ROM connected configured unknown

c2 fc-fabric connected configured unknown

c2::210100e08b23fa25 unknown connected unconfigured unknown

c2::2b000060220041f4 disk connected configured unknown

c3 fc-fabric connected configured unknown

c3::2b000060220041f9 disk connected configured unusable

c3::210100e08b230926 unknown connected unconfigured unknown

c4 fc-private connected unconfigured unknown

c5 fc connected unconfigured unknown

# luxadm -e port

Found path to 2 HBA ports

/devices/pci@6,4000/SUNW,qlc@2/fp@0,0:devctl CONNECTED

/devices/pci@6,4000/SUNW,qlc@3/fp@0,0:devctl CONNECTED

# luxadm display

/dev/rdsk/c6t29000060220041F96257354230303052d0s2

DEVICE PROPERTIES for disk: /dev/rdsk/

c6t29000060220041F96257354230303052d0s2

<snip>

/devices/scsi_vhci/ssd@g29000060220041f96257354230303052:c,raw

Controller /devices/pci@6,4000/SUNW,qlc@3/fp@0,0

Device Address 2b000060220041f9,0

Class primary

State OFFLINE

Controller /devices/pci@6,4000/SUNW,qlc@2/fp@0,0

Device Address 2b000060220041f4,0

Class primary

State ONLINE

Chapter 3 Troubleshooting the Fibre Channel Links 37

For Internal Use Only

Page 54

CODE EXAMPLE 3-6 VxDMP Error Message

Jan 8 18:26:38 diag.xxxxx.xxx.com vxdmp: [ID 619769 kern.notice] NOTICE:

vxdmp: Path failure on 118/0x1f8

Jan 8 18:26:38 diag.xxxxx.xxx.com vxdmp: [ID 997040 kern.notice] NOTICE:

vxvm:vxdmp: disabled path 118/0x1f8 belonging to the dmpnode 231/0xd0

▼ To Verify the Storage Service Processor

You can check the A3/B3 FC link using the Storage Automated Diagnostic

Environment, Diagnose—Test from Topology functionality. Storage Automated

Diagnostic Environment’s implementation of diagnostic tests verify the operation of

user-selected components. Using the Topology view, you can select specific tests,

subtests, and test options.

Refer to the Storage Automated Diagnostic Environment User’s Guide for more

information.

FRU Tests Available for the A3/B3 FC Link Segment

■ The Linktest is not available.

■ The switch and/or GBIC - switchtest test:

■ Can be used only in conjunction with the loopback connector.

■ Cannot be cabled to the virtualization engine while switchtest runs.

■ No virtualization engine tests are available at this time.

38 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 55

▼

To Isolate the A3/B3 FC Link

1. Quiesce the I/O on the A3/B3 FC link path.

2. Break the connection by uncabling the link.

3. Insert the loopback connector into the switch port.

4. Run switchtest:

a. If the test fails, replace the GBIC and rerun switchtest.

b. If the test fails again, replace the switch.

5. If the switch or the GBIC show no errors, replace the remaining components in

the following order:

a. Replace the virtualization engine-side GBIC, recable the link, and monitor the

link for errors.

b. Replace the cable, recable the link, and monitor the link for errors.

c. Replace the virtualization engine, restore the virtualization engine settings,

recable the link, and monitor the link for errors

6. Return the path to production.

The procedures for restoring virtualization engine settings are in the Sun StorEdge

3900 and 6900 Series Reference Manual.

For Internal Use Only

Chapter 3 Troubleshooting the Fibre Channel Links 39

Page 56

A4/B4 Fibre Channel (FC) Link

If a problem occurs with the A4/B4 FC link:

■ In a Sun StorEdge 3900 series system, the Sun StorEdge T3+ array will fail over.

■ In a Sun StorEdge 6900 series system, no Sun StorEdge T3+ array will fail over,

but a severe problem can cause a path to go offline.

FIGURE 3-10 are examples of A4/B4 Link Notification Events.

and

Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Warning

Category : Message

DeviceId : message:diag.xxxxx.xxx.com

EventType: LogEvent.driver.MPXIO_offline

EventTime: 01/29/2002 14:28:06

Found 2 ’driver.MPXIO_offline’ warning(s) in logfile: /var/adm/messages on

diag.xxxxx.xxx.com (id=80e4aa60):

<snip>

---------------------------------------------------------------------Site : FSDE LAB Broomfield CO

Source : diag.xxxxx.xxx.com

Severity : Warning

Category : Message

DeviceId : message:diag.xxxxx.xxx.com

EventType: LogEvent.driver.Fabric_Warning

EventTime: 01/29/2002 14:28:06

Found 1 ’driver.Fabric_Warning’ warning(s) in logfile: /var/adm/messages on

diag.xxxxx.xxx.com (id=80e4aa60):

INFORMATION:

Fabric warning

<snip>

status of hba /devices/pci@a,2000/pci@2/SUNW,qlc@5/fp@0,0:devctl on

diag.xxxxx.xxx.com changed from CONNECTED to NOT CONNECTED

INFORMATION:

monitors changes in the output of luxadm -e port

Found path to 20 HBA ports

/devices/sbus@2,0/SUNW,socal@d,10000:0 NOT CONNECTED

FIGURE 3-9 A4/B4 FC Link Data Host Notification

40 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 57

Site : FSDE LAB Broomfield CO

Source : diag

Severity : Warning

Category : Switch

DeviceId : switch:100000c0dd0061bb

EventType: LogEvent.MessageLog

EventTime: 01/29/2002 14:25:05

Change in Port Statistics on switch diag-sw1b (ip=192.168.0.31):

Port-1: Received 16289 ’InvalidTxWds’ in 0 mins (value=365972 )

---------------------------------------------------------------------Site : FSDE LAB Broomfield CO

Source : diag

Severity : Warning

Category : T3message

DeviceId : t3message:83060c0c

EventType: LogEvent.MessageLog

EventTime: 01/29/2002 14:25:06

Warning(s) found in logfile: /var/adm/messages.t3 on diag (id=83060c0c):

Jan 29 14:12:58 t3b0 ISR1[2]: W: u2ctr ISP2100[2] Received LOOP DOWN async

event

Jan 29 14:13:32 t3b0 MNXT[1]: W: u1ctr starting lun 1 failover

---------------------------------------------------------------------

Site : FSDE LAB Broomfield CO

Source : diag

Severity : Warning

Category : T3message

DeviceId : t3message:83060c0c

EventType: LogEvent.MessageLog

EventTime: 01/29/2002 14:11:14

Warning(s) found in logfile: /var/adm/messages.t3 on diag (id=83060c0c):

Jan 29 14:05:18 t3b0 ISR1[1]: W: u2d4 SVD_PATH_FAILOVER: path_id = 0

Jan 29 14:05:18 t3b0 ISR1[1]: W: u2d5 SVD_PATH_FAILOVER: path_id = 0

Jan 29 14:05:18 t3b0 ISR1[1]: W: u2d6 SVD_PATH_FAILOVER: path_id = 0

Jan 29 14:05:18 t3b0 ISR1[1]: W: u2d7 SVD_PATH_FAILOVER: path_id = 0

Jan 29 14:05:18 t3b0 ISR1[1]: W: u2d8 SVD_PATH_FAILOVER: path_id = 0

Jan 29 14:05:18 t3b0 ISR1[1]: W: u2d9 SVD_PATH_FAILOVER: path_id = 0

FIGURE 3-10 Storage Service Processor Notification

Chapter 3 Troubleshooting the Fibre Channel Links 41

For Internal Use Only

Page 58

▼ To Verify the Data Host

A problem in the A4/B4 FC Link appears differently on the data host, depending on

if the array is a Sun StorEdge 3900 series or a Sun StorEdge 6900 seriesdevice.

Sun StorEdge 3900 Series

In a Sun StorEdge 3900 series device, the data host multipathing software is

responsible for initiating the failover and reports it in /var/adm/messages, such

as those reported by the Storage Automated Diagnostic Environment email

notifications.

The luxadm failover command is used to fail the Sun StorEdge T3+ array LUNs

back to the proper configuration after the failing FRU is replaced. This command is

issued from the data host.

Sun StorEdge 6900 Series

In a Sun StorEdge 6900 series device, the virtualization engine pairs handle the

failover and the failover is not noted on the data host. All paths would remain

ONLINE and ACTIVE.

The mpdrive failback command is used, and is issued from the Storage Service

Processor.

Note – In the event of a complete sw1b or sw2b failure in a Sun StorEdge 6900

series configuration, the virtualization engine pairs handle the failover. In addition,

the multipathing software notes a path failure on the data host, Sun StorEdge Traffic

Manager or VxDMP takes the entire path that was connected to the failed switch

offline, and the ISL ports on the surviving switch go offline as well.

42 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 59

To verify the failover luxadm display can be used, the failed path will be marked

OFFLINE, as shown in

CODE EXAMPLE 3-7 Failed Path marked OFFLINE

# luxadm display /dev/rdsk/c26t60020F200000644>

DEVICE PROPERTIES for disk: /dev/rdsk/

c26t60020F20000064433C3352A60003E82Fd0s2

Status(Port A): O.K.

Status(Port B): O.K.

Vendor: SUN

Product ID: T300

WWN(Node): 50020f2000006443

WWN(Port A): 50020f2300006355

WWN(Port B): 50020f2300006443

Revision: 0118

Serial Num: Unsupported

Unformatted capacity: 488642.000 MBytes

Write Cache: Enabled

Read Cache: Enabled

Minimum prefetch: 0x0

Maximum prefetch: 0x0

Device Type: Disk device

Path(s):

/dev/rdsk/c26t60020F20000064433C3352A60003E82Fd0s2

/devices/scsi_vhci/ssd@g60020f20000064433c3352a60003e82f:c,raw

Controller /devices/pci@a,2000/pci@2/SUNW,qlc@5/fp@0,0

Device Address 50020f2300006355,1

Class primary

State OFFLINE

Controller /devices/pci@e,2000/pci@2/SUNW,qlc@5/fp@0,0

Device Address 50020f2300006443,1

Class secondary

State ONLINE

CODE EXAMPLE 3-7.

Note – This type of error may also cause the device to show up "unusable" in

cfgadm, as shown in CODE EXAMPLE 3-8.

Chapter 3 Troubleshooting the Fibre Channel Links 43

For Internal Use Only

Page 60

CODE EXAMPLE 3-8 Failed Path marked “unusable”

# cfgadm -al

Ap_Id Type Receptacle Occupant Condition

ac0:bank0 memory connected configured ok

ac0:bank1 memory empty unconfigured unknown

c1 scsi-bus connected configured unknown

c16 scsi-bus connected unconfigured unknown

c18 scsi-bus connected unconfigured unknown

c19 scsi-bus connected unconfigured unknown

c1::dsk/c1t6d0 CD-ROM connected configured unknown

c20 fc-private connected unconfigured unknown

c21 fc-fabric connected configured unknown

c21::50020f2300006355 disk connected configured unusable

FRU tests available for the A4/B4 FC Link Segment

■ The switchtest can only be run from the Storage Service Processor

■ The linktest will be able to isolate the switch and the GBIC on the switch. It

will not be able to isolate the cable or the Sun StorEdge T3+ array controller.

▼ To Isolate the A4/B4 FC Link

1. Quiesce the I/O on the A4/B4 FC link path.

2. Run linktest from the Storage Automated Diagnostic Environment GUI to

isolate suspected failing components.

Alternatively, follow these steps:

1. Quiesce the I/O on the A4/B4 FC link path.

2. Run switchtest to test the entire link (re-create the problem).

3. Break the connnection by uncabling the link.

4. Insert the loopback connector into the switch port.

44 Sun StorEdge 3900 and 6900 Series Troubleshooting Guide • March 2002

Page 61

5. Rerun switchtest.

a. If switchtest fails, replace the GBIC and rerun switchtest.

b. If the test fails again, replace the switch.