Solarflare Server Adapter

Solarflare® Server Adapter User Guide

• Introduction...Page 1

• Installation...Page 18

• Solarflare Adapters on Linux...Page 39

• Solarflare Adapters on Windows...Page 109

• Solarflare Adapters on VMware...Page 236

• Solarflare Adapters on Solaris...Page 263

• SR-IOV Virtualization Using KVM...Page 307

• SR-IOV Virtualization for XenServer...Page 324

• Solarflare Adapters on Mac 0S X...Page 334

• Solarflare Boot ROM Agent...Page 344

User Guide

Information in this document is subject to change without notice.

© 2008-2014 Solarflare Communications Inc. All rights reserved.

Trademarks used in this text are registered trademarks of Solarflare® Communications Inc; Adobe is

a trademark of Adobe Systems. Microsoft® and Windows® are registered trademarks of Microsoft

Corporation.

Linux® is the registered trademark of Linus Torvalds in the U.S. and other countries.

Other trademarks and trade names may be used in this document to refer to either the entities

claiming the marks and names or their products. Solarflare Communications Inc. disclaims any

proprietary interest in trademarks and trade names other than its own.

SF-103837-CD

Last revised: July 2014

Issue 11

Issue 11 © Solarflare Communications 2014 i

Solarflare Server Adapter

User Guide

Table of Contents

Table of Contents. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ii

Chapter 1: Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.1 Virtual NIC Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Product Specifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3 Software Driver Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.4 Solarflare AppFlex™ Technology Licensing.. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.5 Open Source Licenses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.6 Support and Download . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.7 Regulatory Information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.8 Regulatory Approval . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Chapter 2: Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

2.1 Solarflare Network Adapter Products . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.2 Fitting a Full Height Bracket (optional) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.3 Inserting the Adapter in a PCI Express (PCIe) Slot . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.4 Attaching a Cable (RJ-45) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.5 Attaching a Cable (SFP+) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.6 Supported SFP+ Cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2.7 Supported SFP+ 10G SR Optical Transceivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2.8 Supported SFP+ 10G LR Optical Transceivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.9 QSFP+ Transceivers and Cables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.10 Supported SFP 1000BASE-T Transceivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.11 Supported 1G Optical Transceivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.12 Supported Speed and Mode. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.13 Configure QSFP+ Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.14 LED States. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

2.15 Solarflare Mezzanine Adapters: SFN5812H and SFN5814H. . . . . . . . . . . . . . . . . . 34

2.16 Solarflare Mezzanine Adapter SFN6832F-C61 . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.17 Solarflare Mezzanine Adapter SFN6832F-C62 . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

2.18 Solarflare Precision Time Synchronization Adapters . . . . . . . . . . . . . . . . . . . . . . . 38

2.19 Solarflare SFA6902F ApplicationOnload™ Engine . . . . . . . . . . . . . . . . . . . . . . . . . 38

Chapter 3: Solarflare Adapters on Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.1 System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.2 Linux Platform Feature Set . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

3.3 Solarflare RPMs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

3.4 Installing Solarflare Drivers and Utilities on Linux . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.5 Red Hat Enterprise Linux Distributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

3.6 SUSE Linux Enterprise Server Distributions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

3.7 Unattended Installations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

3.8 Unattended Installation - Red Hat Enterprise Linux . . . . . . . . . . . . . . . . . . . . . . . . . 47

Issue 11 © Solarflare Communications 2014 ii

Solarflare Server Adapter

User Guide

3.9 Unattended Installation - SUSE Linux Enterprise Server . . . . . . . . . . . . . . . . . . . . . 48

3.10 Hardware Timestamps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

3.11 Configuring the Solarflare Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

3.12 Setting Up VLANs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

3.13 Setting Up Teams. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3.14 Running Adapter Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

3.15 Running Cable Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

3.16 Linux Utilities RPM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.17 Configuring the Boot ROM with sfboot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.18 Upgrading Adapter Firmware with Sfupdate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

3.19 License Install with sfkey . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

3.20 Performance Tuning on Linux. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

3.21 Module Parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

3.22 Linux ethtool Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Chapter 4: Solarflare Adapters on Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

4.1 System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

4.2 Windows Feature Set . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

4.3 Installing the Solarflare Driver Package on Windows. . . . . . . . . . . . . . . . . . . . . . . 112

4.4 Adapter Drivers Only Installation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

4.5 Full Solarflare Package Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

4.6 Install Drivers and Options From a Windows Command Prompt . . . . . . . . . . . . . 119

4.7 Unattended Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

4.8 Managing Adapters with SAM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

4.9 Managing Adapters Remotely with SAM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

4.10 Using SAM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

4.11 Using SAM to Configure Adapter Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 135

4.12 Segmentation Offload. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

4.13 Using SAM to Configure Teams and VLANs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

4.14 Using SAM to View Statistics and State Information . . . . . . . . . . . . . . . . . . . . . . 153

4.15 Using SAM to Run Adapter and Cable Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . 154

4.16 Using SAM for Boot ROM Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

4.17 Managing Firmware with SAM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

4.18 Configuring Network Adapter Properties in Windows. . . . . . . . . . . . . . . . . . . . . 167

4.19 Windows Command Line Tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

4.20 Sfboot: Boot ROM Configuration Tool. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

4.21 Sfupdate: Firmware Update Tool. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

4.22 Sfteam: Adapter Teaming and VLAN Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

4.23 Sfcable: Cable Diagnostics Tool . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

4.24 Sfnet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 203

4.25 Completion codes (%errorlevel%) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

4.26 Teaming and VLANs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

4.27 Performance Tuning on Windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

4.28 Windows Event Log Error Messages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Issue 11 © Solarflare Communications 2014 iii

Solarflare Server Adapter

User Guide

Chapter 5: Solarflare Adapters on VMware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

5.1 System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

5.2 VMware Feature Set . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

5.3 Installing Solarflare Drivers and Utilities on VMware. . . . . . . . . . . . . . . . . . . . . . . 238

5.4 Configuring Teams. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

5.5 Configuring VLANs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

5.6 Running Adapter Diagnostics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

5.7 Configuring the Boot ROM with Sfboot. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 242

5.8 Upgrading Adapter Firmware with Sfupdate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 252

5.9 Performance Tuning on VMware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255

Chapter 6: Solarflare Adapters on Solaris . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

6.1 System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

6.2 Solaris Platform Feature Set . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 264

6.3 Installing Solarflare Drivers. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 265

6.4 Unattended Installation Solaris 10. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266

6.5 Unattended Installation Solaris 11. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 267

6.6 Configuring the Solarflare Adapter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

6.7 Setting Up VLANs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

6.8 Solaris Utilities Package . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

6.9 Configuring the Boot ROM with sfboot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

6.10 Upgrading Adapter Firmware with Sfupdate . . . . . . . . . . . . . . . . . . . . . . . . . . . . 281

6.11 Performance Tuning on Solaris . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

6.12 Module Parameters. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

6.13 Kernel and Network Adapter Statistics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Chapter 7: SR-IOV Virtualization Using KVM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307

7.1 Supported Platforms and Adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307

7.2 Linux KVM SR-IOV . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

7.3 Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311

7.4 Configuration Red Hat 6.1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 314

7.5 Configuration Red Hat 6.2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 317

7.6 Performance Tuning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 320

7.7 Migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 322

Chapter 8: SR-IOV Virtualization for XenServer. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 324

8.1 Supported Platforms and Adapters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 324

8.2 XenServer6 SR-IOV . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325

8.3 Installation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 328

8.4 Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 329

8.5 Performance Tuning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 330

Issue 11 © Solarflare Communications 2014 iv

Solarflare Server Adapter

User Guide

Chapter 9: Solarflare Adapters on Mac 0S X . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 334

9.1 System Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 334

9.2 Supported Hardware Platforms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 334

9.3 Mac 0S X Platform Feature Set. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

9.4 Thunderbolt . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

9.5 Driver Install. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335

9.6 Interface Configuration. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 338

9.7 Tuning. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 339

9.8 Driver Properties via sysctl . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 339

9.9 Firmware Update. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 340

9.10 Performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 342

Chapter 10: Solarflare Boot ROM Agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344

10.1 Configuring the Solarflare Boot ROM Agent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 344

10.2 PXE Support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 345

10.3 iSCSI Boot . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

10.4 Configuring the iSCSI Target . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

10.5 Configuring the Boot ROM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 349

10.6 DHCP Server Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 356

10.7 Installing an Operating System to an iSCSI target. . . . . . . . . . . . . . . . . . . . . . . . . 358

10.8 Default Adapter Settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 367

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 368

Issue 11 © Solarflare Communications 2014 v

Solarflare Server Adapter

Chapter 1: Introduction

This is the User Guide for Solarflare® Server Adapters. This chapter covers the following topics:

• Virtual NIC Interface...Page 1

• Advanced Features and Benefits...Page 2

• Product Specifications...Page 4

• Software Driver Support on page 12

• Solarflare AppFlex™ Technology Licensing....Page 12

• Open Source Licenses...Page 13

• Support and Download...Page 14

• Regulatory Information...Page 14

• Regulatory Approval...Page 15

User Guide

NOTE: Throughout this guide the term Onload refers to both OpenOnload® and EnterpriseOnload®

unless otherwise stated. Users of Onload should refer to the Onload User Guide, SF-104474-CD,

which describes procedures for download and installation of the Onload distribution, accelerating

and tuning the application using Onload to achieve minimum latency and maximum throughput.

1.1 Virtual NIC Interface

Solarflare’s VNIC architecture provides the key to efficient server I/O and is flexible enough to be

applied to multiple server deployment scenarios. These deployment scenarios include:

• Kernel Driver – This deployment uses an instance of a VNIC per CPU core for standard operating

system drivers. This allows network processing to continue over multiple CPU cores in parallel.

The virtual interface provides a performance-optimized path for the kernel TCP/IP stack and

contention-free access from the driver, resulting in extremely low latency and reduced CPU

utilization.

• Accelerated Virtual I/O – The second deployment scenario greatly improves I/O for virtualized

platforms. The VNIC architecture can provide a VNIC per Virtual Machine, giving over a

thousand protected interfaces to the host system, granting any virtualized (guest) operating

system direct access to the network hardware. Solarflare's hybrid SR-IOV technology, unique to

Solarflare Ethernet controllers, is the only way to provide bare-metal I/O performance to

virtualized guest operating systems whilst retaining the ability to live migrate virtual machines.

• OpenOnload™ – The third deployment scenario aims to leverage the host CPU(s) to full

capacity, minimizing software overheads by using a VNIC per application to provide a kernel

bypass solution. Solarflare has created both an open-source and Enterprise class highperformance application accelerator that delivers lower and more predictable latency and

higher message rates for TCP and UDP-based applications, all with no need to modify

applications or change the network infrastructure. To learn more about the open source

Issue 11 © Solarflare Communications 2014 1

OpenOnload project or EnterpriseOnload, download the Onload user guide (SF-104474-CD) or

contact your reseller.

Advanced Features and Benefits

Virtual NIC support The core of Solarflare technology. Protected VNIC interfaces can

be instantiated for each running guest operating system or

application, giving it a direct pipeline to the Ethernet network.

This architecture provides the most efficient way to maximize

network and CPU efficiency. The Solarflare Ethernet controller

supports up to 1024 vNIC interfaces per port.

On IBM System p servers equipped with Solarflare adapters,

each adapter is assigned to a single Logical Partition (LPAR)

where all VNICS are available to the LPAR.

PCI Express Implements PCI Express 3.0.

High Performance Support for 40G Ethernet interfaces and a new internal

datapath micro architecture.

Solarflare Server Adapter

User Guide

Hardware Switch Fabric Full hardware switch fabric in silicon capable of steering any

flow based on Layer 2, Layer 3 or application level protocols

between physical and virtual interfaces. Supporting an open

software defined network control plane with full PCI-IOV

virtualization acceleration for high performance guest operating

systems and virtual applications.

Improved flow processing The addition of dedicated parsing, filtering, traffic shaping and

flow steering engines which are capable of operating flexibly

and with an optimal combination of a full hardware data plane

with software based control plane.

TX PIO Transmit Programmed input/output is the direct transfer of data

to the adapter without CPU involvement. As an alternative to

the usual bus master DMA method, TX PIO improves latency

and is especially useful for smaller packets.

Multicast Replication Received multicast packets are replicated in hardware and

delivered to multiple receive queues.

Sideband management NCSI RMII interface for base board management integration.

SMBus interface for legacy base board management integration.

Issue 11 © Solarflare Communications 2014 2

Solarflare Server Adapter

User Guide

PCI Single-Root-IOV, SR-IOV,

capable

127 Virtual functions per port.

Flexible deployment of 1024 channels between Virtual and

Physical Functions.

Support Alternate Routing ID (ARI).

SR-IOV is not supported for Solarflare adapters on IBM System p

servers.

10-gigabit Ethernet Supports the ability to design a cost effective, high performance

10 Gigabit Ethernet solution.

Receive Side Scaling (RSS) IPv4 and IPv6 RSS raises the utilization levels of multi-core

servers dramatically by distributing I/O load across all CPUs and

cores.

Stateless offloads Through the addition of hardware based TCP segmentation and

reassembly offloads, VLAN, VxLAN and FCOE offloads.

Transmit rate pacing (per

queue)

Provides a mechanism for enforcing bandwidth quotas across all

guest operating systems. Software re-programmable on the fly

to allow for adjustment as congestion increases on the network.

Jumbo frame support Support for up to 9216 byte jumbo frames.

MSI-X support 1024 MSI-X interrupt support enables higher levels of

performance.

Can also work with MSI or legacy line based interrupts.

Ultra low latency Cut through architecture. < 7s end to end latency with

standard kernel drivers, < 3s with Onload drivers.

Remote boot Support for PXE boot 2.1 and iSCSI Boot provides flexibility in

cluster design and diskless servers (see Solarflare Boot ROM

Agent on page 344).

Network boot is not supported for Solarflare adapters on IBM

System p servers.

MAC address filtering Enables the hardware to steer packets based on the MAC

address to a VNIC.

Hardware timestamps The Solarflare Flareon™ SFN7000 series adapters can support

hardware timestamping for all received network packets including PTP.

The SFN5322F and SFN6322F adapters can generate hardware

timestamps of PTP packets.

Issue 11 © Solarflare Communications 2014 3

Solarflare Server Adapter

1.2 Product Specifications

Solarflare Flareon™ Network Adapters

Solarflare Flareon™ Ultra SFN7142Q Dual-Port 40GbE QSFP+ PCIe 3.0 Server I/O Adapter

Part number SFN7142Q

Controller silicon SFC9140

Power 13W typical

PCI Express 8 lanes Gen 3 (8.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes (factory enabled)

PTP and hardware timestamps Enabled by installing AppFlex license.

1PPS Optional bracket and cable assembly - not factory installed.

User Guide

SR-IOV Yes

Network ports 2 x QSFP+ (40G/10G)

Solarflare Flareon™ Ultra SFN7322F Dual-Port 10GbE PCIe 3.0 Server I/O Adapter

Part number SFN7322F

Controller silicon SFC9120

Power 5.9W typical

PCI Express 8 lanes Gen 3 (8.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes (factory enabled)

PTP and hardware timestamps Yes (factory enabled)

1PPS Optional bracket and cable assembly - not factory installed.

SR-IOV Yes

Network ports 2 x SFP+ (10G/1G)

Issue 11 © Solarflare Communications 2014 4

Solarflare Server Adapter

User Guide

Solarflare Flareon™ Ultra SFN7122F Dual-Port 10GbE PCIe 3.0 Server I/O Adapter

Part number SFN7122F

Controller silicon SFC9120

Power 5.9W typical

PCI Express 8 lanes Gen 3 (8.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes (factory enabled)

PTP and hardware timestamps AppFlex™ license required

1PPS Optional bracket and cable assembly - not factory installed.

SR-IOV Yes

Network ports 2 x SFP+ (10G/1G)

Solarflare Flareon™ SFN7002F Dual-Port 10GbE PCIe 3.0 Server I/O Adapter

Part number SFN7002F

Controller silicon SFC9120

Power 5.9W typical

PCI Express 8 lanes Gen 3 (8.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload AppFlex™ license required

PTP and hardware timestamps AppFlex™ license required

1PPS Optional bracket and cable assembly - not factory installed.

SR-IOV Yes

Network ports 2 x SFP+ (10G/1G)

Issue 11 © Solarflare Communications 2014 5

Solarflare Onload Network Adapters

Solarflare SFN5121T Dual-Port 10GBASE-T Server Adapter

Part number SFN5121T

Controller silicon SFL9021

Power 12.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

SR-IOV Yes

Network ports 2 x 10GBASE-T (10G/1G/100M)

Solarflare Server Adapter

User Guide

Solarflare SFN5122F Dual-Port 10G SFP+ Server Adapter

Part number SFN5122F

Controller silicon SFC9020

Power 4.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

SR-IOV Yes

Network ports 2 x SFP+ (10G/1G)

Solarflare SFN6122F Dual-Port 10GbE SFP+ Server Adapter

Part number SFN6122F

Controller silicon SFC9020

Power 5.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

SR-IOV

Issue 11 © Solarflare Communications 2014 6

Yes1

Network ports 2 x SFP+ (10G/1G)

Regulatory Product Code S6102

1. SR-IOV is not supported for Solarflare adapters on IBM System p servers.

Solarflare Server Adapter

User Guide

Issue 11 © Solarflare Communications 2014 7

Solarflare Server Adapter

User Guide

Solarflare SFN6322F Dual-Port 10GbE SFP+ Server Adapter

Part number SFN6122F

Controller silicon SFC9020

Power 5.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

SR-IOV Yes

Network ports 2 x SFP+ (10G/1G)

Solarflare SFA6902F Dual-Port 10GbE SFP+ ApplicationOnload™ Engine

Part number SFA6902F

Controller silicon SFC9020

Power 25W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

SR-IOV Yes

Network ports 2 x SFP+ (10G/1G)

Issue 11 © Solarflare Communications 2014 8

Solarflare Performant Network Adapters

Solarflare SFN5161T Dual-Port 10GBASE-T Server Adapter

Part number SFN5161T

Controller silicon SFL9021

Power 12.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s)

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload No

SR-IOV Yes

Network ports 2 x 10GBASE-T (10G/1G/100M)

Solarflare Server Adapter

User Guide

Solarflare SFN5162F Dual-Port 10G SFP+ Server Adapter

Part number SFN5162F

Controller silicon SFC9020

Power 4.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s)

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload No

SR-IOV

Yes

1

Network ports 2 x SFP+ (10G/1G)

1. SR-IOV is not supported for Solarflare adapters on IBM System p servers.

Issue 11 © Solarflare Communications 2014 9

Solarflare Mezzanine Adapters

Solarflare SFN5812H Dual-Port 10G Ethernet Mezzanine Adapter

Part number SFN5812H

Controller silicon SFC9020

Power 3.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

SR-IOV Yes

Ports 2 x 10GBASE-KX4 backplane transmission

Solarflare Server Adapter

User Guide

Solarflare SFN5814H Quad-Port 10G Ethernet Mezzanine Adapter

Part number SFN5814H

Controller silicon 2 x SFC9020

Power 7.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

SR-IOV Yes

Ports 4 x 10GBASE-KX4 backplane transmission

Solarflare SFN6832F Dual-Port 10GbE SFP+ Mezzanine Adapter

Part number SFN6832F-C61 for DELL PowerEdge C6100 series

SFN6832F-C62 for DELL PowerEdge C6200 series

Controller silicon SFC9020

Power 5.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

Issue 11 © Solarflare Communications 2014 10

Solarflare Server Adapter

User Guide

SR-IOV Yes

Ports 2 x SFP+ (10G/1G)

Regulatory Product Code S6930

Solarflare SFN6822F Dual-Port 10GbE SFP+ FlexibleLOM Onload Server Adapter

Part number SFN6822F

Controller silicon SFC9020

Power 5.9W typical

PCI Express 8 lanes Gen2 (5.0GT/s), 127 SR-IOV virtual functions per port

Virtual NIC support 1024 vNIC interfaces per port

Supports OpenOnload Yes

SR-IOV Yes

Ports 2 x SFP+ (10G/1G)

Issue 11 © Solarflare Communications 2014 11

Solarflare Server Adapter

1.3 Software Driver Support

• Windows 7.

• Windows 8 and 8.1.

• Windows® Server 2008 R2 release.

• Windows® Server 2012 - including R2 release.

• Microsoft® Hyper-V™ Server 2008 R2.

• Linux® 2.6 and 3.x Kernels (32 bit and 64 bit) for the following distributions: RHEL 5, 6, 7 and

MRG. SLES 10, 11 and SLERT.

• VMware® ESX™ 5.0 and ESXi™ 5.1, vSphere™ 4.0 and vSphere™ 4.1.

• Citrix XenServer™ 5.6, 6.0 and Direct Guest Access.

• Linux® KVM.

• Solaris™ 10 updates 8, 9 and 10 and Solaris™ 11 (GLDv3).

User Guide

• Mac OS X Snow Leopard 10.6.8 (32 bit and 64 bit), OS X Lion 10.7.0 and later releases, OS X

Mountain Lion 10.8.0 and later, OS X Mavericks 10.9.

Solarflare SFN5162F and SFN6122F adapters are supported on the IBM POWER architecture (PPC64)

running RHEL 6.4 on IBM System p servers.

The Solarflare accelerated network middleware, OpenOnload and EnterpriseOnload, is supported

on all Linux variants listed above, and is available for all Solarflare Onload network adapters.

Solarflare are not aware of any issues preventing OpenOnload installation on other Linux variants

such as Ubuntu, Gentoo, Fedora and Debian variants.

1.4 Solarflare AppFlex™ Technology Licensing.

Solarflare AppFlex technology allows Solarflare server adapters to be selectively configured to

enable on-board applications. AppFlex licenses are required to enable selected functionality on the

Solarflare Flareon™ adapters and the AOE ApplicationOnload™ Engine.

Customers can obtain access to AppFlex applications via their Solarflare sales channel by obtaining

the corresponding AppFlex authorization code. The authorization code allows the customer to

generate licenses at the MyAppFlex page at https://support.solarflare.com/myappflex.

The sfkey utility application is used to install the generated license key file on selected adapters. For

detailed instructions for sfkey and license installation refer to License Install with sfkey on page 75.

Issue 11 © Solarflare Communications 2014 12

Solarflare Server Adapter

1.5 Open Source Licenses

1.4.1 Solarflare Boot Manager

The Solarflare Boot Manager is installed in the adapter's flash memory. This program is free

software; you can redistribute it and/or modify it under the terms of the GNU General Public License

as published by the Free Software Foundation; either version 2 of the License, or (at your option)

any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without

even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

The latest source code for the Solarflare Boot Manager can be download from https://

support.solarflare.com/. If you require an earlier version of the source code, please e-mail

support@solarflare.com.

1.4.2 Controller Firmware

User Guide

The firmware running on the SFC9xxx controller includes a modified version of libcoroutine. This

software is free software published under a BSD license reproduced below:

Copyright (c) 2002, 2003 Steve Dekorte

All rights reserved.

Redistribution and use in source and binary forms, with or without modification, are permitted

provided that the following conditions are met:

Redistributions of source code must retain the above copyright notice, this list of conditions and the

following disclaimer.

Redistributions in binary form must reproduce the above copyright notice, this list of conditions and

the following disclaimer in the documentation and/or other materials provided with the

distribution.

Neither the name of the author nor the names of other contributors may be used to endorse or

promote products derived from this software without specific prior written permission.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY

EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES

OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT

SHALL THE REGENTS OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO,

PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR

BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY

WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

Issue 11 © Solarflare Communications 2014 13

Solarflare Server Adapter

1.6 Support and Download

Solarflare network drivers, RPM packages and documentation are available for download from

https://support.solarflare.com/.

Software and documentation for OpenOnload is available from www.openonload.org.

1.7 Regulatory Information

Warnings

Do not install the Solarflare network adapter in hazardous areas where highly combustible or

explosive products are stored or used without taking additional safety precautions. Do not expose

the Solarflare network adapter to rain or moisture.

The Solarflare network adapter is a Class III SELV product intended only to be powered by a certified

limited power source.

The equipment has been tested and found to comply with the limits for a Class B digital device,

pursuant to Part 15 of the FCC Rules. These limits are designed to provide reasonable protection

against harmful interference in a residential installation. The equipment generates, uses and can

radiate radio frequency energy and, if not installed and used in accordance with the instructions,

may cause harmful interference to radio communications. However, there is no guarantee that

interference will not occur in a particular installation.

User Guide

If the equipment does cause harmful interference to radio or television reception, which can be

determined by turning the equipment off and on, the user is encouraged to try to correct the

interference by one or more of the following measures:

• Reorient or relocate the receiving antenna.

• Increase the separation between the equipment and receiver.

• Connect the equipment into an outlet on a circuit different from that to which the receiver is

connected.

• Consult the dealer or an experienced radio/TV technician for help.

Changes or modifications not expressly approved by Solarflare Communications, the party

responsible for FCC compliance, could void the user's authority to operate the equipment.

This Class B digital apparatus complies with Canadian ICES-003.

Cet appareil numérique de la classe B est conforme à la norme NMB-003 du Canada.

Underwriters Laboratory Inc ('UL') has not tested the performance or reliability of the security or

signaling aspects of this product. UL has only tested for fire, shock or casualty hazards as outlined in

the UL's Standard for Safety UL 60950-1. UL Certification does not cover the performance or

reliability of the security or signaling aspects of this product. UL makes no representations,

warranties or certifications whatsoever regarding the performance or reliability of any security or

signaling related functions of this product.

Issue 11 © Solarflare Communications 2014 14

Solarflare Server Adapter

Laser Devices

The laser safety of the equipment has been verified using the following certified laser device module

(LDM):

User Guide

Manufactuer Model

Avago Technologies AFBR-703SDZ 9720151-072 TUV R72071411

Finisar Corporation FTLX8571D3BCL 9210176-094 TUV R72080250

When installed in a 10Gb ETHERNET NETWORK INTERFACE CARD FROM THE Solarflare SFN5000,

SFN6000 or SFN7000 SERIES, the laser emission levels remain under Class I limits as specified in the

FDA regulations for lasers, 21 CFR Part 1040.

The decision on what LDMs to use is made by the installer. For example, equipment may use one of

a multiple of different LDMs depending on path length of the laser communication signal. This

equipment is not basic consumer ITE.

The equipment is installed and maintained by qualified staff from the end user communications

company or subcontractor of the end user organization. The end product user and/or installer are

solely responsible for ensuring that the correct devices are utilized in the equipment and the

equipment with LDMs installed complies with applicable laser safety requirements.

CDRH Accession

No

Mark of

conformity

File No

1.8 Regulatory Approval

The information in this section is applicable to SFN5121T, and SFN5162F Solarflare network

adapters:

Category Specification Details

Europe

EMC

1

Safety

RoHS Europe Complies with EU directive 2002/95/EC

US FCC Part 15 Class B

Canada ICES 003/NMB-003 Class B

Europe BS EN 60950-1:2006 +A11:2009

US UL 60950-1 2nd Ed.

Canada CSA C22.2 60950-1-07 2nd Ed.

CB IEC 60950-1:2005 2nd Ed.

BS EN 55024:1998 +A1:2001 +A2:2003

BS EN 55022:2006

1. The safety assessment has been concluded on this product as a component/sub-assembly only.

Additional Regulatory Information for SFN5122F, SFN6122F, SFN6322F , SFA6902F, SFN7002F, SFN7122F, SFN7322F and SFN7142Q adapters.

ሶቯቒ㍔⫀⑵䚕孔函䷘榊㽱椫⹂呹尞Ⓟ◣巿↩᧤9&&,᧥ቑ㲨䄥⪉ቈሲኌኖ $ ㍔⫀㔏

嫢孔函ቊሼᇭሶቑ孔函ት⹅ㄼ䜿⬒ቊ∎䞷ሼቮቋ榊㽱ⰷ⹂ትㆤሰ怆ሶሼሶቋሯሥቭቡሼᇭ

ቀቑቫሩቍ椫⹂ሯ䤉䞮ሺቂ椪ᇬ∎䞷劔ቒ拸⒖ቍ⺍㉫ሯ㉔尐ቋቍቮ⫃⚗ሯሥቭቡሼ

Issue 11 © Solarflare Communications 2014 15

Solarflare Server Adapter

User Guide

巵⛙∎䞷劔᧶

抨㢾䟁櫭䤓彖岙䞱❐᧨⦷⻔⇞䤓䜿⬒₼∎䞷㣑᧨♾厌㦒抯㒟⺓櫊㞍᧨⦷抨䲽㍔㽐ₚ᧨∎

䞷劔㦒嬺尐㻑㘰♥㩟K拸䠅䤓⺜䷥

$ 鞾韥韥 꽺ꓩ끞ꗞꭖ뭪겕韥韥 넩韥韥鱉꽺ꓩ끞 $ 鞾 냱ꈑ놹녅볁놶뼞麦ꈒ냹뼑

韥韥넩꿙鱽 볅ꎙ녅 鿅鱉 ꩡ끞녅鱉 넩 뇅냹 늱넍뼍겑韥 ꗉꄱꐥ 閵뇊뀭넍 덵꾢꾅 ꩡ끞뼍鱉

阸냹ꑞ놶냱ꈑ뼞鱽鲙

Category Specification Details

Europe

US FCC Part 15 Class B

EMC

1

Safety

RoHS Europe Complies with EU directive 2011/65/EU

Canada ICES 003/NMB-003 Class B

Taiwan CNS 13438:2006 Class B

Japan VCCI Regulations V-3:2010 Class B

South Korea

Australia AS/NZS CISPR 22:2009

Europe BS EN 60950-1:2006 +A11:2009

US UL 60950-1 2nd Ed.

Canada CSA C22.2 60950-1-07 2nd Ed.

CB IEC 60950-1:2005 2nd Ed.

BS EN 55022:2010 + A1:2007

BS EN 55024:1998 +A1:2001 +A2:2003

KCC KN-22, KN-24

1. The safety assessment has been concluded on this product as a component/sub-assembly only.

Additional Regulatory Information for SFN5812H, SFN5814H and SFN6832F adapters.

ሶቯቒ㍔⫀⑵䚕孔函䷘榊㽱椫⹂呹尞Ⓟ◣巿↩᧤9&&,᧥ቑ㲨䄥⪉ቈሲኌኖ $ ㍔⫀㔏

嫢孔函ቊሼᇭሶቑ孔函ት⹅ㄼ䜿⬒ቊ∎䞷ሼቮቋ榊㽱ⰷ⹂ትㆤሰ怆ሶሼሶቋሯሥቭቡሼᇭ

ቀቑቫሩቍ椫⹂ሯ䤉䞮ሺቂ椪ᇬ∎䞷劔ቒ拸⒖ቍ⺍㉫ሯ㉔尐ቋቍቮ⫃⚗ሯሥቭቡሼ

巵⛙∎䞷劔᧶

抨㢾䟁櫭䤓彖岙䞱❐᧨⦷⻔⇞䤓䜿⬒₼∎䞷㣑᧨♾厌㦒抯㒟⺓櫊㞍᧨⦷抨䲽㍔㽐ₚ᧨∎

䞷劔㦒嬺尐㻑㘰♥㩟K拸䠅䤓⺜䷥

Issue 11 © Solarflare Communications 2014 16

Solarflare Server Adapter

Category Specification Details

Europe

BS EN 55024:1998 +A1:2001 +A2:2003

BS EN 55022:2006

US FCC Part 15 Class B

EMC

Canada ICES 003/NMB-003 Class B

Taiwan CNS 13438:2006 Class A

Japan VCCI Regulations V-3:2010 Class A

Australia AS/NZS CISPR 22:2009

Europe BS EN 60950-1:2006 +A11:2009

Safety

1

US UL 60950-1 2nd Ed.

Canada CSA C22.2 60950-1-07 2nd Ed.

CB IEC 60950-1:2005 2nd Ed.

RoHS Europe Complies with EU directive 2002/95/EC

1. The safety assessment has been concluded on this product as a component/sub-assembly only.

User Guide

Issue 11 © Solarflare Communications 2014 17

Chapter 2: Installation

This chapter covers the following topics:

• Solarflare Network Adapter Products...Page 19

• Fitting a Full Height Bracket (optional)...Page 20

• Inserting the Adapter in a PCI Express (PCIe) Slot...Page 21

• Attaching a Cable (RJ-45)...Page 22

• Attaching a Cable (SFP+)...Page 23

• Supported SFP+ Cables...Page 25

• Supported SFP+ 10G SR Optical Transceivers...Page 26

• Supported SFP+ 10G LR Optical Transceivers on page 27

• Supported SFP 1000BASE-T Transceivers...Page 29

• Supported 1G Optical Transceivers...Page 30

Solarflare Server Adapter

User Guide

• Supported Speed and Mode...Page 30

• Configure QSFP+ Adapter...Page 31

• LED States...Page 33

• Solarflare Mezzanine Adapters: SFN5812H and SFN5814H...Page 34

• Solarflare Mezzanine Adapter SFN6832F-C61...Page 35

• Solarflare Mezzanine Adapter SFN6832F-C62...Page 37

• Solarflare Precision Time Synchronization Adapters...Page 38

• Solarflare SFA6902F ApplicationOnload™ Engine...Page 38

CAUTION: Servers contain high voltage electrical components. Before removing the server cover,

disconnect the mains power supply to avoid the risk of electrocution.

Static electricity can damage computer components. Before handling computer components,

discharge static electricity from yourself by touching a metal surface, or wear a correctly fitted antistatic wrist band.

Issue 11 © Solarflare Communications 2014 18

2.1 Solarflare Network Adapter Products

Solarflare Flareon™ adapters

- Solarflare Flareon Ultra SFN7142Q Dual-Port 40GbE PCIe 3.0 QSFP+ Server Adapter

- Solarflare Flareon Ultra SFN7322F Dual-Port 10GbE PCIe 3.0 Server I/O Adapter

- Solarflare Flareon Ultra SFN7122F Dual-Port 10GbE PCIe 3.0 Server I/O Adapter

- Solarflare Flareon SFN7002F Dual-Port 10GbE PCIe 3.0 Server I/O Adapter

Solarflare Onload adapters

- Solarflare SFN6322F Dual-Port 10GbE Precision Time Stamping Server Adapter

- Solarflare SFN6122F Dual-Port 10GbE SFP+ Server Adapter

- Solarflare SFA6902F Dual-Port 10GbE ApplicationOnload™ Engine

- Solarflare SFN5122F Dual-Port 10G SFP+ Server Adapter

Solarflare Server Adapter

User Guide

- Solarflare SFN5121T Dual-Port 10GBASE-T Server Adapter

Solarflare Performant network adapters

- Solarflare SFN5161T Dual-Port 10GBASE-T Server Adapter

- Solarflare SFN5162F Dual-Port 10G SFP+ Server Adapter

Solarflare Mezzanine adapters

- Solarflare SFN5812H Dual-Port 10G Ethernet Mezzanine Adapter for IBM BladeCenter

- Solarflare SFN5814H Quad-Port 10G Ethernet Mezzanine Adapter for IBM BladeCenter

- Solarflare SFN6832F-C61 Dual-Port 10GbE SFP+ Mezzanine Adapter for DELL PowerEdge

C6100 series servers.

- Solarflare SFN6832F-C62 Dual-Port 10GbE SFP+ Mezzanine Adapter for DELL PowerEdge

C6200 series servers.

- Solarflare SFN6822F Dual-Port 10GbE SFP+ FlexibleLOM Onload Server Adapter

Solarflare network adapters can be installed on Intel/AMD x86 based 32 bit or 64 bit servers. The

network adapter must be inserted into a PCIe x8 OR PCIe x 16 slot for maximum performance. Refer

to PCI Express Lane Configurations on page 226 for details.

Solarflare SFN5162F and SFN6122F adapters are supported on the IBM POWER architecture (PPC64)

running RHEL 6.4 on IBM System p servers.

Issue 11 © Solarflare Communications 2014 19

Solarflare Server Adapter

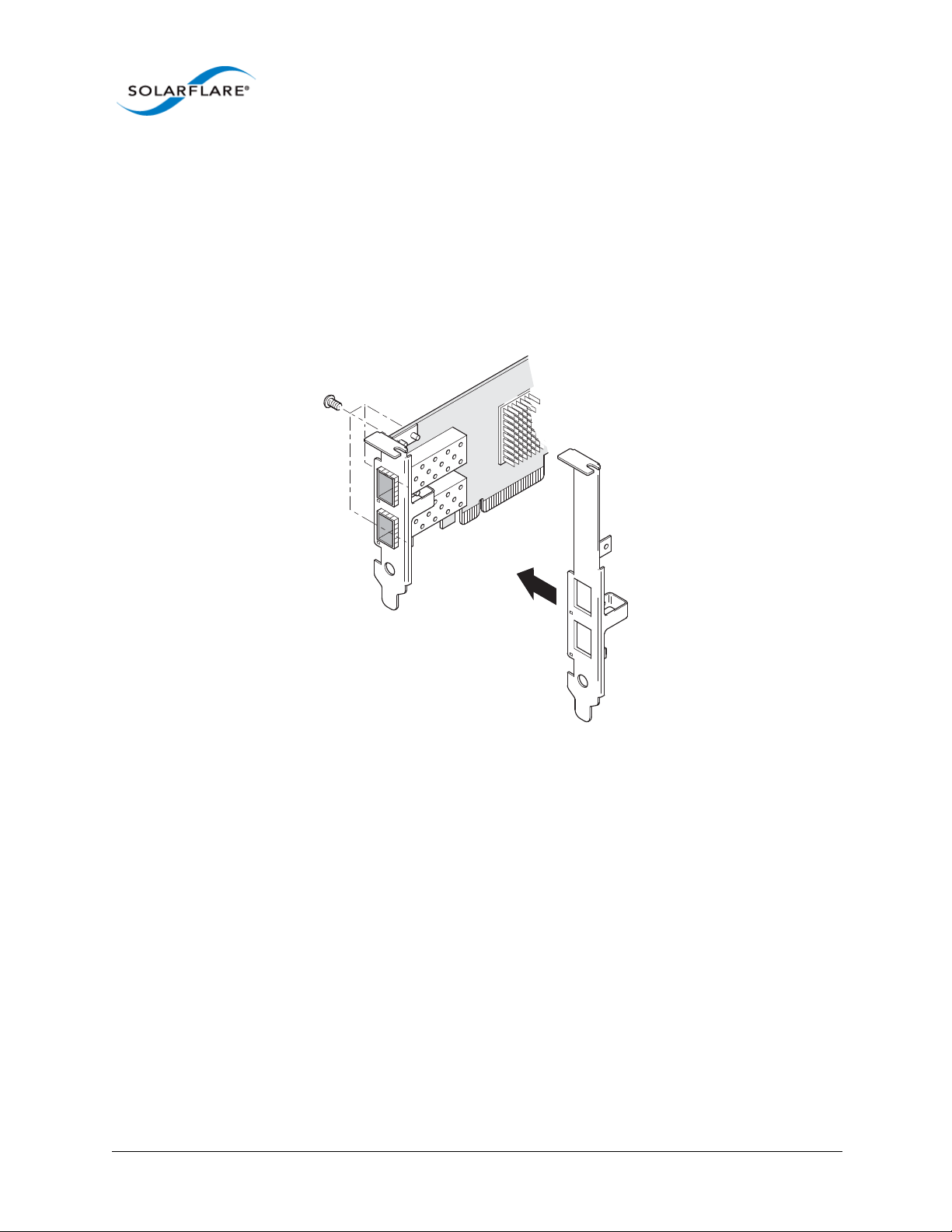

2.2 Fitting a Full Height Bracket (optional)

Solarflare adapters are supplied with a low-profile bracket fitted to the adapter. A full height bracket

has also been supplied for PCIe slots that require this type of bracket.

To fit a full height bracket to the Solarflare adapter:

1 From the back of the adapter, remove the screws securing the bracket.

2 Slide the bracket away from the adapter.

3 Taking care not the overtighten the screws, attach the full height bracket to the adapter.

User Guide

Issue 11 © Solarflare Communications 2014 20

Solarflare Server Adapter

2.3 Inserting the Adapter in a PCI Express (PCIe) Slot

1 Shut down the server and unplug it from the mains. Remove the server cover to access the

PCIe slots in the server.

2 Locate an 8-lane or 16-lane PCIe slot (refer to the server manual if necessary) and insert the

Solarflare card.

3 Secure the adapter bracket in the slot.

4 Replace the cover and restart the server.

User Guide

5 After restarting the server, the host operating system may prompt you to install drivers for the

new hardware. Click Cancel or abort the installation and refer to the relevant chapter in this

manual for how to install the Solarflare adapter drivers for your operating system.

Issue 11 © Solarflare Communications 2014 21

Solarflare Server Adapter

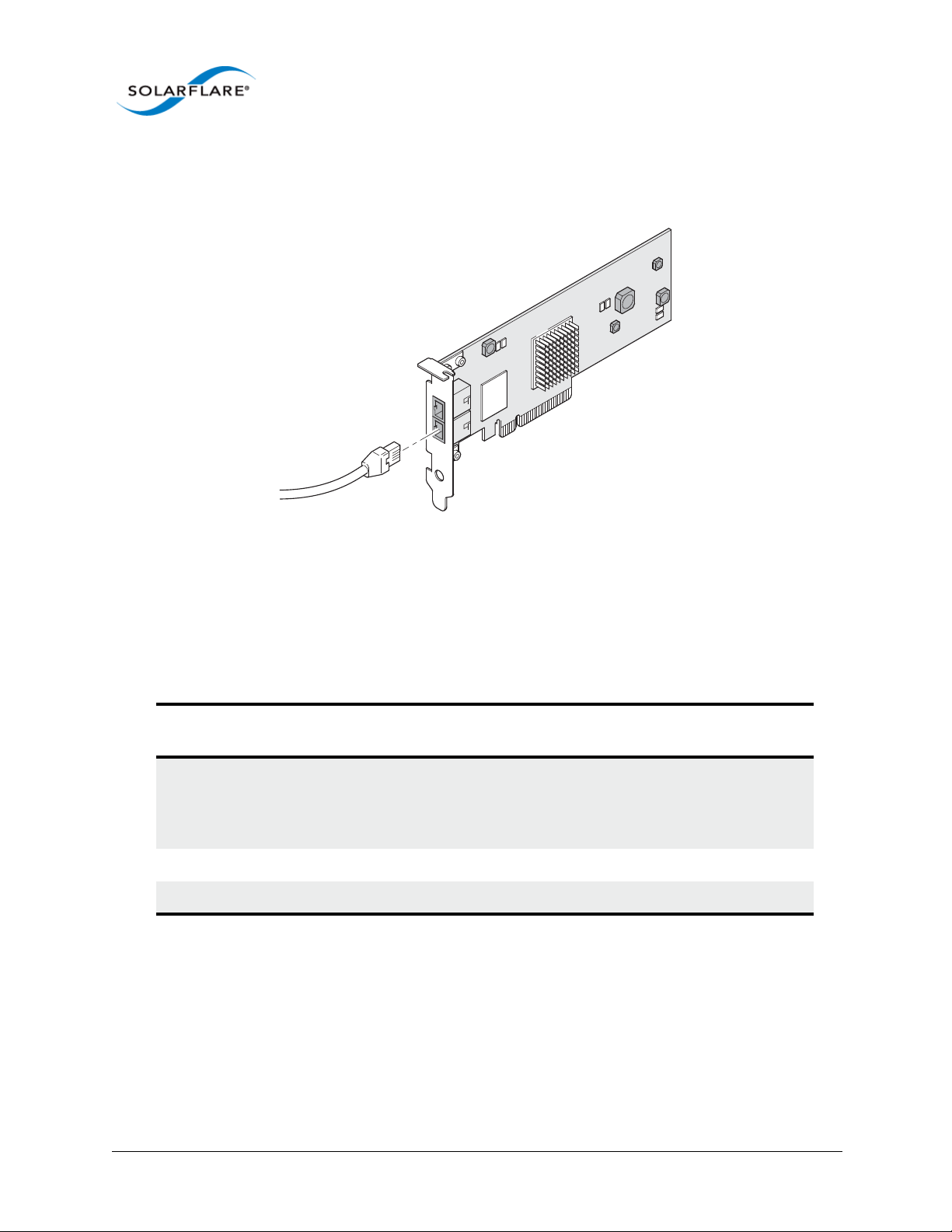

2.4 Attaching a Cable (RJ-45)

Solarflare 10GBASE-T Server Adapters connect to the Ethernet network using a copper cable fitted

with an RJ-45 connector (shown below).

User Guide

RJ-45 Cable Specifications

Table 1 below lists the recommended cable specifications for various Ethernet port types.

Depending on the intended use, attach a suitable cable. For example, to achieve 10 Gb/s

performance, use a Category 6 cable. To achieve the desired performance, the adapter must be

connected to a compliant link partner, such as an IEEE 802.3an-compliant gigabit switch.

Table 1: RJ-45 Cable Specification

Port type Connector Media Type

10GBASE-T RJ-45 Category 6A

Category 6 unshielded twisted pairs (UTP)

Category 5E

1000BASE-T RJ-45 Category 5E, 6, 6A UTP 100m (328 ft.)

100BASE-TX RJ-45 Category 5E, 6, 6A UTP 100m (328 ft.)

Maximum

Distance

100m (328 ft.)

55m (180 ft.)

55m (180 ft.)

Issue 11 © Solarflare Communications 2014 22

Solarflare Server Adapter

2.5 Attaching a Cable (SFP+)

Solarflare SFP+ Server Adapters can be connected to the network using either an SFP+ Direct Attach

cable or a fiber optic cable.

Attaching the SFP+ Direct Attach Cable:

1 Turn the cable so that the connector retention tab and gold fingers are on the same side as the

network adapter retention clip.

Push the cable connector straight in to the adapter socket until it clicks into place.

User Guide

Removing the SFP+ Direct Attach Cable:

1 Pull straight back on the release ring to release the cable retention tab. Alternatively, you can

lift the retention clip on the adapter to free the cable if necessary.

2 Slide the cable free from the adapter socket.

Attaching a fiber optic cable:

WARNING

Do not look directly into the fiber transceiver or cables

as the laser beams can damage your eyesight.

1 Remove and save the fiber optic connector cover.

2 Insert a fiber optic cable into the ports on the network adapter bracket as shown. Most

connectors and ports are keyed for proper orientation. If the cable you are using is not keyed,

Issue 11 © Solarflare Communications 2014 23

Solarflare Server Adapter

check to be sure the connector is oriented properly (transmit port connected to receive port

on the link partner, and vice versa).

Removing a fiber optic cable:

User Guide

WARNING

Do not look directly into the fiber transceiver or cables

as the laser beams can damage your eyesight.

1 Remove the cable from the adapter bracket and replace the fiber optic connector cover.

2 Pull the plastic or wire tab to release the adapter bracket.

3 Hold the main body of the adapter bracket and remove it from the adapter.

Issue 11 © Solarflare Communications 2014 24

Solarflare Server Adapter

2.6 Supported SFP+ Cables

Table 2 is a list of supported SFP+ cables that have been tested by Solarflare. Solarflare is not aware

of any issues preventing the use of other brands of SFP+ cables (of up to 5m in length) with Solarflare

network adapters. However, only cables in the table below have been fully verified and are therefore

supported.

Table 2: Supported SFP+ Direct Attach Cables

Manufacturer Product Code Cable Length Notes

Arista CAB-SFP-SFP-1M 1m

Arista CAB-SFP-SFP-3M 3m

Cisco SFP-H10GB-CU1M 1m

Cisco SFP-H10GB-CU3M 3m

Cisco SFP-H10GB-CU5M 5m

User Guide

HP J9283A/B Procurve 3m

Juniper EX-SFP-10GE-DAC-1m 1m

Juniper EX-SFP-10GE-DAC-3m 3m

Molex 74752-1101 1m

Molex 74752-2301 3m

Molex 74752-3501 5m

Molex 74752-9093 1m 37-0960-01 / 0K585N

Molex 74752-9094 3m 37-0961-01 / 0J564N

Molex 74752-9096 5m 37-0962-01 / 0H603N

Panduit PSF1PXA1M 1m

Panduit PSF1PXA3M 3m

Panduit PSF1PXD5MBU 5m

Siemon SFPP30-01 1m

Siemon SFPP30-02 2m

Siemon SFPP30-03 3m

Siemon SFPP24-05 5m

Tyco 2032237-2 D 1m

Tyco 2032237-4 3m

Issue 11 © Solarflare Communications 2014 25

Solarflare Server Adapter

The Solarflare SFA6902F adapter has been tested and certified with direct attach cables up to 3m in

length.

2.7 Supported SFP+ 10G SR Optical Transceivers

Table 3 is a list of supported SFP+10G SR optical transceivers that have been tested by Solarflare.

Solarflare is not aware of any issues preventing the use of other brands of 10G SR transceivers with

Solarflare network adapters. However, only transceivers in the table below have been fully verified

and are therefore supported.

Table 3: Supported SFP+ 10G Optical SR Transceivers

Manufacturer Product Code Notes

Avago AFBR-703SDZ 10G

Avago AFBR-703SDDZ Dual speed 1G/10G optic.

Avago AFBR-703SMZ 10G

User Guide

Arista SFP-10G-SR 10G

Finisar FTLX8571D3BCL 10G

Finisar FTLX8571D3BCV Dual speed 1G/10G optic.

HP 456096-001 Also labelled as 455883-B21 and

455885-001

Intel AFBR-703SDZ 10G

JDSU PLRXPL-SC-S43-22-N 10G

Juniper AFBR-700SDZ-JU1 10G

MergeOptics TRX10GVP2010 10G

Solarflare SFM-10G-SR 10G

Issue 11 © Solarflare Communications 2014 26

Solarflare Server Adapter

2.8 Supported SFP+ 10G LR Optical Transceivers

Table 4 is a list of supported SFP+10G LR optical transceivers that have been tested by Solarflare.

Solarflare is not aware of any issues preventing the use of other brands of 10G LR transceivers with

Solarflare network adapters. However, only transceivers in the table below have been fully verified

and are therefore supported.

Table 4: Supported SFP+ 10G LR Optical Transceivers

Manufacturer Product Code Notes

Avago AFCT-701SDZ 10G single mode fiber

Finisar FTLX1471D3BCL 10G single mode fiber

2.9 QSFP+ Transceivers and Cables

The following tables identify QSFP+ transceiver modules and cables tested by Solarflare with the

SFN7000 QSP+ adapters. Solarflare are not aware of any issues preventing the use of other brands

of QSFP+ 40G transceivers and cables with Solarflare SFN7000 QSFP+ adapters. However, only

products listed in the tables below have been fully verified and are therefore supported

User Guide

Supported QSFP+ 40GBASE-SR4 Transceivers

The Solarflare Flareon Ultra SFN7142Q adapter has been tested with the following QSFP+ 40GBASESR4 optical transceiver modules.

Table 5: Supported QSFP+ SR4 Transceivers

Manufacturer Product Code Notes

Arista AFBR-79E4Z

Avago AFBR-79EADZ

Avago AFBR-79EIDZ

Avago AFBR-79EQDZ

Avago AFBR-79EQPZ

Standard 100m (OM3 Multimode fiber) range.

Finisar FTL410QE2C

JDSU JQP-04SWAA1

JDSU JDSU-04SRAB1

Solarflare SFM-40G-SR4

Issue 11 © Solarflare Communications 2014 27

Solarflare Server Adapter

Supported QSFP+ 40G Active Optical Cables (AOC)

The Solarflare Flareon Ultra SFN7142Q adapter has been tested with the following QSFP+ Active

Optical Cables (AOC).

Table 6: Supported QSFP+ Active Optical Cables

Manufacturer Product Code Notes

Avago AFBR-7QER05Z

Finisar FCBG410QB1C03

Finisar FCBN410QB1C05

Supported QSFP+ 40G Direct Attach Cables

The Solarflare Flareon Ultra SFN7142Q adapter has been tested with the following QSFP+ Direct

Attach Cables (DAC).

User Guide

Table 7: Supported QSFP+ Direct Attach Cables

Manufacturer Product Code Notes

Arista CAB-Q-Q-3M 3m

Arista CAB-Q-Q-5M 5m

FCI 10093084-3030LF 3m

Molex 74757-1101 1m

Molex 74757-2301 3m

Siemon QSFP30-01 1m

Siemon QSFP30-03 3m

Siemon QSFP30-05 5m

Supported QSFP+ to SFP+ Breakout Cables

Solarflare QSFP+ to SFP+ breakout cables enable users to connect Solarflare SFN7142Q dual-port

QSFP+ server I/O adapters to work as a quad-port SFP+ server I/O adapters. The breakout cables

offer a cost-effective option to support connectivity flexibility in high-speed data center

applications.

These high performance direct-attach assemblies support 2 lanes of 10 Gb/s per QSFP+ port and are

available in lengths of 1 meters and 3 meters. The SOLR-QSFP2SFP-1M, -3M copper DAC cables are

fully tested and compatible with the Solarflare SFN7142Q server I/O adapter. These cables are

compliant with the SFF-8431, SFF-8432, SFF-8436, SFF-8472 and IBTA Volume 2 Revision 1.3

specifications.

Issue 11 © Solarflare Communications 2014 28

Solarflare Server Adapter

Table 8: Supported QSFP+ to SFP+ Breakout Cables

Manufacturer Product Code Notes

Solarflare SOLR-QSFP2SFP-1M

Solarflare SOLR-QSFP2SFP-3M

2.10 Supported SFP 1000BASE-T Transceivers

Table 9 is a list of supported SFP 1000BASE-T transceivers that have been tested by Solarflare.

Solarflare is not aware of any issues preventing the use of other brands of 1000BASE-T transceivers

with the Solarflare network adapters. However, only transceivers in the table below have been fully

verified and are therefore supported.

Table 9: Supported SFP 1000BASE-T Transceivers

User Guide

Manufacturer Product Code

Arista SFP-1G-BT

Avago ABCU-5710RZ

Cisco 30-1410-03

Dell FCMJ-8521-3-(DL)

Finisar FCLF-8521-3

Finisar FCMJ-8521-3

HP 453156-001

453154-B21

3COM 3CSFP93

Issue 11 © Solarflare Communications 2014 29

Solarflare Server Adapter

2.11 Supported 1G Optical Transceivers

Table 10 is a list of supported 1G transceivers that have been tested by Solarflare. Solarflare is not

aware of any issues preventing the use of other brands of 1G transceivers with Solarflare network

adapters. However, only transceivers in the table below have been fully verified and are therefore

supported.

Manufacturer Product Code Type

Avago AFBR-5710PZ 1000Base-SX

Cisco GLC-LH-SM 1000Base-LX/LH

Finisar FTLF8519P2BCL 1000Base-SX

Finisar FTLF8519P3BNL 1000Base-SX

Finisar FTLF1318P2BCL 1000Base-LX

Table 10: Supported 1G Transceivers

User Guide

Finisar FTLF1318P3BTL 1000Base-LX

HP 453153-001

453151-B21

1000Base-SX

2.12 Supported Speed and Mode

Solarflare network adapters support either QSFP+, SFP, SFP+ or Base-T standards.

On Base-T adapters three speeds are supported 100Mbps, 1Gbps and 10Gbps. The adapters use

auto negotiation to automatically select the highest speed supported in common with the link

partner.

On SFP+ adapters the currently inserted SFP module (transceiver) determines the supported speeds,

typically SFP modules only support a single speed. Some Solarflare SFP+ adapters support dual

speed optical modules that can operate at either 1Gbps or 10Gbps. However, these modules do not

auto-negotiate link speed and operate at the maximum (10G) link speed unless explicitly configured

to operate at a lower speed (1G).

Issue 11 © Solarflare Communications 2014 30

Solarflare Server Adapter

2.13 Configure QSFP+ Adapter

QSFP+ adapters can operate as 2 x 10Gbps per QSFP+ port or as 1 x 40Gbps per QSFP+ port. A

configuration of 1 x 40G and 2 x 10G ports is not supported.

User Guide

Figure 1: QSFP+ Port Configuration

The Solarflare 40G breakout cables have only 2 physical cables - for details refer to Supported QSFP+

to SFP+ Breakout Cables on page 28. Breakout cables from other suppliers may have 4 physical

cables. When connecting a third party breakout cable into the Solarflare 40G QSFP+ cage (in 10G

mode), only cables 1 and 3 will be active.

The sfboot utility from the Solarflare Linux Utilities package (SF-107601-LS) can be used to configure

the adapter for 10G or 40G operation.

# sfboot port-mode=40G

Issue 11 © Solarflare Communications 2014 31

Solarflare Server Adapter

User Guide

The tables below summarizes the speeds supported by Solarflare network adapters.

Table 11: SFN5xxx,SFN6xxx and SFN7xxx SFP+ QSFP+ Adapters

Supported Modes Auto neg speed Speed Comment

QSFP+ direct attach cables No 10G or 40G SFN7142Q

QSFP+ optical cables No 10G or 40G SFN7142Q

SFP+ direct attach cable No 10G

SFP+ optical module (10G) No 10G

SFP optical module (1G) No 1G

SFP+ optical module (10G/1G) No 10G or 1G Dual speed modules run at

the maximum speed (10G)

unless explicitly configured to

the lower speed (1G)

SFP 1000BASE-T module No 1G These modules support only

1G and will not link up at

100Mbps

Table 12: SFN5121T, SFN5151T, SFN5161T 10GBASE-T Adapters

Supported Modes Auto neg speed Speed Comment

100Base-T Yes 100Mbps Typically the interface is set

1000Base-TX Yes 1Gbps

10GBase-T Ye s 10Gbps

to auto negotiation speed

and automatically selects the

highest speed supported in

common with it’s link partner.

If the link partner is set to

100Mbps, with no autoneg,

the adapter will use “parallel

detection” to detect and

select 100Mbps speed. If

needed any of the three

speeds can be explicitly

configured

100Base-T in a Solarflare adapter back-to-back (no intervening switch) configuration will not work

and is not supported.

Issue 11 © Solarflare Communications 2014 32

Solarflare Server Adapter

2.14 LED States

There are two LEDs on the Solarflare network adapter transceiver module. LED states are as follows:

Table 13: LED States

Adapter Type LED Description State

User Guide

QSFP+, SFP/SFP+ Link

Activity

BASE-T Speed

Activity

Green (solid) at all speeds

Flashing green when network traffic is present

LEDs are OFF when there is no link present

Green (solid) 10Gbps

Yellow (solid) 100/1000Mbps

Flashing green when network traffic is present

LEDs are OFF when there is no link present

Issue 11 © Solarflare Communications 2014 33

Solarflare Server Adapter

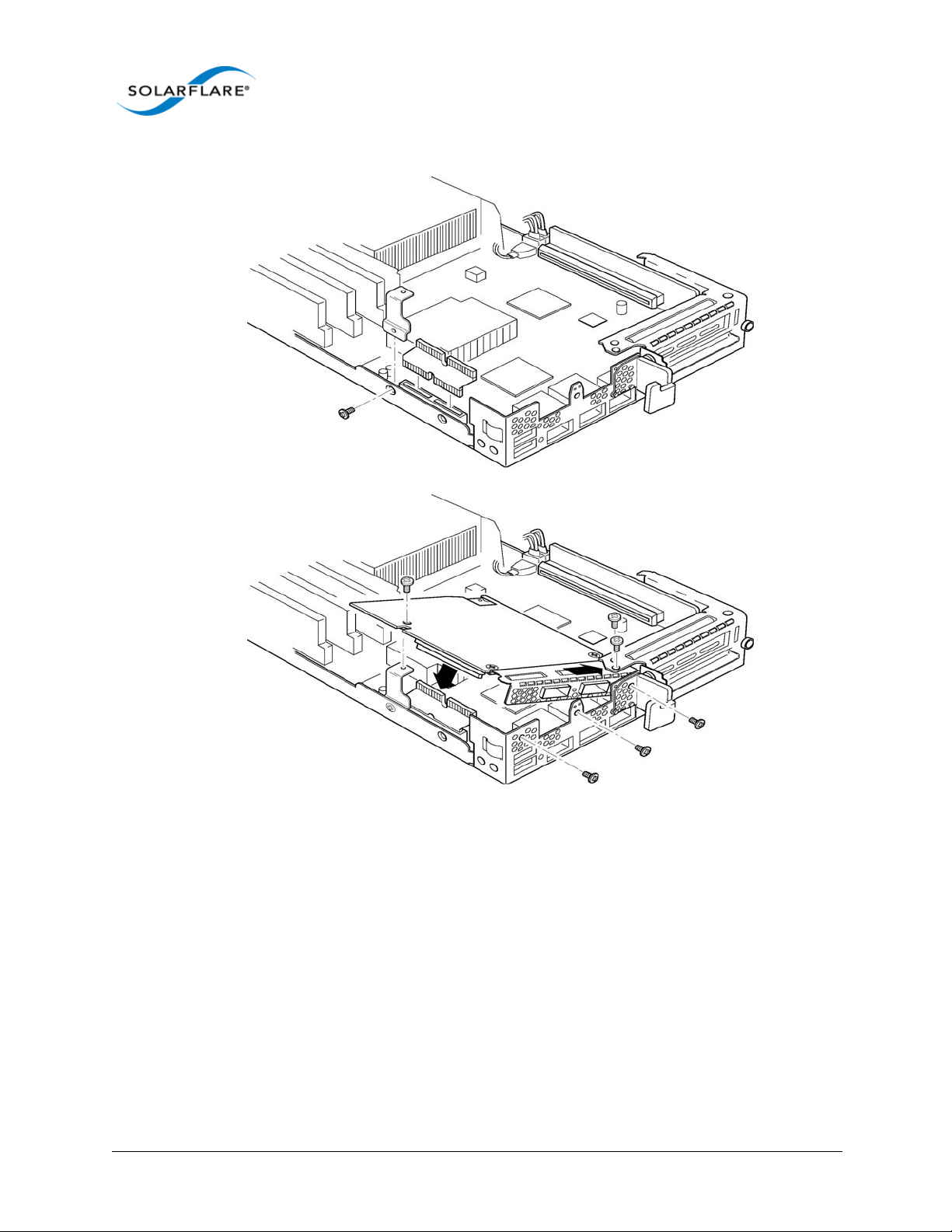

2.15 Solarflare Mezzanine Adapters: SFN5812H and SFN5814H

The Solarflare SFN5812H Dual-Port and SFN5814H Quad-Port are 10G Ethernet Mezzanine Adapters

for the IBM BladeCenter.

Solarflare mezzanine adapters are supported on the IBM BladeCenter E, H and S chassis, HS22,

HS22V and HX5 servers. The IBM BladeCenter blade supports a single Solarflare mezzanine adapter.

1 The blade should be extracted from the BladeCenter in order to install the mezzanine adapter.

2 Remove the blade top cover and locate the two retaining posts towards the rear of the blade -

(Figure 2). Refer to the BladeCenter manual if necessary.

User Guide

Figure 2: Installing the Mezzanine Adapter

3 Hinge the adapter under the retaining posts, as illustrated, and align the mezzanine port

connector with the backplane connector block.

Issue 11 © Solarflare Communications 2014 34

Solarflare Server Adapter

User Guide

4 Lower the adapter, taking care to align the side positioning/retaining posts with the recesses in

the adapter. See Figure 3.

Figure 3: In position mezzanine adapter

5 Press the port connector gently into the connector block ensuring that the adapter is firmly

and correctly seated in the connector block.

6 Replace the blade top cover.

7 When removing the adapter raise the release handle (shown on Figure 3) to ease the adapter

upwards until it can be freed from the connector block.

2.16 Solarflare Mezzanine Adapter SFN6832F-C61

The Solarflare SFN6832F-C61 is a Dual-Port SFP+ are 10GbE Mezzanine Adapters for the DELL

PowerEdge C6100 series rack server. Each DELL PowerEdge node supports a single Solarflare

mezzanine adapter.

1 The node should be extracted from the rack server in order to install the mezzanine adapter.

Refer to the PowerEdge rack server manual if necessary.

Issue 11 © Solarflare Communications 2014 35

Solarflare Server Adapter

User Guide

Figure 4: SFN6832F-C61 - Installing into the rack server node

2 Secure the side retaining bracket as shown in Figure 5 (top diagram)

3 Fit riser PCB card into the slot as shown in Figure 5 (top diagram). Note that the riser card only

fits one way.

4 Offer the adapter to the node and ensure it lies underneath the chassis cover.

5 Lower the adapter into position making sure to connect the adapter slot with the to of the PCB

riser card.

6 Secure the adapter using the supplied screws at the positions shown in the diagram.

Issue 11 © Solarflare Communications 2014 36

Solarflare Server Adapter

2.17 Solarflare Mezzanine Adapter SFN6832F-C62

The Solarflare SFN6832F-C61 is a Dual-Port SFP+ are 10GbE Mezzanine Adapters for the DELL

PowerEdge C6200 series rack server. Each DELL PowerEdge node supports a single Solarflare

mezzanine adapter.

1 The node should be extracted from the rack server in order to install the mezzanine adapter.

Refer to the PowerEdge rack server manual if necessary.

User Guide

Figure 5: SFN6832F-C62 - Installing into the rack server node

2 Fit the PCB riser card to the underside connector on the adapter.

3 Offer the adapter to the rack server node ensuring it lies underneath the chassis cover.

4 Lower to adapter to connect the riser PCB card into the slot in the node.

5 Secure the adapter with the supplied screws at the points shown in the diagram.

Issue 11 © Solarflare Communications 2014 37

Solarflare Server Adapter

2.18 Solarflare Precision Time Synchronization Adapters

The Solarflare SFN7142Q1, SFN7122F1, SFN7322F and SFN6322F adapters can generate hardware

timestamps for PTP packets in support of a network precision time protocol deployment compliant

with the IEEE 1588-2008 specification.

Customers requiring configuration instructions for these adapters and Solarflare PTP in a PTP

deployment should refer to the Solarflare Enhanced PTP User Guide SF-109110-CD.

1. Requires an AppFlex™ license - refer to Solarflare AppFlex™ Technology Licensing. on page 12.

2.19 Solarflare SFA6902F ApplicationOnload™ Engine

The ApplicationOnload™ Engine (AOE) SFA6902F is a full length PCIe form factor adapter that

combines an ultra-low latency adapter with a tightly coupled ’bump-in-the-wire’ FPGA.

For details of installation and configuring applications that run on the AOE refer to the Solarflare AOE

User’s Guide (SF-108389-CD). For details on developing custom applications to run on the FPGA refer

to the AOE Firmware Development Kit User Guide (SF-108390-CD).

User Guide

Issue 11 © Solarflare Communications 2014 38

Solarflare Server Adapter

Chapter 3: Solarflare Adapters on Linux

This chapter covers the following topics on the Linux® platform:

• System Requirements...Page 39

• Linux Platform Feature Set...Page 40

• Solarflare RPMs...Page 41

• Installing Solarflare Drivers and Utilities on Linux...Page 43

• Red Hat Enterprise Linux Distributions...Page 43

• SUSE Linux Enterprise Server Distributions...Page 44

• Unattended Installations...Page 45

• Unattended Installation - Red Hat Enterprise Linux...Page 47

• Unattended Installation - SUSE Linux Enterprise Server...Page 48

• Hardware Timestamps...Page 49

User Guide

• Configuring the Solarflare Adapter...Page 49

• Configuring Receive/Transmit Ring Buffer Size...Page 50

• Setting Up VLANs...Page 51

• Setting Up Teams...Page 52

• Running Adapter Diagnostics...Page 53

• Running Cable Diagnostics...Page 54

• Transmit Packet Steering...Page 89

• Configuring the Boot ROM with sfboot...Page 56

• Upgrading Adapter Firmware with Sfupdate...Page 70

• License Install with sfkey...Page 75

• Performance Tuning on Linux...Page 79

• Module Parameters...Page 99

• Linux ethtool Statistics...Page 101

3.1 System Requirements

Refer to Software Driver Support on page 12 for supported Linux Distributions.

NOTE: SUSE Linux Enterprise Server 11 includes a version of the Solarflare network adapter Driver.

This driver does not support the SFN512x family of adapters. To update the supplied driver, see

SUSE Linux Enterprise Server Distributions on page 44

Issue 11 © Solarflare Communications 2014 39

Solarflare Server Adapter

NOTE: Red Hat Enterprise Linux versions 5.5 and 6.0 include a version of the Solarflare adapter

driver. This driver does not support the SFN512x family of adapters. Red Hat Enterprise Linux 5.6

and 6.1 includes a version of the Solarflare network driver for the SFN512x family of adapters. To

update the supplied driver, see Installing Solarflare Drivers and Utilities on Linux on page 43

3.2 Linux Platform Feature Set

Table 14 lists the features supported by Solarflare adapters on Red Hat and SUSE Linux distributions.

Table 14: Linux Feature Set

Fault diagnostics Support for comprehensive adapter and cable fault diagnostics

and system reports.

• See Running Adapter Diagnostics on page 53

Firmware updates Support for Boot ROM, Phy transceiver and adapter firmware

upgrades.

User Guide

• See Upgrading Adapter Firmware with Sfupdate on page 70

Hardware Timestamps

Solarflare Flareon SFN7122F1 SFN7142Q1 and SFN7322F

adapters support the hardware timestamping of all received

packets - including PTP packets.

The Linux kernel must support the SO_TIMESTAMPING socket

option (2.6.30+) to allow the driver to support hardware packet

timestamping. Therefore hardware packet timestamping is not

available in RHEL 5.

1. Requires an AppFlex license - for details refer to Solarflare AppFlex™

Technology Licensing. on page 12

.

Jumbo frames Support for MTUs (Maximum Transmission Units) from

1500 bytes to 9216 bytes.

• See Configuring Jumbo Frames on page 51

PXE and iSCSI booting Support for diskless booting to a target operating system via

PXE or iSCSI boot.

• See Configuring the Boot ROM with sfboot on page 56

• See Solarflare Boot ROM Agent on page 344

PXE or iSCSI boot are not supported for Solarflare adapters on

IBM System p servers.

Receive Side Scaling (RSS) Support for RSS multi-core load distribution technology.

• See Receive Side Scaling (RSS) on page 85

Issue 11 © Solarflare Communications 2014 40

Solarflare Server Adapter

User Guide

Table 14: Linux Feature Set

Receive Flow Steering (RFS) Improve latency and reduce jitter by steering packets to the

core where a receiving application is running.

See Receive Flow Steering (RFS) on page 87.

SR-IOV Support for XenServer6 PCIe Single Root-IO Virtualization and

Linux KVM SR-IOV.

• See SR-IOV Virtualization for XenServer on page 324

• See SR-IOV Virtualization Using KVM on page 307

SR-IOV is not supported for Solarflare adapters on IBM System

p servers.

Standby and Power

Management

Task offloads Support for TCP Segmentation Offload (TSO), Large Receive

Teaming Improve server reliability and bandwidth by combining physical

Virtual LANs (VLANs) Support for multiple VLANs per adapter.

Transmit Packet Steering

(XPS)

Solarflare adapters support Wake On LAN on Linux. These

settings are only available if the adapter has auxiliary power

supplied by a separate cable.

Offload (LRO), and TCP/UDP/IP checksum offload for improved

adapter performance and reduced CPU processing

requirements.

• See Configuring Task Offloading on page 50

ports, from one or more Solarflare adapters, into a team,

having a single MAC address and which function as a single port

providing redundancy against a single point of failure.

• See Setting Up Teams on page 52

• See Setting Up VLANs on page 51

Supported on Linux 2.6.38 and later kernels. Selects the

transmit queue when transmitting on multi-queue devices.

Refer to Transmit Packet Steering on page 89 for details.

3.3 Solarflare RPMs

Solarflare supply RPM packages in the following formats:

• DKMS

• Source RPM

Issue 11 © Solarflare Communications 2014 41

Solarflare Server Adapter

DKMS RPM

Dynamic Kernel Module Support (DKMS) is a framework where device driver source can reside

outside the kernel source tree. It supports an easy method to rebuild modules when kernels are

upgraded.

User Guide

Execute the command

dkms --version to determine whether DKMS is installed.

To install the Solarflare driver DKMS package execute the following command:

rpm -i sfc-dkms-<version>.noarch.rpm

Building the Source RPM

These instructions may be used to build a source RPM package for use with Linux distributions or

kernel versions where DKMS or KMP packages are not suitable.

NOTE: RPMs can be installed for multiple kernel versions.

1 First, the kernel headers for the running kernel must be installed at

<kernel-version>/build. On Red Hat systems, install the appropriate kernel-smp-

devel or kernel-devel package. On SUSE systems install the kernel-source package.

2 To build a source RPM for the running kernel version from the source RPM, enter the following

at the command-line:

rpmbuild --rebuild <package_name>

Where package_name is the full path to the source RPM (see the note below).

3 To build for a different kernel to the running system, enter the following command:

/lib/modules/

rpmbuild --define 'kernel <kernel version>' --rebuild <package_name>

4 Install the resulting RPM binary package, as described in Installing Solarflare Drivers and

Utilities on Linux.

NOTE: The location of the generated RPM is dependent on the distribution and often the version

of the distribution and the RPM build tools.

The RPM build process should print out the location of the RPM towards the end of the build

process, but it can be hard to find amongst the other output.

Typically the RPM will be placed in /usr/src/<dir>/RPMS/<arch>/, where <dir> is

distribution specific. Possible folders include Red Hat, packages or extra. The RPM file will be

named using the same convention as the Solarflare provided pre-built binary RPMs.

The command:

find /usr/src -name "*sfc*.rpm” will list the locations of all Solarflare

RPMs.

Issue 11 © Solarflare Communications 2014 42

Solarflare Server Adapter

3.4 Installing Solarflare Drivers and Utilities on Linux

• Red Hat Enterprise Linux Distributions...Page 43

• SUSE Linux Enterprise Server Distributions...Page 44

• Building the Source RPM...Page 42

Linux drivers for Solarflare are available in DKMS and source RPM packages. The source RPM can be

used to build binary RPMs for a wide selection of distributions and kernel variants. This section

details how to install the resultant binary RPM.

Solarflare recommend using DKMS RPMs if the DKMS framework is available. See DKMS RPM on

page 42 for more details.

NOTE: The Solarflare adapter should be physically installed in the host computer before installing

the driver. The user must have root permissions to install the adapter drivers.

3.5 Red Hat Enterprise Linux Distributions

User Guide