SGI InfiniteStorage 4000 Series and 5000 Series

Failover Drivers Guide for SANtricity ES

(ISSM 10.86)

007-5886-002 April 2013

The information in this document supports the SGI InfiniteStorage 4000 series and 5000 series storage

systems (ISSM 10.86). Refer to the table below to match your specific SGI InfiniteStorage product

with the model numbers used in this document.

SGI Model #

NetApp Model

TP9600H 6091

TP9700F 6091

IS4500F 6091

TP9600F 3994 and 3992

IS4000H 3994

IS350 3992

IS220 1932

1333

DE1300

IS4100 4900

IS-DMODULE16-Z FC4600

IS-DMODULE60 DE6900

IS4600 7091

IS-DMODULE12 & IS2212 (JBOD) DE1600

IS-DMODULE24 & IS2224 (JBOD) DE5600

IS-DMODULE60-SAS DE6600

IS5012 E2600

IS5024 E2600

IS5060 E2600

IS5512 E5400

IS5524 E5400

IS5560 E5400

IS5600 E5500

Copyright information

Copyright © 1994–2012 NetApp, Inc. All rights reserved. Printed in the U.S.A.

No part of this document covered by copyright may be reproduced in any form or by any means—

graphic, electronic, or mechanical, including photocopying, recording, taping, or storage in an

electronic retrieval system—without prior written permission of the copyright owner.

Software derived from copyrighted NetApp material is subject to the following license and disclaimer:

THIS SOFTWARE IS PROVIDED BY NETAPP "AS IS" AND WITHOUT ANY EXPRESS OR

IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES

OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE, WHICH ARE

HEREBY DISCLAIMED. IN NO EVENT SHALL NETAPP BE LIABLE FOR ANY DIRECT,

INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES

(INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY

OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH

DAMAGE.

NetApp reserves the right to change any products described herein at any time, and without notice.

NetApp assumes no responsibility or liability arising from the use of products described herein, except

as expressly agreed to in writing by NetApp. The use or purchase of this product does not convey a

license under any patent rights, trademark rights, or any other intellectual property rights of NetApp.

RESTRICTED RIGHTS LEGEND: Use, duplication, or disclosure by the government is subject to

restrictions as set forth in subparagraph (c)(1)(ii) of the Rights in Technical Data and Computer

Software clause at DFARS 252.277-7103 (October 1988) and FAR 52-227-19 (June 1987)

ii Copyright information

.

Trademark information

NetApp, the NetApp logo, Network Appliance, the Network Appliance logo, Akorri, ApplianceWatch,

ASUP, AutoSupport, BalancePoint, BalancePoint Predictor, Bycast, Campaign Express,

ComplianceClock, Cryptainer, CryptoShred, Data ONTAP, DataFabric, DataFort, Decru, Decru

DataFort, DenseStak, Engenio, Engenio logo, E-Stack, FAServer, FastStak, FilerView, FlexCache,

FlexClone, FlexPod, FlexScale, FlexShare, FlexSuite, FlexVol, FPolicy, GetSuccessful, gFiler, Go

further, faster, Imagine Virtually Anything, Lifetime Key Management, LockVault, Manage ONTAP,

MetroCluster, MultiStore, NearStore, NetCache, NOW (NetApp on the Web), Onaro, OnCommand,

ONTAPI, OpenKey, PerformanceStak, RAID-DP, ReplicatorX, SANscreen, SANshare, SANtricity,

SecureAdmin, SecureShare, Select, Service Builder, Shadow Tape, Simplicity, Simulate ONTAP,

SnapCopy, SnapDirector, SnapDrive, SnapFilter, SnapLock, SnapManager, SnapMigrator,

SnapMirror, SnapMover, SnapProtect, SnapRestore, Snapshot, SnapSuite, SnapValidator, SnapVault,

StorageGRID, StoreVault, the StoreVault logo, SyncMirror, Tech OnTap, The evolution of storage,

Topio, vFiler, VFM, Virtual File Manager, VPolicy, WAFL, Web Filer, and XBB are trademarks or

registered trademarks of NetApp, Inc. in the United States, other countries, or both.

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business

Machines Corporation in the United States, other countries, or both. A complete and current list of

other IBM trademarks is available on the Web at

Apple is a registered trademark and QuickTime is a trademark of Apple, Inc. in the U.S.A. and/or other

countries. Microsoft is a registered trademark and Windows Media is a trademark of Microsoft

Corporation in the U.S.A. and/or other countries. RealAudio, RealNetworks, RealPlayer, RealSystem,

RealText, and RealVideo are registered trademarks and RealMedia, RealProxy, and SureStream are

trademarks of RealNetworks, Inc. in the U.S.A. and/or other countries.

www.ibm.com/legal/copytrade.shtml.

All other brands or products are trademarks or registered trademarks of their respective holders and

should be treated as such.

NetApp, Inc. is a licensee of the CompactFlash and CF Logo trademarks.

NetApp, Inc. NetCache is certified RealSystem compatible.

Trademark information iii

Table of Contents

Chapter 1 Overview of Failover Drivers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

Failover Driver Setup Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

Supported Failover Drivers Matrix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

I/O Coexistence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Failover Configuration Diagrams. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Single-Host Configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Host Clustering Configurations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Supporting Redundant Controllers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

How a Failover Driver Responds to a Data Path Failure . . . . . . . . . . . . . . . . . . . 8

User Responses to a Data Path Failure. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Load-Balancing Policies. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Least Queue Depth . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Round Robin with Subset I/O . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Weighted Paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Dividing I/O Activity Between Two RAID Controllers to Obtain the Best

Performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

Changing the Preferred Path Online Without Stopping the Applications . . . . . 10

Chapter 2 Failover Drivers for the Windows Operating System . . . . . . . . . . . . . . . . . . . . . 11

Microsoft Multipath Input/Output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Device Specific Module for the Microsoft MPIO Solution . . . . . . . . . . . . . . . . 11

Windows OS Restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Per-Protocol I/O Timeout Values. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

Selective LUN Transfer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

Windows Failover Cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

I/O Shipping Feature for Asymmetric Logical Unit Access (ALUA) . . . . . . . . 14

Verifying that the I/O Shipping Feature for ALUA Support is Installed on

the Windows OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Disabling the I/O Shipping Feature for ALUA Support on the

Windows OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Table of Contents v

Reduced Failover Timing. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Path Congestion Detection and Online/Offline Path States . . . . . . . . . . . . . . . . 17

Configuration Settings for the Windows DSM and the Linux RDAC . . . . . . . . 17

Wait Time Settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

Configuration Settings for Path Congestion Detection and Online/Offline Path

States . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Example Configuration Settings for the Path Congestion Detection Feature . . 24

Running the DSM Failover Driver in a Hyper-V Guest with Pass-Through

Disks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

dsmUtil Utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Device Manager . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Determining if a Path Has Failed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Installing or Upgrading SANtricity ES and DSM on the Windows OS . . . 29

Removing SANtricity ES Storage Manager and the DSM Failover

Driver from the Windows OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

WinObj . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Device Specific Module Driver Directory Structures . . . . . . . . . . . . . 30

Frequently Asked Questions about Windows Failover Drivers . . . . . . . . . . . . . 32

Chapter 3 Failover Drivers for the Linux Operating System . . . . . . . . . . . . . . . . . . . . . . . . 37

Linux OS Restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Features of the RDAC Failover Driver from NetApp . . . . . . . . . . . . . . . . . . . . 37

Prerequisites for Installing RDAC on the Linux OS . . . . . . . . . . . . . . . . . . . . . 38

Installing SANtricity ES Storage Manager and RDAC on the Linux OS . . . . . 39

Installing RDAC Manually on the Linux OS . . . . . . . . . . . . . . . . . . . . . . . 40

Making Sure that RDAC Is Installed Correctly on the Linux OS. . . . . . . . 41

Configuring Failover Drivers for the Linux OS . . . . . . . . . . . . . . . . . . . . . . . . . 42

Compatibility and Migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

mppUtil Utility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Frequently Asked Questions about Linux Failover Drivers. . . . . . . . . . . . . . . . 46

vi Table of Contents

Chapter 4 Device Mapper Multipath for the Linux Operating System . . . . . . . . . . . . . . . . 49

Device Mapper Features. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

Known Limitations and Issues of the Device Mapper . . . . . . . . . . . . . . . . . . . 49

Device Mapper Operating Systems Support . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

I/O Shipping Feature for Asymmetric Logical Unit Access (ALUA) with

Linux Operating Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Installing the Device Mapper Multi-Path for the SUSE Linux Enterprise

Server Version 11 Service Pack 2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Installing the Device Mapper Multi-Path for the Red Hat Enterprise Linux

Operating System Version 6.3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Verifying that the I/O Shipping Feature for ALUA Support is Installed on the

Linux OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Setting Up the multipath.conf File . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

Updating the Blacklist Section . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Updating the Devices Section of the multipath.conf File . . . . . . . . . . . . . . 54

Setting Up DM-MP for Large I/O Blocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

Using the Device Mapper Devices. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

Trouble-

shooting the Device Mapper. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

Chapter 5 Failover Drivers for the Solaris Operating System . . . . . . . . . . . . . . . . . . . . . . . 59

Solaris OS Restrictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Prerequisites for Installing MPxIO on the Solaris OS for the First Time . . . . . 59

Prerequisites for Installing MPxIO on a Solaris OS that Previously Ran

RDAC. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Installing MPxIO on the Solaris 9 OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

Enabling MPxIO on the Solaris 10 OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Enabling MPxIO on the Solaris 11 OS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

Configuring Failover Drivers for the Solaris OS . . . . . . . . . . . . . . . . . . . . . . . . 63

Frequently Asked Questions about Solaris Failover Drivers . . . . . . . . . . . . . . . 63

Chapter 6 Installing ALUA Support for VMware Versions ESX4.1U3, ESXi5.0U1, and

Subsequent Versions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

Table of Contents vii

viii Table of Contents

Overview of Failover Drivers

Failover drivers provide redundant path management for storage devices and cables in

the data path from the host bus adapter to the controller. For example, you can connect

two host bus adapters in the system to the redundant controller pair in a storage array,

with different buses for each controller. If one host bus adapter, one bus cable, or one

controller fails, the failover driver automatically reroutes input/output (I/O) to the

good path, which permits the storage array to continue operating without interruption.

Failover drivers provide these functions:

They automatically identify redundant I/O paths.

They automatically reroute I/O to an alternate controller when a controller fails

or all of the data paths to a controller fail.

They check the state of known paths to the storage array.

They provide status information on the controller and the bus.

They check to see if the Service mode is enabled and if the modes have switched

between Redundant Dual Active Controller (RDAC) and Auto-Volume Transfer

(AVT).

They check to see if Service mode is enabled on a controller and if the AVT or

asymmetric logical unit access (ALUA) mode of operation has changed.

1

Failover Driver

Setup

Considerations

Most storage arrays contain two controllers that are set up as redundant controllers. If

one controller fails, the other controller in the pair takes over the functions of the

failed controller, and the storage array continues to process data. You can then replace

the failed controller and resume normal operation. You do not need to shut down the

storage array to perform this task.

The redundant controller feature is managed by the failover driver software, which

controls data flow to the controller pairs independent of the operating system (OS).

This software tracks the current status of the connections and can perform the

switch-over without any changes in the OS.

Whether your storage arrays have the redundant controller feature depends on a

number of items:

Whether the hardware supports it. Refer to the hardware documentation for your

storage arrays to determine whether the hardware supports redundant controllers.

Whether your OS supports certain failover drivers. Refer to the installation and

support guide for your OS to determine if your OS supports redundant

controllers.

How the storage arrays are connected. The storage array must have two

controllers installed in a redundant configuration. Drive trays must be cabled

correctly to support redundant paths to all drives.

Chapter 1: Overview of Failover Drivers 1

Supported

Failover Drivers

Matrix

2012, Windows 2008,

The I/O Shipping feature implements support for ALUA. With the I/O Shipping

feature, a storage array can service I/O requests through either controller in a duplex

configuration. However, I/O shipping alone does not guarantee that I/O is routed to

the optimized path. With Windows, Linux and VMWare, your storage array supports

an extension to ALUA to address this problem so that volumes are accessed through

the optimized path unless that path fails. With SANtricity ES 10.86 and subsequent

releases, Windows and Linux DM-MP have I/O shipping enabled by default.

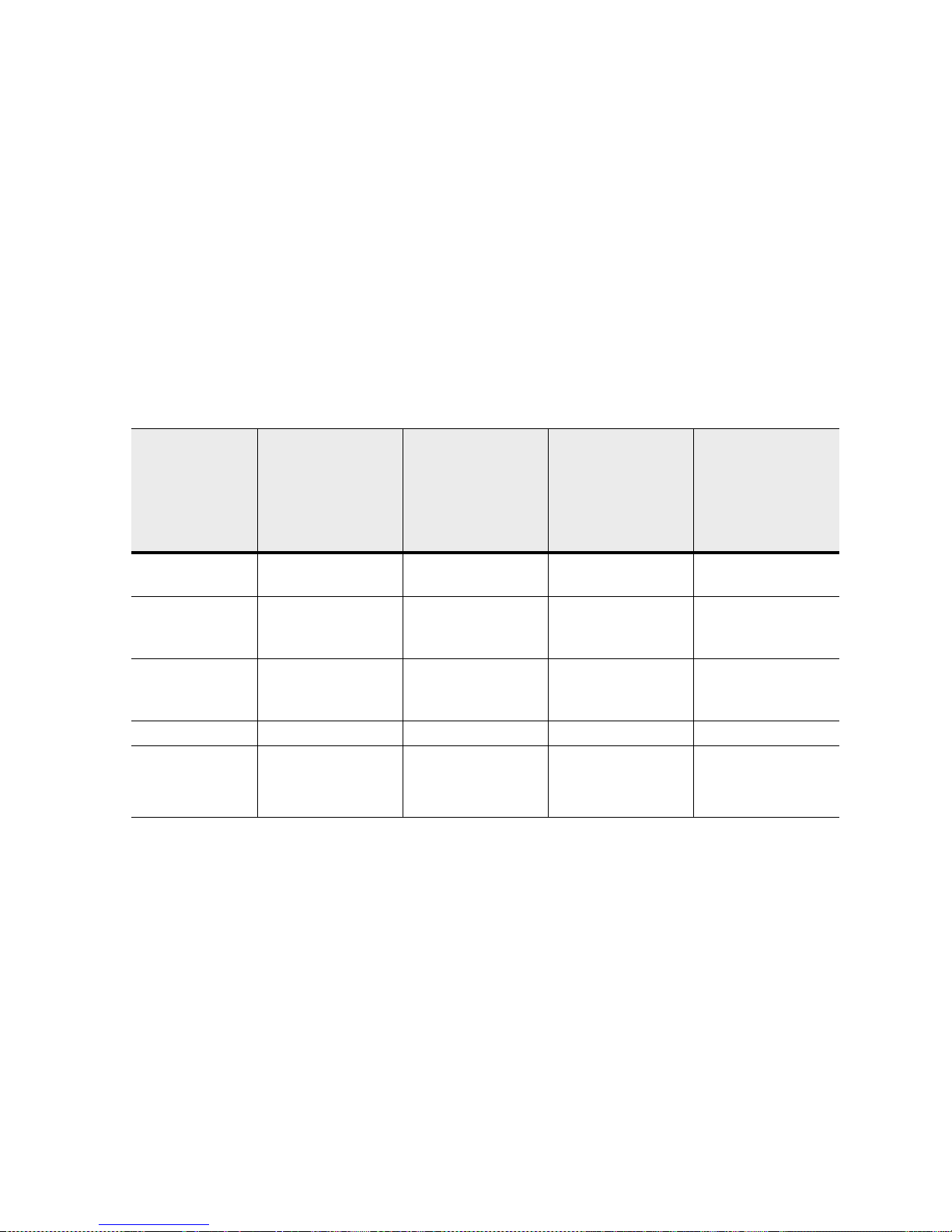

Table 1 Matrix of Supported Failover Drivers by Operating System (OS)

Red Hat Enterprise

Windows Server

Vista and Hyper-V

Linux (RHEL) 5.8

and 5.9 OSs and

SUSE Linux

Enterprise (SLES)

10.4 OS

RHEL 6.3 OS and

SUSE Linux

Enterprise 11.2

Solaris 10 U10,

Solaris 10 u11

Failover driver

type

Storage array

mode

Number of

volumes supported

per partition

Cluster support? Yes Yes Yes Yes

Controller-drive

trays supported

MPIO with NetApp

DSM-ALUA

Mode Select, or

ALUA

255 256 256 255

E2600 and E5400

E5500 (Windows

2012 only)

RDAC DMMP (with E5500)

or RDAC

Either Mode Select or

AV T

E2600 and E5400 E2600, E5400, and

Mode Select. Mode Select or

E5500

MPxIO (non-TPGS)

Asymmetric Logical

Unit Access (ALUA)

E2600 and E5400

2 Supported Failover Drivers Matrix

Table 2 Matrix of Supported Failover Drivers by Operating System (OS)

Solaris 11, Solaris

11.1

Failover driver

type

Storage array

mode

Number of

volumes per

partition

Cluster support? No No Yes NA

Controller-drive

trays supported

MPxIO

(TPGS/ALUA)

Mode Select or

ALUA

255

E2600, E5400, and

E5500

HPUX 11.31

TPGS/ALUA VMWare native -

E2600 and E5400 E2600 and E5400 E2600 and E5400

VMWare 4.1 u3, 5.1,

ad 5.0 u2

SATP/ALUA

Mac OS 10.6 and

10.7

ATTO driver with

TPGS/ALUA

I/O Coexistence A host can have connections to more than one storage array. The connected storage

arrays can have different controller firmware levels, provided that they share common

components (HBAs, switches, and operating system versions) and common failover

drivers.

Failover

Configuration

Diagrams

You can configure failover in several ways. Each configuration has its own advantages

and disadvantages. This section describes these configurations:

Single-host configuration

Multi-host configuration

This section also describes how the storage management software supports redundant

controllers.

NOTE For best results, use the multi-host configuration. It provides the fullest failover

protection and functionality in the event that a problem exists with the connection.

Single-Host

Configuration

Chapter 1: Overview of Failover Drivers 3

In a single-host configuration, the host system contains two host bus adapters

(HBAs), with each HBA connected to one of the controllers in the storage array. The

storage management software is installed on the host. The two connections are

required for maximum failover support for redundant controllers.

Although you can have a single controller in a storage array or a host that has only

one HBA port, you do not have complete failover data path protection with either of

those configurations. The cable and the HBA become a single point of failure, and

any data path failure could result in unpredictable effects on the host system. For the

greatest level of I/O protection, provide each controller in a storage array with its own

connection to a separate HBA in the host system.

Figure 1 Single-Host-to-Storage Array Configuration

1. Host System with Two Fibre Channel Host Bus Adapters

2. Fibre Channel Connection – Fibre Channel Connection

Might Contain One or More Switches

3. Storage Array with Two Fibre Channel Controllers

Host Clustering

Configurations

In a clustering configuration, two host systems are each connected by two

connections to both of the controllers in a storage array. SANtricity ES Storage

Manager, including failover driver support, is installed on each host.

Not every operating system supports this configuration. Consult the restrictions in the

installation and support guide specific to your operating system for more information.

Also, the host systems must be able to handle the multi-host configuration. Refer to

the applicable hardware documentation.

4 Failover Configuration Diagrams

Both hosts have complete visibility of both controllers, all data connections, and all

configured volumes in a storage array, plus failover support for the redundant

controllers. However, in this configuration, you must use caution when you perform

storage management tasks (especially deleting and creating volumes) to make sure

that the two hosts do not send conflicting commands to the controllers in the storage

arrays.

The following items apply to these clustering configurations:

Both hosts must have the same operating system version and SANtricity ES

Storage Manager version installed.

The failover driver configuration might require tuning.

Both host systems must have the same volumes-per-host bus adapter capacity.

This capacity is important for failover situations so that each controller can take

over for the other and show all of the configured volume groups and volumes.

If the operating system on the host system can create reservations, the storage

management software honors them. Each host might have reservations to

specified volume groups and volumes, and only the software on that host can

perform operations on the reserved volume group and volume. Without

reservations, the software on either host system is able to start any operation.

Therefore, you must use caution when you perform certain tasks that need

exclusive access. Especially when you create and delete volumes, make sure that

you have only one configuration session open at a time (from only one host), or

the operations that you perform could fail.

Chapter 1: Overview of Failover Drivers 5

Figure 2 Multi-Host-to-Storage Array Configuration

1. Two Host Systems, Each with Two Fibre Channel Host Bus

Adapters

2. Fibre Channel Connections with Two Switches (Might

Contain Different Switch Configurations)

3. Storage Array with Two Fibre Channel Controllers

Supporting

Redundant

Controllers

6 Failover Configuration Diagrams

The following figure shows how failover drivers provide redundancy when the host

application generates a request for I/O to controller A, but controller A fails. Use the

numbered information to trace the I/O data path.

Figure 3 Example of Failover I/O Data Path Redundancy

Chapter 1: Overview of Failover Drivers 7

1. Host Application

2. I/O Request

3. Failover Driver

4. Host Bus Adapters

5. Controller A Failure

6. Controller B

7. Initial Request to the HBA

8. Initial Request to the Controller Failed

9. Request Returns to the Failover Driver

10. Failover Occurs and I/O Transfers to Another Controller

11. I/O Request Re-sent to Controller B

How a Failover

Driver

Responds to a

Data Path

Failure

User

Responses to a

Data Path

Failure

One of the primary functions of the failover feature is to provide path management.

Failover drivers monitor the data path for devices that are not working correctly or for

multiple link errors. If a failover driver detects either of these conditions, the failover

driver automatically performs these steps:

The failover driver checks the pair table for the redundant controller.

The failover driver forces volumes to the other controller and routes all I/O to the

remaining active controller.

The failover driver performs a path failure if alternate paths to the same controller

are available. If all of the paths to a controller fail, RDAC performs a controller

failure. The failover driver provides notification of an error through the OS error

log facility.

When a drive and a controller fail simultaneously, the event is considered to be a

double failure. The storage management software provides data integrity as long as all

drive failures and controller failures are detected and fixed before more failures occur.

Use the Major Event Log (MEL) to respond to a data path failure. The information in

the MEL provides the answers to these questions:

What is the source of the error?

What is required to fix the error, such as replacement parts or diagnostics?

Under most circumstances, contact your Technical Support Representative any time a

path fails and the storage array notifies you of the failure. Use the Recovery Guru in

the storage management software to diagnose and fix the problem, if possible. If your

controller has failed and your storage array has customer-replaceable controllers,

replace the failed controller. Follow the manufacturer’s instructions for how to replace

a failed controller.

Load-Balancing

Policies

8 How a Failover Driver Responds to a Data Path Failure

Load balancing is the redistribution of read/write requests to maximize throughput

between the server and the storage array. Load balancing is very important in high

workload settings or other settings where consistent service levels are critical. The

multi-path driver transparently balances I/O workload without administrator

intervention. Without multi-path software, a server sending I/O requests down several

paths might operate with very heavy workloads on some paths, while other paths are

not used efficiently.

The multi-path driver determines which paths to a device are in an active state and can

be used for load balancing. The load-balancing policy uses one of three algorithms:

round robin, least queue depth, or least path weight. Multiple options for setting the

load-balancing policies let you optimize I/O performance when mixed host interfaces

are configured. The load-balancing policies that you can choose depend on your

operating system. Load balancing is performed on multiple paths to the same

controller but not across both controllers.

Table 3 Load-Balancing Policies That Are Supported by the Operating Systems

Operating System Multi-Path Driver Load-Balancing Policy

Windows MPIO DSM Round robin with subset, least queue depth,

weighted paths

Red Hat Enterprise Linux (RHEL) RDAC Round robin with subset, least queue depth

DMMP Round robin

SUSE Linux Enterprise (SLES) RDAC Round robin with subset, least queue depth

DMMP Round robin

Solaris MPxIO Round robin with subset

Least Queue Depth The least queue depth policy is also known as the least I/Os policy or the least

requests policy. This policy routes the next I/O request to the data path on the

controller that owns the volume that has the least outstanding I/O requests queued.

For this policy, an I/O request is simply a command in the queue. The type of

command or the number of blocks that are associated with the command is not

considered. The least queue depth policy treats large block requests and small block

requests equally. The data path selected is one of the paths in the path group of the

controller that owns the volume.

Round Robin with

Subset I/O

The round robin with subset I/O load-balancing policy routes I/O requests, in rotation,

to each available data path to the controller that owns the volumes. This policy treats

all paths to the controller that owns the volume equally for I/O activity. Paths to the

secondary controller are ignored until ownership changes. The basic assumption for

the round robin with subset I/O policy is that the data paths are equal. With mixed

host support, the data paths might have different bandwidths or different data transfer

speeds.

Weighted Paths The weighted paths policy assigns a weight factor to each data path to a volume. An

I/O request is routed to the path with the lowest weight value to the controller that

owns the volume. If more than one data path to the volume has the same weight value,

the round-robin with subset path selection policy is used to route I/O requests

between the paths with the same weight value.

Chapter 1: Overview of Failover Drivers 9

Dividing I/O

Activity

Between Two

RAID

Controllers to

Obtain the Best

Performance

For the best performance of a redundant controller system, use the storage

management software to divide I/O activity between the two RAID controllers in the

storage array. You can use either the graphical user interface (GUI) or the command

line interface (CLI).

To use the GUI to divide I/O activity between two RAID controllers, perform one of

these steps:

Specify the owner of the preferred controller of an existing volume – Select

Volume >> Change >> Ownership/Preferred Path in the Array Management

Window.

NOTE You also can use this method to change the preferred path

and ownership of all volumes in a volume group at the same time.

Specify the owner of the preferred controller of a volume when you are

creating the volume – Select Volume >> Create in the Array Management

Window.

To use the CLI, go to the "Create RAID Volume (Free Extent Based Select)" online

help topic for the command syntax and description.

Changing the

Preferred Path

Online Without

Stopping the

Applications

You can change the preferred path setting for a volume or a set of volumes online

without stopping the applications. However, the host multipath driver might not

immediately recognize that the preferred path has changed.

During the interval when the old path is still used, the driver might trigger a volume

not on preferred path Needs Attention condition in the storage

management software. This condition is removed as soon as the state change monitor

is run. A MEL event and an associated alert notification are delivered for the

condition. You can configure the AVT alert delay period with the storage management

software. The Needs Attention reporting is postponed until the failover driver failback

task has had a chance to run.

If the ALUA mode is enabled, the failover driver will continue to use the same data

path or paths. The storage array will, however, use its I/O Shipping feature to service

I/Os requests even if they are directed to the non-owning controller.

NOTE The newer versions of the RDAC failover driver and the DSM failover driver

do not recognize any AVT or ALUA status change (enabled or disabled) until the next

cycle of the state change monitor.

10 Dividing I/O Activity Between Two RAID Controllers to Obtain the Best Performance

Failover Drivers for the Windows Operating System

The failover driver for hosts with Microsoft Windows operating systems is Microsoft

Multipath I/O (MPIO) with a Device Specific Module (DSM) for SANtricity ES

Storage Manager.

2

Microsoft

Multipath

Input/Output

Device Specific

Module for the

Microsoft MPIO

Solution

Windows OS

Restrictions

Microsoft Multipath I/O (MPIO) provides an infrastructure to build highly available

solutions for Windows operating systems (OSs). MPIO uses Device Specific Modules

(DSMs) to provide I/O routing decisions, error analysis, and failover.

NOTE You can use MPIO for all controllers that run controller firmware version 6.19

or later. MPIO is not supported for any earlier versions of the controller firmware, and

MPIO cannot coexist on a server with RDAC. If you have legacy systems that run

controller firmware versions earlier than 6.19, you must use RDAC for your failover

driver. For SANtricity ES Storage Manager Version 10.10 and later and all versions of

SANtricity Storage Manager, the Windows OS supports only MPIO.

The DSM driver is the hardware-specific part of Microsoft’s MPIO solution. This

release supports Microsoft’s Windows Server 2008 OS, and Windows Server 2008 R2

OS and Windows Server 2012. The Hyper-V role is also supported when running the

DSM within the parent partition. The SANtricity ES Storage Manager Version 10.86

works with the DSM to implement supported features.

The MPIO DSM failover driver comes in these versions:

32-bit (x86)

64-bit AMD/EM64T (x64)

These versions are not compatible with each other. Because multiple copies of the

driver cannot run on the same system, each subsequent release is backward

compatible.

Per-Protocol I/O

Timeout Values

Chapter 2: Failover Drivers for the Windows Operating System 11

The timeout value associated with a non-passthrough I/O requests, such as read/write

requests, is based on the Microsoft disk driver's TimeOutValue parameter, as

defined in the Registry. A feature within the DSM allows a customized timeout value

to be applied based on the protocol (such as Fibre Channel, SAS, or iSCSI) that a path

uses. Per-protocol timeout values provide these benefits:

Without per-protocol timeout values, the TimeOutValue setting is global and

affects all storage.

The TimeOutValue is typically reset when an HBA driver is upgraded.

For information about the configurable parameters for the customized timeout

feature, go to Configuration Settings for the Windows DSM and the Linux

RDAC.

The per-protocol timeout values feature slightly modifies the way in which the

SynchTimeout parameter is evaluated. The SynchTimeout parameter

determines the I/O timeout for synchronous requests generated by the DSM driver.

Examples include the native handling of persistent reservation requests and inquiry

commands used during device discovery. It is important that the timeout value for the

requests from the DSM driver be at least as large as the per-protocol I/O timeout

value. When a host boots, the DSM driver performs these actions:

If the value of the SynchTimeout parameter is defined in the Registry key of

the DSM driver, record the current value.

If the value of the TimeOutValue parameter of the Microsoft disk driver is

defined in the Registry, record the current value.

Use the higher of the two values as the initial value of the SynchTimeout

parameter.

If neither value is defined, use a default value of 10 seconds.

For each synchronous I/O request, the higher value of either the per-protocol I/O

timeout or the SynchTimeout parameter is used. For example:

— If the value of the SynchTimeout parameter is 120 seconds, and the value

of the TimeOutValue parameter is 60 seconds, 120 seconds is used for the

initial value.

— If the value of the SynchTimeout parameter is 120 seconds, and the value

of the TimeOutValue parameter is 180 seconds, 180 seconds is used for

the initial value of the synchronous I/O requests for the DSM driver.

— If the I/O timeout value for a different protocol (for example, SAS) is 60

seconds and the initial value is 120 seconds, the I/O is sent using a

120-second timeout.

Selective LUN

Transfer

This feature limits the conditions under which the DSM driver moves a LUN to the

alternative controller to three cases:

1. When a DSM driver with a path to only one controller, the non-preferred path,

2. When an I/O request is directed to a LUN that is owned by the preferred path, but

3. When an I/O request is directed to a LUN that is owned by the non-preferred

Case 2 and case 3 have these user-configurable parameters that can be set to tune the

behavior of this feature.

12 Selective LUN Transfer

discovers a path to the alternate controller.

the DSM driver is attached to only the non-preferred path.

path, but the DSM driver is attached to only the preferred path.

The maximum number of times that the LUN transfer is issued. This parameter

setting prevents a continual ownership thrashing condition from occurring in

cases where the controller tray or the controller-drive tray is attached to another

host that requires the LUN be owned by the current controller.

A time delay before LUN transfers are attempted. This parameter is used to

de-bounce intermittent I/O path link errors. During the time delay, I/O requests

will be retried on the current controller to take advantage of the possibility that

another host might transition the LUN to the current controller.

For further information about these two parameters, go to Configuration Settings for

the Windows DSM and the Linux RDAC.

In the case where the host system is connected to both controllers and an I/O is

returned with a 94/01 status, in which the LUN is not owned and can be owned, the

DSM driver modifies its internal data on which controller to use for that LUN and

reissue the command to the other controller. To avoid interfering with other hosts that

might be attached to a controller tray or the controller-drive tray, the DSM driver does

not issue a LUN transfer command to that controller tray or controller-drive tray.

When the DSM detects that a volume transfer operation is required, the DSM does not

immediately issue the failover/failback command. The DSM will, with the default

settings, delay for three seconds before sending the command to the storage array.

This delay provides time to batch together as many volume transfer operations for

other LUNs as possible. The controller single-threads volume transfer operations and

therefore rejects additional transfer commands until the controller has completed the

operation that it is currently working on. This action results in a period during which

I/Os are not successfully serviced by the storage array. By introducing a delay during

which volume transfer operations can be aggregated into a batch operation, the DSM

reduces the likelihood that a volume transfer operation will exceed the retry limit. In

large system configurations, you might need to increase the default three-second

delay value because, with more hosts in the configuration, more volume transfer

commands might be sent.

The delay value is located at:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\<DSM

_Driver>\Parameters\ LunFailoverDelay.

This feature is enabled when these conditions exist:

The controller tray or the controller-drive tray does not have AVT enabled.

The DSM driver configurable parameter ClassicModeFailover is set to 1.

The DSM driver configurable parameter DisableLunRebalance is set to 4.

Windows

Failover Cluster

Clustering for Windows Server 2008 Operating Systems and subsequent releases of

Windows uses SCSI-3 persistent reservations natively. As a result, you can use one of

the previously mentioned load-balancing policies across all controller paths. If you

are operating in a clustered or shared LUN environment and are not using the I/O

Chapter 2: Failover Drivers for the Windows Operating System 13

Shipping feature of the CFW or Selective LUN Transfer feature of the DSM, set the

DisableLunRebalance parameter to 3. For information about this parameter, go

to Configuration Settings for the Windows DSM and the Linux RDAC.

I/O Shipping

Feature for

Asymmetric

Logical Unit

Access (ALUA)

Verifying that the I/O

Shipping Feature for

ALUA Support is

Installed on the

Windows OS

The I/O Shipping feature implements support for ALUA. With earlier releases of the

controller firmware (CFW), the device specific module (DSM) had to send

input/output (I/O) requests for a particular volume to the controller that owned that

volume. A controller would reject requests it received for a volume that it did not

own. This behavior was necessary in order for the storage array to maintain data

consistency within the volume. This same behavior, however, was responsible for

several areas of contention during system boot and during multi-host\path failure

conditions.

With the I/O Shipping feature, a storage array can service I/O requests through either

controller in a duplex configuration. There is a performance penalty when the

non-owning controller accesses a volume. To maintain the best I/O subsystem

performance, the DSM interacts with the CFW to insure that I/O requests are sent to

the owning controller if that controller is available.

When you install or update host software to SANtricity ES version 10.83 or more

recent and install or update controller firmware to CFW version 7.83 or more recent

on the Windows OS, support for ALUA is enabled by default. Perform the following

steps to verify that ALUA support is installed.

1. From the Start menu, select Command Prompt.

2. At the command prompt, type dsmUtil -g <target_id>.

The appearance of ALUAEnabled properties in the output indicates that the

failover driver is upgraded.

3. At the command prompt, type SMdevices.

The appearance of active optimized or active non-optimized

(rather than passive or unowned) in the output indicates that the host can see

the LUNs that are mapped to it.

Disabling the I/O

Shipping Feature for

ALUA Support on

the Windows OS

14 I/O Shipping Feature for Asymmetric Logical Unit Access (ALUA)

When you install or update the DSM, by default, the Selective LUN Transfer (SLT)

feature is enabled to support I/O Shipping. Some registry values are modified during

the DSM update if the previous version did not enable SLT. To prevent enabling SLT

so that your storage array operates without the I/O Shipping feature, edit the registry

to have the following settings.

1. From the Start menu, select Command Prompt.

2. At the command prompt, type the following command: Regedit.exe

3. In the Registry Editor, expand the path to

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\<

DSM_Driver>\Parameters. The expression <DSM_Driver> is the name of

the DSM driver used in your storage array.

4. Set the parameter DisableLunRebalance.

5. Set the parameter ClassicModeFailover.

6. Close the Registry Editor.

Reduced

Failover Timing

Settings related to drive I/O timeout and HBA connection loss timeout are adjusted in

the host operating system so that failover does not occur when a controller is

restarted. These settings provide protection from exception conditions that might

occur when both controllers in a controller tray or a controller-drive tray are restarted

at the same time, but they have the unfortunate side-effect of causing longer failover

times than might be tolerated by some application or clustered environments. Support

for the reduced failover timing feature includes support for reduced timeout settings,

which result in faster failover response times.

The following restrictions apply to this feature:

Only the Windows Server 2008 OS and subsequent releases of the Windows OS

support this feature.

Non-enterprise products attached to a host must use controller firmware release

7.35 or higher. Enterprise products attached to a host must use controller

firmware release 7.6 or higher. For configurations where a mix of earlier releases

is installed, older versions are not supported.

When this feature is used with Windows Server Failover Cluster (WSFC) on the

Windows Server 2008 OS, MPIO HotFix 970525 is required. The required

HotFix is a standard feature for the Windows Server 2008 R2 OS.

Additional restrictions apply to storage array brownout conditions. Depending on

how long the brownout condition lasts, PR registration information for volumes might

be lost. By design, WSFC periodically polls the cluster storage to determine the

overall health and availability of the resources. One action performed during this

polling is a PRIN READ_KEYS request, which returns registration information.

Because a brownout condition can cause blank information to be returned, WSFC

interprets this as a loss of access to the drive resource and attempts recovery by first

failing that drive resource, and then performing a new arbitration.

Chapter 2: Failover Drivers for the Windows Operating System 15

NOTE Any condition that causes blank registration information to be returned, where

previous requests returned valid registration information, can cause the drive resource

to fail. If the arbitration succeeds, the resource is brought online. Otherwise, the

resource remains in a failed state. One reason for an arbitration failure is the

combination of brownout condition and plug-and-play (PnP) timing issues if the HBA

timeout period expires. When the timeout period expires, the OS is notified of an HBA

change and must re-enumerate the HBAs to determine which devices no longer exist

or, in the case where a connection is re-established, what devices are now present.

The arbitration recovery process happens almost immediately after the resource is

failed. This situation, along with the PnP timing issue, might result in a failed

recovery attempt. Fortunately, you can modify the timing of the recovery process by

using the cluster.exe command-line tool. Microsoft recommends changing the

following, where resource_name is a cluster disk resource, such as Cluster Disk

1:

cluster.exe resource “resource_name” /prop

RestartDelay=4000

cluster.exe resource “resource_name” /prop

RestartThreshold=5

The previous example changes the disk-online delay to four seconds and the number

of online restarts to five. The changes must be repeated for each drive resource. The

changes persist across reboots. To display either the current settings or the changed

settings, run the following command:

cluster.exe resource “resource_name” /prop

Another option exists to prevent the storage array from returning blank registration

information. This option takes advantage of the Active Persist Through Power Loss

(APTPL) feature found in Persistent Reservations, which ensures that the registration

information persists through brownout or other conditions related to a power failure.

APTPL is enabled when a registration is initially made to the drive resource. WSFC

does not use the APTPL feature, but an option is provided in the DSM to set this

feature when a registration request is made.

NOTE Because the APTPL feature is not supported in WSFC, Microsoft does not

recommend its use. The APTPL feature should be considered as an option of last resort

when the cluster.exe options cannot meet the tolerances needed. If a cluster setup

cannot be brought online successfully after this option is used, the controller shell or

SYMbol commands might be required to clear existing persistent reservations.

16 Reduced Failover Timing

NOTE The APTPL feature within the DSM driver is enabled using the DSM utility

with the - o (feature) option by setting the SetAPTPLForPR option to 1. According

to the SCSI specification, you must set this option before PR registration occurs. If you

set this option after a PR registration occurs, take the disk resource offline, and then

bring the disk resource back online. If the DSM driver has set the APTPL option during

registration, an internal flag is set, and the DSM utility output from the -g option

indicates this condition. The SCSI specification does not provide a means for the

initiator to query the storage array to determine the current APTPL setting. As a result,

the -g output from one node might show the option set, but another node might not.

Interpret output from the -g option with caution. By default, the DSM driver is

released without this option enabled.

Path

Congestion

Detection and

Online/Offline

Path States

Configuration

Settings for the

Windows DSM

and the Linux

RDAC

The path congestion detection feature allows the DSM driver to place a path

offline based on the path I/O latency. The DSM automatically sets a path

offline when I/O response times exceed user-definable congestion criteria. An

administrator can manually place a path into the Admin Offline state. When a

path is either set offline by the DSM driver or by an administrator, I/O is routed to

a different path. The offline or admin offline path is not used for I/O until

the system administrator sets the path online.

For more information on path congestion configurable parameters, go to

Configuration Settings for the Windows DSM and the Linux RDAC.

This topic applies to both the Windows OS and the Linux OS. The failover driver that

is provided with the storage management software contains configuration settings that

can modify the behavior of the driver.

For the Linux OS, the configuration settings are in the /etc/mpp.conf file.

For the Windows OS, the configuration settings are in the

HKEY_LOCAL_MACHINE\System\CurrentControlSet\

Services\<DSM_Driver>\Parameters registry key, where

<DSM_Driver> is the name of the OEM-specific driver.

The default Windows failover driver is mppdsm.sys. Any changes to the settings

take effect on the next reboot of the host.

The default values listed in the following table apply to both the Windows OS and the

Linux OS unless the OS is specified in parentheses. Many of these values are

overridden by the failover installer for the Linux OS or the Windows OS.

ATTENTION Possible loss of data access – If you change these settings from their

configured values, you might lose access to the storage array.

Chapter 2: Failover Drivers for the Windows Operating System 17

Table 1 Configuration Settings for the Windows DSM and the Linux RDAC

Default

Parameter Name

Va lu e

(Operating

Description

System)

MaxPathsPerController 4 The maximum number of paths (logical endpoints)

that are supported per controller. The total number

of paths to the storage array is the

MaxPathsPerController value multiplied

by the number of controllers. The allowed values

range from 0x1 (1) to 0x20 (32) for the Windows

OS, and from 0x1 (1) to 0xFF (255) for Linux

RDAC.

For use by Technical Support Representatives only.

ScanInterval 1

(Windows)

The interval time, in seconds, that the failover

driver checks for these conditions:

60 (Linux)

A change in preferred ownership for a LUN

An attempt to rebalance LUNs to their

preferred paths

A change in AVT enabled status or AVT

disabled status

A change in ALUA enabled status or ALUA

disabled status

For the Windows OS, the allowed values range

from 0x1 to 0xFFFFFFFF and must be specified

in minutes.

For the Linux OSs, the allowed values range from

0x1 to 0xFFFFFFFF and must be specified in

seconds.

For use by Technical Support Representatives only.

18 Configuration Settings for the Windows DSM and the Linux RDAC

Default

Parameter Name

Va lu e

(Operating

Description

System)

ErrorLevel 3 This setting determines which errors to log. These

values are valid:

0 – Display all errors

1 – Display path failover errors, controller

failover errors, re-triable errors, fatal errors,

and recovered errors

2 – Display path failover errors, controller

failover errors, re-triable errors, and fatal

errors

3 – Display path failover errors, controller

failover errors, and fatal errors

4 – Display controller failover errors, and fatal

errors

For use by Technical Support Representatives only.

SelectionTimeoutRetryCount 0 The number of times a selection timeout is retried

for an I/O request before the path fails. If another

path to the same controller exists, the I/O is retried.

If no other path to the same controller exists, a

failover takes place. If no valid paths exist to the

alternate controller, the I/O is failed.

The allowed values range from 0x0 to

0xFFFFFFFF.

For use by Technical Support Representatives only.

CommandTimeoutRetryCount 1 The number of times a command timeout is retried

for an I/O request before the path fails. If another

path to the same controller exists, the I/O is retried.

If another path to the same controller does not

exist, a failover takes place. If no valid paths exist

to the alternate controller, the I/O is failed.

The allowed values range from 0x0 to 0xa (10)

for the Windows OS, and from 0x0 to

0xFFFFFFFF For Linux RDAC.

For use by Technical Support Representatives only.

Chapter 2: Failover Drivers for the Windows Operating System 19

Default

Parameter Name

Va lu e

(Operating

Description

System)

UaRetryCount 10 The number of times a Unit Attention (UA) status

from a LUN is retried. This parameter does not

apply to UA conditions due to Quiescence In

Progress.

The allowed values range from 0x0 to 0x64 (100)

for the Windows OS, and from 0x0 to

0xFFFFFFFF For Linux RDAC.

For use by Technical Support Representatives only.

SynchTimeout 120 The timeout, in seconds, for synchronous I/O

requests that are generated internally by the

failover driver. Examples of internal requests

include those related to rebalancing, path

validation, and issuing of failover commands.

The allowed values range from 0x1 to

0xFFFFFFFF.

For use by Technical Support Representatives only.

DisableLunRebalance 0 This parameter provides control over the LUN

failback behavior of rebalancing LUNs to their

preferred paths. These values are possible:

0 – LUN rebalance is enabled for both AVT

and non-AVT modes.

1 – LUN rebalance is disabled for AVT mode

and enabled for non-AVT mode.

2 – LUN rebalance is enabled for AVT mode

and disabled for non-AVT mode.

3 – LUN rebalance is disabled for both AVT

and non-AVT modes.

4 – The selective LUN Transfer feature is

enabled if AVT mode is off and

ClassicModeFailover is set to LUN

level 1.

S2ToS3Key Unique key This value is the SCSI-3 reservation key generated

during failover driver installation.

For use by Technical Support Representatives only.

20 Configuration Settings for the Windows DSM and the Linux RDAC

Default

Parameter Name

Va lu e

(Operating

Description

System)

LoadBalancePolicy 1 This parameter determines the load-balancing

policy used by all volumes managed by the

Windows DSM and Linux RDAC failover drivers.

These values are valid:

0 – Round robin with subset.

1 – Least queue depth with subset.

2 – Least path weight with subset (Windows

OS only)

ClassicModeFailover 0 This parameter provides control over how the DSM

handles failover situations. These values are valid:

0 – Perform controller-level failover (all LUNs

are moved to the alternate controller).

1 – Perform LUN-level failover (only the

LUNs indicating errors are transferred to the

alternate controller).

SelectiveTransfer

MaxTransferAttempts

3 This parameter sets the maximum number of times

that a host transfers the ownership of a LUN to the

alternate controller when the Selective LUN

Transfer mode is enabled. This setting prevents

multiple hosts from continually transferring LUNs

between controllers.

SelectiveTransferMinIO

WaitTime

3 This parameter sets the minimum wait time (in

seconds) that the DSM waits before it transfers a

LUN to the alternate controller when the Selective

LUN Transfer mode is enabled. This parameter is

used to limit excessive LUN transfers due to

intermittent link errors.

Wait Time

Settings

When the failover driver receives an I/O request for the first time, the failover driver

logs timestamp information for the request. If a request returns an error and the

failover driver decides to retry the request, the current time is compared with the

original timestamp information. Depending on the error and the amount of time that

has elapsed, the request is retried to the current owning controller for the LUN, or a

failover is performed and the request sent to the alternate controller. This process is

Chapter 2: Failover Drivers for the Windows Operating System 21

known as a wait time. If the NotReadyWaitTime value, the BusyWaitTime

value, and the QuiescenceWaitTime value are greater than the

ControllerIoWaitTime value, they will have no effect.

For the Linux OS, the configuration settings can be found in the /etc/mpp.conf

file. For the Windows OS, the configuration settings can be found in the Registry

under:

HKEY_LOCAL_MACHINE\System\CurrentControlSet\

Services\<DSM_Driver>

In the preceding setting, <DSM_Driver> is the name of the OEM-specific driver.

The default Windows driver is named mppdsm.sys. Any changes to the settings

take effect the next time the host is restarted.

ATTENTION Possible loss of data access – If you change these settings from their

configured values, you might lose access to the storage array.

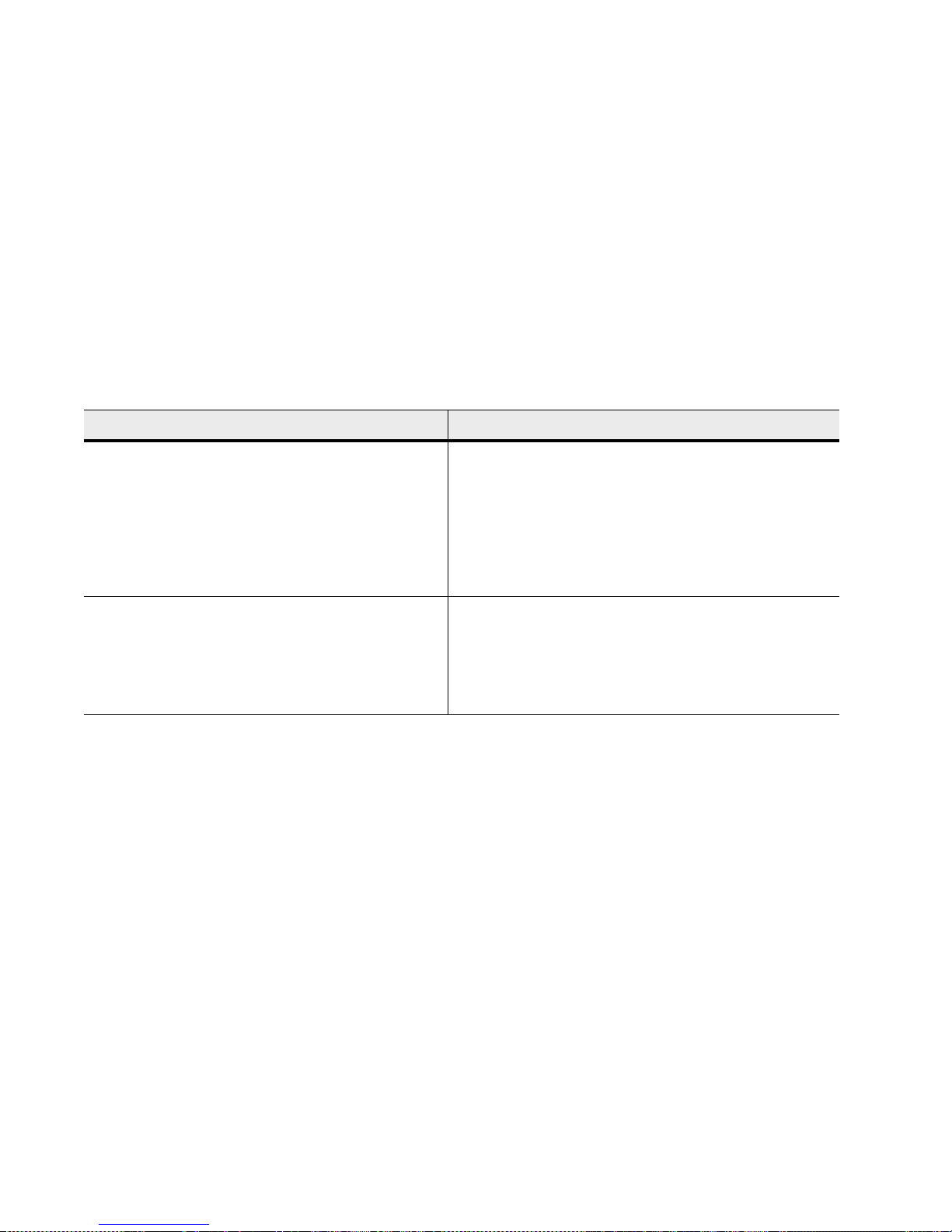

Table 2 Configuration Settings for the Path Congestion Detection Feature

Parameter Name Default Values Description

NotReadyWaitTime Linux: 270

Windows: NA

BusyWaitTime Linux: 270

Windows: NA

QuiescenceWaitTime Linux: 270

Windows: NA

ControllerIoWait

Time

Linux: 120

Windows: NA

ArrayIoWaitTime Linux RDAC: 600

Windows DSM: 600

The time, in seconds, a Not Ready condition (SK 0x06, ASC/ASCQ

0x04/0x01) is allowed before failover is performed.

Valid values range from 0x1 to 0xFFFFFFFF.

The time, in seconds, a Busy condition is allowed before a failover is

performed.

Valid values range from 0x1 to 0xFFFFFFFF.

The time, in seconds, a Busy condition is allowed before a failover is

performed.

Valid values range from 0x1 to 0xFFFFFFFF.

Provides an upper-bound limit, in seconds, that an I/O is retried on a

controller regardless of the retry status before a failover is performed.

If the limit is exceeded on the alternate controller, the I/O is again

attempted on the original controller. This process continues until the

value of the ArrayIoWaitTime limit is reached.

Valid values range from 0x1 to 0xFFFFFFFF.

Provides an upper-bound limit, in seconds, that an I/O is retried to the

storage array regardless of to which controller the request is

attempted. After this limit is exceeded, the I/O is returned with a

failure status.

Valid values range from 0x1 to 0xFFFFFFFF.

22 Wait Time Settings

Configuration

Settings for

Path

Congestion

Detection and

Online/Offline

Path States

The following configuration settings are applied using the utility mppUtil -o

option parameter.

Table 3 Configuration Settings for the Path Congestion Detection Feature

Parameter Name

CongestionDetectionEnabled 0x0 A Boolean value that indicates whether the path congestion

CongestionResponseTime 0x0 If CongestionIoCount is 0x0 or not defined, this

CongestionIoCount 0x0 The number of I/O requests that have exceeded the value of

CongestionTimeFrame 0x0 A sliding window that defines the time period that is evaluated

Default

Va lu e

Description

detection is enabled. If this parameter is not defined or is set

to 0x0, the value is false, the path congestion feature is

disabled, and all of the other parameters are ignored. If set to

0x1, the path congestion feature is enabled. Valid values are

0x0 or 0x1.

parameter represents an average response time in seconds

allowed for an I/O request. If the value of the

CongestionIoCount parameter is nonzero, this

parameter is the absolute time allowed for an I/O request.

Valid values range from 0x1 to 0x10000 (approximately 18

hours).

the CongestionResponseTime parameter within the

value of the CongestionTimeFrame parameter. Valid

values range from 0x0 to 0x10000 (approximately 4000

requests).

in seconds. If this parameter is not defined or is set to 0x0, the

path congestion feature is disabled because no time frame has

been defined. Valid values range from 0x1 to 0x1C20

(approximately two hours).

Chapter 2: Failover Drivers for the Windows Operating System 23

Parameter Name

CongestionSamplingInterval 0x0 The number of I/O requests that must be sent to a path before

CongestionMinPopulationSize 0x0 The number of sampled I/O requests that must be collected

CongestionTakeLastPathOffline 0x0 A Boolean value that indicates whether the DSM driver takes

Default

Va lu e

Description

the nth request is used in the average response time

calculation. For example, if this parameter is set to 100, every

100th request sent to a path is used in the average response

time calculation. If this parameter is set to 0x0 or not defined,

the path congestion feature is disabled for performance

reasons—every I/O request would incur a calculation. Valid

values range from 0x1 to 0xFFFFFFFF (approximately 4

billion requests).

before the average response time is calculated. Valid values

range from 0x1 to 0xFFFFFFFF (approximately 4 billion

requests).

the last path available to the storage array offline if the

congestion thresholds have been exceeded. If this parameter is

not defined or is set to 0x0, the value is false. Valid values are

0x0 or 0x1.

NOTE Setting a path offline with the dsmUtil utility succeeds

regardless of the setting of this value.

Example

Configuration

Settings for the

NOTE Before path congestion detection can be enabled, you must set the

CongestionResponseTime, CongestionTimeFrame, and

CongestionSamplingInterval parameters to valid values.

Path

Congestion

Detection

Feature

24 Example Configuration Settings for the Path Congestion Detection Feature

To set the path congestion IO response time to 10 seconds:

dsmUtil -o CongestionResponseTime=10,SaveSettings

To set the path congestion sampling interval to one minute:

dsmUtil -o CongestionSamplingInterval=60,SaveSettings

To enable path congestion detection:

dsmUtil -o CongestionDetectionEnabled=0x1,SaveSettings

To use the dsmUtil command to set a path to Admin Offline:

dsmUtil -o SetPathOffline=0x77070001

NOTE The path ID (in this example 0x77070001) is found using the dsmUtil -g

command.

To use the dsmUtil command to set a path to online:

dsmUtil -o SetPathOnline=0x77070001

Running the

DSM Failover

Driver in a

Hyper-V Guest

with

Pass-Through

Disks

Consider a scenario where you map storage to a Windows Server 2008 'R2' (or above)

parent partition. You then use the Settings -> SCSI Controller -> Add Hard Drive

command to attach that storage as a pass-through disk to the SCSI controller of a

Hyper-V guest running Windows Server 2008. By default, some SCSI commands are

filtered by Hyper-V, so the DSM Failover driver will fail to run properly.

To work around this issue, SCSI command filtering must be disabled. Run the

following PowerShell script in the Parent partition to determine if SCSI pass-through

filtering is enabled or disabled:

# Powershell Script: Get_SCSI_Passthrough.ps1

$TargetHost=$args[0]

foreach ($Child in Get-WmiObject -Namespace

root\virtualization Msvm_ComputerSystem -Filter

"ElementName='$TargetHost'")

{

$vmData=Get-WmiObject -Namespace root\virtualization

-Query "Associators of {$Child} Where

ResultClass=Msvm_VirtualSystemGlobalSettingData

AssocClass=Msvm_ElementSettingData"

Write-Host "Virtual Machine:" $vmData.ElementName

Write-Host "Currently Bypassing SCSI Filtering:"

$vmData.AllowFullSCSICommandSet

}?

If necessary, run the following PowerShell script in the Parent partition to disabled the

SCSI filtering:

Chapter 2: Failover Drivers for the Windows Operating System 25

# Powershell Script: Set_SCSI_Passthrough.ps1

$TargetHost=$args[0]

$vsManagementService=gwmi

MSVM_VirtualSystemManagementService -namespace

"root\virtualization"

foreach ($Child in Get-WmiObject -Namespace

root\virtualization Msvm_ComputerSystem -Filter

"ElementName='$TargetHost'")

{

$vmData=Get-WmiObject -Namespace root\virtualization

-Query "Associators of {$Child} Where

ResultClass=Msvm_VirtualSystemGlobalSettingData

AssocClass=Msvm_ElementSettingData"

$vmData.AllowFullSCSICommandSet=$true

$vsManagementService.ModifyVirtualSystem($Child,$vmDat

a.PSBase.GetText(1))|out-null

}?

dsmUtil Utility The dsmUtil utility is a command-line driven utility that works only with the

Multipath I/O (MPIO) Device Specific Module (DSM) solution. The utility is used

primarily as a way to instruct the DSM driver to perform various maintenance tasks,

but the utility can also serve as a troubleshooting tool when necessary.

To use the dsmUtil utility, type this command, and press Enter:

dsmUtil [[-a [target_id]]

[-c array_name | missing]

[-d debug_level] [-e error_level] [-g

virtual_target_id]

[-o [[feature_action_name[=value]] |

[feature_variable_name=value]][, SaveSettings]] [-M]

[-P [GetMpioParameters | MpioParameter=value | ...]]

[-R]

[-S]

[-s "failback" | "avt" | "busscan" | "forcerebalance"]

[-w target_wwn, controller_index]

NOTE You must enter the quotation marks as shown around parameter names.

Typing dsmUtil without any parameters shows the usage information.

The following table shows the dsmUtil parameters.

26 dsmUtil Utility

Table 4 dsmUtil Parameters

Parameter Description

-a [target_id] Shows a summary of all storage arrays seen by the DSM. The summary shows the

target_id, the storage array WWID, and the storage array name. If target_id is

specified, DSM point-in-time state information appears for the storage array. On UNIX

operating systems, the virtual HBA specifies unique target IDs for each storage array. The

Windows MPIO virtual HBA driver does not use target IDs. The parameter for this option

can be viewed as an offset into the DSM information structures, with each offset

representing a different storage array.

For use by Technical Support Representatives only.

-c array_name |

missing

Clears the WWN file entries. This file is located in the Program

Files\DSMDrivers\mppdsm\WWN_FILES directory with the extension .wwn. If

the array_name keyword is specified, the WWN file for the specific storage array is

deleted. If the missing keyword is used, all WWN files for previously attached storage

arrays are deleted. If neither keyword is used, all of the WWN files, for both currently

attached and previously attached storage arrays, are deleted.

-d debug_level Sets the current debug reporting level. This option only works if the RDAC driver has

been compiled with debugging enabled.

For use by Technical Support Representatives only.

-e error_level Sets the current error reporting level to error_level, which can have one of these

values:

0 – Show all errors.

1 – Show path failover, controller failover, retryable, fatal, and recovered errors.

2 – Show path failover, controller failover, retryable, and fatal errors.

3 – Show path failover, controller failover, and fatal errors. This is the default setting.

4 – Show controller failover and fatal errors.

5 – Show fatal errors.

For use by Technical Support Representatives only.

-g target_id Displays detailed information about the state of each controller, path, and LUNs for the

specified storage array. You can find the target_id by running the dsmUtil -a

command.

-M Shows the MPIO disk-to-drive mappings for the DSM. The output is similar to that found

with the SMdevices utility.

For use by Technical Support Representatives only.

Chapter 2: Failover Drivers for the Windows Operating System 27

Parameter Description

-o

[[feature_action_nam

e[=value]] |

[feature_variable_na

me=value]][,

SaveSettings]

Troubleshoots a feature or changes a configuration setting. Without the SaveSettings

keyword, the changes only affect the in-memory state of the variable. The

SaveSettings keyword changes both the in-memory state and the persistent state.

Some example commands are:

dsmUtil -o – Displays all the available feature action names.

dsmUtil -o DisableLunRebalance=0x3 – Turns off the DSM-initiated

storage array LUN rebalance (affects only the in-memory state).

-P

[GetMpioParameters |

MpioParameter= value

Displays and sets MPIO parameters.

For use by Technical Support Representatives only.

| ...]

-R Remove the load-balancing policy settings for inactive devices.

-S Reports the Up state or the Down state of the controllers and paths for each LUN in real

time.

-s ["failback" |

"avt" | "busscan" |

"forcerebalance"]

Manually initiates one of the DSM driver’s scan tasks. A “failback” scan causes the DSM

driver to reattempt communications with any failed controllers. An “avt” scan causes the

DSM driver to check whether AVT has been enabled or disabled for an entire storage

array. A “busscan” scan causes the DSM driver to go through its unconfigured devices list

to see if any of them have become configured. A “forcerebalance” scan causes the DSM

driver to move storage array volumes to their preferred controller and ignores the value of

the DisableLunRebalance configuration parameter of the DSM driver.

-w target_wwn,

For use by Technical Support Representatives only.

controller_index

Device Manager Device Manager is part of the Windows operating system. Select Control Panel from

the Start menu. Then select Administrative Tools >> Computer Management >>

Device Manager.

Scroll down to System Devices to view information about the DSM driver itself. This

name might be different based on your network configuration.

The Drives section shows both the drives identified with the HBA drivers and the

volumes created by MPIO. Select one of the MPIO volumes, and right-click it. Select

Properties to open the Multi-Path Disk Device Properties window.

This properties window shows if the device is working correctly. Select the Driver

tab to view the driver information.

28 Device Manager

Determining if a

Path Has Failed

If a controller supports multiple data paths and one of those paths fails, the failover

driver logs the path failure in the OS system log file. In the storage management

software, the storage array shows a Degraded status.

If all of the paths to a controller fail, the failover driver makes entries in the OS

system log that indicate a path failure and failover. In the storage management

software, the storage array shows a Needs Attention condition of Volume not on

preferred path. The failover event is also written to the Major Event Log (MEL). In

addition, if the administrator has configured alert notifications, email messages, or

SNMP traps, messages are posted for this condition. The Recovery Guru in the

storage management software provides more information about the path failure, along

with instructions about how to correct the problem.

NOTE Alert reporting for the Volume not on preferred path condition can be delayed

if you set the failover alert delay parameter through the storage management software.

When you set this parameter, it imposes a delay on the setting of the Needs Attention

condition in the storage management software.

Installing or

Upgrading

SANtricity ES and

DSM on the

Windows OS

Perform the steps in this task to install SANtricity ES Storage Manager and the DSM

or to upgrade from an earlier release of SANtricity ES Storage Manager and the DSM

on a system with a Windows OS. For a clustered system, perform these steps on each

node of the system, one node at a time. See I/O Shipping Feature for Asymmetric

Logical Unit Access (ALUA) for the procedure to verify or disable ALUA with the

Windows OS.

1. Open the SANtricity ES Storage Manager SMIA installation program, which is

available from either the SANtricity ES DVD or from your storage vendor's

website.

2. Click Next.

3. Accept the terms of the license agreement, and click Next.

4. Select Custom, and click Next.

5. Select the applications that you want to install.

a. Click the name of an application to see its description.

b. Select the check box next to an application to install it.

6. Click Next.

If you have a previous version of the software installed, you receive a warning

message:

Existing versions of the following software

already reside on

this computer ... If you choose to continue,

the existing

versions will be overwritten with new

versions ....

Chapter 2: Failover Drivers for the Windows Operating System 29

7. If you receive this warning and want to update SANtricity ES Storage Manager,

click OK.

8. Select whether to automatically start the Event Monitor. Click Next.

— Start the Event Monitor for the one I/O host on which you want to receive

alert notifications.

— Do not start the Event Monitor for all other I/O hosts attached to the storage

array or for computers that you use to manage the storage array.

9. Click Next.

10. If you receive a warning about antivirus or backup software that is installed, click

Continue.

11. Read the pre-installation summary, and click Install.

12. Wait for installation to complete, and click Done.

Removing

SANtricity ES

Storage Manager

and the DSM

Failover Driver from

the Windows OS

NOTE To prevent loss of data, the host from which you are removing SANtricity ES

Storage Manager and the DSM must have only one path to the storage array.

Reconfigure the connections between the host and the storage array to remove any

redundant connections before you uninstall SANtricity ES Storage Manager and the

DSM failover driver.

1. From the Windows Start menu, select Control Panel.

The Control Panel window appears.