Page 1

Design of Experiments

Guide

Page 2

Get the Most from JMP

Whether you are a first-time or a long-time user, there is always something to learn about JMP.

Visit JMP.com and to find the following:

• live and recorded Webcasts about how to get started with JMP

• video demos and Webcasts of new features and advanced techniques

• schedules for seminars being held in your area

• success stories showing how others use JMP

• a blog with tips, tricks, and stories from JMP staff

• a forum to discuss JMP with other users

®

http://www.jmp.com/getstarted/

Page 3

Release 9

Design of Experiments

Guide

“The real voyage of discovery consists not in seeking new

landscapes, but in having new eyes.”

Marcel Proust

JMP, A Business Unit of SAS

SAS Campus Drive

Cary, NC 27513

Page 4

The correct bibliographic citation for this manual is as follows: SAS Institute Inc. 2009. JMP® 9 Design

of Experiments Guide, Second Edition. Cary, NC: SAS Institute Inc.

®

JMP

9 Design of Experiments Guide, Second Edition

Copyright © 2010, SAS Institute Inc., Cary, NC, USA

ISBN 978-1-60764-597-9

All rights reserved. Produced in the United States of America.

For a hard-copy book: No part of this publication may be reproduced, stored in a retrieval system, or

transmitted, in any form or by any means, electronic, mechanical, photocopying, or otherwise, without

the prior written permission of the publisher, SAS Institute Inc.

For a Web download or e-book: Your use of this publication shall be governed by the terms established

by the vendor at the time you acquire this publication.

U.S. Government Restricted Rights Notice: Use, duplication, or disclosure of this software and related

documentation by the U.S. government is subject to the Agreement with SAS Institute and the

restrictions set forth in FAR 52.227-19, Commercial Computer Software-Restricted Rights (June 1987).

SAS Institute Inc., SAS Campus Drive, Cary, North Carolina 27513.

1st printing, September 2010

®

JMP

, SAS® and all other SAS Institute Inc. product or service names are registered trademarks or

trademarks of SAS Institute Inc. in the USA and other countries. ® indicates USA registration.

Other brand and product names are registered trademarks or trademarks of their respective companies.

Page 5

1 Introduction to Designing Experiments

A Beginner’s Tutorial . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

About Designing Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

My First Experiment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

The Situation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Step 1: Design the Experiment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Step 2: Define Factor Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Step 3: Add Interaction Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Step 4: Determine the Number of Runs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Step 5: Check the Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Step 6: Gather and Enter the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Step 7: Analyze the Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2 Examples Using the Custom Designer

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

Creating Screening Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Creating a Main-Effects-Only Screening Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

Creating a Screening Design to Fit All Two-Factor Interactions . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

A Compromise Design Between Main Effects Only and All Interactions . . . . . . . . . . . . . . . . . . . . 20

Creating ‘Super’ Screening Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Screening Designs with Flexible Block Sizes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Checking for Curvature Using One Extra Run . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Contents

Design of Experiments

Creating Response Surface Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

Exploring the Prediction Variance Surface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

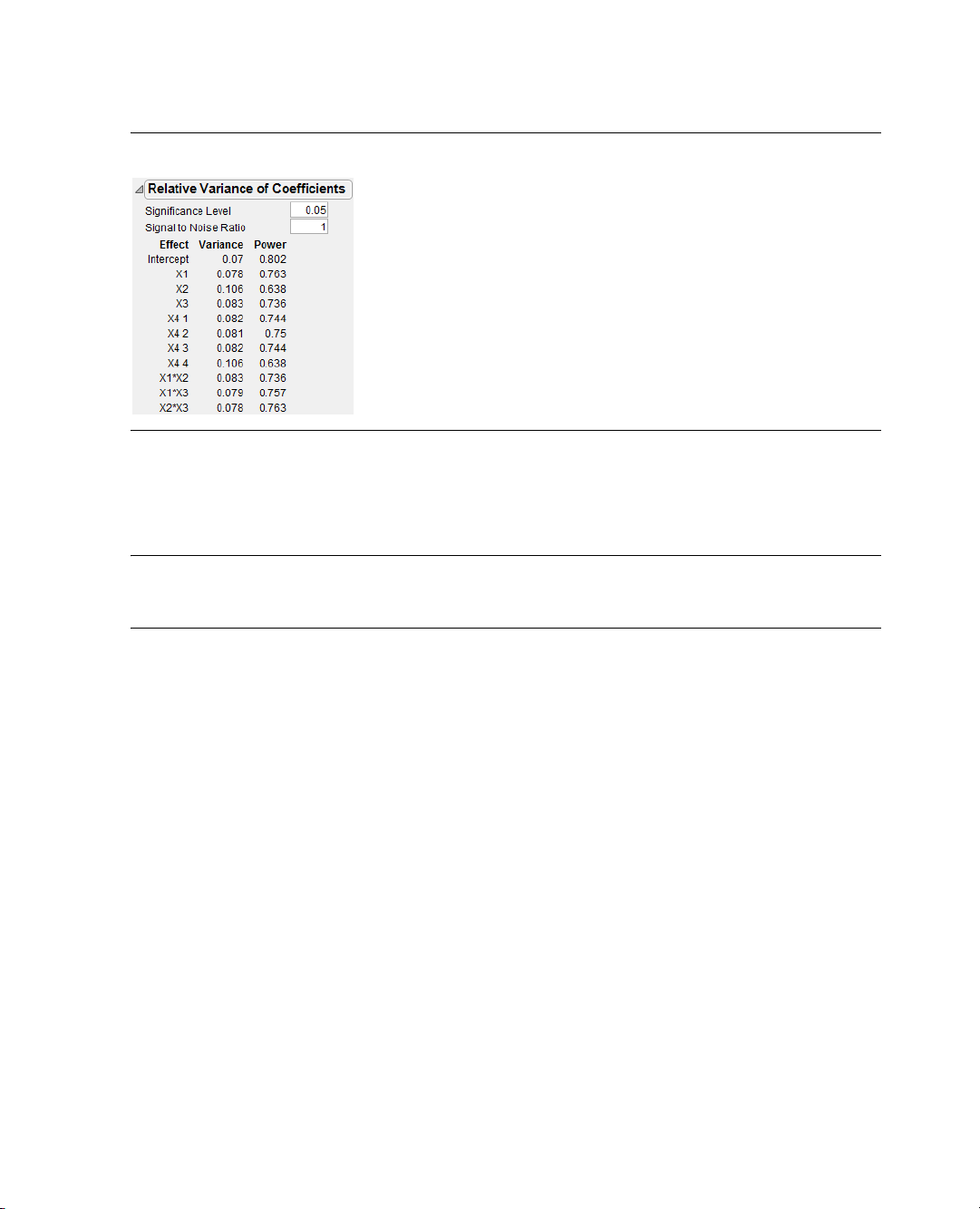

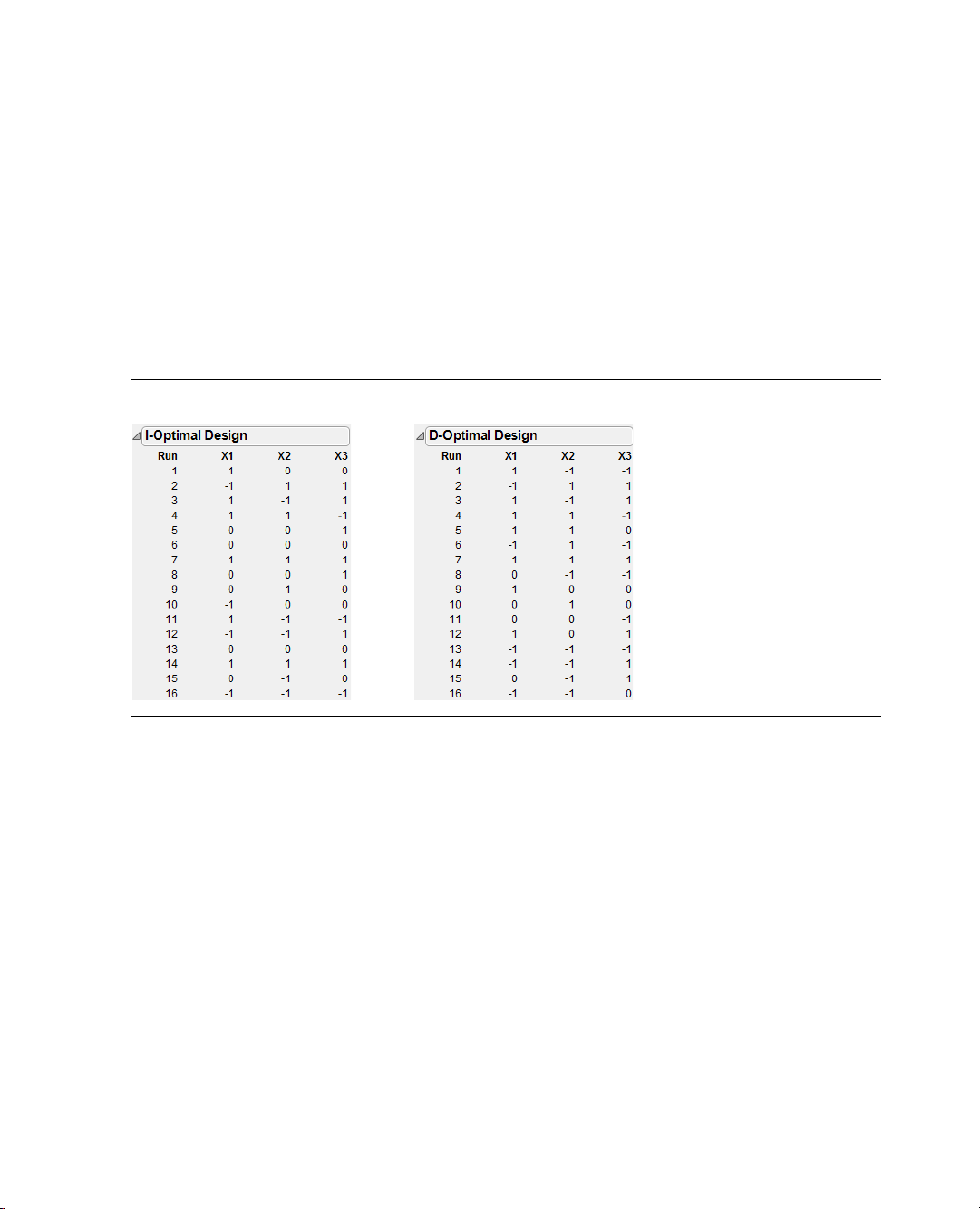

Introducing I-Optimal Designs for Response Surface Modeling . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

A Three-Factor Response Surface Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Response Surface with a Blocking Factor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Creating Mixture Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Mixtures Having Nonmixture Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

Experiments that are Mixtures of Mixtures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Page 6

ii

Special-Purpose Uses of the Custom Designer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Designing Experiments with Fixed Covariate Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Creating a Design with Two Hard-to-Change Factors: Split Plot . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Technical Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

3 Building Custom Designs

The Basic Steps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

Creating a Custom Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Enter Responses and Factors into the Custom Designer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

Describe the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

Specifying Alias Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

Select the Number of Runs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

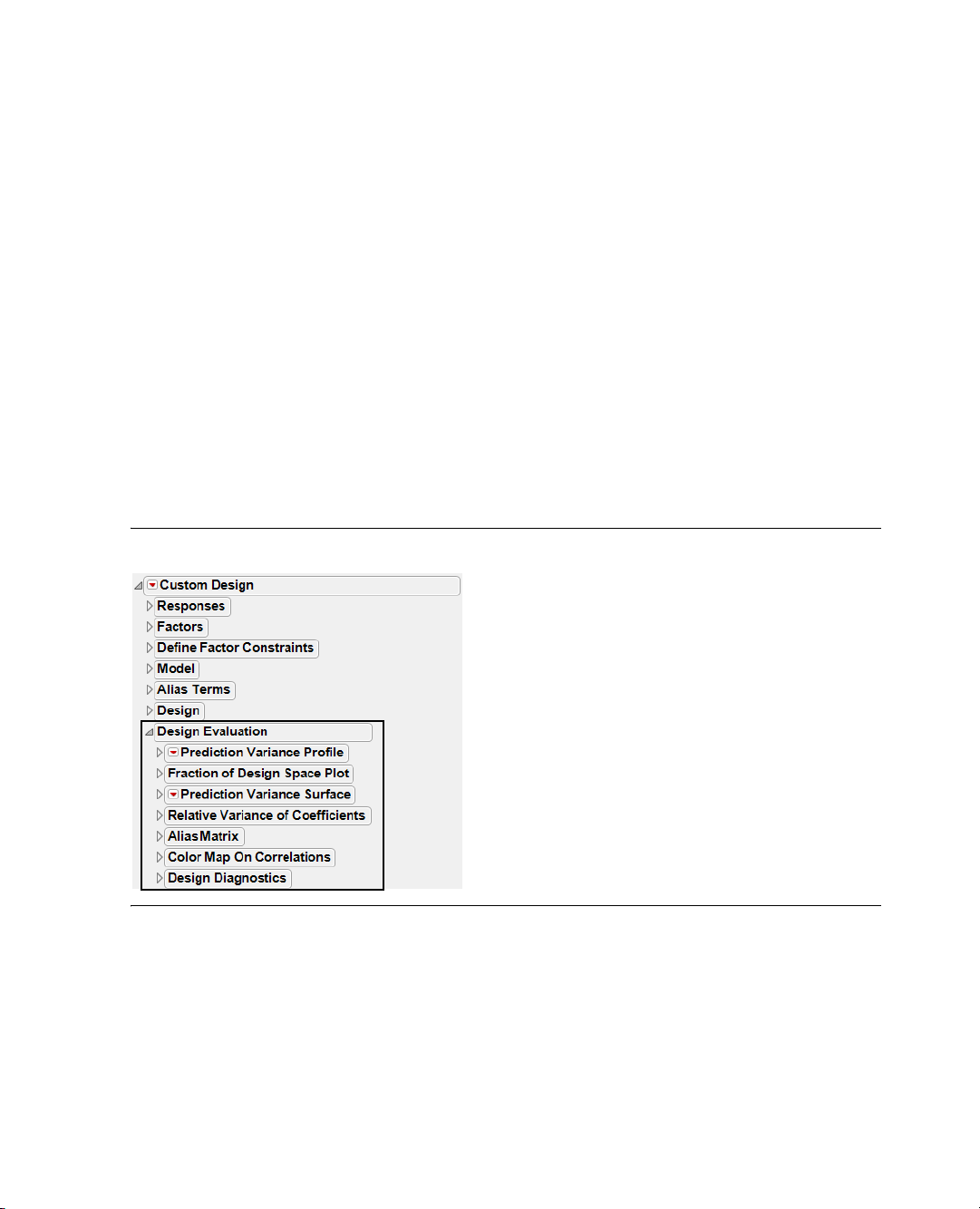

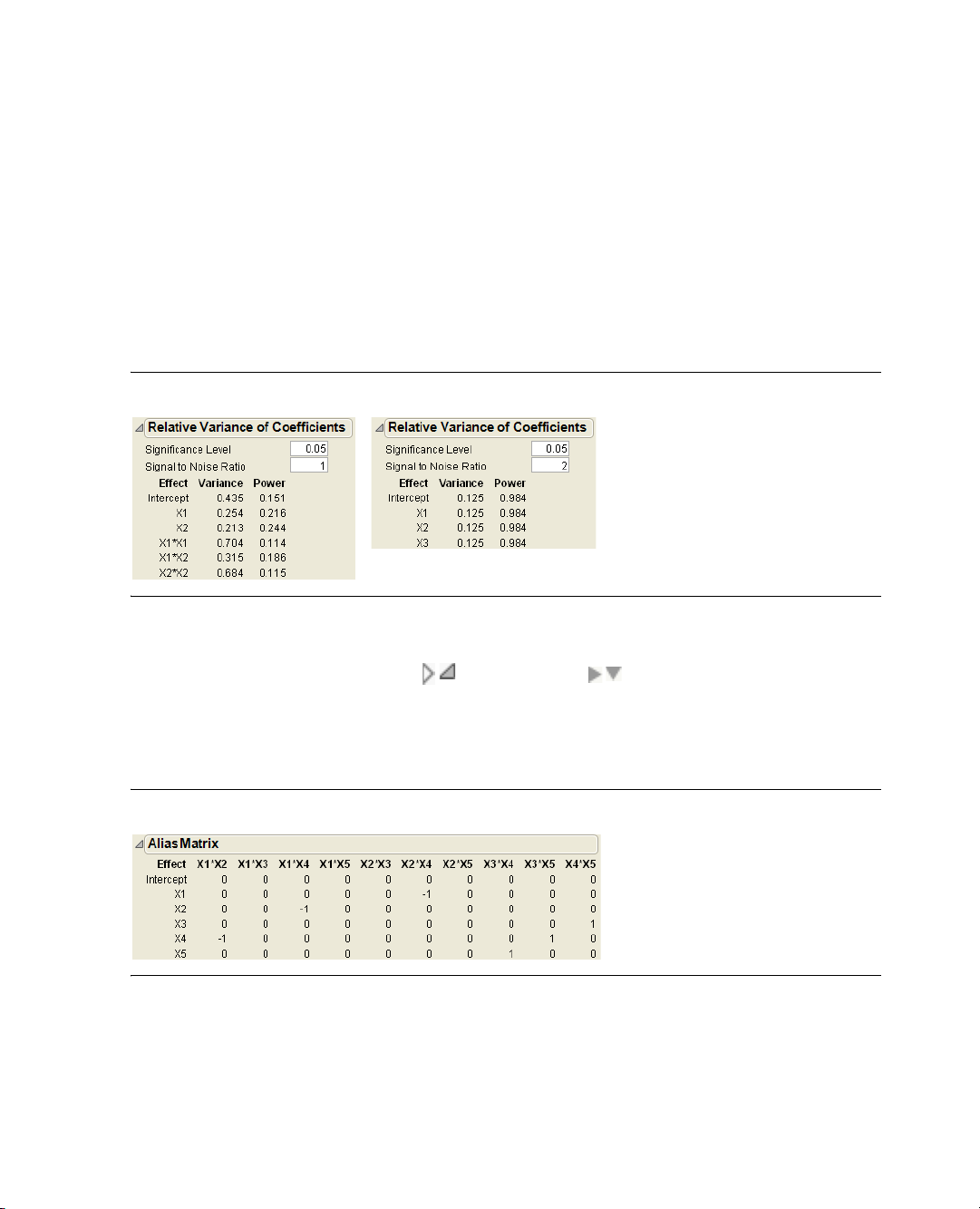

Understanding Design Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

Specify Output Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

Make the JMP Design Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

Creating Random Block Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Creating Split Plot Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

Creating Split-Split Plot Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

Creating Strip Plot Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

Special Custom Design Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Save Responses and Save Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

Load Responses and Load Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Save Constraints and Load Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Set Random Seed: Setting the Number Generator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

Simulate Responses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

Save X Matrix: Viewing the Number of Rows in the Moments Matrix and the Design Matrix (X) in the

Log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

Optimality Criterion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Number of Starts: Changing the Number of Random Starts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

Sphere Radius: Constraining a Design to a Hypersphere . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

Disallowed Combinations: Accounting for Factor Level Restrictions . . . . . . . . . . . . . . . . . . . . . . . 90

Advanced Options for the Custom Designer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

Save Script to Script Window . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Assigning Column Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

Define Low and High Values (DOE Coding) for Columns . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

Set Columns as Factors for Mixture Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

Define Response Column Values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

Page 7

Assign Columns a Design Role . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

Identify Factor Changes Column Property . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

How Custom Designs Work: Behind the Scenes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

4 Screening Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

Screening Design Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Using Two Continuous Factors and One Categorical Factor . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

Using Five Continuous Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

Creating a Screening Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Enter Responses . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Enter Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

Choose a Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

Display and Modify a Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

Specify Output Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

View the Design Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Create a Plackett-Burman design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

iii

Analysis of Screening Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

Using the Screening Analysis Platform . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

Using the Fit Model Platform . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

5 Response Surface Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

A Box-Behnken Design: The Tennis Ball Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

The Prediction Profiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

A Response Surface Plot (Contour Profiler) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

Geometry of a Box-Behnken Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

Creating a Response Surface Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

Enter Responses and Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

Choose a Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 137

Specify Output Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

View the Design Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

6 Full Factorial Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

The Five-Factor Reactor Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 145

Analyze the Reactor Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

Page 8

iv

Creating a Factorial Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Enter Responses and Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

Select Output Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

Make the Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

7 Mixture Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

Mixture Design Types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

The Optimal Mixture Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

The Simplex Centroid Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 158

Creating the Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

Simplex Centroid Design Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

The Simplex Lattice Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 161

The Extreme Vertices Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

Creating the Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 164

An Extreme Vertices Example with Range Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

An Extreme Vertices Example with Linear Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

Extreme Vertices Method: How It Works . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

The ABCD Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

Creating Ternary Plots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 169

Fitting Mixture Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

Whole Model Tests and Analysis of Variance Reports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Understanding Response Surface Reports . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

A Chemical Mixture Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

Create the Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

Analyze the Mixture Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

The Prediction Profiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

The Mixture Profiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

A Ternary Plot of the Mixture Response Surface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 178

8 Discrete Choice Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 179

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 181

Create an Example Choice Experiment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

Analyze the Example Choice Experiment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

Design a Choice Experiment Using Prior Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 189

Page 9

Administer the Survey and Analyze Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

Initial Choice Platform Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

Find Unit Cost and Trade Off Costs with the Profiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

9 Space-Filling Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 195

Introduction to Space-Filling Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Sphere-Packing Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Creating a Sphere-Packing Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

Visualizing the Sphere-Packing Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 199

Latin Hypercube Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

Creating a Latin Hypercube Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

Visualizing the Latin Hypercube Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 202

Uniform Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 204

Comparing Sphere-Packing, Latin Hypercube, and Uniform Methods . . . . . . . . . . . . . . . . . . . . . . . . 206

Minimum Potential Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 207

Maximum Entropy Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 209

v

Gaussian Process IMSE Optimal Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

Borehole Model: A Sphere-Packing Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

Create the Sphere-Packing Design for the Borehole Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 212

Guidelines for the Analysis of Deterministic Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

Results of the Borehole Experiment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 214

10 Accelerated Life Test Designs

Designing Experiments for Accelerated Life Tests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 219

Overview of Accelerated Life Test Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

Using the ALT Design Platform . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221

Platform Options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 226

11 Nonlinear Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

Examples of Nonlinear Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Using Nonlinear Fit to Find Prior Parameter Estimates . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 233

Creating a Nonlinear Design with No Prior Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

Page 10

vi

Creating a Nonlinear Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

Identify the Response and Factor Column with Formula . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 243

Set Up Factors and Parameters in the Nonlinear Design Dialog . . . . . . . . . . . . . . . . . . . . . . . . . . 244

Enter the Number of Runs and Preview the Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 245

Make Table or Augment the Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 246

Advanced Options for the Nonlinear Designer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 247

12 Taguchi Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 249

The Taguchi Design Approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

Taguchi Design Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 251

Analyze the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 254

Creating a Taguchi Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

Detail the Response and Add Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

Choose Inner and Outer Array Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 257

Display Coded Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 258

Make the Design Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 259

13 Augmented Designs

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

A D-Optimal Augmentation of the Reactor Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 263

Analyze the Augmented Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 266

Creating an Augmented Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 274

Replicate a Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 274

Add Center Points . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277

Creating a Foldover Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 278

Adding Axial Points . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279

Adding New Runs and Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 280

Special Augment Design Commands . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 283

Save the Design (X) Matrix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

Modify the Design Criterion (D- or I- Optimality) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

Select the Number of Random Starts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

Specify the Sphere Radius Value . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

Disallow Factor Combinations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 285

Page 11

14 Prospective Sample Size and Power

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 287

Launching the Sample Size and Power Platform . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 289

One-Sample and Two-Sample Means . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 289

Single-Sample Mean . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 291

Sample Size and Power Animation for One Mean . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Two-Sample Means . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295

k-Sample Means . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 296

One Sample Standard Deviation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 298

One Sample Standard Deviation Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 299

One-Sample and Two-Sample Proportions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 300

One Sample Proportion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 300

Two Sample Proportions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

Counts per Unit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

Counts per Unit Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 306

Sigma Quality Level . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307

Sigma Quality Level Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 307

Number of Defects Computation Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

vii

Reliability Test Plan and Demonstration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

Reliability Test Plan . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

Reliability Demonstration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 311

Index

Design of Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 319

Page 12

viii

Page 13

Credits and Acknowledgments

Origin

JMP was developed by SAS Institute Inc., Cary, NC. JMP is not a part of the SAS System, though portions

of JMP were adapted from routines in the SAS System, particularly for linear algebra and probability

calculations. Version 1 of JMP went into production in October 1989.

Credits

JMP was conceived and started by John Sall. Design and development were done by John Sall, Chung-Wei

Ng, Michael Hecht, Richard Potter, Brian Corcoran, Annie Dudley Zangi, Bradley Jones, Craige Hales,

Chris Gotwalt, Paul Nelson, Xan Gregg, Jianfeng Ding, Eric Hill, John Schroedl, Laura Lancaster, Scott

McQuiggan, Melinda Thielbar, Clay Barker, Peng Liu, Dave Barbour, Jeff Polzin, John Ponte, and Steve

Amerige.

In the SAS Institute Technical Support division, Duane Hayes, Wendy Murphrey, Rosemary Lucas, Win

LeDinh, Bobby Riggs, Glen Grimme, Sue Walsh, Mike Stockstill, Kathleen Kiernan, and Liz Edwards

provide technical support.

Nicole Jones, Kyoko Keener, Hui Di, Joseph Morgan, Wenjun Bao, Fang Chen, Susan Shao, Yusuke Ono,

Michael Crotty, Jong-Seok Lee, Tonya Mauldin, Audrey Ventura, Ani Eloyan, Bo Meng, and Sequola

McNeill provide ongoing quality assurance. Additional testing and technical support are provided by Noriki

Inoue, Kyoko Takenaka, and Masakazu Okada from SAS Japan.

Bob Hickey and Jim Borek are the release engineers.

The JMP books were written by Ann Lehman, Lee Creighton, John Sall, Bradley Jones, Erin Vang, Melanie

Drake, Meredith Blackwelder, Diane Perhac, Jonathan Gatlin, Susan Conaghan, and Sheila Loring, with

contributions from Annie Dudley Zangi and Brian Corcoran. Creative services and production was done by

SAS Publications. Melanie Drake implemented the Help system.

Jon Weisz and Jeff Perkinson provided project management. Also thanks to Lou Valente, Ian Cox, Mark

Bailey, and Malcolm Moore for technical advice.

Thanks also to Georges Guirguis, Warren Sarle, Gordon Johnston, Duane Hayes, Russell Wolfinger,

Randall Tobias, Robert N. Rodriguez, Ying So, Warren Kuhfeld, George MacKensie, Bob Lucas, Warren

Kuhfeld, Mike Leonard, and Padraic Neville for statistical R&D support. Thanks are also due to Doug

Melzer, Bryan Wolfe, Vincent DelGobbo, Biff Beers, Russell Gonsalves, Mitchel Soltys, Dave Mackie, and

Stephanie Smith, who helped us get started with SAS Foundation Services from JMP.

Acknowledgments

We owe special gratitude to the people that encouraged us to start JMP, to the alpha and beta testers of

JMP, and to the reviewers of the documentation. In particular we thank Michael Benson, Howard

Page 14

x

Yetter (d), Andy Mauromoustakos, Al Best, Stan Young, Robert Muenchen, Lenore Herzenberg, Ramon

Leon, Tom Lange, Homer Hegedus, Skip Weed, Michael Emptage, Pat Spagan, Paul Wenz, Mike Bowen,

Lori Gates, Georgia Morgan, David Tanaka, Zoe Jewell, Sky Alibhai, David Coleman, Linda Blazek,

Michael Friendly, Joe Hockman, Frank Shen, J.H. Goodman, David Iklé, Barry Hembree, Dan Obermiller,

Jeff Sweeney, Lynn Vanatta, and Kris Ghosh.

Also, we thank Dick DeVeaux, Gray McQuarrie, Robert Stine, George Fraction, Avigdor Cahaner, José

Ramirez, Gudmunder Axelsson, Al Fulmer, Cary Tuckfield, Ron Thisted, Nancy McDermott, Veronica

Czitrom, Tom Johnson, Cy Wegman, Paul Dwyer, DaRon Huffaker, Kevin Norwood, Mike Thompson,

Jack Reese, Francois Mainville, and John Wass.

We also thank the following individuals for expert advice in their statistical specialties: R. Hocking and P.

Spector for advice on effective hypotheses; Robert Mee for screening design generators; Roselinde Kessels

for advice on choice experiments; Greg Piepel, Peter Goos, J. Stuart Hunter, Dennis Lin, Doug

Montgomery, and Chris Nachtsheim for advice on design of experiments; Jason Hsu for advice on multiple

comparisons methods (not all of which we were able to incorporate in JMP); Ralph O’Brien for advice on

homogeneity of variance tests; Ralph O’Brien and S. Paul Wright for advice on statistical power; Keith

Muller for advice in multivariate methods, Harry Martz, Wayne Nelson, Ramon Leon, Dave Trindade, Paul

Tobias, and William Q. Meeker for advice on reliability plots; Lijian Yang and J.S. Marron for bivariate

smoothing design; George Milliken and Yurii Bulavski for development of mixed models; Will Potts and

Cathy Maahs-Fladung for data mining; Clay Thompson for advice on contour plotting algorithms; and

Tom Little, Damon Stoddard, Blanton Godfrey, Tim Clapp, and Joe Ficalora for advice in the area of Six

Sigma; and Josef Schmee and Alan Bowman for advice on simulation and tolerance design.

For sample data, thanks to Patrice Strahle for Pareto examples, the Texas air control board for the pollution

data, and David Coleman for the pollen (eureka) data.

Translations

Trish O'Grady coordinates localization. Special thanks to Noriki Inoue, Kyoko Takenaka, Masakazu Okada,

Naohiro Masukawa and Yusuke Ono (SAS Japan); and Professor Toshiro Haga (retired, Tokyo University of

Science) and Professor Hirohiko Asano (Tokyo Metropolitan University) for reviewing our Japanese

translation; Professors Fengshan Bai, Xuan Lu, and Jianguo Li at Tsinghua University in Beijing, and their

assistants Rui Guo, Shan Jiang, Zhicheng Wan, and Qiang Zhao; and William Zhou (SAS China) and

Zhongguo Zheng, professor at Peking University, for reviewing the Simplified Chinese translation; Jacques

Goupy (consultant, ReConFor) and Olivier Nuñez (professor, Universidad Carlos III de Madrid) for

reviewing the French translation; Dr. Byung Chun Kim (professor, Korea Advanced Institute of Science and

Technology) and Duk-Hyun Ko (SAS Korea) for reviewing the Korean translation; Bertram Schäfer and

David Meintrup (consultants, StatCon) for reviewing the German translation; Patrizia Omodei, Maria

Scaccabarozzi, and Letizia Bazzani (SAS Italy) for reviewing the Italian translation. Finally, thanks to all the

members of our outstanding translation teams.

Past Support

Many people were important in the evolution of JMP. Special thanks to David DeLong, Mary Cole, Kristin

Nauta, Aaron Walker, Ike Walker, Eric Gjertsen, Dave Tilley, Ruth Lee, Annette Sanders, Tim Christensen,

Eric Wasserman, Charles Soper, Wenjie Bao, and Junji Kishimoto. Thanks to SAS Institute quality

assurance by Jeanne Martin, Fouad Younan, and Frank Lassiter. Additional testing for Versions 3 and 4 was

done by Li Yang, Brenda Sun, Katrina Hauser, and Andrea Ritter.

Page 15

Also thanks to Jenny Kendall, John Hansen, Eddie Routten, David Schlotzhauer, and James Mulherin.

Thanks to Steve Shack, Greg Weier, and Maura Stokes for testing JMP Version 1.

Thanks for support from Charles Shipp, Harold Gugel (d), Jim Winters, Matthew Lay, Tim Rey, Rubin

Gabriel, Brian Ruff, William Lisowski, David Morganstein, Tom Esposito, Susan West, Chris Fehily, Dan

Chilko, Jim Shook, Ken Bodner, Rick Blahunka, Dana C. Aultman, and William Fehlner.

Technology License Notices

xi

Scintilla is Copyright 1998-2003 by Neil Hodgson <neilh@scintilla.org>.

WARRANTIES WITH REGARD TO THIS SOFTWARE, INCLUDING ALL IMPLIED WARRANTIES OF

MERCHANTABILITY AND FITNESS, IN NO EVENT SHALL NEIL HODGSON BE LIABLE FOR ANY SPECIAL,

INDIRECT OR CONSEQUENTIAL DAMAGES OR ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF

USE, DATA OR PROFITS, WHETHER IN AN ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS

ACTION, ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

XRender is Copyright © 2002 Keith Packard.

TO THIS SOFTWARE, INCLUDING ALL IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS, IN NO

EVENT SHALL KEITH PACKARD BE LIABLE FOR ANY SPECIAL, INDIRECT OR CONSEQUENTIAL DAMAGES OR

ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN ACTION

OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF OR IN CONNECTION WITH

THE USE OR PERFORMANCE OF THIS SOFTWARE.

KEITH PACKARD DISCLAIMS ALL WARRANTIES WITH REGARD

NEIL HODGSON DISCLAIMS ALL

ImageMagick software is Copyright © 1999-2010 ImageMagick Studio LLC, a non-profit organization

dedicated to making software imaging solutions freely available.

bzlib software is Copyright © 1991-2009, Thomas G. Lane, Guido Vollbeding. All Rights Reserved.

FreeType software is Copyright © 1996-2002 The FreeType Project (www.freetype.org). All rights reserved.

Page 16

xii

Page 17

Chapter 1

Introduction to Designing Experiments

A Beginner’s Tutorial

This tutorial chapter introduces you to the design of experiments (DOE) using JMP’s custom designer. It

gives a general understanding of how to design an experiment using JMP. Refer to subsequent chapters in

this book for more examples and procedures on how to design an experiment for your specific project.

Page 18

Contents

About Designing Experiments. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

My First Experiment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

The Situation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

Step 1: Design the Experiment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

Step 2: Define Factor Constraints . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Step 3: Add Interaction Terms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

Step 4: Determine the Number of Runs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

Step 5: Check the Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

Step 6: Gather and Enter the Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

Step 7: Analyze the Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .10

Page 19

Chapter 1 Introduction to Designing Experiments 3

About Designing Experiments

About Designing Experiments

Increasing productivity and improving quality are important goals in any business. The methods for

determining how to increase productivity and improve quality are evolving. They have changed from costly

and time-consuming trial-and-error searches to the powerful, elegant, and cost-effective statistical methods

that JMP provides.

Designing experiments in JMP is centered around factors, responses, a model, and runs. JMP helps you

determine if and how a factor affects a response.

My First Experiment

If you have never used JMP to design an experiment, this section shows you how to design the experiment

and how to understand JMP’s output.

Tip: The recommended way to create an experiment is to use the custom designer. JMP also provides

classical designs for use in textbook situations.

The Situation

Your goal is to find the best way to microwave a bag of popcorn. Because you have some experience with

this, it is easy to decide on reasonable ranges for the important factors:

• how long to cook the popcorn (between 3 and 5 minutes)

• what level of power to use on the microwave oven (between settings 5 and 10)

• which brand of popcorn to use (Top Secret or Wilbur)

When a bag of popcorn is popped, most of the kernels pop, but some remain unpopped. You prefer to have

all (or nearly all) of the kernels popped and no (or very few) unpopped kernels. Therefore, you define “the

best popped bag” based on the ratio of popped kernels to the total number of kernels.

A good way to improve any procedure is to conduct an experiment. For each experimental run, JMP’s

custom designer determines which brand to use, how long to cook each bag in the microwave and what

power setting to use. Each run involves popping one bag of corn. After popping a bag, enter the total

number of kernels and the number of popped kernels into the appropriate row of a JMP data table. After

doing all the experimental runs, use JMP’s model fitting capabilities to do the data analysis. Then, you can

use JMP’s profiling tools to determine the optimal settings of popping time, power level, and brand.

Page 20

4 Introduction to Designing Experiments Chapter 1

My First Experiment

Step 1: Design the Experiment

The first step is to select DOE > Custom Design. Then, define the responses and factors.

Define the Responses: Popped Kernels and Total Kernels

There are two responses in this experiment:

• the number of popped kernels

• the total number of kernels in the bag. After popping the bag add the number of unpopped kernels to

the number of popped kernels to get the total number of kernels in the bag.

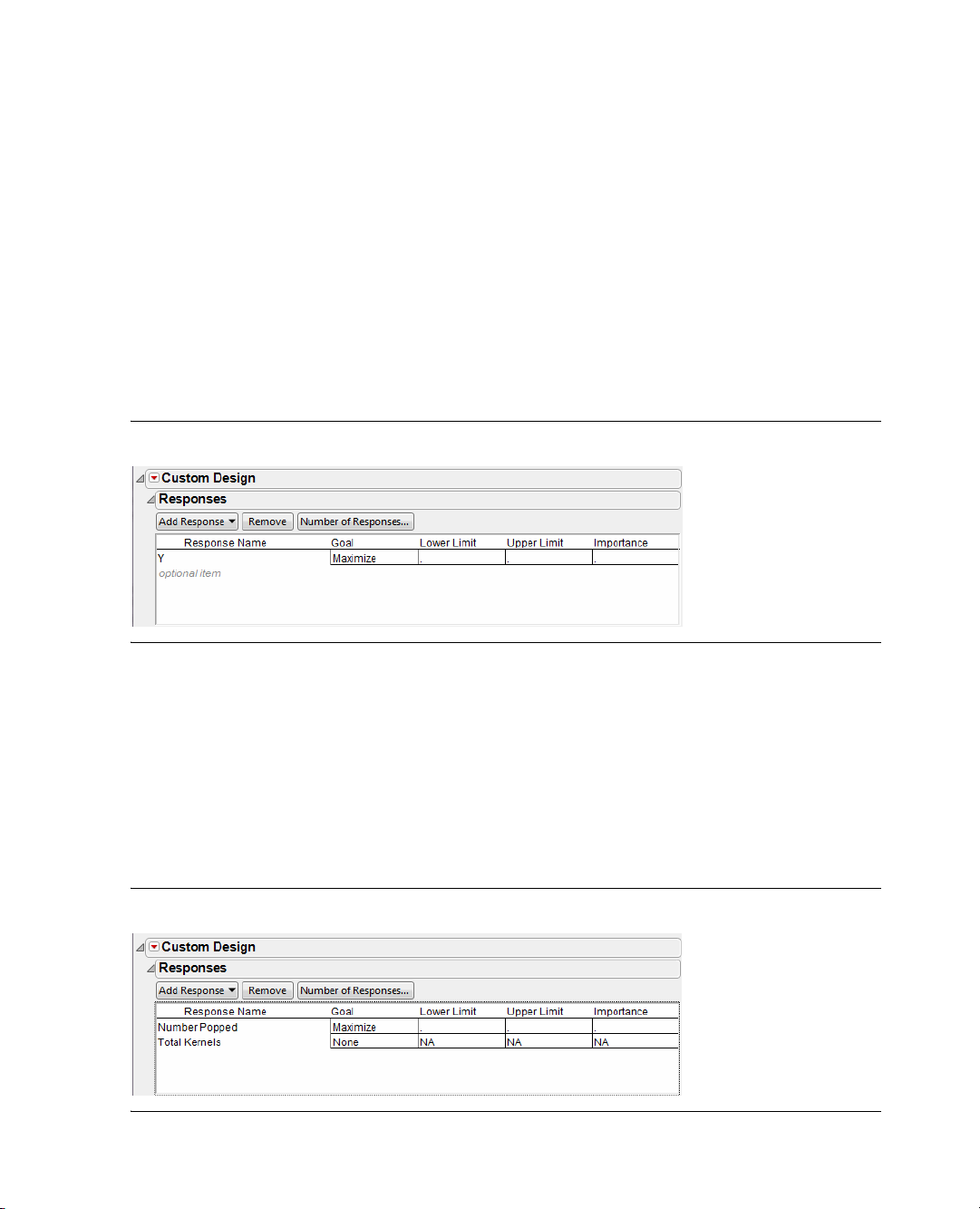

By default, the custom designer contains one response labeled

Figure 1.1 Custom Design Responses Panel

Y (Figure 1.1).

You want to add a second response to the Responses panel and change the names to be more descriptive:

1. To rename the

increase the number of popped kernels, leave the goal at

2. To add the second response (total number of kernels), click

menu that appears. JMP labels this response

3. Double-click

Y response, double-click the name and type “Number Popped.” Since you want to

Maximize.

Add Response and choose None from the

Y2 by default.

Y2 and type “Total Kernels” to rename it.

The completed Responses panel looks like Figure 1.2.

Figure 1.2 Renamed Responses with Specified Goals

Page 21

Chapter 1 Introduction to Designing Experiments 5

My First Experiment

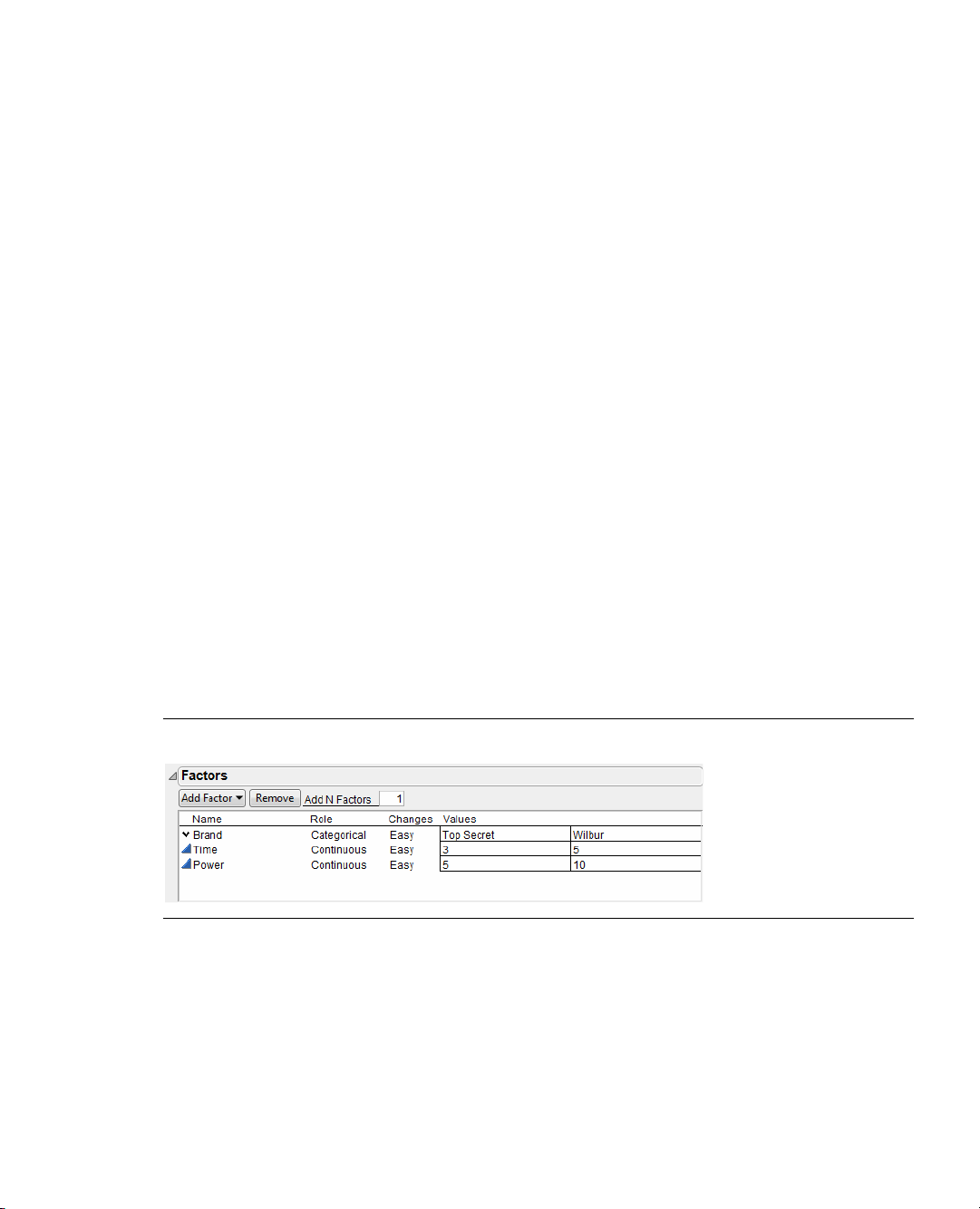

Define the Factors: Time, Power, and Brand

In this experiment, the factors are:

• brand of popcorn (Top Secret or Wilbur)

• cooking time for the popcorn (3 or 5 minutes)

• microwave oven power level (setting 5 or 10)

In the Factors panel, add

1. Click

Add Factor and select Categorical > 2 Level.

Brand as a two-level categorical factor:

2. To change the name of the factor (currently named

3. To rename the default levels (

Add

Time as a two-level continuous factor:

4. Click

Add Factor and select Continuous.

5. Change the default name of the factor (

6. Likewise, to rename the default levels (

L1 and L2), click the level names and type Top S ec r e t and Wilbur.

X2) by double-clicking it and typing Time.

–1 and 1) as 3 and 5, click the current level name and type in the

new value.

Add

Power as a two-level continuous factor:

7. Click

8. Change the name of the factor (currently named

9. Rename the default levels (currently named

Add Factor and select Continuous.

X3) by double-clicking it and typing Power.

-1 and 1) as 5 and 10 by clicking the current name and

typing. The completed Factors panel looks like Figure 1.3.

Figure 1.3 Renamed Factors with Specified Values

X1), double-click on its name and type Brand.

10. Click Continue.

Page 22

6 Introduction to Designing Experiments Chapter 1

My First Experiment

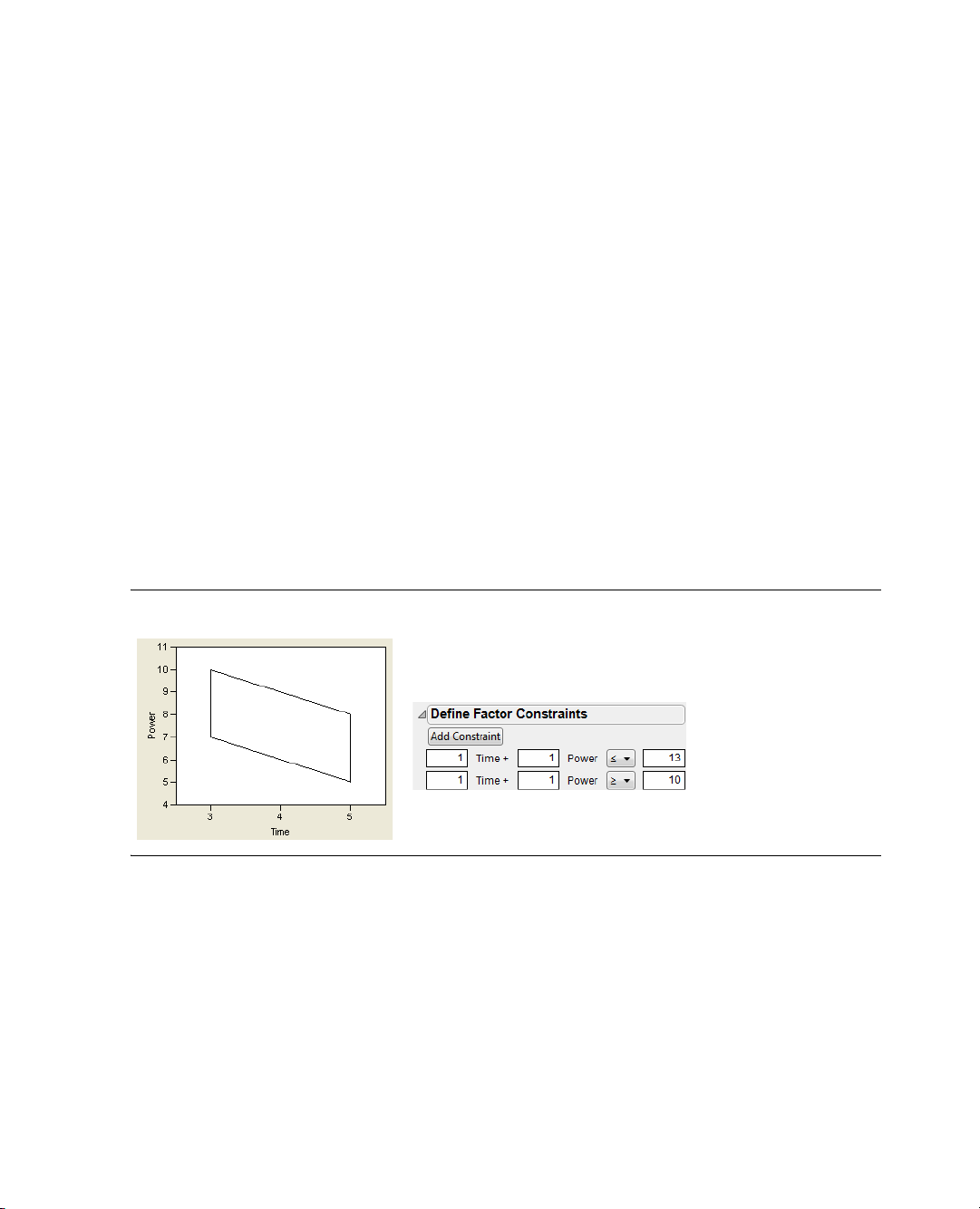

Step 2: Define Factor Constraints

The popping time for this experiment is either 3 or 5 minutes, and the power settings on the microwave are

5 and 10. From experience, you know that

• popping corn for a long time on a high setting tends to scorch kernels.

• not many kernels pop when the popping time is brief and the power setting is low.

You want to constrain the combined popping time and power settings to be less than or equal to 13, but

greater than or equal to 10. To define these limits:

1. Open the Constraints panel by clicking the disclosure button beside the

Define Factor Constraints title

bar (see Figure 1.4).

2. Click the

Add Constraint button twice, once for each of the known constraints.

3. Complete the information, as shown to the right in Figure 1.4. These constraints tell the Custom

Designer to avoid combinations of

to change

<= to >= in the second constraint.

Power and Time that sum to less than 10 and more than 13. Be sure

The area inside the parallelogram, illustrated on the left in Figure 1.4, is the allowable region for the runs.

You can see that popping for 5 minutes at a power of 10 is not allowed and neither is popping for 3 minutes

at a power of 5.

Figure 1.4 Defining Factor Constraints

Step 3: Add Interaction Terms

You are interested in the possibility that the effect of any factor on the proportion of popped kernels may

depend on the value of some other factor. For example, the effect of a change in popping time for the

Wilbur popcorn brand could be larger than the same change in time for the Top Secret brand. This kind of

synergistic effect of factors acting in concert is called a two-factor interaction. You can examine all possible

two-factor interactions in your a priori model of the popcorn popping process.

1. Click

Interactions in the Model panel and select 2nd. JMP adds two-factor interactions to the model as

shown to the left in Figure 1.5.

Page 23

Chapter 1 Introduction to Designing Experiments 7

My First Experiment

In addition, you suspect the graph of the relationship between any factor and any response might be curved.

You can see whether this kind of curvature exists with a quadratic model formed by adding the second order

powers of effects to the model, as follows.

2. Click

Powers and select 2nd to add quadratic effects of the continuous factors, Power and Time.

The completed Model should look like the one to the right in Figure 1.5.

Figure 1.5 Add Interaction and Power Terms to the Model

Step 4: Determine the Number of Runs

The Design Generation panel in Figure 1.6 shows the minimum number of runs needed to perform the

experiment with the effects you’ve added to the model. You can use that minimum or the default number of

runs, or you can specify your own number of runs as long as that number is more than the minimum. JMP

has no restrictions on the number of runs you request. For this example, use the default number of runs, 16.

Click

Make Design to continue.

Figure 1.6 Model and Design Generation Panels

Page 24

8 Introduction to Designing Experiments Chapter 1

My First Experiment

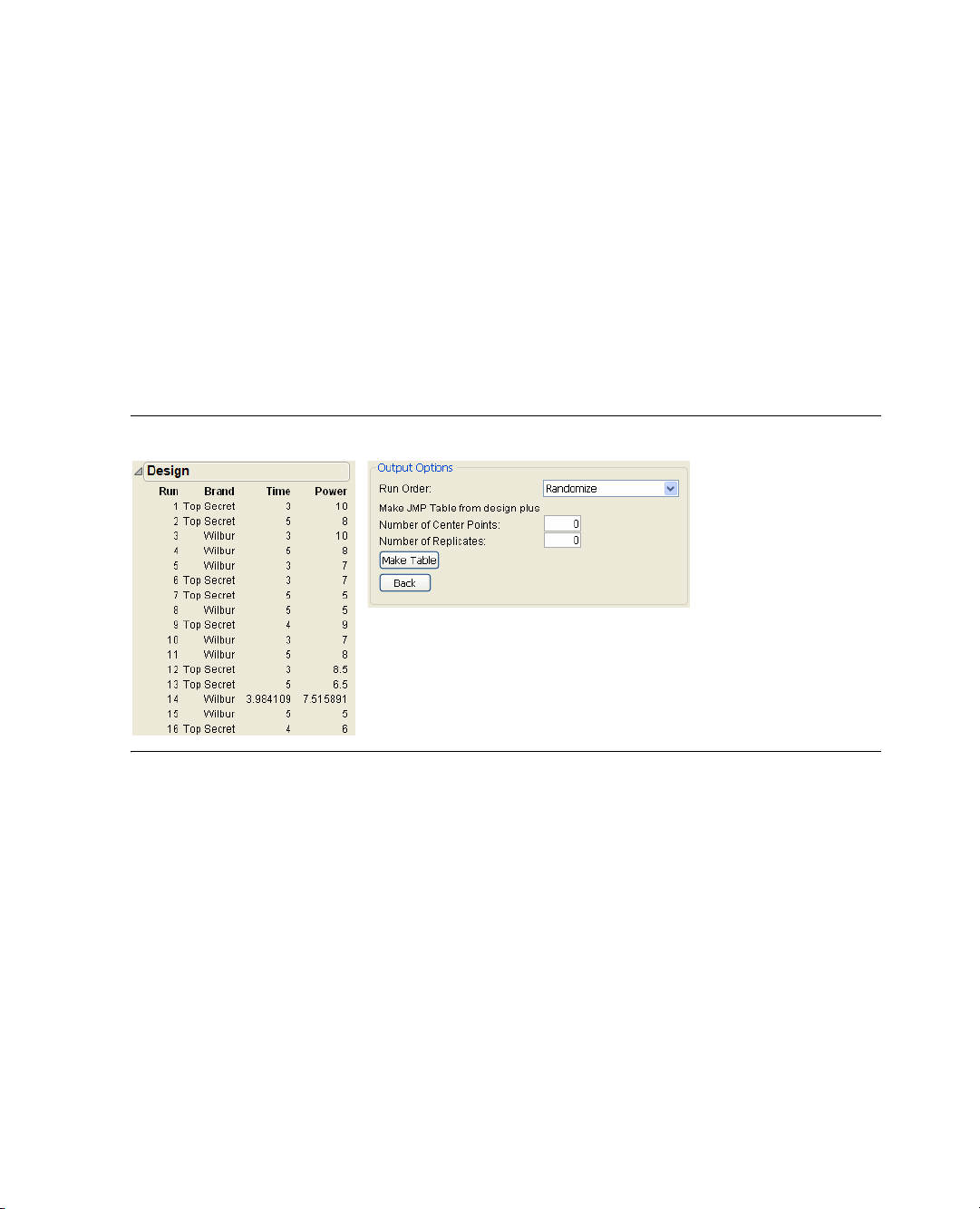

Step 5: Check the Design

When you click Make Design, JMP generates and displays a design, as shown on the left in Figure 1.7.

Note that because JMP uses a random seed to generate custom designs and there is no unique optimal

design for this problem, your table may be different than the one shown here. You can see in the table that

the custom design requires 8 runs using each brand of popcorn.

Scroll to the bottom of the Custom Design window and look at the Output Options area (shown to the

right in Figure 1.7. The

data table when it is created. Keep the selection at

in a random order.

Run Order option lets you designate the order you want the runs to appear in the

Randomize so the rows (runs) in the output table appear

Now click

Figure 1.7 Design and Output Options Section of Custom Designer

Make Table in the Output Options section.

The resulting data table (Figure 1.8) shows the order in which you should do the experimental runs and

provides columns for you to enter the number of popped and total kernels.

You do not have fractional control over the power and time settings on a microwave oven, so you should

round the power and time settings, as shown in the data table. Although this altered design is slightly less

optimal than the one the custom designer suggested, the difference is negligible.

Tip: Note that optionally, before clicking

Right

in the Run Order menu to have JMP present the results in the data table according to the brand. We

have conducted this experiment for you and placed the results, called

Sample Data folder installed with JMP. These results have the columns sorted from left to right.

Make Table in the Output Options, you could select Sort Left to

Popcorn DOE Results.jmp, in the

Page 25

Chapter 1 Introduction to Designing Experiments 9

results from

experiment

scripts to

analyze data

My First Experiment

Figure 1.8 JMP Data Table of Design Runs Generated by Custom Designer

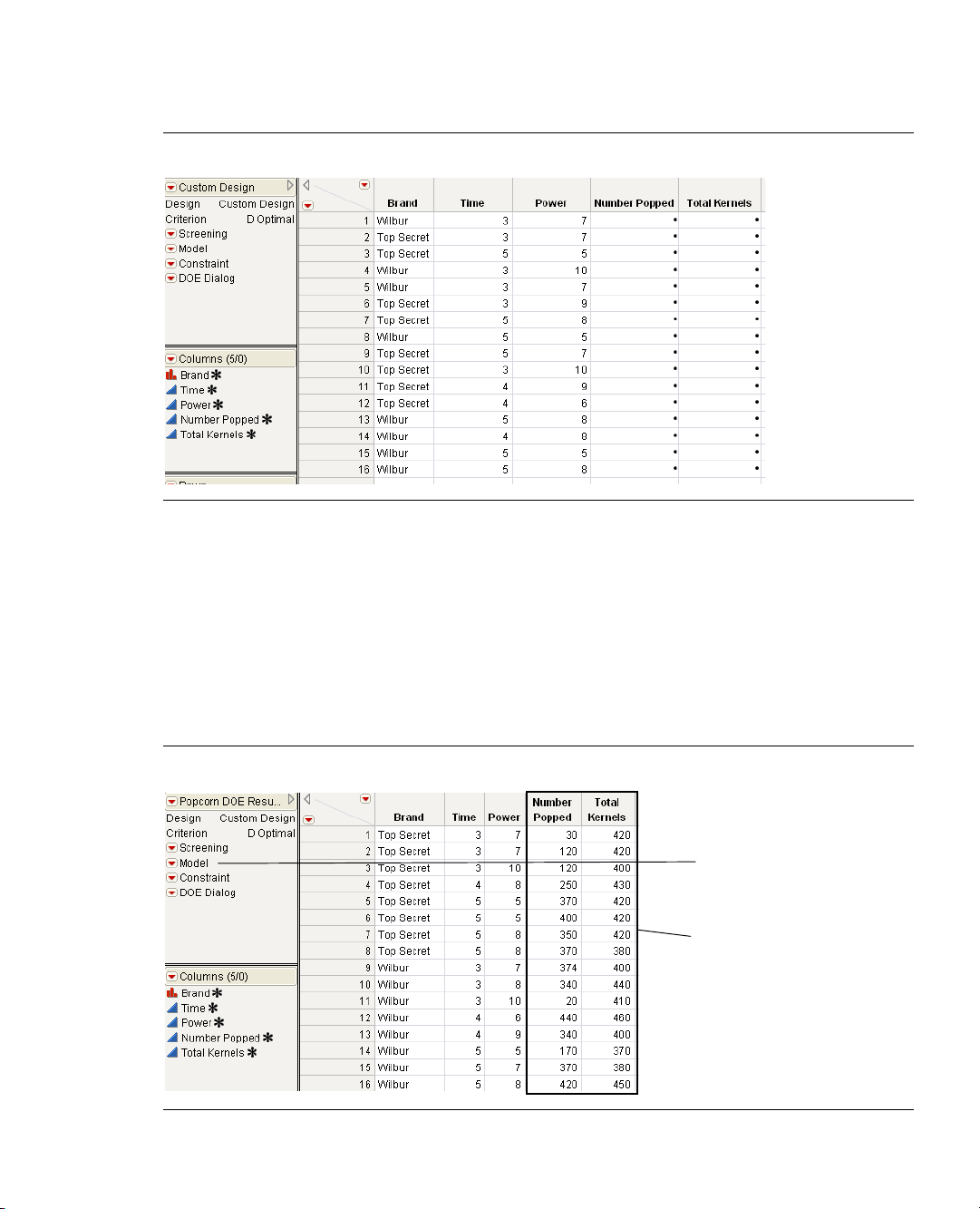

Step 6: Gather and Enter the Data

Pop the popcorn according to the design JMP provided. Then, count the number of popped and unpopped

kernels left in each bag. Finally, enter the numbers shown below into the appropriate columns of the data

table.

We have conducted this experiment for you and placed the results in the

JMP. To see the results, open

data.

The data table is shown in Figure 1.9.

Figure 1.9 Results of the Popcorn DOE Experiment

Popcorn DOE Results.jmp from the Design Experiment folder in the sample

Sample Data folder installed with

Page 26

10 Introduction to Designing Experiments Chapter 1

My First Experiment

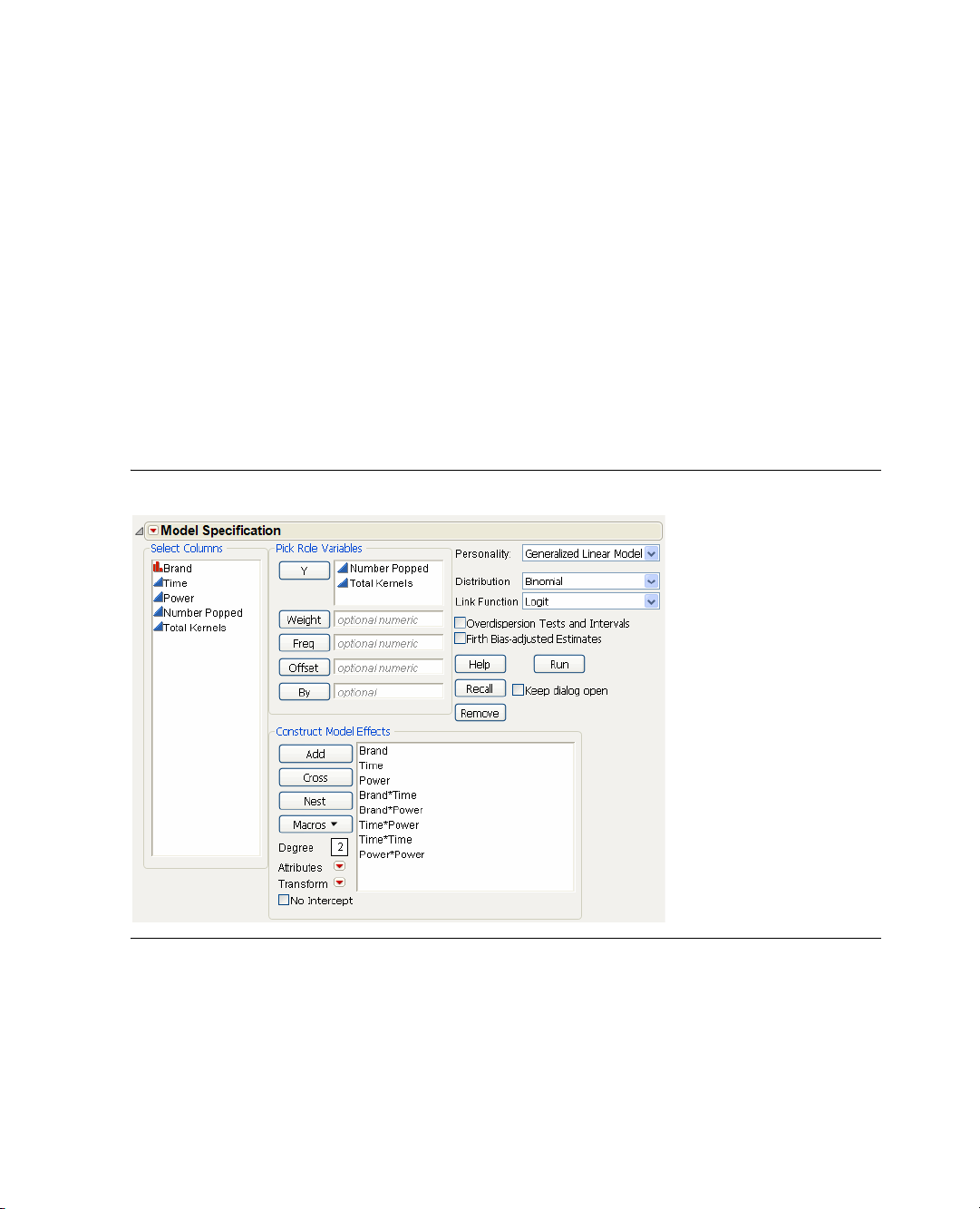

Step 7: Analyze the Results

After the experiment is finished and the number of popped kernels and total kernels have been entered into

the data table, it is time to analyze the data. The design data table has a script, labeled

the top left panel of the table. When you created the design, a standard least squares analysis was stored in

the

Model script with the data table.

Model, that shows in

1. Click the red triangle for

The default fitting personality in the model dialog is

Model and select Run Script.

Standard Least Squares. One assumption of

standard least squares is that your responses are normally distributed. But because you are modeling the

proportion of popped kernels it is more appropriate to assume that your responses come from a binomial

distribution. You can use this assumption by changing to a generalized linear model.

2. Change the Personality to

Logit, as shown in Figure 1.10.

Figure 1.10 Fitting the Model

Generalized Linear Model, Distribution to Binomial, and Link Function to

3. Click Run.

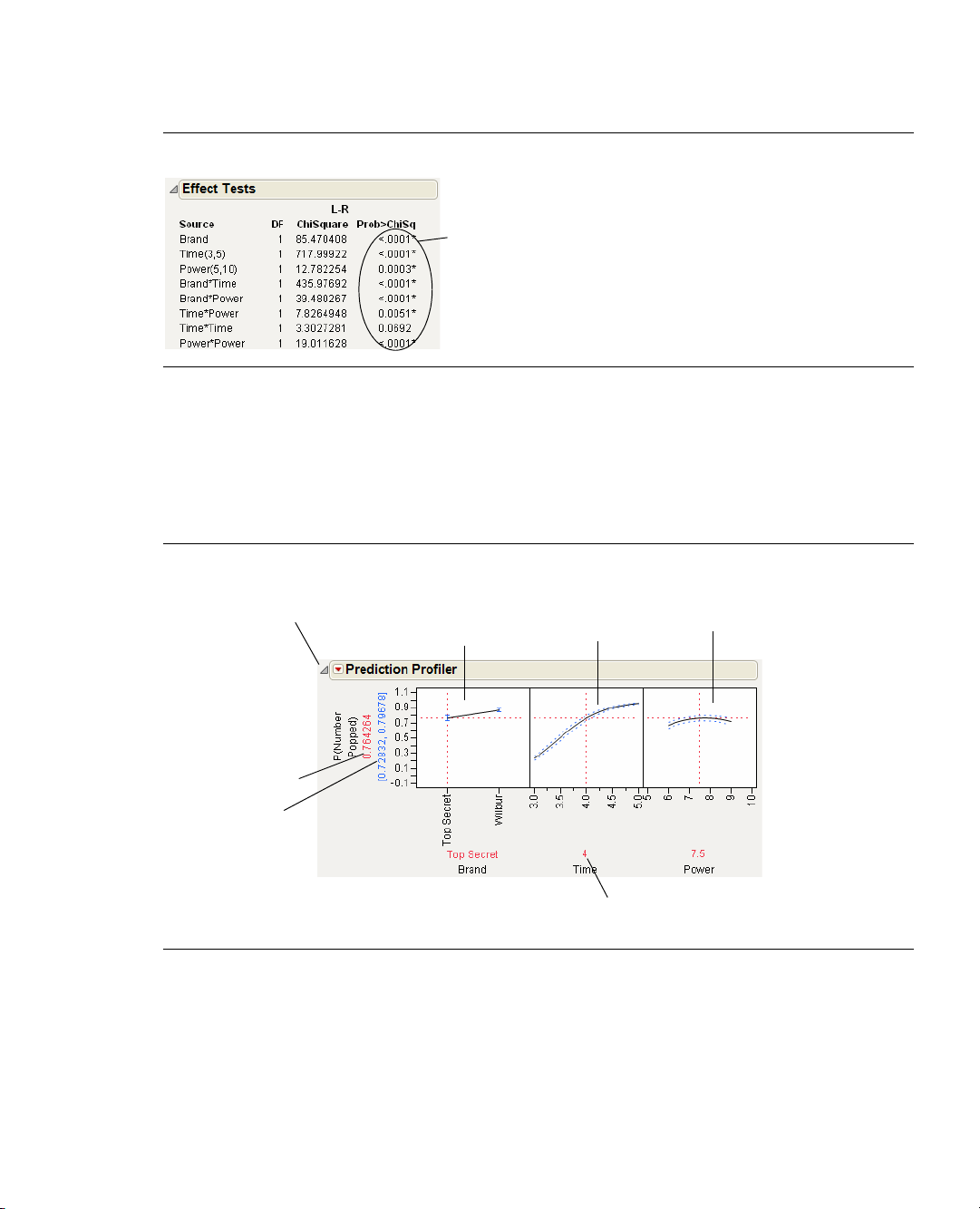

4. Scroll down to view the Effect Tests table (Figure 1.11) and look in the column labeled Prob>Chisq.

This column lists p-values. A low p-value (a value less than 0.05) indicates that results are statistically

significant. There are asterisks that identify the low p-values. You can therefore conclude that, in this

experiment, all the model effects except for

there is a strong relationship between popping time (

(

Brand), and the proportion of popped kernels.

Time*Time are highly significant. You have confirmed that

Time), microwave setting (Power), popcorn brand

Page 27

Chapter 1 Introduction to Designing Experiments 11

p-values indicate significance.

Values with * beside them are

p-values that indicate the results

are statistically significant.

Prediction trace

for

Brand

predicted value

of the response

95% confidence

interval on the mean

response

Factor values (here, time = 4)

Prediction trace

for

Time

Prediction trace

for

Power

Disclosure icon to

open or close the

Prediction Profiler

My First Experiment

Figure 1.11 Investigating p-Values

To further investigate, use the Prediction Profiler to see how changes in the factor settings affect the

numbers of popped and unpopped kernels:

1. Choose

Profilers > Profiler from the red triangle menu on the Generalized Linear Model Fit title bar.

The Prediction Profiler is shown at the bottom of the report. Figure 1.12 shows the Prediction Profiler

for the popcorn experiment. Prediction traces are displayed for each factor.

Figure 1.12 The Prediction Profiler

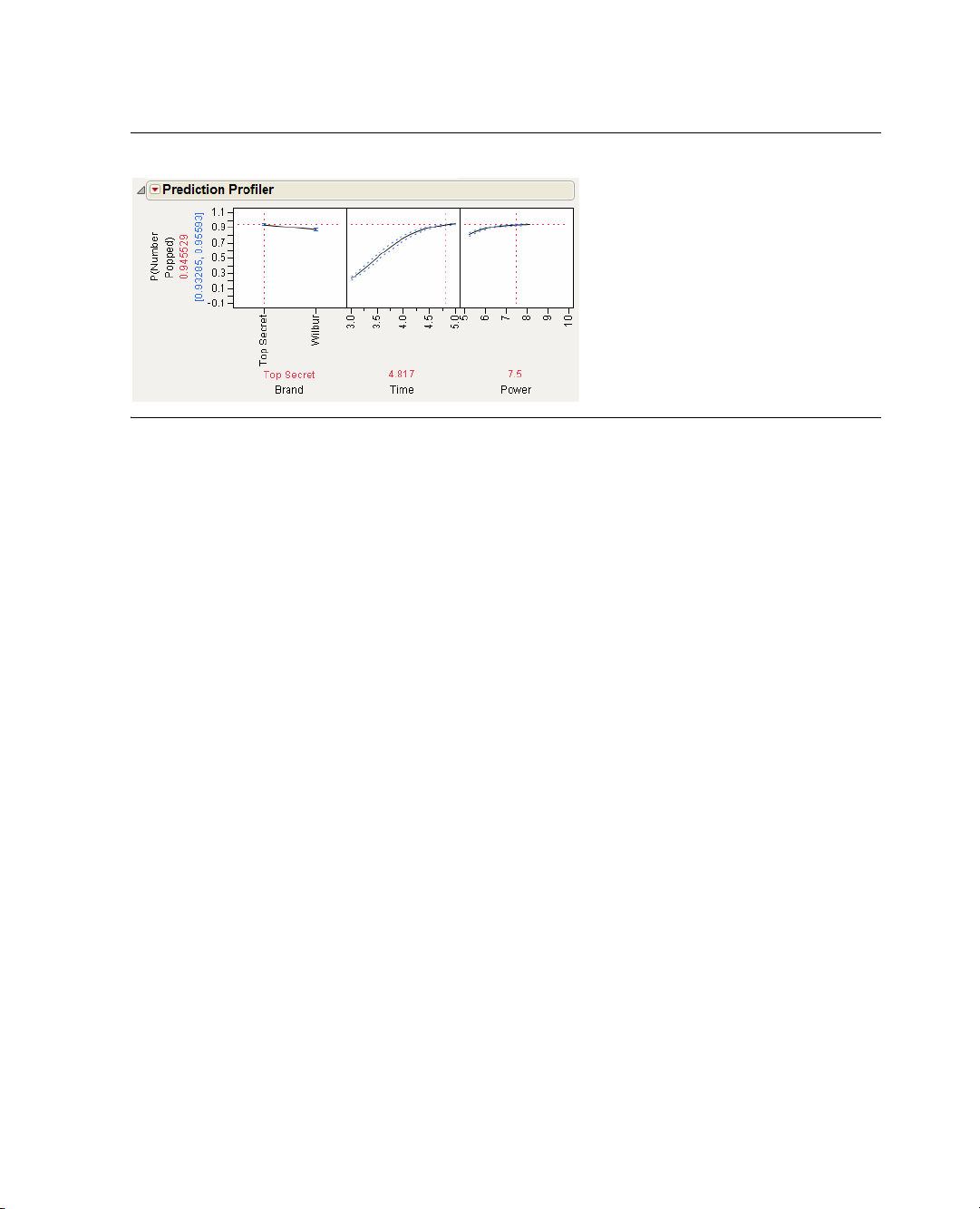

2. Move the vertical red dotted lines to see the effect that changing a factor value has on the response. For

example, drag the red line in the

Time graph to the right and left (Figure 1.13).

Page 28

12 Introduction to Designing Experiments Chapter 1

My First Experiment

Figure 1.13 Moving the Time Value from 4 to Near 5

As Time increases and decreases, the curved Time and Power prediction traces shift their slope and

maximum/minimum values. The substantial slope shift tells you there is an interaction (synergistic effect)

involving

Time and Power.

Furthermore, the steepness of a prediction trace reveals a factor’s importance. Because the prediction trace

for

Time is steeper than that for Brand or Power (see Figure 1.13), you can see that cooking time is more

important than the brand of popcorn or the microwave power setting.

Now for the final steps.

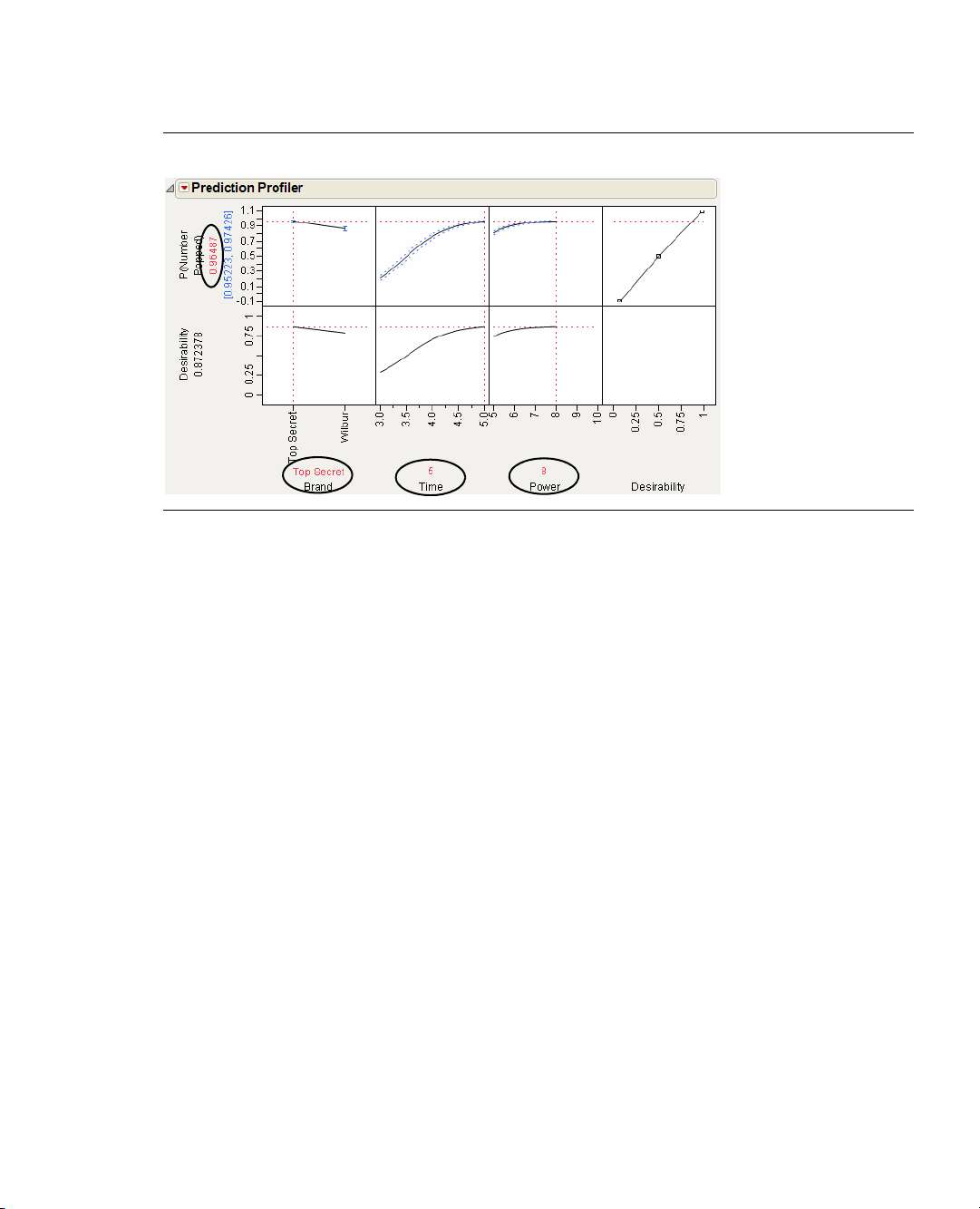

3. Click the red triangle icon in the Prediction Profiler title bar and select

4. Click the red triangle icon in the Prediction Profiler title bar and select

Desirability Functions.

Maximize Desirability. JMP

automatically adjusts the graph to display the optimal settings at which the most kernels will be popped

(Figure 1.14).

Our experiment found how to cook the bag of popcorn with the greatest proportion of popped kernels: use

Top Secret, cook for five minutes, and use a power level of 8. The experiment predicts that cooking at these

settings will yield greater than 96.5% popped kernels.

Page 29

Chapter 1 Introduction to Designing Experiments 13

My First Experiment

Figure 1.14 The Most Desirable Settings

The best settings are the Top Secret brand, cooking time at 5, and power set at 8.

Page 30

14 Introduction to Designing Experiments Chapter 1

My First Experiment

Page 31

Chapter 2

Describe Design Collect Fit Predict

Key mathematical steps: appropriate

computer-based tools are empowering.

Key engineering steps: process knowledge and

engineering judgement are important.

Identify factors

and responses.

Compute design

for maximum

information from

runs.

Use design to set

factors: measure

response for each

run.

Compute best fit

of mathematical

model to data

from test runs.

Use model to find

best factor settings

for on-target

responses and

minimum variability.

Examples Using the Custom Designer

The use of statistical methods in industry is increasing. Arguably, the most cost-beneficial of these methods

for quality and productivity improvement is statistical design of experiments. A trial-and -error search for

the vital few factors that most affect quality is costly and time-consuming. The purpose of experimental

design is to characterize, predict, and then improve the behavior of any system or process. Designed

experiments are a cost-effective way to accomplish these goals.

JMP’s custom designer is the recommended way to describe your process and create a design that works for

your situation. To use the custom designer, you first enter the process variables and constraints, then JMP

tailors a design to suit your unique case. This approach is more general and requires less experience and

expertise than previous tools supporting the statistical design of experiments.

Custom designs accommodate any number of factors of any type. You can also control the number of

experimental runs. This makes custom design more flexible and more cost effective than alternative

approaches.

This chapter presents several examples showing the use of custom designs. It shows how to drive its interface

to build a design using this easy step-by-step approach:

Figure 2.1 Approach to Experimental Design

Page 32

Contents

Creating Screening Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .17

Creating a Main-Effects-Only Screening Design . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .17

Creating a Screening Design to Fit All Two-Factor Interactions. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .19

A Compromise Design Between Main Effects Only and All Interactions. . . . . . . . . . . . . . . . . . . . . . 20

Creating ‘Super’ Screening Designs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

Screening Designs with Flexible Block Sizes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

Checking for Curvature Using One Extra Run . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

Creating Response Surface Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .33

Exploring the Prediction Variance Surface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .33

Introducing I-Optimal Designs for Response Surface Modeling . . . . . . . . . . . . . . . . . . . . . . . . . . . . .36

A Three-Factor Response Surface Design. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .37

Response Surface with a Blocking Factor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .39

Creating Mixture Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .43

Mixtures Having Nonmixture Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .43

Experiments that are Mixtures of Mixtures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

Special-Purpose Uses of the Custom Designer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Designing Experiments with Fixed Covariate Factors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

Creating a Design with Two Hard-to-Change Factors: Split Plot. . . . . . . . . . . . . . . . . . . . . . . . . . . . .54

Technical Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .59

Page 33

Chapter 2 Examples Using the Custom Designer 17

Creating Screening Experiments

Creating Screening Experiments

You can use the screening designer in JMP to create screening designs, but the custom designer is more

flexible and general. The straightforward screening examples described below show that ‘custom’ does not

mean ‘exotic.’ The custom designer is a general purpose design environment that can create screening

designs.

Creating a Main-Effects-Only Screening Design

To create a main-effects-only screening design using the custom designer:

1. Select

2. Enter six continuous factors into the Factors panel (see “Step 1: Design the Experiment,” p. 4, for

3. Click

4. Using the default of eight runs, click

Note to DOE experts: The result is a resolution-three screening design. All the main effects are

estimable, but they are confounded with two factor interactions.

DOE > Custom Design.

details). Figure 2.2 shows the six factors.

Continue. The default model contains only the main effects.

Make Design. Click the disclosure button ( on Windows and

on the Macintosh) to open the

Design Evaluation outline node.

Page 34

18 Examples Using the Custom Designer Chapter 2

Creating Screening Experiments

Figure 2.2 A Main-Effects-Only Screening Design

5. Click the disclosure buttons beside Design Evaluation and then beside Alias Matrix ( on Windows

and on the Macintosh) to open the Alias Matrix. Figure 2.3 shows the Alias Matrix, which is a

table of zeros, ones, and negative ones.

The Alias Matrix shows how the coefficients of the constant and main effect terms in the model are biased

by any active two-factor interaction effects not already added to the model. The column labels identify

interactions. For example, the columns labeled X2*X6 and X3*X4 in the table have a 1 and -1 in the row

for

X1. This means that the expected value of the main effect of X1 is actually the sum of the main effect of

X1 and X2*X6, minus the effect of X3*X4. You are assuming that these interactions are negligible in size

compared to the effect of

Figure 2.3 The Alias Matrix

X1.

Page 35

Chapter 2 Examples Using the Custom Designer 19

Open

outline

nodes

Creating Screening Experiments

Note to DOE experts: The Alias matrix is a generalization of the confounding pattern in fractional

factorial designs.

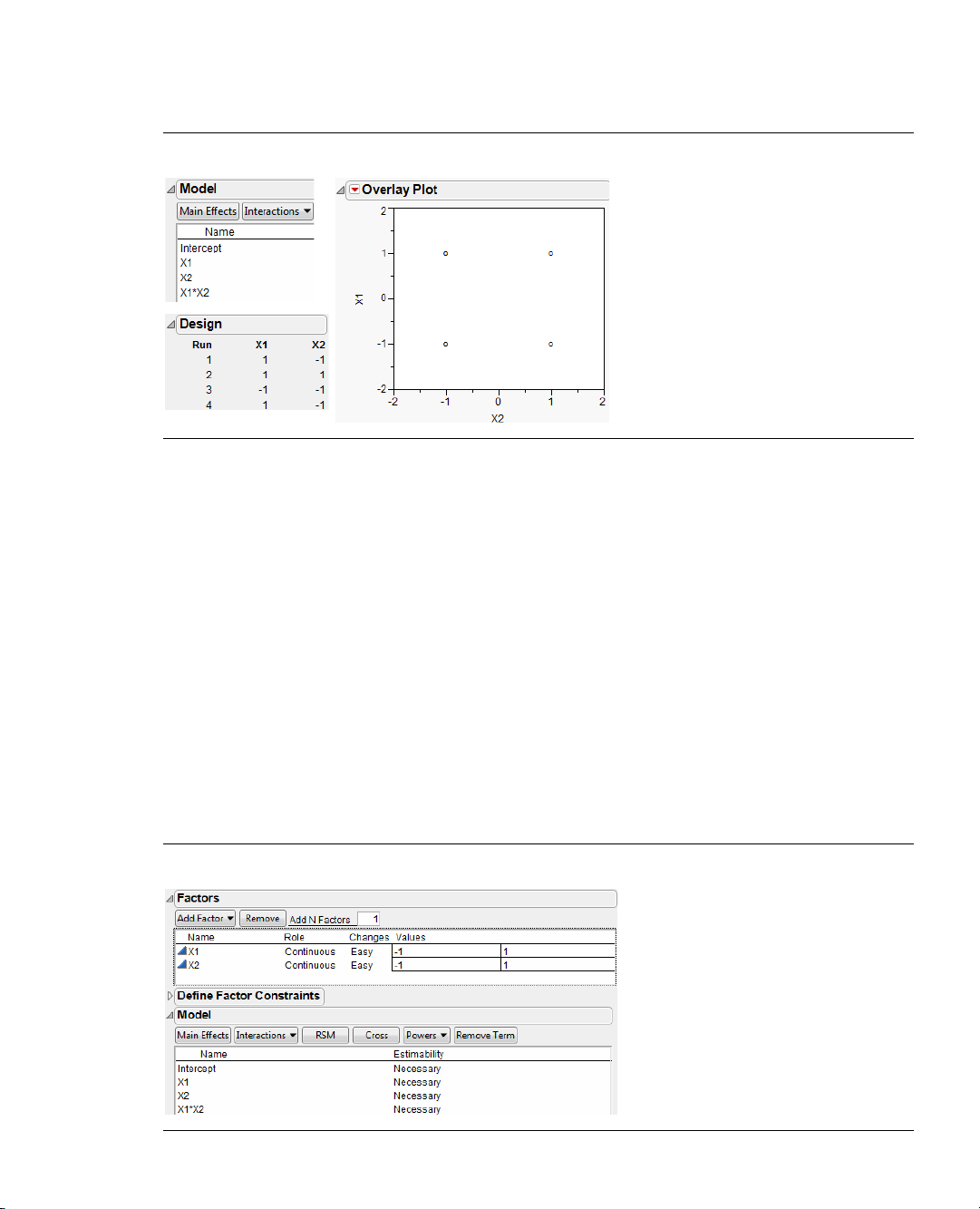

Creating a Screening Design to Fit All Two-Factor Interactions

There is risk involved in designs for main effects only. The risk is that two-factor interactions, if they are

strong, can confuse the results of such experiments. To avoid this risk, you can create experiments resolving

all the two-factor interactions.

Note to DOE experts: The result in this example is a resolution-five screening design. Two-factor

interactions are estimable but are confounded with three-factor interactions.

1. Select

DOE > Custom Design.

2. Enter five continuous factors into the Factors panel (see “Step 1: Design the Experiment,” p. 4 in the

“Introduction to Designing Experiments” chapter for details).

3. Click

4. In the Model panel, select

5. In the Design Generation Panel choose

Continue.

Interactions > 2nd.

Minimum for Number of Runs and click Make Design.

Figure 2.4 shows the runs of the two-factor design with all interactions. The sample size, 16 (a power of

two) is large enough to fit all the terms in the model. The values in your table may be different from those

shown below.

Figure 2.4 All Two-Factor Interactions

Page 36

20 Examples Using the Custom Designer Chapter 2

Creating Screening Experiments

6. Click the disclosure button ( on Windows and on the Macintosh) and to open the Design

Evaluation

The columns labels identify an interaction. For example, the column labelled

interaction of the first and second effect, the column labelled

outlines, then open Alias Matrix. Figure 2.5 shows the alias matrix table of zeros and ones.

1 2 refers to the

2 3 refers to the interaction between the

second and third effect, and so forth.

Look at the column labelled

occurs in the row labelled

expected value of the two-factor interaction

the row labelled

X1*X2 contain only zeros, which means that the Intercept and main effect terms are not

1 2. There is only one value of 1 in that column. All others are 0. The 1

X1*X2. All the other rows and columns are similar. This means that the

X1*X2 is not biased by any other terms. All the rows above

biased by any two-factor interactions.

Figure 2.5 Alias Matrix Showing all Two-Factor Interactions Clear of all Main Effects

A Compromise Design Between Main Effects Only and All Interactions

In a screening situation, suppose there are six continuous factors and resources for n =16 runs. The first

example in this section showed an eight-run design that fit all the main effects. With six factors, there are 15

possible two-factor interactions. The minimum number of runs that could fit the constant, six main effects

and 15 two-factor interactions is 22. This is more than the resource budget of 16 runs. It would be good to

find a compromise between the main-effects only design and a design capable of fitting all the two-factor

interactions.

This example shows how to obtain such a design compromise using the custom designer.

1. Select

2. Define six continuous factors (X1 - X6).

3. Click

4. Click the

DOE > Custom Design.

Continue. The model includes the main effect terms by default. The default estimability of these

terms is

Necessary.

Interactions button and choose 2nd to add all the two-factor interactions.

Page 37

Chapter 2 Examples Using the Custom Designer 21

Creating Screening Experiments

5. Select all the interaction terms and click the current estimability (Necessary) to reveal a menu. Change

Necessary to If Possible, as shown in Figure 2.6.

Figure 2.6 Model for Six-Variable Design with Two-Factor Interactions Designated If Possible

6. Type 16 in the User Specified edit box in the Number of Runs section, as shown. Although the desired

number of runs (16) is less than the total number of model terms, the custom designer builds a design to

estimate as many two-factor interactions as possible.

7. Click

After the custom designer creates the design, click the disclosure button beside

Make Design.

Design Evaluation to open

the Alias Matrix (Figure 2.7). The values in your table may be different from those shown below, but with a

similar pattern.

Page 38

22 Examples Using the Custom Designer Chapter 2

Creating Screening Experiments

Figure 2.7 Alias Matrix

All the rows above the row labelled X1*X2 contain only zeros, which means that the Intercept and main

effect terms are not biased by any two-factor interactions. The row labelled

1 2 column and the same value in the 3 6 column. That means the expected value of the estimate for X1*X2

is actually the sum of

X1*X2 and any real effect due to X3*X6.

Note to DOE experts: The result in this particular example is a resolution-four screening design.

Two-factor interactions are estimable but are aliased with other two-factor interactions.

Creating ‘Super’ Screening Designs

This section shows how to use the technique shown in the previous example to create ‘super’

(supersaturated) screening designs. Supersaturated designs have fewer runs than factors, which makes them

attractive for factor screening when there are many factors and experimental runs are expensive.

In a saturated design, the number of runs equals the number of model terms. In a supersaturated design, as

the name suggests, the number of model terms exceeds the number of runs (Lin, 1993). A supersaturated

design can examine dozens of factors using fewer than half as many runs as factors.

The Need for Supersaturated Designs

7–4

The 2

and the 2

with respect to a main effects model. In the analysis of a saturated design, you can (barely) fit the model, but

there are no degrees of freedom for error or for lack of fit. Until recently, saturated designs represented the

limit of efficiency in designs for screening.

15–11

fractional factorial designs available using the screening designer are both saturated

X1*X2 has the value 0.333 in the

Page 39

Chapter 2 Examples Using the Custom Designer 23

Creating Screening Experiments

Factor screening relies on the sparsity principle. The experimenter expects that only a few of the factors in a

screening experiment are active. The problem is not knowing which are the vital few factors and which are

the trivial many. It is common for brainstorming sessions to turn up dozens of factors. Yet, in practice,

screening experiments rarely involve more than ten factors. What happens to winnow the list from dozens to

ten or so?

If the experimenter is limited to designs that have more runs than factors, then dozens of factors translate

into dozens of runs. Often, this is not economically feasible. The result is that the factor list is reduced

without the benefit of data. In a supersaturated design, the number of model terms exceeds the number of

runs, and you can examine dozens of factors using less than half as many runs.

There are drawbacks:

• If the number of active factors approaches the number of runs in the experiment, then it is likely that

these factors will be impossible to identify. A rule of thumb is that the number of runs should be at least

four times larger than the number of active factors. If you expect that there might be as many as five

active factors, you should have at least 20 runs.

• Analysis of supersaturated designs cannot yet be reduced to an automatic procedure. However, using

forward stepwise regression is reasonable and the Screening platform (

Screening) offers a more streamlined analysis.

Analyze > Modeling >

Example: Twelve Factors in Eight Runs

As an example, consider a supersaturated design with twelve factors. Using model terms designated

Possible

In the last example, two-factor interaction terms were specified

terms—including main effects—are

provides the software machinery for creating a supersaturated design.

If Possible. In a supersaturated design, all

If Possible. Note in Figure 2.8, the only primary term is the intercept.

To see an example of a supersaturated design with twelve factors in eight runs:

1. Select

2. Add 12 continuous factors and click

3. Highlight all terms except the Intercept and click the current estimability (

DOE > Custom Design.

menu. Change

Necessary to If Possible, as shown in Figure 2.8.

Continue.

Necessary) to reveal the

If

Page 40

24 Examples Using the Custom Designer Chapter 2

Creating Screening Experiments

Figure 2.8 Changing the Estimability

4. The desired number of runs is eight so type 8 in the User Specified edit box in the Number of Runs

section.

5. Click the red triangle on the Custom Design title bar and select

6. Click

Make Design, then click Make Table. A window named Simulate Responses and a design table

appear, similar to the one in Figure 2.9. The

Y column values are controlled by the coefficients of the

Simulate Responses.

model in the Simulate Responses window. The values in your table may be different from those shown

below.

Figure 2.9 Simulated Responses and Design Table

Page 41

Chapter 2 Examples Using the Custom Designer 25

Creating Screening Experiments

7. Change the default settings of the coefficients in the Simulate Responses dialog to match those in

Figure 2.10 and click

Apply. The numbers in the Y column change. Because you have set X2 and X10 as

active factors in the simulation, the analysis should be able to identify the same two factors.

Note that random noise is added to the

Y column formula, so the numbers you see might not necessarily

match those in the figure. The values in your table may be different from those shown below.

Figure 2.10 Give Values to Two Main Effects and Specify the Standard Error as 0.5

To identify active factors using stepwise regression:

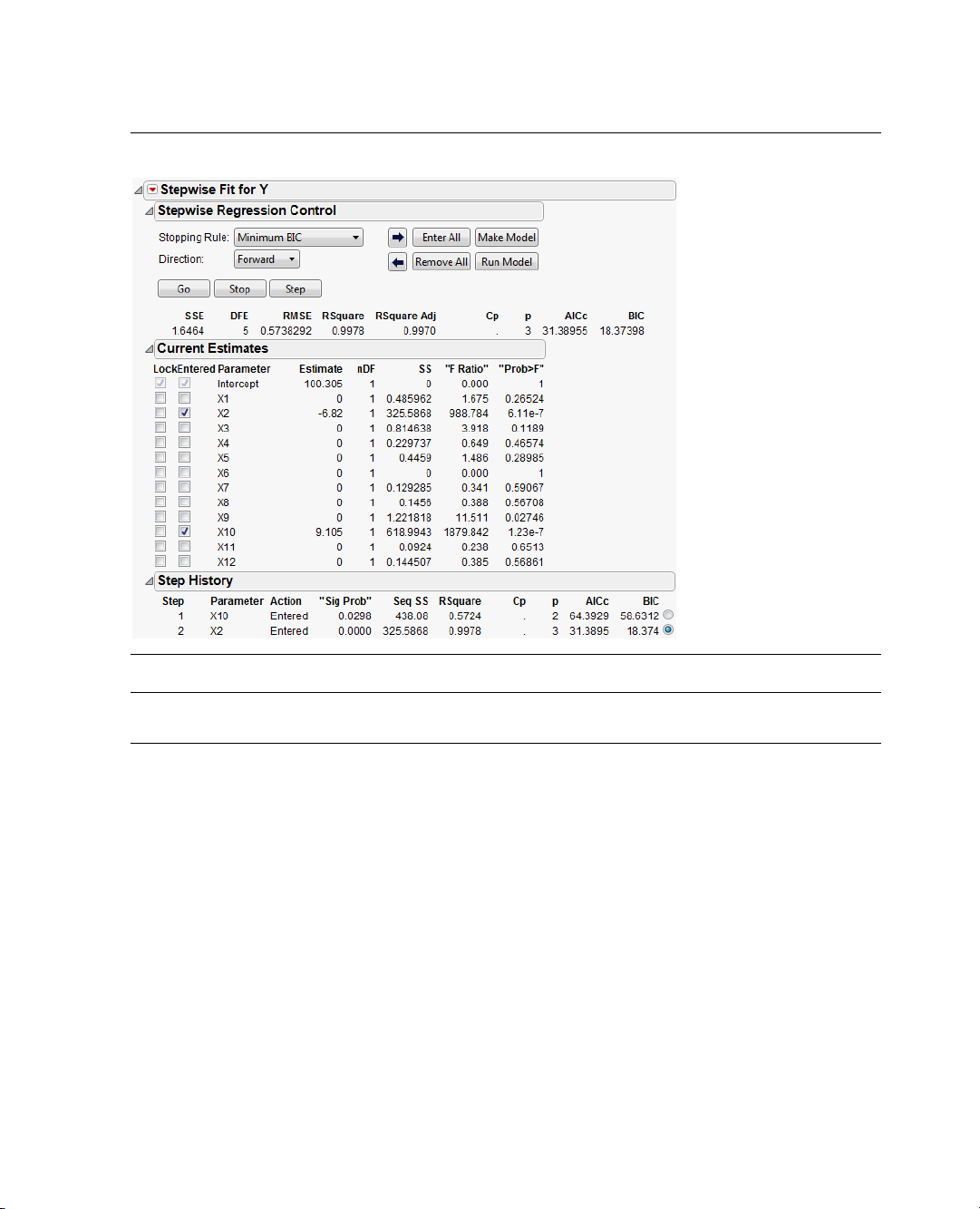

1. To run the

2. Change the

Stepwise.

3. Click

4. In the resulting display click the

Model script in the design table, click the red triangle beside Model and select Run Script.

Personality in the Model Specification window from Standard Least Squares to

Run on the Fit Model dialog.

Step button two times. JMP enters the factors with the largest effects.

From the report that appears, you should identify two active factors, X2 and X10, as shown in

Figure 2.11. The step history appears in the bottom part of the report. Because random noise is added,

your estimates will be slightly different from those shown below.

Page 42

26 Examples Using the Custom Designer Chapter 2

Creating Screening Experiments

Figure 2.11 Stepwise Regression Identifies Active Factors

Note: This example defines two large main effects and sets the rest to zero. In real-world situations, it may

be less likely to have such clearly differentiated effects.

Screening Designs with Flexible Block Sizes

When you create a design using the Screening designer (DOE > Screening), the available block sizes for the

listed designs are a power of two. However, custom designs in JMP can have blocks of any size. The

blocking example shown in this section is flexible because it is using three runs per block, instead of a power

of two.

After you select

Values section of the Factors panel because the sample size is unknown at this point. After you complete the

design, JMP shows the appropriate number of blocks, which is calculated as the sample size divided by the

number of runs per block.

DOE > Custom Design and enter factors, the blocking factor shows only one level in the

Page 43

Chapter 2 Examples Using the Custom Designer 27

Creating Screening Experiments

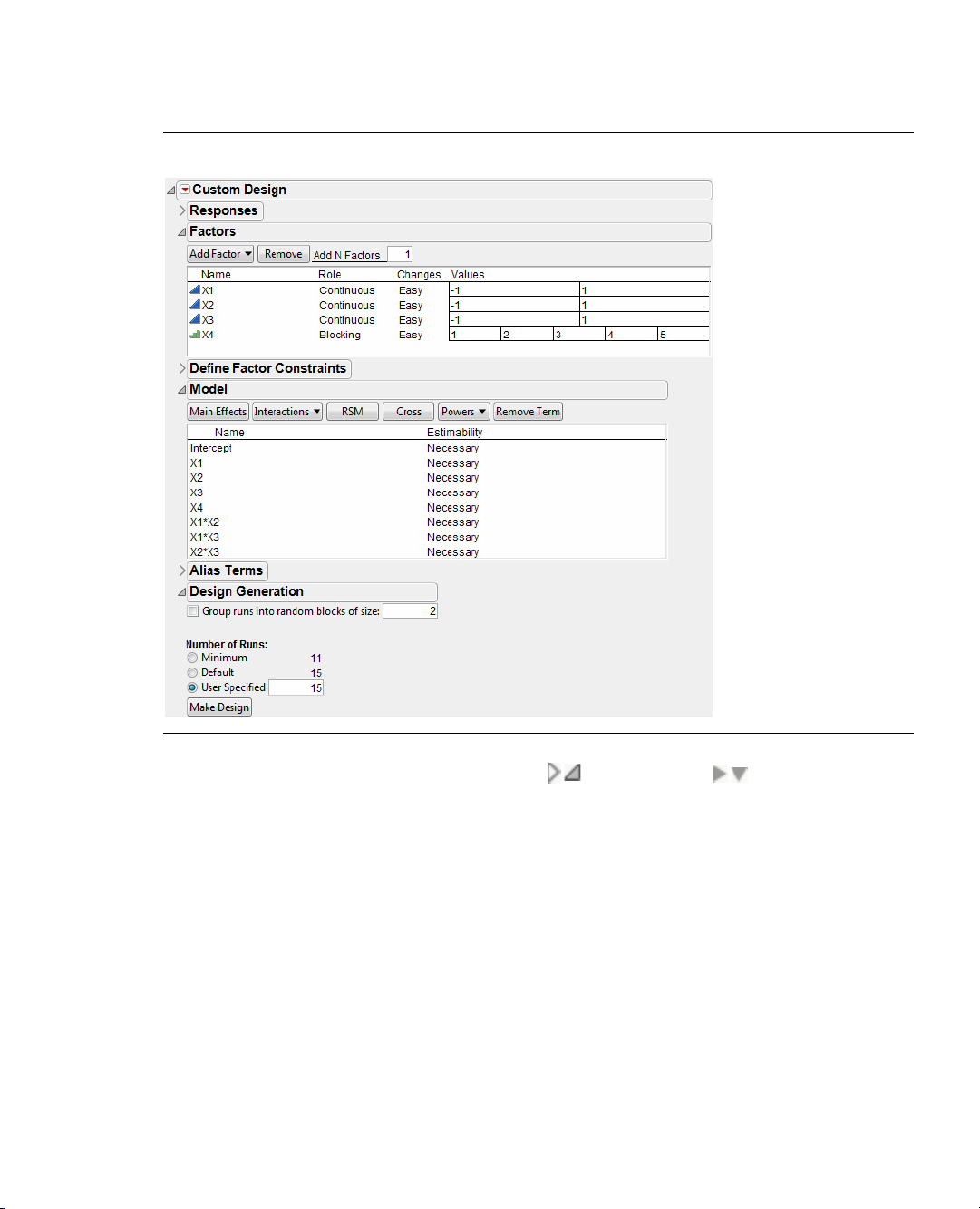

For example, Figure 2.12 shows that when you enter three continuous factors and one blocking factor with

three runs per block, only one block appears in the Factors panel.

Figure 2.12 One Block Appears in the Factors Panel

The default sample size of nine requires three blocks. After you click Continue, there are three blocks in the

Factors panel (Figure 2.13). This is because the default sample size is nine, which requires three blocks with

three runs each.