Data Services Migration

Considerations

BusinessObjects Data Services XI 3.0 (12.0.0)

Copyright

© 2008 Business Objects. All rights reserved. Business Objects owns the following

U.S. patents, which may cover products that are offered and licensed by Business

Objects: 5,555,403; 5,857,205; 6,289,352; 6,247,008; 6,490,593; 6,578,027;

6,831,668; 6,768,986; 6,772,409; 6,882,998; 7,139,766; 7,299,419; 7,194,465;

7,222,130; 7,181,440 and 7,181,435. Business Objects and the Business Objects

logo, BusinessObjects, Business Objects Crystal Vision, Business Process On

Demand, BusinessQuery, Crystal Analysis, Crystal Applications, Crystal Decisions,

Crystal Enterprise, Crystal Insider, Crystal Reports, Desktop Intelligence, Inxight,

the Inxight Logo, LinguistX, Star Tree, Table Lens, ThingFinder, Timewall, Let

there be light, Metify, NSite, Rapid Marts, RapidMarts, the Spectrum Design, Web

Intelligence, Workmail and Xcelsius are trademarks or registered trademarks in

the United States and/or other countries of Business Objects and/or affiliated

companies. All other names mentioned herein may be trademarks of their respective

owners.

Third-party

Contributors

Business Objects products in this release may contain redistributions of software

licensed from third-party contributors. Some of these individual components may

also be available under alternative licenses. A partial listing of third-party

contributors that have requested or permitted acknowledgments, as well as required

notices, can be found at: http://www.businessobjects.com/thirdparty

2008-03-16

Contents

Data Quality to Data Services Migration Guide 7Chapter 1

Introduction..................................................................................................8

Using the migration tool.............................................................................25

How Data Quality repository contents migrate..........................................37

How transforms migrate.............................................................................55

Welcome to Data Services.....................................................................8

Overview of migration...........................................................................14

Overview of the migration utility...........................................................25

Migration checklist................................................................................26

Connection information........................................................................27

Running the dqmigration utility ............................................................29

dqmigration utility syntax and options..................................................31

Migration report ...................................................................................35

How projects and folders migrate.........................................................37

How connections migrate.....................................................................43

How substitution files and variables migrate........................................49

How data types migrate........................................................................54

How Data Quality attributes migrate.....................................................55

Overview of migrated transforms.........................................................55

Address cleansing transforms..............................................................63

Reader and Writer transforms..............................................................73

How Data Quality integrated batch Readers and Writers migrate.....109

How Data Quality transactional Readers and Writers migrate...........116

Matching transforms...........................................................................120

UDT-based transforms.......................................................................129

Other transforms................................................................................139

Data Services Migration Considerations 3

Contents

Suggestion Lists options....................................................................153

Post-migration tasks................................................................................154

Further cleanup .................................................................................154

Improving performance .....................................................................161

Troubleshooting..................................................................................166

Migration Considerations Guide 175Chapter 2

Introduction..............................................................................................176

Behavior changes in version 12.0.0........................................................177

Case transform enhancement............................................................177

Data Quality projects in Data Integrator jobs ....................................178

Data Services web address................................................................178

Large object data type enhancements...............................................179

License keycodes...............................................................................181

Locale selection..................................................................................182

ODBC bigint data type........................................................................183

Persistent and pageable cache enhancements.................................184

Row delimiter for flat files...................................................................184

Behavior changes in version 11.7.3.........................................................185

Data flow cache type..........................................................................185

Job Server enhancement...................................................................186

Logs in the Designer..........................................................................186

Pageable cache for memory-intensive data flows..............................186

Behavior changes in version 11.7.2.........................................................187

Embedded data flows.........................................................................187

Oracle Repository upgrade................................................................187

Solaris and AIX platforms...................................................................189

Behavior changes in version 11.7.0.........................................................189

Data Quality........................................................................................190

Distributed data flows.........................................................................192

JMS Adapter interface........................................................................193

4 Data Services Migration Considerations

Contents

XML Schema enhancement...............................................................193

Password management......................................................................193

Web applications................................................................................194

Web services......................................................................................194

Behavior changes in version 11.6.0.........................................................195

Netezza bulk loading..........................................................................195

Conversion between different data types...........................................196

Behavior changes in version 11.5.1.5......................................................196

Behavior changes in version 11.5.1.........................................................196

Behavior changes in version 11.5.0.0......................................................197

Web Services Adapter........................................................................197

Varchar behavior................................................................................197

Central Repository..............................................................................198

Behavior changes in version 11.0.2.5......................................................198

Teradata named pipe support............................................................198

Behavior changes in version 11.0.2.........................................................198

Behavior changes in version 11.0.1.1......................................................199

Statistics repository tables..................................................................199

Behavior changes in version 11.0.1.........................................................199

Crystal Enterprise adapters................................................................200

Behavior changes in version 11.0.0.........................................................200

Changes to code page names...........................................................201

Data Cleansing...................................................................................202

License files and remote access software.........................................203

Behavior changes in version 6.5.1..........................................................203

Behavior changes in version 6.5.0.1.......................................................204

Web services support.........................................................................204

Sybase bulk loader library on UNIX...................................................205

Behavior changes in version 6.5.0.0.......................................................205

Browsers must support applets and have Java enabled....................205

Execution of to_date and to_char functions.......................................206

Data Services Migration Considerations 5

Contents

Changes to Designer licensing..........................................................207

License files and remote access software.........................................207

Administrator Repository Login..........................................................208

Administrator Users............................................................................209

Business Objects information resources.................................................210

Documentation...................................................................................210

Index 211

6 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

1

Data Quality to Data Services Migration Guide

1

Introduction

Introduction

Welcome to Data Services

Welcome

Data Services XI Release 3 provides data integration and data quality

processes in one runtime environment, delivering enterprise performance

and scalability.

The data integration processes of Data Services allow organizations to easily

explore, extract, transform, and deliver any type of data anywhere across

the enterprise.

The data quality processes of Data Services allow organizations to easily

standardize, cleanse, and consolidate data anywhere, ensuring that end-users

are always working with information that's readily available, accurate, and

trusted.

Documentation set for Data Services

You should become familiar with all the pieces of documentation that relate

to your Data Services product.

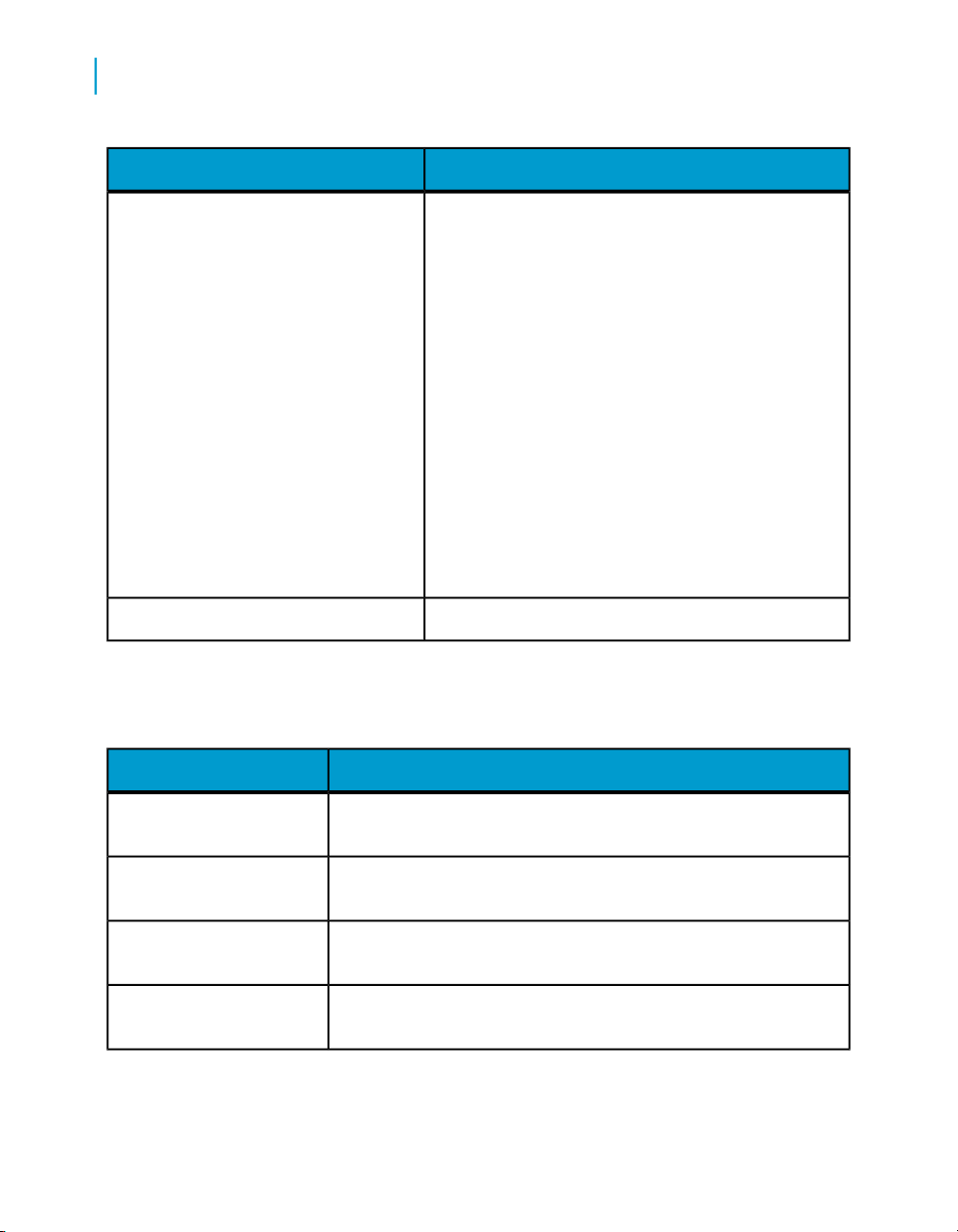

What this document providesDocument

Documentation Map

Release Summary

Release Notes

Getting Started Guide

8 Data Services Migration Considerations

Information about available Data Services books,

languages, and locations

Highlights of key features in this Data Services release

Important information you need before installing and

deploying this version of Data Services

An introduction to Data Services

Data Quality to Data Services Migration Guide

Introduction

What this document providesDocument

1

Installation Guide

Advanced Development Guide

Designer Guide

Integrator's Guide

Management Console: Administrator

Guide

Management Console: Metadata Reports Guide

Migration Considerations

Migration Guide

Performance Optimization Guide

Information about and procedures for installing Data

Services

Guidelines and options for migrating applications including information on multi-user functionality and

the use of the central repository for version control

Information about how to use Data Services Designer

Information for third-party developers to access Data

Services functionality

Information about how to use Data Services Administrator

Information about how to use Data Services Metadata

Reports

Release-specific product behavior changes from

earlier versions of Data Services to the latest release

Information about how to migrate from Data Quality

to Data Services

Information about how to improve the performance

of Data Services

Reference Guide

Detailed reference material for Data Services Designer

Data Services Migration Considerations 9

Data Quality to Data Services Migration Guide

1

Introduction

Technical Manuals

What this document providesDocument

A compiled “master” PDF of core Data Services books

containing a searchable master table of contents and

index:

• Installation Guide

• Getting Started Guide

• Designer Guide

• Reference Guide

• Management Console: Metadata Reports Guide

• Management Console: Administrator Guide

• Performance Optimization Guide

• Advanced Development Guide

• Supplement for J.D. Edwards

• Supplement for Oracle Applications

• Supplement for PeopleSoft

• Supplement for Siebel

• Supplement for SAP

Tutorial

A step-by-step introduction to using Data Services

In addition, you may need to refer to several Adapter Guides and

Supplemental Guides.

What this document providesDocument

JMS Adapter Interface

Salesforce.com Adapter

Interface

Supplement for J.D. Edwards

Supplement for Oracle Applications

10 Data Services Migration Considerations

Information about how to install, configure, and use the Data

Services Adapter for JMS

Information about how to install, configure, and use the Data

Services Salesforce.com Adapter Interface

Information about license-controlled interfaces between Data

Services and J.D. Edwards World and J.D. Edwards OneWorld

Information about the license-controlled interface between Data

Services and Oracle Applications

Data Quality to Data Services Migration Guide

Introduction

What this document providesDocument

1

Supplement for PeopleSoft

Supplement for SAP

Supplement for Siebel

Information about license-controlled interfaces between Data

Services and PeopleSoft

Information about license-controlled interfaces between Data

Services, SAP ERP and R/3, and SAP BI/BW

Information about the license-controlled interface between Data

Services and Siebel

Accessing documentation

You can access the complete documentation set for Data Services in several

places.

Note: For the latest tips and tricks on Data Services, access our Knowledge

Base on the Customer Support site at http://technicalsupport.businessob

jects.com. We have posted valuable tips for getting the most out of your Data

Services product.

Accessing documentation on Windows

After you install Data Services, you can access the documentation from the

Start menu.

1. Choose Start > Programs > BusinessObjects XI 3.0 >

BusinessObjects Data Services > Data Services Documentation.

Note: Only a subset of the documentation is available from the Start

menu. The documentation set for this release is available in

LINK_DIR\Doc\Books\en.

2. Click the appropriate shortcut for the document that you want to view.

Accessing documentation on UNIX

After you install Data Services, you can access the online documentation by

going to the directory where the printable PDF files were installed.

1. Go to LINK_DIR/doc/book/en/.

Data Services Migration Considerations 11

Data Quality to Data Services Migration Guide

1

Introduction

2. Using Adobe Reader, open the PDF file of the document that you want

to view.

Accessing documentation from the Web

You can access the complete documentation set for Data Services from the

Business Objects Customer Support site.

1.

Go to www.businessobjects.com

2. From the "Support" pull-down menu, choose Documentation.

3. On the "Documentation" screen, choose Product Guides and navigate

to the document that you want to view.

You can view the PDFs online or save them to your computer.

Business Objects information resources

Customer support, consulting, and training

A global network of Business technology experts provides customer support,

education, and consulting to ensure maximum business intelligence benefit

to your business.

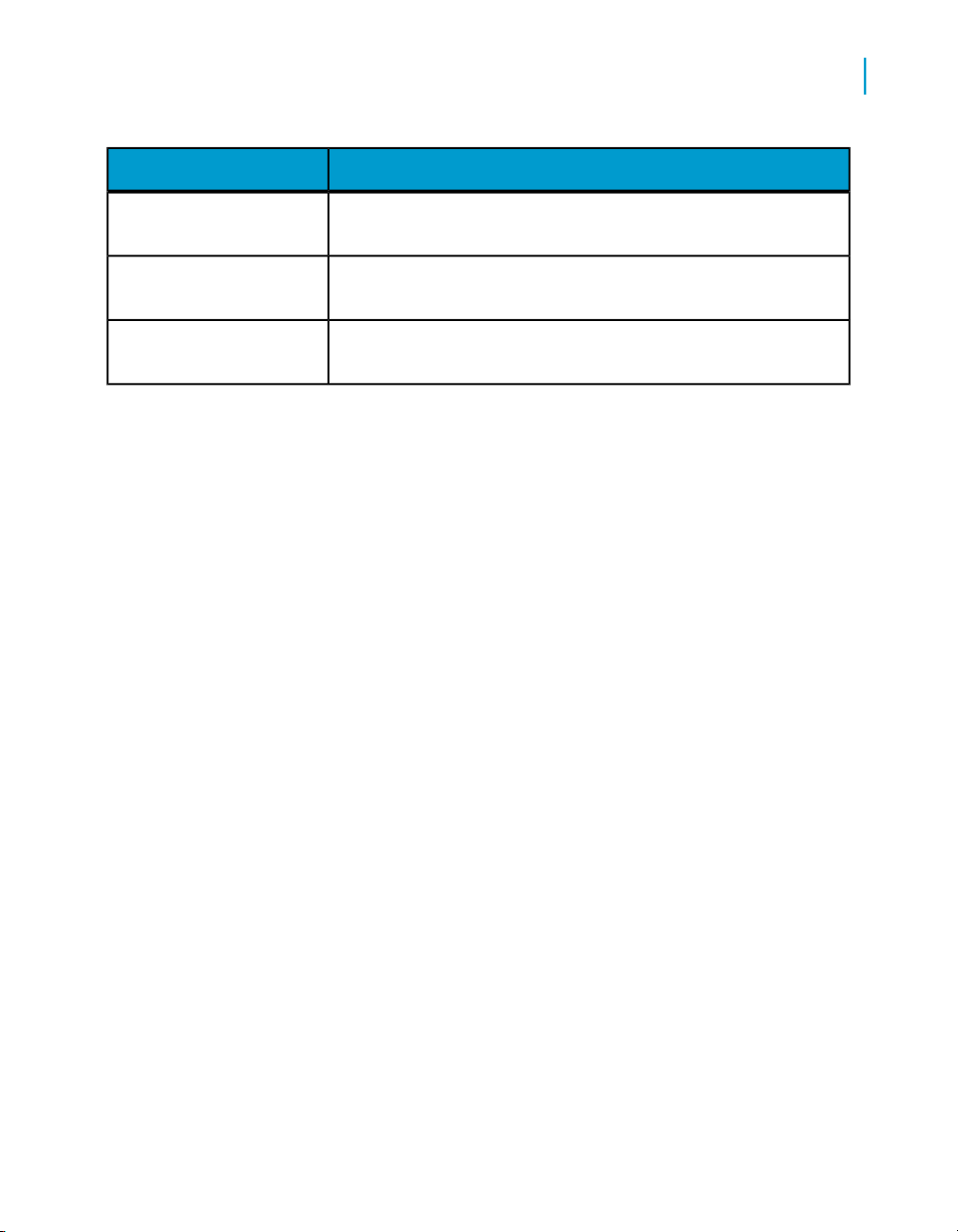

Useful addresses at a glance

Product information

http://www.businessob

jects.com

Product documentation

http://www.businessob

jects.com/support

Documentation mailbox

documentation@businessobjects.com

12 Data Services Migration Considerations

ContentAddress

Information about the full range of Business

Objects products.

Business Objects product documentation, including the Business Objects Documentation

Roadmap.

Send us feedback or questions about your

Business Objects documentation.

Data Quality to Data Services Migration Guide

ContentAddress

Introduction

1

Online Customer Support

http://www.businessob

jects.com/support

Online Developer Community

http://diamond.businessob

jects.com/

Consulting services

http://www.businessob

jects.com/services/consulting/

Education services

http://www.businessob

jects.com/services/training

Online Customer Support

The Business Objects Customer Support web site contains information about

Customer Support programs and services. It also has links to a wide range

of technical information including Knowledge Base articles, downloads, and

support forums. http://www.businessobjects.com/support

Information on Customer Support programs,

as well as links to technical articles, downloads, and online forums.

An online resource for sharing and learning

about Data Services with your developer colleagues.

Information about how Business Objects can

help maximize your business intelligence investment.

Information on Business Objects training options and modules.

Looking for training options?

From traditional classroom learning to targeted e-learning seminars, Business

Objects can offer a training package to suit your learning needs and preferred

learning style. Find more information on the Business Objects Education

web site: http://www.businessobjects.com/services/training

Send us your feedback

Do you have a suggestion on how we can improve our documentation? Is

there something that you particularly like or have found useful? Drop us a

line, and we will do our best to ensure that your suggestion is considered for

the next release of our documentation: documentation@businessobjects.com.

Data Services Migration Considerations 13

Data Quality to Data Services Migration Guide

1

Introduction

Note: If your issue concerns a Business Objects product and not the

documentation, please contact our Customer Support experts.

Overview of migration

About this guide

The Data Quality Migration Guide provides information about:

• migrating your Data Quality Projects into Data Services

• understanding some of the benefits of using Data Services

• seeing the differences between previous versions of Data Quality and

Data Services

• using best practices during migration

• learning how to troubleshoot during migration

Who should migrate?

Anyone who is using Data Quality XI and Data Services as standalone

applications should migrate to Data Services.

The migration utility works with these versions of software:

• Data Integrator 11.7.x

• Data Quality XI 11.7.x and 11.6.x

• Data Integrator XI R2

• Data Quality XI R2 11.5 and newer

• Firstlogic IQ8 8.05c and newer

Those who are using the Firstlogic Data Quality Suite (Job file, RAPID, Library

and/or eDataQuality) cannot use the migration utility to convert the existing

projects into Data Services. The only option is to create the projects again

in Data Services.

1

Some manual steps are required.

2

Some manual steps are required.

14 Data Services Migration Considerations

1

2

Why migrate?

You may have seen some literature that includes a comprehensive list of

reasons to migrate. Here are a handful of the main reasons why you should

migrate.

Performance

The new platform utilizes the past Data Integrator features with the improved

Data Quality features in one user interface.

Data profiling of source and target

You can monitor, analyze, and report on the quality of information contained

in the data marts, data warehouses, and any other data stored in databases.

You can test business rules for validity and prioritize data quality issues so

that investments can be made in the high impact areas.

Improved multi-user support

Data Quality to Data Services Migration Guide

Introduction

1

With Data Services, you have access to both a central repository (if

purchased) for multi-user storage and a local repository for each user. Version

control for the repository objects keeps you in control by labeling and

comparing objects. This version includes top-notch security with

authentication to access the central repository, authorization for group-based

permissions to objects, and auditing for changes to each object.

Powerful debugging capabilities

You can set break points, view the data before and after each transform, set

filters when previewing data and save and print the preview data.

Repository management

You can easily manage your fully relational repository across systems and

have the ability to import repository objects from a file. You can also import

source and target metadata for faster UI response times. With datastore

configurations, you can define varying connection options for similar

datastores between environments, use different relational database

technology between environments without changing your jobs, and use

different database owners between systems without making changes during

Data Services Migration Considerations 15

Data Quality to Data Services Migration Guide

1

Introduction

migration to each environment. With system configurations, you can associate

substitution configurations per system configuration by associating different

substitution parameter values by environment.

Import and export metadata

You can import and export metadata with Common Warehouse Model (CWM)

1.0/1.1 support and ERWIN (Computer Associates) 4.x XML. You can also

export on Meta Integration Model Bridge (MiMB), if you have it installed.

Auditing

You can audit your projects with statistics collection, rule definitions, email

notification, and audit reporting.

Reports and dashboards

With the reporting tool, you can view daily and historical execution results

and duration statistics. With the data validation dashboard, you can view the

results of validation rules, organize the validation rules across jobs into

functional areas and drill down statistics to a functional area's validation

rules. You can also define high-level business rules based on results of

validation rules.

With impact and lineage analysis, you can understand the cost and impact

of other areas when the datasource is modified. You can view, analyze and

print jobs, work flows, and data flow details, view table/file usage based on

the source and target, view a summary of job variables and parameters,

generate PDF/Word documents on a job-by-job basis, and view a transform

option and field mapping summary.

Introduction to the interface

The Data Services user interface is different from the Data Quality user

interface. It has similar elements, but in a different presentation.

16 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

Introduction

1

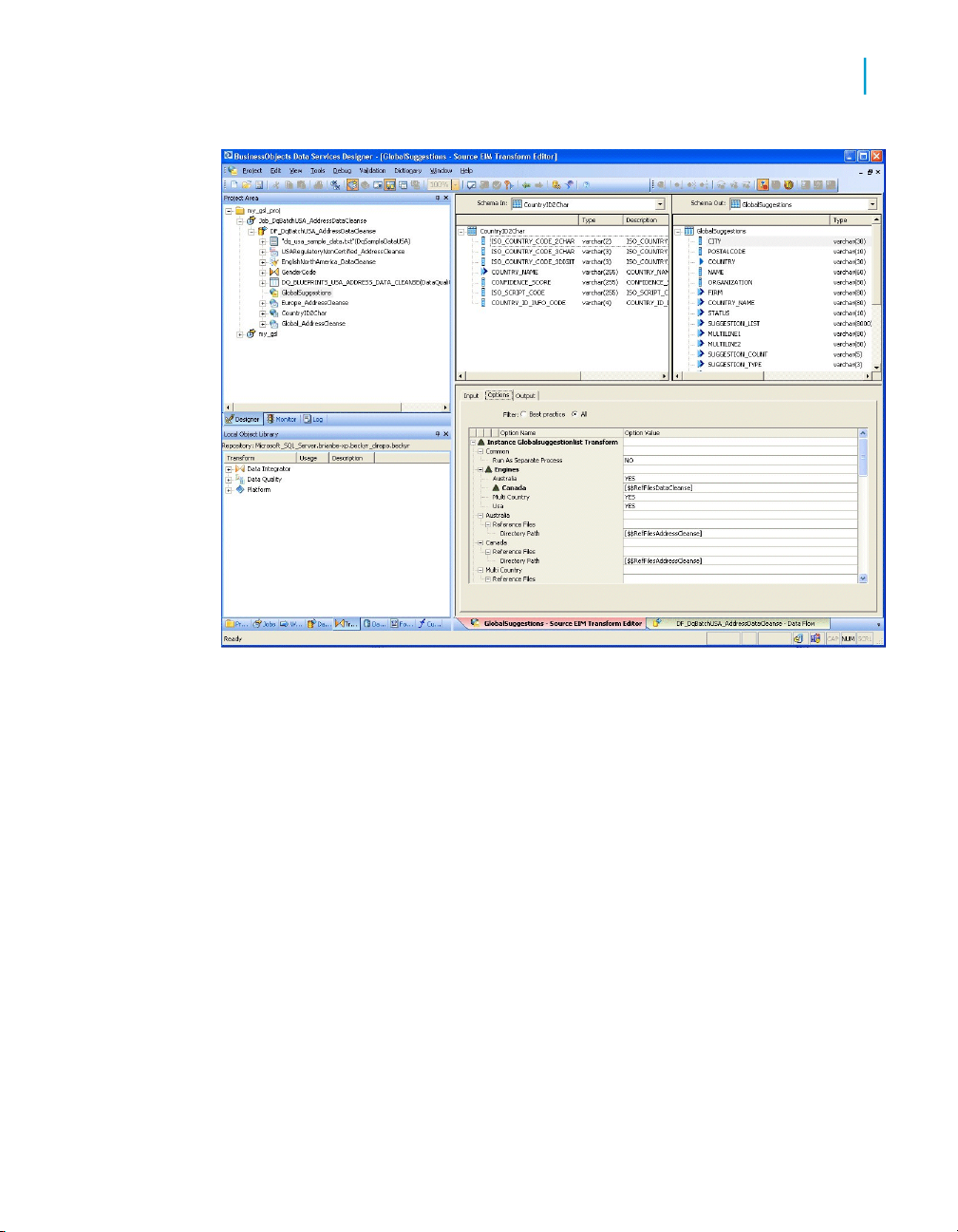

Note: The window shows a project named my_gsl_proj open on the left

portion of the screen. The right portion shows the GlobalSuggestions

transform input and output fields and the Option groups.

In the upper left corner of the Data Services UI, you can see the Project

Area. You can see your project folders and any jobs that you have. It's a

hierarchical view of the objects used in each project.

Below the Project Area is the is the Local Object Library where you have

access to all of the reusable objects in your jobs. It is a view into your

repository, so that you do not need to access the repository directly. There

are tabs at the bottom so that you can view projects, jobs, work flows, data

flows, transforms, data sources, file formats and functions.

The right side of the window is the workspace. The information presented

here will differ based on the objects you have selected. For example, when

you first open Data Services, you will see a Business Objects banner followed

by Getting Started options, Resources, and Recent Projects. In the example,

the workspace has the GlobalSuggestions transform open to the object editor.

Data Services Migration Considerations 17

Data Quality to Data Services Migration Guide

1

Introduction

The editor displays the input and output schemas for the object and the panel

below lists the options for the object.

See the Data Services Designer Guide: Designer User Interface for

information.

Related Topics

• Introduction to the interface on page 16

Downloading blueprints and other content objects

We’ve identified a number of common scenarios that you are likely to perform

with Data Services. Instead of creating your own job from scratch, look

through the blueprints. If you find one that is closely related to your particular

business problem, you can simply use the blueprint and tweak the settings

in the transforms for your specific needs.

For each scenario, we’ve included a blueprint that is already set up to solve

the business problem in that scenario. Each blueprint contains the necessary

Data Services project, jobs, data flows, file formats, sample data, template

tables, and custom functions to run the data flows in your environment with

only a few modifications.

You can download all of the blueprints or only the blueprints and other content

that you think you will find useful from the Business Objects Diamond

Developer website. On the Diamond website, we periodically post new and

updated blueprints, custom functions, best practices, white papers, and other

Data Services content. You can refer to this site frequently for updated content

and use the forums to provide us with any questions or requests you may

have. We've also provided the ability for you to upload and share any content

you've developed with the rest of the Data Services development community.

Instructions for downloading and installing the content objects are also located

on the Diamond website.

1. To access the Business Objects Diamond Developer website, go to

http://diamond.businessobjects.com/dataservices/blueprints in your web

browser.

2. Log in to your Diamond account using your username and password, or

create a new account.

18 Data Services Migration Considerations

3. Open the Content Objects User's Guide to view a list of all of the available

blueprints and content objects and their descriptions, and instructions for

downloading and setting up the blueprints.

4. Use the filters at the top of the Data Services Blueprints page to search

for the blueprint or content objects that you want to download.

5. Select the blueprint that you want to download. To download all blueprints,

select Data Quality Blueprints - All.

6. Follow the instructions in the user's guide to download the files to the

appropriate location and make the necessary modifications in Data

Services to run the blueprints.

Introduction to the migration utility

The Data Quality Migration Utility is a Windows-based utility command line

that migrates your Data Quality repository to the Data Services repository.

The utility is in the LINK_DIR\DQMigration folder. It uses an XML-based

configuration file.

You can set options on this Windows-based utility to migrate the entire

repository (recommended) or on a project-by-project or

transform-by-transform basis. You can also set the utility to Analyze Mode

where the utility identifies errors and warning during migration so that you

can either fix them in Data Quality before fully migrating.

Data Quality to Data Services Migration Guide

Introduction

1

After running the utility you can optionally view the Migration Report in a web

broswer for details of possible errors and warnings. We highly recommend

you fix these before trying to run the job in Data Services.

In addition, if your Data Quality jobs were published as Web services, after

running the utility you can publish the migrated jobs as Data Services Web

services. For information on publishing jobs as Web services, see the Data

Services Integrator's Guide.

Related Topics

• Running the dqmigration utility on page 29

Data Services Migration Considerations 19

Data Quality to Data Services Migration Guide

1

Introduction

• dqmigration utility syntax and options on page 31

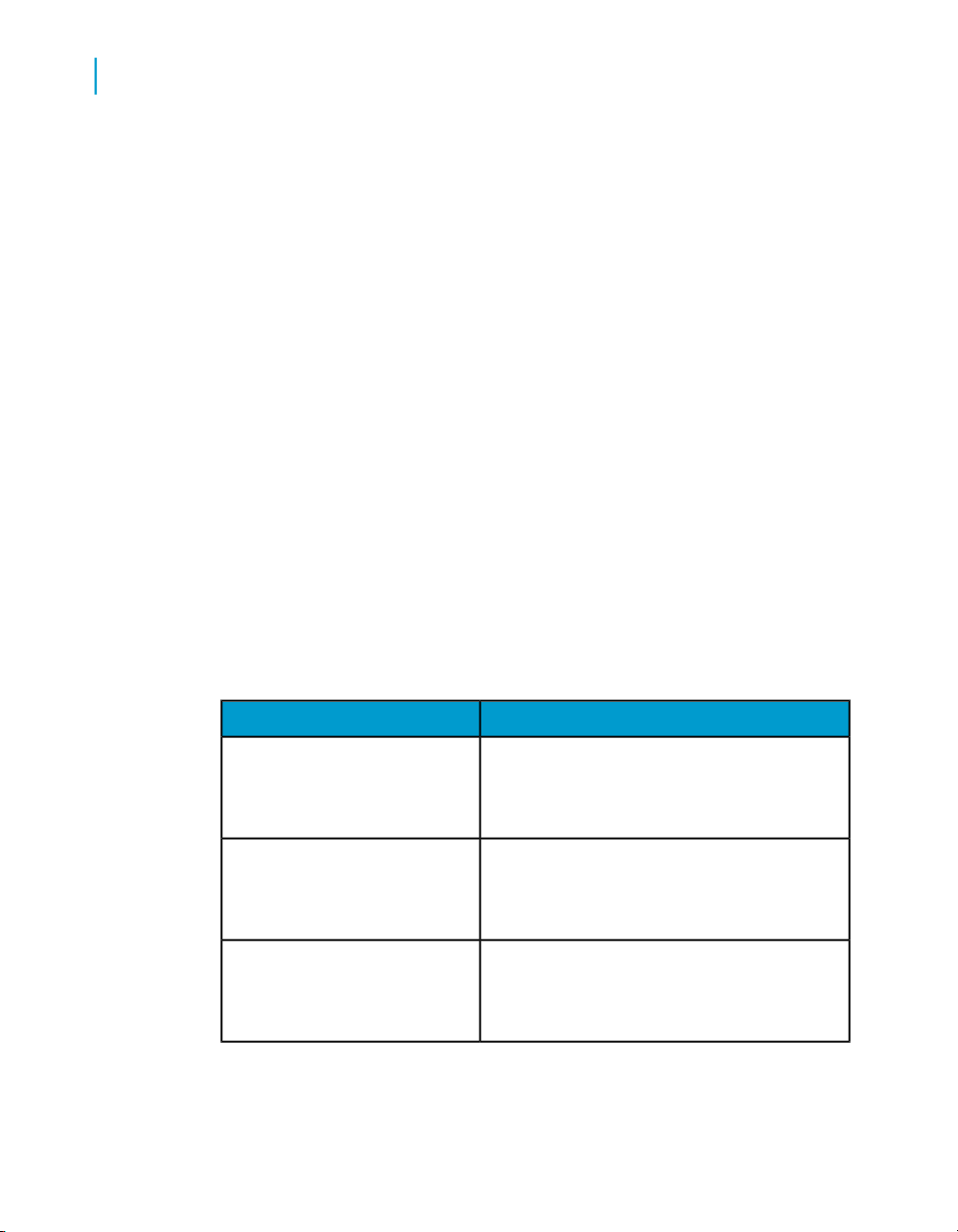

Terminology in Data Quality and Data Services

Several terms are different between Data Quality and Data Services.

Data Quality

er/option editor

vices

projectfolder

jobproject

workspacecanvas

data flowdataflow

object editoroption explor-

real timetransactional

sourcereader

DescriptionData Ser-

Terms are different, but it holds the project or job

that runs.

In Data Quality, a project is able to run. In Data

Services, a project is a level higher. The project

contains the job, and the job is able to run.

In Data Quality, you dragged a transform onto a

canvas. In Data Services, you drag a transform

onto a workspace.

In Data Quality, a dataflow is a series of transforms hooked together, that may or may not run.

In Data Services, the data flow includes everything that will extract, transform and load data.

The terms are different, but you do the same

things: set your options.

The terms are different, but they mean the same

thing: processing one or many records at a time,

usually through a web service.

The terms are different, but they mean the same

thing: a place where the incoming data is held.

targetwriter

substitution

variables

substitution

parameters

In Data Quality, you had a few basic layers: a folder, a project, and a dataflow

that contains a series of transforms which may or may not run.

20 Data Services Migration Considerations

The terms are different, but they mean the same

thing: a place where the output data is held.

The terms are different, but they mean the same

thing: a text string alias.

In Data Services, the top layer is called a project. The next layer is a job that

runs. The job may hold a work flow which is where you can set up conditional

processing. The work flow, if you use one, will contain the data flow that

contains a series of transforms.

See the Data Services Designer Guide: Designer User Interface for

information.

Naming conventions

Object names

Your objects, when migrated, will have the prefix DQM_. If the name of the

object is longer than 64 characters, then a numeric suffix will be added (for

example, _001) to preserve the unique names.

Data Quality to Data Services Migration Guide

Introduction

1

Object

uration

named_connections

Datastores created from

Reater/Writer settings

named_connections

File formats created from

Reader/Writer settings

tions

and jobs

Pre-migrated Data Quality

name

dqserver1_substitutions.xmlSubstitution variable config-

Project first.xml with Reader

called My_DB_Reader

Project first.xml with Reader

called Cust_Det

my_usa_reg_addr_cleanseSDK transform configura-

Migrated Data

Services name

DQM_dqserver1_substitutions.xml

DQM_first_connfirst_connDatastores created from

DQM_first_My_DB_Reader

DQM_CRM_usaCRM_usaFile formats created from

DQM_first_Cust_Detect

DQM_my_usa_reg_addr_cleanse

DQM_firstfirst.xmlData Integrator data flows

Data Services Migration Considerations 21

Data Quality to Data Services Migration Guide

1

Introduction

Input and output field names

Data Quality input and output fields have a period in their names. However,

Data Services does not allow a period (.) in the column names. Therefore,

the dqmigration utility replaces the period with an underscore (_).

For example, suppose your Data Quality Reader transform has input field

names input.field1, input.field2, and input.field3. After migration,

these field names become input_field1, input_field2, and input.field3.

Deprecated objects

Differences between Data Quality and Data Services

While this list is not exhaustive, it lists the major differences that you will see

between Data Quality and Data Services.

There are also changes to transform options and option groups. See each

transform section in this document for details.

• Web Services

The .Net deployment is deprecated.

• Match transforms

The Aggregator, Sorter3, Candidate Selector, Match or Associate, Group

Statistics, Unique ID, and Best Record transforms are now included

together in one Match transform. You will also notice the performance

improvement in the Match and UDT transforms.

• GAC transform

Support for Australia, Canada, Global Address, EMEA, Japan and USA

engines. There is also a new Suggestion List option group.

• URAC transform

You can only output one Suggestion Lists output field within URAC.

• Search/Replace transform

The Search/Replace Transform is replaced by a Query transform using

a new search_replace() function call.

3

The Sorter transform only becomes part of the Match transform when it is migrated in a specific

transform order like Sorter, Aggreator and then Match.

22 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

Introduction

• Data Cleanse transform

New dictionary connection management window.

• Global Suggestion Lists transform

New Suggestion List Option group.

• Phonetic Key transform

The Phonetic Key transform is replaced by a query transform with either

a double_metaphone() or soundex() function call.

• Substitution variables and files

Substitution variables are now referred to as Substitution parameters.

• Python changes

Several Python methods have been deprecated.

Deprecated objects

The improved technology in Data Services requires the depreciation of certain

aspects of Data Quality.

• Compound Transforms

• Shared options

• Candidate Selector's Unique ID record stem support

• Some options and behaivours related to pre/post SQL operations in the

database Reader and Writer transforms

• UDT's per dataflow mode (use a workflow and scripts as a workaround)

• Disabled transforms

1

Note: Disabled transforms in Data Quality projects are enabled after

migration. If you don't want to the enable the transform, then removed

them prior to migration.

• Flat Files

• Binary file type support in fixed-width files in versions of Data Quality

earlier than 11.7

• Logical and packed field support in versions of Data Quality earlier

than 11.7.

• Data collections

• Thread and watermark settings on a per-transform basis

• Observer transform

Data Services Migration Considerations 23

Data Quality to Data Services Migration Guide

1

Introduction

• Progress Service transform

• Integrated batch API

• Admin methods in real-time API

• Netscape and Firefox browsers

• JIS_Encoding for flat files

• Some less popular code page support (most used code pages are

supported)

• Several Python methods

• Web Services .Net deployment

• Several Web Services functions

• Sun Java application server for web tier

Related Topics

• Introduction to the interface on page 16

• Overview of migrated transforms on page 55

Premigration checklist

To ensure a smooth migration, make sure you have completed the following

tasks.

• Upgrade to Data Quality XI 11.7, if possible.

Being on the most current version ensures that the latest options and

functionality are properly installed and named. The options will more

easily map to the Data Services options. Upgrade the repository using

RepoMan.

• Verify that you have permissions to both the Data Quality and Data

Services repositories.

If you don't have a connection to the repositories or permissions to access

the repositories, then you will not be able to migrate to Data Services.

• Back up your Data Quality and Data Services repositories.

Your projects will look different in Data Services. You may want to keep

a backup copy of your repository so that you can compare the Data Quality

and/or Data Services setup with Data Services.

• Clean your repository.

24 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

Delete any unused projects or projects that have verification errors.

Projects that do not run on Data Quality XI will not migrate well and will

not run on Data Services without making some changes in Data Services.

Projects that have verification errors due to input or output fields will not

migrate. Remove any custom transforms, compound transforms and

projects that you do not use anymore from the file system repository.

• Verify that your support files are accessible.

If you have a flat file reader or writer, ensure that the corresponding FMT

or DMT file is in the same directory location as the flat file reader or writer.

• Install Data Services.

Follow the installation instructions in the BusinessObjects Data Services

XI 3.0 Installation Guide.

Using the migration tool

Overview of the migration utility

Using the migration tool

1

You invoke the Data Quality migration utility with the dqmigration command

and specify a migration configuration file name. The utility is only available

on Windows. If your repository is on UNIX, you must have a shared system

to the repository, or FTP the repository file to a Windows system prior to

running the utility.

The configuration file specifies the Data Quality repository to migrate from,

the Data Services repository to migrate to, and processing options for the

migration utility. Data Services provides a default migration configuration file

named dqmig.xml in the directory LINK_DIR\DQMigration. You can either

edit this file or copy it to customize it. For details, see Running the dqmigration

utility on page 29.

The value of the LINK_DIR system variable is the path name of the directory

in which you installed Data Services.

The dqmigration utility creates the following files:

•

Migration report in LINK_DIR \DQMigration\mylogpath

Data Services Migration Considerations 25

Data Quality to Data Services Migration Guide

1

Using the migration tool

This migration report provides the status of each object that was migrated

and displays the informational, warning, and error messages from the

migration utility. After the dqmigration utility completes, it displays a

prompt that asks you if you want to view the migration report. Always

open the file in Internet Explorer.

• Work files

The dqmigration utility creates a directory LINK_DIR

\DQMigration\work_files that contains the following files. Use these files

if you need to troubleshoot any errors in the migration report.

• Directories configuration_rules_1 and configuration_rules_2 – These

directories contain a copy all of the intermediate XML created during

migration.

• .atl files – These files contain the internal language that Data Services

uses to define objects.

The last step of the dqmigration utility imports the .atl files and creates the

equivalent jobs, data flows, and connections in the Data Services repository.

Related Topics

• Running the dqmigration utility on page 29

• dqmigration utility syntax and options on page 31

• Migration report on page 35

Migration checklist

During migration, follow these steps.

• Complete the steps in the premigration checklist.

• Run the migration utility on your entire repository (recommended) or on

your project that has all verification errors fixed.

• Follow the utility prompts to complete the migration steps.

• Review the view the migration report by selecting to view the report at

the end of the migration.

• If you have errors or warnings that can be fixed in Data Quality 11.7, then

fix them and run the utility again. The files for the repository (or project,

as the case may be) will be overwritten in Data Services when the utility

is rerun.

26 Data Services Migration Considerations

• Fix any other errors or warnings in Data Services.

• Follow the recommendations in each transform section to optimize

performance.

• Test the jobs in Data Services and compare the results with Data Quality

results.

• Make changes in Data Services, as appropriate.

After you have your jobs migrated to Data Services, you should set aside

some time to fully analyze and test your jobs in a pre-production environment.

Related Topics

• Premigration checklist on page 24

Connection information

The database connection options may be confusing, especially for

<DATABASE_SERVER_NAME> which may be the name of the server, or

the name of the database. To set your database connection information,

open the dqmig.xml file in the directory LINK_DIR \DQMigration.

Data Quality to Data Services Migration Guide

Using the migration tool

1

Locate the <DI_REPOSITORY_OPTIONS> section.

Based on your database type, you would enter information similar to the

following.

Example: DB2

<DATABASE_TYPE>DB2</DATABASE_TYPE> <!-- Note here that Server name

is actually database name-->

<DATABASE_SERVER_NAME>REPORTS1</DATABASE_SERVER_NAME>

<DATABASE_NAME>REPORTS1</DATABASE_NAME>

<WINDOWS_AUTHENTICATION>NO</WINDOWS_AUTHENTICATION>

Example: MS SQL

<DATABASE_TYPE>Microsoft_SQL_Server</DATABASE_TYPE> <!-- Note

here that Server name is actual machine if it is default instance.-->

Data Services Migration Considerations 27

Data Quality to Data Services Migration Guide

1

Using the migration tool

<DATABASE_SERVER_NAME>MACHINE-XP2</DATABASE_SERVER_NAME> <--If

you have separate instance then you have to mention something like

IQ8TEST-XP2\MSSQL2000 -->

<DATABASE_NAME>REPORTS_MDR</DATABASE_NAME>

<WINDOWS_AUTHENTICATION>NO</WINDOWS_AUTHENTICATION>

Example: MySQL

<DATABASE_TYPE>MySQL</DATABASE_TYPE> <!-- Note here that Server

name is actually ODBC DSN name created by installer.-->

<DATABASE_SERVER_NAME>BusinessObjectsEIM</DATABASE_SERVER_NAME>

<DATABASE_NAME>BusinessObjectsEIM</DATABASE_NAME>

<WINDOWS_AUTHENTICATION>NO</WINDOWS_AUTHENTICATION>

Example: Oracle

<DATABASE_TYPE>Oracle</DATABASE_TYPE> <!-- Note here that Server

name is actually database name-->

<DATABASE_SERVER_NAME>DSORA103.COMPANY.NET</DATABASE_SERV

ER_NAME>

<DATABASE_NAME>DSORA103.COMPANY.NET </DATABASE_NAME>

<WINDOWS_AUTHENTICATION>NO</WINDOWS_AUTHENTICATION>

Example: Sybase

<DATABASE_TYPE>Sybase</DATABASE_TYPE> <!-- Note here that Server

name is actually machine name-->

<DATABASE_SERVER_NAME>sjds003</DATABASE_SERVER_NAME>

<DATABASE_NAME>test_proj </DATABASE_NAME>

<WINDOWS_AUTHENTICATION>NO</WINDOWS_AUTHENTICATION>

28 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

Running the dqmigration utility

When you run the dqmigration utility, you can specify the options in one of

the following ways:

• Run the dqmigration utility and specify all options on the command line.

• Specify all options in a configuration file and run the dqmigration utility

and select only the option that references that file on the command line.

• Specify default values for the options in the configuration file and run the

dqmigration utility with some options specified on the command line.

The command line options override the values specified in the

configuration file.

To run the dqmigration utility:

1. Make sure the PATH system environment variable contains

%LINK_DIR%/bin. The utility will not run if it is not there.

2. Set up the configuration file that contains the options for the dqmigration

utility.

For example, create a customized configuration file named

dqmig_repo.xml and copy the contents of file dqmig.xml file to

dqmig_repo.xml.

Using the migration tool

1

3. Specify the information for the Data Quality repository that you want to

migrate.

a. In the DQ_REPOSITORY_OPTIONS section of the configuration file,

specify the following options.

• Absolute path name of your Data Quality repository configuration

file in the <CONFIGURATION_RULES_PATH> option.

• Path name, relative to the absolute path name, of your Data Quality

substitution file in the <SUBSTITUTION_FILE_NAME> option.

Note: Business Objects recommends that when you run the dqmigra

tion utility the first time, you migrate the entire Data Quality repository

instead of an individual project file to ensure that all dependent files

are also migrated. Therefore, do not specify a value in the

<FILE_OR_PATH> option the first time you run the utility. However,

it is possible that after you migrate the entire repository and you fix

errors in the resulting Data Services jobs, you might find an error that

is easier to fix in Data Quality. In this case, you can run the migration

utility on just the project after fixing it in Data Quality.

Data Services Migration Considerations 29

Data Quality to Data Services Migration Guide

1

Using the migration tool

b. Specify the information for the Data Services repository to which you

want to migrate.

For example, change the options in the dqmig_repo.xml configuration file

to migrate the Data Quality repository at location D:\dqxi\11_7\repos

itory\configuration_rules to the Data Services repository repo.

<?xml version="1.0" encoding="UTF-16"?>

<REPOSITORY_DOCUMENT FileType = "MigrationConfiguration">

<MIGRATION_OPTIONS>

<PROCESSING_OPTIONS>

<LOG_PATH>mylogpath</LOG_PATH>

<ANALYZE_ONLY_MODE>YES</ANALYZE_ONLY_MODE>

</PROCESSING_OPTIONS>

<DQ_REPOSITORY_OPTIONS>

<CONFIGURATION_RULES_PATH>D:\dqxi\11_7\repository\configu

ration_rules</CONFIGURATION_RULES_PATH>

<SUBSTITUTION_FILE_NAME>dqxiserver1_substitutions.xml</SUB

STITUTION_FILE_NAME>

<FILE_OR_PATH></FILE_OR_PATH>

</DQ_REPOSITORY_OPTIONS>

<DI_REPOSITORY_OPTIONS>

<DATABASE_TYPE>Microsoft_SQL_Server</DATABASE_TYPE>

<DATABASE_SERVER_NAME>my_computer</DATABASE_SERVER_NAME>

<DATABASE_NAME>repo</DATABASE_NAME>

<WINDOWS_AUTHENTICATION>NO</WINDOWS_AUTHENTICATION>

</DI_REPOSITORY_OPTIONS>

</MIGRATION_OPTIONS>

</REPOSITORY_DOCUMENT>

<USER_NAME>repo</USER_NAME>

<PASSWORD>repo</PASSWORD>

4. Open a command window and change directory to LINK_DIR\DQMigration

where the dqmigration executable is located.

5. Run the dqmigration utility in analyze mode first to determine the status

of objects within the Data Quality repository.

Specify the value YES for <ANALYZE_ONLY_MODE> in the configuration

file, or use the option -a when you run the dqmigration utility. For example,

type the following command and options in a command window:

dqmigration -cdqmig_repo.xml -aYES

Note: There is no space between the option -a and the value YES.

This step does not update the Data Services repository.

6. When the migration utility displays the prompt that asks if you want to

view the migration report, reply y.

7. In the migration report, review any messages that have type Error.

30 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

Using the migration tool

You should migrate a production Data Quality repository where all of

•

the projects have executed successfully. If your Data Quality repository

contains unverifiable or non-runnable projects, then they will likely not

migrate successfully. Delete these types of projects before you run

the dqmigration utility with <ANALYZE_ONLY_MODE> set to NO.

Note: To delete a project in Data Quality, you delete its .xml file from

the directory \repository\configuration_rules\projects.

• If your Data Quality repository contains objects that the migration utility

currently does not migrate (for example dBase3 data sources), you

need to take some actions before you migrate. For details, see Trou

bleshooting on page 166.

Other errors, such as a Reader or Writer that contains a connection string,

can be fixed in Data Services after you run the migration utility with

<ANALYZE_ONLY_MODE> set to NO.

For information about how to fix errors listed in the migration report, see

Troubleshooting on page 166.

8. After you have fixed the serious errors that pertain to the Data Quality

projects that you want to migrate, run the dqmigration utility with

<ANALYZE_ONLY_MODE> set to NO.

This step imports the migrated objects into the Data Services repository.

1

Some of the Data Services jobs and data flows that result from the migration

from Data Quality might require clean-up tasks before they can execute. For

details, see Further cleanup on page 154.

Other migrated jobs or data flows might require changes to improve their

performance. For details, see Improving performance on page 161.

dqmigration utility syntax and options

Use the dqmigration utility to migrate the contents of a Data Quality

repository to a Data Services repository. You can also specify an individual

project file or folder.

Note: Business Objects recommends that when you run the utility the first

time, migrate the entire Data Quality repository instead of an individual project

file to ensure that all dependent files are also migrated. You would need to

Data Services Migration Considerations 31

Data Quality to Data Services Migration Guide

1

Using the migration tool

obtain the names of these dependent files (click View > Referenced File

Explorer in the Project Architect) and run the migration utility multiple times,

once for each file.

If you specify an option in the command line, it overrides the value in the

configuration file.

Note: If your configuration file is invalid, the command line options will not

be processed.

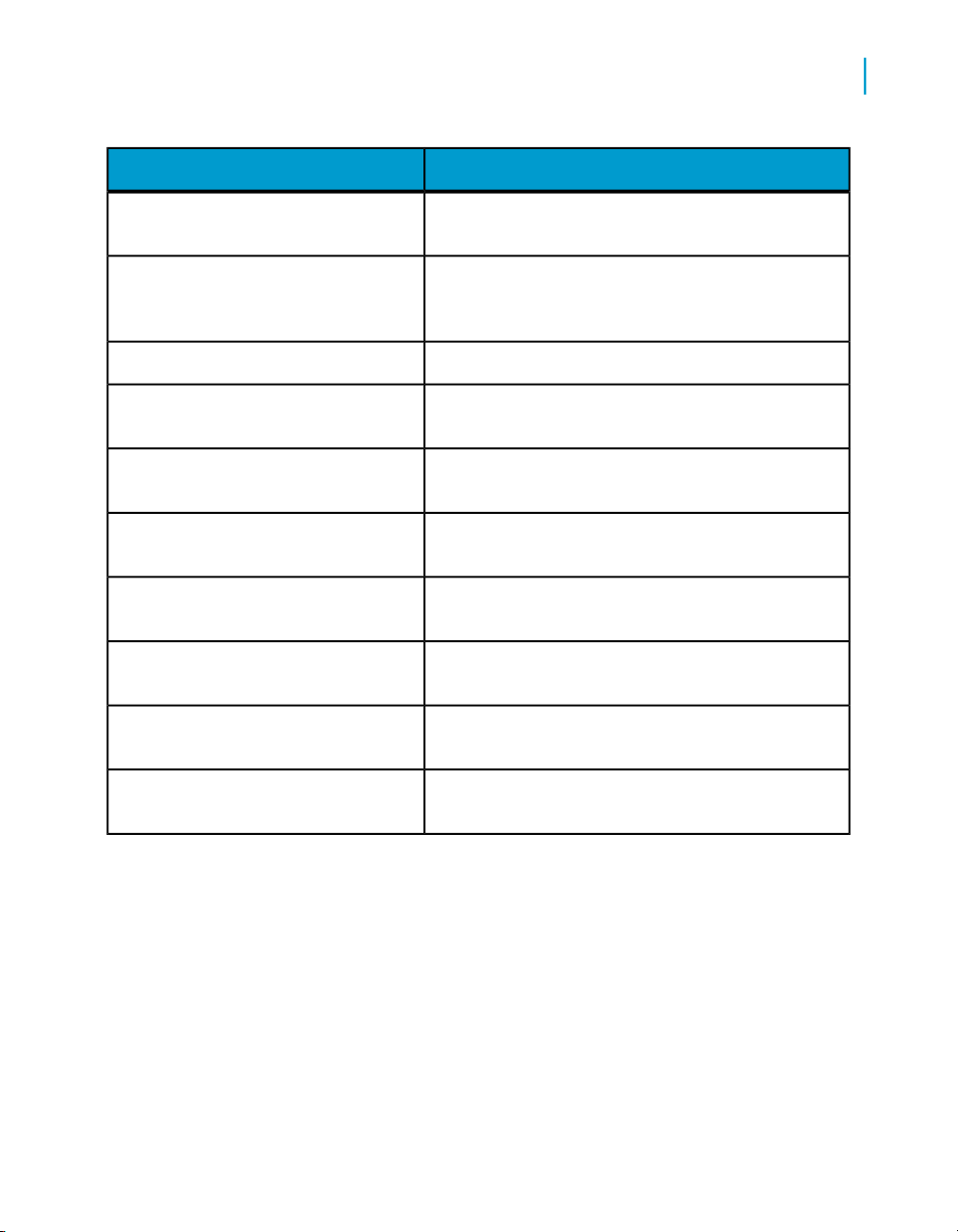

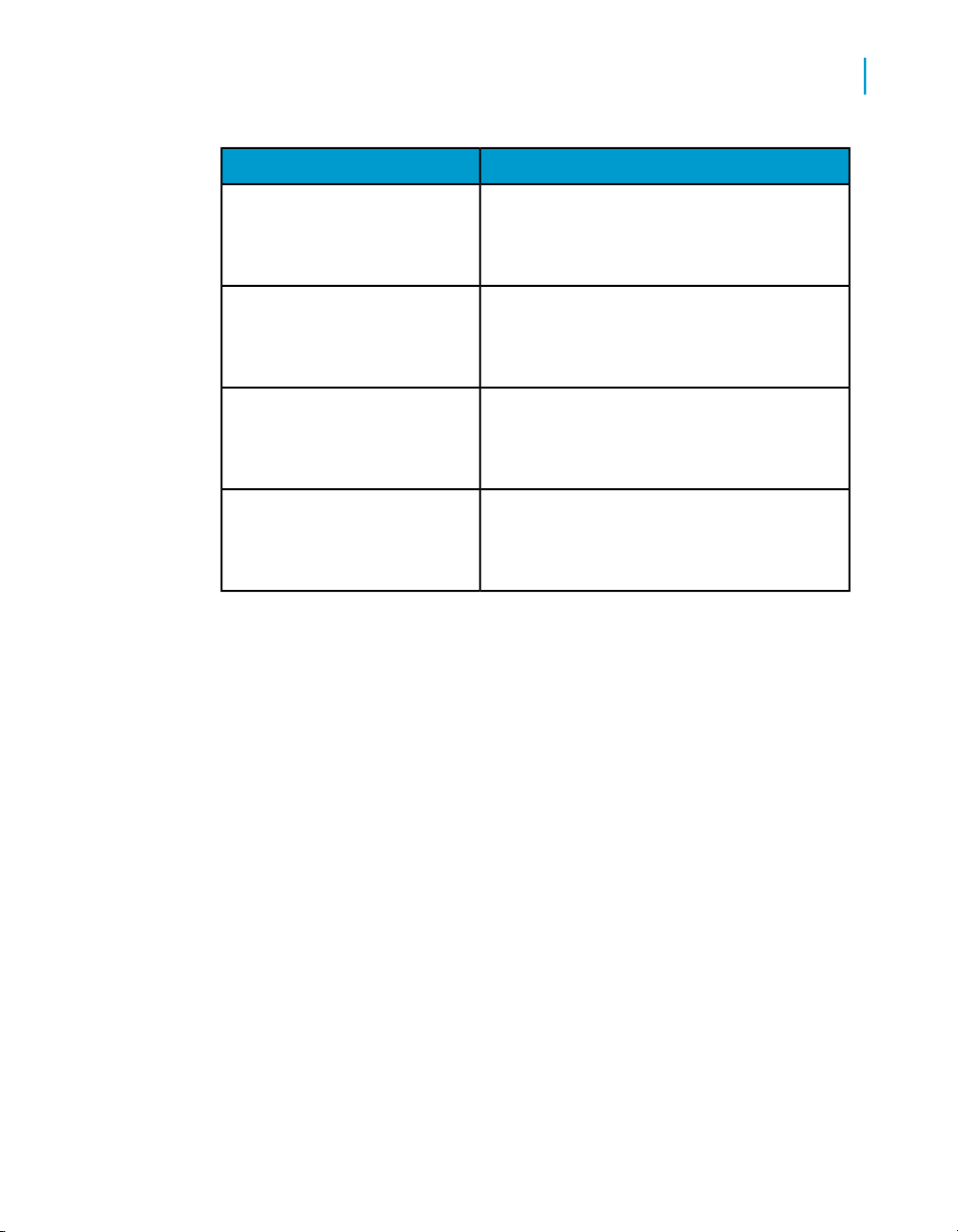

The following table describes the dqmigration utility options.

Option

-h or /h or /?

-cmig_conffile_path

name

-lmig_logfile_path

XML tag in dqmig configuration file

None

None

<LOG_PATH> mig_log

file_path </LOG_PATH>

Description

Prints the options available for this

utility.

Name of the configuration file for this

utility.

Default value is dqmig.xml which

is in the LINK_DIR\DQMigration

directory.

Path for the log file that this dqmigration utility generates.

Default value is migration_logs

which is in the current directory

from which you run this utility.

32 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

Using the migration tool

1

Option

-amode

-ttimeout_in_seconds

XML tag in dqmig configuration file

<ANALYZE_ONLY_MODE>

mode</ANALYZE_ONLY_MODE>

<IMPORT_TIMEOUT> time

out_in_seconds </IMPORT_TIMEOUT>

Description

Analyze mode.

Specify YES to analyze the Data

Quality repository and provide

warning and error messages for

objects that will require manual

steps to complete the migration,

but do not update the Data Services repository.

Specify NO to also update the Data

Services repository with the migrated objects.

Default value is NO.

Amount of time that the migration

utility waits to import one Data Quality project into the Data Services

repository. If one project times out,

the migration utility will continue with

the next project. If a timeout occurs,

the migration log indicates which

object was being processed.

-ridq_input_re

pos_path

<CONFIGURATION_RULES_PATH> dq_in

put_repos_path</CONFIGURATION_RULES_PATH>

Data Services Migration Considerations 33

Default value is 300 seconds.

Path of the Data Quality repository

files.

Default value blank, but the sample

has the value

dq_directory\repository\con

figuration_rules

where dq_directory is the directory

in which you installed Data Quality.

Data Quality to Data Services Migration Guide

1

Using the migration tool

Option

-rsdq_substitu

tion_file

-fidq_input_re

pos_file

-dtds_repo_db_type

XML tag in dqmig configuration file

<SUBSTITUTION_FILE_NAME> dq_substi

tution_file</SUBSTITUTION_FILE_NAME>

<FILE_OR_PATH>dq_input_re

pos_file</FILE_OR_PATH>

<DATABASE_TYPE>ds_re

po_db_type</DATABASE_TYPE>

Description

Name of the substitution file to use

during migration.

Default value is blank, but the

sample has the value

dqxiserver1_substitutions.xml

Optional. Name of XML file or folder

to migrate. If you specify a file, only

that file migrates. If you specify a

folder, all the contents of the folder

and its subfolders migrate.

Default value is NULL which

means migrate the entire Data

Quality repository.

Database type for Data Services

repository. Values can be one of the

following:

• ODBC

• Oracle

• Microsoft_SQL_Server

• Sybase

• MySQL

• DB2

<DATABASE_SERV

-dsdi_repo_db_server

34 Data Services Migration Considerations

ER_NAME>di_repo_db_serv

er</DATABASE_SERV

ER_NAME>

Database server name for a Data

Services repository that is Microsoft

SQL Server or Sybase ASE.

Data Quality to Data Services Migration Guide

Using the migration tool

1

Option

-dndi_repo_db_name

-dwwin_auth

-dudi_repo_us

er_name

-dpdi_repo_password

XML tag in dqmig configuration file

<DATABASE_NAME>di_re

po_db_name</DATABASE_NAME>

<WINDOWS_AUTHENTICA

TION>win_auth</WIN

DOWS_AUTHENTICATION>

<USER_NAME>di_repo_us

er_name </USER_NAME>

<PASSWORD>di_repo_pass

word </PASSWORD>

Description

Connection name for a Data Services repository that is Oracle.

Data source name for a Data Services repository that is DB2 or

MySQL.

Database name for a Data Services repository that is Microsoft

SQL Server or Sybase ASE.

Whether or not to use Windows Authentication (instead of SQL Server

authentication) for Microsoft SQL

Server. Values can be Yes or No.

The default is No.

User name of authorized user to the

Data Services repository.

Password for the user authorized to

the Data Services repository.

Migration report

When you run the dqmigration utility, it generates a migration report in your

LINK_DIR\DQMigration\mylogpath directory.

The dqmigration utility displays the name of the migration report and a

prompt that asks if you want to view it. The dqmigration log file has a name

with the following format:

dqmig_log_processID_threadID_yyyymmdd_hhmmssmmm.xml

For example: dqmig_log_005340_007448_20080619_092451642.xml

The migration report consists of the following sections:

Data Services Migration Considerations 35

Data Quality to Data Services Migration Guide

1

Using the migration tool

• Migration Summary

• Migration Settings – Lists the configuration option values used for this

migration run.

• General Processing Messages – Lists the status message of every

.xml file processed in the Data Quality repository. Possible message

types are:

• Info when the .xml file was processed successfully.

• Error when the .xml file was not processed successfully, and you

will need to take corrective action.

• Warning when the .xml file was processed and migrated, but you

might need to take additional actions after migration to make the

resulting data flow run successfully in Data Services. For example,

a substitution variable is not migrated and you would need to enter

the actual value in the Data Services object after migration.

The dqmigration utility executes in the following three stages, and

this General Processing section displays a different color background

for the messages that are issued by each stage.

• Stage 1 — Determines the version of the source Data Quality

repository. If the Data Quality repository is a version prior to 11.7,

this stage migrates it to version 11.7. The background color of

these stage 1 messages is beige.

• Stage 2 — Migrates Global Address Cleansing and Match family

transforms. The background color of these stage 2 messages is

light pink.

• Stage 3 — Migrates all other Data Quality objects and creates the

.atl file to import into the Data Services repository. The background

color of these stage 3 messages is the background color set for

your Web browser.

• General Objects – Lists the status of substitution files and connection

files.

• Transform Configurations – Lists the status of each transform

configuration that was migrated.

• Jobs – Lists the status of each job that was migrated.

• Migration Details

This section provides detailed migration status for each of the following

objects:

• Each substitution file

36 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

How Data Quality repository contents migrate

• Each named connection in the named_connections.xml file.

• Each transform configuration

• Each job (project)

How Data Quality repository contents

migrate

This section describes how the dqmigration utility migrates the following

Data Quality Repository components which consist mainly of different XML

files and folders:

• Projects and folders

• Substitution files and variables

• Named connections

• Data types

• Content type

1

Related Topics

• Deprecated objects on page 22

How projects and folders migrate

In Data Quality, folders contain objects, and the folder hierarchy can be

multiple levels. After migration to Data Services, the utility prefixes each

object with DQM_, and then they appear together in the Local Object Library.

The "Properties" of the migrated object indicates its source Data Quality

project.

Note: All of the files are not migrated. For example, the contents of the

blueprints folder, and the sample transforms that are automatically installed

in Data Quality. If you want to migrate a file from that folder, move the

contents to another folder that is migrated.

In Data Quality, you can create three types of projects:

Data Services Migration Considerations 37

Data Quality to Data Services Migration Guide

1

How Data Quality repository contents migrate

• Batch – A batch project executes at a specific time and ends after all data

in the specified source is processed. See How batch projects migrate on

page 38.

• Transactional – A transactional project receives data from a calling

application (such as Web services or SDK calls) and processes data as

it arrives. It remains active and ready for new requests. See How

transactional projects migrate on page 40.

• Integrated Batch -- A project run using batch processing with an Integrated

Batch Reader and an Integrated Batch Writer transform. This type of

project can be used to pass data to and from an integrated application,

including Data Integrator XI Release 2 Accelerated (11.7). See How

integrated batch projects migrate on page 41.

Related Topics

• How database Reader transforms migrate on page 73

• How database Writer transforms migrate on page 83

• How flat file Reader transforms migrate on page 93

• How flat file Writer transforms migrate on page 101

• How transactional Readers and Writers migrate on page 116

How batch projects migrate

The dqmigration utility migrates a Data Quality Batch project to the following

two Data Services objects:

•

Data Flow -- A Data Services data flow is the equivalent of a Data Quality

project. Everything having to do with data, including reading sources,

transforming data, and loading targets, occurs inside a data flow.

•

Job -- A job is the only object you can execute in Data Services. Therefore,

the migration utility creates a job to encompass the migrated data flow.

The dqmigration utility also creates additional objects, such as a datastore

or file format, as sections How database Reader transforms migrate on page

73 and How flat file Reader transforms migrate on page 93 describe.

For example, suppose you have the following Data Quality

my_address_cleanse_usa project.

38 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

How Data Quality repository contents migrate

The dqmigration utility migrates this batch project to a Data Services job

named DQM_my_address_cleanse_usa which contains a data flow with the

same name. The dqmigration utility adds a DQM_ prefix to your project name

from Data Quality to form the Data Services job and data flow name.

1

The Project Area shows that the data flow DQM_my_address_cleanse_usa

contains the following migrated transforms:

• The Data Quality Reader transform has become the following Data

Services objects:

• Source object named Reader_Reader, which reads data from file

format DQM_my_address_cleanse_usa_Reader_Reader. The file

format is visible on the Formats tab of the Local Object Library.

• Query transform named Reader_Reader_Query, which maps fields

from the file format to the field names used by the rest of the data flow.

• The Data Quality UsaRegAddressCleanseCass transform has become

the Data Services UsaRegAddressCleanseCass_UsaRegAddressCleanse

transform.

• The Data Quality Writer transform has become the following Data Services

objects:

• Query transform named Writer_Writer_Query

• Target object named Writer_Writer, which maps fields from field

names used in the data flow to the file format DQM_my_ad

Data Services Migration Considerations 39

Data Quality to Data Services Migration Guide

1

How Data Quality repository contents migrate

dress_cleanse_usa_Writer_Writer (visible in the Local Object

Library).

When the dqmigration utility creates the Data Services objects, it updates

each object's description to indicate its source Data Quality project and

transform. The description also contains the original Data Quality description.

For example, in the Data Services Designer, the Formats tab in the Local

Object Library shows the following descriptions.

To view the full description, select a name in the Format list, right-click, and

select Properties.

Related Topics

• How database Reader transforms migrate on page 73

• How database Writer transforms migrate on page 83

• How flat file Reader transforms migrate on page 93

• How flat file Writer transforms migrate on page 101

How transactional projects migrate

The dqmigration utility migrates a Data Quality transactional project to a

Data Services real-time job that consists of the following objects:

• Initialization — Starts the real-time process. The initialization component

can be a script, work flow, data flow, or a combination of objects. It runs

only when a real-time service starts.

• A data flow which contains the resulting Data Services objects from the

Data Quality project:

• A single real-time source — XML message

40 Data Services Migration Considerations

Data Quality to Data Services Migration Guide

How Data Quality repository contents migrate

• A single real-time target — XML message

• Clean-up -- Ends the real-time process. The clean-up component (optional)

can be a script, work flow, data flow,or a combination of objects. It runs

only when a real-time service is shut down.

For example, suppose you have the following Data Quality project

my_trans_address_suggestions_usa.xml.

The dqmigration utility migrates this transactional project

my_trans_address_suggestions_usa to a Data Services real-time job named

DQM_my_trans_address_suggestions_usa which contains a data flow with

the same name.

1

Related Topics

• How transactional Readers and Writers migrate on page 116

• Post-migration tasks for transactional Readers and Writers on page 120

How integrated batch projects migrate

In Data Integrator XI Release 2 Accelerated (version 11.7), a Data Quality

integrated batch project passed data from a job in Data Integrator, cleansed

the data in Data Quality, and passed the data back to the Data Integrator

job. These Data Quality projects were imported into Data Integrator 11.7

through a Data Quality datastore and the imported Data Quality project was

used as a cleansing transform within a Data Integrator data flow.

Data Services Migration Considerations 41

Data Quality to Data Services Migration Guide

1

How Data Quality repository contents migrate

The Data Quality transforms are now integrated into Data Services, and you

can use them just like the regular Data Integrator transforms in a data flow.

You no longer need to create a Data Quality datastore and import the

integrated batch projects.

If you used imported Data Quality projects in Data Integrator 11.7, you will

see them in Data Services.

• Data Quality datastores are still visible, but you cannot edit them and you

cannot create new ones. You can delete the Data Quality datastores.

• You cannot connect to the Data Quality Server and browse the integrated

batch projects.

• The previously imported Data Quality projects are still visible:

• under each Data Quality datastore, but you cannot drag and drop them

into data flows. You can use the option View Where Used to see what

existing data flows use each imported Data Quality project.

• as a Data Quality transform within the data flow, and you can open

this transform to view the field mappings and options.

To use your existing Data Integrator 11.7 integrated batch projects in Data

Services, you must modify them in one of the following ways:

• If your Data Integrator 11.7 data flow contains a small number of imported

Data Quality transforms, modify your data flow in Data Services to use

the new built-in Data Quality transforms.

• If your Data Integrator 11.7 data flow contains a large number of Data

Quality transforms, migrate the Data Quality integrated batch project and

replace the resulting placeholder Readers and Writers with the appropriate

sources and targets. A large number of Data Quality transforms can exist

as either:

• An imported Data Quality project that contains a large number of Data

Quality transforms.

• Multiple imported Data Quality projects that each contain a few Data

Quality transforms.

Related Topics

• Modifying Data Integrator 11.7 Data Quality projects on page 112

• Migrating Data Quality integrated batch projects on page 109

• How batch projects migrate on page 38

42 Data Services Migration Considerations

How connections migrate

The dqmigration utility migrates Data Quality connections to one of the

following Data Services objects, depending on whether the data source is a

file or a database:

•

Datastores when the connection is a database -- Datastores represent

connection configurations between Data Services and databases or

applications.

•

File Formats when the connection is a flat file -- A file format is a set of

properties describing the structure of a flat file (ASCII). File formats

describe the metadata structure, which can be fixed or delimited. You

can use one file format to access multiple files if they all have the same

metadata structure.

For a named connection, the dqmigration utility generates the name of the

resulting datastore or file format as follows:

DQM_connectionname

Data Quality to Data Services Migration Guide

How Data Quality repository contents migrate

1

For connections that are not named (the Reader or Writer transform provides

all of the connection information instead of the file named_connections.xml),

the resulting name of the datastore or file format is:

DQM_projectname_readername_Reader

DQM_projectname_writername_Writer

The following table shows to which Data Services objects each specific

Driver_Name value is migrated.

Table 1-4: Mapping of Data Quality Driver_Name options

Value of Data Quality connection Driver_Name option

FLDB_DBASE3

Data Services Migration Considerations 43

Data Services object

Placeholder Fixed width File Format

DatastoreFLDB_STD_DB2

File Format for a flat fileFLDB_DELIMITED

Data Quality to Data Services Migration Guide

1

How Data Quality repository contents migrate

Value of Data Quality connection Driver_Name option

Rather than not migrate the Data Quality xml file, the dqmigration utility

creates a placeholder file format or placeholder datastore that you can fix

after migration in situations that include the following:

• The flat file type or database type (such as dBase3) is not supported in

Data Services.

• A substitution variable was used in the Data_Source_Name and the file

that the substitution variable refers to was not found.

For information about how to change the placeholder objects, see Table

1-51: on page 167 in Troubleshooting on page 166.

Data Services object

File Format for a flat fileFLDB_FIXED_ASCII

ODBC DatastoreFLDB_STD_MSSQL

Placeholder ODBC DatastoreFLDB_STD_MYSQL

DatastoreFLDB_STD_ODBC

DatastoreFLDB_STD_ORACLE9

DatastoreFLDB_STD_ORACLE10

The following table shows how the Data Quality connection options are

mapped to the Data Services datastore options.

Note: If the dqmigration utility cannot determine the database type from a

named connection (for example, the connection name specified in the Data

Quality Reader does not exist), then the utility creates an Oracle 9 datastore.

Table 1-5: Connection_Options mapping

Data Quality Connection_Options option

44 Data Services Migration Considerations

Data Services datastore

option

Datastore nameNamed_Connection

Data Quality to Data Services Migration Guide

How Data Quality repository contents migrate

1

Data Quality Connection_Options option

FLDB_STD_DB2

FLDB_STD_MYSQL

Data_Source_

Name

Connection string

Data Services datastore

option

Data Source

Data SourceFLDB_STD_MSSQL

Cleanup required. See Fixing

invalid datastores on

page 154.

Data SourceFLDB_STD_ODBC

Connection nameFLDB_STD_ORACLE9

Connection nameFLDB_STD_ORACLE10

Cleanup required. See Fixing

invalid datastores on

page 154.

Data Services Migration Considerations 45

Data Quality to Data Services Migration Guide

1

How Data Quality repository contents migrate

Data Quality Connection_Options option

FLDB_STD_DB2

FLDB_STD_MYSQL

Driver_Name

FLDB_STD_ODBC

FLDB_STD_ORACLE9

Data Services datastore

option

Database type:DB2

Database version:DB2 UDB

8.x

Database type:ODBCFLDB_STD_MSSQL

A placeholder ODBC datastore is created. Cleanup required. See Fixing invalid

datastores on page 154.

Database type:ODBC

Driver_name in ODBC Data

Source Administration

Database type:Oracle

Database version:Oracle

9i

Database type:Oracle

FLDB_STD_ORACLE10

Host_Name

PORT_NUMBER

For example, suppose your Data Quality projects use the following named

connections:

46 Data Services Migration Considerations

Database version:Oracle

10g

Ignored, but the dqmigra

tion log displays a warning.

Ignored, but the dqmigra

tion log displays a warning.

In Edit Datastore, User nameUser_Name

In Edit Datastore, PasswordPassword

Data Quality to Data Services Migration Guide

How Data Quality repository contents migrate

The following Formats tab in the local object library in the Data Services

Designer shows:

• The Data Quality named connection namedoutff is now the Data Services

file format DQM_namedoutff.

Note: A Data Quality named connection can have two contexts: Client

and Server. The dqmigration utility migrates the Server named

connection and ignores the Client one.

For a named connection, the description of the resulting file format refers

to named_connections.xml.

• Other Data Quality connections migrated to file formats in addition to the

namedoutff flat file connection.

1

These other Reader and Writers do not use named connections; therefore,

the description indicates the name of the Data Quality project from which

it was created.

To view the connection information, select a name in the Format list,

right-click, and select Edit.