Page 1

Tutorial

■ SAP BusinessObjects Data Services XI 4.0 (14.0.0)

2010-12-02

Page 2

Copyright

© 2010 SAP AG. All rights reserved.SAP, R/3, SAP NetWeaver, Duet, PartnerEdge, ByDesign, SAP

Business ByDesign, and other SAP products and services mentioned herein as well as their respective

logos are trademarks or registered trademarks of SAP AG in Germany and other countries. Business

Objects and the Business Objects logo, BusinessObjects, Crystal Reports, Crystal Decisions, Web

Intelligence, Xcelsius, and other Business Objects products and services mentioned herein as well

as their respective logos are trademarks or registered trademarks of Business Objects S.A. in the

United States and in other countries. Business Objects is an SAP company.All other product and

service names mentioned are the trademarks of their respective companies. Data contained in this

document serves informational purposes only. National product specifications may vary.These materials

are subject to change without notice. These materials are provided by SAP AG and its affiliated

companies ("SAP Group") for informational purposes only, without representation or warranty of any

kind, and SAP Group shall not be liable for errors or omissions with respect to the materials. The

only warranties for SAP Group products and services are those that are set forth in the express

warranty statements accompanying such products and services, if any. Nothing herein should be

construed as constituting an additional warranty.

2010-12-02

Page 3

Contents

Introduction.............................................................................................................................9Chapter 1

1.1

1.2

1.3

1.4

1.4.1

1.4.2

1.4.3

1.5

1.6

1.6.1

1.6.2

2.1

2.2

2.3

2.4

2.4.1

2.4.2

2.5

2.6

2.6.1

2.6.2

2.7

2.8

Audience and assumptions.......................................................................................................9

SAP BusinessObjects information resources...........................................................................9

Tutorial objectives..................................................................................................................10

Tutorial prerequisites..............................................................................................................11

Preparation for this tutorial.....................................................................................................11

Environment required.............................................................................................................12

Tutorial setup.........................................................................................................................12

Tutorial structure....................................................................................................................19

Exiting the tutorial...................................................................................................................20

To exit the tutorial..................................................................................................................20

To resume the tutorial............................................................................................................21

Product Overview .................................................................................................................23Chapter 2

Product features....................................................................................................................23

Product components..............................................................................................................24

Using the product..................................................................................................................25

System configurations............................................................................................................26

Windows implementation.......................................................................................................27

UNIX implementation.............................................................................................................27

The Designer window.............................................................................................................27

SAP BusinessObjects Data Services objects.........................................................................28

Object hierarchy.....................................................................................................................29

Object-naming conventions....................................................................................................32

New terms.............................................................................................................................32

Section summary and what to do next....................................................................................34

3.1

3.2

3.2.1

3.2.2

Defining Source and Target Metadata .................................................................................35Chapter 3

Logging in to the Designer.....................................................................................................35

Defining a datastore...............................................................................................................36

To define a datastore for the source (ODS) database............................................................36

To define a datastore for the target database.........................................................................38

2010-12-023

Page 4

Contents

3.3

3.3.1

3.3.2

3.4

3.4.1

3.5

3.6

4.1

4.2

4.2.1

4.3

4.3.1

4.4

4.4.1

4.5

4.5.1

4.5.2

4.5.3

4.5.4

4.6

4.7

4.8

4.9

4.10

Importing metadata................................................................................................................38

To import metadata for ODS source tables ...........................................................................38

To import metadata for target tables......................................................................................39

Defining a file format..............................................................................................................39

To define a file format.............................................................................................................40

New terms.............................................................................................................................41

Summary and what to do next................................................................................................42

Populating the SalesOrg Dimension from a Flat File............................................................43Chapter 4

Objects and their hierarchical relationships.............................................................................43

Adding a new project..............................................................................................................44

To add a new project..............................................................................................................44

Adding a job...........................................................................................................................44

To add a new batch job..........................................................................................................44

Adding a work flow.................................................................................................................45

To add a work flow.................................................................................................................46

About data flows....................................................................................................................47

Adding a data flow..................................................................................................................47

Defining the data flow............................................................................................................48

Validating the data flow..........................................................................................................51

Addressing errors..................................................................................................................52

Saving the project..................................................................................................................52

To execute the job.................................................................................................................53

About deleting objects...........................................................................................................55

New terms.............................................................................................................................55

Summary and what to do next................................................................................................56

5.1

5.1.1

5.2

5.2.1

5.3

5.3.1

5.3.2

5.3.3

5.3.4

5.4

5.5

Populating the Time Dimension Using a Transform..............................................................57Chapter 5

Retrieving the project.............................................................................................................57

To open the Class_Exercises project.....................................................................................58

Adding the job and data flow..................................................................................................58

To add the job and data flow..................................................................................................58

Defining the time dimension data flow....................................................................................58

To specify the components of the time data flow...................................................................59

To define the flow of data.......................................................................................................59

To define the output of the Date_Generation transform.........................................................59

To define the output of the query...........................................................................................60

Saving and executing the job..................................................................................................62

Summary and what to do next................................................................................................62

2010-12-024

Page 5

Contents

Populating the Customer Dimension from a Relational Table..............................................63Chapter 6

6.1

6.1.1

6.1.2

6.2

6.2.1

6.3

6.3.1

6.3.2

6.4

6.4.1

6.5

6.6

6.6.1

6.6.2

6.6.3

6.7

7.1

7.1.1

7.2

7.2.1

7.3

7.3.1

7.3.2

7.4

7.4.1

7.5

7.5.1

7.6

7.6.1

7.6.2

7.7

Adding the CustDim job and work flow...................................................................................63

To add the CustDim job.........................................................................................................63

To add the CustDim work flow...............................................................................................64

Adding the CustDim data flow................................................................................................64

To add the CustDim data flow object.....................................................................................64

Defining the CustDim data flow..............................................................................................64

To bring the objects into the data flow....................................................................................64

To define the query................................................................................................................65

Validating the CustDim data flow...........................................................................................66

To verify that the data flow has been constructed properly.....................................................66

Executing the CustDim job.....................................................................................................66

Using the interactive debugger...............................................................................................67

To set a breakpoint in a data flow...........................................................................................67

To use the interactive debugger.............................................................................................68

To set a breakpoint condition.................................................................................................69

Summary and what to do next................................................................................................70

Populating the Material Dimension from an XML File..........................................................71Chapter 7

Adding MtrlDim job, work and data flows...............................................................................71

To add the MtrlDim job objects..............................................................................................72

Importing a document type definition......................................................................................72

To import the mtrl.dtd.............................................................................................................72

Defining the MtrlDim data flow...............................................................................................72

To add the objects to the data flow........................................................................................73

To define the details of qryunnest..........................................................................................73

Validating the MtrlDim data flow.............................................................................................75

To verify that the data flow has been constructed properly.....................................................75

Executing the MtrlDim job......................................................................................................75

To execute the job.................................................................................................................75

Leveraging the XML_Pipeline ................................................................................................76

To setup a job and data flow that uses the XML_Pipeline transform.......................................76

To define the details of XML_Pipeline and Query_Pipeline......................................................77

Summary and what to do next................................................................................................79

8.1

8.2

8.2.1

Populating the Sales Fact Table from Multiple Relational Tables.........................................81Chapter 8

Exercise overview..................................................................................................................82

Adding the SalesFact job, work flow, and data flow................................................................82

To add the SalesFact job objects...........................................................................................82

2010-12-025

Page 6

Contents

8.3

8.3.1

8.4

8.4.1

8.5

8.5.1

8.6

8.7

8.8

8.8.1

8.8.2

8.9

9.1

9.2

9.2.1

9.2.2

9.3

9.3.1

9.3.2

9.3.3

9.3.4

9.4

9.4.1

9.4.2

9.4.3

9.5

Defining the SalesFact data flow............................................................................................82

To define the data flow that will generate the sales fact table.................................................83

Defining the details of the Query transform............................................................................83

To define the details of the query, including the join between source tables...........................83

Defining the details of the lookup_ext function.......................................................................84

To use a lookup_ext function for order status.........................................................................85

Validating the SalesFact data flow..........................................................................................86

Executing the SalesFact job...................................................................................................87

Using metadata reports..........................................................................................................87

Enabling metadata reporting...................................................................................................87

Viewing Impact and Lineage Analysis.....................................................................................88

Summary and what to do next................................................................................................91

Changed-Data Capture.........................................................................................................93Chapter 9

Exercise overview..................................................................................................................93

Adding and defining the initial-load job....................................................................................94

Adding the job and defining global variables...........................................................................94

Adding and defining the work flow..........................................................................................95

Adding and defining the delta-load job....................................................................................97

To add the delta-load data flow...............................................................................................97

To add the job and define the global variables........................................................................98

To add and define the work flow.............................................................................................98

To define the scripts...............................................................................................................99

Executing the jobs..................................................................................................................99

To execute the initial-load job.................................................................................................99

To change the source data...................................................................................................100

To execute the delta-load job...............................................................................................100

Summary and what to do next..............................................................................................101

10.1

10.1.1

10.2

10.2.1

10.2.2

10.3

10.4

10.4.1

10.5

Data Assessment................................................................................................................103Chapter 10

Viewing profile statistics ......................................................................................................103

To obtain profile statistics....................................................................................................104

Defining a validation transform based on data profile statistics.............................................105

To set up the job and data flow that will validate the data format..........................................105

To define the details of the Validation transform to replace incorrectly formatted data ........106

To audit a data flow..............................................................................................................107

Viewing audit details in Operational Dashboard reports........................................................110

To view audit details in the Operational Dashboard..............................................................110

Summary and what to do next..............................................................................................111

2010-12-026

Page 7

Contents

Recovery Mechanisms........................................................................................................113Chapter 11

11.1

11.2

11.2.1

11.3

11.3.1

11.3.2

11.3.3

11.3.4

11.4

11.5

11.5.1

11.6

11.7

12.1

12.2

12.3

12.3.1

12.3.2

12.3.3

12.3.4

12.4

12.4.1

12.4.2

12.4.3

12.4.4

12.4.5

12.4.6

12.4.7

12.4.8

12.4.9

12.4.10

12.4.11

12.5

Creating a recoverable work flow manually...........................................................................113

Adding the job and defining local variables...........................................................................113

To add the job and define local variables..............................................................................114

Specifying a recoverable job................................................................................................114

Creating the script that determines the status......................................................................115

Defining the recoverable data flow with a conditional............................................................116

Adding the script that updates the status.............................................................................118

Specifying job execution order.............................................................................................119

Status of the exercise..........................................................................................................119

Executing the job..................................................................................................................119

To execute the job...............................................................................................................119

Data Services automated recovery properties......................................................................120

Summary and what to do next..............................................................................................120

Multiuser Development.......................................................................................................121Chapter 12

Introduction..........................................................................................................................121

Exercise overview................................................................................................................122

Preparation..........................................................................................................................122

To create a central repository...............................................................................................123

Creating two local repositories.............................................................................................124

Associating repositories to your job server..........................................................................124

Defining connections to the central repository.....................................................................125

Working in a multiuser environment......................................................................................126

To import objects into your local repository .........................................................................126

Activating a connection to the central repository..................................................................127

Adding objects to the central repository...............................................................................128

Checking out objects from the central repository.................................................................130

Checking in objects to the central repository........................................................................131

Undoing check out...............................................................................................................132

Comparing objects...............................................................................................................133

Checking out without replacement.......................................................................................135

Getting objects....................................................................................................................137

Using filtering.......................................................................................................................138

Deleting objects...................................................................................................................139

Summary and what to do next..............................................................................................140

13.1

Extracting SAP Application Data.........................................................................................141Chapter 13

Defining an SAP Applications datastore...............................................................................141

2010-12-027

Page 8

Contents

13.2

13.3

13.3.1

13.3.2

13.3.3

13.3.4

13.3.5

13.3.6

13.4

13.4.1

13.4.2

13.4.3

13.4.4

13.5

13.5.1

13.5.2

13.5.3

13.5.4

13.6

13.7

To import metadata for individual SAP application source tables..........................................142

Repopulating the customer dimension table.........................................................................143

Adding the SAP_CustDim job, work flow, and data flow.......................................................143

Defining the SAP_CustDim data flow...................................................................................144

Defining the ABAP data flow................................................................................................145

Validating the SAP_CustDim data flow.................................................................................149

Executing the SAP_CustDim job..........................................................................................149

About ABAP job execution errors.........................................................................................150

Repopulating the material dimension table............................................................................150

Adding the SAP_MtrlDim job, work flow, and data flow........................................................151

Defining the DF_SAP_MtrlDim data flow..............................................................................151

Defining the data flow..........................................................................................................152

Executing the SAP_MtrlDim job...........................................................................................155

Repopulating the SalesFact table.........................................................................................156

Adding the SAP_SalesFact job, work flow, and data flow.....................................................156

Defining the DF_SAP_SalesFact data flow...........................................................................156

Defining the ABAP data flow................................................................................................157

Validating the SAP_SalesFact data flow and executing the job.............................................163

New Terms..........................................................................................................................163

Summary.............................................................................................................................164

Running a Real-time Job in Test Mode...............................................................................165Chapter 14

14.1

Index 167

Exercise...............................................................................................................................165

2010-12-028

Page 9

Introduction

Introduction

Welcome to the

The software is a component of the SAP BusinessObjects information management solutions and

allows you to extract and integrate data for analytical reporting and e-business.

Exercises in this tutorial introduce concepts and techniques to extract, transform, and load batch data

from flat-file and relational database sources for use in a data warehouse. Additionally, you can use

the software for real-time data extraction and integration. Use this tutorial to gain practical experience

using software components including the Designer, repositories, and Job Servers.

SAP BusinessObjects information management solutions also provide a number of Rapid Marts

packages, which are predefined data models with built-in jobs for use with business intelligence (BI)

and online analytical processing (OLAP) tools. Contact your sales representative for more information

about Rapid Marts.

Tutorial

. This tutorial introduces core features of SAP BusinessObjects Data Services.

1.1 Audience and assumptions

This tutorial assumes that:

• You are an application developer or database administrator working on data extraction, data

warehousing, data integration, or data quality.

• You understand your source data systems, DBMS, business intelligence, and e-business messaging

concepts.

• You understand your organization's data needs.

• You are familiar with SQL (Structured Query Language).

• You are familiar with Microsoft Windows.

1.2 SAP BusinessObjects information resources

A global network of SAP BusinessObjects technology experts provides customer support, education,

and consulting to ensure maximum information management benefit to your business.

Useful addresses at a glance:

2010-12-029

Page 10

Introduction

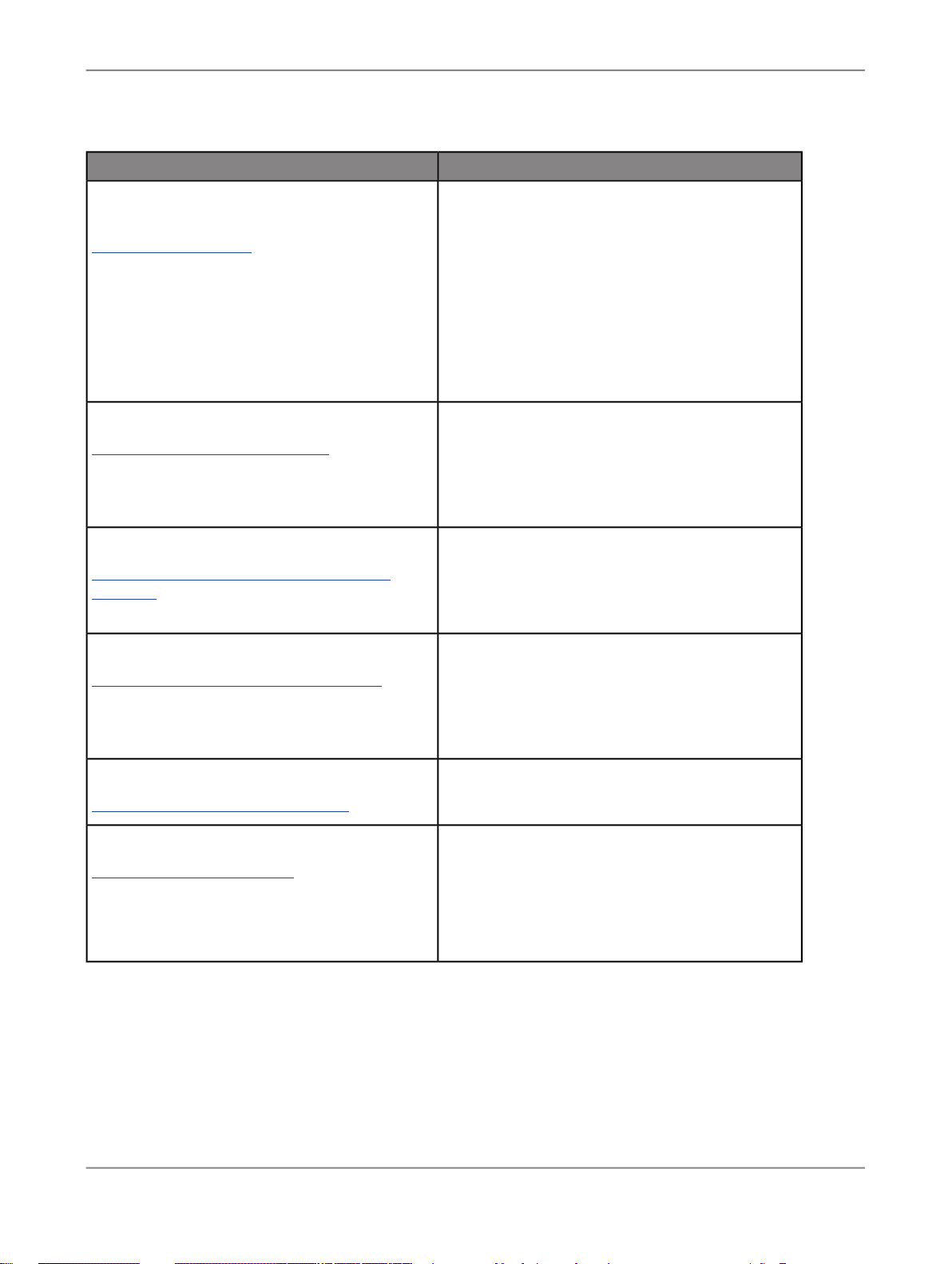

ContentAddress

Customer Support, Consulting, and Education

services

http://service.sap.com/

SAP BusinessObjects Data Services Community

http://www.sdn.sap.com/irj/sdn/ds

Forums on SCN (SAP Community Network )

http://forums.sdn.sap.com/forum.jspa?foru

mID=305

Blueprints

http://www.sdn.sap.com/irj/boc/blueprints

Information about SAP Business User Support

programs, as well as links to technical articles,

downloads, and online forums. Consulting services

can provide you with information about how SAP

BusinessObjects can help maximize your information management investment. Education services

can provide information about training options and

modules. From traditional classroom learning to

targeted e-learning seminars, SAP BusinessObjects

can offer a training package to suit your learning

needs and preferred learning style.

Get online and timely information about SAP BusinessObjects Data Services, including tips and tricks,

additional downloads, samples, and much more.

All content is to and from the community, so feel

free to join in and contact us if you have a submission.

Search the SAP BusinessObjects forums on the

SAP Community Network to learn from other SAP

BusinessObjects Data Services users and start

posting questions or share your knowledge with the

community.

Blueprints for you to download and modify to fit your

needs. Each blueprint contains the necessary SAP

BusinessObjects Data Services project, jobs, data

flows, file formats, sample data, template tables,

and custom functions to run the data flows in your

environment with only a few modifications.

http://help.sap.com/businessobjects/

Supported Platforms (Product Availability Matrix)

https://service.sap.com/PAM

1.3 Tutorial objectives

SAP BusinessObjects product documentation.Product documentation

Get information about supported platforms for SAP

BusinessObjects Data Services.

Use the search function to search for Data Services.

Click the link for the version of Data Services you

are searching for.

2010-12-0210

Page 11

Introduction

The intent of this tutorial is to introduce core Designer functionality.

After completing this tutorial you should be able to:

• Describe the process for extracting, transforming, and loading data using SAP BusinessObjects

Data Services

• Identify Data Services objects

• Define Data Services objects to:

• Extract flat-file, XML, and relational data from your sources

• Transform the data to suit your needs

• Load the data to your targets

• Use Data Services features and functions to:

• Recover from run-time errors

• Capture changed data

• Verify and improve the quality of your source data

• Run a real-time job

• View and print metadata reports

• Examine data throughout a job using the debugger

• Set up a multiuser development environment

1.4 Tutorial prerequisites

This section provides a high-level description of the steps you need to complete before you begin the

tutorial exercises.

1.4.1 Preparation for this tutorial

Read the sections on logging in to the Designer and the Designer user interface in the

BusinessObjects Data Services Designer Guide

including terms and concepts relevant to this tutorial.

SAP

to get an overview of the Designer user interface

This tutorial also provides a high-level summary in the next section,

Product Overview

.

2010-12-0211

Page 12

Introduction

1.4.2 Environment required

To use this tutorial, you must have Data Services running on a supported version of Windows XP or

Windows Server 2003 and a supported RDBMS (such as Oracle, IBM DB2, Microsoft SQL Server, or

Sybase ASE).

You can install Data Services product components (Designer, Administrator, Job Server, Access Server)

on a single computer or distribute them across multiple computers. In the simplest case, all components

in the following diagram can reside on the same computer.

1.4.3 Tutorial setup

Ensure you have access to a RDBMS; contact your system administrator for assistance.

To set up your computer for this tutorial you must do the following tasks:

• Create repository, source, and target databases on an existing RDBMS

• Install SAP BusinessObjects Data Services

• Run the provided SQL scripts to create sample source and target tables

The following sections describe each of these tasks.

1.4.3.1 Create repository, source, and target databases on an existing RDBMS

2010-12-0212

Page 13

Introduction

1.

Log in to your RDBMS.

2.

(Oracle only). Optionally create a service name alias.

Set the protocol to TCP/IP and enter a service name; for example, training.sap. This can act as your

connection name.

3.

Create three databases—for your repository, source operational data store (ODS), and target. For

each, you must create a user account and password.

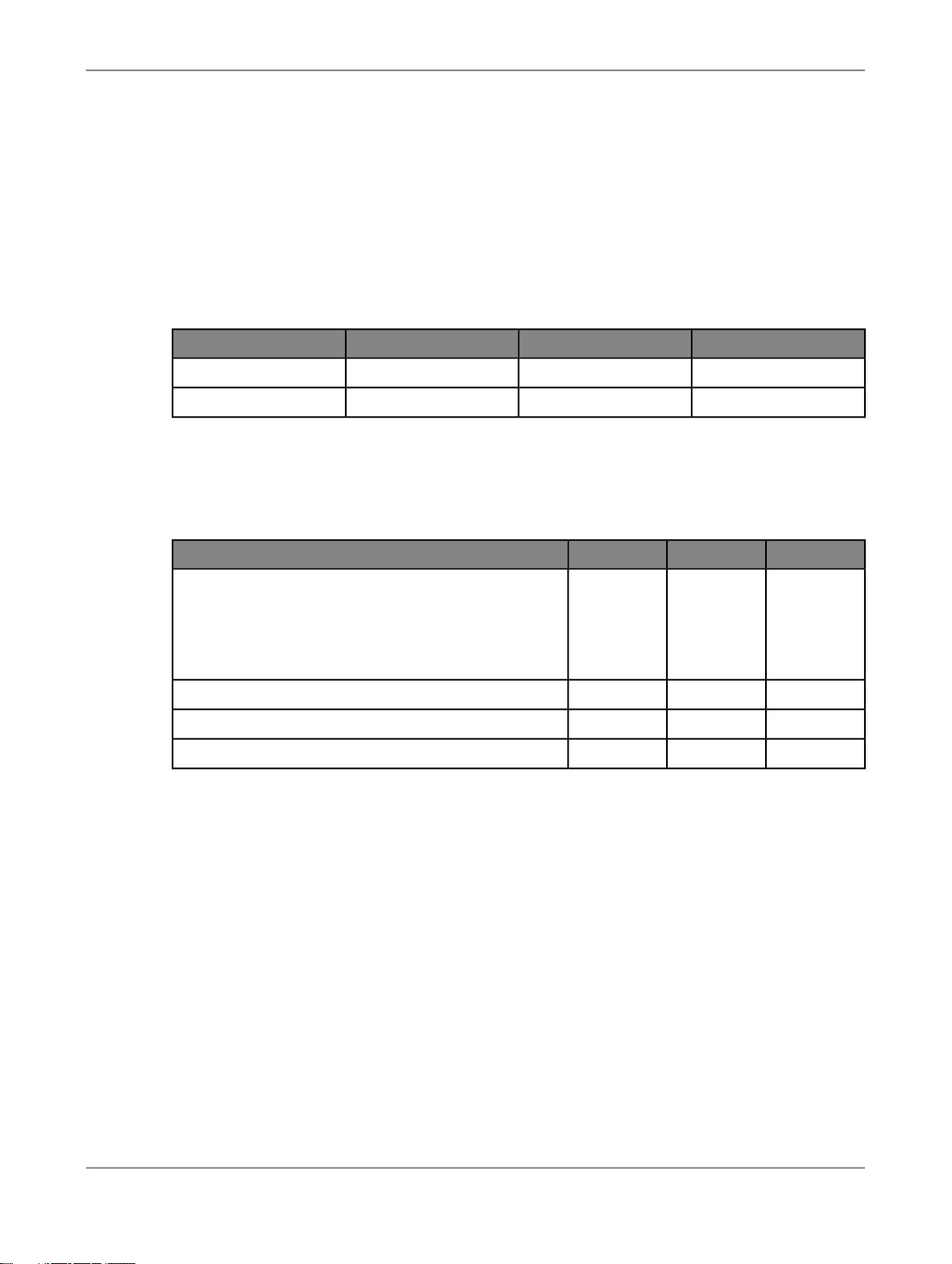

The recommended values used in the tutorial SQL scripts are:

TargetSourceRepository

targetodsrepoUser name

targetodsrepoPassword

4.

Grant access privileges for the user account. For example for Oracle, grant CONNECT and

RESOURCE roles.

5.

Make a note of the connection names, database versions, user names and passwords in the following

table. You will be asked to refer to this information throughout the tutorial.

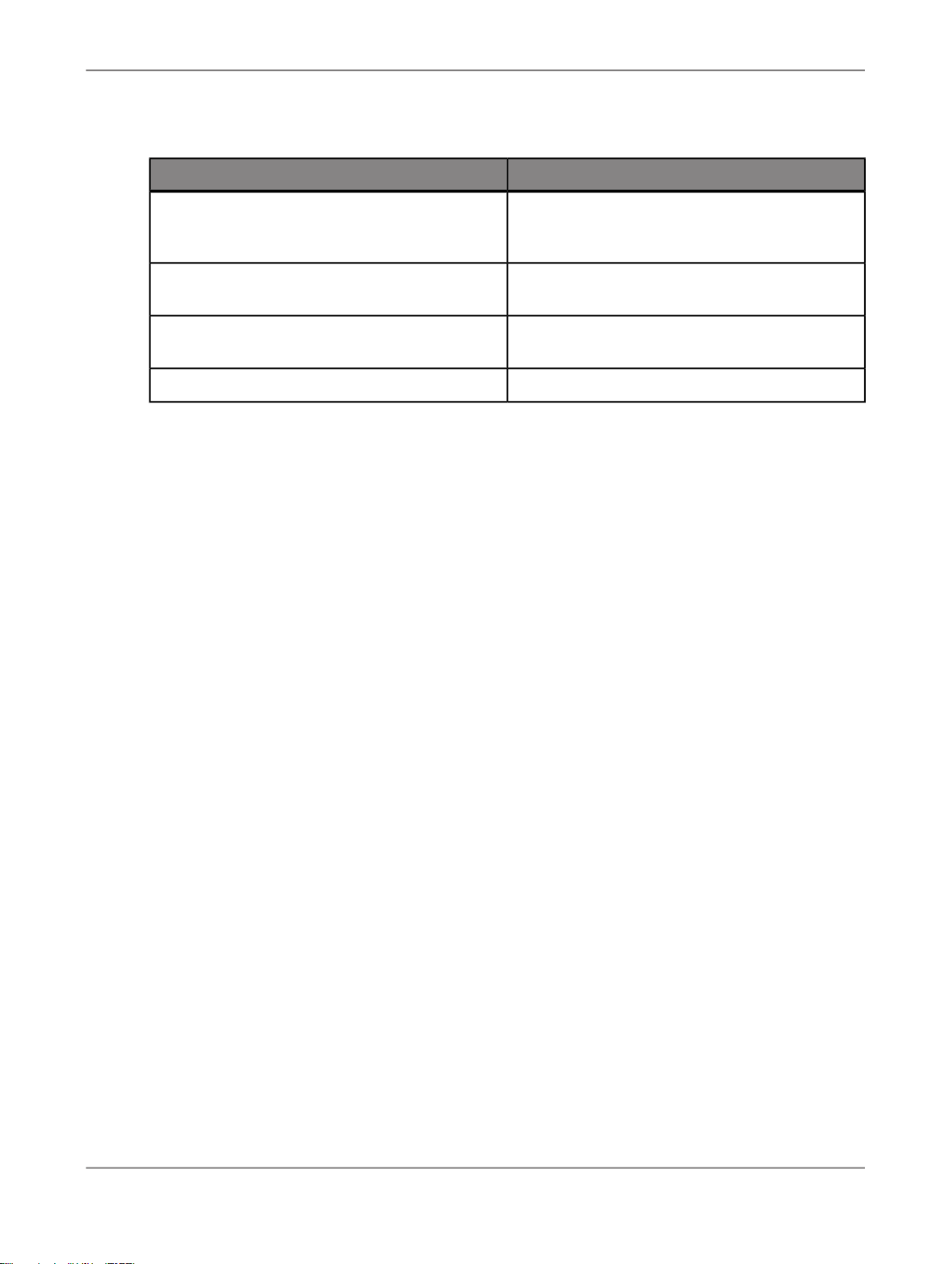

TargetSourceRepositoryValue

Database connection name (Oracle) OR

Database server name AND

Database name (MS-SQL Server)

Database version

User name

Password

1.4.3.2 Install a Central Management Server (CMS)

SAP BusinessObjects Data Services requires a Central Managment Server (CMS) provided by SAP

BusinessObjects Enterprise or Information platform services 4.0.

For detailed information about system requirements, configuration, and installation for Information

platform services 4.0, see the “Installing Information platform services 4.0” section of the

Guide for Windows

.

Installation

For detailed information about system requirements, configuration, and installation for SAP

BusinessObjects Enterprise, see the

SAP BusinessObjects Enterprise Installation Guide

.

2010-12-0213

Page 14

Introduction

Note:

During installation, make a note of the administrator user name and password for the SAP

BusinessObjects Enterprise or Information platform services 4.0 system. You will be asked to enter it

to complete the setup of the tutorial.

1.4.3.2.1 To log in to the Central Management Console

To configure user accounts and define Data Services repositories, log in to the Central Management

Console (CMC).

1.

Navigate to http://<hostname>:8080/BOE/CMC/, where <hostname> is the name of the

machine where you installed SAP BusinessObjects Enterprise or Information platform services 4.0.

2.

Enter the username, password, and authentication type for your CMS user.

3.

Click Log On.

The Central Management Console main screen is displayed.

1.4.3.3 Install SAP BusinessObjects Data Services

For detailed information about system requirements, configuration, and installing on Windows or UNIX,

See the

Be prepared to enter the following information when installing the software:

• Your Windows domain and user name

• Your Windows password

• Your Windows computer name and host ID

• Product keycode

• Connection information for the local repository and Job Server

When you install the software, it configures a Windows service for the Job Server. To verify that the

service is enabled, open the Services Control Panel and ensure that all Data Services services are

configured for a Status of Started and Startup Type Automatic.

The default installation creates the following entries in the Start > Programs > SAP BusinessObjects

Data Services XI 4.0 menu:

Data ServicesLocale Selector

Installation Guide for WindowsorInstallation Guide for UNIX

FunctionCommand

Opens the Designer.Data ServicesDesigner

Allows you to specify the language, territory, and

code page to use for the repository connection

for Designer and to process job data

.

2010-12-0214

Page 15

Introduction

Data ServicesManagement Console

FunctionCommand

Opens a launch page for the Data Services web

applications including the Administrator and

metadata and data quality reporting tools.

Data ServicesRepository Manager

Data ServicesServer Manager

Opens a dialog box that you can use to update

repository connection information.

Opens a dialog box that you can use to configure

Job Servers and Access Servers.

Displays license information.SAP BusinessObjectsLicense Manager

1.4.3.3.1 To create a local repository

1.

Open the Repository Manager.

2.

Choose Local as the repository type.

3.

Enter the connection information for the local repository database that you created.

4.

Type repo for both User and Password.

5.

Click Create.

After creating the repository, you need to define a Job Server and associate the repository with it. You

can also optionally create an Access Server if you want to use web-based batch job administration.

1.4.3.3.2 To define a job server and associate your repository

1.

Open the Server Manager.

2.

In the Job Server tab of the Server Manager window, click Configuration Editor.

3.

In the "Job Server Configuration Editor" window, click Add to add a Job Server.

4.

In the "Job Server Properties" window:

a. Enter a unique name in Job Server name.

b. For Job Server port, enter a port number that is not used by another process on the computer.

If you are unsure of which port number to use, increment the default port number.

c. You do not need to complete the other job server properties to run the exercises in this Tutorial.

5.

Under Associated Repositories, enter the local repository to associate with this Job Server. Every

Job Server must be associated with at least one local repository.

a. Click Add to associate a new local or profiler repository with this Job Server.

b. Under Repository information enter the connection information for the local repository database

that you created.

c. Type repo for both Username and Password.

d. Select the Default repository check box if this is the default repository for this Job Server. You

cannot specify more than one default repository.

e. Click Apply to save your entries. You should see <database_server>_repo_repo in the list

of Associated Repositories.

2010-12-0215

Page 16

Introduction

6.

Click OK to close the "Job Server Properties" window.

7.

Click OK to close the "Job Server Configuration Editor" window.

8.

Click Close and Restart in the Server Manager.

9.

Click OK to confirm that you want to restart the Data Services Service.

1.4.3.3.3 To create a new Data Services user account

Before you can log in to the Designer, you need to create a user account on the Central Management

Server (CMS).

1.

Log in to the Central Management Console (CMC) using the Administrator account you created

during installation.

2.

Click Users and Groups.

The user management screen is displayed.

3.

Click Manage > New > New User.

The "New User" screen is displayed.

4.

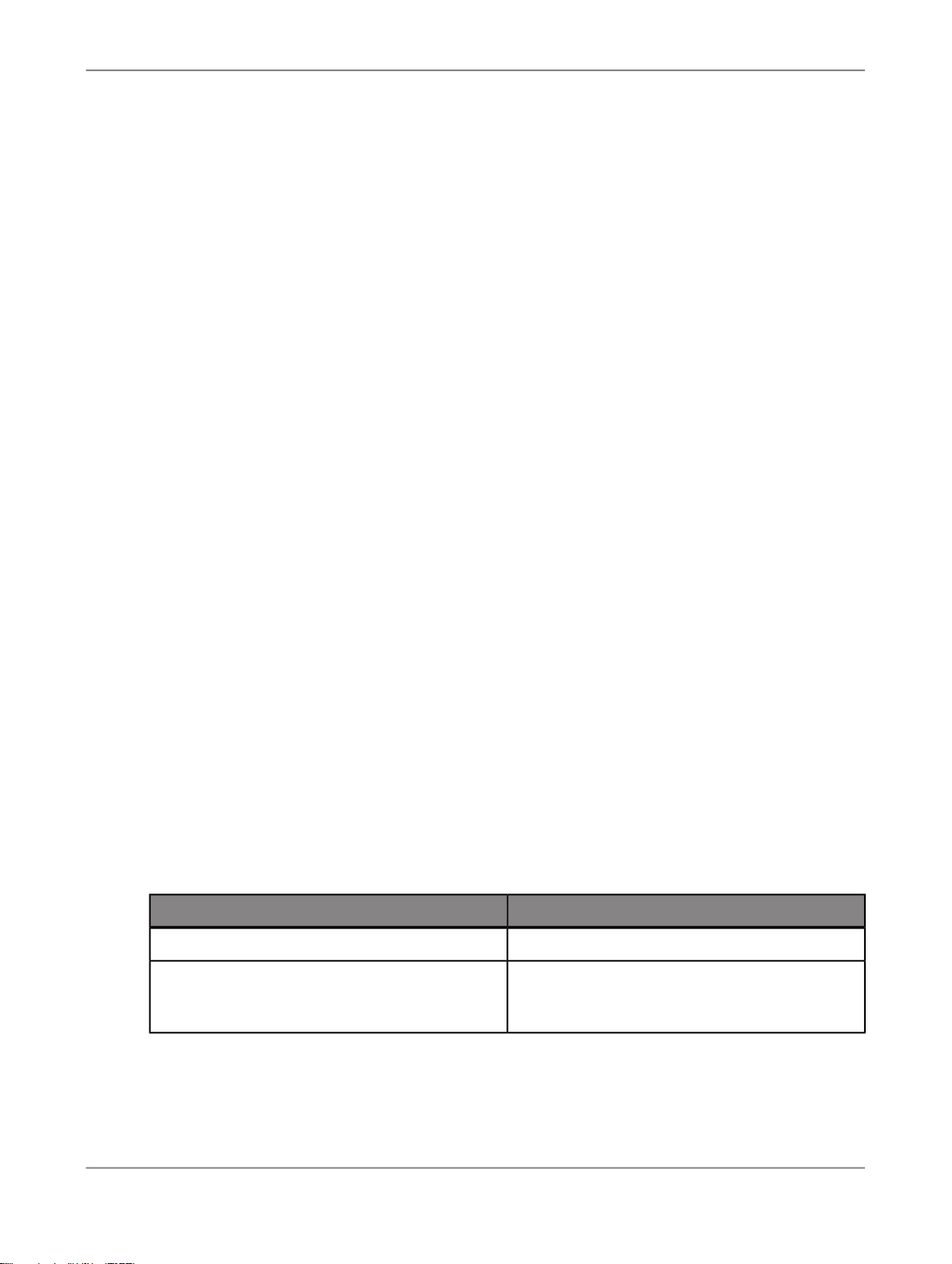

Enter user details for the new user account:

ValueField

EnterpriseAuthentication Type

tutorial_userAccount Name

Tutorial UserFull Name

User created for the Data Services tutorial.Description

tutorial_passPassword

CheckedPassword never expires

UncheckedUser must change password at next logon

5.

Click Create & Close.

The user account is created and the "New User" screen is closed.

6.

Add your user to the necessary Data Services user groups:

a. Click User List.

b. Select tutorial_user in the list of users.

c. Choose Actions > Join Group.

The "Join Group" screen is displayed.

d. Select all the Data Services groups and click >.

Caution:

This simplifies the process for the purposes of the tutorial. In a production environment, you

should plan user access more carefully. For more information about user security, see the

Administrator's Guide

.

The Data Services groups are moved to the Destination Groups area.

2010-12-0216

Page 17

Introduction

e. Click OK.

The "Join Group" screen is closed.

7.

Click Log Off to exit the Central Management Console.

Related Topics

• To log in to the Central Management Console

1.4.3.3.4 To configure the local repository in the CMC

Before you can grant repository access to your user, you need to configure the repository in the Central

Management Console (CMC).

1.

Log in to the Central Management Console using the tutorial user you created.

2.

Click Data Services.

The "Data Services" management screen is displayed.

3.

Click Manage > Configure Repository.

The "Add Data Services Repository" screen is displayed.

4.

Enter a name for the repository.

For example, Tutorial Repository.

5.

Enter the connection information for the database you created for the local repository.

6.

Click Test Connection.

A dialog appears indicating whether or not the connection to the repository database was successful.

Click OK. If the connection failed, verify your database connection information and re-test the

connection.

7.

Click Save.

The "Add Data Services Repository" screen closes.

8.

Click the Repositories folder.

The list of configured repositories is displayed. Verify that the new repository is shown.

9.

Click Log Off to exit the Central Management Console.

Related Topics

• To log in to the Central Management Console

1.4.3.4 Run the provided SQL scripts to create sample source and target tables

2010-12-0217

Page 18

Introduction

Data Services installation includes a batch file (CreateTables_databasetype.bat) for each

supported RDBMS. The batch files run SQL scripts that create and populate tables on your source

database and create the target schema on the target database.

1.

Using Windows Explorer, locate the CreateTables batch file for your RDBMS in your Data Services

installation directory in <LINK_DIR>\Tutorial Files\Scripts.

2.

Open the appropriate script file and edit the pertinent connection information (and user names and

passwords if you are not using ods/ods and target/target).

The Oracle batch file contains commands of the form:

sqlplus username/password@connection @scriptfile.sql > outputfile.out

The Microsoft SQL Server batch file contains commands of the form:

isql /e /n /U username /S servername

/d databasename /P password

/i scriptfile.sql /o outputfile.out

Note:

For Microsoft SQL Server 2008, use CreateTables_MSSQL2005.bat.

The output files provide logs that you can examine for success or error messages.

3.

Double-click on the batch file to run the SQL scripts.

4.

Use an RDBMS query tool to check your source ODS database.

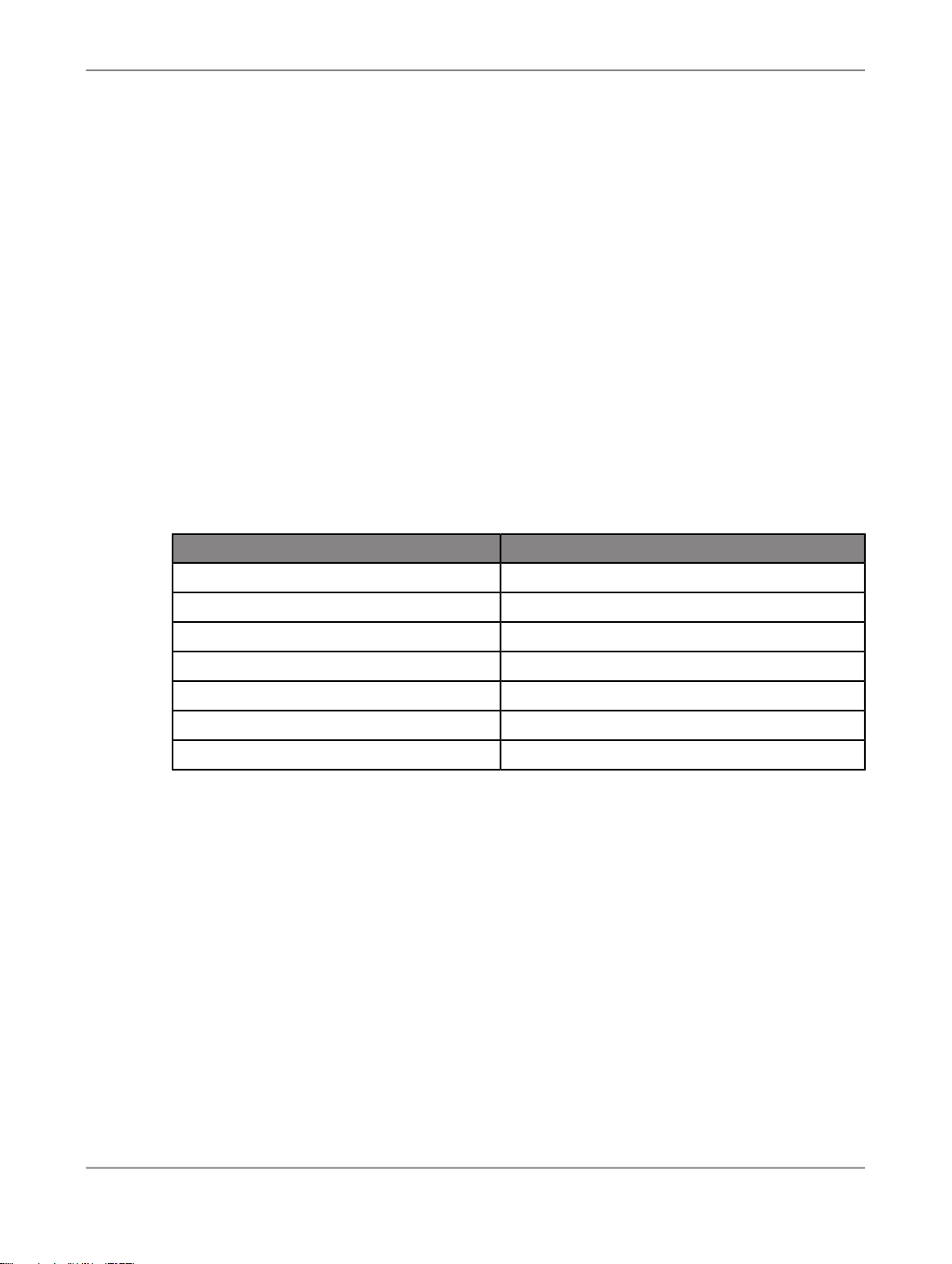

The following tables should exist on your source database after you run the script. These tables

should include a few rows of sample data.

Table name in databaseDescriptive name

ods_customerCustomer

ods_materialMaterial

ods_salesorderSales Order Header

ods_salesitemSales Order Line Item

ods_deliverySales Delivery

ods_employeeEmployee

ods_regionRegion

5.

Use an RDBMS query tool to check your target data warehouse.

The following tables should exist on your target database after you run the script.

Table name in databaseDescriptive name

salesorg_dimSales Org Dimension

cust_dimCustomer Dimension

mtrl_dimMaterial Dimension

time_dimTime Dimension

2010-12-0218

Page 19

Introduction

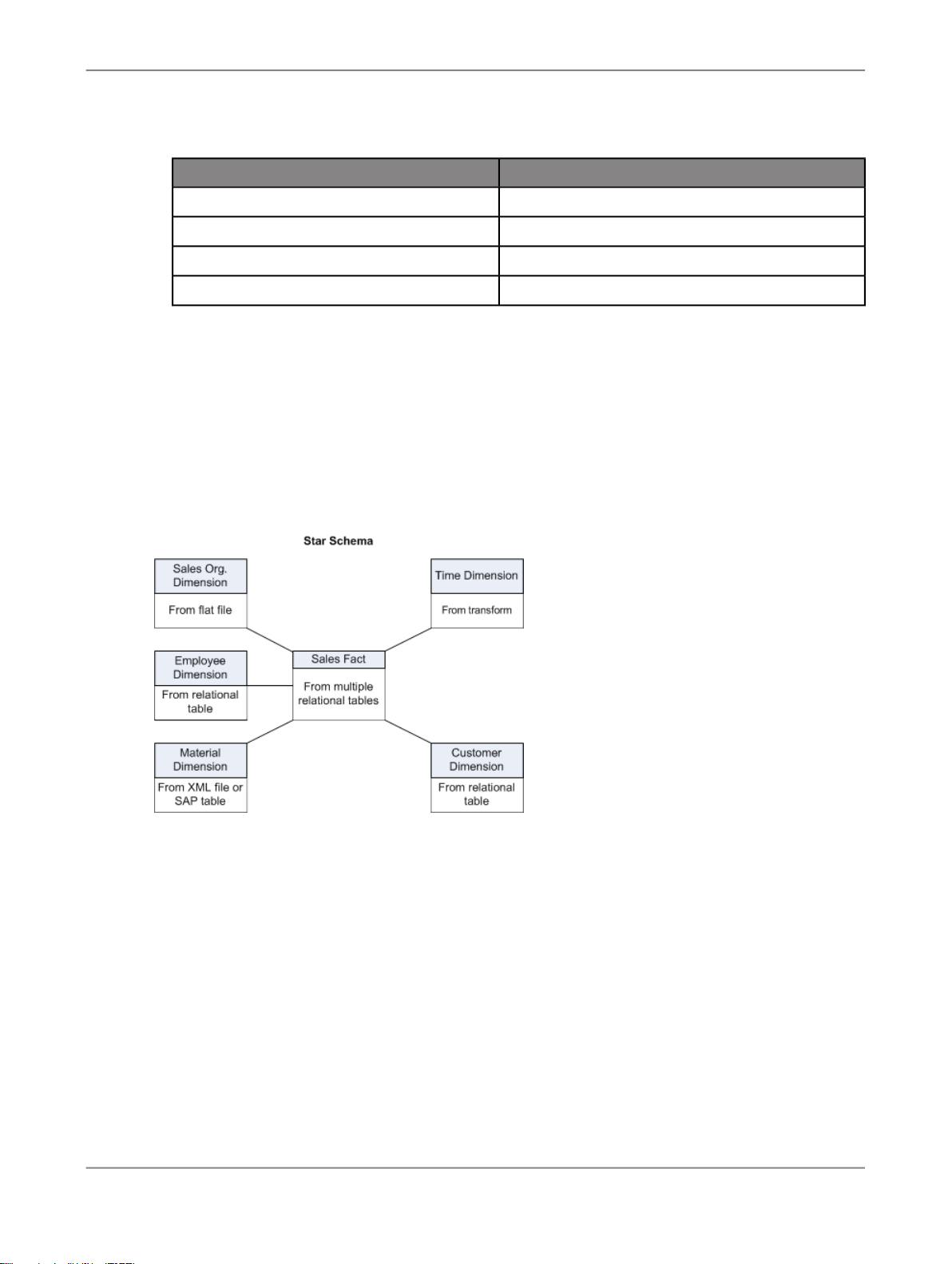

1.5 Tutorial structure

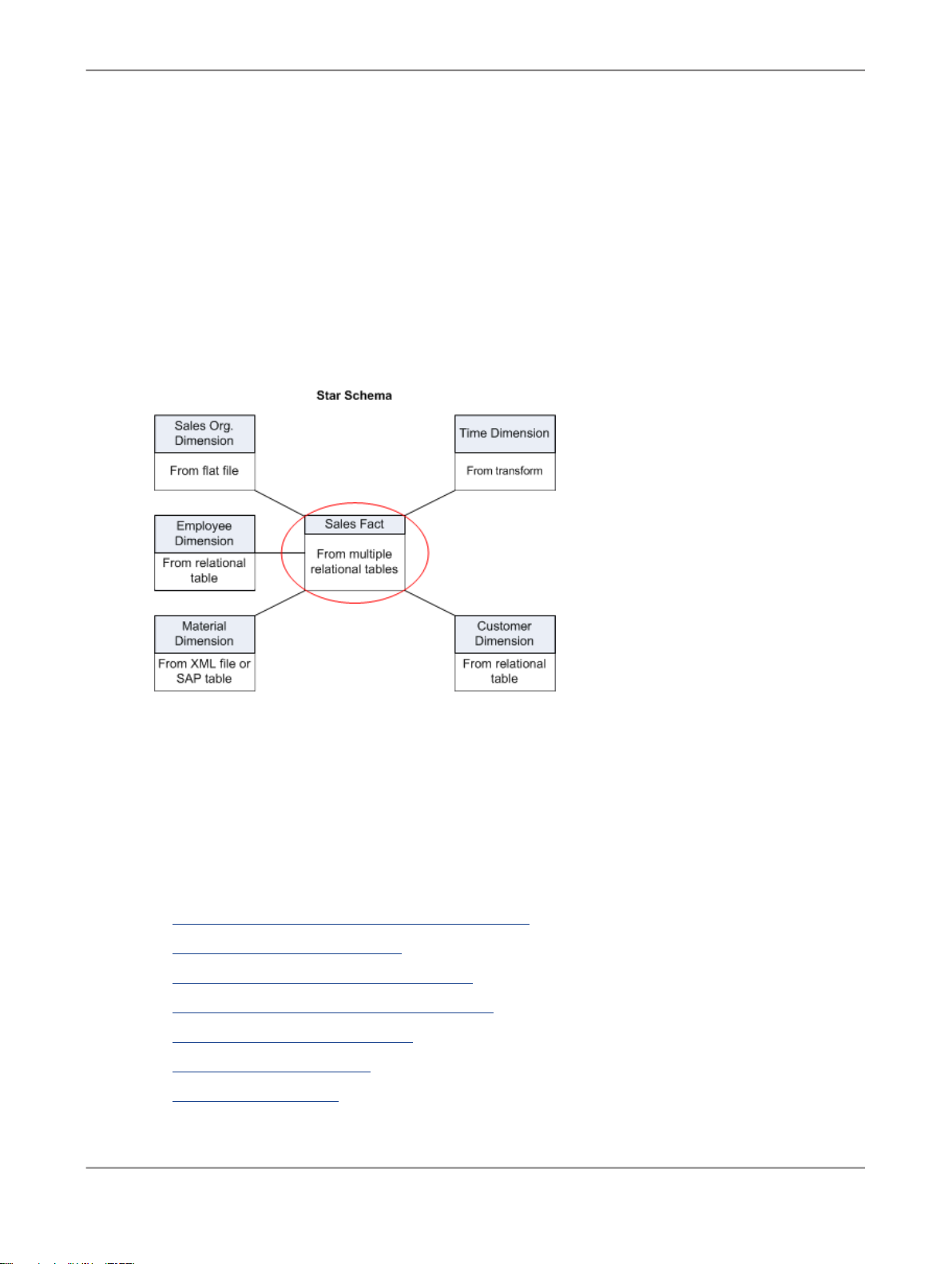

The goal of the tutorial exercises is to demonstrate SAP BusinessObjects Data Services features using

a simplified data model. The model is a sales data warehouse with a star schema that contains one

fact table and some dimension tables.

Table name in databaseDescriptive name

employee_dimEmployee Dimension

sales_factSales Fact

status_tableRecovery Status

CDC_timeCDC Status

Sections build on jobs you create and skills learned in previous sections. You must complete each

exercise to begin the next.

Note:

The screens in this guide are for illustrative purposes. On some screens, the available options depend

on the database type and version in the environment.

This tutorial is organized as follows.

Product Overview

Defining Source and Target Metadata

introduces the basic architecture and the user interface for Data Services.

introduces working with the Designer. Use the Designer to define

a datastore and a file format, then import metadata to the object library. After completing this section,

you will have completed the preliminary work required to define data movement specifications for flat-file

data.

2010-12-0219

Page 20

Introduction

Populating the SalesOrg Dimension from a Flat File

source and target tables. The exercise populates the sales organization dimension table from flat-file

data.

Populating the Time Dimension Using a Transform

creates a data flow for populating the time dimension table.

Populating the Customer Dimension from a Relational Table

tables. This exercise defines a job that populates the customer dimension table.

Populating the Material Dimension from an XML File

This exercise defines a job that populates the material dimension table.

Populating the Sales Fact Table from Multiple Relational Tables

tables and introduces joins and the lookup function. The exercise populates the sales fact table.

Changed-Data Capture

variables, parameters, functions, and scripts.

Data Assessment

exercise uses profile statistics, the validation transform, and the audit data flow feature.

Recovery Mechanisms

Multiuser Development

environment.

Extracting SAP Application Data

sources.

introduces a basic approach to changed-data capture. The exercise uses

introduces features to ensure and improve the validity of your source data. The

presents techniques for recovering from incomplete data loads.

presents the use of a central repository for setting up a multiuser development

provides optional exercises on extracting data from SAP application

introduces basic data flows, query transforms, and

introduces Data Services functions. This exercise

introduces data extraction from relational

introduces data extraction from nested sources.

continues data extraction from relational

Running a Real-time Job in Test Mode

mode.

1.6 Exiting the tutorial

You can exit the tutorial at any point after creating a sample project (see Adding a new project).

1.6.1 To exit the tutorial

1.

From the Project menu, click Exit.

If any work has not been saved, you are prompted to save your work.

2.

Click Yes or No.

provides optional exercises on running a real-time job in test

2010-12-0220

Page 21

Introduction

1.6.2 To resume the tutorial

1.

Log in to the Designer and select the repository in which you saved your work.

The Designer window opens.

2.

From the Project menu, click Open.

3.

Click the name of the project you want to work with, then click Open.

The Designer window opens with the project and the objects within it displayed in the project area.

2010-12-0221

Page 22

Introduction

2010-12-0222

Page 23

Product Overview

Product Overview

This section provides an overview of SAP BusinessObjects Data Services. It introduces the product

architecture and the Designer.

2.1 Product features

SAP BusinessObjects Data Services combines industry-leading data quality and integration into one

platform. With Data Services, your organization can transform and improve data anywhere. You can

have a single environment for development, runtime, management, security and data connectivity.

One of the fundamental capabilities of Data Services is extracting, transforming, and loading (ETL) data

from heterogeneous sources into a target database or data warehouse. You create applications (jobs)

that specify data mappings and transformations by using the Designer.

Use any type of data, including structured or unstructured data from databases or flat files to process

and cleanse and remove duplicate entries. You can create and deliver projects more quickly with a

single user interface and performance improvement with parallelization and grid computing.

Data Services RealTime interfaces provide additional support for real-time data movement and access.

Data Services RealTime reacts immediately to messages as they are sent, performing predefined

operations with message content. Data Services RealTime components provide services to web

applications and other client applications.

Data Services features

• Instant traceability with impact analysis and data lineage capabilities that include the data quality

process

• Data validation with dashboards and process auditing

• Work flow design with exception handling (Try/Catch) and Recovery features

• Multi-user support (check-in/check-out) and versioning via a central repository

• Administration tool with scheduling capabilities and monitoring/dashboards

• Transform management for defining best practices

• Comprehensive administration and reporting tools

• Scalable scripting language with a rich set of built-in functions

• Interoperability and flexibility with Web services-based applications

• High performance parallel transformations and grid computing

• Debugging and built-in profiling and viewing data

• Broad source and target support

2010-12-0223

Page 24

Product Overview

• applications (for example, SAP)

• databases with bulk loading and CDC changes data capture

• files: comma delimited, fixed width, COBOL, XML, Excel

For details about all the features in Data Services, see the

2.2 Product components

The Data Services product consists of several components including:

• Designer

The Designer allows you to create, test, and execute jobs that populate a data warehouse. It is a

development tool with a unique graphical user interface. It enables developers to create objects,

then drag, drop, and configure them by selecting icons in a source-to-target flow diagram. It allows

you to define data mappings, transformations, and control logic. Use the Designer to create

applications specifying work flows (job execution definitions) and data flows (data transformation

definitions).

• Job Server

The Job Server is an application that launches the Data Services processing engine and serves as

an interface to the engine and other components in the Data Services suite.

• Engine

The Data Services engine executes individual jobs defined in the application you create using the

Designer. When you start your application, the Data Services Job Server launches enough engines

to effectively accomplish the defined tasks.

Reference Guide.

• Repository

The repository is a database that stores Designer predefined system objects and user-defined

objects including source and target metadata and transformation rules. In addition to the local

repository used by the Designer and Job Server, you can optionally establish a central repository

for object sharing and version control.

The Designer handles all repository transactions. Direct manipulation of the repository is unnecessary

except for:

• Setup before installing Data Services

You must create space for a repository within your RDBMS before installing Data Services.

• Security administration

Data Services uses your security at the network and RDBMS levels.

• Backup and recovery

2010-12-0224

Page 25

Product Overview

• Access Server

• Administrator

You can export your repository to a file. Additionally, you should regularly back up the database

where the repository is stored.

The Access Server passes messages between web applications and the Data Services Job Server

and engines. It provides a reliable and scalable interface for request-response processing.

The Web Administrator provides browser-based administration of Data Services resources, including:

• Scheduling, monitoring, and executing batch jobs

• Configuring, starting, and stopping real-time services

• Configuring Job Server, Access Server, and repository usage

• Configuring and managing adapters

• Managing users

• Publishing batch jobs and real-time services via Web services

The following diagram illustrates Data Services product components and relationships.

2.3 Using the product

You use Data Services to design, produce, and run data movement applications.

2010-12-0225

Page 26

Product Overview

Using the Designer, you can build work flows and data flows that cleanse your data and specify data

extraction, transformation, and loading processes. In Data Services RealTime, you have the added

capability to build real-time data flows that support e-business transactions.

You create jobs to contain, organize, and run your flows. You create projects to organize the jobs.

Refine and build on your design until you have created a well-tested, production-quality application. In

Data Services, you can set applications to run in test mode or on a specific schedule. Using Data

Services RealTime, you can run applications in real time so they immediately respond to web-based

client requests.

2.4 System configurations

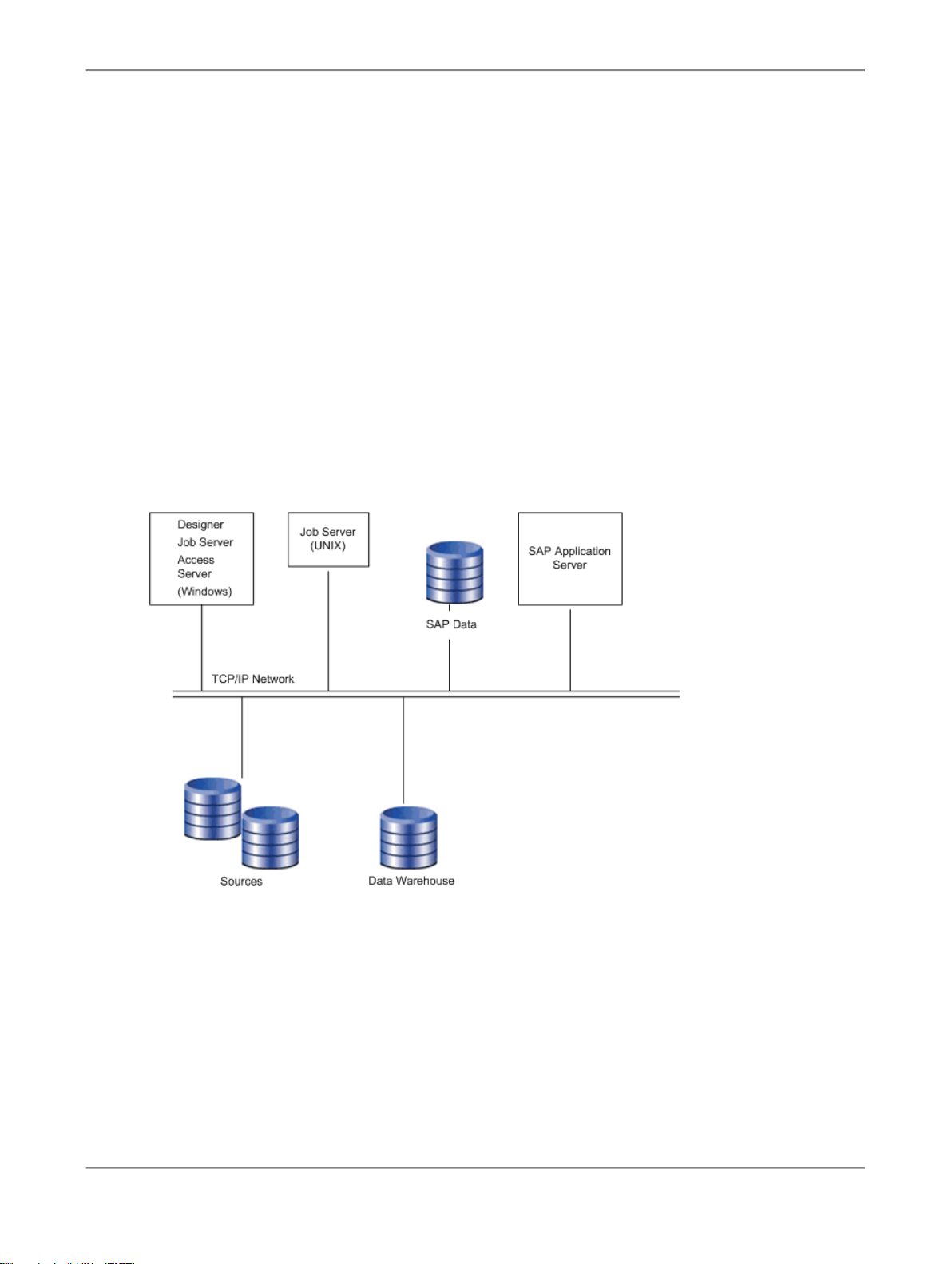

You can configure SAP BusinessObjects Data Services in various ways. The following diagram illustrates

one possible system configuration.

When integrating Data Services into your existing environment, consider:

• The servers shown in the diagram can be separate physical computers, or they can be installed on

a single computer.

• For peak performance, install and create the Data Services local repository on either the same

computer as the Data Services Job Server or on the same computer as the target data warehouse.

In either of the previous configurations, the computer should be on the same LAN segment as the

rest of the Data Services components.

2010-12-0226

Page 27

Product Overview

As shown in the diagram, most Data Services components—the Designer, Job Server, and Access

Server—can run on the same Windows system, or you can install the Job Server on a UNIX system

running Hewlett Packard HP-UX, Sun Solaris, or IBM AIX.

2.4.1 Windows implementation

You can configure a Windows system as either a server or a workstation. A large-memory, multiprocessor

system is ideal because the multithreading, pipelining, and parallel work flow execution features in Data

Services take full advantage of such a system.

You can create your target data warehouse on a database server that may or may not be a separate

physical computer.

You can use a shared disk or FTP to transfer data between your source system and the Data Services

Job Server.

2.4.2 UNIX implementation

You can install the Data Services Job Server on a UNIX system. You can also configure the Job Server

to start automatically when you restart the computer.

2.5 The Designer window

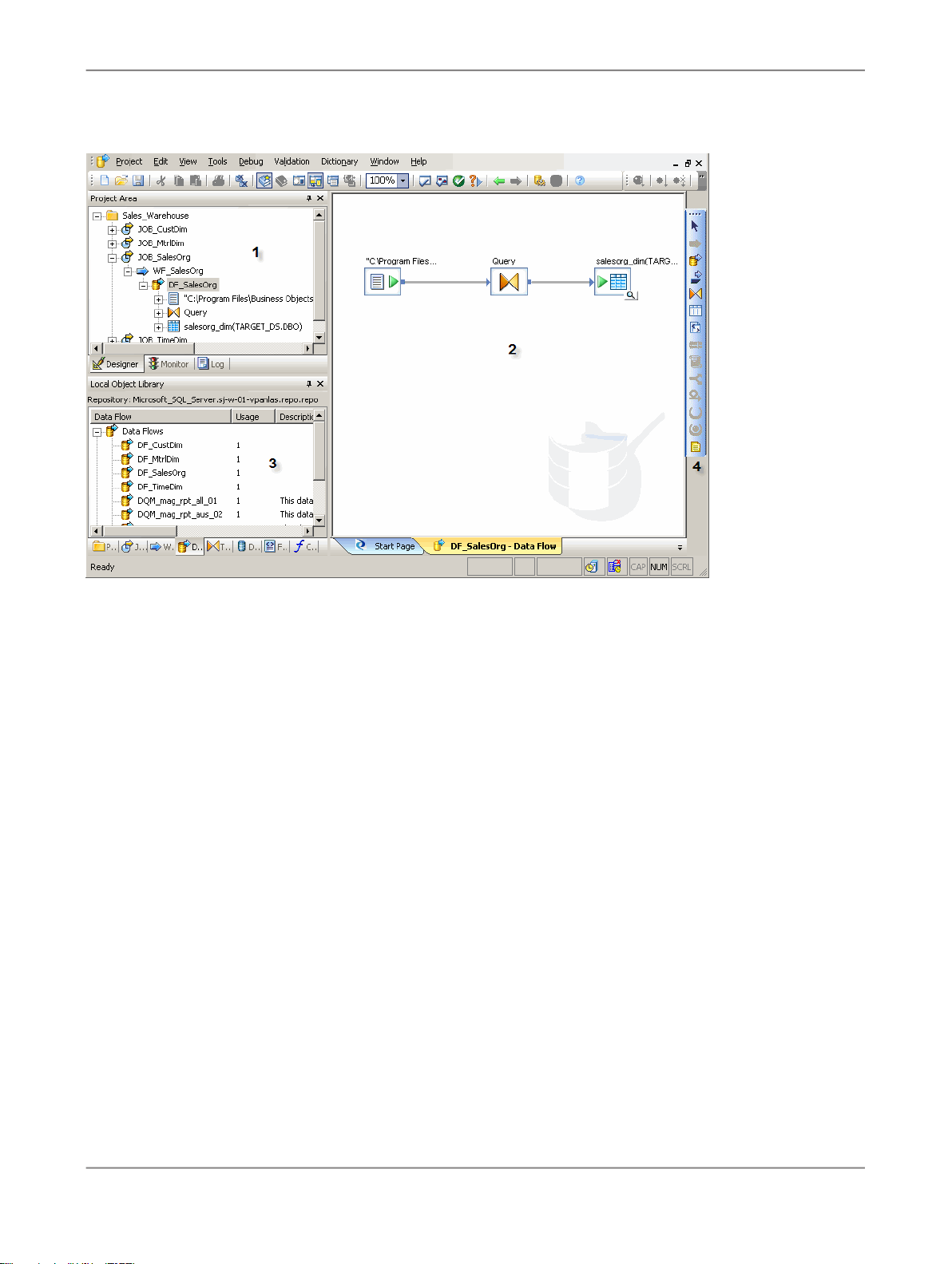

The following illustration shows the key areas of the Designer window.

2010-12-0227

Page 28

Product Overview

The key areas of the Data Services application window are:

1.

Project area — Contains the current project (and the job(s) and other objects within it) available to

you at a given time. In Data Services, all entities you create, modify, or work with are objects.

2.

Workspace — The area of the application window in which you define, display, and modify objects.

3.

Local object library — Provides access to local repository objects including built-in system objects,

such as transforms and transform configurations, and the objects you build and save, such as jobs

and data flows.

4.

Tool palette — Buttons on the tool palette enable you to add new objects to the workspace.

2.6 SAP BusinessObjects Data Services objects

In SAP BusinessObjects Data Services, all entities you add, define, modify, or work with are objects.

Objects have:

• Options that control the object. For example, to set up a connection to a database, defining the

database name would be an option for the connection.

• Properties that describe the object. For example, the name and creation date. Attributes are properties

used to locate and organize objects.

2010-12-0228

Page 29

Product Overview

• Classes that determine how you create and retrieve the object. You can copy reusable objects from

The following diagram shows transform objects in the Data Services object library.

the object library. You cannot copy single-use objects.

When you widen the object library, the name of each object is visible next to its icon. To resize the

object library area, click and drag its border until you see the text you want, then release.

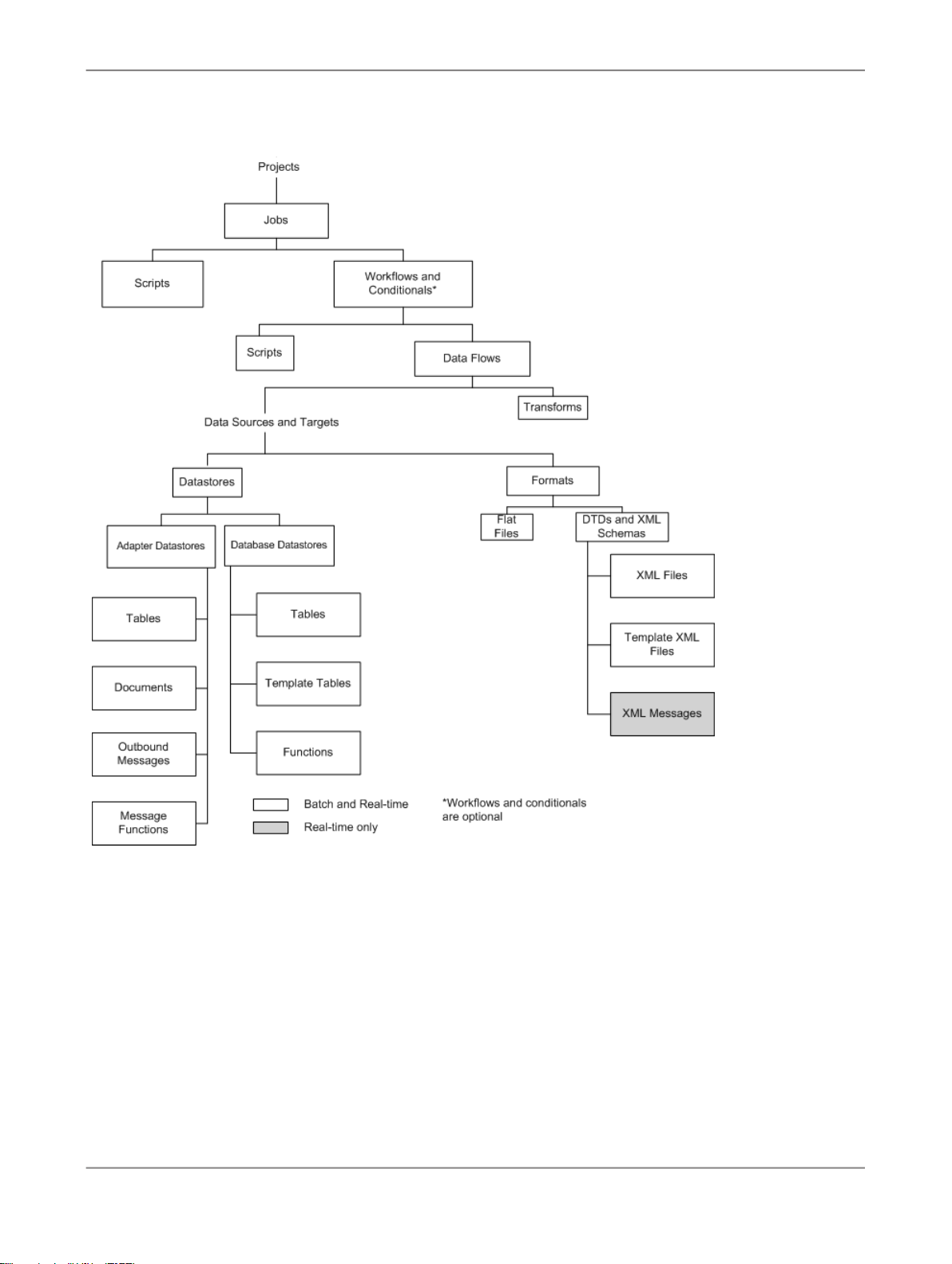

2.6.1 Object hierarchy

The following illustration shows the hierarchical relationships for the key object types within Data

Services.

2010-12-0229

Page 30

Product Overview

In the repository, the Designer groups objects hierarchically from a project, to jobs, to optional work

flows, to data flows. In jobs:

• Work flows define a sequence of processing steps. Work flows and conditionals are optional. A

conditional contains work flows, and you can embed a work flow within another work flow.

• Data flows transform data from source(s) to target(s). You can embed a data flow within a work flow

or within another data flow.

2010-12-0230

Page 31

Product Overview

2.6.1.1 Projects and jobs

A project is the highest-level object in the Designer window. Projects provide you with a way to organize

the other objects you create in Data Services. Only one project is open at a time (where "open" means

"visible in the project area").

A “job” is the smallest unit of work that you can schedule independently for execution.

2.6.1.2 Work flows and data flows

Jobs are composed of work flows and/or data flows:

• A “work flow” is the incorporation of several data flows into a coherent flow of work for an entire job.

• A “data flow” is the process by which source data is transformed into target data.

A work flow orders data flows and operations that support them; a work flow also defines the

interdependencies between data flows. For example, if one target table depends on values from other

tables, use the work flow to specify the order in which you want Data Services to populate the tables.

Also use work flows to define strategies for handling errors that occur during project execution. You

can also use work flows to define conditions for running sections of a project.

The following diagram illustrates a typical work flow.

A data flow defines the basic task that Data Services accomplishes, which involves moving data from

one or more sources to one or more target tables or files. You define data flows by identifying the

sources from which to extract data, the transformations that the data should undergo, and targets.

2010-12-0231

Page 32

Product Overview

Blueprints

We have identified a number of common scenarios that you are likely to handle with Data Services.

Instead of creating your own job from scratch, look through the blueprints. If you find one that is closely

related to your particular business problem, you can simply use the blueprint and tweak the settings in

the transforms for your specific needs.

For each scenario, we have included a blueprint that is already set up to solve the business problem

in that scenario. Each blueprint contains the necessary Data Services project, jobs, data flows, file

formats, sample data, template tables, and custom functions to run the data flows in your environment

with only a few modifications.

You can download all of the blueprints or only the blueprints and other content that you find useful from

the SAP BusinessObjects Community Network. Here, we periodically post new and updated blueprints,

custom functions, best practices, white papers, and other Data Services content. You can refer to this

site frequently for updated content and use the forums to provide us with any questions or requests

you may have. We have also provided the ability for you to upload and share any content that you have

developed with the rest of the Data Services development community.

Instructions for downloading and installing the content objects are also located on the SAP

BusinessObjects Community Network at http://www.sdn.sap.com/irj/boc/blueprints.

2.6.2 Object-naming conventions

Data Services recommends that you follow a consistent naming convention to facilitate object

identification. Here are some examples:

2.7 New terms

ExampleObjectSuffixPrefix

JOB_SalesOrgJobJOB

WF_SalesOrgWork flowWF

DF_CurrencyData flowDF

ODS_DSDatastoreDS

2010-12-0232

Page 33

Product Overview

DescriptionTerm

Property that can be used as a constraint for locating objects.Attribute

Data flow

Job

Object

Object library

Contains steps to define how source data becomes target data. Called by a work

flow or job.

Logical channel that connects Data Services to source and target databases.Datastore

The smallest unit of work that you can schedule independently for execution. A job

is a special work flow that cannot be called by another work flow or job.

Data that describes the objects maintained by Data Services.Metadata

Any project, job, work flow, data flow, datastore, file format, message, custom function,

transform, or transform configurations created, modified, or used in Data Services.

Part of the Designer interface that represents a "window" into the local repository

and provides access to reusable objects.

A choice in a dialog box that controls how an object functions.Option

Logical grouping of related jobs. The Designer can open only one project at a time.Project

Property

Repository

Work flow

Characteristic used to define the state, appearance, or value of an object; for example,

the name of the object or the date it was created.

A database that stores Designer predefined system objects and user-defined objects

including source and target metadata and transformation rules. Can be local or

central (shared).

Table, file, or legacy system from which Data Services reads data.Source

Table or file to which Data Services loads data.Target

Contains steps to define the order of job execution. Calls a data flow to manipulate

data.

2010-12-0233

Page 34

Product Overview

2.8 Section summary and what to do next

This section has given you a short overview of the Data Services product and terminology. For more

information about these topics, see the

Administrator Guide

and the

Designer Guide

.

2010-12-0234

Page 35

Defining Source and Target Metadata

Defining Source and Target Metadata

In this section you will set up logical connections between Data Services, a flat-file source, and a target

data warehouse. You will also create and import objects into the local repository. Storing connection

metadata in the repository enables you to work within Data Services to manage tables that are stored

in various environments.

3.1 Logging in to the Designer

When you log in to the Designer, you must log in as a user defined in the Central Management Server

(CMS).

1.

From the Start menu, click Programs > SAP BusinessObjects Data Services XI 4.0 > Data

Services Designer.

As Data Services starts, a login screen appears.

2.

Enter your user credentials for the CMS.

• System

Specify the server name and optionally the port for the CMS.

• User name

Specify the user name to use to log into CMS.

• Password

Specify the password to use to log into the CMS.

• Authentication

Specify the authentication type used by the CMS.

3.

Click Log On.

The software attempts to connect to the CMS using the specified information. When you log in

successfully, the list of local repositories that are available to you is displayed.

4.

Select the repository you want to use.

5.

Click OK to log in using the selected repository.

In the next section you will define datastores (connections) for your source and target.

2010-12-0235

Page 36

Defining Source and Target Metadata

3.2 Defining a datastore

Datastores:

• Provide a logical channel (connection) to a database

• Must be specified for each source and target database

• Are used to import metadata for source and target databases into the repository.

• Are used by Data Services to read data from source tables and load data to target tables

The databases to which Data Services datastores can connect include:

• Oracle

• IBM DB2

• Microsoft SQL Server

• Sybase ASE

• Sybase IQ

• ODBC

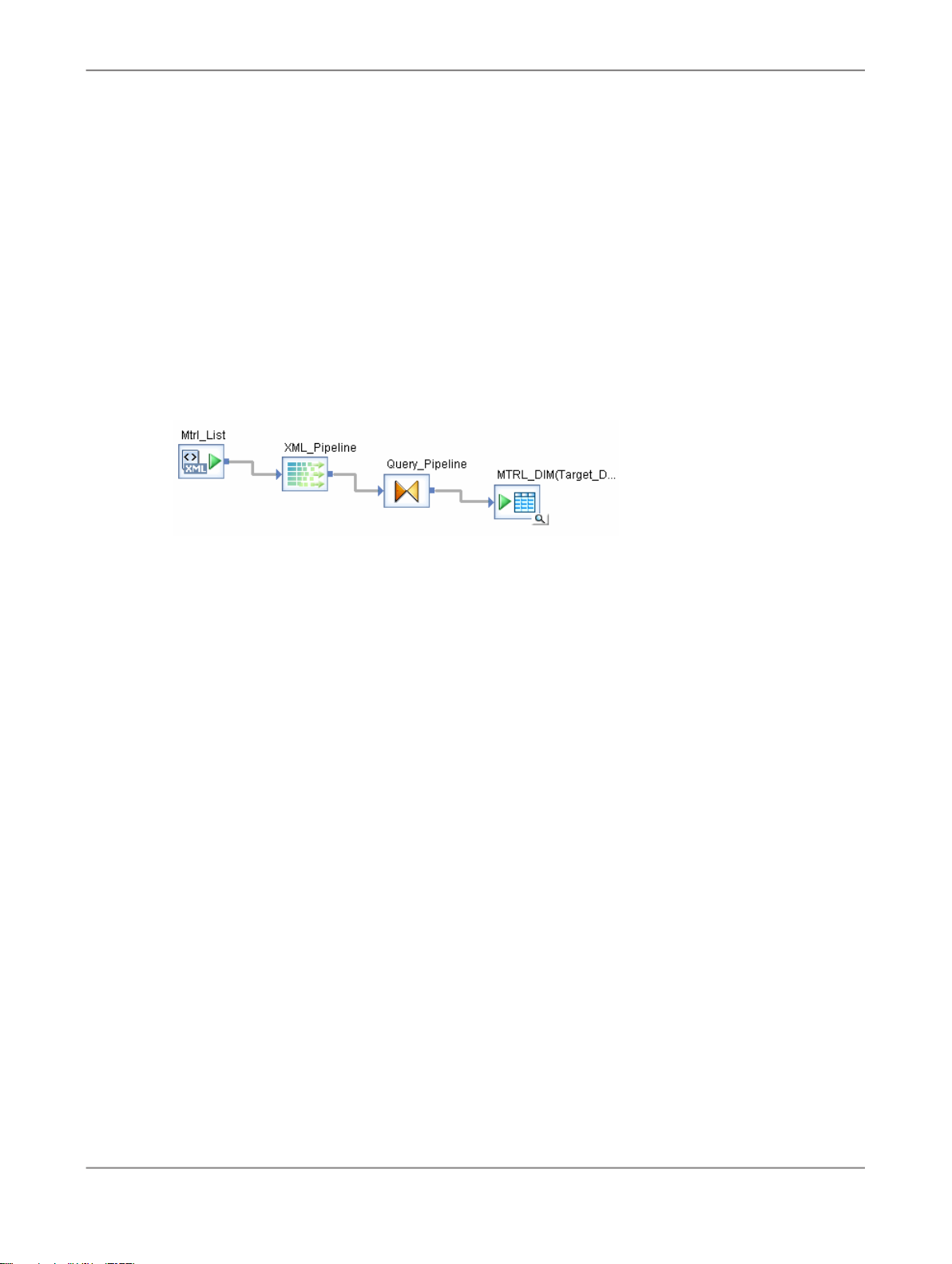

Metadata consists of:

• Database tables

• Table name

• Column names

• Column data types

• Primary key columns

• Table attributes

• RDBMS functions

• Application-specific data structures

Connection metadata is defined in the object library as datastores (for tables) and file formats (for flat

files).

The next task describes how to define datastores using the Designer. Note that while you are designating

the datastores as sources or targets, datastores only function as connections. You will define the actual

source and target objects when you define data flows later in the tutorial.

3.2.1 To define a datastore for the source (ODS) database

1.

From the Datastores tab of the object library, right-click in the blank area and click New.

The "Create New Datastore" window opens. A example for the Oracle environment appears as

follows:

2010-12-0236

Page 37

Defining Source and Target Metadata

2.

In the Datastore name box, type ODS_DS.

This datastore name labels the connection to the database you will use as a source. The datastore

name will appear in the local repository. When you create your own projects/applications, remember

to give your objects meaningful names.

3.

In the Datastore type box, click Database.

4.

In the Database type box, click the option that corresponds to the database software being used

to store the source data.

The remainder of the boxes on the "Create New Datastore" window depend on the Database type

you selected.

The following table lists the minimum options to configure for some common Database types. Enter

the information you recorded in Create repository, source, and target databases on an existing

RDBMS.

Sybase ASEMS SQL ServerDB2Oracle

Database versionEnable CDCEnable CDCEnable CDC

Database server nameDatabase versionDatabase versionDatabase version

Database nameDatabase server nameData sourceConnection name

User nameDatabase nameUser nameUser name

PasswordUser namePasswordPassword

Password

5.

Click OK.

Data Services saves a datastore for your source in the repository.

2010-12-0237

Page 38

Defining Source and Target Metadata

3.2.2 To define a datastore for the target database

Define a datastore for the target database using the same procedure as for the source (ODS) database.

1.

Use Target_DS for the datastore name.

2.

Use the information you recorded in Create repository, source, and target databases on an existing

RDBMS.

3.3 Importing metadata

With Data Services, you can import metadata for individual tables using a datastore. You can import

metadata by:

• Browsing

• Name

• Searching

The following procedure describes how to import by browsing.

3.3.1 To import metadata for ODS source tables

1.

In the Datastores tab, right-click the ODS_DS datastore and click Open.

The names of all the tables in the database defined by the datastore named ODS_DS display in a

window in the workspace.

2.

Move the cursor over the right edge of the Metadata column heading until it changes to a resize

cursor.

3.

Double-click the column separator to automatically resize the column.

4.

Import the following tables by right-clicking each table name and clicking Import. Alternatively,

because the tables are grouped together, click the first name, Shift-click the last, and import them

together. (Use Ctrl-click for nonconsecutive entries.)

• ods.ods_customer

• ods.ods_material

• ods.ods_salesorder

• ods.ods_salesitem

• ods.ods_delivery

2010-12-0238

Page 39

Defining Source and Target Metadata

• ods.ods_employee

• ods.ods_region

Data Services imports the metadata for each table into the local repository.

Note:

In Microsoft SQL Server, the owner prefix might be dbo instead of ods.

5.

In the object library on the Datastores tab, under ODS_DS expand the Tables node and verify the

tables have been imported into the repository.

3.3.2 To import metadata for target tables

1.

Open the Target_DS datastore.

2.

Import the following tables by right-clicking each table name and clicking Import. Alternatively, use

Ctrl-click and import them together

• target.status_table

• target.cust_dim

• target.employee_dim

• target.mtrl_dim

• target.sales_fact

• target.salesorg_dim

• target.time_dim

• target.CDC_time

Data Services imports the metadata for each table into the local repository.

Note:

In Microsoft SQL Server, the owner prefix might be dbo instead of target.

3.

In the object library on the Datastores tab, under Target_DS expand the Tables node and verify

the tables have been imported into the repository.

3.4 Defining a file format

If the source or target RDBMS includes data stored in flat files, you must define file formats in Data

Services. File formats are a set of properties that describe the structure of a flat file.

Data Services includes a file format editor. Use it to define flat file formats. The editor supports delimited

and fixed-width formats.

2010-12-0239

Page 40

Defining Source and Target Metadata

You can specify file formats for one file or a group of files. You can define flat files from scratch or by

importing and modifying an existing flat file. Either way, Data Services saves a connection to the file or

file group.

Note:

Data Services also includes a file format (Transport_Format) that you can use to read flat files in SAP

applications.

In the next section, you will use a flat file as your source data. Therefore, you must create a file format

and connection to the file now.

3.4.1 To define a file format

1.

In the object library, click the Formats tab, right-click in a blank area of the object library, and click

New > File Format.

The file format editor opens.

2.

Under General, leave Type as Delimited. Change the Name to Format_SalesOrg.

3.

Under Data File(s), beside File name(s), click the Files folder icon and navigate in your Data Services

install directory to %LINK_DIR%\Tutorial Files\sales_org.txt. Click Open.

Note:

The file format editor initially displays default values for the file schema. When you select a file, a

prompt asks you to verify that you want to overwrite the current schema with the schema from the

file you selected. Click Yes.

The file format editor displays sample data from the sales_org.txt file in the (lower right) Data Preview

pane.

4.

Under Default Format, change Date to ddmmyyyy.