Page 1

Data Services Performance

Optimization Guide

BusinessObjects Data Services XI 3.1 (12.1.1)

Page 2

Copyright

© 2008 Business Objects, an SAP company. All rights reserved. Business Objects

owns the following U.S. patents, which may cover products that are offered and

licensed by Business Objects: 5,295,243; 5,339,390; 5,555,403; 5,590,250;

5,619,632; 5,632,009; 5,857,205; 5,880,742; 5,883,635; 6,085,202; 6,108,698;

6,247,008; 6,289,352; 6,300,957; 6,377,259; 6,490,593; 6,578,027; 6,581,068;

6,628,312; 6,654,761; 6,768,986; 6,772,409; 6,831,668; 6,882,998; 6,892,189;

6,901,555; 7,089,238; 7,107,266; 7,139,766; 7,178,099; 7,181,435; 7,181,440;

7,194,465; 7,222,130; 7,299,419; 7,320,122 and 7,356,779. Business Objects and

its logos, BusinessObjects, Business Objects Crystal Vision, Business Process

On Demand, BusinessQuery, Cartesis, Crystal Analysis, Crystal Applications,

Crystal Decisions, Crystal Enterprise, Crystal Insider, Crystal Reports, Crystal

Vision, Desktop Intelligence, Inxight and its logos , LinguistX, Star Tree, Table

Lens, ThingFinder, Timewall, Let There Be Light, Metify, NSite, Rapid Marts,

RapidMarts, the Spectrum Design, Web Intelligence, Workmail and Xcelsius are

trademarks or registered trademarks in the United States and/or other countries

of Business Objects and/or affiliated companies. SAP is the trademark or registered

trademark of SAP AG in Germany and in several other countries. All other names

mentioned herein may be trademarks of their respective owners.

Third-party

Contributors

Business Objects products in this release may contain redistributions of software

licensed from third-party contributors. Some of these individual components may

also be available under alternative licenses. A partial listing of third-party

contributors that have requested or permitted acknowledgments, as well as required

notices, can be found at: http://www.businessobjects.com/thirdparty

2008-11-28

Page 3

Contents

Welcome to Data Services 9Chapter 1

Welcome....................................................................................................10

Documentation set for Data Services........................................................10

Accessing documentation..........................................................................13

Business Objects information resources...................................................14

Environment Test Strategy 17Chapter 2

The source OS and database server.........................................................18

The target OS and database server..........................................................19

The network...............................................................................................20

Data Services Job Server OS and job options..........................................20

Accessing documentation on Windows................................................13

Accessing documentation on UNIX......................................................13

Accessing documentation from the Web..............................................14

Operating system.................................................................................18

Database..............................................................................................18

Operating system.................................................................................19

Database..............................................................................................19

Operating system.................................................................................20

Data Services jobs...............................................................................21

Measuring Data Services Performance 23Chapter 3

Data Services processes and threads.......................................................24

Data Services processes .....................................................................24

Data Services threads..........................................................................25

Measuring performance of Data Services jobs..........................................25

Data Services Performance Optimization Guide 3

Page 4

Contents

Checking system utilization..................................................................26

Analyzing log files for task duration......................................................30

Reading the Monitor Log for execution statistics ................................31

Reading the Performance Monitor for execution statistics...................32

To view the Performance Monitor.........................................................33

Reading Operational Dashboards for execution statistics...................34

To compare execution times for the same job over time......................34

Tuning Overview 37Chapter 4

Strategies to execute Data Services jobs..................................................38

Maximizing push-down operations to the database server..................38

Improving Data Services throughput....................................................39

Using advanced Data Services tuning options.....................................40

Maximizing Push-Down Operations 41Chapter 5

Push-down operations...............................................................................42

Full push-down operations...................................................................42

Partial push-down operations...............................................................43

Operations that cannot be pushed down.............................................44

Push-down examples................................................................................45

Scenario 1: Combine transforms to push down ..................................45

Scenario 2: Full push down from the source to the target....................46

Scenario 3: Full push down for auto correct load to the target ............46

Scenario 4: Partial push down to the source .......................................47

To view SQL...............................................................................................47

Data_Transfer transform for push-down operations..................................50

Scenario 1: Push down an operation after a blocking operation..........50

Scenario 2: Using Data_Transfer tables to speed up auto correct

loads.....................................................................................................53

Database link support for push-down operations across datastores.........57

4 Data Services Performance Optimization Guide

Page 5

Contents

Software support..................................................................................58

To take advantage of linked datastores................................................58

Example of push-down with linked datastores.....................................59

Generated SQL statements..................................................................60

Tuning performance at the data flow or Job Server level.....................61

Using Caches 63Chapter 6

Caching data..............................................................................................64

Caching sources...................................................................................65

Caching joins........................................................................................66

To change the cache type for a data flow.............................................66

Caching lookups...................................................................................67

Caching table comparisons..................................................................68

Specifying a pageable cache directory................................................69

Using persistent cache..............................................................................70

Using persistent cache tables as sources ...........................................70

Monitoring and tuning caches....................................................................71

Using statistics for cache self-tuning ...................................................71

To have Data Services automatically choose the cache type..............71

To monitor and tune in-memory and pageable caches........................71

Using parallel Execution 77Chapter 7

Parallel data flows and work flows.............................................................78

Parallel execution in data flows.................................................................79

Table partitioning..................................................................................79

Degree of parallelism ..........................................................................85

Combining table partitioning and a degree of parallelism....................93

File multi-threading...............................................................................96

Data Services Performance Optimization Guide 5

Page 6

Contents

Distributing Data Flow Execution 101Chapter 8

Splitting a data flow into sub data flows...................................................102

Run as a separate process option ....................................................103

Examples of multiple processes for a data flow.................................103

Data_Transfer transform....................................................................110

Examples of multiple processes with Data_Transfer.........................110

Using grid computing to distribute data flow execution...........................116

Server Group......................................................................................116

Distribution levels for data flow execution..........................................116

Using Bulk Loading 123Chapter 9

Bulk loading in Oracle..............................................................................124

Bulk-loading methods.........................................................................124

Bulk-loading modes............................................................................125

Bulk-loading parallel-execution options..............................................125

Bulk-loading scenarios.......................................................................126

Using bulk-loading options.................................................................127

Bulk loading in Microsoft SQL Server......................................................129

To use the SQL Server ODBC bulk copy API....................................130

Network packet size option................................................................130

Maximum rejects option.....................................................................131

Bulk loading in HP Neoview.....................................................................131

Using the UPSERT bulk operation.....................................................132

Bulk loading in Informix............................................................................132

To set Informix server variables..........................................................133

Bulk loading in DB2 Universal Database.................................................133

When to use each DB2 bulk-loading method.....................................133

Using the DB2 CLI load method.........................................................136

To configure your system to use the CLI load method.......................136

6 Data Services Performance Optimization Guide

Page 7

Contents

To use the CLI load method in a Data Services job...........................136

Using the DB2 bulk load utility............................................................137

To configure your system to use the load utility.................................137

To use the load utility in a Data Services job......................................140

Using the import utility .......................................................................142

Bulk loading in Netezza...........................................................................142

Netezza bulk-loading process............................................................142

Options overview................................................................................143

Netezza log files.................................................................................144

Configuring bulk loading for Netezza ................................................144

To configure the target table...............................................................145

Bulk loading in Sybase ASE....................................................................146

Bulk loading in Sybase IQ........................................................................146

Bulk loading in Teradata..........................................................................147

When to use each Teradata bulk load method...................................147

How Data Services and Teradata use the file options to load............154

Using the UPSERT bulk operation.....................................................156

Parallel Transporter method...............................................................157

Teradata standalone utilities ..............................................................161

Other Tuning Techniques 179Chapter 10

Source-based performance options........................................................181

Join ordering.......................................................................................181

Minimizing extracted data...................................................................184

Using array fetch size.........................................................................185

Target-based performance options..........................................................186

Loading method .................................................................................186

Rows per commit................................................................................187

Job design performance options.............................................................188

Loading only changed data ...............................................................188

Minimizing data type conversion........................................................189

Data Services Performance Optimization Guide 7

Page 8

Contents

Minimizing locale conversion..............................................................189

Precision in operations ......................................................................189

Index 191

8 Data Services Performance Optimization Guide

Page 9

Welcome to Data Services

1

Page 10

Welcome to Data Services

1

Welcome

Welcome

Data Services XI Release 3 provides data integration and data quality

processes in one runtime environment, delivering enterprise performance

and scalability.

The data integration processes of Data Services allow organizations to easily

explore, extract, transform, and deliver any type of data anywhere across

the enterprise.

The data quality processes of Data Services allow organizations to easily

standardize, cleanse, and consolidate data anywhere, ensuring that end-users

are always working with information that's readily available, accurate, and

trusted.

Documentation set for Data Services

You should become familiar with all the pieces of documentation that relate

to your Data Services product.

What this document providesDocument

Documentation Map

Release Summary

Release Notes

Getting Started Guide

Installation Guide for Windows

Installation Guide for UNIX

10 Data Services Performance Optimization Guide

Information about available Data Services books,

languages, and locations

Highlights of key features in this Data Services release

Important information you need before installing and

deploying this version of Data Services

An introduction to Data Services

Information about and procedures for installing Data

Services in a Windows environment.

Information about and procedures for installing Data

Services in a UNIX environment.

Page 11

Advanced Development Guide

Welcome to Data Services

Documentation set for Data Services

What this document providesDocument

Guidelines and options for migrating applications including information on multi-user functionality and

the use of the central repository for version control

1

Designer Guide

Integrator's Guide

Management Console: Administrator

Guide

Management Console: Metadata Reports Guide

Migration Considerations Guide

Performance Optimization Guide

Reference Guide

Information about how to use Data Services Designer

Information for third-party developers to access Data

Services functionality. Also provides information about

how to install, configure, and use the Data Services

Adapter for JMS.

Information about how to use Data Services Administrator

Information about how to use Data Services Metadata

Reports

Information about:

• Release-specific product behavior changes from

earlier versions of Data Services to the latest release

• How to migrate from Data Quality to Data Services

Information about how to improve the performance

of Data Services

Detailed reference material for Data Services Designer

Data Services Performance Optimization Guide 11

Page 12

Welcome to Data Services

1

Documentation set for Data Services

Technical Manuals

What this document providesDocument

A compiled “master” PDF of core Data Services books

containing a searchable master table of contents and

index:

•

Getting Started Guide

•

Installation Guide for Windows

•

Installation Guide for UNIX

•

Designer Guide

•

Reference Guide

•

Management Console: Metadata Reports Guide

•

Management Console: Administrator Guide

•

Performance Optimization Guide

•

Advanced Development Guide

•

Supplement for J.D. Edwards

•

Supplement for Oracle Applications

•

Supplement for PeopleSoft

•

Supplement for Siebel

•

Supplement for SAP

Tutorial

A step-by-step introduction to using Data Services

In addition, you may need to refer to several Adapter Guides and

Supplemental Guides.

What this document providesDocument

Salesforce.com Adapter

Interface

Supplement for J.D. Edwards

Supplement for Oracle Applications

Supplement for PeopleSoft

12 Data Services Performance Optimization Guide

Information about how to install, configure, and use the Data

Services Salesforce.com Adapter Interface

Information about license-controlled interfaces between Data

Services and J.D. Edwards World and J.D. Edwards OneWorld

Information about the license-controlled interface between Data

Services and Oracle Applications

Information about license-controlled interfaces between Data

Services and PeopleSoft

Page 13

Welcome to Data Services

Accessing documentation

What this document providesDocument

1

Supplement for SAP

Supplement for Siebel

Information about license-controlled interfaces between Data

Services, SAP ERP, and SAP BI/BW

Information about the license-controlled interface between Data

Services and Siebel

Accessing documentation

You can access the complete documentation set for Data Services in several

places.

Accessing documentation on Windows

After you install Data Services, you can access the documentation from the

Start menu.

1. Choose Start > Programs > BusinessObjects XI 3.1 >

BusinessObjects Data Services > Data Services Documentation.

Note:

Only a subset of the documentation is available from the Start menu. The

documentation set for this release is available in LINK_DIR\Doc\Books\en.

2. Click the appropriate shortcut for the document that you want to view.

Accessing documentation on UNIX

After you install Data Services, you can access the online documentation by

going to the directory where the printable PDF files were installed.

1. Go to LINK_DIR/doc/book/en/.

2. Using Adobe Reader, open the PDF file of the document that you want

to view.

Data Services Performance Optimization Guide 13

Page 14

Welcome to Data Services

1

Business Objects information resources

Accessing documentation from the Web

You can access the complete documentation set for Data Services from the

Business Objects Customer Support site.

1.

Go to http://help.sap.com.

2. Cick Business Objects at the top of the page.

You can view the PDFs online or save them to your computer.

Business Objects information resources

A global network of Business Objects technology experts provides customer

support, education, and consulting to ensure maximum business intelligence

benefit to your business.

Useful addresses at a glance:

ContentAddress

14 Data Services Performance Optimization Guide

Page 15

Welcome to Data Services

Business Objects information resources

ContentAddress

1

Customer Support, Consulting, and Education

services

http://service.sap.com/

Data Services Community

https://www.sdn.sap.com/irj/sdn/businessob

jects-ds

Forums on SCN (SAP Community Network)

https://www.sdn.sap.com/irj/sdn/businessob

jects-forums

Blueprints

http://www.sdn.sap.com/irj/boc/blueprints

Information about Customer Support programs,

as well as links to technical articles, downloads,

and online forums. Consulting services can

provide you with information about how Business Objects can help maximize your business

intelligence investment. Education services can

provide information about training options and

modules. From traditional classroom learning

to targeted e-learning seminars, Business Objects can offer a training package to suit your

learning needs and preferred learning style.

Get online and timely information about Data

Services, including tips and tricks, additional

downloads, samples, and much more. All content is to and from the community, so feel free

to join in and contact us if you have a submission.

Search the Business Objects forums on the

SAP Community Network to learn from other

Data Services users and start posting questions

or share your knowledge with the community.

Blueprints for you to download and modify to fit

your needs. Each blueprint contains the necessary Data Services project, jobs, data flows, file

formats, sample data, template tables, and

custom functions to run the data flows in your

environment with only a few modifications.

Data Services Performance Optimization Guide 15

Page 16

Welcome to Data Services

1

Business Objects information resources

http://help.sap.com/

ContentAddress

Business Objects product documentation.Product documentation

Documentation mailbox

documentation@businessobjects.com

Supported platforms documentation

https://service.sap.com/bosap-support

Send us feedback or questions about your

Business Objects documentation. Do you have

a suggestion on how we can improve our documentation? Is there something that you particularly like or have found useful? Let us know,

and we will do our best to ensure that your

suggestion is considered for the next release

of our documentation.

Note:

If your issue concerns a Business Objects

product and not the documentation, please

contact our Customer Support experts.

Get information about supported platforms for

Data Services.

In the left panel of the window, navigate to

Documentation > Supported Platforms >

BusinessObjects XI 3.1. Click the BusinessObjects Data Services link in the main window.

16 Data Services Performance Optimization Guide

Page 17

Environment Test Strategy

2

Page 18

Environment Test Strategy

2

The source OS and database server

This section covers suggested methods of tuning source and target database

applications, their operating systems, and the network used by your Data

Services environment. It also introduces key Data Services job execution

options.

This section contains the following topics:

•

The source OS and database server on page 18

•

The target OS and database server on page 19

•

The network on page 20

•

Data Services Job Server OS and job options on page 20

To test and tune Data Services jobs, work with all four of these components

in the order shown above.

In addition to the information in this section, you can use your UNIX or

Windows operating system and database server documentation for specific

techniques, commands, and utilities that can help you measure and tune the

Data Services environment.

The source OS and database server

Tune the source operating system and database to quickly read data from

disks.

Operating system

Make the input and output (I/O) operations as fast as possible. The

read-ahead protocol, offered by most operating systems, can greatly improve

performance. This protocol allows you to set the size of each I/O operation.

Usually its default value is 4 to 8 kilobytes which is too small. Set it to at least

64K on most platforms.

Database

Tune your database on the source side to perform SELECTs as quickly as

possible.

18 Data Services Performance Optimization Guide

Page 19

Environment Test Strategy

The target OS and database server

In the database layer, you can improve the performance of SELECTs in

several ways, such as the following:

• Create indexes on appropriate columns, based on your Data Services

data flows.

• Increase the size of each I/O from the database server to match the OS

read-ahead I/O size.

• Increase the size of the shared buffer to allow more data to be cached in

the database server.

• Cache tables that are small enough to fit in the shared buffer. For example,

if jobs access the same piece of data on a database server, then cache

that data. Caching data on database servers will reduce the number of

I/O operations and speed up access to database tables.

See your database server documentation for more information about

techniques, commands, and utilities that can help you measure and tune the

the source databases in your Data Services jobs.

The target OS and database server

2

Tune the target operating system and database to quickly write data to disks.

Operating system

Make the input and output operations as fast as possible. For example, the

asynchronous I/O, offered by most operating systems, can greatly improve

performance. Turn on the asynchronous I/O.

Database

Tune your database on the target side to perform INSERTs and UPDATES

as quickly as possible.

In the database layer, there are several ways to improve the performance

of these operations.

Here are some examples from Oracle:

Data Services Performance Optimization Guide 19

Page 20

Environment Test Strategy

2

The network

• Turn off archive logging

• Turn off redo logging for all tables

• Tune rollback segments for better performance

• Place redo log files and data files on a raw device if possible

• Increase the size of the shared buffer

See your database server documentation for more information about

techniques, commands, and utilities that can help you measure and tune the

the target databases in your Data Services jobs.

The network

When reading and writing data involves going through your network, its ability

to efficiently move large amounts of data with minimal overhead is very

important. Do not underestimate the importance of network tuning (even if

you have a very fast network with lots of bandwidth).

Set network buffers to reduce the number of round trips to the database

servers across the network. For example, adjust the size of the network

buffer in the database client so that each client request completely fills a

small number of network packets.

Data Services Job Server OS and job options

Tune the Job Server operating system and set job execution options to

improve performance and take advantage of self-tuning features of Data

Services.

Operating system

Data Services jobs are multi-threaded applications. Typically a single data

flow in a job initiates one al_engine process that in turn initiates at least 4

threads.

For maximum performance benefits:

20 Data Services Performance Optimization Guide

Page 21

• Consider a design that will run one al_engine process per CPU at a

time.

• Tune the Job Server OS so that Data Services threads spread to all

available CPUs.

For more information, see Checking system utilization on page 26.

Data Services jobs

You can tune Data Services job execution options after:

• Tuning the database and operating system on the source and the target

computers

• Adjusting the size of the network buffer

• Your data flow design seems optimal

You can tune the following execution options to improve the performance of

Data Services jobs:

• Monitor sample rate

Environment Test Strategy

Data Services Job Server OS and job options

2

• Collect statistics for optimization and Use collected statistics

Setting Monitor sample rate

During job execution, Data Services writes information to the monitor log file

and updates job events after processing the number of rows specified in

Monitor sample rate. Default value is 1000. Increase Monitor sample rate

to reduce the number of calls to the operating system to write to the log file.

When setting Monitor sample rate, you must evaluate performance

improvements gained by making fewer calls to the operating system against

your ability to view more detailed statistics during job execution. With a higher

Monitor sample rate, Data Services collects more data before calling the

operating system to open the file, and performance improves. However, with

a higher monitor rate, more time passes before you can view statistics during

job execution.

Data Services Performance Optimization Guide 21

Page 22

Environment Test Strategy

2

Data Services Job Server OS and job options

In production environments when your jobs transfer large volumes of data,

Business Objects recommends that you increase Monitor sample rate to

50,000.

Note:

If you use a virus scanner on your files, exclude the Data Services log from

the virus scan. Otherwise, the virus scan analyzes the Data Services log

repeated during the job execution, which causes a performance degradation.

Collecting statistics for self-tuning

Data Services provides a self-tuning feature to determine the optimal cache

type (in-memory or pageable) to use for a data flow.

To take advantage of this self-tuning feature

1. When you first execute a job, select the option Collect statistics for

optimization to collect statistics which include number of rows and width

of each row. Ensure that you collect statistics with data volumes that

represent your production environment. This option is not selected by

default.

2. The next time you execute the job, this option is selected by default.

3. When changes occur in data volumes, re-run your job with Collect

statistics for optimization to ensure that Data Services has the most

current statistics to optimize cache types.

For more information about these caches, see .

Related Topics

• Using Caches on page 63

22 Data Services Performance Optimization Guide

Page 23

Measuring Data Services Performance

3

Page 24

Measuring Data Services Performance

3

Data Services processes and threads

This section contains the following topics:

•

Data Services processes and threads on page 24

•

Measuring performance of Data Services jobs on page 25

Data Services processes and threads

Data Services uses processes and threads to execute jobs that extract data

from sources, transform the data, and load data into a data warehouse. The

number of concurrently executing processes and threads affects the

performance of Data Services jobs.

Data Services processes

The processes Data Services uses to run jobs are:

• al_jobserver

The al_jobserver initiates one process for each Job Server configured on

a computer. This process does not use much CPU power because it is

only responsible for launching each job and monitoring the job's execution.

• al_engine

For batch jobs, an al_engine process runs when a job starts and for each

of its data flows. Real-time jobs run as a single process.

The number of processes a batch job initiates also depends upon the

number of:

• parallel work flows

• parallel data flows

• sub data flows

For an example of the Data Services monitor log that displays the processes,

see Analyzing log files for task duration on page 30.

24 Data Services Performance Optimization Guide

Page 25

Data Services threads

A data flow typically initiates one al_engine process, which creates one

thread per data flow object. A data flow object can be a source, transform,

or target. For example, two sources, a query, and a target could initiate four

threads.

If you are using parallel objects in data flows, the thread count will increase

to approximately one thread for each source or target table partition. If you

set the Degree of parallelism (DOP) option for your data flow to a value

greater than one, the thread count per transform will increase. For example,

a DOP of 5 allows five concurrent threads for a Query transform. To run

objects within data flows in parallel, use the following Data Services features:

• Table partitioning

• File multithreading

• Degree of parallelism for data flows

Measuring Data Services Performance

Measuring performance of Data Services jobs

3

Related Topics

• Using parallel Execution on page 77

Measuring performance of Data Services

jobs

You can use several techniques to measure performance of Data Services

jobs:

•

Checking system utilization on page 26

•

Analyzing log files for task duration on page 30

•

Reading the Monitor Log for execution statistics on page 31

•

Reading the Performance Monitor for execution statistics on page 32

•

Reading Operational Dashboards for execution statistics on page 34

Data Services Performance Optimization Guide 25

Page 26

Measuring Data Services Performance

3

Measuring performance of Data Services jobs

Checking system utilization

The number of Data Services processes and threads concurrently executing

affects the utilization of system resources (see Data Services processes and

threads on page 24).

Check the utilization of the following system resources:

• CPU

• Memory

• Disk

• Network

To monitor these system resources, use the following tools:

For UNIX:

• top or a third party utility (such as glance for HPUX)

For Windows:

• Performance tab on the Task Manager

Depending on the performance of your jobs and the utilization of system

resources, you might want to adjust the number of Data Services processes

and threads. The following sections describe different situations and suggests

Data Services features to adjust the number of processes and threads for

each situation.

CPU utilization

Data Services is designed to maximize the use of CPUs and memory

available to run the job.

The total number of concurrent threads a job can run depends upon job

design and environment. Test your job while watching multi-threaded Data

Services processes to see how much CPU and memory the job requires.

Make needed adjustments to your job design and environment and test again

to confirm improvements.

26 Data Services Performance Optimization Guide

Page 27

Measuring Data Services Performance

Measuring performance of Data Services jobs

For example, if you run a job and see that the CPU utilization is very high,

you might decrease the DOP value or run less parallel jobs or data flows.

Otherwise, CPU thrashing might occur.

For another example, if you run a job and see that only half a CPU is being

used, or if you run eight jobs on an eight-way computer and CPU usage is

only 50%, you can be interpret this CPU utilization in several ways:

• One interpretation might be that Data Services is able to push most of

the processing down to source and/or target databases.

• Another interpretation might be that there are bottlenecks in the database

server or the network connection. Bottlenecks on database servers do

not allow readers or loaders in jobs to use Job Server CPUs efficiently.

To determine bottlenecks, examine:

• Disk service time on database server computers

Disk service time typically should be below 15 milliseconds. Consult

your server documentation for methods of improving performance.

For example, having a fast disk controller, moving database server

log files to a raw device, and increasing log size could improve disk

service time.

3

• Number of threads per process allowed on each database server

operating system. For example:

• On HPUX, the number of kernel threads per process is configurable.

The CPU to thread ratio defaults to one-to-one. Business Objects

recommends setting the number of kernel threads per CPU to

between 512 and 1024.

• On Solaris and AIX, the number of threads per process is not

configurable. The number of threads per process depends on

system resources. If a process terminates with a message like

"Cannot create threads," you should consider tuning the job.

For example, use the Run as a separate process option to split

a data flow or use the Data_Transfer transform to create two sub

data flows to execute sequentially. Since each sub data flow is

executed by a different Data Services al_engine process, the

number of threads needed for each will be 50% less than in your

previous job design.

Data Services Performance Optimization Guide 27

Page 28

Measuring Data Services Performance

3

Measuring performance of Data Services jobs

If you are using the Degree of parallelism option in your data flow,

reduce the number for this option in the data flow Properties

window.

• Network connection speed

Determine the rate that your data is being transferred across your

network.

• If the network is a bottle neck, you might change your job execution

distribution level from sub data flow to data flow or job to execute

the entire data flow on the local Job Server.

• If the capacity of your network is much larger, you might retrieve

multiple rows from source databases using fewer requests.

• Yet another interpretation might be that the system is under-utilized. In

this case, you might increase the value for the Degree of parallelism

option and increase the number of parallel jobs and data flows.

Related Topics

• Using parallel Execution on page 77

• Using grid computing to distribute data flow execution on page 116

• Using array fetch size on page 185

Data Services memory

For memory utilization, you might have one of the following different cases:

• Low amount of physical memory.

In this case, you might take one of the following actions:

• Add more memory to the Job Server.

• Redesign your data flow to run memory-consuming operations in

separate sub data flows that each use a smaller amount of memory,

and distribute the sub data flows over different Job Servers to access

memory on multiple machines. For more information, see Splitting a

data flow into sub data flows on page 102.

• Redesign your data flow to push down memory-consuming operations

to the database. For more information, see Push-down operations on

page 42.

28 Data Services Performance Optimization Guide

Page 29

Measuring Data Services Performance

Measuring performance of Data Services jobs

For example, if your data flow reads data from a table, joins it to a file,

and then groups it to calculate an average, the group by operation might

be occurring in memory. If you stage the data after the join and before

the group by into a database on a different computer, then when a sub

data flow reads the staged data and continues with the group processing,

it can utilize memory from the database server on a different computer.

This situation optimizes your system as a whole.

For information about how to stage your data, see Data_Transfer transform

on page 110. For more information about distributing sub data flows to

different computers, see Using grid computing to distribute data flow

execution on page 116.

• Large amount of memory but it is under-utilized.

In this case, you might cache more data. Caching data can improve the

performance of data transformations because it reduces the number of

times the system must access the database.

Data Services provides two types of caches: in-memory and pageable.

For more information, see Caching data on page 64.

3

• Paging occurs.

Pageable cache is the default cache type for data flows. On Windows

and Linux, the virtual memory available to the al_engine process is 1.5

gigabytes (500 megabytes of virtual memory is reserved for other engine

operations, totaling 2GB). On UNIX, Data Services limits the virtual

memory for the al_engine process to 3.5 gigabytes (500MB is reserved

for other engine operations, totaling 4GB). If more memory is needed

than these virtual memory limits, Data Services starts paging to continue

executing the data flow.

If your job or data flow requires more memory than these limits, you can

take advantage of one of the following Data Services features to avoid

paging:

• Split the data flow into sub data flows that can each use the amount

of memory set by the virtual memory limits.

Each data flow or each memory-intensive operation within a data flow

can run as a separate process that uses separate memory from each

other to improve performance and throughput. For more information,

see Splitting a data flow into sub data flows on page 102.

Data Services Performance Optimization Guide 29

Page 30

Measuring Data Services Performance

3

Measuring performance of Data Services jobs

• Push-down memory-intensive operations to the database server so

that less memory is used on the Job Server computer. For more

information, see Push-down operations on page 42.

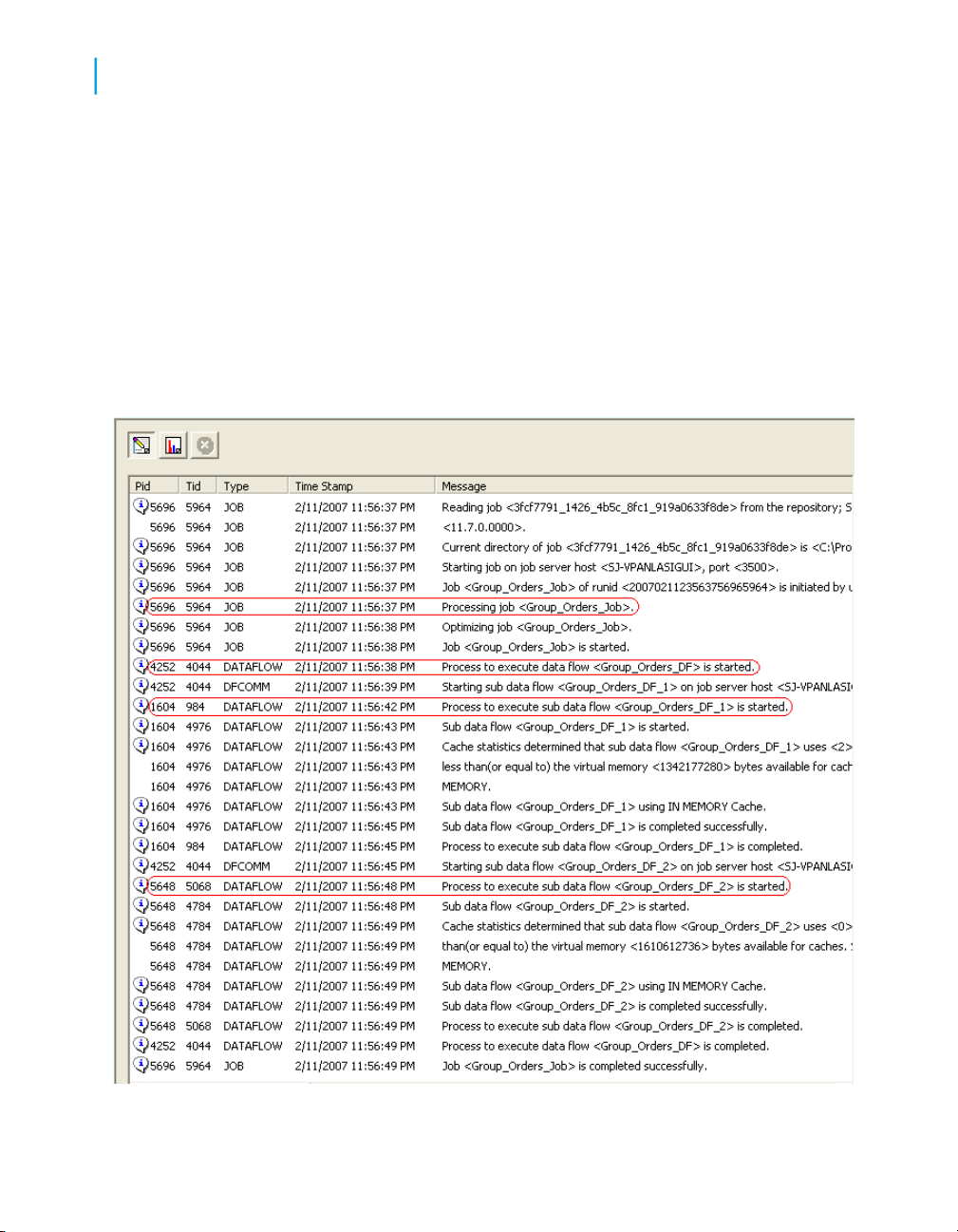

Analyzing log files for task duration

The trace log shows the progress of an execution through each component

(object) of a job. The following sample Trace log shows a separate Process

ID (Pid) for the Job, data flow, and each of the two sub data flows.

30 Data Services Performance Optimization Guide

Page 31

Measuring Data Services Performance

Measuring performance of Data Services jobs

This sample log contains messages about sub data flows, caches, and

statistics.

Related Topics

• Splitting a data flow into sub data flows on page 102

• Caching data on page 64

• Reference Guide: Data Services Objects, Log

Reading the Monitor Log for execution statistics

The Monitor log file indicates how many rows Data Services produces or

loads for a job. By viewing this log during job execution, you can observe

the progress of row-counts to determine the location of bottlenecks. You can

use the Monitor log to answer questions such as the following:

• What transform is running at any moment?

• How many rows have been processed so far?

The frequency that the Monitor log refreshes the statistics is based on

Monitor sample rate.

3

• How long does it take to build the cache for a lookup or comparison table?

How long does it take to process the cache?

If take long time to build the cache, use persistent cache.

• How long does it take to sort?

If take long time to sort, you can redesign your data flow to push down

the sort operation to the database.

• How much time elapses before a blocking operation sends out the first

row?

If your data flow contains resource-intensive operations after the blocking

operation, you can add Data_Transfer transforms to push-down the

resource-intensive operations.

You can view the Monitor log from the following tools:

• The Designer, as the job executes, when you click the Monitor icon.

• The Administrator of the Management Console, when you click the Monitor

link for a job from the Batch Job Status page.

Data Services Performance Optimization Guide 31

Page 32

Measuring Data Services Performance

3

Measuring performance of Data Services jobs

The following sample Monitor log in the Designer shows the path for each

object in the job, the number of rows processed and the elapsed time for

each object. The Absolute time is the total time from the start of the job to

when Data Services completes the execution of the data flow object.

Related Topics

• Setting Monitor sample rate on page 21

• Using persistent cache on page 70

• Push-down operations on page 42

• Data_Transfer transform for push-down operations on page 50

• Reference Guide: Data Services Objects, Log

Reading the Performance Monitor for execution statistics

The Performance Monitor displays execution information for each work flow,

data flow, and sub data flow within a job. You can display the execution times

in a graph or a table format. You can use the Performance Monitor to answer

questions such as the following:

• Which data flows might be bottlenecks?

• How much time did a a data flow or sub data flow take to execute?

• How many rows did the data flow or sub data flow process?

• How much memory did a specific data flow use?

32 Data Services Performance Optimization Guide

Page 33

Measuring Data Services Performance

Measuring performance of Data Services jobs

Note:

Memory statistics (Cache Size column) display in the Performance Monitor

only if you select the Collect statistics for monitoring option when you

execute the job.

The following sample Performance Monitor shows the following information:

• The Query_Lookup transform used 110 kilobytes of memory.

• The first sub data flow processed 830 rows, and the second sub data

flow processed 35 rows.

3

To view the Performance Monitor

1. Access the Management Console with one of the following methods:

• In the Designer top menu bar, click Tools and select Data Services

Management Console.

• Click Start > Programs > BusinessObjects Data Services >

Management Console.

2. On the launch page, click Administrator.

3. Select Batch > repository

4. On the Batch Job Statuspage, find a job execution instance.

5. Under Job Information for an instance, click Performance > Monitor.

Data Services Performance Optimization Guide 33

Page 34

Measuring Data Services Performance

3

Measuring performance of Data Services jobs

Related Topics

• To monitor and tune in-memory and pageable caches on page 71

Reading Operational Dashboards for execution statistics

Operational dashboard reports contain job and data flow execution information

for one or more repositories over a given time period (for example the last

day or week). You can use operational statistics reports to answer some of

the following questions:

• Are jobs executing within the allotted time frames?

• How many jobs succeeded or failed over a given execution period?

• How is the execution time for a job evolving over time?

• How many rows did the data flow process?

To compare execution times for the same job over time

1. Access the metadata reporting tool with one of the following methods:

• In the Designer top menu bar, click Tools and select Data Services

Management Console.

• In the Windows Start menu, click Programs > BusinessObjectsData

Services> Data Services Management Console.

2. On the launch page, click Dashboards.

3. Look at the graphs in Job Execution Statistic History or Job Execution

Duration History to see if performance is increasing or decreasing.

4. On the Job Execution Duration History, if there is a specific day that looks

high or low compared to the other execution times, click that point on the

graph to view the Job Execution Duration graph for all jobs that ran that

day.

34 Data Services Performance Optimization Guide

Page 35

Measuring Data Services Performance

Measuring performance of Data Services jobs

5. Click View all history to compare different executions of a specific job

or data flow.

6. On the Job Execution History tab, you can select a specific job and number

of days.

3

7. On the Data Flow Execution History tab, you can select a specific job and

number of days, as well as search for a specific data flow.

Data Services Performance Optimization Guide 35

Page 36

Measuring Data Services Performance

3

Measuring performance of Data Services jobs

Related Topics

• Management Console Metadata Reports Guide: Operational Dashboard

Reports

36 Data Services Performance Optimization Guide

Page 37

Tuning Overview

4

Page 38

Tuning Overview

4

Strategies to execute Data Services jobs

This section presents an overview of the different Data Services tuning

options, with cross-references to subsequent chapters for more details.

Strategies to execute Data Services jobs

To maximize performance of your Data Services jobs, Business Objects

recommends that you use the following tuning strategies:

•

Maximizing push-down operations to the database server on page 38

•

Improving Data Services throughput on page 39

•

Using advanced Data Services tuning options on page 40

Maximizing push-down operations to the database server

Data Services generates SQL SELECT statements to retrieve the data from

source databases. Data Services automatically distributes the processing

workload by pushing down as much as possible to the source database

server.

Pushing down operations provides the following advantages:

• Use the power of the database server to execute SELECT operations

(such as joins, Group By, and common functions such as decode and

string functions). Often the database is optimized for these operations.

• Minimize the amount of data sent over the network. Less rows can be

retrieved when the SQL statements include filters or aggregations.

You can also do a full push down from the source to the target, which means

Data Services sends SQL INSERT INTO... SELECT statements to the target

database. The following features enable a full push down:

• Data_Transfer transform

• Database links and linked datastores

Related Topics

• Maximizing Push-Down Operations on page 41

38 Data Services Performance Optimization Guide

Page 39

Strategies to execute Data Services jobs

Improving Data Services throughput

Use the following Data Services features to improve throughput:

• Using caches for faster access to data

You can improve the performance of data transformations by caching as

much data as possible. By caching data in memory, you limit the number

of times the system must access the database.

• Bulk loading to the target

Data Services supports database bulk loading engines including the

Oracle bulk load API. You can have multiple bulk load processes running

in parallel.

• Other tuning techniques

• Source-based performance options

• Join ordering

• Minimizing extracted data

Tuning Overview

4

• Using array fetch size

• Target-based performance options

• Loading method

• Rows per commit

• Job design performance options

• Loading only changed data

• Minimizing data type conversion

• Minimizing locale conversion

• Precision in operations

Related Topics

• Using Caches on page 63

• Using Bulk Loading on page 123

• Other Tuning Techniques on page 179

Data Services Performance Optimization Guide 39

Page 40

Tuning Overview

4

Strategies to execute Data Services jobs

• Join ordering on page 181

• Minimizing extracted data on page 184

• Using array fetch size on page 185

• Loading method on page 186

• Rows per commit on page 187

• Loading only changed data on page 188

• Minimizing data type conversion on page 189

• Minimizing locale conversion on page 189

• Precision in operations on page 189

Using advanced Data Services tuning options

If your jobs have CPU-intensive and memory-intensive operations, you can

use the following advanced tuning features to improve performance:

• Parallel processes - Individual work flows and data flows can execute in

parallel if you do not connect them in the Designer workspace.

• Parallel threads - Data Services supports partitioned source tables,

partitioned target tables, and degree of parallelism. These options allow

you to control the number of instances for a source, target, and transform

that can run in parallel within a data flow. Each instance runs as a separate

thread and can run on a separate CPU.

• Server groups and distribution levels - You can group Job Servers on

different computers into a logical Data Services component called a server

group. A server group automatically measures resource availability on

each Job Server in the group and distributes scheduled batch jobs to the

computer with the lightest load at runtime. This functionality also provides

a hot backup method. If one Job Server in a server group is down, another

Job Server in the group processes the job.

You can distribute the execution of data flows or sub data flows within a

batch job across multiple Job Servers within a Server Group to better

balance resource-intensive operations.

Related Topics

• Using parallel Execution on page 77

• Management Console Administrator Guide: Server Groups

• Using grid computing to distribute data flow execution on page 116

40 Data Services Performance Optimization Guide

Page 41

Maximizing Push-Down Operations

5

Page 42

Maximizing Push-Down Operations

5

Push-down operations

For SQL sources and targets, Data Services creates database-specific SQL

statements based on the data flow diagrams in a job. Data Services generates

SQL SELECT statements to retrieve the data from source databases. To

optimize performance, Data Services pushes down as many SELECT

operations as possible to the source database and combines as many

operations as possible into one request to the database. Data Services can

push down SELECT operations such as joins, Group By, and common

functions such as decode and string functions.

Data flow design influences the number of operations that Data Services

can push to the database. Before running a job, you can view the SQL that

Data Services generates and adjust your design to maximize the SQL that

is pushed down to improve performance.

You can use database links and the Data_Transfer transform to pushdown

more operations.

This section discusses:

• Push-down operations

• Push-down examples

• Viewing SQL

• Data_Transfer transform for push-down operations

• Database link support for push-down operations across datastores

Push-down operations

By pushing down operations to the source database, Data Services reduces

the number of rows and operations that the engine must retrieve and process,

which improves performance. When determining which operations to push

to the database, Data Services examines the database and its environment.

Full push-down operations

The Data Services optimizer always first tries to do a full push-down operation.

A full push-down operation is when all Data Services transform operations

can be pushed down to the databases and the data streams directly from

the source database to the target database. Data Services sends SQL

42 Data Services Performance Optimization Guide

Page 43

Maximizing Push-Down Operations

Push-down operations

INSERT INTO... SELECT statements to the target database where the

SELECT retrieves data from the source.

Data Services does a full push-down operation to the source and target

databases when the following conditions are met:

• All of the operations between the source table and target table can be

pushed down

• The source and target tables are from the same datastore or they are in

datastores that have a database link defined between them.

You can also use the following features to enable a full push-down from the

source to the target:

• Data_Transfer transform

• Database links

When all other operations in the data flow can be pushed down to the source

database, the auto-correct loading operation is also pushed down for a full

push-down operation to the target. Data Services sends an SQL MERGE

INTO target statement that implements the Ignore columns with value

and Ignore columns with null options.

5

Partial push-down operations

When a full push-down operation is not possible, Data Services still pushes

down the SELECT statement to the source database. Operations within the

SELECT statement that Data Services can push to the database include:

• Aggregations — Aggregate functions, typically used with a Group by

statement, always produce a data set smaller than or the same size as

the original data set.

• Distinct rows — When you select Distinct rows from the Select tab in

the query editor, Data Services will only output unique rows.

• Filtering — Filtering can produce a data set smaller than or equal to the

original data set.

• Joins — Joins typically produce a data set smaller than or similar in size

to the original tables. Data Services can push down joins when either of

the following conditions exist:

Data Services Performance Optimization Guide 43

Page 44

Maximizing Push-Down Operations

5

Push-down operations

• The source tables are in the same datastore

• The source tables are in datastores that have a database link defined

between them

• Ordering — Ordering does not affect data-set size. Data Services can

efficiently sort data sets that fit in memory. Business Objects recommends

that you push down the Order By for very large data sets.

• Projection — Projection is the subset of columns that you map on the

Mapping tab in the query editor. Projection normally produces a smaller

data set because it only returns columns needed by subsequent operations

in a data flow.

• Functions — Most Data Services functions that have equivalents in the

underlying database are appropriately translated. These functions include

decode, aggregation, and string functions.

Operations that cannot be pushed down

Data Services cannot push some transform operations to the database. For

example:

• Expressions that include Data Services functions that do not have

database correspondents

• Load operations that contain triggers

• Transforms other than Query

• Joins between sources that are on different database servers that do not

have database links defined between them.

Similarly, Data Services cannot always combine operations into single

requests. For example, when a stored procedure contains a COMMIT

statement or does not return a value, Data Services cannot combine the

stored procedure SQL with the SQL for other operations in a query.

Data Services can only push operations supported by the DBMS down to

that DBMS. Therefore, for best performance, try not to intersperse Data

Services transforms among operations that can be pushed down to the

database.

44 Data Services Performance Optimization Guide

Page 45

Maximizing Push-Down Operations

Push-down examples

Push-down examples

The following are typical push-down scenarios.

Scenario 1: Combine transforms to push down

When determining how to push operations to the database, Data Services

first collapses all the transforms into the minimum set of transformations

expressed in terms of the source table columns. Next, Data Services pushes

all possible operations on tables of the same database down to that DBMS.

For example, the following data flow extracts rows from a single source table.

5

The first query selects only the rows in the source where column A contains

a value greater than 100. The second query refines the extraction further,

reducing the number of columns returned and further reducing the qualifying

rows.

Data Services collapses the two queries into a single command for the DBMS

to execute. The following command uses AND to combine the WHERE

clauses from the two queries:

SELECT A, MAX(B), C

Data Services can push down all the operations in this SELECT statement

to the source DBMS.

FROM source

WHERE A > 100 AND B = C

GROUP BY A, C

Data Services Performance Optimization Guide 45

Page 46

Maximizing Push-Down Operations

5

Push-down examples

Scenario 2: Full push down from the source to the target

If the source and target are in the same datastore, Data Services can do a

full push-down operation where the INSERT into the target uses a SELECT

from the source. In the sample data flow in scenario 1, a full push down

passes the following statement to the database:

INSERT INTO target (A, B, C)

SELECT A, MAX(B), C

FROM source

WHERE A > 100 AND B = C

GROUP BY A, C

If the source and target are not in the same datastore, Data Services can

also do a full push-down operation if you use one of the following features:

• Add a Data _Transfer transform before the target.

• Define a database link between the two datastores.

Scenario 3: Full push down for auto correct load to the target

For an Oracle target, if you specify the Auto correct load option, Data

Services can do a full push-down operation where the SQL statement is a

MERGE into the target with a SELECT from the source. In the sample data

flow in scenario 1, a full push down passes the following statement to the

database when the source and target are in the same datastore:

MERGE INTO target s

USING

(SELECT A, MAX(B), C

ON ((s.A = n.A))

WHEN MATCHED THEN

UPDATE SET s.A = n.A,

WHEN NOT MATCHED THEN

46 Data Services Performance Optimization Guide

FROM source) n

s.MAX(B) = n.MAX(B)

s.C = n.C

Page 47

Maximizing Push-Down Operations

INSERT (s.A, s.Max(B), s.C)

VALUES (n.A, n.MAX(B), n.C)

Scenario 4: Partial push down to the source

If the data flow contains operations that cannot be passed to the DBMS,

Data Services optimizes the transformation differently than the previous two

scenarios. For example, if Query1 called func(A) > 100, where func is a

Data Services custom function, then Data Services generates two commands:

• The first query becomes the following command which the source DBMS

executes:

SELECT A, B, C

FROM source

WHERE B = C

• The second query becomes the following command which Data Services

executes because func cannot be pushed to the database:

To view SQL

5

SELECT A, MAX(B), C

FROM Query1

WHERE func(A) > 100

GROUP BY A, C

To view SQL

Before running a job, you can view the SQL code that Data Services

generates for table sources in data flows. By examining the SQL code, you

can verify that Data Services generates the commands you expect. If

necessary, you can alter your design to improve the data flow.

Note:

This option is not available for R/3 data flows.

1. Validate and save data flows.

2. Open a data flow in the workspace.

3. Select Display Optimized SQL from the Validation menu.

Alternately, you can right-click a data flow in the object library and select

Display Optimized SQL.

Data Services Performance Optimization Guide 47

Page 48

Maximizing Push-Down Operations

5

To view SQL

The Optimize SQL window opens and shows a list of datastores and the

optimized SQL code for the selected datastore. By default, the Optimize

SQL window selects the first datastore, as the following example shows:

Data Services only shows the SELECT generated for table sources and

INSERT INTO... SELECT for targets. Data Services does not show the

SQL generated for SQL sources that are not table sources, such as:

• Lookup function

• Key_generation function

• Key_Generation transform

• Table_Comparison transform

4. Select a name from the list of datastores on the left to view the SQL that

this data flow applies against the corresponding database or application.

The following example shows the optimized SQL for the second datastore

which illustrates a full push-down operation (INSERT INTO... SELECT).

This data flows uses a Data_Transfer transform to create a transfer table

that Data Services loads directly into the target. For more information,

see "Data_Transfer transform for push-down operations" on page 1667

48 Data Services Performance Optimization Guide

Page 49

Maximizing Push-Down Operations

To view SQL

In the Optimized SQL window you can:

• Use the Find button to perform a search on the SQL displayed.

• Use the Save As button to save the text as a .sql file.

5

If you try to use the Display Optimized SQL command when there are no

SQL sources in your data flow, Data Services alerts you. Examples of

non-SQL sources include:

• R/3 data flows

• Message sources

• File sources

• IDoc sources

If a data flow is not valid when you click the Display Optimized SQL option,

Data Services alerts you.

Note:

The Optimized SQL window displays the existing SQL statement in the

repository. If you changed your data flow, save it so that the Optimized SQL

window displays your current SQL statement.

Data Services Performance Optimization Guide 49

Page 50

Maximizing Push-Down Operations

5

Data_Transfer transform for push-down operations

Data_Transfer transform for push-down

operations

Use the Data_Transfer transform to move data from a source or from another

transform into the target datastore and enable a full push-down operation

(INSERT INTO... SELECT) to the target. You can use the Data_Transfer

transform to pushdown resource-intensive operations that occur anywhere

within a data flow to the database. Resource-intensive operations include

joins, GROUP BY, ORDER BY, and DISTINCT.

Scenario 1: Push down an operation after a blocking operation

You can place a Data_Transfer transform after a blocking operation to enable

Data Services to push down a subsequent operation. A blocking operation

is an operation that Data Services cannot push down to the database, and

prevents ("blocks") operations after it from being pushed down.

For example, you might have a data flow that groups sales order records by

country and region, and sums the sales amounts to find which regions are

generating the most revenue. The following diagram shows that the data

flow contains a Pivot transform to obtain orders by Customer ID, a Query

transform that contains a lookup_ext function to obtain sales subtotals, and

another Query transform to group the results by country and region.

Because the Pivot transform and the lookup_ext function are before the

query with the GROUP BY clause, Data Services cannot push down the

GROUP BY operation. The following Optimize SQL window shows the

SELECT statement that Data Services pushes down to the source database:

50 Data Services Performance Optimization Guide

Page 51

Maximizing Push-Down Operations

Data_Transfer transform for push-down operations

However, if you add a Data_Transfer transform before the second Query

transform and specify a transfer table in the same datastore as the target

table, Data Services can push down the GROUP BY operation.

5

The following Data_Transfer Editor window shows that the transfer type is

table and the transfer table is in the same datastore as the target table.

Data Services Performance Optimization Guide 51

Page 52

Maximizing Push-Down Operations

5

Data_Transfer transform for push-down operations

The following Optimize SQL window shows that Data Services pushed down

the GROUP BY to the transfer table TRANS2.

52 Data Services Performance Optimization Guide

Page 53

Maximizing Push-Down Operations

Data_Transfer transform for push-down operations

Related Topics

• Operations that cannot be pushed down on page 44

• Reference Guide: Transforms, Data_Transfer

5

Scenario 2: Using Data_Transfer tables to speed up auto correct loads

Auto correct loading ensures that the same row is not duplicated in a target

table, which is useful for data recovery operations. However, an auto correct

load prevents a full push-down operation from the source to the target when

the source and target are in different datastores.

For large loads where auto-correct is required, you can put a Data_Transfer

transform before the target to enable a full push down from the source to the

target. Data Services generates an SQL MERGE INTO target statement

that implements the Ignore columns with value and Ignore columns with

null options if they are selected on the target editor.

For example, the following data flow loads sales orders into a target table

which is in a different datastore from the source.

Data Services Performance Optimization Guide 53

Page 54

Maximizing Push-Down Operations

5

Data_Transfer transform for push-down operations

The following target editor shows that the Auto correct load option is

selected. The Ignore columns with null and Use merge options are also

selected in this example.

The following Optimize SQL window shows the SELECT statement that Data

Services pushes down to the source database.

54 Data Services Performance Optimization Guide

Page 55

Maximizing Push-Down Operations

Data_Transfer transform for push-down operations

When you add a Data_Transfer transform before the target and specify a

transfer table in the same datastore as the target, Data Services can push

down the auto correct operation.

5

Data Services Performance Optimization Guide 55

Page 56

Maximizing Push-Down Operations

5

Data_Transfer transform for push-down operations

The following Optimize SQL window shows the MERGE statement that Data

Services will push down to the target.

56 Data Services Performance Optimization Guide

Page 57

Maximizing Push-Down Operations

Database link support for push-down operations across datastores

Database link support for push-down

operations across datastores

5

Various database vendors support one-way communication paths from one

database server to another. Data Services refers to communication paths

between databases as database links. The datastores in a database link

relationship are called linked datastores.

Data Services uses linked datastores to enhance its performance by pushing

down operations to a target database using a target datastore. Pushing down

operations to a database not only reduces the amount of information that

needs to be transferred between the databases and Data Services but also

allows Data Services to take advantage of the various DMBS capabilities,

such as various join algorithms.

With support for database links, Data Services pushes processing down from

different datastores, which can also refer to the same or different database

type. Linked datastores allow a one-way path for data. For example, if you

import a database link from target database B and link datastore B to

datastore A, Data Services pushes the load operation down to database B,

not to database A.

This section contains the following topics:

• Software support

Data Services Performance Optimization Guide 57

Page 58

Maximizing Push-Down Operations

5

Database link support for push-down operations across datastores

• Example of push-down with linked datastores

• Generated SQL statements

• Tuning performance at the data flow or Job Server level

Related Topics

• Designer Guide: Datastores, Linked datastores

Software support

Data Services supports push-down operations using linked datastores on

all Windows and Unix platforms. It supports DB2, Oracle, and MS SQL server

databases.

To take advantage of linked datastores

1. Create a database link on a database server that you intend to use as a

target in a Data Services job.

The following database software is required. See the Supported Platforms

document for specific version numbers.

• For DB2, use the DB2 Information Services (previously known as

Relational Connect) software and make sure that the database user

has privileges to create and drop a nickname.

To end users and client applications, data sources appear as a single

collective database in DB2. Users and applications interface with the

database managed by the information server. Therefore, configure an

information server and then add the external data sources. DB2 uses

nicknames to identify remote tables and views.

See the DB2 database manuals for more information about how to

create links for DB2 and non-DB2 servers.

• For Oracle, use the Transparent Gateway for DB2 and MS SQL Server.

See the Oracle database manuals for more information about how to

create database links for Oracle and non-Oracle servers.

• For MS SQL Server, no special software is required.

58 Data Services Performance Optimization Guide

Page 59

Maximizing Push-Down Operations

Database link support for push-down operations across datastores