Data Services Reference Guide

BusinessObjects Data Services XI 3.1 (12.1.1)

Copyright

© 2008 Business Objects, an SAP company. All rights reserved. Business Objects

owns the following U.S. patents, which may cover products that are offered and

licensed by Business Objects: 5,295,243; 5,339,390; 5,555,403; 5,590,250;

5,619,632; 5,632,009; 5,857,205; 5,880,742; 5,883,635; 6,085,202; 6,108,698;

6,247,008; 6,289,352; 6,300,957; 6,377,259; 6,490,593; 6,578,027; 6,581,068;

6,628,312; 6,654,761; 6,768,986; 6,772,409; 6,831,668; 6,882,998; 6,892,189;

6,901,555; 7,089,238; 7,107,266; 7,139,766; 7,178,099; 7,181,435; 7,181,440;

7,194,465; 7,222,130; 7,299,419; 7,320,122 and 7,356,779. Business Objects and

its logos, BusinessObjects, Business Objects Crystal Vision, Business Process

On Demand, BusinessQuery, Cartesis, Crystal Analysis, Crystal Applications,

Crystal Decisions, Crystal Enterprise, Crystal Insider, Crystal Reports, Crystal

Vision, Desktop Intelligence, Inxight and its logos , LinguistX, Star Tree, Table

Lens, ThingFinder, Timewall, Let There Be Light, Metify, NSite, Rapid Marts,

RapidMarts, the Spectrum Design, Web Intelligence, Workmail and Xcelsius are

trademarks or registered trademarks in the United States and/or other countries

of Business Objects and/or affiliated companies. SAP is the trademark or registered

trademark of SAP AG in Germany and in several other countries. All other names

mentioned herein may be trademarks of their respective owners.

Third-party

Contributors

Business Objects products in this release may contain redistributions of software

licensed from third-party contributors. Some of these individual components may

also be available under alternative licenses. A partial listing of third-party

contributors that have requested or permitted acknowledgments, as well as required

notices, can be found at: http://www.businessobjects.com/thirdparty

2008-11-28

Contents

Introduction 17Chapter 1

Welcome to Data Services........................................................................18

Overview of this guide...............................................................................24

Data Services Objects 27Chapter 2

Characteristics of objects...........................................................................28

Descriptions of objects ..............................................................................31

Welcome..............................................................................................18

Documentation set for Data Services...................................................18

Accessing documentation....................................................................21

Business Objects information resources..............................................22

About this guide....................................................................................24

Who should read this guide..................................................................25

Object classes .....................................................................................28

Object options, properties, and attributes............................................30

Annotation............................................................................................35

Batch Job.............................................................................................36

Catch ...................................................................................................49

COBOL copybook file format ...............................................................53

Conditional ..........................................................................................63

Data flow .............................................................................................65

Datastore..............................................................................................68

Document...........................................................................................146

DTD....................................................................................................146

Excel workbook format ......................................................................161

File format..........................................................................................170

Data Services Reference Guide 3

Contents

Function..............................................................................................186

Log.....................................................................................................187

Message function...............................................................................197

Outbound message............................................................................198

Project................................................................................................198

Query transform.................................................................................199

Real-time job......................................................................................200

Script..................................................................................................205

Source................................................................................................206

Table...................................................................................................214

Target.................................................................................................220

Target Writer migrated from Data Quality ..........................................269

Template table....................................................................................269

Transform...........................................................................................273

Try......................................................................................................274

While loop...........................................................................................275

Work flow............................................................................................276

XML file..............................................................................................279

XML message....................................................................................284

XML schema......................................................................................286

XML template.....................................................................................306

Smart editor 309Chapter 3

Accessing the smart editor......................................................................310

Smart editor options.................................................................................312

Smart editor toolbar............................................................................312

Editor Library pane.............................................................................312

Editor pane.........................................................................................314

To browse for a function...........................................................................318

To search for a function...........................................................................318

4 Data Services Reference Guide

Contents

Data types 321Chapter 4

Descriptions of data types.......................................................................322

date....................................................................................................323

datetime..............................................................................................325

decimal...............................................................................................326

double ................................................................................................327

int........................................................................................................327

interval................................................................................................328

Large object data types......................................................................328

numeric...............................................................................................335

real.....................................................................................................336

time.....................................................................................................336

timestamp...........................................................................................338

varchar...............................................................................................339

Data type processing...............................................................................341

Date arithmetic...................................................................................341

Type conversion.................................................................................343

Transforms 373Chapter 5

Operation codes......................................................................................374

Descriptions of transforms.......................................................................375

Data Integrator transforms.................................................................379

Data Quality transforms......................................................................464

Platform transforms............................................................................665

Functions and Procedures 719Chapter 6

About functions........................................................................................720

Functions compared with transforms.................................................720

Operation of a function.......................................................................720

Data Services Reference Guide 5

Contents

Arithmetic in date functions................................................................721

Including functions in expressions.....................................................721

Kinds of functions you can use in Data Services...............................725

Descriptions of built-in functions..............................................................730

abs......................................................................................................743

add_months........................................................................................744

ascii....................................................................................................745

avg......................................................................................................746

cast.....................................................................................................747

ceil......................................................................................................750

chr......................................................................................................751

base64_decode..................................................................................752

base64_encode..................................................................................753

concat_date_time ..............................................................................754

count ..................................................................................................754

count_distinct.....................................................................................755

current_configuration .........................................................................757

current_system_configuration............................................................758

dataflow_name ..................................................................................758

datastore_field_value.........................................................................759

date_diff..............................................................................................760

date_part ...........................................................................................761

day_in_month.....................................................................................763

day_in_week......................................................................................764

day_in_year........................................................................................765

db_type...............................................................................................766

db_version..........................................................................................768

db_database_name............................................................................770

db_owner............................................................................................771

decode................................................................................................772

double_metaphone.............................................................................775

6 Data Services Reference Guide

Contents

exec....................................................................................................777

extract_from_xml................................................................................784

file_exists ...........................................................................................787

fiscal_day...........................................................................................788

floor....................................................................................................789

gen_row_num_by_group....................................................................790

gen_row_num ....................................................................................793

get_domain_description.....................................................................794

get_env...............................................................................................795

get_error_filename ............................................................................796

get_file_attribute.................................................................................797

get_monitor_filename ........................................................................798

get_trace_filename ............................................................................799

greatest..............................................................................................800

host_name .........................................................................................802

ifthenelse............................................................................................802

index...................................................................................................804

init_cap...............................................................................................805

interval_to_char..................................................................................807

is_group_changed..............................................................................808

is_set_env..........................................................................................809

is_valid_date......................................................................................810

is_valid_datetime................................................................................812

is_valid_decimal.................................................................................813

is_valid_double...................................................................................815

is_valid_int..........................................................................................816

is_valid_real.......................................................................................817

is_valid_time.......................................................................................819

isempty ..............................................................................................820

isweekend .........................................................................................822

job_name ...........................................................................................823

Data Services Reference Guide 7

Contents

julian...................................................................................................823

julian_to_date.....................................................................................824

key_generation...................................................................................825

last_date ............................................................................................826

least....................................................................................................827

length .................................................................................................829

literal...................................................................................................830

ln.........................................................................................................832

load_to_xml........................................................................................833

log.......................................................................................................838

long_to_varchar..................................................................................839

lookup ................................................................................................840

lookup_ext..........................................................................................846

lookup_seq.........................................................................................857

lower ..................................................................................................863

lpad ....................................................................................................864

lpad_ext..............................................................................................865

ltrim ....................................................................................................868

ltrim_blanks .......................................................................................869

ltrim_blanks_ext ................................................................................870

mail_to................................................................................................871

match_pattern....................................................................................875

match_regex ......................................................................................879

match_simple.....................................................................................891

max.....................................................................................................893

min......................................................................................................894

mod....................................................................................................895

month.................................................................................................896

num_to_interval..................................................................................897

nvl.......................................................................................................899

power..................................................................................................900

8 Data Services Reference Guide

Contents

previous_row_value...........................................................................901

print ...................................................................................................902

pushdown_sql ...................................................................................904

quarter................................................................................................906

raise_exception .................................................................................907

raise_exception_ext ..........................................................................908

rand....................................................................................................909

rand_ext.............................................................................................910

replace_substr ...................................................................................911

replace_substr_ext.............................................................................912

repository_name ................................................................................916

round..................................................................................................917

rpad....................................................................................................918

rpad_ext.............................................................................................919

rtrim ...................................................................................................922

rtrim_blanks .......................................................................................923

rtrim_blanks_ext ................................................................................924

search_replace...................................................................................925

set_env ..............................................................................................934

sleep ..................................................................................................935

soundex..............................................................................................936

sql ......................................................................................................937

sqrt.....................................................................................................941

smtp_to...............................................................................................941

substr..................................................................................................946

sum.....................................................................................................948

sysdate...............................................................................................949

system_user_name ...........................................................................950

systime...............................................................................................951

table_attribute ....................................................................................952

to_char...............................................................................................953

Data Services Reference Guide 9

Contents

to_date...............................................................................................957

to_decimal .........................................................................................958

to_decimal_ext ..................................................................................960

total_rows...........................................................................................961

trunc...................................................................................................963

truncate_table.....................................................................................964

upper..................................................................................................966

varchar_to_long..................................................................................967

wait_for_file........................................................................................968

week_in_month..................................................................................970

week_in_year.....................................................................................971

WL_GetKeyValue ..............................................................................973

word....................................................................................................974

word_ext ............................................................................................976

workflow_name .................................................................................978

year....................................................................................................979

About procedures....................................................................................980

Overview............................................................................................980

Requirements.....................................................................................981

Creating stored procedures in a database.........................................982

Importing metadata for stored procedures ........................................986

Structure of a stored procedure..........................................................987

Calling stored procedures..................................................................989

Checking execution status ................................................................995

Data Services Scripting Language 997Chapter 7

To use Data Services scripting language................................................998

Language syntax.....................................................................................998

Syntax for statements in scripts.........................................................998

Syntax for column and table references in expressions.....................999

Strings..............................................................................................1000

10 Data Services Reference Guide

Contents

Variables...........................................................................................1001

Variable interpolation........................................................................1002

Functions and stored procedures.....................................................1003

Operators.........................................................................................1003

NULL values.....................................................................................1007

Debugging and Validation................................................................1011

Keywords..........................................................................................1013

Sample scripts.......................................................................................1015

Square function................................................................................1016

RepeatString function.......................................................................1016

Metadata in Repository Tables and Views 1019Chapter 8

Auditing metadata..................................................................................1020

AL_AUDIT........................................................................................1020

AL_AUDIT_INFO..............................................................................1022

Imported metadata.................................................................................1024

AL_INDEX........................................................................................1024

AL_PCOLUMN.................................................................................1025

AL_PKEY.........................................................................................1026

ALVW_COLUMNATTR.....................................................................1027

ALVW_COLUMNINFO.....................................................................1028

ALVW_FKREL..................................................................................1030

ALVW_MAPPING.............................................................................1031

ALVW_TABLEATTR.........................................................................1042

ALVW_TABLEINFO..........................................................................1044

Internal metadata...................................................................................1044

AL_LANG.........................................................................................1045

AL_LANGXMLTEXT.........................................................................1046

AL_ATTR..........................................................................................1047

AL_SETOPTIONS............................................................................1048

AL_USAGE......................................................................................1049

Data Services Reference Guide 11

Contents

ALVW_FUNCINFO...........................................................................1053

ALVW_PARENT_CHILD..................................................................1054

Metadata Integrator tables.....................................................................1056

AL_CMS_BV....................................................................................1056

AL_CMS_BV_FIELDS......................................................................1058

AL_CMS_REPORTS........................................................................1059

AL_CMS_REPORTUSAGE.............................................................1060

AL_CMS_FOLDER..........................................................................1062

AL_CMS_UNV.................................................................................1063

AL_CMS_UNV_OBJ........................................................................1064

Operational metadata............................................................................1065

AL_HISTORY...................................................................................1065

ALVW_FLOW_STAT........................................................................1067

Locales and Multi-byte Functionality 1069Chapter 9

Locale support.......................................................................................1070

Locale selection................................................................................1072

Code page support...........................................................................1075

Guidelines for setting locales...........................................................1078

Multi-byte support..................................................................................1084

Multi-byte string functions.................................................................1084

Numeric data types: assigning constant values...............................1084

Byte Order Mark characters.............................................................1086

Round-trip conversion......................................................................1086

Column sizing...................................................................................1087

Limitations of multi-byte support............................................................1087

Definitions..............................................................................................1088

Supported locales and encodings.........................................................1090

Supported languages.......................................................................1091

Supported territories.........................................................................1091

Supported code pages.....................................................................1093

12 Data Services Reference Guide

Contents

Data Quality Fields 1097Chapter 10

Data Quality fields..................................................................................1098

Content types...................................................................................1098

Associate output fields...........................................................................1100

Country ID fields....................................................................................1101

Input Fields.......................................................................................1101

Output fields.....................................................................................1102

Data Cleanse fields................................................................................1103

Input fields........................................................................................1103

Output fields.....................................................................................1105

Geocoder fields......................................................................................1111

Input fields........................................................................................1112

Output fields.....................................................................................1113

Global Address Cleanse fields..............................................................1115

Field category columns in Output tab...............................................1115

Input fields........................................................................................1120

Output Fields....................................................................................1123

Global Suggestion Lists fields...............................................................1141

Input fields........................................................................................1141

Output fields.....................................................................................1143

Match fields............................................................................................1148

Match transform output fields...........................................................1148

Prioritization fields............................................................................1152

USA Regulatory Address Cleanse fields...............................................1152

Field category columns in Output tab...............................................1153

Input fields........................................................................................1157

Output fields.....................................................................................1160

Data Services Reference Guide 13

Contents

Python 1191Chapter 11

Python in Data Services........................................................................1192

About Python in Data Services ........................................................1192

Create an expression with the Python Expression editor.................1196

Built-in objects..................................................................................1200

Data Services-defined classes and methods...................................1202

FlDataCollection class......................................................................1203

FlDataManager class.......................................................................1208

FlDataRecord class..........................................................................1210

FlProperties class.............................................................................1213

FlPythonString class.........................................................................1214

Data Services Python examples......................................................1217

Reserved Words 1227Chapter 12

About Reserved Words..........................................................................1228

Data Quality Appendix 1233Chapter 13

Address Cleanse reference...................................................................1234

Country ISO codes and assignment engines...................................1234

Information codes (Global Address Cleanse)..................................1260

Status codes (Global Address Cleanse)..........................................1263

Quality codes (Global Address Cleanse).........................................1269

Status Codes (USA Regulatory Address Cleanse)..........................1271

USA Regulatory Address Cleanse transform fault codes................1275

About ShowA and ShowL (USA and Canada).................................1278

Data Cleanse reference.........................................................................1289

Data parsing details..........................................................................1289

User-defined pattern matching (UDPM)...........................................1297

Overview of UDPM...........................................................................1298

14 Data Services Reference Guide

Contents

Working with the pattern file.............................................................1299

Regular expressions.........................................................................1301

Creating regular expressions...........................................................1306

Define a pattern................................................................................1308

Alternate expressions.......................................................................1311

Modify the rule file............................................................................1312

What is the rule file?.........................................................................1312

Rule file organization........................................................................1313

Rule example...................................................................................1315

Definition section of a parsing rule...................................................1316

Action section of a parsing rule........................................................1320

Data Cleanse migration tools...........................................................1326

Index 1335

Data Services Reference Guide 15

Contents

16 Data Services Reference Guide

Introduction

1

Introduction

1

Welcome to Data Services

Welcome to Data Services

Welcome

Data Services XI Release 3 provides data integration and data quality

processes in one runtime environment, delivering enterprise performance

and scalability.

The data integration processes of Data Services allow organizations to easily

explore, extract, transform, and deliver any type of data anywhere across

the enterprise.

The data quality processes of Data Services allow organizations to easily

standardize, cleanse, and consolidate data anywhere, ensuring that end-users

are always working with information that's readily available, accurate, and

trusted.

Documentation set for Data Services

You should become familiar with all the pieces of documentation that relate

to your Data Services product.

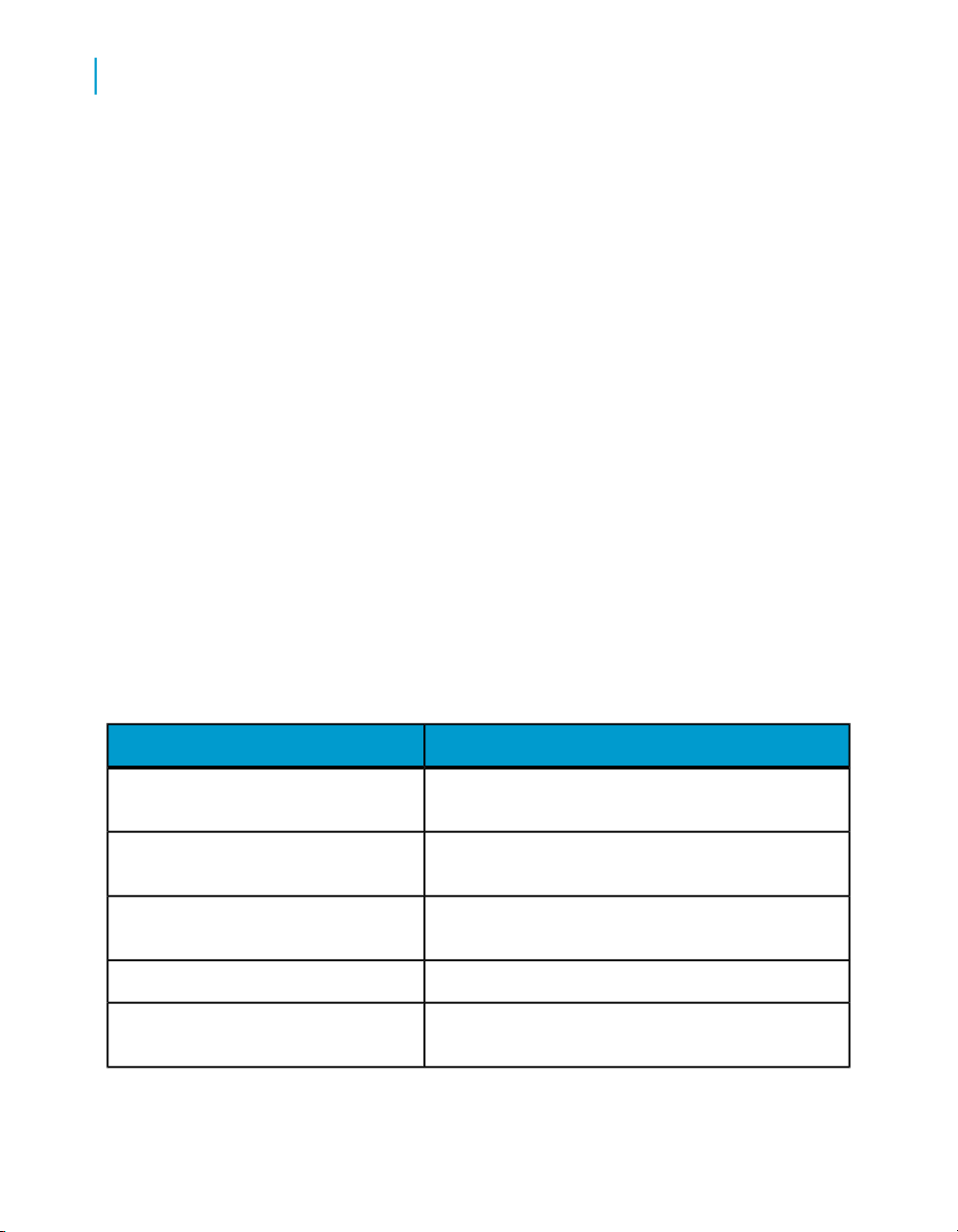

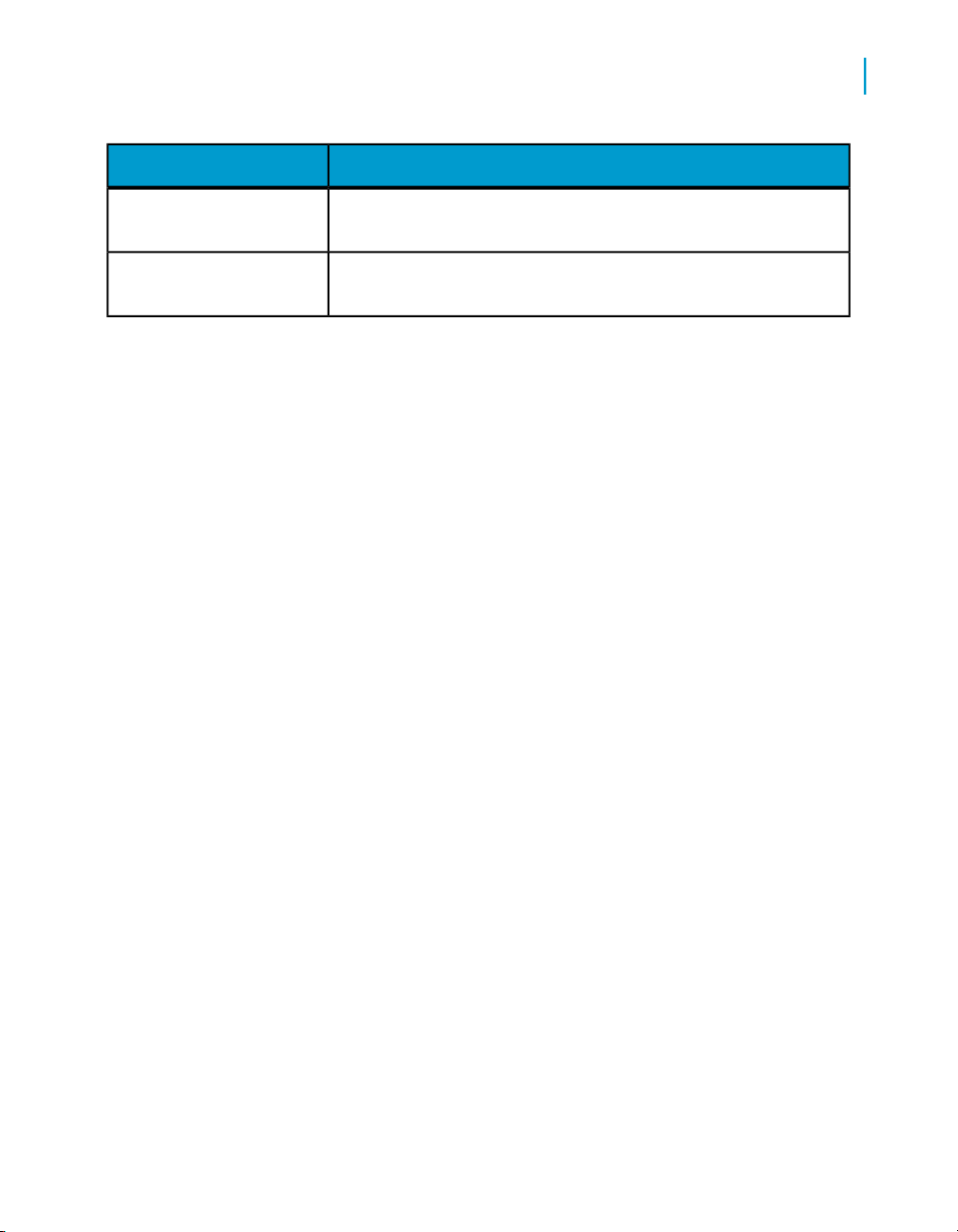

What this document providesDocument

Documentation Map

Release Summary

Release Notes

Getting Started Guide

Installation Guide for Windows

18 Data Services Reference Guide

Information about available Data Services books,

languages, and locations

Highlights of key features in this Data Services release

Important information you need before installing and

deploying this version of Data Services

An introduction to Data Services

Information about and procedures for installing Data

Services in a Windows environment.

Introduction

Welcome to Data Services

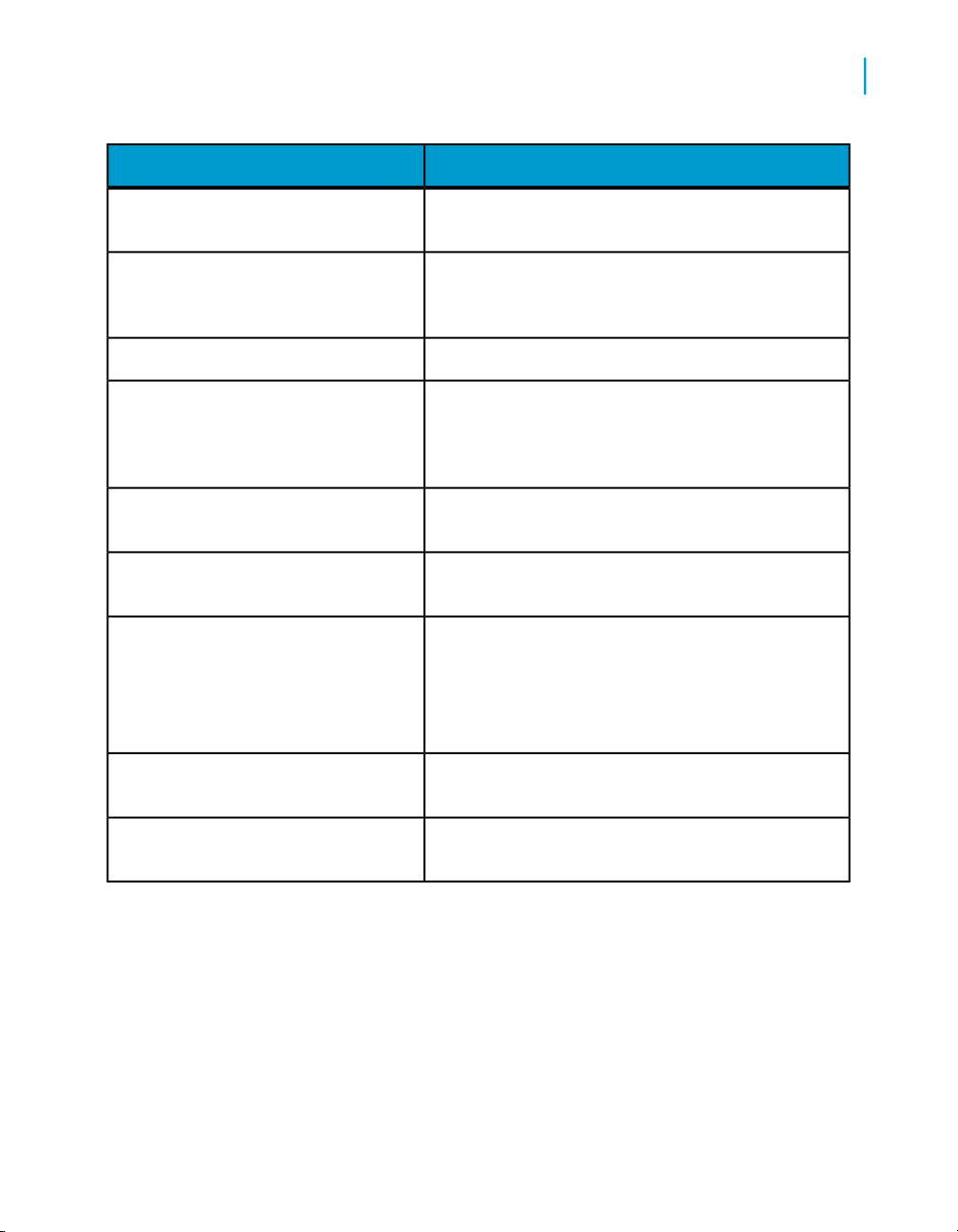

What this document providesDocument

1

Installation Guide for UNIX

Advanced Development Guide

Designer Guide

Integrator's Guide

Management Console: Administrator

Guide

Management Console: Metadata Reports Guide

Migration Considerations Guide

Information about and procedures for installing Data

Services in a UNIX environment.

Guidelines and options for migrating applications including information on multi-user functionality and

the use of the central repository for version control

Information about how to use Data Services Designer

Information for third-party developers to access Data

Services functionality. Also provides information about

how to install, configure, and use the Data Services

Adapter for JMS.

Information about how to use Data Services Administrator

Information about how to use Data Services Metadata

Reports

Information about:

• Release-specific product behavior changes from

earlier versions of Data Services to the latest release

• How to migrate from Data Quality to Data Services

Performance Optimization Guide

Reference Guide

Information about how to improve the performance

of Data Services

Detailed reference material for Data Services Designer

Data Services Reference Guide 19

Introduction

1

Welcome to Data Services

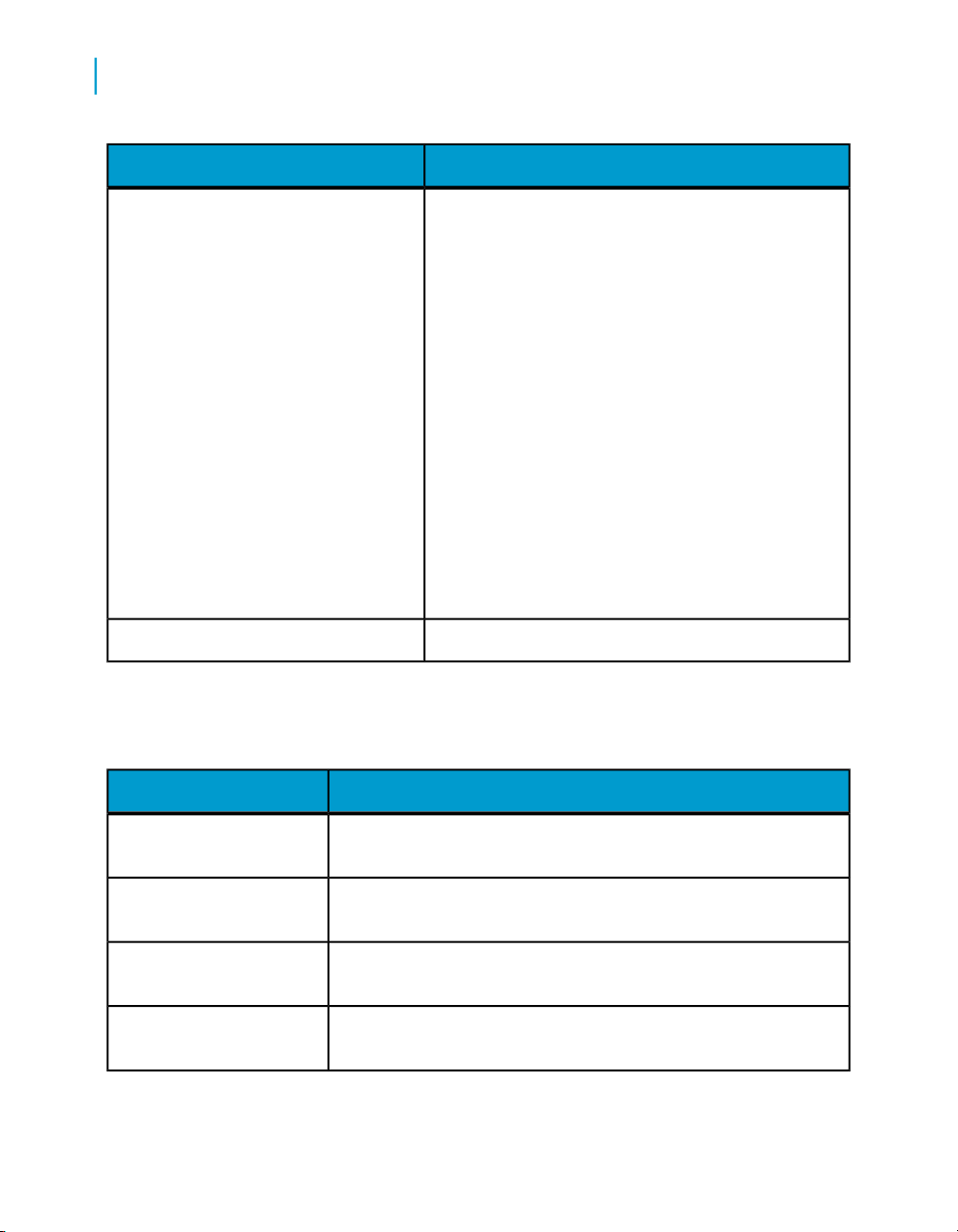

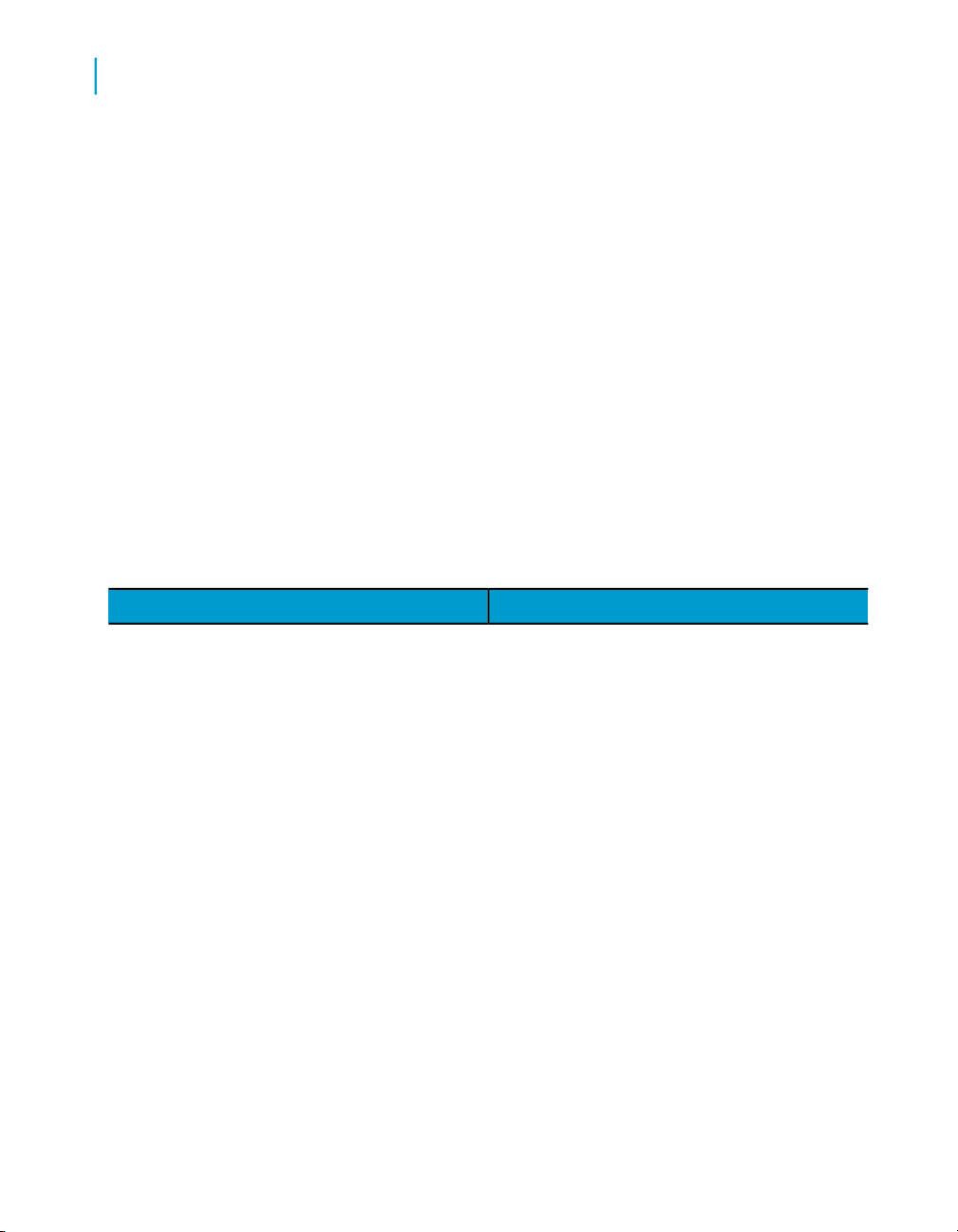

Technical Manuals

What this document providesDocument

A compiled “master” PDF of core Data Services books

containing a searchable master table of contents and

index:

•

Getting Started Guide

•

Installation Guide for Windows

•

Installation Guide for UNIX

•

Designer Guide

•

Reference Guide

•

Management Console: Metadata Reports Guide

•

Management Console: Administrator Guide

•

Performance Optimization Guide

•

Advanced Development Guide

•

Supplement for J.D. Edwards

•

Supplement for Oracle Applications

•

Supplement for PeopleSoft

•

Supplement for Siebel

•

Supplement for SAP

Tutorial

In addition, you may need to refer to several Adapter Guides and

Supplemental Guides.

What this document providesDocument

Salesforce.com Adapter

Interface

Supplement for J.D. Edwards

Supplement for Oracle Applications

Supplement for PeopleSoft

20 Data Services Reference Guide

Information about how to install, configure, and use the Data

Services Salesforce.com Adapter Interface

Information about license-controlled interfaces between Data

Services and J.D. Edwards World and J.D. Edwards OneWorld

Information about the license-controlled interface between Data

Services and Oracle Applications

Information about license-controlled interfaces between Data

Services and PeopleSoft

A step-by-step introduction to using Data Services

Introduction

Welcome to Data Services

What this document providesDocument

1

Supplement for SAP

Supplement for Siebel

Information about license-controlled interfaces between Data

Services, SAP ERP, and SAP BI/BW

Information about the license-controlled interface between Data

Services and Siebel

Accessing documentation

You can access the complete documentation set for Data Services in several

places.

Accessing documentation on Windows

After you install Data Services, you can access the documentation from the

Start menu.

1. Choose Start > Programs > BusinessObjects XI 3.1 >

BusinessObjects Data Services > Data Services Documentation.

Note:

Only a subset of the documentation is available from the Start menu. The

documentation set for this release is available in LINK_DIR\Doc\Books\en.

2. Click the appropriate shortcut for the document that you want to view.

Accessing documentation on UNIX

After you install Data Services, you can access the online documentation by

going to the directory where the printable PDF files were installed.

1. Go to LINK_DIR/doc/book/en/.

2. Using Adobe Reader, open the PDF file of the document that you want

to view.

Data Services Reference Guide 21

Introduction

1

Welcome to Data Services

Accessing documentation from the Web

You can access the complete documentation set for Data Services from the

Business Objects Customer Support site.

1.

Go to http://help.sap.com.

2. Cick Business Objects at the top of the page.

You can view the PDFs online or save them to your computer.

Business Objects information resources

A global network of Business Objects technology experts provides customer

support, education, and consulting to ensure maximum business intelligence

benefit to your business.

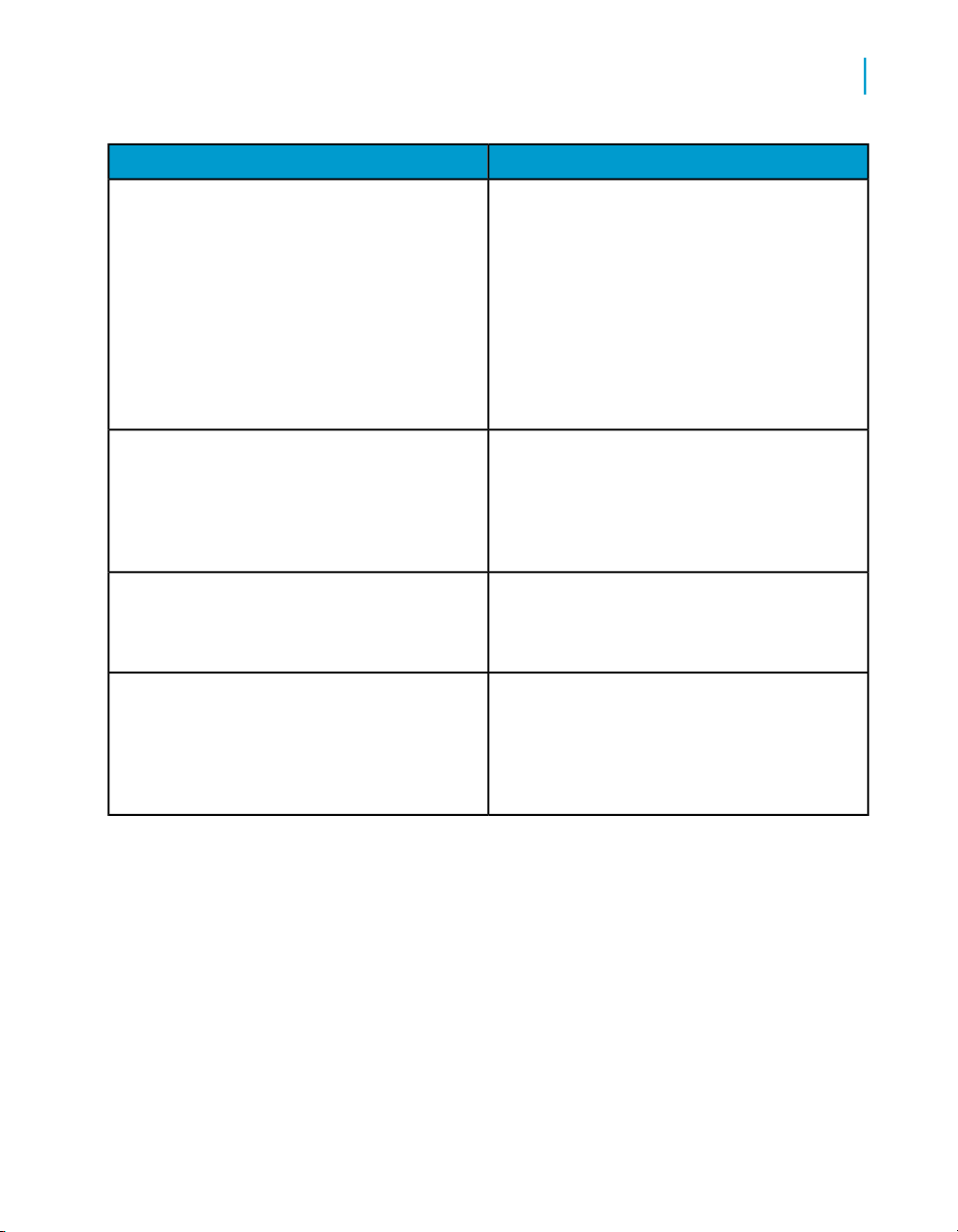

Useful addresses at a glance:

ContentAddress

22 Data Services Reference Guide

Introduction

Welcome to Data Services

ContentAddress

1

Customer Support, Consulting, and Education

services

http://service.sap.com/

Data Services Community

https://www.sdn.sap.com/irj/sdn/businessob

jects-ds

Forums on SCN (SAP Community Network)

https://www.sdn.sap.com/irj/sdn/businessob

jects-forums

Blueprints

http://www.sdn.sap.com/irj/boc/blueprints

Information about Customer Support programs,

as well as links to technical articles, downloads,

and online forums. Consulting services can

provide you with information about how Business Objects can help maximize your business

intelligence investment. Education services can

provide information about training options and

modules. From traditional classroom learning

to targeted e-learning seminars, Business Objects can offer a training package to suit your

learning needs and preferred learning style.

Get online and timely information about Data

Services, including tips and tricks, additional

downloads, samples, and much more. All content is to and from the community, so feel free

to join in and contact us if you have a submission.

Search the Business Objects forums on the

SAP Community Network to learn from other

Data Services users and start posting questions

or share your knowledge with the community.

Blueprints for you to download and modify to fit

your needs.Each blueprint contains the necessary Data Services project, jobs, data flows, file

formats, sample data, template tables, and

custom functions to run the data flows in your

environment with only a few modifications.

Data Services Reference Guide 23

Introduction

1

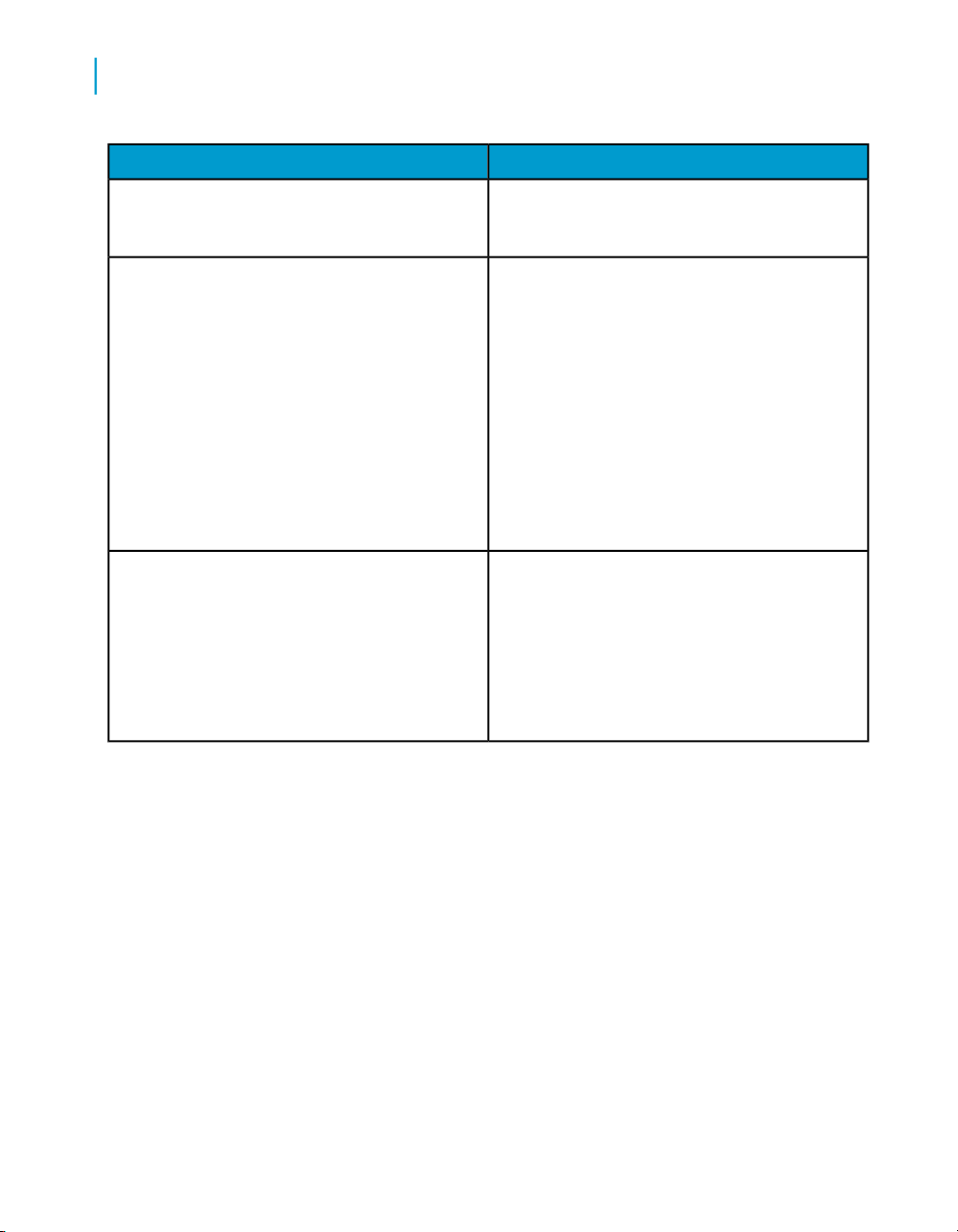

Overview of this guide

http://help.sap.com/

ContentAddress

Business Objects product documentation.Product documentation

Documentation mailbox

documentation@businessobjects.com

Supported platforms documentation

https://service.sap.com/bosap-support

Send us feedback or questions about your

Business Objects documentation. Do you have

a suggestion on how we can improve our documentation? Is there something that you particularly like or have found useful? Let us know,

and we will do our best to ensure that your

suggestion is considered for the next release

of our documentation.

Note:

If your issue concerns a Business Objects

product and not the documentation, please

contact our Customer Support experts.

Get information about supported platforms for

Data Services.

In the left panel of the window, navigate to

Documentation > Supported Platforms >

BusinessObjects XI 3.1. Click the BusinessObjects Data Services link in the main window.

Overview of this guide

About this guide

The Data Services Reference Guide provides a detailed information about

the objects, data types, transforms, and functions in the Data Services

Designer.

For source-specific information, such as information pertaining to a particular

back-office application, refer to the supplement for that application.

24 Data Services Reference Guide

Who should read this guide

This and other Data Services product documentation assume the following:

• You are an application developer, consultant, or database administrator

working on data extraction, data warehousing, data integration, or data

quality.

• You understand your source and target data systems, DBMS, legacy

systems, business intelligence, and messaging concepts.

• You understand your organization's data needs.

• You are familiar with SQL (Structured Query Language).

• If you are interested in using this product to design real-time processing,

you are familiar with:

• DTD and XML Schema formats for XML files

• Publishing Web Services (WSDL, HTTP/S and SOAP protocols, and

so on.)

Introduction

Overview of this guide

1

• You are familiar with Data Services installation environments: Microsoft

Windows or UNIX.

Data Services Reference Guide 25

Introduction

Overview of this guide

1

26 Data Services Reference Guide

Data Services Objects

2

Data Services Objects

2

Characteristics of objects

This section provides a reference of detailed information about the objects,

data types, transforms, and functions in the Data Services Designer.

Note:

For information about source-specific objects, consult the reference chapter

of the Data Services supplement document for that source.

Related Topics

• Characteristics of objects on page 28

• Descriptions of objects on page 31

Characteristics of objects

This section discusses common characteristics of all Data Services objects.

Related Topics

• Object classes on page 28

• Object options, properties, and attributes on page 30

Object classes

An object's class determines how you create and retrieve the object. There

are two classes of objects:

• Reusable objects

• Single-use objects

Related Topics

• Reusable objects on page 28

• Single-use objects on page 30

Reusable objects

After you define and save a reusable object, Data Services stores the

definition in the repository. You can then reuse the definition as often as

necessary by creating calls to the definition.

28 Data Services Reference Guide

Data Services Objects

Characteristics of objects

Most objects created in Data Services are available for reuse. You access

reusable objects through the object library.

A reusable object has a single definition; all calls to the object refer to that

definition. If you change the definition of the object in one place, and then

save the object, the change is reflected to all other calls to the object.

A data flow, for example, is a reusable object. Multiple jobs, such as a weekly

load job and a daily load job, can call the same data flow. If the data flow is

changed, both jobs call the new version of the data flow.

When you drag and drop an object from the object library, you are creating

a new reference (or call) to the existing object definition.

You can edit reusable objects at any time independent of the current open

project. For example, if you open a new project, you can go to the object

library, open a data flow, and edit it. The object will remain "dirty" (that is,

your edited changes will not be saved) until you explicitly save it.

Functions are reusable objects that are not available in the object library.

Data Services provides access to these objects through the function wizard

wherever they can be used.

2

Some objects in the object library are not reusable in all instances:

• Datastores are in the object library because they are a method for

categorizing and accessing external metadata.

• Built-in transforms are "reusable" in that every time you drop a transform,

a new instance of the transform is created.

"Saving" a reusable object in Data Services means storing the language that

describes the object to the repository. The description of a reusable object

includes these components:

• Properties of the object

• Options for the object

• Calls this object makes to other objects

• Definition of single-use objects called by this object

If an object contains a call to another reusable object, only the call to the

second object is saved, not changes to that object's definition.

Data Services stores the description even if the object does not validate.

Data Services Reference Guide 29

Data Services Objects

2

Characteristics of objects

Data Services saves objects without prompting you:

• When you import an object into the repository.

• When you finish editing:

You can explicitly save the reusable object currently open in the workspace

by choosing Save from the Project menu. If a single-use object is open in

the workspace, the Save command is not available.

To save all objects in the repository that have changes, choose Save All

from the Project menu.

Data Services also prompts you to save all objects that have changes when

you execute a job and when you exit the Designer.

Single-use objects

• Datastores

• Flat file formats

• XML Schema or DTD formats

Single-use objects appear only as components of other objects. They operate

only in the context in which they were created.

"Saving" a single-use object in Data Services means storing the language

that describes the object to the repository. The description of a single-use

object can only be saved as part of the reusable object that calls the

single-use object.

Data Services stores the description even if the object does not validate.

Object options, properties, and attributes

Each object is associated with a set of options, properties, and attributes:

• Options control the operation of an object. For example, in a datastore,

an option is the name of the database to which the datastore connects.

• Properties document an object. For example, properties include the

name, description of an object, and the date on which it was created.

30 Data Services Reference Guide

Properties merely describe an object; they do not affect an object's

operation.

To view properties, right-click an object and select Properties.

• Attributes provide additional information about an object. Attribute

values may also affect an object's behavior.

To view attributes, double-click an object from an editor and click the

Attributes tab.

Descriptions of objects

This section describes each Data Services object and tells you how to access

that object.

The following table lists the names and descriptions of objects available in

Data Services:

DescriptionClassObject

Data Services Objects

Descriptions of objects

2

COBOL copybook

file format

Single-useAnnotation

Single-useCatch

Reusable

Single-useConditional

Describes a flow, part of a flow, or a diagram in the

workspace.

Specifies the steps to execute if anerror occurs in a

given exception group while a job is running.

Defines the format for a COBOL copybook file

source.

Specifies the steps to execute based on the result

of a condition.

Data Services Reference Guide 31

Data Services Objects

2

Descriptions of objects

DescriptionClassObject

Defines activities that Data Services executes at a

given time including error, monitor and trace messages.

ReusableBatch Job

Jobs can be dropped only in the project tree. The

object created is a direct reference to the object in

the object library. Only one reference to a job can

exist in the project tree at one time.

Excel workbook

format

ReusableData flow

Single-useDatastore

ReusableDocument

ReusableDTD

ReusableFile format

Specifies the requirements for extracting, transforming, and loading data from sources to targets.

Specifies the connection information Data Services

needs to access a database or other data source.

Cannot be dropped.

Available in certain adapter datastores, documents

are data structures that can support complicated

nested schemas.

A description of an XML file or message. Indicates

the format an XML document reads or writes.

Defines the format for an Excel workbook source.Reusable

Indicates how flat file data is arranged in a source

or target file.

32 Data Services Reference Guide

Returns a value.ReusableFunction

Data Services Objects

Descriptions of objects

DescriptionClassObject

2

Outbound message

Single-useLog

ReusableMessage function

Reusable

Single-useQuery transform

ReusableReal-time job

Records information about a particular execution of

a single job.

Available in certain adapter datastores, message

functions can accommodate XML messages when

properly configured.

Available in certain adapter datastores, outbound

messages are XML-based, hierarchical communications that real-time jobs can publish to adapters.

Groups jobs for convenient access.Single-useProject

Retrieves a data set that satisfies conditions that you

specify.

Defines activities that Data Services executes ondemand.

Real-time jobs are created in the Designer, then

configured and run as services associated with an

Access Server in the Administrator. Real-time jobs

are designed according to data flow model rules and

run as a request-response system.

Source

Single-useScript

Single-use

Evaluates expressions, calls functions, and assigns

values to variables.

An object from which Data Services reads data in a

data flow.

Data Services Reference Guide 33

Data Services Objects

2

Descriptions of objects

DescriptionClassObject

Indicates an external DBMS table for which metadata

has been imported into Data Services, or the target

table into which data is or has been placed.

ReusableTable

A table is associated with its datastore; it does not

exist independently of a datastore connection. A table retrieves or stores data based on the schema of

the table definition from which it was created.

Single-useTarget

ReusableTemplate table

ReusableTransform

Single-useWhile loop

An object in which Data Services loads extracted

and transformed data in a data flow.

A new table you want added to a database.

All datastores except SAP ERP or R/3 datastores

have a default template that you can use to create

any number of tables in the datastore.

Data Services creates the schema for each instance

of a template table at runtime. The created schema

is based on the data loaded into the template table.

Performs operations on data sets.

Requires zero or more data sets; produces zero or

one data set (which may be split).

Introduces a try/catch block.Single-useTry

Repeats a sequence of steps as long as a condition

is true.

ReusableWork flow

34 Data Services Reference Guide

Orders data flows and operations supporting data

flows.

Data Services Objects

Descriptions of objects

DescriptionClassObject

A batch or real-time source or target. As a source,

an XML file translates incoming XML-formatted data

Single-useXML file

Single-useXML message

into data that Data Services can process. As a target,

an XML file translates the data produced by a data

flow, including nested data, into an XML-formatted

file.

A real-time source or target. As sources, XML messages translate incoming XML-formatted requests

into data that a real-time job can process. As targets,

XML messages translate the result of the real-time

job, including hierarchical data, into an XML-formatted response and sends the messages to the Access

Server.

2

Annotation

Class

Single-use

ReusableXML Schema

Single-useXML template

A description of an XML file or message. Indicates

the format an XML document reads or writes.

A target that creates an XML file that matches a

particular input schema. No DTD or XML Schema

is required.

Data Services Reference Guide 35

Data Services Objects

2

Descriptions of objects

Batch Job

Access

Click the annotation icon in the tool palette, then click in the workspace.

Description

Annotations describe a flow, part of a flow, or a diagram in a workspace. An

annotation is associated with the job., work flow, or data flow where it appears.

When you import or export that job, work flow, or data flow, you import or

export associated annotations.

Note:

An annotation has no options or properties.

Related Topics

• Designer Guide: Creating annotations

Class

Reusable

Access

• In the object library, click the Jobs tab.

• In the project area, select a project and right-click Batch Job.

Description

A batch job is a set of objects that you can schedule and execute together.

For Data Services to execute the steps of any object, the object must be part

of a job.

A batch job can contain the following objects:

• Data flows

• Sources

36 Data Services Reference Guide

Data Services Objects

Descriptions of objects

• Transforms

• Targets

• Work flows

• Scripts

• Conditionals

• Try/catch blocks

• While Loops

You can run batch jobs such that you can automatically recover from jobs

that do not execute successfully. During automatic recovery, Data Services

retrieves the results from steps that were successfully completed in the

previous run and executes all other steps. Specifically, Data Services retrieves

results from the following types of steps:

• Work flows

• Data flows

• Script statements

2

• Custom functions (stateless type only)

• SQL function

• EXEC function

• get_env function

• rand function

• sysdate function

• systime function

Batch jobs have the following built-in attributes:

DescriptionAttribute

Name

The name of the object. This name appears on the object

in the object library and in the calls to the object.

Data Services Reference Guide 37

Data Services Objects

2

Descriptions of objects

DescriptionAttribute

Your description of the job.Description

The date when the object was created.Date created

Batch and real-time jobs have properties that determine what information

Data Services collects and logs when running the job. You can set the default

properties that apply each time you run the job or you can set execution

(run-time) properties that apply for a particular run. Execution properties

override default properties.

To set default properties, select the job in the project area or the object library,

right-click, and choose Properties to open the Properties window.

Execution properties are set as you run a job. To set execution properties,

right-click the job in the project area and choose Execute. The Designer

validates the job and opens the Execution Properties window.

You can set three types of execution properties:

• Parameters

• Trace properties

• Global variables

Related Topics

• Designer Guide: Setting global variable values

Parameters

Use parameter options to help capture and diagnose errors using log,

auditing, statistics collection, or recovery options.

Data Services writes log information to one of three files (in the

$LINK_DIR\log\ Job Server name\repository name directory):

• Monitor log file

38 Data Services Reference Guide

• Trace log file

• Error log file

You can also select a system configuration and a Job Server or server group

from the Parameters tab of the Execution Properties window.

Select the Parameters tab to set the following options.

Monitor sample

rate

(# of rows)

Data Services Objects

Descriptions of objects

DescriptionOptions

Enter the number of rows processed before Data Services writes information to the monitor log file and updates job events. Data Services

writes information about the status of each source, target, or transform.

For example, if you enter 1000, Data Services updates the logs after

processing 1,000 rows.

The default is 1000. When setting the value, you must evaluate performance improvements gained by making fewer calls to the operating

system against your ability to find errors quickly. With a higher monitor

sample rate, Data Services collects more data before calling the operating system to open the file: performance improves. However, with

a higher monitor rate, more time passes before you are able to see

any errors.

2

Print all trace

messages

Note:

If you use a virus scanner on your files, exclude the Data Services log

from the virus scan. Otherwise, the virus scan analyzes the Data Services

log repeated during the job execution, which causes a performance

degradation.

Select this check box to print all trace messages to the trace log file

for the current Job Server.

Selecting this option overrides the trace properties set on the Trace

tab.

Data Services Reference Guide 39

Data Services Objects

2

Descriptions of objects

DescriptionOptions

Select this check box if you do not want to collect data validation

statistics for any validation transforms in this job. (The default is

cleared.)

Disable data validation statistics

collection

Enable auditing

Enable recovery

For more information about data validation statistics, see Data Validation dashboards Settings control panel" in the Data Services Manage-

ment Console: Metadata Reports Guide.

For more information about data validation statistics, see the Data

Validation Dashboard Reports chapter in the Data Services Manage-

ment Console: Metadata Reports Guide.

Clear this check box if you do not want to collect audit statistics for

this specific job execution. (The default is selected.)

For more information about auditing, see Using Auditing in the Data

Services Designer Guide.

For more information about auditing, see the Data Assessment

chapter in the Data Services Designer Guide.

(Batch jobs only) Select this check box to enable the automatic recovery feature. When enabled, Data Services saves the results from

completed steps and allows you to resume failed jobs. You cannot

enable the automatic recovery feature when executing a job in data

scan mode.

See Automatically recovering jobs in the Data Services Designer

Guide for information about the recovery options.

See the Recovery Mechanisms chapter in the Data Services Designer

Guide for information about the recovery options.

This property is only available as a run-time property. It is not available

as a default property.

40 Data Services Reference Guide

Recover from last

failed execution

Collect statistics

for optimization

Data Services Objects

Descriptions of objects

DescriptionOptions

(Batch Job only) Select this check box to resume a failed job. Data

Services retrieves the results from any steps that were previously

executed successfully and re-executes any other steps.

This option is a run-time property. This option is not available when

a job has not yet been executed or when recovery mode was disabled

during the previous run.

Select this check box if you want to collect statistics that the Data

Services optimizer will use to choose an optimal cache type (inmemory or pageable). This option is not selected by default.

For more information, see Caching sources in the Data Services

Performance Optimization Guide.

For more information, see the Using Caches chapter in the Data

Services Performance Optimization Guide.

2

Collect statistics

for monitoring

Select this check box if you want to display cache statistics in the

Performance Monitor in the Administrator. (The default is cleared.)

Note:

Use this option only if you want to look at the cache size.

For more information, see Monitoring and tuning caches in the Data

Services Performance Optimization Guide.

For more information, see the Using Caches chapter in the Data

Services Performance Optimization Guide.

Data Services Reference Guide 41

Data Services Objects

2

Descriptions of objects

Use collected

statistics

DescriptionOptions

Select this check box if you want the Data Services optimizer to use

the cache statistics collected on a previous execution of the job. (The

default is selected.)

For more information, see Monitoring and tuning caches in the Data

Services Performance Optimization Guide.

For more information, see Chapter 6: Using Caches in the Data Ser-

vices Performance Optimization Guide.

Select the level within a job that you want to distribute to multiple job

servers for processing:

•

Job - The whole job will execute on an available Job Server.

•

Data flow - Each data flow within the job can execute on an available Job Server.

•

Sub data flow - Each sub data flow (can be a separate transform

Distribution level

42 Data Services Reference Guide

or function) within a data flow can execute on an available Job

Server.

For more information, see Using grid computing to distribute data

flows execution in the Data Services Performance Optimization

Guide.

For more information, see the Distributing Data Flow Execution

chapter in the Data Services Performance Optimization Guide.

System configuration

Data Services Objects

Descriptions of objects

DescriptionOptions

Select the system configuration to use when executing this job. A

system configuration defines a set of datastore configurations, which

define the datastore connections.

For more information, see Creating and managing multiple datastore

configurations Data Services Designer Guide.

For more information, see the Datastores chapter in the Data Services

Designer Guide.

If a system configuration is not specified, Data Services uses the default datastore configuration for each datastore.

This option is a run-time property. This option is only available if there

are system configurations defined in the repository.

Select the Job Server or server group to execute this job. A Job

Server is defined by a host name and port while a server group is

defined by its name. The list contains Job Servers and server groups

linked to the job's repository.

2

Job Server or

server group

For an introduction to server groups, see Server group architecture

in the Data Services Management Console: Administrator Guide.

For an introduction to server groups, see the Using Server Groups

chapter in the Data Services Management Console: Administrator

Guide.

When selecting a Job Server or server group, remember that many

objects in the Designer have options set relative to the Job Server's

location. For example:

•

Directory and file names for source and target files

•

Bulk load directories

Data Services Reference Guide 43

Data Services Objects

2

Descriptions of objects

Trace properties

Use trace properties to select the information that Data Services monitors

and writes to the trace log file during a job. Data Services writes trace

messages to the trace log associated with the current Job Server and writes

error messages to the error log associated with the current Job Server.

To set trace properties, click the Trace tab. To turn a trace on, select the

trace, click Yes in the Value list, and click OK. To turn a trace off, select the

trace, click No in the Value list, and click OK.

You can turn several traces on and off.

DescriptionTrace

Writes a message when a transform imports or exports a row.Row

Session

Work Flow

Data Flow

Trace ABAP

Writes a message when the job description is read from the repository, when the job is optimized, and when the job runs.

Writes a message when the work flow description is read from the

repository, when the work flow is optimized, when the work flow runs,

and when the work flow ends.

Writes a message when the data flow starts, when the data flow

successfully finishes, or when the data flow terminates due to error.

This trace also reports when the bulk loader starts, any bulk loader

warnings occur, and when the bulk loader successfully completes.

Writes a message when a transform starts, completes, or terminates.Transform

Writes a message when an ABAP dataflow starts or stops, and to

report the ABAP job status.

44 Data Services Reference Guide

SQL Functions

SQL Transforms

Data Services Objects

Descriptions of objects

DescriptionTrace

Writes data retrieved before SQL functions:

•

Every row retrieved by the named query before the SQL is submitted in the key_generation function

•

Every row retrieved by the named query before the SQL is submitted in the lookup function (but only if PRE_LOAD_CACHE is

not specified).

•

When mail is sent using the mail_to function.

Writes a message (using the Table_Comparison transform) about

whether a row exists in the target table that corresponds to an input

row from the source table.

The trace message occurs before submitting the query against the

target and for every row retrieved when the named query is submitted

(but only if caching is not turned on).

2

SQL Readers

Writes the SQL query block that a script, query transform, or SQL

function submits to the system. Also writes the SQL results.

Data Services Reference Guide 45

Data Services Objects

2

Descriptions of objects

DescriptionTrace

Writes a message when the bulk loader:

•

Starts

•

Submits a warning message

•

Completes successfully

•

Completes unsuccessfully, if the Clean up bulk loader directory

after load option is selected

Additionally, for Microsoft SQL Server and Sybase ASE, writes

when the SQL Server bulk loader:

•

Completes a successful row submission

•

Encounters an error

SQL Loaders

This instance reports all SQL that Data Services submits to the

target database, including:

•

When a truncate table command executes if the Delete data

from table before loading option is selected.

•

Any parameters included in PRE-LOAD SQL commands

•

Before a batch of SQL statements is submitted

•

When a template table is created (and also dropped, if the

Drop/Create option is turned on)

•

When a delete from table command executes if auto correct

is turned on (Informix environment only).

This trace also writes all rows that Data Services loads into the

target.

Writes a message for every row retrieved from the memory table.Memory Source

Writes a message for every row inserted into the memory table.Memory Target

46 Data Services Reference Guide

SQL Only

Tables

Scripts and Script

Functions

Data Services Objects

Descriptions of objects

DescriptionTrace

Use in conjunction with Trace the SQL Transforms option, the Trace

SQL Readers option, or the Trace SQL Loaders option to stop the writing