Data Services Migration

Considerations Guide

BusinessObjects Data Services XI 3.1 (12.1.1)

Copyright

© 2008 Business Objects, an SAP company. All rights reserved. Business Objects

owns the following U.S. patents, which may cover products that are offered and

licensed by Business Objects: 5,295,243; 5,339,390; 5,555,403; 5,590,250;

5,619,632; 5,632,009; 5,857,205; 5,880,742; 5,883,635; 6,085,202; 6,108,698;

6,247,008; 6,289,352; 6,300,957; 6,377,259; 6,490,593; 6,578,027; 6,581,068;

6,628,312; 6,654,761; 6,768,986; 6,772,409; 6,831,668; 6,882,998; 6,892,189;

6,901,555; 7,089,238; 7,107,266; 7,139,766; 7,178,099; 7,181,435; 7,181,440;

7,194,465; 7,222,130; 7,299,419; 7,320,122 and 7,356,779. Business Objects and

its logos, BusinessObjects, Business Objects Crystal Vision, Business Process

On Demand, BusinessQuery, Cartesis, Crystal Analysis, Crystal Applications,

Crystal Decisions, Crystal Enterprise, Crystal Insider, Crystal Reports, Crystal

Vision, Desktop Intelligence, Inxight and its logos , LinguistX, Star Tree, Table

Lens, ThingFinder, Timewall, Let There Be Light, Metify, NSite, Rapid Marts,

RapidMarts, the Spectrum Design, Web Intelligence, Workmail and Xcelsius are

trademarks or registered trademarks in the United States and/or other countries

of Business Objects and/or affiliated companies. SAP is the trademark or registered

trademark of SAP AG in Germany and in several other countries. All other names

mentioned herein may be trademarks of their respective owners.

Third-party

Contributors

Business Objects products in this release may contain redistributions of software

licensed from third-party contributors. Some of these individual components may

also be available under alternative licenses. A partial listing of third-party

contributors that have requested or permitted acknowledgments, as well as required

notices, can be found at: http://www.businessobjects.com/thirdparty

2008-11-28

Contents

Introduction 9Chapter 1

Welcome to Data Services........................................................................10

Data Services Migration Considerations 17Chapter 2

Behavior changes in version 12.1.1..........................................................18

Behavior changes in version 12.1.0..........................................................20

Behavior changes in version 12.0.0..........................................................26

Welcome..............................................................................................10

Documentation set for Data Services...................................................10

Accessing documentation....................................................................13

Business Objects information resources..............................................14

Blob data type enhancements..............................................................19

Neoview bulk loading...........................................................................20

Cleansing package changes................................................................21

DTD-to-XSD conversion.......................................................................21

Minimum requirements for international addressing directories...........22

Try/catch exception groups..................................................................22

Upgrading from version 12.0.0 to version 12.1.0.................................25

Case transform enhancement..............................................................26

Data Quality projects in Data Integrator jobs ......................................27

Data Services web address..................................................................27

Large object data type enhancements.................................................28

License keycodes.................................................................................31

Locale selection....................................................................................31

ODBC bigint data type..........................................................................33

Persistent and pageable cache enhancements...................................33

Data Services Migration Considerations Guide 3

Contents

Row delimiter for flat files.....................................................................33

Behavior changes in version 11.7.3...........................................................34

Data flow cache type............................................................................35

Job Server enhancement.....................................................................35

Logs in the Designer............................................................................35

Pageable cache for memory-intensive data flows................................35

Adapter SDK........................................................................................36

PeopleSoft 8.........................................................................................36

Behavior changes in version 11.7.2...........................................................36

Embedded data flows...........................................................................37

Oracle Repository upgrade..................................................................37

Solaris and AIX platforms.....................................................................39

Behavior changes in version 11.7.0...........................................................39

Data Quality..........................................................................................40

Distributed data flows...........................................................................42

JMS Adapter interface..........................................................................43

XML Schema enhancement.................................................................43

Password management........................................................................43

Repository size.....................................................................................44

Web applications..................................................................................44

Web services........................................................................................44

Behavior changes in version 11.6.0...........................................................45

Netezza bulk loading............................................................................45

Conversion between different data types.............................................46

Behavior changes in version 11.5.1.5........................................................46

Behavior changes in version 11.5.1...........................................................46

Behavior changes in version 11.5.0.0........................................................47

Web Services Adapter..........................................................................47

Varchar behavior..................................................................................47

Central Repository................................................................................48

Behavior changes in version 11.0.2.5........................................................48

4 Data Services Migration Considerations Guide

Contents

Teradata named pipe support...............................................................48

Behavior changes in version 11.0.2...........................................................48

Behavior changes in version 11.0.1.1........................................................49

Statistics repository tables....................................................................49

Behavior changes in version 11.0.1...........................................................49

Crystal Enterprise adapters..................................................................50

Behavior changes in version 11.0.0...........................................................50

Changes to code page names.............................................................51

Data Cleansing.....................................................................................52

License files and remote access software...........................................53

Behavior changes in version 6.5.1............................................................53

Behavior changes in version 6.5.0.1.........................................................54

Web services support...........................................................................54

Sybase bulk loader library on UNIX.....................................................55

Behavior changes in version 6.5.0.0.........................................................55

Browsers must support applets and have Java enabled......................55

Execution of to_date and to_char functions.........................................56

Changes to Designer licensing............................................................57

License files and remote access software...........................................57

Administrator Repository Login............................................................58

Administrator Users..............................................................................59

Data Quality to Data Services Migration 61Chapter 3

Overview of migration................................................................................62

Who should migrate?...........................................................................62

Why migrate?.......................................................................................62

Introduction to the interface..................................................................64

Downloading blueprints and other content objects..............................66

Introduction to the migration utility........................................................67

Terminology in Data Quality and Data Services...................................67

Naming conventions.............................................................................69

Data Services Migration Considerations Guide 5

Contents

Deprecated objects..............................................................................70

Premigration checklist..........................................................................72

Using the migration tool.............................................................................73

Overview of the migration utility...........................................................73

Migration checklist................................................................................74

Connection information........................................................................75

Running the dqmigration utility ............................................................76

dqmigration utility syntax and options..................................................79

Migration report ...................................................................................83

How Data Quality repository contents migrate..........................................85

How projects and folders migrate.........................................................85

How connections migrate.....................................................................91

How substitution files and variables migrate........................................97

How data types migrate......................................................................102

How Data Quality attributes migrate..................................................103

How transforms migrate...........................................................................103

Overview of migrated transforms.......................................................103

Address cleansing transforms............................................................111

Reader and Writer transforms............................................................121

How Data Quality integrated batch Readers and Writers migrate.....159

How Data Quality transactional Readers and Writers migrate...........165

Matching transforms...........................................................................169

UDT-based transforms.......................................................................178

Other transforms................................................................................188

Suggestion Lists options....................................................................202

Post-migration tasks................................................................................203

Further cleanup .................................................................................203

Improving performance .....................................................................210

Troubleshooting..................................................................................215

6 Data Services Migration Considerations Guide

Contents

Index 223

Data Services Migration Considerations Guide 7

Contents

8 Data Services Migration Considerations Guide

Introduction

1

Introduction

1

Welcome to Data Services

This document contains the following migration topics:

• Migration considerations for behavior changes associated with each

version of the Data Integrator and Data Services products.

• Migration of your Data Quality Projects into Data Services.

Welcome to Data Services

Welcome

Data Services XI Release 3 provides data integration and data quality

processes in one runtime environment, delivering enterprise performance

and scalability.

The data integration processes of Data Services allow organizations to easily

explore, extract, transform, and deliver any type of data anywhere across

the enterprise.

The data quality processes of Data Services allow organizations to easily

standardize, cleanse, and consolidate data anywhere, ensuring that end-users

are always working with information that's readily available, accurate, and

trusted.

Documentation set for Data Services

You should become familiar with all the pieces of documentation that relate

to your Data Services product.

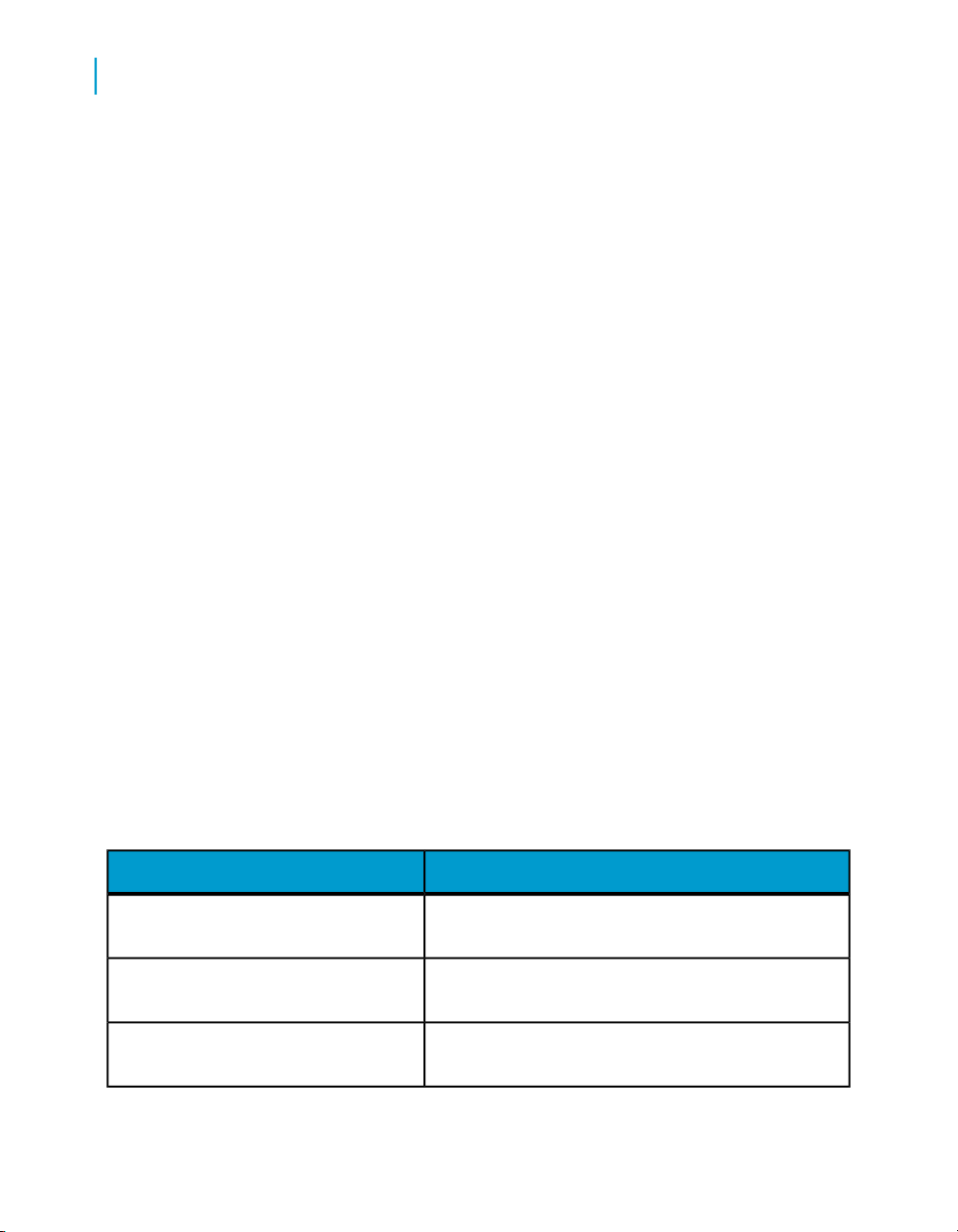

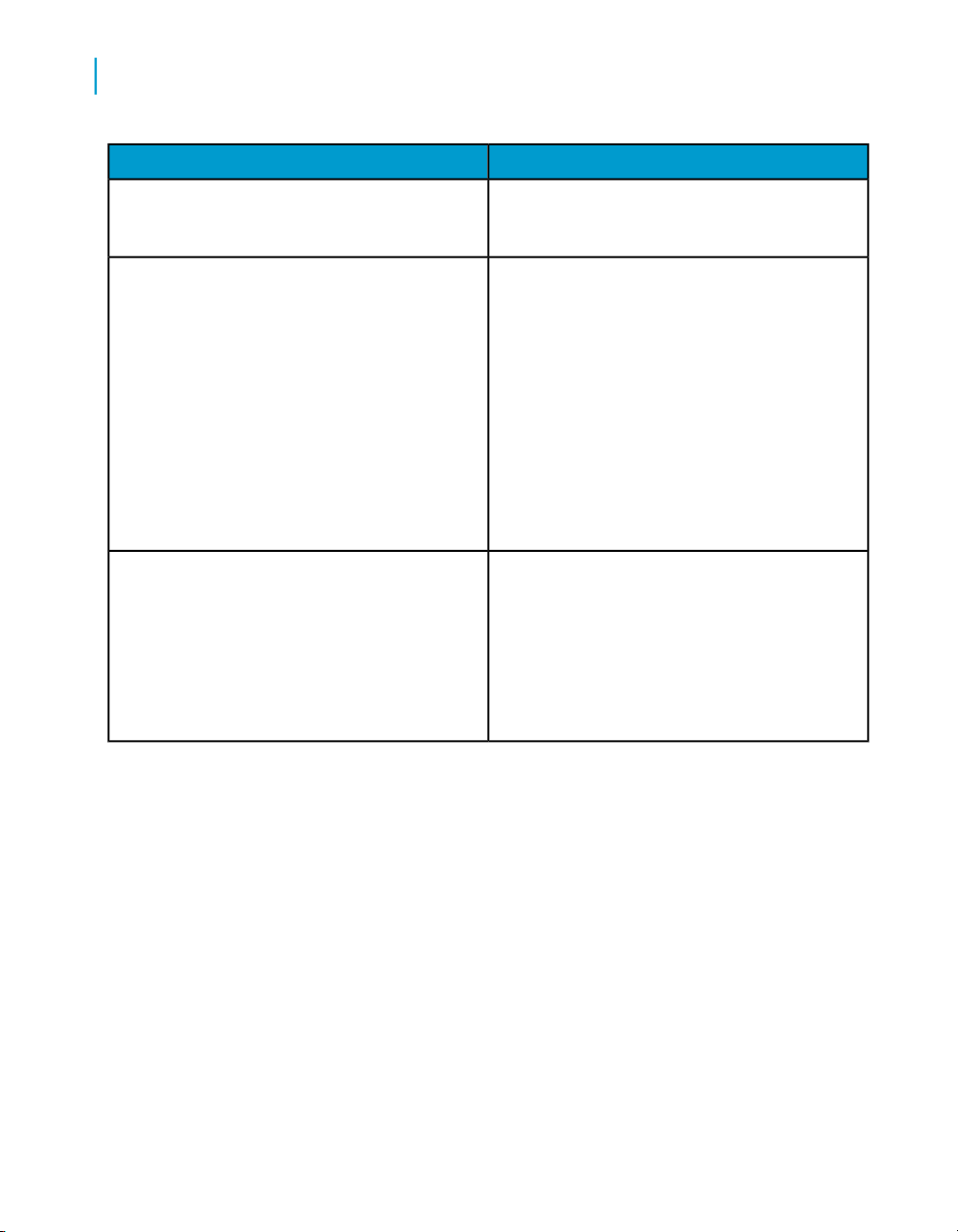

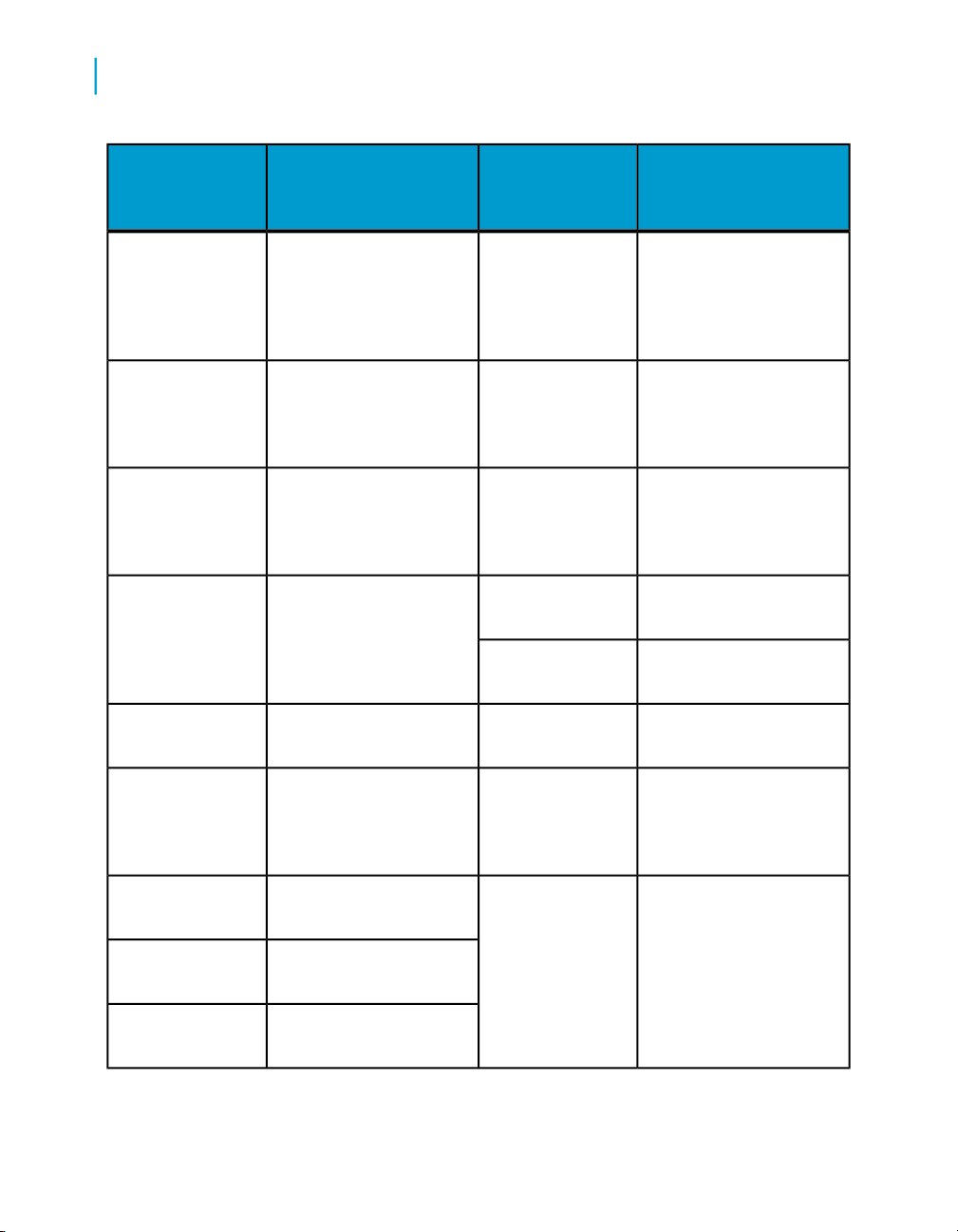

What this document providesDocument

Documentation Map

Release Summary

Release Notes

10 Data Services Migration Considerations Guide

Information about available Data Services books,

languages, and locations

Highlights of key features in this Data Services release

Important information you need before installing and

deploying this version of Data Services

Introduction

Welcome to Data Services

What this document providesDocument

1

Getting Started Guide

Installation Guide for Windows

Installation Guide for UNIX

Advanced Development Guide

Designer Guide

Integrator's Guide

Management Console: Administrator

Guide

Management Console: Metadata Reports Guide

Migration Considerations Guide

An introduction to Data Services

Information about and procedures for installing Data

Services in a Windows environment.

Information about and procedures for installing Data

Services in a UNIX environment.

Guidelines and options for migrating applications including information on multi-user functionality and

the use of the central repository for version control

Information about how to use Data Services Designer

Information for third-party developers to access Data

Services functionality. Also provides information about

how to install, configure, and use the Data Services

Adapter for JMS.

Information about how to use Data Services Administrator

Information about how to use Data Services Metadata

Reports

Information about:

• Release-specific product behavior changes from

earlier versions of Data Services to the latest release

• How to migrate from Data Quality to Data Services

Performance Optimization Guide

Reference Guide

Information about how to improve the performance

of Data Services

Detailed reference material for Data Services Designer

Data Services Migration Considerations Guide 11

Introduction

1

Welcome to Data Services

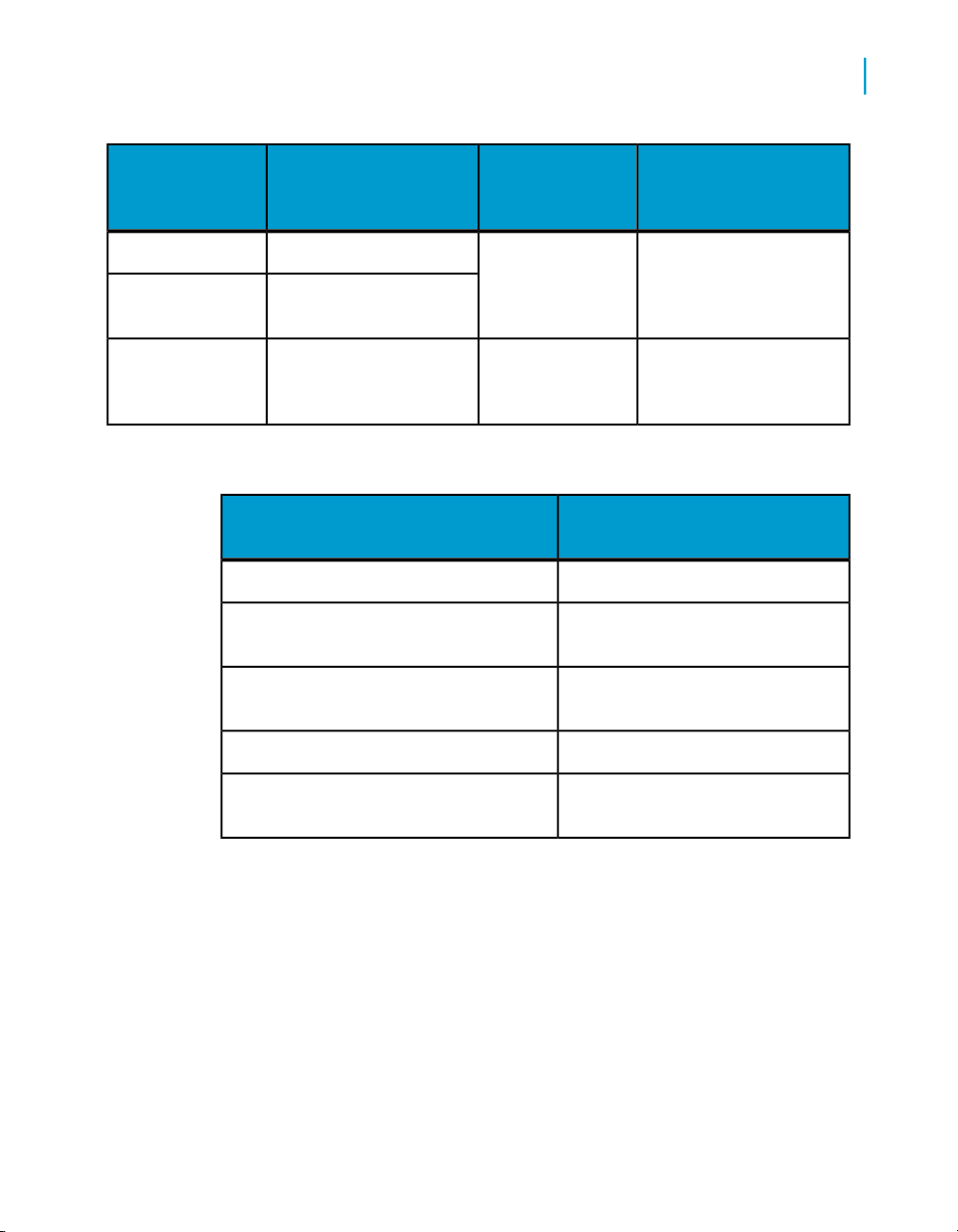

Technical Manuals

What this document providesDocument

A compiled “master” PDF of core Data Services books

containing a searchable master table of contents and

index:

•

Getting Started Guide

•

Installation Guide for Windows

•

Installation Guide for UNIX

•

Designer Guide

•

Reference Guide

•

Management Console: Metadata Reports Guide

•

Management Console: Administrator Guide

•

Performance Optimization Guide

•

Advanced Development Guide

•

Supplement for J.D. Edwards

•

Supplement for Oracle Applications

•

Supplement for PeopleSoft

•

Supplement for Siebel

•

Supplement for SAP

Tutorial

A step-by-step introduction to using Data Services

In addition, you may need to refer to several Adapter Guides and

Supplemental Guides.

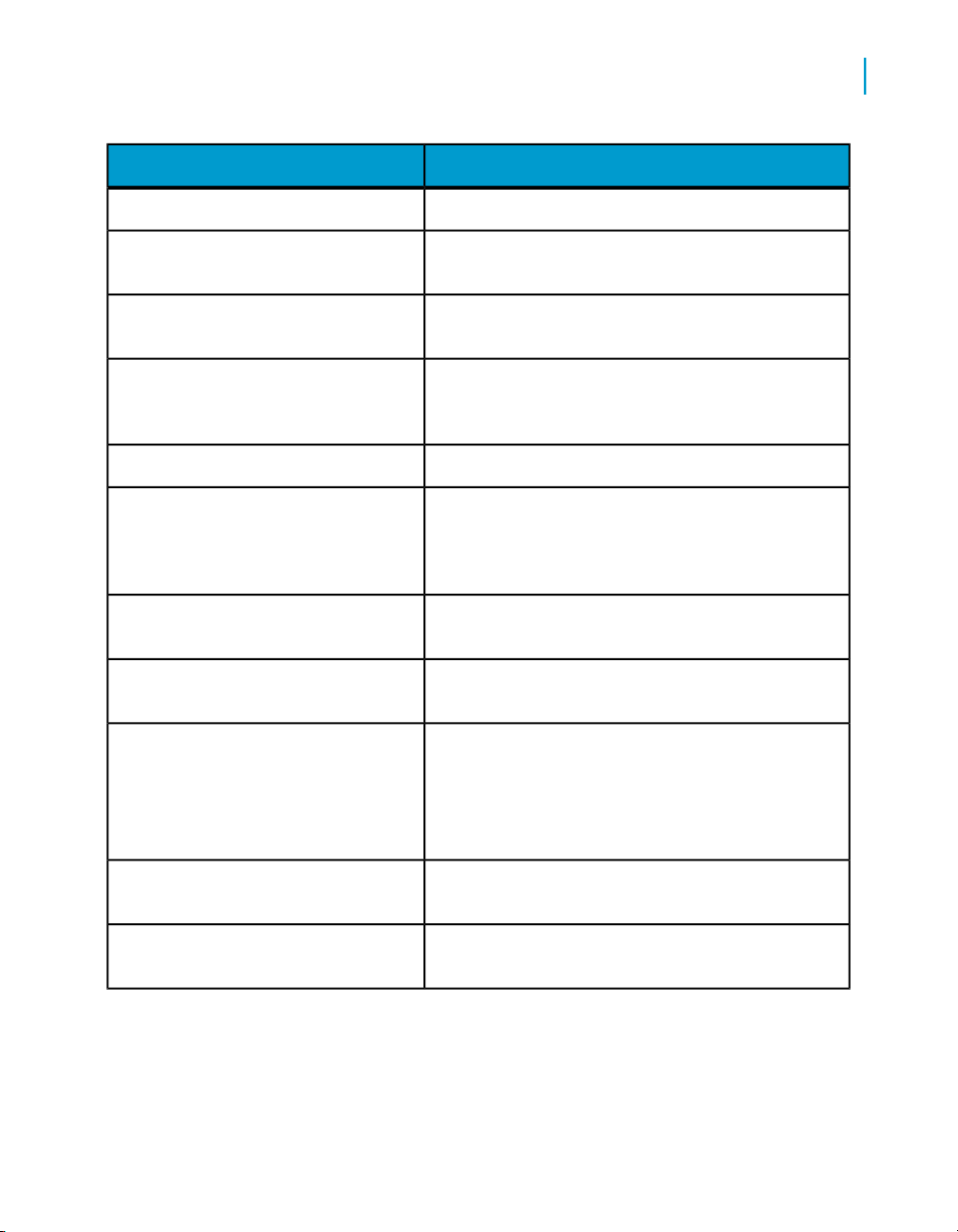

What this document providesDocument

Salesforce.com Adapter

Interface

Supplement for J.D. Edwards

Supplement for Oracle Applications

Supplement for PeopleSoft

12 Data Services Migration Considerations Guide

Information about how to install, configure, and use the Data

Services Salesforce.com Adapter Interface

Information about license-controlled interfaces between Data

Services and J.D. Edwards World and J.D. Edwards OneWorld

Information about the license-controlled interface between Data

Services and Oracle Applications

Information about license-controlled interfaces between Data

Services and PeopleSoft

Introduction

Welcome to Data Services

What this document providesDocument

1

Supplement for SAP

Supplement for Siebel

Information about license-controlled interfaces between Data

Services, SAP ERP, and SAP BI/BW

Information about the license-controlled interface between Data

Services and Siebel

Accessing documentation

You can access the complete documentation set for Data Services in several

places.

Accessing documentation on Windows

After you install Data Services, you can access the documentation from the

Start menu.

1. Choose Start > Programs > BusinessObjects XI 3.1 >

BusinessObjects Data Services > Data Services Documentation.

Note:

Only a subset of the documentation is available from the Start menu. The

documentation set for this release is available in LINK_DIR\Doc\Books\en.

2. Click the appropriate shortcut for the document that you want to view.

Accessing documentation on UNIX

After you install Data Services, you can access the online documentation by

going to the directory where the printable PDF files were installed.

1. Go to LINK_DIR/doc/book/en/.

2. Using Adobe Reader, open the PDF file of the document that you want

to view.

Data Services Migration Considerations Guide 13

Introduction

1

Welcome to Data Services

Accessing documentation from the Web

You can access the complete documentation set for Data Services from the

Business Objects Customer Support site.

1.

Go to http://help.sap.com.

2. Cick Business Objects at the top of the page.

You can view the PDFs online or save them to your computer.

Business Objects information resources

A global network of Business Objects technology experts provides customer

support, education, and consulting to ensure maximum business intelligence

benefit to your business.

Useful addresses at a glance:

ContentAddress

14 Data Services Migration Considerations Guide

Introduction

Welcome to Data Services

ContentAddress

1

Customer Support, Consulting, and Education

services

http://service.sap.com/

Data Services Community

https://www.sdn.sap.com/irj/sdn/businessob

jects-ds

Forums on SCN (SAP Community Network)

https://www.sdn.sap.com/irj/sdn/businessob

jects-forums

Blueprints

http://www.sdn.sap.com/irj/boc/blueprints

Information about Customer Support programs,

as well as links to technical articles, downloads,

and online forums. Consulting services can

provide you with information about how Business Objects can help maximize your business

intelligence investment. Education services can

provide information about training options and

modules. From traditional classroom learning

to targeted e-learning seminars, Business Objects can offer a training package to suit your

learning needs and preferred learning style.

Get online and timely information about Data

Services, including tips and tricks, additional

downloads, samples, and much more. All content is to and from the community, so feel free

to join in and contact us if you have a submission.

Search the Business Objects forums on the

SAP Community Network to learn from other

Data Services users and start posting questions

or share your knowledge with the community.

Blueprints for you to download and modify to fit

your needs. Each blueprint contains the necessary Data Services project, jobs, data flows, file

formats, sample data, template tables, and

custom functions to run the data flows in your

environment with only a few modifications.

Data Services Migration Considerations Guide 15

Introduction

1

Welcome to Data Services

http://help.sap.com/

ContentAddress

Business Objects product documentation.Product documentation

Documentation mailbox

documentation@businessobjects.com

Supported platforms documentation

https://service.sap.com/bosap-support

Send us feedback or questions about your

Business Objects documentation. Do you have

a suggestion on how we can improve our documentation? Is there something that you particularly like or have found useful? Let us know,

and we will do our best to ensure that your

suggestion is considered for the next release

of our documentation.

Note:

If your issue concerns a Business Objects

product and not the documentation, please

contact our Customer Support experts.

Get information about supported platforms for

Data Services.

In the left panel of the window, navigate to

Documentation > Supported Platforms >

BusinessObjects XI 3.1. Click the BusinessObjects Data Services link in the main window.

16 Data Services Migration Considerations Guide

Data Services Migration Considerations

2

Data Services Migration Considerations

2

Behavior changes in version 12.1.1

This chapter describes behavior changes associated with the Data Integrator

product since version 6.5 and in Data Services since 12.0.0 including Data

Quality functionality. Each behavior change is listed under the version number

in which the behavior originated.

For information about how to migrate your Data Quality Projects into Data

Services, see Data Quality to Data Services Migration on page 61.

For the latest Data Services technical documentation, consult the Data

Services Technical Manuals included with your product.

This Migration Considerations document contains the following sections:

•

Behavior changes in version 12.1.1 on page 18

•

Behavior changes in version 12.1.0 on page 20

•

Behavior changes in version 12.0.0 on page 26

•

Behavior changes in version 11.7.3 on page 34

•

Behavior changes in version 11.7.2 on page 36

•

Behavior changes in version 11.7.0 on page 39

•

Behavior changes in version 11.6.0 on page 45

•

Behavior changes in version 11.5.1 on page 46

•

Behavior changes in version 11.5.0.0 on page 47

•

Behavior changes in version 11.0.2.5 on page 48

•

Behavior changes in version 11.0.2 on page 48

•

Behavior changes in version 11.0.1.1 on page 49

•

Behavior changes in version 11.0.1 on page 49

•

Behavior changes in version 11.0.0 on page 50

•

Behavior changes in version 6.5.1 on page 53

•

Behavior changes in version 6.5.0.1 on page 54

•

Behavior changes in version 6.5.0.0 on page 55

To read or download Data Integrator and Data Services documentation for

previous releases (including Release Summaries and Release Notes), see

the SAP Business Objects Help Portal at http://help.sap.com/.

Behavior changes in version 12.1.1

The following sections describe changes in the behavior of Data Services

12.1.1 from previous releases of Data Services and Data Integrator. In most

cases, the new version avoids changes that would cause existing applications

18 Data Services Migration Considerations Guide

to modify their results. However, under some circumstances a change has

been deemed worthwhile or unavoidable.

If you are migrating from Data Quality to Data Services, see Data Quality to

Data Services Migration on page 61.

This section includes migration-specific information associated with the

following features:

•

Blob data type enhancements on page 19

•

Neoview bulk loading on page 20

Blob data type enhancements

Data Services 12.1.1 provides the following enhancements for binary large

object (blob) data types:

• You can now define blob data type columns in a fixed-width file format,

and you can read from and load to blob columns in fixed-width files

• The dqmigration utility now migrates Data Quality binary data types in

fixed-width flat files to Data Services blob (instead of varchar) data types

in fixed-width file formats. You no longer need to change the data type

from varchar to blob after migration.

Data Services Migration Considerations

Behavior changes in version 12.1.1

2

In a fixed-width file, the blob data is always inline with the rest of the data in

the file. The term "inline" means the data itself appears at the location where

a specific column is expected.

The 12.1.0 release of Data Services introduced support for blob data types

in a delimited file. In a delimited file, the blob data always references an

external file at the location where the column is expected. Data Services

automatically generates the file name.

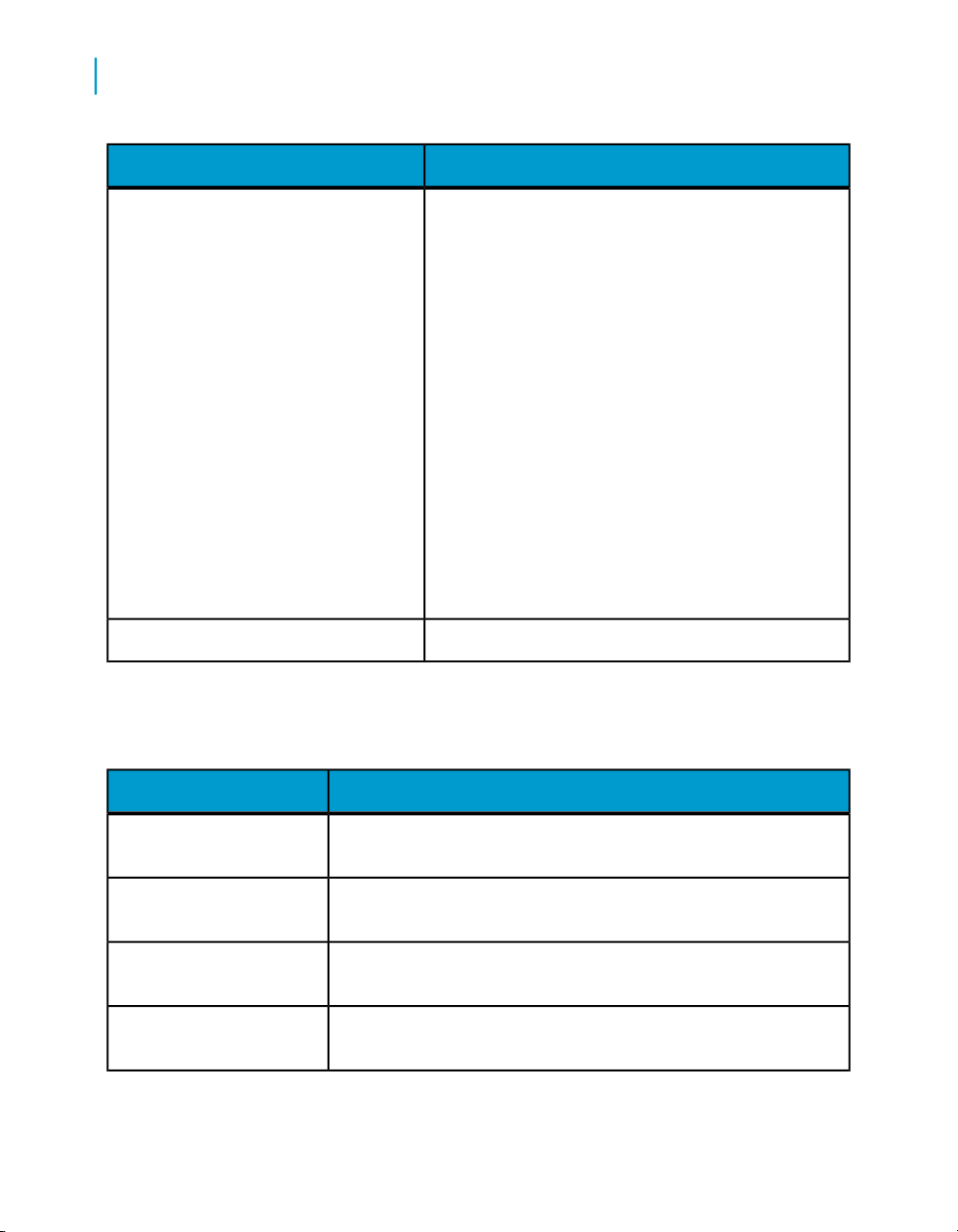

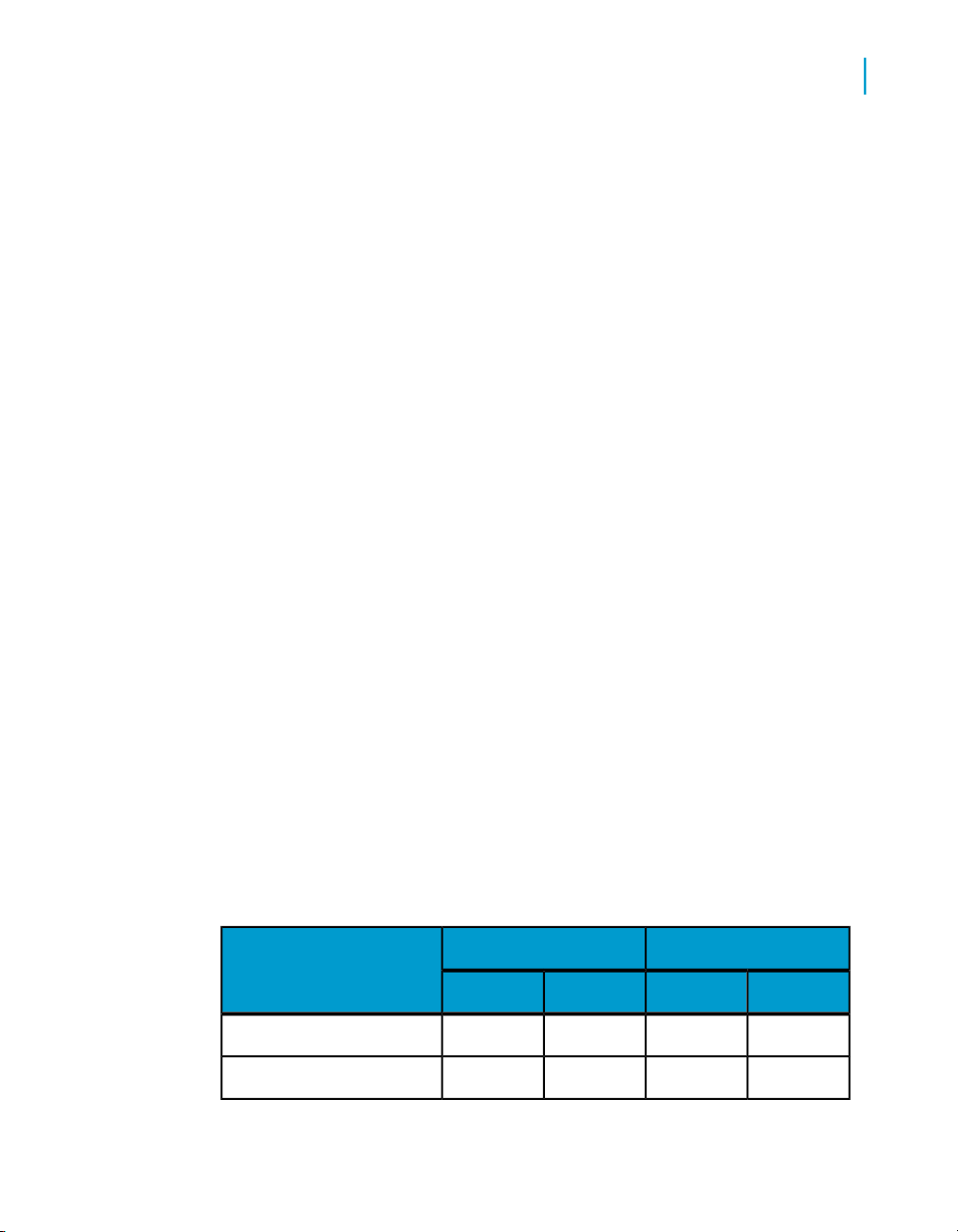

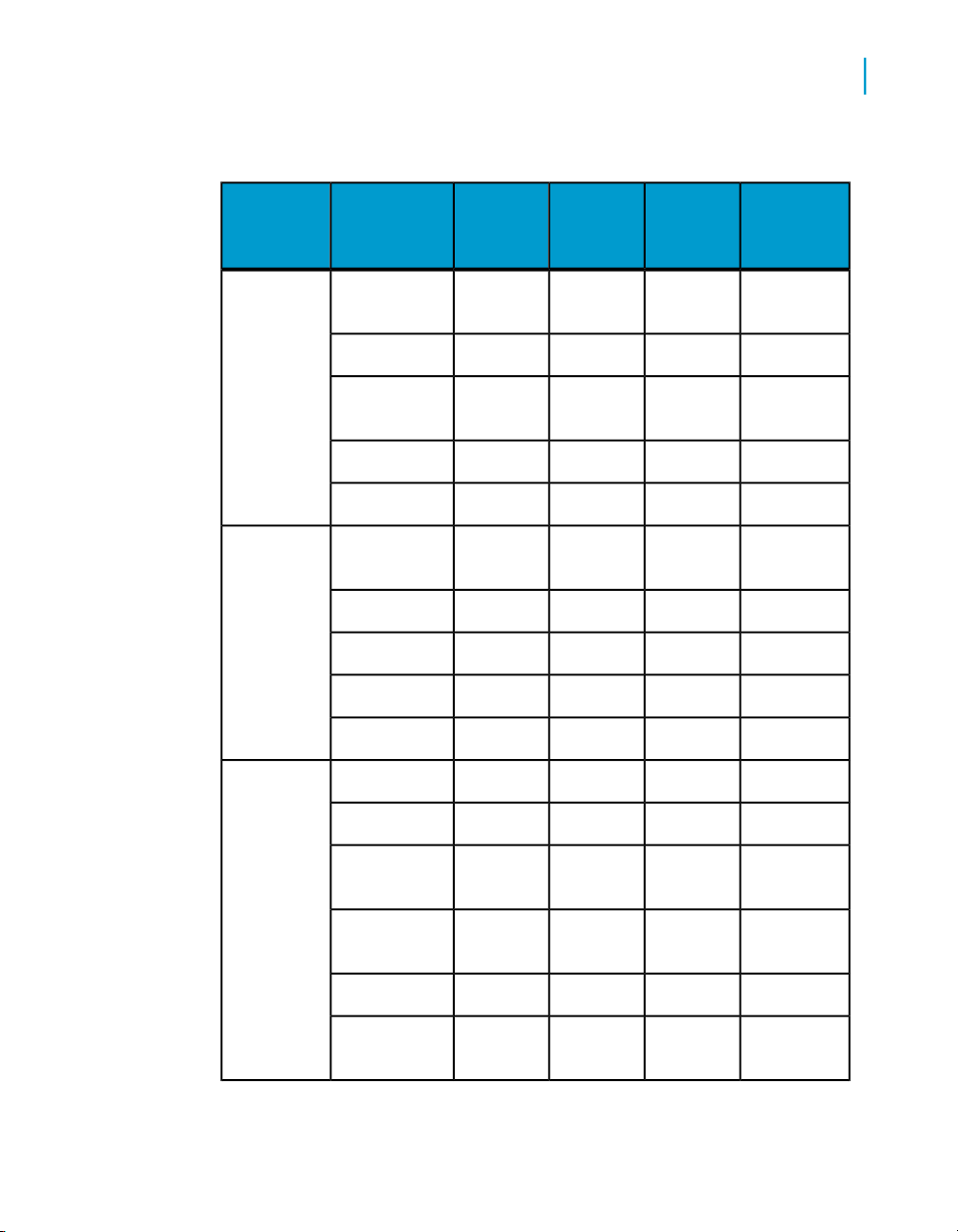

The following table summarizes the capabilities that each release provides

for blob data types:

<<Filename>>Inline

File Type

12.1.112.1.012.1.112.1.0

YesYesNoNoblob in delimited file

NoNoYesNoblob in fixed-width file

Data Services Migration Considerations Guide 19

Data Services Migration Considerations

2

Behavior changes in version 12.1.0

These capabilities help customers migrate their existing Data Quality projects

that handle binary data in flat files to Data Services fixed-width file formats.

The Data Services blob data type now supports blob data types from Data

Quality XI R2 and legacy Firstlogic products.

Related Topics

• Reference Guide: Data Types, blobs

Neoview bulk loading

If you plan to bulk load data to a Neoview database, we recommend that

you set Timeout to 1000 in your Neoview target table.

• If you create a new repository in version 12.1.1, you do not need to set

Timeout because its default value is 1000.

• If you use a 12.1.0 repository when you install version 12.1.1, the default

value for Timeout is 60. Therefore, increase Timeout to 1000 for new

data flows that bulk load into a Neoview database.

Related Topics

• Reference Guide: Data Services Objects, HP Neoview target table options

Behavior changes in version 12.1.0

The following sections describe changes in the behavior of Data Services

12.1.0 from previous releases of Data Services and Data Integrator. In most

cases, the new version avoids changes that would cause existing applications

to modify their results. However, under some circumstances a change has

been deemed worthwhile or unavoidable.

If you are migrating from Data Quality to Data Services, see the Data Quality

to Data Services Migration Guide.

This section includes migration-specific information associated with the

following features:

•

Cleansing package changes on page 21

•

DTD-to-XSD conversion on page 21

•

Minimum requirements for international addressing directories on page 22

•

Try/catch exception groups on page 22

20 Data Services Migration Considerations Guide

•

Upgrading from version 12.0.0 to version 12.1.0 on page 25

Cleansing package changes

Global Parsing Options have been renamed cleansing packages.

You can no longer use the Global Parsing Options installer to install cleansing

packages directly into the repository. You must now use a combination of

the cleansing package installer and the Repository Manager instead.

If you have made any changes to your existing cleansing package

dictionaries, you must do the following:

1. Export the changes using Export Dictionary Changes in the Dictionary

menu of the Data Services Designer.

2. Install the latest cleansing package.

3. Use the Repository Manager to load the cleansing package into the data

cleanse repository.

4. Import the changes into the new cleansing package using Bulk Load in

the Dictionary menu in the Designer.

Data Services Migration Considerations

Behavior changes in version 12.1.0

2

Related Topics

• Designer Guide: Data Quality, To export dictionary changes

• Installation Guide for Windows: To create or upgrade repositories

• Designer Guide: Data Quality, To import dictionary changes

DTD-to-XSD conversion

Data Services no longer supports publishing a DTD-based real-time job as

a Web service if the job uses a DTD to define the input and output messages.

If you migrate from Data Services 12.0.0 to version 12.1.0, you do not need

to do anything unless you change the DTD. If you change the DTD, reimport

it to the repository and publish the Web service as in the following procedure.

If you migrate from Data Integrator 11.7 or earlier versions to Data Services

12.1.0 and publish a DTD-based real-time job as a Web service, you must

reimport the Web service adapter function because the Web address changed

for the Management Console in version 12.0.0. Therefore, you must do the

following after you upgrade your repository to version 12.1.0:

Data Services Migration Considerations Guide 21

Data Services Migration Considerations

2

Behavior changes in version 12.1.0

1. Use any DTD-to-XSD conversion tool to convert the DTD to XSD.

2. Use the Designer to import the XSD to the Data Services repository.

3. Open the original data flow that is using the DTD and replace it with XSD.

4. Publish the real-time job as Web service.

5. Reimport the service as a function in the Web Service datastore.

Related Topics

• Data Services web address on page 27

Minimum requirements for international addressing directories

Due to additional country support and modified database structures (for

performance tuning), the minimum disk space requirement for the international

addressing directories (All World) has increased as follows:

• For the Global Address Cleanse transform (ga_country.dir,

ga_loc12_gen.dir, ga_loc12_gen_nogit.dir, ga_loc34_gen.dir,

ga_region_gen.dir), the minimum requirement has increased from 647

MB to 2.71 GB.

• If you purchase all countries, the the disk space requirement has increased

from 6.1 GB to 9.34 GB.

Try/catch exception groups

This version of Data Services provides better defined exception groups of

errors, new exception groups, and an enhanced catch editor that allows you

to select multiple exception groups in one catch to consolidate actions.

After you upgrade your repository to version 12.1, your try/catch blocks

created in prior versions contain the 12.1 exception group names and

numbers. Be aware of the following situations and additional actions that

you might need to take after you upgrade to version 12.1:

• The repository upgrade will map Parser errors (1) and Resolve errors (2)

to Execution errors (1000) and will map email errors(16) to System

Resource errors (1008). You need to re-evaluate all the actions that are

already defined in all the catch blocks and modify them as appropriate,

22 Data Services Migration Considerations Guide

Data Services Migration Considerations

Behavior changes in version 12.1.0

based on the new catch exception group definitions. See the tables below

for the mapping of exception groups from version 12.0 to version 12.1

and for the definitions of new exception groups.

• All recoverable jobs in a pre-12.1 system lose their recoverable state

when you upgrade. After you upgrade to version 12.1, you need to run

the job from the beginning.

• If you upgrade a central repository, only the latest version of a work flow,

data flow audit script, and user function contain the 12.1 exception group

names. Older versions of these objects contain the pre-12.1 exception

group names.

• In version 12.1, if you have a sequence of catch blocks in a workflow and

one catch block catches an exception, the subsequent catch blocks will

not be executed. For example, if your work flow has the following

sequence and Catch1 catches an exception, then Catch2 and CatchAll

will not execute. In prior versions, both Catch1 and CatchAll will execute.

Try > DataFlow1 > Catch1 > Catch2 > CatchAll

Note:

If you import pre-12.1 ATL files, any catch objects will not contain the new

exception group names and numbers. Only a repository upgrade converts

the pre-12.1 exception groups to the 12.1 exception group names and

numbers.

2

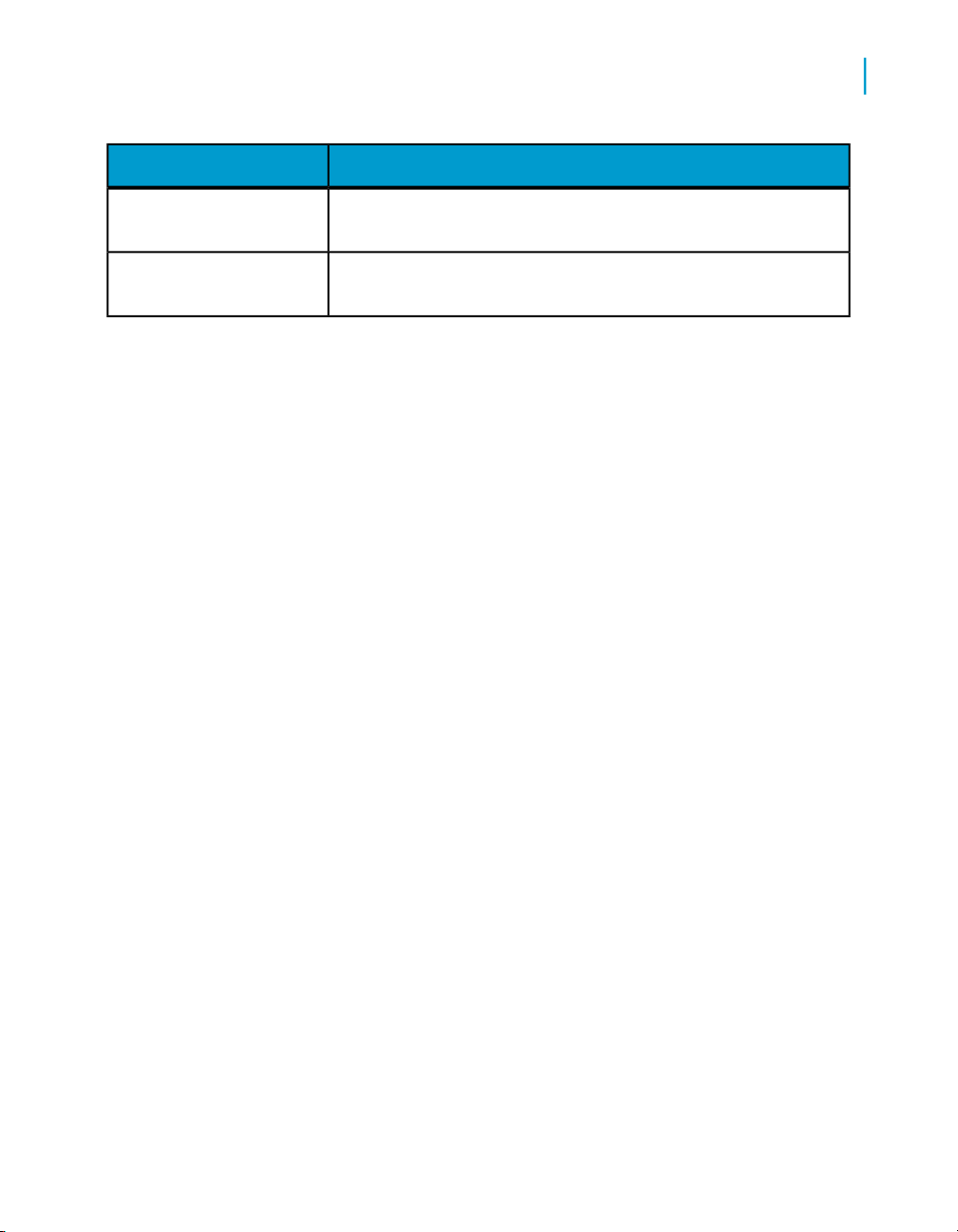

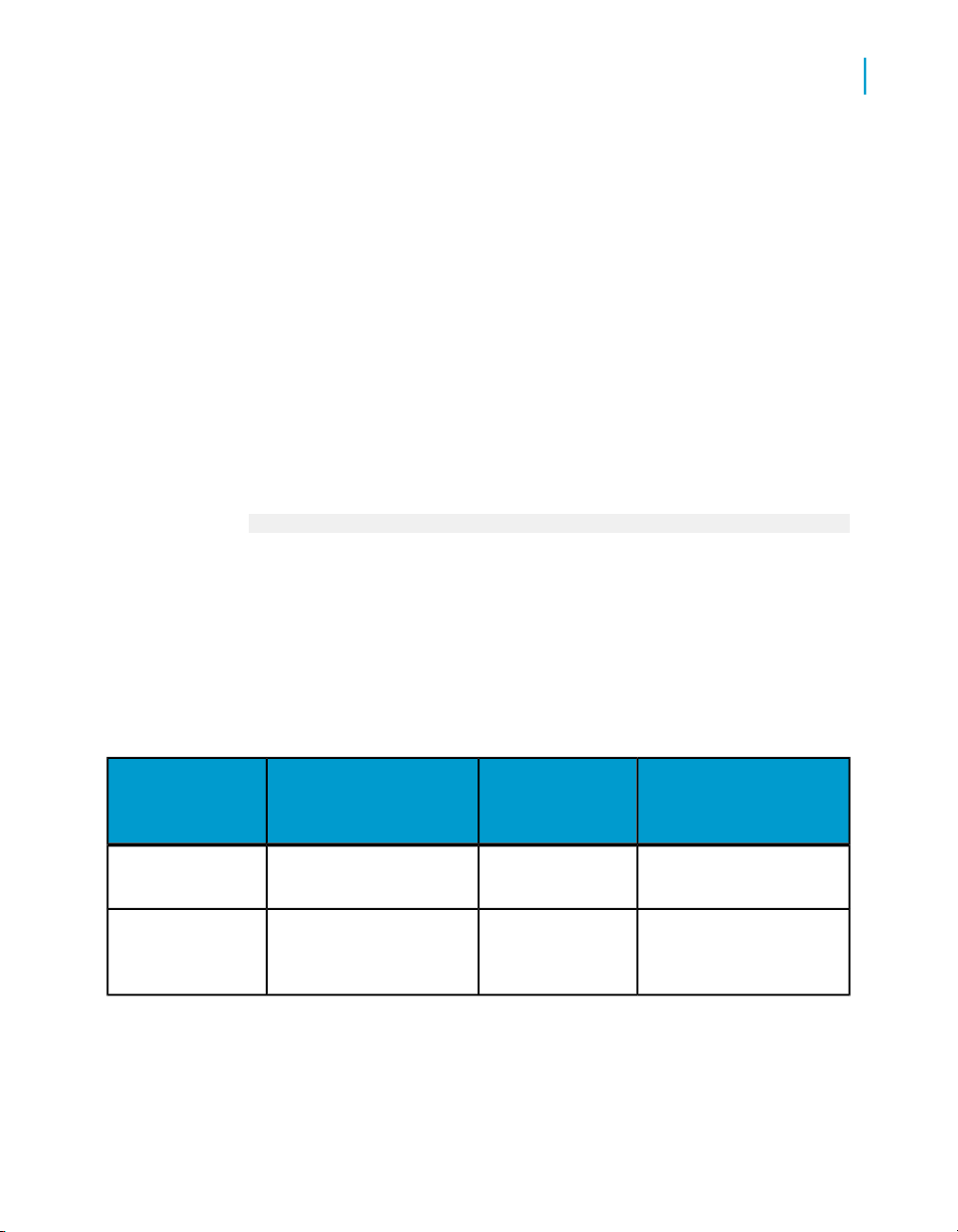

The following table shows how the exception groups in version 12.0 map to

the exception groups in version 12.1:

12.0 Exception

group (group

number)

Catch All Exceptions

Parser Errors (1)

12.0 Description

Errors encountered while

parsing the language

Data Services Migration Considerations Guide 23

12.1 Exception

group (group

number)

Pre-execution errors (1000)

12.1 Description

All errorsAll exceptionsAll errors

Parser errors are not

caught because parsing

occurs prior to execution.

Data Services Migration Considerations

2

Behavior changes in version 12.1.0

12.0 Exception

group (group

number)

Resolver Errors (2)

Execution Errors

(5)

Database Access

Errors (7)

File Access Errors

(8)

12.0 Description

Errors encountered while

validating the semantics

of Data Services objects

which have recommended resolutions

Internal errors that occur

during the execution of a

data movement specification

Generic Database Access

Errors

Errors accessing files

through file formats

12.1 Exception

group (group

number)

Pre-execution errors (1000)

Execution errors

(1001)

Database Access

Errors (1002)

Flat file processing

errors (1004)

File Access Errors

(1005)

12.1 Description

Resolver errors are not

caught because parsing

occurs prior to execution.

Errors from the Data Services job server or transforms

Errors from the database

server while reading data,

writing data, or bulk loading to tables

Errors processing flat files

Errors accessing local

and FTP files

Repository Access

Errors (10)

Connection and

bulk loader errors

(12)

Predefined Transforms Errors (13)

ABAP Generation

Errors (14)

R/3 Execution Errors (15)

24 Data Services Migration Considerations Guide

Errors accessing the Data

Services repository

Errors connecting to

database servers and

bulk loading to tables on

them

Predefined transform errors

ABAP generation errors

R/3 execution errors

Repository access

errors (1006)

Database Connection errors (1003)

R/3 system errors

(1007)

Errors accessing the Data

Services repository

Errors connecting to

database servers

Errors while generating

ABAP programs, during

ABAP generated user

transforms, or while accessing R/3 system using

its API

Data Services Migration Considerations

Behavior changes in version 12.1.0

2

12.0 Exception

group (group

number)

System Exception

Errors (17)

Engine Abort Errors (20)

The following table shows the new exception groups in version 12.1:

New 12.1 Exception group (group

number)

XML processing errors (1010)

COBOL copybook errors (1011)

12.0 Description

Email errorsEmail Errors (16)

System exception errors

Engine abort errors

12.1 Exception

group (group

number)

System Resource

errors (1008)

Execution errors

(1001)

Description

Errors from the SAP BW system.SAP BW execution errors (1009)

Errors processing XML files and

messages

Errors processing COBOL copybook

files

12.1 Description

Errors while accessing or

using operating system

resources, or while sending emails

Errors from the Data Services job server or transforms

Errors processing Excel booksExcel book errors (1012)

Data Quality transform errors (1013)

Errors processing Data Quality

transforms

Upgrading from version 12.0.0 to version 12.1.0

If you are installing version 12.1.0 and the installer detects a previous

installation of version 12.0, you will be prompted to first uninstall version

12.0. The installer will maintain your configuration settings if you install in

the same directory.

Data Services Migration Considerations Guide 25

Data Services Migration Considerations

2

Behavior changes in version 12.0.0

If you are installing version 12.1.0 on top of version 11.x, you do not need

to uninstall the previous version.

Behavior changes in version 12.0.0

The following sections describe changes in the behavior of Data Services

12.0.0 from previous releases of Data Integrator. In most cases, the new

version avoids changes that would cause existing applications to modify their

results. However, under some circumstances a change has been deemed

worthwhile or unavoidable.

If you are migrating from Data Quality to Data Services, see the Data Quality

to Data Services Migration Guide.

This section includes migration-specific information associated with the

following features:

•

Case transform enhancement on page 26

•

Data Quality projects in Data Integrator jobs on page 27

•

Data Services web address on page 27

•

Large object data type enhancements on page 28

•

License keycodes on page 31

•

Locale selection on page 31

•

ODBC bigint data type on page 33

•

Persistent and pageable cache enhancements on page 33

•

Row delimiter for flat files on page 33

Case transform enhancement

In this version, you can choose the order of Case expression processing to

improve performance by processing the less CPU-intensive expressions

first. When the Preserve case expression order option is not selected in

the Case transform, Data Services determines the order to process the case

expressions. The Preserve case expression order option is available only

when the Row can be TRUE for one case only option is selected.

By default, the Row can be TRUE for one case only option is selected and

the Preserve case expression order option is not selected. Therefore,

when you migrate to this version, Data Services will choose the order to

process your Case expressions by default.

26 Data Services Migration Considerations Guide

Data Services Migration Considerations

Behavior changes in version 12.0.0

However, the reordering of expressions can change your results because

there is no way to guarantee which expression will evaluate to TRUE first.

If your results changed in this version and you want to obtain the same results

as prior versions, select the Preserve case expression order option.

Data Quality projects in Data Integrator jobs

To do data cleansing in version Data Integrator 11.7, you created a Data

Qualilty datastore and imported integrated batch projects as Data Quality

transforms. When these imported Data Quality transforms were used in an

11.7 job, the data was passed to Data Quality for cleansing, and then passed

back to the Data Integrator job.

In Data Services 12, the Data Quality transforms are built in. Therefore, if

you used imported Data Quality transforms in Data Integrator 11.7, you must

replace them in Data Services with the new built-in Data Quality transforms.

Related Topics

• Modifying Data Integrator 11.7 Data Quality projects on page 161

• Migrating Data Quality integrated batch projects on page 159

• How integrated batch projects migrate on page 89

2

Data Services web address

In this release, Data Integrator has become part of Data Services. Therefore,

the Web address has changed for the Management Console. In previous

releases, the Web address used "diAdmin" as the following format shows:

http://computername:port/diAdmin

In Data Services, the Web address uses DataServices:

http://computername:port/DataServices

Therefore, when you migrate to Data Services you must make changes in

the following situations:

Data Services Migration Considerations Guide 27

Data Services Migration Considerations

2

Behavior changes in version 12.0.0

• If you created a bookmark that points to the Management Console in a

previous release, you must update the bookmark to the changed Web

address.

• If you generated a Web Service Definition Language (WSDL) file in a

previous version of Data Integrator, you must regenerate it to use the

changed Web address of the Administrator.

Large object data type enhancements

Data Services 12.0 extends the support of large objects as follows:

• Adds support for binary large object (blob) data types from the currently

supported database systems (Oracle, DB2, Microsoft SQL Server, and

so on).

• Extends support for character large object (clob) and national character

object (nclob) data types to other databases.

Prior versions treat the clob and nclob data types as long data types, and

this version continues to treat them as long data types.

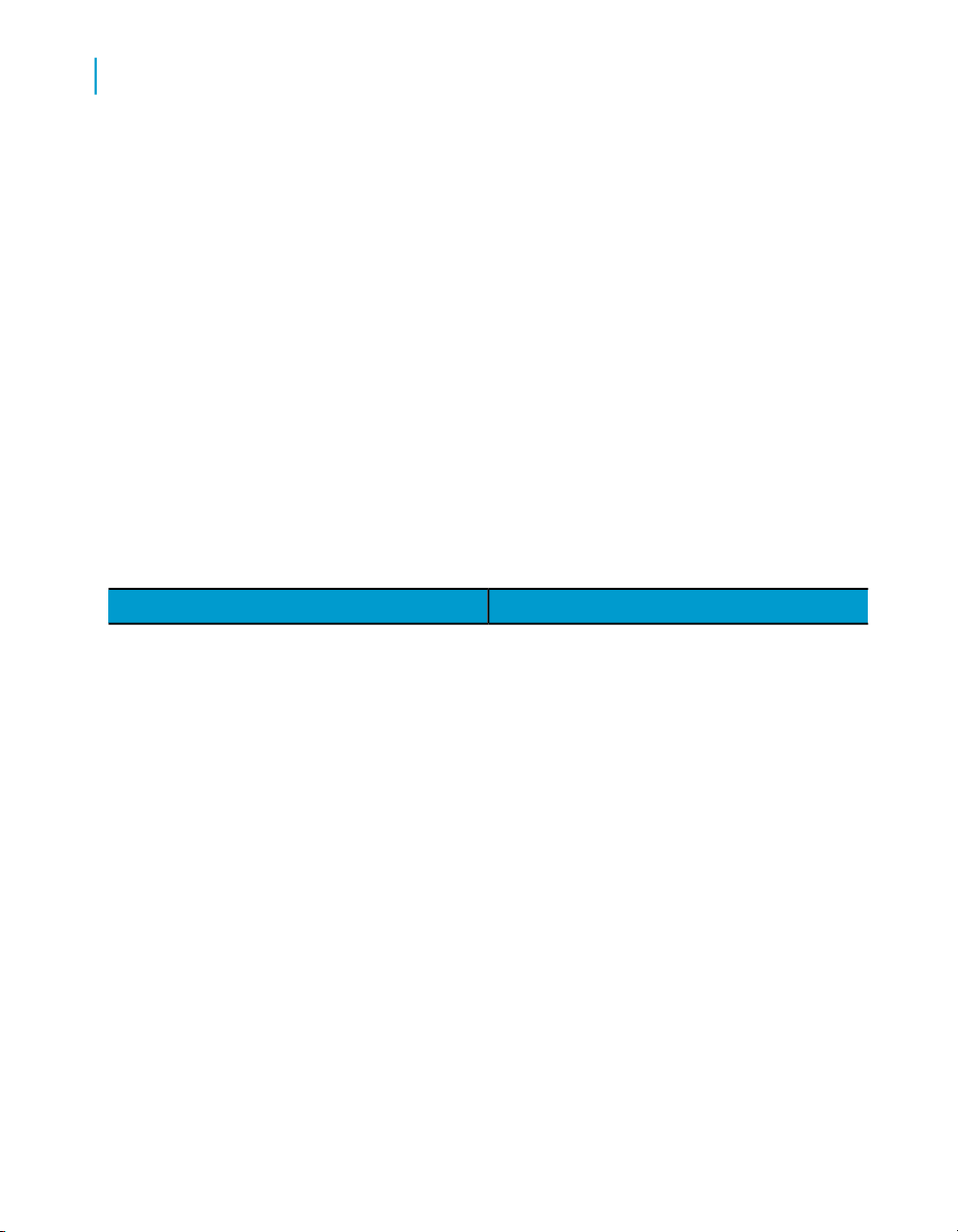

The following table shows the large data types that version 11.7 supports

as long data types and the additional large data types that version 12 now

supports. If your pre-version 12 jobs have sources that contain these

previously unsupported large data types and you now want to use them in

version 12, you must re-import the source tables and modify your existing

jobs to select these newly supported data types.

28 Data Services Migration Considerations Guide

Data Services Migration Considerations

Table 2-4: Database large object data types supported

Behavior changes in version 12.0.0

2

Database

DB2

Informix

Database data type

LONG VARCHAR

LONG VARGRAPHIC

LVARCHAR

Category

VAR

CHAR

Version

11.7 supports

Version

12.0 supports

Version

12.0 data

type

LONGYesYesCLOB

LONGYesYesCLOBCLOB

LONGYesNoNCLOB

LONGYesNoNCLOBDBCLOB

BLOBYesNoBLOBBLOB

VARCHARYesYes

LONGYesYesCLOBTEXT

BLOBYesNoBLOBBYTE

LONGYesYesCLOBCLOB

BLOBYesNoBLOBBLOB

Microsoft

SQL Server

VARCHAR

(max)

NVARCHAR

(max)

VARBINA

RY(max)

LONGYesYesCLOBTEXT

LONGYesNoNCLOBNTEXT

LONGYesNoCLOB

LONGYesNoNCLOB

BLOBYesNoBLOBIMAGE

BLOBYesNoBLOB

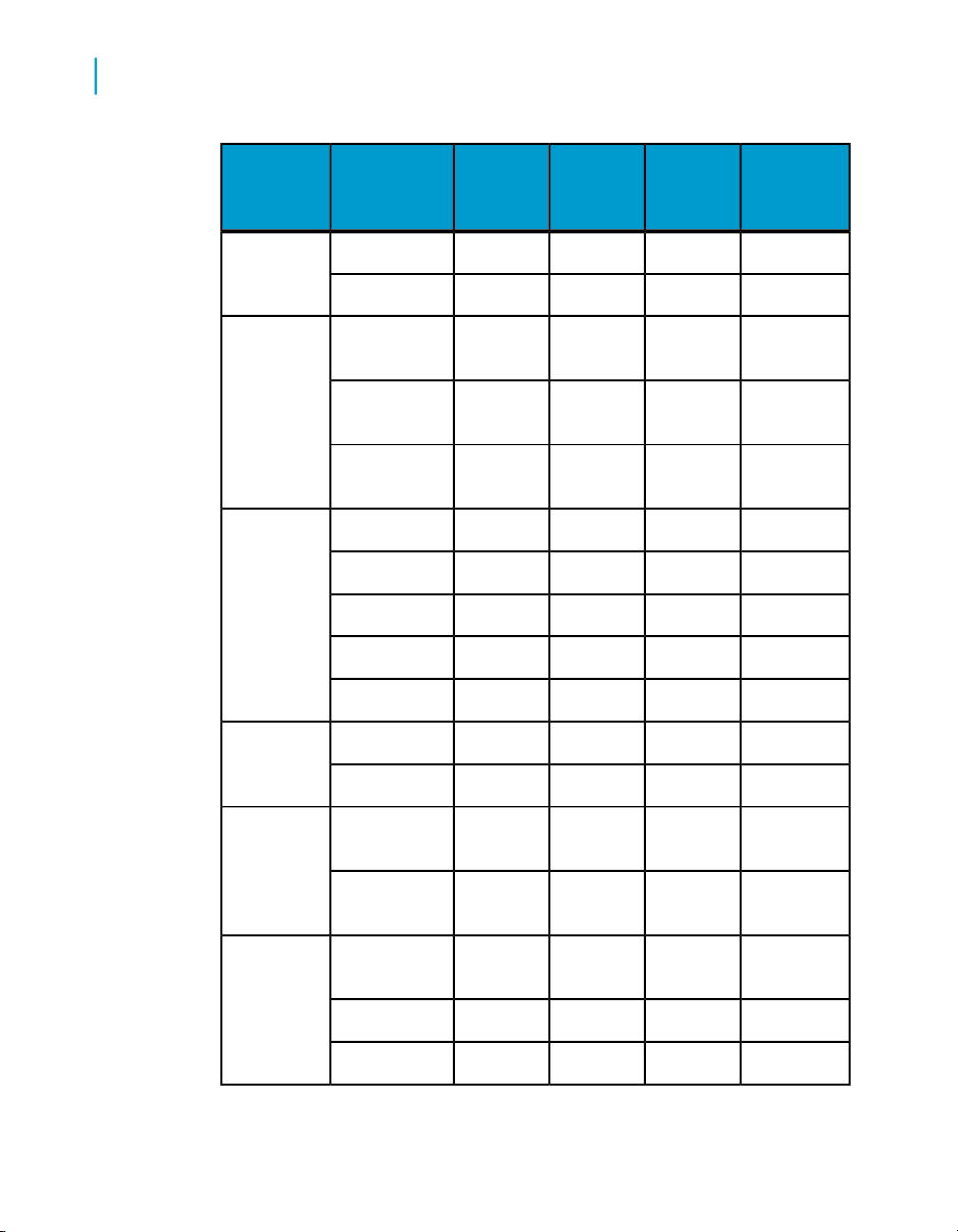

Data Services Migration Considerations Guide 29

Data Services Migration Considerations

2

Behavior changes in version 12.0.0

Database

MySQL

ODBC LONGYesNoNCLOB

Oracle

Database data type

SQL_LONG

VARCHAR

SQL_WLONG

VARCHAR

SQL_LONG

VARBINARY

Category

Version

11.7 supports

Version

12.0 supports

Version

12.0 data

type

LONGYesYesCLOBTEXT

BLOBYesNoBLOBBLOB

LONGYesYesCLOB

BLOBYesNoBLOB

LONGYesYesCLOBLONG

BLOBYesNoBLOBLONGRAW

LONGYesYesCLOBCLOB

LONGYesYesNCLOBNCLOB

BLOBYesNoBLOBBLOB

Sybase ASE

LONG VAR-

Sybase IQ

12.6 or later

Teradata

30 Data Services Migration Considerations Guide

CHAR

LONG BINARY

LONG VARCHAR

LONGYesNoCLOBTEXT

BLOBYesNoBLOBIMAGE

LONGYesYesCLOB

BLOBYesNoBLOB

LONGYesYesCLOB

LONGYesYesCLOBCLOB

BLOBYesNoBLOBBLOB

License keycodes

In this version, Data Services incorporates the BusinessObjects Enterprise

installation technology and uses keycodes to manage the licenses for the

different features. Therefore, Data Services does not use .lic license files

anymore but manages keycodes in the License Manager.

Locale selection

In this version, you no longer set the locale of the Job Server when you install

Data Services. After installation, the locale of the Job Server is set to

<default> which enables Data Services to automatically set the locale for

the repository connection (for the Designer) and to process job data (for the

Job Server) according to the locale of the datastore or operating system.

This capability enables Data Services to automatically change the locale for

better performance (for example, set the locale to non-UTF-8 if the datastore

is non-Unicode data).

Data Services Migration Considerations

Behavior changes in version 12.0.0

2

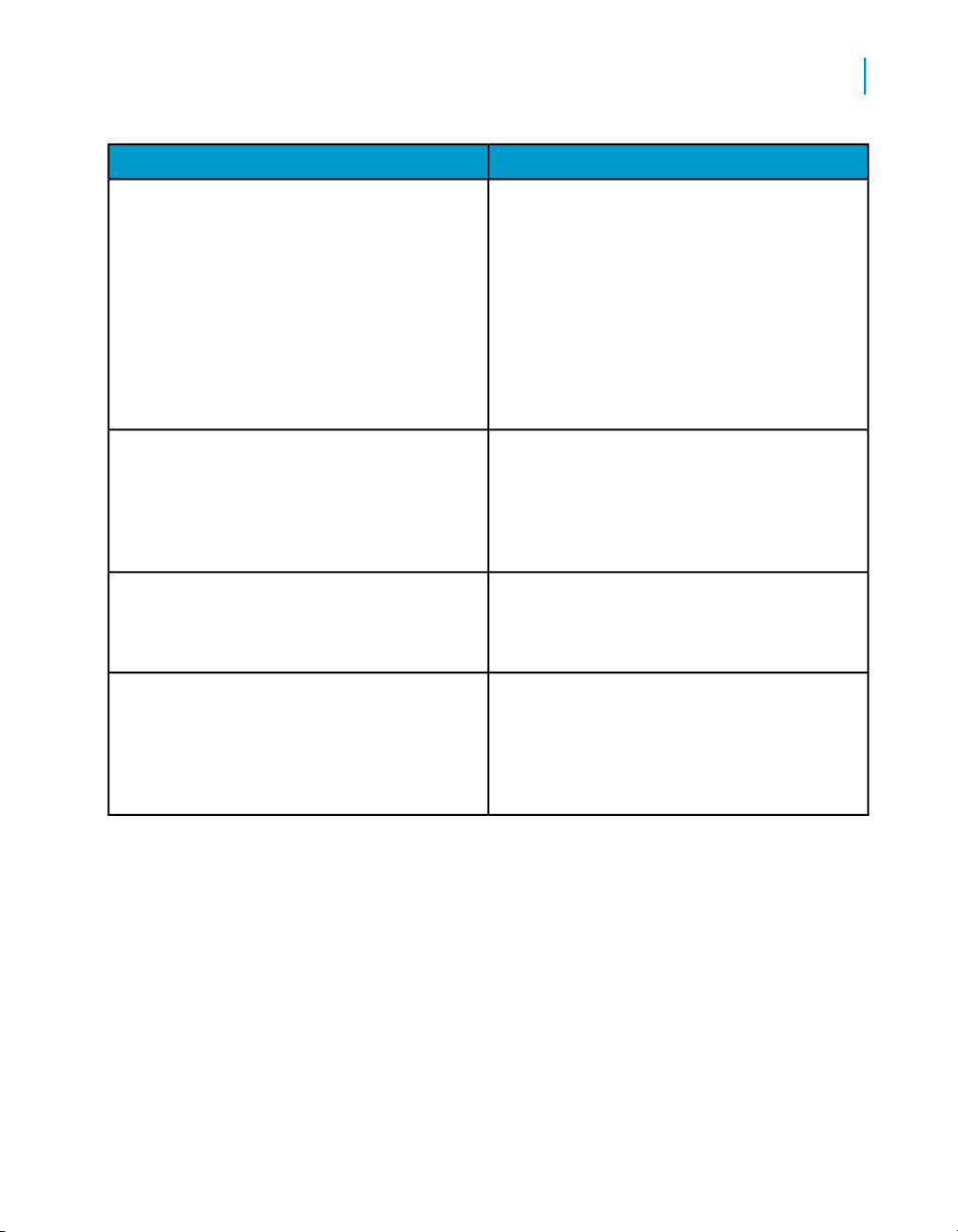

The following table shows different datastores and Job Server locale settings,

the resulting locale that prior versions set, and the new locale that version

12.0 sets for the data flow. In this table, the Job Server locale is set to

<default> and derives its value from the operating system.

Datastore 1

locale

Single-byte

code page

Multi-byte

code page

Multi-byte

code page

Datastore 2

locale

Multi-byte

code page

Multi-byte

code page

Multi-byte

code page

Job Server

locale

Single-byte

code page or

Multi-byte

code page

Single-byte

code page

Multi-byte

code page

Data Services Migration Considerations Guide 31

Data flow locale in prior

version

Same locale

as Job Server

Single-byte

code page

Data flow locale in version 12.0

Unicode

Unicode

UnicodeUnicode

Data Services Migration Considerations

2

Behavior changes in version 12.0.0

Datastore 1

locale

Single-byte

code page 1

Single-byte

code page 1

Single-byte

code page 3

Single-byte

code page 3

Datastore 2

locale

Single-byte

code page 2

Single-byte

code page 2

Single-byte

code page 3

Single-byte

code page 3

Job Server

locale

Single-byte

code page 3

Multi-byte

code page

Single-byte

code page 1

Multi-byte

code page

Data flow locale in prior

version

Single-byte

code page 3

Single-byte

code page 1

Data flow locale in version 12.0

Unicode

UnicodeUnicode

Single-byte

code page 3

UnicodeUnicode

The following table summarizes the locale that Data Services now sets for

each data flow when the locale of the Job Server is set to <default>. Different

data flows in the same job can run in either single-byte or Unicode.

Locale of datastores in data flow

One datastore has multi-byte locale

Job Server locale

Single-byte or

Multi-byte

Locale that Data

Services sets

Unicode

Different single-byte locales

You can override the default locale for the Job Server by using the Data

Services Locale Selector utility. From the Windows Start menu, select

Programs > BusinessObjects XI 3.1 > BusinessObjects Data Services

> Data Services Locale Selector.

32 Data Services Migration Considerations Guide

Single-byte or

Multi-byte

Unicode

Single-byteSingle-byteSame single-byte locale

UnicodeMulti-byteSame single-byte locale

Data Services Migration Considerations

Behavior changes in version 12.0.0

ODBC bigint data type

For an ODBC datastore, Data Services now imports a bigint data type as

decimal. In prior releases of Data Integrator, the bigint data type was imported

as a double data type. If your pre-version 12 jobs have sources that contain

bigint data types, you must re-import the source tables and modify your

existing jobs to handle them as decimal data types.

Persistent and pageable cache enhancements

This release of Data Services provides performance enhancements for the

persistent and pageable caches. Decimal data types now use only half the

memory used in prior versions.

However, persistent cache tables created in prior versions are not compatible

with Data Services. You must recreate them by rerunning the jobs that

originally created and loaded the target persistent cache tables.

2

Row delimiter for flat files

In Data Services 12, you can now specify the following values as row

delimiters for flat files:

• {new line}

If you specify this value for the row delimiter, Data Services writes the

appropriate characters for the operating system on which the Job Server

is running:

• CRLF (\r\n) in Windows

• LF (\n) in UNIX

• any character sequence

In this case, Data Services writes the characters you entered.

• {UNIX new line}

In this case, Data Services writes the characters LF (\n) regardless of the

operating system.

Data Services Migration Considerations Guide 33

Data Services Migration Considerations

2

Behavior changes in version 11.7.3

• {Windows new line}

In this case, Data Services writes the characters CRLF (\r\n) regardless

of the operating system.

In previous releases, you could only specify the following values as row

delimiters for flat files, and the behavior is the same as in the new release:

• {new line}

• any character sequence

If your target appends to an existing file that was generated in a prior release,

Data Services is not backward compatible for the following situations:

• Your Job Server runs on a Windows platform and you choose {UNIX new

line} for the row delimiter.

• Your Job Server runs on a UNIX system and you choose {Windows new

line} for the row delimiter.

In these situations, you must define a new file format, load data from the

existing file into the new file specifying the new row delimiter, and then append

new data to the new file with the new row delimiter.

Behavior changes in version 11.7.3

The following sections describe changes in the behavior of Data Services

12.0 from previous releases of Data Integrator. In most cases, the new version

avoids changes that would cause existing applications to modify their results.

However, under some circumstances a change has been deemed worthwhile

or unavoidable.

This section includes migration-specific information associated with the

following features:

•

Data flow cache type on page 35

•

Job Server enhancement on page 35

•

Logs in the Designer on page 35

•

Pageable cache for memory-intensive data flows on page 35

34 Data Services Migration Considerations Guide

Data flow cache type

When upgrading your repository from versions earlier than 11.7 to an 11.7

repository using version 11.7.3.0, all of the data flows will have a default

Cache type value of pageable. This is different from the behavior in 11.7.2.0,

where the upgraded data flows have a default Cache type value of in-

memory.

Job Server enhancement

Using multithreaded processing for incoming requests, each Data Integrator

Job Server can now accommodate up to 50 Designer clients simultaneously

with no compromise in response time. (To accommodate more than 50

Designers at a time, create more Job Servers.)

In addition, the Job Server now generates a Job Server log file for each day.

You can retain the Job Server logs for a fixed number of days using a new

setting on the Administrator Log retention period page.

Data Services Migration Considerations

Behavior changes in version 11.7.3

2

Logs in the Designer

In Data Integrator 11.7.3, you will only see the logs (trace, error, monitor) for

jobs that started from the Designer, not for jobs started via other methods

(command line, real-time, scheduled jobs, or Web services). To access these

other log files, use the Administrator in the Data Integrator Management

Console.

Pageable cache for memory-intensive data flows

As a result of multibyte metadata support, Data Integrator might consume

more memory when processing and running jobs. If the memory consumption

of some of your jobs were running near the 2-gigabyte virtual memory limit

in a prior version, there is a chance that the same jobs could run out of virtual

memory. If your jobs run out of memory, take the following actions:

• Set the data flow Cache type value to pageable.

Data Services Migration Considerations Guide 35

Data Services Migration Considerations

2

Behavior changes in version 11.7.2

• Specify a pageable cache directory that:

• Contains enough disk space for your data. To estimate the amount of

space required for pageable cache, consider factors such as the

number of concurrently running jobs or data flows and the amount of

pageable cache required for each concurrent data flow

• Exists on a separate disk or file system from the Data Integrator system

and operating system (such as the C: drive on Windows or the root

file system on UNIX).

Adapter SDK

The Adapter SDK no longer supports native SQL or partial SQL.

PeopleSoft 8

PeopleSoft 8 support is implemented for Oracle only.

Data Integrator jobs that ran against previous versions of PeopleSoft are not

guaranteed to work with PeopleSoft 8. You must update the jobs to reflect

metadata or schema differences between PeopleSoft 8 and previous versions.

Behavior changes in version 11.7.2

The following sections describe changes in the behavior of Data Integrator

11.7.2 from previous releases. In most cases, the new version avoids changes

that would cause existing applications to modify their results. However, under

some circumstances a change has been deemed worthwhile or unavoidable.

This section includes migration-specific information associated with the

following features:

•

Embedded data flows on page 37

•

Oracle Repository upgrade on page 37

•

Solaris and AIX platforms on page 39

36 Data Services Migration Considerations Guide

Embedded data flows

In this version of Data Integrator, you cannot create embedded data flows

which have both an input port and an output port. You can create a new

embedded data flow only at the beginning or at the end of a data flow with

at most one port, which can be either an input or an output port.

However, after upgrading to Data Integrator version 11.7.2, embedded data

flows created in previous versions will continue to run.

Oracle Repository upgrade

If you previously upgraded your repository to Data Integrator 11.7.0 and open

the "Object State Report" on the Central repository from the Web

Administrator, you may see the error "ORA04063 view ALVW_OBJ_CINOUT

has errors". This occurs if you had a pre-11.7.0. Oracle central repository

and upgraded the central repository to 11.7.0.

Data Services Migration Considerations

Behavior changes in version 11.7.2

2

Note:

If you upgraded from a pre-11.7.0.0 version of Data Integrator to version

11.7.0.0 and you are now upgrading to version 11.7.2.0, this issue may occur,

and you must follow the instructions below. Alternatively, if you upgraded

from a pre-11.7.0.0 version of Data Integrator to 11.7.2.0 without upgrading

to version 11.7.0.0, this issue will not occur and has been fixed in 11.7.2.0.

To fix this error, manually drop and recreate the view ALVW_OBJ_CINOUT

using an Oracle SQL editor, such as SQLPlus.

Use the following SQL statements to perform the upgrade:

DROP VIEW ALVW_OBJ_CINOUT;

CREATE VIEW ALVW_OBJ_CINOUT (OBJECT_TYPE, NAME, TYPE, NORMNAME,

VERSION, DATASTORE, OWNER,STATE, CHECKOUT_DT, CHECKOUT_REPO,

CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER,SEC_USER_COUT)

AS

(

Data Services Migration Considerations Guide 37

Data Services Migration Considerations

2

Behavior changes in version 11.7.2

select OBJECT_TYPE*1000+TYPE,NAME, N'AL_LANG' , NORMNAME,VER

SION,DATASTORE, OWNER, STATE, CHECKOUT_DT, CHECKOUT_REPO,

CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER ,SEC_USER_COUT

from AL_LANG L1 where NORMNAME NOT IN ( N'CD_DS_D0CAFAE2' ,

N'XML_TEMPLATE_FORMAT' , N'CD_JOB_D0CAFAE2' , N'CD_DF_D0CAFAE2'

, N'DI_JOB_AL_MACH_INFO' , N'DI_DF_AL_MACH_INFO' ,

N'DI_FF_AL_MACH_INFO' )

union

select 20001, NAME,FUNC_TYPE ,NORMNAME, VERSION, DATASTORE,

OWNER, STATE, CHECKOUT_DT, CHECKOUT_REPO, CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER ,SEC_USER_COUT

from AL_FUNCINFO F1 where FUNC_TYPE = N'User_Script_Function'

OR OWNER <> N'acta_owner'

union

select 30001, NAME, N'PROJECT' , NORMNAME, VERSION, N'' , N''

, STATE, CHECKOUT_DT, CHECKOUT_REPO, CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER ,SEC_USER_COUT

from AL_PROJECTS P1

union

select 40001, NAME,TABLE_TYPE, NORMNAME, VERSION, DATASTORE,

OWNER, STATE, CHECKOUT_DT, CHECKOUT_REPO, CHECKIN_DT,

CHECKIN_REPO, LABEL, LABEL_DT,COMMENTS,SEC_USER ,SEC_USER_COUT

from AL_SCHEMA DS1 where DATASTORE <> N'CD_DS_d0cafae2'

union

select 50001, NAME, N'DOMAIN' , NORMNAME, VERSION, DATASTORE,

N'' , STATE, CHECKOUT_DT, CHECKOUT_REPO, CHECKIN_DT,

CHECKIN_REPO, N'' ,to_date( N'01/01/1970' , N'MM/DD/YYYY' ),

N'' ,SEC_USER ,SEC_USER_COUT

from AL_DOMAIN_INFO D1

38 Data Services Migration Considerations Guide

Data Services Migration Considerations

Behavior changes in version 11.7.0

);

Solaris and AIX platforms

Data Integrator 11.7.2 on Solaris and AIX platforms is a 64-bit application

and requires 64-bit versions of the middleware client software (such as Oracle

and SAP) for effective connectivity. If you are upgrading to Data Integrator

11.7.2 from a previous version, you must also upgrade all associated

middleware client software to the 64-bit version of that client. You must also

update all library paths to ensure that Data Integrator uses the correct 64-bit

library paths.

Behavior changes in version 11.7.0

The following sections describe changes in the behavior of Data Integrator

11.7.0. from previous releases. In most cases, the new version avoids

changes that would cause existing applications to modify their results.

However, under some circumstances a change has been deemed worthwhile

or unavoidable.

2

This section includes migration-specific information associated with the

following features:

•

Data Quality on page 40

•

Distributed data flows on page 42

•

JMS Adapter interface on page 43

•

XML Schema enhancement on page 43

•

Password management on page 43

•

Repository size on page 44

•

Web applications on page 44

•

Web services on page 44

Data Services Migration Considerations Guide 39

Data Services Migration Considerations

2

Behavior changes in version 11.7.0

Data Quality

Data Integrator 11.7.0 integrates the BusinessObjects Data Quality XI

application for your data quality (formerly known as Data Cleansing) needs,

which replaces Firstlogic's RAPID technology.

Note:

The following changes are obsolete with Data Services version 12.0 because

the Data Quality transforms are built into Data Services, and you can use

them just like the regular Data Integrator transforms in a data flow.

The following changes to data cleansing occurred in Data Integrator 11.7.0:

• Depending on the Firstlogic products you owned, you previously had up

to three separate transforms that represented data quality functionality:

Address_Enhancement, Match_Merge, and Name_Parsing.

Now, the data quality process takes place through a Data Quality Project.

To upgrade existing data cleansing data flows in Data Integrator, replace

each of the cleansing transforms with an imported Data Quality Project

using the Designer.

You must identify all of the data flows that contain any data cleansing

transforms and replace them with a new Data Quality Project that connects

to a Data Quality blueprint or custom project.

• Data Quality includes many example blueprints - sample projects that

are ready to run or can serve as a starting point when creating your own

customized projects. If the existing blueprints do not completely suit your

needs, just save any blueprint as a project and edit it. You can also create

a project from scratch.

• You must use the Project Architect (Data Quality's graphical user interface)

to edit projects or create new ones. Business Objects strongly

recommends that you do not attempt to manually edit the XML of a project

or blueprint.

• Each imported Data Quality project in Data Integrator represents a

reference to a project or blueprint on the data quality server. The Data

Integrator Data Quality projects allow field mapping.

40 Data Services Migration Considerations Guide

Data Services Migration Considerations

Behavior changes in version 11.7.0

To migrate your data flow to use the new Data Quality transforms

Note:

The following procedure is now obsolete with Data Services version 12.0

because the Data Quality transforms are now built into Data Services and

you can use them just like the regular Data Integrator transforms in a data

flow. If you performed this procedure in Data Integrator version 11.7, you

will need to migrate these data flows to Data Services. See Data Quality

projects in Data Integrator jobs on page 27.

1. Install Data Quality XI, configure and start the server. For installation

instructions, see your Data Quality XI documentation.

Note:

You must start the server before using Data Quality XI with Data Integrator.

2. In the Data Integrator Designer, create a new Business Objects Data

Quality datastore and connect to your Data Quality server.

3. Import the Data Quality projects that represent the data quality

transformations you want to use. Each project appears as a Data Quality

project in your datastore. For the most common data quality

transformations, you can use existing blueprints (sample projects) in the

Data Quality repository

4. Replace each occurrence of the old data cleansing transforms in your

data flows with one of the imported Data Quality transforms. Reconnect

the input and output schemas with the sources and targets used in the

data flow.

2

Note:

If you open a data flow containing old data cleansing transforms

(address_enhancement, name_parsing, match_merge), Data Integrator

displays the old transforms (even though they no longer appear in the object

library). You can even open the properties and see the details for each old

transform.

If you attempt to validate a data flow that contains an old data cleansing

transform, Data Integrator throws an error. For example:

[Custom Transform:Address_Enhancement] BODI-1116074: First Logic

support is obsolete. Please use the new Data Quality feature.

If you attempt to validate a data flow that contains an old data cleansing

transform, Data Integrator throws an error. For example:

Data Services Migration Considerations Guide 41

Data Services Migration Considerations

2

Behavior changes in version 11.7.0

If you attempt to execute a job that contains data flows using the old data

cleansing transforms Data Integrator throws the same type of error.

If you need help migrating your data cleansing data flows to the new Data

Quality transforms, contact the SAP Business Objects Help Portal at

http://help.sap.com/.

Distributed data flows

After upgrading to this version of Data Integrator, existing jobs have the

following default values and behaviors:

• Job distribution level: Job.

All data flows within a job will be run on the same job server.

• The cache type for all data flows: In-memory type

Uses STL map and applies to all join caches, table comparison caches

and lookup caches, and so forth.

• Default forCollect statistics for optimization and Collect statistics for

monitoring: deselected.

• Default for Use collected statistics: selected.

Since no statistics are initially collected, Data Integrator will not initially

use statistics.

• Every data flow is run as a process (not as a sub data flow process).

New jobs and data flows you create using this version of Data Integrator

have the following default values and behaviors:

• Job distribution level: Job.

• The cache type for all data flows:Pageable.

• Collect statistics for optimization and Collect statistics for

monitoring: deselected.

• Use collected statistics: selected.

If you want Data Integrator to use statistics, you must collect statistics for

optimization first.

42 Data Services Migration Considerations Guide

• Every data flow is run as a single process. To run a data flow as multiple

sub data flow processes, you must use the Data_Transfer transform or

select the Run as a separate process option in transforms or functions.

• All temporary cache files are created under the LINK_DIR\Log\PCache

directory. You can change this option from the Server Manager.

JMS Adapter interface

A new license key may be required to install the JMS Adapter interface. If

you have a license key issued prior to Data Integrator XI R2 version 11.5.1,

send a request to licensing@businessobjects.com with "Data Integrator

License Keys" as the subject line.

XML Schema enhancement

Data Integrator 11.7 adds the new Include schema location option for XML

target objects. This option is selected by default.

Data Services Migration Considerations

Behavior changes in version 11.7.0

2

Data Integrator 11.5.2 provided the key

XML_Namespace_No_SchemaLocation for section AL_Engine in the

Designer option Tools > Options > Job Server > General, and the default

value, FALSE, indicates that the schema location is included. If you upgrade

from 11.5.2 and had set XML_Namespace_No_SchemaLocation to TRUE

(indicates that the schema location is NOT included), you must open the

XML target in all data flows and clear the Include schema location option

to keep the old behavior for your XML target objects.

Password management

Data Integrator now encrypts all password fields using two-fish algorithm.

To simplify updating new passwords for the repository database, Data

Integrator includes a password file feature. If you do not have a requirement

to change the password to the database that hosts the repository, you may

not need to use this optional feature.

Data Services Migration Considerations Guide 43

Data Services Migration Considerations

2

Behavior changes in version 11.7.0

However, if you must change the password (for example, security

requirements stipulate that you must change your password every 90 days),

then Business Objects recommends that you migrate your scheduled or

external job command files to use this feature.

Migration requires that every job command file be regenerated to use the

password file. After migration, when you update the repository password,

you need only regenerate the password file. If you do not migrate using the

password file feature, then you must regenerate every job command file

every time you change the associated password.

Repository size

Due to the multi-byte metadata support, the size of the Data Integrator

repository is about two times larger for all database types except Sybase.

Web applications

• The Data Integrator Administrator (formerly called the Web Administrator)

and Metadata Reports interfaces have been combined into the new

Management Console in Data Integrator 11.7. Now, you can start any

Data Integrator Web application from the Management Console launch

pad (home page). If you have created a bookmark or favorite that points

to the previous Administrator URL, you must update the bookmark to

point to http://computername:port/diAdmin.

• If in a previous version of Data Integrator you generated WSDL for Web

service calls, you must regenerate the WSDL because the URL to the

Administrator has been changed in Data Integrator 11.7.

Web services

Data Integrator is now using Xerces2 library. When upgrading to 11.7 or

above and configuring the Web Services adapter to use the xsdPath

parameter in the Web Service configuration file, delete the old Web Services

adapter and create a new one. It is no longer necessary to configure the

xsdPath parameter.

44 Data Services Migration Considerations Guide

Data Services Migration Considerations

Behavior changes in version 11.6.0

Behavior changes in version 11.6.0

Data Integrator version 11.6.0.0 will only support automated upgrade from

ActaWorks and Data Integrator versions 5.2.0 and above to version 11.5.

For customers running versions prior to ActaWorks 5.2.0 the recommended

migration path is to first upgrade to Data Integrator version 6.5.1.

If you are upgrading to 11.6.0.0, to take advantage of the new Netezza

bulk-loading functionality, you must upgrade your repository.

If you are upgrading from Data Integrator versions 11.0.0 or older to Data

Integrator 11.6.0.0, you must upgrade your repository. If upgrading from

11.5.0 to 11.6.0.0, you must upgrade your repository to take advantage of

the new:

• Data Quality Dashboards feature

• Preserving database case feature

• Salesforce.com Adapter Interface (delivered with version 11.5 SP1 Adapter

Interfaces)

2

The following sections describe changes in the behavior of Data Integrator

from previous releases. In most cases, the new version avoids changes that

would cause existing applications to modify their results. However, under

some circumstances a change has been deemed worthwhile or unavoidable.

This section includes:

•

Netezza bulk loading on page 45

•

Conversion between different data types on page 46

Netezza bulk loading

If you are upgrading to 11.6.0.0, to take advantage of the new Netezza

bulk-loading functionality, follow this procedure:

Data Services Migration Considerations Guide 45

Data Services Migration Considerations

2

Behavior changes in version 11.5.1.5

Conversion between different data types

For this release, there is a change in behavior for data conversion between

different data types. Previously, if an error occurred during data conversion

(for example when converting a varchar string to an integer, the varchar

string contains non-digits), the result was random. Now, the return value will

be NULL for any unsuccessful conversion.

Previously Data Integrator returned random data for the result of a varchar

to datetime conversion when the varchar string contains an illegal date format.

Now, the return value will be NULL.

Behavior changes in version 11.5.1.5

Data Integrator version 11.5.1.5 supports only automated upgrade from

ActaWorks and Data Integrator versions 5.2.0 and above to version 11.5.

For customers running versions prior to ActaWorks 5.2.0 the recommended

migration path is to first upgrade to Data Integrator version 6.5.1.

If you are upgrading to 11.5.1.5, to take advantage of the new Netezza

bulk-loading functionality, you must upgrade your repository.

If you are upgrading from Data Integrator versions 11.0.0 or older to Data

Integrator 11.5.1.5, you must upgrade your repository. If upgrading from

11.5.0 to 11.5.1.5, you must upgrade your repository to take advantage of

these features:

• Data Quality Dashboards

• Preserving database case

• Salesforce.com Adapter Interface (delivered with version 11.5 SP1 Adapter

Interfaces)

Behavior changes in version 11.5.1

No new behavior changes in this release.

46 Data Services Migration Considerations Guide

Data Services Migration Considerations

Behavior changes in version 11.5.0.0

Behavior changes in version 11.5.0.0

Data Integrator version 11.5 will only support automated upgrade from

ActaWorks and Data Integrator versions 5.2.0 and above to version 11.5.

For customers running versions prior to ActaWorks 5.2.0 the recommended

migration path is to first upgrade to Data Integrator version 6.5.1.

If you are upgrading from Data Integrator version 11.0.0 to Data Integrator

version 11.5.0.0, you must upgrade your repository.

The following sections describe changes in the behavior of Data Integrator

from previous releases. In most cases, the new version avoids changes that

would cause existing applications to modify their results. However, under

some circumstances a change has been deemed worthwhile or unavoidable.

Web Services Adapter

Newer versions of Data Integrator will overwrite the XSD that defines the

input and output message files. Data Integrator stores those XSDs in a

Tomcat directory and during Metadata import passes to the engine a URL

to the XSDs. The engine expects the XSDs to be available at run time.

2

To avoid this issue, prior to installing Data Integrator version 11.5, save a

copy of your LINKDIR\ext\webservice contents. After installing the new

version of Data Integrator, copy the directory contents back in place. If you

do not follow this procedure, the web service operations will require re-import

from the web service datastore after the installation completes.

Varchar behavior

Data Integrator 11.5.0 and newer (including Data Services) conforms to ANSI