Page 1

Data Integrator Reference Guide

Data Integrator Reference Guide

Data Integrator 11.0.1

Windows and UNIX operating systems

Page 2

Copyright

If you find any problems with this documentation, please report them to Business Objects

S.A. in writing at documentation@businessobjects.com.

Copyright © Business Objects S.A. 2004. All rights reserved.

Trademarks

Patents

Business Objects, the Business Objects logo, Crystal Reports, and Crystal Enterprise are

trademarks or registered trademarks of Business Objects SA or its affiliated companies in the

United States and other countries. All other names mentioned herein may be trademarks of

their respective owners.

Business Objects owns the following U.S. patents, which may cover products that are offered

and sold by Business Objects: 5,555,403, 6,247,008 B1, 6,578,027 B2, 6,490,593 and

6,289,352.

2 Data Integrator Reference Guide

Page 3

Contents

Chapter 1 Introduction 15

Who should read this guide . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

Business Objects information resources . . . . . . . . . . . . . . . . . . . . . . . 17

Chapter 2 Data Integrator Objects 21

Object classes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Reusable objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

Single-use objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

Object options, properties, and attributes . . . . . . . . . . . . . . . . . . . . . . 25

Descriptions of objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Annotation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

Batch Job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Catch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

Conditional . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

COBOL copybook file format . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

Data flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

Datastore . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

Document . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

DTD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

File format . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

Function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

Log . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 120

Message function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

Outbound message . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

Project . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

Query transform . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

Real-time job . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

Script . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133

Data Integrator Reference Guide 3

Page 4

Contents

Source . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .134

Table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .138

Target . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .142

Template table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .179

Transform . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .181

Try . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 182

While loop . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .183

Work flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .184

XML file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .187

XML message . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .190

XML Schema . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .192

XML template . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 208

Chapter 3 Smart Editor 209

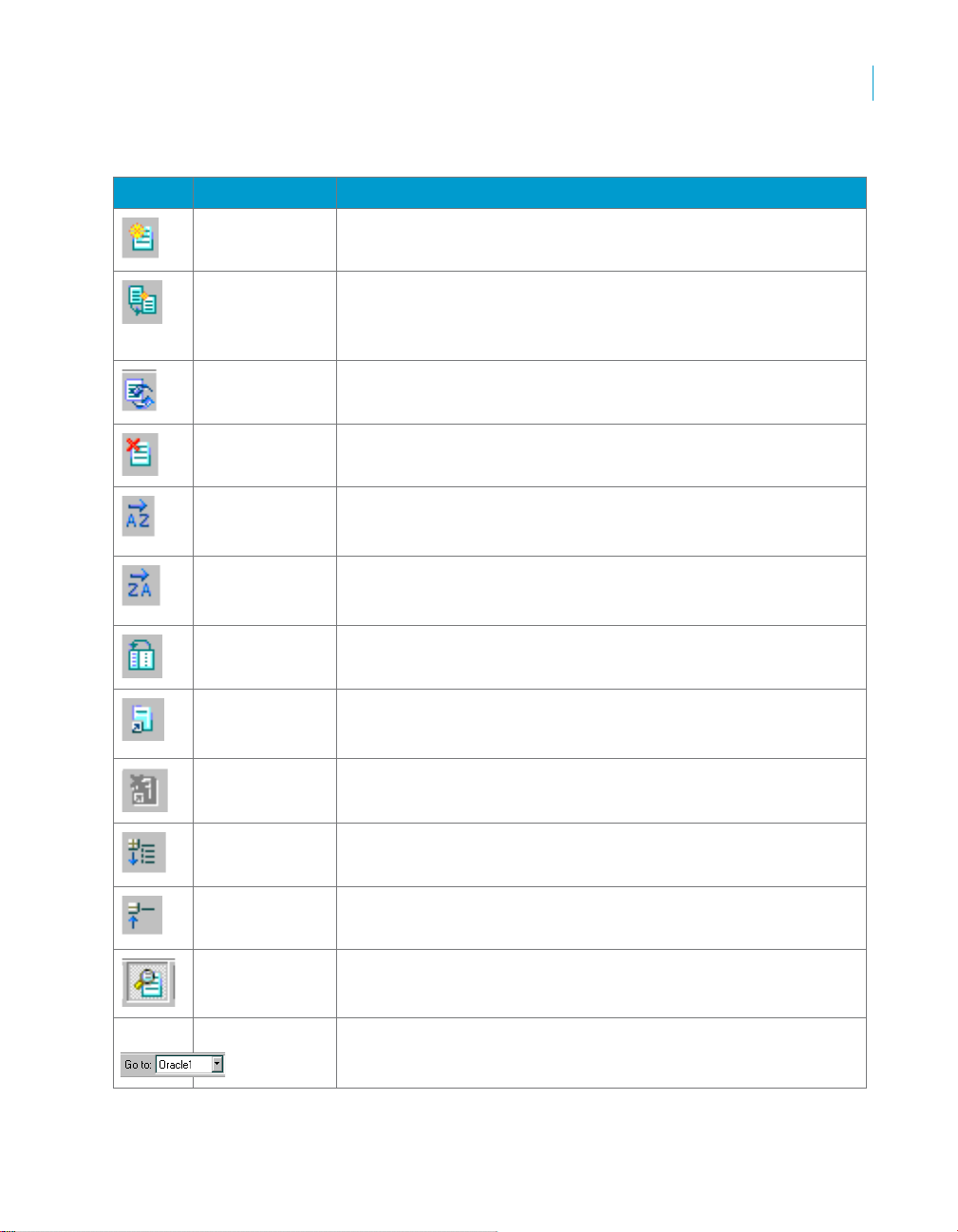

Smart editor toolbar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .213

Editor Library pane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .214

Tabs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .214

Find option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .215

Editor pane . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .216

Syntax coloring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .216

Selection list and tool tips . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 216

Right-click menu and toolbar . . . . . . . . . . . . . . . . . . . . . . . . . . . .218

Validation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .218

Chapter 4 Data Types 221

date . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .224

datetime . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .226

decimal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .227

double . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .228

int . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .229

interval . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 230

long . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .231

numeric . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .235

4 Data Integrator Reference Guide

Page 5

Contents

real . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 236

time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 237

timestamp . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 238

varchar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

Data type processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

Date arithmetic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 240

Type conversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 241

Conversion to/from Data Integrator internal data types . . . . . . . 241

Conversion of data types within expressions . . . . . . . . . . . . . . . 252

Conversion among number data types . . . . . . . . . . . . . . . . . . . . 253

Conversion between explicit data types . . . . . . . . . . . . . . . . . . . 255

Chapter 5 Transforms 257

Operation codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 258

Descriptions of transforms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 259

Address_Enhancement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 261

Case . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

Date_Generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271

Effective_Date . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

Hierarchy_Flattening . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 277

History_Preserving . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 286

Key_Generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Map_CDC_Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Map_Operation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 302

Match_Merge . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

Merge . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 313

Name_Parsing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 315

Pivot (Columns to Rows) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 322

Reverse Pivot (Rows to Columns) . . . . . . . . . . . . . . . . . . . . . . . 327

Query . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 330

Row_Generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 352

SQL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 353

Table_Comparison . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 359

Data Integrator Reference Guide 5

Page 6

Contents

Validation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .366

Chapter 6 Functions and Procedures 375

Functions compared with transforms . . . . . . . . . . . . . . . . . . . . . . . . . 377

Operation of a function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .377

Arithmetic in date functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .378

Including functions in expressions . . . . . . . . . . . . . . . . . . . . . . . . . . .378

Kinds of functions you can use in Data Integrator . . . . . . . . . . . . . . . .381

Custom functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .382

Database and application functions . . . . . . . . . . . . . . . . . . . . . . .388

Descriptions of built-in functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 388

abs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .395

add_months . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .396

avg . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .397

ceil . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .398

concat_date_time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .399

count . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .400

current_configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .401

current_system_configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . 402

dataflow_name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .403

datastore_field_value . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 404

date_diff . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .405

date_part . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 406

day_in_month . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 407

day_in_week . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .408

day_in_year . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 409

db_type . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .410

db_version . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 412

db_database_name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .414

db_owner . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .415

decode . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .416

exec . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 418

extract_from_xml . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .423

6 Data Integrator Reference Guide

Page 7

Contents

file_exists . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 425

fiscal_day . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 426

floor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 427

gen_row_num . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 428

get_domain_description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 429

get_env . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 430

get_error_filename . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 431

get_file_attribute . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 432

get_monitor_filename . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 433

get_trace_filename . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 434

host_name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 435

ifthenelse . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 436

index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 437

init_cap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 438

interval_to_char . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 439

is_set_env . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 440

is_valid_date . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 441

is_valid_datetime . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 442

is_valid_decimal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 443

is_valid_double . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 444

is_valid_int . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 445

is_valid_real . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 446

is_valid_time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 447

isempty . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 448

isweekend . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 449

job_name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 450

julian . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 451

julian_to_date . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 452

key_generation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 453

last_date . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 454

length . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 455

literal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 456

load_to_xml . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 458

Data Integrator Reference Guide 7

Page 8

Contents

long_to_varchar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .461

lookup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 462

lookup_ext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .468

lookup_seq . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .477

lower . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 482

lpad . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .483

lpad_ext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 484

ltrim . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .486

ltrim_blanks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 487

ltrim_blanks_ext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .488

mail_to . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 489

match_pattern . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .491

match_regex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .493

max . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .499

min . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .500

month . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .501

num_to_interval . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .502

nvl . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 503

print . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .504

pushdown_sql . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .505

quarter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .507

raise_exception . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 508

raise_exception_ext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .509

rand . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 510

replace_substr . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .511

replace_substr_ext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .512

repository_name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .515

round . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 516

rpad . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 517

rpad_ext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .518

rtrim . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 520

rtrim_blanks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .521

rtrim_blanks_ext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .522

8 Data Integrator Reference Guide

Page 9

Contents

set_env . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 523

sleep . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 524

sql . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 525

smtp_to . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 527

substr . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 530

sum . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 531

sysdate . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 532

system_user_name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 533

systime . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 534

table_attribute . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 535

to_char . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 536

to_date . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 537

to_decimal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 538

total_rows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 539

trunc . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 540

truncate_table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 541

upper . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 542

varchar_to_long . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 543

week_in_month . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 544

week_in_year . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 545

WL_GetKeyValue . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 546

word . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 547

word_ext . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 548

workflow_name . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 550

year . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 551

About procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 552

Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 552

Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 553

Creating stored procedures in a database . . . . . . . . . . . . . . . . . . . . . 554

Creating stored procedures in Oracle . . . . . . . . . . . . . . . . . . . . . 554

Creating stored procedures in MS SQL Server or Sybase ASE . 555

Creating stored procedure in DB2 . . . . . . . . . . . . . . . . . . . . . . . . 556

Importing metadata for stored procedures . . . . . . . . . . . . . . . . . . . . 557

Data Integrator Reference Guide 9

Page 10

Contents

Structure of a stored procedure . . . . . . . . . . . . . . . . . . . . . . . . . . . . .558

Calling stored procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 559

In general . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .559

From queries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 561

Without the function wizard . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 564

Checking execution status . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 565

Chapter 7 Data Integrator Scripting Language 567

Language syntax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .568

Syntax for statements in scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 568

Syntax for column and table references in expressions . . . . . . . . . . .569

Strings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .569

Quotation marks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .570

Escape characters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .570

Trailing blanks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 570

Variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 571

Variable interpolation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .571

Functions and stored procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . .572

Operators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 572

NULL values . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .573

NULL values and empty strings . . . . . . . . . . . . . . . . . . . . . . . . . .573

Debugging and Validation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .574

Keywords . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .576

BEGIN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 576

CATCH . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .576

ELSE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 577

END . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 577

IF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 577

RETURN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .577

TRY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 577

WHILE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .577

Sample scripts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .578

Square function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .578

10 Data Integrator Reference Guide

Page 11

Contents

RepeatString function . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 578

Chapter 8 Metadata in repository tables and views 581

AL_AUDIT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 583

AL_AUDIT_INFO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 584

Imported metadata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 584

AL_INDEX . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 585

AL_PCOLUMN . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 585

AL_PKEY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 586

ALVW_COLUMNATTR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 586

ALVW_COLUMNINFO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 587

ALVW_FKREL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 588

ALVW_MAPPING . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 588

Example use case . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 589

Mapping types . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 590

How mappings are computed . . . . . . . . . . . . . . . . . . . . . . . . . . . 590

Mapping complexities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 592

Storing nested column-mapping data . . . . . . . . . . . . . . . . . . . . . 593

ALVW_TABLEATTR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 596

ALVW_TABLEINFO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 596

Internal metadata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 596

AL_LANG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 597

AL_ATTR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 598

AL_USAGE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 598

Example use cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 599

ALVW_FUNCINFO . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 601

ALVW_PARENT_CHILD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 601

Metadata Integrator tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 602

AL_CMS_BV . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 603

AL_CMS_BV_FIELDS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 603

AL_CMS_REPORTS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 604

AL_CMS_REPORTUSAGE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 605

AL_CMS_FOLDER . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 606

Data Integrator Reference Guide 11

Page 12

Contents

AL_CMS_UNV . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 606

AL_CMS_UNV_OBJ . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 607

Operational metadata . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .607

AL_HISTORY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .607

ALVW_FLOW_STAT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .608

Chapter 9 Locales and Multi-Byte Functionality 609

Locale support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .612

Code page support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .614

Processing with and without UTF-16 Unicode . . . . . . . . . . . . . . .614

Minimizing transcoding in Data Integrator . . . . . . . . . . . . . . . . . .616

Guidelines for setting locales . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 617

Job Server locale . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .617

Database, database client, and datastore locales . . . . . . . . . . . .618

File format locales . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 619

XML encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .619

Locales Data Integrator automatically sets . . . . . . . . . . . . . . . . .620

Exporting and importing ATLs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 620

Exporting to other repositories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 621

Multi-byte support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .621

Multi-byte string functions supported in Data Integrator . . . . . . . . . . .621

Numeric data types: assigning constant values . . . . . . . . . . . . . . . . .622

Assigning a value as a numeric directly . . . . . . . . . . . . . . . . . . . . 622

Assigning a value in string format . . . . . . . . . . . . . . . . . . . . . . . .622

BOM Characters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .623

Round-trip conversion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .624

Column Sizing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .624

Sorting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .624

List of supported locales and encodings . . . . . . . . . . . . . . . . . . . . . . . . . . 625

Languages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .626

Territories . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .626

Code pages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .627

XML encodings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .629

12 Data Integrator Reference Guide

Page 13

Contents

Supported sorting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 630

Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 630

Chapter 10 Reserved Words 633

Data Integrator Reference Guide 13

Page 14

Contents

14 Data Integrator Reference Guide

Page 15

Data Integrator Reference Guide

Introduction

chapter

Page 16

Introduction

1

About this guide

About this guide

Welcome to the The Data Integrator Reference Guide . This guide provides

detailed information about the objects, data types, transforms, and functions

in the Data Integrator Designer.

This book contains the following chapters:

• Chapter 2: Data Integrator Objects — Describes options, properties, and

• Chapter 3: Smart Editor — Describes the editor that can be used to

• Chapter 4: Data Types — Describes the data types used in Data

• Chapter 5: Transforms — Describes the transforms included with Data

• Chapter 6: Functions and Procedures — Describes the functions

• Chapter 7: Data Integrator Scripting Language — Describes the Data

• Chapter 8: Metadata in repository tables and views — Describes the

• Chapter 9: Locales and Multi-Byte Functionality — Describes how Data

• Chapter 10: Reserved Words — Lists words that have special mean ing in

For source-specific information, such as information pertaining to a particular

back-office application, consult the supplement for that application.

attributes for objects, such as data flows and work flows.

create scripts, expressions, custom functions.

Integrator, and how Data Integrator handles data type conversions.

Integrator and how to use these transforms.

included with Data Integrator and how to use these functions.

Integrator scripting language and how you can use this language to

create scripts, expressions, and custom functions.

repository’s reporting tables and views that you can use to analyze an

Data Integrator application.

Integrator supports the setting of locales and multi-byte code pages for

the Designer, Job Server, and Access Server.

Data Integrator. You cannot use these words in names that you create,

such as names of data flows.

Who should read this guide

This and other Data Integrator product documentation assumes the following:

• You are an application developer, consultant or database administrator

working on data extraction, data warehousing, or data integration.

• You understand your source and target data systems, DBMS, legacy

systems, business intelligence, and messaging concepts.

16 Data Integrator Reference Guide

Page 17

• You understand your organization’s data needs.

• You are familiar with SQL (Structured Query Language).

• If you are interested in using this product to design real-time processing

you are familiar with:

• DTD and XML Schema formats for XML files

• Publishing Web Services (WSDL, HTTP/S and SOAP p rotocols, etc.)

• You are familiar with Data Integrator installation environments: Microsoft

Windows or UNIX.

Business Objects information resources

Consult the Data Integrator Getting Started Guide for:

• An overview of Data Integrator products and architecture

• Data Integrator installation and configuration information

• A list of product documentation and a suggested reading path

After you install Data Integrator, you can view technical documentation from

many locations. To view documentation in PDF format, you can:

• Select Start > Programs > Data Integrator version > Data Integrator

Documentation

• Release Notes

• Release Summary

• Technical Manuals

• Select one of the following from the Designer’s Help menu:

• Release Notes

• Release Summary

• Technical Manuals

• Select Help from the Data Integrator Administrator

You can also view and download PDF documentation by visiting Business

Objects Customer Support online. To access this Web site, you must have a

valid user name and password. To obtain your user name and password, go

to http://www.techsupport.businessobjects.com and click Register.

and choose:

Introduction

About this guide

1

Data Integrator Reference Guide 17

Page 18

Introduction

1

About this guide

18 Data Integrator Reference Guide

Page 19

Introduction

About this guide

1

Data Integrator Reference Guide 19

Page 20

Introduction

1

About this guide

20 Data Integrator Reference Guide

Page 21

Data Integrator Reference Guide

Data Integrator Objects

chapter

Page 22

Data Integrator Objects

2

This chapter contains reference information about general Data Integrator

objects, such as data flows, jobs, and work flows. Topics include:

• Characteristics of objects

• Descriptions of objects

Note: For information about source-specific objects, consult the reference

chapter of the Data Integrator supplement document for that source.

22 Data Integrator Reference Guide

Page 23

Characteristics of objects

This section discusses common characteristics of all Data Integrator objects.

Specifically, this section discusses:

• Object classes

• Object options, properties, and attributes

Object classes

An object’s class determines how you create and retrieve the object. There

are two classes of objects:

• Reusable objects

• Single-use objects

Reusable objects

After you define and save a reusable object, Data Integrator stores the

definition in the repository. You can then reuse the definition as often as

necessary by creating calls to the definition.

Most objects created in Data Integrator are available for reuse. You access

reusable objects through the object library.

A reusable object has a single definition; all calls to the object refer to that

definition. If you change the definition of the object in one place, and then

save the object, the change is reflected to all other calls to the object.

A data flow, for example, is a reusable object. Multiple jobs, such as a weekly

load job and a daily load job, can call the same data flow. If the data flow is

changed, both jobs call the new version of the data flow.

When you drag and drop an object from the object library, you are creating a

new reference (or call) to the existing object definition.

You can edit reusable objects at any time independent of the current open

project. For example, if you open a new project, you can go to the object

library , open a dat a flow, and edit it. The object will remain “dirty” (that is, your

edited changes will not be saved) until you explicitly save it.

Functions are reusable objects that are not available in the object library . Data

Integrator provides access to these objects through the function wizard

wherever they can be used.

Some objects in the object library are not reusable in all instances:

• Datastores are in the object library because they are a method for

categorizing and accessing external metadata.

Data Integrator Objects

Characteristics of objects

2

Data Integrator Reference Guide 23

Page 24

Data Integrator Objects

2

Characteristics of objects

• Built-in transforms are “reusable” in that every time you drop a transform,

a new instance of the transform is created.

Saving reusable objects

“Saving” a reusable object in Data Integrator means storing the language that

describes the object to the repository. The description of a reusable object

includes these components:

• Properties of the object

• Options for the object

• Calls this object makes to other objects

• Definition of single-use objects called by this object

If an object contains a call to another reusable object, only the call to the

second object is saved, not changes to that object’s definition.

Data Integrator stores the description even if the object does not validate.

Data Integrator saves objects without prompting you:

• When you import an object into the repository.

• When you finish editing:

• Datastores

• Flat file formats

• XML Schema or DTD formats

Y ou can explicitly save the reusable object currently open in the wo rkspace by

choosing

workspace, the

To save all objects in the repository that have changes, choose

the

Data Integrator also prompts you to save all objects that have changes when

you execute a job and when you exit the Designer.

Save from the Project menu. If a single-use object is open in the

Save command is not available.

Save All from

Project menu.

Single-use objects

Single-use objects appear only as components of other objects. They ope rate

only in the context in which they were created.

Saving single-use objects

“Saving” a single-use object in Data Integrator means storing the language

that describes the object to the repository. The description of a single-use

object can only be saved as part of the reusable object that calls the singleuse object.

Data Integrator stores the description even if the object does not validate.

24 Data Integrator Reference Guide

Page 25

Object options, properties, and attributes

Each object is associated with a set of options, properties, and attributes:

• Options control the operation of an object. For example, in a datastore,

an option is the name of the database to which the datastore connects.

• Properties document an object. For example, properties include the

name, description of an object, and the date on which it was created.

Properties merely describe an object; they do not affect an object’s

operation.

To view properties, right-click an object and select

• Attributes provide additional information about an object. Attribute values

may also affect an object’s behavior.

To view attributes, double-click an object from an editor and click the

Attributes tab.

Descriptions of objects

This section describes each Data Integrator object and tells you how to

access that object.

The following table lists the names and descriptions of objects available in

Data Integrator:

Data Integrator Objects

Descriptions of objects

Properties.

2

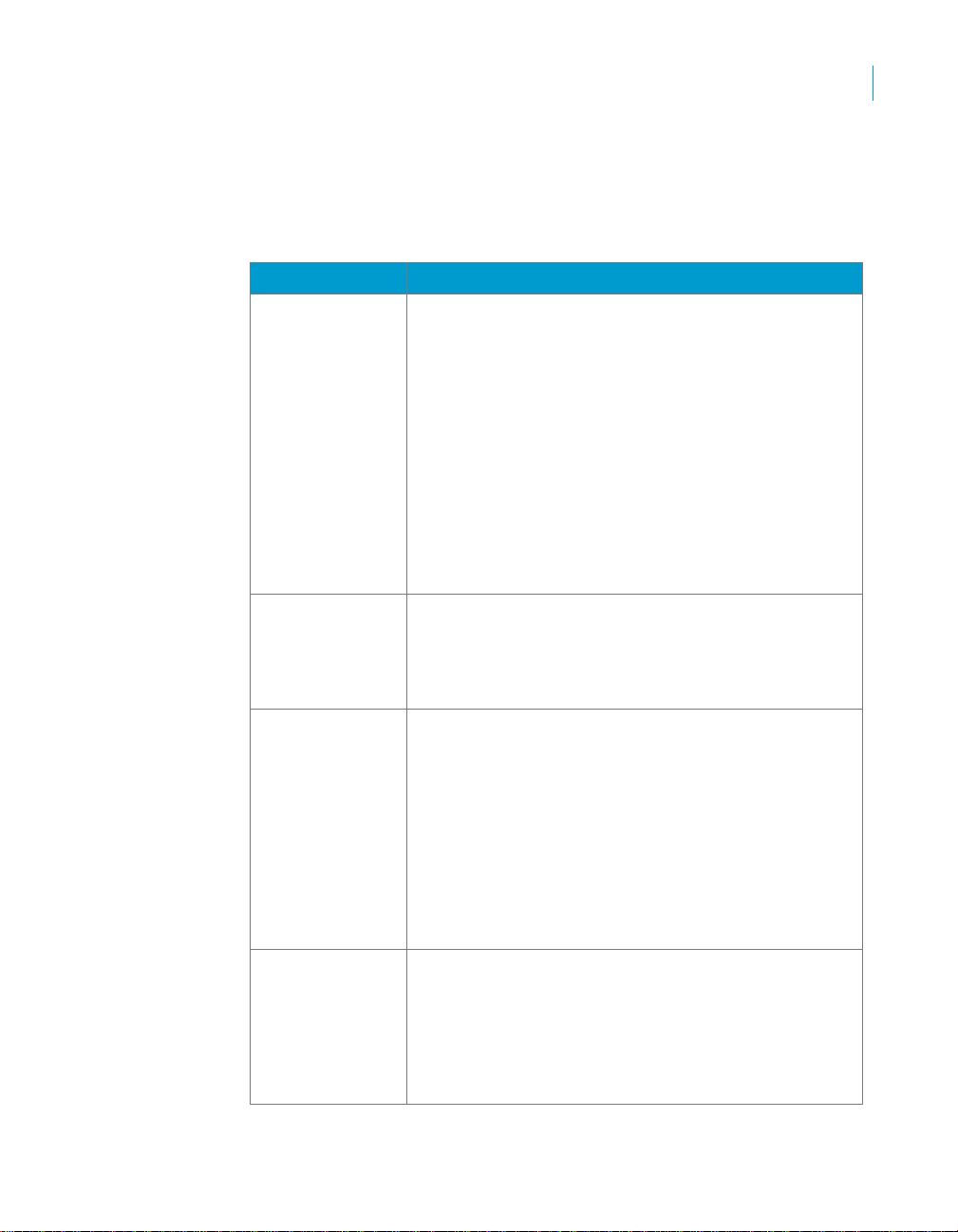

Object Class Description

Annotation Single-use Describes a flow, part of a flow, or a diagram in the workspace.

Catch Single-use Specifies the steps to execute if a given error occurs while a job

is running.

COBOL

copybook file

format

Conditional Single-use Specifies the steps to execute based on the result of a

Batch Job Reusable Defines activities that Data Integrator executes at a given time

Data flow Reusable Specifies the requirements for extracting, transforming, and

Reusable Describes the structure defined in a COBOL copybook file.

condition.

including error, monitor and trace messages.

Jobs can be dropped only in the project tree. The object

created is a direct reference to the object in the object library.

Only one reference to a job can exist in the project tree at one

time.

loading data from sources to targets.

Data Integrator Reference Guide 25

Page 26

Data Integrator Objects

2

Descriptions of objects

Object Class Description

Datastore Single-use Specifies the connection information Data Integrator needs to

access a database or other data source. Cannot be dropped.

Document Reusable Available in certain adapter datastores, documents are data

structures that can support complicated nested schemas.

DTD Reusable A description of an XML file or message. Indicates the format

an XML document reads or writes. See also: XML Schema

File format Reusable Indicates how flat file data is arranged in a source or target file.

Function Reusable Returns a value.

Log Single-use Records information about a particular execution of a single

job.

Message

function

Outbound

message

Project Single-use Groups jobs for convenient access.

Query transform Single-use Retrieves a data set that satisfies conditions that you specify.

Real-time job Reusable Defines activities that Data Integrator executes on-demand.

Script Single-use Evaluates expressions, calls functions, and assigns values to

Source Single-use An object from which Data Integrator reads data in a data flow.

Table Reusable Indicates an external DBMS table for which metadata has been

Target Single-use An object in which Data Integrator loads extracted and

Reusable Available in certain adapter datastores, message functions can

accommodate XML messages when properly configured.

Reusable Available in certain adapter datastores, outbound messages

are XML-based, hierarchical communications that real-time

jobs can publish to adapters.

Real-time jobs are created in the Designer, then configured and

run as services associated with an Access Server in the

Administrator. Real-time jobs are designed according to data

flow model rules and run as a request-response system.

variables.

imported into Data Integrator, or the target table into which data

is or has been placed.

A table is associated with its datastore; it does not exist

independently of a datastore connection. A table retrieves or

stores data based on the schema of the table definition from

which it was created.

transformed data in a data flow.

26 Data Integrator Reference Guide

Page 27

Data Integrator Objects

Descriptions of objects

Object Class Description

Template table Reusable A new table you want added to a database.

All datastores except SAP R/3 datastores have a default

template that you can use to create any number of tables in the

datastore.

Data Integrator creates the schema for each instance of a

template table at runtime. The created schema is based on the

data loaded into the template table.

Transform Reusable Performs operations on data sets.

Requires zero or more data sets; produces zero or one data set

(which may be split).

Try Single-use Introduces a try/catch block.

While loop Single-use Repeats a sequence of steps as long as a condition is true.

Work flow Reusable Orders data flows and operations supporting data flows.

XML file Single-use A batch or real-time source or target. As a source, an XML file

translates incoming XML-formatted data into data that D

NTEGRATOR can process. As a target, an XML file translates the

I

data produced by a data flow, including nested data, into an

XML-formatted file.

XML message Single-use A real-time source or target. As sources, XML messages

translate incoming XML-formatted requests into data that a

real-time job can process. As targets, XML messages translate

the result of the real-time job, including hierarchical data, into

an XML-formatted response and sends the messages to the

Access Server.

XML Schema Reusable A description of an XML file or message. Indicates the format

an XML document reads or writes. See also: DTD

XML template Single-use A target that creates an XML file that matches a particular input

schema. No DTD or XML Schema is required.

ATA

2

Data Integrator Reference Guide 27

Page 28

Data Integrator Objects

2

Descriptions of objects

Annotation

Class

Single-use

Access

Click the annotation icon in the tool palette, then click in the workspace.

Description

Annotations describe a flow, part of a flow, or a diagram in a workspace. An

annotation is associated with the job., work flow, or data flow where it

appears. When you import or export that job, work flow, or data flow, you

import or export associated annotations.

For more information, see “Creating annotations” on page 59 of the Data

Integrator Designer Guide.

Note: An annotation has no options or properties.

28 Data Integrator Reference Guide

Page 29

Data Integrator Objects

Descriptions of objects

Batch Job

Class

Reusable

Access

• In the object library, click the Jobs tab.

• In the project area, select a project and right-click Batch Job.

Description

Note: For information specific to SAP R/3, see Data Integrator Supplement

for SAP.

A batch job is a set of objects that you can schedule and execute together.

For Data Integrator to execute the steps of any object, the object must be part

of a job.

A batch job can contain the following objects:

• Data flows

• Sources

• Transforms

• Targets

• Work flows

• Scripts

• Conditionals

• Try/catch blocks

• While Loops

Y ou can run b atch jobs such that you can automatically recover from jobs that

do not execute successfully. During automatic recovery, Data Integrator

retrieves the results from steps that were successfully completed in the

previous run and executes all other steps. Specifically, Data Integrator

retrieves results from the following types of steps:

• Work flows

• Data flows

• Script statements

• Custom functions (stateless type only)

• SQL function

• EXEC function

2

Data Integrator Reference Guide 29

Page 30

Data Integrator Objects

2

Descriptions of objects

• get_env function

• rand function

• sysdate function

• systime function

Batch jobs have the following built-in attributes:

Attribute Description

Name The name of the object. This name appears on the

object in the object library and in the calls to the

object.

Description Your description of the job.

Date created The date when the object was created.

Batch and real-time jobs have properties that determine what information

Data Integrator collects and logs when running the job. You can set the

default properties that apply each time you run the job or you can set

execution (run-time) properties that apply for a particular run. Execution

properties override default properties.

To set default properties, select the job in the project area or the object library,

right-click, and choose

Execution properties are set as you run a job. To set execution properties,

right-click the job in the project area and choose

validates the job and opens the Execution Properties window.

You can set three types of execution properties:

Properties to open the Properties window.

Execute. The Designer

• Parameters

• Trace properties

• Global variables

For an introduction to using global variables as job properties and

selecting them at runtime, see “Setting global variable values” on

page 286 of the Data Integrator Designer Guide.

Parameters

Use parameter options to help capture and diagnose errors using log, View

Data, or recovery options.

Data Integrator writes log information to one of three files (in the

$LINK_DIR\log\Job Server name\repository name directory):

• Monitor log file

• Trace log file

30 Data Integrator Reference Guide

Page 31

Data Integrator Objects

Descriptions of objects

• Error log file

You can also select a system configuration and a Job Server or server group

from the

Select the

Options Description

Monitor sample

rate

(# of rows)

Print all trace

messages

Enable recovery (Batch jobs only) Select this check box to enable the

Recover from last

failed execution

Parameters tab of the Execution Properties window.

Parameters tab to set the following options.

Enter the number of rows processed before Data

Integrator writes information to the monitor log file and

updates job events. Data Integrator writes information

about the status of each source, target, or transform.

For example, if you enter 1000, Data Integrator updates

the logs after processing 1,000 rows.

The default is 1000. When setting the value, you must

evaluate performance improvement s gain ed by maki ng

fewer calls to the operating system against your ability to

find errors quickly. With a higher monitor sample rate,

Data Integrator collects more data before calling the

operating system to open the file: performance impro ves.

However, with a higher monitor rate, more time passes

before you are able to see any errors.

Select this check box to print all trace messages to the

trace log file for the current Job Server. (For more

information on log files, see “Log” on page 120)

Selecting this option overrides the trace properties set

on the Trace t ab.

automatic recovery feature. When enabled, Data

Integrator saves the results from completed steps and

allows you to resume failed jobs. You cannot enable the

automatic recovery feature when executing a job in data

scan mode.

See “Automatically recovering jobs” on page 437 of the

Data Integrator Designer Guide for information about

the recovery options.

This property is only available as a run-time property. It

is not available as a default property.

(Batch Job only) Select this check box to resume a

failed job. Data Integrator retrieves the results from any

steps that were previously executed successfully and

re-executes any other steps.

This option is a run-time property. This option is not

available when a job has not yet been executed or when

recovery mode was disabled during the previous run.

2

Data Integrator Reference Guide 31

Page 32

Data Integrator Objects

2

Descriptions of objects

Options Description

System

configuration

Job Server or

server group

Select the system configuration to use when executing

this job. A system configuration defines a set of

datastore configurations, which define the datastore

connections. For more information, see “Creating and

managing multiple datastore configurations” on

page 112 of the Data Integrator Designer Guide.

If a system configuration is not specified, Data Integrator

uses the default datastore configuration for each

datastore.

This option is a run-time property. This option is only

available if there are system configurations defined in

the repository.

Select the Job Server or server group to execute this

job. A Job Server is defined by a host name and port

while a server group is defined by its name. The list

contains Job Servers and server groups linked to the

job’s repository.

For an introduction to server groups, see “Using Server

Groups” on page 41 of the Data Integrator Administrator

Guide.

When selecting a Job Server or server group, remember

that many objects in the Designer have options set

relative to the Job Server’s location. For example:

• Directory and file names for source and target files

• Bulk load directories

Trace properties

Use trace properties to select the information that Data Integrator monitors

and writes to the trace log file during a job. Data Integrator writes trace

messages to the trace log associated with the current Job Server and writes

error messages to the error log associated with the current Job Server.

To set trace properties, click the

click Yes in the Value list, and click OK. To turn a trace off, select the trace,

No in the Value list, and click OK.

click

32 Data Integrator Reference Guide

Trace tab. To turn a trace on, select the trace,

Page 33

Data Integrator Objects

Descriptions of objects

You can turn several traces on and off.

Trace Description

Row Writes a message when a transform imports or exports a

row.

Session Writes a message when the job description is read from

the repository, when the job is optimized, and when the

job runs.

Work Flow Writes a message when the work flow description is read

from the repository, when the work flow is optimized,

when the work flow runs, and when the work flow ends.

Data Flow Writes a message when the data flow starts, when the

data flow successfully finishes, or when the data flow

terminates due to error.

This trace also reports when the bulk loader starts, any

bulk loader warnings occur, and when the bulk loader

successfully completes.

Transform Writes a message when a transform starts, completes, or

terminates.

Custom

Transform

Writes a message when a custom transform starts and

completes successfully.

2

Data Integrator Reference Guide 33

Page 34

Data Integrator Objects

2

Descriptions of objects

Trace Description

Custom Function Writes a message of all user invocations of the

AE_LogMessage function from custom C code.

SQL Functions Writes data retrieved before SQL functions:

• Every row retrieved by the named query before the

SQL is submitted in the key_generation function

• Every row retrieved by the named query before the

SQL is submitted in the lookup function (but only if

PRE_LOAD_CACHE is not specified).

• When mail is sent using the mail_to function.

SQL Transforms Writes a message (using the Table_Comparison

transform) about whether a row exists in the target table

that corresponds to an input row from the source table.

The trace message occurs before submitting the query

against the target and for every row retrieved when the

named query is submitted (but only if caching is not

turned on).

SQL Readers Writes the SQL query block that a script, query transform,

or SQL function submits to the system. Also writes the

SQL results.

34 Data Integrator Reference Guide

Page 35

Data Integrator Objects

Descriptions of objects

Trace Description

SQL Loaders Writes a message when the bulk loader:

• Starts

• Submits a warning message

• Completes successfully

• Completes unsuccessfully , if the Clean up bulk loader

directory after load

Additionally, for Microsoft SQL Server and Sybase ASE,

writes when the SQL Server bulk loader:

option is selected

• Completes a successful row submission

• Encounters an error

This instance reports all SQL that Data Integrator submit s

to the target database, including:

• When a truncate stm command executes if the

Delete data from table be fore loa di ng option is

selected.

• Any parameters included in PRE-LOAD SQL

commands

• Before a batch of SQL statements is submitted

• When a template table is created (and also dropped,

Drop/Create option is turned on)

if the

• When a delete stm command executes if auto

correct is turned on (Informix environment only).

Optimized

Dataflow

Tables Writes a message when a table is created or dropped.

Scripts and

Script Functions

For Business Objects consulting and technical support

use.

The message indicates the datastore to which the

created table belongs and the SQL statement used to

create the table.

Writes a message when Data Integrator runs a script or

invokes a script function. Specifically, this trace links a

message when:

• The script is called. Scripts can be started any level

from the job level down to the data flow level.

Additional (and separate) notation is made when a

script is called from within another script.

• A function is called by the script.

• The script successfully completes.

2

Data Integrator Reference Guide 35

Page 36

Data Integrator Objects

2

Descriptions of objects

Trace Description

Access Server

Communication

Writes messages exchanged between the Access Server

and a service provider, including:

• The registration message, which tells the Access

Server that the service provider is ready

• The request the Access Server sends to the service

to execute

• The response from the service to the Access Server

• Any request from the Access Server to shut down

Trace Parallel

Execution

Writes messages describing how data in a data flow is

parallel processed.

36 Data Integrator Reference Guide

Page 37

Data Integrator Objects

Descriptions of objects

Catch

Class

Single-use

Access

With a work flow diagram in the workspace, click the catch icon in the tool

palette.

Description

A catch is part of a serial sequence called a try/catch block. The try/catch

block allows you to specify alternative work flows if errors occur while Data

Integrator is executing a job. Try/catch blocks “catch” groups of errors, apply

solutions that you provide, and continue execution.

For each catch in the try/catch block, specify the following:

• One exception or group of exceptions that the catch handles.

To handle more than one exception or group of exceptions, add more

catches to the try/catch block.

• The work flow to execute if the indicated exception occurs.

Use an existing work flow or define a work flow in the catch editor.

If an exception is thrown during the execution of a try/catch block, and if no

catch is looking for that exception, then the exception is handled by normal

error logic.

Do not reference output variables from a try/catch block in any subsequent

steps if you are using (for batch jobs only) the automatic recovery feature.

Referencing such variables could alter the results during automatic recovery.

Also, try/catch blocks can be used within any real-time job component.

However, try/catch blocks cannot straddle a real-time processing loop and the

initialization or clean up component of a real-time job.

Catches have the following attribute:

2

Attribute Description

Name The name of the object. This name appears on the

object in the diagram.

Data Integrator Reference Guide 37

Page 38

Data Integrator Objects

2

Descriptions of objects

The following table describes exception groups and errors passed as

exceptions.

Exception Number Description

Catch All Exceptions All Catch All Exceptions

Parser Errors 1 Parser errors

Resolver Errors 2 Resolver errors

Execution Errors 5 Internal errors that occur during the

execution of a data movement

specification

Job initialization 50101 Initialization for a job failed

Job cleanup 50102 Job cleanup failed

Job unknown 50103 Unknown job error

Job failure 50104 Session failure

Work flow initialization 50201 Initialization for a work flow failed

Work flow cleanup 50202 Work flow cleanup failed

Work flow unknown 50203 Unknown work flow error

Work flow failure 50204 Work flow failure

Function initialization 50301 Initialization of the function failed

Function cleanup 50302 Function cleanup failed

Function unknown 50303 Unknown function error

Function failure 50304 Function failure

Step failure 50305 Step failure

System function failure 50306 System function execution failure

(function returned an error status)

System function initialization 50307 System function startup or launch failure

Data flow initialization 50401 Initialization for a data flow failed

Dataflow open 50402 Cannot open data flow

Dataflow close 50403 Data flow close failed

Dataflow cleanup 50404 Data flow cleanup failed

Data flow unknown 50405 Data flow unknown

Dataflow failure 50406 Data flow failure

Message bad 50407 Bad message error

Transform initialization 50501 Initialization for transform failed

38 Data Integrator Reference Guide

Page 39

Data Integrator Objects

Descriptions of objects

Exception Number Description

Transform open 50502 Cannot open transform

Transform close 50503 Cannot close transform

Transform cleanup 50504 Cannot clean up transform

Transform unknown 50505 Unknown transform error

Transform failure 50506 Transform failure

OS unknown 50601 Unknown OS error

OS GetPipe 50602 OS GetPipe error

OS ReadPipe 50603 OS ReadPipe error

OS WritePipe 50604 OS WritePipe error

OS ClosePipe 50605 OS ClosePipe error

OS CreatePipe 50606 OS CreatePipe error

OS RedirectPipe 50607 OS RedirectPipe error

OS DuplicatePipe 50608 OS DuplicatePipe error

OS Stdin/stdout restore 50609 OS stdin/stdout restore error

OS CloseProcess 50611 OS CloseProcess error

OS CreateProcess 50612 OS CreateProcess error

OS ResumeProcess 50613 OS ResumeProcess error

OS SuspendProcess 50614 OS Suspend process error

OS TerminateProcess 50615 OS TerminateProcess error

Internal thread initialization 50701 Internal error: thread initialization

Internal thread join 50702 Internal error: thread join

Internal thread start 50703 Internal error: thread start

Internal thread resume 50704 Internal error: thread resume

Internal thread suspend 50705 Internal error: thread suspend

Internal mutex acquire 50711 Internal error: acquire mutex

Internal mutex release 50712 Internal error: release mutex

Internal mutex recursive acquire 50713 Internal error: acquire recursive mutex

Internal mutex recursive release 50714 Internal error: mutex recursive release

Internal trap get error 50721 Internal error: trap get

Internal trap set 50722 Internal error: trap set

Internal trap make 50723 Internal error: trap make

2

Data Integrator Reference Guide 39

Page 40

Data Integrator Objects

2

Descriptions of objects

Exception Number Description

Internal condition wait 50731 Errors encountered while processing

files

Internal condition signal 50732 Cannot open file

Internal queue read 50741 Mismatch of the position of column

Internal queue write 50742 Premature end of row

Internal queue create 50743 Cannot move file pointer to the

beginning of the file

Internal queue delete 50744 Null row is encountered during read

String conversion overflow 50800 String conversion overflow

Validation not done 50801 Validation not complete

Conversion error 50802 Conversion error

Invalid data values 51001 Invalid data values

Database Access Errors 7 Generic Database Access Errors

Unsupported expression 70101 Unsupported expression

Connection broken 70102 Connection broken

Column mismatch 70103 Column mismatch

Microsoft SQL Server 70201 Microsoft SQL Server error

Oracle server 70301 Oracle server error

ODBC 70401 ODBC error

Sybase ASE server 70601 Sybase ASE SQL error

Sybase ASE server operation 70602 Sybase ASE operation error

File Access Errors 8 Errors accessing files through file

formats

Open 80101 Cannot open file

Read NULL row 80102 Null row is encountered during read

Premature end of row 80103 Premature end of row

Position column mismatch 80104 Mismatch the position of column

LSEEK BEGIN 80105 Cannot move file pointer to the

beginning of the file

Repository Access Errors 10 Errors accessing the D

repository

Repository internal error 100101 Repository internal error

Repository ODBC error 100102 Repository ODBC error

ATA INTEGRATOR

40 Data Integrator Reference Guide

Page 41

Data Integrator Objects

Descriptions of objects

Exception Number Description

Microsoft Connection Errors 12 Errors connecting to the Microsoft SQL

Server

MSSQL Server Initialization 120201 Initialization for a Microsoft SQL Server

failed

MSSQL Login Allocation 120202 Initialization for a Microsoft SQL Server

failed

MSSQL Login Connection 120203 Connection to Microsoft SQL Server

failed

MSSQL Database Context 120204 Failed to switch context to a Microsoft

SQL Server database

ODBC Allocate environment 120301 ODBC Allocate environment error

Oracle connection 120302 Oracle connection failed

ODBC connection 120401 ODBC connection failed

Sybase ASE server context allocation 120501 Sybase ASE server failed to allocate

global context

Sybase ASE server initialization 120502 Sybase ASE server failed to initialize

CTLIB

Sybase ASE user data configuration 120503 Sybase ASE server failed to configure

user data

Sybase ASE connection allocation 120504 Sybase ASE server failed to allocate

connection structure

Sybase ASE login connection 120505 Sybase ASE connection failed

Predefined Transforms Errors 13 Predefined Transforms Errors

Column list is not bound 130101 Primary column information list is not

bound

Key generation 130102 Transform Key_Generation error

Options not defined 130103 Transform options are not defined

ABAP Generation Errors 14 ABAP generation errors

ABAP syntax error 140101 ABAP syntax error

R/3 Execution Errors 15 R/3 execution errors

R/3 RFC open failure 150101 R/3 RFC connection failure

R/3 file open failure 150201 R/3 file open failure

R/3 file read failure 150202 R/3 file read failure

R/3 file close failure 150203 R/3 file close failure

2

Data Integrator Reference Guide 41

Page 42

Data Integrator Objects

2

Descriptions of objects

Exception Number Description

R/3 file open failure 150301 R/3 file open failure

R/3 file read failure 150302 R/3 file read failure

R/3 file close failure 150303 R/3 file close failure

R/3 connection open failure 150401 R/3 connection open failure

R/3 system exception 150402 R/3 system exception

R/3 connection broken 150403 R/3 connection broken

R/3 connection retry 150404 R/3 connection retry

R/3 connection tranid missing 150405 R/3 connection transaction ID missing

R/3 connection has been executed 150406 R/3 connection has been executed

R/3 connection memory low 150407 R/3 connection memory low

R/3 connection version mismatch 150408 R/3 connection version mismatch

R/3 connection call not supported 150409 R/3 connection call not supported

Email Errors 16 Email errors

System Exception Errors 17 System exception errors

Engine Abort Errors 20 Engine abort errors

42 Data Integrator Reference Guide

Page 43

Data Integrator Objects

Descriptions of objects

Conditional

Class

Single-use

Access

With a work flow diagram in the workspace, click the conditional icon in the

tool palette.

Description

A conditional implements if/then/else logic in a work flow.

For each conditional, specify the following:

• If: A Boolean expression defining the condition to evaluate.

The expression evaluates to TRUE or FALSE. You can use constants,

functions, variables, parameters, and standard operators to construct the

expression. For information about expressions, see Chapter 3: Smart

Editor.

Note: Do not put a semicolon (;) at the end of your expression in the

box.

• Then: A work flow to execute if the condition is TRUE.

• Else: A work flow to execute if the condition is FALSE.

This branch is optional.

The

Then and Else branches of the conditional can be any steps valid in a

work flow, including a call to an existing work flow.

Conditionals have the following attribute:

2

If

Attribute Description

Name The name of the object. This name appears on the

object in the diagram.

Data Integrator Reference Guide 43

Page 44

Data Integrator Objects

2

Descriptions of objects

COBOL copybook file format

Class

Reusable

Access

In the object library, click the Formats tab.

Description

A COBOL copybook file format describes the structure defined in a COBOL

copybook file (usually denoted with a .cpy extension). Y ou store templates for

file formats in the object library. You use the templates to define the file format

of a particular source in a data flow.

The following tables describe the Import (or Edit) COBOL copybook dialog

box options and the Source COBOL copybook Editor options.

Import or Edit COBOL copybook format options

The Import (or Edit) COBOL copybook format dialog boxes include options on

the following tabs:

• Format

• Data File

• Data Access

44 Data Integrator Reference Guide

Page 45

Data Integrator Objects

Descriptions of objects

Format

The Format tab defines the parameters of the COBOL copybook format.

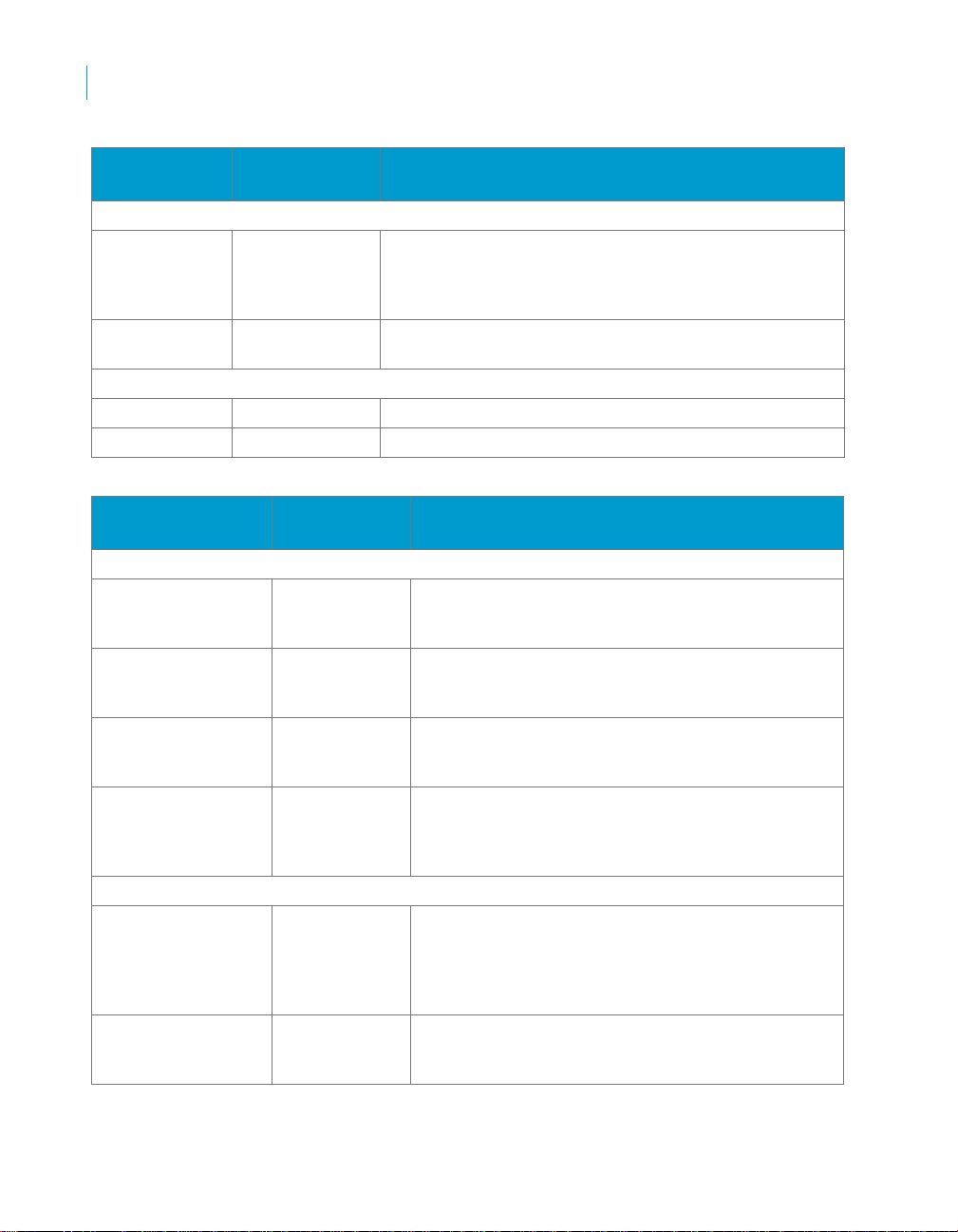

Table 2-1 :Format tab

2

Data file

option

File name Type or browse to the COBOL copybook file name (usually

Expand

occurs

Description

has a .cpy extension). This file contains the schema definition.

Specifies the way to handle OCCURS groups. These groups

can be imported either:

• with each field within an OCCURS group getting a

sequential suffix for each repetition: fieldname_1,

fieldname _2, etc. (unrolled view), or

• with each field within an OCCURS group appearing only

once in the copybook’s schema (collapsed view). For a

collapsed view, the output schema matches the OCCURS

group definition, and for each input record there will be

several output records.

If a copybook contains more than one OCCURS group, you

must check this box.

Ignore

redefines

Source

format

Determines whether or not to ignore REDEFINES clauses.

The format of the copybook source code. Options include:

• Free — All characters on the line can contain COBOL

source code.

• Smart mode — Data Integrator will try to determine

whether the source code is in Standard or Free format; if

this does not produce the desired result, choose the

appropriate source format (standard or free) manually for

reimport.

• Standard — The traditional (IBM mainframe) COBOL

source format, where each line of code is divided into the

following five areas: sequence number (1-6), indicator

area (7), area A (8-11), area B (12-72) and comments (73-

80).

Source

codes [start]

Source

codes [end]

Defines the start column of the copybook source file to use

during the import. Typical value is 7 for IBM mainframe

copybooks (standard source format) and 0 for free format.

Defines the end column of the copybook source file to use

during the import. Typical value is 72 for IBM mainframe

copybooks (standard source format) and 9999 for free format.

Data Integrator Reference Guide 45

Page 46

Data Integrator Objects

2

Descriptions of objects

Data File

The Data File tab defines the parameters of the data file.

Table 2-2 :Data File tab

Data file

option

Directory Type or browse to the directory that contains the COBOL

File name Type or browse to the COBOL copybook data file Name. You

Type Specifies the record format—fixed, variable, or undefined:

Description

copybook data file to import.

If you include a directory path here, then enter only the file

name in the Name field.

can use variables or wild cards (* or ?).

If you leave Directory blank, then type a full path and file

name here.

• Fixed(F)

• Fixed-Blocked(FB)

• Variable(V)

• Variable-Blocked(VB)